[车牌字符识别](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz) | 车牌检测:3.9M

车牌字符识别: 12M | 60 | | 车辆属性 | 7.31ms | [车辆属性](https://bj.bcebos.com/v1/paddledet/models/pipeline/vehicle_attribute_model.zip) | 7.2M | 61 | | 车道线检测 | 47ms | [车道线模型](https://bj.bcebos.com/v1/paddledet/models/pipeline/pp_lite_stdc2_bdd100k.zip) | 47M | 62 | 63 | 下载模型后,解压至`./output_inference`文件夹。 64 | 65 | 在配置文件中,模型路径默认为模型的下载路径,如果用户不修改,则在推理时会自动下载对应的模型。 66 | 67 | **注意:** 68 | 69 | - 检测跟踪模型精度为公开数据集BDD100K-MOT和UA-DETRAC整合后的联合数据集PPVehicle的结果,具体参照[ppvehicle](../../../../configs/ppvehicle) 70 | - 预测速度为T4下,开启TensorRT FP16的效果, 模型预测速度包含数据预处理、模型预测、后处理全流程 71 | 72 | ## 配置文件说明 73 | 74 | PP-Vehicle相关配置位于```deploy/pipeline/config/infer_cfg_ppvehicle.yml```中,存放模型路径,完成不同功能需要设置不同的任务类型 75 | 76 | 功能及任务类型对应表单如下: 77 | 78 | | 输入类型 | 功能 | 任务类型 | 配置项 | 79 | |-------|-------|----------|-----| 80 | | 图片 | 属性识别 | 目标检测 属性识别 | DET ATTR | 81 | | 单镜头视频 | 属性识别 | 多目标跟踪 属性识别 | MOT ATTR | 82 | | 单镜头视频 | 车牌识别 | 多目标跟踪 车牌识别 | MOT VEHICLEPLATE | 83 | 84 | 例如基于视频输入的属性识别,任务类型包含多目标跟踪和属性识别,具体配置如下: 85 | 86 | ``` 87 | crop_thresh: 0.5 88 | visual: True 89 | warmup_frame: 50 90 | 91 | MOT: 92 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip 93 | tracker_config: deploy/pipeline/config/tracker_config.yml 94 | batch_size: 1 95 | enable: True 96 | 97 | VEHICLE_ATTR: 98 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/vehicle_attribute_model.zip 99 | batch_size: 8 100 | color_threshold: 0.5 101 | type_threshold: 0.5 102 | enable: True 103 | ``` 104 | 105 | **注意:** 106 | 107 | - 如果用户需要实现不同任务,可以在配置文件对应enable选项设置为True。 108 | - 如果用户仅需要修改模型文件路径,可以在命令行中--config后面紧跟着 `-o MOT.model_dir=ppyoloe/` 进行修改即可,也可以手动修改配置文件中的相应模型路径,详细说明参考下方参数说明文档。 109 | 110 | 111 | ## 预测部署 112 | 113 | 1. 直接使用默认配置或者examples中配置文件,或者直接在`infer_cfg_ppvehicle.yml`中修改配置: 114 | ``` 115 | # 例:车辆检测,指定配置文件路径和测试图片 116 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml --image_file=test_image.jpg --device=gpu 117 | 118 | # 例:车辆车牌识别,指定配置文件路径和测试视频 119 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_vehicle_plate.yml --video_file=test_video.mp4 --device=gpu 120 | ``` 121 | 122 | 2. 使用命令行进行功能开启,或者模型路径修改: 123 | ``` 124 | # 例:车辆跟踪,指定配置文件路径和测试视频,命令行中开启MOT模型并修改模型路径,命令行中指定的模型路径优先级高于配置文件 125 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml -o MOT.enable=True MOT.model_dir=ppyoloe_infer/ --video_file=test_video.mp4 --device=gpu 126 | 127 | # 例:车辆违章分析,指定配置文件和测试视频,命令行中指定违停区域设置、违停时间判断。 128 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml \ 129 | --video_file=../car_test.mov \ 130 | --device=gpu \ 131 | --draw_center_traj \ 132 | --illegal_parking_time=3 \ 133 | --region_type=custom \ 134 | --region_polygon 600 300 1300 300 1300 800 600 800 135 | 136 | ``` 137 | 138 | ### 在线视频流 139 | 140 | 在线视频流解码功能基于opencv的capture函数,支持rtsp、rtmp格式。 141 | 142 | - rtsp拉流预测 143 | 144 | 对rtsp拉流的支持,使用--rtsp RTSP [RTSP ...]参数指定一路或者多路rtsp视频流,如果是多路地址中间用空格隔开。(或者video_file后面的视频地址直接更换为rtsp流地址),示例如下: 145 | ``` 146 | # 例:车辆属性识别,单路视频流 147 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_vehicle_attr.yml -o visual=False --rtsp rtsp://[YOUR_RTSP_SITE] --device=gpu 148 | 149 | # 例:车辆属性识别,多路视频流 150 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_vehicle_attr.yml -o visual=False --rtsp rtsp://[YOUR_RTSP_SITE1] rtsp://[YOUR_RTSP_SITE2] --device=gpu 151 | ``` 152 | 153 | - 视频结果推流rtsp 154 | 155 | 预测结果进行rtsp推流,使用--pushurl rtsp:[IP] 推流到IP地址端,PC端可以使用[VLC播放器](https://vlc.onl/)打开网络流进行播放,播放地址为 `rtsp:[IP]/videoname`。其中`videoname`是预测的视频文件名,如果视频来源是本地摄像头则`videoname`默认为`output`. 156 | ``` 157 | # 例:车辆属性识别,单路视频流,该示例播放地址为 rtsp://[YOUR_SERVER_IP]:8554/test_video 158 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_vehicle_attr.yml -o visual=False --video_file=test_video.mp4 --device=gpu --pushurl rtsp://[YOUR_SERVER_IP]:8554 159 | ``` 160 | 注: 161 | 1. rtsp推流服务基于 [rtsp-simple-server](https://github.com/aler9/rtsp-simple-server), 如使用推流功能请先开启该服务. 162 | 使用方法很简单,以linux平台为例:1)下载对应平台release包;2)解压后在命令行执行命令 `./rtsp-simple-server`即可,成功后进入服务开启状态就可以接收视频流了。 163 | 2. rtsp推流如果模型处理速度跟不上会出现很明显的卡顿现象,建议跟踪模型使用ppyoloe_s版本,即修改配置中跟踪模型mot_ppyoloe_l_36e_pipeline.zip替换为mot_ppyoloe_s_36e_pipeline.zip。 164 | 165 | ### Jetson部署说明 166 | 167 | 由于Jetson平台算力相比服务器有较大差距,有如下使用建议: 168 | 169 | 1. 模型选择轻量级版本,我们最新提供了轻量级[PP-YOLOE-Plus Tiny模型](../../../../configs/ppvehicle/README.md),该模型在Jetson AGX上可以实现4路视频流20fps实时跟踪。 170 | 2. 如果需进一步提升速度,建议开启跟踪跳帧功能,推荐使用2或者3: `skip_frame_num: 3`,该功能当前默认关闭。 171 | 172 | 上述修改可以直接修改配置文件(推荐),也可以在命令行中修改(字段较长,不推荐)。 173 | 174 | PP-YOLOE-Plus Tiny模型在AGX平台不同功能开启时的速度如下:(测试视频跟踪车辆为1个) 175 | 176 | | 功能 | 平均每帧耗时(ms) | 运行帧率(fps) | 177 | |:----------|:----------|:----------| 178 | | 跟踪 | 13 | 77 | 179 | | 属性识别 | 20.2 | 49.4 | 180 | | 车牌识别 | - | - | 181 | 182 | 183 | ### 参数说明 184 | 185 | | 参数 | 是否必须|含义 | 186 | |-------|-------|----------| 187 | | --config | Yes | 配置文件路径 | 188 | | -o | Option | 覆盖配置文件中对应的配置 | 189 | | --image_file | Option | 需要预测的图片 | 190 | | --image_dir | Option | 要预测的图片文件夹路径 | 191 | | --video_file | Option | 需要预测的视频,或者rtsp流地址 | 192 | | --rtsp | Option | rtsp视频流地址,支持一路或者多路同时输入 | 193 | | --camera_id | Option | 用来预测的摄像头ID,默认为-1(表示不使用摄像头预测,可设置为:0 - (摄像头数目-1) ),预测过程中在可视化界面按`q`退出输出预测结果到:output/output.mp4| 194 | | --device | Option | 运行时的设备,可选择`CPU/GPU/XPU`,默认为`CPU`| 195 | | --pushurl | Option| 对预测结果视频进行推流的地址,以rtsp://开头,该选项优先级高于视频结果本地存储,打开时不再另外存储本地预测结果视频, 默认为空,表示没有开启| 196 | | --output_dir | Option|可视化结果保存的根目录,默认为output/| 197 | | --run_mode | Option |使用GPU时,默认为paddle, 可选(paddle/trt_fp32/trt_fp16/trt_int8)| 198 | | --enable_mkldnn | Option | CPU预测中是否开启MKLDNN加速,默认为False | 199 | | --cpu_threads | Option| 设置cpu线程数,默认为1 | 200 | | --trt_calib_mode | Option| TensorRT是否使用校准功能,默认为False。使用TensorRT的int8功能时,需设置为True,使用PaddleSlim量化后的模型时需要设置为False | 201 | | --do_entrance_counting | Option | 是否统计出入口流量,默认为False | 202 | | --draw_center_traj | Option | 是否绘制跟踪轨迹,默认为False | 203 | | --region_type | Option | 'horizontal'(默认值)、'vertical':表示流量统计方向选择;'custom':表示设置车辆禁停区域 | 204 | | --region_polygon | Option | 设置禁停区域多边形多点的坐标,无默认值 | 205 | | --illegal_parking_time | Option | 设置禁停时间阈值,单位秒(s),-1(默认值)表示不做检查 | 206 | 207 | ## 方案介绍 208 | 209 | PP-Vehicle 整体方案如下图所示: 210 | 211 |

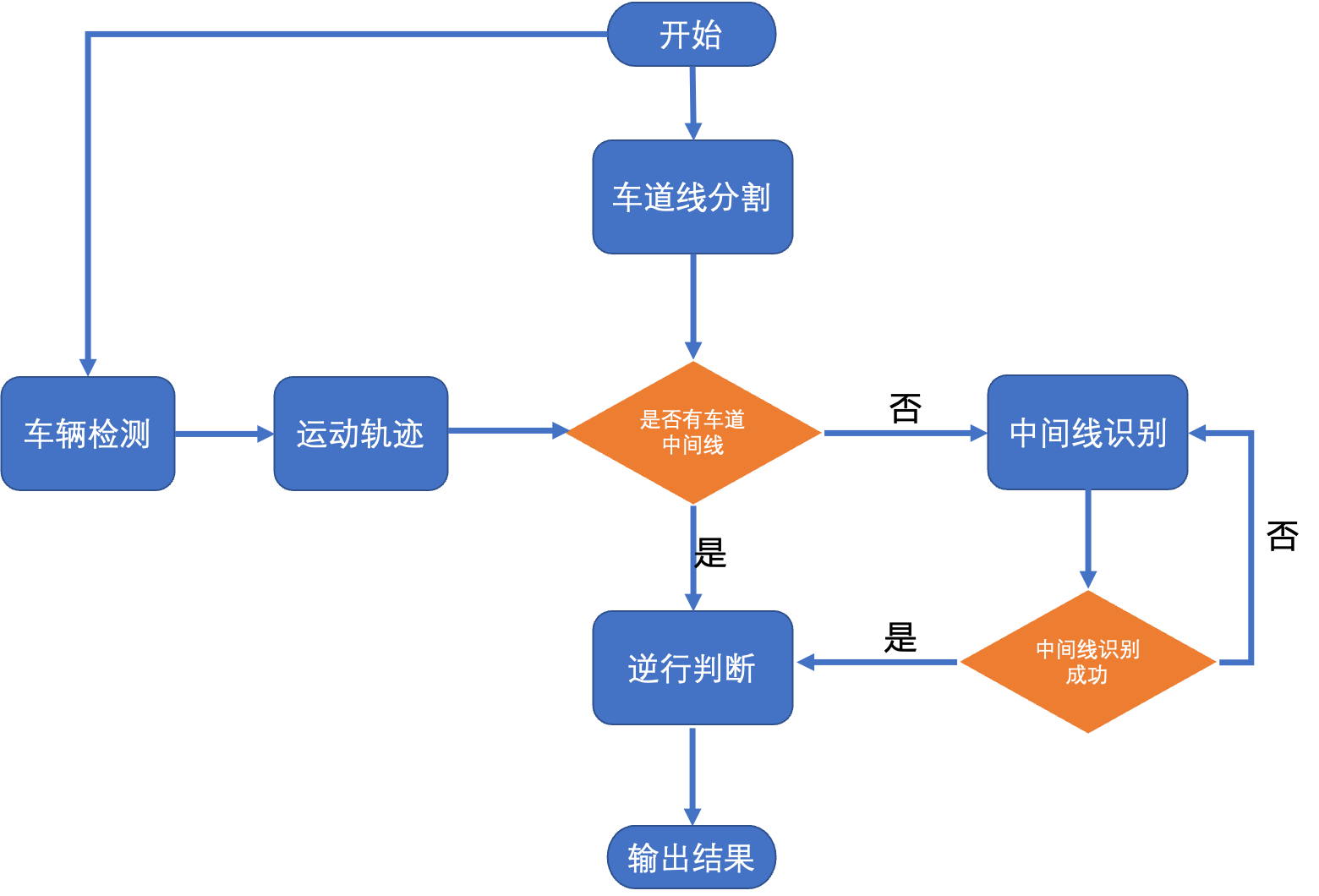

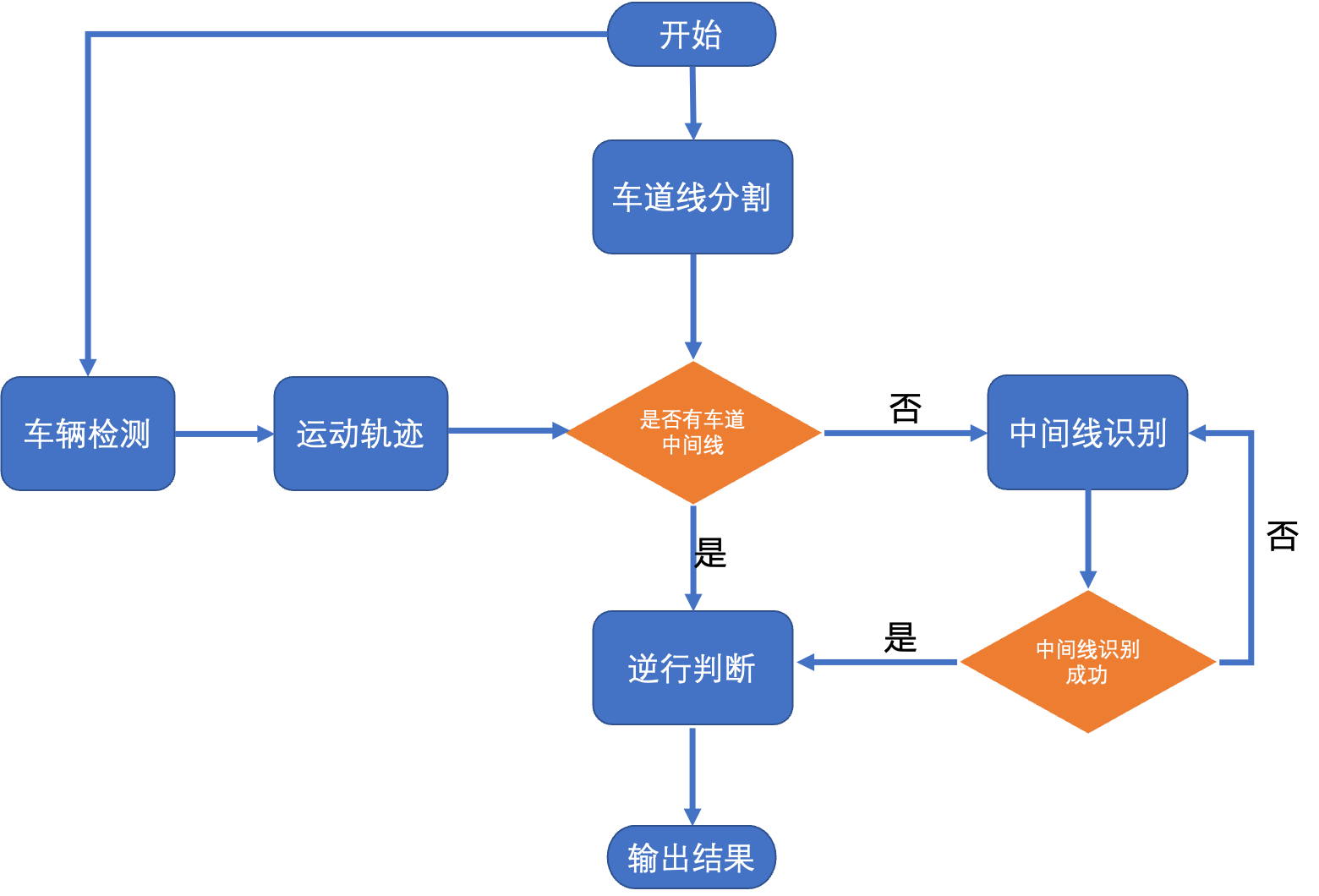

212 |  213 |

213 |

214 |

215 |

216 | ### 车辆检测

217 | - 采用PP-YOLOE L 作为目标检测模型

218 | - 详细文档参考[PP-YOLOE](../../../../configs/ppyoloe/)和[检测跟踪文档](ppvehicle_mot.md)

219 |

220 | ### 车辆跟踪

221 | - 采用SDE方案完成车辆跟踪

222 | - 检测模型使用PP-YOLOE L(高精度)和S(轻量级)

223 | - 跟踪模块采用OC-SORT方案

224 | - 详细文档参考[OC-SORT](../../../../configs/mot/ocsort)和[检测跟踪文档](ppvehicle_mot.md)

225 |

226 | ### 属性识别

227 | - 使用PaddleClas提供的特色模型PP-LCNet,实现对车辆颜色及车型属性的识别。

228 | - 详细文档参考[属性识别](ppvehicle_attribute.md)

229 |

230 | ### 车牌识别

231 | - 使用PaddleOCR特色模型ch_PP-OCRv3_det+ch_PP-OCRv3_rec模型,识别车牌号码

232 | - 详细文档参考[车牌识别](ppvehicle_plate.md)

233 |

234 | ### 违章停车识别

235 | - 车辆跟踪模型使用高精度模型PP-YOLOE L,根据车辆的跟踪轨迹以及指定的违停区域判断是否违章停车,如果存在则展示违章停车车牌号。

236 | - 详细文档参考[违章停车识别](ppvehicle_illegal_parking.md)

237 |

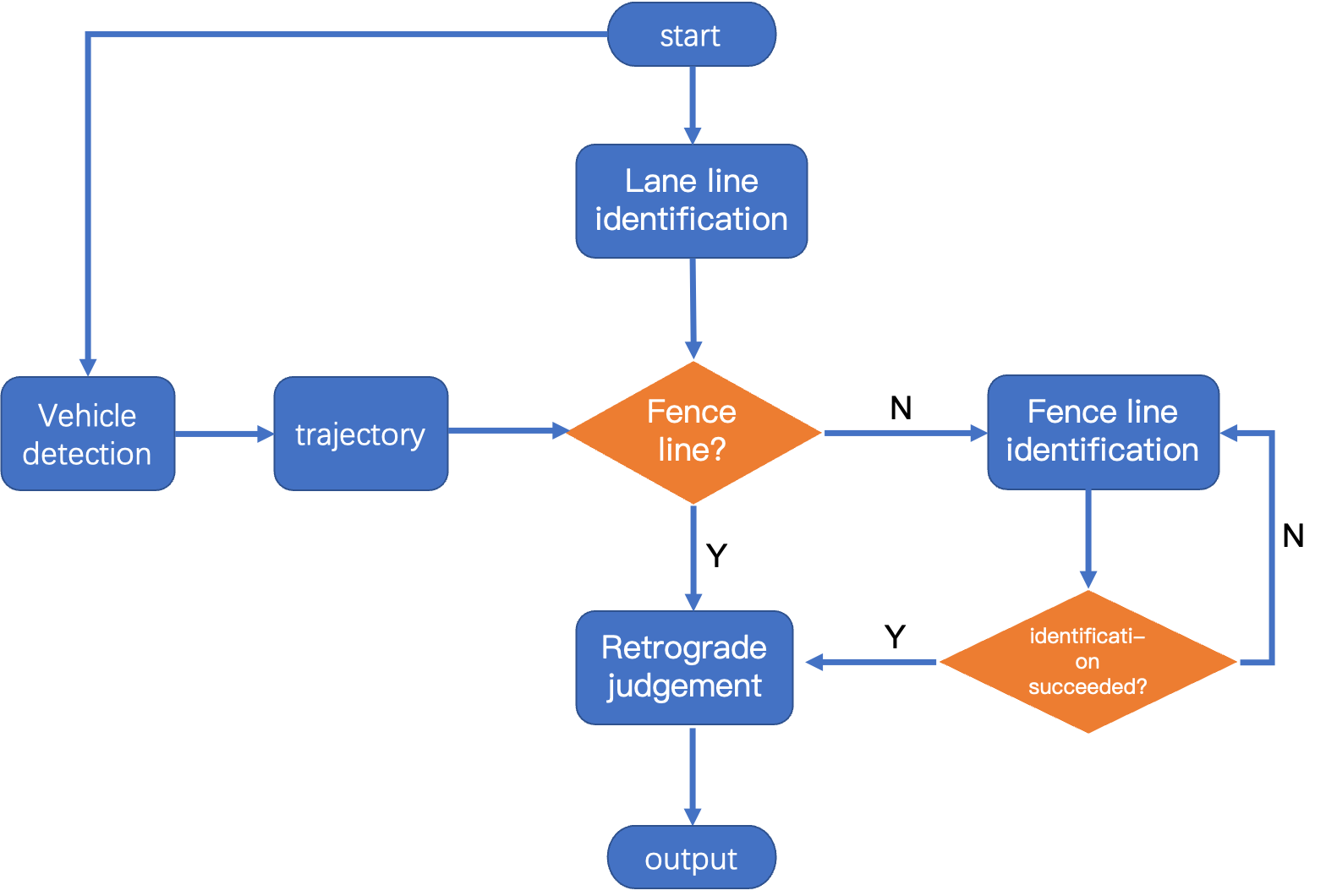

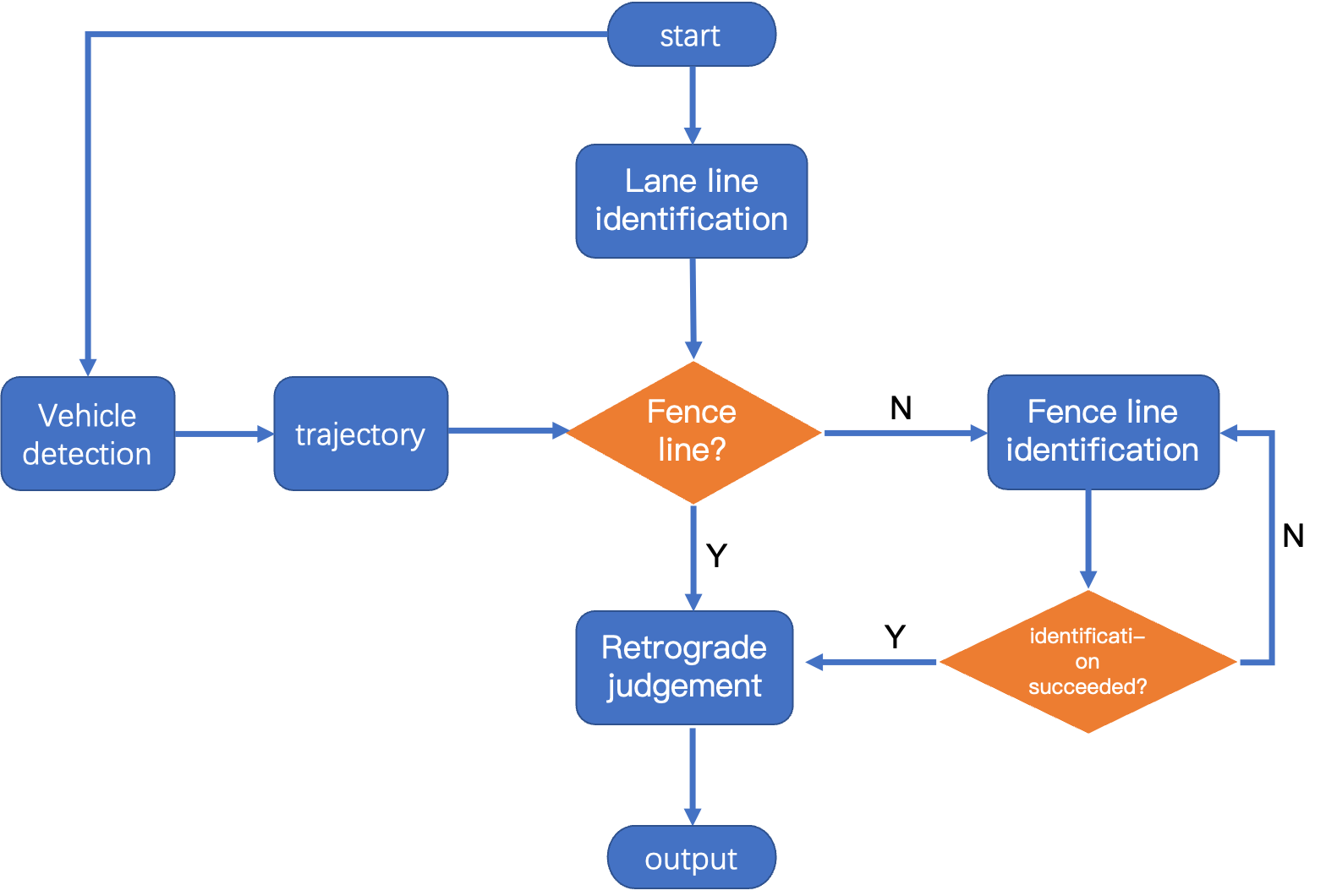

238 | ### 违法分析-逆行

239 | - 违法分析-逆行,通过使用高精度分割模型PP-Seg,对车道线进行分割拟合,然后与车辆轨迹组合判断车辆行驶方向是否与道路方向一致。

240 | - 详细文档参考[违法分析-逆行](ppvehicle_retrograde.md)

241 |

242 | ### 违法分析-压线

243 | - 违法分析-逆行,通过使用高精度分割模型PP-Seg,对车道线进行分割拟合,然后与车辆区域是否覆盖实线区域,进行压线判断。

244 | - 详细文档参考[违法分析-压线](ppvehicle_press.md)

245 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_attribute.md:

--------------------------------------------------------------------------------

1 | [English](pphuman_attribute_en.md) | 简体中文

2 |

3 | # PP-Human属性识别模块

4 |

5 | 行人属性识别在智慧社区,工业巡检,交通监控等方向都具有广泛应用,PP-Human中集成了属性识别模块,属性包含性别、年龄、帽子、眼镜、上衣下衣款式等。我们提供了预训练模型,用户可以直接下载使用。

6 |

7 | | 任务 | 算法 | 精度 | 预测速度(ms) |下载链接 |

8 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

9 | | 行人检测/跟踪 | PP-YOLOE | mAP: 56.3  213 |

213 | MOTA: 72.0 | 检测: 16.2ms

跟踪:22.3ms |[下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip) | 10 | | 行人属性高精度模型 | PP-HGNet_small | mA: 95.4 | 单人 1.54ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPHGNet_small_person_attribute_954_infer.zip) | 11 | | 行人属性轻量级模型 | PP-LCNet_x1_0 | mA: 94.5 | 单人 0.54ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPLCNet_x1_0_person_attribute_945_infer.zip) | 12 | | 行人属性精度与速度均衡模型 | PP-HGNet_tiny | mA: 95.2 | 单人 1.14ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPHGNet_tiny_person_attribute_952_infer.zip) | 13 | 14 | 15 | 1. 检测/跟踪模型精度为[MOT17](https://motchallenge.net/),[CrowdHuman](http://www.crowdhuman.org/),[HIEVE](http://humaninevents.org/)和部分业务数据融合训练测试得到。 16 | 2. 行人属性分析精度为[PA100k](https://github.com/xh-liu/HydraPlus-Net#pa-100k-dataset),[RAPv2](http://www.rapdataset.com/rapv2.html),[PETA](http://mmlab.ie.cuhk.edu.hk/projects/PETA.html)和部分业务数据融合训练测试得到 17 | 3. 预测速度为V100 机器上使用TensorRT FP16时的速度, 该处测速速度为模型预测速度 18 | 4. 属性模型应用依赖跟踪模型结果,请在[跟踪模型页面](./pphuman_mot.md)下载跟踪模型,依自身需求选择高精或轻量级下载。 19 | 5. 模型下载后解压放置在PaddleDetection/output_inference/目录下。 20 | 21 | ## 使用方法 22 | 23 | 1. 从上表链接中下载模型并解压到```PaddleDetection/output_inference```路径下,并修改配置文件中模型路径,也可默认自动下载模型。设置```deploy/pipeline/config/infer_cfg_pphuman.yml```中`ATTR`的enable: True 24 | 25 | `infer_cfg_pphuman.yml`中配置项说明: 26 | ``` 27 | ATTR: #模块名称 28 | model_dir: output_inference/PPLCNet_x1_0_person_attribute_945_infer/ #模型路径 29 | batch_size: 8 #推理最大batchsize 30 | enable: False #功能是否开启 31 | ``` 32 | 33 | 2. 图片输入时,启动命令如下(更多命令参数说明,请参考[快速开始-参数说明](./PPHuman_QUICK_STARTED.md#41-参数说明))。 34 | ```python 35 | #单张图片 36 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 37 | --image_file=test_image.jpg \ 38 | --device=gpu \ 39 | 40 | #图片文件夹 41 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 42 | --image_dir=images/ \ 43 | --device=gpu \ 44 | 45 | ``` 46 | 3. 视频输入时,启动命令如下 47 | ```python 48 | #单个视频文件 49 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 50 | --video_file=test_video.mp4 \ 51 | --device=gpu \ 52 | 53 | #视频文件夹 54 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 55 | --video_dir=test_videos/ \ 56 | --device=gpu \ 57 | ``` 58 | 59 | 4. 若修改模型路径,有以下两种方式: 60 | 61 | - 方法一:```./deploy/pipeline/config/infer_cfg_pphuman.yml```下可以配置不同模型路径,属性识别模型修改ATTR字段下配置 62 | - 方法二:命令行中--config后面紧跟着增加`-o ATTR.model_dir`修改模型路径: 63 | ```python 64 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml 65 | -o ATTR.model_dir=output_inference/PPLCNet_x1_0_person_attribute_945_infer/\ 66 | --video_file=test_video.mp4 \ 67 | --device=gpu 68 | ``` 69 | 70 | 测试效果如下: 71 | 72 |

73 |  74 |

74 |

75 |

76 | 数据来源及版权归属:天覆科技,感谢提供并开源实际场景数据,仅限学术研究使用

77 |

78 | ## 方案说明

79 |

80 | 1. 目标检测/多目标跟踪获取图片/视频输入中的行人检测框,模型方案为PP-YOLOE,详细文档参考[PP-YOLOE](../../../configs/ppyoloe/README_cn.md)

81 | 2. 通过行人检测框的坐标在输入图像中截取每个行人

82 | 3. 使用属性识别分析每个行人对应属性,属性类型与PA100k数据集相同,具体属性列表如下:

83 | ```

84 | - 性别:男、女

85 | - 年龄:小于18、18-60、大于60

86 | - 朝向:朝前、朝后、侧面

87 | - 配饰:眼镜、帽子、无

88 | - 正面持物:是、否

89 | - 包:双肩包、单肩包、手提包

90 | - 上衣风格:带条纹、带logo、带格子、拼接风格

91 | - 下装风格:带条纹、带图案

92 | - 短袖上衣:是、否

93 | - 长袖上衣:是、否

94 | - 长外套:是、否

95 | - 长裤:是、否

96 | - 短裤:是、否

97 | - 短裙&裙子:是、否

98 | - 穿靴:是、否

99 | ```

100 |

101 | 4. 属性识别模型方案为[StrongBaseline](https://arxiv.org/pdf/2107.03576.pdf),模型结构更改为基于PP-HGNet、PP-LCNet的多分类网络结构,引入Weighted BCE loss提升模型效果。

102 |

103 | ## 参考文献

104 | ```

105 | @article{jia2020rethinking,

106 | title={Rethinking of pedestrian attribute recognition: Realistic datasets with efficient method},

107 | author={Jia, Jian and Huang, Houjing and Yang, Wenjie and Chen, Xiaotang and Huang, Kaiqi},

108 | journal={arXiv preprint arXiv:2005.11909},

109 | year={2020}

110 | }

111 | ```

112 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_attribute_en.md:

--------------------------------------------------------------------------------

1 | English | [简体中文](pphuman_attribute.md)

2 |

3 | # Attribute Recognition Modules of PP-Human

4 |

5 | Pedestrian attribute recognition has been widely used in the intelligent community, industrial, and transportation monitoring. Many attribute recognition modules have been gathered in PP-Human, including gender, age, hats, eyes, clothing and up to 26 attributes in total. Also, the pre-trained models are offered here and users can download and use them directly.

6 |

7 | | Task | Algorithm | Precision | Inference Speed(ms) | Download Link |

8 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

9 | | High-Precision Model | PP-HGNet_small | mA: 95.4 | per person 1.54ms | [Download](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPHGNet_small_person_attribute_954_infer.tar) |

10 | | Fast Model | PP-LCNet_x1_0 | mA: 94.5 | per person 0.54ms | [Download](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPLCNet_x1_0_person_attribute_945_infer.tar) |

11 | | Balanced Model | PP-HGNet_tiny | mA: 95.2 | per person 1.14ms | [Download](https://bj.bcebos.com/v1/paddledet/models/pipeline/PPHGNet_tiny_person_attribute_952_infer.tar) |

12 |

13 | 1. The precision of pedestiran attribute analysis is obtained by training and testing on the dataset consist of [PA100k](https://github.com/xh-liu/HydraPlus-Net#pa-100k-dataset),[RAPv2](http://www.rapdataset.com/rapv2.html),[PETA](http://mmlab.ie.cuhk.edu.hk/projects/PETA.html) and some business data.

14 | 2. The inference speed is V100, the speed of using TensorRT FP16.

15 | 3. This model of Attribute is based on the result of tracking, please download tracking model in the [Page of Mot](./pphuman_mot_en.md). The High precision and Faster model are both available.

16 | 4. You should place the model unziped in the directory of `PaddleDetection/output_inference/`.

17 |

18 | ## Instruction

19 |

20 | 1. Download the model from the link in the above table, and unzip it to```./output_inference```, and set the "enable: True" in ATTR of infer_cfg_pphuman.yml

21 |

22 | The meaning of configs of `infer_cfg_pphuman.yml`:

23 | ```

24 | ATTR: #module name

25 | model_dir: output_inference/PPLCNet_x1_0_person_attribute_945_infer/ #model path

26 | batch_size: 8 #maxmum batchsize when inference

27 | enable: False #whether to enable this model

28 | ```

29 |

30 | 2. When inputting the image, run the command as follows (please refer to [QUICK_STARTED-Parameters](./PPHuman_QUICK_STARTED.md#41-参数说明) for more details):

31 | ```python

32 | #single image

33 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

34 | --image_file=test_image.jpg \

35 | --device=gpu \

36 |

37 | #image directory

38 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

39 | --image_dir=images/ \

40 | --device=gpu \

41 |

42 | ```

43 | 3. When inputting the video, run the command as follows:

44 | ```python

45 | #a single video file

46 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

47 | --video_file=test_video.mp4 \

48 | --device=gpu \

49 |

50 | #directory of videos

51 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

52 | --video_dir=test_videos/ \

53 | --device=gpu \

54 | ```

55 | 4. If you want to change the model path, there are two methods:

56 |

57 | - The first: In ```./deploy/pipeline/config/infer_cfg_pphuman.yml``` you can configurate different model paths. In attribute recognition models, you can modify the configuration in the field of ATTR.

58 | - The second: Add `-o ATTR.model_dir` in the command line following the --config to change the model path:

59 | ```python

60 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

61 | -o ATTR.model_dir=output_inference/PPLCNet_x1_0_person_attribute_945_infer/\

62 | --video_file=test_video.mp4 \

63 | --device=gpu

64 | ```

65 |

66 | The test result is:

67 |

68 |  74 |

74 |

69 |  70 |

70 |

71 |

72 | Data Source and Copyright:Skyinfor Technology. Thanks for the provision of actual scenario data, which are only used for academic research here.

73 |

74 | ## Introduction to the Solution

75 |

76 | 1. The PP-YOLOE model is used to handle detection boxs of input images/videos from object detection/ multi-object tracking. For details, please refer to the document [PP-YOLOE](../../../configs/ppyoloe).

77 | 2. Capture every pedestrian in the input images with the help of coordiantes of detection boxes.

78 | 3. Analyze the listed labels of pedestirans through attribute recognition. They are the same as those in the PA100k dataset. The label list is as follows:

79 | ```

80 | - Gender

81 | - Age: Less than 18; 18-60; Over 60

82 | - Orientation: Front; Back; Side

83 | - Accessories: Glasses; Hat; None

84 | - HoldObjectsInFront: Yes; No

85 | - Bag: BackPack; ShoulderBag; HandBag

86 | - TopStyle: UpperStride; UpperLogo; UpperPlaid; UpperSplice

87 | - BottomStyle: LowerStripe; LowerPattern

88 | - ShortSleeve: Yes; No

89 | - LongSleeve: Yes; No

90 | - LongCoat: Yes; No

91 | - Trousers: Yes; No

92 | - Shorts: Yes; No

93 | - Skirt&Dress: Yes; No

94 | - Boots: Yes; No

95 | ```

96 |

97 | 4. The model adopted in the attribute recognition is [StrongBaseline](https://arxiv.org/pdf/2107.03576.pdf), where the structure is the multi-class network structure based on PP-HGNet、PP-LCNet, and Weighted BCE loss is introduced for effect optimization.

98 |

99 | ## Reference

100 | ```

101 | @article{jia2020rethinking,

102 | title={Rethinking of pedestrian attribute recognition: Realistic datasets with efficient method},

103 | author={Jia, Jian and Huang, Houjing and Yang, Wenjie and Chen, Xiaotang and Huang, Kaiqi},

104 | journal={arXiv preprint arXiv:2005.11909},

105 | year={2020}

106 | }

107 | ```

108 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_mot.md:

--------------------------------------------------------------------------------

1 | [English](pphuman_mot_en.md) | 简体中文

2 |

3 | # PP-Human检测跟踪模块

4 |

5 | 行人检测与跟踪在智慧社区,工业巡检,交通监控等方向都具有广泛应用,PP-Human中集成了检测跟踪模块,是关键点检测、属性行为识别等任务的基础。我们提供了预训练模型,用户可以直接下载使用。

6 |

7 | | 任务 | 算法 | 精度 | 预测速度(ms) |下载链接 |

8 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

9 | | 行人检测/跟踪 | PP-YOLOE-l | mAP: 57.8  70 |

70 | MOTA: 82.2 | 检测: 25.1ms

跟踪:31.8ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip) | 10 | | 行人检测/跟踪 | PP-YOLOE-s | mAP: 53.2

MOTA: 73.9 | 检测: 16.2ms

跟踪:21.0ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_s_36e_pipeline.zip) | 11 | 12 | 1. 检测/跟踪模型精度为[COCO-Person](http://cocodataset.org/), [CrowdHuman](http://www.crowdhuman.org/), [HIEVE](http://humaninevents.org/) 和部分业务数据融合训练测试得到,验证集为业务数据 13 | 2. 预测速度为T4 机器上使用TensorRT FP16时的速度, 速度包含数据预处理、模型预测、后处理全流程 14 | 15 | ## 使用方法 16 | 17 | 1. 从上表链接中下载模型并解压到```./output_inference```路径下,并修改配置文件中模型路径。默认为自动下载模型,无需做改动。 18 | 2. 图片输入时,是纯检测任务,启动命令如下 19 | ```python 20 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 21 | --image_file=test_image.jpg \ 22 | --device=gpu 23 | ``` 24 | 3. 视频输入时,是跟踪任务,注意首先设置infer_cfg_pphuman.yml中的MOT配置的`enable=True`,如果希望跳帧加速检测跟踪流程,可以设置`skip_frame_num: 2`,建议跳帧帧数最大不超过3: 25 | ``` 26 | MOT: 27 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip 28 | tracker_config: deploy/pipeline/config/tracker_config.yml 29 | batch_size: 1 30 | skip_frame_num: 2 31 | enable: True 32 | ``` 33 | 然后启动命令如下 34 | ```python 35 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 36 | --video_file=test_video.mp4 \ 37 | --device=gpu 38 | ``` 39 | 4. 若修改模型路径,有以下两种方式: 40 | 41 | - ```./deploy/pipeline/config/infer_cfg_pphuman.yml```下可以配置不同模型路径,检测和跟踪模型分别对应`DET`和`MOT`字段,修改对应字段下的路径为实际期望的路径即可。 42 | - 命令行中--config后面紧跟着增加`-o MOT.model_dir`修改模型路径: 43 | ```python 44 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 45 | -o MOT.model_dir=ppyoloe/\ 46 | --video_file=test_video.mp4 \ 47 | --device=gpu \ 48 | --region_type=horizontal \ 49 | --do_entrance_counting \ 50 | --draw_center_traj 51 | 52 | ``` 53 | **注意:** 54 | - `--do_entrance_counting`表示是否统计出入口流量,不设置即默认为False。 55 | - `--draw_center_traj`表示是否绘制跟踪轨迹,不设置即默认为False。注意绘制跟踪轨迹的测试视频最好是静止摄像头拍摄的。 56 | - `--region_type`表示流量计数的区域,当设置`--do_entrance_counting`时可选择`horizontal`或者`vertical`,默认是`horizontal`,表示以视频图片的中心水平线为出入口,同一物体框的中心点在相邻两秒内分别在区域中心水平线的两侧,即完成计数加一。 57 | 58 | 测试效果如下: 59 | 60 |

61 |  62 |

62 |

63 |

64 | 数据来源及版权归属:天覆科技,感谢提供并开源实际场景数据,仅限学术研究使用

65 |

66 | 5. 区域闯入判断和计数

67 |

68 | 注意首先设置infer_cfg_pphuman.yml中的MOT配置的enable=True,然后启动命令如下

69 | ```python

70 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

71 | --video_file=test_video.mp4 \

72 | --device=gpu \

73 | --draw_center_traj \

74 | --do_break_in_counting \

75 | --region_type=custom \

76 | --region_polygon 200 200 400 200 300 400 100 400

77 | ```

78 | **注意:**

79 | - 区域闯入的测试视频必须是静止摄像头拍摄的,镜头不能抖动或移动。

80 | - `--do_break_in_counting`表示是否进行区域出入后计数,不设置即默认为False。

81 | - `--region_type`表示流量计数的区域,当设置`--do_break_in_counting`时仅可选择`custom`,默认是`custom`,表示以用户自定义区域为出入口,同一物体框的下边界中点坐标在相邻两秒内从区域外到区域内,即完成计数加一。

82 | - `--region_polygon`表示用户自定义区域的多边形的点坐标序列,每两个为一对点坐标(x,y),**按顺时针顺序**连成一个**封闭区域**,至少需要3对点也即6个整数,默认值是`[]`,需要用户自行设置点坐标,如是四边形区域,坐标顺序是`左上、右上、右下、左下`。用户可以运行[此段代码](../../tools/get_video_info.py)获取所测视频的分辨率帧数,以及可以自定义画出自己想要的多边形区域的可视化并自己调整。

83 | 自定义多边形区域的可视化代码运行如下:

84 | ```python

85 | python get_video_info.py --video_file=demo.mp4 --region_polygon 200 200 400 200 300 400 100 400

86 | ```

87 | 快速画出想要的区域的小技巧:先任意取点得到图片,用画图工具打开,鼠标放到想要的区域点上会显示出坐标,记录下来并取整,作为这段可视化代码的region_polygon参数,并再次运行可视化,微调点坐标参数直至满意。

88 |

89 |

90 | 测试效果如下:

91 |

92 |  62 |

62 |

93 |  94 |

94 |

95 |

96 | ## 方案说明

97 |

98 | 1. 使用目标检测/多目标跟踪技术来获取图片/视频输入中的行人检测框,检测模型方案为PP-YOLOE,详细文档参考[PP-YOLOE](../../../../configs/ppyoloe)。

99 | 2. 多目标跟踪模型方案采用[ByteTrack](https://arxiv.org/pdf/2110.06864.pdf)和[OC-SORT](https://arxiv.org/pdf/2203.14360.pdf),采用PP-YOLOE替换原文的YOLOX作为检测器,采用BYTETracker和OCSORTTracker作为跟踪器,详细文档参考[ByteTrack](../../../../configs/mot/bytetrack)和[OC-SORT](../../../../configs/mot/ocsort)。

100 |

101 | ## 参考文献

102 | ```

103 | @article{zhang2021bytetrack,

104 | title={ByteTrack: Multi-Object Tracking by Associating Every Detection Box},

105 | author={Zhang, Yifu and Sun, Peize and Jiang, Yi and Yu, Dongdong and Yuan, Zehuan and Luo, Ping and Liu, Wenyu and Wang, Xinggang},

106 | journal={arXiv preprint arXiv:2110.06864},

107 | year={2021}

108 | }

109 |

110 | @article{cao2022observation,

111 | title={Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking},

112 | author={Cao, Jinkun and Weng, Xinshuo and Khirodkar, Rawal and Pang, Jiangmiao and Kitani, Kris},

113 | journal={arXiv preprint arXiv:2203.14360},

114 | year={2022}

115 | }

116 | ```

117 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_mot_en.md:

--------------------------------------------------------------------------------

1 | English | [简体中文](pphuman_mot.md)

2 |

3 | # Detection and Tracking Module of PP-Human

4 |

5 | Pedestrian detection and tracking is widely used in the intelligent community, industrial inspection, transportation monitoring and so on. PP-Human has the detection and tracking module, which is fundamental to keypoint detection, attribute action recognition, etc. Users enjoy easy access to pretrained models here.

6 |

7 | | Task | Algorithm | Precision | Inference Speed(ms) | Download Link |

8 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

9 | | Pedestrian Detection/ Tracking | PP-YOLOE-l | mAP: 57.8  94 |

94 | MOTA: 82.2 | Detection: 25.1ms

Tracking:31.8ms | [Download](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip) | 10 | | Pedestrian Detection/ Tracking | PP-YOLOE-s | mAP: 53.2

MOTA: 73.9 | Detection: 16.2ms

Tracking:21.0ms | [Download](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_s_36e_pipeline.zip) | 11 | 12 | 1. The precision of the pedestrian detection/ tracking model is obtained by trainning and testing on [COCO-Person](http://cocodataset.org/), [CrowdHuman](http://www.crowdhuman.org/), [HIEVE](http://humaninevents.org/) and some business data. 13 | 2. The inference speed is the speed of using TensorRT FP16 on T4, the total number of data pre-training, model inference, and post-processing. 14 | 15 | ## How to Use 16 | 17 | 1. Download models from the links of the above table and unizp them to ```./output_inference```. 18 | 2. When use the image as input, it's a detection task, the start command is as follows: 19 | ```python 20 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 21 | --image_file=test_image.jpg \ 22 | --device=gpu 23 | ``` 24 | 3. When use the video as input, it's a tracking task, first you should set the "enable: True" in MOT of infer_cfg_pphuman.yml. If you want skip some frames speed up the detection and tracking process, you can set `skip_frame_num: 2`, it is recommended that the maximum number of skip_frame_num should not exceed 3: 25 | ``` 26 | MOT: 27 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip 28 | tracker_config: deploy/pipeline/config/tracker_config.yml 29 | batch_size: 1 30 | skip_frame_num: 2 31 | enable: True 32 | ``` 33 | and then the start command is as follows: 34 | ```python 35 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 36 | --video_file=test_video.mp4 \ 37 | --device=gpu 38 | ``` 39 | 4. There are two ways to modify the model path: 40 | 41 | - In `./deploy/pipeline/config/infer_cfg_pphuman.yml`, you can configurate different model paths,which is proper only if you match keypoint models and action recognition models with the fields of `DET` and `MOT` respectively, and modify the corresponding path of each field into the expected path. 42 | - Add `-o MOT.model_dir` in the command line following the --config to change the model path: 43 | 44 | ```python 45 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \ 46 | -o MOT.model_dir=ppyoloe/\ 47 | --video_file=test_video.mp4 \ 48 | --device=gpu \ 49 | --region_type=horizontal \ 50 | --do_entrance_counting \ 51 | --draw_center_traj 52 | 53 | ``` 54 | **Note:** 55 | 56 | - `--do_entrance_counting` is whether to calculate flow at the gateway, and the default setting is False. 57 | - `--draw_center_traj` means whether to draw the track, and the default setting is False. It's worth noting that the test video of track drawing should be filmed by the still camera. 58 | - `--region_type` means the region type of flow counting. When set `--do_entrance_counting`, you can select from `horizontal` or `vertical`, the default setting is `horizontal`, means that the central horizontal line of the video picture is used as the entrance and exit, and when the central point of the same object box is on both sides of the central horizontal line of the area in two adjacent seconds, the counting plus one is completed. 59 | 60 | The test result is: 61 | 62 |

63 |  64 |

64 |

65 |

66 | Data source and copyright owner:Skyinfor Technology. Thanks for the provision of actual scenario data, which are only used for academic research here.

67 |

68 | 5. Break in and counting

69 |

70 | Please set the "enable: True" in MOT of infer_cfg_pphuman.yml at first, and then the start command is as follows:

71 | ```python

72 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml \

73 | --video_file=test_video.mp4 \

74 | --device=gpu \

75 | --draw_center_traj \

76 | --do_break_in_counting \

77 | --region_type=custom \

78 | --region_polygon 200 200 400 200 300 400 100 400

79 | ```

80 |

81 | **Note:**

82 | - `--do_break_in_counting` is whether to calculate flow when break in the user-defined region, and the default setting is False.

83 | - `--region_type` means the region type of flow counting. When set `--do_break_in_counting`, only `custom` can be selected, and the default is `custom`, which means that the user-defined region is used as the entrance and exit, and when the midpoint coords of the bottom boundary of the same object moves from outside to inside the region within two adjacent seconds, the counting plus one is completed.

84 | - `--region_polygon` means the point coords sequence of the polygon in the user-defined region. Every two integers are a pair of point coords (x,y), which are connected into a closed area in clockwise order. At least 3 pairs of points, that is, 6 integers, are required. The default value is `[]`, and the user needs to set the point coords by himself. Users can run this [code](../../tools/get_video_info.py) to obtain the resolution and frame number of the measured video, and can customize the visualization of drawing the polygon area they want and adjust it by themselves.

85 | The visualization code of the custom polygon region runs as follows:

86 | ```python

87 | python get_video_info.py --video_file=demo.mp4 --region_polygon 200 200 400 200 300 400 100 400

88 | ```

89 |

90 | The test result is:

91 |

92 |  64 |

64 |

93 |  94 |

94 |

95 |

96 |

97 | ## Introduction to the Solution

98 |

99 | 1. Get the pedestrian detection box of the image/ video input through object detection and multi-object tracking. The detection model is PP-YOLOE, please refer to [PP-YOLOE](../../../../configs/ppyoloe) for details.

100 |

101 | 2. The multi-object tracking solution is based on [ByteTrack](https://arxiv.org/pdf/2110.06864.pdf) and [OC-SORT](https://arxiv.org/pdf/2203.14360.pdf), and replace the original YOLOX with PP-YOLOE as the detector,and BYTETracker or OC-SORT Tracker as the tracker, please refer to [ByteTrack](../../../../configs/mot/bytetrack) and [OC-SORT](../../../../configs/mot/ocsort).

102 |

103 | ## Reference

104 | ```

105 | @article{zhang2021bytetrack,

106 | title={ByteTrack: Multi-Object Tracking by Associating Every Detection Box},

107 | author={Zhang, Yifu and Sun, Peize and Jiang, Yi and Yu, Dongdong and Yuan, Zehuan and Luo, Ping and Liu, Wenyu and Wang, Xinggang},

108 | journal={arXiv preprint arXiv:2110.06864},

109 | year={2021}

110 | }

111 | ```

112 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_mtmct.md:

--------------------------------------------------------------------------------

1 | [English](pphuman_mtmct_en.md) | 简体中文

2 |

3 | # PP-Human跨镜头跟踪模块

4 |

5 | 跨镜头跟踪任务,是在单镜头跟踪的基础上,实现不同摄像头中人员的身份匹配关联。在安放、智慧零售等方向有较多的应用。

6 | PP-Human跨镜头跟踪模块主要目的在于提供一套简洁、高效的跨镜跟踪Pipeline,REID模型完全基于开源数据集训练。

7 |

8 | ## 使用方法

9 |

10 | 1. 下载模型 [行人跟踪](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_pipeline.zip)和[REID模型](https://bj.bcebos.com/v1/paddledet/models/pipeline/reid_model.zip) 并解压到```./output_inference```路径下,修改配置文件中模型路径。也可简单起见直接用默认配置,自动下载模型。 MOT模型请参考[mot说明](./pphuman_mot.md)文件下载。

11 |

12 | 2. 跨镜头跟踪模式下,要求输入的多个视频放在同一目录下,同时开启infer_cfg_pphuman.yml 中的REID选择中的enable=True, 命令如下:

13 | ```python

14 | python3 deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml --video_dir=[your_video_file_directory] --device=gpu

15 | ```

16 |

17 | 3. 相关配置在`./deploy/pipeline/config/infer_cfg_pphuman.yml`文件中修改:

18 |

19 | ```python

20 | python3 deploy/pipeline/pipeline.py

21 | --config deploy/pipeline/config/infer_cfg_pphuman.yml -o REID.model_dir=reid_best/

22 | --video_dir=[your_video_file_directory]

23 | --device=gpu

24 | ```

25 |

26 | ## 方案说明

27 |

28 | 跨镜头跟踪模块,主要由跨镜头跟踪Pipeline及REID模型两部分组成。

29 | 1. 跨镜头跟踪Pipeline

30 |

31 | ```

32 |

33 | 单镜头跟踪[id+bbox]

34 | │

35 | 根据bbox截取原图中目标——│

36 | │ │

37 | REID模型 质量评估(遮挡、完整度、亮度等)

38 | │ │

39 | [feature] [quality]

40 | │ │

41 | datacollector—————│

42 | │

43 | 特征排序、筛选

44 | │

45 | 多视频各id相似度计算

46 | │

47 | id聚类、重新分配id

48 | ```

49 |

50 | 2. 模型方案为[reid-strong-baseline](https://github.com/michuanhaohao/reid-strong-baseline), Backbone为ResNet50, 主要特色为模型结构简单。

51 | 本跨镜跟踪中所用REID模型在上述基础上,整合多个开源数据集并压缩模型特征到128维以提升泛化性能。大幅提升了在实际应用中的泛化效果。

52 |

53 | ### 其他建议

54 | - 提供的REID模型基于开源数据集训练得到,建议加入自有数据,训练更加强有力的REID模型,将非常明显提升跨镜跟踪效果。

55 | - 质量评估部分基于简单逻辑+OpenCV实现,效果有限,如果有条件建议针对性训练质量判断模型。

56 |

57 |

58 | ### 示例效果

59 |

60 | - camera 1:

61 |  94 |

94 |

62 |  63 |

63 |

64 |

65 | - camera 2:

66 |  63 |

63 |

67 |  68 |

68 |

69 |

70 |

71 | ## 参考文献

72 | ```

73 | @InProceedings{Luo_2019_CVPR_Workshops,

74 | author = {Luo, Hao and Gu, Youzhi and Liao, Xingyu and Lai, Shenqi and Jiang, Wei},

75 | title = {Bag of Tricks and a Strong Baseline for Deep Person Re-Identification},

76 | booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

77 | month = {June},

78 | year = {2019}

79 | }

80 |

81 | @ARTICLE{Luo_2019_Strong_TMM,

82 | author={H. {Luo} and W. {Jiang} and Y. {Gu} and F. {Liu} and X. {Liao} and S. {Lai} and J. {Gu}},

83 | journal={IEEE Transactions on Multimedia},

84 | title={A Strong Baseline and Batch Normalization Neck for Deep Person Re-identification},

85 | year={2019},

86 | pages={1-1},

87 | doi={10.1109/TMM.2019.2958756},

88 | ISSN={1941-0077},

89 | }

90 | ```

91 |

--------------------------------------------------------------------------------

/docs/tutorials/pphuman_mtmct_en.md:

--------------------------------------------------------------------------------

1 | English | [简体中文](pphuman_mtmct.md)

2 |

3 | # Multi-Target Multi-Camera Tracking Module of PP-Human

4 |

5 | Multi-target multi-camera tracking, or MTMCT, matches the identity of a person in different cameras based on the single-camera tracking. MTMCT is usually applied to the security system and the smart retailing.

6 | The MTMCT module of PP-Human aims to provide a multi-target multi-camera pipleline which is simple, and efficient.

7 |

8 | ## How to Use

9 |

10 | 1. Download [REID model](https://bj.bcebos.com/v1/paddledet/models/pipeline/reid_model.zip) and unzip it to ```./output_inference```. For the MOT model, please refer to [mot description](./pphuman_mot.md).

11 |

12 | 2. In the MTMCT mode, input videos are required to be put in the same directory. set the REID "enable: True" in the infer_cfg_pphuman.yml. The command line is:

13 | ```python

14 | python3 deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_pphuman.yml --video_dir=[your_video_file_directory] --device=gpu

15 | ```

16 |

17 | 3. Configuration can be modified in `./deploy/pipeline/config/infer_cfg_pphuman.yml`.

18 |

19 | ```python

20 | python3 deploy/pipeline/pipeline.py

21 | --config deploy/pipeline/config/infer_cfg_pphuman.yml -o REID.model_dir=reid_best/

22 | --video_dir=[your_video_file_directory]

23 | --device=gpu

24 | ```

25 |

26 | ## Intorduction to the Solution

27 |

28 | MTMCT module consists of the multi-target multi-camera tracking pipeline and the REID model.

29 |

30 | 1. Multi-Target Multi-Camera Tracking Pipeline

31 |

32 | ```

33 |

34 | single-camera tracking[id+bbox]

35 | │

36 | capture the target in the original image according to bbox——│

37 | │ │

38 | REID model quality assessment (covered or not, complete or not, brightness, etc.)

39 | │ │

40 | [feature] [quality]

41 | │ │

42 | datacollector—————│

43 | │

44 | sort out and filter features

45 | │

46 | calculate the similarity of IDs in the videos

47 | │

48 | make the IDs cluster together and rearrange them

49 | ```

50 |

51 | 2. The model solution is [reid-strong-baseline](https://github.com/michuanhaohao/reid-strong-baseline), with ResNet50 as the backbone.

52 |

53 | Under the above circumstances, the REID model used in MTMCT integrates open-source datasets and compresses model features to 128-dimensional features to optimize the generalization. In this way, the actual generalization result becomes much better.

54 |

55 | ### Other Suggestions

56 |

57 | - The provided REID model is obtained from open-source dataset training. It is recommended to add your own data to get a more powerful REID model, notably improving the MTMCT effect.

58 | - The quality assessment is based on simple logic +OpenCV, whose effect is limited. If possible, it is advisable to conduct specific training on the quality assessment model.

59 |

60 |

61 | ### Example

62 |

63 | - camera 1:

64 |  68 |

68 |

65 |  66 |

66 |

67 |

68 | - camera 2:

69 |  66 |

66 |

70 |  71 |

71 |

72 |

73 |

74 | ## Reference

75 | ```

76 | @InProceedings{Luo_2019_CVPR_Workshops,

77 | author = {Luo, Hao and Gu, Youzhi and Liao, Xingyu and Lai, Shenqi and Jiang, Wei},

78 | title = {Bag of Tricks and a Strong Baseline for Deep Person Re-Identification},

79 | booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

80 | month = {June},

81 | year = {2019}

82 | }

83 |

84 | @ARTICLE{Luo_2019_Strong_TMM,

85 | author={H. {Luo} and W. {Jiang} and Y. {Gu} and F. {Liu} and X. {Liao} and S. {Lai} and J. {Gu}},

86 | journal={IEEE Transactions on Multimedia},

87 | title={A Strong Baseline and Batch Normalization Neck for Deep Person Re-identification},

88 | year={2019},

89 | pages={1-1},

90 | doi={10.1109/TMM.2019.2958756},

91 | ISSN={1941-0077},

92 | }

93 | ```

94 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_attribute.md:

--------------------------------------------------------------------------------

1 | [English](ppvehicle_attribute_en.md) | 简体中文

2 |

3 | # PP-Vehicle属性识别模块

4 |

5 | 车辆属性识别在智慧城市,智慧交通等方向具有广泛应用。在PP-Vehicle中,集成了车辆属性识别模块,可识别车辆颜色及车型属性的识别。

6 |

7 | | 任务 | 算法 | 精度 | 预测速度 | 下载链接|

8 | |-----------|------|-----------|----------|---------------|

9 | | 车辆检测/跟踪 | PP-YOLOE | mAP 63.9 | 38.67ms | [预测部署模型](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) |

10 | | 车辆属性识别 | PPLCNet | 90.81 | 7.31 ms | [预测部署模型](https://bj.bcebos.com/v1/paddledet/models/pipeline/vehicle_attribute_model.zip) |

11 |

12 |

13 | 注意:

14 | 1. 属性模型预测速度是基于NVIDIA T4, 开启TensorRT FP16得到。模型预测速度包含数据预处理、模型预测、后处理部分。

15 | 2. 关于PP-LCNet的介绍可以参考[PP-LCNet](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/zh_CN/models/PP-LCNet.md)介绍,相关论文可以查阅[PP-LCNet paper](https://arxiv.org/abs/2109.15099)。

16 | 3. 属性模型的训练和精度测试均基于[VeRi数据集](https://www.v7labs.com/open-datasets/veri-dataset)。

17 |

18 |

19 | - 当前提供的预训练模型支持识别10种车辆颜色及9种车型,同VeRi数据集,具体如下:

20 |

21 | ```yaml

22 | # 车辆颜色

23 | - "yellow"

24 | - "orange"

25 | - "green"

26 | - "gray"

27 | - "red"

28 | - "blue"

29 | - "white"

30 | - "golden"

31 | - "brown"

32 | - "black"

33 |

34 | # 车型

35 | - "sedan"

36 | - "suv"

37 | - "van"

38 | - "hatchback"

39 | - "mpv"

40 | - "pickup"

41 | - "bus"

42 | - "truck"

43 | - "estate"

44 | ```

45 |

46 | ## 使用方法

47 |

48 | ### 配置项说明

49 |

50 | [配置文件](../../config/infer_cfg_ppvehicle.yml)中与属性相关的参数如下:

51 | ```

52 | VEHICLE_ATTR:

53 | model_dir: output_inference/vehicle_attribute_infer/ # 车辆属性模型调用路径

54 | batch_size: 8 # 模型预测时的batch_size大小

55 | color_threshold: 0.5 # 颜色属性阈值,需要置信度达到此阈值才会确定具体颜色,否则为'Unknown‘

56 | type_threshold: 0.5 # 车型属性阈值,需要置信度达到此阈值才会确定具体属性,否则为'Unknown‘

57 | enable: False # 是否开启该功能

58 | ```

59 |

60 | ### 使用命令

61 |

62 | 1. 从模型库下载`车辆检测/跟踪`, `车辆属性识别`两个预测部署模型并解压到`./output_inference`路径下;默认会自动下载模型,如果手动下载,需要修改模型文件夹为模型存放路径。

63 | 2. 修改配置文件中`VEHICLE_ATTR`项的`enable: True`,以启用该功能。

64 | 3. 图片输入时,启动命令如下(更多命令参数说明,请参考[快速开始-参数说明](./PPVehicle_QUICK_STARTED.md)):

65 |

66 | ```bash

67 | # 预测单张图片文件

68 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

69 | --image_file=test_image.jpg \

70 | --device=gpu

71 |

72 | # 预测包含一张或多张图片的文件夹

73 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

74 | --image_dir=images/ \

75 | --device=gpu

76 | ```

77 |

78 | 4. 视频输入时,启动命令如下:

79 |

80 | ```bash

81 | #预测单个视频文件

82 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

83 | --video_file=test_video.mp4 \

84 | --device=gpu

85 |

86 | #预测包含一个或多个视频的文件夹

87 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

88 | --video_dir=test_videos/ \

89 | --device=gpu

90 | ```

91 |

92 | 5. 若修改模型路径,有以下两种方式:

93 |

94 | - 方法一:`./deploy/pipeline/config/infer_cfg_ppvehicle.yml`下可以配置不同模型路径,属性识别模型修改`VEHICLE_ATTR`字段下配置

95 | - 方法二:直接在命令行中增加`-o`,以覆盖配置文件中的默认模型路径:

96 |

97 | ```bash

98 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

99 | --video_file=test_video.mp4 \

100 | --device=gpu \

101 | -o VEHICLE_ATTR.model_dir=output_inference/vehicle_attribute_infer

102 | ```

103 |

104 | 测试效果如下:

105 |

106 |  71 |

71 |

107 |  108 |

108 |

109 |

110 | ## 方案说明

111 | 车辆属性识别模型使用了[PaddleClas](https://github.com/PaddlePaddle/PaddleClas) 的超轻量图像分类方案(PULC,Practical Ultra Lightweight image Classification)。关于该模型的数据准备、训练、测试等详细内容,请见[PULC 车辆属性识别模型](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/zh_CN/PULC/PULC_vehicle_attribute.md).

112 |

113 | 车辆属性识别模型选用了轻量级、高精度的PPLCNet。并在该模型的基础上,进一步使用了以下优化方案:

114 |

115 | - 使用SSLD预训练模型,在不改变推理速度的前提下,精度可以提升约0.5个百分点

116 | - 融合EDA数据增强策略,精度可以再提升0.52个百分点

117 | - 使用SKL-UGI知识蒸馏, 精度可以继续提升0.23个百分点

118 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_attribute_en.md:

--------------------------------------------------------------------------------

1 | English | [简体中文](ppvehicle_attribute.md)

2 |

3 | # Attribute Recognition Module of PP-Vehicle

4 |

5 | Vehicle attribute recognition is widely used in smart cities, smart transportation and other scenarios. In PP-Vehicle, a vehicle attribute recognition module is integrated, which can identify vehicle color and model.

6 |

7 | | Task | Algorithm | Precision | Inference Speed | Download |

8 | |-----------|------|-----------|----------|---------------------|

9 | | Vehicle Detection/Tracking | PP-YOLOE | mAP 63.9 | 38.67ms | [Inference and Deployment Model](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) |

10 | | Vehicle Attribute Recognition | PPLCNet | 90.81 | 7.31 ms | [Inference and Deployment Model](https://bj.bcebos.com/v1/paddledet/models/pipeline/vehicle_attribute_model.zip) |

11 |

12 |

13 | Note:

14 | 1. The inference speed of the attribute model is obtained from the test on NVIDIA T4, with TensorRT FP16. The time includes data pre-process, model inference and post-process.

15 | 2. For introductions, please refer to [PP-LCNet Series](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/en/models/PP-LCNet_en.md). Related paper is available on PP-LCNet paper

16 | 3. The training and test phase of vehicle attribute recognition model are both obtained from [VeRi dataset](https://www.v7labs.com/open-datasets/veri-dataset).

17 |

18 |

19 | - The provided pre-trained model supports 10 colors and 9 models, which is the same with VeRi dataset. The details are as follows:

20 |

21 | ```yaml

22 | # Vehicle Colors

23 | - "yellow"

24 | - "orange"

25 | - "green"

26 | - "gray"

27 | - "red"

28 | - "blue"

29 | - "white"

30 | - "golden"

31 | - "brown"

32 | - "black"

33 |

34 | # Vehicle Models

35 | - "sedan"

36 | - "suv"

37 | - "van"

38 | - "hatchback"

39 | - "mpv"

40 | - "pickup"

41 | - "bus"

42 | - "truck"

43 | - "estate"

44 | ```

45 |

46 | ## Instructions

47 |

48 | ### Description of Configuration

49 |

50 | Parameters related to vehicle attribute recognition in the [config file](../../config/infer_cfg_ppvehicle.yml) are as follows:

51 |

52 | ```yaml

53 | VEHICLE_ATTR:

54 | model_dir: output_inference/vehicle_attribute_infer/ # Path of the model

55 | batch_size: 8 # The size of the inference batch

56 | color_threshold: 0.5 # Threshold of color. Confidence is required to reach this threshold to determine the specific attribute, otherwise it will be 'Unknown‘.

57 | type_threshold: 0.5 # Threshold of vehicle model. Confidence is required to reach this threshold to determine the specific attribute, otherwise it will be 'Unknown‘.

58 | enable: False # Whether to enable this function

59 | ```

60 |

61 | ### How to Use

62 | 1. Download models `Vehicle Detection/Tracking` and `Vehicle Attribute Recognition` from the links in `Model Zoo` and unzip them to ```./output_inference```. The models are automatically downloaded by default. If you download them manually, you need to modify the `model_dir` as the model storage path to use this function.

63 |

64 | 2. Set the "enable: True" of `VEHICLE_ATTR` in infer_cfg_ppvehicle.yml.

65 |

66 | 3. For image input, please run these commands. (Description of more parameters, please refer to [QUICK_STARTED - Parameter_Description](./PPVehicle_QUICK_STARTED.md).

67 |

68 | ```bash

69 | # For single image

70 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

71 | --image_file=test_image.jpg \

72 | --device=gpu

73 |

74 | # For folder contains one or multiple images

75 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

76 | --image_dir=images/ \

77 | --device=gpu

78 | ```

79 |

80 | 4. For video input, please run these commands.

81 |

82 | ```bash

83 | # For single video

84 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

85 | --video_file=test_video.mp4 \

86 | --device=gpu

87 |

88 | # For folder contains one or multiple videos

89 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

90 | --video_dir=test_videos/ \

91 | --device=gpu

92 | ```

93 |

94 | 5. There are two ways to modify the model path:

95 |

96 | - Method 1:Set paths of each model in `./deploy/pipeline/config/infer_cfg_ppvehicle.yml`. For vehicle attribute recognition, the path should be modified under the `VEHICLE_ATTR` field.

97 | - Method 2: Directly add `-o` in command line to override the default model path in the configuration file:

98 |

99 | ```bash

100 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \

101 | --video_file=test_video.mp4 \

102 | --device=gpu \

103 | -o VEHICLE_ATTR.model_dir=output_inference/vehicle_attribute_infer

104 | ```

105 |

106 | The result is shown as follow:

107 |

108 |  108 |

108 |

109 |  110 |

110 |

111 |

112 |

113 | ### Features to the Solution

114 |

115 | The vehicle attribute recognition model adopts PULC, Practical Ultra Lightweight image Classification from [PaddleClas](https://github.com/PaddlePaddle/PaddleClas). For details on data preparation, training, and testing of the model, please refer to [PULC Recognition Model of Vehicle Attribute](https://github.com/PaddlePaddle/PaddleClas/blob/release/2.4/docs/en/PULC/PULC_vehicle_attribute_en.md).

116 |

117 | The vehicle attribute recognition model adopts the lightweight and high-precision PPLCNet. And on top of PPLCNet, our model optimized via::

118 |

119 | - Improved about 0.5 percentage points accuracy by using the SSLD pre-trained model without changing the inference speed.

120 | - Improved 0.52 percentage points accuracy further by integrating EDA data augmentation strategy.

121 | - Improved 0.23 percentage points accuracy by using SKL-UGI knowledge distillation.

122 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_illegal_parking.md:

--------------------------------------------------------------------------------

1 |

2 | # PP-Vehicle违章停车识别模块

3 |

4 | 禁停区域违章停车识别在车辆应用场景中有着非常广泛的应用,借助AI的力量可以减轻人力投入,精准快速识别出违停车辆并进一步采取如广播驱离行为。PP-Vehicle中基于车辆跟踪模型、车牌检测模型和车牌识别模型实现了违章停车识别功能,具体模型信息如下:

5 |

6 | | 任务 | 算法 | 精度 | 预测速度(ms) |预测模型下载链接 |

7 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

8 | | 车辆检测/跟踪 | PP-YOLOE-l | mAP: 63.9 | - |[下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) |

9 | | 车牌检测模型 | ch_PP-OCRv3_det | hmean: 0.979 | - | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_det_infer.tar.gz) |

10 | | 车牌识别模型 | ch_PP-OCRv3_rec | acc: 0.773 | - | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz) |

11 |

12 | 1. 跟踪模型使用PPVehicle数据集(整合了BDD100K-MOT和UA-DETRAC),是将BDD100K-MOT中的car, truck, bus, van和UA-DETRAC中的car, bus, van都合并为1类vehicle(1)后的数据集。

13 | 2. 车牌检测、识别模型使用PP-OCRv3模型在CCPD2019、CCPD2020混合车牌数据集上fine-tune得到。

14 |

15 | ## 使用方法

16 |

17 | 1. 用户可从上表链接中下载模型并解压到```PaddleDetection/output_inference```路径下,并修改配置文件中模型路径,也可默认自动下载模型。在```deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml```中可手动设置三个模型的模型路径。

18 |

19 | `infer_cfg_illegal_parking.yml`中配置项说明:

20 | ```

21 | MOT: # 跟踪模块

22 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip # 跟踪模型路径

23 | tracker_config: deploy/pipeline/config/tracker_config.yml # 跟踪配置文件路径

24 | batch_size: 1 # 跟踪batch size

25 | enable: True # 是否开启跟踪功能

26 |

27 | VEHICLE_PLATE: # 车牌识别模块

28 | det_model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_det_infer.tar.gz # 车牌检测模型路径

29 | det_limit_side_len: 480 # 检测模型单边输入尺寸

30 | det_limit_type: "max" # 检测模型输入尺寸长短边选择,"max"表示长边

31 | rec_model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz # 车牌识别模型路径

32 | rec_image_shape: [3, 48, 320] # 车牌识别模型输入尺寸

33 | rec_batch_num: 6 # 车牌识别batch size

34 | word_dict_path: deploy/pipeline/ppvehicle/rec_word_dict.txt # OCR模型查询字典

35 | enable: True # 是否开启车牌识别功能

36 | ```

37 |

38 | 2. 输入视频,启动命令如下

39 | ```python

40 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml \

41 | --video_file=test_video.mp4 \

42 | --device=gpu \

43 | --draw_center_traj \

44 | --illegal_parking_time=5 \

45 | --region_type=custom \

46 | --region_polygon 100 1000 1000 1000 900 1700 0 1700

47 | ```

48 |

49 | 参数说明如下:

50 | - config:配置文件路径;

51 | - video_file:测试视频路径;

52 | - device:推理设备配置;

53 | - draw_center_traj:画出车辆中心运动轨迹;

54 | - illegal_parking_time:非法停车时间,单位为秒;

55 | - region_type:非法停车区域类型,custom表示自定义;

56 | - region_polygon:自定义非法停车多边形,至少为3个点。

57 |

58 | **注意:**

59 | - 违章停车的测试视频必须是静止摄像头拍摄的,镜头不能抖动或移动。

60 | - 判断车辆是否在违停区域内是**以车辆的中心点**作为参考,车辆擦边而过等场景不算作违章停车。

61 | - `--region_polygon`表示用户自定义区域的多边形的点坐标序列,每两个为一对点坐标(x,y),**按顺时针顺序**连成一个**封闭区域**,至少需要3对点也即6个整数,默认值是`[]`,需要用户自行设置点坐标,如是四边形区域,坐标顺序是`左上、右上、右下、左下`。用户可以运行[此段代码](../../tools/get_video_info.py)获取所测视频的分辨率帧数,以及可以自定义画出自己想要的多边形区域的可视化并自己调整。

62 | 自定义多边形区域的可视化代码运行如下:

63 | ```python

64 | python get_video_info.py --video_file=demo.mp4 --region_polygon 200 200 400 200 300 400 100 400

65 | ```

66 | 快速画出想要的区域的小技巧:先任意取点得到图片,用画图工具打开,鼠标放到想要的区域点上会显示出坐标,记录下来并取整,作为这段可视化代码的region_polygon参数,并再次运行可视化,微调点坐标参数直至满意。

67 |

68 |

69 | 3. 若修改模型路径,有以下两种方式:

70 |

71 | - 方法一:```./deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml```下可以配置不同模型路径;

72 | - 方法二:命令行中--config配置项后面增加`-o VEHICLE_PLATE.det_model_dir=[YOUR_DETMODEL_PATH] VEHICLE_PLATE.rec_model_dir=[YOUR_RECMODEL_PATH]`修改模型路径。

73 |

74 |

75 | 测试效果如下:

76 |

77 |  110 |

110 |

78 |  79 |

79 |

80 |

81 | 可视化视频中左上角num后面的数值表示当前帧中车辆的数目;Total count表示画面中出现的车辆的总数,包括出现又消失的车辆。

82 |

83 | ## 方案说明

84 |

85 | 1. 目标检测/多目标跟踪获取图片/视频输入中的车辆检测框,模型方案为PP-YOLOE,详细文档参考[PP-YOLOE](../../../configs/ppyoloe/README_cn.md)

86 | 2. 基于跟踪算法获取每辆车的轨迹,如果车辆中心在违停区域内且在指定时间内未发生移动,则视为违章停车;

87 | 3. 使用车牌识别模型得到违章停车车牌并可视化。

88 |

89 | ## 参考资料

90 |

91 | 1. PaddeDetection特色检测模型[PP-YOLOE](../../../../configs/ppyoloe)。

92 | 2. Paddle字符识别模型库[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR)。

93 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_illegal_parking_en.md:

--------------------------------------------------------------------------------

1 |

2 | # PP-Vehicle Illegal Parking Recognition Module

3 |

4 | Illegal parking recognition in no-parking areas has a very wide range of applications in vehicle application scenarios. With the help of AI, human input can be reduced, and illegally parked vehicles can be accurately and quickly identified, and further behaviors such as broadcasting to expel the vehicles can be performed. Based on the vehicle tracking model, license plate detection model and license plate recognition model, the PP-Vehicle realizes the illegal parking recognition function. The specific model information is as follows:

5 |

6 | | Task | Algorithm | Precision | Inference Speed(ms) |Inference Model Download Link |

7 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

8 | | Vehicle Tracking | PP-YOLOE-l | mAP: 63.9 | - |[Link](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) |

9 | | Plate Detection | ch_PP-OCRv3_det | hmean: 0.979 | - | [Link](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_det_infer.tar.gz) |

10 | | Plate Recognition | ch_PP-OCRv3_rec | acc: 0.773 | - | [Link](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz) |

11 |

12 | 1. The tracking model uses the PPVehicle dataset (integrating BDD100K-MOT and UA-DETRAC), which combines car, truck, bus, van in BDD100K-MOT and car, bus, and van in UA-DETRAC into one class which named vehicle (1).

13 | 2. The license plate detection and recognition model is fine-tuned on the CCPD2019 and CCPD2020 using the PP-OCRv3 model.

14 |

15 | ## Instructions

16 |

17 | 1. Users can download the model from the link in the table above and unzip it to the ``PaddleDetection/output_inference``` path, and modify the model path in the configuration file, or download the model automatically by default. The model paths for the three models can be manually set in ``deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml```.

18 |

19 | Description of configuration items in `infer_cfg_illegal_parking.yml`:

20 | ```

21 | MOT: # Tracking Module

22 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip # Path of Tracking Model

23 | tracker_config: deploy/pipeline/config/tracker_config.yml # Config Path of Tracking

24 | batch_size: 1 # Tracking batch size

25 | enable: True # Whether to Enable Tracking Function

26 |

27 | VEHICLE_PLATE: # Plate Recognition Module

28 | det_model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_det_infer.tar.gz # Path of Plate Detection Model

29 | det_limit_side_len: 480 # Single Side Size of Detection Model

30 | det_limit_type: "max" # Detection model Input Size Selection of Long and Short Sides, "max" Represents the Long Side

31 | rec_model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz # Path of Plate Recognition Model

32 | rec_image_shape: [3, 48, 320] # The Input Size of Plate Recognition Model

33 | rec_batch_num: 6 # Plate Recognition batch size

34 | word_dict_path: deploy/pipeline/ppvehicle/rec_word_dict.txt # OCR Model Look-up Table

35 | enable: True # Whether to Enable Plate Recognition Function

36 | ```

37 |

38 | 2. Input video, the command is as follows:

39 | ```python

40 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml \

41 | --video_file=test_video.mp4 \

42 | --device=gpu \

43 | --draw_center_traj \

44 | --illegal_parking_time=5 \

45 | --region_type=custom \

46 | --region_polygon 100 1000 1000 1000 900 1700 0 1700

47 |

48 | The parameter description:

49 | - config: config path;

50 | - video_file: video path to be tested;

51 | - device: device to infe;

52 | - draw_center_traj: draw the trajectory of the center of the vehicle;

53 | - illegal_parking_time: illegal parking time, in seconds;

54 | - region_type: illegal parking region type, 'custom' means the region is customized;

55 | - region_polygon: customized illegal parking region which includes three points at least.

56 |

57 | 3. Methods to modify the path of model:

58 |

59 | - Method 1: Configure different model paths in ```./deploy/pipeline/config/examples/infer_cfg_illegal_parking.yml``` file;

60 | - Method2: In the command line, add `-o VEHICLE_PLATE.det_model_dir=[YOUR_DETMODEL_PATH] VEHICLE_PLATE.rec_model_dir=[YOUR_RECMODEL_PATH]` after the --config configuration item to modify the model path.

61 |

62 |

63 | Test Result:

64 |

65 |  79 |

79 |

66 |  67 |

67 |

68 |

69 |

70 | ## Method Description

71 |

72 | 1. Target multi-target tracking obtains the vehicle detection frame in the picture/video input. The model scheme is PP-YOLOE. For detailed documentation, refer to [PP-YOLOE](../../../configs/ppyoloe/README_cn. md)

73 | 2. Obtain the trajectory of each vehicle based on the tracking algorithm. If the center of the vehicle is in the illegal parking area and does not move within the specified time, it is considered illegal parking;

74 | 3. Use the license plate recognition model to get the illegal parking license plate and visualize it.

75 |

76 |

77 | ## References

78 |

79 | 1. Detection Model in PaddeDetection:[PP-YOLOE](../../../../configs/ppyoloe).

80 | 2. Character Recognition Model Library in Paddle: [PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR).

81 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_mot.md:

--------------------------------------------------------------------------------

1 | [English](ppvehicle_mot_en.md) | 简体中文

2 |

3 | # PP-Vehicle车辆跟踪模块

4 |

5 | 【应用介绍】

6 | 车辆检测与跟踪在交通监控、自动驾驶等方向都具有广泛应用,PP-Vehicle中集成了检测跟踪模块,是车牌检测、车辆属性识别等任务的基础。我们提供了预训练模型,用户可以直接下载使用。

7 |

8 | 【模型下载】

9 | | 任务 | 算法 | 精度 | 预测速度(ms) |下载链接 |

10 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

11 | | 车辆检测/跟踪 | PP-YOLOE-l | mAP: 63.9  67 |

67 | MOTA: 50.1 | 检测: 25.1ms

跟踪:31.8ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) | 12 | | 车辆检测/跟踪 | PP-YOLOE-s | mAP: 61.3

MOTA: 46.8 | 检测: 16.2ms

跟踪:21.0ms | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_s_36e_ppvehicle.zip) | 13 | 14 | 1. 检测/跟踪模型精度为PPVehicle数据集训练得到,整合了BDD100K-MOT和UA-DETRAC,是将BDD100K-MOT中的`car, truck, bus, van`和UA-DETRAC中的`car, bus, van`都合并为1类`vehicle(1)`后的数据集,检测精度mAP是PPVehicle的验证集上测得,跟踪精度MOTA是在BDD100K-MOT的验证集上测得(`car, truck, bus, van`合并为1类`vehicle`)。训练具体流程请参照[ppvehicle](../../../../configs/ppvehicle)。 15 | 2. 预测速度为T4 机器上使用TensorRT FP16时的速度, 速度包含数据预处理、模型预测、后处理全流程。 16 | 17 | ## 使用方法 18 | 19 | 【配置项说明】 20 | 21 | 配置文件中与属性相关的参数如下: 22 | ``` 23 | DET: 24 | model_dir: output_inference/mot_ppyoloe_l_36e_ppvehicle/ # 车辆检测模型调用路径 25 | batch_size: 1 # 模型预测时的batch_size大小 26 | 27 | MOT: 28 | model_dir: output_inference/mot_ppyoloe_l_36e_ppvehicle/ # 车辆跟踪模型调用路径 29 | tracker_config: deploy/pipeline/config/tracker_config.yml 30 | batch_size: 1 # 模型预测时的batch_size大小, 跟踪任务只能设置为1 31 | skip_frame_num: -1 # 跳帧预测的帧数,-1表示不进行跳帧,建议跳帧帧数最大不超过3 32 | enable: False # 是否开启该功能,使用跟踪前必须确保设置为True 33 | ``` 34 | 35 | 【使用命令】 36 | 1. 从上表链接中下载模型并解压到```./output_inference```路径下,并修改配置文件中模型路径。默认为自动下载模型,无需做改动。 37 | 2. 图片输入时,是纯检测任务,启动命令如下 38 | ```python 39 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 40 | --image_file=test_image.jpg \ 41 | --device=gpu 42 | ``` 43 | 3. 视频输入时,是跟踪任务,注意首先设置infer_cfg_ppvehicle.yml中的MOT配置的`enable=True`,如果希望跳帧加速检测跟踪流程,可以设置`skip_frame_num: 2`,建议跳帧帧数最大不超过3: 44 | ``` 45 | MOT: 46 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip 47 | tracker_config: deploy/pipeline/config/tracker_config.yml 48 | batch_size: 1 49 | skip_frame_num: 2 50 | enable: True 51 | ``` 52 | ```python 53 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 54 | --video_file=test_video.mp4 \ 55 | --device=gpu 56 | ``` 57 | 4. 若修改模型路径,有以下两种方式: 58 | - 方法一:```./deploy/pipeline/config/infer_cfg_ppvehicle.yml```下可以配置不同模型路径,检测和跟踪模型分别对应`DET`和`MOT`字段,修改对应字段下的路径为实际期望的路径即可。 59 | - 方法二:命令行中--config配置项后面增加`-o MOT.model_dir=[YOUR_DETMODEL_PATH]`修改模型路径。 60 | ```python 61 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 62 | --video_file=test_video.mp4 \ 63 | --device=gpu \ 64 | --region_type=horizontal \ 65 | --do_entrance_counting \ 66 | --draw_center_traj \ 67 | -o MOT.model_dir=ppyoloe/ 68 | 69 | ``` 70 | **注意:** 71 | - `--do_entrance_counting`表示是否统计出入口流量,不设置即默认为False。 72 | - `--draw_center_traj`表示是否绘制跟踪轨迹,不设置即默认为False。注意绘制跟踪轨迹的测试视频最好是静止摄像头拍摄的。 73 | - `--region_type`表示流量计数的区域,当设置`--do_entrance_counting`时可选择`horizontal`或者`vertical`,默认是`horizontal`,表示以视频图片的中心水平线为出入口,同一物体框的中心点在相邻两秒内分别在区域中心水平线的两侧,即完成计数加一。 74 | 75 | 76 | 5. 区域闯入判断和计数 77 | 78 | 注意首先设置infer_cfg_ppvehicle.yml中的MOT配置的enable=True,然后启动命令如下 79 | ```python 80 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 81 | --video_file=test_video.mp4 \ 82 | --device=gpu \ 83 | --draw_center_traj \ 84 | --do_break_in_counting \ 85 | --region_type=custom \ 86 | --region_polygon 200 200 400 200 300 400 100 400 87 | ``` 88 | **注意:** 89 | - 区域闯入的测试视频必须是静止摄像头拍摄的,镜头不能抖动或移动。 90 | - `--do_break_in_counting`表示是否进行区域出入后计数,不设置即默认为False。 91 | - `--region_type`表示流量计数的区域,当设置`--do_break_in_counting`时仅可选择`custom`,默认是`custom`,表示以用户自定义区域为出入口,同一物体框的下边界中点坐标在相邻两秒内从区域外到区域内,即完成计数加一。 92 | - `--region_polygon`表示用户自定义区域的多边形的点坐标序列,每两个为一对点坐标(x,y),**按顺时针顺序**连成一个**封闭区域**,至少需要3对点也即6个整数,默认值是`[]`,需要用户自行设置点坐标,如是四边形区域,坐标顺序是`左上、右上、右下、左下`。用户可以运行[此段代码](../../tools/get_video_info.py)获取所测视频的分辨率帧数,以及可以自定义画出自己想要的多边形区域的可视化并自己调整。 93 | 自定义多边形区域的可视化代码运行如下: 94 | ```python 95 | python get_video_info.py --video_file=demo.mp4 --region_polygon 200 200 400 200 300 400 100 400 96 | ``` 97 | 快速画出想要的区域的小技巧:先任意取点得到图片,用画图工具打开,鼠标放到想要的区域点上会显示出坐标,记录下来并取整,作为这段可视化代码的region_polygon参数,并再次运行可视化,微调点坐标参数直至满意。 98 | 99 | 100 | 【效果展示】 101 | 102 |

103 |  104 |

104 |

105 |

106 | ## 方案说明

107 |

108 | 【实现方案及特色】

109 | 1. 使用目标检测/多目标跟踪技术来获取图片/视频输入中的车辆检测框,检测模型方案为PP-YOLOE,详细文档参考[PP-YOLOE](../../../../configs/ppyoloe)和[ppvehicle](../../../../configs/ppvehicle)。

110 | 2. 多目标跟踪模型方案采用[OC-SORT](https://arxiv.org/pdf/2203.14360.pdf),采用PP-YOLOE替换原文的YOLOX作为检测器,采用OCSORTTracker作为跟踪器,详细文档参考[OC-SORT](../../../../configs/mot/ocsort)。

111 |

112 | ## 参考文献

113 | ```

114 | @article{cao2022observation,

115 | title={Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking},

116 | author={Cao, Jinkun and Weng, Xinshuo and Khirodkar, Rawal and Pang, Jiangmiao and Kitani, Kris},

117 | journal={arXiv preprint arXiv:2203.14360},

118 | year={2022}

119 | }

120 | ```

121 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_mot_en.md:

--------------------------------------------------------------------------------

1 | English | [简体中文](ppvehicle_mot.md)

2 |

3 | # PP-Vehicle Vehicle Tracking Module

4 |

5 | 【Application Introduction】

6 |

7 | Vehicle detection and tracking are widely used in traffic monitoring and autonomous driving. The detection and tracking module is integrated in PP-Vehicle, providing a solid foundation for tasks including license plate detection and vehicle attribute recognition. We provide pre-trained models that can be directly used by developers.

8 |

9 | 【Model Download】

10 |

11 | | Task | Algorithm | Accuracy | Inference speed(ms) | Download Link |

12 | | -------------------------- | ---------- | ----------------------- | ------------------------------------ | ------------------------------------------------------------------------------------------ |

13 | | Vehicle Detection/Tracking | PP-YOLOE-l | mAP: 63.9 104 |

104 | MOTA: 50.1 | Detection: 25.1ms

Tracking:31.8ms | [Link](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) | 14 | | Vehicle Detection/Tracking | PP-YOLOE-s | mAP: 61.3

MOTA: 46.8 | Detection: 16.2ms

Tracking:21.0ms | [Link](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_s_36e_ppvehicle.zip) | 15 | 16 | 1. The detection/tracking model uses the PPVehicle dataset ( which integrates BDD100K-MOT and UA-DETRAC). The dataset merged car, truck, bus, van from BDD100K-MOT and car, bus, van from UA-DETRAC all into 1 class vehicle(1). The detection accuracy mAP was tested on the test set of PPVehicle, and the tracking accuracy MOTA was obtained on the test set of BDD100K-MOT (`car, truck, bus, van` were combined into 1 class `vehicle`). For more details about the training procedure, please refer to [ppvehicle](../../../../configs/ppvehicle). 17 | 2. Inference speed is obtained at T4 with TensorRT FP16 enabled, which includes data pre-processing, model inference and post-processing. 18 | 19 | ## How To Use 20 | 21 | 【Config】 22 | 23 | The parameters associated with the attributes in the configuration file are as follows. 24 | 25 | ``` 26 | DET: 27 | model_dir: output_inference/mot_ppyoloe_l_36e_ppvehicle/ # Vehicle detection model path 28 | batch_size: 1 # Batch_size size for model inference 29 | 30 | MOT: 31 | model_dir: output_inference/mot_ppyoloe_l_36e_ppvehicle/ # Vehicle tracking model path 32 | tracker_config: deploy/pipeline/config/tracker_config.yml 33 | batch_size: 1 # Batch_size size for model inference, 1 only for tracking task. 34 | skip_frame_num: -1 # Number of frames to skip, -1 means no skipping, the maximum skipped frames are recommended to be 3 35 | enable: False # Whether or not to enable this function, please make sure it is set to True before tracking 36 | ``` 37 | 38 | 【Usage】 39 | 40 | 1. Download the model from the link in the table above and unzip it to ``. /output_inference`` and change the model path in the configuration file. The default is to download the model automatically, no changes are needed. 41 | 42 | 2. The image input will start a pure detection task, and the start command is as follows 43 | 44 | ```python 45 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 46 | --image_file=test_image.jpg \ 47 | --device=gpu 48 | ``` 49 | 50 | 3. Video input will start a tracking task. Please set `enable=True` for the MOT configuration in infer_cfg_ppvehicle.yml. If skip frames are needed for faster detection and tracking, it is recommended to set `skip_frame_num: 2` the maximum should not exceed 3. 51 | 52 | ``` 53 | MOT: 54 | model_dir: https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip 55 | tracker_config: deploy/pipeline/config/tracker_config.yml 56 | batch_size: 1 57 | skip_frame_num: 2 58 | enable: True 59 | ``` 60 | 61 | ```python 62 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 63 | --video_file=test_video.mp4 \ 64 | --device=gpu 65 | ``` 66 | 67 | 4. There are two ways to modify the model path 68 | 69 | - Config different model path in```./deploy/pipeline/config/infer_cfg_ppvehicle.yml```. The detection and tracking models correspond to the `DET` and `MOT` fields respectively. Modify the path under the corresponding field to the actual path. 70 | 71 | - **[Recommand]** Add`-o MOT.model_dir=[YOUR_DETMODEL_PATH]` after the config in the command line to modify model path 72 | 73 | ```python 74 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 75 | --video_file=test_video.mp4 \ 76 | --device=gpu \ 77 | --region_type=horizontal \ 78 | --do_entrance_counting \ 79 | --draw_center_traj \ 80 | -o MOT.model_dir=ppyoloe/ 81 | ``` 82 | 83 | **Note:** 84 | 85 | - `--do_entrance_counting` : Whether to count entrance/exit traffic flows, the default is False 86 | 87 | - `--draw_center_traj` : Whether to draw center trajectory, the default is False. Its input video is preferably taken from a still camera 88 | 89 | - `--region_type` : The region for traffic counting. When setting `--do_entrance_counting`, there are two options: `horizontal` or `vertical`. The default is `horizontal`, which means the center horizontal line of the video picture is the entrance and exit. When the center point of the same object frame is on both sides of the centre horizontal line of the region in two adjacent seconds, i.e. the count adds 1. 90 | 5. Regional break-in and counting 91 | 92 | Please set the MOT config: enable=True in `infer_cfg_ppvehicle.yml` before running the starting command: 93 | 94 | ``` 95 | python deploy/pipeline/pipeline.py --config deploy/pipeline/config/infer_cfg_ppvehicle.yml \ 96 | --video_file=test_video.mp4 \ 97 | --device=gpu \ 98 | --draw_center_traj \ 99 | --do_break_in_counting \ 100 | --region_type=custom \ 101 | --region_polygon 200 200 400 200 300 400 100 400 102 | ``` 103 | 104 | **Note:** 105 | 106 | - Test video of area break-ins must be taken from a still camera, with no shaky or moving footage. 107 | 108 | - `--do_break_in_counting`Indicates whether or not to count the entrance and exit of the area. The default is False. 109 | 110 | - `--region_type` indicates the region for traffic counting, when setting `--do_break_in_counting` only `custom` can be selected, and the default is `custom`. It means that the customized region is used as the entry and exit. When the coordinates of the lower boundary midpoint of the same object frame goes to the inside of the region within two adjacent seconds, i.e. the count adds one. 111 | 112 | - `--region_polygon` indicates a sequence of point coordinates for a polygon in a customized region. Every two are a pair of point coordinates (x,y). **In clockwise order** they are connected into a **closed region**, at least 3 pairs of points are needed (or 6 integers). The default value is `[]`. Developers need to set the point coordinates manually. If it is a quadrilateral region, the coordinate order is `top left, top right , bottom right, bottom left`. Developers can run [this code](... /... /tools/get_video_info.py) to get the resolution frames of the predicted video. It also supports customizing and adjusting the visualisation of the polygon area. 113 | The code for the visualisation of the customized polygon area runs as follows. 114 | 115 | ```python 116 | python get_video_info.py --video_file=demo.mp4 --region_polygon 200 200 400 200 300 400 100 400 117 | ``` 118 | 119 | A quick tip for drawing customized area: first take any point to get the picture, open it with the drawing tool, mouse over the area point and the coordinates will be displayed, record it and round it up, use it as a region_polygon parameter for this visualisation code and run the visualisation again, and fine-tune the point coordinates parameter. 120 | 121 | 【Showcase】 122 | 123 |

124 |  125 |

125 |

126 |

127 | ## Solution

128 |

129 | 【Solution and feature】

130 |

131 | - PP-YOLOE is adopted for vehicle detection frame of object detection, multi-object tracking in the picture/video input. For details, please refer to [PP-YOLOE](... /... /... /configs/ppyoloe/README_cn.md) and [PPVehicle](../../../../configs/ppvehicle)

132 | - [OC-SORT](https://arxiv.org/pdf/2203.14360.pdf) is adopted as multi-object tracking model. PP-YOLOE replaced YOLOX as detector, and OCSORTTracker is the tracker. For more details, please refer to [OC-SORT](../../../../configs/mot/ocsort)

133 |

134 | ## Reference

135 |

136 | ```

137 | @article{cao2022observation,

138 | title={Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking},

139 | author={Cao, Jinkun and Weng, Xinshuo and Khirodkar, Rawal and Pang, Jiangmiao and Kitani, Kris},

140 | journal={arXiv preprint arXiv:2203.14360},

141 | year={2022}

142 | }

143 | ```

144 |

--------------------------------------------------------------------------------

/docs/tutorials/ppvehicle_plate.md:

--------------------------------------------------------------------------------

1 | [English](ppvehicle_plate_en.md) | 简体中文

2 |

3 | # PP-Vehicle车牌识别模块

4 |

5 | 车牌识别,在车辆应用场景中有着非常广泛的应用,起到车辆身份识别的作用,比如车辆出入口自动闸机。PP-Vehicle中提供了车辆的跟踪及其车牌识别的功能,并提供模型下载:

6 |

7 | | 任务 | 算法 | 精度 | 预测速度(ms) |预测模型下载链接 |

8 | |:---------------------|:---------:|:------:|:------:| :---------------------------------------------------------------------------------: |

9 | | 车辆检测/跟踪 | PP-YOLOE-l | mAP: 63.9 | - |[下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/mot_ppyoloe_l_36e_ppvehicle.zip) |

10 | | 车牌检测模型 | ch_PP-OCRv3_det | hmean: 0.979 | - | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_det_infer.tar.gz) |

11 | | 车牌识别模型 | ch_PP-OCRv3_rec | acc: 0.773 | - | [下载链接](https://bj.bcebos.com/v1/paddledet/models/pipeline/ch_PP-OCRv3_rec_infer.tar.gz) |

12 | 1. 跟踪模型使用PPVehicle数据集(整合了BDD100K-MOT和UA-DETRAC),是将BDD100K-MOT中的car, truck, bus, van和UA-DETRAC中的car, bus, van都合并为1类vehicle(1)后的数据集。

13 | 2. 车牌检测、识别模型使用PP-OCRv3模型在CCPD2019、CCPD2020混合车牌数据集上fine-tune得到。

14 |

15 | ## 使用方法

16 |

17 | 1. 从上表链接中下载模型并解压到```PaddleDetection/output_inference```路径下,并修改配置文件中模型路径,也可默认自动下载模型。设置```deploy/pipeline/config/infer_cfg_ppvehicle.yml```中`VEHICLE_PLATE`的enable: True

18 |

19 | `infer_cfg_ppvehicle.yml`中配置项说明:

20 | ```

21 | VEHICLE_PLATE: #模块名称

22 | det_model_dir: output_inference/ch_PP-OCRv3_det_infer/ #车牌检测模型路径

23 | det_limit_side_len: 480 #检测模型单边输入尺寸

24 | det_limit_type: "max" #检测模型输入尺寸长短边选择,"max"表示长边

25 | rec_model_dir: output_inference/ch_PP-OCRv3_rec_infer/ #车牌识别模型路径

26 | rec_image_shape: [3, 48, 320] #车牌识别模型输入尺寸

27 | rec_batch_num: 6 #车牌识别batchsize