├── .editorconfig

├── .gitignore

├── CODE_OF_CONDUCT.md

├── LICENSE

├── README.md

├── SECURITY.md

├── SUPPORT.md

├── configs

├── captioning

│ └── m4c_textcaps

│ │ ├── tap_base_pretrain.yml

│ │ └── tap_refine.yml

└── vqa

│ ├── m4c_stvqa

│ ├── tap_base_pretrain.yml

│ └── tap_refine.yml

│ └── m4c_textvqa

│ ├── tap_base_pretrain.yml

│ └── tap_refine.yml

├── data

└── README.md

├── projects

├── M4C_Captioner

│ └── scripts

│ │ └── textcaps_eval.py

├── TAP_Caption

│ └── README.md

└── TAP_QA

│ └── README.md

├── pythia

├── common

│ ├── __init__.py

│ ├── batch_collator.py

│ ├── constants.py

│ ├── dataset_loader.py

│ ├── defaults

│ │ ├── __init__.py

│ │ └── configs

│ │ │ ├── base.yml

│ │ │ └── datasets

│ │ │ ├── captioning

│ │ │ ├── coco.yml

│ │ │ ├── m4c_textcaps.yml

│ │ │ └── m4c_textcaps_ocr100.yml

│ │ │ ├── dialog

│ │ │ └── visual_dialog.yml

│ │ │ └── vqa

│ │ │ ├── clevr.yml

│ │ │ ├── m4c_ocrvqa.yml

│ │ │ ├── m4c_stvqa.yml

│ │ │ ├── m4c_stvqa_ocr100.yml

│ │ │ ├── m4c_textvqa.yml

│ │ │ ├── m4c_textvqa_ocr100.yml

│ │ │ ├── textvqa.yml

│ │ │ ├── visual_genome.yml

│ │ │ ├── vizwiz.yml

│ │ │ └── vqa2.yml

│ ├── meter.py

│ ├── registry.py

│ ├── report.py

│ ├── sample.py

│ └── test_reporter.py

├── datasets

│ ├── __init__.py

│ ├── base_dataset.py

│ ├── base_dataset_builder.py

│ ├── captioning

│ │ ├── __init__.py

│ │ ├── coco

│ │ │ ├── __init__.py

│ │ │ ├── builder.py

│ │ │ └── dataset.py

│ │ └── m4c_textcaps

│ │ │ ├── __init__.py

│ │ │ ├── builder.py

│ │ │ └── dataset.py

│ ├── concat_dataset.py

│ ├── dialog

│ │ ├── __init__.py

│ │ ├── original.py

│ │ └── visual_dialog

│ │ │ ├── config.yml

│ │ │ └── scripts

│ │ │ ├── build_imdb.py

│ │ │ └── extract_vocabulary.py

│ ├── feature_readers.py

│ ├── features_dataset.py

│ ├── image_database.py

│ ├── multi_dataset.py

│ ├── processors.py

│ ├── samplers.py

│ ├── scene_graph_database.py

│ └── vqa

│ │ ├── __init__.py

│ │ ├── clevr

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── m4c_ocrvqa

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── m4c_stvqa

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── m4c_textvqa

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── textvqa

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── visual_genome

│ │ ├── builder.py

│ │ └── dataset.py

│ │ ├── vizwiz

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ └── dataset.py

│ │ └── vqa2

│ │ ├── __init__.py

│ │ ├── builder.py

│ │ ├── dataset.py

│ │ ├── ocr_builder.py

│ │ └── ocr_dataset.py

├── models

│ ├── __init__.py

│ ├── ban.py

│ ├── base_model.py

│ ├── butd.py

│ ├── cnn_lstm.py

│ ├── lorra.py

│ ├── m4c.py

│ ├── m4c_captioner.py

│ ├── pythia.py

│ ├── tap.py

│ ├── top_down_bottom_up.py

│ └── visdial_multi_modal.py

├── modules

│ ├── __init__.py

│ ├── attention.py

│ ├── decoders.py

│ ├── embeddings.py

│ ├── encoders.py

│ ├── layers.py

│ ├── losses.py

│ └── metrics.py

├── trainers

│ ├── __init__.py

│ └── base_trainer.py

└── utils

│ ├── __init__.py

│ ├── build_utils.py

│ ├── checkpoint.py

│ ├── configuration.py

│ ├── dataset_utils.py

│ ├── distributed_utils.py

│ ├── early_stopping.py

│ ├── flags.py

│ ├── general.py

│ ├── logger.py

│ ├── m4c_evaluators.py

│ ├── objects_to_byte_tensor.py

│ ├── phoc

│ ├── __init__.py

│ ├── build_phoc.py

│ └── src

│ │ └── cphoc.c

│ ├── process_answers.py

│ ├── text_utils.py

│ ├── timer.py

│ └── vocab.py

├── requirements.txt

├── setup.py

└── tools

└── run.py

/.editorconfig:

--------------------------------------------------------------------------------

1 | root = true

2 |

3 | [*.py]

4 | charset = utf-8

5 | trim_trailing_whitespace = true

6 | end_of_line = lf

7 | insert_final_newline = true

8 | indent_style = space

9 | indent_size = 4

10 |

11 | [*.md]

12 | trim_trailing_whitespace = false

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.log

2 | *.err

3 | *.pyc

4 | *.swp

5 | .idea/*

6 | **/__pycache__/*

7 | **/output/*

8 | data/.DS_Store

9 | docs/build

10 | results/*

11 | build

12 | dist

13 | boards/*

14 | *.egg-info/

15 | checkpoint

16 | *.pth

17 | *.ckpt

18 | *_cache

19 | .cache

20 | save

21 | .eggs

22 | eggs/

23 | *.egg

24 | .DS_Store

25 | .vscode/*

26 | *.so

27 | *-checkpoint.ipynb

28 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Microsoft Open Source Code of Conduct

2 |

3 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

4 |

5 | Resources:

6 |

7 | - [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

8 | - [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

9 | - Contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with questions or concerns

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) Microsoft Corporation.

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # TAP: Text-Aware Pre-training

2 | [TAP: Text-Aware Pre-training for Text-VQA and Text-Caption](https://arxiv.org/pdf/2012.04638.pdf)

3 |

4 | by [Zhengyuan Yang](https://zyang-ur.github.io/), [Yijuan Lu](https://scholar.google.com/citations?user=cpkrT44AAAAJ&hl=en), [Jianfeng Wang](https://scholar.google.com/citations?user=vJWEw_8AAAAJ&hl=en), [Xi Yin](https://xiyinmsu.github.io/), [Dinei Florencio](https://www.microsoft.com/en-us/research/people/dinei/), [Lijuan Wang](https://www.microsoft.com/en-us/research/people/lijuanw/), [Cha Zhang](https://www.microsoft.com/en-us/research/people/chazhang/), [Lei Zhang](https://www.microsoft.com/en-us/research/people/leizhang/), and [Jiebo Luo](http://cs.rochester.edu/u/jluo)

5 |

6 | IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, Oral

7 |

8 |

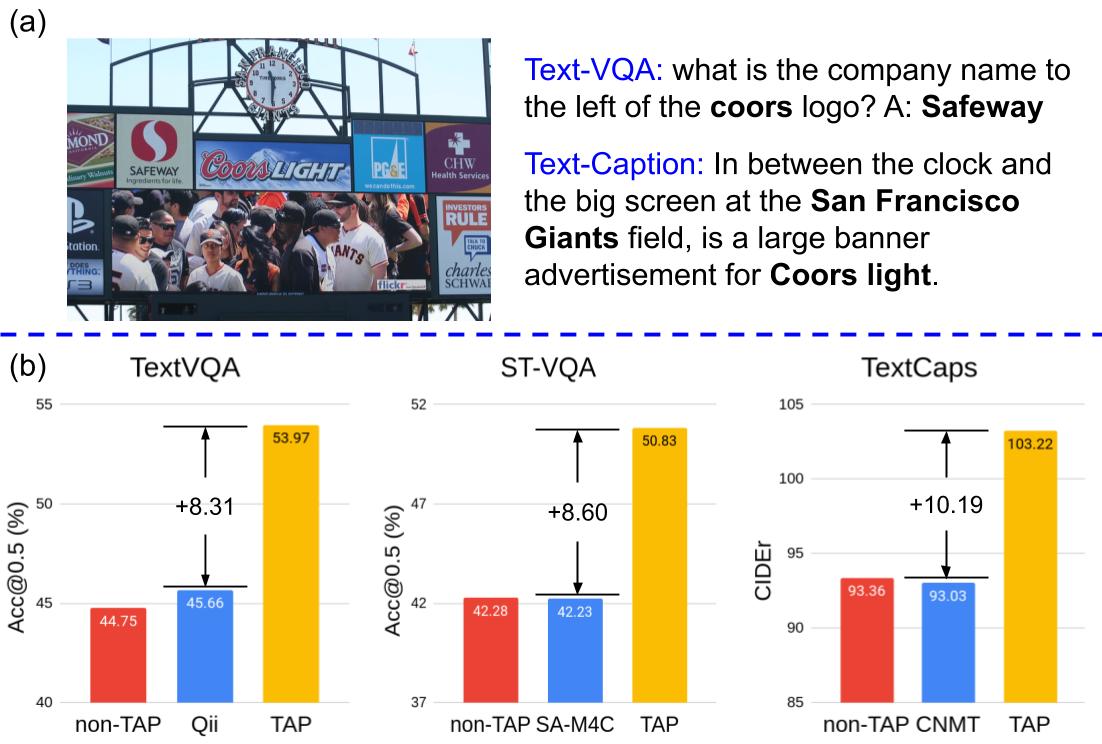

9 | ### Introduction

10 | We propose Text-Aware Pre-training (TAP) for Text-VQA and Text-Caption tasks.

11 | For more details, please refer to our

12 | [paper](https://arxiv.org/pdf/2012.04638.pdf).

13 |

14 |

15 |

16 |

17 |  18 |

18 |

19 |

20 | ### Citation

21 |

22 | @inproceedings{yang2021tap,

23 | title={TAP: Text-Aware Pre-training for Text-VQA and Text-Caption},

24 | author={Yang, Zhengyuan and Lu, Yijuan and Wang, Jianfeng and Yin, Xi and Florencio, Dinei and Wang, Lijuan and Zhang, Cha and Zhang, Lei and Luo, Jiebo},

25 | booktitle={CVPR},

26 | year={2021}

27 | }

28 |

29 | ### Prerequisites

30 | * Python 3.6

31 | * Pytorch 1.4.0

32 | * Please refer to ``requirements.txt``. Or using

33 |

34 | ```

35 | python setup.py develop

36 | ```

37 |

38 | ## Installation

39 |

40 | 1. Clone the repository

41 |

42 | ```

43 | git clone https://github.com/microsoft/TAP.git

44 | cd TAP

45 | python setup.py develop

46 | ```

47 |

48 | 2. Data

49 |

50 | * Please refer to the Readme in the ``data`` folder.

51 |

52 |

53 | ### Training

54 | 3. Train the model, run the code under main folder.

55 | Using flag ``--pretrain`` to access the pre-training mode, otherwise the main QA/Captioning losses are used to optimize the model. Example yml files are in ``configs`` folder. Detailed configs are in [released models](https://github.com/microsoft/TAP/tree/main/data).

56 |

57 | Pre-training:

58 | ```

59 | python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --pretrain --tasks vqa --datasets $dataset --model $model --seed $seed --config configs/vqa/$dataset/"$pretrain_yml".yml --save_dir save/$pretrain_savedir training_parameters.distributed True

60 |

61 | # for example

62 | python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --pretrain --tasks vqa --datasets m4c_textvqa --model m4c_split --seed 13 --config configs/vqa/m4c_textvqa/tap_base_pretrain.yml --save_dir save/m4c_split_pretrain_test training_parameters.distributed True

63 | ```

64 |

65 | Fine-tuning:

66 | ```

67 | python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --tasks vqa --datasets $dataset --model $model --seed $seed --config configs/vqa/$dataset/"$refine_yml".yml --save_dir save/$refine_savedir --resume_file save/$pretrain_savedir/$savename/best.ckpt training_parameters.distributed True

68 |

69 | # for example

70 | python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --tasks vqa --datasets m4c_textvqa --model m4c_split --seed 13 --config configs/vqa/m4c_textvqa/tap_refine.yml --save_dir save/m4c_split_refine_test --resume_file save/pretrained/textvqa_tap_base_pretrain.ckpt training_parameters.distributed True

71 | ```

72 |

73 | 4. Evaluate the model, run the code under main folder.

74 | Set up val or test set by ``--run_type``.

75 |

76 | ```

77 | python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --tasks vqa --datasets $dataset --model $model --config configs/vqa/$dataset/"$refine_yml".yml --save_dir save/$refine_savedir --run_type val --resume_file save/$refine_savedir/$savename/best.ckpt training_parameters.distributed True

78 |

79 | # for example

80 | python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --tasks vqa --datasets m4c_textvqa --model m4c_split --config configs/vqa/m4c_textvqa/tap_refine.yml --save_dir save/m4c_split_refine_test --run_type val --resume_file save/finetuned/textvqa_tap_base_best.ckpt training_parameters.distributed True

81 | ```

82 |

83 | 5. Captioning evaluation.

84 | ```

85 | python projects/M4C_Captioner/scripts/textcaps_eval.py --set val --pred_file YOUR_VAL_PREDICTION_FILE

86 | ```

87 |

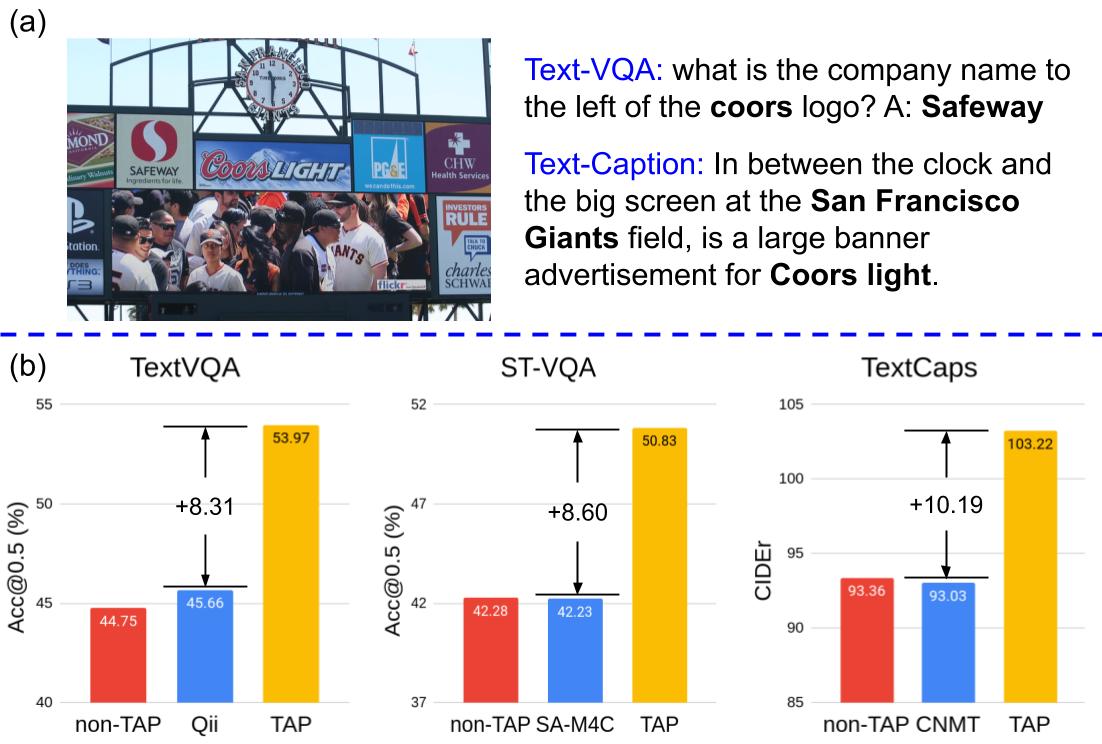

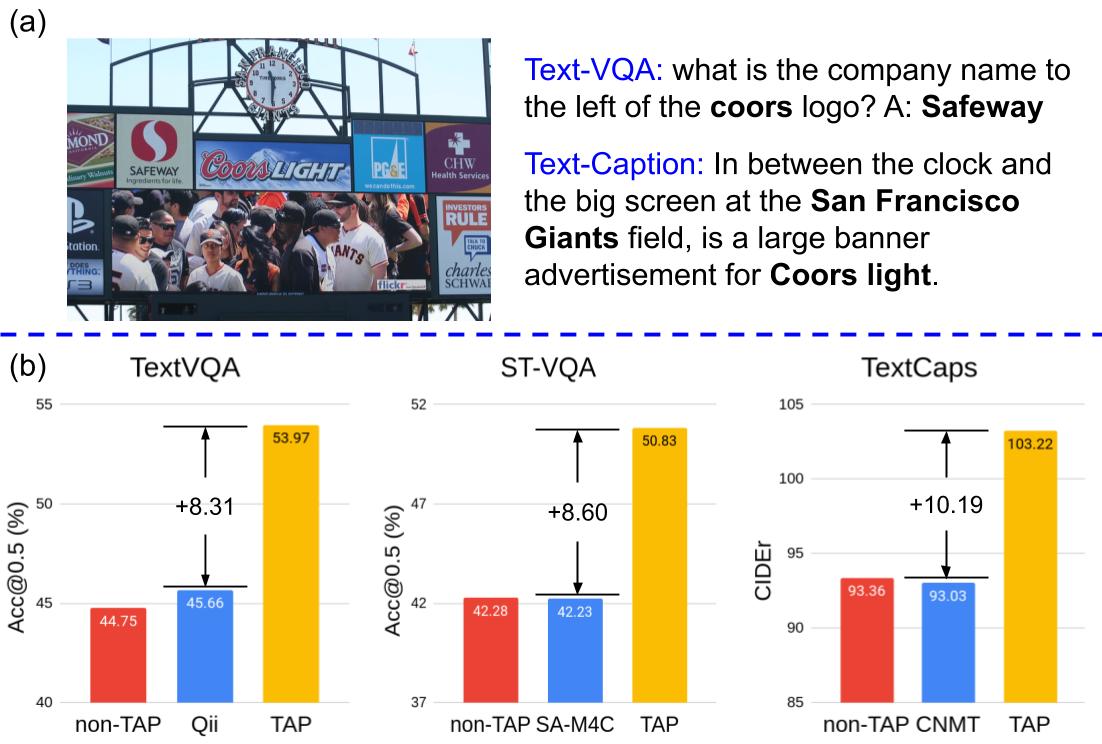

88 | ## Performance and Pre-trained Models

89 | Please check the detailed experiment settings in our [paper](https://arxiv.org/pdf/2012.04638.pdf).

90 |

91 | [Model checkpoints (~17G)](https://tapvqacaption.blob.core.windows.net/data/save).

92 |

93 | ```

94 | path/to/azcopy copy https://tapvqacaption.blob.core.windows.net/data/save /save --recursive

95 | ```

96 |

97 | Please refer to the Readme in the ``data`` folder for the detailed instructions on azcopy downloading.

98 |

99 |

100 |

101 | | Text-VQA |

102 | TAP |

103 | TAP** (with extra data) |

104 |

105 |

106 |

107 |

108 | | TextVQA |

109 | 49.91 |

110 | 54.71 |

111 |

112 |

113 | | STVQA |

114 | 45.29 |

115 | 50.83 |

116 |

117 |

118 |

119 |

120 |

121 |

122 |

123 | | Text-Captioning |

124 | TAP |

125 | TAP** (with extra data) |

126 |

127 |

128 |

129 |

130 | | TextCaps |

131 | 105.05 |

132 | 109.16 |

133 |

134 |

135 |

136 |

137 | ### Credits

138 | The project is built based on the following repository:

139 | * [MMF: A multimodal framework for vision and language research](https://github.com/facebookresearch/mmf/).

--------------------------------------------------------------------------------

/SECURITY.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Security

4 |

5 | Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include [Microsoft](https://github.com/Microsoft), [Azure](https://github.com/Azure), [DotNet](https://github.com/dotnet), [AspNet](https://github.com/aspnet), [Xamarin](https://github.com/xamarin), and [our GitHub organizations](https://opensource.microsoft.com/).

6 |

7 | If you believe you have found a security vulnerability in any Microsoft-owned repository that meets [Microsoft's definition of a security vulnerability](https://docs.microsoft.com/en-us/previous-versions/tn-archive/cc751383(v=technet.10)), please report it to us as described below.

8 |

9 | ## Reporting Security Issues

10 |

11 | **Please do not report security vulnerabilities through public GitHub issues.**

12 |

13 | Instead, please report them to the Microsoft Security Response Center (MSRC) at [https://msrc.microsoft.com/create-report](https://msrc.microsoft.com/create-report).

14 |

15 | If you prefer to submit without logging in, send email to [secure@microsoft.com](mailto:secure@microsoft.com). If possible, encrypt your message with our PGP key; please download it from the [Microsoft Security Response Center PGP Key page](https://www.microsoft.com/en-us/msrc/pgp-key-msrc).

16 |

17 | You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at [microsoft.com/msrc](https://www.microsoft.com/msrc).

18 |

19 | Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

20 |

21 | * Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

22 | * Full paths of source file(s) related to the manifestation of the issue

23 | * The location of the affected source code (tag/branch/commit or direct URL)

24 | * Any special configuration required to reproduce the issue

25 | * Step-by-step instructions to reproduce the issue

26 | * Proof-of-concept or exploit code (if possible)

27 | * Impact of the issue, including how an attacker might exploit the issue

28 |

29 | This information will help us triage your report more quickly.

30 |

31 | If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our [Microsoft Bug Bounty Program](https://microsoft.com/msrc/bounty) page for more details about our active programs.

32 |

33 | ## Preferred Languages

34 |

35 | We prefer all communications to be in English.

36 |

37 | ## Policy

38 |

39 | Microsoft follows the principle of [Coordinated Vulnerability Disclosure](https://www.microsoft.com/en-us/msrc/cvd).

40 |

41 |

--------------------------------------------------------------------------------

/SUPPORT.md:

--------------------------------------------------------------------------------

1 | # TODO: The maintainer of this repo has not yet edited this file

2 |

3 | **REPO OWNER**: Do you want Customer Service & Support (CSS) support for this product/project?

4 |

5 | - **No CSS support:** Fill out this template with information about how to file issues and get help.

6 | - **Yes CSS support:** Fill out an intake form at [aka.ms/spot](https://aka.ms/spot). CSS will work with/help you to determine next steps. More details also available at [aka.ms/onboardsupport](https://aka.ms/onboardsupport).

7 | - **Not sure?** Fill out a SPOT intake as though the answer were "Yes". CSS will help you decide.

8 |

9 | *Then remove this first heading from this SUPPORT.MD file before publishing your repo.*

10 |

11 | # Support

12 |

13 | ## How to file issues and get help

14 |

15 | This project uses GitHub Issues to track bugs and feature requests. Please search the existing

16 | issues before filing new issues to avoid duplicates. For new issues, file your bug or

17 | feature request as a new Issue.

18 |

19 | For help and questions about using this project, please **REPO MAINTAINER: INSERT INSTRUCTIONS HERE

20 | FOR HOW TO ENGAGE REPO OWNERS OR COMMUNITY FOR HELP. COULD BE A STACK OVERFLOW TAG OR OTHER

21 | CHANNEL. WHERE WILL YOU HELP PEOPLE?**.

22 |

23 | ## Microsoft Support Policy

24 |

25 | Support for this **PROJECT or PRODUCT** is limited to the resources listed above.

--------------------------------------------------------------------------------

/configs/captioning/m4c_textcaps/tap_base_pretrain.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/captioning/m4c_textcaps_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textcaps:

6 | image_features:

7 | train:

8 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

9 | val:

10 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

11 | test:

12 | - feat_resx/test,ocr_feat_resx/textvqa_conf/test_images

13 | imdb_files:

14 | train:

15 | - imdb/m4c_textcaps/imdb_train.npy

16 | val:

17 | - imdb/m4c_textcaps/imdb_val_filtered_by_image_id.npy # only one sample per image_id

18 | test:

19 | - imdb/m4c_textcaps/imdb_test_filtered_by_image_id.npy # only one sample per image_id

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | # max_length: 1

25 | max_length: 20

26 | answer_processor:

27 | type: m4c_caption

28 | params:

29 | vocab_file: m4c_captioner_vocabs/textcaps/vocab_textcap_threshold_10.txt

30 | preprocessor:

31 | type: simple_word

32 | params: {}

33 | context_preprocessor:

34 | type: simple_word

35 | params: {}

36 | max_length: 100

37 | max_copy_steps: 30

38 | num_answers: 1

39 | copy_processor:

40 | type: copy

41 | params:

42 | obj_max_length: 100

43 | max_length: 100

44 | phoc_processor:

45 | type: phoc

46 | params:

47 | max_length: 100

48 | model_attributes:

49 | m4c_captioner:

50 | lr_scale_frcn: 0.1

51 | lr_scale_text_bert: 0.1

52 | lr_scale_mmt: 1.0 # no scaling

53 | text_bert_init_from_bert_base: true

54 | text_bert:

55 | num_hidden_layers: 3

56 | obj:

57 | mmt_in_dim: 2048

58 | dropout_prob: 0.1

59 | ocr:

60 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

61 | dropout_prob: 0.1

62 | mmt:

63 | hidden_size: 768

64 | num_hidden_layers: 4

65 | classifier:

66 | type: linear

67 | ocr_max_num: 100

68 | ocr_ptr_net:

69 | hidden_size: 768

70 | query_key_size: 768

71 | params: {}

72 | model_data_dir: ../data

73 | metrics:

74 | - type: maskpred_accuracy

75 | losses:

76 | - type: pretrainonly_m4c_decoding_bce_with_mask

77 | remove_unk_in_pred: true

78 | optimizer_attributes:

79 | params:

80 | eps: 1.0e-08

81 | lr: 1e-4

82 | weight_decay: 0

83 | type: Adam

84 | training_parameters:

85 | clip_norm_mode: all

86 | clip_gradients: true

87 | max_grad_l2_norm: 0.25

88 | lr_scheduler: true

89 | lr_steps:

90 | - 10000

91 | - 11000

92 | lr_ratio: 0.1

93 | use_warmup: true

94 | warmup_factor: 0.2

95 | warmup_iterations: 1000

96 | max_iterations: 12000

97 | batch_size: 128

98 | num_workers: 8

99 | task_size_proportional_sampling: true

100 | monitored_metric: m4c_textcaps/maskpred_accuracy

101 | metric_minimize: false

102 |

--------------------------------------------------------------------------------

/configs/captioning/m4c_textcaps/tap_refine.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/captioning/m4c_textcaps_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textcaps:

6 | image_features:

7 | train:

8 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

9 | val:

10 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

11 | test:

12 | - feat_resx/test,ocr_feat_resx/textvqa_conf/test_images

13 | imdb_files:

14 | train:

15 | - imdb/m4c_textcaps/imdb_train.npy

16 | val:

17 | - imdb/m4c_textcaps/imdb_val_filtered_by_image_id.npy # only one sample per image_id

18 | test:

19 | - imdb/m4c_textcaps/imdb_test_filtered_by_image_id.npy # only one sample per image_id

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | # max_length: 1

25 | max_length: 20

26 | answer_processor:

27 | type: m4c_caption

28 | params:

29 | vocab_file: m4c_captioner_vocabs/textcaps/vocab_textcap_threshold_10.txt

30 | preprocessor:

31 | type: simple_word

32 | params: {}

33 | context_preprocessor:

34 | type: simple_word

35 | params: {}

36 | max_length: 100

37 | max_copy_steps: 30

38 | num_answers: 1

39 | copy_processor:

40 | type: copy

41 | params:

42 | obj_max_length: 100

43 | max_length: 100

44 | phoc_processor:

45 | type: phoc

46 | params:

47 | max_length: 100

48 | model_attributes:

49 | m4c_captioner:

50 | lr_scale_frcn: 0.1

51 | lr_scale_text_bert: 0.1

52 | lr_scale_mmt: 1.0 # no scaling

53 | text_bert_init_from_bert_base: true

54 | text_bert:

55 | num_hidden_layers: 3

56 | obj:

57 | mmt_in_dim: 2048

58 | dropout_prob: 0.1

59 | ocr:

60 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

61 | dropout_prob: 0.1

62 | mmt:

63 | hidden_size: 768

64 | num_hidden_layers: 4

65 | classifier:

66 | type: linear

67 | ocr_max_num: 100

68 | ocr_ptr_net:

69 | hidden_size: 768

70 | query_key_size: 768

71 | params: {}

72 | model_data_dir: ../data

73 | metrics:

74 | - type: textcaps_bleu4

75 | losses:

76 | - type: m4c_decoding_bce_with_mask

77 | remove_unk_in_pred: true

78 | optimizer_attributes:

79 | params:

80 | eps: 1.0e-08

81 | lr: 1e-4

82 | weight_decay: 0

83 | type: Adam

84 | training_parameters:

85 | clip_norm_mode: all

86 | clip_gradients: true

87 | max_grad_l2_norm: 0.25

88 | lr_scheduler: true

89 | lr_steps:

90 | - 10000

91 | - 11000

92 | lr_ratio: 0.1

93 | use_warmup: true

94 | warmup_factor: 0.2

95 | warmup_iterations: 1000

96 | max_iterations: 12000

97 | batch_size: 128

98 | num_workers: 8

99 | task_size_proportional_sampling: true

100 | monitored_metric: m4c_textcaps/textcaps_bleu4

101 | metric_minimize: false

102 |

--------------------------------------------------------------------------------

/configs/vqa/m4c_stvqa/tap_base_pretrain.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/vqa/m4c_stvqa_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textvqa:

6 | image_features:

7 | train:

8 | - feat_resx/stvqa/train,ocr_feat_resx/stvqa_conf

9 | val:

10 | - feat_resx/stvqa/train,ocr_feat_resx/stvqa_conf

11 | test:

12 | - feat_resx/stvqa/test_task3,ocr_feat_resx/stvqa_conf/test_task3

13 | imdb_files:

14 | train:

15 | - original_dl/ST-VQA/m4c_stvqa/imdb_subtrain.npy

16 | val:

17 | - original_dl/ST-VQA/m4c_stvqa/imdb_subval.npy

18 | test:

19 | - original_dl/ST-VQA/m4c_stvqa/imdb_test_task3.npy

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | max_length: 20

25 | context_processor:

26 | params:

27 | max_length: 100

28 | answer_processor:

29 | type: m4c_answer

30 | params:

31 | vocab_file: m4c_vocabs/stvqa/fixed_answer_vocab_stvqa_5k.txt

32 | preprocessor:

33 | type: simple_word

34 | params: {}

35 | context_preprocessor:

36 | type: simple_word

37 | params: {}

38 | max_length: 100

39 | max_copy_steps: 12

40 | num_answers: 10

41 | copy_processor:

42 | type: copy

43 | params:

44 | obj_max_length: 100

45 | max_length: 100

46 | phoc_processor:

47 | type: phoc

48 | params:

49 | max_length: 100

50 | model_attributes:

51 | m4c_split:

52 | lr_scale_frcn: 0.1

53 | lr_scale_text_bert: 0.1

54 | lr_scale_mmt: 1.0 # no scaling

55 | text_bert_init_from_bert_base: true

56 | text_bert:

57 | num_hidden_layers: 3

58 | obj:

59 | mmt_in_dim: 2048

60 | dropout_prob: 0.1

61 | ocr:

62 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

63 | dropout_prob: 0.1

64 | mmt:

65 | hidden_size: 768

66 | num_hidden_layers: 4

67 | classifier:

68 | type: linear

69 | ocr_max_num: 100

70 | ocr_ptr_net:

71 | hidden_size: 768

72 | query_key_size: 768

73 | params: {}

74 | model_data_dir: ../data

75 | metrics:

76 | - type: maskpred_accuracy

77 | losses:

78 | - type: pretrainonly_m4c_decoding_bce_with_mask

79 | optimizer_attributes:

80 | params:

81 | eps: 1.0e-08

82 | lr: 1e-4

83 | weight_decay: 0

84 | type: Adam

85 | training_parameters:

86 | clip_norm_mode: all

87 | clip_gradients: true

88 | max_grad_l2_norm: 0.25

89 | lr_scheduler: true

90 | lr_steps:

91 | - 14000

92 | - 19000

93 | lr_ratio: 0.1

94 | use_warmup: true

95 | warmup_factor: 0.2

96 | warmup_iterations: 1000

97 | max_iterations: 24000

98 | batch_size: 128

99 | num_workers: 8

100 | task_size_proportional_sampling: true

101 | monitored_metric: m4c_stvqa/maskpred_accuracy

102 | metric_minimize: false

--------------------------------------------------------------------------------

/configs/vqa/m4c_stvqa/tap_refine.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/vqa/m4c_stvqa_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textvqa:

6 | image_features:

7 | train:

8 | - feat_resx/stvqa/train,ocr_feat_resx/stvqa_conf

9 | val:

10 | - feat_resx/stvqa/train,ocr_feat_resx/stvqa_conf

11 | test:

12 | - feat_resx/stvqa/test_task3,ocr_feat_resx/stvqa_conf/test_task3

13 | imdb_files:

14 | train:

15 | - original_dl/ST-VQA/m4c_stvqa/imdb_subtrain.npy

16 | val:

17 | - original_dl/ST-VQA/m4c_stvqa/imdb_subval.npy

18 | test:

19 | - original_dl/ST-VQA/m4c_stvqa/imdb_test_task3.npy

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | max_length: 20

25 | context_processor:

26 | params:

27 | max_length: 100

28 | answer_processor:

29 | type: m4c_answer

30 | params:

31 | vocab_file: m4c_vocabs/stvqa/fixed_answer_vocab_stvqa_5k.txt

32 | preprocessor:

33 | type: simple_word

34 | params: {}

35 | context_preprocessor:

36 | type: simple_word

37 | params: {}

38 | max_length: 100

39 | max_copy_steps: 12

40 | num_answers: 10

41 | copy_processor:

42 | type: copy

43 | params:

44 | obj_max_length: 100

45 | max_length: 100

46 | phoc_processor:

47 | type: phoc

48 | params:

49 | max_length: 100

50 | model_attributes:

51 | m4c_split:

52 | lr_scale_frcn: 0.1

53 | lr_scale_text_bert: 0.1

54 | lr_scale_mmt: 1.0 # no scaling

55 | text_bert_init_from_bert_base: true

56 | text_bert:

57 | num_hidden_layers: 3

58 | obj:

59 | mmt_in_dim: 2048

60 | dropout_prob: 0.1

61 | ocr:

62 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

63 | dropout_prob: 0.1

64 | mmt:

65 | hidden_size: 768

66 | num_hidden_layers: 4

67 | classifier:

68 | type: linear

69 | ocr_max_num: 100

70 | ocr_ptr_net:

71 | hidden_size: 768

72 | query_key_size: 768

73 | params: {}

74 | model_data_dir: ../data

75 | metrics:

76 | - type: stvqa_accuracy

77 | - type: stvqa_anls

78 | losses:

79 | - type: m4c_decoding_bce_with_mask

80 | optimizer_attributes:

81 | params:

82 | eps: 1.0e-08

83 | lr: 1e-4

84 | weight_decay: 0

85 | type: Adam

86 | training_parameters:

87 | clip_norm_mode: all

88 | clip_gradients: true

89 | max_grad_l2_norm: 0.25

90 | lr_scheduler: true

91 | lr_steps:

92 | - 14000

93 | - 19000

94 | lr_ratio: 0.1

95 | use_warmup: true

96 | warmup_factor: 0.2

97 | warmup_iterations: 1000

98 | max_iterations: 24000

99 | batch_size: 128

100 | num_workers: 8

101 | task_size_proportional_sampling: true

102 | monitored_metric: m4c_stvqa/stvqa_accuracy

103 | metric_minimize: false

--------------------------------------------------------------------------------

/configs/vqa/m4c_textvqa/tap_base_pretrain.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/vqa/m4c_textvqa_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textvqa:

6 | image_features:

7 | train:

8 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

9 | val:

10 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

11 | test:

12 | - feat_resx/test,ocr_feat_resx/textvqa_conf/test_images

13 | imdb_files:

14 | train:

15 | - imdb/m4c_textvqa/imdb_train_ocr_en.npy

16 | val:

17 | - imdb/m4c_textvqa/imdb_val_ocr_en.npy

18 | test:

19 | - imdb/m4c_textvqa/imdb_test_ocr_en.npy

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | max_length: 20

25 | context_processor:

26 | params:

27 | max_length: 100

28 | answer_processor:

29 | type: m4c_answer

30 | params:

31 | vocab_file: m4c_vocabs/textvqa/fixed_answer_vocab_textvqa_5k.txt

32 | preprocessor:

33 | type: simple_word

34 | params: {}

35 | context_preprocessor:

36 | type: simple_word

37 | params: {}

38 | max_length: 100

39 | max_copy_steps: 12

40 | num_answers: 10

41 | copy_processor:

42 | type: copy

43 | params:

44 | obj_max_length: 100

45 | max_length: 100

46 | phoc_processor:

47 | type: phoc

48 | params:

49 | max_length: 100

50 | model_attributes:

51 | m4c_split:

52 | lr_scale_frcn: 0.1

53 | lr_scale_text_bert: 0.1

54 | lr_scale_mmt: 1.0 # no scaling

55 | text_bert_init_from_bert_base: true

56 | text_bert:

57 | num_hidden_layers: 3

58 | obj:

59 | mmt_in_dim: 2048

60 | dropout_prob: 0.1

61 | ocr:

62 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

63 | dropout_prob: 0.1

64 | mmt:

65 | hidden_size: 768

66 | num_hidden_layers: 4

67 | classifier:

68 | type: linear

69 | ocr_max_num: 100

70 | ocr_ptr_net:

71 | hidden_size: 768

72 | query_key_size: 768

73 | params: {}

74 | model_data_dir: ../data

75 | metrics:

76 | - type: maskpred_accuracy

77 | losses:

78 | - type: pretrainonly_m4c_decoding_bce_with_mask

79 | optimizer_attributes:

80 | params:

81 | eps: 1.0e-08

82 | lr: 1e-4

83 | weight_decay: 0

84 | type: Adam

85 | training_parameters:

86 | clip_norm_mode: all

87 | clip_gradients: true

88 | max_grad_l2_norm: 0.25

89 | lr_scheduler: true

90 | lr_steps:

91 | - 14000

92 | - 19000

93 | lr_ratio: 0.1

94 | use_warmup: true

95 | warmup_factor: 0.2

96 | warmup_iterations: 1000

97 | max_iterations: 24000

98 | batch_size: 128

99 | num_workers: 8

100 | task_size_proportional_sampling: true

101 | monitored_metric: m4c_textvqa/maskpred_accuracy

102 | metric_minimize: false

--------------------------------------------------------------------------------

/configs/vqa/m4c_textvqa/tap_refine.yml:

--------------------------------------------------------------------------------

1 | includes:

2 | - common/defaults/configs/datasets/vqa/m4c_textvqa_ocr100.yml

3 | # Use soft copy

4 | dataset_attributes:

5 | m4c_textvqa:

6 | image_features:

7 | train:

8 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

9 | val:

10 | - feat_resx/train,ocr_feat_resx/textvqa_conf/train_images

11 | test:

12 | - feat_resx/test,ocr_feat_resx/textvqa_conf/test_images

13 | imdb_files:

14 | train:

15 | - imdb/m4c_textvqa/imdb_train_ocr_en.npy

16 | val:

17 | - imdb/m4c_textvqa/imdb_val_ocr_en.npy

18 | test:

19 | - imdb/m4c_textvqa/imdb_test_ocr_en.npy

20 | processors:

21 | text_processor:

22 | type: bert_tokenizer

23 | params:

24 | max_length: 20

25 | context_processor:

26 | params:

27 | max_length: 100

28 | answer_processor:

29 | type: m4c_answer

30 | params:

31 | vocab_file: m4c_vocabs/textvqa/fixed_answer_vocab_textvqa_5k.txt

32 | preprocessor:

33 | type: simple_word

34 | params: {}

35 | context_preprocessor:

36 | type: simple_word

37 | params: {}

38 | max_length: 100

39 | max_copy_steps: 12

40 | num_answers: 10

41 | copy_processor:

42 | type: copy

43 | params:

44 | obj_max_length: 100

45 | max_length: 100

46 | phoc_processor:

47 | type: phoc

48 | params:

49 | max_length: 100

50 | model_attributes:

51 | m4c_split:

52 | lr_scale_frcn: 0.1

53 | lr_scale_text_bert: 0.1

54 | lr_scale_mmt: 1.0 # no scaling

55 | text_bert_init_from_bert_base: true

56 | text_bert:

57 | num_hidden_layers: 3

58 | obj:

59 | mmt_in_dim: 2048

60 | dropout_prob: 0.1

61 | ocr:

62 | mmt_in_dim: 3052 # 300 (FastText) + 604 (PHOC) + 2048 (Faster R-CNN) + 100 (all zeros; legacy)

63 | dropout_prob: 0.1

64 | mmt:

65 | hidden_size: 768

66 | num_hidden_layers: 4

67 | classifier:

68 | type: linear

69 | ocr_max_num: 100

70 | ocr_ptr_net:

71 | hidden_size: 768

72 | query_key_size: 768

73 | params: {}

74 | model_data_dir: ../data

75 | metrics:

76 | - type: textvqa_accuracy

77 | losses:

78 | - type: m4c_decoding_bce_with_mask

79 | optimizer_attributes:

80 | params:

81 | eps: 1.0e-08

82 | lr: 1e-4

83 | weight_decay: 0

84 | type: Adam

85 | training_parameters:

86 | clip_norm_mode: all

87 | clip_gradients: true

88 | max_grad_l2_norm: 0.25

89 | lr_scheduler: true

90 | lr_steps:

91 | - 14000

92 | - 19000

93 | lr_ratio: 0.1

94 | use_warmup: true

95 | warmup_factor: 0.2

96 | warmup_iterations: 1000

97 | max_iterations: 24000

98 | batch_size: 128

99 | num_workers: 8

100 | task_size_proportional_sampling: true

101 | monitored_metric: m4c_textvqa/textvqa_accuracy

102 | metric_minimize: false

--------------------------------------------------------------------------------

/data/README.md:

--------------------------------------------------------------------------------

1 | ## Data Organization

2 | We recommend using the following AzCopy command to download.

3 | AzCopy executable tools can be downloaded [here](https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy-v10#download-azcopy).

4 | Move ``GoogleCC`` folder under ``data`` to match the default paths.

5 |

6 | [TextVQA/Caps/STVQA Data (~62G)](https://tapvqacaption.blob.core.windows.net/data/data).

7 |

8 | [OCR-CC Data (Huge, ~1.3T)](https://tapvqacaption.blob.core.windows.net/data/GoogleCC).

9 |

10 | [Model checkpoints (~17G)](https://tapvqacaption.blob.core.windows.net/data/save).

11 |

12 | A subset of OCR-CC with around 400K samples is availble in imdb ``data/imdb/cc/imdb_train_ocr_subset.npy``. The subset is faster to train with a small drop in performance, compared with the full set ``data/imdb/cc/imdb_train_ocr.npy``.

13 |

14 | ```

15 | path/to/azcopy copy --resursive"

16 |

17 | # for example, downloading TextVQA/Caps/STVQA Data

18 | path/to/azcopy copy https://tapvqacaption.blob.core.windows.net/data/data /data --recursive

19 |

20 | # for example, downloading OCR-CC Data

21 | path/to/azcopy copy https://tapvqacaption.blob.core.windows.net/data/GoogleCC /data/GoogleCC --recursive

22 |

23 | # for example, downloading model checkpoints

24 | path/to/azcopy copy https://tapvqacaption.blob.core.windows.net/data/save /save --recursive

25 | ```

26 |

--------------------------------------------------------------------------------

/projects/M4C_Captioner/scripts/textcaps_eval.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import json

3 | import numpy as np

4 | import os

5 |

6 | sys.path.append(

7 | os.path.join(os.path.dirname(__file__), '../../../pythia/scripts/coco/')

8 | )

9 | import coco_caption_eval # NoQA

10 |

11 |

12 | def print_metrics(res_metrics):

13 | print(res_metrics)

14 | keys = ['Bleu_1', 'Bleu_2', 'Bleu_3', 'Bleu_4', 'METEOR', 'ROUGE_L', 'SPICE', 'CIDEr']

15 | print('\n\n**********\nFinal model performance:\n**********')

16 | for k in keys:

17 | print(k, ': %.1f' % (res_metrics[k] * 100))

18 |

19 |

20 | if __name__ == '__main__':

21 | import argparse

22 | parser = argparse.ArgumentParser()

23 | parser.add_argument('--pred_file', type=str, required=True)

24 | parser.add_argument('--set', type=str, default='val')

25 | args = parser.parse_args()

26 |

27 | if args.set not in ['train', 'val']:

28 | raise Exception(

29 | 'this script only supports TextCaps train and val set. '

30 | 'Please use the EvalAI server for test set evaluation'

31 | )

32 |

33 | with open(args.pred_file) as f:

34 | preds = json.load(f)

35 | imdb_file = os.path.join(

36 | os.path.dirname(__file__),

37 | '../../../data/imdb/m4c_textcaps/imdb_{}.npy'.format(args.set)

38 | )

39 | imdb = np.load(imdb_file, allow_pickle=True)

40 | imdb = imdb[1:]

41 |

42 | gts = [

43 | {'image_id': info['image_id'], 'caption': info['caption_str']}

44 | for info in imdb

45 | ]

46 | preds = [

47 | {'image_id': p['image_id'], 'caption': p['caption']}

48 | for p in preds

49 | ]

50 | imgids = list(set(g['image_id'] for g in gts))

51 |

52 | metrics = coco_caption_eval.calculate_metrics(

53 | imgids, {'annotations': gts}, {'annotations': preds}

54 | )

55 |

56 | print_metrics(metrics)

57 |

--------------------------------------------------------------------------------

/projects/TAP_Caption/README.md:

--------------------------------------------------------------------------------

1 | TAP

2 |

--------------------------------------------------------------------------------

/projects/TAP_QA/README.md:

--------------------------------------------------------------------------------

1 | TAP

2 |

--------------------------------------------------------------------------------

/pythia/common/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Facebook, Inc. and its affiliates.

2 |

--------------------------------------------------------------------------------

/pythia/common/batch_collator.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Facebook, Inc. and its affiliates.

2 | from pythia.common.sample import SampleList

3 | import random

4 | import torch

5 |

6 | def combine_seq(texta, textlena, textmaska, textb, textlenb, textmaskb, texta_maxlen=None):

7 | if textmaska is None: textmaska = torch.ones(texta.shape).long()*-1

8 | if textmaskb is None: textmaskb = torch.ones(textb.shape).long()*-1

9 | textb[0] = 102

10 | cmb_text, cmb_textmask = torch.cat([texta,textb],0), torch.cat([textmaska,textmaskb],0)

11 | cmb_textlen = textlena + textlenb

12 | return cmb_text, cmb_textlen, cmb_textmask

13 |

14 | def combine_seq_pollute(batch):

15 | batch_size = len(batch)

16 | for ii in range(batch_size):

17 | assert(batch_size!=0)

18 | if batch_size!=1:

19 | pollute = random.choice([i for i in range(batch_size) if i!=ii])

20 | else:

21 | pollute = ii

22 | qidx, ocridx, objidx = ii, ii, ii

23 | if 'langtag_pollute' in batch[ii]:

24 | if int(batch[ii].langtag_pollute)==1: qidx = pollute

25 | if 'ocrtag_pollute' in batch[ii]:

26 | if int(batch[ii].ocrtag_pollute)==1: ocridx = pollute

27 | if 'objtag_pollute' in batch[ii]:

28 | if int(batch[ii].objtag_pollute)==1: objidx = pollute

29 | qocr_text, qocr_text_len, qocr_text_mask_label = combine_seq(\

30 | batch[qidx].text, batch[qidx].text_len, batch[qidx].text_mask_label, \

31 | batch[ocridx].ocr_text, batch[ocridx].ocr_text_len, batch[ocridx].ocrtext_mask_label, texta_maxlen=batch[qidx].text.shape[0])

32 |

33 | batch[ii].cmb_text, batch[ii].cmb_text_len, batch[ii].cmb_text_mask_label = combine_seq(\

34 | qocr_text, qocr_text_len, qocr_text_mask_label, \

35 | batch[objidx].obj_text, batch[objidx].obj_text_len, batch[objidx].objtext_mask_label, texta_maxlen=qocr_text.shape[0])

36 | return batch

37 |

38 | class BatchCollator:

39 | # TODO: Think more if there is a better way to do this

40 | _IDENTICAL_VALUE_KEYS = ["dataset_type", "dataset_name"]

41 |

42 | def __call__(self, batch):

43 | batch = combine_seq_pollute(batch)

44 | sample_list = SampleList(batch)

45 | for key in self._IDENTICAL_VALUE_KEYS:

46 | sample_list[key + "_"] = sample_list[key]

47 | sample_list[key] = sample_list[key][0]

48 |

49 | return sample_list

--------------------------------------------------------------------------------

/pythia/common/constants.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Facebook, Inc. and its affiliates.

2 | import os

3 |

4 |

5 | imdb_version = 1

6 | FASTTEXT_WIKI_URL = (

7 | "https://dl.fbaipublicfiles.com/pythia/pretrained_models/fasttext/wiki.en.bin"

8 | )

9 |

10 | CLEVR_DOWNLOAD_URL = (

11 | "https://dl.fbaipublicfiles.com/clevr/CLEVR_v1.0.zip"

12 | )

13 |

14 | VISUAL_GENOME_CONSTS = {

15 | "imdb_url": "https://dl.fbaipublicfiles.com/pythia/data/imdb/visual_genome.tar.gz",

16 | "features_url": "https://dl.fbaipublicfiles.com/pythia/features/visual_genome.tar.gz",

17 | "synset_file": "vg_synsets.txt",

18 | "vocabs": "https://dl.fbaipublicfiles.com/pythia/data/vocab.tar.gz"

19 | }

20 |

21 | VISUAL_DIALOG_CONSTS = {

22 | "imdb_url": {

23 | "train": "https://www.dropbox.com/s/ix8keeudqrd8hn8/visdial_1.0_train.zip?dl=1",

24 | "val": "https://www.dropbox.com/s/ibs3a0zhw74zisc/visdial_1.0_val.zip?dl=1",

25 | "test": "https://www.dropbox.com/s/ibs3a0zhw74zisc/visdial_1.0_test.zip?dl=1"

26 | },

27 | "features_url": {

28 | "visual_dialog": "https://dl.fbaipublicfiles.com/pythia/features/visual_dialog.tar.gz",

29 | "coco": "https://dl.fbaipublicfiles.com/pythia/features/coco.tar.gz"

30 | },

31 | "vocabs": "https://dl.fbaipublicfiles.com/pythia/data/vocab.tar.gz"

32 | }

33 |

34 | DOWNLOAD_CHUNK_SIZE = 1024 * 1024

35 |

--------------------------------------------------------------------------------

/pythia/common/dataset_loader.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Facebook, Inc. and its affiliates.

2 | import os

3 |

4 | import yaml

5 | from torch.utils.data import DataLoader

6 |

7 | from pythia.common.batch_collator import BatchCollator

8 | from pythia.common.test_reporter import TestReporter

9 | from pythia.datasets.multi_dataset import MultiDataset

10 | from pythia.datasets.samplers import DistributedSampler

11 | from pythia.utils.general import get_batch_size

12 |

13 |

14 | class DatasetLoader:

15 | def __init__(self, config):

16 | self.config = config

17 |

18 | def load_datasets(self):

19 | self.train_dataset = MultiDataset("train")

20 | self.val_dataset = MultiDataset("val")

21 | self.test_dataset = MultiDataset("test")

22 |

23 | self.train_dataset.load(**self.config)

24 | self.val_dataset.load(**self.config)

25 | self.test_dataset.load(**self.config)

26 |

27 | if self.train_dataset.num_datasets == 1:

28 | self.train_loader = self.train_dataset.first_loader

29 | self.val_loader = self.val_dataset.first_loader

30 | self.test_loader = self.test_dataset.first_loader

31 | else:

32 | self.train_loader = self.train_dataset

33 | self.val_loader = self.val_dataset

34 | self.test_loader = self.test_dataset

35 |

36 | self.mapping = {

37 | "train": self.train_dataset,

38 | "val": self.val_dataset,

39 | "test": self.test_dataset,

40 | }

41 |

42 | self.test_reporter = None

43 | self.should_not_log = self.config.training_parameters.should_not_log

44 |

45 | @property

46 | def dataset_config(self):

47 | return self._dataset_config

48 |

49 | @dataset_config.setter

50 | def dataset_config(self, config):

51 | self._dataset_config = config

52 |

53 | def get_config(self):

54 | return self._dataset_config

55 |

56 | def get_test_reporter(self, dataset_type):

57 | dataset = getattr(self, "{}_dataset".format(dataset_type))

58 | return TestReporter(dataset)

59 |

60 | def update_registry_for_model(self, config):

61 | self.train_dataset.update_registry_for_model(config)

62 | self.val_dataset.update_registry_for_model(config)

63 | self.test_dataset.update_registry_for_model(config)

64 |

65 | def clean_config(self, config):

66 | self.train_dataset.clean_config(config)

67 | self.val_dataset.clean_config(config)

68 | self.test_dataset.clean_config(config)

69 |

70 | def prepare_batch(self, batch, *args, **kwargs):

71 | return self.mapping[batch.dataset_type].prepare_batch(batch)

72 |

73 | def verbose_dump(self, report, *args, **kwargs):

74 | if self.config.training_parameters.verbose_dump:

75 | dataset_type = report.dataset_type

76 | self.mapping[dataset_type].verbose_dump(report, *args, **kwargs)

77 |

78 | def seed_sampler(self, dataset_type, seed):

79 | dataset = getattr(self, "{}_dataset".format(dataset_type))

80 | dataset.seed_sampler(seed)

81 |

--------------------------------------------------------------------------------

/pythia/common/defaults/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/microsoft/TAP/352891f93c75ac5d6b9ba141bbe831477dcdd807/pythia/common/defaults/__init__.py

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/base.yml:

--------------------------------------------------------------------------------

1 | # Configuration for training

2 | training_parameters:

3 | # Name of the trainer class used to define the training/evalution loop

4 | trainer: 'base_trainer'

5 | # Name of the experiment, will be used while saving checkpoints

6 | # and generating reports

7 | experiment_name: run

8 | # Maximum number of iterations the training will run

9 | max_iterations: 22000

10 | # Maximum epochs in case you don't want to use iterations

11 | # Can be mixed with max iterations, so it will stop whichever is

12 | # completed first. Default: null means epochs won't be used

13 | max_epochs: null

14 | # After `log_interval` iterations, current iteration's training loss and

15 | # metrics will be reported. This will also report validation

16 | # loss and metrics on a single batch from validation set

17 | # to provide an estimate on validation side

18 | log_interval: 100

19 | # After `snapshot_interval` iterations, pythia will make a snapshot

20 | # which will involve creating a checkpoint for current training scenarios

21 | # This will also evaluate validation metrics on whole validation set

22 | # TODO: Change this to checkpoint_interval and create a new

23 | # `validation_interval` for evaluating on validation set

24 | snapshot_interval: 1000

25 | # Whether gradients should be clipped

26 | clip_gradients: false

27 | # Mode for clip norm

28 | clip_norm_mode: all

29 | # Device to be used, if cuda then GPUs will be used

30 | device: cuda

31 | # Seed to be used for training. -1 means random seed.

32 | # Either pass fixed through your config or command line arguments

33 | seed: null

34 | # Size of each batch. If distributed or data_parallel

35 | # is used, this will be divided equally among GPUs

36 | batch_size: 512

37 | # Number of workers to be used in dataloaders

38 | num_workers: 4

39 |

40 | # Whether to use early stopping, (Default: false)

41 | should_early_stop: false

42 | # Patience for early stopping

43 | patience: 4000

44 | # Metric to be monitored for early stopping

45 | # loss will monitor combined loss from all of the tasks

46 | # Usually, it will be of the form `dataset_metric`

47 | # for e.g. vqa2_vqa_accuracy

48 | monitored_metric: total_loss

49 | # Whether the monitored metric should be minimized for early stopping

50 | # or not, for e.g. you would want to minimize loss but maximize accuracy

51 | metric_minimize: true

52 |

53 | # Should a lr scheduler be used

54 | lr_scheduler: false

55 | # Steps for LR scheduler, will be an array of iteration count

56 | # when lr should be decreased

57 | lr_steps: []

58 | # Ratio for each lr step

59 | lr_ratio: 0.1

60 |

61 | # Should use warmup for lr

62 | use_warmup: false

63 | # Warmup factor learning rate warmup

64 | warmup_factor: 0.2

65 | # Iteration until which warnup should be done

66 | warmup_iterations: 1000

67 |

68 | # Type of run, train+inference by default means both training and inference

69 | # (test) stage will be run, if run_type contains 'val',

70 | # inference will be run on val set also.

71 | run_type: train+inference

72 | # Level of logging, only logs which are >= to current level will be logged

73 | logger_level: info

74 | # Whether to use distributed training, mutually exclusive with respected

75 | # to `data_parallel` flag

76 | distributed: false

77 | # Local rank of the GPU device

78 | local_rank: null

79 |

80 | # Whether to use data parallel, mutually exclusive with respect to

81 | # `distributed` flag

82 | data_parallel: false

83 | # Whether JSON files for evalai evaluation should be generated

84 | evalai_inference: false

85 | # Use to load specific modules from checkpoint to your model,

86 | # this is helpful in finetuning. for e.g. you can specify

87 | # text_embeddings: text_embedding_pythia

88 | # for loading `text_embedding` module of your model

89 | # from `text_embedding_pythia`

90 | pretrained_mapping: {}

91 | # Whether the above mentioned pretrained mapping should be loaded or not

92 | load_pretrained: false

93 |

94 | # Directory for saving checkpoints and other metadata

95 | save_dir: "./save"

96 | # Directory for saving logs

97 | log_dir: "./logs"

98 | # Whether Pythia should log or not, Default: False, which means

99 | # pythia will log by default

100 | should_not_log: false

101 |

102 | # If verbose dump is active, pythia will dump dataset, model specific

103 | # information which can be useful in debugging

104 | verbose_dump: false

105 | # If resume is true, pythia will try to load automatically load

106 | # last of same parameters from save_dir

107 | resume: false

108 | # `resume_file` can be used to load a specific checkpoint from a file

109 | resume_file: null

110 | # Whether to pin memory in dataloader

111 | pin_memory: false

112 |

113 | # Use in multi-tasking, when you want to sample tasks proportional to their sizes

114 | dataset_size_proportional_sampling: true

115 |

116 | # Attributes for model, default configuration files for various models

117 | # included in pythia can be found under configs directory in root folder

118 | model_attributes: {}

119 |

120 | # Attributes for datasets. Separate configuration

121 | # for different datasets included in pythia are included in dataset folder

122 | # which can be mixed and matched to train multiple datasets together

123 | # An example for mixing all vqa datasets is present under vqa folder

124 | dataset_attributes: {}

125 |

126 | # Defines which datasets from the above tasks you want to train on

127 | datasets: []

128 |

129 | # Defines which model you want to train on

130 | model: null

131 |

132 | # Attributes for optimizer, examples can be found in models' configs in

133 | # configs folder

134 | optimizer_attributes: {}

135 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/captioning/coco.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | coco:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features:

7 | train:

8 | - coco/detectron_fix_100/fc6/train_val_2014

9 | val:

10 | - coco/detectron_fix_100/fc6/train_val_2014

11 | test:

12 | - coco/detectron_fix_100/fc6/train_val_2014

13 | imdb_files:

14 | train:

15 | - imdb/coco_captions/imdb_karpathy_train.npy

16 | val:

17 | - imdb/coco_captions/imdb_karpathy_val.npy

18 | test:

19 | - imdb/coco_captions/imdb_karpathy_test.npy

20 | features_max_len: 100

21 | processors:

22 | text_processor:

23 | type: vocab

24 | params:

25 | max_length: 52

26 | vocab:

27 | type: intersected

28 | embedding_name: glove.6B.300d

29 | vocab_file: vocabs/vocabulary_captioning_thresh5.txt

30 | preprocessor:

31 | type: simple_sentence

32 | params: {}

33 | caption_processor:

34 | type: caption

35 | params:

36 | vocab:

37 | type: intersected

38 | embedding_name: glove.6B.300d

39 | vocab_file: vocabs/vocabulary_captioning_thresh5.txt

40 | min_captions_per_img: 5

41 | return_info: false

42 | # Return OCR information

43 | use_ocr: false

44 | # Return spatial information of OCR tokens if present

45 | use_ocr_info: false

46 | training_parameters:

47 | monitored_metric: coco/caption_bleu4

48 | metric_minimize: false

49 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/captioning/m4c_textcaps.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_textcaps:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 50

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 50

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/captioning/m4c_textcaps_ocr100.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_textcaps:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 100

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 100

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

23 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/dialog/visual_dialog.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | visual_genome:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features:

7 | train:

8 | - coco/detectron_fix_100/fc6/train_val_2014,coco/resnet152/train_val_2014

9 | val:

10 | - visual_dialog/detectron_fix_100/fc6/val2018,visual_dialog/resnet152/

11 | test:

12 | - visual_dialog/detectron_fix_100/fc6/test2018,visual_dialog/resnet152/

13 | imdb_files:

14 | train:

15 | - imdb/visual_dialog/visdial_1.0_train.json

16 | val:

17 | - imdb/visual_dialog/visdial_1.0_val.json

18 | test:

19 | - imdb/visual_dialog/visdial_1.0_test.json

20 | features_max_len: 100

21 | processors:

22 | text_processor:

23 | type: vocab

24 | params:

25 | max_length: 14

26 | vocab:

27 | type: intersected

28 | embedding_name: glove.6B.300d

29 | vocab_file: vocabs/vocabulary_100k.txt

30 | preprocessor:

31 | type: simple_sentence

32 | params: {}

33 | answer_processor:

34 | type: vqa_answer

35 | params:

36 | num_answers: 1

37 | vocab_file: vocabs/answers_vqa.txt

38 | preprocessor:

39 | type: simple_word

40 | params: {}

41 | discriminative_answer_processor:

42 | type: vocab

43 | params:

44 | max_length: 1

45 | vocab:

46 | type: random

47 | vocab_file: vocabs/vocabulary_100k.txt

48 | vg_answer_preprocessor:

49 | type: simple_word

50 | params: {}

51 | history_processor:

52 | type: vocab

53 | params:

54 | max_length: 100

55 | vocab:

56 | type: intersected

57 | embedding_name: glove.6B.300d

58 | vocab_file: vocabs/vocabulary_100k.txt

59 | preprocessor:

60 | type: simple_sentence

61 | params: {}

62 | bbox_processor:

63 | type: bbox

64 | params:

65 | max_length: 50

66 | return_history: true

67 | # Means you have to rank 100 candidate answers

68 | discriminative:

69 | enabled: true

70 | # Only return answer indices, otherwise it will return

71 | # glove embeddings

72 | return_indices: true

73 | no_unk: false

74 | # Return OCR information

75 | use_ocr: false

76 | # Return spatial information of OCR tokens if present

77 | use_ocr_info: false

78 | training_parameters:

79 | monitored_metric: visual_dialog/r@1

80 | metric_minimize: false

81 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/clevr.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | clevr:

3 | data_root_dir: ../data

4 | data_folder: CLEVR_v1.0

5 | build_attributes:

6 | min_count: 1

7 | split_regex: " "

8 | keep:

9 | - ";"

10 | - ","

11 | remove:

12 | - "?"

13 | - "."

14 | processors:

15 | text_processor:

16 | type: vocab

17 | params:

18 | max_length: 10

19 | vocab:

20 | type: random

21 | vocab_file: vocabs/clevr_question_vocab.txt

22 | preprocessor:

23 | type: simple_sentence

24 | params: {}

25 | answer_processor:

26 | type: multi_hot_answer_from_vocab

27 | params:

28 | num_answers: 1

29 | # Vocab file is relative to [data_root_dir]/[data_folder]

30 | vocab_file: vocabs/clevr_answer_vocab.txt

31 | preprocessor:

32 | type: simple_word

33 | params: {}

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/m4c_ocrvqa.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_ocrvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 50

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 50

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/m4c_stvqa.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_stvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 50

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 50

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/m4c_stvqa_ocr100.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_stvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 100

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 100

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

23 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/m4c_textvqa.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_textvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 50

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 50

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/m4c_textvqa_ocr100.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | m4c_textvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | features_max_len: 100

7 | processors:

8 | context_processor:

9 | type: fasttext

10 | params:

11 | max_length: 100

12 | model_file: .vector_cache/wiki.en.bin

13 | ocr_token_processor:

14 | type: simple_word

15 | params: {}

16 | bbox_processor:

17 | type: bbox

18 | params:

19 | max_length: 100

20 | return_info: true

21 | use_ocr: true

22 | use_ocr_info: true

23 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/textvqa.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | textvqa:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features:

7 | train:

8 | - open_images/detectron_fix_100/fc6/train,open_images/resnet152/train

9 | val:

10 | - open_images/detectron_fix_100/fc6/train,open_images/resnet152/train

11 | test:

12 | - open_images/detectron_fix_100/fc6/test,open_images/resnet152/test

13 | imdb_files:

14 | train:

15 | - imdb/textvqa_0.5/imdb_textvqa_train.npy

16 | val:

17 | - imdb/textvqa_0.5/imdb_textvqa_val.npy

18 | test:

19 | - imdb/textvqa_0.5/imdb_textvqa_test.npy

20 | features_max_len: 137

21 | processors:

22 | text_processor:

23 | type: vocab

24 | params:

25 | max_length: 14

26 | vocab:

27 | type: intersected

28 | embedding_name: glove.6B.300d

29 | vocab_file: vocabs/vocabulary_100k.txt

30 | preprocessor:

31 | type: simple_sentence

32 | params: {}

33 | answer_processor:

34 | type: vqa_answer

35 | params:

36 | vocab_file: vocabs/answers_textvqa_8k.txt

37 | preprocessor:

38 | type: simple_word

39 | params: {}

40 | num_answers: 10

41 | context_processor:

42 | type: fasttext

43 | params:

44 | max_length: 50

45 | model_file: .vector_cache/wiki.en.bin

46 | ocr_token_processor:

47 | type: simple_word

48 | params: {}

49 | bbox_processor:

50 | type: bbox

51 | params:

52 | max_length: 50

53 | return_info: true

54 | # Return OCR information

55 | use_ocr: true

56 | # Return spatial information of OCR tokens if present

57 | use_ocr_info: false

58 | training_parameters:

59 | monitored_metric: textvqa/vqa_accuracy

60 | metric_minimize: false

61 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/visual_genome.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | visual_genome:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features:

7 | train:

8 | - visual_genome/detectron_fix_100/fc6/,visual_genome/resnet152/

9 | val:

10 | - visual_genome/detectron_fix_100/fc6/,visual_genome/resnet152/

11 | test:

12 | - visual_genome/detectron_fix_100/fc6/,visual_genome/resnet152/

13 | imdb_files:

14 | train:

15 | - imdb/visual_genome/vg_question_answers.jsonl

16 | val:

17 | - imdb/visual_genome/vg_question_answers_placeholder.jsonl

18 | test:

19 | - imdb/visual_genome/vg_question_answers_placeholder.jsonl

20 | scene_graph_files:

21 | train:

22 | - imdb/visual_genome/vg_scene_graphs.jsonl

23 | val:

24 | - imdb/visual_genome/vg_scene_graphs_placeholder.jsonl

25 | test:

26 | - imdb/visual_genome/vg_scene_graphs_placeholder.jsonl

27 | features_max_len: 100

28 | processors:

29 | text_processor:

30 | type: vocab

31 | params:

32 | max_length: 14

33 | vocab:

34 | type: intersected

35 | embedding_name: glove.6B.300d

36 | vocab_file: vocabs/vocabulary_100k.txt

37 | preprocessor:

38 | type: simple_sentence

39 | params: {}

40 | answer_processor:

41 | type: vqa_answer

42 | params:

43 | num_answers: 1

44 | vocab_file: vocabs/answers_vqa.txt

45 | preprocessor:

46 | type: simple_word

47 | params: {}

48 | vg_answer_preprocessor:

49 | type: simple_word

50 | params: {}

51 | attribute_processor:

52 | type: vocab

53 | params:

54 | max_length: 2

55 | vocab:

56 | type: random

57 | vocab_file: vocabs/vocabulary_100k.txt

58 | name_processor:

59 | type: vocab

60 | params:

61 | max_length: 1

62 | vocab:

63 | type: random

64 | vocab_file: vocabs/vocabulary_100k.txt

65 | predicate_processor:

66 | type: vocab

67 | params:

68 | max_length: 2

69 | vocab:

70 | type: random

71 | vocab_file: vocabs/vocabulary_100k.txt

72 | synset_processor:

73 | type: vocab

74 | params:

75 | max_length: 1

76 | vocab:

77 | type: random

78 | vocab_file: vocabs/vg_synsets.txt

79 | bbox_processor:

80 | type: bbox

81 | params:

82 | max_length: 50

83 | return_scene_graph: true

84 | return_objects: true

85 | return_relationships: true

86 | return_info: true

87 | no_unk: false

88 | # Return OCR information

89 | use_ocr: false

90 | # Return spatial information of OCR tokens if present

91 | use_ocr_info: false

92 | training_parameters:

93 | monitored_metric: visual_genome/vqa_accuracy

94 | metric_minimize: false

95 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/vizwiz.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | vizwiz:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features:

7 | train:

8 | - vizwiz/detectron_fix_100/fc6/train,vizwiz/resnet152/train

9 | val:

10 | - vizwiz/detectron_fix_100/fc6/val,vizwiz/resnet152/val

11 | test:

12 | - vizwiz/detectron_fix_100/fc6/test,vizwiz/resnet152/test

13 | imdb_files:

14 | train:

15 | - imdb/vizwiz/imdb_vizwiz_train.npy

16 | val:

17 | - imdb/vizwiz/imdb_vizwiz_val.npy

18 | test:

19 | - imdb/vizwiz/imdb_vizwiz_test.npy

20 | features_max_len: 100

21 | processors:

22 | text_processor:

23 | type: vocab

24 | params:

25 | max_length: 14

26 | vocab:

27 | type: intersected

28 | embedding_name: glove.6B.300d

29 | vocab_file: vocabs/vocabulary_100k.txt

30 | preprocessor:

31 | type: simple_sentence

32 | params: {}

33 | answer_processor:

34 | type: vqa_answer

35 | params:

36 | vocab_file: vocabs/answers_vizwiz_7k.txt

37 | preprocessor:

38 | type: simple_word

39 | params: {}

40 | num_answers: 10

41 | context_processor:

42 | type: fasttext

43 | params:

44 | max_length: 50

45 | model_file: .vector_cache/wiki.en.bin

46 | ocr_token_processor:

47 | type: simple_word

48 | params: {}

49 | bbox_processor:

50 | type: bbox

51 | params:

52 | max_length: 50

53 | return_info: true

54 | # Return OCR information

55 | use_ocr: false

56 | # Return spatial information of OCR tokens if present

57 | use_ocr_info: false

58 | training_parameters:

59 | monitored_metric: vizwiz/vqa_accuracy

60 | metric_minimize: false

61 |

--------------------------------------------------------------------------------

/pythia/common/defaults/configs/datasets/vqa/vqa2.yml:

--------------------------------------------------------------------------------

1 | dataset_attributes:

2 | vqa2:

3 | data_root_dir: ../data

4 | image_depth_first: false

5 | fast_read: false

6 | image_features: