├── .ci

├── azure-pipelines.yml

└── vars

│ └── ai-utilities.yml

├── .dependabot

└── config.yml

├── .developer

├── pycharm_code_style.xml

└── pycharm_inspections.xml

├── .github

└── workflows

│ └── pythonpublish.yml

├── .gitignore

├── .gitmodules

├── .pycrunch-config.yaml

├── .pylintrc

├── CODE_OF_CONDUCT.md

├── LICENSE

├── README.md

├── SECURITY.md

├── _config.yml

├── azure_utils

├── __init__.py

├── azureml_tools

│ ├── __init__.py

│ ├── config.py

│ ├── experiment.py

│ ├── resource_group.py

│ ├── storage.py

│ ├── subscription.py

│ └── workspace.py

├── configuration

│ ├── __init__.py

│ ├── configuration_ui.py

│ ├── configuration_validation.py

│ ├── notebook_config.py

│ ├── project.yml

│ └── project_configuration.py

├── dev_ops

│ ├── __init__.py

│ └── testing_utilities.py

├── logger

│ ├── README.md

│ ├── __init__.py

│ ├── ai_logger.py

│ ├── blob_storage.py

│ ├── key_vault.py

│ ├── storageutils.py

│ └── tests

│ │ ├── __init__.py

│ │ └── statsCollectionTest.py

├── machine_learning

│ ├── __init__.py

│ ├── contexts

│ │ ├── __init__.py

│ │ ├── model_management_context.py

│ │ ├── realtime_score_context.py

│ │ └── workspace_contexts.py

│ ├── datasets

│ │ ├── __init__.py

│ │ └── stack_overflow_data.py

│ ├── deep

│ │ ├── __init__.py

│ │ └── create_deep_model.py

│ ├── duplicate_model.py

│ ├── factories

│ │ ├── __init__.py

│ │ └── realtime_factory.py

│ ├── item_selector.py

│ ├── label_rank.py

│ ├── realtime

│ │ ├── __init__.py

│ │ ├── image.py

│ │ └── kubernetes.py

│ ├── register_datastores.py

│ ├── templates

│ │ └── webtest.json

│ ├── train_local.py

│ ├── training_arg_parsers.py

│ └── utils.py

├── notebook_widgets

│ ├── __init__.py

│ ├── notebook_configuration_widget.py

│ └── workspace_widget.py

├── rts_estimator.py

├── samples

│ ├── __init__.py

│ └── deep_rts_samples.py

└── utilities.py

├── docs

├── Configuration_ReadMe.md

├── DEVELOPMENT_README.md

├── app_insights_1.png

├── app_insights_availability.png

├── app_insights_perf.png

├── app_insights_perf_dash.png

├── conda_ui.png

├── images

│ └── pycharm_import_code_style.png

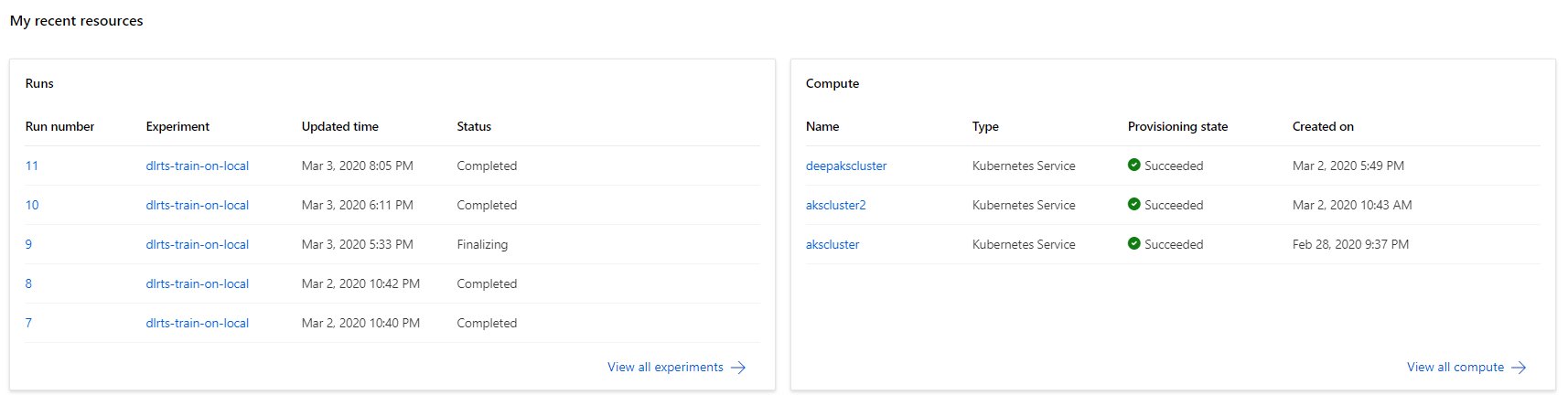

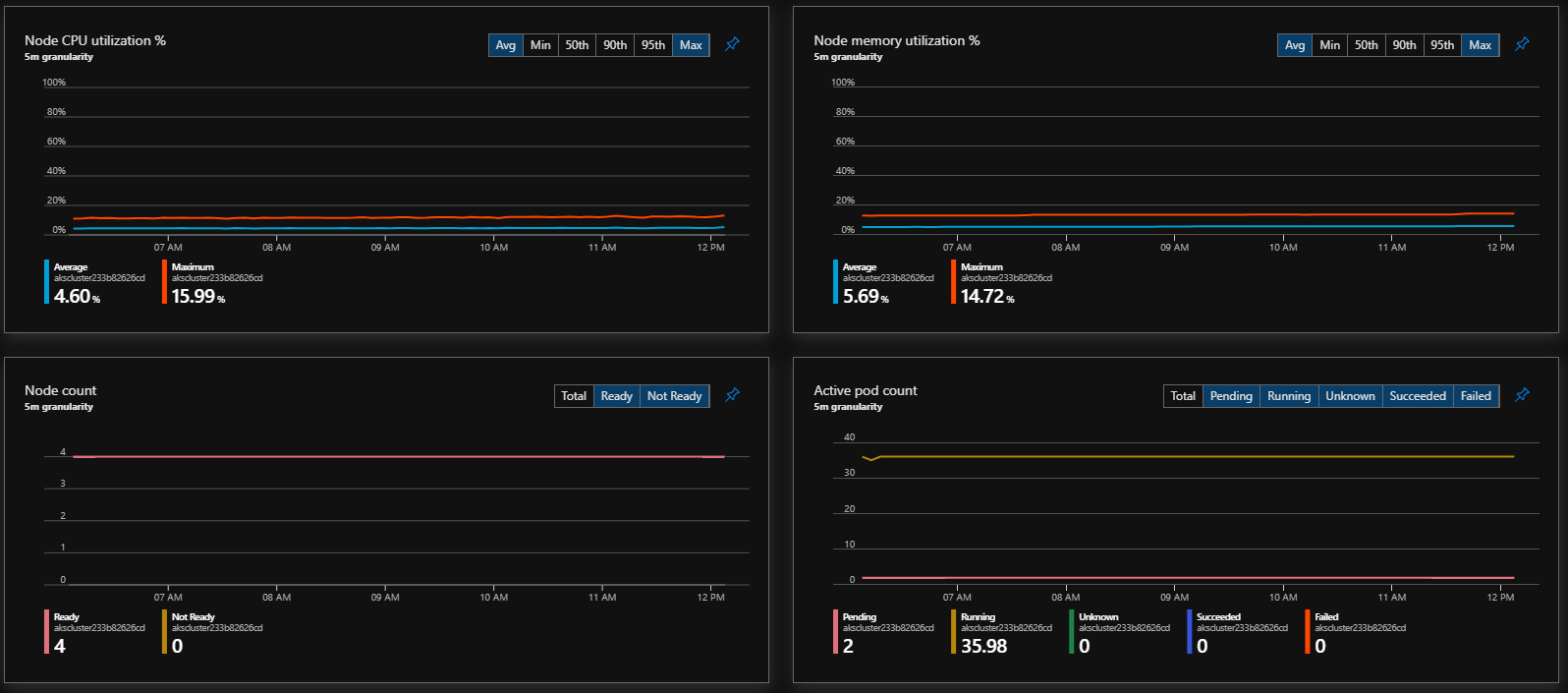

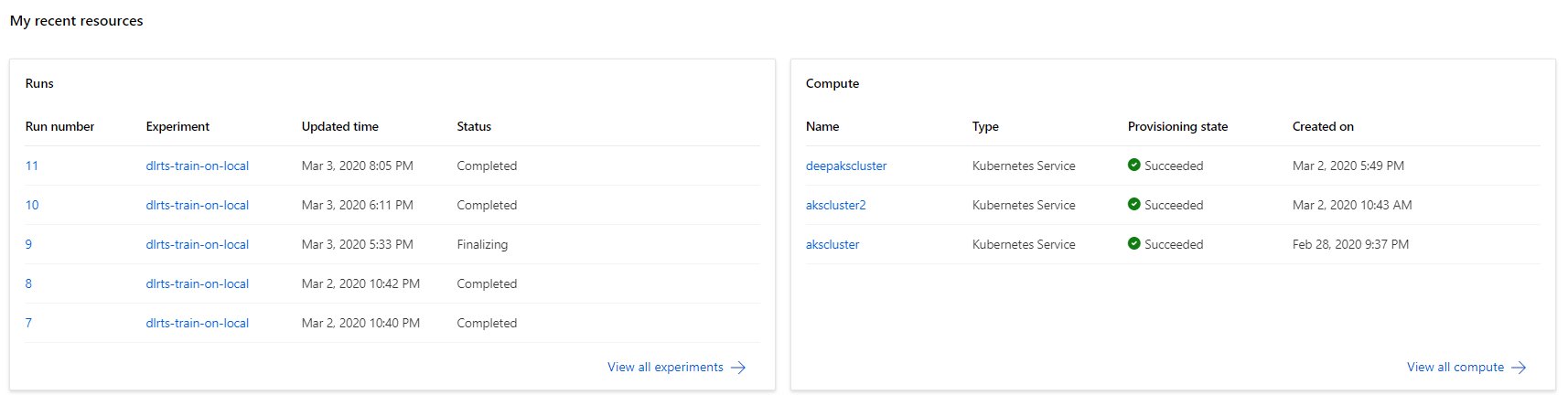

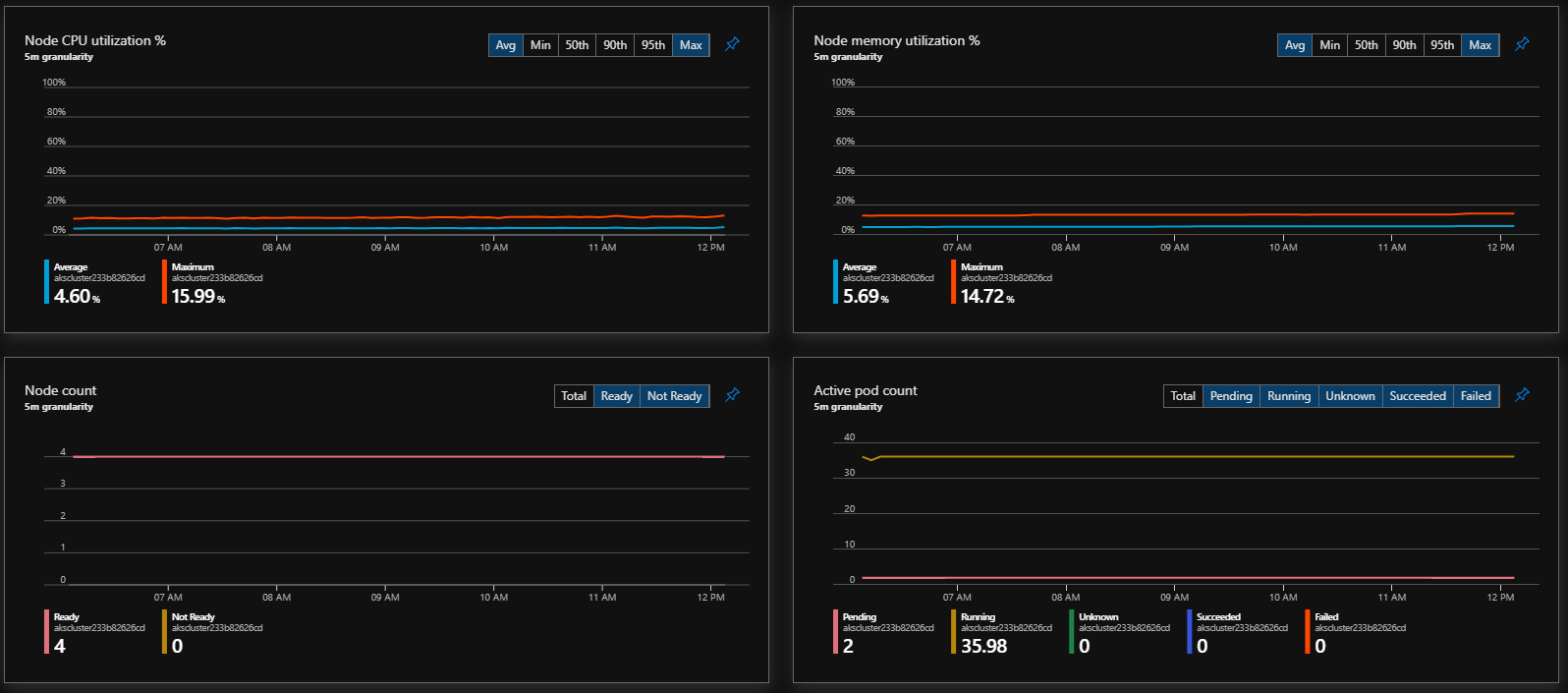

├── kubernetes.png

├── studio.png

└── tkinter_ui.png

├── environment.yml

├── environment_r.yml

├── notebooks

├── AzureMachineLearningConfig.ipynb

├── R

│ └── get_or_create_workspace.r

├── __init__.py

├── ai-deep-realtime-score.ipynb

├── ai-deep-realtime-score_no_files.ipynb

├── ai-ml-realtime-score.ipynb

└── exampleconfiguration.ipynb

├── project_sample.yml

├── pytest.ini

├── sample_workspace_conf.yml

├── scripts

├── add_ssh_ip.py

├── add_webtest.sh

├── create_deep_model.py

├── create_model.py

├── deploy_app_insights_k8s.sh

├── deploy_function.sh

├── version.sh

├── webtest.json

└── webtest.parameters.json

├── setup.py

├── source

└── score.py

├── tests

├── __init__.py

├── configuration

│ ├── __init__.py

│ ├── test_config.py

│ └── test_validation.py

├── conftest.py

├── machine_learning

│ ├── __init__.py

│ ├── contexts

│ │ ├── __init__.py

│ │ ├── test_fpga_deploy.py

│ │ ├── test_realtime_contexts.py

│ │ ├── test_realtime_contexts_integration.py

│ │ └── test_realtime_contexts_mock.py

│ ├── script

│ │ └── create_model.py

│ ├── test_deep_rts_samples.py

│ ├── test_realtime.py

│ ├── test_register_datastores.py

│ └── test_utils.py

├── mocks

│ ├── __init__.py

│ └── azureml

│ │ ├── __init__.py

│ │ └── azureml_mocks.py

└── test_notebooks.py

└── workspace_conf.yml

/.ci/azure-pipelines.yml:

--------------------------------------------------------------------------------

1 | # AI Utilities Sample

2 | #

3 | # A Github Service Connection must also be created with the name "AIArchitecturesAndPractices-GitHub"

4 | # https://docs.microsoft.com/en-us/azure/devops/pipelines/process/demands?view=azure-devops&tabs=yaml

5 | #

6 | # An Agent_Name Variable must be creating in the Azure DevOps UI.

7 | # https://docs.microsoft.com/en-us/azure/devops/pipelines/process/variables?view=azure-devops&tabs=yaml%2Cbatch#secret-variables

8 | #

9 | # This must point to an Agent Pool, with a Self-Hosted Linux VM with a DOcker.

10 | # https://docs.microsoft.com/en-us/azure/devops/pipelines/agents/v2-linux?view=azure-devops

11 |

12 | resources:

13 | repositories:

14 | - repository: aitemplates

15 | type: github

16 | name: microsoft/AI

17 | endpoint: AIArchitecturesAndPractices-GitHub

18 |

19 | trigger:

20 | batch: true

21 | branches:

22 | include:

23 | - master

24 |

25 | pr:

26 | autoCancel: true

27 | branches:

28 | include:

29 | - master

30 |

31 | variables:

32 | - template: ./vars/ai-utilities.yml

33 |

34 | stages:

35 | - template: .ci/stages/deploy_notebooks_stages_v5.yml@aitemplates

36 | parameters:

37 | Agent: $(Agent_Name)

38 | jobDisplayName: ai-utilities

39 | TridentWorkloadTypeShort: ${{ variables.TridentWorkloadTypeShort }}

40 | DeployLocation: ${{ variables.DeployLocation }}

41 | ProjectLocation: ${{ variables.ProjectLocation }}

42 | conda: ${{ variables.conda }}

43 | post_cleanup: false

44 |

45 | multi_region_1: false

46 | multi_region_2: false

47 |

48 | flighting_release: false

49 | flighting_preview: false

50 | flighting_master: false

51 |

52 | sql_server_name: $(sql_server_name)

53 | sql_database_name: $(sql_database_name)

54 | sql_username: $(sql_username)

55 | sql_password: $(sql_password)

56 |

57 | container_name: $(container_name)

58 | account_name: $(account_name)

59 | account_key: $(account_key)

60 | datastore_rg: $(datastore_rg)

61 |

--------------------------------------------------------------------------------

/.ci/vars/ai-utilities.yml:

--------------------------------------------------------------------------------

1 | variables:

2 | TridentWorkloadTypeShort: aiutilities

3 | DeployLocation: eastus

4 | ProjectLocation: "."

5 | conda: ai-utilities

6 |

--------------------------------------------------------------------------------

/.dependabot/config.yml:

--------------------------------------------------------------------------------

1 | version: 1

2 | update_configs:

3 | - package_manager: "python"

4 | directory: "/"

5 | update_schedule: "live"

6 | allowed_updates:

7 | - match:

8 | # Only includes indirect (aka transient/sub-dependencies) for

9 | # supported package managers: ruby:bundler, python, php:composer, rust:cargo

10 | update_type: "all"

11 |

--------------------------------------------------------------------------------

/.developer/pycharm_code_style.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

--------------------------------------------------------------------------------

/.developer/pycharm_inspections.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

16 |

17 |

18 |

24 |

25 |

26 |

27 |

--------------------------------------------------------------------------------

/.github/workflows/pythonpublish.yml:

--------------------------------------------------------------------------------

1 | name: Upload Python Package

2 |

3 | on:

4 | release:

5 | types: [created]

6 |

7 | jobs:

8 | deploy:

9 | runs-on: ubuntu-latest

10 | steps:

11 | - uses: actions/checkout@v2

12 | - name: Set up Python

13 | uses: actions/setup-python@v1

14 | with:

15 | python-version: '3.x'

16 | - name: Install dependencies

17 | run: |

18 | python -m pip install --upgrade pip

19 | pip install setuptools wheel twine

20 | - name: Build and publish

21 | env:

22 | TWINE_USERNAME: ${{ secrets.PYPI_USERNAME }}

23 | TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

24 | run: |

25 | python setup.py sdist bdist_wheel

26 | twine upload dist/*

27 | - name: Update version for next release

28 | run: |

29 | var=$(sed -ne "s/version=['\"]\([^'\"]*\)['\"] *,.*/\1/p" ./setup.py)

30 | IFS='.' read -r -a array <<<"$var"

31 |

32 | major="${array[1]}"

33 | minor="${array[2]}"

34 |

35 | if [ "${array[2]}" -eq 9 ]; then

36 | echo $major

37 | major=$((major + 1))

38 | echo $major

39 | else

40 | minor=$((minor + 1))

41 | fi

42 |

43 | version=0.$major.$minor

44 |

45 | sed -i "s/version=['\"]\([^'\"]*\)['\"] *,.*/version=\"$version\",/" ./setup.py

46 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | workspace_conf.yml

2 | project.yml

3 | data_folder

4 | model.pkl

5 | test-timing-output.xml

6 | *project.yml

7 | *.output_ipynb

8 | *lynx*jpg

9 |

10 | *env.yml

11 | my_env.yml

12 | lgbmenv.yml

13 |

14 | # Byte-compiled / optimized / DLL files

15 | __pycache__/

16 | *.py[cod]

17 | *$py.class

18 |

19 | # Jetbrains

20 | .idea

21 |

22 | # AzureML

23 | .azureml

24 |

25 | # C extensions

26 | *.so

27 |

28 | # Distribution / packaging

29 | .Python

30 | build/

31 | develop-eggs/

32 | dist/

33 | downloads/

34 | eggs/

35 | .eggs/

36 | lib/

37 | lib64/

38 | parts/

39 | sdist/

40 | var/

41 | wheels/

42 | *.egg-info/

43 | .installed.cfg

44 | *.egg

45 | MANIFEST

46 |

47 | # PyInstaller

48 | # Usually these files are written by a python script from a template

49 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

50 | *.manifest

51 | *.spec

52 |

53 | # Installer logs

54 | pip-log.txt

55 | pip-delete-this-directory.txt

56 |

57 | # Unit test / coverage reports

58 | htmlcov/

59 | .tox/

60 | .coverage

61 | .coverage.*

62 | .cache

63 | nosetests.xml

64 | coverage.xml

65 | *.cover

66 | .hypothesis/

67 | .pytest_cache/

68 |

69 | # Translations

70 | *.mo

71 | *.pot

72 |

73 | # Django stuff:

74 | *.log

75 | local_settings.py

76 | db.sqlite3

77 |

78 | # Flask stuff:

79 | instance/

80 | .webassets-cache

81 |

82 | # Scrapy stuff:

83 | .scrapy

84 |

85 | # Sphinx documentation

86 | docs/_build/

87 |

88 | # PyBuilder

89 | target/

90 |

91 | # Jupyter Notebook

92 | .ipynb_checkpoints

93 |

94 | # pyenv

95 | .python-version

96 |

97 | # celery beat schedule file

98 | celerybeat-schedule

99 |

100 | # SageMath parsed files

101 | *.sage.py

102 |

103 | # Environments

104 | .env

105 | .venv

106 | env/

107 | venv/

108 | ENV/

109 | env.bak/

110 | venv.bak/

111 |

112 | # Spyder project settings

113 | .spyderproject

114 | .spyproject

115 |

116 | # Rope project settings

117 | .ropeproject

118 |

119 | # mkdocs documentation

120 | /site

121 |

122 | # mypy

123 | .mypy_cache/

124 |

125 | config/__pycahce__/

126 | /.vscode/

127 | /aml_config/

128 |

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/microsoft/ai-utilities/b6c097dddff00ae4f7321da52653d9d6d8a94884/.gitmodules

--------------------------------------------------------------------------------

/.pycrunch-config.yaml:

--------------------------------------------------------------------------------

1 | engine:

2 | runtime: pytest

3 | # maximum number of concurrent test runners

4 | cpu-cores: 2

5 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Microsoft Open Source Code of Conduct

2 |

3 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

4 |

5 | Resources:

6 |

7 | - [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

8 | - [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

9 | - Contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with questions or concerns

10 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) Microsoft Corporation.

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](https://notebooks.azure.com/import/gh/microsoft/AI-Utilities)

2 |  3 |

3 |  4 | # AI Utilities Sample Project

5 | This project is a sample showcasing how to create a common repository for utilities that can be used by other AI projects.

6 |

7 | # Working with Project

8 | ## Build Project

9 |

10 | ```bash

11 | pip install -e .\AI-Utilities\

12 | ```

13 |

14 | ## Run Unit Tests with Conda

15 | ```bash

16 | conda env create -f environment.yml

17 | conda activate ai-utilities

18 | python -m ipykernel install --prefix=[anaconda-root-dir]\envs\ai-utilities --name ai-utilities

19 | pytest tests

20 | ```

21 |

22 | Example Anaconda Root Dir

23 | C:\Users\dcibo\Anaconda3

24 |

25 | # Contributing

26 |

27 | This project welcomes contributions and suggestions. Most contributions require you to agree to a

28 | Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

29 | the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

30 |

31 | When you submit a pull request, a CLA bot will automatically determine whether you need to provide

32 | a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

33 | provided by the bot. You will only need to do this once across all repos using our CLA.

34 |

35 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

36 | For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

37 | contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

38 |

--------------------------------------------------------------------------------

/SECURITY.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Security

4 |

5 | Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include [Microsoft](https://github.com/Microsoft), [Azure](https://github.com/Azure), [DotNet](https://github.com/dotnet), [AspNet](https://github.com/aspnet), [Xamarin](https://github.com/xamarin), and [our GitHub organizations](https://opensource.microsoft.com/).

6 |

7 | If you believe you have found a security vulnerability in any Microsoft-owned repository that meets Microsoft's [Microsoft's definition of a security vulnerability](https://docs.microsoft.com/en-us/previous-versions/tn-archive/cc751383(v=technet.10)) of a security vulnerability, please report it to us as described below.

8 |

9 | ## Reporting Security Issues

10 |

11 | **Please do not report security vulnerabilities through public GitHub issues.**

12 |

13 | Instead, please report them to the Microsoft Security Response Center (MSRC) at [https://msrc.microsoft.com/create-report](https://msrc.microsoft.com/create-report).

14 |

15 | If you prefer to submit without logging in, send email to [secure@microsoft.com](mailto:secure@microsoft.com). If possible, encrypt your message with our PGP key; please download it from the the [Microsoft Security Response Center PGP Key page](https://www.microsoft.com/en-us/msrc/pgp-key-msrc).

16 |

17 | You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at [microsoft.com/msrc](https://www.microsoft.com/msrc).

18 |

19 | Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

20 |

21 | * Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

22 | * Full paths of source file(s) related to the manifestation of the issue

23 | * The location of the affected source code (tag/branch/commit or direct URL)

24 | * Any special configuration required to reproduce the issue

25 | * Step-by-step instructions to reproduce the issue

26 | * Proof-of-concept or exploit code (if possible)

27 | * Impact of the issue, including how an attacker might exploit the issue

28 |

29 | This information will help us triage your report more quickly.

30 |

31 | If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our [Microsoft Bug Bounty Program](https://microsoft.com/msrc/bounty) page for more details about our active programs.

32 |

33 | ## Preferred Languages

34 |

35 | We prefer all communications to be in English.

36 |

37 | ## Policy

38 |

39 | Microsoft follows the principle of [Coordinated Vulnerability Disclosure](https://www.microsoft.com/en-us/msrc/cvd).

40 |

41 |

42 |

--------------------------------------------------------------------------------

/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-hacker

--------------------------------------------------------------------------------

/azure_utils/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | azure_utils - __init__.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 | import os

8 |

9 | directory = os.path.dirname(os.path.realpath(__file__))

10 | notebook_directory = directory.replace("azure_utils", "notebooks")

11 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - azureml_tools/__init__.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/config.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - azureml_tools/config.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

8 | import ast

9 | import logging

10 |

11 | from dotenv import dotenv_values, find_dotenv, set_key

12 |

13 | defaults = {

14 | "CLUSTER_NAME": "gpucluster24rv3",

15 | "CLUSTER_VM_SIZE": "Standard_NC24rs_v3",

16 | "CLUSTER_MIN_NODES": 0,

17 | "CLUSTER_MAX_NODES": 2,

18 | "WORKSPACE": "workspace",

19 | "RESOURCE_GROUP": "amlccrg",

20 | "REGION": "eastus",

21 | "DATASTORE_NAME": "datastore",

22 | "CONTAINER_NAME": "container",

23 | "ACCOUNT_NAME": "premiumstorage",

24 | "SUBSCRIPTION_ID": None,

25 | }

26 |

27 |

28 | def load_config(dot_env_path: find_dotenv(raise_error_if_not_found=True)):

29 | """ Load the variables from the .env file

30 | Returns:

31 | .env variables(dict)

32 | """

33 | logger = logging.getLogger(__name__)

34 | logger.info(f"Found config in {dot_env_path}")

35 | return dotenv_values(dot_env_path)

36 |

37 |

38 | def _convert(value):

39 | try:

40 | return ast.literal_eval(value)

41 | except (ValueError, SyntaxError):

42 | return value

43 |

44 |

45 | class AzureMLConfig:

46 | """Creates AzureMLConfig object

47 |

48 | Stores all the configuration options and syncs them with the .env file

49 | """

50 |

51 | _reserved = ("_dot_env_path",)

52 |

53 | def __init__(self):

54 | self._dot_env_path = find_dotenv(raise_error_if_not_found=True)

55 |

56 | for key, value in load_config(dot_env_path=self._dot_env_path).items():

57 | self.__dict__[key] = _convert(value)

58 |

59 | for key, value in defaults.items():

60 | if key not in self.__dict__:

61 | setattr(self, key, value)

62 |

63 | def __setattr__(self, name, value):

64 | if name not in self._reserved:

65 | if not isinstance(value, str):

66 | value = str(value)

67 | set_key(self._dot_env_path, name, value)

68 | self.__dict__[name] = value

69 |

70 |

71 | experiment_config = AzureMLConfig()

72 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/resource_group.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - resource_group.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

8 | import logging

9 |

10 | from azure.common.credentials import get_cli_profile

11 | from azure.mgmt.resource import ResourceManagementClient

12 | from azure.mgmt.resource.resources.models import ResourceGroup

13 |

14 |

15 | def _get_resource_group_client(profile_credentials, subscription_id):

16 | return ResourceManagementClient(profile_credentials, subscription_id)

17 |

18 |

19 | def resource_group_exists(resource_group_name, resource_group_client=None):

20 | """

21 |

22 | :param resource_group_name:

23 | :param resource_group_client:

24 | :return:

25 | """

26 | return resource_group_client.resource_groups.check_existence(resource_group_name)

27 |

28 |

29 | class ResourceGroupException(Exception):

30 | """Except when checking for Resource Group"""

31 |

32 | pass

33 |

34 |

35 | def create_resource_group(

36 | profile_credentials, subscription_id: str, location: str, resource_group_name: str

37 | ) -> ResourceGroup:

38 | """Creates resource group if it doesn't exist

39 |

40 | Args:

41 | profile_credentials : credentials from Azure login

42 | subscription_id (str): subscription you wish to use

43 | location (str): location you wish the strage to be created in

44 | resource_group_name (str): the name of the resource group you want the storage to be created under

45 |

46 | Raises:

47 | ResourceGroupException: Exception if the resource group could not be created

48 |

49 | Returns:

50 | ResourceGroup: an Azure resource group object

51 |

52 | Examples:

53 | >>> profile = get_cli_profile()

54 | >>> profile.set_active_subscription("YOUR-SUBSCRIPTION")

55 | >>> cred, subscription_id, _ = profile.get_login_credentials()

56 | >>> rg = create_resource_group(cred, subscription_id, "eastus", "testrg2")

57 | """

58 | logger = logging.getLogger(__name__)

59 | resource_group_client = _get_resource_group_client(

60 | profile_credentials, subscription_id

61 | )

62 | if resource_group_exists(

63 | resource_group_name, resource_group_client=resource_group_client

64 | ):

65 | logger.debug(f"Found resource group {resource_group_name}")

66 | resource_group = resource_group_client.resource_groups.get(resource_group_name)

67 | else:

68 | logger.debug(f"Creating resource group {resource_group_name} in {location}")

69 | resource_group_params = {"location": location}

70 | resource_group = resource_group_client.resource_groups.create_or_update(

71 | resource_group_name, resource_group_params

72 | )

73 |

74 | if "Succeeded" not in resource_group.properties.provisioning_state:

75 | raise ResourceGroupException(

76 | f"Resource group not created successfully | State {resource_group.properties.provisioning_state}"

77 | )

78 |

79 | return resource_group

80 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/storage.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - storage.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 | from typing import Any, Tuple

8 |

9 | from azure.mgmt.storage import StorageManagementClient

10 | from azure.mgmt.storage.models import Kind, Sku, SkuName, StorageAccountCreateParameters

11 |

12 | from azure_utils.azureml_tools.resource_group import create_resource_group

13 |

14 |

15 | class StorageAccountCreateFailure(Exception):

16 | """Storage Account Create Failure Exception"""

17 |

18 | pass

19 |

20 |

21 | def create_premium_storage(

22 | profile_credentials: object,

23 | subscription_id: str,

24 | location: str,

25 | resource_group_name: str,

26 | storage_name: str,

27 | ) -> Tuple[Any, dict]:

28 | """Create premium blob storage

29 |

30 | Args:

31 | profile_credentials : credentials from Azure login (see example below for details)

32 | subscription_id (str): subscription you wish to use

33 | location (str): location you wish the strage to be created in

34 | resource_group_name (str): the name of the resource group you want the storage to be created under

35 | storage_name (str): the name of the storage account

36 |

37 | Raises:

38 | Exception: [description]

39 |

40 | Returns:

41 | [type]: [description]

42 |

43 | Example:

44 | >>> from azure.common.credentials import get_cli_profile

45 | >>> profile = get_cli_profile()

46 | >>> profile.set_active_subscription("YOUR-ACCOUNT")

47 | >>> cred, subscription_id, _ = profile.get_login_credentials()

48 | >>> storage = create_premium_storage(cred, subscription_id, "eastus", "testrg", "teststr", wait=False)

49 | """

50 | storage_client = StorageManagementClient(profile_credentials, subscription_id)

51 | create_resource_group(

52 | profile_credentials, subscription_id, location, resource_group_name

53 | )

54 | if not storage_client.storage_accounts.check_name_availability(

55 | storage_name

56 | ).name_available:

57 | storage_account = storage_client.storage_accounts.get_properties(

58 | resource_group_name, storage_name

59 | )

60 | else:

61 | storage_async_operation = storage_client.storage_accounts.create(

62 | resource_group_name,

63 | storage_name,

64 | StorageAccountCreateParameters(

65 | sku=Sku(name=SkuName.premium_lrs),

66 | kind=Kind.block_blob_storage,

67 | location="eastus",

68 | ),

69 | )

70 | storage_account = storage_async_operation.result()

71 |

72 | if "Succeeded" not in storage_account.provisioning_state:

73 | raise StorageAccountCreateFailure(

74 | f"Storage account not created successfully | State {storage_account.provisioning_state}"

75 | )

76 |

77 | storage_keys = storage_client.storage_accounts.list_keys(

78 | resource_group_name, storage_name

79 | )

80 | storage_keys = {v.key_name: v.value for v in storage_keys.keys}

81 |

82 | return storage_account, storage_keys

83 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/subscription.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - storageutils.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 | import logging

8 | import subprocess

9 | import sys

10 |

11 | # noinspection PyProtectedMember

12 | from azure.cli.core._profile import Profile

13 | from azure.common.credentials import get_cli_profile

14 | from azure.mgmt.resource import SubscriptionClient

15 | from knack.util import CLIError

16 | from prompt_toolkit import prompt

17 | from tabulate import tabulate

18 | from toolz import pipe

19 |

20 | _GREEN = "\033[0;32m"

21 | _BOLD = "\033[;1m"

22 |

23 |

24 | def run_az_cli_login() -> None:

25 | """

26 | Run az login in shell

27 | """

28 | process = subprocess.Popen(

29 | ["az", "login"], stdout=subprocess.PIPE, stderr=subprocess.STDOUT

30 | )

31 | for c in iter(lambda: process.stdout.read(1), b""):

32 | sys.stdout.write(_GREEN + _BOLD + c.decode(sys.stdout.encoding))

33 |

34 |

35 | def list_subscriptions(profile: Profile = None) -> list:

36 | """Lists the subscriptions associated with the profile

37 |

38 | If you don't supply a profile it will try to get the profile from your Azure CLI login

39 |

40 | Args:

41 | profile (azure.cli.core._profile.Profile, optional): Profile you wish to use. Defaults to None.

42 |

43 | Returns:

44 | list: list of subscriptions

45 | """

46 | if profile is None:

47 | profile = subscription_profile()

48 | cred, _, _ = profile.get_login_credentials()

49 | sub_client = SubscriptionClient(cred)

50 | return [

51 | {"Index": i, "Name": sub.display_name, "id": sub.subscription_id}

52 | for i, sub in enumerate(sub_client.subscriptions.list())

53 | ]

54 |

55 |

56 | def subscription_profile() -> Profile:

57 | """Return the Azure CLI profile

58 |

59 | Returns:

60 | azure.cli.core._profile.Profile: Azure profile

61 | """

62 | logger = logging.getLogger(__name__)

63 | try:

64 | return get_cli_profile()

65 | except CLIError:

66 | logger.info("Not logged in, running az login")

67 | run_az_cli_login()

68 | return get_cli_profile()

69 |

70 |

71 | def _prompt_sub_id_selection(profile: Profile) -> str:

72 | sub_list = list_subscriptions(profile=profile)

73 | pipe(sub_list, tabulate, print)

74 | prompt_result = prompt("Please type in index of subscription you want to use: ")

75 | selected_sub = sub_list[int(prompt_result)]

76 | print(

77 | f"You selected index {prompt_result} sub id {selected_sub['id']} name {selected_sub['Name']}"

78 | )

79 | return selected_sub["id"]

80 |

81 |

82 | def select_subscription(profile: Profile = None, sub_name_or_id: str = None) -> Profile:

83 | """Sets active subscription

84 |

85 | If you don't supply a profile it will try to get the profile from your Azure CLI login

86 | If you don't supply a subscription name or id it will list ones from your account and ask you to select one

87 |

88 | Args:

89 | profile (azure.cli.core._profile.Profile, optional): Profile you wish to use. Defaults to None.

90 | sub_name_or_id (str, optional): The subscription name or id to use. Defaults to None.

91 |

92 | Returns:

93 | azure.cli.core._profile.Profile: Azure profile

94 |

95 | Example:

96 | >>> profile = select_subscription()

97 | """

98 | if profile is None:

99 | profile = subscription_profile()

100 |

101 | if sub_name_or_id is None:

102 | sub_name_or_id = _prompt_sub_id_selection(profile)

103 |

104 | profile.set_active_subscription(sub_name_or_id)

105 | return profile

106 |

--------------------------------------------------------------------------------

/azure_utils/azureml_tools/workspace.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - workspace

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

8 | import logging

9 | import os

10 | from pathlib import Path

11 |

12 | import azureml

13 | from azureml.core.authentication import (

14 | AuthenticationException,

15 | AzureCliAuthentication,

16 | InteractiveLoginAuthentication,

17 | ServicePrincipalAuthentication,

18 | AbstractAuthentication,

19 | )

20 | from azureml.core import Workspace

21 |

22 | _DEFAULT_AML_PATH = "aml_config/azml_config.json"

23 |

24 |

25 | def _get_auth() -> AbstractAuthentication:

26 | """Returns authentication to Azure Machine Learning workspace."""

27 | logger = logging.getLogger(__name__)

28 | if os.environ.get("AML_SP_PASSWORD", None):

29 | logger.debug("Trying to authenticate with Service Principal")

30 | aml_sp_password = os.environ.get("AML_SP_PASSWORD")

31 | aml_sp_tenant_id = os.environ.get("AML_SP_TENNANT_ID")

32 | aml_sp_username = os.environ.get("AML_SP_USERNAME")

33 | auth = ServicePrincipalAuthentication(

34 | aml_sp_tenant_id, aml_sp_username, aml_sp_password

35 | )

36 | else:

37 | logger.debug("Trying to authenticate with CLI Authentication")

38 | try:

39 | auth = AzureCliAuthentication()

40 | auth.get_authentication_header()

41 | except AuthenticationException:

42 | logger.debug("Trying to authenticate with Interactive login")

43 | auth = InteractiveLoginAuthentication()

44 |

45 | return auth

46 |

47 |

48 | def create_workspace(

49 | workspace_name: str,

50 | resource_group: str,

51 | subscription_id: str,

52 | workspace_region: str,

53 | filename: str = "azml_config.json",

54 | ) -> Workspace:

55 | """Creates Azure Machine Learning workspace."""

56 | logger = logging.getLogger(__name__)

57 | auth = _get_auth()

58 |

59 | # noinspection PyTypeChecker

60 | ws = azureml.core.Workspace.create(

61 | name=workspace_name,

62 | subscription_id=subscription_id,

63 | resource_group=resource_group,

64 | location=workspace_region,

65 | exist_ok=True,

66 | auth=auth,

67 | )

68 |

69 | logger.info(ws.get_details())

70 | ws.write_config(file_name=filename)

71 | return ws

72 |

73 |

74 | def load_workspace(path: str) -> Workspace:

75 | """Loads Azure Machine Learning workspace from a config file."""

76 | auth = _get_auth()

77 | # noinspection PyTypeChecker

78 | workspace = azureml.core.Workspace.from_config(auth=auth, path=path)

79 | logger = logging.getLogger(__name__)

80 | logger.info(

81 | "\n".join(

82 | [

83 | "Workspace name: " + str(workspace.name),

84 | "Azure region: " + str(workspace.location),

85 | "Subscription id: " + str(workspace.subscription_id),

86 | "Resource group: " + str(workspace.resource_group),

87 | ]

88 | )

89 | )

90 | return workspace

91 |

92 |

93 | def workspace_for_user(

94 | workspace_name: str,

95 | resource_group: str,

96 | subscription_id: str,

97 | workspace_region: str,

98 | config_path: str = _DEFAULT_AML_PATH,

99 | ) -> Workspace:

100 | """Returns Azure Machine Learning workspace."""

101 | if os.path.isfile(config_path):

102 | return load_workspace(config_path)

103 |

104 | path_obj = Path(config_path)

105 | filename = path_obj.name

106 | return create_workspace(

107 | workspace_name,

108 | resource_group,

109 | subscription_id=subscription_id,

110 | workspace_region=workspace_region,

111 | filename=filename,

112 | )

113 |

--------------------------------------------------------------------------------

/azure_utils/configuration/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - configuration/__init__.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

--------------------------------------------------------------------------------

/azure_utils/configuration/configuration_ui.py:

--------------------------------------------------------------------------------

1 | """

2 | - configuration_ui.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

8 | from tkinter import Button, END, Frame, Label, TRUE, Text, messagebox

9 |

10 | from azure_utils.configuration.configuration_validation import (

11 | Validation,

12 | ValidationResult,

13 | )

14 | from azure_utils.configuration.project_configuration import ProjectConfiguration

15 |

16 |

17 | class SettingsUpdate(Frame):

18 | """

19 | UI Wrapper for project configuration settings.

20 |

21 | Provide a configuration file as described in configuration.ProjectConfiguration.

22 |

23 | A UI is built using a grid where each row consists of:

24 | setting_description | Text control to show accept values

25 |

26 | Final row of the grid has a save and cancel button.

27 |

28 | Save updates the configuration file with any settings put on the UI.

29 | """

30 |

31 | def __init__(self, project_configuration, master):

32 | Frame.__init__(self, master=master)

33 |

34 | # self.configuration = Instance of ProjectConfiguration and master

35 | # self.master_win = Instance of Tk application.

36 | # self.settings = Will be a dictionary where

37 | # key = Setting name

38 | # value = Text control

39 |

40 | self.configuration = project_configuration

41 | self.master_win = master

42 | self.settings = {}

43 |

44 | # Set up validator

45 | self.validator = Validation()

46 |

47 | # Set up some window options

48 |

49 | self.master_win.title(self.configuration.project_name())

50 | self.master_win.resizable(width=TRUE, height=TRUE)

51 | self.master_win.configure(padx=10, pady=10)

52 |

53 | # Populate the grid first with settings followed by the two buttons (cancel/save)

54 | current_row = 0

55 | for setting in self.configuration.get_settings():

56 |

57 | if not isinstance(setting, dict):

58 | print("Found setting does not match pattern...")

59 | continue

60 |

61 | # Only can be one key as they are singletons with a list

62 | # of values

63 | if len(setting.keys()) == 1:

64 | for setting_name in setting.keys():

65 | details = setting[setting_name]

66 | description = details[0][ProjectConfiguration.setting_description]

67 | value = details[1][ProjectConfiguration.setting_value]

68 |

69 | lbl = Label(self.master_win, text=description)

70 | lbl.grid(row=current_row, column=0, columnspan=1, sticky="nwse")

71 | txt = Text(self.master_win, height=1, width=40, wrap="none")

72 | txt.grid(

73 | row=current_row, column=1, columnspan=2, sticky="nwse", pady=10

74 | )

75 | txt.insert(END, value)

76 |

77 | self.settings[setting_name] = txt

78 | current_row += 1

79 |

80 | # Add in the save/cancel buttons

81 | save_button = Button(self.master_win, text="Save", command=self.save_setting)

82 | save_button.grid(row=current_row, column=1, columnspan=1, sticky="nwse")

83 | close_button = Button(self.master_win, text="Cancel", command=self.cancel)

84 | close_button.grid(row=current_row, column=2, columnspan=1, sticky="nwse")

85 |

86 | def cancel(self):

87 | """

88 | Cancel clicked, just close the window.

89 | """

90 | self.master_win.destroy()

91 |

92 | def save_setting(self):

93 | """

94 | Save clicked

95 | - For each row, collect the setting name and user input.

96 | - Clean user input

97 | - Set values for all settings

98 | - Save configuration

99 | - Close window

100 | """

101 | validate_responses = self.prompt_field_validation()

102 | field_responses = []

103 |

104 | for setting in self.settings:

105 | user_entered = self.settings[setting].get("1.0", END)

106 | user_entered = user_entered.strip().replace("\n", "")

107 |

108 | # Validate it

109 | if validate_responses:

110 | res = self.validator.validate_input(setting, user_entered)

111 | field_responses.append(res)

112 | Validation.dump_validation_result(res)

113 | else:

114 | print("Updating {} with '{}'".format(setting, user_entered))

115 |

116 | self.configuration.set_value(setting, user_entered)

117 |

118 | if self.validate_responses(field_responses):

119 | print("Writing out new configuration options...")

120 | self.configuration.save_configuration()

121 | self.cancel()

122 |

123 | @staticmethod

124 | def validate_responses(validation_responses) -> bool:

125 | """

126 | Determine if there are any failures or warnings. If so, give the user the

127 | option on staying on the screen to fix them.

128 |

129 | :param validation_responses: Response to validate

130 | :return: `bool` validation outcome

131 | """

132 |

133 | if validation_responses:

134 | failed = [

135 | x for x in validation_responses if x.status == ValidationResult.failure

136 | ]

137 | warn = [

138 | x for x in validation_responses if x.status == ValidationResult.warning

139 | ]

140 |

141 | error_count, message = SettingsUpdate.get_failed_message(failed)

142 | error_count, message = SettingsUpdate.get_warning_message(

143 | warn, error_count, message

144 | )

145 |

146 | return SettingsUpdate.print_if_errors(error_count, message)

147 | return True

148 |

149 | @staticmethod

150 | def print_if_errors(error_count, message):

151 | """

152 |

153 | :param error_count:

154 | :param message:

155 | :return:

156 | """

157 | if error_count > 0:

158 | user_prefix = "The following fields either failed validation or produced a warning :\n\n"

159 | user_postfix = "Click Yes to continue with these validation issues or No to correct them."

160 | return messagebox.askyesno(

161 | "Validate Errors", "{}{}{}".format(user_prefix, message, user_postfix)

162 | )

163 | return True

164 |

165 | @staticmethod

166 | def get_warning_message(warn, error_count=0, message=""):

167 | """

168 |

169 | :param warn:

170 | :param error_count:

171 | :param message:

172 | :return:

173 | """

174 | if warn:

175 | message += "WARNINGS:\n"

176 | for resp in warn:

177 | if resp.reason != Validation.FIELD_NOT_RECOGNIZED:

178 | error_count += 1

179 | message += " {}:\n{}\n\n".format(resp.type, resp.reason)

180 | message += "\n"

181 | return error_count, message

182 |

183 | @staticmethod

184 | def get_failed_message(failed, error_count=0, message=""):

185 | """

186 |

187 | :param failed:

188 | :param error_count:

189 | :param message:

190 | :return:

191 | """

192 | if failed:

193 | message += "ERRORS:\n"

194 | for resp in failed:

195 | error_count += 1

196 | message += " {}\n".format(resp.type)

197 | message += "\n"

198 | return error_count, message

199 |

200 | def prompt_field_validation(self) -> bool:

201 | """

202 | Prompt user for field to validation

203 |

204 | :return: `bool` based on user's response

205 | """

206 | valid_fields = "\n"

207 | for setting in self.settings:

208 | if self.validator.is_field_valid(setting):

209 | valid_fields += "{}\n".format(setting)

210 |

211 | user_prefix = "The following fields can be validated :\n\n"

212 | user_postfix = "\nValidation will add several seconds to the save, would you like to validate these settings?"

213 |

214 | return messagebox.askyesno(

215 | "Validate Inputs", "{}{}{}".format(user_prefix, valid_fields, user_postfix)

216 | )

217 |

--------------------------------------------------------------------------------

/azure_utils/configuration/notebook_config.py:

--------------------------------------------------------------------------------

1 | """

2 | - notebook_config.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 |

7 | Import the needed functionality

8 | - tkinter :

9 | Python GUI library

10 | - configuration.ProjectConfiguration

11 | Configuration object that reads/writes to the configuration settings YAML file.

12 | - configuration_ui.SettingsUpdate

13 | tkinter based UI that dynamically loads any appropriate configuration file

14 | and displays it to the user to alter the settings.

15 |

16 |

17 | If you wish to run this file locally, uncomment the section below and then run

18 | the Python script directly from this directory. This will utilize the

19 | project.yml file as the configuration file under test.

20 |

21 | LOCAL_ONLY

22 | import os

23 | import sys

24 | if __name__ == "__main__":

25 | current = os.getcwd()

26 | az_utils = os.path.split(current)

27 | while not az_utils[0].endswith("AI-Utilities"):

28 | az_utils = os.path.split(az_utils[0])

29 |

30 | if az_utils[0] not in sys.path:

31 | sys.path.append(az_utils[0])

32 | """

33 |

34 | from tkinter import Tk

35 |

36 | from azure_utils.configuration.configuration_ui import SettingsUpdate

37 | from azure_utils.configuration.project_configuration import ProjectConfiguration

38 |

39 | project_configuration_file = "project.yml"

40 | train_py_default = "train.py"

41 | score_py_default = "score.py"

42 |

43 |

44 | def get_or_configure_settings(configuration_yaml: str = project_configuration_file):

45 | """

46 | Only configure the settings if the subscription ID has not been provided yet.

47 | This will help with automation in which the configuration file is provided.

48 |

49 | :param configuration_yaml: Location of configuration yaml

50 | """

51 | settings_object = get_settings(configuration_yaml)

52 | sub_id = settings_object.get_value("subscription_id")

53 |

54 | if sub_id == "<>":

55 | configure_settings(configuration_yaml)

56 |

57 | return get_settings(configuration_yaml)

58 |

59 |

60 | def configure_settings(configuration_yaml: str = project_configuration_file):

61 | """

62 | Launch a tkinter UI to configure the project settings in the provided

63 | configuration_yaml file. If a file is not provided, the default ./project.yml

64 | file will be created for the caller.

65 |

66 | configuration_yaml -> Disk location of the configuration file to modify.

67 |

68 | ProjectConfiguration will open an existing YAML file or create a new one. It is

69 | suggested that your project simply create a simple configuration file containing

70 | all of you settings so that the user simply need to modify it with the UI.

71 |

72 | In this instance, we assume that the default configuration file is called project.yml.

73 | This will be used if the user passes nothing else in.

74 |

75 | :param configuration_yaml: Location of configuration yaml

76 | """

77 | project_configuration = ProjectConfiguration(configuration_yaml)

78 |

79 | # Finally, create a Tk window and pass that along with the configuration object

80 | # to the SettingsObject class for modification.

81 |

82 | window = Tk()

83 | app = SettingsUpdate(project_configuration, window)

84 | app.mainloop()

85 |

86 |

87 | def get_settings(

88 | configuration_yaml: str = project_configuration_file,

89 | ) -> ProjectConfiguration:

90 | """

91 | Acquire the project settings from the provided configuration_yaml file.

92 | If a file is not provided, the default ./project.yml will be created and

93 | and empty set of settings will be returned to the user.

94 |

95 | configuration_yaml -> Disk location of the configuration file to modify.

96 |

97 | ProjectConfiguration will open an existing YAML file or create a new one. It is

98 | suggested that your project simply create a simple configuration file containing

99 | all of you settings so that the user simply need to modify it with the UI.

100 |

101 | In this instance, we assume that the default configuration file is called project.yml.

102 | This will be used if the user passes nothing else in.

103 |

104 | :param configuration_yaml: Project configuration yml

105 | :return: loaded ProjectConfiguration object

106 | """

107 | return ProjectConfiguration(configuration_yaml)

108 |

109 |

110 | if __name__ == "__main__":

111 | configure_settings()

112 |

--------------------------------------------------------------------------------

/azure_utils/configuration/project.yml:

--------------------------------------------------------------------------------

1 | project_name: AI Default Project

2 | settings:

3 | - subscription_id:

4 | - description: Azure Subscription Id

5 | - value: abcd1234

6 | - resource_group:

7 | - description: Azure Resource Group Name

8 | - value: <>

9 | - workspace_name:

10 | - description: Azure ML Workspace Name

11 | - value: <>

12 | - workspace_region:

13 | - description: Azure ML Workspace Region

14 | - value: <>

15 | - image_name:

16 | - description: Docker Container Image Name

17 | - value: <>

18 | - aks_service_name:

19 | - description: AKS Service Name

20 | - value: <>

21 | - aks_name:

22 | - description: AKS Cluster Name

23 | - value: <>

24 | - storage_account:

25 | - description: Azure Storage Account

26 | - value: my_account-name*

27 |

--------------------------------------------------------------------------------

/azure_utils/dev_ops/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - __init__.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

--------------------------------------------------------------------------------

/azure_utils/dev_ops/testing_utilities.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - testing_utilities.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 | import json

8 | import os

9 | import re

10 | import sys

11 |

12 | import nbformat

13 | import papermill as pm

14 | from junit_xml import TestCase, TestSuite, to_xml_report_file

15 | from nbconvert import MarkdownExporter, RSTExporter

16 |

17 | notebook_output_ext = ".output_ipynb"

18 |

19 |

20 | def run_notebook(

21 | input_notebook,

22 | add_nunit_attachment,

23 | parameters=None,

24 | kernel_name="ai-architecture-template",

25 | root=".",

26 | ):

27 | """

28 | Used to run a notebook in the correct directory.

29 |

30 | Parameters

31 | ----------

32 | :param input_notebook: Name of Notebook to Test

33 | :param add_nunit_attachment:

34 | :param parameters:

35 | :param kernel_name: Jupyter Kernal

36 | :param root:

37 | """

38 |

39 | output_notebook = input_notebook.replace(".ipynb", notebook_output_ext)

40 | try:

41 | results = pm.execute_notebook(

42 | os.path.join(root, input_notebook),

43 | os.path.join(root, output_notebook),

44 | parameters=parameters,

45 | kernel_name=kernel_name,

46 | )

47 |

48 | for cell in results.cells:

49 | if cell.cell_type == "code":

50 | assert not cell.metadata.papermill.exception, "Error in Python Notebook"

51 | finally:

52 | with open(os.path.join(root, output_notebook)) as json_file:

53 | data = json.load(json_file)

54 | jupyter_output = nbformat.reads(

55 | json.dumps(data), as_version=nbformat.NO_CONVERT

56 | )

57 |

58 | export_md(

59 | jupyter_output,

60 | output_notebook,

61 | add_nunit_attachment,

62 | file_ext=".txt",

63 | root=root,

64 | )

65 |

66 | regex = r"Deployed (.*) with name (.*). Took (.*) seconds."

67 |

68 | with open(os.path.join(root, output_notebook)) as file:

69 | data = file.read()

70 |

71 | test_cases = []

72 | for group in re.findall(regex, data):

73 | test_cases.append(

74 | TestCase(

75 | name=group[0] + " creation",

76 | classname=input_notebook,

77 | elapsed_sec=float(group[2]),

78 | status="Success",

79 | )

80 | )

81 |

82 | test_suite = TestSuite("my test suite", test_cases)

83 |

84 | with open("test-timing-output.xml", "w") as test_file:

85 | to_xml_report_file(test_file, [test_suite], prettyprint=False)

86 |

87 |

88 | def export_notebook(

89 | exporter, jupyter_output, output_notebook, add_nunit_attachment, file_ext, root="."

90 | ):

91 | """

92 | Export Jupyter Output to File

93 |

94 | :param exporter:

95 | :param jupyter_output:

96 | :param output_notebook:

97 | :param add_nunit_attachment:

98 | :param file_ext:

99 | :param root:

100 | """

101 | (body, _) = exporter.from_notebook_node(jupyter_output)

102 | path = os.path.join(root, output_notebook.replace(notebook_output_ext, file_ext))

103 | with open(path, "w") as text_file:

104 | sys.stderr.write(body)

105 | text_file.write(body)

106 |

107 | if add_nunit_attachment is not None:

108 | add_nunit_attachment(path, output_notebook)

109 |

110 |

111 | def export_md(

112 | jupyter_output, output_notebook, add_nunit_attachment, file_ext=".md", root="."

113 | ):

114 | """

115 | Export Jupyter Output to Markdown File

116 |

117 | :param jupyter_output:

118 | :param output_notebook:

119 | :param add_nunit_attachment:

120 | :param file_ext:

121 | :param root:

122 | """

123 | markdown_exporter = MarkdownExporter()

124 | export_notebook(

125 | markdown_exporter,

126 | jupyter_output,

127 | output_notebook,

128 | add_nunit_attachment,

129 | file_ext,

130 | root=root,

131 | )

132 |

133 |

134 | def export_rst(

135 | jupyter_output, output_notebook, add_nunit_attachment, file_ext=".rst", root="."

136 | ):

137 | """

138 | Export Jupyter Output to RST File

139 |

140 | :param jupyter_output:

141 | :param output_notebook:

142 | :param add_nunit_attachment:

143 | :param file_ext:

144 | :param root:

145 | """

146 | rst_exporter = RSTExporter()

147 | export_notebook(

148 | rst_exporter,

149 | jupyter_output,

150 | output_notebook,

151 | add_nunit_attachment,

152 | file_ext,

153 | root=root,

154 | )

155 |

--------------------------------------------------------------------------------

/azure_utils/logger/README.md:

--------------------------------------------------------------------------------

1 | # Data Tracker

2 | Dan Grecoe - A Microsoft Employee

3 |

4 | When running Python projects through Azure Dev Ops (https://dev.azure.com) there is a need to collect certain statistics

5 | such as deployment time, or to pass out information related to a deployment as the agent the build runs on will be torn

6 | down once the build os complete.

7 |

8 | Of course, there are several option for doing so and this repository contains one option.

9 |

10 | The code in this repository enables saving these data to an Azure Storage account for consumption at a later time.

11 |

12 | Descriptions of the class and how it performs can be found in the MetricsUtils/hpStatisticsCollection.py file.

13 |

14 | An example on how to use the code for various tasks can be found in the statsCollectionTest.py file.

15 |

16 | ## Pre-requisites

17 | To use this example, you must pip install the following into your environment:

18 | - azure-cli-core

19 | - azure-storage-blob

20 |

21 | These should be installed with the azml libraries, but if they don't work that is why.

22 |

23 | ## Use in a notebook with AZML

24 | First you need to include the following

25 |

26 | ```

27 | from MetricsUtils.hpStatisticsCollection import statisticsCollector, CollectionEntry

28 | from MetricsUtils.storageutils import storageConnection

29 | ```

30 |

31 | This gives you access to the code. This assumes that you have installed either as a submodule or manually, the files in

32 | a folder called MetricsUtils in the same directory as the notebooks themselves.

33 |

34 | ### First notebook

35 | In the first notebook, you can certainly make use of the tracker to collect stats before the workspace is created, for

36 | example:

37 |

38 | ```

39 | statisticsCollector.startTask(CollectionEntry.AML_WORKSPACE_CREATION)

40 |

41 | ws = Workspace.create(

42 | name=workspace_name,

43 | subscription_id=subscription_id,

44 | resource_group=resource_group,

45 | location=workspace_region,

46 | create_resource_group=True,

47 | auth=get_auth(env_path),

48 | exist_ok=True,

49 | )

50 |

51 | statisticsCollector.endTask(CollectionEntry.AML_WORKSPACE_CREATION)

52 | ```

53 |

54 | In fact, you are going to need to create this workspace to get the storage account name. So, in that first notebook, you

55 | will likely want to save off the storage connection string into the environment or .env file.

56 |

57 | The storage account name can be found with this code:

58 | ```

59 | stgAcctName = ws.get_details()['storageAccount'].split('/')[-1]

60 | ```

61 |

62 | Once you have the storage account name, you save the statistics to storage using the following at or near the bottom of

63 | your notebook. If you believe there may be failures along the way, you can perform the upload multiple times, it will

64 | just overwrite what is there.

65 |

66 | Also note that this assumes the user is logged in to the same subscription as the storage account.

67 | ```

68 | storageConnString = storageConnection.getConnectionStringWithAzCredentials(resource_group, stgAcct)

69 | statisticsCollector.uploadContent(storageConnString)

70 | ```

71 |

72 | ### Follow on notebooks

73 | The difference in a follow up notebook is that settings have likely already been saved. Since we have the storage

74 | account name now in the environment, we just need to pull the information from storage into the tracking class such as:

75 |

76 | ```

77 | storageConnString = storageConnection.getConnectionStringWithAzCredentials(resource_group, stgAcct)

78 | statisticsCollector.hydrateFromStorage(storageConnString)

79 | ```

80 |

81 | Then continue to use the object as you did in the first notebook being sure to call teh uploadContent() method to save

82 | whatever changes you want to storage.

83 |

84 | # Contributing

85 |

86 | This project welcomes contributions and suggestions. Most contributions require you to agree to a

87 | Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

88 | the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

89 |

90 | When you submit a pull request, a CLA bot will automatically determine whether you need to provide

91 | a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

92 | provided by the bot. You will only need to do this once across all repos using our CLA.

93 |

94 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

95 | For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

96 | contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

97 |

--------------------------------------------------------------------------------

/azure_utils/logger/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - logger/__init__.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 | """

7 |

--------------------------------------------------------------------------------

/azure_utils/logger/ai_logger.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - MetricUtils/blobStorage.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 |

7 | Enumeration used in teh statisticsCollector class to track individual tasks. These enums can be used by

8 | both the producer (IPYNB path) and consumer (E2E path).

9 |

10 | The statisticsCollector requires the enum for tracking calls

11 | startTask()

12 | endTask()

13 | addEntry()

14 | getEntry()

15 | """

16 |

17 | import json

18 | from datetime import datetime

19 | from enum import Enum

20 |

21 | from azure_utils.logger.blob_storage import BlobStorageAccount

22 | from azure_utils.logger.storageutils import StorageConnection

23 |

24 |

25 | class CollectionEntry(Enum):

26 | """ Deploy Steps Enums"""

27 |

28 | AKS_CLUSTER_CREATION = "akscreate"

29 | AML_COMPUTE_CREATION = "amlcompute"

30 | AML_WORKSPACE_CREATION = "amlworkspace"

31 |

32 |

33 | # Class used for keeping track of tasks during the execution of a path. Data can be archived and retrieved to/from

34 | # Azure Storage.

35 | #

36 | # Tasks can be started and completed with an internal timer keeping track of the MS it takes to run

37 | # startTask()

38 | # endTask()

39 | #

40 | # Entries can also be added with any other data point the user would require

41 | # addEntry()

42 | #

43 | # Regardless of how an entry was put in the collection, it can be retrieved

44 | # getEntry()

45 | #

46 | # Working with the collection itself

47 | # getCollection() -> Retrieves the internal collection as JSON

48 | # uploadContent() -? Uploads to the provided storage account in pre-defined container/blob

49 | # hydrateFromStorage() -> Resets the internal collection to the data found in the provided storage account from

50 | # the pre-defined container/blob.

51 |

52 |

53 | class StatisticsCollector:

54 | """ Statistics Collector """

55 |

56 | __metrics__ = {}

57 | __running_tasks__ = {}

58 | __statscontainer__ = "pathmetrics"

59 | __statsblob__ = "statistics.json"

60 |

61 | # No need to create an instance, all methods are static.

62 |

63 | def __init__(self, path_name: str):

64 | self.path_name = path_name

65 |

66 | @staticmethod

67 | def start_task(collection_entry):

68 | """

69 | Starts a task using one of the enumerators and records a start time of the task. If using this,

70 | the entry is not put into the __metrics__ connection until endTask() is called.

71 |

72 | :param collection_entry: an instance of a CollectionEntry enum

73 | """

74 | StatisticsCollector.__running_tasks__[

75 | collection_entry.value

76 | ] = datetime.utcnow()

77 |

78 | @staticmethod

79 | def end_task(collection_entry):

80 | """

81 | Ends a task using one of the enumerators. If the start time was previously recorded using

82 | startTask() an entry for the specific enumeration is added to the __metrics__ collection that

83 | will be used to upload data to Azure Storage.

84 |

85 | :param collection_entry: an instance of a CollectionEntry enum

86 | """

87 | if collection_entry.value in StatisticsCollector.__running_tasks__.keys():

88 | time_diff = (

89 | datetime.utcnow()

90 | - StatisticsCollector.__running_tasks__[collection_entry.value]

91 | )

92 | ms_delta = time_diff.total_seconds() * 1000

93 | StatisticsCollector.__metrics__[collection_entry.value] = ms_delta

94 |

95 | @staticmethod

96 | def add_entry(collection_entry, data_point):

97 | """

98 | Single call to add an entry to the __metrics__ collection. This would be used when you want to run

99 | the timers in the external code directly.

100 |

101 | This is used to set manual task times or any other valid data point.

102 |

103 |

104 | :param collection_entry: an instance of a CollectionEntry enum

105 | :param data_point: Any valid python data type (string, int, etc)

106 | """

107 | StatisticsCollector.__metrics__[collection_entry.value] = data_point

108 |

109 | """

110 | Retrieve an entry in the internal collection.

111 |

112 | Parameters:

113 | collectionEntry - an instance of a CollectionEntry enum

114 |

115 | Returns:

116 | The data in the collection or None if the entry is not present.

117 | """

118 |

119 | @staticmethod

120 | def get_entry(collection_entry):

121 | """

122 |

123 | :param collection_entry:

124 | :return:

125 | """

126 | return_data_point = None

127 | if collection_entry.value in StatisticsCollector.__metrics__.keys():

128 | return_data_point = StatisticsCollector.__metrics__[collection_entry.value]

129 | return return_data_point

130 |

131 | """

132 | Returns the __metrics__ collection as a JSON string.

133 |

134 | Parameters:

135 | None

136 |

137 | Returns:

138 | String representation of the collection in JSON

139 | """

140 |

141 | @staticmethod

142 | def get_collection():

143 | """

144 |

145 | :return:

146 | """

147 | return json.dumps(StatisticsCollector.__metrics__)

148 |

149 | """

150 | Uploads the JSON string representation of the __metrics__ collection to the specified

151 | storage account.

152 |

153 | Parameters:

154 | connectionString - A complete connection string to an Azure Storage account

155 |

156 | Returns:

157 | Nothing

158 | """

159 |

160 | @staticmethod

161 | def upload_content(connection_string):

162 | """

163 |

164 | :param connection_string:

165 | """

166 | containers, storage_account = StatisticsCollector._get_containers(

167 | connection_string

168 | )

169 | if StatisticsCollector.__statscontainer__ not in containers:

170 | storage_account.create_container(StatisticsCollector.__statscontainer__)

171 | storage_account.upload_blob(

172 | StatisticsCollector.__statscontainer__,

173 | StatisticsCollector.__statsblob__,

174 | StatisticsCollector.get_collection(),

175 | )

176 |

177 | """

178 | Download the content from blob storage as a string representation of the JSON. This can be used for collecting

179 | and pushing downstream to whomever is interested. This call does not affect the internal collection.

180 |

181 | Parameters:

182 | connectionString - A complete connection string to an Azure Storage account

183 |

184 | Returns:

185 | The uploaded collection that was pushed to storage or None if not present.

186 |

187 | """

188 |

189 | @staticmethod

190 | def retrieve_content(connection_string):

191 | """

192 |

193 | :param connection_string:

194 | :return:

195 | """

196 | return_content = None

197 | containers, storage_account = StatisticsCollector._get_containers(

198 | connection_string

199 | )

200 | if StatisticsCollector.__statscontainer__ in containers:

201 | return_content = storage_account.download_blob(

202 | StatisticsCollector.__statscontainer__,

203 | StatisticsCollector.__statsblob__,

204 | )

205 | return return_content

206 |

207 | @staticmethod

208 | def _get_containers(connection_string):

209 | connection_object = StorageConnection(connection_string)

210 | storage_account = BlobStorageAccount(connection_object)

211 | # noinspection PyUnresolvedReferences

212 | containers = storage_account.getContainers()

213 | return containers, storage_account

214 |

215 | """

216 | Retrieves the content in storage and hydrates the __metrics__ dictionary, dropping any existing information.

217 |

218 | Useful between IPYNB runs/stages in DevOps.

219 |

220 | Parameters:

221 | connectionString - A complete connection string to an Azure Storage account

222 |

223 | Returns:

224 | Nothing

225 | """

226 |

227 | @staticmethod

228 | def hydrate_from_storage(connection_string):

229 | """

230 |

231 | :param connection_string:

232 | """

233 | return_content = StatisticsCollector.retrieve_content(connection_string)

234 | if return_content is not None:

235 | StatisticsCollector.__metrics__ = json.loads(return_content)

236 | else:

237 | print("There was no data in storage")

238 |

--------------------------------------------------------------------------------

/azure_utils/logger/blob_storage.py:

--------------------------------------------------------------------------------

1 | """

2 | AI-Utilities - MetricUtils/blobStorage.py

3 |

4 | Copyright (c) Microsoft Corporation. All rights reserved.

5 | Licensed under the MIT License.

6 |

7 | Class that performs work against an Azure Storage account with containers and blobs.

8 |

9 | To use, this library must be installed:

10 |

11 | pip install azure-storage-blob

12 | """

13 |

14 | from datetime import datetime, timedelta

15 |

16 | from azure.storage.blob import BlobPermissions, BlockBlobService, PublicAccess

17 |

18 |

19 | class BlobStorageAccount:

20 | """

21 | Constructor that receives a logger.storageutils.storageConnection instance

22 | """

23 |

24 | def __init__(self, storage_connection):

25 | self.connection = storage_connection

26 | self.service = BlockBlobService(

27 | self.connection.AccountName, self.connection.AccountKey

28 | )

29 |

30 | # Creates a new storage container in the Azure Storage account

31 |

32 | def create_container(self, container_name):

33 | """

34 |

35 | :param container_name:

36 | """

37 | if self.connection and self.service:

38 | self.service.create_container(container_name)

39 | self.service.set_container_acl(

40 | container_name, public_access=PublicAccess.Blob

41 | )

42 |

43 | # Retrieve a blob SAS token on a specific blob

44 |

45 | def get_blob_sas_token(self, container_name, blob_name):

46 | """

47 |

48 | :param container_name:

49 | :param blob_name:

50 | :return:

51 | """

52 | return_token = None

53 | if self.connection and self.service:

54 | # noinspection PyUnresolvedReferences,PyTypeChecker

55 | return_token = self.service.generate_blob_shared_access_signature(

56 | container_name,

57 | blob_name,

58 | BlobPermissions.READ,

59 | datetime.utcnow() + timedelta(hours=1),

60 | )

61 |