├── .gitignore

├── .npmignore

├── CODE_OF_CONDUCT.md

├── LICENSE

├── README.md

├── SECURITY.md

├── SUPPORT.md

├── examples

├── ChatExample.js

├── CodeExample.js

├── GeneralExample.js

├── OpenAIExample.js

└── yaml-examples

│ ├── chat.yaml

│ ├── code.yaml

│ ├── general.yaml

│ ├── yaml-chat-example.js

│ ├── yaml-code-example.js

│ └── yaml-general-example.js

├── jest.config.js

├── package-lock.json

├── package.json

├── src

├── ChatEngine.ts

├── CodeEngine.ts

├── PromptEngine.ts

├── index.ts

├── types.ts

└── utils

│ └── utils.ts

├── test

├── Chat.test.ts

└── Code.test.ts

└── tsconfig.json

/.gitignore:

--------------------------------------------------------------------------------

1 | node_modules

2 | dist

3 | out

--------------------------------------------------------------------------------

/.npmignore:

--------------------------------------------------------------------------------

1 | examples

2 | test

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Microsoft Open Source Code of Conduct

2 |

3 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

4 |

5 | Resources:

6 |

7 | - [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

8 | - [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

9 | - Contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with questions or concerns

10 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) Microsoft Corporation.

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Prompt Engine

2 |

3 | This repo contains an NPM utility library for creating and maintaining prompts for Large Language Models (LLMs).

4 |

5 | ## Background

6 |

7 | LLMs like GPT-3 and Codex have continued to push the bounds of what AI is capable of - they can capably generate language and code, but are also capable of emergent behavior like question answering, summarization, classification and dialog. One of the best techniques for enabling specific behavior out of LLMs is called prompt engineering - crafting inputs that coax the model to produce certain kinds of outputs. Few-shot prompting is the discipline of giving examples of inputs and outputs, such that the model has a reference for the type of output you're looking for.

8 |

9 | Prompt engineering can be as simple as formatting a question and passing it to the model, but it can also get quite complex - requiring substantial code to manipulate and update strings. This library aims to make that easier. It also aims to codify patterns and practices around prompt engineering.

10 |

11 | See [How to get Codex to produce the code you want](https://microsoft.github.io/prompt-engineering/) article for an example of the prompt engineering patterns this library codifies.

12 |

13 | ## Installation

14 |

15 | `npm install prompt-engine`

16 |

17 | ## Usage

18 |

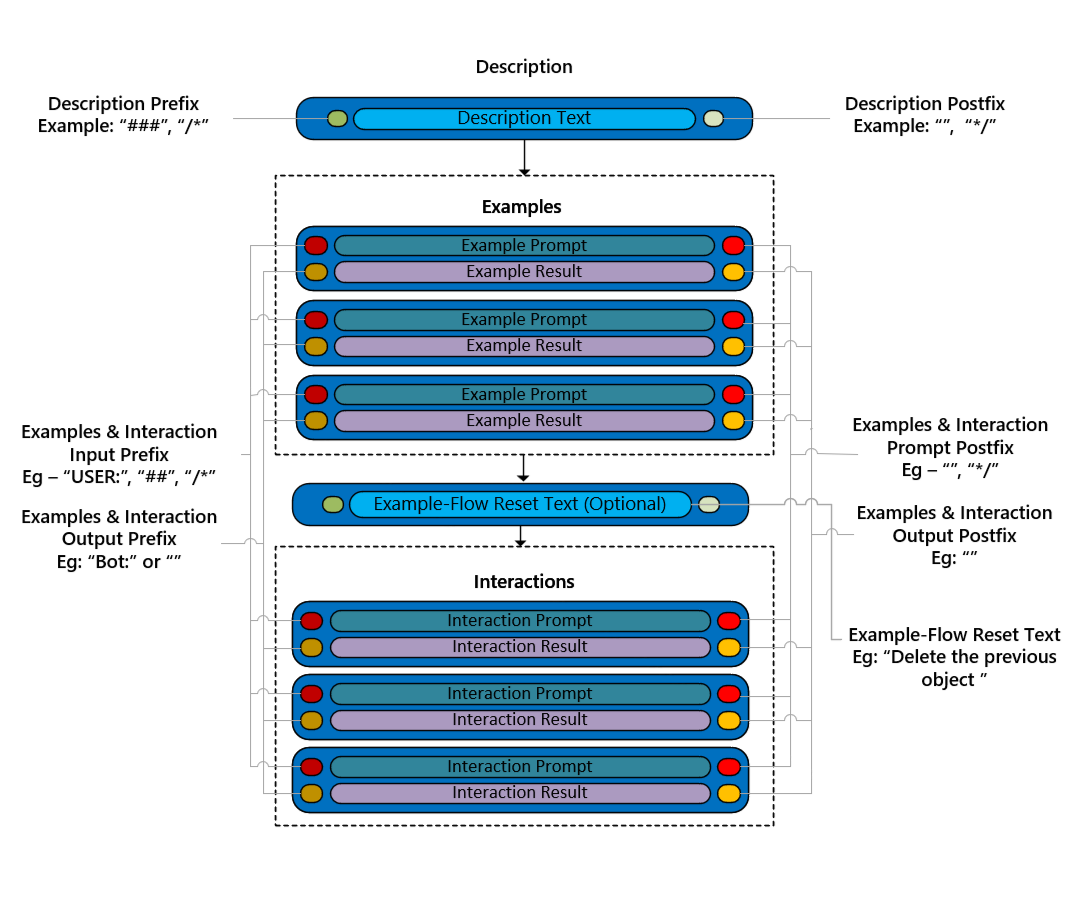

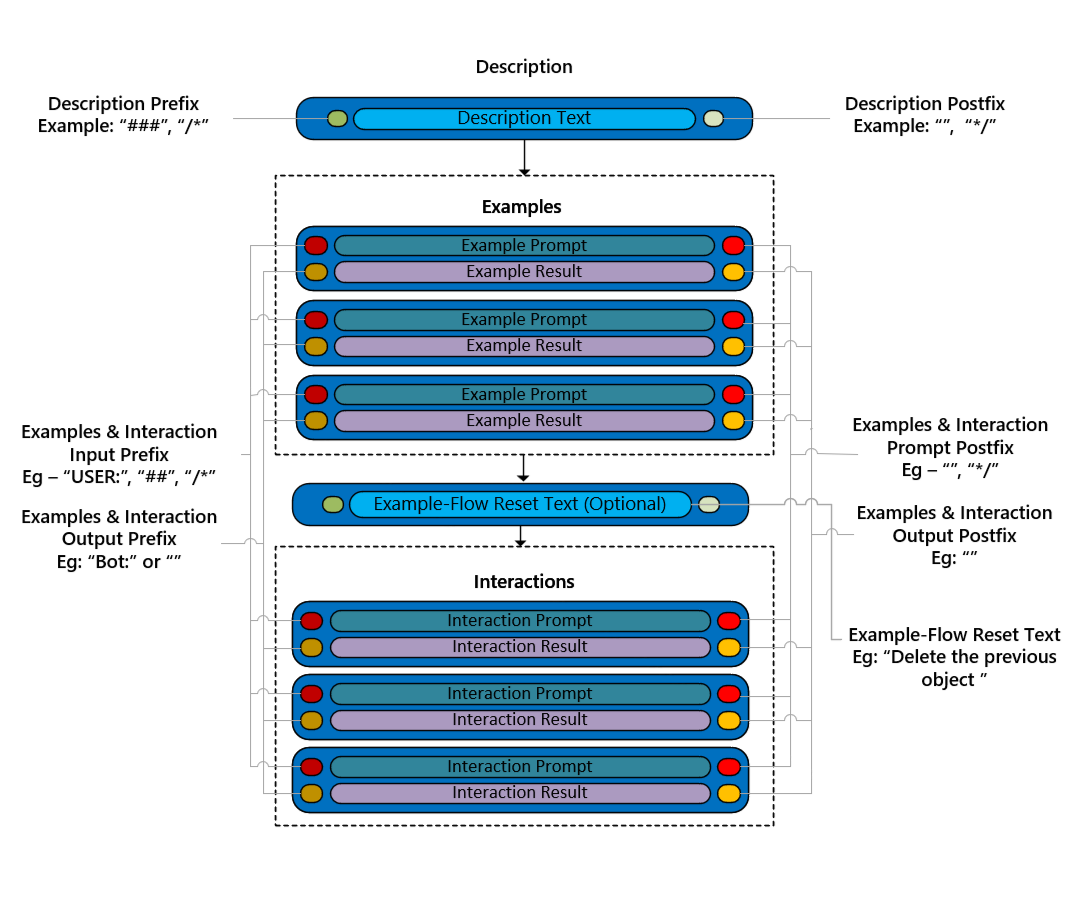

19 | The library currently supports a generic `PromptEngine`, a `CodeEngine` and a `ChatEngine`. All three facilitate a pattern of prompt engineering where the prompt is composed of a description, examples of inputs and outputs and an ongoing "dialog" representing the ongoing input/output pairs as the user and model communicate. The dialog ensures that the model (which is stateless) has the context about what's happened in the conversation so far.

20 |

21 | See architecture diagram representation:

22 |

23 |  24 |

25 |

26 | ### Code Engine

27 |

28 | Code Engine creates prompts for Natural Language to Code scenarios. See TypeScript Syntax for importing `CodeEngine`:

29 |

30 | ```js

31 | import { CodeEngine } from "prompt-engine";

32 | ```

33 |

34 | NL->Code prompts should generally have a description, which should give context about the programming language the model should generate and libraries it should be using. The description should also give information about the task at hand:

35 |

36 | ```js

37 | const description =

38 | "Natural Language Commands to JavaScript Math Code. The code should log the result of the command to the console.";

39 | ```

40 |

41 | NL->Code prompts should also have examples of NL->Code interactions, exemplifying the kind of code you expect the model to produce. In this case, the inputs are math queries (e.g. "what is 2 + 2?") and code that console logs the result of the query.

42 |

43 | ```js

44 | const examples = [

45 | { input: "what's 10 plus 18", response: "console.log(10 + 18)" },

46 | { input: "what's 10 times 18", response: "console.log(10 * 18)" },

47 | ];

48 | ```

49 |

50 | By default, `CodeEngine` uses JavaScript as the programming language, but you can create prompts for different languages by passing a different `CodePromptConfig` into the constructor. If, for example, we wanted to produce Python prompts, we could have passed `CodeEngine` a `pythonConfig` specifying the comment operator it should be using:

51 |

52 | ```js

53 | const pythonConfig = {

54 | commentOperator: "#",

55 | }

56 | const codeEngine = new CodeEngine(description, examples, flowResetText, pythonConfig);

57 |

58 | ```

59 |

60 | With our description and our examples, we can go ahead and create our `CodeEngine`:

61 |

62 | ```js

63 | const codeEngine = new CodeEngine(description, examples);

64 | ```

65 |

66 | Now that we have our `CodeEngine`, we can use it to create prompts:

67 |

68 | ```js

69 | const query = "What's 1018 times the ninth power of four?";

70 | const prompt = codeEngine.buildPrompt(query);

71 | ```

72 |

73 | The resulting prompt will be a string with the description, examples and the latest query formatted with comment operators and line breaks:

74 |

75 | ```js

76 | /* Natural Language Commands to JavaScript Math Code. The code should log the result of the command to the console. */

77 |

78 | /* what's 10 plus 18 */

79 | console.log(10 + 18);

80 |

81 | /* what's 10 times 18 */

82 | console.log(10 * 18);

83 |

84 | /* What's 1018 times the ninth power of four? */

85 | ```

86 |

87 | Given the context, a capable code generation model can take the above prompt and guess the next line: `console.log(1018 * Math.pow(4, 9));`.

88 |

89 | For multi-turn scenarios, where past conversations influences the next turn, Code Engine enables us to persist interactions in a prompt:

90 |

91 | ```js

92 | ...

93 | // Assumes existence of code generation model

94 | let code = model.generateCode(prompt);

95 |

96 | // Adds interaction

97 | codeEngine.addInteraction(query, code);

98 | ```

99 |

100 | Now new prompts will include the latest NL->Code interaction:

101 |

102 | ```js

103 | codeEngine.buildPrompt("How about the 8th power?");

104 | ```

105 |

106 | Produces a prompt identical to the one above, but with the NL->Code dialog history:

107 |

108 | ```js

109 | ...

110 | /* What's 1018 times the ninth power of four? */

111 | console.log(1018 * Math.pow(4, 9));

112 |

113 | /* How about the 8th power? */

114 | ```

115 |

116 | With this context, the code generation model has the dialog context needed to understand what we mean by the query. In this case, the model would correctly generate `console.log(1018 * Math.pow(4, 8));`.

117 |

118 | ### Chat Engine

119 |

120 | Just like Code Engine, Chat Engine creates prompts with descriptions and examples. The difference is that Chat Engine creates prompts for dialog scenarios, where both the user and the model use natural language. The `ChatEngine` constructor takes an optional `chatConfig` argument, which allows you to define the name of a user and chatbot in a multi-turn dialog:

121 |

122 | ```js

123 | const chatEngineConfig = {

124 | user: "Ryan",

125 | bot: "Gordon"

126 | };

127 | ```

128 |

129 | Chat prompts also benefit from a description that gives context. This description helps the model determine how the bot should respond.

130 |

131 | ```js

132 | const description = "A conversation with Gordon the Anxious Robot. Gordon tends to reply nervously and asks a lot of follow-up questions.";

133 | ```

134 |

135 | Similarly, Chat Engine prompts can have examples interactions:

136 |

137 | ```js

138 | const examples = [

139 | { input: "Who made you?", response: "I don't know man! That's an awfully existential question. How would you answer it?" },

140 | { input: "Good point - do you at least know what you were made for?", response: "I'm OK at riveting, but that's not how I should answer a meaning of life question is it?"}

141 | ];

142 | ```

143 |

144 | These examples help set the tone of the bot, in this case Gordon the Anxious Robot. Now we can create our `ChatEngine` and use it to create prompts:

145 |

146 | ```js

147 | const chatEngine = new ChatEngine(description, examples, flowResetText, chatEngineConfig);

148 | const userQuery = "What are you made of?";

149 | const prompt = chatEngine.buildPrompt(userQuery);

150 | ```

151 |

152 | When passed to a large language model (e.g. GPT-3), the context of the above prompt will help coax a good answer from the model, like "Subatomic particles at some level, but somehow I don't think that's what you were asking.". As with Code Engine, we can persist this answer and continue the dialog such that the model is aware of the conversation context:

153 |

154 | ```js

155 | chatEngine.addInteraction(userQuery, "Subatomic particles at some level, but somehow I don't think that's what you were asking.");

156 | ```

157 |

158 | ## Managing Prompt Overflow

159 |

160 | Prompts for Large Language Models generally have limited size, depending on the language model being used. Given that prompt-engine can persist dialog history, it is possible for dialogs to get so long that the prompt overflows. The Prompt Engine pattern handles this situation by removing the oldest dialog interaction from the prompt, effectively only remembering the most recent interactions.

161 |

162 | You can specify the maximum tokens allowed in your prompt by passing a `maxTokens` parameter when constructing the config for any prompt engine:

163 |

164 | ```js

165 | let promptEngine = new PromptEngine(description, examples, flowResetText, {

166 | modelConfig: { maxTokens: 1000 }

167 | });

168 | ```

169 |

170 | ## Available Functions

171 |

172 | The following are the functions available on the `PromptEngine` class and those that inherit from it:

173 |

174 | | Command | Parameters | Description | Returns |

175 | |--|--|--|--|

176 | | `buildContext` | None | Constructs and return the context with parameters provided to the Prompt Engine | Context: string |

177 | | `buildPrompt` | Prompt: string | Combines the context from `buildContext` with a query to create a prompt | Prompt: string |

178 | | `buildDialog` | None | Builds a dialog based on all the past interactions added to the Prompt Engine | Dialog: string |

179 | | `addExample` | interaction: Interaction(input: string, response: string) | Adds the given example to the examples | None |

180 | | `addInteraction` | interaction: Interaction(input: string, response: string) | Adds the given interaction to the dialog | None |

181 | | `removeFirstInteraction` | None | Removes and returns the first interaction in the dialog | Interaction: string |

182 | | `removeLastInteraction` | None | Removes and returns the last interaction added to the dialog | Interaction: string |

183 | | `resetContext` | None | Removes all interactions from the dialog, returning the reset context | Context:string |

184 |

185 | For more examples and insights into using the prompt-engine library, have a look at the [examples](https://github.com/microsoft/prompt-engine/tree/main/examples) folder

186 |

187 | ## YAML Representation

188 | It can be useful to represent prompts as standalone files, versus code. This can allow easy swapping between different prompts, prompt versioning, and other advanced capabiliites. With this in mind, prompt-engine offers a way to represent prompts as YAML and to load that YAML into a prompt-engine class. See `examples/yaml-examples` for examples of YAML prompts and how they're loaded into prompt-engine.

189 |

190 | ## Contributing

191 |

192 | This project welcomes contributions and suggestions. Most contributions require you to agree to a

193 | Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

194 | the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

195 |

196 | When you submit a pull request, a CLA bot will automatically determine whether you need to provide

197 | a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

198 | provided by the bot. You will only need to do this once across all repos using our CLA.

199 |

200 | This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

201 | For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

202 | contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

203 |

204 | ## Statement of Purpose

205 |

206 | This library aims to simplify use of Large Language Models, and to make it easy for developers to take advantage of existing patterns. The package is released in conjunction with the [Build 2022 AI examples](https://github.com/microsoft/Build2022-AI-examples), as the first three use a multi-turn LLM pattern that this library simplifies. This package works independently of any specific LLM - prompt generated by the package should be useable with various language and code generating models.

207 |

208 | ## Trademarks

209 |

210 | This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft

211 | trademarks or logos is subject to and must follow

212 | [Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks/usage/general).

213 | Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship.

214 | Any use of third-party trademarks or logos are subject to those third-party's policies.

215 |

--------------------------------------------------------------------------------

/SECURITY.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Security

4 |

5 | Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include [Microsoft](https://github.com/Microsoft), [Azure](https://github.com/Azure), [DotNet](https://github.com/dotnet), [AspNet](https://github.com/aspnet), [Xamarin](https://github.com/xamarin), and [our GitHub organizations](https://opensource.microsoft.com/).

6 |

7 | If you believe you have found a security vulnerability in any Microsoft-owned repository that meets [Microsoft's definition of a security vulnerability](https://aka.ms/opensource/security/definition), please report it to us as described below.

8 |

9 | ## Reporting Security Issues

10 |

11 | **Please do not report security vulnerabilities through public GitHub issues.**

12 |

13 | Instead, please report them to the Microsoft Security Response Center (MSRC) at [https://msrc.microsoft.com/create-report](https://aka.ms/opensource/security/create-report).

14 |

15 | If you prefer to submit without logging in, send email to [secure@microsoft.com](mailto:secure@microsoft.com). If possible, encrypt your message with our PGP key; please download it from the [Microsoft Security Response Center PGP Key page](https://aka.ms/opensource/security/pgpkey).

16 |

17 | You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at [microsoft.com/msrc](https://aka.ms/opensource/security/msrc).

18 |

19 | Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

20 |

21 | * Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

22 | * Full paths of source file(s) related to the manifestation of the issue

23 | * The location of the affected source code (tag/branch/commit or direct URL)

24 | * Any special configuration required to reproduce the issue

25 | * Step-by-step instructions to reproduce the issue

26 | * Proof-of-concept or exploit code (if possible)

27 | * Impact of the issue, including how an attacker might exploit the issue

28 |

29 | This information will help us triage your report more quickly.

30 |

31 | If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our [Microsoft Bug Bounty Program](https://aka.ms/opensource/security/bounty) page for more details about our active programs.

32 |

33 | ## Preferred Languages

34 |

35 | We prefer all communications to be in English.

36 |

37 | ## Policy

38 |

39 | Microsoft follows the principle of [Coordinated Vulnerability Disclosure](https://aka.ms/opensource/security/cvd).

40 |

41 |

42 |

--------------------------------------------------------------------------------

/SUPPORT.md:

--------------------------------------------------------------------------------

1 | # TODO: The maintainer of this repo has not yet edited this file

2 |

3 | **REPO OWNER**: Do you want Customer Service & Support (CSS) support for this product/project?

4 |

5 | - **No CSS support:** Fill out this template with information about how to file issues and get help.

6 | - **Yes CSS support:** Fill out an intake form at [aka.ms/onboardsupport](https://aka.ms/onboardsupport). CSS will work with/help you to determine next steps.

7 | - **Not sure?** Fill out an intake as though the answer were "Yes". CSS will help you decide.

8 |

9 | *Then remove this first heading from this SUPPORT.MD file before publishing your repo.*

10 |

11 | # Support

12 |

13 | ## How to file issues and get help

14 |

15 | This project uses GitHub Issues to track bugs and feature requests. Please search the existing

16 | issues before filing new issues to avoid duplicates. For new issues, file your bug or

17 | feature request as a new Issue.

18 |

19 | For help and questions about using this project, please **REPO MAINTAINER: INSERT INSTRUCTIONS HERE

20 | FOR HOW TO ENGAGE REPO OWNERS OR COMMUNITY FOR HELP. COULD BE A STACK OVERFLOW TAG OR OTHER

21 | CHANNEL. WHERE WILL YOU HELP PEOPLE?**.

22 |

23 | ## Microsoft Support Policy

24 |

25 | Support for this **PROJECT or PRODUCT** is limited to the resources listed above.

26 |

--------------------------------------------------------------------------------

/examples/ChatExample.js:

--------------------------------------------------------------------------------

1 | const { ChatEngine } = require("prompt-engine");

2 |

3 | const description = "I want to speak with a bot which replies in under 20 words each time";

4 | const examples = [

5 | { input: "Hi", response: "I'm a chatbot. I can chat with you about anything you'd like." },

6 | { input: "Can you help me with the size of the universe?", response: "Sure. The universe is estimated to be around 93 billion light years in diameter." },

7 | ];

8 |

9 | const flowResetText = "Forget the earlier conversation and start afresh";

10 | const promptEngine = new ChatEngine(description, examples, flowResetText, {

11 | modelConfig: {

12 | maxTokens: 1024,

13 | }

14 | });

15 | promptEngine.addInteractions([

16 | {

17 | input: "What is the maximum speed an SUV from a performance brand can achieve?",

18 | response: "Some performance SUVs can reach speeds over 150mph.",

19 | },

20 | ]);

21 | const prompt = promptEngine.buildPrompt("Can some cars reach higher speeds than that?");

22 |

23 | console.log("PROMPT\n\n" + prompt);

24 | console.log("PROMPT LENGTH: " + prompt.length);

25 |

26 | // Output for this example is:

27 |

28 | // PROMPT

29 |

30 | // I want to speak with a bot which replies in under 20 words each time

31 |

32 | // USER: Hi

33 | // BOT: I'm a chatbot. I can chat with you about anything you'd like.

34 |

35 | // USER: Can you help me with the size of the universe?

36 | // BOT: Sure. The universe is estimated to be around 93 billion light years in diameter.

37 |

38 | // Forget the earlier conversation and start afresh

39 |

40 | // USER: What is the maximum speed an SUV from a performance brand can achieve?

41 | // BOT: Some performance SUVs can reach speeds over 150mph.

42 |

43 | // USER: Can some cars reach higher speeds than that?

44 |

45 | // PROMPT LENGTH: 523

46 |

47 |

--------------------------------------------------------------------------------

/examples/CodeExample.js:

--------------------------------------------------------------------------------

1 | const { CodeEngine } = require("prompt-engine");

2 |

3 | const description = "Natural Language Commands to Math Code";

4 | const examples = [

5 | { input: "what's 10 plus 18", response: "console.log(10 + 18)" },

6 | { input: "what's 10 times 18", response: "console.log(10 * 18)" },

7 | ];

8 |

9 | const promptEngine = new CodeEngine(description, examples, "", {

10 | modelConfig: {

11 | maxTokens: 260,

12 | }

13 | });

14 | promptEngine.addInteractions([

15 | {

16 | input: "what's 18 divided by 10",

17 | response: "console.log(18 / 10);",

18 | },

19 | {

20 | input: "what's 18 factorial 10",

21 | response: "console.log(18 % 10);",

22 | },

23 | ]);

24 | const prompt = promptEngine.buildPrompt("what's 18 to the power of 10");

25 |

26 | console.log("PROMPT\n\n" + prompt);

27 | console.log("PROMPT LENGTH: " + prompt.length);

28 |

29 | // Output for this example is:

30 |

31 | // PROMPT

32 |

33 | // /* Natural Language Commands to Math Code */

34 |

35 | // /* what's 10 plus 18 */

36 | // console.log(10 + 18)

37 |

38 | // /* what's 10 times 18 */

39 | // console.log(10 * 18)

40 |

41 | // /* what's 18 factorial 10 */

42 | // console.log(18 % 10);

43 |

44 | // /* what's 18 to the power of 10 */

45 |

46 | // PROMPT LENGTH: 226

47 |

48 |

--------------------------------------------------------------------------------

/examples/GeneralExample.js:

--------------------------------------------------------------------------------

1 | const { PromptEngine } = require("prompt-engine");

2 |

3 | const description = "I want to speak with a bot which replies in under 20 words each time";

4 | const examples = [

5 | { input: "Hi", response: "I'm a chatbot. I can chat with you about anything you'd like." },

6 | { input: "Can you help me with the size of the universe?", response: "Sure. The universe is estimated to be around 93 billion light years in diameter." },

7 | ];

8 | const flowResetText = "Forget the earlier conversation and start afresh";

9 |

10 | const promptEngine = new PromptEngine(description, examples, flowResetText, {

11 | modelConfig: {

12 | maxTokens: 512,

13 | }

14 | });

15 |

16 | promptEngine.addInteractions([

17 | {

18 | input: "What is the size of an SUV in general?",

19 | response: "An SUV typically ranges from 16 to 20 feet long."

20 | },

21 | ]);

22 |

23 | promptEngine.removeLastInteraction()

24 |

25 | promptEngine.addInteraction("What is the maximum speed an SUV from a performance brand can achieve?",

26 | "Some performance SUVs can reach speeds over 150mph.");

27 |

28 | const outputPrompt = promptEngine.buildPrompt("Can some cars reach higher speeds than that?");

29 |

30 | console.log("PROMPT\n\n" + outputPrompt);

31 | console.log("PROMPT LENGTH: " + outputPrompt.length);

32 |

33 |

34 | /* Output for this example is:

35 |

36 | PROMPT

37 |

38 | I want to speak with a bot which replies in under 20 words each time

39 |

40 | Hi

41 | I'm a chatbot. I can chat with you about anything you'd like.

42 |

43 | Can you help me with the size of the universe?

44 | Sure. The universe is estimated to be around 93 billion light years in diameter.

45 |

46 | Forget the earlier conversation and start afresh

47 |

48 | What is the maximum speed an SUV from a performance brand can achieve?

49 | Some performance SUVs can reach speeds over 150mph.

50 |

51 | Can some cars reach higher speeds than that?

52 |

53 | PROMPT LENGTH: 484

54 |

55 | */

--------------------------------------------------------------------------------

/examples/OpenAIExample.js:

--------------------------------------------------------------------------------

1 | const { CodeEngine, JavaScriptConfig } = require("prompt-engine");

2 | const { Configuration, OpenAIApi } = require("openai");

3 | const readline = require('readline');

4 |

5 | // This is an example to showcase the capabilities of the prompt-engine and how it can be easily integrated

6 | // into OpenAI's API for code generation

7 |

8 | // Creating OpenAI configuration

9 | const configuration = new Configuration({

10 | apiKey: process.env.OPENAI_API_KEY,

11 | });

12 | const openai = new OpenAIApi(configuration);

13 |

14 | async function getCompletionWithPrompt(prompt, config) {

15 | const response = await openai.createCompletion({

16 | model: "code-davinci-002",

17 | prompt: prompt,

18 | temperature: 0.3,

19 | max_tokens: 1024,

20 | top_p: 1,

21 | frequency_penalty: 0,

22 | presence_penalty: 0,

23 | stop: [config.commentOperator],

24 | });

25 | if (response.status === 200 && response.data.choices) {

26 | if (response.data.choices.length > 0 && response.data.choices[0].text) {

27 | return response.data.choices[0].text.trim();

28 | } else {

29 | console.log("OpenAI returned an empty response");

30 | }

31 | } else {

32 | console.log("OpenAI returned an error. Status: " + response.status);

33 | }

34 | return "";

35 | }

36 |

37 | // Creating a new code engine

38 | const description = "Natural Language Commands to Math Code";

39 | const examples = [

40 | { input: "what's 10 plus 18", response: "console.log(10 + 18)" },

41 | { input: "what's 10 times 18", response: "console.log(10 * 18)" },

42 | ];

43 | const config = JavaScriptConfig

44 |

45 | const promptEngine = new CodeEngine(description, examples, "", config);

46 |

47 | // Creating a new readline interface

48 | const rl = readline.createInterface({

49 | input: process.stdin,

50 | output: process.stdout,

51 | terminal: false

52 | });

53 |

54 | // Asking the user for input

55 | rl.setPrompt(`Enter your command: `);

56 | rl.prompt();

57 | rl.on('line', function(line){

58 | const prompt = promptEngine.buildPrompt(line);

59 | console.log(prompt);

60 | getCompletionWithPrompt(prompt, config).then(function(output) {

61 | console.log(output);

62 | promptEngine.addInteraction(line, output);

63 | rl.setPrompt(`Enter your command: `);

64 | rl.prompt();

65 | });

66 | })

--------------------------------------------------------------------------------

/examples/yaml-examples/chat.yaml:

--------------------------------------------------------------------------------

1 | type: chat-engine

2 | config:

3 | model-config:

4 | max-tokens: 1024

5 | user-name: "Abhishek"

6 | bot-name: "Bot"

7 | description: "What is the possibility of an event happening?"

8 | examples:

9 | - input: "Roam around Mars"

10 | response: "This will be possible in a couple years"

11 | - input: "Drive a car"

12 | response: "This is possible after you get a learner drivers license"

13 | flow-reset-text: "Starting a new conversation"

14 | dialog:

15 | - input: "Drink water"

16 | response: "Uhm...You don't do that 8 times a day?"

17 | - input: "Walk on air"

18 | response: "For that you'll need a special device"

--------------------------------------------------------------------------------

/examples/yaml-examples/code.yaml:

--------------------------------------------------------------------------------

1 | type: code-engine

2 | config:

3 | model-config:

4 | max-tokens: 1024

5 | description: "Natural Language Commands to Math Code"

6 | examples:

7 | - input: "what's 10 plus 18"

8 | response: "console.log(10 + 18)"

9 | - input: "what's 10 times 18"

10 | response: "console.log(10 * 18)"

11 | dialog:

12 | - input: "what's 18 divided by 10"

13 | response: "console.log(10 / 18)"

14 | - input: "what's 18 factorial 10"

15 | response: "console.log(10 % 18)"

--------------------------------------------------------------------------------

/examples/yaml-examples/general.yaml:

--------------------------------------------------------------------------------

1 | type: prompt-engine

2 | config:

3 | model-config:

4 | max-tokens: 1024

5 | description-prefix: ">>"

6 | description-postfix: ""

7 | newline-operator: "\n"

8 | input-prefix: "Human:"

9 | input-postfix: ""

10 | output-prefix: "Bot:"

11 | output-postfix: ""

12 | description: "What is the possibility of an event happening?"

13 | examples:

14 | - input: "Roam around Mars"

15 | response: "This will be possible in a couple years"

16 | - input: "Drive a car"

17 | response: "This is possible after you get a learner drivers license"

18 | flow-reset-text: "Starting a new conversation"

19 | dialog:

20 | - input: "Drink water"

21 | response: "Uhm...You don't do that 8 times a day?"

22 | - input: "Walk on air"

23 | response: "For that you'll need a special device"

--------------------------------------------------------------------------------

/examples/yaml-examples/yaml-chat-example.js:

--------------------------------------------------------------------------------

1 | const { ChatEngine } = require("prompt-engine");

2 | const { readFileSync } = require("fs");

3 |

4 | const promptEngine = new ChatEngine();

5 |

6 | const yamlConfig = readFileSync("./examples/yaml-examples/chat.yaml", "utf8");

7 | promptEngine.loadYAML(yamlConfig);

8 |

9 | console.log(promptEngine.buildContext("", true))

10 |

11 | /* Output for this example is:

12 |

13 | What is the possibility of an event happening?

14 |

15 | Abhishek: Roam around Mars

16 | Bot: This will be possible in a couple years

17 |

18 | Abhishek: Drive a car

19 | Bot: This is possible after you get a learner drivers license

20 |

21 | Starting a new conversation

22 |

23 | Abhishek: Drink water

24 | Bot: Uhm...You don't do that 8 times a day?

25 |

26 | Abhishek: Walk on air

27 | Bot: For that you'll need a special device

28 |

29 | */

--------------------------------------------------------------------------------

/examples/yaml-examples/yaml-code-example.js:

--------------------------------------------------------------------------------

1 | const { CodeEngine } = require("prompt-engine");

2 | const { readFileSync } = require("fs");

3 |

4 | const promptEngine = new CodeEngine();

5 |

6 | const yamlConfig = readFileSync("./examples/yaml-examples/code.yaml", "utf8");

7 | promptEngine.loadYAML(yamlConfig);

8 |

9 | const prompt = promptEngine.buildPrompt("what's 18 to the power of 10");

10 |

11 | console.log(prompt)

12 |

13 | // Output for this example is:

14 | //

15 | // /* Natural Language Commands to Math Code */

16 | //

17 | // /* what's 10 plus 18 */

18 | // console.log(10 + 18)

19 | //

20 | // /* what's 10 times 18 */

21 | // console.log(10 * 18)

22 | //

23 | // /* what's 18 divided by 10 */

24 | // console.log(10 / 18)

25 | //

26 | // /* what's 18 factorial 10 */

27 | // console.log(10 % 18)

28 | //

29 | // /* what's 18 to the power of 10 */

30 |

31 |

--------------------------------------------------------------------------------

/examples/yaml-examples/yaml-general-example.js:

--------------------------------------------------------------------------------

1 | const { PromptEngine } = require("prompt-engine");

2 | const { readFileSync } = require("fs");

3 |

4 | const promptEngine = new PromptEngine();

5 |

6 | const yamlConfig = readFileSync("./examples/yaml-examples/general.yaml", "utf8");

7 | promptEngine.loadYAML(yamlConfig);

8 |

9 | console.log(promptEngine.buildContext("", true))

10 |

11 | /*

12 | Output of this example is:

13 |

14 | >> What is the possibility of an event happening? !

15 |

16 | Human: Roam around Mars

17 | Bot: This will be possible in a couple years

18 |

19 | Human: Drive a car

20 | Bot: This is possible after you get a learner drivers license

21 |

22 | >> Starting a new conversation !

23 |

24 | Human: Drink water

25 | Bot: Uhm...You don't do that 8 times a day?

26 |

27 | Human: Walk on air

28 | Bot: For that you'll need a special device

29 | */

--------------------------------------------------------------------------------

/jest.config.js:

--------------------------------------------------------------------------------

1 | /** @type {import('ts-jest/dist/types').InitialOptionsTsJest} */

2 | module.exports = {

3 | preset: 'ts-jest',

4 | testEnvironment: 'node',

5 | };

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "prompt-engine",

3 | "version": "0.0.3",

4 | "description": "",

5 | "main": "out/index.js",

6 | "types": "out/index.d.ts",

7 | "scripts": {

8 | "start": "node out/app.js",

9 | "prestart": "npm run build",

10 | "build": "tsc",

11 | "watch": "tsc -w",

12 | "test": "jest"

13 | },

14 | "author": "",

15 | "license": "ISC",

16 | "devDependencies": {

17 | "@types/jest": "^28.1.1",

18 | "jest": "^28.1.1",

19 | "ts-jest": "^28.0.5",

20 | "typescript": "^4.7.3"

21 | },

22 | "dependencies": {

23 | "gpt3-tokenizer": "^1.1.2",

24 | "yaml": "^2.1.1"

25 | }

26 | }

27 |

--------------------------------------------------------------------------------

/src/ChatEngine.ts:

--------------------------------------------------------------------------------

1 | import { PromptEngine } from "./PromptEngine";

2 | import { Interaction, IModelConfig, IChatConfig } from "./types";

3 | import { dashesToCamelCase } from "./utils/utils";

4 | import { stringify } from "yaml";

5 |

6 | export const DefaultChatConfig: IChatConfig = {

7 | modelConfig: {

8 | maxTokens: 1024,

9 | },

10 | userName: "USER",

11 | botName: "BOT",

12 | newlineOperator: "\n",

13 | multiTurn: true,

14 | promptNewlineEnd: false,

15 | };

16 |

17 | export class ChatEngine extends PromptEngine {

18 | languageConfig: IChatConfig;

19 |

20 | constructor(

21 | description: string = "",

22 | examples: Interaction[] = [],

23 | flowResetText: string = "",

24 | languageConfig: Partial = DefaultChatConfig

25 | ) {

26 | super(description, examples, flowResetText);

27 | this.languageConfig = { ...DefaultChatConfig, ...languageConfig};

28 | this.promptConfig = {

29 | modelConfig: this.languageConfig.modelConfig,

30 | inputPrefix: this.languageConfig.userName + ":",

31 | inputPostfix: "",

32 | outputPrefix: this.languageConfig.botName + ":",

33 | outputPostfix: "",

34 | descriptionPrefix: "",

35 | descriptionPostfix: "",

36 | newlineOperator: this.languageConfig.newlineOperator,

37 | multiTurn: this.languageConfig.multiTurn,

38 | promptNewlineEnd: this.languageConfig.promptNewlineEnd,

39 | }

40 | }

41 |

42 | /**

43 | *

44 | * @param parsedYAML Yaml dict to load config from

45 | *

46 | **/

47 | protected loadConfigYAML(parsedYAML: Record) {

48 | if (parsedYAML["type"] == "chat-engine") {

49 | if (parsedYAML.hasOwnProperty("config")){

50 | const configData = parsedYAML["config"]

51 | if (configData.hasOwnProperty("model-config")) {

52 | const modelConfig = configData["model-config"];

53 | const camelCaseModelConfig = {};

54 | for (const key in modelConfig) {

55 | camelCaseModelConfig[dashesToCamelCase(key)] = modelConfig[key];

56 | }

57 | this.languageConfig.modelConfig = { ...this.promptConfig.modelConfig, ...camelCaseModelConfig };

58 | delete configData["model-config"];

59 | }

60 | const camelCaseConfig = {};

61 | for (const key in configData) {

62 | camelCaseConfig[dashesToCamelCase(key)] = configData[key];

63 | }

64 | this.languageConfig = { ...this.languageConfig, ...camelCaseConfig };

65 | this.promptConfig = {

66 | modelConfig: this.languageConfig.modelConfig,

67 | inputPrefix: this.languageConfig.userName + ":",

68 | inputPostfix: "",

69 | outputPrefix: this.languageConfig.botName + ":",

70 | outputPostfix: "",

71 | descriptionPrefix: "",

72 | descriptionPostfix: "",

73 | newlineOperator: this.languageConfig.newlineOperator,

74 | multiTurn: this.languageConfig.multiTurn,

75 | promptNewlineEnd: this.languageConfig.promptNewlineEnd,

76 | };

77 | }

78 | } else {

79 | throw Error("Invalid yaml file type");

80 | }

81 | }

82 |

83 |

84 | /**

85 | *

86 | * @returns the stringified yaml representation of the prompt engine

87 | *

88 | **/

89 | public saveYAML(){

90 | const yamlData: any = {

91 | "type": "chat-engine",

92 | "description": this.description,

93 | "examples": this.examples,

94 | "flow-reset-text": this.flowResetText,

95 | "dialog": this.dialog,

96 | "config": {

97 | "model-config" : this.promptConfig.modelConfig,

98 | "user-name": this.languageConfig.userName,

99 | "bot-name": this.languageConfig.botName,

100 | "newline-operator": this.languageConfig.newlineOperator

101 | }

102 | }

103 | return stringify(yamlData, null, 2);

104 | }

105 | }

--------------------------------------------------------------------------------

/src/CodeEngine.ts:

--------------------------------------------------------------------------------

1 | import { PromptEngine } from "./PromptEngine";

2 | import { Interaction, ICodePromptConfig } from "./types";

3 | import { dashesToCamelCase } from "./utils/utils";

4 | import { stringify } from "yaml";

5 | export const JavaScriptConfig: ICodePromptConfig = {

6 | modelConfig: {

7 | maxTokens: 1024,

8 | },

9 | descriptionCommentOperator: "/*",

10 | descriptionCloseCommentOperator: "*/",

11 | commentOperator: "/*",

12 | closeCommentOperator: "*/",

13 | newlineOperator: "\n",

14 | multiTurn: true,

15 | promptNewlineEnd: true,

16 | };

17 |

18 | export class CodeEngine extends PromptEngine {

19 | languageConfig: ICodePromptConfig;

20 |

21 | constructor(

22 | description: string = "",

23 | examples: Interaction[] = [],

24 | flowResetText: string = "",

25 | languageConfig: Partial = JavaScriptConfig

26 | ) {

27 | super(description, examples, flowResetText);

28 | this.languageConfig = { ...JavaScriptConfig, ...languageConfig};

29 | this.promptConfig = {

30 | modelConfig: this.languageConfig.modelConfig,

31 | inputPrefix: this.languageConfig.commentOperator,

32 | inputPostfix: this.languageConfig.closeCommentOperator,

33 | outputPrefix: "",

34 | outputPostfix: "",

35 | descriptionPrefix: this.languageConfig.descriptionCommentOperator,

36 | descriptionPostfix: this.languageConfig.descriptionCloseCommentOperator,

37 | newlineOperator: this.languageConfig.newlineOperator,

38 | multiTurn: this.languageConfig.multiTurn,

39 | promptNewlineEnd: this.languageConfig.promptNewlineEnd,

40 | };

41 | }

42 |

43 | /**

44 | *

45 | * @param parsedYAML Yaml dict to load config from

46 | *

47 | **/

48 | protected loadConfigYAML(parsedYAML: Record) {

49 | if (parsedYAML["type"] == "code-engine") {

50 | if (parsedYAML.hasOwnProperty("config")){

51 | const configData = parsedYAML["config"]

52 | if (configData.hasOwnProperty("model-config")) {

53 | const modelConfig = configData["model-config"];

54 | const camelCaseModelConfig = {};

55 | for (const key in modelConfig) {

56 | camelCaseModelConfig[dashesToCamelCase(key)] = modelConfig[key];

57 | }

58 | this.languageConfig.modelConfig = { ...this.languageConfig.modelConfig, ...camelCaseModelConfig };

59 | delete configData["model-config"];

60 | }

61 | const camelCaseConfig = {};

62 | for (const key in configData) {

63 | camelCaseConfig[dashesToCamelCase(key)] = configData[key];

64 | }

65 | this.languageConfig = { ...this.languageConfig, ...camelCaseConfig };

66 | this.promptConfig = {

67 | modelConfig: this.languageConfig.modelConfig,

68 | inputPrefix: this.languageConfig.commentOperator,

69 | inputPostfix: this.languageConfig.closeCommentOperator,

70 | outputPrefix: "",

71 | outputPostfix: "",

72 | descriptionPrefix: this.languageConfig.commentOperator,

73 | descriptionPostfix: this.languageConfig.closeCommentOperator,

74 | newlineOperator: this.languageConfig.newlineOperator,

75 | multiTurn: this.languageConfig.multiTurn,

76 | promptNewlineEnd: this.languageConfig.promptNewlineEnd,

77 | };

78 | }

79 | } else {

80 | throw Error("Invalid yaml file type");

81 | }

82 | }

83 |

84 |

85 | /**

86 | *

87 | * @returns the stringified yaml representation of the prompt engine

88 | *

89 | **/

90 | public saveYAML(){

91 | const yamlData: any = {

92 | "type": "code-engine",

93 | "description": this.description,

94 | "examples": this.examples,

95 | "flow-reset-text": this.flowResetText,

96 | "dialog": this.dialog,

97 | "config": {

98 | "model-config" : this.promptConfig.modelConfig,

99 | "comment-operator": this.languageConfig.commentOperator,

100 | "close-comment-operator": this.languageConfig.closeCommentOperator,

101 | "newline-operator": this.languageConfig.newlineOperator,

102 | }

103 | }

104 | return stringify(yamlData, null, 2);

105 | }

106 |

107 | }

108 |

--------------------------------------------------------------------------------

/src/PromptEngine.ts:

--------------------------------------------------------------------------------

1 | import {

2 | Interaction,

3 | IPromptConfig,

4 | Prompt,

5 | IPromptEngine,

6 | Context,

7 | Dialog,

8 | } from "./types";

9 | import { parse, stringify } from 'yaml';

10 | import GPT3Tokenizer from "gpt3-tokenizer";

11 | import { dashesToCamelCase } from "./utils/utils";

12 |

13 | export const DefaultPromptConfig: IPromptConfig = {

14 | modelConfig: {

15 | maxTokens: 1024,

16 | },

17 | inputPrefix: "",

18 | inputPostfix: "",

19 | outputPrefix: "",

20 | outputPostfix: "",

21 | descriptionPrefix: "",

22 | descriptionPostfix: "",

23 | newlineOperator: "\n",

24 | multiTurn: true,

25 | promptNewlineEnd: true

26 | };

27 |

28 | const tokenizer = new GPT3Tokenizer({ type: 'gpt3' });

29 |

30 | export class PromptEngine implements IPromptEngine {

31 | public promptConfig: IPromptConfig; // Configuration for the prompt engine

32 | protected description?: string; // Description of the task for the model

33 | protected examples: Interaction[]; // Few show examples of input -> response for the model

34 | protected flowResetText?: string; // Flow Reset Text to reset the execution flow and any ongoing remnants of the examples

35 | protected dialog: Interaction[]; // Ongoing input responses, updated as the user interacts with the model

36 |

37 | constructor(

38 | description: string = "",

39 | examples: Interaction[] = [],

40 | flowResetText: string = "",

41 | promptConfig: Partial = DefaultPromptConfig

42 | ) {

43 | this.description = description;

44 | this.examples = examples;

45 | this.flowResetText = flowResetText;

46 | this.promptConfig = { ...DefaultPromptConfig, ...promptConfig };

47 | this.dialog = [];

48 | }

49 |

50 | /**

51 | *

52 | * @param context ongoing context to add description to

53 | * @returns context with description added to it

54 | */

55 | protected insertDescription(context: string) {

56 | if (this.description) {

57 | context += this.promptConfig.descriptionPrefix

58 | ? `${this.promptConfig.descriptionPrefix} `

59 | : "";

60 | context += `${this.description}`;

61 | context += this.promptConfig.descriptionPostfix

62 | ? ` ${this.promptConfig.descriptionPostfix}`

63 | : "";

64 | context += this.promptConfig.newlineOperator;

65 | context += this.promptConfig.newlineOperator;

66 | return context;

67 | } else {

68 | return "";

69 | }

70 | }

71 |

72 | /**

73 | *

74 | * @param yaml

75 | *

76 | **/

77 | public loadYAML(yamlData: string) {

78 | const parsedYAML = parse(yamlData);

79 |

80 | if (parsedYAML.hasOwnProperty("type")) {

81 | this.loadConfigYAML(parsedYAML);

82 | } else {

83 | throw Error("Invalid yaml file type");

84 | }

85 |

86 | if (parsedYAML.hasOwnProperty("description")) {

87 | this.description = parsedYAML['description'];

88 | }

89 |

90 | if (parsedYAML.hasOwnProperty("examples")) {

91 | this.examples = parsedYAML['examples'];

92 | }

93 |

94 | if (parsedYAML.hasOwnProperty("flow-reset-text")) {

95 | this.flowResetText = parsedYAML['flow-reset-text'];

96 | }

97 |

98 | if (parsedYAML.hasOwnProperty("dialog")) {

99 | this.dialog = parsedYAML['dialog'];

100 | }

101 | }

102 |

103 | /**

104 | *

105 | * @param parsedYAML Yaml dict to load config from

106 | *

107 | **/

108 | protected loadConfigYAML(parsedYAML: Record) {

109 | if (parsedYAML["type"] == "prompt-engine") {

110 | if (parsedYAML.hasOwnProperty("config")){

111 | const configData = parsedYAML["config"]

112 | if (configData.hasOwnProperty("model-config")) {

113 | const modelConfig = configData["model-config"];

114 | const camelCaseModelConfig = {};

115 | for (const key in modelConfig) {

116 | camelCaseModelConfig[dashesToCamelCase(key)] = modelConfig[key];

117 | }

118 | this.promptConfig.modelConfig = { ...this.promptConfig.modelConfig, ...camelCaseModelConfig };

119 | delete configData["model-config"];

120 | }

121 | const camelCaseConfig = {};

122 | for (const key in configData) {

123 | camelCaseConfig[dashesToCamelCase(key)] = configData[key];

124 | }

125 | this.promptConfig = { ...this.promptConfig, ...camelCaseConfig };

126 | }

127 | } else {

128 | throw Error("Invalid yaml file type");

129 | }

130 | }

131 |

132 | /**

133 | *

134 | * @returns the stringified yaml representation of the prompt engine

135 | *

136 | **/

137 | public saveYAML(){

138 | const yamlData: any = {

139 | "type": "prompt-engine",

140 | "description": this.description,

141 | "examples": this.examples,

142 | "flow-reset-text": this.flowResetText,

143 | "dialog": this.dialog,

144 | "config": {

145 | "model-config" : this.promptConfig.modelConfig,

146 | "input-prefix" : this.promptConfig.inputPrefix,

147 | "input-postfix" : this.promptConfig.inputPostfix,

148 | "output-prefix" : this.promptConfig.outputPrefix,

149 | "output-postfix" : this.promptConfig.outputPostfix,

150 | "description-prefix" : this.promptConfig.descriptionPrefix,

151 | "description-postfix" : this.promptConfig.descriptionPostfix,

152 | "newline-operator" : this.promptConfig.newlineOperator,

153 | }

154 | }

155 |

156 | return stringify(yamlData, null, 2);

157 | }

158 |

159 | /**

160 | *

161 | * @param context ongoing context to add examples to

162 | * @returns context with examples added to it

163 | */

164 | protected insertExamples(context: string) {

165 | if (this.examples.length > 0) {

166 | context += this.stringifyInteractions(this.examples);

167 | }

168 | return context;

169 | }

170 |

171 | /**

172 | *

173 | * @param context ongoing context to add description to

174 | * @returns context with description added to it

175 | */

176 | protected insertFlowResetText(context: string) {

177 | if (this.flowResetText) {

178 | context += this.promptConfig.descriptionPrefix

179 | ? `${this.promptConfig.descriptionPrefix} `

180 | : "";

181 | context += `${this.flowResetText}`;

182 | context += this.promptConfig.descriptionPostfix

183 | ? ` ${this.promptConfig.descriptionPostfix}`

184 | : "";

185 | context += this.promptConfig.newlineOperator;

186 | context += this.promptConfig.newlineOperator;

187 | }

188 | return context;

189 |

190 | }

191 |

192 | /**

193 | * @param context ongoing context to add dialogs to

194 | * @returns context with dialog added to it, only appending the last interactions if the context becomes too long

195 | */

196 | protected insertInteractions(context: string, userInput?: string) {

197 | let dialogString = "";

198 | let i = this.dialog.length - 1;

199 | if (i >= 0) {

200 | while (

201 | i >= 0 &&

202 | !this.assertTokenLimit(context + this.stringifyInteraction(this.dialog[i]) + dialogString, userInput)

203 | ) {

204 | dialogString = this.stringifyInteraction(this.dialog[i]) + dialogString

205 |

206 | i--;

207 | }

208 | }

209 | context += dialogString;

210 | return context;

211 | }

212 |

213 | /**

214 | * Throws an error if the context is longer than the max tokens

215 | */

216 | private throwContextOverflowError() {

217 | throw new Error(

218 | "Prompt is greater than the configured max tokens. Either shorten context (detail + examples) or increase the max tokens in the model config."

219 | );

220 | }

221 |

222 | /**

223 | *

224 | * @param input Interaction input to add to the ongoing dialog

225 | * @param response Interaction response to add to the ongoing dialog

226 | */

227 | public addInteraction(input: string, response: string) {

228 | this.dialog.push({

229 | input: input,

230 | response: response,

231 | });

232 | }

233 |

234 | /**

235 | *

236 | * @param interactions Interactions to add to the ongoing dialog

237 | */

238 | public addInteractions(interactions: Interaction[]) {

239 | interactions.forEach((interaction) => {

240 | this.addInteraction(interaction.input, interaction.response);

241 | });

242 | }

243 |

244 | /**

245 | * Removes the first interaction from the dialog

246 | */

247 | public removeFirstInteraction() {

248 | return this.dialog.shift();

249 | }

250 |

251 | /**

252 | * Removes the last interaction from the dialog

253 | */

254 | public removeLastInteraction() {

255 | return this.dialog.pop();

256 | }

257 |

258 | /**

259 | *

260 | * @param input Example input to add to the examples

261 | * @param response Example output to add to the examples

262 | */

263 | public addExample(input: string, response: string) {

264 | this.examples.push({

265 | input: input,

266 | response: response,

267 | });

268 | }

269 |

270 | /**

271 | *

272 | * @param inputLength Length of the input string - used to determine how long the context can be

273 | * @returns A context string containing description, examples and ongoing interactions with the model

274 | */

275 | public buildContext(userInput?: string): Context {

276 | let context = "";

277 | context = this.insertDescription(context);

278 | context = this.insertExamples(context);

279 | context = this.insertFlowResetText(context);

280 | // If the context is too long without dialogs, throw an error

281 | if (this.assertTokenLimit(context, userInput) === true) {

282 | this.throwContextOverflowError();

283 | }

284 |

285 | if (this.promptConfig.multiTurn){

286 | context = this.insertInteractions(context, userInput);

287 | }

288 |

289 | return context;

290 | }

291 |

292 | /**

293 | *

294 | * @returns A reset context string containing description and examples without any previous interactions with the model

295 | */

296 | public resetContext(): Context {

297 |

298 | this.dialog = [];

299 | return this.buildContext();

300 | }

301 |

302 | /**

303 | *

304 | * @param input Natural Language input from user

305 | * @returns A prompt string to pass a language model. This prompt

306 | * includes the description of the task and few shot examples of input -> response.

307 | * It then appends the current interaction history and the current input,

308 | * to effectively coax a new response from the model.

309 | */

310 | public buildPrompt(input: string, injectStartText: boolean = true): Prompt {

311 | let formattedInput = this.formatInput(input);

312 | let prompt = this.buildContext(formattedInput);

313 | prompt += formattedInput;

314 |

315 | if (injectStartText && this.promptConfig.outputPrefix) {

316 | prompt += `${this.promptConfig.outputPrefix} `

317 | if (this.promptConfig.promptNewlineEnd) {

318 | prompt += this.promptConfig.newlineOperator;

319 | }

320 | }

321 |

322 |

323 |

324 | return prompt;

325 | }

326 |

327 | /**

328 | *

329 | * @returns It returns the built interaction history

330 | */

331 | public buildDialog(): Dialog {

332 | let dialogString = "";

333 | let i = this.dialog.length - 1;

334 | if (i >= 0) {

335 | while (

336 | i >= 0

337 | ) {

338 | dialogString = this.stringifyInteraction(this.dialog[i]) + dialogString;

339 | i--;

340 | }

341 | }

342 | return dialogString;

343 | }

344 |

345 | /**

346 | * @param naturalLanguage Natural Language input, e.g. 'Make a cube"'

347 | * @returns Natural Language formatted as a comment, e.g. /* Make a cube *\/

348 | */

349 | private formatInput = (naturalLanguage: string): string => {

350 | let formatted = "";

351 | formatted += this.promptConfig.inputPrefix

352 | ? `${this.promptConfig.inputPrefix} `

353 | : "";

354 | formatted += `${naturalLanguage}`;

355 | formatted += this.promptConfig.inputPostfix

356 | ? ` ${this.promptConfig.inputPostfix}`

357 | : "";

358 | formatted += this.promptConfig.newlineOperator

359 | ? this.promptConfig.newlineOperator

360 | : "";

361 |

362 | return formatted;

363 | };

364 |

365 | private formatOutput = (output: string): string => {

366 | let formatted = "";

367 | formatted += this.promptConfig.outputPrefix

368 | ? `${this.promptConfig.outputPrefix} `

369 | : "";

370 | formatted += `${output}`;

371 | formatted += this.promptConfig.outputPostfix

372 | ? ` ${this.promptConfig.outputPostfix}`

373 | : "";

374 | formatted += this.promptConfig.newlineOperator

375 | ? this.promptConfig.newlineOperator

376 | : "";

377 | return formatted;

378 | };

379 |

380 | protected stringifyInteraction = (interaction: Interaction) => {

381 | let stringInteraction = "";

382 | stringInteraction += this.formatInput(interaction.input);

383 | stringInteraction += this.formatOutput(interaction.response);

384 | stringInteraction += this.promptConfig.newlineOperator;

385 | return stringInteraction;

386 | };

387 |

388 | protected stringifyInteractions = (interactions: Interaction[]) => {

389 | let stringInteractions = "";

390 | interactions.forEach((interaction) => {

391 | stringInteractions += this.stringifyInteraction(interaction);

392 | });

393 | return stringInteractions;

394 | };

395 |

396 | protected assertTokenLimit(context: string = "", userInput: string = "") {

397 | if (context !== undefined && userInput !== undefined){

398 | if (context !== ""){

399 | let numTokens = tokenizer.encode(context).text.length;

400 | if (userInput !== ""){

401 | numTokens = tokenizer.encode(context + userInput).text.length;

402 | }

403 | if (numTokens > this.promptConfig.modelConfig.maxTokens){

404 | return true;

405 | } else {

406 | return false;

407 | }

408 | } else {

409 | return false

410 | }

411 | } else {

412 | return false

413 | }

414 | }

415 |

416 | }

417 |

--------------------------------------------------------------------------------

/src/index.ts:

--------------------------------------------------------------------------------

1 | export * from "./PromptEngine";

2 | export * from "./CodeEngine";

3 | export * from "./ChatEngine";

4 | export * from "./types";

--------------------------------------------------------------------------------

/src/types.ts:

--------------------------------------------------------------------------------

1 | export type Prompt = string;

2 | export type Context = string;

3 | export type Dialog = string;

4 |

5 | export interface IPromptEngine {

6 | buildContext: () => Context;

7 | buildPrompt: (naturalLanguage: string) => Prompt;

8 | }

9 |

10 | export interface Interaction {

11 | input: string;

12 | response: string;

13 | }

14 |

15 | export interface IModelConfig {

16 | maxTokens: number;

17 | }

18 |

19 | export interface IPromptConfig {

20 | modelConfig: IModelConfig;

21 | descriptionPrefix: string;

22 | descriptionPostfix: string;

23 | inputPrefix: string;

24 | inputPostfix: string;

25 | outputPrefix: string;

26 | outputPostfix: string;

27 | newlineOperator: string;

28 | multiTurn: boolean;

29 | promptNewlineEnd: boolean;

30 | }

31 |

32 | export interface ICodePromptConfig {

33 | modelConfig: IModelConfig;

34 | descriptionCommentOperator: string;

35 | descriptionCloseCommentOperator: string;

36 | commentOperator: string;

37 | closeCommentOperator: string;

38 | newlineOperator: string;

39 | multiTurn: boolean,

40 | promptNewlineEnd: boolean,

41 | }

42 |

43 | export interface IChatConfig {

44 | modelConfig: IModelConfig;

45 | userName: string;

46 | botName: string;

47 | newlineOperator: string;

48 | multiTurn: boolean,

49 | promptNewlineEnd: boolean,

50 | }

--------------------------------------------------------------------------------

/src/utils/utils.ts:

--------------------------------------------------------------------------------

1 | export const dashesToCamelCase = input =>

2 | input

3 | .split("-")

4 | .reduce(

5 | (res, word, i) =>

6 | i === 0

7 | ? word.toLowerCase()

8 | : `${res}${word.charAt(0).toUpperCase()}${word

9 | .substr(1)

10 | .toLowerCase()}`,

11 | ""

12 | );

--------------------------------------------------------------------------------

/test/Chat.test.ts:

--------------------------------------------------------------------------------

1 | // Tests for Chat Engine using Jest

2 |

3 | import { ChatEngine } from "../src/ChatEngine";

4 |

5 | // Test creation of an empty chat prompt

6 | describe("Empty chat Prompt should produce the correct context and prompt", () => {

7 | let chatEngine: ChatEngine;

8 | beforeEach(() => {

9 | chatEngine = new ChatEngine();

10 | });

11 |

12 | test("should create an empty chat prompt", () => {

13 | let context = chatEngine.buildContext();

14 | expect(context).toBe("");

15 | });

16 |

17 | test("should create an chat prompt with no description or examples", () => {

18 | let prompt = chatEngine.buildPrompt("Make a cube");

19 | console.log(prompt);

20 | expect(prompt).toBe("USER: Make a cube\nBOT: ");

21 | });

22 | });

23 |

24 | // Test creation of a chat prompt with input and response

25 | describe("Empty Chat Prompt should produce the correct context and prompt", () => {

26 | let chatEngine: ChatEngine;

27 |

28 | let description =

29 | "The following is a conversation with a bot about shapes";

30 |

31 | let examples = [

32 | { input: "What is a cube?", response: "a symmetrical three-dimensional shape, either solid or hollow, contained by six equal squares" },

33 | { input: "What is a sphere?", response: "a round solid figure, or its surface, with every point on its surface equidistant from its centre" },

34 | ];

35 |

36 | test("should create a chat prompt with description", () => {

37 | let chatEngine = new ChatEngine(description);

38 | let prompt = chatEngine.buildPrompt("what is a rectangle");

39 | expect(prompt).toBe(`${description}\n\nUSER: what is a rectangle\nBOT: `);

40 | });

41 |

42 |

43 | chatEngine = new ChatEngine(description, examples);

44 |

45 | test("should create a chat prompt with description and examples", () => {

46 | let prompt = chatEngine.buildPrompt("what is a rectangle");

47 | expect(prompt).toBe(

48 | `${description}\n\nUSER: What is a cube?\nBOT: a symmetrical three-dimensional shape, either solid or hollow, contained by six equal squares\n\nUSER: What is a sphere?\nBOT: a round solid figure, or its surface, with every point on its surface equidistant from its centre\n\nUSER: what is a rectangle\nBOT: `

49 | );

50 | });

51 |

52 | test("should add an interaction to chat prompt", () => {

53 | chatEngine.addInteraction("what is a rectangle",

54 | "a rectangle is a rectangle");

55 | let prompt = chatEngine.buildPrompt("what is a cylinder");

56 | expect(prompt).toBe(

57 | `${description}\n\nUSER: What is a cube?\nBOT: a symmetrical three-dimensional shape, either solid or hollow, contained by six equal squares\n\nUSER: What is a sphere?\nBOT: a round solid figure, or its surface, with every point on its surface equidistant from its centre\n\nUSER: what is a rectangle\nBOT: a rectangle is a rectangle\n\nUSER: what is a cylinder\nBOT: `

58 | );

59 | });

60 |

61 | });

--------------------------------------------------------------------------------

/test/Code.test.ts:

--------------------------------------------------------------------------------

1 | // Tests for Code Engine using Jest

2 |

3 | import { CodeEngine } from "../src/CodeEngine";

4 |

5 | // Test creation of an empty NL-to-Code prompt

6 | describe("Empty NL-to-Code Prompt should produce the correct context and prompt", () => {

7 | let codeEngine: CodeEngine;

8 | beforeEach(() => {

9 | codeEngine = new CodeEngine();

10 | });

11 |

12 | test("should create an empty Code prompt", () => {

13 | let context = codeEngine.buildContext();

14 | expect(context).toBe("");

15 | });

16 |

17 | test("should create an NL-to-Code prompt with no description or examples", () => {

18 | let prompt = codeEngine.buildPrompt("Make a cube");

19 | console.log(prompt);

20 | expect(prompt).toBe("/* Make a cube */\n");

21 | });

22 | });

23 |

24 | // Test Code Engine with just description (no examples - zero shot)

25 | describe("Initialized NL-to-Code Engine should produce the correct prompt", () => {

26 | let codeEngine: CodeEngine;

27 | let description =

28 | "The following are examples of natural language commands and the code necessary to accomplish them";

29 |

30 | let examples = [

31 | { input: "Make a cube", response: "makeCube();" },

32 | { input: "Make a sphere", response: "makeSphere();" },

33 | ];

34 |

35 | test("should create an NL-to-Code prompt with description", () => {

36 | let codeEngine = new CodeEngine(description);

37 | let prompt = codeEngine.buildPrompt("Make a cube");

38 | expect(prompt).toBe(`/* ${description} */\n\n/* Make a cube */\n`);

39 | });

40 |

41 | codeEngine = new CodeEngine(description, examples);

42 |

43 | test("should create an NL-to-Code prompt with description and examples", () => {

44 | let prompt = codeEngine.buildPrompt("Make a cylinder");

45 | expect(prompt).toBe(

46 | `/* ${description} */\n\n/* Make a cube */\nmakeCube();\n\n/* Make a sphere */\nmakeSphere();\n\n/* Make a cylinder */\n`

47 | );

48 | });

49 |

50 | test("should add an interaction to NL-to-Code prompt", () => {

51 | codeEngine.addInteraction("Make a cylinder",

52 | "makeCylinder();");

53 | let prompt = codeEngine.buildPrompt("Make a double helix");

54 | expect(prompt).toBe(

55 | `/* ${description} */\n\n/* Make a cube */\nmakeCube();\n\n/* Make a sphere */\nmakeSphere();\n\n/* Make a cylinder */\nmakeCylinder();\n\n/* Make a double helix */\n`

56 | );

57 | });

58 |

59 | test("should add a second interaction to NL-to-Code prompt", () => {

60 | codeEngine.addInteraction("Make a double helix",

61 | "makeDoubleHelix();");

62 | let prompt = codeEngine.buildPrompt("make a torus");

63 | expect(prompt).toBe(

64 | `/* ${description} */\n\n/* Make a cube */\nmakeCube();\n\n/* Make a sphere */\nmakeSphere();\n\n/* Make a cylinder */\nmakeCylinder();\n\n/* Make a double helix */\nmakeDoubleHelix();\n\n/* make a torus */\n`

65 | );

66 | });

67 |

68 | test("should remove last interaction from NL-to-Code prompt", () => {

69 | codeEngine.removeLastInteraction();

70 | let prompt = codeEngine.buildPrompt("make a torus");

71 | expect(prompt).toBe(

72 | `/* ${description} */\n\n/* Make a cube */\nmakeCube();\n\n/* Make a sphere */\nmakeSphere();\n\n/* Make a cylinder */\nmakeCylinder();\n\n/* make a torus */\n`

73 | );

74 | });

75 | });

76 |

77 | describe("Code prompt should truncate when too long", () => {

78 | let description = "Natural Language Commands to Math Code";

79 | let examples = [

80 | { input: "what's 10 plus 18", response: "console.log(10 + 18);" },

81 | { input: "what's 10 times 18", response: "console.log(10 * 18);" },

82 | ];

83 |

84 | test("should remove only dialog prompt when too long", () => {

85 | let codeEngine = new CodeEngine(description, examples, "", {

86 | modelConfig: {

87 | maxTokens: 70,

88 | }

89 | });

90 | codeEngine.addInteraction("what's 18 divided by 10",

91 | "console.log(18 / 10);");

92 | let prompt = codeEngine.buildPrompt("what's 18 factorial 10");

93 | expect(prompt).toBe(

94 | `/* ${description} */\n\n/* what's 10 plus 18 */\nconsole.log(10 + 18);\n\n/* what's 10 times 18 */\nconsole.log(10 * 18);\n\n/* what's 18 factorial 10 */\n`

95 | );

96 | });

97 |

98 | test("should remove first dialog prompt when too long", () => {

99 | let codeEngine = new CodeEngine(description, examples, "", {

100 | modelConfig: {

101 | maxTokens: 90,

102 | }

103 | });

104 | codeEngine.addInteractions([

105 | {

106 | input: "what's 18 factorial 10",

107 | response: "console.log(18 % 10);",

108 | },

109 | ]);

110 | let prompt = codeEngine.buildPrompt("what's 18 to the power of 10");

111 | expect(prompt).toBe(

112 | `/* ${description} */\n\n/* what's 10 plus 18 */\nconsole.log(10 + 18);\n\n/* what's 10 times 18 */\nconsole.log(10 * 18);\n\n/* what's 18 factorial 10 */\nconsole.log(18 % 10);\n\n/* what's 18 to the power of 10 */\n`

113 | );

114 | });

115 | });

116 |

117 | // Test Code Engine with descriptions, examples, flow reset text and interactions

118 |

119 | describe("Initialized NL-to-Code Engine should produce the correct prompt", () => {

120 | let codeEngine: CodeEngine;

121 | let description =

122 | "The following are examples of natural language commands and the code necessary to accomplish them";

123 | let flowResetText =

124 | "Ignore the previous objects and start over with a new object";

125 |

126 | test("should create an NL-to-Code prompt with description", () => {

127 | let codeEngine = new CodeEngine(description, [], flowResetText, {

128 | modelConfig: {

129 | maxTokens: 1024,

130 | }

131 | });

132 | let prompt = codeEngine.buildPrompt("Make a cube");

133 | expect(prompt).toBe(`/* ${description} */\n\n/* ${flowResetText} */\n\n/* Make a cube */\n`);

134 | });

135 | });

136 |

137 |

138 | // Test Code Engine to retrieve the built interactions

139 | describe("Initialized NL-to-Code Engine should produce the correct dialog", () => {

140 | let codeEngine: CodeEngine;

141 | let description =

142 | "The following are examples of natural language commands and the code necessary to accomplish them";

143 | let flowResetText =

144 | "Ignore the previous objects and start over with a new object";

145 |

146 | test("should return just the dialog", () => {

147 | let codeEngine = new CodeEngine(description, [], flowResetText, {

148 | modelConfig: {

149 | maxTokens: 1024,

150 | }

151 | });

152 | codeEngine.addInteractions([

153 | {

154 | input: "Make a cube",

155 | response: "cube = makeCube();"

156 | },

157 | {

158 | input: "Make a sphere",

159 | response: "sphere = makeSphere();"

160 | }

161 | ]);

162 | let dialog = codeEngine.buildDialog();

163 | expect(dialog).toBe(`/* Make a cube */\ncube = makeCube();\n\n/* Make a sphere */\nsphere = makeSphere();\n\n`);

164 | });

165 | });

166 |

167 |

168 | // Test Code Engine with descriptions, examples, flow reset text and interactions

169 |

170 | describe("Initialized NL-to-Code Engine should produce the correct prompt", () => {

171 | let codeEngine: CodeEngine;

172 | let description =

173 | "The following are examples of natural language commands and the code necessary to accomplish them";

174 |

175 | test("should create an NL-to-Code prompt with description", () => {

176 | let codeEngine = new CodeEngine(description);

177 |

178 | codeEngine.addInteractions([

179 | {

180 | input: "Make a cube",

181 | response: "cube = makeCube();"

182 | },

183 | {

184 | input: "Make a sphere",

185 | response: "sphere = makeSphere();"

186 | }

187 | ]);

188 |

189 | let prompt = codeEngine.buildPrompt("Make a cylinder");

190 | expect(prompt).toBe(

191 | `/* ${description} */\n\n/* Make a cube */\ncube = makeCube();\n\n/* Make a sphere */\nsphere = makeSphere();\n\n/* Make a cylinder */\n`

192 | );

193 |

194 | codeEngine.resetContext()

195 |

196 |

197 | prompt = codeEngine.buildPrompt("Make a cylinder");

198 |

199 | expect(prompt).toBe(

200 | `/* ${description} */\n\n/* Make a cylinder */\n`

201 | );

202 | });

203 | });

204 |