193 |

194 |

199 |

200 |

202 |

207 |

243 |

244 |

245 |

250 |

251 |

208 |

242 |

209 |

213 |

214 |

215 |

241 |

216 |

240 | 217 |239 |218 |222 | 238 |219 | “Everyone has a plan 'till they get punched in the mouth.” 220 |

221 |

4 |

5 | \

6 |

7 |

8 | - **Transparency** - Easily drill down and see how metrics are calculated at the customer level

9 | - **Open source &** **Private** - The entire app is open source and easy to self host

10 | - **Extensible &** **Hackable** - Have some weird edge case you need to exclude? Easily update your data model with a little SQL.

11 | - **Push first** - Metrics can be pushed to Slack, Sheets, and email so you don't need to check yet another dashboard

12 |

13 | \

14 |

15 |

16 |

17 |

18 |

4 |

5 | \

6 |

7 |

8 | - **Transparency** - Easily drill down and see how metrics are calculated at the customer level

9 | - **Open source &** **Private** - The entire app is open source and easy to self host

10 | - **Extensible &** **Hackable** - Have some weird edge case you need to exclude? Easily update your data model with a little SQL.

11 | - **Push first** - Metrics can be pushed to Slack, Sheets, and email so you don't need to check yet another dashboard

12 |

13 | \

14 |

15 |

16 |

17 |

18 |  19 |

20 |

21 | \

22 |

23 | \

24 |

25 |

26 | ### There is a [free hosted version here](https://pulse.trypaper.io/?ref=github)

27 |

28 | ## Self-Hosted

29 |

30 | * Docker - See [docker-compose.yml](docker-compose.yml)

31 | * Render - See [render.yaml](render.yaml) as a guide (you'll need to remove the domain)

32 | * GCP - TODO

33 | * AWS - TODO

34 |

35 | ## Google Sheets

36 |

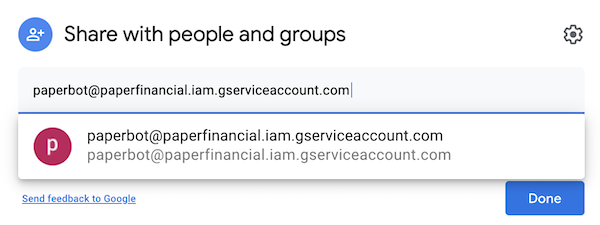

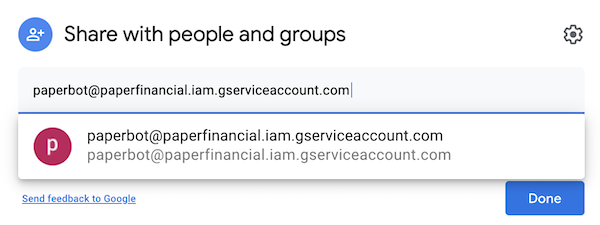

37 | 1. Go to the Sheet you want to connect to Pulse

38 | 2. Click "Share" in the top right

39 | 3. Share the sheet with paperbot@paperfinancial.iam.gserviceaccount.com

40 | 4. Add the Spreadsheet ID (long ID in the Sheet URL) and sheet name to https://pulse.trypaper.io/settings

41 |

42 |

43 |

44 |

45 |

--------------------------------------------------------------------------------

/frontend/src/store.js:

--------------------------------------------------------------------------------

1 | // import axios from 'axios';

2 |

3 | export const store = {

4 | state: {

5 | showDebugStuffNow: false,

6 | isLoggedIn: false,

7 | checkedLogin: false,

8 | gotUserData: false,

9 | gotDbt: false,

10 | hideSidebar: false,

11 | gotMetrics: false,

12 | gotEvents: false,

13 | metricData: {},

14 | events: {data: []},

15 | user: {

16 | ok: false,

17 | oauth: false,

18 | hasStripe: false,

19 | settings: {}

20 | },

21 | slackCode: false,

22 | // settings: {

23 | // notifications: {

24 | // alerts: {

25 | // slack: true,

26 | // email: false,

27 | // },

28 | // weekly: {

29 | // slack: true,

30 | // email: true,

31 | // },

32 | // monthly: {

33 | // slack: true,

34 | // email: true,

35 | // },

36 | // }

37 | // },

38 | dbt: {},

39 | jobStatuses: {},

40 | analysis: {

41 | uuid: false,

42 | mode: 'search',

43 | code: 'select *\nfrom customers as c',

44 | results: {

45 | rows: [],

46 | cols: [],

47 | },

48 | viz: {

49 | type: 'grid',

50 | encoding: {

51 | "x": {"field": false, "type": "ordinal"},

52 | "y": {"field": false, "type": "quantitative"},

53 | }

54 | }

55 | },

56 | userData: {

57 | savedFunders: []

58 | },

59 | msg: {

60 | show: false,

61 | primary: '',

62 | secondary: '',

63 | icon: '',

64 | type: '',

65 | time: 8000,

66 | }

67 | }

68 | }

--------------------------------------------------------------------------------

/backend/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 |

3 | # Byte-compiled / optimized / DLL files

4 | __pycache__/

5 | *.py[cod]

6 | *$py.class

7 |

8 | # C extensions

9 | *.so

10 |

11 | # Distribution / packaging

12 | .Python

13 | build/

14 | develop-eggs/

15 | dist/

16 | downloads/

17 | eggs/

18 | .eggs/

19 | lib/

20 | lib64/

21 | parts/

22 | sdist/

23 | var/

24 | wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | .hypothesis/

50 | .pytest_cache/

51 |

52 | # Translations

53 | *.mo

54 | *.pot

55 |

56 | # Django stuff:

57 | *.log

58 | local_settings.py

59 | db.sqlite3

60 |

61 | # Flask stuff:

62 | instance/

63 | .webassets-cache

64 |

65 | # Scrapy stuff:

66 | .scrapy

67 |

68 | # Sphinx documentation

69 | docs/_build/

70 |

71 | # PyBuilder

72 | target/

73 |

74 | # Jupyter Notebook

75 | .ipynb_checkpoints

76 |

77 | # pyenv

78 | .python-version

79 |

80 | # celery beat schedule file

81 | celerybeat-schedule

82 |

83 | # SageMath parsed files

84 | *.sage.py

85 |

86 | # Environments

87 | .env

88 | .venv

89 | env/

90 | venv/

91 | ENV/

92 | env.bak/

93 | venv.bak/

94 |

95 | # Spyder project settings

96 | .spyderproject

97 | .spyproject

98 |

99 | # Rope project settings

100 | .ropeproject

101 |

102 | # mkdocs documentation

103 | /site

104 |

105 | # mypy

106 | .mypy_cache/

107 |

--------------------------------------------------------------------------------

/frontend/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "paper",

3 | "version": "0.2.0",

4 | "private": true,

5 | "license": "BUSL-1.1",

6 | "scripts": {

7 | "serve": "vue-cli-service serve",

8 | "build": "vue-cli-service build",

9 | "lint": "vue-cli-service lint"

10 | },

11 | "dependencies": {

12 | "@headlessui/vue": "^1.2.0",

13 | "@heroicons/vue": "^1.0.1",

14 | "@magic-ext/oauth": "^0.7.0",

15 | "@tailwindcss/aspect-ratio": "^0.2.0",

16 | "@tailwindcss/forms": "^0.2.1",

17 | "@tailwindcss/typography": "^0.4.0",

18 | "axios": "^0.21.0",

19 | "core-js": "^3.6.5",

20 | "gridjs": "^5.0.0",

21 | "magic-sdk": "^4.2.1",

22 | "mitt": "^2.1.0",

23 | "mousetrap": "^1.6.5",

24 | "postcss": "^8.2.6",

25 | "ssf": "^0.11.2",

26 | "tailwindcss": "^2.1.0",

27 | "typed.js": "^2.0.11",

28 | "vega": "^5.20.2",

29 | "vega-embed": "^6.18.1",

30 | "vega-lite": "^5.1.0",

31 | "vue": "^3.0.5",

32 | "vue-currency-input": "2.0.0-rc.1",

33 | "vue-router": "4",

34 | "vue3-popper": "^1.2.0"

35 | },

36 | "devDependencies": {

37 | "@vue/cli-plugin-babel": "~4.5.0",

38 | "@vue/cli-plugin-eslint": "~4.5.0",

39 | "@vue/cli-plugin-router": "~4.5.0",

40 | "@vue/cli-service": "~4.5.0",

41 | "@vue/compiler-sfc": "^3.0.5",

42 | "autoprefixer": "^9.0.0",

43 | "babel-eslint": "^10.1.0",

44 | "eslint": "^6.7.2",

45 | "eslint-plugin-vue": "^6.2.2"

46 | },

47 | "eslintConfig": {

48 | "root": true,

49 | "env": {

50 | "node": true

51 | },

52 | "extends": [

53 | "plugin:vue/essential",

54 | "eslint:recommended"

55 | ],

56 | "parserOptions": {

57 | "parser": "babel-eslint"

58 | },

59 | "rules": {

60 | "no-unused-vars": "off",

61 | "vue/valid-v-model": "off"

62 | }

63 | },

64 | "browserslist": [

65 | "> 1%",

66 | "last 2 versions",

67 | "not dead"

68 | ]

69 | }

70 |

--------------------------------------------------------------------------------

/backend/pints/modeling.py:

--------------------------------------------------------------------------------

1 | import os

2 | import subprocess

3 | import json

4 | import yaml

5 |

6 | from logger import logger

7 |

8 | def getDbt():

9 | with open(r'./dbt/models/stripe/models.yml') as file:

10 | d = yaml.safe_load(file)

11 | logger.info(f'getDbt: {d}')

12 | return d

13 |

14 | def getCols(sql, cols):

15 | cols2 = []

16 | for col in cols:

17 | # import pdb; pdb.set_trace()

18 | colFormat = [s for s in sql['selected'] if s['alias'] == col]

19 | if colFormat:

20 | colFormat = colFormat[0].get('format', False)

21 | else:

22 | colFormat = False

23 | col2 = {

24 | 'name': col,

25 | 'format': colFormat

26 | }

27 | cols2.append(col2)

28 | return cols2

29 |

30 | def runDbt(teamId):

31 | logger.info(f"run_dbt user: {teamId}")

32 | os.environ['PAPER_DBT_SCHEMA'] = f"team_{teamId}"

33 | os.environ['PAPER_DBT_TEAM_ID'] = f"{teamId}"

34 | session = subprocess.Popen(['./dbt/run_dbt.sh'],

35 | stdout=subprocess.PIPE, stderr=subprocess.PIPE)

36 | stdout, stderr = session.communicate()

37 | logger.info(f'run_dbt stdout: {stdout}')

38 | dbtLogs = []

39 | dbtErrors = []

40 | if stderr:

41 | logger.error(f'run_dbt stderr: {stderr}')

42 | try:

43 | s = stdout.strip()

44 | lines = s.splitlines()

45 | for line in lines:

46 | j = json.loads(line)

47 | if j['levelname'] == 'ERROR':

48 | logger.error(f"run_dbt error: {j['message']}")

49 | dbtErrors.append(j)

50 | if 'OK created table' in j['message']:

51 | count = int(j['message'].split('SELECT')[1].split('\x1b')[0])

52 | table = j['extra']['unique_id']

53 | table = table.split('.')

54 | table = table[len(table)-1]

55 | print(table, count)

56 | j['table'] = table

57 | j['count'] = count

58 | dbtLogs.append(j)

59 | except Exception as e:

60 | logger.error(f'run_dbt log parse error: {e}')

61 | return dbtLogs, dbtErrors

--------------------------------------------------------------------------------

/backend/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.9

2 |

3 | # Update the package list and install chrome

4 | # Set up the Chrome PPA

5 | RUN wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add -

6 | RUN echo "deb http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list

7 |

8 | # Update the package list and install chrome

9 | RUN apt-get update -y

10 | RUN apt-get install -y libglib2.0-0 \

11 | libnss3 \

12 | libgconf-2-4 \

13 | libfontconfig1

14 | ENV CHROME_VERSION 90.0.4430.212

15 | # => was getting ERROR [ 6/18] RUN apt-get update -y && apt-get install -y google-chrome-stable=91.0.4472.164-1

16 | # upgraded to 92.0.4515.107, might need to downgrade to 91.0.4472.164 if something breaks in Slack

17 | # RUN apt-get update -y && apt-get install -y google-chrome-stable=$CHROME_VERSION-1

18 | # RUN apt-get install -y google-chrome-stable=$CHROME_VERSION-1

19 |

20 | # Check available versions here: https://www.ubuntuupdates.org/package/google_chrome/stable/main/base/google-chrome-stable

21 | # ARG CHROME_VERSION="81.0.4044.113-1"

22 | RUN wget --no-verbose -O /tmp/chrome.deb https://dl.google.com/linux/chrome/deb/pool/main/g/google-chrome-stable/google-chrome-stable_${CHROME_VERSION}-1_amd64.deb \

23 | && apt install -y /tmp/chrome.deb \

24 | && rm /tmp/chrome.deb

25 |

26 | # Set up Chromedriver Environment variables

27 | ENV CHROMEDRIVER_VERSION 90.0.4430.24

28 | ENV CHROMEDRIVER_DIR /chromedriver

29 | RUN mkdir $CHROMEDRIVER_DIR

30 |

31 | # Download and install Chromedriver

32 | RUN wget -q --continue -P $CHROMEDRIVER_DIR "http://chromedriver.storage.googleapis.com/$CHROMEDRIVER_VERSION/chromedriver_linux64.zip"

33 | RUN unzip $CHROMEDRIVER_DIR/chromedriver* -d $CHROMEDRIVER_DIR

34 |

35 | # Put Chromedriver into the PATH

36 | ENV PATH $CHROMEDRIVER_DIR:$PATH

37 | # update config to not run as root

38 | RUN sed -i 's|exec -a "$0" "$HERE/chrome" "$@"|exec -a "$0" "$HERE/chrome" "$@" --no-sandbox --user-data-dir |g' /opt/google/chrome/google-chrome

39 |

40 | COPY requirements.txt /app/

41 | RUN pip3 --default-timeout=600 install -r /app/requirements.txt

42 |

43 | EXPOSE 22

44 | EXPOSE 5000/tcp

45 |

46 | ENV PORT 5000

47 |

48 | COPY app.py /app/

49 | COPY logger.py /app/

50 | ADD pints /app/pints

51 | ADD metrics /app/metrics

52 | ADD static /app/static

53 | ADD dbt /app/dbt

54 |

55 | CMD cd app && exec gunicorn -t 900 --bind :$PORT --workers $WORKERS --threads 1 --preload app:app

--------------------------------------------------------------------------------

/frontend/src/views/Slack2.vue:

--------------------------------------------------------------------------------

1 |

2 |

19 |

20 |

21 | \

22 |

23 | \

24 |

25 |

26 | ### There is a [free hosted version here](https://pulse.trypaper.io/?ref=github)

27 |

28 | ## Self-Hosted

29 |

30 | * Docker - See [docker-compose.yml](docker-compose.yml)

31 | * Render - See [render.yaml](render.yaml) as a guide (you'll need to remove the domain)

32 | * GCP - TODO

33 | * AWS - TODO

34 |

35 | ## Google Sheets

36 |

37 | 1. Go to the Sheet you want to connect to Pulse

38 | 2. Click "Share" in the top right

39 | 3. Share the sheet with paperbot@paperfinancial.iam.gserviceaccount.com

40 | 4. Add the Spreadsheet ID (long ID in the Sheet URL) and sheet name to https://pulse.trypaper.io/settings

41 |

42 |

43 |

44 |

45 |

--------------------------------------------------------------------------------

/frontend/src/store.js:

--------------------------------------------------------------------------------

1 | // import axios from 'axios';

2 |

3 | export const store = {

4 | state: {

5 | showDebugStuffNow: false,

6 | isLoggedIn: false,

7 | checkedLogin: false,

8 | gotUserData: false,

9 | gotDbt: false,

10 | hideSidebar: false,

11 | gotMetrics: false,

12 | gotEvents: false,

13 | metricData: {},

14 | events: {data: []},

15 | user: {

16 | ok: false,

17 | oauth: false,

18 | hasStripe: false,

19 | settings: {}

20 | },

21 | slackCode: false,

22 | // settings: {

23 | // notifications: {

24 | // alerts: {

25 | // slack: true,

26 | // email: false,

27 | // },

28 | // weekly: {

29 | // slack: true,

30 | // email: true,

31 | // },

32 | // monthly: {

33 | // slack: true,

34 | // email: true,

35 | // },

36 | // }

37 | // },

38 | dbt: {},

39 | jobStatuses: {},

40 | analysis: {

41 | uuid: false,

42 | mode: 'search',

43 | code: 'select *\nfrom customers as c',

44 | results: {

45 | rows: [],

46 | cols: [],

47 | },

48 | viz: {

49 | type: 'grid',

50 | encoding: {

51 | "x": {"field": false, "type": "ordinal"},

52 | "y": {"field": false, "type": "quantitative"},

53 | }

54 | }

55 | },

56 | userData: {

57 | savedFunders: []

58 | },

59 | msg: {

60 | show: false,

61 | primary: '',

62 | secondary: '',

63 | icon: '',

64 | type: '',

65 | time: 8000,

66 | }

67 | }

68 | }

--------------------------------------------------------------------------------

/backend/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 |

3 | # Byte-compiled / optimized / DLL files

4 | __pycache__/

5 | *.py[cod]

6 | *$py.class

7 |

8 | # C extensions

9 | *.so

10 |

11 | # Distribution / packaging

12 | .Python

13 | build/

14 | develop-eggs/

15 | dist/

16 | downloads/

17 | eggs/

18 | .eggs/

19 | lib/

20 | lib64/

21 | parts/

22 | sdist/

23 | var/

24 | wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | .hypothesis/

50 | .pytest_cache/

51 |

52 | # Translations

53 | *.mo

54 | *.pot

55 |

56 | # Django stuff:

57 | *.log

58 | local_settings.py

59 | db.sqlite3

60 |

61 | # Flask stuff:

62 | instance/

63 | .webassets-cache

64 |

65 | # Scrapy stuff:

66 | .scrapy

67 |

68 | # Sphinx documentation

69 | docs/_build/

70 |

71 | # PyBuilder

72 | target/

73 |

74 | # Jupyter Notebook

75 | .ipynb_checkpoints

76 |

77 | # pyenv

78 | .python-version

79 |

80 | # celery beat schedule file

81 | celerybeat-schedule

82 |

83 | # SageMath parsed files

84 | *.sage.py

85 |

86 | # Environments

87 | .env

88 | .venv

89 | env/

90 | venv/

91 | ENV/

92 | env.bak/

93 | venv.bak/

94 |

95 | # Spyder project settings

96 | .spyderproject

97 | .spyproject

98 |

99 | # Rope project settings

100 | .ropeproject

101 |

102 | # mkdocs documentation

103 | /site

104 |

105 | # mypy

106 | .mypy_cache/

107 |

--------------------------------------------------------------------------------

/frontend/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "paper",

3 | "version": "0.2.0",

4 | "private": true,

5 | "license": "BUSL-1.1",

6 | "scripts": {

7 | "serve": "vue-cli-service serve",

8 | "build": "vue-cli-service build",

9 | "lint": "vue-cli-service lint"

10 | },

11 | "dependencies": {

12 | "@headlessui/vue": "^1.2.0",

13 | "@heroicons/vue": "^1.0.1",

14 | "@magic-ext/oauth": "^0.7.0",

15 | "@tailwindcss/aspect-ratio": "^0.2.0",

16 | "@tailwindcss/forms": "^0.2.1",

17 | "@tailwindcss/typography": "^0.4.0",

18 | "axios": "^0.21.0",

19 | "core-js": "^3.6.5",

20 | "gridjs": "^5.0.0",

21 | "magic-sdk": "^4.2.1",

22 | "mitt": "^2.1.0",

23 | "mousetrap": "^1.6.5",

24 | "postcss": "^8.2.6",

25 | "ssf": "^0.11.2",

26 | "tailwindcss": "^2.1.0",

27 | "typed.js": "^2.0.11",

28 | "vega": "^5.20.2",

29 | "vega-embed": "^6.18.1",

30 | "vega-lite": "^5.1.0",

31 | "vue": "^3.0.5",

32 | "vue-currency-input": "2.0.0-rc.1",

33 | "vue-router": "4",

34 | "vue3-popper": "^1.2.0"

35 | },

36 | "devDependencies": {

37 | "@vue/cli-plugin-babel": "~4.5.0",

38 | "@vue/cli-plugin-eslint": "~4.5.0",

39 | "@vue/cli-plugin-router": "~4.5.0",

40 | "@vue/cli-service": "~4.5.0",

41 | "@vue/compiler-sfc": "^3.0.5",

42 | "autoprefixer": "^9.0.0",

43 | "babel-eslint": "^10.1.0",

44 | "eslint": "^6.7.2",

45 | "eslint-plugin-vue": "^6.2.2"

46 | },

47 | "eslintConfig": {

48 | "root": true,

49 | "env": {

50 | "node": true

51 | },

52 | "extends": [

53 | "plugin:vue/essential",

54 | "eslint:recommended"

55 | ],

56 | "parserOptions": {

57 | "parser": "babel-eslint"

58 | },

59 | "rules": {

60 | "no-unused-vars": "off",

61 | "vue/valid-v-model": "off"

62 | }

63 | },

64 | "browserslist": [

65 | "> 1%",

66 | "last 2 versions",

67 | "not dead"

68 | ]

69 | }

70 |

--------------------------------------------------------------------------------

/backend/pints/modeling.py:

--------------------------------------------------------------------------------

1 | import os

2 | import subprocess

3 | import json

4 | import yaml

5 |

6 | from logger import logger

7 |

8 | def getDbt():

9 | with open(r'./dbt/models/stripe/models.yml') as file:

10 | d = yaml.safe_load(file)

11 | logger.info(f'getDbt: {d}')

12 | return d

13 |

14 | def getCols(sql, cols):

15 | cols2 = []

16 | for col in cols:

17 | # import pdb; pdb.set_trace()

18 | colFormat = [s for s in sql['selected'] if s['alias'] == col]

19 | if colFormat:

20 | colFormat = colFormat[0].get('format', False)

21 | else:

22 | colFormat = False

23 | col2 = {

24 | 'name': col,

25 | 'format': colFormat

26 | }

27 | cols2.append(col2)

28 | return cols2

29 |

30 | def runDbt(teamId):

31 | logger.info(f"run_dbt user: {teamId}")

32 | os.environ['PAPER_DBT_SCHEMA'] = f"team_{teamId}"

33 | os.environ['PAPER_DBT_TEAM_ID'] = f"{teamId}"

34 | session = subprocess.Popen(['./dbt/run_dbt.sh'],

35 | stdout=subprocess.PIPE, stderr=subprocess.PIPE)

36 | stdout, stderr = session.communicate()

37 | logger.info(f'run_dbt stdout: {stdout}')

38 | dbtLogs = []

39 | dbtErrors = []

40 | if stderr:

41 | logger.error(f'run_dbt stderr: {stderr}')

42 | try:

43 | s = stdout.strip()

44 | lines = s.splitlines()

45 | for line in lines:

46 | j = json.loads(line)

47 | if j['levelname'] == 'ERROR':

48 | logger.error(f"run_dbt error: {j['message']}")

49 | dbtErrors.append(j)

50 | if 'OK created table' in j['message']:

51 | count = int(j['message'].split('SELECT')[1].split('\x1b')[0])

52 | table = j['extra']['unique_id']

53 | table = table.split('.')

54 | table = table[len(table)-1]

55 | print(table, count)

56 | j['table'] = table

57 | j['count'] = count

58 | dbtLogs.append(j)

59 | except Exception as e:

60 | logger.error(f'run_dbt log parse error: {e}')

61 | return dbtLogs, dbtErrors

--------------------------------------------------------------------------------

/backend/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.9

2 |

3 | # Update the package list and install chrome

4 | # Set up the Chrome PPA

5 | RUN wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add -

6 | RUN echo "deb http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list

7 |

8 | # Update the package list and install chrome

9 | RUN apt-get update -y

10 | RUN apt-get install -y libglib2.0-0 \

11 | libnss3 \

12 | libgconf-2-4 \

13 | libfontconfig1

14 | ENV CHROME_VERSION 90.0.4430.212

15 | # => was getting ERROR [ 6/18] RUN apt-get update -y && apt-get install -y google-chrome-stable=91.0.4472.164-1

16 | # upgraded to 92.0.4515.107, might need to downgrade to 91.0.4472.164 if something breaks in Slack

17 | # RUN apt-get update -y && apt-get install -y google-chrome-stable=$CHROME_VERSION-1

18 | # RUN apt-get install -y google-chrome-stable=$CHROME_VERSION-1

19 |

20 | # Check available versions here: https://www.ubuntuupdates.org/package/google_chrome/stable/main/base/google-chrome-stable

21 | # ARG CHROME_VERSION="81.0.4044.113-1"

22 | RUN wget --no-verbose -O /tmp/chrome.deb https://dl.google.com/linux/chrome/deb/pool/main/g/google-chrome-stable/google-chrome-stable_${CHROME_VERSION}-1_amd64.deb \

23 | && apt install -y /tmp/chrome.deb \

24 | && rm /tmp/chrome.deb

25 |

26 | # Set up Chromedriver Environment variables

27 | ENV CHROMEDRIVER_VERSION 90.0.4430.24

28 | ENV CHROMEDRIVER_DIR /chromedriver

29 | RUN mkdir $CHROMEDRIVER_DIR

30 |

31 | # Download and install Chromedriver

32 | RUN wget -q --continue -P $CHROMEDRIVER_DIR "http://chromedriver.storage.googleapis.com/$CHROMEDRIVER_VERSION/chromedriver_linux64.zip"

33 | RUN unzip $CHROMEDRIVER_DIR/chromedriver* -d $CHROMEDRIVER_DIR

34 |

35 | # Put Chromedriver into the PATH

36 | ENV PATH $CHROMEDRIVER_DIR:$PATH

37 | # update config to not run as root

38 | RUN sed -i 's|exec -a "$0" "$HERE/chrome" "$@"|exec -a "$0" "$HERE/chrome" "$@" --no-sandbox --user-data-dir |g' /opt/google/chrome/google-chrome

39 |

40 | COPY requirements.txt /app/

41 | RUN pip3 --default-timeout=600 install -r /app/requirements.txt

42 |

43 | EXPOSE 22

44 | EXPOSE 5000/tcp

45 |

46 | ENV PORT 5000

47 |

48 | COPY app.py /app/

49 | COPY logger.py /app/

50 | ADD pints /app/pints

51 | ADD metrics /app/metrics

52 | ADD static /app/static

53 | ADD dbt /app/dbt

54 |

55 | CMD cd app && exec gunicorn -t 900 --bind :$PORT --workers $WORKERS --threads 1 --preload app:app

--------------------------------------------------------------------------------

/frontend/src/views/Slack2.vue:

--------------------------------------------------------------------------------

1 |

2 |  28 |

28 |  181 |

181 |