3 |

3 | 4 | Kubernetes Guide 5 |

3 |

3 |

84 |  85 |

85 |

86 |

94 |

95 | **Building Highly-Availability(HA) Clusters with kubeadm. Source: [Kubernetes.io](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/)**

96 |

97 | ### Developer Resources

98 | [Back to the Top](#table-of-contents)

99 |

100 | - [Kubernetes Certifications](https://kubernetes.io/training/)

101 |

102 | - [Getting started with Kubernetes on AWS](https://aws.amazon.com/kubernetes/)

103 |

104 | - [Kubernetes on Microsoft Azure](https://azure.microsoft.com/en-us/topic/what-is-kubernetes/)

105 |

106 | - [Intro to Azure Kubernetes Service](https://docs.microsoft.com/en-us/azure/aks/kubernetes-dashboard)

107 |

108 | - [Getting started with Google Cloud](https://cloud.google.com/learn/what-is-kubernetes)

109 |

110 | - [Azure Red Hat OpenShift ](https://azure.microsoft.com/en-us/services/openshift/)

111 |

112 | - [Getting started with Kubernetes on Red Hat](https://www.redhat.com/en/topics/containers/what-is-kubernetes)

113 |

114 | - [Getting started with Kubernetes on IBM](https://www.ibm.com/cloud/learn/kubernetes)

115 |

116 | - [Red Hat OpenShift on IBM Cloud](https://www.ibm.com/cloud/openshift)

117 |

118 | - [Kubernetes Contributors](https://www.kubernetes.dev/)

119 |

120 | - [Kubernetes Tutorials from Pulumi](https://www.pulumi.com/docs/tutorials/kubernetes/)

121 |

122 | - [Enable OpenShift Virtualization on Red Hat OpenShift](https://developers.redhat.com/blog/2020/08/28/enable-openshift-virtualization-on-red-hat-openshift/)

123 |

124 | - [YAML basics in Kubernetes](https://developer.ibm.com/technologies/containers/tutorials/yaml-basics-and-usage-in-kubernetes/)

125 |

126 | - [Elastic Cloud on Kubernetes](https://www.elastic.co/elastic-cloud-kubernetes)

127 |

128 | - [Docker and Kubernetes](https://www.docker.com/products/kubernetes)

129 |

130 | - [Running Apache Spark on Kubernetes](http://spark.apache.org/docs/latest/running-on-kubernetes.html)

131 |

132 | - [Kubernetes Across VMware vRealize Automation](https://blogs.vmware.com/management/2019/06/kubernetes-across-vmware-cloud-automation-services.html)

133 |

134 | - [VMware Tanzu Kubernetes Grid](https://tanzu.vmware.com/kubernetes-grid)

135 |

136 | - [All the Ways VMware Tanzu Works with AWS](https://tanzu.vmware.com/content/blog/all-the-ways-vmware-tanzutm-works-with-aws)

137 |

138 | - [Using Ansible in a Cloud-Native Kubernetes Environment](https://www.ansible.com/blog/how-useful-is-ansible-in-a-cloud-native-kubernetes-environment)

139 |

140 | - [Managing Kubernetes (K8s) objects with Ansible](https://docs.ansible.com/ansible/latest/collections/community/kubernetes/k8s_module.html)

141 |

142 | - [Setting up a Kubernetes cluster using Vagrant and Ansible](https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/)

143 |

144 | - [Running MongoDB with Kubernetes](https://www.mongodb.com/kubernetes)

145 |

146 | - [Kubernetes Fluentd](https://docs.fluentd.org/v/0.12/articles/kubernetes-fluentd)

147 |

148 | - [Understanding the new GitLab Kubernetes Agent](https://about.gitlab.com/blog/2020/09/22/introducing-the-gitlab-kubernetes-agent/)

149 |

150 | - [Intro Local Process with Kubernetes for Visual Studio 2019](https://devblogs.microsoft.com/visualstudio/introducing-local-process-with-kubernetes-for-visual-studio%E2%80%AF2019/)

151 |

152 | - [Kubernetes Playground by Katacoda](https://www.katacoda.com/courses/kubernetes/playground)

153 |

154 | ### Kubernetes Courses & Certifications

155 | [Back to the Top](#table-of-contents)

156 |

157 | - [Kubernetes Training & Certifications](https://kubernetes.io/training/)

158 |

159 | - [Top Kubernetes Courses Online | Coursera](https://www.coursera.org/courses?query=kubernetes)

160 |

161 | - [Top Kubernetes Courses Online | Udemy](https://www.udemy.com/topic/kubernetes/)

162 |

163 | - [Kubernetes Courses - IBM Developer](https://developer.ibm.com/components/kubernetes/courses/)

164 |

165 | - [Introduction to Kubernetes Courses | edX](https://www.edx.org/course/introduction-to-kubernetes)

166 |

167 | - [VMware Tanzu Education](https://tanzu.vmware.com/education)

168 |

169 | - [KubeAcademy from VMware](https://kube.academy/)

170 |

171 | - [Online Kubernetes Course: Beginners Guide to Kubernetes | Pluralsight](https://www.pluralsight.com/courses/getting-started-kubernetes)

172 |

173 | - [Getting Started with Google Kubernetes Engine | Pluralsight](https://www.pluralsight.com/courses/getting-started-google-kubernetes-engine-8)

174 |

175 | - [Scalable Microservices with Kubernetes course from Udacity](https://www.udacity.com/course/scalable-microservices-with-kubernetes--ud615)

176 |

177 | ### Kubernetes Books

178 | [Back to the Top](#table-of-contents)

179 |

180 | - [Kubernetes for Full-Stack Developers by Digital Ocean](https://assets.digitalocean.com/books/kubernetes-for-full-stack-developers.pdf)

181 |

182 | - [Kubernetes Patterns - Red Hat](https://www.redhat.com/cms/managed-files/cm-oreilly-kubernetes-patterns-ebook-f19824-201910-en.pdf)

183 |

184 | - [The Ultimate Guide to Kubernetes Deployments with Octopus](https://i.octopus.com/books/kubernetes-book.pdf)

185 |

186 | - [Learng Kubernetes (PDF)](https://riptutorial.com/Download/kubernetes.pdf)

187 |

188 | - [Certified Kubernetes Administrator (CKA) Study Guide: In-Depth Guidance and Practice](https://www.amazon.com/Certified-Kubernetes-Administrator-Study-Depth/dp/1098107225/ref=sr_1_29?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-29)

189 |

190 | - [Quick Start Kubernetes by Nigel Poulton (2022)](https://www.amazon.com/Quick-Start-Kubernetes-Nigel-Poulton-ebook/dp/B08T21NW4Z/ref=sr_1_18?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-18)

191 |

192 | - [The Kubernetes Book by Nigel Poulton (2022)](https://www.amazon.com/Kubernetes-Book-Version-November-2018-ebook/dp/B072TS9ZQZ/ref=sr_1_4?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-4)

193 |

194 | - [Kubernetes: Up and Running: Dive into the Future of Infrastructure](https://www.amazon.com/Kubernetes-Running-Dive-Future-Infrastructure/dp/1492046531/ref=sr_1_5?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-5)

195 |

196 | - [Kubernetes and Docker - An Enterprise Guide: Effectively containerize applications, integrate enterprise systems, and scale applications in your enterprise](https://www.amazon.com/Kubernetes-Docker-Effectively-containerize-applications/dp/183921340X/ref=sr_1_24?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-24)

197 |

198 | - [Kubernetes in Action](https://www.amazon.com/Kubernetes-Action-Marko-Luksa/dp/1617293725/ref=sr_1_7?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-7)

199 |

200 | - [Kubernetes – An Enterprise Guide: Effectively containerize applications, integrate enterprise systems, and scale](https://www.amazon.com/Kubernetes-Enterprise-Effectively-containerize-applications/dp/1803230037/ref=sr_1_6?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-6)

201 |

202 | - [Production Kubernetes: Building Successful Application Platforms](https://www.amazon.com/Production-Kubernetes-Successful-Application-Platforms/dp/1492092304/ref=sr_1_8?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-8)

203 |

204 | - [The Kubernetes Bible: The definitive guide to deploying and managing Kubernetes across major cloud platforms](https://www.amazon.com/Kubernetes-Bible-definitive-deploying-platforms/dp/1838827692/ref=sr_1_16?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-16)

205 |

206 | - [Networking and Kubernetes: A Layered Approach](https://www.amazon.com/Networking-Kubernetes-Approach-James-Strong/dp/1492081655/ref=sr_1_12?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-12)

207 |

208 | - [Kubernetes Best Practices: Blueprints for Building Successful Applications on Kubernetes](https://www.amazon.com/Kubernetes-Best-Practices-Blueprints-Applications/dp/1492056472/ref=sr_1_19?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-19)

209 |

210 | - [Kubernetes Security and Observability: A Holistic Approach to Securing Containers and Cloud Native Apps](https://www.amazon.com/Kubernetes-Security-Observability-Containers-Applications/dp/1098107101/ref=sr_1_26?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-26)

211 |

212 | - [Hands-on Kubernetes on Azure: Use Azure Kubernetes Service to automate management, scaling, and deployment of containerized apps](https://www.amazon.com/Hands-Kubernetes-Azure-containerized-applications-ebook/dp/B095H26VFY/ref=sr_1_11?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-11)

213 |

214 | ### YouTube Tutorials

215 | [Back to the Top](#table-of-contents)

216 |

217 | [](https://www.youtube.com/watch?v=kGrpLKNi4ZI)

218 | [](https://www.youtube.com/watch?v=Oe_mhDtb22M)

219 | [](https://www.youtube.com/watch?v=vxtq_pJp7_A)

220 | [](https://www.youtube.com/watch?v=S8eX0MxfnB4)

221 | [")](https://www.youtube.com/watch?v=d6WC5n9G_sM)

222 | [](https://www.youtube.com/watch?v=VnvRFRk_51k)

223 | [](https://www.youtube.com/watch?v=wXuSqFJVNQA)

224 | [](https://www.youtube.com/watch?v=kTp5xUtcalw)

225 | [](https://www.youtube.com/watch?v=PziYflu8cB8)

226 | [](https://www.youtube.com/watch?v=n-fAf2mte6M)

227 |

228 | ### Red Hat CodeReady Containers (CRC) on WSL

229 |

230 | [Back to the Top](#table-of-contents)

231 |

232 | [Red Hat CodeReady Containers (CRC)](https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/crc/2.9.0) is a tool that provides a minimal, preconfigured OpenShift 4 cluster on a laptop or desktop machine for development and testing purposes. CRC is delivered as a platform inside of the VM.

233 |

234 | * **odo (OpenShift Do)**, a CLI tool for developers, to manage application components on the OpenShift Container Platform.

235 |

236 |

94 |

95 | **Building Highly-Availability(HA) Clusters with kubeadm. Source: [Kubernetes.io](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/)**

96 |

97 | ### Developer Resources

98 | [Back to the Top](#table-of-contents)

99 |

100 | - [Kubernetes Certifications](https://kubernetes.io/training/)

101 |

102 | - [Getting started with Kubernetes on AWS](https://aws.amazon.com/kubernetes/)

103 |

104 | - [Kubernetes on Microsoft Azure](https://azure.microsoft.com/en-us/topic/what-is-kubernetes/)

105 |

106 | - [Intro to Azure Kubernetes Service](https://docs.microsoft.com/en-us/azure/aks/kubernetes-dashboard)

107 |

108 | - [Getting started with Google Cloud](https://cloud.google.com/learn/what-is-kubernetes)

109 |

110 | - [Azure Red Hat OpenShift ](https://azure.microsoft.com/en-us/services/openshift/)

111 |

112 | - [Getting started with Kubernetes on Red Hat](https://www.redhat.com/en/topics/containers/what-is-kubernetes)

113 |

114 | - [Getting started with Kubernetes on IBM](https://www.ibm.com/cloud/learn/kubernetes)

115 |

116 | - [Red Hat OpenShift on IBM Cloud](https://www.ibm.com/cloud/openshift)

117 |

118 | - [Kubernetes Contributors](https://www.kubernetes.dev/)

119 |

120 | - [Kubernetes Tutorials from Pulumi](https://www.pulumi.com/docs/tutorials/kubernetes/)

121 |

122 | - [Enable OpenShift Virtualization on Red Hat OpenShift](https://developers.redhat.com/blog/2020/08/28/enable-openshift-virtualization-on-red-hat-openshift/)

123 |

124 | - [YAML basics in Kubernetes](https://developer.ibm.com/technologies/containers/tutorials/yaml-basics-and-usage-in-kubernetes/)

125 |

126 | - [Elastic Cloud on Kubernetes](https://www.elastic.co/elastic-cloud-kubernetes)

127 |

128 | - [Docker and Kubernetes](https://www.docker.com/products/kubernetes)

129 |

130 | - [Running Apache Spark on Kubernetes](http://spark.apache.org/docs/latest/running-on-kubernetes.html)

131 |

132 | - [Kubernetes Across VMware vRealize Automation](https://blogs.vmware.com/management/2019/06/kubernetes-across-vmware-cloud-automation-services.html)

133 |

134 | - [VMware Tanzu Kubernetes Grid](https://tanzu.vmware.com/kubernetes-grid)

135 |

136 | - [All the Ways VMware Tanzu Works with AWS](https://tanzu.vmware.com/content/blog/all-the-ways-vmware-tanzutm-works-with-aws)

137 |

138 | - [Using Ansible in a Cloud-Native Kubernetes Environment](https://www.ansible.com/blog/how-useful-is-ansible-in-a-cloud-native-kubernetes-environment)

139 |

140 | - [Managing Kubernetes (K8s) objects with Ansible](https://docs.ansible.com/ansible/latest/collections/community/kubernetes/k8s_module.html)

141 |

142 | - [Setting up a Kubernetes cluster using Vagrant and Ansible](https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/)

143 |

144 | - [Running MongoDB with Kubernetes](https://www.mongodb.com/kubernetes)

145 |

146 | - [Kubernetes Fluentd](https://docs.fluentd.org/v/0.12/articles/kubernetes-fluentd)

147 |

148 | - [Understanding the new GitLab Kubernetes Agent](https://about.gitlab.com/blog/2020/09/22/introducing-the-gitlab-kubernetes-agent/)

149 |

150 | - [Intro Local Process with Kubernetes for Visual Studio 2019](https://devblogs.microsoft.com/visualstudio/introducing-local-process-with-kubernetes-for-visual-studio%E2%80%AF2019/)

151 |

152 | - [Kubernetes Playground by Katacoda](https://www.katacoda.com/courses/kubernetes/playground)

153 |

154 | ### Kubernetes Courses & Certifications

155 | [Back to the Top](#table-of-contents)

156 |

157 | - [Kubernetes Training & Certifications](https://kubernetes.io/training/)

158 |

159 | - [Top Kubernetes Courses Online | Coursera](https://www.coursera.org/courses?query=kubernetes)

160 |

161 | - [Top Kubernetes Courses Online | Udemy](https://www.udemy.com/topic/kubernetes/)

162 |

163 | - [Kubernetes Courses - IBM Developer](https://developer.ibm.com/components/kubernetes/courses/)

164 |

165 | - [Introduction to Kubernetes Courses | edX](https://www.edx.org/course/introduction-to-kubernetes)

166 |

167 | - [VMware Tanzu Education](https://tanzu.vmware.com/education)

168 |

169 | - [KubeAcademy from VMware](https://kube.academy/)

170 |

171 | - [Online Kubernetes Course: Beginners Guide to Kubernetes | Pluralsight](https://www.pluralsight.com/courses/getting-started-kubernetes)

172 |

173 | - [Getting Started with Google Kubernetes Engine | Pluralsight](https://www.pluralsight.com/courses/getting-started-google-kubernetes-engine-8)

174 |

175 | - [Scalable Microservices with Kubernetes course from Udacity](https://www.udacity.com/course/scalable-microservices-with-kubernetes--ud615)

176 |

177 | ### Kubernetes Books

178 | [Back to the Top](#table-of-contents)

179 |

180 | - [Kubernetes for Full-Stack Developers by Digital Ocean](https://assets.digitalocean.com/books/kubernetes-for-full-stack-developers.pdf)

181 |

182 | - [Kubernetes Patterns - Red Hat](https://www.redhat.com/cms/managed-files/cm-oreilly-kubernetes-patterns-ebook-f19824-201910-en.pdf)

183 |

184 | - [The Ultimate Guide to Kubernetes Deployments with Octopus](https://i.octopus.com/books/kubernetes-book.pdf)

185 |

186 | - [Learng Kubernetes (PDF)](https://riptutorial.com/Download/kubernetes.pdf)

187 |

188 | - [Certified Kubernetes Administrator (CKA) Study Guide: In-Depth Guidance and Practice](https://www.amazon.com/Certified-Kubernetes-Administrator-Study-Depth/dp/1098107225/ref=sr_1_29?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-29)

189 |

190 | - [Quick Start Kubernetes by Nigel Poulton (2022)](https://www.amazon.com/Quick-Start-Kubernetes-Nigel-Poulton-ebook/dp/B08T21NW4Z/ref=sr_1_18?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-18)

191 |

192 | - [The Kubernetes Book by Nigel Poulton (2022)](https://www.amazon.com/Kubernetes-Book-Version-November-2018-ebook/dp/B072TS9ZQZ/ref=sr_1_4?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-4)

193 |

194 | - [Kubernetes: Up and Running: Dive into the Future of Infrastructure](https://www.amazon.com/Kubernetes-Running-Dive-Future-Infrastructure/dp/1492046531/ref=sr_1_5?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-5)

195 |

196 | - [Kubernetes and Docker - An Enterprise Guide: Effectively containerize applications, integrate enterprise systems, and scale applications in your enterprise](https://www.amazon.com/Kubernetes-Docker-Effectively-containerize-applications/dp/183921340X/ref=sr_1_24?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-24)

197 |

198 | - [Kubernetes in Action](https://www.amazon.com/Kubernetes-Action-Marko-Luksa/dp/1617293725/ref=sr_1_7?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-7)

199 |

200 | - [Kubernetes – An Enterprise Guide: Effectively containerize applications, integrate enterprise systems, and scale](https://www.amazon.com/Kubernetes-Enterprise-Effectively-containerize-applications/dp/1803230037/ref=sr_1_6?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-6)

201 |

202 | - [Production Kubernetes: Building Successful Application Platforms](https://www.amazon.com/Production-Kubernetes-Successful-Application-Platforms/dp/1492092304/ref=sr_1_8?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-8)

203 |

204 | - [The Kubernetes Bible: The definitive guide to deploying and managing Kubernetes across major cloud platforms](https://www.amazon.com/Kubernetes-Bible-definitive-deploying-platforms/dp/1838827692/ref=sr_1_16?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-16)

205 |

206 | - [Networking and Kubernetes: A Layered Approach](https://www.amazon.com/Networking-Kubernetes-Approach-James-Strong/dp/1492081655/ref=sr_1_12?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-12)

207 |

208 | - [Kubernetes Best Practices: Blueprints for Building Successful Applications on Kubernetes](https://www.amazon.com/Kubernetes-Best-Practices-Blueprints-Applications/dp/1492056472/ref=sr_1_19?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-19)

209 |

210 | - [Kubernetes Security and Observability: A Holistic Approach to Securing Containers and Cloud Native Apps](https://www.amazon.com/Kubernetes-Security-Observability-Containers-Applications/dp/1098107101/ref=sr_1_26?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-26)

211 |

212 | - [Hands-on Kubernetes on Azure: Use Azure Kubernetes Service to automate management, scaling, and deployment of containerized apps](https://www.amazon.com/Hands-Kubernetes-Azure-containerized-applications-ebook/dp/B095H26VFY/ref=sr_1_11?crid=15963283P4C0V&keywords=kubernetes&qid=1653935057&s=books&sprefix=kubernetes%2Cstripbooks%2C174&sr=1-11)

213 |

214 | ### YouTube Tutorials

215 | [Back to the Top](#table-of-contents)

216 |

217 | [](https://www.youtube.com/watch?v=kGrpLKNi4ZI)

218 | [](https://www.youtube.com/watch?v=Oe_mhDtb22M)

219 | [](https://www.youtube.com/watch?v=vxtq_pJp7_A)

220 | [](https://www.youtube.com/watch?v=S8eX0MxfnB4)

221 | [")](https://www.youtube.com/watch?v=d6WC5n9G_sM)

222 | [](https://www.youtube.com/watch?v=VnvRFRk_51k)

223 | [](https://www.youtube.com/watch?v=wXuSqFJVNQA)

224 | [](https://www.youtube.com/watch?v=kTp5xUtcalw)

225 | [](https://www.youtube.com/watch?v=PziYflu8cB8)

226 | [](https://www.youtube.com/watch?v=n-fAf2mte6M)

227 |

228 | ### Red Hat CodeReady Containers (CRC) on WSL

229 |

230 | [Back to the Top](#table-of-contents)

231 |

232 | [Red Hat CodeReady Containers (CRC)](https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/crc/2.9.0) is a tool that provides a minimal, preconfigured OpenShift 4 cluster on a laptop or desktop machine for development and testing purposes. CRC is delivered as a platform inside of the VM.

233 |

234 | * **odo (OpenShift Do)**, a CLI tool for developers, to manage application components on the OpenShift Container Platform.

235 |

236 |

237 |  238 |

238 |

239 |

320 |  321 |

321 |

322 |

337 |  338 |

338 |

339 | Podman

340 |

355 |  356 |

356 |

357 | Buildah

358 |

375 |  376 |

376 |

377 | Rancher Desktop

378 |

381 |  382 |

382 |

383 | Rancher Desktop Kubernetes Settings

384 |

413 |  414 |

414 |

415 | Rancher Desktop Architecture Overview

416 |

423 |  424 |

424 |

425 | Enable the WSL 2 base engine in Docker Desktop

426 |

431 |  432 |

432 |

433 |

629 |  630 |

630 |

631 |

638 |  639 |

639 |

640 |

731 |  732 |

732 |

733 |

740 |  741 |

741 |

742 |

749 |  750 |

750 |

751 |

758 |  759 |

759 |

760 |

767 |  768 |

768 |

769 |

776 |  777 |

777 |

778 |

785 |  786 |

786 |

787 |

794 |  795 |

795 |

796 |

807 |  808 |

808 |

809 |

816 |  817 |

817 |

818 |

825 |  826 |

826 |

827 |

834 |  835 |

835 |

836 |

843 |  844 |

844 |

845 |

854 |  855 |

855 |

856 |

908 |  909 |

909 |

910 |

915 |  916 |

916 |

917 |

1012 |  1013 |

1013 |

1014 |

1043 |

1044 |

1045 |

1050 |

1051 |

1052 |

1090 |  1091 |

1091 |

1092 |

1100 |  1101 |

1101 |

1102 | Installing Red Hat OpenShift Data Science

1103 |

1106 |  1107 |

1107 |

1108 | Opening Red Hat OpenShift Data Science

1109 |

1112 |  1113 |

1113 |

1114 | JuypterHub on Red Hat OpenShift Data Science

1115 |

1118 |  1119 |

1119 |

1120 | Exploring Tools on Red Hat OpenShift Data Science

1121 |

1124 |  1125 |

1125 |

1126 | Setting up JupyterHub Notebook Server

1127 |

1130 |  1131 |

1131 |

1132 | Creating a new Python 3 Notebook

1133 |

1136 |  1137 |

1137 |

1138 | Python 3 JupyterHub Notebook

1139 |

1142 |  1143 |

1143 |

1144 | JupyterHub Notebook Sample Demo

1145 |

1148 |  1149 |

1149 |

1150 | OpenShift Project Models

1151 |

1154 |  1155 |

1155 |

1156 | How OpenShift integrates with JupyterHub using Python - Source-to-Image (S2I)

1157 |

1168 |  1169 |

1169 |

1170 |

1251 |  1252 |

1252 |

1253 |

1275 |  1276 |

1276 |

1277 | Podman Desktop

1278 |

1281 |  1282 |

1282 |

1283 | Podman

1284 |

1300 |  1301 |

1301 |

1302 | Buildah

1303 |

1312 |  1313 |

1313 |

1314 |

1688 |

1689 | ## ML Learning Resources

1690 |

1691 | [Kubernetes for Machine Learning on Platform9](https://platform9.com/blog/kubernetes-for-machine-learning/)

1692 |

1693 | [Introducing Amazon SageMaker Operators for Kubernetes](https://aws.amazon.com/blogs/machine-learning/introducing-amazon-sagemaker-operators-for-kubernetes/)

1694 |

1695 | [Deploying machine learning models on Kubernetes with Google Cloud](https://cloud.google.com/community/tutorials/kubernetes-ml-ops)

1696 |

1697 | [Create and attach Azure Kubernetes Service with Azure Machine Learning](https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-kubernetes)

1698 |

1699 | [Kubernetes for MLOps: Scaling Enterprise Machine Learning, Deep Learning, AI with HPE](https://www.hpe.com/us/en/resources/solutions/kubernetes-mlops.html)

1700 |

1701 | [Machine Learning by Stanford University from Coursera](https://www.coursera.org/learn/machine-learning)

1702 |

1703 | [Machine Learning Courses Online from Coursera](https://www.coursera.org/courses?query=machine%20learning&)

1704 |

1705 | [Machine Learning Courses Online from Udemy](https://www.udemy.com/topic/machine-learning/)

1706 |

1707 | [Learn Machine Learning with Online Courses and Classes from edX](https://www.edx.org/learn/machine-learning)

1708 |

1709 | ## ML frameworks & applications

1710 |

1711 | [TensorFlow](https://www.tensorflow.org) is an end-to-end open source platform for machine learning. It has a comprehensive, flexible ecosystem of tools, libraries and community resources that lets researchers push the state-of-the-art in ML and developers easily build and deploy ML powered applications.

1712 |

1713 | [Tensorman](https://github.com/pop-os/tensorman) is a utility for easy management of Tensorflow containers by developed by [System76]( https://system76.com).Tensorman allows Tensorflow to operate in an isolated environment that is contained from the rest of the system. This virtual environment can operate independent of the base system, allowing you to use any version of Tensorflow on any version of a Linux distribution that supports the Docker runtime.

1714 |

1715 | [Keras](https://keras.io) is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.It was developed with a focus on enabling fast experimentation. It is capable of running on top of TensorFlow, Microsoft Cognitive Toolkit, R, Theano, or PlaidML.

1716 |

1717 | [PyTorch](https://pytorch.org) is a library for deep learning on irregular input data such as graphs, point clouds, and manifolds. Primarily developed by Facebook's AI Research lab.

1718 |

1719 | [Amazon SageMaker](https://aws.amazon.com/sagemaker/) is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning (ML) models quickly. SageMaker removes the heavy lifting from each step of the machine learning process to make it easier to develop high quality models.

1720 |

1721 | [Azure Databricks](https://azure.microsoft.com/en-us/services/databricks/) is a fast and collaborative Apache Spark-based big data analytics service designed for data science and data engineering. Azure Databricks, sets up your Apache Spark environment in minutes, autoscale, and collaborate on shared projects in an interactive workspace. Azure Databricks supports Python, Scala, R, Java, and SQL, as well as data science frameworks and libraries including TensorFlow, PyTorch, and scikit-learn.

1722 |

1723 | [Microsoft Cognitive Toolkit (CNTK)](https://docs.microsoft.com/en-us/cognitive-toolkit/) is an open-source toolkit for commercial-grade distributed deep learning. It describes neural networks as a series of computational steps via a directed graph. CNTK allows the user to easily realize and combine popular model types such as feed-forward DNNs, convolutional neural networks (CNNs) and recurrent neural networks (RNNs/LSTMs). CNTK implements stochastic gradient descent (SGD, error backpropagation) learning with automatic differentiation and parallelization across multiple GPUs and servers.

1724 |

1725 | [Apache Airflow](https://airflow.apache.org) is an open-source workflow management platform created by the community to programmatically author, schedule and monitor workflows. Install. Principles. Scalable. Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers. Airflow is ready to scale to infinity.

1726 |

1727 | [Open Neural Network Exchange(ONNX)](https://github.com/onnx) is an open ecosystem that empowers AI developers to choose the right tools as their project evolves. ONNX provides an open source format for AI models, both deep learning and traditional ML. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types.

1728 |

1729 | [Apache MXNet](https://mxnet.apache.org/) is a deep learning framework designed for both efficiency and flexibility. It allows you to mix symbolic and imperative programming to maximize efficiency and productivity. At its core, MXNet contains a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient. MXNet is portable and lightweight, scaling effectively to multiple GPUs and multiple machines. Support for Python, R, Julia, Scala, Go, Javascript and more.

1730 |

1731 | [AutoGluon](https://autogluon.mxnet.io/index.html) is toolkit for Deep learning that automates machine learning tasks enabling you to easily achieve strong predictive performance in your applications. With just a few lines of code, you can train and deploy high-accuracy deep learning models on tabular, image, and text data.

1732 |

1733 | [Anaconda](https://www.anaconda.com/) is a very popular Data Science platform for machine learning and deep learning that enables users to develop models, train them, and deploy them.

1734 |

1735 | [PlaidML](https://github.com/plaidml/plaidml) is an advanced and portable tensor compiler for enabling deep learning on laptops, embedded devices, or other devices where the available computing hardware is not well supported or the available software stack contains unpalatable license restrictions.

1736 |

1737 | [OpenCV](https://opencv.org) is a highly optimized library with focus on real-time computer vision applications. The C++, Python, and Java interfaces support Linux, MacOS, Windows, iOS, and Android.

1738 |

1739 | [Scikit-Learn](https://scikit-learn.org/stable/index.html) is a Python module for machine learning built on top of SciPy, NumPy, and matplotlib, making it easier to apply robust and simple implementations of many popular machine learning algorithms.

1740 |

1741 | [Weka](https://www.cs.waikato.ac.nz/ml/weka/) is an open source machine learning software that can be accessed through a graphical user interface, standard terminal applications, or a Java API. It is widely used for teaching, research, and industrial applications, contains a plethora of built-in tools for standard machine learning tasks, and additionally gives transparent access to well-known toolboxes such as scikit-learn, R, and Deeplearning4j.

1742 |

1743 | [Caffe](https://github.com/BVLC/caffe) is a deep learning framework made with expression, speed, and modularity in mind. It is developed by Berkeley AI Research (BAIR)/The Berkeley Vision and Learning Center (BVLC) and community contributors.

1744 |

1745 | [Theano](https://github.com/Theano/Theano) is a Python library that allows you to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently including tight integration with NumPy.

1746 |

1747 | [nGraph](https://github.com/NervanaSystems/ngraph) is an open source C++ library, compiler and runtime for Deep Learning. The nGraph Compiler aims to accelerate developing AI workloads using any deep learning framework and deploying to a variety of hardware targets.It provides the freedom, performance, and ease-of-use to AI developers.

1748 |

1749 | [NVIDIA cuDNN](https://developer.nvidia.com/cudnn) is a GPU-accelerated library of primitives for [deep neural networks](https://developer.nvidia.com/deep-learning). cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. cuDNN accelerates widely used deep learning frameworks, including [Caffe2](https://caffe2.ai/), [Chainer](https://chainer.org/), [Keras](https://keras.io/), [MATLAB](https://www.mathworks.com/solutions/deep-learning.html), [MxNet](https://mxnet.incubator.apache.org/), [PyTorch](https://pytorch.org/), and [TensorFlow](https://www.tensorflow.org/).

1750 |

1751 | [Jupyter Notebook](https://jupyter.org/) is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations and narrative text. Jupyter is used widely in industries that do data cleaning and transformation, numerical simulation, statistical modeling, data visualization, data science, and machine learning.

1752 |

1753 | [Apache Spark](https://spark.apache.org/) is a unified analytics engine for large-scale data processing. It provides high-level APIs in Scala, Java, Python, and R, and an optimized engine that supports general computation graphs for data analysis. It also supports a rich set of higher-level tools including Spark SQL for SQL and DataFrames, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for stream processing.

1754 |

1755 | [Apache Spark Connector for SQL Server and Azure SQL](https://github.com/microsoft/sql-spark-connector) is a high-performance connector that enables you to use transactional data in big data analytics and persists results for ad-hoc queries or reporting. The connector allows you to use any SQL database, on-premises or in the cloud, as an input data source or output data sink for Spark jobs.

1756 |

1757 | [Apache PredictionIO](https://predictionio.apache.org/) is an open source machine learning framework for developers, data scientists, and end users. It supports event collection, deployment of algorithms, evaluation, querying predictive results via REST APIs. It is based on scalable open source services like Hadoop, HBase (and other DBs), Elasticsearch, Spark and implements what is called a Lambda Architecture.

1758 |

1759 | [Cluster Manager for Apache Kafka(CMAK)](https://github.com/yahoo/CMAK) is a tool for managing [Apache Kafka](https://kafka.apache.org/) clusters.

1760 |

1761 | [BigDL](https://bigdl-project.github.io/) is a distributed deep learning library for Apache Spark. With BigDL, users can write their deep learning applications as standard Spark programs, which can directly run on top of existing Spark or Hadoop clusters.

1762 |

1763 | [Koalas](https://pypi.org/project/koalas/) is project makes data scientists more productive when interacting with big data, by implementing the pandas DataFrame API on top of Apache Spark.

1764 |

1765 | [Apache Spark™ MLflow](https://mlflow.org/) is an open source platform to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. MLflow currently offers four components:

1766 |

1767 | **[MLflow Tracking](https://mlflow.org/docs/latest/tracking.html)**: Record and query experiments: code, data, config, and results.

1768 |

1769 | **[MLflow Projects](https://mlflow.org/docs/latest/projects.html)**: Package data science code in a format to reproduce runs on any platform.

1770 |

1771 | **[MLflow Models](https://mlflow.org/docs/latest/models.html)**: Deploy machine learning models in diverse serving environments.

1772 |

1773 | **[Model Registry](https://mlflow.org/docs/latest/model-registry.html)**: Store, annotate, discover, and manage models in a central repository.

1774 |

1775 | [Eclipse Deeplearning4J (DL4J)](https://deeplearning4j.konduit.ai/) is a set of projects intended to support all the needs of a JVM-based(Scala, Kotlin, Clojure, and Groovy) deep learning application. This means starting with the raw data, loading and preprocessing it from wherever and whatever format it is in to building and tuning a wide variety of simple and complex deep learning networks.

1776 |

1777 | [Numba](https://github.com/numba/numba) is an open source, NumPy-aware optimizing compiler for Python sponsored by Anaconda, Inc. It uses the LLVM compiler project to generate machine code from Python syntax. Numba can compile a large subset of numerically-focused Python, including many NumPy functions. Additionally, Numba has support for automatic parallelization of loops, generation of GPU-accelerated code, and creation of ufuncs and C callbacks.

1778 |

1779 | [Chainer](https://chainer.org/) is a Python-based deep learning framework aiming at flexibility. It provides automatic differentiation APIs based on the define-by-run approach (dynamic computational graphs) as well as object-oriented high-level APIs to build and train neural networks. It also supports CUDA/cuDNN using [CuPy](https://github.com/cupy/cupy) for high performance training and inference.

1780 |

1781 | [cuML](https://github.com/rapidsai/cuml) is a suite of libraries that implement machine learning algorithms and mathematical primitives functions that share compatible APIs with other RAPIDS projects. cuML enables data scientists, researchers, and software engineers to run traditional tabular ML tasks on GPUs without going into the details of CUDA programming. In most cases, cuML's Python API matches the API from scikit-learn.

1782 |

1783 | # Networking

1784 |

1785 | [Back to the Top](https://github.com/mikeroyal/kubernetes-Guide/blob/main/README.md#table-of-contents)

1786 |

1787 |

1688 |

1689 | ## ML Learning Resources

1690 |

1691 | [Kubernetes for Machine Learning on Platform9](https://platform9.com/blog/kubernetes-for-machine-learning/)

1692 |

1693 | [Introducing Amazon SageMaker Operators for Kubernetes](https://aws.amazon.com/blogs/machine-learning/introducing-amazon-sagemaker-operators-for-kubernetes/)

1694 |

1695 | [Deploying machine learning models on Kubernetes with Google Cloud](https://cloud.google.com/community/tutorials/kubernetes-ml-ops)

1696 |

1697 | [Create and attach Azure Kubernetes Service with Azure Machine Learning](https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-kubernetes)

1698 |

1699 | [Kubernetes for MLOps: Scaling Enterprise Machine Learning, Deep Learning, AI with HPE](https://www.hpe.com/us/en/resources/solutions/kubernetes-mlops.html)

1700 |

1701 | [Machine Learning by Stanford University from Coursera](https://www.coursera.org/learn/machine-learning)

1702 |

1703 | [Machine Learning Courses Online from Coursera](https://www.coursera.org/courses?query=machine%20learning&)

1704 |

1705 | [Machine Learning Courses Online from Udemy](https://www.udemy.com/topic/machine-learning/)

1706 |

1707 | [Learn Machine Learning with Online Courses and Classes from edX](https://www.edx.org/learn/machine-learning)

1708 |

1709 | ## ML frameworks & applications

1710 |

1711 | [TensorFlow](https://www.tensorflow.org) is an end-to-end open source platform for machine learning. It has a comprehensive, flexible ecosystem of tools, libraries and community resources that lets researchers push the state-of-the-art in ML and developers easily build and deploy ML powered applications.

1712 |

1713 | [Tensorman](https://github.com/pop-os/tensorman) is a utility for easy management of Tensorflow containers by developed by [System76]( https://system76.com).Tensorman allows Tensorflow to operate in an isolated environment that is contained from the rest of the system. This virtual environment can operate independent of the base system, allowing you to use any version of Tensorflow on any version of a Linux distribution that supports the Docker runtime.

1714 |

1715 | [Keras](https://keras.io) is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.It was developed with a focus on enabling fast experimentation. It is capable of running on top of TensorFlow, Microsoft Cognitive Toolkit, R, Theano, or PlaidML.

1716 |

1717 | [PyTorch](https://pytorch.org) is a library for deep learning on irregular input data such as graphs, point clouds, and manifolds. Primarily developed by Facebook's AI Research lab.

1718 |

1719 | [Amazon SageMaker](https://aws.amazon.com/sagemaker/) is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning (ML) models quickly. SageMaker removes the heavy lifting from each step of the machine learning process to make it easier to develop high quality models.

1720 |

1721 | [Azure Databricks](https://azure.microsoft.com/en-us/services/databricks/) is a fast and collaborative Apache Spark-based big data analytics service designed for data science and data engineering. Azure Databricks, sets up your Apache Spark environment in minutes, autoscale, and collaborate on shared projects in an interactive workspace. Azure Databricks supports Python, Scala, R, Java, and SQL, as well as data science frameworks and libraries including TensorFlow, PyTorch, and scikit-learn.

1722 |

1723 | [Microsoft Cognitive Toolkit (CNTK)](https://docs.microsoft.com/en-us/cognitive-toolkit/) is an open-source toolkit for commercial-grade distributed deep learning. It describes neural networks as a series of computational steps via a directed graph. CNTK allows the user to easily realize and combine popular model types such as feed-forward DNNs, convolutional neural networks (CNNs) and recurrent neural networks (RNNs/LSTMs). CNTK implements stochastic gradient descent (SGD, error backpropagation) learning with automatic differentiation and parallelization across multiple GPUs and servers.

1724 |

1725 | [Apache Airflow](https://airflow.apache.org) is an open-source workflow management platform created by the community to programmatically author, schedule and monitor workflows. Install. Principles. Scalable. Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers. Airflow is ready to scale to infinity.

1726 |

1727 | [Open Neural Network Exchange(ONNX)](https://github.com/onnx) is an open ecosystem that empowers AI developers to choose the right tools as their project evolves. ONNX provides an open source format for AI models, both deep learning and traditional ML. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types.

1728 |

1729 | [Apache MXNet](https://mxnet.apache.org/) is a deep learning framework designed for both efficiency and flexibility. It allows you to mix symbolic and imperative programming to maximize efficiency and productivity. At its core, MXNet contains a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient. MXNet is portable and lightweight, scaling effectively to multiple GPUs and multiple machines. Support for Python, R, Julia, Scala, Go, Javascript and more.

1730 |

1731 | [AutoGluon](https://autogluon.mxnet.io/index.html) is toolkit for Deep learning that automates machine learning tasks enabling you to easily achieve strong predictive performance in your applications. With just a few lines of code, you can train and deploy high-accuracy deep learning models on tabular, image, and text data.

1732 |

1733 | [Anaconda](https://www.anaconda.com/) is a very popular Data Science platform for machine learning and deep learning that enables users to develop models, train them, and deploy them.

1734 |

1735 | [PlaidML](https://github.com/plaidml/plaidml) is an advanced and portable tensor compiler for enabling deep learning on laptops, embedded devices, or other devices where the available computing hardware is not well supported or the available software stack contains unpalatable license restrictions.

1736 |

1737 | [OpenCV](https://opencv.org) is a highly optimized library with focus on real-time computer vision applications. The C++, Python, and Java interfaces support Linux, MacOS, Windows, iOS, and Android.

1738 |

1739 | [Scikit-Learn](https://scikit-learn.org/stable/index.html) is a Python module for machine learning built on top of SciPy, NumPy, and matplotlib, making it easier to apply robust and simple implementations of many popular machine learning algorithms.

1740 |

1741 | [Weka](https://www.cs.waikato.ac.nz/ml/weka/) is an open source machine learning software that can be accessed through a graphical user interface, standard terminal applications, or a Java API. It is widely used for teaching, research, and industrial applications, contains a plethora of built-in tools for standard machine learning tasks, and additionally gives transparent access to well-known toolboxes such as scikit-learn, R, and Deeplearning4j.

1742 |

1743 | [Caffe](https://github.com/BVLC/caffe) is a deep learning framework made with expression, speed, and modularity in mind. It is developed by Berkeley AI Research (BAIR)/The Berkeley Vision and Learning Center (BVLC) and community contributors.

1744 |

1745 | [Theano](https://github.com/Theano/Theano) is a Python library that allows you to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently including tight integration with NumPy.

1746 |

1747 | [nGraph](https://github.com/NervanaSystems/ngraph) is an open source C++ library, compiler and runtime for Deep Learning. The nGraph Compiler aims to accelerate developing AI workloads using any deep learning framework and deploying to a variety of hardware targets.It provides the freedom, performance, and ease-of-use to AI developers.

1748 |

1749 | [NVIDIA cuDNN](https://developer.nvidia.com/cudnn) is a GPU-accelerated library of primitives for [deep neural networks](https://developer.nvidia.com/deep-learning). cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. cuDNN accelerates widely used deep learning frameworks, including [Caffe2](https://caffe2.ai/), [Chainer](https://chainer.org/), [Keras](https://keras.io/), [MATLAB](https://www.mathworks.com/solutions/deep-learning.html), [MxNet](https://mxnet.incubator.apache.org/), [PyTorch](https://pytorch.org/), and [TensorFlow](https://www.tensorflow.org/).

1750 |

1751 | [Jupyter Notebook](https://jupyter.org/) is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations and narrative text. Jupyter is used widely in industries that do data cleaning and transformation, numerical simulation, statistical modeling, data visualization, data science, and machine learning.

1752 |

1753 | [Apache Spark](https://spark.apache.org/) is a unified analytics engine for large-scale data processing. It provides high-level APIs in Scala, Java, Python, and R, and an optimized engine that supports general computation graphs for data analysis. It also supports a rich set of higher-level tools including Spark SQL for SQL and DataFrames, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for stream processing.

1754 |

1755 | [Apache Spark Connector for SQL Server and Azure SQL](https://github.com/microsoft/sql-spark-connector) is a high-performance connector that enables you to use transactional data in big data analytics and persists results for ad-hoc queries or reporting. The connector allows you to use any SQL database, on-premises or in the cloud, as an input data source or output data sink for Spark jobs.

1756 |

1757 | [Apache PredictionIO](https://predictionio.apache.org/) is an open source machine learning framework for developers, data scientists, and end users. It supports event collection, deployment of algorithms, evaluation, querying predictive results via REST APIs. It is based on scalable open source services like Hadoop, HBase (and other DBs), Elasticsearch, Spark and implements what is called a Lambda Architecture.

1758 |

1759 | [Cluster Manager for Apache Kafka(CMAK)](https://github.com/yahoo/CMAK) is a tool for managing [Apache Kafka](https://kafka.apache.org/) clusters.

1760 |

1761 | [BigDL](https://bigdl-project.github.io/) is a distributed deep learning library for Apache Spark. With BigDL, users can write their deep learning applications as standard Spark programs, which can directly run on top of existing Spark or Hadoop clusters.

1762 |

1763 | [Koalas](https://pypi.org/project/koalas/) is project makes data scientists more productive when interacting with big data, by implementing the pandas DataFrame API on top of Apache Spark.

1764 |

1765 | [Apache Spark™ MLflow](https://mlflow.org/) is an open source platform to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. MLflow currently offers four components:

1766 |

1767 | **[MLflow Tracking](https://mlflow.org/docs/latest/tracking.html)**: Record and query experiments: code, data, config, and results.

1768 |

1769 | **[MLflow Projects](https://mlflow.org/docs/latest/projects.html)**: Package data science code in a format to reproduce runs on any platform.

1770 |

1771 | **[MLflow Models](https://mlflow.org/docs/latest/models.html)**: Deploy machine learning models in diverse serving environments.

1772 |

1773 | **[Model Registry](https://mlflow.org/docs/latest/model-registry.html)**: Store, annotate, discover, and manage models in a central repository.

1774 |

1775 | [Eclipse Deeplearning4J (DL4J)](https://deeplearning4j.konduit.ai/) is a set of projects intended to support all the needs of a JVM-based(Scala, Kotlin, Clojure, and Groovy) deep learning application. This means starting with the raw data, loading and preprocessing it from wherever and whatever format it is in to building and tuning a wide variety of simple and complex deep learning networks.

1776 |

1777 | [Numba](https://github.com/numba/numba) is an open source, NumPy-aware optimizing compiler for Python sponsored by Anaconda, Inc. It uses the LLVM compiler project to generate machine code from Python syntax. Numba can compile a large subset of numerically-focused Python, including many NumPy functions. Additionally, Numba has support for automatic parallelization of loops, generation of GPU-accelerated code, and creation of ufuncs and C callbacks.

1778 |

1779 | [Chainer](https://chainer.org/) is a Python-based deep learning framework aiming at flexibility. It provides automatic differentiation APIs based on the define-by-run approach (dynamic computational graphs) as well as object-oriented high-level APIs to build and train neural networks. It also supports CUDA/cuDNN using [CuPy](https://github.com/cupy/cupy) for high performance training and inference.

1780 |

1781 | [cuML](https://github.com/rapidsai/cuml) is a suite of libraries that implement machine learning algorithms and mathematical primitives functions that share compatible APIs with other RAPIDS projects. cuML enables data scientists, researchers, and software engineers to run traditional tabular ML tasks on GPUs without going into the details of CUDA programming. In most cases, cuML's Python API matches the API from scikit-learn.

1782 |

1783 | # Networking

1784 |

1785 | [Back to the Top](https://github.com/mikeroyal/kubernetes-Guide/blob/main/README.md#table-of-contents)

1786 |

1787 |

1788 |  1789 |

1789 |

1790 |

2080 |

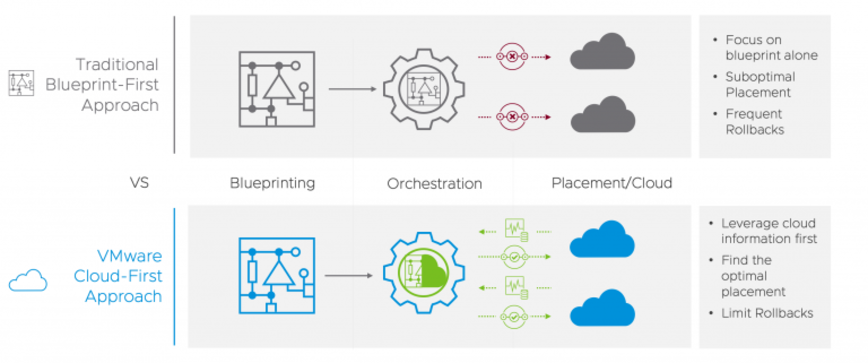

2081 | **VMware Cloud First Approach. Source: [VMware](https://www.vmware.com/products/telco-cloud-automation.html).**

2082 |

2083 |

2084 |

2080 |

2081 | **VMware Cloud First Approach. Source: [VMware](https://www.vmware.com/products/telco-cloud-automation.html).**

2082 |

2083 |

2084 |  2085 |

2086 | **VMware Telco Cloud Automation Components. Source: [VMware](https://www.vmware.com/products/telco-cloud-automation.html).**

2087 |

2088 |

2089 | ## Telco Learning Resources

2090 |

2091 | [HPE(Hewlett Packard Enterprise) Telco Blueprints overview](https://techhub.hpe.com/eginfolib/servers/docs/Telco/Blueprints/infocenter/index.html#GUID-9906A227-C1FB-4FD5-A3C3-F3B72EC81CAB.html)

2092 |

2093 | [Network Functions Virtualization Infrastructure (NFVI) by Cisco](https://www.cisco.com/c/en/us/solutions/service-provider/network-functions-virtualization-nfv-infrastructure/index.html)

2094 |

2095 | [Introduction to vCloud NFV Telco Edge from VMware](https://docs.vmware.com/en/VMware-vCloud-NFV-OpenStack-Edition/3.1/vloud-nfv-edge-reference-arch-31/GUID-744C45F1-A8D5-4523-9E5E-EAF6336EE3A0.html)

2096 |

2097 | [VMware Telco Cloud Automation(TCA) Architecture Overview](https://docs.vmware.com/en/VMware-Telco-Cloud-Platform-5G-Edition/1.0/telco-cloud-platform-5G-edition-reference-architecture/GUID-C19566B3-F42D-4351-BA55-DE70D55FB0DD.html)

2098 |

2099 | [5G Telco Cloud from VMware](https://telco.vmware.com/)

2100 |

2101 | [Maturing OpenStack Together To Solve Telco Needs from Red Hat](https://www.redhat.com/cms/managed-files/4.Nokia%20CloudBand%20&%20Red%20Hat%20-%20Maturing%20Openstack%20together%20to%20solve%20Telco%20needs%20Ehud%20Malik,%20Senior%20PLM,%20Nokia%20CloudBand.pdf)

2102 |

2103 | [Red Hat telco ecosystem program](https://connect.redhat.com/en/programs/telco-ecosystem)

2104 |

2105 | [OpenStack for Telcos by Canonical](https://ubuntu.com/blog/openstack-for-telcos-by-canonical)

2106 |

2107 | [Open source NFV platform for 5G from Ubuntu](https://ubuntu.com/telco)

2108 |

2109 | [Understanding 5G Technology from Verizon](https://www.verizon.com/5g/)

2110 |

2111 | [Verizon and Unity partner to enable 5G & MEC gaming and enterprise applications](https://www.verizon.com/about/news/verizon-unity-partner-5g-mec-gaming-enterprise)

2112 |

2113 | [Understanding 5G Technology from Intel](https://www.intel.com/content/www/us/en/wireless-network/what-is-5g.html)

2114 |

2115 | [Understanding 5G Technology from Qualcomm](https://www.qualcomm.com/invention/5g/what-is-5g)

2116 |

2117 | [Telco Acceleration with Xilinx](https://www.xilinx.com/applications/wired-wireless/telco.html)

2118 |

2119 | [VIMs on OSM Public Wiki](https://osm.etsi.org/wikipub/index.php/VIMs)

2120 |

2121 | [Amazon EC2 Overview and Networking Introduction for Telecom Companies](https://docs.aws.amazon.com/whitepapers/latest/ec2-networking-for-telecom/ec2-networking-for-telecom.pdf)

2122 |

2123 | [Citrix Certified Associate – Networking(CCA-N)](http://training.citrix.com/cms/index.php/certification/networking/)

2124 |

2125 | [Citrix Certified Professional – Virtualization(CCP-V)](https://www.globalknowledge.com/us-en/training/certification-prep/brands/citrix/section/virtualization/citrix-certified-professional-virtualization-ccp-v/)

2126 |

2127 | [CCNP Routing and Switching](https://learningnetwork.cisco.com/s/ccnp-enterprise)

2128 |

2129 | [Certified Information Security Manager(CISM)](https://www.isaca.org/credentialing/cism)

2130 |

2131 | [Wireshark Certified Network Analyst (WCNA)](https://www.wiresharktraining.com/certification.html)

2132 |

2133 | [Juniper Networks Certification Program Enterprise (JNCP)](https://www.juniper.net/us/en/training/certification/)

2134 |

2135 | [Cloud Native Computing Foundation Training and Certification Program](https://www.cncf.io/certification/training/)

2136 |

2137 |

2138 | ## Tools

2139 |

2140 | [Open Stack](https://www.openstack.org/) is an open source cloud platform, deployed as infrastructure-as-a-service (IaaS) to orchestrate data center operations on bare metal, private cloud hardware, public cloud resources, or both (hybrid/multi-cloud architecture). OpenStack includes advance use of virtualization & SDN for network traffic optimization to handle the core cloud-computing services of compute, networking, storage, identity, and image services.

2141 |

2142 | [StarlingX](https://www.starlingx.io/) is a complete cloud infrastructure software stack for the edge used by the most demanding applications in industrial IOT, telecom, video delivery and other ultra-low latency use cases.

2143 |

2144 | [Airship](https://www.airshipit.org/) is a collection of open source tools for automating cloud provisioning and management. Airship provides a declarative framework for defining and managing the life cycle of open infrastructure tools and the underlying hardware.

2145 |

2146 | [Network functions virtualization (NFV)](https://www.vmware.com/topics/glossary/content/network-functions-virtualization-nfv) is the replacement of network appliance hardware with virtual machines. The virtual machines use a hypervisor to run networking software and processes such as routing and load balancing. NFV allows for the separation of communication services from dedicated hardware, such as routers and firewalls. This separation means network operations can provide new services dynamically and without installing new hardware. Deploying network components with network functions virtualization only takes hours compared to months like with traditional networking solutions.

2147 |

2148 | [Software Defined Networking (SDN)](https://www.vmware.com/topics/glossary/content/software-defined-networking) is an approach to networking that uses software-based controllers or application programming interfaces (APIs) to communicate with underlying hardware infrastructure and direct traffic on a network. This model differs from that of traditional networks, which use dedicated hardware devices (routers and switches) to control network traffic.

2149 |

2150 | [Virtualized Infrastructure Manager (VIM)](https://www.cisco.com/c/en/us/td/docs/net_mgmt/network_function_virtualization_Infrastructure/3_2_2/install_guide/Cisco_VIM_Install_Guide_3_2_2/Cisco_VIM_Install_Guide_3_2_2_chapter_00.html) is a service delivery and reduce costs with high performance lifecycle management Manage the full lifecycle of the software and hardware comprising your NFV infrastructure (NFVI), and maintaining a live inventory and allocation plan of both physical and virtual resources.

2151 |

2152 | [Management and Orchestration(MANO)](https://www.etsi.org/technologies/open-source-mano) is an ETSI-hosted initiative to develop an Open Source NFV Management and Orchestration (MANO) software stack aligned with ETSI NFV. Two of the key components of the ETSI NFV architectural framework are the NFV Orchestrator and VNF Manager, known as NFV MANO.

2153 |

2154 | [Magma](https://www.magmacore.org/) is an open source software platform that gives network operators an open, flexible and extendable mobile core network solution. Their mission is to connect the world to a faster network by enabling service providers to build cost-effective and extensible carrier-grade networks. Magma is 3GPP generation (2G, 3G, 4G or upcoming 5G networks) and access network agnostic (cellular or WiFi). It can flexibly support a radio access network with minimal development and deployment effort.

2155 |

2156 | [OpenRAN](https://open-ran.org/) is an intelligent Radio Access Network(RAN) integrated on general purpose platforms with open interface between software defined functions. Open RANecosystem enables enormous flexibility and interoperability with a complete openess to multi-vendor deployments.

2157 |

2158 | [Open vSwitch(OVS)](https://www.openvswitch.org/)is an open source production quality, multilayer virtual switch licensed under the open source Apache 2.0 license. It is designed to enable massive network automation through programmatic extension, while still supporting standard management interfaces and protocols (NetFlow, sFlow, IPFIX, RSPAN, CLI, LACP, 802.1ag).

2159 |

2160 | [Edge](https://www.ibm.com/cloud/what-is-edge-computing) is a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. This proximity to data at its source can deliver strong business benefits, including faster insights, improved response times and better bandwidth availability.

2161 |

2162 | [Multi-access edge computing (MEC)](https://www.etsi.org/technologies/multi-access-edge-computing) is an Industry Specification Group (ISG) within ETSI to create a standardized, open environment which will allow the efficient and seamless integration of applications from vendors, service providers, and third-parties across multi-vendor Multi-access Edge Computing platforms.

2163 |

2164 | [Virtualized network functions(VNFs)](https://www.juniper.net/documentation/en_US/cso4.1/topics/concept/nsd-vnf-overview.html) is a software application used in a Network Functions Virtualization (NFV) implementation that has well defined interfaces, and provides one or more component networking functions in a defined way. For example, a security VNF provides Network Address Translation (NAT) and firewall component functions.

2165 |

2166 | [Cloud-Native Network Functions(CNF)](https://www.cncf.io/announcements/2020/11/18/cloud-native-network-functions-conformance-launched-by-cncf/) is a network function designed and implemented to run inside containers. CNFs inherit all the cloud native architectural and operational principles including Kubernetes(K8s) lifecycle management, agility, resilience, and observability.

2167 |

2168 | [Physical Network Function(PNF)](https://www.mpirical.com/glossary/pnf-physical-network-function) is a physical network node which has not undergone virtualization. Both PNFs and VNFs (Virtualized Network Functions) can be used to form an overall Network Service.

2169 |

2170 | [Network functions virtualization infrastructure(NFVI)](https://docs.vmware.com/en/VMware-vCloud-NFV/2.0/vmware-vcloud-nfv-reference-architecture-20/GUID-FBEA6C6B-54D8-4A37-87B1-D825F9E0DBC7.html) is the foundation of the overall NFV architecture. It provides the physical compute, storage, and networking hardware that hosts the VNFs. Each NFVI block can be thought of as an NFVI node and many nodes can be deployed and controlled geographically.

2171 |

2172 | # Open Source Security

2173 |

2174 | [Back to the Top](https://github.com/mikeroyal/Kubernetes-Guide/blob/main/README.md#table-of-contents)

2175 |

2176 |

2177 | [Open Source Security Foundation (OpenSSF)](https://openssf.org/) is a cross-industry collaboration that brings together leaders to improve the security of open source software by building a broader community, targeted initiatives, and best practices. The OpenSSF brings together open source security initiatives under one foundation to accelerate work through cross-industry support. Along with the Core Infrastructure Initiative and the Open Source Security Coalition, and will include new working groups that address vulnerability disclosures, security tooling and more.

2178 |

2179 | ## Security Standards, Frameworks and Benchmarks

2180 |

2181 | [STIGs Benchmarks - Security Technical Implementation Guides](https://public.cyber.mil/stigs/)

2182 |

2183 | [CIS Benchmarks - CIS Center for Internet Security](https://www.cisecurity.org/cis-benchmarks/)

2184 |

2185 | [CIS Top 18 Critical Security Controls](https://www.cisecurity.org/controls/cis-controls-list)

2186 |

2187 | [OSSTMM (Open Source Security Testing Methodology Manual) PDF](https://github.com/mikeroyal/Open-Source-Security-Guide/files/8834704/osstmm.en.2.1.pdf)

2188 |

2189 | [NIST Technical Guide to Information Security Testing and Assessment (PDF)](https://github.com/mikeroyal/Open-Source-Security-Guide/files/8834705/nistspecialpublication800-115.pdf)

2190 |

2191 | [NIST - Current FIPS](https://www.nist.gov/itl/current-fips)

2192 |

2193 | [ISO Standards Catalogue](https://www.iso.org/standards.html)

2194 |

2195 | [Common Criteria for Information Technology Security Evaluation (CC)](https://www.commoncriteriaportal.org/cc/) is an international standard (ISO / IEC 15408) for computer security. It allows an objective evaluation to validate that a particular product satisfies a defined set of security requirements.

2196 |

2197 | [ISO 22301](https://www.iso.org/en/contents/data/standard/07/51/75106.html) is the international standard that provides a best-practice framework for implementing an optimised BCMS (business continuity management system).

2198 |

2199 | [ISO27001](https://www.iso.org/isoiec-27001-information-security.html) is the international standard that describes the requirements for an ISMS (information security management system). The framework is designed to help organizations manage their security practices in one place, consistently and cost-effectively.

2200 |

2201 | [ISO 27701](https://www.iso.org/en/contents/data/standard/07/16/71670.html) specifies the requirements for a PIMS (privacy information management system) based on the requirements of ISO 27001.

2202 | It is extended by a set of privacy-specific requirements, control objectives and controls. Companies that have implemented ISO 27001 will be able to use ISO 27701 to extend their security efforts to cover privacy management.

2203 |

2204 | [EU GDPR (General Data Protection Regulation)](https://gdpr.eu/) is a privacy and data protection law that supersedes existing national data protection laws across the EU, bringing uniformity by introducing just one main data protection law for companies/organizations to comply with.

2205 |

2206 | [CCPA (California Consumer Privacy Act)](https://www.oag.ca.gov/privacy/ccpa) is a data privacy law that took effect on January 1, 2020 in the State of California. It applies to businesses that collect California residents’ personal information, and its privacy requirements are similar to those of the EU’s GDPR (General Data Protection Regulation).

2207 |

2208 | [Payment Card Industry (PCI) Data Security Standards (DSS)](https://docs.microsoft.com/en-us/microsoft-365/compliance/offering-pci-dss) is a global information security standard designed to prevent fraud through increased control of credit card data.

2209 |

2210 | [SOC 2](https://www.aicpa.org/interestareas/frc/assuranceadvisoryservices/aicpasoc2report.html) is an auditing procedure that ensures your service providers securely manage your data to protect the interests of your comapny/organization and the privacy of their clients.

2211 |

2212 | [NIST CSF](https://www.nist.gov/national-security-standards) is a voluntary framework primarily intended for critical infrastructure organizations to manage and mitigate cybersecurity risk based on existing best practice.

2213 |

2214 | ## Security Tools

2215 |

2216 | [AppArmor](https://www.apparmor.net/) is an effective and easy-to-use Linux application security system. AppArmor proactively protects the operating system and applications from external or internal threats, even zero-day attacks, by enforcing good behavior and preventing both known and unknown application flaws from being exploited. AppArmor supplements the traditional Unix discretionary access control (DAC) model by providing mandatory access control (MAC). It has been included in the mainline Linux kernel since version 2.6.36 and its development has been supported by Canonical since 2009.

2217 |

2218 | [SELinux](https://github.com/SELinuxProject/selinux) is a security enhancement to Linux which allows users and administrators more control over access control. Access can be constrained on such variables as which users and applications can access which resources. These resources may take the form of files. Standard Linux access controls, such as file modes (-rwxr-xr-x) are modifiable by the user and the applications which the user runs. Conversely, SELinux access controls are determined by a policy loaded on the system which may not be changed by careless users or misbehaving applications.

2219 |

2220 | [Control Groups(Cgroups)](https://www.redhat.com/sysadmin/cgroups-part-one) is a Linux kernel feature that allows you to allocate resources such as CPU time, system memory, network bandwidth, or any combination of these resources for user-defined groups of tasks (processes) running on a system.

2221 |

2222 | [EarlyOOM](https://github.com/rfjakob/earlyoom) is a daemon for Linux that enables users to more quickly recover and regain control over their system in low-memory situations with heavy swap usage.

2223 |

2224 | [Libgcrypt](https://www.gnupg.org/related_software/libgcrypt/) is a general purpose cryptographic library originally based on code from GnuPG.

2225 |

2226 | [Pi-hole](https://pi-hole.net/) is a [DNS sinkhole](https://en.wikipedia.org/wiki/DNS_Sinkhole) that protects your devices from unwanted content, without installing any client-side software, intended for use on a private network. It is designed for use on embedded devices with network capability, such as the Raspberry Pi, but it can be used on other machines running Linux and cloud implementations.

2227 |

2228 | [Aircrack-ng](https://www.aircrack-ng.org/) is a network software suite consisting of a detector, packet sniffer, WEP and WPA/WPA2-PSK cracker and analysis tool for 802.11 wireless LANs. It works with any wireless network interface controller whose driver supports raw monitoring mode and can sniff 802.11a, 802.11b and 802.11g traffic.

2229 |

2230 | [Acra](https://cossacklabs.com/acra) is a single database security suite with 9 strong security controls: application level encryption, searchable encryption, data masking, data tokenization, secure authentication, data leakage prevention, database request firewall, cryptographically signed audit logging, security events automation. It is designed to cover the most important data security requirements with SQL and NoSQL databases and distributed apps in a fast, convenient, and reliable way.

2231 |

2232 | [Netdata](https://github.com/netdata/netdata) is high-fidelity infrastructure monitoring and troubleshooting, real-time monitoring Agent collects thousands of metrics from systems, hardware, containers, and applications with zero configuration. It runs permanently on all your physical/virtual servers, containers, cloud deployments, and edge/IoT devices, and is perfectly safe to install on your systems mid-incident without any preparation.

2233 |

2234 | [Trivy](https://aquasecurity.github.io/trivy/) is a comprehensive security scanner for vulnerabilities in container images, file systems, and Git repositories, as well as for configuration issues and hard-coded secrets.

2235 |

2236 | [Lynis](https://cisofy.com/lynis/) is a security auditing tool for Linux, macOS, and UNIX-based systems. Assists with compliance testing (HIPAA/ISO27001/PCI DSS) and system hardening. Agentless, and installation optional.

2237 |

2238 | [OWASP Nettacker](https://github.com/OWASP/Nettacker) is a project created to automate information gathering, vulnerability scanning and eventually generating a report for networks, including services, bugs, vulnerabilities, misconfigurations, and other information. This software will utilize TCP SYN, ACK, ICMP, and many other protocols in order to detect and bypass Firewall/IDS/IPS devices.

2239 |

2240 | [Terrascan](https://runterrascan.io/) is a static code analyzer for Infrastructure as Code to mitigate risk before provisioning cloud native infrastructure.

2241 |

2242 | [Sliver](https://github.com/BishopFox/sliver) is an open source cross-platform adversary emulation/red team framework, it can be used by organizations of all sizes to perform security testing. Sliver's implants support C2 over Mutual TLS (mTLS), WireGuard, HTTP(S), and DNS and are dynamically compiled with per-binary asymmetric encryption keys.

2243 |

2244 | [Attack Surface Analyzer](https://github.com/microsoft/AttackSurfaceAnalyzer) is a [Microsoft](https://github.com/microsoft/) developed open source security tool that analyzes the attack surface of a target system and reports on potential security vulnerabilities introduced during the installation of software or system misconfiguration.

2245 |

2246 | [Intel Owl](https://intelowl.readthedocs.io/) is an Open Source Intelligence, or OSINT solution to get threat intelligence data about a specific file, an IP or a domain from a single API at scale. It integrates a number of analyzers available online and a lot of cutting-edge malware analysis tools.

2247 |

2248 | [Deepfence ThreatMapper](https://deepfence.io/) is a runtime tool that hunts for vulnerabilities in your cloud native production platforms(Linux, K8s, AWS Fargate and more.), and ranks these vulnerabilities based on their risk-of-exploit.

2249 |

2250 | [Dockle](https://containers.goodwith.tech/) is a Container Image Linter for Security and helping build the Best-Practice Docker Image.

2251 |

2252 | [RustScan](https://github.com/RustScan/RustScan) is a Modern Port Scanner.

2253 |

2254 | [gosec](https://github.com/securego/gosec) is a Golang Security Checker that inspects source code for security problems by scanning the Go AST.

2255 |

2256 | [Prowler](https://github.com/prowler-cloud/prowler) is an Open Source security tool to perform AWS security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness. It contains more than 240 controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

2257 |

2258 | [Burp Suite](https://portswigger.net/burp) is a leading range of cybersecurity tools.

2259 |

2260 | [KernelCI](https://foundation.kernelci.org/) is a community-based open source distributed test automation system focused on upstream kernel development. The primary goal of KernelCI is to use an open testing philosophy to ensure the quality, stability and long-term maintenance of the Linux kernel.

2261 |

2262 | [Continuous Kernel Integration project](https://github.com/cki-project) helps find bugs in kernel patches before they are commited to an upstram kernel tree. We are team of kernel developers, kernel testers, and automation engineers.

2263 |

2264 | [eBPF](https://ebpf.io) is a revolutionary technology that can run sandboxed programs in the Linux kernel without changing kernel source code or loading kernel modules. By making the Linux kernel programmable, infrastructure software can leverage existing layers, making them more intelligent and feature-rich without continuing to add additional layers of complexity to the system.

2265 |

2266 | [Cilium](https://cilium.io/) uses eBPF to accelerate getting data in and out of L7 proxies such as Envoy, enabling efficient visibility into API protocols like HTTP, gRPC, and Kafka.

2267 |

2268 | [Hubble](https://github.com/cilium/hubble) is a Network, Service & Security Observability for Kubernetes using eBPF.

2269 |

2270 | [Istio](https://istio.io/) is an open platform to connect, manage, and secure microservices. Istio's control plane provides an abstraction layer over the underlying cluster management platform, such as Kubernetes and Mesos.

2271 |

2272 | [Certgen](https://github.com/cilium/certgen) is a convenience tool to generate and store certificates for Hubble Relay mTLS.

2273 |

2274 | [Scapy](https://scapy.net/) is a python-based interactive packet manipulation program & library.

2275 |

2276 | [syzkaller](https://github.com/google/syzkaller) is an unsupervised, coverage-guided kernel fuzzer.

2277 |

2278 | [SchedViz](https://github.com/google/schedviz) is a tool for gathering and visualizing kernel scheduling traces on Linux machines.

2279 |

2280 | [oss-fuzz](https://google.github.io/oss-fuzz/) aims to make common open source software more secure and stable by combining modern fuzzing techniques with scalable, distributed execution.

2281 |

2282 | [OSSEC](https://www.ossec.net/) is a free, open-source host-based intrusion detection system. It performs log analysis, integrity checking, Windows registry monitoring, rootkit detection, time-based alerting, and active response.

2283 |