├── __init__.py

├── roboenvs

├── __init__.py

├── roboschool_hockey.xml

├── roboschool_pong.xml

├── joint_hockey.py

└── joint_pong.py

├── mpi_utils.py

├── .gitignore

├── README.md

├── recorder.py

├── cnn_policy.py

├── dynamics.py

├── vec_env.py

├── auxiliary_tasks.py

├── utils.py

├── rollouts.py

├── run.py

├── cppo_agent.py

└── wrappers.py

/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/roboenvs/__init__.py:

--------------------------------------------------------------------------------

1 | import gym

2 | from gym.envs.registration import register

3 |

4 | from .joint_pong import DiscretizeActionWrapper, MultiDiscreteToUsual

5 |

6 | register(

7 | id='RoboschoolPong-v2',

8 | entry_point='.joint_pong:RoboschoolPongJoint',

9 | max_episode_steps=10000,

10 | tags={"pg_complexity": 20 * 1000000},

11 | )

12 |

13 | register(

14 | id='RoboschoolHockey-v1',

15 | entry_point='.joint_hockey:RoboschoolHockeyJoint',

16 | max_episode_steps=1000,

17 | tags={"pg_complexity": 20 * 1000000},

18 | )

19 |

20 |

21 | def make_robopong():

22 | return gym.make("RoboschoolPong-v2")

23 |

24 |

25 | def make_robohockey():

26 | return gym.make("RoboschoolHockey-v1")

27 |

--------------------------------------------------------------------------------

/mpi_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import tensorflow as tf

3 | from mpi4py import MPI

4 |

5 |

6 | class MpiAdamOptimizer(tf.train.AdamOptimizer):

7 | """Adam optimizer that averages gradients across mpi processes."""

8 |

9 | def __init__(self, comm, **kwargs):

10 | self.comm = comm

11 | tf.train.AdamOptimizer.__init__(self, **kwargs)

12 |

13 | def compute_gradients(self, loss, var_list, **kwargs):

14 | grads_and_vars = tf.train.AdamOptimizer.compute_gradients(self, loss, var_list, **kwargs)

15 | grads_and_vars = [(g, v) for g, v in grads_and_vars if g is not None]

16 | flat_grad = tf.concat([tf.reshape(g, (-1,)) for g, v in grads_and_vars], axis=0)

17 | shapes = [v.shape.as_list() for g, v in grads_and_vars]

18 | sizes = [int(np.prod(s)) for s in shapes]

19 |

20 | _task_id, num_tasks = self.comm.Get_rank(), self.comm.Get_size()

21 | buf = np.zeros(sum(sizes), np.float32)

22 |

23 | def _collect_grads(flat_grad):

24 | self.comm.Allreduce(flat_grad, buf, op=MPI.SUM)

25 | np.divide(buf, float(num_tasks), out=buf)

26 | return buf

27 |

28 | avg_flat_grad = tf.py_func(_collect_grads, [flat_grad], tf.float32)

29 | avg_flat_grad.set_shape(flat_grad.shape)

30 | avg_grads = tf.split(avg_flat_grad, sizes, axis=0)

31 | avg_grads_and_vars = [(tf.reshape(g, v.shape), v)

32 | for g, (_, v) in zip(avg_grads, grads_and_vars)]

33 |

34 | return avg_grads_and_vars

35 |

--------------------------------------------------------------------------------

/roboenvs/roboschool_hockey.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

24 |

26 |

27 |

28 |

29 |

30 |

--------------------------------------------------------------------------------

/roboenvs/roboschool_pong.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

24 |

26 |

27 |

28 |

29 |

30 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Created by .ignore support plugin (hsz.mobi)

2 | ### Python template

3 | # Byte-compiled / optimized / DLL files

4 | __pycache__/

5 | *.py[cod]

6 | *$py.class

7 |

8 | # C extensions

9 | *.so

10 |

11 | # Distribution / packaging

12 | .Python

13 | build/

14 | develop-eggs/

15 | dist/

16 | downloads/

17 | eggs/

18 | .eggs/

19 | lib/

20 | lib64/

21 | parts/

22 | sdist/

23 | var/

24 | wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | .hypothesis/

50 | .pytest_cache/

51 |

52 | # Translations

53 | *.mo

54 | *.pot

55 |

56 | # Django stuff:

57 | *.log

58 | local_settings.py

59 | db.sqlite3

60 |

61 | # Flask stuff:

62 | instance/

63 | .webassets-cache

64 |

65 | # Scrapy stuff:

66 | .scrapy

67 |

68 | # Sphinx documentation

69 | docs/_build/

70 |

71 | # PyBuilder

72 | target/

73 |

74 | # Jupyter Notebook

75 | .ipynb_checkpoints

76 |

77 | # pyenv

78 | .python-version

79 |

80 | # celery beat schedule file

81 | celerybeat-schedule

82 |

83 | # SageMath parsed files

84 | *.sage.py

85 |

86 | # Environments

87 | .env

88 | .venv

89 | env/

90 | venv/

91 | ENV/

92 | env.bak/

93 | venv.bak/

94 |

95 | # Spyder project settings

96 | .spyderproject

97 | .spyproject

98 |

99 | # Rope project settings

100 | .ropeproject

101 |

102 | # mkdocs documentation

103 | /site

104 |

105 | # mypy

106 | .mypy_cache/

107 |

108 | .idea/

109 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Large-Scale Study of Curiosity-Driven Learning ##

2 | #### [[Project Website]](https://pathak22.github.io/large-scale-curiosity/) [[Demo Video]](https://youtu.be/l1FqtAHfJLI)

3 |

4 | [Yuri Burda*](https://sites.google.com/site/yburda/), [Harri Edwards*](https://github.com/harri-edwards/), [Deepak Pathak*](https://people.eecs.berkeley.edu/~pathak/),

[Amos Storkey](http://homepages.inf.ed.ac.uk/amos/), [Trevor Darrell](https://people.eecs.berkeley.edu/~trevor/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/)

5 | (* alphabetical ordering, equal contribution)

6 |

7 | University of California, Berkeley

8 | OpenAI

9 | University of Edinburgh

10 |

11 |

12 |  13 |

14 |

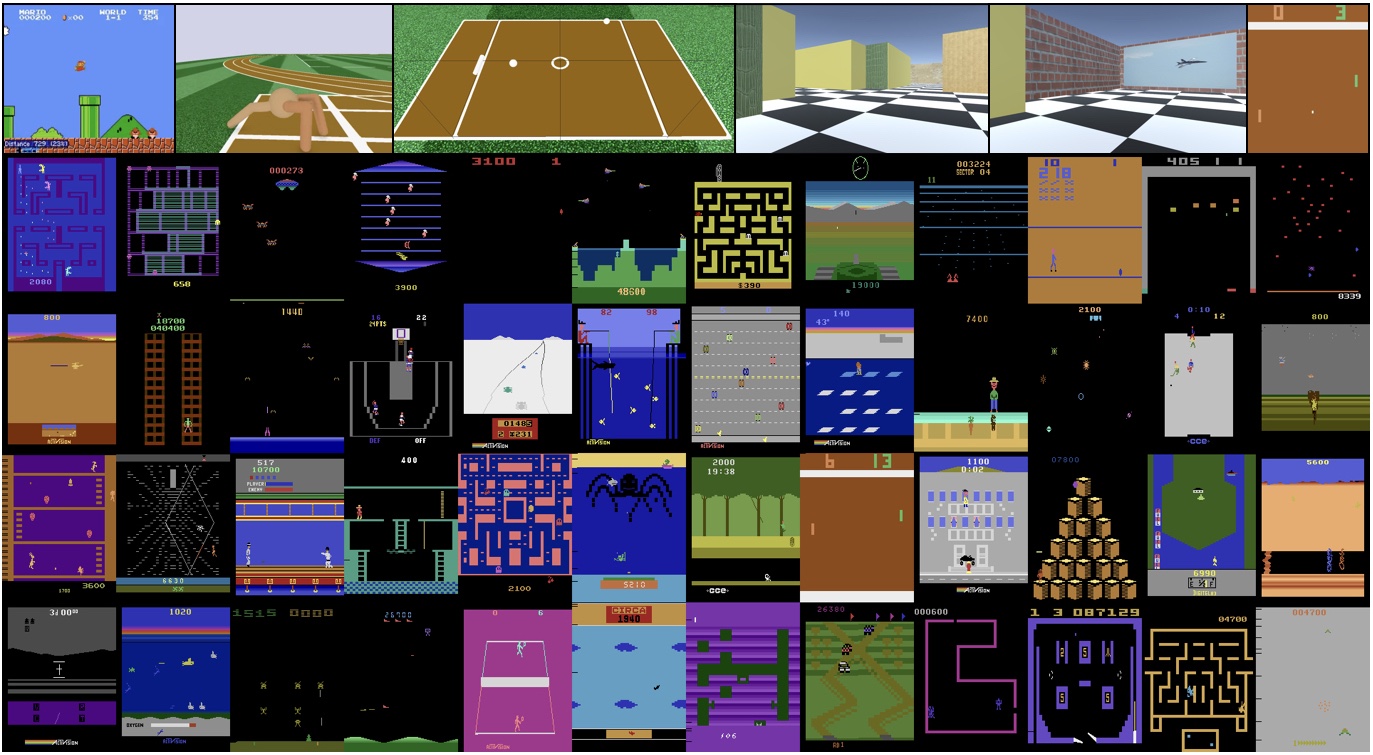

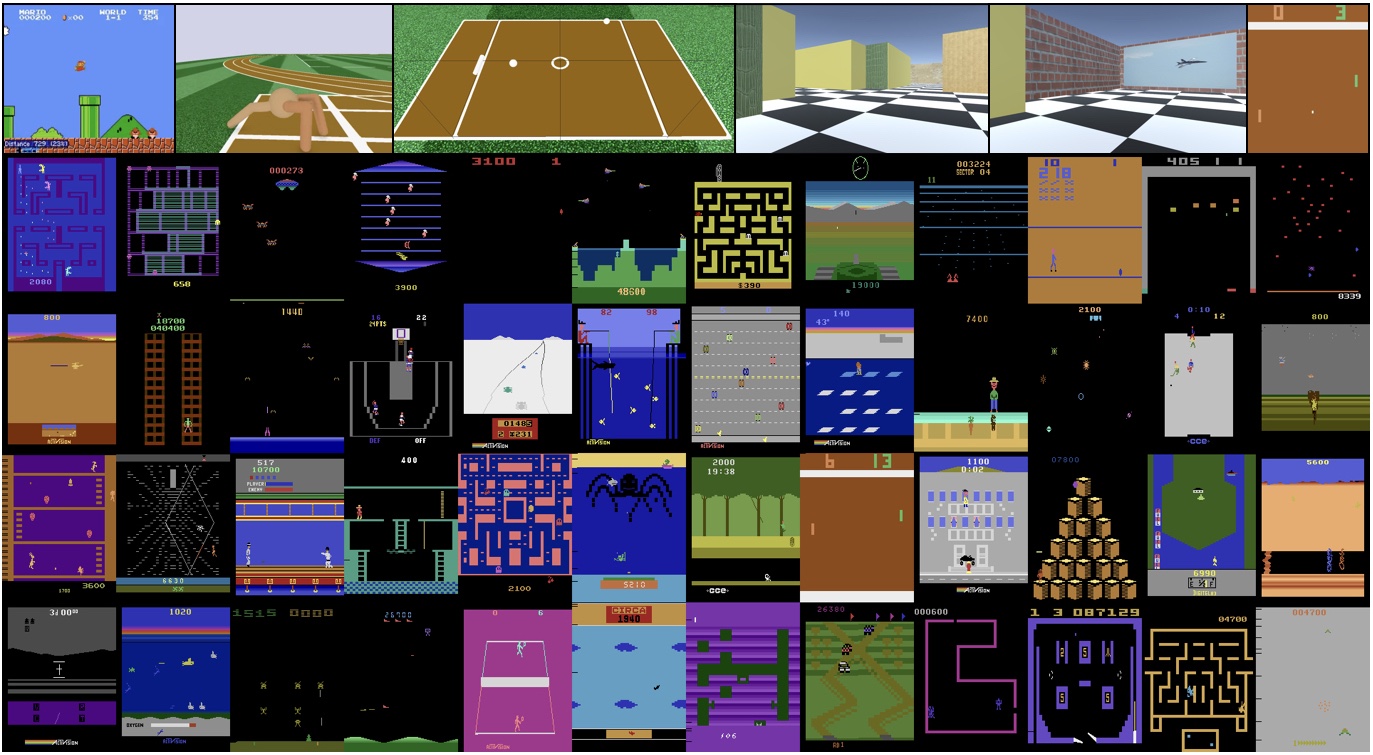

15 | This is a TensorFlow based implementation for our [paper on large-scale study of curiosity-driven learning](https://pathak22.github.io/large-scale-curiosity/) across

16 | 54 environments. Curiosity is a type of intrinsic reward function which uses prediction error as reward signal. In this paper, We perform the first large-scale study of purely curiosity-driven learning, i.e. without any extrinsic rewards, across 54 standard benchmark environments. We further investigate the effect of using different feature spaces for computing prediction error and show that random features are sufficient for many popular RL game benchmarks, but learned features appear to generalize better (e.g. to novel game levels in Super Mario Bros.). If you find this work useful in your research, please cite:

17 |

18 | @inproceedings{largeScaleCuriosity2018,

19 | Author = {Burda, Yuri and Edwards, Harri and

20 | Pathak, Deepak and Storkey, Amos and

21 | Darrell, Trevor and Efros, Alexei A.},

22 | Title = {Large-Scale Study of Curiosity-Driven Learning},

23 | Booktitle = {arXiv:1808.04355},

24 | Year = {2018}

25 | }

26 |

27 | ### Installation and Usage

28 | The following command should train a pure exploration agent on Breakout with default experiment parameters.

29 | ```bash

30 | python run.py

31 | ```

32 | To use more than one gpu/machine, use MPI (e.g. `mpiexec -n 8 python run.py` should use 1024 parallel environments to collect experience instead of the default 128 on an 8 gpu machine).

33 |

34 | ### Other helpful pointers

35 | - [Paper](https://pathak22.github.io/large-scale-curiosity/resources/largeScaleCuriosity2018.pdf)

36 | - [Project Website](https://pathak22.github.io/large-scale-curiosity/)

37 | - [Demo Video](https://youtu.be/l1FqtAHfJLI)

38 |

--------------------------------------------------------------------------------

/recorder.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pickle

3 |

4 | from baselines import logger

5 | from mpi4py import MPI

6 |

7 |

8 | class Recorder(object):

9 | def __init__(self, nenvs, nlumps):

10 | self.nenvs = nenvs

11 | self.nlumps = nlumps

12 | self.nenvs_per_lump = nenvs // nlumps

13 | self.acs = [[] for _ in range(nenvs)]

14 | self.int_rews = [[] for _ in range(nenvs)]

15 | self.ext_rews = [[] for _ in range(nenvs)]

16 | self.ep_infos = [{} for _ in range(nenvs)]

17 | self.filenames = [self.get_filename(i) for i in range(nenvs)]

18 | if MPI.COMM_WORLD.Get_rank() == 0:

19 | logger.info("episode recordings saved to ", self.filenames[0])

20 |

21 | def record(self, timestep, lump, acs, infos, int_rew, ext_rew, news):

22 | for out_index in range(self.nenvs_per_lump):

23 | in_index = out_index + lump * self.nenvs_per_lump

24 | if timestep == 0:

25 | self.acs[in_index].append(acs[out_index])

26 | else:

27 | if self.is_first_episode_step(in_index):

28 | try:

29 | self.ep_infos[in_index]['random_state'] = infos[out_index]['random_state']

30 | except:

31 | pass

32 |

33 | self.int_rews[in_index].append(int_rew[out_index])

34 | self.ext_rews[in_index].append(ext_rew[out_index])

35 |

36 | if news[out_index]:

37 | self.ep_infos[in_index]['ret'] = infos[out_index]['episode']['r']

38 | self.ep_infos[in_index]['len'] = infos[out_index]['episode']['l']

39 | self.dump_episode(in_index)

40 |

41 | self.acs[in_index].append(acs[out_index])

42 |

43 | def dump_episode(self, i):

44 | episode = {'acs': self.acs[i],

45 | 'int_rew': self.int_rews[i],

46 | 'info': self.ep_infos[i]}

47 | filename = self.filenames[i]

48 | if self.episode_worth_saving(i):

49 | with open(filename, 'ab') as f:

50 | pickle.dump(episode, f, protocol=-1)

51 | self.acs[i].clear()

52 | self.int_rews[i].clear()

53 | self.ext_rews[i].clear()

54 | self.ep_infos[i].clear()

55 |

56 | def episode_worth_saving(self, i):

57 | return (i == 0 and MPI.COMM_WORLD.Get_rank() == 0)

58 |

59 | def is_first_episode_step(self, i):

60 | return len(self.int_rews[i]) == 0

61 |

62 | def get_filename(self, i):

63 | filename = os.path.join(logger.get_dir(), 'env{}_{}.pk'.format(MPI.COMM_WORLD.Get_rank(), i))

64 | return filename

65 |

--------------------------------------------------------------------------------

/cnn_policy.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | from baselines.common.distributions import make_pdtype

3 |

4 | from utils import getsess, small_convnet, activ, fc, flatten_two_dims, unflatten_first_dim

5 |

6 |

7 | class CnnPolicy(object):

8 | def __init__(self, ob_space, ac_space, hidsize,

9 | ob_mean, ob_std, feat_dim, layernormalize, nl, scope="policy"):

10 | if layernormalize:

11 | print("Warning: policy is operating on top of layer-normed features. It might slow down the training.")

12 | self.layernormalize = layernormalize

13 | self.nl = nl

14 | self.ob_mean = ob_mean

15 | self.ob_std = ob_std

16 | with tf.variable_scope(scope):

17 | self.ob_space = ob_space

18 | self.ac_space = ac_space

19 | self.ac_pdtype = make_pdtype(ac_space)

20 | self.ph_ob = tf.placeholder(dtype=tf.int32,

21 | shape=(None, None) + ob_space.shape, name='ob')

22 | self.ph_ac = self.ac_pdtype.sample_placeholder([None, None], name='ac')

23 | self.pd = self.vpred = None

24 | self.hidsize = hidsize

25 | self.feat_dim = feat_dim

26 | self.scope = scope

27 | pdparamsize = self.ac_pdtype.param_shape()[0]

28 |

29 | sh = tf.shape(self.ph_ob)

30 | x = flatten_two_dims(self.ph_ob)

31 | self.flat_features = self.get_features(x, reuse=False)

32 | self.features = unflatten_first_dim(self.flat_features, sh)

33 |

34 | with tf.variable_scope(scope, reuse=False):

35 | x = fc(self.flat_features, units=hidsize, activation=activ)

36 | x = fc(x, units=hidsize, activation=activ)

37 | pdparam = fc(x, name='pd', units=pdparamsize, activation=None)

38 | vpred = fc(x, name='value_function_output', units=1, activation=None)

39 | pdparam = unflatten_first_dim(pdparam, sh)

40 | self.vpred = unflatten_first_dim(vpred, sh)[:, :, 0]

41 | self.pd = pd = self.ac_pdtype.pdfromflat(pdparam)

42 | self.a_samp = pd.sample()

43 | self.entropy = pd.entropy()

44 | self.nlp_samp = pd.neglogp(self.a_samp)

45 |

46 | def get_features(self, x, reuse):

47 | x_has_timesteps = (x.get_shape().ndims == 5)

48 | if x_has_timesteps:

49 | sh = tf.shape(x)

50 | x = flatten_two_dims(x)

51 |

52 | with tf.variable_scope(self.scope + "_features", reuse=reuse):

53 | x = (tf.to_float(x) - self.ob_mean) / self.ob_std

54 | x = small_convnet(x, nl=self.nl, feat_dim=self.feat_dim, last_nl=None, layernormalize=self.layernormalize)

55 |

56 | if x_has_timesteps:

57 | x = unflatten_first_dim(x, sh)

58 | return x

59 |

60 | def get_ac_value_nlp(self, ob):

61 | a, vpred, nlp = \

62 | getsess().run([self.a_samp, self.vpred, self.nlp_samp],

63 | feed_dict={self.ph_ob: ob[:, None]})

64 | return a[:, 0], vpred[:, 0], nlp[:, 0]

65 |

--------------------------------------------------------------------------------

/dynamics.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import tensorflow as tf

3 |

4 | from auxiliary_tasks import JustPixels

5 | from utils import small_convnet, flatten_two_dims, unflatten_first_dim, getsess, unet

6 |

7 |

8 | class Dynamics(object):

9 | def __init__(self, auxiliary_task, predict_from_pixels, feat_dim=None, scope='dynamics'):

10 | self.scope = scope

11 | self.auxiliary_task = auxiliary_task

12 | self.hidsize = self.auxiliary_task.hidsize

13 | self.feat_dim = feat_dim

14 | self.obs = self.auxiliary_task.obs

15 | self.last_ob = self.auxiliary_task.last_ob

16 | self.ac = self.auxiliary_task.ac

17 | self.ac_space = self.auxiliary_task.ac_space

18 | self.ob_mean = self.auxiliary_task.ob_mean

19 | self.ob_std = self.auxiliary_task.ob_std

20 | if predict_from_pixels:

21 | self.features = self.get_features(self.obs, reuse=False)

22 | else:

23 | self.features = tf.stop_gradient(self.auxiliary_task.features)

24 |

25 | self.out_features = self.auxiliary_task.next_features

26 |

27 | with tf.variable_scope(self.scope + "_loss"):

28 | self.loss = self.get_loss()

29 |

30 | def get_features(self, x, reuse):

31 | nl = tf.nn.leaky_relu

32 | x_has_timesteps = (x.get_shape().ndims == 5)

33 | if x_has_timesteps:

34 | sh = tf.shape(x)

35 | x = flatten_two_dims(x)

36 | with tf.variable_scope(self.scope + "_features", reuse=reuse):

37 | x = (tf.to_float(x) - self.ob_mean) / self.ob_std

38 | x = small_convnet(x, nl=nl, feat_dim=self.feat_dim, last_nl=nl, layernormalize=False)

39 | if x_has_timesteps:

40 | x = unflatten_first_dim(x, sh)

41 | return x

42 |

43 | def get_loss(self):

44 | ac = tf.one_hot(self.ac, self.ac_space.n, axis=2)

45 | sh = tf.shape(ac)

46 | ac = flatten_two_dims(ac)

47 |

48 | def add_ac(x):

49 | return tf.concat([x, ac], axis=-1)

50 |

51 | with tf.variable_scope(self.scope):

52 | x = flatten_two_dims(self.features)

53 | x = tf.layers.dense(add_ac(x), self.hidsize, activation=tf.nn.leaky_relu)

54 |

55 | def residual(x):

56 | res = tf.layers.dense(add_ac(x), self.hidsize, activation=tf.nn.leaky_relu)

57 | res = tf.layers.dense(add_ac(res), self.hidsize, activation=None)

58 | return x + res

59 |

60 | for _ in range(4):

61 | x = residual(x)

62 | n_out_features = self.out_features.get_shape()[-1].value

63 | x = tf.layers.dense(add_ac(x), n_out_features, activation=None)

64 | x = unflatten_first_dim(x, sh)

65 | return tf.reduce_mean((x - tf.stop_gradient(self.out_features)) ** 2, -1)

66 |

67 | def calculate_loss(self, ob, last_ob, acs):

68 | n_chunks = 8

69 | n = ob.shape[0]

70 | chunk_size = n // n_chunks

71 | assert n % n_chunks == 0

72 | sli = lambda i: slice(i * chunk_size, (i + 1) * chunk_size)

73 | return np.concatenate([getsess().run(self.loss,

74 | {self.obs: ob[sli(i)], self.last_ob: last_ob[sli(i)],

75 | self.ac: acs[sli(i)]}) for i in range(n_chunks)], 0)

76 |

77 |

78 | class UNet(Dynamics):

79 | def __init__(self, auxiliary_task, predict_from_pixels, feat_dim=None, scope='pixel_dynamics'):

80 | assert isinstance(auxiliary_task, JustPixels)

81 | assert not predict_from_pixels, "predict from pixels must be False, it's set up to predict from features that are normalized pixels."

82 | super(UNet, self).__init__(auxiliary_task=auxiliary_task,

83 | predict_from_pixels=predict_from_pixels,

84 | feat_dim=feat_dim,

85 | scope=scope)

86 |

87 | def get_features(self, x, reuse):

88 | raise NotImplementedError

89 |

90 | def get_loss(self):

91 | nl = tf.nn.leaky_relu

92 | ac = tf.one_hot(self.ac, self.ac_space.n, axis=2)

93 | sh = tf.shape(ac)

94 | ac = flatten_two_dims(ac)

95 | ac_four_dim = tf.expand_dims(tf.expand_dims(ac, 1), 1)

96 |

97 | def add_ac(x):

98 | if x.get_shape().ndims == 2:

99 | return tf.concat([x, ac], axis=-1)

100 | elif x.get_shape().ndims == 4:

101 | sh = tf.shape(x)

102 | return tf.concat(

103 | [x, ac_four_dim + tf.zeros([sh[0], sh[1], sh[2], ac_four_dim.get_shape()[3].value], tf.float32)],

104 | axis=-1)

105 |

106 | with tf.variable_scope(self.scope):

107 | x = flatten_two_dims(self.features)

108 | x = unet(x, nl=nl, feat_dim=self.feat_dim, cond=add_ac)

109 | x = unflatten_first_dim(x, sh)

110 | self.prediction_pixels = x * self.ob_std + self.ob_mean

111 | return tf.reduce_mean((x - tf.stop_gradient(self.out_features)) ** 2, [2, 3, 4])

112 |

--------------------------------------------------------------------------------

/vec_env.py:

--------------------------------------------------------------------------------

1 | """

2 | An interface for asynchronous vectorized environments.

3 | """

4 |

5 | import ctypes

6 | from abc import ABC, abstractmethod

7 | from multiprocessing import Pipe, Array, Process

8 |

9 | import gym

10 | import numpy as np

11 | from baselines import logger

12 |

13 | _NP_TO_CT = {np.float32: ctypes.c_float,

14 | np.int32: ctypes.c_int32,

15 | np.int8: ctypes.c_int8,

16 | np.uint8: ctypes.c_char,

17 | np.bool: ctypes.c_bool}

18 | _CT_TO_NP = {v: k for k, v in _NP_TO_CT.items()}

19 |

20 |

21 | class CloudpickleWrapper(object):

22 | """

23 | Uses cloudpickle to serialize contents (otherwise multiprocessing tries to use pickle)

24 | """

25 |

26 | def __init__(self, x):

27 | self.x = x

28 |

29 | def __getstate__(self):

30 | import cloudpickle

31 | return cloudpickle.dumps(self.x)

32 |

33 | def __setstate__(self, ob):

34 | import pickle

35 | self.x = pickle.loads(ob)

36 |

37 |

38 | class VecEnv(ABC):

39 | """

40 | An abstract asynchronous, vectorized environment.

41 | """

42 |

43 | def __init__(self, num_envs, observation_space, action_space):

44 | self.num_envs = num_envs

45 | self.observation_space = observation_space

46 | self.action_space = action_space

47 |

48 | @abstractmethod

49 | def reset(self):

50 | """

51 | Reset all the environments and return an array of

52 | observations, or a tuple of observation arrays.

53 |

54 | If step_async is still doing work, that work will

55 | be cancelled and step_wait() should not be called

56 | until step_async() is invoked again.

57 | """

58 | pass

59 |

60 | @abstractmethod

61 | def step_async(self, actions):

62 | """

63 | Tell all the environments to start taking a step

64 | with the given actions.

65 | Call step_wait() to get the results of the step.

66 |

67 | You should not call this if a step_async run is

68 | already pending.

69 | """

70 | pass

71 |

72 | @abstractmethod

73 | def step_wait(self):

74 | """

75 | Wait for the step taken with step_async().

76 |

77 | Returns (obs, rews, dones, infos):

78 | - obs: an array of observations, or a tuple of

79 | arrays of observations.

80 | - rews: an array of rewards

81 | - dones: an array of "episode done" booleans

82 | - infos: a sequence of info objects

83 | """

84 | pass

85 |

86 | @abstractmethod

87 | def close(self):

88 | """

89 | Clean up the environments' resources.

90 | """

91 | pass

92 |

93 | def step(self, actions):

94 | self.step_async(actions)

95 | return self.step_wait()

96 |

97 | def render(self):

98 | logger.warn('Render not defined for %s' % self)

99 |

100 |

101 | class ShmemVecEnv(VecEnv):

102 | """

103 | An AsyncEnv that uses multiprocessing to run multiple

104 | environments in parallel.

105 | """

106 |

107 | def __init__(self, env_fns, spaces=None):

108 | """

109 | If you don't specify observation_space, we'll have to create a dummy

110 | environment to get it.

111 | """

112 | if spaces:

113 | observation_space, action_space = spaces

114 | else:

115 | logger.log('Creating dummy env object to get spaces')

116 | with logger.scoped_configure(format_strs=[]):

117 | dummy = env_fns[0]()

118 | observation_space, action_space = dummy.observation_space, dummy.action_space

119 | dummy.close()

120 | del dummy

121 | VecEnv.__init__(self, len(env_fns), observation_space, action_space)

122 |

123 | obs_spaces = observation_space.spaces if isinstance(self.observation_space, gym.spaces.Tuple) else (

124 | self.observation_space,)

125 | self.obs_bufs = [tuple(Array(_NP_TO_CT[s.dtype.type], int(np.prod(s.shape))) for s in obs_spaces) for _ in

126 | env_fns]

127 | self.obs_shapes = [s.shape for s in obs_spaces]

128 | self.obs_dtypes = [s.dtype for s in obs_spaces]

129 |

130 | self.parent_pipes = []

131 | self.procs = []

132 | for env_fn, obs_buf in zip(env_fns, self.obs_bufs):

133 | wrapped_fn = CloudpickleWrapper(env_fn)

134 | parent_pipe, child_pipe = Pipe()

135 | proc = Process(target=_subproc_worker,

136 | args=(child_pipe, parent_pipe, wrapped_fn, obs_buf, self.obs_shapes))

137 | proc.daemon = True

138 | self.procs.append(proc)

139 | self.parent_pipes.append(parent_pipe)

140 | proc.start()

141 | child_pipe.close()

142 | self.waiting_step = False

143 |

144 | def reset(self):

145 | if self.waiting_step:

146 | logger.warn('Called reset() while waiting for the step to complete')

147 | self.step_wait()

148 | for pipe in self.parent_pipes:

149 | pipe.send(('reset', None))

150 | return self._decode_obses([pipe.recv() for pipe in self.parent_pipes])

151 |

152 | def step_async(self, actions):

153 | assert len(actions) == len(self.parent_pipes)

154 | for pipe, act in zip(self.parent_pipes, actions):

155 | pipe.send(('step', act))

156 |

157 | def step_wait(self):

158 | outs = [pipe.recv() for pipe in self.parent_pipes]

159 | obs, rews, dones, infos = zip(*outs)

160 | return self._decode_obses(obs), np.array(rews), np.array(dones), infos

161 |

162 | def close(self):

163 | if self.waiting_step:

164 | self.step_wait()

165 | for pipe in self.parent_pipes:

166 | pipe.send(('close', None))

167 | for pipe in self.parent_pipes:

168 | pipe.recv()

169 | pipe.close()

170 | for proc in self.procs:

171 | proc.join()

172 |

173 | def _decode_obses(self, obs):

174 | """

175 | Turn the observation responses into a single numpy

176 | array, possibly via shared memory.

177 | """

178 | obs = []

179 | for i, shape in enumerate(self.obs_shapes):

180 | bufs = [b[i] for b in self.obs_bufs]

181 | o = [np.frombuffer(b.get_obj(), dtype=self.obs_dtypes[i]).reshape(shape) for b in bufs]

182 | obs.append(np.array(o))

183 | return tuple(obs) if len(obs) > 1 else obs[0]

184 |

185 |

186 | def _subproc_worker(pipe, parent_pipe, env_fn_wrapper, obs_buf, obs_shape):

187 | """

188 | Control a single environment instance using IPC and

189 | shared memory.

190 |

191 | If obs_buf is not None, it is a shared-memory buffer

192 | for communicating observations.

193 | """

194 |

195 | def _write_obs(obs):

196 | if not isinstance(obs, tuple):

197 | obs = (obs,)

198 | for o, b, s in zip(obs, obs_buf, obs_shape):

199 | dst = b.get_obj()

200 | dst_np = np.frombuffer(dst, dtype=_CT_TO_NP[dst._type_]).reshape(s) # pylint: disable=W0212

201 | np.copyto(dst_np, o)

202 |

203 | env = env_fn_wrapper.x()

204 | parent_pipe.close()

205 | try:

206 | while True:

207 | cmd, data = pipe.recv()

208 | if cmd == 'reset':

209 | pipe.send(_write_obs(env.reset()))

210 | elif cmd == 'step':

211 | obs, reward, done, info = env.step(data)

212 | if done:

213 | obs = env.reset()

214 | pipe.send((_write_obs(obs), reward, done, info))

215 | elif cmd == 'close':

216 | pipe.send(None)

217 | break

218 | else:

219 | raise RuntimeError('Got unrecognized cmd %s' % cmd)

220 | finally:

221 | env.close()

222 |

--------------------------------------------------------------------------------

/auxiliary_tasks.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 |

3 | from utils import small_convnet, fc, activ, flatten_two_dims, unflatten_first_dim, small_deconvnet

4 |

5 |

6 | class FeatureExtractor(object):

7 | def __init__(self, policy, features_shared_with_policy, feat_dim=None, layernormalize=None,

8 | scope='feature_extractor'):

9 | self.scope = scope

10 | self.features_shared_with_policy = features_shared_with_policy

11 | self.feat_dim = feat_dim

12 | self.layernormalize = layernormalize

13 | self.policy = policy

14 | self.hidsize = policy.hidsize

15 | self.ob_space = policy.ob_space

16 | self.ac_space = policy.ac_space

17 | self.obs = self.policy.ph_ob

18 | self.ob_mean = self.policy.ob_mean

19 | self.ob_std = self.policy.ob_std

20 | with tf.variable_scope(scope):

21 | self.last_ob = tf.placeholder(dtype=tf.int32,

22 | shape=(None, 1) + self.ob_space.shape, name='last_ob')

23 | self.next_ob = tf.concat([self.obs[:, 1:], self.last_ob], 1)

24 |

25 | if features_shared_with_policy:

26 | self.features = self.policy.features

27 | self.last_features = self.policy.get_features(self.last_ob, reuse=True)

28 | else:

29 | self.features = self.get_features(self.obs, reuse=False)

30 | self.last_features = self.get_features(self.last_ob, reuse=True)

31 | self.next_features = tf.concat([self.features[:, 1:], self.last_features], 1)

32 |

33 | self.ac = self.policy.ph_ac

34 | self.scope = scope

35 |

36 | self.loss = self.get_loss()

37 |

38 | def get_features(self, x, reuse):

39 | nl = tf.nn.leaky_relu

40 | x_has_timesteps = (x.get_shape().ndims == 5)

41 | if x_has_timesteps:

42 | sh = tf.shape(x)

43 | x = flatten_two_dims(x)

44 | with tf.variable_scope(self.scope + "_features", reuse=reuse):

45 | x = (tf.to_float(x) - self.ob_mean) / self.ob_std

46 | x = small_convnet(x, nl=nl, feat_dim=self.feat_dim, last_nl=None, layernormalize=self.layernormalize)

47 | if x_has_timesteps:

48 | x = unflatten_first_dim(x, sh)

49 | return x

50 |

51 | def get_loss(self):

52 | return tf.zeros((), dtype=tf.float32)

53 |

54 |

55 | class InverseDynamics(FeatureExtractor):

56 | def __init__(self, policy, features_shared_with_policy, feat_dim=None, layernormalize=None):

57 | super(InverseDynamics, self).__init__(scope="inverse_dynamics", policy=policy,

58 | features_shared_with_policy=features_shared_with_policy,

59 | feat_dim=feat_dim, layernormalize=layernormalize)

60 |

61 | def get_loss(self):

62 | with tf.variable_scope(self.scope):

63 | x = tf.concat([self.features, self.next_features], 2)

64 | sh = tf.shape(x)

65 | x = flatten_two_dims(x)

66 | x = fc(x, units=self.policy.hidsize, activation=activ)

67 | x = fc(x, units=self.ac_space.n, activation=None)

68 | param = unflatten_first_dim(x, sh)

69 | idfpd = self.policy.ac_pdtype.pdfromflat(param)

70 | return idfpd.neglogp(self.ac)

71 |

72 |

73 | class VAE(FeatureExtractor):

74 | def __init__(self, policy, features_shared_with_policy, feat_dim=None, layernormalize=False, spherical_obs=False):

75 | assert not layernormalize, "VAE features should already have reasonable size, no need to layer normalize them"

76 | self.spherical_obs = spherical_obs

77 | super(VAE, self).__init__(scope="vae", policy=policy,

78 | features_shared_with_policy=features_shared_with_policy,

79 | feat_dim=feat_dim, layernormalize=False)

80 | self.features = tf.split(self.features, 2, -1)[0] # use mean only for features exposed to the dynamics

81 | self.next_features = tf.split(self.next_features, 2, -1)[0]

82 |

83 | def get_features(self, x, reuse):

84 | nl = tf.nn.leaky_relu

85 | x_has_timesteps = (x.get_shape().ndims == 5)

86 | if x_has_timesteps:

87 | sh = tf.shape(x)

88 | x = flatten_two_dims(x)

89 | with tf.variable_scope(self.scope + "_features", reuse=reuse):

90 | x = (tf.to_float(x) - self.ob_mean) / self.ob_std

91 | x = small_convnet(x, nl=nl, feat_dim=2 * self.feat_dim, last_nl=None, layernormalize=False)

92 | if x_has_timesteps:

93 | x = unflatten_first_dim(x, sh)

94 | return x

95 |

96 | def get_loss(self):

97 | with tf.variable_scope(self.scope):

98 | posterior_mean, posterior_scale = tf.split(self.features, 2, -1)

99 | posterior_scale = tf.nn.softplus(posterior_scale)

100 | posterior_distribution = tf.distributions.Normal(loc=posterior_mean, scale=posterior_scale)

101 |

102 | sh = tf.shape(posterior_mean)

103 | prior = tf.distributions.Normal(loc=tf.zeros(sh), scale=tf.ones(sh))

104 |

105 | posterior_kl = tf.distributions.kl_divergence(posterior_distribution, prior)

106 |

107 | posterior_kl = tf.reduce_sum(posterior_kl, [-1])

108 | assert posterior_kl.get_shape().ndims == 2

109 |

110 | posterior_sample = posterior_distribution.sample()

111 | reconstruction_distribution = self.decoder(posterior_sample)

112 | norm_obs = self.add_noise_and_normalize(self.obs)

113 | reconstruction_likelihood = reconstruction_distribution.log_prob(norm_obs)

114 | assert reconstruction_likelihood.get_shape().as_list()[2:] == [84, 84, 4]

115 | reconstruction_likelihood = tf.reduce_sum(reconstruction_likelihood, [2, 3, 4])

116 |

117 | likelihood_lower_bound = reconstruction_likelihood - posterior_kl

118 | return - likelihood_lower_bound

119 |

120 | def add_noise_and_normalize(self, x):

121 | x = tf.to_float(x) + tf.random_uniform(shape=tf.shape(x), minval=0., maxval=1.)

122 | x = (x - self.ob_mean) / self.ob_std

123 | return x

124 |

125 | def decoder(self, z):

126 | nl = tf.nn.leaky_relu

127 | z_has_timesteps = (z.get_shape().ndims == 3)

128 | if z_has_timesteps:

129 | sh = tf.shape(z)

130 | z = flatten_two_dims(z)

131 | with tf.variable_scope(self.scope + "decoder"):

132 | z = small_deconvnet(z, nl=nl, ch=4 if self.spherical_obs else 8, positional_bias=True)

133 | if z_has_timesteps:

134 | z = unflatten_first_dim(z, sh)

135 | if self.spherical_obs:

136 | scale = tf.get_variable(name="scale", shape=(), dtype=tf.float32,

137 | initializer=tf.ones_initializer())

138 | scale = tf.maximum(scale, -4.)

139 | scale = tf.nn.softplus(scale)

140 | scale = scale * tf.ones_like(z)

141 | else:

142 | z, scale = tf.split(z, 2, -1)

143 | scale = tf.nn.softplus(scale)

144 | # scale = tf.Print(scale, [scale])

145 | return tf.distributions.Normal(loc=z, scale=scale)

146 |

147 |

148 | class JustPixels(FeatureExtractor):

149 | def __init__(self, policy, features_shared_with_policy, feat_dim=None, layernormalize=None,

150 | scope='just_pixels'):

151 | assert not layernormalize

152 | assert not features_shared_with_policy

153 | super(JustPixels, self).__init__(scope=scope, policy=policy,

154 | features_shared_with_policy=False,

155 | feat_dim=None, layernormalize=None)

156 |

157 | def get_features(self, x, reuse):

158 | with tf.variable_scope(self.scope + "_features", reuse=reuse):

159 | x = (tf.to_float(x) - self.ob_mean) / self.ob_std

160 | return x

161 |

162 | def get_loss(self):

163 | return tf.zeros((), dtype=tf.float32)

164 |

--------------------------------------------------------------------------------

/utils.py:

--------------------------------------------------------------------------------

1 | import multiprocessing

2 | import os

3 | import platform

4 | from functools import partial

5 |

6 | import numpy as np

7 | import tensorflow as tf

8 | from baselines.common.tf_util import normc_initializer

9 | from mpi4py import MPI

10 |

11 |

12 | def bcast_tf_vars_from_root(sess, vars):

13 | """

14 | Send the root node's parameters to every worker.

15 |

16 | Arguments:

17 | sess: the TensorFlow session.

18 | vars: all parameter variables including optimizer's

19 | """

20 | rank = MPI.COMM_WORLD.Get_rank()

21 | for var in vars:

22 | if rank == 0:

23 | MPI.COMM_WORLD.bcast(sess.run(var))

24 | else:

25 | sess.run(tf.assign(var, MPI.COMM_WORLD.bcast(None)))

26 |

27 |

28 | def get_mean_and_std(array):

29 | comm = MPI.COMM_WORLD

30 | task_id, num_tasks = comm.Get_rank(), comm.Get_size()

31 | local_mean = np.array(np.mean(array))

32 | sum_of_means = np.zeros((), dtype=np.float32)

33 | comm.Allreduce(local_mean, sum_of_means, op=MPI.SUM)

34 | mean = sum_of_means / num_tasks

35 |

36 | n_array = array - mean

37 | sqs = n_array ** 2

38 | local_mean = np.array(np.mean(sqs))

39 | sum_of_means = np.zeros((), dtype=np.float32)

40 | comm.Allreduce(local_mean, sum_of_means, op=MPI.SUM)

41 | var = sum_of_means / num_tasks

42 | std = var ** 0.5

43 | return mean, std

44 |

45 |

46 | def guess_available_gpus(n_gpus=None):

47 | if n_gpus is not None:

48 | return list(range(n_gpus))

49 | if 'CUDA_VISIBLE_DEVICES' in os.environ:

50 | cuda_visible_divices = os.environ['CUDA_VISIBLE_DEVICES']

51 | cuda_visible_divices = cuda_visible_divices.split(',')

52 | return [int(n) for n in cuda_visible_divices]

53 | nvidia_dir = '/proc/driver/nvidia/gpus/'

54 | if os.path.exists(nvidia_dir):

55 | n_gpus = len(os.listdir(nvidia_dir))

56 | return list(range(n_gpus))

57 | raise Exception("Couldn't guess the available gpus on this machine")

58 |

59 |

60 | def setup_mpi_gpus():

61 | """

62 | Set CUDA_VISIBLE_DEVICES using MPI.

63 | """

64 | available_gpus = guess_available_gpus()

65 |

66 | node_id = platform.node()

67 | nodes_ordered_by_rank = MPI.COMM_WORLD.allgather(node_id)

68 | processes_outranked_on_this_node = [n for n in nodes_ordered_by_rank[:MPI.COMM_WORLD.Get_rank()] if n == node_id]

69 | local_rank = len(processes_outranked_on_this_node)

70 | os.environ['CUDA_VISIBLE_DEVICES'] = str(available_gpus[local_rank])

71 |

72 |

73 | def guess_available_cpus():

74 | return int(multiprocessing.cpu_count())

75 |

76 |

77 | def setup_tensorflow_session():

78 | num_cpu = guess_available_cpus()

79 |

80 | tf_config = tf.ConfigProto(

81 | inter_op_parallelism_threads=num_cpu,

82 | intra_op_parallelism_threads=num_cpu

83 | )

84 | return tf.Session(config=tf_config)

85 |

86 |

87 | def random_agent_ob_mean_std(env, nsteps=10000):

88 | ob = np.asarray(env.reset())

89 | if MPI.COMM_WORLD.Get_rank() == 0:

90 | obs = [ob]

91 | for _ in range(nsteps):

92 | ac = env.action_space.sample()

93 | ob, _, done, _ = env.step(ac)

94 | if done:

95 | ob = env.reset()

96 | obs.append(np.asarray(ob))

97 | mean = np.mean(obs, 0).astype(np.float32)

98 | std = np.std(obs, 0).mean().astype(np.float32)

99 | else:

100 | mean = np.empty(shape=ob.shape, dtype=np.float32)

101 | std = np.empty(shape=(), dtype=np.float32)

102 | MPI.COMM_WORLD.Bcast(mean, root=0)

103 | MPI.COMM_WORLD.Bcast(std, root=0)

104 | return mean, std

105 |

106 |

107 | def layernorm(x):

108 | m, v = tf.nn.moments(x, -1, keep_dims=True)

109 | return (x - m) / (tf.sqrt(v) + 1e-8)

110 |

111 |

112 | getsess = tf.get_default_session

113 |

114 | fc = partial(tf.layers.dense, kernel_initializer=normc_initializer(1.))

115 | activ = tf.nn.relu

116 |

117 |

118 | def flatten_two_dims(x):

119 | return tf.reshape(x, [-1] + x.get_shape().as_list()[2:])

120 |

121 |

122 | def unflatten_first_dim(x, sh):

123 | return tf.reshape(x, [sh[0], sh[1]] + x.get_shape().as_list()[1:])

124 |

125 |

126 | def add_pos_bias(x):

127 | with tf.variable_scope(name_or_scope=None, default_name="pos_bias"):

128 | b = tf.get_variable(name="pos_bias", shape=[1] + x.get_shape().as_list()[1:], dtype=tf.float32,

129 | initializer=tf.zeros_initializer())

130 | return x + b

131 |

132 |

133 | def small_convnet(x, nl, feat_dim, last_nl, layernormalize, batchnorm=False):

134 | bn = tf.layers.batch_normalization if batchnorm else lambda x: x

135 | x = bn(tf.layers.conv2d(x, filters=32, kernel_size=8, strides=(4, 4), activation=nl))

136 | x = bn(tf.layers.conv2d(x, filters=64, kernel_size=4, strides=(2, 2), activation=nl))

137 | x = bn(tf.layers.conv2d(x, filters=64, kernel_size=3, strides=(1, 1), activation=nl))

138 | x = tf.reshape(x, (-1, np.prod(x.get_shape().as_list()[1:])))

139 | x = bn(fc(x, units=feat_dim, activation=None))

140 | if last_nl is not None:

141 | x = last_nl(x)

142 | if layernormalize:

143 | x = layernorm(x)

144 | return x

145 |

146 |

147 | def small_deconvnet(z, nl, ch, positional_bias):

148 | sh = (8, 8, 64)

149 | z = fc(z, np.prod(sh), activation=nl)

150 | z = tf.reshape(z, (-1, *sh))

151 | z = tf.layers.conv2d_transpose(z, 128, kernel_size=4, strides=(2, 2), activation=nl, padding='same')

152 | assert z.get_shape().as_list()[1:3] == [16, 16]

153 | z = tf.layers.conv2d_transpose(z, 64, kernel_size=8, strides=(2, 2), activation=nl, padding='same')

154 | assert z.get_shape().as_list()[1:3] == [32, 32]

155 | z = tf.layers.conv2d_transpose(z, ch, kernel_size=8, strides=(3, 3), activation=None, padding='same')

156 | assert z.get_shape().as_list()[1:3] == [96, 96]

157 | z = z[:, 6:-6, 6:-6]

158 | assert z.get_shape().as_list()[1:3] == [84, 84]

159 | if positional_bias:

160 | z = add_pos_bias(z)

161 | return z

162 |

163 |

164 | def unet(x, nl, feat_dim, cond, batchnorm=False):

165 | bn = tf.layers.batch_normalization if batchnorm else lambda x: x

166 | layers = []

167 | x = tf.pad(x, [[0, 0], [6, 6], [6, 6], [0, 0]])

168 | x = bn(tf.layers.conv2d(cond(x), filters=32, kernel_size=8, strides=(3, 3), activation=nl, padding='same'))

169 | assert x.get_shape().as_list()[1:3] == [32, 32]

170 | layers.append(x)

171 | x = bn(tf.layers.conv2d(cond(x), filters=64, kernel_size=8, strides=(2, 2), activation=nl, padding='same'))

172 | layers.append(x)

173 | assert x.get_shape().as_list()[1:3] == [16, 16]

174 | x = bn(tf.layers.conv2d(cond(x), filters=64, kernel_size=4, strides=(2, 2), activation=nl, padding='same'))

175 | layers.append(x)

176 | assert x.get_shape().as_list()[1:3] == [8, 8]

177 |

178 | x = tf.reshape(x, (-1, np.prod(x.get_shape().as_list()[1:])))

179 | x = fc(cond(x), units=feat_dim, activation=nl)

180 |

181 | def residual(x):

182 | res = bn(tf.layers.dense(cond(x), feat_dim, activation=tf.nn.leaky_relu))

183 | res = tf.layers.dense(cond(res), feat_dim, activation=None)

184 | return x + res

185 |

186 | for _ in range(4):

187 | x = residual(x)

188 |

189 | sh = (8, 8, 64)

190 | x = fc(cond(x), np.prod(sh), activation=nl)

191 | x = tf.reshape(x, (-1, *sh))

192 | x += layers.pop()

193 | x = bn(tf.layers.conv2d_transpose(cond(x), 64, kernel_size=4, strides=(2, 2), activation=nl, padding='same'))

194 | assert x.get_shape().as_list()[1:3] == [16, 16]

195 | x += layers.pop()

196 | x = bn(tf.layers.conv2d_transpose(cond(x), 32, kernel_size=8, strides=(2, 2), activation=nl, padding='same'))

197 | assert x.get_shape().as_list()[1:3] == [32, 32]

198 | x += layers.pop()

199 | x = tf.layers.conv2d_transpose(cond(x), 4, kernel_size=8, strides=(3, 3), activation=None, padding='same')

200 | assert x.get_shape().as_list()[1:3] == [96, 96]

201 | x = x[:, 6:-6, 6:-6]

202 | assert x.get_shape().as_list()[1:3] == [84, 84]

203 | assert layers == []

204 | return x

205 |

206 |

207 | def tile_images(array, n_cols=None, max_images=None, div=1):

208 | if max_images is not None:

209 | array = array[:max_images]

210 | if len(array.shape) == 4 and array.shape[3] == 1:

211 | array = array[:, :, :, 0]

212 | assert len(array.shape) in [3, 4], "wrong number of dimensions - shape {}".format(array.shape)

213 | if len(array.shape) == 4:

214 | assert array.shape[3] == 3, "wrong number of channels- shape {}".format(array.shape)

215 | if n_cols is None:

216 | n_cols = max(int(np.sqrt(array.shape[0])) // div * div, div)

217 | n_rows = int(np.ceil(float(array.shape[0]) / n_cols))

218 |

219 | def cell(i, j):

220 | ind = i * n_cols + j

221 | return array[ind] if ind < array.shape[0] else np.zeros(array[0].shape)

222 |

223 | def row(i):

224 | return np.concatenate([cell(i, j) for j in range(n_cols)], axis=1)

225 |

226 | return np.concatenate([row(i) for i in range(n_rows)], axis=0)

227 |

228 |

--------------------------------------------------------------------------------

/rollouts.py:

--------------------------------------------------------------------------------

1 | from collections import deque, defaultdict

2 |

3 | import numpy as np

4 | from mpi4py import MPI

5 |

6 | from recorder import Recorder

7 |

8 |

9 | class Rollout(object):

10 | def __init__(self, ob_space, ac_space, nenvs, nsteps_per_seg, nsegs_per_env, nlumps, envs, policy,

11 | int_rew_coeff, ext_rew_coeff, record_rollouts, dynamics):

12 | self.nenvs = nenvs

13 | self.nsteps_per_seg = nsteps_per_seg

14 | self.nsegs_per_env = nsegs_per_env

15 | self.nsteps = self.nsteps_per_seg * self.nsegs_per_env

16 | self.ob_space = ob_space

17 | self.ac_space = ac_space

18 | self.nlumps = nlumps

19 | self.lump_stride = nenvs // self.nlumps

20 | self.envs = envs

21 | self.policy = policy

22 | self.dynamics = dynamics

23 |

24 | self.reward_fun = lambda ext_rew, int_rew: ext_rew_coeff * np.clip(ext_rew, -1., 1.) + int_rew_coeff * int_rew

25 |

26 | self.buf_vpreds = np.empty((nenvs, self.nsteps), np.float32)

27 | self.buf_nlps = np.empty((nenvs, self.nsteps), np.float32)

28 | self.buf_rews = np.empty((nenvs, self.nsteps), np.float32)

29 | self.buf_ext_rews = np.empty((nenvs, self.nsteps), np.float32)

30 | self.buf_acs = np.empty((nenvs, self.nsteps, *self.ac_space.shape), self.ac_space.dtype)

31 | self.buf_obs = np.empty((nenvs, self.nsteps, *self.ob_space.shape), self.ob_space.dtype)

32 | self.buf_obs_last = np.empty((nenvs, self.nsegs_per_env, *self.ob_space.shape), np.float32)

33 |

34 | self.buf_news = np.zeros((nenvs, self.nsteps), np.float32)

35 | self.buf_new_last = self.buf_news[:, 0, ...].copy()

36 | self.buf_vpred_last = self.buf_vpreds[:, 0, ...].copy()

37 |

38 | self.env_results = [None] * self.nlumps

39 | # self.prev_feat = [None for _ in range(self.nlumps)]

40 | # self.prev_acs = [None for _ in range(self.nlumps)]

41 | self.int_rew = np.zeros((nenvs,), np.float32)

42 |

43 | self.recorder = Recorder(nenvs=self.nenvs, nlumps=self.nlumps) if record_rollouts else None

44 | self.statlists = defaultdict(lambda: deque([], maxlen=100))

45 | self.stats = defaultdict(float)

46 | self.best_ext_ret = None

47 | self.all_visited_rooms = []

48 | self.all_scores = []

49 |

50 | self.step_count = 0

51 |

52 | def collect_rollout(self):

53 | self.ep_infos_new = []

54 | for t in range(self.nsteps):

55 | self.rollout_step()

56 | self.calculate_reward()

57 | self.update_info()

58 |

59 | def calculate_reward(self):

60 | int_rew = self.dynamics.calculate_loss(ob=self.buf_obs,

61 | last_ob=self.buf_obs_last,

62 | acs=self.buf_acs)

63 | self.buf_rews[:] = self.reward_fun(int_rew=int_rew, ext_rew=self.buf_ext_rews)

64 |

65 | def rollout_step(self):

66 | t = self.step_count % self.nsteps

67 | s = t % self.nsteps_per_seg

68 | for l in range(self.nlumps):

69 | obs, prevrews, news, infos = self.env_get(l)

70 | # if t > 0:

71 | # prev_feat = self.prev_feat[l]

72 | # prev_acs = self.prev_acs[l]

73 | for info in infos:

74 | epinfo = info.get('episode', {})

75 | mzepinfo = info.get('mz_episode', {})

76 | retroepinfo = info.get('retro_episode', {})

77 | epinfo.update(mzepinfo)

78 | epinfo.update(retroepinfo)

79 | if epinfo:

80 | if "n_states_visited" in info:

81 | epinfo["n_states_visited"] = info["n_states_visited"]

82 | epinfo["states_visited"] = info["states_visited"]

83 | self.ep_infos_new.append((self.step_count, epinfo))

84 |

85 | sli = slice(l * self.lump_stride, (l + 1) * self.lump_stride)

86 |

87 | acs, vpreds, nlps = self.policy.get_ac_value_nlp(obs)

88 | self.env_step(l, acs)

89 |

90 | # self.prev_feat[l] = dyn_feat

91 | # self.prev_acs[l] = acs

92 | self.buf_obs[sli, t] = obs

93 | self.buf_news[sli, t] = news

94 | self.buf_vpreds[sli, t] = vpreds

95 | self.buf_nlps[sli, t] = nlps

96 | self.buf_acs[sli, t] = acs

97 | if t > 0:

98 | self.buf_ext_rews[sli, t - 1] = prevrews

99 | # if t > 0:

100 | # dyn_logp = self.policy.call_reward(prev_feat, pol_feat, prev_acs)

101 | #

102 | # int_rew = dyn_logp.reshape(-1, )

103 | #

104 | # self.int_rew[sli] = int_rew

105 | # self.buf_rews[sli, t - 1] = self.reward_fun(ext_rew=prevrews, int_rew=int_rew)

106 | if self.recorder is not None:

107 | self.recorder.record(timestep=self.step_count, lump=l, acs=acs, infos=infos, int_rew=self.int_rew[sli],

108 | ext_rew=prevrews, news=news)

109 | self.step_count += 1

110 | if s == self.nsteps_per_seg - 1:

111 | for l in range(self.nlumps):

112 | sli = slice(l * self.lump_stride, (l + 1) * self.lump_stride)

113 | nextobs, ext_rews, nextnews, _ = self.env_get(l)

114 | self.buf_obs_last[sli, t // self.nsteps_per_seg] = nextobs

115 | if t == self.nsteps - 1:

116 | self.buf_new_last[sli] = nextnews

117 | self.buf_ext_rews[sli, t] = ext_rews

118 | _, self.buf_vpred_last[sli], _ = self.policy.get_ac_value_nlp(nextobs)

119 | # dyn_logp = self.policy.call_reward(self.prev_feat[l], last_pol_feat, prev_acs)

120 | # dyn_logp = dyn_logp.reshape(-1, )

121 | # int_rew = dyn_logp

122 | #

123 | # self.int_rew[sli] = int_rew

124 | # self.buf_rews[sli, t] = self.reward_fun(ext_rew=ext_rews, int_rew=int_rew)

125 |

126 | def update_info(self):

127 | all_ep_infos = MPI.COMM_WORLD.allgather(self.ep_infos_new)

128 | all_ep_infos = sorted(sum(all_ep_infos, []), key=lambda x: x[0])

129 | if all_ep_infos:

130 | all_ep_infos = [i_[1] for i_ in all_ep_infos] # remove the step_count

131 | keys_ = all_ep_infos[0].keys()

132 | all_ep_infos = {k: [i[k] for i in all_ep_infos] for k in keys_}

133 |

134 | self.statlists['eprew'].extend(all_ep_infos['r'])

135 | self.stats['eprew_recent'] = np.mean(all_ep_infos['r'])

136 | self.statlists['eplen'].extend(all_ep_infos['l'])

137 | self.stats['epcount'] += len(all_ep_infos['l'])

138 | self.stats['tcount'] += sum(all_ep_infos['l'])

139 | if 'visited_rooms' in keys_:

140 | # Montezuma specific logging.

141 | self.stats['visited_rooms'] = sorted(list(set.union(*all_ep_infos['visited_rooms'])))

142 | self.stats['pos_count'] = np.mean(all_ep_infos['pos_count'])

143 | self.all_visited_rooms.extend(self.stats['visited_rooms'])

144 | self.all_scores.extend(all_ep_infos["r"])

145 | self.all_scores = sorted(list(set(self.all_scores)))

146 | self.all_visited_rooms = sorted(list(set(self.all_visited_rooms)))

147 | if MPI.COMM_WORLD.Get_rank() == 0:

148 | print("All visited rooms")

149 | print(self.all_visited_rooms)

150 | print("All scores")

151 | print(self.all_scores)

152 | if 'levels' in keys_:

153 | # Retro logging

154 | temp = sorted(list(set.union(*all_ep_infos['levels'])))

155 | self.all_visited_rooms.extend(temp)

156 | self.all_visited_rooms = sorted(list(set(self.all_visited_rooms)))

157 | if MPI.COMM_WORLD.Get_rank() == 0:

158 | print("All visited levels")

159 | print(self.all_visited_rooms)

160 |

161 | current_max = np.max(all_ep_infos['r'])

162 | else:

163 | current_max = None

164 | self.ep_infos_new = []

165 |

166 | if current_max is not None:

167 | if (self.best_ext_ret is None) or (current_max > self.best_ext_ret):

168 | self.best_ext_ret = current_max

169 | self.current_max = current_max

170 |

171 | def env_step(self, l, acs):

172 | self.envs[l].step_async(acs)

173 | self.env_results[l] = None

174 |

175 | def env_get(self, l):

176 | if self.step_count == 0:

177 | ob = self.envs[l].reset()

178 | out = self.env_results[l] = (ob, None, np.ones(self.lump_stride, bool), {})

179 | else:

180 | if self.env_results[l] is None:

181 | out = self.env_results[l] = self.envs[l].step_wait()

182 | else:

183 | out = self.env_results[l]

184 | return out

185 |

--------------------------------------------------------------------------------

/run.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | try:

3 | from OpenGL import GLU

4 | except:

5 | print("no OpenGL.GLU")

6 | import functools

7 | import os.path as osp

8 | from functools import partial

9 |

10 | import gym

11 | import tensorflow as tf

12 | from baselines import logger

13 | from baselines.bench import Monitor

14 | from baselines.common.atari_wrappers import NoopResetEnv, FrameStack

15 | from mpi4py import MPI

16 |

17 | from auxiliary_tasks import FeatureExtractor, InverseDynamics, VAE, JustPixels

18 | from cnn_policy import CnnPolicy

19 | from cppo_agent import PpoOptimizer

20 | from dynamics import Dynamics, UNet

21 | from utils import random_agent_ob_mean_std

22 | from wrappers import MontezumaInfoWrapper, make_mario_env, make_robo_pong, make_robo_hockey, \

23 | make_multi_pong, AddRandomStateToInfo, MaxAndSkipEnv, ProcessFrame84, ExtraTimeLimit

24 |

25 |

26 | def start_experiment(**args):

27 | make_env = partial(make_env_all_params, add_monitor=True, args=args)

28 |

29 | trainer = Trainer(make_env=make_env,

30 | num_timesteps=args['num_timesteps'], hps=args,

31 | envs_per_process=args['envs_per_process'])

32 | log, tf_sess = get_experiment_environment(**args)

33 | with log, tf_sess:

34 | logdir = logger.get_dir()

35 | print("results will be saved to ", logdir)

36 | trainer.train()

37 |

38 |

39 | class Trainer(object):

40 | def __init__(self, make_env, hps, num_timesteps, envs_per_process):

41 | self.make_env = make_env

42 | self.hps = hps

43 | self.envs_per_process = envs_per_process

44 | self.num_timesteps = num_timesteps

45 | self._set_env_vars()

46 |

47 | self.policy = CnnPolicy(

48 | scope='pol',

49 | ob_space=self.ob_space,

50 | ac_space=self.ac_space,

51 | hidsize=512,

52 | feat_dim=512,

53 | ob_mean=self.ob_mean,

54 | ob_std=self.ob_std,

55 | layernormalize=False,

56 | nl=tf.nn.leaky_relu)

57 |

58 | self.feature_extractor = {"none": FeatureExtractor,

59 | "idf": InverseDynamics,

60 | "vaesph": partial(VAE, spherical_obs=True),

61 | "vaenonsph": partial(VAE, spherical_obs=False),

62 | "pix2pix": JustPixels}[hps['feat_learning']]

63 | self.feature_extractor = self.feature_extractor(policy=self.policy,

64 | features_shared_with_policy=False,

65 | feat_dim=512,

66 | layernormalize=hps['layernorm'])

67 |

68 | self.dynamics = Dynamics if hps['feat_learning'] != 'pix2pix' else UNet

69 | self.dynamics = self.dynamics(auxiliary_task=self.feature_extractor,

70 | predict_from_pixels=hps['dyn_from_pixels'],

71 | feat_dim=512)

72 |

73 | self.agent = PpoOptimizer(

74 | scope='ppo',

75 | ob_space=self.ob_space,

76 | ac_space=self.ac_space,

77 | stochpol=self.policy,

78 | use_news=hps['use_news'],

79 | gamma=hps['gamma'],

80 | lam=hps["lambda"],

81 | nepochs=hps['nepochs'],

82 | nminibatches=hps['nminibatches'],

83 | lr=hps['lr'],

84 | cliprange=0.1,

85 | nsteps_per_seg=hps['nsteps_per_seg'],

86 | nsegs_per_env=hps['nsegs_per_env'],

87 | ent_coef=hps['ent_coeff'],

88 | normrew=hps['norm_rew'],

89 | normadv=hps['norm_adv'],

90 | ext_coeff=hps['ext_coeff'],

91 | int_coeff=hps['int_coeff'],

92 | dynamics=self.dynamics

93 | )

94 |

95 | self.agent.to_report['aux'] = tf.reduce_mean(self.feature_extractor.loss)

96 | self.agent.total_loss += self.agent.to_report['aux']

97 | self.agent.to_report['dyn_loss'] = tf.reduce_mean(self.dynamics.loss)

98 | self.agent.total_loss += self.agent.to_report['dyn_loss']

99 | self.agent.to_report['feat_var'] = tf.reduce_mean(tf.nn.moments(self.feature_extractor.features, [0, 1])[1])

100 |

101 | def _set_env_vars(self):

102 | env = self.make_env(0, add_monitor=False)

103 | self.ob_space, self.ac_space = env.observation_space, env.action_space

104 | self.ob_mean, self.ob_std = random_agent_ob_mean_std(env)

105 | del env

106 | self.envs = [functools.partial(self.make_env, i) for i in range(self.envs_per_process)]

107 |

108 | def train(self):

109 | self.agent.start_interaction(self.envs, nlump=self.hps['nlumps'], dynamics=self.dynamics)

110 | while True:

111 | info = self.agent.step()

112 | if info['update']:

113 | logger.logkvs(info['update'])

114 | logger.dumpkvs()

115 | if self.agent.rollout.stats['tcount'] > self.num_timesteps:

116 | break

117 |

118 | self.agent.stop_interaction()

119 |

120 |

121 | def make_env_all_params(rank, add_monitor, args):

122 | if args["env_kind"] == 'atari':

123 | env = gym.make(args['env'])

124 | assert 'NoFrameskip' in env.spec.id

125 | env = NoopResetEnv(env, noop_max=args['noop_max'])

126 | env = MaxAndSkipEnv(env, skip=4)

127 | env = ProcessFrame84(env, crop=False)

128 | env = FrameStack(env, 4)

129 | env = ExtraTimeLimit(env, args['max_episode_steps'])

130 | if 'Montezuma' in args['env']:

131 | env = MontezumaInfoWrapper(env)

132 | env = AddRandomStateToInfo(env)

133 | elif args["env_kind"] == 'mario':

134 | env = make_mario_env()

135 | elif args["env_kind"] == "retro_multi":

136 | env = make_multi_pong()

137 | elif args["env_kind"] == 'robopong':

138 | if args["env"] == "pong":

139 | env = make_robo_pong()

140 | elif args["env"] == "hockey":

141 | env = make_robo_hockey()

142 |

143 | if add_monitor:

144 | env = Monitor(env, osp.join(logger.get_dir(), '%.2i' % rank))

145 | return env

146 |

147 |

148 | def get_experiment_environment(**args):

149 | from utils import setup_mpi_gpus, setup_tensorflow_session

150 | from baselines.common import set_global_seeds

151 | from gym.utils.seeding import hash_seed

152 | process_seed = args["seed"] + 1000 * MPI.COMM_WORLD.Get_rank()

153 | process_seed = hash_seed(process_seed, max_bytes=4)

154 | set_global_seeds(process_seed)

155 | setup_mpi_gpus()

156 |

157 | logger_context = logger.scoped_configure(dir=None,

158 | format_strs=['stdout', 'log',

159 | 'csv'] if MPI.COMM_WORLD.Get_rank() == 0 else ['log'])

160 | tf_context = setup_tensorflow_session()

161 | return logger_context, tf_context

162 |

163 |

164 | def add_environments_params(parser):

165 | parser.add_argument('--env', help='environment ID', default='BreakoutNoFrameskip-v4',

166 | type=str)

167 | parser.add_argument('--max-episode-steps', help='maximum number of timesteps for episode', default=4500, type=int)

168 | parser.add_argument('--env_kind', type=str, default="atari")

169 | parser.add_argument('--noop_max', type=int, default=30)

170 |

171 |

172 | def add_optimization_params(parser):

173 | parser.add_argument('--lambda', type=float, default=0.95)

174 | parser.add_argument('--gamma', type=float, default=0.99)

175 | parser.add_argument('--nminibatches', type=int, default=8)

176 | parser.add_argument('--norm_adv', type=int, default=1)

177 | parser.add_argument('--norm_rew', type=int, default=1)

178 | parser.add_argument('--lr', type=float, default=1e-4)

179 | parser.add_argument('--ent_coeff', type=float, default=0.001)

180 | parser.add_argument('--nepochs', type=int, default=3)

181 | parser.add_argument('--num_timesteps', type=int, default=int(1e8))

182 |

183 |

184 | def add_rollout_params(parser):

185 | parser.add_argument('--nsteps_per_seg', type=int, default=128)

186 | parser.add_argument('--nsegs_per_env', type=int, default=1)

187 | parser.add_argument('--envs_per_process', type=int, default=128)

188 | parser.add_argument('--nlumps', type=int, default=1)

189 |

190 |

191 | if __name__ == '__main__':

192 | import argparse

193 |

194 | parser = argparse.ArgumentParser(formatter_class=argparse.ArgumentDefaultsHelpFormatter)

195 | add_environments_params(parser)

196 | add_optimization_params(parser)

197 | add_rollout_params(parser)

198 |

199 | parser.add_argument('--exp_name', type=str, default='')

200 | parser.add_argument('--seed', help='RNG seed', type=int, default=0)

201 | parser.add_argument('--dyn_from_pixels', type=int, default=0)

202 | parser.add_argument('--use_news', type=int, default=0)

203 | parser.add_argument('--ext_coeff', type=float, default=0.)

204 | parser.add_argument('--int_coeff', type=float, default=1.)

205 | parser.add_argument('--layernorm', type=int, default=0)

206 | parser.add_argument('--feat_learning', type=str, default="none",

207 | choices=["none", "idf", "vaesph", "vaenonsph", "pix2pix"])

208 |

209 | args = parser.parse_args()

210 |

211 | start_experiment(**args.__dict__)

212 |

--------------------------------------------------------------------------------

/roboenvs/joint_hockey.py:

--------------------------------------------------------------------------------

1 | # Make a basic version of pong, run it with random agent.

2 |

3 | import os

4 | import sys

5 |

6 | import gym

7 | import gym.spaces

8 | import gym.utils

9 | import gym.utils.seeding

10 | import numpy as np

11 | import roboschool

12 | from roboschool.scene_abstract import Scene, cpp_household

13 |

14 |

15 | class HockeyScene(Scene):

16 | # multiplayer = False

17 | # players_count = 1

18 | VIDEO_W = 84

19 | VIDEO_H = 84

20 | TIMEOUT = 300

21 |

22 | def __init__(self):

23 | Scene.__init__(self, gravity=9.8, timestep=0.0165 / 4, frame_skip=8)

24 | self.score_left = 0

25 | self.score_right = 0

26 |

27 | def actor_introduce(self, robot):

28 | i = robot.player_n - 1

29 |

30 | def episode_restart(self):

31 | Scene.episode_restart(self)

32 | if self.score_right + self.score_left > 0:

33 | sys.stdout.write("%i:%i " % (self.score_left, self.score_right))

34 | sys.stdout.flush()

35 | self.mjcf = self.cpp_world.load_mjcf(os.path.join(os.path.dirname(__file__), "roboschool_hockey.xml"))

36 | dump = 0

37 | for r in self.mjcf:

38 | if dump: print("ROBOT '%s'" % r.root_part.name)

39 | for part in r.parts:

40 | if dump: print("\tPART '%s'" % part.name)

41 | # if part.name==self.robot_name:

42 | for j in r.joints:

43 | if j.name == "p0x": self.p0x = j

44 | if j.name == "p0y": self.p0y = j

45 | if j.name == "p1x": self.p1x = j

46 | if j.name == "p1y": self.p1y = j

47 | if j.name == "ballx": self.ballx = j

48 | if j.name == "bally": self.bally = j

49 | self.ballx.set_motor_torque(0.0)

50 | self.bally.set_motor_torque(0.0)

51 | for r in self.mjcf:

52 | r.query_position()

53 | fpose = cpp_household.Pose()

54 | fpose.set_xyz(0, 0, -0.04)

55 | self.field = self.cpp_world.load_thingy(

56 | os.path.join(os.path.dirname(roboschool.__file__), "models_outdoor/stadium/pong1.obj"),

57 | fpose, 1.0, 0, 0xFFFFFF, True)

58 | self.camera = self.cpp_world.new_camera_free_float(self.VIDEO_W, self.VIDEO_H, "video_camera")

59 | self.camera_itertia = 0

60 | self.frame = 0

61 | self.jstate_for_frame = -1

62 | self.score_left = 0

63 | self.score_right = 0

64 | self.bounce_n = 0

65 | self.restart_from_center(self.np_random.randint(2) == 0)

66 |

67 | def restart_from_center(self, leftwards):

68 | self.ballx.reset_current_position(0, 0)

69 | self.bally.reset_current_position(0, 0)

70 | self.timeout = self.TIMEOUT

71 | self.timeout_dir = (-1 if leftwards else +1)

72 | # self.bounce_n = 0

73 | self.ball_x, ball_vx = self.ballx.current_position()

74 | self.ball_y, ball_vy = self.bally.current_position()

75 |

76 | def global_step(self):

77 | self.frame += 1

78 |

79 | # if not self.multiplayer:

80 | # # Trainer

81 | # self.p1x.set_servo_target( self.trainer_x, 0.02, 0.02, 4 )

82 | # self.p1y.set_servo_target( self.trainer_y, 0.02, 0.02, 4 )

83 |

84 | Scene.global_step(self)

85 |

86 | self.ball_x, ball_vx = self.ballx.current_position()

87 | self.ball_y, ball_vy = self.bally.current_position()

88 |

89 | if np.abs(self.ball_y) > 1.0 and self.ball_y * ball_vy > 0:

90 | self.bally.reset_current_position(self.ball_y, -ball_vy)

91 |

92 | if ball_vx * self.timeout_dir < 0:

93 | # if self.timeout_dir < 0:

94 | # self.score_left += 0.00*np.abs(ball_vx) # hint for early learning: hit the ball!

95 | # else:

96 | # self.score_right += 0.00*np.abs(ball_vx)

97 | self.timeout_dir *= -1

98 | self.timeout = self.TIMEOUT

99 | self.bounce_n += 1

100 |

101 | def global_state(self):

102 | if self.frame == self.jstate_for_frame:

103 | return self.jstate

104 | self.jstate_for_frame = self.frame

105 | j = np.array(

106 | [j.current_relative_position() for j in [self.p0x, self.p0y, self.p1x, self.p1y, self.ballx, self.bally]]

107 | ).flatten()

108 | self.jstate = np.concatenate([j, [(self.timeout - self.TIMEOUT) / self.TIMEOUT]])

109 | return self.jstate

110 |

111 | def HUD(self, a, s):

112 | self.cpp_world.test_window_history_advance()

113 | self.cpp_world.test_window_observations(s.tolist())

114 | self.cpp_world.test_window_actions(a[:2].tolist())

115 | s = "%04i TIMEOUT%3i %0.2f:%0.2f" % (

116 | self.frame, self.timeout, self.score_left, self.score_right

117 | )

118 |

119 | def camera_adjust(self):

120 | "Looks like first 3 coordinates specify position of the camera and the last three the orientation."

121 | self.camera.move_and_look_at(0.1, -0.1, 1.9, 0.1, 0.2, 0)

122 |

123 |

124 | class RoboschoolHockeyJoint(gym.Env):

125 | VIDEO_W = 84

126 | VIDEO_H = 84

127 |

128 | def __init__(self):

129 | self.player_n = 0

130 | self.scene = None

131 | action_dim = 4

132 | # obs_dim = 13

133 | high = np.ones([action_dim])

134 | self.action_space = gym.spaces.Box(-high, high)

135 | self.observation_space = gym.spaces.Box(low=0, high=255,

136 | shape=(self.VIDEO_W, self.VIDEO_H, 3), dtype=np.uint8)

137 | self._seed()

138 |

139 | def create_single_player_scene(self):

140 | self.player_n = 0

141 | s = HockeyScene()

142 | s.np_random = self.np_random

143 | return s

144 |

145 | def _seed(self, seed=None):

146 | self.np_random, seed = gym.utils.seeding.np_random(seed)

147 | return [seed]

148 |

149 | def reset(self):

150 | if self.scene is None:

151 | self.scene = self.create_single_player_scene()

152 | self.scene.episode_restart()

153 | s = self.calc_state()

154 | self.score_reported = 0

155 | obs = self.render("rgb_array")

156 | return obs

157 |

158 | def calc_state(self):

159 | j = self.scene.global_state()

160 | if self.player_n == 1:

161 | # [ 0,1, 2,3, 4, 5, 6,7, 8,9,10,11,12]

162 | # [p0x,v,p0y,v, p1x,v,p1y,v, bx,v,by,v, T]

163 | signflip = np.array([-1, -1, 1, 1, -1, -1, 1, 1, -1, -1, 1, 1, 1])

164 | reorder = np.array([4, 5, 6, 7, 0, 1, 2, 3, 8, 9, 10, 11, 12])

165 | j = (j * signflip)[reorder]

166 | return j

167 |

168 | def apply_action(self, a0, a1):

169 | assert (np.isfinite(a0).all())

170 | assert (np.isfinite(a1).all())

171 | a0 = np.clip(a0, -1, +1)

172 | a1 = np.clip(a1, -1, +1)

173 | self.scene.p0x.set_target_speed(3 * float(a0[0]), 0.05, 7)

174 | self.scene.p0y.set_target_speed(3 * float(a0[1]), 0.05, 7)

175 | self.scene.p1x.set_target_speed(-3 * float(a1[0]), 0.05, 7)

176 | self.scene.p1y.set_target_speed(3 * float(a1[1]), 0.05, 7)

177 |

178 | def step(self, a):

179 | a0 = a[:2]

180 | a1 = a[2:]

181 | self.apply_action(a0, a1)

182 | self.scene.global_step()

183 | state = self.calc_state()

184 | self.scene.HUD(a, state)

185 | new_score = self.scene.bounce_n

186 | # new_score = int(new_score)

187 | self.rewards = new_score - self.score_reported

188 | self.score_reported = new_score

189 | if (self.scene.score_left > 10) or (self.scene.score_right > 10):

190 | done = True

191 | else:

192 | done = False

193 | obs = self.render("rgb_array")

194 | return obs, self.rewards, done, {}

195 |

196 | def render(self, mode):

197 | if mode == "human":

198 | return self.scene.cpp_world.test_window()

199 | elif mode == "rgb_array":

200 | self.scene.camera_adjust()

201 | rgb, _, _, _, _ = self.scene.camera.render(False, False,

202 | False) # render_depth, render_labeling, print_timing)

203 | rendered_rgb = np.fromstring(rgb, dtype=np.uint8).reshape((self.VIDEO_H, self.VIDEO_W, 3))

204 | return rendered_rgb

205 | else:

206 | assert (0)

207 |

208 |

209 | class MultiDiscreteToUsual(gym.ActionWrapper):

210 | def __init__(self, env):

211 | gym.ActionWrapper.__init__(self, env)

212 |

213 | self._inp_act_size = self.env.action_space.nvec

214 | self.action_space = gym.spaces.Discrete(np.prod(self._inp_act_size))

215 |

216 | def action(self, a):

217 | vec = np.zeros(dtype=np.int8, shape=self._inp_act_size.shape)

218 | for i, n in enumerate(self._inp_act_size):

219 | vec[i] = a % n

220 | a /= n

221 | return vec

222 |

223 |

224 | class DiscretizeActionWrapper(gym.ActionWrapper):

225 | def __init__(self, env=None, nsamples=11):

226 | super().__init__(env)

227 | assert isinstance(env.action_space, gym.spaces.Box)

228 | self._dist_to_cont = []

229 | for low, high in zip(env.action_space.low, env.action_space.high):

230 | self._dist_to_cont.append(np.linspace(low, high, nsamples))

231 | temp = [nsamples for _ in self._dist_to_cont]

232 | self.action_space = gym.spaces.MultiDiscrete(temp)

233 |

234 | def action(self, action):

235 | assert len(action) == len(self._dist_to_cont)

236 | return np.array([m[a] for a, m in zip(action, self._dist_to_cont)], dtype=np.float32)

237 |

--------------------------------------------------------------------------------

/roboenvs/joint_pong.py:

--------------------------------------------------------------------------------

1 | # Make a basic version of pong, run it with random agent.

2 |

3 | import os

4 | import sys

5 |

6 | import gym

7 | import gym.spaces

8 | import gym.utils

9 | import gym.utils.seeding

10 | import numpy as np

11 | import roboschool

12 | from roboschool.scene_abstract import Scene, cpp_household

13 |

14 |

15 | class PongScene(Scene):

16 | VIDEO_W = 84

17 | VIDEO_H = 84

18 | TIMEOUT = 300

19 |

20 | def __init__(self):

21 | Scene.__init__(self, gravity=9.8, timestep=0.0165 / 4, frame_skip=8)

22 | self.score_left = 0

23 | self.score_right = 0

24 |

25 | def actor_introduce(self, robot):

26 | i = robot.player_n - 1

27 |

28 | def episode_restart(self):

29 | Scene.episode_restart(self)

30 | if self.score_right + self.score_left > 0:

31 | sys.stdout.write("%i:%i " % (self.score_left, self.score_right))

32 | sys.stdout.flush()

33 | self.mjcf = self.cpp_world.load_mjcf(os.path.join(os.path.dirname(__file__), "roboschool_pong.xml"))

34 | dump = 0

35 | for r in self.mjcf:

36 | if dump: print("ROBOT '%s'" % r.root_part.name)

37 | for part in r.parts:

38 | if dump: print("\tPART '%s'" % part.name)

39 | # if part.name==self.robot_name:

40 | for j in r.joints:

41 | if j.name == "p0x": self.p0x = j

42 | if j.name == "p0y": self.p0y = j

43 | if j.name == "p1x": self.p1x = j

44 | if j.name == "p1y": self.p1y = j

45 | if j.name == "ballx": self.ballx = j

46 | if j.name == "bally": self.bally = j

47 | self.ballx.set_motor_torque(0.0)

48 | self.bally.set_motor_torque(0.0)

49 | for r in self.mjcf:

50 | r.query_position()

51 | fpose = cpp_household.Pose()

52 | fpose.set_xyz(0, 0, -0.04)

53 | self.field = self.cpp_world.load_thingy(

54 | os.path.join(os.path.dirname(roboschool.__file__), "models_outdoor/stadium/pong1.obj"),

55 | fpose, 1.0, 0, 0xFFFFFF, True)

56 | self.camera = self.cpp_world.new_camera_free_float(self.VIDEO_W, self.VIDEO_H, "video_camera")

57 | self.camera_itertia = 0

58 | self.frame = 0

59 | self.jstate_for_frame = -1

60 | self.score_left = 0

61 | self.score_right = 0

62 | self.bounce_n = 0

63 | self.restart_from_center(self.np_random.randint(2) == 0)

64 |

65 | def restart_from_center(self, leftwards):

66 | self.ballx.reset_current_position(0, self.np_random.uniform(low=2.0, high=2.5) * (-1 if leftwards else +1))

67 | self.bally.reset_current_position(self.np_random.uniform(low=-0.9, high=+0.9),

68 | self.np_random.uniform(low=-2, high=+2))

69 | self.timeout = self.TIMEOUT

70 | self.timeout_dir = (-1 if leftwards else +1)

71 | # self.bounce_n = 0

72 | self.trainer_x = self.np_random.uniform(low=-0.9, high=+0.9)

73 | self.trainer_y = self.np_random.uniform(low=-0.9, high=+0.9)

74 |

75 | self.ball_x, ball_vx = self.ballx.current_position()

76 | self.ball_y, ball_vy = self.bally.current_position()

77 |

78 | def global_step(self):

79 | self.frame += 1

80 |

81 | Scene.global_step(self)

82 |

83 | self.ball_x, ball_vx = self.ballx.current_position()

84 | self.ball_y, ball_vy = self.bally.current_position()

85 |

86 | if np.abs(self.ball_y) > 1.0 and self.ball_y * ball_vy > 0:

87 | self.bally.reset_current_position(self.ball_y, -ball_vy)

88 |

89 | if ball_vx * self.timeout_dir < 0:

90 | # if self.timeout_dir < 0:

91 | # self.score_left += 0.00*np.abs(ball_vx) # hint for early learning: hit the ball!

92 | # else: