17 |

18 |

19 |

--------------------------------------------------------------------------------

/src/jump_reward_inference.egg-info/PKG-INFO:

--------------------------------------------------------------------------------

1 | Metadata-Version: 2.1

2 | Name: jump-reward-inference

3 | Version: 0.0.8

4 | Summary: A package for fast real-time music joint rhythmic parameters tracking including beats, downbeats, tempo and meter using the BeatNet AI, a super compact 1D state space and the jump back reward technique

5 | Home-page: https://github.com/mjhydri/1D-StateSpace

6 | Author: Mojtaba Heydari

7 | Author-email: mheydari@ur.rochester.edu

8 | Keywords: Beat tracking,Downbeat tracking,meter detection,tempo tracking,1D state space,jump reward technique,efficient state space,

9 | License-File: LICENSE

10 | Requires-Dist: numpy

11 | Requires-Dist: Cython

12 | Requires-Dist: librosa>=0.8.0

13 | Requires-Dist: numba==0.54.1

14 | Requires-Dist: mido>=1.2.6

15 | Requires-Dist: pytest

16 | Requires-Dist: torch

17 | Requires-Dist: Matplotlib

18 | Requires-Dist: BeatNet>=0.0.4

19 | Requires-Dist: madmom

20 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Mojtaba Heydari

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/src/jump_reward_inference/joint_tracker.py:

--------------------------------------------------------------------------------

1 | # Author: Mojtaba Heydari

2 |

3 |

4 | """

5 | This is the user script of the causal deterministic jump-back reward inference model on the proposed 1D state space.

6 |

7 | The model first takes the waveform to the spectral domain and then feeds them into one of the pre-trained BeatNet models to obtain beat/downbeat activations.

8 | Finally, the activations are used in a jump-reward inference model to infer beats, downbeats, tempo, and meter.

9 |

10 | system input: Raw audio waveform

11 |

12 | System output: a vector with beats, downbeats, local tempo, and local meter columns, respectively. shape (num_beats, 4).

13 |

14 |

15 | References:

16 |

17 | M. Heydari, M. McCallum, A. Ehmann and Z. Duan, "A NOVEL 1D STATE SPACE FOR EFFICIENT MUSIC RHYTHMIC ANALYSIS", In Proc. IEEE Int. Conf. Acoust. Speech

18 | Signal Process. (ICASSP), 2022. #(Submitted)

19 |

20 | M. Heydari, F. Cwitkowitz, and Z. Duan, “BeatNet:CRNN and particle filtering for online joint beat down-beat and meter tracking,” in Proc. of the 22th Intl.

21 | Conf.on Music Information Retrieval (ISMIR), 2021.

22 |

23 | """

24 | from jump_reward_inference.inference import inference_1D

25 | from BeatNet.BeatNet import BeatNet

26 |

27 |

28 | class joint_inference():

29 | def __init__(self, model, plot=False):

30 | self.activation_estimator = BeatNet(model)

31 | self.estimator = inference_1D(beats_per_bar=[], fps=50, plot=plot)

32 |

33 | def process(self, audio_path):

34 | preds = self.activation_estimator.activation_extractor_online(

35 | audio_path) # extracting the activations using the BeatNet nueral network

36 | output = self.estimator.process(

37 | preds) # infering online joing beat, downbeat, tempo and meter using the 1D state space and jump back reward technique

38 | return output

39 |

40 |

--------------------------------------------------------------------------------

/src/jump_reward_inference/state_space_1D.py:

--------------------------------------------------------------------------------

1 | # Author: Mojtaba Heydari

2 |

3 |

4 | import numpy as np

5 |

6 |

7 | class state_space_1D:

8 | '''

9 | This class creates 1D state spaces for different music rhythmic hierarchies.

10 |

11 | M. Heydari, M. McCallum, A. Ehmann and Z. Duan, "A NOVEL 1D STATE SPACE FOR EFFICIENT MUSIC RHYTHMIC ANALYSIS", In Proc. IEEE Int. Conf. Acoust. Speech

12 | Signal Process. (ICASSP), 2022. #(Submitted)

13 | '''

14 |

15 | def __init__(self, min_interval, max_interval):

16 | self.min_interval = int(np.round(min_interval))

17 | self.max_interval = int(np.round(max_interval))

18 | self.first_states = np.array([0])

19 | self.last_states = np.array([self.max_interval-1])

20 | self.num_states = self.max_interval

21 | self.state_intervals = np.array([max_interval] * max_interval)

22 | self.state_positions = np.linspace(0, 1, self.num_states, endpoint=False)

23 |

24 |

25 | class beat_state_space_1D(state_space_1D):

26 |

27 | def __init__(self, alpha=0.01, tempo=None, fps=None, min_interval=None, max_interval=None):

28 | super().__init__(min_interval, max_interval)

29 | self.jump_weights = np.concatenate((np.zeros(self.min_interval), np.array([alpha] * (self.max_interval - self.min_interval)),))

30 | if tempo and fps:

31 | self.jump_weights[round(60. * fps / tempo) - self.min_interval] = 1 - alpha

32 |

33 |

34 | class downbeat_state_space_1D(state_space_1D):

35 |

36 | def __init__(self, alpha=0.1, meter=None, min_beats_per_bar=None, max_beats_per_bar=None):

37 | super().__init__(min_beats_per_bar, max_beats_per_bar)

38 | self.jump_weights = np.concatenate((np.zeros(self.min_interval-1), np.array([alpha] * (self.max_interval - self.min_interval+1)),))

39 | if meter:

40 | self.jump_weights[meter[0] - self.min_interval+1] = 1 - alpha

41 | pass

42 |

43 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | """

2 | Created on 10-29-21 by Mojtaba Heydari

3 |

4 | """

5 |

6 |

7 | # Local imports

8 | # None.

9 |

10 | # Third party imports

11 | # None.

12 |

13 | # Python standard library imports

14 | import setuptools

15 | from setuptools import find_packages

16 | import distutils.cmd

17 |

18 |

19 | # Required packages

20 | REQUIRED_PACKAGES = [

21 | 'numpy',

22 | 'Cython',

23 | 'librosa>=0.8.0',

24 | 'numba==0.54.1',

25 | 'mido>=1.2.6',

26 | 'pytest',

27 | #'pyaudio',

28 | ##'pyfftw',

29 | 'torch',

30 | 'Matplotlib',

31 | 'BeatNet>=0.0.4',

32 | 'madmom',

33 | ]

34 |

35 |

36 | class MakeReqsCommand(distutils.cmd.Command):

37 | """A custom command to export requirements to a requirements.txt file."""

38 |

39 | description = 'Export requirements to a requirements.txt file.'

40 | user_options = []

41 |

42 | def initialize_options(self):

43 | """Set default values for options."""

44 | pass

45 |

46 | def finalize_options(self):

47 | """Post-process options."""

48 | pass

49 |

50 | def run(self):

51 | """Run command."""

52 | with open('./requirements.txt', 'w') as f:

53 | for req in REQUIRED_PACKAGES:

54 | f.write(req)

55 | f.write('\n')

56 |

57 |

58 |

59 | setuptools.setup(

60 | cmdclass={

61 | 'make_reqs': MakeReqsCommand

62 | },

63 |

64 | # Package details

65 | name="jump_reward_inference",

66 | version="0.0.8",

67 | package_dir={"": "src"},

68 | packages=find_packages(where="src"),

69 | # packages=find_packages(),

70 | include_package_data=True,

71 | install_requires=REQUIRED_PACKAGES,

72 |

73 | # Metadata to display on PyPI

74 | author="Mojtaba Heydari",

75 | author_email="mheydari@ur.rochester.edu",

76 | description="A package for fast real-time music joint rhythmic parameters tracking including beats, downbeats, tempo and meter using the BeatNet AI, a super compact 1D state space and the jump back reward technique",

77 | keywords="Beat tracking, Downbeat tracking, meter detection, tempo tracking, 1D state space, jump reward technique, efficient state space, ",

78 | url="https://github.com/mjhydri/1D-StateSpace"

79 |

80 |

81 | # CLI - not developed yet

82 | #entry_points = {

83 | # 'console_scripts': ['beatnet=beatnet.cli:main']

84 | #}

85 | )

86 |

--------------------------------------------------------------------------------

/.idea/workspace.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

65 | 1636000079788

66 |

67 |

68 |

69 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # A Novel 1D State Space for Efficient Music Rhythmic Analysis

2 |

3 | An implementation of the probablistic jump reward semi_Markov inference model for music rhythmic analysis leveraging the proposed 1D state space.

4 |

5 | [](https://pypi.org/project/jump-reward-inference/)

6 | [![CC BY 4.0][cc-by-shield]][cc-by]

7 | [](https://pepy.tech/project/jump-reward-inference)

8 | [](https://arxiv.org/abs/2111.00704)

9 |

10 | [cc-by]: http://creativecommons.org/licenses/by/4.0/

11 | [cc-by-image]: https://i.creativecommons.org/l/by/4.0/88x31.png

12 | [cc-by-shield]: https://img.shields.io/badge/License-CC%20BY%204.0-lightgrey.svg

13 |

14 | [](https://paperswithcode.com/sota/online-beat-tracking-on-gtzan?p=a-novel-1d-state-space-for-efficient-music)

15 |

16 | This repository contains the source code and demo videos of a joint music rhythmic analyzer system using the 1D state space and jump reward technique proposed in ICASSP-2022. This implementation includes music beat, downbeat, tempo, and meter tracking jointly and in a causal fashion.

17 |

18 | The model first takes the waveform to the spectral domain and then feeds them into one of the pre-trained BeatNet models to obtain beat/downbeat activations.

19 | Finally, the activations are used in a jump-reward inference model to infer beats, downbeats, tempo, and meter.

20 |

21 |

22 | System Input:

23 | -------------

24 | Raw audio waveform

25 |

26 | System Output:

27 | --------------

28 | A vector including beats, downbeats, local tempo, and local meter columns, respectively and with the following shape: numpy_array(num_beats, 4).

29 |

30 | Installation Command:

31 | ---------------------

32 | Approach #1: Installing binaries from the pypi website:

33 | ```

34 | pip install jump-reward-inference

35 | ```

36 |

37 | Approach #2: Installing directly from the Git repository:

38 | ```

39 | pip install git+https://github.com/mjhydri/1D-StateSpace

40 | ```

41 | * Note that by using either of the approaches all dependencies and required packages get installed automatically except Pyaudio that connot be installed that way. Pyaudio is a python binding for Portaudio to handle audio streaming.

42 |

43 | If Pyaudio is not installed in your machine, download an appropriate version for your machine from *[here](https://www.lfd.uci.edu/~gohlke/pythonlibs/)*. Then, navigate to the file location through commandline and use the following command to install the wheel file locally:

44 | ```

45 | pip install

46 | ```

47 | Usage Example:

48 | --------------

49 | ```

50 | from jump_reward_inference.joint_tracker import joint_inference

51 |

52 |

53 | estimator = joint_inference(1, plot=True)

54 |

55 | output = estimator.process("music file directory")

56 | ```

57 |

58 | Video Tutorial:

59 | ------------

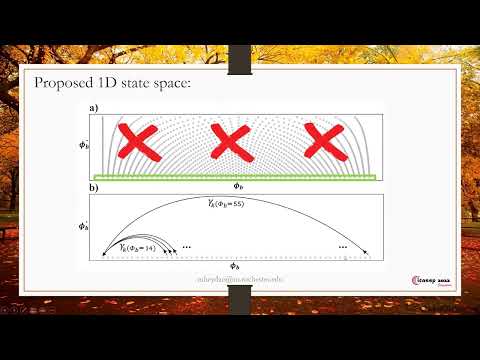

60 | 1: In this tutorial, we explain the proposed 1D state space and the mechanism of the jump=back reward technique.

61 |

62 | [](https://youtu.be/LMdJdrLGEXo)

63 |

64 | Video Demos:

65 | ------------

66 |

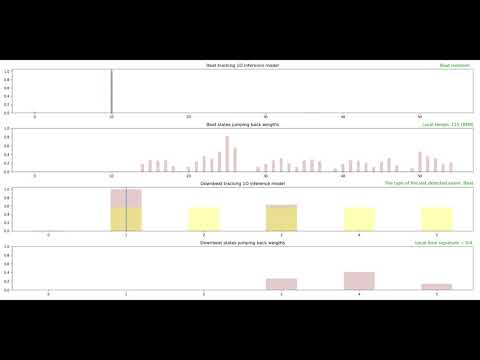

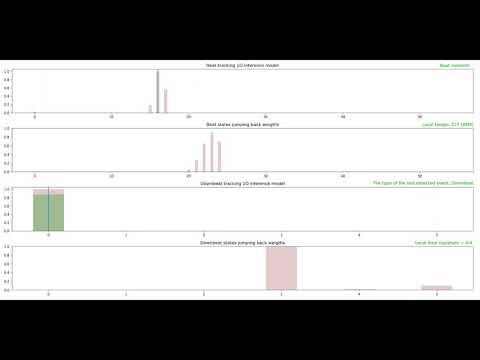

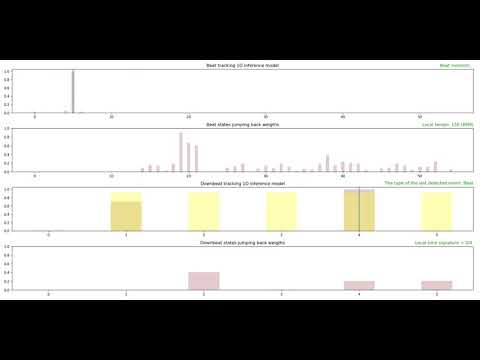

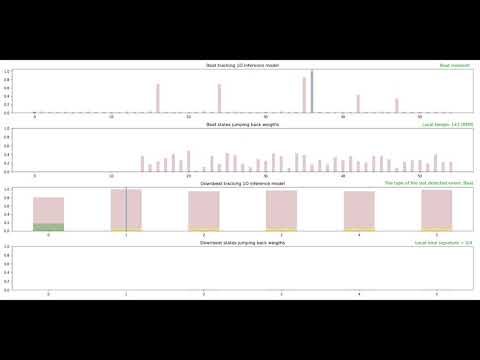

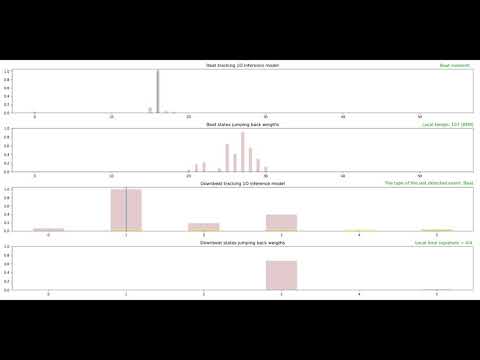

67 | This section demonstrates the system performance for several music genres. Each demo comprises four plots that are described as follows:

68 |

69 | * The first plot: 1D state space for music beat and tempo tracking. Each bar represents the posterior probability of the corresponding state at each time frame.

70 | * The second plot: The jump-back reward vector for the corresponding beat states.

71 | * The third plot:1D state space for music downbeat and meter tracking.

72 | * The fourth plot: The jump-back reward vector for the corresponding downbeat states.

73 |

74 |

75 |

76 | 1: Music Genre: Pop

77 |

78 | [](https://youtu.be/YXGzvLe6bSQ)

79 |

80 |

81 |

82 | 2: Music Genre: Country

83 |

84 | [](https://youtu.be/-9Lwirn6YAI)

85 |

86 |

87 |

88 | 3: Music Genre: Reggae

89 |

90 | [](https://youtu.be/VnDBmXWemPI)

91 |

92 |

93 |

94 | 4: Music Genre: Blues

95 |

96 | [](https://youtu.be/CcUe3P0Y9BM)

97 |

98 |

99 |

100 | 5: Music Genre: Classical

101 |

102 | [](https://youtu.be/fl2ErbGrbyo)

103 |

104 |

105 | Demos Discussion:

106 | -----------------

107 | 1- As demo videos suggest, the system infers multiple music rhythmic parameters, including music beat, downbeat, tempo and meter jointly and in an online fashion using very compact 1D state spaces and jump back reward technique. The system works suitably for different music genres. However, the process is relatively more straightforward for some genres such as pop and country due to the rich percussive content, solid attacks, and simpler rhythmic structures. In contrast, it is more challenging for genres with poor percussive profile, longer attack times, and more complex rhythmic structures such as classical music.

108 |

109 | 2- Since both neural networks and inference models are designed for online/real-time applications, the causalilty constrains are applied and future data is not accessible. It makes the jumpback weigths weaker initially and become stronger over time.

110 |

111 | 3- Given longer listening time is required to infer higher hierarchies, i.e., downbeat and meter, within the very early few seconds, downbeat results are less confident than lower hierarchies, i.e., beat and tempo, however, they get accurate after observing a bar period.

112 |

113 | Acknowledgement

114 | ---------------

115 | Many thanks to the Pandora/SiriusXM Inc. research team for making it legal to publish the project's source code. To load the raw audio and input features extraction [Librosa](https://github.com/librosa/librosa) and [Madmom](https://github.com/CPJKU/madmom) libraries are ustilzed respectively. Many thanks for their great jobs. This work has been partially supported by the National Science Foundation grant 1846184.

116 |

117 | *[arXiv 2111.00704](https://arxiv.org/abs/2111.00704)*

118 |

119 | References:

120 | -----------

121 | M. Heydari, M. McCallum, A. Ehmann and Z. Duan, "A Novel 1D State Space for Efficient Music Rhythmic Analysis", In Proc. IEEE Int. Conf. Acoust. Speech

122 | Signal Process. (ICASSP), 2022.

123 |

124 | M. Heydari, F. Cwitkowitz, and Z. Duan, “BeatNet:CRNN and particle filtering for online joint beat down-beat and meter tracking,” in Proc. of the 22th Intl.

125 | Conf.on Music Information Retrieval (ISMIR), 2021.

126 |

127 | M. Heydari and Z. Duan, “Don’t Look Back: An online beat tracking method using RNN and enhanced particle filtering,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2021.

128 |

--------------------------------------------------------------------------------

/src/jump_reward_inference/inference.py:

--------------------------------------------------------------------------------

1 | # Author: Mojtaba Heydari

2 |

3 |

4 | import numpy as np

5 | import matplotlib.pyplot as plt

6 | from jump_reward_inference.state_space_1D import beat_state_space_1D, downbeat_state_space_1D

7 |

8 |

9 | class BDObservationModel:

10 | """

11 | Observation model for beat and downbeat tracking with particle filtering.

12 |

13 | Based on the first character of this parameter, each (down-)beat period gets split into (down-)beat states

14 | "B" stands for border model which classifies 1/(observation lambda) fraction of states as downbeat states and

15 | the rest as the beat states (if it is used for downbeat tracking state space) or the same fraction of states

16 | as beat states and the rest as the none beat states (if it is used for beat tracking state space).

17 | "N" model assigns a constant number of the beginning states as downbeat states and the rest as beat states

18 | or beginning states as beat and the rest as none-beat states

19 | "G" model is a smooth Gaussian transition (soft border) between downbeat/beat or beat/none-beat states

20 |

21 |

22 | Parameters

23 | ----------

24 | state_space : :class:`BarStateSpace` instance

25 | BarStateSpace instance.

26 | observation_lambda : str

27 |

28 | References:

29 | ----------

30 |

31 | M. Heydari and Z. Duan, “Don’t look back: An online

32 | beat tracking method using RNN and enhanced particle filtering,” in In Proc. IEEE Int. Conf. Acoust. Speech

33 | Signal Process. (ICASSP), 2021.

34 |

35 | M. Heydari, F. Cwitkowitz, and Z. Duan, “BeatNet:CRNN and particle filtering for online joint beat down-beat

36 | and meter tracking,” in Proc. of the 22th Intl. Conf.on Music Information Retrieval (ISMIR), 2021.

37 |

38 | M. Heydari and Z. Duan, “Don’t Look Back: An online beat tracking method using RNN and enhanced

39 | particle filtering,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2021.

40 |

41 |

42 | """

43 |

44 | def __init__(self, state_space, observation_lambda):

45 |

46 | if observation_lambda[0] == 'B': # Observation model for the rigid boundaries

47 | observation_lambda = int(observation_lambda[1:])

48 | # compute observation pointers

49 | # always point to the non-beat densities

50 | pointers = np.zeros(state_space.num_states, dtype=np.uint32)

51 | # unless they are in the beat range of the state space

52 | border = 1. / observation_lambda

53 | pointers[state_space.state_positions % 1 < border] = 1

54 | # the downbeat (i.e. the first beat range) points to density column 2

55 | pointers[state_space.state_positions < border] = 2

56 | # instantiate a ObservationModel with the pointers

57 | self.pointers = pointers

58 |

59 | elif observation_lambda[0] == 'N': # Observation model for the fixed number of states as beats boundaries

60 | observation_lambda = int(observation_lambda[1:])

61 | # computing observation pointers

62 | # always point to the non-beat densities

63 | pointers = np.zeros(state_space.num_states, dtype=np.uint32)

64 | # unless they are in the beat range of the state space

65 | for i in range(observation_lambda):

66 | border = np.asarray(state_space.first_states) + i

67 | pointers[border[1:]] = 1

68 | # the downbeat (i.e. the first beat range) points to density column 2

69 | pointers[border[0]] = 2

70 | # instantiate a ObservationModel with the pointers

71 | self.pointers = pointers

72 |

73 | elif observation_lambda[0] == 'G': # Observation model for gaussian boundaries

74 | observation_lambda = float(observation_lambda[1:])

75 | pointers = np.zeros((state_space.num_beats + 1, state_space.num_states))

76 | for i in range(state_space.num_beats + 1):

77 | pointers[i] = gaussian(state_space.state_positions, i, observation_lambda)

78 | pointers[0] = pointers[0] + pointers[-1]

79 | pointers[1] = np.sum(pointers[1:-1], axis=0)

80 | pointers = pointers[:2]

81 | self.pointers = pointers

82 |

83 |

84 | def gaussian(x, mu, sig):

85 | return np.exp(-np.power((x - mu) / sig, 2.) / 2) # /(np.sqrt(2.*np.pi)*sig)

86 |

87 | # Beat state space observations

88 | def densities(observations, observation_model, state_model):

89 | new_obs = np.zeros(state_model.num_states, float)

90 | if len(np.shape(observation_model.pointers)) != 2: # For Border line and fixed number observation distribution

91 | new_obs[np.argwhere(

92 | observation_model.pointers == 2)] = observations

93 | new_obs[np.argwhere(

94 | observation_model.pointers == 0)] = 0.03

95 | elif len(np.shape(observation_model.pointers)) == 2: # For Gaussian observation distribution

96 | new_obs = observation_model.pointers[

97 | 0] * observations

98 | new_obs[new_obs < 0.005] = 0.03

99 | return new_obs

100 |

101 |

102 | # Downbeat state space observations

103 | def densities2(observations, observation_model, state_model):

104 | new_obs = np.zeros(state_model.num_states, float)

105 | if len(np.shape(observation_model.pointers)) != 2: # For Border line and fixed number observation distribution

106 | new_obs[np.argwhere(

107 | observation_model.pointers == 2)] = observations[1]

108 | new_obs[np.argwhere(

109 | observation_model.pointers == 0)] = 0.00002

110 | elif len(np.shape(observation_model.pointers)) == 2: # For Gaussian observation distribution

111 | new_obs = observation_model.pointers[0] * observations

112 | new_obs[new_obs < 0.005] = 0.03

113 | # return the observations

114 | return new_obs

115 |

116 |

117 | # def densities_down(observations, beats_per_bar):

118 | # new_obs = np.zeros(beats_per_bar, float)

119 | # new_obs[0] = observations[1] # downbeat

120 | # new_obs[1:] = observations[0] # beat

121 | # return new_obs

122 |

123 |

124 | class inference_1D:

125 | '''

126 | Running the jump-reward inference for the 1D state spaces over the given activation functions to infer music rhythmic information jointly.

127 |

128 | Parameters

129 | ----------

130 | activations : numpy array, shape (num_frames, 2)

131 | Activation function with probabilities corresponding to beats

132 | and downbeats given in the first and second column, respectively.

133 |

134 | Returns

135 | ----------

136 | beats, downbeats, local tempo, local meter : numpy array, shape (num_beats, 4)

137 | "1" at the second column indicates the downbeats.

138 |

139 | References:

140 | ----------

141 |

142 | '''

143 | MIN_BPM = 55.

144 | MAX_BPM = 215.

145 | LAMBDA_B = 0.01 # beat transition lambda

146 | Lambda_D = 0.01 # downbeat transition lambda

147 | OBSERVATION_LAMBDA = "B56"

148 | fps = 50

149 | T = 1 / fps

150 | MIN_BEAT_PER_BAR = 1

151 | MAX_BEAT_PER_BAR = 4

152 | OFFSET = 4 # The point of time after which the inference model starts to work. Can be zero!

153 | IG_THRESHOLD = 0.4 # Information Gate threshold

154 |

155 | def __init__(self, beats_per_bar=[], min_bpm=MIN_BPM, max_bpm=MAX_BPM, offset=OFFSET,

156 | min_bpb=MIN_BEAT_PER_BAR, max_bpb=MAX_BEAT_PER_BAR, ig_threshold=IG_THRESHOLD,

157 | lambda_b=LAMBDA_B, lambda_d=Lambda_D, observation_lambda=OBSERVATION_LAMBDA,

158 | fps=None, plot=False, **kwargs):

159 | self.beats_per_bar = beats_per_bar

160 | self.fps = fps

161 | self.observation_lambda = observation_lambda

162 | self.offset = offset

163 | self.ig_threshold = ig_threshold

164 | self.plot = plot

165 | beats_per_bar = np.array(beats_per_bar, ndmin=1)

166 |

167 | # convert timing information to construct a beat state space

168 | min_interval = round(60. * fps / max_bpm)

169 | max_interval = round(60. * fps / min_bpm)

170 |

171 | # State spaces and observation models initialization

172 | self.st = beat_state_space_1D(alpha=lambda_b, tempo=None, fps=None, min_interval=min_interval,

173 | max_interval=max_interval, ) # beat tracking state space

174 | self.st2 = downbeat_state_space_1D(alpha=lambda_d, meter=self.beats_per_bar, min_beats_per_bar=min_bpb,

175 | max_beats_per_bar=max_bpb) # downbeat tracking state space

176 | self.om = BDObservationModel(self.st, observation_lambda) # beat tracking observation model

177 | self.om2 = BDObservationModel(self.st2, "B60") # downbeat tracking observation model

178 | pass

179 |

180 | def process(self, activations):

181 | T = 1 / self.fps

182 | font = {'color': 'green', 'weight': 'normal', 'size': 12}

183 | counter = 0

184 | if self.plot:

185 | fig = plt.figure(figsize=(1800 / 96, 900 / 96), dpi=96)

186 | subplot1 = fig.add_subplot(411)

187 | subplot2 = fig.add_subplot(412)

188 | subplot3 = fig.add_subplot(413)

189 | subplot4 = fig.add_subplot(414)

190 | subplot1.title.set_text('Beat tracking inference diagram')

191 | subplot2.title.set_text('Beat states jumping back weigths')

192 | subplot3.title.set_text('Downbeat tracking inference diagram')

193 | subplot4.title.set_text('Downbeat states jumping back weigths')

194 | fig.tight_layout()

195 |

196 | # output vector initialization for beat, downbeat, tempo and meter

197 | output = np.zeros((1, 4), dtype=float)

198 | # Beat and downbeat belief state initialization

199 | beat_distribution = np.ones(self.st.num_states) * 0.8

200 | beat_distribution[5] = 1 # this is just a flag to show the initial transitions

201 | down_distribution = np.ones(self.st2.num_states) * 0.8

202 | # local tempo and meter initialization

203 | local_tempo = 0

204 | meter = 0

205 |

206 | activations = activations[int(self.offset / T):]

207 | both_activations = activations.copy()

208 | activations = np.max(activations, axis=1)

209 | activations[activations < self.ig_threshold] = 0.03

210 |

211 | for i in range(len(activations)): # loop through all frames to infer beats/downbeats

212 | counter += 1

213 | # beat detection

214 |

215 | # beat transition (motion)

216 | local_beat = ''

217 | if np.max(self.st.jump_weights) > 1:

218 | self.st.jump_weights = 0.7 * self.st.jump_weights / np.max(self.st.jump_weights)

219 | b_weight = self.st.jump_weights.copy()

220 | beat_jump_rewards1 = -beat_distribution * b_weight # calculating the transition rewards

221 | b_weight[b_weight < 0.7] = 0 # Thresholding the jump backs

222 | beat_distribution1 = sum(beat_distribution * b_weight) # jump back

223 | beat_distribution2 = np.roll((beat_distribution * (1 - b_weight)), 1) # move forward

224 | beat_distribution2[0] += beat_distribution1

225 | beat_distribution = beat_distribution2

226 |

227 | # Beat correction

228 | if activations[i] > self.ig_threshold: # beat correction is done only when there is a meaningful activation

229 | obs = densities(activations[i], self.om, self.st)

230 | beat_distribution_old = beat_distribution.copy()

231 | beat_distribution = beat_distribution_old * obs

232 | if np.min(beat_distribution) < 1e-5: # normalize beat distribution if its minimum is below a threshold

233 | beat_distribution = 0.8 * beat_distribution / np.max(beat_distribution)

234 | beat_max = np.argmax(beat_distribution)

235 | beat_jump_rewards2 = beat_distribution - beat_distribution_old # beat correction rewards calculation

236 | beat_jump_rewards = beat_jump_rewards2

237 | beat_jump_rewards[:self.st.min_interval - 1] = 0

238 | if np.max(-beat_jump_rewards) != 0:

239 | beat_jump_rewards = -4 * beat_jump_rewards / np.max(-beat_jump_rewards)

240 | self.st.jump_weights += beat_jump_rewards

241 | local_tempo = round(self.fps * 60 / (np.argmax(self.st.jump_weights) + 1))

242 | else:

243 | beat_jump_rewards1[:self.st.min_interval - 1] = 0

244 | self.st.jump_weights += 2 * beat_jump_rewards1

245 | self.st.jump_weights[:self.st.min_interval - 1] = 0

246 | beat_max = np.argmax(beat_distribution)

247 |

248 | # downbeat detection

249 | if (beat_max < (

250 | int(.07 / T)) + 1) and (counter * T + self.offset) - output[-1][

251 | 0] > .45 * T * self.st.min_interval: # here the local tempo (:np.argmax(self.st.jump_weights)+1): can be used as the criteria rather than the minimum tempo

252 | local_beat = 'NoooOOoooW!'

253 |

254 | # downbeat transition (motion)

255 | if np.max(self.st2.jump_weights) > 1:

256 | self.st2.jump_weights = 0.2 * self.st2.jump_weights / np.max(self.st2.jump_weights)

257 | d_weight = self.st2.jump_weights.copy()

258 | down_jump_rewards1 = - down_distribution * d_weight

259 | d_weight[d_weight < 0.2] = 0

260 | down_distribution1 = sum(down_distribution * d_weight) # jump back

261 | down_distribution2 = np.roll((down_distribution * (1 - d_weight)), 1) # move forward

262 | down_distribution2[0] += down_distribution1

263 | down_distribution = down_distribution2

264 |

265 | # Downbeat correction

266 | if both_activations[i][1] > 0.00002:

267 | obs2 = densities2(both_activations[i], self.om2, self.st2)

268 | down_distribution_old = down_distribution.copy()

269 | down_distribution = down_distribution_old * obs2

270 | if np.min(down_distribution) < 1e-5: # normalize downbeat distribution if its minimum is below a threshold

271 | down_distribution = 0.8 * down_distribution/np.max(down_distribution)

272 | down_max = np.argmax(down_distribution)

273 | down_jump_rewards2 = down_distribution - down_distribution_old # downbeat correction rewards calculation

274 | down_jump_rewards = down_jump_rewards2

275 | down_jump_rewards[:self.st2.max_interval - 1] = 0

276 | if np.max(-down_jump_rewards) != 0:

277 | down_jump_rewards = -0.3 * down_jump_rewards / np.max(-down_jump_rewards)

278 | self.st2.jump_weights = self.st2.jump_weights + down_jump_rewards

279 | meter = np.argmax(self.st2.jump_weights) + 1

280 | else:

281 | down_jump_rewards1[:self.st2.min_interval - 1] = 0

282 | self.st2.jump_weights += 2 * down_jump_rewards1

283 | self.st2.jump_weights[:self.st2.min_interval - 1] = 0

284 | down_max = np.argmax(down_distribution)

285 |

286 | # Beat vs Downbeat mark off

287 | if down_max == self.st2.first_states[0]:

288 | output = np.append(output, [[counter * T + self.offset, 1, local_tempo, meter]], axis=0)

289 | last_detected = "Downbeat"

290 | else:

291 | output = np.append(output, [[counter * T + self.offset, 2, local_tempo, meter]], axis=0)

292 | last_detected = "Beat"

293 | # Downbeat probability mass and weights

294 | if self.plot:

295 | subplot3.cla()

296 | subplot4.cla()

297 | down_distribution = down_distribution / np.max(down_distribution)

298 | subplot3.bar(np.arange(self.st2.num_states), down_distribution, color='maroon', width=0.4,

299 | alpha=0.2)

300 | subplot3.bar(0, both_activations[i][1], color='green', width=0.4, alpha=0.3)

301 | # subplot3.bar(np.arange(1, self.st2.num_states), both_activations[i][0], color='yellow', width=0.4, alpha=0.3)

302 | subplot4.bar(np.arange(self.st2.num_states), self.st2.jump_weights, color='maroon', width=0.4,

303 | alpha=0.2)

304 | subplot4.set_ylim([0, 1])

305 | subplot3.title.set_text('Downbeat tracking 1D inference model')

306 | subplot4.title.set_text(f'Downbeat states jumping back weigths')

307 | subplot4.text(1, -0.26, f'The type of the last detected event: {last_detected}',

308 | horizontalalignment='right', verticalalignment='center', transform=subplot2.transAxes,

309 | fontdict=font)

310 | subplot4.text(1, -1.63, f'Local time signature = {meter}/4 ', horizontalalignment='right',

311 | verticalalignment='center', transform=subplot2.transAxes, fontdict=font)

312 | position2 = down_max

313 | subplot3.axvline(x=position2)

314 |

315 | # Downbeat probability mass and weights

316 | if self.plot: # activates this when you want to plot the performance

317 | if counter % 1 == 0: # Choosing how often to plot

318 | print(counter)

319 | subplot1.cla()

320 | subplot2.cla()

321 | beat_distribution = beat_distribution / np.max(beat_distribution)

322 | subplot1.bar(np.arange(self.st.num_states), beat_distribution, color='maroon', width=0.4, alpha=0.2)

323 | subplot1.bar(0, activations[i], color='green', width=0.4, alpha=0.3)

324 | # subplot2.bar(np.arange(self.st.num_states), np.concatenate((np.zeros(self.st.min_interval),self.st.jump_weights)), color='maroon', width=0.4, alpha=0.2)

325 | subplot2.bar(np.arange(self.st.num_states), self.st.jump_weights, color='maroon', width=0.4,

326 | alpha=0.2)

327 | subplot2.set_ylim([0, 1])

328 | subplot1.title.set_text('Beat tracking 1D inference model')

329 | subplot2.title.set_text("Beat states jumping back weigths")

330 | subplot1.text(1, 2.48, f'Beat moment: {local_beat} ', horizontalalignment='right',

331 | verticalalignment='top', transform=subplot2.transAxes, fontdict=font)

332 | subplot2.text(1, 1.12, f'Local tempo: {local_tempo} (BPM)', horizontalalignment='right',

333 | verticalalignment='top', transform=subplot2.transAxes, fontdict=font)

334 | position = beat_max

335 | subplot1.axvline(x=position)

336 | plt.pause(0.05)

337 | subplot1.clear()

338 |

339 | return output[1:]

340 |

--------------------------------------------------------------------------------