├── .gitignore

├── .travis.yml

├── HOWTO_RELEASE.md

├── LICENSE

├── MANIFEST.in

├── Makefile

├── README.md

├── conda_recipes

├── README.md

├── build_all.sh

├── flann

│ ├── .binstar.yml

│ ├── build.sh

│ └── meta.yaml

├── megaman

│ ├── .binstar.yml

│ ├── build.sh

│ ├── meta.yaml

│ └── run_test.sh

├── pyamg

│ ├── .binstar.yml

│ ├── build.sh

│ ├── meta.yaml

│ └── run_test.sh

└── pyflann

│ ├── .binstar.yml

│ ├── build.sh

│ └── meta.yaml

├── doc

├── .gitignore

├── Makefile

├── conf.py

├── embedding

│ ├── API.rst

│ ├── index.rst

│ ├── isomap.rst

│ ├── locally_linear.rst

│ ├── ltsa.rst

│ └── spectral_embedding.rst

├── geometry

│ ├── API.rst

│ ├── geometry.rst

│ └── index.rst

├── images

│ ├── circle_to_ellipse_embedding.png

│ ├── index.rst

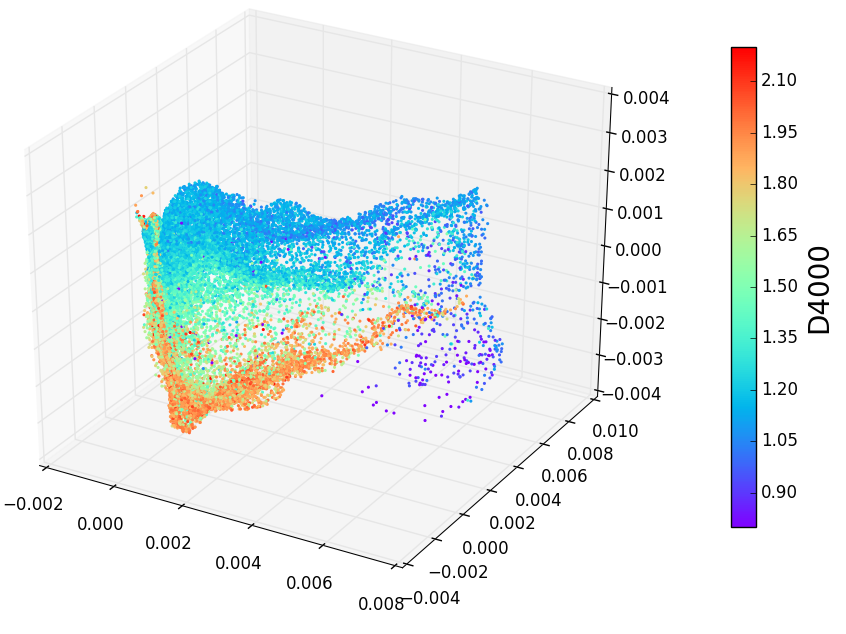

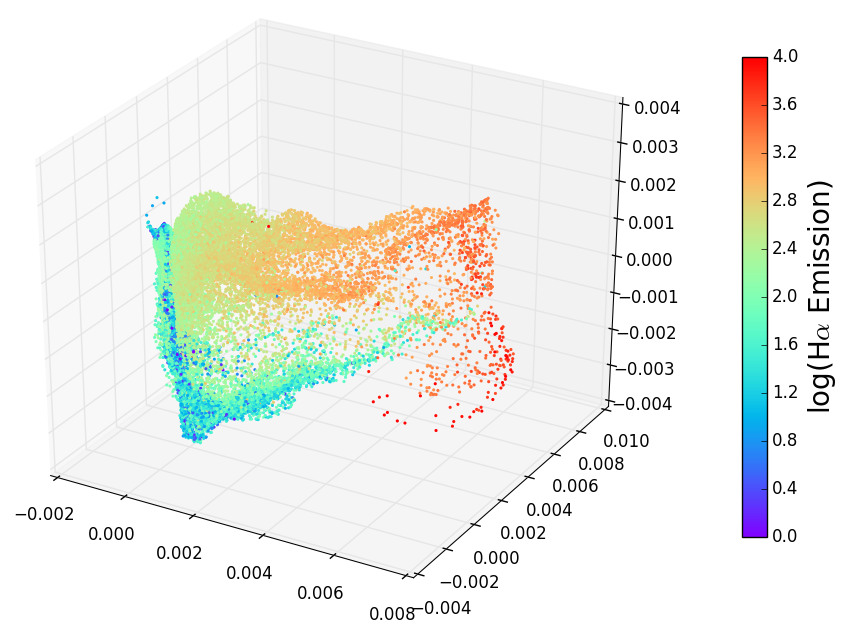

│ ├── spectra_D4000.png

│ ├── spectra_Halpha.png

│ ├── spectra_Halpha.rst

│ ├── word2vec.rst

│ └── word2vec_rmetric_plot_no_digits.png

├── index.rst

├── installation.rst

├── sphinxext

│ └── numpy_ext

│ │ ├── __init__.py

│ │ ├── astropyautosummary.py

│ │ ├── autodoc_enhancements.py

│ │ ├── automodapi.py

│ │ ├── automodsumm.py

│ │ ├── changelog_links.py

│ │ ├── comment_eater.py

│ │ ├── compiler_unparse.py

│ │ ├── docscrape.py

│ │ ├── docscrape_sphinx.py

│ │ ├── doctest.py

│ │ ├── edit_on_github.py

│ │ ├── numpydoc.py

│ │ ├── phantom_import.py

│ │ ├── smart_resolver.py

│ │ ├── tocdepthfix.py

│ │ ├── traitsdoc.py

│ │ ├── utils.py

│ │ └── viewcode.py

└── utils

│ ├── API.rst

│ └── index.rst

├── examples

├── example.py

├── examples_index.ipynb

├── manifold_intro.ipynb

├── megaman_install_usage_colab.ipynb

├── megaman_tutorial.ipynb

├── megaman_tutorial.py

├── rad_est_utils.py

├── radius_estimation_tutorial.ipynb

├── tutorial_data_plot.png

├── tutorial_embeddings.png

├── tutorial_isomap_plot.png

└── tutorial_spectral_plot.png

├── megaman

├── __check_build

│ ├── __init__.py

│ ├── _check_build.pyx

│ └── setup.py

├── __init__.py

├── datasets

│ ├── __init__.py

│ ├── datasets.py

│ └── megaman.png

├── embedding

│ ├── __init__.py

│ ├── base.py

│ ├── isomap.py

│ ├── locally_linear.py

│ ├── ltsa.py

│ ├── spectral_embedding.py

│ └── tests

│ │ ├── __init__.py

│ │ ├── test_base.py

│ │ ├── test_embeddings.py

│ │ ├── test_isomap.py

│ │ ├── test_lle.py

│ │ ├── test_ltsa.py

│ │ └── test_spectral_embedding.py

├── geometry

│ ├── __init__.py

│ ├── adjacency.py

│ ├── affinity.py

│ ├── complete_adjacency_matrix.py

│ ├── cyflann

│ │ ├── __init__.py

│ │ ├── cyflann_index.cc

│ │ ├── cyflann_index.h

│ │ ├── index.pxd

│ │ ├── index.pyx

│ │ └── setup.py

│ ├── geometry.py

│ ├── laplacian.py

│ ├── rmetric.py

│ ├── tests

│ │ ├── __init__.py

│ │ ├── test_adjacency.py

│ │ ├── test_affinity.py

│ │ ├── test_complete_adjacency_matrix.py

│ │ ├── test_geometry.py

│ │ ├── test_laplacian.m

│ │ ├── test_laplacian.py

│ │ ├── test_rmetric.py

│ │ └── testmegaman_laplacian_rad0_2_lam1_5_n200.mat

│ └── utils.py

├── plotter

│ ├── __init__.py

│ ├── covar_plotter3.py

│ ├── plotter.py

│ ├── scatter_3d.py

│ └── utils.py

├── relaxation

│ ├── __init__.py

│ ├── optimizer.py

│ ├── precomputed.py

│ ├── riemannian_relaxation.py

│ ├── tests

│ │ ├── __init__.py

│ │ ├── eps_halfdome.mat

│ │ ├── rloss_halfdome.mat

│ │ ├── test_precomputed_S.py

│ │ ├── test_precomputed_Y.py

│ │ ├── test_regression_test.py

│ │ ├── test_relaxation_keywords.py

│ │ ├── test_tracing_var.py

│ │ └── utils.py

│ ├── trace_variable.py

│ └── utils.py

├── setup.py

└── utils

│ ├── __init__.py

│ ├── analyze_dimension_and_radius.py

│ ├── covar_plotter.py

│ ├── eigendecomp.py

│ ├── estimate_radius.py

│ ├── k_means_clustering.py

│ ├── large_sparse_functions.py

│ ├── nystrom_extension.py

│ ├── spectral_clustering.py

│ ├── testing.py

│ ├── tests

│ ├── __init__.py

│ ├── test_analyze_dimension_and_radius.py

│ ├── test_eigendecomp.py

│ ├── test_estimate_radius.py

│ ├── test_nystrom.py

│ ├── test_spectral_clustering.py

│ ├── test_testing.py

│ └── test_validation.py

│ └── validation.py

├── setup.py

└── tools

└── cythonize.py

/.gitignore:

--------------------------------------------------------------------------------

1 | *~

2 | *.pyc

3 | junk*

4 | *.cxx

5 | *.c

6 | cythonize.dat

7 |

8 | cover

9 |

10 | MANIFEST

11 |

12 | # Byte-compiled / optimized / DLL files

13 | __pycache__/

14 | *.py[cod]

15 | *$py.class

16 |

17 | # C extensions

18 | *.so

19 |

20 | # Distribution / packaging

21 | .Python

22 | env/

23 | build/

24 | develop-eggs/

25 | dist/

26 | downloads/

27 | eggs/

28 | .eggs/

29 | lib/

30 | lib64/

31 | parts/

32 | sdist/

33 | var/

34 | *.egg-info/

35 | .installed.cfg

36 | *.egg

37 |

38 | # PyInstaller

39 | # Usually these files are written by a python script from a template

40 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

41 | *.manifest

42 | *.spec

43 |

44 | # Installer logs

45 | pip-log.txt

46 | pip-delete-this-directory.txt

47 |

48 | # Unit test / coverage reports

49 | htmlcov/

50 | .tox/

51 | .coverage

52 | .coverage.*

53 | .cache

54 | nosetests.xml

55 | coverage.xml

56 | *,cover

57 | .hypothesis/

58 |

59 | # Translations

60 | *.mo

61 | *.pot

62 |

63 | # Django stuff:

64 | *.log

65 | local_settings.py

66 |

67 | # Flask instance folder

68 | instance/

69 |

70 | # Sphinx documentation

71 | docs/_build/

72 |

73 | # PyBuilder

74 | target/

75 |

76 | # IPython Notebook

77 | .ipynb_checkpoints

78 | Untitled*.ipynb

79 |

80 | # pyenv

81 | .python-version

82 |

83 | # macos DS_Store

84 | .DS_Store

85 | **/*/.DS_Store

86 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: python

2 |

3 | # sudo false implies containerized builds

4 | sudo: false

5 |

6 | python:

7 | - 2.7

8 | - 3.4

9 | - 3.5

10 |

11 | env:

12 | global:

13 | # Directory where tests are run from

14 | - TEST_DIR=/tmp/megaman

15 | - CONDA_CHANNEL="conda-forge"

16 | - CONDA_DEPS="pip nose coverage cython scikit-learn flann h5py"

17 | - PIP_DEPS="coveralls"

18 | matrix:

19 | - EXTRA_DEPS="pyflann pyamg"

20 | - EXTRA_DEPS=""

21 |

22 | before_install:

23 | - export MINICONDA=$HOME/miniconda

24 | - export PATH="$MINICONDA/bin:$PATH"

25 | - hash -r

26 | - wget http://repo.continuum.io/miniconda/Miniconda-latest-Linux-x86_64.sh -O miniconda.sh

27 | - bash miniconda.sh -b -f -p $MINICONDA

28 | - conda config --set always_yes yes

29 | - conda update conda

30 | - conda info -a

31 | - conda create -n testenv python=$TRAVIS_PYTHON_VERSION

32 | - source activate testenv

33 | - conda install -c $CONDA_CHANNEL $CONDA_DEPS $EXTRA_DEPS

34 | - travis_retry pip install $PIP_DEPS

35 |

36 | install:

37 | - python setup.py install

38 |

39 | script:

40 | - mkdir -p $TEST_DIR

41 | - cd $TEST_DIR && nosetests -v --with-coverage --cover-package=megaman megaman

42 |

43 | after_success:

44 | - coveralls

45 |

--------------------------------------------------------------------------------

/HOWTO_RELEASE.md:

--------------------------------------------------------------------------------

1 | # How to Release

2 |

3 | Here's a quick step-by-step for cutting a new release of megaman.

4 |

5 | ## Pre-release

6 |

7 | 1. update version in ``megaman/__init__.py`` to, e.g. "0.1"

8 |

9 | 2. update version in **two places** in ``doc/conf.py`` to the same

10 |

11 | 3. create a release tag; e.g.

12 | ```

13 | $ git tag -a v0.1 -m 'version 0.1 release'

14 | ```

15 |

16 | 4. push the commits and tag to github

17 |

18 | 5. confirm that CI tests pass on github

19 |

20 | 6. under "tags" on github, update the release notes

21 |

22 |

23 | ## Publishing the Release

24 |

25 | 1. push the new release to PyPI (requires jakevdp's permissions)

26 | ```

27 | $ python setup.py sdist upload

28 | ```

29 |

30 | 2. change directories to ``doc`` and build the documentation:

31 | ```

32 | $ cd doc/

33 | $ make html # build documentation

34 | $ make publish # publish to github pages

35 | ```

36 |

37 | 3. Publish the conda build:

38 | submit a PR to http://github.com/conda-forge/megaman-feedstock

39 | updating recipe/meta.yaml with the appropriate version. Once merged,

40 | then the conda install command will point to the new version.

41 |

42 | ## Post-release

43 |

44 | 1. update version in ``megaman/__init__.py`` to next version; e.g. '0.2.dev0'

45 |

46 | 2. update version in ``doc/conf.py`` to the same (in two places)

47 |

48 | 3. push changes to github

49 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2016

2 | All rights reserved.

3 |

4 | Redistribution and use in source and binary forms, with or without

5 | modification, are permitted provided that the following conditions are met:

6 |

7 | * Redistributions of source code must retain the above copyright notice, this

8 | list of conditions and the following disclaimer.

9 |

10 | * Redistributions in binary form must reproduce the above copyright notice,

11 | this list of conditions and the following disclaimer in the documentation

12 | and/or other materials provided with the distribution.

13 |

14 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

15 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

16 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

17 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

18 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

19 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

20 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

21 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

22 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

23 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

24 |

25 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include *.md

2 | include *.py

3 | recursive-include megaman *.py *.pyx *.pxd *.cc *.h *.mat *.png

4 | recursive-include doc *

5 | recursive-include tools *.py

6 | recursive-include examples *.py *.ipynb

7 | include Makefile

8 | include LICENSE

9 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | CURRENT_DIR = $(shell pwd)

2 | TEST_DIR = /tmp/megaman

3 | PKG = megaman

4 |

5 | install:

6 | python setup.py install

7 |

8 | clean:

9 | rm -r build/

10 |

11 | test-dir:

12 | mkdir -p $(TEST_DIR)

13 |

14 | test: test-dir install

15 | cd $(TEST_DIR) && nosetests $(PKG)

16 |

17 | doctest: test-dir install

18 | cd $(TEST_DIR) && nosetests --with-doctest $(PKG)

19 |

20 | test-coverage: test-dir install

21 | cd $(TEST_DIR) && nosetests --with-coverage --cover-package=$(PKG) $(PKG)

22 |

23 | test-coverage-html: test-dir install

24 | cd $(TEST_DIR) && nosetests --with-coverage --cover-html --cover-package=$(PKG) $(PKG)

25 | rsync -r $(TEST_DIR)/cover $(CURRENT_DIR)/

26 | echo "open ./cover/index.html with a web browser to see coverage report"

27 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # megaman: Manifold Learning for Millions of Points

2 |

3 |

4 |

5 | [](https://anaconda.org/conda-forge/megaman)

6 | [](https://travis-ci.org/mmp2/megaman)

7 | [](https://pypi.python.org/pypi/megaman)

8 | [](https://github.com/mmp2/megaman/blob/master/LICENSE)

9 |

10 | ``megaman`` is a scalable manifold learning package implemented in

11 | python. It has a front-end API designed to be familiar

12 | to [scikit-learn](http://scikit-learn.org/) but harnesses

13 | the C++ Fast Library for Approximate Nearest Neighbors (FLANN)

14 | and the Sparse Symmetric Positive Definite (SSPD) solver

15 | Locally Optimal Block Precodition Gradient (LOBPCG) method

16 | to scale manifold learning algorithms to large data sets.

17 | On a personal computer megaman can embed 1 million data points

18 | with hundreds of dimensions in 10 minutes.

19 | megaman is designed for researchers and as such caches intermediary

20 | steps and indices to allow for fast re-computation with new parameters.

21 |

22 | Package documentation can be found at http://mmp2.github.io/megaman/

23 |

24 | If you use our software please cite the following JMLR paper:

25 |

26 | McQueen, Meila, VanderPlas, & Zhang, "Megaman: Scalable Manifold Learning in Python",

27 | Journal of Machine Learning Research, Vol 17 no. 14, 2016.

28 | http://jmlr.org/papers/v17/16-109.html

29 |

30 | You can also find our arXiv paper at http://arxiv.org/abs/1603.02763

31 |

32 | ## Examples

33 |

34 | - [Tutorial Notebook]( https://github.com/mmp2/megaman/blob/master/examples/megaman_tutorial.ipynb)

35 |

36 | ## Installation and Examples in Google Colab

37 |

38 | Below it's a tutorial to install megaman on Google Colab, through Conda environment.

39 |

40 | It also provides tutorial of using megaman to build spectral embedding on uniform swiss roll dataset.

41 |

42 | - [Install & Example script]( https://colab.research.google.com/drive/1ms22YK3TvrIx0gji6UZqG0zoSNRCWtXj?usp=sharing)

43 | - [You can download the Jupyter Notebook version here]( https://github.com/mmp2/megaman/blob/master/examples/megaman_install_usage_colab.ipynb)

44 |

45 | ## ~~Installation with Conda~~

46 |

47 |

59 |

60 | Due to the change of API,

61 | `$ conda install -c conda-forge megaman`

62 | is no longer supported.

63 | We are currently working on fixing the bug.

64 |

65 | Please see the full install instructions below to build `megaman` from source.

66 |

67 | ## Installation from source

68 |

69 | To install megaman from source requires the following:

70 |

71 | - [python](http://python.org) tested with versions 2.7, 3.5 and 3.6

72 | - [numpy](http://numpy.org) version 1.8 or higher

73 | - [scipy](http://scipy.org) version 0.16.0 or higher

74 | - [scikit-learn](http://scikit-learn.org)

75 | - [FLANN](http://www.cs.ubc.ca/research/flann/)

76 | - [pyflann](http://www.cs.ubc.ca/research/flann/) which offers another method of computing distance matrices (this is bundled with the FLANN source code)

77 | - [cython](http://cython.org/)

78 | - a C++ compiler such as ``gcc``/``g++``

79 |

80 | Optional requirements include

81 |

82 | - [pyamg](http://pyamg.org/), which allows for faster decompositions of large matrices

83 | - [nose](https://nose.readthedocs.org/) for running the unit tests

84 | - [h5py](http://www.h5py.org) for reading testing .mat files

85 | - [plotly](https://plot.ly) an graphing library for interactive plot

86 |

87 |

88 | These requirements can be installed on Linux and MacOSX using the following conda command:

89 |

90 | ```shell

91 | $ conda create -n manifold_env python=3.5 -y

92 | # can also use python=2.7 or python=3.6

93 |

94 | $ source activate manifold_env

95 | $ conda install --channel=conda-forge -y pip nose coverage cython numpy scipy \

96 | scikit-learn pyflann pyamg h5py plotly

97 | ```

98 |

99 | Clone this repository and `cd` into source repository

100 |

101 | ```shell

102 | $ cd /tmp/

103 | $ git clone https://github.com/mmp2/megaman.git

104 | $ cd megaman

105 | ```

106 |

107 | Finally, within the source repository, run this command to install the ``megaman`` package itself:

108 | ```shell

109 | $ python setup.py install

110 | ```

111 |

112 | ## Unit Tests

113 | megaman uses ``nose`` for unit tests. With ``nose`` installed, type

114 | ```

115 | $ make test

116 | ```

117 | to run the unit tests. ``megaman`` is tested on Python versions 2.7, 3.4, and 3.5.

118 |

119 | ## Authors

120 | - [James McQueen](http://www.stat.washington.edu/people/jmcq/)

121 | - [Marina Meila](http://www.stat.washington.edu/mmp/)

122 | - [Zhongyue Zhang](https://github.com/Jerryzcn)

123 | - [Jake VanderPlas](http://www.vanderplas.com)

124 | - [Yu-Chia Chen](https://github.com/yuchaz)

125 |

126 | ## Other Contributors

127 |

128 | - Xiao Wang: lazy rmetric, Nystrom Extension

129 | - [Hangliang Ren (Harry)](https://github.com/Harryahh): Installation tutorials, Spectral Embedding

130 |

131 | ## Future Work

132 |

133 | See this issues list for what we have planned for upcoming releases:

134 |

135 | [Future Work](https://github.com/mmp2/megaman/issues/47)

136 |

--------------------------------------------------------------------------------

/conda_recipes/README.md:

--------------------------------------------------------------------------------

1 | # Conda recipes

2 |

3 | This directory contains conda build recipes for megaman and its dependencies.

4 | For more information see the

5 | [Conda Build documentation](http://conda.pydata.org/docs/build_tutorials/pkgs2.html)

6 |

--------------------------------------------------------------------------------

/conda_recipes/build_all.sh:

--------------------------------------------------------------------------------

1 | conda config --set anaconda_upload yes

2 | conda build flann

3 | conda build --py all pyflann

4 | conda build --python 2.7 --python 3.4 --python 3.5 --numpy 1.9 --numpy 1.10 pyamg

5 | conda build --python 2.7 --python 3.4 --python 3.5 --numpy 1.10 megaman

6 |

--------------------------------------------------------------------------------

/conda_recipes/flann/.binstar.yml:

--------------------------------------------------------------------------------

1 | package: flann

2 | platform:

3 | - osx-64

4 | - osx-32

5 | - linux-64

6 | - linux-32

7 | script:

8 | - conda build .

9 | build_targets:

10 | - conda

11 |

--------------------------------------------------------------------------------

/conda_recipes/flann/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # cannot build flann from within the source directory

4 | mkdir build

5 | cd build

6 |

7 | # On OSX, we need to ensure we're using conda's gcc/g++

8 | if [[ `uname` == Darwin ]]; then

9 | export CC=gcc

10 | export CXX=g++

11 | fi

12 |

13 | cmake .. -DCMAKE_INSTALL_PREFIX=$PREFIX -DBUILD_MATLAB_BINDINGS:BOOL=OFF -DBUILD_PYTHON_BINDINGS:BOOL=OFF -DBUILD_EXAMPLES:BOOL=OFF

14 |

15 | make -j$CPU_COUNT install

16 |

--------------------------------------------------------------------------------

/conda_recipes/flann/meta.yaml:

--------------------------------------------------------------------------------

1 | package:

2 | name: flann

3 | version: "1.8.5dev"

4 |

5 | source:

6 | git_url: https://github.com/mariusmuja/flann.git

7 | git_tag: b8a442fd98f8ce32ae3465bfd3427b5cbc36f6a5

8 |

9 | build:

10 | number: 2

11 | string: {{PKG_BUILDNUM}}_g{{GIT_FULL_HASH[:7]}}

12 |

13 | requirements:

14 | build:

15 | - gcc 4.8* # [osx]

16 | - hdf5

17 | - cmake

18 | run:

19 | - libgcc 4.8* #[osx]

20 | - hdf5

21 |

22 | about:

23 | home: http://www.cs.ubc.ca/research/flann/

24 | license: BSD

25 | license_file: COPYING

26 |

--------------------------------------------------------------------------------

/conda_recipes/megaman/.binstar.yml:

--------------------------------------------------------------------------------

1 | package: megaman

2 | platform:

3 | - osx-64

4 | - osx-32

5 | - linux-64

6 | - linux-32

7 | script:

8 | - conda build .

9 | build_targets:

10 | - conda

11 |

--------------------------------------------------------------------------------

/conda_recipes/megaman/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # On OSX, we need to ensure we're using conda's gcc/g++

4 | if [[ `uname` == Darwin ]]; then

5 | export CC=gcc

6 | export CXX=g++

7 | fi

8 |

9 | $PYTHON setup.py install

10 |

--------------------------------------------------------------------------------

/conda_recipes/megaman/meta.yaml:

--------------------------------------------------------------------------------

1 | package:

2 | name: megaman

3 | version: 0.1.1

4 |

5 | source:

6 | git_url: https://github.com/mmp2/megaman.git

7 | git_tag: v0.1.1

8 |

9 | build:

10 | number: 2

11 | string: np{{CONDA_NPY}}py{{CONDA_PY}}_{{PKG_BUILDNUM}}

12 |

13 | requirements:

14 | build:

15 | - python >=2.7,<3|>=3.4,{{PY_VER}}*

16 | - numpy {{NPY_VER}}*

17 | - cython

18 | - flann

19 | - gcc 4.8* # [osx]

20 | run:

21 | - python {{PY_VER}}*

22 | - numpy {{NPY_VER}}*

23 | - scipy >=0.16

24 | - scikit-learn >=0.17

25 | - pyamg

26 | - pyflann

27 | - libgcc 4.8* # [osx]

28 |

29 | test:

30 | requires:

31 | - nose

32 | imports:

33 | - megaman

34 | - megaman.geometry

35 | - megaman.embedding

36 | - megaman.utils

37 |

38 | about:

39 | home: http://mmp2.github.io/megaman

40 | license: BSD

41 | license_file: LICENSE

42 |

--------------------------------------------------------------------------------

/conda_recipes/megaman/run_test.sh:

--------------------------------------------------------------------------------

1 | nosetests -v megaman

2 |

--------------------------------------------------------------------------------

/conda_recipes/pyamg/.binstar.yml:

--------------------------------------------------------------------------------

1 | package: pyamg

2 | platform:

3 | - osx-64

4 | - osx-32

5 | - linux-64

6 | - linux-32

7 | script:

8 | - conda build .

9 | build_targets:

10 | - conda

11 |

--------------------------------------------------------------------------------

/conda_recipes/pyamg/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # On OSX, we need to ensure we're using conda's gcc/g++

4 | if [[ `uname` == Darwin ]]; then

5 | export CC=gcc

6 | export CXX=g++

7 | fi

8 |

9 | $PYTHON setup.py install

10 |

--------------------------------------------------------------------------------

/conda_recipes/pyamg/meta.yaml:

--------------------------------------------------------------------------------

1 | package:

2 | name: pyamg

3 | version: "3.0.2"

4 |

5 | source:

6 | git_url: https://github.com/pyamg/pyamg.git

7 | git_tag: v3.0.2

8 |

9 | build:

10 | number: 2

11 | string: np{{CONDA_NPY}}py{{CONDA_PY}}_{{PKG_BUILDNUM}}

12 |

13 | requirements:

14 | build:

15 | - python >=2.7,<3|>=3.4,{{PY_VER}}*

16 | - numpy {{NPY_VER}}*

17 | - scipy

18 | - nose

19 | - zlib # [linux]

20 | - gcc 4.8* # [osx]

21 | run:

22 | - python {{PY_VER}}*

23 | - numpy {{NPY_VER}}*

24 | - scipy

25 | - zlib # [linux]

26 |

27 | test:

28 | requires:

29 | - nose

30 | imports:

31 | - pyamg

32 |

33 | about:

34 | home: http://www.pyamg.org/

35 | license: MIT

36 | license_file: LICENSE.txt

37 |

--------------------------------------------------------------------------------

/conda_recipes/pyamg/run_test.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | if [[ `uname` == Darwin ]] && [ $PY_VER == "2.7" ]; then

4 | echo "skipping tests; see https://github.com/pyamg/pyamg/issues/165"

5 | else

6 | nosetests -v pyamg

7 | fi

8 |

--------------------------------------------------------------------------------

/conda_recipes/pyflann/.binstar.yml:

--------------------------------------------------------------------------------

1 | package: pyflann

2 | platform:

3 | - osx-64

4 | - osx-32

5 | - linux-64

6 | - linux-32

7 | script:

8 | - conda build .

9 | build_targets:

10 | - conda

11 |

--------------------------------------------------------------------------------

/conda_recipes/pyflann/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | cd src/python

4 | cmake . -DLIBRARY_OUTPUT_PATH=$PREFIX/lib -DFLANN_VERSION="$PKG_VERSION"

5 | $PYTHON setup.py install

6 |

--------------------------------------------------------------------------------

/conda_recipes/pyflann/meta.yaml:

--------------------------------------------------------------------------------

1 | package:

2 | name: pyflann

3 | version: "1.8.5dev"

4 |

5 | source:

6 | git_url: https://github.com/mariusmuja/flann.git

7 | git_tag: b8a442fd98f8ce32ae3465bfd3427b5cbc36f6a5

8 |

9 | build:

10 | number: 2

11 | string: py{{CONDA_PY}}_{{PKG_BUILDNUM}}_g{{GIT_FULL_HASH[:7]}}

12 |

13 | requirements:

14 | build:

15 | - python {{PY_VER}}*

16 | - setuptools

17 | - flann 1.8.5dev

18 | - cmake

19 | run:

20 | - python {{PY_VER}}*

21 | - flann 1.8.5dev

22 | - numpy

23 |

24 | test:

25 | imports:

26 | - pyflann

27 |

28 | about:

29 | home: http://www.cs.ubc.ca/research/flann/

30 | license: BSD

31 | license_file: COPYING

32 |

--------------------------------------------------------------------------------

/doc/.gitignore:

--------------------------------------------------------------------------------

1 | _build

2 |

--------------------------------------------------------------------------------

/doc/embedding/API.rst:

--------------------------------------------------------------------------------

1 | .. _embedding_API:

2 |

3 | .. testsetup:: *

4 |

5 | from megaman.embedding import *

6 |

7 | API Documentation

8 | =================

9 |

10 | .. automodule:: megaman.embedding.spectral_embedding

11 | :members:

12 |

13 | .. automodule:: megaman.embedding.isomap

14 | :members:

15 |

16 | .. automodule:: megaman.embedding.locally_linear

17 | :members:

18 |

19 | .. automodule:: megaman.embedding.ltsa

20 | :members:

21 |

--------------------------------------------------------------------------------

/doc/embedding/index.rst:

--------------------------------------------------------------------------------

1 | .. _embedding:

2 |

3 | ***************************************************

4 | Tools for Embedding (``megaman.embedding``)

5 | ***************************************************

6 |

7 | This module contains tools for nonlinear embedding data sets.

8 | These tools include Isomap, Spectral Embedding & Diffusion

9 | Maps, Local Tangent Space Alignment, and Locally Linear

10 | Embedding

11 |

12 | .. toctree::

13 | :maxdepth: 2

14 |

15 | isomap.rst

16 | locally_linear.rst

17 | ltsa.rst

18 | spectral_embedding.rst

19 | API

20 |

--------------------------------------------------------------------------------

/doc/embedding/isomap.rst:

--------------------------------------------------------------------------------

1 | .. _isomap:

2 |

3 | Isomap

4 | ======

5 |

6 | Isomap is one of the embeddings implemented in the megaman package.

7 | Isomap uses Multidimensional Scaling (MDS) to preserve pairwsise

8 | graph shortest distance computed using a sparse neighborhood graph.

9 |

10 | For more information see:

11 |

12 | * Tenenbaum, J.B.; De Silva, V.; & Langford, J.C.

13 | A global geometric framework for nonlinear dimensionality reduction.

14 | Science 290 (5500)

15 |

16 | :class:'~megaman.embedding.Isomap'

17 | This class is used to interface with isomap embedding function.

18 | Like all embedding functions in megaman it operates using a

19 | Geometry object. The Isomap class allows you to optionally

20 | pass an exiting Geometry object, otherwise it creates one.

21 |

22 | API of Isomap

23 | -------------

24 |

25 | The Isomap model, along with all the other models in megaman, have an API

26 | designed to follow in the same vein of

27 | `scikit-learn `_ API.

28 |

29 | Consequentially, the Isomap class functions as follows

30 |

31 | 1. At class instantiation `.Isomap()` parameters are passed. See API

32 | documementation for more information. An existing Geometry object

33 | can be passed to `.Isomap()`.

34 | 2. The `.fit()` method creates a Geometry object if one was not

35 | already passed and then calculates th embedding.

36 | The number of components and eigen solver can also be passed to the

37 | `.fit()` function. Since Isomap caches important quantities

38 | (like the graph distance matrix) which do not change by selecting

39 | different eigen solvers and embeding dimension these can be passed

40 | and a new embedding computed without re-computing existing quantities.

41 | the `.fit()` function does not return anything but it does create

42 | the attribute `self.embedding_` only one `self.embedding_` exists

43 | at a given time. If a new embedding is computed the old one is overwritten.

44 | 3. The `.fit_transform()` function calls the `fit()` function and returns

45 | the embedding. It does not allow for changing parameters.

46 |

47 | See the API documentation for further information.

48 |

49 | Example Usage

50 | -------------

51 |

52 | Here is an example using the function on a random data set::

53 |

54 | import numpy as np

55 | from megaman.geometry import Geometry

56 | from megaman.embedding import Isomap

57 |

58 | X = np.random.randn(100, 10)

59 | radius = 5

60 | adjacency_method = 'cyflann'

61 | adjacency_kwds = {'radius':radius} # ignore distances above this radius

62 |

63 | geom = Geometry(adjacency_method=adjacency_method, adjacency_kwds=adjacency_kwds)

64 |

65 | isomap = Isomap(n_components=n_components, eigen_solver='arpack', geom=geom)

66 | embed_isomap = isomap.fit_transform(X)

67 |

--------------------------------------------------------------------------------

/doc/embedding/locally_linear.rst:

--------------------------------------------------------------------------------

1 | .. _locally_linear:

2 |

3 | Locally Linear Embedding

4 | ========================

5 |

6 | Locally linear embedding is one of the methods implemented in the megaman package.

7 | Locally Linear Embedding uses reconstruction weights estiamted on the original

8 | data set to produce an embedding that preserved the original reconstruction

9 | weights.

10 |

11 | For more information see:

12 |

13 | * Roweis, S. & Saul, L. Nonlinear dimensionality reduction

14 | by locally linear embedding. Science 290:2323 (2000).

15 |

16 | :class:'~megaman.embedding.LocallyLinearEmbedding'

17 | This class is used to interface with locally linear embedding function.

18 | Like all embedding functions in megaman it operates using a

19 | Geometry object. The Locally Linear class allows you to optionally

20 | pass an exiting Geometry object, otherwise it creates one.

21 |

22 |

23 | API of Locally Linear Embedding

24 | -------------------------------

25 |

26 | The Locally Linear model, along with all the other models in megaman, have an API

27 | designed to follow in the same vein of

28 | `scikit-learn `_ API.

29 |

30 | Consequentially, the Locally Linear class functions as follows

31 |

32 | 1. At class instantiation `.LocallyLinear()` parameters are passed. See API

33 | documementation for more information. An existing Geometry object

34 | can be passed to `.LocallyLinear()`.

35 | 2. The `.fit()` method creates a Geometry object if one was not

36 | already passed and then calculates th embedding.

37 | The number of components and eigen solver can also be passed to the

38 | `.fit()` function. WARNING: NOT COMPLETED

39 | Since LocallyLinear caches important quantities

40 | (like the barycenter weight matrix) which do not change by selecting

41 | different eigen solvers and embeding dimension these can be passed

42 | and a new embedding computed without re-computing existing quantities.

43 | the `.fit()` function does not return anything but it does create

44 | the attribute `self.embedding_` only one `self.embedding_` exists

45 | at a given time. If a new embedding is computed the old one is overwritten.

46 | 3. The `.fit_transform()` function calls the `fit()` function and returns

47 | the embedding. It does not allow for changing parameters.

48 |

49 | See the API documentation for further information.

50 |

51 | Example Usage

52 | -------------

53 |

54 | Here is an example using the function on a random data set::

55 |

56 | import numpy as np

57 | from megaman.geometry import Geometry

58 | from megaman.embedding import (Isomap, LocallyLinearEmbedding, LTSA, SpectralEmbedding)

59 |

60 | X = np.random.randn(100, 10)

61 | radius = 5

62 | adjacency_method = 'cyflann'

63 | adjacency_kwds = {'radius':radius} # ignore distances above this radius

64 |

65 | geom = Geometry(adjacency_method=adjacency_method, adjacency_kwds=adjacency_kwds)

66 | lle = LocallyLinearEmbedding(n_components=n_components, eigen_solver='arpack', geom=geom)

67 | embed_lle = lle.fit_transform(X)

68 |

--------------------------------------------------------------------------------

/doc/embedding/ltsa.rst:

--------------------------------------------------------------------------------

1 | .. _ltsa:

2 |

3 | Local Tangent Space Alignment

4 | =============================

5 |

6 | Local Tangent Space Alignment is one of the methods implemented in the megaman package.

7 | Local Tangent Space Alighment uses independent estimates of the local tangent

8 | space at each point and then uses a global alignment procedure with a

9 | unit-scale condition to create a single embedding from each local tangent

10 | space.

11 |

12 | For more information see:

13 |

14 | * Zhang, Z. & Zha, H. Principal manifolds and nonlinear

15 | dimensionality reduction via tangent space alignment.

16 | Journal of Shanghai Univ. 8:406 (2004)

17 |

18 | :class:'~megaman.embedding.LTSA'

19 | This class is used to interface with local tangent space

20 | alignment embedding function.

21 | Like all embedding functions in megaman it operates using a

22 | Geometry object. The Locally Linear class allows you to optionally

23 | pass an exiting Geometry object, otherwise it creates one.

24 |

25 |

26 | API of Local Tangent Space Alignment

27 | ------------------------------------

28 |

29 | The Locally Tangent Space Alignment model, along with all the other models in megaman,

30 | have an API designed to follow in the same vein of

31 | `scikit-learn `_ API.

32 |

33 | Consequentially, the LTSA class functions as follows

34 |

35 | 1. At class instantiation `.LTSA()` parameters are passed. See API

36 | documementation for more information. An existing Geometry object

37 | can be passed to `.LTSA()`.

38 | 2. The `.fit()` method creates a Geometry object if one was not

39 | already passed and then calculates th embedding.

40 | The eigen solver can also be passed to the

41 | `.fit()` function. WARNING: NOT COMPLETED

42 | Since LTSA caches important quantities

43 | (like the local tangent spaces) which do not change by selecting

44 | different eigen solvers and this can be passed

45 | and a new embedding computed without re-computing existing quantities.

46 | the `.fit()` function does not return anything but it does create

47 | the attribute `self.embedding_` only one `self.embedding_` exists

48 | at a given time. If a new embedding is computed the old one is overwritten.

49 | 3. The `.fit_transform()` function calls the `fit()` function and returns

50 | the embedding. It does not allow for changing parameters.

51 |

52 | See the API documentation for further information.

53 |

54 | Example Usage

55 | -------------

56 |

57 | Here is an example using the function on a random data set::

58 |

59 | import numpy as np

60 | from megaman.geometry import Geometry

61 | from megaman.embedding import (Isomap, LocallyLinearEmbedding, LTSA, SpectralEmbedding)

62 |

63 | X = np.random.randn(100, 10)

64 | radius = 5

65 | adjacency_method = 'cyflann'

66 | adjacency_kwds = {'radius':radius} # ignore distances above this radius

67 |

68 | geom = Geometry(adjacency_method=adjacency_method, adjacency_kwds=adjacency_kwds)

69 |

70 | ltsa =LTSA(n_components=n_components, eigen_solver='arpack', geom=geom)

71 | embed_ltsa = ltsa.fit_transform(X)

72 |

--------------------------------------------------------------------------------

/doc/embedding/spectral_embedding.rst:

--------------------------------------------------------------------------------

1 | .. _spectral_embedding:

2 |

3 | Spectral Embedding

4 | ==================

5 |

6 | Spectral Embedding is on of the methods implemented in the megaman package.

7 | Spectral embedding (and diffusion maps) uses the spectrum (eigen vectors

8 | and eigen values) of a graph Laplacian estimated from the data set. There

9 | are a number of different graph Laplacians that can be used.

10 |

11 | For more information see:

12 |

13 | * A Tutorial on Spectral Clustering, 2007

14 | Ulrike von Luxburg

15 | http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.165.9323

16 |

17 | :class:'~megaman.embedding.SpectralEmbedding'

18 | This class is used to interface with spectral embedding function.

19 | Like all embedding functions in megaman it operates using a

20 | Geometry object. The Isomap class allows you to optionally

21 | pass an exiting Geometry object, otherwise it creates one.

22 |

23 | API of Spectral Embedding

24 | -------------------------

25 |

26 | The Spectral Embedding model, along with all the other models in megaman,

27 | have an API designed to follow in the same vein of

28 | `scikit-learn `_ API.

29 |

30 | Consequentially, the LTSA class functions as follows

31 |

32 | 1. At class instantiation `.SpectralEmbedding()` parameters are passed. See API

33 | documementation for more information. An existing Geometry object

34 | can be passed to `.SpectralEmbedding()`. Here is also where

35 | you have the option to use diffusion maps.

36 | 2. The `.fit()` method creates a Geometry object if one was not

37 | already passed and then calculates th embedding.

38 | The eigen solver can also be passed to the

39 | `.fit()` function. WARNING: NOT COMPLETED

40 | Since Geometry caches important quantities

41 | (like the graph Laplacian) which do not change by selecting

42 | different eigen solvers and this can be passed

43 | and a new embedding computed without re-computing existing quantities.

44 | the `.fit()` function does not return anything but it does create

45 | the attribute `self.embedding_` only one `self.embedding_` exists

46 | at a given time. If a new embedding is computed the old one is overwritten.

47 | 3. The `.fit_transform()` function calls the `fit()` function and returns

48 | the embedding. It does not allow for changing parameters.

49 |

50 | See the API documentation for further information.

51 |

52 | Example Usage

53 | -------------

54 |

55 | Here is an example using the function on a random data set::

56 |

57 | import numpy as np

58 | from megaman.geometry import Geometry

59 | from megaman.embedding import SpectralEmbedding

60 |

61 | X = np.random.randn(100, 10)

62 | radius = 5

63 | adjacency_method = 'cyflann'

64 | adjacency_kwds = {'radius':radius} # ignore distances above this radius

65 | affinity_method = 'gaussian'

66 | affinity_kwds = {'radius':radius} # A = exp(-||x - y||/radius^2)

67 | laplacian_method = 'geometric'

68 | laplacian_kwds = {'scaling_epps':radius} # scaling ensures convergence to Laplace-Beltrami operator

69 |

70 | geom = Geometry(adjacency_method=adjacency_method, adjacency_kwds=adjacency_kwds,

71 | affinity_method=affinity_method, affinity_kwds=affinity_kwds,

72 | laplacian_method=laplacian_method, laplacian_kwds=laplacian_kwds)

73 |

74 | spectral = SpectralEmbedding(n_components=n_components, eigen_solver='arpack',

75 | geom=geom)

76 | embed_spectral = spectral.fit_transform(X)

--------------------------------------------------------------------------------

/doc/geometry/API.rst:

--------------------------------------------------------------------------------

1 | .. _geometry_API:

2 |

3 | .. testsetup:: *

4 |

5 | from megaman.geometry import *

6 |

7 | API Documentation

8 | =================

9 |

10 | .. automodule:: megaman.geometry.geometry

11 | :members:

12 |

13 | .. automodule:: megaman.geometry.rmetric

14 | :members:

15 |

--------------------------------------------------------------------------------

/doc/geometry/geometry.rst:

--------------------------------------------------------------------------------

1 | .. _geom:

2 |

3 | Geometry

4 | ========

5 |

6 | One of the fundamental objectives of manifold learning is to understand

7 | the geometry of the data. As such the primary class of this package

8 | is the geometry class:

9 |

10 | :class:'~megaman.geometry.Geometry'

11 | This class is used as the interface to compute various quantities

12 | on the original data set including: pairwise distance graphs,

13 | affinity matrices, and laplacian matrices. It also caches these

14 | quantities and allows for fast re-computation with new parameters.

15 |

16 | API of Geometry

17 | ---------------

18 |

19 | The Geometry class is used to interface with functions that compute various

20 | geometric quantities with respect to the original data set. This is the object

21 | that is passed (or computed) within each embedding function. It is how

22 | megaman caches important quantities allowing for fast re-computation with

23 | various new parameters. Beyond instantiation, the Geometry class offers

24 | three types of functions: compute, set & delete that work with the four

25 | primary data matrices: (raw) data, adjacency matrix, affinity matrix,

26 | and Laplacian Matrix.

27 |

28 | 1. Class instantiation : during class instantiation you input the parameters

29 | concerning the original data matrix such as the distance calculation method,

30 | neighborhood and affinity radius, laplacian type. Each of the three

31 | computed matrices (adjacency, affinity, laplacian) have their

32 | own keyword dictionaries which permit these methods to easily be extended.

33 | 2. `set_[some]_matrix` : these functions allow you to assign a matrix of data

34 | to the geometry object. In particular these are used to fit the geometry

35 | to your input data (which may be of the form data_matrix, adjacency_matrix,

36 | or affinity_matrix). You can also set a Laplacian matrix.

37 | 3. `compute_[some]_matrix` : these functions are designed to compute the

38 | selected matrix (e.g. adjacency). Additional keyword arguments can be

39 | passed which override the ones passed at instantiation. NB: this method

40 | will always re-compute a matrix.

41 | 4. Geometry Attributes. Other than the parameters passed at instantiation each

42 | matrix that is computed is stored as an attribute e.g. geom.adjacency_matrix,

43 | geom.adjacency_matrix, geom.laplacian_matrix. Raw data is stored as geom.X.

44 | If you want to query for these matrices without recomputing you should use

45 | these attributes e.g. my_affinity = geom.affinity_matrix.

46 | 5. `delete_[some]_matrix` : if you are working with large data sets and choose

47 | an algorithm (e.g. Isomap or Spectral Embedding) that do not require the

48 | original data_matrix, these methods can be used to clear memory.

49 |

50 | See the API documentation for further information.

51 |

52 | Example Usage

53 | -------------

54 |

55 | Here is an example using the function on a random data set::

56 |

57 | import numpy as np

58 | from megaman.geometry import Geometry

59 |

60 | X = np.random.randn(100, 10)

61 | radius = 5

62 | adjacency_method = 'cyflann'

63 | adjacency_kwds = {'radius':radius} # ignore distances above this radius

64 | affinity_method = 'gaussian'

65 | affinity_kwds = {'radius':radius} # A = exp(-||x - y||/radius^2)

66 | laplacian_method = 'geometric'

67 | laplacian_kwds = {'scaling_epps':radius} # scaling ensures convergence to Laplace-Beltrami operator

68 |

69 | geom = Geometry(adjacency_method=adjacency_method, adjacency_kwds=adjacency_kwds,

70 | affinity_method=affinity_method, affinity_kwds=affinity_kwds,

71 | laplacian_method=laplacian_method, laplacian_kwds=laplacian_kwds)

--------------------------------------------------------------------------------

/doc/geometry/index.rst:

--------------------------------------------------------------------------------

1 | .. _geometry:

2 |

3 | ***************************************************

4 | Tools for Geometric Analysis (``megaman.geometry``)

5 | ***************************************************

6 |

7 | This module contains tools for analyzing inherent geometry of a data set.

8 | These tools include pairwise distance calculation, as well as affinity and

9 | Laplacian construction (e.g. :class:`~megaman.geometry.Geometry`).

10 |

11 | .. toctree::

12 | :maxdepth: 2

13 |

14 | geometry.rst

15 | API

16 |

--------------------------------------------------------------------------------

/doc/images/circle_to_ellipse_embedding.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mmp2/megaman/249a7d725de1f99ea7f6ba169a5a89468fc423ec/doc/images/circle_to_ellipse_embedding.png

--------------------------------------------------------------------------------

/doc/images/index.rst:

--------------------------------------------------------------------------------

1 | .. _images:

2 |

3 | *********************

4 | Figures from Megaman

5 | *********************

6 |

7 | This section contains some experimental results from using the

8 | megaman package.

9 |

10 | .. toctree::

11 | :maxdepth: 2

12 |

13 | spectra_Halpha.rst

14 | word2vec.rst

--------------------------------------------------------------------------------

/doc/images/spectra_D4000.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mmp2/megaman/249a7d725de1f99ea7f6ba169a5a89468fc423ec/doc/images/spectra_D4000.png

--------------------------------------------------------------------------------

/doc/images/spectra_Halpha.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mmp2/megaman/249a7d725de1f99ea7f6ba169a5a89468fc423ec/doc/images/spectra_Halpha.png

--------------------------------------------------------------------------------

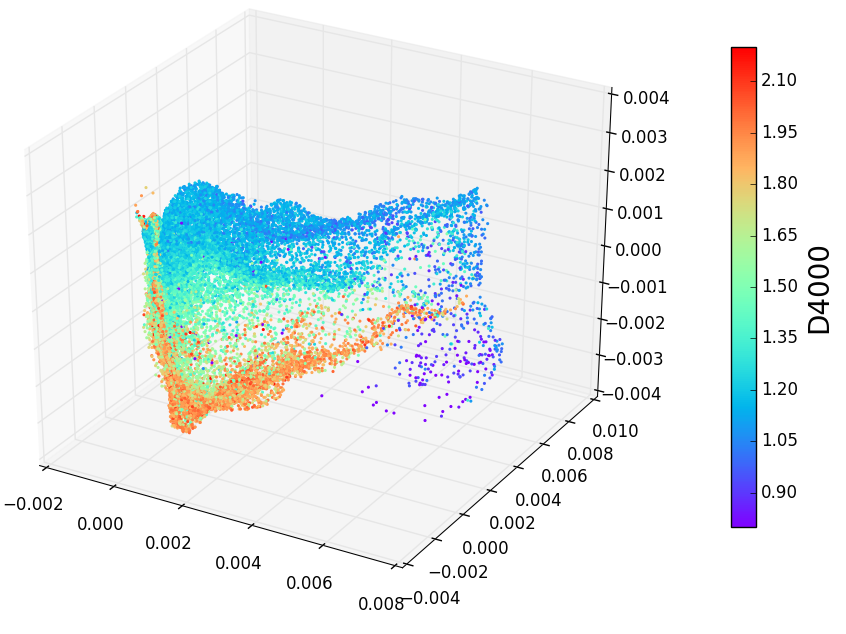

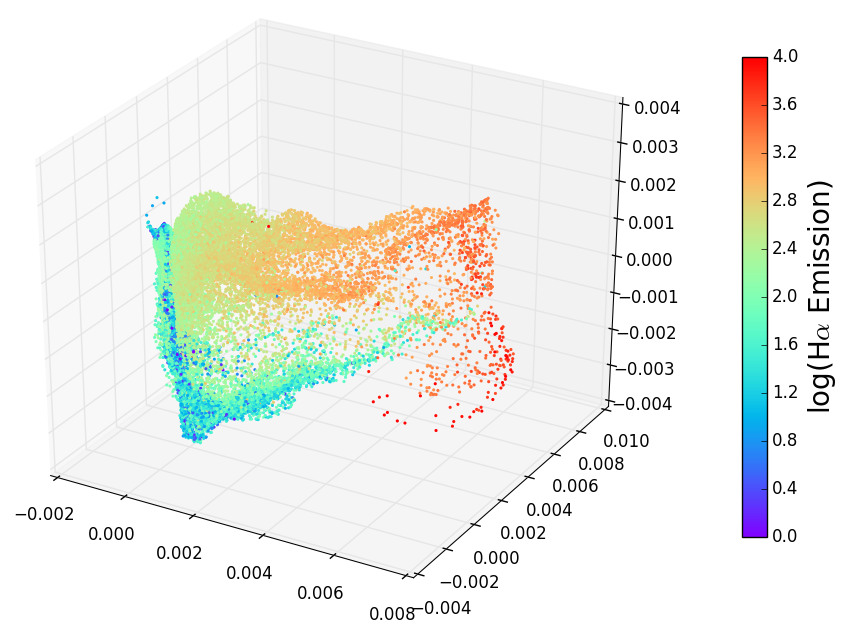

/doc/images/spectra_Halpha.rst:

--------------------------------------------------------------------------------

1 | .. _spectrum_Halpha:

2 |

3 | Spectrum Halpha Plot

4 | ====================

5 |

6 | .. figure:: spectra_Halpha.png

7 | :scale: 50 %

8 | :alt: spectrm Halpha

9 |

10 | A three-dimensional embedding of the main sample of galaxy spectra

11 | from the Sloan Digital Sky Survey (approximately 675,000 spectra

12 | observed in 3750 dimensions). Colors in the above figure indicate

13 | the strength of Hydrogen alpha emission, a very nonlinear feature

14 | which requires dozens of dimensions to be captured in a linear embedding.

--------------------------------------------------------------------------------

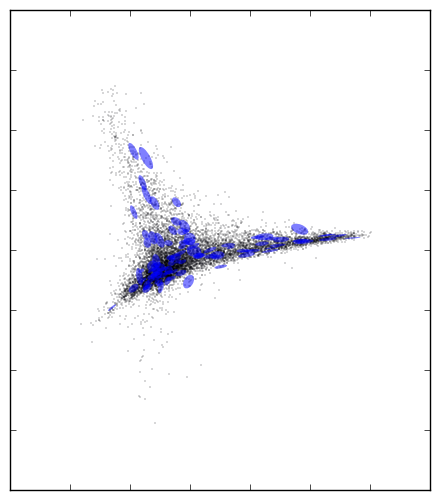

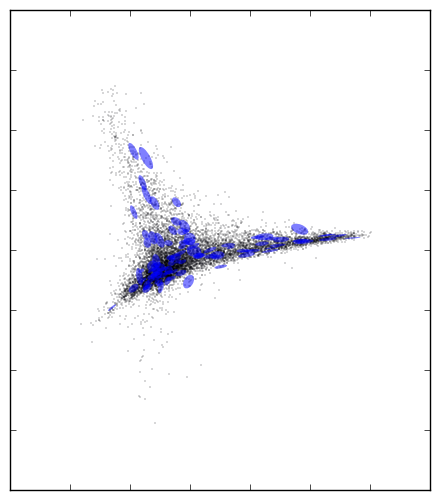

/doc/images/word2vec.rst:

--------------------------------------------------------------------------------

1 | .. _word2vec:

2 |

3 | Word2Vec Plot

4 | ====================

5 |

6 | .. figure:: word2vec_rmetric_plot_no_digits.png

7 | :scale: 50 %

8 | :alt: word2vec embedding with R. metric

9 |

10 | 3,000,000 words and phrases mapped by word2vec using Google News into 300

11 | dimensions. The data was then embedded into 2 dimensions using Spectral

12 | Embedding. The plot shows a sample of 10,000 points displaying the overall

13 | shape of the embedding as well as the estimated "stretch"

14 | (i.e. dual push-forward Riemannian metric) at various locations in the embedding.

--------------------------------------------------------------------------------

/doc/images/word2vec_rmetric_plot_no_digits.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mmp2/megaman/249a7d725de1f99ea7f6ba169a5a89468fc423ec/doc/images/word2vec_rmetric_plot_no_digits.png

--------------------------------------------------------------------------------

/doc/index.rst:

--------------------------------------------------------------------------------

1 | .. image:: images/spectra_Halpha.png

2 | :height: 238 px

3 | :width: 318 px

4 | :align: left

5 | :target: /megaman/images/spectra_Halpha

6 | .. image:: images/word2vec_rmetric_plot_no_digits.png

7 | :height: 250 px

8 | :width: 220 px

9 | :align: right

10 | :target: /megaman/images/word2vec

11 |

12 |

13 | megaman: Manifold Learning for Millions of Points

14 | =================================================

15 |

16 | megaman is a scalable manifold learning package implemented in

17 | python. It has a front-end API designed to be familiar

18 | to `scikit-learn `_ but harnesses

19 | the C++ Fast Library for Approximate Nearest Neighbors (FLANN)

20 | and the Sparse Symmetric Positive Definite (SSPD) solver

21 | Locally Optimal Block Precodition Gradient (LOBPCG) method

22 | to scale manifold learning algorithms to large data sets.

23 | It is designed for researchers and as such caches intermediary

24 | steps and indices to allow for fast re-computation with new parameters.

25 |

26 | For issues & contributions, see the source

27 | `repository on github `_.

28 |

29 | For example notebooks see the

30 | `index on github `_.

31 |

32 | You can also read our

33 | `arXiv paper `_.

34 |

35 | Documentation

36 | =============

37 |

38 | .. toctree::

39 | :maxdepth: 2

40 |

41 | installation

42 | geometry/index

43 | embedding/index

44 | utils/index

45 | images/index

46 |

47 |

48 | Indices and tables

49 | ==================

50 |

51 | * :ref:`genindex`

52 | * :ref:`modindex`

53 | * :ref:`search`

54 |

--------------------------------------------------------------------------------

/doc/installation.rst:

--------------------------------------------------------------------------------

1 | Installation

2 | ============

3 |

4 | Though ``megaman`` has a fair number of compiled dependencies, it is

5 | straightforward to install using the cross-platform conda_ package manager.

6 |

7 | Installation with Conda

8 | -----------------------

9 |

10 | To install ``megaman`` and all its dependencies using conda_, run::

11 |

12 | $ conda install megaman --channel=conda-forge

13 |

14 | Currently builds are available for OSX and Linux, on Python 2.7, 3.4, and 3.5.

15 | For other operating systems, see the full install instructions below.

16 |

17 | Installation from Source

18 | ------------------------

19 |

20 | To install ``megaman`` from source requires the following:

21 |

22 | - python_: tested with versions 2.7, 3.4, and 3.5

23 | - numpy_: version 1.8 or higher

24 | - scipy_: version 0.16.0 or higher

25 | - scikit-learn_: version 0.16.0 or higher

26 | - FLANN_: version 1.8 or higher

27 | - cython_: version 0.23 or higher

28 | - a C++ compiler such as ``gcc``/``g++`` (we recommend version 4.8.*)

29 |

30 | Optional requirements include:

31 |

32 | - pyamg_, which provides fast decompositions of large sparse matrices

33 | - pyflann_, which offers an alternative FLANN interface for computing distance matrices (this is bundled with the FLANN source code)

34 | - nose_ for running the unit tests

35 |

36 | These requirements can be installed on Linux and MacOSX using the following conda command::

37 |

38 | $ conda install --channel=jakevdp pip nose coverage gcc cython numpy scipy scikit-learn pyflann pyamg

39 |

40 | Finally, within the source repository, run this command to install the ``megaman`` package itself::

41 |

42 | $ python setup.py install

43 |

44 | Unit Tests

45 | ----------

46 | ``megaman`` uses nose_ for unit tests. To run the unit tests once ``nose`` is installed, type in the source directory::

47 |

48 | $ make test

49 |

50 | or, outside the source directory once ``megaman`` is installed::

51 |

52 | $ nosetests megaman

53 |

54 | ``megaman`` is tested on Python versions 2.7, 3.4, and 3.5.

55 |

56 | .. _conda: http://conda.pydata.org/miniconda.html

57 | .. _python: http://python.org

58 | .. _numpy: http://numpy.org

59 | .. _scipy: http://scipy.org

60 | .. _scikit-learn: http://scikit-learn.org

61 | .. _FLANN: http://www.cs.ubc.ca/research/flann/

62 | .. _pyamg: http://pyamg.org/

63 | .. _pyflann: http://www.cs.ubc.ca/research/flann/

64 | .. _nose: https://nose.readthedocs.org/

65 | .. _cython: http://cython.org/

66 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/__init__.py:

--------------------------------------------------------------------------------

1 | from __future__ import division, absolute_import, print_function

2 |

3 | from .numpydoc import setup

4 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/astropyautosummary.py:

--------------------------------------------------------------------------------

1 | # Licensed under a 3-clause BSD style license - see LICENSE.rst

2 | """

3 | This sphinx extension builds off of `sphinx.ext.autosummary` to

4 | clean up some issues it presents in the Astropy docs.

5 |

6 | The main issue this fixes is the summary tables getting cut off before the

7 | end of the sentence in some cases.

8 |

9 | Note: Sphinx 1.2 appears to have fixed the the main issues in the stock

10 | autosummary extension that are addressed by this extension. So use of this

11 | extension with newer versions of Sphinx is deprecated.

12 | """

13 |

14 | import re

15 |

16 | from distutils.version import LooseVersion

17 |

18 | import sphinx

19 |

20 | from sphinx.ext.autosummary import Autosummary

21 |

22 | from ...utils import deprecated

23 |

24 | # used in AstropyAutosummary.get_items

25 | _itemsummrex = re.compile(r'^([A-Z].*?\.(?:\s|$))')

26 |

27 |

28 | @deprecated('1.0', message='AstropyAutosummary is only needed when used '

29 | 'with Sphinx versions less than 1.2')

30 | class AstropyAutosummary(Autosummary):

31 | def get_items(self, names):

32 | """Try to import the given names, and return a list of

33 | ``[(name, signature, summary_string, real_name), ...]``.

34 | """

35 | from sphinx.ext.autosummary import (get_import_prefixes_from_env,

36 | import_by_name, get_documenter, mangle_signature)

37 |

38 | env = self.state.document.settings.env

39 |

40 | prefixes = get_import_prefixes_from_env(env)

41 |

42 | items = []

43 |

44 | max_item_chars = 50

45 |

46 | for name in names:

47 | display_name = name

48 | if name.startswith('~'):

49 | name = name[1:]

50 | display_name = name.split('.')[-1]

51 |

52 | try:

53 | import_by_name_values = import_by_name(name, prefixes=prefixes)

54 | except ImportError:

55 | self.warn('[astropyautosummary] failed to import %s' % name)

56 | items.append((name, '', '', name))

57 | continue

58 |

59 | # to accommodate Sphinx v1.2.2 and v1.2.3

60 | if len(import_by_name_values) == 3:

61 | real_name, obj, parent = import_by_name_values

62 | elif len(import_by_name_values) == 4:

63 | real_name, obj, parent, module_name = import_by_name_values

64 |

65 | # NB. using real_name here is important, since Documenters

66 | # handle module prefixes slightly differently

67 | documenter = get_documenter(obj, parent)(self, real_name)

68 | if not documenter.parse_name():

69 | self.warn('[astropyautosummary] failed to parse name %s' % real_name)

70 | items.append((display_name, '', '', real_name))

71 | continue

72 | if not documenter.import_object():

73 | self.warn('[astropyautosummary] failed to import object %s' % real_name)

74 | items.append((display_name, '', '', real_name))

75 | continue

76 |

77 | # -- Grab the signature

78 |

79 | sig = documenter.format_signature()

80 | if not sig:

81 | sig = ''

82 | else:

83 | max_chars = max(10, max_item_chars - len(display_name))

84 | sig = mangle_signature(sig, max_chars=max_chars)

85 | sig = sig.replace('*', r'\*')

86 |

87 | # -- Grab the summary

88 |

89 | doc = list(documenter.process_doc(documenter.get_doc()))

90 |

91 | while doc and not doc[0].strip():

92 | doc.pop(0)

93 | m = _itemsummrex.search(" ".join(doc).strip())

94 | if m:

95 | summary = m.group(1).strip()

96 | elif doc:

97 | summary = doc[0].strip()

98 | else:

99 | summary = ''

100 |

101 | items.append((display_name, sig, summary, real_name))

102 |

103 | return items

104 |

105 |

106 | def setup(app):

107 | # need autosummary, of course

108 | app.setup_extension('sphinx.ext.autosummary')

109 |

110 | # Don't make the replacement if Sphinx is at least 1.2

111 | if LooseVersion(sphinx.__version__) < LooseVersion('1.2.0'):

112 | # this replaces the default autosummary with the astropy one

113 | app.add_directive('autosummary', AstropyAutosummary)

114 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/autodoc_enhancements.py:

--------------------------------------------------------------------------------

1 | """

2 | Miscellaneous enhancements to help autodoc along.

3 | """

4 |

5 |

6 | # See

7 | # https://github.com/astropy/astropy-helpers/issues/116#issuecomment-71254836

8 | # for further background on this.

9 | def type_object_attrgetter(obj, attr, *defargs):

10 | """

11 | This implements an improved attrgetter for type objects (i.e. classes)

12 | that can handle class attributes that are implemented as properties on

13 | a metaclass.

14 |

15 | Normally `getattr` on a class with a `property` (say, "foo"), would return

16 | the `property` object itself. However, if the class has a metaclass which

17 | *also* defines a `property` named "foo", ``getattr(cls, 'foo')`` will find

18 | the "foo" property on the metaclass and resolve it. For the purposes of

19 | autodoc we just want to document the "foo" property defined on the class,

20 | not on the metaclass.

21 |

22 | For example::

23 |

24 | >>> class Meta(type):

25 | ... @property

26 | ... def foo(cls):

27 | ... return 'foo'

28 | ...

29 | >>> class MyClass(metaclass=Meta):

30 | ... @property

31 | ... def foo(self):

32 | ... \"\"\"Docstring for MyClass.foo property.\"\"\"

33 | ... return 'myfoo'

34 | ...

35 | >>> getattr(MyClass, 'foo')

36 | 'foo'

37 | >>> type_object_attrgetter(MyClass, 'foo')

38 |

39 | >>> type_object_attrgetter(MyClass, 'foo').__doc__

40 | 'Docstring for MyClass.foo property.'

41 |

42 | The last line of the example shows the desired behavior for the purposes

43 | of autodoc.

44 | """

45 |

46 | for base in obj.__mro__:

47 | if attr in base.__dict__:

48 | if isinstance(base.__dict__[attr], property):

49 | # Note, this should only be used for properties--for any other

50 | # type of descriptor (classmethod, for example) this can mess

51 | # up existing expectations of what getattr(cls, ...) returns

52 | return base.__dict__[attr]

53 | break

54 |

55 | return getattr(obj, attr, *defargs)

56 |

57 |

58 | def setup(app):

59 | app.add_autodoc_attrgetter(type, type_object_attrgetter)

60 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/changelog_links.py:

--------------------------------------------------------------------------------

1 | # Licensed under a 3-clause BSD style license - see LICENSE.rst

2 | """

3 | This sphinx extension makes the issue numbers in the changelog into links to

4 | GitHub issues.

5 | """

6 |

7 | from __future__ import print_function

8 |

9 | import re

10 |

11 | from docutils.nodes import Text, reference

12 |

13 | BLOCK_PATTERN = re.compile('\[#.+\]', flags=re.DOTALL)

14 | ISSUE_PATTERN = re.compile('#[0-9]+')

15 |

16 |

17 | def process_changelog_links(app, doctree, docname):

18 | for rex in app.changelog_links_rexes:

19 | if rex.match(docname):

20 | break

21 | else:

22 | # if the doc doesn't match any of the changelog regexes, don't process

23 | return

24 |

25 | app.info('[changelog_links] Adding changelog links to "{0}"'.format(docname))

26 |

27 | for item in doctree.traverse():

28 |

29 | if not isinstance(item, Text):

30 | continue

31 |

32 | # We build a new list of items to replace the current item. If

33 | # a link is found, we need to use a 'reference' item.

34 | children = []

35 |

36 | # First cycle through blocks of issues (delimited by []) then

37 | # iterate inside each one to find the individual issues.

38 | prev_block_end = 0

39 | for block in BLOCK_PATTERN.finditer(item):

40 | block_start, block_end = block.start(), block.end()

41 | children.append(Text(item[prev_block_end:block_start]))

42 | block = item[block_start:block_end]

43 | prev_end = 0

44 | for m in ISSUE_PATTERN.finditer(block):

45 | start, end = m.start(), m.end()

46 | children.append(Text(block[prev_end:start]))

47 | issue_number = block[start:end]

48 | refuri = app.config.github_issues_url + issue_number[1:]

49 | children.append(reference(text=issue_number,

50 | name=issue_number,

51 | refuri=refuri))

52 | prev_end = end

53 |

54 | prev_block_end = block_end

55 |

56 | # If no issues were found, this adds the whole item,

57 | # otherwise it adds the remaining text.

58 | children.append(Text(block[prev_end:block_end]))

59 |

60 | # If no blocks were found, this adds the whole item, otherwise

61 | # it adds the remaining text.

62 | children.append(Text(item[prev_block_end:]))

63 |

64 | # Replace item by the new list of items we have generated,

65 | # which may contain links.

66 | item.parent.replace(item, children)

67 |

68 |

69 | def setup_patterns_rexes(app):

70 | app.changelog_links_rexes = [re.compile(pat) for pat in

71 | app.config.changelog_links_docpattern]

72 |

73 |

74 | def setup(app):

75 | app.connect('doctree-resolved', process_changelog_links)

76 | app.connect('builder-inited', setup_patterns_rexes)

77 | app.add_config_value('github_issues_url', None, True)

78 | app.add_config_value('changelog_links_docpattern', ['.*changelog.*', 'whatsnew/.*'], True)

79 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/comment_eater.py:

--------------------------------------------------------------------------------

1 | from __future__ import division, absolute_import, print_function

2 |

3 | import sys

4 | if sys.version_info[0] >= 3:

5 | from io import StringIO

6 | else:

7 | from io import StringIO

8 |

9 | import compiler

10 | import inspect

11 | import textwrap

12 | import tokenize

13 |

14 | from .compiler_unparse import unparse

15 |

16 |

17 | class Comment(object):

18 | """ A comment block.

19 | """

20 | is_comment = True

21 | def __init__(self, start_lineno, end_lineno, text):

22 | # int : The first line number in the block. 1-indexed.

23 | self.start_lineno = start_lineno

24 | # int : The last line number. Inclusive!

25 | self.end_lineno = end_lineno

26 | # str : The text block including '#' character but not any leading spaces.

27 | self.text = text

28 |

29 | def add(self, string, start, end, line):

30 | """ Add a new comment line.

31 | """

32 | self.start_lineno = min(self.start_lineno, start[0])

33 | self.end_lineno = max(self.end_lineno, end[0])

34 | self.text += string

35 |

36 | def __repr__(self):

37 | return '%s(%r, %r, %r)' % (self.__class__.__name__, self.start_lineno,

38 | self.end_lineno, self.text)

39 |

40 |

41 | class NonComment(object):

42 | """ A non-comment block of code.

43 | """

44 | is_comment = False

45 | def __init__(self, start_lineno, end_lineno):

46 | self.start_lineno = start_lineno

47 | self.end_lineno = end_lineno

48 |

49 | def add(self, string, start, end, line):

50 | """ Add lines to the block.

51 | """

52 | if string.strip():

53 | # Only add if not entirely whitespace.

54 | self.start_lineno = min(self.start_lineno, start[0])

55 | self.end_lineno = max(self.end_lineno, end[0])

56 |

57 | def __repr__(self):

58 | return '%s(%r, %r)' % (self.__class__.__name__, self.start_lineno,

59 | self.end_lineno)

60 |

61 |

62 | class CommentBlocker(object):

63 | """ Pull out contiguous comment blocks.

64 | """

65 | def __init__(self):

66 | # Start with a dummy.

67 | self.current_block = NonComment(0, 0)

68 |

69 | # All of the blocks seen so far.

70 | self.blocks = []

71 |

72 | # The index mapping lines of code to their associated comment blocks.

73 | self.index = {}

74 |

75 | def process_file(self, file):

76 | """ Process a file object.

77 | """

78 | if sys.version_info[0] >= 3:

79 | nxt = file.__next__

80 | else:

81 | nxt = file.next

82 | for token in tokenize.generate_tokens(nxt):

83 | self.process_token(*token)

84 | self.make_index()

85 |

86 | def process_token(self, kind, string, start, end, line):

87 | """ Process a single token.

88 | """

89 | if self.current_block.is_comment:

90 | if kind == tokenize.COMMENT:

91 | self.current_block.add(string, start, end, line)

92 | else:

93 | self.new_noncomment(start[0], end[0])

94 | else:

95 | if kind == tokenize.COMMENT:

96 | self.new_comment(string, start, end, line)

97 | else:

98 | self.current_block.add(string, start, end, line)

99 |

100 | def new_noncomment(self, start_lineno, end_lineno):

101 | """ We are transitioning from a noncomment to a comment.

102 | """

103 | block = NonComment(start_lineno, end_lineno)

104 | self.blocks.append(block)

105 | self.current_block = block

106 |

107 | def new_comment(self, string, start, end, line):

108 | """ Possibly add a new comment.

109 |

110 | Only adds a new comment if this comment is the only thing on the line.

111 | Otherwise, it extends the noncomment block.

112 | """

113 | prefix = line[:start[1]]

114 | if prefix.strip():

115 | # Oops! Trailing comment, not a comment block.

116 | self.current_block.add(string, start, end, line)

117 | else:

118 | # A comment block.

119 | block = Comment(start[0], end[0], string)

120 | self.blocks.append(block)

121 | self.current_block = block

122 |

123 | def make_index(self):

124 | """ Make the index mapping lines of actual code to their associated

125 | prefix comments.

126 | """

127 | for prev, block in zip(self.blocks[:-1], self.blocks[1:]):

128 | if not block.is_comment:

129 | self.index[block.start_lineno] = prev

130 |

131 | def search_for_comment(self, lineno, default=None):

132 | """ Find the comment block just before the given line number.

133 |

134 | Returns None (or the specified default) if there is no such block.

135 | """

136 | if not self.index:

137 | self.make_index()

138 | block = self.index.get(lineno, None)

139 | text = getattr(block, 'text', default)

140 | return text

141 |

142 |

143 | def strip_comment_marker(text):

144 | """ Strip # markers at the front of a block of comment text.

145 | """

146 | lines = []

147 | for line in text.splitlines():

148 | lines.append(line.lstrip('#'))

149 | text = textwrap.dedent('\n'.join(lines))

150 | return text

151 |

152 |

153 | def get_class_traits(klass):

154 | """ Yield all of the documentation for trait definitions on a class object.

155 | """

156 | # FIXME: gracefully handle errors here or in the caller?

157 | source = inspect.getsource(klass)

158 | cb = CommentBlocker()

159 | cb.process_file(StringIO(source))

160 | mod_ast = compiler.parse(source)

161 | class_ast = mod_ast.node.nodes[0]

162 | for node in class_ast.code.nodes:

163 | # FIXME: handle other kinds of assignments?

164 | if isinstance(node, compiler.ast.Assign):

165 | name = node.nodes[0].name

166 | rhs = unparse(node.expr).strip()

167 | doc = strip_comment_marker(cb.search_for_comment(node.lineno, default=''))

168 | yield name, rhs, doc

169 |

170 |

--------------------------------------------------------------------------------

/doc/sphinxext/numpy_ext/doctest.py:

--------------------------------------------------------------------------------

1 | # Licensed under a 3-clause BSD style license - see LICENSE.rst

2 | """

3 | This is a set of three directives that allow us to insert metadata

4 | about doctests into the .rst files so the testing framework knows

5 | which tests to skip.

6 |

7 | This is quite different from the doctest extension in Sphinx itself,

8 | which actually does something. For astropy, all of the testing is

9 | centrally managed from py.test and Sphinx is not used for running

10 | tests.

11 | """

12 | import re

13 | from docutils.nodes import literal_block

14 | from sphinx.util.compat import Directive

15 |

16 |

17 | class DoctestSkipDirective(Directive):

18 | has_content = True

19 |

20 | def run(self):