├── Claude_3_5_Sonnet_to_gpt_4o_mini_Conversion.ipynb

├── Instruct_Prompt_>_Base_Model_Prompt_Converter.ipynb

├── LICENSE

├── Llama_3_1_405B_>_8B_Conversion.ipynb

├── README.md

├── XL_to_XS_conversion.ipynb

├── claude_prompt_engineer.ipynb

├── gpt_planner.ipynb

├── gpt_prompt_engineer.ipynb

├── gpt_prompt_engineer_Classification_Version.ipynb

└── opus_to_haiku_conversion.ipynb

/Claude_3_5_Sonnet_to_gpt_4o_mini_Conversion.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {

6 | "id": "view-in-github",

7 | "colab_type": "text"

8 | },

9 | "source": [

10 | " "

11 | ]

12 | },

13 | {

14 | "cell_type": "markdown",

15 | "metadata": {

16 | "id": "WljjH8K3s7kG"

17 | },

18 | "source": [

19 | "# Claude 3.5 Sonnet to gpt-4o-mini - part of the `gpt-prompt-engineer` repo\n",

20 | "\n",

21 | "This notebook gives you the ability to go from Claude 3.5 Sonnet to GPT-4o-mini -- reducing costs massively while keeping quality high.\n",

22 | "\n",

23 | "By Matt Shumer (https://twitter.com/mattshumer_)\n",

24 | "\n",

25 | "Github repo: https://github.com/mshumer/gpt-prompt-engineer"

26 | ]

27 | },

28 | {

29 | "cell_type": "code",

30 | "execution_count": null,

31 | "metadata": {

32 | "id": "dQmMZdkG_RA5"

33 | },

34 | "outputs": [],

35 | "source": [

36 | "!pip install openai\n",

37 | "\n",

38 | "OPENAI_API_KEY = \"YOUR API KEY HERE\" # enter your OpenAI API key here\n",

39 | "ANTHROPIC_API_KEY = \"YOUR API KEY HERE\" # enter your Anthropic API key here"

40 | ]

41 | },

42 | {

43 | "cell_type": "code",

44 | "execution_count": null,

45 | "metadata": {

46 | "id": "wXeqMQpzzosx"

47 | },

48 | "outputs": [],

49 | "source": [

50 | "import re\n",

51 | "import json\n",

52 | "import requests\n",

53 | "from openai import OpenAI\n",

54 | "\n",

55 | "client = OpenAI(api_key=OPENAI_API_KEY)\n",

56 | "\n",

57 | "def generate_candidate_prompts(task, prompt_example, response_example):\n",

58 | " headers = {\n",

59 | " \"x-api-key\": ANTHROPIC_API_KEY,\n",

60 | " \"anthropic-version\": \"2023-06-01\",\n",

61 | " \"content-type\": \"application/json\"\n",

62 | " }\n",

63 | "\n",

64 | " data = {\n",

65 | " \"model\": 'claude-3-5-sonnet-20240620',\n",

66 | " \"max_tokens\": 4000,\n",

67 | " \"temperature\": .5,\n",

68 | " \"system\": \"\"\"Given an example training sample, create seven additional samples for the same task that are even better. Each example should contain a and a .\n",

69 | "\n",

70 | "\n",

71 | "1. Ensure the new examples are diverse and unique from one another.\n",

72 | "2. They should all be perfect. If you make a mistake, this system won't work.\n",

73 | "\n",

74 | "\n",

75 | "Respond in this format:\n",

76 | "\n",

77 | "\n",

78 | "\n",

79 | "PUT_PROMPT_HERE\n",

80 | "\n",

81 | "\n",

82 | "PUT_RESPONSE_HERE\n",

83 | "\n",

84 | "\n",

85 | "\n",

86 | "\n",

87 | "\n",

88 | "PUT_PROMPT_HERE\n",

89 | "\n",

90 | "\n",

91 | "PUT_RESPONSE_HERE\n",

92 | "\n",

93 | "\n",

94 | "\n",

95 | "...\n",

96 | "\"\"\",\n",

97 | " \"messages\": [\n",

98 | " {\"role\": \"user\", \"content\": f\"\"\"{task}\n",

99 | "\n",

100 | "\n",

101 | "{prompt_example}\n",

102 | "\n",

103 | "\n",

104 | "\n",

105 | "{response_example}\n",

106 | "\"\"\"},\n",

107 | " ]\n",

108 | " }\n",

109 | "\n",

110 | "\n",

111 | " response = requests.post(\"https://api.anthropic.com/v1/messages\", headers=headers, json=data)\n",

112 | "\n",

113 | " response_text = response.json()['content'][0]['text']\n",

114 | "\n",

115 | " # Parse out the prompts and responses\n",

116 | " prompts_and_responses = []\n",

117 | " examples = re.findall(r'(.*?)', response_text, re.DOTALL)\n",

118 | " for example in examples:\n",

119 | " prompt = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

120 | " response = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

121 | " prompts_and_responses.append({'prompt': prompt, 'response': response})\n",

122 | "\n",

123 | " return prompts_and_responses\n",

124 | "\n",

125 | "def generate_system_prompt(task, prompt_examples):\n",

126 | " headers = {\n",

127 | " \"x-api-key\": ANTHROPIC_API_KEY,\n",

128 | " \"anthropic-version\": \"2023-06-01\",\n",

129 | " \"content-type\": \"application/json\"\n",

130 | " }\n",

131 | "\n",

132 | " data = {\n",

133 | " \"model\": 'claude-3-5-sonnet-20240620',\n",

134 | " \"max_tokens\": 1000,\n",

135 | " \"temperature\": .5,\n",

136 | " \"system\": \"\"\"Given a user-description of their a set of prompt / response pairs (it'll be in JSON for easy reading) for the types of outputs we want to generate given inputs, write a fantastic system prompt that describes the task to be done perfectly.\n",

137 | "\n",

138 | "\n",

139 | "1. Do this perfectly.\n",

140 | "2. Respond only with the system prompt, and nothing else. No other text will be allowed.\n",

141 | "\n",

142 | "\n",

143 | "Respond in this format:\n",

144 | "\n",

145 | "WRITE_SYSTEM_PROMPT_HERE\n",

146 | "\"\"\",\n",

147 | " \"messages\": [\n",

148 | " {\"role\": \"user\", \"content\": f\"\"\"{task}\n",

149 | "\n",

150 | "\n",

151 | "{str(prompt_examples)}\n",

152 | "\"\"\"},\n",

153 | " ]\n",

154 | " }\n",

155 | "\n",

156 | "\n",

157 | " response = requests.post(\"https://api.anthropic.com/v1/messages\", headers=headers, json=data)\n",

158 | "\n",

159 | " response_text = response.json()['content'][0]['text']\n",

160 | "\n",

161 | " # Parse out the prompt\n",

162 | " system_prompt = response_text.split('')[1].split('')[0].strip()\n",

163 | "\n",

164 | " return system_prompt\n",

165 | "\n",

166 | "def test_mini(generated_examples, prompt_example, system_prompt):\n",

167 | " messages = [{\"role\": \"system\", \"content\": system_prompt}]\n",

168 | "\n",

169 | " for example in generated_examples:\n",

170 | " messages.append({\"role\": \"user\", \"content\": example['prompt']})\n",

171 | " messages.append({\"role\": \"assistant\", \"content\": example['response']})\n",

172 | "\n",

173 | " messages.append({\"role\": \"user\", \"content\": prompt_example.strip()})\n",

174 | "\n",

175 | " response = client.chat.completions.create(\n",

176 | " model=\"gpt-4o-mini\",\n",

177 | " messages=messages,\n",

178 | " max_tokens=2000,\n",

179 | " temperature=0.5\n",

180 | " )\n",

181 | "\n",

182 | " response_text = response.choices[0].message.content\n",

183 | "\n",

184 | " return response_text\n",

185 | "\n",

186 | "def run_mini_conversion_process(task, prompt_example, response_example):\n",

187 | " print('Generating the prompts / responses...')\n",

188 | " # Generate candidate prompts\n",

189 | " generated_examples = generate_candidate_prompts(task, prompt_example, response_example)\n",

190 | "\n",

191 | " print('Prompts / responses generated. Now generating system prompt...')\n",

192 | "\n",

193 | " # Generate the system prompt\n",

194 | " system_prompt = generate_system_prompt(task, generated_examples)\n",

195 | "\n",

196 | " print('System prompt generated:', system_prompt)\n",

197 | "\n",

198 | " print('\\n\\nTesting the new prompt on GPT-4o-mini, using your input example...')\n",

199 | " # Test the generated examples and system prompt with the GPT-4o-mini model\n",

200 | " mini_response = test_mini(generated_examples, prompt_example, system_prompt)\n",

201 | "\n",

202 | " print('GPT-4o-mini responded with:')\n",

203 | " print(mini_response)\n",

204 | "\n",

205 | " print('\\n\\n!! CHECK THE FILE DIRECTORY, THE PROMPT IS NOW SAVED THERE !!')\n",

206 | "\n",

207 | " # Create a dictionary with all the relevant information\n",

208 | " result = {\n",

209 | " \"task\": task,\n",

210 | " \"initial_prompt_example\": prompt_example,\n",

211 | " \"initial_response_example\": response_example,\n",

212 | " \"generated_examples\": generated_examples,\n",

213 | " \"system_prompt\": system_prompt,\n",

214 | " \"mini_response\": mini_response\n",

215 | " }\n",

216 | "\n",

217 | " # Save the GPT-4o-mini prompt to a Python file\n",

218 | " with open(\"gpt4o_mini_prompt.py\", \"w\") as file:\n",

219 | " file.write('system_prompt = \"\"\"' + system_prompt + '\"\"\"\\n\\n')\n",

220 | "\n",

221 | " file.write('messages = [\\n')\n",

222 | " for example in generated_examples:\n",

223 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + example['prompt'] + '\"\"\"},\\n')\n",

224 | " file.write(' {\"role\": \"assistant\", \"content\": \"\"\"' + example['response'] + '\"\"\"},\\n')\n",

225 | "\n",

226 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + prompt_example.strip() + '\"\"\"}\\n')\n",

227 | " file.write(']\\n')\n",

228 | "\n",

229 | " return result"

230 | ]

231 | },

232 | {

233 | "cell_type": "markdown",

234 | "source": [

235 | "## Fill in your task, prompt_example, and response_example here. Make sure you keep the quality really high here... this is the most important step!"

236 | ],

237 | "metadata": {

238 | "id": "ZujTAzhuBMea"

239 | }

240 | },

241 | {

242 | "cell_type": "code",

243 | "source": [

244 | "task = \"refactoring complex code\"\n",

245 | "\n",

246 | "prompt_example = \"\"\"def calculate_total(prices, tax, discount, shipping_fee, gift_wrap_fee, membership_discount):\n",

247 | "\n",

248 | " total = 0\n",

249 | "\n",

250 | " for i in range(len(prices)):\n",

251 | "\n",

252 | " total += prices[i]\n",

253 | "\n",

254 | " if membership_discount != 0:\n",

255 | "\n",

256 | " total = total - (total * (membership_discount / 100))\n",

257 | "\n",

258 | " if discount != 0:\n",

259 | "\n",

260 | " total = total - (total * (discount / 100))\n",

261 | "\n",

262 | " total = total + (total * (tax / 100))\n",

263 | "\n",

264 | " if total < 50:\n",

265 | "\n",

266 | " total += shipping_fee\n",

267 | "\n",

268 | " else:\n",

269 | "\n",

270 | " total += shipping_fee / 2\n",

271 | "\n",

272 | " if gift_wrap_fee != 0:\n",

273 | "\n",

274 | " total += gift_wrap_fee * len(prices)\n",

275 | "\n",

276 | " if total > 1000:\n",

277 | "\n",

278 | " total -= 50\n",

279 | "\n",

280 | " elif total > 500:\n",

281 | "\n",

282 | " total -= 25\n",

283 | "\n",

284 | " total = round(total, 2)\n",

285 | "\n",

286 | " if total < 0:\n",

287 | "\n",

288 | " total = 0\n",

289 | "\n",

290 | " return total\"\"\"\n",

291 | "\n",

292 | "response_example = \"\"\"def calculate_total(prices, tax_rate, discount_rate, shipping_fee, gift_wrap_fee, membership_discount_rate):\n",

293 | "\n",

294 | " def apply_percentage_discount(amount, percentage):\n",

295 | "\n",

296 | " return amount * (1 - percentage / 100)\n",

297 | "\n",

298 | " def calculate_shipping_fee(total):\n",

299 | "\n",

300 | " return shipping_fee if total < 50 else shipping_fee / 2\n",

301 | "\n",

302 | " def apply_tier_discount(total):\n",

303 | "\n",

304 | " if total > 1000:\n",

305 | "\n",

306 | " return total - 50\n",

307 | "\n",

308 | " elif total > 500:\n",

309 | "\n",

310 | " return total - 25\n",

311 | "\n",

312 | " return total\n",

313 | "\n",

314 | " subtotal = sum(prices)\n",

315 | "\n",

316 | " subtotal = apply_percentage_discount(subtotal, membership_discount_rate)\n",

317 | "\n",

318 | " subtotal = apply_percentage_discount(subtotal, discount_rate)\n",

319 | "\n",

320 | "\n",

321 | "\n",

322 | " total = subtotal * (1 + tax_rate / 100)\n",

323 | "\n",

324 | " total += calculate_shipping_fee(total)\n",

325 | "\n",

326 | " total += gift_wrap_fee * len(prices)\n",

327 | "\n",

328 | "\n",

329 | "\n",

330 | " total = apply_tier_discount(total)\n",

331 | "\n",

332 | " total = max(0, round(total, 2))\n",

333 | "\n",

334 | "\n",

335 | "\n",

336 | " return total\"\"\""

337 | ],

338 | "metadata": {

339 | "id": "XSZqqOoQ-5_E"

340 | },

341 | "execution_count": null,

342 | "outputs": []

343 | },

344 | {

345 | "cell_type": "markdown",

346 | "source": [

347 | "### Now, let's run this system and get our new prompt! At the end, you'll see a new file pop up in the directory that contains everything you'll need to reduce your costs while keeping quality high w/ gpt-4o-mini!"

348 | ],

349 | "metadata": {

350 | "id": "cMO3cJzWA-O0"

351 | }

352 | },

353 | {

354 | "cell_type": "code",

355 | "source": [

356 | "result = run_mini_conversion_process(task, prompt_example, response_example)"

357 | ],

358 | "metadata": {

359 | "id": "O-Bn0rupAJqb"

360 | },

361 | "execution_count": null,

362 | "outputs": []

363 | }

364 | ],

365 | "metadata": {

366 | "colab": {

367 | "provenance": [],

368 | "include_colab_link": true

369 | },

370 | "kernelspec": {

371 | "display_name": "Python 3",

372 | "name": "python3"

373 | },

374 | "language_info": {

375 | "codemirror_mode": {

376 | "name": "ipython",

377 | "version": 3

378 | },

379 | "file_extension": ".py",

380 | "mimetype": "text/x-python",

381 | "name": "python",

382 | "nbconvert_exporter": "python",

383 | "pygments_lexer": "ipython3",

384 | "version": "3.8.8"

385 | }

386 | },

387 | "nbformat": 4,

388 | "nbformat_minor": 0

389 | }

--------------------------------------------------------------------------------

/Instruct_Prompt_>_Base_Model_Prompt_Converter.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": [],

7 | "authorship_tag": "ABX9TyMiPidmyxX9dj90NyYRBkK/",

8 | "include_colab_link": true

9 | },

10 | "kernelspec": {

11 | "name": "python3",

12 | "display_name": "Python 3"

13 | },

14 | "language_info": {

15 | "name": "python"

16 | }

17 | },

18 | "cells": [

19 | {

20 | "cell_type": "markdown",

21 | "metadata": {

22 | "id": "view-in-github",

23 | "colab_type": "text"

24 | },

25 | "source": [

26 | "

"

11 | ]

12 | },

13 | {

14 | "cell_type": "markdown",

15 | "metadata": {

16 | "id": "WljjH8K3s7kG"

17 | },

18 | "source": [

19 | "# Claude 3.5 Sonnet to gpt-4o-mini - part of the `gpt-prompt-engineer` repo\n",

20 | "\n",

21 | "This notebook gives you the ability to go from Claude 3.5 Sonnet to GPT-4o-mini -- reducing costs massively while keeping quality high.\n",

22 | "\n",

23 | "By Matt Shumer (https://twitter.com/mattshumer_)\n",

24 | "\n",

25 | "Github repo: https://github.com/mshumer/gpt-prompt-engineer"

26 | ]

27 | },

28 | {

29 | "cell_type": "code",

30 | "execution_count": null,

31 | "metadata": {

32 | "id": "dQmMZdkG_RA5"

33 | },

34 | "outputs": [],

35 | "source": [

36 | "!pip install openai\n",

37 | "\n",

38 | "OPENAI_API_KEY = \"YOUR API KEY HERE\" # enter your OpenAI API key here\n",

39 | "ANTHROPIC_API_KEY = \"YOUR API KEY HERE\" # enter your Anthropic API key here"

40 | ]

41 | },

42 | {

43 | "cell_type": "code",

44 | "execution_count": null,

45 | "metadata": {

46 | "id": "wXeqMQpzzosx"

47 | },

48 | "outputs": [],

49 | "source": [

50 | "import re\n",

51 | "import json\n",

52 | "import requests\n",

53 | "from openai import OpenAI\n",

54 | "\n",

55 | "client = OpenAI(api_key=OPENAI_API_KEY)\n",

56 | "\n",

57 | "def generate_candidate_prompts(task, prompt_example, response_example):\n",

58 | " headers = {\n",

59 | " \"x-api-key\": ANTHROPIC_API_KEY,\n",

60 | " \"anthropic-version\": \"2023-06-01\",\n",

61 | " \"content-type\": \"application/json\"\n",

62 | " }\n",

63 | "\n",

64 | " data = {\n",

65 | " \"model\": 'claude-3-5-sonnet-20240620',\n",

66 | " \"max_tokens\": 4000,\n",

67 | " \"temperature\": .5,\n",

68 | " \"system\": \"\"\"Given an example training sample, create seven additional samples for the same task that are even better. Each example should contain a and a .\n",

69 | "\n",

70 | "\n",

71 | "1. Ensure the new examples are diverse and unique from one another.\n",

72 | "2. They should all be perfect. If you make a mistake, this system won't work.\n",

73 | "\n",

74 | "\n",

75 | "Respond in this format:\n",

76 | "\n",

77 | "\n",

78 | "\n",

79 | "PUT_PROMPT_HERE\n",

80 | "\n",

81 | "\n",

82 | "PUT_RESPONSE_HERE\n",

83 | "\n",

84 | "\n",

85 | "\n",

86 | "\n",

87 | "\n",

88 | "PUT_PROMPT_HERE\n",

89 | "\n",

90 | "\n",

91 | "PUT_RESPONSE_HERE\n",

92 | "\n",

93 | "\n",

94 | "\n",

95 | "...\n",

96 | "\"\"\",\n",

97 | " \"messages\": [\n",

98 | " {\"role\": \"user\", \"content\": f\"\"\"{task}\n",

99 | "\n",

100 | "\n",

101 | "{prompt_example}\n",

102 | "\n",

103 | "\n",

104 | "\n",

105 | "{response_example}\n",

106 | "\"\"\"},\n",

107 | " ]\n",

108 | " }\n",

109 | "\n",

110 | "\n",

111 | " response = requests.post(\"https://api.anthropic.com/v1/messages\", headers=headers, json=data)\n",

112 | "\n",

113 | " response_text = response.json()['content'][0]['text']\n",

114 | "\n",

115 | " # Parse out the prompts and responses\n",

116 | " prompts_and_responses = []\n",

117 | " examples = re.findall(r'(.*?)', response_text, re.DOTALL)\n",

118 | " for example in examples:\n",

119 | " prompt = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

120 | " response = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

121 | " prompts_and_responses.append({'prompt': prompt, 'response': response})\n",

122 | "\n",

123 | " return prompts_and_responses\n",

124 | "\n",

125 | "def generate_system_prompt(task, prompt_examples):\n",

126 | " headers = {\n",

127 | " \"x-api-key\": ANTHROPIC_API_KEY,\n",

128 | " \"anthropic-version\": \"2023-06-01\",\n",

129 | " \"content-type\": \"application/json\"\n",

130 | " }\n",

131 | "\n",

132 | " data = {\n",

133 | " \"model\": 'claude-3-5-sonnet-20240620',\n",

134 | " \"max_tokens\": 1000,\n",

135 | " \"temperature\": .5,\n",

136 | " \"system\": \"\"\"Given a user-description of their a set of prompt / response pairs (it'll be in JSON for easy reading) for the types of outputs we want to generate given inputs, write a fantastic system prompt that describes the task to be done perfectly.\n",

137 | "\n",

138 | "\n",

139 | "1. Do this perfectly.\n",

140 | "2. Respond only with the system prompt, and nothing else. No other text will be allowed.\n",

141 | "\n",

142 | "\n",

143 | "Respond in this format:\n",

144 | "\n",

145 | "WRITE_SYSTEM_PROMPT_HERE\n",

146 | "\"\"\",\n",

147 | " \"messages\": [\n",

148 | " {\"role\": \"user\", \"content\": f\"\"\"{task}\n",

149 | "\n",

150 | "\n",

151 | "{str(prompt_examples)}\n",

152 | "\"\"\"},\n",

153 | " ]\n",

154 | " }\n",

155 | "\n",

156 | "\n",

157 | " response = requests.post(\"https://api.anthropic.com/v1/messages\", headers=headers, json=data)\n",

158 | "\n",

159 | " response_text = response.json()['content'][0]['text']\n",

160 | "\n",

161 | " # Parse out the prompt\n",

162 | " system_prompt = response_text.split('')[1].split('')[0].strip()\n",

163 | "\n",

164 | " return system_prompt\n",

165 | "\n",

166 | "def test_mini(generated_examples, prompt_example, system_prompt):\n",

167 | " messages = [{\"role\": \"system\", \"content\": system_prompt}]\n",

168 | "\n",

169 | " for example in generated_examples:\n",

170 | " messages.append({\"role\": \"user\", \"content\": example['prompt']})\n",

171 | " messages.append({\"role\": \"assistant\", \"content\": example['response']})\n",

172 | "\n",

173 | " messages.append({\"role\": \"user\", \"content\": prompt_example.strip()})\n",

174 | "\n",

175 | " response = client.chat.completions.create(\n",

176 | " model=\"gpt-4o-mini\",\n",

177 | " messages=messages,\n",

178 | " max_tokens=2000,\n",

179 | " temperature=0.5\n",

180 | " )\n",

181 | "\n",

182 | " response_text = response.choices[0].message.content\n",

183 | "\n",

184 | " return response_text\n",

185 | "\n",

186 | "def run_mini_conversion_process(task, prompt_example, response_example):\n",

187 | " print('Generating the prompts / responses...')\n",

188 | " # Generate candidate prompts\n",

189 | " generated_examples = generate_candidate_prompts(task, prompt_example, response_example)\n",

190 | "\n",

191 | " print('Prompts / responses generated. Now generating system prompt...')\n",

192 | "\n",

193 | " # Generate the system prompt\n",

194 | " system_prompt = generate_system_prompt(task, generated_examples)\n",

195 | "\n",

196 | " print('System prompt generated:', system_prompt)\n",

197 | "\n",

198 | " print('\\n\\nTesting the new prompt on GPT-4o-mini, using your input example...')\n",

199 | " # Test the generated examples and system prompt with the GPT-4o-mini model\n",

200 | " mini_response = test_mini(generated_examples, prompt_example, system_prompt)\n",

201 | "\n",

202 | " print('GPT-4o-mini responded with:')\n",

203 | " print(mini_response)\n",

204 | "\n",

205 | " print('\\n\\n!! CHECK THE FILE DIRECTORY, THE PROMPT IS NOW SAVED THERE !!')\n",

206 | "\n",

207 | " # Create a dictionary with all the relevant information\n",

208 | " result = {\n",

209 | " \"task\": task,\n",

210 | " \"initial_prompt_example\": prompt_example,\n",

211 | " \"initial_response_example\": response_example,\n",

212 | " \"generated_examples\": generated_examples,\n",

213 | " \"system_prompt\": system_prompt,\n",

214 | " \"mini_response\": mini_response\n",

215 | " }\n",

216 | "\n",

217 | " # Save the GPT-4o-mini prompt to a Python file\n",

218 | " with open(\"gpt4o_mini_prompt.py\", \"w\") as file:\n",

219 | " file.write('system_prompt = \"\"\"' + system_prompt + '\"\"\"\\n\\n')\n",

220 | "\n",

221 | " file.write('messages = [\\n')\n",

222 | " for example in generated_examples:\n",

223 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + example['prompt'] + '\"\"\"},\\n')\n",

224 | " file.write(' {\"role\": \"assistant\", \"content\": \"\"\"' + example['response'] + '\"\"\"},\\n')\n",

225 | "\n",

226 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + prompt_example.strip() + '\"\"\"}\\n')\n",

227 | " file.write(']\\n')\n",

228 | "\n",

229 | " return result"

230 | ]

231 | },

232 | {

233 | "cell_type": "markdown",

234 | "source": [

235 | "## Fill in your task, prompt_example, and response_example here. Make sure you keep the quality really high here... this is the most important step!"

236 | ],

237 | "metadata": {

238 | "id": "ZujTAzhuBMea"

239 | }

240 | },

241 | {

242 | "cell_type": "code",

243 | "source": [

244 | "task = \"refactoring complex code\"\n",

245 | "\n",

246 | "prompt_example = \"\"\"def calculate_total(prices, tax, discount, shipping_fee, gift_wrap_fee, membership_discount):\n",

247 | "\n",

248 | " total = 0\n",

249 | "\n",

250 | " for i in range(len(prices)):\n",

251 | "\n",

252 | " total += prices[i]\n",

253 | "\n",

254 | " if membership_discount != 0:\n",

255 | "\n",

256 | " total = total - (total * (membership_discount / 100))\n",

257 | "\n",

258 | " if discount != 0:\n",

259 | "\n",

260 | " total = total - (total * (discount / 100))\n",

261 | "\n",

262 | " total = total + (total * (tax / 100))\n",

263 | "\n",

264 | " if total < 50:\n",

265 | "\n",

266 | " total += shipping_fee\n",

267 | "\n",

268 | " else:\n",

269 | "\n",

270 | " total += shipping_fee / 2\n",

271 | "\n",

272 | " if gift_wrap_fee != 0:\n",

273 | "\n",

274 | " total += gift_wrap_fee * len(prices)\n",

275 | "\n",

276 | " if total > 1000:\n",

277 | "\n",

278 | " total -= 50\n",

279 | "\n",

280 | " elif total > 500:\n",

281 | "\n",

282 | " total -= 25\n",

283 | "\n",

284 | " total = round(total, 2)\n",

285 | "\n",

286 | " if total < 0:\n",

287 | "\n",

288 | " total = 0\n",

289 | "\n",

290 | " return total\"\"\"\n",

291 | "\n",

292 | "response_example = \"\"\"def calculate_total(prices, tax_rate, discount_rate, shipping_fee, gift_wrap_fee, membership_discount_rate):\n",

293 | "\n",

294 | " def apply_percentage_discount(amount, percentage):\n",

295 | "\n",

296 | " return amount * (1 - percentage / 100)\n",

297 | "\n",

298 | " def calculate_shipping_fee(total):\n",

299 | "\n",

300 | " return shipping_fee if total < 50 else shipping_fee / 2\n",

301 | "\n",

302 | " def apply_tier_discount(total):\n",

303 | "\n",

304 | " if total > 1000:\n",

305 | "\n",

306 | " return total - 50\n",

307 | "\n",

308 | " elif total > 500:\n",

309 | "\n",

310 | " return total - 25\n",

311 | "\n",

312 | " return total\n",

313 | "\n",

314 | " subtotal = sum(prices)\n",

315 | "\n",

316 | " subtotal = apply_percentage_discount(subtotal, membership_discount_rate)\n",

317 | "\n",

318 | " subtotal = apply_percentage_discount(subtotal, discount_rate)\n",

319 | "\n",

320 | "\n",

321 | "\n",

322 | " total = subtotal * (1 + tax_rate / 100)\n",

323 | "\n",

324 | " total += calculate_shipping_fee(total)\n",

325 | "\n",

326 | " total += gift_wrap_fee * len(prices)\n",

327 | "\n",

328 | "\n",

329 | "\n",

330 | " total = apply_tier_discount(total)\n",

331 | "\n",

332 | " total = max(0, round(total, 2))\n",

333 | "\n",

334 | "\n",

335 | "\n",

336 | " return total\"\"\""

337 | ],

338 | "metadata": {

339 | "id": "XSZqqOoQ-5_E"

340 | },

341 | "execution_count": null,

342 | "outputs": []

343 | },

344 | {

345 | "cell_type": "markdown",

346 | "source": [

347 | "### Now, let's run this system and get our new prompt! At the end, you'll see a new file pop up in the directory that contains everything you'll need to reduce your costs while keeping quality high w/ gpt-4o-mini!"

348 | ],

349 | "metadata": {

350 | "id": "cMO3cJzWA-O0"

351 | }

352 | },

353 | {

354 | "cell_type": "code",

355 | "source": [

356 | "result = run_mini_conversion_process(task, prompt_example, response_example)"

357 | ],

358 | "metadata": {

359 | "id": "O-Bn0rupAJqb"

360 | },

361 | "execution_count": null,

362 | "outputs": []

363 | }

364 | ],

365 | "metadata": {

366 | "colab": {

367 | "provenance": [],

368 | "include_colab_link": true

369 | },

370 | "kernelspec": {

371 | "display_name": "Python 3",

372 | "name": "python3"

373 | },

374 | "language_info": {

375 | "codemirror_mode": {

376 | "name": "ipython",

377 | "version": 3

378 | },

379 | "file_extension": ".py",

380 | "mimetype": "text/x-python",

381 | "name": "python",

382 | "nbconvert_exporter": "python",

383 | "pygments_lexer": "ipython3",

384 | "version": "3.8.8"

385 | }

386 | },

387 | "nbformat": 4,

388 | "nbformat_minor": 0

389 | }

--------------------------------------------------------------------------------

/Instruct_Prompt_>_Base_Model_Prompt_Converter.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": [],

7 | "authorship_tag": "ABX9TyMiPidmyxX9dj90NyYRBkK/",

8 | "include_colab_link": true

9 | },

10 | "kernelspec": {

11 | "name": "python3",

12 | "display_name": "Python 3"

13 | },

14 | "language_info": {

15 | "name": "python"

16 | }

17 | },

18 | "cells": [

19 | {

20 | "cell_type": "markdown",

21 | "metadata": {

22 | "id": "view-in-github",

23 | "colab_type": "text"

24 | },

25 | "source": [

26 | " "

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "source": [

32 | "Part of the `gpt-prompt-engineer` repo.\n",

33 | "\n",

34 | "Created by [Matt Shumer](https://twitter.com/mattshumer_)."

35 | ],

36 | "metadata": {

37 | "id": "eskBstij58XV"

38 | }

39 | },

40 | {

41 | "cell_type": "code",

42 | "source": [

43 | "!pip install anthropic\n",

44 | "\n",

45 | "import requests\n",

46 | "import anthropic\n",

47 | "\n",

48 | "ANTHROPIC_API_KEY = \"YOUR API KEY\" # Replace with your Anthropic API key\n",

49 | "OCTO_API_KEY = \"YOUR API KEY\" # Replace with your OctoAI API key\n",

50 | "\n",

51 | "client = anthropic.Anthropic(\n",

52 | " api_key=ANTHROPIC_API_KEY,\n",

53 | ")"

54 | ],

55 | "metadata": {

56 | "id": "vSYmdogL6GVF"

57 | },

58 | "execution_count": null,

59 | "outputs": []

60 | },

61 | {

62 | "cell_type": "markdown",

63 | "source": [

64 | "## Let's generate the converted prompt"

65 | ],

66 | "metadata": {

67 | "id": "z7yjKs_Y6ar8"

68 | }

69 | },

70 | {

71 | "cell_type": "code",

72 | "source": [

73 | "original_instruct_prompt = \"\"\"write an essay about frogs\"\"\" ## place your prompt to convert here\n",

74 | "\n",

75 | "message = client.messages.create(\n",

76 | " model=\"claude-3-opus-20240229\",\n",

77 | " max_tokens=1000,\n",

78 | " temperature=0.4,\n",

79 | " system=\"Given a prompt designed for an assistant model, convert it to be used with a base model. Use code as a trick to do this well.\",\n",

80 | " messages=[\n",

81 | " {\n",

82 | " \"role\": \"user\",\n",

83 | " \"content\": [\n",

84 | " {\n",

85 | " \"type\": \"text\",\n",

86 | " \"text\": \"Prompt to convert:\\n\\nApril 8, 2024 — Cohere recently announced the launch of Command R+, a cutting-edge addition to its R-series of large language models (LLMs). In this blog post, Cohere CEO Aidan Gomez delves into how this new model is poised to enhance enterprise-grade AI applications through enhanced efficiency, accuracy, and a robust partnership with Microsoft Azure.\\nToday, we’re introducing Command R+, our most powerful, scalable large language model (LLM) purpose-built to excel at real-world enterprise use cases. Command R+ joins our R-series of LLMs focused on balancing high efficiency with strong accuracy, enabling businesses to move beyond proof-of-concept, and into production with AI.\\nCommand R+, like our recently launched Command R model, features a 128k-token context window and is designed to offer best-in-class:\\nAdvanced Retrieval Augmented Generation (RAG) with citation to reduce hallucinations\\nMultilingual coverage in 10 key languages to support global business operations\\nTool Use to automate sophisticated business processes\\nOur latest model builds on the key strengths of Command R and further improves performance across the board. Command R+ outperforms similar models in the scalable market category, and is competitive with significantly more expensive models on key business-critical capabilities. We achieve this while providing the same commitment to data privacy and security that we’re known for.\\nAs we continue to serve the global enterprise community, we are proud to announce a new collaboration with Microsoft Azure to accelerate enterprise AI adoption.\\n“The collaboration with Cohere underscores our dedication to leading the charge in the AI revolution, bringing the innovative Command R+ model to Azure AI,” said John Montgomery, CVP of Azure AI Platform at Microsoft. “This partnership exemplifies our commitment to providing a comprehensive suite of AI tools that empower businesses to achieve more while adhering to the highest standards of security and compliance. Together, we’re setting new benchmarks for what’s possible in enterprise AI, fostering a future where technology amplifies human capability and innovation.”\\n--\\nGive me three good headlines\\n\"\n",

87 | " }\n",

88 | " ]\n",

89 | " },\n",

90 | " {\n",

91 | " \"role\": \"assistant\",\n",

92 | " \"content\": [\n",

93 | " {\n",

94 | " \"type\": \"text\",\n",

95 | " \"text\": \"\\nimport ai\\n\\ndef generate_headlines(press_release):\\n prompt = f\\\"\\\"\\\"Given the following press release, generate a Python list containing three compelling, informative headlines for a news article or blog post covering this announcement. \\n\\n Each headline should:\\n - Be no more than 20 words \\n - Capture the key points about the new Command R+ model and Cohere's partnership with Microsoft Azure\\n\\n Press Release:\\n \\\\\\\"\\\\\\\"\\\\\\\"{press_release}\\\\\\\"\\\\\\\"\\\\\\\"\\n \\n Respond with the Python list containing the three headlines, with each headline surrounded by double quotes.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\npress_release = \\\"\\\"\\\"\\nApril 8, 2024 — Cohere recently announced the launch of Command R+, a cutting-edge addition to its R-series of large language models (LLMs). In this blog post, Cohere CEO Aidan Gomez delves into how this new model is poised to enhance enterprise-grade AI applications through enhanced efficiency, accuracy, and a robust partnership with Microsoft Azure.\\n\\nToday, we're introducing Command R+, our most powerful, scalable large language model (LLM) purpose-built to excel at real-world enterprise use cases. Command R+ joins our R-series of LLMs focused on balancing high efficiency with strong accuracy, enabling businesses to move beyond proof-of-concept, and into production with AI.\\n\\nCommand R+, like our recently launched Command R model, features a 128k-token context window and is designed to offer best-in-class:\\n\\nAdvanced Retrieval Augmented Generation (RAG) with citation to reduce hallucinations\\nMultilingual coverage in 10 key languages to support global business operations \\nTool Use to automate sophisticated business processes\\nOur latest model builds on the key strengths of Command R and further improves performance across the board. Command R+ outperforms similar models in the scalable market category, and is competitive with significantly more expensive models on key business-critical capabilities. We achieve this while providing the same commitment to data privacy and security that we're known for.\\n\\nAs we continue to serve the global enterprise community, we are proud to announce a new collaboration with Microsoft Azure to accelerate enterprise AI adoption.\\n\\n\\\"The collaboration with Cohere underscores our dedication to leading the charge in the AI revolution, bringing the innovative Command R+ model to Azure AI,\\\" said John Montgomery, CVP of Azure AI Platform at Microsoft. \\\"This partnership exemplifies our commitment to providing a comprehensive suite of AI tools that empower businesses to achieve more while adhering to the highest standards of security and compliance. Together, we're setting new benchmarks for what's possible in enterprise AI, fostering a future where technology amplifies human capability and innovation.\\\"\\n\\\"\\\"\\\"\\n\\nprint(generate_headlines(press_release))\\n\\nOutput:\\n\\\"\\\"\\\"\\n[\\n\"\n",

96 | " }\n",

97 | " ]\n",

98 | " },\n",

99 | " {\n",

100 | " \"role\": \"user\",\n",

101 | " \"content\": [\n",

102 | " {\n",

103 | " \"type\": \"text\",\n",

104 | " \"text\": \"Prompt to convert:\\n\\nSpend Less Time in Bird Library\\nMake this shorter.\\n\"\n",

105 | " }\n",

106 | " ]\n",

107 | " },\n",

108 | " {\n",

109 | " \"role\": \"assistant\",\n",

110 | " \"content\": [\n",

111 | " {\n",

112 | " \"type\": \"text\",\n",

113 | " \"text\": \"\\nimport ai\\n\\ndef shorten_text(input_text):\\n prompt = f\\\"\\\"\\\"Shorten the following text while preserving the key meaning. Ensure the text you generate is shorter than the original:\\n\\n Text: \\\"{input_text}\\\"\\n \\n Respond with the shortened text string inside quotes.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\ntext = \\\"Spend Less Time in Bird Library\\\"\\n\\nprint('The shortened text is: ', shorten_text(input_text))\\n\\nOutput:\\n\\\"\\\"\\\"\\n\\nThe shortened text is: \\\", \\\"\\n\"\n",

114 | " }\n",

115 | " ]\n",

116 | " },\n",

117 | " {\n",

118 | " \"role\": \"user\",\n",

119 | " \"content\": [\n",

120 | " {\n",

121 | " \"type\": \"text\",\n",

122 | " \"text\": \"Prompt to convert:\\n\\npip uninstall -y transformers && pip uninstall -y flash-attn && pip install flash-attn && pip install git+https://github.com/huggingface/transformers && git clone https://github.com/OpenAccess-AI-Collective/axolotl && cd axolotl && pip3 install -e .[flash-attn] && pip3 install -U git+https://github.com/huggingface/peft.git && pip uninstall -y deepspeed && pip install -U deepspeed && pip install accelerate && pip uninstall -y deepspeed && pip install deepspeed==0.13.1 && conda install --name py3.10 mpi4py\\nHow do I run this but use the specific branch dbrx of axolotl?\\n\"\n",

123 | " }\n",

124 | " ]\n",

125 | " },\n",

126 | " {\n",

127 | " \"role\": \"assistant\",\n",

128 | " \"content\": [\n",

129 | " {\n",

130 | " \"type\": \"text\",\n",

131 | " \"text\": \"\\nimport ai\\n\\ndef modify_commands(commands):\\n prompt = f\\\"\\\"\\\"Given the following series of shell commands:\\n\\n ```\\n {commands}\\n ```\\n \\n Modify the commands to clone the specific 'dbrx' branch of the 'axolotl' repository instead of the default branch.\\n\\n Respond with the updated series of commands.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\ncommands = \\\"\\\"\\\"\\npip uninstall -y transformers && pip uninstall -y flash-attn && pip install flash-attn && pip install git+https://github.com/huggingface/transformers && git clone https://github.com/OpenAccess-AI-Collective/axolotl && cd axolotl && pip3 install -e .[flash-attn] && pip3 install -U git+https://github.com/huggingface/peft.git && pip uninstall -y deepspeed && pip install -U deepspeed && pip install accelerate && pip uninstall -y deepspeed && pip install deepspeed==0.13.1 && conda install --name py3.10 mpi4py\\n\\\"\\\"\\\"\\n\\nprint(modify_commands(commands))\\n\\nOutput:\\n\\\"\\\"\\\"\\n\"\n",

132 | " }\n",

133 | " ]\n",

134 | " },\n",

135 | " {\n",

136 | " \"role\": \"user\",\n",

137 | " \"content\": [\n",

138 | " {\n",

139 | " \"type\": \"text\",\n",

140 | " \"text\": f\"Prompt to convert:\\n\\n{original_instruct_prompt.strip()}\\n\"\n",

141 | " }\n",

142 | " ]\n",

143 | " },\n",

144 | " {\n",

145 | " \"role\": \"assistant\",\n",

146 | " \"content\": [\n",

147 | " {\n",

148 | " \"type\": \"text\",\n",

149 | " \"text\": \"\"\n",

150 | " }\n",

151 | " ]\n",

152 | " },\n",

153 | " ]\n",

154 | ")\n",

155 | "converted_prompt = message.content[0].text.split('')[0].strip()\n",

156 | "\n",

157 | "print(converted_prompt)"

158 | ],

159 | "metadata": {

160 | "id": "Qbzawzrt6dfQ"

161 | },

162 | "execution_count": null,

163 | "outputs": []

164 | },

165 | {

166 | "cell_type": "markdown",

167 | "source": [

168 | "## Use OctoAI's Mixtral 8x22B endpoint to test your prompt"

169 | ],

170 | "metadata": {

171 | "id": "CemDevtNS34g"

172 | }

173 | },

174 | {

175 | "cell_type": "code",

176 | "execution_count": null,

177 | "metadata": {

178 | "id": "SxSB1loL3g8P"

179 | },

180 | "outputs": [],

181 | "source": [

182 | "def generate_octo(prompt, max_tokens, temperature):\n",

183 | " url = \"https://text.octoai.run/v1/completions\"\n",

184 | " headers = {\n",

185 | " \"Content-Type\": \"application/json\",\n",

186 | " \"Authorization\": f\"Bearer {OCTO_API_KEY}\"\n",

187 | " }\n",

188 | " data = {\n",

189 | " \"model\": \"mixtral-8x22b\",\n",

190 | " \"prompt\": prompt,\n",

191 | " \"max_tokens\": max_tokens,\n",

192 | " \"temperature\": temperature,\n",

193 | " }\n",

194 | "\n",

195 | " response = requests.post(url, headers=headers, json=data)\n",

196 | "\n",

197 | " if response.status_code == 200:\n",

198 | " result = response.json()\n",

199 | " else:\n",

200 | " print(f\"Request failed with status code: {response.status_code}\")\n",

201 | " print(f\"Error message: {response.text}\")\n",

202 | "\n",

203 | " return response.json()['choices'][0]['text']\n",

204 | "\n",

205 | "print(generate_octo(converted_prompt, 500, .6).strip())"

206 | ]

207 | }

208 | ]

209 | }

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 mshumer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Llama_3_1_405B_>_8B_Conversion.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": [],

7 | "include_colab_link": true

8 | },

9 | "kernelspec": {

10 | "name": "python3",

11 | "display_name": "Python 3"

12 | },

13 | "language_info": {

14 | "name": "python"

15 | }

16 | },

17 | "cells": [

18 | {

19 | "cell_type": "markdown",

20 | "metadata": {

21 | "id": "view-in-github",

22 | "colab_type": "text"

23 | },

24 | "source": [

25 | "

"

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "source": [

32 | "Part of the `gpt-prompt-engineer` repo.\n",

33 | "\n",

34 | "Created by [Matt Shumer](https://twitter.com/mattshumer_)."

35 | ],

36 | "metadata": {

37 | "id": "eskBstij58XV"

38 | }

39 | },

40 | {

41 | "cell_type": "code",

42 | "source": [

43 | "!pip install anthropic\n",

44 | "\n",

45 | "import requests\n",

46 | "import anthropic\n",

47 | "\n",

48 | "ANTHROPIC_API_KEY = \"YOUR API KEY\" # Replace with your Anthropic API key\n",

49 | "OCTO_API_KEY = \"YOUR API KEY\" # Replace with your OctoAI API key\n",

50 | "\n",

51 | "client = anthropic.Anthropic(\n",

52 | " api_key=ANTHROPIC_API_KEY,\n",

53 | ")"

54 | ],

55 | "metadata": {

56 | "id": "vSYmdogL6GVF"

57 | },

58 | "execution_count": null,

59 | "outputs": []

60 | },

61 | {

62 | "cell_type": "markdown",

63 | "source": [

64 | "## Let's generate the converted prompt"

65 | ],

66 | "metadata": {

67 | "id": "z7yjKs_Y6ar8"

68 | }

69 | },

70 | {

71 | "cell_type": "code",

72 | "source": [

73 | "original_instruct_prompt = \"\"\"write an essay about frogs\"\"\" ## place your prompt to convert here\n",

74 | "\n",

75 | "message = client.messages.create(\n",

76 | " model=\"claude-3-opus-20240229\",\n",

77 | " max_tokens=1000,\n",

78 | " temperature=0.4,\n",

79 | " system=\"Given a prompt designed for an assistant model, convert it to be used with a base model. Use code as a trick to do this well.\",\n",

80 | " messages=[\n",

81 | " {\n",

82 | " \"role\": \"user\",\n",

83 | " \"content\": [\n",

84 | " {\n",

85 | " \"type\": \"text\",\n",

86 | " \"text\": \"Prompt to convert:\\n\\nApril 8, 2024 — Cohere recently announced the launch of Command R+, a cutting-edge addition to its R-series of large language models (LLMs). In this blog post, Cohere CEO Aidan Gomez delves into how this new model is poised to enhance enterprise-grade AI applications through enhanced efficiency, accuracy, and a robust partnership with Microsoft Azure.\\nToday, we’re introducing Command R+, our most powerful, scalable large language model (LLM) purpose-built to excel at real-world enterprise use cases. Command R+ joins our R-series of LLMs focused on balancing high efficiency with strong accuracy, enabling businesses to move beyond proof-of-concept, and into production with AI.\\nCommand R+, like our recently launched Command R model, features a 128k-token context window and is designed to offer best-in-class:\\nAdvanced Retrieval Augmented Generation (RAG) with citation to reduce hallucinations\\nMultilingual coverage in 10 key languages to support global business operations\\nTool Use to automate sophisticated business processes\\nOur latest model builds on the key strengths of Command R and further improves performance across the board. Command R+ outperforms similar models in the scalable market category, and is competitive with significantly more expensive models on key business-critical capabilities. We achieve this while providing the same commitment to data privacy and security that we’re known for.\\nAs we continue to serve the global enterprise community, we are proud to announce a new collaboration with Microsoft Azure to accelerate enterprise AI adoption.\\n“The collaboration with Cohere underscores our dedication to leading the charge in the AI revolution, bringing the innovative Command R+ model to Azure AI,” said John Montgomery, CVP of Azure AI Platform at Microsoft. “This partnership exemplifies our commitment to providing a comprehensive suite of AI tools that empower businesses to achieve more while adhering to the highest standards of security and compliance. Together, we’re setting new benchmarks for what’s possible in enterprise AI, fostering a future where technology amplifies human capability and innovation.”\\n--\\nGive me three good headlines\\n\"\n",

87 | " }\n",

88 | " ]\n",

89 | " },\n",

90 | " {\n",

91 | " \"role\": \"assistant\",\n",

92 | " \"content\": [\n",

93 | " {\n",

94 | " \"type\": \"text\",\n",

95 | " \"text\": \"\\nimport ai\\n\\ndef generate_headlines(press_release):\\n prompt = f\\\"\\\"\\\"Given the following press release, generate a Python list containing three compelling, informative headlines for a news article or blog post covering this announcement. \\n\\n Each headline should:\\n - Be no more than 20 words \\n - Capture the key points about the new Command R+ model and Cohere's partnership with Microsoft Azure\\n\\n Press Release:\\n \\\\\\\"\\\\\\\"\\\\\\\"{press_release}\\\\\\\"\\\\\\\"\\\\\\\"\\n \\n Respond with the Python list containing the three headlines, with each headline surrounded by double quotes.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\npress_release = \\\"\\\"\\\"\\nApril 8, 2024 — Cohere recently announced the launch of Command R+, a cutting-edge addition to its R-series of large language models (LLMs). In this blog post, Cohere CEO Aidan Gomez delves into how this new model is poised to enhance enterprise-grade AI applications through enhanced efficiency, accuracy, and a robust partnership with Microsoft Azure.\\n\\nToday, we're introducing Command R+, our most powerful, scalable large language model (LLM) purpose-built to excel at real-world enterprise use cases. Command R+ joins our R-series of LLMs focused on balancing high efficiency with strong accuracy, enabling businesses to move beyond proof-of-concept, and into production with AI.\\n\\nCommand R+, like our recently launched Command R model, features a 128k-token context window and is designed to offer best-in-class:\\n\\nAdvanced Retrieval Augmented Generation (RAG) with citation to reduce hallucinations\\nMultilingual coverage in 10 key languages to support global business operations \\nTool Use to automate sophisticated business processes\\nOur latest model builds on the key strengths of Command R and further improves performance across the board. Command R+ outperforms similar models in the scalable market category, and is competitive with significantly more expensive models on key business-critical capabilities. We achieve this while providing the same commitment to data privacy and security that we're known for.\\n\\nAs we continue to serve the global enterprise community, we are proud to announce a new collaboration with Microsoft Azure to accelerate enterprise AI adoption.\\n\\n\\\"The collaboration with Cohere underscores our dedication to leading the charge in the AI revolution, bringing the innovative Command R+ model to Azure AI,\\\" said John Montgomery, CVP of Azure AI Platform at Microsoft. \\\"This partnership exemplifies our commitment to providing a comprehensive suite of AI tools that empower businesses to achieve more while adhering to the highest standards of security and compliance. Together, we're setting new benchmarks for what's possible in enterprise AI, fostering a future where technology amplifies human capability and innovation.\\\"\\n\\\"\\\"\\\"\\n\\nprint(generate_headlines(press_release))\\n\\nOutput:\\n\\\"\\\"\\\"\\n[\\n\"\n",

96 | " }\n",

97 | " ]\n",

98 | " },\n",

99 | " {\n",

100 | " \"role\": \"user\",\n",

101 | " \"content\": [\n",

102 | " {\n",

103 | " \"type\": \"text\",\n",

104 | " \"text\": \"Prompt to convert:\\n\\nSpend Less Time in Bird Library\\nMake this shorter.\\n\"\n",

105 | " }\n",

106 | " ]\n",

107 | " },\n",

108 | " {\n",

109 | " \"role\": \"assistant\",\n",

110 | " \"content\": [\n",

111 | " {\n",

112 | " \"type\": \"text\",\n",

113 | " \"text\": \"\\nimport ai\\n\\ndef shorten_text(input_text):\\n prompt = f\\\"\\\"\\\"Shorten the following text while preserving the key meaning. Ensure the text you generate is shorter than the original:\\n\\n Text: \\\"{input_text}\\\"\\n \\n Respond with the shortened text string inside quotes.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\ntext = \\\"Spend Less Time in Bird Library\\\"\\n\\nprint('The shortened text is: ', shorten_text(input_text))\\n\\nOutput:\\n\\\"\\\"\\\"\\n\\nThe shortened text is: \\\", \\\"\\n\"\n",

114 | " }\n",

115 | " ]\n",

116 | " },\n",

117 | " {\n",

118 | " \"role\": \"user\",\n",

119 | " \"content\": [\n",

120 | " {\n",

121 | " \"type\": \"text\",\n",

122 | " \"text\": \"Prompt to convert:\\n\\npip uninstall -y transformers && pip uninstall -y flash-attn && pip install flash-attn && pip install git+https://github.com/huggingface/transformers && git clone https://github.com/OpenAccess-AI-Collective/axolotl && cd axolotl && pip3 install -e .[flash-attn] && pip3 install -U git+https://github.com/huggingface/peft.git && pip uninstall -y deepspeed && pip install -U deepspeed && pip install accelerate && pip uninstall -y deepspeed && pip install deepspeed==0.13.1 && conda install --name py3.10 mpi4py\\nHow do I run this but use the specific branch dbrx of axolotl?\\n\"\n",

123 | " }\n",

124 | " ]\n",

125 | " },\n",

126 | " {\n",

127 | " \"role\": \"assistant\",\n",

128 | " \"content\": [\n",

129 | " {\n",

130 | " \"type\": \"text\",\n",

131 | " \"text\": \"\\nimport ai\\n\\ndef modify_commands(commands):\\n prompt = f\\\"\\\"\\\"Given the following series of shell commands:\\n\\n ```\\n {commands}\\n ```\\n \\n Modify the commands to clone the specific 'dbrx' branch of the 'axolotl' repository instead of the default branch.\\n\\n Respond with the updated series of commands.\\\"\\\"\\\"\\n\\n return ai.generate(prompt)\\n\\ncommands = \\\"\\\"\\\"\\npip uninstall -y transformers && pip uninstall -y flash-attn && pip install flash-attn && pip install git+https://github.com/huggingface/transformers && git clone https://github.com/OpenAccess-AI-Collective/axolotl && cd axolotl && pip3 install -e .[flash-attn] && pip3 install -U git+https://github.com/huggingface/peft.git && pip uninstall -y deepspeed && pip install -U deepspeed && pip install accelerate && pip uninstall -y deepspeed && pip install deepspeed==0.13.1 && conda install --name py3.10 mpi4py\\n\\\"\\\"\\\"\\n\\nprint(modify_commands(commands))\\n\\nOutput:\\n\\\"\\\"\\\"\\n\"\n",

132 | " }\n",

133 | " ]\n",

134 | " },\n",

135 | " {\n",

136 | " \"role\": \"user\",\n",

137 | " \"content\": [\n",

138 | " {\n",

139 | " \"type\": \"text\",\n",

140 | " \"text\": f\"Prompt to convert:\\n\\n{original_instruct_prompt.strip()}\\n\"\n",

141 | " }\n",

142 | " ]\n",

143 | " },\n",

144 | " {\n",

145 | " \"role\": \"assistant\",\n",

146 | " \"content\": [\n",

147 | " {\n",

148 | " \"type\": \"text\",\n",

149 | " \"text\": \"\"\n",

150 | " }\n",

151 | " ]\n",

152 | " },\n",

153 | " ]\n",

154 | ")\n",

155 | "converted_prompt = message.content[0].text.split('')[0].strip()\n",

156 | "\n",

157 | "print(converted_prompt)"

158 | ],

159 | "metadata": {

160 | "id": "Qbzawzrt6dfQ"

161 | },

162 | "execution_count": null,

163 | "outputs": []

164 | },

165 | {

166 | "cell_type": "markdown",

167 | "source": [

168 | "## Use OctoAI's Mixtral 8x22B endpoint to test your prompt"

169 | ],

170 | "metadata": {

171 | "id": "CemDevtNS34g"

172 | }

173 | },

174 | {

175 | "cell_type": "code",

176 | "execution_count": null,

177 | "metadata": {

178 | "id": "SxSB1loL3g8P"

179 | },

180 | "outputs": [],

181 | "source": [

182 | "def generate_octo(prompt, max_tokens, temperature):\n",

183 | " url = \"https://text.octoai.run/v1/completions\"\n",

184 | " headers = {\n",

185 | " \"Content-Type\": \"application/json\",\n",

186 | " \"Authorization\": f\"Bearer {OCTO_API_KEY}\"\n",

187 | " }\n",

188 | " data = {\n",

189 | " \"model\": \"mixtral-8x22b\",\n",

190 | " \"prompt\": prompt,\n",

191 | " \"max_tokens\": max_tokens,\n",

192 | " \"temperature\": temperature,\n",

193 | " }\n",

194 | "\n",

195 | " response = requests.post(url, headers=headers, json=data)\n",

196 | "\n",

197 | " if response.status_code == 200:\n",

198 | " result = response.json()\n",

199 | " else:\n",

200 | " print(f\"Request failed with status code: {response.status_code}\")\n",

201 | " print(f\"Error message: {response.text}\")\n",

202 | "\n",

203 | " return response.json()['choices'][0]['text']\n",

204 | "\n",

205 | "print(generate_octo(converted_prompt, 500, .6).strip())"

206 | ]

207 | }

208 | ]

209 | }

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 mshumer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Llama_3_1_405B_>_8B_Conversion.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": [],

7 | "include_colab_link": true

8 | },

9 | "kernelspec": {

10 | "name": "python3",

11 | "display_name": "Python 3"

12 | },

13 | "language_info": {

14 | "name": "python"

15 | }

16 | },

17 | "cells": [

18 | {

19 | "cell_type": "markdown",

20 | "metadata": {

21 | "id": "view-in-github",

22 | "colab_type": "text"

23 | },

24 | "source": [

25 | " "

26 | ]

27 | },

28 | {

29 | "cell_type": "markdown",

30 | "source": [

31 | "# Llama 3.1 405B to Llama 3.1 8B - part of the `gpt-prompt-engineer` repo\n",

32 | "\n",

33 | "This notebook gives you the ability to go from Llama 3.1 405B to Llama 3.1 8B -- reducing costs massively while keeping quality high.\n",

34 | "\n",

35 | "This is powered by OctoAI inference. You'll need to sign up for OctoAI and get an API key to continue.\n",

36 | "\n",

37 | "By Matt Shumer (https://twitter.com/mattshumer_) and Ben Hamm (https://www.linkedin.com/in/hammben/)\n",

38 | "\n",

39 | "Github repo: https://github.com/mshumer/gpt-prompt-engineer\n",

40 | "\n"

41 | ],

42 | "metadata": {

43 | "id": "2Jyodln24Bda"

44 | }

45 | },

46 | {

47 | "cell_type": "code",

48 | "execution_count": null,

49 | "metadata": {

50 | "id": "oeDuRwSk3tG6"

51 | },

52 | "outputs": [],

53 | "source": [

54 | "!pip install openai"

55 | ]

56 | },

57 | {

58 | "cell_type": "markdown",

59 | "source": [

60 | "Get your OctoAI API key at: https://octo.ai"

61 | ],

62 | "metadata": {

63 | "id": "ULGwpuIi6Rm0"

64 | }

65 | },

66 | {

67 | "cell_type": "code",

68 | "source": [

69 | "import os\n",

70 | "\n",

71 | "os.environ[\"OCTOAI_API_KEY\"] = \"PLACE YOUR KEY HERE\""

72 | ],

73 | "metadata": {

74 | "id": "xXqMwX5U6Qac"

75 | },

76 | "execution_count": null,

77 | "outputs": []

78 | },

79 | {

80 | "cell_type": "code",

81 | "source": [

82 | "from IPython.display import HTML, display\n",

83 | "import re\n",

84 | "import json\n",

85 | "import os\n",

86 | "from openai import OpenAI\n",

87 | "\n",

88 | "def set_css():\n",

89 | " display(HTML('''\n",

90 | " \n",

95 | " '''))\n",

96 | "get_ipython().events.register('pre_run_cell', set_css)\n",

97 | "\n",

98 | "# Initialize the OpenAI client with custom base URL\n",

99 | "client = OpenAI(\n",

100 | " base_url=\"https://text.octoai.run/v1\",\n",

101 | " api_key=os.environ['OCTOAI_API_KEY'],\n",

102 | ")\n",

103 | "\n",

104 | "# Define model names\n",

105 | "small_model = \"meta-llama-3.1-8b-instruct\"\n",

106 | "big_model = \"meta-llama-3.1-405b-instruct\"\n",

107 | "\n",

108 | "def generate_candidate_prompts(task, prompt_example, response_example):\n",

109 | " system_prompt = \"\"\"Given an example training sample, create seven additional samples for the same task that are even better. Each example should contain a and a .\n",

110 | "\n",

111 | "\n",

112 | "1. Ensure the new examples are diverse and unique from one another.\n",

113 | "2. They should all be perfect. If you make a mistake, this system won't work.\n",

114 | "\n",

115 | "\n",

116 | "Respond in this format:\n",

117 | "\n",

118 | "\n",

119 | "\n",

120 | "PUT_PROMPT_HERE\n",

121 | "\n",

122 | "\n",

123 | "PUT_RESPONSE_HERE\n",

124 | "\n",

125 | "\n",

126 | "\n",

127 | "\n",

128 | "\n",

129 | "PUT_PROMPT_HERE\n",

130 | "\n",

131 | "\n",

132 | "PUT_RESPONSE_HERE\n",

133 | "\n",

134 | "\n",

135 | "\n",

136 | "...\n",

137 | "\"\"\"\n",

138 | "\n",

139 | " user_content = f\"\"\"{task}\n",

140 | "\n",

141 | "\n",

142 | "{prompt_example}\n",

143 | "\n",

144 | "\n",

145 | "\n",

146 | "{response_example}\n",

147 | "\"\"\"\n",

148 | "\n",

149 | " response = client.chat.completions.create(\n",

150 | " model=big_model,\n",

151 | " messages=[\n",

152 | " {\"role\": \"system\", \"content\": system_prompt},\n",

153 | " {\"role\": \"user\", \"content\": user_content}\n",

154 | " ],\n",

155 | " max_tokens=4000,\n",

156 | " temperature=0.5\n",

157 | " )\n",

158 | "\n",

159 | " response_text = response.choices[0].message.content\n",

160 | "\n",

161 | " # Parse out the prompts and responses\n",

162 | " prompts_and_responses = []\n",

163 | " examples = re.findall(r'(.*?)', response_text, re.DOTALL)\n",

164 | " for example in examples:\n",

165 | " prompt = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

166 | " response = re.findall(r'(.*?)', example, re.DOTALL)[0].strip()\n",

167 | " prompts_and_responses.append({'prompt': prompt, 'response': response})\n",

168 | "\n",

169 | " return prompts_and_responses\n",

170 | "\n",

171 | "def generate_system_prompt(task, prompt_examples):\n",

172 | " system_prompt = \"\"\"Given a user-description of their a set of prompt / response pairs (it'll be in JSON for easy reading) for the types of outputs we want to generate given inputs, write a fantastic system prompt that describes the task to be done perfectly.\n",

173 | "\n",

174 | "\n",

175 | "1. Do this perfectly.\n",

176 | "2. Respond only with the system prompt, and nothing else. No other text will be allowed.\n",

177 | "\n",

178 | "\n",

179 | "Respond in this format:\n",

180 | "\n",

181 | "WRITE_SYSTEM_PROMPT_HERE\n",

182 | "\"\"\"\n",

183 | "\n",

184 | " user_content = f\"\"\"{task}\n",

185 | "\n",

186 | "\n",

187 | "{str(prompt_examples)}\n",

188 | "\"\"\"\n",

189 | "\n",

190 | " response = client.chat.completions.create(\n",

191 | " model=big_model,\n",

192 | " messages=[\n",

193 | " {\"role\": \"system\", \"content\": system_prompt},\n",

194 | " {\"role\": \"user\", \"content\": user_content}\n",

195 | " ],\n",

196 | " max_tokens=1000,\n",

197 | " temperature=0.5\n",

198 | " )\n",

199 | "\n",

200 | " response_text = response.choices[0].message.content\n",

201 | "\n",

202 | " # Parse out the prompt\n",

203 | " generated_system_prompt = response_text.split('')[1].split('')[0].strip()\n",

204 | "\n",

205 | " return generated_system_prompt\n",

206 | "\n",

207 | "def test_small_model(generated_examples, prompt_example, system_prompt):\n",

208 | " messages = [{\"role\": \"system\", \"content\": system_prompt}]\n",

209 | "\n",

210 | " for example in generated_examples:\n",

211 | " messages.append({\"role\": \"user\", \"content\": example['prompt']})\n",

212 | " messages.append({\"role\": \"assistant\", \"content\": example['response']})\n",

213 | "\n",

214 | " messages.append({\"role\": \"user\", \"content\": prompt_example.strip()})\n",

215 | "\n",

216 | " response = client.chat.completions.create(\n",

217 | " model=small_model,\n",

218 | " messages=messages,\n",

219 | " max_tokens=2000,\n",

220 | " temperature=0.5\n",

221 | " )\n",

222 | "\n",

223 | " response_text = response.choices[0].message.content\n",

224 | "\n",

225 | " return response_text\n",

226 | "\n",

227 | "def run_conversion_process(task, prompt_example, response_example):\n",

228 | " print('Generating the prompts / responses...')\n",

229 | " # Generate candidate prompts\n",

230 | " generated_examples = generate_candidate_prompts(task, prompt_example, response_example)\n",

231 | "\n",

232 | " print('Prompts / responses generated. Now generating system prompt...')\n",

233 | "\n",

234 | " # Generate the system prompt\n",

235 | " system_prompt = generate_system_prompt(task, generated_examples)\n",

236 | "\n",

237 | " print('System prompt generated:', system_prompt)\n",

238 | "\n",

239 | " print(f'\\n\\nTesting the new prompt on {small_model}, using your input example...')\n",

240 | " # Test the generated examples and system prompt with the small model\n",

241 | " small_model_response = test_small_model(generated_examples, prompt_example, system_prompt)\n",

242 | "\n",

243 | " print(f'{small_model} responded with:')\n",

244 | " print(small_model_response)\n",

245 | "\n",

246 | " print('\\n\\n!! CHECK THE FILE DIRECTORY, THE PROMPT IS NOW SAVED THERE !!')\n",

247 | "\n",

248 | " # Create a dictionary with all the relevant information\n",

249 | " result = {\n",

250 | " \"task\": task,\n",

251 | " \"initial_prompt_example\": prompt_example,\n",

252 | " \"initial_response_example\": response_example,\n",

253 | " \"generated_examples\": generated_examples,\n",

254 | " \"system_prompt\": system_prompt,\n",

255 | " \"small_model_response\": small_model_response\n",

256 | " }\n",

257 | "\n",

258 | " # Save the small model prompt to a Python file\n",

259 | " with open(\"small_model_prompt.py\", \"w\") as file:\n",

260 | " file.write('system_prompt = \"\"\"' + system_prompt + '\"\"\"\\n\\n')\n",

261 | "\n",

262 | " file.write('messages = [\\n')\n",

263 | " for example in generated_examples:\n",

264 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + example['prompt'] + '\"\"\"},\\n')\n",

265 | " file.write(' {\"role\": \"assistant\", \"content\": \"\"\"' + example['response'] + '\"\"\"},\\n')\n",

266 | "\n",

267 | " file.write(' {\"role\": \"user\", \"content\": \"\"\"' + prompt_example.strip() + '\"\"\"}\\n')\n",

268 | " file.write(']\\n')\n",

269 | "\n",

270 | " return result"

271 | ],

272 | "metadata": {

273 | "id": "ylWOvJEG4N3I"

274 | },

275 | "execution_count": null,

276 | "outputs": []

277 | },

278 | {

279 | "cell_type": "markdown",

280 | "source": [

281 | "# Fill in your task, prompt_example, and response_example here. Make sure you keep the quality really high here... this is the most important step!"

282 | ],

283 | "metadata": {

284 | "id": "y8PCYOdO4ZYF"

285 | }

286 | },

287 | {

288 | "cell_type": "code",

289 | "source": [

290 | "task = \"refactoring complex code\"\n",

291 | "\n",

292 | "prompt_example = \"\"\"def calculate_total(prices, tax, discount, shipping_fee, gift_wrap_fee, membership_discount):\n",

293 | "\n",

294 | " total = 0\n",

295 | "\n",

296 | " for i in range(len(prices)):\n",

297 | "\n",

298 | " total += prices[i]\n",

299 | "\n",

300 | " if membership_discount != 0:\n",

301 | "\n",

302 | " total = total - (total * (membership_discount / 100))\n",

303 | "\n",

304 | " if discount != 0:\n",

305 | "\n",

306 | " total = total - (total * (discount / 100))\n",

307 | "\n",

308 | " total = total + (total * (tax / 100))\n",

309 | "\n",

310 | " if total < 50:\n",

311 | "\n",

312 | " total += shipping_fee\n",

313 | "\n",

314 | " else:\n",

315 | "\n",

316 | " total += shipping_fee / 2\n",

317 | "\n",

318 | " if gift_wrap_fee != 0:\n",

319 | "\n",

320 | " total += gift_wrap_fee * len(prices)\n",

321 | "\n",

322 | " if total > 1000:\n",

323 | "\n",

324 | " total -= 50\n",

325 | "\n",

326 | " elif total > 500:\n",

327 | "\n",

328 | " total -= 25\n",

329 | "\n",

330 | " total = round(total, 2)\n",

331 | "\n",

332 | " if total < 0:\n",

333 | "\n",

334 | " total = 0\n",

335 | "\n",

336 | " return total\"\"\"\n",

337 | "\n",

338 | "response_example = \"\"\"def calculate_total(prices, tax_rate, discount_rate, shipping_fee, gift_wrap_fee, membership_discount_rate):\n",

339 | "\n",

340 | " def apply_percentage_discount(amount, percentage):\n",

341 | "\n",

342 | " return amount * (1 - percentage / 100)\n",

343 | "\n",

344 | " def calculate_shipping_fee(total):\n",

345 | "\n",

346 | " return shipping_fee if total < 50 else shipping_fee / 2\n",

347 | "\n",

348 | " def apply_tier_discount(total):\n",

349 | "\n",

350 | " if total > 1000:\n",

351 | "\n",

352 | " return total - 50\n",

353 | "\n",

354 | " elif total > 500:\n",

355 | "\n",

356 | " return total - 25\n",

357 | "\n",

358 | " return total\n",

359 | "\n",

360 | " subtotal = sum(prices)\n",

361 | "\n",

362 | " subtotal = apply_percentage_discount(subtotal, membership_discount_rate)\n",

363 | "\n",

364 | " subtotal = apply_percentage_discount(subtotal, discount_rate)\n",

365 | "\n",

366 | "\n",

367 | "\n",

368 | " total = subtotal * (1 + tax_rate / 100)\n",

369 | "\n",

370 | " total += calculate_shipping_fee(total)\n",

371 | "\n",

372 | " total += gift_wrap_fee * len(prices)\n",

373 | "\n",

374 | "\n",

375 | "\n",

376 | " total = apply_tier_discount(total)\n",

377 | "\n",

378 | " total = max(0, round(total, 2))\n",

379 | "\n",

380 | "\n",

381 | "\n",

382 | " return total\"\"\""

383 | ],

384 | "metadata": {

385 | "id": "zdPBIjMc4YIj"

386 | },

387 | "execution_count": null,

388 | "outputs": []

389 | },

390 | {

391 | "cell_type": "markdown",

392 | "source": [

393 | "# Now, let's run this system and get our new prompt! At the end, you'll see a new file pop up in the directory that contains everything you'll need to reduce your costs while keeping quality high w/ Llama 3.1 8B!"

394 | ],

395 | "metadata": {

396 | "id": "xu8WkKy44eRF"

397 | }

398 | },

399 | {

400 | "cell_type": "code",

401 | "source": [

402 | "result = run_conversion_process(task, prompt_example, response_example)"

403 | ],

404 | "metadata": {

405 | "id": "VxDzg8944eBB"

406 | },

407 | "execution_count": null,

408 | "outputs": []

409 | }

410 | ]

411 | }

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # gpt-prompt-engineer

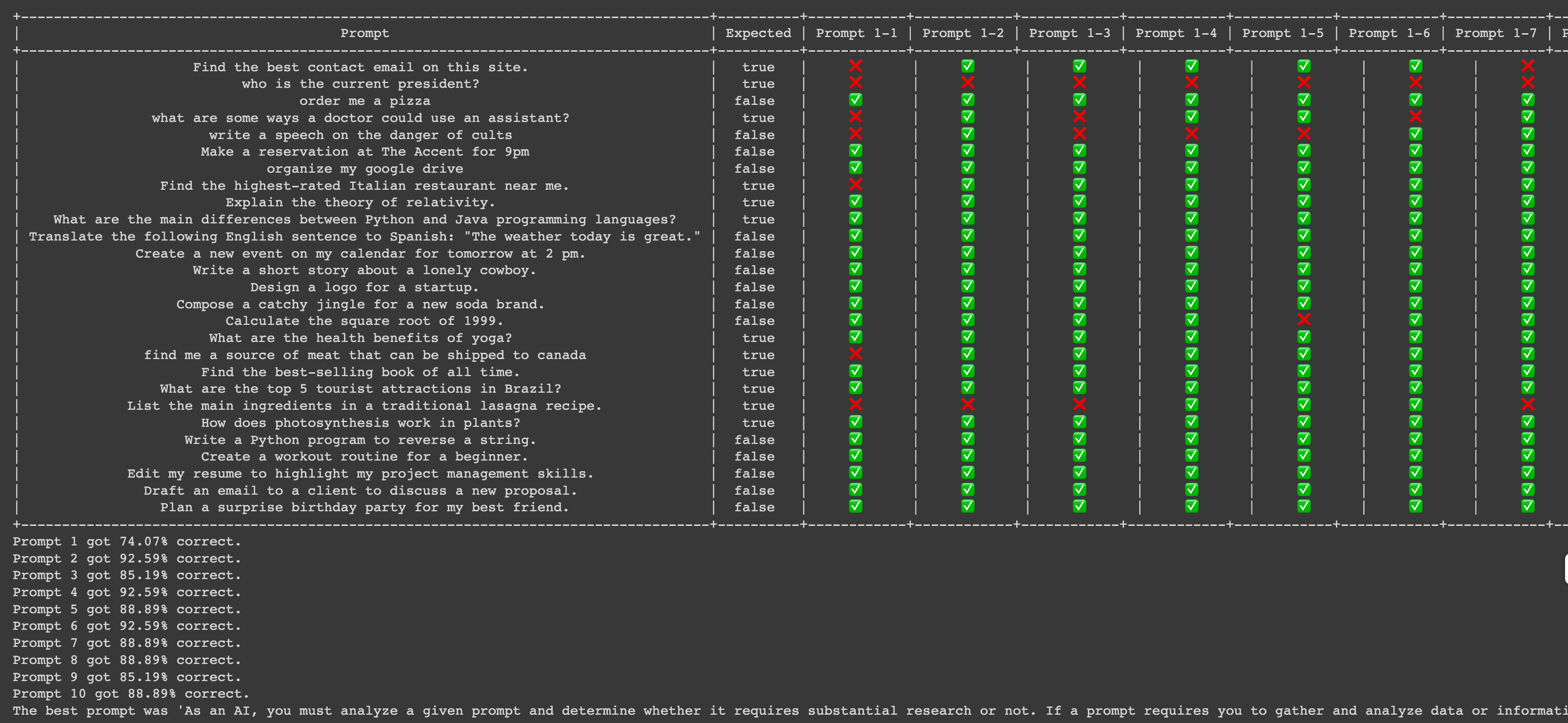

2 | [](https://twitter.com/mattshumer_) [](https://colab.research.google.com/github/mshumer/gpt-prompt-engineer/blob/main/gpt_prompt_engineer.ipynb) [](https://colab.research.google.com/drive/16NLMjqyuUWxcokE_NF6RwHD8grwEeoaJ?usp=sharing)

3 |

4 | ## Overview

5 |

6 | Prompt engineering is kind of like alchemy. There's no clear way to predict what will work best. It's all about experimenting until you find the right prompt. `gpt-prompt-engineer` is a tool that takes this experimentation to a whole new level.

7 |

8 | **Simply input a description of your task and some test cases, and the system will generate, test, and rank a multitude of prompts to find the ones that perform the best.**

9 |

10 | ## *New 3/20/24: The Claude 3 Opus Version*

11 | I've added a new version of gpt-prompt-engineer that takes full advantage of Anthropic's Claude 3 Opus model. This version auto-generates test cases and allows for the user to define multiple input variables, making it even more powerful and flexible. Try it out with the claude-prompt-engineer.ipynb notebook in the repo!

12 |

13 | ## *New 3/20/24: Claude 3 Opus -> Haiku Conversion Version*

14 | This notebook enables you to build lightning-fast, performant AI systems at a fraction of the typical cost. By using Claude 3 Opus to establish the latent space and Claude 3 Haiku for the actual generation, you can achieve amazing results. The process works by leveraging Opus to produce a collection of top-notch examples, which are then used to guide Haiku in generating output of comparable quality while dramatically reducing both latency and cost per generation. Try it out with the opus-to-haiku-conversion.ipynb notebook in the repo!

15 |

16 | ## Features

17 |

18 | - **Prompt Generation**: Using GPT-4, GPT-3.5-Turbo, or Claude 3 Opus, `gpt-prompt-engineer` can generate a variety of possible prompts based on a provided use-case and test cases.

19 |

20 | - **Prompt Testing**: The real magic happens after the generation. The system tests each prompt against all the test cases, comparing their performance and ranking them using an ELO rating system.

21 |

"

26 | ]

27 | },

28 | {

29 | "cell_type": "markdown",

30 | "source": [

31 | "# Llama 3.1 405B to Llama 3.1 8B - part of the `gpt-prompt-engineer` repo\n",

32 | "\n",