├── dataset

├── __init__.py

├── __pycache__

│ ├── scaler.cpython-39.pyc

│ ├── __init__.cpython-39.pyc

│ ├── preprocess.cpython-39.pyc

│ ├── quaternion.cpython-39.pyc

│ └── dance_dataset.cpython-39.pyc

├── preprocess.py

├── quaternion.py

├── masks.py

├── scaler.py

└── dance_dataset.py

├── data

├── audio_extraction

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-39.pyc

│ │ ├── both_features.cpython-39.pyc

│ │ ├── jukebox_features.cpython-39.pyc

│ │ └── baseline_features.cpython-39.pyc

│ ├── jukebox_features.py

│ └── baseline_features.py

├── download_dataset.sh

├── splits

│ ├── crossmodal_test.txt

│ ├── ignore_list.txt

│ └── crossmodal_train.txt

├── filter_split_data.py

├── create_dataset.py

└── slice.py

├── figs

└── model_00.png

├── .idea

├── misc.xml

├── .gitignore

├── inspectionProfiles

│ └── profiles_settings.xml

├── modules.xml

└── DGSDP-main.iml

├── train.py

├── eval

├── eval_pfc.py

├── beat_align_score.py

├── features

│ ├── kinetic.py

│ ├── utils.py

│ ├── manual_new.py

│ └── manual.py

└── metrics_diversity.py

├── model

├── utils.py

├── adan.py

├── rotary_embedding_torch.py

├── style_CLIP.py

├── model.py

└── diffusion.py

├── args.py

├── README.md

├── test.py

├── vis.py

└── DGSDP.py

/dataset/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/data/audio_extraction/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/figs/model_00.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/figs/model_00.png

--------------------------------------------------------------------------------

/dataset/__pycache__/scaler.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/dataset/__pycache__/scaler.cpython-39.pyc

--------------------------------------------------------------------------------

/dataset/__pycache__/__init__.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/dataset/__pycache__/__init__.cpython-39.pyc

--------------------------------------------------------------------------------

/dataset/__pycache__/preprocess.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/dataset/__pycache__/preprocess.cpython-39.pyc

--------------------------------------------------------------------------------

/dataset/__pycache__/quaternion.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/dataset/__pycache__/quaternion.cpython-39.pyc

--------------------------------------------------------------------------------

/dataset/__pycache__/dance_dataset.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/dataset/__pycache__/dance_dataset.cpython-39.pyc

--------------------------------------------------------------------------------

/data/audio_extraction/__pycache__/__init__.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/data/audio_extraction/__pycache__/__init__.cpython-39.pyc

--------------------------------------------------------------------------------

/data/audio_extraction/__pycache__/both_features.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/data/audio_extraction/__pycache__/both_features.cpython-39.pyc

--------------------------------------------------------------------------------

/data/audio_extraction/__pycache__/jukebox_features.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/data/audio_extraction/__pycache__/jukebox_features.cpython-39.pyc

--------------------------------------------------------------------------------

/data/audio_extraction/__pycache__/baseline_features.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/mucunzhuzhu/DGSDP/HEAD/data/audio_extraction/__pycache__/baseline_features.cpython-39.pyc

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

--------------------------------------------------------------------------------

/.idea/.gitignore:

--------------------------------------------------------------------------------

1 | # Default ignored files

2 | /shelf/

3 | /workspace.xml

4 | # Editor-based HTTP Client requests

5 | /httpRequests/

6 | # Datasource local storage ignored files

7 | /dataSources/

8 | /dataSources.local.xml

9 |

--------------------------------------------------------------------------------

/.idea/inspectionProfiles/profiles_settings.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/train.py:

--------------------------------------------------------------------------------

1 | from args import parse_train_opt

2 | from DGSDP import DGSDP

3 |

4 |

5 | def train(opt):

6 | model = DGSDP(opt.feature_type)

7 | model.train_loop(opt)

8 |

9 |

10 | if __name__ == "__main__":

11 | opt = parse_train_opt()

12 | train(opt)

13 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/data/download_dataset.sh:

--------------------------------------------------------------------------------

1 | wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1RzqSbSnbMEwLUagV0GThfpm9JJXePGkV' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1RzqSbSnbMEwLUagV0GThfpm9JJXePGkV" -O edge_aistpp.zip && rm -rf /tmp/cookies.txt

2 | unzip edge_aistpp.zip

--------------------------------------------------------------------------------

/.idea/DGSDP-main.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/data/splits/crossmodal_test.txt:

--------------------------------------------------------------------------------

1 | gLH_sBM_cAll_d17_mLH4_ch02

2 | gLH_sBM_cAll_d18_mLH4_ch02

3 | gKR_sBM_cAll_d30_mKR2_ch02

4 | gKR_sBM_cAll_d28_mKR2_ch02

5 | gBR_sBM_cAll_d04_mBR0_ch02

6 | gBR_sBM_cAll_d05_mBR0_ch02

7 | gLO_sBM_cAll_d13_mLO2_ch02

8 | gLO_sBM_cAll_d15_mLO2_ch02

9 | gJB_sBM_cAll_d08_mJB5_ch02

10 | gJB_sBM_cAll_d09_mJB5_ch02

11 | gWA_sBM_cAll_d26_mWA0_ch02

12 | gWA_sBM_cAll_d25_mWA0_ch02

13 | gJS_sBM_cAll_d03_mJS3_ch02

14 | gJS_sBM_cAll_d01_mJS3_ch02

15 | gMH_sBM_cAll_d24_mMH3_ch02

16 | gMH_sBM_cAll_d22_mMH3_ch02

17 | gHO_sBM_cAll_d20_mHO5_ch02

18 | gHO_sBM_cAll_d21_mHO5_ch02

19 | gPO_sBM_cAll_d10_mPO1_ch02

20 | gPO_sBM_cAll_d11_mPO1_ch02

--------------------------------------------------------------------------------

/data/audio_extraction/jukebox_features.py:

--------------------------------------------------------------------------------

1 | import os

2 | from functools import partial

3 | from pathlib import Path

4 |

5 | import jukemirlib

6 | import numpy as np

7 | from tqdm import tqdm

8 |

9 | FPS = 30

10 | LAYER = 66

11 |

12 |

13 | def extract(fpath, skip_completed=True, dest_dir="aist_juke_feats"):

14 | os.makedirs(dest_dir, exist_ok=True)

15 | audio_name = Path(fpath).stem

16 | save_path = os.path.join(dest_dir, audio_name + ".npy")

17 |

18 | if os.path.exists(save_path) and skip_completed:

19 | return

20 |

21 | audio = jukemirlib.load_audio(fpath)

22 | reps = jukemirlib.extract(audio, layers=[LAYER], downsample_target_rate=FPS)

23 |

24 | #np.save(save_path, reps[LAYER])

25 | return reps[LAYER], save_path

26 |

27 |

28 | def extract_folder(src, dest):

29 | fpaths = Path(src).glob("*")

30 | fpaths = sorted(list(fpaths))

31 | extract_ = partial(extract, skip_completed=False, dest_dir=dest)

32 | for fpath in tqdm(fpaths):

33 | rep, path = extract_(fpath)

34 | np.save(path, rep)

35 |

36 |

37 | if __name__ == "__main__":

38 | import argparse

39 |

40 | parser = argparse.ArgumentParser()

41 |

42 | parser.add_argument("--src", help="source path to AIST++ audio files")

43 | parser.add_argument("--dest", help="dest path to audio features")

44 |

45 | args = parser.parse_args()

46 |

47 | extract_folder(args.src, args.dest)

48 |

--------------------------------------------------------------------------------

/data/splits/ignore_list.txt:

--------------------------------------------------------------------------------

1 | gBR_sFM_cAll_d05_mBR5_ch14

2 | gBR_sFM_cAll_d06_mBR5_ch19

3 | gBR_sFM_cAll_d04_mBR4_ch07

4 | gBR_sFM_cAll_d05_mBR4_ch13

5 | gBR_sBM_cAll_d04_mBR0_ch08

6 | gBR_sBM_cAll_d04_mBR0_ch07

7 | gBR_sBM_cAll_d04_mBR0_ch10

8 | gBR_sBM_cAll_d05_mBR0_ch07

9 | gJB_sBM_cAll_d07_mJB2_ch06

10 | gJB_sBM_cAll_d07_mJB3_ch01

11 | gJB_sBM_cAll_d07_mJB3_ch05

12 | gJB_sBM_cAll_d08_mJB0_ch07

13 | gJB_sBM_cAll_d08_mJB0_ch09

14 | gJB_sBM_cAll_d08_mJB1_ch09

15 | gJB_sFM_cAll_d08_mJB3_ch11

16 | gJB_sBM_cAll_d08_mJB5_ch07

17 | gJB_sBM_cAll_d09_mJB2_ch07

18 | gJB_sBM_cAll_d09_mJB3_ch07

19 | gJB_sBM_cAll_d09_mJB4_ch06

20 | gJB_sBM_cAll_d09_mJB4_ch07

21 | gJB_sBM_cAll_d09_mJB4_ch09

22 | gJB_sBM_cAll_d09_mJB5_ch09

23 | gJS_sFM_cAll_d01_mJS0_ch01

24 | gJS_sFM_cAll_d01_mJS1_ch02

25 | gJS_sFM_cAll_d02_mJS0_ch08

26 | gJS_sFM_cAll_d03_mJS0_ch01

27 | gJS_sBM_cAll_d03_mJS3_ch10

28 | gKR_sBM_cAll_d30_mKR5_ch02

29 | gKR_sBM_cAll_d30_mKR5_ch01

30 | gHO_sFM_cAll_d20_mHO5_ch13

31 | gWA_sBM_cAll_d27_mWA4_ch02

32 | gWA_sBM_cAll_d27_mWA4_ch08

33 | gWA_sFM_cAll_d26_mWA2_ch10

34 | gWA_sBM_cAll_d25_mWA3_ch04

35 | gWA_sFM_cAll_d27_mWA1_ch16

36 | gWA_sBM_cAll_d25_mWA1_ch04

37 | gWA_sBM_cAll_d27_mWA5_ch01

38 | gWA_sBM_cAll_d27_mWA5_ch08

39 | gWA_sBM_cAll_d27_mWA3_ch01

40 | gWA_sBM_cAll_d27_mWA2_ch08

41 | gWA_sBM_cAll_d26_mWA1_ch01

42 | gWA_sBM_cAll_d26_mWA0_ch09

43 | gWA_sBM_cAll_d27_mWA2_ch01

44 | gWA_sBM_cAll_d25_mWA2_ch04

45 | gWA_sBM_cAll_d25_mWA2_ch03

46 |

47 |

--------------------------------------------------------------------------------

/data/filter_split_data.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import os

3 | import pickle

4 | import shutil

5 | from pathlib import Path

6 |

7 |

8 | def fileToList(f):

9 | out = open(f, "r").readlines()

10 | out = [x.strip() for x in out]

11 | out = [x for x in out if len(x)]

12 | out = [x for x in out if len(x)]

13 | return out

14 |

15 |

16 | filter_list = set(fileToList("splits/ignore_list.txt"))

17 | train_list = set(fileToList("splits/crossmodal_train.txt"))

18 | test_list = set(fileToList("splits/crossmodal_test.txt"))

19 |

20 |

21 | def split_data(dataset_path):

22 | # train - test split

23 | for split_list, split_name in zip([train_list, test_list], ["train", "test"]):

24 | Path(f"{split_name}/motions").mkdir(parents=True, exist_ok=True)

25 | Path(f"{split_name}/wavs").mkdir(parents=True, exist_ok=True)

26 | for sequence in split_list:

27 | if sequence in filter_list:

28 | continue

29 | motion = f"{dataset_path}/motions/{sequence}.pkl"

30 | wav = f"{dataset_path}/wavs/{sequence}.wav"

31 | assert os.path.isfile(motion)

32 | assert os.path.isfile(wav)

33 | motion_data = pickle.load(open(motion, "rb"))

34 | trans = motion_data["smpl_trans"]

35 | pose = motion_data["smpl_poses"]

36 | scale = motion_data["smpl_scaling"]

37 | out_data = {"pos": trans, "q": pose, "scale": scale}

38 | pickle.dump(out_data, open(f"{split_name}/motions/{sequence}.pkl", "wb"))

39 | shutil.copyfile(wav, f"{split_name}/wavs/{sequence}.wav")

40 |

--------------------------------------------------------------------------------

/data/create_dataset.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | from pathlib import Path

4 |

5 | from audio_extraction.baseline_features import \

6 | extract_folder as baseline_extract

7 | from audio_extraction.jukebox_features import extract_folder as jukebox_extract

8 | from filter_split_data import *

9 | from slice import *

10 |

11 |

12 | def create_dataset(opt):

13 | # split the data according to the splits files

14 | print("Creating train / test split")

15 | split_data(opt.dataset_folder)

16 | # slice motions/music into sliding windows to create training dataset

17 | print("Slicing train data") #

18 | slice_aistpp(f"train/motions", f"train/wavs")

19 | print("Slicing test data")

20 | slice_aistpp(f"test/motions", f"test/wavs")

21 | # process dataset to extract audio features

22 | if opt.extract_baseline:

23 | print("Extracting baseline features")

24 | baseline_extract("train/wavs_sliced", "train/baseline_feats")

25 | baseline_extract("test/wavs_sliced", "test/baseline_feats")

26 | if opt.extract_jukebox:

27 | print("Extracting jukebox features")

28 | jukebox_extract("train/wavs_sliced", "train/jukebox_feats")

29 | jukebox_extract("test/wavs_sliced", "test/jukebox_feats")

30 |

31 |

32 | def parse_opt():

33 | parser = argparse.ArgumentParser()

34 | parser.add_argument("--stride", type=float, default=0.5)

35 | parser.add_argument("--length", type=float, default=5.0, help="checkpoint")

36 | parser.add_argument(

37 | "--dataset_folder",

38 | type=str,

39 | default="edge_aistpp",

40 | help="folder containing motions and music",

41 | )

42 | parser.add_argument("--extract-baseline", action="store_true")

43 | parser.add_argument("--extract-jukebox", action="store_true")

44 | opt = parser.parse_args()

45 | return opt

46 |

47 |

48 | if __name__ == "__main__":

49 | opt = parse_opt()

50 | create_dataset(opt)

51 |

--------------------------------------------------------------------------------

/dataset/preprocess.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import os

3 | import re

4 | from pathlib import Path

5 |

6 | import torch

7 |

8 | from .scaler import MinMaxScaler

9 |

10 |

11 | def increment_path(path, exist_ok=False, sep="", mkdir=False):

12 | # Increment file or directory path, i.e. runs/exp --> runs/exp{sep}2, runs/exp{sep}3, ... etc.

13 | path = Path(path) # os-agnostic

14 | if path.exists() and not exist_ok:

15 | suffix = path.suffix

16 | path = path.with_suffix("")

17 | dirs = glob.glob(f"{path}{sep}*") # similar paths

18 | matches = [re.search(rf"%s{sep}(\d+)" % path.stem, d) for d in dirs]

19 | i = [int(m.groups()[0]) for m in matches if m] # indices

20 | n = max(i) + 1 if i else 2 # increment number

21 | path = Path(f"{path}{sep}{n}{suffix}") # update path

22 | dir = path if path.suffix == "" else path.parent # directory

23 | if not dir.exists() and mkdir:

24 | dir.mkdir(parents=True, exist_ok=True) # make directory

25 | return path

26 |

27 |

28 | class Normalizer:

29 | def __init__(self, data):

30 | flat = data.reshape(-1, data.shape[-1])

31 | self.scaler = MinMaxScaler((-1, 1), clip=True)

32 | self.scaler.fit(flat)

33 |

34 | def normalize(self, x):

35 | batch, seq, ch = x.shape

36 | x = x.reshape(-1, ch)

37 | return self.scaler.transform(x).reshape((batch, seq, ch))

38 |

39 | def unnormalize(self, x):

40 | batch, seq, ch = x.shape

41 | x = x.reshape(-1, ch)

42 | x = torch.clip(x, -1, 1) # clip to force compatibility

43 | return self.scaler.inverse_transform(x).reshape((batch, seq, ch))

44 |

45 |

46 | def vectorize_many(data):

47 | # given a list of batch x seqlen x joints? x channels, flatten all to batch x seqlen x -1, concatenate

48 | batch_size = data[0].shape[0]

49 | seq_len = data[0].shape[1]

50 |

51 | out = [x.reshape(batch_size, seq_len, -1).contiguous() for x in data]

52 |

53 | global_pose_vec_gt = torch.cat(out, dim=2)

54 | return global_pose_vec_gt

55 |

--------------------------------------------------------------------------------

/dataset/quaternion.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from pytorch3d.transforms import (axis_angle_to_matrix, matrix_to_axis_angle,

3 | matrix_to_quaternion, matrix_to_rotation_6d,

4 | quaternion_to_matrix, rotation_6d_to_matrix)

5 |

6 |

7 | def quat_to_6v(q):

8 | assert q.shape[-1] == 4

9 | mat = quaternion_to_matrix(q)

10 | mat = matrix_to_rotation_6d(mat)

11 | return mat

12 |

13 |

14 | def quat_from_6v(q):

15 | assert q.shape[-1] == 6

16 | mat = rotation_6d_to_matrix(q)

17 | quat = matrix_to_quaternion(mat)

18 | return quat

19 |

20 |

21 | def ax_to_6v(q):

22 | assert q.shape[-1] == 3

23 | mat = axis_angle_to_matrix(q)

24 | mat = matrix_to_rotation_6d(mat)

25 | return mat

26 |

27 |

28 | def ax_from_6v(q):

29 | assert q.shape[-1] == 6

30 | mat = rotation_6d_to_matrix(q)

31 | ax = matrix_to_axis_angle(mat)

32 | return ax

33 |

34 |

35 | def quat_slerp(x, y, a): # merged = quat_slerp(left, right, slerp_weight)

36 | """

37 | Performs spherical linear interpolation (SLERP) between x and y, with proportion a

38 |

39 | :param x: quaternion tensor (N, S, J, 4)

40 | :param y: quaternion tensor (N, S, J, 4)

41 | :param a: interpolation weight (S, )

42 | :return: tensor of interpolation results

43 | """

44 | len = torch.sum(x * y, axis=-1)

45 |

46 | neg = len < 0.0

47 | len[neg] = -len[neg]

48 | y[neg] = -y[neg]

49 |

50 | a = torch.zeros_like(x[..., 0]) + a

51 |

52 | amount0 = torch.zeros_like(a)

53 | amount1 = torch.zeros_like(a)

54 |

55 | linear = (1.0 - len) < 0.01

56 | omegas = torch.arccos(len[~linear])

57 | sinoms = torch.sin(omegas)

58 |

59 | amount0[linear] = 1.0 - a[linear]

60 | amount0[~linear] = torch.sin((1.0 - a[~linear]) * omegas) / sinoms

61 |

62 | amount1[linear] = a[linear]

63 | amount1[~linear] = torch.sin(a[~linear] * omegas) / sinoms

64 |

65 | # reshape

66 | amount0 = amount0[..., None]

67 | amount1 = amount1[..., None]

68 |

69 | res = amount0 * x + amount1 * y

70 |

71 | return res

72 |

--------------------------------------------------------------------------------

/eval/eval_pfc.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import glob

3 | import os

4 | import pickle

5 | import random

6 |

7 | import numpy as np

8 | from tqdm import tqdm

9 |

10 |

11 | def calc_physical_score(dir):

12 | scores = []

13 | names = []

14 | accelerations = []

15 | up_dir = 2 # z is up

16 | flat_dirs = [i for i in range(3) if i != up_dir]

17 | DT = 1 / 30

18 |

19 | it = glob.glob(os.path.join(dir, "*.pkl"))

20 | if len(it) > 1000:

21 | it = random.sample(it, 1000)

22 | for pkl in tqdm(it):

23 | info = pickle.load(open(pkl, "rb"))

24 | joint3d = info["full_pose"]

25 | joint3d = joint3d.reshape(-1,24,3)

26 | root_v = (joint3d[1:, 0, :] - joint3d[:-1, 0, :]) / DT

27 | root_a = (root_v[1:] - root_v[:-1]) / DT

28 | # clamp the up-direction of root acceleration

29 | root_a[:, up_dir] = np.maximum(root_a[:, up_dir], 0) #

30 | # l2 norm

31 | root_a = np.linalg.norm(root_a, axis=-1)

32 | scaling = root_a.max()

33 | root_a /= scaling

34 |

35 | foot_idx = [7, 10, 8, 11]

36 | feet = joint3d[:, foot_idx]

37 | foot_v = np.linalg.norm(

38 | feet[2:, :, flat_dirs] - feet[1:-1, :, flat_dirs], axis=-1

39 | ) # (S-2, 4) horizontal velocity

40 | foot_mins = np.zeros((len(foot_v), 2))

41 | foot_mins[:, 0] = np.minimum(foot_v[:, 0], foot_v[:, 1])

42 | foot_mins[:, 1] = np.minimum(foot_v[:, 2], foot_v[:, 3])

43 |

44 | foot_loss = (

45 | foot_mins[:, 0] * foot_mins[:, 1] * root_a

46 | ) # min leftv * min rightv * root_a (S-2,)

47 | foot_loss = foot_loss.mean()

48 | scores.append(foot_loss)

49 | names.append(pkl)

50 | accelerations.append(foot_mins[:, 0].mean())

51 |

52 | out = np.mean(scores) * 10000

53 | print(f"{dir} has a mean PFC of {out}")

54 |

55 |

56 | def parse_eval_opt():

57 | parser = argparse.ArgumentParser()

58 | parser.add_argument(

59 | "--motion_path",

60 | type=str,

61 | default="./motions",

62 | help="Where to load saved motions",

63 | )

64 | opt = parser.parse_args()

65 | return opt

66 |

67 |

68 | if __name__ == "__main__":

69 | opt = parse_eval_opt()

70 | opt.motion_path = "eval/motions"

71 |

72 | calc_physical_score(opt.motion_path)

--------------------------------------------------------------------------------

/dataset/masks.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 | smpl_joints = [

4 | "root", # 0

5 | "lhip",

6 | "rhip",

7 | "belly", # 1 2 3

8 | "lknee",

9 | "rknee",

10 | "spine", # 4 5 6

11 | "lankle",

12 | "rankle",

13 | "chest", # 7 8 9

14 | "ltoes",

15 | "rtoes",

16 | "neck", # 10 11 12

17 | "linshoulder",

18 | "rinshoulder", # 13 14

19 | "head",

20 | "lshoulder",

21 | "rshoulder", # 15 16 17

22 | "lelbow",

23 | "relbow", # 18 19

24 | "lwrist",

25 | "rwrist", # 20 21

26 | "lhand",

27 | "rhand", # 22 23

28 | ]

29 |

30 |

31 | def joint_indices_to_channel_indices(indices):

32 | out = []

33 | for index in indices:

34 | out += list(range(3 + 3 * index, 3 + 3 * index + 3))

35 | return out

36 |

37 |

38 | def get_first_last_mask(posq_batch, start_width=1, end_width=1):

39 | # an array in batch x seq_len x channels

40 | # return a mask that is ones in the first and last row (or first/last WIDTH rows) in the sequence direction

41 | mask = torch.zeros_like(posq_batch)

42 | mask[..., :start_width, :] = 1

43 | mask[..., -end_width:, :] = 1

44 | return mask

45 |

46 |

47 | def get_first_mask(posq_batch, start_width=1):

48 | # an array in batch x seq_len x channels

49 | # return a mask that is ones in the first and last row (or first/last WIDTH rows) in the sequence direction

50 | mask = torch.zeros_like(posq_batch)

51 | mask[..., :start_width, :] = 1

52 | return mask

53 |

54 |

55 | def get_middle_mask(posq_batch, start=0, end=-1):

56 | # an array in batch x seq_len x channels

57 | # return a mask that is ones in the first and last row (or first/last WIDTH rows) in the sequence direction

58 | mask = torch.zeros_like(posq_batch)

59 | mask[..., start:end, :] = 1

60 | return mask

61 |

62 |

63 | def lowerbody_mask(posq_batch):

64 | # an array in batch x seq_len x channels

65 | # return a mask that is ones in the first and last row (or first/last WIDTH rows) in the sequence direction

66 | mask = torch.zeros_like(posq_batch)

67 | lowerbody_indices = [0, 1, 2, 4, 5, 7, 8, 10, 11]

68 | root_traj_indices = [0, 1, 2]

69 | lowerbody_indices = (

70 | joint_indices_to_channel_indices(lowerbody_indices) + root_traj_indices

71 | ) # plus root traj

72 | mask[..., :, lowerbody_indices] = 1

73 | return mask

74 |

75 |

76 | def upperbody_mask(posq_batch):

77 | # an array in batch x seq_len x channels

78 | # return a mask that is ones in the first and last row (or first/last WIDTH rows) in the sequence direction

79 | mask = torch.zeros_like(posq_batch)

80 | upperbody_indices = [0, 3, 6, 9, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23]

81 | root_traj_indices = [0, 1, 2]

82 | upperbody_indices = (

83 | joint_indices_to_channel_indices(upperbody_indices) + root_traj_indices

84 | ) # plus root traj

85 | mask[..., :, upperbody_indices] = 1

86 | return mask

87 |

--------------------------------------------------------------------------------

/dataset/scaler.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 |

4 | def _handle_zeros_in_scale(scale, copy=True, constant_mask=None):

5 | # if we are fitting on 1D arrays, scale might be a scalar

6 | if constant_mask is None:

7 | # Detect near constant values to avoid dividing by a very small

8 | # value that could lead to surprising results and numerical

9 | # stability issues.

10 | constant_mask = scale < 10 * torch.finfo(scale.dtype).eps

11 |

12 | if copy:

13 | # New array to avoid side-effects

14 | scale = scale.clone()

15 | scale[constant_mask] = 1.0

16 | return scale

17 |

18 |

19 | class MinMaxScaler:

20 | _parameter_constraints: dict = {

21 | "feature_range": [tuple],

22 | "copy": ["boolean"],

23 | "clip": ["boolean"],

24 | }

25 |

26 | def __init__(self, feature_range=(0, 1), *, copy=True, clip=False):

27 | self.feature_range = feature_range

28 | self.copy = copy

29 | self.clip = clip

30 |

31 | def _reset(self):

32 | """Reset internal data-dependent state of the scaler, if necessary.

33 | __init__ parameters are not touched.

34 | """

35 | # Checking one attribute is enough, because they are all set together

36 | # in partial_fit

37 | if hasattr(self, "scale_"):

38 | del self.scale_

39 | del self.min_

40 | del self.n_samples_seen_

41 | del self.data_min_

42 | del self.data_max_

43 | del self.data_range_

44 |

45 | def fit(self, X):

46 | # Reset internal state before fitting

47 | self._reset()

48 | return self.partial_fit(X)

49 |

50 | def partial_fit(self, X):

51 | feature_range = self.feature_range

52 | if feature_range[0] >= feature_range[1]:

53 | raise ValueError(

54 | "Minimum of desired feature range must be smaller than maximum. Got %s."

55 | % str(feature_range)

56 | )

57 |

58 | data_min = torch.min(X, axis=0)[0]

59 | data_max = torch.max(X, axis=0)[0]

60 |

61 | self.n_samples_seen_ = X.shape[0]

62 |

63 | data_range = data_max - data_min

64 | self.scale_ = (feature_range[1] - feature_range[0]) / _handle_zeros_in_scale(

65 | data_range, copy=True

66 | )

67 | self.min_ = feature_range[0] - data_min * self.scale_

68 | self.data_min_ = data_min

69 | self.data_max_ = data_max

70 | self.data_range_ = data_range

71 | return self

72 |

73 | def transform(self, X):

74 | X *= self.scale_.to(X.device)

75 | X += self.min_.to(X.device)

76 | if self.clip:

77 | torch.clip(X, self.feature_range[0], self.feature_range[1], out=X)

78 | return X

79 |

80 | def inverse_transform(self, X):

81 | X -= self.min_[-X.shape[1] :].to(X.device)

82 | X /= self.scale_[-X.shape[1] :].to(X.device)

83 | return X

84 |

--------------------------------------------------------------------------------

/data/slice.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import os

3 | import pickle

4 |

5 | import librosa as lr

6 | import numpy as np

7 | import soundfile as sf

8 | from tqdm import tqdm

9 |

10 |

11 | def slice_audio(audio_file, stride, length, out_dir):

12 | # stride, length in seconds

13 | audio, sr = lr.load(audio_file, sr=None)

14 | file_name = os.path.splitext(os.path.basename(audio_file))[0]

15 | start_idx = 0

16 | idx = 0

17 | window = int(length * sr)

18 | stride_step = int(stride * sr)

19 | while start_idx <= len(audio) - window:

20 | audio_slice = audio[start_idx : start_idx + window]

21 | sf.write(f"{out_dir}/{file_name}_slice{idx}.wav", audio_slice, sr)

22 | start_idx += stride_step

23 | idx += 1

24 | return idx

25 |

26 |

27 | def slice_motion(motion_file, stride, length, num_slices, out_dir):

28 | motion = pickle.load(open(motion_file, "rb"))

29 | pos, q = motion["pos"], motion["q"]

30 | scale = motion["scale"][0]

31 | file_name = os.path.splitext(os.path.basename(motion_file))[0]

32 | # normalize root position

33 | pos /= scale

34 | start_idx = 0

35 | window = int(length * 60)

36 | stride_step = int(stride * 60)

37 | slice_count = 0

38 | # slice until done or until matching audio slices

39 | while start_idx <= len(pos) - window and slice_count < num_slices:

40 | pos_slice, q_slice = (

41 | pos[start_idx : start_idx + window],

42 | q[start_idx : start_idx + window],

43 | )

44 | out = {"pos": pos_slice, "q": q_slice}

45 | pickle.dump(out, open(f"{out_dir}/{file_name}_slice{slice_count}.pkl", "wb"))

46 | start_idx += stride_step

47 | slice_count += 1

48 | return slice_count

49 |

50 |

51 | def slice_aistpp(motion_dir, wav_dir, stride=0.5, length=5):

52 | wavs = sorted(glob.glob(f"{wav_dir}/*.wav"))

53 | motions = sorted(glob.glob(f"{motion_dir}/*.pkl"))

54 | wav_out = wav_dir + "_sliced_"+ str(stride)

55 | motion_out = motion_dir + "_sliced_" + str(stride)

56 | os.makedirs(wav_out, exist_ok=True)

57 | os.makedirs(motion_out, exist_ok=True)

58 | assert len(wavs) == len(motions)

59 | for wav, motion in tqdm(zip(wavs, motions)):

60 | # make sure name is matching

61 | m_name = os.path.splitext(os.path.basename(motion))[0]

62 | w_name = os.path.splitext(os.path.basename(wav))[0]

63 | assert m_name == w_name, str((motion, wav))

64 | audio_slices = slice_audio(wav, stride, length, wav_out) # 3

65 | motion_slices = slice_motion(motion, stride, length, audio_slices, motion_out)

66 | # make sure the slices line up

67 | assert audio_slices == motion_slices, str(

68 | (wav, motion, audio_slices, motion_slices)

69 | )

70 |

71 |

72 | def slice_audio_folder(wav_dir, stride=0.5, length=5):

73 | wavs = sorted(glob.glob(f"{wav_dir}/*.wav"))

74 | wav_out = wav_dir + "_sliced"

75 | os.makedirs(wav_out, exist_ok=True)

76 | for wav in tqdm(wavs):

77 | audio_slices = slice_audio(wav, stride, length, wav_out)

78 |

--------------------------------------------------------------------------------

/model/utils.py:

--------------------------------------------------------------------------------

1 | import math

2 |

3 | import numpy as np

4 | import torch

5 | from einops import rearrange, reduce, repeat

6 | from einops.layers.torch import Rearrange

7 | from torch import nn

8 |

9 |

10 | # absolute positional embedding used for vanilla transformer sequential data

11 | class PositionalEncoding(nn.Module):

12 | def __init__(self, d_model, dropout=0.1, max_len=500, batch_first=False):

13 | super().__init__()

14 | self.batch_first = batch_first

15 |

16 | self.dropout = nn.Dropout(p=dropout)

17 |

18 | pe = torch.zeros(max_len, d_model)

19 | position = torch.arange(0, max_len).unsqueeze(1)

20 | div_term = torch.exp(torch.arange(0, d_model, 2) * (-np.log(10000.0) / d_model))

21 | pe[:, 0::2] = torch.sin(position * div_term)

22 | pe[:, 1::2] = torch.cos(position * div_term)

23 | pe = pe.unsqueeze(0).transpose(0, 1)

24 |

25 | self.register_buffer("pe", pe)

26 |

27 | def forward(self, x):

28 | if self.batch_first:

29 | x = x + self.pe.permute(1, 0, 2)[:, : x.shape[1], :]

30 | else:

31 | x = x + self.pe[: x.shape[0], :]

32 | return self.dropout(x)

33 |

34 |

35 | # very similar positional embedding used for diffusion timesteps

36 | class SinusoidalPosEmb(nn.Module):

37 | def __init__(self, dim):

38 | super().__init__()

39 | self.dim = dim

40 |

41 | def forward(self, x):

42 | device = x.device

43 | half_dim = self.dim // 2

44 | emb = math.log(10000) / (half_dim - 1)

45 | emb = torch.exp(torch.arange(half_dim, device=device) * -emb)

46 | emb = x[:, None] * emb[None, :]

47 | emb = torch.cat((emb.sin(), emb.cos()), dim=-1)

48 | return emb

49 |

50 |

51 | # dropout mask

52 | def prob_mask_like(shape, prob, device):

53 | if prob == 1:

54 | return torch.ones(shape, device=device, dtype=torch.bool)

55 | elif prob == 0:

56 | return torch.zeros(shape, device=device, dtype=torch.bool)

57 | else:

58 | return torch.zeros(shape, device=device).float().uniform_(0, 1) < prob

59 |

60 |

61 | def extract(a, t, x_shape):

62 | b, *_ = t.shape

63 | out = a.gather(-1, t)

64 | return out.reshape(b, *((1,) * (len(x_shape) - 1)))

65 |

66 | def make_beta_schedule(

67 | schedule, n_timestep, linear_start=1e-4, linear_end=2e-2, cosine_s=8e-3

68 | ):

69 | if schedule == "linear":

70 | betas = (

71 | torch.linspace(

72 | linear_start ** 0.5, linear_end ** 0.5, n_timestep, dtype=torch.float64

73 | )

74 | ** 2

75 | )

76 |

77 | elif schedule == "cosine":

78 | timesteps = (

79 | torch.arange(n_timestep + 1, dtype=torch.float64) / n_timestep + cosine_s

80 | )

81 | alphas = timesteps / (1 + cosine_s) * np.pi / 2

82 | alphas = torch.cos(alphas).pow(2)

83 | alphas = alphas / alphas[0]

84 | betas = 1 - alphas[1:] / alphas[:-1]

85 | betas = np.clip(betas, a_min=0, a_max=0.999)

86 |

87 | elif schedule == "sqrt_linear":

88 | betas = torch.linspace(

89 | linear_start, linear_end, n_timestep, dtype=torch.float64

90 | )

91 | elif schedule == "sqrt":

92 | betas = (

93 | torch.linspace(linear_start, linear_end, n_timestep, dtype=torch.float64)

94 | ** 0.5

95 | )

96 | else:

97 | raise ValueError(f"schedule '{schedule}' unknown.")

98 | return betas.numpy()

99 |

--------------------------------------------------------------------------------

/data/audio_extraction/baseline_features.py:

--------------------------------------------------------------------------------

1 | import os

2 | from functools import partial

3 | from pathlib import Path

4 |

5 | import librosa

6 | import librosa as lr

7 | import numpy as np

8 | from tqdm import tqdm

9 |

10 | FPS = 30

11 | HOP_LENGTH = 512

12 | SR = FPS * HOP_LENGTH

13 | EPS = 1e-6

14 |

15 |

16 | def _get_tempo(audio_name):

17 | """Get tempo (BPM) for a music by parsing music name."""

18 |

19 |

20 | # a lot of stuff, only take the 5th element

21 | audio_name = audio_name.split("_")[4]

22 |

23 | assert len(audio_name) == 4

24 | if audio_name[0:3] in [

25 | "mBR",

26 | "mPO",

27 | "mLO",

28 | "mMH",

29 | "mLH",

30 | "mWA",

31 | "mKR",

32 | "mJS",

33 | "mJB",

34 | ]:

35 | return int(audio_name[3]) * 10 + 80

36 | elif audio_name[0:3] == "mHO":

37 | return int(audio_name[3]) * 5 + 110

38 | else:

39 | assert False, audio_name

40 |

41 |

42 | def extract(fpath, skip_completed=True, dest_dir="aist_baseline_feats"):

43 | os.makedirs(dest_dir, exist_ok=True)

44 | audio_name = Path(fpath).stem

45 | save_path = os.path.join(dest_dir, audio_name + ".npy")

46 |

47 | if os.path.exists(save_path) and skip_completed:

48 | return

49 |

50 | data, _ = librosa.load(fpath, sr=SR)

51 |

52 | envelope = librosa.onset.onset_strength(y=data, sr=SR)

53 | mfcc = librosa.feature.mfcc(y=data, sr=SR, n_mfcc=20).T

54 | chroma = librosa.feature.chroma_cens(

55 | y=data, sr=SR, hop_length=HOP_LENGTH, n_chroma=12

56 | ).T

57 |

58 | peak_idxs = librosa.onset.onset_detect(

59 | onset_envelope=envelope.flatten(), sr=SR, hop_length=HOP_LENGTH

60 | )

61 | peak_onehot = np.zeros_like(envelope, dtype=np.float32)

62 | peak_onehot[peak_idxs] = 1.0 # (seq_len,)

63 |

64 | try:

65 | start_bpm = _get_tempo(audio_name)

66 | except:

67 | # determine manually

68 | start_bpm = lr.beat.tempo(y=lr.load(fpath)[0])[0]

69 |

70 | tempo, beat_idxs = librosa.beat.beat_track(

71 | onset_envelope=envelope,

72 | sr=SR,

73 | hop_length=HOP_LENGTH,

74 | start_bpm=start_bpm,

75 | tightness=100,

76 | )

77 | beat_onehot = np.zeros_like(envelope, dtype=np.float32)

78 | beat_onehot[beat_idxs] = 1.0 # (seq_len,)

79 |

80 | audio_feature = np.concatenate(

81 | [envelope[:, None], mfcc, chroma, peak_onehot[:, None], beat_onehot[:, None]],

82 | axis=-1,

83 | ) # envelope:1, mfcc:20, chroma:12, peak_onehot:1, beat_onehot:1

84 |

85 | # chop to ensure exact shape

86 | audio_feature = audio_feature[:5 * FPS]

87 | assert (audio_feature.shape[0] - 5 * FPS) == 0, f"expected output to be ~5s, but was {audio_feature.shape[0] / FPS}"

88 |

89 | #np.save(save_path, audio_feature)

90 | return audio_feature, save_path

91 |

92 |

93 | def extract_folder(src, dest):

94 | fpaths = Path(src).glob("*")

95 | fpaths = sorted(list(fpaths))

96 | extract_ = partial(extract, skip_completed=False, dest_dir=dest)

97 | for fpath in tqdm(fpaths):

98 | rep, path = extract_(fpath)

99 | np.save(path, rep)

100 |

101 |

102 | if __name__ == "__main__":

103 | import argparse

104 |

105 | parser = argparse.ArgumentParser()

106 |

107 | parser.add_argument("--src", help="source path to AIST++ audio files")

108 | parser.add_argument("--dest", help="dest path to audio features")

109 |

110 | args = parser.parse_args()

111 |

112 | extract_folder(args.src, args.dest)

113 |

114 |

--------------------------------------------------------------------------------

/model/adan.py:

--------------------------------------------------------------------------------

1 | import math

2 |

3 | import torch

4 | from torch.optim import Optimizer

5 |

6 |

7 | def exists(val):

8 | return val is not None

9 |

10 |

11 | class Adan(Optimizer):

12 | def __init__(

13 | self,

14 | params,

15 | lr=1e-3,

16 | betas=(0.02, 0.08, 0.01),

17 | eps=1e-8,

18 | weight_decay=0,

19 | restart_cond: callable = None,

20 | ):

21 | assert len(betas) == 3

22 |

23 | defaults = dict(

24 | lr=lr,

25 | betas=betas,

26 | eps=eps,

27 | weight_decay=weight_decay,

28 | restart_cond=restart_cond,

29 | )

30 |

31 | super().__init__(params, defaults)

32 |

33 | def step(self, closure=None):

34 | loss = None

35 |

36 | if exists(closure):

37 | loss = closure()

38 |

39 | for group in self.param_groups:

40 |

41 | lr = group["lr"]

42 | beta1, beta2, beta3 = group["betas"]

43 | weight_decay = group["weight_decay"]

44 | eps = group["eps"]

45 | restart_cond = group["restart_cond"]

46 |

47 | for p in group["params"]:

48 | if not exists(p.grad):

49 | continue

50 |

51 | data, grad = p.data, p.grad.data

52 | assert not grad.is_sparse

53 |

54 | state = self.state[p]

55 |

56 | if len(state) == 0:

57 | state["step"] = 0

58 | state["prev_grad"] = torch.zeros_like(grad)

59 | state["m"] = torch.zeros_like(grad)

60 | state["v"] = torch.zeros_like(grad)

61 | state["n"] = torch.zeros_like(grad)

62 |

63 | step, m, v, n, prev_grad = (

64 | state["step"],

65 | state["m"],

66 | state["v"],

67 | state["n"],

68 | state["prev_grad"],

69 | )

70 |

71 | if step > 0:

72 | prev_grad = state["prev_grad"]

73 |

74 | # main algorithm

75 |

76 | m.mul_(1 - beta1).add_(grad, alpha=beta1)

77 |

78 | grad_diff = grad - prev_grad

79 |

80 | v.mul_(1 - beta2).add_(grad_diff, alpha=beta2)

81 |

82 | next_n = (grad + (1 - beta2) * grad_diff) ** 2

83 |

84 | n.mul_(1 - beta3).add_(next_n, alpha=beta3)

85 |

86 | # bias correction terms

87 |

88 | step += 1

89 |

90 | correct_m, correct_v, correct_n = map(

91 | lambda n: 1 / (1 - (1 - n) ** step), (beta1, beta2, beta3)

92 | )

93 |

94 | # gradient step

95 |

96 | def grad_step_(data, m, v, n):

97 | weighted_step_size = lr / (n * correct_n).sqrt().add_(eps)

98 |

99 | denom = 1 + weight_decay * lr

100 |

101 | data.addcmul_(

102 | weighted_step_size,

103 | (m * correct_m + (1 - beta2) * v * correct_v),

104 | value=-1.0,

105 | ).div_(denom)

106 |

107 | grad_step_(data, m, v, n)

108 |

109 | # restart condition

110 |

111 | if exists(restart_cond) and restart_cond(state):

112 | m.data.copy_(grad)

113 | v.zero_()

114 | n.data.copy_(grad ** 2)

115 |

116 | grad_step_(data, m, v, n)

117 |

118 | # set new incremented step

119 |

120 | prev_grad.copy_(grad)

121 | state["step"] = step

122 |

123 | return loss

124 |

125 |

126 |

--------------------------------------------------------------------------------

/args.py:

--------------------------------------------------------------------------------

1 | import argparse

2 |

3 |

4 | def parse_train_opt():

5 | parser = argparse.ArgumentParser()

6 | parser.add_argument("--project", default="runs/train", help="project/name")

7 | parser.add_argument("--exp_name", default="exp", help="save to project/name")

8 | parser.add_argument("--data_path", type=str, default="/data/zhuying/PAMD-main/data/", help="raw data path")

9 | parser.add_argument(

10 | "--processed_data_dir",

11 | type=str,

12 | default="data/dataset_backups/",

13 | help="Dataset backup path",

14 | )

15 | parser.add_argument(

16 | "--render_dir", type=str, default="renders/", help="Sample render path"

17 | )

18 |

19 | parser.add_argument("--feature_type", type=str, default="jukebox")

20 | parser.add_argument(

21 | "--wandb_pj_name", type=str, default="EDGE", help="project name"

22 | )

23 | parser.add_argument("--batch_size", type=int, default=64, help="batch size")

24 | parser.add_argument("--epochs", type=int, default=2000)

25 | parser.add_argument(

26 | "--force_reload", action="store_true", help="force reloads the datasets"

27 | )

28 | parser.add_argument(

29 | "--no_cache", action="store_true", help="don't reuse / cache loaded dataset"

30 | )

31 | parser.add_argument(

32 | "--save_interval",

33 | type=int,

34 | default=100,

35 | help='Log model after every "save_period" epoch',

36 | )

37 | parser.add_argument("--ema_interval", type=int, default=1, help="ema every x steps")

38 | parser.add_argument(

39 | "--checkpoint", type=str, default="", help="trained checkpoint path (optional)"

40 | )

41 | opt = parser.parse_args()

42 | return opt

43 |

44 |

45 | def parse_test_opt():

46 | parser = argparse.ArgumentParser()

47 | parser.add_argument("--data_path", type=str, default="/data/zhuying/PAMD-main/data/", help="raw data path")

48 | parser.add_argument(

49 | "--force_reload", action="store_true", help="force reloads the datasets"

50 | )

51 | parser.add_argument(

52 | "--edit_render_dir", type=str, default="renders_edit", help="Sample render path"

53 | )

54 | parser.add_argument("--feature_type", type=str, default="jukebox")

55 | parser.add_argument("--out_length", type=float, default=30, help="max. length of output, in seconds")

56 | parser.add_argument(

57 | "--processed_data_dir",

58 | type=str,

59 | default="data/dataset_backups/",

60 | help="Dataset backup path",

61 | )

62 | parser.add_argument(

63 | "--render_dir", type=str, default="renders/", help="Sample render path"

64 | )

65 | parser.add_argument(

66 | "--checkpoint", type=str, default="checkpoint.pt", help="checkpoint"

67 | )

68 | parser.add_argument(

69 | "--music_dir",

70 | type=str,

71 | default="data/test/wavs",

72 | help="folder containing input music",

73 | )

74 | parser.add_argument(

75 | "--save_motions", action="store_true", help="Saves the motions for evaluation"

76 | )

77 | parser.add_argument(

78 | "--motion_save_dir",

79 | type=str,

80 | default="eval/motions",

81 | help="Where to save the motions",

82 | )

83 | parser.add_argument(

84 | "--cache_features",

85 | action="store_true",

86 | help="Save the jukebox features for later reuse",

87 | )

88 | parser.add_argument(

89 | "--no_render",

90 | action="store_true",

91 | help="Don't render the video",

92 | )

93 | parser.add_argument(

94 | "--use_cached_features",

95 | action="store_true",

96 | help="Use precomputed features instead of music folder",

97 | )

98 | parser.add_argument(

99 | "--feature_cache_dir",

100 | type=str,

101 | default="cached_features/",

102 | help="Where to save/load the features",

103 | )

104 | opt = parser.parse_args()

105 | return opt

106 |

--------------------------------------------------------------------------------

/eval/beat_align_score.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import pickle

3 | from features.kinetic import extract_kinetic_features

4 | from features.manual_new import extract_manual_features

5 | from scipy import linalg

6 | import json

7 | # kinetic, manual

8 | import os

9 | from scipy.ndimage import gaussian_filter as G

10 | from scipy.signal import argrelextrema

11 | import glob

12 | import matplotlib.pyplot as plt

13 | import random

14 |

15 | from tqdm import tqdm

16 |

17 | music_root = './test_wavs_sliced/baseline_feats'

18 |

19 | def get_mb(key, length=None):

20 | path = os.path.join(music_root, key)

21 | with open(path) as f:

22 | # print(path)

23 | sample_dict = json.loads(f.read())

24 | if length is not None:

25 | beats = np.array(sample_dict['music_array'])[:, 53][:][:length]

26 | else:

27 | beats = np.array(sample_dict['music_array'])[:, 53]

28 |

29 | beats = beats.astype(bool)

30 | beat_axis = np.arange(len(beats))

31 | beat_axis = beat_axis[beats]

32 |

33 | # fig, ax = plt.subplots()

34 | # ax.set_xticks(beat_axis, minor=True)

35 | # # ax.set_xticks([0.3, 0.55, 0.7], minor=True)

36 | # ax.xaxis.grid(color='deeppink', linestyle='--', linewidth=1.5, which='minor')

37 | # ax.xaxis.grid(True, which='minor')

38 |

39 | # print(len(beats))

40 | return beat_axis

41 |

42 |

43 | def get_mb2(key, length=None):

44 | path = os.path.join(music_root, key)

45 | if length is not None:

46 | beats = np.load(path)[:, 34][:][:length]

47 | else:

48 | beats = np.load(path)[:, 34]

49 | beats = beats.astype(bool)

50 | beat_axis = np.arange(len(beats))

51 | beat_axis = beat_axis[beats]

52 |

53 | return beat_axis

54 |

55 |

56 | def calc_db(keypoints, name=''):

57 | keypoints = np.array(keypoints).reshape(-1, 24, 3)

58 | kinetic_vel = np.mean(np.sqrt(np.sum((keypoints[1:] - keypoints[:-1]) ** 2, axis=2)), axis=1)

59 | kinetic_vel = G(kinetic_vel, 5)

60 | motion_beats = argrelextrema(kinetic_vel, np.less)

61 | return motion_beats, len(kinetic_vel)

62 |

63 |

64 | def BA(music_beats, motion_beats):

65 | ba = 0

66 | for bb in music_beats:

67 | ba += np.exp(-np.min((motion_beats[0] - bb) ** 2) / 2 / 9)

68 | return (ba / len(music_beats))

69 |

70 |

71 | def calc_ba_score(root):

72 | ba_scores = []

73 |

74 | it = glob.glob(os.path.join(root, "*.pkl"))

75 | if len(it) > 1000:

76 | it = random.sample(it, 1000)

77 | for pkl in tqdm(it):

78 | print('pkl',pkl)

79 | info = pickle.load(open(pkl, "rb"))

80 | joint3d = info["full_pose"]

81 |

82 | joint3d = joint3d.reshape(-1,72)

83 |

84 | # for pkl in os.listdir(root):

85 | # # print(pkl)

86 | # if os.path.isdir(os.path.join(root, pkl)):

87 | # continue

88 | # joint3d = np.load(os.path.join(root, pkl), allow_pickle=True).item()['pred_position'][:,

89 | # :] # shape:(length+1,72)

90 |

91 | dance_beats, length = calc_db(joint3d, pkl)

92 | print('length',length)

93 | pkl= os.path.basename(pkl)

94 | pkl_split = pkl.split('.')[0].split('_')[1] + '_' + pkl.split('.')[0].split('_')[2] + '_' + \

95 | pkl.split('.')[0].split('_')[3] + '_' + pkl.split('.')[0].split('_')[4] + '_' + \

96 | pkl.split('.')[0].split('_')[5] + '_' + pkl.split('.')[0].split('_')[6]

97 | pkl_split2 = pkl.split('.')[0].split('_')[1]+'_'+pkl.split('.')[0].split('_')[2]+ '_'+\

98 | pkl.split('.')[0].split('_')[3]+'_'+pkl.split('.')[0].split('_')[4]+'_'+\

99 | pkl.split('.')[0].split('_')[5]+'_'+pkl.split('.')[0].split('_')[6]+'_' + \

100 | pkl.split('.')[0].split('_')[7]

101 | # music_beats = get_mb(pkl_split + '.json', length)

102 | music_beats = get_mb2(pkl_split +'/'+pkl_split2 +'.npy', length)

103 | ba_scores.append(BA(music_beats, dance_beats))

104 |

105 | return np.mean(ba_scores)

106 |

107 |

108 | if __name__ == '__main__':

109 | pred_root = 'eval/motions'

110 | print(calc_ba_score(pred_root))

111 |

--------------------------------------------------------------------------------

/model/rotary_embedding_torch.py:

--------------------------------------------------------------------------------

1 | from inspect import isfunction

2 | from math import log, pi

3 |

4 | import torch

5 | from einops import rearrange, repeat

6 | from torch import einsum, nn

7 |

8 | # helper functions

9 |

10 |

11 | def exists(val):

12 | return val is not None

13 |

14 |

15 | def broadcat(tensors, dim=-1):

16 | num_tensors = len(tensors)

17 | shape_lens = set(list(map(lambda t: len(t.shape), tensors)))

18 | assert len(shape_lens) == 1, "tensors must all have the same number of dimensions"

19 | shape_len = list(shape_lens)[0]

20 |

21 | dim = (dim + shape_len) if dim < 0 else dim

22 | dims = list(zip(*map(lambda t: list(t.shape), tensors)))

23 |

24 | expandable_dims = [(i, val) for i, val in enumerate(dims) if i != dim]

25 | assert all(

26 | [*map(lambda t: len(set(t[1])) <= 2, expandable_dims)]

27 | ), "invalid dimensions for broadcastable concatentation"

28 | max_dims = list(map(lambda t: (t[0], max(t[1])), expandable_dims))

29 | expanded_dims = list(map(lambda t: (t[0], (t[1],) * num_tensors), max_dims))

30 | expanded_dims.insert(dim, (dim, dims[dim]))

31 | expandable_shapes = list(zip(*map(lambda t: t[1], expanded_dims)))

32 | tensors = list(map(lambda t: t[0].expand(*t[1]), zip(tensors, expandable_shapes)))

33 | return torch.cat(tensors, dim=dim)

34 |

35 |

36 | # rotary embedding helper functions

37 |

38 |

39 | def rotate_half(x):

40 | x = rearrange(x, "... (d r) -> ... d r", r=2)

41 | x1, x2 = x.unbind(dim=-1)

42 | x = torch.stack((-x2, x1), dim=-1)

43 | return rearrange(x, "... d r -> ... (d r)")

44 |

45 |

46 | def apply_rotary_emb(freqs, t, start_index=0):

47 | freqs = freqs.to(t)

48 | rot_dim = freqs.shape[-1]

49 | end_index = start_index + rot_dim

50 | assert (

51 | rot_dim <= t.shape[-1]

52 | ), f"feature dimension {t.shape[-1]} is not of sufficient size to rotate in all the positions {rot_dim}"

53 | t_left, t, t_right = (

54 | t[..., :start_index],

55 | t[..., start_index:end_index],

56 | t[..., end_index:],

57 | )

58 | t = (t * freqs.cos()) + (rotate_half(t) * freqs.sin())

59 | return torch.cat((t_left, t, t_right), dim=-1)

60 |

61 |

62 | # learned rotation helpers

63 |

64 |

65 | def apply_learned_rotations(rotations, t, start_index=0, freq_ranges=None):

66 | if exists(freq_ranges):

67 | rotations = einsum("..., f -> ... f", rotations, freq_ranges)

68 | rotations = rearrange(rotations, "... r f -> ... (r f)")

69 |

70 | rotations = repeat(rotations, "... n -> ... (n r)", r=2)

71 | return apply_rotary_emb(rotations, t, start_index=start_index)

72 |

73 |

74 | # classes

75 |

76 |

77 | class RotaryEmbedding(nn.Module):

78 | def __init__(

79 | self,

80 | dim,

81 | custom_freqs=None,

82 | freqs_for="lang",

83 | theta=10000,

84 | max_freq=10,

85 | num_freqs=1,

86 | learned_freq=False,

87 | ):

88 | super().__init__()

89 | if exists(custom_freqs):

90 | freqs = custom_freqs

91 | elif freqs_for == "lang":

92 | freqs = 1.0 / (

93 | theta ** (torch.arange(0, dim, 2)[: (dim // 2)].float() / dim)

94 | )

95 | elif freqs_for == "pixel":

96 | freqs = torch.linspace(1.0, max_freq / 2, dim // 2) * pi

97 | elif freqs_for == "constant":

98 | freqs = torch.ones(num_freqs).float()

99 | else:

100 | raise ValueError(f"unknown modality {freqs_for}")

101 |

102 | self.cache = dict()

103 |

104 | if learned_freq:

105 | self.freqs = nn.Parameter(freqs)

106 | else:

107 | self.register_buffer("freqs", freqs)

108 |

109 | def rotate_queries_or_keys(self, t, seq_dim=-2):

110 | device = t.device

111 | seq_len = t.shape[seq_dim]

112 | freqs = self.forward(

113 | lambda: torch.arange(seq_len, device=device), cache_key=seq_len

114 | )

115 | return apply_rotary_emb(freqs, t)

116 |

117 | def forward(self, t, cache_key=None):

118 | if exists(cache_key) and cache_key in self.cache:

119 | return self.cache[cache_key]

120 |

121 | if isfunction(t):

122 | t = t()

123 |

124 | freqs = self.freqs

125 |

126 | freqs = torch.einsum("..., f -> ... f", t.type(freqs.dtype), freqs)

127 | freqs = repeat(freqs, "... n -> ... (n r)", r=2)

128 |

129 | if exists(cache_key):

130 | self.cache[cache_key] = freqs

131 |

132 | return freqs

133 |

--------------------------------------------------------------------------------

/eval/features/kinetic.py:

--------------------------------------------------------------------------------

1 | # BSD License

2 |

3 | # For fairmotion software

4 |

5 | # Copyright (c) Facebook, Inc. and its affiliates. All rights reserved.

6 | # Modified by Ruilong Li

7 |

8 | # Redistribution and use in source and binary forms, with or without modification,

9 | # are permitted provided that the following conditions are met:

10 |

11 | # * Redistributions of source code must retain the above copyright notice, this

12 | # list of conditions and the following disclaimer.

13 |

14 | # * Redistributions in binary form must reproduce the above copyright notice,

15 | # this list of conditions and the following disclaimer in the documentation

16 | # and/or other materials provided with the distribution.

17 |

18 | # * Neither the name Facebook nor the names of its contributors may be used to

19 | # endorse or promote products derived from this software without specific

20 | # prior written permission.

21 |

22 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND

23 | # ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

24 | # WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

25 | # DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR

26 | # ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

27 | # (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

28 | # LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON

29 | # ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

30 | # (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

31 | # SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

32 | import numpy as np

33 | from . import utils as feat_utils

34 |

35 |

36 | def extract_kinetic_features(positions):

37 | assert len(positions.shape) == 3 # (seq_len, n_joints, 3)

38 | features = KineticFeatures(positions)

39 | kinetic_feature_vector = []

40 | for i in range(positions.shape[1]):

41 | feature_vector = np.hstack(

42 | [

43 | features.average_kinetic_energy_horizontal(i),

44 | features.average_kinetic_energy_vertical(i),

45 | features.average_energy_expenditure(i),

46 | ]

47 | )

48 | kinetic_feature_vector.extend(feature_vector)

49 | kinetic_feature_vector = np.array(kinetic_feature_vector, dtype=np.float32)

50 | return kinetic_feature_vector

51 |

52 |

53 | class KineticFeatures:

54 | def __init__(

55 | self, positions, frame_time=1./60, up_vec="y", sliding_window=2

56 | ):

57 | self.positions = positions

58 | self.frame_time = frame_time

59 | self.up_vec = up_vec

60 | self.sliding_window = sliding_window

61 |

62 | def average_kinetic_energy(self, joint):

63 | average_kinetic_energy = 0

64 | for i in range(1, len(self.positions)):

65 | average_velocity = feat_utils.calc_average_velocity(

66 | self.positions, i, joint, self.sliding_window, self.frame_time

67 | )

68 | average_kinetic_energy += average_velocity ** 2

69 | average_kinetic_energy = average_kinetic_energy / (

70 | len(self.positions) - 1.0

71 | )

72 | return average_kinetic_energy

73 |

74 | def average_kinetic_energy_horizontal(self, joint):

75 | val = 0

76 | for i in range(1, len(self.positions)):

77 | average_velocity = feat_utils.calc_average_velocity_horizontal(

78 | self.positions,

79 | i,

80 | joint,

81 | self.sliding_window,

82 | self.frame_time,

83 | self.up_vec,

84 | )

85 | val += average_velocity ** 2

86 | val = val / (len(self.positions) - 1.0)

87 | return val

88 |

89 | def average_kinetic_energy_vertical(self, joint):

90 | val = 0

91 | for i in range(1, len(self.positions)):

92 | average_velocity = feat_utils.calc_average_velocity_vertical(

93 | self.positions,

94 | i,

95 | joint,

96 | self.sliding_window,

97 | self.frame_time,

98 | self.up_vec,

99 | )

100 | val += average_velocity ** 2

101 | val = val / (len(self.positions) - 1.0)

102 | return val

103 |

104 | def average_energy_expenditure(self, joint):

105 | val = 0.0

106 | for i in range(1, len(self.positions)):

107 | val += feat_utils.calc_average_acceleration(

108 | self.positions, i, joint, self.sliding_window, self.frame_time

109 | )

110 | val = val / (len(self.positions) - 1.0)

111 | return val

112 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Controllable Dance Generation with Style-Guided Motion Diffusion

2 | **[Controllable Dance Generation with Style-Guided Motion Diffusion](https://arxiv.org/abs/2406.07871)**, [Arxiv](https://arxiv.org/abs/2406.07871)

3 | **[Flexible Music-Conditioned Dance Generation with Style Description Prompts](https://arxiv.org/abs/2406.07871v1)**

4 |

5 | ### Demo Videos of Dance Generation

6 |

7 |

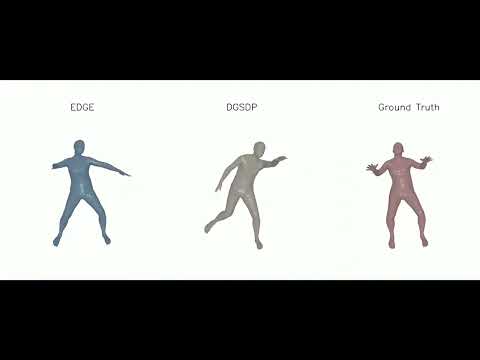

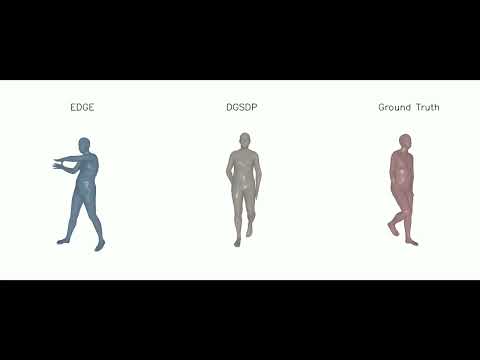

15 |

16 | **Demo Videos of Dance Generation: (a) Dance Generation; (b) Long Dance Generation**

17 |

18 | *Abstract: Dance plays an important role as an artistic form and expression in human culture, yet the creation of dance remains a challenging task. Most dance generation methods primarily rely solely on music, seldom taking into consideration intrinsic attributes such as music style or genre. In this work, we introduce Flexible Dance Generation with Style Description Prompts (DGSDP), a diffusion-based framework for suitable for diversified tasks of dance generation by fully leveraging the semantics of music style. The core component of this framework is Music-Conditioned Style-Aware Diffusion (MCSAD), which comprises a Transformer-based network and a music Style Modulation module. The MCSAD seemly integrates music conditions and style description prompts into the dance generation framework, ensuring that generated dances are consistent with the music content and style. To facilitate flexible dance generation and accommodate different tasks, a spatial-temporal masking strategy is effectively applied in the backward diffusion process. The proposed framework successfully generates realistic dance sequences that are accurately aligned with music for a variety of tasks such as long-term generation, dance in-betweening, dance inpainting, and etc. We hope that this work has the potential to inspire dance generation and creation, with promising applications in entertainment, art, and education.*

19 |

20 |

21 |

22 |

23 | ## Requirements

24 | * We follow the environment configuration of [EDGE](https://github.com/Stanford-TML/EDGE)

25 |

26 | ## Chekpoint

27 | * Download the saved model checkpoint from [Google Drive](https://drive.google.com/file/d/1qYEY45m3paDEXfBqpBWGCYaqvZS-Z60k/view?usp=drive_link).

28 |

29 | ## Dataset Download

30 | Download and process the AIST++ dataset (wavs and motion only) using:

31 | ```.bash

32 | cd data

33 | bash download_dataset.sh

34 | python create_dataset.py --extract-baseline --extract-jukebox

35 | ```

36 | This will process the dataset to match the settings used in the paper. The data processing will take ~24 hrs and ~50 GB to precompute all the Jukebox features for the dataset.

37 |

38 | ## Training

39 | Run model/style_CLIP.py to generate semantic features.

40 | ```.bash

41 | cd model

42 | python style_CLIP.py

43 | ```

44 | Then, run the training script, e.g.

45 | ```.bash

46 | cd ../

47 | accelerate launch train.py --batch_size 128 --epochs 2000 --feature_type jukebox --learning_rate 0.0002

48 | ```

49 | to train the model with the settings from the paper. The training will log progress to `wandb` and intermittently produce sample outputs to visualize learning.

50 |

51 | ## Testing and Evaluation

52 | Download the long music from [Google Drive](https://drive.google.com/file/d/1d2sqwQfW3f4XcNyYx3oWXdDQphrhfokj/view?usp=drive_link).

53 |

54 | Evaluate your model's outputs with the Beat Align Score, PFC, FID, Diversity score proposed in the paper:

55 | 1. Generate ~1k samples, saving the joint positions with the `--save_motions` argument

56 | 2. Run the evaluation script

57 | ```.bash

58 | python test.py --music_dir custom_music/ --save_motions

59 | python eval/beat_align_score.py

60 | python eval/eval_pfc.py

61 | python eval/metrics_diveristy.py

62 | ```

63 |

64 | ## Citation

65 | ```

66 | @misc{wang2025controllabledancegenerationstyleguided,

67 | title={Controllable Dance Generation with Style-Guided Motion Diffusion},

68 | author={Hongsong Wang and Ying Zhu and Xin Geng and Liang Wang},

69 | year={2025},

70 | eprint={2406.07871},

71 | archivePrefix={arXiv},

72 | primaryClass={cs.CV},

73 | url={https://arxiv.org/abs/2406.07871},

74 | }

75 |

76 | @article{wang2024flexible,

77 | title={Flexible Music-Conditioned Dance Generation with Style Description Prompts},

78 | author={Wang, Hongsong and Zhu, Yin and Geng, Xin},

79 | journal={arXiv preprint arXiv:2406.07871},

80 | year={2024}

81 | }

82 | ```

83 |

--------------------------------------------------------------------------------

/model/style_CLIP.py:

--------------------------------------------------------------------------------

1 | # from transformers import BertTokenizer, BertModel

2 | import os

3 | import pickle

4 | from pathlib import Path

5 |

6 | from transformers import AutoModel,AutoTokenizer

7 | import torch

8 |

9 | import clip

10 | import random

11 |

12 | device = "cuda" if torch.cuda.is_available() else "cpu"

13 |

14 | clip_model, clip_preprocess = clip.load("ViT-B/32", device=device, jit=False) # Must set jit=False for training

15 | clip_model.eval()

16 | for p in clip_model.parameters():

17 | p.requires_grad = False

18 |

19 |

20 |

21 | # 输入文本

22 | text=[]

23 | text.append("Breakdance, also known as B-boying or breaking, is an energetic and acrobatic style of dance that originated in the streets of New York City. It combines intricate footwork, power moves, freezes, and energetic spins on the floor. Breakdancers showcase their strength, agility, and creativity through explosive movements and impressive stunts. This dynamic dance style has become a global phenomenon, inspiring dancers worldwide to push their physical limits and express themselves through the art of breaking.")

24 | text.append("House dance is a vibrant and soulful style that originated in the underground clubs of Chicago and New York City. It combines elements of disco, funk, and hip-hop, creating a unique fusion of footwork, fluid movements, and intricate rhythms. House dancers often freestyle to electronic music, allowing their bodies to flow with the infectious beats. With its emphasis on individual expression and improvisation, house dance embodies the spirit of freedom and community within the dance culture.")

25 | text.append("Jazz ballet, also known as contemporary jazz, is a fusion of classical ballet technique and the expressive nature of jazz dance. It combines the grace and precision of ballet with the rhythmic and syncopated movements of jazz. Jazz ballet dancers exhibit a strong sense of musicality, emphasizing body isolations, turns, jumps, and extensions. This versatile dance style allows for both structured choreography and personal interpretation, making it a popular choice for dancers seeking a balance between technique and creative expression.")

26 | text.append("Street Jazz is a dance form that combines elements of street dance and jazz dance. It embodies the characteristics of street culture and urban vibes, emphasizing freedom, individuality, and a sense of rhythm. The dance steps of Street Jazz include fluid body movements, sharp footwork, and dynamic spins. Dancers express themselves freely on stage, showcasing their personality and sense of style through dance. Street Jazz is suitable for individuals who enjoy dancing, have a passion for street culture, and seek to express their individuality.")

27 | text.append("Krump is an expressive and aggressive street dance style that originated in the neighborhoods of Los Angeles. It is characterized by its intense and energetic movements, including chest pops, stomps, arm swings, and exaggerated facial expressions. Krump dancers use their bodies as a form of personal expression, channeling their emotions and energy into powerful and raw performances. This urban dance form serves as an outlet for self-expression and has evolved into a community-driven movement promoting positivity and self-empowerment.")

28 | text.append("Los Angeles-style hip-hop dance, also known as LA-style, is a fusion of various street dance styles that emerged from the urban communities of Los Angeles. It combines elements of popping, locking, breaking, and freestyle, creating a dynamic and diverse dance form. LA-style dancers showcase their unique styles and personalities through intricate body isolations, fluid movements, and sharp hits. This dance style embodies the vibrant and eclectic culture of Los Angeles and has influenced the global hip-hop dance scene.")

29 | text.append("Locking is a funk-based dance style that originated in the 1970s. It is characterized by its distinctive moves known as locks, which involve freezing in certain positions and then quickly transitioning to the next. Locking combines funky footwork, energetic arm gestures, and exaggerated body movements, creating a playful and entertaining dance form. Lockers often incorporate humor and showmanship into their performances, making it a popular style for entertainment purposes.")

30 | text.append("Middle Hip-Hop is a dance form that combines various elements of street dance, including popping and locking, with the rhythms and beats of hip-hop music. It requires a great deal of rhythm, coordination, and control. Middle Hip-Hop features energetic and dynamic movements, such as intricate footwork, isolations, and body waves. Dancers express themselves through their movements, delivering a powerful and engaging performance that captivates audiences. Middle Hip-Hop provides a great way for individuals to enhance their overall fitness, build confidence, and express their creativity.")

31 | text.append("Popular dance encompasses a wide range of contemporary dance styles that are widely enjoyed and accessible to the general public. It includes various genres such as commercial dance, music video choreography, and social dances like line dancing or party dances. Popular dancers often adapt and fuse different dance styles, creating visually appealing routines that resonate with a broad audience. This dance category reflects the ever-evolving trends and influences in popular culture.")

32 | text.append("Waacking, also known as punking or whacking, is a dance style that originated in the LGBTQ+ clubs of 1970s Los Angeles. It is characterized by its fluid arm movements, dramatic poses, and expressive storytelling through dance. Waacking requires precision, musicality, and personality to convey emotions and narratives. Dancers often use props like fans or scarves to enhance their performances. This dance form celebrates individuality, self-expression, and the liberation of one's true self.")

33 |

34 | style=[]

35 | style.append("mBR")

36 | style.append("mHO")

37 | style.append("mJB")

38 | style.append("mJS")

39 | style.append("mKR")

40 | style.append("mLH")

41 | style.append("mLO")

42 | style.append("mMH")

43 | style.append("mPO")

44 | style.append("mWA")

45 |

46 | features ={}

47 |

48 | for i in range(len(text)):

49 | t = clip.tokenize([text[i]], truncate=True).cuda()

50 | feature = clip_model.encode_text(t).cpu().float().reshape(-1)

51 |

52 | # print(features)

53 | print(feature.shape) # (1,768)

54 | features.update({style[i]:feature})

55 |

56 | save_path = "../data/style_clip"

57 | Path(save_path).mkdir(parents=True, exist_ok=True)

58 | outname = style[i] +'.pkl'

59 | pickle.dump(

60 | {

61 | style[i]:feature

62 | },

63 | open(os.path.join(save_path, outname), "wb"),

64 | )

65 |

66 |

67 |

68 | style_features = []

69 | for i in range(len(style)):

70 | path = '../data/style_clip/' + style[i] + '.pkl'

71 | with open(path, 'rb') as f:

72 | data = pickle.load(f)

73 | print(data[style[i]].shape)

74 | style_features.append(data[style[i]])

75 |

--------------------------------------------------------------------------------

/test.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import os

3 | import pickle

4 | from functools import cmp_to_key

5 | from pathlib import Path

6 | from tempfile import TemporaryDirectory

7 | import random

8 |

9 | import jukemirlib

10 | import numpy as np

11 | import torch

12 | from tqdm import tqdm

13 |

14 | from args import parse_test_opt

15 | from data.slice import slice_audio

16 | from DGSDP import DGSDP

17 | from data.audio_extraction.baseline_features import extract as baseline_extract

18 | from data.audio_extraction.jukebox_features import extract as juke_extract

19 |

20 | # sort filenames that look like songname_slice{number}.ext

21 | key_func = lambda x: int(os.path.splitext(x)[0].split("_")[-1].split("slice")[-1])