32 |

47 | );

48 | }

49 | }

50 |

51 | export default ProjectsListContainer;

--------------------------------------------------------------------------------

/src/tar.js:

--------------------------------------------------------------------------------

1 | var Q = require('q');

2 | var spawn = require('child_process').spawn;

3 | var baseTar = require('tar-fs')

4 |

5 | var tar = {};

6 |

7 | tar.createFromStream = function(path, stream) {

8 | var extract = baseTar.extract(path, {strict: false});

9 | stream.pipe(extract);

10 |

11 | return Q.Promise(function(resolve, reject){

12 | stream.on('error', function(err){

13 | reject(err);

14 | })

15 |

16 | extract.on('finish', function() {

17 | resolve();

18 | });

19 | });

20 | }

21 |

22 | /**

23 | * Creates a tarball at path with the contents

24 | * of the sourceDirectory.

25 | *

26 | * After the tarball is created, the callback

27 | * gets invoked.

28 | *

29 | * @return promise

30 | */

31 | tar.create = function(path, sourceDirectory, buildLogger, buildOptions){

32 | var deferred = Q.defer();

33 | var tar = spawn('tar', ['-C', sourceDirectory, '-czvf', path, '.']);

34 |

35 | buildLogger.info('[%s] Creating tarball', buildOptions.buildId);

36 |

37 | tar.stderr.on('data', function(data) {

38 | buildLogger.error('[%s] Error creating tarball', buildOptions.buildId);

39 | deferred.reject(data)

40 | });

41 |

42 | tar.stdout.on('data', function(data) {

43 | buildLogger.error('[%s] %s', buildOptions.buildId, data.toString());

44 | });

45 |

46 | tar.on('close', function(code) {

47 | if (code === 0) {

48 | buildLogger.info('[%s] Tarball created', buildOptions.buildId);

49 | deferred.resolve()

50 | } else {

51 | buildLogger.info('[%s] Error creating tarball', buildOptions.buildId);

52 | deferred.reject(new Error("Unable to tar -- exit code " + code))

53 | }

54 | });

55 |

56 | return deferred.promise;

57 | };

58 |

59 | module.exports = tar;

60 |

--------------------------------------------------------------------------------

/src/hooks.js:

--------------------------------------------------------------------------------

1 | var Q = require('q');

2 | var _ = require('lodash');

3 | var hooks = {};

4 |

5 | /**

6 | * Utility function that runs the hook

7 | * in a container and resolves / rejects

8 | * based on the hooks exit code.

9 | */

10 | function promisifyHook(dockerClient, buildId, command, logger) {

11 | var deferred = Q.defer();

12 |

13 | dockerClient.run(buildId, command.split(' '), process.stdout, function (err, data, container) {

14 | if (err) {

15 | deferred.reject(err);

16 | } else if (data.StatusCode === 0) {

17 | deferred.resolve();

18 | } else {

19 | logger.error(data);

20 | deferred.reject(command + ' failed, exited with status code ' + data.StatusCode);

21 | }

22 | });

23 |

24 | return deferred.promise;

25 | }

26 |

27 | /**

28 | * Run all hooks for a specified event on a container

29 | * built from the buildId, ie. my_node_app:master

30 | *

31 | * If any of the hooks fails, the returning promise

32 | * will be rejected.

33 | *

34 | * @return promise

35 | */

36 | hooks.run = function(event, buildId, project, dockerClient, logger) {

37 | var deferred = Q.defer();

38 | var hooks = project[event];

39 | var promises = [];

40 |

41 | if (_.isArray(hooks)) {

42 | logger.info('[%s] Running %s hooks', buildId, event);

43 |

44 | _.each(hooks, function(command) {

45 | logger.info('[%s] Running %s hook "%s"', buildId, event, command);

46 |

47 | promises.push(promisifyHook(dockerClient, buildId, command, logger));

48 | });

49 |

50 | return Q.all(promises);

51 | } else {

52 | logger.info('[%s] No %s hooks to run', buildId, event);

53 | deferred.resolve();

54 | }

55 |

56 | return deferred.promise;

57 | };

58 |

59 | module.exports = hooks;

60 |

--------------------------------------------------------------------------------

/src/utils.js:

--------------------------------------------------------------------------------

1 | var url = require('url');

2 | var _ = require('lodash');

3 | var config = require('./config');

4 | var utils = {};

5 |

6 | /**

7 | * Gets a roger path

8 | *

9 | * @param {string} to

10 | * @return {string}

11 | */

12 | utils.path = function(to) {

13 | return config.get('paths.' + to);

14 | }

15 |

16 | /**

17 | * Throw a simple exception with the

18 | * given name and message.

19 | *

20 | * @param {string} name

21 | * @param {string} message

22 | * @throws {Error}

23 | */

24 | utils.throw = function(name, message){

25 | var e = new Error(message)

26 | e.name = name

27 |

28 | throw e

29 | }

30 |

31 | /**

32 | * Utility function that takes an

33 | * object and recursively loops

34 | * through it replacing all "sensitive"

35 | * informations with *****.

36 | *

37 | * This is done to print out objects

38 | * without exposing passwords and so

39 | * on.

40 | */

41 | utils.obfuscate = function(object) {

42 | object = _.clone(object);

43 | var stopWords = ['password', 'github', 'github-token', 'token', 'access-key', 'secret']

44 |

45 | _.each(object, function(value, key){

46 | if (typeof value === 'object') {

47 | object[key] = utils.obfuscate(value);

48 | }

49 |

50 | if (_.isString(value)) {

51 | object[key] = utils.obfuscateString(value);

52 |

53 | if (_.contains(stopWords, key)) {

54 | object[key] = '*****'

55 | }

56 | }

57 | })

58 |

59 | return object;

60 | };

61 |

62 | /**

63 | * Takes a string and remove all sensitive

64 | * values from it.

65 | *

66 | * For example, if the string is a URL, it

67 | * will remove the auth, if present.

68 | */

69 | utils.obfuscateString = function(string) {

70 | var parts = url.parse(string);

71 |

72 | if (parts.host && parts.auth) {

73 | parts.auth = null;

74 |

75 | return url.format(parts);

76 | }

77 |

78 | return string;

79 | }

80 |

81 | module.exports = utils;

82 |

--------------------------------------------------------------------------------

/src/client/webpack.config.js:

--------------------------------------------------------------------------------

1 | var webpack = require('webpack');

2 | var path = require('path');

3 | var ExtractTextPlugin = require('extract-text-webpack-plugin');

4 | var mergeWebpackConfig = require('webpack-config-merger');

5 |

6 | // Default configurations

7 | var config = {

8 | entry: [

9 | './app.jsx', // App entry point

10 | './index.html',

11 | './styles/App.scss'

12 | ],

13 | output: {

14 | path: path.join(__dirname, 'dist'),

15 | filename: 'bundle.js'

16 | },

17 | resolve: {

18 | extensions: ['', '.js', '.jsx']

19 | },

20 | module: {

21 | loaders: [{

22 | test: /\.jsx?$/,

23 | exclude: /(node_modules|bower_components)/,

24 | loaders: ['babel']

25 | },

26 | {

27 | test: /\.html$/,

28 | loader: "file?name=[name].[ext]"

29 | },

30 | {

31 | test: /\.scss$/,

32 | loader: ExtractTextPlugin.extract(

33 | 'css?sourceMap!' +

34 | 'sass?sourceMap'

35 | )

36 | }]

37 | },

38 | plugins: [

39 | new webpack.NoErrorsPlugin(),

40 | new ExtractTextPlugin('app.css')

41 | ]

42 | };

43 |

44 | // Development specific configurations

45 | var devConfig = {

46 | entry: [

47 | 'webpack-dev-server/client?http://0.0.0.0:8080', // WebpackDevServer host and port

48 | 'webpack/hot/only-dev-server'

49 | ],

50 | devtool: process.env.WEBPACK_DEVTOOL || 'source-map',

51 | module: {

52 | loaders: [{

53 | test: /\.jsx?$/,

54 | exclude: /(node_modules|bower_components)/,

55 | loaders: ['react-hot', 'babel']

56 | }]

57 | },

58 | devServer: {

59 | contentBase: "./dist",

60 | noInfo: true, // --no-info option

61 | hot: true,

62 | inline: true

63 | },

64 | plugins: [

65 | new webpack.HotModuleReplacementPlugin(),

66 | ]

67 | };

68 |

69 | var isDev = process.env.NODE_ENV !== 'production';

70 | if(isDev) {

71 | mergeWebpackConfig(config, devConfig);

72 | }

73 |

74 | module.exports = config;

--------------------------------------------------------------------------------

/src/notifications.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 | var config = require('./config');

3 | var router = require('./router');

4 | var notifications = {};

5 |

6 | /**

7 | * Trigger notifications for a specific project.

8 | *

9 | * @param project

10 | * @param branch

11 | * @param options {buildId, uuid, logger}

12 | */

13 | notifications.trigger = function(project, branch, options){

14 | options.logger.info('[%s] Sending notifications for %s', options.buildId, options.uuid);

15 | var comments = ['[' + options.project.name + ':' + branch + '] BUILD PASSED'];

16 | var buildLink = router.generate('build-link', {build: options.uuid, projectName: project.name}, true);

17 | var status = {

18 | state: 'success',

19 | target_url: buildLink,

20 | description: 'The build succeeded!'

21 | };

22 |

23 | if (options.result instanceof Error) {

24 | comments = ['[' + options.project.name + ':' + branch + '] BUILD FAILED: ' + options.result.message];

25 | status.state = 'failure';

26 | status.description = 'The build failed!';

27 | }

28 |

29 | comments.push("You can check the build output at " + buildLink);

30 |

31 | _.each(config.get('notifications'), function(val, key){

32 | if (val['global'] === 'true') {

33 | project.notify.push(key);

34 | }

35 | });

36 |

37 | if (_.isArray(project.notify)) {

38 | _.each(project.notify, function(handler){

39 | options.branch = branch;

40 | options.comments = _.clone(comments);

41 | options.status = status;

42 |

43 | var notificationOptions = (project.notify && project.notify[handler]) || config.get('notifications.' + handler);

44 |

45 | try {

46 | require('./notifications/' + handler)(project, _.clone(options), notificationOptions);

47 | } catch(err) {

48 | options.logger.info('[%s] Error with notifying %s: %s', options.buildId, handler, err.toString());

49 | }

50 | });

51 | }

52 | };

53 |

54 | module.exports = notifications;

55 |

--------------------------------------------------------------------------------

/src/storage.js:

--------------------------------------------------------------------------------

1 | var dispatcher = require('./dispatcher');

2 | var config = require('./config');

3 | var adapter = require('./storage/' + config.get('app.storage'));

4 |

5 | /**

6 | * The storage object is simply

7 | * responsible for proxying a

8 | * storage adapter (ie. mysql)

9 | * and defining the interface

10 | * that the adapter needs to

11 | * implement.

12 | *

13 | * If you wish to implement your own

14 | * storage solution, you will only

15 | * need to implement the methods

16 | * exported here.

17 | *

18 | * For an exaple implementation,

19 | * see storage/file.js, our super-dummy

20 | * file-based storage system.

21 | *

22 | * @type {Object}

23 | */

24 | module.exports = {

25 | /**

26 | * Saves build information.

27 | */

28 | saveBuild: function(id, tag, project, branch, status) {

29 | return adapter.saveBuild(id, tag, project, branch, status).then(function(result) {

30 | dispatcher.emit('storage-updated');

31 |

32 | return result;

33 | });

34 | },

35 | /**

36 | * Returns all builds of a project,

37 | * DESC sorted.

38 | */

39 | getBuilds: function(limit) {

40 | return adapter.getBuilds(limit);

41 | },

42 | /**

43 | * Returns all started jobs.

44 | */

45 | getStartedBuilds: function() {

46 | return this.getBuildsByStatus(['started']);

47 | },

48 | /**

49 | * Returns all jobs that are either started

50 | * or queued.

51 | */

52 | getPendingBuilds: function() {

53 | return this.getBuildsByStatus(['started', 'queued']);

54 | },

55 | /**

56 | * Returns a list of builds in the given

57 | * statuses.

58 | *

59 | * @param {list} statuses

60 | * @return {list}

61 | */

62 | getBuildsByStatus: function(statuses) {

63 | return adapter.getBuildsByStatus(statuses);

64 | },

65 | /**

66 | * Returns all projects,

67 | * DESC sorted by latest build.

68 | */

69 | getProjects: function(limit) {

70 | return adapter.getProjects(limit);

71 | },

72 | /**

73 | * Returns a particular build.

74 | */

75 | getBuild: function(id) {

76 | return adapter.getBuild(id);

77 | }

78 | }

79 |

--------------------------------------------------------------------------------

/src/client/components/buildOutput.jsx:

--------------------------------------------------------------------------------

1 | 'use strict';

2 |

3 | import React from 'react';

4 |

5 | class BuildOutput extends React.Component {

6 | constructor(...props) {

7 | super(...props);

8 | this.state = { autoUpdate: true };

9 | this._numberOfTries = 0;

10 | this._lastScrollHeight = 0;

11 | this.stopTimer = this.stopTimer.bind(this);

12 | }

13 |

14 | componentDidMount() {

15 | this.updateBuildOutput();

16 | }

17 |

18 | componentWillReceiveProps(props) {

19 | this.updateBuildOutput();

20 | }

21 |

22 | getBuildOutputWindow(){

23 | let buildOutputFrame = this.refs.buildOutputFrame.getDOMNode();

24 | return buildOutputFrame.contentWindow;

25 | }

26 |

27 | scrollLogView(){

28 | try{

29 | let _win = this.getBuildOutputWindow();

30 | let scrollHeight = _win.document.body.scrollHeight;

31 |

32 | if(scrollHeight === this._lastScrollHeight ){

33 | this._numberOfTries++;

34 | } else {

35 | this._numberOfTries = 0;

36 | this._lastScrollHeight = scrollHeight;

37 | }

38 |

39 | if( this._numberOfTries > 100 && this.props.buildStatus !== 'started' ){

40 | this.stopTimer();

41 | return;

42 | }

43 |

44 | if(this.state.autoUpdate){

45 | _win.scrollTo(0, scrollHeight);

46 | }

47 | } catch(e){}

48 | }

49 |

50 | updateBuildOutput(){

51 | this.stopTimer();

52 | this._numberOfTries = 0;

53 |

54 | let runTimer = () => {

55 | this.raf = requestAnimationFrame(this.scrollLogView.bind(this));

56 | this.tmr = setTimeout( runTimer, 1);

57 | };

58 |

59 | runTimer();

60 | }

61 |

62 | stopTimer(){

63 | clearTimeout(this.tmr);

64 | cancelAnimationFrame(this.raf);

65 | }

66 |

67 | toggleAutoUpdate(){

68 | this.setState({

69 | autoUpdate: !this.state.autoUpdate

70 | });

71 |

72 | if(this.state.autoUpdate){

73 | this.updateBuildOutput();

74 | }

75 | }

76 |

77 | render() {

78 | return Projects

33 |

34 |

35 |

36 |

37 | {

38 | _.filter(this.props.projects,(p)=>{

39 | let name = Utils.getProjectShortName(p.alias);

40 | return !! name.match(this.state.searchText, 'ig');

41 | }).map((project) =>{

42 | return

43 | })

44 | }

45 |

46 |

79 |

85 |

86 |

;

87 | }

88 | }

89 |

90 | export default BuildOutput;

91 |

--------------------------------------------------------------------------------

/src/git.js:

--------------------------------------------------------------------------------

1 | var Q = require('q');

2 | var spawn = require('child_process').spawn;

3 | var utils = require("./utils");

4 | var Git = require("nodegit");

5 | var git = {};

6 |

7 | /**

8 | * Get a nodegit commit object from a reference name

9 | * @param {string} path - The path to the Git repository

10 | * @param {string} name - The name of the reference to get

11 | * @return {promise} - A promise that resolves to the commit

12 | */

13 | git.getCommit = function(path, name) {

14 | return Git.Repository.open(path)

15 | .then(function(repo) {

16 | // Look up the branch or tag by its name

17 | return Git.Reference.dwim(repo, name)

18 | .then(function(ref) {

19 | // Convert tags to commit objects

20 | return ref.peel(Git.Object.TYPE.COMMIT);

21 | })

22 | .then(function(ref) {

23 | // Get the commit object from the repo

24 | return Git.Commit.lookup(repo, ref.id());

25 | });

26 | });

27 | }

28 |

29 | /**

30 | * Check the type of the specified reference name

31 | * @param {string} path - The path to the Git repository

32 | * @param {string} name - The name of the reference to check

33 | * @return {promise} - A promise that resolves to the result,

34 | * either 'tag', 'branch', or 'commit'

35 | */

36 | git.getRefType = function(path, name) {

37 | return Git.Repository.open(path)

38 | then(function(repo) {

39 | return Git.Reference.dwim(repo, name)

40 | .then(function(ref) {

41 | if (ref.isTag()) {

42 | return 'tag';

43 | }

44 | if (ref.isBranch()) {

45 | return 'branch';

46 | }

47 | return 'commit';

48 | });

49 | });

50 | }

51 |

52 | /**

53 | * Clones the repository at the specified path,

54 | * only fetching a specific branch -- which is

55 | * the one we want to build.

56 | *

57 | * @return promise

58 | */

59 | git.clone = function(repo, path, branch, logger) {

60 | logger.info('Cloning %s:%s in %s', utils.obfuscateString(repo), branch, path);

61 | var deferred = Q.defer();

62 | var clone = spawn('git', ['clone', '-b', branch, '--single-branch', '--depth', '1', repo, path]);

63 |

64 | clone.stdout.on('data', function (data) {

65 | logger.info('git clone %s: %s', utils.obfuscateString(repo), data.toString());

66 | });

67 |

68 | /**

69 | * Git gotcha, the output of a 'git clone'

70 | * is sent to stderr rather than stdout

71 | *

72 | * @see http://git.661346.n2.nabble.com/Bugreport-Git-responds-with-stderr-instead-of-stdout-td4959280.html

73 | */

74 | clone.stderr.on('data', function (err) {

75 | logger.info('git clone %s: %s', utils.obfuscateString(repo), err.toString());

76 | });

77 |

78 | clone.on('close', function (code) {

79 | if (code === 0) {

80 | logger.info('git clone %s: finished cloning', utils.obfuscateString(repo));

81 | deferred.resolve();

82 | } else {

83 | deferred.reject('child process exited with status ' + code);

84 | }

85 | });

86 |

87 | return deferred.promise;

88 | }

89 |

90 | module.exports = git;

91 |

--------------------------------------------------------------------------------

/src/client/app.jsx:

--------------------------------------------------------------------------------

1 | import React from 'react';

2 | import _ from 'lodash';

3 | import Router from 'react-router';

4 | import {Route, RouteHandler} from 'react-router';

5 | import Header from './components/header';

6 | import ProjectsListContainer from './components/projectsListContainer';

7 | import ProjectDetails from './components/projectDetails';

8 | import store from './stores/store';

9 | import actions from './actions/actions';

10 | import Utils from './utils/utils';

11 |

12 | export class ProjectDetailsView extends React.Component {

13 | constructor(...props) {

14 | super(...props);

15 | }

16 |

17 | render() {

18 | let getProjectDetails = () => {

19 | return _.find(this.props.projects, (project)=>{

20 | return Utils.getProjectShortName(project.name) === this.props.params.project;

21 | }) || {}

22 | };

23 | let buildId = this.props.params.buildId;

24 |

25 | return

68 |

80 | );

81 | }

82 | }

83 |

84 | App.contextTypes = {

85 | router: React.PropTypes.func.isRequired

86 | };

87 |

88 | let routes = (

89 |

70 |

79 |

71 |

78 |

72 |

77 |

74 |

76 |

53 |

87 | );

88 | }

89 | }

90 |

91 | export default ProjectDetails;

92 |

--------------------------------------------------------------------------------

/src/storage/file.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 | var moment = require('moment');

3 | var yaml = require('js-yaml');

4 | var fs = require('fs');

5 | var url = require('url');

6 | var Q = require('q');

7 |

8 | /**

9 | * This is the simplest storage system

10 | * available in roger.

11 | *

12 | * It keeps a record of all builds in memory

13 | * and flushes them to disk every time a build

14 | * is saved.

15 | *

16 | * It is also quite dummy since, not having

17 | * a powerful engine to rely on, saves

18 | * everything in a single list of builds -- so,

19 | * for example, when you query for projects

20 | * it loops all builds and extracts the

21 | * projects.

22 | *

23 | * This storage is implemented to simply get

24 | * started with Roger, but you should consider

25 | * a more persistent (and serious :-P) engine

26 | * for production installations.

27 | *

28 | * @type {Object}

29 | */

30 | var file = {};

31 | var data = {builds: {}};

32 |

33 | /**

34 | * Initializing the DB.

35 | */

36 | try {

37 | data = yaml.safeLoad(fs.readFileSync('/db/data.yml'));

38 | } catch (err) {

39 | console.error(err);

40 | }

41 |

42 | /**

43 | * Flush changes to disk.

44 | */

45 | function flush() {

46 | try {

47 | fs.mkdirSync('/db');

48 | } catch(err) {

49 | if ( err.code !== 'EEXIST' ) {

50 | throw err;

51 | }

52 | }

53 |

54 | fs.writeFileSync('/db/data.yml', yaml.safeDump(data));

55 | }

56 |

57 | file.saveBuild = function(id, tag, project, branch, status) {

58 | data.builds[id] = {

59 | branch: branch,

60 | project: project,

61 | status: status,

62 | id: id,

63 | tag: tag,

64 | 'created_at': data.builds[id] ? data.builds[id]['created_at'] : moment().format(),

65 | 'updated_at': moment().format()

66 | };

67 |

68 | flush();

69 |

70 | return Q.Promise(function(resolve) {

71 | resolve();

72 | });

73 | };

74 |

75 | file.getBuilds = function(limit) {

76 | return Q.Promise(function(resolve) {

77 | limit = limit || 10;

78 |

79 | var builds = _.sortBy(data.builds, function(build) {

80 | return - moment(build['updated_at']).unix();

81 | }).slice(0, limit);

82 |

83 | resolve(builds);

84 | });

85 | };

86 |

87 | file.getBuildsByStatus = function(statuses) {

88 | return Q.Promise(function(resolve) {

89 | var builds = _.where(data.builds, function(build) {

90 | return _.contains(statuses, build.status);

91 | });

92 |

93 | resolve(builds);

94 | });

95 | };

96 |

97 | file.getProjects = function(limit) {

98 | return Q.Promise(function(resolve) {

99 | limit = limit || 10;

100 | var projects = [];

101 |

102 | _.each(_.sortBy(data.builds, function(build) {

103 | return - moment(build['updated_at']).unix();

104 | }), function(build) {

105 | var u = url.parse(build.project);

106 | var alias = u.pathname;

107 | var parts = alias.split('__');

108 |

109 | if (parts.length === 2) {

110 | alias = parts[1] + ' (' + parts[0].substr(1) + ')';

111 | }

112 |

113 | projects.push({

114 | name: build.project,

115 | alias: alias,

116 | 'latest_build': build

117 | });

118 | });

119 |

120 | resolve(_.uniq(projects, 'name').slice(0, limit));

121 | });

122 | };

123 |

124 | file.getBuild = function(id) {

125 | return Q.Promise(function(resolve, reject) {

126 | var build = _.where(data.builds, {id: id})[0];

127 |

128 | if (build) {

129 | resolve(build);

130 | return;

131 | }

132 |

133 | reject();

134 | });

135 | };

136 |

137 | module.exports = file;

138 |

--------------------------------------------------------------------------------

/src/publisher/s3.js:

--------------------------------------------------------------------------------

1 | var Q = require('q');

2 | var AWS = require('aws-sdk');

3 | var dir = require('node-dir');

4 | var path = require('path');

5 | var _ = require('lodash');

6 | var docker = require('./../docker');

7 |

8 | /**

9 | * Upload a single file to S3.

10 | *

11 | * @return readable stream

12 | */

13 | function uploadToS3(options) {

14 | var s3 = require('s3');

15 |

16 | var client = s3.createClient({

17 | s3Options: {

18 | accessKeyId: options.key,

19 | secretAccessKey: options.secret,

20 | },

21 | });

22 |

23 | var params = {

24 | localFile: options.path,

25 |

26 | s3Params: {

27 | Bucket: options.bucket,

28 | Key: options.name || options.path,

29 | },

30 | };

31 | var uploader = client.uploadFile(params);

32 |

33 | return uploader;

34 | }

35 |

36 | /**

37 | * Uploads a whole directory to S3,

38 | * traversing its contents.

39 | *

40 | * @return promise

41 | */

42 | function uploadDirectoryToS3(options) {

43 | var uploads = [];

44 |

45 | return Q.Promise(function(resolve, reject){

46 | dir.files(options.dir, function(err, files){

47 | if (err) {

48 | throw err;

49 | }

50 |

51 | options.logger.info("[%s] Files to be uploaded to S3: \n%s", options.buildId, files.join("\n"));

52 |

53 | var count = 0;

54 |

55 | files.forEach(function(file){

56 | var f = path.relative(options.dir, file);

57 | options.path = file;

58 | options.name = path.join(options.bucketPath || '', f);

59 | options.logger.info("[%s] Uploading %s in s3://%s/%s", options.buildId, file, options.bucket, options.name);

60 |

61 | uploads.push(Q.Promise(function(resolve, reject){

62 | count++;

63 | var upload = uploadToS3(options);

64 |

65 | upload.on('end', function(data) {

66 | count--;

67 | options.logger.info("[%s] %d remaining files to upload from %s", options.buildId, count, options.copy);

68 | resolve();

69 | });

70 |

71 | upload.on('error', function(err){

72 | reject(err);

73 | })

74 | }));

75 | })

76 |

77 | return Q.all(uploads).then(function(){

78 | resolve();

79 | }).catch(function(){

80 | reject()

81 | });

82 | })

83 | });

84 | }

85 |

86 | /**

87 | * Uploads to S3 after copying the desired stuff from

88 | * docker to the host machine.

89 | */

90 | module.exports = function(dockerClient, buildId, project, logger, options){

91 | return Q.Promise(function(resolve, reject){

92 | var command = options.command || "sleep 1";

93 | console.info('Started publishing to S3');

94 | console.info(buildId);

95 | dockerClient.run(buildId, command.split(' '), process.stdout, function (err, data, container) {

96 | if (err) {

97 | reject(err);

98 | } else if (data.StatusCode === 0) {

99 | var artifactPath = path.join('/', 'tmp', buildId, 'publish', (new Date().getTime()).toString());

100 |

101 | docker.copy(container, options.copy, artifactPath).then(function(){

102 | logger.info("[%s] Copied %s outside of the container, in %s, preparing to upload it to S3", buildId, options.copy, artifactPath);

103 | var o = _.clone(options);

104 | o.buildId = buildId;

105 | o.dir = path.join(artifactPath, options.copy);

106 | o.logger = logger;

107 |

108 | uploadDirectoryToS3(o).then(function(){

109 | logger.info("[%s] All assets from %s uploaded to S3", buildId, options.copy);

110 | resolve()

111 | }).catch(function(err){

112 | reject(err)

113 | })

114 | }).catch(function(err){

115 | reject(err);

116 | });

117 | } else {

118 | reject(command + ' failed, exited with status code ' + data.StatusCode);

119 | }

120 | });

121 | });

122 | };

123 |

--------------------------------------------------------------------------------

/src/github.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 | var Q = require('q');

3 | var api = require("github");

4 | var config = require('./config');

5 | var logger = require('./logger');

6 | var github = {};

7 |

8 | /**

9 | * Retrieves a single pull

10 | * request.

11 | */

12 | function getPullRequest(token, user, repo, number) {

13 | var deferred = Q.defer();

14 | var client = createClient(token);

15 |

16 | client.pullRequests.get({

17 | owner: user,

18 | repo: repo,

19 | number: number

20 | }, function(err, pr){

21 | if (err) {

22 | deferred.reject(err);

23 | return;

24 | }

25 |

26 | deferred.resolve(pr)

27 | })

28 |

29 | return deferred.promise;

30 | }

31 |

32 | /**

33 | * Create status

34 | *

35 | * @param options {owner, repo, sha, state, comment, logger}

36 | */

37 | github.createStatus = function(options) {

38 | var client = createClient(options.token);

39 |

40 | client.repos.createStatus({

41 | owner: options.user,

42 | repo: options.repo,

43 | sha: options.sha,

44 | state: options.status.state,

45 | target_url: options.status.target_url,

46 | description: options.status.description,

47 | context: "continuous-integration/roger",

48 | }, function(err, res){

49 | if (err) {

50 | options.logger.error(err);

51 | return;

52 | }

53 | options.logger.info('[%s] Created github status for build %s', options.buildId, options.uuid);

54 | return;

55 | });

56 | };

57 |

58 | /**

59 | * Creates an authenticated client for

60 | * the github API.

61 | */

62 | function createClient(token) {

63 | var client = new api({version: '3.0.0'});

64 |

65 | client.authenticate({

66 | type: "oauth",

67 | token: token

68 | });

69 |

70 | return client;

71 | }

72 |

73 | /**

74 | * Extract the projects referred

75 | * by this hook.

76 | *

77 | * The project will be matched with the

78 | * repository URL in its configuration

79 | * (ie from: github/me/project)

80 | */

81 | github.getProjectsFromHook = function(payload) {

82 | var projects = [];

83 |

84 | _.each(config.get('projects'), function(project){

85 | if (payload.repository && payload.repository.full_name && project.from.match(payload.repository.full_name)) {

86 | projects.push(project);

87 | }

88 | });

89 |

90 | return projects;

91 | }

92 |

93 | /**

94 | * Retrieves build information from

95 | * a github hook.

96 | *

97 | * All you need to know is which repo /

98 | * branch this hook refers to.

99 | */

100 | github.getBuildInfoFromHook = function(req) {

101 | var deferred = Q.defer();

102 | var payload = req.body;

103 | var repo = payload.repository && payload.repository.html_url

104 | var info = {repo: repo}

105 | var githubToken = config.get('auth.github')

106 |

107 | if (repo) {

108 | if (req.headers['x-github-event'] === 'push') {

109 | // Remove leading /refs/*/ from branch or tag name

110 | info.branch = payload.ref.replace(/^refs\/[^\/]+\//, '');

111 | deferred.resolve(info);

112 | } else if (req.headers['x-github-event'] === 'create' && payload.ref_type === 'tag') {

113 | info.branch = payload.ref

114 | deferred.resolve(info);

115 | } else if (req.headers['x-github-event'] === 'issue_comment' && githubToken && payload.issue.pull_request && payload.comment.body === 'build please!') {

116 | var user = payload.repository.owner.login;

117 | var repo = payload.repository.name;

118 | var nr = payload.issue.pull_request.url.split('/').pop();

119 |

120 | getPullRequest(githubToken, user, repo, nr).then(function(pr){

121 | info.branch = pr.head.ref;

122 | deferred.resolve(info);

123 | }).catch(function(err){

124 | logger.error('Error while retrieving PR from github ("%s")', err.message);

125 | deferred.reject(err);

126 | });

127 | } else {

128 | deferred.reject('Could not obtain build info from the hook payload');

129 | }

130 | } else {

131 | deferred.reject('No project specified');

132 | }

133 |

134 | return deferred.promise;

135 | };

136 |

137 | module.exports = github;

138 |

--------------------------------------------------------------------------------

/src/routes.js:

--------------------------------------------------------------------------------

1 | var bodyParser = require('body-parser')

2 | var _ = require('lodash');

3 | var fs = require('fs');

4 | var express = require('express');

5 | var path = require('path');

6 | var uuid = require('node-uuid');

7 | var growingFile = require('growing-file');

8 | var config = require('./config');

9 | var storage = require('./storage');

10 | var logger = require('./logger');

11 | var builder = require('./builder');

12 | var utils = require('./utils');

13 | var github = require('./github');

14 | var storage = require('./storage');

15 | var router = require('./router');

16 | var auth = require('./auth');

17 | var routes = {};

18 |

19 | /**

20 | * Builds all configured projects.

21 | */

22 | routes.build = function(req, res, next) {

23 | var repo = req.query.repo || req.body.repo

24 | var branch = req.query.branch || req.body.branch || "master"

25 | var options = req.query.options || req.body.options || {}

26 |

27 | if (!repo) {

28 | res.status(400).body = {message: "You must specify a 'repo' parameter"};

29 | next();

30 | }

31 |

32 | builder.schedule(repo, branch, uuid.v4(), options)

33 |

34 | res.status(202).body = {message: "build scheduled"};

35 | next();

36 | }

37 |

38 | /**

39 | * List all builds

40 | */

41 | routes.builds = function(req, res, next) {

42 | storage.getBuilds(req.query.limit).then(function(builds){

43 | res.status(200).body = {builds: builds};

44 | next();

45 | })

46 | }

47 |

48 | /**

49 | * List all projects

50 | */

51 | routes.projects = function(req, res, next) {

52 | storage.getProjects(req.query.limit).then(function(projects){

53 | res.status(200).body = {projects: projects};

54 | next();

55 | })

56 | }

57 |

58 |

59 | /**

60 | * Single build's details

61 | */

62 | routes.singleBuild = function(req, res, next) {

63 | storage.getBuild(req.params.build).then(function(build){

64 | res.status(200).body = {build: build}

65 | next()

66 | }).catch(function(){

67 | res.status(404);

68 | next();

69 | })

70 | };

71 |

72 | /**

73 | * Shows the progressive status of the build

74 | * by keeping an eye on its log file.

75 | */

76 | routes.buildLog = function(req, res, next) {

77 | var logFile = path.join(utils.path('logs'), req.params.build + '.log');

78 |

79 | if (fs.existsSync(logFile)) {

80 | res.writeHead(200, {'Content-Type': 'text/plain'});

81 |

82 | growingFile.open(logFile, {timeout: 60000, interval: 1000}).pipe(res);

83 | return;

84 | }

85 |

86 | res.status(404);

87 | next();

88 | };

89 |

90 |

91 | /**

92 | * List configuration

93 | */

94 | routes.config = function(req, res, next) {

95 | res.status(200).body = config.get();

96 | next();

97 | };

98 |

99 | /**

100 | * Trigger a build from a github hook.

101 | *

102 | * Github will hit this URL and we will

103 | * extract from the hook the information

104 | * needed to schedule a build.

105 | */

106 | routes.buildFromGithubHook = function(req, res) {

107 | github.getBuildInfoFromHook(req).then(function(info){

108 | builder.schedule(info.repo, info.branch || "master", uuid.v4(), null, true);

109 |

110 | res.status(202).send({message: "builds triggered", info: info});

111 | return;

112 | }).catch(function(err){

113 | res.status(400).send({error: 'unable to get build infos from this hook'});

114 | logger.error(err)

115 | });

116 | };

117 |

118 | /**

119 | * Middleware that lets you specify

120 | * branches via colon in the URL

121 | * ie. redis:master.

122 | *

123 | * If you don't want to specify a branch

124 | * simply omit it:

125 | *

126 | * /api/projects/redis/build --> will build master

127 | *

128 | * else use a colon:

129 | *

130 | * /api/projects/redis:my-branch/build --> will build my-branch

131 | */

132 | function repoMiddleware(req, res, next, repo){

133 | var parts = repo.split(':');

134 |

135 | if (parts.length === 2) {

136 | req.params.repo = parts[0];

137 | req.params.branch = parts[1];

138 | }

139 |

140 | next();

141 | };

142 |

143 | /**

144 | * Hides sensitive values from the

145 | * response.body.

146 | */

147 | function obfuscateMiddleware(req, res, next) {

148 | res.body = utils.obfuscate(res.body);

149 | next();

150 | }

151 |

152 |

153 | /**

154 | * 404 page: checks whether the reesponse

155 | * body is set, if not it assumes no one

156 | * could handle this request.

157 | */

158 | function notFoundMiddleware(req, res, next){

159 | if (!res.body) {

160 | res.status(404).send({

161 | error: 'The page requested exists in your dreams',

162 | code: 404

163 | });

164 | }

165 |

166 | next();

167 | }

168 |

169 | /**

170 | * Registers routes and middlewares

171 | * on the app.

172 | */

173 | routes.bind = function(app) {

174 | app.param('repo', repoMiddleware);

175 | app.use(bodyParser.json());

176 |

177 | app.get(router.generate('config'), auth.authorize, routes.config);

178 | app.get(router.generate('builds'), auth.authorize, routes.builds);

179 | app.get(router.generate('projects'), auth.authorize, routes.projects);

180 | app.get(router.generate('build'), auth.authorize, routes.singleBuild);

181 | app.get(router.generate('build-log'), auth.authorize, routes.buildLog);

182 | app.post(router.generate('build-project'), auth.authorize, routes.build);

183 | app.post(router.generate('github-hooks'), routes.buildFromGithubHook);

184 | app.get(router.generate('build-project'), auth.authorize, routes.build);

185 |

186 | app.use('/', express.static(path.join(__dirname, 'client/dist')));

187 | app.use(notFoundMiddleware);

188 | app.use(obfuscateMiddleware);

189 |

190 | /**

191 | * This middleare is actually used

192 | * to send the response to the

193 | * client.

194 | *

195 | * Since we want to perform some

196 | * transformations to the response,

197 | * like obfuscating it, we cannot

198 | * call res.send(...) directly in

199 | * the controllers.

200 | */

201 | app.use(function(req, res){

202 | res.send(res.body);

203 | })

204 | };

205 |

206 | module.exports = routes;

207 |

--------------------------------------------------------------------------------

/src/docker.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 | var Docker = require('dockerode');

3 | var Q = require('q');

4 | var fs = require('fs');

5 | var config = require('./config');

6 | var tar = require('./tar');

7 | var docker = {client: {}};

8 | var path = require('path');

9 |

10 | if (config.get('docker.client.host') === '__gateway__') {

11 | config.config.docker.client.host = require('netroute').getGateway()

12 | }

13 |

14 | docker.client = new Docker(config.get('docker.client'));

15 |

16 | function extractAndRepackage(project, imageId, builderId, buildId, buildLogger, dockerOptions, uuid) {

17 | return Q.Promise(function(resolve, reject) {

18 | delete dockerOptions.dockerfile;

19 | var extractPath = project.build.extract;

20 | extractPath += (extractPath[extractPath.length] === '/') ? '.' : '/.';

21 | buildLogger.info('Boldly extracting produced stuff form: ', extractPath);

22 |

23 | docker.client.createContainer({Image: builderId, name: uuid, Cmd: ['/bin/sh']}, function (err, container) {

24 | if (err) {

25 | reject(err);

26 | return;

27 | }

28 |

29 | container.getArchive({path: extractPath}, function(error, data) {

30 | var failed = false;

31 | if (error) {

32 | reject(error);

33 | return;

34 | }

35 |

36 | function fail(error) {

37 | if (!failed) {

38 | container.remove(function() {

39 | failed = true;

40 | reject(error);

41 | });

42 | }

43 | }

44 |

45 | var srcPath = path.join(config.get('paths.tars'), project.name + '_' + uuid + '.tar');

46 | var destination = fs.createWriteStream(srcPath);

47 | data.on('data', function() {

48 | process.stdout.write('•');

49 | });

50 |

51 | data.on('end', function() {

52 | process.stdout.write('\n');

53 | });

54 |

55 | data.on('error', fail);

56 | destination.on('error', fail);

57 |

58 | destination.on('finish', function() {

59 | container.remove(function() {

60 | docker.buildImage(project, srcPath, imageId, buildId, buildLogger, dockerOptions, uuid).then(resolve).catch(reject);

61 | });

62 | });

63 |

64 | data.pipe(destination);

65 | });

66 | });

67 | });

68 | }

69 |

70 | docker.pullImage = function pullImage(buildId, from, imageId, dockerOptions, buildLogger) {

71 | return Q.promise(function(resolve, reject) {

72 | let opts = {

73 | authconfig: docker.getAuth(buildId, buildLogger)

74 | };

75 |

76 | let tag = imageId + ((dockerOptions.dockerfile) ? '-builder' : '');

77 |

78 | if (from === "scratch") {

79 | return resolve();

80 | }

81 |

82 | buildLogger.info('[%s] pull image %s', buildId, from);

83 |

84 | docker.client.pull(from, opts, function (err, stream) {

85 | if (err) {

86 | return reject(err)

87 | }

88 |

89 | stream.on('data', function(out) {

90 | let result = {};

91 |

92 | try {

93 | result = JSON.parse(out.toString('utf-8'));

94 | } catch (err) {

95 | buildLogger.error('[%s] %s', tag, err);

96 | }

97 |

98 | if (result.error) {

99 | buildLogger.error('[%s] %s', tag, result.error);

100 | reject(result.error);

101 | return;

102 | }

103 |

104 | if (result.progress) {

105 | result.status = result.status + ' ' + result.progress;

106 | }

107 |

108 | buildLogger.info('[%s] %s', tag, result.stream || result.status);

109 | });

110 |

111 |

112 | stream.on('end', function(err) {

113 | if (err) {

114 | return reject(err)

115 | }

116 |

117 | resolve()

118 | })

119 | })

120 | })

121 |

122 | };

123 |

124 | docker.extractFromImageName = function extractFromImageName(dockerFile) {

125 | return Q.promise(function (resolve, reject) {

126 | let from;

127 |

128 | fs.readFile(dockerFile, function (err, data) {

129 | if (err) {

130 | return reject(err)

131 | }

132 |

133 | data.toString().split('\n').forEach(function (line) {

134 | let match = line.match(/^FROM +(.*)$/);

135 | if (match) {

136 | from = match[1]

137 | }

138 | });

139 |

140 | if (!from) {

141 | reject('FROM keyword not found.');

142 | }

143 |

144 | resolve(from);

145 | });

146 |

147 | });

148 | };

149 |

150 | /**

151 | * Builds a docker image.

152 | *

153 | * @return promise

154 | */

155 | docker.buildImage = function(project, tarPath, imageId, buildId, buildLogger, dockerOptions, uuid) {

156 | return Q.promise(function(resolve, reject) {

157 | dockerOptions = dockerOptions || {};

158 | var tag = imageId + ((dockerOptions.dockerfile) ? '-builder' : '');

159 | var realBuildId = buildId;

160 |

161 | docker.client.buildImage(tarPath, _.extend({t: tag}, dockerOptions), function(err, response) {

162 | if (err) {

163 | reject(err);

164 | return;

165 | }

166 |

167 | buildLogger.info('[%s] Build is in progress...', tag);

168 |

169 | response.on('data', function(out) {

170 | var result = {};

171 |

172 | try {

173 | result = JSON.parse(out.toString('utf-8'));

174 | } catch (err) {

175 | buildLogger.error('[%s] %s', tag, err);

176 | }

177 |

178 | if (result.error) {

179 | buildLogger.error('[%s] %s', tag, result.error);

180 | reject(result.error);

181 | return;

182 | }

183 |

184 | if (result.progress) {

185 | result.status = result.status + ' ' + result.progress;

186 | }

187 |

188 | buildLogger.info('[%s] %s', tag, result.stream || result.status);

189 | if (result.stream && result.stream.indexOf('Successfully built ') == 0) {

190 | realBuildId = result.stream.split('Successfully built ')[1].replace('\n', '');

191 | }

192 | });

193 |

194 | response.on('end', function() {

195 | if (dockerOptions.dockerfile) {

196 | extractAndRepackage(project, imageId, tag, buildId, buildLogger, dockerOptions, uuid).then(resolve).catch(function(err){

197 | buildLogger.error('[%s] %s', tag, err.message);

198 | });

199 | return;

200 | }

201 |

202 | resolve(realBuildId);

203 | });

204 | });

205 | });

206 | };

207 |

208 | /**

209 | * Tags "branch" off the latest imageId.

210 | *

211 | * @return promise

212 | */

213 | docker.tag = function(imageId, buildId, branch) {

214 | var deferred = Q.defer();

215 | var image = docker.client.getImage(imageId + ':' + branch);

216 |

217 | image.tag({repo: imageId, tag: branch}, function() {

218 | deferred.resolve(docker.client.getImage(imageId));

219 | });

220 |

221 | return deferred.promise;

222 | };

223 |

224 | /**

225 | * Retrieves the authconfig needed

226 | * in order to push this image.

227 | *

228 | * This method is mainly here if you

229 | * have builds that need to be pushed

230 | * to the dockerhub.

231 | *

232 | * @see http://stackoverflow.com/questions/24814714/docker-remote-api-pull-from-docker-hub-private-registry

233 | */

234 | docker.getAuth = function(buildId, buildLogger) {

235 | let options = config.get('auth.registry', {}, {});

236 |

237 | if (options.length > 0) {

238 | buildLogger.info('[%s] Image should be pushed to the DockerHub @ hub.docker.com', buildId);

239 |

240 | options.serveraddress = options.address || '127.0.0.1';

241 |

242 | if (!options || !options.username || !options.password) {

243 | buildLogger.error('It seems that the build "%s" should be pushed to a private registry', buildId);

244 | buildLogger.error('but you forgot to add your credentials in the config file');

245 | buildLogger.error();

246 | buildLogger.error('Please specify:');

247 | buildLogger.error(' - username');

248 | buildLogger.error(' - password');

249 | buildLogger.error();

250 | buildLogger.error('See https://github.com/namshi/roger#configuration');

251 |

252 | throw new Error('Fatality! MUAHAHUAAHUAH!');

253 | }

254 | }

255 |

256 | return options;

257 | };

258 |

259 | /**

260 | * Pushes an image to a registry.

261 | *

262 | * @return promise

263 | */

264 | docker.push = function(image, buildId, uuid, branch, registry, buildLogger) {

265 | var deferred = Q.defer();

266 |

267 | image.push({tag: branch, force: true}, function(err, data) {

268 | var somethingWentWrong = false;

269 |

270 | if (err) {

271 | deferred.reject(err);

272 | } else {

273 | data.on('error', function(err) {

274 | deferred.reject(err);

275 | });

276 |

277 | data.on('data', function(out) {

278 | var message = {};

279 |

280 | try {

281 | message = JSON.parse(out.toString('utf-8'));

282 | } catch (err) {

283 | buildLogger.error("[%s] %s", buildId, err);

284 | }

285 |

286 |

287 | if (message.error) {

288 | deferred.reject(message);

289 | somethingWentWrong = true;

290 | }

291 |

292 | buildLogger.info('[%s] %s', buildId, message.status || message.error);

293 | });

294 |

295 | data.on('end', function() {

296 | if (!somethingWentWrong) {

297 | buildLogger.info('[%s] Pushed image of build %s to the registry at http://%s', buildId, uuid, registry);

298 | deferred.resolve();

299 | }

300 | });

301 | }

302 | }, docker.getAuth(buildId, buildLogger));

303 |

304 | return deferred.promise;

305 | };

306 |

307 | /**

308 | * Copies stuff from the container to

309 | * the host machine.

310 | */

311 | docker.copy = function(container, containerPath, hostPath) {

312 | return Q.Promise(function(resolve, reject) {

313 | container.export(function(err, data) {

314 | if (err) {

315 | reject(err);

316 | return;

317 | }

318 |

319 | return tar.createFromStream(hostPath, data).then(function() {

320 | resolve();

321 | });

322 | });

323 | });

324 | };

325 |

326 | module.exports = docker;

327 |

--------------------------------------------------------------------------------

/src/builder.js:

--------------------------------------------------------------------------------

1 | var docker = require('./docker');

2 | var config = require('./config');

3 | var storage = require('./storage');

4 | var logger = require('./logger');

5 | var utils = require('./utils');

6 | var publisher = require('./publisher');

7 | var hooks = require('./hooks');

8 | var notifications = require('./notifications');

9 | var fs = require('fs');

10 | var p = require('path');

11 | var moment = require('moment');

12 | var git = require('./git');

13 | var matching = require('./matching');

14 | var tar = require('./tar');

15 | var url = require('url');

16 | var _ = require('lodash');

17 | var yaml = require('js-yaml');

18 |

19 | /**

20 | * Returns a logger for a build,

21 | * which is gonna extend the base

22 | * logger by writing also on the

23 | * filesystem.

24 | */

25 | function getBuildLogger(uuid) {

26 | var logFile = p.join(utils.path('logs'), uuid + '.log');

27 | var buildLogger = new logger.Logger;

28 | buildLogger.add(logger.transports.File, { filename: logFile, json: false });

29 | buildLogger.add(logger.transports.Console, {timestamp: true});

30 |

31 | return buildLogger;

32 | }

33 |

34 | /**

35 | * This is the main builder object,

36 | * responsible to schedule builds,

37 | * talk to docker, send notifications

38 | * etc etc.

39 | *

40 | * @type {Object}

41 | */

42 | var builder = {};

43 |

44 | /**

45 | * Schedules builds for a repo.

46 | *

47 | * It will clone the repo and read the build.yml

48 | * file, then trigger as many builds as we find

49 | * in the configured build.yml.

50 | *

51 | * @param {string} repo

52 | * @param {string} gitBranch

53 | * @param {string} uuid

54 | * @param {object} dockerOptions

55 | * @param {boolean} checkBranch - Enable branch checking by setting to true

56 | * @return {void}

57 | */

58 | builder.schedule = function(repo, gitBranch, uuid, dockerOptions, checkBranch = false) {

59 | var path = p.join(utils.path('sources'), uuid);

60 | var branch = gitBranch;

61 | var builds = [];

62 | var cloneUrl = repo;

63 | dockerOptions = dockerOptions || {};

64 |

65 | if (branch === 'master') {

66 | branch = 'latest';

67 | }

68 |

69 | var githubToken = config.get('auth.github');

70 | if (githubToken) {

71 | var uri = url.parse(repo);

72 | uri.auth = githubToken;

73 | cloneUrl = uri.format(uri);

74 | }

75 |

76 | console.log('cloning: cloneUrl->' + cloneUrl + ', path->' + path + ', gitBranch->' + gitBranch);

77 | git.clone(cloneUrl, path, gitBranch, logger).then(function() {

78 | try {

79 | return yaml.safeLoad(fs.readFileSync(p.join(path, 'build.yml'), 'utf8'));

80 | } catch(err) {

81 | logger.error(err.toString(), err.stack);

82 | return {};

83 | }

84 | }).then(async function(buildConfig) {

85 | const { settings, ...projects } = buildConfig;

86 |

87 | /**

88 | * In case no projects are defined, let's build

89 | * the smallest possible configuration for the current

90 | * build: we will take the repo name and build this

91 | * project, ie github.com/antirez/redis will build

92 | * under the name "redis".

93 | */

94 | if (Object.keys(projects).length === 0) {

95 | projects[cloneUrl.split('/').pop()] = {};

96 | }

97 |

98 | // Check branch name matches rules

99 | const matchedBranch = !checkBranch || await matching.checkNameRules(settings, gitBranch, path);

100 | if (!matchedBranch) {

101 | logger.info('The branch name didn\'t match the defined rules');

102 | return builds;

103 | }

104 |

105 | _.each(projects, function(project, name) {

106 | project.id = repo + '__' + name;

107 | project.name = name;

108 | project.repo = repo;

109 | project.homepage = repo;

110 | project['github-token'] = githubToken;

111 | project.registry = project.registry || config.get('app.defaultRegistry') || '127.0.0.1:5000';

112 |

113 | console.log('project ' + name + ': ', utils.obfuscate(project));

114 | if (!!project.build) {

115 | dockerOptions.dockerfile = project.build.dockerfile;

116 | }

117 |

118 | builds.push(builder.build(project, uuid + '-' + project.name, path, gitBranch, branch, dockerOptions));

119 | });

120 |

121 | return builds;

122 | }).catch(function(err) {

123 | logger.error(err.toString(), err.stack);

124 | }).done();

125 | };

126 |

127 | /**

128 | * Builds a project.

129 | *

130 | * @param {object} project

131 | * @param {string} uuid

132 | * @param {string} path

133 | * @param {string} gitBranch

134 | * @param {string} branch

135 | * @param {object} dockerOptions

136 | * @return {Promise}

137 | */

138 | builder.build = function(project, uuid, path, gitBranch, branch, dockerOptions) {

139 | var buildLogger = getBuildLogger(uuid);

140 | var tarPath = p.join(utils.path('tars'), uuid + '.tar');

141 | var imageId = project.registry + '/' + project.name;

142 | var buildId = imageId + ':' + branch;

143 | var author = 'unknown@unknown.com';

144 | var now = moment();

145 | var sha = '';

146 |

147 | storage.saveBuild(uuid, buildId, project.id, branch, 'queued').then(function() {

148 | return builder.hasCapacity();

149 | }).then(function() {

150 | return storage.saveBuild(uuid, buildId, project.id, branch, 'started');

151 | }).then(function() {

152 | return git.getCommit(path, gitBranch);

153 | }).then(function(commit) {

154 | author = commit.author().email();

155 | sha = commit.sha();

156 |

157 | return builder.addRevFile(gitBranch, path, commit, project, buildLogger, {buildId: buildId});

158 | }).then(function() {

159 | var dockerfilePath = path;

160 |

161 | if (project.dockerfilePath) {

162 | dockerfilePath = p.join(path, project.dockerfilePath);

163 | }

164 |

165 | return tar.create(tarPath, dockerfilePath + '/', buildLogger, {buildId: buildId});

166 | }).then(function() {

167 | var dockerfilePath = path;

168 |

169 | if (project.dockerfilePath) {

170 | dockerfilePath = p.join(path, project.dockerfilePath);

171 | }

172 | var dockerFile = dockerfilePath + '/Dockerfile';

173 | buildLogger.info('docker file path %s ', dockerFile);

174 |

175 | return docker.extractFromImageName(dockerFile);

176 | }).then(function(from) {

177 | return docker.pullImage(buildId, from, imageId, dockerOptions, buildLogger);

178 | }).then(function() {

179 | buildLogger.info('[%s] Created tarball for %s', buildId, uuid);

180 |

181 | return docker.buildImage(project, tarPath, imageId + ':' + branch, buildId, buildLogger, dockerOptions, uuid);

182 | }).then(function(realBuildId) {

183 | buildLogger.info('[%s] %s built succesfully as imageId: %s', buildId, uuid, realBuildId);

184 | buildLogger.info('[%s] Tagging %s as imageId: %s', buildId, uuid, realBuildId);

185 |

186 | return docker.tag(imageId, buildId, branch);

187 | }).then(function(image) {

188 | return publisher.publish(docker.client, buildId, project, buildLogger).then(function() {

189 | return image;

190 | });

191 | }).then(function(image) {

192 | buildLogger.info('[%s] Running after-build hooks for %s', buildId, uuid);

193 |

194 | return hooks.run('after-build', buildId, project, docker.client, buildLogger).then(function() {

195 | return image;

196 | });

197 | }).then(function(image) {

198 | buildLogger.info('[%s] Ran after-build hooks for %s', buildId, uuid);

199 | buildLogger.info('[%s] Pushing %s to %s', buildId, uuid, project.registry);

200 |

201 | return docker.push(image, buildId, uuid, branch, project.registry, buildLogger);

202 | }).then(function() {

203 | return storage.saveBuild(uuid, buildId, project.id, branch, 'passed');

204 | }).then(function() {

205 | buildLogger.info('[%s] Finished build %s in %s #SWAG', buildId, uuid, moment(now).fromNow(Boolean));

206 |

207 | return true;

208 | }).catch(function(err) {

209 | if (err.name === 'NO_CAPACITY_LEFT') {

210 | buildLogger.info('[%s] Too many builds running concurrently, queueing this one...', buildId);

211 |

212 | setTimeout(function() {

213 | builder.build(project, uuid, path, gitBranch, branch, dockerOptions);

214 | }, config.get('builds.retry-after') * 1000);

215 | } else {

216 | return builder.markBuildAsFailed(err, uuid, buildId, project, branch, buildLogger);

217 | }

218 | }).then(function(result) {

219 | if (result) {

220 | notifications.trigger(project, branch, {author: author, project: project, result: result, logger: buildLogger, uuid: uuid, buildId: buildId, sha: sha});

221 | }

222 | }).catch(function(err) {

223 | buildLogger.error('[%s] Error sending notifications for %s ("%s")', buildId, uuid, err.message || err.error, err.stack);

224 | }).done();

225 | };

226 |

227 | /**

228 | * Checks whether we are running too many parallel

229 | * builds.

230 | *

231 | * @return {Promise}

232 | */

233 | builder.hasCapacity = function() {

234 | return storage.getStartedBuilds().then(function(builds) {

235 | maxConcurrentBuilds = config.get('builds.concurrent');

236 |

237 | if (maxConcurrentBuilds && builds.length >= maxConcurrentBuilds) {

238 | utils.throw('NO_CAPACITY_LEFT');

239 | }

240 | });

241 | };

242 |

243 | /**

244 | * Adds a revfile at the build path

245 | * with information about the latest

246 | * commit.

247 | */

248 | builder.addRevFile = function(gitBranch, path, commit, project, buildLogger, options) {

249 | var parts = [path];

250 |

251 | if (project.dockerfilePath) {

252 | parts.push(project.dockerfilePath);

253 | }

254 |

255 | if (project.revfile) {

256 | parts.push(project.revfile);

257 | }

258 |

259 | var revFilePath = p.join(parts.join('/'), 'rev.txt');

260 |

261 | buildLogger.info('[%s] Going to create revfile in %s', options.buildId, revFilePath);

262 | fs.appendFileSync(revFilePath, 'Version: ' + gitBranch);

263 | fs.appendFileSync(revFilePath, '\nDate: ' + commit.date());

264 | fs.appendFileSync(revFilePath, '\nAuthor: ' + commit.author());

265 | fs.appendFileSync(revFilePath, '\nSha: ' + commit.sha());

266 | fs.appendFileSync(revFilePath, '\n');

267 | fs.appendFileSync(revFilePath, '\nCommit message:');

268 | fs.appendFileSync(revFilePath, '\n');

269 | fs.appendFileSync(revFilePath, '\n ' + commit.message());

270 | buildLogger.info('[%s] Created revfile in %s', options.buildId, revFilePath);

271 | };

272 |

273 | /**

274 | * Marks the given build as failed.

275 | *

276 | * @param {Error} err

277 | * @param {string} uuid

278 | * @param {string} buildId

279 | * @param {Object} project

280 | * @param {string} branch

281 | * @param {Object} buildLogger

282 | * @return {Error}

283 | */

284 | builder.markBuildAsFailed = function(err, uuid, buildId, project, branch, buildLogger) {

285 | var message = err.message || err.error || err;

286 |

287 | return storage.saveBuild(uuid, buildId, project.id, branch, 'failed').then(function() {

288 | buildLogger.error('[%s] BUILD %s FAILED! ("%s") #YOLO', buildId, uuid, message, err.stack);

289 |

290 | return new Error(message);

291 | });

292 | };

293 |

294 | /**

295 | * Upon booting, look for builds that didn't finish

296 | * before the last shutdown, then mark them as failed.

297 | */

298 | logger.info('Looking for pending builds...');

299 | storage.getPendingBuilds().then(function(builds) {

300 | builds.forEach(function(staleBuild) {

301 | logger.info('Build %s marked as failed, was pending upon restart of the server', staleBuild.id);

302 | storage.saveBuild(staleBuild.id, staleBuild.tag, staleBuild.project, staleBuild.branch, 'failed');

303 | });

304 | });

305 |

306 | module.exports = builder;

307 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

54 |

86 |

55 |

77 |

56 |

63 | 57 | {Utils.getProjectShortName(data.name)} 58 | Branch: {currentBuild.branch} 59 | Build: {currentBuild.status} 61 |

62 |

64 | {

65 | !data.buildInProgress &&

66 | }

67 |

68 |

69 | {

70 | data.buildInProgress ?

71 |

72 | :

73 |

74 | }

75 |

76 |

78 |

85 |

79 |

81 |

82 |

84 |  2 |

3 | # Roger

4 |

5 | > A continuous integration and build server and for docker containers

6 |

7 | Roger is a simple yet powerful build

8 | server for docker containers: you will

9 | only need to specify your configuration

10 | and it will build your projects every time

11 | you schedule a build or, for example,

12 | open a pull request on github.

13 |

14 | It is easy to deploy and comes with

15 | built-in integration with platforms

16 | like Github or the Docker Registry,

17 | which means that you can build your

18 | private repositories and push them to

19 | the Docker Hub or your own private

20 | registry out of the box.

21 |

22 |

23 |

24 | Ready to hack?

25 |

26 | * [installation](#installation)

27 | * [configuration](#configuration-reference)

28 | * [configuring a project](#project-configuration)

29 | * [configuring the server](#server-configuration)

30 | * [configuring auth](#configuring-auth)

31 | * [build hooks](#build-hooks)

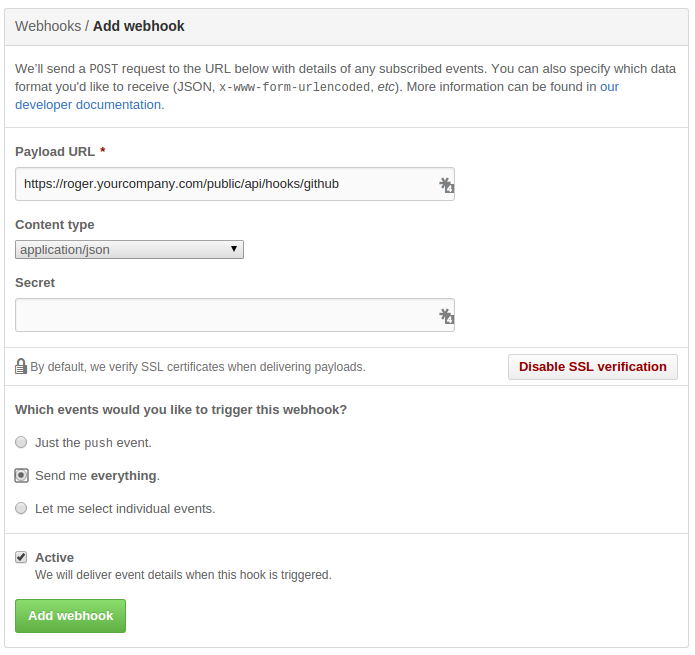

32 | * [github](#github)

33 | * [notification](#notifications)

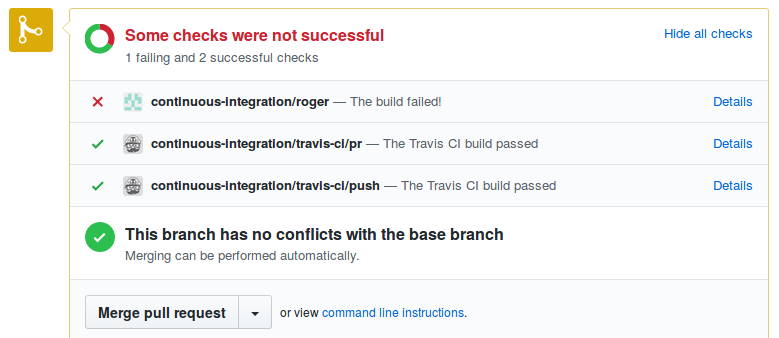

34 | * [Github status](##github-statuses)

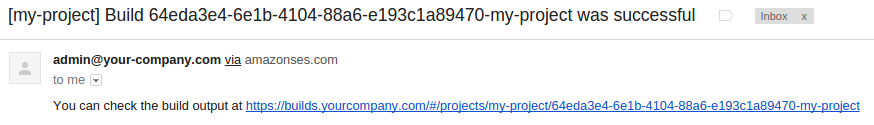

35 | * [email (Amazon SES)](#email-through-amazon-ses)

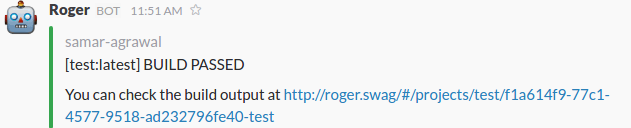

36 | * [slack (Builds Channel)](#notification-on-slack)

37 | * [publishing](#publishing-artifacts)

38 | * [s3](#s3)

39 | * [use different images for building and running your app](#use-different-images-for-building-and-running-your-app)

40 | * [hooks](#hooks)

41 | * [after-build](#after-build)

42 | * [Slim your images](#use-different-images-for-building-and-running)

43 | * [APIs](#apis)

44 | * [get all projects](#listing-all-projects)

45 | * [get all builds](#listing-all-builds)

46 | * [get a build](#getting-a-build)

47 | * [start a build](#triggering-builds)

48 | * [roger in production](#in-production)

49 | * [why roger?](#why-did-you-build-this)

50 | * [contributing](#contributing)

51 | * [tests](#tests)

52 |

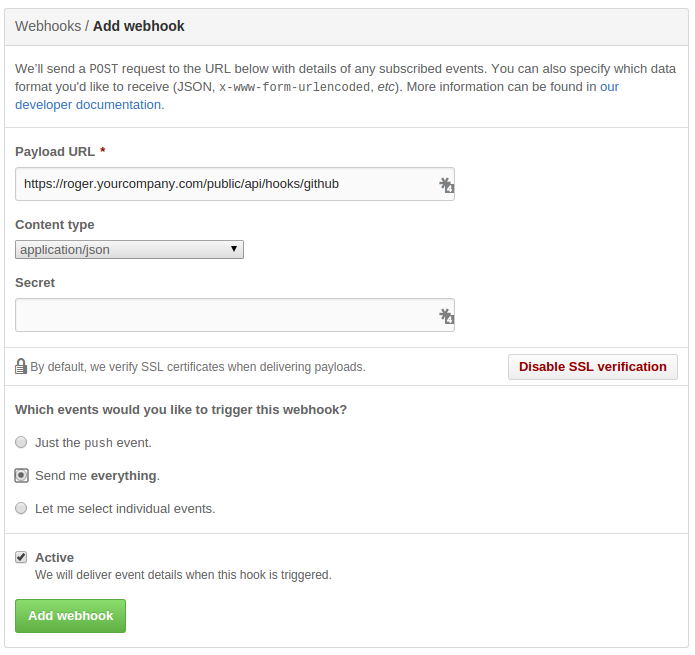

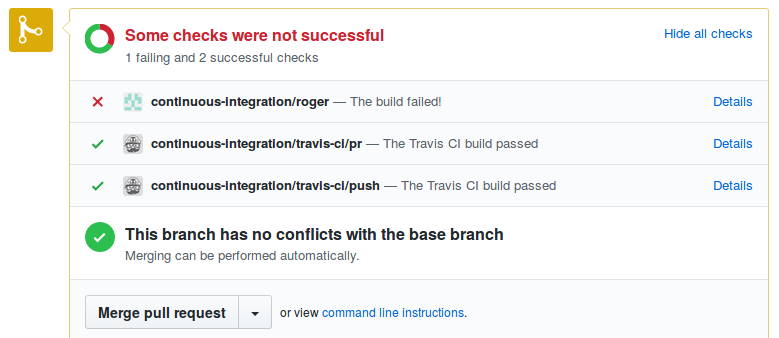

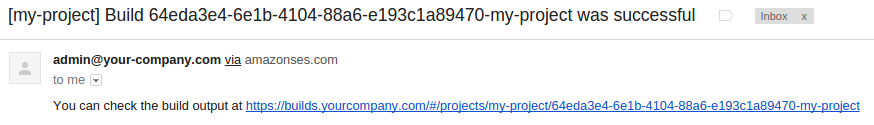

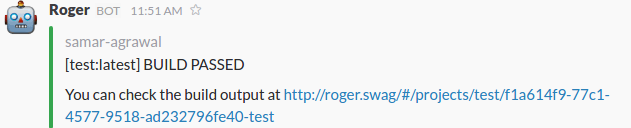

53 | ## Installation

54 |

55 | Create a `config.yml` file for roger:

56 |

57 | ``` yaml

58 | auth:

59 | dockerhub: # these credentials are only useful if you need to push to the dockerhub

60 | username: user # your username on the dockerhub

61 | email: someone@gmail.com # your...well, you get it

62 | password: YOUR_DOCKERHUB_PASSWORD

63 | github: YOUR_GITHUB_TOKEN # General token to be used to authenticate to clone any project or comment on PRs (https://github.com/settings/tokens/new)

64 | ```

65 |

66 | and run roger:

67 |

68 | ```

69 | docker run -ti -p 8080:8080 \

70 | -v /tmp/logs:/tmp/roger-builds/logs \

71 | -v $(pwd)/db:/db \

72 | -v /path/to/your/config.yml:/config.yml \

73 | -v /var/run/docker.sock:/tmp/docker.sock \

74 | namshi/roger

75 | ```

76 |

77 | If roger starts correctly, you should see

78 | something like:

79 |

80 | ```

81 | 2015-01-27T17:52:50.827Z - info: using config: {...}}

82 | Roger running on port 8080

83 | ```

84 |

85 | and you can open the web interface up

86 | on your [localhost](http://localhost:8080).

87 |

88 | Now, time for our first build: pick a project of yours,

89 | on github, and add a `build.yml` file in the root of the

90 | repo:

91 |

92 | ``` yaml

93 | redis: # this is the name of your project

94 | registry: registry.company.com # your private registry, ie. 127.0.0.1:5000

95 | ```

96 |

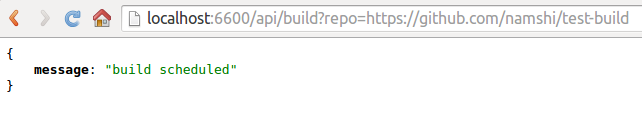

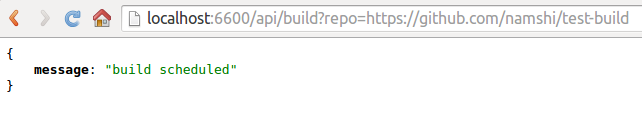

97 | then visit `http://localhost:8080/api/build?repo=URL_OF_YOUR_REPO`

98 | (ie. `localhost:8080/api/build?repo=https://github.com/namshi/test-build`)

99 | and you should receive a confirmation that the build has been

100 | scheduled:

101 |

102 |

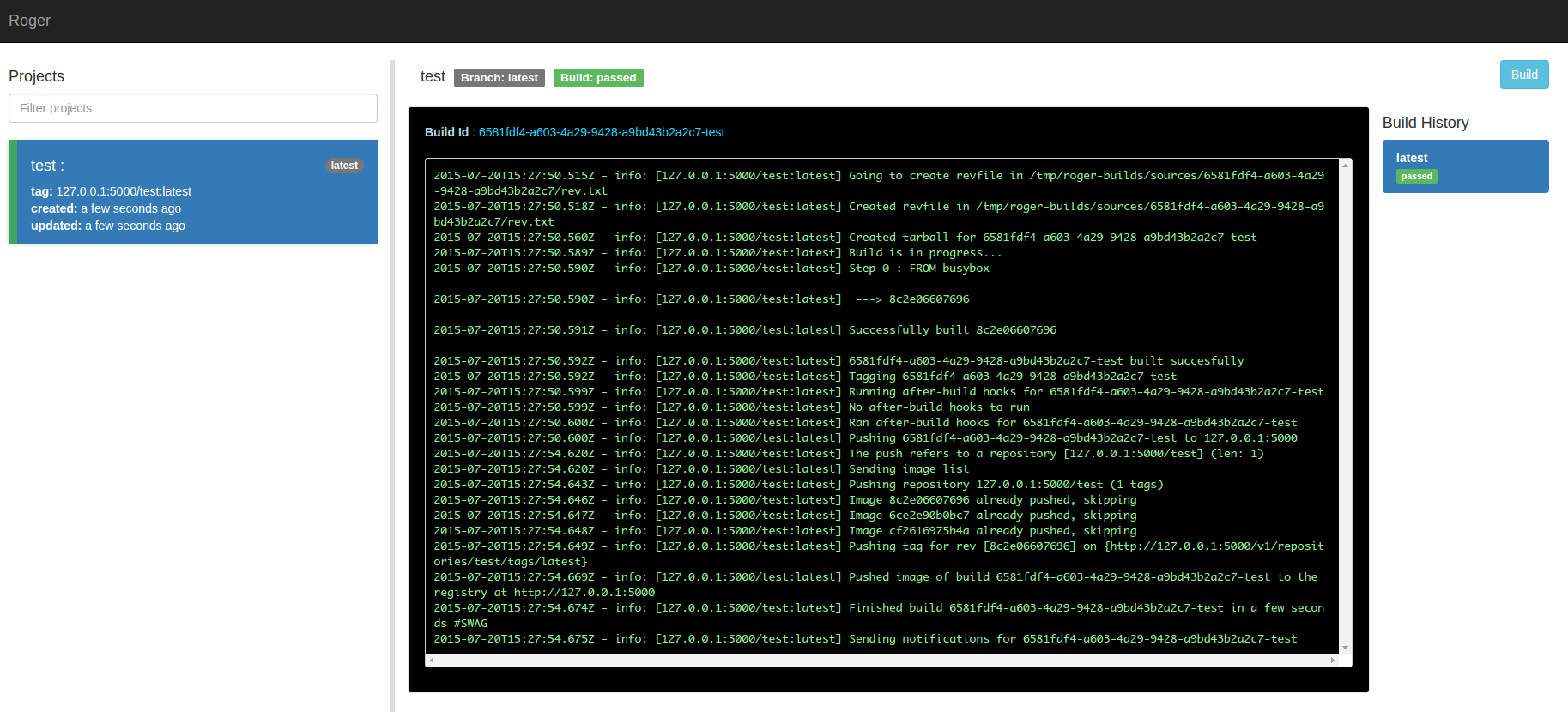

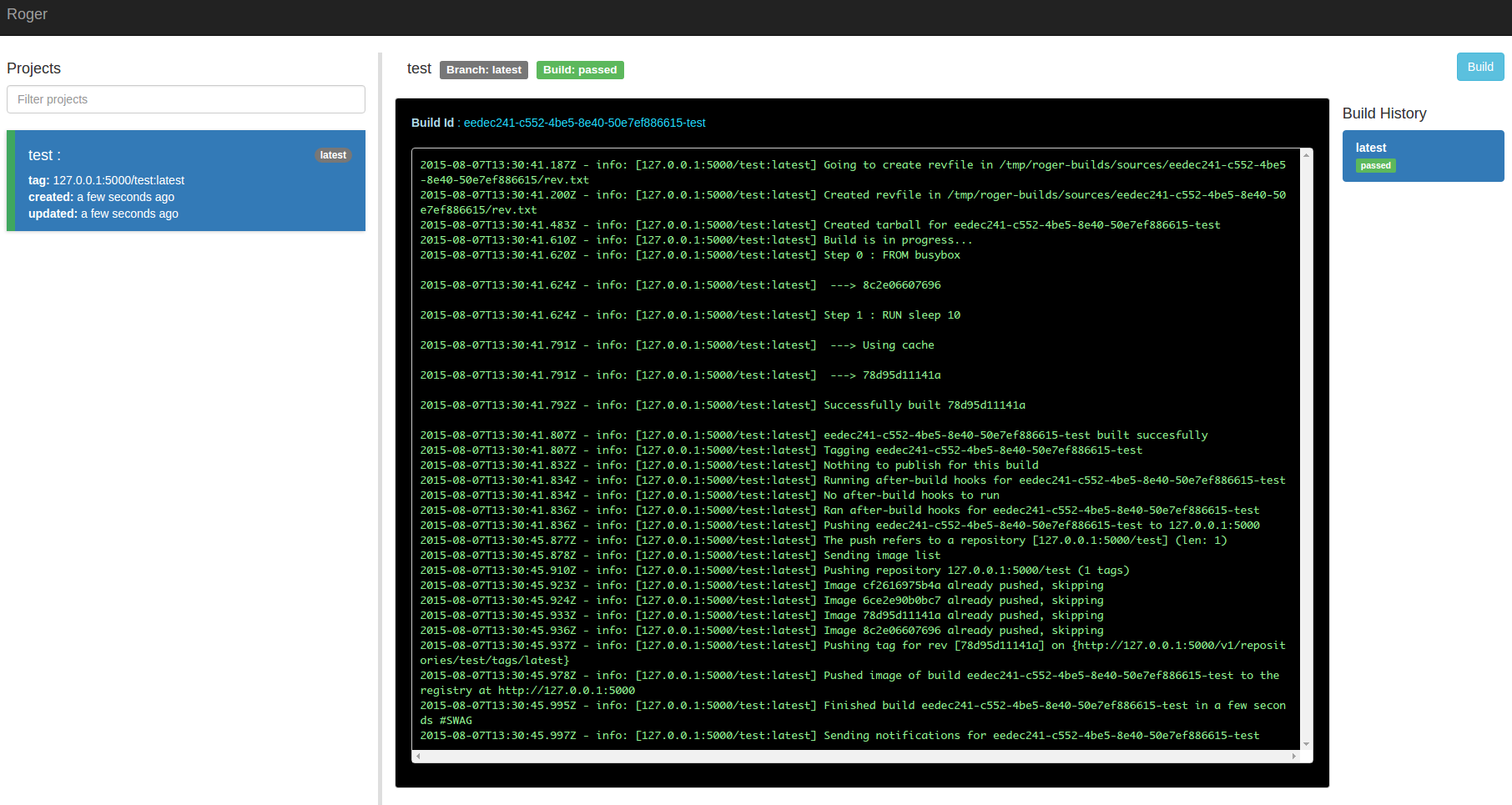

103 |

104 | Now open the web interface, your docker build is running!

105 |

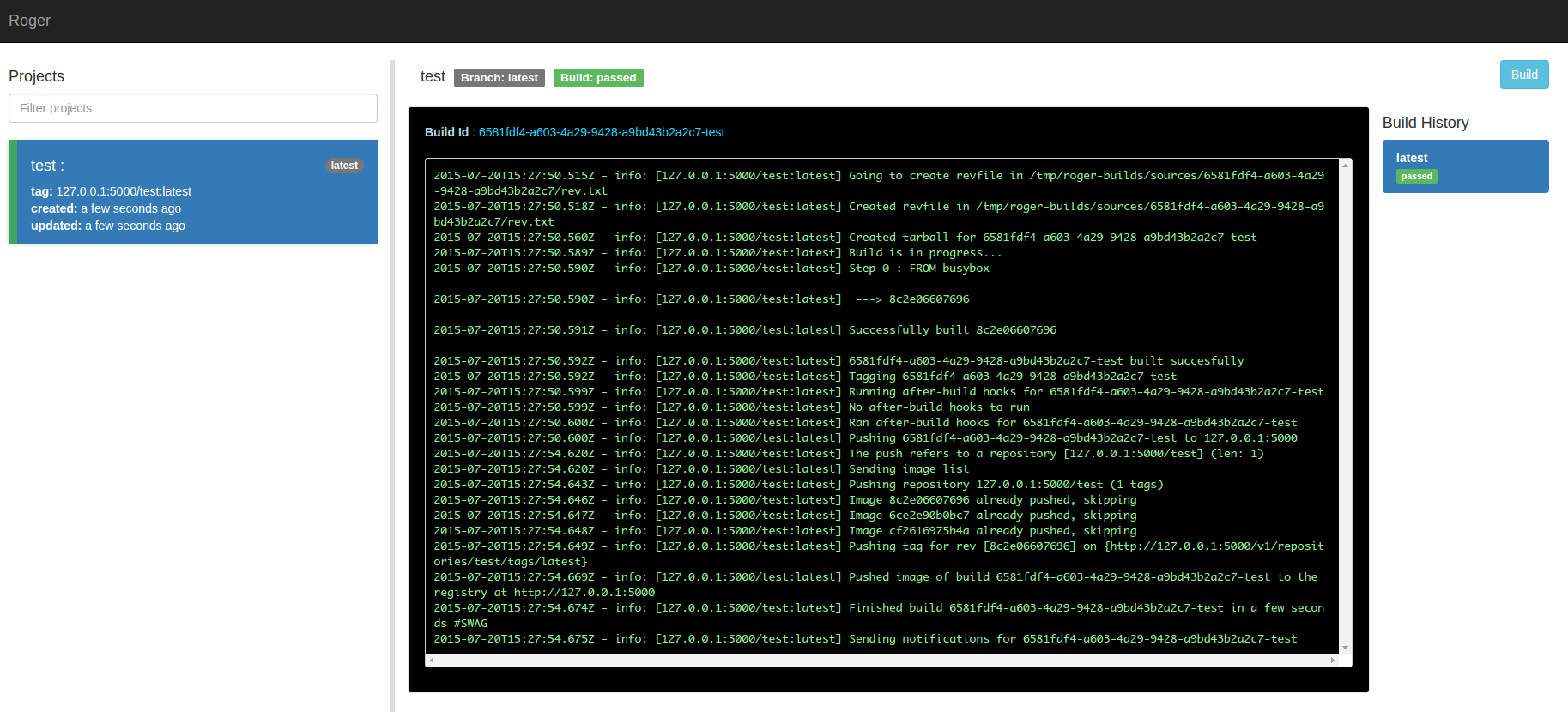

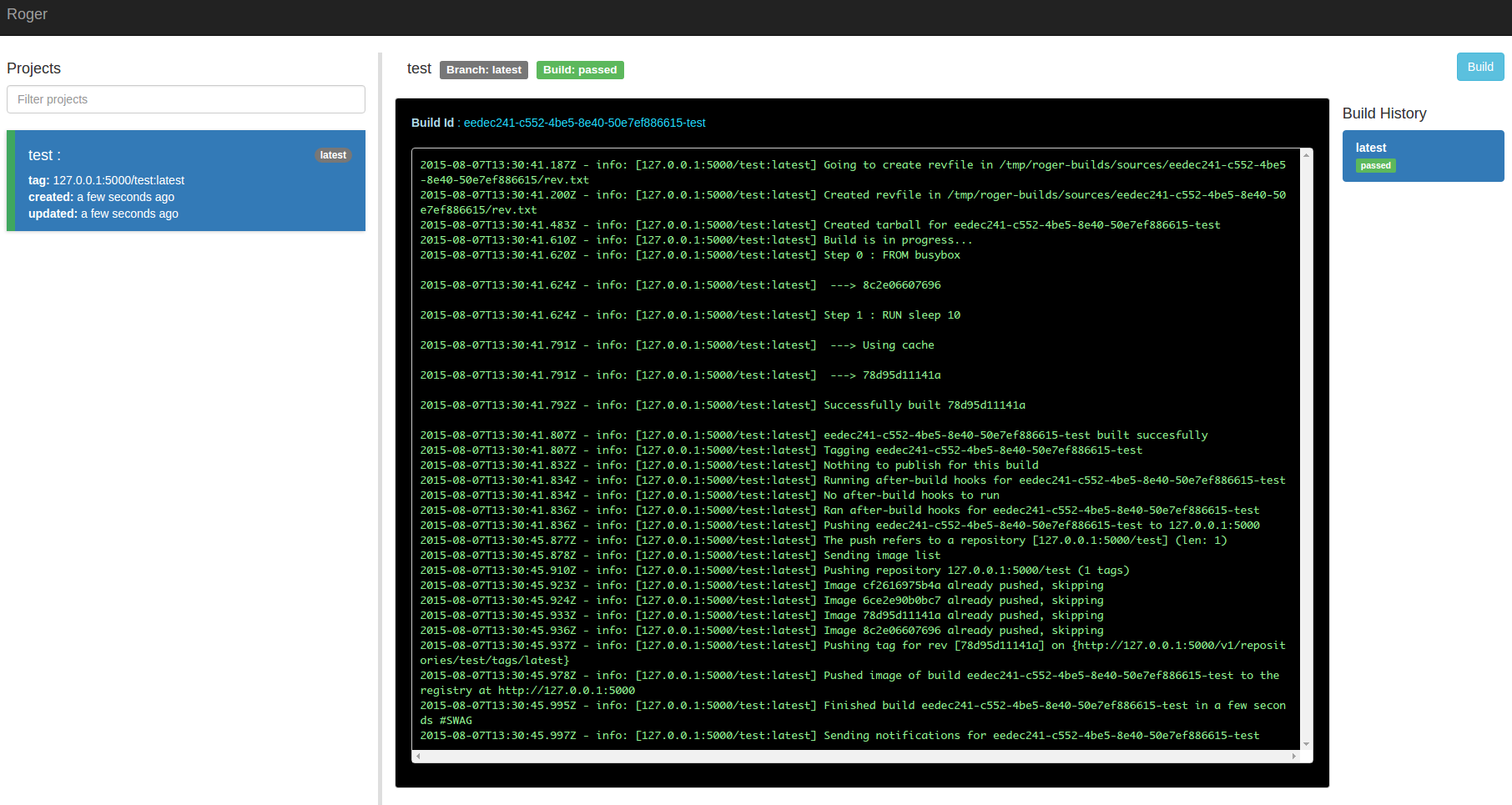

106 |

107 |

108 | > Protip: if you do a docker-compose up in the root

109 | > of roger, the dev environment for roger, including

110 | > a local registry, starts on its own: you might want to

111 | > use this if you are playing with Roger for the first

112 | > time and you don't have a registry available at

113 | > registry.company.com

114 |

115 | ## Configuration reference

116 |

117 | ### Project configuration

118 |

119 | In your repos, you can specify a few different

120 | configuration options, for example:

121 |

122 | ``` yaml

123 | redis: # name of the project, will be the name of the image as well

124 | registry: 127.0.0.1:5001 # url of the registry to which we're gonna push

125 | ```

126 |

127 | Want to push to the dockerhub?

128 |

129 | ``` yaml

130 | redis: # if you don't specify the registry, we'll assume you want to push to a local registry at 127.0.0.1:5000

131 | registry: dockerhub

132 | ```

133 |

134 | Want to publish assets to S3? Run tests? Here's a full overview of what roger

135 | can do with your project:

136 |

137 | ``` yaml

138 | redis:

139 | dockerfilePath: some/subdir # location of the dockerfile, omit this if it's in the root of the repo

140 | registry: 127.0.0.1:5001

141 | revfile: somedir # means roger will create a rev.txt file with informations about the build at this path

142 | after-build: # hooks to execute after an image is built, before pushing it to the registry, ie. tests

143 | - ls -la

144 | - npm test

145 | notify:

146 | - github

147 | - emailSes

148 | - slack

149 | publish:

150 | -

151 | to: s3

152 | copy: /src/build/public/ # this is the path inside the container

153 | bucket: my-bucket # name of the s3 bucket

154 | bucketPath: initial-path # the initial path, ie. s3://my-bucket/initial-path

155 | command: gulp build # optional: a command to run right before publishing (you might wanna build stuff here)

156 | ```

157 |

158 | Want to build 2 projects from the same repo?

159 |

160 | ``` yaml

161 | redis:

162 | dockerfilePath: src

163 | redis-server:

164 | dockerfilePath: server/src

165 | ```

166 |

167 | #### Branch and tag filtering

168 |

169 | Want to only build from specific branches or tags? Builds that are started automatically by a webhook (see [build hooks](#build-hooks) below), can be filtered, based on the name of the branch or tag sent by the webhook. Webhooks that don't match the rules will not cause a build to be scheduled.

170 |

171 | Matching rules can be configured with one or more of the following properties:

172 |

173 | - **branches**: An array of branch names that should be built

174 | - **patterns**: An array of JavaScript-compatible regular expressions for matching branch and tag names to be built

175 | - **tags**: A boolean allowing all tags to be built when set to `true`

176 |

177 | The following example will allow the branches `develop` and `master` to be built, as well as any branch or tag matching the regular expression `v\d+\.\d+\.\d+$`, which looks for a version number in the form `v1.0.0`.

178 |

179 | ```yaml

180 | redis:

181 | registry: dockerhub

182 |

183 | settings:

184 | matching:

185 | branches:

186 | - master

187 | - develop

188 | patterns:

189 | - ^v\d+\.\d+\.\d+$

190 | ```

191 |

192 | The following example will allow the `master` branch, plus all tags to be built:

193 |

194 | ```yaml

195 | redis:

196 | registry: dockerhub

197 |

198 | settings:

199 | matching:

200 | branches:

201 | - master

202 | tags: true

203 | ```

204 |

205 | Note that filtering only applies to webhook builds, and only when the `matching` options are configured. Builds scheduled using the web UI or the `api/build` endpoint are not affected by these filters.

206 |

207 | ### Server configuration

208 |

209 | Roger will read a `/config.yml` file that you

210 | need to mount in the container:

211 |

212 | ``` yaml

213 | app: # generic settings

214 | url: 'https://builds.yourcompany.com' # optional, just used for logging

215 | auth: ~ # authentication turned off by default, see next paragraph

216 | defaultRegistry: registry.company.com # the default registry to use, ie. 127.0.0.1:5000

217 | builds:

218 | concurrent: 5 # max number of builds to run in parallel, use ~ to disable

219 | retry-after: 30 # interval, in seconds, for Roger to check whether it can start queued builds

220 | auth: # authentication on various providers

221 | dockerhub: # these credentials are only useful if you need to push to the dockerhub

222 | username: odino # your username on the dockerhub

223 | email: alessandro.nadalin@gmail.com # your...well, you get it

224 | password: YOUR_DOCKERHUB_PASSWORD

225 | github: YOUR_SECRET_TOKEN # General token to be used to authenticate to clone any project (https://github.com/settings/tokens/new)

226 | notifications: # configs to notify of build failures / successes

227 | github: # this will create a github status

228 | token: '{{ auth.github }}' # config values can reference other values

229 | emailSes: # sends an email through amazon SES

230 | accessKey: 1234

231 | secret: 5678

232 | region: eu-west-1

233 | to:

234 | - john.doe@gmail.com # a list of people who will be notified

235 | - committer # this is a special value that references the email of the commit author

236 | from: builds@company.com # sender email (needs to be verified on SES: http://docs.aws.amazon.com/ses/latest/DeveloperGuide/verify-email-addresses.html)

237 | docker: