├── .gitignore

├── LICENSE

├── README.md

├── __init__.py

├── configs

└── config.ini

├── evaluation

├── __init__.py

├── evaluate_el.py

├── evaluate_inference.py

└── evaluate_types.py

├── models

├── __init__.py

├── base.py

├── batch_normalizer.py

└── figer_model

│ ├── __init__.py

│ ├── coherence_model.py

│ ├── coldStart.py

│ ├── context_encoder.py

│ ├── el_model.py

│ ├── entity_posterior.py

│ ├── joint_context.py

│ ├── labeling_model.py

│ ├── loss_optim.py

│ └── wiki_desc.py

├── neuralel.py

├── neuralel_jsonl.py

├── neuralel_tadir.py

├── overview.png

├── readers

├── Mention.py

├── __init__.py

├── config.py

├── crosswikis_test.py

├── inference_reader.py

├── test_reader.py

├── textanno_test_reader.py

├── utils.py

└── vocabloader.py

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | logs/

2 | deprecated/

3 | test.py

4 | readers/crosswikis_test.py

5 |

6 | # Byte-compiled / optimized / DLL files

7 | __pycache__/

8 | *.py[cod]

9 | *$py.class

10 |

11 | # C extensions

12 | *.so

13 |

14 | # Distribution / packaging

15 | .Python

16 | env/

17 | build/

18 | develop-eggs/

19 | dist/

20 | downloads/

21 | eggs/

22 | .eggs/

23 | lib/

24 | lib64/

25 | parts/

26 | sdist/

27 | var/

28 | *.egg-info/

29 | .installed.cfg

30 | *.egg

31 |

32 | # PyInstaller

33 | # Usually these files are written by a python script from a template

34 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

35 | *.manifest

36 | *.spec

37 |

38 | # Installer logs

39 | pip-log.txt

40 | pip-delete-this-directory.txt

41 |

42 | # Unit test / coverage reports

43 | htmlcov/

44 | .tox/

45 | .coverage

46 | .coverage.*

47 | .cache

48 | nosetests.xml

49 | coverage.xml

50 | *,cover

51 | .hypothesis/

52 |

53 | # Translations

54 | *.mo

55 | *.pot

56 |

57 | # Django stuff:

58 | *.log

59 | local_settings.py

60 |

61 | # Flask stuff:

62 | instance/

63 | .webassets-cache

64 |

65 | # Scrapy stuff:

66 | .scrapy

67 |

68 | # Sphinx documentation

69 | docs/_build/

70 |

71 | # PyBuilder

72 | target/

73 |

74 | # IPython Notebook

75 | .ipynb_checkpoints

76 |

77 | # pyenv

78 | .python-version

79 |

80 | # celery beat schedule file

81 | celerybeat-schedule

82 |

83 | # dotenv

84 | .env

85 |

86 | # virtualenv

87 | venv/

88 | ENV/

89 |

90 | # Spyder project settings

91 | .spyderproject

92 |

93 | # Rope project settings

94 | .ropeproject

95 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | Neural Entity Linking

2 | =====================

3 | Code for paper

4 | "[Entity Linking via Joint Encoding of Types, Descriptions, and Context](http://cogcomp.org/page/publication_view/817)", EMNLP '17

5 |

6 |  7 |

8 | ## Abstract

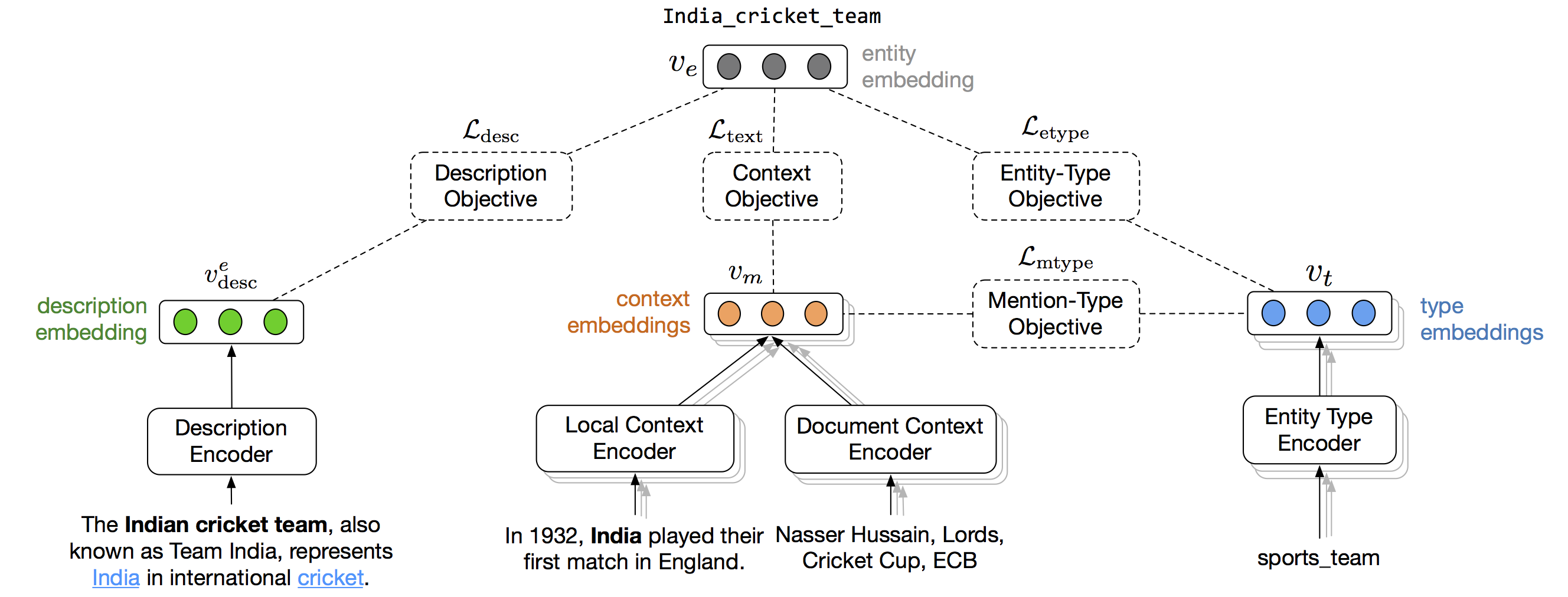

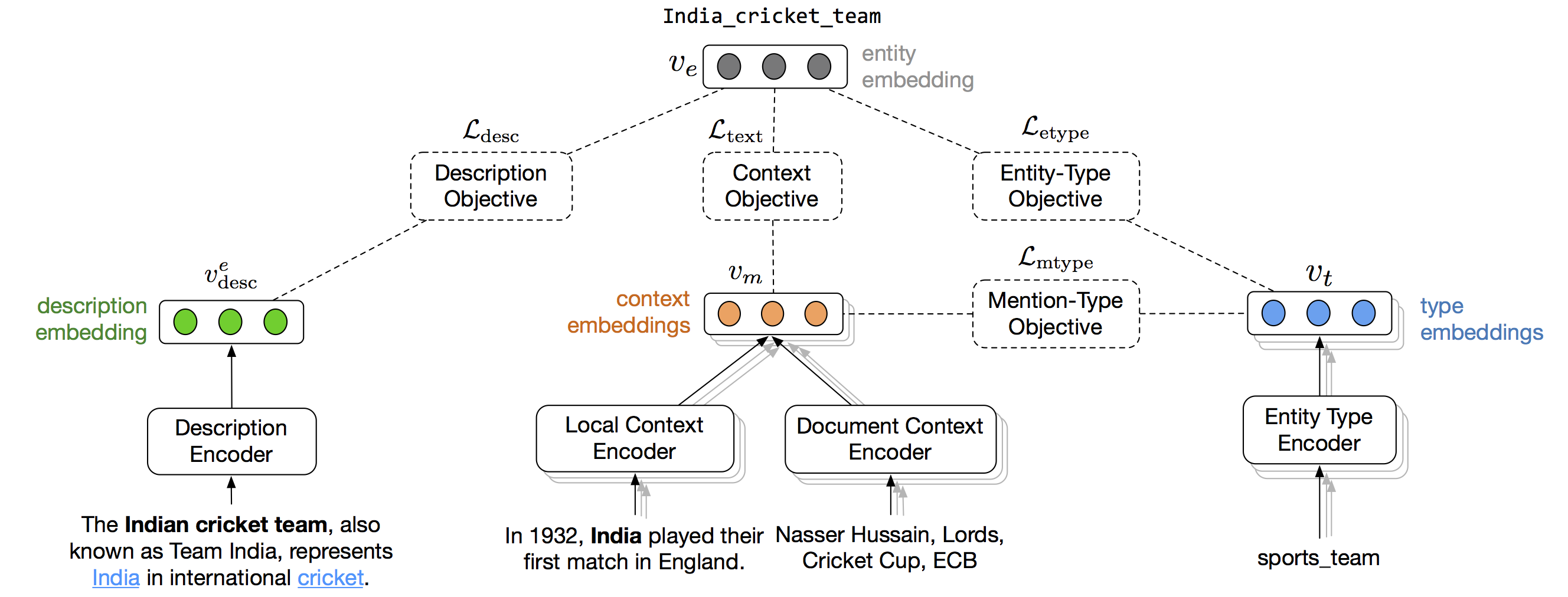

9 | For accurate entity linking, we need to capture the various information aspects of an entity, such as its description in a KB, contexts in which it is mentioned, and structured knowledge. Further, a linking system should work on texts from different domains without requiring domain-specific training data or hand-engineered features.

10 | In this work we present a neural, modular entity linking system that learns a unified dense representation for each entity using multiple sources of information, such as its description, contexts around its mentions, and fine-grained types. We show that the resulting entity linking system is effective at combining these sources, and performs competitively, sometimes out-performing current state-of-art-systems across datasets, without requiring any domain-specific training data or hand-engineered features. We also show that our model can effectively "embed" entities that are new to the KB, and is able to link its mentions accurately.

11 |

12 | ### Requirements

13 | * Python 3.4

14 | * Tensorflow 0.11 / 0.12

15 | * numpy

16 | * [CogComp-NLPy](https://github.com/CogComp/cogcomp-nlpy)

17 | * [Resources](https://drive.google.com/open?id=0Bz-t37BfgoTuSEtXOTI1SEF3VnM) - Pretrained models, vectors, etc.

18 |

19 | ### How to run inference

20 | 1. Clone the [code repository](https://github.com/nitishgupta/neural-el/)

21 | 1. Download the [resources folder](https://drive.google.com/open?id=0Bz-t37BfgoTuSEtXOTI1SEF3VnM).

22 | 2. In `config/config.ini` set the correct path to the resources folder you just downloaded

23 | 3. Run using:

24 | ```

25 | python3 neuralel.py --config=configs/config.ini --model_path=PATH_TO_MODEL_IN_RESOURCES --mode=inference

26 | ```

27 | The file `sampletest.txt` in the resources folder contains the text to be entity-linked. Currently we only support linking for a single document. Make sure the text in `sampletest.txt` is a single doc in a single line.

28 |

29 | ### Installing cogcomp-nlpy

30 | [CogComp-NLPy](https://github.com/CogComp/cogcomp-nlpy) is needed to detect named-entity mentions using NER. To install:

31 | ```

32 | pip install cython

33 | pip install ccg_nlpy

34 | ```

35 |

36 | ### Installing Tensorflow (CPU Version)

37 | To install tensorflow 0.12:

38 | ```

39 | export TF_BINARY_URL=https://storage.googleapis.com/tensorflow/linux/cpu/tensorflow-0.12.1-cp34-cp34m-linux_x86_64.whl

40 | (Regular) pip install --upgrade $TF_BINARY_URL

41 | (Conda) pip install --ignore-installed --upgrade $TF_BINARY_URL

42 | ```

43 |

--------------------------------------------------------------------------------

/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/nitishgupta/neural-el/8c7c278acefa66238a75e805511ff26a567fd4e0/__init__.py

--------------------------------------------------------------------------------

/configs/config.ini:

--------------------------------------------------------------------------------

1 | [DEFAULT]

2 | resources_dir: /shared/bronte/ngupta19/neural-el_resources

3 |

4 | vocab_dir: ${resources_dir}/vocab

5 |

6 | word_vocab_pkl:${vocab_dir}/word_vocab.pkl

7 | kwnwid_vocab_pkl:${vocab_dir}/knwn_wid_vocab.pkl

8 | label_vocab_pkl:${vocab_dir}/label_vocab.pkl

9 | cohstringG9_vocab_pkl:${vocab_dir}/cohstringG9_vocab.pkl

10 | widWiktitle_pkl:${vocab_dir}/wid2Wikititle.pkl

11 |

12 | # One CWIKIs PATH NEEDED

13 | crosswikis_pruned_pkl: ${resources_dir}/crosswikis.pruned.pkl

14 |

15 |

16 | glove_pkl: ${resources_dir}/glove.pkl

17 | glove_word_vocab_pkl:${vocab_dir}/glove_word_vocab.pkl

18 |

19 | # Should be removed

20 | test_file: ${resources_dir}/sampletest.txt

21 |

--------------------------------------------------------------------------------

/evaluation/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/nitishgupta/neural-el/8c7c278acefa66238a75e805511ff26a567fd4e0/evaluation/__init__.py

--------------------------------------------------------------------------------

/evaluation/evaluate_el.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import numpy as np

4 |

5 | coarsetypes = set(["location", "person", "organization", "event"])

6 | coarseTypeIds = set([1,5,10,25])

7 |

8 |

9 | def computeMaxPriorContextJointEntities(

10 | WIDS_list, wikiTitles_list, condProbs_list, contextProbs_list,

11 | condContextJointProbs_list, verbose):

12 |

13 | assert (len(wikiTitles_list) == len(condProbs_list) ==

14 | len(contextProbs_list) == len(condContextJointProbs_list))

15 | numMens = len(wikiTitles_list)

16 | numWithCorrectInCand = 0

17 | accCond = 0

18 | accCont = 0

19 | accJoint = 0

20 |

21 | # [[(trueWT, maxPrWT, maxContWT, maxJWT), (trueWID, maxPrWID, maxContWID, maxJWID)]]

22 | evaluationWikiTitles = []

23 |

24 | sortedContextWTs = []

25 |

26 | for (WIDS, wTs, cProbs, contProbs, jointProbs) in zip(WIDS_list,

27 | wikiTitles_list,

28 | condProbs_list,

29 | contextProbs_list,

30 | condContextJointProbs_list):

31 | if wTs[0] == "":

32 | evaluationWikiTitles.append([tuple([""]*4), tuple([""]*4)])

33 |

34 | else:

35 | numWithCorrectInCand += 1

36 | trueWID = WIDS[0]

37 | trueEntity = wTs[0]

38 | tCondProb = cProbs[0]

39 | tContProb = contProbs[0]

40 | tJointProb = jointProbs[0]

41 |

42 | maxCondEntity_idx = np.argmax(cProbs)

43 | maxCondWID = WIDS[maxCondEntity_idx]

44 | maxCondEntity = wTs[maxCondEntity_idx]

45 | maxCondProb = cProbs[maxCondEntity_idx]

46 | if trueEntity == maxCondEntity and maxCondProb!=0.0:

47 | accCond+= 1

48 |

49 | maxContEntity_idx = np.argmax(contProbs)

50 | maxContWID = WIDS[maxContEntity_idx]

51 | maxContEntity = wTs[maxContEntity_idx]

52 | maxContProb = contProbs[maxContEntity_idx]

53 | if maxContEntity == trueEntity and maxContProb!=0.0:

54 | accCont+= 1

55 |

56 | contProbs_sortIdxs = np.argsort(contProbs).tolist()[::-1]

57 | sortContWTs = [wTs[i] for i in contProbs_sortIdxs]

58 | sortedContextWTs.append(sortContWTs)

59 |

60 | maxJointEntity_idx = np.argmax(jointProbs)

61 | maxJointWID = WIDS[maxJointEntity_idx]

62 | maxJointEntity = wTs[maxJointEntity_idx]

63 | maxJointProb = jointProbs[maxJointEntity_idx]

64 | maxJointCprob = cProbs[maxJointEntity_idx]

65 | maxJointContP = contProbs[maxJointEntity_idx]

66 | if maxJointEntity == trueEntity and maxJointProb!=0:

67 | accJoint+= 1

68 |

69 | predWTs = (trueEntity, maxCondEntity, maxContEntity, maxJointEntity)

70 | predWIDs = (trueWID, maxCondWID, maxContWID, maxJointWID)

71 | evaluationWikiTitles.append([predWTs, predWIDs])

72 |

73 | if verbose:

74 | print("True: {} c:{:.3f} cont:{:.3f} J:{:.3f}".format(

75 | trueEntity, tCondProb, tContProb, tJointProb))

76 | print("Pred: {} c:{:.3f} cont:{:.3f} J:{:.3f}".format(

77 | maxJointEntity, maxJointCprob, maxJointContP, maxJointProb))

78 | print("maxPrior: {} p:{:.3f} maxCont:{} p:{:.3f}".format(

79 | maxCondEntity, maxCondProb, maxContEntity, maxContProb))

80 | #AllMentionsProcessed

81 | if numWithCorrectInCand != 0:

82 | accCond = accCond/float(numWithCorrectInCand)

83 | accCont = accCont/float(numWithCorrectInCand)

84 | accJoint = accJoint/float(numWithCorrectInCand)

85 | else:

86 | accCond = 0.0

87 | accCont = 0.0

88 | accJoint = 0.0

89 |

90 | print("Total Mentions : {} In Knwn Mentions : {}".format(

91 | numMens, numWithCorrectInCand))

92 | print("Priors Accuracy: {:.3f} Context Accuracy: {:.3f} Joint Accuracy: {:.3f}".format(

93 | (accCond), accCont, accJoint))

94 |

95 | assert len(evaluationWikiTitles) == numMens

96 |

97 | return (evaluationWikiTitles, sortedContextWTs)

98 |

99 |

100 | def convertWidIdxs2WikiTitlesAndWIDs(widIdxs_list, idx2knwid, wid2WikiTitle):

101 | wikiTitles_list = []

102 | WIDS_list = []

103 | for widIdxs in widIdxs_list:

104 | wids = [idx2knwid[wididx] for wididx in widIdxs]

105 | wikititles = [wid2WikiTitle[idx2knwid[wididx]] for wididx in widIdxs]

106 | WIDS_list.append(wids)

107 | wikiTitles_list.append(wikititles)

108 |

109 | return (WIDS_list, wikiTitles_list)

110 |

111 |

112 | def _normalizeProbList(probList):

113 | norm_probList = []

114 | for probs in probList:

115 | s = sum(probs)

116 | if s != 0.0:

117 | n_p = [p/s for p in probs]

118 | norm_probList.append(n_p)

119 | else:

120 | norm_probList.append(probs)

121 | return norm_probList

122 |

123 |

124 | def computeFinalEntityProbs(condProbs_list, contextProbs_list, alpha=0.5):

125 | condContextJointProbs_list = []

126 | condProbs_list = _normalizeProbList(condProbs_list)

127 | contextProbs_list = _normalizeProbList(contextProbs_list)

128 |

129 | for (cprobs, contprobs) in zip(condProbs_list, contextProbs_list):

130 | #condcontextprobs = [(alpha*x + (1-alpha)*y) for (x,y) in zip(cprobs, contprobs)]

131 | condcontextprobs = [(x + y - x*y) for (x,y) in zip(cprobs, contprobs)]

132 | sum_condcontextprobs = sum(condcontextprobs)

133 | if sum_condcontextprobs != 0.0:

134 | condcontextprobs = [float(x)/sum_condcontextprobs for x in condcontextprobs]

135 | condContextJointProbs_list.append(condcontextprobs)

136 | return condContextJointProbs_list

137 |

138 |

139 | def computeFinalEntityScores(condProbs_list, contextProbs_list, alpha=0.5):

140 | condContextJointProbs_list = []

141 | condProbs_list = _normalizeProbList(condProbs_list)

142 | #contextProbs_list = _normalizeProbList(contextProbs_list)

143 |

144 | for (cprobs, contprobs) in zip(condProbs_list, contextProbs_list):

145 | condcontextprobs = [(alpha*x + (1-alpha)*y) for (x,y) in zip(cprobs, contprobs)]

146 | sum_condcontextprobs = sum(condcontextprobs)

147 | if sum_condcontextprobs != 0.0:

148 | condcontextprobs = [float(x)/sum_condcontextprobs for x in condcontextprobs]

149 | condContextJointProbs_list.append(condcontextprobs)

150 | return condContextJointProbs_list

151 |

152 |

153 | ##############################################################################

154 |

155 | def evaluateEL(condProbs_list, widIdxs_list, contextProbs_list,

156 | idx2knwid, wid2WikiTitle, verbose=False):

157 | ''' Prior entity prob, True and candidate entity WIDs, Predicted ent. prob.

158 | using context for each of te 30 candidates. First element in the candidates is

159 | the true entity.

160 | Args:

161 | For each mention:

162 | condProbs_list: List of prior probs for 30 candidates.

163 | widIdxs_list: List of candidate widIdxs probs for 30 candidates.

164 | contextProbss_list: List of candidate prob. using context

165 | idx2knwid: Map for widIdx -> WID

166 | wid2WikiTitle: Map from WID -> WikiTitle

167 | wid2TypeLabels: Map from WID -> List of Types

168 | '''

169 | print("Evaluating E-Linking ... ")

170 | (WIDS_list, wikiTitles_list) = convertWidIdxs2WikiTitlesAndWIDs(

171 | widIdxs_list, idx2knwid, wid2WikiTitle)

172 |

173 | alpha = 0.5

174 | #for alpha in alpha_range:

175 | print("Alpha : {}".format(alpha))

176 | jointProbs_list = computeFinalEntityProbs(

177 | condProbs_list, contextProbs_list, alpha=alpha)

178 |

179 | # evaluationWikiTitles:

180 | # For each mention [(trWT, maxPWT, maxCWT, maxJWT), (trWID, ...)]

181 | (evaluationWikiTitles,

182 | sortedContextWTs) = computeMaxPriorContextJointEntities(

183 | WIDS_list, wikiTitles_list, condProbs_list, contextProbs_list,

184 | jointProbs_list, verbose)

185 |

186 |

187 | '''

188 | condContextJointScores_list = computeFinalEntityScores(

189 | condProbs_list, contextProbs_list, alpha=alpha)

190 |

191 | evaluationWikiTitles = computeMaxPriorContextJointEntities(

192 | WIDS_list, wikiTitles_list, condProbs_list, contextProbs_list,

193 | condContextJointScores_list, verbose)

194 | '''

195 |

196 | return (jointProbs_list, evaluationWikiTitles, sortedContextWTs)

197 |

198 | ##############################################################################

199 |

200 |

201 | def f1(p,r):

202 | if p == 0.0 and r == 0.0:

203 | return 0.0

204 | return (float(2*p*r))/(p + r)

205 |

206 |

207 | def strict_pred(true_label_batch, pred_score_batch):

208 | ''' Calculates strict precision/recall/f1 given truth and predicted scores

209 | args

210 | true_label_batch: Binary Numpy matrix of [num_instances, num_labels]

211 | pred_score_batch: Real [0,1] numpy matrix of [num_instances, num_labels]

212 |

213 | return:

214 | correct_preds: Number of correct strict preds

215 | precision : correct_preds / num_instances

216 | '''

217 | (true_labels, pred_labels) = types_convert_mat_to_sets(

218 | true_label_batch, pred_score_batch)

219 |

220 | num_instanes = len(true_labels)

221 | correct_preds = 0

222 | for i in range(0, num_instanes):

223 | if true_labels[i] == pred_labels[i]:

224 | correct_preds += 1

225 | #endfor

226 | precision = recall = float(correct_preds)/num_instanes

227 |

228 | return correct_preds, precision

229 |

230 |

231 | def correct_context_prediction(entity_posterior_scores, batch_size):

232 | bool_array = np.equal(np.argmax(entity_posterior_scores, axis=1),

233 | [0]*batch_size)

234 | correct_preds = np.sum(bool_array)

235 | return correct_preds

236 |

--------------------------------------------------------------------------------

/evaluation/evaluate_inference.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import numpy as np

4 |

5 |

6 | def computeMaxPriorContextJointEntities(

7 | WIDS_list, wikiTitles_list, condProbs_list, contextProbs_list,

8 | condContextJointProbs_list, verbose):

9 |

10 | assert (len(wikiTitles_list) == len(condProbs_list) ==

11 | len(contextProbs_list) == len(condContextJointProbs_list))

12 | numMens = len(wikiTitles_list)

13 |

14 | evaluationWikiTitles = []

15 | sortedContextWTs = []

16 | for (WIDS, wTs,

17 | cProbs, contProbs, jointProbs) in zip(WIDS_list,

18 | wikiTitles_list,

19 | condProbs_list,

20 | contextProbs_list,

21 | condContextJointProbs_list):

22 | # if wTs[0] == "":

23 | # evaluationWikiTitles.append([tuple([""]*3),

24 | # tuple([""]*3)])

25 | # else:

26 | maxCondEntity_idx = np.argmax(cProbs)

27 | maxCondWID = WIDS[maxCondEntity_idx]

28 | maxCondEntity = wTs[maxCondEntity_idx]

29 | maxCondProb = cProbs[maxCondEntity_idx]

30 |

31 | maxContEntity_idx = np.argmax(contProbs)

32 | maxContWID = WIDS[maxContEntity_idx]

33 | maxContEntity = wTs[maxContEntity_idx]

34 | maxContProb = contProbs[maxContEntity_idx]

35 |

36 | contProbs_sortIdxs = np.argsort(contProbs).tolist()[::-1]

37 | sortContWTs = [wTs[i] for i in contProbs_sortIdxs]

38 | sortedContextWTs.append(sortContWTs)

39 |

40 | maxJointEntity_idx = np.argmax(jointProbs)

41 | maxJointWID = WIDS[maxJointEntity_idx]

42 | maxJointEntity = wTs[maxJointEntity_idx]

43 | maxJointProb = jointProbs[maxJointEntity_idx]

44 | maxJointCprob = cProbs[maxJointEntity_idx]

45 | maxJointContP = contProbs[maxJointEntity_idx]

46 |

47 | predWTs = (maxCondEntity, maxContEntity, maxJointEntity)

48 | predWIDs = (maxCondWID, maxContWID, maxJointWID)

49 | predProbs = (maxCondProb, maxContProb, maxJointProb)

50 | evaluationWikiTitles.append([predWTs, predWIDs, predProbs])

51 |

52 | assert len(evaluationWikiTitles) == numMens

53 |

54 | return (evaluationWikiTitles, sortedContextWTs)

55 |

56 | def convertWidIdxs2WikiTitlesAndWIDs(widIdxs_list, idx2knwid, wid2WikiTitle):

57 | wikiTitles_list = []

58 | WIDS_list = []

59 | for widIdxs in widIdxs_list:

60 | wids = [idx2knwid[wididx] for wididx in widIdxs]

61 | wikititles = [wid2WikiTitle[idx2knwid[wididx]] for wididx in widIdxs]

62 | WIDS_list.append(wids)

63 | wikiTitles_list.append(wikititles)

64 |

65 | return (WIDS_list, wikiTitles_list)

66 |

67 | def _normalizeProbList(probList):

68 | norm_probList = []

69 | for probs in probList:

70 | s = sum(probs)

71 | if s != 0.0:

72 | n_p = [p/s for p in probs]

73 | norm_probList.append(n_p)

74 | else:

75 | norm_probList.append(probs)

76 | return norm_probList

77 |

78 |

79 | def computeFinalEntityProbs(condProbs_list, contextProbs_list):

80 | condContextJointProbs_list = []

81 | condProbs_list = _normalizeProbList(condProbs_list)

82 | contextProbs_list = _normalizeProbList(contextProbs_list)

83 |

84 | for (cprobs, contprobs) in zip(condProbs_list, contextProbs_list):

85 | condcontextprobs = [(x + y - x*y) for (x,y) in zip(cprobs, contprobs)]

86 | sum_condcontextprobs = sum(condcontextprobs)

87 | if sum_condcontextprobs != 0.0:

88 | condcontextprobs = [float(x)/sum_condcontextprobs for x in condcontextprobs]

89 | condContextJointProbs_list.append(condcontextprobs)

90 | return condContextJointProbs_list

91 |

92 |

93 | def computeFinalEntityScores(condProbs_list, contextProbs_list, alpha=0.5):

94 | condContextJointProbs_list = []

95 | condProbs_list = _normalizeProbList(condProbs_list)

96 | #contextProbs_list = _normalizeProbList(contextProbs_list)

97 |

98 | for (cprobs, contprobs) in zip(condProbs_list, contextProbs_list):

99 | condcontextprobs = [(alpha*x + (1-alpha)*y) for (x,y) in zip(cprobs, contprobs)]

100 | sum_condcontextprobs = sum(condcontextprobs)

101 | if sum_condcontextprobs != 0.0:

102 | condcontextprobs = [float(x)/sum_condcontextprobs for x in condcontextprobs]

103 | condContextJointProbs_list.append(condcontextprobs)

104 | return condContextJointProbs_list

105 |

106 |

107 | #############################################################################

108 |

109 |

110 | def evaluateEL(condProbs_list, widIdxs_list, contextProbs_list,

111 | idx2knwid, wid2WikiTitle, verbose=False):

112 | ''' Prior entity prob, True and candidate entity WIDs, Predicted ent. prob.

113 | using context for each of te 30 candidates. First element in the candidates

114 | is the true entity.

115 | Args:

116 | For each mention:

117 | condProbs_list: List of prior probs for 30 candidates.

118 | widIdxs_list: List of candidate widIdxs probs for 30 candidates.

119 | contextProbss_list: List of candidate prob. using context

120 | idx2knwid: Map for widIdx -> WID

121 | wid2WikiTitle: Map from WID -> WikiTitle

122 | '''

123 | # print("Evaluating E-Linking ... ")

124 | (WIDS_list, wikiTitles_list) = convertWidIdxs2WikiTitlesAndWIDs(

125 | widIdxs_list, idx2knwid, wid2WikiTitle)

126 |

127 | jointProbs_list = computeFinalEntityProbs(condProbs_list,

128 | contextProbs_list)

129 |

130 | (evaluationWikiTitles,

131 | sortedContextWTs) = computeMaxPriorContextJointEntities(

132 | WIDS_list, wikiTitles_list, condProbs_list, contextProbs_list,

133 | jointProbs_list, verbose)

134 |

135 | return (jointProbs_list, evaluationWikiTitles, sortedContextWTs)

136 |

137 | ##############################################################################

138 |

139 |

140 | def f1(p,r):

141 | if p == 0.0 and r == 0.0:

142 | return 0.0

143 | return (float(2*p*r))/(p + r)

144 |

145 |

146 | def strict_pred(true_label_batch, pred_score_batch):

147 | ''' Calculates strict precision/recall/f1 given truth and predicted scores

148 | args

149 | true_label_batch: Binary Numpy matrix of [num_instances, num_labels]

150 | pred_score_batch: Real [0,1] numpy matrix of [num_instances, num_labels]

151 |

152 | return:

153 | correct_preds: Number of correct strict preds

154 | precision : correct_preds / num_instances

155 | '''

156 | (true_labels, pred_labels) = types_convert_mat_to_sets(

157 | true_label_batch, pred_score_batch)

158 |

159 | num_instanes = len(true_labels)

160 | correct_preds = 0

161 | for i in range(0, num_instanes):

162 | if true_labels[i] == pred_labels[i]:

163 | correct_preds += 1

164 | #endfor

165 | precision = recall = float(correct_preds)/num_instanes

166 |

167 | return correct_preds, precision

168 |

169 |

170 | def correct_context_prediction(entity_posterior_scores, batch_size):

171 | bool_array = np.equal(np.argmax(entity_posterior_scores, axis=1),

172 | [0]*batch_size)

173 | correct_preds = np.sum(bool_array)

174 | return correct_preds

175 |

--------------------------------------------------------------------------------

/evaluation/evaluate_types.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import numpy as np

4 |

5 | coarsetypes = set(["location", "person", "organization", "event"])

6 | coarseTypeIds = set([1,5,10,25])

7 |

8 | def _convertTypeMatToTypeSets(typesscore_mat, idx2label, threshold):

9 | ''' Gets true labels and pred scores in numpy matrix and converts to list

10 | args

11 | true_label_batch: Binary Numpy matrix of [num_instances, num_labels]

12 | pred_score_batch: Real [0,1] numpy matrix of [num_instances, num_labels]

13 |

14 | return:

15 | true_labels: List of list of true label (indices) for batch of instances

16 | pred_labels : List of list of pred label (indices) for batch of instances

17 | (threshold = 0.5)

18 | '''

19 | labels = []

20 | for i in typesscore_mat:

21 | # i in array of label_vals for i-th example

22 | labels_i = []

23 | max_idx = -1

24 | max_val = -1

25 | for (label_idx, val) in enumerate(i):

26 | if val >= threshold:

27 | labels_i.append(idx2label[label_idx])

28 | if val > max_val:

29 | max_idx = label_idx

30 | max_val = val

31 | if len(labels_i) == 0:

32 | labels_i.append(idx2label[max_idx])

33 | labels.append(set(labels_i))

34 |

35 | '''

36 | assert 0.0 < threshold <= 1.0

37 | boolmat = typesscore_mat >= threshold

38 | boollist = boolmat.tolist()

39 | num_instanes = len(boollist)

40 | labels = []

41 | for i in range(0, num_instanes):

42 | labels_i = [idx2label[i] for i, x in enumerate(boollist[i]) if x]

43 | labels.append(set(labels_i))

44 | ##

45 | '''

46 | return labels

47 |

48 | def convertTypesScoreMatLists_TypeSets(typeScoreMat_list, idx2label, threshold):

49 | '''

50 | Take list of type scores numpy mat (per batch) as ouput from Tensorflow.

51 | Convert into list of type sets for each mention based on the thresold

52 |

53 | Return:

54 | typeSets_list: Size=num_instances. Each instance is set of type labels for mention

55 | '''

56 |

57 | typeSets_list = []

58 | for typeScoreMat in typeScoreMat_list:

59 | typeLabels_list = _convertTypeMatToTypeSets(typeScoreMat,

60 | idx2label, threshold)

61 | typeSets_list.extend(typeLabels_list)

62 | return typeSets_list

63 |

64 |

65 | def typesPredictionStats(pred_labels, true_labels):

66 | '''

67 | args

68 | true_label_batch: Binary Numpy matrix of [num_instances, num_labels]

69 | pred_score_batch: Real [0,1] numpy matrix of [num_instances, num_labels]

70 | '''

71 |

72 | # t_hat \interesect t

73 | t_intersect = 0

74 | t_hat_count = 0

75 | t_count = 0

76 | t_t_hat_exact = 0

77 | loose_macro_p = 0.0

78 | loose_macro_r = 0.0

79 | num_instances = len(true_labels)

80 | for i in range(0, num_instances):

81 | intersect = len(true_labels[i].intersection(pred_labels[i]))

82 | t_h_c = len(pred_labels[i])

83 | t_c = len(true_labels[i])

84 | t_intersect += intersect

85 | t_hat_count += t_h_c

86 | t_count += t_c

87 | exact = 1 if (true_labels[i] == pred_labels[i]) else 0

88 | t_t_hat_exact += exact

89 | if len(pred_labels[i]) > 0:

90 | loose_macro_p += intersect / float(t_h_c)

91 | if len(true_labels[i]) > 0:

92 | loose_macro_r += intersect / float(t_c)

93 |

94 | return (t_intersect, t_t_hat_exact, t_hat_count, t_count,

95 | loose_macro_p, loose_macro_r)

96 |

97 | def typesEvaluationMetrics(pred_TypeSetsList, true_TypeSetsList):

98 | num_instances = len(true_TypeSetsList)

99 | (t_i, t_th_exact, t_h_c, t_c, l_m_p, l_m_r) = typesPredictionStats(

100 | pred_labels=pred_TypeSetsList, true_labels=true_TypeSetsList)

101 | strict = float(t_th_exact)/float(num_instances)

102 | loose_macro_p = l_m_p / float(num_instances)

103 | loose_macro_r = l_m_r / float(num_instances)

104 | loose_macro_f = f1(loose_macro_p, loose_macro_r)

105 | if t_h_c > 0:

106 | loose_micro_p = float(t_i)/float(t_h_c)

107 | else:

108 | loose_micro_p = 0

109 | if t_c > 0:

110 | loose_micro_r = float(t_i)/float(t_c)

111 | else:

112 | loose_micro_r = 0

113 | loose_micro_f = f1(loose_micro_p, loose_micro_r)

114 |

115 | return (strict, loose_macro_p, loose_macro_r, loose_macro_f, loose_micro_p,

116 | loose_micro_r, loose_micro_f)

117 |

118 |

119 | def performTypingEvaluation(predLabelScoresnumpymat_list, idx2label):

120 | '''

121 | Args: List of numpy mat, one for ech batch, for true and pred type scores

122 | trueLabelScoresnumpymat_list: List of score matrices output by tensorflow

123 | predLabelScoresnumpymat_list: List of score matrices output by tensorflow

124 | '''

125 | pred_TypeSetsList = convertTypesScoreMatLists_TypeSets(

126 | typeScoreMat_list=predLabelScoresnumpymat_list, idx2label=idx2label,

127 | threshold=0.75)

128 |

129 | return pred_TypeSetsList

130 |

131 |

132 | def evaluate(predLabelScoresnumpymat_list, idx2label):

133 | # print("Evaluating Typing ... ")

134 | pred_TypeSetsList = convertTypesScoreMatLists_TypeSets(

135 | typeScoreMat_list=predLabelScoresnumpymat_list, idx2label=idx2label,

136 | threshold=0.75)

137 |

138 | return pred_TypeSetsList

139 |

140 |

141 | def f1(p,r):

142 | if p == 0.0 and r == 0.0:

143 | return 0.0

144 | return (float(2*p*r))/(p + r)

145 |

146 |

147 | def strict_pred(true_label_batch, pred_score_batch):

148 | ''' Calculates strict precision/recall/f1 given truth and predicted scores

149 | args

150 | true_label_batch: Binary Numpy matrix of [num_instances, num_labels]

151 | pred_score_batch: Real [0,1] numpy matrix of [num_instances, num_labels]

152 |

153 | return:

154 | correct_preds: Number of correct strict preds

155 | precision : correct_preds / num_instances

156 | '''

157 | (true_labels, pred_labels) = types_convert_mat_to_sets(

158 | true_label_batch, pred_score_batch)

159 |

160 | num_instanes = len(true_labels)

161 | correct_preds = 0

162 | for i in range(0, num_instanes):

163 | if true_labels[i] == pred_labels[i]:

164 | correct_preds += 1

165 | #endfor

166 | precision = recall = float(correct_preds)/num_instanes

167 |

168 | return correct_preds, precision

169 |

170 | def correct_context_prediction(entity_posterior_scores, batch_size):

171 | bool_array = np.equal(np.argmax(entity_posterior_scores, axis=1),

172 | [0]*batch_size)

173 | correct_preds = np.sum(bool_array)

174 | return correct_preds

175 |

--------------------------------------------------------------------------------

/models/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/nitishgupta/neural-el/8c7c278acefa66238a75e805511ff26a567fd4e0/models/__init__.py

--------------------------------------------------------------------------------

/models/base.py:

--------------------------------------------------------------------------------

1 | import os

2 | from glob import glob

3 | import tensorflow as tf

4 |

5 | class Model(object):

6 | """Abstract object representing an Reader model."""

7 | def __init__(self):

8 | pass

9 |

10 | # def get_model_dir(self):

11 | # model_dir = self.dataset

12 | # for attr in self._attrs:

13 | # if hasattr(self, attr):

14 | # model_dir += "/%s=%s" % (attr, getattr(self, attr))

15 | # return model_dir

16 |

17 | def get_model_dir(self, attrs=None):

18 | model_dir = self.dataset

19 | if attrs == None:

20 | attrs = self._attrs

21 | for attr in attrs:

22 | if hasattr(self, attr):

23 | model_dir += "/%s=%s" % (attr, getattr(self, attr))

24 | return model_dir

25 |

26 | def get_log_dir(self, root_log_dir, attrs=None):

27 | model_dir = self.get_model_dir(attrs=attrs)

28 | log_dir = os.path.join(root_log_dir, model_dir)

29 | if not os.path.exists(log_dir):

30 | os.makedirs(log_dir)

31 | return log_dir

32 |

33 | def save(self, saver, checkpoint_dir, attrs=None, global_step=None):

34 | print(" [*] Saving checkpoints...")

35 | model_name = type(self).__name__

36 | model_dir = self.get_model_dir(attrs=attrs)

37 |

38 | checkpoint_dir = os.path.join(checkpoint_dir, model_dir)

39 | if not os.path.exists(checkpoint_dir):

40 | os.makedirs(checkpoint_dir)

41 | saver.save(self.sess, os.path.join(checkpoint_dir, model_name),

42 | global_step=global_step)

43 | print(" [*] Saving done...")

44 |

45 | def initialize(self, log_dir="./logs"):

46 | self.merged_sum = tf.merge_all_summaries()

47 | self.writer = tf.train.SummaryWriter(log_dir, self.sess.graph_def)

48 |

49 | tf.initialize_all_variables().run()

50 | self.load(self.checkpoint_dir)

51 |

52 | start_iter = self.step.eval()

53 |

54 | def load(self, saver, checkpoint_dir, attrs=None):

55 | print(" [*] Loading checkpoints...")

56 | model_dir = self.get_model_dir(attrs=attrs)

57 | # /checkpointdir/attrs=values/

58 | checkpoint_dir = os.path.join(checkpoint_dir, model_dir)

59 | print(" [#] Checkpoint Dir : {}".format(checkpoint_dir))

60 | ckpt = tf.train.get_checkpoint_state(checkpoint_dir)

61 | if ckpt and ckpt.model_checkpoint_path:

62 | ckpt_name = os.path.basename(ckpt.model_checkpoint_path)

63 | print("ckpt_name: {}".format(ckpt_name))

64 | saver.restore(self.sess, os.path.join(checkpoint_dir, ckpt_name))

65 | print(" [*] Load SUCCESS")

66 | return True

67 | else:

68 | print(" [!] Load failed...")

69 | return False

70 |

71 | def loadCKPTPath(self, saver, ckptPath=None):

72 | assert ckptPath != None

73 | print(" [#] CKPT Path : {}".format(ckptPath))

74 | if os.path.exists(ckptPath):

75 | saver.restore(self.sess, ckptPath)

76 | print(" [*] Load SUCCESS")

77 | return True

78 | else:

79 | print(" [*] CKPT Path doesn't exist")

80 | return False

81 |

82 | def loadSpecificCKPT(self, saver, checkpoint_dir, ckptName=None, attrs=None):

83 | assert ckptName != None

84 | model_dir = self.get_model_dir(attrs=attrs)

85 | checkpoint_dir = os.path.join(checkpoint_dir, model_dir)

86 | checkpoint_path = os.path.join(checkpoint_dir, ckptName)

87 | print(" [#] CKPT Path : {}".format(checkpoint_path))

88 | if os.path.exists(checkpoint_path):

89 | saver.restore(self.sess, checkpoint_path)

90 |

91 | print(" [*] Load SUCCESS")

92 | return True

93 | else:

94 | print(" [*] CKPT Path doesn't exist")

95 | return False

96 |

97 |

98 |

99 | def collect_scope(self, scope_name, graph=None, var_type=tf.GraphKeys.VARIABLES):

100 | if graph == None:

101 | graph = tf.get_default_graph()

102 |

103 | var_list = graph.get_collection(var_type, scope=scope_name)

104 |

105 | assert_str = "No variable exists with name_scope '{}'".format(scope_name)

106 | assert len(var_list) != 0, assert_str

107 |

108 | return var_list

109 |

110 | def get_scope_var_name_set(self, var_name):

111 | clean_var_num = var_name.split(":")[0]

112 | scopes_names = clean_var_num.split("/")

113 | return set(scopes_names)

114 |

115 |

116 | def scope_vars_list(self, scope_name, var_list):

117 | scope_var_list = []

118 | for var in var_list:

119 | scope_var_name = self.get_scope_var_name_set(var.name)

120 | if scope_name in scope_var_name:

121 | scope_var_list.append(var)

122 | return scope_var_list

123 |

--------------------------------------------------------------------------------

/models/batch_normalizer.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | #from tensorflow.python import control_flow_ops

3 | from tensorflow.python.ops import control_flow_ops

4 |

5 | class BatchNorm():

6 | def __init__(self,

7 | input,

8 | training,

9 | decay=0.95,

10 | epsilon=1e-4,

11 | name='bn',

12 | reuse_vars=False):

13 |

14 | self.decay = decay

15 | self.epsilon = epsilon

16 | self.batchnorm(input, training, name, reuse_vars)

17 |

18 | def batchnorm(self, input, training, name, reuse_vars):

19 | with tf.variable_scope(name, reuse=reuse_vars) as bn:

20 | rank = len(input.get_shape().as_list())

21 | in_dim = input.get_shape().as_list()[-1]

22 |

23 | if rank == 2:

24 | self.axes = [0]

25 | elif rank == 4:

26 | self.axes = [0, 1, 2]

27 | else:

28 | raise ValueError('Input tensor must have rank 2 or 4.')

29 |

30 | self.offset = tf.get_variable(

31 | 'offset',

32 | shape=[in_dim],

33 | initializer=tf.constant_initializer(0.0))

34 |

35 | self.scale = tf.get_variable(

36 | 'scale',

37 | shape=[in_dim],

38 | initializer=tf.constant_initializer(1.0))

39 |

40 | self.ema = tf.train.ExponentialMovingAverage(decay=self.decay)

41 |

42 | self.output = tf.cond(training,

43 | lambda: self.get_normalizer(input, True),

44 | lambda: self.get_normalizer(input, False))

45 |

46 | def get_normalizer(self, input, train_flag):

47 | if train_flag:

48 | self.mean, self.variance = tf.nn.moments(input, self.axes)

49 | # Fixes numerical instability if variance ~= 0, and it goes negative

50 | v = tf.nn.relu(self.variance)

51 | ema_apply_op = self.ema.apply([self.mean, self.variance])

52 | with tf.control_dependencies([ema_apply_op]):

53 | self.output_training = tf.nn.batch_normalization(

54 | input, self.mean, v, self.offset, self.scale,

55 | self.epsilon, 'normalizer_train'),

56 | return self.output_training

57 | else:

58 | self.output_test = tf.nn.batch_normalization(

59 | input, self.ema.average(self.mean),

60 | self.ema.average(self.variance), self.offset, self.scale,

61 | self.epsilon, 'normalizer_test')

62 | return self.output_test

63 |

64 | def get_batch_moments(self):

65 | return self.mean, self.variance

66 |

67 | def get_ema_moments(self):

68 | return self.ema.average(self.mean), self.ema.average(self.variance)

69 |

70 | def get_offset_scale(self):

71 | return self.offset, self.scale

72 |

--------------------------------------------------------------------------------

/models/figer_model/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/nitishgupta/neural-el/8c7c278acefa66238a75e805511ff26a567fd4e0/models/figer_model/__init__.py

--------------------------------------------------------------------------------

/models/figer_model/coherence_model.py:

--------------------------------------------------------------------------------

1 | import time

2 | import numpy as np

3 | import tensorflow as tf

4 |

5 | from models.base import Model

6 |

7 | class CoherenceModel(Model):

8 | '''

9 | Input is sparse tensor of mention strings in mention's document.

10 | Pass through feed forward and get a coherence representation

11 | (keep same as context_encoded_dim)

12 | '''

13 |

14 | def __init__(self, num_layers, batch_size, input_size,

15 | coherence_indices, coherence_values, coherence_matshape,

16 | context_encoded_dim, scope_name, device,

17 | dropout_keep_prob=1.0):

18 |

19 | # Num of layers in the encoder and decoder network

20 | self.num_layers = num_layers

21 | self.input_size = input_size

22 | self.context_encoded_dim = context_encoded_dim

23 | self.dropout_keep_prob = dropout_keep_prob

24 | self.batch_size = batch_size

25 |

26 | with tf.variable_scope(scope_name) as s, tf.device(device) as d:

27 | coherence_inp_tensor = tf.SparseTensor(coherence_indices,

28 | coherence_values,

29 | coherence_matshape)

30 |

31 | # Feed-forward Net for coherence_representation

32 | # Layer 1

33 | self.trans_weights = tf.get_variable(

34 | name="coherence_layer_0",

35 | shape=[self.input_size, self.context_encoded_dim],

36 | initializer=tf.random_normal_initializer(

37 | mean=0.0,

38 | stddev=1.0/(100.0)))

39 |

40 | # [B, context_encoded_dim]

41 | coherence_encoded = tf.sparse_tensor_dense_matmul(

42 | coherence_inp_tensor, self.trans_weights)

43 | coherence_encoded = tf.nn.relu(coherence_encoded)

44 |

45 | # Hidden Layers. NumLayers >= 2

46 | self.hidden_layers = []

47 | for i in range(1, self.num_layers):

48 | weight_matrix = tf.get_variable(

49 | name="coherence_layer_"+str(i),

50 | shape=[self.context_encoded_dim, self.context_encoded_dim],

51 | initializer=tf.random_normal_initializer(

52 | mean=0.0,

53 | stddev=1.0/(100.0)))

54 | self.hidden_layers.append(weight_matrix)

55 |

56 | for i in range(1, self.num_layers):

57 | coherence_encoded = tf.nn.dropout(

58 | coherence_encoded, keep_prob=self.dropout_keep_prob)

59 | coherence_encoded = tf.matmul(coherence_encoded,

60 | self.hidden_layers[i-1])

61 | coherence_encoded = tf.nn.relu(coherence_encoded)

62 |

63 | self.coherence_encoded = tf.nn.dropout(

64 | coherence_encoded, keep_prob=self.dropout_keep_prob)

65 |

--------------------------------------------------------------------------------

/models/figer_model/coldStart.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | import tensorflow as tf

4 | import numpy as np

5 |

6 | import readers.utils as utils

7 | from evaluation import evaluate

8 | from evaluation import evaluate_el

9 | from evaluation import evaluate_types

10 | from models.base import Model

11 | from models.figer_model.context_encoder import ContextEncoderModel

12 | from models.figer_model.coherence_model import CoherenceModel

13 | from models.figer_model.wiki_desc import WikiDescModel

14 | from models.figer_model.joint_context import JointContextModel

15 | from models.figer_model.labeling_model import LabelingModel

16 | from models.figer_model.entity_posterior import EntityPosterior

17 | from models.figer_model.loss_optim import LossOptim

18 |

19 |

20 | class ColdStart(object):

21 | def __init__(self, figermodel):

22 | print("###### ENTERED THE COLD WORLD OF THE UNKNOWN ##############")

23 | # Object of the WikiELModel Class

24 | self.fm = figermodel

25 | self.coldDir = self.fm.reader.coldDir

26 | coldWid2DescVecs_pkl = os.path.join(self.coldDir, "coldwid2descvecs.pkl")

27 | self.coldWid2DescVecs = utils.load(coldWid2DescVecs_pkl)

28 | self.num_cold_entities = self.fm.reader.num_cold_entities

29 | self.batch_size = self.fm.batch_size

30 | (self.coldwid2idx,

31 | self.idx2coldwid) = (self.fm.reader.coldwid2idx, self.fm.reader.idx2coldwid)

32 |

33 | def _makeDescLossGraph(self):

34 | with tf.variable_scope("cold") as s:

35 | with tf.device(self.fm.device_placements['gpu']) as d:

36 | tf.set_random_seed(1)

37 |

38 | self.coldEnEmbsToAssign = tf.placeholder(

39 | tf.float32, [self.num_cold_entities, 200], name="coldEmbsAssignment")

40 |

41 | self.coldEnEmbs = tf.get_variable(

42 | name="cold_entity_embeddings",

43 | shape=[self.num_cold_entities, 200],

44 | initializer=tf.random_normal_initializer(mean=-0.25,

45 | stddev=1.0/(100.0)))

46 |

47 | self.assignColdEmbs = self.coldEnEmbs.assign(self.coldEnEmbsToAssign)

48 |

49 | self.trueColdEnIds = tf.placeholder(

50 | tf.int32, [self.batch_size], name="true_entities_idxs")

51 |

52 | # Should be a list of zeros

53 | self.softTrueIdxs = tf.placeholder(

54 | tf.int32, [self.batch_size], name="softmaxTrueEnsIdxs")

55 |

56 | # [B, D]

57 | self.trueColdEmb = tf.nn.embedding_lookup(

58 | self.coldEnEmbs, self.trueColdEnIds)

59 | # [B, 1, D]

60 | self.trueColdEmb_exp = tf.expand_dims(

61 | input=self.trueColdEmb, dim=1)

62 |

63 | self.label_scores = tf.matmul(self.trueColdEmb,

64 | self.fm.labeling_model.label_weights)

65 |

66 | self.labeling_losses = tf.nn.sigmoid_cross_entropy_with_logits(

67 | logits=self.label_scores,

68 | targets=self.fm.labels_batch,

69 | name="labeling_loss")

70 |

71 | self.labelingLoss = tf.reduce_sum(

72 | self.labeling_losses) / tf.to_float(self.batch_size)

73 |

74 | # [B, D]

75 | self.descEncoded = self.fm.wikidescmodel.desc_encoded

76 |

77 | ## Maximize sigmoid of dot-prod between true emb. and desc encoding

78 | descLosses = -tf.sigmoid(tf.reduce_sum(tf.mul(self.trueColdEmb, self.descEncoded), 1))

79 | self.descLoss = tf.reduce_sum(descLosses)/tf.to_float(self.batch_size)

80 |

81 |

82 | # L-2 Norm Loss

83 | self.trueEmbNormLoss = tf.reduce_sum(

84 | tf.square(self.trueColdEmb))/(tf.to_float(self.batch_size))

85 |

86 |

87 | ''' Concat trueColdEmb_exp to negKnownEmbs so that 0 is the true entity.

88 | Dotprod this emb matrix with descEncoded to get scores and apply softmax

89 | '''

90 |

91 | self.trcoldvars = self.fm.scope_vars_list(scope_name="cold",

92 | var_list=tf.trainable_variables())

93 |

94 | print("Vars in Training")

95 | for var in self.trcoldvars:

96 | print(var.name)

97 |

98 |

99 | self.optimizer = tf.train.AdamOptimizer(

100 | learning_rate=self.fm.learning_rate,

101 | name='AdamCold_')

102 |

103 | self.total_labeling_loss = self.labelingLoss + self.trueEmbNormLoss

104 | self.label_gvs = self.optimizer.compute_gradients(

105 | loss=self.total_labeling_loss, var_list=self.trcoldvars)

106 | self.labeling_optim_op = self.optimizer.apply_gradients(self.label_gvs)

107 |

108 | self.total_loss = self.labelingLoss + 100*self.descLoss + self.trueEmbNormLoss

109 | self.comb_gvs = self.optimizer.compute_gradients(

110 | loss=self.total_loss, var_list=self.trcoldvars)

111 | self.combined_optim_op = self.optimizer.apply_gradients(self.comb_gvs)

112 |

113 |

114 | self.allcoldvars = self.fm.scope_vars_list(scope_name="cold",

115 | var_list=tf.all_variables())

116 |

117 | print("All Vars in Cold")

118 | for var in self.allcoldvars:

119 | print(var.name)

120 |

121 | print("Loaded and graph made")

122 | ### GRAPH COMPLETE ###

123 |

124 | #############################################################################

125 | def _trainColdEmbFromTypes(self, epochsToTrain=5):

126 | print("Training Cold Entity Embeddings from Typing Info")

127 |

128 | epochsDone = self.fm.reader.val_epochs

129 |

130 | while self.fm.reader.val_epochs < epochsToTrain:

131 | (left_batch, left_lengths,

132 | right_batch, right_lengths,

133 | wids_batch,

134 | labels_batch, coherence_batch,

135 | wid_idxs_batch, wid_cprobs_batch) = self.fm.reader._next_padded_batch(data_type=1)

136 |

137 | trueColdWidIdxsBatch = []

138 | trueColdWidDescWordVecBatch = []

139 | for wid in wids_batch:

140 | trueColdWidIdxsBatch.append(self.coldwid2idx[wid])

141 | trueColdWidDescWordVecBatch.append(self.coldWid2DescVecs[wid])

142 |

143 | feed_dict = {self.trueColdEnIds: trueColdWidIdxsBatch,

144 | self.fm.labels_batch: labels_batch}

145 |

146 | fetch_tensor = [self.labelingLoss, self.trueEmbNormLoss]

147 |

148 | (fetches, _) = self.fm.sess.run([fetch_tensor,

149 | self.labeling_optim_op],

150 | feed_dict=feed_dict)

151 |

152 | labelingLoss = fetches[0]

153 | trueEmbNormLoss = fetches[1]

154 |

155 | print("LL : {} NormLoss : {}".format(labelingLoss, trueEmbNormLoss))

156 |

157 | newedone = self.fm.reader.val_epochs

158 | if newedone > epochsDone:

159 | print("Epochs : {}".format(newedone))

160 | epochsDone = newedone

161 |

162 | #############################################################################

163 | def _trainColdEmbFromTypesAndDesc(self, epochsToTrain=5):

164 | print("Training Cold Entity Embeddings from Typing Info")

165 |

166 | epochsDone = self.fm.reader.val_epochs

167 |

168 | while self.fm.reader.val_epochs < epochsToTrain:

169 | (left_batch, left_lengths,

170 | right_batch, right_lengths,

171 | wids_batch,

172 | labels_batch, coherence_batch,

173 | wid_idxs_batch, wid_cprobs_batch) = self.fm.reader._next_padded_batch(data_type=1)

174 |

175 | trueColdWidIdxsBatch = []

176 | trueColdWidDescWordVecBatch = []

177 | for wid in wids_batch:

178 | trueColdWidIdxsBatch.append(self.coldwid2idx[wid])

179 | trueColdWidDescWordVecBatch.append(self.coldWid2DescVecs[wid])

180 |

181 | feed_dict = {self.fm.wikidesc_batch: trueColdWidDescWordVecBatch,

182 | self.trueColdEnIds: trueColdWidIdxsBatch,

183 | self.fm.labels_batch: labels_batch}

184 |

185 | fetch_tensor = [self.labelingLoss, self.descLoss, self.trueEmbNormLoss]

186 |

187 | (fetches,_) = self.fm.sess.run([fetch_tensor,

188 | self.combined_optim_op],

189 | feed_dict=feed_dict)

190 |

191 | labelingLoss = fetches[0]

192 | descLoss = fetches[1]

193 | normLoss = fetches[2]

194 |

195 | print("L : {} D : {} NormLoss : {}".format(labelingLoss, descLoss, normLoss))

196 |

197 | newedone = self.fm.reader.val_epochs

198 | if newedone > epochsDone:

199 | print("Epochs : {}".format(newedone))

200 | epochsDone = newedone

201 |

202 | #############################################################################

203 |

204 | def runEval(self):

205 | print("Running Evaluations")

206 | self.fm.reader.reset_validation()

207 | correct = 0

208 | total = 0

209 | totnew = 0

210 | correctnew = 0

211 | while self.fm.reader.val_epochs < 1:

212 | (left_batch, left_lengths,

213 | right_batch, right_lengths,

214 | wids_batch,

215 | labels_batch, coherence_batch,

216 | wid_idxs_batch,

217 | wid_cprobs_batch) = self.fm.reader._next_padded_batch(data_type=1)

218 |

219 | trueColdWidIdxsBatch = []

220 |

221 | for wid in wids_batch:

222 | trueColdWidIdxsBatch.append(self.coldwid2idx[wid])

223 |

224 | feed_dict = {self.fm.sampled_entity_ids: wid_idxs_batch,

225 | self.fm.left_context_embeddings: left_batch,

226 | self.fm.right_context_embeddings: right_batch,

227 | self.fm.left_lengths: left_lengths,

228 | self.fm.right_lengths: right_lengths,

229 | self.fm.coherence_indices: coherence_batch[0],

230 | self.fm.coherence_values: coherence_batch[1],

231 | self.fm.coherence_matshape: coherence_batch[2],

232 | self.trueColdEnIds: trueColdWidIdxsBatch}

233 |

234 | fetch_tensor = [self.trueColdEmb,

235 | self.fm.joint_context_encoded,

236 | self.fm.posterior_model.sampled_entity_embeddings,

237 | self.fm.posterior_model.entity_scores]

238 |

239 | fetched_vals = self.fm.sess.run(fetch_tensor, feed_dict=feed_dict)

240 | [trueColdEmbs, # [B, D]

241 | context_encoded, # [B, D]

242 | neg_entity_embeddings, # [B, N, D]

243 | neg_entity_scores] = fetched_vals # [B, N]

244 |

245 | # [B]

246 | trueColdWidScores = np.sum(trueColdEmbs*context_encoded, axis=1)

247 | entity_scores = neg_entity_scores

248 | entity_scores[:,0] = trueColdWidScores

249 | context_entity_scores = np.exp(entity_scores)/np.sum(np.exp(entity_scores))

250 |

251 | maxIdxs = np.argmax(context_entity_scores, axis=1)

252 | for i in range(0, self.batch_size):

253 | total += 1

254 | if maxIdxs[i] == 0:

255 | correct += 1

256 |

257 | scores_withpriors = context_entity_scores + wid_cprobs_batch

258 |

259 | maxIdxs = np.argmax(scores_withpriors, axis=1)

260 | for i in range(0, self.batch_size):

261 | totnew += 1

262 | if maxIdxs[i] == 0:

263 | correctnew += 1

264 |

265 | print("Context T : {} C : {}".format(total, correct))

266 | print("WPriors T : {} C : {}".format(totnew, correctnew))

267 |

268 | ##############################################################################

269 |

270 | def typeBasedColdEmbExp(self, ckptName="FigerModel-20001"):

271 | ''' Train cold embeddings using wiki desc loss

272 | '''

273 | saver = tf.train.Saver(var_list=tf.all_variables())

274 |

275 | print("Loading Model ... ")

276 | if ckptName == None:

277 | print("Given CKPT Name")

278 | sys.exit()

279 | else:

280 | load_status = self.fm.loadSpecificCKPT(

281 | saver=saver, checkpoint_dir=self.fm.checkpoint_dir,

282 | ckptName=ckptName, attrs=self.fm._attrs)

283 | if not load_status:

284 | print("No model to load. Exiting")

285 | sys.exit(0)

286 |

287 | self._makeDescLossGraph()

288 | self.fm.sess.run(tf.initialize_variables(self.allcoldvars))

289 | self._trainColdEmbFromTypes(epochsToTrain=5)

290 |

291 | self.runEval()

292 |

293 | ##############################################################################

294 |

295 | def typeAndWikiDescBasedColdEmbExp(self, ckptName="FigerModel-20001"):

296 | ''' Train cold embeddings using wiki desc loss

297 | '''

298 | saver = tf.train.Saver(var_list=tf.all_variables())

299 |

300 | print("Loading Model ... ")

301 | if ckptName == None:

302 | print("Given CKPT Name")

303 | sys.exit()

304 | else:

305 | load_status = self.fm.loadSpecificCKPT(

306 | saver=saver, checkpoint_dir=self.fm.checkpoint_dir,

307 | ckptName=ckptName, attrs=self.fm._attrs)

308 | if not load_status:

309 | print("No model to load. Exiting")

310 | sys.exit(0)

311 |

312 | self._makeDescLossGraph()

313 | self.fm.sess.run(tf.initialize_variables(self.allcoldvars))

314 | self._trainColdEmbFromTypesAndDesc(epochsToTrain=5)

315 |

316 | self.runEval()

317 |

318 | # EVALUATION FOR COLD START WHEN INITIALIZING COLD EMB FROM WIKI DESC ENCODING

319 | def wikiDescColdEmbExp(self, ckptName="FigerModel-20001"):

320 | ''' Assign cold entity embeddings as wiki desc encoding

321 | '''

322 | assert self.batch_size == 1

323 | print("Loaded Cold Start Class. ")

324 | print("Size of cold entities : {}".format(len(self.coldWid2DescVecs)))

325 |

326 | saver = tf.train.Saver(var_list=tf.all_variables(), max_to_keep=5)

327 |

328 | print("Loading Model ... ")

329 | if ckptName == None:

330 | print("Given CKPT Name")

331 | sys.exit()

332 | else:

333 | load_status = self.fm.loadSpecificCKPT(

334 | saver=saver, checkpoint_dir=self.fm.checkpoint_dir,

335 | ckptName=ckptName, attrs=self.fm._attrs)

336 | if not load_status:

337 | print("No model to load. Exiting")

338 | sys.exit(0)

339 |

340 | iter_done = self.fm.global_step.eval()

341 | print("[#] Model loaded with iterations done: %d" % iter_done)

342 |

343 | self._makeDescLossGraph()

344 | self.fm.sess.run(tf.initialize_variables(self.allcoldvars))

345 |

346 | # Fill with encoded desc. in order of idx2coldwid

347 | print("Getting Encoded Description Vectors")

348 | descEncodedMatrix = []

349 | for idx in range(0, len(self.idx2coldwid)):

350 | wid = self.idx2coldwid[idx]

351 | desc_vec = self.coldWid2DescVecs[wid]

352 | feed_dict = {self.fm.wikidesc_batch: [desc_vec]}

353 | desc_encoded = self.fm.sess.run(self.fm.wikidescmodel.desc_encoded,

354 | feed_dict=feed_dict)

355 | descEncodedMatrix.append(desc_encoded[0])

356 |

357 | print("Initialization Experiment")

358 | self.runEval()

359 |

360 | print("Assigning Cold Embeddings from Wiki Desc Encoder ...")

361 | self.fm.sess.run(self.assignColdEmbs,

362 | feed_dict={self.coldEnEmbsToAssign:descEncodedMatrix})

363 |

364 | print("After assigning based on Wiki Encoder")

365 | self.runEval()

366 |

367 | ##############################################################################

368 |

--------------------------------------------------------------------------------

/models/figer_model/context_encoder.py:

--------------------------------------------------------------------------------

1 | import time

2 | import tensorflow as tf

3 | import numpy as np

4 |

5 | from models.base import Model

6 |

7 | class ContextEncoderModel(Model):

8 | """Run Forward and Backward LSTM and concatenate last outputs to get

9 | context representation"""

10 |

11 | def __init__(self, num_layers, batch_size, lstm_size,

12 | left_embed_batch, left_lengths, right_embed_batch, right_lengths,

13 | context_encoded_dim, scope_name, device, dropout_keep_prob=1.0):

14 |

15 | self.num_layers = num_layers # Num of layers in the encoder and decoder network

16 | self.num_lstm_layers = 1

17 |

18 | # Left / Right Context Dim.

19 | # Context Representation Dim : 2*lstm_size

20 | self.lstm_size = lstm_size

21 | self.dropout_keep_prob = dropout_keep_prob

22 | self.batch_size = batch_size

23 | self.context_encoded_dim = context_encoded_dim

24 | self.left_context_embeddings = left_embed_batch

25 | self.right_context_embeddings = right_embed_batch

26 |

27 | with tf.variable_scope(scope_name) as sc, tf.device(device) as d:

28 | with tf.variable_scope("left_encoder") as s:

29 | l_encoder_cell = tf.nn.rnn_cell.BasicLSTMCell(

30 | self.lstm_size, state_is_tuple=True)

31 |

32 | l_dropout_cell = tf.nn.rnn_cell.DropoutWrapper(

33 | cell=l_encoder_cell,

34 | input_keep_prob=self.dropout_keep_prob,

35 | output_keep_prob=self.dropout_keep_prob)

36 |

37 | self.left_encoder = tf.nn.rnn_cell.MultiRNNCell(

38 | [l_dropout_cell] * self.num_lstm_layers, state_is_tuple=True)

39 |

40 | self.left_outputs, self.left_states = tf.nn.dynamic_rnn(

41 | cell=self.left_encoder, inputs=self.left_context_embeddings,

42 | sequence_length=left_lengths, dtype=tf.float32)

43 |

44 | with tf.variable_scope("right_encoder") as s:

45 | r_encoder_cell = tf.nn.rnn_cell.BasicLSTMCell(

46 | self.lstm_size, state_is_tuple=True)

47 |

48 | r_dropout_cell = tf.nn.rnn_cell.DropoutWrapper(

49 | cell=r_encoder_cell,

50 | input_keep_prob=self.dropout_keep_prob,

51 | output_keep_prob=self.dropout_keep_prob)

52 |

53 | self.right_encoder = tf.nn.rnn_cell.MultiRNNCell(

54 | [r_dropout_cell] * self.num_lstm_layers, state_is_tuple=True)

55 |

56 | self.right_outputs, self.right_states = tf.nn.dynamic_rnn(

57 | cell=self.right_encoder,

58 | inputs=self.right_context_embeddings,

59 | sequence_length=right_lengths, dtype=tf.float32)

60 |

61 | # Left Context Encoded

62 | # [B, LSTM_DIM]

63 | self.left_last_output = self.get_last_output(

64 | outputs=self.left_outputs, lengths=left_lengths,

65 | name="left_context_encoded")

66 |

67 | # Right Context Encoded

68 | # [B, LSTM_DIM]

69 | self.right_last_output = self.get_last_output(

70 | outputs=self.right_outputs, lengths=right_lengths,

71 | name="right_context_encoded")

72 |

73 | # Context Encoded Vector

74 | self.context_lstm_encoded = tf.concat(

75 | 1, [self.left_last_output, self.right_last_output],

76 | name='context_lstm_encoded')

77 |

78 | # Linear Transformation to get context_encoded_dim

79 | # Layer 1

80 | self.trans_weights = tf.get_variable(

81 | name="context_trans_weights",

82 | shape=[2*self.lstm_size, self.context_encoded_dim],

83 | initializer=tf.random_normal_initializer(

84 | mean=0.0,

85 | stddev=1.0/(100.0)))

86 |

87 | # [B, context_encoded_dim]

88 | context_encoded = tf.matmul(self.context_lstm_encoded,

89 | self.trans_weights)

90 | context_encoded = tf.nn.relu(context_encoded)

91 |

92 | self.hidden_layers = []

93 | for i in range(1, self.num_layers):

94 | weight_matrix = tf.get_variable(

95 | name="context_hlayer_"+str(i),

96 | shape=[self.context_encoded_dim, self.context_encoded_dim],

97 | initializer=tf.random_normal_initializer(

98 | mean=0.0,

99 | stddev=1.0/(100.0)))

100 | self.hidden_layers.append(weight_matrix)

101 |

102 | for i in range(1, self.num_layers):

103 | context_encoded = tf.nn.dropout(

104 | context_encoded, keep_prob=self.dropout_keep_prob)

105 | context_encoded = tf.matmul(context_encoded,

106 | self.hidden_layers[i-1])

107 | context_encoded = tf.nn.relu(context_encoded)

108 |

109 | self.context_encoded = tf.nn.dropout(

110 | context_encoded, keep_prob=self.dropout_keep_prob)

111 |

112 | def get_last_output(self, outputs, lengths, name):

113 | reverse_output = tf.reverse_sequence(input=outputs,

114 | seq_lengths=tf.to_int64(lengths),

115 | seq_dim=1,

116 | batch_dim=0)

117 | en_last_output = tf.slice(input_=reverse_output,

118 | begin=[0,0,0],

119 | size=[self.batch_size, 1, -1])

120 | # [batch_size, h_dim]

121 | encoder_last_output = tf.reshape(en_last_output,

122 | shape=[self.batch_size, -1],

123 | name=name)

124 |

125 | return encoder_last_output

126 |

--------------------------------------------------------------------------------

/models/figer_model/entity_posterior.py:

--------------------------------------------------------------------------------

1 | import time