├── .devcontainer

├── Dockerfile

└── devcontainer.json

├── .github

└── workflows

│ └── deploy.yml

├── .gitignore

├── LICENSE

├── Makefile

├── README.md

├── small-rust-tutorial

├── .gitignore

├── book.toml

└── src

│ ├── .chapter_9.md

│ ├── SUMMARY.md

│ ├── chapter_1.md

│ ├── chapter_2.md

│ ├── chapter_3.md

│ ├── chapter_4.md

│ ├── chapter_5.md

│ ├── chapter_6.md

│ ├── chapter_7.md

│ ├── chapter_8.md

│ ├── chapter_9.md

│ ├── faq.md

│ ├── guest_lectures.md

│ ├── learn_rust_tips.md

│ ├── preface.md

│ ├── projects.md

│ └── sustainability.md

└── week5

├── mplambda.py

└── processbench.py

/.devcontainer/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM mcr.microsoft.com/devcontainers/rust:0-1-bullseye

2 |

3 | # Include lld linker to improve build times either by using environment variable

4 | # RUSTFLAGS="-C link-arg=-fuse-ld=lld" or with Cargo's configuration file (i.e see .cargo/config.toml).

5 | RUN apt-get update && export DEBIAN_FRONTEND=noninteractive \

6 | && apt-get -y install clang lld \

7 | && apt-get autoremove -y && apt-get clean -y

8 |

--------------------------------------------------------------------------------

/.devcontainer/devcontainer.json:

--------------------------------------------------------------------------------

1 | // For format details, see https://aka.ms/devcontainer.json. For config options, see the README at:

2 | // https://github.com/microsoft/vscode-dev-containers/tree/v0.245.2/containers/rust

3 | {

4 | "name": "Rust",

5 | "build": {

6 | "dockerfile": "Dockerfile",

7 | "args": {

8 | // Use the VARIANT arg to pick a Debian OS version: buster, bullseye

9 | // Use bullseye when on local on arm64/Apple Silicon.

10 | "VARIANT": "buster"

11 | }

12 | },

13 | "runArgs": [

14 | "--cap-add=SYS_PTRACE",

15 | "--security-opt",

16 | "seccomp=unconfined"

17 | ],

18 |

19 | // Configure tool-specific properties.

20 | "customizations": {

21 | // Configure properties specific to VS Code.

22 | "vscode": {

23 | // Set *default* container specific settings.json values on container create.

24 | "settings": {

25 | "lldb.executable": "/usr/bin/lldb",

26 | // VS Code don't watch files under ./target

27 | "files.watcherExclude": {

28 | "**/target/**": true

29 | },

30 | "rust-analyzer.checkOnSave.command": "clippy"

31 | },

32 |

33 | // Add the IDs of extensions you want installed when the container is created.

34 | "extensions": [

35 | "rust-lang.rust-analyzer",

36 | "GitHub.copilot-nightly",

37 | "GitHub.copilot-labs",

38 | "ms-vscode.makefile-tools"

39 | ]

40 | }

41 | },

42 |

43 | // Use 'forwardPorts' to make a list of ports inside the container available locally.

44 | // "forwardPorts": [],

45 |

46 | // Use 'postCreateCommand' to run commands after the container is created.

47 | "postCreateCommand": "./setup.sh",

48 |

49 | // Comment out to connect as root instead. More info: https://aka.ms/vscode-remote/containers/non-root.

50 | "remoteUser": "vscode"

51 | }

52 |

--------------------------------------------------------------------------------

/.github/workflows/deploy.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 |

3 | on:

4 | push:

5 | branches: [ main ]

6 | pull_request:

7 | branches: [ main ]

8 |

9 | jobs:

10 | build:

11 | name: Build, Test and Deploy

12 | runs-on: ubuntu-latest

13 | steps:

14 | - uses: actions/checkout@v1

15 | - uses: actions-rs/toolchain@v1

16 | with:

17 | toolchain: stable

18 | - run: (test -x $HOME/.cargo/bin/mdbook || cargo install --vers "^0.4" mdbook)

19 | - run: make deploy

20 |

21 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Generated by Cargo

2 | # will have compiled files and executables

3 | /target/

4 |

5 | # Remove Cargo.lock from gitignore if creating an executable, leave it for libraries

6 | # More information here https://doc.rust-lang.org/cargo/guide/cargo-toml-vs-cargo-lock.html

7 | Cargo.lock

8 |

9 | # These are backup files generated by rustfmt

10 | **/*.rs.bk

11 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Creative Commons Legal Code

2 |

3 | Attribution-NonCommercial 4.0 International

4 |

5 | CREATIVE COMMONS CORPORATION IS NOT A LAW FIRM AND DOES NOT PROVIDE

6 | LEGAL SERVICES. DISTRIBUTION OF THIS DOCUMENT DOES NOT CREATE AN

7 | ATTORNEY-CLIENT RELATIONSHIP. CREATIVE COMMONS PROVIDES THIS

8 | INFORMATION ON AN "AS-IS" BASIS. CREATIVE COMMONS MAKES NO WARRANTIES

9 | REGARDING THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS

10 | PROVIDED HEREUNDER, AND DISCLAIMS LIABILITY FOR DAMAGES RESULTING FROM

11 | THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS PROVIDED

12 | HEREUNDER.

13 |

14 | By exercising the Licensed Rights (defined below), You accept and agree to be bound by the terms and conditions of this Creative Commons Attribution-NonCommercial 4.0 International Public License ("Public License"). To the extent this Public License may be interpreted as a contract, You are granted the Licensed Rights in consideration of Your acceptance of these terms and conditions, and the Licensor grants You such rights in consideration of benefits the Licensor receives from making the Licensed Material available under these terms and conditions.

15 |

16 | Section 1 – Definitions.

17 |

18 | Adapted Material means material subject to Copyright and Similar Rights that is derived from or based upon the Licensed Material and in which the Licensed Material is translated, altered, arranged, transformed, or otherwise modified in a manner requiring permission under the Copyright and Similar Rights held by the Licensor. For purposes of this Public License, where the Licensed Material is a musical work, performance, or sound recording, Adapted Material is always produced where the Licensed Material is synched in timed relation with a moving image.

19 | Adapter's License means the license You apply to Your Copyright and Similar Rights in Your contributions to Adapted Material in accordance with the terms and conditions of this Public License.

20 | Copyright and Similar Rights means copyright and/or similar rights closely related to copyright including, without limitation, performance, broadcast, sound recording, and Sui Generis Database Rights, without regard to how the rights are labeled or categorized. For purposes of this Public License, the rights specified in Section 2(b)(1)-(2) are not Copyright and Similar Rights.

21 | Effective Technological Measures means those measures that, in the absence of proper authority, may not be circumvented under laws fulfilling obligations under Article 11 of the WIPO Copyright Treaty adopted on December 20, 1996, and/or similar international agreements.

22 | Exceptions and Limitations means fair use, fair dealing, and/or any other exception or limitation to Copyright and Similar Rights that applies to Your use of the Licensed Material.

23 | Licensed Material means the artistic or literary work, database, or other material to which the Licensor applied this Public License.

24 | Licensed Rights means the rights granted to You subject to the terms and conditions of this Public License, which are limited to all Copyright and Similar Rights that apply to Your use of the Licensed Material and that the Licensor has authority to license.

25 | Licensor means the individual(s) or entity(ies) granting rights under this Public License.

26 | NonCommercial means not primarily intended for or directed towards commercial advantage or monetary compensation. For purposes of this Public License, the exchange of the Licensed Material for other material subject to Copyright and Similar Rights by digital file-sharing or similar means is NonCommercial provided there is no payment of monetary compensation in connection with the exchange.

27 | Share means to provide material to the public by any means or process that requires permission under the Licensed Rights, such as reproduction, public display, public performance, distribution, dissemination, communication, or importation, and to make material available to the public including in ways that members of the public may access the material from a place and at a time individually chosen by them.

28 | Sui Generis Database Rights means rights other than copyright resulting from Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the legal protection of databases, as amended and/or succeeded, as well as other essentially equivalent rights anywhere in the world.

29 | You means the individual or entity exercising the Licensed Rights under this Public License. Your has a corresponding meaning.

30 | Section 2 – Scope.

31 |

32 | License grant.

33 | Subject to the terms and conditions of this Public License, the Licensor hereby grants You a worldwide, royalty-free, non-sublicensable, non-exclusive, irrevocable license to exercise the Licensed Rights in the Licensed Material to:

34 | reproduce and Share the Licensed Material, in whole or in part, for NonCommercial purposes only; and

35 | produce, reproduce, and Share Adapted Material for NonCommercial purposes only.

36 | Exceptions and Limitations. For the avoidance of doubt, where Exceptions and Limitations apply to Your use, this Public License does not apply, and You do not need to comply with its terms and conditions.

37 | Term. The term of this Public License is specified in Section 6(a).

38 | Media and formats; technical modifications allowed. The Licensor authorizes You to exercise the Licensed Rights in all media and formats whether now known or hereafter created, and to make technical modifications necessary to do so. The Licensor waives and/or agrees not to assert any right or authority to forbid You from making technical modifications necessary to exercise the Licensed Rights, including technical modifications necessary to circumvent Effective Technological Measures. For purposes of this Public License, simply making modifications authorized by this Section 2(a)(4) never produces Adapted Material.

39 | Downstream recipients.

40 | Offer from the Licensor – Licensed Material. Every recipient of the Licensed Material automatically receives an offer from the Licensor to exercise the Licensed Rights under the terms and conditions of this Public License.

41 | No downstream restrictions. You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, the Licensed Material if doing so restricts exercise of the Licensed Rights by any recipient of the Licensed Material.

42 | No endorsement. Nothing in this Public License constitutes or may be construed as permission to assert or imply that You are, or that Your use of the Licensed Material is, connected with, or sponsored, endorsed, or granted official status by, the Licensor or others designated to receive attribution as provided in Section 3(a)(1)(A)(i).

43 | Other rights.

44 |

45 | Moral rights, such as the right of integrity, are not licensed under this Public License, nor are publicity, privacy, and/or other similar personality rights; however, to the extent possible, the Licensor waives and/or agrees not to assert any such rights held by the Licensor to the limited extent necessary to allow You to exercise the Licensed Rights, but not otherwise.

46 | Patent and trademark rights are not licensed under this Public License.

47 | To the extent possible, the Licensor waives any right to collect royalties from You for the exercise of the Licensed Rights, whether directly or through a collecting society under any voluntary or waivable statutory or compulsory licensing scheme. In all other cases the Licensor expressly reserves any right to collect such royalties, including when the Licensed Material is used other than for NonCommercial purposes.

48 | Section 3 – License Conditions.

49 |

50 | Your exercise of the Licensed Rights is expressly made subject to the following conditions.

51 |

52 | Attribution.

53 |

54 | If You Share the Licensed Material (including in modified form), You must:

55 |

56 | retain the following if it is supplied by the Licensor with the Licensed Material:

57 | identification of the creator(s) of the Licensed Material and any others designated to receive attribution, in any reasonable manner requested by the Licensor (including by pseudonym if designated);

58 | a copyright notice;

59 | a notice that refers to this Public License;

60 | a notice that refers to the disclaimer of warranties;

61 | a URI or hyperlink to the Licensed Material to the extent reasonably practicable;

62 | indicate if You modified the Licensed Material and retain an indication of any previous modifications; and

63 | indicate the Licensed Material is licensed under this Public License, and include the text of, or the URI or hyperlink to, this Public License.

64 | You may satisfy the conditions in Section 3(a)(1) in any reasonable manner based on the medium, means, and context in which You Share the Licensed Material. For example, it may be reasonable to satisfy the conditions by providing a URI or hyperlink to a resource that includes the required information.

65 | If requested by the Licensor, You must remove any of the information required by Section 3(a)(1)(A) to the extent reasonably practicable.

66 | If You Share Adapted Material You produce, the Adapter's License You apply must not prevent recipients of the Adapted Material from complying with this Public License.

67 | Section 4 – Sui Generis Database Rights.

68 |

69 | Where the Licensed Rights include Sui Generis Database Rights that apply to Your use of the Licensed Material:

70 |

71 | for the avoidance of doubt, Section 2(a)(1) grants You the right to extract, reuse, reproduce, and Share all or a substantial portion of the contents of the database for NonCommercial purposes only;

72 | if You include all or a substantial portion of the database contents in a database in which You have Sui Generis Database Rights, then the database in which You have Sui Generis Database Rights (but not its individual contents) is Adapted Material; and

73 | You must comply with the conditions in Section 3(a) if You Share all or a substantial portion of the contents of the database.

74 | For the avoidance of doubt, this Section 4 supplements and does not replace Your obligations under this Public License where the Licensed Rights include other Copyright and Similar Rights.

75 | Section 5 – Disclaimer of Warranties and Limitation of Liability.

76 |

77 | Unless otherwise separately undertaken by the Licensor, to the extent possible, the Licensor offers the Licensed Material as-is and as-available, and makes no representations or warranties of any kind concerning the Licensed Material, whether express, implied, statutory, or other. This includes, without limitation, warranties of title, merchantability, fitness for a particular purpose, non-infringement, absence of latent or other defects, accuracy, or the presence or absence of errors, whether or not known or discoverable. Where disclaimers of warranties are not allowed in full or in part, this disclaimer may not apply to You.

78 | To the extent possible, in no event will the Licensor be liable to You on any legal theory (including, without limitation, negligence) or otherwise for any direct, special, indirect, incidental, consequential, punitive, exemplary, or other losses, costs, expenses, or damages arising out of this Public License or use of the Licensed Material, even if the Licensor has been advised of the possibility of such losses, costs, expenses, or damages. Where a limitation of liability is not allowed in full or in part, this limitation may not apply to You.

79 | The disclaimer of warranties and limitation of liability provided above shall be interpreted in a manner that, to the extent possible, most closely approximates an absolute disclaimer and waiver of all liability.

80 | Section 6 – Term and Termination.

81 |

82 | This Public License applies for the term of the Copyright and Similar Rights licensed here. However, if You fail to comply with this Public License, then Your rights under this Public License terminate automatically.

83 | Where Your right to use the Licensed Material has terminated under Section 6(a), it reinstates:

84 |

85 | automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

86 | upon express reinstatement by the Licensor.

87 | For the avoidance of doubt, this Section 6(b) does not affect any right the Licensor may have to seek remedies for Your violations of this Public License.

88 | For the avoidance of doubt, the Licensor may also offer the Licensed Material under separate terms or conditions or stop distributing the Licensed Material at any time; however, doing so will not terminate this Public License.

89 | Sections 1, 5, 6, 7, and 8 survive termination of this Public License.

90 | Section 7 – Other Terms and Conditions.

91 |

92 | The Licensor shall not be bound by any additional or different terms or conditions communicated by You unless expressly agreed.

93 | Any arrangements, understandings, or agreements regarding the Licensed Material not stated herein are separate from and independent of the terms and conditions of this Public License.

94 | Section 8 – Interpretation.

95 |

96 | For the avoidance of doubt, this Public License does not, and shall not be interpreted to, reduce, limit, restrict, or impose conditions on any use of the Licensed Material that could lawfully be made without permission under this Public License.

97 | To the extent possible, if any provision of this Public License is deemed unenforceable, it shall be automatically reformed to the minimum extent necessary to make it enforceable. If the provision cannot be reformed, it shall be severed from this Public License without affecting the enforceability of the remaining terms and conditions.

98 | No term or condition of this Public License will be waived and no failure to comply consented to unless expressly agreed to by the Licensor.

99 | Nothing in this Public License constitutes or may be interpreted as a limitation upon, or waiver of, any privileges and immunities that apply to the Licensor or You, including from the legal processes of any jurisdiction or authority.

100 | Creative Commons is not a party to its public licenses. Notwithstanding, Creative Commons may elect to apply one of its public licenses to material it publishes and in those instances will be considered the “Licensor.” The text of the Creative Commons public licenses is dedicated to the public domain under the CC0 Public Domain Dedication. Except for the limited purpose of indicating that material is shared under a Creative Commons public license or as otherwise permitted by the Creative Commons policies published at creativecommons.org/policies, Creative Commons does not authorize the use of the trademark “Creative Commons” or any other trademark or logo of Creative Commons without its prior written consent including, without limitation, in connection with any unauthorized modifications to any of its public licenses or any other arrangements, understandings, or agreements concerning use of licensed material. For the avoidance of doubt, this paragraph does not form part of the public licenses.

101 |

102 | Creative Commons may be contacted at creativecommons.org.

103 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | install:

2 | cargo install mdbook

3 |

4 | build:

5 | mdbook build small-rust-tutorial

6 |

7 | serve:

8 | mdbook serve -p 8000 -n 127.0.0.1 small-rust-tutorial

9 |

10 | format:

11 | cargo fmt --quiet

12 |

13 | lint:

14 | cargo clippy --quiet

15 |

16 | test:

17 | cargo test --quiet

18 |

19 | linkcheck:

20 | mdbook test -L small-rust-tutorial

21 |

22 | run:

23 | cargo run

24 |

25 | release:

26 | cargo build --release

27 |

28 | deploy:

29 | #if git is not configured, configure it

30 | if [ -z "$(git config --global user.email)" ]; then git config --global user.email "noah.gift@gmail.com" &&\

31 | git config --global user.name "Noah Gift"; fi

32 |

33 | #install mdbook if not installed

34 | if [ ! -x "$(command -v mdbook)" ]; then cargo install mdbook; fi

35 | @echo "====> deploying to github"

36 | # if worktree exists, remove it: git worktree remove --force /tmp/book

37 | # otherwise add it: git worktree add /tmp/book gh-pages

38 | if [ -d /tmp/book ]; then git worktree remove --force /tmp/book; fi

39 | git worktree add -f /tmp/book gh-pages

40 | mdbook build small-rust-tutorial

41 | rm -rf /tmp/book/*

42 | cp -rp small-rust-tutorial/book/* /tmp/book/

43 | cd /tmp/book && \

44 | git add -A && \

45 | git commit -m "deployed on $(shell date) by ${USER}" && \

46 | git push origin gh-pages

47 | git update-ref -d refs/heads/gh-pages

48 | git push --force

49 |

50 | all: format lint test run

51 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # A Brief Rust Tutorial

2 | A brief tutorial on Rust for the O'Reilly book Enterprise MLOps as well as Duke Cloud Computing for Data Course.

3 | website here: https://nogibjj.github.io/rust-tutorial/ *(btw, yes, I made this image using Rust)*

4 |

5 |

6 |

--------------------------------------------------------------------------------

/small-rust-tutorial/.gitignore:

--------------------------------------------------------------------------------

1 | book

2 |

--------------------------------------------------------------------------------

/small-rust-tutorial/book.toml:

--------------------------------------------------------------------------------

1 | [book]

2 | authors = ["Noah Gift"]

3 | language = "en"

4 | multilingual = false

5 | src = "src"

6 | title = "Small Rust Tutorial For MLOps"

7 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/.chapter_9.md:

--------------------------------------------------------------------------------

1 | # Chapter 9-Serverless

2 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/SUMMARY.md:

--------------------------------------------------------------------------------

1 | # Summary

2 |

3 | - [Learning Rust Tips](./learn_rust_tips.md)

4 | - [Preface](./preface.md)

5 | - [Chapter 1-Getting Started with Rust](./chapter_1.md)

6 | - [Chapter 2-Getting Started with Cloud Computing](./chapter_2.md)

7 | - [Chapter 3-Week 3-Virtualization and Containers](./chapter_3.md)

8 | - [Chapter 4-Week 4-Containerized-Rust](./chapter_4.md)

9 | - [Chapter 5-Week 5-Distributed Computing and Concurrency with Rust](./chapter_5.md)

10 | - [Chapter 6-Week6-Distributed Computing](./chapter_6.md)

11 | - [Chapter 7-Week7-Serverless](./chapter_7.md)

12 | - [Chapter 8-Week8-Serverless](./chapter_8.md)

13 | - [Chapter 9-AI Assisted Coding & Big Data Systems](./chapter_9.md)

14 | - [Frequently Asked Questions](./faq.md)

15 | - [Projects](./projects.md)

16 | - [Guest Lecturers](./guest_lectures.md)

17 | - [Sustainability](./sustainability.md)

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_1.md:

--------------------------------------------------------------------------------

1 | # Chapter 1-Week 1 (Getting Started With Rust for Cloud, Data and MLOps)

2 |

3 | *(btw, yes, I made this image using Rust)*

4 |

5 |

6 | ## Demo Video of building a Command-Line Tool in Rust

7 |

8 |

9 |

10 | * [Link to: Assimilate-Python-For-Rust live demo](https://www.youtube.com/watch?v=wl77SW57odA&t=972s)

11 |

12 | ## Graduate Cloud Computing for Data w/ Rust first approach

13 |

14 | * Heuristic: Rust if you can, Python if you must

15 | * Refer to these resources when needed:

16 | * [Online Book Cloud Computing for Data](https://paiml.com/docs/home/books/cloud-computing-for-data/)

17 | * [Developing on AWS with C#](https://d1.awsstatic.com/developer-center/Developing-on-AWS-with-CSharp.pdf)

18 | * [Syllabus](https://noahgift.github.io/cloud-data-analysis-at-scale/syllabus)

19 | * [Project](https://noahgift.github.io/cloud-data-analysis-at-scale/projects)

20 | * [New Rust Guide](https://nogibjj.github.io/rust-tutorial/)

21 | * [GitHub Template Rust New Projects](https://github.com/noahgift/rust-new-project-template)

22 | * [Rust MLOps Template](https://github.com/nogibjj/rust-mlops-template)

23 | * [Building Cloud Computing Solutions at Scale Specialization](https://www.coursera.org/specializations/building-cloud-computing-solutions-at-scale) **You should refer to this guide often, and this Rust tutorial supplements it**

24 |

25 | ### Key Goals in Semester

26 |

27 | * ~1, 500 Rust projects = 100 Students * 15 Weeks

28 | * Build Resume worthy projects

29 | * Projects should be runnable with minimal instructions as command-line tools or microservices deployed to the cloud

30 |

31 | ### How to Accomplish Goals

32 |

33 | #### Two different demo channels

34 |

35 | * Weekly Learning Demo: Projects can take 10-60 minutes on average to complete (Text only explanation, screencast optional). You must show the code via the link and explain it via `README.md`.

36 | * Weekly Project Progress Demo: Demo via screencast, required. The demo should be 3-7 minutes.

37 |

38 | #### Two Different Portfolio Styles

39 |

40 | ##### Weekly Learning Repo Spec

41 |

42 | * Weekly Learning Repo Should Mimic This Style: [https://github.com/nogibjj/rust-mlops-template](https://github.com/nogibjj/rust-mlops-template), as in many tiny projects get automatically built because of the `Makefile`: [https://github.com/nogibjj/rust-mlops-template/blob/main/Makefile](https://github.com/nogibjj/rust-mlops-template/blob/main/Makefile)

43 |

44 | ##### Big Projects Repo Spec

45 |

46 | Each "big" project should have a dedicated repo for it; a good example is the following repo: [https://github.com/noahgift/rdedupe](https://github.com/noahgift/rdedupe). Please also follow these additional guidelines:

47 |

48 | * Each repo needs a well-written README.md with an architectural diagram

49 | * Each repo needs a GitHub release (see example here: [https://github.com/rust-lang/mdBook/releases](https://github.com/rust-lang/mdBook/releases)) where a person can run your binary.

50 | * Each repo needs a containerized version of your project where they can build the project and do a `docker pull` to a public container registry like Docker Hub: [Docker Hub](https://hub.docker.com)

51 | * I would encourage advanced students to build a library for one of your projects and submit it to crates.io: [https://crates.io](https://crates.io) if it benefits the Rust community (Don't publish junk)

52 | * Each repo needs to publish a benchmark showing performance. Advanced students may want to consider benchmarking your Rust project against a Python project

53 | * You should default toward building command-line tools with Clap: [https://crates.io/crates/clap](https://crates.io/crates/clap) and web applications with Actix: [https://crates.io/crates/actix](https://crates.io/crates/actix), unless you have a compelling reason to switch to a new framework.

54 | * Your repo should include continuous integration steps: test, format, lint, publish (deploy as a binary or deploy as a Microservice).

55 | * Microservices should include logging; see rust-mlops-template for example.

56 | * A good starting point is this Rust new project template: [https://github.com/noahgift/rust-new-project-template](https://github.com/noahgift/rust-new-project-template)

57 | * Each project should include a reproducible GitHub .devcontainer workflow; see rust-mops-template for example.

58 |

59 | ### Structure Each Week

60 |

61 | * 3:30-4:45 - Teach

62 | * 4:45-5:00 - Break

63 | * 5:00-6:00 - Teach

64 |

65 | #### Projects

66 |

67 | ##### Team Final Project (Team Size: 3-4): Rust MLOps Microservice

68 |

69 | * Build an end-to-end MLOps solution that invokes a model in a cloud platform using only Rust technology (i.e., Pure Rust Code). Examples could include the PyTorch model, or Hugging Face model, or any model packaged with a Microservice. (see the guide above about specs)

70 |

71 | ##### Individual Project #1: Rust CLI

72 |

73 | * Build a useful command-line tool in data engineering or machine learning engineering. (see the guide above about specs)

74 |

75 | ##### Individual Project #2: Kubernetes (or similar) Microservice in Rust

76 |

77 | * Build a functional web microservice in data engineering or machine learning engineering. (see the guide above about specs)

78 |

79 | ##### Individual Project #3: Interact with Big Data in Rust

80 |

81 | * Build a functional web microservice or CLI in data engineering or machine learning engineering that uses a large data platform. (see the guide above about specs)

82 |

83 | ##### Individual Project #4: Serverless Data Engineering Pipeline with Rust

84 |

85 | * Build a useful, serverless application in Rust. (see the guide above about specs) Also see [https://noahgift.github.io/cloud-data-analysis-at-scale/projects#project-4](https://noahgift.github.io/cloud-data-analysis-at-scale/projects#project-4).

86 |

87 | ##### Optional Advanced Individual Projects

88 |

89 | For advanced students, feel free to substitute one of the projects for these domains:

90 |

91 | * Web Assembly Rust: Follow the above guidelines, but port your deploy target to Rust Web Assembly. For example, you were Hugging Face in the browser.

92 |

93 | * Build an MLOps platform in Rust that could be a commercial solution (just a prototype)

94 |

95 | * Build a Rust Game that uses MLOps and runs in the cloud

96 |

97 | * (Or something else that challenges you)

98 |

99 | ### Onboarding Day 1

100 |

101 | * GitHub Codespaces with Copilot

102 | * AWS Learner Labs

103 | * Azure Free Credits

104 | * More TBD (AWS Credits, etc.)

105 |

106 | ### Getting Started with GitHub Codespaces for Rust

107 |

108 | #### rust-new-project-template

109 |

110 | All Rust projects can follow this pattern:

111 |

112 | 1. Create a new repo using Rust New Project Template: [https://github.com/noahgift/rust-new-project-template](https://github.com/noahgift/rust-new-project-template)

113 | 2. Create a new Codespace and use it

114 | 3. Use `main.rs` to call the handle CLI and `lib.rs` to handle logic and import `clap` in `Cargo.toml` as shown in this project.

115 | 4. Use `cargo init --name 'hello' or whatever you want to call your project.

116 | 5. Put your "ideas" in as comments in Rust to seed GitHub Copilot, i.e //build anadd function

117 | 6. Run `make format` i.e. `cargo format`

118 | 7. Run `make lint` i.e. `cargo clippy --quiet`

119 | 8. Run project: `cargo run -- --help`

120 | 9. Push your changes to allow GitHub Actions to: `format` check, `lint` check, and other actions like binary deploy.

121 |

122 |

123 | This pattern is a new emerging pattern and is ideal for systems programming in Rust.

124 |

125 |

126 |

127 | Repo example here: [https://github.com/nogibjj/hello-rust](https://github.com/nogibjj/hello-rust)

128 |

129 | #### Reproduce

130 | A good starting point for a new Rust project

131 |

132 | To run: `cargo run -- marco --name "Marco"`

133 | Be careful to use the NAME of the project in the `Cargo.toml` to call `lib.rs` as in:

134 |

135 | ```

136 | [package]

137 | name = "hello"

138 | ```

139 |

140 | For example, see the name `hello` invoked alongside `marco_polo`, which is in `lib.rs`.

141 |

142 | `lib.rs` code:

143 |

144 | ```rust

145 | /* A Marco Polo game. */

146 |

147 | /* Accepts a string with a name.

148 | If the name is "Marco", returns "Polo".

149 | If the name is "any other value", it returns "Marco".

150 | */

151 | pub fn marco_polo(name: &str) -> String {

152 | if name == "Marco" {

153 | "Polo".to_string()

154 | } else {

155 | "Marco".to_string()

156 | }

157 | }

158 | ```

159 |

160 |

161 | `main.rs` code:

162 |

163 | ```Rust

164 | fn main() {

165 | let args = Cli::parse();

166 | match args.command {

167 | Some(Commands::Marco { name }) => {

168 | println!("{}", hello::marco_polo(&name));

169 | }

170 | None => println!("No command was used"),

171 | }

172 | }

173 | ```

174 |

175 |

176 | ##### References

177 |

178 | * [Cargo Book](https://doc.rust-lang.org/cargo/)

179 | * [The Rust Programming Language Official Tutorial](https://doc.rust-lang.org/book/)

180 | * [Comprehensive Rust Google Tutorial](https://google.github.io/comprehensive-rust/)

181 | * [rust-cli-template](https://github.com/kbknapp/rust-cli-template)

182 | * [Command-Line Rust](https://www.oreilly.com/library/view/command-line-rust/9781098109424/)

183 | * [Switching to Rust from Python (Live Rough Draft Series)](https://learning.oreilly.com/videos/switching-to-rust/01252023VIDEOPAIML/)

184 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_2.md:

--------------------------------------------------------------------------------

1 | # Chapter 2-Week 2 (Up and Running with Cloud Computing)

2 |

3 | Goal: Up and running with Cloud Computing technology

4 |

5 | ## Part 1: Getting Started with Cloud Computing Foundations

6 |

7 | * [Review Foundations of AWS Cloud Computings Slides](https://docs.google.com/presentation/d/1lOuZsW7SQstJyBeavXTHwBco4OIoQRkI/edit#slide=id.p1)

8 |

9 |

10 | ### High Level Summary

11 |

12 | * Three ways to interact with AWS: Console, Terminal and SDK (Rust, C#, Python, etc)

13 |

14 | #### Demo

15 |

16 | * Demo console, cli, sdk

17 |

18 | [Setup Rust in AWS Cloud 9 Direct Link](https://www.youtube.com/watch?v=R8JnZ4sY4ks)

19 |

20 |

21 |

22 |

23 | #### Related videos

24 |

25 | * [Install Rust Cloud9](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/080232022VIDEOPAIML-c1_s17/)

26 | * [Learn AWS CloudShell](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/080232022VIDEOPAIML-c1_s1/)

27 | * [Powershell EC2 AWS CloudShell](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/080232022VIDEOPAIML-c1_s2/)

28 |

29 | ## Part 2: Developing Effective Technical Communication

30 |

31 | * Remote work isn't going away ability to work async is critical to success

32 | * Some tips on [Effective Technical Communication](https://paiml.com/docs/home/books/cloud-computing-for-data/chapter01-getting-started/#effective-async-technical-discussions)

33 |

34 | ### High Level Summary

35 |

36 | If someone cannot reproduce what you did, why would they hire you???

37 |

38 | * Build 100% reproduceable code: **If not automated it is broken**

39 | * Automatically tested via GitHub

40 | * Automatically linted via GitHub

41 | * Automatically formatted (check for compliance) via GitHub

42 | * Automatically deployed via GitHub (packages, containers, Microservice)

43 | * Automatically interactive (people can extend) with GitHub [.devcontainers](https://docs.github.com/en/codespaces/setting-up-your-project-for-codespaces/adding-a-dev-container-configuration/introduction-to-dev-containers)

44 | * Incredible `README.md` that shows clearly what you are doing and an architectural diagram.

45 | * Optional video demo 3-7 minutes (that shows what you did)

46 | * Include portfolio

47 | * Consider using `rust` [mdbook](https://rust-lang.github.io/mdBook/) (what I built this tutorial in) for an extra-special touch.

48 |

49 | ## Part 3: Using AWS Cloud and Azure Cloud with SDK

50 |

51 | ### Demo

52 |

53 | #### AWS Lambda Rust Marco Polo

54 |

55 | * [AWS Lambda Build and Deploy with Rust-Direct Link](https://www.youtube.com/watch?v=jUTiHUTfGYo)

56 |

57 |

58 |

59 | * [Source for Marco Polo Rust Lambda](https://github.com/nogibjj/rust-mlops-template/tree/main/marco-polo-lambda)

60 |

61 | `main.rs` [direct link](https://github.com/nogibjj/rust-mlops-template/blob/main/marco-polo-lambda/src/main.rs).

62 |

63 | ```rust

64 | use lambda_runtime::{run, service_fn, Error, LambdaEvent};

65 | use serde::{Deserialize, Serialize};

66 |

67 | #[derive(Deserialize)]

68 | struct Request {

69 | name: String,

70 | }

71 |

72 | #[derive(Serialize)]

73 | struct Response {

74 | req_id: String,

75 | msg: String,

76 | }

77 |

78 | async fn function_handler(event: LambdaEvent) -> Result {

79 | // Extract some useful info from the request

80 | let name = event.payload.name;

81 | let logic = match name.as_str() {

82 | "Marco" => "Polo",

83 | _ => "Who?",

84 | };

85 |

86 | // Prepare the response

87 | let resp = Response {

88 | req_id: event.context.request_id,

89 | msg: format!("{} says {}", name, logic),

90 | };

91 |

92 | // Return `Response` (it will be serialized to JSON automatically by the runtime)

93 | Ok(resp)

94 | }

95 |

96 | #[tokio::main]

97 | async fn main() -> Result<(), Error> {

98 | tracing_subscriber::fmt()

99 | .with_max_level(tracing::Level::INFO)

100 | // disable printing the name of the module in every log line.

101 | .with_target(false)

102 | // disabling time is handy because CloudWatch will add the ingestion time.

103 | .without_time()

104 | .init();

105 |

106 | run(service_fn(function_handler)).await

107 | }

108 |

109 | ```

110 |

111 | `Cargo.toml` [direct link](https://github.com/nogibjj/rust-mlops-template/blob/main/marco-polo-lambda/Cargo.toml)

112 |

113 | ```toml

114 | [package]

115 | name = "marco-polo-lambda"

116 | version = "0.1.0"

117 | edition = "2021"

118 |

119 | # Starting in Rust 1.62 you can use `cargo add` to add dependencies

120 | # to your project.

121 | #

122 | # If you're using an older Rust version,

123 | # download cargo-edit(https://github.com/killercup/cargo-edit#installation)

124 | # to install the `add` subcommand.

125 | #

126 | # Running `cargo add DEPENDENCY_NAME` will

127 | # add the latest version of a dependency to the list,

128 | # and it will keep the alphabetic ordering for you.

129 |

130 | [dependencies]

131 |

132 | lambda_runtime = "0.7"

133 | serde = "1.0.136"

134 | tokio = { version = "1", features = ["macros"] }

135 | tracing = { version = "0.1", features = ["log"] }

136 | tracing-subscriber = { version = "0.3", default-features = false, features = ["fmt"] }

137 | ```

138 |

139 | ##### Steps to run

140 |

141 | * `make format` to format code

142 | * `make lint` to lint

143 | * `make release-arm` to build for arm which is: `cargo lambda build --release --arm64`

144 | * `make deploy` which is this`cargo lambda deploy`

145 |

146 | ```Working demo

147 | (.venv) @noahgift ➜ /workspaces/rust-mlops-template/marco-polo-lambda (main) $ make invoke

148 | cargo lambda invoke --remote \

149 | --data-ascii '{"name": "Marco"}' \

150 | --output-format json \

151 | marco-polo-lambda

152 | {

153 | "msg": "Marco says Polo",

154 | "req_id": "abc67e2b-a3aa-47fa-98fb-d07eb627577e"

155 | }

156 | ```

157 |

158 | #### AWS S3 Account Summarizer with Rust

159 |

160 | * [Source code for AWS S3 Summarizer](https://github.com/nogibjj/rust-mlops-template/tree/main/awsmetas3)

161 |

162 | `lib.rs` [direct link]

163 | ```rust

164 | //Information about the AWS S3 service

165 | use aws_config::meta::region::RegionProviderChain;

166 | use aws_sdk_s3::{Client, Error};

167 |

168 | // Create a new AWS S3 client

169 | pub async fn client() -> Result {

170 | let region_provider = RegionProviderChain::first_try(None)

171 | .or_default_provider()

172 | .or_else("us-east-1");

173 | let shared_config = aws_config::from_env().region(region_provider).load().await;

174 | let client = Client::new(&shared_config);

175 | Ok(client)

176 | }

177 |

178 | /* return a list of all buckets in an AWS S3 account

179 | */

180 |

181 | pub async fn list_buckets(client: &Client) -> Result, Error> {

182 | //create vector to store bucket names

183 | let mut bucket_names: Vec = Vec::new();

184 | let resp = client.list_buckets().send().await?;

185 | let buckets = resp.buckets().unwrap_or_default();

186 | //store bucket names in vector

187 | for bucket in buckets {

188 | bucket_names.push(bucket.name().unwrap().to_string());

189 | }

190 | Ok(bucket_names)

191 | }

192 |

193 | // Get the size of an AWS S3 bucket by summing all the objects in the bucket

194 | // return the size in bytes

195 | async fn bucket_size(client: &Client, bucket: &str) -> Result {

196 | let resp = client.list_objects_v2().bucket(bucket).send().await?;

197 | let contents = resp.contents().unwrap_or_default();

198 | //store in a vector

199 | let mut sizes: Vec = Vec::new();

200 | for object in contents {

201 | sizes.push(object.size());

202 | }

203 | let total_size: i64 = sizes.iter().sum();

204 | println!("Total size of bucket {} is {} bytes", bucket, total_size);

205 | Ok(total_size)

206 | }

207 |

208 | /* Use list_buckets to get a list of all buckets in an AWS S3 account

209 | return a vector of all bucket sizes.

210 | If there is an error continue to the next bucket only print if verbose is true

211 | Return the vector

212 | */

213 | pub async fn list_bucket_sizes(client: &Client, verbose: Option) -> Result, Error> {

214 | let verbose = verbose.unwrap_or(false);

215 | let buckets = list_buckets(client).await.unwrap();

216 | let mut bucket_sizes: Vec = Vec::new();

217 | for bucket in buckets {

218 | match bucket_size(client, &bucket).await {

219 | Ok(size) => bucket_sizes.push(size),

220 | Err(e) => {

221 | if verbose {

222 | println!("Error: {}", e);

223 | }

224 | }

225 | }

226 | }

227 | Ok(bucket_sizes)

228 | }

229 | ```

230 |

231 |

232 | `main.rs` [direct link]

233 |

234 | ```rust

235 | (/*A Command-line tool to Interrogate AWS S3.

236 | Determines information about AWS S3 buckets and objects.

237 | */

238 | use clap::Parser;

239 | use humansize::{format_size, DECIMAL};

240 |

241 | #[derive(Parser)]

242 | //add extended help

243 | #[clap(

244 | version = "1.0",

245 | author = "Noah Gift",

246 | about = "Finds out information about AWS S3",

247 | after_help = "Example: awsmetas3 account-size"

248 | )]

249 | struct Cli {

250 | #[clap(subcommand)]

251 | command: Option,

252 | }

253 |

254 | #[derive(Parser)]

255 | enum Commands {

256 | Buckets {},

257 | AccountSize {

258 | #[clap(short, long)]

259 | verbose: Option,

260 | },

261 | }

262 |

263 | #[tokio::main]

264 | async fn main() {

265 | let args = Cli::parse();

266 | let client = awsmetas3::client().await.unwrap();

267 | match args.command {

268 | Some(Commands::Buckets {}) => {

269 | let buckets = awsmetas3::list_buckets(&client).await.unwrap();

270 | //print count of buckets

271 | println!("Found {} buckets", buckets.len());

272 | println!("Buckets: {:?}", buckets);

273 | }

274 | /*print total size of all buckets in human readable format

275 | Use list_bucket_sizes to get a list of all buckets in an AWS S3 account

276 | */

277 | Some(Commands::AccountSize { verbose }) => {

278 | let bucket_sizes = awsmetas3::list_bucket_sizes(&client, verbose)

279 | .await

280 | .unwrap();

281 | let total_size: i64 = bucket_sizes.iter().sum();

282 | println!(

283 | "Total size of all buckets is {}",

284 | format_size(total_size as u64, DECIMAL)

285 | );

286 | }

287 | None => println!("No command specified"),

288 | }

289 | })

290 | ```

291 |

292 | `Cargo.toml` [direct link](https://github.com/nogibjj/rust-mlops-template/blob/main/awsmetas3/Cargo.toml)

293 |

294 | ```toml

295 | [package]

296 | name = "awsmetas3"

297 | version = "0.1.0"

298 | edition = "2021"

299 |

300 | # See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

301 |

302 | [dependencies]

303 | aws-config = "0.52.0"

304 | aws-sdk-s3 = "0.22.0"

305 | tokio = { version = "1", features = ["full"] }

306 | clap = {version="4.0.32", features=["derive"]}

307 | humansize = "2.0.0"

308 | ```

309 |

310 | #### Related videos

311 |

312 | * [Install Rust Cloud9](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/080232022VIDEOPAIML-c1_s17/)

313 | * [Build Aws Rust S3 Size Calculator](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/080232022VIDEOPAIML-c1_s18/)

314 |

315 | ### References

316 |

317 | * [Developing on AWS with C# Free PDF O'Reilly book](https://d1.awsstatic.com/developer-center/Developing-on-AWS-with-CSharp.pdf)

318 | * [52 Weeks of AWS-The Complete Series](https://learning.oreilly.com/videos/52-weeks-of/080232022VIDEOPAIML/)

319 | * [Microsoft Azure Fundamentals (AZ-900) Certification](https://learning.oreilly.com/videos/microsoft-azure-fundamentals/27702422VIDEOPAIML/)

320 | * [A Graduate Level Three to Five Week Bootcamp on AWS. Go from ZERO to FIVE Certifications.](https://github.com/noahgift/aws-bootcamp)

321 | * [Duke Coursera Cloud Computing Foundations](https://www.coursera.org/learn/cloud-computing-foundations-duke?specialization=building-cloud-computing-solutions-at-scale)

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_3.md:

--------------------------------------------------------------------------------

1 | # Chapter 3 - Week 3: Virtualization and Containers

2 |

3 | * Refer to [Coursera Material on Virtualization](https://www.coursera.org/learn/cloud-virtualization-containers-api-duke?specialization=building-cloud-computing-solutions-at-scale)

4 |

5 | * [AWS Container Slides](https://docs.google.com/presentation/d/1uBlq4CMeQSffU3wwyU0xRrSR7buud20t/edit#slide=id.p1)

6 |

7 |

8 | ### Continuous Delivery of Rust Actix to ECR and AWS App Runner

9 |

10 | * [Code](https://github.com/nogibjj/rust-mlops-template/blob/main/README.md#containerized-actix-continuous-delivery-to-aws-app-runner)

11 |

12 | * [Live stream walkthrough direct link](https://www.youtube.com/watch?v=Im72N3or2FE)

13 |

14 |

15 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_4.md:

--------------------------------------------------------------------------------

1 | # Chapter 4 - Week 4: Containerized Rust

2 |

3 | * Refer to [Web Applications and Command-Line Tools for Data Engineering](https://www.coursera.org/learn/web-app-command-line-tools-for-data-engineering-duke) with a specific focus on Week 3: Python (and .NET) Microservices and Week 4: Python Packaging and Command Line Tools and the lessons focused on containerization.

4 |

5 | ## Building A Tiny Rust Container for a Command-Line Tool

6 |

7 | ## Containerized Actix Microservice

8 |

9 | * [Containerized Actix Microservice GitHub Project](https://github.com/noahgift/rust-mlops-template/tree/main/webdocker)

10 |

11 | `Dockerfile`

12 |

13 | ```bash

14 | FROM rust:latest as builder

15 | ENV APP webdocker

16 | WORKDIR /usr/src/$APP

17 | COPY . .

18 | RUN cargo install --path .

19 |

20 | FROM debian:buster-slim

21 | RUN apt-get update && rm -rf /var/lib/apt/lists/*

22 | COPY --from=builder /usr/local/cargo/bin/$APP /usr/local/bin/$APP

23 | #export this actix web service to port 8080 and 0.0.0.0

24 | EXPOSE 8080

25 | CMD ["webdocker"]

26 | ```

27 |

28 | `Cargo.toml`

29 |

30 | ```toml

31 | [package]

32 | name = "webdocker"

33 | version = "0.1.0"

34 | edition = "2021"

35 |

36 | # See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

37 |

38 | [dependencies]

39 | actix-web = "4"

40 | rand = "0.8"

41 | ```

42 |

43 | `lib.rs`

44 |

45 | ```rust

46 | /*A library that returns back random fruit */

47 |

48 | use rand::Rng;

49 |

50 | //create an const array of 10 fruits

51 | pub const FRUITS: [&str; 10] = [

52 | "Apple",

53 | "Banana",

54 | "Orange",

55 | "Pineapple",

56 | "Strawberry",

57 | "Watermelon",

58 | "Grapes",

59 | "Mango",

60 | "Papaya",

61 | "Kiwi",

62 | ];

63 |

64 | //create a function that returns a random fruit

65 | pub fn random_fruit() -> &'static str {

66 | let mut rng = rand::thread_rng();

67 | let random_index = rng.gen_range(0..FRUITS.len());

68 | FRUITS[random_index]

69 | }

70 | ```

71 |

72 | `main.rs`

73 |

74 | ```rust

75 | /*An actix Microservice that has multiple routes:

76 | A. / that turns a hello world

77 | B. /fruit that returns a random fruit

78 | C. /health that returns a 200 status code

79 | D. /version that returns the version of the service

80 | */

81 |

82 | use actix_web::{get, App, HttpResponse, HttpServer, Responder};

83 | //import the random fruit function from the lib.rs file

84 | use webdocker::random_fruit;

85 |

86 | //create a function that returns a hello world

87 | #[get("/")]

88 | async fn hello() -> impl Responder {

89 | HttpResponse::Ok().body("Hello World Random Fruit!")

90 | }

91 |

92 | //create a function that returns a random fruit

93 | #[get("/fruit")]

94 | async fn fruit() -> impl Responder {

95 | //print the random fruit

96 | println!("Random Fruit: {}", random_fruit());

97 | HttpResponse::Ok().body(random_fruit())

98 | }

99 |

100 | //create a function that returns a 200 status code

101 | #[get("/health")]

102 | async fn health() -> impl Responder {

103 | HttpResponse::Ok()

104 | }

105 |

106 | //create a function that returns the version of the service

107 | #[get("/version")]

108 | async fn version() -> impl Responder {

109 | //print the version of the service

110 | println!("Version: {}", env!("CARGO_PKG_VERSION"));

111 | HttpResponse::Ok().body(env!("CARGO_PKG_VERSION"))

112 | }

113 |

114 | #[actix_web::main]

115 | async fn main() -> std::io::Result<()> {

116 | //add a print message to the console that the service is running

117 | println!("Running the service");

118 | HttpServer::new(|| {

119 | App::new()

120 | .service(hello)

121 | .service(fruit)

122 | .service(health)

123 | .service(version)

124 | })

125 | .bind("0.0.0.0:8080")?

126 | .run()

127 | .await

128 | }

129 | ```

130 |

131 | Deployed to AWS App Runner via ECR

132 |

133 |

134 |

135 | 1. cd into `webdocker`

136 | 2. build and run container (can do via `Makefile`) or

137 |

138 | `docker build -t fruit .`

139 | `docker run -it --rm -p 8080:8080 fruit`

140 |

141 | 3. push to ECR

142 | 4. Tell AWS App Runner to autodeploy

143 |

144 | * [Direct link to video](https://www.youtube.com/watch?v=I3cEQ_7aD1A)

145 |

146 |

147 |

148 |

149 |

150 | ## Related Demos

151 |

152 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_5.md:

--------------------------------------------------------------------------------

1 | # Chapter 5 - Week 5: Distributed Computing and Concurrency (True Threads with Rust)

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_6.md:

--------------------------------------------------------------------------------

1 | # Chapter 6 - Week 6: Distributed Computing

2 |

3 |

4 | * [Challenges and Opportunities in Distributed Computing](https://paiml.com/docs/home/books/cloud-computing-for-data/chapter04-distributed-computing/)

5 | * Cover [GPU in Rust Examples] via PyTorch bindings for Rust + Stable Diffusion.

6 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_7.md:

--------------------------------------------------------------------------------

1 | # Chapter 7 - Week 7: Serverless

2 |

3 |

4 | ## AWS Lambda with Rust

5 |

6 | * [Slides AWS Lambda](https://docs.google.com/presentation/d/1lAa88cZrYjrC1cnj-rwgiintsK9HO16R/edit?usp=sharing&ouid=114367115509726512575&rtpof=true&sd=true)

7 |

8 | * [Link YouTube Video Rust Lambda](https://www.youtube.com/watch?v=jUTiHUTfGYo)

9 |

10 |

11 |

12 | * Fast inference and low memory

13 |

14 |

15 |

16 |

17 |

18 |

19 | ### Part 2: Guest Maxime DAVID

20 |

21 | [Maxime DAVID](https://www.linkedin.com/in/maxday/)

22 |

23 | * [Slides on Rust for AWS Lambda](https://docs.google.com/presentation/d/1eoFYJWrD6oTnRP8n5gKkhRP6HvTPpCPKESUWDJpmZ1c/edit#slide=id.g213a871c055_0_0)

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_8.md:

--------------------------------------------------------------------------------

1 | # Chapter 8-More Serverless

2 |

3 | ## Hour 1: Rust with Azure Functions

4 |

5 | * Guest Lecture [Alfredo Deza](https://www.linkedin.com/in/alfredodeza/)

6 | * [Deploy Rust on Azure Functions](https://learning.oreilly.com/videos/deploy-rust-on/27965683VIDEOPAIML/)

7 |

8 |

9 | ## Hour2: Step Functions with Rust

10 |

11 | Marco, Polo Rust Step Function

12 |

13 | Code here: https://github.com/nogibjj/rust-mlops-template/blob/main/step-functions-rust/README.md

14 |

15 | * create new marco polo lambda

16 | `cargo lambda new rust-marco`

17 |

18 | Then build, deploy and invoke: `make release` `make deploy` and `make invoke`:

19 |

20 | ```bash

21 | (.venv) @noahgift ➜ /workspaces/rust-mlops-template/step-functions-rust/rust-marco (main) $ make invoke

22 | cargo lambda invoke --remote \

23 | --data-ascii '{"name": "Marco"}' \

24 | --output-format json \

25 | rust-marco

26 | {

27 | "payload": "Polo",

28 | "req_id": "20de1794-1055-4731-9488-7c9217ad195d"

29 | }

30 | ```

31 |

32 |

33 | * create new rust polo lambda

34 | `cargo lambda new rust-polo`

35 |

36 |

37 |

38 |

39 |

40 | [Walkthrough](https://www.youtube.com/watch?v=2UktR8XSCE0)

41 |

42 |

43 |

44 | ## GCP Cloud Functions

45 |

46 | * [Using Gcp Cloud Functions](https://learning.oreilly.com/videos/google-professional-cloud/03032022VIDEOPAIML/03032022VIDEOPAIML-c1_s12/)

--------------------------------------------------------------------------------

/small-rust-tutorial/src/chapter_9.md:

--------------------------------------------------------------------------------

1 | # Chapter 9: AI Pair Assisted Programming and Big Data Storage

2 |

3 | ## Mini-Lecture A: Using GitHub Copilot CLI

4 |

5 | * [github-copilot-cli](https://www.npmjs.com/package/@githubnext/github-copilot-cli)

6 |

7 | tldr: these are the commands

8 |

9 | ```bash

10 | #!/usr/bin/env bash

11 |

12 | #some setup stuff for the dev environment

13 | #install nodejs

14 | curl -fsSL https://deb.nodesource.com/setup_19.x | sudo -E bash - &&\

15 | sudo apt-get install -y nodejs

16 |

17 | #install GitHub Copilot CLI

18 | npm install -g @githubnext/github-copilot-cli

19 |

20 | #authenticate with GitHub Copilot

21 | github-copilot-cli auth

22 |

23 | #Upgrade

24 | #npm install -g @githubnext/github-copilot-cli

25 | ```

26 |

27 | * [Watch Copilot Video Link](https://youtube.com/live/F67rjOjEQE4)

28 |

29 |

30 |

31 | Suggestion: Try out commands via `??` and put them into a `cmd.sh` so you can save both prompt and command

32 | Here is an example of a `cmd.sh`

33 |

34 | ```bash

35 | ### GitHub Copilot Commands

36 | ## Prompt: ?? find all large rust target folders and get size and location

37 | ## CMD: find . -name "target" -type d -exec du -sh {} \;

38 |

39 | ## Prompt: ?? delete all rust build directories to save space

40 | ## CMD: find . -name "target" -type d -exec rm -rf {} \;

41 | ```

42 |

43 | ## Mini-Lecture B: Building Chat Bot with OpenAI while Using ChatGPT4 as an AI Pair Programming Assistant

44 |

45 |

46 | In this innovative project, we demonstrate the power of AI-driven pair programming tools, such as ChatGPT-4, OpenAI, and GitHub Copilot, by creating a fully-functional chatbot using the Rust programming language. The chatbot connects to the OpenAI API to provide intelligent, dynamic responses to user input. Throughout the development process, ChatGPT-4 assists with code refactoring and optimization, resulting in a high-quality, production-ready chatbot. This project showcases the remarkable capabilities of AI in augmenting human programming skills and improving code quality.

47 |

48 | Here's the output of the tree command, displaying the project structure:

49 | ```bash

50 | .

51 | ├── Cargo.lock

52 | ├── Cargo.toml

53 | ├── src

54 | │ ├── chatbot.rs

55 | │ ├── lib.rs

56 | │ └── main.rs

57 | └── tests

58 | └── test.rs

59 |

60 | ```

61 |

62 | This project consists of the following main components:

63 |

64 | - `Cargo.lock` and `Cargo.toml`: Rust's package manager files containing dependencies and metadata.

65 | - `src`: The source code directory containing the main application and library files.

66 | - `chatbot.rs`: The file containing the chatbot logic, including functions for user input, API calls, and AI response handling.

67 | - `lib.rs`: The file exposing the chatbot module and its contents, as well as the chat loop function.

68 | - `main.rs`: The main entry point of the application, initializing the environment and invoking the chat loop function.

69 | - `tests`: The directory containing test files.

70 | - `test.rs`: The file with test cases for the chatbot functions.

71 |

72 |

73 | * [Watch Live Coding a Chatbot with Rust and OpenAI](https://www.youtube.com/live/rBhMUAlC9TM?feature=share)

74 |

75 |

76 | * [Code here](https://github.com/nogibjj/assimilate-openai/tree/main/chatbot)

77 |

78 | `main.rs`

79 | ```rust

80 | use chatbot::chatbot::run_chat_loop;

81 | use reqwest::Client;

82 |

83 | use std::env;

84 |

85 | #[tokio::main]

86 | async fn main() -> Result<(), reqwest::Error> {

87 | let client = Client::new();

88 |

89 | // Use env variable OPENAI_API_KEY

90 | let api_key = env::var("OPENAI_API_KEY").expect("OPENAI_API_KEY must be set");

91 | let url = "https://api.openai.com/v1/completions";

92 |

93 | run_chat_loop(&client, &api_key, url).await?;

94 |

95 | Ok(())

96 | }

97 | ```

98 |

99 | `lib.rs`

100 | ```rust

101 | pub mod chatbot;

102 | ```

103 |

104 | `chatbot.rs`

105 | ```rust

106 | use reqwest::{header, Client};

107 | use serde_json::json;

108 | use serde_json::Value;

109 | use std::io;

110 | use std::io::Write;

111 |

112 | pub async fn run_chat_loop(

113 | client: &Client,

114 | api_key: &str,

115 | url: &str,

116 | ) -> Result<(), reqwest::Error> {

117 | let mut conversation = String::from("The following is a conversation with an AI assistant. The assistant is helpful, creative, clever, and very friendly.\n");

118 |

119 | loop {

120 | print!("Human: ");

121 | io::stdout().flush().unwrap();

122 |

123 | let user_input = read_user_input();

124 |

125 | if user_input.to_lowercase() == "quit" || user_input.to_lowercase() == "exit" {

126 | break;

127 | }

128 |

129 | conversation.push_str("Human: ");

130 | conversation.push_str(&user_input);

131 | conversation.push_str("\nAI: ");

132 |

133 | let json = json!({

134 | "model": "text-davinci-003",

135 | "prompt": conversation,

136 | "temperature": 0.9,

137 | "max_tokens": 150,

138 | "top_p": 1.0,

139 | "frequency_penalty": 0.0,

140 | "presence_penalty": 0.6,

141 | "stop": [" Human:", " AI:"]

142 | });

143 |

144 | let body = call_api(client, api_key, url, json).await?;

145 | let ai_response = get_ai_response(&body);

146 |

147 | println!("AI: {}", ai_response);

148 |

149 | conversation.push_str(ai_response);

150 | conversation.push('\n');

151 | }

152 |

153 | Ok(())

154 | }

155 |

156 | pub async fn call_api(

157 | client: &Client,

158 | api_key: &str,

159 | url: &str,

160 | json: serde_json::Value,

161 | ) -> Result {

162 | let response = client

163 | .post(url)

164 | .header(header::AUTHORIZATION, format!("Bearer {}", api_key))

165 | .header(header::CONTENT_TYPE, "application/json")

166 | .json(&json)

167 | .send()

168 | .await?;

169 |

170 | let body: Value = response.json().await?;

171 | Ok(body)

172 | }

173 |

174 | pub fn get_ai_response(body: &Value) -> &str {

175 | body["choices"][0]["text"].as_str().unwrap().trim()

176 | }

177 |

178 | pub fn read_user_input() -> String {

179 | let mut user_input = String::new();

180 | io::stdin().read_line(&mut user_input).unwrap();

181 | user_input.trim().to_string()

182 | }

183 |

184 | ```

185 |

186 | `tests/test.rs`

187 | ```rust

188 | use chatbot::chatbot::get_ai_response;

189 | use serde_json::json;

190 |

191 | #[test]

192 | fn test_get_ai_response() {

193 | let body = json!({

194 | "choices": [

195 | {

196 | "text": " Hello! How can I help you today?\n"

197 | }

198 | ]

199 | });

200 |

201 | let response = get_ai_response(&body);

202 | assert_eq!(response, "Hello! How can I help you today?");

203 | }

204 | ```

205 |

206 | ## Mini-Lecture C: Using Azure Databricks

207 |

208 |

209 | * [Follow Tutorials on Azure Databricks](https://learn.microsoft.com/en-us/azure/databricks/)

210 |

211 |

212 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/faq.md:

--------------------------------------------------------------------------------

1 | # FAQ

2 |

3 | ## There are two many resources; what should I use?

4 |

5 | The primary resource for this course is [Building Cloud Computing Solutions at Scale Specialization](https://www.coursera.org/specializations/building-cloud-computing-solutions-at-scale), and it extensively covers the most important concepts. You can work through this course as you attend class.

6 |

7 | The secondary resources are this "live JIT (Just in Time) [book on Rust](https://nogibjj.github.io/rust-tutorial/). In the real world, you will be overwhelmed with information, and the best approach to using this information is to develop a heuristic. A good heuristic to master software engineering is building projects daily and making many mistakes weekly. When you are stuck, refer to a resource. Doing is much better than reading.

8 |

9 | ## What can I do to get the most out of this class?

10 |

11 | Try to code almost every single day for at least one hour. Use [GitHub Copilot](https://github.com/features/copilot) + continuous integration tools (Format/Lint/Compile/Test/Run) to understand how to build and deploy working software. The more cycles of building code while using these tools, the better you will get at real-world software engineering. There is no substitute for daily practice; you must code every day or almost every day.

12 |

13 | It would be best if you also coded using the toolchain of modern tools that build quality software since jobs in the real world do care about code quality. Suppose you are not using automated quality control tools like formatting and linting locally and in the build system automatically to deploy binaries or microservices. In that case, you won't learn how to engage as a professional software engineer fully.

14 |

15 |

16 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/guest_lectures.md:

--------------------------------------------------------------------------------

1 | # Guest Lectures

2 |

3 | ## Derek Wales

4 |

5 | * Title: Product Manager Dell

6 | * Topic: Virtualization

7 | * Date: 02/01/2023

8 | * [https://www.linkedin.com/in/derek-wales/](https://www.linkedin.com/in/derek-wales/)

9 |

10 | ### Resources:

11 |

12 | * [Slides on Virtualization](https://docs.google.com/presentation/d/1Y0sD-RQkeGQy3BwHOKOVj9KzQ4KqtlgA/edit?usp=share_link&ouid=114367115509726512575&rtpof=true&sd=true)

13 | * [Docker Walkthrough Scripts](https://docs.google.com/document/d/1uY227Fq1fZeQtyqc1x5Ecjym2Jx4Wa8M/edit?usp=sharing&ouid=114367115509726512575&rtpof=true&sd=true)

14 |

15 |

16 | ## Thomas Dohmke

17 |

18 | * Title: CEO GitHub

19 | * Topic: GitHub Copilot

20 | * Date: 03/22/2023

21 | * Linkedin: [https://www.linkedin.com/in/ashtom](https://www.linkedin.com/in/ashtom)

22 | * [Key Talk YouTube Video-Open-Source Values](https://www.youtube.com/watch?v=cdbsg1iIoQ4)

23 |

24 | ## Maxime David

25 |

26 | * Title: Software Engineering @DataDog

27 | * Topic: Rust for AWS Lambda

28 | * Date: 3/01

29 | * GitHub: [https://github.com/maxday](https://github.com/maxday)

30 | * YouTube: [https://www.youtube.com/@maxday_coding](https://www.youtube.com/@maxday_coding)

31 | * [Key Talk YouTube Video-Live Stream Discussion Using Rust](https://youtube.com/live/IZclXw4c3Vc)

32 | * [Podcast](https://podcast.paiml.com/episodes/maxime-david-serverless-with-rust)

33 | * [Enterprise MLOps Interviews](https://learning.oreilly.com/videos/enterprise-mlops-interviews/08012022VIDEOPAIML/)

34 | * [Slides on Rust for AWS Lambda](https://docs.google.com/presentation/d/1eoFYJWrD6oTnRP8n5gKkhRP6HvTPpCPKESUWDJpmZ1c/edit#slide=id.g213a871c055_0_0)

35 |

36 |

37 |

38 |

39 | ## Alfredo Deza

40 |

41 | * Title: Author, Olympian, Adjunct Professor at Duke, Senior Cloud Advocate @Microsoft

42 | * Topic: Rust with ONNX, Azure, OpenAI, Binary Deploy via GitHub Actions

43 | * Date: Week of 3/22-24, at 5pm

44 | * Linkedin: [https://www.linkedin.com/in/alfredodeza/](https://www.linkedin.com/in/alfredodeza/)

45 |

46 | ## Ken Youens-Clark

47 |

48 | * Title: O'Reilly Author Command-Line Rust

49 | * [Buy Book-Command-Line Rust](https://www.amazon.com/Command-Line-Rust-Project-Based-Primer-Writing/dp/1098109430)

50 | * Date: Feb. 15th

51 | * Additional Links:

52 | * [clap_v4 branch of book code](https://github.com/kyclark/command-line-rust/tree/clap_v4)

53 | * [clap_v4 derive pattern of book code](https://github.com/kyclark/command-line-rust/tree/clap_v4_derive)

54 |

55 | ## Denny Lee

56 |

57 | * Sr. Staff Developer Advocate @Databricks

58 | * [Linkedin](https://www.linkedin.com/in/dennyglee/)

59 | * Date: April, 5th @4pm.

60 |

61 | ## Matthew Powers

62 |

63 | * Developer Advocate @Databricks

64 | * [Linked](https://www.linkedin.com/in/matthew-powers-cfa-6246525/)

65 | * Date: April, 5th @5pm.

66 |

67 | ## Brian Tarbox

68 |

69 | * Linkedin: [https://www.linkedin.com/in/briantarbox/](https://www.linkedin.com/in/briantarbox/)

70 | * Date: TBD

71 | * AWS Lambda for Alexa Guru

--------------------------------------------------------------------------------

/small-rust-tutorial/src/learn_rust_tips.md:

--------------------------------------------------------------------------------

1 | ## Tips on Learning Rust

2 |

3 | Note, you can learn Rust for MLOps by taking this Duke Coursera course here: [DevOps, DataOps, MLOps](https://www.coursera.org/learn/devops-dataops-mlops-duke)

4 |

5 | ### Leveling Up with Rust via GitHub Copilot

6 |

7 | Use GitHub ecosystem to "LEVEL UP" to a more powerful language in Rust.

8 |

9 | * [Direct link to Video](https://www.youtube.com/watch?v=U_qyJZfiMLo)

10 | * [Direct link to Repo in video](https://github.com/nogibjj/hello-rust-template-example)

11 |

12 |

13 |

14 | ### Teaching MLOps at Scale (GitHub Universe 2022)

15 |

16 | * [MLOps in Education](https://github.com/readme/guides/mlops-education)

17 |

18 |

19 |

--------------------------------------------------------------------------------

/small-rust-tutorial/src/preface.md:

--------------------------------------------------------------------------------

1 | # Preface

2 |

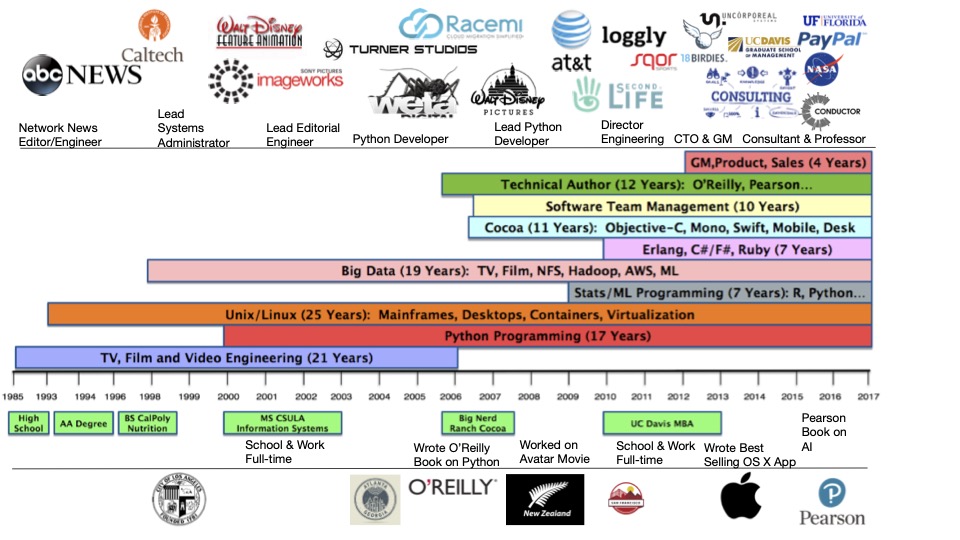

3 | This book is for [Cloud Computing for Data](https://datascience.duke.edu/academics/electives/#721) at Duke University in 2023 by [Noah Gift](https://noahgift.com/). What currently keeps me busy is working as an [Executive in Residence at the Duke MIDS (Data Science)](https://www.coursera.org/instructor/noahgift) and Duke AI Product Management program and as a consultant and author in Cloud Computing, Big Data, DevOps, and MLOps. The following visual resume is a good idea to show what I have worked on in my career. I am a Rust Fanatic.

4 |

5 |

6 |

7 | ### Related Duke Coursera Content

8 |

9 | * You can find many related Coursera Courses at [https://www.coursera.org/instructor/noahgift](https://www.coursera.org/instructor/noahgift)

10 |

11 | 🔭 I'm currently working on or just finished the following things below:

12 |

13 | ##### MLOps (Specialization: 4 Courses)

14 | ###### Publisher: Coursera + Duke

15 | ###### Release Date: 1/1/2023

16 |

17 | * [DevOps, DataOps, MLOps](https://www.coursera.org/learn/devops-dataops-mlops-duke)

18 | * [MLOps Platforms: Amazon SageMaker and Azure ML](https://www.coursera.org/learn/mlops-aws-azure-duke)

19 | * [Open Source Platforms for MLOps](https://www.coursera.org/learn/open-source-mlops-platforms-duke)

20 | * [Python Essentials for MLOps](https://www.coursera.org/learn/python-essentials-mlops-duke)

21 |

22 | ##### Foundations of Data Engineering (Specialization: 4 Courses)

23 | ###### Publisher: Coursera + Duke

24 | ###### Release Date: 2/1/2022

25 |

26 |

27 | * [Python, Bash, and SQL Essentials for Data Engineering Specialization](https://www.coursera.org/specializations/python-bash-sql-data-engineering-duke)

28 | * [Course1: Python and Pandas for Data Engineering](https://www.coursera.org/learn/python-and-pandas-for-data-engineering-duke?specialization=python-bash-sql-data-engineering-duke)

29 | * [Course2: Linux and Bash for Data Engineering](https://www.coursera.org/learn/linux-and-bash-for-data-engineering-duke?specialization=python-bash-sql-data-engineering-duke)

30 | * [Github Repos for Projects in Course](https://github.com/noahgift/cloud-data-analysis-at-scale#github-repos-for-projects-in-course)

31 | * [Course3: Scripting with Python and SQL for Data Engineering](https://www.coursera.org/learn/scripting-with-python-sql-for-data-engineering-duke?specialization=python-bash-sql-data-engineering-duke)

32 | * [Course4: Web Development and Command-Line Tools in Python for Data Engineering](https://www.coursera.org/learn/web-app-command-line-tools-for-data-engineering-duke?specialization=python-bash-sql-data-engineering-duke)

33 |

34 | ## AWS Certified Solutions Architect Professional exam (SAP-C01)

35 | ### Publisher: Linkedin Learning

36 | #### Release Date: January, 2021

37 |

38 | * [AWS Certified Solutions Architect - Professional (SAP-C01) Cert Prep: 1 Design for Organizational Complexity](https://www.linkedin.com/learning/aws-certified-solutions-architect-professional-sap-c01-cert-prep-1-design-for-organizational-complexity/design-for-organizational-complexity?autoplay=true)

39 | * [Microsoft Azure Data Engineering (DP-203): 2 Design and Develop Data Processing](https://www.linkedin.com/learning/aws-certified-solutions-architect-professional-sap-c01-cert-prep-2-design-for-new-solutions/introduction-to-domain-2?autoplay=true)

40 | * [AWS Certified Solutions Architect - Professional (SAP-C01) Cert Prep: 2 Design for New Solutions](https://www.linkedin.com/learning/aws-certified-solutions-architect-professional-sap-c01-cert-prep-3-migration-planning/select-an-appropriate-server-migration-mechanism?autoplay=true)

41 |

42 |

43 | ##### AWS w/ C#

44 | ###### Publisher: O'Reilly

45 |

46 | ###### Release Date: 2022 (Reinvent 2022 Target)

47 |

48 | Working with O'Reilly and AWS to write a book on building solutions on AWS with C#/.NET 6.

49 |

50 | * [Developing on AWS with C#](https://www.amazon.com/Developing-AWS-Comprehensive-Solutions-Platform/dp/1492095877)

51 |

52 |

53 | ##### Practical MLOps

54 | ###### Publisher: O'Reilly