├── .codecov.yml

├── .flake8

├── .github

└── workflows

│ └── ci.yml

├── .gitignore

├── .pre-commit-config.yaml

├── CHANGELOG.md

├── CODE_OF_CONDUCT.md

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── justfile

├── pyproject.toml

├── src

└── deadlink

│ ├── __about__.py

│ ├── __init__.py

│ ├── _check.py

│ ├── _cli.py

│ ├── _main.py

│ └── _replace_redirects.py

├── tests

├── test_check.py

├── test_cli.py

└── test_replace_redirects.py

└── tox.ini

/.codecov.yml:

--------------------------------------------------------------------------------

1 | comment: no

2 |

--------------------------------------------------------------------------------

/.flake8:

--------------------------------------------------------------------------------

1 | [flake8]

2 | ignore = E203, E266, E501, W503

3 | max-line-length = 80

4 | max-complexity = 18

5 | select = B,C,E,F,W,T4,B9

6 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: ci

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 | pull_request:

8 | branches:

9 | - main

10 |

11 | jobs:

12 | lint:

13 | runs-on: ubuntu-latest

14 | steps:

15 | - name: Check out repo

16 | uses: actions/checkout@v2

17 | - name: Set up Python

18 | uses: actions/setup-python@v2

19 | - name: Run pre-commit

20 | uses: pre-commit/action@v2.0.3

21 |

22 | build:

23 | runs-on: ubuntu-latest

24 | strategy:

25 | matrix:

26 | python-version: ["3.7", "3.8", "3.9", "3.10"]

27 | steps:

28 | - uses: actions/setup-python@v2

29 | with:

30 | python-version: ${{ matrix.python-version }}

31 | - uses: actions/checkout@v2

32 | - name: Test with tox

33 | run: |

34 | pip install tox

35 | tox -- --cov deadlink --cov-report xml --cov-report term

36 | - name: Submit to codecov

37 | uses: codecov/codecov-action@v1

38 | if: ${{ matrix.python-version == '3.9' }}

39 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *.swp

3 | *.prof

4 | MANIFEST

5 | dist/

6 | build/

7 | .coverage

8 | .cache/

9 | *.egg-info/

10 | .pytest_cache/

11 | .tox/

12 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | repos:

2 | - repo: https://github.com/PyCQA/isort

3 | rev: 5.10.1

4 | hooks:

5 | - id: isort

6 |

7 | - repo: https://github.com/psf/black

8 | rev: 22.6.0

9 | hooks:

10 | - id: black

11 | language_version: python3

12 |

13 | - repo: https://github.com/PyCQA/flake8

14 | rev: 4.0.1

15 | hooks:

16 | - id: flake8

17 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | # Changelog

2 |

3 | All notable changes to this project will be documented in this file.

4 |

5 | ## [0.3.1] - 2021-07-13

6 |

7 | ### Changed

8 | - intercept more httpx errors

9 |

10 | ## [0.3.0] - 2021-07-08

11 |

12 | ### Changed

13 | - renamed deadlink-fix to deadlink-replace-redirects

14 | - only follow permanent redirects (301)

15 | - removed `__version__`

16 |

17 | ### Fixed

18 | - respect the allowed/ignored URL patterns in the config

19 |

20 | ## [0.2.6] - 2021-07-08

21 |

22 | ### Added

23 | - --allow-files/--ignore-files command line options

24 | - pre-commit hook

25 |

26 | ### Fixed

27 | - don't modify hidden files/directories

28 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # deadlink Code of Conduct

2 |

3 | ## Our Pledge

4 |

5 | In the interest of fostering an open and welcoming environment, we as

6 | contributors and maintainers pledge to making participation in our project and

7 | our community a harassment-free experience for everyone, regardless of age, body

8 | size, disability, ethnicity, sex characteristics, gender identity and expression,

9 | level of experience, education, socio-economic status, nationality, personal

10 | appearance, race, religion, or sexual identity and orientation.

11 |

12 | ## Our Standards

13 |

14 | Examples of behavior that contributes to creating a positive environment

15 | include:

16 |

17 | * Using welcoming and inclusive language

18 | * Being respectful of differing viewpoints and experiences

19 | * Gracefully accepting constructive criticism

20 | * Focusing on what is best for the community

21 | * Showing empathy towards other community members

22 |

23 | Examples of unacceptable behavior by participants include:

24 |

25 | * The use of sexualized language or imagery and unwelcome sexual attention or

26 | advances

27 | * Trolling, insulting/derogatory comments, and personal or political attacks

28 | * Public or private harassment

29 | * Publishing others' private information, such as a physical or electronic

30 | address, without explicit permission

31 | * Other conduct which could reasonably be considered inappropriate in a

32 | professional setting

33 |

34 | ## Our Responsibilities

35 |

36 | Project maintainers are responsible for clarifying the standards of acceptable

37 | behavior and are expected to take appropriate and fair corrective action in

38 | response to any instances of unacceptable behavior.

39 |

40 | Project maintainers have the right and responsibility to remove, edit, or

41 | reject comments, commits, code, wiki edits, issues, and other contributions

42 | that are not aligned to this Code of Conduct, or to ban temporarily or

43 | permanently any contributor for other behaviors that they deem inappropriate,

44 | threatening, offensive, or harmful.

45 |

46 | ## Scope

47 |

48 | This Code of Conduct applies within all project spaces, and it also applies when

49 | an individual is representing the project or its community in public spaces.

50 | Examples of representing a project or community include using an official

51 | project e-mail address, posting via an official social media account, or acting

52 | as an appointed representative at an online or offline event. Representation of

53 | a project may be further defined and clarified by project maintainers.

54 |

55 | ## Enforcement

56 |

57 | Instances of abusive, harassing, or otherwise unacceptable behavior may be

58 | reported by contacting the project team at [INSERT EMAIL ADDRESS]. All

59 | complaints will be reviewed and investigated and will result in a response that

60 | is deemed necessary and appropriate to the circumstances. The project team is

61 | obligated to maintain confidentiality with regard to the reporter of an incident.

62 | Further details of specific enforcement policies may be posted separately.

63 |

64 | Project maintainers who do not follow or enforce the Code of Conduct in good

65 | faith may face temporary or permanent repercussions as determined by other

66 | members of the project's leadership.

67 |

68 | ## Attribution

69 |

70 | This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

71 | available at https://www.contributor-covenant.org/version/1/4/code-of-conduct/

72 |

73 | [homepage]: https://www.contributor-covenant.org

74 |

75 | For answers to common questions about this code of conduct, see

76 | https://www.contributor-covenant.org/faq/

77 |

78 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # deadlink contributing guidelines

2 |

3 | The deadlink community appreciates your contributions via issues and

4 | pull requests. Note that the [code of conduct](CODE_OF_CONDUCT.md)

5 | applies to all interactions with the deadlink project, including

6 | issues and pull requests.

7 |

8 | When submitting pull requests, please follow the style guidelines of

9 | the project, ensure that your code is tested and documented, and write

10 | good commit messages, e.g., following [these

11 | guidelines](https://chris.beams.io/posts/git-commit/).

12 |

13 | By submitting a pull request, you are licensing your code under the

14 | project [license](LICENSE) and affirming that you either own copyright

15 | (automatic for most individuals) or are authorized to distribute under

16 | the project license (e.g., in case your employer retains copyright on

17 | your work).

18 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (c) 2021-2022 Nico Schlömer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 | [](https://pypi.org/project/deadlink/)

6 | [](https://pypi.org/project/deadlink/)

7 | [](https://github.com/nschloe/deadlink/)

8 | [](https://pepy.tech/project/deadlink)

9 |

10 |

11 |

12 | [](https://github.com/nschloe/deadlink/actions?query=workflow%3Aci)

13 | [](https://app.codecov.io/gh/nschloe/deadlink)

14 | [](https://lgtm.com/projects/g/nschloe/deadlink)

15 | [](https://github.com/psf/black)

16 |

17 | Parses text files for HTTP URLs and checks if they are still valid. Install with

18 |

19 | ```

20 | pip install deadlink

21 | ```

22 |

23 | and use as

24 |

25 |

26 |

27 |

28 | ```sh

29 | deadlink check README.md # or multiple files/directories

30 | # or deadlink c README.md

31 | ```

32 |

33 | To explicitly allow or ignore certain URLs, use

34 |

35 | ```

36 | deadlink check README.md -a http: -i stackoverflow.com github

37 | ```

38 |

39 | This only considers URLs containing `http:` and _not_ containing `stackoverflow.com` or

40 | `github`. You can also place allow and ignore lists in the config file

41 | `~/.config/deadlink/config.toml`, e.g.,

42 |

43 | ```toml

44 | allow_urls = [

45 | "https:"

46 | ]

47 | ignore_urls = [

48 | "stackoverflow.com",

49 | "math.stackexchange.com",

50 | "discord.gg",

51 | "doi.org"

52 | ]

53 | igonore_files = [

54 | ".svg"

55 | ]

56 | ```

57 |

58 | See

59 |

60 | ```

61 | deadlink check -h

62 | ```

63 |

64 | for all options.

65 | Use

66 |

67 | ```sh

68 | deadlink replace-redirects paths-or-files

69 | # or deadlink rr paths-or-files

70 | ```

71 |

72 | to replace redirects in the given files. The same filters as for `deadlink check` apply.

73 |

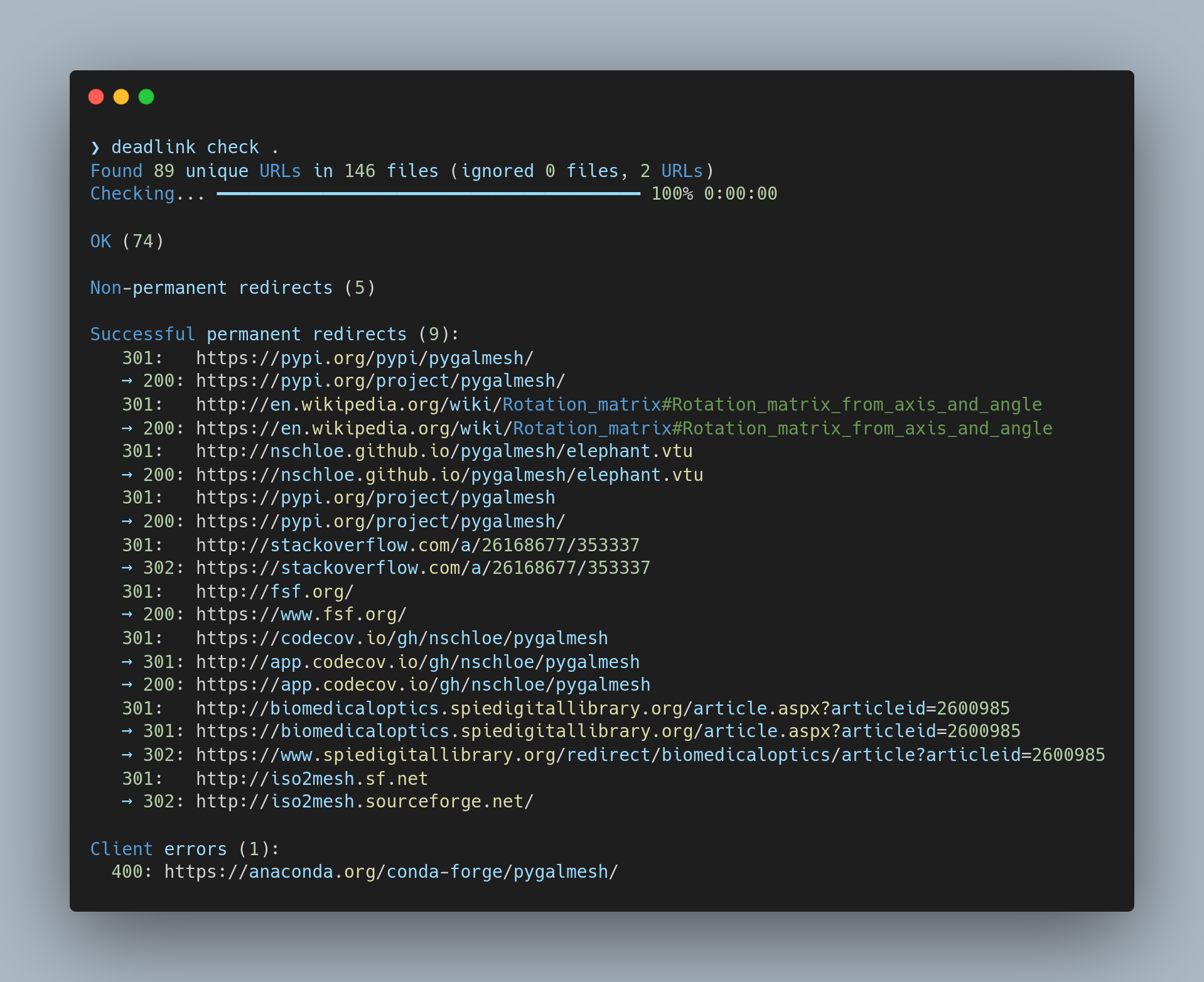

74 | Example output:

75 |

76 |

77 |

78 |

79 |

80 | #### Similar projects:

81 |

82 | - [awesome_bot](https://github.com/dkhamsing/awesome_bot)

83 | - [brök](https://github.com/smallhadroncollider/brok)

84 | - [link-verifier](https://github.com/bmuschko/link-verifier)

85 |

--------------------------------------------------------------------------------

/justfile:

--------------------------------------------------------------------------------

1 | # version := `python3 -c "from src.deadlink.__about__ import __version__; print(__version__)"`

2 | version := `python3 -c 'exec(open("./src/deadlink/__about__.py").read()); print(__version__)'`

3 |

4 | default:

5 | @echo "\"just publish\"?"

6 |

7 | publish:

8 | @if [ "$(git rev-parse --abbrev-ref HEAD)" != "main" ]; then exit 1; fi

9 | gh release create "v{{version}}"

10 | flit publish

11 |

12 | clean:

13 | @find . | grep -E "(__pycache__|\.pyc|\.pyo$)" | xargs rm -rf

14 | @rm -rf src/*.egg-info/ build/ dist/ .tox/

15 |

16 | format:

17 | isort .

18 | black .

19 | blacken-docs README.md

20 |

21 | lint:

22 | black --check .

23 | flake8 .

24 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools>=61"]

3 | build-backend = "setuptools.build_meta"

4 |

5 | [tool.isort]

6 | profile = "black"

7 |

8 | [project]

9 | name = "deadlink"

10 | authors = [{name = "Nico Schlömer", email = "nico.schloemer@gmail.com"}]

11 | description = "Check and fix URLs in text files"

12 | readme = "README.md"

13 | license = {file = "LICENSE"}

14 | classifiers = [

15 | "Development Status :: 4 - Beta",

16 | "License :: OSI Approved :: MIT License",

17 | "Operating System :: OS Independent",

18 | "Programming Language :: Python",

19 | "Programming Language :: Python :: 3",

20 | "Programming Language :: Python :: 3.7",

21 | "Programming Language :: Python :: 3.8",

22 | "Programming Language :: Python :: 3.9",

23 | "Programming Language :: Python :: 3.10",

24 | "Topic :: Utilities",

25 | ]

26 | dynamic = ["version"]

27 | requires-python = ">=3.7"

28 | dependencies = [

29 | "appdirs",

30 | "httpx >= 0.20.0",

31 | "rich",

32 | "toml",

33 | ]

34 |

35 | [tool.setuptools.dynamic]

36 | version = {attr = "deadlink.__about__.__version__"}

37 |

38 | [project.urls]

39 | Code = "https://github.com/nschloe/deadlink"

40 | Issues = "https://github.com/nschloe/deadlink/issues"

41 | Funding = "https://github.com/sponsors/nschloe"

42 |

43 | [project.scripts]

44 | deadlink = "deadlink:cli"

45 |

--------------------------------------------------------------------------------

/src/deadlink/__about__.py:

--------------------------------------------------------------------------------

1 | __version__ = "0.5.0"

2 |

--------------------------------------------------------------------------------

/src/deadlink/__init__.py:

--------------------------------------------------------------------------------

1 | from .__about__ import __version__

2 | from ._cli import cli

3 | from ._main import categorize_urls

4 |

5 | __all__ = ["categorize_urls", "cli", "__version__"]

6 |

--------------------------------------------------------------------------------

/src/deadlink/_check.py:

--------------------------------------------------------------------------------

1 | from ._main import (

2 | categorize_urls,

3 | find_files,

4 | find_urls,

5 | is_allowed,

6 | plural,

7 | print_to_screen,

8 | read_config,

9 | )

10 |

11 |

12 | def check(args) -> int:

13 | # get non-hidden files in non-hidden directories

14 | files = find_files(args.paths)

15 |

16 | d = read_config()

17 |

18 | # filter files by allow-ignore-lists

19 | allow_patterns = set() if args.allow_files is None else set(args.allow_files)

20 | if "allow_files" in d:

21 | allow_patterns = allow_patterns.union(set(d["allow_files"]))

22 | ignore_patterns = set() if args.ignore_files is None else set(args.ignore_files)

23 | if "ignore_files" in d:

24 | ignore_patterns = ignore_patterns.union(set(d["ignore_files"]))

25 |

26 | num_files_before = len(files)

27 | files = set(

28 | filter(lambda item: is_allowed(item, allow_patterns, ignore_patterns), files)

29 | )

30 | num_ignored_files = num_files_before - len(files)

31 |

32 | allow_patterns = set() if args.allow_urls is None else set(args.allow_urls)

33 | if "allow_urls" in d:

34 | allow_patterns = allow_patterns.union(set(d["allow_urls"]))

35 | ignore_patterns = set() if args.ignore_urls is None else set(args.ignore_urls)

36 | if "ignore_urls" in d:

37 | ignore_patterns = ignore_patterns.union(set(d["ignore_urls"]))

38 |

39 | urls = find_urls(files)

40 |

41 | num_urls_before = len(urls)

42 | urls = set(

43 | filter(lambda item: is_allowed(item, allow_patterns, ignore_patterns), urls)

44 | )

45 | num_ignored_urls = num_urls_before - len(urls)

46 |

47 | urls_str = plural(len(urls), "unique URL")

48 | files_str = plural(len(files), "file")

49 | ifiles_str = plural(num_ignored_files, "file")

50 | iurls_str = plural(num_ignored_urls, "URL")

51 | print(f"Found {urls_str} in {files_str} (ignored {ifiles_str}, {iurls_str})")

52 |

53 | d = categorize_urls(

54 | urls,

55 | args.timeout,

56 | args.max_connections,

57 | args.max_keepalive_connections,

58 | )

59 |

60 | print_to_screen(d)

61 | has_errors = any(

62 | len(d[key]) > 0

63 | for key in ["Client errors", "Server errors", "Timeouts", "Other errors"]

64 | )

65 | return 1 if has_errors else 0

66 |

--------------------------------------------------------------------------------

/src/deadlink/_cli.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from sys import version_info

3 |

4 | from .__about__ import __version__

5 | from ._check import check

6 | from ._replace_redirects import replace_redirects

7 |

8 |

9 | def get_version_text(prog):

10 | copyright = "Copyright (c) 2021-2022 Nico Schlömer "

11 | python_version = f"{version_info.major}.{version_info.minor}.{version_info.micro}"

12 | return "\n".join([f"{prog} {__version__} [Python {python_version}]", copyright])

13 |

14 |

15 | def cli(argv=None):

16 | parser = argparse.ArgumentParser(

17 | description="Find or replace dead links in text files."

18 | )

19 |

20 | parser.add_argument(

21 | "--version",

22 | "-v",

23 | action="version",

24 | version=get_version_text(parser.prog),

25 | help="display version information",

26 | )

27 |

28 | subparsers = parser.add_subparsers(

29 | title="subcommands",

30 | required=True,

31 | # Keep the next line around until the fix in

32 | # (2021-07-23) can be considered

33 | # "distributed enough".

34 | dest="func",

35 | )

36 |

37 | subparser_check = subparsers.add_parser(

38 | "check", help="Check for dead links", aliases=["c"]

39 | )

40 | _cli_check(subparser_check)

41 | subparser_check.set_defaults(func=check)

42 |

43 | subparser_rr = subparsers.add_parser(

44 | "replace-redirects", help="Replaces permanent redirects", aliases=["rr"]

45 | )

46 | _cli_replace_redirects(subparser_rr)

47 | subparser_rr.set_defaults(func=replace_redirects)

48 |

49 | args = parser.parse_args(argv)

50 | return args.func(args)

51 |

52 |

53 | def _cli_check(parser):

54 | parser.add_argument("paths", type=str, nargs="+", help="files or paths to check")

55 | parser.add_argument(

56 | "-t",

57 | "--timeout",

58 | type=float,

59 | default=10.0,

60 | help="connection timeout in seconds (default: 10)",

61 | )

62 | parser.add_argument(

63 | "-c",

64 | "--max-connections",

65 | type=int,

66 | default=100,

67 | help="maximum number of allowable connections (default: 100)",

68 | )

69 | parser.add_argument(

70 | "-k",

71 | "--max-keepalive-connections",

72 | type=int,

73 | default=10,

74 | help="number of allowable keep-alive connections (default: 10)",

75 | )

76 | parser.add_argument(

77 | "-a",

78 | "--allow-urls",

79 | type=str,

80 | nargs="+",

81 | help="only consider URLs containing these strings (e.g., http:)",

82 | )

83 | parser.add_argument(

84 | "-i",

85 | "--ignore-urls",

86 | type=str,

87 | nargs="+",

88 | help="ignore URLs containing these strings (e.g., github.com)",

89 | )

90 | parser.add_argument(

91 | "-af",

92 | "--allow-files",

93 | type=str,

94 | nargs="+",

95 | help="only consider file names containing these strings (e.g., .txt)",

96 | )

97 | parser.add_argument(

98 | "-if",

99 | "--ignore-files",

100 | type=str,

101 | nargs="+",

102 | help="ignore file names containing these strings (e.g., .svg)",

103 | )

104 |

105 |

106 | def _cli_replace_redirects(parser):

107 | parser.add_argument("paths", type=str, nargs="+", help="files or paths to check")

108 | parser.add_argument(

109 | "-t",

110 | "--timeout",

111 | type=float,

112 | default=10.0,

113 | help="connection timeout in seconds (default: 10)",

114 | )

115 | parser.add_argument(

116 | "-c",

117 | "--max-connections",

118 | type=int,

119 | default=100,

120 | help="maximum number of allowable connections (default: 100)",

121 | )

122 | parser.add_argument(

123 | "-k",

124 | "--max-keepalive-connections",

125 | type=int,

126 | default=10,

127 | help="number of allowable keep-alive connections (default: 10)",

128 | )

129 | parser.add_argument(

130 | "-i",

131 | "--ignore-urls",

132 | type=str,

133 | nargs="+",

134 | help="ignore URLs containing these strings (e.g., github.com)",

135 | )

136 | parser.add_argument(

137 | "-a",

138 | "--allow-urls",

139 | type=str,

140 | nargs="+",

141 | help="only consider URLs containing these strings (e.g., http:)",

142 | )

143 | parser.add_argument(

144 | "-af",

145 | "--allow-files",

146 | type=str,

147 | nargs="+",

148 | help="only consider file names containing these strings (e.g., .txt)",

149 | )

150 | parser.add_argument(

151 | "-if",

152 | "--ignore-files",

153 | type=str,

154 | nargs="+",

155 | help="ignore file names containing these strings (e.g., .svg)",

156 | )

157 | parser.add_argument(

158 | "-y",

159 | "--yes",

160 | default=False,

161 | action="store_true",

162 | help="automatic yes to prompt; useful for non-interactive runs (default: false)",

163 | )

164 |

--------------------------------------------------------------------------------

/src/deadlink/_main.py:

--------------------------------------------------------------------------------

1 | from __future__ import annotations

2 |

3 | import asyncio

4 | import re

5 | import ssl

6 | from collections import namedtuple

7 | from pathlib import Path

8 | from typing import Callable

9 | from urllib.parse import urlsplit, urlunsplit

10 |

11 | import appdirs

12 | import httpx

13 | import toml

14 | from rich.console import Console

15 | from rich.progress import track

16 |

17 | # https://regexr.com/3e6m0

18 | # make all groups non-capturing with ?:

19 | url_regex = re.compile(

20 | r"http(?:s)?:\/\/.(?:www\.)?[-a-zA-Z0-9@:%._\+~#=]{2,256}\.[a-z]{2,6}\b(?:[-a-zA-Z0-9@:%_\+.~#?&/=]*)"

21 | )

22 |

23 | Info = namedtuple("Info", ["status_code", "url"])

24 |

25 |

26 | def _get_urls_from_file(path):

27 | try:

28 | with open(path) as f:

29 | content = f.read()

30 | except UnicodeDecodeError:

31 | return []

32 | return url_regex.findall(content)

33 |

34 |

35 | async def _get_return_code(

36 | url: str,

37 | client,

38 | timeout: float,

39 | follow_codes: list[int],

40 | max_num_redirects: int = 10,

41 | is_allowed: Callable | None = None,

42 | ):

43 | k = 0

44 | seq = []

45 | while True:

46 | if is_allowed is not None and not is_allowed(url):

47 | seq.append(Info(None, url))

48 | break

49 |

50 | # Pretend to be a browser .

51 | # If we don't do this, sometimes we'll get a 403 where browsers don't (e.g.,

52 | # JSTOR).

53 | headers = {

54 | "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.182 Safari/537.36"

55 | }

56 |

57 | try:

58 | r = await client.head(

59 | url, follow_redirects=False, timeout=timeout, headers=headers

60 | )

61 | except httpx.TimeoutException:

62 | seq.append(Info(901, url))

63 | break

64 | except httpx.HTTPError:

65 | seq.append(Info(902, url))

66 | break

67 | except ssl.SSLCertVerificationError:

68 | seq.append(Info(903, url))

69 | break

70 | except Exception:

71 | # Intercept all other errors

72 | seq.append(Info(900, url))

73 | break

74 |

75 | seq.append(Info(r.status_code, url))

76 |

77 | if (

78 | k >= max_num_redirects

79 | or r.status_code not in follow_codes

80 | or "Location" not in r.headers

81 | ):

82 | break

83 |

84 | # Handle redirect

85 | loc = r.headers["Location"]

86 | url_split = urlsplit(url)

87 |

88 | # create loc split that can be overridden

89 | loc_split = urlsplit(loc)

90 | loc_split = [

91 | loc_split.scheme,

92 | loc_split.netloc,

93 | loc_split.path,

94 | loc_split.query,

95 | loc_split.fragment,

96 | ]

97 |

98 | # handle relative redirects

99 | if loc_split[0] == "":

100 | loc_split[0] = url_split.scheme

101 | if loc_split[1] == "":

102 | loc_split[1] = url_split.netloc

103 |

104 | # The URL fragment, if (the part after a #-sign, if there is one) is not

105 | # contained in the redirect location because it's not sent to the server in the

106 | # first place. Append it manually.

107 | if url_split.fragment != "" and loc_split[4] == "":

108 | loc_split[4] = url_split.fragment

109 |

110 | url = urlunsplit(loc_split)

111 |

112 | k += 1

113 |

114 | return seq

115 |

116 |

117 | async def _get_all_return_codes(

118 | urls,

119 | timeout: float,

120 | max_connections: int,

121 | max_keepalive_connections: int,

122 | follow_codes: list[int],

123 | is_allowed: Callable | None = None,

124 | ):

125 | # return await asyncio.gather(*map(_get_return_code, urls))

126 | ret = []

127 | limits = httpx.Limits(

128 | max_keepalive_connections=max_keepalive_connections,

129 | max_connections=max_connections,

130 | )

131 | async with httpx.AsyncClient(limits=limits) as client:

132 | tasks = map(

133 | lambda x: _get_return_code(

134 | x, client, timeout, follow_codes=follow_codes, is_allowed=is_allowed

135 | ),

136 | urls,

137 | )

138 | for task in track(

139 | asyncio.as_completed(tasks), description="Checking...", total=len(urls)

140 | ):

141 | ret.append(await task)

142 |

143 | return ret

144 |

145 |

146 | def find_non_hidden_files(root):

147 | root = Path(root)

148 | if root.is_file():

149 | if not root.name.startswith("."):

150 | yield str(root)

151 | else:

152 | for path in root.glob("*"):

153 | if not path.name.startswith("."):

154 | if path.is_file():

155 | yield str(path)

156 | else:

157 | yield from find_non_hidden_files(path)

158 |

159 |

160 | def find_files(paths: list[str]):

161 | return [filepath for path in paths for filepath in find_non_hidden_files(path)]

162 |

163 |

164 | def find_urls(files):

165 | return set(url for f in files for url in _get_urls_from_file(f))

166 |

167 |

168 | def replace_in_string(content: str, replacements: dict[str, str]):

169 | # register where to replace what

170 | repl = [

171 | (m.span(0), replacements[m.group(0)])

172 | for m in url_regex.finditer(content)

173 | if m.group(0) in replacements

174 | ]

175 |

176 | k0 = 0

177 | out = []

178 | for k in range(len(repl)):

179 | span, string = repl[k]

180 | out.append(content[k0 : span[0]])

181 | out.append(string)

182 | k0 = span[1]

183 | # and the rest

184 | out.append(content[k0:])

185 | return "".join(out)

186 |

187 |

188 | def replace_in_file(p, redirects: dict[str, str]):

189 | # read

190 | try:

191 | with open(p) as f:

192 | content = f.read()

193 | except UnicodeDecodeError:

194 | return

195 | # replace

196 | new_content = replace_in_string(content, redirects)

197 | # rewrite

198 | if new_content != content:

199 | with open(p, "w") as f:

200 | f.write(new_content)

201 |

202 |

203 | def read_config():

204 | # check if there is a config file with more allowed/ignored domains

205 | config_file = Path(appdirs.user_config_dir()) / "deadlink" / "config.toml"

206 | try:

207 | with open(config_file) as f:

208 | out = toml.load(f)

209 | except FileNotFoundError:

210 | out = {}

211 |

212 | return out

213 |

214 |

215 | def is_allowed(item, allow_set: set[str], ignore_set: set[str]) -> bool:

216 | is_allowed = True

217 |

218 | if is_allowed:

219 | for a in allow_set:

220 | if re.search(a, item) is None:

221 | is_allowed = False

222 | break

223 |

224 | if is_allowed:

225 | for i in ignore_set:

226 | if re.search(i, item) is not None:

227 | is_allowed = False

228 | break

229 |

230 | return is_allowed

231 |

232 |

233 | def categorize_urls(

234 | urls: set[str],

235 | timeout: float = 10.0,

236 | max_connections: int = 100,

237 | max_keepalive_connections: int = 10,

238 | is_allowed: Callable | None = None,

239 | ):

240 | # only follow permanent redirects

241 | follow_codes = [

242 | 301, # Moved Permanently

243 | 308, # Permanent Redirect

244 | ]

245 | r = asyncio.run(

246 | _get_all_return_codes(

247 | urls,

248 | timeout,

249 | max_connections,

250 | max_keepalive_connections,

251 | follow_codes,

252 | is_allowed,

253 | )

254 | )

255 |

256 | # sort results into dictionary

257 | d = {

258 | "OK": [],

259 | "Successful permanent redirects": [],

260 | "Failing permanent redirects": [],

261 | "Non-permanent redirects": [],

262 | "Client errors": [],

263 | "Server errors": [],

264 | "Timeouts": [],

265 | "Other errors": [],

266 | "Other HTTP errors": [],

267 | "SSL certificate errors": [],

268 | "Ignored": [],

269 | }

270 | for item in r:

271 | status_code = item[0].status_code

272 | if status_code is None:

273 | d["Ignored"].append(item)

274 | elif 200 <= status_code < 300:

275 | d["OK"].append(item)

276 | elif 300 <= status_code < 400:

277 | if status_code in [301, 308]:

278 | if 200 <= item[-1].status_code < 400:

279 | d["Successful permanent redirects"].append(item)

280 | else:

281 | d["Failing permanent redirects"].append(item)

282 | else:

283 | d["Non-permanent redirects"].append(item)

284 | elif 400 <= status_code < 500:

285 | d["Client errors"].append(item)

286 | elif 500 <= status_code < 600:

287 | d["Server errors"].append(item)

288 | elif status_code == 900:

289 | d["Other errors"].append(item)

290 | elif status_code == 901:

291 | d["Timeouts"].append(item)

292 | elif status_code == 902:

293 | d["Other HTTP errors"].append(item)

294 | elif status_code == 903:

295 | d["SSL certificate errors"].append(item)

296 | else:

297 | raise RuntimeError(f"Unknown status code {status_code}")

298 |

299 | return d

300 |

301 |

302 | def print_to_screen(d):

303 | # sort by status code

304 | for key, value in d.items():

305 | d[key] = sorted(value, key=lambda x: x[0].status_code)

306 |

307 | console = Console()

308 |

309 | key = "OK"

310 | if key in d and len(d[key]) > 0:

311 | print()

312 | num = len(d[key])

313 | console.print(f"{key} ({num})", style="green", highlight=False)

314 |

315 | key = "Non-permanent redirects"

316 | if key in d and len(d[key]) > 0:

317 | print()

318 | num = len(d[key])

319 | console.print(f"{key} ({num})", style="green", highlight=False)

320 |

321 | key = "Ignored"

322 | if key in d and len(d[key]) > 0:

323 | print()

324 | num = len(d[key])

325 | console.print(f"{key} ({num})", style="white", highlight=False)

326 |

327 | keycol = [

328 | ("Successful permanent redirects", "yellow"),

329 | ("Failing permanent redirects", "red"),

330 | ]

331 | for key, base_color in keycol:

332 | if key not in d or len(d[key]) == 0:

333 | continue

334 | print()

335 | console.print(f"{key} ({len(d[key])}):", style=base_color, highlight=False)

336 | for seq in d[key]:

337 | for k, item in enumerate(seq):

338 | if item.status_code < 300:

339 | color = "green"

340 | elif (300 <= item.status_code < 400) or item.status_code is None:

341 | color = "yellow"

342 | else:

343 | color = "red"

344 |

345 | sc = "xxx" if item.status_code is None else item.status_code

346 | if k == 0:

347 | console.print(f" [dim]{sc}[/]: {item.url}", style=color)

348 | else:

349 | console.print(f" → [dim]{sc}[/]: {item.url}", style=color)

350 |

351 | for key in [

352 | "Client errors",

353 | "Server errors",

354 | "Timeouts",

355 | "Other errors",

356 | "Other HTTP errors",

357 | "SSL certificate errors",

358 | ]:

359 | if key not in d or len(d[key]) == 0:

360 | continue

361 | print()

362 | console.print(f"{key} ({len(d[key])}):", style="red", highlight=False)

363 | for item in d[key]:

364 | url = item[0].url

365 | status_code = item[0].status_code

366 | if item[0].status_code < 900:

367 | console.print(f" [dim]{status_code}[/]: {url}", style="red")

368 | else:

369 | console.print(f" {url}", style="red")

370 |

371 |

372 | def plural(number: int, noun: str) -> str:

373 | out = str(number) + " " + noun

374 | if number != 1:

375 | out += "s"

376 | return out

377 |

--------------------------------------------------------------------------------

/src/deadlink/_replace_redirects.py:

--------------------------------------------------------------------------------

1 | import random

2 | from pathlib import Path

3 |

4 | from ._main import (

5 | categorize_urls,

6 | find_files,

7 | find_urls,

8 | is_allowed,

9 | plural,

10 | print_to_screen,

11 | read_config,

12 | replace_in_file,

13 | )

14 |

15 |

16 | def replace_redirects(args):

17 | # get non-hidden files in non-hidden directories

18 | files = find_files(args.paths)

19 |

20 | d = read_config()

21 |

22 | # filter files by allow-ignore-lists

23 | allow_patterns = set() if args.allow_files is None else set(args.allow_files)

24 | if "allow_files" in d:

25 | allow_patterns = allow_patterns.union(set(d["allow_files"]))

26 | ignore_patterns = set() if args.ignore_files is None else set(args.ignore_files)

27 | if "ignore_files" in d:

28 | ignore_patterns = ignore_patterns.union(set(d["ignore_files"]))

29 |

30 | num_files_before = len(files)

31 | files = set(

32 | filter(lambda item: is_allowed(item, allow_patterns, ignore_patterns), files)

33 | )

34 | num_ignored_files = num_files_before - len(files)

35 |

36 | allow_patterns = set() if args.allow_urls is None else set(args.allow_urls)

37 | if "allow_urls" in d:

38 | allow_patterns = allow_patterns.union(set(d["allow_urls"]))

39 | ignore_patterns = set() if args.ignore_urls is None else set(args.ignore_urls)

40 | if "ignore_urls" in d:

41 | ignore_patterns = ignore_patterns.union(set(d["ignore_urls"]))

42 |

43 | urls = find_urls(files)

44 |

45 | # Shuffle the urls. In case there's rate limiting (e.g., on github.com),

46 | # different URLs are checked on every turn.

47 | urls = list(urls)

48 | random.shuffle(urls)

49 |

50 | urls_str = plural(len(urls), "unique URL")

51 | files_str = plural(len(files), "file")

52 | ifiles_str = plural(num_ignored_files, "file")

53 | print(f"Found {urls_str} in {files_str} (ignored {ifiles_str})")

54 |

55 | d = categorize_urls(

56 | urls,

57 | args.timeout,

58 | args.max_connections,

59 | args.max_keepalive_connections,

60 | lambda url: is_allowed(url, allow_patterns, ignore_patterns),

61 | )

62 |

63 | # only consider successful permanent redirects

64 | redirects = d["Successful permanent redirects"]

65 |

66 | if len(redirects) == 0:

67 | print("No redirects found.")

68 | return 0

69 |

70 | print_to_screen({"Successful permanent redirects": redirects})

71 | rdr = "redirect" if len(redirects) == 1 else "redirects"

72 | print(f"\nReplace those {len(redirects)} {rdr}? [y/N] ", end="")

73 |

74 | if args.yes:

75 | print("Auto yes.")

76 | else:

77 | choice = input().lower()

78 | if choice not in ["y", "yes"]:

79 | print("Abort.")

80 | return 1

81 |

82 | # create a dictionary from redirects

83 | replace = dict([(r[0].url, r[-1].url) for r in redirects])

84 |

85 | for path in args.paths:

86 | path = Path(path)

87 | if path.is_dir():

88 | for p in path.rglob("*"):

89 | if p.is_file():

90 | replace_in_file(p, replace)

91 | else:

92 | assert path.is_file()

93 | replace_in_file(path, replace)

94 |

95 | return 0

96 |

--------------------------------------------------------------------------------

/tests/test_check.py:

--------------------------------------------------------------------------------

1 | import pytest

2 |

3 | import deadlink

4 |

5 |

6 | @pytest.mark.parametrize(

7 | "url,category",

8 | [

9 | ("https://httpstat.us/200", "OK"),

10 | ("https://httpstat.us/404", "Client errors"),

11 | ("https://this-doesnt-exist.doesit", "Other HTTP errors"),

12 | ("https://httpstat.us/301", "Successful permanent redirects"),

13 | ("https://httpstat.us/302", "Non-permanent redirects"),

14 | ("https://httpstat.us/308", "Successful permanent redirects"),

15 | ("https://httpstat.us/500", "Server errors"),

16 | ("https://httpstat.us/200?sleep=99999", "Timeouts"),

17 | ("https://httpstat.us/XYZ", "Ignored"),

18 | ],

19 | )

20 | def test_check(url, category):

21 | print(url)

22 | out = deadlink.categorize_urls(

23 | {url}, timeout=1.0, is_allowed=lambda url: "XYZ" not in url

24 | )

25 | print(out)

26 | assert len(out[category]) == 1

27 | deadlink._main.print_to_screen(out)

28 |

29 |

30 | def test_preserve_fragment():

31 | url = "http://www.numpy.org/devdocs/dev/development_workflow.html#writing-the-commit-message"

32 | url2 = "https://numpy.org/devdocs/dev/development_workflow.html#writing-the-commit-message"

33 | out = deadlink.categorize_urls({url})

34 | assert out["Successful permanent redirects"][0][-1].url == url2

35 |

36 |

37 | @pytest.mark.skip("URL doesn't exist anymore")

38 | def test_relative_redirect():

39 | url = "http://numpy-discussion.10968.n7.nabble.com/NEP-31-Context-local-and-global-overrides-of-the-NumPy-API-tp47452p47472.html"

40 | url2 = "http://numpy-discussion.10968.n7.nabble.com/NEP-31-Context-local-and-global-overrides-of-the-NumPy-API-td47452.html#a47472"

41 | out = deadlink.categorize_urls({url})

42 | assert out["Successful permanent redirects"][0][-1].url == url2

43 |

--------------------------------------------------------------------------------

/tests/test_cli.py:

--------------------------------------------------------------------------------

1 | import pathlib

2 |

3 | import deadlink

4 |

5 |

6 | def test_cli():

7 | this_dir = pathlib.Path(__file__).resolve().parent

8 | files = str((this_dir / ".." / "README.md").resolve())

9 | deadlink.cli(["check", files, "-a", "http", "-i", "xyz"])

10 |

--------------------------------------------------------------------------------

/tests/test_replace_redirects.py:

--------------------------------------------------------------------------------

1 | import tempfile

2 | from pathlib import Path

3 |

4 | import pytest

5 |

6 | import deadlink

7 |

8 |

9 | def test_replace():

10 | content = (

11 | "some text\n"

12 | + "http://example.com\n"

13 | + "http://example.com/path\n"

14 | + "more text"

15 | )

16 | d = {

17 | "http://example.com/path": "http://example.com/path/more",

18 | "http://example.com": "http://example.com/home",

19 | }

20 | new_content = deadlink._main.replace_in_string(content, d)

21 |

22 | ref = (

23 | "some text\n"

24 | + "http://example.com/home\n"

25 | + "http://example.com/path/more\n"

26 | + "more text"

27 | )

28 | assert ref == new_content

29 |

30 |

31 | @pytest.mark.parametrize(

32 | "content, ref",

33 | [

34 | (

35 | "some text\nhttps://httpstat.us/301\nmore text",

36 | "some text\nhttps://httpstat.us\nmore text",

37 | ),

38 | # For some reason, http://www.google.com doesn't get redirected

39 | # https://stackoverflow.com/q/68303464/353337

40 | # (

41 | # "http://www.google.com",

42 | # "https://www.google.com"

43 | # )

44 | ],

45 | )

46 | def test_fix_cli(content, ref):

47 |

48 | with tempfile.TemporaryDirectory() as tmpdir:

49 | tmpdir = Path(tmpdir)

50 | infile = tmpdir / "in.txt"

51 | with open(infile, "w") as f:

52 | f.write(content)

53 |

54 | deadlink.cli(["replace-redirects", str(infile), "--yes"])

55 |

56 | with open(infile) as f:

57 | out = f.read()

58 |

59 | assert out == ref

60 |

--------------------------------------------------------------------------------

/tox.ini:

--------------------------------------------------------------------------------

1 | [tox]

2 | envlist = py3

3 | isolated_build = True

4 |

5 | [testenv]

6 | deps =

7 | pytest

8 | pytest-cov

9 | pytest-codeblocks

10 | extras = all

11 | commands =

12 | pytest {posargs} --codeblocks

13 |

--------------------------------------------------------------------------------