2 |  3 |

3 |

6 |

7 | ---

8 |

9 | ## Installation and Usage

10 |

11 | ### Run Script Directly

12 |

13 | With the introduction of webhook ingestion and auto-generation of prerolls, it is no longer advised to run this

14 | application as a direct Python script. Please use the [Docker container](#run-as-docker-container) instead.

15 |

16 | ### Run as Docker Container

17 |

18 | #### Requirements

19 |

20 | - Docker

21 |

22 | #### Docker Compose

23 |

24 | Complete the provided `docker-compose.yml` file and run:

25 |

26 | ```sh

27 | docker-compose up -d

28 | ```

29 |

30 | #### Docker CLI

31 |

32 | ```sh

33 | docker run -d \

34 | --name=plex_prerolls \

35 | -p 8283:8283 \

36 | -e PUID=1000 \

37 | -e PGID=1000 \

38 | -e TZ=Etc/UTC \

39 | -v /path/to/config:/ \

40 | -v /path/to/logs:/logs \

41 | -v /path/to/preroll/files:/files \

42 | -v /path/to/auto-generated/rolls/temp:/renders \

43 | -v /path/to/auto-generated/rolls/parent:/auto_rolls \

44 | --restart unless-stopped \

45 | nwithan8/plex_prerolls:latest

46 | ```

47 |

48 | #### Paths and Environment Variables

49 |

50 | | Path | Description |

51 | |---------------|------------------------------------------------------------------------------------------------------------------------------|

52 | | `/config` | Path to config directory (`config.yaml` should be in this directory) |

53 | | `/logs` | Path to log directory (`Plex Prerolls.log` will be in this directory) |

54 | | `/files` | Path to the root directory of all preroll files (for [Path Globbing](#path-globbing) feature) |

55 | | `/auto_rolls` | Path to the root directory where all [auto-generated prerolls files](#auto-generation) will be stored |

56 | | `/renders` | Path to where [auto-generated prerolls](#auto-generation) and associated assets will be temporarily stored during generation |

57 |

58 | | Environment Variable | Description |

59 | |----------------------|-------------------------------------------------------------------|

60 | | `PUID` | UID of user to run as |

61 | | `PGID` | GID of user to run as |

62 | | `TZ` | Timezone to use for cron schedule |

63 |

64 | ---

65 |

66 | ## Schedule Rules

67 |

68 | Any entry whose schedule falls within the current date/time at the time of execution will be added to the preroll.

69 |

70 | You can define as many schedules as you want, in the following categories (order does not matter):

71 |

72 | 1. **always**: Items listed here will always be included (appended) to the preroll list

73 | - If you have a large set of prerolls, you can provide all paths and use `count` to randomly select a smaller

74 | subset of the list to use on each run.

75 |

76 | 2. **date_range**: Schedule based on a specific date/time range (including [wildcards](#date-range-section-scheduling))

77 |

78 | 3. **weekly**: Schedule based on a specific week of the year

79 |

80 | 4. **monthly**: Schedule based on a specific month of the year

81 |

82 | ### Advanced Scheduling

83 |

84 | #### Weight

85 |

86 | All schedule entries accept an optional `weight` value that can be used to adjust the emphasis of this entry over

87 | others by adding the listed paths multiple times. Since Plex selects a random preroll from the list of paths, having the

88 | same path listed multiple times increases its chances of being selected over paths that only appear once. This allows

89 | you to combine, e.g. a `date_range` entry with an `always` entry, but place more weight/emphasis on the `date_range`

90 | entry.

91 |

92 | ```yaml

93 | date_range:

94 | enabled: true

95 | ranges:

96 | - start_date: 2020-01-01 # Jan 1st, 2020

97 | end_date: 2020-01-02 # Jan 2nd, 2020

98 | paths:

99 | - /path/to/video.mp4

100 | - /path/to/another/video.mp4

101 | weight: 2 # Add these paths to the list twice (make up greater percentage of prerolls - more likely to be selected)

102 | ```

103 |

104 | #### Disable Always

105 |

106 | Any schedule entry (except for the `always` section) can disable the inclusion of the `always` section by setting the

107 | `disable_always` value to `true`. This can be useful if you want to make one specific, i.e. `date_range` entry for a

108 | holiday,

109 | and you don't want to include the `always` section for this specific holiday, but you still want to include the `always`

110 | section

111 | for other holidays.

112 |

113 | ```yaml

114 | date_range:

115 | enabled: true

116 | ranges:

117 | - start_date: 2020-01-01 # Jan 1st, 2020

118 | end_date: 2020-01-02 # Jan 2nd, 2020

119 | paths:

120 | - /path/to/video.mp4

121 | - /path/to/another/video.mp4

122 | disable_always: true # Disable the inclusion of the `always` section when this entry is active

123 | ```

124 |

125 | #### Path Globbing

126 |

127 | **NOTE**: This feature will only work if you are running the Docker container on the same machine as your Plex

128 | server.

129 |

130 | Instead of listing out each individual preroll file, you can use glob (wildcard) patterns to match multiple files in a

131 | specific directory.

132 |

133 | The application will search for all files on your local filesystem that match the pattern(s) and automatically translate

134 | them to Plex-compatible remote paths.

135 |

136 | ##### Setup

137 |

138 | Enable the feature under the `path_globbing` section of each schedule.

139 |

140 | Each `pair` is a local (`root_path`) path and remote (`plex_path`) path that correspond to each other.

141 | The `patterns` list is a list of glob patterns that will be searched for in the `root_path` directory and translated to

142 | the `plex_path`-directory equivalent.

143 |

144 | You can provide multiple `pairs` to match multiple local-remote directory pairs and multiple subsequent glob patterns.

145 |

146 | ```yaml

147 | path_globbing:

148 | enabled: true

149 | pairs:

150 | - root_path: /files # The root folder to use for globbing

151 | plex_path: /path/to/prerolls/in/plex # The path to use for the Plex server

152 | patterns:

153 | - "local/path/to/prerolls/*.mp4" # The pattern to look for in the root_path

154 | - "local/path/to/prerolls/*.mkv" # The pattern to look for in the root_path

155 | - root_path: /other/files

156 | plex_path: /path/to/other/prerolls/in/plex

157 | patterns:

158 | - "local/path/to/prerolls/*.mp4"

159 | - "local/path/to/prerolls/*.mkv"

160 | ```

161 |

162 | For example, if your prerolls on your file system are located at `/mnt/user/media/prerolls` and Plex sees them at

163 | `/media/prerolls`, you would set the `root_path` to `/mnt/user/media/prerolls` and the `plex_path` to `/media/prerolls`.

164 |

165 | If you are using the Docker container, you can mount the preroll directory to the container at any location you would

166 | prefer (recommended: `/files`) and set the `root_path` accordingly. Although you can define multiple roots, it is

167 | recommended to use a single all-encompassing root folder and rely on more-detailed glob patterns to match files in

168 | specific subdirectories.

169 |

170 | If you are using the Unraid version of this container, the "Files Path" path is mapped to `/files` by default; you

171 | should set `root_path` to `/files` and `plex_path` to the same directory as seen by Plex.

172 |

173 | #### Usage

174 |

175 | In any schedule section, you can use the `path_globbing` key to specify glob pattern rules to match files.

176 |

177 | ```yaml

178 | always:

179 | enabled: true

180 | paths:

181 | - /remote/path/1.mp4

182 | - /remote/path/2.mp4

183 | - /remote/path/3.mp4

184 | path_globbing:

185 | enabled: true

186 | pairs:

187 | - root_path: /files

188 | plex_path: /path/to/prerolls/in/plex

189 | patterns:

190 | - "*.mp4"

191 | ```

192 |

193 | The above example will match all `.mp4` files in the `root_path` directory and append them to the list of prerolls.

194 |

195 | If you have organized your prerolls into subdirectories, you can specify specific subdirectories to match, or use `**`

196 | to match all subdirectories.

197 |

198 | ```yaml

199 | always:

200 | enabled: true

201 | paths:

202 | - /remote/path/1.mp4

203 | - /remote/path/2.mp4

204 | - /remote/path/3.mp4

205 | path_globbing:

206 | enabled: true

207 | pairs:

208 | - root_path: /files

209 | plex_path: /path/to/prerolls/in/plex

210 | patterns:

211 | - "subdir1/*.mp4"

212 | - "subdir2/*.mp4"

213 | - "subdir3/**/*.mp4"

214 | ```

215 |

216 | You can use both `paths` and `path_globbing` in the same section, allowing you to mix and match specific files with glob

217 | patterns. Please note that `paths` entries must be fully-qualified **remote** paths (as seen by Plex), while `pattern`

218 | entries in `path_globbing` are relative to the **local** `root_path` directory.

219 |

220 | #### Date Range Section Scheduling

221 |

222 | `date_range` entries can accept both dates (`yyyy-mm-dd`) and datetimes (`yyyy-mm-dd hh:mm:ss`, 24-hour time).

223 |

224 | `date_range` entries can also accept wildcards for any of the date/time fields. This can be useful for scheduling

225 | recurring events, such as annual events, "first-of-the-month" events, or even hourly events.

226 |

227 | ```yaml

228 | date_range:

229 | enabled: true

230 | ranges:

231 | # Each entry requires start_date, end_date, path values

232 | - start_date: 2020-01-01 # Jan 1st, 2020

233 | end_date: 2020-01-02 # Jan 2nd, 2020

234 | paths:

235 | - /path/to/video.mp4

236 | - /path/to/another/video.mp4

237 | - start_date: xxxx-07-04 # Every year on July 4th

238 | end_date: xxxx-07-04 # Every year on July 4th

239 | paths:

240 | - /path/to/video.mp4

241 | - /path/to/another/video.mp4

242 | - name: "My Schedule" # Optional name for logging purposes

243 | start_date: xxxx-xx-02 # Every year on the 2nd of every month

244 | end_date: xxxx-xx-03 # Every year on the 3rd of every month

245 | paths:

246 | - /path/to/video.mp4

247 | - /path/to/another/video.mp4

248 | - start_date: xxxx-xx-xx 08:00:00 # Every day at 8am

249 | end_date: xxxx-xx-xx 09:30:00 # Every day at 9:30am

250 | paths:

251 | - /path/to/video.mp4

252 | - /path/to/another/video.mp4

253 | ```

254 |

255 | You should [adjust your cron schedule](#scheduling-script) to run the script more frequently if you use this feature.

256 |

257 | `date_range` entries also accept an optional `name` value that can be used to identify the schedule in the logs.

258 |

259 | ---

260 |

261 | ## Advanced Configuration

262 |

263 | ### Auto-Generation

264 |

265 | **NOTE**: This feature will only work if you are running the Docker container on the same machine as your Plex

266 | server.

267 |

268 | **NOTE**: This feature relies on Plex webhooks, which require a Plex Pass subscription.

269 |

270 | Plex Prerolls can automatically generate prerolls, store the generated files in a specified directory and include them

271 | in the list of prerolls.

272 |

273 | #### "Recently Added Media" Pre-Rolls

274 |

275 | The application can generate trailer-like prerolls for each new media item added to your library (with a rolling total,

276 | defaults to 10 items).

277 |

278 | This is done by receiving a webhook from Plex when new media is added, retrieving a trailer and soundtrack (via YouTube)

279 | as well as poster and metadata for the media item, and generating a preroll from these assets.

280 |

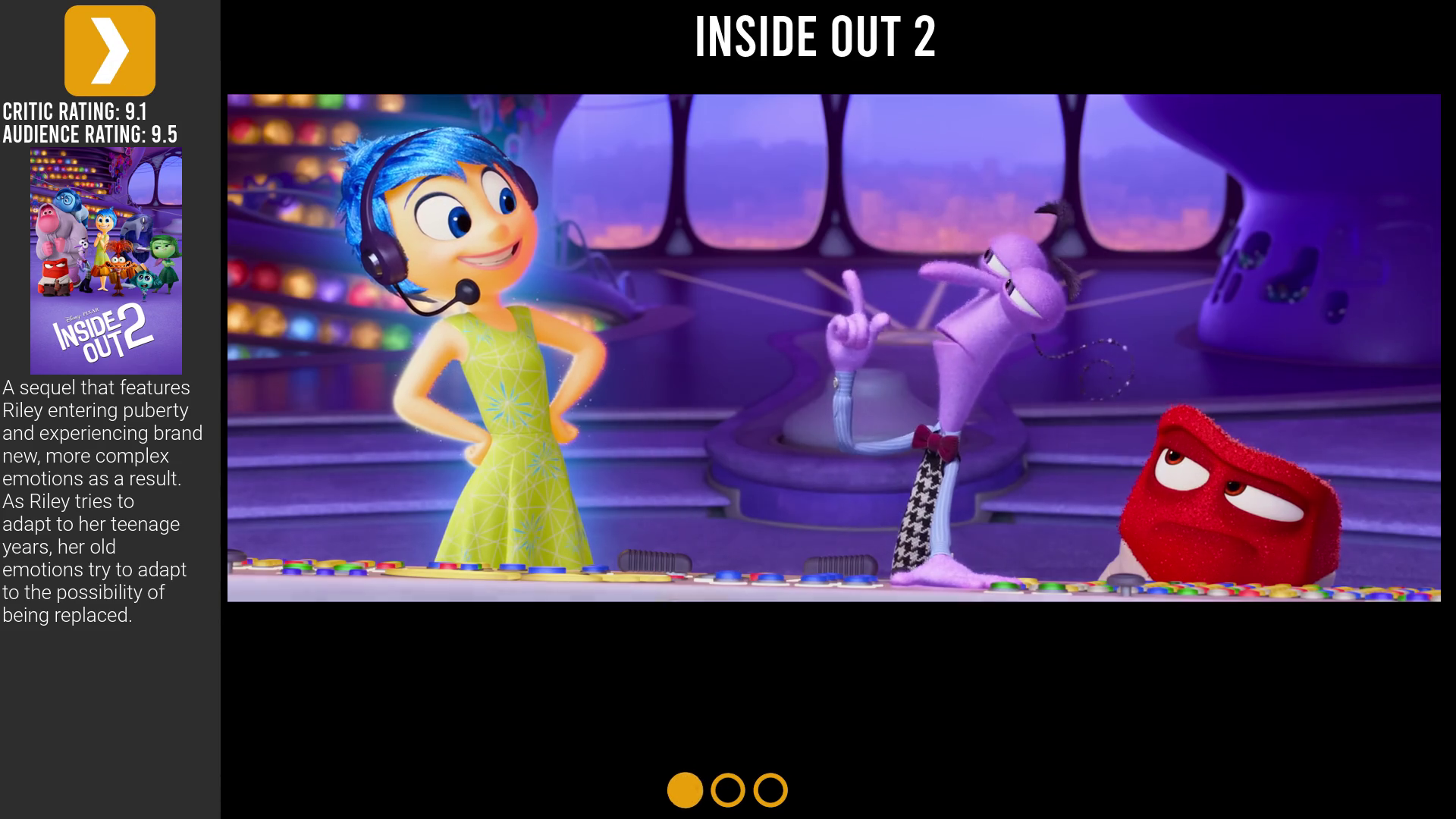

281 | Example of a generated preroll:

282 |

283 |  3 |

3 | Plex Prerolls

4 |A script to automate management of Plex pre-rolls.

5 | 284 |

285 | > :warning: This feature requires [extracting cookies for YouTube](https://github.com/yt-dlp/yt-dlp/wiki/FAQ#how-do-i-pass-cookies-to-yt-dlp) and storing them in a file called `yt_dlp_cookies.txt` alongside your `config.yaml` file.

286 |

287 | ##### Setup

288 |

289 | [Set up a Plex webhook](https://support.plex.tv/articles/115002267687-webhooks/) to point to the application's

290 | `/recently-added` endpoint (e.g. `http://localhost:8283/recently-added`).

291 |

292 | Because this feature requires Plex Prerolls and Plex Media Server to be running on the same host machine, it is highly

293 | recommended to use internal networking (local IP addresses) rather than publicly exposing Plex Prerolls to the Internet.

294 |

295 | ---

296 |

297 | ## Shout out to places to get Pre-Roll

298 |

299 | -

284 |

285 | > :warning: This feature requires [extracting cookies for YouTube](https://github.com/yt-dlp/yt-dlp/wiki/FAQ#how-do-i-pass-cookies-to-yt-dlp) and storing them in a file called `yt_dlp_cookies.txt` alongside your `config.yaml` file.

286 |

287 | ##### Setup

288 |

289 | [Set up a Plex webhook](https://support.plex.tv/articles/115002267687-webhooks/) to point to the application's

290 | `/recently-added` endpoint (e.g. `http://localhost:8283/recently-added`).

291 |

292 | Because this feature requires Plex Prerolls and Plex Media Server to be running on the same host machine, it is highly

293 | recommended to use internal networking (local IP addresses) rather than publicly exposing Plex Prerolls to the Internet.

294 |

295 | ---

296 |

297 | ## Shout out to places to get Pre-Roll

298 |

299 | -