├── LICENSE

├── PPO_practice.ipynb

├── README.md

├── code

├── LICENSE

├── README.md

├── benchmarks

│ ├── README.md

│ ├── benchmark_gpt_dummy.py

│ ├── benchmark_gpt_dummy.sh

│ └── benchmark_opt_lora_dummy.py

├── chatgpt

│ ├── __init__.py

│ ├── __pycache__

│ │ └── __init__.cpython-310.pyc

│ ├── dataset

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── reward_dataset.cpython-310.pyc

│ │ │ └── utils.cpython-310.pyc

│ │ ├── reward_dataset.py

│ │ └── utils.py

│ ├── experience_maker

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── base.cpython-310.pyc

│ │ │ └── naive.cpython-310.pyc

│ │ ├── base.py

│ │ └── naive.py

│ ├── models

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── generation.cpython-310.pyc

│ │ │ ├── generation_utils.cpython-310.pyc

│ │ │ ├── lora.cpython-310.pyc

│ │ │ ├── loss.cpython-310.pyc

│ │ │ └── utils.cpython-310.pyc

│ │ ├── base

│ │ │ ├── __init__.py

│ │ │ ├── __pycache__

│ │ │ │ ├── __init__.cpython-310.pyc

│ │ │ │ ├── actor.cpython-310.pyc

│ │ │ │ ├── critic.cpython-310.pyc

│ │ │ │ └── reward_model.cpython-310.pyc

│ │ │ ├── actor.py

│ │ │ ├── critic.py

│ │ │ └── reward_model.py

│ │ ├── bloom

│ │ │ ├── __init__.py

│ │ │ ├── __pycache__

│ │ │ │ ├── __init__.cpython-310.pyc

│ │ │ │ ├── bloom_actor.cpython-310.pyc

│ │ │ │ ├── bloom_critic.cpython-310.pyc

│ │ │ │ └── bloom_rm.cpython-310.pyc

│ │ │ ├── bloom_actor.py

│ │ │ ├── bloom_critic.py

│ │ │ └── bloom_rm.py

│ │ ├── generation.py

│ │ ├── generation_utils.py

│ │ ├── gpt

│ │ │ ├── __init__.py

│ │ │ ├── __pycache__

│ │ │ │ ├── __init__.cpython-310.pyc

│ │ │ │ ├── gpt_actor.cpython-310.pyc

│ │ │ │ ├── gpt_critic.cpython-310.pyc

│ │ │ │ └── gpt_rm.cpython-310.pyc

│ │ │ ├── gpt_actor.py

│ │ │ ├── gpt_critic.py

│ │ │ └── gpt_rm.py

│ │ ├── lora.py

│ │ ├── loss.py

│ │ ├── opt

│ │ │ ├── __init__.py

│ │ │ ├── __pycache__

│ │ │ │ ├── __init__.cpython-310.pyc

│ │ │ │ ├── opt_actor.cpython-310.pyc

│ │ │ │ ├── opt_critic.cpython-310.pyc

│ │ │ │ └── opt_rm.cpython-310.pyc

│ │ │ ├── opt_actor.py

│ │ │ ├── opt_critic.py

│ │ │ └── opt_rm.py

│ │ └── utils.py

│ ├── replay_buffer

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── base.cpython-310.pyc

│ │ │ ├── naive.cpython-310.pyc

│ │ │ └── utils.cpython-310.pyc

│ │ ├── base.py

│ │ ├── naive.py

│ │ └── utils.py

│ └── trainer

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ ├── __init__.cpython-310.pyc

│ │ ├── base.cpython-310.pyc

│ │ ├── ppo.cpython-310.pyc

│ │ ├── rm.cpython-310.pyc

│ │ └── utils.cpython-310.pyc

│ │ ├── base.py

│ │ ├── callbacks

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── base.cpython-310.pyc

│ │ │ ├── performance_evaluator.cpython-310.pyc

│ │ │ └── save_checkpoint.cpython-310.pyc

│ │ ├── base.py

│ │ ├── performance_evaluator.py

│ │ └── save_checkpoint.py

│ │ ├── ppo.py

│ │ ├── rm.py

│ │ ├── strategies

│ │ ├── __init__.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── base.cpython-310.pyc

│ │ │ ├── colossalai.cpython-310.pyc

│ │ │ ├── ddp.cpython-310.pyc

│ │ │ ├── naive.cpython-310.pyc

│ │ │ └── sampler.cpython-310.pyc

│ │ ├── base.py

│ │ ├── colossalai.py

│ │ ├── ddp.py

│ │ ├── naive.py

│ │ └── sampler.py

│ │ └── utils.py

├── examples

│ ├── README.md

│ ├── inference.py

│ ├── requirements.txt

│ ├── test_ci.sh

│ ├── train_dummy.py

│ ├── train_dummy.sh

│ ├── train_prompts.py

│ ├── train_prompts.sh

│ ├── train_reward_model.py

│ └── train_rm.sh

├── pytest.ini

├── requirements-test.txt

├── requirements.txt

├── setup.py

├── tests

│ ├── __init__.py

│ ├── test_checkpoint.py

│ └── test_data.py

├── utils.py

└── version.txt

├── data

├── stage1. domain_adaptive_pretraining

│ ├── BTS.csv

│ ├── domain_adaptive_kuksundo_pretrain.jsonl

│ ├── domain_adaptive_pretrain_ive.jsonl

│ ├── 국선도.csv

│ ├── 아이브.csv

│ └── 템플릿.csv

├── stage1. domain_instruction_tuning

│ ├── ive_instruction_test.jsonl

│ ├── ive_instruction_train.jsonl

│ ├── kuksundo_instruction_test.jsonl

│ └── kuksundo_instruction_train.jsonl

├── stage2. RM

│ ├── ive_test_rm.jsonl

│ ├── ive_train_rm.jsonl

│ ├── kuksundo_test_rm.jsonl

│ └── kuksundo_train_rm.jsonl

└── stage3. PPO

│ ├── ive_test_ppo.jsonl

│ ├── ive_train_ppo.jsonl

│ ├── kuksundo_test_ppo.jsonl

│ └── kuksundo_train_ppo.jsonl

├── mygpt_실습.ipynb

└── requirements.txt

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 oglee815

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # update

2 | - 2024-6-13: 데이터 생성과 학습 노트북 통합 -> 하나의 colab 노트북으로 통일

3 | - 2023-10-24: 라인 넘버 추가 및 코드 정리

4 |

5 | # mygpt-lecture

6 | 본 자료는 '나만의 데이터로 만드는 MyGPT 강의' 관련 자료입니다.

7 |

8 | 아래 자료를 참고하여 만들었습니다.

9 | https://github.com/airobotlab/KoChatGPT

10 |

11 | # 전체 목차

12 |  13 |

14 | # 실습 진행 순서

15 |

13 |

14 | # 실습 진행 순서

15 |  16 |

17 | # 학습코드

18 | [my_gpt실습.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/mygpt_실습.ipynb)

19 |

20 | # 데이터 생성 코드

21 | [my_gpt실습.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/mygpt_실습.ipynb)

22 | - ChatGPT API를 통해 데이터 자동 생성

23 | -

16 |

17 | # 학습코드

18 | [my_gpt실습.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/mygpt_실습.ipynb)

19 |

20 | # 데이터 생성 코드

21 | [my_gpt실습.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/mygpt_실습.ipynb)

22 | - ChatGPT API를 통해 데이터 자동 생성

23 | -  24 |

25 | # PPO 강화학습 연습 코드(Lunar Lander2)

26 | - [ppo_practice.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/PPO_practice.ipynb)

27 | -

24 |

25 | # PPO 강화학습 연습 코드(Lunar Lander2)

26 | - [ppo_practice.ipynb](https://colab.research.google.com/github/oglee815/mygpt-lecture/blob/main/PPO_practice.ipynb)

27 | -  28 |

29 | # 학습 결과 예시

30 | - SKT-KoGPT2와 나무 위키의 '아이브' 카테고리 데이터를 기반으로 ChatGPT의 Stage 1, 2, 3를 학습 한 뒤, Stage 1의 SFT와 결과 비교

31 | -

28 |

29 | # 학습 결과 예시

30 | - SKT-KoGPT2와 나무 위키의 '아이브' 카테고리 데이터를 기반으로 ChatGPT의 Stage 1, 2, 3를 학습 한 뒤, Stage 1의 SFT와 결과 비교

31 | -  32 | - KL Penalty 덕분인지 의외로 동일한 Output을 내놓는 경우가 많음

33 |

34 | # 자료 관련 문의

35 | - 이현제, oglee815@gmail.com

36 | - h8.lee@samsung.com

37 |

--------------------------------------------------------------------------------

/code/README.md:

--------------------------------------------------------------------------------

1 | # RLHF - Colossal-AI

2 |

3 | ## Table of Contents

4 |

5 | - [What is RLHF - Colossal-AI?](#intro)

6 | - [How to Install?](#install)

7 | - [The Plan](#the-plan)

8 | - [How can you partcipate in open source?](#invitation-to-open-source-contribution)

9 | ---

10 | ## Intro

11 | Implementation of RLHF (Reinforcement Learning with Human Feedback) powered by Colossal-AI. It supports distributed training and offloading, which can fit extremly large models. More details can be found in the [blog](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt).

12 |

13 |

32 | - KL Penalty 덕분인지 의외로 동일한 Output을 내놓는 경우가 많음

33 |

34 | # 자료 관련 문의

35 | - 이현제, oglee815@gmail.com

36 | - h8.lee@samsung.com

37 |

--------------------------------------------------------------------------------

/code/README.md:

--------------------------------------------------------------------------------

1 | # RLHF - Colossal-AI

2 |

3 | ## Table of Contents

4 |

5 | - [What is RLHF - Colossal-AI?](#intro)

6 | - [How to Install?](#install)

7 | - [The Plan](#the-plan)

8 | - [How can you partcipate in open source?](#invitation-to-open-source-contribution)

9 | ---

10 | ## Intro

11 | Implementation of RLHF (Reinforcement Learning with Human Feedback) powered by Colossal-AI. It supports distributed training and offloading, which can fit extremly large models. More details can be found in the [blog](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt).

12 |

13 |

14 |  15 |

15 |

16 |

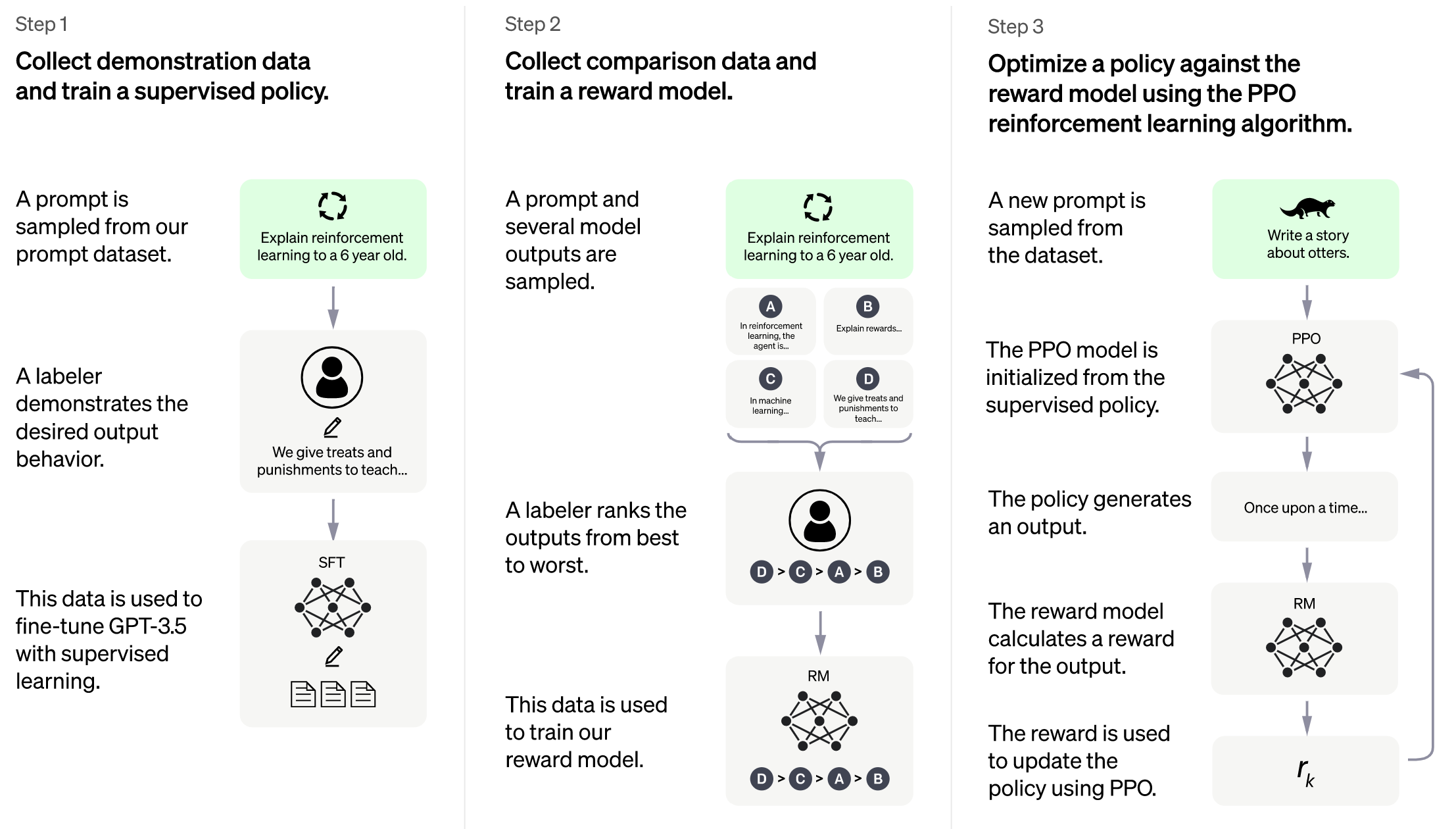

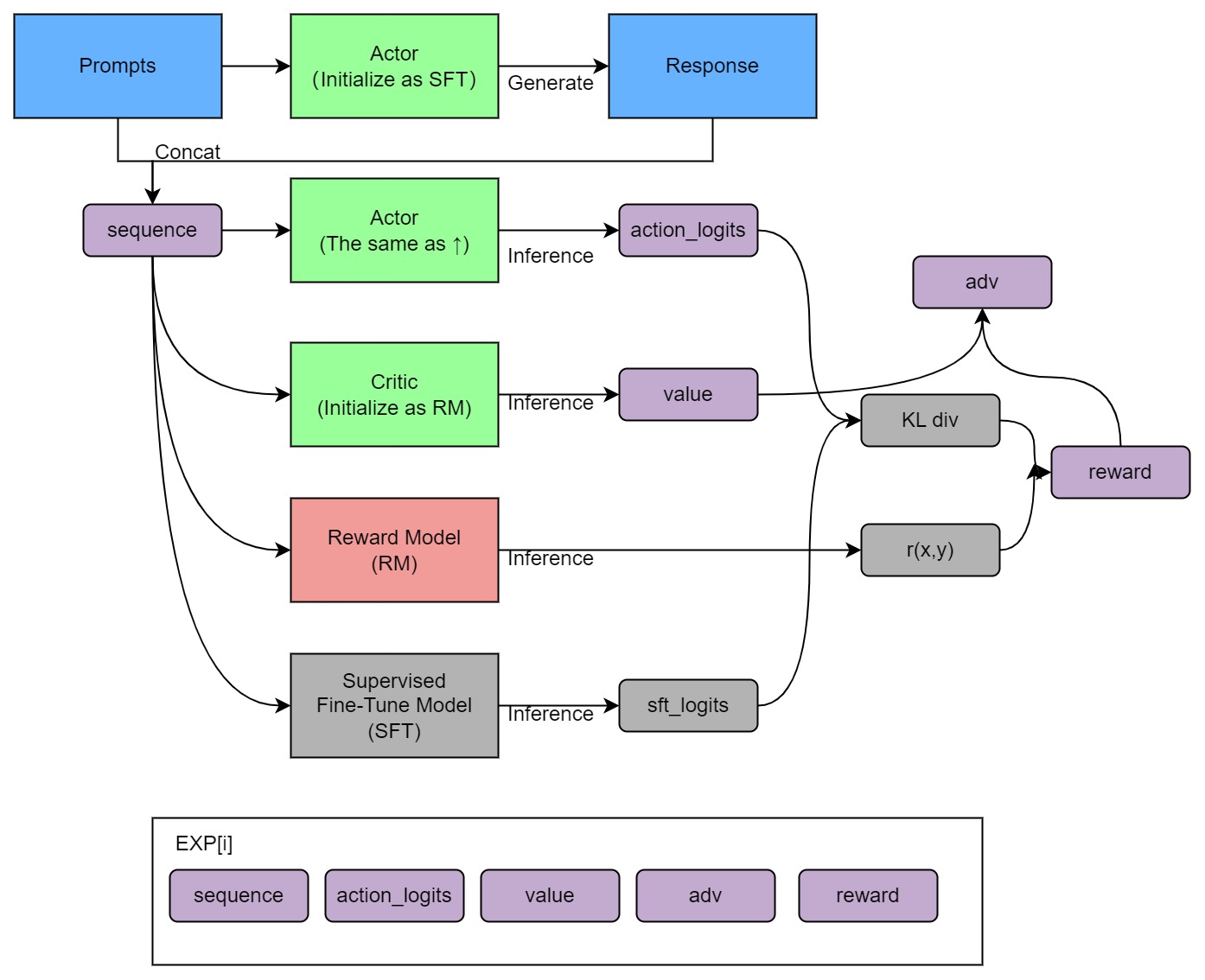

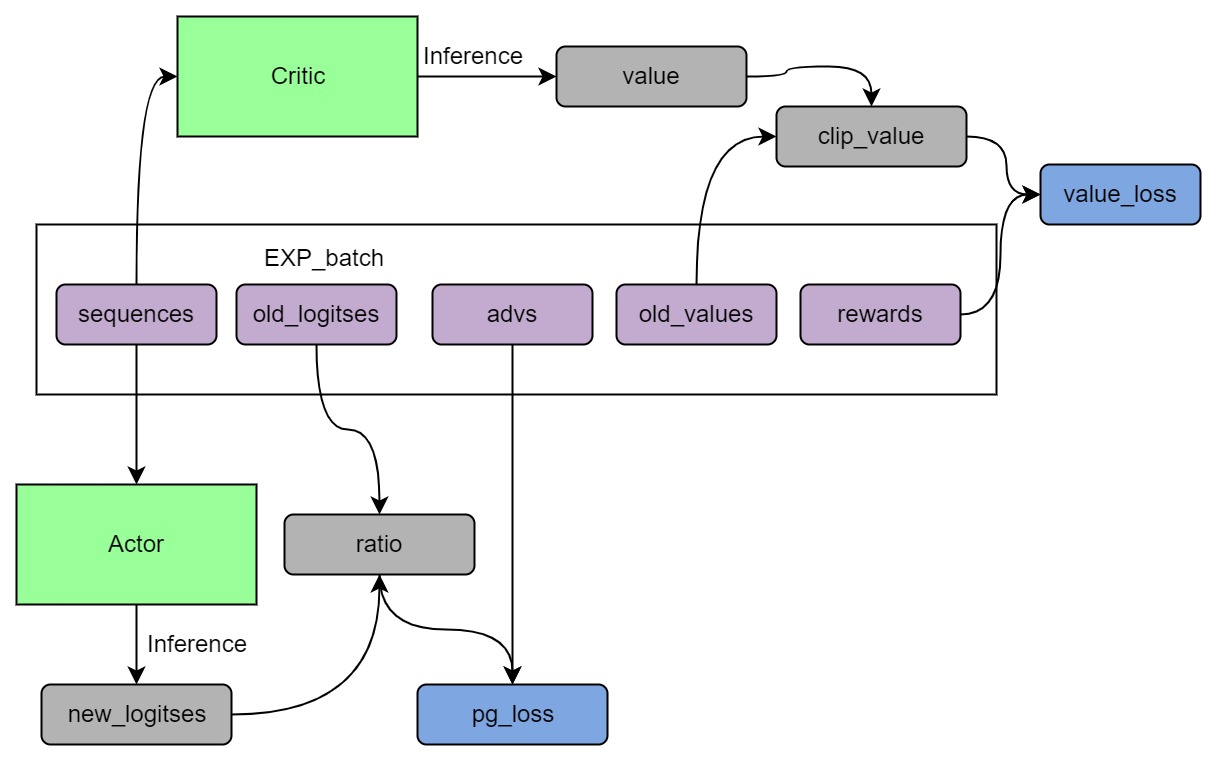

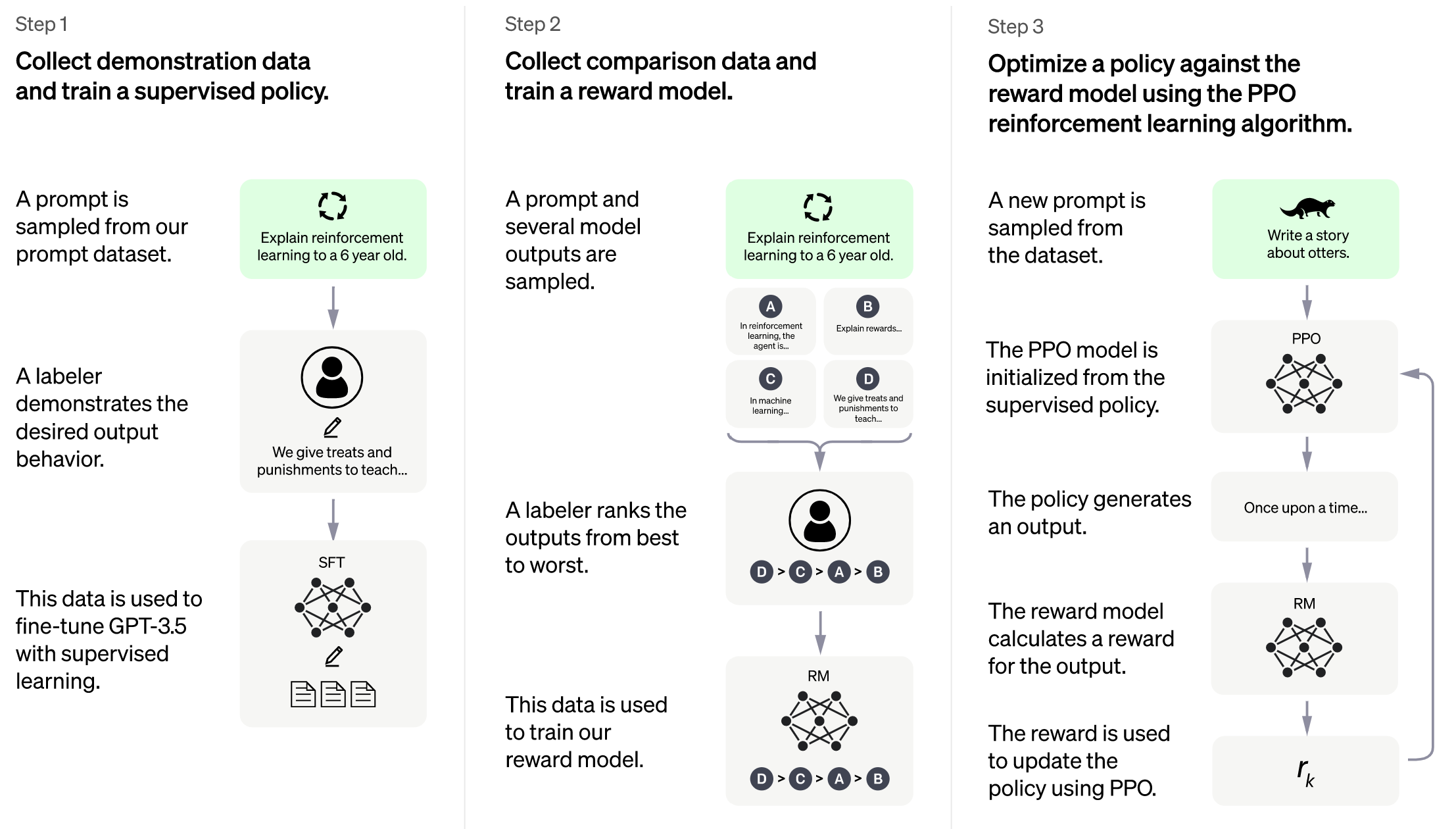

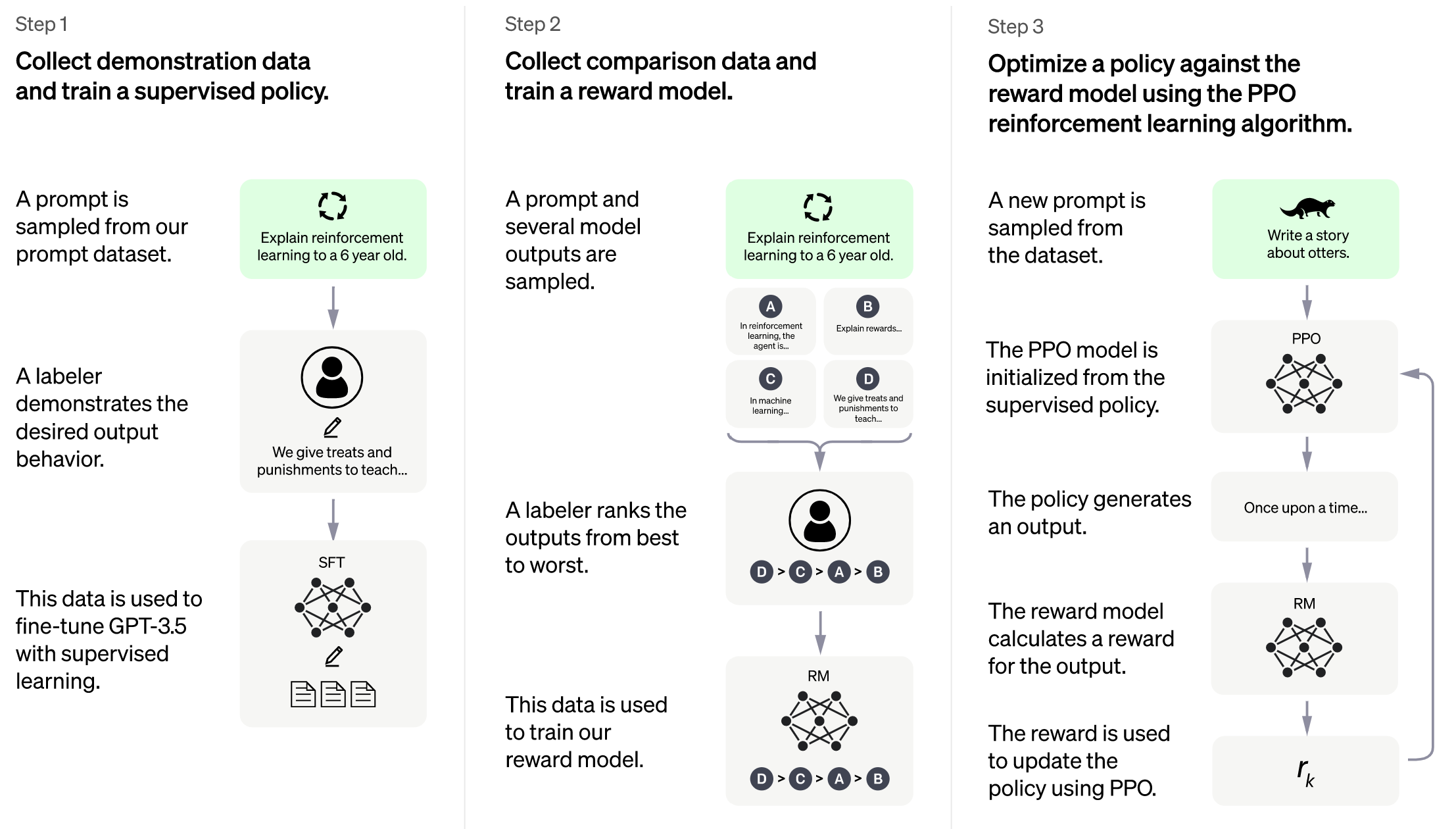

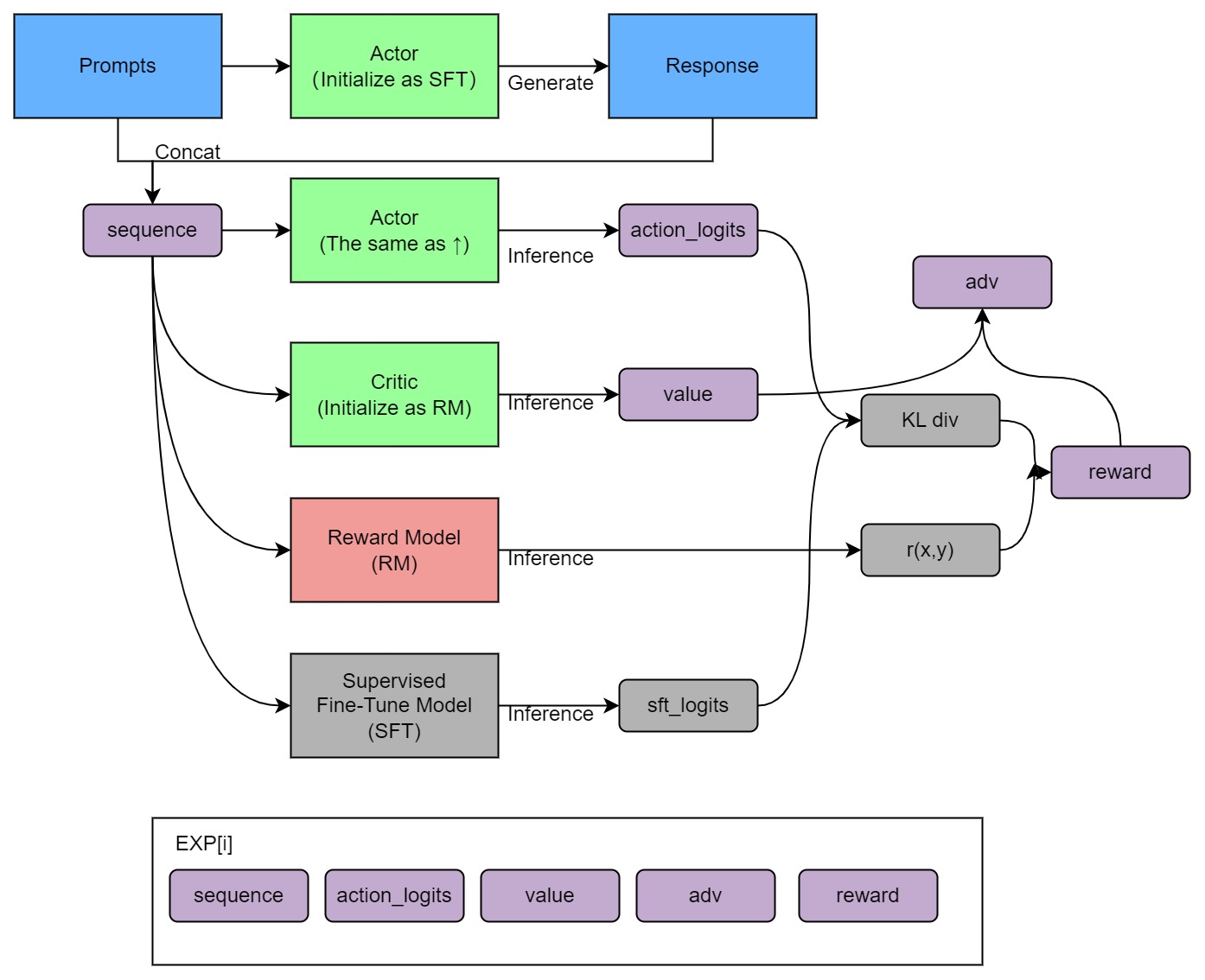

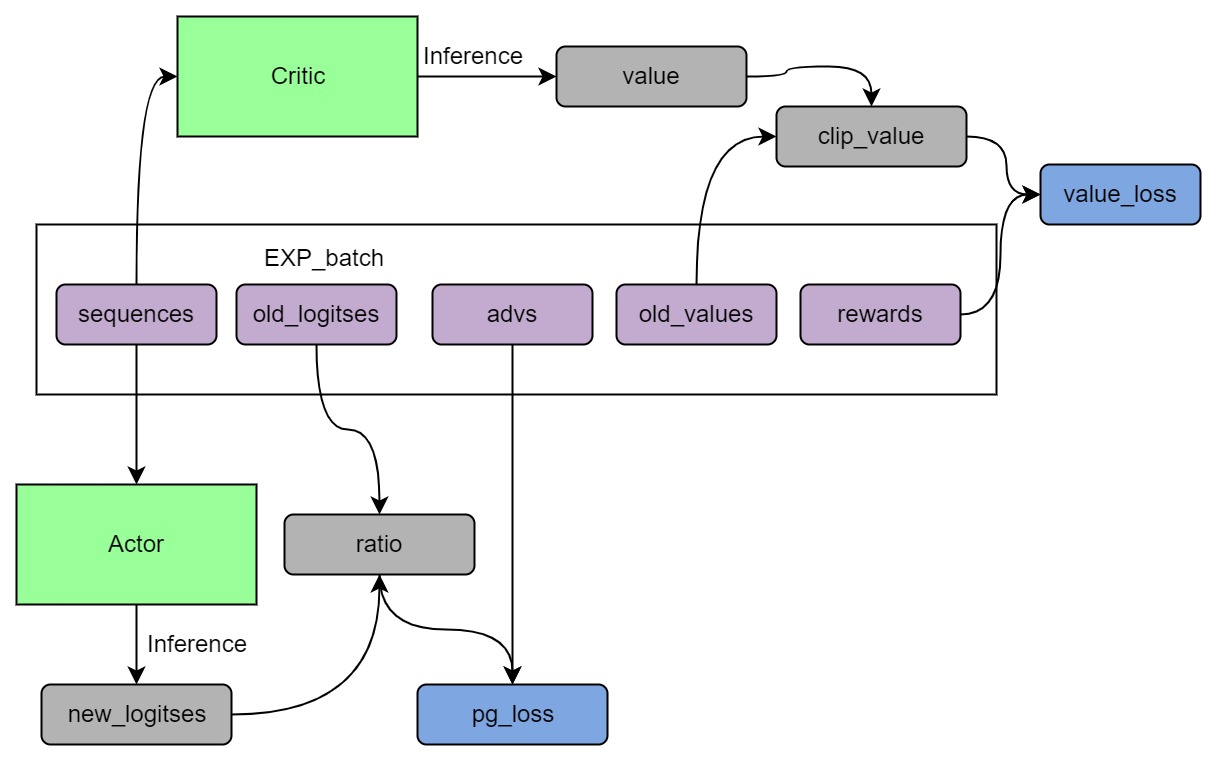

17 | ## Training process (step 3)

18 |

19 |  20 |

20 |

21 |

22 |  23 |

23 |

24 |

25 |

26 | ## Install

27 | ```shell

28 | pip install .

29 | ```

30 |

31 | ## Usage

32 |

33 | The main entrypoint is `Trainer`. We only support PPO trainer now. We support many training strategies:

34 |

35 | - NaiveStrategy: simplest strategy. Train on single GPU.

36 | - DDPStrategy: use `torch.nn.parallel.DistributedDataParallel`. Train on multi GPUs.

37 | - ColossalAIStrategy: use Gemini and Zero of ColossalAI. It eliminates model duplication on each GPU and supports offload. It's very useful when training large models on multi GPUs.

38 |

39 | Simplest usage:

40 |

41 | ```python

42 | from chatgpt.trainer import PPOTrainer

43 | from chatgpt.trainer.strategies import ColossalAIStrategy

44 | from chatgpt.models.gpt import GPTActor, GPTCritic

45 | from chatgpt.models.base import RewardModel

46 | from copy import deepcopy

47 | from colossalai.nn.optimizer import HybridAdam

48 |

49 | strategy = ColossalAIStrategy()

50 |

51 | with strategy.model_init_context():

52 | # init your model here

53 | # load pretrained gpt2

54 | actor = GPTActor(pretrained='gpt2')

55 | critic = GPTCritic()

56 | initial_model = deepcopy(actor).cuda()

57 | reward_model = RewardModel(deepcopy(critic.model), deepcopy(critic.value_head)).cuda()

58 |

59 | actor_optim = HybridAdam(actor.parameters(), lr=5e-6)

60 | critic_optim = HybridAdam(critic.parameters(), lr=5e-6)

61 |

62 | # prepare models and optimizers

63 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model = strategy.prepare(

64 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model)

65 |

66 | # load saved model checkpoint after preparing

67 | strategy.load_model(actor, 'actor_checkpoint.pt', strict=False)

68 | # load saved optimizer checkpoint after preparing

69 | strategy.load_optimizer(actor_optim, 'actor_optim_checkpoint.pt')

70 |

71 | trainer = PPOTrainer(strategy,

72 | actor,

73 | critic,

74 | reward_model,

75 | initial_model,

76 | actor_optim,

77 | critic_optim,

78 | ...)

79 |

80 | trainer.fit(dataset, ...)

81 |

82 | # save model checkpoint after fitting on only rank0

83 | strategy.save_model(actor, 'actor_checkpoint.pt', only_rank0=True)

84 | # save optimizer checkpoint on all ranks

85 | strategy.save_optimizer(actor_optim, 'actor_optim_checkpoint.pt', only_rank0=False)

86 | ```

87 |

88 | For more details, see `examples/`.

89 |

90 | We also support training reward model with true-world data. See `examples/train_reward_model.py`.

91 |

92 | ## FAQ

93 |

94 | ### How to save/load checkpoint

95 |

96 | To load pretrained model, you can simply use huggingface pretrained models:

97 |

98 | ```python

99 | # load OPT-350m pretrained model

100 | actor = OPTActor(pretrained='facebook/opt-350m')

101 | ```

102 |

103 | To save model checkpoint:

104 |

105 | ```python

106 | # save model checkpoint on only rank0

107 | strategy.save_model(actor, 'actor_checkpoint.pt', only_rank0=True)

108 | ```

109 |

110 | This function must be called after `strategy.prepare()`.

111 |

112 | For DDP strategy, model weights are replicated on all ranks. And for ColossalAI strategy, model weights may be sharded, but all-gather will be applied before returning state dict. You can set `only_rank0=True` for both of them, which only saves checkpoint on rank0, to save disk space usage. The checkpoint is float32.

113 |

114 | To save optimizer checkpoint:

115 |

116 | ```python

117 | # save optimizer checkpoint on all ranks

118 | strategy.save_optimizer(actor_optim, 'actor_optim_checkpoint.pt', only_rank0=False)

119 | ```

120 |

121 | For DDP strategy, optimizer states are replicated on all ranks. You can set `only_rank0=True`. But for ColossalAI strategy, optimizer states are sharded over all ranks, and no all-gather will be applied. So for ColossalAI strategy, you can only set `only_rank0=False`. That is to say, each rank will save a cehckpoint. When loading, each rank should load the corresponding part.

122 |

123 | Note that different stategy may have different shapes of optimizer checkpoint.

124 |

125 | To load model checkpoint:

126 |

127 | ```python

128 | # load saved model checkpoint after preparing

129 | strategy.load_model(actor, 'actor_checkpoint.pt', strict=False)

130 | ```

131 |

132 | To load optimizer checkpoint:

133 |

134 | ```python

135 | # load saved optimizer checkpoint after preparing

136 | strategy.load_optimizer(actor_optim, 'actor_optim_checkpoint.pt')

137 | ```

138 |

139 | ## The Plan

140 |

141 | - [x] implement PPO fine-tuning

142 | - [x] implement training reward model

143 | - [x] support LoRA

144 | - [x] support inference

145 | - [ ] open source the reward model weight

146 | - [ ] support llama from [facebook](https://github.com/facebookresearch/llama)

147 | - [ ] support BoN(best of N sample)

148 | - [ ] implement PPO-ptx fine-tuning

149 | - [ ] integrate with Ray

150 | - [ ] support more RL paradigms, like Implicit Language Q-Learning (ILQL),

151 | - [ ] support chain of throught by [langchain](https://github.com/hwchase17/langchain)

152 |

153 | ### Real-time progress

154 | You will find our progress in github project broad

155 |

156 | [Open ChatGPT](https://github.com/orgs/hpcaitech/projects/17/views/1)

157 |

158 | ## Invitation to open-source contribution

159 | Referring to the successful attempts of [BLOOM](https://bigscience.huggingface.co/) and [Stable Diffusion](https://en.wikipedia.org/wiki/Stable_Diffusion), any and all developers and partners with computing powers, datasets, models are welcome to join and build the Colossal-AI community, making efforts towards the era of big AI models from the starting point of replicating ChatGPT!

160 |

161 | You may contact us or participate in the following ways:

162 | 1. [Leaving a Star ⭐](https://github.com/hpcaitech/ColossalAI/stargazers) to show your like and support. Thanks!

163 | 2. Posting an [issue](https://github.com/hpcaitech/ColossalAI/issues/new/choose), or submitting a PR on GitHub follow the guideline in [Contributing](https://github.com/hpcaitech/ColossalAI/blob/main/CONTRIBUTING.md).

164 | 3. Join the Colossal-AI community on

165 | [Slack](https://join.slack.com/t/colossalaiworkspace/shared_invite/zt-z7b26eeb-CBp7jouvu~r0~lcFzX832w),

166 | and [WeChat(微信)](https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/WeChat.png "qrcode") to share your ideas.

167 | 4. Send your official proposal to email contact@hpcaitech.com

168 |

169 | Thanks so much to all of our amazing contributors!

170 |

171 | ## Quick Preview

172 |

173 |  174 |

174 |

175 |

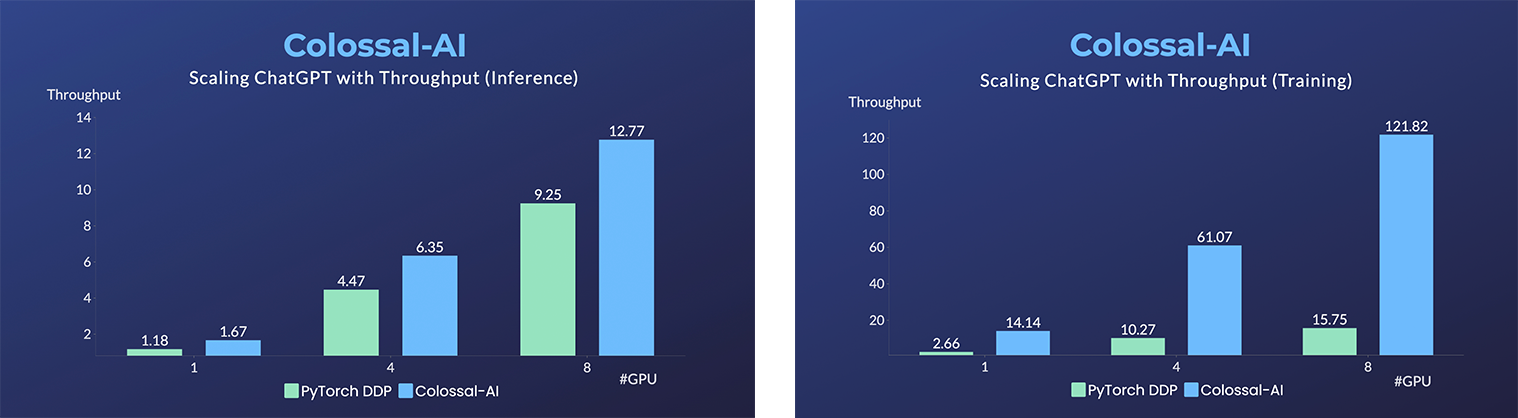

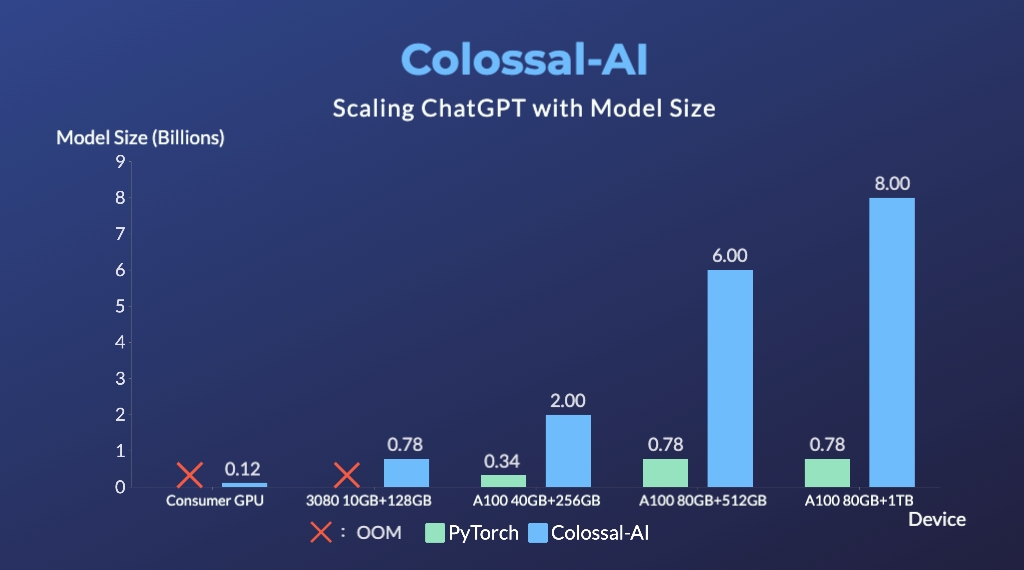

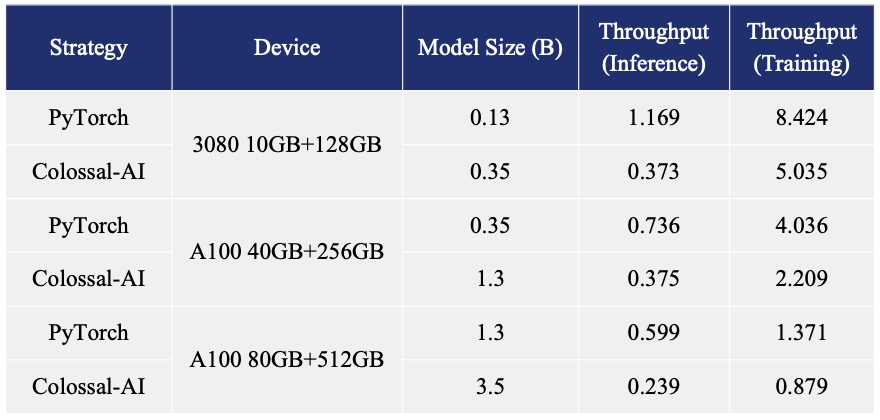

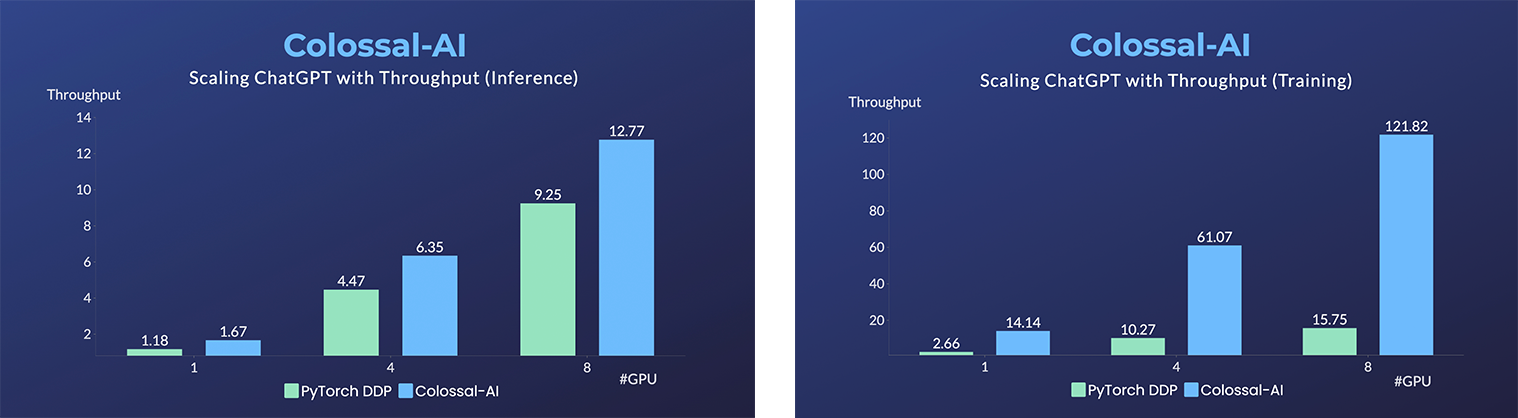

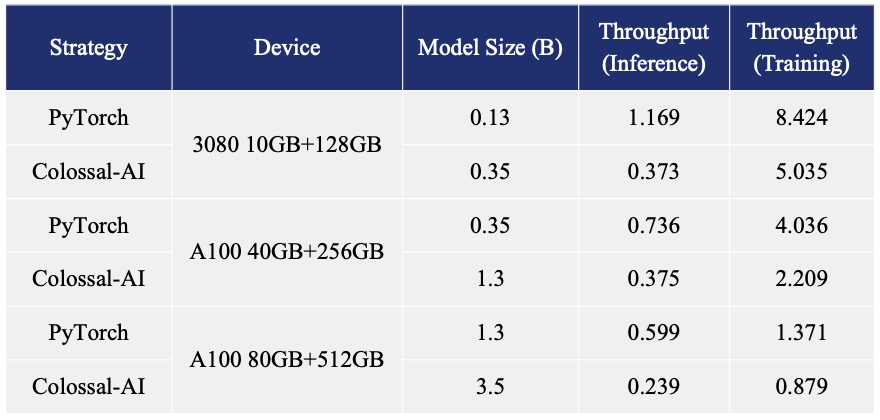

176 | - Up to 7.73 times faster for single server training and 1.42 times faster for single-GPU inference

177 |

178 |

179 |  180 |

180 |

181 |

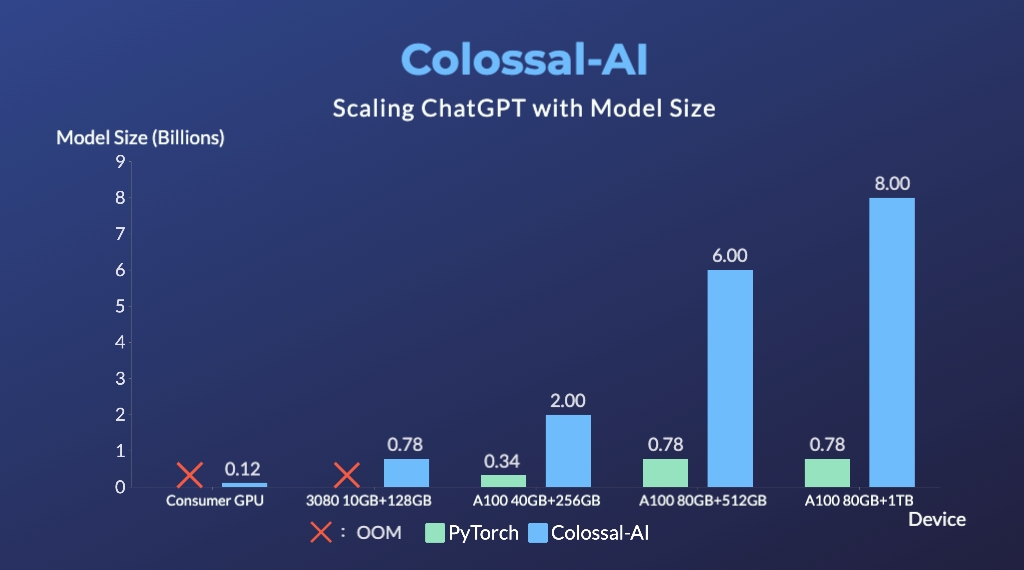

182 | - Up to 10.3x growth in model capacity on one GPU

183 | - A mini demo training process requires only 1.62GB of GPU memory (any consumer-grade GPU)

184 |

185 |

186 |  187 |

187 |

188 |

189 | - Increase the capacity of the fine-tuning model by up to 3.7 times on a single GPU

190 | - Keep in a sufficiently high running speed

191 |

192 | ## Citations

193 |

194 | ```bibtex

195 | @article{Hu2021LoRALA,

196 | title = {LoRA: Low-Rank Adaptation of Large Language Models},

197 | author = {Edward J. Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Weizhu Chen},

198 | journal = {ArXiv},

199 | year = {2021},

200 | volume = {abs/2106.09685}

201 | }

202 |

203 | @article{ouyang2022training,

204 | title={Training language models to follow instructions with human feedback},

205 | author={Ouyang, Long and Wu, Jeff and Jiang, Xu and Almeida, Diogo and Wainwright, Carroll L and Mishkin, Pamela and Zhang, Chong and Agarwal, Sandhini and Slama, Katarina and Ray, Alex and others},

206 | journal={arXiv preprint arXiv:2203.02155},

207 | year={2022}

208 | }

209 | ```

210 |

--------------------------------------------------------------------------------

/code/benchmarks/README.md:

--------------------------------------------------------------------------------

1 | # Benchmarks

2 |

3 | ## Benchmark GPT on dummy prompt data

4 |

5 | We provide various GPT models (string in parentheses is the corresponding model name used in this script):

6 |

7 | - GPT2-S (s)

8 | - GPT2-M (m)

9 | - GPT2-L (l)

10 | - GPT2-XL (xl)

11 | - GPT2-4B (4b)

12 | - GPT2-6B (6b)

13 | - GPT2-8B (8b)

14 | - GPT2-10B (10b)

15 | - GPT2-12B (12b)

16 | - GPT2-15B (15b)

17 | - GPT2-18B (18b)

18 | - GPT2-20B (20b)

19 | - GPT2-24B (24b)

20 | - GPT2-28B (28b)

21 | - GPT2-32B (32b)

22 | - GPT2-36B (36b)

23 | - GPT2-40B (40b)

24 | - GPT3 (175b)

25 |

26 | We also provide various training strategies:

27 |

28 | - ddp: torch DDP

29 | - colossalai_gemini: ColossalAI GeminiDDP with `placement_policy="cuda"`, like zero3

30 | - colossalai_gemini_cpu: ColossalAI GeminiDDP with `placement_policy="cpu"`, like zero3-offload

31 | - colossalai_zero2: ColossalAI zero2

32 | - colossalai_zero2_cpu: ColossalAI zero2-offload

33 | - colossalai_zero1: ColossalAI zero1

34 | - colossalai_zero1_cpu: ColossalAI zero1-offload

35 |

36 | We only support `torchrun` to launch now. E.g.

37 |

38 | ```shell

39 | # run GPT2-S on single-node single-GPU with min batch size

40 | torchrun --standalone --nproc_per_node 1 benchmark_gpt_dummy.py --model s --strategy ddp --experience_batch_size 1 --train_batch_size 1

41 | # run GPT2-XL on single-node 4-GPU

42 | torchrun --standalone --nproc_per_node 4 benchmark_gpt_dummy.py --model xl --strategy colossalai_zero2

43 | # run GPT3 on 8-node 8-GPU

44 | torchrun --nnodes 8 --nproc_per_node 8 \

45 | --rdzv_id=$JOB_ID --rdzv_backend=c10d --rdzv_endpoint=$HOST_NODE_ADDR \

46 | benchmark_gpt_dummy.py --model 175b --strategy colossalai_gemini

47 | ```

48 |

49 | > ⚠ Batch sizes in CLI args and outputed throughput/TFLOPS are all values of per GPU.

50 |

51 | In this benchmark, we assume the model architectures/sizes of actor and critic are the same for simplicity. But in practice, to reduce training cost, we may use a smaller critic.

52 |

53 | We also provide a simple shell script to run a set of benchmarks. But it only supports benchmark on single node. However, it's easy to run on multi-nodes by modifying launch command in this script.

54 |

55 | Usage:

56 |

57 | ```shell

58 | # run for GPUS=(1 2 4 8) x strategy=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu") x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

59 | ./benchmark_gpt_dummy.sh

60 | # run for GPUS=2 x strategy=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu") x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

61 | ./benchmark_gpt_dummy.sh 2

62 | # run for GPUS=2 x strategy=ddp x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

63 | ./benchmark_gpt_dummy.sh 2 ddp

64 | # run for GPUS=2 x strategy=ddp x model=l x batch_size=(1 2 4 8 16 32 64 128 256)

65 | ./benchmark_gpt_dummy.sh 2 ddp l

66 | ```

67 |

68 | ## Benchmark OPT with LoRA on dummy prompt data

69 |

70 | We provide various OPT models (string in parentheses is the corresponding model name used in this script):

71 |

72 | - OPT-125M (125m)

73 | - OPT-350M (350m)

74 | - OPT-700M (700m)

75 | - OPT-1.3B (1.3b)

76 | - OPT-2.7B (2.7b)

77 | - OPT-3.5B (3.5b)

78 | - OPT-5.5B (5.5b)

79 | - OPT-6.7B (6.7b)

80 | - OPT-10B (10b)

81 | - OPT-13B (13b)

82 |

83 | We only support `torchrun` to launch now. E.g.

84 |

85 | ```shell

86 | # run OPT-125M with no lora (lora_rank=0) on single-node single-GPU with min batch size

87 | torchrun --standalone --nproc_per_node 1 benchmark_opt_lora_dummy.py --model 125m --strategy ddp --experience_batch_size 1 --train_batch_size 1 --lora_rank 0

88 | # run OPT-350M with lora_rank=4 on single-node 4-GPU

89 | torchrun --standalone --nproc_per_node 4 benchmark_opt_lora_dummy.py --model 350m --strategy colossalai_zero2 --lora_rank 4

90 | ```

91 |

92 | > ⚠ Batch sizes in CLI args and outputed throughput/TFLOPS are all values of per GPU.

93 |

94 | In this benchmark, we assume the model architectures/sizes of actor and critic are the same for simplicity. But in practice, to reduce training cost, we may use a smaller critic.

95 |

--------------------------------------------------------------------------------

/code/benchmarks/benchmark_gpt_dummy.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from copy import deepcopy

3 |

4 | import torch

5 | import torch.distributed as dist

6 | import torch.nn as nn

7 | from chatgpt.models.base import RewardModel

8 | from chatgpt.models.gpt import GPTActor, GPTCritic

9 | from chatgpt.trainer import PPOTrainer

10 | from chatgpt.trainer.callbacks import PerformanceEvaluator

11 | from chatgpt.trainer.strategies import ColossalAIStrategy, DDPStrategy, Strategy

12 | from torch.optim import Adam

13 | from transformers.models.gpt2.configuration_gpt2 import GPT2Config

14 | from transformers.models.gpt2.tokenization_gpt2 import GPT2Tokenizer

15 |

16 | from colossalai.nn.optimizer import HybridAdam

17 |

18 |

19 | def get_model_numel(model: nn.Module, strategy: Strategy) -> int:

20 | numel = sum(p.numel() for p in model.parameters())

21 | if isinstance(strategy, ColossalAIStrategy) and strategy.stage == 3 and strategy.shard_init:

22 | numel *= dist.get_world_size()

23 | return numel

24 |

25 |

26 | def preprocess_batch(samples) -> dict:

27 | input_ids = torch.stack(samples)

28 | attention_mask = torch.ones_like(input_ids, dtype=torch.long)

29 | return {'input_ids': input_ids, 'attention_mask': attention_mask}

30 |

31 |

32 | def print_rank_0(*args, **kwargs) -> None:

33 | if dist.get_rank() == 0:

34 | print(*args, **kwargs)

35 |

36 |

37 | def print_model_numel(model_dict: dict) -> None:

38 | B = 1024**3

39 | M = 1024**2

40 | K = 1024

41 | outputs = ''

42 | for name, numel in model_dict.items():

43 | outputs += f'{name}: '

44 | if numel >= B:

45 | outputs += f'{numel / B:.2f} B\n'

46 | elif numel >= M:

47 | outputs += f'{numel / M:.2f} M\n'

48 | elif numel >= K:

49 | outputs += f'{numel / K:.2f} K\n'

50 | else:

51 | outputs += f'{numel}\n'

52 | print_rank_0(outputs)

53 |

54 |

55 | def get_gpt_config(model_name: str) -> GPT2Config:

56 | model_map = {

57 | 's': GPT2Config(),

58 | 'm': GPT2Config(n_embd=1024, n_layer=24, n_head=16),

59 | 'l': GPT2Config(n_embd=1280, n_layer=36, n_head=20),

60 | 'xl': GPT2Config(n_embd=1600, n_layer=48, n_head=25),

61 | '2b': GPT2Config(n_embd=2048, n_layer=40, n_head=16),

62 | '4b': GPT2Config(n_embd=2304, n_layer=64, n_head=16),

63 | '6b': GPT2Config(n_embd=4096, n_layer=30, n_head=16),

64 | '8b': GPT2Config(n_embd=4096, n_layer=40, n_head=16),

65 | '10b': GPT2Config(n_embd=4096, n_layer=50, n_head=16),

66 | '12b': GPT2Config(n_embd=4096, n_layer=60, n_head=16),

67 | '15b': GPT2Config(n_embd=4096, n_layer=78, n_head=16),

68 | '18b': GPT2Config(n_embd=4096, n_layer=90, n_head=16),

69 | '20b': GPT2Config(n_embd=8192, n_layer=25, n_head=16),

70 | '24b': GPT2Config(n_embd=8192, n_layer=30, n_head=16),

71 | '28b': GPT2Config(n_embd=8192, n_layer=35, n_head=16),

72 | '32b': GPT2Config(n_embd=8192, n_layer=40, n_head=16),

73 | '36b': GPT2Config(n_embd=8192, n_layer=45, n_head=16),

74 | '40b': GPT2Config(n_embd=8192, n_layer=50, n_head=16),

75 | '175b': GPT2Config(n_positions=2048, n_embd=12288, n_layer=96, n_head=96),

76 | }

77 | try:

78 | return model_map[model_name]

79 | except KeyError:

80 | raise ValueError(f'Unknown model "{model_name}"')

81 |

82 |

83 | def main(args):

84 | if args.strategy == 'ddp':

85 | strategy = DDPStrategy()

86 | elif args.strategy == 'colossalai_gemini':

87 | strategy = ColossalAIStrategy(stage=3, placement_policy='cuda', initial_scale=2**5)

88 | elif args.strategy == 'colossalai_gemini_cpu':

89 | strategy = ColossalAIStrategy(stage=3, placement_policy='cpu', initial_scale=2**5)

90 | elif args.strategy == 'colossalai_zero2':

91 | strategy = ColossalAIStrategy(stage=2, placement_policy='cuda')

92 | elif args.strategy == 'colossalai_zero2_cpu':

93 | strategy = ColossalAIStrategy(stage=2, placement_policy='cpu')

94 | elif args.strategy == 'colossalai_zero1':

95 | strategy = ColossalAIStrategy(stage=1, placement_policy='cuda')

96 | elif args.strategy == 'colossalai_zero1_cpu':

97 | strategy = ColossalAIStrategy(stage=1, placement_policy='cpu')

98 | else:

99 | raise ValueError(f'Unsupported strategy "{args.strategy}"')

100 |

101 | model_config = get_gpt_config(args.model)

102 |

103 | with strategy.model_init_context():

104 | actor = GPTActor(config=model_config).cuda()

105 | critic = GPTCritic(config=model_config).cuda()

106 |

107 | initial_model = deepcopy(actor).cuda()

108 | reward_model = RewardModel(deepcopy(critic.model), deepcopy(critic.value_head)).cuda()

109 |

110 | actor_numel = get_model_numel(actor, strategy)

111 | critic_numel = get_model_numel(critic, strategy)

112 | initial_model_numel = get_model_numel(initial_model, strategy)

113 | reward_model_numel = get_model_numel(reward_model, strategy)

114 | print_model_numel({

115 | 'Actor': actor_numel,

116 | 'Critic': critic_numel,

117 | 'Initial model': initial_model_numel,

118 | 'Reward model': reward_model_numel

119 | })

120 | performance_evaluator = PerformanceEvaluator(actor_numel,

121 | critic_numel,

122 | initial_model_numel,

123 | reward_model_numel,

124 | enable_grad_checkpoint=False,

125 | ignore_episodes=1)

126 |

127 | if args.strategy.startswith('colossalai'):

128 | actor_optim = HybridAdam(actor.parameters(), lr=5e-6)

129 | critic_optim = HybridAdam(critic.parameters(), lr=5e-6)

130 | else:

131 | actor_optim = Adam(actor.parameters(), lr=5e-6)

132 | critic_optim = Adam(critic.parameters(), lr=5e-6)

133 |

134 | tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

135 | tokenizer.pad_token = tokenizer.eos_token

136 |

137 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model = strategy.prepare(

138 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model)

139 |

140 | trainer = PPOTrainer(strategy,

141 | actor,

142 | critic,

143 | reward_model,

144 | initial_model,

145 | actor_optim,

146 | critic_optim,

147 | max_epochs=args.max_epochs,

148 | train_batch_size=args.train_batch_size,

149 | experience_batch_size=args.experience_batch_size,

150 | tokenizer=preprocess_batch,

151 | max_length=512,

152 | do_sample=True,

153 | temperature=1.0,

154 | top_k=50,

155 | pad_token_id=tokenizer.pad_token_id,

156 | eos_token_id=tokenizer.eos_token_id,

157 | callbacks=[performance_evaluator])

158 |

159 | random_prompts = torch.randint(tokenizer.vocab_size, (1000, 400), device=torch.cuda.current_device())

160 | trainer.fit(random_prompts,

161 | num_episodes=args.num_episodes,

162 | max_timesteps=args.max_timesteps,

163 | update_timesteps=args.update_timesteps)

164 |

165 | print_rank_0(f'Peak CUDA mem: {torch.cuda.max_memory_allocated()/1024**3:.2f} GB')

166 |

167 |

168 | if __name__ == '__main__':

169 | parser = argparse.ArgumentParser()

170 | parser.add_argument('--model', default='s')

171 | parser.add_argument('--strategy',

172 | choices=[

173 | 'ddp', 'colossalai_gemini', 'colossalai_gemini_cpu', 'colossalai_zero2',

174 | 'colossalai_zero2_cpu', 'colossalai_zero1', 'colossalai_zero1_cpu'

175 | ],

176 | default='ddp')

177 | parser.add_argument('--num_episodes', type=int, default=3)

178 | parser.add_argument('--max_timesteps', type=int, default=8)

179 | parser.add_argument('--update_timesteps', type=int, default=8)

180 | parser.add_argument('--max_epochs', type=int, default=3)

181 | parser.add_argument('--train_batch_size', type=int, default=8)

182 | parser.add_argument('--experience_batch_size', type=int, default=8)

183 | args = parser.parse_args()

184 | main(args)

185 |

--------------------------------------------------------------------------------

/code/benchmarks/benchmark_gpt_dummy.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | # Usage: $0

3 | set -xu

4 |

5 | BASE=$(realpath $(dirname $0))

6 |

7 |

8 | PY_SCRIPT=${BASE}/benchmark_gpt_dummy.py

9 | export OMP_NUM_THREADS=8

10 |

11 | function tune_batch_size() {

12 | # we found when experience batch size is equal to train batch size

13 | # peak CUDA memory usage of making experience phase is less than or equal to that of training phase

14 | # thus, experience batch size can be larger than or equal to train batch size

15 | for bs in 1 2 4 8 16 32 64 128 256; do

16 | torchrun --standalone --nproc_per_node $1 $PY_SCRIPT --model $2 --strategy $3 --experience_batch_size $bs --train_batch_size $bs || return 1

17 | done

18 | }

19 |

20 | if [ $# -eq 0 ]; then

21 | num_gpus=(1 2 4 8)

22 | else

23 | num_gpus=($1)

24 | fi

25 |

26 | if [ $# -le 1 ]; then

27 | strategies=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu")

28 | else

29 | strategies=($2)

30 | fi

31 |

32 | if [ $# -le 2 ]; then

33 | models=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b")

34 | else

35 | models=($3)

36 | fi

37 |

38 |

39 | for num_gpu in ${num_gpus[@]}; do

40 | for strategy in ${strategies[@]}; do

41 | for model in ${models[@]}; do

42 | tune_batch_size $num_gpu $model $strategy || break

43 | done

44 | done

45 | done

46 |

--------------------------------------------------------------------------------

/code/benchmarks/benchmark_opt_lora_dummy.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from copy import deepcopy

3 |

4 | import torch

5 | import torch.distributed as dist

6 | import torch.nn as nn

7 | from chatgpt.models.base import RewardModel

8 | from chatgpt.models.opt import OPTActor, OPTCritic

9 | from chatgpt.trainer import PPOTrainer

10 | from chatgpt.trainer.callbacks import PerformanceEvaluator

11 | from chatgpt.trainer.strategies import ColossalAIStrategy, DDPStrategy, Strategy

12 | from torch.optim import Adam

13 | from transformers import AutoTokenizer

14 | from transformers.models.opt.configuration_opt import OPTConfig

15 |

16 | from colossalai.nn.optimizer import HybridAdam

17 |

18 |

19 | def get_model_numel(model: nn.Module, strategy: Strategy) -> int:

20 | numel = sum(p.numel() for p in model.parameters())

21 | if isinstance(strategy, ColossalAIStrategy) and strategy.stage == 3 and strategy.shard_init:

22 | numel *= dist.get_world_size()

23 | return numel

24 |

25 |

26 | def preprocess_batch(samples) -> dict:

27 | input_ids = torch.stack(samples)

28 | attention_mask = torch.ones_like(input_ids, dtype=torch.long)

29 | return {'input_ids': input_ids, 'attention_mask': attention_mask}

30 |

31 |

32 | def print_rank_0(*args, **kwargs) -> None:

33 | if dist.get_rank() == 0:

34 | print(*args, **kwargs)

35 |

36 |

37 | def print_model_numel(model_dict: dict) -> None:

38 | B = 1024**3

39 | M = 1024**2

40 | K = 1024

41 | outputs = ''

42 | for name, numel in model_dict.items():

43 | outputs += f'{name}: '

44 | if numel >= B:

45 | outputs += f'{numel / B:.2f} B\n'

46 | elif numel >= M:

47 | outputs += f'{numel / M:.2f} M\n'

48 | elif numel >= K:

49 | outputs += f'{numel / K:.2f} K\n'

50 | else:

51 | outputs += f'{numel}\n'

52 | print_rank_0(outputs)

53 |

54 |

55 | def get_gpt_config(model_name: str) -> OPTConfig:

56 | model_map = {

57 | '125m': OPTConfig.from_pretrained('facebook/opt-125m'),

58 | '350m': OPTConfig(hidden_size=1024, ffn_dim=4096, num_hidden_layers=24, num_attention_heads=16),

59 | '700m': OPTConfig(hidden_size=1280, ffn_dim=5120, num_hidden_layers=36, num_attention_heads=20),

60 | '1.3b': OPTConfig.from_pretrained('facebook/opt-1.3b'),

61 | '2.7b': OPTConfig.from_pretrained('facebook/opt-2.7b'),

62 | '3.5b': OPTConfig(hidden_size=3072, ffn_dim=12288, num_hidden_layers=32, num_attention_heads=32),

63 | '5.5b': OPTConfig(hidden_size=3840, ffn_dim=15360, num_hidden_layers=32, num_attention_heads=32),

64 | '6.7b': OPTConfig.from_pretrained('facebook/opt-6.7b'),

65 | '10b': OPTConfig(hidden_size=5120, ffn_dim=20480, num_hidden_layers=32, num_attention_heads=32),

66 | '13b': OPTConfig.from_pretrained('facebook/opt-13b'),

67 | }

68 | try:

69 | return model_map[model_name]

70 | except KeyError:

71 | raise ValueError(f'Unknown model "{model_name}"')

72 |

73 |

74 | def main(args):

75 | if args.strategy == 'ddp':

76 | strategy = DDPStrategy()

77 | elif args.strategy == 'colossalai_gemini':

78 | strategy = ColossalAIStrategy(stage=3, placement_policy='cuda', initial_scale=2**5)

79 | elif args.strategy == 'colossalai_gemini_cpu':

80 | strategy = ColossalAIStrategy(stage=3, placement_policy='cpu', initial_scale=2**5)

81 | elif args.strategy == 'colossalai_zero2':

82 | strategy = ColossalAIStrategy(stage=2, placement_policy='cuda')

83 | elif args.strategy == 'colossalai_zero2_cpu':

84 | strategy = ColossalAIStrategy(stage=2, placement_policy='cpu')

85 | elif args.strategy == 'colossalai_zero1':

86 | strategy = ColossalAIStrategy(stage=1, placement_policy='cuda')

87 | elif args.strategy == 'colossalai_zero1_cpu':

88 | strategy = ColossalAIStrategy(stage=1, placement_policy='cpu')

89 | else:

90 | raise ValueError(f'Unsupported strategy "{args.strategy}"')

91 |

92 | torch.cuda.set_per_process_memory_fraction(args.cuda_mem_frac)

93 |

94 | model_config = get_gpt_config(args.model)

95 |

96 | with strategy.model_init_context():

97 | actor = OPTActor(config=model_config, lora_rank=args.lora_rank).cuda()

98 | critic = OPTCritic(config=model_config, lora_rank=args.lora_rank).cuda()

99 |

100 | initial_model = deepcopy(actor).cuda()

101 | reward_model = RewardModel(deepcopy(critic.model), deepcopy(critic.value_head)).cuda()

102 |

103 | actor_numel = get_model_numel(actor, strategy)

104 | critic_numel = get_model_numel(critic, strategy)

105 | initial_model_numel = get_model_numel(initial_model, strategy)

106 | reward_model_numel = get_model_numel(reward_model, strategy)

107 | print_model_numel({

108 | 'Actor': actor_numel,

109 | 'Critic': critic_numel,

110 | 'Initial model': initial_model_numel,

111 | 'Reward model': reward_model_numel

112 | })

113 | performance_evaluator = PerformanceEvaluator(actor_numel,

114 | critic_numel,

115 | initial_model_numel,

116 | reward_model_numel,

117 | enable_grad_checkpoint=False,

118 | ignore_episodes=1)

119 |

120 | if args.strategy.startswith('colossalai'):

121 | actor_optim = HybridAdam(actor.parameters(), lr=5e-6)

122 | critic_optim = HybridAdam(critic.parameters(), lr=5e-6)

123 | else:

124 | actor_optim = Adam(actor.parameters(), lr=5e-6)

125 | critic_optim = Adam(critic.parameters(), lr=5e-6)

126 |

127 | tokenizer = AutoTokenizer.from_pretrained('facebook/opt-350m')

128 | tokenizer.pad_token = tokenizer.eos_token

129 |

130 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model = strategy.prepare(

131 | (actor, actor_optim), (critic, critic_optim), reward_model, initial_model)

132 |

133 | trainer = PPOTrainer(strategy,

134 | actor,

135 | critic,

136 | reward_model,

137 | initial_model,

138 | actor_optim,

139 | critic_optim,

140 | max_epochs=args.max_epochs,

141 | train_batch_size=args.train_batch_size,

142 | experience_batch_size=args.experience_batch_size,

143 | tokenizer=preprocess_batch,

144 | max_length=512,

145 | do_sample=True,

146 | temperature=1.0,

147 | top_k=50,

148 | pad_token_id=tokenizer.pad_token_id,

149 | eos_token_id=tokenizer.eos_token_id,

150 | callbacks=[performance_evaluator])

151 |

152 | random_prompts = torch.randint(tokenizer.vocab_size, (1000, 400), device=torch.cuda.current_device())

153 | trainer.fit(random_prompts,

154 | num_episodes=args.num_episodes,

155 | max_timesteps=args.max_timesteps,

156 | update_timesteps=args.update_timesteps)

157 |

158 | print_rank_0(f'Peak CUDA mem: {torch.cuda.max_memory_allocated()/1024**3:.2f} GB')

159 |

160 |

161 | if __name__ == '__main__':

162 | parser = argparse.ArgumentParser()

163 | parser.add_argument('--model', default='125m')

164 | parser.add_argument('--strategy',

165 | choices=[

166 | 'ddp', 'colossalai_gemini', 'colossalai_gemini_cpu', 'colossalai_zero2',

167 | 'colossalai_zero2_cpu', 'colossalai_zero1', 'colossalai_zero1_cpu'

168 | ],

169 | default='ddp')

170 | parser.add_argument('--num_episodes', type=int, default=3)

171 | parser.add_argument('--max_timesteps', type=int, default=8)

172 | parser.add_argument('--update_timesteps', type=int, default=8)

173 | parser.add_argument('--max_epochs', type=int, default=3)

174 | parser.add_argument('--train_batch_size', type=int, default=8)

175 | parser.add_argument('--experience_batch_size', type=int, default=8)

176 | parser.add_argument('--lora_rank', type=int, default=4)

177 | parser.add_argument('--cuda_mem_frac', type=float, default=1.0)

178 | args = parser.parse_args()

179 | main(args)

180 |

--------------------------------------------------------------------------------

/code/chatgpt/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/__init__.py

--------------------------------------------------------------------------------

/code/chatgpt/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/dataset/__init__.py:

--------------------------------------------------------------------------------

1 | from .reward_dataset import RewardDataset

2 | from .utils import is_rank_0

3 |

4 | __all__ = ['RewardDataset', 'is_rank_0']

5 |

--------------------------------------------------------------------------------

/code/chatgpt/dataset/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/dataset/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/dataset/__pycache__/reward_dataset.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/dataset/__pycache__/reward_dataset.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/dataset/__pycache__/utils.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/dataset/__pycache__/utils.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/dataset/reward_dataset.py:

--------------------------------------------------------------------------------

1 | from typing import Callable

2 |

3 | from torch.utils.data import Dataset

4 | from tqdm import tqdm

5 |

6 | from .utils import is_rank_0

7 |

8 |

9 | class RewardDataset(Dataset):

10 | """

11 | Dataset for reward model

12 |

13 | Args:

14 | dataset: dataset for reward model

15 | tokenizer: tokenizer for reward model

16 | max_length: max length of input

17 | """

18 |

19 | def __init__(self, dataset, tokenizer: Callable, max_length: int) -> None:

20 | super().__init__()

21 | self.chosen = []

22 | self.reject = []

23 | for data in tqdm(dataset, disable=not is_rank_0()):

24 | prompt = data['prompt']

25 |

26 | chosen = prompt + data['chosen'] + tokenizer.eos_token #"<|endoftext|>"

27 | chosen_token = tokenizer(chosen,

28 | max_length=max_length,

29 | padding="max_length",

30 | truncation=True,

31 | return_tensors="pt")

32 | self.chosen.append({

33 | "input_ids": chosen_token['input_ids'],

34 | "attention_mask": chosen_token['attention_mask']

35 | })

36 |

37 | reject = prompt + data['rejected'] + tokenizer.eos_token

38 | reject_token = tokenizer(reject,

39 | max_length=max_length,

40 | padding="max_length",

41 | truncation=True,

42 | return_tensors="pt")

43 | self.reject.append({

44 | "input_ids": reject_token['input_ids'],

45 | "attention_mask": reject_token['attention_mask']

46 | })

47 |

48 | def __len__(self):

49 | length = len(self.chosen)

50 | return length

51 |

52 | def __getitem__(self, idx):

53 | return self.chosen[idx]["input_ids"], self.chosen[idx]["attention_mask"], self.reject[idx]["input_ids"], self.reject[idx]["attention_mask"]

54 |

--------------------------------------------------------------------------------

/code/chatgpt/dataset/utils.py:

--------------------------------------------------------------------------------

1 | import torch.distributed as dist

2 |

3 |

4 | def is_rank_0() -> bool:

5 | return not dist.is_initialized() or dist.get_rank() == 0

6 |

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/__init__.py:

--------------------------------------------------------------------------------

1 | from .base import Experience, ExperienceMaker

2 | from .naive import NaiveExperienceMaker

3 |

4 | __all__ = ['Experience', 'ExperienceMaker', 'NaiveExperienceMaker']

5 |

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/experience_maker/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/__pycache__/base.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/experience_maker/__pycache__/base.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/__pycache__/naive.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/experience_maker/__pycache__/naive.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/base.py:

--------------------------------------------------------------------------------

1 | from abc import ABC, abstractmethod

2 | from dataclasses import dataclass

3 | from typing import Optional

4 |

5 | import torch

6 | import torch.nn as nn

7 | from chatgpt.models.base import Actor

8 |

9 |

10 | @dataclass

11 | class Experience:

12 | """Experience is a batch of data.

13 | These data should have the the sequence length and number of actions.

14 | Left padding for sequences is applied.

15 |

16 | Shapes of each tensor:

17 | sequences: (B, S)

18 | action_log_probs: (B, A)

19 | values: (B)

20 | reward: (B)

21 | advatanges: (B)

22 | attention_mask: (B, S)

23 | action_mask: (B, A)

24 |

25 | "A" is the number of actions.

26 | """

27 | sequences: torch.Tensor

28 | action_log_probs: torch.Tensor

29 | values: torch.Tensor

30 | reward: torch.Tensor

31 | advantages: torch.Tensor

32 | attention_mask: Optional[torch.LongTensor]

33 | action_mask: Optional[torch.BoolTensor]

34 |

35 | @torch.no_grad()

36 | def to_device(self, device: torch.device) -> None:

37 | self.sequences = self.sequences.to(device)

38 | self.action_log_probs = self.action_log_probs.to(device)

39 | self.values = self.values.to(device)

40 | self.reward = self.reward.to(device)

41 | self.advantages = self.advantages.to(device)

42 | if self.attention_mask is not None:

43 | self.attention_mask = self.attention_mask.to(device)

44 | if self.action_mask is not None:

45 | self.action_mask = self.action_mask.to(device)

46 |

47 | def pin_memory(self):

48 | self.sequences = self.sequences.pin_memory()

49 | self.action_log_probs = self.action_log_probs.pin_memory()

50 | self.values = self.values.pin_memory()

51 | self.reward = self.reward.pin_memory()

52 | self.advantages = self.advantages.pin_memory()

53 | if self.attention_mask is not None:

54 | self.attention_mask = self.attention_mask.pin_memory()

55 | if self.action_mask is not None:

56 | self.action_mask = self.action_mask.pin_memory()

57 | return self

58 |

59 |

60 | class ExperienceMaker(ABC):

61 |

62 | def __init__(self,

63 | actor: Actor,

64 | critic: nn.Module,

65 | reward_model: nn.Module,

66 | initial_model: Actor,

67 | kl_coef: float = 0.1) -> None:

68 | super().__init__()

69 | self.actor = actor

70 | self.critic = critic

71 | self.reward_model = reward_model

72 | self.initial_model = initial_model

73 | self.kl_coef = kl_coef

74 |

75 | @abstractmethod

76 | def make_experience(self, input_ids: torch.Tensor, **generate_kwargs) -> Experience:

77 | pass

78 |

--------------------------------------------------------------------------------

/code/chatgpt/experience_maker/naive.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from chatgpt.models.utils import compute_reward, normalize

3 |

4 | from .base import Experience, ExperienceMaker

5 |

6 |

7 | class NaiveExperienceMaker(ExperienceMaker):

8 | """

9 | Naive experience maker.

10 | """

11 |

12 | @torch.no_grad()

13 | def make_experience(self, input_ids: torch.Tensor, **generate_kwargs) -> Experience:

14 | self.actor.eval()

15 | self.critic.eval()

16 | self.initial_model.eval()

17 | self.reward_model.eval()

18 |

19 | sequences, attention_mask, action_mask = self.actor.generate(input_ids,

20 | return_action_mask=True,

21 | **generate_kwargs)

22 | num_actions = action_mask.size(1)

23 |

24 | action_log_probs = self.actor(sequences, num_actions, attention_mask)

25 | base_action_log_probs = self.initial_model(sequences, num_actions, attention_mask)

26 | value = self.critic(sequences, action_mask, attention_mask)

27 | r = self.reward_model(sequences, attention_mask)

28 |

29 | reward = compute_reward(r, self.kl_coef, action_log_probs, base_action_log_probs, action_mask=action_mask)

30 |

31 | advantage = reward - value

32 | # TODO(ver217): maybe normalize adv

33 | if advantage.ndim == 1:

34 | advantage = advantage.unsqueeze(-1)

35 |

36 | return Experience(sequences, action_log_probs, value, reward, advantage, attention_mask, action_mask)

37 |

--------------------------------------------------------------------------------

/code/chatgpt/models/__init__.py:

--------------------------------------------------------------------------------

1 | from .base import Actor, Critic, RewardModel

2 | from .loss import PairWiseLoss, PolicyLoss, PPOPtxActorLoss, ValueLoss

3 |

4 | __all__ = ['Actor', 'Critic', 'RewardModel', 'PolicyLoss', 'ValueLoss', 'PPOPtxActorLoss', 'PairWiseLoss']

5 |

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/generation.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/generation.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/generation_utils.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/generation_utils.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/lora.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/lora.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/loss.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/loss.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/__pycache__/utils.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/__pycache__/utils.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/base/__init__.py:

--------------------------------------------------------------------------------

1 | from .actor import Actor

2 | from .critic import Critic

3 | from .reward_model import RewardModel

4 |

5 | __all__ = ['Actor', 'Critic', 'RewardModel']

6 |

--------------------------------------------------------------------------------

/code/chatgpt/models/base/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/base/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/base/__pycache__/actor.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/base/__pycache__/actor.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/base/__pycache__/critic.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/base/__pycache__/critic.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/base/__pycache__/reward_model.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/base/__pycache__/reward_model.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/base/actor.py:

--------------------------------------------------------------------------------

1 | from typing import Optional, Tuple, Union

2 |

3 | import torch

4 | import torch.nn as nn

5 | import torch.nn.functional as F

6 |

7 | from ..generation import generate

8 | from ..lora import LoRAModule

9 | from ..utils import log_probs_from_logits

10 |

11 |

12 | class Actor(LoRAModule):

13 | """

14 | Actor model base class.

15 |

16 | Args:

17 | model (nn.Module): Actor Model.

18 | lora_rank (int): LoRA rank.

19 | lora_train_bias (str): LoRA bias training mode.

20 | """

21 |

22 | def __init__(self, model: nn.Module, lora_rank: int = 0, lora_train_bias: str = 'none') -> None:

23 | super().__init__(lora_rank=lora_rank, lora_train_bias=lora_train_bias)

24 | self.model = model

25 | self.convert_to_lora()

26 |

27 | @torch.no_grad()

28 | def generate(

29 | self,

30 | input_ids: torch.Tensor,

31 | return_action_mask: bool = True,

32 | **kwargs

33 | ) -> Union[Tuple[torch.LongTensor, torch.LongTensor], Tuple[torch.LongTensor, torch.LongTensor, torch.BoolTensor]]:

34 | sequences = generate(self.model, input_ids, **kwargs)

35 | attention_mask = None

36 | pad_token_id = kwargs.get('pad_token_id', None)

37 | if pad_token_id is not None:

38 | attention_mask = sequences.not_equal(pad_token_id).to(dtype=torch.long, device=sequences.device)

39 | if not return_action_mask:

40 | return sequences, attention_mask, None

41 | input_len = input_ids.size(1)

42 | eos_token_id = kwargs.get('eos_token_id', None)

43 | if eos_token_id is None:

44 | action_mask = torch.ones_like(sequences, dtype=torch.bool)

45 | else:

46 | # left padding may be applied, only mask action

47 | action_mask = (sequences[:, input_len:] == eos_token_id).cumsum(dim=-1) == 0

48 | action_mask = F.pad(action_mask, (1 + input_len, -1), value=True) # include eos token and input

49 | action_mask[:, :input_len] = False

50 | action_mask = action_mask[:, 1:]

51 | return sequences, attention_mask, action_mask[:, -(sequences.size(1) - input_len):]

52 |

53 | def forward(self,

54 | sequences: torch.LongTensor,

55 | num_actions: int,

56 | attention_mask: Optional[torch.Tensor] = None) -> torch.Tensor:

57 | """Returns action log probs

58 | """

59 | output = self.model(sequences, attention_mask=attention_mask)

60 | logits = output['logits']

61 | log_probs = log_probs_from_logits(logits[:, :-1, :], sequences[:, 1:])

62 | return log_probs[:, -num_actions:]

63 |

--------------------------------------------------------------------------------

/code/chatgpt/models/base/critic.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import torch

4 | import torch.nn as nn

5 |

6 | from ..lora import LoRAModule

7 | from ..utils import masked_mean

8 |

9 |

10 | class Critic(LoRAModule):

11 | """

12 | Critic model base class.

13 |

14 | Args:

15 | model (nn.Module): Critic model.

16 | value_head (nn.Module): Value head to get value.

17 | lora_rank (int): LoRA rank.

18 | lora_train_bias (str): LoRA bias training mode.

19 | """

20 |

21 | def __init__(

22 | self,

23 | model: nn.Module,

24 | value_head: nn.Module,

25 | lora_rank: int = 0,

26 | lora_train_bias: str = 'none',

27 | use_action_mask: bool = False,

28 | ) -> None:

29 |

30 | super().__init__(lora_rank=lora_rank, lora_train_bias=lora_train_bias)

31 | self.model = model

32 | self.value_head = value_head

33 | self.use_action_mask = use_action_mask

34 | self.convert_to_lora()

35 |

36 | def forward(self,

37 | sequences: torch.LongTensor,

38 | action_mask: Optional[torch.Tensor] = None,

39 | attention_mask: Optional[torch.Tensor] = None) -> torch.Tensor:

40 | outputs = self.model(sequences, attention_mask=attention_mask)

41 | last_hidden_states = outputs['last_hidden_state']

42 |

43 | values = self.value_head(last_hidden_states).squeeze(-1)

44 |

45 | if action_mask is not None and self.use_action_mask:

46 | num_actions = action_mask.size(1)

47 | prompt_mask = attention_mask[:, :-num_actions]

48 | values = values[:, :-num_actions]

49 | value = masked_mean(values, prompt_mask, dim=1)

50 | return value

51 |

52 | values = values[:, :-1]

53 | value = values.mean(dim=1)

54 | return value

55 |

--------------------------------------------------------------------------------

/code/chatgpt/models/base/reward_model.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import torch

4 | import torch.nn as nn

5 |

6 | from ..lora import LoRAModule

7 |

8 |

9 | class RewardModel(LoRAModule):

10 | """

11 | Reward model base class.

12 |

13 | Args:

14 | model (nn.Module): Reward model.

15 | value_head (nn.Module): Value head to get reward score.

16 | lora_rank (int): LoRA rank.

17 | lora_train_bias (str): LoRA bias training mode.

18 | """

19 |

20 | def __init__(self,

21 | model: nn.Module,

22 | value_head: Optional[nn.Module] = None,

23 | lora_rank: int = 0,

24 | lora_train_bias: str = 'none') -> None:

25 | super().__init__(lora_rank=lora_rank, lora_train_bias=lora_train_bias)

26 | self.model = model

27 | self.convert_to_lora()

28 |

29 | if value_head is not None:

30 | if value_head.out_features != 1:

31 | raise ValueError("The value head of reward model's output dim should be 1!")

32 | self.value_head = value_head

33 | else:

34 | self.value_head = nn.Linear(model.config.n_embd, 1)

35 |

36 | def forward(self, sequences: torch.LongTensor, attention_mask: Optional[torch.Tensor] = None) -> torch.Tensor:

37 | outputs = self.model(sequences, attention_mask=attention_mask)

38 | last_hidden_states = outputs['last_hidden_state']

39 | values = self.value_head(last_hidden_states)[:, :-1]

40 | value = values.mean(dim=1).squeeze(1) # ensure shape is (B)

41 | return value

42 |

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/__init__.py:

--------------------------------------------------------------------------------

1 | from .bloom_actor import BLOOMActor

2 | from .bloom_critic import BLOOMCritic

3 | from .bloom_rm import BLOOMRM

4 |

5 | __all__ = ['BLOOMActor', 'BLOOMCritic', 'BLOOMRM']

6 |

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/__pycache__/__init__.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/bloom/__pycache__/__init__.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/__pycache__/bloom_actor.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/bloom/__pycache__/bloom_actor.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/__pycache__/bloom_critic.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/bloom/__pycache__/bloom_critic.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/__pycache__/bloom_rm.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/oglee815/mygpt-lecture/ed6a506cd9605f10b5fec52e840f16f3cb46ab98/code/chatgpt/models/bloom/__pycache__/bloom_rm.cpython-310.pyc

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/bloom_actor.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import torch

4 | from transformers import BloomConfig, BloomForCausalLM, BloomModel

5 |

6 | from ..base import Actor

7 |

8 |

9 | class BLOOMActor(Actor):

10 | """

11 | BLOOM Actor model.

12 |

13 | Args:

14 | pretrained (str): Pretrained model name or path.

15 | config (BloomConfig): Model config.

16 | checkpoint (bool): Enable gradient checkpointing.

17 | lora_rank (int): LoRA rank.

18 | lora_train_bias (str): LoRA bias training mode.

19 | """

20 |

21 | def __init__(self,

22 | pretrained: str = None,

23 | config: Optional[BloomConfig] = None,

24 | checkpoint: bool = False,

25 | lora_rank: int = 0,

26 | lora_train_bias: str = 'none') -> None:

27 | if pretrained is not None:

28 | model = BloomForCausalLM.from_pretrained(pretrained)

29 | elif config is not None:

30 | model = BloomForCausalLM(config)

31 | else:

32 | model = BloomForCausalLM(BloomConfig())

33 | if checkpoint:

34 | model.gradient_checkpointing_enable()

35 | super().__init__(model, lora_rank, lora_train_bias)

36 |

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/bloom_critic.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import torch

4 | import torch.nn as nn

5 | from transformers import BloomConfig, BloomForCausalLM, BloomModel

6 |

7 | from ..base import Critic

8 |

9 |

10 | class BLOOMCritic(Critic):

11 | """

12 | BLOOM Critic model.

13 |

14 | Args:

15 | pretrained (str): Pretrained model name or path.

16 | config (BloomConfig): Model config.

17 | checkpoint (bool): Enable gradient checkpointing.

18 | lora_rank (int): LoRA rank.

19 | lora_train_bias (str): LoRA bias training mode.

20 | """

21 |

22 | def __init__(self,

23 | pretrained: str = None,

24 | config: Optional[BloomConfig] = None,

25 | checkpoint: bool = False,

26 | lora_rank: int = 0,

27 | lora_train_bias: str = 'none',

28 | **kwargs) -> None:

29 | if pretrained is not None:

30 | model = BloomModel.from_pretrained(pretrained)

31 | elif config is not None:

32 | model = BloomModel(config)

33 | else:

34 | model = BloomModel(BloomConfig())

35 | if checkpoint:

36 | model.gradient_checkpointing_enable()

37 | value_head = nn.Linear(model.config.hidden_size, 1)

38 | super().__init__(model, value_head, lora_rank, lora_train_bias, **kwargs)

39 |

--------------------------------------------------------------------------------

/code/chatgpt/models/bloom/bloom_rm.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import torch.nn as nn

4 | from transformers import BloomConfig, BloomForCausalLM, BloomModel

5 |

6 | from ..base import RewardModel

7 |

8 |

9 | class BLOOMRM(RewardModel):

10 | """

11 | BLOOM Reward model.

12 |

13 | Args:

14 | pretrained (str): Pretrained model name or path.

15 | config (BloomConfig): Model config.

16 | checkpoint (bool): Enable gradient checkpointing.

17 | lora_rank (int): LoRA rank.

18 | lora_train_bias (str): LoRA bias training mode.

19 | """

20 |

21 | def __init__(self,

22 | pretrained: str = None,

23 | config: Optional[BloomConfig] = None,

24 | checkpoint: bool = False,

25 | lora_rank: int = 0,

26 | lora_train_bias: str = 'none') -> None:

27 | if pretrained is not None:

28 | model = BloomModel.from_pretrained(pretrained)

29 | elif config is not None:

30 | model = BloomModel(config)

31 | else:

32 | model = BloomModel(BloomConfig())

33 | if checkpoint:

34 | model.gradient_checkpointing_enable()

35 | value_head = nn.Linear(model.config.hidden_size, 1)

36 | super().__init__(model, value_head, lora_rank, lora_train_bias)

37 |

--------------------------------------------------------------------------------

/code/chatgpt/models/generation.py:

--------------------------------------------------------------------------------

1 | from typing import Any, Callable, Optional

2 |

3 | import torch

4 | import torch.distributed as dist

5 | import torch.nn as nn

6 |

7 | try:

8 | from transformers.generation_logits_process import (

9 | LogitsProcessorList,

10 | TemperatureLogitsWarper,

11 | TopKLogitsWarper,

12 | TopPLogitsWarper,

13 | )

14 | except ImportError:

15 | from transformers.generation import LogitsProcessorList, TemperatureLogitsWarper, TopKLogitsWarper, TopPLogitsWarper

16 |

17 |

18 | def prepare_logits_processor(top_k: Optional[int] = None,

19 | top_p: Optional[float] = None,

20 | temperature: Optional[float] = None) -> LogitsProcessorList:

21 | processor_list = LogitsProcessorList()

22 | if temperature is not None and temperature != 1.0:

23 | processor_list.append(TemperatureLogitsWarper(temperature))

24 | if top_k is not None and top_k != 0:

25 | processor_list.append(TopKLogitsWarper(top_k))

26 | if top_p is not None and top_p < 1.0:

27 | processor_list.append(TopPLogitsWarper(top_p))

28 | return processor_list

29 |

30 |

31 | def _is_sequence_finished(unfinished_sequences: torch.Tensor) -> bool:

32 | if dist.is_initialized() and dist.get_world_size() > 1:

33 | # consider DP

34 | unfinished_sequences = unfinished_sequences.clone()

35 | dist.all_reduce(unfinished_sequences)

36 | return unfinished_sequences.max() == 0

37 |

38 |

39 | def sample(model: nn.Module,

40 | input_ids: torch.Tensor,

41 | max_length: int,

42 | early_stopping: bool = False,

43 | eos_token_id: Optional[int] = None,

44 | pad_token_id: Optional[int] = None,

45 | top_k: Optional[int] = None,

46 | top_p: Optional[float] = None,

47 | temperature: Optional[float] = None,

48 | prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

49 | update_model_kwargs_fn: Optional[Callable[[dict, Any], dict]] = None,

50 | **model_kwargs) -> torch.Tensor:

51 | if input_ids.size(1) >= max_length:

52 | return input_ids

53 |

54 | logits_processor = prepare_logits_processor(top_k, top_p, temperature)

55 | unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

56 |

57 | for _ in range(input_ids.size(1), max_length):

58 | model_inputs = prepare_inputs_fn(input_ids, **model_kwargs) if prepare_inputs_fn is not None else {

59 | 'input_ids': input_ids

60 | }

61 | outputs = model(**model_inputs)

62 |

63 | next_token_logits = outputs['logits'][:, -1, :]

64 | # pre-process distribution

65 | next_token_logits = logits_processor(input_ids, next_token_logits)

66 | # sample

67 | probs = torch.softmax(next_token_logits, dim=-1, dtype=torch.float)

68 | next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

69 |

70 | # finished sentences should have their next token be a padding token

71 | if eos_token_id is not None:

72 | if pad_token_id is None:

73 | raise ValueError("If `eos_token_id` is defined, make sure that `pad_token_id` is defined.")

74 | next_tokens = next_tokens * unfinished_sequences + pad_token_id * (1 - unfinished_sequences)

75 |

76 | # update generated ids, model inputs for next step

77 | input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

78 | if update_model_kwargs_fn is not None:

79 | model_kwargs = update_model_kwargs_fn(outputs, **model_kwargs)

80 |

81 | # if eos_token was found in one sentence, set sentence to finished

82 | if eos_token_id is not None:

83 | unfinished_sequences = unfinished_sequences.mul((next_tokens != eos_token_id).long())

84 |

85 | # stop when each sentence is finished if early_stopping=True

86 | if early_stopping and _is_sequence_finished(unfinished_sequences):

87 | break

88 |

89 | return input_ids

90 |

91 |

92 | def generate(model: nn.Module,

93 | input_ids: torch.Tensor,

94 | max_length: int,

95 | num_beams: int = 1,

96 | do_sample: bool = True,

97 | early_stopping: bool = False,

98 | eos_token_id: Optional[int] = None,

99 | pad_token_id: Optional[int] = None,

100 | top_k: Optional[int] = None,

101 | top_p: Optional[float] = None,

102 | temperature: Optional[float] = None,

103 | prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

104 | update_model_kwargs_fn: Optional[Callable[[dict, Any], dict]] = None,

105 | **model_kwargs) -> torch.Tensor:

106 | """Generate token sequence. The returned sequence is input_ids + generated_tokens.

107 |

108 | Args:

109 | model (nn.Module): model

110 | input_ids (torch.Tensor): input sequence

111 | max_length (int): max length of the returned sequence

112 | num_beams (int, optional): number of beams. Defaults to 1.

113 | do_sample (bool, optional): whether to do sample. Defaults to True.

114 | early_stopping (bool, optional): if True, the sequence length may be smaller than max_length due to finding eos. Defaults to False.

115 | eos_token_id (Optional[int], optional): end of sequence token id. Defaults to None.