├── project

├── plugins.sbt

├── build.properties

├── Versions.scala

└── Dependencies.scala

├── .gitignore

├── images

├── app_stream_flow.gif

├── template-overview.png

├── consumer_stream_creation_slow.gif

└── producer_stream_creation_slow.gif

├── src

├── main

│ ├── scala

│ │ └── com

│ │ │ └── omearac

│ │ │ ├── http

│ │ │ ├── HttpService.scala

│ │ │ └── routes

│ │ │ │ ├── ProducerCommands.scala

│ │ │ │ └── ConsumerCommands.scala

│ │ │ ├── shared

│ │ │ ├── AkkaStreams.scala

│ │ │ ├── EventSourcing.scala

│ │ │ ├── Messages.scala

│ │ │ └── JsonMessageConversion.scala

│ │ │ ├── producers

│ │ │ ├── ProducerStream.scala

│ │ │ ├── EventProducer.scala

│ │ │ ├── DataProducer.scala

│ │ │ └── ProducerStreamManager.scala

│ │ │ ├── settings

│ │ │ └── Settings.scala

│ │ │ ├── consumers

│ │ │ ├── EventConsumer.scala

│ │ │ ├── DataConsumer.scala

│ │ │ ├── ConsumerStream.scala

│ │ │ └── ConsumerStreamManager.scala

│ │ │ └── Main.scala

│ └── resources

│ │ ├── logback.xml

│ │ └── application.conf

└── test

│ ├── resources

│ ├── logback-test.xml

│ └── application.conf

│ └── scala

│ └── akka

│ ├── SettingsSpec.scala

│ ├── kafka

│ ├── ProducerStreamManagerSpec.scala

│ ├── DataProducerSpec.scala

│ ├── ProducerStreamSpec.scala

│ ├── EventProducerSpec.scala

│ ├── DataConsumerSpec.scala

│ ├── EventConsumerSpec.scala

│ ├── ConsumerStreamManagerSpec.scala

│ └── ConsumerStreamSpec.scala

│ └── HTTPInterfaceSpec.scala

├── LICENSE

└── README.md

/project/plugins.sbt:

--------------------------------------------------------------------------------

1 | logLevel := Level.Warn

--------------------------------------------------------------------------------

/project/build.properties:

--------------------------------------------------------------------------------

1 | sbt.version = 0.13.8

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | #sbt

2 | project/project

3 | project/target

4 | target

5 |

6 | #IntelliJ

7 | .idea

8 | .idea_modules

9 |

--------------------------------------------------------------------------------

/images/app_stream_flow.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/omearac/reactive-kafka-microservice-template/HEAD/images/app_stream_flow.gif

--------------------------------------------------------------------------------

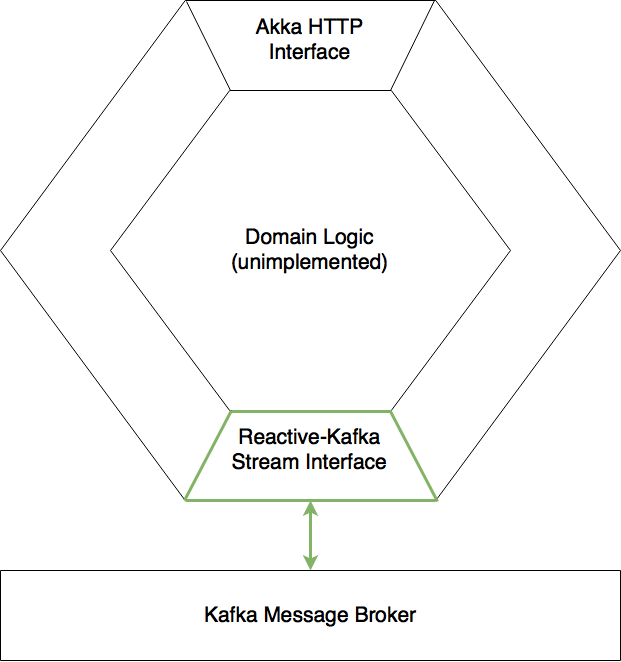

/images/template-overview.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/omearac/reactive-kafka-microservice-template/HEAD/images/template-overview.png

--------------------------------------------------------------------------------

/images/consumer_stream_creation_slow.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/omearac/reactive-kafka-microservice-template/HEAD/images/consumer_stream_creation_slow.gif

--------------------------------------------------------------------------------

/images/producer_stream_creation_slow.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/omearac/reactive-kafka-microservice-template/HEAD/images/producer_stream_creation_slow.gif

--------------------------------------------------------------------------------

/project/Versions.scala:

--------------------------------------------------------------------------------

1 | object Versions {

2 |

3 | val akka = "2.4.16"

4 |

5 | val akka_http = "10.0.1"

6 |

7 | val akka_kafka = "0.13"

8 |

9 | val logback = "1.1.3"

10 |

11 | val log4j_over_slf4j = "1.7.12"

12 |

13 | val io_spray = "1.3.2"

14 |

15 | val play_json = "2.4.0-M2"

16 |

17 | val scalatest = "3.0.1"

18 | }

19 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/http/HttpService.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.http

2 |

3 | import akka.http.scaladsl.server.Directives._

4 | import com.omearac.http.routes.{ConsumerCommands, ProducerCommands}

5 |

6 |

7 | trait HttpService extends ConsumerCommands with ProducerCommands {

8 | //Joining the Http Routes

9 | def routes = producerHttpCommands ~ dataConsumerHttpCommands ~ eventConsumerHttpCommands

10 | }

11 |

--------------------------------------------------------------------------------

/src/main/resources/logback.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 | [%highlight(%-5level)] %cyan(%logger{5}): %msg %n

4 |

5 |

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/src/test/resources/logback-test.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 | [%highlight(%-5level)] %cyan(%logger{5}): %msg %n

4 |

5 |

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/shared/AkkaStreams.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.shared

2 |

3 | import akka.actor.ActorSystem

4 | import akka.stream.ActorMaterializer

5 |

6 | /**

7 | *This trait contains the components required to materialize and run Akka Streams

8 | */

9 |

10 | trait AkkaStreams {

11 | implicit val system: ActorSystem

12 | implicit def executionContext = system.dispatcher

13 | implicit def materializer = ActorMaterializer()

14 | }

15 |

--------------------------------------------------------------------------------

/project/Dependencies.scala:

--------------------------------------------------------------------------------

1 | import sbt._

2 |

3 | object Dependencies {

4 | val kafka = "com.typesafe.akka" %% "akka-stream-kafka" % Versions.akka_kafka

5 |

6 | val logback = "ch.qos.logback" % "logback-classic" % Versions.logback

7 |

8 | val log4j_over_slf4j = "org.slf4j" % "log4j-over-slf4j" % Versions.log4j_over_slf4j

9 |

10 | val akka_slf4j = "com.typesafe.akka" %% "akka-slf4j" % Versions.akka

11 |

12 | val scalatest = "org.scalatest" %% "scalatest" % Versions.scalatest % "test"

13 |

14 | val akka_testkit = "com.typesafe.akka" %% "akka-testkit" % Versions.akka % "test"

15 |

16 | val play_json = "com.typesafe.play" % "play-json_2.11" % Versions.play_json

17 |

18 | val akka_http_core = "com.typesafe.akka" %% "akka-http-core" % Versions.akka_http

19 |

20 | val akka_http_testkit = "com.typesafe.akka" %% "akka-http-testkit" % Versions.akka_http

21 |

22 | val io_spray = "io.spray" %% "spray-json" % Versions.io_spray

23 | }

24 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/shared/EventSourcing.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.shared

2 |

3 | import java.util.Date

4 |

5 | import akka.actor.{ActorRef, ActorSystem}

6 | import akka.serialization._

7 | import com.omearac.shared.EventMessages.EventMessage

8 | import com.omearac.shared.KafkaMessages.ExampleAppEvent

9 |

10 | /**

11 | * This trait converts EventMessages to ExampleAppEvents and defines the method for publishing them to the local

12 | * Akka Event Bus. The conversion occurs since we eventally publish the ExampleAppEvents to Kafka via a stream once

13 | * they're picked up from the local bus.

14 | */

15 |

16 | trait EventSourcing {

17 | implicit val system: ActorSystem

18 | val dateFormat = new java.text.SimpleDateFormat("dd:MM:yy:HH:mm:ss.SSS")

19 | lazy val timetag = dateFormat.format(new Date(System.currentTimeMillis()))

20 | def self: ActorRef

21 |

22 |

23 | def publishLocalEvent(msg: EventMessage) : Unit = {

24 | val exampleAppEvent = ExampleAppEvent(timetag, Serialization.serializedActorPath(self), msg.toString)

25 | system.eventStream.publish(exampleAppEvent)

26 | }

27 | }

28 |

29 |

30 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/shared/Messages.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.shared

2 |

3 | import akka.stream.scaladsl.SourceQueueWithComplete

4 |

5 | /**

6 | * EventMessages are those which are emitted throughout the application and KafkaMessages are those which

7 | * are converted to/from JSON to be published/consumed to/from Kafka.

8 | * The EventMessages are converted to ExampleAppEvents when they are published.

9 | */

10 |

11 | object EventMessages {

12 | abstract class EventMessage

13 | case class ActivatedConsumerStream(kafkaTopic: String) extends EventMessage

14 | case class TerminatedConsumerStream(kafkaTopic: String) extends EventMessage

15 | case class ActivatedProducerStream[msgType](producerStream: SourceQueueWithComplete[msgType], kafkaTopic: String) extends EventMessage

16 | case class MessagesPublished(numberOfMessages: Int) extends EventMessage

17 | case class FailedMessageConversion(kafkaTopic: String, msg: String, msgType: String) extends EventMessage

18 | }

19 |

20 | object KafkaMessages {

21 | case class KafkaMessage(time: String, subject: String, item: Int)

22 | case class ExampleAppEvent(time: String, senderID: String, eventType: String)

23 | }

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/http/routes/ProducerCommands.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.http.routes

2 |

3 | import akka.actor.ActorRef

4 | import akka.event.LoggingAdapter

5 | import akka.http.scaladsl.model.StatusCodes

6 | import akka.http.scaladsl.server.Directives._

7 | import akka.http.scaladsl.server.Route

8 | import akka.pattern.ask

9 | import akka.util.Timeout

10 | import com.omearac.producers.DataProducer.PublishMessages

11 | import com.omearac.shared.EventMessages.MessagesPublished

12 |

13 | import scala.concurrent.duration._

14 |

15 |

16 | /**

17 | * This trait defines the HTTP API for telling the DataProducer to publish data messages to Kafka via the Stream

18 | */

19 |

20 | trait ProducerCommands {

21 | def log: LoggingAdapter

22 | def dataProducer: ActorRef

23 |

24 | val producerHttpCommands: Route = pathPrefix("data_producer"){

25 | implicit val timeout = Timeout(10 seconds)

26 | path("produce" / IntNumber) {

27 | {numOfMessagesToProduce =>

28 | get {

29 | onSuccess(dataProducer ? PublishMessages(numOfMessagesToProduce)) {

30 | case MessagesPublished(numberOfMessages) => complete(StatusCodes.OK, numberOfMessages + " messages Produced as Ordered, Boss!")

31 | case _ => complete(StatusCodes.InternalServerError)

32 | }

33 | }

34 | }

35 | }

36 | }

37 | }

38 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/producers/ProducerStream.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.producers

2 |

3 | import akka.actor.{ActorRef, ActorSystem}

4 | import akka.kafka.ProducerSettings

5 | import akka.kafka.scaladsl.Producer

6 | import akka.stream.OverflowStrategy

7 | import akka.stream.scaladsl.{Flow, Source}

8 | import com.omearac.shared.JsonMessageConversion.Conversion

9 | import com.omearac.shared.{AkkaStreams, EventSourcing}

10 | import org.apache.kafka.clients.producer.ProducerRecord

11 | import org.apache.kafka.common.serialization.{ByteArraySerializer, StringSerializer}

12 |

13 | /**

14 | * This trait defines the functions for creating the producer stream components.

15 | */

16 |

17 | trait ProducerStream extends AkkaStreams with EventSourcing {

18 | implicit val system: ActorSystem

19 | def self: ActorRef

20 |

21 | def createStreamSource[msgType] = {

22 | Source.queue[msgType](Int.MaxValue,OverflowStrategy.backpressure)

23 | }

24 |

25 | def createStreamSink(producerProperties: Map[String, String]) = {

26 | val kafkaMBAddress = producerProperties("bootstrap-servers")

27 | val producerSettings = ProducerSettings(system, new ByteArraySerializer, new StringSerializer).withBootstrapServers(kafkaMBAddress)

28 |

29 | Producer.plainSink(producerSettings)

30 | }

31 |

32 | def createStreamFlow[msgType: Conversion](producerProperties: Map[String, String]) = {

33 | val numberOfPartitions = producerProperties("num.partitions").toInt -1

34 | val topicToPublish = producerProperties("publish-topic")

35 | val rand = new scala.util.Random

36 | val range = 0 to numberOfPartitions

37 |

38 | Flow[msgType].map { msg =>

39 | val partition = range(rand.nextInt(range.length))

40 | val stringJSONMessage = Conversion[msgType].convertToJson(msg)

41 | new ProducerRecord[Array[Byte], String](topicToPublish, partition, null, stringJSONMessage)

42 | }

43 | }

44 | }

45 |

--------------------------------------------------------------------------------

/src/test/scala/akka/SettingsSpec.scala:

--------------------------------------------------------------------------------

1 | package akka

2 |

3 | import akka.actor.ActorSystem

4 | import com.omearac.settings.Settings

5 | import org.scalatest._

6 |

7 |

8 | class SettingsSpec extends WordSpecLike with Matchers {

9 | val system = ActorSystem("SettingsSpec")

10 | val settings = Settings(system)

11 |

12 | "Consumer Settings" must {

13 | "read in correct values from config" in {

14 | settings.Http.host should ===("0.0.0.0")

15 | settings.KafkaConsumers.numberOfConsumers should ===(2)

16 | val consumerSettings = settings.KafkaConsumers.KafkaConsumerInfo

17 |

18 | val consumerA = consumerSettings("KafkaMessage")

19 | consumerA("bootstrap-servers") should ===("localhost:9092")

20 | consumerA("subscription-topic") should ===("TempChannel1")

21 | consumerA("groupId") should ===("group1")

22 |

23 | val consumerB = consumerSettings("ExampleAppEvent")

24 | consumerB("bootstrap-servers") should ===("localhost:9092")

25 | consumerB("subscription-topic") should ===("TempChannel2")

26 | consumerB("groupId") should ===("group2")

27 | }

28 | }

29 |

30 | "Producer Settings" must {

31 | "read in correct values from config" in {

32 | settings.KafkaProducers.numberOfProducers should ===(2)

33 | val producerSettings = settings.KafkaProducers.KafkaProducerInfo

34 |

35 | val producerA = producerSettings("KafkaMessage")

36 | producerA("bootstrap-servers") should ===("localhost:9092")

37 | producerA("publish-topic") should ===("TempChannel1")

38 | producerA("num.partitions") should ===("5")

39 |

40 | val producerB = producerSettings("ExampleAppEvent")

41 | producerB("bootstrap-servers") should ===("localhost:9092")

42 | producerB("publish-topic") should ===("TempChannel2")

43 | producerB("num.partitions") should ===("5")

44 | }

45 | }

46 | }

47 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/producers/EventProducer.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.producers

2 |

3 | import akka.actor.{Actor, Props}

4 | import akka.event.Logging

5 | import akka.stream.scaladsl.SourceQueueWithComplete

6 | import com.omearac.shared.EventMessages.ActivatedProducerStream

7 | import com.omearac.shared.EventSourcing

8 | import com.omearac.shared.KafkaMessages.ExampleAppEvent

9 |

10 |

11 | /**

12 | * This actor receives local app events called "ExampleAppEvent"s which are initially published to the

13 | * "internal" Akka Event Bus and which he is subscribed to. He then publishes the event messages to the Kafka

14 | * topic called AppEventChannel. The idea would be that another microservice is subscribed to

15 | * the AppEventChannel topic and can then react to events this microservice emits.

16 | */

17 |

18 | object EventProducer {

19 |

20 | def props: Props = Props(new EventProducer)

21 | }

22 |

23 | class EventProducer extends Actor with EventSourcing {

24 |

25 | import context._

26 |

27 | implicit val system = context.system

28 | val log = Logging(system, this.getClass.getName)

29 |

30 | var producerStream: SourceQueueWithComplete[Any] = null

31 | val subscribedMessageTypes = Seq(classOf[ExampleAppEvent])

32 |

33 | override def preStart(): Unit = {

34 | super.preStart()

35 | subscribedMessageTypes.foreach(system.eventStream.subscribe(self, _))

36 | }

37 |

38 | override def postStop(): Unit = {

39 | subscribedMessageTypes.foreach(system.eventStream.unsubscribe(self, _))

40 | super.postStop()

41 | }

42 |

43 | def receive: Receive = {

44 | case ActivatedProducerStream(streamRef, _) =>

45 | producerStream = streamRef

46 | become(publishEvent)

47 |

48 | case msg: ExampleAppEvent => if (producerStream == null) self ! msg else producerStream.offer(msg)

49 | case other => log.error("EventProducer got the unknown message while in idle: " + other)

50 | }

51 |

52 | def publishEvent: Receive = {

53 | case msg: ExampleAppEvent => producerStream.offer(msg)

54 | case other => log.error("EventProducer got the unknown message while producing: " + other)

55 | }

56 | }

57 |

--------------------------------------------------------------------------------

/src/test/resources/application.conf:

--------------------------------------------------------------------------------

1 | http {

2 | host = "0.0.0.0"

3 | port = "8080"

4 | }

5 |

6 | akka {

7 | loggers = ["akka.testkit.TestEventListener"]

8 | #loggers = ["akka.event.slf4j.Slf4jLogger"]

9 | loglevel = "DEBUG"

10 | logging-filter = "akka.event.DefaultLoggingFilter"

11 |

12 | kafka {

13 | consumer {

14 | num-consumers = "2"

15 | c1 {

16 | bootstrap-servers = "localhost:9092"

17 | groupId = "group1"

18 | subscription-topic = "TempChannel1"

19 | message-type = "KafkaMessage"

20 | poll-interval = 50ms

21 | poll-timeout = 50ms

22 | stop-timeout = 30s

23 | close-timeout = 20s

24 | commit-timeout = 15s

25 | wakeup-timeout = 10s

26 | use-dispatcher = "akka.kafka.default-dispatcher"

27 | kafka-clients {

28 | enable.auto.commit = false

29 | }

30 | }

31 |

32 | c2 {

33 | bootstrap-servers = "localhost:9092"

34 | groupId = "group2"

35 | subscription-topic = "TempChannel2"

36 | message-type = "ExampleAppEvent"

37 | poll-interval = 50ms

38 | poll-timeout = 50ms

39 | stop-timeout = 30s

40 | close-timeout = 20s

41 | commit-timeout = 15s

42 | wakeup-timeout = 10s

43 | use-dispatcher = "akka.kafka.default-dispatcher"

44 | kafka-clients {

45 | enable.auto.commit = false

46 | }

47 | }

48 | }

49 |

50 | producer {

51 | num-producers = "2"

52 |

53 | p1 {

54 | bootstrap-servers = "localhost:9092"

55 | publish-topic = "TempChannel1"

56 | message-type = "KafkaMessage"

57 | parallelism = 100

58 | close-timeout = 60s

59 | use-dispatcher = "akka.kafka.default-dispatcher"

60 |

61 | request.required.acks = "1"

62 | num.partitions = "5"

63 | }

64 |

65 | p2 {

66 | bootstrap-servers = "localhost:9092"

67 | message-type = "ExampleAppEvent"

68 | publish-topic = "TempChannel2"

69 | parallelism = 100

70 | close-timeout = 60s

71 | use-dispatcher = "akka.kafka.default-dispatcher"

72 | request.required.acks = "1"

73 | num.partitions = "5"

74 | }

75 | }

76 | }

77 | }

78 |

79 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/producers/DataProducer.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.producers

2 |

3 | import akka.actor._

4 | import akka.event.Logging

5 | import akka.stream.scaladsl.SourceQueueWithComplete

6 | import com.omearac.producers.DataProducer.PublishMessages

7 | import com.omearac.shared.EventMessages.{ActivatedProducerStream, MessagesPublished}

8 | import com.omearac.shared.EventSourcing

9 | import com.omearac.shared.KafkaMessages.KafkaMessage

10 |

11 | /**

12 | * This actor publishes 'KafkaMessage's to the Kafka topic TestDataChannel. The idea would be that another microservice is subscribed to

13 | * the TestDataChannel topic and can then react to data messages this microservice emits.

14 | * This actor gets the stream connection reference from the ProducerStreamManager such that when he gets a

15 | * PublishMessages command message from the HTTP interface, he will create KafkaMessages and then offer/send them to the stream.

16 | */

17 |

18 | object DataProducer {

19 |

20 | //Command Messages

21 | case class PublishMessages(numberOfMessages: Int)

22 |

23 | def props: Props = Props(new DataProducer)

24 |

25 | }

26 |

27 | class DataProducer extends Actor with EventSourcing {

28 |

29 | import context._

30 |

31 | implicit val system = context.system

32 | val log = Logging(system, this.getClass.getName)

33 |

34 | var producerStream: SourceQueueWithComplete[Any] = null

35 |

36 | def receive: Receive = {

37 | case ActivatedProducerStream(streamRef, kafkaTopic) =>

38 | producerStream = streamRef

39 | become(publishData)

40 | case msg: PublishMessages => if (producerStream == null) self ! msg

41 | case other => log.error("DataProducer got the unknown message while in idle: " + other)

42 | }

43 |

44 | def publishData: Receive = {

45 | case PublishMessages(numberOfMessages) =>

46 | for (i <- 1 to numberOfMessages) {

47 | val myPublishableMessage = KafkaMessage(timetag, " send me to kafka, yo!", i)

48 | producerStream.offer(myPublishableMessage)

49 | }

50 |

51 | //Tell the akka-http front end that messages were sent

52 | sender() ! MessagesPublished(numberOfMessages)

53 | publishLocalEvent(MessagesPublished(numberOfMessages))

54 | case other => log.error("DataProducer got the unknown message while producing: " + other)

55 | }

56 | }

57 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/http/routes/ConsumerCommands.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.http.routes

2 |

3 | import akka.actor.ActorRef

4 | import akka.event.LoggingAdapter

5 | import akka.http.scaladsl.model.StatusCodes

6 | import akka.http.scaladsl.server.Directives._

7 | import akka.http.scaladsl.server._

8 | import akka.pattern.ask

9 | import akka.util.Timeout

10 | import com.omearac.consumers.DataConsumer.{ConsumerActorReply, ManuallyInitializeStream, ManuallyTerminateStream}

11 |

12 | import scala.concurrent.duration._

13 |

14 | /**

15 | * This trait defines the HTTP API for starting and stopping the Data and Event Consumer Streams

16 | */

17 |

18 | trait ConsumerCommands {

19 | def dataConsumer: ActorRef

20 |

21 | def eventConsumer: ActorRef

22 |

23 | def log: LoggingAdapter

24 |

25 | val dataConsumerHttpCommands: Route = pathPrefix("data_consumer") {

26 | implicit val timeout = Timeout(10 seconds)

27 | path("stop") {

28 | get {

29 | onSuccess(dataConsumer ? ManuallyTerminateStream) {

30 | case m: ConsumerActorReply => log.info(m.message); complete(StatusCodes.OK, m.message);

31 | case _ => complete(StatusCodes.InternalServerError)

32 | }

33 | }

34 | } ~

35 | path("start") {

36 | get {

37 | onSuccess(dataConsumer ? ManuallyInitializeStream) {

38 | case m: ConsumerActorReply => log.info(m.message); complete(StatusCodes.OK, m.message)

39 | case _ => complete(StatusCodes.InternalServerError)

40 | }

41 | }

42 | }

43 | }

44 |

45 | val eventConsumerHttpCommands: Route = pathPrefix("event_consumer") {

46 | implicit val timeout = Timeout(10 seconds)

47 | path("stop") {

48 | get {

49 | onSuccess(eventConsumer ? ManuallyTerminateStream) {

50 | case m: ConsumerActorReply => log.info(m.message); complete(StatusCodes.OK, m.message);

51 | case _ => complete(StatusCodes.InternalServerError)

52 | }

53 | }

54 | } ~

55 | path("start") {

56 | get {

57 | onSuccess(eventConsumer ? ManuallyInitializeStream) {

58 | case m: ConsumerActorReply => log.info(m.message); complete(StatusCodes.OK, m.message)

59 | case _ => complete(StatusCodes.InternalServerError)

60 | }

61 | }

62 | }

63 | }

64 |

65 | }

66 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/settings/Settings.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.settings

2 |

3 | import akka.actor.ActorSystem

4 |

5 | /**

6 | * In this class we read in the application.conf configuration file to get the various consumer/producer settings

7 | * as well as the akka-http host

8 | */

9 |

10 | class Settings(system:ActorSystem) {

11 | object Http {

12 | val host = system.settings.config.getString("http.host")

13 | }

14 | object KafkaProducers {

15 | val numberOfProducers = system.settings.config.getInt("akka.kafka.producer.num-producers")

16 |

17 | //TODO: We only have one bootstrap server (kafka broker) at the moment so we get one IP below)

18 | val KafkaProducerInfo: Map[String, Map[String,String]] = (for (i <- 1 to numberOfProducers) yield {

19 | val kafkaMessageType = system.settings.config.getString(s"akka.kafka.producer.p$i.message-type")

20 | val kafkaMessageBrokerIP = system.settings.config.getString(s"akka.kafka.producer.p$i.bootstrap-servers")

21 | val kafkaTopic = system.settings.config.getString(s"akka.kafka.producer.p$i.publish-topic")

22 | val numberOfPartitions = system.settings.config.getString(s"akka.kafka.producer.p$i.num.partitions")

23 | kafkaMessageType -> Map("bootstrap-servers" -> kafkaMessageBrokerIP, "publish-topic" -> kafkaTopic, "num.partitions" -> numberOfPartitions)

24 | }).toMap

25 | }

26 |

27 | object KafkaConsumers {

28 | val numberOfConsumers = system.settings.config.getInt("akka.kafka.consumer.num-consumers")

29 |

30 | //TODO: We only have one bootstrap server (kafka broker) at the moment so we get one IP below)

31 | val KafkaConsumerInfo: Map[String, Map[String,String]] = (for (i <- 1 to numberOfConsumers) yield {

32 | val kafkaMessageType = system.settings.config.getString(s"akka.kafka.consumer.c$i.message-type")

33 | val kafkaMessageBrokerIP = system.settings.config.getString(s"akka.kafka.consumer.c$i.bootstrap-servers")

34 | val kafkaTopic = system.settings.config.getString(s"akka.kafka.consumer.c$i.subscription-topic")

35 | val groupId = system.settings.config.getString(s"akka.kafka.consumer.c$i.groupId")

36 | kafkaMessageType -> Map("bootstrap-servers" -> kafkaMessageBrokerIP, "subscription-topic" -> kafkaTopic, "groupId" -> groupId)

37 | }).toMap

38 | }

39 | }

40 |

41 | object Settings {

42 | def apply(system: ActorSystem) = new Settings(system)

43 | }

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/producers/ProducerStreamManager.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.producers

2 |

3 | import akka.actor._

4 | import com.omearac.producers.ProducerStreamManager.InitializeProducerStream

5 | import com.omearac.settings.Settings

6 | import com.omearac.shared.EventMessages.ActivatedProducerStream

7 | import com.omearac.shared.JsonMessageConversion.Conversion

8 | import com.omearac.shared.KafkaMessages.{ExampleAppEvent, KafkaMessage}

9 |

10 | /**

11 | * This actor is responsible for creating and terminating the publishing akka-kafka streams.

12 | * Upon receiving an InitializeProducerStream message with a corresponding message type

13 | * (KafkaMessage or ExampleAppEvent) and producer source actor reference, this manager initializes the stream,

14 | * sends an ActivatedProducerStream message to the source actor and finally publishes a local event to the

15 | * Akka Event Bus.

16 | */

17 |

18 | object ProducerStreamManager {

19 |

20 | //CommandMessage

21 | case class InitializeProducerStream(producerActorRef: ActorRef, msgType: Any)

22 |

23 | def props: Props = Props(new ProducerStreamManager)

24 | }

25 |

26 | class ProducerStreamManager extends Actor with ProducerStream {

27 | implicit val system = context.system

28 |

29 | //Get Kafka Producer Config Settings

30 | val settings = Settings(system).KafkaProducers

31 |

32 | //Edit this receive method with any new Streamed message types

33 | def receive: Receive = {

34 | case InitializeProducerStream(producerActorRef, KafkaMessage) => {

35 |

36 | //Get producer properties

37 | val producerProperties = settings.KafkaProducerInfo("KafkaMessage")

38 | startProducerStream[KafkaMessage](producerActorRef, producerProperties)

39 | }

40 | case InitializeProducerStream(producerActorRef, ExampleAppEvent) => {

41 |

42 | //Get producer properties

43 | val producerProperties = settings.KafkaProducerInfo("ExampleAppEvent")

44 | startProducerStream[ExampleAppEvent](producerActorRef, producerProperties)

45 | }

46 | case other => println(s"Producer Stream Manager got unknown message: $other")

47 | }

48 |

49 |

50 | def startProducerStream[msgType: Conversion](producerActorSource: ActorRef, producerProperties: Map[String, String]) = {

51 | val streamSource = createStreamSource[msgType]

52 | val streamFlow = createStreamFlow[msgType](producerProperties)

53 | val streamSink = createStreamSink(producerProperties)

54 | val producerStream = streamSource.via(streamFlow).to(streamSink).run()

55 |

56 | //Send the completed stream reference to the actor who wants to publish to it

57 | val kafkaTopic = producerProperties("publish-topic")

58 | producerActorSource ! ActivatedProducerStream(producerStream, kafkaTopic)

59 | publishLocalEvent(ActivatedProducerStream(producerStream, kafkaTopic))

60 | }

61 | }

62 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/ProducerStreamManagerSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import akka.actor.ActorSystem

4 | import akka.stream.scaladsl.SourceQueueWithComplete

5 | import akka.testkit.{DefaultTimeout, ImplicitSender, TestActorRef, TestKit, TestProbe}

6 | import com.omearac.producers.ProducerStreamManager

7 | import com.omearac.producers.ProducerStreamManager.InitializeProducerStream

8 | import com.omearac.shared.AkkaStreams

9 | import com.omearac.shared.EventMessages.ActivatedProducerStream

10 | import com.omearac.shared.KafkaMessages.{ExampleAppEvent, KafkaMessage}

11 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

12 |

13 |

14 | class ProducerStreamManagerSpec extends TestKit(ActorSystem("ProducerStreamManagerSpec"))

15 | with DefaultTimeout with ImplicitSender

16 | with WordSpecLike with Matchers with BeforeAndAfterAll

17 | with AkkaStreams {

18 |

19 | val testProducerStreamManager = TestActorRef(new ProducerStreamManager)

20 | val producerStreamManagerActor = testProducerStreamManager.underlyingActor

21 |

22 | //Create an test event listener for the local message bus

23 | val testEventListener = TestProbe()

24 | system.eventStream.subscribe(testEventListener.ref, classOf[ExampleAppEvent])

25 |

26 | override def afterAll: Unit = {

27 | shutdown()

28 | }

29 |

30 |

31 | "Sending InitializeProducerStream(self, KafkaMessage) to ProducerStreamManager" should {

32 | "initialize the stream for that particular message type, return ActivatedProducerStream(streaRef, \"TempChannel1\") and produce local event " in {

33 | testProducerStreamManager ! InitializeProducerStream(self, KafkaMessage)

34 | Thread.sleep(500)

35 | var streamRef: SourceQueueWithComplete[Any] = null

36 | expectMsgPF() {

37 | case ActivatedProducerStream(sr, kt) => if (kt == "TempChannel1") {

38 | streamRef = sr; ()

39 | } else fail()

40 | }

41 |

42 | Thread.sleep(500)

43 | val resultMessage = ActivatedProducerStream(streamRef, "TempChannel1")

44 | testEventListener.expectMsgPF() {

45 | case ExampleAppEvent(_, _, m) => if (m == resultMessage.toString) () else fail()

46 | }

47 | }

48 | }

49 |

50 | "Sending InitializeProducerStream(self, ExampleAppEvent) to ProducerStreamManager" should {

51 | "initialize the stream for that particular message type, return ActivatedProducerStream(streaRef, \"TempChannel2\") and produce local event " in {

52 | testProducerStreamManager ! InitializeProducerStream(self, ExampleAppEvent)

53 | Thread.sleep(500)

54 | var streamRef: SourceQueueWithComplete[Any] = null

55 | expectMsgPF() {

56 | case ActivatedProducerStream(sr, kt) => if (kt == "TempChannel2") {

57 | streamRef = sr; ()

58 | } else fail()

59 | }

60 |

61 | Thread.sleep(500)

62 | val resultMessage = ActivatedProducerStream(streamRef, "TempChannel2")

63 | testEventListener.expectMsgPF() {

64 | case ExampleAppEvent(_, _, m) => if (m == resultMessage.toString) () else fail()

65 | }

66 | }

67 | }

68 | }

69 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/DataProducerSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import akka.Done

4 | import akka.actor.ActorSystem

5 | import akka.stream.QueueOfferResult

6 | import akka.stream.QueueOfferResult.Enqueued

7 | import akka.stream.scaladsl.SourceQueueWithComplete

8 | import akka.testkit.{DefaultTimeout, EventFilter, ImplicitSender, TestActorRef, TestKit, TestProbe}

9 | import com.omearac.producers.DataProducer

10 | import com.omearac.producers.DataProducer.PublishMessages

11 | import com.omearac.shared.AkkaStreams

12 | import com.omearac.shared.EventMessages.{ActivatedProducerStream, MessagesPublished}

13 | import com.omearac.shared.KafkaMessages.ExampleAppEvent

14 | import com.typesafe.config.ConfigFactory

15 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

16 |

17 | import scala.concurrent.Future

18 |

19 |

20 | class DataProducerSpec extends TestKit(ActorSystem("DataProducerSpec", ConfigFactory.parseString(

21 | """

22 | akka.loggers = ["akka.testkit.TestEventListener"] """)))

23 | with DefaultTimeout with ImplicitSender

24 | with WordSpecLike with Matchers with BeforeAndAfterAll

25 | with AkkaStreams {

26 |

27 | val testProducer = TestActorRef(new DataProducer)

28 | val producerActor = testProducer.underlyingActor

29 |

30 | val mockProducerStream: SourceQueueWithComplete[Any] = new SourceQueueWithComplete[Any] {

31 | override def complete(): Unit = println("complete")

32 |

33 | override def fail(ex: Throwable): Unit = println("fail")

34 |

35 | override def offer(elem: Any): Future[QueueOfferResult] = Future {

36 | Enqueued

37 | }

38 |

39 | override def watchCompletion(): Future[Done] = Future {

40 | Done

41 | }

42 | }

43 |

44 | override def afterAll: Unit = {

45 | shutdown()

46 | }

47 |

48 | //Create an test event listener for the local message bus

49 | val testEventListener = TestProbe()

50 | system.eventStream.subscribe(testEventListener.ref, classOf[ExampleAppEvent])

51 |

52 |

53 | "Sending ActivatedProducerStream to DataProducer in receive state" should {

54 | "save the stream ref and change state to producing " in {

55 | testProducer ! ActivatedProducerStream(mockProducerStream, "TestTopic")

56 | Thread.sleep(500)

57 | producerActor.producerStream should be(mockProducerStream)

58 | EventFilter.error(message = "DataProducer got the unknown message while producing: testMessage", occurrences = 1) intercept {

59 | testProducer ! "testMessage"

60 | }

61 | }

62 | }

63 |

64 | "Sending PublishMessages(number: Int) to DataProducer in publishData state" should {

65 | "return MessagesPublished(number: Int) and publish the local event " in {

66 | val producing = producerActor.publishData

67 | producerActor.context.become(producing)

68 | producerActor.producerStream = mockProducerStream

69 | val resultMessage = MessagesPublished(5)

70 | testProducer ! PublishMessages(5)

71 | expectMsg(resultMessage)

72 | testEventListener.expectMsgPF() {

73 | case ExampleAppEvent(_, _, m) => if (m == resultMessage.toString) () else fail()

74 | }

75 | }

76 | }

77 | }

78 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/consumers/EventConsumer.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.consumers

2 |

3 | import akka.actor.{Actor, ActorRef, Props}

4 | import akka.event.Logging

5 | import com.omearac.consumers.ConsumerStreamManager.{InitializeConsumerStream, TerminateConsumerStream}

6 | import com.omearac.consumers.DataConsumer.{ConsumerActorReply, ManuallyInitializeStream, ManuallyTerminateStream}

7 | import com.omearac.settings.Settings

8 | import com.omearac.shared.EventMessages.ActivatedConsumerStream

9 | import com.omearac.shared.EventSourcing

10 | import com.omearac.shared.KafkaMessages.ExampleAppEvent

11 |

12 | import scala.collection.mutable.ArrayBuffer

13 |

14 |

15 | /**

16 | * This actor serves as a Sink for the kafka stream that is created by the ConsumerStreamManager.

17 | * The corresponding stream converts the json from the kafka topic AppEventChannel to the message type ExampleAppEvent.

18 | * Once this actor receives a batch of such messages he prints them out.

19 | *

20 | * This actor can be started and stopped manually from the HTTP interface, and in doing so, changes between receiving

21 | * states.

22 | */

23 |

24 | object EventConsumer {

25 |

26 | def props: Props = Props(new EventConsumer)

27 | }

28 |

29 | class EventConsumer extends Actor with EventSourcing {

30 | implicit val system = context.system

31 | val log = Logging(system, this.getClass.getName)

32 |

33 | //Once stream is started by manager, we save the actor ref of the manager

34 | var consumerStreamManager: ActorRef = null

35 |

36 | //Get Kafka Topic

37 | val kafkaTopic = Settings(system).KafkaConsumers.KafkaConsumerInfo("ExampleAppEvent")("subscription-topic")

38 |

39 | def receive: Receive = {

40 | case InitializeConsumerStream(_, ExampleAppEvent) =>

41 | consumerStreamManager ! InitializeConsumerStream(self, ExampleAppEvent)

42 |

43 | case ActivatedConsumerStream(_) => consumerStreamManager = sender()

44 |

45 | case "STREAM_INIT" =>

46 | sender() ! "OK"

47 | println("Event Consumer entered consuming state!")

48 | context.become(consumingEvents)

49 |

50 | case ManuallyTerminateStream => sender() ! ConsumerActorReply("Event Consumer Stream Already Stopped")

51 |

52 | case ManuallyInitializeStream =>

53 | consumerStreamManager ! InitializeConsumerStream(self, ExampleAppEvent)

54 | sender() ! ConsumerActorReply("Event Consumer Stream Started")

55 |

56 | case other => log.error("Event Consumer got unknown message while in idle:" + other)

57 | }

58 |

59 | def consumingEvents: Receive = {

60 | case ActivatedConsumerStream(_) => consumerStreamManager = sender()

61 |

62 | case consumerMessageBatch: ArrayBuffer[_] =>

63 | sender() ! "OK"

64 | consumerMessageBatch.foreach(println)

65 |

66 | case "STREAM_DONE" =>

67 | context.become(receive)

68 |

69 | case ManuallyInitializeStream => sender() ! ConsumerActorReply("Event Consumer Already Started")

70 |

71 | case ManuallyTerminateStream =>

72 | consumerStreamManager ! TerminateConsumerStream(kafkaTopic)

73 | sender() ! ConsumerActorReply("Event Consumer Stream Stopped")

74 |

75 | case other => log.error("Event Consumer got unknown message while in consuming: " + other)

76 | }

77 | }

78 |

79 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/consumers/DataConsumer.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.consumers

2 |

3 | import akka.actor._

4 | import akka.event.Logging

5 | import com.omearac.consumers.ConsumerStreamManager.{InitializeConsumerStream, TerminateConsumerStream}

6 | import com.omearac.consumers.DataConsumer.{ConsumerActorReply, ManuallyInitializeStream, ManuallyTerminateStream}

7 | import com.omearac.settings.Settings

8 | import com.omearac.shared.EventMessages.ActivatedConsumerStream

9 | import com.omearac.shared.EventSourcing

10 | import com.omearac.shared.KafkaMessages.KafkaMessage

11 |

12 | import scala.collection.mutable.ArrayBuffer

13 |

14 |

15 | /**

16 | * This actor serves as a Sink for the kafka stream that is created by the ConsumerStreamManager.

17 | * The corresponding stream converts the json from the kafka topic TestDataChannel to the message type KafkaMessage.

18 | * Once this actor receives a batch of such messages he prints them out.

19 | *

20 | * This actor can be started and stopped manually from the HTTP interface, and in doing so, changes between receiving

21 | * states.

22 | */

23 |

24 | object DataConsumer {

25 |

26 | //Command Messages

27 | case object ManuallyInitializeStream

28 |

29 | case object ManuallyTerminateStream

30 |

31 | //Document Messages

32 | case class ConsumerActorReply(message: String)

33 |

34 | def props: Props = Props(new DataConsumer)

35 | }

36 |

37 | class DataConsumer extends Actor with EventSourcing {

38 | implicit val system = context.system

39 | val log = Logging(system, this.getClass.getName)

40 |

41 | //Once stream is started by manager, we save the actor ref of the manager

42 | var consumerStreamManager: ActorRef = null

43 |

44 | //Get Kafka Topic

45 | val kafkaTopic = Settings(system).KafkaConsumers.KafkaConsumerInfo("KafkaMessage")("subscription-topic")

46 |

47 | def receive: Receive = {

48 |

49 | case ActivatedConsumerStream(_) => consumerStreamManager = sender()

50 |

51 | case "STREAM_INIT" =>

52 | sender() ! "OK"

53 | println("Data Consumer entered consuming state!")

54 | context.become(consumingData)

55 |

56 | case ManuallyTerminateStream => sender() ! ConsumerActorReply("Data Consumer Stream Already Stopped")

57 |

58 | case ManuallyInitializeStream =>

59 | consumerStreamManager ! InitializeConsumerStream(self, KafkaMessage)

60 | sender() ! ConsumerActorReply("Data Consumer Stream Started")

61 |

62 | case other => log.error("Data Consumer got unknown message while in idle:" + other)

63 | }

64 |

65 | def consumingData: Receive = {

66 | case ActivatedConsumerStream(_) => consumerStreamManager = sender()

67 |

68 | case consumerMessageBatch: ArrayBuffer[_] =>

69 | sender() ! "OK"

70 | consumerMessageBatch.foreach(println)

71 |

72 | case "STREAM_DONE" =>

73 | context.become(receive)

74 |

75 | case ManuallyInitializeStream => sender() ! ConsumerActorReply("Data Consumer Already Started")

76 |

77 | case ManuallyTerminateStream =>

78 | consumerStreamManager ! TerminateConsumerStream(kafkaTopic)

79 | sender() ! ConsumerActorReply("Data Consumer Stream Stopped")

80 |

81 | case other => log.error("Data Consumer got Unknown message while in consuming " + other)

82 | }

83 | }

84 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/Main.scala:

--------------------------------------------------------------------------------

1 | package com.omearac

2 |

3 | import akka.actor.{ActorSystem, Props}

4 | import akka.event.Logging

5 | import akka.http.scaladsl.Http

6 | import akka.http.scaladsl.Http.ServerBinding

7 | import com.omearac.consumers.ConsumerStreamManager.InitializeConsumerStream

8 | import com.omearac.consumers.{ConsumerStreamManager, DataConsumer, EventConsumer}

9 | import com.omearac.http.HttpService

10 | import com.omearac.producers.ProducerStreamManager.InitializeProducerStream

11 | import com.omearac.producers.{DataProducer, EventProducer, ProducerStreamManager}

12 | import com.omearac.settings.Settings

13 | import com.omearac.shared.AkkaStreams

14 | import com.omearac.shared.KafkaMessages.{ExampleAppEvent, KafkaMessage}

15 |

16 | import scala.concurrent.{Await, Future}

17 | import scala.concurrent.duration._

18 | import scala.io.StdIn

19 |

20 | /**

21 | * This starts the Reactive Kafka Microservice Template

22 | */

23 |

24 | object Main extends App with HttpService with AkkaStreams {

25 | implicit val system = ActorSystem("akka-reactive-kafka-app")

26 | val log = Logging(system, this.getClass.getName)

27 |

28 | //Start the akka-http server and listen for http requests

29 | val akkaHttpServer = startAkkaHTTPServer()

30 |

31 | //Create the Producer Stream Manager and Consumer Stream Manager

32 | val producerStreamManager = system.actorOf(Props(new ProducerStreamManager), "producerStreamManager")

33 | val consumerStreamManager = system.actorOf(Props(new ConsumerStreamManager), "consumerStreamManager")

34 |

35 | //Create actor to publish event messages to kafka stream.

36 | val eventProducer = system.actorOf(EventProducer.props, "eventProducer")

37 | producerStreamManager ! InitializeProducerStream(eventProducer, ExampleAppEvent)

38 |

39 | //Create actor to consume event messages from kafka stream.

40 | val eventConsumer = system.actorOf(EventConsumer.props, "eventConsumer")

41 | consumerStreamManager ! InitializeConsumerStream(eventConsumer, ExampleAppEvent)

42 |

43 | //Create actor to publish data messages to kafka stream.

44 | val dataProducer = system.actorOf(DataProducer.props, "dataProducer")

45 | producerStreamManager ! InitializeProducerStream(dataProducer, KafkaMessage)

46 |

47 | //Create actor to consume data messages from kafka stream.

48 | val dataConsumer = system.actorOf(DataConsumer.props, "dataConsumer")

49 | consumerStreamManager ! InitializeConsumerStream(dataConsumer, KafkaMessage)

50 |

51 | //Shutdown

52 | shutdownApplication()

53 |

54 | private def startAkkaHTTPServer(): Future[ServerBinding] = {

55 | val settings = Settings(system).Http

56 | val host = settings.host

57 |

58 | println(s"Specify the TCP port do you want to host the HTTP server at (e.g. 8001, 8080..etc)? \nHit Return when finished:")

59 | val portNum = StdIn.readInt()

60 |

61 | println(s"Waiting for http requests at http://$host:$portNum/")

62 | Http().bindAndHandle(routes, host, portNum)

63 | }

64 |

65 | private def shutdownApplication(): Unit = {

66 | scala.sys.addShutdownHook({

67 | println("Terminating the Application...")

68 | akkaHttpServer.flatMap(_.unbind())

69 | system.terminate()

70 | Await.result(system.whenTerminated, 30 seconds)

71 | println("Application Terminated")

72 | })

73 | }

74 | }

75 |

76 |

77 |

78 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/shared/JsonMessageConversion.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.shared

2 |

3 | import akka.util.Timeout

4 | import com.omearac.shared.EventMessages.FailedMessageConversion

5 | import com.omearac.shared.KafkaMessages.{ExampleAppEvent, KafkaMessage}

6 | import play.api.libs.json.Json

7 | import spray.json._

8 |

9 | import scala.concurrent.duration._

10 |

11 |

12 | /**

13 | * Here we define a typeclass which converts case class messages to/from JSON.

14 | * Currently, we can convert KafkaMessage and ExampleAppEvent messages to/from JSON.

15 | * Any additional case class types need to have conversion methods defined here.

16 | */

17 |

18 |

19 | object JsonMessageConversion {

20 | implicit val resolveTimeout = Timeout(3 seconds)

21 |

22 | trait Conversion[T] {

23 | def convertFromJson(msg: String): Either[FailedMessageConversion, T]

24 | def convertToJson(msg: T): String

25 | }

26 |

27 | //Here is where we create implicit objects for each Message Type you wish to convert to/from JSON

28 | object Conversion extends DefaultJsonProtocol {

29 |

30 | implicit object KafkaMessageConversions extends Conversion[KafkaMessage] {

31 | implicit val json3 = jsonFormat3(KafkaMessage)

32 |

33 | /**

34 | * Converts the JSON string from the CommittableMessage to KafkaMessage case class

35 | * @param msg is the json string to be converted to KafkaMessage case class

36 | * @return either a KafkaMessage or Unit (if conversion fails)

37 | */

38 | def convertFromJson(msg: String): Either[FailedMessageConversion, KafkaMessage] = {

39 | try {

40 | Right(msg.parseJson.convertTo[KafkaMessage])

41 | }

42 | catch {

43 | case e: Exception => Left(FailedMessageConversion("kafkaTopic", msg, "to: KafkaMessage"))

44 | }

45 | }

46 | def convertToJson(msg: KafkaMessage) = {

47 | implicit val writes = Json.writes[KafkaMessage]

48 | Json.toJson(msg).toString

49 | }

50 | }

51 |

52 | implicit object ExampleAppEventConversion extends Conversion[ExampleAppEvent] {

53 | implicit val json3 = jsonFormat3(ExampleAppEvent)

54 |

55 | /**

56 | * Converts the JSON string from the CommittableMessage to ExampleAppEvent case class

57 | * @param msg is the json string to be converted to ExampleAppEvent case class

58 | * @return either a ExampleAppEvent or Unit (if conversion fails)

59 | */

60 | def convertFromJson(msg: String): Either[FailedMessageConversion, ExampleAppEvent] = {

61 | try {

62 | Right(msg.parseJson.convertTo[ExampleAppEvent])

63 | }

64 | catch {

65 | case e: Exception => Left(FailedMessageConversion("kafkaTopic", msg, "to: ExampleAppEvent"))

66 | }

67 | }

68 | def convertToJson(msg: ExampleAppEvent) = {

69 | implicit val writes = Json.writes[ExampleAppEvent]

70 | Json.toJson(msg).toString

71 | }

72 | }

73 |

74 | //Adding some sweet sweet syntactic sugar

75 | def apply[T: Conversion] : Conversion[T] = implicitly

76 | }

77 | }

78 |

79 |

80 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/ProducerStreamSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import akka.actor.ActorSystem

4 | import akka.stream.scaladsl.{Sink, Source}

5 | import akka.testkit.{DefaultTimeout, ImplicitSender, TestKit, TestProbe}

6 | import com.omearac.consumers.ConsumerStream

7 | import com.omearac.producers.ProducerStream

8 | import com.omearac.settings.Settings

9 | import com.omearac.shared.JsonMessageConversion.Conversion

10 | import com.omearac.shared.KafkaMessages.{ExampleAppEvent, KafkaMessage}

11 | import org.apache.kafka.clients.producer.ProducerRecord

12 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

13 |

14 |

15 | class ProducerStreamSpec extends TestKit(ActorSystem("ProducerStreamSpec"))

16 | with DefaultTimeout with ImplicitSender

17 | with WordSpecLike with Matchers with BeforeAndAfterAll

18 | with ConsumerStream with ProducerStream {

19 |

20 | val settings = Settings(system).KafkaProducers

21 | val probe = TestProbe()

22 |

23 | override def afterAll: Unit = {

24 | shutdown()

25 | }

26 |

27 | "Sending KafkaMessages to the KafkaMessage producerStream" should {

28 | "be converted to JSON and obtained by the Stream Sink " in {

29 |

30 | //Creating Producer Stream Components for publishing KafkaMessages

31 | val producerProps = settings.KafkaProducerInfo("KafkaMessage")

32 | val numOfMessages = 50

33 | val kafkaMsgs = for { i <- 0 to numOfMessages} yield KafkaMessage("sometime", "somestuff", i)

34 | val producerSource= Source(kafkaMsgs)

35 | val producerFlow = createStreamFlow[KafkaMessage](producerProps)

36 | val producerSink = Sink.actorRef(probe.ref, "complete")

37 |

38 | val jsonKafkaMsgs = for { msg <- kafkaMsgs} yield Conversion[KafkaMessage].convertToJson(msg)

39 |

40 | producerSource.via(producerFlow).runWith(producerSink)

41 | for (i <- 0 to jsonKafkaMsgs.length) {

42 | probe.expectMsgPF(){

43 | case m: ProducerRecord[_,_] => if (jsonKafkaMsgs.contains(m.value())) () else fail()

44 | case "complete" => ()

45 | }

46 | }

47 | }

48 | }

49 |

50 | "Sending ExampleAppEvent messages to the EventMessage producerStream" should {

51 | "be converted to JSON and obtained by the Stream Sink " in {

52 |

53 | //Creating Producer Stream Components for publishing ExampleAppEvent messages

54 | val producerProps = settings.KafkaProducerInfo("ExampleAppEvent")

55 | val numOfMessages = 50

56 | val eventMsgs = for { i <- 0 to 50} yield ExampleAppEvent("sometime", "senderID", s"Event number $i occured")

57 |

58 | val producerSource= Source(eventMsgs)

59 | val producerFlow = createStreamFlow[ExampleAppEvent](producerProps)

60 | val producerSink = Sink.actorRef(probe.ref, "complete")

61 |

62 | val jsonAppEventMsgs = for{ msg <- eventMsgs} yield Conversion[ExampleAppEvent].convertToJson(msg)

63 | producerSource.via(producerFlow).runWith(producerSink)

64 | for (i <- 0 to jsonAppEventMsgs.length){

65 | probe.expectMsgPF(){

66 | case m: ProducerRecord[_,_] => if (jsonAppEventMsgs.contains(m.value())) () else fail()

67 | case "complete" => ()

68 | }

69 | }

70 | }

71 | }

72 | }

73 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/EventProducerSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import java.util.Date

4 |

5 | import akka.Done

6 | import akka.actor.ActorSystem

7 | import akka.serialization.Serialization

8 | import akka.stream.QueueOfferResult

9 | import akka.stream.QueueOfferResult.Enqueued

10 | import akka.stream.scaladsl.SourceQueueWithComplete

11 | import akka.testkit.{DefaultTimeout, EventFilter, ImplicitSender, TestActorRef, TestKit, TestProbe}

12 | import com.omearac.producers.EventProducer

13 | import com.omearac.shared.AkkaStreams

14 | import com.omearac.shared.EventMessages.{ActivatedProducerStream, MessagesPublished}

15 | import com.omearac.shared.KafkaMessages.ExampleAppEvent

16 | import com.typesafe.config.ConfigFactory

17 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

18 |

19 | import scala.concurrent.Future

20 |

21 |

22 | class EventProducerSpec extends TestKit(ActorSystem("EventProducerSpec",ConfigFactory.parseString("""

23 | akka.loggers = ["akka.testkit.TestEventListener"] """)))

24 | with DefaultTimeout with ImplicitSender

25 | with WordSpecLike with Matchers with BeforeAndAfterAll

26 | with AkkaStreams {

27 |

28 | val testProducer = TestActorRef(new EventProducer)

29 | val producerActor = testProducer.underlyingActor

30 | val mockProducerStream: SourceQueueWithComplete[Any] = new SourceQueueWithComplete[Any] {

31 | override def complete(): Unit = println("complete")

32 |

33 | override def fail(ex: Throwable): Unit = println("fail")

34 |

35 | override def offer(elem: Any): Future[QueueOfferResult] = Future{Enqueued}

36 |

37 | override def watchCompletion(): Future[Done] = Future{Done}

38 | }

39 |

40 | override def afterAll: Unit = {

41 | shutdown()

42 | }

43 |

44 | //Create an test event listener for the local message bus

45 | val testEventListener = TestProbe()

46 | system.eventStream.subscribe(testEventListener.ref, classOf[ExampleAppEvent])

47 |

48 |

49 | "Sending ActivatedProducerStream to EventProducer in receive state" should {

50 | "save the stream ref and change state to producing " in {

51 | testProducer ! ActivatedProducerStream(mockProducerStream, "TestTopic")

52 | Thread.sleep(500)

53 | producerActor.producerStream should be(mockProducerStream)

54 | EventFilter.error(message = "EventProducer got the unknown message while producing: testMessage", occurrences = 1) intercept {

55 | testProducer ! "testMessage"

56 | }

57 | }

58 | }

59 |

60 | "Sending ExampleAppEvent to system bus while EventProducer is in publishEvent state" should {

61 | "offer the ExampleAppEvent to the stream " in {

62 | val producingState = producerActor.publishEvent

63 | producerActor.context.become(producingState)

64 | producerActor.producerStream = mockProducerStream

65 | val dateFormat = new java.text.SimpleDateFormat("dd:MM:yy:HH:mm:ss.SSS")

66 | lazy val timetag = dateFormat.format(new Date(System.currentTimeMillis()))

67 | val eventMsg = MessagesPublished(5)

68 | val testMessage = ExampleAppEvent(timetag,Serialization.serializedActorPath(self),eventMsg.toString)

69 | system.eventStream.publish(testMessage)

70 | testEventListener.expectMsgPF(){

71 | case ExampleAppEvent(_,_,m) => if (m == eventMsg.toString) () else fail()

72 | }

73 | }

74 | }

75 | }

76 |

--------------------------------------------------------------------------------

/src/test/scala/akka/HTTPInterfaceSpec.scala:

--------------------------------------------------------------------------------

1 | package akka

2 |

3 | import akka.event.Logging

4 | import akka.http.scaladsl.testkit.ScalatestRouteTest

5 | import akka.stream.QueueOfferResult

6 | import akka.stream.QueueOfferResult.Enqueued

7 | import akka.stream.scaladsl.SourceQueueWithComplete

8 | import akka.testkit.{TestActorRef, TestProbe}

9 | import com.omearac.consumers.{DataConsumer, EventConsumer}

10 | import com.omearac.http.routes.{ConsumerCommands, ProducerCommands}

11 | import com.omearac.producers.DataProducer

12 | import org.scalatest.{Matchers, WordSpec}

13 |

14 | import scala.concurrent.Future

15 |

16 |

17 | class HTTPInterfaceSpec extends WordSpec

18 | with Matchers with ScalatestRouteTest

19 | with ConsumerCommands with ProducerCommands {

20 |

21 | val log = Logging(system, this.getClass.getName)

22 |

23 | //Mocks for DataConsumer Tests

24 | val dataConsumer = TestActorRef(new DataConsumer)

25 | val manager = TestProbe()

26 | dataConsumer.underlyingActor.consumerStreamManager = manager.ref

27 |

28 | //Mocks for EventConsumer Tests

29 | val eventConsumer = TestActorRef(new EventConsumer)

30 | eventConsumer.underlyingActor.consumerStreamManager = manager.ref

31 |

32 | //Mocks for DataProducer Tests

33 | val dataProducer = TestActorRef(new DataProducer)

34 | val mockProducerStream: SourceQueueWithComplete[Any] = new SourceQueueWithComplete[Any] {

35 | override def complete(): Unit = println("complete")

36 |

37 | override def fail(ex: Throwable): Unit = println("fail")

38 |

39 | override def offer(elem: Any): Future[QueueOfferResult] = Future{Enqueued}

40 |

41 | override def watchCompletion(): Future[Done] = Future{Done}

42 | }

43 |

44 |

45 | "The HTTP interface to control the DataConsumerStream" should {

46 | "return a Already Stopped message for GET requests to /data_consumer/stop" in {

47 | Get("/data_consumer/stop") ~> dataConsumerHttpCommands ~> check {

48 | responseAs[String] shouldEqual "Data Consumer Stream Already Stopped"

49 | }

50 | }

51 |

52 | "return a Stream Started response for GET requests to /data_consumer/start" in {

53 | Get("/data_consumer/start") ~> dataConsumerHttpCommands ~> check {

54 | responseAs[String] shouldEqual "Data Consumer Stream Started"

55 | }

56 | }

57 | }

58 |

59 | "The HTTP interface to control the EventConsumerStream" should {

60 | "return a Already Stopped message for GET requests to /event_consumer/stop" in {

61 | Get("/event_consumer/stop") ~> eventConsumerHttpCommands ~> check {

62 | responseAs[String] shouldEqual "Event Consumer Stream Already Stopped"

63 | }

64 | }

65 |

66 | "return a Stream Started response for GET requests to /data_consumer/start" in {

67 | Get("/event_consumer/start") ~> eventConsumerHttpCommands ~> check {

68 | responseAs[String] shouldEqual "Event Consumer Stream Started"

69 | }

70 | }

71 | }

72 |

73 | "The HTTP interface to tell the DataProducer Actor to publish messages to Kafka" should {

74 | "return a Messages Produced message for GET requests to /data_producer/produce/10" in {

75 | dataProducer.underlyingActor.producerStream = mockProducerStream

76 | val producing = dataProducer.underlyingActor.publishData

77 | dataProducer.underlyingActor.context.become(producing)

78 |

79 | Get("/data_producer/produce/10") ~> producerHttpCommands ~> check {

80 | responseAs[String] shouldEqual "10 messages Produced as Ordered, Boss!"

81 | }

82 | }

83 | }

84 | }

85 |

86 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/DataConsumerSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import akka.actor.{Actor, ActorSystem, Props}

4 | import akka.testkit.{DefaultTimeout, ImplicitSender, TestActorRef, TestKit}

5 | import com.omearac.consumers.ConsumerStreamManager.{InitializeConsumerStream, TerminateConsumerStream}

6 | import com.omearac.consumers.DataConsumer

7 | import com.omearac.consumers.DataConsumer.{ConsumerActorReply, ManuallyInitializeStream, ManuallyTerminateStream}

8 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

9 |

10 | import scala.collection.mutable.ArrayBuffer

11 |

12 |

13 | class DataConsumerSpec extends TestKit(ActorSystem("DataConsumerSpec"))

14 | with DefaultTimeout with ImplicitSender

15 | with WordSpecLike with Matchers with BeforeAndAfterAll {

16 |

17 | //Creating the Actors

18 | val testConsumer = TestActorRef(new DataConsumer)

19 | val mockStreamAndManager = system.actorOf(Props(new MockStreamAndManager), "mockStreamAndManager")

20 |

21 | override def afterAll: Unit = {

22 | shutdown()

23 | }

24 |

25 | class MockStreamAndManager extends Actor {

26 | val receive: Receive = {

27 | case InitializeConsumerStream(_, _) => testConsumer ! "STREAM_INIT"

28 | case TerminateConsumerStream(_) => testConsumer ! "STREAM_DONE"

29 | }

30 | }

31 |

32 |

33 | "Sending ManuallyTerminateStream to DataConsumer in receive state" should {

34 | "return a Stream Already Stopped reply " in {

35 | testConsumer ! ManuallyTerminateStream

36 | expectMsg(ConsumerActorReply("Data Consumer Stream Already Stopped"))

37 | }

38 | }

39 |

40 | "Sending ManuallyInitializeStream to DataConsumer in receive state" should {

41 | "forward the message to the ConsumerStreamManager and change state to consuming" in {

42 | testConsumer.underlyingActor.consumerStreamManager = mockStreamAndManager

43 | testConsumer ! ManuallyInitializeStream

44 | expectMsg(ConsumerActorReply("Data Consumer Stream Started"))

45 | //Now check for state change

46 | Thread.sleep(750)

47 | testConsumer ! ManuallyInitializeStream

48 | expectMsg(ConsumerActorReply("Data Consumer Already Started"))

49 | }

50 | }

51 |

52 | "Sending STREAM_DONE to DataConsumer while in consuming state" should {

53 | "change state to idle state" in {

54 | val consuming = testConsumer.underlyingActor.consumingData

55 | testConsumer.underlyingActor.context.become(consuming)

56 | testConsumer ! "STREAM_DONE"

57 | //Now check for state change

58 | Thread.sleep(750)

59 | testConsumer ! ManuallyTerminateStream

60 | expectMsg(ConsumerActorReply("Data Consumer Stream Already Stopped"))

61 | }

62 | }

63 | "Sending ManuallyTerminateStream to DataConsumer while in consuming state" should {

64 | "forward the message to the ConsumerStreamManager and then upon reply, change state to idle" in {

65 | val consuming = testConsumer.underlyingActor.consumingData

66 | testConsumer.underlyingActor.context.become(consuming)

67 | testConsumer ! ManuallyTerminateStream

68 | expectMsg(ConsumerActorReply("Data Consumer Stream Stopped"))

69 | //Now check for state change

70 | Thread.sleep(750)

71 | testConsumer ! ManuallyTerminateStream

72 | expectMsg(ConsumerActorReply("Data Consumer Stream Already Stopped"))

73 | }

74 | }

75 |

76 | "Sending ConsumerMessageBatch message" should {

77 | "reply OK" in {

78 | val msgBatch: ArrayBuffer[String] = ArrayBuffer("test1")

79 | val consuming = testConsumer.underlyingActor.consumingData

80 | testConsumer.underlyingActor.context.become(consuming)

81 | testConsumer.underlyingActor.consumerStreamManager = mockStreamAndManager

82 | testConsumer ! msgBatch

83 | expectMsg("OK")

84 | }

85 | }

86 | }

87 |

--------------------------------------------------------------------------------

/src/test/scala/akka/kafka/EventConsumerSpec.scala:

--------------------------------------------------------------------------------

1 | package akka.kafka

2 |

3 | import akka.actor.{Actor, ActorSystem, Props}

4 | import akka.testkit.{DefaultTimeout, ImplicitSender, TestActorRef, TestKit}

5 | import com.omearac.consumers.ConsumerStreamManager.{InitializeConsumerStream, TerminateConsumerStream}

6 | import com.omearac.consumers.DataConsumer.{ConsumerActorReply, ManuallyInitializeStream, ManuallyTerminateStream}

7 | import com.omearac.consumers.EventConsumer

8 | import org.scalatest.{BeforeAndAfterAll, Matchers, WordSpecLike}

9 |

10 | import scala.collection.mutable.ArrayBuffer

11 |

12 |

13 | class EventConsumerSpec extends TestKit(ActorSystem("EventConsumerSpec"))

14 | with DefaultTimeout with ImplicitSender

15 | with WordSpecLike with Matchers with BeforeAndAfterAll {

16 |

17 | //Creating the Actors

18 | val testConsumer = TestActorRef(new EventConsumer)

19 | val mockStreamAndManager = system.actorOf(Props(new MockStreamAndManager), "mockStreamAndManager")

20 |

21 | override def afterAll: Unit = {

22 | shutdown()

23 | }

24 |

25 | class MockStreamAndManager extends Actor {

26 | val receive: Receive = {

27 | case InitializeConsumerStream(_, _) => testConsumer ! "STREAM_INIT"

28 | case TerminateConsumerStream(_) => testConsumer ! "STREAM_DONE"

29 | }

30 | }

31 |

32 |

33 | "Sending ManuallyTerminateStream to EventConsumer in receive state" should {

34 | "return a Stream Already Stopped reply " in {

35 | testConsumer ! ManuallyTerminateStream

36 | expectMsg(ConsumerActorReply("Event Consumer Stream Already Stopped"))

37 | }

38 | }

39 |

40 | "Sending ManuallyInitializeStream to EventConsumer in receive state" should {

41 | "forward the message to the ConsumerStreamManager and change state to consuming" in {

42 | testConsumer.underlyingActor.consumerStreamManager = mockStreamAndManager

43 | testConsumer ! ManuallyInitializeStream

44 | expectMsg(ConsumerActorReply("Event Consumer Stream Started"))

45 | //Now check for state change

46 | Thread.sleep(750)

47 | testConsumer ! ManuallyInitializeStream

48 | expectMsg(ConsumerActorReply("Event Consumer Already Started"))

49 | }

50 | }

51 |

52 | "Sending STREAM_DONE to EventConsumer while in consuming state" should {

53 | "change state to idle state" in {

54 | val consuming = testConsumer.underlyingActor.consumingEvents

55 | testConsumer.underlyingActor.context.become(consuming)

56 | testConsumer ! "STREAM_DONE"

57 | //Now check for state change

58 | Thread.sleep(750)

59 | testConsumer ! ManuallyTerminateStream

60 | expectMsg(ConsumerActorReply("Event Consumer Stream Already Stopped"))

61 | }

62 | }

63 | "Sending ManuallyTerminateStream to EventConsumer while in consuming state" should {

64 | "forward the message to the ConsumerStreamManager and then upon reply, change state to idle" in {

65 | val consuming = testConsumer.underlyingActor.consumingEvents

66 | testConsumer.underlyingActor.context.become(consuming)

67 | testConsumer ! ManuallyTerminateStream

68 | expectMsg(ConsumerActorReply("Event Consumer Stream Stopped"))

69 | //Now check for state change

70 | Thread.sleep(750)

71 | testConsumer ! ManuallyTerminateStream

72 | expectMsg(ConsumerActorReply("Event Consumer Stream Already Stopped"))

73 | }

74 | }

75 |

76 | "Sending ConsumerMessageBatch message" should {

77 | "reply OK" in {

78 | val msgBatch: ArrayBuffer[String] = ArrayBuffer("test1")

79 | val consuming = testConsumer.underlyingActor.consumingEvents

80 | testConsumer.underlyingActor.context.become(consuming)

81 | testConsumer.underlyingActor.consumerStreamManager = mockStreamAndManager

82 | testConsumer ! msgBatch

83 | expectMsg("OK")

84 | }

85 | }

86 | }

87 |

--------------------------------------------------------------------------------

/src/main/scala/com/omearac/consumers/ConsumerStream.scala:

--------------------------------------------------------------------------------

1 | package com.omearac.consumers

2 |

3 | import akka.actor.{ActorRef, ActorSystem}

4 | import akka.kafka.ConsumerMessage.CommittableOffsetBatch

5 | import akka.kafka.scaladsl.Consumer

6 | import akka.kafka.{ConsumerMessage, ConsumerSettings, Subscriptions}

7 | import akka.stream.scaladsl.{Flow, Sink}

8 | import com.omearac.shared.EventMessages.FailedMessageConversion

9 | import com.omearac.shared.JsonMessageConversion.Conversion

10 | import com.omearac.shared.{AkkaStreams, EventSourcing}

11 | import org.apache.kafka.clients.consumer.ConsumerConfig

12 | import org.apache.kafka.common.serialization.{ByteArrayDeserializer, StringDeserializer}

13 |

14 | import scala.collection.mutable.ArrayBuffer

15 | import scala.concurrent.Future

16 |

17 | /**

18 | * This trait defines the functions for creating the consumer stream components as well

19 | * as functions for which are used in the stream Flow.

20 | */

21 |

22 | trait ConsumerStream extends AkkaStreams with EventSourcing {

23 | implicit val system: ActorSystem

24 | def self: ActorRef

25 |

26 |

27 | def createStreamSink(consumerActorSink : ActorRef) = {

28 | Sink.actorRefWithAck(consumerActorSink, "STREAM_INIT", "OK", "STREAM_DONE")

29 | }

30 |

31 | def createStreamSource(consumerProperties: Map[String,String]) = {

32 | val kafkaMBAddress = consumerProperties("bootstrap-servers")

33 | val groupID = consumerProperties("groupId")

34 | val topicSubscription = consumerProperties("subscription-topic")

35 | val consumerSettings = ConsumerSettings(system, new ByteArrayDeserializer, new StringDeserializer)

36 | .withBootstrapServers(kafkaMBAddress)

37 | .withGroupId(groupID)

38 | .withProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest")

39 |

40 | Consumer.committableSource(consumerSettings, Subscriptions.topics(topicSubscription))

41 | }

42 |

43 | def createStreamFlow[msgType: Conversion] = {

44 | Flow[ConsumerMessage.CommittableMessage[Array[Byte], String]]

45 | .map(msg => (msg.committableOffset, Conversion[msgType].convertFromJson(msg.record.value)))

46 | //Publish the conversion error event messages returned from the JSONConversion

47 | .map (tuple => publishConversionErrors[msgType](tuple))