├── .gitignore

├── LANGS.md

├── README.md

├── book.json

├── en

├── 10changelog.md

├── 21_environment_preparation.md

├── 22.md

├── 23.md

├── 24.md

├── 310_portal.md

├── 311_heartbeat_server.md

├── 312_judge.md

├── 313_links.md

├── 314_alarm.md

├── 315_task.md

├── 316_gateway.md

├── 317_nodata.md

├── 318_aggregator.md

├── 319_agent-updater.md

├── 31environment_preparation.md

├── 32_agent.md

├── 33transfer.md

├── 34graph.md

├── 35_query.md

├── 36_dashboard.md

├── 37_email_and_message_sending_interface.md

├── 38_sender.md

├── 39_webqianduan.md

├── 3yuanmaanzhuang.md

├── 410_mongodb_monitoring.md

├── 411_memcache_monitoring.md

├── 412_rabbitmq_monitoring.md

├── 413_switch_monitoring.md

├── 414_monitoring_windows_platform.md

├── 415_haproxy_monitoring.md

├── 416_dockercontainer_monitoring_practice.md

├── 417_nginx_monitoring.md

├── 418_jmx_monitoring.md

├── 419_hardware_monitoring.md

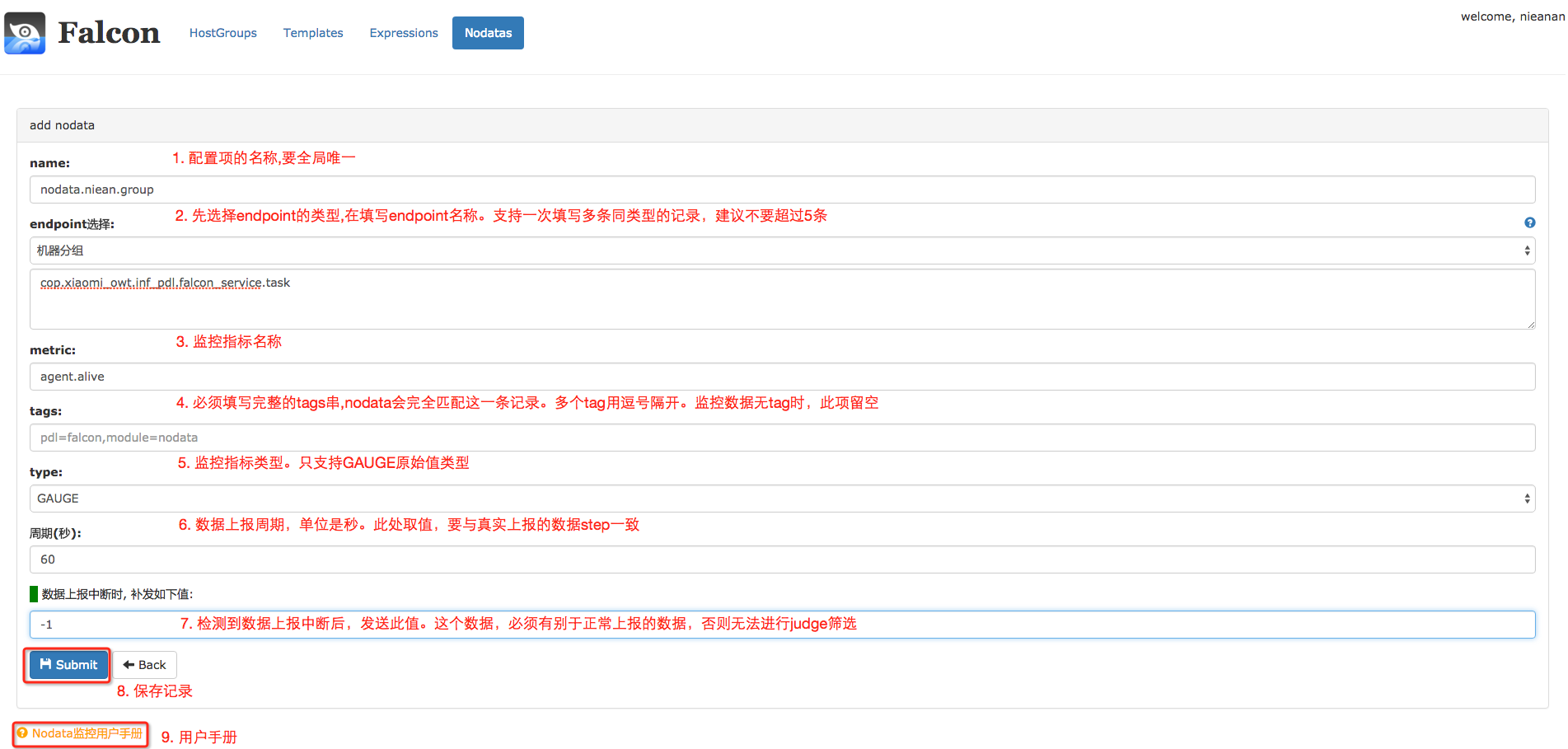

├── 42_nodata_configuration.md

├── 43_cluster_aggregation.md

├── 44_alarm_function_description.md

├── 45_user-defined_and_push_data_to_open-falcon.md

├── 46_historical_data_inquiry.md

├── 47_introduction.md

├── 48_mysql_monitor_practice.md

├── 49_redis_monitor.md

├── 4user_manual.md

├── 51_data_model.md

├── 52_about_data_collection.md

├── 53_plugin_mechanism.md

├── 54_tag_and_hostgroup.md

├── 5data_model.md

├── 61_deployment.md

├── 62_self-monitoring_practice.md

├── 6practical_experience.md

├── 71_community_contribution.md

├── 72_modify_the_drawing_curve_precision.md

├── 73_modifying_the_network_card_flux_unit.md

├── 74_supporting_grnfana_view_show.md

├── 7secondary_development.md

├── 8api.md

├── 91_about_collection.md

├── 91about_collection_md.md

├── 92_about_alert.md

├── 93_about_drawing.md

├── 94_linux_common_monitor_control_index.md

├── 95_qq_group_q&a.md

├── README.md

├── SUMMARY.md

├── chapter1.md

├── community.md

├── contributionlistbox.md

├── erjinzhi.md

└── kuai_su_ru_men.md

├── en_0_2

├── GLOSSARY.md

├── README.md

├── SUMMARY.md

├── api

│ └── README.md

├── authors.md

├── changelog

│ └── README.md

├── contributing.md

├── dev

│ ├── README.md

│ ├── change_graph_rra.md

│ ├── change_net_unit.md

│ ├── community_resource.md

│ └── support_grafana.md

├── distributed_install

│ ├── README.md

│ ├── agent-updater.md

│ ├── agent.md

│ ├── aggregator.md

│ ├── alarm.md

│ ├── api.md

│ ├── gateway.md

│ ├── graph.md

│ ├── hbs.md

│ ├── judge.md

│ ├── mail-sms.md

│ ├── nodata.md

│ ├── prepare.md

│ ├── task.md

│ └── transfer.md

├── donate.md

├── faq

│ ├── README.md

│ ├── alarm.md

│ ├── collect.md

│ ├── graph.md

│ ├── linux-metrics.md

│ └── qq.md

├── image

│ ├── OpenFalcon_wechat.jpg

│ ├── func_aggregator_1.png

│ ├── func_aggregator_2.png

│ ├── func_aggregator_3.png

│ ├── func_aggregator_4.png

│ ├── func_aggregator_5.png

│ ├── func_getting_started_1.png

│ ├── func_getting_started_10.png

│ ├── func_getting_started_11.png

│ ├── func_getting_started_12.png

│ ├── func_getting_started_2.png

│ ├── func_getting_started_3.png

│ ├── func_getting_started_4.png

│ ├── func_getting_started_5.png

│ ├── func_getting_started_6.png

│ ├── func_getting_started_7.png

│ ├── func_getting_started_8.png

│ ├── func_getting_started_9.png

│ ├── func_intro_1.png

│ ├── func_intro_2.png

│ ├── func_intro_3.png

│ ├── func_intro_4.png

│ ├── func_intro_5.png

│ ├── func_intro_6.png

│ ├── func_intro_7.png

│ ├── func_intro_8.png

│ ├── func_nodata_1.png

│ ├── func_nodata_2.png

│ ├── func_nodata_3.png

│ ├── linkedsee_1.png

│ ├── linkedsee_2.png

│ ├── linkedsee_3.png

│ ├── linkedsee_4.png

│ ├── linkedsee_5.png

│ ├── practice_graph-scaling_io01.png

│ ├── practice_graph-scaling_io02.png

│ ├── practice_graph-scaling_io03.png

│ ├── practice_graph-scaling_quantity.png

│ ├── practice_graph-scaling_rrd.png

│ └── practice_graph-scaling_stats.png

├── intro

│ └── README.md

├── philosophy

│ ├── README.md

│ ├── data-collect.md

│ ├── data-model.md

│ ├── plugin.md

│ └── tags-and-hostgroup.md

├── practice

│ ├── README.md

│ ├── deploy.md

│ ├── graph-scaling.md

│ └── monitor.md

├── quick_install

│ ├── README.md

│ ├── backend.md

│ ├── frontend.md

│ ├── prepare.md

│ └── upgrade.md

├── styles

│ └── website.css

└── usage

│ ├── MongoDB.md

│ ├── README.md

│ ├── aggregator.md

│ ├── data-push.md

│ ├── docker.md

│ ├── du-proc.md

│ ├── esxi.md

│ ├── flume.md

│ ├── func.md

│ ├── getting-started.md

│ ├── haproxy.md

│ ├── hwcheck.md

│ ├── jmx.md

│ ├── lvs.md

│ ├── memcache.md

│ ├── mesos.md

│ ├── mymon.md

│ ├── ngx_metric.md

│ ├── nodata.md

│ ├── proc-port-monitor.md

│ ├── query.md

│ ├── rabbitmq.md

│ ├── redis.md

│ ├── solr.md

│ ├── switch.md

│ ├── urlooker.md

│ ├── vsphere-esxi.md

│ ├── vsphere.md

│ └── win.md

├── plugins.txt

├── zh

├── GLOSSARY.md

├── README.md

├── SUMMARY.md

├── api

│ └── README.md

├── authors.md

├── changelog

│ └── README.md

├── contributing.md

├── dev

│ ├── README.md

│ ├── change_graph_rra.md

│ ├── change_net_unit.md

│ ├── community_resource.md

│ └── support_grafana.md

├── donate.md

├── faq

│ ├── README.md

│ ├── alarm.md

│ ├── collect.md

│ ├── graph.md

│ ├── linux-metrics.md

│ └── qq.md

├── image

│ └── OpenFalcon_wechat.jpg

├── images

│ └── practice

│ │ └── deploy.png

├── install_from_src

│ ├── README.md

│ ├── agent-updater.md

│ ├── agent.md

│ ├── aggregator.md

│ ├── alarm.md

│ ├── dashboard.md

│ ├── fe.md

│ ├── gateway.md

│ ├── graph.md

│ ├── hbs.md

│ ├── judge.md

│ ├── links.md

│ ├── mail-sms.md

│ ├── nodata.md

│ ├── portal.md

│ ├── prepare.md

│ ├── query.md

│ ├── sender.md

│ ├── task.md

│ └── transfer.md

├── intro

│ └── README.md

├── philosophy

│ ├── README.md

│ ├── data-collect.md

│ ├── data-model.md

│ ├── plugin.md

│ └── tags-and-hostgroup.md

├── practice

│ ├── README.md

│ ├── deploy.md

│ └── monitor.md

├── quick_install

│ ├── README.md

│ ├── graph_components.md

│ ├── judge_components.md

│ ├── links.md

│ └── prepare.md

└── usage

│ ├── MongoDB.md

│ ├── README.md

│ ├── aggregator.md

│ ├── data-push.md

│ ├── docker.md

│ ├── esxi.md

│ ├── func.md

│ ├── getting-started.md

│ ├── haproxy.md

│ ├── hwcheck.md

│ ├── jmx.md

│ ├── lvs.md

│ ├── memcache.md

│ ├── mesos.md

│ ├── mymon.md

│ ├── ngx_metric.md

│ ├── nodata.md

│ ├── proc-port-monitor.md

│ ├── query.md

│ ├── rabbitmq.md

│ ├── redis.md

│ ├── solr.md

│ ├── switch.md

│ ├── urlooker.md

│ ├── vsphere.md

│ └── win.md

└── zh_0_2

├── GLOSSARY.md

├── README.md

├── SUMMARY.md

├── api

└── README.md

├── authors.md

├── changelog

└── README.md

├── contributing.md

├── dev

├── README.md

├── change_graph_rra.md

├── change_net_unit.md

├── community_resource.md

└── support_grafana.md

├── distributed_install

├── README.md

├── agent-updater.md

├── agent.md

├── aggregator.md

├── alarm.md

├── api.md

├── gateway.md

├── graph.md

├── hbs.md

├── judge.md

├── mail-sms.md

├── nodata.md

├── prepare.md

├── task.md

└── transfer.md

├── donate.md

├── faq

├── README.md

├── alarm.md

├── collect.md

├── graph.md

├── linux-metrics.md

└── qq.md

├── image

├── OpenFalcon_wechat.jpg

├── func_aggregator_1.png

├── func_aggregator_2.png

├── func_aggregator_3.png

├── func_aggregator_4.png

├── func_aggregator_5.png

├── func_getting_started_1.png

├── func_getting_started_10.png

├── func_getting_started_11.png

├── func_getting_started_12.png

├── func_getting_started_2.png

├── func_getting_started_3.png

├── func_getting_started_4.png

├── func_getting_started_5.png

├── func_getting_started_6.png

├── func_getting_started_7.png

├── func_getting_started_8.png

├── func_getting_started_9.png

├── func_intro_1.png

├── func_intro_2.png

├── func_intro_3.png

├── func_intro_4.png

├── func_intro_5.png

├── func_intro_6.png

├── func_intro_7.png

├── func_intro_8.png

├── func_nodata_1.png

├── func_nodata_2.png

├── func_nodata_3.png

├── linkedsee_1.png

├── linkedsee_2.png

├── linkedsee_3.png

├── linkedsee_4.png

├── linkedsee_5.png

├── practice_graph-scaling_io01.png

├── practice_graph-scaling_io02.png

├── practice_graph-scaling_io03.png

├── practice_graph-scaling_quantity.png

├── practice_graph-scaling_rrd.png

└── practice_graph-scaling_stats.png

├── intro

└── README.md

├── philosophy

├── README.md

├── data-collect.md

├── data-model.md

├── plugin.md

└── tags-and-hostgroup.md

├── practice

├── README.md

├── deploy.md

├── graph-scaling.md

└── monitor.md

├── quick_install

├── README.md

├── backend.md

├── frontend.md

├── prepare.md

└── upgrade.md

├── styles

└── website.css

└── usage

├── MongoDB.md

├── README.md

├── aggregator.md

├── data-push.md

├── docker.md

├── du-proc.md

├── esxi.md

├── fault-recovery.md

├── flume.md

├── func.md

├── getting-started.md

├── haproxy.md

├── hwcheck.md

├── jmx.md

├── lvs.md

├── memcache.md

├── mesos.md

├── mymon.md

├── ngx_metric.md

├── nodata.md

├── proc-port-monitor.md

├── prometheus-exporter.md

├── query.md

├── rabbitmq.md

├── redis.md

├── solr.md

├── switch.md

├── urlooker.md

├── vsphere-esxi.md

├── vsphere.md

└── win.md

/.gitignore:

--------------------------------------------------------------------------------

1 | # Compiled Object files, Static and Dynamic libs (Shared Objects)

2 | *.o

3 | *.a

4 | *.so

5 |

6 | # Folders

7 | _obj

8 | _test

9 |

10 | # Architecture specific extensions/prefixes

11 | *.[568vq]

12 | [568vq].out

13 |

14 | *.cgo1.go

15 | *.cgo2.c

16 | _cgo_defun.c

17 | _cgo_gotypes.go

18 | _cgo_export.*

19 |

20 | _testmain.go

21 |

22 | *.exe

23 | *.test

24 | *.prof

25 |

26 | *.swp

27 | *.swo

28 | *.log

29 | .idea

30 | .DS_Store

31 | _book

32 | node_modules

33 | package-lock.json

34 |

--------------------------------------------------------------------------------

/LANGS.md:

--------------------------------------------------------------------------------

1 | * [简体中文 v0.2 - 最新版本](zh_0_2)

2 | * [English v0.2 - latest version](en_0_2)

3 | * [ ](en)

4 | * [ ](zh)

5 |

--------------------------------------------------------------------------------

/en/21_environment_preparation.md:

--------------------------------------------------------------------------------

1 |

2 | ##Environment Preparation

3 |

4 | ###Install Redis

5 |

6 | ```

7 | yum install -y redis

8 | ```

9 | ###Install MySQL

10 | ```

11 | yum install -y mysql-server

12 |

13 | ```

14 |

15 | ###create work directory

16 |

17 | ```

18 | export HOME=/home/work

19 | export WORKSPACE=$HOME/open-falcon

20 | mkdir -p $WORKSPACE

21 | cd $WORKSPACE

22 | ```

23 |

24 | ###Initialize the MySQL table structure

25 |

26 | ```

27 | # All components of open-falcon can start without the root account. It is recommended that common accounts be used for installation to increase security. Here we use a common account work to install and deploy all components.

28 | # However, the root account is required when yum is used to install some dependent lib databases.

29 | export HOME=/home/work

30 | export WORKSPACE=$HOME/open-falcon

31 | mkdir -p $WORKSPACE

32 | cd $WORKSPACE

33 |

34 | git clone https://github.com/open-falcon/scripts.git

35 | cd ./scripts/

36 | mysql -h localhost -u root -p < db_schema/graph-db-schema.sql

37 | mysql -h localhost -u root -p < db_schema/dashboard-db-schema.sql

38 |

39 | mysql -h localhost -u root -p < db_schema/portal-db-schema.sql

40 | mysql -h localhost -u root -p < db_schema/links-db-schema.sql

41 | mysql -h localhost -u root -p < db_schema/uic-db-schema.sql

42 |

43 | ```

44 |

45 | ##Download compiled component

46 |

47 | We have compiled relevant component into binary version to make it easier to use. The binaries can only run on 64 bit Linux

48 |

49 | Domestic users please click here to quickly download the compiled binary version.

50 | ```

51 | DOWNLOAD="https://github.com/open-falcon/of-release/releases/download/v0.1.0/open-falcon-v0.1.0.tar.gz"

52 | cd $WORKSPACE

53 |

54 | mkdir ./tmp

55 | wget $DOWNLOAD -O open-falcon-latest.tar.gz

56 | #uncompress

57 | tar -zxf open-falcon-latest.tar.gz -C ./tmp/

58 |

59 | for x in `find ./tmp/ -name "*.tar.gz"`; do

60 | app=`echo $x|cut -d '-' -f2`;

61 | mkdir -p $app;

62 | tar -zxf $x -C $app;

63 | done

64 |

65 | rm -rf ./tmp && rm -f open-falcon-latest.tar.gz

66 | ```

67 |

68 | ##Changelog

69 |

70 | http://book.open-falcon.com/zh/changelog/README.html

71 |

72 |

73 |

74 |

75 |

76 |

--------------------------------------------------------------------------------

/en/24.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | The following two steps should be processed to open alarm merging function:

4 |

5 | ##Adjust the configuration of alarm

6 |

7 | ```

8 | cd $WORKSPACE/alarm/

9 |

10 | 1. Adjust the content of highQueues configuration in cfg.json into

11 | [

12 | "event:p0",

13 | "event:p1"

14 | ]

15 | 2. Adjust the content of lowQueues configuration in cfg.json into

16 | [

17 | "event:p2",

18 | "event:p3",

19 | "event:p4",

20 | "event:p5",

21 | "event:p6"

22 | ]

23 |

24 | Instruction:

25 | - In Open-Falcon, alarm is categorized into P0, P1... P6, the priority of alarm decreases successfully.

26 | - For alarm of high priority, Open-Falcon will ensure it is sent preferentially.

27 | - Alarm merging function only applies to alarms of low priority, because alarms of high priority are normally very important, which highly need real-time response, and we do not suggest for alarm combination.

28 | - Therefore, it will not be combined in highQueues configuratio

29 |

30 | ```

31 |

32 | ##Links components installation

33 |

34 | The function of links components: when multiple alarms merger into one alarm, the http link addresses with details of alarms will be attached in the message for users to check the details.

35 |

36 | ###install dependency

37 |

38 | ```

39 | # yum install -y python-virtualenv

40 | $ cd $WORKSPACE/links/

41 | $ virtualenv ./env

42 | $ ./env/bin/pip install -r pip_requirements.txt

43 | ```

44 | ###init database and config

45 |

46 | ```

47 | - database schema: https://github.com/open-falcon/scripts/blob/master/db_schema/links-db-schema.sql

48 | - database config: ./frame/config.py

49 | - initialize the data for Links.Of course,you can reference the part of Links in connecting with environment preparing

50 |

51 | ```

52 | ###start

53 |

54 | ```

55 | $ cd $WORKSPACE/links/

56 | $ ./control start

57 | --> goto http://127.0.0.1:5090

58 |

59 | $ ./control tail

60 | --> tail log

61 | ```

62 |

63 |

64 |

--------------------------------------------------------------------------------

/en/316_gateway.md:

--------------------------------------------------------------------------------

1 | ##Gateway

2 |

3 | **If you haven't come across the machine room partitioning problem, please ignore the module directly.**

4 |

5 | If you have already come across the machine room partitioning problem and are eager to resolve the monitoring data backhaul problem occurred when machine room partitioning, please use this component. More information is here.

6 |

7 |

--------------------------------------------------------------------------------

/en/319_agent-updater.md:

--------------------------------------------------------------------------------

1 | ##Agent-updater

2 |

3 | It is required to deploy "falcon-agent" for each machine. If there are just a small number of machines in the company, it is OK to install manually with tools such as pssh, ansible and fabric. But if there is a large number of machines in the company, installing, upgrading and rollbacking "falcon-agent" manually can be a nightmare.

4 |

5 | A tool named "agent-updater" is developed, which can be used to manage "falcon-agent". "agent-updater" also has an agent: "ops-updater", which can be regarded as a super agent and used to manage the agents of other agents. It is recommended to install ops-updater together when setting the machine up. Usually, ops-upgrader doesn't need upgrades.

6 |

7 | Please refer to https://github.com/open-falcon/ops-updater for details.

8 |

9 | If you want to learn how to use the Go language to write a complete project, you can study "agent-updater". I have even recorded a Video course to demonstrate how to develop it step by step. Tutorial link:

10 |

11 | * http://www.jikexueyuan.com/course/1336.html

12 | * http://www.jikexueyuan.com/course/1357.html

13 | * http://www.jikexueyuan.com/course/1462.html

14 | * http://www.jikexueyuan.com/course/1490.html

15 |

--------------------------------------------------------------------------------

/en/37_email_and_message_sending_interface.md:

--------------------------------------------------------------------------------

1 | ##Email and Message Sending Interface

2 |

3 | This component has no code and each company needs to provide code by itself.

4 |

5 | The monitoring system needs to send alarm emails or messages after alarm events occur. Each company may have its own email server and sending method and its own message channel and sending method. To adapt to different companies, falcon sets up a specification for the access scheme where each company needs to provide message and email sending http interfaces.

6 |

7 | Short message sending http interface:

8 |

9 | ```

10 | method: post

11 | params:

12 | - content: Short message content

13 | - tos: Multiple mobile phone numbers separated by commas

14 |

15 | ```

16 | Email sending http interface:

17 |

18 | ```

19 | method: post

20 | params:

21 | - content:Email content

22 | - subject: Email subject

23 | - tos: Multiple email addresses separated by commas

24 |

25 | ```

26 |

27 |

--------------------------------------------------------------------------------

/en/3yuanmaanzhuang.md:

--------------------------------------------------------------------------------

1 | #

2 |

3 |

--------------------------------------------------------------------------------

/en/411_memcache_monitoring.md:

--------------------------------------------------------------------------------

1 | ##Memcache monitoring

2 |

3 | We have introduced the usual monitoring data source in section of Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon.

4 |

5 | The data of Memcache can be collected by collecting script memcached-monitor.

6 |

7 | ##Operating principle

8 |

9 | Memcached-monitor is a cron, and the collecting script is run per minute.

10 | ```Memcached-monitor```.py can automatically detect the port of Memcached, and connect to Memcached instance to collect some monitoring index, for example get_hit_ratio, usage and so on, then assembling to the normative format of open-falcon to post to the host falcon-agent. Falcon-agent provides a http interface, and as for the using method, please refer to the instances in Data Collection.

11 |

12 | For example, we have 1000 machines deployed Memcached instance, and we can deploy 1000 crons for the 1000 machines, i.e. it is one-to-one corresponded to the Memcached instance.

13 |

14 | Notice, script ```memcached-monitor.py``` automatically finds the Memcached port by ````ps -ef |grep memcached|grep -v grep |sed -n 's/.* *-p *\([0-9]\{1,5\}\).*/\1/p```. If port is not designated by –p when Memcached initiates, the self-finding would be failed, and at this time, the script needs to be modified and designate the very port.

15 |

--------------------------------------------------------------------------------

/en/412_rabbitmq_monitoring.md:

--------------------------------------------------------------------------------

1 | ##RabbitMQ monitoring

2 |

3 | We have introduced the usual monitoring data source in section Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon

4 |

5 | The data of RMQ can be collected by script rabbitmq-monitor.

6 |

7 | ##Operating principle

8 |

9 | rabbitmq-monitor is a cron, and the script ```rabbitmq-monitor.py``` is run every minute, wherein RMQ username and password and so on are deployed. The script connects to the RMQ instance and collect some monitoring index such as messages_ready, messages_total, deliver_rate, publish_rate and so on, and then assemble to the normative format of open-falcon to post to the host falcon-agent.

10 |

11 | Falcon-agent provides a http interface, and as for the using method, please refer to the instances in Data Collection.

12 |

13 | For example, we deployed 5 RMQ instance, and a cron can be run in every RMQ machine, i.e. it is one-to-one corresponded to the Memcached instance.

14 |

--------------------------------------------------------------------------------

/en/414_monitoring_windows_platform.md:

--------------------------------------------------------------------------------

1 | ##monitoring windows platform

2 |

3 | We have introduced the usual monitoring data source in section of Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon.

4 |

5 | The running index of switch collection: we can write a python to collect each item of running index of switch by SNMP protocol, including memory usage, CPU usage, disk usage, network traffic, etc.

6 |

7 | We can collect the monitoring index of windows host directly by windows_collect script.

8 |

9 | ###Usage

10 | * Modify the configuration parameter at the head of the script according to the real deployment condition.

11 | * Change the mysql into utf8 in graph to support Chinese. This step is important because windows mac may be Chinese.

12 | * Test: python windows_collect.py

13 | * Run windows plan mission and complete

14 |

15 | ###The environment tested:

16 | * windows 10

17 | * windows 7

18 | * windows server 2012

19 | ________________________________________

20 | Otherwise you can use the golang version windows agent: https://github.com/LeonZYang/agent

21 |

22 |

--------------------------------------------------------------------------------

/en/415_haproxy_monitoring.md:

--------------------------------------------------------------------------------

1 | ##HAProxy monitoring

2 |

3 | We have introduced the usual monitoring data source in section of Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon.

4 |

5 | The data collection of HAProxy can be done by haproxymon.

6 |

7 | ##Operating principle

8 |

9 | Haproxymon is a cron and the ```haproxymon.py``` is run every minute. Haproxymon collects the haproxy basic state information by the stats socket interface of haproxy, such as qcur、scur、rate etc., and then assemble to the normative format of open-falcon to post to the host falcon-agent.

10 |

11 | Falcon-agent provides a http interface, and as for the using method, please refer to the instances in Data Collection.

12 |

--------------------------------------------------------------------------------

/en/416_dockercontainer_monitoring_practice.md:

--------------------------------------------------------------------------------

1 | ##Dockercontainer monitoring practice

2 |

3 | We have introduced the usual monitoring data source in section of Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon.

4 |

5 | The data collection of docker container can be done by micadvisor_open.

6 |

7 | ##Operating principle

8 |

9 | Micadvisor-open is the docker container resources monitoring plug-in based on open-falcon, which monitors the CPU, memory, diskio and net io etc. and collects the data and reports to open-falcon.

10 |

11 | ##The index collected

12 |

13 | | Counters | Notes |

14 | | -- | -- |

15 | | cpu.busy | Cpu usage percent |

16 | | cpu.user | Cpu usage percent in user mode |

17 | | cpu.system | Cpu usage percent in kernel mode |

18 | | cpu.core.busy | Every cpu usage percent |

19 | | mem.memused.percent | Memory usage percent |

20 | | mem.memused | Memory usage original value |

21 | | mem.memtotal | Total memory |

22 | | mem.memused.hot | Memory heat usage percent |

23 | | disk.io.read_bytes | Disk io read bytes |

24 | | disk.io.write_bytes | Disk io write bytes |

25 | | net.if.in.bytes | Net io in bytes |

26 | | net.if.in.packets | Net io in packets |

27 | | net.if.in.errors | Net io in errors |

28 | | net.if.in.dropped | Net io in dropped |

29 | | net.if.out.bytes | Net io out bytes |

30 | | net.if.out.packets | Net io out packets |

31 | | net.if.out.errors | Net io out errors |

32 | | net.if.out.dropped | Net io out dropped |

33 |

34 | ##Contributors

35 | mengzhuo: QQ:296142139; MAIL:mengzhuo@xiaomi.com

36 |

37 | ##Supplement

38 | * another lib bank of docker metric collection:https://github.com/projecteru/eru-metric

39 |

40 |

--------------------------------------------------------------------------------

/en/417_nginx_monitoring.md:

--------------------------------------------------------------------------------

1 | ##Nginx monitoring

2 |

3 | We have introduced the usual monitoring data source in section of Data Collection. As a monitoring frame, open-falcon can collect monitoring index data in any system and it just need to organize the monitoring data to the normative format of open-falcon.

4 |

5 | The data collection of Nginx can be done by ngx_metric.

6 |

7 | ##Operating principle

8 |

9 | ngx_metric makes the real-time analysis of nginx request using the ```log by lua ```function of lua - nginx - the module , and stores the intermediate results with the help of ```NGX. shared. DICT```, and finally takes out the intermediate results to calculate, format and output through external python scripts. The falcon can be directly pushed to the falcon agent according to the output results falcon format.

10 |

11 | ##Help

12 |

13 | For more detail please refer to: ngx metric

--------------------------------------------------------------------------------

/en/418_jmx_monitoring.md:

--------------------------------------------------------------------------------

1 | # 4.18 JMX monitoring

2 |

3 |

--------------------------------------------------------------------------------

/en/44_alarm_function_description.md:

--------------------------------------------------------------------------------

1 | ##Alarm function description

2 |

3 | While configuring alarm strategies, open-falcon supports multiple alarm trigger function, for example, all(#3) diff(#10), etc., the numbers after # indicates that it is a newest historic point. For instance, #3 means the newest 3 points.

4 |

5 | ```

6 | all(#3): send alarm when all three newest points reach the threshold value

7 | max(#3): send alarm when the maximum value of all three newest points reaches the threshold value

8 | min(#3): send alarm when the minimum value of all three newest points reaches the threshold value

9 | sum(#3): send alarm when the sum of all three newest points reaches the threshold value

10 | avg(#3): send alarm when the average value of all three newest points reaches the threshold value

11 | diff(#3): the newest point pushed (minuend) minus 3 newest points (3 subtrahends) equals 3 numbers, if one of them reaches the threshold value, then alarm will be sent

12 | pdiff(#3): the newest point pushed minus 3 newest points equals 3 numbers, and divide the 3 numbers respectively by the 3 newest points (3 subtrahends) equals 3 values, if one of them reaches the threshold value, then alarm will be sent

13 | ```

14 |

15 | The most commonly used function is ```all```, for instance cpu.idle ```all(#3) < 5```, means alarm will be sent when the value of cpu.idle is less than 5% for 3 consecutive times.

16 |

17 | It is not so easy to understand diff and pdiff. The design of diff and pdiff is to solve the problem of alarm for the sudden increase and decrease of flow. If you still cannot understand it, then you can only read the original codes: https://github.com/open-falcon/judge/blob/master/store/func.go

18 |

19 |

--------------------------------------------------------------------------------

/en/46_historical_data_inquiry.md:

--------------------------------------------------------------------------------

1 | ##Historical data inquiry

2 |

3 | Any data pushed in open-falcon can be inquired through API provided by query component afterwards.

4 |

5 | ##Inquire historical data

6 |

7 | Use the port HTTP POST /graph/history to inquire the historical data of certain period in the past. The port cannot be used to inquire the two newly submitted data points. One python example is as follows:

8 |

9 | ```

10 | #-*- coding:utf8 -*-

11 |

12 | import requests

13 | import time

14 | import json

15 |

16 | end = int(time.time()) # starting time

17 | start = end - 3600 # ending time (In example,the data is queried an hour ago)

18 |

19 | d = {

20 | "start": start,

21 | "end": end,

22 | "cf": "AVERAGE",

23 | "endpoint_counters": [

24 | {

25 | "endpoint": "host1",

26 | "counter": "cpu.idle",

27 | },

28 | {

29 | "endpoint": "host1",

30 | "counter": "load.1min",

31 | },

32 | ],

33 | }

34 |

35 | query_api = "http://127.0.0.1:9966/graph/history"

36 | r = requests.post(query_api, data=json.dumps(d))

37 | print r.text

38 | ```

39 | Among which,

40 |

41 | 1.start: the start time point of the historical data need to be inquired (as the form of UNIX time)

42 |

43 | 2.end: the end time point of the historical data need to be inquired (as the form of UNIX time)

44 |

45 | 3.cf: appointed sample mode, can choose from: AVERAGE, MAX, and MIN

46 |

47 | 4.endpoint_counters: arrays, among which each element is a key value composed of endpoint and counter, among which counter is comprised by metric/sorted(tags), if there is no tags then it is metric by itself.

48 |

49 | 5.query_api: the monitor address of query module + api

50 |

51 | ##Inquire newly submitted data

52 |

53 | Use the port HTTP POST /graph/last to inquire a newly submitted data point. A bash example is as follows

54 |

55 |

--------------------------------------------------------------------------------

/en/48_mysql_monitor_practice.md:

--------------------------------------------------------------------------------

1 | ##MySQL monitoring practice

2 |

3 | In the part of Data Collection, we introduced common monitor data source. Open-falcon as a monitor frame can collect monitor index data of any system. The only thing is to change monitor data into the standard format of open-falcon.

4 |

5 | The data collection of MySQL can be managed through mymon.

6 |

7 | ##Operating principle

8 |

9 | Mymon is a cron. It runs once every minute. In the configuration file, the database link address is configured. Mymon is connected to the database to collect some monitor indexes such as global status, global variables, slave status, etc., and then packed into a standard format data for open-falcon and posted to falcon-agent of this machine. falcon-agent provides a http port, and you can refer to the examples in Data Collection for its application method.

10 |

11 | For instance, we have 1000 machines which are configured with MySQL instance. We can deploy 1000 cron in the 1000 machines, i.e.: one-to-one correspondence with instance in database.

12 |

13 | ##Supplement

14 |

15 | Remote monitor mysql instance If you want to collect mysql instance indexes in hostB through mymon of hostA, you can do it like this: "set the endpoint in the configuration file of mymon in hostA as the machine name of hostB, meanwhile, set mysql instance in hostB as the configuration item of [mysql]". When checking mysql indexes and adding strategies to mysql indexes, we need to find corresponding indexes for the machine name of hostB.

16 |

17 |

18 |

--------------------------------------------------------------------------------

/en/49_redis_monitor.md:

--------------------------------------------------------------------------------

1 | # 4.9 Redis monitoring

2 |

3 | In the part of Data Collection, we introduced common monitor data source. Open-falcon as a monitor frame can collect monitor index data of any system. The only thing is to change monitor data into the standard format of open-falcon.

4 |

5 | The data collection of redis can be managed through collecting script redis-monitor or redismon.

6 |

7 | ##Operating principle

8 |

9 | redis-monitor is a cron. It runs a collecting script redis-monitor.py every minute, and the address of redis service is configured. redis-monitor is connected to redis instance to collect some monitor indexessuch as connected_clients, used_memory, etc., and then packed into a standard format data for open-falcon and posted to falcon-agent of this machine. falcon-agent provides a http port, and you can refer to the examples in Data Collection for its application method.

10 |

11 | For instance, we have 1000 machines which are configured with Redis instance. We can deploy 1000 cron in the 1000 machines, i.e.: one-to-one correspondence with Redis isntance.

--------------------------------------------------------------------------------

/en/4user_manual.md:

--------------------------------------------------------------------------------

1 | ##The User`s Manual for open-falcon

2 |

3 |

4 |

5 |

--------------------------------------------------------------------------------

/en/51_data_model.md:

--------------------------------------------------------------------------------

1 | # 5.1 Data Model

2 |

3 | Data model

4 | Open-Falcon uses the data format similar to OpenTSDB: metric, endpoint, plus multi-group key value tags. There are two examples:

5 | ```

6 | {

7 | metric: load.1min,

8 | endpoint: open-Falcon-host,

9 | tags: srv=Falcon,idc=aws-sgp,group=az1,

10 | value: 1.5,

11 | timestamp: `date +%s`,

12 | counterType: GAUGE,

13 | step: 60

14 | }

15 | {

16 | metric: net.port.listen,

17 | endpoint: open-Falcon-host,

18 | tags: port=3306,

19 | value: 1,

20 | timestamp: `date +%s`,

21 | counterType: GAUGE,

22 | step: 60

23 | }

24 | ```

25 | Among them, metric is the name of monitoring metrics, endpoint is the monitoring entity, tags is the attribute tag of monitoring data, counterType is the data type defined by Open-Falcon (the values are GAUGE, COUNTER), step is the reported period of monitoring data, value and timestamp is the valid monitoring data.

--------------------------------------------------------------------------------

/en/54_tag_and_hostgroup.md:

--------------------------------------------------------------------------------

1 | # 5.4 Tag and HostGroup

2 |

3 |

--------------------------------------------------------------------------------

/en/5data_model.md:

--------------------------------------------------------------------------------

1 | # 5.Data Model

2 |

3 |

--------------------------------------------------------------------------------

/en/61_deployment.md:

--------------------------------------------------------------------------------

1 | # 6.1 Deployment

2 |

3 |

--------------------------------------------------------------------------------

/en/6practical_experience.md:

--------------------------------------------------------------------------------

1 | # 6.Practical Experience

2 |

3 |

--------------------------------------------------------------------------------

/en/71_community_contribution.md:

--------------------------------------------------------------------------------

1 | # 7.1 Community contribution

2 |

3 | ##Business monitoring

4 | * Windows Agent

5 | * MySQL Monitor

6 | * Redis Monitor

7 | * RPC Monitor

8 | * Switch Monitor

9 | * Falcon-Agent downtime monitoring

10 | * memcached

11 | * Docker monitoring Lib

12 | * mesos monitoring

13 | * Winodws/Linux automatically report the property

14 | * Nginx monitoring: enterprise-level monitoring standard

15 | * JMX monitoring: monitoring plug-in based on open-falcon

16 | * [Baidu-RPC Monitor](https://github.com/solrex/brpc-open-falcon)

17 | * [Elasticsearch Monitor](https://github.com/solrex/es-open-falcon)

18 | * [Redis Monitor (Multiple Instance)](https://github.com/solrex/redis-open-falcon)

19 | * [SSDB Monitor](https://github.com/solrex/ssdb-open-falcon)

20 |

21 | ##Script

22 | * Summarize the plugin script of each cpu core

23 | * Summarize the plugin script the process wastes

24 | * The monitoring script and service aiming at falcon

25 | * Windows metrics collector

26 | * Monitoring script of Dell server hardware state

27 | ##SDK

28 | * Node.js perfcounter

29 | * Golang perfcounter

30 | ##Else

31 | * SMS sender

32 | * Mail sender

33 | * Open-Falcon Ansible Playbook

34 | * Open-Falcon Docker

35 | ##The text

36 | * OpenFalcon communication: a PPT suitable for generalizing OpenFalcon in the company

37 | * OpenFalcon @ SACC-2015

38 | * The whole brain process of OpenFalcon writing: the weigh and compromise during the development process

39 | * OpenFalcon oprating and recording the screen showing for 10 minutes more

40 | * 7 means when MIUI open-source monitoring system OpenFalcon faced with high concurrency

41 |

42 | ##The meeting

43 | **Kylin meeting technology salon-Open-Falcon v0.1.0 published:**

44 |

45 | * Lai Wei——Open-Falcon new version character analysis and planning

46 | * Xie Danbo——the falling and subliming of Open-Falcon in Meituan

47 | * Ou Yaowei——the revolution and evolution of enterprise-level monitoring platform

48 |

--------------------------------------------------------------------------------

/en/74_supporting_grnfana_view_show.md:

--------------------------------------------------------------------------------

1 | # 7.4 Supporting Grnfana view show

2 |

3 | ##Supporting Grnfana view show

4 |

5 | Compared to the Dashboard created by Open-Falcon, Grafana can self-define chart flexibly and control the permission, assign label as well as query aiming at Dashboard, and the show option is more diverse. The teaching help you do well in the show of Open-Falcon.

6 |

7 | ##Before start

8 |

9 | Open-Falcon and Grafana don’t support each other at present, so you need the following PR

10 |

11 | * Grafana PR#3787 ( v2.6 supported)

12 | * Query PR#5(combined to the latest query code, please inspect if you are using the latest version)

13 | *

14 | For more details please refer to Youku

15 |

16 | ##Set Datasource

17 |

18 | When you get the abovementioned PR Grafana SC, install it as the official teaching, and compile it as follows:

19 | 1.Compile the front code go run build.go build

20 |

21 | 2.Comile the back code grunt

22 |

23 | 3.Execute grafana-server

24 |

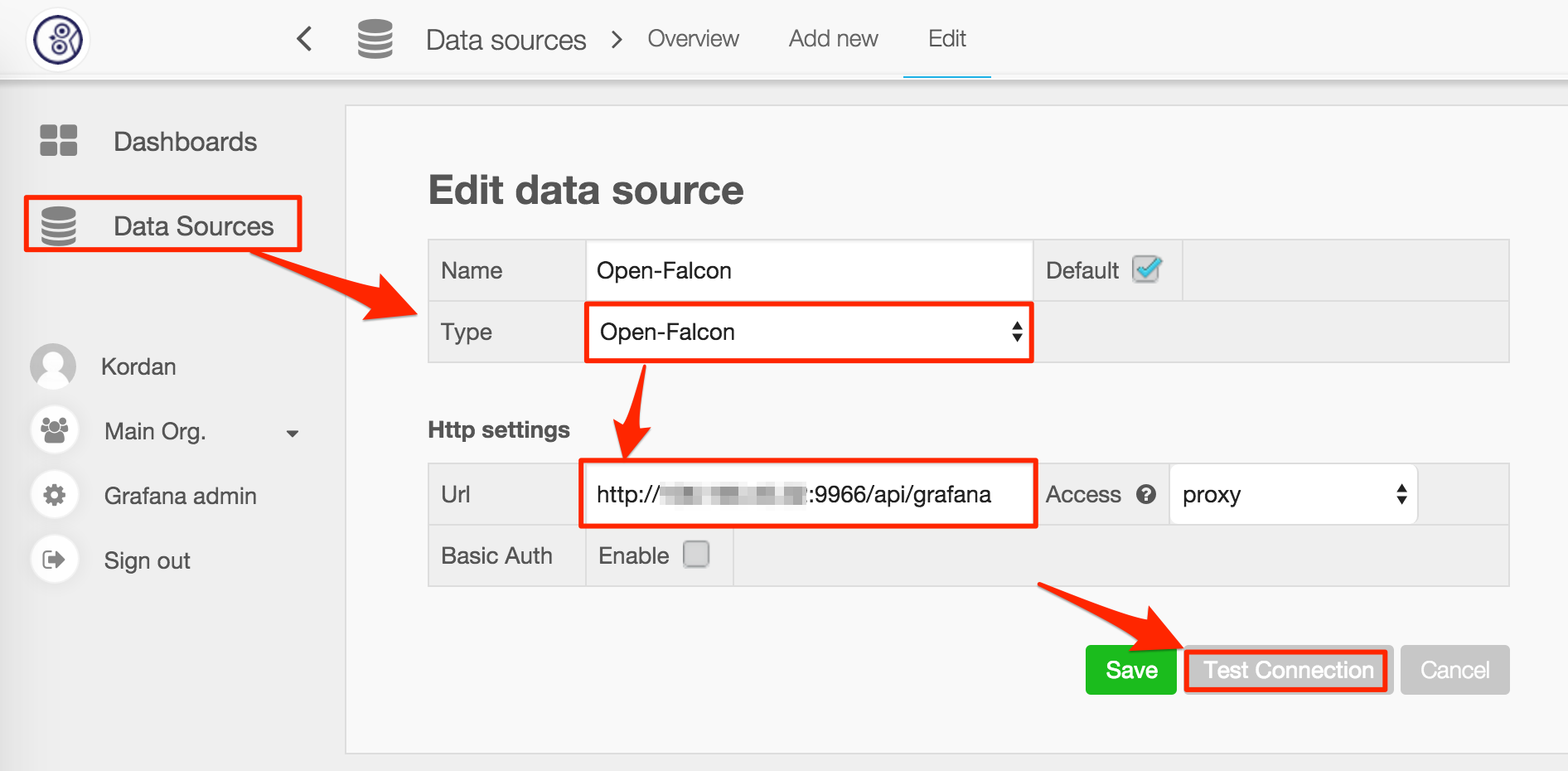

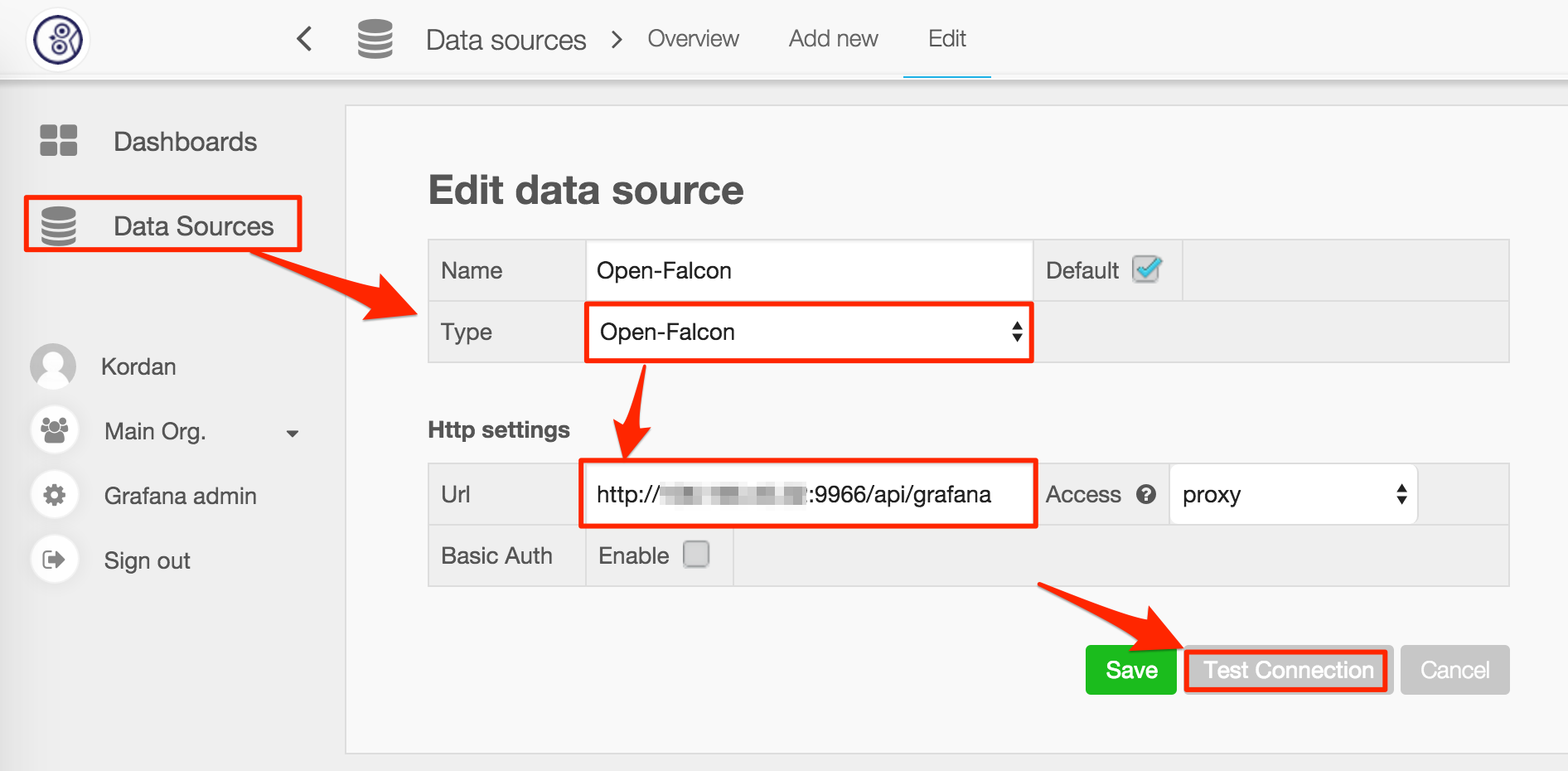

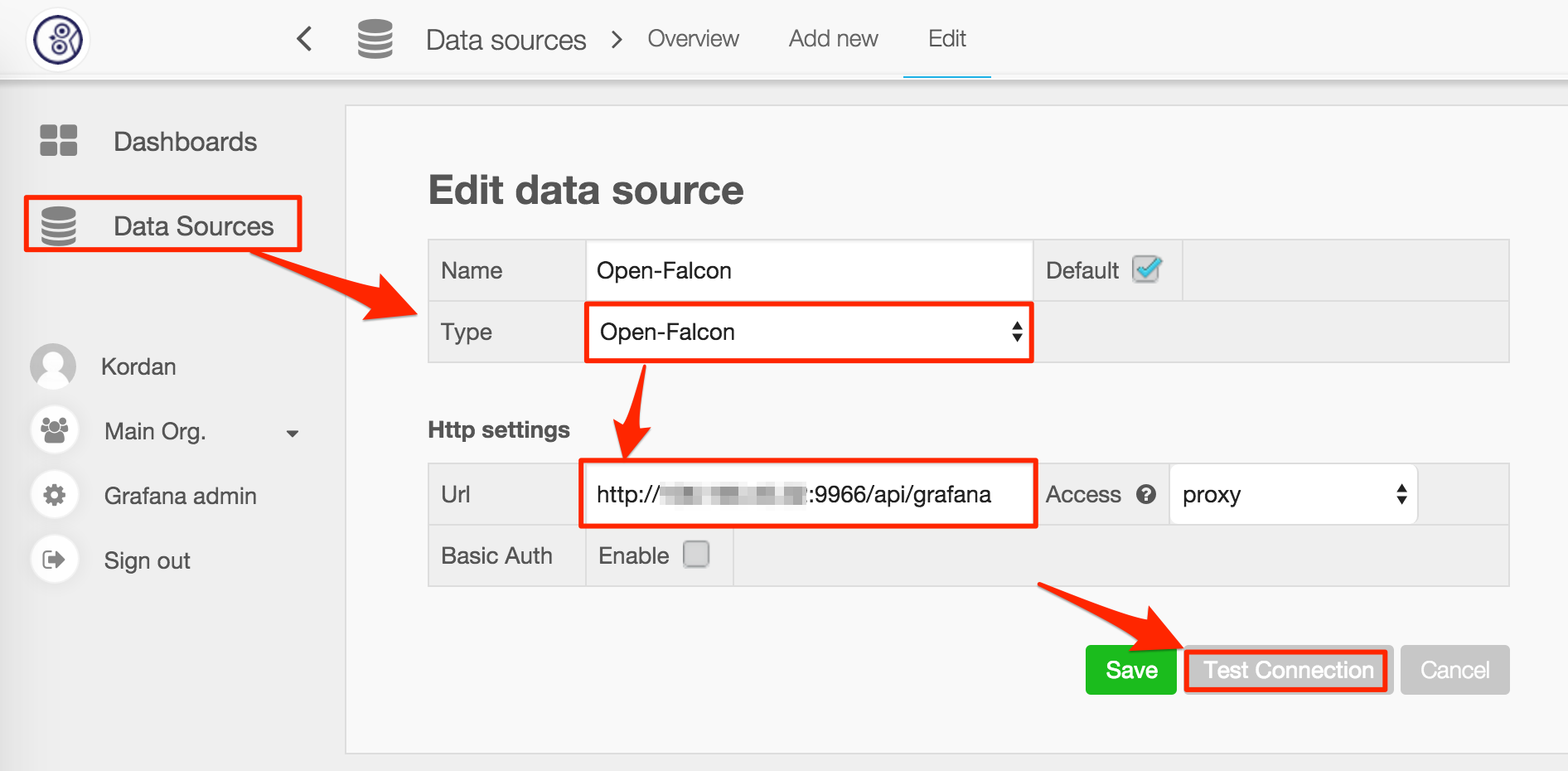

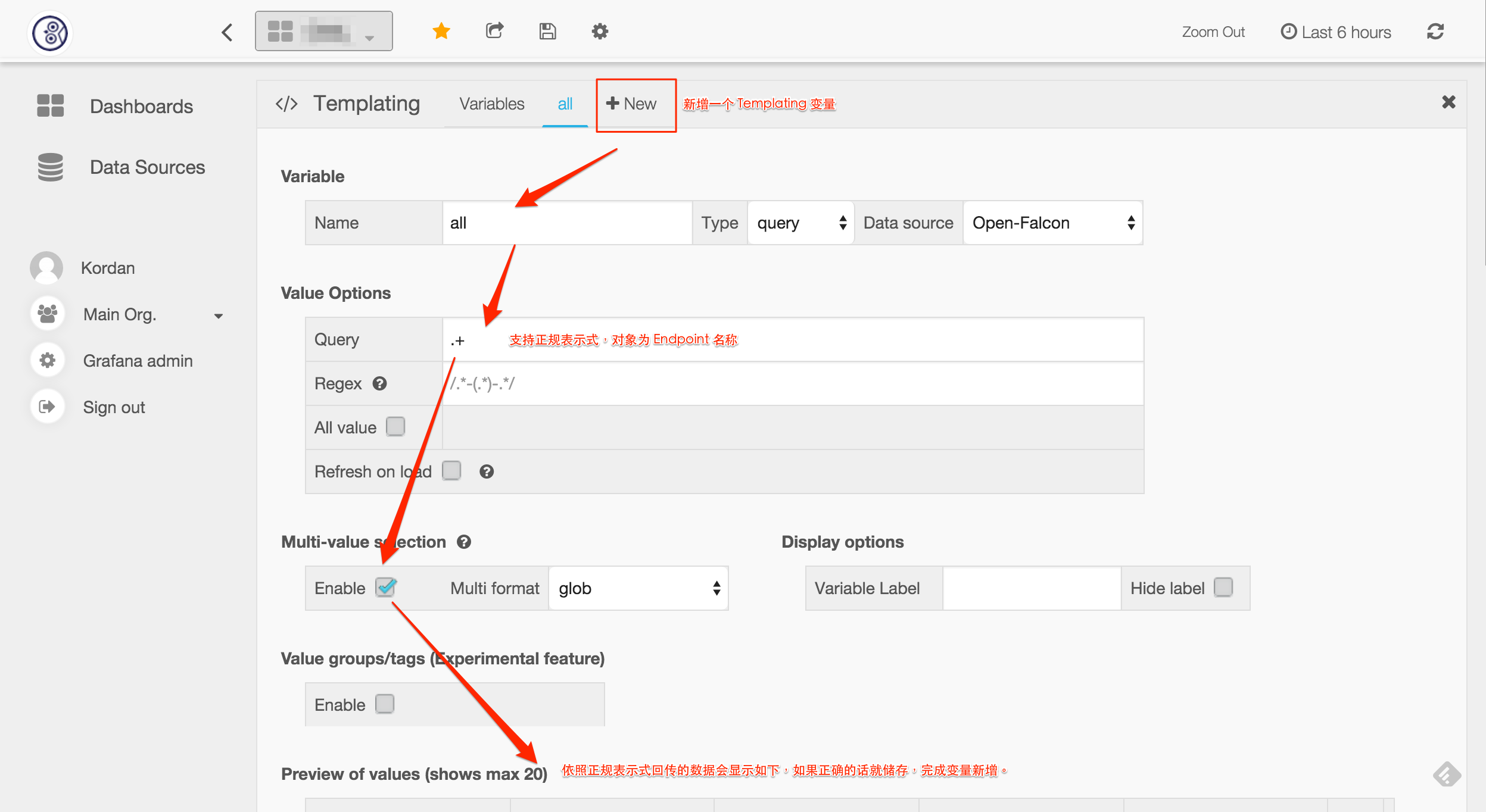

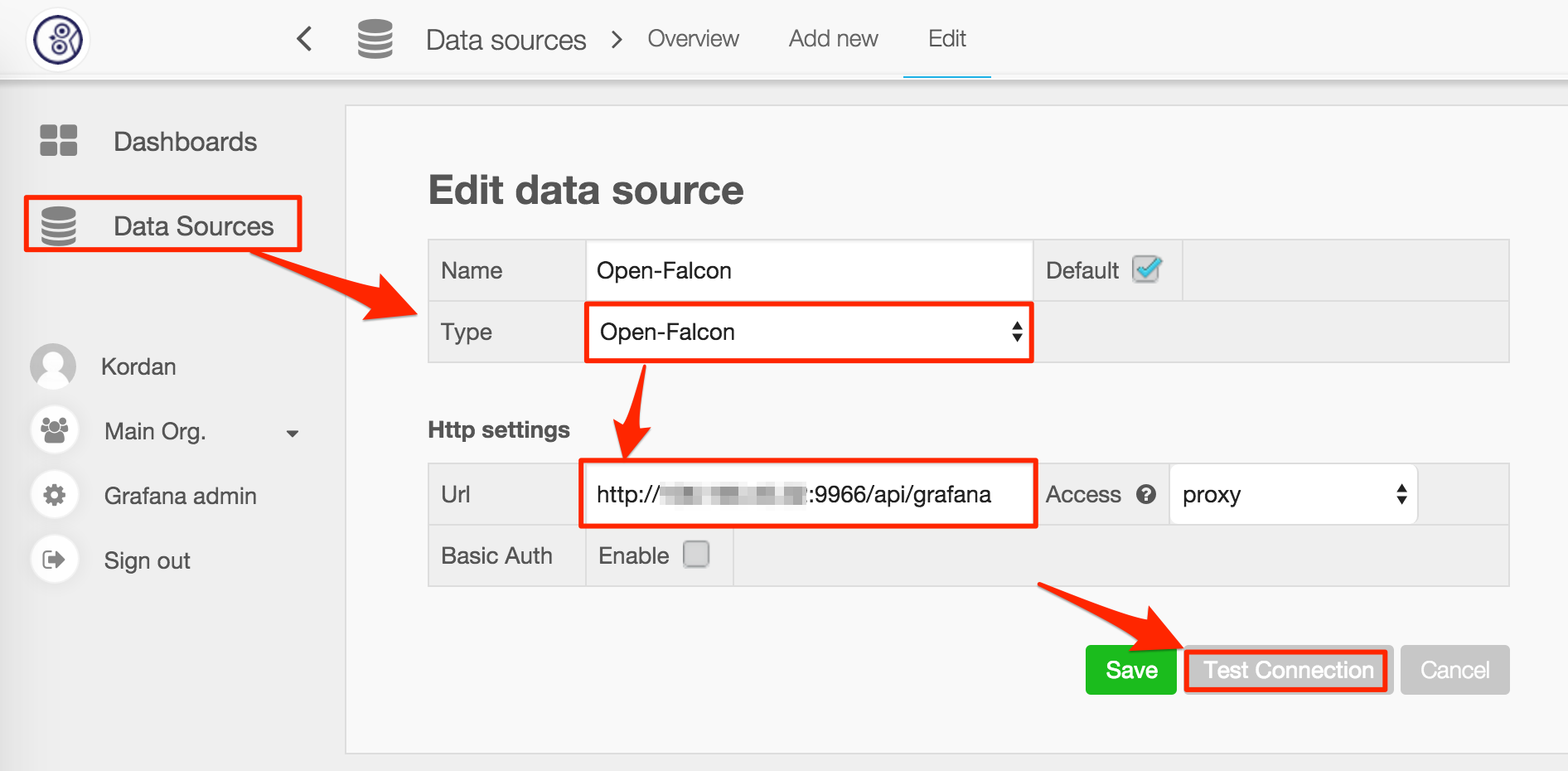

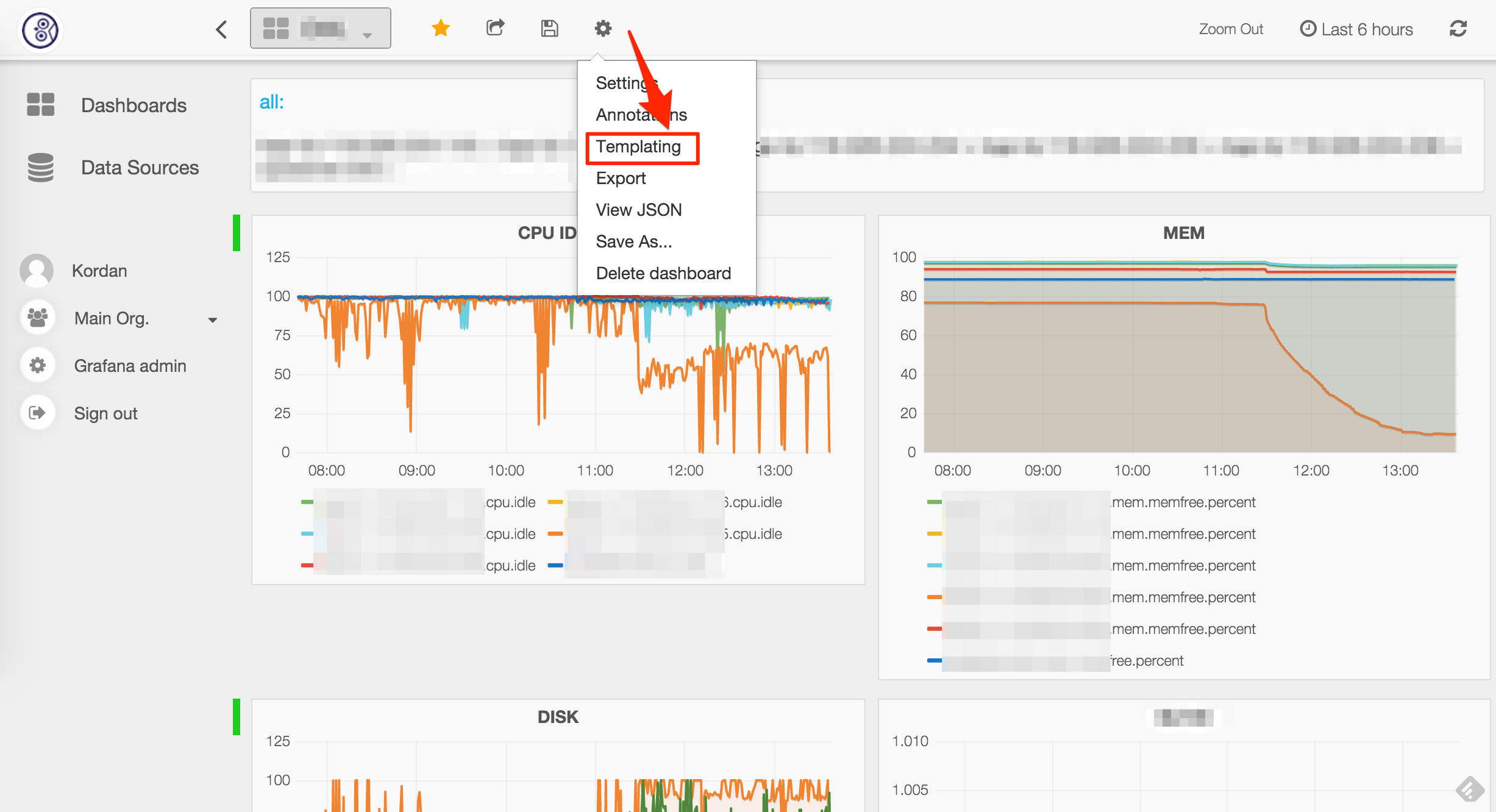

25 | After initiating Grafana, add new Open-Falcon Datasource as the following picture. Notice that the URL we use here is the newly added API in falcon-query.

26 |

27 | ##picture

28 |

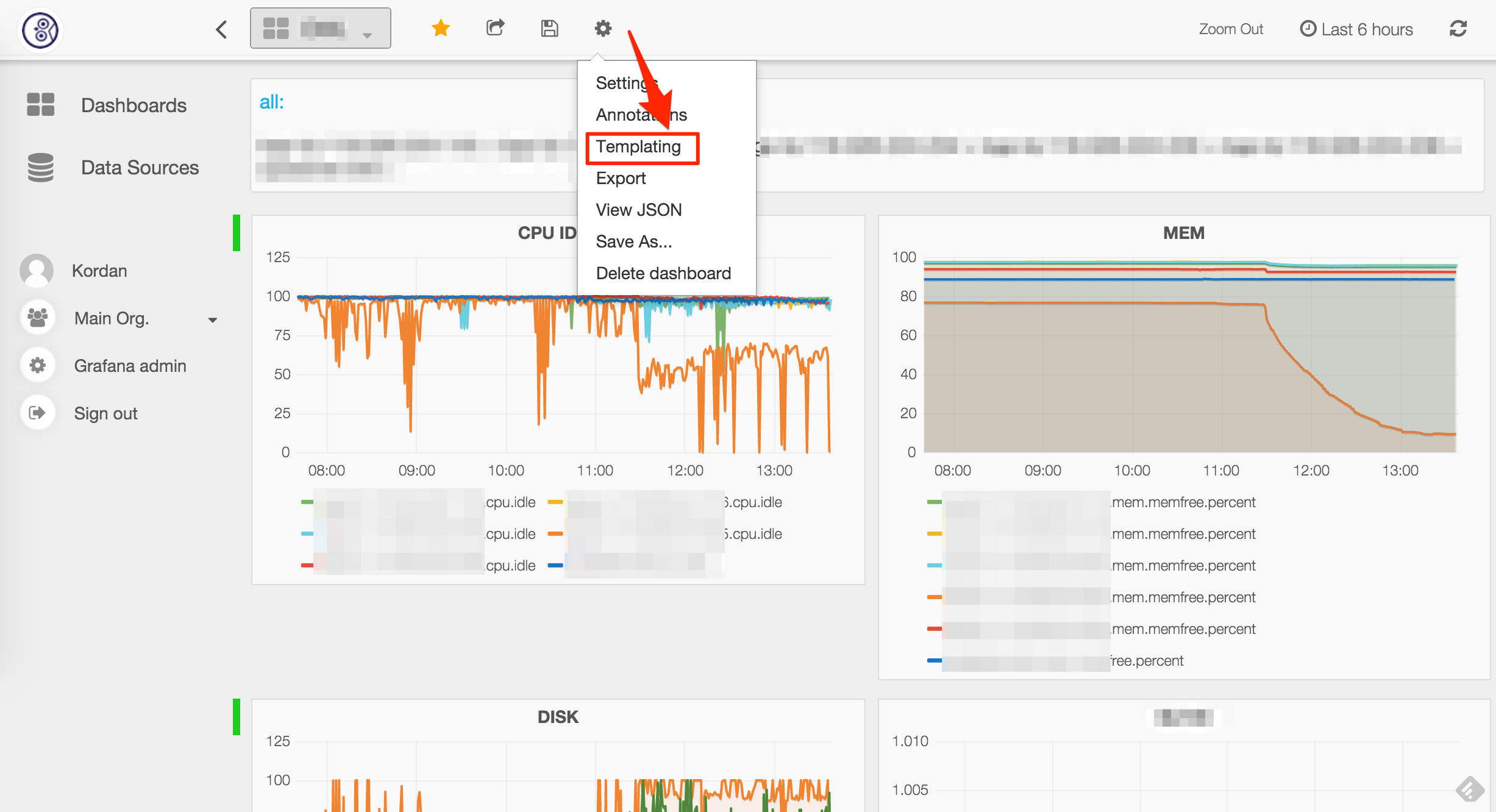

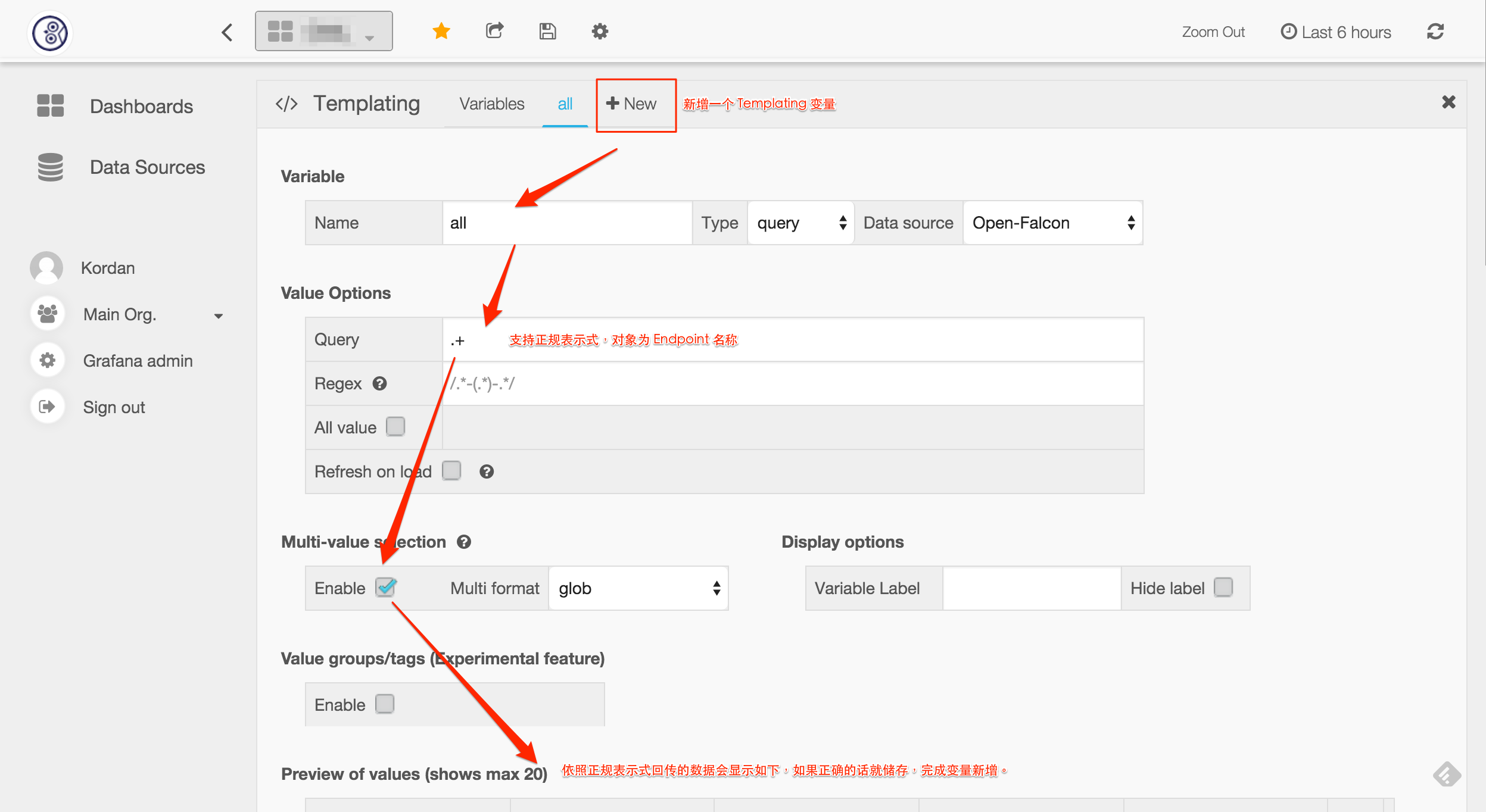

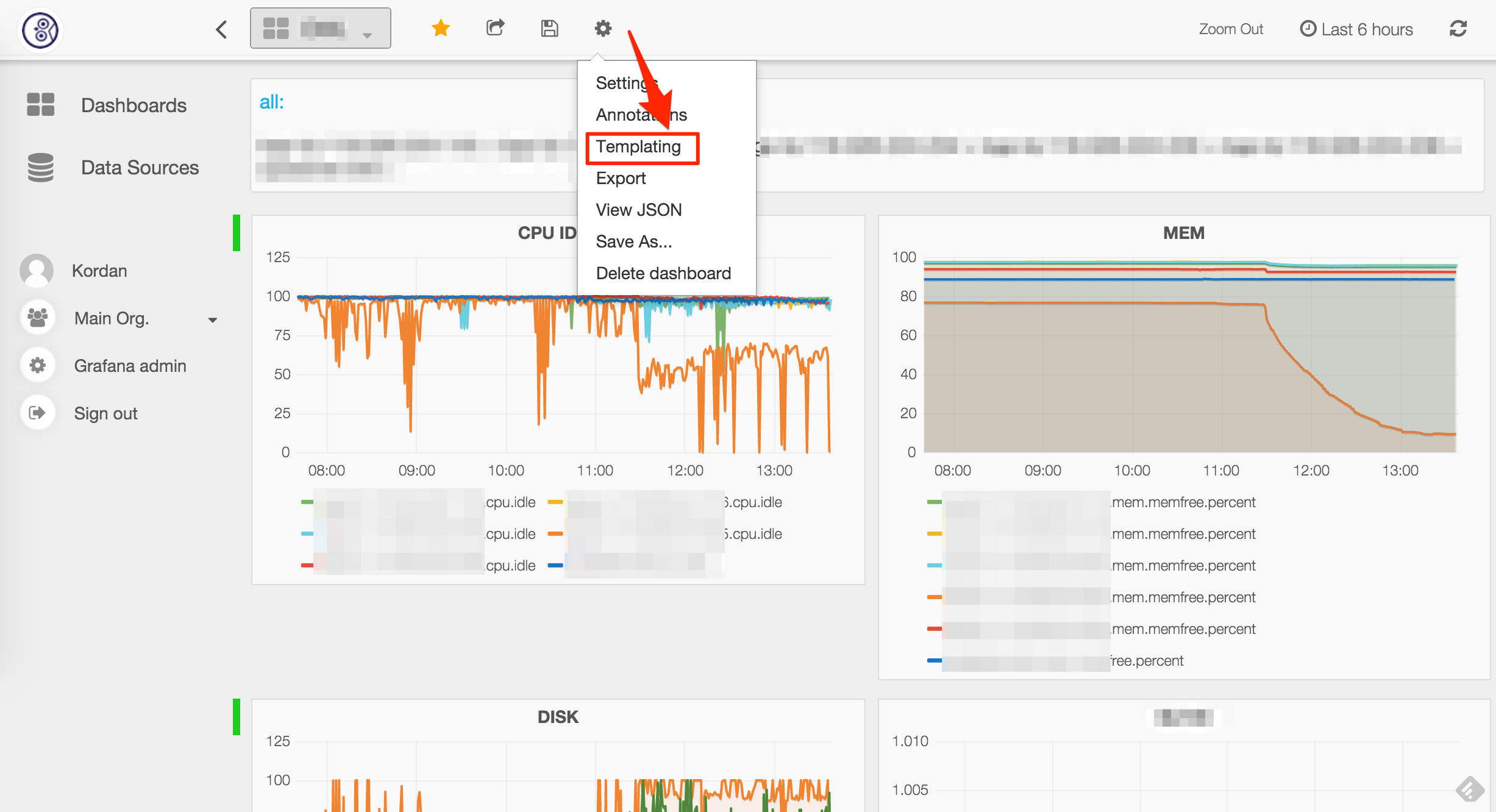

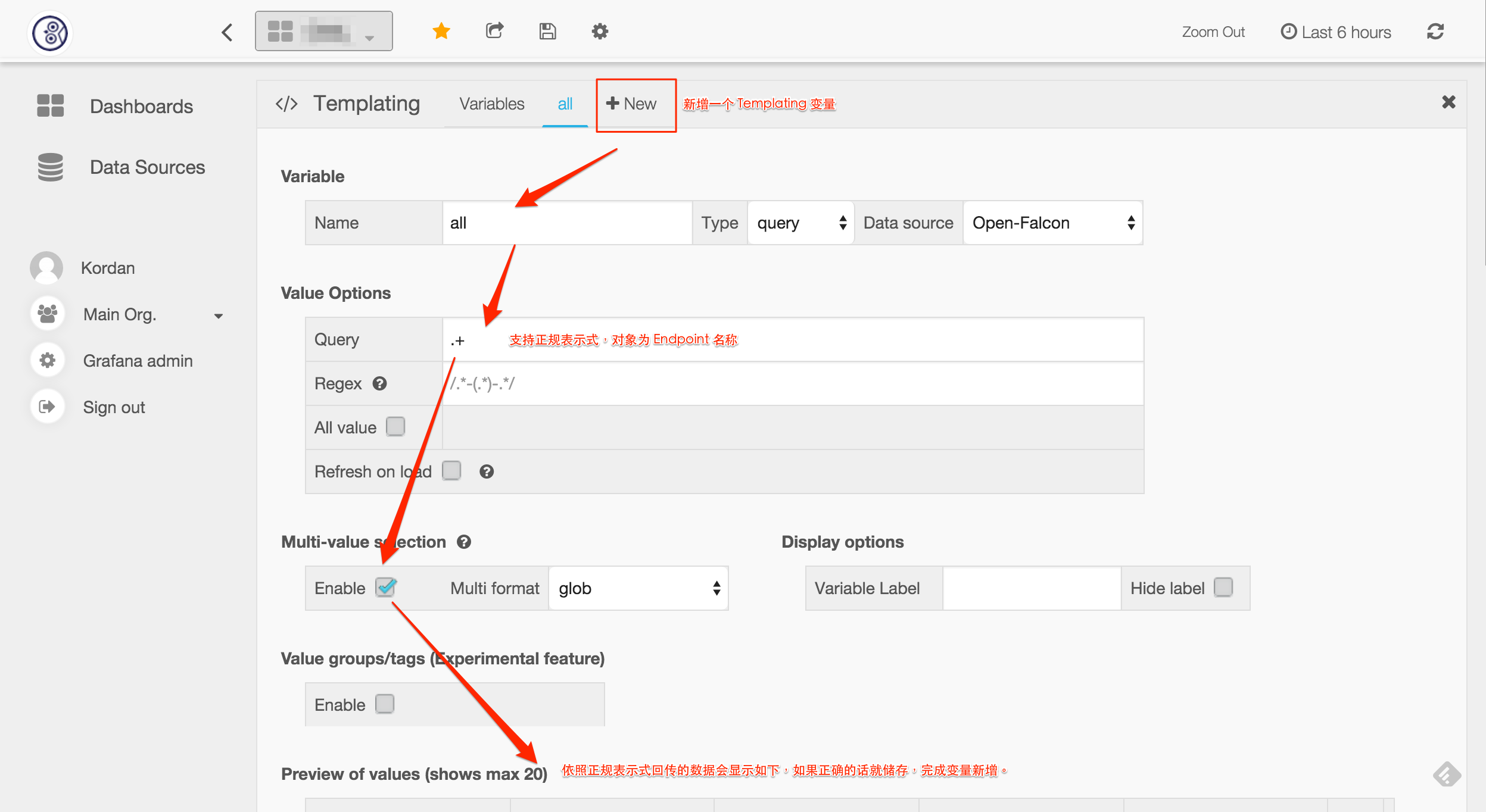

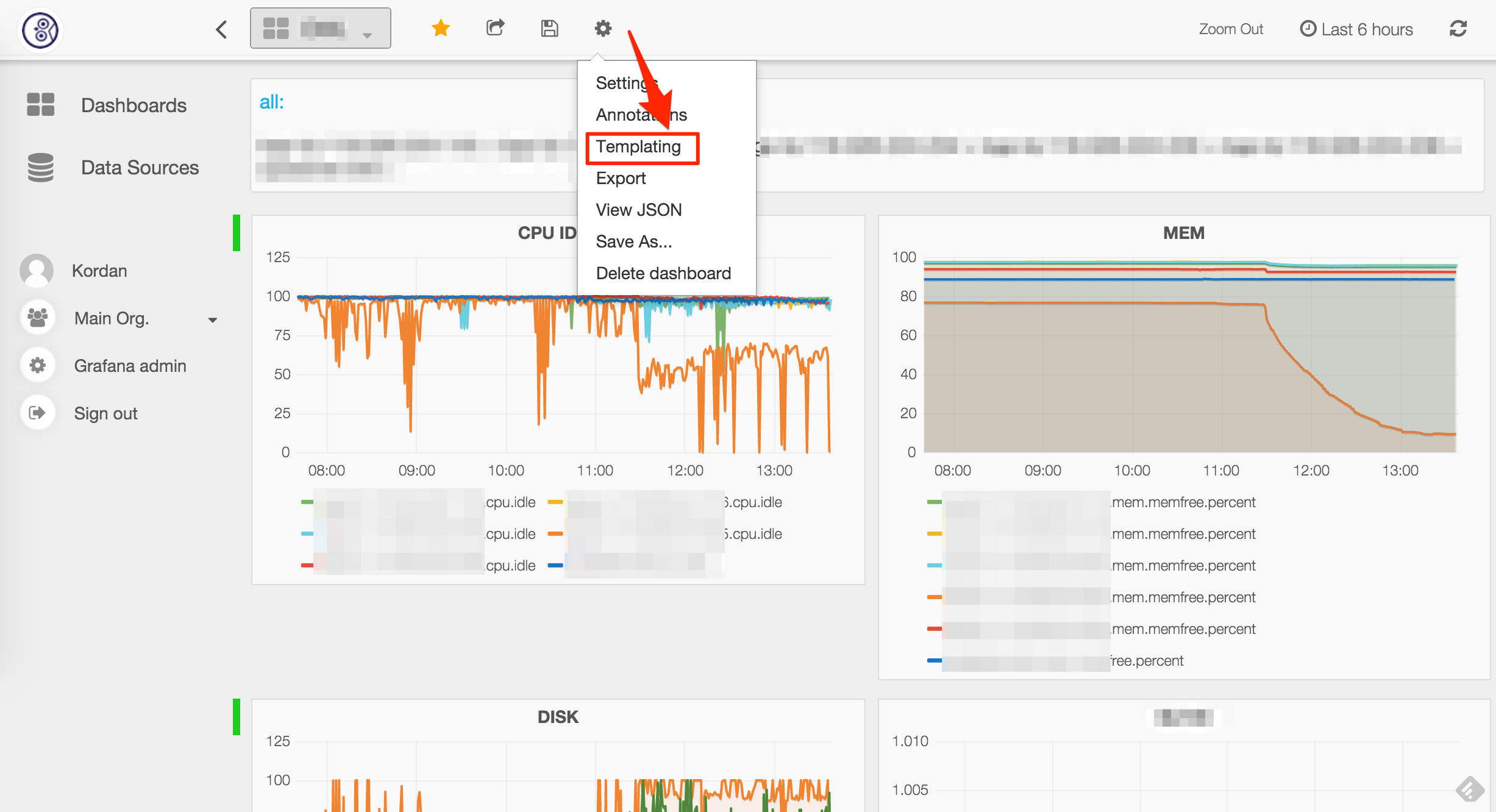

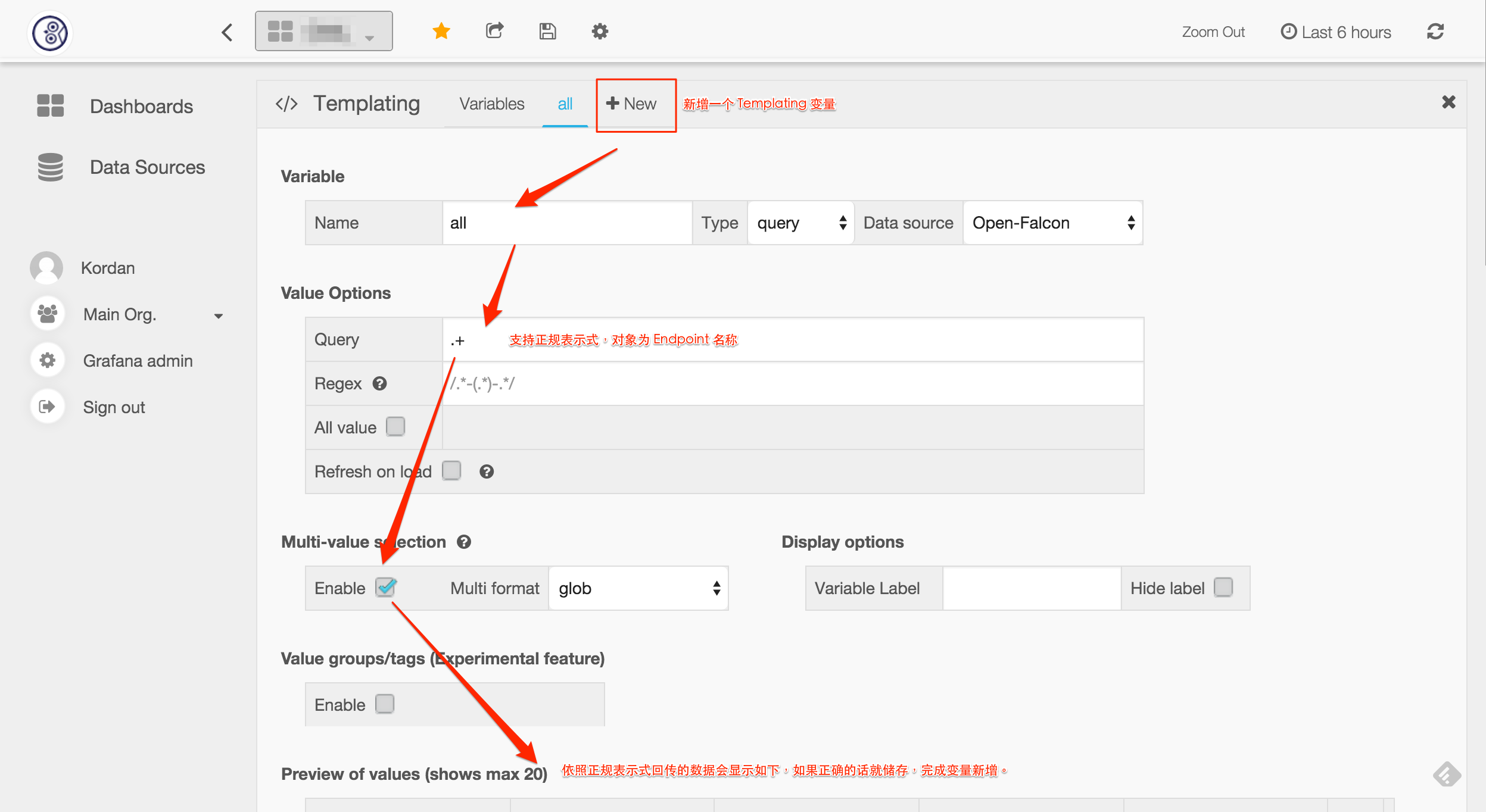

29 | ##Newly added Templating variable

30 |

31 | It’s unrealistic to add new monitoring items to the chart one by one when there are already more than one hundred machines in Open-Falcon, so Grafana provides a variable of Templating so that we can dynamically choose the machine we want to pay attention to.

32 |

33 | 1.Set to click Templating

34 |

35 | 2.Newly add Templating variable

36 |

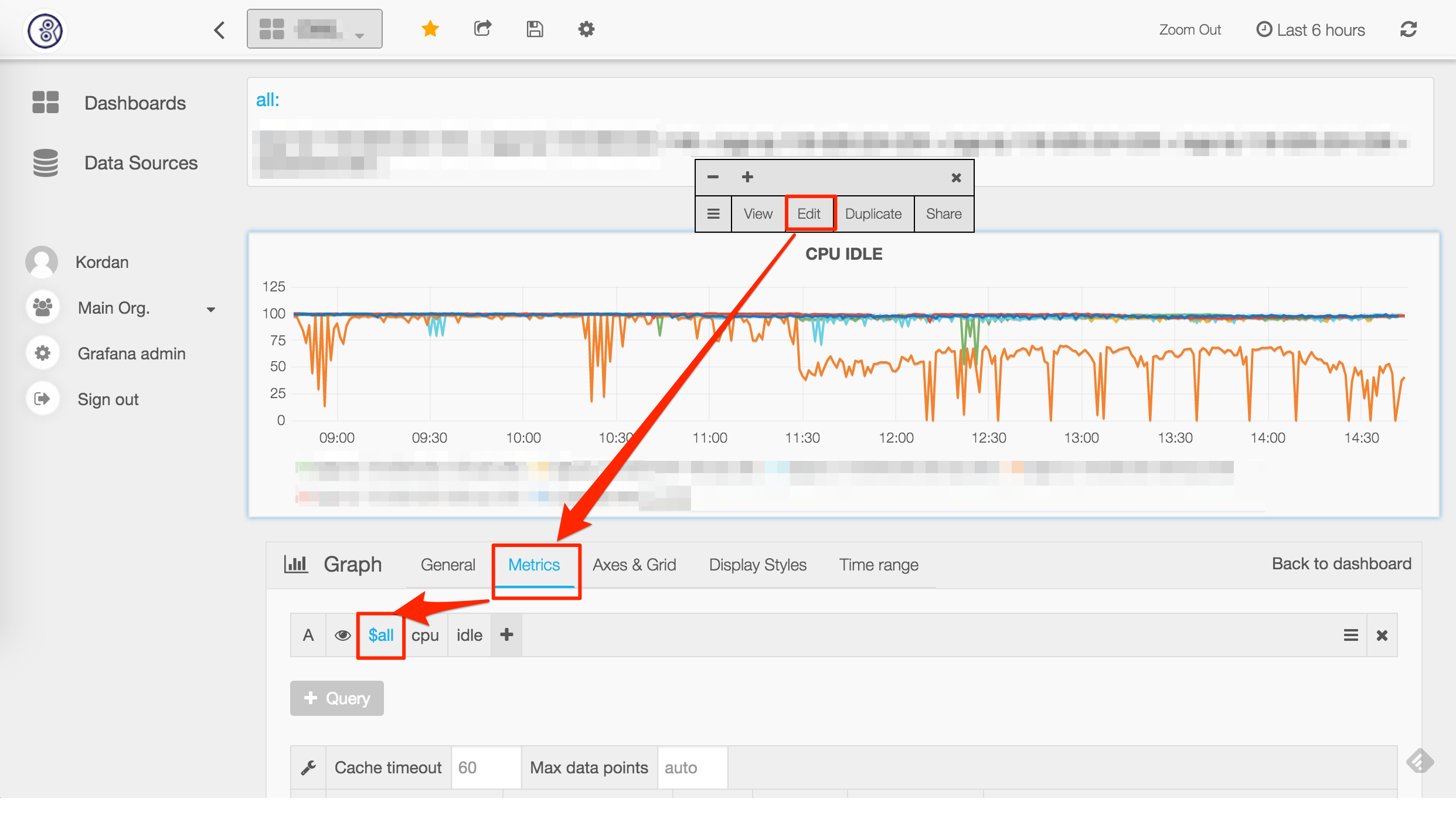

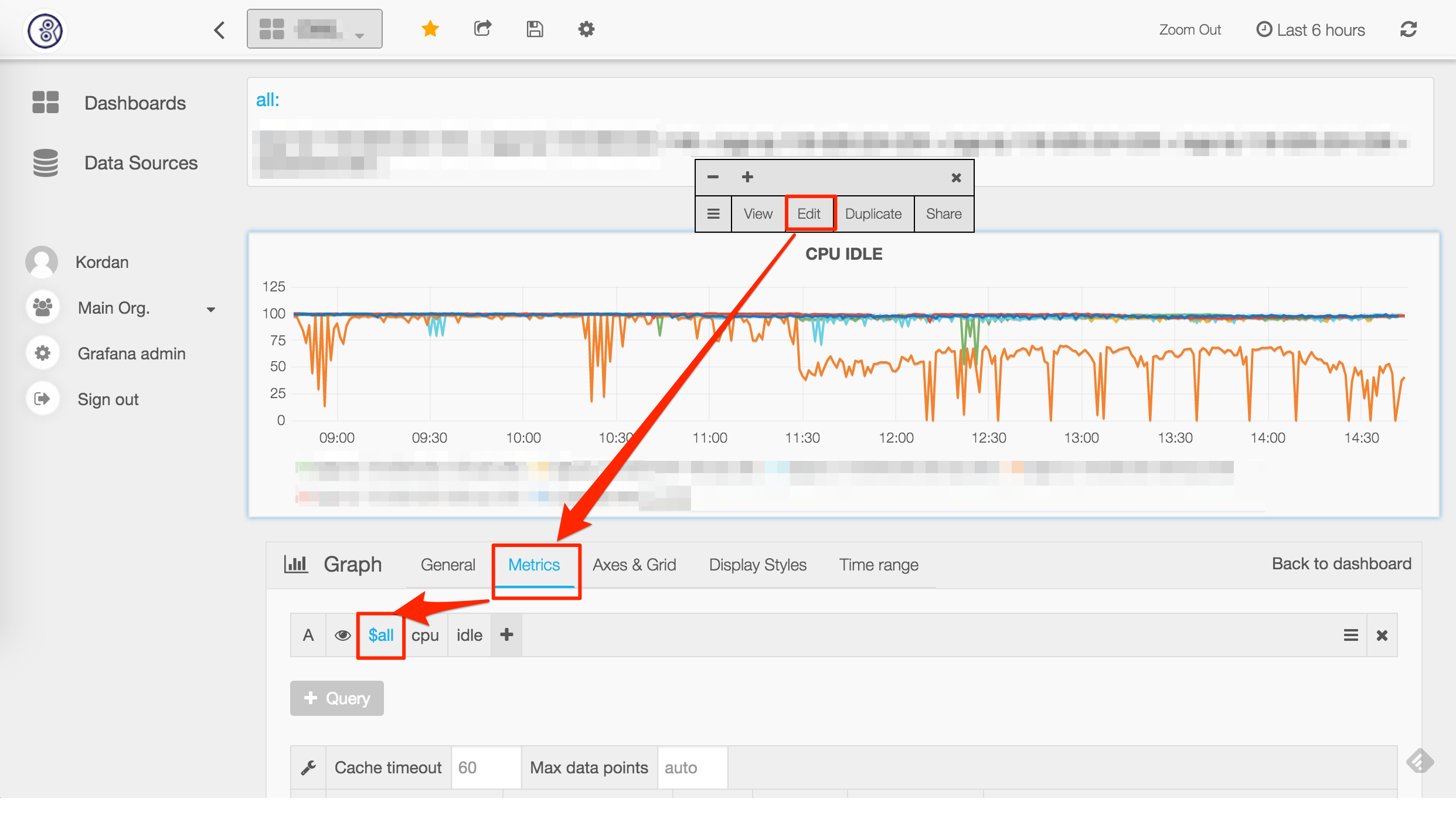

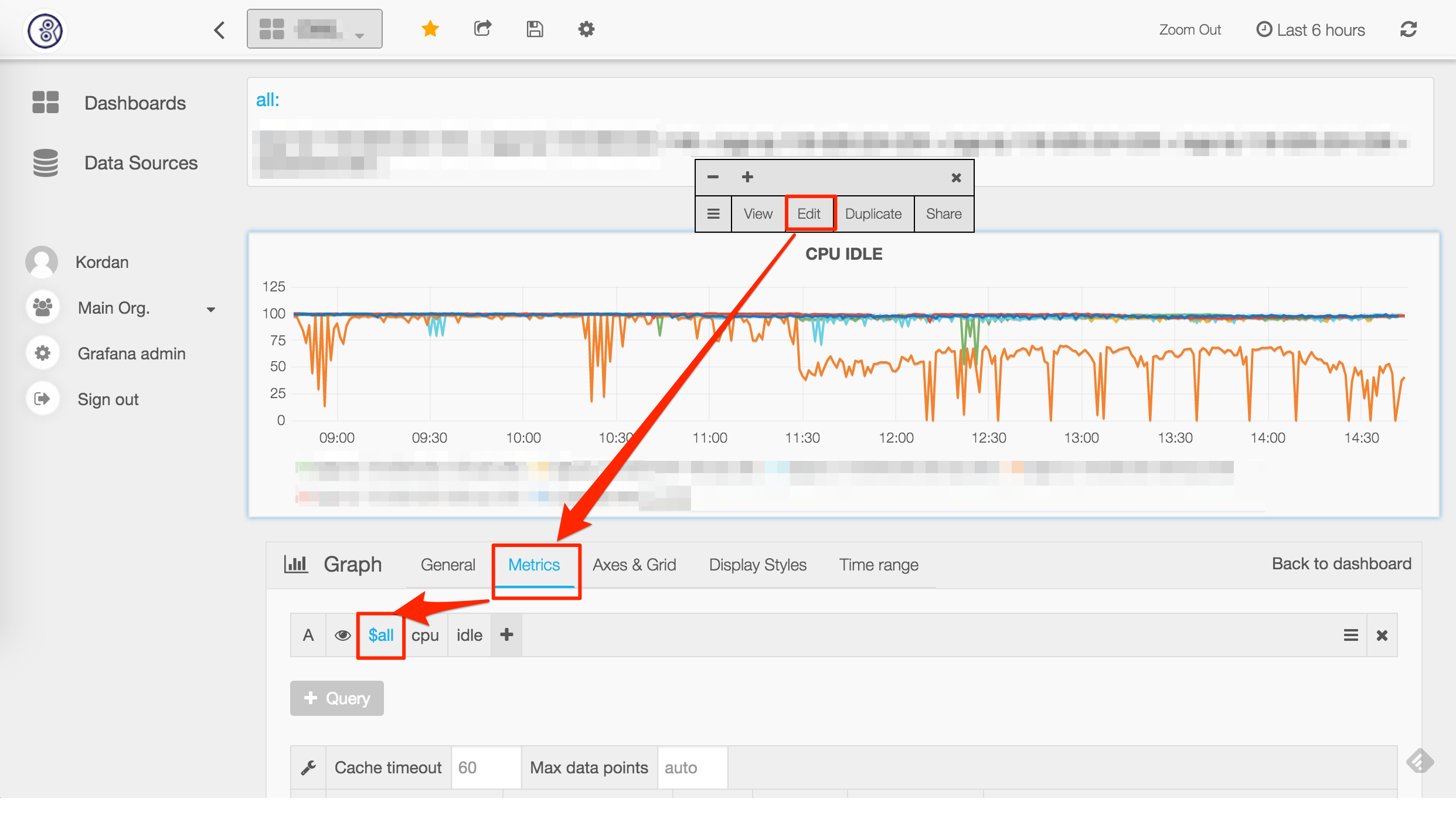

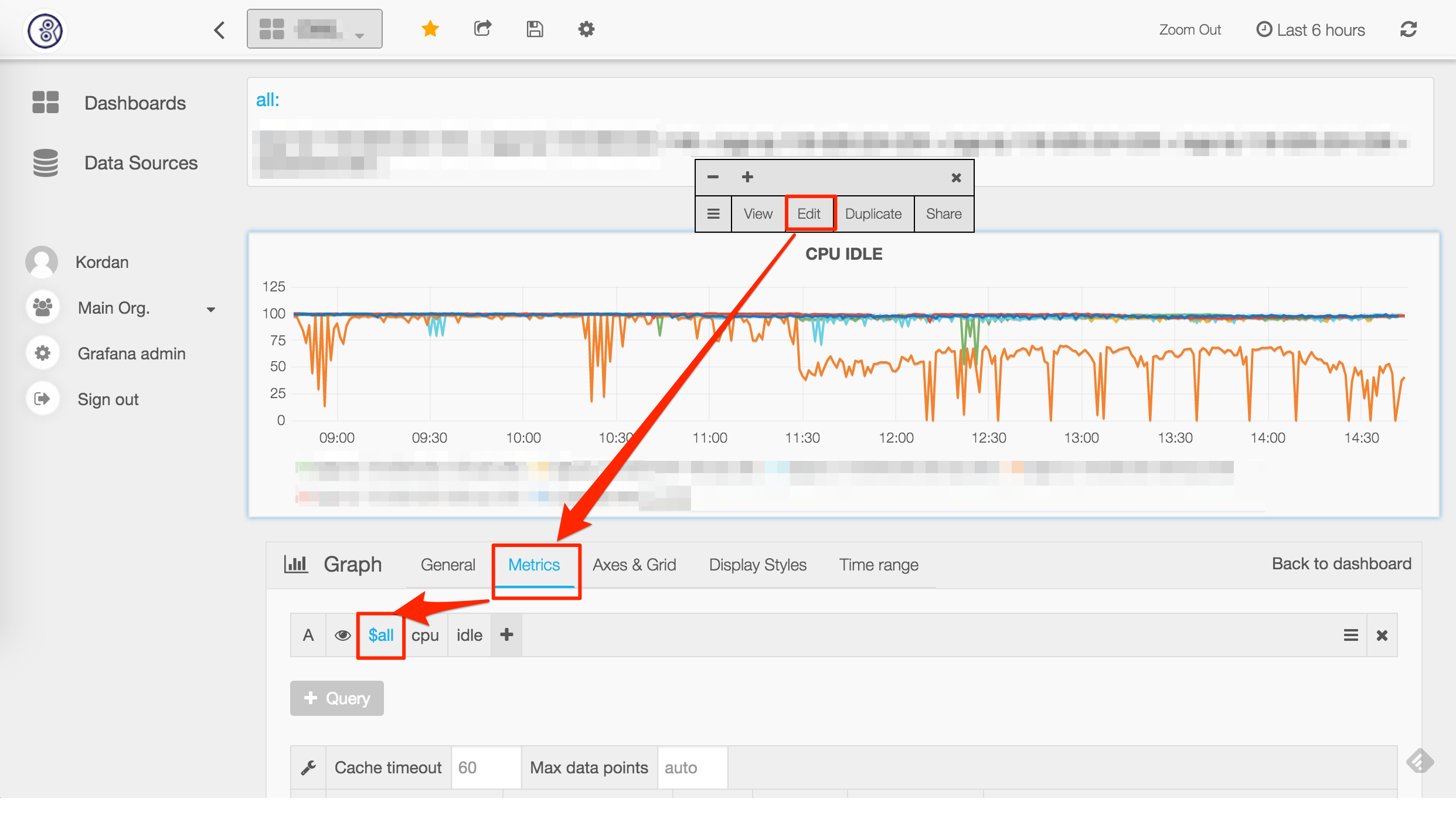

37 | ##Newly added chart

38 |

39 | As for Templating, we can replace Endpoint name with it and choose the monitoring item we focus on to finish the adding of chart.

40 |

41 | ##picture

--------------------------------------------------------------------------------

/en/7secondary_development.md:

--------------------------------------------------------------------------------

1 | # 7.Secondary Development

2 |

3 | ##The construction of go development environment:

4 | ```

5 | cd ~

6 | wget http://dinp.qiniudn.com/go1.4.1.linux-amd64.tar.gz

7 | tar zxf go1.4.1.linux-amd64.tar.gz

8 | mkdir -p workspace/src

9 | echo "" >> .bashrcecho 'export GOROOT=$HOME/go' >> .bashrcecho 'export GOPATH=$HOME/workspace' >> .bashrcecho 'export PATH=$GOROOT/bin:$GOPATH/bin:$PATH' >> .bashrcecho "" >> .bashrc

10 | source .bashrc

11 | ```

12 | ##clone code

13 | ```

14 | cd $GOPATH/src

15 | mkdir github.comcd github.com

16 | git clone --recursive https://github.com/XiaoMi/open-falcon.git

17 | ```

18 | ##compiling an element(taking agent as an example)

19 | ```

20 | cd $GOPATH/src/github.com/open-falcon/agent

21 | go get ./...

22 | ./control build

23 | ```

24 | ##User defined modification filing strategy

25 | Modify open-falcon/graph/rrdtool/rrdtool.go

26 |

27 | ##picture

28 |

29 | Compile element graph again, and replace the original binary

30 |

31 | Eliminate all the original rrd files(under /home/work/data/6070/ by default)

32 |

33 | ##Plugin mechanism

34 | 1.Find a git to store all the plugins of company

35 |

36 | 2.Download the repo plugin to the local by calling the /plugin/update interface of agent

37 |

38 | 3.Deploy which hosts can execute which plugins in portal

39 |

40 | 4.The naming way of plugin: $step_xx.yy, which needs the execute permission to save to the each directory by classification

41 |

42 | 5.Print the collected data to stdout

43 |

44 | 6.You may modify the agent and download the plugin.tar.gz from a http address at fixed period if you find the git way inconvenient

--------------------------------------------------------------------------------

/en/8api.md:

--------------------------------------------------------------------------------

1 | # 8.API

2 |

3 | ##open-falcon api

4 |

5 |

6 |

--------------------------------------------------------------------------------

/en/91_about_collection.md:

--------------------------------------------------------------------------------

1 | # 9.1 About collection

2 |

3 |

4 | Open-Falcon data collection, including drawing data collection and alert data collection. We will introduce how to verify whether the data collection in two links is normal or not below.

5 |

6 | ##How to verify whether the drawing data collection is normal

7 |

8 | The data link is: ```agent->transfer->graph->query->dashboard.``` There is a http interface of graph to verify the link ```agent->transfer->graph.``` For example, the http port of graph is 6071, and we may access the verification in this way:

9 | ```

10 | # $endpointand$counter are variables

11 | curl http://127.0.0.1:6071/history/$endpoint/$counter

12 | # If the data reported are without tags, the access method is as follows:

13 | curl http://127.0.0.1:6071/history/host01/agent.alive

14 | # If the data reported are with tags, the access method is as follows, wherein the tags are module=graph,project=falcon

15 | curl http://127.0.0.1:6071/history/host01/qps/module=graph,project=falcon

16 | ```

17 | If null value is returned by the said interface, it means that agent doesn’t report data or there is an error in transfer service.

18 |

19 | ##How to verify whether the alert data collection is normal

20 |

21 | The data link is: ```agent->transfer->judge``` . There is a http interface of judge to verify the link ```agent->transfer->judge```. For example, the http port of judge is 6081, and we may access the verification in this way:

22 | ```

23 | curl http://127.0.0.1:6081/history/$endpoint/$counter

24 | # $endpointare $counterare variables, for example:

25 | curl http://127.0.0.1:6081/history/host01/cpu.idle

26 | # counter=$metric/sorted($tags)# If the data reported are with tags, the access method is as follows, for example:

27 | curl http://127.0.0.1:6081/history/host01/qps/module=judge,project=falcon

28 | ```

29 | If null value is returned by the said interface, it means that agent doesn’t report data or there is an error in transfer service.

--------------------------------------------------------------------------------

/en/91about_collection_md.md:

--------------------------------------------------------------------------------

1 | # 9.FAQ

2 |

3 | ##The most common questions

4 |

5 |

--------------------------------------------------------------------------------

/en/92_about_alert.md:

--------------------------------------------------------------------------------

1 | # 9.2 About alert

2 |

3 | ##Problems about alert

4 | ###After the strategy is deployed, there has been no alert. How to troubleshoot the problem?

5 |

6 | 1.Troubleshoot the log of sender、alarm、judge、hbs、agent、transfer

7 |

8 | 2.Access the http page of alarm by browser to see if there is unrecovered alert. If there is, the alert is generated and isn’t sent out. The interfaces of e-mail and message may go wrong and the deployed api in sender needs to be inspected.

9 |

10 | 3.Open the debug of agent to see if the push data is normal.

11 |

12 | 4.Inspect the agent configuration to see if the address of heartbeat(hbs) and transfer is correctly deployed and enabled.

13 |

14 | 5.Inspect the transfer configuration to see if the address of judge is correctly deployed.

15 |

16 | 6.Judge provides a http interface to debug and inspect if some data is correctly pushed up, for example, the cpu.idle data of qd-open-falcon-judge01.hd may be checked in this way:

17 | ```curl 127.0.0.1:6081/history/qd-open-falcon-judge01.hd/cpu.idle```

18 |

19 | 7.Inspect if the time of server is synchronized, we may use ntp or chrony :

20 |

21 | **The said 127.0.0.1:6081 refers to the http port of judge.**

22 |

23 | 1.Inspect whether the hbs address judge deployed is correct

24 |

25 | 2.Inspect whether the database address hbs deployed is correct

26 |

27 | 3.Inspect whether the deployed strategy template in portal is deployed with the alert receiver

28 |

29 | 4.Inspect whether the deployed strategy template in portal is bound to some HostGroup and the aim machine is just in the HostGroup

30 |

31 | 5.Go to UIC and inspect whether it added itself in the alert receiver group

32 |

33 | 6.Go to UIC and inspect whether the contact information of itself is correct

34 |

35 | ###Creat a HostGroup in Portal page and report the error when adding machine to HostGroup

36 |

37 | 1.Inspect whether agent deployed the heartbeat address correctly and enabled

38 |

39 | 2.Inspect hbs log

40 |

41 | 3.Inspect the database address hbs deployed is correct

42 |

43 | 4.Inspect the deploy hosts of hbs is deployed to sync. Hbs will write the host table only when it is blank, and we may add machine on the page only when there is data in the host table

44 |

--------------------------------------------------------------------------------

/en/README.md:

--------------------------------------------------------------------------------

1 | # Welcome to Open-Falcon community

2 |

3 | With everyone's enthusiastic support and help,Open-Falcon is developing at full speed . Now:

4 |

5 | 1. During half a year, we have getted more than 1300 stars on github.

6 |

7 | 2. We received dozens of pull-requests,much 100 problems and the Community College has more than 2000 members.

8 |

9 | 3. We received lots of plugins from members who support us all the time.This plugins for example:MySQL,redis,RabbitMQ,windows-agent,switch-agent,nginx-stats.They also received many documents.

10 |

11 | 4. In different degrees,Hundreds of Internet Company use Open-Falcon,such as: 美团、金山云、快网、宜信、七牛、又拍云、赶集、滴滴、金山办公、爱奇艺、一点资讯、快牙、开心网、借贷宝、百度、迅雷等等

12 |

13 |

14 | NOTICE:

15 |

16 | 1. QQ群已全部满员,请添加我的微信 `laiweivic` ,注明个人介绍和来源自open-falcon,我会拉你进入交流群组;

17 | 2. 我们更推荐您升级到[夜莺监控](https://github.com/ccfos/nightingale): 关于open-falcon和夜莺的对比介绍,请参考阅读[云原生监控的十个特点和趋势](https://mp.weixin.qq.com/s?__biz=MzkzNjI5OTM5Nw==&mid=2247483738&idx=1&sn=e8bdbb974a2cd003c1abcc2b5405dd18&chksm=c2a19fb0f5d616a63185cd79277a79a6b80118ef2185890d0683d2bb20451bd9303c78d083c5#rd);

18 |

19 |

20 | 请大家优先在 github 上提交 [issue](https://github.com/open-falcon/falcon-plus/issues), 方便问题沉淀,github issue 会最高优先级解决。

21 | - QQ五群:42607978 (已满员)

22 | - QQ四群:697503992 (已满员)

23 | - QQ一群:373249123 (已满员)

24 | - QQ二群:516088946 (已满员)

25 | - QQ三群:469342415 (已满员)

26 |

27 | # Acknowledgement

28 |

29 | This english document is translated and maintained by [宋立岭](https://github.com/songliling),thanks a lot.

30 |

--------------------------------------------------------------------------------

/en/community.md:

--------------------------------------------------------------------------------

1 | # welcome to Open-Falcon community

2 |

3 |

4 | With everyone's enthusiastic support and help,Open-Falcon is developing at full speed . Now:

5 |

6 | 1. During half a year, we have getted more than 1300 stars on github.

7 |

8 | 2. We received dozens of pull-requests,much 100 problems and the Community College has more than 2000 members.

9 |

10 | 3. We received lots of plugins from members who support us all the time.This plugins for example:MySQL,redis,RabbitMQ,windows-agent,switch-agent,nginx-stats.They also received many documents.

11 |

12 | 4. In different degrees,Hundreds of Internet Company use Open-Falcon,such as: 美团、金山云、快网、宜信、七牛、又拍云、赶集、滴滴、金山办公、爱奇艺、一点资讯、快牙、开心网、借贷宝、百度、迅雷等等

13 |

14 |

15 |

16 | * QQ gruop:373249123(FULL)

17 | * QQ 2th group:516088946(FULL)

18 | * QQ 3rd group:469342415(JOIN US)

19 |

20 |

21 |

22 |

23 |

--------------------------------------------------------------------------------

/en/contributionlistbox.md:

--------------------------------------------------------------------------------

1 | ##Authors' contributions

2 |

3 | * laiwei 来炜没睡醒@微博 / hellolaiwei@微信

4 | * 秦晓辉 UlricQin@微博 微信公众号:sa-dev,语音答疑

5 | * yubo x80386@微信

6 | * niean niean_sail@微信

7 | * 小米运维部

8 |

9 |

--------------------------------------------------------------------------------

/en/erjinzhi.md:

--------------------------------------------------------------------------------

1 | The Open-Falcon can be divided into two parts: mapping component and alarm component. These two parts can work independently.

2 |

3 | * Installation of mapping component is to manage collecting, gathering, storing, archiving, sampling, querying and displaying of data (Dashboard/Screen). It can work independently as a storage presentation solution of time-series data.

4 | * Installation of alarm component is to manage alarm policy configuration (portal), alarm judge (judge), alarm handling (alarm/sender), user group management (uic), etc. It can work independently.

5 | * If you are proficient in docker and want to quickly set up and experience Open-Falcon, please refer to Install Open-Falcon with Docker Mirror.

6 |

7 |

--------------------------------------------------------------------------------

/en_0_2/GLOSSARY.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

--------------------------------------------------------------------------------

/en_0_2/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [OpenFalcon](http://open-falcon.com) is an expandable open source monitor resolution with high availability at enterprise-level.

4 |

5 | With the warm support and help of everyone, OpenFalcon has become one of the most popular monitor systems in China.

6 |

7 | Now:

8 | - Thousands of stars, hundreds of forks, hundreds of pull-requests received at [github](https://github.com/open-falcon/falcon-plus);

9 | - More than 6000 community users;

10 | - Used by more than 200 companies in varying degrees, including companies in Mainland China, Singapore and Taiwan;

11 | - Dozens of plugin support contributed by the community, like MySQL, Redis, Windows, switch, LVS, Mongodb, Memcache, docker, mesos、URL monitor etc.

12 |

13 | -----

14 | **Acknowledgements**

15 |

16 | - OpenFalcon was initially started by Xiaomi and we would also like to acknowledge contributions by engineers from [these companies](./contributing.html) and [these individual developers](./contributing.html).

17 | - The OpenFalcon logo and website were contributed by Cepave Design Team.

18 | - [Wei Lai](https://github.com/laiwei) is the founder of OpenFalcon software and community.

19 | - The [english doc](http://book.open-falcon.com/en/index.html) is translated by [Liling Song](https://github.com/songliling).

20 |

21 | NOTICE:

22 |

23 | 1. QQ群已全部满员,请添加我的微信 `laiweivic` ,注明个人介绍和来源自open-falcon,我会拉你进入交流群组;

24 | 2. 我们更推荐您升级到[夜莺监控](https://github.com/ccfos/nightingale): 关于open-falcon和夜莺的对比介绍,请参考阅读[云原生监控的十个特点和趋势](https://mp.weixin.qq.com/s?__biz=MzkzNjI5OTM5Nw==&mid=2247483738&idx=1&sn=e8bdbb974a2cd003c1abcc2b5405dd18&chksm=c2a19fb0f5d616a63185cd79277a79a6b80118ef2185890d0683d2bb20451bd9303c78d083c5#rd);

25 |

26 |

27 | 请大家优先在 github 上提交 [issue](https://github.com/open-falcon/falcon-plus/issues), 方便问题沉淀,github issue 会最高优先级解决。

28 | - QQ五群:42607978 (已满员)

29 | - QQ四群:697503992 (已满员)

30 | - QQ一群:373249123 (已满员)

31 | - QQ二群:516088946 (已满员)

32 | - QQ三群:469342415 (已满员)

33 |

34 |  35 |

--------------------------------------------------------------------------------

/en_0_2/api/README.md:

--------------------------------------------------------------------------------

1 | # open-falcon api

2 | - [api v0.2](http://open-falcon.com/falcon-plus/)

3 |

--------------------------------------------------------------------------------

/en_0_2/authors.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ##Authors' contributions

4 |

5 | * laiwei 来炜没睡醒@微博 / hellolaiwei@微信

6 | * 秦晓辉 UlricQin@微博 微信公众号:sa-dev,语音答疑

7 | * yubo x80386@微信

8 | * niean niean_sail@微信

9 | * 小米运维部

--------------------------------------------------------------------------------

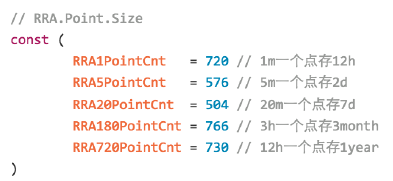

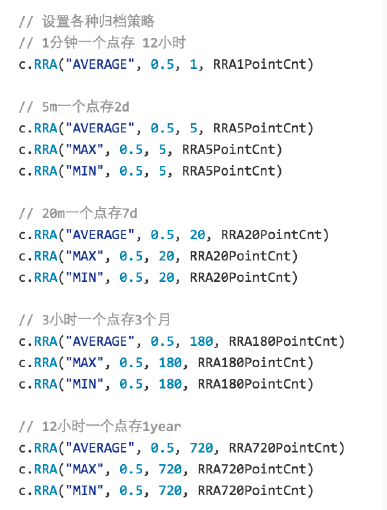

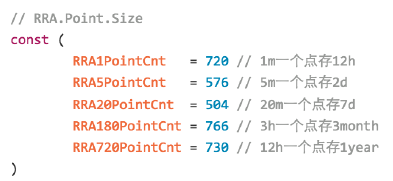

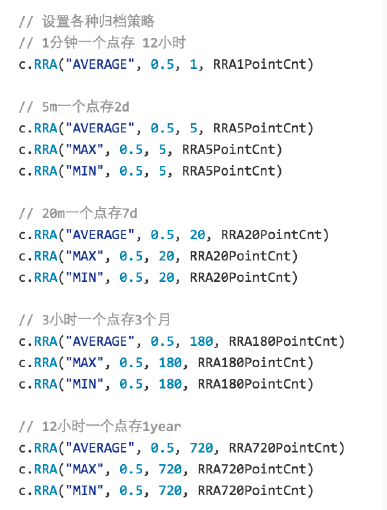

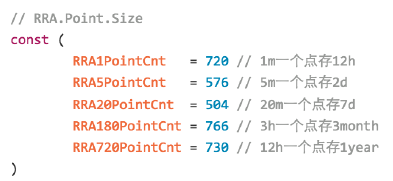

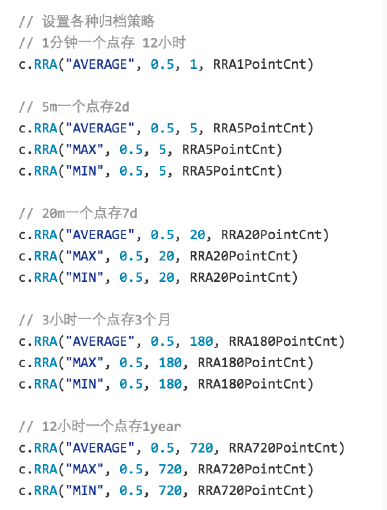

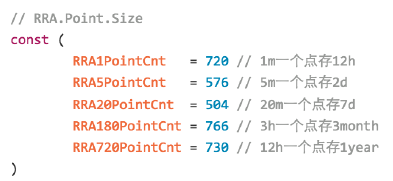

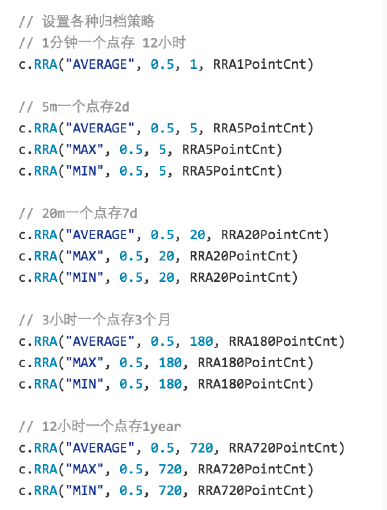

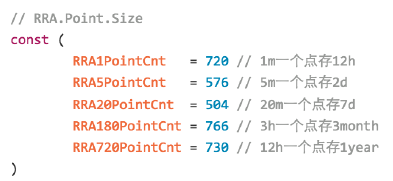

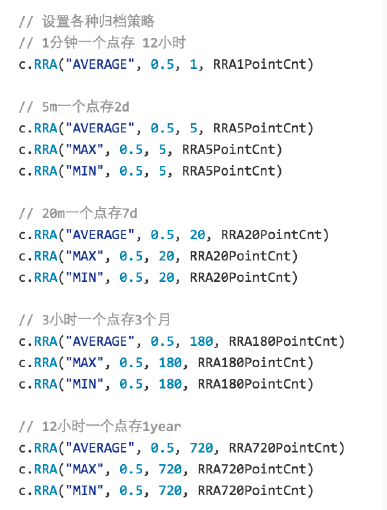

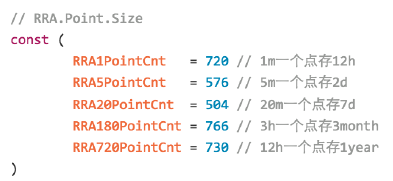

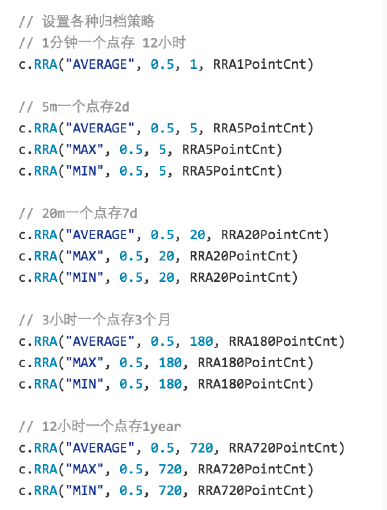

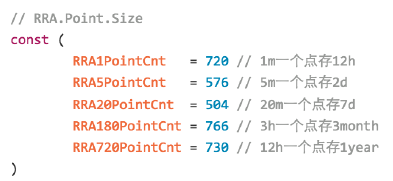

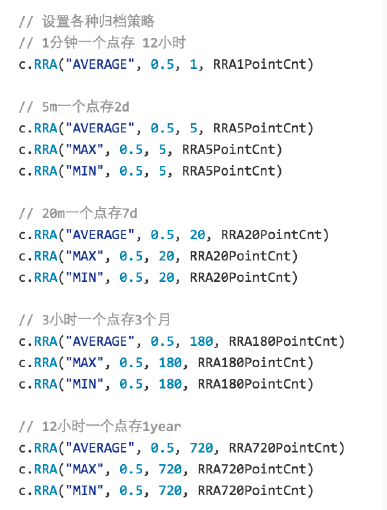

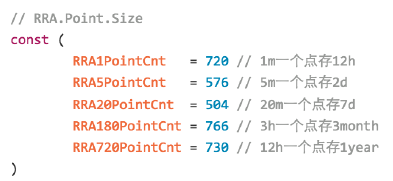

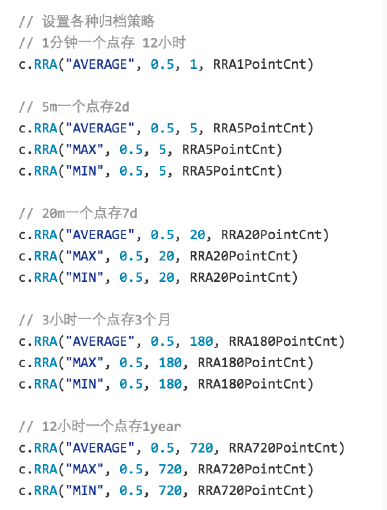

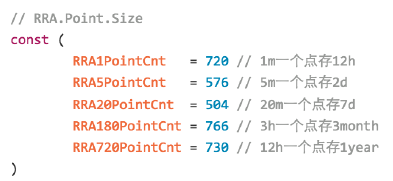

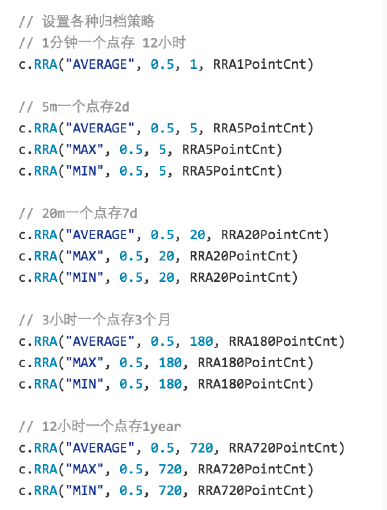

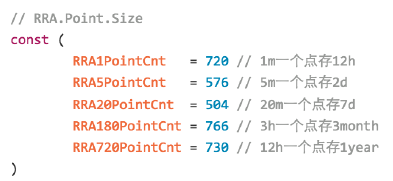

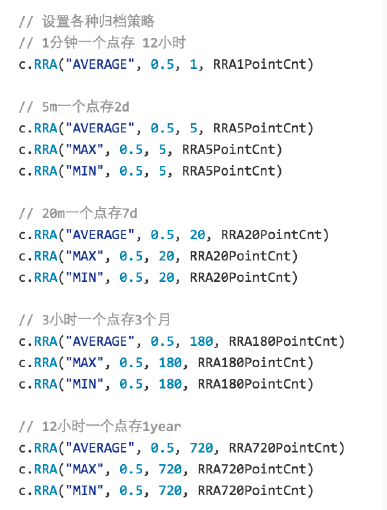

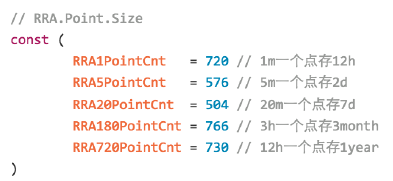

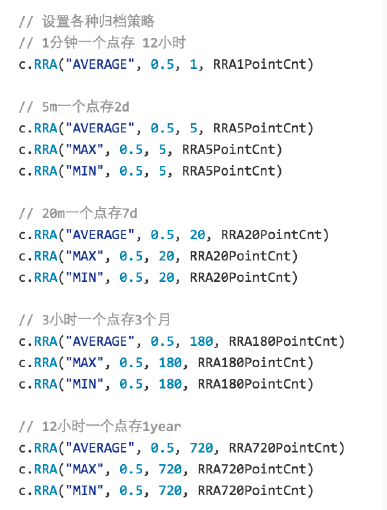

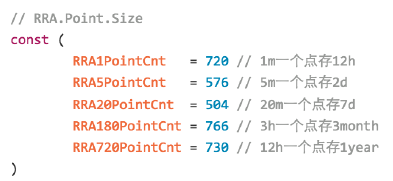

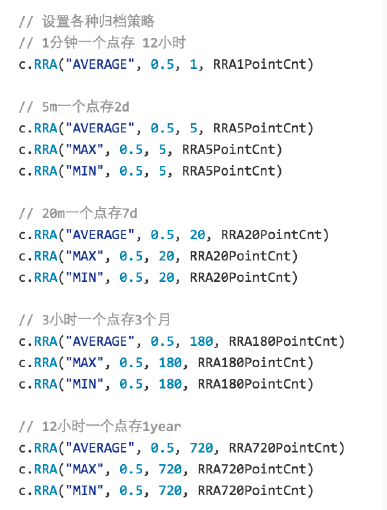

/en_0_2/dev/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Environment Preparation

4 |

5 | Please refer to [Environment Preparation](../quick_install/prepare.md)

6 | # Changing Custom Archiving Strategy

7 | Change open-falcon/graph/rrdtool/rrdtool.go

8 |

9 |

10 |

11 |

12 | Recompile Graph module and substitute the existing binary for a newer one

13 |

14 | Delete all previous RRD files (saved at "/home/work/data/6070/" by default)

15 |

16 | # Plugin Mechanism

17 | 1. Find a git that can store all the plugins of our company

18 | 2. Pull the Repo pulgin to local system by calling the /plugin/update port of Agent

19 | 3. Set which machine can execute which plugin in Portal

20 | 4. Plugins are named in form of "$step_xx.yy" and stored with executable permission in each directory of Repo by category

21 | 5. Print collected data to Stdout

22 | 6. Modify Agent if you find the git method inconvenient, just download zip files "plugin.tar.gz" from certain http address regularly

23 |

24 |

--------------------------------------------------------------------------------

/en_0_2/dev/support_grafana.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Supporting Grafana View

4 |

5 | Compared with builtin Dashboard of Open-Falcon, Grafana has flexible custom diagram. It can also set access control, add label and query information for Dashboard. Display setting of diagram is more various than before.

6 |

7 | This tutorial will help you with the look of Open-Falcon!

8 |

9 | ### Installation and Instruction

10 |

11 | Please refer to [grafana open-falcon](https://github.com/open-falcon/grafana-openfalcon-datasource)

12 |

13 |

14 | ### Acknowledges

15 | - the contribution of fastweb @kordan @masato25 and etc.

16 |

--------------------------------------------------------------------------------

/en_0_2/distributed_install/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Summary

4 |

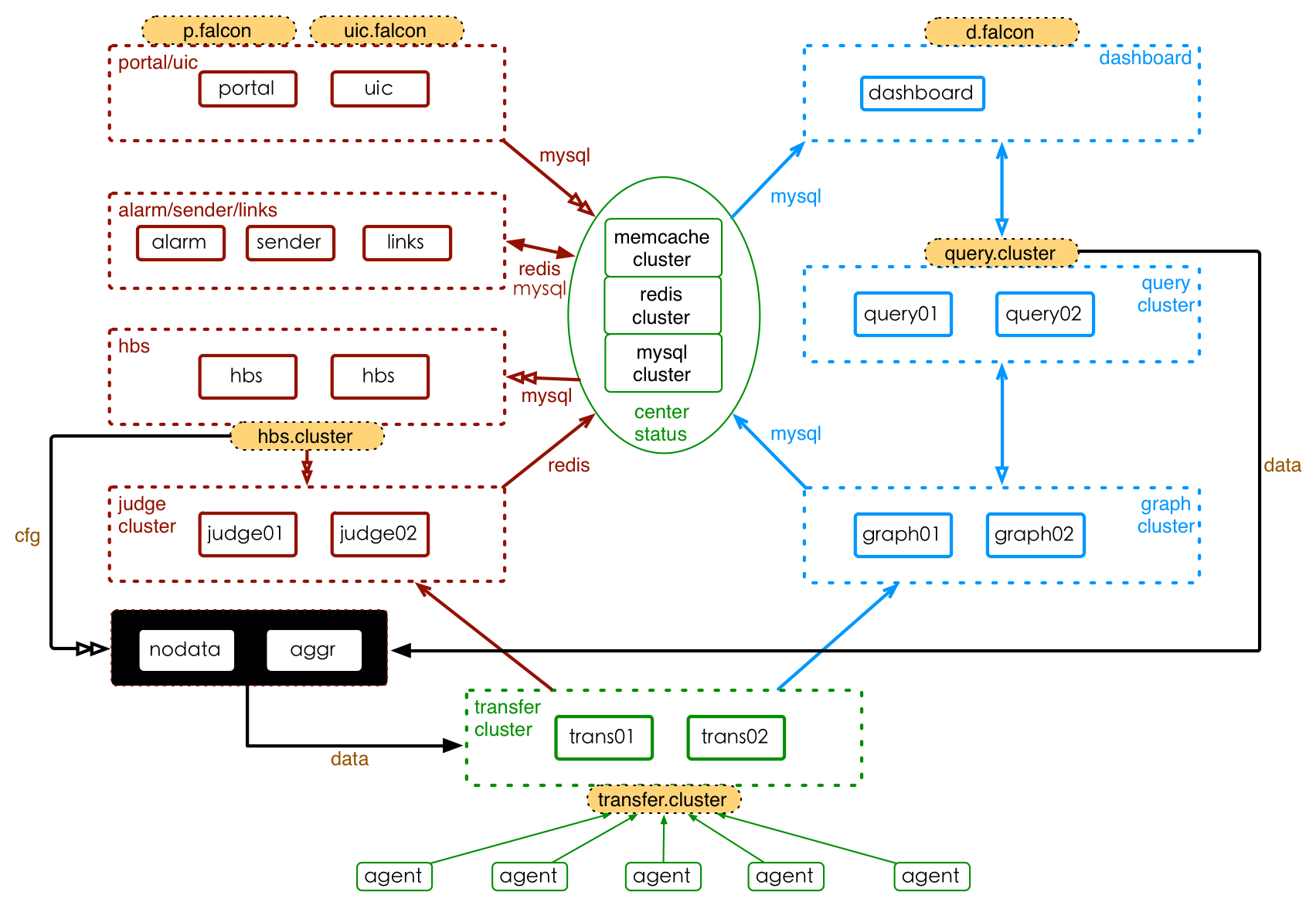

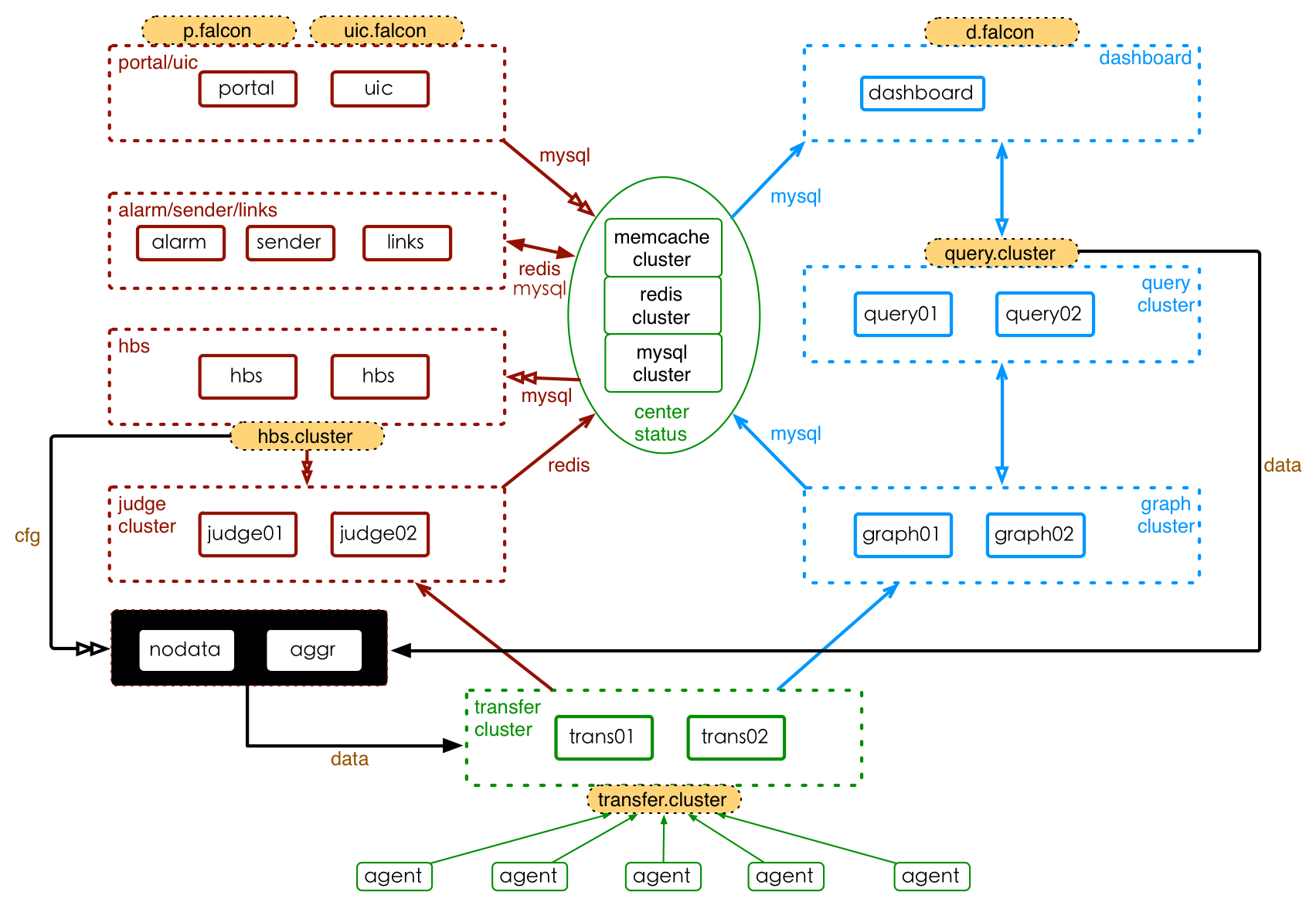

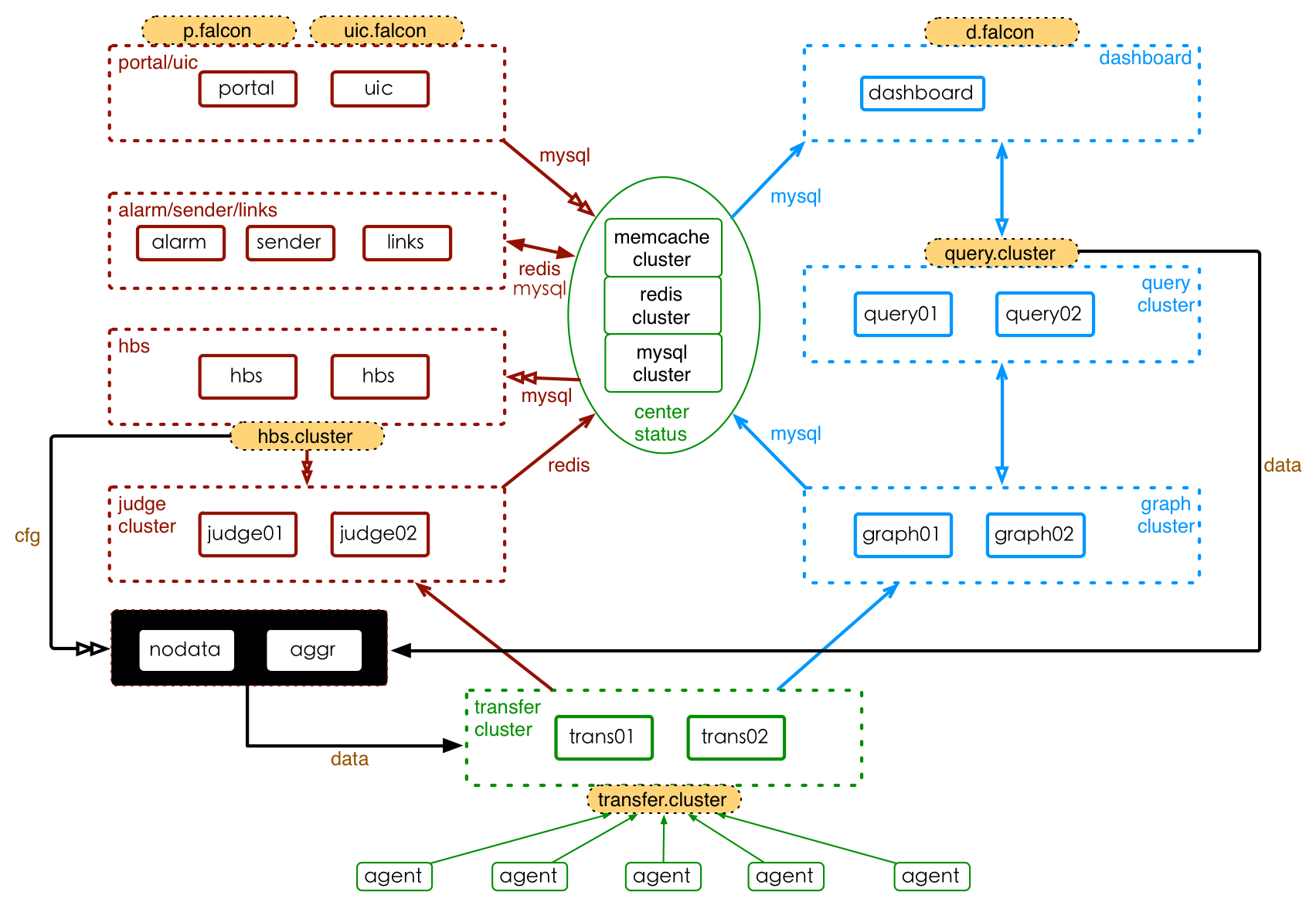

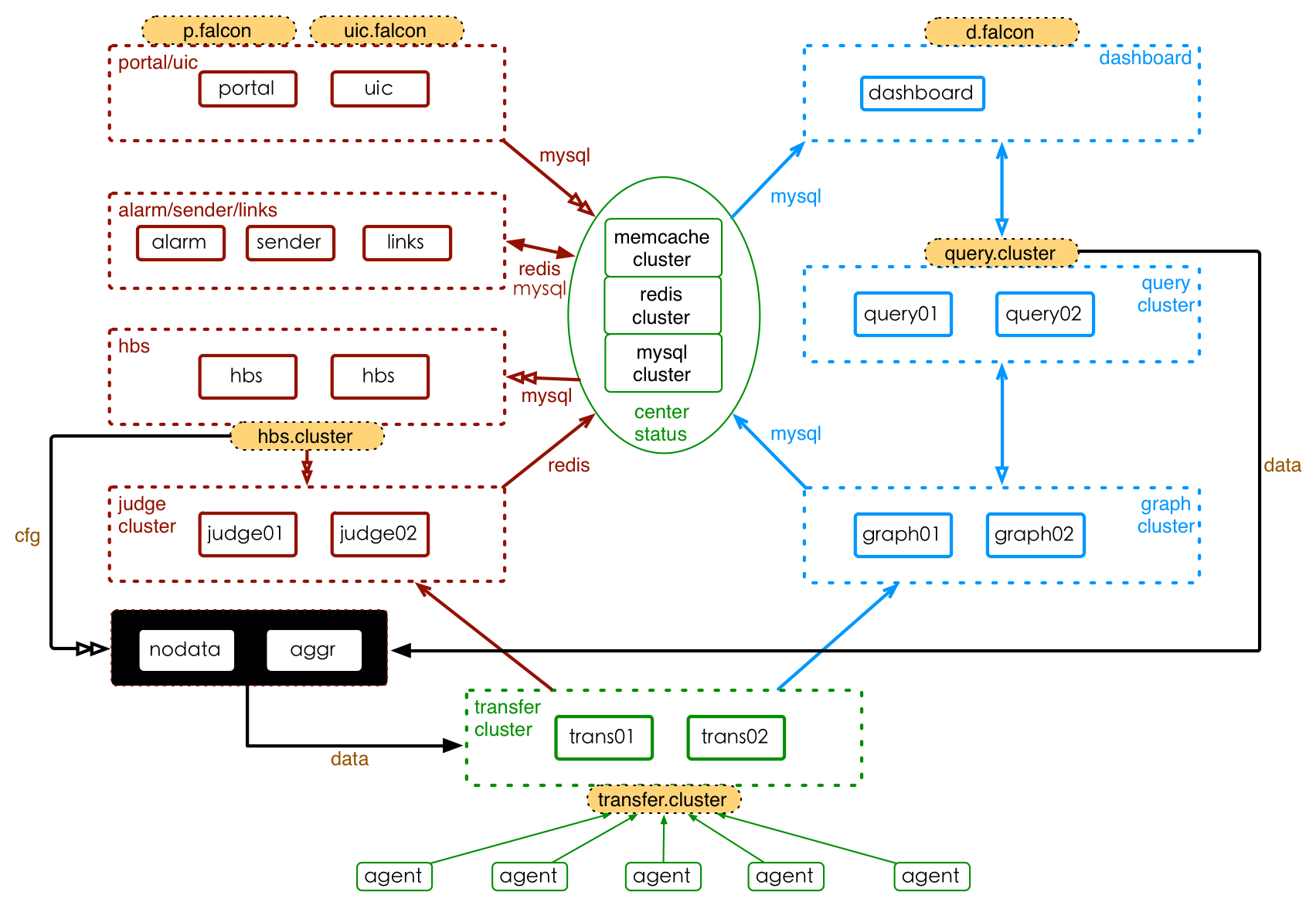

5 | Open-Falcon is a large distributed system with more than 10 modules. They can be divided by function into basic module, graph link module and alarm link module. The architecture of its installation and deployment is shown in the picture below.

6 |

7 |

8 |

9 | ## Quick Installation on One Macine

10 |

11 | Please refer to [quick_install](../quick_install/README.md)

12 |

13 | ## Open-Falcon Installation in Docker Format

14 |

15 | Refer to:

16 | - https://github.com/open-falcon/falcon-plus/blob/master/docker/README.md

17 | - https://github.com/open-falcon/dashboard/blob/master/README.md

18 |

19 | ## Distributed Installation on Several Machines

20 |

21 | This chapter is about distributed installation on several machines. Please follow the steps to install every module.

22 |

23 | ## Video Tutorial of Installation

24 |

25 | 《[The analysis of Deployment and Architecture of Open-Falcon](http://www.jikexueyuan.com/course/1651.html)》

26 |

27 |

--------------------------------------------------------------------------------

/en_0_2/distributed_install/agent-updater.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Agent-updater

4 |

5 | Falcon-agent needs to be deployed in every machine. If the quantity of company's machine is relatively small, it does not matter that you install falcon-agent manually using tools like pssh, ansible and fabric. But when the quantity increases, it will become a nightmare that you finish all the installation, update and rolling back manually.

6 |

7 | I personally developed a tool called Agent-updater for Falcon-agent management. Agent-updater also has a agent called ops-updater, which can be considered as a super agent that manage the agent of other agents. Ops-updater is recommended during installing. Usually, it does not require an update.

8 |

9 | For more information, please visit: https://github.com/open-falcon/ops-updater

10 |

11 | If you want to learn how to write a full project using Go language, you can also study agent-updater. I even recorded a video tutorial to show you how it is developed. The links are down below:

12 |

13 | - http://www.jikexueyuan.com/course/1336.html

14 | - http://www.jikexueyuan.com/course/1357.html

15 | - http://www.jikexueyuan.com/course/1462.html

16 | - http://www.jikexueyuan.com/course/1490.html

17 |

18 |

--------------------------------------------------------------------------------

/en_0_2/distributed_install/aggregator.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Aggregator

4 |

5 | Cluster aggregation module aggregates the value of one specified index of all machines in one cluster, providing a monitoring experience with cluster perspective.

6 |

7 |

8 | ## Service Deployment

9 | Service deployment includes configuration changes, starting the service, testing the service, stopping the service etc. Before this, you need to unzip installation package to deployment directory of the service.

10 |

11 | ```

12 | # Change the configuration (the meaning of each setting is as follow)

13 | mv cfg.example.json cfg.json

14 | vim cfg.json

15 |

16 | # Start the service

17 | ./open-falcon start aggregator

18 |

19 | # Check the log

20 | ./open-falcon monitor aggregator

21 |

22 | # Stop the service

23 | ./open-falcon stop aggregator

24 |

25 | ```

26 |

27 |

28 | ## Configuraion Informaion

29 | The configuration file is "./cfg.json" and there will be an example configuration file "cfg.example.json" in each installation package by default. The meaning of each setting is as follows

30 |

31 | ```

32 | {

33 | "debug": true,

34 | "http": {

35 | "enabled": true,

36 | "listen": "0.0.0.0:6055"

37 | },

38 | "database": {

39 | "addr": "root:@tcp(127.0.0.1:3306)/falcon_portal?loc=Local&parseTime=true",

40 | "idle": 10,

41 | "ids": [1, -1],

42 | "interval": 55

43 | },

44 | "api": {

45 | "connect_timeout": 500,

46 | "request_timeout": 2000,

47 | "plus_api": "http://127.0.0.1:8080", #address where falcon-plus api module is running

48 | "plus_api_token": "default-token-used-in-server-side", #token used in mutual authentication with falcon-plus api module

49 | "push_api": "http://127.0.0.1:1988/v1/push" #http port of push's data provided by Agent

50 | }

51 | }

52 |

53 |

54 | ```

55 |

--------------------------------------------------------------------------------

/en_0_2/distributed_install/gateway.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Gateway

4 |

5 | **If no problem comes up in room partition, please omit this component**。

6 |

7 | If there is something wrong with room partition and you need an urgent solution to the problem of data return in room partition, please use this component. For more information please visit [Here](https://github.com/open-falcon/falcon-plus/blob/master/modules/gateway/README.md).

--------------------------------------------------------------------------------

/en_0_2/distributed_install/prepare.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## Environment Preparation

4 | Please refer to [Environment Preparation](../quick_install/prepare.md)

5 |

--------------------------------------------------------------------------------

/en_0_2/donate.md:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/donate.md

--------------------------------------------------------------------------------

/en_0_2/faq/collect.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # FAQ about Data Collection

4 | Open-Falcon data collection includes [Graph Data] collection and [Alarm Data] collection. Here is how to check if the data collection works properly in these two links.

5 |

6 |

7 | ### How to check if [Graph Data] collection works properly?

8 | The data link is `agent->transfer->graph->query->dashboard`. There is a http port in Graph that can check the link `agent->transfer->graph`. For example, if the http port in Graph is 6071, then you can check by visiting:

9 |

10 | ```bash

11 | # $endpoint and $counter are variables

12 | curl http://127.0.0.1:6071/history/$endpoint/$counter

13 |

14 | # If the data are sent without tags, then you should visit

15 | curl http://127.0.0.1:6071/history/host01/agent.alive

16 |

17 | # If the data are sent with tags, then you should visit

18 | curl http://127.0.0.1:6071/history/host01/qps/module=graph,project=falcon

19 | "module=graph" and "project=falcon" are tags

20 | ```

21 | If those ports return void, that means Agent does not send data or an error occurs in Transfer.

22 |

23 |

24 | ### How to check if [Alarm Data] collection works properly?

25 |

26 | The data link is `agent->transfer->judge`. There is an http port in Judge that can check the link `agent->transfer->judge`. For example, if the http port in Judge is 6081, then you can check by visiting:

27 |

28 | ```bash

29 | curl http://127.0.0.1:6081/history/$endpoint/$counter

30 |

31 | # $endpoint and $counter are variables

32 | curl http://127.0.0.1:6081/history/host01/cpu.idle

33 |

34 | # counter=$metric/sorted($tags)

35 | # If the data are sent with tags, then you should visit

36 | curl http://127.0.0.1:6081/history/host01/qps/module=judge,project=falcon

37 | ```

38 | If those ports return void, that means Agent did not send data or an error occurs in Transfer.

39 |

40 |

--------------------------------------------------------------------------------

/en_0_2/image/OpenFalcon_wechat.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/OpenFalcon_wechat.jpg

--------------------------------------------------------------------------------

/en_0_2/image/func_aggregator_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_aggregator_1.png

--------------------------------------------------------------------------------

/en_0_2/image/func_aggregator_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_aggregator_2.png

--------------------------------------------------------------------------------

/en_0_2/image/func_aggregator_3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_aggregator_3.png

--------------------------------------------------------------------------------

/en_0_2/image/func_aggregator_4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_aggregator_4.png

--------------------------------------------------------------------------------

/en_0_2/image/func_aggregator_5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_aggregator_5.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_1.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_10.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_10.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_11.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_11.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_12.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_2.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_3.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_4.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_5.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_6.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_6.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_7.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_7.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_8.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_8.png

--------------------------------------------------------------------------------

/en_0_2/image/func_getting_started_9.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_getting_started_9.png

--------------------------------------------------------------------------------

/en_0_2/image/func_intro_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_intro_1.png

--------------------------------------------------------------------------------

/en_0_2/image/func_intro_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/open-falcon/book/f5ac09ca0c92d3c3d77aee4767aaab80edad1bbf/en_0_2/image/func_intro_2.png

--------------------------------------------------------------------------------

/en_0_2/image/func_intro_3.png:

--------------------------------------------------------------------------------