├── .gitignore

├── CONTRIBUTING.md

├── LICENSE

├── MANIFEST.in

├── README.md

├── README_PyPI.md

├── images

├── demo.gif

├── imgur_app.png

├── logo.png

└── reddit_app.png

├── pyproject.toml

├── requirements.txt

├── setup.cfg

└── src

└── saveddit

├── __init__.py

├── _version.py

├── configuration.py

├── multireddit_downloader.py

├── multireddit_downloader_config.py

├── saveddit.py

├── search_config.py

├── search_subreddits.py

├── submission_downloader.py

├── subreddit_downloader.py

├── subreddit_downloader_config.py

├── user_downloader.py

└── user_downloader_config.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 | .DS_Store

6 |

7 | # C extensions

8 | *.so

9 |

10 | # Distribution / packaging

11 | .Python

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | wheels/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 | cover/

54 |

55 | # Translations

56 | *.mo

57 | *.pot

58 |

59 | # Django stuff:

60 | *.log

61 | local_settings.py

62 | db.sqlite3

63 | db.sqlite3-journal

64 |

65 | # Flask stuff:

66 | instance/

67 | .webassets-cache

68 |

69 | # Scrapy stuff:

70 | .scrapy

71 |

72 | # Sphinx documentation

73 | docs/_build/

74 |

75 | # PyBuilder

76 | .pybuilder/

77 | target/

78 |

79 | # Jupyter Notebook

80 | .ipynb_checkpoints

81 |

82 | # IPython

83 | profile_default/

84 | ipython_config.py

85 |

86 | # pyenv

87 | # For a library or package, you might want to ignore these files since the code is

88 | # intended to run in multiple environments; otherwise, check them in:

89 | # .python-version

90 |

91 | # pipenv

92 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

93 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

94 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

95 | # install all needed dependencies.

96 | #Pipfile.lock

97 |

98 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

99 | __pypackages__/

100 |

101 | # Celery stuff

102 | celerybeat-schedule

103 | celerybeat.pid

104 |

105 | # SageMath parsed files

106 | *.sage.py

107 |

108 | # Environments

109 | .env

110 | .venv

111 | env/

112 | venv/

113 | ENV/

114 | env.bak/

115 | venv.bak/

116 |

117 | # Spyder project settings

118 | .spyderproject

119 | .spyproject

120 |

121 | # Rope project settings

122 | .ropeproject

123 |

124 | # mkdocs documentation

125 | /site

126 |

127 | # mypy

128 | .mypy_cache/

129 | .dmypy.json

130 | dmypy.json

131 |

132 | # Pyre type checker

133 | .pyre/

134 |

135 | # pytype static type analyzer

136 | .pytype/

137 |

138 | # Cython debug symbols

139 | cython_debug/

140 |

141 | # Configuration file

142 | **/user_config.yaml

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing

2 | Contributions are welcomed. Open a pull-request or an issue.

3 |

4 | ## Code of conduct

5 | This project adheres to the [Open Code of Conduct][code-of-conduct]. By participating, you are expected to honor this code.

6 |

7 | [code-of-conduct]: https://github.com/spotify/code-of-conduct/blob/master/code-of-conduct.md

8 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Pranav

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include images/*

2 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 |

6 |

7 |  8 |

9 |

10 |

8 |

9 |

10 |  11 |

12 |

11 |

12 |

13 |

14 | `saveddit` is a bulk media downloader for reddit

15 |

16 | ```console

17 | pip3 install saveddit

18 | ```

19 |

20 | ## Setting up authorization

21 |

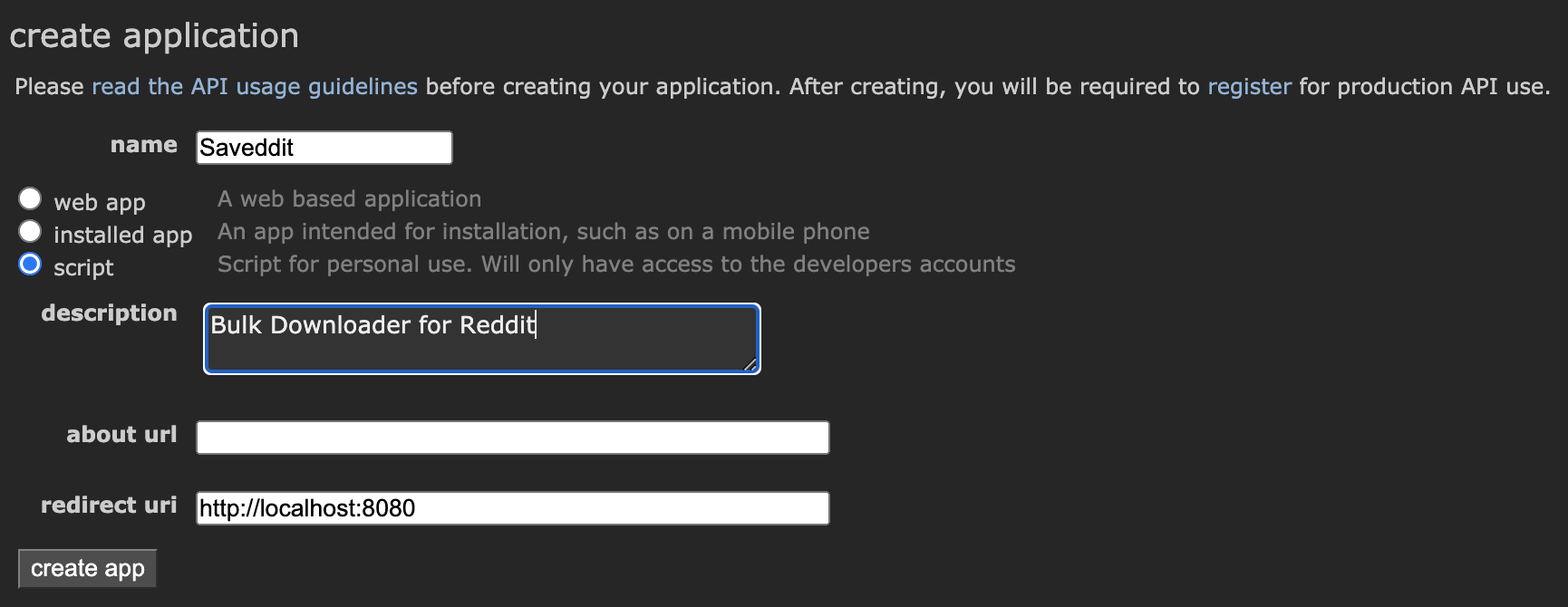

22 | * [Register an application with Reddit](https://ssl.reddit.com/prefs/apps/)

23 | - Write down your client ID and secret from the app

24 | - More about Reddit API access [here](https://ssl.reddit.com/wiki/api)

25 | - Wiki page about Reddit OAuth2 applications [here](https://github.com/reddit-archive/reddit/wiki/OAuth2)

26 |

27 |

28 |  29 |

29 |

30 |

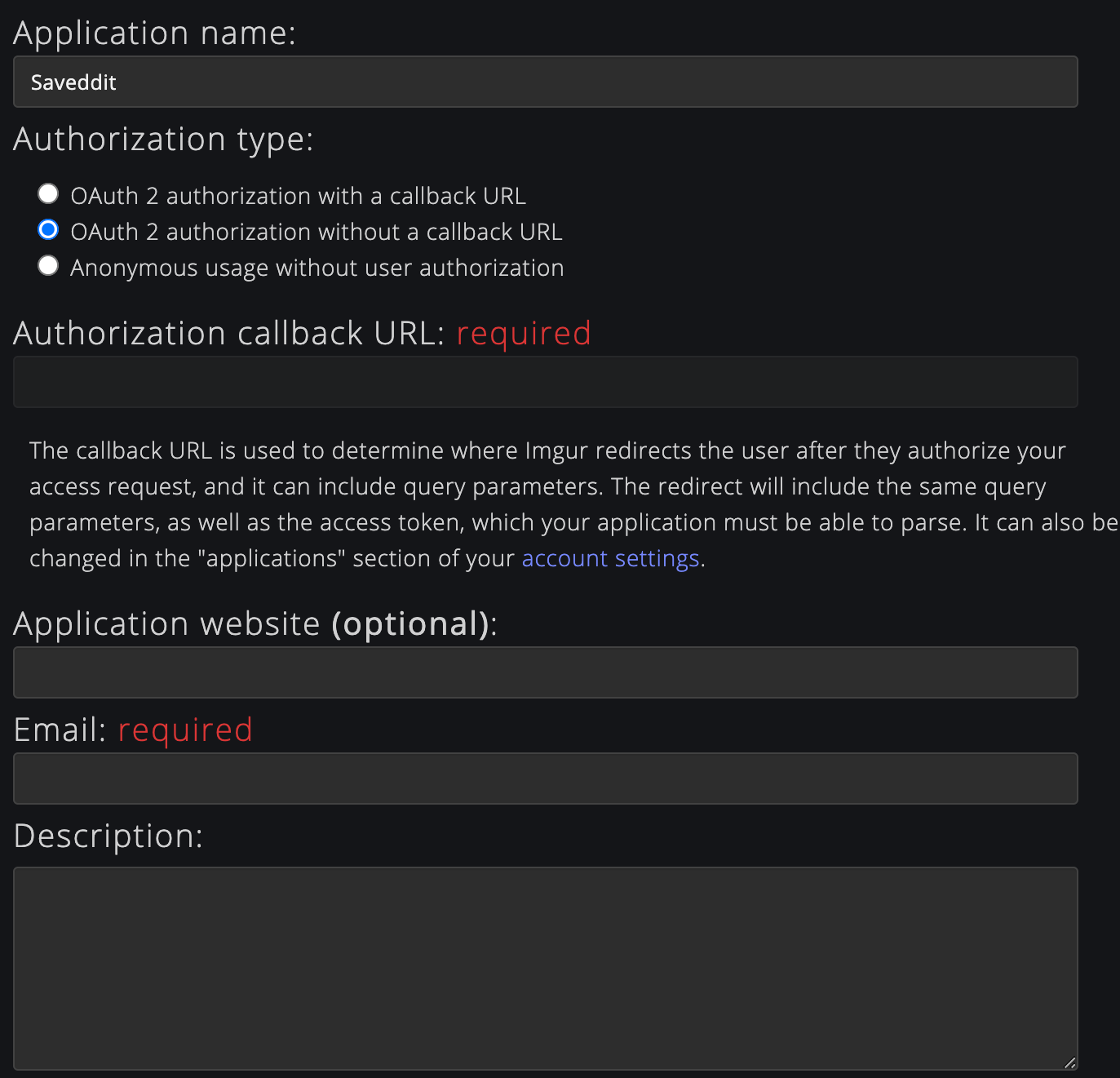

31 | * [Register an application with Imgur](https://api.imgur.com/oauth2/addclient)

32 | - Write down the Imgur client ID from the app

33 |

34 |

35 |  36 |

36 |

37 |

38 | These registrations will authorize you to use the Reddit and Imgur APIs to download publicly available information.

39 |

40 | ## User configuration

41 |

42 | The first time you run `saveddit`, you will see something like this:

43 |

44 | ```console

45 | foo@bar:~$ saveddit

46 | Retrieving configuration from ~/.saveddit/user_config.yaml file

47 | No configuration file found.

48 | Creating one. Would you like to edit it now?

49 | > Choose Y for yes and N for no

50 | ```

51 |

52 | Once you choose 'yes', the program will request you to enter these credentials:

53 | - Your imgur client ID

54 | - Your reddit client ID

55 | - Your reddit client secret

56 | - Your reddit username

57 |

58 | In case you choose 'no', the program will create a file which you can edit later, this is how to edit it:

59 |

60 | * Open the generated `~/.saveddit/user_config.yaml`

61 | * Update the client IDs and secrets from the previous step

62 | * If you plan on using the `user` API, add your reddit username as well

63 |

64 | ```yaml

65 | imgur_client_id: ''

66 | reddit_client_id: ''

67 | reddit_client_secret: ''

68 | reddit_username: ''

69 | ```

70 |

71 | ## Download from Subreddit

72 |

73 | ```console

74 | foo@bar:~$ saveddit subreddit -h

75 | Retrieving configuration from /Users/pranav/.saveddit/user_config.yaml file

76 |

77 | usage: saveddit subreddit [-h] [-f categories [categories ...]] [-l post_limit] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

78 |

79 | positional arguments:

80 | subreddits Names of subreddits to download, e.g., AskReddit

81 |

82 | optional arguments:

83 | -h, --help show this help message and exit

84 | -f categories [categories ...]

85 | Categories of posts to download (default: ['hot', 'new', 'rising', 'controversial', 'top', 'gilded'])

86 | -l post_limit Limit the number of submissions downloaded in each category (default: None, i.e., all submissions)

87 | --skip-comments When true, saveddit will not save comments to a comments.json file

88 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

89 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

90 | --all-comments When true, saveddit will download all the comments in a post instead of just downloading the top ones.)

91 | -o output_path Directory where saveddit will save downloaded content

92 | ```

93 |

94 | ```console

95 | foo@bar:~$ saveddit subreddit pics -f hot -l 5 -o ~/Desktop

96 | ```

97 |

98 | ```console

99 | foo@bar:~$ tree -L 4 ~/Desktop/www.reddit.com

100 | /Users/pranav/Desktop/www.reddit.com

101 | └── r

102 | └── pics

103 | └── hot

104 | ├── 000_Prince_Philip_Duke_of_Edinburgh_...

105 | ├── 001_Day_10_of_Nobody_Noticing_the_Ap...

106 | ├── 002_First_edited_picture

107 | ├── 003_Reorganized_a_few_months_ago_and...

108 | └── 004_Van_Gogh_inspired_rainy_street_I...

109 | ```

110 |

111 | You can download from multiple subreddits and use multiple filters:

112 |

113 | ```console

114 | foo@bar:~$ saveddit subreddit funny AskReddit -f hot top new rising -l 5 -o ~/Downloads/Reddit/.

115 | ```

116 |

117 | The downloads from each subreddit to go to a separate folder like so:

118 |

119 | ```console

120 | foo@bar:~$ tree -L 3 ~/Downloads/Reddit/www.reddit.com

121 | /Users/pranav/Downloads/Reddit/www.reddit.com

122 | └── r

123 | ├── AskReddit

124 | │ ├── hot

125 | │ ├── new

126 | │ ├── rising

127 | │ └── top

128 | └── funny

129 | ├── hot

130 | ├── new

131 | ├── rising

132 | └── top

133 | ```

134 |

135 | ## Download from anonymous Multireddit

136 |

137 | To download from an anonymous multireddit, use the `multireddit` option and pass a number of subreddit names

138 |

139 | ```console

140 | foo@bar:~$ saveddit multireddit -h

141 | usage: saveddit multireddit [-h] [-f categories [categories ...]] [-l post_limit] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

142 |

143 | positional arguments:

144 | subreddits Names of subreddits to download, e.g., aww, pics. The downloads will be stored in /www.reddit.com/m/aww+pics/.

145 |

146 | optional arguments:

147 | -h, --help show this help message and exit

148 | -f categories [categories ...]

149 | Categories of posts to download (default: ['hot', 'new', 'random_rising', 'rising', 'controversial', 'top', 'gilded'])

150 | -l post_limit Limit the number of submissions downloaded in each category (default: None, i.e., all submissions)

151 | --skip-comments When true, saveddit will not save comments to a comments.json file

152 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

153 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

154 | -o output_path Directory where saveddit will save downloaded content

155 | ```

156 |

157 | ```console

158 | foo@bar:~$ saveddit multireddit EarthPorn NaturePics -f hot -l 5 -o ~/Desktop

159 | ```

160 |

161 | Anonymous multireddits are saved in `www.reddit.com/m///` like so:

162 |

163 | ```console

164 | tree -L 4 ~/Desktop/www.reddit.com

165 | /Users/pranav/Desktop/www.reddit.com

166 | └── m

167 | └── EarthPorn+NaturePics

168 | └── hot

169 | ├── 000_Banning_State_Park_Minnesota_OC_...

170 | ├── 001_Misty_forest_in_the_mountains_of...

171 | ├── 002_One_of_the_highlights_of_my_last...

172 | ├── 003__OC_Japan_Kyoto_Garden_of_the_Go...

173 | └── 004_Sunset_at_Mt_Rainier_National_Pa...

174 | ```

175 |

176 | ## Download from User's page

177 |

178 | ```console

179 | foo@bar:~$ saveddit user -h

180 | usage: saveddit user [-h] users [users ...] {saved,gilded,submitted,multireddits,upvoted,comments} ...

181 |

182 | positional arguments:

183 | users Names of users to download, e.g., Poem_for_your_sprog

184 | {saved,gilded,submitted,multireddits,upvoted,comments}

185 |

186 | optional arguments:

187 | -h, --help show this help message and exit

188 | ```

189 |

190 | Here's a usage example for downloading all comments made by `Poem_for_your_sprog`

191 |

192 | ```console

193 | foo@bar:~$ saveddit user "Poem_for_your_sprog" comments -s top -l 5 -o ~/Desktop

194 | ```

195 |

196 | Here's another example for downloading `kemitche`'s multireddits:

197 |

198 | ```console

199 | foo@bar:~$ saveddit user kemitche multireddits -n reddit -f hot -l 5 -o ~/Desktop

200 | ```

201 |

202 | User-specific content is downloaded to `www.reddit.com/u//...` like so:

203 |

204 | ```console

205 | foo@bar:~$ tree ~/Desktop/www.reddit.com

206 | /Users/pranav/Desktop/www.reddit.com

207 | └── u

208 | ├── Poem_for_your_sprog

209 | │ ├── comments

210 | │ │ └── top

211 | │ │ ├── 000_Comment_my_name_is_Cow_and_wen_its_ni....json

212 | │ │ ├── 001_Comment_It_stopped_at_six_and_life....json

213 | │ │ ├── 002_Comment__Perhaps_I_could_listen_to_podca....json

214 | │ │ ├── 003_Comment__I_don_t_have_regret_for_the_thi....json

215 | │ │ └── 004_Comment__So_throw_off_the_chains_of_oppr....json

216 | │ └── user.json

217 | └── kemitche

218 | ├── m

219 | │ └── reddit

220 | │ └── hot

221 | │ ├── 000_When_posting_to_my_u_channel_NSF...

222 | │ │ ├── comments.json

223 | │ │ └── submission.json

224 | │ ├── 001_How_to_remove_popular_near_you

225 | │ │ ├── comments.json

226 | │ │ └── submission.json

227 | │ ├── 002__IOS_2021_13_0_Reddit_is_just_su...

228 | │ │ ├── comments.json

229 | │ │ └── submission.json

230 | │ ├── 003_The_Approve_User_button_should_n...

231 | │ │ ├── comments.json

232 | │ │ └── submission.json

233 | │ └── 004_non_moderators_unable_to_view_su...

234 | │ ├── comments.json

235 | │ └── submission.json

236 | └── user.json

237 | ```

238 |

239 | ## Search and Download

240 |

241 | `saveddit` support searching subreddits and downloading search results

242 |

243 | ```console

244 | foo@bar:~$ saveddit search -h

245 | usage: saveddit search [-h] -q query [-s sort] [-t time_filter] [--include-nsfw] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

246 |

247 | positional arguments:

248 | subreddits Names of subreddits to search, e.g., all, aww, pics

249 |

250 | optional arguments:

251 | -h, --help show this help message and exit

252 | -q query Search query string

253 | -s sort Sort to apply on search (default: relevance, choices: [relevance, hot, top, new, comments])

254 | -t time_filter Time filter to apply on search (default: all, choices: [all, day, hour, month, week, year])

255 | --include-nsfw When true, saveddit will include NSFW results in search

256 | --skip-comments When true, saveddit will not save comments to a comments.json file

257 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

258 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

259 | -o output_path Directory where saveddit will save downloaded content

260 | ```

261 |

262 | e.g.,

263 |

264 | ```console

265 | foo@bar:~$ saveddit search soccer -q "Chelsea" -o ~/Desktop

266 | ```

267 |

268 | The downloaded search results are stored in `www.reddit.com/q////.`

269 |

270 | ```console

271 | foo@bar:~$ tree -L 4 ~/Desktop/www.reddit.com/q

272 | /Users/pranav/Desktop/www.reddit.com/q

273 | └── Chelsea

274 | └── soccer

275 | └── relevance

276 | ├── 000__Official_Results_for_UEFA_Champ...

277 | ├── 001_Porto_0_1_Chelsea_Mason_Mount_32...

278 | ├── 002_Crystal_Palace_0_2_Chelsea_Chris...

279 | ├── 003_Post_Match_Thread_Chelsea_2_5_We...

280 | ├── 004_Match_Thread_Porto_vs_Chelsea_UE...

281 | ├── 005_Crystal_Palace_1_4_Chelsea_Chris...

282 | ├── 006_Porto_0_2_Chelsea_Ben_Chilwell_8...

283 | ├── 007_Post_Match_Thread_Porto_0_2_Chel...

284 | ├── 008_UCL_Quaterfinalists_are_Bayern_D...

285 | ├── 009__MD_Mino_Raiola_and_Haaland_s_fa...

286 | ├── 010_Chelsea_2_5_West_Brom_Callum_Rob...

287 | ├── 011_Chelsea_1_2_West_Brom_Matheus_Pe...

288 | ├── 012__Bild_Sport_via_Sport_Witness_Ch...

289 | ├── 013_Match_Thread_Chelsea_vs_West_Bro...

290 | ├── 014_Chelsea_1_3_West_Brom_Callum_Rob...

291 | ├── 015_Match_Thread_Chelsea_vs_Atletico...

292 | ├── 016_Stefan_Savi�\207_Atlético_Madrid_str...

293 | ├── 017_Chelsea_1_0_West_Brom_Christian_...

294 | └── 018_Alvaro_Morata_I_ve_never_had_dep...

295 | ```

296 |

297 | ## Supported Links:

298 |

299 | * Direct links to images or videos, e.g., `.png`, `.jpg`, `.mp4`, `.gif` etc.

300 | * Reddit galleries `reddit.com/gallery/...`

301 | * Reddit videos `v.redd.it/...`

302 | * Gfycat links `gfycat.com/...`

303 | * Redgif links `redgifs.com/...`

304 | * Imgur images `imgur.com/...`

305 | * Imgur albums `imgur.com/a/...` and `imgur.com/gallery/...`

306 | * Youtube links `youtube.com/...` and `yout.be/...`

307 | * These [sites](https://ytdl-org.github.io/youtube-dl/supportedsites.html) supported by `youtube-dl`

308 | * Self posts

309 | * For all other cases, `saveddit` will simply fetch the HTML of the URL

310 |

311 | ## Contributing

312 | Contributions are welcome, have a look at the [CONTRIBUTING.md](CONTRIBUTING.md) document for more information.

313 |

314 | ## License

315 | The project is available under the [MIT](https://opensource.org/licenses/MIT) license.

316 |

--------------------------------------------------------------------------------

/README_PyPI.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | `saveddit` is a bulk media downloader for reddit

4 |

5 | ```console

6 | pip3 install saveddit

7 | ```

8 |

9 | ## Setting up authorization

10 |

11 | * [Register an application with Reddit](https://ssl.reddit.com/prefs/apps/)

12 | - Write down your client ID and secret from the app

13 | - More about Reddit API access [here](https://ssl.reddit.com/wiki/api)

14 | - Wiki page about Reddit OAuth2 applications [here](https://github.com/reddit-archive/reddit/wiki/OAuth2)

15 |

16 |

17 |

18 | * [Register an application with Imgur](https://api.imgur.com/oauth2/addclient)

19 | - Write down the Imgur client ID from the app

20 |

21 |

22 |

23 | These registrations will authorize you to use the Reddit and Imgur APIs to download publicly available information.

24 |

25 | ## User configuration

26 |

27 | The first time you run `saveddit`, you will see something like this:

28 |

29 | ```console

30 | foo@bar:~$ saveddit

31 | Retrieving configuration from ~/.saveddit/user_config.yaml file

32 | No configuration file found.

33 | Creating one. Would you like to edit it now?

34 | > Choose Y for yes and N for no

35 | ```

36 |

37 | Once you choose 'yes', the program will request you to enter these credentials:

38 | - Your imgur client ID

39 | - Your reddit client ID

40 | - Your reddit client secret

41 | - Your reddit username

42 |

43 | In case you choose 'no', the program will create a file which you can edit later, this is how to edit it:

44 |

45 | * Open the generated `~/.saveddit/user_config.yaml`

46 | * Update the client IDs and secrets from the previous step

47 | * If you plan on using the `user` API, add your reddit username as well

48 |

49 | ```yaml

50 | imgur_client_id: ''

51 | reddit_client_id: ''

52 | reddit_client_secret: ''

53 | reddit_username: ''

54 | ```

55 |

56 | ## Download from Subreddit

57 |

58 | ```console

59 | foo@bar:~$ saveddit subreddit -h

60 | Retrieving configuration from /Users/pranav/.saveddit/user_config.yaml file

61 |

62 | usage: saveddit subreddit [-h] [-f categories [categories ...]] [-l post_limit] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

63 |

64 | positional arguments:

65 | subreddits Names of subreddits to download, e.g., AskReddit

66 |

67 | optional arguments:

68 | -h, --help show this help message and exit

69 | -f categories [categories ...]

70 | Categories of posts to download (default: ['hot', 'new', 'rising', 'controversial', 'top', 'gilded'])

71 | -l post_limit Limit the number of submissions downloaded in each category (default: None, i.e., all submissions)

72 | --skip-comments When true, saveddit will not save comments to a comments.json file

73 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

74 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

75 | --all-comments When true, saveddit will download all the comments in a post instead of just downloading the top ones.)

76 | -o output_path Directory where saveddit will save downloaded content

77 | ```

78 |

79 | ```console

80 | foo@bar:~$ saveddit subreddit pics -f hot -l 5 -o ~/Desktop

81 | ```

82 |

83 | ```console

84 | foo@bar:~$ tree -L 4 ~/Desktop/www.reddit.com

85 | /Users/pranav/Desktop/www.reddit.com

86 | └── r

87 | └── pics

88 | └── hot

89 | ├── 000_Prince_Philip_Duke_of_Edinburgh_...

90 | ├── 001_Day_10_of_Nobody_Noticing_the_Ap...

91 | ├── 002_First_edited_picture

92 | ├── 003_Reorganized_a_few_months_ago_and...

93 | └── 004_Van_Gogh_inspired_rainy_street_I...

94 | ```

95 |

96 | You can download from multiple subreddits and use multiple filters:

97 |

98 | ```console

99 | foo@bar:~$ saveddit subreddit funny AskReddit -f hot top new rising -l 5 -o ~/Downloads/Reddit/.

100 | ```

101 |

102 | The downloads from each subreddit to go to a separate folder like so:

103 |

104 | ```console

105 | foo@bar:~$ tree -L 3 ~/Downloads/Reddit/www.reddit.com

106 | /Users/pranav/Downloads/Reddit/www.reddit.com

107 | └── r

108 | ├── AskReddit

109 | │ ├── hot

110 | │ ├── new

111 | │ ├── rising

112 | │ └── top

113 | └── funny

114 | ├── hot

115 | ├── new

116 | ├── rising

117 | └── top

118 | ```

119 |

120 | ## Download from anonymous Multireddit

121 |

122 | To download from an anonymous multireddit, use the `multireddit` option and pass a number of subreddit names

123 |

124 | ```console

125 | foo@bar:~$ saveddit multireddit -h

126 | usage: saveddit multireddit [-h] [-f categories [categories ...]] [-l post_limit] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

127 |

128 | positional arguments:

129 | subreddits Names of subreddits to download, e.g., aww, pics. The downloads will be stored in /www.reddit.com/m/aww+pics/.

130 |

131 | optional arguments:

132 | -h, --help show this help message and exit

133 | -f categories [categories ...]

134 | Categories of posts to download (default: ['hot', 'new', 'random_rising', 'rising', 'controversial', 'top', 'gilded'])

135 | -l post_limit Limit the number of submissions downloaded in each category (default: None, i.e., all submissions)

136 | --skip-comments When true, saveddit will not save comments to a comments.json file

137 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

138 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

139 | -o output_path Directory where saveddit will save downloaded content

140 | ```

141 |

142 | ```console

143 | foo@bar:~$ saveddit multireddit EarthPorn NaturePics -f hot -l 5 -o ~/Desktop

144 | ```

145 |

146 | Anonymous multireddits are saved in `www.reddit.com/m///` like so:

147 |

148 | ```console

149 | tree -L 4 ~/Desktop/www.reddit.com

150 | /Users/pranav/Desktop/www.reddit.com

151 | └── m

152 | └── EarthPorn+NaturePics

153 | └── hot

154 | ├── 000_Banning_State_Park_Minnesota_OC_...

155 | ├── 001_Misty_forest_in_the_mountains_of...

156 | ├── 002_One_of_the_highlights_of_my_last...

157 | ├── 003__OC_Japan_Kyoto_Garden_of_the_Go...

158 | └── 004_Sunset_at_Mt_Rainier_National_Pa...

159 | ```

160 |

161 | ## Download from User's page

162 |

163 | ```console

164 | foo@bar:~$ saveddit user -h

165 | usage: saveddit user [-h] users [users ...] {saved,gilded,submitted,multireddits,upvoted,comments} ...

166 |

167 | positional arguments:

168 | users Names of users to download, e.g., Poem_for_your_sprog

169 | {saved,gilded,submitted,multireddits,upvoted,comments}

170 |

171 | optional arguments:

172 | -h, --help show this help message and exit

173 | ```

174 |

175 | Here's a usage example for downloading all comments made by `Poem_for_your_sprog`

176 |

177 | ```console

178 | foo@bar:~$ saveddit user "Poem_for_your_sprog" comments -s top -l 5 -o ~/Desktop

179 | ```

180 |

181 | Here's another example for downloading `kemitche`'s multireddits:

182 |

183 | ```console

184 | foo@bar:~$ saveddit user kemitche multireddits -n reddit -f hot -l 5 -o ~/Desktop

185 | ```

186 |

187 | User-specific content is downloaded to `www.reddit.com/u//...` like so:

188 |

189 | ```console

190 | foo@bar:~$ tree ~/Desktop/www.reddit.com

191 | /Users/pranav/Desktop/www.reddit.com

192 | └── u

193 | ├── Poem_for_your_sprog

194 | │ ├── comments

195 | │ │ └── top

196 | │ │ ├── 000_Comment_my_name_is_Cow_and_wen_its_ni....json

197 | │ │ ├── 001_Comment_It_stopped_at_six_and_life....json

198 | │ │ ├── 002_Comment__Perhaps_I_could_listen_to_podca....json

199 | │ │ ├── 003_Comment__I_don_t_have_regret_for_the_thi....json

200 | │ │ └── 004_Comment__So_throw_off_the_chains_of_oppr....json

201 | │ └── user.json

202 | └── kemitche

203 | ├── m

204 | │ └── reddit

205 | │ └── hot

206 | │ ├── 000_When_posting_to_my_u_channel_NSF...

207 | │ │ ├── comments.json

208 | │ │ └── submission.json

209 | │ ├── 001_How_to_remove_popular_near_you

210 | │ │ ├── comments.json

211 | │ │ └── submission.json

212 | │ ├── 002__IOS_2021_13_0_Reddit_is_just_su...

213 | │ │ ├── comments.json

214 | │ │ └── submission.json

215 | │ ├── 003_The_Approve_User_button_should_n...

216 | │ │ ├── comments.json

217 | │ │ └── submission.json

218 | │ └── 004_non_moderators_unable_to_view_su...

219 | │ ├── comments.json

220 | │ └── submission.json

221 | └── user.json

222 | ```

223 |

224 | ## Search and Download

225 |

226 | `saveddit` support searching subreddits and downloading search results

227 |

228 | ```console

229 | foo@bar:~$ saveddit search -h

230 | usage: saveddit search [-h] -q query [-s sort] [-t time_filter] [--include-nsfw] [--skip-comments] [--skip-meta] [--skip-videos] -o output_path subreddits [subreddits ...]

231 |

232 | positional arguments:

233 | subreddits Names of subreddits to search, e.g., all, aww, pics

234 |

235 | optional arguments:

236 | -h, --help show this help message and exit

237 | -q query Search query string

238 | -s sort Sort to apply on search (default: relevance, choices: [relevance, hot, top, new, comments])

239 | -t time_filter Time filter to apply on search (default: all, choices: [all, day, hour, month, week, year])

240 | --include-nsfw When true, saveddit will include NSFW results in search

241 | --skip-comments When true, saveddit will not save comments to a comments.json file

242 | --skip-meta When true, saveddit will not save meta to a submission.json file on submissions

243 | --skip-videos When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)

244 | -o output_path Directory where saveddit will save downloaded content

245 | ```

246 |

247 | e.g.,

248 |

249 | ```console

250 | foo@bar:~$ saveddit search soccer -q "Chelsea" -o ~/Desktop

251 | ```

252 |

253 | The downloaded search results are stored in `www.reddit.com/q////.`

254 |

255 | ```console

256 | foo@bar:~$ tree -L 4 ~/Desktop/www.reddit.com/q

257 | /Users/pranav/Desktop/www.reddit.com/q

258 | └── Chelsea

259 | └── soccer

260 | └── relevance

261 | ├── 000__Official_Results_for_UEFA_Champ...

262 | ├── 001_Porto_0_1_Chelsea_Mason_Mount_32...

263 | ├── 002_Crystal_Palace_0_2_Chelsea_Chris...

264 | ├── 003_Post_Match_Thread_Chelsea_2_5_We...

265 | ├── 004_Match_Thread_Porto_vs_Chelsea_UE...

266 | ├── 005_Crystal_Palace_1_4_Chelsea_Chris...

267 | ├── 006_Porto_0_2_Chelsea_Ben_Chilwell_8...

268 | ├── 007_Post_Match_Thread_Porto_0_2_Chel...

269 | ├── 008_UCL_Quaterfinalists_are_Bayern_D...

270 | ├── 009__MD_Mino_Raiola_and_Haaland_s_fa...

271 | ├── 010_Chelsea_2_5_West_Brom_Callum_Rob...

272 | ├── 011_Chelsea_1_2_West_Brom_Matheus_Pe...

273 | ├── 012__Bild_Sport_via_Sport_Witness_Ch...

274 | ├── 013_Match_Thread_Chelsea_vs_West_Bro...

275 | ├── 014_Chelsea_1_3_West_Brom_Callum_Rob...

276 | ├── 015_Match_Thread_Chelsea_vs_Atletico...

277 | ├── 016_Stefan_Savi�\207_Atlético_Madrid_str...

278 | ├── 017_Chelsea_1_0_West_Brom_Christian_...

279 | └── 018_Alvaro_Morata_I_ve_never_had_dep...

280 | ```

281 |

282 | ## Supported Links:

283 |

284 | * Direct links to images or videos, e.g., `.png`, `.jpg`, `.mp4`, `.gif` etc.

285 | * Reddit galleries `reddit.com/gallery/...`

286 | * Reddit videos `v.redd.it/...`

287 | * Gfycat links `gfycat.com/...`

288 | * Redgif links `redgifs.com/...`

289 | * Imgur images `imgur.com/...`

290 | * Imgur albums `imgur.com/a/...` and `imgur.com/gallery/...`

291 | * Youtube links `youtube.com/...` and `yout.be/...`

292 | * These [sites](https://ytdl-org.github.io/youtube-dl/supportedsites.html) supported by `youtube-dl`

293 | * Self posts

294 | * For all other cases, `saveddit` will simply fetch the HTML of the URL

295 |

296 | ## Contributing

297 | Contributions are welcome, have a look at the [CONTRIBUTING.md](CONTRIBUTING.md) document for more information.

298 |

299 | ## License

300 | The project is available under the [MIT](https://opensource.org/licenses/MIT) license.

301 |

--------------------------------------------------------------------------------

/images/demo.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/p-ranav/saveddit/f4aa0749eec1020bb9927c6dd7fd5059a6d989af/images/demo.gif

--------------------------------------------------------------------------------

/images/imgur_app.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/p-ranav/saveddit/f4aa0749eec1020bb9927c6dd7fd5059a6d989af/images/imgur_app.png

--------------------------------------------------------------------------------

/images/logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/p-ranav/saveddit/f4aa0749eec1020bb9927c6dd7fd5059a6d989af/images/logo.png

--------------------------------------------------------------------------------

/images/reddit_app.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/p-ranav/saveddit/f4aa0749eec1020bb9927c6dd7fd5059a6d989af/images/reddit_app.png

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = [

3 | "setuptools>=42",

4 | "wheel"

5 | ]

6 | build-backend = "setuptools.build_meta"

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | colorama==0.4.4

2 | coloredlogs==15.0

3 | verboselogs==1.7

4 | praw==7.2.0

5 | tqdm==4.60.0

6 | ffmpeg_python==0.2.0

7 | youtube_dl==2021.4.7

8 | requests==2.25.1

9 | beautifulsoup4==4.9.3

10 | PyYAML==5.4.1

11 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | # replace with your username:

3 | name = saveddit

4 | version = 2.2.1

5 | author = Pranav Srinivas Kumar

6 | author_email = pranav.srinivas.kumar@gmail.com

7 | description = Bulk Downloader for Reddit

8 | long_description = file: README_PyPI.md

9 | long_description_content_type = text/markdown

10 | url = https://github.com/p-ranav/saveddit

11 | project_urls =

12 | Bug Tracker = https://github.com/p-ranav/saveddit/issues

13 | classifiers =

14 | Programming Language :: Python :: 3

15 | License :: OSI Approved :: MIT License

16 | Operating System :: OS Independent

17 |

18 | [options]

19 | package_dir =

20 | = src

21 | packages = find:

22 | python_requires = >=3.8

23 | install_requires =

24 | praw

25 | verboselogs

26 | requests

27 | colorama

28 | coloredlogs

29 | youtube_dl

30 | tqdm

31 | ffmpeg_python

32 | beautifulsoup4

33 | PyYAML

34 |

35 | [options.packages.find]

36 | where = src

37 |

38 | [options.entry_points]

39 | console_scripts =

40 | saveddit = saveddit.saveddit:main

--------------------------------------------------------------------------------

/src/saveddit/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/p-ranav/saveddit/f4aa0749eec1020bb9927c6dd7fd5059a6d989af/src/saveddit/__init__.py

--------------------------------------------------------------------------------

/src/saveddit/_version.py:

--------------------------------------------------------------------------------

1 | __version__ = "2.2.1"

--------------------------------------------------------------------------------

/src/saveddit/configuration.py:

--------------------------------------------------------------------------------

1 | import os

2 | from typing import Union

3 | import yaml

4 | import pathlib

5 | import colorama

6 | import sys

7 |

8 |

9 | class ConfigurationLoader:

10 | PURPLE = colorama.Fore.MAGENTA

11 | WHITE = colorama.Style.RESET_ALL

12 | RED = colorama.Fore.RED

13 |

14 | @staticmethod

15 | def load(path):

16 | """

17 | Loads Saveddit configuration from a configuration file.

18 | If ifle is not found, create one and exit.

19 |

20 | Arguments:

21 | path: path to user_config.yaml file

22 |

23 | Returns:

24 | A Python dictionary with Saveddit configuration info

25 | """

26 |

27 | def _create_config(_path):

28 | _STD_CONFIG = {

29 | "reddit_client_id": "",

30 | "reddit_client_secret": "",

31 | "reddit_username": "",

32 | "imgur_client_id": "",

33 | }

34 | with open(_path, "x") as _f:

35 | yaml.dump(_STD_CONFIG, _f)

36 | sys.exit(0)

37 |

38 | # Explicitly converting path to POSIX-like path (to avoid '\\' hell)

39 | print(

40 | "{notice}Retrieving configuration from {path} file{white}".format(

41 | path=path,

42 | notice=ConfigurationLoader.PURPLE,

43 | white=ConfigurationLoader.WHITE,

44 | )

45 | )

46 | path = pathlib.Path(path).absolute().as_posix()

47 |

48 | # Check if file exists. If not, create one and fill it with std config template

49 | if not os.path.exists(path):

50 | print(

51 | "{red}No configuration file found.\nCreating one. Would you like to edit it now?\n > Choose {purple}Y{red} for yes and {purple}N{red} for no.{white}".format(

52 | red=ConfigurationLoader.RED,

53 | path=path,

54 | white=ConfigurationLoader.WHITE,

55 | purple=ConfigurationLoader.PURPLE,

56 | )

57 | )

58 | getchoice = str(input("> "))

59 | if getchoice == "Y":

60 | reddit_client = str(input("Reddit Client ID: "))

61 | reddit_client_sec = str(input("Reddit Client Secret: "))

62 | reddit_user = str(input("Reddit Username: "))

63 | imgur_client = str(input("Imgur Client ID: "))

64 | STD_CONFIG = {

65 | "reddit_client_id": "{}".format(reddit_client),

66 | "reddit_client_secret": "{}".format(reddit_client_sec),

67 | "reddit_username": "{}".format(reddit_user),

68 | "imgur_client_id": "{}".format(imgur_client),

69 | }

70 | with open(path, "x") as f:

71 | yaml.dump(STD_CONFIG, f)

72 | sys.exit(0)

73 | elif getchoice == "N":

74 | print(

75 | "{red}Alright.\nPlease edit {path} with valid credentials.\nExiting{white}".format(

76 | red=ConfigurationLoader.RED,

77 | path=path,

78 | white=ConfigurationLoader.WHITE,

79 | )

80 | )

81 | _create_config(path)

82 | else:

83 | print("Invalid choice.")

84 | exit()

85 |

86 | with open(path, "r") as _f:

87 | return yaml.safe_load(_f.read())

88 |

--------------------------------------------------------------------------------

/src/saveddit/multireddit_downloader.py:

--------------------------------------------------------------------------------

1 | import coloredlogs

2 | from colorama import Fore, Style

3 | from datetime import datetime, timezone

4 | import logging

5 | import verboselogs

6 | import getpass

7 | import json

8 | import os

9 | import praw

10 | from pprint import pprint

11 | import re

12 | from saveddit.submission_downloader import SubmissionDownloader

13 | from saveddit.subreddit_downloader import SubredditDownloader

14 | from saveddit.multireddit_downloader_config import MultiredditDownloaderConfig

15 | import sys

16 | from tqdm import tqdm

17 |

18 | class MultiredditDownloader:

19 | config = SubredditDownloader.config

20 | REDDIT_CLIENT_ID = config['reddit_client_id']

21 | REDDIT_CLIENT_SECRET = config['reddit_client_secret']

22 | IMGUR_CLIENT_ID = config['imgur_client_id']

23 |

24 | def __init__(self, multireddit_names):

25 | self.logger = verboselogs.VerboseLogger(__name__)

26 | level_styles = {

27 | 'critical': {'bold': True, 'color': 'red'},

28 | 'debug': {'color': 'green'},

29 | 'error': {'color': 'red'},

30 | 'info': {'color': 'white'},

31 | 'notice': {'color': 'magenta'},

32 | 'spam': {'color': 'white', 'faint': True},

33 | 'success': {'bold': True, 'color': 'green'},

34 | 'verbose': {'color': 'blue'},

35 | 'warning': {'color': 'yellow'}

36 | }

37 | coloredlogs.install(level='SPAM', logger=self.logger,

38 | fmt='%(message)s', level_styles=level_styles)

39 |

40 | self.reddit = praw.Reddit(

41 | client_id=MultiredditDownloader.REDDIT_CLIENT_ID,

42 | client_secret=MultiredditDownloader.REDDIT_CLIENT_SECRET,

43 | user_agent="saveddit (by /u/p_ranav)"

44 | )

45 |

46 | self.multireddit_name = "+".join(multireddit_names)

47 | self.multireddit = self.reddit.subreddit(self.multireddit_name)

48 |

49 | def download(self, output_path, categories=MultiredditDownloaderConfig.DEFAULT_CATEGORIES, post_limit=MultiredditDownloaderConfig.DEFAULT_POST_LIMIT, skip_videos=False, skip_meta=False, skip_comments=False, comment_limit=0):

50 | '''

51 | categories: List of categories within the multireddit to download (see MultiredditDownloaderConfig.DEFAULT_CATEGORIES)

52 | post_limit: Number of posts to download (default: None, i.e., all posts)

53 | comment_limit: Number of comment levels to download from submission (default: `0`, i.e., only top-level comments)

54 | - to get all comments, set comment_limit to `None`

55 | '''

56 |

57 | multireddit_dir_name = self.multireddit_name

58 | if len(multireddit_dir_name) > 64:

59 | multireddit_dir_name = multireddit_dir_name[0:63]

60 | multireddit_dir_name += "..."

61 |

62 | root_dir = os.path.join(os.path.join(os.path.join(

63 | output_path, "www.reddit.com"), "m"), multireddit_dir_name)

64 | categories = categories

65 |

66 | for c in categories:

67 | self.logger.notice("Downloading from /m/" +

68 | self.multireddit_name + "/" + c + "/")

69 | category_dir = os.path.join(root_dir, c)

70 | if not os.path.exists(category_dir):

71 | os.makedirs(category_dir)

72 | category_function = getattr(self.multireddit, c)

73 |

74 | for i, submission in enumerate(category_function(limit=post_limit)):

75 | SubmissionDownloader(submission, i, self.logger, category_dir,

76 | skip_videos, skip_meta, skip_comments, comment_limit,

77 | {'imgur_client_id': MultiredditDownloader.IMGUR_CLIENT_ID})

78 |

--------------------------------------------------------------------------------

/src/saveddit/multireddit_downloader_config.py:

--------------------------------------------------------------------------------

1 | class MultiredditDownloaderConfig:

2 | DEFAULT_CATEGORIES = ["hot", "new", "random_rising", "rising",

3 | "controversial", "top", "gilded"]

4 | DEFAULT_POST_LIMIT = None

--------------------------------------------------------------------------------

/src/saveddit/saveddit.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import sys

3 | from saveddit.multireddit_downloader_config import MultiredditDownloaderConfig

4 | from saveddit.search_config import SearchConfig

5 | from saveddit.subreddit_downloader_config import SubredditDownloaderConfig

6 | from saveddit.user_downloader_config import UserDownloaderConfig

7 | from saveddit._version import __version__

8 |

9 |

10 | def asciiart():

11 | return r''' .___ .___.__ __

12 | ___________ ___ __ ____ __| _/__| _/|__|/ |_

13 | / ___/\__ \\ \/ // __ \ / __ |/ __ | | \ __\

14 | \___ \ / __ \\ /\ ___// /_/ / /_/ | | || |

15 | /____ >(____ /\_/ \___ >____ \____ | |__||__|

16 | \/ \/ \/ \/ \/

17 |

18 | Downloader for Reddit

19 | version : ''' + __version__ + '''

20 | URL : https://github.com/p-ranav/saveddit

21 | '''

22 |

23 |

24 | def check_positive(value):

25 | ivalue = int(value)

26 | if ivalue <= 0:

27 | raise argparse.ArgumentTypeError(

28 | "%s is an invalid positive int value" % value)

29 | return ivalue

30 |

31 | class UniqueAppendAction(argparse.Action):

32 | '''

33 | Class used to discard duplicates in list arguments

34 | https://stackoverflow.com/questions/9376670/python-argparse-force-a-list-item-to-be-unique

35 | '''

36 | def __call__(self, parser, namespace, values, option_string=None):

37 | unique_values = set(values)

38 | setattr(namespace, self.dest, unique_values)

39 |

40 | def main():

41 | argv = sys.argv[1:]

42 |

43 | parser = argparse.ArgumentParser(prog="saveddit")

44 | parser.add_argument('-v', '--version', action='version', version='%(prog)s ' + __version__)

45 |

46 | subparsers = parser.add_subparsers(dest="subparser_name")

47 |

48 | subreddit_parser = subparsers.add_parser('subreddit')

49 | subreddit_parser.add_argument('subreddits',

50 | metavar='subreddits',

51 | nargs='+',

52 | action=UniqueAppendAction,

53 | help='Names of subreddits to download, e.g., AskReddit')

54 | subreddit_parser.add_argument('-f',

55 | metavar='categories',

56 | default=SubredditDownloaderConfig.DEFAULT_CATEGORIES,

57 | nargs='+',

58 | action=UniqueAppendAction,

59 | help='Categories of posts to download (default: %(default)s)')

60 | subreddit_parser.add_argument('-l',

61 | default=SubredditDownloaderConfig.DEFAULT_POST_LIMIT,

62 | metavar='post_limit',

63 | type=check_positive,

64 | help='Limit the number of submissions downloaded in each category (default: %(default)s, i.e., all submissions)')

65 | subreddit_parser.add_argument('--skip-comments',

66 | default=False,

67 | action='store_true',

68 | help='When true, saveddit will not save comments to a comments.json file')

69 | subreddit_parser.add_argument('--skip-meta',

70 | default=False,

71 | action='store_true',

72 | help='When true, saveddit will not save meta to a submission.json file on submissions')

73 | subreddit_parser.add_argument('--skip-videos',

74 | default=False,

75 | action='store_true',

76 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

77 | subreddit_parser.add_argument('--all-comments',

78 | default=False,

79 | action='store_true',

80 | help='When true, saveddit will download all the comments in a post instead of just the top ones.')

81 | subreddit_parser.add_argument('-o',

82 | required=True,

83 | type=str,

84 | metavar='output_path',

85 | help='Directory where saveddit will save downloaded content'

86 | )

87 |

88 | multireddit_parser = subparsers.add_parser('multireddit')

89 | multireddit_parser.add_argument('subreddits',

90 | metavar='subreddits',

91 | nargs='+',

92 | action=UniqueAppendAction,

93 | help='Names of subreddits to download, e.g., aww, pics. The downloads will be stored in /www.reddit.com/m/aww+pics/.')

94 | multireddit_parser.add_argument('-f',

95 | metavar='categories',

96 | default=MultiredditDownloaderConfig.DEFAULT_CATEGORIES,

97 | nargs='+',

98 | action=UniqueAppendAction,

99 | help='Categories of posts to download (default: %(default)s)')

100 | multireddit_parser.add_argument('-l',

101 | default=MultiredditDownloaderConfig.DEFAULT_POST_LIMIT,

102 | metavar='post_limit',

103 | type=check_positive,

104 | help='Limit the number of submissions downloaded in each category (default: %(default)s, i.e., all submissions)')

105 | multireddit_parser.add_argument('--skip-comments',

106 | default=False,

107 | action='store_true',

108 | help='When true, saveddit will not save comments to a comments.json file')

109 | multireddit_parser.add_argument('--skip-meta',

110 | default=False,

111 | action='store_true',

112 | help='When true, saveddit will not save meta to a submission.json file on submissions')

113 | multireddit_parser.add_argument('--skip-videos',

114 | default=False,

115 | action='store_true',

116 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

117 | multireddit_parser.add_argument('-o',

118 | required=True,

119 | type=str,

120 | metavar='output_path',

121 | help='Directory where saveddit will save downloaded content'

122 | )

123 |

124 | search_parser = subparsers.add_parser('search')

125 | search_parser.add_argument('subreddits',

126 | metavar='subreddits',

127 | nargs='+',

128 | action=UniqueAppendAction,

129 | help='Names of subreddits to search, e.g., all, aww, pics')

130 | search_parser.add_argument('-q',

131 | metavar='query',

132 | required=True,

133 | help='Search query string')

134 | search_parser.add_argument('-s',

135 | metavar='sort',

136 | default=SearchConfig.DEFAULT_SORT,

137 | choices=SearchConfig.DEFAULT_SORT_CATEGORIES,

138 | help='Sort to apply on search (default: %(default)s, choices: [%(choices)s])')

139 | search_parser.add_argument('-t',

140 | metavar='time_filter',

141 | default=SearchConfig.DEFAULT_TIME_FILTER,

142 | choices=SearchConfig.DEFAULT_TIME_FILTER_CATEGORIES,

143 | help='Time filter to apply on search (default: %(default)s, choices: [%(choices)s])')

144 | search_parser.add_argument('--include-nsfw',

145 | default=False,

146 | action='store_true',

147 | help='When true, saveddit will include NSFW results in search')

148 | search_parser.add_argument('--skip-comments',

149 | default=False,

150 | action='store_true',

151 | help='When true, saveddit will not save comments to a comments.json file')

152 | search_parser.add_argument('--skip-meta',

153 | default=False,

154 | action='store_true',

155 | help='When true, saveddit will not save meta to a submission.json file on submissions')

156 | search_parser.add_argument('--skip-videos',

157 | default=False,

158 | action='store_true',

159 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

160 | search_parser.add_argument('-o',

161 | required=True,

162 | type=str,

163 | metavar='output_path',

164 | help='Directory where saveddit will save downloaded content'

165 | )

166 |

167 | user_parser = subparsers.add_parser('user')

168 | user_parser.add_argument('users',

169 | metavar='users',

170 | nargs='+',

171 | help='Names of users to download, e.g., Poem_for_your_sprog')

172 |

173 |

174 | user_subparsers = user_parser.add_subparsers(dest="user_subparser_name")

175 | user_subparsers.required = True

176 |

177 | # user.saved subparser

178 | saved_parser = user_subparsers.add_parser('saved')

179 | saved_parser.add_argument('--skip-meta',

180 | default=False,

181 | action='store_true',

182 | help='When true, saveddit will not save meta to a submission.json file on submissions')

183 | saved_parser.add_argument('--skip-comments',

184 | default=False,

185 | action='store_true',

186 | help='When true, saveddit will not save comments to a comments.json file')

187 | saved_parser.add_argument('--skip-videos',

188 | default=False,

189 | action='store_true',

190 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

191 | saved_parser.add_argument('-l',

192 | default=UserDownloaderConfig.DEFAULT_POST_LIMIT,

193 | metavar='post_limit',

194 | type=check_positive,

195 | help='Limit the number of saved submissions downloaded (default: %(default)s, i.e., all submissions)')

196 | saved_parser.add_argument('-o',

197 | required=True,

198 | type=str,

199 | metavar='output_path',

200 | help='Directory where saveddit will save downloaded content'

201 | )

202 |

203 | # user.gilded subparser

204 | gilded_parser = user_subparsers.add_parser('gilded')

205 | gilded_parser.add_argument('--skip-meta',

206 | default=False,

207 | action='store_true',

208 | help='When true, saveddit will not save meta to a submission.json file on submissions')

209 | gilded_parser.add_argument('--skip-comments',

210 | default=False,

211 | action='store_true',

212 | help='When true, saveddit will not save comments to a comments.json file')

213 | gilded_parser.add_argument('--skip-videos',

214 | default=False,

215 | action='store_true',

216 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

217 | gilded_parser.add_argument('-l',

218 | default=UserDownloaderConfig.DEFAULT_POST_LIMIT,

219 | metavar='post_limit',

220 | type=check_positive,

221 | help='Limit the number of saved submissions downloaded (default: %(default)s, i.e., all submissions)')

222 | gilded_parser.add_argument('-o',

223 | required=True,

224 | type=str,

225 | metavar='output_path',

226 | help='Directory where saveddit will save downloaded content'

227 | )

228 |

229 | # user.submitted subparser

230 | submitted_parser = user_subparsers.add_parser('submitted')

231 | submitted_parser.add_argument('-s',

232 | metavar='sort',

233 | default=UserDownloaderConfig.DEFAULT_SORT,

234 | choices=UserDownloaderConfig.DEFAULT_SORT_OPTIONS,

235 | help='Download submissions sorted by this option (default: %(default)s, choices: [%(choices)s])')

236 | submitted_parser.add_argument('--skip-comments',

237 | default=False,

238 | action='store_true',

239 | help='When true, saveddit will not save comments to a comments.json file for the submissions')

240 | submitted_parser.add_argument('--skip-meta',

241 | default=False,

242 | action='store_true',

243 | help='When true, saveddit will not save meta to a submission.json file on submissions')

244 | submitted_parser.add_argument('--skip-videos',

245 | default=False,

246 | action='store_true',

247 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

248 | submitted_parser.add_argument('-l',

249 | default=UserDownloaderConfig.DEFAULT_POST_LIMIT,

250 | metavar='post_limit',

251 | type=check_positive,

252 | help='Limit the number of submissions downloaded (default: %(default)s, i.e., all submissions)')

253 | submitted_parser.add_argument('-o',

254 | required=True,

255 | type=str,

256 | metavar='output_path',

257 | help='Directory where saveddit will save downloaded posts'

258 | )

259 |

260 | # user.multireddits subparser

261 | submitted_parser = user_subparsers.add_parser('multireddits')

262 | submitted_parser.add_argument('-n',

263 | metavar='names',

264 | default=None,

265 | nargs='+',

266 | action=UniqueAppendAction,

267 | help='Names of specific multireddits to download (default: %(default)s, i.e., all multireddits for this user)')

268 | submitted_parser.add_argument('-f',

269 | metavar='categories',

270 | default=UserDownloaderConfig.DEFAULT_CATEGORIES,

271 | nargs='+',

272 | action=UniqueAppendAction,

273 | help='Categories of posts to download (default: %(default)s)')

274 | submitted_parser.add_argument('--skip-comments',

275 | default=False,

276 | action='store_true',

277 | help='When true, saveddit will not save comments to a comments.json file for the submissions')

278 | submitted_parser.add_argument('--skip-meta',

279 | default=False,

280 | action='store_true',

281 | help='When true, saveddit will not save meta to a submission.json file on submissions')

282 | submitted_parser.add_argument('--skip-videos',

283 | default=False,

284 | action='store_true',

285 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

286 | submitted_parser.add_argument('-l',

287 | default=UserDownloaderConfig.DEFAULT_POST_LIMIT,

288 | metavar='post_limit',

289 | type=check_positive,

290 | help='Limit the number of submissions downloaded (default: %(default)s, i.e., all submissions)')

291 | submitted_parser.add_argument('-o',

292 | required=True,

293 | type=str,

294 | metavar='output_path',

295 | help='Directory where saveddit will save downloaded posts'

296 | )

297 |

298 | # user.upvoted subparser

299 | upvoted_parser = user_subparsers.add_parser('upvoted')

300 | upvoted_parser.add_argument('--skip-comments',

301 | default=False,

302 | action='store_true',

303 | help='When true, saveddit will not save comments to a comments.json file for the upvoted submissions')

304 | upvoted_parser.add_argument('--skip-meta',

305 | default=False,

306 | action='store_true',

307 | help='When true, saveddit will not save meta to a submission.json file on upvoted submissions')

308 | upvoted_parser.add_argument('--skip-videos',

309 | default=False,

310 | action='store_true',

311 | help='When true, saveddit will not download videos (e.g., gfycat, redgifs, youtube, v.redd.it links)')

312 | upvoted_parser.add_argument('-l',

313 | default=UserDownloaderConfig.DEFAULT_POST_LIMIT,

314 | metavar='post_limit',

315 | type=check_positive,

316 | help='Limit the number of submissions downloaded (default: %(default)s, i.e., all submissions)')

317 | upvoted_parser.add_argument('-o',

318 | required=True,

319 | type=str,

320 | metavar='output_path',

321 | help='Directory where saveddit will save downloaded posts'

322 | )

323 |

324 | # user.comments subparser

325 | comments_parser = user_subparsers.add_parser('comments')

326 | comments_parser.add_argument('-s',

327 | metavar='sort',

328 | default=UserDownloaderConfig.DEFAULT_SORT,

329 | choices=UserDownloaderConfig.DEFAULT_SORT_OPTIONS,

330 | help='Download comments sorted by this option (default: %(default)s, choices: [%(choices)s])')

331 | comments_parser.add_argument('-l',

332 | default=UserDownloaderConfig.DEFAULT_COMMENT_LIMIT,

333 | metavar='post_limit',

334 | type=check_positive,

335 | help='Limit the number of comments downloaded (default: %(default)s, i.e., all comments)')

336 | comments_parser.add_argument('-o',

337 | required=True,

338 | type=str,

339 | metavar='output_path',

340 | help='Directory where saveddit will save downloaded comments'

341 | )

342 |

343 | args = parser.parse_args(argv)

344 | print(asciiart())

345 |

346 | if args.subparser_name == "subreddit":

347 | from saveddit.subreddit_downloader import SubredditDownloader

348 | for subreddit in args.subreddits:

349 | downloader = SubredditDownloader(subreddit)

350 | downloader.download(args.o,

351 | download_all_comments=args.all_comments, categories=args.f, post_limit=args.l, skip_videos=args.skip_videos, skip_meta=args.skip_meta, skip_comments=args.skip_comments)

352 | elif args.subparser_name == "multireddit":

353 | from saveddit.multireddit_downloader import MultiredditDownloader

354 | downloader = MultiredditDownloader(args.subreddits)

355 | downloader.download(args.o,

356 | categories=args.f, post_limit=args.l, skip_videos=args.skip_videos, skip_meta=args.skip_meta, skip_comments=args.skip_comments)

357 | elif args.subparser_name == "search":

358 | from saveddit.search_subreddits import SearchSubreddits

359 | downloader = SearchSubreddits(args.subreddits)

360 | downloader.download(args)

361 | elif args.subparser_name == "user":

362 | from saveddit.user_downloader import UserDownloader

363 | downloader = UserDownloader()

364 | downloader.download_user_meta(args)

365 | if args.user_subparser_name == "comments":

366 | downloader.download_comments(args)

367 | elif args.user_subparser_name == "multireddits":

368 | downloader.download_multireddits(args)

369 | elif args.user_subparser_name == "submitted":

370 | downloader.download_submitted(args)

371 | elif args.user_subparser_name == "saved":

372 | downloader.download_saved(args)

373 | elif args.user_subparser_name == "upvoted":

374 | downloader.download_upvoted(args)

375 | elif args.user_subparser_name == "gilded":

376 | downloader.download_gilded(args)

377 | else:

378 | parser.print_help()

379 |

380 | if __name__ == "__main__":

381 | main()

382 |

--------------------------------------------------------------------------------

/src/saveddit/search_config.py:

--------------------------------------------------------------------------------

1 | class SearchConfig:

2 | DEFAULT_SORT = "relevance"

3 | DEFAULT_SORT_CATEGORIES = ["relevance", "hot", "top", "new", "comments"]

4 | DEFAULT_SYNTAX = "lucene"

5 | DEFAULT_SYNTAX_CATEGORIES = ["cloud search", "lucene", "plain"]

6 | DEFAULT_TIME_FILTER = "all"

7 | DEFAULT_TIME_FILTER_CATEGORIES = ["all", "day", "hour", "month", "week", "year"]

--------------------------------------------------------------------------------

/src/saveddit/search_subreddits.py:

--------------------------------------------------------------------------------

1 | import coloredlogs

2 | from colorama import Fore, Style

3 | from datetime import datetime, timezone

4 | import logging

5 | import verboselogs

6 | import getpass

7 | import json

8 | import os

9 | import praw

10 | from pprint import pprint

11 | import re

12 | from saveddit.submission_downloader import SubmissionDownloader

13 | from saveddit.subreddit_downloader import SubredditDownloader

14 | from saveddit.search_config import SearchConfig

15 | import sys

16 | from tqdm import tqdm

17 |

18 | class SearchSubreddits:

19 | config = SubredditDownloader.config

20 | REDDIT_CLIENT_ID = config['reddit_client_id']

21 | REDDIT_CLIENT_SECRET = config['reddit_client_secret']

22 | IMGUR_CLIENT_ID = config['imgur_client_id']

23 |

24 | REDDIT_USERNAME = None

25 | try:

26 | REDDIT_USERNAME = config['reddit_username']

27 | except Exception as e:

28 | pass

29 |

30 | REDDIT_PASSWORD = None

31 | if REDDIT_USERNAME:

32 | if sys.stdin.isatty():

33 | print("Username: " + REDDIT_USERNAME)

34 | REDDIT_PASSWORD = getpass.getpass("Password: ")

35 | else:

36 | # echo "foobar" > password

37 | # saveddit user .... < password

38 | REDDIT_PASSWORD = sys.stdin.readline().rstrip()

39 |

40 | def __init__(self, subreddit_names):

41 | self.logger = verboselogs.VerboseLogger(__name__)

42 | level_styles = {

43 | 'critical': {'bold': True, 'color': 'red'},

44 | 'debug': {'color': 'green'},

45 | 'error': {'color': 'red'},

46 | 'info': {'color': 'white'},

47 | 'notice': {'color': 'magenta'},

48 | 'spam': {'color': 'white', 'faint': True},

49 | 'success': {'bold': True, 'color': 'green'},

50 | 'verbose': {'color': 'blue'},

51 | 'warning': {'color': 'yellow'}

52 | }

53 | coloredlogs.install(level='SPAM', logger=self.logger,

54 | fmt='%(message)s', level_styles=level_styles)

55 |

56 | if not SearchSubreddits.REDDIT_USERNAME:

57 | self.logger.error("`reddit_username` in user_config.yaml is empty")

58 | self.logger.error("If you plan on using the user API of saveddit, then add your username to user_config.yaml")

59 | print("Exiting now")

60 | exit()

61 | else:

62 | if not len(SearchSubreddits.REDDIT_PASSWORD):

63 | if sys.stdin.isatty():

64 | print("Username: " + REDDIT_USERNAME)

65 | REDDIT_PASSWORD = getpass.getpass("Password: ")

66 | else:

67 | # echo "foobar" > password

68 | # saveddit user .... < password

69 | REDDIT_PASSWORD = sys.stdin.readline().rstrip()

70 |

71 | self.reddit = praw.Reddit(

72 | client_id=SearchSubreddits.REDDIT_CLIENT_ID,

73 | client_secret=SearchSubreddits.REDDIT_CLIENT_SECRET,

74 | user_agent="saveddit (by /u/p_ranav)"

75 | )

76 |

77 | self.multireddit_name = "+".join(subreddit_names)

78 | self.subreddit = self.reddit.subreddit(self.multireddit_name)

79 |

80 | def download(self, args):

81 | output_path = args.o

82 | query = args.q

83 | sort = args.s

84 | syntax = SearchConfig.DEFAULT_SYNTAX

85 | time_filter = args.t

86 | include_nsfw = args.include_nsfw

87 | skip_comments = args.skip_comments

88 | skip_videos = args.skip_videos

89 | skip_meta = args.skip_meta

90 | comment_limit = 0 # top-level comments ONLY

91 |

92 | self.logger.verbose("Searching '" + query + "' in " + self.multireddit_name + ", sorted by " + sort)

93 | if include_nsfw:

94 | self.logger.spam(" * Including NSFW results")

95 |

96 | search_dir = os.path.join(os.path.join(os.path.join(os.path.join(os.path.join(

97 | output_path, "www.reddit.com"), "q"), query), self.multireddit_name), sort)

98 |

99 | if not os.path.exists(search_dir):

100 | os.makedirs(search_dir)

101 |

102 | search_results = None

103 | if include_nsfw:

104 | search_params = {"include_over_18": "on"}

105 | search_results = self.subreddit.search(query, sort, syntax, time_filter, params=search_params)

106 | else:

107 | search_results = self.subreddit.search(query, sort, syntax, time_filter)

108 |

109 | results_found = False

110 | for i, submission in enumerate(search_results):

111 | if not results_found:

112 | results_found = True

113 | SubmissionDownloader(submission, i, self.logger, search_dir,

114 | skip_videos, skip_meta, skip_comments, comment_limit,

115 | {'imgur_client_id': SubredditDownloader.IMGUR_CLIENT_ID})

116 |

117 | if not results_found:

118 | self.logger.spam(" * No results found")

--------------------------------------------------------------------------------

/src/saveddit/submission_downloader.py:

--------------------------------------------------------------------------------

1 | from bs4 import BeautifulSoup

2 | import coloredlogs

3 | from colorama import Fore

4 | import contextlib

5 | import logging

6 | import verboselogs

7 | from datetime import datetime

8 | import os

9 | from io import StringIO

10 | import json

11 | import mimetypes

12 | import ffmpeg

13 | import praw

14 | from pprint import pprint

15 | import re

16 | import requests

17 | from tqdm import tqdm

18 | import urllib.request

19 | import youtube_dl

20 | import os

21 |

22 |

23 | class SubmissionDownloader:

24 | def __init__(self, submission, submission_index, logger, output_dir, skip_videos, skip_meta, skip_comments, comment_limit, config):

25 | self.IMGUR_CLIENT_ID = config["imgur_client_id"]

26 |

27 | self.logger = logger

28 | i = submission_index

29 | prefix_str = '#' + str(i).zfill(3) + ' '

30 | self.indent_1 = ' ' * len(prefix_str) + "* "

31 | self.indent_2 = ' ' * len(self.indent_1) + "- "

32 |

33 | has_url = getattr(submission, "url", None)

34 | if has_url:

35 | title = submission.title

36 | self.logger.verbose(prefix_str + '"' + title + '"')

37 | title = re.sub(r'\W+', '_', title)

38 |

39 | # Truncate title

40 | if len(title) > 32:

41 | title = title[0:32]

42 | if os.name == "nt":

43 | pass

44 | else:

45 | title += "..."

46 |

47 | # Prepare directory for the submission

48 | post_dir = str(i).zfill(3) + "_" + title.replace(" ", "_")

49 | submission_dir = os.path.join(output_dir, post_dir)

50 | if not os.path.exists(submission_dir):

51 | os.makedirs(submission_dir)

52 | elif os.path.exists(submission_dir):

53 | print("File exists, Skipping it.")

54 | return

55 |

56 | self.logger.spam(

57 | self.indent_1 + "Processing `" + submission.url + "`")

58 |

59 | success = False

60 |

61 | should_create_files_dir = True

62 | if skip_comments and skip_meta:

63 | should_create_files_dir = False

64 |

65 | def create_files_dir(submission_dir):

66 | if should_create_files_dir:

67 | files_dir = os.path.join(submission_dir, "files")

68 | if not os.path.exists(files_dir):

69 | os.makedirs(files_dir)

70 | return files_dir

71 | else:

72 | return submission_dir

73 |

74 | if self.is_direct_link_to_content(submission.url, [".png", ".jpg", ".jpeg", ".gif"]):

75 | files_dir = create_files_dir(submission_dir)

76 |

77 | filename = submission.url.split("/")[-1]

78 | self.logger.spam(

79 | self.indent_1 + "This is a direct link to a " + filename.split(".")[-1] + " file")

80 | save_path = os.path.join(files_dir, filename)

81 | self.download_direct_link(submission, save_path)

82 | success = True

83 | elif self.is_direct_link_to_content(submission.url, [".mp4"]):

84 | filename = submission.url.split("/")[-1]

85 | self.logger.spam(

86 | self.indent_1 + "This is a direct link to a " + filename.split(".")[-1] + " file")

87 | if not skip_videos:

88 | files_dir = create_files_dir(submission_dir)

89 | save_path = os.path.join(files_dir, filename)

90 | self.download_direct_link(submission, save_path)

91 | success = True

92 | else:

93 | self.logger.spam(self.indent_1 + "Skipping download of video content")

94 | success = True

95 | elif self.is_reddit_gallery(submission.url):

96 | files_dir = create_files_dir(submission_dir)

97 |

98 | self.logger.spam(

99 | self.indent_1 + "This is a reddit gallery")

100 | self.download_reddit_gallery(submission, files_dir, skip_videos)

101 | success = True

102 | elif self.is_reddit_video(submission.url):

103 | self.logger.spam(

104 | self.indent_1 + "This is a reddit video")

105 |

106 | if not skip_videos:

107 | files_dir = create_files_dir(submission_dir)

108 | self.download_reddit_video(submission, files_dir)

109 | success = True

110 | else:

111 | self.logger.spam(self.indent_1 + "Skipping download of video content")

112 | success = True

113 | elif self.is_gfycat_link(submission.url) or self.is_redgifs_link(submission.url):

114 | if self.is_gfycat_link(submission.url):

115 | self.logger.spam(

116 | self.indent_1 + "This is a gfycat link")

117 | else:

118 | self.logger.spam(

119 | self.indent_1 + "This is a redgif link")

120 |

121 | if not skip_videos:

122 | files_dir = create_files_dir(submission_dir)

123 | self.download_gfycat_or_redgif(submission, files_dir)

124 | success = True

125 | else:

126 | self.logger.spam(self.indent_1 + "Skipping download of video content")

127 | success = True

128 | elif self.is_imgur_album(submission.url):

129 | files_dir = create_files_dir(submission_dir)

130 |

131 | self.logger.spam(

132 | self.indent_1 + "This is an imgur album")

133 | self.download_imgur_album(submission, files_dir)

134 | success = True

135 | elif self.is_imgur_image(submission.url):

136 | files_dir = create_files_dir(submission_dir)

137 |

138 | self.logger.spam(

139 | self.indent_1 + "This is an imgur image or video")

140 | self.download_imgur_image(submission, files_dir)

141 | success = True

142 | elif self.is_self_post(submission):

143 | self.logger.spam(self.indent_1 + "This is a self-post")

144 | success = True

145 | elif (not skip_videos) and (self.is_youtube_link(submission.url) or self.is_supported_by_youtubedl(submission.url)):

146 | if self.is_youtube_link(submission.url):

147 | self.logger.spam(

148 | self.indent_1 + "This is a youtube link")

149 | else:

150 | self.logger.spam(

151 | self.indent_1 + "This link is supported by a youtube-dl extractor")

152 |

153 | if not skip_videos:

154 | files_dir = create_files_dir(submission_dir)

155 | self.download_youtube_video(submission.url, files_dir)

156 | success = True

157 | else:

158 | self.logger.spam(self.indent_1 + "Skipping download of video content")

159 | success = True

160 | else:

161 | success = True

162 |

163 | # Download submission meta

164 | if not skip_meta:

165 | self.logger.spam(self.indent_1 + "Saving submission.json")

166 | self.download_submission_meta(submission, submission_dir)

167 | else:

168 | self.logger.spam(

169 | self.indent_1 + "Skipping submissions meta")

170 |

171 | # Downlaod comments if requested

172 | if not skip_comments:

173 | if comment_limit == None:

174 | self.logger.spam(

175 | self.indent_1 + "Saving all comments to comments.json")

176 | else:

177 | self.logger.spam(

178 | self.indent_1 + "Saving top-level comments to comments.json")

179 | self.download_comments(

180 | submission, submission_dir, comment_limit)

181 | else:

182 | self.logger.spam(

183 | self.indent_1 + "Skipping comments")

184 |

185 | if success:

186 | self.logger.spam(

187 | self.indent_1 + "Saved to " + submission_dir + "\n")

188 | else:

189 | self.logger.warning(

190 | self.indent_1 + "Failed to download from link " + submission.url + "\n"

191 | )

192 |

193 | def print_formatted_error(self, e):

194 | for line in str(e).split("\n"):

195 | self.logger.error(self.indent_2 + line)

196 |

197 | def is_direct_link_to_content(self, url, supported_file_formats):

198 | url_leaf = url.split("/")[-1]

199 | return any([i in url_leaf for i in supported_file_formats]) and ".gifv" not in url_leaf

200 |

201 | def download_direct_link(self, submission, output_path):

202 | try:

203 | urllib.request.urlretrieve(submission.url, output_path)

204 | except Exception as e: