├── .gitignore

├── README.md

├── assets

├── 3dunet_arch.png

├── Decision Making in Applying Deep Learning - Page 1.svg

├── IOU_segmentation.png

├── RCNN_arch.png

├── alexnet_arch.png

├── chestxray8_arch.png

├── deepmask_arch.PNG

├── fast_rcnn_arch.png

├── faster_rcnn_arch.png

├── fcn_arch.png

├── fpn_arch.png

├── fpn_arch2.png

├── fpn_arch3.png

├── images

│ ├── 2d3d_deep3dbox.png

│ ├── 2d3d_deep3dbox_1.png

│ ├── 2d3d_deep3dbox_2.png

│ ├── 2d3d_deep3dbox_code.png

│ ├── 2d3d_deep3dbox_equivalency.png

│ ├── 2d3d_fqnet_1.png

│ ├── 2d3d_fqnet_2.png

│ ├── 2d3d_shift_rcnn_1.png

│ ├── 2d3d_shift_rcnn_2.png

│ ├── cam_conv.jpg

│ ├── disnet.png

│ ├── foresee.png

│ ├── pseudo_lidar.png

│ └── tesla_release_note_10.11.2.png

├── lstm_arch.png

├── lstm_calc.png

├── mask_rcnn_arch.png

├── mask_rcnn_arch2.png

├── multipath_arch.png

├── overfeat_bb_regressor.png

├── overfeat_efficient_sliding_window.png

├── overfeat_shift_and_stich.png

├── papers

│ ├── Devils_in_BatchNorm_yuxin_wu.pdf

│ ├── bosch_traffic_lights.pdf

│ └── schumann2018.pdf

├── polygon_rnn_arch.png

├── review_mlp_vs_transformers

│ ├── illustrated_transformers_vs_mlp.docx

│ └── illustration_mlp_vs_transformers.ipynb

├── segmentation_tasks_example.png

├── sharpmask_arch.png

├── sharpmask_head_arch.png

├── sharpmask_refinement_arch.png

├── ssd_arch.png

├── unet_arch.png

├── vgg_arch.png

├── vnet_arch.png

├── yolo9000_wordtree.png

├── yolo_arch.png

├── yolo_arch2.png

├── yolo_diagram.png

└── yolo_loss.png

├── chrono

├── 2019H1.md

├── 2019H2.md

└── ipynb

│ └── visualization_paper_reading_2019.ipynb

├── code_notes

├── _template.md

├── old_tf_notes

│ └── tf_learning_notes.md

├── openpilot.md

├── pitfalls.md

├── setup_log.md

└── simple_faster_rcnn.md

├── learning_filters

└── README.md

├── learning_pnc

├── crash_course.md

└── pnc_notes.md

├── learning_slam

├── slam_14.md

└── slam_ref.md

├── openai_orgchart

├── README.md

├── openai_orgchart.ipynb

├── openai_orgchart.txt

├── resource_allocation.png

├── skill_diversity_of_addtional_contributors.png

├── skill_diversity_of_all_contributors.png

├── top_contributors in Additional contributions.png

├── top_contributors in Long context.png

├── top_contributors.png

├── top_contributors_in_Deployment.png

├── top_contributors_in_Evaluation_analysis.png

├── top_contributors_in_Pretraining.png

├── top_contributors_in_Reinforcement_Learning_Alignment.png

├── top_contributors_in_Vision.png

└── word_cloud.png

├── paper_notes

├── 2dod_calib.md

├── 3d_gck.md

├── 3d_lanenet+.md

├── 3d_lanenet.md

├── 3d_rcnn.md

├── 3d_shapenets.md

├── 3ddl_cvpr2017.md

├── 3dod_review.md

├── 3dssd.md

├── 6d_vnet.md

├── AUNet_panoptic.md

├── M2Det.md

├── MixMatch.md

├── _template.md

├── acnet.md

├── adaptive_nms.md

├── adriver_i.md

├── afdet.md

├── aft.md

├── agg_loss.md

├── alphago.md

├── am3d.md

├── amodal_completion.md

├── anchor_detr.md

├── ap_mr.md

├── apollo_car_parts.md

├── apollo_em_planner.md

├── apollocar3d.md

├── argoverse.md

├── association_lstm.md

├── associative_embedding.md

├── astyx_dataset.md

├── astyx_radar_camera_fusion.md

├── atss.md

├── autoaugment.md

├── av20.md

├── avod.md

├── avp_slam.md

├── avp_slam_late_fusion.md

├── ba_sfm_learner.md

├── bag_of_freebies_object_detection.md

├── bag_of_tricks_classification.md

├── banet.md

├── batchnorm_pruning.md

├── bayes_od.md

├── bayesian_segnet.md

├── bayesian_yolov3.md

├── bc_sac.md

├── bev_feat_stitching.md

├── bev_od_ipm.md

├── bev_seg.md

├── bevdepth.md

├── bevdet.md

├── bevdet4d.md

├── beverse.md

├── bevformer.md

├── bevfusion.md

├── bevnet_sdca.md

├── birdgan.md

├── blendmask.md

├── bn_ffn_bn.md

├── bosch_traffic_lights.md

├── boxinst.md

├── boxy.md

├── bs3d.md

├── c3dpo.md

├── caddn.md

├── calib_modern_nn.md

├── cam2bev.md

├── cam_conv.md

├── cap.md

├── casgeo.md

├── cbam.md

├── cbgs.md

├── cbn.md

├── cc.md

├── center3d.md

├── centerfusion.md

├── centermask.md

├── centernet.md

├── centernet2.md

├── centerpoint.md

├── centertrack.md

├── centroid_voting.md

├── channel_pruning_megvii.md

├── chauffeurnet.md

├── class_balanced_loss.md

├── classical_keypoints.md

├── cluster_vo.md

├── cnn_seg.md

├── codex.md

├── complex_yolo.md

├── condinst.md

├── confluence.md

├── consistent_video_depth.md

├── contfuse.md

├── coord_conv.md

├── corenet.md

├── corner_case_multisensor.md

├── corner_case_vision_arxiv.md

├── cornernet.md

├── crf_net.md

├── crowd_det.md

├── crowdhuman.md

├── csp_pedestrian.md

├── cube_slam.md

├── cubifae_3d.md

├── cvt.md

├── d3vo.md

├── d4lcn.md

├── da_3det.md

├── dagmapper.md

├── darts.md

├── ddmp.md

├── deep3dbox.md

├── deep_active_learning_lidar.md

├── deep_boundary_extractor.md

├── deep_depth_completion_rgbd.md

├── deep_double_descent.md

├── deep_fusion_review.md

├── deep_lane_association.md

├── deep_manta.md

├── deep_optics.md

├── deep_radar_detector.md

├── deep_road_mapper.md

├── deep_signals.md

├── deep_sort.md

├── deep_structured_crosswalk.md

├── deeplidar.md

├── deepmot.md

├── deepv2d.md

├── defcn.md

├── deformable_detr.md

├── dekr.md

├── delving_bev.md

├── dense_tnt.md

├── densebox.md

├── densepose.md

├── depth_coeff.md

├── depth_from_one_line.md

├── depth_hints.md

├── detect_track.md

├── detr.md

├── detr3d.md

├── df_vo.md

├── disnet.md

├── distance_estimation_pose_radar.md

├── distant_object_radar.md

├── dl_regression_calib.md

├── dorn.md

├── dota.md

├── double_anchor.md

├── double_descent.md

├── drive_dreamer.md

├── drive_gan.md

├── drive_wm.md

├── drivegpt4.md

├── drivevlm.md

├── drl_flappy.md

├── drl_vessel_centerline.md

├── dsnt.md

├── dsp.md

├── dtp.md

├── e2e_lmd.md

├── e2e_review_hongyang.md

├── edge_aware_depth_normal.md

├── edgeconv.md

├── efficientdet.md

├── efficientnet.md

├── egonet.md

├── eudm.md

├── extreme_clicking.md

├── extremenet.md

├── faf.md

├── fairmot.md

├── fbnet.md

├── fcos.md

├── fcos3d.md

├── feature_metric.md

├── federated_learning_comm.md

├── fiery.md

├── fishing_net.md

├── fishyscape.md

├── fixres.md

├── flamingo.md

├── focal_loss.md

├── foresee_mono3dod.md

├── foveabox.md

├── fqnet.md

├── frozen_depth.md

├── frustum_pointnet.md

├── fsaf_detection.md

├── fsm.md

├── gac.md

├── gaia_1.md

├── gato.md

├── gaussian_yolov3.md

├── gen_lanenet.md

├── genie.md

├── geometric_pretraining.md

├── geonet.md

├── gfocal.md

├── gfocalv2.md

├── ghostnet.md

├── giou.md

├── glnet.md

├── gpp.md

├── gpt4.md

├── gpt4v_robotics.md

├── gradnorm.md

├── graph_spectrum.md

├── groupnorm.md

├── gs3d.md

├── guided_backprop.md

├── gupnet.md

├── h3d.md

├── hdmapnet.md

├── hevi.md

├── home.md

├── how_hard_can_it_be.md

├── hran.md

├── hugging_gpt.md

├── human_centric_annotation.md

├── insta_yolo.md

├── instance_mot_seg.md

├── instructgpt.md

├── intentnet.md

├── iou_net.md

├── joint_learned_bptp.md

├── kalman_filter.md

├── keep_hd_maps_updated_bmw.md

├── kinematic_mono3d.md

├── kitti.md

├── kitti_lane.md

├── kl_loss.md

├── km3d_net.md

├── kp2d.md

├── kp3d.md

├── lanenet.md

├── lasernet.md

├── lasernet_kl.md

├── layer_compensated_pruning.md

├── learn_depth_and_motion.md

├── learning_correspondence.md

├── learning_ood_conf.md

├── learning_to_look_around_objects.md

├── learnk.md

├── lego.md

├── lego_loam.md

├── legr.md

├── lidar_rcnn.md

├── lidar_sim.md

├── lifelong_feature_mapping_google.md

├── lift_splat_shoot.md

├── lighthead_rcnn.md

├── lingo_1.md

├── lingo_2.md

├── llm_brain.md

├── llm_vision_intel.md

├── locomotion_next_token_pred.md

├── long_term_feat_bank.md

├── lottery_ticket_hypothesis.md

├── lstr.md

├── m2bev.md

├── m2i.md

├── m3d_rpn.md

├── mae.md

├── manydepth.md

├── maptr.md

├── marc.md

├── mb_net.md

├── mebow.md

├── meinst.md

├── mfs.md

├── mgail_ad.md

├── mile.md

├── misc.md

├── mixup.md

├── mlf.md

├── mmf.md

├── mnasnet.md

├── mobilenets.md

├── mobilenets_v2.md

├── mobilenets_v3.md

├── moc.md

├── moco.md

├── monet3d.md

├── mono3d++.md

├── mono3d.md

├── mono3d_fisheye.md

├── mono_3d_tracking.md

├── mono_3dod_2d3d_constraints.md

├── mono_uncertainty.md

├── monodepth.md

├── monodepth2.md

├── monodis.md

├── monodle.md

├── monodr.md

├── monoef.md

├── monoflex.md

├── monogrnet.md

├── monogrnet_russian.md

├── monolayout.md

├── monoloco.md

├── monopair.md

├── monopsr.md

├── monoresmatch.md

├── monoscene.md

├── mot_and_sot.md

├── motionnet.md

├── movi_3d.md

├── mp3.md

├── mpdm.md

├── mpdm2.md

├── mpv_nets.md

├── mtcnn.md

├── multi_object_mono_slam.md

├── multigrid_training.md

├── multinet_raquel.md

├── multipath++.md

├── multipath.md

├── multipath_uber.md

├── mv3d.md

├── mvcnn.md

├── mvf.md

├── mvp.md

├── mvra.md

├── nas_fpn.md

├── nature_dqn_paper.md

├── neat.md

├── network_slimming.md

├── ng_ransac.md

├── nmp.md

├── non_local_net.md

├── nuplan.md

├── nuscenes.md

├── obj_dist_iccv2019.md

├── obj_motion_net.md

├── object_detection_region_decomposition.md

├── objects_without_bboxes.md

├── occ3d.md

├── occlusion_net.md

├── occupancy_networks.md

├── oft.md

├── onenet.md

├── openoccupancy.md

├── opportunities_foundation_models.md

├── out_of_data.md

├── owod.md

├── packnet.md

├── packnet_sg.md

├── palm_e.md

├── panet.md

├── panoptic_bev.md

├── panoptic_fpn.md

├── panoptic_segmentation.md

├── parametric_cont_conv.md

├── patchnet.md

├── patdnn.md

├── pdq.md

├── perceiver.md

├── perceiver_io.md

├── perceiving_humans.md

├── persformer.md

├── petr.md

├── petrv2.md

├── peudo_lidar_e2d.md

├── pie.md

├── pillar_motion.md

├── pillar_od.md

├── pix2seq.md

├── pix2seq_v2.md

├── pixels_to_graphs.md

├── pixor++.md

├── pixor.md

├── pnpnet.md

├── point_cnn.md

├── point_painting.md

├── point_pillars.md

├── point_rcnn.md

├── pointnet++.md

├── pointnet.md

├── pointrend.md

├── pointtrack++.md

├── pointtrack.md

├── polarmask.md

├── polymapper.md

├── posenet.md

├── powernorm.md

├── pp_yolo.md

├── ppgeo.md

├── prevention_dataset.md

├── prompt_craft.md

├── pruning_filters.md

├── psdet.md

├── pseudo_lidar++.md

├── pseudo_lidar.md

├── pseudo_lidar_e2e.md

├── pseudo_lidar_v3.md

├── pss.md

├── pwc_net.md

├── pyroccnet.md

├── pyva.md

├── qcnet.md

├── quo_vadis_i3d.md

├── r2_nms.md

├── radar_3d_od_fcn.md

├── radar_camera_qcom.md

├── radar_detection_pointnet.md

├── radar_fft_qcom.md

├── radar_point_semantic_seg.md

├── radar_target_detection_tsinghua.md

├── rarnet.md

├── realtime_panoptic.md

├── recurrent_retinanet.md

├── recurrent_ssd.md

├── refined_mpl.md

├── reid_surround_fisheye.md

├── rep_loss.md

├── repvgg.md

├── resnest.md

├── resnext.md

├── rethinking_pretraining.md

├── retina_face.md

├── retina_unet.md

├── retnet.md

├── review_descriptors.md

├── review_mono_3dod.md

├── rfcn.md

├── rib_centerline_philips.md

├── road_slam.md

├── road_tracer.md

├── robovqa.md

├── roi10d.md

├── roi_transformer.md

├── rolo.md

├── ror.md

├── rpt.md

├── rrpn_radar.md

├── rt1.md

├── rt2.md

├── rtm3d.md

├── rvnet.md

├── rwkv.md

├── s3dot.md

├── saycan.md

├── sc_sfm_learner.md

├── scaled_yolov4.md

├── sdflabel.md

├── self_mono_sf.md

├── semilocal_3d_lanenet.md

├── sfm_learner.md

├── sgdepth.md

├── shift_rcnn.md

├── simmim.md

├── simtrack.md

├── sknet.md

├── sku110k.md

├── slimmable_networks.md

├── slowfast.md

├── smoke.md

├── smwa.md

├── social_lstm.md

├── solo.md

├── solov2.md

├── sort.md

├── sparse_hd_maps.md

├── sparse_rcnn.md

├── sparse_to_dense.md

├── spatial_embedding.md

├── specialized_cyclists.md

├── speednet.md

├── ss3d.md

├── stitcher.md

├── stn.md

├── struct2depth.md

├── stsu.md

├── subpixel_conv.md

├── super.md

├── superpoint.md

├── surfel_gan.md

├── surroundocc.md

├── swahr.md

├── task_grouping.md

├── taskonomy.md

├── tensormask.md

├── tfl_exploting_map_korea.md

├── tfl_lidar_map_building_brazil.md

├── tfl_mapping_google.md

├── tfl_robust_japan.md

├── tfl_stanford.md

├── ti_mmwave_radar_webinar.md

├── tidybot.md

├── tlnet.md

├── tnt.md

├── to_learn_or_not.md

├── tot.md

├── towards_safe_ad.md

├── towards_safe_ad2.md

├── towards_safe_ad_calib.md

├── tpvformer.md

├── tracktor.md

├── trafficpredict.md

├── train_in_germany.md

├── transformer.md

├── transformers_are_rnns.md

├── translating_images_to_maps.md

├── trianflow.md

├── tsinghua_daimler_cyclists.md

├── tsl_frequency.md

├── tsm.md

├── tsp.md

├── twsm_net.md

├── umap.md

├── uncertainty_bdl.md

├── uncertainty_multitask.md

├── understanding_apr.md

├── uniad.md

├── unisim.md

├── universal_slimmable.md

├── unsuperpoint.md

├── ur3d.md

├── vectormapnet.md

├── ved.md

├── vehicle_centric_velocity_net.md

├── velocity_net.md

├── vg_nms.md

├── video_ldm.md

├── videogpt.md

├── vip3d.md

├── virtual_normal.md

├── vision_llm.md

├── vit.md

├── vo_monodepth.md

├── vol_vs_mvcnn.md

├── voxformer.md

├── voxnet.md

├── voxposer.md

├── vpgnet.md

├── vpn.md

├── vpt.md

├── vslam_for_ad.md

├── wayformer.md

├── waymo_dataset.md

├── what_monodepth_see.md

├── widerperson.md

├── world_dreamer.md

├── wysiwyg.md

├── yolact.md

├── yolof.md

├── yolov3.md

├── yolov4.md

└── yolov5.md

├── start

├── first_cnn_papers.md

└── first_cnn_papers_notes.md

├── talk_notes

├── andrej.md

├── cvpr_2021

│ ├── assets

│ │ ├── 8cam_setup.jpg

│ │ ├── cover.jpg

│ │ ├── data_auto_labeling.jpg

│ │ ├── depth_velocity_with_vision_1.jpg

│ │ ├── depth_velocity_with_vision_2.jpg

│ │ ├── depth_velocity_with_vision_3.jpg

│ │ ├── large_clean_diverse_data.jpg

│ │ ├── release_and_validation.jpg

│ │ ├── tesla_dataset.jpg

│ │ ├── tesla_no_radar.jpg

│ │ ├── traffic_control_warning_pmm.jpg

│ │ └── trainig_cluster.jpg

│ └── cvpr_2021.md

├── scaledml_2020

│ ├── assets

│ │ ├── bevnet.jpg

│ │ ├── env.jpg

│ │ ├── evaluation.jpg

│ │ ├── operation_vacation.jpg

│ │ ├── pedestrian_aeb.jpg

│ │ ├── stop1.jpg

│ │ ├── stop10.jpg

│ │ ├── stop11.jpg

│ │ ├── stop12.jpg

│ │ ├── stop13.jpg

│ │ ├── stop2.jpg

│ │ ├── stop3.jpg

│ │ ├── stop4.jpg

│ │ ├── stop5.jpg

│ │ ├── stop6.jpg

│ │ ├── stop7.jpg

│ │ ├── stop8.jpg

│ │ ├── stop9.jpg

│ │ ├── stop_overview.jpg

│ │ └── vidar.jpg

│ └── scaledml_2020.md

└── state_of_gpt_2023

│ ├── media

│ ├── image001.jpg

│ ├── image002.jpg

│ ├── image003.jpg

│ ├── image004.jpg

│ ├── image005.jpg

│ ├── image006.jpg

│ ├── image007.jpg

│ ├── image008.jpg

│ ├── image009.jpg

│ ├── image010.jpg

│ ├── image011.jpg

│ ├── image012.jpg

│ ├── image013.jpg

│ ├── image014.jpg

│ ├── image015.jpg

│ ├── image016.jpg

│ ├── image017.jpg

│ ├── image018.jpg

│ ├── image019.jpg

│ ├── image020.jpg

│ ├── image021.jpg

│ ├── image022.jpg

│ ├── image023.jpg

│ ├── image024.jpg

│ ├── image025.jpg

│ ├── image026.jpg

│ ├── image027.jpg

│ ├── image028.jpg

│ ├── image029.jpg

│ ├── image030.jpg

│ ├── image031.jpg

│ ├── image032.jpg

│ ├── image033.jpg

│ ├── image034.jpg

│ ├── image035.jpg

│ ├── image036.jpg

│ ├── image037.jpg

│ └── image038.jpg

│ └── state_of_gpt_2023.md

├── topics

├── topic_3d_lld.md

├── topic_bev_segmentation.md

├── topic_cls_reg.md

├── topic_crowd_detection.md

├── topic_detr.md

├── topic_occupancy_network.md

├── topic_single_stage_instance_segmentation.md

├── topic_transformers_bev.md

└── topic_vlm.md

└── trusty.md

/.gitignore:

--------------------------------------------------------------------------------

1 | # ignore numpy data file

2 | *.npy

3 | # ignore Desktop Service Store in Mac OSX

4 | .DS_Store

5 | */.DS_Store

6 | *.html

7 | # ignore ipynb checkpoints

8 | .ipynb_checkpoints

9 | # ignore ide

10 | .idea

11 |

--------------------------------------------------------------------------------

/assets/3dunet_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/3dunet_arch.png

--------------------------------------------------------------------------------

/assets/IOU_segmentation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/IOU_segmentation.png

--------------------------------------------------------------------------------

/assets/RCNN_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/RCNN_arch.png

--------------------------------------------------------------------------------

/assets/alexnet_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/alexnet_arch.png

--------------------------------------------------------------------------------

/assets/chestxray8_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/chestxray8_arch.png

--------------------------------------------------------------------------------

/assets/deepmask_arch.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/deepmask_arch.PNG

--------------------------------------------------------------------------------

/assets/fast_rcnn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/fast_rcnn_arch.png

--------------------------------------------------------------------------------

/assets/faster_rcnn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/faster_rcnn_arch.png

--------------------------------------------------------------------------------

/assets/fcn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/fcn_arch.png

--------------------------------------------------------------------------------

/assets/fpn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/fpn_arch.png

--------------------------------------------------------------------------------

/assets/fpn_arch2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/fpn_arch2.png

--------------------------------------------------------------------------------

/assets/fpn_arch3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/fpn_arch3.png

--------------------------------------------------------------------------------

/assets/images/2d3d_deep3dbox.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_deep3dbox.png

--------------------------------------------------------------------------------

/assets/images/2d3d_deep3dbox_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_deep3dbox_1.png

--------------------------------------------------------------------------------

/assets/images/2d3d_deep3dbox_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_deep3dbox_2.png

--------------------------------------------------------------------------------

/assets/images/2d3d_deep3dbox_code.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_deep3dbox_code.png

--------------------------------------------------------------------------------

/assets/images/2d3d_deep3dbox_equivalency.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_deep3dbox_equivalency.png

--------------------------------------------------------------------------------

/assets/images/2d3d_fqnet_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_fqnet_1.png

--------------------------------------------------------------------------------

/assets/images/2d3d_fqnet_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_fqnet_2.png

--------------------------------------------------------------------------------

/assets/images/2d3d_shift_rcnn_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_shift_rcnn_1.png

--------------------------------------------------------------------------------

/assets/images/2d3d_shift_rcnn_2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/2d3d_shift_rcnn_2.png

--------------------------------------------------------------------------------

/assets/images/cam_conv.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/cam_conv.jpg

--------------------------------------------------------------------------------

/assets/images/disnet.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/disnet.png

--------------------------------------------------------------------------------

/assets/images/foresee.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/foresee.png

--------------------------------------------------------------------------------

/assets/images/pseudo_lidar.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/pseudo_lidar.png

--------------------------------------------------------------------------------

/assets/images/tesla_release_note_10.11.2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/images/tesla_release_note_10.11.2.png

--------------------------------------------------------------------------------

/assets/lstm_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/lstm_arch.png

--------------------------------------------------------------------------------

/assets/lstm_calc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/lstm_calc.png

--------------------------------------------------------------------------------

/assets/mask_rcnn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/mask_rcnn_arch.png

--------------------------------------------------------------------------------

/assets/mask_rcnn_arch2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/mask_rcnn_arch2.png

--------------------------------------------------------------------------------

/assets/multipath_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/multipath_arch.png

--------------------------------------------------------------------------------

/assets/overfeat_bb_regressor.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/overfeat_bb_regressor.png

--------------------------------------------------------------------------------

/assets/overfeat_efficient_sliding_window.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/overfeat_efficient_sliding_window.png

--------------------------------------------------------------------------------

/assets/overfeat_shift_and_stich.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/overfeat_shift_and_stich.png

--------------------------------------------------------------------------------

/assets/papers/Devils_in_BatchNorm_yuxin_wu.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/papers/Devils_in_BatchNorm_yuxin_wu.pdf

--------------------------------------------------------------------------------

/assets/papers/bosch_traffic_lights.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/papers/bosch_traffic_lights.pdf

--------------------------------------------------------------------------------

/assets/papers/schumann2018.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/papers/schumann2018.pdf

--------------------------------------------------------------------------------

/assets/polygon_rnn_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/polygon_rnn_arch.png

--------------------------------------------------------------------------------

/assets/review_mlp_vs_transformers/illustrated_transformers_vs_mlp.docx:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/review_mlp_vs_transformers/illustrated_transformers_vs_mlp.docx

--------------------------------------------------------------------------------

/assets/segmentation_tasks_example.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/segmentation_tasks_example.png

--------------------------------------------------------------------------------

/assets/sharpmask_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/sharpmask_arch.png

--------------------------------------------------------------------------------

/assets/sharpmask_head_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/sharpmask_head_arch.png

--------------------------------------------------------------------------------

/assets/sharpmask_refinement_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/sharpmask_refinement_arch.png

--------------------------------------------------------------------------------

/assets/ssd_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/ssd_arch.png

--------------------------------------------------------------------------------

/assets/unet_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/unet_arch.png

--------------------------------------------------------------------------------

/assets/vgg_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/vgg_arch.png

--------------------------------------------------------------------------------

/assets/vnet_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/vnet_arch.png

--------------------------------------------------------------------------------

/assets/yolo9000_wordtree.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/yolo9000_wordtree.png

--------------------------------------------------------------------------------

/assets/yolo_arch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/yolo_arch.png

--------------------------------------------------------------------------------

/assets/yolo_arch2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/yolo_arch2.png

--------------------------------------------------------------------------------

/assets/yolo_diagram.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/yolo_diagram.png

--------------------------------------------------------------------------------

/assets/yolo_loss.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/assets/yolo_loss.png

--------------------------------------------------------------------------------

/code_notes/_template.md:

--------------------------------------------------------------------------------

1 | # [Paper Title](link_to_paper)

2 |

3 | _December 2019_

4 |

5 | tl;dr: Summary of the main idea.

6 |

7 | #### Overall impression

8 | Describe the overall impression of the paper.

9 |

10 | #### Key ideas

11 | - Summaries of the key ideas

12 |

13 | #### Technical details

14 | - Summary of technical details

15 |

16 | #### Notes

17 | - Questions and notes on how to improve/revise the current work

18 |

19 |

--------------------------------------------------------------------------------

/code_notes/pitfalls.md:

--------------------------------------------------------------------------------

1 | # Pitfalls in Python and its libraries

2 |

3 | ## Python

4 | - Python passes by object. This is quite different from pass by reference and pass by value, as explained in the blog [here](https://robertheaton.com/2014/02/09/pythons-pass-by-object-reference-as-explained-by-philip-k-dick/) and [here](https://jeffknupp.com/blog/2012/11/13/is-python-callbyvalue-or-callbyreference-neither/).

5 | - pay attention to the trailing `,`.

6 | ```

7 | >>> a = 1

8 | >>> a

9 | 1

10 | >>> a = 1,

11 | >>> a

12 | (1,)

13 | ```

14 |

--------------------------------------------------------------------------------

/code_notes/simple_faster_rcnn.md:

--------------------------------------------------------------------------------

1 | # [simple-faster-rcnn-pytorch](https://github.com/chenyuntc/simple-faster-rcnn-pytorch/) (2.1k star)

2 |

3 | _January 2020_

4 |

5 | tl;dr: Summary of the main idea.

6 |

7 | #### Overall impression

8 | Describe the overall impression of the paper.

9 |

10 | #### Key ideas

11 | - Summaries of the key ideas

12 |

13 | #### Technical details

14 | - Summary of technical details

15 |

16 | #### Notes

17 | - [从编程实现角度学习Faster R-CNN(附极简实现)](https://zhuanlan.zhihu.com/p/32404424)

18 |

19 |

--------------------------------------------------------------------------------

/learning_slam/slam_14.md:

--------------------------------------------------------------------------------

1 | # [视觉SLAM14讲 (14 Lectures on Visual SLAM)](https://github.com/gaoxiang12/slambook2)

2 |

3 | _January 2020_

4 |

5 | #### Chapter 1

6 | - Questions and notes on how to improve/revise the current work

7 |

8 |

--------------------------------------------------------------------------------

/openai_orgchart/resource_allocation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/resource_allocation.png

--------------------------------------------------------------------------------

/openai_orgchart/skill_diversity_of_addtional_contributors.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/skill_diversity_of_addtional_contributors.png

--------------------------------------------------------------------------------

/openai_orgchart/skill_diversity_of_all_contributors.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/skill_diversity_of_all_contributors.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors in Additional contributions.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors in Additional contributions.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors in Long context.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors in Long context.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors_in_Deployment.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors_in_Deployment.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors_in_Evaluation_analysis.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors_in_Evaluation_analysis.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors_in_Pretraining.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors_in_Pretraining.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors_in_Reinforcement_Learning_Alignment.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors_in_Reinforcement_Learning_Alignment.png

--------------------------------------------------------------------------------

/openai_orgchart/top_contributors_in_Vision.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/top_contributors_in_Vision.png

--------------------------------------------------------------------------------

/openai_orgchart/word_cloud.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/patrick-llgc/Learning-Deep-Learning/76920bd450ab0e07d5163374ff93537bb4327b0e/openai_orgchart/word_cloud.png

--------------------------------------------------------------------------------

/paper_notes/2dod_calib.md:

--------------------------------------------------------------------------------

1 | # [Calibrating Uncertainties in Object Localization Task](https://arxiv.org/abs/1811.11210)

2 |

3 | _November 2019_

4 |

5 | tl;dr: Proof of concept by applying Uncertainty calibration to object detector.

6 |

7 | #### Overall impression

8 | For a more theoretical treatment refer to [accurate uncertainty via calibrated regression](dl_regression_calib.md). A more detailed application is [can we trust you](towards_safe_ad_calib.md).

9 |

10 | #### Key ideas

11 | - Validating uncertainty estimates: plot regressed aleatoric uncertainty $\sigma_i^2$ and $(b_i - \bar{b_i})^2$

12 | - To find 90% confidence interval, the upper and lower bounds are given by $\hat{P^{-1}}(r \pm 90/2)$, where $r = \hat{P(x)}$ and $\hat{P}$ is the P after calibration.

13 |

14 | #### Technical details

15 | - Summary of technical details

16 |

17 | #### Notes

18 | - Questions and notes on how to improve/revise the current work

19 |

20 |

--------------------------------------------------------------------------------

/paper_notes/3d_gck.md:

--------------------------------------------------------------------------------

1 | # [3D-GCK: Single-Shot 3D Detection of Vehicles from Monocular RGB Images via Geometrically Constrained Keypoints in Real-Time](https://arxiv.org/abs/2006.13084)

2 |

3 | _August 2020_

4 |

5 | tl;dr: Annotate and predict an 8DoF polyline for 3D perception.

6 |

7 | #### Overall impression

8 | The paper proposed a way to annotate and regress a 3D bbox, in the form of a 8 DoF polyline. This is very similar but different from [Bounding Shapes](bounding_shapes.md).

9 |

10 | This is one of the series of papers from Daimler.

11 |

12 | - [MergeBox](mb_net.md)

13 | - [Bounding Shapes](bounding_shapes.md)

14 | - [3D Geometrically constraint keypoints](3d_gck.md)

15 |

16 |

17 | #### Key ideas

18 | - Bounding shape is one 4-point and 8DoF polyline.

19 |

20 | - Distance is calculated with IPM.

21 |

22 | #### Technical details

23 | - Summary of technical details

24 |

25 | #### Notes

26 | - Series production cars: mass production cars

27 |

28 |

--------------------------------------------------------------------------------

/paper_notes/3d_lanenet+.md:

--------------------------------------------------------------------------------

1 | # [3D-LaneNet+: Anchor Free Lane Detection using a Semi-Local Representation](https://arxiv.org/abs/2011.01535)

2 |

3 | _November 2020_

4 |

5 | tl;dr: This is a shrunk down version of [Semilocal 3D LaneNet](semilocal_3d_lanenet.md). By design, the semi-local tile based approach is anchor free.

6 |

7 | #### Overall impression

8 | Describe the overall impression of the paper.

9 |

10 | #### Key ideas

11 | - Summaries of the key ideas

12 |

13 | #### Technical details

14 | - Summary of technical details

15 |

16 | #### Notes

17 | - Questions and notes on how to improve/revise the current work

18 |

19 |

--------------------------------------------------------------------------------

/paper_notes/3ddl_cvpr2017.md:

--------------------------------------------------------------------------------

1 | # [3D Deep Learning Tutorial at CVPR 2017](https://www.youtube.com/watch?v=8CenT_4HWyY)

2 |

3 | _Mar 2019_

4 |

5 | tl;dr: CVPR Tutorial on 3d deep learning.

6 |

7 | #### Overall impression

8 | - 3D representations:

9 | - rasterized: multiview 2d, 3d voxelized

10 | - geometric: point cloud, mesh, primitive-based CAD

--------------------------------------------------------------------------------

/paper_notes/3dod_review.md:

--------------------------------------------------------------------------------

1 | # [A Survey on 3D Object Detection Methods for Autonomous Driving Applications](http://wrap.warwick.ac.uk/114314/1/WRAP-survey-3D-object-detection-methods-autonomous-driving-applications-Arnold-2019.pdf)

2 |

3 | _October 2019_

4 |

5 | tl;dr: Summary of 3DOD methods based on monocular images, lidars and sensor fusion methods of the two.

6 |

7 | #### Overall impression

8 | The review is updated as of 2018. However there have been a lot of progress of mono 3DOD in 2019. I shall write a review of mono 3DOD soon.

9 |

10 | #### Key ideas

11 | > - The main drawback of monocular methods is the lack of depth cues, which limits detection and localization accuracy, especially for far and occluded objects.

12 |

13 | > - Most mono 3DOD methods have shifted towards a learned paradigm for RPN and second stage of of 3D model matching and reprojection to obtain 3D Box.

14 |

15 | #### Technical details

16 | - Mono

17 | - [mono3D](mono3d.md)

18 | - 3DVP

19 | - subCNN

20 | - [deepMANTA](deep_manta.md)

21 | - [deep3DBox](deep3dbox.md)

22 | - 360 panorama

23 | - Lidar

24 | - projection

25 | - VeloFCN

26 | - [Complex YOLO](complex_yolo.md)

27 | - Towards Safe (variational dropout)

28 | - BirdNet (lidar point cloud normalization)

29 | - Volumetric

30 | - 3DFCN

31 | - Vote3Deep

32 | - point net

33 | - VoxelNet

34 |

35 | - Sensor Fusion

36 | - [MV3D](mv3d.md)

37 | - [AVOD](avod.md)

38 | - [Frustum PointNet](frustum_pointnet.md)

39 |

40 | #### Notes

41 | - camera and lidar calibration with odometry

42 |

--------------------------------------------------------------------------------

/paper_notes/6d_vnet.md:

--------------------------------------------------------------------------------

1 | # [6D-VNet: End-to-end 6DoF Vehicle Pose Estimation from Monocular RGB Images](http://openaccess.thecvf.com/content_CVPRW_2019/papers/Autonomous%20Driving/Wu_6D-VNet_End-to-End_6-DoF_Vehicle_Pose_Estimation_From_Monocular_RGB_Images_CVPRW_2019_paper.pdf)

2 |

3 | _January 2020_

4 |

5 | tl;dr: Directly regress 3D distance and quaternion direction from RoIPooled features.

6 |

7 | #### Overall impression

8 | This is an extension of mask RCNN, by extending the mask head to regress fine-grained vehicle model (such as Audi Q5), quaternion and distance.

9 |

10 | #### Key ideas

11 | - Previous methods usually estimate depth via two step process: 1) regress bbox and direction 2) postprocess to estimate 3D translation via projective distance estimation. --> this requires bbox and orientation to be estimated correctly.

12 | - Robotics usually requires strict estimation of orientation but translation can be relaxed. However AD require accurate estimation of translation.

13 | - the features for regreessing fine-class and orientation (quaternion) is also concate with the translational branch to predict translation.

14 | - The target of translation is also preprocessed to essentially regress z directly. This can also be used to predict the 3D projection.

15 | $$

16 | x = (u - c_x) z / f_x \\

17 | y = (u - c_y) z / f_y

18 | $$

19 |

20 | #### Technical details

21 | - Summary of technical details

22 |

23 | #### Notes

24 | - Postprocessing DL output may suffer from error accumulation. If we work the postprocessing into label preprocessing, this could not be a problem anymore. Of course, keeping both will add to redundancy.

25 | - [github code](https://github.com/stevenwudi/6DVNET)

26 |

27 |

--------------------------------------------------------------------------------

/paper_notes/M2Det.md:

--------------------------------------------------------------------------------

1 | # [M2Det: A Single-Shot Object Detector based on Multi-Level Feature Pyramid Network](https://arxiv.org/abs/1811.04533)

2 |

3 | @YuShen1116

4 |

5 | _May 2019_

6 |

7 | #### tl;dr

8 | A new method to produce feature map for object detection.

9 |

10 | #### Overall impression

11 | Describe the overall impression of the paper.

12 | Previous methods of building feature pyramid(SSD, FPN, STDN) still have some limitations because

13 | their pyramids are built on classification backbone. This paper states a new method of generating

14 | feature pyramid, and integrated into SSD architecture.

15 | As a result, they achieves AP of 41.0 at speed of 11.8 FPS with single-scale inference strategy on

16 | MS-COCO dataset.

17 |

18 | #### Key ideas

19 | - Use Feature Fusion Modules(add figure later) to fuse the shallow and deep features(such as conv4_3 and conv5_3 of VGG)

20 | from backbone.

21 | - stack several Thinned U-shape Module and Feature Fusion Module together,

22 | to generate feature maps in different scale(from shallow to deep).

23 | - Use a scale-wise feature aggregation module to generate a multi-level feature pyramid from above features.

24 | - Apply detection layer on this pyramid.

25 |

26 |

27 | #### Notes

28 | - Not very easy to train, it costs 3 - more than 10+ days to train the whole pipeline.

29 | - I think this idea is interesting because it states that the features from classification backbone is not good enough

30 | for object detection. Modifying the features for specific task could be a good direction to try.

31 | - M2: multi-level, multi-scale features

32 |

--------------------------------------------------------------------------------

/paper_notes/_template.md:

--------------------------------------------------------------------------------

1 | # [Paper Title](link_to_paper)

2 |

3 | _November 2024_

4 |

5 | tl;dr: Summarize the the main idea of the paper with one sentence.

6 |

7 | #### Overall impression

8 | Describe the overall impression of the paper. In a multi-paragraph format, this is a high-level overview of this paper, including its main contribution, advantages compared with previous methods. Also this would include drawbacks of he paper and point out future directions.

9 |

10 | #### Key ideas

11 | - Summaries of the key ideas, each formulating a bullet point. Finer grained details would belisted in nested bullet points. The main aspects to consider include, but not limited to Model architecture, data, eval.

12 |

13 | #### Technical details

14 | - Summary of technical details, such as important training details, or bugs of previous benchmarks.

15 |

16 | #### Notes

17 | - Questions and notes on how to improve/revise the current work

18 |

19 |

--------------------------------------------------------------------------------

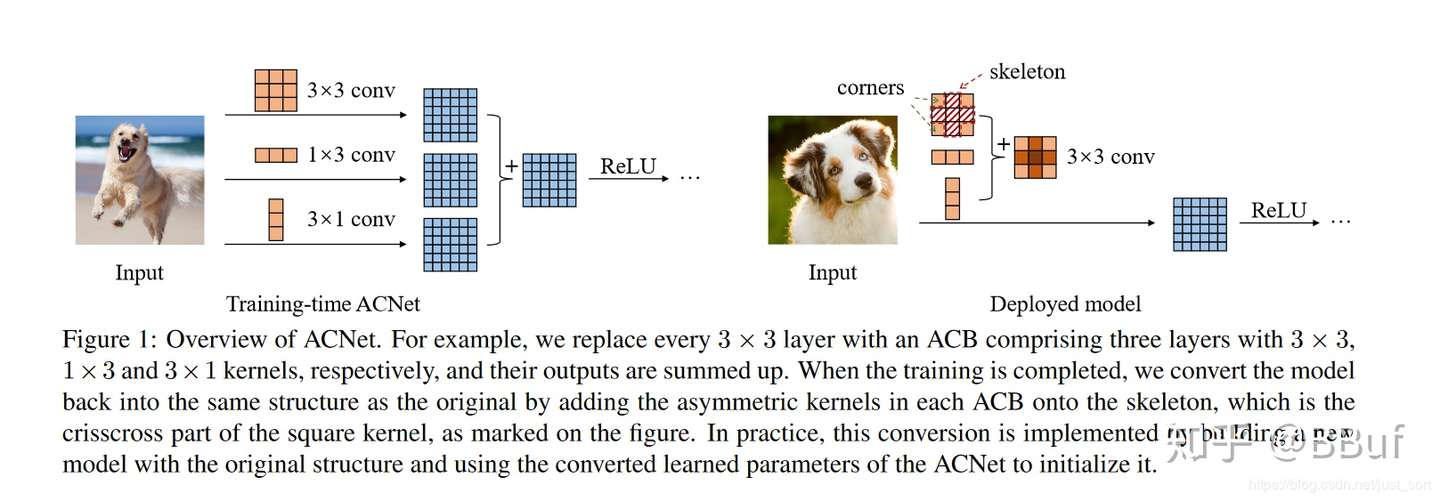

/paper_notes/acnet.md:

--------------------------------------------------------------------------------

1 | # [ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks](https://arxiv.org/abs/1908.03930)

2 |

3 | _January 2021_

4 |

5 | tl;dr: Train with 3x3, 3x1 and 1x3, but deploy with fused 3x3.

6 |

7 | #### Overall impression

8 | This paper take the idea of BN fusion during inference to a new level, by fusing conv kernels. It has **no additional hyperparameters during training, and no additional parameters during inference, thanks to the fact that additivity holds for convolution.**

9 |

10 | It directly inspired [RepVGG](repvgg.md), a follow-up work by the same authors.

11 |

12 | #### Key ideas

13 | - Asymmetric convolution block (ACB)

14 | - During training, replace every 3x3 by 3 parallel branches, 3x3, 3x1 and 1x3.

15 | - During inference, merge the 3 branches into 1, through BN fusion and branch fusion.

16 |

17 |

18 | - ACNet strengthens the skeleton

19 | - Skeletons are more important than corners. Removing corners causes less harm than skeletons.

20 | - ACNet aggravates this imbalance

21 | - Adding ACB to edges cannot diminish the importance of other parts. Skeleton is still very important.

22 |

23 |

24 | #### Technical details

25 | - Breaking large kernels into asymmetric convolutional kernels can save computation and increase receptive field cheaply.

26 | - ACNet can enhance the robustness toward rotational distortions. Train upright, and infer on rotated images. --> but the improvement in robustness is quite marginal.

27 |

28 | #### Notes

29 | - [Review on Zhihu](https://zhuanlan.zhihu.com/p/131282789)

30 |

31 |

--------------------------------------------------------------------------------

/paper_notes/astyx_dataset.md:

--------------------------------------------------------------------------------

1 | # [Astyx dataset: Automotive Radar Dataset for Deep Learning Based 3D Object Detection](https://www.astyx.com/fileadmin/redakteur/dokumente/Automotive_Radar_Dataset_for_Deep_learning_Based_3D_Object_Detection.PDF)

2 |

3 | _January 2020_

4 |

5 | tl;dr: Dataset with radar data from proprietary high resolution radar design.

6 |

7 | #### Overall impression

8 | Active learning scheme based on uncertainty sampling using estimated scores as approximation.

9 |

10 | #### Key ideas

11 | - Radar+camera sees more clearly than lidar+camera, for far away objects and for pedestrians. --> However even with radar, the recall is only ~0.5. Too low for real-world application.

12 |

13 | #### Technical details

14 | - Cross sensor calibration has two steps: camera lidar 2D-3D with checkerboard, and radar lidar 3D-3D relative pose estimation.

15 | - Annotation has "invisible" objects as well associated via temporal reference, but invisible in camera and lidar.

16 |

17 | #### Notes

18 | - [Dataset](https://www.astyx.com/development/astyx-hires2019-dataset.html)

19 | - [Estimation of height](https://sci-hub.tw/10.1109/RADAR.2019.8835831) in this dataset

--------------------------------------------------------------------------------

/paper_notes/astyx_radar_camera_fusion.md:

--------------------------------------------------------------------------------

1 | # [Astyx camera radar: Deep Learning Based 3D Object Detection for Automotive Radar and Camera](https://www.astyx.net/fileadmin/redakteur/dokumente/Deep_Learning_Based_3D_Object_Detection_for_Automotive_Radar_and_Camera.PDF)

2 |

3 | _January 2020_

4 |

5 | tl;dr: Camera + radar fusion based on AVOD.

6 |

7 | #### Overall impression

8 |

9 |

10 | #### Key ideas

11 | The architecture is largely based on [AVOD](avod.md). It converts radar into height and intensity maps and uses the pseudo image and camera image for region proposal.

12 |

13 | #### Technical details

14 | - Bbox encoding has 10 dim (4 pts + 2 z-values) in the original AVOD paper. However this paper said it used 14 dim.

15 | - Radar+camera does not detect perpendicular cars well. However it detects cars that align with the direction of the ego car much better.

16 |

17 |

18 |

--------------------------------------------------------------------------------

/paper_notes/ba_sfm_learner.md:

--------------------------------------------------------------------------------

1 | # [Self-Supervised Learning of Depth and Ego-motion with Differentiable Bundle Adjustment](https://arxiv.org/abs/1909.13163)

2 |

3 | _July 2020_

4 |

5 | tl;dr: Introduce [BA-Net](banet.md) into [SfM learner](sfm_learner.md).

6 |

7 | #### Overall impression

8 | The paper looks to be SOTA compared to the papers it cites. However the performance looks on par with [monodepth2](monodepth2.md). The performance is actually not as good as BA-Net on KITTI.

9 |

10 | The paper spent too much texts on explaining the existing work of [BA-Net](banet.md). The main innovation of the paper seems to be using poseNet to provide a good initial guess of the camera pose.

11 |

12 | #### Key ideas

13 | - Summaries of the key ideas

14 |

15 | #### Technical details

16 | - Summary of technical details

17 |

18 | #### Notes

19 | - Questions and notes on how to improve/revise the current work

20 |

21 |

--------------------------------------------------------------------------------

/paper_notes/batchnorm_pruning.md:

--------------------------------------------------------------------------------

1 | # [BatchNorm Pruning: Rethinking the Smaller-Norm-Less-Informative Assumption in Channel Pruning of Convolution Layers](https://arxiv.org/abs/1802.00124)

2 |

3 | _May 2020_

4 |

5 | tl;dr: Similar idea to [Network Slimming](network_slimming.md) but with more details.

6 |

7 | #### Overall impression

8 | Two questions to answer:

9 |

10 | - Can we set wt < thresh to zero. If so, under what constraints?

11 | - Can we set a global thresh to diff layers?

12 |

13 | Many previous works are norm-based pruning, which do not have solid theoretical foundation. One cannot assign different weights to the Lasso regularization to diff layers, as we can perform model reparameterization to reduce Lasso loss. In addition, in the presence of BN, any linear scaling of W will not change results.

14 |

15 |

16 | #### Key ideas

17 | - This paper (together with concurrent work of [Network Slimming](network_slimming.md)) focuses on sparsifying the gamma value in BN layer.

18 | - gamma works on top of normalized random variable and thus comparable across layers.

19 | - The impact of gamma is independent across diff layers.

20 | - A regularization term based on L1 of gamma is introduced, but scaled by a per layer factor $\lambda$. The global weight of the regularization term is $\rho$.

21 | - ISTA (Iterative Shrinkage-Thresholding Algorithm) is better than gradient descent.

22 |

23 | #### Technical details

24 | - Summary of technical details

25 |

26 | #### Notes

27 | - Questions and notes on how to improve/revise the current work

28 |

29 |

--------------------------------------------------------------------------------

/paper_notes/bev_od_ipm.md:

--------------------------------------------------------------------------------

1 | # [BEV-IPM: Deep Learning based Vehicle Position and Orientation Estimation via Inverse Perspective Mapping Image](https://ieeexplore.ieee.org/abstract/document/8814050)

2 |

3 | _October 2019_

4 |

5 | tl;dr: IPM of the pitch/role corrected camera image, and then perform 2DOD on the IPM image.

6 |

7 | #### Overall impression

8 | The paper performs 2DOD on IPM'ed image. This seems quite hard but obviously doable. The GT on BEV image seems to come from 3D GT, but the paper did not go to details about it.

9 |

10 | The detection distance is only up to ~50 meters. Beyond 50 m, it is hard to reliably detect distance and position. --> Maybe vehicle yaw are not important for cars beyond 50 meters after all?

11 |

12 | #### Key ideas

13 | - Motion cancellation using IMU (motion due to wind disturbance or fluctuation of road surface)

14 | - IPM assumptions:

15 | - road is flat

16 | - mounting position of the camera is stationary --> motion cancellation helps this.

17 | - the vehicle to be detected is on the ground

18 | - 2DOD oriented bbox detection based on YOLOv3.

19 |

20 | #### Technical details

21 | - KITTI does not label bbox smaller than 25 pixels, which translates to 60 meters according to fx=fy=721 of KITTI's intrinsics.

22 |

23 | #### Notes

24 | - [youtube demo](https://www.youtube.com/watch?v=2zvS87d1png&feature=youtu.be) the results look reasonably good, but how about occluded cases?

25 |

26 |

--------------------------------------------------------------------------------

/paper_notes/bev_seg.md:

--------------------------------------------------------------------------------

1 | # [BEV-Seg: Bird’s Eye View Semantic Segmentation Using Geometry and Semantic Point Cloud](https://arxiv.org/abs/2006.11436)

2 |

3 | _June 2020_

4 |

5 | tl;dr: Detached model to perform domain adaptation sim2real.

6 |

7 | #### Overall impression

8 | Two stage model to bridge domain gap. This is very similar to [GenLaneNet](gen_lanenet.md) for 3D LLD prediction. The idea of using semantic segmentation to bridge the sim2real gap is explored in many BEV semantic segmentation tasks such as [BEV-Seg](bev_seg.md), [CAM2BEV](cam2bev.md), [VPN](vpn.md).

9 |

10 | The first stage model already extracted away domain-dependent features and thus the second stage model can be used as is.

11 |

12 | The GT of BEV segmentation is difficult to collect in most domains. The simulated segmentation GT can be obtained in abundance with simulator such as CARLA.

13 |

14 |

15 |

16 | #### Key ideas

17 | - **View transformation**: pixel-wise depth prediction

18 | - The first stage generates the pseudo-lidar point cloud, and render it in BEV.

19 | - This is incomplete and may have many void pixels.

20 | - Always choosing the point of lower height.

21 | - The second stage converts the BEV view of pseudo-lidar point cloud to BEV segmentation.

22 | - Fills in the void pixels

23 | - Smooth already predicted segmentation

24 | - During inference, only finetune first stage. Use second stage as is.

25 |

26 |

27 | #### Technical details

28 | - Summary of technical details

29 |

30 | #### Notes

31 | - [talk at CVPR 2020](https://youtu.be/WRH7N_GxgjE?t=1554)

32 |

33 |

--------------------------------------------------------------------------------

/paper_notes/birdgan.md:

--------------------------------------------------------------------------------

1 | # [BirdGAN: Learning 2D to 3D Lifting for Object Detection in 3D for Autonomous Vehicles](https://arxiv.org/abs/1904.08494)

2 |

3 | _October 2019_

4 |

5 | tl;dr: Learn to map 2D perspective image to BEV with GAN.

6 |

7 | #### Overall impression

8 | The performance of BirdGAN on 3D object detection has the SOTA. The AP_3D @ IoU=0.7 is ~60 for easy and ~40 for hard. This is much better than the ~10 for [ForeSeE](foresee_mono3dod.md)

9 |

10 | One major drawback is the limited forward distance BirdGAN can handle. In the clipping case, the frontal depth is only about 10 to 15 meters.

11 |

12 | Personally I feel GAN related architecture not reliable for production. The closest to production research so far is still [pseudo-lidar++](pseudo_lidar++.md).

13 |

14 | #### Key ideas

15 | - Train a GAN to translate 2D perspective image to BEV.

16 | - Use the generated BEV to perform sensor fusion in AVOD and MV3D.

17 | - Clipping further away points in lidar helps training and generates better performance --> while this also severely limited the application of the idea.

18 |

19 | #### Technical details

20 | - Summary of technical details

21 |

22 | #### Notes

23 | - Maybe the 3D AP is not what matters most in autonomous driving. Predicting closeby objects better at the cost of distant objects is not optimal for autonomous driving.

--------------------------------------------------------------------------------

/paper_notes/bs3d.md:

--------------------------------------------------------------------------------

1 | # [BS3D: Beyond Bounding Boxes: Using Bounding Shapes for Real-Time 3D Vehicle Detection from Monocular RGB Images](https://ieeexplore.ieee.org/abstract/document/8814036/)

2 |

3 | _August 2020_

4 |

5 | tl;dr: Annotate and predict a 6DoF bounding shape for 3D perception.

6 |

7 | #### Overall impression

8 | The paper proposed a way to annotate and regress a 3D bbox, in the form of a 8 DoF polyline.

9 |

10 | This is one of the series of papers from Daimler.

11 |

12 | - [MergeBox](mb_net.md)

13 | - [Bounding Shapes](bounding_shapes.md)

14 | - [3D Geometrically constraint keypoints](3d_gck.md)

15 |

16 | #### Key ideas

17 | - Bounding shape is one 4-point and 8DoF polyline.

18 |

19 |

20 |

21 | #### Technical details

22 | - The paper normalizes dimension and 3D location to that y = 0. When real depth is recovered (via lidar, radar or stereo), the monocular perception

23 | - An object is of [Manhattan properties](https://openaccess.thecvf.com/content_cvpr_2017/papers/Gao_Exploiting_Symmetry_andor_CVPR_2017_paper.pdf) if 3 orthogonal axes can be inferred, such as cars, buses, motorbikes, trains, etc.

24 |

25 | #### Notes

26 | - 57% of all American drivers do not use turn signals when changing the lane. ([source](https://www.insurancejournal.com/news/national/2006/03/15/66496.htm))

27 |

28 |

--------------------------------------------------------------------------------

/paper_notes/c3dpo.md:

--------------------------------------------------------------------------------

1 | # [C3DPO: Canonical 3D Pose Networks for Non-Rigid Structure From Motion](https://arxiv.org/abs/1909.02533)

2 |

3 | _December 2019_

4 |

5 | tl;dr: Infer 3D pose for non-rigid objects by introducing DL to non-rigid structure-from-motion (NR-SFM).

6 |

7 | #### Overall impression

8 | C3DPO transforms closed-formed matrix decomposition problem into a DL-based parameter estimation problem. This method is faster and also can embody prior info that is not apparent in the linear model.

9 |

10 | A challenge in NR-SFM is the ambiguity of internal object deformation (or pose in this paper, non-rigid motion) and viewpoint changes (rigid motion). C3DPO introduces a canonicalization network to encourage the consistent decomposition.

11 |

12 |

13 | #### Key ideas

14 | - The main takeaway from this work: Work as many constraints as possible into loss. Use any mathematical cycle-consistency to constrain learning.

15 | - Use deep learning to supplement maths, not to replace math.

16 |

17 | #### Technical details

18 | - Summary of technical details

19 |

20 | #### Notes

21 | - Questions and notes on how to improve/revise the current work

22 |

23 |

--------------------------------------------------------------------------------

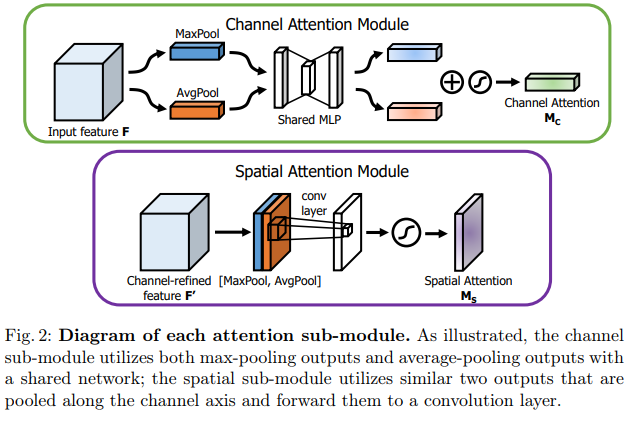

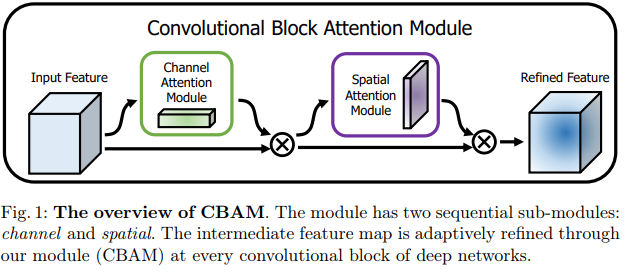

/paper_notes/cbam.md:

--------------------------------------------------------------------------------

1 | # [CBAM: Convolutional Block Attention Module](https://arxiv.org/abs/1807.06521)

2 |

3 | _May 2020_

4 |

5 | tl;dr: Improvement over SENet.

6 |

7 | #### Overall impression

8 | Channel attention module is very much like SENet but more concise. Spatial attention module concatenates mean pooling and max pooling across channels and blends them together.

9 |

10 | Each attention is then used sequentially with each feature map.

11 |

12 |

13 |

14 |

15 | The Spatial attention module is modified in [Yolov4](yolov4.md) to a point wise operation.

16 |

17 | #### Key ideas

18 | - Summaries of the key ideas

19 |

20 | #### Technical details

21 | - Summary of technical details

22 |

23 | #### Notes

24 | - Questions and notes on how to improve/revise the current work

25 |

26 |

--------------------------------------------------------------------------------

/paper_notes/cbgs.md:

--------------------------------------------------------------------------------

1 | # [CBGS: Class-balanced Grouping and Sampling for Point Cloud 3D Object Detection](https://arxiv.org/abs/1908.09492)

2 |

3 | _July 2020_

4 |

5 | tl;dr: Class rebalance of minority helps in object detection for nuscenes dataset.

6 |

7 | #### Overall impression

8 | The class balanced sampling and class-grouped heads are useful to handle imbalanced object detection.

9 |

10 | #### Key ideas

11 | - **DS sampling**:

12 | - increases sample density of rare classes to avoid gradient vanishing

13 | - count instances and samples (frames). Resample so that samples for each class is on the same order of magnitude.

14 | - **Class balanced grouping**: each group has a separate head.

15 | - Classes of similar shapes or sizes should be grouped.

16 | - Instance numbers of diff groups should be balanced properly.

17 | - Supergroups:

18 | - cars (majority classes)

19 | - truck, construction vehicle

20 | - bus, trailer

21 | - barrier

22 | - motorcycle, bicycle

23 | - pedestrian, traffic cone

24 | - Fit ground plane and plant GT back in.

25 | - Bag of tricks

26 | - Accumulate 10 frames (0.5 seconds) to form a dense lidar BEV

27 | - AdamW + [One cycle policy](https://sgugger.github.io/the-1cycle-policy.html)

28 |

29 | #### Technical details

30 | - Regress vx and vy. If bicycle speed is above a certain thresh, then it is with rider.

31 |

32 | #### Notes

33 | - Questions and notes on how to improve/revise the current work

34 |

35 |

--------------------------------------------------------------------------------

/paper_notes/cbn.md:

--------------------------------------------------------------------------------

1 | # [CBN: Cross-Iteration Batch Normalization](https://arxiv.org/abs/2002.05712)

2 |

3 | _May 2020_

4 |

5 | tl;dr: Improve batch normalization when minibatch size is small.

6 |

7 | #### Overall impression

8 | Similar to [GroupNorm](groupnorm.md) in improving performance when batch size is small. It accumulates stats over mini-batches. However, as weights are changing in each iteration, the statistics collected under those weights may become inaccurate under the new weight. A naive average will be wrong. Fortunately, weights change gradually. In Cross-Iteration Batch Normalization (CBM), it estimates those statistics from k previous iterations with the adjustment below.

9 |

10 |

11 |

12 | #### Key ideas

13 | - Summaries of the key ideas

14 |

15 | #### Technical details

16 | - Summary of technical details

17 |

18 | #### Notes

19 | - Questions and notes on how to improve/revise the current work

20 |

21 |

--------------------------------------------------------------------------------

/paper_notes/centermask.md:

--------------------------------------------------------------------------------

1 | # [CenterMask: Single Shot Instance Segmentation With Point Representation](https://arxiv.org/abs/2004.04446)

2 |

3 | _April 2020_

4 |

5 | tl;dr: Summary of the main idea.

6 |

7 | #### Overall impression

8 | [CenterMask](centermask.md) works almost in exactly the same way as [BlendMask](blendmask.md). See [my review on Medium](https://towardsdatascience.com/single-stage-instance-segmentation-a-review-1eeb66e0cc49).

9 |

10 | - [CenterMask](centermask.md) uses 1 prototype mask (named global saliency map) explicitly.

11 | - [CenterMask](centermask.md)'s name comes from the fact that it uses [CenterNet](centernet.md) as the backbone, while [CenterMask](centermask.md) uses the similar anchor-free one-stage [FCOS](fcos.md) as backbone.

12 |

13 |

14 | #### Key ideas

15 | - Summaries of the key ideas

16 |

17 | #### Technical details

18 | - Summary of technical details

19 |

20 | #### Notes

21 | - Questions and notes on how to improve/revise the current work

22 |

23 |

--------------------------------------------------------------------------------

/paper_notes/centroid_voting.md:

--------------------------------------------------------------------------------