├── .gitignore

├── README.md

├── custom_retriever

├── __init__.py

├── bm25_retriever.py

├── build_embedding_cache.py

├── ensemble_rerank_retriever.py

├── ensemble_retriever.py

├── query_rewrite_ensemble_retriever.py

└── vector_store_retriever.py

├── data

├── corpus_openai_embedding.npy

├── demo.py

├── doc_qa_dataset.csv

├── doc_qa_dataset.json

├── doc_qa_test.json

├── doc_qa_test_demo.json

├── paul_graham_essay.txt

├── pg_eval_dataset.json

├── queries_openai_embedding.npy

├── query_rewrite.json

└── query_rewrite_openai_embedding.npy

├── docs

├── RAG框架中的Rerank算法评估.md

├── RAG框架中的Retrieve算法评估.md

└── RAG框架中的召回算法可视化分析及提升方法.md

├── embedding_finetune

├── embedding_fine_tuning.ipynb

├── test.txt

└── train.txt

├── evaluation

├── __init__.py

├── evaluation_bge-base-embedding_2024-01-05 12:30:06.csv

├── evaluation_bge-base-sft-embedding_2024-01-05 17:30:54.csv

├── evaluation_bge-large-embedding_2024-01-05 12:14:56.csv

├── evaluation_bge-large-sft-embedding_2024-01-05 17:10:41.csv

├── evaluation_bge-m3-embedding_2024-02-02 23:33:19.csv

├── evaluation_bm25_2023-12-26 12:55:48.csv

├── evaluation_ensemble_2023-12-26 22:20:24.csv

├── evaluation_exp.py

├── evaluation_jina-base-zh-embedding_2024-02-02 23:09:30.csv

├── evaluation_openai-embedding_2023-12-26 17:14:02.csv

├── evaluation_rerank-bge-base_2023-12-29 19:16:40.csv

├── evaluation_rerank-bge-large_2023-12-29 15:35:11.csv

├── evaluation_rerank-cohere_2023-12-26 23:01:01.csv

└── metric_statistics.py

├── late_chunking

├── jina_late_chunking.ipynb

├── jina_zh_late_chunking.ipynb

├── late_chunk_embeddings.py

├── late_chunking_exp.py

├── late_chunking_gradio_server.py

└── my_late_chunking_exp.ipynb

├── preprocess

├── __init__.py

├── add_corpus.py

├── data_transfer.py

├── get_text_id_mapping.py

└── query_rewrite.py

├── requirements.txt

├── services

├── __init__.py

├── data_analysis.py

├── embedding_server.py

├── llama_index_demo.ipynb

├── search_result_analysis.xlsx

└── server_gradio.py

└── utils

├── __init__.py

└── rerank.py

/.gitignore:

--------------------------------------------------------------------------------

1 | .ipynb_checkpoints

2 | .idea

3 | evaluation/*.html

4 | */__pycache__

5 | evaluation/tmp*

6 | late_chunking/.env

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | 本项目是针对RAG中的Retrieve阶段的召回技术及算法效果所做评估实验。使用主体框架为`LlamaIndex`,版本为0.9.21.

2 |

3 | Retrieve Method:

4 |

5 | - BM25 Retriever

6 | - Embedding Retriever(OpenAI, BGE, BGE-Finetune)

7 | - Ensemble Retriever

8 | - Ensemble Retriever + Cohere Rerank

9 | - Ensemble Retriever + BGE-BASE Rerank

10 | - Ensemble Retriever + BGE-LARGE Rerank

11 |

12 | 参考文章(也可查看`docs`文件夹):

13 |

14 | 1. [NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=1977141018&lang=zh_CN#rd)

15 | 2. [NLP(八十三)RAG框架中的Rerank算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486225&idx=1&sn=235eb787e2034f24554d8e997dbb4718&chksm=fcb9b281cbce3b9761342ebadbe001747ce2e74d84340f78b0e12c4d4c6aed7a7817f246c845&token=1977141018&lang=zh_CN#rd)

16 | 3. [NLP(八十四)RAG框架中的召回算法可视化分析及提升方法](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486264&idx=1&sn=afa31ecc8b23724154a08090ccfab213&chksm=fcb9b2a8cbce3bbeb6daaee6308c10f097c32d304f076c3061718e669fd366c8aec9e6cf379d&token=823710334&lang=zh_CN#rd)

17 | 4. [NLP(八十六)RAG框架Retrieve阶段的Embedding模型微调](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486333&idx=1&sn=29d00d472647bc5d6e336bec22c88139&chksm=fcb9b2edcbce3bfb42ea149d96fb1296b10a79a60db7ad2da01b85ab223394191205426bc025&token=1376257911&lang=zh_CN#rd)

18 | 5. [NLP(一百零一)Embedding模型微调实践](https://mp.weixin.qq.com/s/lJ3Mycjw1G99T08r8c7dSQ)

19 | 6. [NLP(一百零二)ReRank模型微调实践](https://mp.weixin.qq.com/s/RiPYANTyEgFtIIFHaKq3Rg)

20 |

21 | ## 数据

22 |

23 | 参考`data/doc_qa_test.json`文件,格式以LlamaIndex框架为标准。

24 |

25 | ## 评估结果

26 |

27 | BM25 Retriever Evaluation:

28 |

29 | | retrievers | hit_rate | mrr | cost_time |

30 | |-----------------|--------------------|--------------------|--------------------|

31 | | bm25_top_1_eval | 0.7975077881619937 | 0.7975077881619937 | 461.2770080566406 |

32 | | bm25_top_2_eval | 0.8535825545171339 | 0.8255451713395638 | 510.3020668029785 |

33 | | bm25_top_3_eval | 0.9003115264797508 | 0.8411214953271028 | 570.6708431243896 |

34 | | bm25_top_4_eval | 0.9158878504672897 | 0.8450155763239875 | 420.72606086730957 |

35 | | bm25_top_5_eval | 0.940809968847352 | 0.8500000000000001 | 388.5960578918457 |

36 |

37 | Embedding Retriever Evaluation:

38 |

39 | | retrievers | hit_rate | mrr | cost_time |

40 | |----------------------|--------------------|--------------------|--------------------|

41 | | embedding_top_1_eval | 0.6074766355140186 | 0.6074766355140186 | 67.68369674682617 |

42 | | embedding_top_2_eval | 0.6978193146417445 | 0.6526479750778816 | 60.84489822387695 |

43 | | embedding_top_3_eval | 0.7320872274143302 | 0.6640706126687436 | 59.905052185058594 |

44 | | embedding_top_4_eval | 0.778816199376947 | 0.6757528556593978 | 63.54880332946777 |

45 | | embedding_top_5_eval | 0.794392523364486 | 0.6788681204569056 | 67.79217720031738 |

46 |

47 | Ensemble Retriever Evaluation:

48 |

49 | | retrievers | hit_rate | mrr | cost_time |

50 | |---------------------|--------------------|--------------------|--------------------|

51 | | ensemble_top_1_eval | 0.7009345794392523 | 0.7009345794392523 | 1072.7379322052002 |

52 | | ensemble_top_2_eval | 0.8535825545171339 | 0.7741433021806854 | 1088.8781547546387 |

53 | | ensemble_top_3_eval | 0.8940809968847352 | 0.7928348909657321 | 980.7949066162109 |

54 | | ensemble_top_4_eval | 0.9190031152647975 | 0.8016614745586708 | 935.1701736450195 |

55 | | ensemble_top_5_eval | 0.9376947040498442 | 0.8078920041536861 | 868.2990074157715 |

56 |

57 | Ensemble Retriever + Rerank Evaluation:

58 |

59 | | retrievers | hit_rate | mrr | cost_time |

60 | |----------------------------|--------------------|--------------------|-------------------|

61 | | ensemble_rerank_top_1_eval | 0.8348909657320872 | 0.8348909657320872 | 2140632.404088974 |

62 | | ensemble_rerank_top_2_eval | 0.9034267912772586 | 0.8785046728971962 | 2157657.287120819 |

63 | | ensemble_rerank_top_3_eval | 0.9345794392523364 | 0.9008307372793353 | 2200800.935983658 |

64 | | ensemble_rerank_top_4_eval | 0.9470404984423676 | 0.9078400830737278 | 2150398.734807968 |

65 | | ensemble_rerank_top_5_eval | 0.9657320872274143 | 0.9098650051921081 | 2149122.938156128 |

66 |

67 |

68 |

69 |

70 |

71 | ## 不同Rerank算法之间的比较

72 |

73 | bge-rerank-base:

74 |

75 | | retrievers | hit_rate | mrr |

76 | |-------------------------------------|----------|--------|

77 | | ensemble_bge_base_rerank_top_1_eval | 0.8255 | 0.8255 |

78 | | ensemble_bge_base_rerank_top_2_eval | 0.8785 | 0.8489 |

79 | | ensemble_bge_base_rerank_top_3_eval | 0.9346 | 0.8686 |

80 | | ensemble_bge_base_rerank_top_4_eval | 0.947 | 0.872 |

81 | | ensemble_bge_base_rerank_top_5_eval | 0.9564 | 0.8693 |

82 |

83 | bge-rerank-large:

84 |

85 | | retrievers | hit_rate | mrr |

86 | |--------------------------------------|----------|--------|

87 | | ensemble_bge_large_rerank_top_1_eval | 0.8224 | 0.8224 |

88 | | ensemble_bge_large_rerank_top_2_eval | 0.8847 | 0.8364 |

89 | | ensemble_bge_large_rerank_top_3_eval | 0.9377 | 0.8572 |

90 | | ensemble_bge_large_rerank_top_4_eval | 0.9502 | 0.8564 |

91 | | ensemble_bge_large_rerank_top_5_eval | 0.9626 | 0.8537 |

92 |

93 | ft-bge-rerank-base:

94 |

95 | | retrievers | hit_rate | mrr |

96 | |----------------------------------------|----------|----------|

97 | | ensemble_ft_bge_base_rerank_top_1_eval | 0.8474 | 0.8474 |

98 | | ensemble_ft_bge_base_rerank_top_2_eval | 0.9003 | 0.8816 |

99 | | ensemble_ft_bge_base_rerank_top_3_eval | 0.9408 | 0.9102 |

100 | | ensemble_ft_bge_base_rerank_top_4_eval | 0.9533 | 0.9180 |

101 | | ensemble_ft_bge_base_rerank_top_5_eval | 0.9657 | 0.9240 |

102 |

103 |

104 | ft-bge-rerank-large:

105 |

106 | | retrievers | hit_rate | mrr |

107 | |-----------------------------------------|----------|---------|

108 | | ensemble_ft_bge_large_rerank_top_1_eval | 0.8474 | 0.8474 |

109 | | ensemble_ft_bge_large_rerank_top_2_eval | 0.9003 | 0.8769 |

110 | | ensemble_ft_bge_large_rerank_top_3_eval | 0.9439 | 0.9024 |

111 | | ensemble_ft_bge_large_rerank_top_4_eval | 0.9564 | 0.9029 |

112 | | ensemble_ft_bge_large_rerank_top_5_eval | 0.9688 | 0.9028 |

113 |

114 |

115 |

116 |

117 | ## 不同Embedding模型之间的比较

118 |

119 | jina-base-zh-embedding:

120 |

121 | | retrievers | hit_rate | mrr | cost_time |

122 | |----------------------|--------------------|--------------------|--------------------|

123 | | embedding_top_1_eval | 0.5389408099688473 | 0.5389408099688473 | 34.9421501159668 |

124 | | embedding_top_2_eval | 0.6448598130841121 | 0.5919003115264797 | 35.04490852355957 |

125 | | embedding_top_3_eval | 0.7165109034267912 | 0.6157840083073729 | 40.548086166381836 |

126 | | embedding_top_4_eval | 0.7476635514018691 | 0.6235721703011423 | 41.40806198120117 |

127 | | embedding_top_5_eval | 0.7694704049844237 | 0.6279335410176532 | 43.450117111206055 |

128 |

129 | bge-base-embedding:

130 |

131 | | retrievers | hit_rate | mrr | cost_time |

132 | |----------------------|--------------------|--------------------|--------------------|

133 | | embedding_top_1_eval | 0.6043613707165109 | 0.6043613707165109 | 40.014028549194336 |

134 | | embedding_top_2_eval | 0.7071651090342679 | 0.6557632398753894 | 38.26403617858887 |

135 | | embedding_top_3_eval | 0.7538940809968847 | 0.6713395638629284 | 39.404869079589844 |

136 | | embedding_top_4_eval | 0.7912772585669782 | 0.6806853582554517 | 43.24913024902344 |

137 | | embedding_top_5_eval | 0.8099688473520249 | 0.684423676012461 | 53.58481407165527 |

138 |

139 | bge-large-embedding:

140 |

141 | | retrievers | hit_rate | mrr | cost_time |

142 | |----------------------|--------------------|--------------------|--------------------|

143 | | embedding_top_1_eval | 0.5919003115264797 | 0.5919003115264797 | 50.39501190185547 |

144 | | embedding_top_2_eval | 0.7133956386292835 | 0.6526479750778816 | 52.02889442443848 |

145 | | embedding_top_3_eval | 0.7725856697819314 | 0.6723779854620976 | 51.7120361328125 |

146 | | embedding_top_4_eval | 0.794392523364486 | 0.6778296988577361 | 51.872968673706055 |

147 | | embedding_top_5_eval | 0.822429906542056 | 0.6834371754932502 | 56.67304992675781 |

148 |

149 | bge-m3-embedding:

150 |

151 | | retrievers | hit_rate | mrr | cost_time |

152 | |----------------------|--------------------|--------------------|--------------------|

153 | | embedding_top_1_eval | 0.6822429906542056 | 0.6822429906542056 | 43.41626167297363 |

154 | | embedding_top_2_eval | 0.778816199376947 | 0.7305295950155763 | 44.278860092163086 |

155 | | embedding_top_3_eval | 0.8193146417445483 | 0.7440290758047767 | 45.64094543457031 |

156 | | embedding_top_4_eval | 0.8504672897196262 | 0.7518172377985461 | 46.158790588378906 |

157 | | embedding_top_5_eval | 0.8722741433021807 | 0.7561786085150571 | 50.23527145385742 |

158 |

159 | bce-embedding:

160 |

161 | | retrievers | hit_rate | mrr | cost_time |

162 | |----------------------|--------------------|--------------------|--------------------|

163 | | embedding_top_1_eval | 0.5794392523364486 | 0.5794392523364486 | 42.510032653808594 |

164 | | embedding_top_2_eval | 0.6853582554517134 | 0.632398753894081 | 42.72007942199707 |

165 | | embedding_top_3_eval | 0.7227414330218068 | 0.6448598130841121 | 41.066884994506836 |

166 | | embedding_top_4_eval | 0.7507788161993769 | 0.6518691588785047 | 43.18714141845703 |

167 | | embedding_top_5_eval | 0.7663551401869159 | 0.6549844236760125 | 44.08693313598633 |

168 |

169 | bge-base-embedding-finetune:

170 |

171 | | retrievers | hit_rate | mrr | cost_time |

172 | |----------------------|--------------------|--------------------|--------------------|

173 | | embedding_top_1_eval | 0.7289719626168224 | 0.7289719626168224 | 48.82097244262695 |

174 | | embedding_top_2_eval | 0.8598130841121495 | 0.794392523364486 | 42.237043380737305 |

175 | | embedding_top_3_eval | 0.9003115264797508 | 0.8078920041536863 | 42.33193397521973 |

176 | | embedding_top_4_eval | 0.9065420560747663 | 0.8094496365524404 | 45.35722732543945 |

177 | | embedding_top_5_eval | 0.9158878504672897 | 0.811318795430945 | 50.804853439331055 |

178 |

179 | bge-large-embedding-finetune:

180 |

181 | | retrievers | hit_rate | mrr | cost_time |

182 | |----------------------|--------------------|--------------------|--------------------|

183 | | embedding_top_1_eval | 0.7570093457943925 | 0.7570093457943925 | 47.14798927307129 |

184 | | embedding_top_2_eval | 0.881619937694704 | 0.8193146417445483 | 44.70491409301758 |

185 | | embedding_top_3_eval | 0.9190031152647975 | 0.8317757009345794 | 46.12398147583008 |

186 | | embedding_top_4_eval | 0.9376947040498442 | 0.8364485981308412 | 49.448251724243164 |

187 | | embedding_top_5_eval | 0.9376947040498442 | 0.8364485981308412 | 57.805776596069336 |

188 |

189 |

190 |

191 |

192 |

193 | ## 可视化分析

194 |

195 |

196 |

197 | | 检索类型 | 优点 | 缺点 |

198 | |----------|------|------|

199 | | 向量检索 (Embedding) | 1. 语义理解更强。

2. 能有效处理模糊或间接的查询。

3. 对自然语言的多样性适应性强。

4. 能识别不同词汇的相同意义。 | 1. 计算和存储成本高。

2. 索引时间较长。

3. 高度依赖训练数据的质量和数量。

4. 结果解释性较差。 |

200 | | 关键词检索 (BM25) | 1. 检索速度快。

2. 实现简单,资源需求低。

3. 结果易于理解,可解释性强。

4. 对精确查询表现良好。 | 1. 对复杂语义理解有限。

2. 对查询变化敏感,灵活性差。

3. 难以处理同义词和多义词。

4. 需要用户准确使用关键词。 |

201 |

202 | - `query`: "NEDO"的全称是什么?

203 |

204 |

205 |

206 | 在这个例子中,Embedding召回结果优于BM25,BM25召回结果虽然在top_3结果中存在,但排名第三,排在首位的是不相关的文本,而Embedding由于文本相似度的优势,将正确结果放在了首位。

207 |

208 | - `query`: 日本半导体产品的主要应用领域是什么?

209 |

210 |

211 |

212 | 在这个例子中,BM25召回结果优于Embedding。

213 |

214 | - `query`: 《美日半导体协议》对日本半导体市场有何影响?

215 |

216 |

217 |

218 | 在这个例子中,正确文本在BM25算法召回结果中排名第二,在Embedding算法中排第三,混合搜索排名第一,这里体现了混合搜索的优越性。

219 |

220 | - `query`: 80年代日本电子产业的辉煌表现在哪些方面?

221 |

222 |

223 |

224 | 在这个例子中,不管是BM25, Embedding,还是Ensemble,都没能将正确文本排在第一位,而经过Rerank以后,正确文本排在第一位,这里体现了Rerank算法的优势。

225 |

226 | ## 改进方案

227 |

228 | 1. Query Rewrite

229 |

230 | - 原始query: 半导体制造设备市场美、日、荷各占多少份额?

231 | - 改写后query:美国、日本和荷兰在半导体制造设备市场的份额分别是多少?

232 |

233 | 改写后的query在BM25和Embedding的top 3召回结果中都能找到。该query对应的正确文本为:

234 |

235 | > 全球半导体设备制造领域,美国、日本和荷兰控制着全球370亿美元半导体制造设备市场的90%以上。其中,美国的半导体制造设备(SME)产业占全球产量的近50%,日本约占30%,荷兰约占17%%。更具体地,以光刻机为例,EUV光刻工序其实有众多日本厂商的参与,如东京电子生产的EUV涂覆显影设备,占据100%的市场份额,Lasertec Corp.也是全球唯一的测试机制造商。另外还有EUV光刻胶,据南大光电在3月发布的相关报告中披露,全球仅有日本厂商研发出了EUV光刻胶。

236 |

237 | 从中我们可以看到,在改写后的query中,美国、日本、荷兰这三个词发挥了重要作用,因此,**query改写对于含有缩写的query有一定的召回效果改善**。

238 |

239 | 2. HyDE

240 |

241 | HyDE(全称Hypothetical Document Embeddings)是RAG中的一种技术,它基于一个假设:相较于直接查询,通过大语言模型 (LLM) 生成的答案在嵌入空间中可能更为接近。HyDE 首先响应查询生成一个假设性文档(答案),然后将其嵌入,从而提高搜索的效果。

242 |

243 | 比如:

244 |

245 | - 原始query: 美日半导体协议是由哪两部门签署的?

246 | - 加上回答后的query: 美日半导体协议是由哪两部门签署的?美日半导体协议是由美国商务部和日本经济产业省签署的。

247 |

248 | 加上回答后的query使用BM25算法可以找回正确文本,且排名第一位,而Embedding算法仍无法召回。

249 |

250 | 正确文本为:

251 |

252 | > 1985年6月,美国半导体产业贸易保护的调子开始升高。美国半导体工业协会向国会递交一份正式的“301条款”文本,要求美国政府制止日本公司的倾销行为。民意调查显示,68%的美国人认为日本是美国最大的威胁。在舆论的引导和半导体工业协会的推动下,美国政府将信息产业定为可以动用国家安全借口进行保护的新兴战略产业,半导体产业成为美日贸易战的焦点。1985年10月,美国商务部出面指控日本公司倾销256K和1M内存。一年后,日本通产省被迫与美国商务部签署第一次《美日半导体协议》。

253 |

254 | 从中可以看出,大模型的回答是正确的,美国商务部这个关键词发挥了重要作用,因此,HyDE对于特定的query有召回效果提升。

255 |

256 |

257 | ## Late Chunking探索

258 |

259 | 1. 中文Late-Chunking例子: late_chunking/jina_zh_late_chunking.ipynb

260 | 2. 使用Gradio实现中文Late-Chunking服务: late_chunking/late_chunking_gradio_server.py

261 | 3. 在RAG过程中,使用Late-Chunking提升召回效果,保证回复质量: late_chunking/my_late_chunking_exp.ipynb

--------------------------------------------------------------------------------

/custom_retriever/__init__.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: __init__.py.py

4 | # @time: 2023/12/25 17:42

5 |

--------------------------------------------------------------------------------

/custom_retriever/bm25_retriever.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: bm25_retriever.py

4 | # @time: 2023/12/25 17:42

5 | from typing import List

6 |

7 | from elasticsearch import Elasticsearch

8 | from llama_index.schema import TextNode

9 | from llama_index import QueryBundle

10 | from llama_index.schema import NodeWithScore

11 | from llama_index.retrievers import BaseRetriever

12 | from llama_index.indices.query.schema import QueryType

13 |

14 | from preprocess.get_text_id_mapping import text_node_id_mapping

15 |

16 |

17 | class CustomBM25Retriever(BaseRetriever):

18 | """Custom retriever for elasticsearch with bm25"""

19 | def __init__(self, top_k) -> None:

20 | """Init params."""

21 | super().__init__()

22 | self.es_client = Elasticsearch("http://localhost:9200")

23 | self.top_k = top_k

24 |

25 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

26 | if isinstance(query, str):

27 | query = QueryBundle(query)

28 | else:

29 | query = query

30 |

31 | result = []

32 | # 查询数据(全文搜索)

33 | dsl = {

34 | 'query': {

35 | 'match': {

36 | 'content': query.query_str

37 | }

38 | },

39 | "size": self.top_k

40 | }

41 | search_result = self.es_client.search(index='docs', body=dsl)

42 | if search_result['hits']['hits']:

43 | for record in search_result['hits']['hits']:

44 | text = record['_source']['content']

45 | node_with_score = NodeWithScore(node=TextNode(text=text,

46 | id_=text_node_id_mapping[text]),

47 | score=record['_score'])

48 | result.append(node_with_score)

49 |

50 | return result

51 |

52 |

53 | if __name__ == '__main__':

54 | from pprint import pprint

55 | custom_bm25_retriever = CustomBM25Retriever(top_k=3)

56 | query = "美日半导体协议是由哪两部门签署的?美日半导体协议是由美国商务部和日本经济产业省签署的。"

57 | t_result = custom_bm25_retriever.retrieve(str_or_query_bundle=query)

58 | pprint(t_result)

59 |

--------------------------------------------------------------------------------

/custom_retriever/build_embedding_cache.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: build_embedding_cache.py

4 | # @time: 2023/12/26 12:57

5 | import os

6 | import time

7 | import math

8 | import json

9 | import random

10 | import requests

11 | import numpy as np

12 | from retry import retry

13 | from tqdm import tqdm

14 |

15 |

16 | class EmbeddingCache(object):

17 | def __init__(self):

18 | pass

19 |

20 | @staticmethod

21 | @retry(exceptions=Exception, tries=3, max_delay=20)

22 | def get_openai_embedding(req_text: str):

23 | time.sleep(random.random() / 2)

24 | url = "https://api.openai.com/v1/embeddings"

25 | headers = {'Content-Type': 'application/json', "Authorization": f"Bearer {os.getenv('OPENAI_API_KEY')}"}

26 | payload = json.dumps({"model": "text-embedding-ada-002", "input": req_text})

27 | new_req = requests.request("POST", url, headers=headers, data=payload)

28 | return new_req.json()['data'][0]['embedding']

29 |

30 | @staticmethod

31 | @retry(exceptions=Exception, tries=3, max_delay=20)

32 | def get_bge_embedding(req_text: str):

33 | url = "http://localhost:50073/embedding"

34 | headers = {'Content-Type': 'application/json'}

35 | payload = json.dumps({"text": req_text})

36 | new_req = requests.request("POST", url, headers=headers, data=payload)

37 | return new_req.json()['embedding']

38 |

39 | @staticmethod

40 | @retry(exceptions=Exception, tries=3, max_delay=20)

41 | def get_jina_embedding(req_text: str):

42 | time.sleep(random.random() / 2)

43 | url = 'https://api.jina.ai/v1/embeddings'

44 | headers = {

45 | 'Content-Type': 'application/json',

46 | 'Authorization': f'Bearer {os.getenv("JINA_API_KEY")}'

47 | }

48 | data = {

49 | 'input': [req_text],

50 | 'model': 'jina-embeddings-v2-base-zh'

51 | }

52 | response = requests.post(url, headers=headers, json=data)

53 | embedding = response.json()["data"][0]["embedding"]

54 | embedding_norm = math.sqrt(sum([i**2 for i in embedding]))

55 | return [i/embedding_norm for i in embedding]

56 |

57 | def build_with_context(self, context_type: str):

58 | with open("../data/doc_qa_test.json", "r", encoding="utf-8") as f:

59 | content = json.loads(f.read())

60 | queries = list(content[context_type].values())

61 | query_num = len(queries)

62 | embedding_data = np.empty(shape=[query_num, 768])

63 | for i in tqdm(range(query_num), desc="generate embedding"):

64 | embedding_data[i] = self.get_bge_embedding(queries[i])

65 | np.save(f"../data/{context_type}_bce_embedding.npy", embedding_data)

66 |

67 | def build(self):

68 | self.build_with_context("queries")

69 | self.build_with_context("corpus")

70 |

71 | @staticmethod

72 | def load(query_write=False):

73 | current_dir = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

74 | queries_embedding_data = np.load(os.path.join(current_dir, "data/queries_jina_base_zh_embedding.npy"))

75 | corpus_embedding_data = np.load(os.path.join(current_dir, "data/corpus_jina_base_zh_late_chunking_embedding.npy"))

76 | query_embedding_dict = {}

77 | with open(os.path.join(current_dir, "data/doc_qa_test.json"), "r", encoding="utf-8") as f:

78 | content = json.loads(f.read())

79 | queries = list(content["queries"].values())

80 | corpus = list(content["corpus"].values())

81 | for i in range(len(queries)):

82 | query_embedding_dict[queries[i]] = queries_embedding_data[i].tolist()

83 | if query_write:

84 | rewrite_queries_embedding_data = np.load(os.path.join(current_dir, "data/query_rewrite_openai_embedding.npy"))

85 | with open("../data/query_rewrite.json", "r", encoding="utf-8") as f:

86 | rewrite_content = json.loads(f.read())

87 |

88 | rewrite_queries_list = []

89 | for original_query, rewrite_queries in rewrite_content.items():

90 | rewrite_queries_list.extend(rewrite_queries)

91 | for i in range(len(rewrite_queries_list)):

92 | query_embedding_dict[rewrite_queries_list[i]] = rewrite_queries_embedding_data[i].tolist()

93 | return query_embedding_dict, corpus_embedding_data, corpus

94 |

95 |

96 | if __name__ == '__main__':

97 | EmbeddingCache().build()

98 |

--------------------------------------------------------------------------------

/custom_retriever/ensemble_rerank_retriever.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: ensemble_rerank_retriever.py

4 | # @time: 2023/12/26 19:18

5 | from typing import List

6 |

7 | from llama_index.schema import TextNode

8 | from llama_index.schema import NodeWithScore

9 | from llama_index.retrievers import BaseRetriever

10 | from llama_index.indices.query.schema import QueryBundle, QueryType

11 |

12 | from preprocess.get_text_id_mapping import text_node_id_mapping

13 | from custom_retriever.bm25_retriever import CustomBM25Retriever

14 | from custom_retriever.vector_store_retriever import VectorSearchRetriever

15 | from utils.rerank import bge_rerank_result

16 |

17 |

18 | class EnsembleRerankRetriever(BaseRetriever):

19 | def __init__(self, top_k, faiss_index):

20 | super().__init__()

21 | self.faiss_index = faiss_index

22 | self.top_k = top_k

23 | self.embedding_retriever = VectorSearchRetriever(top_k=self.top_k, faiss_index=faiss_index)

24 |

25 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

26 | if isinstance(query, str):

27 | query = QueryBundle(query)

28 | else:

29 | query = query

30 | # print(query.query_str)

31 | bm25_search_nodes = CustomBM25Retriever(top_k=self.top_k).retrieve(query)

32 | embedding_search_nodes = self.embedding_retriever.retrieve(query)

33 | bm25_docs = [node.text for node in bm25_search_nodes]

34 | embedding_docs = [node.text for node in embedding_search_nodes]

35 | # remove duplicate document

36 | all_documents = set()

37 | for doc_list in [bm25_docs, embedding_docs]:

38 | for doc in doc_list:

39 | all_documents.add(doc)

40 | doc_lists = list(all_documents)

41 | rerank_doc_lists = bge_rerank_result(query.query_str, doc_lists, top_n=self.top_k)

42 | result = []

43 | for sorted_doc in rerank_doc_lists:

44 | text, score = sorted_doc

45 | node_with_score = NodeWithScore(node=TextNode(text=text,

46 | id_=text_node_id_mapping[text]),

47 | score=score)

48 | result.append(node_with_score)

49 |

50 | return result

51 |

52 |

53 | if __name__ == '__main__':

54 | from faiss import IndexFlatIP

55 |

56 | faiss_index = IndexFlatIP(1536)

57 | ensemble_retriever = EnsembleRerankRetriever(top_k=2, faiss_index=faiss_index)

58 | t_result = ensemble_retriever.retrieve(str_or_query_bundle="索尼1953年引入的技术专利是什么?")

59 | print(t_result)

60 | faiss_index.reset()

61 |

--------------------------------------------------------------------------------

/custom_retriever/ensemble_retriever.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: ensemble_retriever.py

4 | # @time: 2023/12/26 18:50

5 | from typing import List

6 | from operator import itemgetter

7 |

8 | from llama_index.schema import TextNode

9 | from llama_index.schema import NodeWithScore

10 | from llama_index.retrievers import BaseRetriever

11 | from llama_index.indices.query.schema import QueryType

12 |

13 | from preprocess.get_text_id_mapping import text_node_id_mapping

14 | from custom_retriever.bm25_retriever import CustomBM25Retriever

15 | from custom_retriever.vector_store_retriever import VectorSearchRetriever

16 |

17 |

18 | class EnsembleRetriever(BaseRetriever):

19 | def __init__(self, top_k, faiss_index, weights):

20 | super().__init__()

21 | self.weights = weights

22 | self.c: int = 60

23 | self.faiss_index = faiss_index

24 | self.top_k = top_k

25 | self.embedding_retriever = VectorSearchRetriever(top_k=self.top_k, faiss_index=faiss_index)

26 |

27 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

28 | bm25_search_nodes = CustomBM25Retriever(top_k=self.top_k).retrieve(query)

29 | embedding_search_nodes = self.embedding_retriever.retrieve(query)

30 | bm25_docs = [node.text for node in bm25_search_nodes]

31 | embedding_docs = [node.text for node in embedding_search_nodes]

32 | doc_lists = [bm25_docs, embedding_docs]

33 |

34 | # Create a union of all unique documents in the input doc_lists

35 | all_documents = set()

36 | for doc_list in doc_lists:

37 | for doc in doc_list:

38 | all_documents.add(doc)

39 |

40 | # Initialize the RRF score dictionary for each document

41 | rrf_score_dic = {doc: 0.0 for doc in all_documents}

42 |

43 | # Calculate RRF scores for each document

44 | for doc_list, weight in zip(doc_lists, self.weights):

45 | for rank, doc in enumerate(doc_list, start=1):

46 | rrf_score = weight * (1 / (rank + self.c))

47 | rrf_score_dic[doc] += rrf_score

48 |

49 | # Sort documents by their RRF scores in descending order

50 | sorted_documents = sorted(rrf_score_dic.items(), key=itemgetter(1), reverse=True)

51 | result = []

52 | for sorted_doc in sorted_documents[:self.top_k]:

53 | text, score = sorted_doc

54 | node_with_score = NodeWithScore(node=TextNode(text=text,

55 | id_=text_node_id_mapping[text]),

56 | score=score)

57 | result.append(node_with_score)

58 |

59 | return result

60 |

61 |

62 | if __name__ == '__main__':

63 | from faiss import IndexFlatIP

64 |

65 | faiss_index = IndexFlatIP(1536)

66 | query = "日本半导体发展史的三个时期是什么?日本半导体发展史可以分为以下三个时期:1. 初期发展(1950年代至1970年代):在这一时期,日本半导体行业主要依赖于进口技术和设备。日本政府积极推动半导体产业的发展,设立了研究机构和实验室,并提供财政支持。日本企业开始生产晶体管和集成电路,逐渐取得了技术突破和市场份额的增长。2. 高速增长(1980年代至1990年代):在这一时期,日本半导体行业迅速崛起,成为全球"

67 | query = "美日半导体协议是由哪两部门签署的?美日半导体协议是由美国商务部和日本经济产业省签署的。"

68 | query = "日美半导体协议要求美国芯片在日本市场份额是多少?根据日美半导体协议,要求美国芯片在日本市场的份额为20%。"

69 | query = "尼康和佳能的光刻机在哪个市场占优势?尼康和佳能都是知名的相机制造商,但在光刻机市场上,尼康占据着主导地位。尼康是全球最大的光刻机制造商之一,其光刻机产品广泛应用于半导体行业,尤其在高端光刻机市场上"

70 | ensemble_retriever = EnsembleRetriever(top_k=3, faiss_index=faiss_index, weights=[0.5, 0.5])

71 | t_result = ensemble_retriever.retrieve(str_or_query_bundle=query)

72 | print(t_result)

73 | faiss_index.reset()

74 |

--------------------------------------------------------------------------------

/custom_retriever/query_rewrite_ensemble_retriever.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: query_rewrite_ensemble_retriever.py

4 | # @time: 2023/12/28 13:49

5 | # -*- coding: utf-8 -*-

6 | # @place: Pudong, Shanghai

7 | # @file: ensemble_retriever.py

8 | # @time: 2023/12/26 18:50

9 | import json

10 | from typing import List

11 | from operator import itemgetter

12 |

13 | from llama_index.schema import TextNode

14 | from llama_index.schema import NodeWithScore

15 | from llama_index.retrievers import BaseRetriever

16 | from llama_index.indices.query.schema import QueryType

17 |

18 | from preprocess.get_text_id_mapping import text_node_id_mapping

19 | from custom_retriever.bm25_retriever import CustomBM25Retriever

20 | from custom_retriever.vector_store_retriever import VectorSearchRetriever

21 |

22 |

23 | class QueryRewriteEnsembleRetriever(BaseRetriever):

24 | def __init__(self, top_k, faiss_index):

25 | super().__init__()

26 | self.c: int = 60

27 | self.faiss_index = faiss_index

28 | self.top_k = top_k

29 | self.embedding_retriever = VectorSearchRetriever(top_k=self.top_k, faiss_index=faiss_index, query_rewrite=True)

30 | with open('../data/query_rewrite.json', 'r') as f:

31 | self.query_write_dict = json.loads(f.read())

32 |

33 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

34 | doc_lists = []

35 | bm25_search_nodes = CustomBM25Retriever(top_k=self.top_k).retrieve(query.query_str)

36 | doc_lists.append([node.text for node in bm25_search_nodes])

37 | embedding_search_nodes = self.embedding_retriever.retrieve(query.query_str)

38 | doc_lists.append([node.text for node in embedding_search_nodes])

39 | # check: need query rewrite

40 | if len(set([_.id_ for _ in bm25_search_nodes]) & set([_.id_ for _ in embedding_search_nodes])) == 0:

41 | print(query.query_str)

42 | for search_query in self.query_write_dict[query.query_str]:

43 | bm25_search_nodes = CustomBM25Retriever(top_k=self.top_k).retrieve(search_query)

44 | doc_lists.append([node.text for node in bm25_search_nodes])

45 | embedding_search_nodes = self.embedding_retriever.retrieve(search_query)

46 | doc_lists.append([node.text for node in embedding_search_nodes])

47 |

48 | # Create a union of all unique documents in the input doc_lists

49 | all_documents = set()

50 | for doc_list in doc_lists:

51 | for doc in doc_list:

52 | all_documents.add(doc)

53 | # print(all_documents)

54 |

55 | # Initialize the RRF score dictionary for each document

56 | rrf_score_dic = {doc: 0.0 for doc in all_documents}

57 |

58 | # Calculate RRF scores for each document

59 | for doc_list, weight in zip(doc_lists, [1/len(doc_lists)] * len(doc_lists)):

60 | for rank, doc in enumerate(doc_list, start=1):

61 | rrf_score = weight * (1 / (rank + self.c))

62 | rrf_score_dic[doc] += rrf_score

63 |

64 | # Sort documents by their RRF scores in descending order

65 | sorted_documents = sorted(rrf_score_dic.items(), key=itemgetter(1), reverse=True)

66 | result = []

67 | for sorted_doc in sorted_documents[:self.top_k]:

68 | text, score = sorted_doc

69 | node_with_score = NodeWithScore(node=TextNode(text=text,

70 | id_=text_node_id_mapping[text]),

71 | score=score)

72 | result.append(node_with_score)

73 |

74 | return result

75 |

76 |

77 | if __name__ == '__main__':

78 | from faiss import IndexFlatIP

79 | from pprint import pprint

80 | faiss_index = IndexFlatIP(1536)

81 | ensemble_retriever = QueryRewriteEnsembleRetriever(top_k=3, faiss_index=faiss_index)

82 | query = "半导体制造设备市场美、日、荷各占多少份额?"

83 | t_result = ensemble_retriever.retrieve(str_or_query_bundle=query)

84 | pprint(t_result)

85 | faiss_index.reset()

86 |

--------------------------------------------------------------------------------

/custom_retriever/vector_store_retriever.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @file: vector_store_retriever.py

4 | # @time: 2023/12/25 17:43

5 | from typing import List

6 |

7 | import numpy as np

8 | from llama_index.schema import TextNode

9 | from llama_index import QueryBundle

10 | from llama_index.schema import NodeWithScore

11 | from llama_index.retrievers import BaseRetriever

12 | from llama_index.indices.query.schema import QueryType

13 |

14 | from preprocess.get_text_id_mapping import text_node_id_mapping

15 | from custom_retriever.build_embedding_cache import EmbeddingCache

16 |

17 |

18 | class VectorSearchRetriever(BaseRetriever):

19 | def __init__(self, top_k, faiss_index, query_rewrite=False) -> None:

20 | super().__init__()

21 | self.top_k = top_k

22 | self.faiss_index = faiss_index

23 | self.queries_embedding_dict, self.corpus_embedding, self.corpus = EmbeddingCache().load(query_write=query_rewrite)

24 | # add vector

25 | self.faiss_index.add(self.corpus_embedding)

26 |

27 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

28 | if isinstance(query, str):

29 | query = QueryBundle(query)

30 | else:

31 | query = query

32 |

33 | result = []

34 | # vector search

35 | if query.query_str in self.queries_embedding_dict:

36 | query_embedding = self.queries_embedding_dict[query.query_str]

37 | else:

38 | query_embedding = EmbeddingCache().get_openai_embedding(req_text=query.query_str)

39 | distances, doc_indices = self.faiss_index.search(np.array([query_embedding]), self.top_k)

40 |

41 | for i, sent_index in enumerate(doc_indices.tolist()[0]):

42 | text = self.corpus[sent_index]

43 | node_with_score = NodeWithScore(node=TextNode(text=text, id_=text_node_id_mapping[text]),

44 | score=distances.tolist()[0][i])

45 | result.append(node_with_score)

46 |

47 | return result

48 |

49 |

50 | if __name__ == '__main__':

51 | from pprint import pprint

52 | from faiss import IndexFlatIP

53 | faiss_index = IndexFlatIP(1536)

54 | vector_search_retriever = VectorSearchRetriever(top_k=3, faiss_index=faiss_index)

55 | query = "美日半导体协议是由哪两部门签署的?美日半导体协议是由美国商务部和日本经济产业省签署的。"

56 | t_result = vector_search_retriever.retrieve(str_or_query_bundle=query)

57 | pprint(t_result)

58 | faiss_index.reset()

59 |

--------------------------------------------------------------------------------

/data/corpus_openai_embedding.npy:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/percent4/embedding_rerank_retrieval/f6a0ee5d388b20807e9f07c81c69cf963ea2d463/data/corpus_openai_embedding.npy

--------------------------------------------------------------------------------

/data/demo.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @place: Pudong, Shanghai

3 | # @contact: lianmingjie@shanda.com

4 | # @file: demo.py

5 | # @time: 2023/12/26 11:15

6 | import json

7 | with open("doc_qa_test.json", "r") as f:

8 | content = json.loads(f.read())

9 |

10 | new_content = {}

11 | n = 5

12 | for k, v in content.items():

13 | if k in ["queries", "relevant_docs"]:

14 | new_content[k] = {}

15 | for key in list(v.keys())[:n]:

16 | new_content[k][key] = v[key]

17 | else:

18 | new_content[k] = v

19 |

20 | with open("doc_qa_test_demo.json", "w") as f:

21 | f.write(json.dumps(new_content, indent=4, ensure_ascii=False))

22 |

--------------------------------------------------------------------------------

/data/queries_openai_embedding.npy:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/percent4/embedding_rerank_retrieval/f6a0ee5d388b20807e9f07c81c69cf963ea2d463/data/queries_openai_embedding.npy

--------------------------------------------------------------------------------

/data/query_rewrite_openai_embedding.npy:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/percent4/embedding_rerank_retrieval/f6a0ee5d388b20807e9f07c81c69cf963ea2d463/data/query_rewrite_openai_embedding.npy

--------------------------------------------------------------------------------

/docs/RAG框架中的Rerank算法评估.md:

--------------------------------------------------------------------------------

1 | > 本文将详细介绍RAG框架中的两种Rerank模型的评估实验:bge-reranker和Cohere Rerank。

2 |

3 | 在文章[NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=1977141018&lang=zh_CN#rd)中,我们在评估Retrieve算法的时候,发现在Ensemble Search阶段之后加入Rerank算法能有效提升检索效果,其中top_3的Hit Rate指标增加约4%。

4 |

5 | 因此,本文将深入Rerank算法对比,主要对比bge-reranker和Cohere Rerank两种算法,分析它们对于提升检索效果的作用。

6 |

7 | ## 为什么需要重排序?

8 |

9 | **混合检索**通过融合多种检索技术的优势,能够提升检索的召回效果。然而,这种方法在应用不同的检索模式时,必须对结果进行整合和标准化处理。标准化是指将数据调整到一致的标准范围或格式,以便于更有效地进行比较、分析和处理。在完成这些步骤后,这些数据将整合并提供给大型模型进行处理。为了实现这一过程,我们需要引入一个评分系统,即`重排序模型(Rerank Model)`,它有助于进一步优化和精炼检索结果。

10 |

11 | `Rerank模型`通过对候选文档列表进行重新排序,以提高其与用户查询语义的匹配度,从而优化排序结果。该模型的核心在于评估用户问题与每个候选文档之间的关联程度,并基于这种相关性给文档排序,使得与用户问题更为相关的文档排在更前的位置。这种模型的实现通常涉及计算相关性分数,然后按照这些分数从高到低排列文档。市场上已有一些流行的重排序模型,例如 **Cohere rerank**、**bge-reranker** 等,它们在不同的应用场景中表现出了优异的性能。

12 |

13 |

14 |

15 | ## BGE-Reranker模型

16 |

17 | **Cohere Rerank**模型目前闭源,对外提供API,普通账号提供免费使用额度,生产环境最好使用付费服务,因此,本文不再过多介绍,关于这块的文章可参考其官网博客:[https://txt.cohere.com/rerank/](https://txt.cohere.com/rerank/) .

18 |

19 | **bge-reranker**是`BAAI`(北京智源人工智能研究院)发布的系列模型之一,包括Embedding、Rerank系列模型等。`bge-reranker`模型在HuggingFace上开源,有`base`、`large`两个版本模型。

20 |

21 | 借助`FlagEmbedding`,我们以BAAI/bge-reranker-base模型为例,使用FastAPI封装成HTTP服务,Python代码如下:

22 |

23 | ```python

24 | # !/usr/bin/env python

25 | # encoding: utf-8

26 | import uvicorn

27 | from fastapi import FastAPI

28 | from pydantic import BaseModel

29 | from operator import itemgetter

30 | from FlagEmbedding import FlagReranker

31 |

32 |

33 | app = FastAPI()

34 |

35 | reranker = FlagReranker('/data_2/models/bge-reranker-base/models--BAAI--bge-reranker-base/blobs', use_fp16=True)

36 |

37 |

38 | class QuerySuite(BaseModel):

39 | query: str

40 | passages: list[str]

41 | top_k: int = 1

42 |

43 |

44 | @app.post('/bge_base_rerank')

45 | def rerank(query_suite: QuerySuite):

46 | scores = reranker.compute_score([[query_suite.query, passage] for passage in query_suite.passages])

47 | if isinstance(scores, list):

48 | similarity_dict = {passage: scores[i] for i, passage in enumerate(query_suite.passages)}

49 | else:

50 | similarity_dict = {passage: scores for i, passage in enumerate(query_suite.passages)}

51 | sorted_similarity_dict = sorted(similarity_dict.items(), key=itemgetter(1), reverse=True)

52 | result = {}

53 | for j in range(query_suite.top_k):

54 | result[sorted_similarity_dict[j][0]] = sorted_similarity_dict[j][1]

55 | return result

56 |

57 |

58 | if __name__ == '__main__':

59 | uvicorn.run(app, host='0.0.0.0', port=50072)

60 | ```

61 |

62 | 计算"上海天气"与"北京美食"、"上海气候"的Rerank相关性分数,请求如下:

63 |

64 | ```bash

65 | curl --location 'http://localhost:50072/bge_base_rerank' \

66 | --header 'Content-Type: application/json' \

67 | --data '{

68 | "query": "上海天气",

69 | "passages": ["北京美食", "上海气候"],

70 | "top_k": 2

71 | }'

72 | ```

73 |

74 | 输出如下:

75 |

76 | ```json

77 | {

78 | "上海气候": 6.24609375,

79 | "北京美食": -7.29296875

80 | }

81 | ```

82 |

83 | ## 评估实验

84 |

85 | 我们使用[NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=1977141018&lang=zh_CN#rd)中的数据集和评估代码,在ensemble search阶段之后加入BGE-Reranker服务API调用。

86 |

87 | 其中,`bge-reranker-base`的评估结果如下:

88 |

89 | | retrievers | hit_rate | mrr |

90 | |-------------------------------------|----------|--------|

91 | | ensemble_bge_base_rerank_top_1_eval | 0.8255 | 0.8255 |

92 | | ensemble_bge_base_rerank_top_2_eval | 0.8785 | 0.8489 |

93 | | ensemble_bge_base_rerank_top_3_eval | 0.9346 | 0.8686 |

94 | | ensemble_bge_base_rerank_top_4_eval | 0.947 | 0.872 |

95 | | ensemble_bge_base_rerank_top_5_eval | 0.9564 | 0.8693 |

96 |

97 | `bge-reranker-large`的评估结果如下:

98 |

99 | | retrievers | hit_rate | mrr |

100 | |--------------------------------------|----------|--------|

101 | | ensemble_bge_large_rerank_top_1_eval | 0.8224 | 0.8224 |

102 | | ensemble_bge_large_rerank_top_2_eval | 0.8847 | 0.8364 |

103 | | ensemble_bge_large_rerank_top_3_eval | 0.9377 | 0.8572 |

104 | | ensemble_bge_large_rerank_top_4_eval | 0.9502 | 0.8564 |

105 | | ensemble_bge_large_rerank_top_5_eval | 0.9626 | 0.8537 |

106 |

107 | 以Ensemble Search为baseline,分别对三种Rerank模型进行Hit Rate指标统计,柱状图如下:

108 |

109 |

110 |

111 | 从上述的统计图中可以得到如下结论:

112 |

113 | - 在Ensemble Search阶段后加入Rerank模型会有检索效果提升

114 | - 就检索效果而言,Rerank模型的结果为:Cohere > bge-rerank-large > bge-rerank-base,但效果相差不大

115 |

116 |

117 | ## 总结

118 |

119 | 本文详细介绍了RAG框架中的两种Rerank模型的评估实验:bge-reranker和Cohere Rerank,算是在之前Retrieve算法评估实验上的延续工作,后续将会有更多工作持续更新。

120 |

121 | 本文的所有过程及指标结果已开源至Github,网址为:[https://github.com/percent4/embedding_rerank_retrieval](https://github.com/percent4/embedding_rerank_retrieval) .

122 |

--------------------------------------------------------------------------------

/docs/RAG框架中的Retrieve算法评估.md:

--------------------------------------------------------------------------------

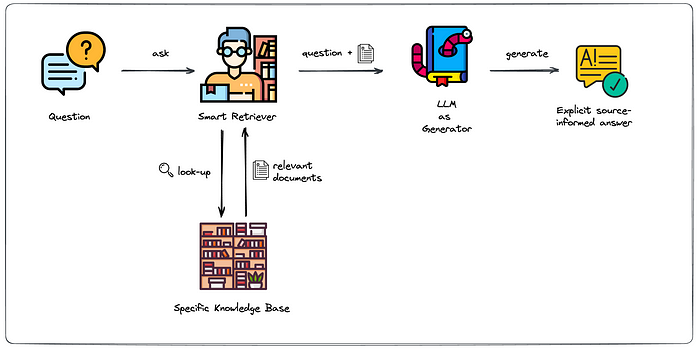

1 | > 本文将详细介绍RAG框架中的各种Retrieve算法,比如BM25, Embedding Search, Ensemble Search, Rerank等的评估实验过程与结果。本文是目前除了LlamaIndex官方网站例子之外为数不多的介绍Retrieve算法评估实验的文章。

2 |

3 | ## 什么是RAG中的Retrieve?

4 |

5 | `RAG`即Retrieval Augmented Generation的简称,是现阶段增强使用LLM的常见方式之一,其一般步骤为:

6 |

7 | 1. 文档划分(Document Split)

8 | 2. 向量嵌入(Embedding)

9 | 3. 文档获取(Retrieve)

10 | 4. Prompt工程(Prompt Engineering)

11 | 5. 大模型问答(LLM)

12 |

13 | 大致的流程图参考如下:

14 |

15 |

16 |

17 | 通常来说,可将`RAG`划分为召回(**Retrieve**)阶段和答案生成(**Answer Generate**)阶段,而效果优化也从这方面入手。针对召回阶段,文档获取是其中重要的步骤,决定了注入大模型的知识背景,常见的召回算法如下:

18 |

19 | - **BM25(又称Keyword Search)**: 使用BM24算法找回相关文档,一般对于特定领域关键词效果较好,比如人名,结构名等;

20 | - **Embedding Search**: 使用Embedding模型将query和corpus进行文本嵌入,使用向量相似度进行文本匹配,可解决BM25算法的相似关键词召回效果差的问题,该过程一般会使用向量数据库(Vector Database);

21 | - **Ensemble Search**: 融合BM25算法和Embedding Search的结果,使用RFF算法进行重排序,一般会比单独的召回算法效果好;

22 | - **Rerank**: 上述的召回算法一般属于粗召回阶段,更看重性能;Rerank是对粗召回阶段的结果,再与query进行文本匹配,属于Rerank(又称为重排、精排)阶段,更看重效果;

23 |

24 | 综合上述Retrieve算法的框架示意图如下:

25 |

26 |

27 |

28 | 上述的Retrieve算法更有优劣,一般会选择合适的场景进行使用或考虑综合几种算法进行使用。那么,它们的效果具体如何呢?

29 |

30 |

31 | ## Retrieve算法评估

32 |

33 | 那么,如何对Retrieve算法进行具体效果评估呢?

34 |

35 | 本文将通过构造自有数据集进行测试,分别对上述四种Retrieve算法进行实验,采用`Hit Rate`和`MRR`指标进行评估。

36 |

37 | 在**LlamaIndex**官方Retrieve Evaluation中,提供了对Retrieve算法的评估示例,具体细节可参考如下:

38 |

39 | [https://blog.llamaindex.ai/boosting-rag-picking-the-best-embedding-reranker-models-42d079022e83](https://blog.llamaindex.ai/boosting-rag-picking-the-best-embedding-reranker-models-42d079022e83)

40 |

41 | 这是现在网上较为权威的Retrieve Evaluation实验,本文将参考LlamaIndex的做法,给出更为详细的评估实验过程与结果。

42 |

43 | Retrieve Evaluation实验的步骤如下:

44 |

45 | 1. `文档划分`:寻找合适数据集,进行文档划分;

46 | 2. `问题生成`:对划分后的文档,使用LLM对文档内容生成问题;

47 | 3. `召回文本`:对生成的每个问题,采用不同的Retrieve算法,得到召回结果;

48 | 4. `指标评估`:使用`Hit Rate`和`MRR`指标进行评估

49 |

50 | 步骤是清晰的,那么,我们来看下评估指标:`Hit Rate`和`MRR`。

51 |

52 | `Hit Rate`即命中率,一般指的是我们预期的召回文本(真实值)在召回结果的前k个文本中会出现,也就是Recall@k时,能得到预期文本。一般,`Hit Rate`越高,就说明召回算法效果越好。

53 |

54 | `MRR`即Mean Reciprocal Rank,是一种常见的评估检索效果的指标。MRR 是衡量系统在一系列查询中返回相关文档或信息的平均排名的逆数的平均值。例如,如果一个系统对第一个查询的正确答案排在第二位,对第二个查询的正确答案排在第一位,则 MRR 为 (1/2 + 1/1) / 2。

55 |

56 | 在LlamaIndex中,这两个指标的对应类分别为`HitRate`和`MRR`,源代码如下:

57 |

58 | ```python

59 | class HitRate(BaseRetrievalMetric):

60 | """Hit rate metric."""

61 |

62 | metric_name: str = "hit_rate"

63 |

64 | def compute(

65 | self,

66 | query: Optional[str] = None,

67 | expected_ids: Optional[List[str]] = None,

68 | retrieved_ids: Optional[List[str]] = None,

69 | expected_texts: Optional[List[str]] = None,

70 | retrieved_texts: Optional[List[str]] = None,

71 | **kwargs: Any,

72 | ) -> RetrievalMetricResult:

73 | """Compute metric."""

74 | if retrieved_ids is None or expected_ids is None:

75 | raise ValueError("Retrieved ids and expected ids must be provided")

76 | is_hit = any(id in expected_ids for id in retrieved_ids)

77 | return RetrievalMetricResult(

78 | score=1.0 if is_hit else 0.0,

79 | )

80 |

81 |

82 | class MRR(BaseRetrievalMetric):

83 | """MRR metric."""

84 |

85 | metric_name: str = "mrr"

86 |

87 | def compute(

88 | self,

89 | query: Optional[str] = None,

90 | expected_ids: Optional[List[str]] = None,

91 | retrieved_ids: Optional[List[str]] = None,

92 | expected_texts: Optional[List[str]] = None,

93 | retrieved_texts: Optional[List[str]] = None,

94 | **kwargs: Any,

95 | ) -> RetrievalMetricResult:

96 | """Compute metric."""

97 | if retrieved_ids is None or expected_ids is None:

98 | raise ValueError("Retrieved ids and expected ids must be provided")

99 | for i, id in enumerate(retrieved_ids):

100 | if id in expected_ids:

101 | return RetrievalMetricResult(

102 | score=1.0 / (i + 1),

103 | )

104 | return RetrievalMetricResult(

105 | score=0.0,

106 | )

107 | ```

108 |

109 | ## 数据集构造

110 |

111 | 在文章[NLP(六十一)使用Baichuan-13B-Chat模型构建智能文档](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247485425&idx=1&sn=bd85ddfce82d77ceec5a66cb96835400&chksm=fcb9be61cbce37773109f9703c2b6c4256d5037c8bf4497dfb9ad0f296ce0ee4065255954c1c&token=1977141018&lang=zh_CN#rd)笔者介绍了如何使用RAG框架来实现智能文档问答。

112 |

113 | 以这个项目为基础,笔者采集了日本半导体行业相关的网络文章及其他文档,进行文档划分,导入至ElastricSearch,并使用OpenAI Embedding获取文本嵌入向量。语料库一共为433个文档片段(Chunk),其中321个与日本半导体行业相关(不妨称之为`领域文档`)。

114 |

115 | 还差query数据集。这点是从LlamaIndex官方示例中获取的灵感:**使用大模型生成query**!

116 |

117 | 针对上述321个领域文档,使用GPT-4模型生成一个与文本内容相关的问题,即query,Python代码如下:

118 |

119 | ```python

120 | # -*- coding: utf-8 -*-

121 | # @place: Pudong, Shanghai

122 | # @file: data_transfer.py

123 | # @time: 2023/12/25 17:51

124 | import pandas as pd

125 | from llama_index.llms import OpenAI

126 | from llama_index.schema import TextNode

127 | from llama_index.evaluation import generate_question_context_pairs

128 | import random

129 | random.seed(42)

130 |

131 | llm = OpenAI(model="gpt-4", max_retries=5)

132 |

133 | # Prompt to generate questions

134 | qa_generate_prompt_tmpl = """\

135 | Context information is below.

136 |

137 | ---------------------

138 | {context_str}

139 | ---------------------

140 |

141 | Given the context information and not prior knowledge.

142 | generate only questions based on the below query.

143 |

144 | You are a university professor. Your task is to set {num_questions_per_chunk} questions for the upcoming Chinese quiz.

145 | Questions throughout the test should be diverse. Questions should not contain options or start with Q1/Q2.

146 | Questions must be written in Chinese. The expression must be concise and clear.

147 | It should not exceed 15 Chinese characters. Words such as "这", "那", "根据", "依据" and other punctuation marks

148 | should not be used. Abbreviations may be used for titles and professional terms.

149 | """

150 |

151 | nodes = []

152 | data_df = pd.read_csv("../data/doc_qa_dataset.csv", encoding="utf-8")

153 | for i, row in data_df.iterrows():

154 | if len(row["content"]) > 80 and i > 96:

155 | node = TextNode(text=row["content"])

156 | node.id_ = f"node_{i + 1}"

157 | nodes.append(node)

158 |

159 |

160 | doc_qa_dataset = generate_question_context_pairs(

161 | nodes, llm=llm, num_questions_per_chunk=1, qa_generate_prompt_tmpl=qa_generate_prompt_tmpl

162 | )

163 |

164 | doc_qa_dataset.save_json("../data/doc_qa_dataset.json")

165 | ```

166 |

167 | 原始数据`doc_qa_dataset.csv`是笔者从Kibana中的Discover中导出的,使用llama-index模块和GPT-4模型,以合适的Prompt,对每个领域文档生成一个问题,并保存为doc_qa_dataset.json,这就是我们进行Retrieve Evaluation的数据格式,其中包括queries, corpus, relevant_docs, mode四个字段。

168 |

169 | 我们来查看第一个文档及生成的答案,如下:

170 |

171 | ```json

172 | {

173 | "queries": {

174 | "7813f025-333d-494f-bc14-a51b2d57721b": "日本半导体产业的现状和影响因素是什么?",

175 | ...

176 | },

177 | "corpus": {

178 | "node_98": "日本半导体产业在上世纪80年代到达顶峰后就在缓慢退步,但若简单认为日本半导体产业失败了,就是严重误解,今天日本半导体产业仍有非常有竞争力的企业和产品。客观认识日本半导体产业的成败及其背后的原因,对正在大力发展半导体产业的中国,有非常强的参考价值。",

179 | ...

180 | },

181 | "relevant_docs": {

182 | "7813f025-333d-494f-bc14-a51b2d57721b": [

183 | "node_98"

184 | ],

185 | ...

186 | },

187 | "mode": "text"

188 | }

189 | ```

190 |

191 |

192 | ## Retrieve算法评估

193 |

194 | 我们需要评估的Retrieve算法为BM25, Embedding Search, Ensemble Search, Ensemble + Rerank,下面将分别就Retriever实现方式、指标评估实验对每种Retrieve算法进行详细介绍。

195 |

196 | ### BM25

197 |

198 | BM25的储存采用ElasticSearch,即直接使用ES内置的BM25算法。笔者在llama-index对BaseRetriever进行定制化开发(这也是我们实现自己想法的一种常规方法),对其简单封装,Python代码如下:

199 |

200 | ```python

201 | # -*- coding: utf-8 -*-

202 | # @place: Pudong, Shanghai

203 | # @file: bm25_retriever.py

204 | # @time: 2023/12/25 17:42

205 | from typing import List

206 |

207 | from elasticsearch import Elasticsearch

208 | from llama_index.schema import TextNode

209 | from llama_index import QueryBundle

210 | from llama_index.schema import NodeWithScore

211 | from llama_index.retrievers import BaseRetriever

212 | from llama_index.indices.query.schema import QueryType

213 |

214 | from preprocess.get_text_id_mapping import text_node_id_mapping

215 |

216 |

217 | class CustomBM25Retriever(BaseRetriever):

218 | """Custom retriever for elasticsearch with bm25"""

219 | def __init__(self, top_k) -> None:

220 | """Init params."""

221 | super().__init__()

222 | self.es_client = Elasticsearch([{'host': 'localhost', 'port': 9200}])

223 | self.top_k = top_k

224 |

225 | def _retrieve(self, query: QueryType) -> List[NodeWithScore]:

226 | if isinstance(query, str):

227 | query = QueryBundle(query)

228 | else:

229 | query = query

230 |

231 | result = []

232 | # 查询数据(全文搜索)

233 | dsl = {

234 | 'query': {

235 | 'match': {

236 | 'content': query.query_str

237 | }

238 | },

239 | "size": self.top_k

240 | }

241 | search_result = self.es_client.search(index='docs', body=dsl)

242 | if search_result['hits']['hits']:

243 | for record in search_result['hits']['hits']:

244 | text = record['_source']['content']

245 | node_with_score = NodeWithScore(node=TextNode(text=text,

246 | id_=text_node_id_mapping[text]),

247 | score=record['_score'])

248 | result.append(node_with_score)

249 |

250 | return result

251 | ```

252 |

253 | 之后,对top_k结果进行指标评估,Python代码如下:

254 |

255 | ```python

256 | # -*- coding: utf-8 -*-

257 | # @place: Pudong, Shanghai

258 | # @file: evaluation_exp.py

259 | # @time: 2023/12/25 20:01

260 | import asyncio

261 | import time

262 |

263 | import pandas as pd

264 | from datetime import datetime

265 | from faiss import IndexFlatIP

266 | from llama_index.evaluation import RetrieverEvaluator

267 | from llama_index.finetuning.embeddings.common import EmbeddingQAFinetuneDataset

268 |

269 | from custom_retriever.bm25_retriever import CustomBM25Retriever

270 | from custom_retriever.vector_store_retriever import VectorSearchRetriever

271 | from custom_retriever.ensemble_retriever import EnsembleRetriever

272 | from custom_retriever.ensemble_rerank_retriever import EnsembleRerankRetriever

273 | from custom_retriever.query_rewrite_ensemble_retriever import QueryRewriteEnsembleRetriever

274 |

275 |

276 | # Display results from evaluate.

277 | def display_results(name_list, eval_results_list):

278 | pd.set_option('display.precision', 4)

279 | columns = {"retrievers": [], "hit_rate": [], "mrr": []}

280 | for name, eval_results in zip(name_list, eval_results_list):

281 | metric_dicts = []

282 | for eval_result in eval_results:

283 | metric_dict = eval_result.metric_vals_dict

284 | metric_dicts.append(metric_dict)

285 |

286 | full_df = pd.DataFrame(metric_dicts)

287 |

288 | hit_rate = full_df["hit_rate"].mean()

289 | mrr = full_df["mrr"].mean()

290 |

291 | columns["retrievers"].append(name)

292 | columns["hit_rate"].append(hit_rate)

293 | columns["mrr"].append(mrr)

294 |

295 | metric_df = pd.DataFrame(columns)

296 |

297 | return metric_df

298 |

299 |

300 | doc_qa_dataset = EmbeddingQAFinetuneDataset.from_json("../data/doc_qa_test.json")

301 | metrics = ["mrr", "hit_rate"]

302 | # bm25 retrieve

303 | evaluation_name_list = []

304 | evaluation_result_list = []

305 | cost_time_list = []

306 | for top_k in [1, 2, 3, 4, 5]:

307 | start_time = time.time()

308 | bm25_retriever = CustomBM25Retriever(top_k=top_k)

309 | bm25_retriever_evaluator = RetrieverEvaluator.from_metric_names(metrics, retriever=bm25_retriever)

310 | bm25_eval_results = asyncio.run(bm25_retriever_evaluator.aevaluate_dataset(doc_qa_dataset))

311 | evaluation_name_list.append(f"bm25_top_{top_k}_eval")

312 | evaluation_result_list.append(bm25_eval_results)

313 | cost_time_list.append((time.time() - start_time) * 1000)

314 |

315 | print("done for bm25 evaluation!")

316 | df = display_results(evaluation_name_list, evaluation_result_list)

317 | df['cost_time'] = cost_time_list

318 | print(df.head())

319 | df.to_csv(f"evaluation_bm25_{datetime.now().strftime('%Y-%m-%d %H:%M:%S')}.csv", encoding="utf-8", index=False)

320 | ```

321 |

322 | BM25算法的实验结果如下:

323 |

324 | | retrievers | hit_rate | mrr | cost_time |

325 | |-----------------|----------|--------|-----------|

326 | | bm25_top_1_eval | 0.7975 | 0.7975 | 461.277 |

327 | | bm25_top_2_eval | 0.8536 | 0.8255 | 510.3021 |

328 | | bm25_top_3_eval | 0.9003 | 0.8411 | 570.6708 |

329 | | bm25_top_4_eval | 0.9159 | 0.845 | 420.7261 |

330 | | bm25_top_5_eval | 0.9408 | 0.85 | 388.5961 |

331 |

332 | ### Embedding Search

333 |

334 | BM25算法的实现是简单的。Embedding Search的较为复杂些,首先需要对queries和corpus进行文本嵌入,这里的Embedding模型使用Openai的text-embedding-ada-002,向量维度为1536,并将结果存入numpy数据结构中,保存为npy文件,方便后续加载和重复使用。

335 |

336 | 为了避免使用过重的向量数据集,本实验采用内存向量数据集: **faiss**。使用faiss加载向量,index类型选用IndexFlatIP,并进行向量相似度搜索。

337 |

338 | Embedding Search也需要定制化开发Retriever及指标评估,这里不再赘述,具体实验可参考文章末尾的Github项目地址。

339 |

340 | Embedding Search的实验结果如下:

341 |

342 | | retrievers | hit_rate | mrr | cost_time |

343 | |----------------------|----------|--------|-----------|

344 | | embedding_top_1_eval | 0.6075 | 0.6075 | 67.6837 |

345 | | embedding_top_2_eval | 0.6978 | 0.6526 | 60.8449 |

346 | | embedding_top_3_eval | 0.7321 | 0.6641 | 59.9051 |

347 | | embedding_top_4_eval | 0.7788 | 0.6758 | 63.5488 |

348 | | embedding_top_5_eval | 0.7944 | 0.6789 | 67.7922 |

349 |

350 | > 注意: 这里的召回时间花费比BM25还要少,完全得益于我们已经存储好了文本向量,并使用faiss进行加载、查询。

351 |

352 | ### Ensemble Search

353 |

354 | Ensemble Search融合BM25算法和Embedding Search算法,针对两种算法召回的top_k个文档,使用RRF算法进行重新排序,再获取top_k个文档。RRF算法是经典且优秀的集成排序算法,这里不再展开介绍,后续专门写文章介绍。

355 |

356 | Ensemble Search的实验结果如下:

357 |

358 | | retrievers | hit_rate | mrr | cost_time |

359 | |---------------------|----------|--------|-----------|

360 | | ensemble_top_1_eval | 0.7009 | 0.7009 | 1072.7379 |

361 | | ensemble_top_2_eval | 0.8536 | 0.7741 | 1088.8782 |

362 | | ensemble_top_3_eval | 0.8941 | 0.7928 | 980.7949 |

363 | | ensemble_top_4_eval | 0.919 | 0.8017 | 935.1702 |

364 | | ensemble_top_5_eval | 0.9377 | 0.8079 | 868.299 |

365 |

366 | ### Ensemble + Rerank

367 |

368 | 如果还想在Ensemble Search的基础上再进行效果优化,可考虑加入Rerank算法。常见的Rerank模型有Cohere(API调用),BGE-Rerank(开源模型)等。本文使用Cohere Rerank API.

369 |

370 | Ensemble + Rerank的实验结果如下:

371 |

372 | | retrievers | hit_rate | mrr | cost_time |

373 | |----------------------------|----------|--------|--------------|

374 | | ensemble_rerank_top_1_eval | 0.8349 | 0.8349 | 2140632.4041 |

375 | | ensemble_rerank_top_2_eval | 0.9034 | 0.8785 | 2157657.2871 |

376 | | ensemble_rerank_top_3_eval | 0.9346 | 0.9008 | 2200800.936 |

377 | | ensemble_rerank_top_4_eval | 0.947 | 0.9078 | 2150398.7348 |

378 | | ensemble_rerank_top_5_eval | 0.9657 | 0.9099 | 2149122.9382 |

379 |

380 | ## 指标可视化及分析

381 |

382 | ### 指标可视化

383 |

384 | 上述的评估结果不够直观,我们使用Plotly模块绘制指标的条形图,结果如下:

385 |

386 |

387 |

388 |

389 |

390 | ### 指标分析

391 |

392 | 我们对上述统计图进行指标分析,可得到结论如下:

393 |

394 | - 对于每种Retrieve算法,随着k的增加,top_k的Hit Rate指标和MRR指标都有所增加,即检索效果变好,这是显而易见的结论;

395 | - 就总体检索效果而言,Ensemble + Rerank > Ensemble > 单独的Retrieve

396 | - 本项目中就单独的Retrieve算法而言,BM25的检索效果比Embedding Search好(可能与生成的问答来源于文档有关),但这不是普遍结论,两种算法更有合适的场景

397 | - 加入Rerank后,检索效果可获得一定的提升,以top_3评估结果来说,ensemble的Hit Rate为0.8941,加入Rerank后为0.9346,提升约4%

398 |

399 | ## 总结

400 |

401 | 本文详细介绍了RAG框架,并结合自有数据集对各种Retrieve算法进行评估。笔者通过亲身实验和编写Retriever代码,深入了解了RAG框架中的经典之作LlamaIndex,同时,本文也是难得的介绍RAG框架Retrieve阶段评估实验的文章。

402 |

403 | 本文的所有过程及指标结果已开源至Github,网址为:[https://github.com/percent4/embedding_rerank_retrieval](https://github.com/percent4/embedding_rerank_retrieval) .

404 |

405 | 后续,笔者将在此项目基础上,验证各种优化RAG框架Retrieve效果的手段,比如Query Rewrite, Query Transform, HyDE等,这将是一个获益无穷的项目啊!

406 |

407 | ## 参考文献

408 |

409 | 1. Retrieve Evaluation官网文章:https://blog.llamaindex.ai/boosting-rag-picking-the-best-embedding-reranker-models-42d079022e83

410 | 2. Retrieve Evaluation Colab上的代码:https://colab.research.google.com/drive/1TxDVA__uimVPOJiMEQgP5fwHiqgKqm4-?usp=sharing

411 | 3. LlamaIndex官网:https://docs.llamaindex.ai/en/stable/index.html

412 | 4. RetrieverEvaluator in LlamaIndex: https://docs.llamaindex.ai/en/stable/module_guides/evaluating/usage_pattern_retrieval.html

413 | 5. NLP(六十一)使用Baichuan-13B-Chat模型构建智能文档: https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247485425&idx=1&sn=bd85ddfce82d77ceec5a66cb96835400&chksm=fcb9be61cbce37773109f9703c2b6c4256d5037c8bf4497dfb9ad0f296ce0ee4065255954c1c&token=1977141018&lang=zh_CN#rd

414 | 6. NLP(六十九)智能文档助手升级: https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247485609&idx=1&sn=f8337b4822b1cdf95a586af6097ef288&chksm=fcb9b139cbce382f735e4c119ade8084067cde0482910c72767f36a29e7291385cbe6dfbd6a9&payreadticket=HBB91zkl4I6dXpw0Q4OcOF8ECZz0pS9kOGHJqycwrN7jFWHyUOCBe7sWFWytD7_9wo_NzcM#rd

415 | 7. NLP(八十一)智能文档问答助手项目改进: https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486103&idx=1&sn=caa204eda0760bab69b7e40abff8e696&chksm=fcb9b307cbce3a1108d305ec44281e3446241e90e9c17d62dd0b6eaa48cba5e20d31f0129584&token=1977141018&lang=zh_CN#rd

416 |

--------------------------------------------------------------------------------

/docs/RAG框架中的召回算法可视化分析及提升方法.md:

--------------------------------------------------------------------------------

1 | > 本文将会对笔者之前在RAG框架中的Retrieve算法的不同召回手段进行可视化分析,并介绍RAG框架中的优化方法。

2 |

3 | 在文章[NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=823710334&lang=zh_CN#rd)中笔者介绍了RAG框架中不同的Retrieve算法(包括BM25, Embedding, Ensemble, Ensemble+Rerank)的评估实验,并给出了详细的数据集与评测过程、评估结果。

4 |

5 | 在文章[NLP(八十三)RAG框架中的Rerank算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486225&idx=1&sn=235eb787e2034f24554d8e997dbb4718&chksm=fcb9b281cbce3b9761342ebadbe001747ce2e74d84340f78b0e12c4d4c6aed7a7817f246c845&token=823710334&lang=zh_CN#rd)中笔者进一步介绍了Rerank算法在RAG框架中的作用,并对不同的Rerank算法进行了评估。

6 |

7 | **以上两篇文章是笔者对RAG框架的深入探索,文章获得了读者的一致好评。**

8 |

9 | 本文将会继续深入RAG框架的探索,内容如下:

10 |

11 | - Retrieve算法的可视化分析:使用Gradio模块搭建可视化页面用于展示不同召回算法的结果。

12 | - BM25, Embedding, Ensemble, Ensemble + Rerank召回分析:结合具体事例,给出不同召回手段的结果,比较它们的优劣。

13 | - RAG框架中的提升方法:主要介绍Query Rewirte, HyDE.

14 |

15 | ## Retrieve算法的可视化分析

16 |

17 | 为了对Retrieve算法的召回结果进行分析,笔者使用Gradio模块来开发前端页面以支持召回结果的可视化分析。

18 |

19 | Python代码如下:

20 |

21 | ```python

22 | # -*- coding: utf-8 -*-

23 | from random import shuffle

24 | import gradio as gr

25 | import pandas as pd

26 |

27 | from faiss import IndexFlatIP

28 | from llama_index.evaluation.retrieval.metrics import HitRate, MRR

29 |

30 | from custom_retriever.bm25_retriever import CustomBM25Retriever

31 | from custom_retriever.vector_store_retriever import VectorSearchRetriever

32 | from custom_retriever.ensemble_retriever import EnsembleRetriever

33 | from custom_retriever.ensemble_rerank_retriever import EnsembleRerankRetriever

34 | from preprocess.get_text_id_mapping import queries, query_relevant_docs

35 | from preprocess.query_rewrite import generate_queries, llm

36 |

37 | retrieve_methods = ["bm25", "embedding", "ensemble", "ensemble + bge-rerank-large", "query_rewrite + ensemble"]

38 |

39 |

40 | def get_metric(search_query, search_result):

41 | hit_rate = HitRate().compute(query=search_query,

42 | expected_ids=query_relevant_docs[search_query],

43 | retrieved_ids=[_.id_ for _ in search_result])

44 | mrr = MRR().compute(query=search_query,

45 | expected_ids=query_relevant_docs[search_query],

46 | retrieved_ids=[_.id_ for _ in search_result])

47 | return [hit_rate.score, mrr.score]

48 |

49 |

50 | def get_retrieve_result(retriever_list, retrieve_top_k, retrieve_query):

51 | columns = {"metric_&_top_k": ["Hit Rate", "MRR"] + [f"top_{k + 1}" for k in range(retrieve_top_k)]}

52 | if "bm25" in retriever_list:

53 | bm25_retriever = CustomBM25Retriever(top_k=retrieve_top_k)

54 | search_result = bm25_retriever.retrieve(retrieve_query)

55 | columns["bm25"] = []

56 | columns["bm25"].extend(get_metric(retrieve_query, search_result))

57 | for i, node in enumerate(search_result, start=1):

58 | columns["bm25"].append(node.text)

59 | if "embedding" in retriever_list:

60 | faiss_index = IndexFlatIP(1536)

61 | vector_search_retriever = VectorSearchRetriever(top_k=retrieve_top_k, faiss_index=faiss_index)

62 | search_result = vector_search_retriever.retrieve(str_or_query_bundle=retrieve_query)

63 | columns["embedding"] = []

64 | columns["embedding"].extend(get_metric(retrieve_query, search_result))

65 | for i in range(retrieve_top_k):

66 | columns["embedding"].append(search_result[i].text)

67 | faiss_index.reset()

68 | if "ensemble" in retriever_list:

69 | faiss_index = IndexFlatIP(1536)

70 | ensemble_retriever = EnsembleRetriever(top_k=retrieve_top_k, faiss_index=faiss_index, weights=[0.5, 0.5])

71 | search_result = ensemble_retriever.retrieve(str_or_query_bundle=retrieve_query)

72 | columns["ensemble"] = []

73 | columns["ensemble"].extend(get_metric(retrieve_query, search_result))

74 | for i in range(retrieve_top_k):

75 | columns["ensemble"].append(search_result[i].text)

76 | faiss_index.reset()

77 | if "ensemble + bge-rerank-large" in retriever_list:

78 | faiss_index = IndexFlatIP(1536)

79 | ensemble_retriever = EnsembleRerankRetriever(top_k=retrieve_top_k, faiss_index=faiss_index)

80 | search_result = ensemble_retriever.retrieve(str_or_query_bundle=retrieve_query)

81 | columns["ensemble + bge-rerank-large"] = []

82 | columns["ensemble + bge-rerank-large"].extend(get_metric(retrieve_query, search_result))

83 | for i in range(retrieve_top_k):

84 | columns["ensemble + bge-rerank-large"].append(search_result[i].text)

85 | faiss_index.reset()

86 | if "query_rewrite + ensemble" in retriever_list:

87 | queries = generate_queries(llm, retrieve_query, num_queries=1)

88 | print(f"original query: {retrieve_query}\n"

89 | f"rewrite query: {queries}")

90 | faiss_index = IndexFlatIP(1536)

91 | ensemble_retriever = EnsembleRetriever(top_k=retrieve_top_k, faiss_index=faiss_index, weights=[0.5, 0.5])

92 | search_result = ensemble_retriever.retrieve(str_or_query_bundle=queries[0])

93 | columns["query_rewrite + ensemble"] = []

94 | columns["query_rewrite + ensemble"].extend(get_metric(retrieve_query, search_result))

95 | for i in range(retrieve_top_k):

96 | columns["query_rewrite + ensemble"].append(search_result[i].text)

97 | faiss_index.reset()

98 | retrieve_df = pd.DataFrame(columns)

99 | return retrieve_df

100 |

101 |

102 | with gr.Blocks() as demo:

103 | retrievers = gr.CheckboxGroup(choices=retrieve_methods,

104 | type="value",

105 | label="Retrieve Methods")

106 | top_k = gr.Dropdown(list(range(1, 6)), label="top_k", value=3)

107 | shuffle(queries)

108 | query = gr.Dropdown(queries, label="query", value=queries[0])

109 | # 设置输出组件

110 | result_table = gr.DataFrame(label='Result', wrap=True)

111 | theme = gr.themes.Base()

112 | # 设置按钮

113 | submit = gr.Button("Submit")

114 | submit.click(fn=get_retrieve_result, inputs=[retrievers, top_k, query], outputs=result_table)

115 |

116 |

117 | demo.launch()

118 | ```

119 |

120 | 该页面可以选择召回算法,top_k参数,以及query,返回召回算法的指标及top_k召回文本,如下图:

121 |

122 |

123 |

124 | 有了这个页面,我们可以很方便地对召回结果进行分析。为了有更全面的分析,我们再使用Python脚本,对测试query不同召回算法(BM25, Embedding, Ensemble)的top_3召回结果及指标进行记录。

125 |

126 | 当然,我们还筛选出badcase,用来帮助我们更好地对召回算法进行分析。所谓badcase,指的是query的top_3召回指标在BM25, Embedding, Ensemble算法上都为0。badcase如下:

127 |

128 | |query|

129 | |---|

130 | |日美半导体协议对日本半导体产业有何影响?|

131 | |请列举三个美国的科技公司。|

132 | |日本半导体发展史的三个时期是什么?|

133 | |日美半导体协议要求美国芯片在日本市场份额是多少?|

134 | |日本在全球半导体市场的份额是多少?|

135 | |日本半导体设备在国内市场的占比是多少?|

136 | |日本企业在全球半导体产业的地位如何?|

137 | |美日半导体协议的主要内容是什么?|

138 | |尼康和佳能的光刻机在哪个市场占优势?|

139 | |美日半导体协议是由哪两部门签署的?|

140 | |日本在全球半导体材料行业的地位如何?|

141 | |日本半导体业的衰落原因是什么?|

142 | |日本半导体业的兴衰原因是什么?|

143 | |日本半导体企业如何保持竞争力?|

144 | |日本半导体产业在哪些方面仍有影响力?|

145 | |半导体制造设备市场美、日、荷各占多少份额?|

146 | |80年代日本半导体企业的问题是什么?|

147 | |尼康在哪种技术上失去了优势?|

148 |

149 | ## 不同召回算法实例分析

150 |

151 | 在文章[NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=823710334&lang=zh_CN#rd)中,在评估实验中,对于单个的Retrieve算法,BM25表现要优于Embedding。但事实上,两者各有优劣。

152 |

153 | | 检索类型 | 优点 | 缺点 |

154 | |----------|------|------|

155 | | 向量检索 (Embedding) | 1. 语义理解更强。

2. 能有效处理模糊或间接的查询。

3. 对自然语言的多样性适应性强。

4. 能识别不同词汇的相同意义。 | 1. 计算和存储成本高。

2. 索引时间较长。

3. 高度依赖训练数据的质量和数量。

4. 结果解释性较差。 |

156 | | 关键词检索 (BM25) | 1. 检索速度快。

2. 实现简单,资源需求低。

3. 结果易于理解,可解释性强。

4. 对精确查询表现良好。 | 1. 对复杂语义理解有限。

2. 对查询变化敏感,灵活性差。

3. 难以处理同义词和多义词。

4. 需要用户准确使用关键词。 |

157 |

158 |

159 | `向量检索`(Embedding)的优势:

160 |

161 | - 复杂语义的文本查找(基于文本相似度)

162 | - 相近语义理解(如老鼠/捕鼠器/奶酪,谷歌/必应/搜索引擎)

163 | - 多语言理解(跨语言理解,如输入中文匹配英文)

164 | - 多模态理解(支持文本、图像、音视频等的相似匹配)

165 | - 容错性(处理拼写错误、模糊的描述)

166 |

167 | 虽然向量检索在以上情景中具有明显优势,但有某些情况效果不佳。比如:

168 |

169 | - 搜索一个人或物体的名字(例如,伊隆·马斯克,iPhone 15)

170 | - 搜索缩写词或短语(例如,RAG,RLHF)

171 | - 搜索 ID(例如,gpt-3.5-turbo,titan-xlarge-v1.01)

172 |

173 | 而上面这些的缺点恰恰都是传统关键词搜索的优势所在,传统`关键词搜索`(BM25)擅长:

174 |

175 | - 精确匹配(如产品名称、姓名、产品编号)

176 |

177 | - 少量字符的匹配(通过少量字符进行向量检索时效果非常不好,但很多用户恰恰习惯只输入几个关键词)

178 | - 倾向低频词汇的匹配(低频词汇往往承载了语言中的重要意义,比如“你想跟我去喝咖啡吗?”这句话中的分词,“喝”“咖啡”会比“你”“吗”在句子中承载更重要的含义)

179 |

180 | 基于`向量检索`和`关键词搜索`更有优劣,因此才需要`混合搜索`(Ensemble)。而在`混合搜索`的基础上,需要对多路召回结果进行`精排`(即`Rerank`),重新调整召回文本的顺序。

181 |

182 | 为了更好地理解上述召回算法的优缺点,下面结合具体的实例来进行阐述。

183 |

184 | - `query`: "NEDO"的全称是什么?

185 |

186 |

187 |

188 | 在这个例子中,Embedding召回结果优于BM25,BM25召回结果虽然在top_3结果中存在,但排名第三,排在首位的是不相关的文本,而Embedding由于文本相似度的优势,将正确结果放在了首位。

189 |

190 | - `query`: 日本半导体产品的主要应用领域是什么?

191 |

192 |

193 |

194 | 在这个例子中,BM25召回结果优于Embedding。

195 |

196 | - `query`: 《美日半导体协议》对日本半导体市场有何影响?

197 |

198 |

199 |

200 | 在这个例子中,正确文本在BM25算法召回结果中排名第二,在Embedding算法中排第三,混合搜索排名第一,这里体现了混合搜索的优越性。

201 |

202 | - `query`: 80年代日本电子产业的辉煌表现在哪些方面?

203 |

204 |

205 |

206 | 在这个例子中,不管是BM25, Embedding,还是Ensemble,都没能将正确文本排在第一位,而经过Rerank以后,正确文本排在第一位,这里体现了Rerank算法的优势。

207 |

208 | ## RAG中的提升方法

209 |

210 | 经过上述Retrieve算法的对比,我们对不同的Retrieve算法有了深入的了解。然而,Retrieve算法并不能帮助我们解决所有问题,比如上述的badcase,就是用各种Retrieve算法都无法找回的。

211 |

212 | 那么,还有其它优化手段吗?在RAG框架中,还存在一系列的优化手段,这在`Langchain`和`Llmma-Index`中都给出了各种优化手段。本文将尝试两种优化手段:Query Rewrite和HyDE.

213 |

214 | ### Query Rewrite

215 |

216 | 业界对于Query Rewrite,有着相当完善且复杂的流程,因为它对于后续的召回结果有直接影响。本文借助大模型对query进行直接改写,看看是否有召回效果提升。

217 |

218 | 比如:

219 |

220 | - 原始query: 半导体制造设备市场美、日、荷各占多少份额?

221 | - 改写后query:美国、日本和荷兰在半导体制造设备市场的份额分别是多少?

222 |

223 | 改写后的query在BM25和Embedding的top 3召回结果中都能找到。该query对应的正确文本为:

224 |

225 | > 全球半导体设备制造领域,美国、日本和荷兰控制着全球370亿美元半导体制造设备市场的90%以上。其中,美国的半导体制造设备(SME)产业占全球产量的近50%,日本约占30%,荷兰约占17%%。更具体地,以光刻机为例,EUV光刻工序其实有众多日本厂商的参与,如东京电子生产的EUV涂覆显影设备,占据100%的市场份额,Lasertec Corp.也是全球唯一的测试机制造商。另外还有EUV光刻胶,据南大光电在3月发布的相关报告中披露,全球仅有日本厂商研发出了EUV光刻胶。

226 |

227 | 从中我们可以看到,在改写后的query中,美国、日本、荷兰这三个词发挥了重要作用,因此,**query改写对于含有缩写的query有一定的召回效果改善**。

228 |

229 | ### HyDE

230 |

231 | HyDE(全称Hypothetical Document Embeddings)是RAG中的一种技术,它基于一个假设:相较于直接查询,通过大语言模型 (LLM) 生成的答案在嵌入空间中可能更为接近。HyDE 首先响应查询生成一个假设性文档(答案),然后将其嵌入,从而提高搜索的效果。

232 |

233 | 比如:

234 |

235 | - 原始query: 美日半导体协议是由哪两部门签署的?

236 | - 加上回答后的query: 美日半导体协议是由哪两部门签署的?美日半导体协议是由美国商务部和日本经济产业省签署的。

237 |

238 | 加上回答后的query使用BM25算法可以找回正确文本,且排名第一位,而Embedding算法仍无法召回。

239 |

240 | 正确文本为:

241 |

242 | > 1985年6月,美国半导体产业贸易保护的调子开始升高。美国半导体工业协会向国会递交一份正式的“301条款”文本,要求美国政府制止日本公司的倾销行为。民意调查显示,68%的美国人认为日本是美国最大的威胁。在舆论的引导和半导体工业协会的推动下,美国政府将信息产业定为可以动用国家安全借口进行保护的新兴战略产业,半导体产业成为美日贸易战的焦点。1985年10月,美国商务部出面指控日本公司倾销256K和1M内存。一年后,日本通产省被迫与美国商务部签署第一次《美日半导体协议》。

243 |

244 | 从中可以看出,大模型的回答是正确的,美国商务部这个关键词发挥了重要作用,因此,HyDE对于特定的query有召回效果提升。

245 |

246 | ## 总结

247 |

248 | 本文结合具体的例子,对于不同的Retrieve算法的效果优劣有了比较清晰的认识,事实上,这也是笔者一直在NLP领域努力的一个方向:简单而深刻。

249 |

250 | 同时,还介绍了两种RAG框架中的优化方法,或许可以在工作中有应用价值。后续笔者将继续关注RAG框架,欢迎大家关注。

251 |

252 | 本文代码及数据已开源至Github: [https://github.com/percent4/embedding_rerank_retrieval](https://github.com/percent4/embedding_rerank_retrieval)。

253 |

254 | ## 参考文献

255 |

256 | 1. [NLP(八十二)RAG框架中的Retrieve算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486199&idx=1&sn=f24175b05bdf5bc6dd42efed4d5acae8&chksm=fcb9b367cbce3a711fabd1a56bb5b9d803aba2f42964b4e1f9a4dc6e2174f0952ddb9e1d4c55&token=823710334&lang=zh_CN#rd)

257 | 2. [NLP(八十三)RAG框架中的Rerank算法评估](https://mp.weixin.qq.com/s?__biz=MzU2NTYyMDk5MQ==&mid=2247486225&idx=1&sn=235eb787e2034f24554d8e997dbb4718&chksm=fcb9b281cbce3b9761342ebadbe001747ce2e74d84340f78b0e12c4d4c6aed7a7817f246c845&token=823710334&lang=zh_CN#rd)

258 | 3. 引入混合检索(Hybrid Search)和重排序(Rerank)改进 RAG 系统召回效果: [https://mp.weixin.qq.com/s/_Rmafm7tI3JWMNqoqFX_FQ](https://mp.weixin.qq.com/s/_Rmafm7tI3JWMNqoqFX_FQ)

--------------------------------------------------------------------------------

/embedding_finetune/embedding_fine_tuning.ipynb: