├── .gitignore

├── LICENSE

├── README.md

├── blueprints

├── multi_provider

│ ├── README.md

│ ├── anthropic

│ │ ├── __init__.py

│ │ ├── agent.py

│ │ └── utils.py

│ ├── bedrock

│ │ ├── __init__.py

│ │ ├── agent.py

│ │ └── utils.py

│ ├── core

│ │ ├── __init__.py

│ │ └── base.py

│ ├── gemini

│ │ ├── __init__.py

│ │ ├── agent.py

│ │ └── utils.py

│ ├── openai

│ │ ├── __init__.py

│ │ ├── agent.py

│ │ └── utils.py

│ ├── requirements.txt

│ └── tests

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── test_anthropic.py

│ │ ├── test_bedrock.py

│ │ ├── test_gemini.py

│ │ └── test_openai.py

└── single_provider

│ ├── anthropic

│ ├── README.md

│ ├── agent.py

│ └── test.py

│ ├── bedrock

│ ├── README.md

│ ├── __init__.py

│ ├── agent.py

│ ├── test.py

│ └── utils.py

│ ├── gemini

│ ├── README.md

│ ├── agent.py

│ ├── requirements.txt

│ └── test.py

│ └── openai

│ ├── README.md

│ ├── agent.py

│ └── test.py

├── examples

├── agentic-rag

│ ├── audio-retriever

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── table.py

│ ├── customer-reviews

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── table.py

│ ├── financial-pdf-reports

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── table.py

│ ├── image-analyzer

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── table.py

│ ├── memory-retriever

│ │ ├── agent.py

│ │ └── requirements.txt

│ ├── slack-agent

│ │ ├── .env-template

│ │ ├── README.md

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── tools.py

│ └── video-summarizer

│ │ ├── agent.py

│ │ ├── requirements.txt

│ │ └── table.py

├── getting-started

│ └── pixelagent_basics_tutorial.py

├── memory

│ ├── README.md

│ ├── anthropic

│ │ ├── basic.py

│ │ ├── requirements.txt

│ │ └── semantic-memory-search.py

│ └── openai

│ │ ├── basic.py

│ │ ├── requirements.txt

│ │ └── semantic-memory.py

├── planning

│ ├── README.md

│ ├── anthropic

│ │ ├── react.py

│ │ └── requirements.txt

│ └── openai

│ │ ├── react.py

│ │ └── requirements.txt

├── reflection

│ ├── anthropic

│ │ ├── reflection.py

│ │ └── requirements.txt

│ ├── openai

│ │ ├── reflection.py

│ │ └── requirements.txt

│ └── reflection.md

├── tool-calling

│ ├── anthropic

│ │ ├── finance.py

│ │ └── requirements.txt

│ ├── bedrock

│ │ ├── finance.py

│ │ └── requirements.txt

│ └── openai

│ │ ├── finance.py

│ │ └── requirements.txt

└── vision

│ ├── image-analyzer.py

│ └── requirements.txt

├── pixelagent

├── __init__.py

├── anthropic

│ ├── __init__.py

│ ├── agent.py

│ └── utils.py

├── bedrock

│ ├── README.md

│ ├── __init__.py

│ ├── agent.py

│ └── utils.py

├── core

│ ├── __init__.py

│ └── base.py

├── gemini

│ ├── __init__.py

│ ├── agent.py

│ └── utils.py

└── openai

│ ├── __init__.py

│ ├── agent.py

│ └── utils.py

├── poetry.lock

├── poetry.toml

├── pyproject.toml

├── scripts

└── release.sh

└── tests

├── README.md

├── conftest.py

├── pytest.ini

├── test.py

├── test_anthropic.py

├── test_bedrock.py

├── test_gemini.py

└── test_openai.py

/.gitignore:

--------------------------------------------------------------------------------

1 | .venv

2 | .vscode

3 | dist

4 | **/__pycache__

5 | *.egg-info

6 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

30 |

31 | ---

32 | # Pixelagent: An Agent Engineering Blueprint

33 |

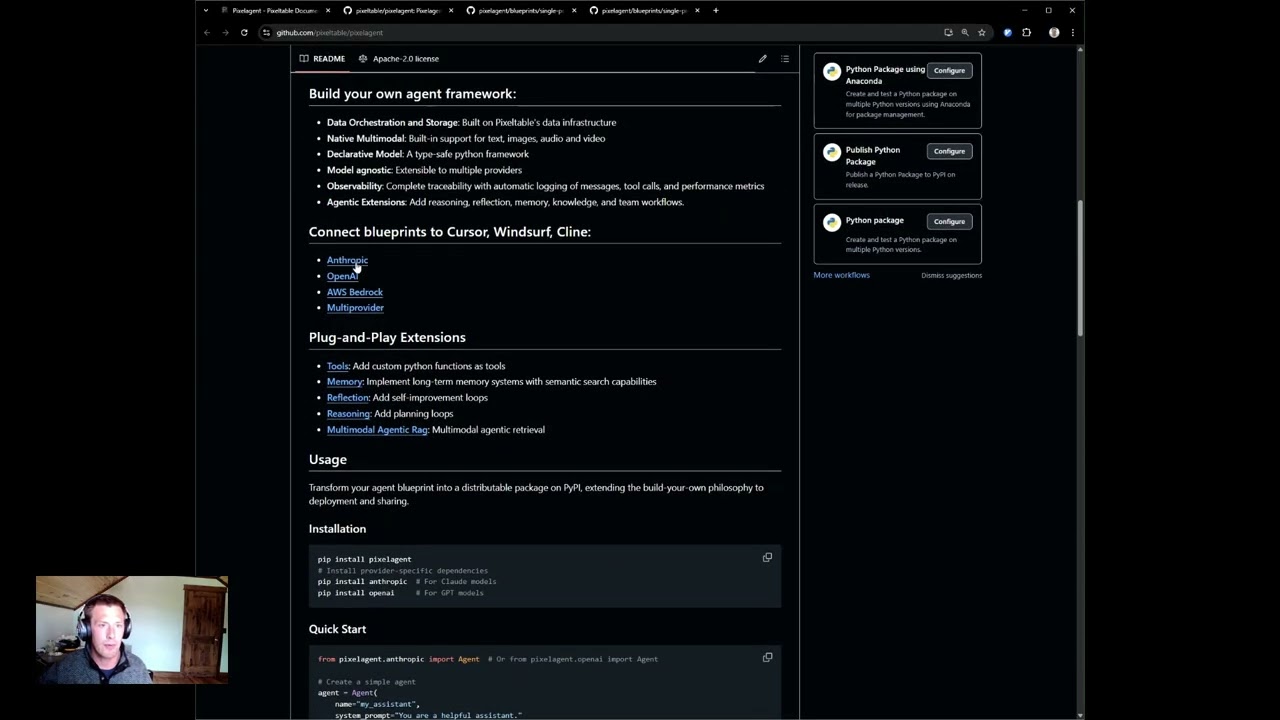

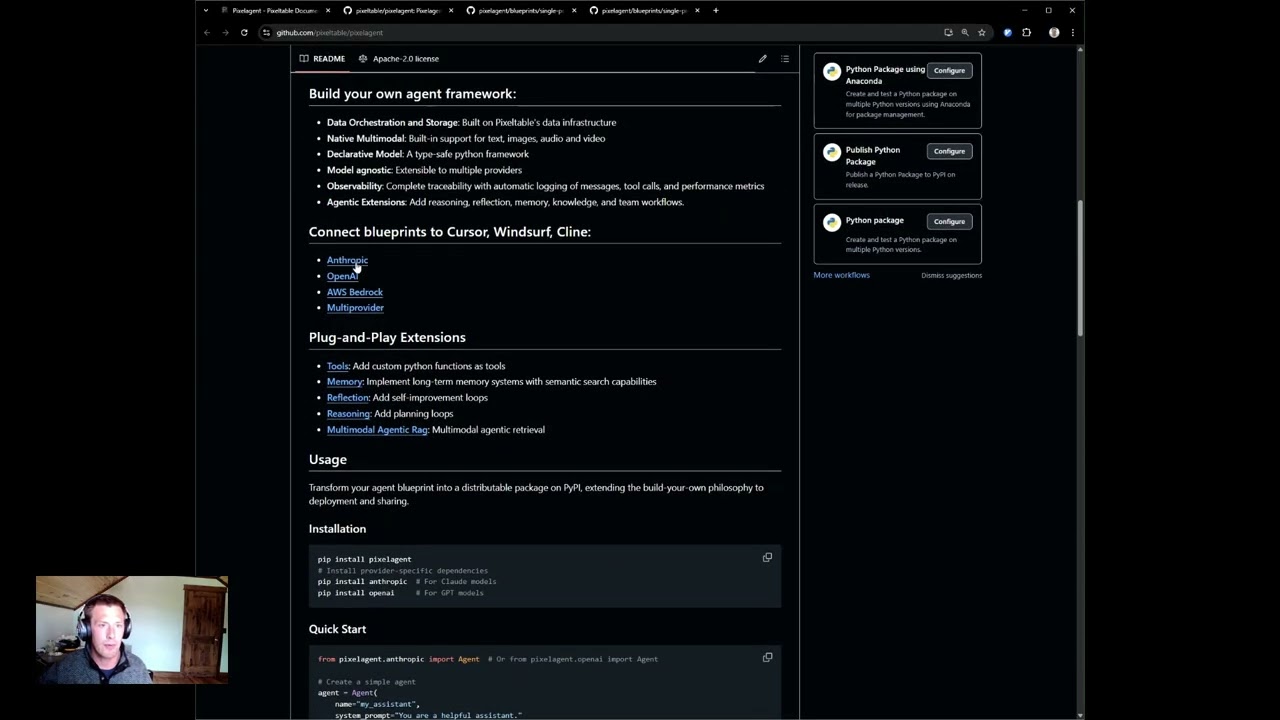

34 | We see agents as the intersection of an LLM, storage, and orchestration. [Pixeltable](https://github.com/pixeltable/pixeltable) unifies this interface into a single declarative framework, making it the de-facto choice for engineers to build custom agentic applications with build-your-own functionality for memory, tool-calling, and more.

35 |

36 |

37 | ## Build your own agent framework:

38 |

39 | - **Data Orchestration and Storage**: Built on Pixeltable's data infrastructure

40 | - **Native Multimodal**: Built-in support for text, images, audio and video

41 | - **Declarative Model**: A type-safe python framework

42 | - **Model agnostic**: Extensible to multiple providers

43 | - **Observability**: Complete traceability with automatic logging of messages, tool calls, and performance metrics

44 | - **Agentic Extensions**: Add reasoning, reflection, memory, knowledge, and team workflows.

45 |

46 | ## Connect blueprints to Cursor, Windsurf, Cline:

47 |

48 | - **[Anthropic](https://github.com/pixeltable/pixelagent/blob/main/blueprints/single_provider/anthropic/README.md)**

49 | - **[OpenAI](https://github.com/pixeltable/pixelagent/blob/main/blueprints/single_provider/openai/README.md)**

50 | - **[AWS Bedrock](https://github.com/pixeltable/pixelagent/blob/main/blueprints/single_provider/bedrock/README.md)**

51 | - **[Multiprovider](https://github.com/pixeltable/pixelagent/tree/main/blueprints/multi_provider/README.md)**

52 |

53 | ## Plug-and-Play Extensions

54 |

55 | - **[Tools](examples/tool-calling)**: Add custom python functions as tools

56 | - **[Memory](examples/memory)**: Implement long-term memory systems with semantic search capabilities

57 | - **[Reflection](examples/reflection)**: Add self-improvement loops

58 | - **[Reasoning](examples/planning)**: Add planning loops

59 | - **[Multimodal Agentic Rag](examples/agentic-rag)**: Multimodal agentic retrieval

60 |

61 | ## Usage

62 |

63 | Transform your agent blueprint into a distributable package on PyPI, extending the build-your-own philosophy to deployment and sharing.

64 |

65 | ### Installation

66 |

67 | ```bash

68 | pip install pixelagent

69 | # Install provider-specific dependencies

70 | pip install anthropic # For Claude models

71 | pip install openai # For GPT models

72 | ```

73 |

74 | ### Quick Start

75 |

76 | ```python

77 | from pixelagent.anthropic import Agent # Or from pixelagent.openai import Agent

78 |

79 | # Create a simple agent

80 | agent = Agent(

81 | name="my_assistant",

82 | system_prompt="You are a helpful assistant."

83 | )

84 |

85 | # Chat with your agent

86 | response = agent.chat("Hello, who are you?")

87 | print(response)

88 | ```

89 |

90 | ### Adding Tools

91 |

92 | ```python

93 | import pixeltable as pxt

94 | from pixelagent.anthropic import Agent

95 | import yfinance as yf

96 |

97 | # Define a tool as a UDF

98 | @pxt.udf

99 | def stock_price(ticker: str) -> dict:

100 | """Get stock information for a ticker symbol"""

101 | stock = yf.Ticker(ticker)

102 | return stock.info

103 |

104 | # Create agent with tool

105 | agent = Agent(

106 | name="financial_assistant",

107 | system_prompt="You are a financial analyst assistant.",

108 | tools=pxt.tools(stock_price)

109 | )

110 |

111 | # Use tool calling

112 | result = agent.tool_call("What's the current price of NVDA?")

113 | print(result)

114 | ```

115 |

116 | ### State management

117 |

118 | ```python

119 | import pixeltable as pxt

120 |

121 | # Agent memory is automatically persisted in tables

122 | memory = pxt.get_table("my_assistant.memory")

123 | conversations = memory.collect()

124 |

125 | # Access tool call history

126 | tools_log = pxt.get_table("financial_assistant.tools")

127 | tool_history = tools_log.collect()

128 |

129 | # cusomatizable memory database

130 | conversational_agent = Agent(

131 | name="conversation_agent",

132 | system_prompt="Focus on remebering the conversation",

133 | n_latest_messages=14

134 | )

135 | ```

136 |

137 | ### Custom Agentic Strategies

138 | ```python

139 |

140 | # ReAct pattern for step-by-step reasoning and planning

141 | import re

142 | from datetime import datetime

143 | from pixelagent.openai import Agent

144 | import pixeltable as pxt

145 |

146 | # Define a tool

147 | @pxt.udf

148 | def stock_info(ticker: str) -> dict:

149 | """Get stock information for analysis"""

150 | import yfinance as yf

151 | stock = yf.Ticker(ticker)

152 | return stock.info

153 |

154 | # ReAct system prompt with structured reasoning pattern

155 | REACT_PROMPT = """

156 | Today is {date}

157 |

158 | IMPORTANT: You have {max_steps} maximum steps. You are on step {step}.

159 |

160 | Follow this EXACT step-by-step reasoning and action pattern:

161 |

162 | 1. THOUGHT: Think about what information you need to answer the question.

163 | 2. ACTION: Either use a tool OR write "FINAL" if you're ready to give your final answer.

164 |

165 | Available tools:

166 | {tools}

167 |

168 | Always structure your response with these exact headings:

169 |

170 | THOUGHT: [your reasoning]

171 | ACTION: [tool_name] OR simply write "FINAL"

172 | """

173 |

174 | # Helper function to extract sections from responses

175 | def extract_section(text, section_name):

176 | pattern = rf'{section_name}:?\s*(.*?)(?=\n\s*(?:THOUGHT|ACTION):|$)'

177 | match = re.search(pattern, text, re.DOTALL | re.IGNORECASE)

178 | return match.group(1).strip() if match else ""

179 |

180 | # Execute ReAct planning loop

181 | def run_react_loop(question, max_steps=5):

182 | step = 1

183 | while step <= max_steps:

184 | # Dynamic system prompt with current step

185 | react_system_prompt = REACT_PROMPT.format(

186 | date=datetime.now().strftime("%Y-%m-%d"),

187 | tools=["stock_info"],

188 | step=step,

189 | max_steps=max_steps,

190 | )

191 |

192 | # Agent with updated system prompt

193 | agent = Agent(

194 | name="financial_planner",

195 | system_prompt=react_system_prompt,

196 | reset=False, # Maintain memory between steps

197 | )

198 |

199 | # Get agent's response for current step

200 | response = agent.chat(question)

201 |

202 | # Extract action to determine next step

203 | action = extract_section(response, "ACTION")

204 |

205 | # Check if agent is ready for final answer

206 | if "FINAL" in action.upper():

207 | break

208 |

209 | # Call tool if needed

210 | if "stock_info" in action.lower():

211 | tool_agent = Agent(

212 | name="financial_planner",

213 | tools=pxt.tools(stock_info)

214 | )

215 | tool_agent.tool_call(question)

216 |

217 | step += 1

218 |

219 | # Generate final recommendation

220 | return Agent(name="financial_planner").chat(question)

221 |

222 | # Run the planning loop

223 | recommendation = run_react_loop("Create an investment recommendation for AAPL")

224 | ```

225 |

226 | Check out our [tutorials](examples/) for more examples including reflection loops, planning patterns, and multi-provider implementations.

227 |

228 | ## Tutorials and Examples

229 |

230 | - **Basics**: Check out [Getting Started](examples/getting-started/pixelagent_basics_tutorial.py) for a step-by-step introduction to core concepts

231 | - **Advanced Patterns**: Explore [Reflection](examples/reflection/anthropic/reflection.py) and [Planning](examples/planning/anthropic/react.py) for more complex agent architectures

232 | - **Specialized Directories**: Browse our example directories for deeper implementations of specific techniques

233 |

234 |

235 | Ready to start building? Dive into the blueprints, tweak them to your needs, and let Pixeltable handle the AI data infrastructure while you focus on innovation!

236 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/anthropic/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Anthropic agent module for multi-provider blueprints.

3 |

4 | This module provides an Agent class for interacting with Anthropic's Claude models.

5 | """

6 |

7 | from .agent import Agent

8 | from .utils import create_messages

9 |

10 | __all__ = ["Agent", "create_messages"]

11 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/anthropic/agent.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import pixeltable as pxt

4 | import pixeltable.functions as pxtf

5 |

6 | from ..core.base import BaseAgent

7 |

8 | from .utils import create_messages

9 |

10 | try:

11 | from pixeltable.functions.anthropic import invoke_tools, messages

12 | except ImportError:

13 | raise ImportError("anthropic not found; run `pip install anthropic`")

14 |

15 |

16 | class Agent(BaseAgent):

17 | """

18 | Anthropic-specific implementation of the BaseAgent.

19 |

20 | This agent uses Anthropic's Claude API for generating responses and handling tools.

21 | It inherits common functionality from BaseAgent including:

22 | - Table setup and management

23 | - Memory persistence

24 | - Base chat and tool call implementations

25 |

26 | The agent supports both limited and unlimited conversation history through

27 | the n_latest_messages parameter.

28 | """

29 |

30 | def __init__(

31 | self,

32 | name: str,

33 | system_prompt: str,

34 | model: str = "claude-3-5-sonnet-latest",

35 | n_latest_messages: Optional[int] = 10,

36 | tools: Optional[pxt.tools] = None,

37 | reset: bool = False,

38 | chat_kwargs: Optional[dict] = None,

39 | tool_kwargs: Optional[dict] = None,

40 | ):

41 | # Initialize the base agent with all common parameters

42 | super().__init__(

43 | name=name,

44 | system_prompt=system_prompt,

45 | model=model,

46 | n_latest_messages=n_latest_messages, # None for unlimited history

47 | tools=tools,

48 | reset=reset,

49 | chat_kwargs=chat_kwargs,

50 | tool_kwargs=tool_kwargs,

51 | )

52 |

53 | def _setup_chat_pipeline(self):

54 | """

55 | Configure the chat completion pipeline using Pixeltable's computed columns.

56 | This method implements the abstract method from BaseAgent.

57 |

58 | The pipeline consists of 4 steps:

59 | 1. Retrieve recent messages from memory

60 | 2. Format messages for Claude

61 | 3. Get completion from Anthropic

62 | 4. Extract the response text

63 |

64 | Note: The pipeline automatically handles memory limits based on n_latest_messages.

65 | When set to None, it maintains unlimited conversation history.

66 | """

67 |

68 | # Step 1: Define a query to get recent messages

69 | @pxt.query

70 | def get_recent_memory(current_timestamp: pxt.Timestamp) -> list[dict]:

71 | """

72 | Get recent messages from memory, respecting n_latest_messages limit if set.

73 | Messages are ordered by timestamp (newest first).

74 | Returns all messages if n_latest_messages is None.

75 | """

76 | query = (

77 | self.memory.where(self.memory.timestamp < current_timestamp)

78 | .order_by(self.memory.timestamp, asc=False)

79 | .select(role=self.memory.role, content=self.memory.content)

80 | )

81 | if self.n_latest_messages is not None:

82 | query = query.limit(self.n_latest_messages)

83 | return query

84 |

85 | # Step 2: Add computed columns to process the conversation

86 | # First, get the conversation history

87 | self.agent.add_computed_column(

88 | memory_context=get_recent_memory(self.agent.timestamp), if_exists="ignore"

89 | )

90 |

91 | # Format messages for Claude (simpler than OpenAI as system prompt is passed separately)

92 | self.agent.add_computed_column(

93 | messages=create_messages(

94 | self.agent.memory_context,

95 | self.agent.user_message,

96 | self.agent.image,

97 | ),

98 | if_exists="ignore",

99 | )

100 |

101 | # Get Claude's API response (note: system prompt passed directly to messages())

102 | self.agent.add_computed_column(

103 | response=messages(

104 | messages=self.agent.messages,

105 | model=self.model,

106 | system=self.system_prompt, # Claude handles system prompt differently

107 | **self.chat_kwargs,

108 | ),

109 | if_exists="ignore",

110 | )

111 |

112 | # Extract the final response text from Claude's specific response format

113 | self.agent.add_computed_column(

114 | agent_response=self.agent.response.content[0].text, if_exists="ignore"

115 | )

116 |

117 | def _setup_tools_pipeline(self):

118 | """

119 | Configure the tool execution pipeline using Pixeltable's computed columns.

120 | This method implements the abstract method from BaseAgent.

121 |

122 | The pipeline has 4 stages:

123 | 1. Get initial response from Claude with potential tool calls

124 | 2. Execute any requested tools

125 | 3. Format tool results for follow-up

126 | 4. Get final response incorporating tool outputs

127 |

128 | Note: Claude's tool calling format differs slightly from OpenAI's,

129 | but the overall flow remains the same thanks to BaseAgent abstraction.

130 | """

131 | # Stage 1: Get initial response with potential tool calls

132 | self.tools_table.add_computed_column(

133 | initial_response=messages(

134 | model=self.model,

135 | system=self.system_prompt, # Include system prompt for consistent behavior

136 | messages=[{"role": "user", "content": self.tools_table.tool_prompt}],

137 | tools=self.tools, # Pass available tools to Claude

138 | **self.tool_kwargs,

139 | ),

140 | if_exists="ignore",

141 | )

142 |

143 | # Stage 2: Execute any tools that Claude requested

144 | self.tools_table.add_computed_column(

145 | tool_output=invoke_tools(self.tools, self.tools_table.initial_response),

146 | if_exists="ignore",

147 | )

148 |

149 | # Stage 3: Format tool results for follow-up

150 | self.tools_table.add_computed_column(

151 | tool_response_prompt=pxtf.string.format(

152 | "{0}: {1}", self.tools_table.tool_prompt, self.tools_table.tool_output

153 | ),

154 | if_exists="ignore",

155 | )

156 |

157 | # Stage 4: Get final response incorporating tool results

158 | self.tools_table.add_computed_column(

159 | final_response=messages(

160 | model=self.model,

161 | system=self.system_prompt,

162 | messages=[

163 | {"role": "user", "content": self.tools_table.tool_response_prompt}

164 | ],

165 | **self.tool_kwargs,

166 | ),

167 | if_exists="ignore",

168 | )

169 |

170 | # Extract the final response text from Claude's format

171 | self.tools_table.add_computed_column(

172 | tool_answer=self.tools_table.final_response.content[0].text,

173 | if_exists="ignore",

174 | )

175 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/anthropic/utils.py:

--------------------------------------------------------------------------------

1 | import base64

2 | import io

3 | from typing import Optional

4 |

5 | import PIL

6 | import pixeltable as pxt

7 |

8 |

9 | @pxt.udf

10 | def create_messages(

11 | memory_context: list[dict],

12 | current_message: str,

13 | image: Optional[PIL.Image.Image] = None,

14 | ) -> list[dict]:

15 | """

16 | Create a formatted message list for Anthropic Claude models.

17 |

18 | This function formats the conversation history and current message

19 | into the structure expected by Anthropic Claude models.

20 |

21 | Args:

22 | memory_context: List of previous messages from memory

23 | current_message: The current user message

24 | image: Optional image to include with the message

25 |

26 | Returns:

27 | List of formatted messages for Anthropic Claude

28 | """

29 | # Create a copy to avoid modifying the original

30 | messages = memory_context.copy()

31 |

32 | # For text-only messages

33 | if not image:

34 | messages.append({"role": "user", "content": current_message})

35 | return messages

36 |

37 | # Convert image to base64

38 | bytes_arr = io.BytesIO()

39 | image.save(bytes_arr, format="JPEG")

40 | b64_bytes = base64.b64encode(bytes_arr.getvalue())

41 | b64_encoded_image = b64_bytes.decode("utf-8")

42 |

43 | # Create content blocks with text and image

44 | content_blocks = [

45 | {"type": "text", "text": current_message},

46 | {

47 | "type": "image",

48 | "source": {

49 | "type": "base64",

50 | "media_type": "image/jpeg",

51 | "data": b64_encoded_image,

52 | },

53 | },

54 | ]

55 |

56 | messages.append({"role": "user", "content": content_blocks})

57 |

58 | return messages

59 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/bedrock/__init__.py:

--------------------------------------------------------------------------------

1 | from .agent import Agent

2 |

3 | __all__ = ["Agent"]

4 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/bedrock/agent.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import pixeltable as pxt

4 | import pixeltable.functions as pxtf

5 |

6 | from ..core.base import BaseAgent

7 |

8 | from .utils import create_messages

9 |

10 | try:

11 | from pixeltable.functions.bedrock import converse, invoke_tools

12 | except ImportError:

13 | raise ImportError("boto3 not found; run `pip install boto3`")

14 |

15 |

16 | class Agent(BaseAgent):

17 | """

18 | AWS Bedrock-specific implementation of the BaseAgent.

19 |

20 | This agent uses AWS Bedrock's Claude API for generating responses and handling tools.

21 | It inherits common functionality from BaseAgent including:

22 | - Table setup and management

23 | - Memory persistence

24 | - Base chat and tool call implementations

25 |

26 | The agent supports both limited and unlimited conversation history through

27 | the n_latest_messages parameter for regular chat, while tool calls use only

28 | the current message without conversation history.

29 | """

30 |

31 | def __init__(

32 | self,

33 | name: str,

34 | system_prompt: str,

35 | model: str = "amazon.nova-pro-v1:0",

36 | n_latest_messages: Optional[int] = 10,

37 | tools: Optional[pxt.tools] = None,

38 | reset: bool = False,

39 | chat_kwargs: Optional[dict] = None,

40 | tool_kwargs: Optional[dict] = None,

41 | ):

42 | # Initialize the base agent with all common parameters

43 | super().__init__(

44 | name=name,

45 | system_prompt=system_prompt,

46 | model=model,

47 | n_latest_messages=n_latest_messages, # None for unlimited history

48 | tools=tools,

49 | reset=reset,

50 | chat_kwargs=chat_kwargs,

51 | tool_kwargs=tool_kwargs,

52 | )

53 |

54 | def _setup_chat_pipeline(self):

55 | """

56 | Configure the chat completion pipeline using Pixeltable's computed columns.

57 | This method implements the abstract method from BaseAgent.

58 |

59 | The pipeline consists of 4 steps:

60 | 1. Retrieve recent messages from memory

61 | 2. Format messages for Claude

62 | 3. Get completion from Anthropic

63 | 4. Extract the response text

64 |

65 | Note: The pipeline automatically handles memory limits based on n_latest_messages.

66 | When set to None, it maintains unlimited conversation history.

67 | """

68 |

69 | # Step 1: Define a query to get recent messages

70 | @pxt.query

71 | def get_recent_memory(current_timestamp: pxt.Timestamp) -> list[dict]:

72 | """

73 | Get recent messages from memory, respecting n_latest_messages limit if set.

74 | Messages are ordered by timestamp (newest first).

75 | Returns all messages if n_latest_messages is None.

76 | """

77 | query = (

78 | self.memory.where(self.memory.timestamp < current_timestamp)

79 | .order_by(self.memory.timestamp, asc=False)

80 | .select(role=self.memory.role, content=self.memory.content)

81 | )

82 | if self.n_latest_messages is not None:

83 | query = query.limit(self.n_latest_messages)

84 | return query

85 |

86 | # Step 2: Add computed columns to process the conversation

87 | # First, get the conversation history

88 | self.agent.add_computed_column(

89 | memory_context=get_recent_memory(self.agent.timestamp), if_exists="ignore"

90 | )

91 |

92 | # Format messages for Claude (simpler than OpenAI as system prompt is passed separately)

93 | self.agent.add_computed_column(

94 | messages=create_messages(

95 | self.agent.memory_context,

96 | self.agent.user_message,

97 | self.agent.image,

98 | ),

99 | if_exists="ignore",

100 | )

101 |

102 | # Get Bedrock Claude's API response

103 | self.agent.add_computed_column(

104 | response=converse(

105 | messages=self.agent.messages,

106 | model_id=self.model,

107 | system=[{"text": self.system_prompt}],

108 | **self.chat_kwargs,

109 | ),

110 | if_exists="ignore",

111 | )

112 |

113 | # Extract the final response text from Bedrock Claude's specific response format

114 | self.agent.add_computed_column(

115 | agent_response=self.agent.response.output.message.content[0].text,

116 | if_exists="ignore"

117 | )

118 |

119 | def _setup_tools_pipeline(self):

120 | """

121 | Configure the tool execution pipeline using Pixeltable's computed columns.

122 | This method implements the abstract method from BaseAgent.

123 |

124 | The pipeline has 4 stages:

125 | 1. Get initial response from Bedrock Claude with potential tool calls

126 | 2. Execute any requested tools

127 | 3. Format tool results for follow-up

128 | 4. Get final response incorporating tool outputs

129 |

130 | Note: For tool calls, we only use the current message without conversation history

131 | to ensure tool execution is based solely on the current request.

132 | """

133 | # Stage 1: Get initial response with potential tool calls

134 | # Note: We only use the current tool prompt without memory context

135 | self.tools_table.add_computed_column(

136 | initial_response=converse(

137 | model_id=self.model,

138 | system=[{"text": self.system_prompt}],

139 | messages=[{"role": "user", "content": [{"text": self.tools_table.tool_prompt}]}],

140 | tool_config=self.tools, # Pass available tools to Bedrock Claude

141 | **self.tool_kwargs,

142 | ),

143 | if_exists="ignore",

144 | )

145 |

146 | # Stage 2: Execute any tools that Bedrock Claude requested

147 | self.tools_table.add_computed_column(

148 | tool_output=invoke_tools(self.tools, self.tools_table.initial_response),

149 | if_exists="ignore",

150 | )

151 |

152 | # Stage 3: Format tool results for follow-up

153 | self.tools_table.add_computed_column(

154 | tool_response_prompt=pxtf.string.format(

155 | "{0}: {1}", self.tools_table.tool_prompt, self.tools_table.tool_output

156 | ),

157 | if_exists="ignore",

158 | )

159 |

160 | # Stage 4: Get final response incorporating tool results

161 | # Again, we only use the current tool response without memory context

162 | self.tools_table.add_computed_column(

163 | final_response=converse(

164 | model_id=self.model,

165 | system=[{"text": self.system_prompt}],

166 | messages=[

167 | {"role": "user", "content": [{"text": self.tools_table.tool_response_prompt}]}

168 | ],

169 | **self.tool_kwargs,

170 | ),

171 | if_exists="ignore",

172 | )

173 |

174 | # Extract the final response text from Bedrock Claude's format

175 | self.tools_table.add_computed_column(

176 | tool_answer=self.tools_table.final_response.output.message.content[0].text,

177 | if_exists="ignore",

178 | )

179 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/bedrock/utils.py:

--------------------------------------------------------------------------------

1 | import base64

2 | import io

3 | from typing import Optional

4 |

5 | import PIL

6 | import pixeltable as pxt

7 |

8 |

9 | @pxt.udf

10 | def create_messages(

11 | memory_context: list[dict],

12 | current_message: str,

13 | image: Optional[PIL.Image.Image] = None,

14 | ) -> list[dict]:

15 | """

16 | Create a formatted message list for Bedrock Claude models.

17 |

18 | This function formats the conversation history and current message

19 | into the structure expected by Bedrock Claude models.

20 |

21 | Args:

22 | memory_context: List of previous messages from memory

23 | current_message: The current user message

24 | image: Optional image to include with the message

25 |

26 | Returns:

27 | List of formatted messages for Bedrock Claude

28 | """

29 | # Create a copy to avoid modifying the original and format for Bedrock

30 | messages = []

31 |

32 | # Get messages in oldest-first order

33 | reversed_memory = list(reversed(memory_context))

34 |

35 | # Ensure the conversation starts with a user message

36 | # If the first message is from the assistant, skip it

37 | start_idx = 0

38 | if reversed_memory and reversed_memory[0]["role"] == "assistant":

39 | start_idx = 1

40 |

41 | # Format previous messages for Bedrock

42 | for msg in reversed_memory[start_idx:]:

43 | # Convert string content to the required list format

44 | if isinstance(msg["content"], str):

45 | messages.append({

46 | "role": msg["role"],

47 | "content": [{"text": msg["content"]}]

48 | })

49 | else:

50 | # If it's already in the correct format, keep it as is

51 | messages.append(msg)

52 |

53 | # For text-only messages

54 | if not image:

55 | messages.append({"role": "user", "content": [{"text": current_message}]})

56 | return messages

57 |

58 | # Convert image to base64

59 | bytes_arr = io.BytesIO()

60 | image.save(bytes_arr, format="JPEG")

61 | b64_bytes = base64.b64encode(bytes_arr.getvalue())

62 | b64_encoded_image = b64_bytes.decode("utf-8")

63 |

64 | # Create content blocks with text and image

65 | content_blocks = [

66 | {"type": "text", "text": current_message},

67 | {

68 | "type": "image",

69 | "source": {

70 | "type": "base64",

71 | "media_type": "image/jpeg",

72 | "data": b64_encoded_image,

73 | },

74 | },

75 | ]

76 |

77 | messages.append({"role": "user", "content": content_blocks})

78 |

79 | return messages

80 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/core/__init__.py:

--------------------------------------------------------------------------------

1 | from .base import BaseAgent

2 |

3 | __all__ = ["BaseAgent"]

4 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/gemini/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Google Gemini agent module for multi-provider blueprints.

3 |

4 | This module provides an Agent class for interacting with Google Gemini models.

5 | """

6 |

7 | from .agent import Agent

8 | from .utils import create_content

9 |

10 | __all__ = ["Agent", "create_content"]

11 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/gemini/agent.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import pixeltable as pxt

4 | import pixeltable.functions as pxtf

5 |

6 | from ..core.base import BaseAgent

7 |

8 | from .utils import create_content

9 |

10 | try:

11 | from pixeltable.functions.gemini import generate_content, invoke_tools

12 | except ImportError:

13 | raise ImportError("google.genai not found; run `pip install google-genai`")

14 |

15 |

16 | class Agent(BaseAgent):

17 | """

18 | Google Gemini-specific implementation of the BaseAgent.

19 |

20 | This agent uses Google Gemini's generate_content API for generating responses and handling tools.

21 | It inherits common functionality from BaseAgent including:

22 | - Table setup and management

23 | - Memory persistence

24 | - Base chat and tool call implementations

25 |

26 | The agent supports both limited and unlimited conversation history through

27 | the n_latest_messages parameter.

28 | """

29 |

30 | def __init__(

31 | self,

32 | name: str,

33 | system_prompt: str,

34 | model: str = "gemini-2.0-flash",

35 | n_latest_messages: Optional[int] = 10,

36 | tools: Optional[pxt.tools] = None,

37 | reset: bool = False,

38 | chat_kwargs: Optional[dict] = None,

39 | tool_kwargs: Optional[dict] = None,

40 | ):

41 | # Initialize the base agent with all common parameters

42 | super().__init__(

43 | name=name,

44 | system_prompt=system_prompt,

45 | model=model,

46 | n_latest_messages=n_latest_messages, # None for unlimited history

47 | tools=tools,

48 | reset=reset,

49 | chat_kwargs=chat_kwargs,

50 | tool_kwargs=tool_kwargs,

51 | )

52 |

53 | def _setup_chat_pipeline(self):

54 | """

55 | Configure the chat completion pipeline using Pixeltable's computed columns.

56 | This method implements the abstract method from BaseAgent.

57 |

58 | The pipeline consists of 4 steps:

59 | 1. Retrieve recent messages from memory

60 | 2. Format content for Gemini

61 | 3. Get completion from Google Gemini

62 | 4. Extract the response text

63 |

64 | Note: The pipeline automatically handles memory limits based on n_latest_messages.

65 | When set to None, it maintains unlimited conversation history.

66 | """

67 |

68 | # Step 1: Define a query to get recent messages

69 | @pxt.query

70 | def get_recent_memory(current_timestamp: pxt.Timestamp) -> list[dict]:

71 | """

72 | Get recent messages from memory, respecting n_latest_messages limit if set.

73 | Messages are ordered by timestamp (newest first).

74 | Returns all messages if n_latest_messages is None.

75 | """

76 | query = (

77 | self.memory.where(self.memory.timestamp < current_timestamp)

78 | .order_by(self.memory.timestamp, asc=False)

79 | .select(role=self.memory.role, content=self.memory.content)

80 | )

81 | if self.n_latest_messages is not None:

82 | query = query.limit(self.n_latest_messages)

83 | return query

84 |

85 | # Step 2: Add computed columns to process the conversation

86 | # First, get the conversation history

87 | self.agent.add_computed_column(

88 | memory_context=get_recent_memory(self.agent.timestamp), if_exists="ignore"

89 | )

90 |

91 | # Format content for Gemini (text-based format with conversation context)

92 | self.agent.add_computed_column(

93 | prompt=create_content(

94 | self.agent.system_prompt,

95 | self.agent.memory_context,

96 | self.agent.user_message,

97 | self.agent.image,

98 | ),

99 | if_exists="ignore",

100 | )

101 |

102 | # Get Gemini's API response (note: contents parameter instead of messages)

103 | self.agent.add_computed_column(

104 | response=generate_content(

105 | contents=self.agent.prompt,

106 | model=self.model,

107 | **self.chat_kwargs,

108 | ),

109 | if_exists="ignore",

110 | )

111 |

112 | # Extract the final response text from Gemini's specific response format

113 | self.agent.add_computed_column(

114 | agent_response=self.agent.response['candidates'][0]['content']['parts'][0]['text'],

115 | if_exists="ignore"

116 | )

117 |

118 | def _setup_tools_pipeline(self):

119 | """

120 | Configure the tool execution pipeline using Pixeltable's computed columns.

121 | This method implements the abstract method from BaseAgent.

122 |

123 | The pipeline has 4 stages:

124 | 1. Get initial response from Gemini with potential tool calls

125 | 2. Execute any requested tools

126 | 3. Format tool results for follow-up

127 | 4. Get final response incorporating tool outputs

128 |

129 | Note: Gemini's tool calling format uses the same structure as other providers

130 | thanks to BaseAgent abstraction.

131 | """

132 | # Stage 1: Get initial response with potential tool calls

133 | self.tools_table.add_computed_column(

134 | initial_response=generate_content(

135 | contents=self.tools_table.tool_prompt,

136 | model=self.model,

137 | tools=self.tools, # Pass available tools to Gemini

138 | **self.tool_kwargs,

139 | ),

140 | if_exists="ignore",

141 | )

142 |

143 | # Stage 2: Execute any tools that Gemini requested

144 | self.tools_table.add_computed_column(

145 | tool_output=invoke_tools(self.tools, self.tools_table.initial_response),

146 | if_exists="ignore",

147 | )

148 |

149 | # Stage 3: Format tool results for follow-up

150 | self.tools_table.add_computed_column(

151 | tool_response_prompt=pxtf.string.format(

152 | "{0}: {1}", self.tools_table.tool_prompt, self.tools_table.tool_output

153 | ),

154 | if_exists="ignore",

155 | )

156 |

157 | # Stage 4: Get final response incorporating tool results

158 | self.tools_table.add_computed_column(

159 | final_response=generate_content(

160 | contents=self.tools_table.tool_response_prompt,

161 | model=self.model,

162 | **self.tool_kwargs,

163 | ),

164 | if_exists="ignore",

165 | )

166 |

167 | # Extract the final response text from Gemini's format

168 | self.tools_table.add_computed_column(

169 | tool_answer=self.tools_table.final_response['candidates'][0]['content']['parts'][0]['text'],

170 | if_exists="ignore",

171 | )

172 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/gemini/utils.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import PIL

4 | import pixeltable as pxt

5 |

6 |

7 | @pxt.udf

8 | def create_content(

9 | system_prompt: str,

10 | memory_context: list[dict],

11 | current_message: str,

12 | image: Optional[PIL.Image.Image] = None,

13 | ) -> str:

14 |

15 | # Build the conversation context as a text string

16 | context = f"System: {system_prompt}\n\n"

17 |

18 | # Add memory context

19 | for msg in memory_context:

20 | context += f"{msg['role'].title()}: {msg['content']}\n"

21 |

22 | # Add current message

23 | context += f"User: {current_message}\n"

24 | context += "Assistant: "

25 |

26 | return context

27 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/openai/__init__.py:

--------------------------------------------------------------------------------

1 | from .agent import Agent

2 |

3 | __all__ = ["Agent"]

4 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/openai/agent.py:

--------------------------------------------------------------------------------

1 | from typing import Optional

2 |

3 | import pixeltable as pxt

4 | import pixeltable.functions as pxtf

5 |

6 | from ..core.base import BaseAgent

7 |

8 | from .utils import create_messages

9 |

10 | try:

11 | from pixeltable.functions.openai import chat_completions, invoke_tools

12 | except ImportError:

13 | raise ImportError("openai not found; run `pip install openai`")

14 |

15 |

16 | class Agent(BaseAgent):

17 | """

18 | OpenAI-specific implementation of the BaseAgent.

19 |

20 | This agent uses OpenAI's chat completion API for generating responses and handling tools.

21 | It inherits common functionality from BaseAgent including:

22 | - Table setup and management

23 | - Memory persistence

24 | - Base chat and tool call implementations

25 | """

26 |

27 | def __init__(

28 | self,

29 | name: str,

30 | system_prompt: str,

31 | model: str = "gpt-4o-mini",

32 | n_latest_messages: Optional[int] = 10,

33 | tools: Optional[pxt.tools] = None,

34 | reset: bool = False,

35 | chat_kwargs: Optional[dict] = None,

36 | tool_kwargs: Optional[dict] = None,

37 | ):

38 | # Initialize the base agent with all common parameters

39 | super().__init__(

40 | name=name,

41 | system_prompt=system_prompt,

42 | model=model,

43 | n_latest_messages=n_latest_messages, # None for unlimited history

44 | tools=tools,

45 | reset=reset,

46 | chat_kwargs=chat_kwargs,

47 | tool_kwargs=tool_kwargs,

48 | )

49 |

50 | def _setup_chat_pipeline(self):

51 | """

52 | Configure the chat completion pipeline using Pixeltable's computed columns.

53 | This method implements the abstract method from BaseAgent.

54 |

55 | The pipeline consists of 4 steps:

56 | 1. Retrieve recent messages from memory

57 | 2. Format messages with system prompt

58 | 3. Get completion from OpenAI

59 | 4. Extract the response text

60 | """

61 |

62 | # Step 1: Define a query to get recent messages

63 | @pxt.query

64 | def get_recent_memory(current_timestamp: pxt.Timestamp) -> list[dict]:

65 | """

66 | Get recent messages from memory, respecting n_latest_messages limit if set.

67 | Messages are ordered by timestamp (newest first).

68 | """

69 | query = (

70 | self.memory.where(self.memory.timestamp < current_timestamp)

71 | .order_by(self.memory.timestamp, asc=False)

72 | .select(role=self.memory.role, content=self.memory.content)

73 | )

74 | if self.n_latest_messages is not None:

75 | query = query.limit(self.n_latest_messages)

76 | return query

77 |

78 | # Step 2: Add computed columns to process the conversation

79 | # First, get the conversation history

80 | self.agent.add_computed_column(

81 | memory_context=get_recent_memory(self.agent.timestamp),

82 | if_exists="ignore",

83 | )

84 |

85 | # Format messages for OpenAI with system prompt

86 | self.agent.add_computed_column(

87 | prompt=create_messages(

88 | self.agent.system_prompt,

89 | self.agent.memory_context,

90 | self.agent.user_message,

91 | self.agent.image,

92 | ),

93 | if_exists="ignore",

94 | )

95 |

96 | # Get OpenAI's API response

97 | self.agent.add_computed_column(

98 | response=chat_completions(

99 | messages=self.agent.prompt, model=self.model, **self.chat_kwargs

100 | ),

101 | if_exists="ignore",

102 | )

103 |

104 | # Extract the final response text

105 | self.agent.add_computed_column(

106 | agent_response=self.agent.response.choices[0].message.content,

107 | if_exists="ignore",

108 | )

109 |

110 | def _setup_tools_pipeline(self):

111 | """

112 | Configure the tool execution pipeline using Pixeltable's computed columns.

113 | This method implements the abstract method from BaseAgent.

114 |

115 | The pipeline has 4 stages:

116 | 1. Get initial response from OpenAI with potential tool calls

117 | 2. Execute any requested tools

118 | 3. Format tool results for follow-up

119 | 4. Get final response incorporating tool outputs

120 | """

121 | # Stage 1: Get initial response with potential tool calls

122 | self.tools_table.add_computed_column(

123 | initial_response=chat_completions(

124 | model=self.model,

125 | messages=[{"role": "user", "content": self.tools_table.tool_prompt}],

126 | tools=self.tools, # Pass available tools to OpenAI

127 | **self.tool_kwargs,

128 | ),

129 | if_exists="ignore",

130 | )

131 |

132 | # Stage 2: Execute any tools that OpenAI requested

133 | self.tools_table.add_computed_column(

134 | tool_output=invoke_tools(self.tools, self.tools_table.initial_response),

135 | if_exists="ignore",

136 | )

137 |

138 | # Stage 3: Format tool results for follow-up

139 | self.tools_table.add_computed_column(

140 | tool_response_prompt=pxtf.string.format(

141 | "{0}: {1}", self.tools_table.tool_prompt, self.tools_table.tool_output

142 | ),

143 | if_exists="ignore",

144 | )

145 |

146 | # Stage 4: Get final response incorporating tool results

147 | self.tools_table.add_computed_column(

148 | final_response=chat_completions(

149 | model=self.model,

150 | messages=[

151 | {"role": "user", "content": self.tools_table.tool_response_prompt},

152 | ],

153 | **self.tool_kwargs,

154 | ),

155 | if_exists="ignore",

156 | )

157 |

158 | # Extract the final response text

159 | self.tools_table.add_computed_column(

160 | tool_answer=self.tools_table.final_response.choices[0].message.content,

161 | if_exists="ignore",

162 | )

163 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/openai/utils.py:

--------------------------------------------------------------------------------

1 | import base64

2 | import io

3 | from typing import Optional

4 |

5 | import PIL

6 | import pixeltable as pxt

7 |

8 |

9 | @pxt.udf

10 | def create_messages(

11 | system_prompt: str,

12 | memory_context: list[dict],

13 | current_message: str,

14 | image: Optional[PIL.Image.Image] = None,

15 | ) -> list[dict]:

16 |

17 | messages = [{"role": "system", "content": system_prompt}]

18 | messages.extend(memory_context.copy())

19 |

20 | if not image:

21 | messages.append({"role": "user", "content": current_message})

22 | return messages

23 |

24 | # Encode Image

25 | bytes_arr = io.BytesIO()

26 | image.save(bytes_arr, format="jpeg")

27 | b64_bytes = base64.b64encode(bytes_arr.getvalue())

28 | b64_encoded_image = b64_bytes.decode("utf-8")

29 |

30 | # Create content blocks with text and image

31 | content_blocks = [

32 | {"type": "text", "text": current_message},

33 | {

34 | "type": "image_url",

35 | "image_url": {"url": f"data:image/jpeg;base64,{b64_encoded_image}"},

36 | },

37 | ]

38 |

39 | messages.append({"role": "user", "content": content_blocks})

40 | return messages

41 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/requirements.txt:

--------------------------------------------------------------------------------

1 | pixeltable

2 | openai

3 | anthropic

4 | boto3

5 | google-genai

6 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/README.md:

--------------------------------------------------------------------------------

1 | # Multi-Provider Agent Tests

2 |

3 | This directory contains test scripts for the multi-provider agent blueprints. Each test demonstrates how to create and use agents powered by different LLM providers.

4 |

5 | ## Available Tests

6 |

7 | - `test_anthropic.py`: Tests the Anthropic Claude agent with chat and tool calling

8 | - `test_openai.py`: Tests the OpenAI GPT agent with chat and tool calling

9 | - `test_bedrock.py`: Tests the AWS Bedrock agent with chat and tool calling

10 |

11 | ## Running the Tests

12 |

13 | ### Running Individual Tests

14 |

15 | To run a specific test, navigate to the `blueprints/multi_provider` directory and run:

16 |

17 | ```bash

18 | python -m tests.test_anthropic

19 | ```

20 |

21 | Or for other providers:

22 |

23 | ```bash

24 | python -m tests.test_openai

25 | python -m tests.test_bedrock

26 | ```

27 |

28 | ### Running All Tests

29 |

30 | To run all tests in sequence, use the provided runner script:

31 |

32 | ```bash

33 | python -m tests.run_all_tests

34 | ```

35 |

36 | You can also specify which tests to run:

37 |

38 | ```bash

39 | python -m tests.run_all_tests test_anthropic test_openai

40 | ```

41 |

42 | ## Test Features

43 |

44 | Each test demonstrates:

45 |

46 | 1. **Conversational Memory**: The agent remembers previous interactions within the conversation

47 | 2. **Tool Calling**: The agent can use tools to retrieve external information

48 | 3. **Memory Persistence**: The agent maintains memory across different interaction types

49 |

50 | ## Provider-Specific Notes

51 |

52 | ### Anthropic Claude

53 |

54 | - Uses the `claude-3-5-sonnet-latest` model by default

55 | - Demonstrates a weather tool

56 |

57 | ### OpenAI

58 |

59 | - Uses the `gpt-4o-mini` model by default

60 | - Demonstrates a weather tool

61 |

62 | ### AWS Bedrock

63 |

64 | - Uses the `amazon.nova-pro-v1:0` model by default

65 | - Demonstrates a stock price tool

66 | - Requires AWS credentials to be configured

67 |

68 | ## Import Pattern

69 |

70 | The tests demonstrate a simple import pattern:

71 |

72 | ```python

73 | # Import the agent classes with aliases

74 | from blueprints.multi_provider.ant.agent import Agent as AnthropicAgent

75 | from blueprints.multi_provider.oai.agent import Agent as OpenAIAgent

76 | from blueprints.multi_provider.bedrock.agent import Agent as BedrockAgent

77 | ```

78 |

79 | This pattern makes it clear which provider's agent is being used while maintaining a consistent interface.

80 |

81 | ### For Application Code

82 |

83 | When using these agents in your application code, you can use the same import pattern:

84 |

85 | ```python

86 | from blueprints.multi_provider.ant.agent import Agent as AnthropicAgent

87 | from blueprints.multi_provider.oai.agent import Agent as OpenAIAgent

88 | from blueprints.multi_provider.bedrock.agent import Agent as BedrockAgent

89 | ```

90 |

91 | The alias makes it clear which provider you're using, while the actual implementation uses directory names that avoid conflicts with the underlying Python packages.

92 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/__init__.py:

--------------------------------------------------------------------------------

1 | """

2 | Test package for multi_provider blueprints.

3 |

4 | This package contains test scripts for the multi-provider agent blueprints,

5 | demonstrating chat and tool calling functionality for different LLM providers.

6 | """

7 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/test_anthropic.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from pathlib import Path

3 |

4 | root_dir = Path(__file__).resolve().parents[3]

5 | sys.path.insert(0, str(root_dir))

6 |

7 | from blueprints.multi_provider.anthropic.agent import Agent as AnthropicAgent

8 |

9 |

10 | import pixeltable as pxt

11 |

12 | @pxt.udf

13 | def weather(city: str) -> str:

14 | """

15 | Returns the weather in a given city.

16 | """

17 | return f"The weather in {city} is sunny with a high of 75°F."

18 |

19 |

20 | def main():

21 | # Create an Anthropic agent with memory

22 | agent = AnthropicAgent(

23 | name="anthropic_test",

24 | system_prompt="You are a helpful assistant that can answer questions and use tools.",

25 | model="claude-3-5-sonnet-latest", # Use Claude 3.5 Sonnet

26 | n_latest_messages=10, # Keep last 10 messages in context

27 | tools=pxt.tools(weather), # Register the weather tool

28 | reset=True, # Reset the agent's memory for testing

29 | )

30 |

31 | print("\n=== Testing Conversational Memory ===\n")

32 |

33 | # First conversation turn

34 | user_message = "Hello, my name is Alice."

35 | print(f"User: {user_message}")

36 | response = agent.chat(user_message)

37 | print(f"Agent: {response}\n")

38 |

39 | # Second conversation turn - the agent should remember the user's name

40 | user_message = "What's my name?"

41 | print(f"User: {user_message}")

42 | response = agent.chat(user_message)

43 | print(f"Agent: {response}\n")

44 |

45 | print("\n=== Testing Tool Calling ===\n")

46 |

47 | # Tool call

48 | user_message = "What's the weather in San Francisco?"

49 | print(f"User: {user_message}")

50 | response = agent.tool_call(user_message)

51 | print(f"Agent: {response}\n")

52 |

53 | # Another tool call

54 | user_message = "How about the weather in New York?"

55 | print(f"User: {user_message}")

56 | response = agent.tool_call(user_message)

57 | print(f"Agent: {response}\n")

58 |

59 | print("\n=== Testing Memory After Tool Calls ===\n")

60 |

61 | # Regular chat after tool calls - should still remember the user's name

62 | user_message = "Do you still remember my name?"

63 | print(f"User: {user_message}")

64 | response = agent.chat(user_message)

65 | print(f"Agent: {response}\n")

66 |

67 |

68 | if __name__ == "__main__":

69 | main()

70 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/test_bedrock.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from pathlib import Path

3 |

4 | root_dir = Path(__file__).resolve().parents[3]

5 | sys.path.insert(0, str(root_dir))

6 |

7 | from blueprints.multi_provider.bedrock.agent import Agent as BedrockAgent

8 |

9 |

10 | import pixeltable as pxt

11 |

12 | @pxt.udf

13 | def stock_price(ticker: str) -> float:

14 | """Get the current stock price for a given ticker symbol."""

15 | # This is a mock implementation for testing

16 | prices = {

17 | "AAPL": 175.34,

18 | "MSFT": 325.89,

19 | "GOOGL": 142.56,

20 | "AMZN": 178.23,

21 | "NVDA": 131.17,

22 | }

23 | return prices.get(ticker.upper(), 0.0)

24 |

25 |

26 | def main():

27 | # Create a Bedrock agent with memory

28 | agent = BedrockAgent(

29 | name="bedrock_test",

30 | system_prompt="You are a helpful assistant that can answer questions and use tools.",

31 | model="amazon.nova-pro-v1:0", # Use the Amazon Nova Pro model

32 | n_latest_messages=10, # Keep last 10 messages in context

33 | tools=pxt.tools(stock_price), # Register the stock_price tool

34 | reset=True, # Reset the agent's memory for testing

35 | )

36 |

37 | print("\n=== Testing Conversational Memory ===\n")

38 |

39 | # First conversation turn

40 | user_message = "Hello, my name is Charlie."

41 | print(f"User: {user_message}")

42 | response = agent.chat(user_message)

43 | print(f"Agent: {response}\n")

44 |

45 | # Second conversation turn - the agent should remember the user's name

46 | user_message = "What's my name?"

47 | print(f"User: {user_message}")

48 | response = agent.chat(user_message)

49 | print(f"Agent: {response}\n")

50 |

51 | print("\n=== Testing Tool Calling ===\n")

52 |

53 | # Tool call

54 | user_message = "What is the stock price of NVDA today?"

55 | print(f"User: {user_message}")

56 | response = agent.tool_call(user_message)

57 | print(f"Agent: {response}\n")

58 |

59 | # Another tool call

60 | user_message = "What about AAPL?"

61 | print(f"User: {user_message}")

62 | response = agent.tool_call(user_message)

63 | print(f"Agent: {response}\n")

64 |

65 | print("\n=== Testing Memory After Tool Calls ===\n")

66 |

67 | # Regular chat after tool calls - should still remember the user's name

68 | user_message = "Do you still remember my name?"

69 | print(f"User: {user_message}")

70 | response = agent.chat(user_message)

71 | print(f"Agent: {response}\n")

72 |

73 |

74 | if __name__ == "__main__":

75 | main()

76 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/test_gemini.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pytest

3 |

4 | import pixeltable as pxt

5 | from ..gemini import Agent

6 |

7 |

8 | @pxt.udf

9 | def weather(city: str) -> str:

10 | """

11 | Returns the weather in a given city.

12 | """

13 | return f"The weather in {city} is sunny."

14 |

15 |

16 | @pytest.mark.skipif(

17 | not os.getenv("GEMINI_API_KEY"), reason="GEMINI_API_KEY not set"

18 | )

19 | def test_gemini_agent_chat():

20 | """Test basic chat functionality with Gemini agent."""

21 | agent = Agent(

22 | name="test_gemini_agent",

23 | system_prompt="You're a helpful assistant.",

24 | reset=True,

25 | )

26 |

27 | # Test basic chat

28 | response = agent.chat("Hi, how are you?")

29 | assert isinstance(response, str)

30 | assert len(response) > 0

31 |

32 | # Test memory functionality

33 | response2 = agent.chat("What was my last question?")

34 | assert isinstance(response2, str)

35 | assert len(response2) > 0

36 |

37 |

38 | @pytest.mark.skipif(

39 | not os.getenv("GEMINI_API_KEY"), reason="GEMINI_API_KEY not set"

40 | )

41 | def test_gemini_agent_tools():

42 | """Test tool calling functionality with Gemini agent."""

43 | agent = Agent(

44 | name="test_gemini_tools",

45 | system_prompt="You're my assistant.",

46 | tools=pxt.tools(weather),

47 | reset=True,

48 | )

49 |

50 | # Test tool call

51 | response = agent.tool_call("Get weather in San Francisco")

52 | assert isinstance(response, str)

53 | assert "San Francisco" in response or "sunny" in response

54 |

55 |

56 | @pytest.mark.skipif(

57 | not os.getenv("GEMINI_API_KEY"), reason="GEMINI_API_KEY not set"

58 | )

59 | def test_gemini_agent_unlimited_memory():

60 | """Test unlimited memory functionality."""

61 | agent = Agent(

62 | name="test_gemini_unlimited",

63 | system_prompt="You're my assistant.",

64 | n_latest_messages=None, # Unlimited memory

65 | reset=True,

66 | )

67 |

68 | # Test basic functionality with unlimited memory

69 | response = agent.chat("Remember this number: 42")

70 | assert isinstance(response, str)

71 | assert len(response) > 0

72 |

--------------------------------------------------------------------------------

/blueprints/multi_provider/tests/test_openai.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from pathlib import Path

3 |

4 | root_dir = Path(__file__).resolve().parents[3]

5 | sys.path.insert(0, str(root_dir))

6 |

7 | from blueprints.multi_provider.openai.agent import Agent as OpenAIAgent

8 |

9 |

10 | import pixeltable as pxt

11 |

12 | @pxt.udf

13 | def weather(city: str) -> str:

14 | """

15 | Returns the weather in a given city.

16 | """

17 | return f"The weather in {city} is sunny with a high of 75°F."

18 |

19 |

20 | def main():

21 | # Create an OpenAI agent with memory

22 | agent = OpenAIAgent(

23 | name="openai_test",

24 | system_prompt="You are a helpful assistant that can answer questions and use tools.",

25 | model="gpt-4o-mini", # Use GPT-4o Mini

26 | n_latest_messages=10, # Keep last 10 messages in context

27 | tools=pxt.tools(weather), # Register the weather tool

28 | reset=True, # Reset the agent's memory for testing

29 | )

30 |

31 | print("\n=== Testing Conversational Memory ===\n")

32 |

33 | # First conversation turn

34 | user_message = "Hello, my name is Bob."

35 | print(f"User: {user_message}")

36 | response = agent.chat(user_message)

37 | print(f"Agent: {response}\n")

38 |

39 | # Second conversation turn - the agent should remember the user's name

40 | user_message = "What's my name?"

41 | print(f"User: {user_message}")

42 | response = agent.chat(user_message)

43 | print(f"Agent: {response}\n")

44 |

45 | print("\n=== Testing Tool Calling ===\n")

46 |

47 | # Tool call

48 | user_message = "What's the weather in Seattle?"

49 | print(f"User: {user_message}")

50 | response = agent.tool_call(user_message)

51 | print(f"Agent: {response}\n")

52 |

53 | # Another tool call

54 | user_message = "How about the weather in Chicago?"

55 | print(f"User: {user_message}")

56 | response = agent.tool_call(user_message)

57 | print(f"Agent: {response}\n")

58 |

59 | print("\n=== Testing Memory After Tool Calls ===\n")

60 |

61 | # Regular chat after tool calls - should still remember the user's name

62 | user_message = "Do you still remember my name?"

63 | print(f"User: {user_message}")

64 | response = agent.chat(user_message)

65 | print(f"Agent: {response}\n")

66 |

67 |

68 | if __name__ == "__main__":

69 | main()

70 |

--------------------------------------------------------------------------------

/blueprints/single_provider/anthropic/test.py:

--------------------------------------------------------------------------------

1 | import pixeltable as pxt

2 | from agent import Agent

3 |

4 |

5 | @pxt.udf

6 | def weather(city: str) -> str:

7 | """

8 | Returns the weather in a given city.

9 | """

10 | return f"The weather in {city} is sunny."

11 |

12 |

13 | # Create an agent

14 | agent = Agent(

15 | name="anthropic_agent",

16 | system_prompt="You’re my assistant.",

17 | tools=pxt.tools(weather),

18 | reset=True,

19 | )

20 |

21 | # Persistant chat and memory

22 | print(agent.chat("Hi, how are you?"))

23 | print(agent.chat("What was my last question?"))

24 |

25 | # Tool call

26 | print(agent.tool_call("Get weather in San Francisco"))

27 |

--------------------------------------------------------------------------------

/blueprints/single_provider/bedrock/README.md:

--------------------------------------------------------------------------------

1 | # AWS Bedrock Agent Blueprint

2 |

3 | This blueprint demonstrates how to create a conversational AI agent powered by AWS Bedrock models using Pixeltable for persistent memory, storage, orchestration, and tool execution.

4 |

5 | ## Features

6 |

7 | - **Persistent Memory**: Maintains conversation history in a structured database

8 | - **Unlimited Memory Support**: Option to maintain complete conversation history without limits

9 | - **Tool Execution**: Supports function calling with AWS Bedrock models

10 | - **Image Support**: Can process and respond to images

11 | - **Configurable Context Window**: Control how many previous messages are included in the context

12 | - **Modular Architecture**: Built on a flexible BaseAgent design for maintainability and extensibility

13 |

14 | ## Prerequisites

15 |

16 | - Python 3.8+

17 | - Pixeltable (`pip install pixeltable`)

18 | - AWS Boto3 (`pip install boto3`)

19 | - AWS credentials configured (via AWS CLI, environment variables, or IAM role)

20 |

21 | ## AWS Credentials Setup

22 |

23 | Before using this blueprint, ensure you have:

24 |

25 | 1. An AWS account with access to AWS Bedrock

26 | 2. Proper IAM permissions to use Bedrock models

27 | 3. AWS credentials configured using one of these methods:

28 | - AWS CLI: Run `aws configure`

29 | - Environment variables: Set `AWS_ACCESS_KEY_ID`, `AWS_SECRET_ACCESS_KEY`, and `AWS_REGION`

30 | - IAM role (if running on AWS infrastructure)

31 |

32 | ## Quick Start

33 |

34 | ```python

35 | from blueprints.single_provider.bedrock.agent import Agent

36 |

37 | # Create a simple chat agent

38 | agent = Agent(

39 | name="my_bedrock_agent",

40 | system_prompt="You are a helpful assistant.",

41 | model="amazon.nova-pro-v1:0", # You can use other Bedrock models like "anthropic.claude-3-sonnet-20240229-v1:0"

42 | n_latest_messages=10, # Number of recent messages to include in context (set to None for unlimited)

43 | reset=True # Start with a fresh conversation history

44 | )

45 |

46 | # Chat with the agent

47 | response = agent.chat("Hello, who are you?")

48 | print(response)

49 |

50 | # Chat with an image

51 | from PIL import Image

52 | img = Image.open("path/to/image.jpg")

53 | response = agent.chat("What's in this image?", image=img)

54 | print(response)

55 | ```

56 |

57 | ## Tool Execution Example

58 |

59 | ```python

60 | import pixeltable as pxt

61 |

62 | # Define tools

63 | weather_tools = pxt.tools([

64 | {

65 | "type": "function",

66 | "function": {

67 | "name": "get_weather",

68 | "description": "Get the current weather for a location",

69 | "parameters": {

70 | "type": "object",

71 | "properties": {

72 | "location": {

73 | "type": "string",

74 | "description": "The city and state, e.g. San Francisco, CA"

75 | }

76 | },

77 | "required": ["location"]

78 | }

79 | }

80 | }

81 | ])

82 |

83 | # Define tool implementation

84 | def get_weather(location):

85 | # In a real application, this would call a weather API

86 | return f"It's sunny and 72°F in {location}"

87 |

88 | # Create agent with tools

89 | agent = Agent(

90 | name="weather_assistant",

91 | system_prompt="You are a helpful weather assistant.",

92 | model="amazon.nova-pro-v1:0",

93 | tools=weather_tools,

94 | reset=True

95 | )

96 |

97 | # Register tool implementation

98 | weather_tools.register_tool("get_weather", get_weather)

99 |

100 | # Use tool calling

101 | response = agent.tool_call("What's the weather like in Seattle?")

102 | print(response)

103 | ```

104 |

105 | ## Available Bedrock Models

106 |

107 | This blueprint works with various AWS Bedrock models, including:

108 |

109 | - `amazon.nova-pro-v1:0` (default)

110 | - `anthropic.claude-3-sonnet-20240229-v1:0`

111 | - `anthropic.claude-3-opus-20240229-v1:0`

112 | - `anthropic.claude-3-haiku-20240307-v1:0`

113 | - `meta.llama3-70b-instruct-v1:0`

114 | - `meta.llama3-8b-instruct-v1:0`

115 |

116 | Note that different models have different capabilities and pricing. Refer to the [AWS Bedrock documentation](https://docs.aws.amazon.com/bedrock/latest/userguide/what-is-bedrock.html) for more details.

117 |

118 | ## Advanced Configuration

119 |

120 | You can pass additional parameters to the Bedrock API using the `chat_kwargs` and `tool_kwargs` parameters:

121 |

122 | ```python

123 | agent = Agent(

124 | name="advanced_agent",

125 | system_prompt="You are a helpful assistant.",

126 | model="amazon.nova-pro-v1:0",

127 | chat_kwargs={

128 | "temperature": 0.7,

129 | "max_tokens": 1000

130 | },

131 | tool_kwargs={

132 | "temperature": 0.2 # Lower temperature for more deterministic tool calls

133 | }

134 | )

135 | ```

136 |

137 | ## Unlimited Memory Support

138 |

139 | You can create an agent with unlimited conversation history by setting `n_latest_messages=None`:

140 |

141 | ```python

142 | # Create an agent with unlimited memory

143 | agent = Agent(

144 | name="memory_agent",

145 | system_prompt="You are a helpful assistant with perfect memory.",

146 | model="amazon.nova-pro-v1:0",

147 | n_latest_messages=None, # No limit on conversation history

148 | reset=True

149 | )

150 |

151 | # The agent will remember all previous interactions

152 | response1 = agent.chat("My name is Alice.")

153 | response2 = agent.chat("What's my name?") # Agent will remember

154 | response3 = agent.chat("Do you remember my name?") # Agent will still remember

155 | ```

156 |

157 | This is particularly useful for applications that require long-term memory or context awareness.

158 |

159 | ## Architecture

160 |

161 | This blueprint uses a modular architecture with:

162 |

163 | 1. **BaseAgent**: An abstract base class that handles common functionality like:

164 | - Table setup and management

165 | - Memory persistence

166 | - Core chat and tool call implementations

167 |

168 | 2. **Agent**: The Bedrock-specific implementation that inherits from BaseAgent and implements:

169 | - Bedrock-specific message formatting

170 | - Bedrock API integration

171 | - Tool calling for Bedrock models

172 |

173 | This architecture makes the code more maintainable and extensible.

174 |

175 | ## How It Works

176 |

177 | The agent uses Pixeltable to create and manage three tables:

178 |

179 | 1. **memory**: Stores all conversation history with timestamps

180 | 2. **agent**: Manages chat interactions and responses

181 | 3. **tools**: (Optional) Handles tool execution and responses

182 |

183 | When you send a message to the agent, it:

184 |

185 | 1. Stores your message in the memory table

186 | 2. Triggers a pipeline that retrieves recent conversation history

187 | 3. Formats the messages for the Bedrock API (with proper content structure)

188 | 4. Gets a response from the Bedrock model

189 | 5. Stores the response in memory

190 | 6. Returns the response to you

191 |

192 | Tool execution follows a similar pattern but uses a specialized pipeline:

193 | 1. The user's prompt is sent to Bedrock with available tools

194 | 2. Bedrock decides which tools to call and with what parameters

195 | 3. The tools are executed and their results are returned

196 | 4. The results are sent back to Bedrock for a final response

197 | 5. The response is stored in memory and returned to the user

198 |

--------------------------------------------------------------------------------

/blueprints/single_provider/bedrock/__init__.py:

--------------------------------------------------------------------------------

1 | from .agent import Agent

2 |

3 | __all__ = ["Agent"]

4 |

--------------------------------------------------------------------------------

/blueprints/single_provider/bedrock/test.py:

--------------------------------------------------------------------------------

1 | """

2 | Test script for the AWS Bedrock Agent Blueprint.

3 |

4 | This script demonstrates how to create and use a Bedrock-powered agent

5 | with both basic chat functionality and tool execution.

6 |

7 | Prerequisites:

8 | - AWS credentials configured (via AWS CLI, environment variables, or IAM role)

9 | - Access to AWS Bedrock models

10 | """

11 |

12 | import pixeltable as pxt

13 | from blueprints.single_provider.bedrock.agent import Agent

14 |

15 |

16 | @pxt.udf

17 | def stock_price(ticker: str) -> float:

18 | """Get the current stock price for a given ticker symbol."""

19 | # This is a mock implementation for testing

20 | prices = {

21 | "AAPL": 175.34,

22 | "MSFT": 325.89,

23 | "GOOGL": 142.56,

24 | "AMZN": 178.23,

25 | "NVDA": 131.17,

26 | }

27 | return prices.get(ticker.upper(), 0.0)

28 |

29 |

30 | def main():

31 | # Create a Bedrock agent with memory

32 | agent = Agent(

33 | name="bedrock_test",

34 | system_prompt="You are a helpful assistant that can answer questions and use tools.",

35 | model="amazon.nova-pro-v1:0", # Use the Amazon Nova Pro model

36 | n_latest_messages=None, # Unlimited memory to ensure all messages are included

37 | tools=pxt.tools(stock_price), # Register the stock_price tool

38 | reset=True, # Reset the agent's memory for testing

39 | )

40 |

41 | print("\n=== Testing Conversational Memory ===\n")