├── .gitignore

├── .github

├── .gitignore

├── ISSUE_TEMPLATE

│ └── bug_report.md

└── workflows

│ ├── check.yaml

│ └── pkgdown.yaml

├── tests

├── testthat.R

└── testthat

│ ├── test-scDblFinder.R

│ ├── test-recoverDoublets.R

│ ├── test-findDoubletClusters.R

│ └── test-computeDoubletDensity.R

├── .Rbuildignore

├── inst

├── docs

│ └── scDblFinder_comparison.png

├── extdata

│ └── example_fragments.tsv.gz

├── CITATION

└── NEWS

├── _pkgdown.yml

├── Dockerfile

├── man

├── propHomotypic.Rd

├── TFIDF.Rd

├── getExpectedDoublets.Rd

├── plotThresholds.Rd

├── mockDoubletSCE.Rd

├── getCellPairs.Rd

├── addDoublets.Rd

├── selFeatures.Rd

├── clusterStickiness.Rd

├── cxds2.Rd

├── plotDoubletMap.Rd

├── doubletPairwiseEnrichment.Rd

├── amulet.Rd

├── createDoublets.Rd

├── fastcluster.Rd

├── aggregateFeatures.Rd

├── clamulet.Rd

├── directDblClassification.Rd

├── amuletFromCounts.Rd

├── doubletThresholding.Rd

├── getArtificialDoublets.Rd

├── getFragmentOverlaps.Rd

├── recoverDoublets.Rd

├── computeDoubletDensity.Rd

├── findDoubletClusters.Rd

└── scDblFinder.Rd

├── DESCRIPTION

├── R

├── clustering.R

├── atac_processing.R

├── plotting.R

├── enrichment.R

├── recoverDoublets.R

├── getFragmentOverlaps.R

├── computeDoubletDensity.R

├── findDoubletClusters.R

└── doubletThresholding.R

├── vignettes

├── recoverDoublets.Rmd

├── introduction.Rmd

├── computeDoubletDensity.Rmd

├── findDoubletClusters.Rmd

└── scATAC.Rmd

├── NAMESPACE

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | docs

2 |

--------------------------------------------------------------------------------

/.github/.gitignore:

--------------------------------------------------------------------------------

1 | *.html

2 |

--------------------------------------------------------------------------------

/tests/testthat.R:

--------------------------------------------------------------------------------

1 | library(testthat)

2 | library(scDblFinder)

3 | test_check("scDblFinder")

4 |

--------------------------------------------------------------------------------

/.Rbuildignore:

--------------------------------------------------------------------------------

1 | ^\.github$

2 | ^.*\.Rproj$

3 | ^\.Rproj\.user$

4 | ^_pkgdown\.yml$

5 | ^docs$

6 | ^pkgdown$

7 |

--------------------------------------------------------------------------------

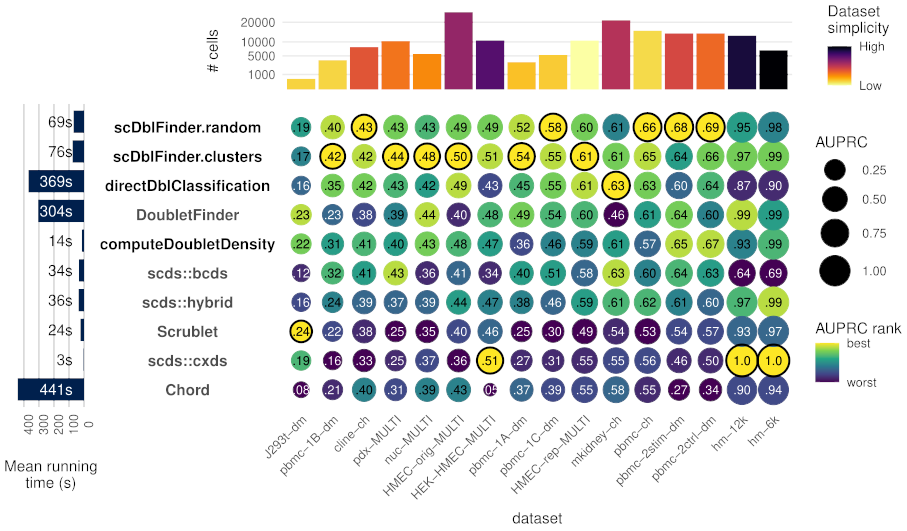

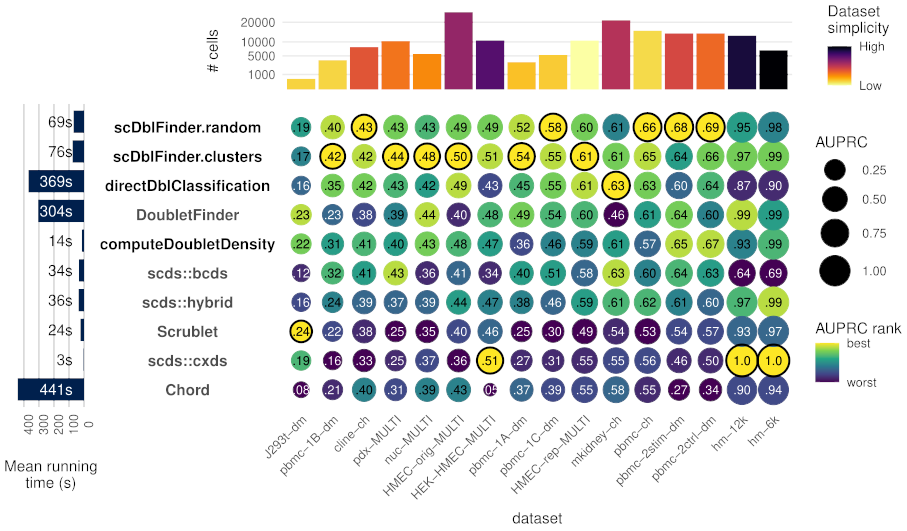

/inst/docs/scDblFinder_comparison.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/plger/scDblFinder/HEAD/inst/docs/scDblFinder_comparison.png

--------------------------------------------------------------------------------

/inst/extdata/example_fragments.tsv.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/plger/scDblFinder/HEAD/inst/extdata/example_fragments.tsv.gz

--------------------------------------------------------------------------------

/_pkgdown.yml:

--------------------------------------------------------------------------------

1 | url: https://plger.github.io/scDblFinder/

2 | template:

3 | bootstrap: 5

4 | bootswatch: cyborg

5 | theme: arrow-dark

6 |

7 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM bioconductor/bioconductor_docker:devel

2 |

3 | MAINTAINER pl.germain@gmail.com

4 |

5 | WORKDIR /home/build/package

6 |

7 | COPY . /home/build/package

8 |

9 | ENV R_REMOTES_NO_ERRORS_FROM_WARNINGS=true

10 |

11 | RUN Rscript -e "BiocManager::install('ensembldb'); BiocManager::install('Rtsne')"

12 | RUN Rscript -e "devtools::install('.', dependencies=TRUE, repos = BiocManager::repositories(), build_vignettes = TRUE)"

13 |

--------------------------------------------------------------------------------

/inst/CITATION:

--------------------------------------------------------------------------------

1 | bibentry(bibtype = "Article",

2 | title = "Doublet identification in single-cell sequencing data using scDblFinder",

3 | author = c(person("Pierre-Luc", "Germain"),

4 | person("Aaron", "Lun"),

5 | person("Carlos", "Garcia Meixide"),

6 | person("Will", "Macnair"),

7 | person("Mark D.", "Robinson")),

8 | year = 2022,

9 | journal = "f1000research",

10 | doi = "10.12688/f1000research.73600.2"

11 | )

12 |

--------------------------------------------------------------------------------

/man/propHomotypic.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{propHomotypic}

4 | \alias{propHomotypic}

5 | \title{propHomotypic}

6 | \usage{

7 | propHomotypic(clusters)

8 | }

9 | \arguments{

10 | \item{clusters}{A vector of cluster labels}

11 | }

12 | \value{

13 | A numeric value between 0 and 1.

14 | }

15 | \description{

16 | Computes the proportion of pairs expected to be made of elements from the

17 | same cluster.

18 | }

19 | \examples{

20 | clusters <- sample(LETTERS[1:5], 100, replace=TRUE)

21 | propHomotypic(clusters)

22 | }

23 |

--------------------------------------------------------------------------------

/man/TFIDF.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/atac_processing.R

3 | \name{TFIDF}

4 | \alias{TFIDF}

5 | \title{TFIDF}

6 | \usage{

7 | TFIDF(x, sf = 10000)

8 | }

9 | \arguments{

10 | \item{x}{The matrix of occurrences}

11 |

12 | \item{sf}{Scaling factor}

13 | }

14 | \value{

15 | An array of same dimensions as `x`

16 | }

17 | \description{

18 | The Term Frequency - Inverse Document Frequency (TF-IDF) normalization, as

19 | implemented in Stuart & Butler et al. 2019.

20 | }

21 | \examples{

22 | m <- matrix(rpois(500,1),nrow=50)

23 | m <- TFIDF(m)

24 | }

25 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **MRE -- Minimal example to reproduce the bug**

14 | Steps to reproduce the behavior, eventually with a dataset if it's dataset-specific. If possible, try to reproduce the error with a single sample and/or without multithreading.

15 |

16 | **Traceback**

17 | If the issue triggers an R error (rather than, say, unexpected results), please provide the output of `traceback()` right after the error occurs.

18 |

19 | **Session info**

20 | Provide the output of `sessionInfo()`.

21 |

22 | Before posting your issue, please ensure that it is reproducible with a recent Bioconductor and `scDblFinder` version.

23 |

--------------------------------------------------------------------------------

/man/getExpectedDoublets.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{getExpectedDoublets}

4 | \alias{getExpectedDoublets}

5 | \title{getExpectedDoublets}

6 | \usage{

7 | getExpectedDoublets(x, dbr = NULL, only.heterotypic = TRUE, dbr.per1k = 0.008)

8 | }

9 | \arguments{

10 | \item{x}{A vector of cluster labels for each cell}

11 |

12 | \item{dbr}{The expected doublet rate.}

13 |

14 | \item{only.heterotypic}{Logical; whether to return expectations only for

15 | heterotypic doublets}

16 |

17 | \item{dbr.per1k}{The expected proportion of doublets per 1000 cells.}

18 | }

19 | \value{

20 | The expected number of doublets of each combination of clusters

21 | }

22 | \description{

23 | getExpectedDoublets

24 | }

25 | \examples{

26 | # random cluster labels

27 | cl <- sample(head(LETTERS,4), size=2000, prob=c(.4,.2,.2,.2), replace=TRUE)

28 | getExpectedDoublets(cl)

29 | }

30 |

--------------------------------------------------------------------------------

/man/plotThresholds.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/plotting.R

3 | \name{plotThresholds}

4 | \alias{plotThresholds}

5 | \title{plotThresholds}

6 | \usage{

7 | plotThresholds(d, ths = (0:100)/100, dbr = NULL, dbr.sd = NULL, do.plot = TRUE)

8 | }

9 | \arguments{

10 | \item{d}{A data.frame of cell properties, with each row representing a cell,

11 | as produced by `scDblFinder(..., returnType="table")`.}

12 |

13 | \item{ths}{A vector of thresholds between 0 and 1 at which to plot values.}

14 |

15 | \item{dbr}{The expected (mean) doublet rate.}

16 |

17 | \item{dbr.sd}{The standard deviation of the doublet rate, representing the

18 | uncertainty in the estimate.}

19 |

20 | \item{do.plot}{Logical; whether to plot the data (otherwise will return the

21 | underlying data.frame).}

22 | }

23 | \value{

24 | A ggplot, or a data.frame if `do.plot==FALSE`.

25 | }

26 | \description{

27 | Plots scores used for thresholding.

28 | }

29 |

--------------------------------------------------------------------------------

/man/mockDoubletSCE.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{mockDoubletSCE}

4 | \alias{mockDoubletSCE}

5 | \title{mockDoubletSCE}

6 | \usage{

7 | mockDoubletSCE(

8 | ncells = c(200, 300),

9 | ngenes = 200,

10 | mus = NULL,

11 | dbl.rate = 0.1,

12 | only.heterotypic = TRUE

13 | )

14 | }

15 | \arguments{

16 | \item{ncells}{A positive integer vector indicating the number of cells per

17 | cluster (min 2 clusters)}

18 |

19 | \item{ngenes}{The number of genes to simulate. Ignored if `mus` is given.}

20 |

21 | \item{mus}{A list of cluster averages.}

22 |

23 | \item{dbl.rate}{The doublet rate}

24 |

25 | \item{only.heterotypic}{Whether to create only heterotypic doublets}

26 | }

27 | \value{

28 | A SingleCellExperiment object, with the colData columns `type`

29 | indicating whether the cell is a singlet or doublet, and `cluster`

30 | indicating from which cluster (or cluster combination) it was simulated.

31 | }

32 | \description{

33 | Creates a mock random single-cell experiment object with doublets

34 | }

35 | \examples{

36 | sce <- mockDoubletSCE()

37 | }

38 |

--------------------------------------------------------------------------------

/man/getCellPairs.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/getArtificialDoublets.R

3 | \name{getCellPairs}

4 | \alias{getCellPairs}

5 | \title{getCellPairs}

6 | \usage{

7 | getCellPairs(

8 | clusters,

9 | n = 1000,

10 | ls = NULL,

11 | q = c(0.1, 0.9),

12 | selMode = "proportional",

13 | soft.min = 5

14 | )

15 | }

16 | \arguments{

17 | \item{clusters}{A vector of cluster labels for each cell, or a list containing

18 | metacells and graph}

19 |

20 | \item{n}{The number of cell pairs to obtain}

21 |

22 | \item{ls}{Optional library sizes}

23 |

24 | \item{q}{Library size quantiles between which to include cells (ignored if

25 | `ls` is NULL)}

26 |

27 | \item{selMode}{How to decide the number of pairs of each kind to produce.

28 | Either 'proportional' (default, proportional to the abundance of the

29 | underlying clusters), 'uniform' or 'sqrt'.}

30 |

31 | \item{soft.min}{Minimum number of pairs of a given type.}

32 | }

33 | \value{

34 | A data.frame with the columns

35 | }

36 | \description{

37 | Given a vector of cluster labels, returns pairs of cross-cluster cells

38 | }

39 | \examples{

40 | # create random labels

41 | x <- sample(head(LETTERS), 100, replace=TRUE)

42 | getCellPairs(x, n=6)

43 | }

44 |

--------------------------------------------------------------------------------

/.github/workflows/check.yaml:

--------------------------------------------------------------------------------

1 | on:

2 | push:

3 | pull_request:

4 | branches:

5 | - devel

6 | paths-ignore:

7 | - 'README.md'

8 |

9 | name: R-CMD-check

10 |

11 | jobs:

12 | R-CMD-check:

13 | runs-on: ubuntu-latest

14 | container: plger/scdblfinder:latest

15 |

16 | steps:

17 | - name: Check out repo

18 | uses: actions/checkout@v2

19 |

20 | - name: Install latest BiocCheck

21 | run: BiocManager::install(c("BiocCheck"))

22 | shell: Rscript {0}

23 |

24 | - name: Check

25 | env:

26 | _R_CHECK_CRAN_INCOMING_REMOTE_: false

27 | run: rcmdcheck::rcmdcheck(args = "--no-manual", error_on = "error", check_dir = "check")

28 | shell: Rscript {0}

29 |

30 | - name: BiocCheck

31 | run: BiocCheck::BiocCheck(".")

32 | shell: Rscript {0}

33 |

34 | - name: Upload check results

35 | if: failure()

36 | uses: actions/upload-artifact@master

37 | with:

38 | name: ${{ runner.os }}-r${{ matrix.config.r }}-results

39 | path: check

40 |

41 | - name: Show testthat output

42 | if: always()

43 | run: find check -name 'testthat.Rout*' -exec cat '{}' \; || true

44 | shell: bash

45 |

--------------------------------------------------------------------------------

/man/addDoublets.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/getArtificialDoublets.R

3 | \name{addDoublets}

4 | \alias{addDoublets}

5 | \title{addDoublets}

6 | \usage{

7 | addDoublets(

8 | x,

9 | clusters,

10 | dbr = (0.01 * ncol(x)/1000),

11 | only.heterotypic = TRUE,

12 | adjustSize = FALSE,

13 | prefix = "doublet.",

14 | ...

15 | )

16 | }

17 | \arguments{

18 | \item{x}{A count matrix of singlets, or a

19 | \code{\link[SummarizedExperiment]{SummarizedExperiment-class}}}

20 |

21 | \item{clusters}{A vector of cluster labels for each column of `x`}

22 |

23 | \item{dbr}{The doublet rate}

24 |

25 | \item{only.heterotypic}{Whether to add only heterotypic doublets.}

26 |

27 | \item{adjustSize}{Whether to adjust the library sizes of the doublets.}

28 |

29 | \item{prefix}{Prefix for the colnames generated.}

30 |

31 | \item{...}{Any further arguments to \code{\link{createDoublets}}.}

32 | }

33 | \value{

34 | A `SingleCellExperiment` with the colData columns `cluster` and

35 | `type` (indicating whether the cell is a singlet or doublet).

36 | }

37 | \description{

38 | Adds artificial doublets to an existing dataset

39 | }

40 | \examples{

41 | sce <- mockDoubletSCE(dbl.rate=0)

42 | sce <- addDoublets(sce, clusters=sce$cluster)

43 | }

44 |

--------------------------------------------------------------------------------

/man/selFeatures.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{selFeatures}

4 | \alias{selFeatures}

5 | \title{selFeatures}

6 | \usage{

7 | selFeatures(

8 | sce,

9 | clusters = NULL,

10 | nfeatures = 1000,

11 | propMarkers = 0,

12 | FDR.max = 0.05

13 | )

14 | }

15 | \arguments{

16 | \item{sce}{A \code{\link[SummarizedExperiment]{SummarizedExperiment-class}},

17 | \code{\link[SingleCellExperiment]{SingleCellExperiment-class}} with a

18 | 'counts' assay.}

19 |

20 | \item{clusters}{Optional cluster assignments. Should either be a vector of

21 | labels for each cell.}

22 |

23 | \item{nfeatures}{The number of features to select.}

24 |

25 | \item{propMarkers}{The proportion of features to select from markers (rather

26 | than on the basis of high expression). Ignored if `clusters` isn't given.}

27 |

28 | \item{FDR.max}{The maximum marker binom FDR to be included in the selection.

29 | (see \code{\link[scran]{findMarkers}}).}

30 | }

31 | \value{

32 | A vector of feature (i.e. row) names.

33 | }

34 | \description{

35 | Selects features based on cluster-wise expression or marker detection, or a

36 | combination.

37 | }

38 | \examples{

39 | sce <- mockDoubletSCE()

40 | selFeatures(sce, clusters=sce$cluster, nfeatures=5)

41 | }

42 |

--------------------------------------------------------------------------------

/man/clusterStickiness.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/enrichment.R

3 | \name{clusterStickiness}

4 | \alias{clusterStickiness}

5 | \title{clusterStickiness}

6 | \usage{

7 | clusterStickiness(

8 | x,

9 | type = c("quasibinomial", "nbinom", "binomial", "poisson"),

10 | inclDiff = NULL,

11 | verbose = TRUE

12 | )

13 | }

14 | \arguments{

15 | \item{x}{A table of double statistics, or a SingleCellExperiment on which

16 | \link{scDblFinder} was run using the cluster-based approach.}

17 |

18 | \item{type}{The type of test to use (quasibinomial recommended).}

19 |

20 | \item{inclDiff}{Logical; whether to include the difficulty in the model. If

21 | NULL, will be used only if there is a significant trend with the enrichment.}

22 |

23 | \item{verbose}{Logical; whether to print additional running information.}

24 | }

25 | \value{

26 | A table of test results for each cluster.

27 | }

28 | \description{

29 | Tests for enrichment of doublets created from each cluster (i.e. cluster's

30 | stickiness). Only applicable with >=4 clusters.

31 | Note that when applied to an multisample object, this functions assumes that

32 | the cluster labels match across samples.

33 | }

34 | \examples{

35 | sce <- mockDoubletSCE(rep(200,5), dbl.rate=0.2)

36 | sce <- scDblFinder(sce, clusters=TRUE, artificialDoublets=500)

37 | clusterStickiness(sce)

38 | }

39 |

--------------------------------------------------------------------------------

/man/cxds2.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{cxds2}

4 | \alias{cxds2}

5 | \title{cxds2}

6 | \usage{

7 | cxds2(x, whichDbls = c(), ntop = 500, binThresh = NULL)

8 | }

9 | \arguments{

10 | \item{x}{A matrix of counts, or a `SingleCellExperiment` containing a

11 | 'counts'}

12 |

13 | \item{whichDbls}{The columns of `x` which are known doublets.}

14 |

15 | \item{ntop}{The number of top features to keep.}

16 |

17 | \item{binThresh}{The count threshold to be considered expressed.}

18 | }

19 | \value{

20 | A cxds score or, if `x` is a `SingleCellExperiment`, `x` with an

21 | added `cxds_score` colData column.

22 | }

23 | \description{

24 | Calculates a coexpression-based doublet score using the method developed by

25 | \href{https://doi.org/10.1093/bioinformatics/btz698}{Bais and Kostka 2020}.

26 | This is the original implementation from the

27 | \href{https://www.bioconductor.org/packages/release/bioc/html/scds.html}{scds}

28 | package, but enabling scores to be calculated for all cells while the gene

29 | coexpression is based only on a subset (i.e. excluding known/artificial

30 | doublets) and making it robust to low sparsity.

31 | }

32 | \examples{

33 | sce <- mockDoubletSCE()

34 | sce <- cxds2(sce)

35 | # which is equivalent to

36 | # sce$cxds_score <- cxds2(counts(sce))

37 | }

38 | \references{

39 | \url{https://doi.org/10.1093/bioinformatics/btz698}

40 | }

41 |

--------------------------------------------------------------------------------

/man/plotDoubletMap.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/plotting.R

3 | \name{plotDoubletMap}

4 | \alias{plotDoubletMap}

5 | \title{plotDoubletMap}

6 | \usage{

7 | plotDoubletMap(

8 | sce,

9 | colorBy = "enrichment",

10 | labelBy = "observed",

11 | addSizes = TRUE,

12 | col = NULL,

13 | column_title = "Clusters",

14 | row_title = "Clusters",

15 | column_title_side = "bottom",

16 | na_col = "white",

17 | ...

18 | )

19 | }

20 | \arguments{

21 | \item{sce}{A SingleCellExperiment object on which `scDblFinder` has been run

22 | with the cluster-based approach.}

23 |

24 | \item{colorBy}{Determines the color mapping. Either "enrichment" (for

25 | log2-enrichment over expectation) or any column of

26 | `metadata(sce)$scDblFinder.stats`}

27 |

28 | \item{labelBy}{Determines the cell labels. Either "enrichment" (for

29 | log2-enrichment over expectation) or any column of

30 | `metadata(sce)$scDblFinder.stats`}

31 |

32 | \item{addSizes}{Logical; whether to add the sizes of clusters to labels}

33 |

34 | \item{col}{The colors scale to use (passed to `ComplexHeatmap::Heatmap`)}

35 |

36 | \item{column_title}{passed to `ComplexHeatmap::Heatmap`}

37 |

38 | \item{row_title}{passed to `ComplexHeatmap::Heatmap`}

39 |

40 | \item{column_title_side}{passed to `ComplexHeatmap::Heatmap`}

41 |

42 | \item{na_col}{color for NA cells}

43 |

44 | \item{...}{passed to `ComplexHeatmap::Heatmap`}

45 | }

46 | \value{

47 | a Heatmap object

48 | }

49 | \description{

50 | Plots a heatmap of observed versus expected doublets.

51 | Requires the `ComplexHeatmap` package.

52 | }

53 |

--------------------------------------------------------------------------------

/man/doubletPairwiseEnrichment.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/enrichment.R

3 | \name{doubletPairwiseEnrichment}

4 | \alias{doubletPairwiseEnrichment}

5 | \title{doubletPairwiseEnrichment}

6 | \usage{

7 | doubletPairwiseEnrichment(

8 | x,

9 | lower.tail = FALSE,

10 | sampleWise = FALSE,

11 | type = c("poisson", "binomial", "nbinom", "chisq"),

12 | inclDiff = TRUE,

13 | verbose = TRUE

14 | )

15 | }

16 | \arguments{

17 | \item{x}{A table of double statistics, or a SingleCellExperiment on which

18 | scDblFinder was run using the cluster-based approach.}

19 |

20 | \item{lower.tail}{Logical; defaults to FALSE to test enrichment (instead of

21 | depletion).}

22 |

23 | \item{sampleWise}{Logical; whether to perform tests sample-wise in multi-sample

24 | datasets. If FALSE (default), will aggregate counts before testing.}

25 |

26 | \item{type}{Type of test to use.}

27 |

28 | \item{inclDiff}{Logical; whether to regress out any effect of the

29 | identification difficulty in calculating expected counts}

30 |

31 | \item{verbose}{Logical; whether to output eventual warnings/notes}

32 | }

33 | \value{

34 | A table of significances for each combination.

35 | }

36 | \description{

37 | Calculates enrichment in any type of doublet (i.e. specific combination of

38 | clusters) over random expectation.

39 | Note that when applied to an multisample object, this functions assumes that

40 | the cluster labels match across samples.

41 | }

42 | \examples{

43 | sce <- mockDoubletSCE()

44 | sce <- scDblFinder(sce, clusters=TRUE, artificialDoublets=500)

45 | doubletPairwiseEnrichment(sce)

46 | }

47 |

--------------------------------------------------------------------------------

/man/amulet.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/atac.R

3 | \name{amulet}

4 | \alias{amulet}

5 | \title{amulet}

6 | \usage{

7 | amulet(x, ...)

8 | }

9 | \arguments{

10 | \item{x}{The path to a fragments file, or a GRanges object containing the

11 | fragments (with the `name` column containing the barcode, and the `score`

12 | column containing the count).}

13 |

14 | \item{...}{Any argument to \code{\link{getFragmentOverlaps}}.}

15 | }

16 | \value{

17 | A data.frame including, for each barcode, the number sites covered by

18 | more than two reads, the number of reads, and p- and q-values (low values

19 | indicative of doublets).

20 | }

21 | \description{

22 | ATACseq (Thibodeau, Eroglu, et al., Genome Biology 2021). The rationale is

23 | that cells with unexpectedly many loci covered by more than two reads are

24 | more likely to be doublets.

25 | }

26 | \details{

27 | When used on normal (or compressed) fragment files, this

28 | implementation is relatively fast (except for reading in the data) but it

29 | has a large memory footprint since the overlaps are performed in memory. It

30 | is therefore recommended to compress the fragment files using bgzip and index

31 | them with Tabix; in this case each chromosome will be read and processed

32 | separately, leading to a considerably lower memory footprint. See the

33 | underlying \code{\link{getFragmentOverlaps}} for details.

34 | }

35 | \examples{

36 | # here we use a dummy fragment file for example:

37 | fragfile <- system.file( "extdata", "example_fragments.tsv.gz",

38 | package="scDblFinder" )

39 | res <- amulet(fragfile)

40 |

41 | }

42 |

--------------------------------------------------------------------------------

/.github/workflows/pkgdown.yaml:

--------------------------------------------------------------------------------

1 | # Workflow derived from https://github.com/r-lib/actions/tree/v2/examples

2 | # Need help debugging build failures? Start at https://github.com/r-lib/actions#where-to-find-help

3 | on:

4 | push:

5 | branches: [main, master, devel]

6 | paths-ignore:

7 | - 'README.md'

8 | - '.github/**'

9 | - 'R/**'

10 | - 'tests/**'

11 | pull_request:

12 | branches: [main, master, devel]

13 | release:

14 | types: [published]

15 | workflow_dispatch:

16 |

17 | name: pkgdown

18 |

19 | jobs:

20 | pkgdown:

21 | runs-on: ubuntu-latest

22 | container: plger/scdblfinder:latest

23 | # Only restrict concurrency for non-PR jobs

24 | concurrency:

25 | group: pkgdown-${{ github.event_name != 'pull_request' || github.run_id }}

26 | env:

27 | GITHUB_PAT: ${{ secrets.GITHUB_TOKEN }}

28 | permissions:

29 | contents: write

30 | steps:

31 | - uses: actions/checkout@v4

32 |

33 | - name: Install rsync 📚

34 | run: apt-get update && apt-get install -y rsync

35 |

36 | - uses: r-lib/actions/setup-pandoc@v2

37 |

38 | #- uses: r-lib/actions/setup-r@v2

39 | # with:

40 | # use-public-rspm: true

41 |

42 | - uses: r-lib/actions/setup-r-dependencies@v2

43 | with:

44 | extra-packages: any::pkgdown, local::.

45 | needs: website

46 |

47 | - name: Build site

48 | run: pkgdown::build_site_github_pages(new_process = FALSE, install = FALSE)

49 | shell: Rscript {0}

50 |

51 | - name: Deploy to GitHub pages 🚀

52 | if: github.event_name != 'pull_request'

53 | uses: JamesIves/github-pages-deploy-action@v4.4.1

54 | with:

55 | clean: false

56 | branch: gh-pages

57 | folder: docs

58 |

--------------------------------------------------------------------------------

/man/createDoublets.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/getArtificialDoublets.R

3 | \name{createDoublets}

4 | \alias{createDoublets}

5 | \title{createDoublets}

6 | \usage{

7 | createDoublets(

8 | x,

9 | dbl.idx,

10 | clusters = NULL,

11 | resamp = 0.5,

12 | halfSize = 0.5,

13 | adjustSize = FALSE,

14 | prefix = "dbl."

15 | )

16 | }

17 | \arguments{

18 | \item{x}{A count matrix of real cells}

19 |

20 | \item{dbl.idx}{A matrix or data.frame with pairs of cell indexes stored in

21 | the first two columns.}

22 |

23 | \item{clusters}{An optional vector of cluster labels (for each column of `x`)}

24 |

25 | \item{resamp}{Logical; whether to resample the doublets using the poisson

26 | distribution. Alternatively, if a proportion between 0 and 1, the proportion

27 | of doublets to resample.}

28 |

29 | \item{halfSize}{Logical; whether to half the library size of doublets

30 | (instead of just summing up the cells). Alternatively, a number between 0

31 | and 1 can be given, determining the proportion of the doublets for which

32 | to perform the size adjustment. Ignored if not resampling.}

33 |

34 | \item{adjustSize}{Logical; whether to adjust the size of the doublets using

35 | the median sizes per cluster of the originating cells. Requires `clusters` to

36 | be given. Alternatively to a logical value, a number between 0 and 1 can be

37 | given, determining the proportion of the doublets for which to perform the

38 | size adjustment.}

39 |

40 | \item{prefix}{Prefix for the colnames generated.}

41 | }

42 | \value{

43 | A matrix of artificial doublets.

44 | }

45 | \description{

46 | Creates artificial doublet cells by combining given pairs of cells

47 | }

48 | \examples{

49 | sce <- mockDoubletSCE()

50 | idx <- getCellPairs(sce$cluster, n=200)

51 | art.dbls <- createDoublets(sce, idx)

52 | }

53 |

--------------------------------------------------------------------------------

/DESCRIPTION:

--------------------------------------------------------------------------------

1 | Package: scDblFinder

2 | Type: Package

3 | Title: scDblFinder

4 | Version: 1.25.0

5 | Authors@R: c(

6 | person("Pierre-Luc", "Germain", email="pierre-luc.germain@hest.ethz.ch", role=c("cre","aut"), comment=c(ORCID="0000-0003-3418-4218")),

7 | person("Aaron", "Lun", email="infinite.monkeys.with.keyboards@gmail.com", role="ctb"))

8 | URL: https://github.com/plger/scDblFinder,

9 | https://plger.github.io/scDblFinder/

10 | BugReports: https://github.com/plger/scDblFinder/issues

11 | Description:

12 | The scDblFinder package gathers various methods for the detection and

13 | handling of doublets/multiplets in single-cell sequencing data (i.e.

14 | multiple cells captured within the same droplet or reaction volume). It

15 | includes methods formerly found in the scran package, the new fast

16 | and comprehensive scDblFinder method, and a reimplementation of the

17 | Amulet detection method for single-cell ATAC-seq.

18 | License: GPL-3 + file LICENSE

19 | Depends:

20 | R (>= 4.0),

21 | SingleCellExperiment

22 | Imports:

23 | igraph,

24 | Matrix,

25 | BiocGenerics,

26 | BiocParallel,

27 | BiocNeighbors,

28 | BiocSingular,

29 | S4Vectors,

30 | SummarizedExperiment,

31 | scran,

32 | scater,

33 | scuttle,

34 | bluster,

35 | methods,

36 | DelayedArray,

37 | xgboost,

38 | stats,

39 | utils,

40 | MASS,

41 | IRanges,

42 | GenomicRanges,

43 | GenomeInfoDb,

44 | Rsamtools,

45 | rtracklayer

46 | Suggests:

47 | BiocStyle,

48 | knitr,

49 | rmarkdown,

50 | testthat,

51 | scRNAseq,

52 | circlize,

53 | ComplexHeatmap,

54 | ggplot2,

55 | dplyr,

56 | viridisLite,

57 | mbkmeans

58 | VignetteBuilder: knitr

59 | Encoding: UTF-8

60 | RoxygenNote: 7.3.2

61 | biocViews: Preprocessing, SingleCell, RNASeq, ATACSeq

62 |

--------------------------------------------------------------------------------

/man/fastcluster.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/clustering.R

3 | \name{fastcluster}

4 | \alias{fastcluster}

5 | \title{fastcluster}

6 | \usage{

7 | fastcluster(

8 | x,

9 | k = NULL,

10 | rdname = "PCA",

11 | nstart = 3,

12 | iter.max = 50,

13 | ndims = NULL,

14 | nfeatures = 1000,

15 | verbose = TRUE,

16 | returnType = c("clusters", "preclusters", "metacells", "graph"),

17 | ...

18 | )

19 | }

20 | \arguments{

21 | \item{x}{An object of class SCE}

22 |

23 | \item{k}{The number of k-means clusters to use in the primary step (should

24 | be much higher than the number of expected clusters). Defaults to 1/10th of

25 | the number of cells with a maximum of 3000.}

26 |

27 | \item{rdname}{The name of the dimensionality reduction to use.}

28 |

29 | \item{nstart}{Number of starts for k-means clustering}

30 |

31 | \item{iter.max}{Number of iterations for k-means clustering}

32 |

33 | \item{ndims}{Number of dimensions to use}

34 |

35 | \item{nfeatures}{Number of features to use (ignored if `rdname` is given and

36 | the corresponding dimensional reduction exists in `sce`)}

37 |

38 | \item{verbose}{Logical; whether to output progress messages}

39 |

40 | \item{returnType}{See return.}

41 |

42 | \item{...}{Arguments passed to `scater::runPCA` (e.g. BPPARAM or BSPARAM) if

43 | `x` does not have `rdname`.}

44 | }

45 | \value{

46 | By default, a vector of cluster labels. If

47 | `returnType='preclusters'`, returns the k-means pre-clusters. If

48 | `returnType='metacells'`, returns the metacells aggretated by pre-clusters

49 | and the corresponding cell indexes. If `returnType='graph'`, returns the

50 | graph of (meta-)cells and the corresponding cell indexes.

51 | }

52 | \description{

53 | Performs a fast two-step clustering: first clusters using k-means with a very

54 | large k, then uses louvain clustering of the k cluster averages and reports

55 | back the cluster labels.

56 | }

57 | \examples{

58 | sce <- mockDoubletSCE()

59 | sce$cluster <- fastcluster(sce)

60 |

61 | }

62 |

--------------------------------------------------------------------------------

/man/aggregateFeatures.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/atac_processing.R

3 | \name{aggregateFeatures}

4 | \alias{aggregateFeatures}

5 | \title{aggregateFeatures}

6 | \usage{

7 | aggregateFeatures(

8 | x,

9 | dims.use = seq(2L, 12L),

10 | k = 1000,

11 | num_init = 3,

12 | use.mbk = NULL,

13 | use.subset = 20000,

14 | minCount = 1L,

15 | norm.fn = TFIDF,

16 | twoPass = FALSE,

17 | ...

18 | )

19 | }

20 | \arguments{

21 | \item{x}{A integer/numeric (sparse) matrix, or a `SingleCellExperiment`

22 | including a `counts` assay.}

23 |

24 | \item{dims.use}{The PCA dimensions to use for clustering rows.}

25 |

26 | \item{k}{The approximate number of meta-features desired}

27 |

28 | \item{num_init}{The number of initializations used for k-means clustering.}

29 |

30 | \item{use.mbk}{Logical; whether to use minibatch k-means (see

31 | \code{\link[mbkmeans]{mbkmeans}}). If NULL, the minibatch approach will be

32 | used if there are more than 30000 features.}

33 |

34 | \item{use.subset}{How many cells (columns) to use to cluster the features.}

35 |

36 | \item{minCount}{The minimum number of counts for a region to be included.}

37 |

38 | \item{norm.fn}{The normalization function to use on the un-clustered data (a

39 | function taking a count matrix as a single argument and returning a matrix

40 | of the same dimensions). \link{TFIDF} by default.}

41 |

42 | \item{twoPass}{Logical; whether to perform the procedure twice, so in the

43 | second round cells are aggregated based on the meta-features of the first

44 | round, before re-clustering the features. Ignored if the dataset has fewer

45 | than `use.subset` cells.}

46 |

47 | \item{...}{Passed to \code{\link[mbkmeans]{mbkmeans}}. Can for instance be

48 | used to pass the `BPPARAM` argument for multithreading.}

49 | }

50 | \value{

51 | An aggregated version of `x` (either an array or a

52 | `SingleCellExperiment`, depending on the input). If `x` is a

53 | `SingleCellExperiment`, the feature clusters will also be stored in

54 | `metadata(x)$featureGroups`

55 | }

56 | \description{

57 | Aggregates similar features (rows).

58 | }

59 |

--------------------------------------------------------------------------------

/man/clamulet.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/atac.R

3 | \name{clamulet}

4 | \alias{clamulet}

5 | \title{clamulet}

6 | \usage{

7 | clamulet(

8 | x,

9 | artificialDoublets = NULL,

10 | iter = 2,

11 | k = NULL,

12 | minCount = 0.001,

13 | maxN = 500,

14 | nfeatures = 25,

15 | max_depth = 5,

16 | threshold = 0.75,

17 | returnAll = FALSE,

18 | verbose = TRUE,

19 | ...

20 | )

21 | }

22 | \arguments{

23 | \item{x}{The path to a fragment file (see \code{\link{getFragmentOverlaps}}

24 | for performance/memory-related guidelines)}

25 |

26 | \item{artificialDoublets}{The number of artificial doublets to generate}

27 |

28 | \item{iter}{The number of learning iterations (should be 1 to)}

29 |

30 | \item{k}{The number(s) of nearest neighbors at which to gather statistics}

31 |

32 | \item{minCount}{The minimum number of cells in which a locus is detected to

33 | be considered. If lower than 1, it is interpreted as a fraction of the

34 | number of cells.}

35 |

36 | \item{maxN}{The maximum number of regions per cell to consider to establish

37 | windows for meta-features}

38 |

39 | \item{nfeatures}{The number of meta-features to consider}

40 |

41 | \item{max_depth}{The maximum tree depth}

42 |

43 | \item{threshold}{The score threshold used during iterations}

44 |

45 | \item{returnAll}{Logical; whether to return data also for artificial doublets}

46 |

47 | \item{verbose}{Logical; whether to print progress information}

48 |

49 | \item{...}{Arguments passed to \code{\link{getFragmentOverlaps}}}

50 | }

51 | \value{

52 | A data.frame

53 | }

54 | \description{

55 | Classification-powered Amulet-like method

56 | }

57 | \details{

58 | `clamulet` operates similarly to the `scDblFinder` method, but generates

59 | doublets by operating on the fragment coverages. This has the advantage that

60 | the number of loci covered by more than two reads can be computed for

61 | artificial doublets, enabling the use of this feature (along with the

62 | kNN-based ones) in a classification scheme. It however has the disadvantage

63 | of being rather slow and memory hungry, and appears to be outperformed by a

64 | simple p-value combination of the two methods (see vignette).

65 | }

66 |

--------------------------------------------------------------------------------

/man/directDblClassification.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/misc.R

3 | \name{directDblClassification}

4 | \alias{directDblClassification}

5 | \title{directClassification}

6 | \usage{

7 | directDblClassification(

8 | sce,

9 | dbr = NULL,

10 | processing = "default",

11 | iter = 2,

12 | dims = 20,

13 | nrounds = 0.25,

14 | max_depth = 6,

15 | ...

16 | )

17 | }

18 | \arguments{

19 | \item{sce}{A \code{\link[SummarizedExperiment]{SummarizedExperiment-class}},

20 | \code{\link[SingleCellExperiment]{SingleCellExperiment-class}}, or array of

21 | counts.}

22 |

23 | \item{dbr}{The expected doublet rate. By default this is assumed to be 1\%

24 | per thousand cells captured (so 4\% among 4000 thousand cells), which is

25 | appropriate for 10x datasets. Corrections for homeotypic doublets will be

26 | performed on the given rate.}

27 |

28 | \item{processing}{Counts (real and artificial) processing. Either

29 | 'default' (normal \code{scater}-based normalization and PCA), "rawPCA" (PCA

30 | without normalization), "rawFeatures" (no normalization/dimensional

31 | reduction), "normFeatures" (uses normalized features, without PCA) or a

32 | custom function with (at least) arguments `e` (the matrix of counts) and

33 | `dims` (the desired number of dimensions), returning a named matrix with

34 | cells as rows and components as columns.}

35 |

36 | \item{iter}{A positive integer indicating the number of scoring iterations.

37 | At each iteration, real cells that would be called as doublets are excluding

38 | from the training, and new scores are calculated.}

39 |

40 | \item{dims}{The number of dimensions used.}

41 |

42 | \item{nrounds}{Maximum rounds of boosting. If NULL, will be determined

43 | through cross-validation.}

44 |

45 | \item{max_depth}{Maximum depths of each tree.}

46 |

47 | \item{...}{Any doublet generation or pre-processing argument passed to

48 | `scDblFinder`.}

49 | }

50 | \value{

51 | A \code{\link[SummarizedExperiment]{SummarizedExperiment-class}}

52 | with the additional `colData` column `directDoubletScore`.

53 | }

54 | \description{

55 | Trains a classifier directly on the expression matrix to distinguish

56 | artificial doublets from real cells.

57 | }

58 | \examples{

59 | sce <- directDblClassification(mockDoubletSCE(), artificialDoublets=1)

60 | boxplot(sce$directDoubletScore~sce$type)

61 | }

62 |

--------------------------------------------------------------------------------

/man/amuletFromCounts.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/atac.R

3 | \name{amuletFromCounts}

4 | \alias{amuletFromCounts}

5 | \title{amuletFromCounts}

6 | \usage{

7 | amuletFromCounts(x, maxWidth = 500L, exclude = c("chrM", "M", "Mt"))

8 | }

9 | \arguments{

10 | \item{x}{A `SingleCellExperiment` object, or a matrix of counts with cells

11 | as columns. If the rows represent peaks, it is recommended to limite their

12 | width (see details).}

13 |

14 | \item{maxWidth}{the maximum width for a feature to be included. This is

15 | ignored unless `x` is a `SingleCellExperiment` with `rowRanges`.}

16 |

17 | \item{exclude}{an optional `GRanges` of regions to be excluded. This is

18 | ignored unless `x` is a `SingleCellExperiment` with `rowRanges`.}

19 | }

20 | \value{

21 | If `x` is a `SingleCellExperiment`, returns the object with an

22 | additional `amuletFromCounts.q` colData column. Otherwise returns a vector of

23 | the amulet doublet q-values for each cell.

24 | }

25 | \description{

26 | A reimplementation of the Amulet doublet detection method for single-cell

27 | ATACseq (Thibodeau, Eroglu, et al., Genome Biology 2021), based on tile/peak

28 | counts. Note that this is only a fast approximation to the original Amulet

29 | method, and *performs considerably worse*; for an equivalent implementation,

30 | see \code{\link{amulet}}.

31 | }

32 | \details{

33 | The rationale for the amulet method is that a single diploid cell should not

34 | have more than two reads covering a single genomic location, and the method

35 | looks for cells enriched with sites covered by more than two reads.

36 | If the method is applied on a peak-level count matrix, however, larger peaks

37 | can however contain multiple reads even though no single nucleotide is

38 | covered more than once. Therefore, in such case we recommend to limit the

39 | width of the peaks used for this analysis, ideally to maximum twice the upper

40 | bound of the fragment size. For example, with a mean fragment size of 250bp

41 | and standard deviation of 125bp, peaks larger than 500bp are very likely to

42 | contain non-overlapping fragments, and should therefore be excluded using the

43 | `maxWidth` argument.

44 | }

45 | \examples{

46 | x <- mockDoubletSCE()

47 | x <- amuletFromCounts(x)

48 | table(call=x$amuletFromCounts.q<0.05, truth=x$type)

49 | }

50 | \seealso{

51 | \code{\link{amulet}}

52 | }

53 |

--------------------------------------------------------------------------------

/inst/NEWS:

--------------------------------------------------------------------------------

1 | Changes in version 1.23.1 (2025-07-17)

2 | + fixed compatibility issues with xgboost version>=3

3 |

4 | Changes in version 1.19.9 (2025-01-07)

5 | + fixed the default dbr.per1k value in the top-level function

6 | + slight memory improvements (gc and not coercing DelayedArray before sample split)

7 |

8 | Changes in version 1.19.6 (2024-09-19)

9 | + added a dbr.per1k parameter to set doublet rates per thousands of cells, updated the default from 1 to 0.8\%

10 | + fixed some issues stemming from the cxds score in some corner cases (absence of inverse correlation between genes)

11 | + updated documentation

12 |

13 | Changes in version 1.13.14 (2023-06-19)

14 | + reduced the default minimum number of artificial doublets to improve call robustness in very small datasets.

15 |

16 | Changes in version 1.13.10 (2023-03-23)

17 | + fixed serializing error in multithreading large single samples

18 | + computed thresholds now reported in metadata

19 |

20 | Changes in version 1.13.7 (2023-01-09)

21 | + added possibility to provide the genes/features to use, updated docs

22 |

23 | Changes in version 1.13.4 (2022-11-21)

24 | + fixed bug in samples reporting in split mode (doesn't affect doublets scores)

25 |

26 | Changes in version 1.13.3 (2022-11-20)

27 | + updated default parameters according to https://arxiv.org/abs/2211.00772

28 |

29 | Changes in version 1.13.2 (2022-11-11)

30 | + added two-pass mode for feature aggregation

31 |

32 | Changes in version 1.9.11 (2022-04-16)

33 | + fixed larger kNN size

34 |

35 | Changes in version 1.9.9 (2022-04-9)

36 | + improved amulet reimplementation

37 | + added clamulet and scATAC vignette

38 |

39 | Changes in version 1.9.1 (2021-11-02)

40 | + added reimplementation of the amulet method for scATAC-seq

41 |

42 | Changes in version 1.7.3 (2021-07-26)

43 | + scDblFinder now includes both cluster-based and random modes for artificial doublet generation

44 | + thresholding has been streamlined

45 | + default parameters have been optimized using benchmark datasets

46 | + added the `directDblClassification` method

47 |

48 | Changes in version 1.5.11 (2021-01-19)

49 | + scDblFinder now provides doublet enrichment tests

50 | + doublet generation and default parameters have been further optimized

51 |

52 | Changes in version 1.3.25 (2020-10-26)

53 | + scDblFinder has important improvements on speed, robustness and accuracy

54 | + in additional to doublet calls, scDblFinder reports the putative origin (combination of clusters) of doublets

55 |

56 | Changes in version 1.3.19 (2020-08-06)

57 | + scDblFinder now hosts the doublet detection methods formerly part of `scran`

58 |

--------------------------------------------------------------------------------

/man/doubletThresholding.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/doubletThresholding.R

3 | \name{doubletThresholding}

4 | \alias{doubletThresholding}

5 | \title{doubletThresholding}

6 | \usage{

7 | doubletThresholding(

8 | d,

9 | dbr = NULL,

10 | dbr.sd = NULL,

11 | dbr.per1k = 0.008,

12 | stringency = 0.5,

13 | p = 0.1,

14 | method = c("auto", "optim", "dbr", "griffiths"),

15 | perSample = TRUE,

16 | returnType = c("threshold", "call")

17 | )

18 | }

19 | \arguments{

20 | \item{d}{A data.frame of cell properties, with each row representing a cell, as

21 | produced by `scDblFinder(..., returnType="table")`, or minimally containing a `score`

22 | column.}

23 |

24 | \item{dbr}{The expected (mean) doublet rate. If `d` contains a `cluster` column, the

25 | doublet rate will be adjusted for homotypic doublets.}

26 |

27 | \item{dbr.sd}{The standard deviation of the doublet rate, representing the

28 | uncertainty in the estimate. Ignored if `method!="optim"`.}

29 |

30 | \item{dbr.per1k}{The expected proportion of doublets per 1000 cells.}

31 |

32 | \item{stringency}{A numeric value >0 and <1 which controls the relative weight of false

33 | positives (i.e. real cells) and false negatives (artificial doublets) in setting the

34 | threshold. A value of 0.5 gives equal weight to both; a higher value (e.g. 0.7) gives

35 | higher weight to the false positives, and a lower to artificial doublets. Ignored if

36 | `method!="optim"`.}

37 |

38 | \item{p}{The p-value threshold determining the deviation in doublet score.}

39 |

40 | \item{method}{The thresholding method to use, either 'auto' (default, automatic

41 | selection depending on the available fields), 'optim' (optimization of

42 | misclassification rate and deviation from expected doublet rate), 'dbr' (strictly

43 | based on the expected doublet rate), or 'griffiths' (cluster-wise number of

44 | median absolute deviation in doublet score).}

45 |

46 | \item{perSample}{Logical; whether to perform thresholding individually for each sample.}

47 |

48 | \item{returnType}{The type of value to return, either doublet calls (`call`) or

49 | thresholds (`threshold`).}

50 | }

51 | \value{

52 | A vector of doublet calls if `returnType=="call"`, or a threshold (or vector

53 | of thresholds) if `returnType=="threshold"`.

54 | }

55 | \description{

56 | Sets the doublet scores threshold; typically called by

57 | \code{\link[scDblFinder]{scDblFinder}}.

58 | }

59 | \examples{

60 | sce <- mockDoubletSCE()

61 | d <- scDblFinder(sce, verbose=FALSE, returnType="table")

62 | th <- doubletThresholding(d, dbr=0.05)

63 | th

64 |

65 | }

66 |

--------------------------------------------------------------------------------

/tests/testthat/test-scDblFinder.R:

--------------------------------------------------------------------------------

1 | sce <- mockDoubletSCE(ncells=c(100,200,150,100), ngenes=250)

2 | sce$fastcluster <- fastcluster(sce, nfeatures=100, verbose=FALSE)

3 | sce$sample <- sample(LETTERS[1:2], ncol(sce), replace=TRUE)

4 |

5 | test_that("fastcluster works as expected",{

6 | expect_equal(sum(is.na(sce$fastcluster)),0)

7 | expect_gt(sum(apply(table(sce$cluster, sce$fastcluster),1,max)[1:4])/

8 | sum(sce$type=="singlet"), 0.8)

9 | x <- fastcluster(sce, nfeatures=100, k=3, verbose=FALSE, return="preclusters")

10 | expect_equal(sum(is.na(x)),0)

11 | expect_gt(sum(apply(table(sce$cluster, x),1,max)[1:3])/

12 | sum(sce$type=="singlet"), 0.8)

13 |

14 | })

15 |

16 | sce <- scDblFinder(sce, clusters="fastcluster", samples="sample",

17 | artificialDoublets=250, dbr=0.1, verbose=FALSE)

18 |

19 | test_that("scDblFinder works as expected", {

20 | expect_equal(sum(is.na(sce$scDblFinder.score)),0)

21 | expect(min(sce$scDblFinder.score)>=0 & max(sce$scDblFinder.score)<=1,

22 | failure_message="scDblFinder.score not within 0-1")

23 | expect_gt(sum(sce$type==sce$scDblFinder.class)/ncol(sce), 0.8)

24 | sce <- scDblFinder(sce, samples="sample", artificialDoublets=250,

25 | dbr=0.1, verbose=FALSE)

26 | expect_equal(sum(is.na(sce$scDblFinder.score)),0)

27 | expect(min(sce$scDblFinder.score)>=0 & max(sce$scDblFinder.score)<=1,

28 | failure_message="scDblFinder.score not within 0-1")

29 | expect_gt(sum(sce$type==sce$scDblFinder.class)/ncol(sce), 0.8)

30 | })

31 |

32 | test_that("feature aggregation works as expected", {

33 | sce2 <- aggregateFeatures(sce, k=20)

34 | expect_equal(nrow(sce2),20)

35 | expect_equal(sum(is.na(counts(sce2)) | is.infinite(counts(sce2))), 0)

36 | sce2 <- scDblFinder( sce2, clusters="fastcluster", processing="normFeatures",

37 | artificialDoublets=250, dbr=0.1, verbose=FALSE)

38 | expect_equal(sum(is.na(sce2$scDblFinder.score)),0)

39 | expect_gt(sum(sce2$type==sce2$scDblFinder.class)/ncol(sce2), 0.8)

40 | })

41 |

42 | test_that("doublet enrichment works as expected", {

43 | cs <- clusterStickiness(sce)$FDR

44 | expect_equal(sum(is.na(cs)),0)

45 | })

46 |

47 |

48 | test_that("amulet works as expected", {

49 | fragfile <- system.file("extdata","example_fragments.tsv.gz",

50 | package="scDblFinder")

51 | res <- amulet(fragfile)

52 | expect_equal(res$nFrags, c(878,2401,2325,1882,1355))

53 | expect_equal(sum(res$nAbove2<=1), 4)

54 | expect_equal(res["barcode5","nAbove2"], 6)

55 | expect_lt(res["barcode5","p.value"], 0.01)

56 | })

--------------------------------------------------------------------------------

/man/getArtificialDoublets.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/getArtificialDoublets.R

3 | \name{getArtificialDoublets}

4 | \alias{getArtificialDoublets}

5 | \title{getArtificialDoublets}

6 | \usage{

7 | getArtificialDoublets(

8 | x,

9 | n = 3000,

10 | clusters = NULL,

11 | resamp = 0.25,

12 | halfSize = 0.25,

13 | adjustSize = 0.25,

14 | propRandom = 0.1,

15 | selMode = c("proportional", "uniform", "sqrt"),

16 | n.meta.cells = 2,

17 | meta.triplets = TRUE,

18 | trim.q = c(0.05, 0.95)

19 | )

20 | }

21 | \arguments{

22 | \item{x}{A count matrix, with features as rows and cells as columns.}

23 |

24 | \item{n}{The approximate number of doublet to generate (default 3000).}

25 |

26 | \item{clusters}{The optional clusters labels to use to build cross-cluster

27 | doublets.}

28 |

29 | \item{resamp}{Logical; whether to resample the doublets using the poisson

30 | distribution. Alternatively, if a proportion between 0 and 1, the proportion

31 | of doublets to resample.}

32 |

33 | \item{halfSize}{Logical; whether to half the library size of doublets

34 | (instead of just summing up the cells). Alternatively, a number between 0

35 | and 1 can be given, determining the proportion of the doublets for which

36 | to perform the size adjustment.}

37 |

38 | \item{adjustSize}{Logical; whether to adjust the size of the doublets using

39 | the ratio between each cluster's median library size. Alternatively, a number

40 | between 0 and 1 can be given, determining the proportion of the doublets for

41 | which to perform the size adjustment.}

42 |

43 | \item{propRandom}{The proportion of the created doublets that are fully

44 | random (default 0.1); the rest will be doublets created across clusters.

45 | Ignored if `clusters` is NULL.}

46 |

47 | \item{selMode}{The cell pair selection mode for inter-cluster doublet

48 | generation, either 'uniform' (same number of doublets for each combination),

49 | 'proportional' (proportion expected from the clusters' prevalences), or

50 | 'sqrt' (roughly the square root of the expected proportion).}

51 |

52 | \item{n.meta.cells}{The number of meta-cell per cluster to create. If given,

53 | additional doublets will be created from cluster meta-cells. Ignored if

54 | `clusters` is missing.}

55 |

56 | \item{meta.triplets}{Logical; whether to create triplets from meta cells.

57 | Ignored if `clusters` is missing.}

58 |

59 | \item{trim.q}{A vector of two values between 0 and 1}

60 | }

61 | \value{

62 | A list with two elements: `counts` (the count matrix of

63 | the artificial doublets) and `origins` the clusters from which each

64 | artificial doublets originated (NULL if `clusters` is not given).

65 | }

66 | \description{

67 | Create expression profiles of random artificial doublets.

68 | }

69 | \examples{

70 | m <- t(sapply( seq(from=0, to=5, length.out=50),

71 | FUN=function(x) rpois(30,x) ) )

72 | doublets <- getArtificialDoublets(m, 30)

73 |

74 | }

75 |

--------------------------------------------------------------------------------

/tests/testthat/test-recoverDoublets.R:

--------------------------------------------------------------------------------

1 | # This tests the recoverDoublets function.

2 | # library(scDblFinder); library(testthat); source("test-recoverDoublets.R")

3 |

4 | set.seed(99000077)

5 | ngenes <- 100

6 | mu1 <- 2^rexp(ngenes) * 5

7 | mu2 <- 2^rnorm(ngenes) * 5

8 |

9 | counts.1 <- matrix(rpois(ngenes*100, mu1), nrow=ngenes)

10 | counts.2 <- matrix(rpois(ngenes*100, mu2), nrow=ngenes)

11 | counts.m <- matrix(rpois(ngenes*20, mu1+mu2), nrow=ngenes)

12 |

13 | counts <- cbind(counts.1, counts.2, counts.m)

14 | clusters <- rep(1:3, c(ncol(counts.1), ncol(counts.2), ncol(counts.m)))

15 |

16 | library(SingleCellExperiment)

17 | sce <- SingleCellExperiment(list(counts=counts))

18 | sce <- scuttle::logNormCounts(sce)

19 |

20 | set.seed(99000007)

21 | test_that("recoverDoublets works as expected", {

22 | known.doublets <- clusters==3 & rbinom(length(clusters), 1, 0.5)==0

23 | ref <- recoverDoublets(sce, known.doublets, samples=c(1, 1, 1))

24 |

25 | expect_true(min(ref$proportion[ref$predicted]) >= max(ref$proportion[!ref$predicted & !ref$known]))

26 | expect_false(any(ref$predicted & ref$known))

27 | expect_true(sum(ref$predicted) <= metadata(ref)$intra)

28 |

29 | # Responds to 'k'.

30 | alt <- recoverDoublets(sce, known.doublets, samples=c(1, 1, 1), k=20)

31 | expect_false(identical(ref, alt))

32 |

33 | # Responds to 'samples'

34 | alt <- recoverDoublets(sce, known.doublets, samples=c(1, 2, 3))

35 | expect_false(identical(ref, alt))

36 |

37 | # subset.row has the intended effect

38 | sub <- recoverDoublets(assay(sce), known.doublets, samples=c(1, 1, 1), subset.row=1:50)

39 | alt <- recoverDoublets(assay(sce)[1:50,], known.doublets, samples=c(1, 1, 1))

40 | expect_identical(sub, alt)

41 | })

42 |

43 | set.seed(99000008)

44 | test_that("recoverDoublets gives the correct results on the toy example", {

45 | known.doublets <- clusters==3 & 1:2==1 # alternating doublets.

46 | ref <- recoverDoublets(sce, known.doublets, samples=c(1, 1), k=10)

47 | expect_identical(clusters==3, ref$known | ref$predicted)

48 |

49 | expect_true(min(ref$proportion[ref$predicted]) >= max(ref$proportion[!ref$predicted & !ref$known]))

50 | })

51 |

52 | set.seed(99000008)

53 | test_that("recoverDoublets works for other inputs", {

54 | known.doublets <- clusters==3 & rbinom(length(clusters), 1, 0.5)==0

55 | ref <- recoverDoublets(logcounts(sce), known.doublets, samples=c(1, 1, 1))

56 | alt <- recoverDoublets(sce, known.doublets, samples=c(1, 1, 1))

57 | expect_identical(ref, alt)

58 |

59 | # Works for transposition

60 | alt <- recoverDoublets(t(logcounts(sce)), known.doublets, samples=c(1, 1, 1), transposed=TRUE)

61 | expect_identical(ref, alt)

62 |

63 | # Works by stuffing values in reduced dims.

64 | reducedDim(sce, "pretend") <- t(logcounts(sce))

65 | alt <- recoverDoublets(sce, known.doublets, samples=c(1, 1, 1), use.dimred="pretend")

66 | expect_identical(ref, alt)

67 | })

68 |

--------------------------------------------------------------------------------

/man/getFragmentOverlaps.Rd:

--------------------------------------------------------------------------------

1 | % Generated by roxygen2: do not edit by hand

2 | % Please edit documentation in R/getFragmentOverlaps.R

3 | \name{getFragmentOverlaps}

4 | \alias{getFragmentOverlaps}

5 | \title{getFragmentOverlaps}

6 | \usage{

7 | getFragmentOverlaps(

8 | x,

9 | barcodes = NULL,

10 | regionsToExclude = GRanges(c("M", "chrM", "MT", "X", "Y", "chrX", "chrY"), IRanges(1L,

11 | width = 10^8)),

12 | minFrags = 500L,

13 | uniqueFrags = TRUE,

14 | maxFragSize = 1000L,

15 | removeHighOverlapSites = TRUE,

16 | fullInMemory = FALSE,

17 | BPPARAM = NULL,

18 | verbose = TRUE,

19 | ret = c("stats", "loci", "coverages")

20 | )

21 | }

22 | \arguments{

23 | \item{x}{The path to a fragments file, or a GRanges object containing the

24 | fragments (with the `name` column containing the barcode, and optionally

25 | the `score` column containing the count).}

26 |

27 | \item{barcodes}{Optional character vector of cell barcodes to consider}

28 |

29 | \item{regionsToExclude}{A GRanges of regions to exclude. As per the original

30 | Amulet method, we recommend excluding repeats, as well as sex and

31 | mitochondrial chromosomes. (Note that the end coordinate does not need to

32 | be exact when excluding entire chromosomes, but greater or equal to the

33 | chromosome length.)}

34 |

35 | \item{minFrags}{Minimum number of fragments for a barcode to be

36 | considered. If `uniqueFrags=TRUE`, this is the minimum number of unique

37 | fragments. Ignored if `barcodes` is given.}

38 |

39 | \item{uniqueFrags}{Logical; whether to use only unique fragments.}

40 |

41 | \item{maxFragSize}{Integer indicating the maximum fragment size to consider}

42 |

43 | \item{removeHighOverlapSites}{Logical; whether to remove sites that have

44 | more than two reads in unexpectedly many cells.}

45 |

46 | \item{fullInMemory}{Logical; whether to process all chromosomes together.

47 | This will speed up the process but at the cost of a very high memory

48 | consumption (as all fragments will be loaded in memory). This is anyway the

49 | default mode when `x` is not Tabix-indexed.}

50 |

51 | \item{BPPARAM}{A `BiocParallel` parameter object for multithreading. Note

52 | that multithreading will increase the memory usage.}

53 |

54 | \item{verbose}{Logical; whether to print progress messages.}

55 |

56 | \item{ret}{What to return, either barcode 'stats' (default), 'loci', or

57 | 'coverages'.}

58 | }

59 | \value{

60 | A data.frame with counts and overlap statistics for each barcode.

61 | }

62 | \description{

63 | Count the number of overlapping fragments.

64 | }

65 | \details{

66 | When used on normal (or compressed) fragment files, this

67 | implementation is relatively fast (except for reading in the data) but it

68 | has a large memory footprint since the overlaps are performed in memory. It

69 | is therefore recommended to compress the fragment files using bgzip and index

70 | them with Tabix; in this case each chromosome will be read and processed

71 | separately, leading to a considerably lower memory footprint.

72 | }

73 |

--------------------------------------------------------------------------------

/R/clustering.R:

--------------------------------------------------------------------------------

1 | #' fastcluster

2 | #'

3 | #' Performs a fast two-step clustering: first clusters using k-means with a very

4 | #' large k, then uses louvain clustering of the k cluster averages and reports

5 | #' back the cluster labels.

6 | #'

7 | #' @param x An object of class SCE

8 | #' @param k The number of k-means clusters to use in the primary step (should

9 | #' be much higher than the number of expected clusters). Defaults to 1/10th of

10 | #' the number of cells with a maximum of 3000.

11 | #' @param rdname The name of the dimensionality reduction to use.

12 | #' @param nstart Number of starts for k-means clustering

13 | #' @param iter.max Number of iterations for k-means clustering

14 | #' @param ndims Number of dimensions to use

15 | #' @param nfeatures Number of features to use (ignored if `rdname` is given and

16 | #' the corresponding dimensional reduction exists in `sce`)

17 | #' @param verbose Logical; whether to output progress messages

18 | #' @param returnType See return.

19 | #' @param ... Arguments passed to `scater::runPCA` (e.g. BPPARAM or BSPARAM) if

20 | #' `x` does not have `rdname`.

21 | #'

22 | #' @return By default, a vector of cluster labels. If

23 | #' `returnType='preclusters'`, returns the k-means pre-clusters. If

24 | #' `returnType='metacells'`, returns the metacells aggretated by pre-clusters

25 | #' and the corresponding cell indexes. If `returnType='graph'`, returns the

26 | #' graph of (meta-)cells and the corresponding cell indexes.

27 | #'

28 | #' @importFrom igraph cluster_louvain membership

29 | #' @importFrom scran buildKNNGraph

30 | #' @importFrom stats kmeans

31 | #'

32 | #' @examples

33 | #' sce <- mockDoubletSCE()

34 | #' sce$cluster <- fastcluster(sce)

35 | #'

36 | #' @export

37 | #' @importFrom bluster makeKNNGraph

38 | #' @importFrom igraph membership cluster_louvain

39 | #' @importFrom DelayedArray rowsum

40 | fastcluster <- function( x, k=NULL, rdname="PCA", nstart=3, iter.max=50,

41 | ndims=NULL, nfeatures=1000, verbose=TRUE,

42 | returnType=c("clusters","preclusters","metacells",

43 | "graph"), ...){

44 | returnType <- match.arg(returnType)

45 | x <- .getDR(x, ndims=ndims, nfeatures=nfeatures, rdname=rdname,

46 | verbose=verbose, ...)

47 | if(is.null(k)) k <- min(2500, floor(nrow(x)/10))

48 | if((returnType != "clusters" || nrow(x)>1000) && nrow(x)>k){

49 | if(verbose) message("Building meta-cells")

50 | k <- kmeans(x, k, iter.max=iter.max, nstart=nstart)$cluster

51 | if(returnType=="preclusters") return(k)

52 | x <- rowsum(x, k)

53 | x <- x/as.integer(table(k)[rownames(x)])

54 | if(returnType=="metacells") return(list(meta=x,idx=k))

55 | }else{

56 | k <- seq_len(nrow(x))

57 | }

58 | if(verbose) message("Building KNN graph and clustering")

59 | x <- makeKNNGraph(as.matrix(x), k=min(max(2,floor(sqrt(length(unique(k))))-1),10))

60 | if(returnType=="graph") return(list(k=k, graph=x))

61 | cl <- membership(cluster_louvain(x))

62 | cl[k]

63 | }

64 |

65 | #' @importFrom scater runPCA

66 | #' @importFrom scuttle logNormCounts librarySizeFactors computeLibraryFactors

67 | #' @importFrom BiocSingular IrlbaParam

68 | #' @import SingleCellExperiment

69 | .prepSCE <- function(sce, ndims=30, nfeatures=1000, ...){

70 | if(!("logcounts" %in% assayNames(sce))){

71 | if(is.null(librarySizeFactors(sce)))

72 | sce <- computeLibraryFactors(sce)

73 | ls <- librarySizeFactors(sce)

74 | if(any(is.na(ls) | ls==0))

75 | stop("Some of the size factors are invalid. Consider removing",

76 | "cells with sizeFactors of zero, or filling in the",

77 | "`logcounts' assay yourself.")

78 | sce <- logNormCounts(sce)

79 | }

80 | if(!("PCA" %in% reducedDimNames(sce))){

81 | sce <- runPCA(sce, ncomponents=ifelse(is.null(ndims),30,ndims),

82 | ntop=min(nfeatures,nrow(sce)),

83 | BSPARAM=IrlbaParam(), ...)

84 | }

85 | sce

86 | }

87 |

88 | .getDR <- function(x, ndims=30, nfeatures=1000, rdname="PCA", verbose=TRUE, ...){

89 | if(!(rdname %in% reducedDimNames(x))){

90 | if(verbose) message("Reduced dimension not found - running PCA...")

91 | x <- .prepSCE(x, ndims=ndims, nfeatures=nfeatures, ...)

92 | }

93 | x <- reducedDim(x, rdname)

94 | if(is.null(ndims)) dims <- 20

95 | x[,seq_len(min(ncol(x),as.integer(ndims)))]

96 | }

97 |

98 | .getMetaGraph <- function(x, clusters, BPPARAM=SerialParam()){

99 | x <- rowsum(x, clusters)

100 | x <- x/as.integer(table(clusters)[rownames(x)])

101 | makeKNNGraph(x, k=min(max(2,floor(sqrt(length(unique(clusters))))-1),10),

102 | BPPARAM=BPPARAM)

103 | }

104 |

--------------------------------------------------------------------------------

/vignettes/recoverDoublets.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: Recovering intra-sample doublets

3 | package: scDblFinder

4 | author:

5 | - name: Aaron Lun

6 | email: infinite.monkeys.with.keyboards@gmail.com

7 | date: "`r Sys.Date()`"

8 | output:

9 | BiocStyle::html_document

10 | vignette: |

11 | %\VignetteIndexEntry{5_recoverDoublets}

12 | %\VignetteEngine{knitr::rmarkdown}

13 | %\VignetteEncoding{UTF-8}

14 | ---

15 |

16 | # tl;dr

17 |

18 | See the relevant section of the [OSCA book](https://osca.bioconductor.org/doublet-detection.html#doublet-detection-in-multiplexed-experiments) for an example of the `recoverDoublets()` function in action on real data.

19 | A toy example is also provided in `?recoverDoublets`.

20 |

21 | # Mathematical background

22 |

23 | Consider any two cell states $C_1$ and $C_2$ forming a doublet population $D_{12}$.

24 | We will focus on the relative frequency of inter-sample to intra-sample doublets in $D_{12}$.

25 | Given a vector $\vec p_X$ containing the proportion of cells from each sample in state $X$, and assuming that doublets form randomly between pairs of samples, the expected proportion of intra-sample doublets in $D_{12}$ is $\vec p_{C_1} \cdot \vec p_{C_2}$.

26 | Subtracting this from 1 gives us the expected proportion of inter-sample doublets $q_{D_{12}}$.

27 | Similarly, the expected proportion of inter-sample doublets in $C_1$ is just $q_{C_1} =1 - \| \vec p_{C_1} \|_2^2$.

28 |

29 | Now, let's consider the observed proportion of events $r_X$ in each state $X$ that are known doublets.

30 | We have $r_{D_{12}} = q_{D_{12}}$ as there are no other events in $D_{12}$ beyond actual doublets.

31 | On the other hand, we expect that $r_{C_1} \ll q_{C_1}$ due to presence of a large majority of non-doublet cells in $C_1$ (same for $C_2$).

32 | If we assume that $q_{D_{12}} \ge q_{C_1}$ and $q_{C_2}$, the observed proportion $r_{D_{12}}$ should be larger than $r_{C_1}$ and $r_{C_2}$.

33 | (The last assumption is not always true but the $\ll$ should give us enough wiggle room to be robust to violations.)

34 |

35 |

44 |

45 | The above reasoning motivates the use of the proportion of known doublet neighbors as a "doublet score" to identify events that are most likely to be themselves doublets.

46 | `recoverDoublets()` computes the proportion of known doublet neighbors for each cell by performing a $k$-nearest neighbor search against all other cells in the dataset.

47 | It is then straightforward to calculate the proportion of neighboring cells that are marked as known doublets, representing our estimate of $r_X$ for each cell.

48 |

49 | # Obtaining explicit calls

50 |

51 | While the proportions are informative, there comes a time when we need to convert these into explicit doublet calls.

52 | This is achieved with $\vec S$, the vector of the proportion of cells from each sample across the entire dataset (i.e., `samples`).

53 | We assume that all cell states contributing to doublet states have proportion vectors equal to $\vec S$, such that the expected proportion of doublets that occur between cells from the same sample is $\| \vec S\|_2^2$.

54 | We then solve

55 |

56 | $$

57 | \frac{N_{intra}}{(N_{intra} + N_{inter}} = \| \vec S\|_2^2

58 | $$

59 |