├── models

├── __init__.py

├── gradcam.py

├── experimental.py

├── yolo_v5_object_detector.py

├── yolo.py

└── common.py

├── utils

├── __init__.py

├── activations.py

├── downloads.py

├── autoanchor.py

├── loss.py

├── augmentations.py

├── metrics.py

├── torch_utils.py

├── plots.py

└── general.py

├── images

├── bus.jpg

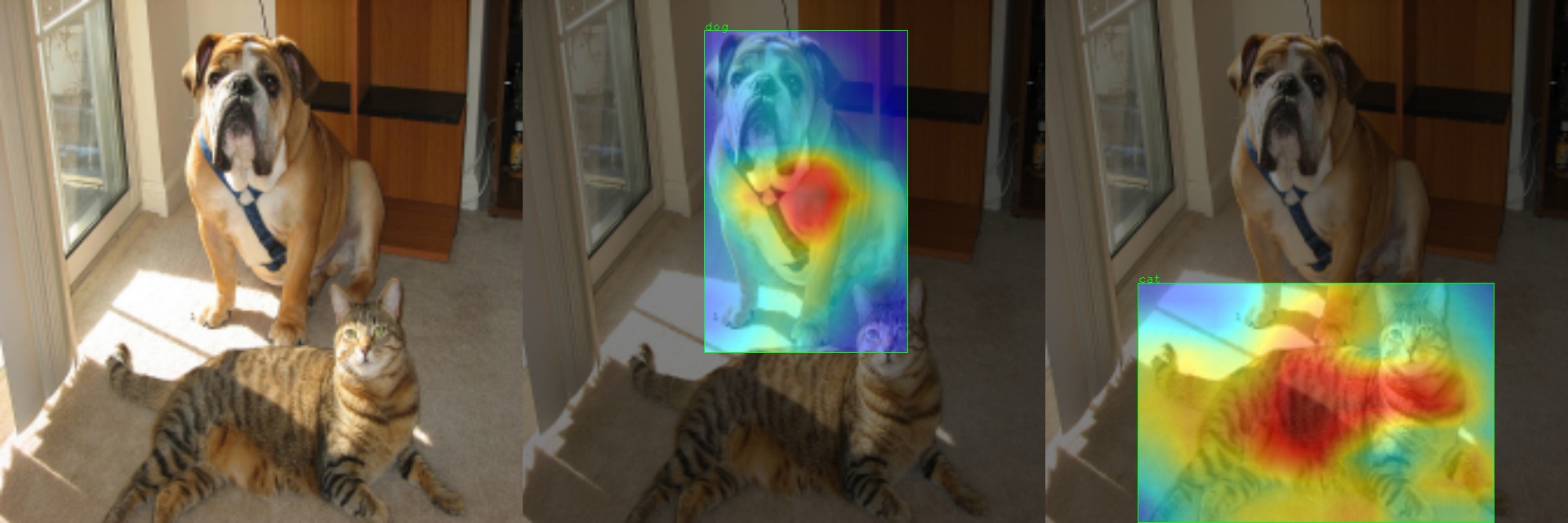

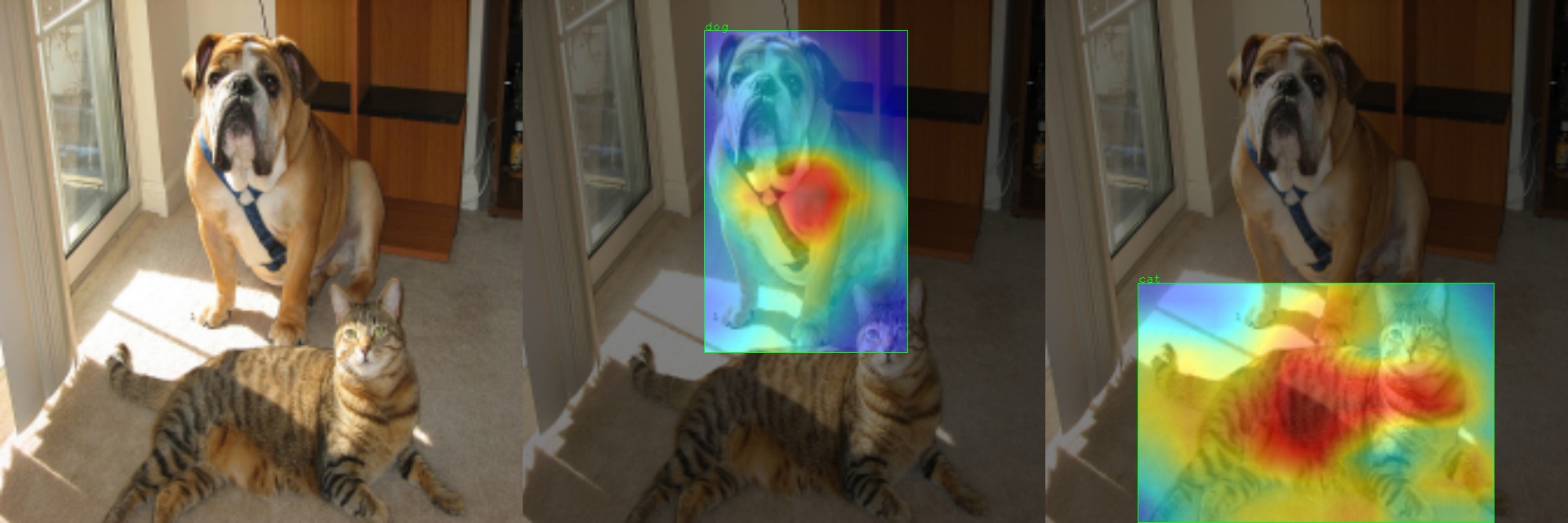

├── dog.jpg

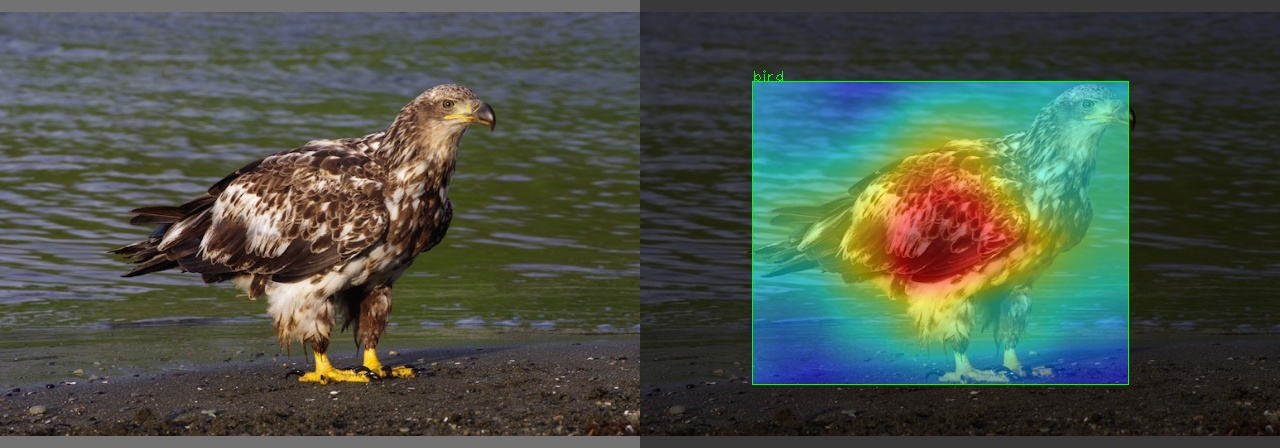

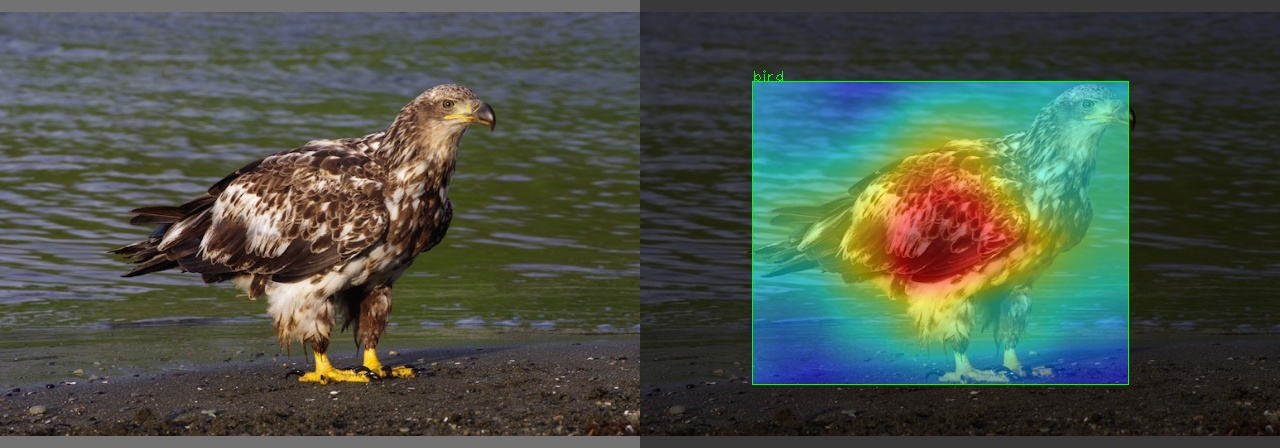

├── eagle.jpg

└── cat-dog.jpg

├── outputs

├── bus-res.jpg

├── dog-res.jpg

├── eagle-res.jpg

└── cat-dog-res.jpg

├── requirements.txt

├── LICENSE

├── README.md

├── .gitignore

└── main.py

/models/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/images/bus.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/images/bus.jpg

--------------------------------------------------------------------------------

/images/dog.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/images/dog.jpg

--------------------------------------------------------------------------------

/images/eagle.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/images/eagle.jpg

--------------------------------------------------------------------------------

/images/cat-dog.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/images/cat-dog.jpg

--------------------------------------------------------------------------------

/outputs/bus-res.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/outputs/bus-res.jpg

--------------------------------------------------------------------------------

/outputs/dog-res.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/outputs/dog-res.jpg

--------------------------------------------------------------------------------

/outputs/eagle-res.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/outputs/eagle-res.jpg

--------------------------------------------------------------------------------

/outputs/cat-dog-res.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/pooya-mohammadi/yolov5-gradcam/HEAD/outputs/cat-dog-res.jpg

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | # pip install -r requirements.txt

2 |

3 | # Base ----------------------------------------

4 | matplotlib>=3.2.2

5 | numpy>=1.18.5

6 | opencv-python>=4.5.4

7 | Pillow>=7.1.2

8 | PyYAML>=5.3.1

9 | requests>=2.23.0

10 | scipy>=1.4.1

11 | torch>=1.7.0,<1.11.0

12 | torchvision>=0.8.1

13 | tqdm>=4.41.0

14 | # Logging -------------------------------------

15 | tensorboard>=2.4.1

16 | # wandb

17 |

18 | # Plotting ------------------------------------

19 | pandas>=1.1.4

20 | seaborn>=0.11.0

21 |

22 | # loading model ---------------------------------------

23 | deep_utils>=0.8.5

24 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Pooya Mohammadi Kazaj

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # YOLO-V5 GRADCAM

2 |

3 | I constantly desired to know to which part of an object the object-detection models pay more attention. So I searched for it, but I didn't find any for Yolov5.

4 | Here is my implementation of Grad-cam for YOLO-v5. To load the model I used the yolov5's main codes, and for computing GradCam I used the codes from the gradcam_plus_plus-pytorch repository.

5 | Please follow my GitHub account and star ⭐ the project if this functionality benefits your research or projects.

6 |

7 | ## Update:

8 | Repo works fine with yolov5-v6.1

9 |

10 |

11 | ## Installation

12 | `pip install -r requirements.txt`

13 |

14 | ## Infer

15 | `python main.py --model-path yolov5s.pt --img-path images/cat-dog.jpg --output-dir outputs`

16 |

17 | **NOTE**: If you don't have any weights and just want to test, don't change the model-path argument. The yolov5s model will be automatically downloaded thanks to the download function from yolov5.

18 |

19 | **NOTE**: For more input arguments, check out the main.py or run the following command:

20 |

21 | ```python main.py -h```

22 |

23 | ### Custom Name

24 | To pass in your custom model you might want to pass in your custom names as well, which be done as below:

25 | ```

26 | python main.py --model-path cutom-model-path.pt --img-path img-path.jpg --output-dir outputs --names obj1,obj2,obj3

27 | ```

28 | ## Examples

29 | [](https://colab.research.google.com/github/pooya-mohammadi/yolov5-gradcam/blob/master/main.ipynb)

30 |

31 |  32 |

32 |  33 |

33 |  34 |

35 | ## Note

36 | I checked the code, but I couldn't find an explanation for why the truck's heatmap does not show anything. Please inform me or create a pull request if you find the reason.

37 |

38 | This problem is solved in version 6.1

39 |

40 | ## TO Do

41 | 1. Add GradCam++

42 | 2. Add ScoreCam

43 | 3. Add the functionality to the deep_utils library

44 |

45 | # References

46 | 1. https://github.com/1Konny/gradcam_plus_plus-pytorch

47 | 2. https://github.com/ultralytics/yolov5

48 | 3. https://github.com/pooya-mohammadi/deep_utils

49 |

50 | ## Citation

51 |

52 | Please cite **yolov5-gradcam** if it helps your research. You can use the following BibTeX entry:

53 | ```

54 | @misc{deep_utils,

55 | title = {yolov5-gradcam},

56 | author = {Mohammadi Kazaj, Pooya},

57 | howpublished = {\url{github.com/pooya-mohammadi/yolov5-gradcam}},

58 | year = {2021}

59 | }

60 | ```

61 |

--------------------------------------------------------------------------------

/models/gradcam.py:

--------------------------------------------------------------------------------

1 | import time

2 | import torch

3 | import torch.nn.functional as F

4 |

5 |

6 | def find_yolo_layer(model, layer_name):

7 | """Find yolov5 layer to calculate GradCAM and GradCAM++

8 |

9 | Args:

10 | model: yolov5 model.

11 | layer_name (str): the name of layer with its hierarchical information.

12 |

13 | Return:

14 | target_layer: found layer

15 | """

16 | hierarchy = layer_name.split('_')

17 | target_layer = model.model._modules[hierarchy[0]]

18 |

19 | for h in hierarchy[1:]:

20 | target_layer = target_layer._modules[h]

21 | return target_layer

22 |

23 |

24 | class YOLOV5GradCAM:

25 |

26 | def __init__(self, model, layer_name, img_size=(640, 640)):

27 | self.model = model

28 | self.gradients = dict()

29 | self.activations = dict()

30 |

31 | def backward_hook(module, grad_input, grad_output):

32 | self.gradients['value'] = grad_output[0]

33 | return None

34 |

35 | def forward_hook(module, input, output):

36 | self.activations['value'] = output

37 | return None

38 |

39 | target_layer = find_yolo_layer(self.model, layer_name)

40 | target_layer.register_forward_hook(forward_hook)

41 | target_layer.register_backward_hook(backward_hook)

42 |

43 | device = 'cuda' if next(self.model.model.parameters()).is_cuda else 'cpu'

44 | self.model(torch.zeros(1, 3, *img_size, device=device))

45 | print('[INFO] saliency_map size :', self.activations['value'].shape[2:])

46 |

47 | def forward(self, input_img, class_idx=True):

48 | """

49 | Args:

50 | input_img: input image with shape of (1, 3, H, W)

51 | Return:

52 | mask: saliency map of the same spatial dimension with input

53 | logit: model output

54 | preds: The object predictions

55 | """

56 | saliency_maps = []

57 | b, c, h, w = input_img.size()

58 | tic = time.time()

59 | preds, logits = self.model(input_img)

60 | print("[INFO] model-forward took: ", round(time.time() - tic, 4), 'seconds')

61 | for logit, cls, cls_name in zip(logits[0], preds[1][0], preds[2][0]):

62 | if class_idx:

63 | score = logit[cls]

64 | else:

65 | score = logit.max()

66 | self.model.zero_grad()

67 | tic = time.time()

68 | score.backward(retain_graph=True)

69 | print(f"[INFO] {cls_name}, model-backward took: ", round(time.time() - tic, 4), 'seconds')

70 | gradients = self.gradients['value']

71 | activations = self.activations['value']

72 | b, k, u, v = gradients.size()

73 | alpha = gradients.view(b, k, -1).mean(2)

74 | weights = alpha.view(b, k, 1, 1)

75 | saliency_map = (weights * activations).sum(1, keepdim=True)

76 | saliency_map = F.relu(saliency_map)

77 | saliency_map = F.upsample(saliency_map, size=(h, w), mode='bilinear', align_corners=False)

78 | saliency_map_min, saliency_map_max = saliency_map.min(), saliency_map.max()

79 | saliency_map = (saliency_map - saliency_map_min).div(saliency_map_max - saliency_map_min).data

80 | saliency_maps.append(saliency_map)

81 | return saliency_maps, logits, preds

82 |

83 | def __call__(self, input_img):

84 | return self.forward(input_img)

85 |

--------------------------------------------------------------------------------

/utils/activations.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Activation functions

4 | """

5 |

6 | import torch

7 | import torch.nn as nn

8 | import torch.nn.functional as F

9 |

10 |

11 | # SiLU https://arxiv.org/pdf/1606.08415.pdf ----------------------------------------------------------------------------

12 | class SiLU(nn.Module): # export-friendly version of nn.SiLU()

13 | @staticmethod

14 | def forward(x):

15 | return x * torch.sigmoid(x)

16 |

17 |

18 | class Hardswish(nn.Module): # export-friendly version of nn.Hardswish()

19 | @staticmethod

20 | def forward(x):

21 | # return x * F.hardsigmoid(x) # for torchscript and CoreML

22 | return x * F.hardtanh(x + 3, 0., 6.) / 6. # for torchscript, CoreML and ONNX

23 |

24 |

25 | # Mish https://github.com/digantamisra98/Mish --------------------------------------------------------------------------

26 | class Mish(nn.Module):

27 | @staticmethod

28 | def forward(x):

29 | return x * F.softplus(x).tanh()

30 |

31 |

32 | class MemoryEfficientMish(nn.Module):

33 | class F(torch.autograd.Function):

34 | @staticmethod

35 | def forward(ctx, x):

36 | ctx.save_for_backward(x)

37 | return x.mul(torch.tanh(F.softplus(x))) # x * tanh(ln(1 + exp(x)))

38 |

39 | @staticmethod

40 | def backward(ctx, grad_output):

41 | x = ctx.saved_tensors[0]

42 | sx = torch.sigmoid(x)

43 | fx = F.softplus(x).tanh()

44 | return grad_output * (fx + x * sx * (1 - fx * fx))

45 |

46 | def forward(self, x):

47 | return self.F.apply(x)

48 |

49 |

50 | # FReLU https://arxiv.org/abs/2007.11824 -------------------------------------------------------------------------------

51 | class FReLU(nn.Module):

52 | def __init__(self, c1, k=3): # ch_in, kernel

53 | super().__init__()

54 | self.conv = nn.Conv2d(c1, c1, k, 1, 1, groups=c1, bias=False)

55 | self.bn = nn.BatchNorm2d(c1)

56 |

57 | def forward(self, x):

58 | return torch.max(x, self.bn(self.conv(x)))

59 |

60 |

61 | # ACON https://arxiv.org/pdf/2009.04759.pdf ----------------------------------------------------------------------------

62 | class AconC(nn.Module):

63 | r""" ACON activation (activate or not).

64 | AconC: (p1*x-p2*x) * sigmoid(beta*(p1*x-p2*x)) + p2*x, beta is a learnable parameter

65 | according to "Activate or Not: Learning Customized Activation" .

66 | """

67 |

68 | def __init__(self, c1):

69 | super().__init__()

70 | self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))

71 | self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))

72 | self.beta = nn.Parameter(torch.ones(1, c1, 1, 1))

73 |

74 | def forward(self, x):

75 | dpx = (self.p1 - self.p2) * x

76 | return dpx * torch.sigmoid(self.beta * dpx) + self.p2 * x

77 |

78 |

79 | class MetaAconC(nn.Module):

80 | r""" ACON activation (activate or not).

81 | MetaAconC: (p1*x-p2*x) * sigmoid(beta*(p1*x-p2*x)) + p2*x, beta is generated by a small network

82 | according to "Activate or Not: Learning Customized Activation" .

83 | """

84 |

85 | def __init__(self, c1, k=1, s=1, r=16): # ch_in, kernel, stride, r

86 | super().__init__()

87 | c2 = max(r, c1 // r)

88 | self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))

89 | self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))

90 | self.fc1 = nn.Conv2d(c1, c2, k, s, bias=True)

91 | self.fc2 = nn.Conv2d(c2, c1, k, s, bias=True)

92 | # self.bn1 = nn.BatchNorm2d(c2)

93 | # self.bn2 = nn.BatchNorm2d(c1)

94 |

95 | def forward(self, x):

96 | y = x.mean(dim=2, keepdims=True).mean(dim=3, keepdims=True)

97 | # batch-size 1 bug/instabilities https://github.com/ultralytics/yolov5/issues/2891

98 | # beta = torch.sigmoid(self.bn2(self.fc2(self.bn1(self.fc1(y))))) # bug/unstable

99 | beta = torch.sigmoid(self.fc2(self.fc1(y))) # bug patch BN layers removed

100 | dpx = (self.p1 - self.p2) * x

101 | return dpx * torch.sigmoid(beta * dpx) + self.p2 * x

102 |

--------------------------------------------------------------------------------

/models/experimental.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Experimental modules

4 | """

5 | import math

6 |

7 | import numpy as np

8 | import torch

9 | import torch.nn as nn

10 |

11 | from models.common import Conv

12 | from utils.downloads import attempt_download

13 |

14 |

15 | class Sum(nn.Module):

16 | # Weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

17 | def __init__(self, n, weight=False): # n: number of inputs

18 | super().__init__()

19 | self.weight = weight # apply weights boolean

20 | self.iter = range(n - 1) # iter object

21 | if weight:

22 | self.w = nn.Parameter(-torch.arange(1.0, n) / 2, requires_grad=True) # layer weights

23 |

24 | def forward(self, x):

25 | y = x[0] # no weight

26 | if self.weight:

27 | w = torch.sigmoid(self.w) * 2

28 | for i in self.iter:

29 | y = y + x[i + 1] * w[i]

30 | else:

31 | for i in self.iter:

32 | y = y + x[i + 1]

33 | return y

34 |

35 |

36 | class MixConv2d(nn.Module):

37 | # Mixed Depth-wise Conv https://arxiv.org/abs/1907.09595

38 | def __init__(self, c1, c2, k=(1, 3), s=1, equal_ch=True): # ch_in, ch_out, kernel, stride, ch_strategy

39 | super().__init__()

40 | n = len(k) # number of convolutions

41 | if equal_ch: # equal c_ per group

42 | i = torch.linspace(0, n - 1E-6, c2).floor() # c2 indices

43 | c_ = [(i == g).sum() for g in range(n)] # intermediate channels

44 | else: # equal weight.numel() per group

45 | b = [c2] + [0] * n

46 | a = np.eye(n + 1, n, k=-1)

47 | a -= np.roll(a, 1, axis=1)

48 | a *= np.array(k) ** 2

49 | a[0] = 1

50 | c_ = np.linalg.lstsq(a, b, rcond=None)[0].round() # solve for equal weight indices, ax = b

51 |

52 | self.m = nn.ModuleList([

53 | nn.Conv2d(c1, int(c_), k, s, k // 2, groups=math.gcd(c1, int(c_)), bias=False) for k, c_ in zip(k, c_)])

54 | self.bn = nn.BatchNorm2d(c2)

55 | self.act = nn.SiLU()

56 |

57 | def forward(self, x):

58 | return self.act(self.bn(torch.cat([m(x) for m in self.m], 1)))

59 |

60 |

61 | class Ensemble(nn.ModuleList):

62 | # Ensemble of models

63 | def __init__(self):

64 | super().__init__()

65 |

66 | def forward(self, x, augment=False, profile=False, visualize=False):

67 | y = [module(x, augment, profile, visualize)[0] for module in self]

68 | # y = torch.stack(y).max(0)[0] # max ensemble

69 | # y = torch.stack(y).mean(0) # mean ensemble

70 | y = torch.cat(y, 1) # nms ensemble

71 | return y, None # inference, train output

72 |

73 |

74 | def attempt_load(weights, device=None, inplace=True, fuse=True):

75 | from models.yolo import Detect, Model

76 |

77 | # Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

78 | model = Ensemble()

79 | for w in weights if isinstance(weights, list) else [weights]:

80 | ckpt = torch.load(attempt_download(w), map_location=device)

81 | ckpt = (ckpt.get('ema') or ckpt['model']).float() # FP32 model

82 | model.append(ckpt.fuse().eval() if fuse else ckpt.eval()) # fused or un-fused model in eval mode

83 |

84 | # Compatibility updates

85 | for m in model.modules():

86 | t = type(m)

87 | if t in (nn.Hardswish, nn.LeakyReLU, nn.ReLU, nn.ReLU6, nn.SiLU, Detect, Model):

88 | m.inplace = inplace # torch 1.7.0 compatibility

89 | if t is Detect and not isinstance(m.anchor_grid, list):

90 | delattr(m, 'anchor_grid')

91 | setattr(m, 'anchor_grid', [torch.zeros(1)] * m.nl)

92 | elif t is Conv:

93 | m._non_persistent_buffers_set = set() # torch 1.6.0 compatibility

94 | elif t is nn.Upsample and not hasattr(m, 'recompute_scale_factor'):

95 | m.recompute_scale_factor = None # torch 1.11.0 compatibility

96 |

97 | if len(model) == 1:

98 | return model[-1] # return model

99 | print(f'Ensemble created with {weights}\n')

100 | for k in 'names', 'nc', 'yaml':

101 | setattr(model, k, getattr(model[0], k))

102 | model.stride = model[torch.argmax(torch.tensor([m.stride.max() for m in model])).int()].stride # max stride

103 | assert all(model[0].nc == m.nc for m in model), f'Models have different class counts: {[m.nc for m in model]}'

104 | return model # return ensemble

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Repo-specific GitIgnore ----------------------------------------------------------------------------------------------

2 | *.jpeg

3 | *.png

4 | *.bmp

5 | *.tif

6 | *.tiff

7 | *.heic

8 | *.JPG

9 | *.JPEG

10 | *.PNG

11 | *.BMP

12 | *.TIF

13 | *.TIFF

14 | *.HEIC

15 | *.mp4

16 | *.mov

17 | *.MOV

18 | *.avi

19 | *.data

20 | *.json

21 | *.cfg

22 | !cfg/yolov3*.cfg

23 |

24 | storage.googleapis.com

25 | runs/*

26 | data/*

27 | !data/hyps/*

28 | !data/images/zidane.jpg

29 | !data/images/bus.jpg

30 | !data/*.sh

31 |

32 | results*.csv

33 |

34 | # Datasets -------------------------------------------------------------------------------------------------------------

35 | coco/

36 | coco128/

37 | VOC/

38 |

39 | # MATLAB GitIgnore -----------------------------------------------------------------------------------------------------

40 | *.m~

41 | *.mat

42 | !targets*.mat

43 |

44 | # Neural Network weights -----------------------------------------------------------------------------------------------

45 | *.weights

46 | *.pt

47 | *.pb

48 | *.onnx

49 | *.mlmodel

50 | *.torchscript

51 | *.tflite

52 | *.h5

53 | *_saved_model/

54 | *_web_model/

55 | darknet53.conv.74

56 | yolov3-tiny.conv.15

57 |

58 | # GitHub Python GitIgnore ----------------------------------------------------------------------------------------------

59 | # Byte-compiled / optimized / DLL files

60 | __pycache__/

61 | *.py[cod]

62 | *$py.class

63 |

64 | # C extensions

65 | *.so

66 |

67 | # Distribution / packaging

68 | .Python

69 | env/

70 | build/

71 | develop-eggs/

72 | dist/

73 | downloads/

74 | eggs/

75 | .eggs/

76 | lib/

77 | lib64/

78 | parts/

79 | sdist/

80 | var/

81 | wheels/

82 | *.egg-info/

83 | /wandb/

84 | .installed.cfg

85 | *.egg

86 |

87 |

88 | # PyInstaller

89 | # Usually these files are written by a python script from a template

90 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

91 | *.manifest

92 | *.spec

93 |

94 | # Installer logs

95 | pip-log.txt

96 | pip-delete-this-directory.txt

97 |

98 | # Unit test / coverage reports

99 | htmlcov/

100 | .tox/

101 | .coverage

102 | .coverage.*

103 | .cache

104 | nosetests.xml

105 | coverage.xml

106 | *.cover

107 | .hypothesis/

108 |

109 | # Translations

110 | *.mo

111 | *.pot

112 |

113 | # Django stuff:

114 | *.log

115 | local_settings.py

116 |

117 | # Flask stuff:

118 | instance/

119 | .webassets-cache

120 |

121 | # Scrapy stuff:

122 | .scrapy

123 |

124 | # Sphinx documentation

125 | docs/_build/

126 |

127 | # PyBuilder

128 | target/

129 |

130 | # Jupyter Notebook

131 | .ipynb_checkpoints

132 |

133 | # pyenv

134 | .python-version

135 |

136 | # celery beat schedule file

137 | celerybeat-schedule

138 |

139 | # SageMath parsed files

140 | *.sage.py

141 |

142 | # dotenv

143 | .env

144 |

145 | # virtualenv

146 | .venv*

147 | venv*/

148 | ENV*/

149 |

150 | # Spyder project settings

151 | .spyderproject

152 | .spyproject

153 |

154 | # Rope project settings

155 | .ropeproject

156 |

157 | # mkdocs documentation

158 | /site

159 |

160 | # mypy

161 | .mypy_cache/

162 |

163 |

164 | # https://github.com/github/gitignore/blob/master/Global/macOS.gitignore -----------------------------------------------

165 |

166 | # General

167 | .DS_Store

168 | .AppleDouble

169 | .LSOverride

170 |

171 | # Icon must end with two \r

172 | Icon

173 | Icon?

174 |

175 | # Thumbnails

176 | ._*

177 |

178 | # Files that might appear in the root of a volume

179 | .DocumentRevisions-V100

180 | .fseventsd

181 | .Spotlight-V100

182 | .TemporaryItems

183 | .Trashes

184 | .VolumeIcon.icns

185 | .com.apple.timemachine.donotpresent

186 |

187 | # Directories potentially created on remote AFP share

188 | .AppleDB

189 | .AppleDesktop

190 | Network Trash Folder

191 | Temporary Items

192 | .apdisk

193 |

194 |

195 | # https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

196 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and WebStorm

197 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

198 |

199 | # User-specific stuff:

200 | .idea/*

201 | .idea/**/workspace.xml

202 | .idea/**/tasks.xml

203 | .idea/dictionaries

204 | .html # Bokeh Plots

205 | .pg # TensorFlow Frozen Graphs

206 | .avi # videos

207 |

208 | # Sensitive or high-churn files:

209 | .idea/**/dataSources/

210 | .idea/**/dataSources.ids

211 | .idea/**/dataSources.local.xml

212 | .idea/**/sqlDataSources.xml

213 | .idea/**/dynamic.xml

214 | .idea/**/uiDesigner.xml

215 |

216 | # Gradle:

217 | .idea/**/gradle.xml

218 | .idea/**/libraries

219 |

220 | # CMake

221 | cmake-build-debug/

222 | cmake-build-release/

223 |

224 | # Mongo Explorer plugin:

225 | .idea/**/mongoSettings.xml

226 |

227 | ## File-based project format:

228 | *.iws

229 |

230 | ## Plugin-specific files:

231 |

232 | # IntelliJ

233 | out/

234 |

235 | # mpeltonen/sbt-idea plugin

236 | .idea_modules/

237 |

238 | # JIRA plugin

239 | atlassian-ide-plugin.xml

240 |

241 | # Cursive Clojure plugin

242 | .idea/replstate.xml

243 |

244 | # Crashlytics plugin (for Android Studio and IntelliJ)

245 | com_crashlytics_export_strings.xml

246 | crashlytics.properties

247 | crashlytics-build.properties

248 | fabric.properties

249 |

--------------------------------------------------------------------------------

/main.py:

--------------------------------------------------------------------------------

1 | import os

2 | import time

3 | import argparse

4 | import numpy as np

5 | from models.gradcam import YOLOV5GradCAM

6 | from models.yolo_v5_object_detector import YOLOV5TorchObjectDetector

7 | import cv2

8 | from deep_utils import Box, split_extension

9 |

10 | # Arguments

11 | parser = argparse.ArgumentParser()

12 | parser.add_argument('--model-path', type=str, default="yolov5s.pt", help='Path to the model')

13 | parser.add_argument('--img-path', type=str, default='images/', help='input image path')

14 | parser.add_argument('--output-dir', type=str, default='outputs', help='output dir')

15 | parser.add_argument('--img-size', type=int, default=640, help="input image size")

16 | parser.add_argument('--target-layer', type=str, default='model_23_cv3_act',

17 | help='The layer hierarchical address to which gradcam will applied,'

18 | ' the names should be separated by underline')

19 | parser.add_argument('--method', type=str, default='gradcam', help='gradcam or gradcampp')

20 | parser.add_argument('--device', type=str, default='cpu', help='cuda or cpu')

21 | parser.add_argument('--names', type=str, default=None,

22 | help='The name of the classes. The default is set to None and is set to coco classes. Provide your custom names as follow: object1,object2,object3')

23 |

24 | args = parser.parse_args()

25 |

26 |

27 | def get_res_img(bbox, mask, res_img):

28 | mask = mask.squeeze(0).mul(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).detach().cpu().numpy().astype(

29 | np.uint8)

30 | heatmap = cv2.applyColorMap(mask, cv2.COLORMAP_JET)

31 | n_heatmat = (Box.fill_outer_box(heatmap, bbox) / 255).astype(np.float32)

32 | res_img = res_img / 255

33 | res_img = cv2.add(res_img, n_heatmat)

34 | res_img = (res_img / res_img.max())

35 | return res_img, n_heatmat

36 |

37 |

38 | def put_text_box(bbox, cls_name, res_img):

39 | x1, y1, x2, y2 = bbox

40 | # this is a bug in cv2. It does not put box on a converted image from torch unless it's buffered and read again!

41 | cv2.imwrite('temp.jpg', (res_img * 255).astype(np.uint8))

42 | res_img = cv2.imread('temp.jpg')

43 | res_img = Box.put_box(res_img, bbox)

44 | res_img = Box.put_text(res_img, cls_name, (x1, y1))

45 | return res_img

46 |

47 |

48 | def concat_images(images):

49 | w, h = images[0].shape[:2]

50 | width = w

51 | height = h * len(images)

52 | base_img = np.zeros((width, height, 3), dtype=np.uint8)

53 | for i, img in enumerate(images):

54 | base_img[:, h * i:h * (i + 1), ...] = img

55 | return base_img

56 |

57 |

58 | def main(img_path):

59 | device = args.device

60 | input_size = (args.img_size, args.img_size)

61 | img = cv2.imread(img_path)

62 | print('[INFO] Loading the model')

63 | model = YOLOV5TorchObjectDetector(args.model_path, device, img_size=input_size,

64 | names=None if args.names is None else args.names.strip().split(","))

65 | torch_img = model.preprocessing(img[..., ::-1])

66 | if args.method == 'gradcam':

67 | saliency_method = YOLOV5GradCAM(model=model, layer_name=args.target_layer, img_size=input_size)

68 | tic = time.time()

69 | masks, logits, [boxes, _, class_names, _] = saliency_method(torch_img)

70 | print("total time:", round(time.time() - tic, 4))

71 | result = torch_img.squeeze(0).mul(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).detach().cpu().numpy()

72 | result = result[..., ::-1] # convert to bgr

73 | images = [result]

74 | for i, mask in enumerate(masks):

75 | res_img = result.copy()

76 | bbox, cls_name = boxes[0][i], class_names[0][i]

77 | res_img, heat_map = get_res_img(bbox, mask, res_img)

78 | res_img = put_text_box(bbox, cls_name, res_img)

79 | images.append(res_img)

80 | final_image = concat_images(images)

81 | img_name = split_extension(os.path.split(img_path)[-1], suffix='-res')

82 | output_path = f'{args.output_dir}/{img_name}'

83 | os.makedirs(args.output_dir, exist_ok=True)

84 | print(f'[INFO] Saving the final image at {output_path}')

85 | cv2.imwrite(output_path, final_image)

86 |

87 |

88 | def folder_main(folder_path):

89 | device = args.device

90 | input_size = (args.img_size, args.img_size)

91 | print('[INFO] Loading the model')

92 | model = YOLOV5TorchObjectDetector(args.model_path, device, img_size=input_size,

93 | names=None if args.names is None else args.names.strip().split(","))

94 | for item in os.listdir(folder_path):

95 | img_path = os.path.join(folder_path, item)

96 | img = cv2.imread(img_path)

97 | torch_img = model.preprocessing(img[..., ::-1])

98 | if args.method == 'gradcam':

99 | saliency_method = YOLOV5GradCAM(model=model, layer_name=args.target_layer, img_size=input_size)

100 | tic = time.time()

101 | masks, logits, [boxes, _, class_names, _] = saliency_method(torch_img)

102 | print("total time:", round(time.time() - tic, 4))

103 | result = torch_img.squeeze(0).mul(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).detach().cpu().numpy()

104 | result = result[..., ::-1] # convert to bgr

105 | images = [result]

106 | for i, mask in enumerate(masks):

107 | res_img = result.copy()

108 | bbox, cls_name = boxes[0][i], class_names[0][i]

109 | res_img, heat_map = get_res_img(bbox, mask, res_img)

110 | res_img = put_text_box(bbox, cls_name, res_img)

111 | images.append(res_img)

112 | final_image = concat_images(images)

113 | img_name = split_extension(os.path.split(img_path)[-1], suffix='-res')

114 | output_path = f'{args.output_dir}/{img_name}'

115 | os.makedirs(args.output_dir, exist_ok=True)

116 | print(f'[INFO] Saving the final image at {output_path}')

117 | cv2.imwrite(output_path, final_image)

118 |

119 |

120 | if __name__ == '__main__':

121 | if os.path.isdir(args.img_path):

122 | folder_main(args.img_path)

123 | else:

124 | main(args.img_path)

125 |

--------------------------------------------------------------------------------

/utils/downloads.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Download utils

4 | """

5 |

6 | import os

7 | import platform

8 | import subprocess

9 | import time

10 | import urllib

11 | from pathlib import Path

12 | from zipfile import ZipFile

13 |

14 | import requests

15 | import torch

16 |

17 |

18 | def gsutil_getsize(url=''):

19 | # gs://bucket/file size https://cloud.google.com/storage/docs/gsutil/commands/du

20 | s = subprocess.check_output(f'gsutil du {url}', shell=True).decode('utf-8')

21 | return eval(s.split(' ')[0]) if len(s) else 0 # bytes

22 |

23 |

24 | def safe_download(file, url, url2=None, min_bytes=1E0, error_msg=''):

25 | # Attempts to download file from url or url2, checks and removes incomplete downloads < min_bytes

26 | file = Path(file)

27 | assert_msg = f"Downloaded file '{file}' does not exist or size is < min_bytes={min_bytes}"

28 | try: # url1

29 | print(f'Downloading {url} to {file}...')

30 | torch.hub.download_url_to_file(url, str(file))

31 | assert file.exists() and file.stat().st_size > min_bytes, assert_msg # check

32 | except Exception as e: # url2

33 | file.unlink(missing_ok=True) # remove partial downloads

34 | print(f'ERROR: {e}\nRe-attempting {url2 or url} to {file}...')

35 | os.system(f"curl -L '{url2 or url}' -o '{file}' --retry 3 -C -") # curl download, retry and resume on fail

36 | finally:

37 | if not file.exists() or file.stat().st_size < min_bytes: # check

38 | file.unlink(missing_ok=True) # remove partial downloads

39 | print(f"ERROR: {assert_msg}\n{error_msg}")

40 | print('')

41 |

42 |

43 | def attempt_download(file, repo='ultralytics/yolov5'): # from utils.downloads import *; attempt_download()

44 | # Attempt file download if does not exist

45 | file = Path(str(file).strip().replace("'", ''))

46 |

47 | if not file.exists():

48 | # URL specified

49 | name = Path(urllib.parse.unquote(str(file))).name # decode '%2F' to '/' etc.

50 | if str(file).startswith(('http:/', 'https:/')): # download

51 | url = str(file).replace(':/', '://') # Pathlib turns :// -> :/

52 | name = name.split('?')[0] # parse authentication https://url.com/file.txt?auth...

53 | safe_download(file=name, url=url, min_bytes=1E5)

54 | return name

55 |

56 | # GitHub assets

57 | file.parent.mkdir(parents=True, exist_ok=True) # make parent dir (if required)

58 | try:

59 | response = requests.get(f'https://api.github.com/repos/{repo}/releases/latest').json() # github api

60 | assets = [x['name'] for x in response['assets']] # release assets, i.e. ['yolov5s.pt', 'yolov5m.pt', ...]

61 | tag = response['tag_name'] # i.e. 'v1.0'

62 | except: # fallback plan

63 | assets = ['yolov5n.pt', 'yolov5s.pt', 'yolov5m.pt', 'yolov5l.pt', 'yolov5x.pt',

64 | 'yolov5n6.pt', 'yolov5s6.pt', 'yolov5m6.pt', 'yolov5l6.pt', 'yolov5x6.pt']

65 | try:

66 | tag = subprocess.check_output('git tag', shell=True, stderr=subprocess.STDOUT).decode().split()[-1]

67 | except:

68 | tag = 'v6.0' # current release

69 |

70 | if name in assets:

71 | safe_download(file,

72 | url=f'https://github.com/{repo}/releases/download/{tag}/{name}',

73 | # url2=f'https://storage.googleapis.com/{repo}/ckpt/{name}', # backup url (optional)

74 | min_bytes=1E5,

75 | error_msg=f'{file} missing, try downloading from https://github.com/{repo}/releases/')

76 |

77 | return str(file)

78 |

79 |

80 | def gdrive_download(id='16TiPfZj7htmTyhntwcZyEEAejOUxuT6m', file='tmp.zip'):

81 | # Downloads a file from Google Drive. from yolov5.utils.downloads import *; gdrive_download()

82 | t = time.time()

83 | file = Path(file)

84 | cookie = Path('cookie') # gdrive cookie

85 | print(f'Downloading https://drive.google.com/uc?export=download&id={id} as {file}... ', end='')

86 | file.unlink(missing_ok=True) # remove existing file

87 | cookie.unlink(missing_ok=True) # remove existing cookie

88 |

89 | # Attempt file download

90 | out = "NUL" if platform.system() == "Windows" else "/dev/null"

91 | os.system(f'curl -c ./cookie -s -L "drive.google.com/uc?export=download&id={id}" > {out}')

92 | if os.path.exists('cookie'): # large file

93 | s = f'curl -Lb ./cookie "drive.google.com/uc?export=download&confirm={get_token()}&id={id}" -o {file}'

94 | else: # small file

95 | s = f'curl -s -L -o {file} "drive.google.com/uc?export=download&id={id}"'

96 | r = os.system(s) # execute, capture return

97 | cookie.unlink(missing_ok=True) # remove existing cookie

98 |

99 | # Error check

100 | if r != 0:

101 | file.unlink(missing_ok=True) # remove partial

102 | print('Download error ') # raise Exception('Download error')

103 | return r

104 |

105 | # Unzip if archive

106 | if file.suffix == '.zip':

107 | print('unzipping... ', end='')

108 | ZipFile(file).extractall(path=file.parent) # unzip

109 | file.unlink() # remove zip

110 |

111 | print(f'Done ({time.time() - t:.1f}s)')

112 | return r

113 |

114 |

115 | def get_token(cookie="./cookie"):

116 | with open(cookie) as f:

117 | for line in f:

118 | if "download" in line:

119 | return line.split()[-1]

120 | return ""

121 |

122 | # Google utils: https://cloud.google.com/storage/docs/reference/libraries ----------------------------------------------

123 | #

124 | #

125 | # def upload_blob(bucket_name, source_file_name, destination_blob_name):

126 | # # Uploads a file to a bucket

127 | # # https://cloud.google.com/storage/docs/uploading-objects#storage-upload-object-python

128 | #

129 | # storage_client = storage.Client()

130 | # bucket = storage_client.get_bucket(bucket_name)

131 | # blob = bucket.blob(destination_blob_name)

132 | #

133 | # blob.upload_from_filename(source_file_name)

134 | #

135 | # print('File {} uploaded to {}.'.format(

136 | # source_file_name,

137 | # destination_blob_name))

138 | #

139 | #

140 | # def download_blob(bucket_name, source_blob_name, destination_file_name):

141 | # # Uploads a blob from a bucket

142 | # storage_client = storage.Client()

143 | # bucket = storage_client.get_bucket(bucket_name)

144 | # blob = bucket.blob(source_blob_name)

145 | #

146 | # blob.download_to_filename(destination_file_name)

147 | #

148 | # print('Blob {} downloaded to {}.'.format(

149 | # source_blob_name,

150 | # destination_file_name))

151 |

--------------------------------------------------------------------------------

/utils/autoanchor.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Auto-anchor utils

4 | """

5 |

6 | import random

7 |

8 | import numpy as np

9 | import torch

10 | import yaml

11 | from tqdm import tqdm

12 |

13 | from utils.general import colorstr

14 |

15 |

16 | def check_anchor_order(m):

17 | # Check anchor order against stride order for YOLOv5 Detect() module m, and correct if necessary

18 | a = m.anchors.prod(-1).view(-1) # anchor area

19 | da = a[-1] - a[0] # delta a

20 | ds = m.stride[-1] - m.stride[0] # delta s

21 | if da.sign() != ds.sign(): # same order

22 | print('Reversing anchor order')

23 | m.anchors[:] = m.anchors.flip(0)

24 |

25 |

26 | def check_anchors(dataset, model, thr=4.0, imgsz=640):

27 | # Check anchor fit to data, recompute if necessary

28 | prefix = colorstr('autoanchor: ')

29 | print(f'\n{prefix}Analyzing anchors... ', end='')

30 | m = model.module.model[-1] if hasattr(model, 'module') else model.model[-1] # Detect()

31 | shapes = imgsz * dataset.shapes / dataset.shapes.max(1, keepdims=True)

32 | scale = np.random.uniform(0.9, 1.1, size=(shapes.shape[0], 1)) # augment scale

33 | wh = torch.tensor(np.concatenate([l[:, 3:5] * s for s, l in zip(shapes * scale, dataset.labels)])).float() # wh

34 |

35 | def metric(k): # compute metric

36 | r = wh[:, None] / k[None]

37 | x = torch.min(r, 1. / r).min(2)[0] # ratio metric

38 | best = x.max(1)[0] # best_x

39 | aat = (x > 1. / thr).float().sum(1).mean() # anchors above threshold

40 | bpr = (best > 1. / thr).float().mean() # best possible recall

41 | return bpr, aat

42 |

43 | anchors = m.anchors.clone() * m.stride.to(m.anchors.device).view(-1, 1, 1) # current anchors

44 | bpr, aat = metric(anchors.cpu().view(-1, 2))

45 | print(f'anchors/target = {aat:.2f}, Best Possible Recall (BPR) = {bpr:.4f}', end='')

46 | if bpr < 0.98: # threshold to recompute

47 | print('. Attempting to improve anchors, please wait...')

48 | na = m.anchors.numel() // 2 # number of anchors

49 | try:

50 | anchors = kmean_anchors(dataset, n=na, img_size=imgsz, thr=thr, gen=1000, verbose=False)

51 | except Exception as e:

52 | print(f'{prefix}ERROR: {e}')

53 | new_bpr = metric(anchors)[0]

54 | if new_bpr > bpr: # replace anchors

55 | anchors = torch.tensor(anchors, device=m.anchors.device).type_as(m.anchors)

56 | m.anchors[:] = anchors.clone().view_as(m.anchors) / m.stride.to(m.anchors.device).view(-1, 1, 1) # loss

57 | check_anchor_order(m)

58 | print(f'{prefix}New anchors saved to model. Update model *.yaml to use these anchors in the future.')

59 | else:

60 | print(f'{prefix}Original anchors better than new anchors. Proceeding with original anchors.')

61 | print('') # newline

62 |

63 |

64 | def kmean_anchors(dataset='./data/coco128.yaml', n=9, img_size=640, thr=4.0, gen=1000, verbose=True):

65 | """ Creates kmeans-evolved anchors from training dataset

66 |

67 | Arguments:

68 | dataset: path to data.yaml, or a loaded dataset

69 | n: number of anchors

70 | img_size: image size used for training

71 | thr: anchor-label wh ratio threshold hyperparameter hyp['anchor_t'] used for training, default=4.0

72 | gen: generations to evolve anchors using genetic algorithm

73 | verbose: print all results

74 |

75 | Return:

76 | k: kmeans evolved anchors

77 |

78 | Usage:

79 | from utils.autoanchor import *; _ = kmean_anchors()

80 | """

81 | from scipy.cluster.vq import kmeans

82 |

83 | thr = 1. / thr

84 | prefix = colorstr('autoanchor: ')

85 |

86 | def metric(k, wh): # compute metrics

87 | r = wh[:, None] / k[None]

88 | x = torch.min(r, 1. / r).min(2)[0] # ratio metric

89 | # x = wh_iou(wh, torch.tensor(k)) # iou metric

90 | return x, x.max(1)[0] # x, best_x

91 |

92 | def anchor_fitness(k): # mutation fitness

93 | _, best = metric(torch.tensor(k, dtype=torch.float32), wh)

94 | return (best * (best > thr).float()).mean() # fitness

95 |

96 | def print_results(k):

97 | k = k[np.argsort(k.prod(1))] # sort small to large

98 | x, best = metric(k, wh0)

99 | bpr, aat = (best > thr).float().mean(), (x > thr).float().mean() * n # best possible recall, anch > thr

100 | print(f'{prefix}thr={thr:.2f}: {bpr:.4f} best possible recall, {aat:.2f} anchors past thr')

101 | print(f'{prefix}n={n}, img_size={img_size}, metric_all={x.mean():.3f}/{best.mean():.3f}-mean/best, '

102 | f'past_thr={x[x > thr].mean():.3f}-mean: ', end='')

103 | for i, x in enumerate(k):

104 | print('%i,%i' % (round(x[0]), round(x[1])), end=', ' if i < len(k) - 1 else '\n') # use in *.cfg

105 | return k

106 |

107 | if isinstance(dataset, str): # *.yaml file

108 | with open(dataset, errors='ignore') as f:

109 | data_dict = yaml.safe_load(f) # model dict

110 | from utils.datasets import LoadImagesAndLabels

111 | dataset = LoadImagesAndLabels(data_dict['train'], augment=True, rect=True)

112 |

113 | # Get label wh

114 | shapes = img_size * dataset.shapes / dataset.shapes.max(1, keepdims=True)

115 | wh0 = np.concatenate([l[:, 3:5] * s for s, l in zip(shapes, dataset.labels)]) # wh

116 |

117 | # Filter

118 | i = (wh0 < 3.0).any(1).sum()

119 | if i:

120 | print(f'{prefix}WARNING: Extremely small objects found. {i} of {len(wh0)} labels are < 3 pixels in size.')

121 | wh = wh0[(wh0 >= 2.0).any(1)] # filter > 2 pixels

122 | # wh = wh * (np.random.rand(wh.shape[0], 1) * 0.9 + 0.1) # multiply by random scale 0-1

123 |

124 | # Kmeans calculation

125 | print(f'{prefix}Running kmeans for {n} anchors on {len(wh)} points...')

126 | s = wh.std(0) # sigmas for whitening

127 | k, dist = kmeans(wh / s, n, iter=30) # points, mean distance

128 | assert len(k) == n, f'{prefix}ERROR: scipy.cluster.vq.kmeans requested {n} points but returned only {len(k)}'

129 | k *= s

130 | wh = torch.tensor(wh, dtype=torch.float32) # filtered

131 | wh0 = torch.tensor(wh0, dtype=torch.float32) # unfiltered

132 | k = print_results(k)

133 |

134 | # Plot

135 | # k, d = [None] * 20, [None] * 20

136 | # for i in tqdm(range(1, 21)):

137 | # k[i-1], d[i-1] = kmeans(wh / s, i) # points, mean distance

138 | # fig, ax = plt.subplots(1, 2, figsize=(14, 7), tight_layout=True)

139 | # ax = ax.ravel()

140 | # ax[0].plot(np.arange(1, 21), np.array(d) ** 2, marker='.')

141 | # fig, ax = plt.subplots(1, 2, figsize=(14, 7)) # plot wh

142 | # ax[0].hist(wh[wh[:, 0]<100, 0],400)

143 | # ax[1].hist(wh[wh[:, 1]<100, 1],400)

144 | # fig.savefig('wh.png', dpi=200)

145 |

146 | # Evolve

147 | npr = np.random

148 | f, sh, mp, s = anchor_fitness(k), k.shape, 0.9, 0.1 # fitness, generations, mutation prob, sigma

149 | pbar = tqdm(range(gen), desc=f'{prefix}Evolving anchors with Genetic Algorithm:') # progress bar

150 | for _ in pbar:

151 | v = np.ones(sh)

152 | while (v == 1).all(): # mutate until a change occurs (prevent duplicates)

153 | v = ((npr.random(sh) < mp) * random.random() * npr.randn(*sh) * s + 1).clip(0.3, 3.0)

154 | kg = (k.copy() * v).clip(min=2.0)

155 | fg = anchor_fitness(kg)

156 | if fg > f:

157 | f, k = fg, kg.copy()

158 | pbar.desc = f'{prefix}Evolving anchors with Genetic Algorithm: fitness = {f:.4f}'

159 | if verbose:

160 | print_results(k)

161 |

162 | return print_results(k)

163 |

--------------------------------------------------------------------------------

/models/yolo_v5_object_detector.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from deep_utils.utils.box_utils.boxes import Box

3 | import torch

4 | from models.experimental import attempt_load

5 | from utils.general import xywh2xyxy

6 | from utils.datasets import letterbox

7 | import cv2

8 | import time

9 | import torchvision

10 | import torch.nn as nn

11 | from utils.metrics import box_iou

12 |

13 |

14 | class YOLOV5TorchObjectDetector(nn.Module):

15 | def __init__(self,

16 | model_weight,

17 | device,

18 | img_size,

19 | names=None,

20 | mode='eval',

21 | confidence=0.4,

22 | iou_thresh=0.45,

23 | agnostic_nms=False):

24 | super(YOLOV5TorchObjectDetector, self).__init__()

25 | self.device = device

26 | self.model = None

27 | self.img_size = img_size

28 | self.mode = mode

29 | self.confidence = confidence

30 | self.iou_thresh = iou_thresh

31 | self.agnostic = agnostic_nms

32 | self.model = attempt_load(model_weight, device=device)

33 | print("[INFO] Model is loaded")

34 | self.model.requires_grad_(True)

35 | self.model.to(device)

36 | if self.mode == 'train':

37 | self.model.train()

38 | else:

39 | self.model.eval()

40 | # fetch the names

41 | if names is None:

42 | print('[INFO] fetching names from coco file')

43 | self.names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat',

44 | 'traffic light',

45 | 'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep',

46 | 'cow',

47 | 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase',

48 | 'frisbee',

49 | 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard',

50 | 'surfboard',

51 | 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana',

52 | 'apple',

53 | 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair',

54 | 'couch',

55 | 'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote',

56 | 'keyboard', 'cell phone',

57 | 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors',

58 | 'teddy bear',

59 | 'hair drier', 'toothbrush']

60 | else:

61 | self.names = names

62 |

63 | # preventing cold start

64 | img = torch.zeros((1, 3, *self.img_size), device=device)

65 | self.model(img)

66 |

67 | @staticmethod

68 | def non_max_suppression(prediction, logits, conf_thres=0.6, iou_thres=0.45, classes=None, agnostic=False,

69 | multi_label=False, labels=(), max_det=300):

70 | """Runs Non-Maximum Suppression (NMS) on inference and logits results

71 |

72 | Returns:

73 | list of detections, on (n,6) tensor per image [xyxy, conf, cls] and pruned input logits (n, number-classes)

74 | """

75 |

76 | nc = prediction.shape[2] - 5 # number of classes

77 | xc = prediction[..., 4] > conf_thres # candidates

78 |

79 | # Checks

80 | assert 0 <= conf_thres <= 1, f'Invalid Confidence threshold {conf_thres}, valid values are between 0.0 and 1.0'

81 | assert 0 <= iou_thres <= 1, f'Invalid IoU {iou_thres}, valid values are between 0.0 and 1.0'

82 |

83 | # Settings

84 | min_wh, max_wh = 2, 4096 # (pixels) minimum and maximum box width and height

85 | max_nms = 30000 # maximum number of boxes into torchvision.ops.nms()

86 | time_limit = 10.0 # seconds to quit after

87 | redundant = True # require redundant detections

88 | multi_label &= nc > 1 # multiple labels per box (adds 0.5ms/img)

89 | merge = False # use merge-NMS

90 |

91 | t = time.time()

92 | output = [torch.zeros((0, 6), device=prediction.device)] * prediction.shape[0]

93 | logits_output = [torch.zeros((0, 80), device=logits.device)] * logits.shape[0]

94 | for xi, (x, log_) in enumerate(zip(prediction, logits)): # image index, image inference

95 | # Apply constraints

96 | # x[((x[..., 2:4] < min_wh) | (x[..., 2:4] > max_wh)).any(1), 4] = 0 # width-height

97 | x = x[xc[xi]] # confidence

98 | log_ = log_[xc[xi]]

99 | # Cat apriori labels if autolabelling

100 | if labels and len(labels[xi]):

101 | l = labels[xi]

102 | v = torch.zeros((len(l), nc + 5), device=x.device)

103 | v[:, :4] = l[:, 1:5] # box

104 | v[:, 4] = 1.0 # conf

105 | v[range(len(l)), l[:, 0].long() + 5] = 1.0 # cls

106 | x = torch.cat((x, v), 0)

107 |

108 | # If none remain process next image

109 | if not x.shape[0]:

110 | continue

111 |

112 | # Compute conf

113 | x[:, 5:] *= x[:, 4:5] # conf = obj_conf * cls_conf

114 | # log_ *= x[:, 4:5]

115 | # Box (center x, center y, width, height) to (x1, y1, x2, y2)

116 | box = xywh2xyxy(x[:, :4])

117 |

118 | # Detections matrix nx6 (xyxy, conf, cls)

119 | if multi_label:

120 | i, j = (x[:, 5:] > conf_thres).nonzero(as_tuple=False).T

121 | x = torch.cat((box[i], x[i, j + 5, None], j[:, None].float()), 1)

122 | else: # best class only

123 | conf, j = x[:, 5:].max(1, keepdim=True)

124 | # log_ = x[:, 5:]

125 | x = torch.cat((box, conf, j.float()), 1)[conf.view(-1) > conf_thres]

126 | log_ = log_[conf.view(-1) > conf_thres]

127 | # Filter by class

128 | if classes is not None:

129 | x = x[(x[:, 5:6] == torch.tensor(classes, device=x.device)).any(1)]

130 |

131 | # Check shape

132 | n = x.shape[0] # number of boxes

133 | if not n: # no boxes

134 | continue

135 | elif n > max_nms: # excess boxes

136 | x = x[x[:, 4].argsort(descending=True)[:max_nms]] # sort by confidence

137 |

138 | # Batched NMS

139 | c = x[:, 5:6] * (0 if agnostic else max_wh) # classes

140 | boxes, scores = x[:, :4] + c, x[:, 4] # boxes (offset by class), scores

141 | i = torchvision.ops.nms(boxes, scores, iou_thres) # NMS

142 | if i.shape[0] > max_det: # limit detections

143 | i = i[:max_det]

144 | if merge and (1 < n < 3E3): # Merge NMS (boxes merged using weighted mean)

145 | # update boxes as boxes(i,4) = weights(i,n) * boxes(n,4)

146 | iou = box_iou(boxes[i], boxes) > iou_thres # iou matrix

147 | weights = iou * scores[None] # box weights

148 | x[i, :4] = torch.mm(weights, x[:, :4]).float() / weights.sum(1, keepdim=True) # merged boxes

149 | if redundant:

150 | i = i[iou.sum(1) > 1] # require redundancy

151 |

152 | output[xi] = x[i]

153 | logits_output[xi] = log_[i]

154 | assert log_[i].shape[0] == x[i].shape[0]

155 | if (time.time() - t) > time_limit:

156 | print(f'WARNING: NMS time limit {time_limit}s exceeded')

157 | break # time limit exceeded

158 |

159 | return output, logits_output

160 |

161 | @staticmethod

162 | def yolo_resize(img, new_shape=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True):

163 |

164 | return letterbox(img, new_shape=new_shape, color=color, auto=auto, scaleFill=scaleFill, scaleup=scaleup)

165 |

166 | def forward(self, img):

167 | prediction, logits, _ = self.model(img, augment=False)

168 | prediction, logits = self.non_max_suppression(prediction, logits, self.confidence, self.iou_thresh,

169 | classes=None,

170 | agnostic=self.agnostic)

171 | self.boxes, self.class_names, self.classes, self.confidences = [[[] for _ in range(img.shape[0])] for _ in

172 | range(4)]

173 | for i, det in enumerate(prediction): # detections per image

174 | if len(det):

175 | for *xyxy, conf, cls in det:

176 | bbox = Box.box2box(xyxy,

177 | in_source=Box.BoxSource.Torch,

178 | to_source=Box.BoxSource.Numpy,

179 | return_int=True)

180 | self.boxes[i].append(bbox)

181 | self.confidences[i].append(round(conf.item(), 2))

182 | cls = int(cls.item())

183 | self.classes[i].append(cls)

184 | if self.names is not None:

185 | self.class_names[i].append(self.names[cls])

186 | else:

187 | self.class_names[i].append(cls)

188 | return [self.boxes, self.classes, self.class_names, self.confidences], logits

189 |

190 | def preprocessing(self, img):

191 | if len(img.shape) != 4:

192 | img = np.expand_dims(img, axis=0)

193 | im0 = img.astype(np.uint8)

194 | img = np.array([self.yolo_resize(im, new_shape=self.img_size)[0] for im in im0])

195 | img = img.transpose((0, 3, 1, 2))

196 | img = np.ascontiguousarray(img)

197 | img = torch.from_numpy(img).to(self.device)

198 | img = img / 255.0

199 | return img

200 |

201 |

202 | if __name__ == '__main__':

203 | model_path = 'runs/train/cart-detection/weights/best.pt'

204 | img_path = './16_4322071600_101_0_4160379257.jpg'

205 | model = YOLOV5TorchObjectDetector(model_path, 'cpu', img_size=(640, 640)).to('cpu')

206 | img = np.expand_dims(cv2.imread(img_path)[..., ::-1], axis=0)

207 | img = model.preprocessing(img)

208 | a = model(img)

209 | print(model._modules)

210 |

--------------------------------------------------------------------------------

/utils/loss.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Loss functions

4 | """

5 |

6 | import torch

7 | import torch.nn as nn

8 |

9 | from utils.metrics import bbox_iou

10 | from utils.torch_utils import is_parallel

11 |

12 |

13 | def smooth_BCE(eps=0.1): # https://github.com/ultralytics/yolov3/issues/238#issuecomment-598028441

14 | # return positive, negative label smoothing BCE targets

15 | return 1.0 - 0.5 * eps, 0.5 * eps

16 |

17 |

18 | class BCEBlurWithLogitsLoss(nn.Module):

19 | # BCEwithLogitLoss() with reduced missing label effects.

20 | def __init__(self, alpha=0.05):

21 | super(BCEBlurWithLogitsLoss, self).__init__()

22 | self.loss_fcn = nn.BCEWithLogitsLoss(reduction='none') # must be nn.BCEWithLogitsLoss()

23 | self.alpha = alpha

24 |

25 | def forward(self, pred, true):

26 | loss = self.loss_fcn(pred, true)

27 | pred = torch.sigmoid(pred) # prob from logits

28 | dx = pred - true # reduce only missing label effects

29 | # dx = (pred - true).abs() # reduce missing label and false label effects

30 | alpha_factor = 1 - torch.exp((dx - 1) / (self.alpha + 1e-4))

31 | loss *= alpha_factor

32 | return loss.mean()

33 |

34 |

35 | class FocalLoss(nn.Module):

36 | # Wraps focal loss around existing loss_fcn(), i.e. criteria = FocalLoss(nn.BCEWithLogitsLoss(), gamma=1.5)

37 | def __init__(self, loss_fcn, gamma=1.5, alpha=0.25):

38 | super(FocalLoss, self).__init__()

39 | self.loss_fcn = loss_fcn # must be nn.BCEWithLogitsLoss()

40 | self.gamma = gamma

41 | self.alpha = alpha

42 | self.reduction = loss_fcn.reduction

43 | self.loss_fcn.reduction = 'none' # required to apply FL to each element

44 |

45 | def forward(self, pred, true):

46 | loss = self.loss_fcn(pred, true)

47 | # p_t = torch.exp(-loss)

48 | # loss *= self.alpha * (1.000001 - p_t) ** self.gamma # non-zero power for gradient stability

49 |

50 | # TF implementation https://github.com/tensorflow/addons/blob/v0.7.1/tensorflow_addons/losses/focal_loss.py

51 | pred_prob = torch.sigmoid(pred) # prob from logits

52 | p_t = true * pred_prob + (1 - true) * (1 - pred_prob)

53 | alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

54 | modulating_factor = (1.0 - p_t) ** self.gamma

55 | loss *= alpha_factor * modulating_factor

56 |

57 | if self.reduction == 'mean':

58 | return loss.mean()

59 | elif self.reduction == 'sum':

60 | return loss.sum()

61 | else: # 'none'

62 | return loss

63 |

64 |

65 | class QFocalLoss(nn.Module):

66 | # Wraps Quality focal loss around existing loss_fcn(), i.e. criteria = FocalLoss(nn.BCEWithLogitsLoss(), gamma=1.5)

67 | def __init__(self, loss_fcn, gamma=1.5, alpha=0.25):

68 | super(QFocalLoss, self).__init__()

69 | self.loss_fcn = loss_fcn # must be nn.BCEWithLogitsLoss()

70 | self.gamma = gamma

71 | self.alpha = alpha

72 | self.reduction = loss_fcn.reduction

73 | self.loss_fcn.reduction = 'none' # required to apply FL to each element

74 |

75 | def forward(self, pred, true):

76 | loss = self.loss_fcn(pred, true)

77 |

78 | pred_prob = torch.sigmoid(pred) # prob from logits

79 | alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

80 | modulating_factor = torch.abs(true - pred_prob) ** self.gamma

81 | loss *= alpha_factor * modulating_factor

82 |

83 | if self.reduction == 'mean':

84 | return loss.mean()

85 | elif self.reduction == 'sum':

86 | return loss.sum()

87 | else: # 'none'

88 | return loss

89 |

90 |

91 | class ComputeLoss:

92 | # Compute losses

93 | def __init__(self, model, autobalance=False):

94 | self.sort_obj_iou = False

95 | device = next(model.parameters()).device # get model device

96 | h = model.hyp # hyperparameters

97 |

98 | # Define criteria

99 | BCEcls = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['cls_pw']], device=device))

100 | BCEobj = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['obj_pw']], device=device))

101 |

102 | # Class label smoothing https://arxiv.org/pdf/1902.04103.pdf eqn 3

103 | self.cp, self.cn = smooth_BCE(eps=h.get('label_smoothing', 0.0)) # positive, negative BCE targets

104 |

105 | # Focal loss

106 | g = h['fl_gamma'] # focal loss gamma

107 | if g > 0:

108 | BCEcls, BCEobj = FocalLoss(BCEcls, g), FocalLoss(BCEobj, g)

109 |

110 | det = model.module.model[-1] if is_parallel(model) else model.model[-1] # Detect() module

111 | self.balance = {3: [4.0, 1.0, 0.4]}.get(det.nl, [4.0, 1.0, 0.25, 0.06, .02]) # P3-P7

112 | self.ssi = list(det.stride).index(16) if autobalance else 0 # stride 16 index

113 | self.BCEcls, self.BCEobj, self.gr, self.hyp, self.autobalance = BCEcls, BCEobj, 1.0, h, autobalance

114 | for k in 'na', 'nc', 'nl', 'anchors':

115 | setattr(self, k, getattr(det, k))

116 |

117 | def __call__(self, p, targets): # predictions, targets, model

118 | device = targets.device

119 | lcls, lbox, lobj = torch.zeros(1, device=device), torch.zeros(1, device=device), torch.zeros(1, device=device)

120 | tcls, tbox, indices, anchors = self.build_targets(p, targets) # targets

121 |

122 | # Losses

123 | for i, pi in enumerate(p): # layer index, layer predictions

124 | b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

125 | tobj = torch.zeros_like(pi[..., 0], device=device) # target obj

126 |

127 | n = b.shape[0] # number of targets

128 | if n:

129 | ps = pi[b, a, gj, gi] # prediction subset corresponding to targets

130 |

131 | # Regression

132 | pxy = ps[:, :2].sigmoid() * 2. - 0.5

133 | pwh = (ps[:, 2:4].sigmoid() * 2) ** 2 * anchors[i]

134 | pbox = torch.cat((pxy, pwh), 1) # predicted box

135 | iou = bbox_iou(pbox.T, tbox[i], x1y1x2y2=False, CIoU=True) # iou(prediction, target)

136 | lbox += (1.0 - iou).mean() # iou loss

137 |

138 | # Objectness

139 | score_iou = iou.detach().clamp(0).type(tobj.dtype)

140 | if self.sort_obj_iou:

141 | sort_id = torch.argsort(score_iou)

142 | b, a, gj, gi, score_iou = b[sort_id], a[sort_id], gj[sort_id], gi[sort_id], score_iou[sort_id]

143 | tobj[b, a, gj, gi] = (1.0 - self.gr) + self.gr * score_iou # iou ratio

144 |

145 | # Classification

146 | if self.nc > 1: # cls loss (only if multiple classes)

147 | t = torch.full_like(ps[:, 5:], self.cn, device=device) # targets

148 | t[range(n), tcls[i]] = self.cp

149 | lcls += self.BCEcls(ps[:, 5:], t) # BCE

150 |

151 | # Append targets to text file

152 | # with open('targets.txt', 'a') as file:

153 | # [file.write('%11.5g ' * 4 % tuple(x) + '\n') for x in torch.cat((txy[i], twh[i]), 1)]

154 |

155 | obji = self.BCEobj(pi[..., 4], tobj)

156 | lobj += obji * self.balance[i] # obj loss

157 | if self.autobalance:

158 | self.balance[i] = self.balance[i] * 0.9999 + 0.0001 / obji.detach().item()

159 |

160 | if self.autobalance:

161 | self.balance = [x / self.balance[self.ssi] for x in self.balance]

162 | lbox *= self.hyp['box']

163 | lobj *= self.hyp['obj']

164 | lcls *= self.hyp['cls']

165 | bs = tobj.shape[0] # batch size

166 |

167 | return (lbox + lobj + lcls) * bs, torch.cat((lbox, lobj, lcls)).detach()

168 |

169 | def build_targets(self, p, targets):

170 | # Build targets for compute_loss(), input targets(image,class,x,y,w,h)

171 | na, nt = self.na, targets.shape[0] # number of anchors, targets

172 | tcls, tbox, indices, anch = [], [], [], []

173 | gain = torch.ones(7, device=targets.device) # normalized to gridspace gain

174 | ai = torch.arange(na, device=targets.device).float().view(na, 1).repeat(1, nt) # same as .repeat_interleave(nt)

175 | targets = torch.cat((targets.repeat(na, 1, 1), ai[:, :, None]), 2) # append anchor indices

176 |

177 | g = 0.5 # bias

178 | off = torch.tensor([[0, 0],

179 | [1, 0], [0, 1], [-1, 0], [0, -1], # j,k,l,m

180 | # [1, 1], [1, -1], [-1, 1], [-1, -1], # jk,jm,lk,lm

181 | ], device=targets.device).float() * g # offsets

182 |

183 | for i in range(self.nl):

184 | anchors = self.anchors[i]

185 | gain[2:6] = torch.tensor(p[i].shape)[[3, 2, 3, 2]] # xyxy gain

186 |

187 | # Match targets to anchors

188 | t = targets * gain

189 | if nt:

190 | # Matches

191 | r = t[:, :, 4:6] / anchors[:, None] # wh ratio

192 | j = torch.max(r, 1. / r).max(2)[0] < self.hyp['anchor_t'] # compare

193 | # j = wh_iou(anchors, t[:, 4:6]) > model.hyp['iou_t'] # iou(3,n)=wh_iou(anchors(3,2), gwh(n,2))

194 | t = t[j] # filter

195 |

196 | # Offsets

197 | gxy = t[:, 2:4] # grid xy

198 | gxi = gain[[2, 3]] - gxy # inverse

199 | j, k = ((gxy % 1. < g) & (gxy > 1.)).T

200 | l, m = ((gxi % 1. < g) & (gxi > 1.)).T

201 | j = torch.stack((torch.ones_like(j), j, k, l, m))

202 | t = t.repeat((5, 1, 1))[j]

203 | offsets = (torch.zeros_like(gxy)[None] + off[:, None])[j]

204 | else:

205 | t = targets[0]

206 | offsets = 0

207 |

208 | # Define

209 | b, c = t[:, :2].long().T # image, class

210 | gxy = t[:, 2:4] # grid xy

211 | gwh = t[:, 4:6] # grid wh

212 | gij = (gxy - offsets).long()

213 | gi, gj = gij.T # grid xy indices

214 |

215 | # Append

216 | a = t[:, 6].long() # anchor indices

217 | indices.append((b, a, gj.clamp_(0, gain[3] - 1), gi.clamp_(0, gain[2] - 1))) # image, anchor, grid indices

218 | tbox.append(torch.cat((gxy - gij, gwh), 1)) # box

219 | anch.append(anchors[a]) # anchors

220 | tcls.append(c) # class

221 |

222 | return tcls, tbox, indices, anch

223 |

--------------------------------------------------------------------------------

/utils/augmentations.py:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license

2 | """

3 | Image augmentation functions

4 | """

5 |

6 | import logging

7 | import math

8 | import random

9 |

10 | import cv2

11 | import numpy as np

12 |

13 | from utils.general import colorstr, segment2box, resample_segments, check_version

14 | from utils.metrics import bbox_ioa

15 |

16 |

17 | class Albumentations:

18 | # YOLOv5 Albumentations class (optional, only used if package is installed)

19 | def __init__(self):

20 | self.transform = None

21 | try:

22 | import albumentations as A

23 | check_version(A.__version__, '1.0.3') # version requirement

24 |

25 | self.transform = A.Compose([

26 | A.Blur(p=0.01),

27 | A.MedianBlur(p=0.01),

28 | A.ToGray(p=0.01),

29 | A.CLAHE(p=0.01),

30 | A.RandomBrightnessContrast(p=0.0),

31 | A.RandomGamma(p=0.0),

32 | A.ImageCompression(quality_lower=75, p=0.0)],

33 | bbox_params=A.BboxParams(format='yolo', label_fields=['class_labels']))

34 |

35 | logging.info(colorstr('albumentations: ') + ', '.join(f'{x}' for x in self.transform.transforms if x.p))

36 | except ImportError: # package not installed, skip

37 | pass

38 | except Exception as e:

39 | logging.info(colorstr('albumentations: ') + f'{e}')

40 |

41 | def __call__(self, im, labels, p=1.0):

42 | if self.transform and random.random() < p:

43 | new = self.transform(image=im, bboxes=labels[:, 1:], class_labels=labels[:, 0]) # transformed

44 | im, labels = new['image'], np.array([[c, *b] for c, b in zip(new['class_labels'], new['bboxes'])])

45 | return im, labels

46 |

47 |

48 | def augment_hsv(im, hgain=0.5, sgain=0.5, vgain=0.5):

49 | # HSV color-space augmentation

50 | if hgain or sgain or vgain:

51 | r = np.random.uniform(-1, 1, 3) * [hgain, sgain, vgain] + 1 # random gains

52 | hue, sat, val = cv2.split(cv2.cvtColor(im, cv2.COLOR_BGR2HSV))

53 | dtype = im.dtype # uint8

54 |

55 | x = np.arange(0, 256, dtype=r.dtype)

56 | lut_hue = ((x * r[0]) % 180).astype(dtype)

57 | lut_sat = np.clip(x * r[1], 0, 255).astype(dtype)

58 | lut_val = np.clip(x * r[2], 0, 255).astype(dtype)

59 |

60 | im_hsv = cv2.merge((cv2.LUT(hue, lut_hue), cv2.LUT(sat, lut_sat), cv2.LUT(val, lut_val)))

61 | cv2.cvtColor(im_hsv, cv2.COLOR_HSV2BGR, dst=im) # no return needed

62 |

63 |

64 | def hist_equalize(im, clahe=True, bgr=False):

65 | # Equalize histogram on BGR image 'im' with im.shape(n,m,3) and range 0-255

66 | yuv = cv2.cvtColor(im, cv2.COLOR_BGR2YUV if bgr else cv2.COLOR_RGB2YUV)

67 | if clahe:

68 | c = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8, 8))

69 | yuv[:, :, 0] = c.apply(yuv[:, :, 0])

70 | else:

71 | yuv[:, :, 0] = cv2.equalizeHist(yuv[:, :, 0]) # equalize Y channel histogram

72 | return cv2.cvtColor(yuv, cv2.COLOR_YUV2BGR if bgr else cv2.COLOR_YUV2RGB) # convert YUV image to RGB

73 |

74 |

75 | def replicate(im, labels):

76 | # Replicate labels

77 | h, w = im.shape[:2]

78 | boxes = labels[:, 1:].astype(int)

79 | x1, y1, x2, y2 = boxes.T

80 | s = ((x2 - x1) + (y2 - y1)) / 2 # side length (pixels)

81 | for i in s.argsort()[:round(s.size * 0.5)]: # smallest indices

82 | x1b, y1b, x2b, y2b = boxes[i]

83 | bh, bw = y2b - y1b, x2b - x1b

84 | yc, xc = int(random.uniform(0, h - bh)), int(random.uniform(0, w - bw)) # offset x, y

85 | x1a, y1a, x2a, y2a = [xc, yc, xc + bw, yc + bh]

86 | im[y1a:y2a, x1a:x2a] = im[y1b:y2b, x1b:x2b] # im4[ymin:ymax, xmin:xmax]

87 | labels = np.append(labels, [[labels[i, 0], x1a, y1a, x2a, y2a]], axis=0)

88 |

89 | return im, labels

90 |

91 |

92 | def letterbox(im, new_shape=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True, stride=32):

93 | # Resize and pad image while meeting stride-multiple constraints

94 | shape = im.shape[:2] # current shape [height, width]

95 | if isinstance(new_shape, int):

96 | new_shape = (new_shape, new_shape)

97 |

98 | # Scale ratio (new / old)

99 | r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

100 | if not scaleup: # only scale down, do not scale up (for better val mAP)

101 | r = min(r, 1.0)

102 |

103 | # Compute padding

104 | ratio = r, r # width, height ratios

105 | new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

106 | dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

107 | if auto: # minimum rectangle

108 | dw, dh = np.mod(dw, stride), np.mod(dh, stride) # wh padding

109 | elif scaleFill: # stretch

110 | dw, dh = 0.0, 0.0

111 | new_unpad = (new_shape[1], new_shape[0])

112 | ratio = new_shape[1] / shape[1], new_shape[0] / shape[0] # width, height ratios

113 |

114 | dw /= 2 # divide padding into 2 sides

115 | dh /= 2

116 |

117 | if shape[::-1] != new_unpad: # resize

118 | im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

119 | top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

120 | left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

121 | im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

122 | return im, ratio, (dw, dh)

123 |

124 |

125 | def random_perspective(im, targets=(), segments=(), degrees=10, translate=.1, scale=.1, shear=10, perspective=0.0,

126 | border=(0, 0)):

127 | # torchvision.transforms.RandomAffine(degrees=(-10, 10), translate=(.1, .1), scale=(.9, 1.1), shear=(-10, 10))

128 | # targets = [cls, xyxy]

129 |

130 | height = im.shape[0] + border[0] * 2 # shape(h,w,c)

131 | width = im.shape[1] + border[1] * 2

132 |

133 | # Center

134 | C = np.eye(3)

135 | C[0, 2] = -im.shape[1] / 2 # x translation (pixels)

136 | C[1, 2] = -im.shape[0] / 2 # y translation (pixels)

137 |

138 | # Perspective

139 | P = np.eye(3)

140 | P[2, 0] = random.uniform(-perspective, perspective) # x perspective (about y)

141 | P[2, 1] = random.uniform(-perspective, perspective) # y perspective (about x)

142 |

143 | # Rotation and Scale

144 | R = np.eye(3)

145 | a = random.uniform(-degrees, degrees)

146 | # a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

147 | s = random.uniform(1 - scale, 1 + scale)

148 | # s = 2 ** random.uniform(-scale, scale)

149 | R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

150 |

151 | # Shear

152 | S = np.eye(3)

153 | S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

154 | S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

155 |

156 | # Translation

157 | T = np.eye(3)

158 | T[0, 2] = random.uniform(0.5 - translate, 0.5 + translate) * width # x translation (pixels)

159 | T[1, 2] = random.uniform(0.5 - translate, 0.5 + translate) * height # y translation (pixels)

160 |

161 | # Combined rotation matrix

162 | M = T @ S @ R @ P @ C # order of operations (right to left) is IMPORTANT

163 | if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

164 | if perspective:

165 | im = cv2.warpPerspective(im, M, dsize=(width, height), borderValue=(114, 114, 114))

166 | else: # affine

167 | im = cv2.warpAffine(im, M[:2], dsize=(width, height), borderValue=(114, 114, 114))