├── .havenignore

├── src

├── __init__.py

├── datasets

│ ├── utils.py

│ ├── color_matching.py

│ ├── extract_baselines.py

│ ├── preprocess_Waymo.py

│ ├── __init__.py

│ └── waymo_od.py

├── renderer

│ ├── metrics.py

│ └── losses.py

├── pointLF

│ ├── feature_mapping.py

│ ├── pointLF_helper.py

│ ├── ptlf_vis.py

│ ├── light_field_renderer.py

│ ├── layer.py

│ ├── pointcloud_encoding

│ │ ├── simpleview.py

│ │ └── pointnet_features.py

│ ├── scene_point_lightfield.py

│ ├── attention_modules.py

│ └── icp

│ │ └── pts_registration.py

├── scenes

│ ├── nodes.py

│ ├── raysampler

│ │ ├── frustum_helpers.py

│ │ └── rayintersection.py

│ └── init_detection.py

├── utils_dist.py

└── utils.py

├── scripts

├── vis.gif

└── tst_waymo.py

├── exp_configs

├── __init__.py

└── pointLF_exps.py

├── LICENSE

├── .gitignore

├── README.md

└── trainval.py

/.havenignore:

--------------------------------------------------------------------------------

1 | .tmp

--------------------------------------------------------------------------------

/src/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/scripts/vis.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princeton-computational-imaging/neural-point-light-fields/HEAD/scripts/vis.gif

--------------------------------------------------------------------------------

/exp_configs/__init__.py:

--------------------------------------------------------------------------------

1 | from . import pointLF_exps

2 |

3 | EXP_GROUPS = {}

4 | EXP_GROUPS.update(pointLF_exps.EXP_GROUPS)

5 |

--------------------------------------------------------------------------------

/src/datasets/utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 | def invert_transformation(rot, t):

4 | t = np.matmul(-rot.T, t)

5 | inv_translation = np.concatenate([rot.T, t[:, None]], axis=1)

6 | return np.concatenate([inv_translation, np.array([[0., 0., 0., 1.]])])

7 |

8 | def roty_matrix(roty):

9 | c = np.cos(roty)

10 | s = np.sin(roty)

11 | return np.array([[c, 0, s], [0, 1, 0], [-s, 0, c]])

12 |

13 |

14 | def rotz_matrix(roty):

15 | c = np.cos(roty)

16 | s = np.sin(roty)

17 | return np.array([[c, -s, 0], [s, c, 0], [0, 0, 1]])

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2022 princeton-computational-imaging

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/src/renderer/metrics.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | import torch.nn as nn

4 | from ..scenes import NeuralScene

5 | from pytorch3d.renderer.implicit.utils import RayBundle

6 |

7 |

8 | def calc_mse(x: torch.Tensor, y: torch.Tensor, **kwargs):

9 | return torch.mean((x - y) ** 2)

10 |

11 |

12 | def calc_psnr(x: torch.Tensor, y: torch.Tensor, **kwargs):

13 | mse = calc_mse(x, y)

14 | psnr = -10.0 * torch.log10(mse)

15 | return psnr

16 |

17 |

18 | def calc_latent_dist(xycfn, scene, reg, **kwargs):

19 | trainable_latent_nodes_id = scene.getSceneIdByTypeId(list(scene.nodes['scene_object'].keys()))

20 |

21 | # Remove "non"-nodes from the rays

22 | latent_nodess_id = xycfn[..., 4].unique().tolist()

23 | try:

24 | latent_nodess_id.remove(-1)

25 | except:

26 | pass

27 |

28 | # Just include nodes that have latent arrays

29 | # TODO: Do that for each class separatly

30 | latent_nodess_id = set(trainable_latent_nodes_id) & set(latent_nodess_id)

31 |

32 | if len(latent_nodess_id) != 0:

33 | latent_codes = torch.stack([scene.getNodeBySceneId(i).latent for i in latent_nodess_id])

34 | latent_dist = torch.sum(reg * torch.norm(latent_codes, dim=-1))

35 | else:

36 | latent_dist = torch.tensor(0.)

37 |

38 | return latent_dist

39 |

40 |

41 | def get_rgb_gt(rgb: torch.Tensor, scene: NeuralScene, xycfn: torch.Tensor):

42 | xycf = xycfn[..., -1, :4].reshape(len(rgb), -1)

43 | rgb_gt = torch.zeros_like(rgb)

44 |

45 | # TODO: Make more efficient by not retriving image for each pixel,

46 | # but storing all gt_images in a single tensor on the cpu

47 | # TODO: During test time just get all images avilable

48 | for f in xycf[:, 3].unique():

49 | if f == -1:

50 | continue

51 | frame = scene.frames[int(f)]

52 | for c in xycf[:, 2].unique():

53 | cf_mask = torch.all(xycf[:, 2:] == torch.tensor([c, f], device=xycf.device), dim=1)

54 | xy = xycf[cf_mask, :2].cpu()

55 |

56 | c_id = scene.getNodeBySceneId(int(c)).type_idx

57 | gt_img = frame.images[c_id]

58 | gt_px = torch.from_numpy(gt_img[xy[:, 1], xy[:, 0]]).to(device=rgb.device, dtype=rgb.dtype)

59 | rgb_gt[cf_mask] = gt_px

60 |

61 | return rgb_gt

--------------------------------------------------------------------------------

/src/datasets/color_matching.py:

--------------------------------------------------------------------------------

1 | # https://stackoverflow.com/questions/56918877/color-match-in-images

2 | import numpy as np

3 | import cv2

4 | from skimage.io import imread, imsave

5 | from skimage import exposure

6 | from skimage.exposure import match_histograms

7 |

8 | import matplotlib.pyplot as plt

9 |

10 |

11 | # https://www.pyimagesearch.com/2014/06/30/super-fast-color-transfer-images/

12 | def color_transfer(source, target):

13 | source = cv2.normalize(source, None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

14 | target = cv2.normalize(target, None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

15 | source = cv2.cvtColor(source, cv2.COLOR_RGB2BGR)

16 | target = cv2.cvtColor(target, cv2.COLOR_RGB2BGR)

17 |

18 | # convert the images from the RGB to L*ab* color space, being

19 | # sure to utilizing the floating point data type (note: OpenCV

20 | # expects floats to be 32-bit, so use that instead of 64-bit)

21 | source = cv2.cvtColor(source, cv2.COLOR_BGR2LAB).astype("float32")

22 | target = cv2.cvtColor(target, cv2.COLOR_BGR2LAB).astype("float32")

23 |

24 | # compute color statistics for the source and target images

25 | (lMeanSrc, lStdSrc, aMeanSrc, aStdSrc, bMeanSrc, bStdSrc) = image_stats(source)

26 | (lMeanTar, lStdTar, aMeanTar, aStdTar, bMeanTar, bStdTar) = image_stats(target)

27 | # subtract the means from the target image

28 | (l, a, b) = cv2.split(target)

29 | l -= lMeanTar

30 | # a -= aMeanTar

31 | # b -= bMeanTar

32 | # scale by the standard deviations

33 | l = (lStdTar / lStdSrc) * l

34 | # a = (aStdTar / aStdSrc) * a

35 | # b = (bStdTar / bStdSrc) * b

36 | # add in the source mean

37 | l += lMeanSrc

38 | # a += aMeanSrc

39 | # b += bMeanSrc

40 | # clip the pixel intensities to [0, 255] if they fall outside

41 | # this range

42 | l = np.clip(l, 0, 255)

43 | a = np.clip(a, 0, 255)

44 | b = np.clip(b, 0, 255)

45 | # merge the channels together and convert back to the RGB color

46 | # space, being sure to utilize the 8-bit unsigned integer data

47 | # type

48 | transfer = cv2.merge([l, a, b])

49 | transfer = cv2.cvtColor(transfer.astype("uint8"), cv2.COLOR_LAB2BGR)

50 |

51 | # return the color transferred image

52 | transfer = cv2.cvtColor(transfer, cv2.COLOR_BGR2RGB).astype("float32") / 255.

53 | return transfer

54 |

55 |

56 | def image_stats(image):

57 | # compute the mean and standard deviation of each channel

58 | (l, a, b) = cv2.split(image)

59 | (lMean, lStd) = (l.mean(), l.std())

60 | (aMean, aStd) = (a.mean(), a.std())

61 | (bMean, bStd) = (b.mean(), b.std())

62 | # return the color statistics

63 | return (lMean, lStd, aMean, aStd, bMean, bStd)

64 |

65 |

66 | def histogram_matching(source, target):

67 | matched = match_histograms(target, source, multichannel=False)

68 | return matched

--------------------------------------------------------------------------------

/src/pointLF/feature_mapping.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 | import numpy as np

5 |

6 |

7 | # Positional Encoding

8 | class PositionalEncoding(nn.Module):

9 | def __init__(self, multires, input_dims=3, include_input=True, log_sampling=True):

10 |

11 | super().__init__()

12 | self.embed_fns = []

13 | self.out_dims = 0

14 |

15 | if include_input:

16 | self.embed_fns.append(lambda x: x)

17 | self.out_dims += input_dims

18 |

19 | max_freq = multires - 1

20 | N_freqs = multires

21 |

22 | if log_sampling:

23 | freq_bands = 2.0 ** torch.linspace(0.0, max_freq, steps=N_freqs)

24 | else:

25 | freq_bands = torch.linspace(2.0 ** 0.0, 2.0 ** max_freq, steps=N_freqs)

26 |

27 | for freq in freq_bands:

28 | for periodic_fn in [torch.sin, torch.cos]:

29 | self.embed_fns.append(

30 | lambda x, periodic_fn=periodic_fn, freq=freq: periodic_fn(x * freq)

31 | )

32 | self.out_dims += input_dims

33 |

34 | def forward(self, x: torch.Tensor):

35 | return torch.cat([fn(x) for fn in self.embed_fns], -1)

36 |

37 |

38 | # Positional Encoding Old (section 5.1)

39 | class Embedder:

40 | def __init__(self, **kwargs):

41 | self.kwargs = kwargs

42 | self.create_embedding_fn()

43 |

44 | def create_embedding_fn(self):

45 | embed_fns = []

46 | d = self.kwargs["input_dims"]

47 | out_dim = 0

48 | if self.kwargs["include_input"]:

49 | embed_fns.append(lambda x: x)

50 | out_dim += d

51 |

52 | max_freq = self.kwargs["max_freq_log2"]

53 | N_freqs = self.kwargs["num_freqs"]

54 |

55 | if self.kwargs["log_sampling"]:

56 | freq_bands = 2.0 ** torch.linspace(0.0, max_freq, steps=N_freqs)

57 | else:

58 | freq_bands = torch.linspace(2.0 ** 0.0, 2.0 ** max_freq, steps=N_freqs)

59 |

60 | for freq in freq_bands:

61 | for p_fn in self.kwargs["periodic_fns"]:

62 | embed_fns.append(lambda x, p_fn=p_fn, freq=freq: p_fn(x * freq))

63 | out_dim += d

64 |

65 | self.embed_fns = embed_fns

66 | self.out_dim = out_dim

67 |

68 | def embed(self, inputs):

69 | return torch.cat([fn(inputs) for fn in self.embed_fns], -1)

70 |

71 |

72 | def get_embedder(multires, i=0, input_dims=3):

73 | if i == -1:

74 | return nn.Identity(), input_dims

75 |

76 | embed_kwargs = {

77 | "include_input": True,

78 | "input_dims": input_dims,

79 | "max_freq_log2": multires - 1,

80 | "num_freqs": multires,

81 | "log_sampling": True,

82 | "periodic_fns": [torch.sin, torch.cos],

83 | }

84 |

85 | embedder_obj = Embedder(**embed_kwargs)

86 | embed = lambda x, eo=embedder_obj: eo.embed(x)

87 | return embed, embedder_obj.out_dim

88 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | '# Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 | .tmp/*

6 | .tmp

7 | data/*

8 | job_config.py

9 | *.png

10 | /pretrained_models/

11 | conda-spec-file-julian.txt

12 | example_weights/*

13 | # C extensions

14 | *.so

15 | results/*

16 | .results/

17 | # Distribution / packaging

18 | .Python

19 | build/

20 | develop-eggs/

21 | dist/

22 | downloads/

23 | eggs/

24 | .eggs/

25 | lib/

26 | lib64/

27 | parts/

28 | sdist/

29 | var/

30 | wheels/

31 | pip-wheel-metadata/

32 | share/python-wheels/

33 | *.egg-info/

34 | .installed.cfg

35 | *.egg

36 | MANIFEST

37 |

38 | # PyInstaller

39 | # Usually these files are written by a python script from a template

40 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

41 | *.manifest

42 | *.spec

43 |

44 | # Installer logs

45 | pip-log.txt

46 | pip-delete-this-directory.txt

47 |

48 | # Unit test / coverage reports

49 | htmlcov/

50 | .tox/

51 | .nox/

52 | .coverage

53 | .coverage.*

54 | .cache

55 | nosetests.xml

56 | coverage.xml

57 | *.cover

58 | *.py,cover

59 | .hypothesis/

60 | .pytest_cache/

61 |

62 | # Translations

63 | *.mo

64 | *.pot

65 |

66 | # Django stuff:

67 | *.log

68 | local_settings.py

69 | db.sqlite3

70 | db.sqlite3-journal

71 |

72 | # Flask stuff:

73 | instance/

74 | .webassets-cache

75 |

76 | # Scrapy stuff:

77 | .scrapy

78 |

79 | # Sphinx documentation

80 | docs/_build/

81 |

82 | # PyBuilder

83 | target/

84 |

85 | # Jupyter Notebook

86 | .ipynb_checkpoints

87 |

88 | # IPython

89 | profile_default/

90 | ipython_config.py

91 |

92 | # pyenv

93 | .python-version

94 |

95 | # pipenv

96 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

97 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

98 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

99 | # install all needed dependencies.

100 | #Pipfile.lock

101 |

102 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

103 | __pypackages__/

104 |

105 | # Celery stuff

106 | celerybeat-schedule

107 | celerybeat.pid

108 |

109 | # SageMath parsed files

110 | *.sage.py

111 |

112 | # Environments

113 | .env

114 | .venv

115 | env/

116 | venv/

117 | ENV/

118 | env.bak/

119 | venv.bak/

120 |

121 | # Spyder project settings

122 | .spyderproject

123 | .spyproject

124 |

125 | # Rope project settings

126 | .ropeproject

127 |

128 | # mkdocs documentation

129 | /site

130 |

131 | # mypy

132 | .mypy_cache/

133 | .dmypy.json

134 | dmypy.json

135 |

136 | # Pyre type checker

137 | .pyre/

138 |

139 | *.pyc

140 | *#*

141 | *.pyx

142 | *.record

143 | *.tar.gz

144 | *.tar

145 | *.swp

146 | *.npy

147 | *.ckpt*

148 | *.idea*

149 | axcana.egg-info

150 | .vscode

151 | .idea

152 | *.blg

153 | *.gz

154 | *.avi

155 | main.log

156 | main.aux

157 | main.bbl

158 | main.brf

159 | main.pdf

160 | *.log

161 | *.aux

162 | *.bbl

163 | *.brf

164 | mainSup.pdf

165 | egrebuttal.pdf

166 | .tmp/*

167 | /results/

168 | /results/

169 |

170 | /model_library/

171 | old_model/

172 | /.tmp/

173 | /src/datasets/eos_dataset_src/

174 |

--------------------------------------------------------------------------------

/src/pointLF/pointLF_helper.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 |

5 | def pre_scale_MV(x, translation=-1.4):

6 | batchsize = x.size()[0]

7 | scaled_x = x

8 |

9 | for k in range(batchsize):

10 | for i in range(3):

11 | max = scaled_x[k, ..., i].max()

12 | min = scaled_x[k, ..., i].sort()[0][10]

13 | ax_size = max - min

14 | scaled_x[k, ..., i] -= min

15 | scaled_x[k, ..., i] *= 2 / ax_size

16 | scaled_x[k, ..., i] -= 1.

17 |

18 | scaled_x = torch.minimum(scaled_x, torch.tensor([1.], device='cuda'))

19 | scaled_x = torch.maximum(scaled_x, torch.tensor([-1.], device='cuda'))

20 |

21 | # scaled_x[:, 0] = torch.tensor([-1.0, -1.0, -1.0], device=scaled_x.device)

22 | # scaled_x[:, 1] = torch.tensor([1.0, -1.0, -1.0], device=scaled_x.device)

23 | # scaled_x[:, 2] = torch.tensor([-1.0, 1.0, -1.0], device=scaled_x.device)

24 | # scaled_x[:, 3] = torch.tensor([1.0, 1.0, -1.0], device=scaled_x.device)

25 | # scaled_x[:, 4] = torch.tensor([-1.0, -1.0, 1.0], device=scaled_x.device)

26 | # scaled_x[:, 5] = torch.tensor([-1.0, 1.0, 1.0], device=scaled_x.device)

27 | # scaled_x[:, 6] = torch.tensor([1.0, -1.0, 1.0], device=scaled_x.device)

28 | # scaled_x[:, 7] = torch.tensor([1.0, 1.0, 1.0], device=scaled_x.device)

29 |

30 | scaled_x *= 1 / -translation

31 |

32 | # scaled_plt = scaled_x[0].cpu().detach().numpy()

33 | # fig3d = plt.figure()

34 | # ax3d = fig3d.gca(projection='3d')

35 | # ax3d.scatter(scaled_plt[:, 0], scaled_plt[:, 1], scaled_plt[:, 2], c='blue')

36 |

37 | return scaled_x

38 |

39 |

40 | def select_Mv_feat(feature_maps, scaled_pts, closest_mask, batchsize, k_closest, feature_extractor, img_resolution=128,

41 | feature_resolution=16):

42 | feat2img_f = img_resolution // feature_resolution

43 |

44 | # n_feat_maps, batchsize, n_features, feat_heigth, feat_width = feature_maps.shape

45 | n_batch, maps_per_batch, n_features, feat_heigth, feat_width = feature_maps.shape

46 | n_feat_maps = maps_per_batch * n_batch

47 | feature_maps = feature_maps.reshape(n_feat_maps, n_features, feat_heigth, feat_width)

48 |

49 | # Only retrive pts_feat for relevant points

50 | masked_scaled_pts = [sc_x[mask] for (sc_x, mask) in zip(scaled_pts, closest_mask)]

51 | masked_scaled_pts = torch.stack(masked_scaled_pts).view(n_batch, -1, 3)

52 |

53 | # Get coordinates in the feautre maps for each point

54 | coordinates, coord_x, coord_y, depth = feature_extractor._get_img_coord(masked_scaled_pts, resolution=img_resolution)

55 |

56 | # Adjust for downscaled feature maps

57 | coord_x = torch.round(coord_x.view(n_feat_maps, -1, k_closest) / feat2img_f).to(torch.long)

58 | coord_x = torch.minimum(coord_x, torch.tensor([feat_heigth - 1], device=coord_x.device))

59 | coord_y = torch.round(coord_y.view(n_feat_maps, -1, k_closest) / feat2img_f).to(torch.long)

60 | coord_y = torch.minimum(coord_y, torch.tensor([feat_width - 1], device=coord_x.device))

61 |

62 | # depth = depth.view(n_batch, maps_per_batch, -1, k_closest)

63 |

64 | # Extract features for each ray and k closest points

65 | feature_maps = feature_maps.permute(0, 2, 3, 1)

66 | pts_feat = torch.stack([feature_maps[i][tuple([coord_x[i], coord_y[i]])] for i in range(n_feat_maps)])

67 | pts_feat = pts_feat.reshape(n_batch, maps_per_batch, -1, k_closest, n_features)

68 | pts_feat = pts_feat.permute(0, 2, 3, 1, 4)

69 | # Sum all pts_feat from all feature maps

70 | # pts_feat = pts_feat.sum(dim=1)

71 | # pts_feat = torch.max(pts_feat, dim=1)[0]

72 |

73 | return pts_feat

74 |

75 |

76 | def lin_weighting(z, distance, projected, my=0.9):

77 |

78 |

79 | inv_pt_ray_dist = torch.div(1, distance)

80 | pt_ray_dist_weights = inv_pt_ray_dist / torch.norm(inv_pt_ray_dist, dim=-1)[..., None]

81 |

82 | inv_proj_dist = torch.div(1, projected)

83 | pt_proj_dist_weights = inv_proj_dist / torch.norm(inv_proj_dist, dim=-1)[..., None]

84 |

85 | z = z * (my * pt_ray_dist_weights + (1-my) * pt_proj_dist_weights)[..., None, None]

86 |

87 | return torch.sum(z, dim=2)

--------------------------------------------------------------------------------

/scripts/tst_waymo.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 |

4 | import matplotlib.pyplot as plt

5 | import matplotlib.patches as patches

6 | from PIL import Image

7 | import tensorflow as tf

8 | from waymo_open_dataset.utils import frame_utils

9 | from waymo_open_dataset import dataset_pb2 as open_dataset

10 | from NeuralSceneGraph.data_loader.load_waymo_od import load_waymo_od_data

11 |

12 |

13 | tf.compat.v1.enable_eager_execution()

14 |

15 | # Plot every i_plt image

16 | i_plt = 1

17 |

18 | start = 50

19 | end = 60

20 |

21 | frames_path = '/home/julian/Desktop/waymo_open/segment-9985243312780923024_3049_720_3069_720_with_camera_labels.tfrecord'

22 | basedir = '/home/julian/Desktop/waymo_open'

23 | img_dir = '/home/julian/Desktop/waymo_open/tst_01'

24 |

25 | cam_ls = ['front', 'front_left', 'front_right']

26 |

27 | records = []

28 | dir_list = os.listdir(basedir)

29 | dir_list.sort()

30 | for f in dir_list:

31 | if 'record' in f:

32 | records.append(os.path.join(basedir, f))

33 |

34 |

35 |

36 | images = load_waymo_od_data(frames_path, selected_frames=[start, end])[0]

37 | for i_record, tf_record in enumerate(records):

38 | dataset = tf.data.TFRecordDataset(tf_record, compression_type='')

39 | print(tf_record)

40 |

41 | for i, data in enumerate(dataset):

42 | if not i % i_plt:

43 | frame = open_dataset.Frame()

44 | frame.ParseFromString(bytearray(data.numpy()))

45 |

46 | for index, camera_image in enumerate(frame.images):

47 | if camera_image.name in [1, 2, 3]:

48 | img_arr = np.array(tf.image.decode_jpeg(camera_image.image))

49 | # plt.imshow(img_arr, cmap=None)

50 |

51 | cam_dir = os.path.join(img_dir, cam_ls[camera_image.name-1])

52 | im_name = 'img_' + str(i_record) + '_' + str(i) + '.jpg'

53 | im = Image.fromarray(img_arr)

54 | im.save(os.path.join(cam_dir, im_name))

55 |

56 | # frames = []

57 | # max_frames=10

58 | # i_plt = 100

59 | #

60 | # for i, data in enumerate(dataset):

61 | # if not i % i_plt:

62 | # frame = open_dataset.Frame()

63 | # frame.ParseFromString(bytearray(data.numpy()))

64 | #

65 | # for index, camera_image in enumerate(frame.images):

66 | # if camera_image.name in [1, 2, 3]:

67 | # plt.imshow(tf.image.decode_jpeg(camera_image.image), cmap=None)

68 |

69 | # layout = [3, 3, index+1]

70 | # ax = plt.subplot(*layout)

71 | #

72 | # plt.imshow(tf.image.decode_jpeg(camera_image.image), cmap=None)

73 | # plt.title(open_dataset.CameraName.Name.Name(camera_image.name))

74 | # plt.grid(False)

75 | # plt.axis('off')

76 |

77 | # frames.append(frame)

78 | # if i >= max_frames-1:

79 | # break

80 |

81 | # frame = frames[0]

82 |

83 | (range_images, camera_projections, range_image_top_pose) = (

84 | frame_utils.parse_range_image_and_camera_projection(frame))

85 |

86 | print(frame.context)

87 |

88 | def show_camera_image(camera_image, camera_labels, layout, cmap=None):

89 | """Show a camera image and the given camera labels."""

90 |

91 | ax = plt.subplot(*layout)

92 |

93 | # Draw the camera labels.

94 | for camera_labels in frame.camera_labels:

95 | # Ignore camera labels that do not correspond to this camera.

96 | if camera_labels.name != camera_image.name:

97 | continue

98 |

99 | # Iterate over the individual labels.

100 | for label in camera_labels.labels:

101 | # Draw the object bounding box.

102 | ax.add_patch(patches.Rectangle(

103 | xy=(label.box.center_x - 0.5 * label.box.length,

104 | label.box.center_y - 0.5 * label.box.width),

105 | width=label.box.length,

106 | height=label.box.width,

107 | linewidth=1,

108 | edgecolor='red',

109 | facecolor='none'))

110 |

111 | # Show the camera image.

112 | plt.imshow(tf.image.decode_jpeg(camera_image.image), cmap=cmap)

113 | plt.title(open_dataset.CameraName.Name.Name(camera_image.name))

114 | plt.grid(False)

115 | plt.axis('off')

116 |

117 | plt.figure(figsize=(25, 20))

118 |

119 | for index, image in enumerate(frame.images):

120 | show_camera_image(image, frame.camera_labels, [3, 3, index+1])

121 |

122 |

123 | a = 0

--------------------------------------------------------------------------------

/src/pointLF/ptlf_vis.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import matplotlib.pyplot as plt

4 | import open3d as o3d

5 | from sklearn.manifold import TSNE

6 |

7 |

8 | def plt_pts_selected_2D(output_dict, axis):

9 | selected_points = [pts[mask] for pts, mask in

10 | zip(output_dict['points_in'], output_dict['closest_mask_in'])]

11 |

12 | for l, cf in enumerate(output_dict['samples']):

13 | plt_pts = selected_points[l].reshape(-1, 3)

14 | all_pts = output_dict['points_in'][l]

15 |

16 | plt.figure()

17 | plt.scatter(all_pts[:, axis[0]], all_pts[:, axis[1]])

18 | plt.scatter(plt_pts[:, axis[0]], plt_pts[:, axis[1]])

19 |

20 | if 'points_scaled' in output_dict:

21 | selected_points = [pts[mask] for pts, mask in

22 | zip(output_dict['points_scaled'], output_dict['closest_mask_in'])]

23 | for l, cf in enumerate(output_dict['samples']):

24 | plt_pts = selected_points[l].reshape(-1, 3)

25 | all_pts = output_dict['points_scaled'][l]

26 |

27 | plt.figure()

28 | plt.scatter(all_pts[:, axis[0]], all_pts[:, axis[1]])

29 | plt.scatter(plt_pts[:, axis[0]], plt_pts[:, axis[1]])

30 |

31 | def plt_BEV_pts_selected(output_dict):

32 | plt_pts_selected_2D(output_dict, (0,1))

33 |

34 | def plt_SIDE_pts_selected(output_dict):

35 | plt_pts_selected_2D(output_dict, (0, 2))

36 |

37 | def plt_FRONT_pts_selected(output_dict):

38 | plt_pts_selected_2D(output_dict, (1, 2))

39 |

40 | def visualize_output(output_dict, selected_only=False, scaled=False, n_plt_rays=None):

41 | if scaled:

42 | pts_in = output_dict['points_scaled']

43 | else:

44 | pts_in = output_dict['points_in']

45 |

46 | masks_in = output_dict['closest_mask_in']

47 |

48 | if 'sum_mv_point_features' in output_dict:

49 | feat_per_point = output_dict['sum_mv_point_features'].squeeze()

50 | if len(feat_per_point.shape) == 4:

51 | n_batch, n_rays, n_closest_pts, feat_dim = feat_per_point.shape

52 | else:

53 | n_batch = 1

54 | n_rays, n_closest_pts, feat_dim = feat_per_point.shape

55 |

56 | for i, (pts, mask, feat) in enumerate(zip(pts_in, masks_in, feat_per_point)):

57 |

58 | # Get feature embedding for visualization

59 | feat = feat.reshape(-1, feat_dim)

60 | feat_embedded = TSNE(n_components=3).fit_transform(feat)

61 |

62 | # Transform embedded space to RGB

63 | feat_embedded = feat_embedded - feat_embedded.min(axis=0)

64 | color = feat_embedded / feat_embedded.max(axis=0)

65 |

66 | if n_plt_rays is not None:

67 | ray_ids = np.random.choice(len(mask), n_plt_rays)

68 | mask = mask[ray_ids]

69 | color = color.reshape(n_rays, n_closest_pts, 3)

70 | color = color[ray_ids].reshape(-1, 3)

71 |

72 | pts_close = pts[mask]

73 | pcd_close = get_pcd_vis(pts_close, color_vector=color)

74 | if selected_only:

75 | o3d.visualization.draw_geometries([pcd_close])

76 | else:

77 | pcd = get_pcd_vis(pts)

78 | o3d.visualization.draw_geometries([pcd, pcd_close])

79 | else:

80 | for i, (pts, mask) in enumerate(zip(pts_in, masks_in)):

81 | pts_close = pts[mask]

82 | pcd_close = get_pcd_vis(pts, uniform_color=[1., 0.7, 0.])

83 |

84 | if selected_only:

85 | o3d.visualization.draw_geometries([pcd_close])

86 | else:

87 | pcd = get_pcd_vis(pts)

88 | o3d.visualization.draw_geometries([pcd, pcd_close])

89 |

90 | def get_pcd_vis(pts, uniform_color=None, color_vector=None):

91 | pts = pts.reshape(-1,3)

92 | pcd = o3d.geometry.PointCloud()

93 | pcd.points = o3d.utility.Vector3dVector(pts)

94 | if uniform_color is not None:

95 | # pcd.paint_uniform_color([1., 0.7, 0.])

96 | pcd.paint_uniform_color(uniform_color)

97 | if color_vector is not None:

98 | assert len(pts) == len(color_vector)

99 | pcd.colors = o3d.utility.Vector3dVector(color_vector)

100 |

101 | return pcd

102 |

103 |

104 |

105 | # pts_in_name = ".tmp/points_in_{}.ply".format(i)

106 | # o3d.io.write_point_cloud(pts_in_name, pcd)

107 | # pcd = o3d.io.read_point_cloud(pts_in_name)

--------------------------------------------------------------------------------

/src/scenes/nodes.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | import torch.nn as nn

4 | from pytorch3d.renderer import PerspectiveCameras

5 | from src.pointLF.point_light_field import PointLightField

6 | from pytorch3d.transforms.rotation_conversions import euler_angles_to_matrix

7 |

8 | DEVICE = "cpu"

9 | TRAINCAM = False

10 | TRAINOBJSZ = False

11 | TRAINOBJ = False

12 |

13 |

14 | class Intrinsics:

15 | def __init__(

16 | self,

17 | H,

18 | W,

19 | focal,

20 | P=None

21 | ):

22 | self.H = int(H)

23 | self.W = int(W)

24 | if np.size(focal) == 1:

25 | self.f_x = nn.Parameter(

26 | torch.tensor(focal, device=DEVICE, requires_grad=TRAINCAM)

27 | )

28 | self.f_y = nn.Parameter(

29 | torch.tensor(focal, device=DEVICE, requires_grad=TRAINCAM)

30 | )

31 | else:

32 | self.f_x = nn.Parameter(

33 | torch.tensor(focal[0], device=DEVICE, requires_grad=TRAINCAM)

34 | )

35 | self.f_y = nn.Parameter(

36 | torch.tensor(focal[1], device=DEVICE, requires_grad=TRAINCAM)

37 | )

38 |

39 | self.P = P

40 |

41 |

42 | class NeuralCamera(PerspectiveCameras):

43 | def __init__(self, h, w, f, intrinsics=None, name=None, type=None, P=None):

44 | # TODO: Cleanup intrinsics and h, w, f

45 | # TODO: Add P matrix for projection

46 | self.H = h

47 | self.W = w

48 | if intrinsics is None:

49 | self.intrinsics = Intrinsics(h, w, f, P)

50 | else:

51 | self.intrinsics = intrinsics

52 |

53 | # Add opengl2cam rotation

54 | opengl2cam = euler_angles_to_matrix(

55 | torch.tensor([np.pi, np.pi, 0.0], device=DEVICE), "ZYX"

56 | )

57 | if type == 'waymo':

58 | waymo_rot = euler_angles_to_matrix(torch.tensor([np.pi, 0., 0.],

59 | device=DEVICE), 'ZYX')

60 |

61 | opengl2cam = torch.matmul(waymo_rot, opengl2cam)

62 |

63 | # Simplified version under the assumption of square pixels

64 | # [self.intrinsics.f_x, self.intrinsics.f_y]

65 | PerspectiveCameras.__init__(

66 | self,

67 | focal_length=torch.tensor([[self.intrinsics.f_x, self.intrinsics.f_y]]),

68 | principal_point=torch.tensor(

69 | [[self.intrinsics.W / 2, self.intrinsics.H / 2]]

70 | ),

71 | R=opengl2cam,

72 | image_size=torch.tensor([[self.intrinsics.W, self.intrinsics.H]]),

73 | )

74 | self.name = name

75 |

76 |

77 | class Lidar:

78 | def __init__(self, Tr_li2cam=None, name=None):

79 | # TODO: Add all relevant params to scene init

80 | self.sensor_type = 'lidar'

81 | self.li2cam = Tr_li2cam

82 |

83 | self.name = name

84 |

85 |

86 | class ObjectClass:

87 | def __init__(self, name):

88 | self.static = False

89 | self.name = name

90 |

91 |

92 | class SceneObject:

93 | def __init__(self, length, height, width, object_class_node):

94 | self.static = False

95 | self.object_class_type_idx = object_class_node.type_idx

96 | self.object_class_name = object_class_node.name

97 | self.length = length

98 | self.height = height

99 | self.width = width

100 | self.box_size = torch.tensor([self.length, self.height, self.width])

101 |

102 |

103 | class Background:

104 | def __init__(self, transformation=None, near=0.5, far=100.0, lightfield_config={}):

105 | self.static = True

106 | global_transformation = np.eye(4)

107 |

108 | if transformation is not None:

109 | transformation = np.squeeze(transformation)

110 | if transformation.shape == (3, 3):

111 | global_transformation[:3, :3] = transformation

112 | elif transformation.shape == (3):

113 | global_transformation[:3, 3] = transformation

114 | elif transformation.shape == (3, 4):

115 | global_transformation[:3, :] = transformation

116 | elif transformation.shape == (4, 4):

117 | global_transformation = transformation

118 | else:

119 | print(

120 | "Ignoring wolrd transformation, not of shape [3, 3], [3, 4], [3, 1] or [4, 4], but",

121 | transformation.shape,

122 | )

123 |

124 | self.transformation = torch.from_numpy(global_transformation)

125 | self.near = torch.tensor(near)

126 | self.far = torch.tensor(far)

--------------------------------------------------------------------------------

/src/utils_dist.py:

--------------------------------------------------------------------------------

1 | import torch.distributed as dist

2 | import torch

3 | from torch.utils.data import DataLoader, DistributedSampler

4 | from operator import itemgetter

5 |

6 |

7 | class DatasetFromSampler(torch.utils.data.Dataset):

8 | """Dataset to create indexes from `Sampler`.

9 | Args:

10 | sampler: PyTorch sampler

11 | """

12 |

13 | def __init__(self, sampler):

14 | """Initialisation for DatasetFromSampler."""

15 | self.sampler = sampler

16 | self.sampler_list = None

17 |

18 | def __getitem__(self, index: int):

19 | """Gets element of the dataset.

20 | Args:

21 | index: index of the element in the dataset

22 | Returns:

23 | Single element by index

24 | """

25 | if self.sampler_list is None:

26 | self.sampler_list = list(self.sampler)

27 | return self.sampler_list[index]

28 |

29 | def __len__(self) -> int:

30 | """

31 | Returns:

32 | int: length of the dataset

33 | """

34 | return len(self.sampler)

35 |

36 |

37 | class DistributedSamplerWrapper(DistributedSampler):

38 | """

39 | Wrapper over `Sampler` for distributed training.

40 | Allows you to use any sampler in distributed mode.

41 | It is especially useful in conjunction with

42 | `torch.nn.parallel.DistributedDataParallel`. In such case, each

43 | process can pass a DistributedSamplerWrapper instance as a DataLoader

44 | sampler, and load a subset of subsampled data of the original dataset

45 | that is exclusive to it.

46 | .. note::

47 | Sampler is assumed to be of constant size.

48 | """

49 |

50 | def __init__(

51 | self,

52 | sampler,

53 | num_replicas=None,

54 | rank=None,

55 | shuffle: bool = True,

56 | ):

57 | """

58 | Args:

59 | sampler: Sampler used for subsampling

60 | num_replicas (int, optional): Number of processes participating in

61 | distributed training

62 | rank (int, optional): Rank of the current process

63 | within ``num_replicas``

64 | shuffle (bool, optional): If true (default),

65 | sampler will shuffle the indices

66 | """

67 | super(DistributedSamplerWrapper, self).__init__(

68 | DatasetFromSampler(sampler),

69 | num_replicas=num_replicas,

70 | rank=rank,

71 | shuffle=shuffle,

72 | )

73 | self.sampler = sampler

74 |

75 | def __iter__(self):

76 | """@TODO: Docs. Contribution is welcome."""

77 | self.dataset = DatasetFromSampler(self.sampler)

78 | indexes_of_indexes = super().__iter__()

79 | subsampler_indexes = self.dataset

80 | return iter(itemgetter(*indexes_of_indexes)(subsampler_indexes))

81 |

82 |

83 | def setup_for_distributed(is_master):

84 | """

85 | This function disables printing when not in master process

86 | """

87 | import builtins as __builtin__

88 |

89 | builtin_print = __builtin__.print

90 |

91 | def print(*args, **kwargs):

92 | force = kwargs.pop("force", False)

93 | if is_master or force:

94 | builtin_print(*args, **kwargs)

95 |

96 | __builtin__.print = print

97 |

98 |

99 | def is_dist_avail_and_initialized():

100 | if not dist.is_available():

101 | return False

102 | if not dist.is_initialized():

103 | return False

104 | return True

105 |

106 |

107 | def get_world_size():

108 | if not is_dist_avail_and_initialized():

109 | return 1

110 | return dist.get_world_size()

111 |

112 |

113 | def get_rank():

114 | if not is_dist_avail_and_initialized():

115 | return 0

116 | return dist.get_rank()

117 |

118 |

119 | def is_main_process():

120 | return get_rank() == 0

121 |

122 |

123 | def save_on_master(*args, **kwargs):

124 | if is_main_process():

125 | torch.save(*args, **kwargs)

126 |

127 |

128 | import os, torch

129 |

130 |

131 | def init_distributed_mode(args):

132 | if "RANK" in os.environ and "WORLD_SIZE" in os.environ:

133 | args.rank = int(os.environ["RANK"])

134 | args.world_size = int(os.environ["WORLD_SIZE"])

135 | args.gpu = int(os.environ["LOCAL_RANK"])

136 | elif "SLURM_PROCID" in os.environ:

137 | args.rank = int(os.environ["SLURM_PROCID"])

138 | args.gpu = args.rank % torch.cuda.device_count()

139 | else:

140 | print("Not using distributed mode")

141 | args.distributed = False

142 | return

143 |

144 | args.distributed = True

145 |

146 | torch.cuda.set_device(args.gpu)

147 | args.dist_backend = "nccl"

148 | print(

149 | "| distributed init (rank {}): {}".format(args.rank, args.dist_url), flush=True

150 | )

151 | torch.distributed.init_process_group(

152 | backend=args.dist_backend,

153 | init_method=args.dist_url,

154 | world_size=args.world_size,

155 | rank=args.rank,

156 | )

157 | torch.distributed.barrier()

158 | setup_for_distributed(args.rank == 0)

159 |

--------------------------------------------------------------------------------

/src/pointLF/light_field_renderer.py:

--------------------------------------------------------------------------------

1 | from typing import Callable, Tuple, List

2 |

3 | import torch

4 | import torch.nn as nn

5 | import torch.nn.functional as F

6 | import numpy

7 | from pytorch3d.renderer import RayBundle

8 | from ..scenes import NeuralScene

9 |

10 |

11 | class LightFieldRenderer(nn.Module):

12 |

13 | def __init__(self, light_field_module, chunksize: int, cam_centered: bool = False):

14 | super(LightFieldRenderer, self).__init__()

15 |

16 | self.light_field_module = light_field_module

17 | self.chunksize = chunksize

18 | if cam_centered:

19 | self.rotate2cam = True

20 | else:

21 | self.rotate2cam = False

22 |

23 | # def forward(self, frame_idx: int, camera_idx: int, scene: NeuralScene, volumetric_function: Callable, chunk_idx: int, **kwargs):

24 | def forward(self, input_dict, scene, **kwargs):

25 |

26 | ##############

27 | # TODO: Clean Up and move somewhere else

28 | ray_bundle = input_dict['ray_bundle']

29 | device = ray_bundle.origins.device

30 | pts = input_dict['pts']

31 | ray_dirs_select = input_dict['ray_dirs_select']

32 | closest_point_mask = input_dict['closest_point_mask']

33 |

34 | cf_ls = [list(pts_k[0].keys()) for pts_k in pts]

35 | import numpy as np

36 | unique_cf = np.unique(np.concatenate([np.array(cf) for cf in cf_ls]), axis=0)

37 |

38 | pts_to_unpack = {

39 | 0: 'pt_cloud_select',

40 | 1: 'closest_point_dist',

41 | 2: 'closest_point_azimuth',

42 | 3: 'closest_point_pitch',

43 |

44 | }

45 |

46 | if len(unique_cf) != len(cf_ls):

47 | new_cf = [tuple(list(cf[0]) + [j]) for j, cf in enumerate(cf_ls)]

48 |

49 | output_dict = {

50 | new_cf[j]:

51 | v

52 | for j, pt in enumerate(pts) for k, v in pt[4].items()

53 | }

54 |

55 | closest_point_mask = {new_cf[j]: v for j, pt in enumerate(closest_point_mask) for k, v in pt.items()}

56 | ray_dirs_select = {new_cf[j]: v.to(device) for j, pt in enumerate(ray_dirs_select) for k, v in pt.items()}

57 | pts = {

58 | n: {

59 | new_cf[j]:

60 | v.to(device)

61 | for j, pt in enumerate(pts) for k, v in pt[i].items()

62 | }

63 | for i, n in pts_to_unpack.items()

64 | }

65 | pts.update({'output_dict': output_dict})

66 |

67 | a = 0

68 |

69 | else:

70 | output_dict = {

71 | k:

72 | v

73 | for pt in pts for k, v in pt[4].items()

74 | }

75 |

76 | closest_point_mask = {k: v for pt in closest_point_mask for k, v in pt.items()}

77 | ray_dirs_select = {k: v.to(device) for pt in ray_dirs_select for k, v in pt.items()}

78 | pts = {

79 | n: {

80 | k:

81 | v.to(device)

82 | for pt in pts for k, v in pt[i].items()

83 | }

84 | for i, n in pts_to_unpack.items()

85 | }

86 | pts.update({'output_dict': output_dict})

87 | ##################

88 |

89 | images, output_dict = self.light_field_module(

90 | ray_bundle=input_dict['ray_bundle'],

91 | scene=scene,

92 | closest_point_mask=closest_point_mask,

93 | pt_cloud_select=pts['pt_cloud_select'],

94 | closest_point_dist=pts['closest_point_dist'],

95 | closest_point_azimuth=pts['closest_point_azimuth'],

96 | closest_point_pitch=pts['closest_point_pitch'],

97 | output_dict=pts['output_dict'],

98 | ray_dirs_select=ray_dirs_select,

99 | rotate2cam=self.rotate2cam,

100 | **kwargs

101 | )

102 |

103 | if scene.tonemapping:

104 | rgb = torch.zeros_like(images)

105 | tone_mapping_ls = [scene.frames[cf[0][1]].load_tone_mapping(cf[0][0]) for cf in cf_ls]

106 | for i in range(len(images)):

107 | rgb[i] = self.tone_map(images[i], tone_mapping_ls[i])

108 |

109 | else:

110 | rgb = images

111 |

112 | output_dict.update(

113 | {

114 | 'rgb': rgb.view(-1, 3),

115 | 'ray_bundle': ray_bundle._replace(xys=ray_bundle.xys[..., None, :])

116 | }

117 | )

118 |

119 | return output_dict

120 |

121 | def tone_map(self, x, tone_mapping_params):

122 |

123 | x = (tone_mapping_params['contrast'] * (x - 0.5) + \

124 | 0.5 + \

125 | tone_mapping_params['brightness']) * \

126 | torch.cat(list(tone_mapping_params['wht_pt'].values()))

127 | x = self.leaky_clamping(x, gamma=tone_mapping_params['gamma'])

128 |

129 | return x

130 |

131 | def leaky_clamping(self, x, gamma, alpha=0.01):

132 | x[x < 0] = x[x < 0] * alpha

133 | x[x > 1] = (-alpha / x[x > 1]) + alpha + 1.

134 | x[(x > 0.) & (x < 1.)] = x[(x > 0.) & (x < 1.)] ** gamma

135 | return x

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

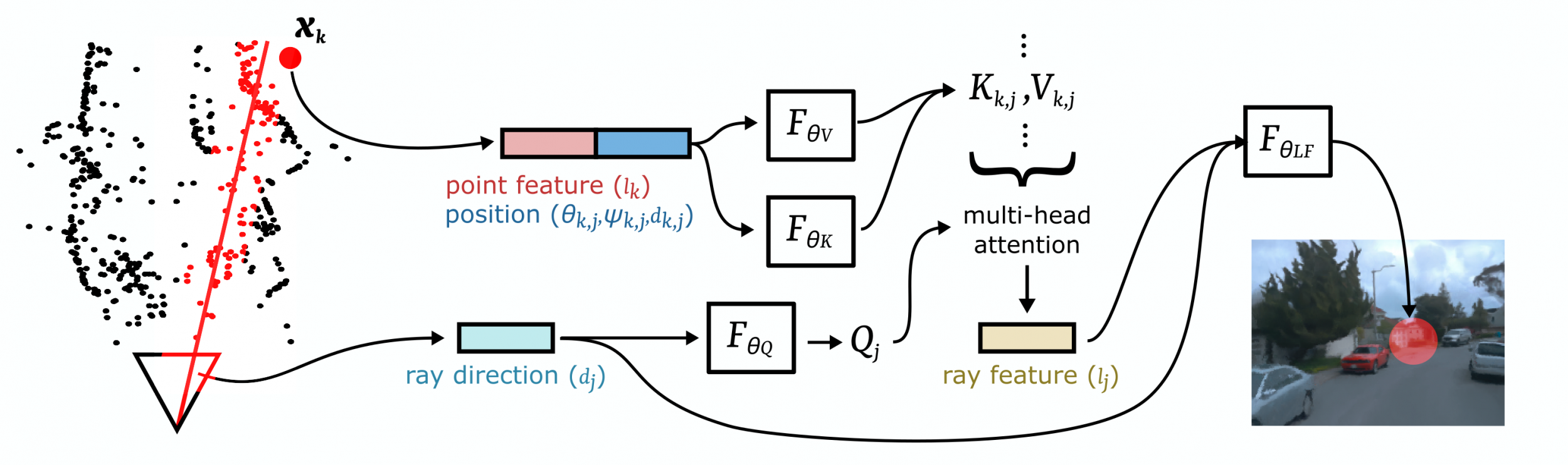

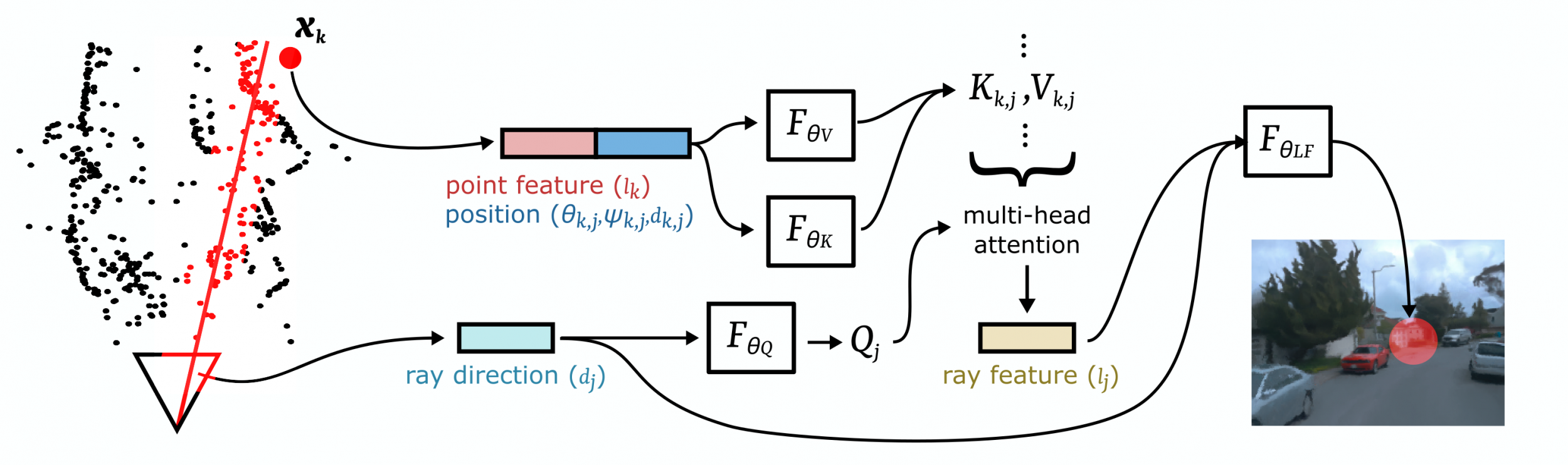

1 | # Neural Point Light Fields (CVPR 2022)

2 |

3 |  4 |

5 | ### [Project Page](https://light.princeton.edu/publication/neural-point-light-fields)

6 | #### Julian Ost, Issam Laradji, Alejandro Newell, Yuval Bahat, Felix Heide

7 |

8 |

9 | Neural Point Light Fields represent scenes with a light field living on a sparse point cloud. As neural volumetric

10 | rendering methods require dense sampling of the underlying functional scene representation, at hundreds of samples

11 | along with a ray cast through the volume, they are fundamentally limited to small scenes with the same objects

12 | projected to hundreds of training views. Promoting sparse point clouds to neural implicit light fields allows us to

13 | represent large scenes effectively with only a single implicit sampling operation per ray.

14 |

15 | These point light fields are a function of the ray direction, and local point feature neighborhood, allowing us to

16 | interpolate the light field conditioned training images without dense object coverage and parallax. We assess the

17 | proposed method for novel view synthesis on large driving scenarios, where we synthesize realistic unseen views that

18 | existing implicit approaches fail to represent. We validate that Neural Point Light Fields make it possible to predict

19 | videos along unseen trajectories previously only feasible to generate by explicitly modeling the scene.

20 |

21 | ---

22 |

23 | ### Data Preparation

24 | #### Waymo

25 |

26 | 1. Download the compressed data of the Waymo Open Dataset:

27 | [Waymo Validation Tar Files](https://console.cloud.google.com/storage/browser/waymo_open_dataset_v_1_3_1/validation?pageState=(%22StorageObjectListTable%22:(%22f%22:%22%255B%255D%22))&prefix=&forceOnObjectsSortingFiltering=false)

28 |

29 | 2. To run the code as used in the paper, store as follows: `./data/validation/validation_xxxx/segment-xxxxxx`

30 |

31 | 3. Neural Point Light Fields is well tested on the segments mentioned in the [Supplementary](https://light.princeton.edu/wp-content/uploads/2022/04/NeuralPointLightFields-Supplementary.pdf) and shown in the experiment group `pointLF_waymo_server`.

32 |

33 |

34 | 4. Preprocess the data running: `./src/datasets/preprocess_Waymo.py -d "./data/validation/validation_xxxx/segment-xxxxxx" --no_data`

35 |

36 | ---

37 |

38 | ### Requirements

39 |

40 | Environment setup

41 | ```

42 | conda create -n NeuralPointLF python=3.7

43 | conda activate NeuralPointLF

44 | ```

45 | Install required packages

46 | ```

47 | conda install -c pytorch -c conda-forge pytorch=1.7.1 torchvision=0.8.2 cudatoolkit=11.0

48 | conda install -c fvcore -c iopath -c conda-forge fvcore iopath

49 | conda install -c bottler nvidiacub

50 | conda install jupyterlab

51 | pip install scikit-image matplotlib imageio plotly opencv-python

52 | conda install pytorch3d -c pytorch3d

53 | conda install -c open3d-admin -c conda-forge open3d

54 | ```

55 | Add large-scale ML toolkit

56 | ```

57 | pip install --upgrade git+https://github.com/haven-ai/haven-ai

58 | ```

59 |

60 | ---

61 | ### Training and Validation

62 | In the first iteration of a scene, the point clouds will be preprocessed and stored, which might take some time.

63 | If you want to train on unmerged point cloud data set `merge_pcd=False` in the config file.

64 |

65 | Train one specific scene from the Waymo Open data set:

66 | ```

67 | python trainval.py -e pointLF_waymo_server -sb -d ./data/waymo/validation --epoch_size

68 | 100 --num_workers=

69 | ```

70 |

71 | Reconstruct the training path (`--render_only=True`)

72 | ```

73 | python trainval.py -e pointLF_waymo_server -sb -d ./data/waymo/validation --epoch_size

74 | 100 --num_workers= --render_only=True

75 | ```

76 |

77 | Argument Descriptions:

78 | ```

79 | -e [Experiment group to run like 'mushrooms' (the rest of the experiment groups are in exp_configs/sps_exps.py)]

80 | -sb [Directory where the experiments are saved]

81 | -r [Flag for whether to reset the experiments]

82 | -d [Directory where the datasets are aved]

83 | ```

84 |

85 | **_Disclaimer_**: The codebase is optimized to run on larger GPU servers with a lot of free CPU memory.

86 | To test on local and low memory, choose `pointLF_waymo_local` instead of `pointLF_waymo_server`.

87 | Adjustments of batch size, chunk size and number of rays will have an effect on necessary resources.

88 |

89 | ---

90 | ### Visualization of Results

91 |

92 | Follow these steps to visualize plots. Open `results.ipynb`, run the first cell to get a dashboard like in the gif below, click on the "plots" tab, then click on "Display plots". Parameters of the plots can be adjusted in the dashboard for custom visualizations.

93 |

94 |

4 |

5 | ### [Project Page](https://light.princeton.edu/publication/neural-point-light-fields)

6 | #### Julian Ost, Issam Laradji, Alejandro Newell, Yuval Bahat, Felix Heide

7 |

8 |

9 | Neural Point Light Fields represent scenes with a light field living on a sparse point cloud. As neural volumetric

10 | rendering methods require dense sampling of the underlying functional scene representation, at hundreds of samples

11 | along with a ray cast through the volume, they are fundamentally limited to small scenes with the same objects

12 | projected to hundreds of training views. Promoting sparse point clouds to neural implicit light fields allows us to

13 | represent large scenes effectively with only a single implicit sampling operation per ray.

14 |

15 | These point light fields are a function of the ray direction, and local point feature neighborhood, allowing us to

16 | interpolate the light field conditioned training images without dense object coverage and parallax. We assess the

17 | proposed method for novel view synthesis on large driving scenarios, where we synthesize realistic unseen views that

18 | existing implicit approaches fail to represent. We validate that Neural Point Light Fields make it possible to predict

19 | videos along unseen trajectories previously only feasible to generate by explicitly modeling the scene.

20 |

21 | ---

22 |

23 | ### Data Preparation

24 | #### Waymo

25 |

26 | 1. Download the compressed data of the Waymo Open Dataset:

27 | [Waymo Validation Tar Files](https://console.cloud.google.com/storage/browser/waymo_open_dataset_v_1_3_1/validation?pageState=(%22StorageObjectListTable%22:(%22f%22:%22%255B%255D%22))&prefix=&forceOnObjectsSortingFiltering=false)

28 |

29 | 2. To run the code as used in the paper, store as follows: `./data/validation/validation_xxxx/segment-xxxxxx`

30 |

31 | 3. Neural Point Light Fields is well tested on the segments mentioned in the [Supplementary](https://light.princeton.edu/wp-content/uploads/2022/04/NeuralPointLightFields-Supplementary.pdf) and shown in the experiment group `pointLF_waymo_server`.

32 |

33 |

34 | 4. Preprocess the data running: `./src/datasets/preprocess_Waymo.py -d "./data/validation/validation_xxxx/segment-xxxxxx" --no_data`

35 |

36 | ---

37 |

38 | ### Requirements

39 |

40 | Environment setup

41 | ```

42 | conda create -n NeuralPointLF python=3.7

43 | conda activate NeuralPointLF

44 | ```

45 | Install required packages

46 | ```

47 | conda install -c pytorch -c conda-forge pytorch=1.7.1 torchvision=0.8.2 cudatoolkit=11.0

48 | conda install -c fvcore -c iopath -c conda-forge fvcore iopath

49 | conda install -c bottler nvidiacub

50 | conda install jupyterlab

51 | pip install scikit-image matplotlib imageio plotly opencv-python

52 | conda install pytorch3d -c pytorch3d

53 | conda install -c open3d-admin -c conda-forge open3d

54 | ```

55 | Add large-scale ML toolkit

56 | ```

57 | pip install --upgrade git+https://github.com/haven-ai/haven-ai

58 | ```

59 |

60 | ---

61 | ### Training and Validation

62 | In the first iteration of a scene, the point clouds will be preprocessed and stored, which might take some time.

63 | If you want to train on unmerged point cloud data set `merge_pcd=False` in the config file.

64 |

65 | Train one specific scene from the Waymo Open data set:

66 | ```

67 | python trainval.py -e pointLF_waymo_server -sb -d ./data/waymo/validation --epoch_size

68 | 100 --num_workers=

69 | ```

70 |

71 | Reconstruct the training path (`--render_only=True`)

72 | ```

73 | python trainval.py -e pointLF_waymo_server -sb -d ./data/waymo/validation --epoch_size

74 | 100 --num_workers= --render_only=True

75 | ```

76 |

77 | Argument Descriptions:

78 | ```

79 | -e [Experiment group to run like 'mushrooms' (the rest of the experiment groups are in exp_configs/sps_exps.py)]

80 | -sb [Directory where the experiments are saved]

81 | -r [Flag for whether to reset the experiments]

82 | -d [Directory where the datasets are aved]

83 | ```

84 |

85 | **_Disclaimer_**: The codebase is optimized to run on larger GPU servers with a lot of free CPU memory.

86 | To test on local and low memory, choose `pointLF_waymo_local` instead of `pointLF_waymo_server`.

87 | Adjustments of batch size, chunk size and number of rays will have an effect on necessary resources.

88 |

89 | ---

90 | ### Visualization of Results

91 |

92 | Follow these steps to visualize plots. Open `results.ipynb`, run the first cell to get a dashboard like in the gif below, click on the "plots" tab, then click on "Display plots". Parameters of the plots can be adjusted in the dashboard for custom visualizations.

93 |

94 |

95 |  96 |

96 |

97 |

98 | ---

99 | #### Citation

100 | ```

101 | @InProceedings{ost2022pointlightfields,

102 | title = {Neural Point Light Fields},

103 | author = {Ost, Julian and Laradji, Issam and Newell, Alejandro and Bahat, Yuval and Heide, Felix},

104 | journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

105 | year = {2022}

106 | }

107 | ```

108 |

109 |

110 |

--------------------------------------------------------------------------------

/src/datasets/extract_baselines.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import open3d as o3d

4 | import matplotlib.pyplot as plt

5 | from PIL import Image

6 |

7 |

8 | def extract_waymo_poses(dataset, scene_list, i_train, i_test, i_all):

9 | cam_names = ['FRONT', 'FRONT_LEFT', 'FRONT_RIGHT', 'SIDE_LEFT', 'SIDE_RIGHT']

10 | assert len(scene_list) == 1

11 | first_fr = scene_list[0]['first_frame']

12 | last_fr = scene_list[0]['last_frame']

13 |

14 | pose_file_n = "poses_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

15 | img_file_n = "imgs_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

16 | index_test_n= "index_test_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

17 | index_train_n = "index_train_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

18 | pt_depth_file_n = "pt_depth_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

19 | depth_img_file_n = "pt_depth_{}_{}.npy".format(str(first_fr).zfill(4), str(last_fr).zfill(4))

20 |

21 | segmemt_pth = ''

22 | for s in dataset.images[0].split('/')[1:-2]:

23 | segmemt_pth += '/' + s

24 |

25 | remove_side_views = True

26 |

27 | n_frames = len(dataset.poses_world) // 5

28 |

29 | if remove_side_views:

30 | n_imgs = n_frames * 3

31 | cam_names = cam_names[:3]

32 |

33 | else:

34 | n_imgs = n_frames * 5

35 |

36 | cam_pose = dataset.poses_world[:n_imgs]

37 | img_path = dataset.images[:n_imgs]

38 |

39 | cam_pose_openGL = cam_pose.dot(np.array([[-1., 0., 0., 0., ], [0., 1., 0., 0., ], [0., 0., -1., 0., ], [0., 0., 0., 1., ], ]))

40 |

41 | # Add H, W, focal

42 | hwf1 = [np.array([[dataset.H[c_name], dataset.W[c_name], dataset.focal[c_name], 1.]]).repeat(n_frames, axis=0) for c_name in cam_names]

43 | hwf1 = np.concatenate(hwf1)[:, :, None]

44 | cam_pose_openGL = np.concatenate([cam_pose_openGL, hwf1], axis=2)

45 |

46 | np.save(os.path.join(segmemt_pth, pose_file_n), cam_pose_openGL)

47 | np.save(os.path.join(segmemt_pth, img_file_n), img_path)

48 | np.save(os.path.join(segmemt_pth, index_test_n), i_test)

49 | np.save(os.path.join(segmemt_pth, index_train_n), i_train)

50 |

51 | xyz_pts = []

52 |

53 | # Extract depth points (and images)

54 | for i in range(n_imgs):

55 | fr = i % n_frames

56 | pts_i_veh = np.asarray(o3d.io.read_point_cloud(dataset.point_cloud_pth[fr]).points)

57 | pts_i_veh = np.concatenate([pts_i_veh, np.ones([len(pts_i_veh), 1])], axis=-1)

58 |

59 | cam2veh_i = dataset.poses[i]

60 | veh2cam_i = np.concatenate([cam2veh_i[:3, :3].T, np.matmul(cam2veh_i[:3, :3].T, -cam2veh_i[:3, 3])[:, None]], axis=-1)

61 |

62 | pts_i_cam = np.matmul(veh2cam_i, pts_i_veh.T).T

63 |

64 | focal_i = hwf1[i, 2]

65 | h_i = hwf1[i, 0]

66 | w_i = hwf1[i, 1]

67 |

68 | # x - W

69 | x = -focal_i * (pts_i_cam[:, 0] / pts_i_cam[:, 2])

70 | # y - H

71 | y = -focal_i * (pts_i_cam[:, 1] / pts_i_cam[:, 2])

72 |

73 | xyz = np.stack([x, y, pts_i_cam[:, 2]]).T

74 |

75 | visible_pts_map = (xyz[:, 2] > 0) & (np.abs(xyz[:, 0]) < w_i // 2) & (np.abs(xyz[:, 1]) < h_i // 2)

76 |

77 | xyz_visible = xyz[visible_pts_map]

78 |

79 | # xxx['coord'][:, 0] == W

80 | # xxx['coord'][:, 1] == H

81 | xyz_visible[:, 0] = np.maximum(np.minimum(xyz_visible[:, 0] + w_i // 2, w_i), 0)

82 | xyz_visible[:, 1] = np.maximum(np.minimum(xyz_visible[:, 1] + h_i // 2, h_i), 0)

83 |

84 | xyz_pts.append(

85 | {

86 | 'depth': xyz_visible[:, 2],

87 | 'coord': xyz_visible[:, :2],

88 | 'weight': np.ones_like(xyz_visible[:, 2])

89 | }

90 | )

91 |

92 | # ######### Debug Depth Outputs

93 | # if i == 102:

94 | # scale = 8

95 | #

96 | # h_scaled = h_i // scale

97 | # w_scaled = w_i // scale

98 | #

99 | # xyz_vis_scaled = xyz_visible / 8

100 | #

101 | # depth_img = np.zeros([int(h_scaled), int(w_scaled), 1])

102 | # depth_img[np.floor(xyz_vis_scaled[:, 1]).astype("int"), np.floor(xyz_vis_scaled[:, 0]).astype("int")] = xyz_visible[:, 2][:, None]

103 | # plt.figure()

104 | # plt.imshow(depth_img[..., 0], cmap="plasma")

105 | #

106 | # plt.figure()

107 | # img_i = Image.open(img_path[i])

108 | # img_i = img_i.resize((w_scaled, h_scaled))

109 | # plt.imshow(np.asarray(img_i))

110 | #

111 | # # pcd = o3d.geometry.PointCloud()

112 | # # pcd.points = o3d.utility.Vector3dVector(pts_i_cam)

113 |

114 | np.save(os.path.join(segmemt_pth, pt_depth_file_n), xyz_pts)

115 |

116 |

117 | # plt.scatter(cam_pose_openGL[:, 0, 3], cam_pose_openGL[:, 1, 3])

118 | # plt.axis('equal')

119 | #

120 | # for i in range(len(pos)):

121 | # p = cam_pose_openGL[i]

122 | # # p = pos[i].dot(np.array([[-1., 0., 0., 0., ],

123 | # # [0., 1., 0., 0., ],

124 | # # [0., 0., -1., 0., ],

125 | # # [0., 0., 0., 1., ], ]))

126 | # plt.arrow(p[0, 3], p[1, 3], p[0, 0], p[1, 0], color="red")

127 | # plt.arrow(p[0, 3], p[1, 3], p[0, 2], p[1, 2], color="black")

128 |

129 |

130 |

131 | def extract_depth_information(dataset, exp_dict):

132 | pass

133 |

134 |

135 | def extract_depth_image(dataset, exp_dict):

136 | pass

--------------------------------------------------------------------------------

/src/pointLF/layer.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.nn.functional as F

4 | from torch import Tensor

5 | import math

6 |

7 | class DenseLayer(nn.Linear):

8 | def __init__(self, in_dim: int, out_dim: int, activate: str = "relu", *args, **kwargs) -> None:

9 | if activate:

10 | self.activation = activate

11 | else:

12 | self.activation = 'linear'

13 | super().__init__(in_dim, out_dim, *args,)

14 |

15 | def reset_parameters(self) -> None:

16 | torch.nn.init.xavier_uniform_(self.weight, gain=torch.nn.init.calculate_gain(self.activation))

17 | if self.bias is not None:

18 | torch.nn.init.zeros_(self.bias)

19 |

20 | def forward(self, input: Tensor, **kwargs) -> Tensor:

21 | out = super(DenseLayer, self).forward(input)

22 | if self.activation == 'relu':

23 | out = F.relu(out)

24 |

25 | return out

26 |

27 |

28 | class EqualLinear(nn.Module):

29 | """Linear layer with equalized learning rate.

30 |

31 | During the forward pass the weights are scaled by the inverse of the He constant (i.e. sqrt(in_dim)) to

32 | prevent vanishing gradients and accelerate training. This constant only works for ReLU or LeakyReLU

33 | activation functions.

34 |

35 | Args:

36 | ----

37 | in_channel: int

38 | Input channels.

39 | out_channel: int

40 | Output channels.

41 | bias: bool

42 | Use bias term.

43 | bias_init: float

44 | Initial value for the bias.

45 | lr_mul: float

46 | Learning rate multiplier. By scaling weights and the bias we can proportionally scale the magnitude of

47 | the gradients, effectively increasing/decreasing the learning rate for this layer.

48 | activate: bool

49 | Apply leakyReLU activation.

50 |

51 | """

52 |

53 | def __init__(self, in_channel, out_channel, bias=True, bias_init=0, lr_mul=1, activate=False):

54 | super().__init__()

55 |

56 | self.weight = nn.Parameter(torch.randn(out_channel, in_channel).div_(lr_mul))

57 |

58 | if bias:

59 | self.bias = nn.Parameter(torch.zeros(out_channel).fill_(bias_init))

60 | else:

61 | self.bias = None

62 |

63 | self.activate = activate

64 | self.scale = (1 / math.sqrt(in_channel)) * lr_mul

65 | self.lr_mul = lr_mul

66 |

67 | def forward(self, input):

68 | if self.activate:

69 | out = F.linear(input, self.weight * self.scale)

70 | out = fused_leaky_relu(out, self.bias * self.lr_mul)

71 | else:

72 | out = F.linear(input, self.weight * self.scale, bias=self.bias * self.lr_mul)

73 | return out

74 |

75 | def __repr__(self):

76 | return f"{self.__class__.__name__}({self.weight.shape[1]}, {self.weight.shape[0]})"

77 |

78 |

79 | class FusedLeakyReLU(nn.Module):

80 | def __init__(self, channel, bias=True, negative_slope=0.2, scale=2 ** 0.5):

81 | super().__init__()

82 |

83 | if bias:

84 | self.bias = nn.Parameter(torch.zeros(channel))

85 |

86 | else:

87 | self.bias = None

88 |

89 | self.negative_slope = negative_slope

90 | self.scale = scale

91 |

92 | def forward(self, input):

93 | return fused_leaky_relu(input, self.bias, self.negative_slope, self.scale)

94 |

95 |

96 | def fused_leaky_relu(input, bias=None, negative_slope=0.2, scale=2 ** 0.5):

97 | if input.dtype == torch.float16:

98 | bias = bias.half()

99 |

100 | if bias is not None:

101 | rest_dim = [1] * (input.ndim - bias.ndim - 1)

102 | return F.leaky_relu(input + bias.view(1, bias.shape[0], *rest_dim), negative_slope=0.2) * scale

103 |

104 | else:

105 | return F.leaky_relu(input, negative_slope=0.2) * scale

106 |

107 |

108 | class ModulationLayer(nn.Module):

109 | def __init__(self, in_ch, out_ch, z_dim, demodulate=True, activate=True, bias=True, **kwargs):

110 | super(ModulationLayer, self).__init__()

111 | self.eps = 1e-8

112 |

113 | self.in_ch = in_ch

114 | self.out_ch = out_ch

115 | self.z_dim = z_dim

116 | self.demodulate = demodulate

117 |

118 | self.scale = 1 / math.sqrt(in_ch)

119 | self.weight = nn.Parameter(torch.randn(out_ch, in_ch))

120 | self.modulation = EqualLinear(z_dim, in_ch, bias_init=1, activate=False)

121 |

122 | if activate:

123 | # FusedLeakyReLU includes a bias term

124 | self.activate = FusedLeakyReLU(out_ch, bias=bias)

125 | elif bias:

126 | self.bias = nn.Parameter(torch.zeros(1, out_ch))

127 |

128 | def __repr__(self):

129 | return f'{self.__class__.__name__}({self.in_channel}, {self.out_channel}, z_dim={self.z_dim})'

130 |

131 |

132 | def forward(self, input, z):

133 | # feature modulation

134 | gamma = self.modulation(z) # B, in_ch

135 | input = input * gamma

136 |

137 | weight = self.weight * self.scale

138 |

139 | if self.demodulate:

140 | # weight is out_ch x in_ch

141 | # here we calculate the standard deviation per input channel

142 | demod = torch.rsqrt(weight.pow(2).sum([1]) + self.eps)

143 | weight = weight * demod.view(-1, 1)

144 |

145 | # also normalize inputs

146 | input_demod = torch.rsqrt(input.pow(2).sum([1]) + self.eps)

147 | input = input * input_demod.view(-1, 1)

148 |

149 | out = F.linear(input, weight)

150 |

151 | if hasattr(self, 'activate'):

152 | out = self.activate(out)

153 |

154 | if hasattr(self, 'bias'):

155 | out = out + self.bias

156 |

157 | return out

--------------------------------------------------------------------------------

/src/scenes/raysampler/frustum_helpers.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | from pytorch3d.transforms import Rotate, Transform3d

4 | from src.scenes.frames import GraphEdge

5 |

6 |

7 | def get_frustum_world(camera, edge2camera, device='cpu'):

8 | cam_trafo = edge2camera.get_transformation_c2p().to(device=device)

9 | H = camera.intrinsics.H

10 | W = camera.intrinsics.W

11 | focal = camera.intrinsics.f_x

12 | sensor_corner = torch.tensor([[0., 0.], [0., H - 1], [W - 1, H - 1], [W - 1, 0.]], device=device)

13 | frustum_edges = torch.stack([(sensor_corner[:, 0] - W * .5) / focal, -(sensor_corner[:, 1] - H * .5) / focal,

14 | -torch.ones(size=(4,), device=device)], -1)

15 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

16 | frustum_edges = \

17 | Rotate(camera.R, device=device).compose(cam_trafo.translate(-edge2camera.translation)).transform_points(

18 | frustum_edges.reshape(1, -1, 3))[0]

19 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

20 |

21 | frustum_normals = torch.cross(frustum_edges, frustum_edges[[1, 2, 3, 0], :])

22 |

23 | frustum_normals /= torch.norm(frustum_normals, dim=-1)[:, None]

24 |

25 | return frustum_edges, frustum_normals

26 |

27 |

28 | def get_frustum(camera, edge2camera, edge2reference, device='cpu'):

29 | # Gets the edges and normals of a cameras frustum with respect to a reference system

30 | openGL2dataset = Rotate(camera.R, device=device)

31 |

32 | if type(edge2reference) == GraphEdge:

33 | ref2cam = torch.eye(4, device=device)[None]

34 |

35 | ref2cam[0, 3, :3] = edge2reference.translation - edge2camera.translation

36 | ref2cam[0, :3, :3] = edge2camera.getRotation_c2p().compose(edge2reference.getRotation_p2c()).get_matrix()[:, :3, :3]

37 | cam_trafo = Transform3d(matrix=ref2cam)

38 |

39 | else:

40 | wo2veh = edge2reference

41 | cam2wo = edge2camera.get_transformation_c2p().cpu().get_matrix()[0].T.detach().numpy()

42 |

43 | cam2veh = wo2veh.dot(cam2wo)

44 | cam2veh = Transform3d(matrix=torch.tensor(cam2veh.T, device=device, dtype=torch.float32))

45 | cam_trafo = cam2veh

46 | ref2cam = cam2veh.get_matrix()

47 |

48 | H = camera.intrinsics.H

49 | W = camera.intrinsics.W

50 | focal = camera.intrinsics.f_x.detach()

51 | sensor_corner = torch.tensor([[0., 0.], [0., H - 1], [W - 1, H - 1], [W - 1, 0.]], device=device)

52 | frustum_edges = torch.stack([(sensor_corner[:, 0] - W * .5) / focal, -(sensor_corner[:, 1] - H * .5) / focal,

53 | -torch.ones(size=(4,), device=device)], -1)

54 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

55 | frustum_edges = openGL2dataset.compose(cam_trafo.translate(-ref2cam[:, 3, :3])).transform_points(

56 | frustum_edges.reshape(1, -1, 3))[0]

57 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

58 | frustum_normals = torch.cross(frustum_edges, frustum_edges[[1, 2, 3, 0], :])

59 | frustum_normals /= torch.norm(frustum_normals, dim=-1)[:, None]

60 | return frustum_edges, frustum_normals

61 |

62 |

63 | def get_frustum_torch(camera, edge2camera, edge2reference, device='cpu'):

64 | # Gets the edges and normals of a cameras frustum with respect to a reference system

65 | openGL2dataset = Rotate(camera.R, device=device)

66 |

67 | if type(edge2reference) == GraphEdge:

68 | ref2cam = torch.eye(4, device=device)[None]

69 |

70 | ref2cam[0, 3, :3] = edge2reference.translation - edge2camera.translation

71 | ref2cam[0, :3, :3] = edge2camera.getRotation_c2p().compose(edge2reference.getRotation_p2c()).get_matrix()[:, :3,

72 | :3]

73 | cam_trafo = Transform3d(matrix=ref2cam)

74 |

75 | else:

76 | wo2veh = edge2reference

77 | cam2wo = edge2camera.get_transformation_c2p(device='cpu', requires_grad=False).get_matrix()[0].T

78 |

79 | cam2veh = torch.matmul(wo2veh, cam2wo)

80 | cam2veh = Transform3d(matrix=cam2veh.T)

81 | cam_trafo = cam2veh

82 | ref2cam = cam2veh.get_matrix()

83 |

84 | H = camera.intrinsics.H

85 | W = camera.intrinsics.W

86 | focal = camera.intrinsics.f_x.detach()

87 | sensor_corner = torch.tensor([[0., 0.], [0., H - 1], [W - 1, H - 1], [W - 1, 0.]], device=device)

88 | frustum_edges = torch.stack([(sensor_corner[:, 0] - W * .5) / focal, -(sensor_corner[:, 1] - H * .5) / focal,

89 | -torch.ones(size=(4,), device=device)], -1)

90 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

91 | frustum_edges = openGL2dataset.compose(cam_trafo.translate(-ref2cam[:, 3, :3])).transform_points(

92 | frustum_edges.reshape(1, -1, 3))[0]

93 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

94 | frustum_normals = torch.cross(frustum_edges, frustum_edges[[1, 2, 3, 0], :])

95 | frustum_normals /= torch.norm(frustum_normals, dim=-1)[:, None]

96 | return frustum_edges, frustum_normals

97 |

98 |

99 | # TODO: Convert to full numpy version

100 | def get_frustum_np(camera, edge2camera, edge2reference, device='cpu'):

101 | # Gets the edges and normals of a cameras frustum with respect to a reference system

102 |

103 | openGL2dataset = Rotate(camera.R, device=device)

104 |

105 | ref2cam = torch.eye(4, device=device)[None]

106 |

107 | ref2cam[0, 3, :3] = edge2reference.translation - edge2camera.translation

108 | ref2cam[0, :3, :3] = edge2camera.getRotation_c2p().compose(edge2reference.getRotation_p2c()).get_matrix()[:, :3, :3]

109 | cam_trafo = Transform3d(matrix=ref2cam)

110 |

111 | H = camera.intrinsics.H

112 | W = camera.intrinsics.W

113 | focal = camera.intrinsics.f_x

114 | sensor_corner = torch.tensor([[0., 0.], [0., H - 1], [W - 1, H - 1], [W - 1, 0.]], device=device)

115 | frustum_edges = torch.stack([(sensor_corner[:, 0] - W * .5) / focal, -(sensor_corner[:, 1] - H * .5) / focal,

116 | -torch.ones(size=(4,), device=device)], -1)

117 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

118 | frustum_edges = openGL2dataset.compose(cam_trafo.translate(-ref2cam[:, 3, :3])).transform_points(

119 | frustum_edges.reshape(1, -1, 3))[0]

120 | frustum_edges /= torch.norm(frustum_edges, dim=-1)[:, None]

121 | frustum_normals = torch.cross(frustum_edges, frustum_edges[[1, 2, 3, 0], :])

122 | frustum_normals /= torch.norm(frustum_normals, dim=-1)[:, None]

123 | return frustum_edges, frustum_normals

--------------------------------------------------------------------------------

/src/pointLF/pointcloud_encoding/simpleview.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | # from all_utils import DATASET_NUM_CLASS

4 | from .simpleview_utils import PCViews, Squeeze, BatchNormPoint

5 | import matplotlib.pyplot as plt

6 |

7 | from .resnet import _resnet, BasicBlock

8 |

9 |

10 | class MVModel(nn.Module):

11 | def __init__(self, task,

12 | # dataset,

13 | backbone,

14 | feat_size,

15 | resolution=128,

16 | upscale_feats = True,

17 | ):

18 | super().__init__()

19 | assert task == 'cls'

20 | self.task = task

21 | self.num_class = 10 # DATASET_NUM_CLASS[dataset]

22 | self.dropout_p = 0.5

23 | self.feat_size = feat_size

24 |

25 | self.resolution = resolution

26 | self.upscale_feats = False

27 |

28 | pc_views = PCViews()

29 | self.num_views = pc_views.num_views

30 | self._get_img = pc_views.get_img

31 | self._get_img_coord = pc_views.get_img_coord

32 |