├── .gitignore

├── LICENSE

├── README.md

├── _init_paths.py

├── cfgs

├── res101.yml

├── res101_ls.yml

├── res50.yml

└── vgg16.yml

├── demo.py

├── images

├── img1.jpg

├── img1_det.jpg

├── img1_det_res101.jpg

├── img2.jpg

├── img2_det.jpg

├── img2_det_res101.jpg

├── img3.jpg

├── img3_det.jpg

├── img3_det_res101.jpg

├── img4.jpg

├── img4_det.jpg

└── img4_det_res101.jpg

├── lib

├── Makefile

├── datasets

│ ├── VOCdevkit-matlab-wrapper

│ │ ├── get_voc_opts.m

│ │ ├── voc_eval.m

│ │ └── xVOCap.m

│ ├── __init__.py

│ ├── coco.py

│ ├── ds_utils.py

│ ├── factory.py

│ ├── imagenet.py

│ ├── imdb.py

│ ├── pascal_voc.py

│ ├── pascal_voc_rbg.py

│ ├── tools

│ │ └── mcg_munge.py

│ ├── vg.py

│ ├── vg_eval.py

│ └── voc_eval.py

├── make.sh

├── model

│ ├── __init__.py

│ ├── couplenet

│ │ ├── __init__.py

│ │ ├── couplenet.py

│ │ └── resnet_atrous.py

│ ├── faster_rcnn

│ │ ├── __init__.py

│ │ ├── faster_rcnn.py

│ │ ├── resnet.py

│ │ └── vgg16.py

│ ├── nms

│ │ ├── .gitignore

│ │ ├── __init__.py

│ │ ├── _ext

│ │ │ ├── __init__.py

│ │ │ └── nms

│ │ │ │ └── __init__.py

│ │ ├── build.py

│ │ ├── make.sh

│ │ ├── nms_cpu.py

│ │ ├── nms_gpu.py

│ │ ├── nms_kernel.cu

│ │ ├── nms_wrapper.py

│ │ └── src

│ │ │ ├── nms_cuda.c

│ │ │ ├── nms_cuda.h

│ │ │ ├── nms_cuda_kernel.cu

│ │ │ └── nms_cuda_kernel.h

│ ├── psroi_pooling

│ │ ├── __init__.py

│ │ ├── _ext

│ │ │ ├── __init__.py

│ │ │ └── psroi_pooling

│ │ │ │ └── __init__.py

│ │ ├── build.py

│ │ ├── functions

│ │ │ ├── __init__.py

│ │ │ └── psroi_pooling.py

│ │ ├── modules

│ │ │ ├── __init__.py

│ │ │ └── psroi_pool.py

│ │ └── src

│ │ │ ├── cuda

│ │ │ ├── psroi_pooling_kernel.cu

│ │ │ └── psroi_pooling_kernel.h

│ │ │ ├── psroi_pooling_cuda.c

│ │ │ └── psroi_pooling_cuda.h

│ ├── rfcn

│ │ ├── __init__.py

│ │ ├── resnet_atrous.py

│ │ └── rfcn.py

│ ├── roi_align

│ │ ├── __init__.py

│ │ ├── _ext

│ │ │ ├── __init__.py

│ │ │ └── roi_align

│ │ │ │ └── __init__.py

│ │ ├── build.py

│ │ ├── functions

│ │ │ ├── __init__.py

│ │ │ └── roi_align.py

│ │ ├── make.sh

│ │ ├── modules

│ │ │ ├── __init__.py

│ │ │ └── roi_align.py

│ │ └── src

│ │ │ ├── roi_align.c

│ │ │ ├── roi_align.h

│ │ │ ├── roi_align_cuda.c

│ │ │ ├── roi_align_cuda.h

│ │ │ ├── roi_align_kernel.cu

│ │ │ └── roi_align_kernel.h

│ ├── roi_crop

│ │ ├── __init__.py

│ │ ├── _ext

│ │ │ ├── __init__.py

│ │ │ ├── crop_resize

│ │ │ │ └── __init__.py

│ │ │ └── roi_crop

│ │ │ │ └── __init__.py

│ │ ├── build.py

│ │ ├── functions

│ │ │ ├── __init__.py

│ │ │ ├── crop_resize.py

│ │ │ ├── gridgen.py

│ │ │ └── roi_crop.py

│ │ ├── make.sh

│ │ ├── modules

│ │ │ ├── __init__.py

│ │ │ ├── gridgen.py

│ │ │ └── roi_crop.py

│ │ └── src

│ │ │ ├── roi_crop.c

│ │ │ ├── roi_crop.h

│ │ │ ├── roi_crop_cuda.c

│ │ │ ├── roi_crop_cuda.h

│ │ │ ├── roi_crop_cuda_kernel.cu

│ │ │ └── roi_crop_cuda_kernel.h

│ ├── roi_pooling

│ │ ├── __init__.py

│ │ ├── _ext

│ │ │ ├── __init__.py

│ │ │ └── roi_pooling

│ │ │ │ └── __init__.py

│ │ ├── build.py

│ │ ├── functions

│ │ │ ├── __init__.py

│ │ │ └── roi_pool.py

│ │ ├── modules

│ │ │ ├── __init__.py

│ │ │ └── roi_pool.py

│ │ └── src

│ │ │ ├── roi_pooling.c

│ │ │ ├── roi_pooling.h

│ │ │ ├── roi_pooling_cuda.c

│ │ │ ├── roi_pooling_cuda.h

│ │ │ ├── roi_pooling_kernel.cu

│ │ │ └── roi_pooling_kernel.h

│ ├── rpn

│ │ ├── __init__.py

│ │ ├── anchor_target_layer.py

│ │ ├── bbox_transform.py

│ │ ├── generate_anchors.py

│ │ ├── proposal_layer.py

│ │ ├── proposal_target_layer_cascade.py

│ │ └── rpn.py

│ └── utils

│ │ ├── .gitignore

│ │ ├── __init__.py

│ │ ├── bbox.c

│ │ ├── bbox.pyx

│ │ ├── blob.py

│ │ ├── config.py

│ │ ├── logger.py

│ │ └── net_utils.py

├── pycocotools

│ ├── UPSTREAM_REV

│ ├── __init__.py

│ ├── _mask.c

│ ├── _mask.pyx

│ ├── coco.py

│ ├── cocoeval.py

│ ├── license.txt

│ ├── mask.py

│ ├── maskApi.c

│ └── maskApi.h

├── roi_data_layer

│ ├── __init__.py

│ ├── minibatch.py

│ ├── roibatchLoader.py

│ └── roidb.py

└── setup.py

├── requirements.txt

├── test_net.py

└── trainval_net.py

/.gitignore:

--------------------------------------------------------------------------------

1 | data/*

2 | .idea/

3 | *.pyc

4 | *~

5 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Jianwei Yang

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # A Pytorch Implementation of R-FCN/CoupleNet

2 |

3 | This repo has moved to [princewang1994/RFCN_CoupleNet.pytorch](https://github.com/princewang1994/RFCN_CoupleNet.pytorch), it will stop updating here.

4 |

5 | ## Introduction

6 |

7 | This project is an pytorch implement R-FCN and CoupleNet, large part code is reference from [jwyang/faster-rcnn.pytorch](https://github.com/jwyang/faster-rcnn.pytorch). The R-FCN structure is refer to [Caffe R-FCN](https://github.com/daijifeng001/R-FCN) and [Py-R-FCN](https://github.com/YuwenXiong/py-R-FCN)

8 |

9 | - For R-FCN, mAP@0.5 reached 73.2 in VOC2007 trainval dataset

10 | - For CoupleNet, mAP@0.5 reached 75.2 in VOC2007 trainval dataset

11 |

12 | ## R-FCN

13 |

14 | arXiv:1605.06409: [R-FCN: Object Detection via Region-based Fully Convolutional Networks](https://arxiv.org/abs/1605.06409)

15 |

16 |

17 |

18 | This repo has following modification compare to [jwyang/faster-rcnn.pytorch](https://github.com/jwyang/faster-rcnn.pytorch):

19 |

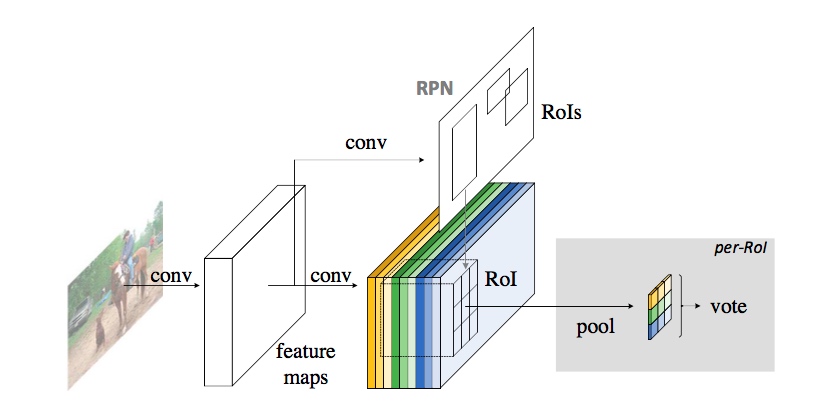

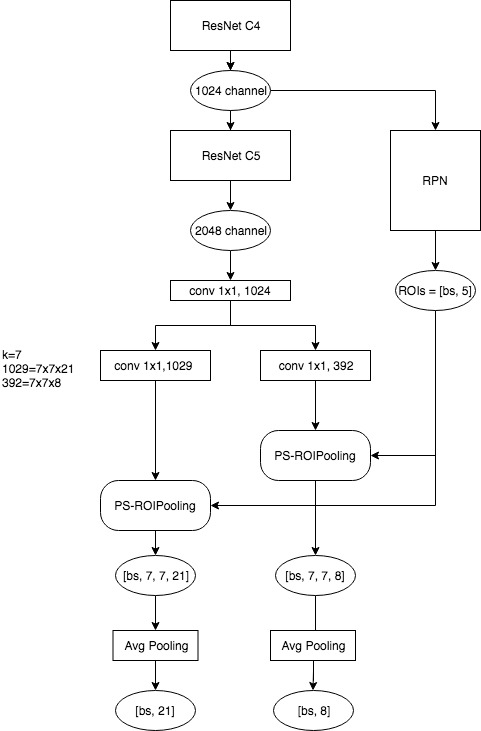

20 | - **R-FCN architecture**: We refered to the origin [Caffe version] of R-FCN, the main structure of R-FCN is show in following figure.

21 | - **PS-RoIPooling with CUDA** :(refer to the other pytorch implement R-FCN, pytorch_RFCN). I have modified it to fit multi-image training (not only batch-size=1 is supported)

22 | - **Implement multi-scale training:** As the original paper says, each image is randomly reized to differenct resolutions (400, 500, 600, 700, 800) when training, and during test time, we use fix input size(600). These make 1.2 mAP gain in our experiments.

23 | - **Implement OHEM:** in this repo, we implement Online Hard Example Mining(OHEM) method in the paper, set `OHEM: False` in `cfgs/res101.yml` for using OHEM. Unluckly, it cause a bit performance degration in my experiments

24 |

25 |

26 |

27 | ## CoupleNet

28 |

29 | arXiv:1708.02863:[CoupleNet: Coupling Global Structure with Local Parts for Object Detection](https://arxiv.org/abs/1708.02863)

30 |

31 |

32 |

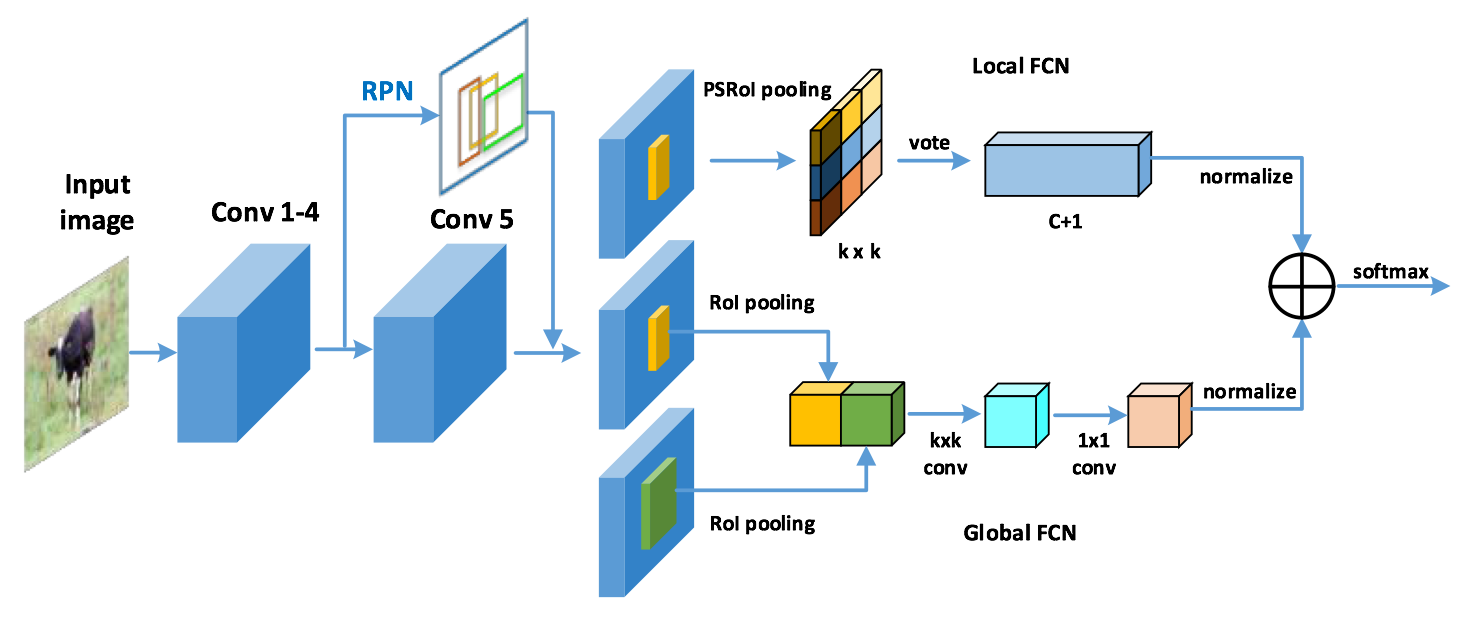

33 | - Making changes based on R-FCN

34 | - Implement local/global FCN in CoupleNet

35 |

36 | ## Tutorial

37 |

38 | * [R-FCN blog](http://blog.prince2015.club/2018/07/13/R-FCN/)

39 |

40 | ## Benchmarking

41 |

42 | We benchmark our code thoroughly on three datasets: pascal voc using two different architecture: R-FCN and CoupleNet. Results shows following:

43 |

44 | 1). PASCAL VOC 2007 (Train: 07_trainval - Test: 07_test, scale=400, 500, 600, 700, 800)

45 |

46 | model | #GPUs | batch size | lr | lr_decay | max_epoch | time/epoch | mem/GPU | mAP

47 | ---------|--------|-----|--------|-----|-----|-------|--------|-----

48 | [R-FCN](https://drive.google.com/file/d/1JMh0gguOozEEIRijQxkQnMKLTAp2_iu5/view?usp=sharing) | 1 | 2 | 4e-3 | 8 | 20 | 0.88 hr | 3000 MB | 73.8

49 | CouleNet | 1 | 2 | 4e-3 | 8 | 20 | 0.60 hr | 8900 MB | 75.2

50 |

51 | - Pretrained model for R-FCN(VOC2007) has released~, See `Test` part following

52 |

53 |

54 | ## Preparation

55 |

56 |

57 | First of all, clone the code

58 | ```

59 | $ git clone https://github.com/princewang1994/R-FCN.pytorch.git

60 | ```

61 |

62 | Then, create a folder:

63 | ```

64 | $ cd R-FCN.pytorch && mkdir data

65 | $ cd data

66 | $ ln -s $VOC_DEVKIT_ROOT .

67 | ```

68 |

69 | ### prerequisites

70 |

71 | * Python 3.6

72 | * Pytorch 0.3.0, **NOT suport 0.4.0 because of some errors**

73 | * CUDA 8.0 or higher

74 |

75 | ### Data Preparation

76 |

77 | * **PASCAL_VOC 07+12**: Please follow the instructions in [py-faster-rcnn](https://github.com/rbgirshick/py-faster-rcnn#beyond-the-demo-installation-for-training-and-testing-models) to prepare VOC datasets. Actually, you can refer to any others. After downloading the data, creat softlinks in the folder data/.

78 | * **Pretrained ResNet**: download from [here](https://drive.google.com/file/d/1I4Jmh2bU6BJVnwqfg5EDe8KGGdec2UE8/view?usp=sharing) and put it to `$RFCN_ROOT/data/pretrained_model/resnet101_caffe.pth`.

79 |

80 |

81 | ### Compilation

82 |

83 | As pointed out by [ruotianluo/pytorch-faster-rcnn](https://github.com/ruotianluo/pytorch-faster-rcnn), choose the right `-arch` in `make.sh` file, to compile the cuda code:

84 |

85 | | GPU model | Architecture |

86 | | ------------- | ------------- |

87 | | TitanX (Maxwell/Pascal) | sm_52 |

88 | | GTX 960M | sm_50 |

89 | | GTX 1080 (Ti) | sm_61 |

90 | | Grid K520 (AWS g2.2xlarge) | sm_30 |

91 | | Tesla K80 (AWS p2.xlarge) | sm_37 |

92 |

93 | More details about setting the architecture can be found [here](https://developer.nvidia.com/cuda-gpus) or [here](http://arnon.dk/matching-sm-architectures-arch-and-gencode-for-various-nvidia-cards/)

94 |

95 | Install all the python dependencies using pip:

96 | ```

97 | $ pip install -r requirements.txt

98 | ```

99 |

100 | Compile the cuda dependencies using following simple commands:

101 |

102 | ```

103 | $ cd lib

104 | $ sh make.sh

105 | ```

106 |

107 | It will compile all the modules you need, including NMS, ROI_Pooing, ROI_Align and ROI_Crop. The default version is compiled with Python 2.7, please compile by yourself if you are using a different python version.

108 |

109 | ## Train

110 |

111 | To train a R-FCN model with ResNet101 on pascal_voc, simply run:

112 | ```

113 | $ CUDA_VISIBLE_DEVICES=$GPU_ID python trainval_net.py \

114 | --arch rfcn \

115 | --dataset pascal_voc --net res101 \

116 | --bs $BATCH_SIZE --nw $WORKER_NUMBER \

117 | --lr $LEARNING_RATE --lr_decay_step $DECAY_STEP \

118 | --cuda

119 | ```

120 |

121 | - Set `--s` to identified differenct experiments.

122 | - For CoupleNet training, replace `--arch rfcn` with `--arch couplenet`, other arguments should be modified according to your machine. (e.g. larger learning rate for bigger batch-size)

123 | - Model are saved to `$RFCN_ROOT/save`

124 |

125 | ## Test

126 |

127 | If you want to evlauate the detection performance of a pre-trained model on pascal_voc test set, simply run

128 | ```

129 | $ python test_net.py --dataset pascal_voc --arch rfcn \

130 | --net res101 \

131 | --checksession $SESSION \

132 | --checkepoch $EPOCH \

133 | --checkpoint $CHECKPOINT \

134 | --cuda

135 | ```

136 | - Specify the specific model session(`--s` in training phase), chechepoch and checkpoint, e.g., SESSION=1, EPOCH=6, CHECKPOINT=5010.

137 |

138 | ### Pretrained Model

139 |

140 | - R-FCN VOC2007: [faster_rcnn_2_12_5010.pth](https://drive.google.com/file/d/1JMh0gguOozEEIRijQxkQnMKLTAp2_iu5/view?usp=sharing)

141 |

142 | Download from link above and put it to `save/rfcn/res101/pascal_voc/faster_rcnn_2_12_5010.pth`. Then you can set `$SESSiON=2, $EPOCH=12, $CHECKPOINT=5010` in test command. It'll got 73.2 mAP.

143 |

144 | ## Demo

145 |

146 | Below are some detection results:

147 |

148 |

149 |

150 |

2 | #include

3 | #include

4 |

5 |

6 | void ROIAlignForwardCpu(const float* bottom_data, const float spatial_scale, const int num_rois,

7 | const int height, const int width, const int channels,

8 | const int aligned_height, const int aligned_width, const float * bottom_rois,

9 | float* top_data);

10 |

11 | void ROIAlignBackwardCpu(const float* top_diff, const float spatial_scale, const int num_rois,

12 | const int height, const int width, const int channels,

13 | const int aligned_height, const int aligned_width, const float * bottom_rois,

14 | float* top_data);

15 |

16 | int roi_align_forward(int aligned_height, int aligned_width, float spatial_scale,

17 | THFloatTensor * features, THFloatTensor * rois, THFloatTensor * output)

18 | {

19 | //Grab the input tensor

20 | float * data_flat = THFloatTensor_data(features);

21 | float * rois_flat = THFloatTensor_data(rois);

22 |

23 | float * output_flat = THFloatTensor_data(output);

24 |

25 | // Number of ROIs

26 | int num_rois = THFloatTensor_size(rois, 0);

27 | int size_rois = THFloatTensor_size(rois, 1);

28 | if (size_rois != 5)

29 | {

30 | return 0;

31 | }

32 |

33 | // data height

34 | int data_height = THFloatTensor_size(features, 2);

35 | // data width

36 | int data_width = THFloatTensor_size(features, 3);

37 | // Number of channels

38 | int num_channels = THFloatTensor_size(features, 1);

39 |

40 | // do ROIAlignForward

41 | ROIAlignForwardCpu(data_flat, spatial_scale, num_rois, data_height, data_width, num_channels,

42 | aligned_height, aligned_width, rois_flat, output_flat);

43 |

44 | return 1;

45 | }

46 |

47 | int roi_align_backward(int aligned_height, int aligned_width, float spatial_scale,

48 | THFloatTensor * top_grad, THFloatTensor * rois, THFloatTensor * bottom_grad)

49 | {

50 | //Grab the input tensor

51 | float * top_grad_flat = THFloatTensor_data(top_grad);

52 | float * rois_flat = THFloatTensor_data(rois);

53 |

54 | float * bottom_grad_flat = THFloatTensor_data(bottom_grad);

55 |

56 | // Number of ROIs

57 | int num_rois = THFloatTensor_size(rois, 0);

58 | int size_rois = THFloatTensor_size(rois, 1);

59 | if (size_rois != 5)

60 | {

61 | return 0;

62 | }

63 |

64 | // batch size

65 | int batch_size = THFloatTensor_size(bottom_grad, 0);

66 | // data height

67 | int data_height = THFloatTensor_size(bottom_grad, 2);

68 | // data width

69 | int data_width = THFloatTensor_size(bottom_grad, 3);

70 | // Number of channels

71 | int num_channels = THFloatTensor_size(bottom_grad, 1);

72 |

73 | // do ROIAlignBackward

74 | ROIAlignBackwardCpu(top_grad_flat, spatial_scale, num_rois, data_height,

75 | data_width, num_channels, aligned_height, aligned_width, rois_flat, bottom_grad_flat);

76 |

77 | return 1;

78 | }

79 |

80 | void ROIAlignForwardCpu(const float* bottom_data, const float spatial_scale, const int num_rois,

81 | const int height, const int width, const int channels,

82 | const int aligned_height, const int aligned_width, const float * bottom_rois,

83 | float* top_data)

84 | {

85 | const int output_size = num_rois * aligned_height * aligned_width * channels;

86 |

87 | #pragma omp parallel for

88 | for (int idx = 0; idx < output_size; ++idx)

89 | {

90 | // (n, c, ph, pw) is an element in the aligned output

91 | int pw = idx % aligned_width;

92 | int ph = (idx / aligned_width) % aligned_height;

93 | int c = (idx / aligned_width / aligned_height) % channels;

94 | int n = idx / aligned_width / aligned_height / channels;

95 |

96 | float roi_batch_ind = bottom_rois[n * 5 + 0];

97 | float roi_start_w = bottom_rois[n * 5 + 1] * spatial_scale;

98 | float roi_start_h = bottom_rois[n * 5 + 2] * spatial_scale;

99 | float roi_end_w = bottom_rois[n * 5 + 3] * spatial_scale;

100 | float roi_end_h = bottom_rois[n * 5 + 4] * spatial_scale;

101 |

102 | // Force malformed ROI to be 1x1

103 | float roi_width = fmaxf(roi_end_w - roi_start_w + 1., 0.);

104 | float roi_height = fmaxf(roi_end_h - roi_start_h + 1., 0.);

105 | float bin_size_h = roi_height / (aligned_height - 1.);

106 | float bin_size_w = roi_width / (aligned_width - 1.);

107 |

108 | float h = (float)(ph) * bin_size_h + roi_start_h;

109 | float w = (float)(pw) * bin_size_w + roi_start_w;

110 |

111 | int hstart = fminf(floor(h), height - 2);

112 | int wstart = fminf(floor(w), width - 2);

113 |

114 | int img_start = roi_batch_ind * channels * height * width;

115 |

116 | // bilinear interpolation

117 | if (h < 0 || h >= height || w < 0 || w >= width)

118 | {

119 | top_data[idx] = 0.;

120 | }

121 | else

122 | {

123 | float h_ratio = h - (float)(hstart);

124 | float w_ratio = w - (float)(wstart);

125 | int upleft = img_start + (c * height + hstart) * width + wstart;

126 | int upright = upleft + 1;

127 | int downleft = upleft + width;

128 | int downright = downleft + 1;

129 |

130 | top_data[idx] = bottom_data[upleft] * (1. - h_ratio) * (1. - w_ratio)

131 | + bottom_data[upright] * (1. - h_ratio) * w_ratio

132 | + bottom_data[downleft] * h_ratio * (1. - w_ratio)

133 | + bottom_data[downright] * h_ratio * w_ratio;

134 | }

135 | }

136 | }

137 |

138 | void ROIAlignBackwardCpu(const float* top_diff, const float spatial_scale, const int num_rois,

139 | const int height, const int width, const int channels,

140 | const int aligned_height, const int aligned_width, const float * bottom_rois,

141 | float* bottom_diff)

142 | {

143 | const int output_size = num_rois * aligned_height * aligned_width * channels;

144 |

145 | #pragma omp parallel for

146 | for (int idx = 0; idx < output_size; ++idx)

147 | {

148 | // (n, c, ph, pw) is an element in the aligned output

149 | int pw = idx % aligned_width;

150 | int ph = (idx / aligned_width) % aligned_height;

151 | int c = (idx / aligned_width / aligned_height) % channels;

152 | int n = idx / aligned_width / aligned_height / channels;

153 |

154 | float roi_batch_ind = bottom_rois[n * 5 + 0];

155 | float roi_start_w = bottom_rois[n * 5 + 1] * spatial_scale;

156 | float roi_start_h = bottom_rois[n * 5 + 2] * spatial_scale;

157 | float roi_end_w = bottom_rois[n * 5 + 3] * spatial_scale;

158 | float roi_end_h = bottom_rois[n * 5 + 4] * spatial_scale;

159 |

160 | // Force malformed ROI to be 1x1

161 | float roi_width = fmaxf(roi_end_w - roi_start_w + 1., 0.);

162 | float roi_height = fmaxf(roi_end_h - roi_start_h + 1., 0.);

163 | float bin_size_h = roi_height / (aligned_height - 1.);

164 | float bin_size_w = roi_width / (aligned_width - 1.);

165 |

166 | float h = (float)(ph) * bin_size_h + roi_start_h;

167 | float w = (float)(pw) * bin_size_w + roi_start_w;

168 |

169 | int hstart = fminf(floor(h), height - 2);

170 | int wstart = fminf(floor(w), width - 2);

171 |

172 | int img_start = roi_batch_ind * channels * height * width;

173 |

174 | // bilinear interpolation

175 | if (h < 0 || h >= height || w < 0 || w >= width)

176 | {

177 | float h_ratio = h - (float)(hstart);

178 | float w_ratio = w - (float)(wstart);

179 | int upleft = img_start + (c * height + hstart) * width + wstart;

180 | int upright = upleft + 1;

181 | int downleft = upleft + width;

182 | int downright = downleft + 1;

183 |

184 | bottom_diff[upleft] += top_diff[idx] * (1. - h_ratio) * (1. - w_ratio);

185 | bottom_diff[upright] += top_diff[idx] * (1. - h_ratio) * w_ratio;

186 | bottom_diff[downleft] += top_diff[idx] * h_ratio * (1. - w_ratio);

187 | bottom_diff[downright] += top_diff[idx] * h_ratio * w_ratio;

188 | }

189 | }

190 | }

191 |

--------------------------------------------------------------------------------

/lib/model/roi_align/src/roi_align.h:

--------------------------------------------------------------------------------

1 | int roi_align_forward(int aligned_height, int aligned_width, float spatial_scale,

2 | THFloatTensor * features, THFloatTensor * rois, THFloatTensor * output);

3 |

4 | int roi_align_backward(int aligned_height, int aligned_width, float spatial_scale,

5 | THFloatTensor * top_grad, THFloatTensor * rois, THFloatTensor * bottom_grad);

6 |

--------------------------------------------------------------------------------

/lib/model/roi_align/src/roi_align_cuda.c:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include "roi_align_kernel.h"

4 |

5 | extern THCState *state;

6 |

7 | int roi_align_forward_cuda(int aligned_height, int aligned_width, float spatial_scale,

8 | THCudaTensor * features, THCudaTensor * rois, THCudaTensor * output)

9 | {

10 | // Grab the input tensor

11 | float * data_flat = THCudaTensor_data(state, features);

12 | float * rois_flat = THCudaTensor_data(state, rois);

13 |

14 | float * output_flat = THCudaTensor_data(state, output);

15 |

16 | // Number of ROIs

17 | int num_rois = THCudaTensor_size(state, rois, 0);

18 | int size_rois = THCudaTensor_size(state, rois, 1);

19 | if (size_rois != 5)

20 | {

21 | return 0;

22 | }

23 |

24 | // data height

25 | int data_height = THCudaTensor_size(state, features, 2);

26 | // data width

27 | int data_width = THCudaTensor_size(state, features, 3);

28 | // Number of channels

29 | int num_channels = THCudaTensor_size(state, features, 1);

30 |

31 | cudaStream_t stream = THCState_getCurrentStream(state);

32 |

33 | ROIAlignForwardLaucher(

34 | data_flat, spatial_scale, num_rois, data_height,

35 | data_width, num_channels, aligned_height,

36 | aligned_width, rois_flat,

37 | output_flat, stream);

38 |

39 | return 1;

40 | }

41 |

42 | int roi_align_backward_cuda(int aligned_height, int aligned_width, float spatial_scale,

43 | THCudaTensor * top_grad, THCudaTensor * rois, THCudaTensor * bottom_grad)

44 | {

45 | // Grab the input tensor

46 | float * top_grad_flat = THCudaTensor_data(state, top_grad);

47 | float * rois_flat = THCudaTensor_data(state, rois);

48 |

49 | float * bottom_grad_flat = THCudaTensor_data(state, bottom_grad);

50 |

51 | // Number of ROIs

52 | int num_rois = THCudaTensor_size(state, rois, 0);

53 | int size_rois = THCudaTensor_size(state, rois, 1);

54 | if (size_rois != 5)

55 | {

56 | return 0;

57 | }

58 |

59 | // batch size

60 | int batch_size = THCudaTensor_size(state, bottom_grad, 0);

61 | // data height

62 | int data_height = THCudaTensor_size(state, bottom_grad, 2);

63 | // data width

64 | int data_width = THCudaTensor_size(state, bottom_grad, 3);

65 | // Number of channels

66 | int num_channels = THCudaTensor_size(state, bottom_grad, 1);

67 |

68 | cudaStream_t stream = THCState_getCurrentStream(state);

69 | ROIAlignBackwardLaucher(

70 | top_grad_flat, spatial_scale, batch_size, num_rois, data_height,

71 | data_width, num_channels, aligned_height,

72 | aligned_width, rois_flat,

73 | bottom_grad_flat, stream);

74 |

75 | return 1;

76 | }

77 |

--------------------------------------------------------------------------------

/lib/model/roi_align/src/roi_align_cuda.h:

--------------------------------------------------------------------------------

1 | int roi_align_forward_cuda(int aligned_height, int aligned_width, float spatial_scale,

2 | THCudaTensor * features, THCudaTensor * rois, THCudaTensor * output);

3 |

4 | int roi_align_backward_cuda(int aligned_height, int aligned_width, float spatial_scale,

5 | THCudaTensor * top_grad, THCudaTensor * rois, THCudaTensor * bottom_grad);

6 |

--------------------------------------------------------------------------------

/lib/model/roi_align/src/roi_align_kernel.cu:

--------------------------------------------------------------------------------

1 | #ifdef __cplusplus

2 | extern "C" {

3 | #endif

4 |

5 | #include

6 | #include

7 | #include

8 | #include "roi_align_kernel.h"

9 |

10 | #define CUDA_1D_KERNEL_LOOP(i, n) \

11 | for (int i = blockIdx.x * blockDim.x + threadIdx.x; i < n; \

12 | i += blockDim.x * gridDim.x)

13 |

14 |

15 | __global__ void ROIAlignForward(const int nthreads, const float* bottom_data, const float spatial_scale, const int height, const int width,

16 | const int channels, const int aligned_height, const int aligned_width, const float* bottom_rois, float* top_data) {

17 | CUDA_1D_KERNEL_LOOP(index, nthreads) {

18 | // (n, c, ph, pw) is an element in the aligned output

19 | // int n = index;

20 | // int pw = n % aligned_width;

21 | // n /= aligned_width;

22 | // int ph = n % aligned_height;

23 | // n /= aligned_height;

24 | // int c = n % channels;

25 | // n /= channels;

26 |

27 | int pw = index % aligned_width;

28 | int ph = (index / aligned_width) % aligned_height;

29 | int c = (index / aligned_width / aligned_height) % channels;

30 | int n = index / aligned_width / aligned_height / channels;

31 |

32 | // bottom_rois += n * 5;

33 | float roi_batch_ind = bottom_rois[n * 5 + 0];

34 | float roi_start_w = bottom_rois[n * 5 + 1] * spatial_scale;

35 | float roi_start_h = bottom_rois[n * 5 + 2] * spatial_scale;

36 | float roi_end_w = bottom_rois[n * 5 + 3] * spatial_scale;

37 | float roi_end_h = bottom_rois[n * 5 + 4] * spatial_scale;

38 |

39 | // Force malformed ROIs to be 1x1

40 | float roi_width = fmaxf(roi_end_w - roi_start_w + 1., 0.);

41 | float roi_height = fmaxf(roi_end_h - roi_start_h + 1., 0.);

42 | float bin_size_h = roi_height / (aligned_height - 1.);

43 | float bin_size_w = roi_width / (aligned_width - 1.);

44 |

45 | float h = (float)(ph) * bin_size_h + roi_start_h;

46 | float w = (float)(pw) * bin_size_w + roi_start_w;

47 |

48 | int hstart = fminf(floor(h), height - 2);

49 | int wstart = fminf(floor(w), width - 2);

50 |

51 | int img_start = roi_batch_ind * channels * height * width;

52 |

53 | // bilinear interpolation

54 | if (h < 0 || h >= height || w < 0 || w >= width) {

55 | top_data[index] = 0.;

56 | } else {

57 | float h_ratio = h - (float)(hstart);

58 | float w_ratio = w - (float)(wstart);

59 | int upleft = img_start + (c * height + hstart) * width + wstart;

60 | int upright = upleft + 1;

61 | int downleft = upleft + width;

62 | int downright = downleft + 1;

63 |

64 | top_data[index] = bottom_data[upleft] * (1. - h_ratio) * (1. - w_ratio)

65 | + bottom_data[upright] * (1. - h_ratio) * w_ratio

66 | + bottom_data[downleft] * h_ratio * (1. - w_ratio)

67 | + bottom_data[downright] * h_ratio * w_ratio;

68 | }

69 | }

70 | }

71 |

72 |

73 | int ROIAlignForwardLaucher(const float* bottom_data, const float spatial_scale, const int num_rois, const int height, const int width,

74 | const int channels, const int aligned_height, const int aligned_width, const float* bottom_rois, float* top_data, cudaStream_t stream) {

75 | const int kThreadsPerBlock = 1024;

76 | const int output_size = num_rois * aligned_height * aligned_width * channels;

77 | cudaError_t err;

78 |

79 |

80 | ROIAlignForward<<<(output_size + kThreadsPerBlock - 1) / kThreadsPerBlock, kThreadsPerBlock, 0, stream>>>(

81 | output_size, bottom_data, spatial_scale, height, width, channels,

82 | aligned_height, aligned_width, bottom_rois, top_data);

83 |

84 | err = cudaGetLastError();

85 | if(cudaSuccess != err) {

86 | fprintf( stderr, "cudaCheckError() failed : %s\n", cudaGetErrorString( err ) );

87 | exit( -1 );

88 | }

89 |

90 | return 1;

91 | }

92 |

93 |

94 | __global__ void ROIAlignBackward(const int nthreads, const float* top_diff, const float spatial_scale, const int height, const int width,

95 | const int channels, const int aligned_height, const int aligned_width, float* bottom_diff, const float* bottom_rois) {

96 | CUDA_1D_KERNEL_LOOP(index, nthreads) {

97 |

98 | // (n, c, ph, pw) is an element in the aligned output

99 | int pw = index % aligned_width;

100 | int ph = (index / aligned_width) % aligned_height;

101 | int c = (index / aligned_width / aligned_height) % channels;

102 | int n = index / aligned_width / aligned_height / channels;

103 |

104 | float roi_batch_ind = bottom_rois[n * 5 + 0];

105 | float roi_start_w = bottom_rois[n * 5 + 1] * spatial_scale;

106 | float roi_start_h = bottom_rois[n * 5 + 2] * spatial_scale;

107 | float roi_end_w = bottom_rois[n * 5 + 3] * spatial_scale;

108 | float roi_end_h = bottom_rois[n * 5 + 4] * spatial_scale;

109 | /* int roi_start_w = round(bottom_rois[1] * spatial_scale); */

110 | /* int roi_start_h = round(bottom_rois[2] * spatial_scale); */

111 | /* int roi_end_w = round(bottom_rois[3] * spatial_scale); */

112 | /* int roi_end_h = round(bottom_rois[4] * spatial_scale); */

113 |

114 | // Force malformed ROIs to be 1x1

115 | float roi_width = fmaxf(roi_end_w - roi_start_w + 1., 0.);

116 | float roi_height = fmaxf(roi_end_h - roi_start_h + 1., 0.);

117 | float bin_size_h = roi_height / (aligned_height - 1.);

118 | float bin_size_w = roi_width / (aligned_width - 1.);

119 |

120 | float h = (float)(ph) * bin_size_h + roi_start_h;

121 | float w = (float)(pw) * bin_size_w + roi_start_w;

122 |

123 | int hstart = fminf(floor(h), height - 2);

124 | int wstart = fminf(floor(w), width - 2);

125 |

126 | int img_start = roi_batch_ind * channels * height * width;

127 |

128 | // bilinear interpolation

129 | if (!(h < 0 || h >= height || w < 0 || w >= width)) {

130 | float h_ratio = h - (float)(hstart);

131 | float w_ratio = w - (float)(wstart);

132 | int upleft = img_start + (c * height + hstart) * width + wstart;

133 | int upright = upleft + 1;

134 | int downleft = upleft + width;

135 | int downright = downleft + 1;

136 |

137 | atomicAdd(bottom_diff + upleft, top_diff[index] * (1. - h_ratio) * (1 - w_ratio));

138 | atomicAdd(bottom_diff + upright, top_diff[index] * (1. - h_ratio) * w_ratio);

139 | atomicAdd(bottom_diff + downleft, top_diff[index] * h_ratio * (1 - w_ratio));

140 | atomicAdd(bottom_diff + downright, top_diff[index] * h_ratio * w_ratio);

141 | }

142 | }

143 | }

144 |

145 | int ROIAlignBackwardLaucher(const float* top_diff, const float spatial_scale, const int batch_size, const int num_rois, const int height, const int width,

146 | const int channels, const int aligned_height, const int aligned_width, const float* bottom_rois, float* bottom_diff, cudaStream_t stream) {

147 | const int kThreadsPerBlock = 1024;

148 | const int output_size = num_rois * aligned_height * aligned_width * channels;

149 | cudaError_t err;

150 |

151 | ROIAlignBackward<<<(output_size + kThreadsPerBlock - 1) / kThreadsPerBlock, kThreadsPerBlock, 0, stream>>>(

152 | output_size, top_diff, spatial_scale, height, width, channels,

153 | aligned_height, aligned_width, bottom_diff, bottom_rois);

154 |

155 | err = cudaGetLastError();

156 | if(cudaSuccess != err) {

157 | fprintf( stderr, "cudaCheckError() failed : %s\n", cudaGetErrorString( err ) );

158 | exit( -1 );

159 | }

160 |

161 | return 1;

162 | }

163 |

164 |

165 | #ifdef __cplusplus

166 | }

167 | #endif

168 |

--------------------------------------------------------------------------------

/lib/model/roi_align/src/roi_align_kernel.h:

--------------------------------------------------------------------------------

1 | #ifndef _ROI_ALIGN_KERNEL

2 | #define _ROI_ALIGN_KERNEL

3 |

4 | #ifdef __cplusplus

5 | extern "C" {

6 | #endif

7 |

8 | __global__ void ROIAlignForward(const int nthreads, const float* bottom_data,

9 | const float spatial_scale, const int height, const int width,

10 | const int channels, const int aligned_height, const int aligned_width,

11 | const float* bottom_rois, float* top_data);

12 |

13 | int ROIAlignForwardLaucher(

14 | const float* bottom_data, const float spatial_scale, const int num_rois, const int height,

15 | const int width, const int channels, const int aligned_height,

16 | const int aligned_width, const float* bottom_rois,

17 | float* top_data, cudaStream_t stream);

18 |

19 | __global__ void ROIAlignBackward(const int nthreads, const float* top_diff,

20 | const float spatial_scale, const int height, const int width,

21 | const int channels, const int aligned_height, const int aligned_width,

22 | float* bottom_diff, const float* bottom_rois);

23 |

24 | int ROIAlignBackwardLaucher(const float* top_diff, const float spatial_scale, const int batch_size, const int num_rois,

25 | const int height, const int width, const int channels, const int aligned_height,

26 | const int aligned_width, const float* bottom_rois,

27 | float* bottom_diff, cudaStream_t stream);

28 |

29 | #ifdef __cplusplus

30 | }

31 | #endif

32 |

33 | #endif

34 |

35 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_crop/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_crop/_ext/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_crop/_ext/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_crop/_ext/crop_resize/__init__.py:

--------------------------------------------------------------------------------

1 |

2 | from torch.utils.ffi import _wrap_function

3 | from ._crop_resize import lib as _lib, ffi as _ffi

4 |

5 | __all__ = []

6 | def _import_symbols(locals):

7 | for symbol in dir(_lib):

8 | fn = getattr(_lib, symbol)

9 | locals[symbol] = _wrap_function(fn, _ffi)

10 | __all__.append(symbol)

11 |

12 | _import_symbols(locals())

13 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/_ext/roi_crop/__init__.py:

--------------------------------------------------------------------------------

1 |

2 | from torch.utils.ffi import _wrap_function

3 | from ._roi_crop import lib as _lib, ffi as _ffi

4 |

5 | __all__ = []

6 | def _import_symbols(locals):

7 | for symbol in dir(_lib):

8 | fn = getattr(_lib, symbol)

9 | if callable(fn):

10 | locals[symbol] = _wrap_function(fn, _ffi)

11 | else:

12 | locals[symbol] = fn

13 | __all__.append(symbol)

14 |

15 | _import_symbols(locals())

16 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/build.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | import os

3 | import torch

4 | from torch.utils.ffi import create_extension

5 |

6 | #this_file = os.path.dirname(__file__)

7 |

8 | sources = ['src/roi_crop.c']

9 | headers = ['src/roi_crop.h']

10 | defines = []

11 | with_cuda = False

12 |

13 | if torch.cuda.is_available():

14 | print('Including CUDA code.')

15 | sources += ['src/roi_crop_cuda.c']

16 | headers += ['src/roi_crop_cuda.h']

17 | defines += [('WITH_CUDA', None)]

18 | with_cuda = True

19 |

20 | this_file = os.path.dirname(os.path.realpath(__file__))

21 | print(this_file)

22 | extra_objects = ['src/roi_crop_cuda_kernel.cu.o']

23 | extra_objects = [os.path.join(this_file, fname) for fname in extra_objects]

24 |

25 | ffi = create_extension(

26 | '_ext.roi_crop',

27 | headers=headers,

28 | sources=sources,

29 | define_macros=defines,

30 | relative_to=__file__,

31 | with_cuda=with_cuda,

32 | extra_objects=extra_objects

33 | )

34 |

35 | if __name__ == '__main__':

36 | ffi.build()

37 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/functions/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_crop/functions/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_crop/functions/crop_resize.py:

--------------------------------------------------------------------------------

1 | # functions/add.py

2 | import torch

3 | from torch.autograd import Function

4 | from .._ext import roi_crop

5 | from cffi import FFI

6 | ffi = FFI()

7 |

8 | class RoICropFunction(Function):

9 | def forward(self, input1, input2):

10 | self.input1 = input1

11 | self.input2 = input2

12 | self.device_c = ffi.new("int *")

13 | output = torch.zeros(input2.size()[0], input1.size()[1], input2.size()[1], input2.size()[2])

14 | #print('decice %d' % torch.cuda.current_device())

15 | if input1.is_cuda:

16 | self.device = torch.cuda.current_device()

17 | else:

18 | self.device = -1

19 | self.device_c[0] = self.device

20 | if not input1.is_cuda:

21 | roi_crop.BilinearSamplerBHWD_updateOutput(input1, input2, output)

22 | else:

23 | output = output.cuda(self.device)

24 | roi_crop.BilinearSamplerBHWD_updateOutput_cuda(input1, input2, output)

25 | return output

26 |

27 | def backward(self, grad_output):

28 | grad_input1 = torch.zeros(self.input1.size())

29 | grad_input2 = torch.zeros(self.input2.size())

30 | #print('backward decice %d' % self.device)

31 | if not grad_output.is_cuda:

32 | roi_crop.BilinearSamplerBHWD_updateGradInput(self.input1, self.input2, grad_input1, grad_input2, grad_output)

33 | else:

34 | grad_input1 = grad_input1.cuda(self.device)

35 | grad_input2 = grad_input2.cuda(self.device)

36 | roi_crop.BilinearSamplerBHWD_updateGradInput_cuda(self.input1, self.input2, grad_input1, grad_input2, grad_output)

37 | return grad_input1, grad_input2

38 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/functions/gridgen.py:

--------------------------------------------------------------------------------

1 | # functions/add.py

2 | import torch

3 | from torch.autograd import Function

4 | import numpy as np

5 |

6 |

7 | class AffineGridGenFunction(Function):

8 | def __init__(self, height, width,lr=1):

9 | super(AffineGridGenFunction, self).__init__()

10 | self.lr = lr

11 | self.height, self.width = height, width

12 | self.grid = np.zeros( [self.height, self.width, 3], dtype=np.float32)

13 | self.grid[:,:,0] = np.expand_dims(np.repeat(np.expand_dims(np.arange(-1, 1, 2.0/(self.height)), 0), repeats = self.width, axis = 0).T, 0)

14 | self.grid[:,:,1] = np.expand_dims(np.repeat(np.expand_dims(np.arange(-1, 1, 2.0/(self.width)), 0), repeats = self.height, axis = 0), 0)

15 | # self.grid[:,:,0] = np.expand_dims(np.repeat(np.expand_dims(np.arange(-1, 1, 2.0/(self.height - 1)), 0), repeats = self.width, axis = 0).T, 0)

16 | # self.grid[:,:,1] = np.expand_dims(np.repeat(np.expand_dims(np.arange(-1, 1, 2.0/(self.width - 1)), 0), repeats = self.height, axis = 0), 0)

17 | self.grid[:,:,2] = np.ones([self.height, width])

18 | self.grid = torch.from_numpy(self.grid.astype(np.float32))

19 | #print(self.grid)

20 |

21 | def forward(self, input1):

22 | self.input1 = input1

23 | output = input1.new(torch.Size([input1.size(0)]) + self.grid.size()).zero_()

24 | self.batchgrid = input1.new(torch.Size([input1.size(0)]) + self.grid.size()).zero_()

25 | for i in range(input1.size(0)):

26 | self.batchgrid[i] = self.grid.astype(self.batchgrid[i])

27 |

28 | # if input1.is_cuda:

29 | # self.batchgrid = self.batchgrid.cuda()

30 | # output = output.cuda()

31 |

32 | for i in range(input1.size(0)):

33 | output = torch.bmm(self.batchgrid.view(-1, self.height*self.width, 3), torch.transpose(input1, 1, 2)).view(-1, self.height, self.width, 2)

34 |

35 | return output

36 |

37 | def backward(self, grad_output):

38 |

39 | grad_input1 = self.input1.new(self.input1.size()).zero_()

40 |

41 | # if grad_output.is_cuda:

42 | # self.batchgrid = self.batchgrid.cuda()

43 | # grad_input1 = grad_input1.cuda()

44 |

45 | grad_input1 = torch.baddbmm(grad_input1, torch.transpose(grad_output.view(-1, self.height*self.width, 2), 1,2), self.batchgrid.view(-1, self.height*self.width, 3))

46 | return grad_input1

47 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/functions/roi_crop.py:

--------------------------------------------------------------------------------

1 | # functions/add.py

2 | import torch

3 | from torch.autograd import Function

4 | from .._ext import roi_crop

5 | import pdb

6 |

7 | class RoICropFunction(Function):

8 | def forward(self, input1, input2):

9 | self.input1 = input1.clone()

10 | self.input2 = input2.clone()

11 | output = input2.new(input2.size()[0], input1.size()[1], input2.size()[1], input2.size()[2]).zero_()

12 | assert output.get_device() == input1.get_device(), "output and input1 must on the same device"

13 | assert output.get_device() == input2.get_device(), "output and input2 must on the same device"

14 | roi_crop.BilinearSamplerBHWD_updateOutput_cuda(input1, input2, output)

15 | return output

16 |

17 | def backward(self, grad_output):

18 | grad_input1 = self.input1.new(self.input1.size()).zero_()

19 | grad_input2 = self.input2.new(self.input2.size()).zero_()

20 | roi_crop.BilinearSamplerBHWD_updateGradInput_cuda(self.input1, self.input2, grad_input1, grad_input2, grad_output)

21 | return grad_input1, grad_input2

22 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/make.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | CUDA_PATH=/usr/local/cuda/

4 |

5 | cd src

6 | echo "Compiling my_lib kernels by nvcc..."

7 | nvcc -c -o roi_crop_cuda_kernel.cu.o roi_crop_cuda_kernel.cu -x cu -Xcompiler -fPIC -arch=sm_52

8 |

9 | cd ../

10 | python build.py

11 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/modules/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_crop/modules/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_crop/modules/roi_crop.py:

--------------------------------------------------------------------------------

1 | from torch.nn.modules.module import Module

2 | from ..functions.roi_crop import RoICropFunction

3 |

4 | class _RoICrop(Module):

5 | def __init__(self, layout = 'BHWD'):

6 | super(_RoICrop, self).__init__()

7 | def forward(self, input1, input2):

8 | return RoICropFunction()(input1, input2)

9 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/src/roi_crop.h:

--------------------------------------------------------------------------------

1 | int BilinearSamplerBHWD_updateOutput(THFloatTensor *inputImages, THFloatTensor *grids, THFloatTensor *output);

2 |

3 | int BilinearSamplerBHWD_updateGradInput(THFloatTensor *inputImages, THFloatTensor *grids, THFloatTensor *gradInputImages,

4 | THFloatTensor *gradGrids, THFloatTensor *gradOutput);

5 |

6 |

7 |

8 | int BilinearSamplerBCHW_updateOutput(THFloatTensor *inputImages, THFloatTensor *grids, THFloatTensor *output);

9 |

10 | int BilinearSamplerBCHW_updateGradInput(THFloatTensor *inputImages, THFloatTensor *grids, THFloatTensor *gradInputImages,

11 | THFloatTensor *gradGrids, THFloatTensor *gradOutput);

12 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/src/roi_crop_cuda.c:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include "roi_crop_cuda_kernel.h"

5 |

6 | #define real float

7 |

8 | // this symbol will be resolved automatically from PyTorch libs

9 | extern THCState *state;

10 |

11 | // Bilinear sampling is done in BHWD (coalescing is not obvious in BDHW)

12 | // we assume BHWD format in inputImages

13 | // we assume BHW(YX) format on grids

14 |

15 | int BilinearSamplerBHWD_updateOutput_cuda(THCudaTensor *inputImages, THCudaTensor *grids, THCudaTensor *output){

16 | // THCState *state = getCutorchState(L);

17 | // THCudaTensor *inputImages = (THCudaTensor *)luaT_checkudata(L, 2, "torch.CudaTensor");

18 | // THCudaTensor *grids = (THCudaTensor *)luaT_checkudata(L, 3, "torch.CudaTensor");

19 | // THCudaTensor *output = (THCudaTensor *)luaT_checkudata(L, 4, "torch.CudaTensor");

20 |

21 | int success = 0;

22 | success = BilinearSamplerBHWD_updateOutput_cuda_kernel(output->size[1],

23 | output->size[3],

24 | output->size[2],

25 | output->size[0],

26 | THCudaTensor_size(state, inputImages, 1),

27 | THCudaTensor_size(state, inputImages, 2),

28 | THCudaTensor_size(state, inputImages, 3),

29 | THCudaTensor_size(state, inputImages, 0),

30 | THCudaTensor_data(state, inputImages),

31 | THCudaTensor_stride(state, inputImages, 0),

32 | THCudaTensor_stride(state, inputImages, 1),

33 | THCudaTensor_stride(state, inputImages, 2),

34 | THCudaTensor_stride(state, inputImages, 3),

35 | THCudaTensor_data(state, grids),

36 | THCudaTensor_stride(state, grids, 0),

37 | THCudaTensor_stride(state, grids, 3),

38 | THCudaTensor_stride(state, grids, 1),

39 | THCudaTensor_stride(state, grids, 2),

40 | THCudaTensor_data(state, output),

41 | THCudaTensor_stride(state, output, 0),

42 | THCudaTensor_stride(state, output, 1),

43 | THCudaTensor_stride(state, output, 2),

44 | THCudaTensor_stride(state, output, 3),

45 | THCState_getCurrentStream(state));

46 |

47 | //check for errors

48 | if (!success) {

49 | THError("aborting");

50 | }

51 | return 1;

52 | }

53 |

54 | int BilinearSamplerBHWD_updateGradInput_cuda(THCudaTensor *inputImages, THCudaTensor *grids, THCudaTensor *gradInputImages,

55 | THCudaTensor *gradGrids, THCudaTensor *gradOutput)

56 | {

57 | // THCState *state = getCutorchState(L);

58 | // THCudaTensor *inputImages = (THCudaTensor *)luaT_checkudata(L, 2, "torch.CudaTensor");

59 | // THCudaTensor *grids = (THCudaTensor *)luaT_checkudata(L, 3, "torch.CudaTensor");

60 | // THCudaTensor *gradInputImages = (THCudaTensor *)luaT_checkudata(L, 4, "torch.CudaTensor");

61 | // THCudaTensor *gradGrids = (THCudaTensor *)luaT_checkudata(L, 5, "torch.CudaTensor");

62 | // THCudaTensor *gradOutput = (THCudaTensor *)luaT_checkudata(L, 6, "torch.CudaTensor");

63 |

64 | int success = 0;

65 | success = BilinearSamplerBHWD_updateGradInput_cuda_kernel(gradOutput->size[1],

66 | gradOutput->size[3],

67 | gradOutput->size[2],

68 | gradOutput->size[0],

69 | THCudaTensor_size(state, inputImages, 1),

70 | THCudaTensor_size(state, inputImages, 2),

71 | THCudaTensor_size(state, inputImages, 3),

72 | THCudaTensor_size(state, inputImages, 0),

73 | THCudaTensor_data(state, inputImages),

74 | THCudaTensor_stride(state, inputImages, 0),

75 | THCudaTensor_stride(state, inputImages, 1),

76 | THCudaTensor_stride(state, inputImages, 2),

77 | THCudaTensor_stride(state, inputImages, 3),

78 | THCudaTensor_data(state, grids),

79 | THCudaTensor_stride(state, grids, 0),

80 | THCudaTensor_stride(state, grids, 3),

81 | THCudaTensor_stride(state, grids, 1),

82 | THCudaTensor_stride(state, grids, 2),

83 | THCudaTensor_data(state, gradInputImages),

84 | THCudaTensor_stride(state, gradInputImages, 0),

85 | THCudaTensor_stride(state, gradInputImages, 1),

86 | THCudaTensor_stride(state, gradInputImages, 2),

87 | THCudaTensor_stride(state, gradInputImages, 3),

88 | THCudaTensor_data(state, gradGrids),

89 | THCudaTensor_stride(state, gradGrids, 0),

90 | THCudaTensor_stride(state, gradGrids, 3),

91 | THCudaTensor_stride(state, gradGrids, 1),

92 | THCudaTensor_stride(state, gradGrids, 2),

93 | THCudaTensor_data(state, gradOutput),

94 | THCudaTensor_stride(state, gradOutput, 0),

95 | THCudaTensor_stride(state, gradOutput, 1),

96 | THCudaTensor_stride(state, gradOutput, 2),

97 | THCudaTensor_stride(state, gradOutput, 3),

98 | THCState_getCurrentStream(state));

99 |

100 | //check for errors

101 | if (!success) {

102 | THError("aborting");

103 | }

104 | return 1;

105 | }

106 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/src/roi_crop_cuda.h:

--------------------------------------------------------------------------------

1 | // Bilinear sampling is done in BHWD (coalescing is not obvious in BDHW)

2 | // we assume BHWD format in inputImages

3 | // we assume BHW(YX) format on grids

4 |

5 | int BilinearSamplerBHWD_updateOutput_cuda(THCudaTensor *inputImages, THCudaTensor *grids, THCudaTensor *output);

6 |

7 | int BilinearSamplerBHWD_updateGradInput_cuda(THCudaTensor *inputImages, THCudaTensor *grids, THCudaTensor *gradInputImages,

8 | THCudaTensor *gradGrids, THCudaTensor *gradOutput);

9 |

--------------------------------------------------------------------------------

/lib/model/roi_crop/src/roi_crop_cuda_kernel.h:

--------------------------------------------------------------------------------

1 | #ifdef __cplusplus

2 | extern "C" {

3 | #endif

4 |

5 |

6 | int BilinearSamplerBHWD_updateOutput_cuda_kernel(/*output->size[3]*/int oc,

7 | /*output->size[2]*/int ow,

8 | /*output->size[1]*/int oh,

9 | /*output->size[0]*/int ob,

10 | /*THCudaTensor_size(state, inputImages, 3)*/int ic,

11 | /*THCudaTensor_size(state, inputImages, 1)*/int ih,

12 | /*THCudaTensor_size(state, inputImages, 2)*/int iw,

13 | /*THCudaTensor_size(state, inputImages, 0)*/int ib,

14 | /*THCudaTensor *inputImages*/float *inputImages, int isb, int isc, int ish, int isw,

15 | /*THCudaTensor *grids*/float *grids, int gsb, int gsc, int gsh, int gsw,

16 | /*THCudaTensor *output*/float *output, int osb, int osc, int osh, int osw,

17 | /*THCState_getCurrentStream(state)*/cudaStream_t stream);

18 |

19 | int BilinearSamplerBHWD_updateGradInput_cuda_kernel(/*gradOutput->size[3]*/int goc,

20 | /*gradOutput->size[2]*/int gow,

21 | /*gradOutput->size[1]*/int goh,

22 | /*gradOutput->size[0]*/int gob,

23 | /*THCudaTensor_size(state, inputImages, 3)*/int ic,

24 | /*THCudaTensor_size(state, inputImages, 1)*/int ih,

25 | /*THCudaTensor_size(state, inputImages, 2)*/int iw,

26 | /*THCudaTensor_size(state, inputImages, 0)*/int ib,

27 | /*THCudaTensor *inputImages*/float *inputImages, int isb, int isc, int ish, int isw,

28 | /*THCudaTensor *grids*/float *grids, int gsb, int gsc, int gsh, int gsw,

29 | /*THCudaTensor *gradInputImages*/float *gradInputImages, int gisb, int gisc, int gish, int gisw,

30 | /*THCudaTensor *gradGrids*/float *gradGrids, int ggsb, int ggsc, int ggsh, int ggsw,

31 | /*THCudaTensor *gradOutput*/float *gradOutput, int gosb, int gosc, int gosh, int gosw,

32 | /*THCState_getCurrentStream(state)*/cudaStream_t stream);

33 |

34 |

35 | #ifdef __cplusplus

36 | }

37 | #endif

38 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_pooling/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_pooling/_ext/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_pooling/_ext/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_pooling/_ext/roi_pooling/__init__.py:

--------------------------------------------------------------------------------

1 |

2 | from torch.utils.ffi import _wrap_function

3 | from ._roi_pooling import lib as _lib, ffi as _ffi

4 |

5 | __all__ = []

6 | def _import_symbols(locals):

7 | for symbol in dir(_lib):

8 | fn = getattr(_lib, symbol)

9 | if callable(fn):

10 | locals[symbol] = _wrap_function(fn, _ffi)

11 | else:

12 | locals[symbol] = fn

13 | __all__.append(symbol)

14 |

15 | _import_symbols(locals())

16 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/build.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | import os

3 | import torch

4 | from torch.utils.ffi import create_extension

5 |

6 |

7 | sources = ['src/roi_pooling.c']

8 | headers = ['src/roi_pooling.h']

9 | extra_objects = []

10 | defines = []

11 | with_cuda = False

12 |

13 | this_file = os.path.dirname(os.path.realpath(__file__))

14 | print(this_file)

15 |

16 | if torch.cuda.is_available():

17 | print('Including CUDA code.')

18 | sources += ['src/roi_pooling_cuda.c']

19 | headers += ['src/roi_pooling_cuda.h']

20 | defines += [('WITH_CUDA', None)]

21 | with_cuda = True

22 | extra_objects = ['src/roi_pooling.cu.o']

23 | extra_objects = [os.path.join(this_file, fname) for fname in extra_objects]

24 |

25 | ffi = create_extension(

26 | '_ext.roi_pooling',

27 | headers=headers,

28 | sources=sources,

29 | define_macros=defines,

30 | relative_to=__file__,

31 | with_cuda=with_cuda,

32 | extra_objects=extra_objects

33 | )

34 |

35 | if __name__ == '__main__':

36 | ffi.build()

37 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/functions/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_pooling/functions/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_pooling/functions/roi_pool.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch.autograd import Function

3 | from .._ext import roi_pooling

4 | import pdb

5 |

6 | class RoIPoolFunction(Function):

7 | def __init__(ctx, pooled_height, pooled_width, spatial_scale):

8 | ctx.pooled_width = pooled_width

9 | ctx.pooled_height = pooled_height

10 | ctx.spatial_scale = spatial_scale

11 | ctx.feature_size = None

12 |

13 | def forward(ctx, features, rois):

14 | ctx.feature_size = features.size()

15 | batch_size, num_channels, data_height, data_width = ctx.feature_size

16 | num_rois = rois.size(0)

17 | output = features.new(num_rois, num_channels, ctx.pooled_height, ctx.pooled_width).zero_()

18 | ctx.argmax = features.new(num_rois, num_channels, ctx.pooled_height, ctx.pooled_width).zero_().int()

19 | ctx.rois = rois

20 | if not features.is_cuda:

21 | _features = features.permute(0, 2, 3, 1)

22 | roi_pooling.roi_pooling_forward(ctx.pooled_height, ctx.pooled_width, ctx.spatial_scale,

23 | _features, rois, output)

24 | else:

25 | roi_pooling.roi_pooling_forward_cuda(ctx.pooled_height, ctx.pooled_width, ctx.spatial_scale,

26 | features, rois, output, ctx.argmax)

27 |

28 | return output

29 |

30 | def backward(ctx, grad_output):

31 | assert(ctx.feature_size is not None and grad_output.is_cuda)

32 | batch_size, num_channels, data_height, data_width = ctx.feature_size

33 | grad_input = grad_output.new(batch_size, num_channels, data_height, data_width).zero_()

34 |

35 | roi_pooling.roi_pooling_backward_cuda(ctx.pooled_height, ctx.pooled_width, ctx.spatial_scale,

36 | grad_output, ctx.rois, grad_input, ctx.argmax)

37 |

38 | return grad_input, None

39 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/modules/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/roi_pooling/modules/__init__.py

--------------------------------------------------------------------------------

/lib/model/roi_pooling/modules/roi_pool.py:

--------------------------------------------------------------------------------

1 | from torch.nn.modules.module import Module

2 | from ..functions.roi_pool import RoIPoolFunction

3 |

4 |

5 | class _RoIPooling(Module):

6 | def __init__(self, pooled_height, pooled_width, spatial_scale):

7 | super(_RoIPooling, self).__init__()

8 |

9 | self.pooled_width = int(pooled_width)

10 | self.pooled_height = int(pooled_height)

11 | self.spatial_scale = float(spatial_scale)

12 |

13 | def forward(self, features, rois):

14 | return RoIPoolFunction(self.pooled_height, self.pooled_width, self.spatial_scale)(features, rois)

15 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/src/roi_pooling.c:

--------------------------------------------------------------------------------

1 | #include |

2 | #include

3 |

4 | int roi_pooling_forward(int pooled_height, int pooled_width, float spatial_scale,

5 | THFloatTensor * features, THFloatTensor * rois, THFloatTensor * output)

6 | {

7 | // Grab the input tensor

8 | float * data_flat = THFloatTensor_data(features);

9 | float * rois_flat = THFloatTensor_data(rois);

10 |

11 | float * output_flat = THFloatTensor_data(output);

12 |

13 | // Number of ROIs

14 | int num_rois = THFloatTensor_size(rois, 0);

15 | int size_rois = THFloatTensor_size(rois, 1);

16 | // batch size

17 | int batch_size = THFloatTensor_size(features, 0);

18 | if(batch_size != 1)

19 | {

20 | return 0;

21 | }

22 | // data height

23 | int data_height = THFloatTensor_size(features, 1);

24 | // data width

25 | int data_width = THFloatTensor_size(features, 2);

26 | // Number of channels

27 | int num_channels = THFloatTensor_size(features, 3);

28 |

29 | // Set all element of the output tensor to -inf.

30 | THFloatStorage_fill(THFloatTensor_storage(output), -1);

31 |

32 | // For each ROI R = [batch_index x1 y1 x2 y2]: max pool over R

33 | int index_roi = 0;

34 | int index_output = 0;

35 | int n;

36 | for (n = 0; n < num_rois; ++n)

37 | {

38 | int roi_batch_ind = rois_flat[index_roi + 0];

39 | int roi_start_w = round(rois_flat[index_roi + 1] * spatial_scale);

40 | int roi_start_h = round(rois_flat[index_roi + 2] * spatial_scale);

41 | int roi_end_w = round(rois_flat[index_roi + 3] * spatial_scale);

42 | int roi_end_h = round(rois_flat[index_roi + 4] * spatial_scale);

43 | // CHECK_GE(roi_batch_ind, 0);

44 | // CHECK_LT(roi_batch_ind, batch_size);

45 |

46 | int roi_height = fmaxf(roi_end_h - roi_start_h + 1, 1);

47 | int roi_width = fmaxf(roi_end_w - roi_start_w + 1, 1);

48 | float bin_size_h = (float)(roi_height) / (float)(pooled_height);

49 | float bin_size_w = (float)(roi_width) / (float)(pooled_width);

50 |

51 | int index_data = roi_batch_ind * data_height * data_width * num_channels;

52 | const int output_area = pooled_width * pooled_height;

53 |

54 | int c, ph, pw;

55 | for (ph = 0; ph < pooled_height; ++ph)

56 | {

57 | for (pw = 0; pw < pooled_width; ++pw)

58 | {

59 | int hstart = (floor((float)(ph) * bin_size_h));

60 | int wstart = (floor((float)(pw) * bin_size_w));

61 | int hend = (ceil((float)(ph + 1) * bin_size_h));

62 | int wend = (ceil((float)(pw + 1) * bin_size_w));

63 |

64 | hstart = fminf(fmaxf(hstart + roi_start_h, 0), data_height);

65 | hend = fminf(fmaxf(hend + roi_start_h, 0), data_height);

66 | wstart = fminf(fmaxf(wstart + roi_start_w, 0), data_width);

67 | wend = fminf(fmaxf(wend + roi_start_w, 0), data_width);

68 |

69 | const int pool_index = index_output + (ph * pooled_width + pw);

70 | int is_empty = (hend <= hstart) || (wend <= wstart);

71 | if (is_empty)

72 | {

73 | for (c = 0; c < num_channels * output_area; c += output_area)

74 | {

75 | output_flat[pool_index + c] = 0;

76 | }

77 | }

78 | else

79 | {

80 | int h, w, c;

81 | for (h = hstart; h < hend; ++h)

82 | {

83 | for (w = wstart; w < wend; ++w)

84 | {

85 | for (c = 0; c < num_channels; ++c)

86 | {

87 | const int index = (h * data_width + w) * num_channels + c;

88 | if (data_flat[index_data + index] > output_flat[pool_index + c * output_area])

89 | {

90 | output_flat[pool_index + c * output_area] = data_flat[index_data + index];

91 | }

92 | }

93 | }

94 | }

95 | }

96 | }

97 | }

98 |

99 | // Increment ROI index

100 | index_roi += size_rois;

101 | index_output += pooled_height * pooled_width * num_channels;

102 | }

103 | return 1;

104 | }

--------------------------------------------------------------------------------

/lib/model/roi_pooling/src/roi_pooling.h:

--------------------------------------------------------------------------------

1 | int roi_pooling_forward(int pooled_height, int pooled_width, float spatial_scale,

2 | THFloatTensor * features, THFloatTensor * rois, THFloatTensor * output);

--------------------------------------------------------------------------------

/lib/model/roi_pooling/src/roi_pooling_cuda.c:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include "roi_pooling_kernel.h"

4 |

5 | extern THCState *state;

6 |

7 | int roi_pooling_forward_cuda(int pooled_height, int pooled_width, float spatial_scale,

8 | THCudaTensor * features, THCudaTensor * rois, THCudaTensor * output, THCudaIntTensor * argmax)

9 | {

10 | // Grab the input tensor

11 | float * data_flat = THCudaTensor_data(state, features);

12 | float * rois_flat = THCudaTensor_data(state, rois);

13 |

14 | float * output_flat = THCudaTensor_data(state, output);

15 | int * argmax_flat = THCudaIntTensor_data(state, argmax);

16 |

17 | // Number of ROIs

18 | int num_rois = THCudaTensor_size(state, rois, 0);

19 | int size_rois = THCudaTensor_size(state, rois, 1);

20 | if (size_rois != 5)

21 | {

22 | return 0;

23 | }

24 |

25 | // batch size

26 | // int batch_size = THCudaTensor_size(state, features, 0);

27 | // if (batch_size != 1)

28 | // {

29 | // return 0;

30 | // }

31 | // data height

32 | int data_height = THCudaTensor_size(state, features, 2);

33 | // data width

34 | int data_width = THCudaTensor_size(state, features, 3);

35 | // Number of channels

36 | int num_channels = THCudaTensor_size(state, features, 1);

37 |

38 | cudaStream_t stream = THCState_getCurrentStream(state);

39 |

40 | ROIPoolForwardLaucher(

41 | data_flat, spatial_scale, num_rois, data_height,

42 | data_width, num_channels, pooled_height,

43 | pooled_width, rois_flat,

44 | output_flat, argmax_flat, stream);

45 |

46 | return 1;

47 | }

48 |

49 | int roi_pooling_backward_cuda(int pooled_height, int pooled_width, float spatial_scale,

50 | THCudaTensor * top_grad, THCudaTensor * rois, THCudaTensor * bottom_grad, THCudaIntTensor * argmax)

51 | {

52 | // Grab the input tensor

53 | float * top_grad_flat = THCudaTensor_data(state, top_grad);

54 | float * rois_flat = THCudaTensor_data(state, rois);

55 |

56 | float * bottom_grad_flat = THCudaTensor_data(state, bottom_grad);

57 | int * argmax_flat = THCudaIntTensor_data(state, argmax);

58 |

59 | // Number of ROIs

60 | int num_rois = THCudaTensor_size(state, rois, 0);

61 | int size_rois = THCudaTensor_size(state, rois, 1);

62 | if (size_rois != 5)

63 | {

64 | return 0;

65 | }

66 |

67 | // batch size

68 | int batch_size = THCudaTensor_size(state, bottom_grad, 0);

69 | // if (batch_size != 1)

70 | // {

71 | // return 0;

72 | // }

73 | // data height

74 | int data_height = THCudaTensor_size(state, bottom_grad, 2);

75 | // data width

76 | int data_width = THCudaTensor_size(state, bottom_grad, 3);

77 | // Number of channels

78 | int num_channels = THCudaTensor_size(state, bottom_grad, 1);

79 |

80 | cudaStream_t stream = THCState_getCurrentStream(state);

81 | ROIPoolBackwardLaucher(

82 | top_grad_flat, spatial_scale, batch_size, num_rois, data_height,

83 | data_width, num_channels, pooled_height,

84 | pooled_width, rois_flat,

85 | bottom_grad_flat, argmax_flat, stream);

86 |

87 | return 1;

88 | }

89 |

--------------------------------------------------------------------------------

/lib/model/roi_pooling/src/roi_pooling_cuda.h:

--------------------------------------------------------------------------------

1 | int roi_pooling_forward_cuda(int pooled_height, int pooled_width, float spatial_scale,

2 | THCudaTensor * features, THCudaTensor * rois, THCudaTensor * output, THCudaIntTensor * argmax);

3 |

4 | int roi_pooling_backward_cuda(int pooled_height, int pooled_width, float spatial_scale,

5 | THCudaTensor * top_grad, THCudaTensor * rois, THCudaTensor * bottom_grad, THCudaIntTensor * argmax);

--------------------------------------------------------------------------------

/lib/model/roi_pooling/src/roi_pooling_kernel.h:

--------------------------------------------------------------------------------

1 | #ifndef _ROI_POOLING_KERNEL

2 | #define _ROI_POOLING_KERNEL

3 |

4 | #ifdef __cplusplus

5 | extern "C" {

6 | #endif

7 |

8 | int ROIPoolForwardLaucher(

9 | const float* bottom_data, const float spatial_scale, const int num_rois, const int height,

10 | const int width, const int channels, const int pooled_height,

11 | const int pooled_width, const float* bottom_rois,

12 | float* top_data, int* argmax_data, cudaStream_t stream);

13 |

14 |

15 | int ROIPoolBackwardLaucher(const float* top_diff, const float spatial_scale, const int batch_size, const int num_rois,

16 | const int height, const int width, const int channels, const int pooled_height,

17 | const int pooled_width, const float* bottom_rois,

18 | float* bottom_diff, const int* argmax_data, cudaStream_t stream);

19 |

20 | #ifdef __cplusplus

21 | }

22 | #endif

23 |

24 | #endif

25 |

26 |

--------------------------------------------------------------------------------

/lib/model/rpn/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/princewang1994/R-FCN.pytorch/0c8da30bfd23e61f4c7fd1299626b9d82cf8a164/lib/model/rpn/__init__.py

--------------------------------------------------------------------------------

/lib/model/rpn/generate_anchors.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | # --------------------------------------------------------

3 | # Faster R-CNN

4 | # Copyright (c) 2015 Microsoft

5 | # Licensed under The MIT License [see LICENSE for details]

6 | # Written by Ross Girshick and Sean Bell

7 | # --------------------------------------------------------

8 |

9 | import numpy as np

10 | import pdb

11 |

12 | # Verify that we compute the same anchors as Shaoqing's matlab implementation:

13 | #

14 | # >> load output/rpn_cachedir/faster_rcnn_VOC2007_ZF_stage1_rpn/anchors.mat

15 | # >> anchors

16 | #

17 | # anchors =

18 | #

19 | # -83 -39 100 56

20 | # -175 -87 192 104

21 | # -359 -183 376 200

22 | # -55 -55 72 72

23 | # -119 -119 136 136

24 | # -247 -247 264 264

25 | # -35 -79 52 96

26 | # -79 -167 96 184

27 | # -167 -343 184 360

28 |

29 | #array([[ -83., -39., 100., 56.],

30 | # [-175., -87., 192., 104.],

31 | # [-359., -183., 376., 200.],

32 | # [ -55., -55., 72., 72.],

33 | # [-119., -119., 136., 136.],

34 | # [-247., -247., 264., 264.],

35 | # [ -35., -79., 52., 96.],

36 | # [ -79., -167., 96., 184.],

37 | # [-167., -343., 184., 360.]])

38 |

39 | try:

40 | xrange # Python 2

41 | except NameError:

42 | xrange = range # Python 3

43 |

44 |

45 | def generate_anchors(base_size=16, ratios=[0.5, 1, 2],

46 | scales=2**np.arange(3, 6)):

47 | """

48 | Generate anchor (reference) windows by enumerating aspect ratios X

49 | scales wrt a reference (0, 0, 15, 15) window.

50 | """

51 |

52 | base_anchor = np.array([1, 1, base_size, base_size]) - 1

53 | ratio_anchors = _ratio_enum(base_anchor, ratios)

54 | anchors = np.vstack([_scale_enum(ratio_anchors[i, :], scales)

55 | for i in xrange(ratio_anchors.shape[0])])

56 | return anchors

57 |

58 | def _whctrs(anchor):

59 | """

60 | Return width, height, x center, and y center for an anchor (window).

61 | """

62 |

63 | w = anchor[2] - anchor[0] + 1

64 | h = anchor[3] - anchor[1] + 1

65 | x_ctr = anchor[0] + 0.5 * (w - 1)

66 | y_ctr = anchor[1] + 0.5 * (h - 1)

67 | return w, h, x_ctr, y_ctr

68 |

69 | def _mkanchors(ws, hs, x_ctr, y_ctr):

70 | """

71 | Given a vector of widths (ws) and heights (hs) around a center

72 | (x_ctr, y_ctr), output a set of anchors (windows).

73 | """

74 |

75 | ws = ws[:, np.newaxis]

76 | hs = hs[:, np.newaxis]

77 | anchors = np.hstack((x_ctr - 0.5 * (ws - 1),

78 | y_ctr - 0.5 * (hs - 1),

79 | x_ctr + 0.5 * (ws - 1),

80 | y_ctr + 0.5 * (hs - 1)))

81 | return anchors

82 |

83 | def _ratio_enum(anchor, ratios):

84 | """

85 | Enumerate a set of anchors for each aspect ratio wrt an anchor.

86 | """

87 |

88 | w, h, x_ctr, y_ctr = _whctrs(anchor)

89 | size = w * h

90 | size_ratios = size / ratios

91 | ws = np.round(np.sqrt(size_ratios))

92 | hs = np.round(ws * ratios)

93 | anchors = _mkanchors(ws, hs, x_ctr, y_ctr)

94 | return anchors

95 |

96 | def _scale_enum(anchor, scales):

97 | """

98 | Enumerate a set of anchors for each scale wrt an anchor.

99 | """

100 |

101 | w, h, x_ctr, y_ctr = _whctrs(anchor)

102 | ws = w * scales

103 | hs = h * scales

104 | anchors = _mkanchors(ws, hs, x_ctr, y_ctr)

105 | return anchors

106 |

107 | if __name__ == '__main__':

108 | import time

109 | t = time.time()

110 | a = generate_anchors()

111 | print(time.time() - t)

112 | print(a)