├── .gitignore

├── Makefile

├── README.md

├── SUMMARY.md

├── awesome-system-design.md

├── drafts

├── fintech-sd-work-in-progress.md

├── ideas.md

└── three-basic-topics.md

├── en

├── 11-three-programming-paradigms.md

├── 12-solid-design-principles.md

├── 120-designing-uber.md

├── 121-designing-facebook-photo-storage.md

├── 122-key-value-cache.md

├── 123-ios-architecture-patterns-revisited.md

├── 136-fraud-detection-with-semi-supervised-learning.md

├── 137-stream-and-batch-processing.md

├── 140-designing-a-recommendation-system.md

├── 145-introduction-to-architecture.md

├── 161-designing-stock-exchange.md

├── 162-designing-smart-notification-of-stock-price-changes.md

├── 166-designing-payment-webhook.md

├── 167-designing-paypal-money-transfer.md

├── 168-designing-a-metric-system.md

├── 169-how-to-write-solid-code.md

├── 174-designing-memcached.md

├── 177-designing-Airbnb-or-a-hotel-booking-system.md

├── 178-lyft-marketing-automation-symphony.md

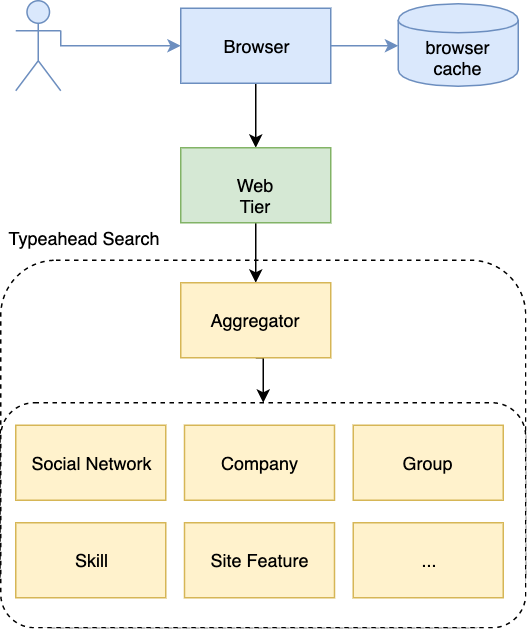

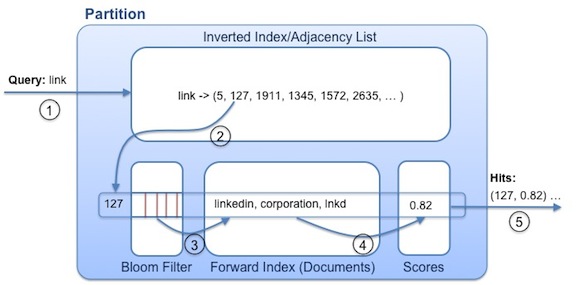

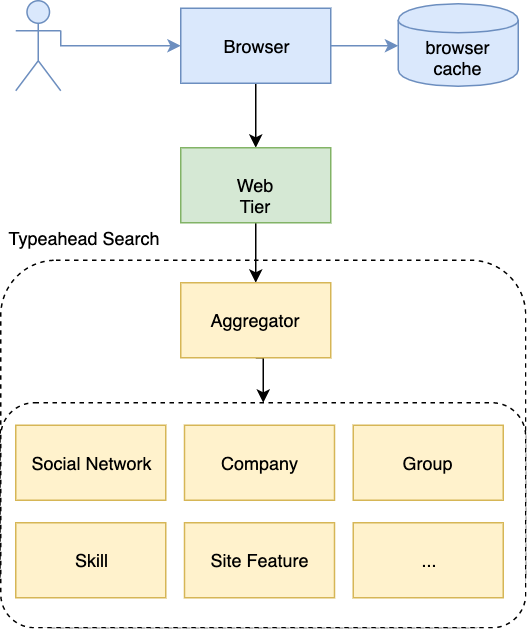

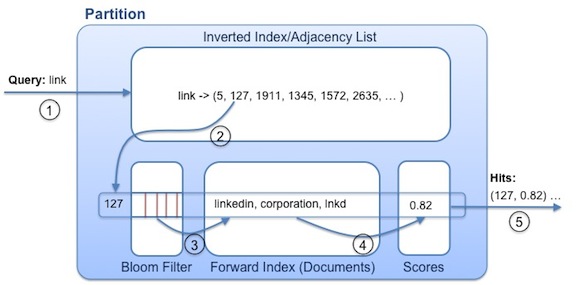

├── 179-designing-typeahead-search-or-autocomplete.md

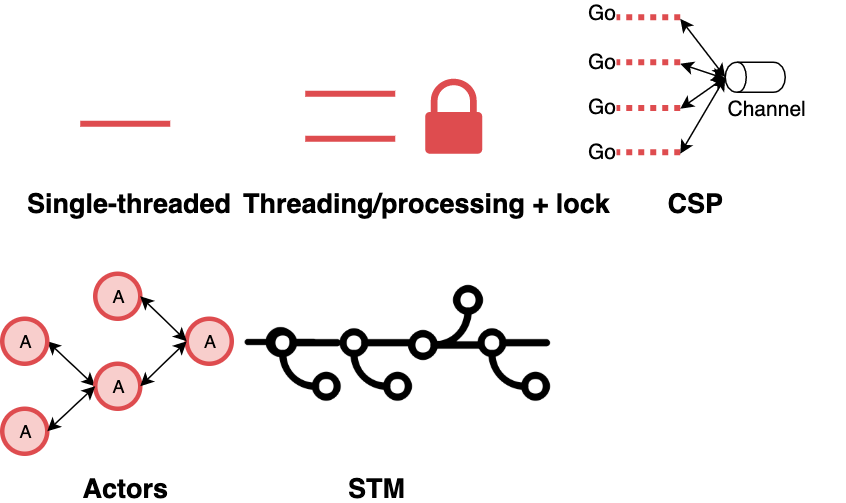

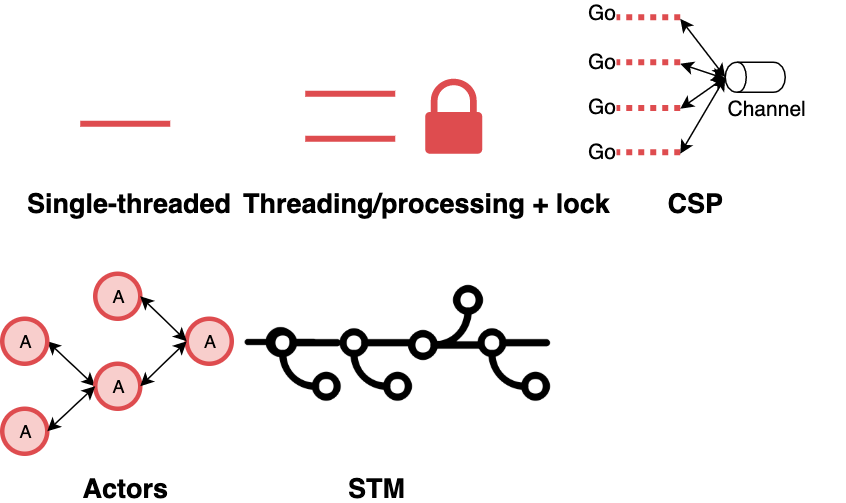

├── 181-concurrency-models.md

├── 182-designing-l7-load-balancer.md

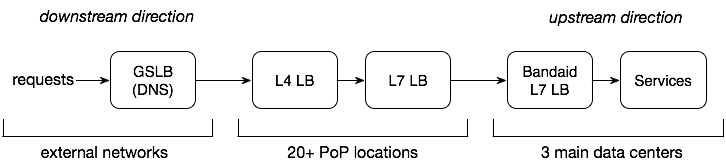

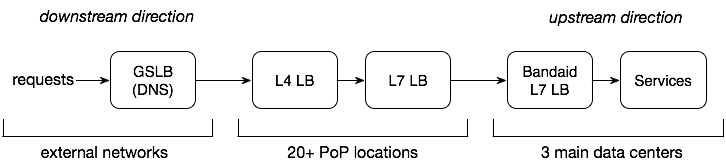

├── 2016-02-13-crack-the-system-design-interview.md

├── 2018-07-10-cloud-design-patterns.md

├── 2018-07-20-experience-deep-dive.md

├── 2018-07-21-data-partition-and-routing.md

├── 2018-07-22-b-tree-vs-b-plus-tree.md

├── 2018-07-23-load-balancer-types.md

├── 2018-07-24-replica-and-consistency.md

├── 2018-07-26-acid-vs-base.md

├── 38-how-to-stream-video-over-http.md

├── 41-how-to-scale-a-web-service.md

├── 43-how-to-design-robust-and-predictable-apis-with-idempotency.md

├── 45-how-to-design-netflix-view-state-service.md

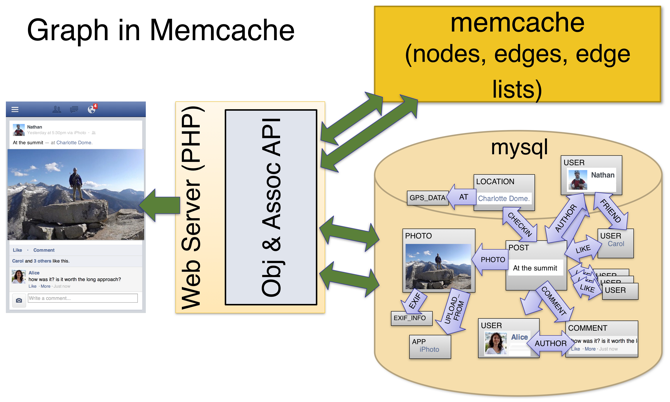

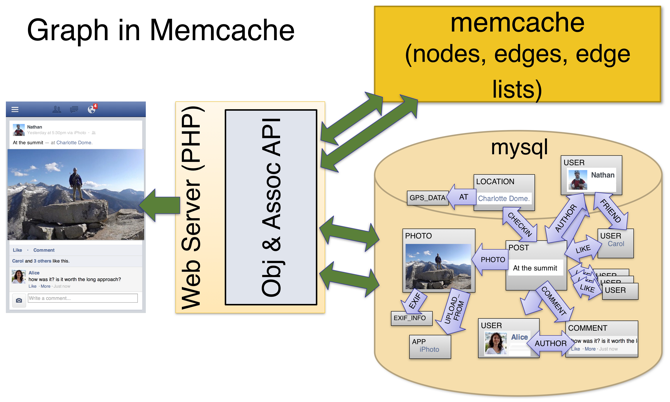

├── 49-facebook-tao.md

├── 61-what-is-apache-kafka.md

├── 63-soft-skills-interview.md

├── 66-public-api-choices.md

├── 68-bloom-filter.md

├── 69-skiplist.md

├── 78-four-kinds-of-no-sql.md

├── 80-relational-database.md

├── 83-lambda-architecture.md

├── 84-designing-a-url-shortener.md

├── 85-improving-availability-with-failover.md

└── 97-designing-a-kv-store-with-external-storage.md

└── zh-CN

├── 11-three-programming-paradigms.md

├── 12-solid-design-principles.md

├── 120-designing-uber.md

├── 121-designing-facebook-photo-storage.md

├── 122-key-value-cache.md

├── 123-ios-architecture-patterns-revisited.md

├── 136-fraud-detection-with-semi-supervised-learning.md

├── 137-stream-and-batch-processing.md

├── 140-designing-a-recommendation-system.md

├── 145-introduction-to-architecture.md

├── 161-designing-stock-exchange.md

├── 162-designing-smart-notification-of-stock-price-changes.md

├── 166-designing-payment-webhook.md

├── 167-designing-paypal-money-transfer.md

├── 168-designing-a-metric-system.md

├── 169-how-to-write-solid-code.md

├── 174-designing-memcached.md

├── 177-designing-Airbnb-or-a-hotel-booking-system.md

├── 178-lyft-marketing-automation-symphony.md

├── 179-designing-typeahead-search-or-autocomplete.md

├── 181-concurrency-models.md

├── 182-designing-l7-load-balancer.md

├── 2016-02-13-crack-the-system-design-interview.md

├── 2018-07-10-cloud-design-patterns.md

├── 2018-07-20-experience-deep-dive.md

├── 2018-07-21-data-partition-and-routing.md

├── 2018-07-22-b-tree-vs-b-plus-tree.md

├── 2018-07-23-load-balancer-types.md

├── 2018-07-24-replica-and-consistency.md

├── 2018-07-26-acid-vs-base.md

├── 38-how-to-stream-video-over-http.md

├── 41-how-to-scale-a-web-service.md

├── 43-how-to-design-robust-and-predictable-apis-with-idempotency.md

├── 45-how-to-design-netflix-view-state-service.md

├── 49-facebook-tao.md

├── 61-what-is-apache-kafka.md

├── 63-soft-skills-interview.md

├── 66-public-api-choices.md

├── 68-bloom-filter.md

├── 69-skiplist.md

├── 78-four-kinds-of-no-sql.md

├── 80-relational-database.md

├── 83-lambda-architecture.md

├── 84-designing-a-url-shortener.md

├── 85-improving-availability-with-failover.md

├── 97-designing-a-kv-store-with-external-storage.md

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | .idea/

2 | *.pdf

3 | _book/**

4 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | .PHONY: install

2 | install:

3 | nvm use v10 && npm install gitbook-cli -g

4 |

5 | .PHONY: dev

6 | dev:

7 | gitbook serve

8 |

9 | .PHONY: build

10 | build:

11 | gitbook pdf ./ ./_book/system-design-and-architecture-2nd-edition.pdf

12 | gitbook epub ./ ./_book/system-design-and-architecture-2nd-edition.epub

13 | gitbook mobi ./ ./_book/system-design-and-architecture-2nd-edition.mobi

14 |

--------------------------------------------------------------------------------

/SUMMARY.md:

--------------------------------------------------------------------------------

1 | # Summary

2 |

3 | * [Introduction](README.md)

4 |

5 | ### System Design in Practice

6 |

7 | * [Design Pinterest](./en/2016-02-13-crack-the-system-design-interview.md)

8 | * [Design Uber](./en/120-designing-uber.md)

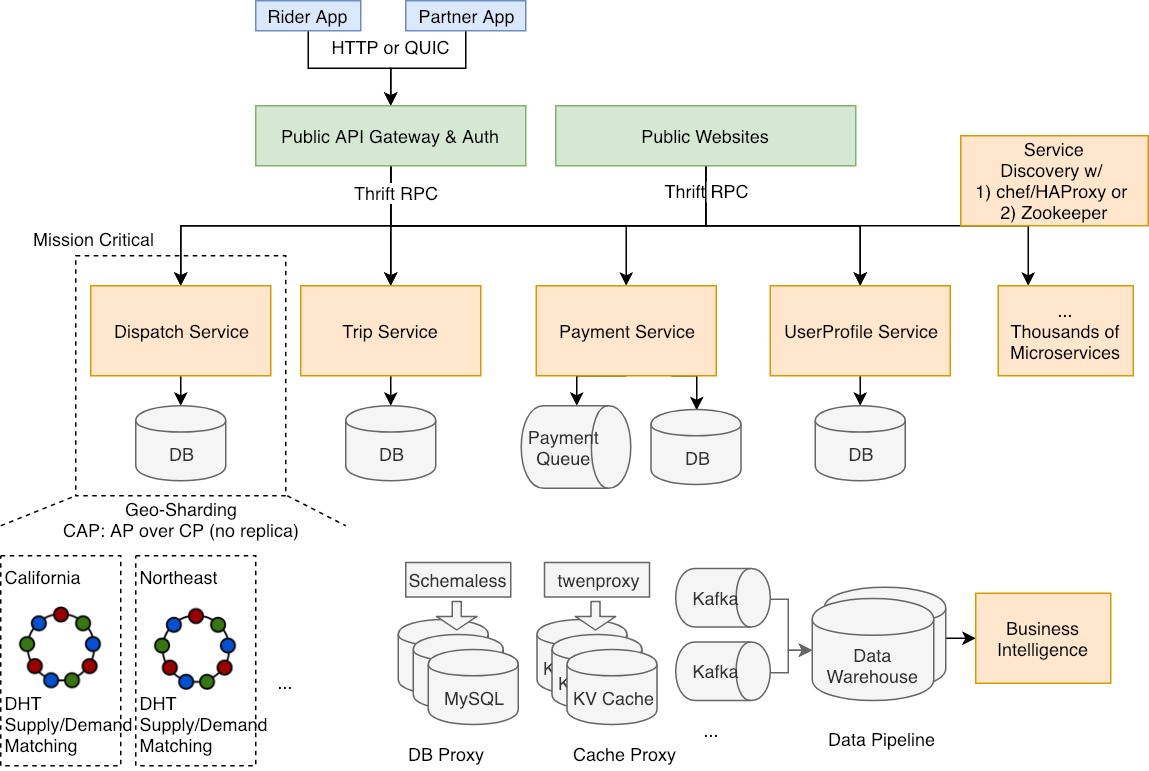

9 | * [Design Facebook Social Graph Store](./en/49-facebook-tao.md)

10 | * [Design Netflix Viewing Data](./en/45-how-to-design-netflix-view-state-service.md)

11 | * [Design idempotent APIs](./en/43-how-to-design-robust-and-predictable-apis-with-idempotency.md)

12 | * [Design video streaming over HTTP](./en/38-how-to-stream-video-over-http.md)

13 | * [What is Apache Kafka?](./en/61-what-is-apache-kafka.md)

14 | * [Design a URL shortener](./en/84-designing-a-url-shortener.md)

15 | * [Design a KV store with external storage](./en/97-designing-a-kv-store-with-external-storage.md)

16 | * [Design a distributed in-memory KV store or Memcached](./en/174-designing-memcached.md)

17 | * [Design Facebook photo storage](./en/121-designing-facebook-photo-storage.md)

18 | * [Design Stock Exchange](./en/161-designing-stock-exchange.md)

19 | * [Design Smart Notification of Stock Price Changes](./en/162-designing-smart-notification-of-stock-price-changes.md)

20 | * [Design Square Cash or PayPal Money Transfer System](./en/167-designing-paypal-money-transfer.md)

21 | * [Design payment webhook](./en/166-designing-payment-webhook.md)

22 | * [Design a metric system](./en/168-designing-a-metric-system.md)

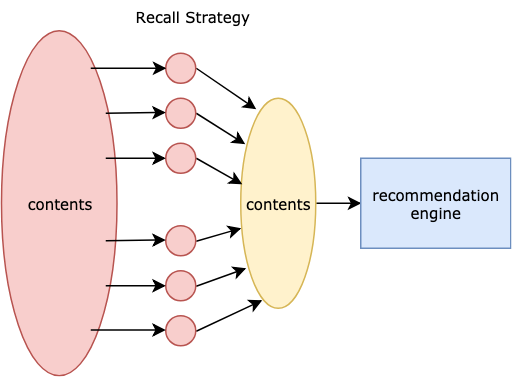

23 | * [Design a recommendation system](./en/140-designing-a-recommendation-system.md)

24 | * [Design Airbnb or a hotel booking system](./en/177-designing-Airbnb-or-a-hotel-booking-system.md)

25 | * [Lyft's Marketing Automation Platform -- Symphony](./en/178-lyft-marketing-automation-symphony.md)

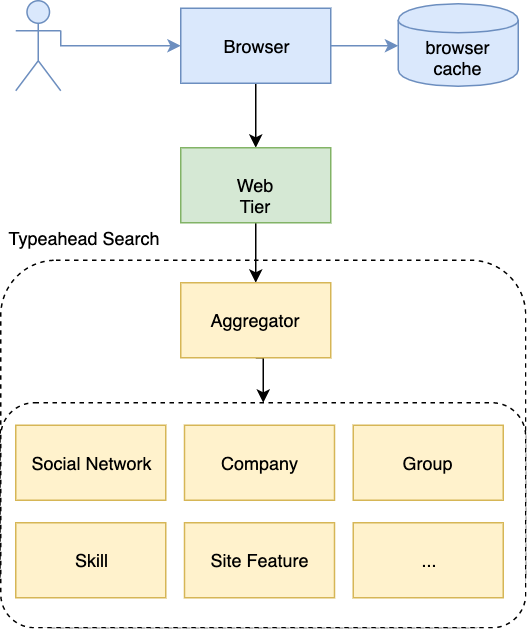

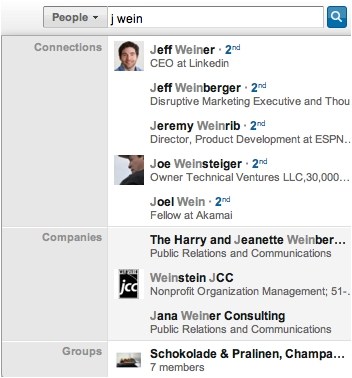

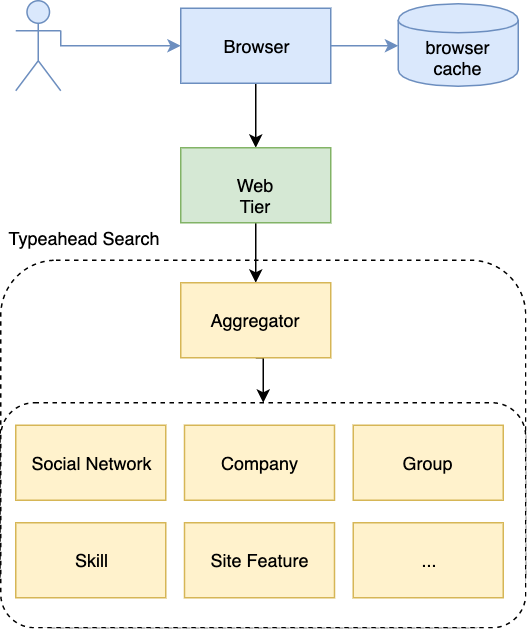

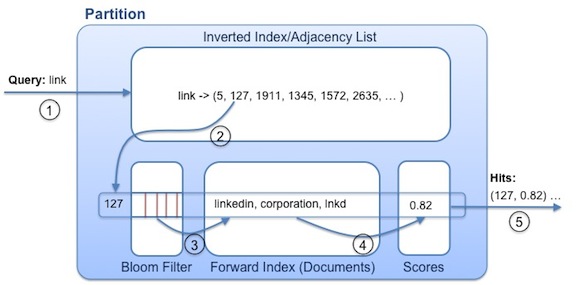

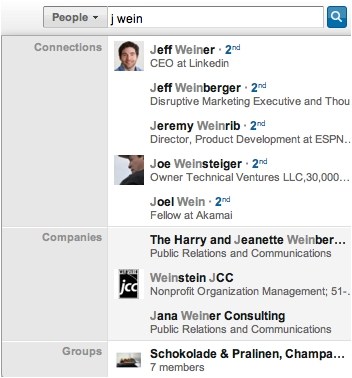

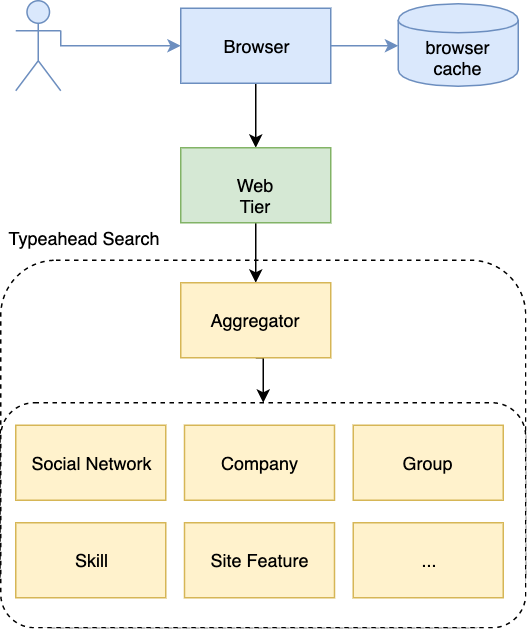

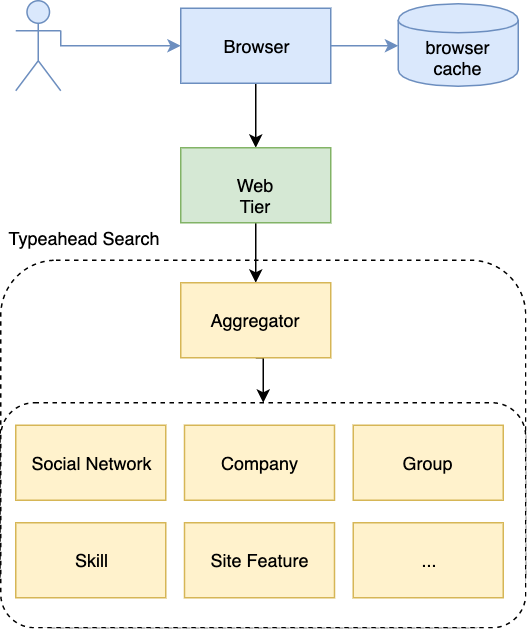

26 | * [Design typeahead search or autocomplete](./en/179-designing-typeahead-search-or-autocomplete.md)

27 | * [Design a Load Balancer or Dropbox Bandaid](./en/182-designing-l7-load-balancer.md)

28 | * [Fraud Detection with Semi-supervised Learning](./en/136-fraud-detection-with-semi-supervised-learning.md)

29 | * [Credit Card Processing System](./en/236-credit-card-processing-system.md)

30 | * [Design Online Judge or Leetcode](./en/243-designing-online-judge-or-leetcode.md)

31 | * [AuthN and AuthZ](./en/253-authn-authz-micro-services.md)

32 | * [AuthZ 2022](./en/277-enterprise-authorization-2022.md)

33 |

34 |

35 | ## System Design Theories

36 |

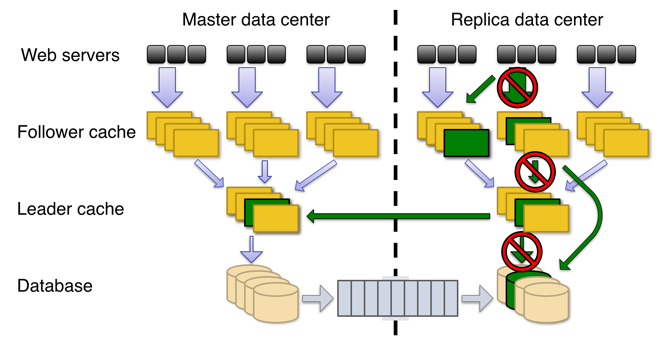

37 | * [Introduction to Architecture](./en/145-introduction-to-architecture.md)

38 | * [How to scale a web service?](./en/41-how-to-scale-a-web-service.md)

39 | * [ACID vs BASE](./en/2018-07-26-acid-vs-base.md)

40 | * [Data Partition and Routing](./en/2018-07-21-data-partition-and-routing.md)

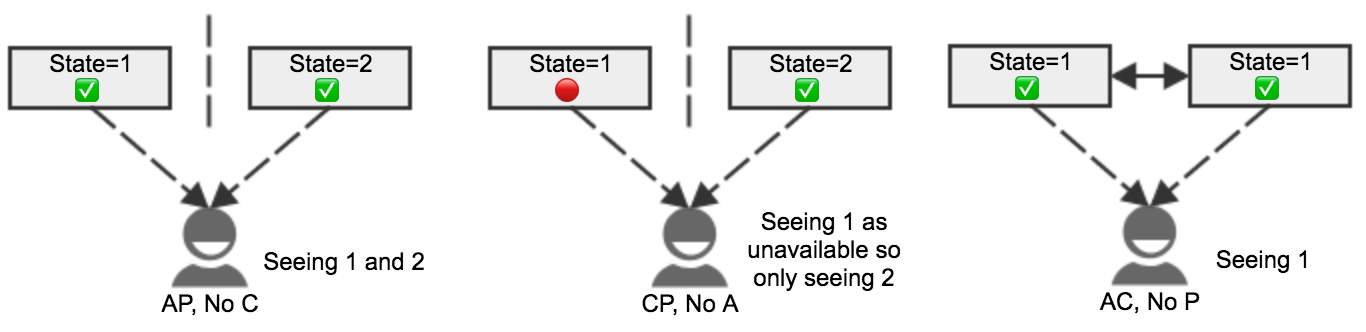

41 | * [Replica, Consistency, and CAP theorem](./en/2018-07-24-replica-and-consistency.md)

42 | * [Load Balancer Types](./en/2018-07-23-load-balancer-types.md)

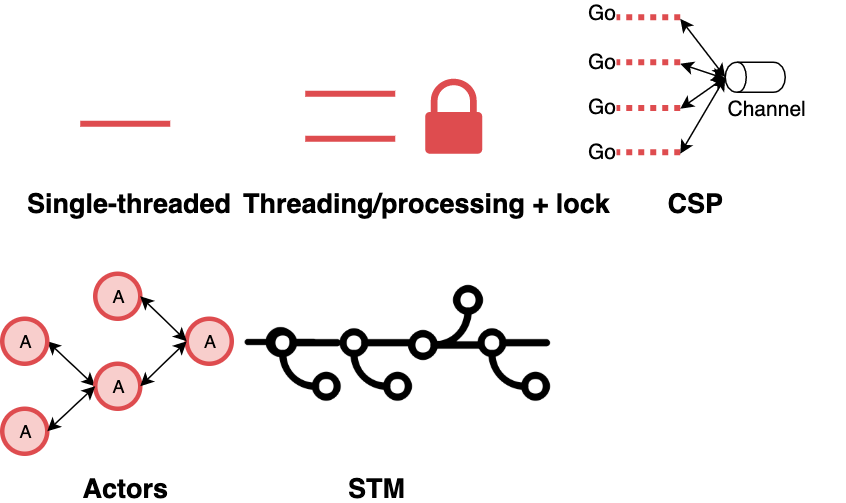

43 | * [Concurrency Model](./en/181-concurrency-models.md)

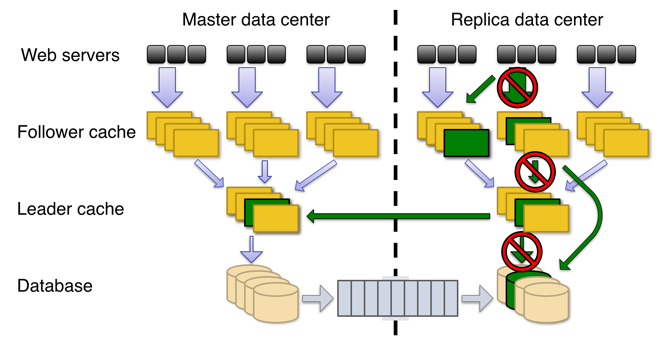

44 | * [Improving availability with failover](./en/85-improving-availability-with-failover.md)

45 | * [Bloom Filter](./en/68-bloom-filter.md)

46 | * [Skiplist](./en/69-skiplist.md)

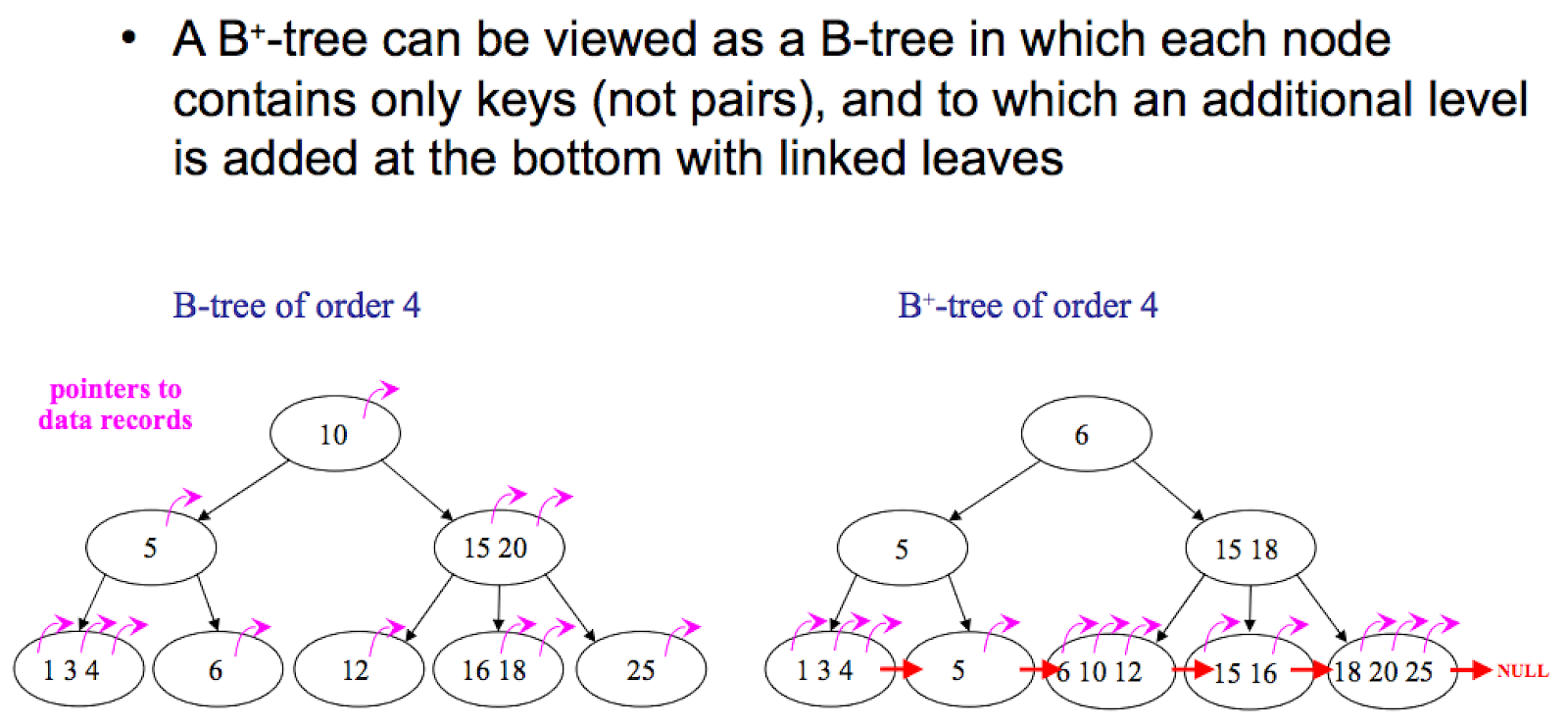

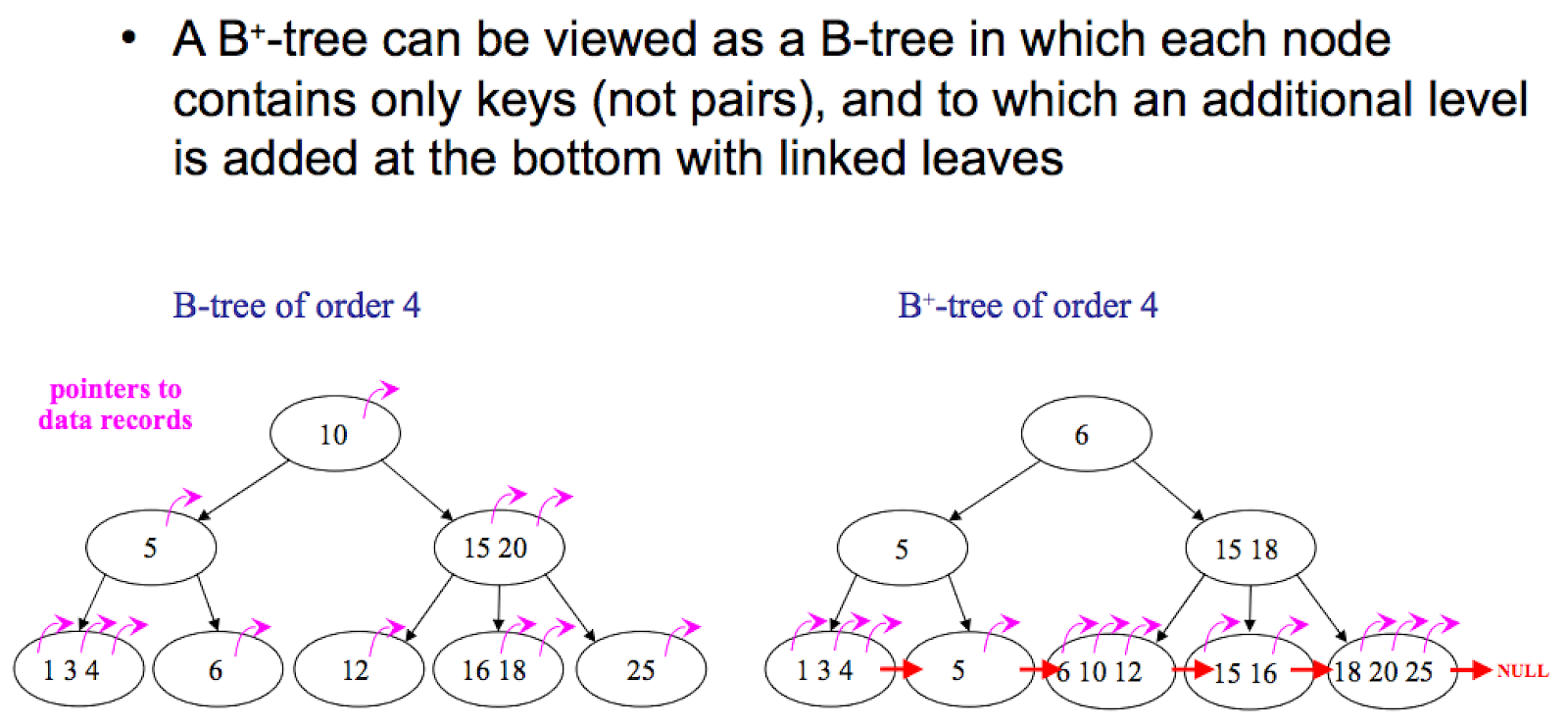

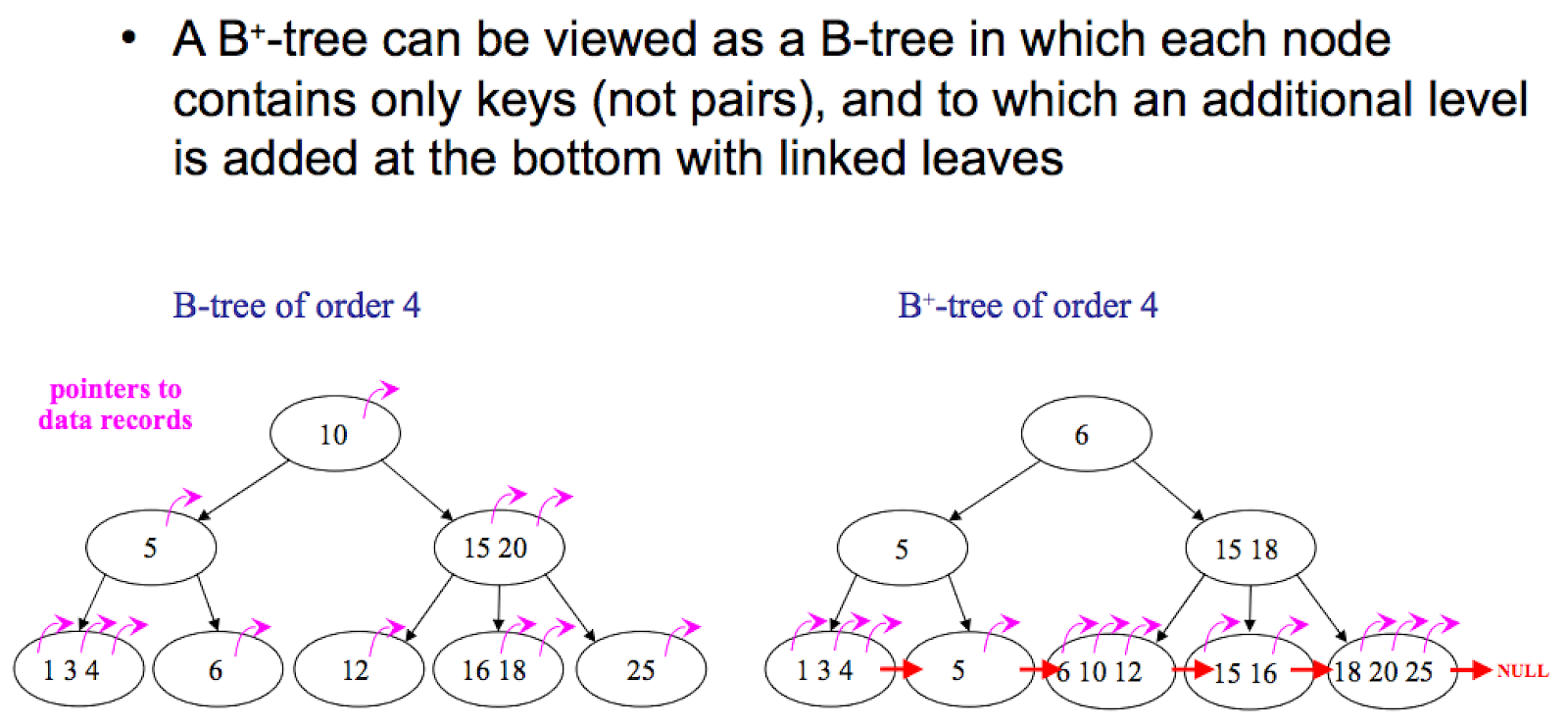

47 | * [B tree vs. B+ tree](./en/2018-07-22-b-tree-vs-b-plus-tree.md)

48 | * [Intro to Relational Database](./en/80-relational-database.md)

49 | * [4 Kinds of No-SQL](./en/78-four-kinds-of-no-sql.md)

50 | * [Key value cache](./en/122-key-value-cache.md)

51 | * [Stream and Batch Processing Frameworks](./en/137-stream-and-batch-processing.md)

52 | * [Cloud Design Patterns](./en/2018-07-10-cloud-design-patterns.md)

53 | * [Public API Choices](./en/66-public-api-choices.md)

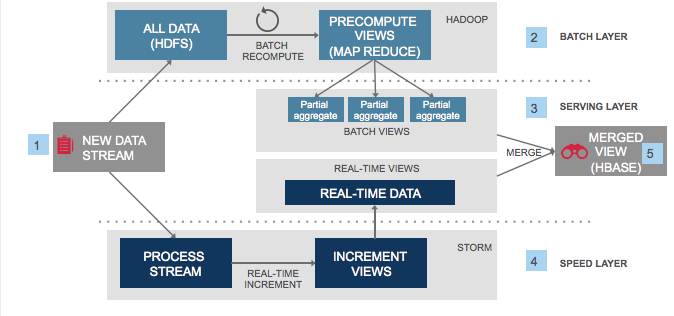

54 | * [Lambda Architecture](./en/83-lambda-architecture.md)

55 | * [iOS Architecture Patterns Revisited](./en/123-ios-architecture-patterns-revisited.md)

56 | * [What can we communicate in soft skills interview?](./en/63-soft-skills-interview.md)

57 | * [Experience Deep Dive](./en/2018-07-20-experience-deep-dive.md)

58 | * [3 Programming Paradigms](./en/11-three-programming-paradigms.md)

59 | * [SOLID Design Principles](./en/12-solid-design-principles.md)

60 |

61 | ## Effective Interview Prep

62 |

63 | * Introduction to software engineer interview

64 | * How to crack the coding interview, for real?

65 | * How to communicate in the interview?

66 | * Experience deep dive

67 | * Culture fit

68 | * Be a software engineer - a hero's journey

69 |

--------------------------------------------------------------------------------

/awesome-system-design.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: "Awesome System Design Resources"

4 | date: 2020-09-11 22:10

5 | language: en

6 | ---

7 |

8 | ## Tutorials and Blogs

9 |

10 | * https://github.com/puncsky/system-design-and-architecture

11 | * https://github.com/donnemartin/system-design-primer

12 | * http://highscalability.com/

13 | * https://www.educative.io/courses/grokking-the-system-design-interview

14 | * https://medium.com/netflix-techblog

15 | * https://engineering.fb.com/

16 | * https://medium.com/airbnb-engineering

17 | * https://medium.com/paypal-engineering

18 | * https://medium.com/imgur-engineering

19 |

20 | ## Books

21 |

22 | * The Practice of Cloud System Administration (Thomas A. Limoncelli)

23 | * Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems (Martin Kleppmann)

24 | * Database Internals (Alex Petrov)

25 | * Clean Architecture (Robert C. Martin)

26 | * Patterns of Enterprise Application Architecure (Martin Fowler)

27 | * NoSQL Distilled (Pramod J. Sadalage)

28 |

29 | Candidate List: https://docs.google.com/spreadsheets/d/11Rjv-SVXj4DN9l2qTZzM-oirMMK9wXTjbS8GFzBmR0s/edit#gid=2041570299

30 |

--------------------------------------------------------------------------------

/drafts/fintech-sd-work-in-progress.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: post

3 | title: "FinTech System Design"

4 | date: 2021-05-21 16:40

5 | comments: true

6 | categories: System Design

7 | language: en

8 | abstract: abstract

9 | references:

10 | - references

11 | ---

12 |

13 | @[toc]

14 |

15 | We will go through a series of building blocks of modern financial systems.

16 |

17 | * Centralized Finance

18 | * Deposit Account

19 | * ACH

20 | * Wire

21 | * Check

22 | * Credit Card

23 | * PCI DSS

24 | * Electronic Payment Methods

25 | * NFC

26 | * QR Codes (https://www.w3.org/Payments/WG/)

27 | * https://www.w3.org/2020/10/21-wpwg-minutes.html#emvco

28 | * EMVCo QR code specifications and use cases https://www.w3.org/2020/Talks/emvco-qr-20201021.pdf

29 | * China Union Pay QR code use cases http://www.w3.org/2020/Talks/unionpay-qr-20201021.pptx

30 | * Open Banking

31 | * Web Monetization

32 | * Real-time Payments (RTP) and bill pay

33 | * Web https://www.w3.org/Payments/WG/

34 | * Digital Wallet

35 | * Loan

36 | * Fraud & Risk

37 | * Stock Exchange

38 | * Bookkeeping

39 | * Security & Compliance

40 | * Decentralized Finance

41 | * Blockchain

42 | * Smart Contract

43 | * DApp

44 | * Digital Wallet

45 | * Cross-chain

46 | * Decentralized crypto exchanges

47 | * Case study

48 | * Chinese DC/EP

49 | * Facebook Novi and Intermittence

50 | * Stripe and Aggregators

51 | * Plaid

52 | * Fact.co One-click

53 | * Transaction Fee and Bargaining Power

54 | * Apple Pay

55 | * https://whimsical.com/apple-pay-SZBkbYi4YvzpiTLgJPPRSt

56 |

57 |

58 | # Centralized Finance

59 |

60 | # Decentralized Finance

61 |

62 | # Case Study

63 |

64 | ## Apple Pay

65 |

66 | ### Customer's Perspective

67 |

68 | Goal

69 |

70 | * faster and easier than cards and cash

71 | * retailing-first

72 | * privacy and security by design

73 |

74 |

75 |

76 | ### Developer's Perspective

77 |

--------------------------------------------------------------------------------

/drafts/ideas.md:

--------------------------------------------------------------------------------

1 | #### TODO

2 |

3 | * Designing instagram or newsfeed APIs

4 | * Designing Yelp / Finding nearest K POIs

5 | * Designing trending topics / top K exceptions in the system

6 | * Designing distributed web crawler

7 | * Designing i18n service

8 | * Designing ads bidding system

9 | * Designing a dropbox or a file-sharing system

10 | * Designing a calendar system

11 | * Designing an instant chat system / Facebook Messenger / WeChat

12 | * Designing a ticketing system or Ticketmaster

13 | * Designing a voice assistant or Siri

14 |

15 |

--------------------------------------------------------------------------------

/drafts/three-basic-topics.md:

--------------------------------------------------------------------------------

1 | ## Appendix: Three Basic Topics

2 |

3 | > Here are three basic topics that could be discussed during the interview.

4 |

5 | ### Communication

6 |

7 | How do different microservices interact with each other? -- communication protocols.

8 |

9 | Here is a simple comparison of those protocols.

10 |

11 | - UDP and TCP are both transport layer protocols. TCP is reliable and connection-based. UDP is connectionless and unreliable.

12 | - HTTP is in the application layer and is TCP-based since HTTP assumes a reliable transport.

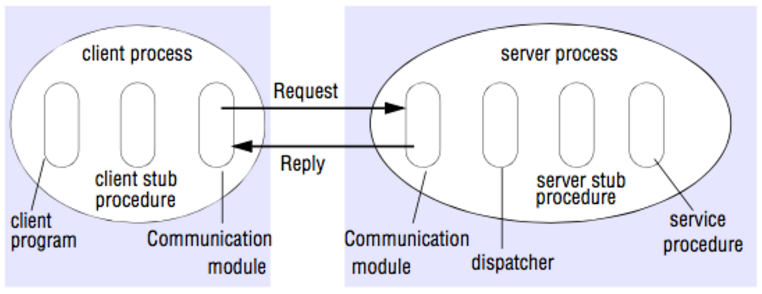

13 | - RPC, a session layer (or application layer in TCP/IP layer model) protocol, is an inter-process communication that allows a computer program to cause a subroutine or procedure to execute in another machine, like a function call.

14 |

15 | ##### Further discussions

16 |

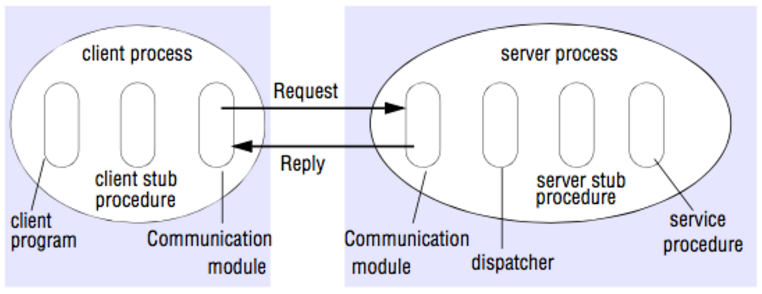

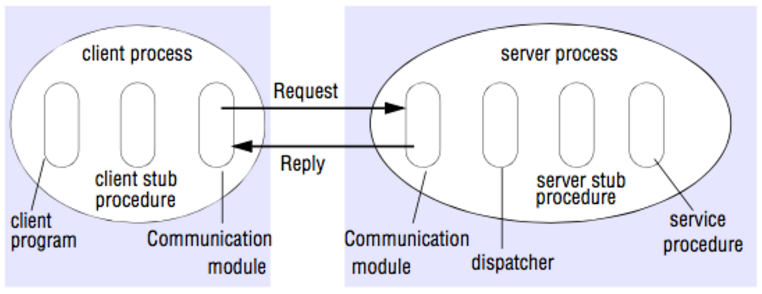

17 | Since RPC is super useful, some interviewers may ask how RPC works. The following picture is a brief answer.

18 |

19 |

20 |

21 |

22 | * Stub procedure: a local procedure that marshals the procedure identifier and the arguments into a request message, and then to send via its communication module to the server. When the reply message arrives, it unmarshals the results.

23 |

24 | We do not have to implement our own RPC protocols. There are off-the-shelf frameworks, like gRPC, Apache Thrift, and Apache Avro.

25 |

26 | - gRPC: a cross-platform open source high performance RPC framework developed by Google.

27 | - Apache Thrift: supports more languages, richer data structures: list, set, map, etc. that Protobuf does not support) Incomplete documentation and hard to find good examples.

28 | - User case: Hbase/Cassandra/Hypertable/Scrib/..

29 | - Apache Avro: Avro is heavily used in the hadoop ecosystem and based on dynamic schemas in Json. It features dynamic typing, untagged data, and no manually-assigned field IDs.

30 |

31 | Generally speaking, RPC is internally used by many tech companies for its great performance; however, it is rather hard to debug or may have compatibility issues in different environments. So for public APIs, we tend to use HTTP APIs, and are usually following the RESTful style.

32 |

33 | - REST (Representational state transfer of resources)

34 | - Best practice of HTTP API to interact with resources.

35 | - URL only decides the location. Headers (Accept and Content-Type, etc.) decide the representation. HTTP methods(GET/POST/PUT/DELETE) decide the state transfer.

36 | - minimize the coupling between client and server (a huge number of HTTP infras on various clients, data-marshalling).

37 | - stateless and scaling out.

38 | - service partitioning feasible.

39 | - used for public API.

40 |

41 | Learn more in [public API choices](66-public-api-choices.md).

42 |

43 | ### Storage

44 |

45 | #### Relational Database

46 |

47 | The relational database is the default choice for most use cases because of ACID (atomicity, consistency, isolation, and durability). One tricky thing is **consistency -- it means that any transaction will bring the database from one valid state to another, (different from the consistency in CAP** in the distributed system.

48 |

49 | ##### Schema Design and 3rd Normal Form (3NF)

50 |

51 | To reduce redundancy and improve consistency, people follow 3NF when designing database schemas:

52 |

53 | - 1NF: tabular, each row-column intersection contains only one value

54 | - 2NF: only the primary key determines all the attributes

55 | - 3NF: only the candidate keys determine all the attributes (and non-prime attributes do not depend on each other)

56 |

57 | ##### Db Proxy

58 |

59 | What if we want to eliminate single point of failure? What if the dataset is too large for one single machine to hold? For MySQL, the answer is to use a DB proxy to distribute data, [either by clustering or by sharding](http://dba.stackexchange.com/questions/8889/mysql-sharding-vs-mysql-cluster ).

60 |

61 | Clustering is a decentralized solution. Everything is automatic. Data is distributed, moved, rebalanced automatically. Nodes gossip with each other, (though it may cause group isolation).

62 |

63 | Sharding is a centralized solution. If we get rid of properties of clustering that we don't like, sharding is what we get. Data is distributed manually and does not move. Nodes are not aware of each other.

64 |

65 | #### NoSQL

66 |

67 | In a regular Internet service, the read write ratio is about 100:1 to 1000:1. However, when reading from a hard disk, a database join operation is time consuming, and 99% of the time is spent on disk seek. Not to mention a distributed join operation across networks.

68 |

69 | To optimize the read performance, **denormalization** is introduced by adding redundant data or by grouping data. These four categories of NoSQL are here to help.

70 |

71 | ##### Key-value Store

72 |

73 | The abstraction of a KV store is a giant hashtable/hashmap/dictionary.

74 |

75 | The main reason we want to use a key-value cache is to reduce latency for accessing active data. Achieve an O(1) read/write performance on a fast and expensive media (like memory or SSD), instead of a traditional O(logn) read/write on a slow and cheap media (typically hard drive).

76 |

77 | There are three major factors to consider when we design the cache.

78 |

79 | 1. Pattern: How to cache? is it read-through/write-through/write-around/write-back/cache-aside?

80 | 2. Placement: Where to place the cache? client side/distinct layer/server side?

81 | 3. Replacement: When to expire/replace the data? LRU/LFU/ARC?

82 |

83 | Out-of-box choices: Redis/Memcache? Redis supports data persistence while memcache does not. Riak, Berkeley DB, HamsterDB, Amazon Dynamo, Project Voldemort, etc.

84 |

85 | ##### Document Store

86 |

87 | The abstraction of a document store is like a KV store, but documents, like XML, JSON, BSON, and so on, are stored in the value part of the pair.

88 |

89 | The main reason we want to use a document store is for flexibility and performance. Flexibility is obtained by schemaless document, and performance is improved by breaking 3NF. Startup's business requirements are changing from time to time. Flexible schema empowers them to move fast.

90 |

91 | Out-of-box choices: MongoDB, CouchDB, Terrastore, OrientDB, RavenDB, etc.

92 |

93 | ##### Column-oriented Store

94 |

95 | The abstraction of a column-oriented store is like a giant nested map: ColumnFamily>.

96 |

97 | The main reason we want to use a column-oriented store is that it is distributed, highly-available, and optimized for write.

98 |

99 | Out-of-box choices: Cassandra, HBase, Hypertable, Amazon SimpleDB, etc.

100 |

101 | ##### Graph Database

102 |

103 | As the name indicates, this database's abstraction is a graph. It allows us to store entities and the relationships between them.

104 |

105 | If we use a relational database to store the graph, adding/removing relationships may involve schema changes and data movement, which is not the case when using a graph database. On the other hand, when we create tables in a relational database for the graph, we model based on the traversal we want; if the traversal changes, the data will have to change.

106 |

107 | Out-of-box choices: Neo4J, Infinitegraph, OrientDB, FlockDB, etc.

108 |

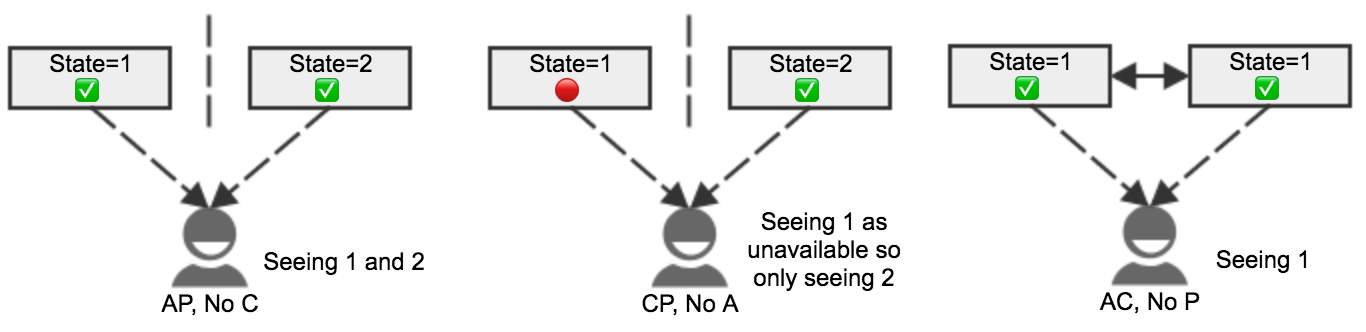

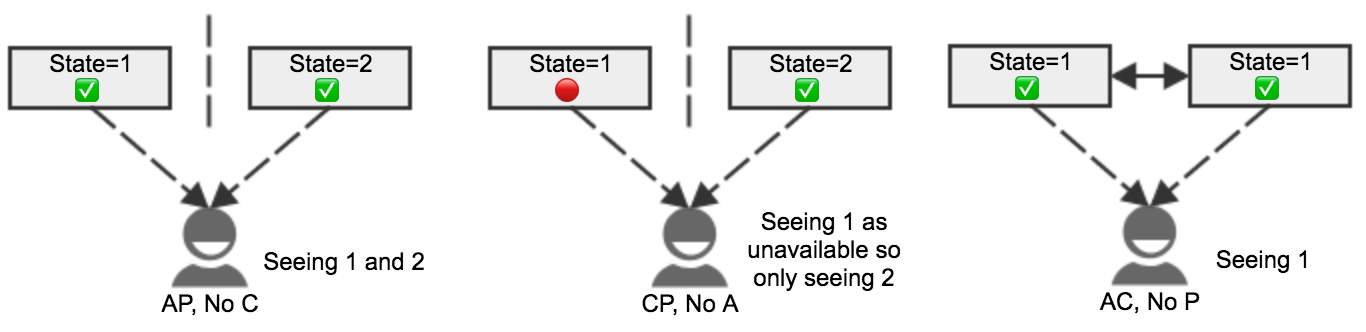

109 | ### CAP Theorem

110 |

111 | When we design a distributed system, **trading off among CAP (consistency, availability, and partition tolerance)** is almost the first thing we want to consider.

112 |

113 | - Consistency: all nodes see the same data at the same time

114 | - Availability: a guarantee that every request receives a response about whether it succeeded or failed

115 | - Partition tolerance: system continues to operate despite arbitrary message loss or failure of part of the system

116 |

117 | In a distributed context, the choice is between CP and AP. Unfortunately, CA is just a joke because a single point of failure is never a choice in the real distributed systems world.

118 |

119 | To ensure consistency, there are some popular protocols to consider: 2PC, eventual consistency (vector clock + RWN), Paxos, [In-Sync Replica](http://www.confluent.io/blog/hands-free-kafka-replication-a-lesson-in-operational-simplicity/), etc.

120 |

121 | To ensure availability, we can add replicas for the data. As to components of the whole system, people usually do [cold standby, warm standby, hot standby, and active-active](https://www.ibm.com/developerworks/community/blogs/RohitShetty/entry/high_availability_cold_warm_hot?lang=en) to handle the failover.

122 |

--------------------------------------------------------------------------------

/en/11-three-programming-paradigms.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 11-three-programming-paradigms

3 | id: 11-three-programming-paradigms

4 | title: "3 Programming Paradigms"

5 | date: 2018-08-12 02:31

6 | comments: true

7 | tags: [system design]

8 | description: "Structured programming is a discipline imposed upon the direct transfer of control. OO programming is a discipline imposed upon the indirect transfer of control. Functional programming is discipline imposed upon variable assignment."

9 | references:

10 | - https://www.amazon.com/Clean-Architecture-Craftsmans-Software-Structure/dp/0134494164

11 | ---

12 |

13 | Structured programming vs. OO programming vs. Functional programming

14 |

15 |

16 |

17 | 1. Structured programming is discipline imposed upon direct transfer of control.

18 | 1. Testability: software is like science: Science does not work by proving statements true, but rather by proving statements false. Structured programming forces us to recursively decompose a program into a set of small provable functions.

19 |

20 |

21 |

22 | 2. OO programming is discipline imposed upon indirect transfer of control.

23 | 1. Capsulation, inheritance, polymorphism(pointers to functions) are not unique to OO.

24 | 2. But OO makes polymorphism safe and convenient to use. And then enable the powerful ==plugin architecture== with dependency inversion

25 | 1. Source code dependencies and flow of control are typically the same. However, if we make them both depend on interfaces, dependency is inverted.

26 | 2. Interfaces empower independent deployability. e.g. when deploying Solidity smart contracts, importing and using interfaces consume much less gases than doing so for the entire implementation.

27 |

28 |

29 |

30 | 3. Functional programming: Immutability. is discipline imposed upon variable assignment.

31 | 1. Why important? All race conditions, deadlock conditions, and concurrent update problems are due to mutable variables.

32 | 2. ==Event sourcing== is a strategy wherein we store the transactions, but not the state. When state is required, we simply apply all the transactions from the beginning of time.

33 |

--------------------------------------------------------------------------------

/en/12-solid-design-principles.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 12-solid-design-principles

3 | id: 12-solid-design-principles

4 | title: "SOLID Design Principles"

5 | date: 2018-08-13 18:07

6 | comments: true

7 | tags: [system design]

8 | description: SOLID is an acronym of design principles that help software engineers write solid code. S is for single responsibility principle, O for open/closed principle, L for Liskov’s substitution principle, I for interface segregation principle and D for dependency inversion principle.

9 | references:

10 | - https://www.amazon.com/Clean-Architecture-Craftsmans-Software-Structure/dp/0134494164

11 | ---

12 |

13 | SOLID is an acronym of design principles that help software engineers write solid code within a project.

14 |

15 | 1. S - **Single Responsibility Principle**. A module should be responsible to one, and only one, actor. a module is just a cohesive set of functions and data structures.

16 |

17 |

18 | 2. O - **Open/Closed Principle**. A software artifact should be open for extension but closed for modification.

19 |

20 |

21 | 3. L - **Liskov’s Substitution Principle**. Simplify code with interface and implementation, generics, sub-classing, and duck-typing for inheritance.

22 |

23 |

24 | 4. I - **Interface Segregation Principle**. Segregate the monolithic interface into smaller ones to decouple modules.

25 |

26 |

27 | 5. D - **Dependency Inversion Principle**. The source code dependencies are inverted against the flow of control. most visible organizing principle in our architecture diagrams.

28 | 1. Things should be stable concrete, Or stale abstract, not ==concrete and volatile.==

29 | 2. So use ==abstract factory== to create volatile concrete objects (manage undesirable dependency.) 产生 interface 的 interface

30 | 3. DIP violations cannot be entirely removed. Most systems will contain at least one such concrete component — this component is often called main.

31 |

--------------------------------------------------------------------------------

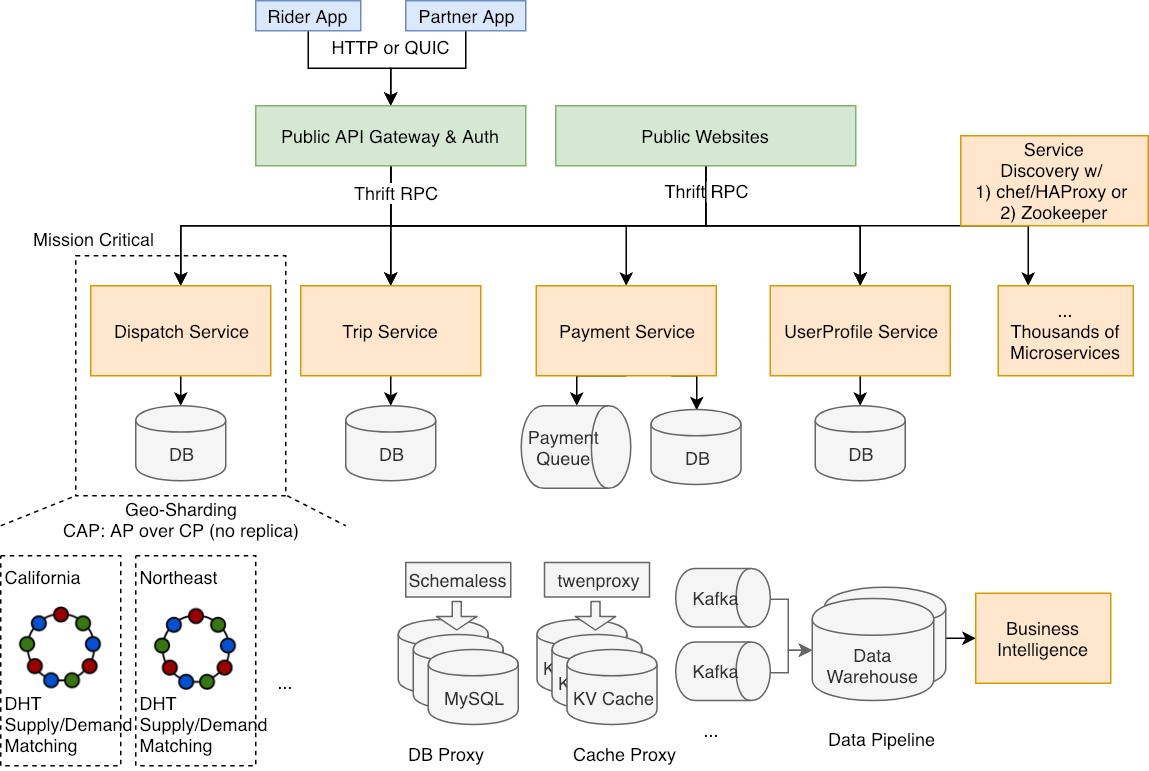

/en/120-designing-uber.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 120-designing-uber

3 | id: 120-designing-uber

4 | title: "Designing Uber"

5 | date: 2019-01-03 18:39

6 | comments: true

7 | tags: [system design]

8 | references:

9 | - https://medium.com/yalantis-mobile/uber-underlying-technologies-and-how-it-actually-works-526f55b37c6f

10 | - https://www.youtube.com/watch?t=116&v=vujVmugFsKc

11 | - http://www.infoq.com/news/2015/03/uber-realtime-market-platform

12 | - https://www.youtube.com/watch?v=kb-m2fasdDY&vl=en

13 | ---

14 |

15 | Disclaimer: All things below are collected from public sources or purely original. No Uber-confidential stuff here.

16 |

17 | ## Requirements

18 |

19 | * ride hailing service targeting the transportation markets around the world

20 | * realtime dispatch in massive scale

21 | * backend design

22 |

23 |

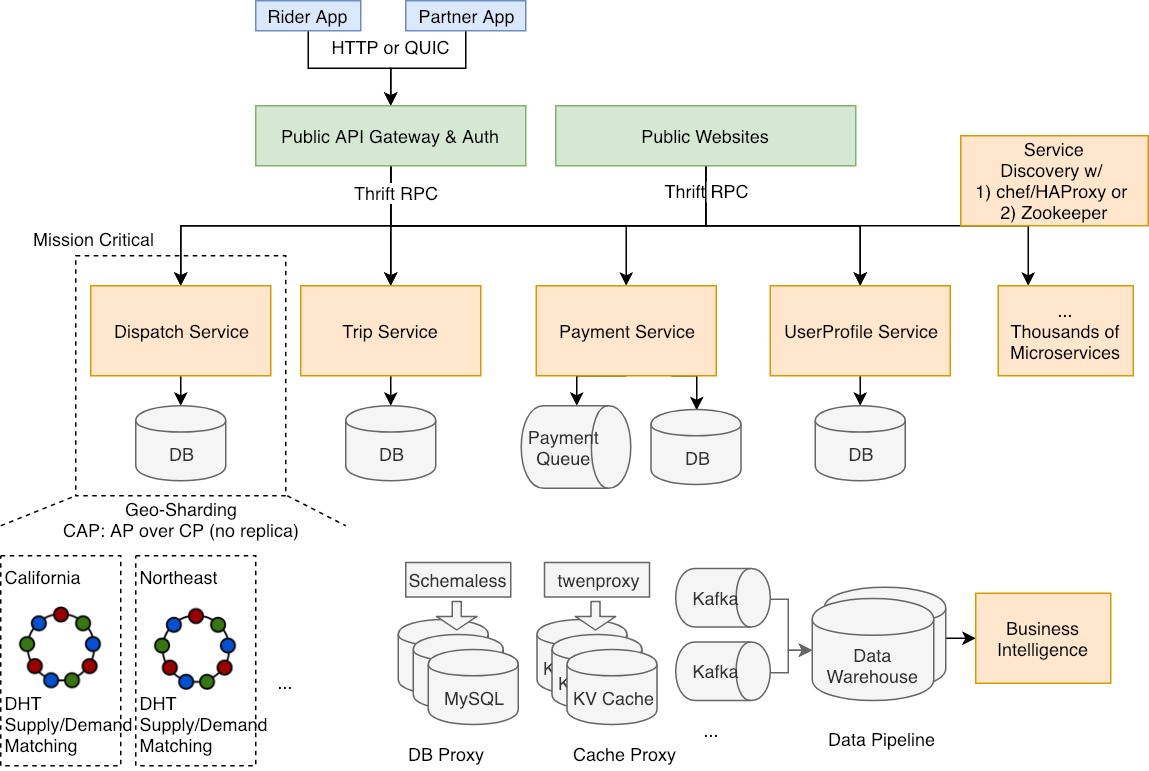

24 |

25 | ## Architecture

26 |

27 |

28 |

29 |

30 |

31 | ## Why micro services?

32 | ==Conway's law== says structures of software systems are copies of the organization structures.

33 |

34 | | | Monolithic ==Service== | Micro Services |

35 | |--- |--- |--- |

36 | | Productivity, when teams and codebases are small | ✅ High | ❌ Low |

37 | | ==Productivity, when teams and codebases are large== | ❌ Low | ✅ High (Conway's law) |

38 | | ==Requirements on Engineering Quality== | ❌ High (under-qualified devs break down the system easily) | ✅ Low (runtimes are segregated) |

39 | | Dependency Bump | ✅ Fast (centrally managed) | ❌ Slow |

40 | | Multi-tenancy support / Production-staging Segregation | ✅ Easy | ❌ Hard (each individual service has to either 1) build staging env connected to others in staging 2) Multi-tenancy support across the request contexts and data storage) |

41 | | Debuggability, assuming same modules, metrics, logs | ❌ Low | ✅ High (w/ distributed tracing) |

42 | | Latency | ✅ Low (local) | ❌ High (remote) |

43 | | DevOps Costs | ✅ Low (High on building tools) | ❌ High (capacity planning is hard) |

44 |

45 | Combining monolithic ==codebase== and micro services can bring benefits from both sides.

46 |

47 | ## Dispatch Service

48 |

49 | * consistent hashing sharded by geohash

50 | * data is transient, in memory, and thus there is no need to replicate. (CAP: AP over CP)

51 | * single-threaded or locked matching in a shard to prevent double dispatching

52 |

53 |

54 |

55 | ## Payment Service

56 |

57 | ==The key is to have an async design==, because payment systems usually have a very long latency for ACID transactions across multiple systems.

58 |

59 | * leverage event queues

60 | * payment gateway w/ Braintree, PayPal, Card.io, Alipay, etc.

61 | * logging intensively to track everything

62 | * [APIs with idempotency, exponential backoff, and random jitter](https://puncsky.com/notes/43-how-to-design-robust-and-predictable-apis-with-idempotency)

63 |

64 |

65 | ## UserProfile Service and Trip Service

66 |

67 | * low latency with caching

68 | * UserProfile Service has the challenge to serve users in increasing types (driver, rider, restaurant owner, eater, etc) and user schemas in different regions and countries.

69 |

70 | ## Push Notification Service

71 |

72 | * Apple Push Notifications Service (not quite reliable)

73 | * Google Cloud Messaging Service GCM (it can detect the deliverability) or

74 | * SMS service is usually more reliable

75 |

--------------------------------------------------------------------------------

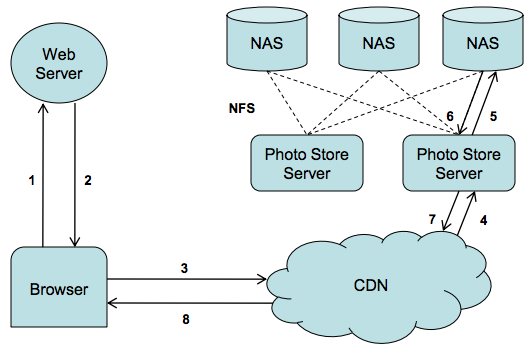

/en/121-designing-facebook-photo-storage.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 121-designing-facebook-photo-storage

3 | id: 121-designing-facebook-photo-storage

4 | title: "Designing Facebook photo storage"

5 | date: 2019-01-04 12:11

6 | comments: true

7 | tags: [system design]

8 | description: "Traditional NFS based design has metadata bottleneck: large metadata size limits the metadata hit ratio. Facebook photo storage eliminates the metadata by aggregating hundreds of thousands of images in a single haystack store file."

9 | references:

10 | - https://www.usenix.org/conference/osdi10/finding-needle-haystack-facebooks-photo-storage

11 | - https://www.facebook.com/notes/facebook-engineering/needle-in-a-haystack-efficient-storage-of-billions-of-photos/76191543919

12 | ---

13 |

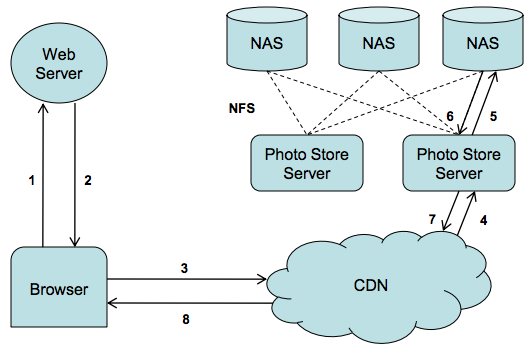

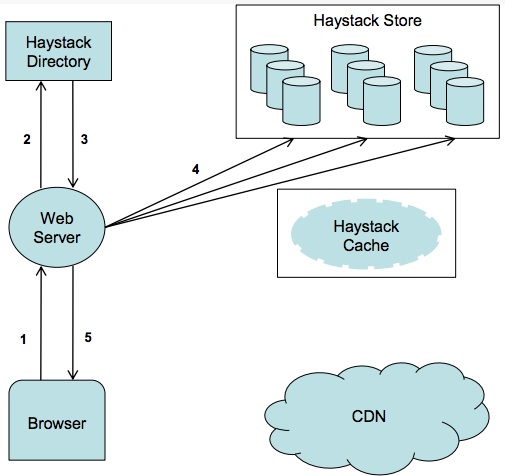

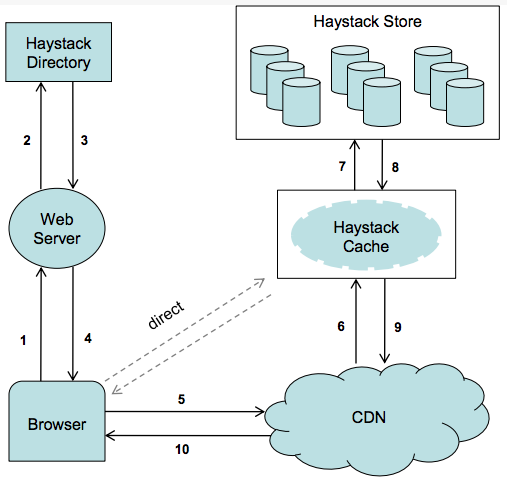

14 | ## Motivation & Assumptions

15 |

16 | * PB-level Blob storage

17 | * Traditional NFS based desgin (Each image stored as a file) has metadata bottleneck: large metadata size severely limits the metadata hit ratio.

18 | * Explain more about the metadata overhead

19 |

20 | > For the Photos application most of this metadata, such as permissions, is unused and thereby wastes storage capacity. Yet the more significant cost is that the file’s metadata must be read from disk into memory in order to find the file itself. While insignificant on a small scale, multiplied over billions of photos and petabytes of data, accessing metadata is the throughput bottleneck.

21 |

22 |

23 |

24 | ## Solution

25 |

26 | Eliminates the metadata overhead by aggregating hundreds of thousands of images in a single haystack store file.

27 |

28 |

29 |

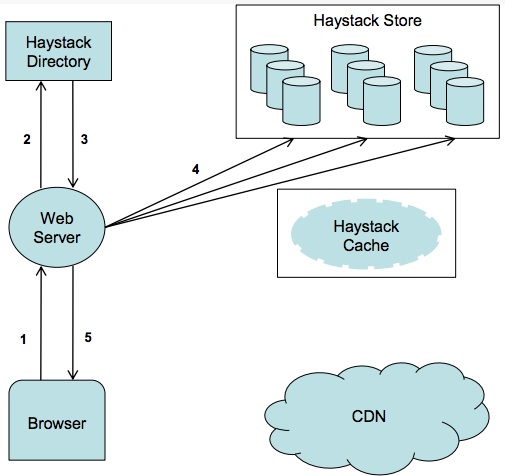

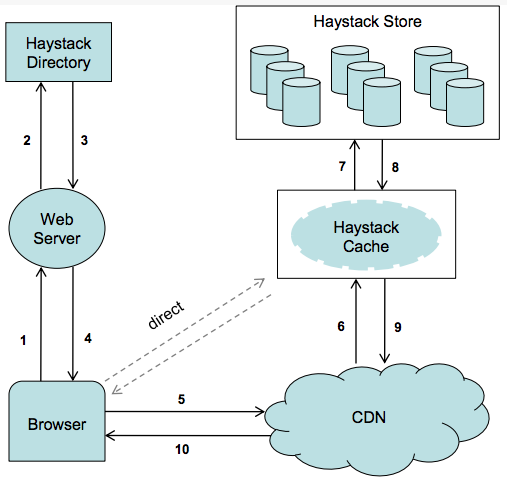

30 | ## Architecture

31 |

32 |

33 |

34 |

35 |

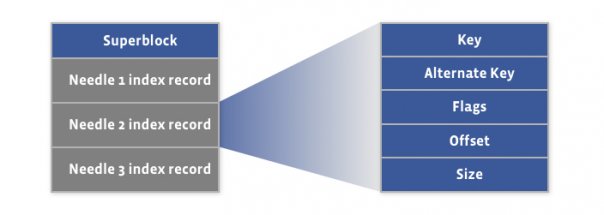

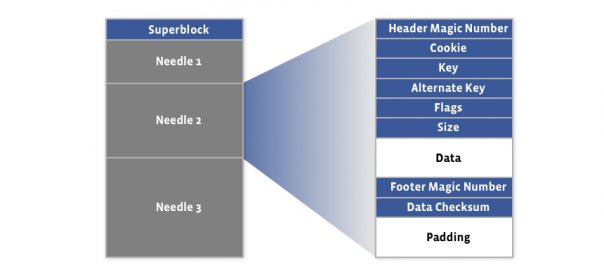

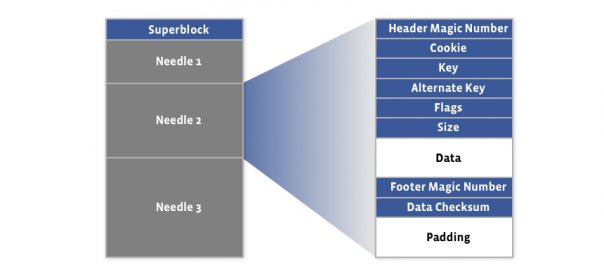

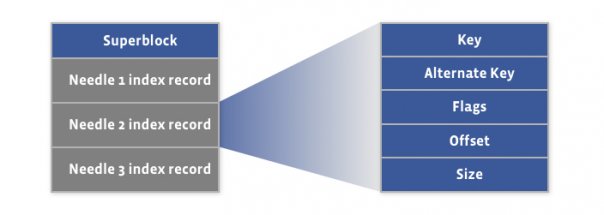

36 | ## Data Layout

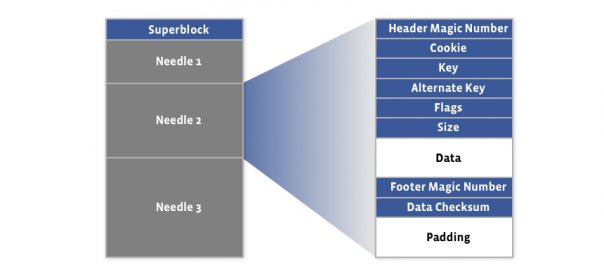

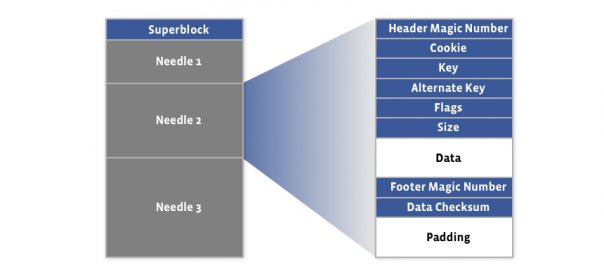

37 |

38 | index file (for quick memory load) + haystack store file containing needles.

39 |

40 | index file layout

41 |

42 |

43 |

44 |

45 |

46 |

47 | haystack store file

48 |

49 |

50 |

51 |

52 |

53 | ### CRUD Operations

54 |

55 | * Create: write to store file and then ==async== write index file, because index is not critical

56 | * Read: read(offset, key, alternate_key, cookie, data_size)

57 | * Update: Append only. If the app meets duplicate keys, then it can choose one with largest offset to update.

58 | * Delete: soft delete by marking the deleted bit in the flag field. Hard delete is executed by the compact operation.

59 |

60 |

61 |

62 | ## Usecases

63 |

64 | Upload

65 |

66 |

67 |

68 |

69 | Download

70 |

71 |

72 |

--------------------------------------------------------------------------------

/en/122-key-value-cache.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 122-key-value-cache

3 | id: 122-key-value-cache

4 | title: "Key value cache"

5 | date: 2019-01-06 23:24

6 | comments: true

7 | description: "The key-value cache is used to reduce the latency of data access. What are read-through, write-through, write-behind, write-back, write-behind, and cache-aside patterns?"

8 | tags: [system design]

9 | ---

10 |

11 | KV cache is like a giant hash map and used to reduce the latency of data access, typically by

12 |

13 | 1. Putting data from slow and cheap media to fast and expensive ones.

14 | 2. Indexing from tree-based data structures of `O(log n)` to hash-based ones of `O(1)` to read and write

15 |

16 |

17 | There are various cache policies like read-through/write-through(or write-back), and cache-aside. By and large, Internet services have a read to write ratio of 100:1 to 1000:1, so we usually optimize for read.

18 |

19 | In distributed systems, we choose those policies according to the business requirements and contexts, under the guidance of [CAP theorem](https://puncsky.com/notes/2018-07-24-replica-and-consistency).

20 |

21 |

22 |

23 | ## Regular Patterns

24 |

25 | * Read

26 | * Read-through: the clients read data from the database via the cache layer. The cache returns when the read hits the cache; otherwise, it fetches data from the database, caches it, and then return the vale.

27 | * Write

28 | * Write-through: clients write to the cache and the cache updates the database. The cache returns when it finishes the database write.

29 | * Write-behind / write-back: clients write to the cache, and the cache returns immediately. Behind the cache write, the cache asynchronously writes to the database.

30 | * Write-around: clients write to the database directly, around the cache.

31 |

32 |

33 |

34 | ## Cache-aside pattern

35 | When a cache does not support native read-through and write-through operations, and the resource demand is unpredictable, we use this cache-aside pattern.

36 |

37 | * Read: try to hit the cache. If not hit, read from the database and then update the cache.

38 | * Write: write to the database first and then ==delete the cache entry==. A common pitfall here is that [people mistakenly update the cache with the value, and double writes in a high concurrency environment will make the cache dirty](https://www.quora.com/Why-does-Facebook-use-delete-to-remove-the-key-value-pair-in-Memcached-instead-of-updating-the-Memcached-during-write-request-to-the-backend).

39 |

40 |

41 | ==There are still chances for dirty cache in this pattern.== It happens when these two cases are met in a racing condition:

42 |

43 | 1. read database and update cache

44 | 2. update database and delete cache

45 |

46 |

47 |

48 | ## Where to put the cache?

49 |

50 | * client-side

51 | * distinct layer

52 | * server-side

53 |

54 |

55 |

56 | ## What if data volume reaches the cache capacity? Use cache replacement policies

57 | * LRU(Least Recently Used): check time, and evict the most recently used entries and keep the most recently used ones.

58 | * LFU(Least Frequently Used): check frequency, and evict the most frequently used entries and keep the most frequently used ones.

59 | * ARC(Adaptive replacement cache): it has a better performance than LRU. It is achieved by keeping both the most frequently and frequently used entries, as well as a history for eviction. (Keeping MRU+MFU+eviction history.)

60 |

61 |

62 |

63 | ## Who are the King of the cache usage?

64 | [Facebook TAO](https://puncsky.com/notes/49-facebook-tao)

65 |

--------------------------------------------------------------------------------

/en/123-ios-architecture-patterns-revisited.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 123-ios-architecture-patterns-revisited

3 | id: 123-ios-architecture-patterns-revisited

4 | title: "iOS Architecture Patterns Revisited"

5 | date: 2019-01-10 02:26

6 | comments: true

7 | tags: [architecture, mobile, system design]

8 | description: "Architecture can directly impact costs per feature. Let's compare Tight-coupling MVC, Cocoa MVC, MVP, MVVM, and VIPER in three dimensions: balanced distribution of responsibility among feature actors, testability and ease of use and maintainability."

9 | references:

10 | - https://medium.com/ios-os-x-development/ios-architecture-patterns-ecba4c38de52

11 | ---

12 |

13 | ## Why bother with architecture?

14 |

15 | Answer: [for reducing human resources costs per feature](https://puncsky.com/notes/10-thinking-software-architecture-as-physical-buildings#ultimate-goal-saving-human-resources-costs-per-feature).

16 |

17 | Mobile developers evaluate the architecture in three dimensions.

18 |

19 | 1. Balanced distribution of responsibilities among feature actors.

20 | 2. Testability

21 | 3. Ease of use and maintainability

22 |

23 |

24 | | | Distribution of Responsibility | Testability | Ease of Use |

25 | | --- | --- | --- | --- |

26 | | Tight-coupling MVC | ❌ | ❌ | ✅ |

27 | | Cocoa MVC | ❌ VC are coupled | ❌ | ✅⭐ |

28 | | MVP | ✅ Separated View Lifecycle | ✅ | Fair: more code |

29 | | MVVM | ✅ | Fair: because of View's UIKit dependant | Fair |

30 | | VIPER | ✅⭐️ | ✅⭐️ | ❌ |

31 |

32 |

33 |

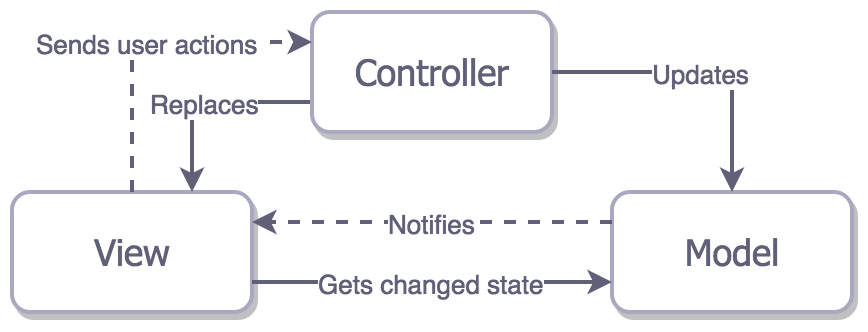

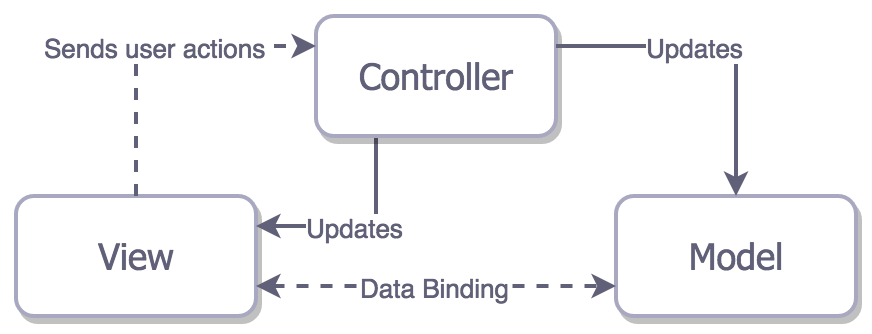

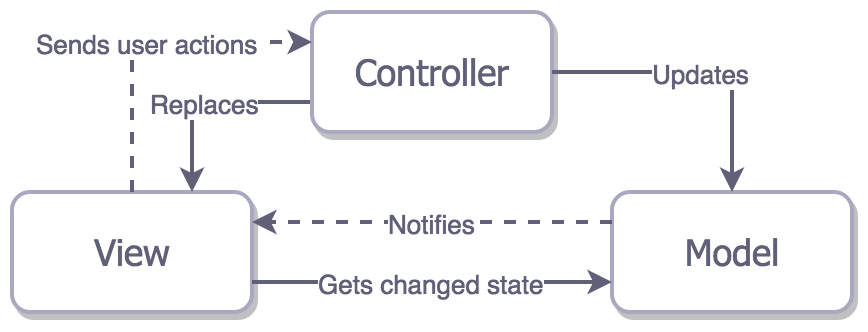

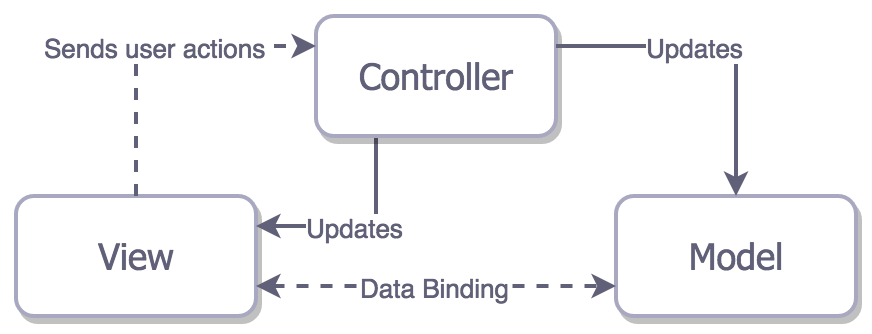

34 | ## Tight-coupling MVC

35 |

36 |

37 |

38 | For example, in a multi-page web application, page completely reloaded once you press on the link to navigate somewhere else. The problem is that the View is tightly coupled with both Controller and Model.

39 |

40 |

41 |

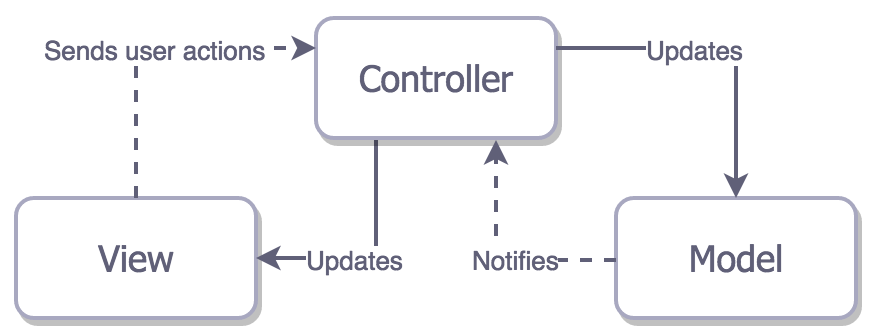

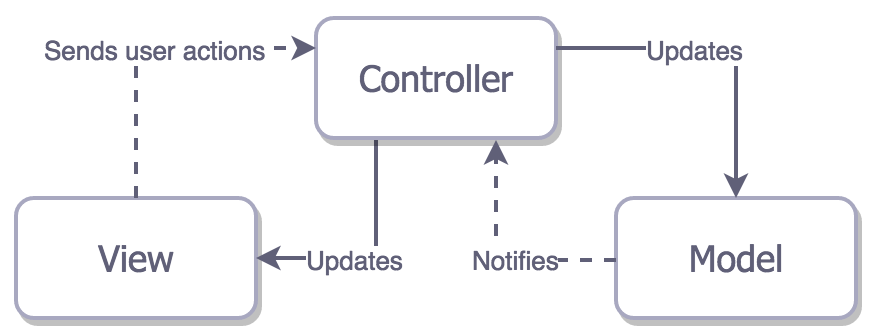

42 | ## Cocoa MVC

43 |

44 | Apple’s MVC, in theory, decouples View from Model via Controller.

45 |

46 |

47 |

48 |

49 | Apple’s MVC in reality encourages ==massive view controllers==. And the view controller ends up doing everything.

50 |

51 |

52 |

53 | It is hard to test coupled massive view controllers. However, Cocoa MVC is the best architectural pattern regarding the speed of the development.

54 |

55 |

56 |

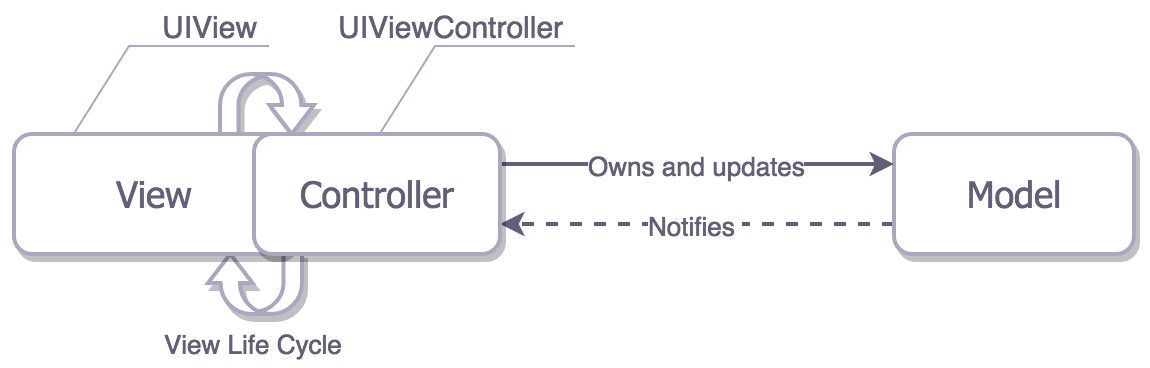

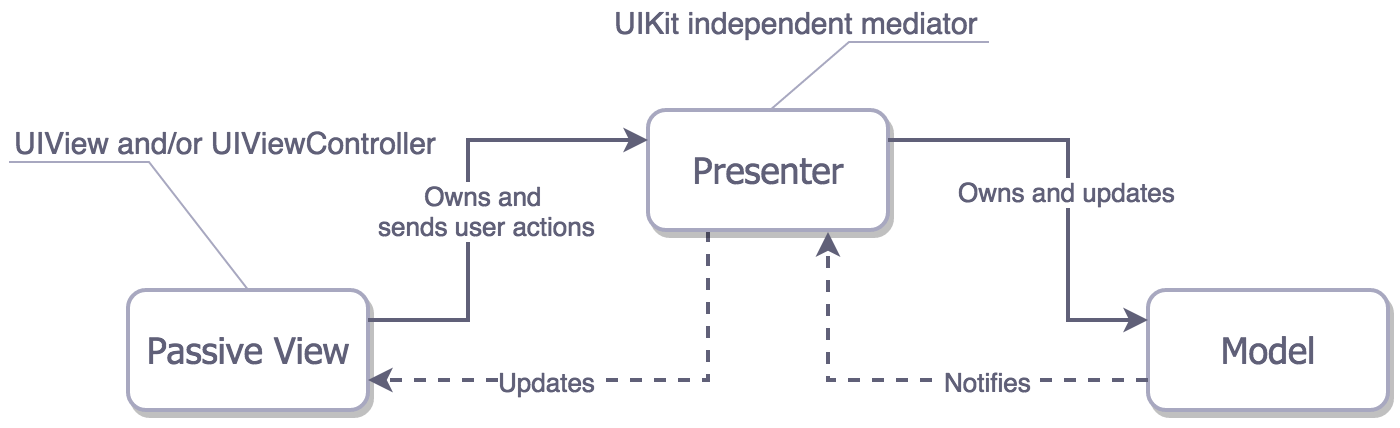

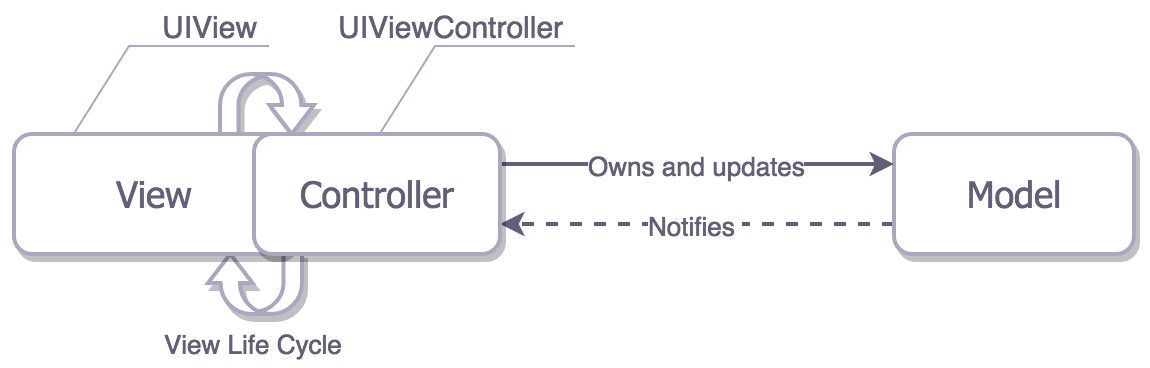

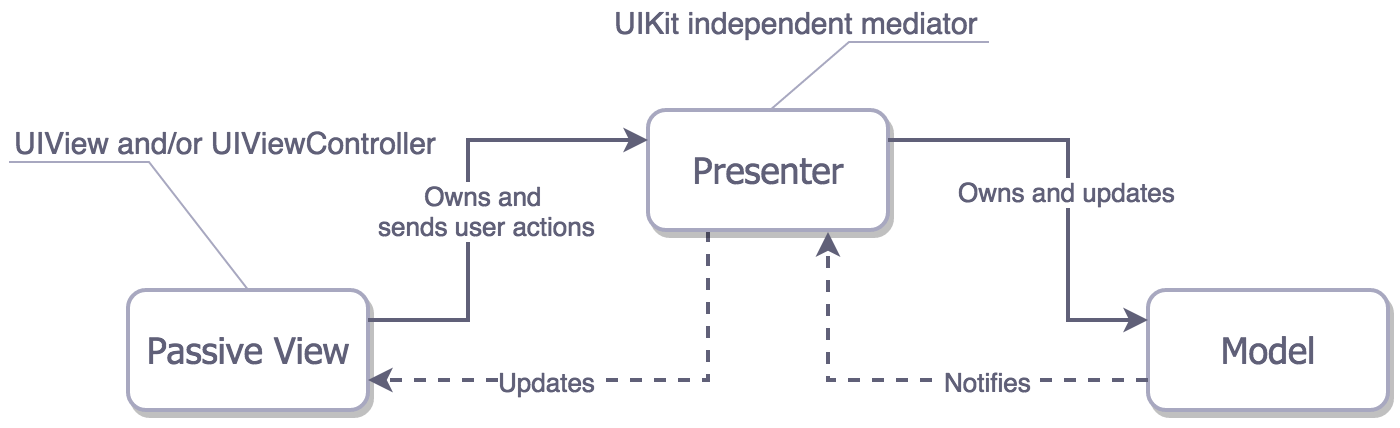

57 | ## MVP

58 |

59 | In an MVP, Presenter has nothing to do with the life cycle of the view controller, and the View can be mocked easily. We can say the UIViewController is actually the View.

60 |

61 |

62 |

63 |

64 | There is another kind of MVP: the one with data bindings. And as you can see, there is tight coupling between View and the other two.

65 |

66 |

67 |

68 |

69 |

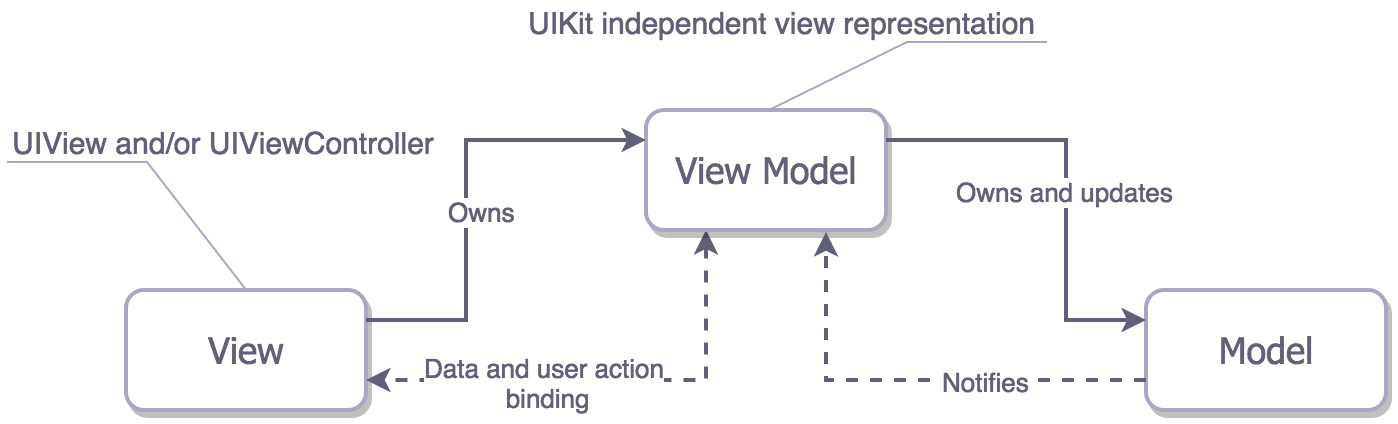

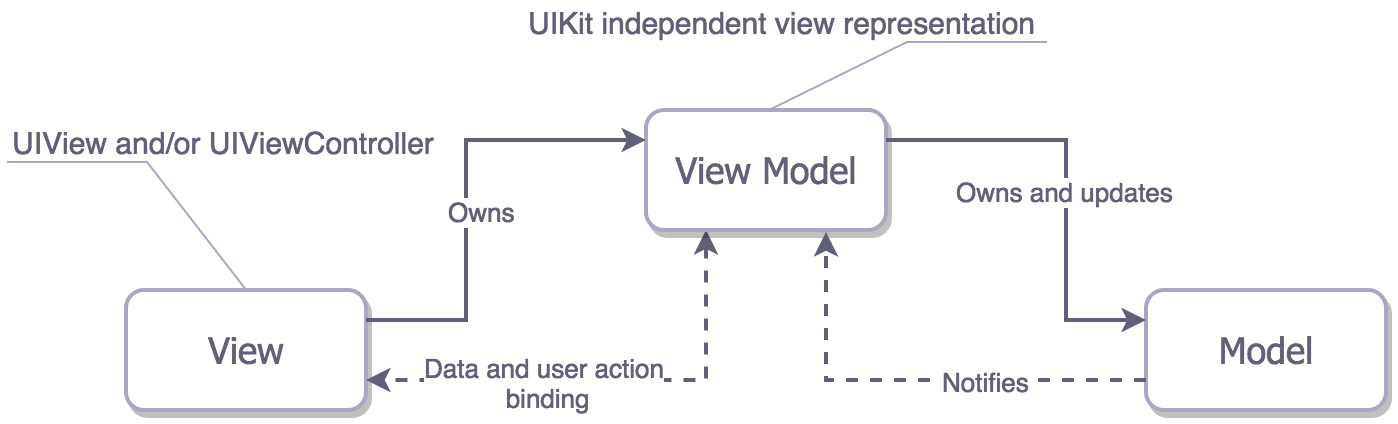

70 | ## MVVM

71 |

72 | It is similar to MVP but binding is between View and View Model.

73 |

74 |

75 |

76 |

77 |

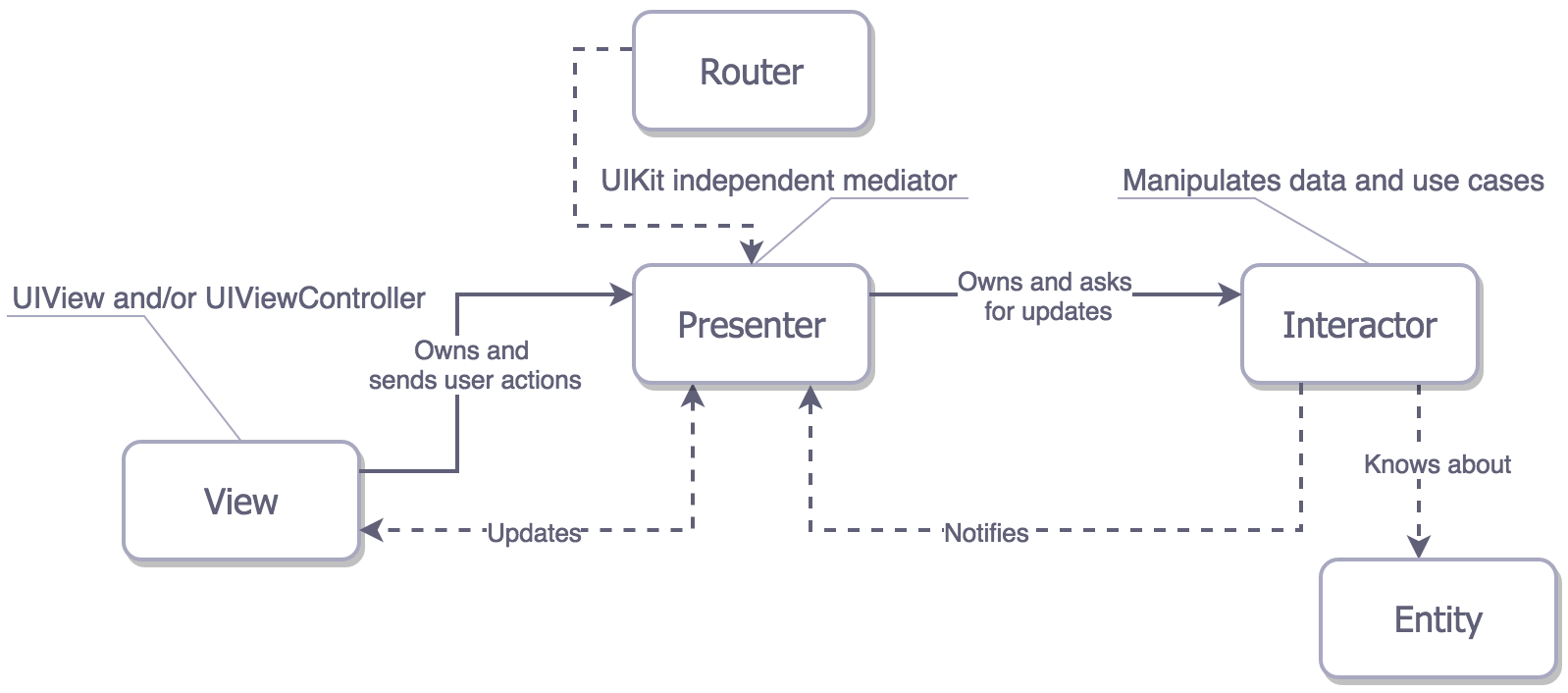

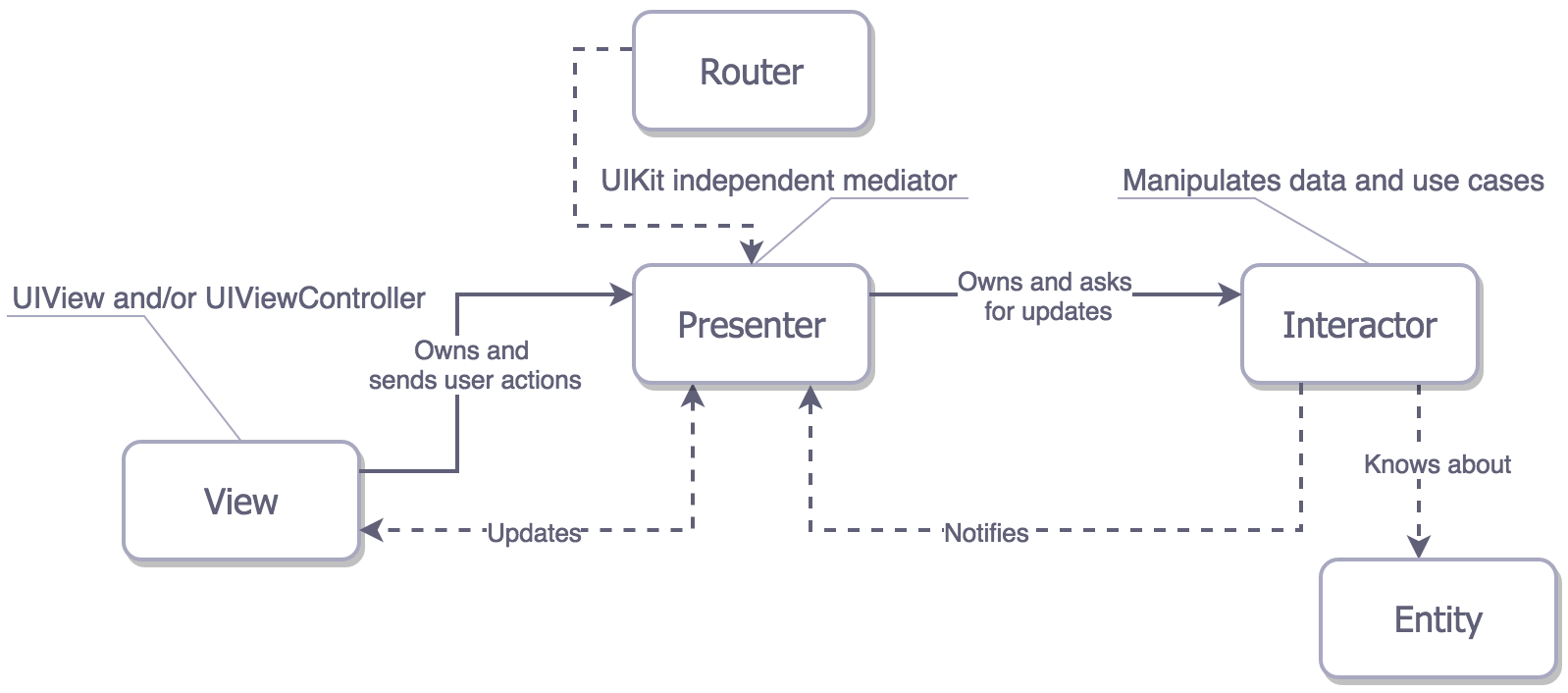

78 | ## VIPER

79 | There are five layers (VIPER View, Interactor, Presenter, Entity, and Routing) instead of three when compared to MV(X). This distributes responsibilities well but the maintainability is bad.

80 |

81 |

82 |

83 |

84 | When compared to MV(X), VIPER

85 |

86 | 1. Model logic is shifted to Interactor and Entities are left as dumb data structures.

87 | 2. ==UI related business logic is placed into Presenter, while the data altering capabilities are placed into Interactor==.

88 | 3. It introduces Router for the navigation responsibility.

89 |

--------------------------------------------------------------------------------

/en/136-fraud-detection-with-semi-supervised-learning.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 136-fraud-detection-with-semi-supervised-learning

3 | id: 136-fraud-detection-with-semi-supervised-learning

4 | title: "Fraud Detection with Semi-supervised Learning"

5 | date: 2019-02-13 23:57

6 | comments: true

7 | tags: [architecture, system design]

8 | description: Fraud Detection fights against account takeovers and Botnet attacks during login. Semi-supervised learning has better learning accuracy than unsupervised learning and less time and costs than supervised learning.

9 | references:

10 | - https://www.slideshare.net/Hadoop_Summit/semisupervised-learning-in-an-adversarial-environment

11 | image: https://web-dash-v2.onrender.com/api/og-tianpan-co?title=Fraud%20Detection%20with%20Semi-supervised%20Learning

12 |

13 | ---

14 |

15 | ## Clarify Requirements

16 |

17 | Calculate risk probability scores in realtime and make decisions along with a rule engine to prevent ATO (account takeovers) and Botnet attacks.

18 |

19 | Train clustering fatures with online and offline pipelines

20 |

21 | 1. Source from website logs, auth logs, user actions, transactions, high-risk accounts in watch list

22 | 2. track event data in kakfa topics

23 | 3. Process events and prepare clustering features

24 |

25 | Realtime scoring and rule-based decision

26 |

27 | 4. assess a risk score comprehensively for online services

28 |

29 | 5. Maintain flexibility with manually configuration in a rule engine

30 | 6. share, or use the insights in online services

31 |

32 | ATOs ranking from easy to hard to detect

33 |

34 | 1. from single IP

35 | 2. from IPs on the same device

36 | 3. from IPs across the world

37 | 4. from 100k IPs

38 | 5. attacks on specific accounts

39 | 6. phishing and malware

40 |

41 | Challenges

42 |

43 | * Manual feature selection

44 | * Feature evolution in adversarial environment

45 | * Scalability

46 | * No online DBSCAN

47 |

48 | ## **High-level Architecture**

49 |

50 |

51 |

52 | ## Core Components and Workflows

53 |

54 | Semi-supervised learning = unlabeled data + small amount of labeled data

55 |

56 | Why? better learning accuracy than unsupervised learning + less time and costs than supervised learning

57 |

58 | ### Training: To prepare clustering features in database

59 |

60 | - **Streaming Pipeline on Spark:**

61 | - Runs continuously in real-time.

62 | - Performs feature normalization and categorical transformation on the fly.

63 | - **Feature Normalization**: Scale your numeric features (e.g., age, income) so that they are between 0 and 1.

64 | - **Categorical Feature Transformation**: Apply one-hot encoding or another transformation to convert categorical features into a numeric format suitable for the machine learning model.

65 | - Uses **Spark MLlib’s K-means** to cluster streaming data into groups.

66 | - After running k-means and forming clusters, you might find that certain clusters have more instances of fraud.

67 | - Once you’ve labeled a cluster as fraudulent based on historical data or expert knowledge, you can use that cluster assignment during inference. Any new data point assigned to that fraudulent cluster can be flagged as suspicious.

68 | - **Hourly Cronjob Pipeline:**

69 | - Runs periodically every hour (batch processing).

70 | - Applies **thresholding** to identify anomalies based on results from the clustering model.

71 | - **Tunes parameters** of the **DBSCAN algorithm** to improve clustering and anomaly detection.

72 | - Uses **DBSCAN** from **scikit-learn** to find clusters and detect outliers in batch data.

73 | - DBSCAN, which can detect outliers, might identify clusters of regular transactions and separate them from **noise**, which could be unusual, potentially fraudulent transactions.

74 | - Transactions in the noisy or outlier regions (points that don’t belong to any dense cluster) can be flagged as suspicious.

75 | - After identifying a cluster as fraudulent, DBSCAN helps detect patterns of fraud even in irregularly shaped transaction distributions.

76 |

77 | ## Serving

78 |

79 | The serving layer is where the rubber meets the road - where we turn our machine learning models and business rules into actual fraud prevention decisions. Here's how it works:

80 |

81 | - Fraud Detection Scoring Service:

82 | - Takes real-time features extracted from incoming requests

83 | - Applies both clustering models (K-means from streaming and DBSCAN from batch)

84 | - Combines scores with streaming counters (like login attempts per IP)

85 | - Outputs a unified risk score between 0 and 1

86 | - Rule Engine:

87 | - Acts as the "brain" of the system

88 | - Combines ML scores with configurable business rules

89 | - Examples of rules:

90 | - If risk score > 0.8 AND user is accessing from new IP → require 2FA

91 | - If risk score > 0.9 AND account is high-value → block transaction

92 | - Rules are stored in a database and can be updated without code changes

93 | - Provides an admin portal for security teams to adjust rules

94 | - Integration with Other Services:

95 | - Exposes REST APIs for real-time scoring

96 | - Publishes results to streaming counters for monitoring

97 | - Feeds decisions back to the training pipeline to improve model accuracy

98 | - Observability:

99 | - Tracks key metrics like false positive/negative rates

100 | - Monitors model drift and feature distribution changes

101 | - Provides dashboards for security analysts to investigate patterns

102 | - Logs detailed information for post-incident analysis

103 |

104 |

--------------------------------------------------------------------------------

/en/137-stream-and-batch-processing.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 137-stream-and-batch-processing

3 | id: 137-stream-and-batch-processing

4 | title: "Stream and Batch Processing Frameworks"

5 | date: 2019-02-16 22:13

6 | comments: true

7 | tags: [system design]

8 | description: "Stream and Batch processing frameworks can process high throughput at low latency. Why is Flink gaining popularity? And how to make an architectural choice among Storm, Storm-trident, Spark, and Flink?"

9 | references:

10 | - https://storage.googleapis.com/pub-tools-public-publication-data/pdf/43864.pdf

11 | - https://cs.stanford.edu/~matei/papers/2018/sigmod_structured_streaming.pdf

12 | - https://spark.apache.org/docs/latest/structured-streaming-programming-guide.html

13 | - https://stackoverflow.com/questions/28502787/google-dataflow-vs-apache-storm

14 | ---

15 |

16 | ## Why such frameworks?

17 |

18 | * process high-throughput in low latency.

19 | * fault-tolerance in distributed systems.

20 | * generic abstraction to serve volatile business requirements.

21 | * for bounded data set (batch processing) and for unbounded data set (stream processing).

22 |

23 |

24 | ## Brief history of batch/stream processing

25 |

26 | 1. Hadoop and MapReduce. Google made batch processing as simple as MR `result = pairs.map((pair) => (morePairs)).reduce(somePairs => lessPairs)` in a distributed system.

27 | 2. Apache Storm and DAG Topology. MR doesn’t efficiently express iterative algorithms. Thus Nathan Marz abstracted stream processing into a graph of spouts and bolts.

28 | 3. Spark in-memory Computing. Reynold Xin said Spark sorted the same data **3X faster** using **10X fewer machines** compared to Hadoop.

29 | 4. Google Dataflow based on Millwheel and FlumeJava. Google supports both batch and streaming computing with the windowing API.

30 |

31 |

32 | ### Wait... But why is Flink gaining popularity?

33 |

34 | 1. its fast adoption of ==Google Dataflow==/Beam programming model.

35 | 2. its highly efficient implementation of Chandy-Lamport checkpointing.

36 |

37 |

38 |

39 | ## How?

40 |

41 | ### Architectural Choices

42 |

43 | To serve requirements above with commodity machines, the steaming framework use distributed systems in these architectures...

44 |

45 | * master-slave (centralized): apache storm with zookeeper, apache samza with YARN.

46 | * P2P (decentralized): apache s4.

47 |

48 |

49 | ### Features

50 |

51 | 1. DAG Topology for Iterative Processing. e.g. GraphX in Spark, topologies in Apache Storm, DataStream API in Flink.

52 | 2. Delivery Guarantees. How guaranteed to deliver data from nodes to nodes? at-least once / at-most once / exactly once.

53 | 3. Fault-tolerance. Using [cold/warm/hot standby, checkpointing, or active-active](https://tianpan.co/notes/85-improving-availability-with-failover).

54 | 4. Windowing API for unbounded data set. e.g. Stream Windows in Apache Flink. Spark Window Functions. Windowing in Apache Beam.

55 |

56 |

57 | ## Comparison

58 |

59 | | Framework | Storm | Storm-trident | Spark | Flink |

60 | | --------------------------- | ------------- | ------------- | ------------ | ------------ |

61 | | Model | native | micro-batch | micro-batch | native |

62 | | Guarentees | at-least-once | exactly-once | exactly-once | exactly-once |

63 | | Fault-tolerance | Record-Ack | record-ack | checkpoint | checkpoint |

64 | | Overhead of fault-tolerance | high | medium | medium | low |

65 | | latency | very-low | high | high | low |

66 | | throughput | low | medium | high | high |

67 |

--------------------------------------------------------------------------------

/en/145-introduction-to-architecture.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 145-introduction-to-architecture

3 | id: 145-introduction-to-architecture

4 | title: "Introduction to Architecture"

5 | date: 2019-05-11 15:52

6 | comments: true

7 | tags: [system design]

8 | description: Architecture serves the full lifecycle of the software system to make it easy to understand, develop, test, deploy and operate. The O’Reilly book Software Architecture Patterns gives a simple but effective introduction to five fundamental architectures.

9 | references:

10 | - https://puncsky.com/notes/10-thinking-software-architecture-as-physical-buildings

11 | - https://www.oreilly.com/library/view/software-architecture-patterns/9781491971437/ch01.html

12 | - http://www.ruanyifeng.com/blog/2016/09/software-architecture.html

13 | ---

14 |

15 | ## What is architecture?

16 |

17 | Architecture is the shape of the software system. Thinking it as a big picture of physical buildings.

18 |

19 | * paradigms are bricks.

20 | * design principles are rooms.

21 | * components are buildings.

22 |

23 | Together they serve a specific purpose like a hospital is for curing patients and a school is for educating students.

24 |

25 |

26 | ## Why do we need architecture?

27 |

28 | ### Behavior vs. Structure

29 |

30 | Every software system provides two different values to the stakeholders: behavior and structure. Software developers are responsible for ensuring that both those values remain high.

31 |

32 | ==Software architects are, by virtue of their job description, more focused on the structure of the system than on its features and functions.==

33 |

34 |

35 | ### Ultimate Goal - ==saving human resources costs per feature==

36 |

37 | Architecture serves the full lifecycle of the software system to make it easy to understand, develop, test, deploy, and operate.

38 | The goal is to minimize the human resources costs per business use-case.

39 |

40 |

41 |

42 | The O’Reilly book Software Architecture Patterns by Mark Richards is a simple but effective introduction to these five fundamental architectures.

43 |

44 |

45 |

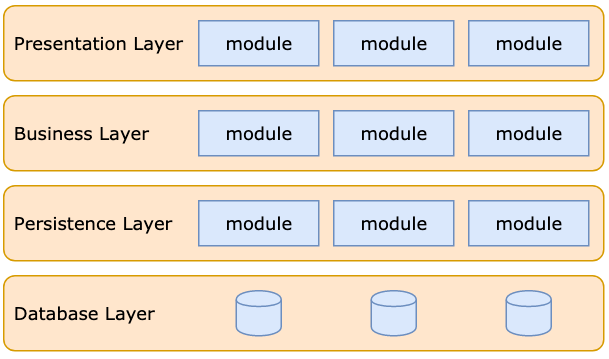

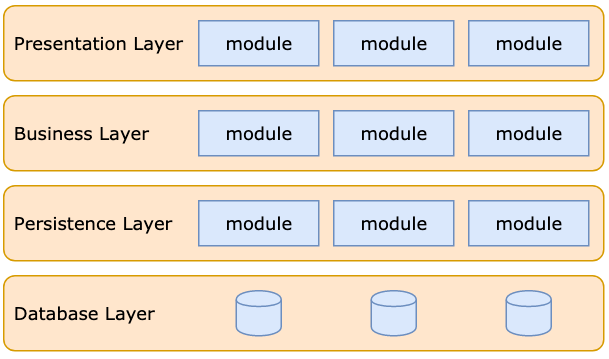

46 | ## 1. Layered Architecture

47 |

48 |

49 |

50 | The layered architecture is the most common in adoption, well-known among developers, and hence the de facto standard for applications. If you do not know what architecture to use, use it.

51 |

52 | [comment]: \<\> (https://www.draw.io/#G1ldM5O9Y62Upqg_t5rcTNHIRseP-7fqQT)

53 |

54 |

55 |

56 |

57 | Examples

58 |

59 | * TCP / IP Model: Application layer > transport layer > internet layer > network access layer

60 | * [Facebook TAO](https://puncsky.com/notes/49-facebook-tao): web layer > cache layer (follower + leader) > database layer

61 |

62 | Pros and Cons

63 |

64 | * Pros

65 | * ease of use

66 | * separation of responsibility

67 | * testability

68 | * Cons

69 | * monolithic

70 | * hard to adjust, extend or update. You have to make changes to all the layers.

71 |

72 |

73 |

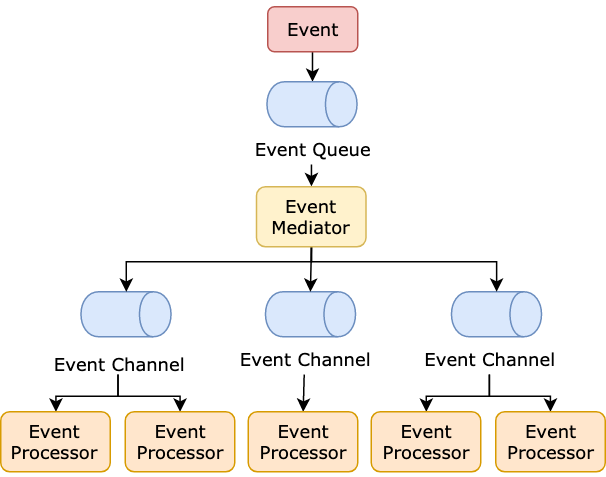

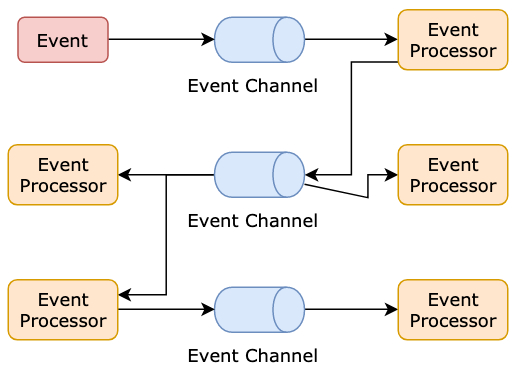

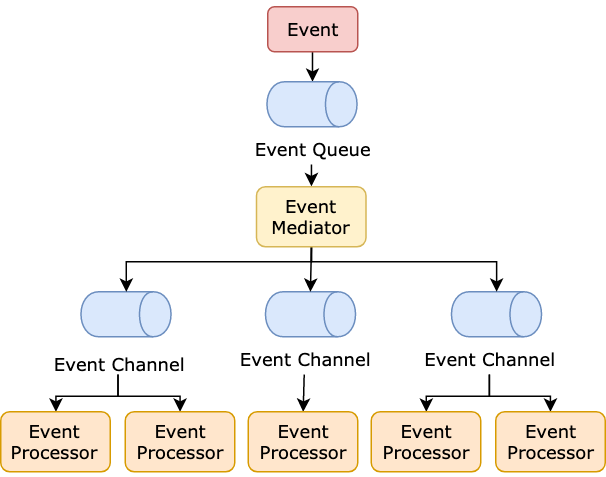

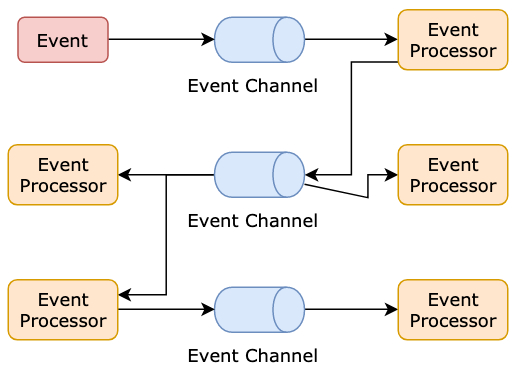

74 | ## 2. Event-Driven Architecture

75 |

76 |

77 |

78 | A state change will emit an event to the system. All the components communicate with each other through events.

79 |

80 |

81 |

82 |

83 | A simple project can combine the mediator, event queue, and channel. Then we get a simplified architecture:

84 |

85 |

86 |

87 |

88 | Examples

89 |

90 | * QT: Signals and Slots

91 | * Payment Infrastructure: Bank gateways usually have very high latencies, so they adopt async technologies in their architecture design.

92 |

93 |

94 |

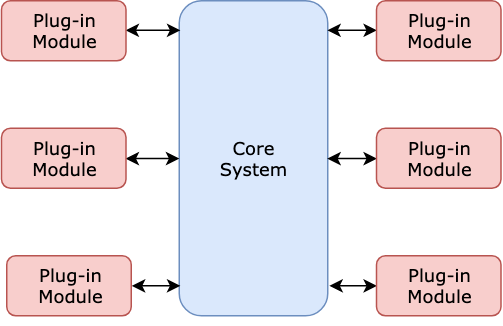

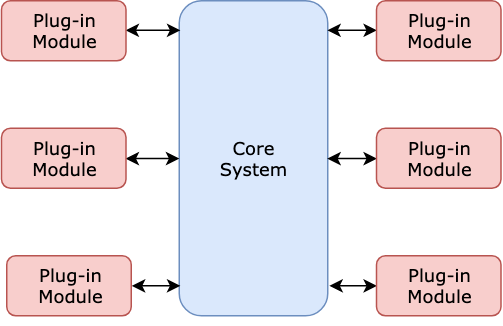

95 | ## 3. Micro-kernel Architecture (aka Plug-in Architecture)

96 |

97 |

98 |

99 | The software's responsibilities are divided into one "core" and multiple "plugins". The core contains the bare minimum functionality. Plugins are independent of each other and implement shared interfaces to achieve different goals.

100 |

101 |

102 |

103 |

104 | Examples

105 |

106 | * Visual Studio Code, Eclipse

107 | * MINIX operating system

108 |

109 |

110 |

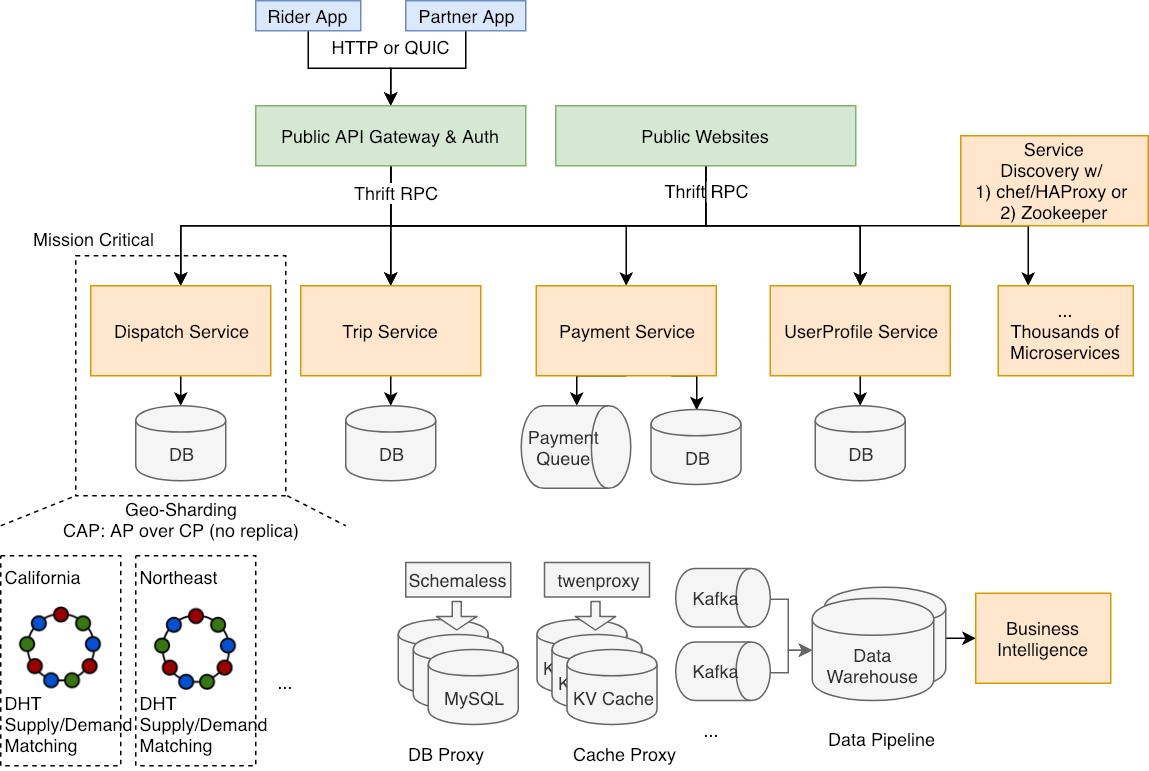

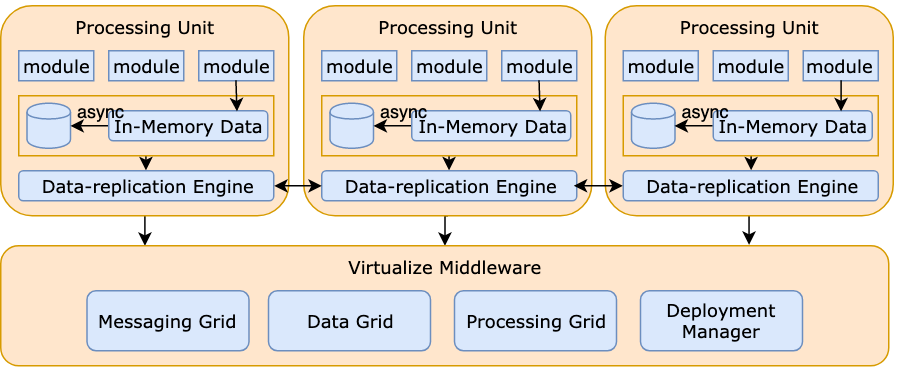

111 | ## 4. Microservices Architecture

112 |

113 |

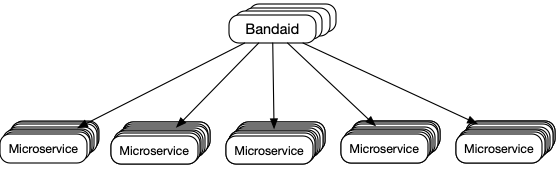

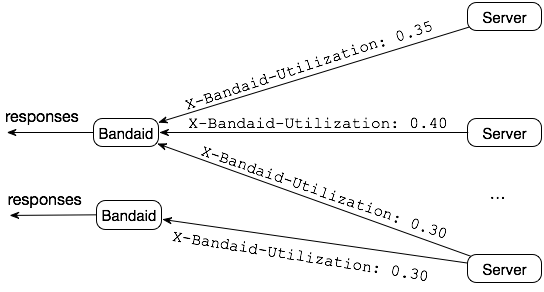

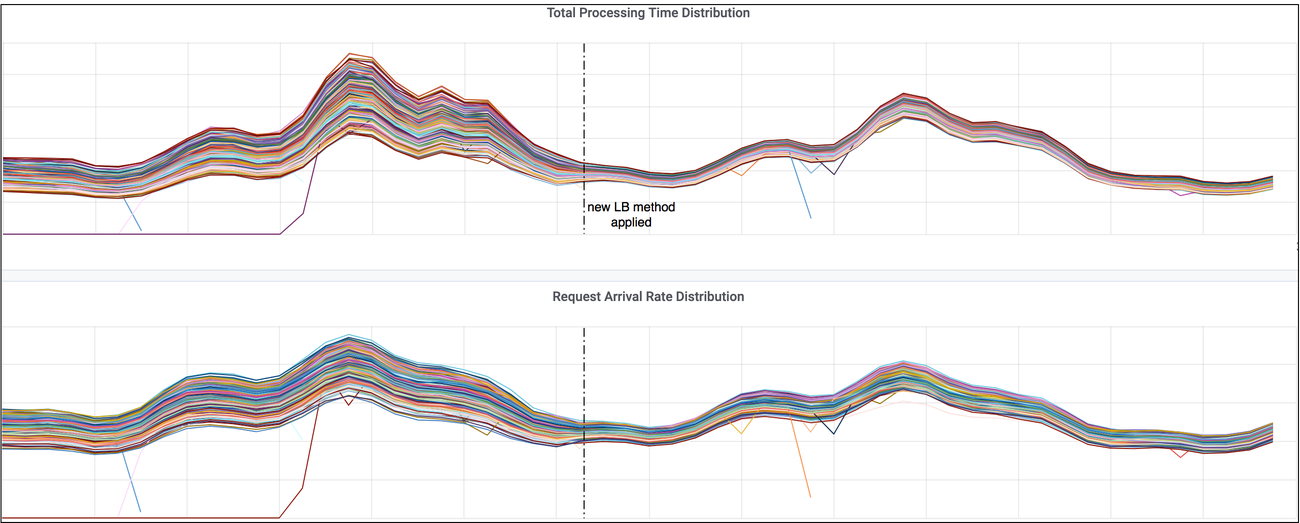

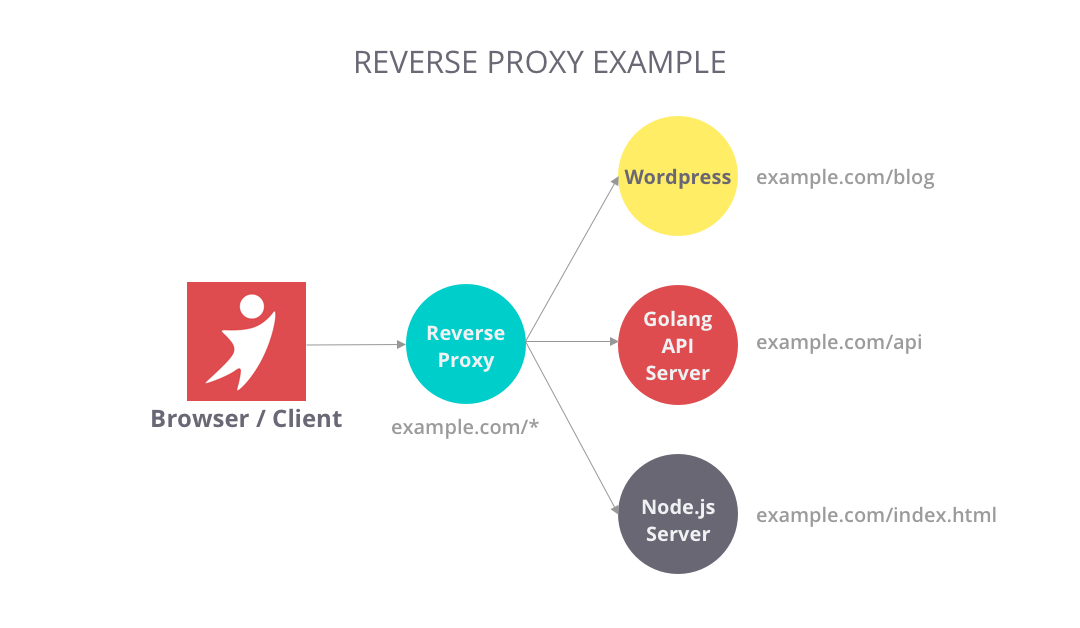

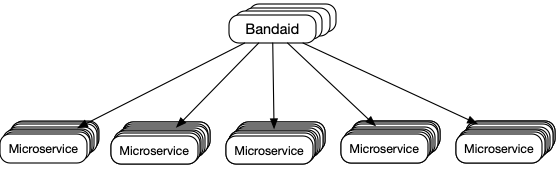

114 |

115 | A massive system is decoupled to multiple micro-services, each of which is a separately deployed unit, and they communicate with each other via [RPCs](/blog/2016-02-13-crack-the-system-design-interview#21-communication).

116 |

117 |

118 |

119 |

120 |

121 |

122 | Examples

123 |

124 | * Uber: See [designing Uber](https://puncsky.com/notes/120-designing-uber)

125 | * Smartly

126 |

127 |

128 |

129 |

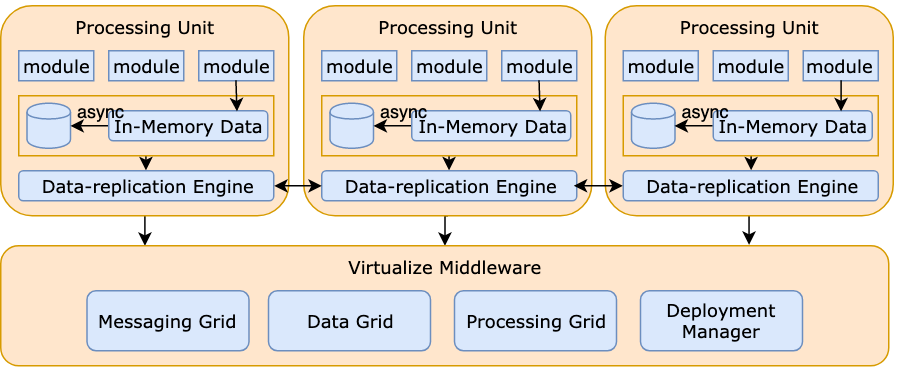

130 | ## 5. Space-based Architecture

131 |

132 |

133 |

134 | This pattern gets its name from "tuple space", which means “distributed shared memory". There is no database or synchronous database access, and thus no database bottleneck. All the processing units share the replicated application data in memory. These processing units can be started up and shut down elastically.

135 |

136 |

137 |

138 |

139 |

140 | Examples: See [Wikipedia](https://en.wikipedia.org/wiki/Tuple_space#Example_usage)

141 |

142 | - Mostly adopted among Java users: e.g., JavaSpaces

143 |

--------------------------------------------------------------------------------

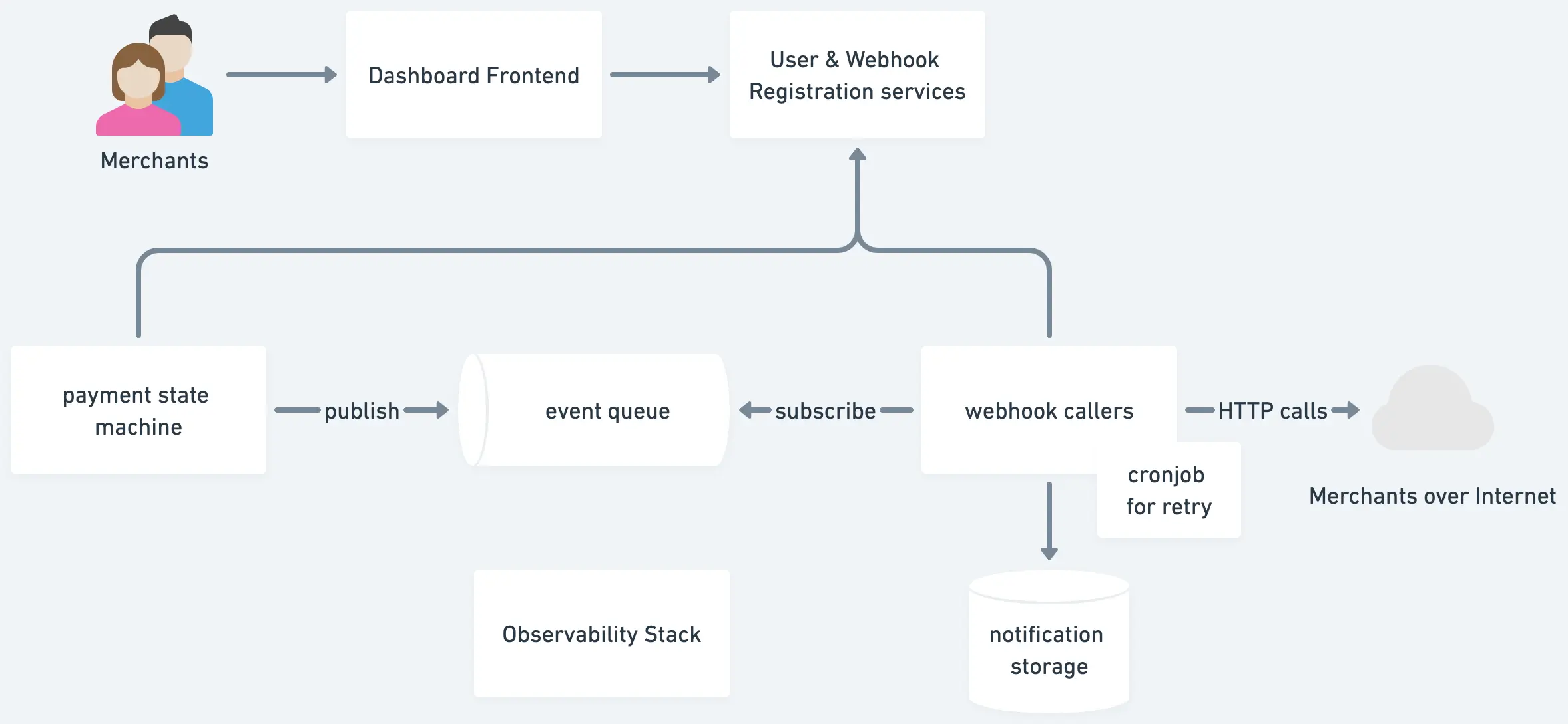

/en/166-designing-payment-webhook.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 166-designing-payment-webhook

3 | id: 166-designing-payment-webhook

4 | title: "Designing payment webhook"

5 | date: 2019-08-19 21:15

6 | updateDate: 2024-04-06 17:29

7 | comments: true

8 | tags: [system design]

9 | slides: false

10 | description: Design a webhook that notifies the merchant when the payment succeeds. We need to aggregate the metrics (e.g., success vs. failure) and display it on the dashboard.

11 | references:

12 | - https://commerce.coinbase.com/docs/#webhooks

13 | - https://bitworking.org/news/2017/03/prometheus

14 | - https://workos.com/blog/building-webhooks-into-your-application-guidelines-and-best-practices

15 |

16 | ---

17 |

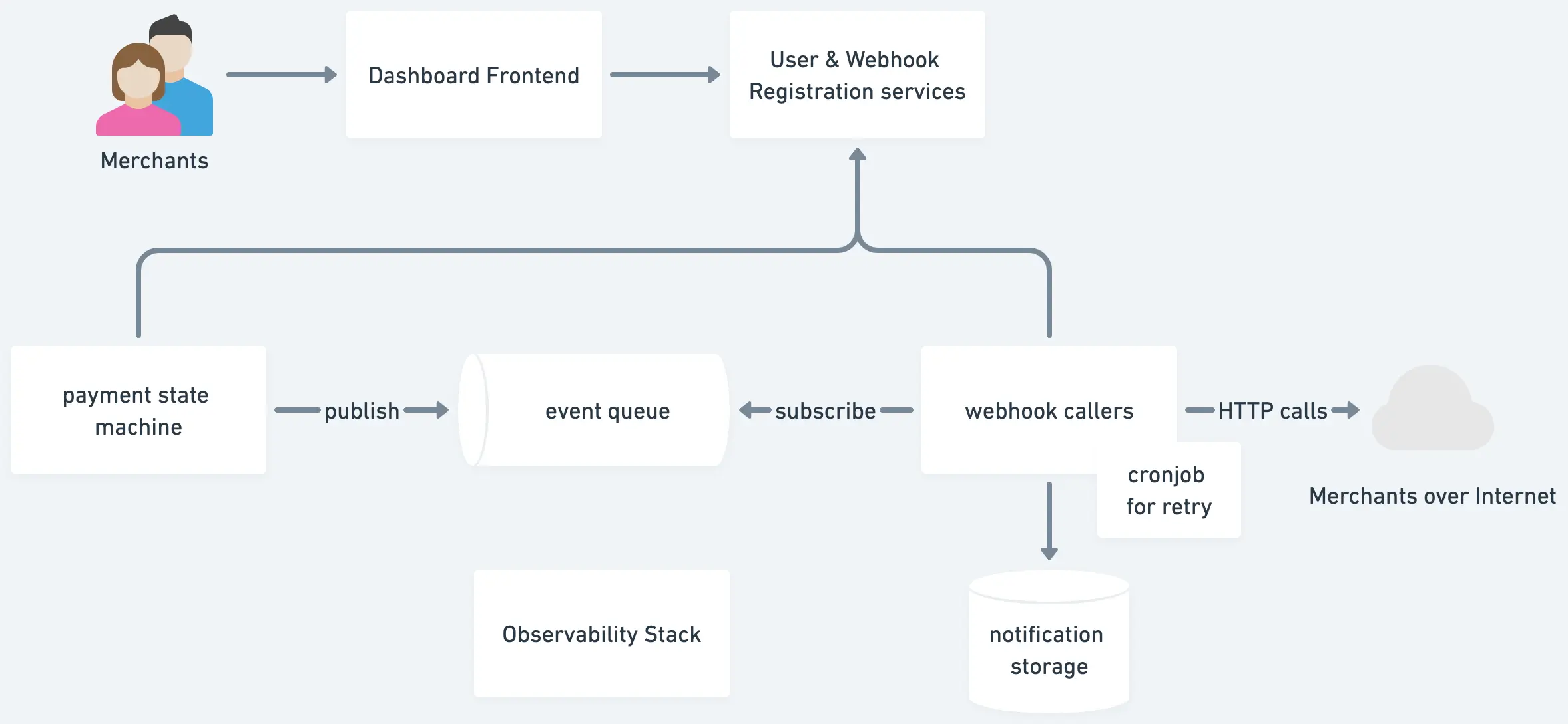

18 | ## 1. Clarifying Requirements

19 |

20 | 1. Webhook will call the merchant back once the payment succeeds.

21 | 1. Merchant developer registers webhook information with us.

22 | 2. Make a POST HTTP request to the webhooks reliably and securely.

23 | 2. High availability, error-handling, and failure-resilience.

24 | 1. Async design. Assuming that the servers of merchants are located across the world, and may have a very high latency like 15s.

25 | 2. At-least-once delivery. Idempotent key.

26 | 3. Order does not matter.

27 | 4. Robust & predictable retry and short-circuit.

28 | 3. Security, observability & scalability

29 | 1. Anti-spoofing.

30 | 2. Notify the merchant when their receivers are broken.

31 | 3. easy to extend and scale.

32 |

33 |

34 |

35 | ## 2. Sketch out the high-level design

36 |

37 | async design + retry + queuing + observability + security

38 |

39 |

40 |

41 | ## 3. Features and Components

42 |

43 | ### Core Features

44 |

45 | 1. Users go to dashboard frontend to register webhook information with us - like the URL to call, the scope of events they want to subscribe, and then get an API key from us.

46 | 2. When there is a new event, publish it into the queue and then get consumed by callers. Callers get the registration and make the HTTP call to external services.

47 |

48 | ### Webhook callers

49 |

50 | 1. Subscribe to the event queue for payment success events published by a payment state machine or other services.

51 |

52 | 2. Once callers accept an event, fetch webhook URI, secret, and settings from the user settings service. Prepare the request based on those settings. For security...

53 |

54 | * All webhooks from user settings must be in HTTPs

55 |

56 | * If the payload is huge, the prospect latency is high, and we wants to make sure the target reciever is alive, we can verify its existance with a ping carrying a challenge. e.g. [Dropbox verifies webhook endpoints](https://www.dropbox.com/developers/reference/webhooks#documentation) by sending a GET request with a “challenge” param (a random string) encoded in the URL, which your endpoint is required to echo back as a response.

57 | * All callback requests are with header `x-webhook-signature`. So that the receiver can authenticate the request.

58 | * For symetric signature, we can use HMAC/SHA256 signature. Its value is `HMAC(webhook secret, raw request payload);`. Telegram takes this.

59 | * For asymmetric signature, we can use RSA/SHA256 signature. Its value is `RSA(webhook private key, raw request payload);` Stripe takes this.

60 | * If it's sensitive information, we can also consider encryption for the payload instead of just signing.

61 |

62 | 3. Make an HTTP POST request to the external merchant's endpoints with event payload and security headers.

63 |

64 | ### API Definition

65 |

66 | ```json5

67 | // POST https://example.com/webhook/

68 | {

69 | "id": 1,

70 | "scheduled_for": "2017-01-31T20:50:02Z",

71 | "event": {

72 | "id": "24934862-d980-46cb-9402-43c81b0cdba6",

73 | "resource": "event",

74 | "type": "charge:created",

75 | "api_version": "2018-03-22",

76 | "created_at": "2017-01-31T20:49:02Z",

77 | "data": {

78 | "code": "66BEOV2A", // or order ID the user need to fulfill

79 | "name": "The Sovereign Individual",

80 | "description": "Mastering the Transition to the Information Age",

81 | "hosted_url": "https://commerce.coinbase.com/charges/66BEOV2A",

82 | "created_at": "2017-01-31T20:49:02Z",

83 | "expires_at": "2017-01-31T21:49:02Z",

84 | "metadata": {},

85 | "pricing_type": "CNY",

86 | "payments": [

87 | // ...

88 | ],

89 | "addresses": {

90 | // ...

91 | }

92 | }

93 | }

94 | }

95 | ```

96 |

97 | The merchant server should respond with a 200 HTTP status code to acknowledge receipt of a webhook.

98 |

99 | ### Error-handling

100 |

101 | If there is no acknowledgment of receipt, we will [retry with idempotency key and exponential backoff for up to three days. The maximum retry interval is 1 hour.](https://puncsky.com/notes/43-how-to-design-robust-and-predictable-apis-with-idempotency) If it's reaching a certain limit, short-circuit / mark it as broken. Sending out an Email to the merchant.

102 |

103 | ### Metrics

104 |

105 | The Webhook callers service emits statuses into the time-series DB for metrics.

106 |

107 | Using Statsd + Influx DB vs. Prometheus?

108 |

109 | * InfluxDB: Application pushes data to InfluxDB. It has a monolithic DB for metrics and indices.

110 | * Prometheus: Prometheus server pulls the metrics values from the running application periodically. It uses LevelDB for indices, but each metric is stored in its own file.

111 |

112 | Or use the expensive DataDog or other APM services if you have a generous budget.

113 |

--------------------------------------------------------------------------------

/en/169-how-to-write-solid-code.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 169-how-to-write-solid-code

3 | id: 169-how-to-write-solid-code

4 | title: "How to write solid code?"

5 | date: 2019-09-25 02:29

6 | comments: true

7 | tags: [system design]

8 | description: Empathy plays the most important role in writing solid code. Besides, you need to choose a sustainable architecture to decrease human resource costs in total as the project scales. Then, adopt patterns and best practices; avoid anti-patterns. Finally, refactor if necessary.

9 | ---

10 |

11 |

12 |

13 | 1. empathy / perspective-taking is the most important.

14 | 1. realize that code is written for human to read first and then for machines to execute.

15 | 2. software is so "soft" and there are many ways to achieve one thing. It's all about making the proper trade-offs to fulfill the requirements.

16 | 3. Invent and Simplify: Apple Pay RFID vs. Wechat Scan QR Code.

17 |

18 | 2. choose a sustainable architecture to reduce human resources costs per feature.

19 |

20 |

21 |

22 |

23 | 3. adopt patterns and best practices.

24 |

25 | 4. avoid anti-patterns

26 | * missing error-handling

27 | * callback hell = spaghetti code + unpredictable error handling

28 | * over-long inheritance chain

29 | * circular dependency

30 | * over-complicated code

31 | * nested tertiary operation

32 | * comment out unused code

33 | * missing i18n, especially RTL issues

34 | * don't repeat yourself

35 | * simple copy-and-paste

36 | * unreasonable comments

37 |

38 | 5. effective refactoring

39 | * semantic version

40 | * never introduce breaking change to non major versions

41 | * two legged change

42 |

--------------------------------------------------------------------------------

/en/174-designing-memcached.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 174-designing-memcached

3 | id: 174-designing-memcached

4 | title: "Designing Memcached or an in-memory KV store"

5 | date: 2019-10-03 22:04

6 | comments: true

7 | tags: [system design]

8 | description: Memcached = rich client + distributed servers + hash table + LRU. It features a simple server and pushes complexity to the client) and hence reliable and easy to deploy.

9 | references:

10 | - https://github.com/memcached/memcached/wiki/Overview

11 | - https://people.cs.uchicago.edu/~junchenj/34702/slides/34702-MemCache.pdf

12 | - https://en.wikipedia.org/wiki/Hash_table

13 | ---

14 |

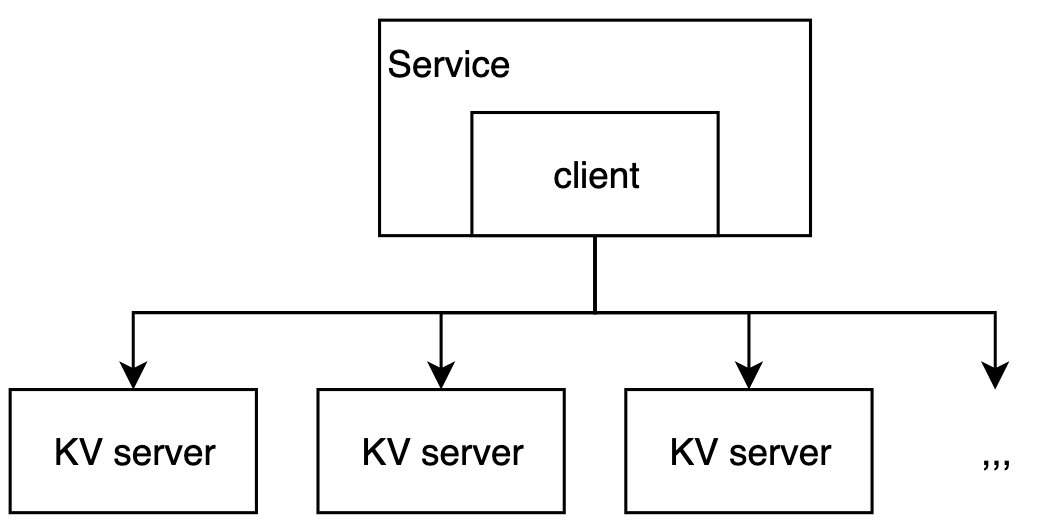

15 | ## Requirements

16 |

17 | 1. High-performance, distributed key-value store

18 | * Why distributed?

19 | * Answer: to hold a larger size of data

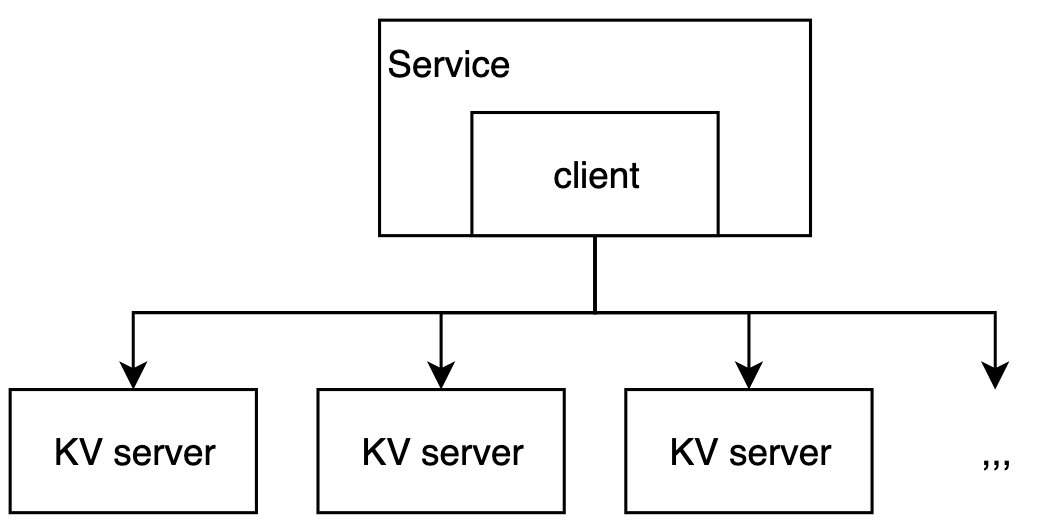

20 |  24 | 2. For in-memory storage of small data objects

25 | 3. Simple server (pushing complexity to the client) and hence reliable and easy to deploy

26 |

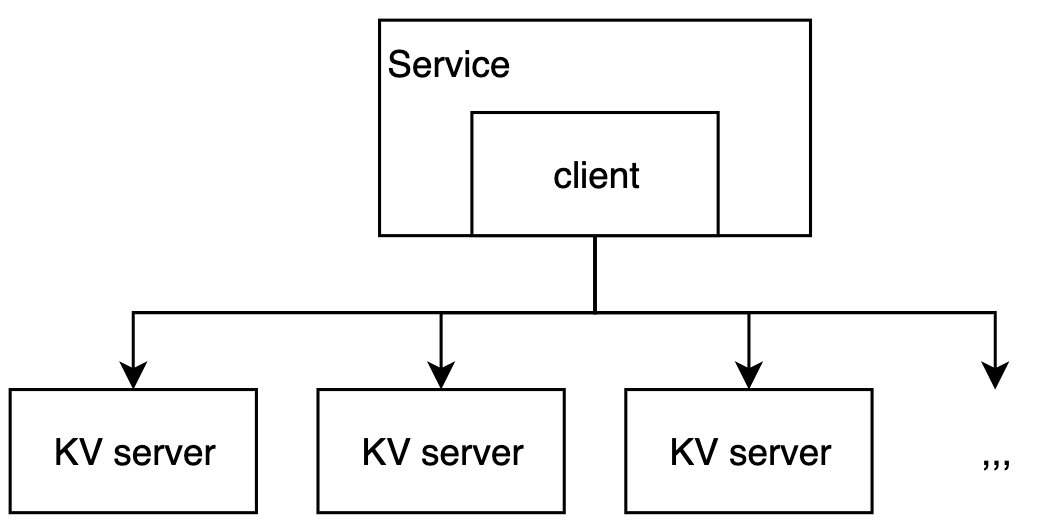

27 | ## Architecture

28 | Big Picture: Client-server

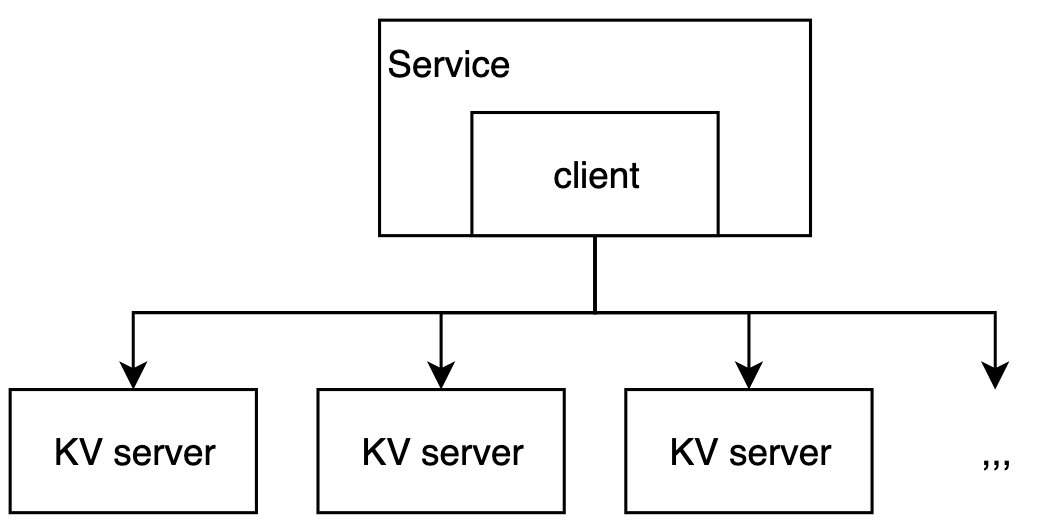

29 |

30 |

24 | 2. For in-memory storage of small data objects

25 | 3. Simple server (pushing complexity to the client) and hence reliable and easy to deploy

26 |

27 | ## Architecture

28 | Big Picture: Client-server

29 |

30 |  34 |

35 | * client

36 | * given a list of Memcached servers

37 | * chooses a server based on the key

38 | * server

39 | * store KVs into the internal hash table

40 | * LRU eviction

41 |

42 |

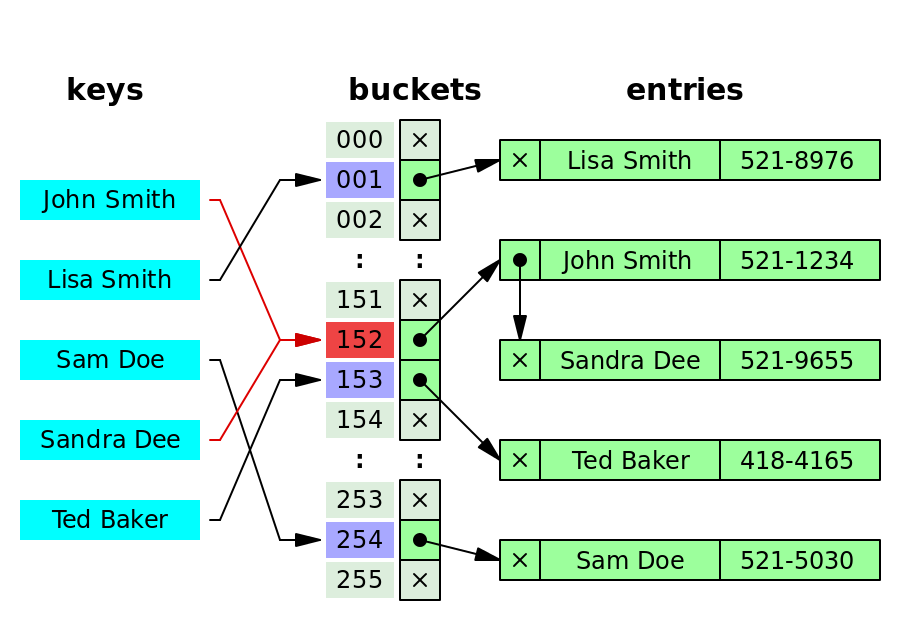

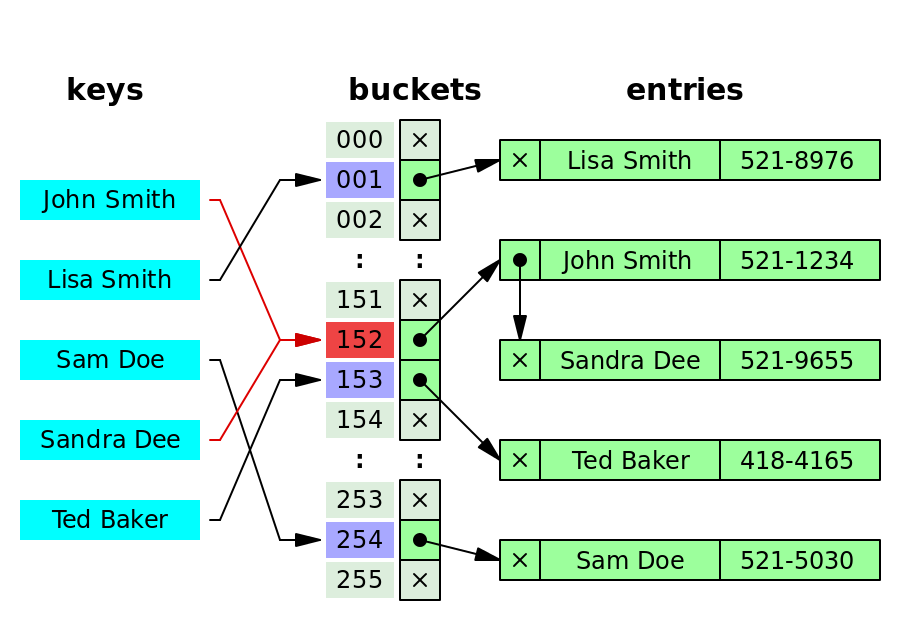

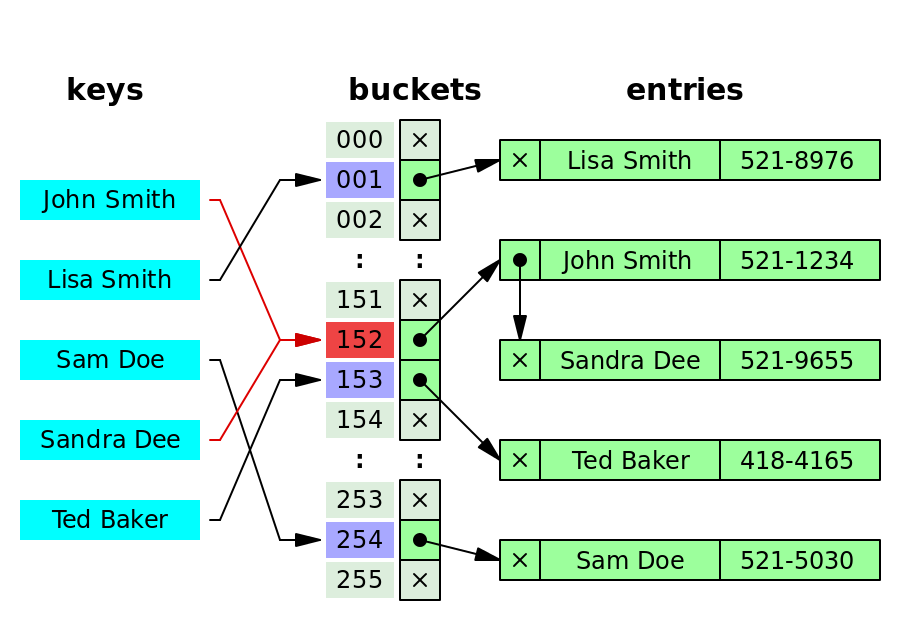

43 | The Key-value server consists of a fixed-size hash table + single-threaded handler + coarse locking

44 |

45 |

46 |

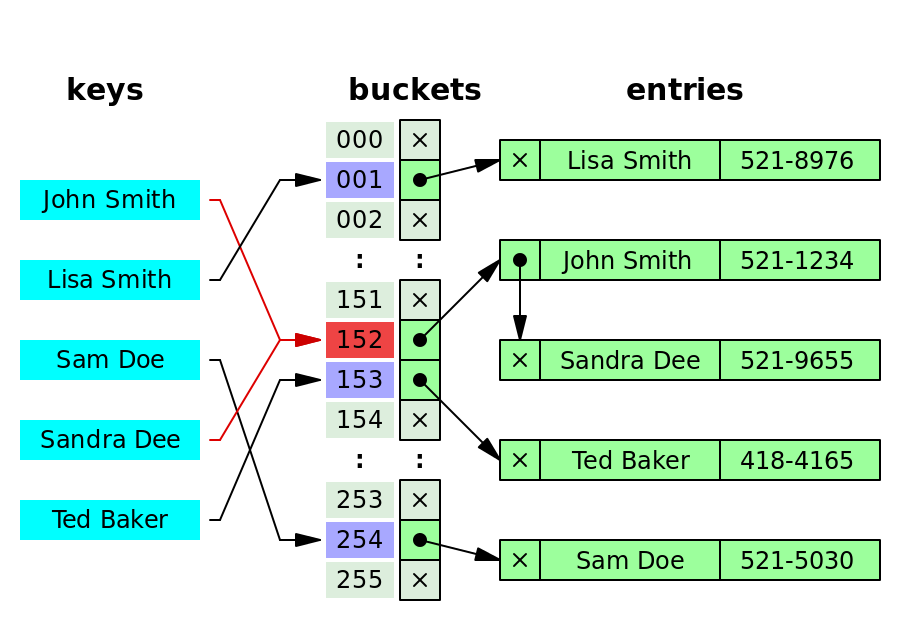

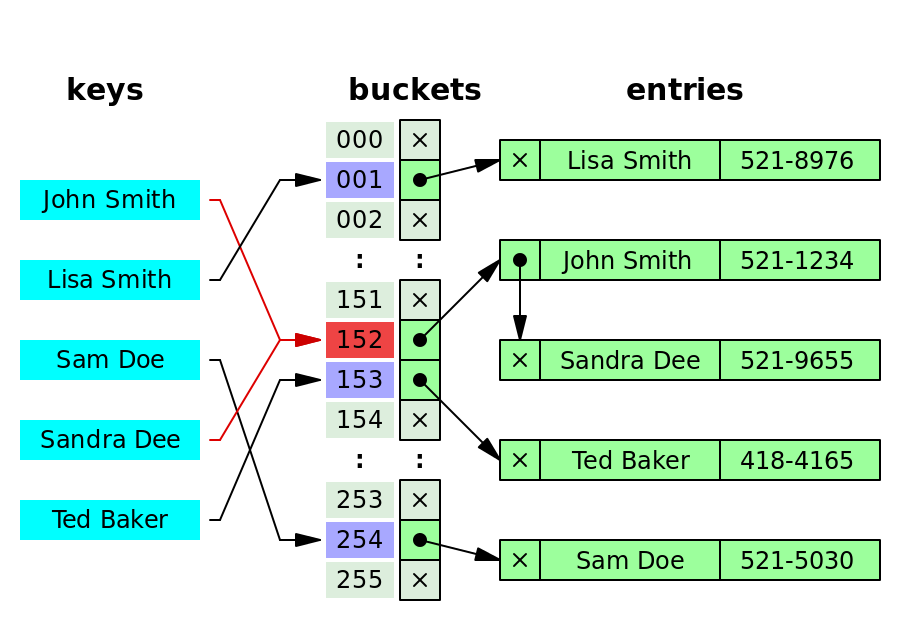

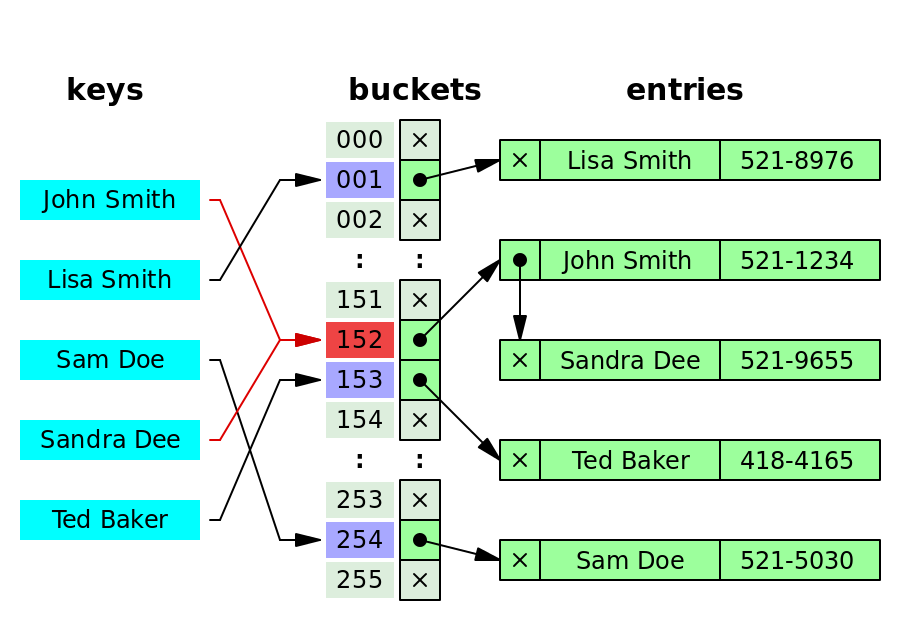

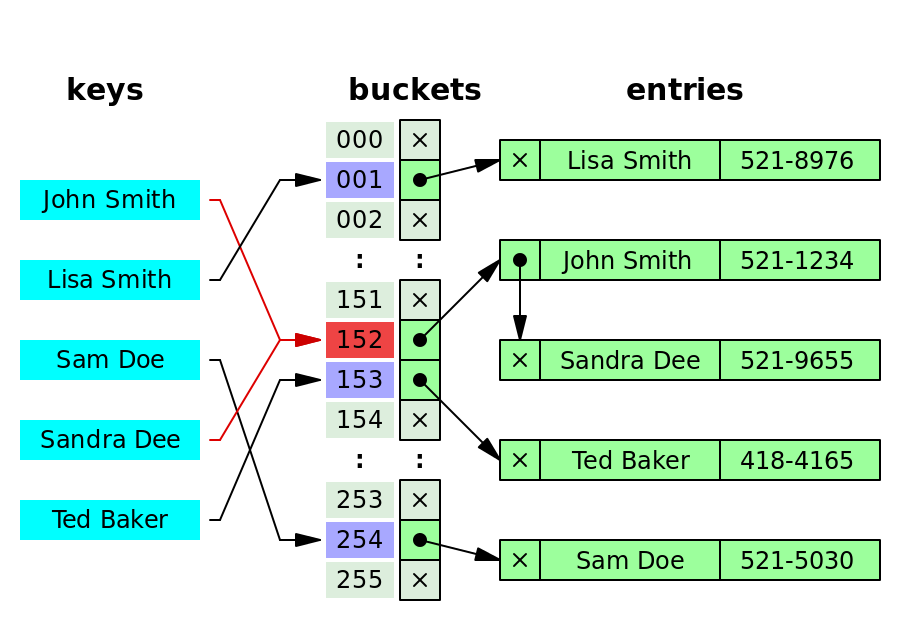

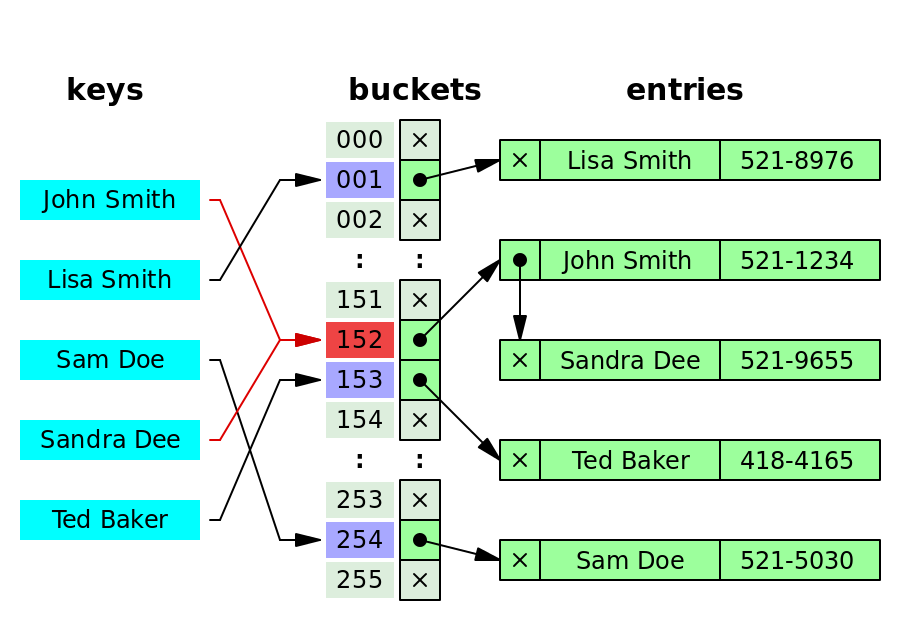

47 | How to handle collisions? Mostly three ways to resolve:

48 |

49 | 1. Separate chaining: the collided bucket chains a list of entries with the same index, and you can always append the newly collided key-value pair to the list.

50 | 2. open addressing: if there is a collision, go to the next index until finding an available bucket.

51 | 3. dynamic resizing: resize the hash table and allocate more spaces; hence, collisions will happen less frequently.

52 |

53 | ## How does the client determine which server to query?

54 |

55 | See [Data Partition and Routing](https://puncsky.com/notes/2018-07-21-data-partition-and-routing)

56 |

57 | ## How to use cache?

58 |

59 | See [Key value cache](https://puncsky.com/notes/122-key-value-cache)

60 |

61 | ## How to further optimize?

62 |

63 | See [How Facebook Scale its Social Graph Store? TAO](https://puncsky.com/notes/49-facebook-tao)

64 |

--------------------------------------------------------------------------------

/en/177-designing-Airbnb-or-a-hotel-booking-system.md:

--------------------------------------------------------------------------------

1 | ---

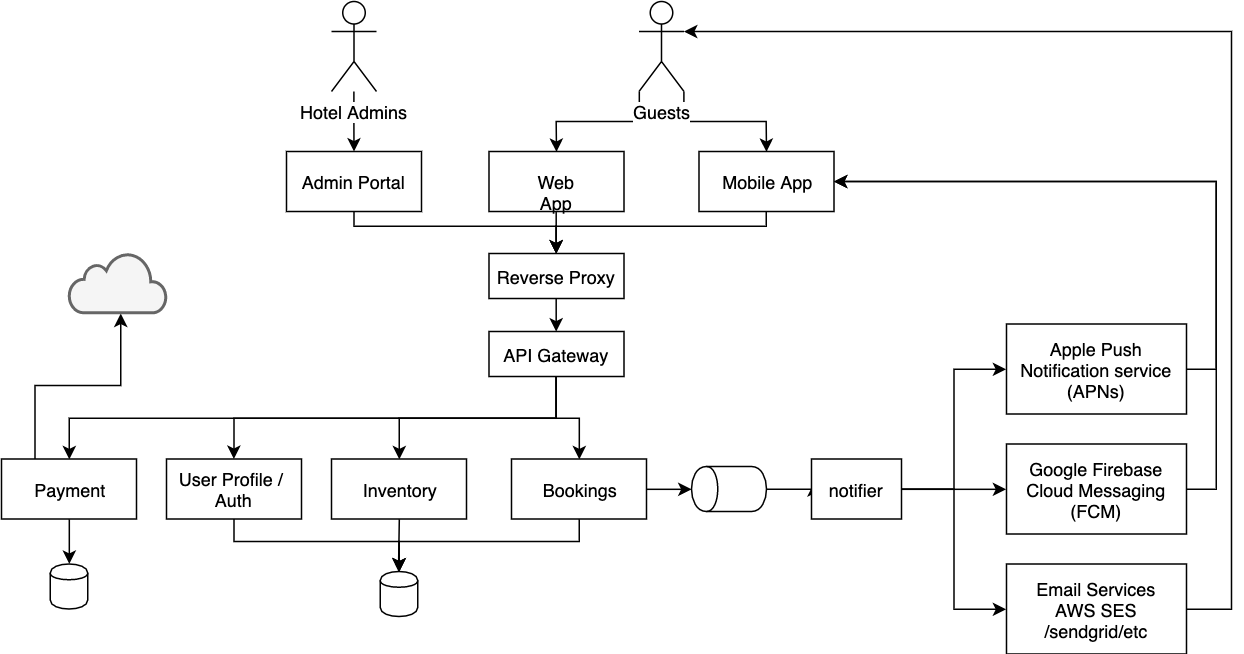

2 | slug: 177-designing-Airbnb-or-a-hotel-booking-system

3 | id: 177-designing-Airbnb-or-a-hotel-booking-system

4 | title: "Designing Airbnb or a hotel booking system"

5 | date: 2019-10-06 01:39

6 | comments: true

7 | slides: false

8 | tags: [system design]

9 | description: For guests and hosts, we store data with a relational database and build indexes to search by location, metadata, and availability. We can use external vendors for payment and remind the reservations with a priority queue.

10 | references:

11 | - https://www.vertabelo.com/blog/designing-a-data-model-for-a-hotel-room-booking-system/

12 | ---

13 |

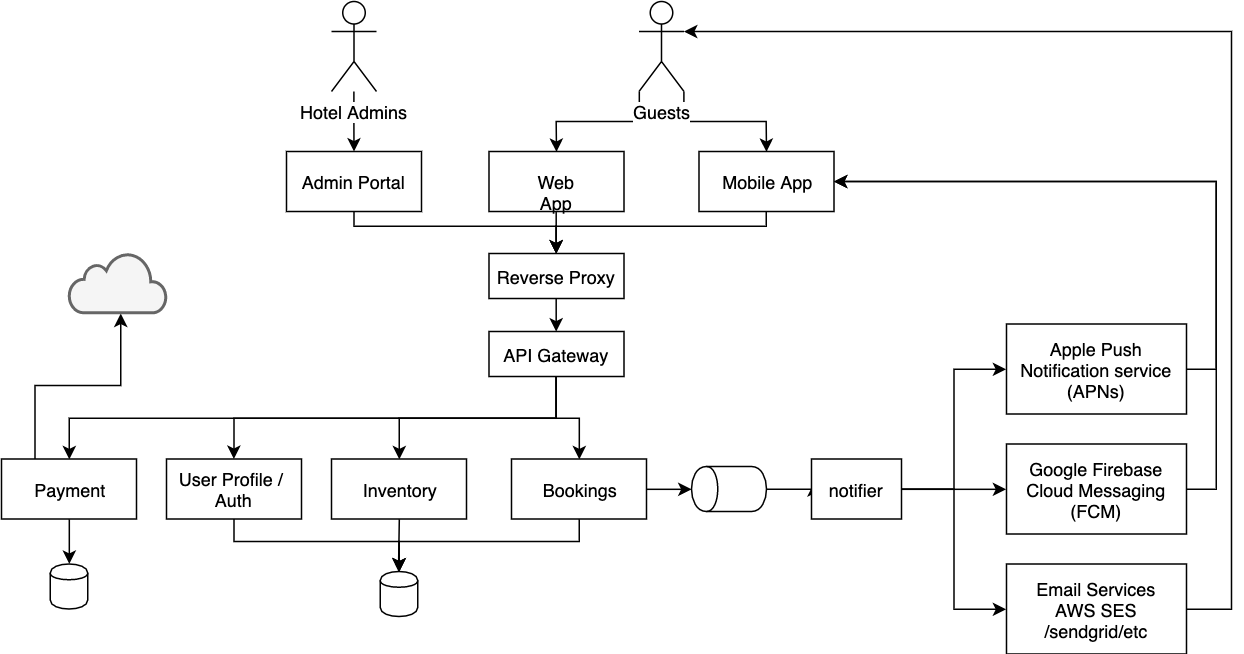

14 | ## Requirements

15 | * for guests

16 | * search rooms by locations, dates, number of rooms, and number of guests

17 | * get room details (like picture, name, review, address, etc.) and prices

18 | * pay and book room from inventory by date and room id

19 | * checkout as a guest

20 | * user is logged in already

21 | * notification via Email and mobile push notification

22 | * for hotel or rental administrators (suppliers/hosts)

23 | * administrators (receptionist/manager/rental owner): manage room inventory and help the guest to check-in and check out

24 | * housekeeper: clean up rooms routinely

25 |

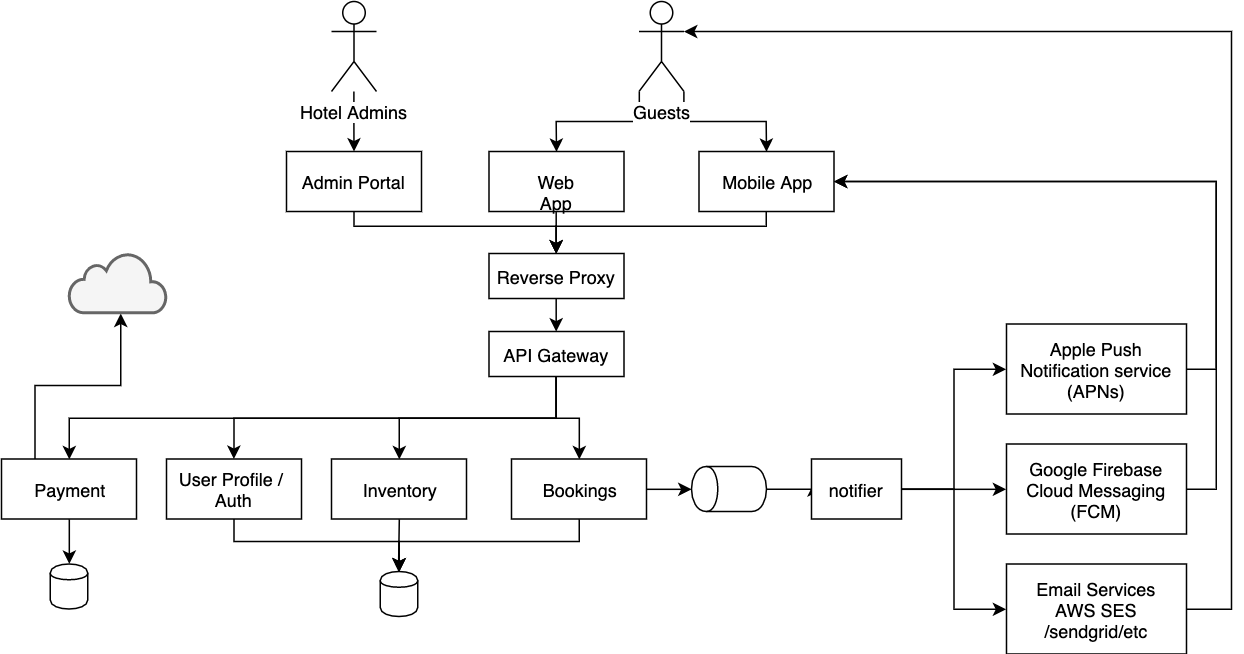

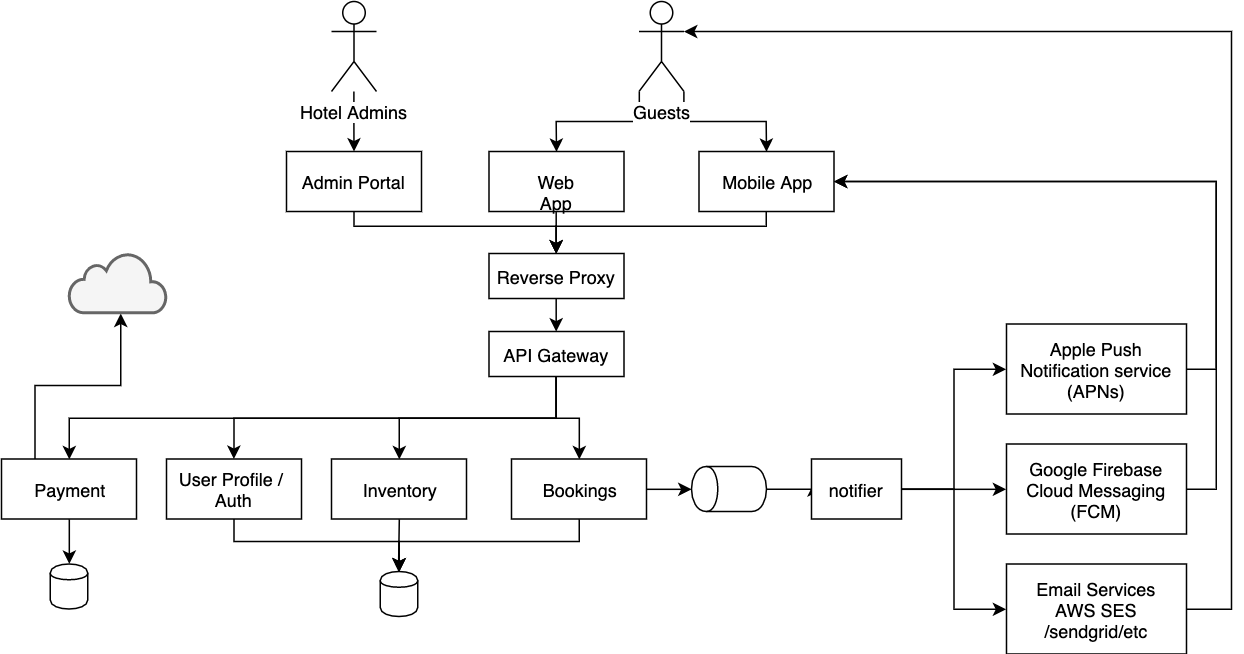

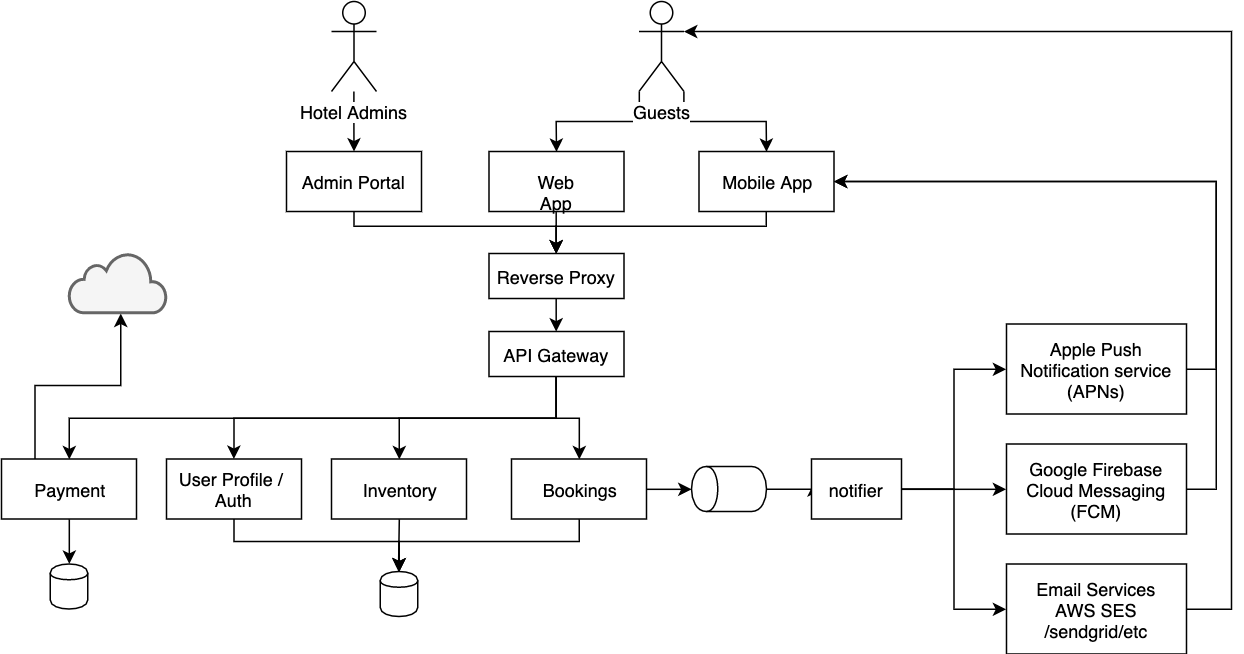

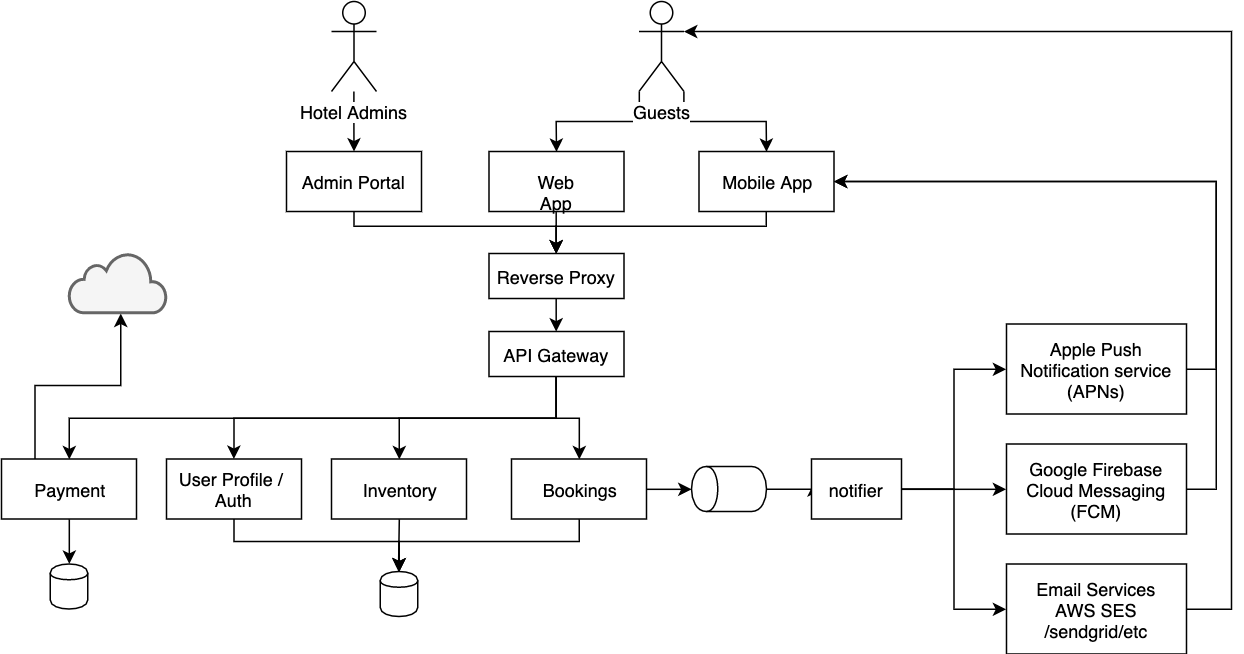

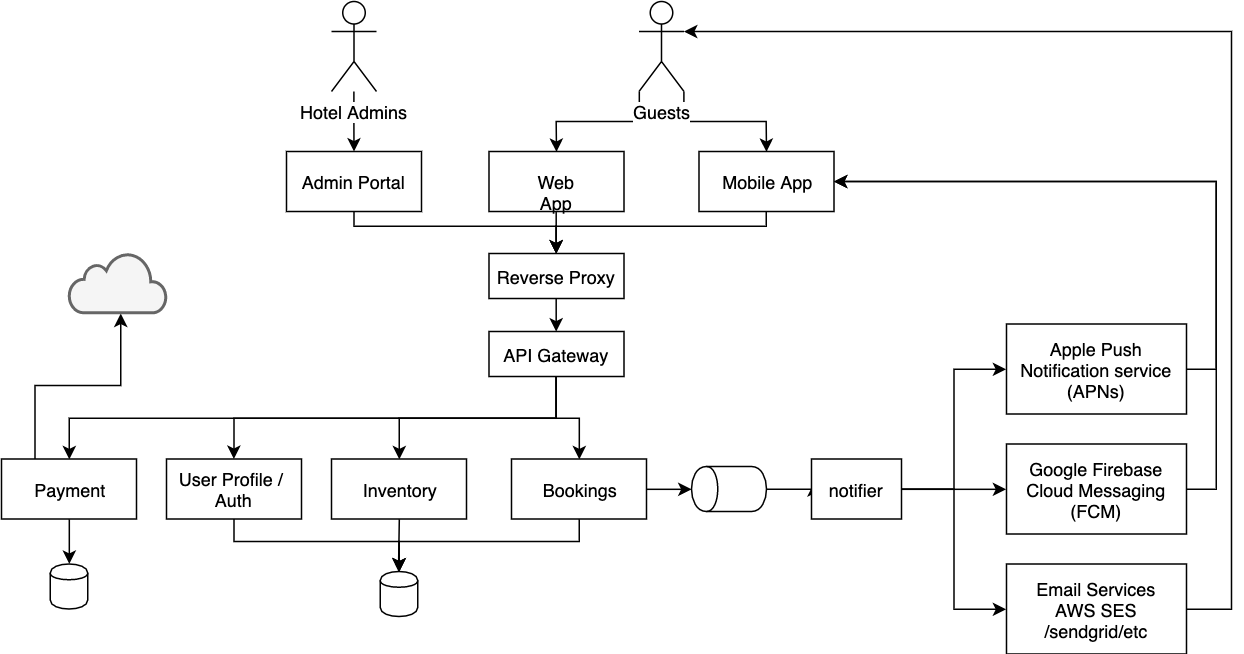

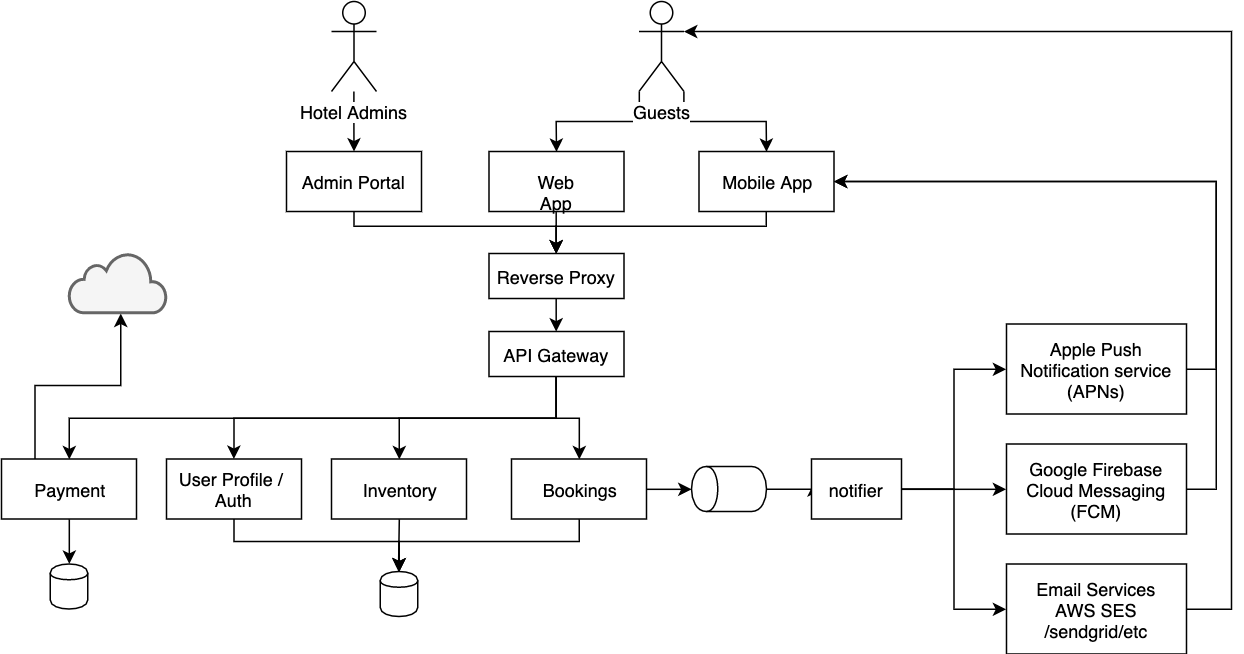

26 | ## Architecture

27 |

28 |

29 |

30 | ## Components

31 |

32 | ### Inventory \<\> Bookings \<\> Users (guests and hosts)

33 |

34 | Suppliers provide their room details in the inventory. And users can search, get, and reserve rooms accordingly. After reserving the room, the user's payment will change the `status` of the `reserved_room` as well. You could check the data model in [this post](https://www.vertabelo.com/blog/designing-a-data-model-for-a-hotel-room-booking-system/).

35 |

36 | ### How to find available rooms?

37 |

38 | * by location: geo-search with [spatial indexing](https://en.wikipedia.org/wiki/Spatial_database), e.g. geo-hash or quad-tree.

39 | * by room metadata: apply filters or search conditions when querying the database.

40 | * by date-in and date-out and availability. Two options:

41 | * option 1: for a given `room_id`, check all `occupied_room` today or later, transform the data structure to an array of occupation by days, and finally find available slots in the array. This process might be time-consuming, so we can build the availability index.

42 | * option 2: for a given `room_id`, always create an entry for an occupied day. Then it will be easier to query unavailable slots by dates.

43 |

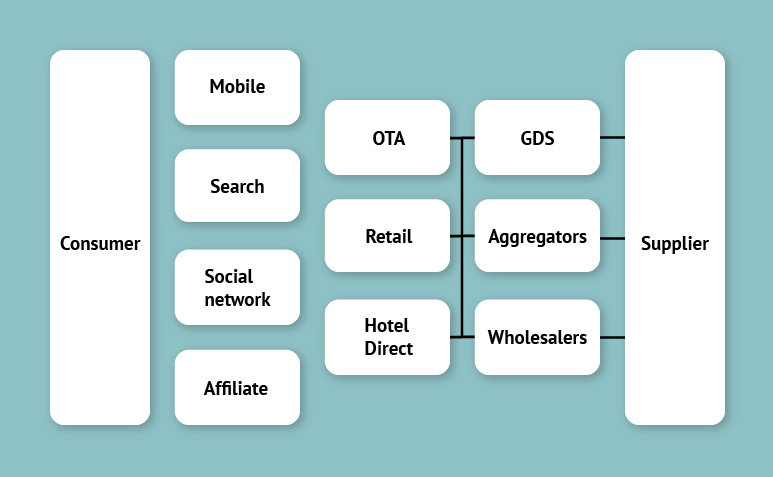

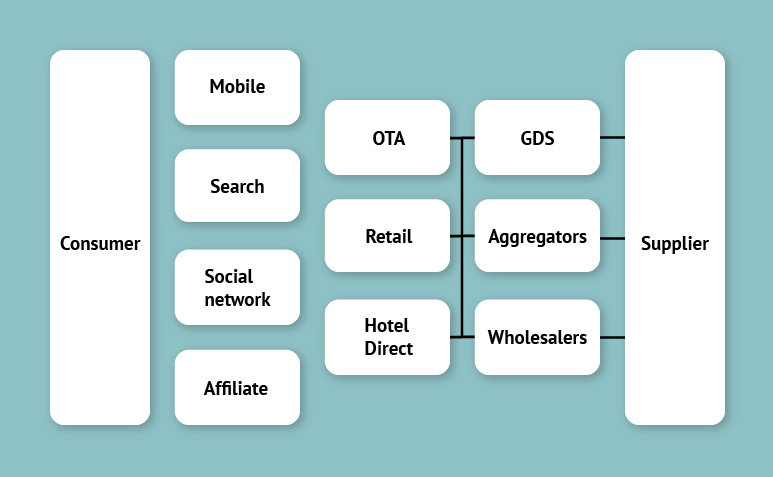

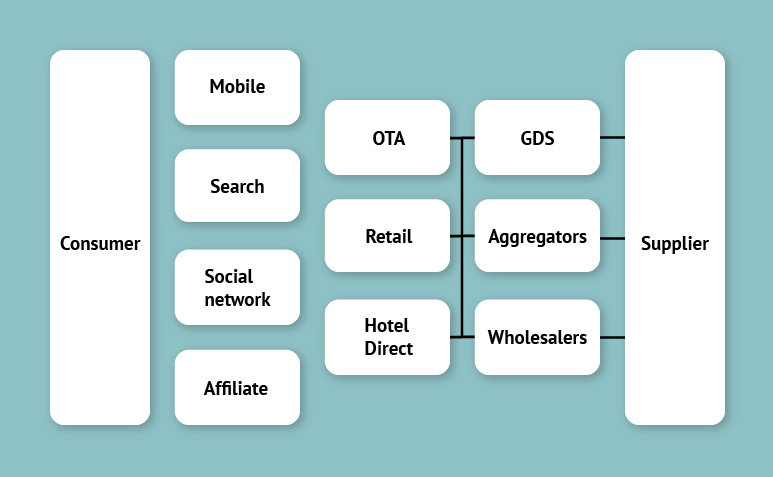

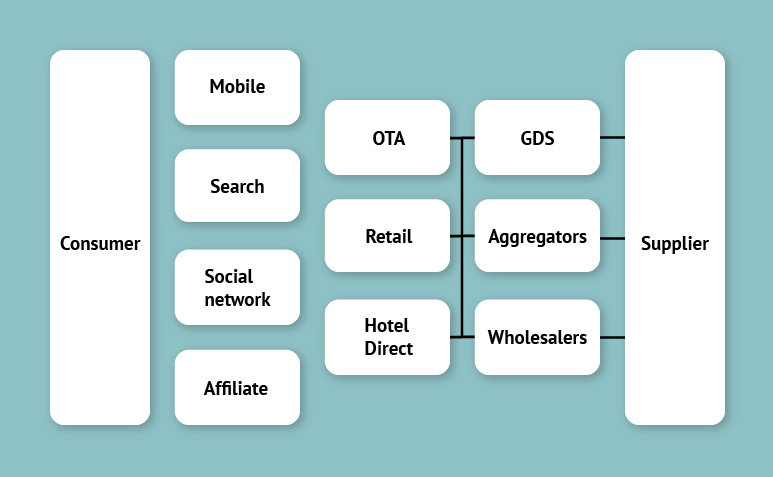

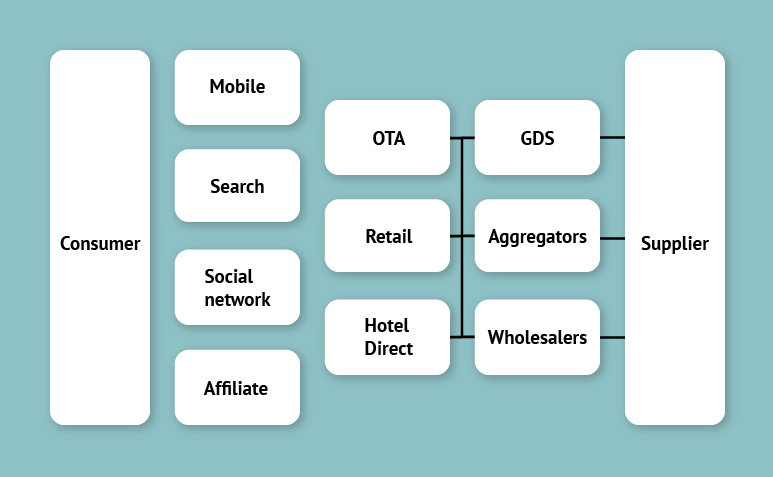

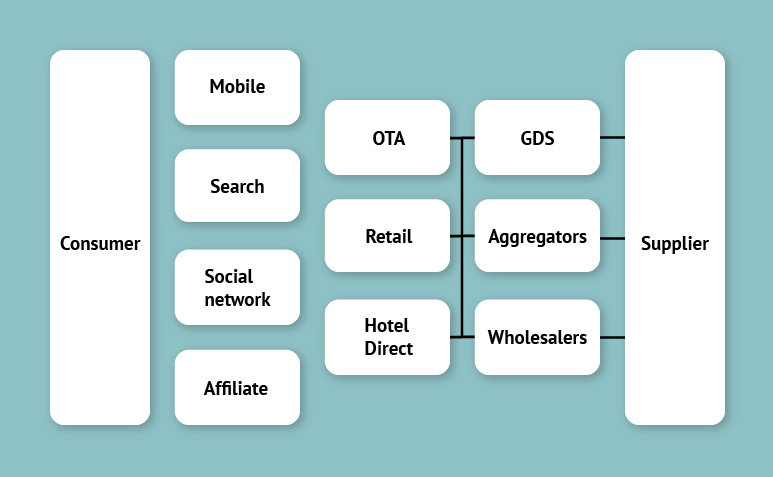

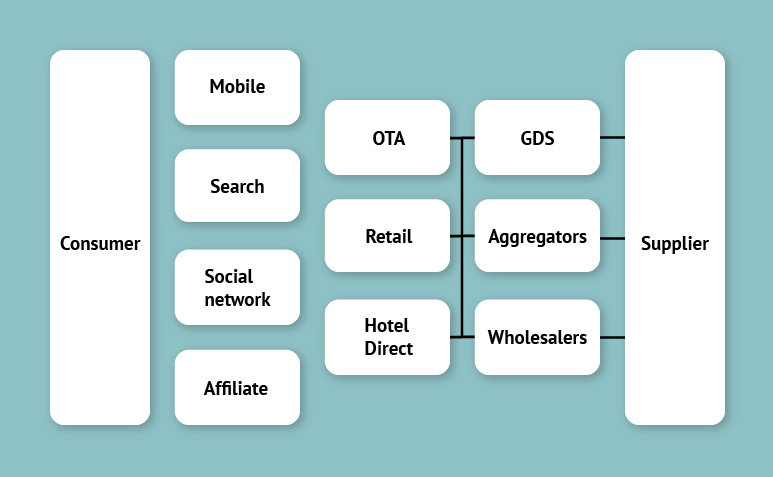

44 | ### For hotels, syncing data

45 |

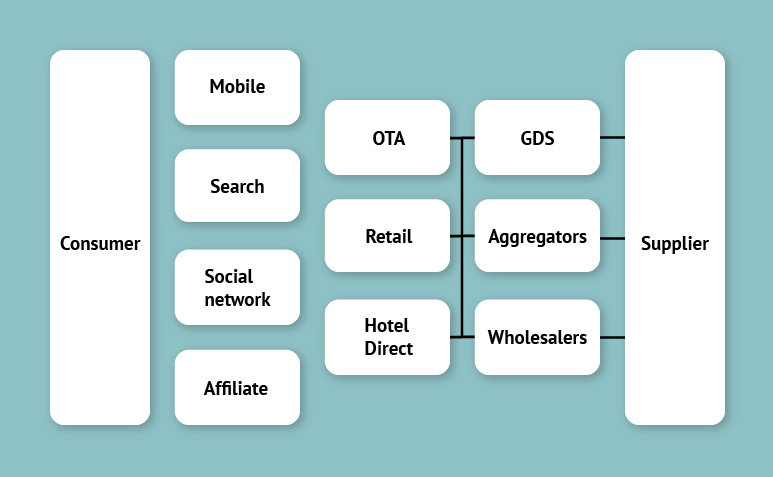

46 | If it is a hotel booking system, then it will probably publish to Booking Channels like GDS, Aggregators, and Wholesalers.

47 |

48 |

49 |

50 | To sync data across those places. We can

51 |

52 | 1. [retry with idempotency to improve the success rate of the external calls and ensure no duplicate orders](https://puncsky.com/notes/43-how-to-design-robust-and-predictable-apis-with-idempotency).

53 | 2. provide webhook callback APIs to external vendors to update status in the internal system.

54 |

55 | ### Payment & Bookkeeping

56 |

57 | Data model: [double-entry bookkeeping](https://puncsky.com/notes/167-designing-paypal-money-transfer#payment-service)

58 |

59 | To execute the payment, since we are calling the external payment gateway, like bank or Stripe, Braintree, etc. It is crucial to keep data in-sync across different places. [We need to sync data across the transaction table and external banks and vendors.](https://puncsky.com/#how-to-sync-across-the-transaction-table-and-external-banks-and-vendors)

60 |

61 | ### Notifier for reminders / alerts

62 |

63 | The notification system is essentially a delayer scheduler (priority queue + subscriber) plus API integrations.

64 |

65 | For example, a daily cronjob will query the database for notifications to be sent out today and put them into the priority queue by date. The subscriber will get the earliest ones from the priority queue and send out if reaching the expected timestamp. Otherwise, put the task back to the queue and sleep to make the CPU idle for other work, which can be interrupted if there are new alerts added for today.

66 |

--------------------------------------------------------------------------------

/en/178-lyft-marketing-automation-symphony.md:

--------------------------------------------------------------------------------

1 | ---

2 | slug: 178-lyft-marketing-automation-symphony

3 | id: 178-lyft-marketing-automation-symphony

4 | title: "Lyft's Marketing Automation Platform -- Symphony"

5 | date: 2019-10-09 23:30

6 | comments: true

7 | tags: [marketing, system design]

8 | slides: false

9 | description: "To achieve a higher ROI in advertising, Lyft launched a marketing automation platform, which consists of three main components: lifetime value forecaster, budget allocator, and bidders."

10 | references:

11 | - https://eng.lyft.com/lyft-marketing-automation-b43b7b7537cc

12 | ---

13 |

14 | ## Acquisition Efficiency Problem:How to achieve a better ROI in advertising?

15 |

16 | In details, Lyft's advertisements should meet requirements as below:

17 |

18 | 1. being able to manage region-specific ad campaigns

19 | 2. guided by data-driven growth: The growth must be scalable, measurable, and predictable

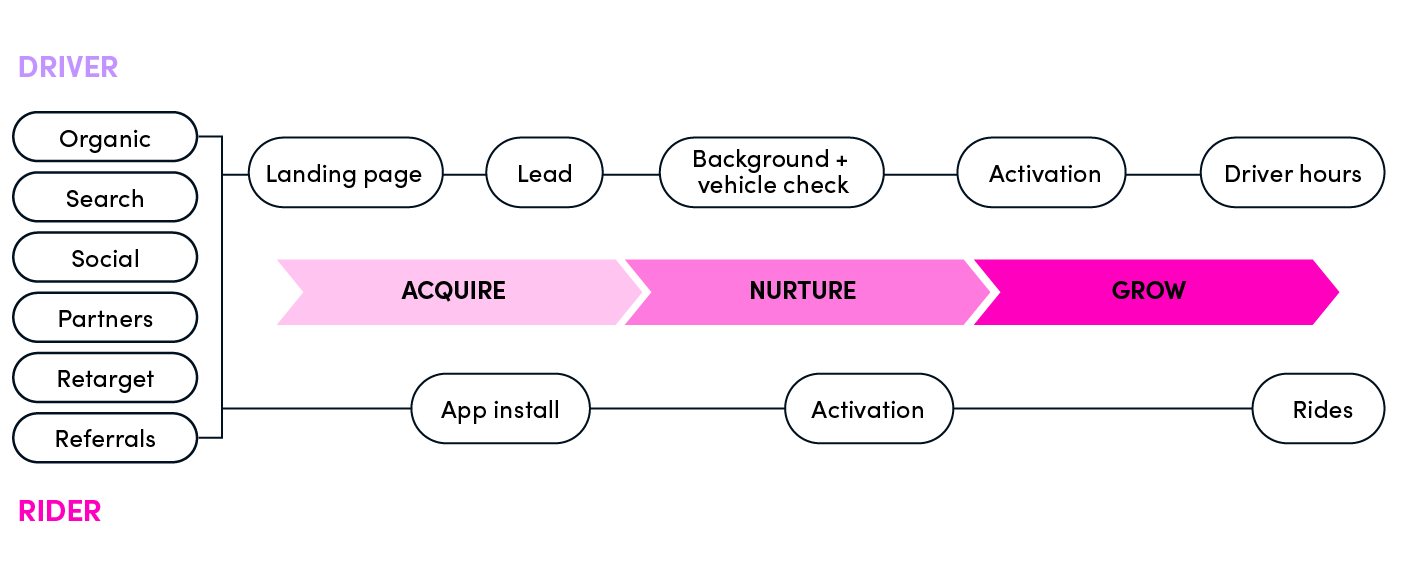

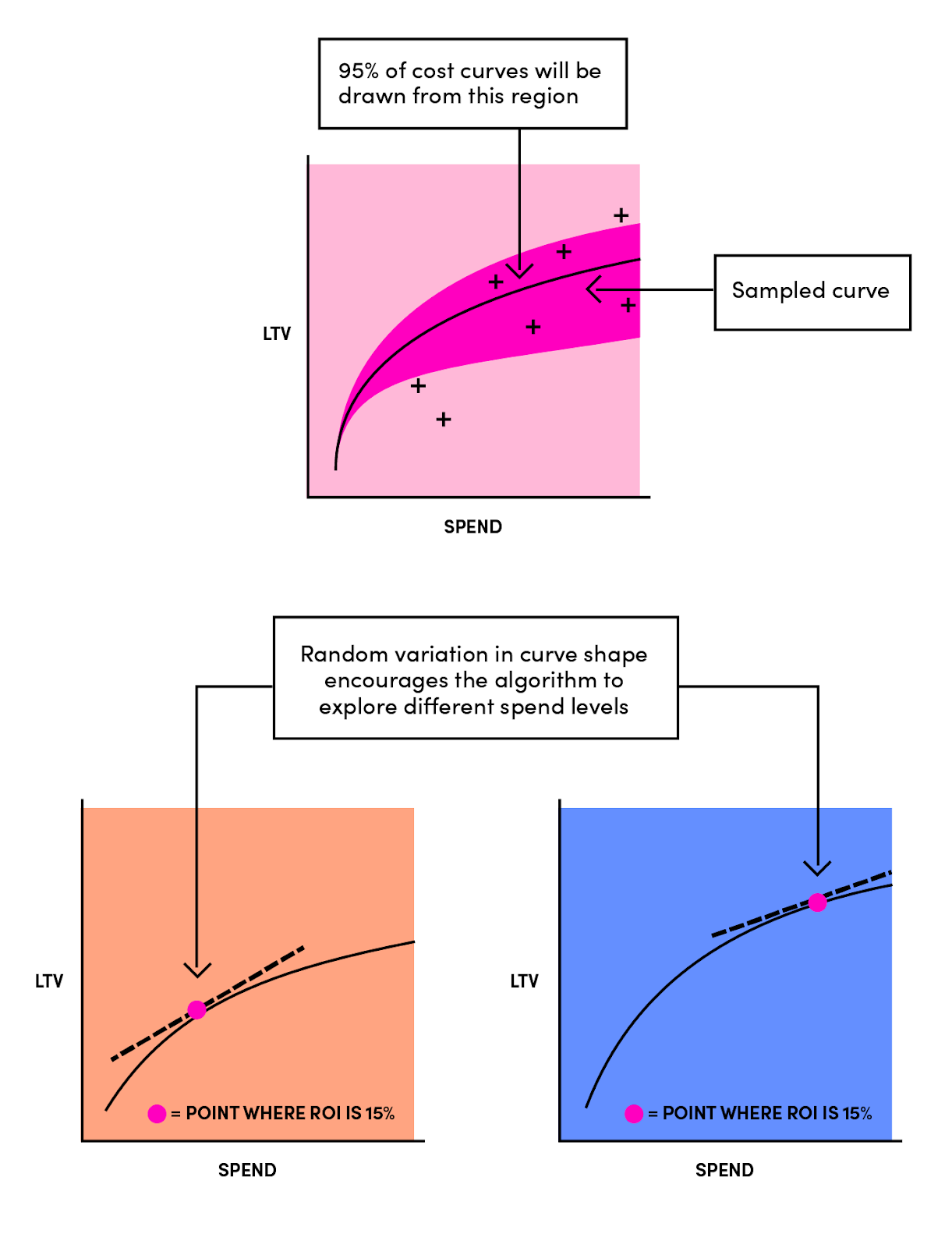

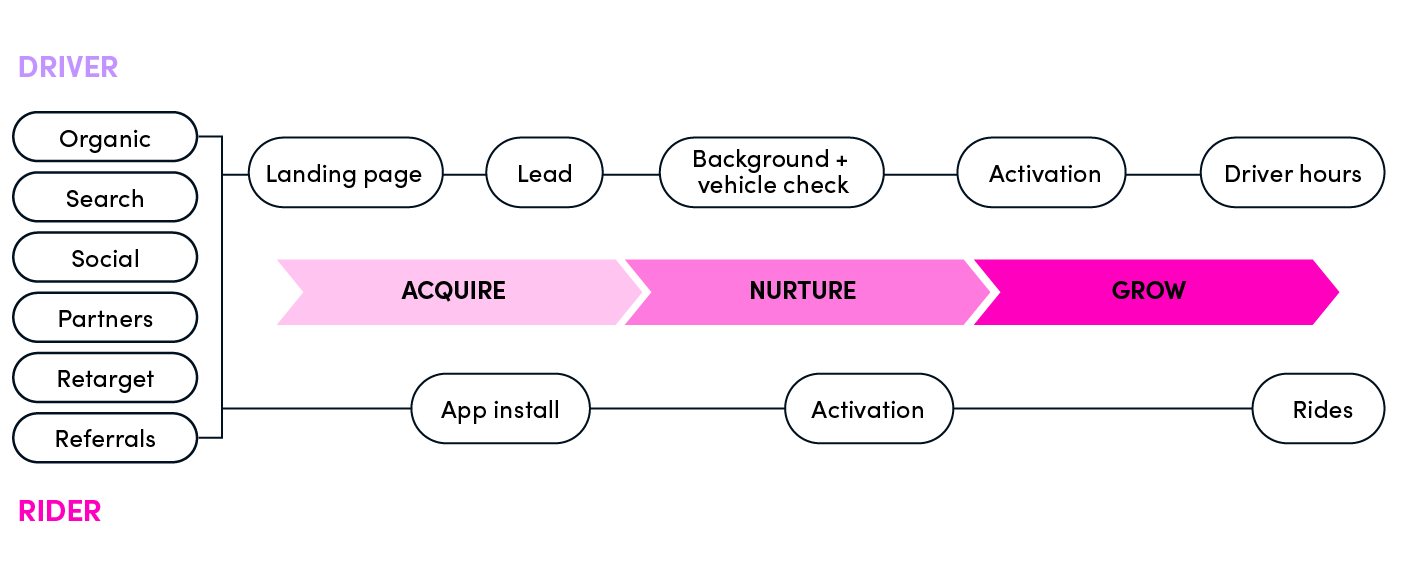

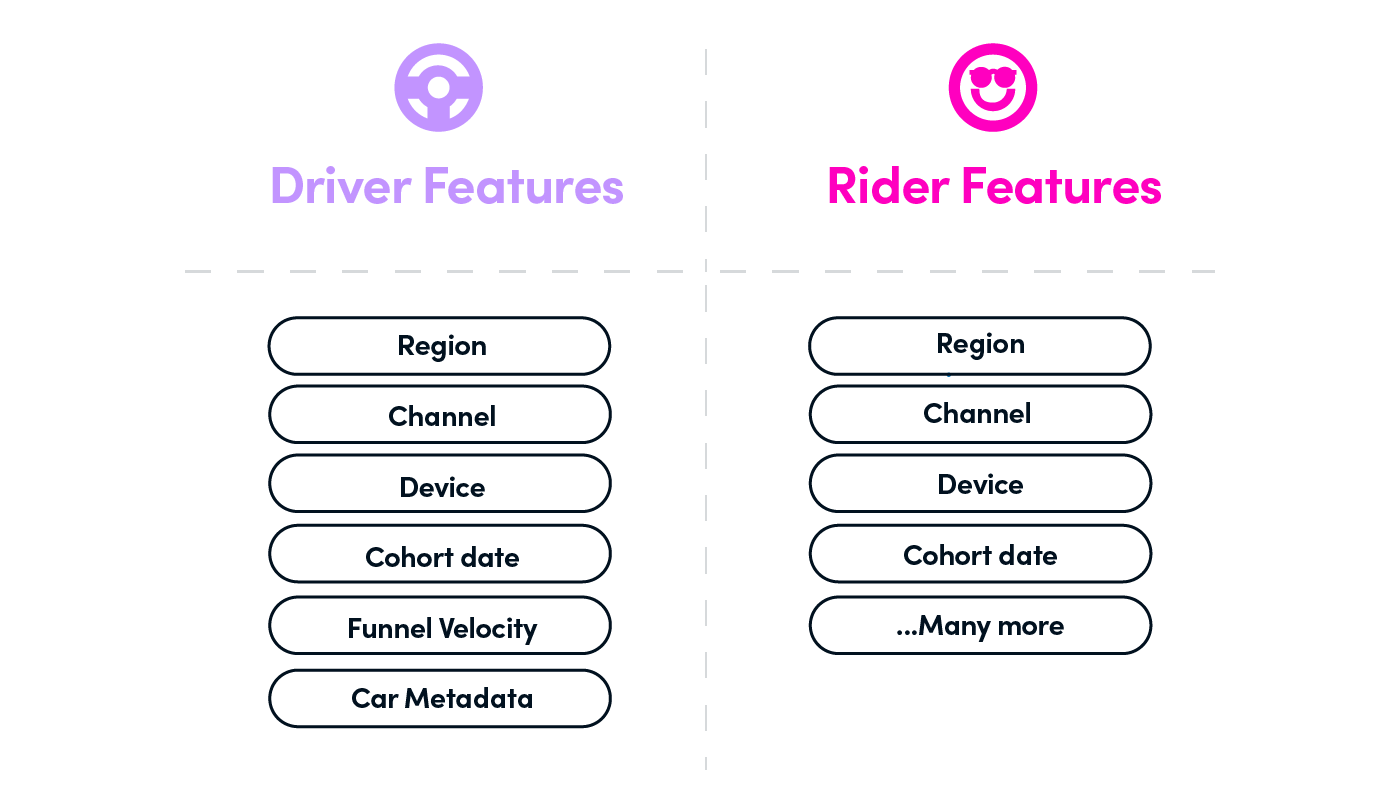

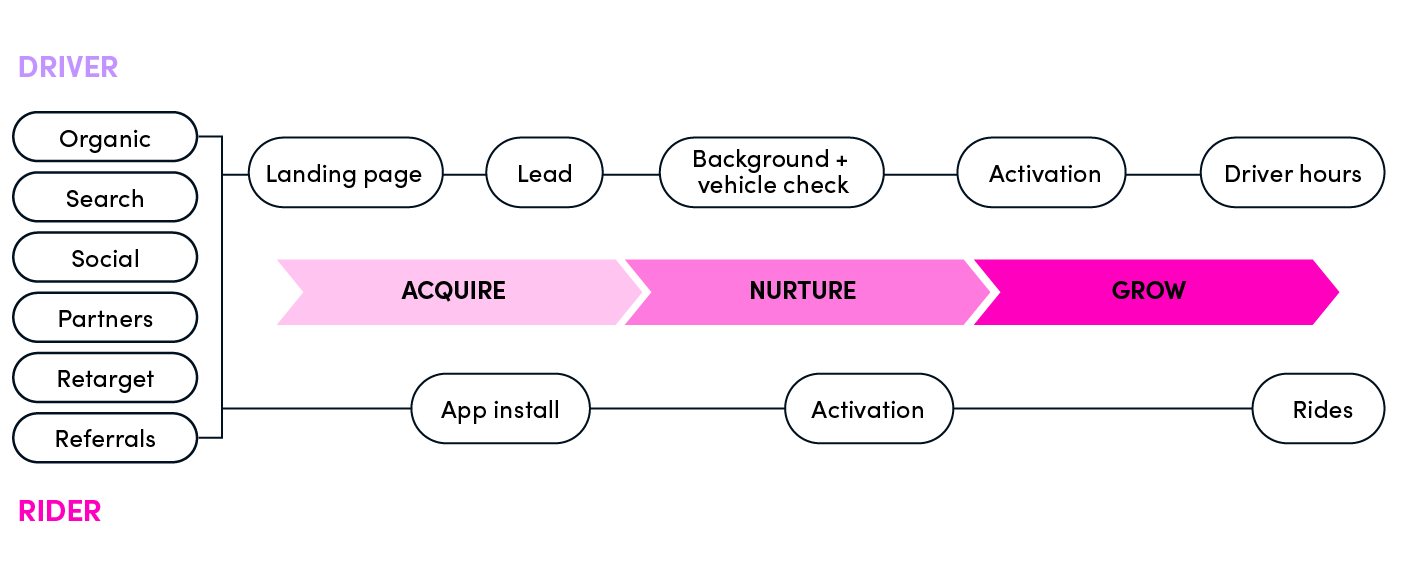

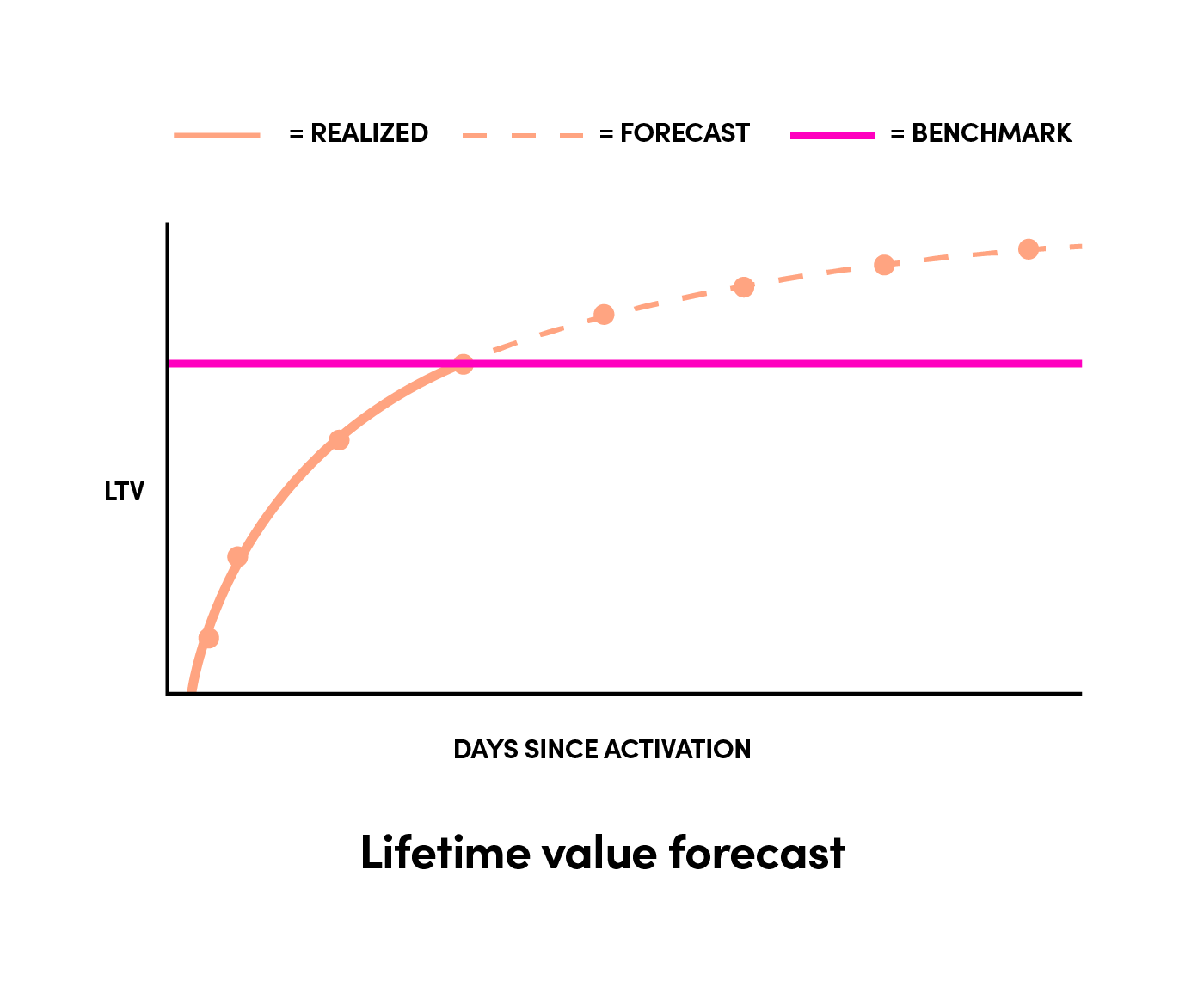

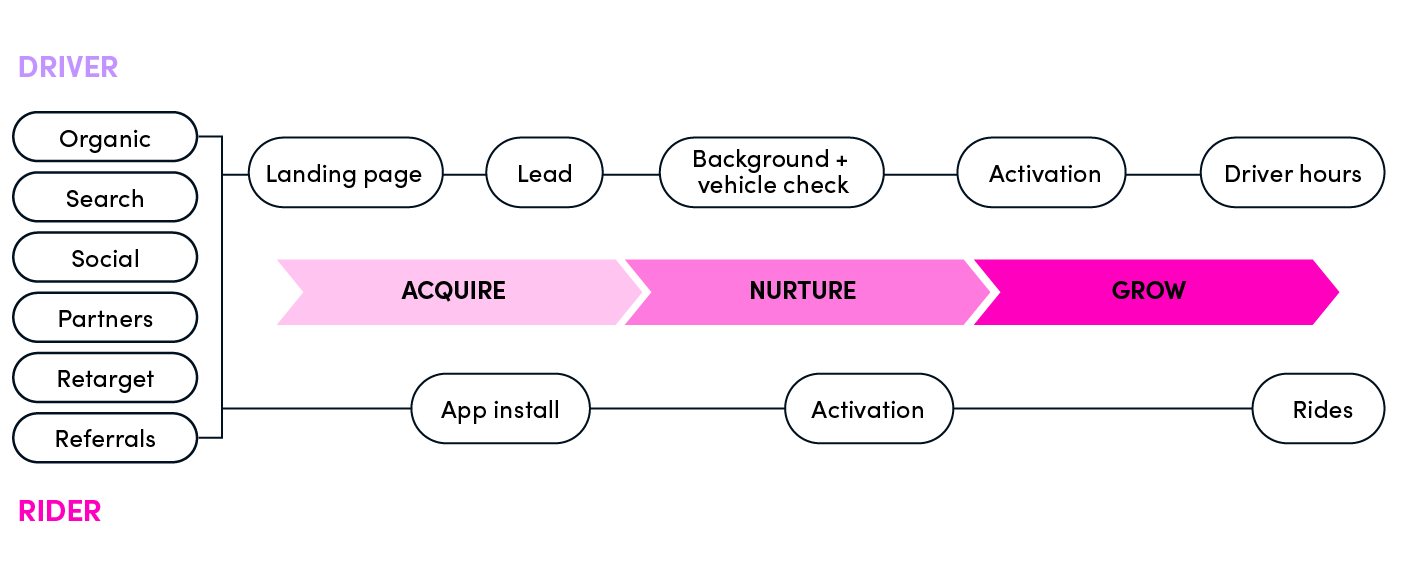

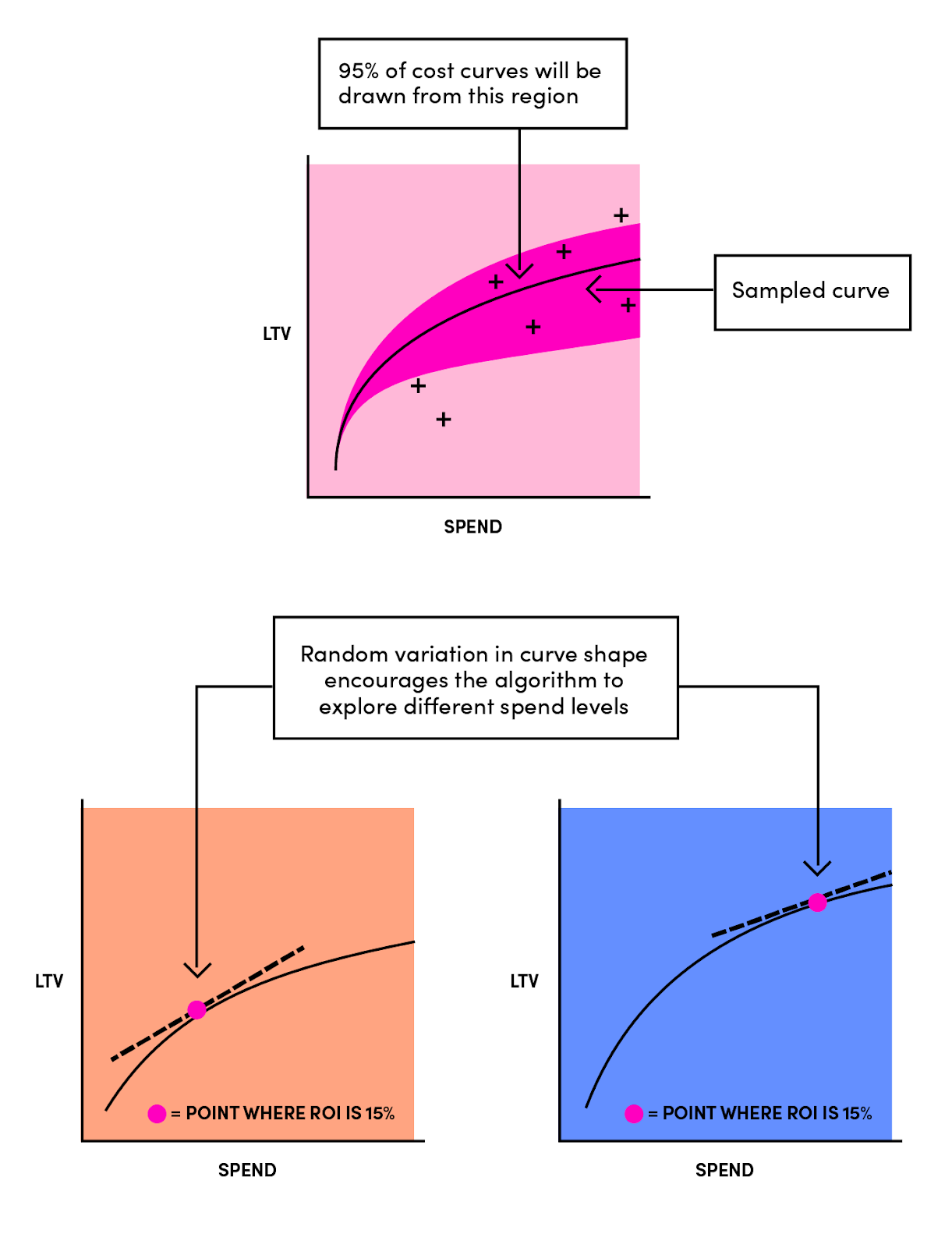

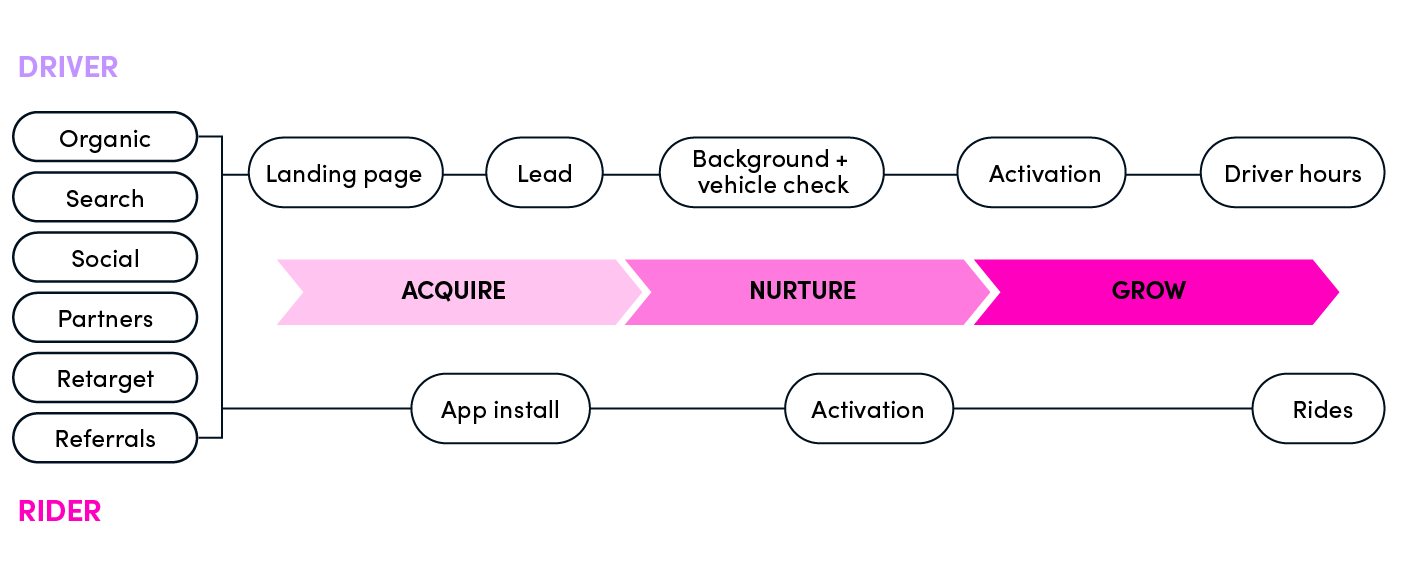

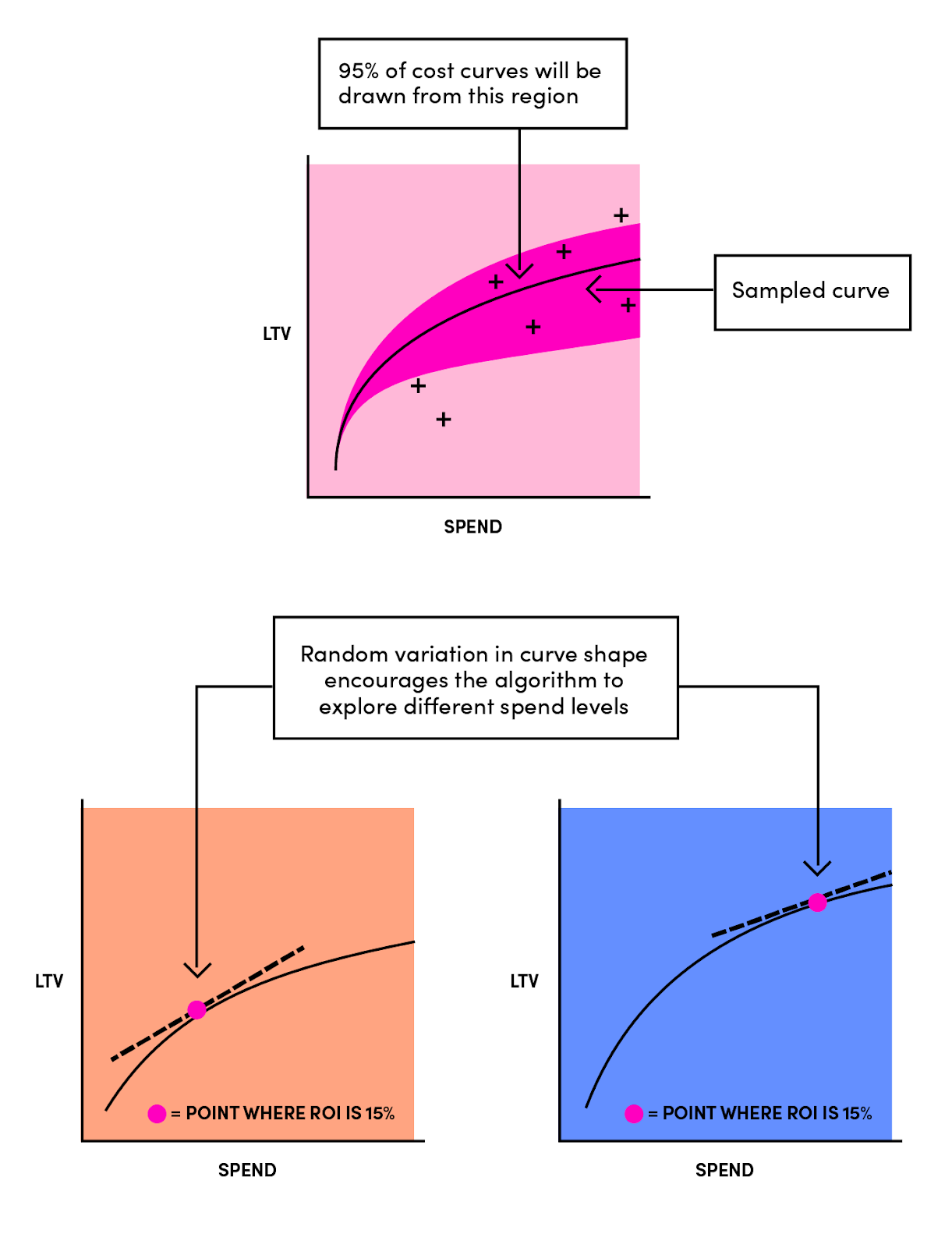

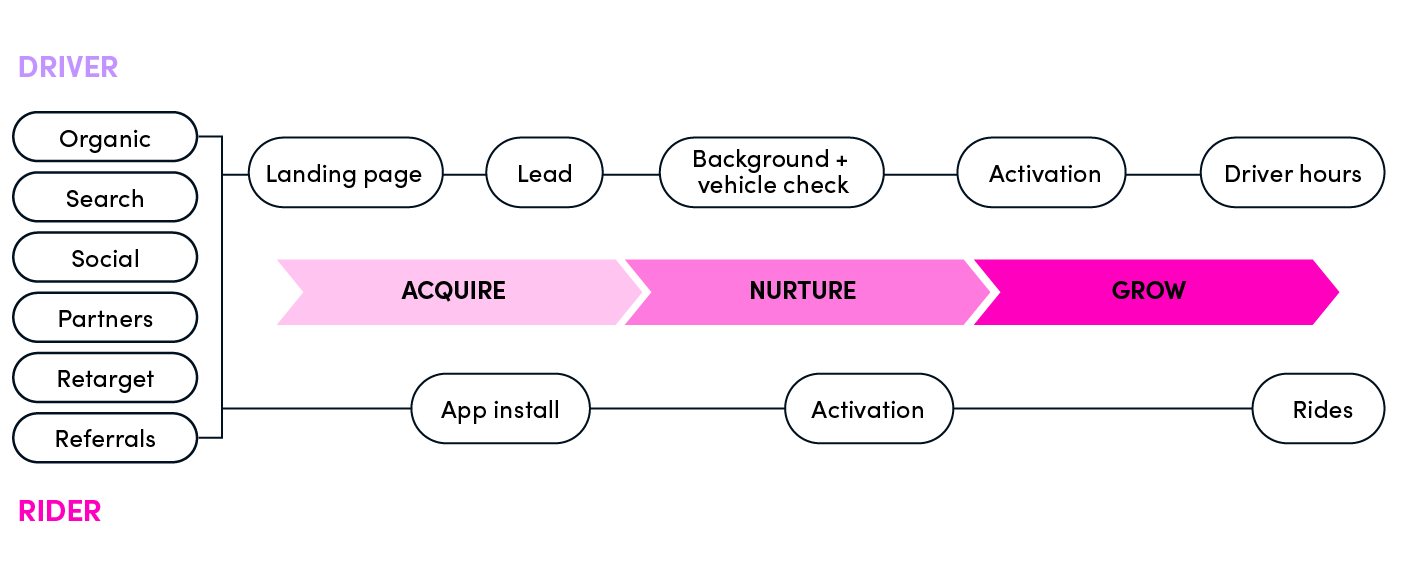

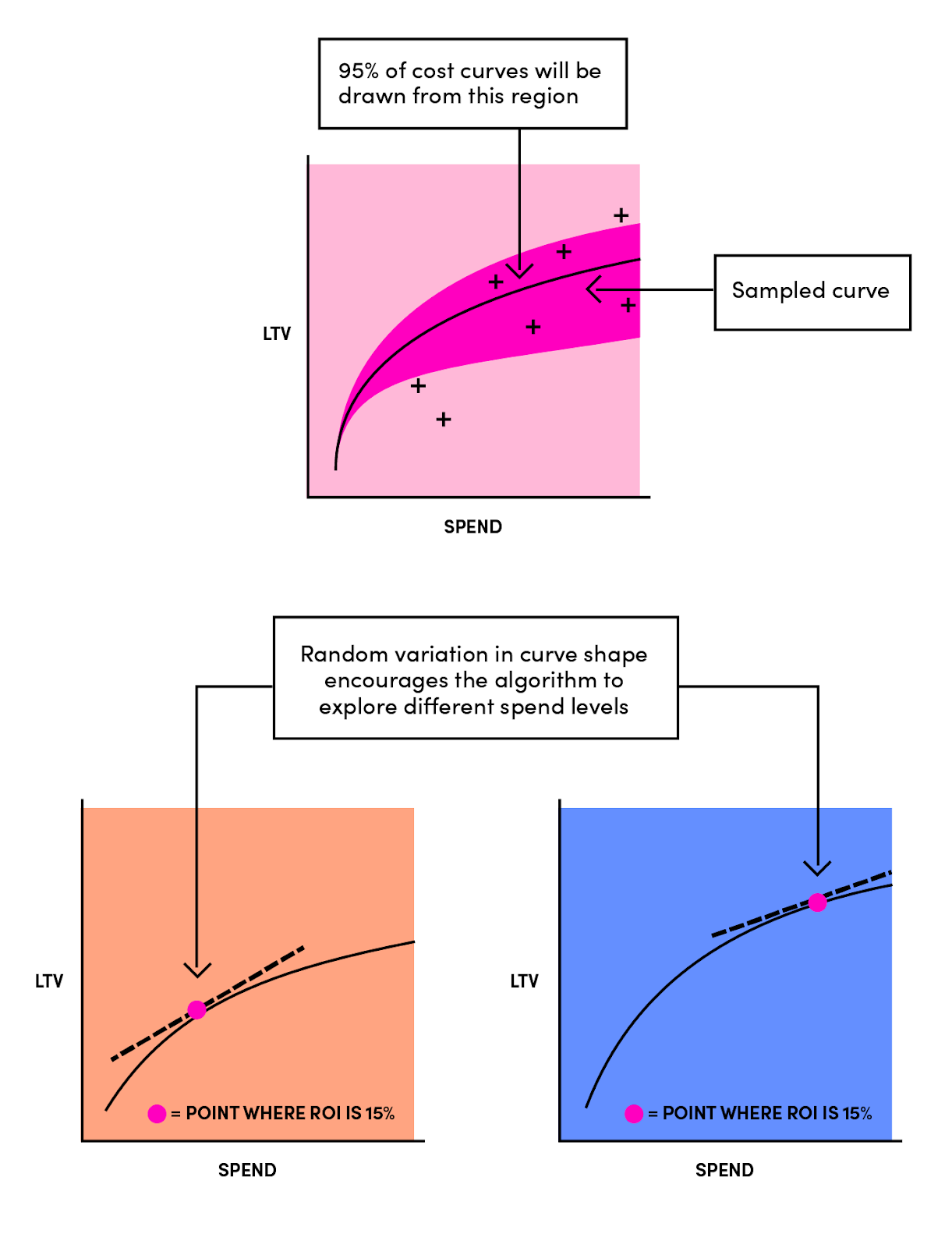

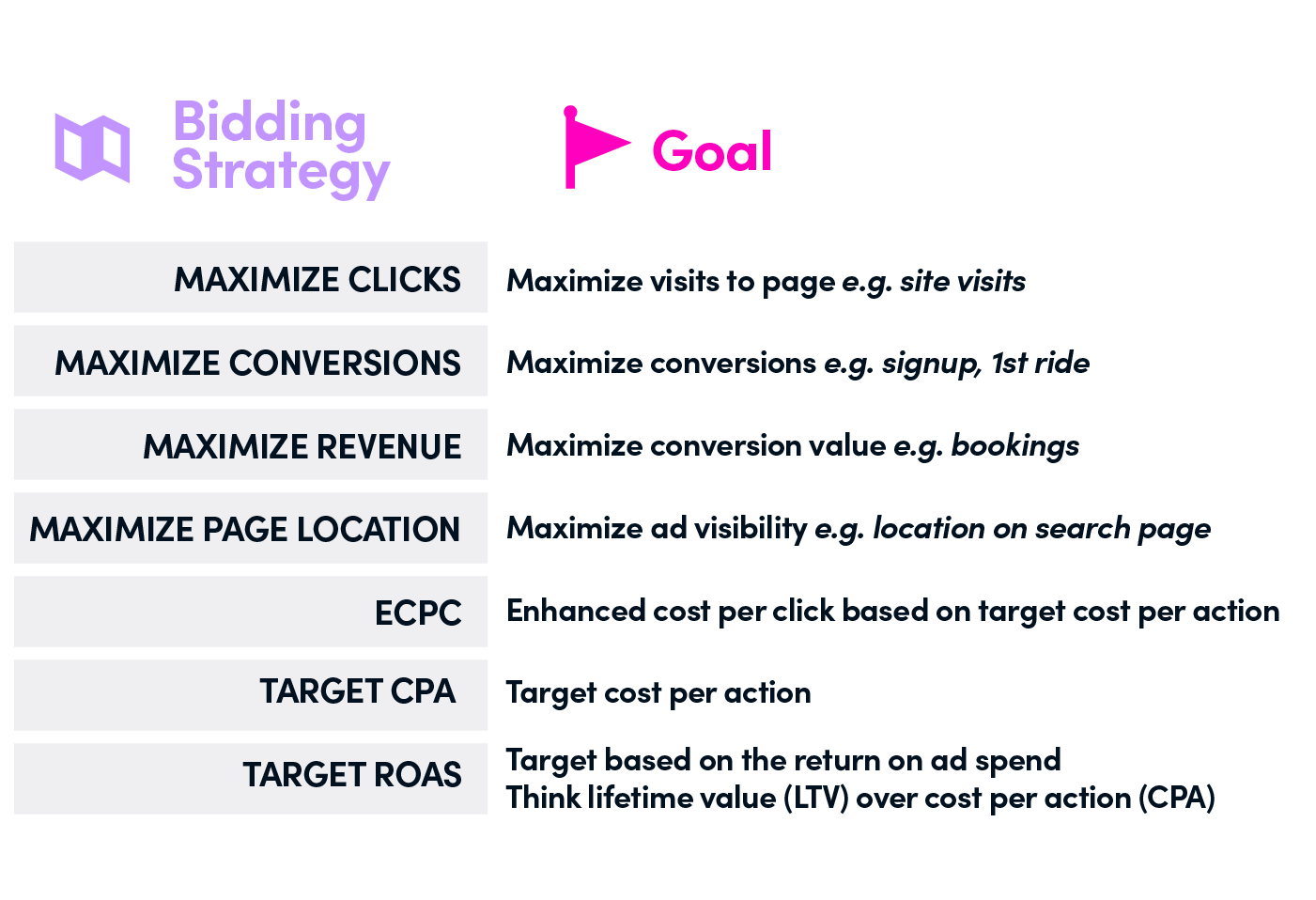

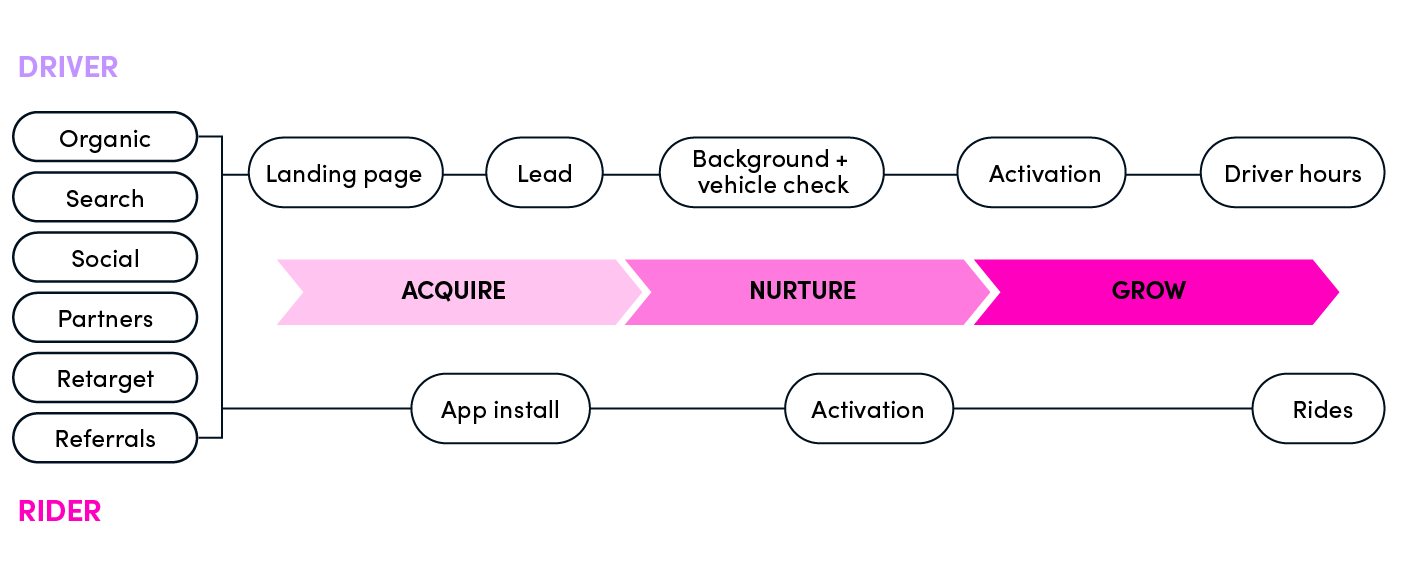

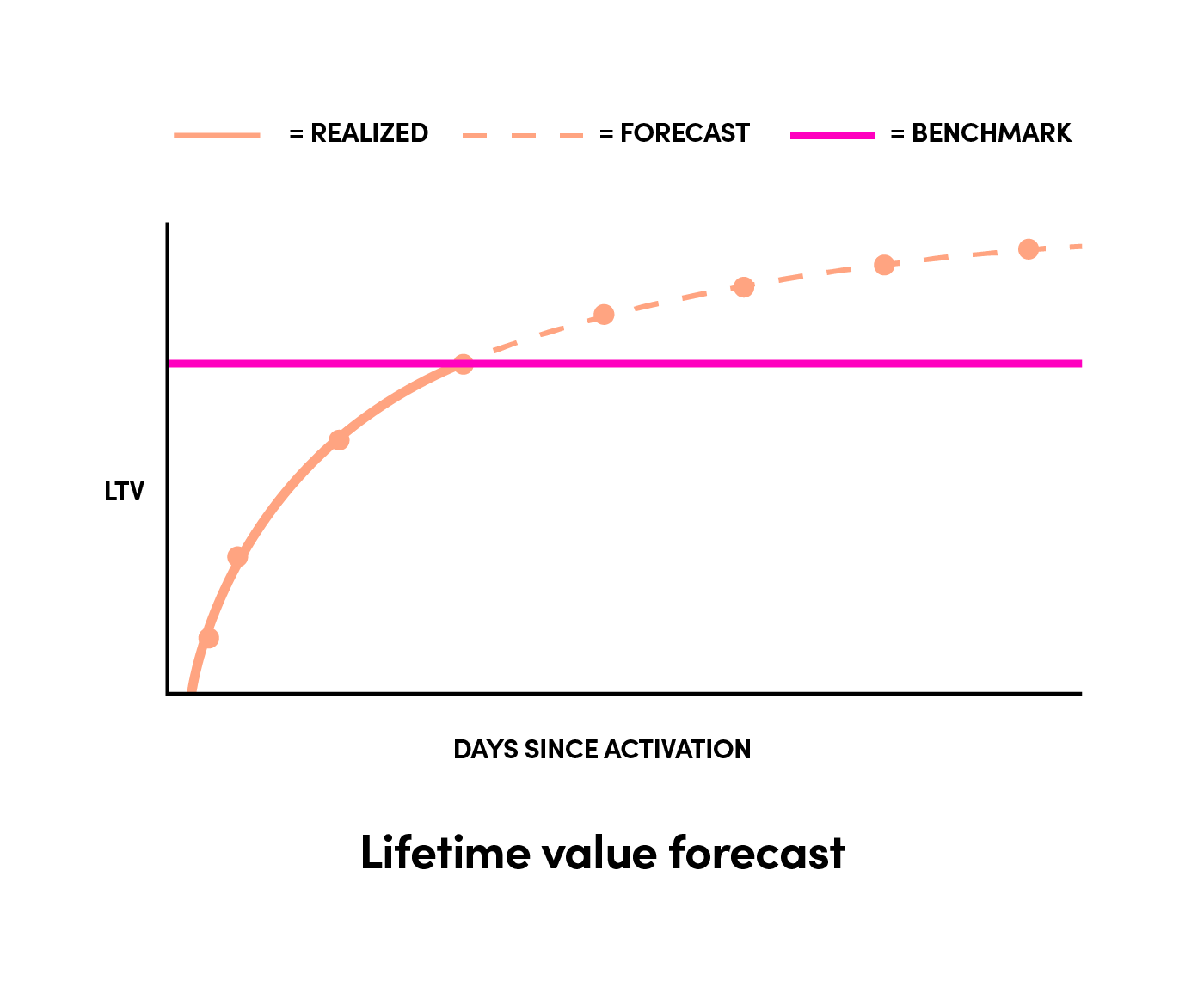

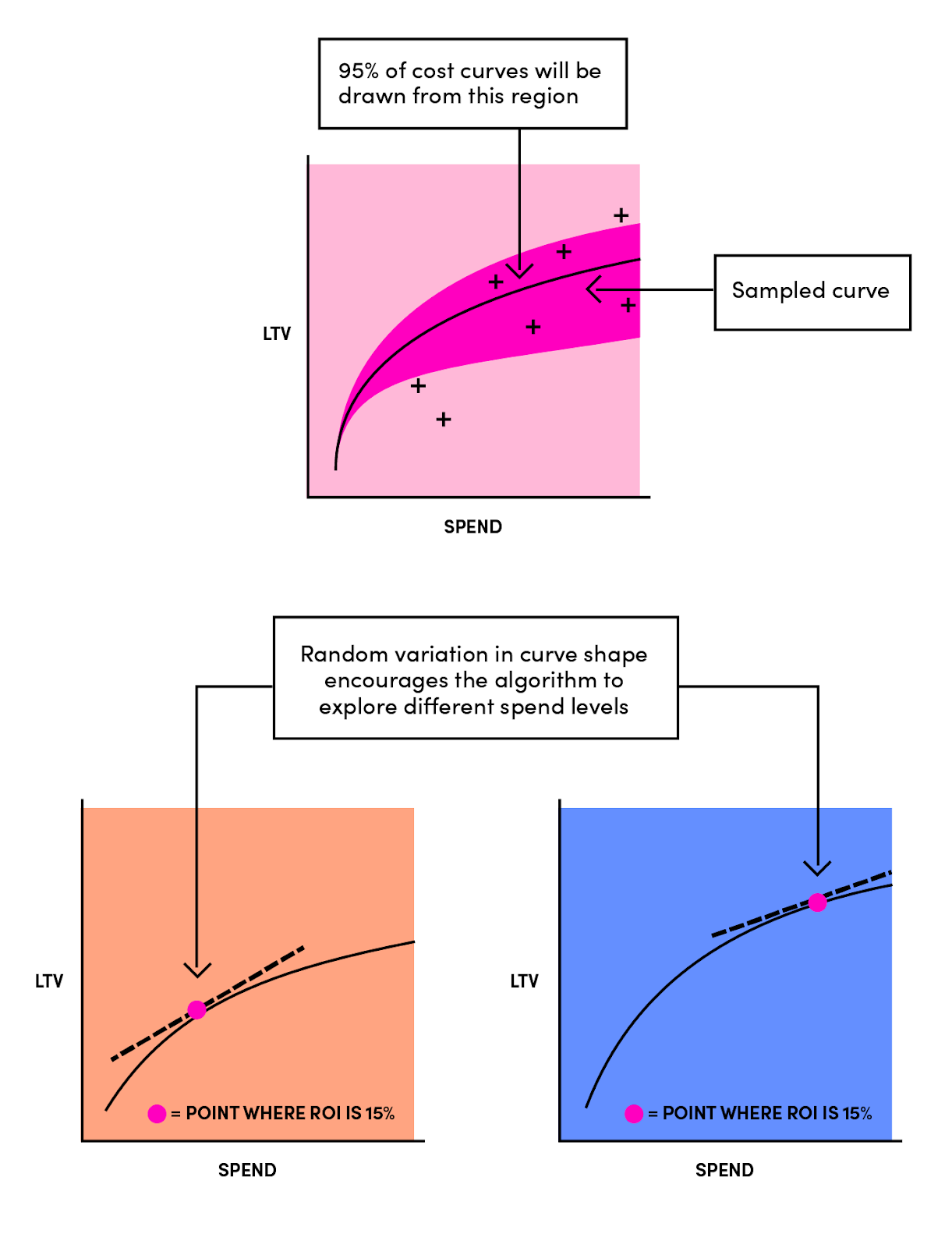

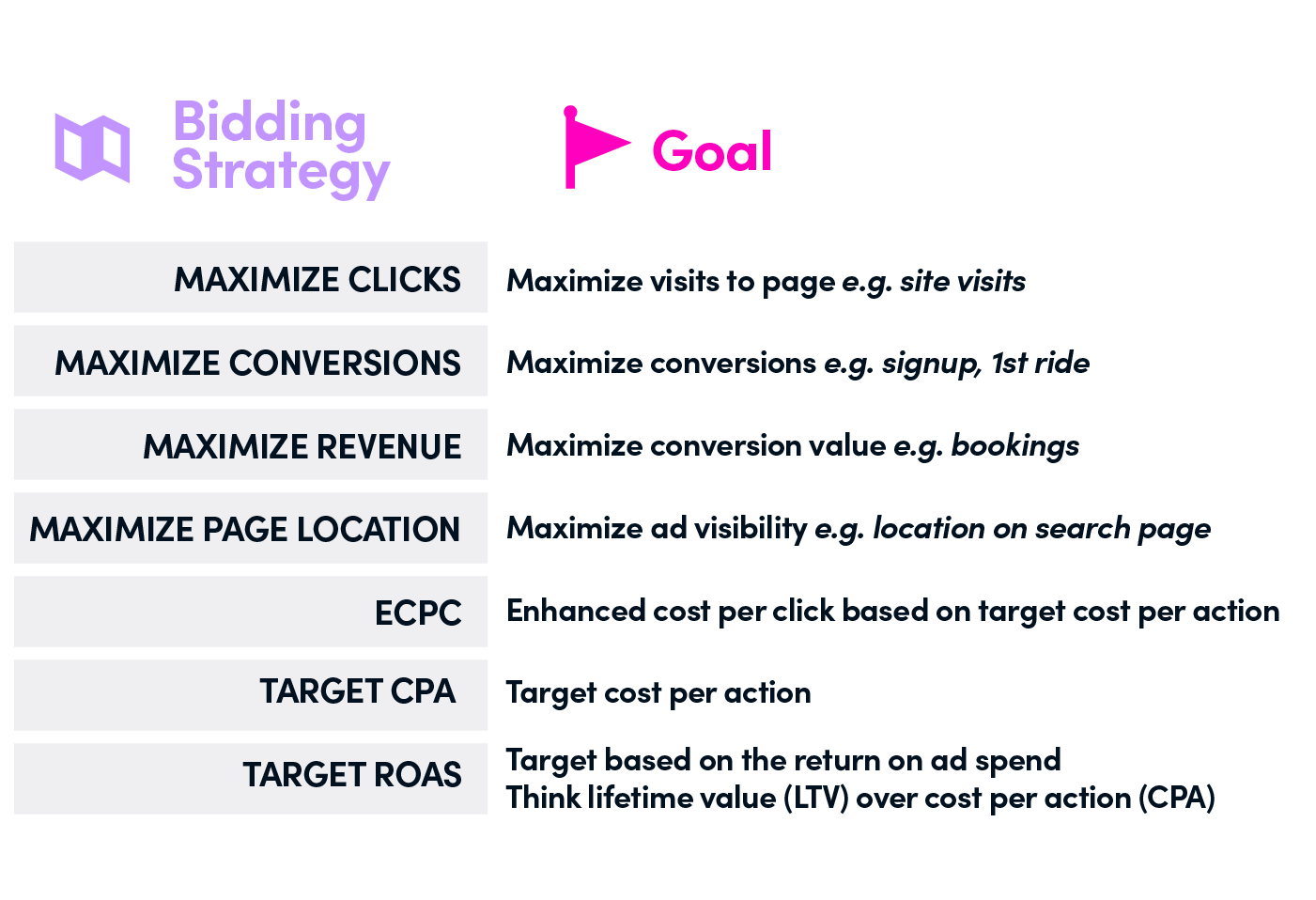

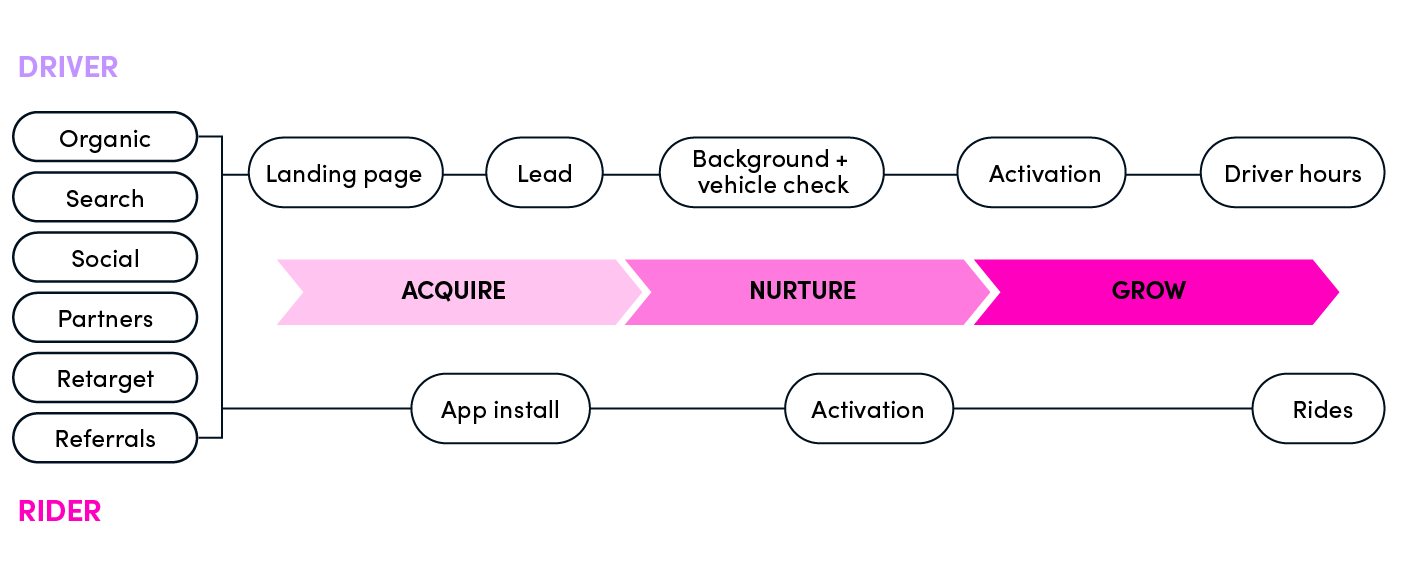

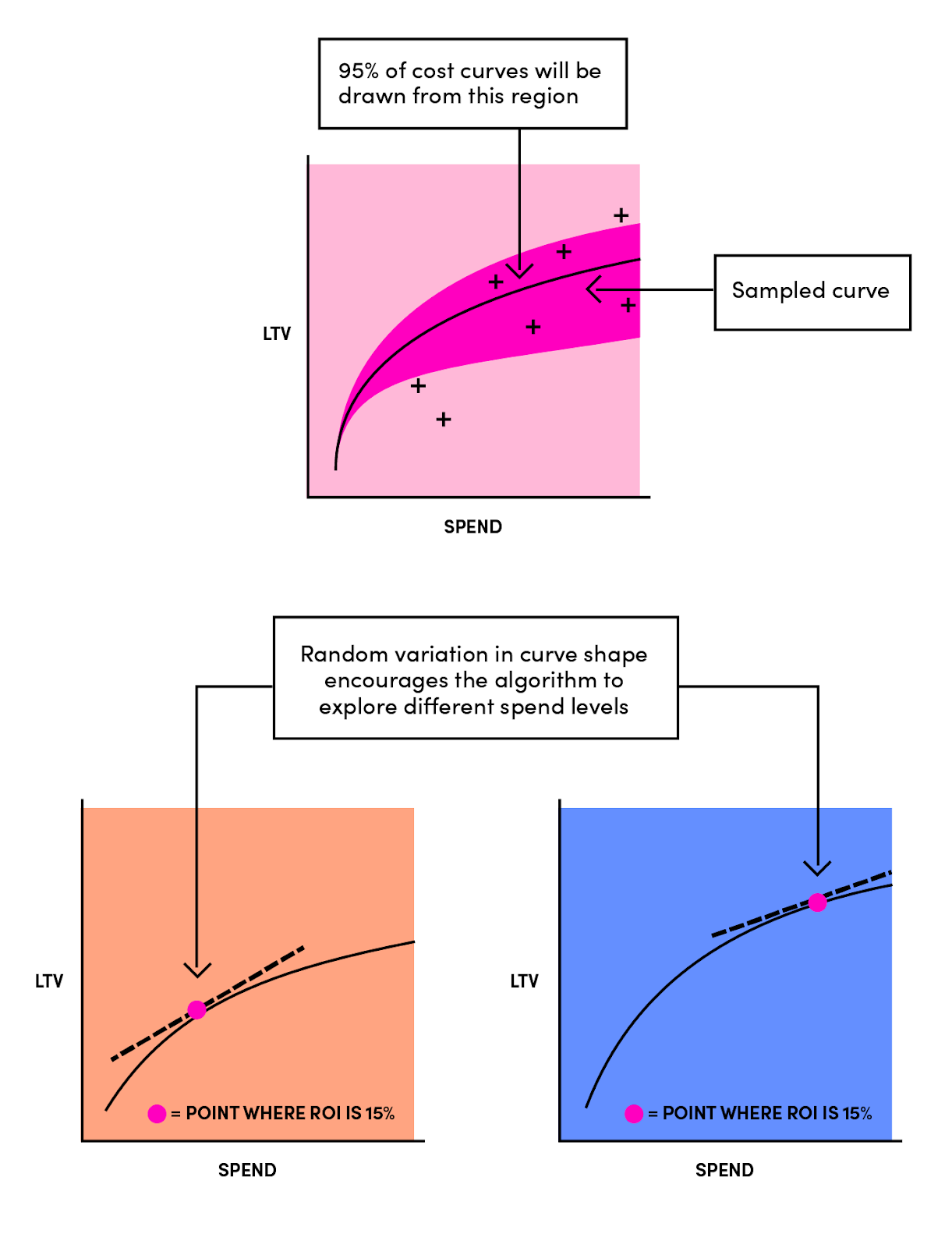

20 | 3. supporting Lyft's unique growth model as shown below

21 |

22 |

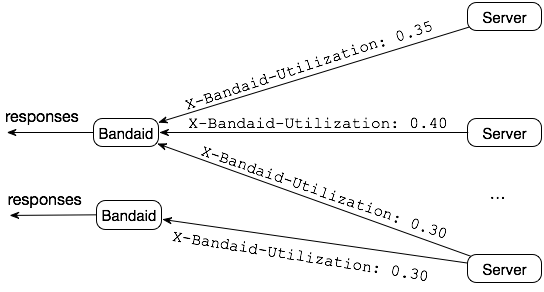

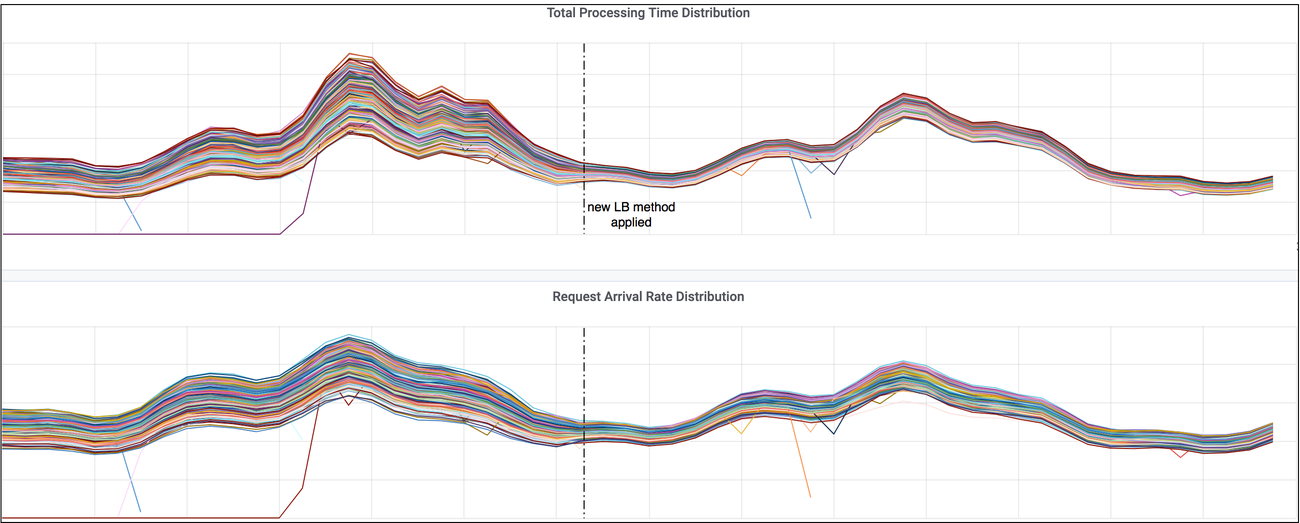

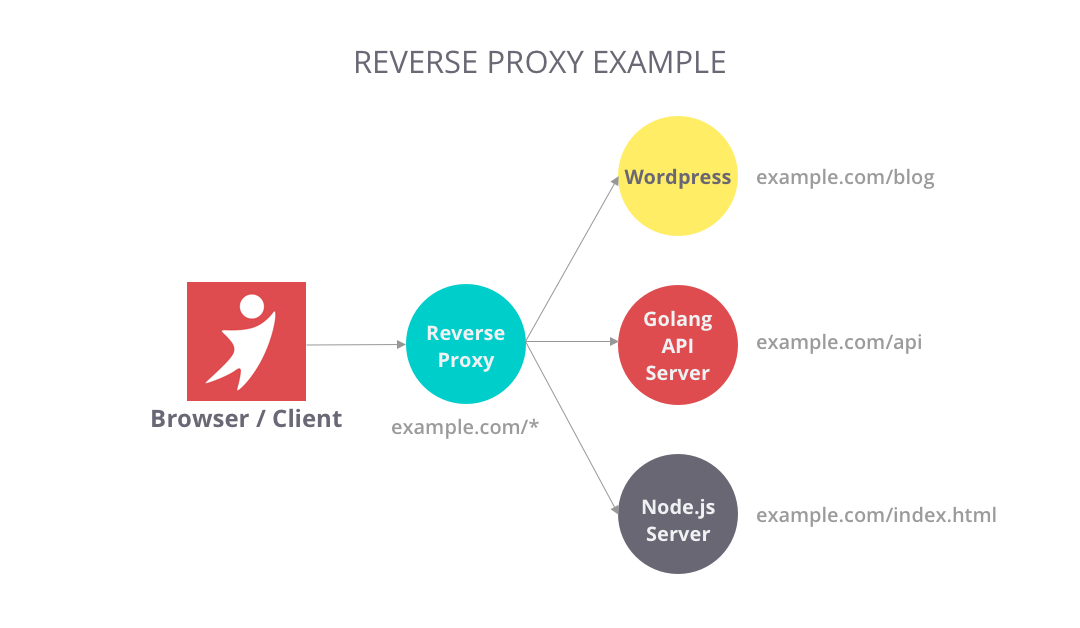

23 |

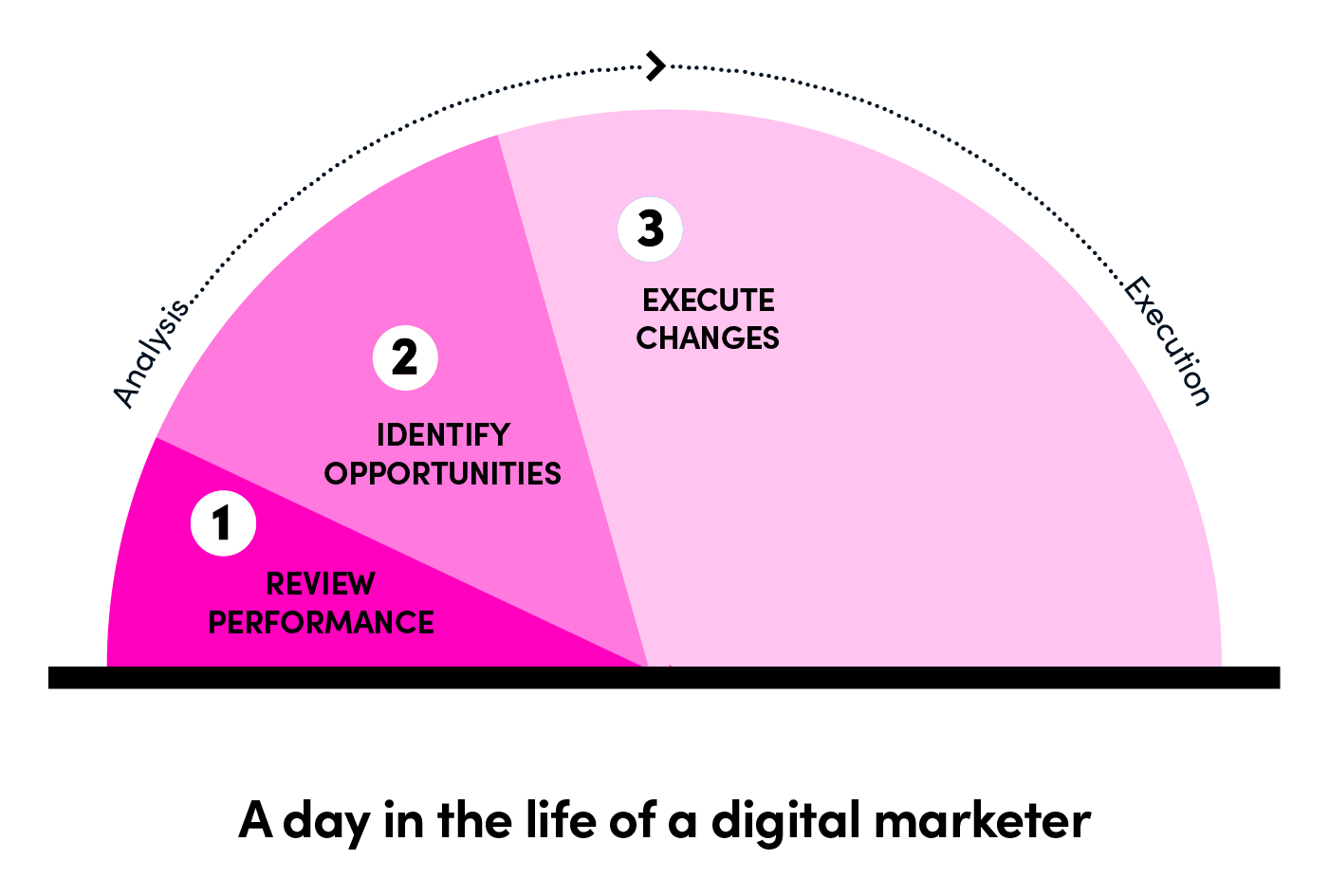

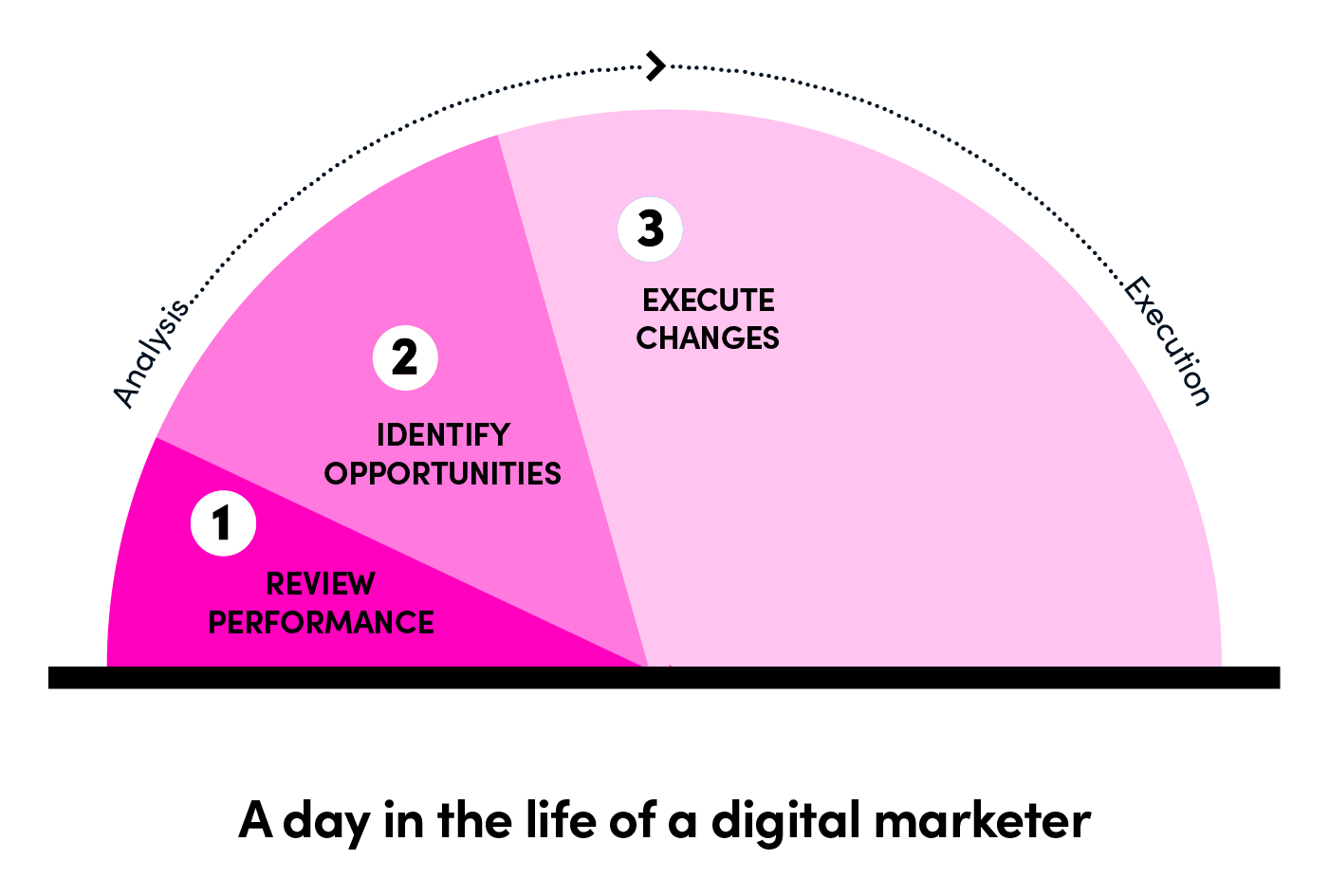

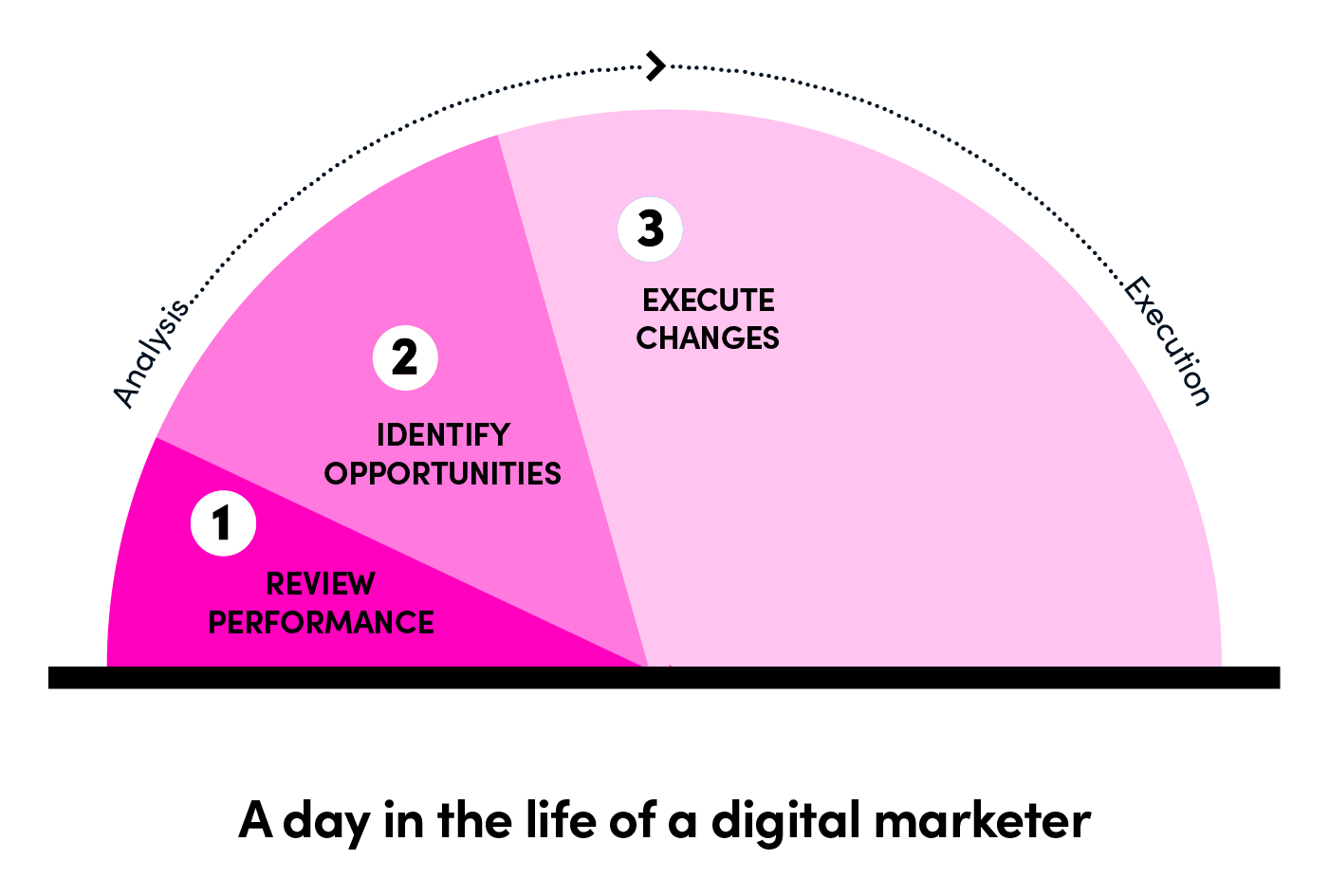

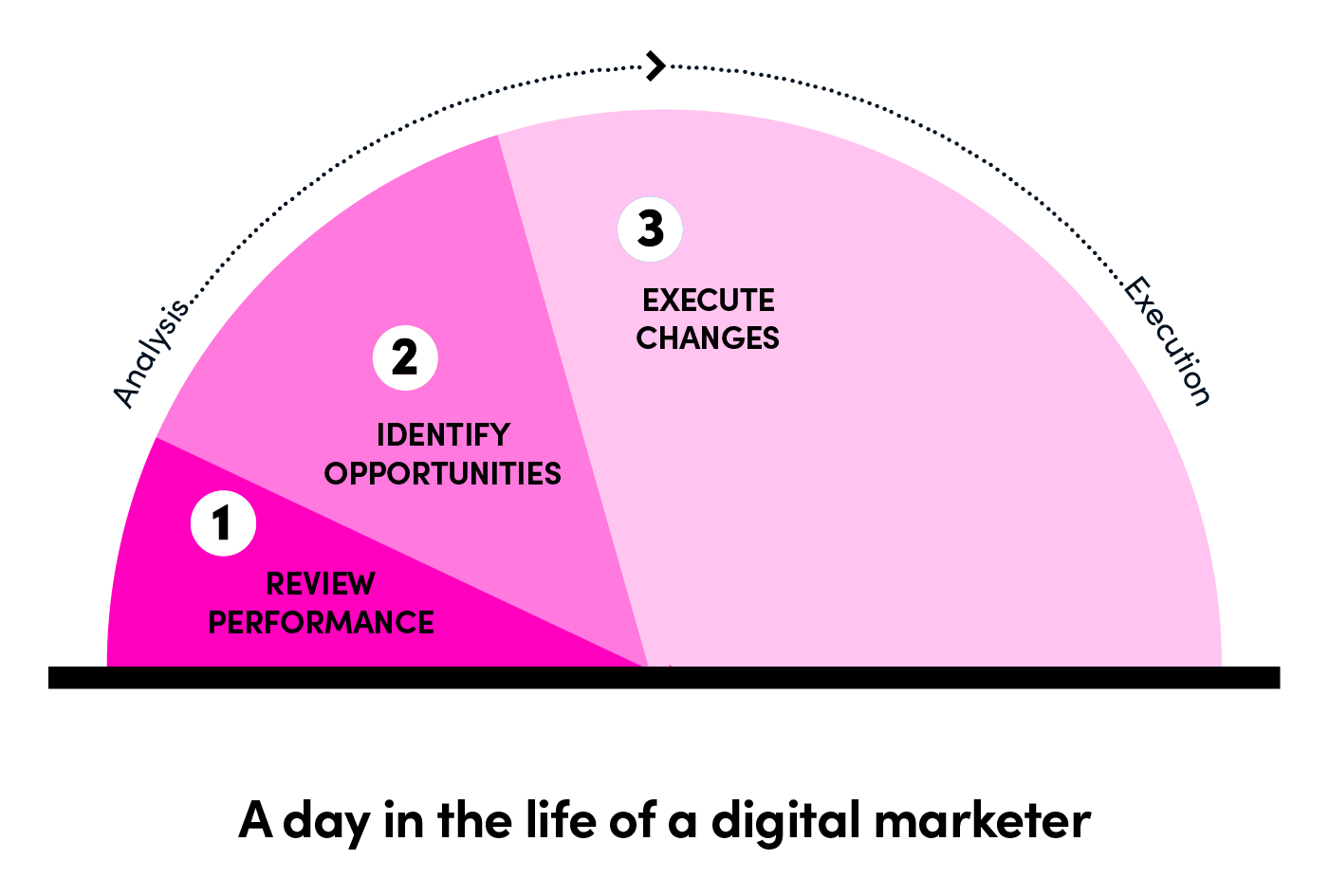

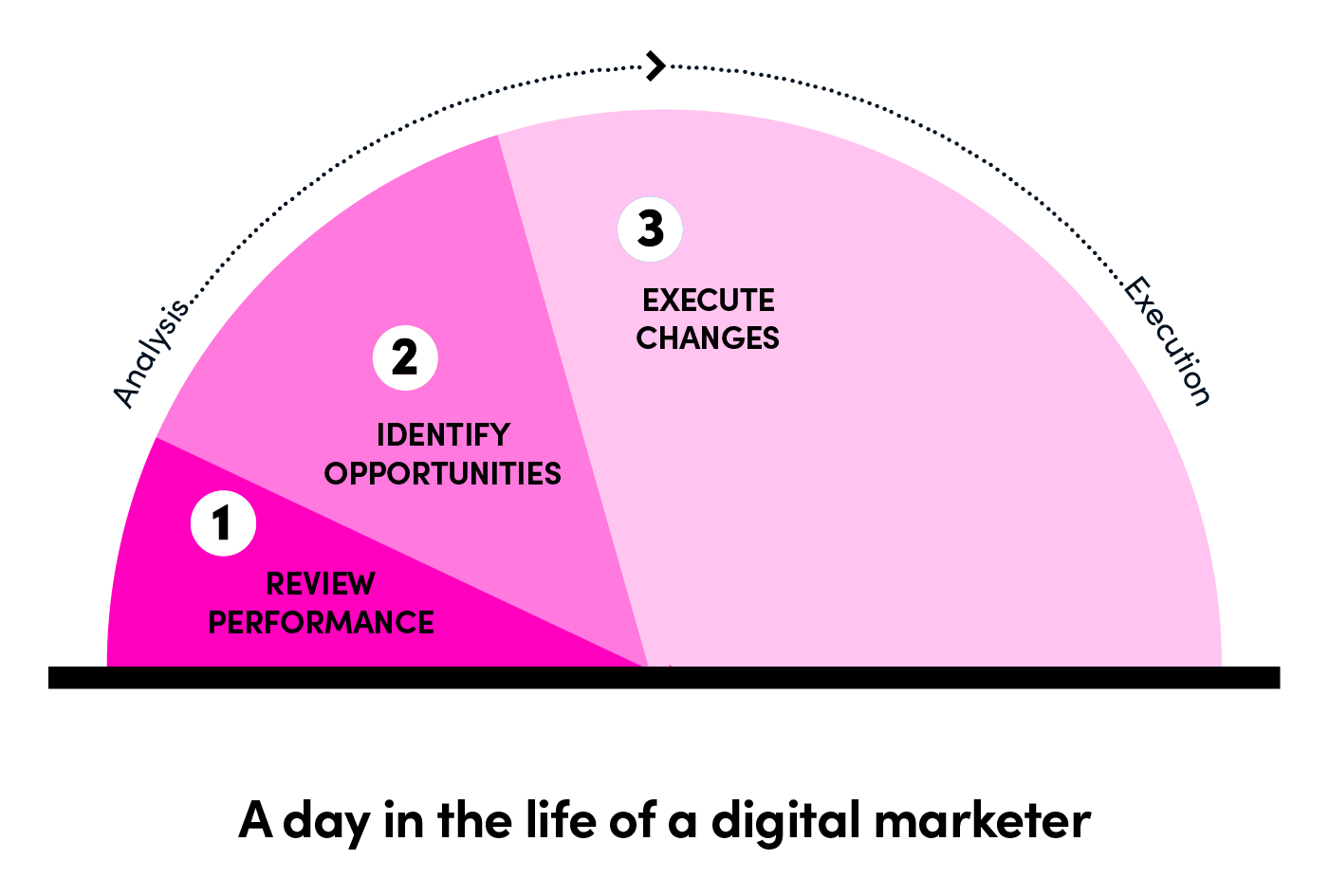

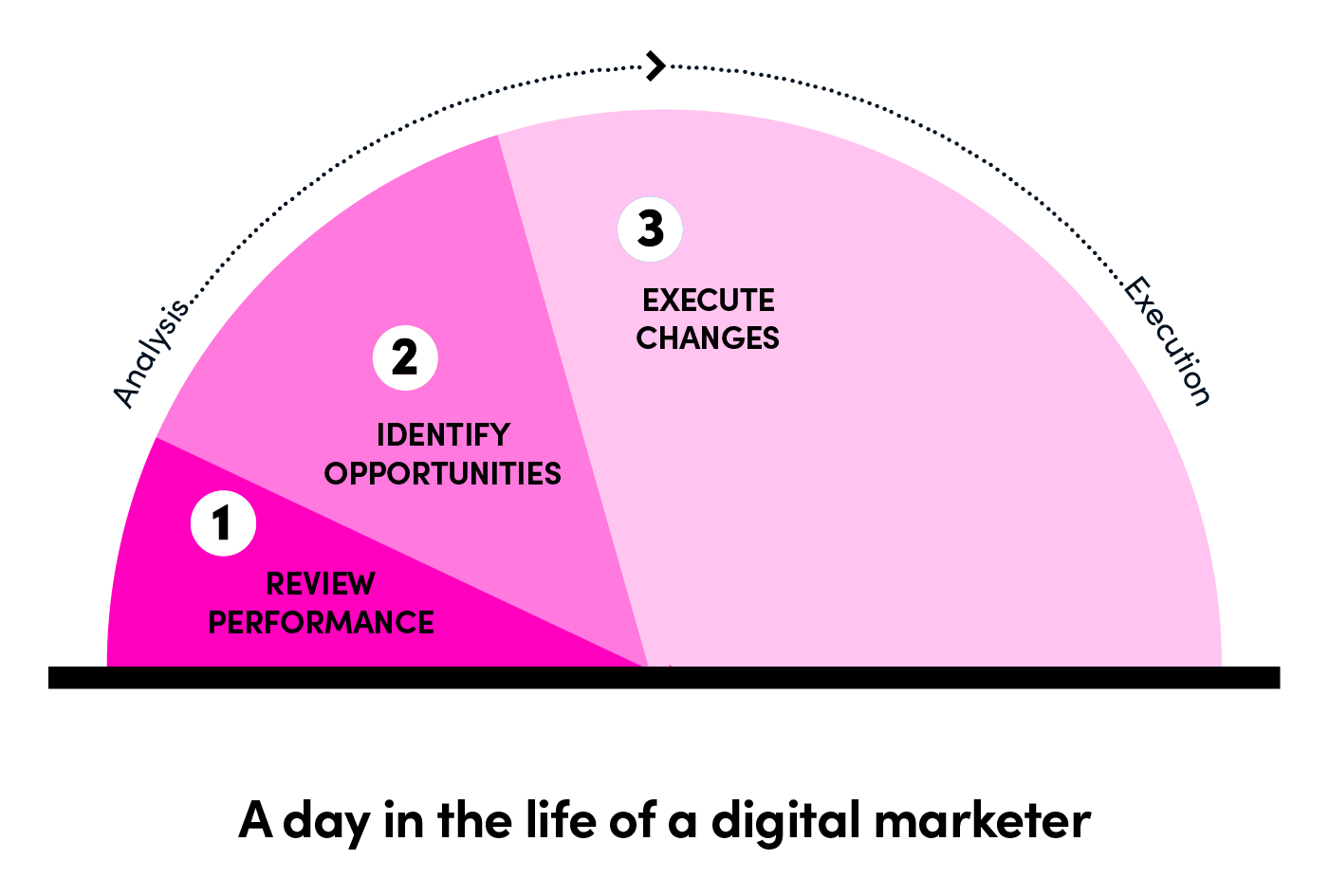

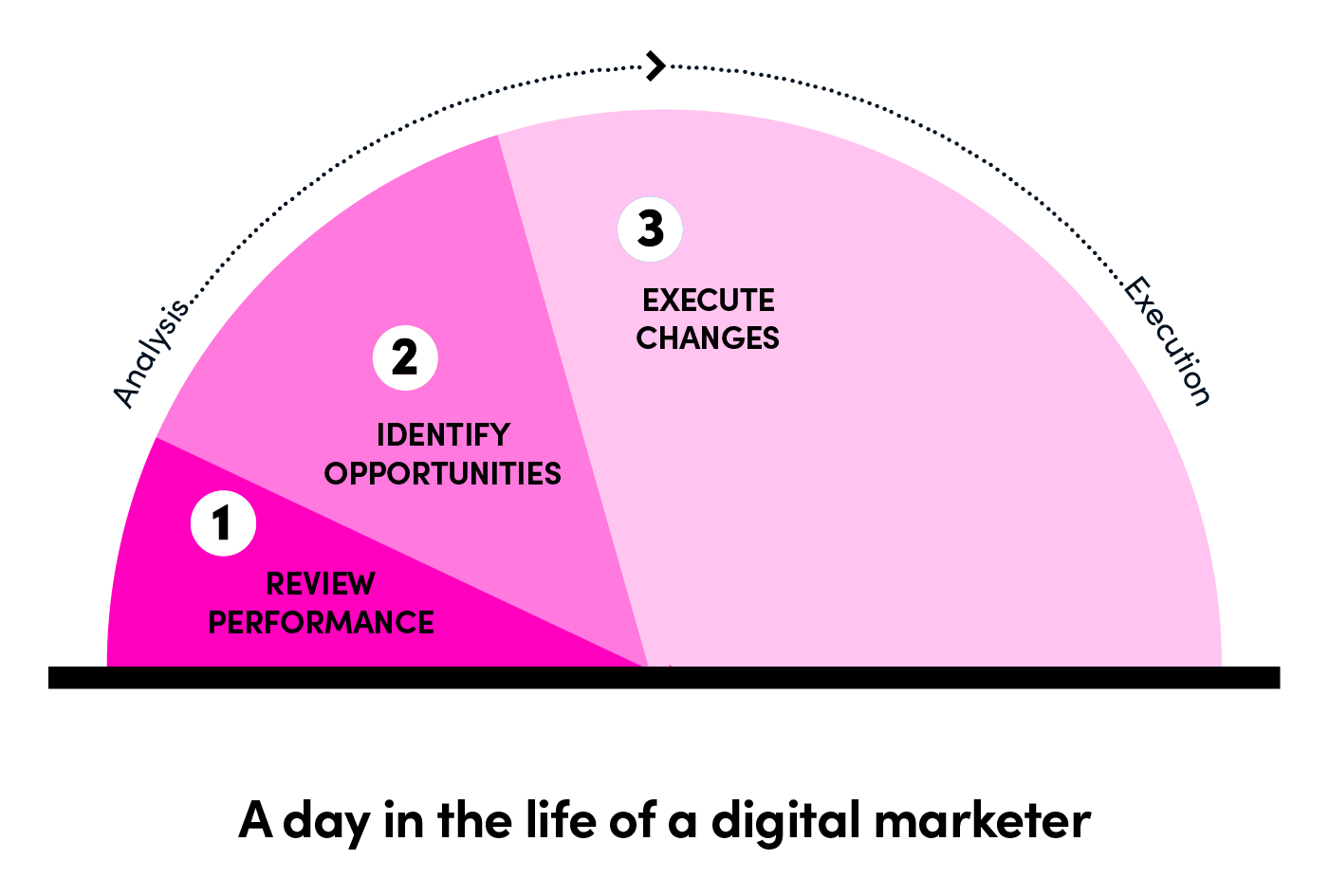

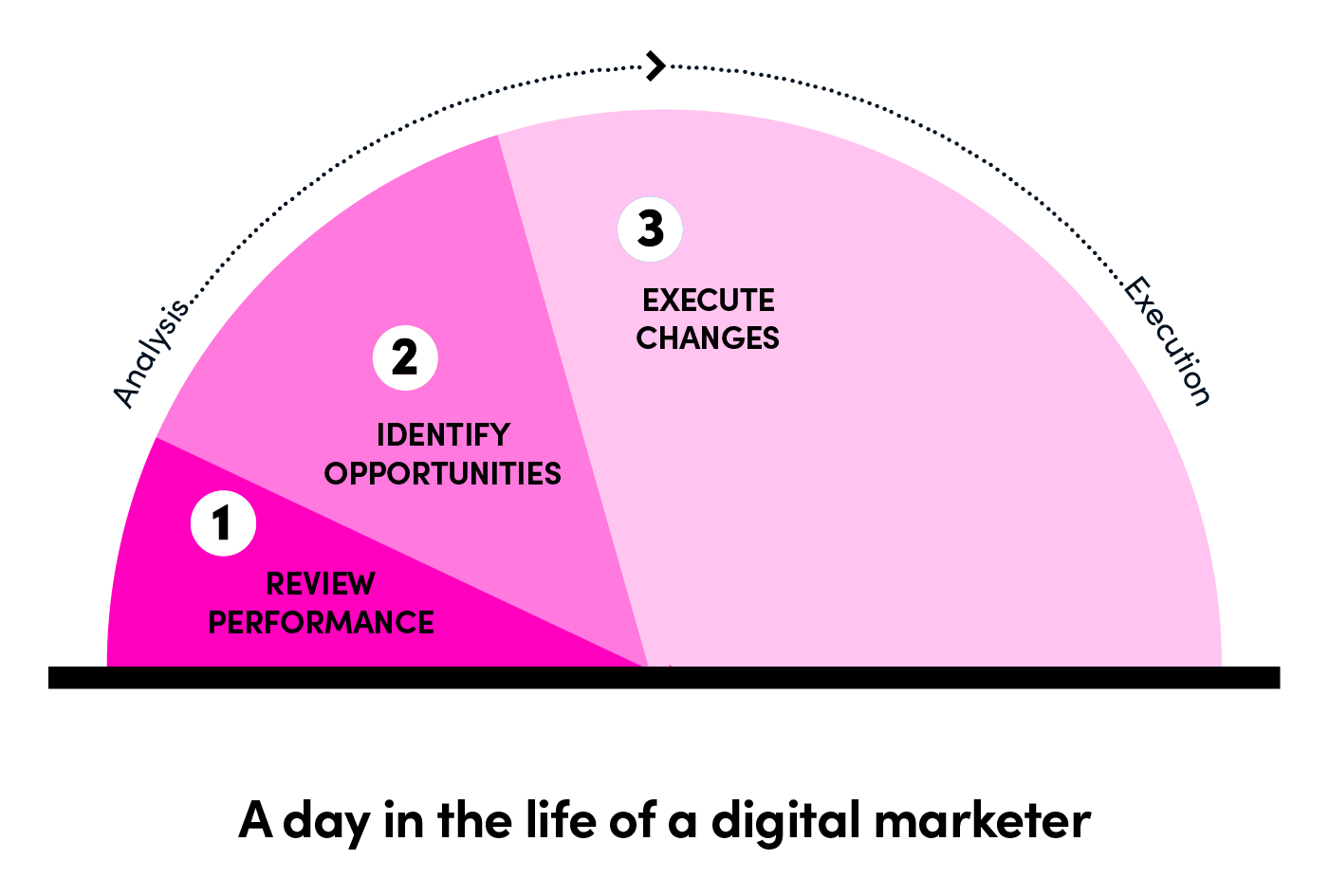

24 | However, the biggest challenge is to manage all the processes of cross-region marketing at scale, which include choosing bids, budgets, creatives, incentives, and audiences, running A/B tests, and so on. You can see what occupies a day in the life of a digital marketer:

25 |

26 |

27 |

28 | We can find out that *execution* occupies most of the time while *analysis*, thought as more important, takes much less time. A scaling strategy will enable marketers to concentrate on analysis and decision-making process instead of operational activities.

29 |

30 | ## Solution: Automation

31 |

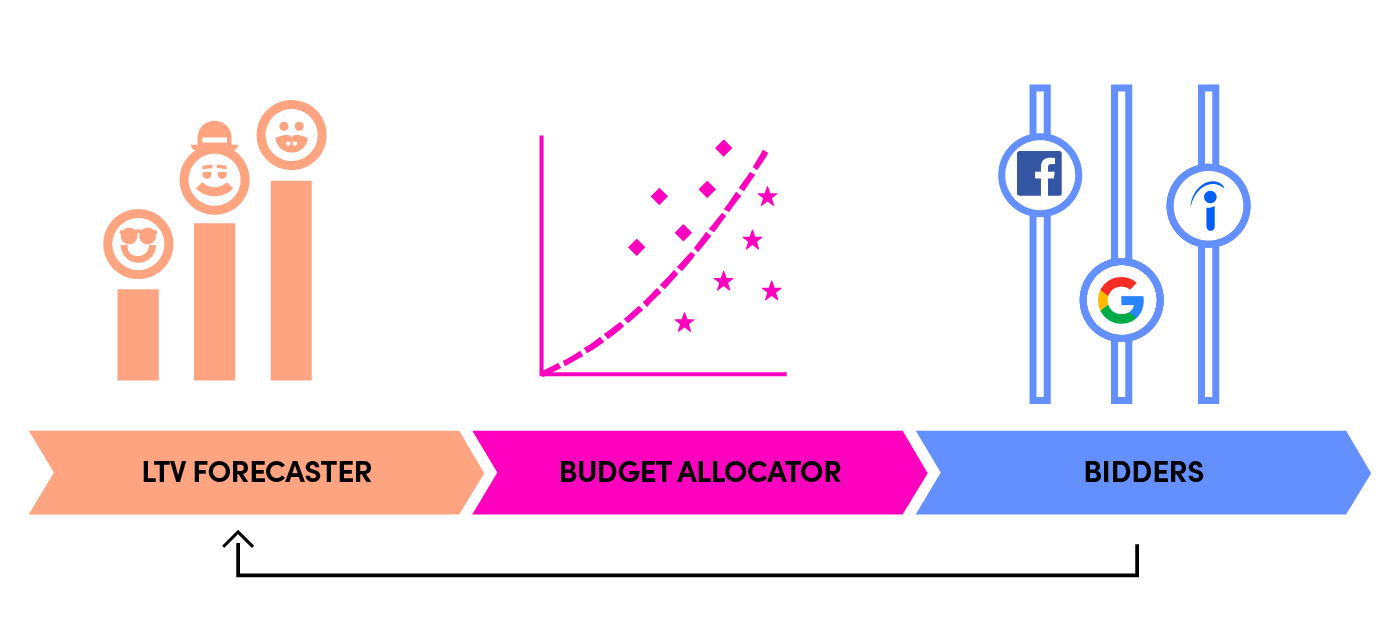

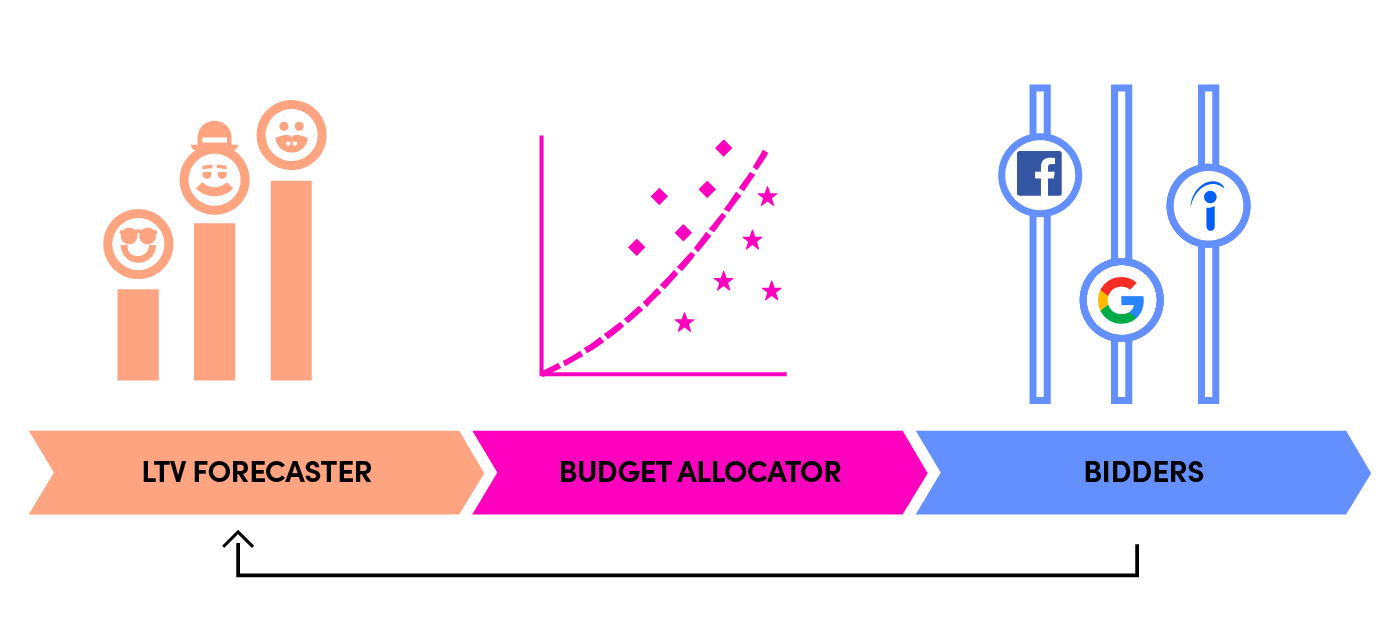

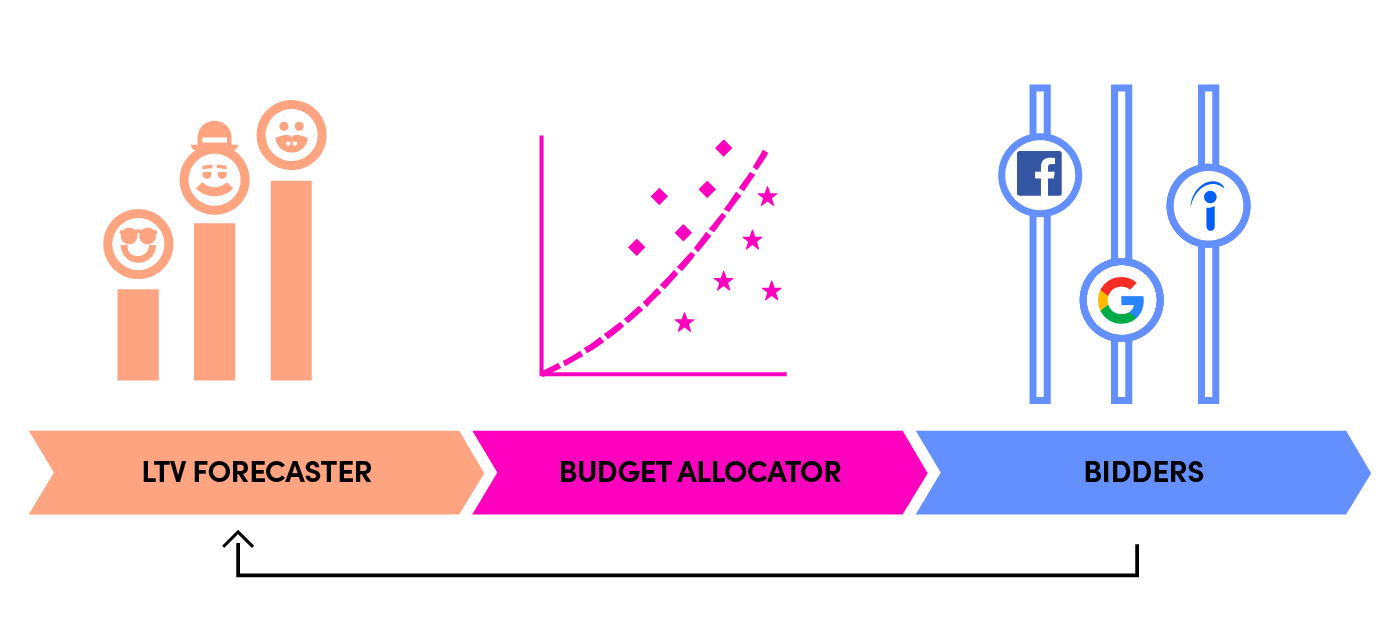

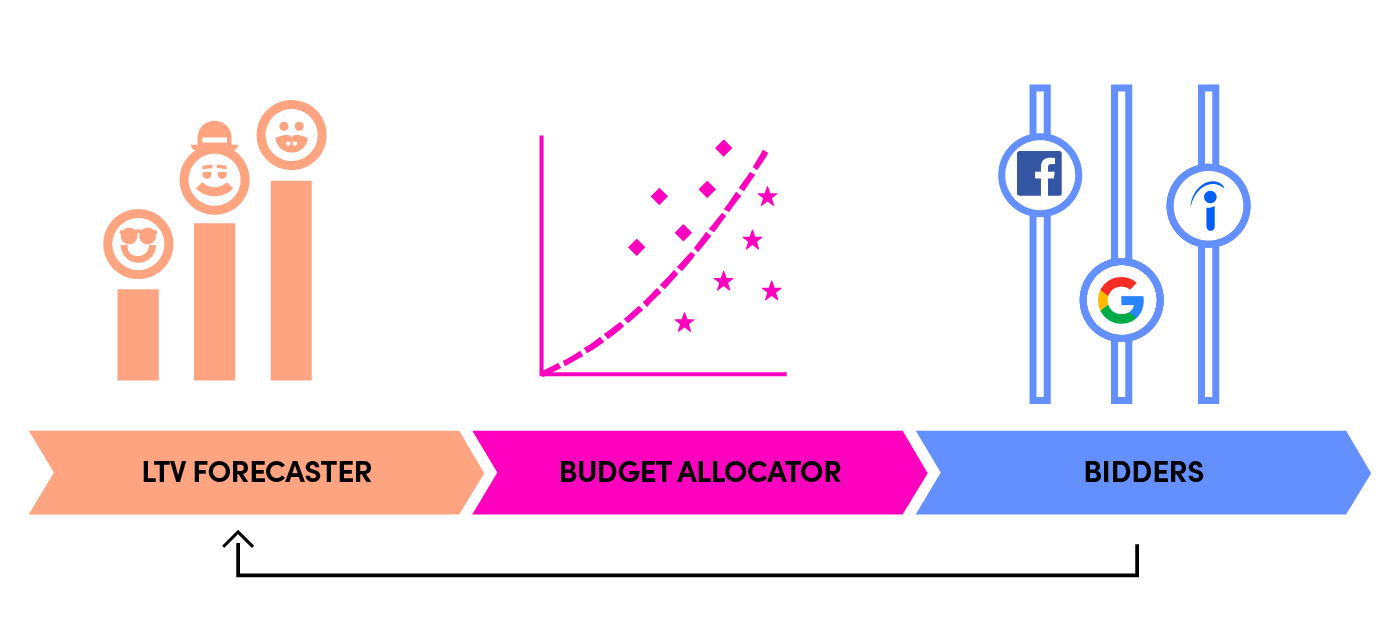

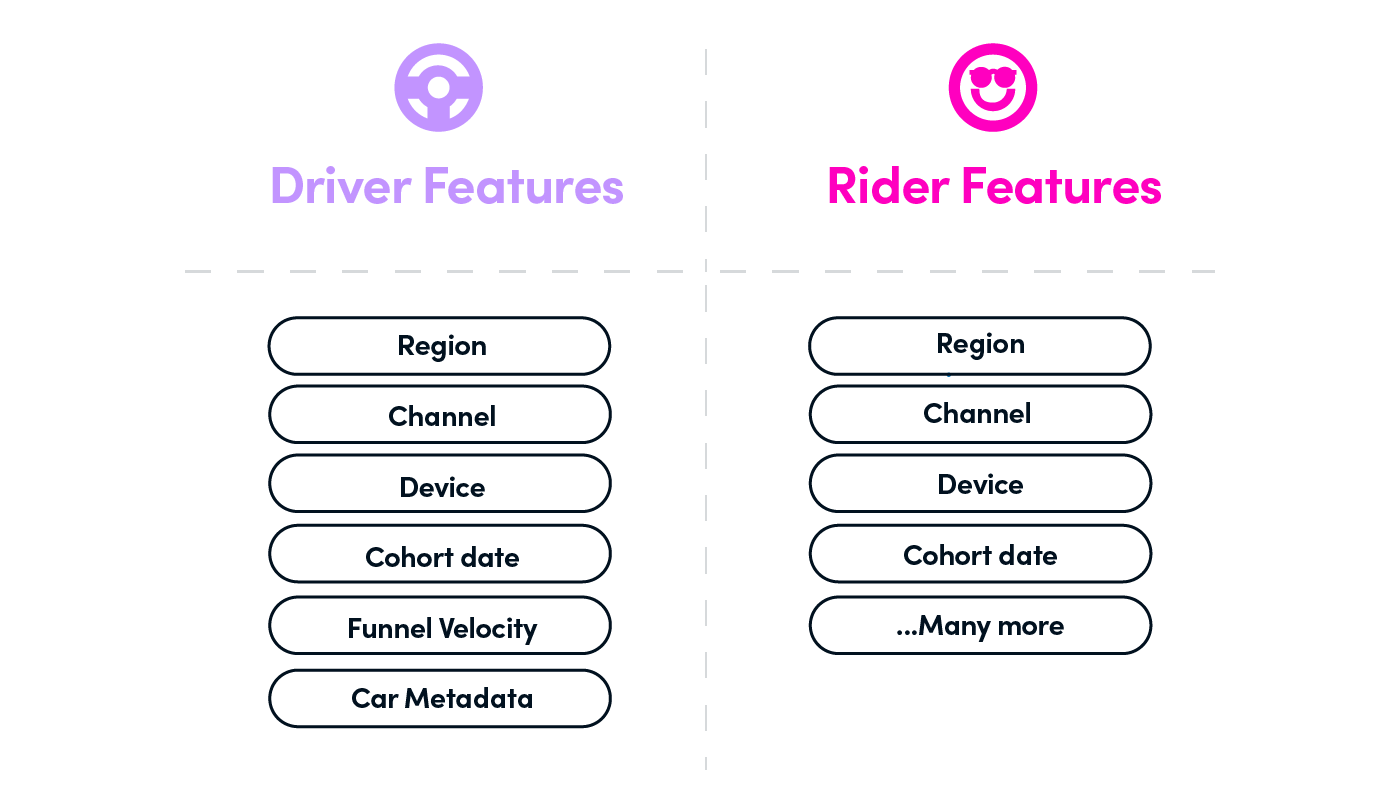

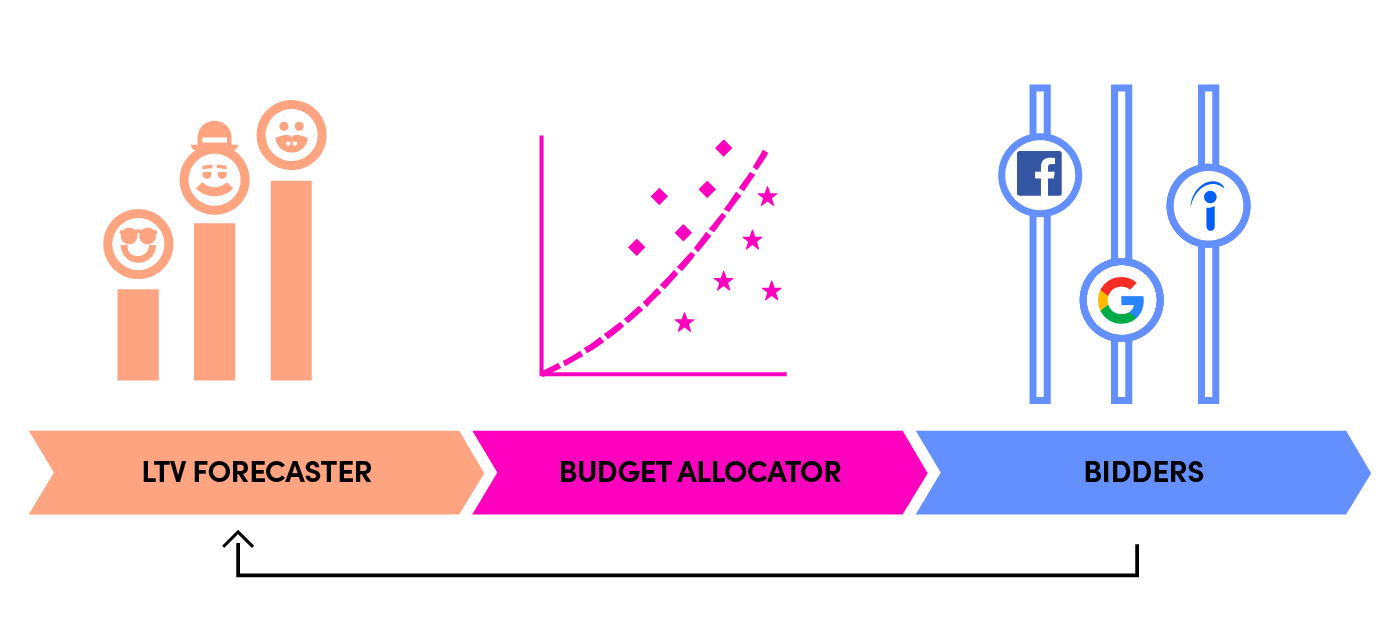

32 | To reduce costs and improve experimental efficiency, we need to

33 |

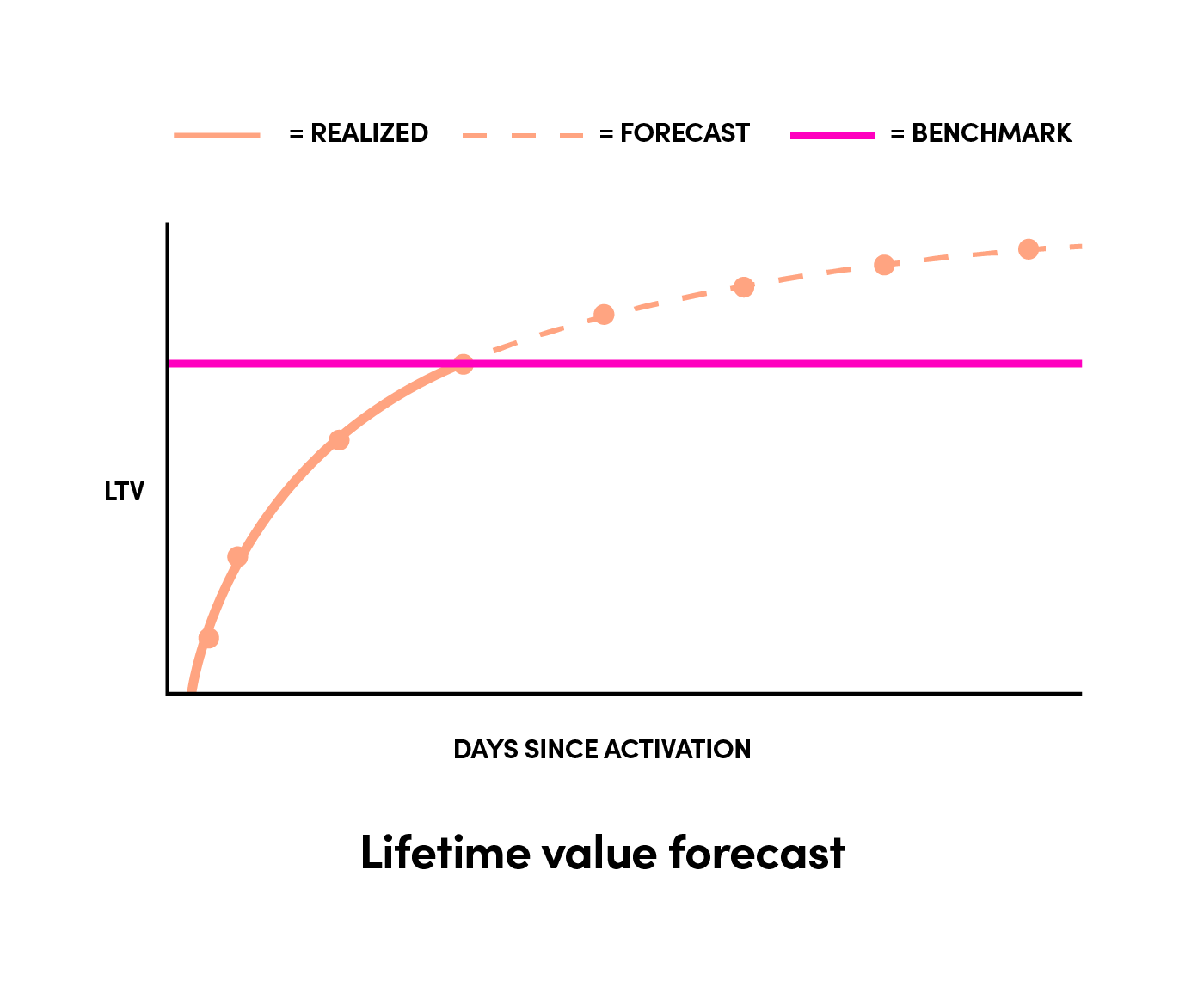

34 | 1. predict the likelihood of a new user to be interested in our product

35 | 2. evaluate effectively and allocate marketing budgets across channels

36 | 3. manage thousands of ad campaigns handily

37 |

38 | The marketing performance data flows into the reinforcement-learning system of Lyft: [Amundsen](https://guigu.io/blog/2018-12-03-making-progress-30-kilometers-per-day)

39 |

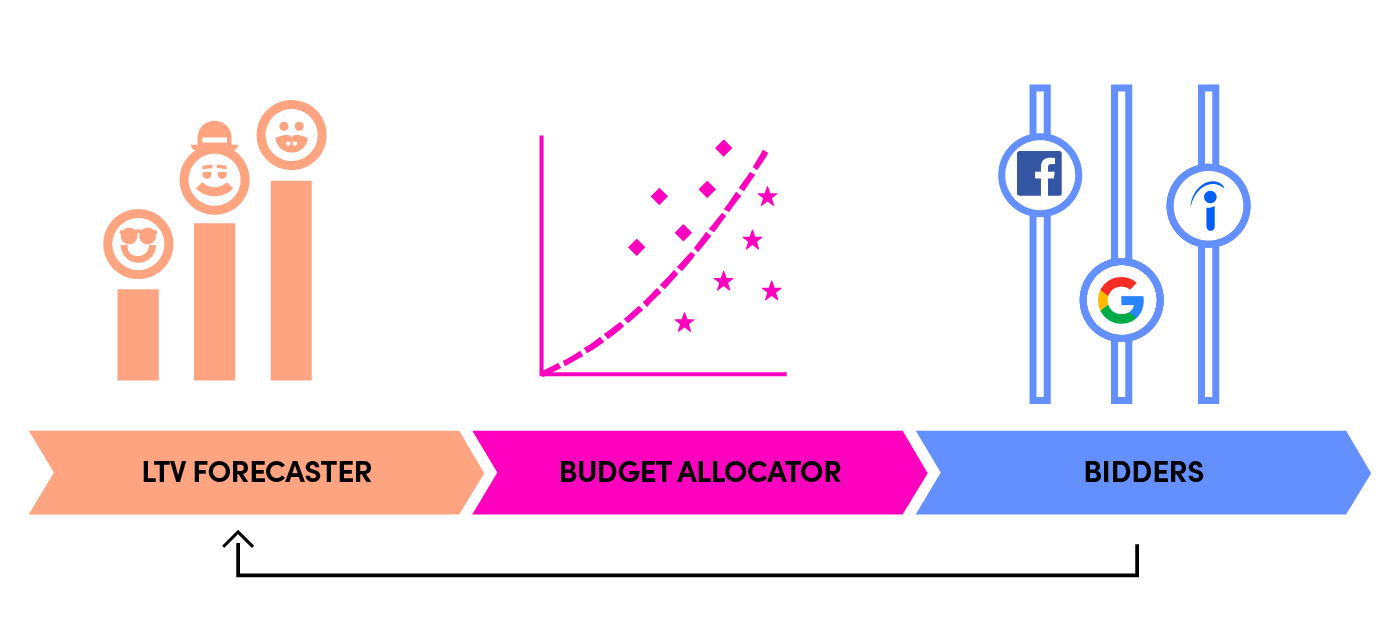

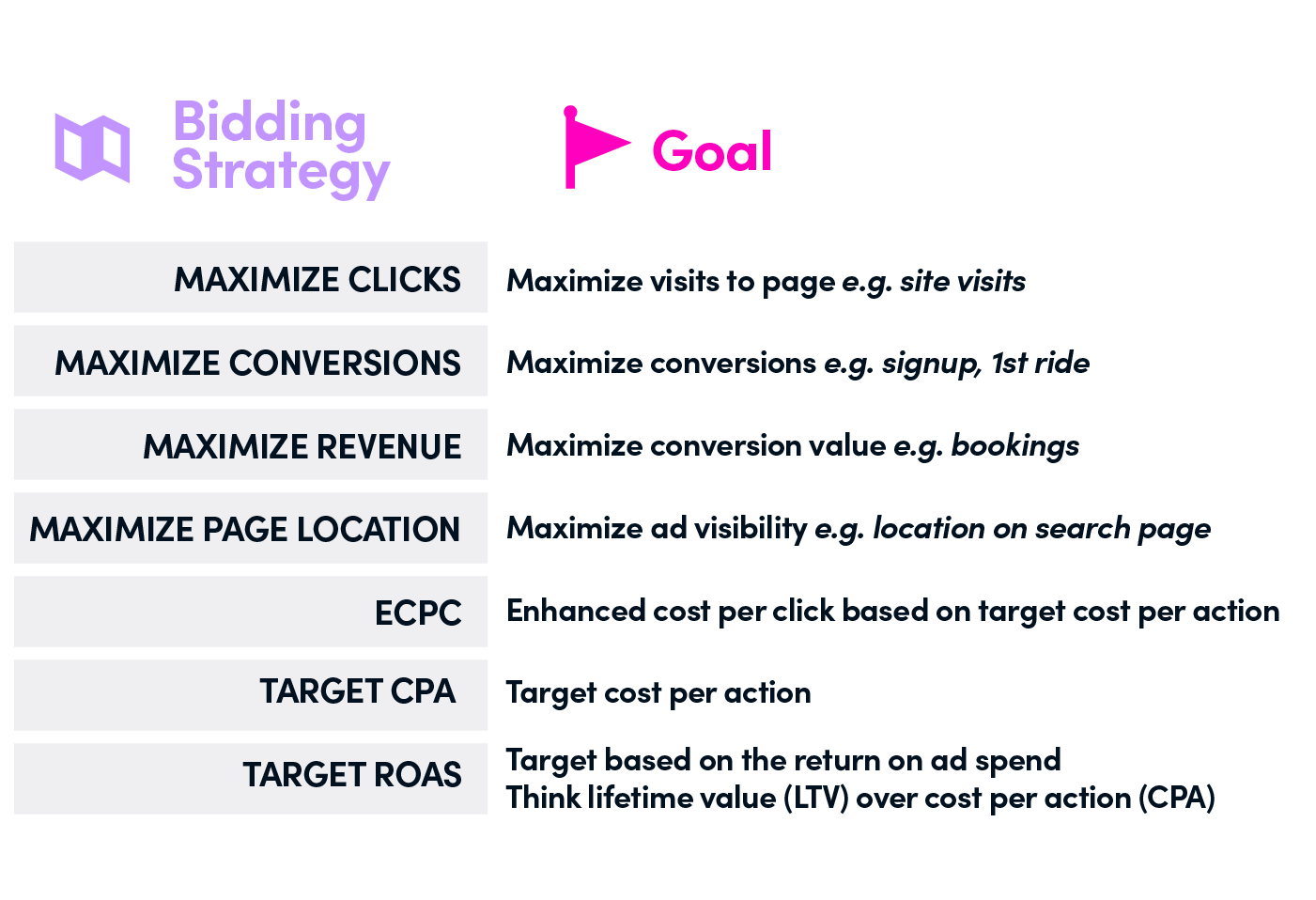

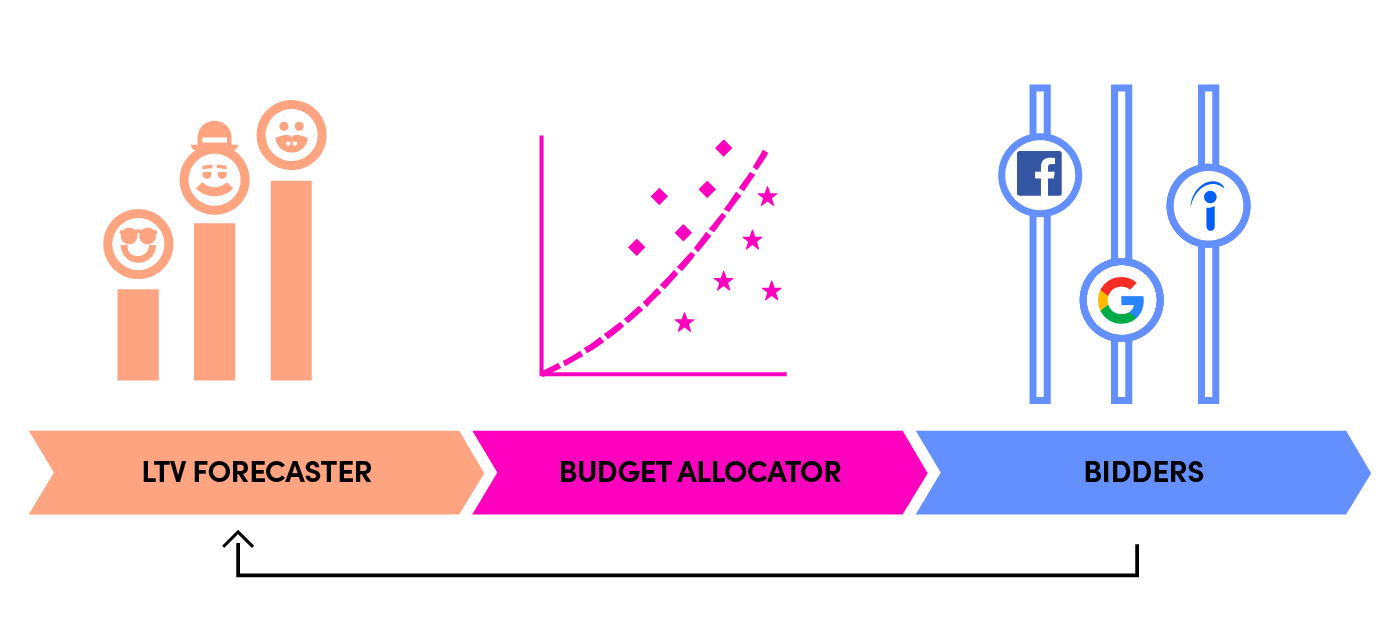

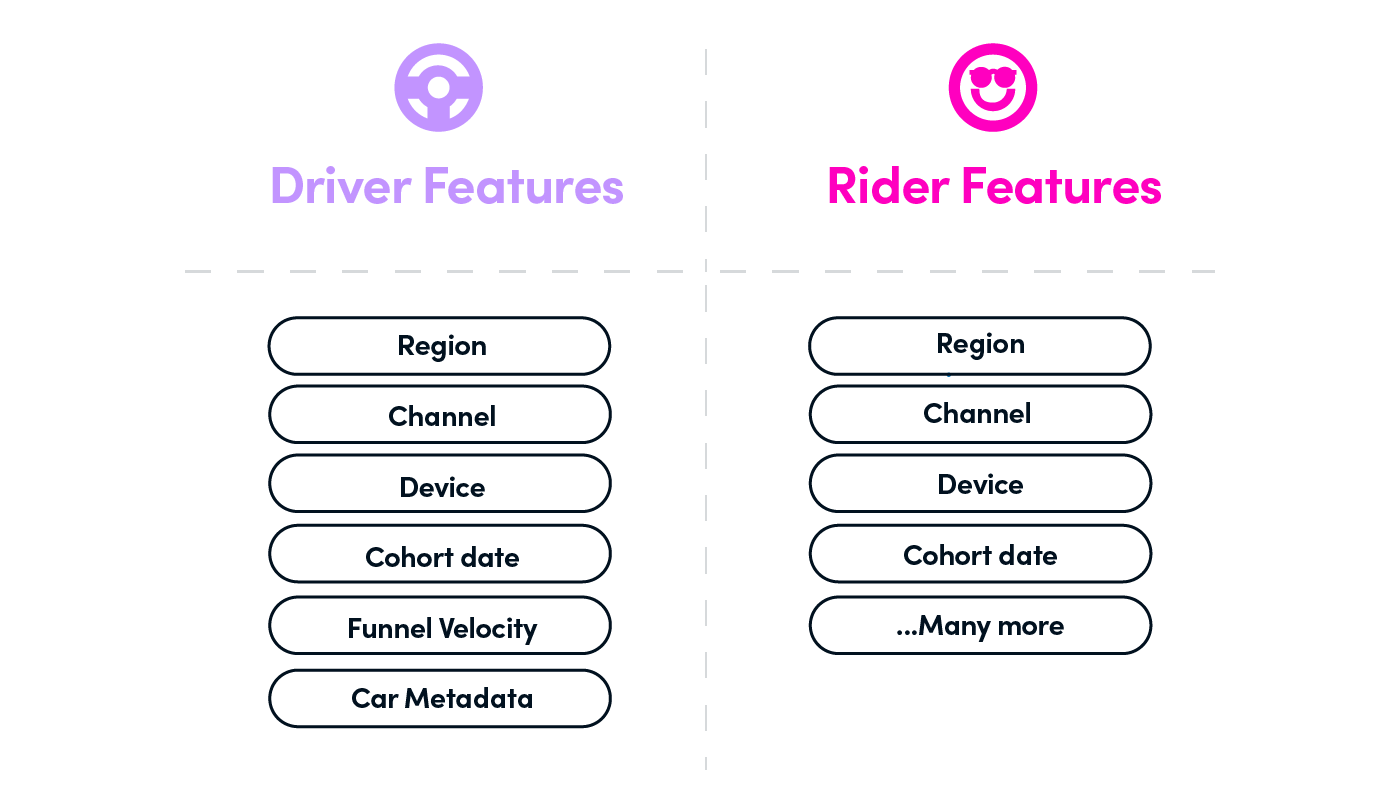

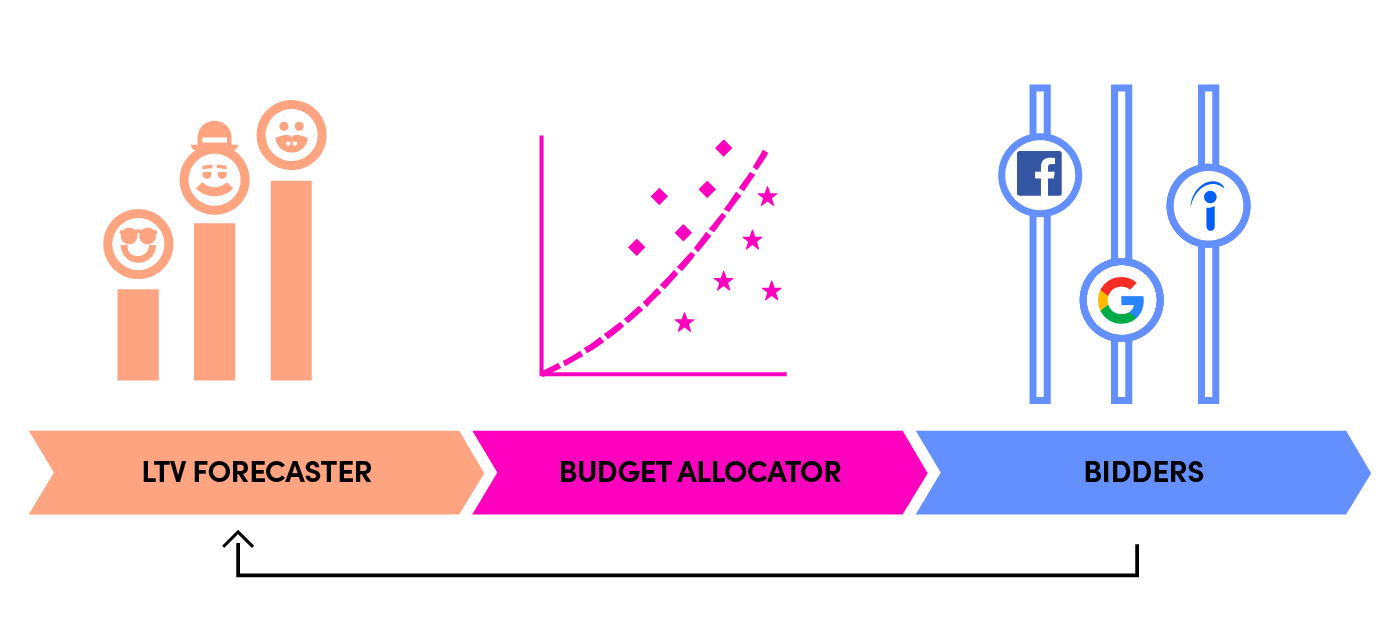

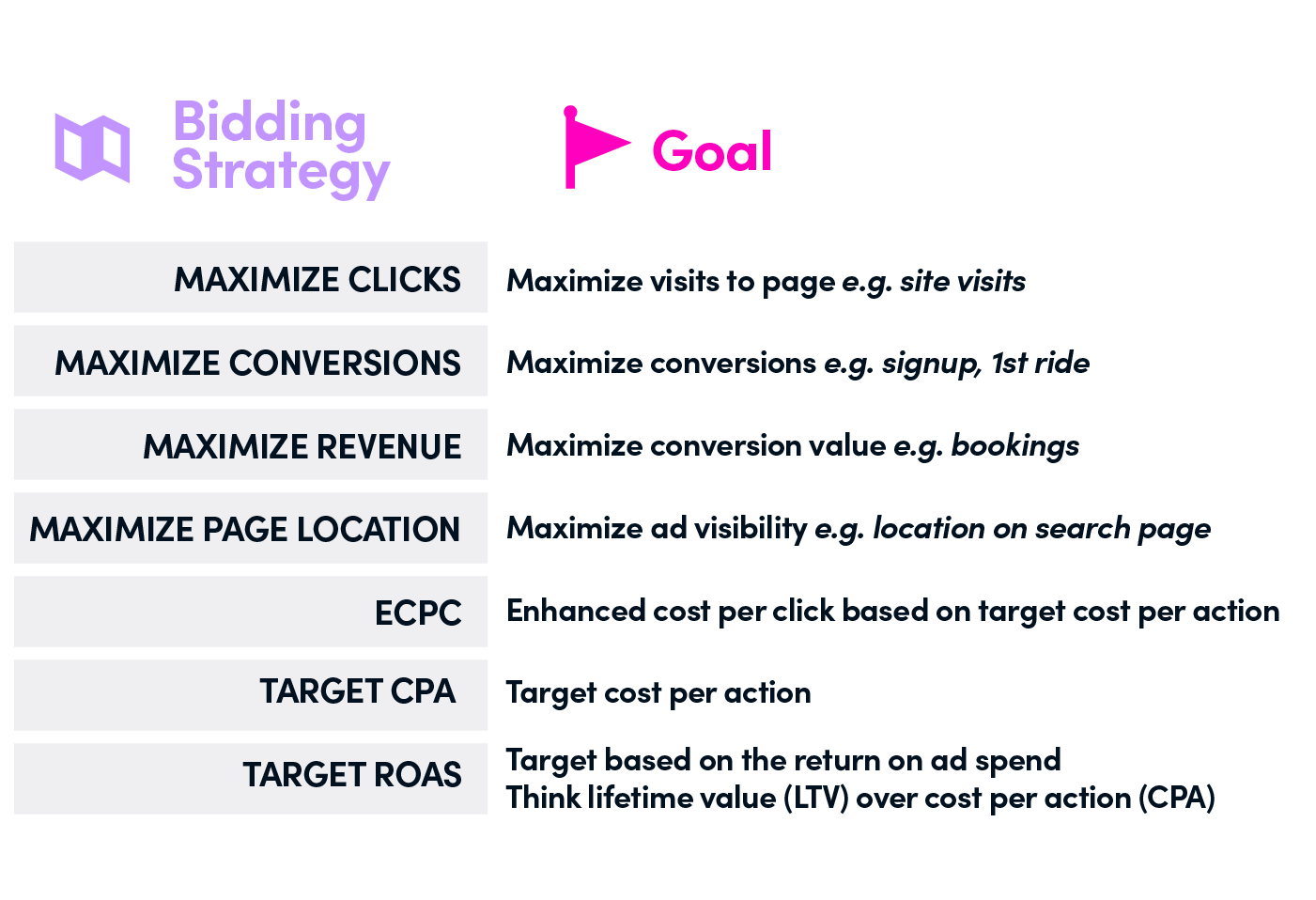

40 | The problems that need to be automated include:

41 |

42 | 1. updating bids across search keywords

43 | 2. turning off poor-performing creatives

44 | 3. changing referrals values by market

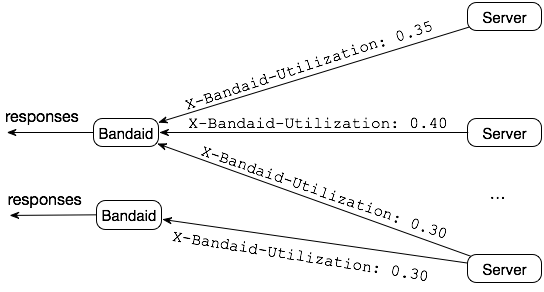

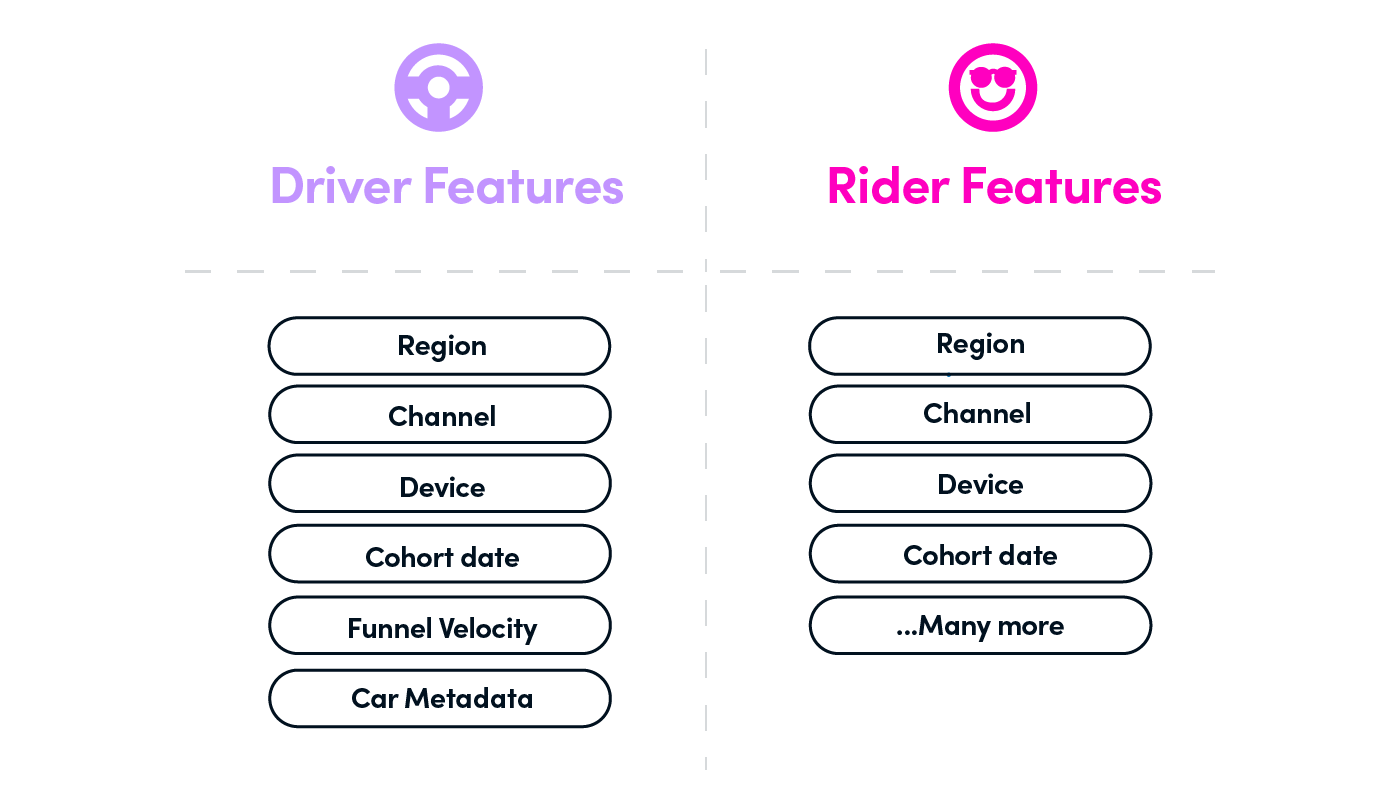

45 | 4. identifying high-value user segments