├── .gitignore

├── .vscode

├── launch.json

└── settings.json

├── CHANGELOG.md

├── Datasets

└── README.md

├── LICENSE

├── MANIFEST.in

├── Models

└── README.md

├── README.md

├── Tests

├── README.md

├── test_tensorflow_metrics.py

└── test_text_utils.py

├── Tutorials

├── 01_image_to_word

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ ├── requiremenets.txt

│ └── train.py

├── 02_captcha_to_text

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ └── train.py

├── 03_handwriting_recognition

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ └── train.py

├── 04_sentence_recognition

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ └── train.py

├── 05_sound_to_text

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ ├── train.py

│ └── train_no_limit.py

├── 06_pytorch_introduction

│ ├── README.md

│ ├── model.py

│ ├── requirements.txt

│ ├── test.py

│ └── train.py

├── 07_pytorch_wrapper

│ ├── README.md

│ ├── model.py

│ ├── requirements.txt

│ ├── test.py

│ └── train.py

├── 08_handwriting_recognition_torch

│ ├── README.md

│ ├── configs.py

│ ├── inferenceModel.py

│ ├── model.py

│ ├── requirements.txt

│ └── train_torch.py

├── 09_translation_transformer

│ ├── README.md

│ ├── configs.py

│ ├── download.py

│ ├── model.py

│ ├── requirements.txt

│ ├── test.py

│ └── train.py

├── 10_wav2vec2_torch

│ ├── configs.py

│ ├── requirements.txt

│ ├── test.py

│ ├── train.py

│ └── train_tf.py

├── 11_Yolov8

│ ├── README.md

│ ├── convert2onnx.py

│ ├── requirements.txt

│ ├── run_pretrained.py

│ ├── test_yolov8.py

│ └── train_yolov8.py

└── README.md

├── bin

├── read_parquet.py

└── setup.sh

├── mltu

├── __init__.py

├── annotations

│ ├── __init__.py

│ ├── audio.py

│ ├── detections.py

│ └── images.py

├── augmentors.py

├── configs.py

├── dataProvider.py

├── inferenceModel.py

├── preprocessors.py

├── tensorflow

│ ├── README.md

│ ├── __init__.py

│ ├── callbacks.py

│ ├── dataProvider.py

│ ├── layers.py

│ ├── losses.py

│ ├── metrics.py

│ ├── model_utils.py

│ ├── models

│ │ └── u2net.py

│ ├── requirements.txt

│ └── transformer

│ │ ├── __init__.py

│ │ ├── attention.py

│ │ ├── callbacks.py

│ │ ├── layers.py

│ │ └── utils.py

├── tokenizers.py

├── torch

│ ├── README.md

│ ├── __init__.py

│ ├── callbacks.py

│ ├── dataProvider.py

│ ├── handlers.py

│ ├── losses.py

│ ├── metrics.py

│ ├── model.py

│ ├── requirements.txt

│ └── yolo

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── annotation.py

│ │ ├── detectors

│ │ ├── __init__.py

│ │ ├── detector.py

│ │ ├── onnx_detector.py

│ │ └── torch_detector.py

│ │ ├── loss.py

│ │ ├── metrics.py

│ │ ├── optimizer.py

│ │ ├── preprocessors.py

│ │ ├── pruning_utils.py

│ │ └── requirements.txt

├── transformers.py

└── utils

│ ├── __init__.py

│ └── text_utils.py

├── requirements.txt

└── setup.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__

2 | *.egg-info

3 | *.pyc

4 | venv

5 |

6 | Datasets/*

7 | Models/*

8 | dist

9 |

10 | !*.md

11 |

12 | .idea

13 | .python-version

14 |

15 | test

16 | build

17 | yolov8*

18 | pyrightconfig.json

--------------------------------------------------------------------------------

/.vscode/launch.json:

--------------------------------------------------------------------------------

1 | {

2 | // Use IntelliSense to learn about possible attributes.

3 | // Hover to view descriptions of existing attributes.

4 | // For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

5 | "version": "0.2.0",

6 | "configurations": [

7 | {

8 | "name": "Python: Current File",

9 | "type": "python",

10 | "request": "launch",

11 | "program": "${file}",

12 | "console": "integratedTerminal",

13 | "justMyCode": false,

14 | "subProcess": true,

15 | }

16 | ]

17 | }

--------------------------------------------------------------------------------

/.vscode/settings.json:

--------------------------------------------------------------------------------

1 | {

2 | "python.analysis.typeCheckingMode": "off",

3 | "python.testing.unittestArgs": [

4 | "-v",

5 | "-s",

6 | "./Tests",

7 | "-p",

8 | "*test*.py"

9 | ],

10 | "python.testing.pytestEnabled": false,

11 | "python.testing.unittestEnabled": true

12 | }

--------------------------------------------------------------------------------

/Datasets/README.md:

--------------------------------------------------------------------------------

1 | # Empty repository to hold the datasets when running Tutorials

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2022 Rokas

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include requirements.txt

--------------------------------------------------------------------------------

/Models/README.md:

--------------------------------------------------------------------------------

1 | # Empty repository to hold the Models when running Tutorials

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # MLTU - Machine Learning Training Utilities

2 | Machine Learning Training Utilities for TensorFlow 2.* and PyTorch with Python 3

3 |

4 |  5 |

5 |

6 |

7 | # Installation:

8 | To use MLTU in your own project, you can install it from PyPI:

9 | ```bash

10 | pip install mltu

11 | ```

12 | When running tutorials, it's necessary to install mltu for a specific tutorial, for example:

13 | ```bash

14 | pip install mltu==0.1.3

15 | ```

16 | Each tutorial has its own requirements.txt file for a specific mltu version. As this project progress, the newest versions may have breaking changes, so it's recommended to use the same version as in the tutorial.

17 |

18 | # Tutorials and Examples can be found on [PyLessons.com](https://pylessons.com/mltu)

19 | 1. [Text Recognition With TensorFlow and CTC network](https://pylessons.com/ctc-text-recognition), code in ```Tutorials\01_image_to_word``` folder;

20 | 2. [TensorFlow OCR model for reading Captchas](https://pylessons.com/tensorflow-ocr-captcha), code in ```Tutorials\02_captcha_to_text``` folder;

21 | 3. [Handwriting words recognition with TensorFlow](https://pylessons.com/handwriting-recognition), code in ```Tutorials\03_handwriting_recognition``` folder;

22 | 4. [Handwritten sentence recognition with TensorFlow](https://pylessons.com/handwritten-sentence-recognition), code in ```Tutorials\04_sentence_recognition``` folder;

23 | 5. [Introduction to speech recognition with TensorFlow](https://pylessons.com/speech-recognition), code in ```Tutorials\05_speech_recognition``` folder;

24 | 6. [Introduction to PyTorch in a practical way](https://pylessons.com/pytorch-introduction), code in ```Tutorials\06_pytorch_introduction``` folder;

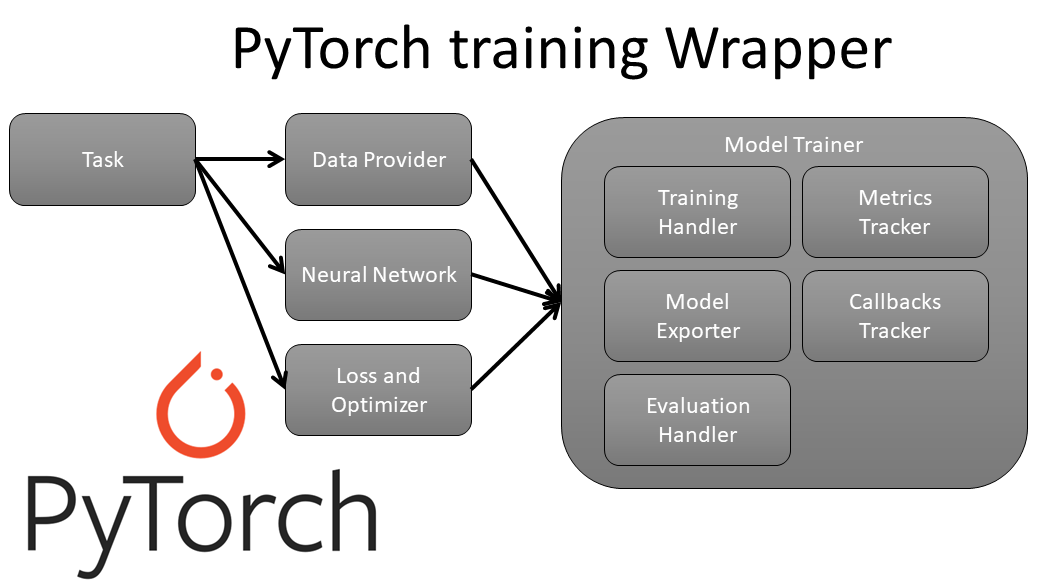

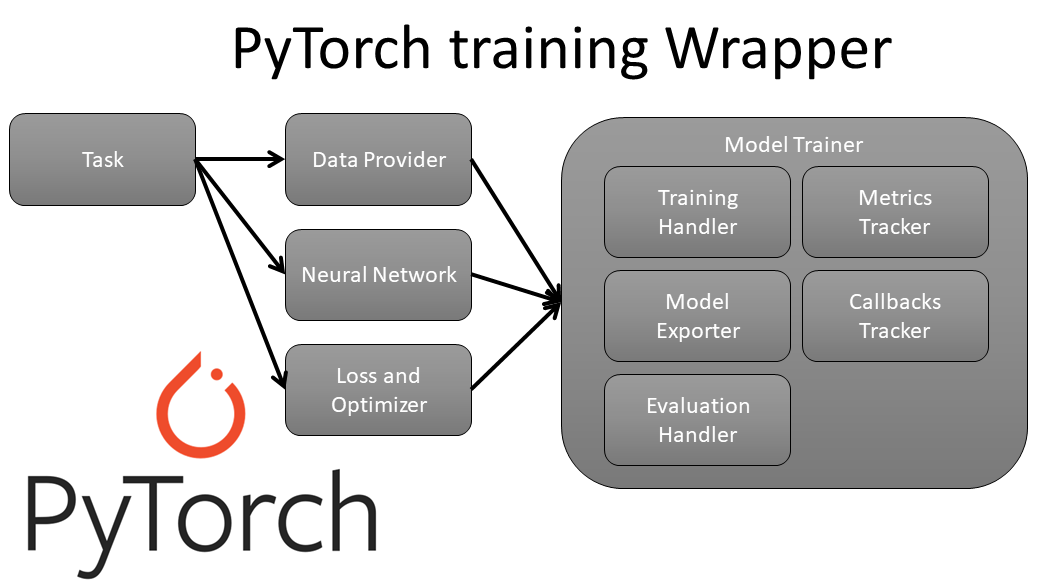

25 | 7. [Using custom wrapper to simplify PyTorch models training pipeline](https://pylessons.com/pytorch-introduction), code in ```Tutorials\07_pytorch_wrapper``` folder;

26 | 8. [Handwriting words recognition with PyTorch](https://pylessons.com/handwriting-recognition-pytorch), code in ```Tutorials\08_handwriting_recognition_torch``` folder;

27 | 9. [Transformer training with TensorFlow for Translation task](https://pylessons.com/transformers-training), code in ```Tutorials\09_translation_transformer``` folder;

28 | 10. [Speech Recognition in Python | finetune wav2vec2 model for a custom ASR model](https://youtu.be/h6ooEGzjkj0), code in ```Tutorials\10_wav2vec2_torch``` folder;

29 | 11. [YOLOv8: Real-Time Object Detection Simplified](https://youtu.be/vegL__weCxY), code in ```Tutorials\11_Yolov8``` folder;

30 | 12. [YOLOv8: Customizing Object Detector training](https://youtu.be/ysYiV1CbCyY), code in ```Tutorials\11_Yolov8\train_yolov8.py``` folder;

--------------------------------------------------------------------------------

/Tests/README.md:

--------------------------------------------------------------------------------

1 | # Repository for unit tests

--------------------------------------------------------------------------------

/Tests/test_tensorflow_metrics.py:

--------------------------------------------------------------------------------

1 | import unittest

2 | import numpy as np

3 | from mltu.tensorflow.metrics import CERMetric, WERMetric

4 |

5 | import numpy as np

6 | import tensorflow as tf

7 |

8 | class TestMetrics(unittest.TestCase):

9 |

10 | def to_embeddings(self, sentences, vocab):

11 | embeddings, max_len = [], 0

12 |

13 | for sentence in sentences:

14 | embedding = []

15 | for character in sentence:

16 | embedding.append(vocab.index(character))

17 | embeddings.append(embedding)

18 | max_len = max(max_len, len(embedding))

19 | return embeddings, max_len

20 |

21 | def setUp(self) -> None:

22 | true_words = ["Who are you", "I am a student", "I am a teacher", "Just different sentence length"]

23 | pred_words = ["Who are you", "I am a ztudent", "I am A reacher", "Just different length"]

24 |

25 | vocab = set()

26 | for sen in true_words + pred_words:

27 | for character in sen:

28 | vocab.add(character)

29 | self.vocab = "".join(vocab)

30 |

31 | sentence_true, max_len_true = self.to_embeddings(true_words, self.vocab)

32 | sentence_pred, max_len_pred = self.to_embeddings(pred_words, self.vocab)

33 |

34 | max_len = max(max_len_true, max_len_pred)

35 | padding_length = 64

36 |

37 | self.sen_true = [np.pad(sen, (0, max_len - len(sen)), "constant", constant_values=len(self.vocab)) for sen in sentence_true]

38 | self.sen_pred = [np.pad(sen, (0, padding_length - len(sen)), "constant", constant_values=-1) for sen in sentence_pred]

39 |

40 | def test_CERMetric(self):

41 | vocabulary = tf.constant(list(self.vocab))

42 | cer = CERMetric.get_cer(self.sen_true, self.sen_pred, vocabulary).numpy()

43 |

44 | self.assertTrue(np.array_equal(cer, np.array([0.0, 0.071428575, 0.14285715, 0.42857143], dtype=np.float32)))

45 |

46 | def test_WERMetric(self):

47 | vocabulary = tf.constant(list(self.vocab))

48 | wer = WERMetric.get_wer(self.sen_true, self.sen_pred, vocabulary).numpy()

49 |

50 | self.assertTrue(np.array_equal(wer, np.array([0., 0.25, 0.5, 0.33333334], dtype=np.float32)))

51 |

52 | if __name__ == "__main__":

53 | unittest.main()

--------------------------------------------------------------------------------

/Tests/test_text_utils.py:

--------------------------------------------------------------------------------

1 | import unittest

2 |

3 | from mltu.utils.text_utils import edit_distance, get_cer, get_wer

4 |

5 | class TestTextUtils(unittest.TestCase):

6 |

7 | def test_edit_distance(self):

8 | """ This unit test includes several test cases to cover different scenarios, including no errors,

9 | substitution errors, insertion errors, deletion errors, and a more complex case with multiple

10 | errors. It also includes a test case for empty input.

11 | """

12 | # Test simple case with no errors

13 | prediction_tokens = ["A", "B", "C"]

14 | reference_tokens = ["A", "B", "C"]

15 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 0)

16 |

17 | # Test simple case with one substitution error

18 | prediction_tokens = ["A", "B", "D"]

19 | reference_tokens = ["A", "B", "C"]

20 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 1)

21 |

22 | # Test simple case with one insertion error

23 | prediction_tokens = ["A", "B", "C"]

24 | reference_tokens = ["A", "B", "C", "D"]

25 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 1)

26 |

27 | # Test simple case with one deletion error

28 | prediction_tokens = ["A", "B"]

29 | reference_tokens = ["A", "B", "C"]

30 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 1)

31 |

32 | # Test more complex case with multiple errors

33 | prediction_tokens = ["A", "B", "C", "D", "E"]

34 | reference_tokens = ["A", "C", "B", "F", "E"]

35 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 3)

36 |

37 | # Test empty input

38 | prediction_tokens = []

39 | reference_tokens = []

40 | self.assertEqual(edit_distance(prediction_tokens, reference_tokens), 0)

41 |

42 | def test_get_cer(self):

43 | # Test simple case with no errors

44 | preds = ["A B C"]

45 | target = ["A B C"]

46 | self.assertEqual(get_cer(preds, target), 0)

47 |

48 | # Test simple case with one character error

49 | preds = ["A B C"]

50 | target = ["A B D"]

51 | self.assertEqual(get_cer(preds, target), 1/5)

52 |

53 | # Test simple case with multiple character errors

54 | preds = ["A B C"]

55 | target = ["D E F"]

56 | self.assertEqual(get_cer(preds, target), 3/5)

57 |

58 | # Test empty input

59 | preds = []

60 | target = []

61 | self.assertEqual(get_cer(preds, target), 0)

62 |

63 | # Test simple case with different word lengths

64 | preds = ["ABC"]

65 | target = ["ABCDEFG"]

66 | self.assertEqual(get_cer(preds, target), 4/7)

67 |

68 | def test_get_wer(self):

69 | # Test simple case with no errors

70 | preds = "A B C"

71 | target = "A B C"

72 | self.assertEqual(get_wer(preds, target), 0)

73 |

74 | # Test simple case with one word error

75 | preds = "A B C"

76 | target = "A B D"

77 | self.assertEqual(get_wer(preds, target), 1/3)

78 |

79 | # Test simple case with multiple word errors

80 | preds = "A B C"

81 | target = "D E F"

82 | self.assertEqual(get_wer(preds, target), 1)

83 |

84 | # Test empty input

85 | preds = ""

86 | target = ""

87 | self.assertEqual(get_wer(preds, target), 0)

88 |

89 | # Test simple case with different sentence lengths

90 | preds = ["ABC"]

91 | target = ["ABC DEF"]

92 | self.assertEqual(get_wer(preds, target), 1)

93 |

94 |

95 | if __name__ == "__main__":

96 | unittest.main()

97 |

--------------------------------------------------------------------------------

/Tutorials/01_image_to_word/configs.py:

--------------------------------------------------------------------------------

1 | import os

2 | from datetime import datetime

3 |

4 | from mltu.configs import BaseModelConfigs

5 |

6 |

7 | class ModelConfigs(BaseModelConfigs):

8 | def __init__(self):

9 | super().__init__()

10 | self.model_path = os.path.join("Models/1_image_to_word", datetime.strftime(datetime.now(), "%Y%m%d%H%M"))

11 | self.vocab = "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz"

12 | self.height = 32

13 | self.width = 128

14 | self.max_text_length = 23

15 | self.batch_size = 1024

16 | self.learning_rate = 1e-4

17 | self.train_epochs = 100

18 | self.train_workers = 20

--------------------------------------------------------------------------------

/Tutorials/01_image_to_word/inferenceModel.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import typing

3 | import numpy as np

4 |

5 | from mltu.inferenceModel import OnnxInferenceModel

6 | from mltu.utils.text_utils import ctc_decoder, get_cer

7 |

8 | class ImageToWordModel(OnnxInferenceModel):

9 | def __init__(self, char_list: typing.Union[str, list], *args, **kwargs):

10 | super().__init__(*args, **kwargs)

11 | self.char_list = char_list

12 |

13 | def predict(self, image: np.ndarray):

14 | image = cv2.resize(image, self.input_shapes[0][1:3][::-1])

15 |

16 | image_pred = np.expand_dims(image, axis=0).astype(np.float32)

17 |

18 | preds = self.model.run(self.output_names, {self.input_names[0]: image_pred})[0]

19 |

20 | text = ctc_decoder(preds, self.char_list)[0]

21 |

22 | return text

23 |

24 |

25 | if __name__ == "__main__":

26 | import pandas as pd

27 | from tqdm import tqdm

28 | from mltu.configs import BaseModelConfigs

29 |

30 | configs = BaseModelConfigs.load("Models/1_image_to_word/202211270035/configs.yaml")

31 |

32 | model = ImageToWordModel(model_path=configs.model_path, char_list=configs.vocab)

33 |

34 | df = pd.read_csv("Models/1_image_to_word/202211270035/val.csv").dropna().values.tolist()

35 |

36 | accum_cer = []

37 | for image_path, label in tqdm(df[:20]):

38 | image = cv2.imread(image_path.replace("\\", "/"))

39 |

40 | try:

41 | prediction_text = model.predict(image)

42 |

43 | cer = get_cer(prediction_text, label)

44 | print(f"Image: {image_path}, Label: {label}, Prediction: {prediction_text}, CER: {cer}")

45 |

46 | # resize image by 3 times for visualization

47 | # image = cv2.resize(image, (image.shape[1] * 3, image.shape[0] * 3))

48 | # cv2.imshow(prediction_text, image)

49 | # cv2.waitKey(0)

50 | # cv2.destroyAllWindows()

51 | except:

52 | continue

53 |

54 | accum_cer.append(cer)

55 |

56 | print(f"Average CER: {np.average(accum_cer)}")

--------------------------------------------------------------------------------

/Tutorials/01_image_to_word/model.py:

--------------------------------------------------------------------------------

1 | from keras import layers

2 | from keras.models import Model

3 |

4 | from mltu.tensorflow.model_utils import residual_block

5 |

6 |

7 | def train_model(input_dim, output_dim, activation="leaky_relu", dropout=0.2):

8 |

9 | inputs = layers.Input(shape=input_dim, name="input")

10 |

11 | input = layers.Lambda(lambda x: x / 255)(inputs)

12 |

13 | x1 = residual_block(input, 16, activation=activation, skip_conv=True, strides=1, dropout=dropout)

14 |

15 | x2 = residual_block(x1, 16, activation=activation, skip_conv=True, strides=2, dropout=dropout)

16 | x3 = residual_block(x2, 16, activation=activation, skip_conv=False, strides=1, dropout=dropout)

17 |

18 | x4 = residual_block(x3, 32, activation=activation, skip_conv=True, strides=2, dropout=dropout)

19 | x5 = residual_block(x4, 32, activation=activation, skip_conv=False, strides=1, dropout=dropout)

20 |

21 | x6 = residual_block(x5, 64, activation=activation, skip_conv=True, strides=1, dropout=dropout)

22 | x7 = residual_block(x6, 64, activation=activation, skip_conv=False, strides=1, dropout=dropout)

23 |

24 | squeezed = layers.Reshape((x7.shape[-3] * x7.shape[-2], x7.shape[-1]))(x7)

25 |

26 | blstm = layers.Bidirectional(layers.LSTM(64, return_sequences=True))(squeezed)

27 |

28 | output = layers.Dense(output_dim + 1, activation="softmax", name="output")(blstm)

29 |

30 | model = Model(inputs=inputs, outputs=output)

31 | return model

--------------------------------------------------------------------------------

/Tutorials/01_image_to_word/requiremenets.txt:

--------------------------------------------------------------------------------

1 | mltu==0.1.3

--------------------------------------------------------------------------------

/Tutorials/01_image_to_word/train.py:

--------------------------------------------------------------------------------

1 | import os

2 | from tqdm import tqdm

3 | import tensorflow as tf

4 |

5 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

6 | except: pass

7 |

8 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

9 |

10 | from mltu.preprocessors import ImageReader

11 | from mltu.annotations.images import CVImage

12 | from mltu.transformers import ImageResizer, LabelIndexer, LabelPadding

13 | from mltu.tensorflow.dataProvider import DataProvider

14 | from mltu.tensorflow.losses import CTCloss

15 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

16 | from mltu.tensorflow.metrics import CWERMetric

17 |

18 |

19 | from model import train_model

20 | from configs import ModelConfigs

21 |

22 | configs = ModelConfigs()

23 |

24 | data_path = "Datasets/90kDICT32px"

25 | val_annotation_path = data_path + "/annotation_val.txt"

26 | train_annotation_path = data_path + "/annotation_train.txt"

27 |

28 | # Read metadata file and parse it

29 | def read_annotation_file(annotation_path):

30 | dataset, vocab, max_len = [], set(), 0

31 | with open(annotation_path, "r") as f:

32 | for line in tqdm(f.readlines()):

33 | line = line.split()

34 | image_path = data_path + line[0][1:]

35 | label = line[0].split("_")[1]

36 | dataset.append([image_path, label])

37 | vocab.update(list(label))

38 | max_len = max(max_len, len(label))

39 | return dataset, sorted(vocab), max_len

40 |

41 | train_dataset, train_vocab, max_train_len = read_annotation_file(train_annotation_path)

42 | val_dataset, val_vocab, max_val_len = read_annotation_file(val_annotation_path)

43 |

44 | # Save vocab and maximum text length to configs

45 | configs.vocab = "".join(train_vocab)

46 | configs.max_text_length = max(max_train_len, max_val_len)

47 | configs.save()

48 |

49 | # Create training data provider

50 | train_data_provider = DataProvider(

51 | dataset=train_dataset,

52 | skip_validation=True,

53 | batch_size=configs.batch_size,

54 | data_preprocessors=[ImageReader(CVImage)],

55 | transformers=[

56 | ImageResizer(configs.width, configs.height),

57 | LabelIndexer(configs.vocab),

58 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab))

59 | ],

60 | )

61 |

62 | # Create validation data provider

63 | val_data_provider = DataProvider(

64 | dataset=val_dataset,

65 | skip_validation=True,

66 | batch_size=configs.batch_size,

67 | data_preprocessors=[ImageReader(CVImage)],

68 | transformers=[

69 | ImageResizer(configs.width, configs.height),

70 | LabelIndexer(configs.vocab),

71 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab))

72 | ],

73 | )

74 |

75 | model = train_model(

76 | input_dim = (configs.height, configs.width, 3),

77 | output_dim = len(configs.vocab),

78 | )

79 | # Compile the model and print summary

80 | model.compile(

81 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

82 | loss=CTCloss(),

83 | metrics=[CWERMetric()],

84 | run_eagerly=False

85 | )

86 | model.summary(line_length=110)

87 |

88 | # Define path to save the model

89 | os.makedirs(configs.model_path, exist_ok=True)

90 |

91 | # Define callbacks

92 | earlystopper = EarlyStopping(monitor="val_CER", patience=10, verbose=1)

93 | checkpoint = ModelCheckpoint(f"{configs.model_path}/model.h5", monitor="val_CER", verbose=1, save_best_only=True, mode="min")

94 | trainLogger = TrainLogger(configs.model_path)

95 | tb_callback = TensorBoard(f"{configs.model_path}/logs", update_freq=1)

96 | reduceLROnPlat = ReduceLROnPlateau(monitor="val_CER", factor=0.9, min_delta=1e-10, patience=5, verbose=1, mode="auto")

97 | model2onnx = Model2onnx(f"{configs.model_path}/model.h5")

98 |

99 | # Train the model

100 | model.fit(

101 | train_data_provider,

102 | validation_data=val_data_provider,

103 | epochs=configs.train_epochs,

104 | callbacks=[earlystopper, checkpoint, trainLogger, reduceLROnPlat, tb_callback, model2onnx],

105 | workers=configs.train_workers

106 | )

107 |

108 | # Save training and validation datasets as csv files

109 | train_data_provider.to_csv(os.path.join(configs.model_path, "train.csv"))

110 | val_data_provider.to_csv(os.path.join(configs.model_path, "val.csv"))

--------------------------------------------------------------------------------

/Tutorials/02_captcha_to_text/configs.py:

--------------------------------------------------------------------------------

1 | import os

2 | from datetime import datetime

3 |

4 | from mltu.configs import BaseModelConfigs

5 |

6 |

7 | class ModelConfigs(BaseModelConfigs):

8 | def __init__(self):

9 | super().__init__()

10 | self.model_path = os.path.join("Models/02_captcha_to_text", datetime.strftime(datetime.now(), "%Y%m%d%H%M"))

11 | self.vocab = ""

12 | self.height = 50

13 | self.width = 200

14 | self.max_text_length = 0

15 | self.batch_size = 64

16 | self.learning_rate = 1e-3

17 | self.train_epochs = 1000

18 | self.train_workers = 20

--------------------------------------------------------------------------------

/Tutorials/02_captcha_to_text/inferenceModel.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import typing

3 | import numpy as np

4 |

5 | from mltu.inferenceModel import OnnxInferenceModel

6 | from mltu.utils.text_utils import ctc_decoder, get_cer

7 |

8 | class ImageToWordModel(OnnxInferenceModel):

9 | def __init__(self, char_list: typing.Union[str, list], *args, **kwargs):

10 | super().__init__(*args, **kwargs)

11 | self.char_list = char_list

12 |

13 | def predict(self, image: np.ndarray):

14 | image = cv2.resize(image, self.input_shapes[0][1:3][::-1])

15 |

16 | image_pred = np.expand_dims(image, axis=0).astype(np.float32)

17 |

18 | preds = self.model.run(self.output_names, {self.input_names[0]: image_pred})[0]

19 |

20 | text = ctc_decoder(preds, self.char_list)[0]

21 |

22 | return text

23 |

24 | if __name__ == "__main__":

25 | import pandas as pd

26 | from tqdm import tqdm

27 | from mltu.configs import BaseModelConfigs

28 |

29 | configs = BaseModelConfigs.load("Models/02_captcha_to_text/202212211205/configs.yaml")

30 |

31 | model = ImageToWordModel(model_path=configs.model_path, char_list=configs.vocab)

32 |

33 | df = pd.read_csv("Models/02_captcha_to_text/202212211205/val.csv").values.tolist()

34 |

35 | accum_cer = []

36 | for image_path, label in tqdm(df):

37 | image = cv2.imread(image_path.replace("\\", "/"))

38 |

39 | prediction_text = model.predict(image)

40 |

41 | cer = get_cer(prediction_text, label)

42 | print(f"Image: {image_path}, Label: {label}, Prediction: {prediction_text}, CER: {cer}")

43 |

44 | accum_cer.append(cer)

45 |

46 | print(f"Average CER: {np.average(accum_cer)}")

--------------------------------------------------------------------------------

/Tutorials/02_captcha_to_text/model.py:

--------------------------------------------------------------------------------

1 | from keras import layers

2 | from keras.models import Model

3 |

4 | from mltu.tensorflow.model_utils import residual_block

5 |

6 |

7 | def train_model(input_dim, output_dim, activation="leaky_relu", dropout=0.2):

8 |

9 | inputs = layers.Input(shape=input_dim, name="input")

10 |

11 | # normalize images here instead in preprocessing step

12 | input = layers.Lambda(lambda x: x / 255)(inputs)

13 |

14 | x1 = residual_block(input, 16, activation=activation, skip_conv=True, strides=1, dropout=dropout)

15 |

16 | x2 = residual_block(x1, 16, activation=activation, skip_conv=True, strides=2, dropout=dropout)

17 | x3 = residual_block(x2, 16, activation=activation, skip_conv=False, strides=1, dropout=dropout)

18 |

19 | x4 = residual_block(x3, 32, activation=activation, skip_conv=True, strides=2, dropout=dropout)

20 | x5 = residual_block(x4, 32, activation=activation, skip_conv=False, strides=1, dropout=dropout)

21 |

22 | x6 = residual_block(x5, 64, activation=activation, skip_conv=True, strides=2, dropout=dropout)

23 | x7 = residual_block(x6, 32, activation=activation, skip_conv=True, strides=1, dropout=dropout)

24 |

25 | x8 = residual_block(x7, 64, activation=activation, skip_conv=True, strides=2, dropout=dropout)

26 | x9 = residual_block(x8, 64, activation=activation, skip_conv=False, strides=1, dropout=dropout)

27 |

28 | squeezed = layers.Reshape((x9.shape[-3] * x9.shape[-2], x9.shape[-1]))(x9)

29 |

30 | blstm = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(squeezed)

31 | blstm = layers.Dropout(dropout)(blstm)

32 |

33 | output = layers.Dense(output_dim + 1, activation="softmax", name="output")(blstm)

34 |

35 | model = Model(inputs=inputs, outputs=output)

36 | return model

37 |

--------------------------------------------------------------------------------

/Tutorials/02_captcha_to_text/train.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

3 | except: pass

4 |

5 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

6 |

7 | from mltu.tensorflow.dataProvider import DataProvider

8 | from mltu.tensorflow.losses import CTCloss

9 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

10 | from mltu.tensorflow.metrics import CWERMetric

11 |

12 | from mltu.preprocessors import ImageReader

13 | from mltu.transformers import ImageResizer, LabelIndexer, LabelPadding

14 | from mltu.augmentors import RandomBrightness, RandomRotate, RandomErodeDilate

15 | from mltu.annotations.images import CVImage

16 |

17 | from model import train_model

18 | from configs import ModelConfigs

19 |

20 | import os

21 | from urllib.request import urlopen

22 | from io import BytesIO

23 | from zipfile import ZipFile

24 |

25 |

26 | def download_and_unzip(url, extract_to="Datasets"):

27 | http_response = urlopen(url)

28 | zipfile = ZipFile(BytesIO(http_response.read()))

29 | zipfile.extractall(path=extract_to)

30 |

31 |

32 | if not os.path.exists(os.path.join("Datasets", "captcha_images_v2")):

33 | download_and_unzip("https://github.com/AakashKumarNain/CaptchaCracker/raw/master/captcha_images_v2.zip",

34 | extract_to="Datasets")

35 |

36 | # Create a list of all the images and labels in the dataset

37 | dataset, vocab, max_len = [], set(), 0

38 | captcha_path = os.path.join("Datasets", "captcha_images_v2")

39 | for file in os.listdir(captcha_path):

40 | file_path = os.path.join(captcha_path, file)

41 | label = os.path.splitext(file)[0] # Get the file name without the extension

42 | dataset.append([file_path, label])

43 | vocab.update(list(label))

44 | max_len = max(max_len, len(label))

45 |

46 | configs = ModelConfigs()

47 |

48 | # Save vocab and maximum text length to configs

49 | configs.vocab = "".join(vocab)

50 | configs.max_text_length = max_len

51 | configs.save()

52 |

53 | # Create a data provider for the dataset

54 | data_provider = DataProvider(

55 | dataset=dataset,

56 | skip_validation=True,

57 | batch_size=configs.batch_size,

58 | data_preprocessors=[ImageReader(CVImage)],

59 | transformers=[

60 | ImageResizer(configs.width, configs.height),

61 | LabelIndexer(configs.vocab),

62 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab))

63 | ],

64 | )

65 | # Split the dataset into training and validation sets

66 | train_data_provider, val_data_provider = data_provider.split(split = 0.9)

67 |

68 | # Augment training data with random brightness, rotation and erode/dilate

69 | train_data_provider.augmentors = [RandomBrightness(), RandomRotate(), RandomErodeDilate()]

70 |

71 | # Creating TensorFlow model architecture

72 | model = train_model(

73 | input_dim = (configs.height, configs.width, 3),

74 | output_dim = len(configs.vocab),

75 | )

76 |

77 | # Compile the model and print summary

78 | model.compile(

79 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

80 | loss=CTCloss(),

81 | metrics=[CWERMetric(padding_token=len(configs.vocab))],

82 | run_eagerly=False

83 | )

84 | model.summary(line_length=110)

85 | # Define path to save the model

86 | os.makedirs(configs.model_path, exist_ok=True)

87 |

88 | # Define callbacks

89 | earlystopper = EarlyStopping(monitor="val_CER", patience=50, verbose=1, mode="min")

90 | checkpoint = ModelCheckpoint(f"{configs.model_path}/model.h5", monitor="val_CER", verbose=1, save_best_only=True, mode="min")

91 | trainLogger = TrainLogger(configs.model_path)

92 | tb_callback = TensorBoard(f"{configs.model_path}/logs", update_freq=1)

93 | reduceLROnPlat = ReduceLROnPlateau(monitor="val_CER", factor=0.9, min_delta=1e-10, patience=20, verbose=1, mode="min")

94 | model2onnx = Model2onnx(f"{configs.model_path}/model.h5")

95 |

96 | # Train the model

97 | model.fit(

98 | train_data_provider,

99 | validation_data=val_data_provider,

100 | epochs=configs.train_epochs,

101 | callbacks=[earlystopper, checkpoint, trainLogger, reduceLROnPlat, tb_callback, model2onnx],

102 | workers=configs.train_workers

103 | )

104 |

105 | # Save training and validation datasets as csv files

106 | train_data_provider.to_csv(os.path.join(configs.model_path, "train.csv"))

107 | val_data_provider.to_csv(os.path.join(configs.model_path, "val.csv"))

--------------------------------------------------------------------------------

/Tutorials/03_handwriting_recognition/configs.py:

--------------------------------------------------------------------------------

1 | import os

2 | from datetime import datetime

3 |

4 | from mltu.configs import BaseModelConfigs

5 |

6 | class ModelConfigs(BaseModelConfigs):

7 | def __init__(self):

8 | super().__init__()

9 | self.model_path = os.path.join("Models/03_handwriting_recognition", datetime.strftime(datetime.now(), "%Y%m%d%H%M"))

10 | self.vocab = ""

11 | self.height = 32

12 | self.width = 128

13 | self.max_text_length = 0

14 | self.batch_size = 16

15 | self.learning_rate = 0.0005

16 | self.train_epochs = 1000

17 | self.train_workers = 20

--------------------------------------------------------------------------------

/Tutorials/03_handwriting_recognition/inferenceModel.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import typing

3 | import numpy as np

4 |

5 | from mltu.inferenceModel import OnnxInferenceModel

6 | from mltu.utils.text_utils import ctc_decoder, get_cer

7 |

8 | class ImageToWordModel(OnnxInferenceModel):

9 | def __init__(self, char_list: typing.Union[str, list], *args, **kwargs):

10 | super().__init__(*args, **kwargs)

11 | self.char_list = char_list

12 |

13 | def predict(self, image: np.ndarray):

14 | image = cv2.resize(image, self.input_shapes[0][1:3][::-1])

15 |

16 | image_pred = np.expand_dims(image, axis=0).astype(np.float32)

17 |

18 | preds = self.model.run(self.output_names, {self.input_names[0]: image_pred})[0]

19 |

20 | text = ctc_decoder(preds, self.char_list)[0]

21 |

22 | return text

23 |

24 | if __name__ == "__main__":

25 | import pandas as pd

26 | from tqdm import tqdm

27 | from mltu.configs import BaseModelConfigs

28 |

29 | configs = BaseModelConfigs.load("Models/03_handwriting_recognition/202301111911/configs.yaml")

30 |

31 | model = ImageToWordModel(model_path=configs.model_path, char_list=configs.vocab)

32 |

33 | df = pd.read_csv("Models/03_handwriting_recognition/202301111911/val.csv").values.tolist()

34 |

35 | accum_cer = []

36 | for image_path, label in tqdm(df):

37 | image = cv2.imread(image_path.replace("\\", "/"))

38 |

39 | prediction_text = model.predict(image)

40 |

41 | cer = get_cer(prediction_text, label)

42 | print(f"Image: {image_path}, Label: {label}, Prediction: {prediction_text}, CER: {cer}")

43 |

44 | accum_cer.append(cer)

45 |

46 | # resize by 4x

47 | image = cv2.resize(image, (image.shape[1] * 4, image.shape[0] * 4))

48 | cv2.imshow("Image", image)

49 | cv2.waitKey(0)

50 | cv2.destroyAllWindows()

51 |

52 | print(f"Average CER: {np.average(accum_cer)}")

--------------------------------------------------------------------------------

/Tutorials/03_handwriting_recognition/model.py:

--------------------------------------------------------------------------------

1 | from keras import layers

2 | from keras.models import Model

3 |

4 | from mltu.tensorflow.model_utils import residual_block

5 |

6 |

7 | def train_model(input_dim, output_dim, activation="leaky_relu", dropout=0.2):

8 |

9 | inputs = layers.Input(shape=input_dim, name="input")

10 |

11 | # normalize images here instead in preprocessing step

12 | input = layers.Lambda(lambda x: x / 255)(inputs)

13 |

14 | x1 = residual_block(input, 16, activation=activation, skip_conv=True, strides=1, dropout=dropout)

15 |

16 | x2 = residual_block(x1, 16, activation=activation, skip_conv=True, strides=2, dropout=dropout)

17 | x3 = residual_block(x2, 16, activation=activation, skip_conv=False, strides=1, dropout=dropout)

18 |

19 | x4 = residual_block(x3, 32, activation=activation, skip_conv=True, strides=2, dropout=dropout)

20 | x5 = residual_block(x4, 32, activation=activation, skip_conv=False, strides=1, dropout=dropout)

21 |

22 | x6 = residual_block(x5, 64, activation=activation, skip_conv=True, strides=2, dropout=dropout)

23 | x7 = residual_block(x6, 64, activation=activation, skip_conv=True, strides=1, dropout=dropout)

24 |

25 | x8 = residual_block(x7, 64, activation=activation, skip_conv=False, strides=1, dropout=dropout)

26 | x9 = residual_block(x8, 64, activation=activation, skip_conv=False, strides=1, dropout=dropout)

27 |

28 | squeezed = layers.Reshape((x9.shape[-3] * x9.shape[-2], x9.shape[-1]))(x9)

29 |

30 | blstm = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(squeezed)

31 | blstm = layers.Dropout(dropout)(blstm)

32 |

33 | output = layers.Dense(output_dim + 1, activation="softmax", name="output")(blstm)

34 |

35 | model = Model(inputs=inputs, outputs=output)

36 | return model

37 |

--------------------------------------------------------------------------------

/Tutorials/03_handwriting_recognition/train.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

3 | except: pass

4 |

5 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

6 |

7 | from mltu.preprocessors import ImageReader

8 | from mltu.transformers import ImageResizer, LabelIndexer, LabelPadding, ImageShowCV2

9 | from mltu.augmentors import RandomBrightness, RandomRotate, RandomErodeDilate, RandomSharpen

10 | from mltu.annotations.images import CVImage

11 |

12 | from mltu.tensorflow.dataProvider import DataProvider

13 | from mltu.tensorflow.losses import CTCloss

14 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

15 | from mltu.tensorflow.metrics import CWERMetric

16 |

17 | from model import train_model

18 | from configs import ModelConfigs

19 |

20 | import os

21 | import tarfile

22 | from tqdm import tqdm

23 | from urllib.request import urlopen

24 | from io import BytesIO

25 | from zipfile import ZipFile

26 |

27 |

28 | def download_and_unzip(url, extract_to="Datasets", chunk_size=1024*1024):

29 | http_response = urlopen(url)

30 |

31 | data = b""

32 | iterations = http_response.length // chunk_size + 1

33 | for _ in tqdm(range(iterations)):

34 | data += http_response.read(chunk_size)

35 |

36 | zipfile = ZipFile(BytesIO(data))

37 | zipfile.extractall(path=extract_to)

38 |

39 | dataset_path = os.path.join("Datasets", "IAM_Words")

40 | if not os.path.exists(dataset_path):

41 | download_and_unzip("https://git.io/J0fjL", extract_to="Datasets")

42 |

43 | file = tarfile.open(os.path.join(dataset_path, "words.tgz"))

44 | file.extractall(os.path.join(dataset_path, "words"))

45 |

46 | dataset, vocab, max_len = [], set(), 0

47 |

48 | # Preprocess the dataset by the specific IAM_Words dataset file structure

49 | words = open(os.path.join(dataset_path, "words.txt"), "r").readlines()

50 | for line in tqdm(words):

51 | if line.startswith("#"):

52 | continue

53 |

54 | line_split = line.split(" ")

55 | if line_split[1] == "err":

56 | continue

57 |

58 | folder1 = line_split[0][:3]

59 | folder2 = "-".join(line_split[0].split("-")[:2])

60 | file_name = line_split[0] + ".png"

61 | label = line_split[-1].rstrip("\n")

62 |

63 | rel_path = os.path.join(dataset_path, "words", folder1, folder2, file_name)

64 | if not os.path.exists(rel_path):

65 | print(f"File not found: {rel_path}")

66 | continue

67 |

68 | dataset.append([rel_path, label])

69 | vocab.update(list(label))

70 | max_len = max(max_len, len(label))

71 |

72 | # Create a ModelConfigs object to store model configurations

73 | configs = ModelConfigs()

74 |

75 | # Save vocab and maximum text length to configs

76 | configs.vocab = "".join(vocab)

77 | configs.max_text_length = max_len

78 | configs.save()

79 |

80 | # Create a data provider for the dataset

81 | data_provider = DataProvider(

82 | dataset=dataset,

83 | skip_validation=True,

84 | batch_size=configs.batch_size,

85 | data_preprocessors=[ImageReader(CVImage)],

86 | transformers=[

87 | ImageResizer(configs.width, configs.height, keep_aspect_ratio=False),

88 | LabelIndexer(configs.vocab),

89 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab)),

90 | ],

91 | )

92 |

93 | # Split the dataset into training and validation sets

94 | train_data_provider, val_data_provider = data_provider.split(split = 0.9)

95 |

96 | # Augment training data with random brightness, rotation and erode/dilate

97 | train_data_provider.augmentors = [

98 | RandomBrightness(),

99 | RandomErodeDilate(),

100 | RandomSharpen(),

101 | RandomRotate(angle=10),

102 | ]

103 |

104 | # Creating TensorFlow model architecture

105 | model = train_model(

106 | input_dim = (configs.height, configs.width, 3),

107 | output_dim = len(configs.vocab),

108 | )

109 |

110 | # Compile the model and print summary

111 | model.compile(

112 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

113 | loss=CTCloss(),

114 | metrics=[CWERMetric(padding_token=len(configs.vocab))],

115 | )

116 | model.summary(line_length=110)

117 |

118 | # Define callbacks

119 | earlystopper = EarlyStopping(monitor="val_CER", patience=20, verbose=1)

120 | checkpoint = ModelCheckpoint(f"{configs.model_path}/model.h5", monitor="val_CER", verbose=1, save_best_only=True, mode="min")

121 | trainLogger = TrainLogger(configs.model_path)

122 | tb_callback = TensorBoard(f"{configs.model_path}/logs", update_freq=1)

123 | reduceLROnPlat = ReduceLROnPlateau(monitor="val_CER", factor=0.9, min_delta=1e-10, patience=10, verbose=1, mode="auto")

124 | model2onnx = Model2onnx(f"{configs.model_path}/model.h5")

125 |

126 | # Train the model

127 | model.fit(

128 | train_data_provider,

129 | validation_data=val_data_provider,

130 | epochs=configs.train_epochs,

131 | callbacks=[earlystopper, checkpoint, trainLogger, reduceLROnPlat, tb_callback, model2onnx],

132 | workers=configs.train_workers

133 | )

134 |

135 | # Save training and validation datasets as csv files

136 | train_data_provider.to_csv(os.path.join(configs.model_path, "train.csv"))

137 | val_data_provider.to_csv(os.path.join(configs.model_path, "val.csv"))

--------------------------------------------------------------------------------

/Tutorials/04_sentence_recognition/README.md:

--------------------------------------------------------------------------------

1 | # Handwritten sentence recognition with TensorFlow

2 | ## Unlock the power of handwritten sentence recognition with TensorFlow and CTC loss. From digitizing notes to transcribing historical documents and automating exam grading

3 |

4 |

5 | ## **Detailed tutorial**:

6 | ## [Handwritten sentence recognition with TensorFlow](https://pylessons.com/handwritten-sentence-recognition)

7 |

8 |

9 |  10 |

10 |

--------------------------------------------------------------------------------

/Tutorials/04_sentence_recognition/configs.py:

--------------------------------------------------------------------------------

1 | import os

2 | from datetime import datetime

3 |

4 | from mltu.configs import BaseModelConfigs

5 |

6 | class ModelConfigs(BaseModelConfigs):

7 | def __init__(self):

8 | super().__init__()

9 | self.model_path = os.path.join("Models/04_sentence_recognition", datetime.strftime(datetime.now(), "%Y%m%d%H%M"))

10 | self.vocab = ""

11 | self.height = 96

12 | self.width = 1408

13 | self.max_text_length = 0

14 | self.batch_size = 32

15 | self.learning_rate = 0.0005

16 | self.train_epochs = 1000

17 | self.train_workers = 20

--------------------------------------------------------------------------------

/Tutorials/04_sentence_recognition/inferenceModel.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import typing

3 | import numpy as np

4 |

5 | from mltu.inferenceModel import OnnxInferenceModel

6 | from mltu.utils.text_utils import ctc_decoder, get_cer, get_wer

7 | from mltu.transformers import ImageResizer

8 |

9 | class ImageToWordModel(OnnxInferenceModel):

10 | def __init__(self, char_list: typing.Union[str, list], *args, **kwargs):

11 | super().__init__(*args, **kwargs)

12 | self.char_list = char_list

13 |

14 | def predict(self, image: np.ndarray):

15 | image = ImageResizer.resize_maintaining_aspect_ratio(image, *self.input_shapes[0][1:3][::-1])

16 |

17 | image_pred = np.expand_dims(image, axis=0).astype(np.float32)

18 |

19 | preds = self.model.run(self.output_names, {self.input_names[0]: image_pred})[0]

20 |

21 | text = ctc_decoder(preds, self.char_list)[0]

22 |

23 | return text

24 |

25 | if __name__ == "__main__":

26 | import pandas as pd

27 | from tqdm import tqdm

28 | from mltu.configs import BaseModelConfigs

29 |

30 | configs = BaseModelConfigs.load("Models/04_sentence_recognition/202301131202/configs.yaml")

31 |

32 | model = ImageToWordModel(model_path=configs.model_path, char_list=configs.vocab)

33 |

34 | df = pd.read_csv("Models/04_sentence_recognition/202301131202/val.csv").values.tolist()

35 |

36 | accum_cer, accum_wer = [], []

37 | for image_path, label in tqdm(df):

38 | image = cv2.imread(image_path.replace("\\", "/"))

39 |

40 | prediction_text = model.predict(image)

41 |

42 | cer = get_cer(prediction_text, label)

43 | wer = get_wer(prediction_text, label)

44 | print("Image: ", image_path)

45 | print("Label:", label)

46 | print("Prediction: ", prediction_text)

47 | print(f"CER: {cer}; WER: {wer}")

48 |

49 | accum_cer.append(cer)

50 | accum_wer.append(wer)

51 |

52 | cv2.imshow(prediction_text, image)

53 | cv2.waitKey(0)

54 | cv2.destroyAllWindows()

55 |

56 | print(f"Average CER: {np.average(accum_cer)}, Average WER: {np.average(accum_wer)}")

--------------------------------------------------------------------------------

/Tutorials/04_sentence_recognition/model.py:

--------------------------------------------------------------------------------

1 | from keras import layers

2 | from keras.models import Model

3 |

4 | from mltu.tensorflow.model_utils import residual_block

5 |

6 |

7 | def train_model(input_dim, output_dim, activation="leaky_relu", dropout=0.2):

8 |

9 | inputs = layers.Input(shape=input_dim, name="input")

10 |

11 | # normalize images here instead in preprocessing step

12 | input = layers.Lambda(lambda x: x / 255)(inputs)

13 |

14 | x1 = residual_block(input, 32, activation=activation, skip_conv=True, strides=1, dropout=dropout)

15 |

16 | x2 = residual_block(x1, 32, activation=activation, skip_conv=True, strides=2, dropout=dropout)

17 | x3 = residual_block(x2, 32, activation=activation, skip_conv=False, strides=1, dropout=dropout)

18 |

19 | x4 = residual_block(x3, 64, activation=activation, skip_conv=True, strides=2, dropout=dropout)

20 | x5 = residual_block(x4, 64, activation=activation, skip_conv=False, strides=1, dropout=dropout)

21 |

22 | x6 = residual_block(x5, 128, activation=activation, skip_conv=True, strides=2, dropout=dropout)

23 | x7 = residual_block(x6, 128, activation=activation, skip_conv=True, strides=1, dropout=dropout)

24 |

25 | x8 = residual_block(x7, 128, activation=activation, skip_conv=True, strides=2, dropout=dropout)

26 | x9 = residual_block(x8, 128, activation=activation, skip_conv=False, strides=1, dropout=dropout)

27 |

28 | squeezed = layers.Reshape((x9.shape[-3] * x9.shape[-2], x9.shape[-1]))(x9)

29 |

30 | blstm = layers.Bidirectional(layers.LSTM(256, return_sequences=True))(squeezed)

31 | blstm = layers.Dropout(dropout)(blstm)

32 |

33 | blstm = layers.Bidirectional(layers.LSTM(64, return_sequences=True))(blstm)

34 | blstm = layers.Dropout(dropout)(blstm)

35 |

36 | output = layers.Dense(output_dim + 1, activation="softmax", name="output")(blstm)

37 |

38 | model = Model(inputs=inputs, outputs=output)

39 | return model

40 |

--------------------------------------------------------------------------------

/Tutorials/04_sentence_recognition/train.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

3 | except: pass

4 |

5 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

6 |

7 | from mltu.preprocessors import ImageReader

8 | from mltu.transformers import ImageResizer, LabelIndexer, LabelPadding, ImageShowCV2

9 | from mltu.augmentors import RandomBrightness, RandomRotate, RandomErodeDilate, RandomSharpen

10 | from mltu.annotations.images import CVImage

11 |

12 | from mltu.tensorflow.dataProvider import DataProvider

13 | from mltu.tensorflow.losses import CTCloss

14 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

15 | from mltu.tensorflow.metrics import CERMetric, WERMetric

16 |

17 | from model import train_model

18 | from configs import ModelConfigs

19 |

20 | import os

21 | from tqdm import tqdm

22 |

23 | # Must download and extract datasets manually from https://fki.tic.heia-fr.ch/databases/download-the-iam-handwriting-database to Datasets\IAM_Sentences

24 | sentences_txt_path = os.path.join("Datasets", "IAM_Sentences", "ascii", "sentences.txt")

25 | sentences_folder_path = os.path.join("Datasets", "IAM_Sentences", "sentences")

26 |

27 | dataset, vocab, max_len = [], set(), 0

28 | words = open(sentences_txt_path, "r").readlines()

29 | for line in tqdm(words):

30 | if line.startswith("#"):

31 | continue

32 |

33 | line_split = line.split(" ")

34 | if line_split[2] == "err":

35 | continue

36 |

37 | folder1 = line_split[0][:3]

38 | folder2 = "-".join(line_split[0].split("-")[:2])

39 | file_name = line_split[0] + ".png"

40 | label = line_split[-1].rstrip("\n")

41 |

42 | # replace "|" with " " in label

43 | label = label.replace("|", " ")

44 |

45 | rel_path = os.path.join(sentences_folder_path, folder1, folder2, file_name)

46 | if not os.path.exists(rel_path):

47 | print(f"File not found: {rel_path}")

48 | continue

49 |

50 | dataset.append([rel_path, label])

51 | vocab.update(list(label))

52 | max_len = max(max_len, len(label))

53 |

54 | # Create a ModelConfigs object to store model configurations

55 | configs = ModelConfigs()

56 |

57 | # Save vocab and maximum text length to configs

58 | configs.vocab = "".join(vocab)

59 | configs.max_text_length = max_len

60 | configs.save()

61 |

62 | # Create a data provider for the dataset

63 | data_provider = DataProvider(

64 | dataset=dataset,

65 | skip_validation=True,

66 | batch_size=configs.batch_size,

67 | data_preprocessors=[ImageReader(CVImage)],

68 | transformers=[

69 | ImageResizer(configs.width, configs.height, keep_aspect_ratio=True),

70 | LabelIndexer(configs.vocab),

71 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab)),

72 | ],

73 | )

74 |

75 | # Split the dataset into training and validation sets

76 | train_data_provider, val_data_provider = data_provider.split(split = 0.9)

77 |

78 | # Augment training data with random brightness, rotation and erode/dilate

79 | train_data_provider.augmentors = [

80 | RandomBrightness(),

81 | RandomErodeDilate(),

82 | RandomSharpen(),

83 | ]

84 |

85 | # Creating TensorFlow model architecture

86 | model = train_model(

87 | input_dim = (configs.height, configs.width, 3),

88 | output_dim = len(configs.vocab),

89 | )

90 |

91 | # Compile the model and print summary

92 | model.compile(

93 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

94 | loss=CTCloss(),

95 | metrics=[

96 | CERMetric(vocabulary=configs.vocab),

97 | WERMetric(vocabulary=configs.vocab)

98 | ],

99 | run_eagerly=False

100 | )

101 | model.summary(line_length=110)

102 |

103 | # Define callbacks

104 | earlystopper = EarlyStopping(monitor="val_CER", patience=20, verbose=1, mode="min")

105 | checkpoint = ModelCheckpoint(f"{configs.model_path}/model.h5", monitor="val_CER", verbose=1, save_best_only=True, mode="min")

106 | trainLogger = TrainLogger(configs.model_path)

107 | tb_callback = TensorBoard(f"{configs.model_path}/logs", update_freq=1)

108 | reduceLROnPlat = ReduceLROnPlateau(monitor="val_CER", factor=0.9, min_delta=1e-10, patience=5, verbose=1, mode="auto")

109 | model2onnx = Model2onnx(f"{configs.model_path}/model.h5")

110 |

111 | # Train the model

112 | model.fit(

113 | train_data_provider,

114 | validation_data=val_data_provider,

115 | epochs=configs.train_epochs,

116 | callbacks=[earlystopper, checkpoint, trainLogger, reduceLROnPlat, tb_callback, model2onnx],

117 | workers=configs.train_workers

118 | )

119 |

120 | # Save training and validation datasets as csv files

121 | train_data_provider.to_csv(os.path.join(configs.model_path, "train.csv"))

122 | val_data_provider.to_csv(os.path.join(configs.model_path, "val.csv"))

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/README.md:

--------------------------------------------------------------------------------

1 | # Introduction to speech recognition with TensorFlow

2 | ## Master the basics of speech recognition with TensorFlow: Learn how to build and train models, implement real-time audio recognition, and develop practical applications

3 |

4 |

5 | ## **Detailed tutorial**:

6 | ## [Introduction to speech recognition with TensorFlow](https://pylessons.com/speech-recognition)

7 |

8 |

9 |  10 |

10 |

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/configs.py:

--------------------------------------------------------------------------------

1 | import os

2 | from datetime import datetime

3 |

4 | from mltu.configs import BaseModelConfigs

5 |

6 |

7 | class ModelConfigs(BaseModelConfigs):

8 | def __init__(self):

9 | super().__init__()

10 | self.model_path = os.path.join("Models/05_sound_to_text", datetime.strftime(datetime.now(), "%Y%m%d%H%M"))

11 | self.frame_length = 256

12 | self.frame_step = 160

13 | self.fft_length = 384

14 |

15 | self.vocab = "abcdefghijklmnopqrstuvwxyz'?! "

16 | self.input_shape = None

17 | self.max_text_length = None

18 | self.max_spectrogram_length = None

19 |

20 | self.batch_size = 8

21 | self.learning_rate = 0.0005

22 | self.train_epochs = 1000

23 | self.train_workers = 20

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/inferenceModel.py:

--------------------------------------------------------------------------------

1 | import typing

2 | import numpy as np

3 |

4 | from mltu.inferenceModel import OnnxInferenceModel

5 | from mltu.preprocessors import WavReader

6 | from mltu.utils.text_utils import ctc_decoder, get_cer, get_wer

7 |

8 | class WavToTextModel(OnnxInferenceModel):

9 | def __init__(self, char_list: typing.Union[str, list], *args, **kwargs):

10 | super().__init__(*args, **kwargs)

11 | self.char_list = char_list

12 |

13 | def predict(self, data: np.ndarray):

14 | data_pred = np.expand_dims(data, axis=0)

15 |

16 | preds = self.model.run(self.output_names, {self.input_names[0]: data_pred})[0]

17 |

18 | text = ctc_decoder(preds, self.char_list)[0]

19 |

20 | return text

21 |

22 | if __name__ == "__main__":

23 | import pandas as pd

24 | from tqdm import tqdm

25 | from mltu.configs import BaseModelConfigs

26 |

27 | configs = BaseModelConfigs.load("Models/05_sound_to_text/202302051936/configs.yaml")

28 |

29 | model = WavToTextModel(model_path=configs.model_path, char_list=configs.vocab, force_cpu=False)

30 |

31 | df = pd.read_csv("Models/05_sound_to_text/202302051936/val.csv").values.tolist()

32 |

33 | accum_cer, accum_wer = [], []

34 | for wav_path, label in tqdm(df):

35 | wav_path = wav_path.replace("\\", "/")

36 | spectrogram = WavReader.get_spectrogram(wav_path, frame_length=configs.frame_length, frame_step=configs.frame_step, fft_length=configs.fft_length)

37 | WavReader.plot_raw_audio(wav_path, label)

38 |

39 | padded_spectrogram = np.pad(spectrogram, ((0, configs.max_spectrogram_length - spectrogram.shape[0]),(0,0)), mode="constant", constant_values=0)

40 |

41 | WavReader.plot_spectrogram(spectrogram, label)

42 |

43 | text = model.predict(padded_spectrogram)

44 |

45 | true_label = "".join([l for l in label.lower() if l in configs.vocab])

46 |

47 | cer = get_cer(text, true_label)

48 | wer = get_wer(text, true_label)

49 |

50 | accum_cer.append(cer)

51 | accum_wer.append(wer)

52 |

53 | print(f"Average CER: {np.average(accum_cer)}, Average WER: {np.average(accum_wer)}")

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/model.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | from keras import layers

3 | from keras.models import Model

4 |

5 | from mltu.tensorflow.model_utils import residual_block, activation_layer

6 |

7 |

8 | def train_model(input_dim, output_dim, activation="leaky_relu", dropout=0.2):

9 |

10 | inputs = layers.Input(shape=input_dim, name="input", dtype=tf.float32)

11 |

12 | # expand dims to add channel dimension

13 | input = layers.Lambda(lambda x: tf.expand_dims(x, axis=-1))(inputs)

14 |

15 | # Convolution layer 1

16 | x = layers.Conv2D(filters=32, kernel_size=[11, 41], strides=[2, 2], padding="same", use_bias=False)(input)

17 | x = layers.BatchNormalization()(x)

18 | x = activation_layer(x, activation="leaky_relu")

19 |

20 | # Convolution layer 2

21 | x = layers.Conv2D(filters=32, kernel_size=[11, 21], strides=[1, 2], padding="same", use_bias=False)(x)

22 | x = layers.BatchNormalization()(x)

23 | x = activation_layer(x, activation="leaky_relu")

24 |

25 | # Reshape the resulted volume to feed the RNNs layers

26 | x = layers.Reshape((-1, x.shape[-2] * x.shape[-1]))(x)

27 |

28 | # RNN layers

29 | x = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(x)

30 | x = layers.Dropout(dropout)(x)

31 |

32 | x = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(x)

33 | x = layers.Dropout(dropout)(x)

34 |

35 | x = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(x)

36 | x = layers.Dropout(dropout)(x)

37 |

38 | x = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(x)

39 | x = layers.Dropout(dropout)(x)

40 |

41 | x = layers.Bidirectional(layers.LSTM(128, return_sequences=True))(x)

42 |

43 | # Dense layer

44 | x = layers.Dense(256)(x)

45 | x = activation_layer(x, activation="leaky_relu")

46 | x = layers.Dropout(dropout)(x)

47 |

48 | # Classification layer

49 | output = layers.Dense(output_dim + 1, activation="softmax", dtype=tf.float32)(x)

50 |

51 | model = Model(inputs=inputs, outputs=output)

52 | return model

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/train.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

3 | except: pass

4 |

5 | import os

6 | import tarfile

7 | import pandas as pd

8 | from tqdm import tqdm

9 | from urllib.request import urlopen

10 | from io import BytesIO

11 |

12 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

13 | from mltu.preprocessors import WavReader

14 |

15 | from mltu.tensorflow.dataProvider import DataProvider

16 | from mltu.transformers import LabelIndexer, LabelPadding, SpectrogramPadding

17 | from mltu.tensorflow.losses import CTCloss

18 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

19 | from mltu.tensorflow.metrics import CERMetric, WERMetric

20 |

21 | from model import train_model

22 | from configs import ModelConfigs

23 |

24 |

25 | def download_and_unzip(url, extract_to="Datasets", chunk_size=1024*1024):

26 | http_response = urlopen(url)

27 |

28 | data = b""

29 | iterations = http_response.length // chunk_size + 1

30 | for _ in tqdm(range(iterations)):

31 | data += http_response.read(chunk_size)

32 |

33 | tarFile = tarfile.open(fileobj=BytesIO(data), mode="r|bz2")

34 | tarFile.extractall(path=extract_to)

35 | tarFile.close()

36 |

37 |

38 | dataset_path = os.path.join("Datasets", "LJSpeech-1.1")

39 | if not os.path.exists(dataset_path):

40 | download_and_unzip("https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2", extract_to="Datasets")

41 |

42 | dataset_path = "Datasets/LJSpeech-1.1"

43 | metadata_path = dataset_path + "/metadata.csv"

44 | wavs_path = dataset_path + "/wavs/"

45 |

46 | # Read metadata file and parse it

47 | metadata_df = pd.read_csv(metadata_path, sep="|", header=None, quoting=3)

48 | metadata_df.columns = ["file_name", "transcription", "normalized_transcription"]

49 | metadata_df = metadata_df[["file_name", "normalized_transcription"]]

50 |

51 | # structure the dataset where each row is a list of [wav_file_path, sound transcription]

52 | dataset = [[f"Datasets/LJSpeech-1.1/wavs/{file}.wav", label.lower()] for file, label in metadata_df.values.tolist()]

53 |

54 | # Create a ModelConfigs object to store model configurations

55 | configs = ModelConfigs()

56 |

57 | max_text_length, max_spectrogram_length = 0, 0

58 | for file_path, label in tqdm(dataset):

59 | spectrogram = WavReader.get_spectrogram(file_path, frame_length=configs.frame_length, frame_step=configs.frame_step, fft_length=configs.fft_length)

60 | valid_label = [c for c in label if c in configs.vocab]

61 | max_text_length = max(max_text_length, len(valid_label))

62 | max_spectrogram_length = max(max_spectrogram_length, spectrogram.shape[0])

63 | configs.input_shape = [max_spectrogram_length, spectrogram.shape[1]]

64 |

65 | configs.max_spectrogram_length = max_spectrogram_length

66 | configs.max_text_length = max_text_length

67 | configs.save()

68 |

69 | # Create a data provider for the dataset

70 | data_provider = DataProvider(

71 | dataset=dataset,

72 | skip_validation=True,

73 | batch_size=configs.batch_size,

74 | data_preprocessors=[

75 | WavReader(frame_length=configs.frame_length, frame_step=configs.frame_step, fft_length=configs.fft_length),

76 | ],

77 | transformers=[

78 | SpectrogramPadding(max_spectrogram_length=configs.max_spectrogram_length, padding_value=0),

79 | LabelIndexer(configs.vocab),

80 | LabelPadding(max_word_length=configs.max_text_length, padding_value=len(configs.vocab)),

81 | ],

82 | )

83 |

84 | # Split the dataset into training and validation sets

85 | train_data_provider, val_data_provider = data_provider.split(split = 0.9)

86 |

87 | # Creating TensorFlow model architecture

88 | model = train_model(

89 | input_dim = configs.input_shape,

90 | output_dim = len(configs.vocab),

91 | dropout=0.5

92 | )

93 |

94 | # Compile the model and print summary

95 | model.compile(

96 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

97 | loss=CTCloss(),

98 | metrics=[

99 | CERMetric(vocabulary=configs.vocab),

100 | WERMetric(vocabulary=configs.vocab)

101 | ],

102 | run_eagerly=False

103 | )

104 | model.summary(line_length=110)

105 |

106 | # Define callbacks

107 | earlystopper = EarlyStopping(monitor="val_CER", patience=20, verbose=1, mode="min")

108 | checkpoint = ModelCheckpoint(f"{configs.model_path}/model.h5", monitor="val_CER", verbose=1, save_best_only=True, mode="min")

109 | trainLogger = TrainLogger(configs.model_path)

110 | tb_callback = TensorBoard(f"{configs.model_path}/logs", update_freq=1)

111 | reduceLROnPlat = ReduceLROnPlateau(monitor="val_CER", factor=0.8, min_delta=1e-10, patience=5, verbose=1, mode="auto")

112 | model2onnx = Model2onnx(f"{configs.model_path}/model.h5")

113 |

114 | # Train the model

115 | model.fit(

116 | train_data_provider,

117 | validation_data=val_data_provider,

118 | epochs=configs.train_epochs,

119 | callbacks=[earlystopper, checkpoint, trainLogger, reduceLROnPlat, tb_callback, model2onnx],

120 | workers=configs.train_workers

121 | )

122 |

123 | # Save training and validation datasets as csv files

124 | train_data_provider.to_csv(os.path.join(configs.model_path, "train.csv"))

125 | val_data_provider.to_csv(os.path.join(configs.model_path, "val.csv"))

126 |

--------------------------------------------------------------------------------

/Tutorials/05_sound_to_text/train_no_limit.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | try: [tf.config.experimental.set_memory_growth(gpu, True) for gpu in tf.config.experimental.list_physical_devices("GPU")]

3 | except: pass

4 | tf.keras.mixed_precision.set_global_policy('mixed_float16') # mixed precission training for faster training time

5 |

6 | import os

7 | import tarfile

8 | import pandas as pd

9 | from tqdm import tqdm

10 | from urllib.request import urlopen

11 | from io import BytesIO

12 |

13 | from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau, TensorBoard

14 | from mltu.preprocessors import WavReader

15 |

16 | from mltu.tensorflow.dataProvider import DataProvider

17 | from mltu.transformers import LabelIndexer, LabelPadding, SpectrogramPadding

18 | from mltu.tensorflow.losses import CTCloss

19 | from mltu.tensorflow.callbacks import Model2onnx, TrainLogger

20 | from mltu.tensorflow.metrics import CERMetric, WERMetric

21 |

22 | from model import train_model

23 | from configs import ModelConfigs

24 |

25 |

26 | def download_and_unzip(url, extract_to="Datasets", chunk_size=1024*1024):

27 | http_response = urlopen(url)

28 |

29 | data = b""

30 | iterations = http_response.length // chunk_size + 1

31 | for _ in tqdm(range(iterations)):

32 | data += http_response.read(chunk_size)

33 |

34 | tarFile = tarfile.open(fileobj=BytesIO(data), mode="r|bz2")

35 | tarFile.extractall(path=extract_to)

36 | tarFile.close()

37 |

38 |

39 | dataset_path = os.path.join("Datasets", "LJSpeech-1.1")

40 | if not os.path.exists(dataset_path):

41 | download_and_unzip("https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2", extract_to="Datasets")

42 |

43 | dataset_path = "Datasets/LJSpeech-1.1"

44 | metadata_path = dataset_path + "/metadata.csv"

45 | wavs_path = dataset_path + "/wavs/"

46 |

47 | # Read metadata file and parse it

48 | metadata_df = pd.read_csv(metadata_path, sep="|", header=None, quoting=3)

49 | metadata_df.columns = ["file_name", "transcription", "normalized_transcription"]

50 | metadata_df = metadata_df[["file_name", "normalized_transcription"]]

51 |

52 | # structure the dataset where each row is a list of [wav_file_path, sound transcription]

53 | dataset = [[f"Datasets/LJSpeech-1.1/wavs/{file}.wav", label.lower()] for file, label in metadata_df.values.tolist()]

54 |

55 | # Create a ModelConfigs object to store model configurations

56 | configs = ModelConfigs()

57 | configs.save()

58 |

59 | # Create a data provider for the dataset

60 | data_provider = DataProvider(

61 | dataset=dataset,

62 | skip_validation=True,

63 | batch_size=configs.batch_size,

64 | data_preprocessors=[

65 | WavReader(frame_length=configs.frame_length, frame_step=configs.frame_step, fft_length=configs.fft_length),

66 | ],

67 | transformers=[

68 | LabelIndexer(configs.vocab),

69 | ],

70 | batch_postprocessors=[

71 | SpectrogramPadding(padding_value=0, use_on_batch=True),

72 | LabelPadding(padding_value=len(configs.vocab), use_on_batch=True),

73 | ],

74 | )

75 |

76 | # Split the dataset into training and validation sets

77 | train_data_provider, val_data_provider = data_provider.split(split = 0.9)

78 |

79 | # Creating TensorFlow model architecture

80 | model = train_model(

81 | input_dim = (None, 193),

82 | output_dim = len(configs.vocab),

83 | dropout=0.5

84 | )

85 |

86 | # Compile the model and print summary

87 | model.compile(

88 | optimizer=tf.keras.optimizers.Adam(learning_rate=configs.learning_rate),

89 | loss=CTCloss(),

90 | metrics=[

91 | CERMetric(vocabulary=configs.vocab),

92 | WERMetric(vocabulary=configs.vocab)

93 | ],

94 | run_eagerly=False

95 | )

96 | model.summary(line_length=110)

97 |