├── chF

├── 02_mmlu

│ ├── requirements-extra.txt

│ ├── random_guessing_baseline.py

│ ├── 1_letter_matching.py

│ ├── 2_logprob.py

│ └── 3_teacher_forcing.py

├── README.md

├── 03_leaderboards

│ ├── 1_elo_leaderboard.py

│ ├── votes.json

│ ├── README.md

│ └── 2_bradley_terry_leaderboard.py

└── 04_llm-judge

│ └── README.md

├── requirements.txt

├── ch01

└── README.md

├── chG

├── 01_main-chapter-code

│ ├── public

│ │ ├── logo_dark.webp

│ │ └── logo_light.webp

│ ├── README.md

│ ├── qwen3_chat_interface.py

│ └── qwen3_chat_interface_multiturn.py

└── README.md

├── chC

└── README.md

├── ch02

├── 01_main-chapter-code

│ └── README.md

├── 02_setup-tips

│ ├── README.md

│ ├── gpu-instructions.md

│ └── python-instructions.md

├── README.md

├── 04_torch-compile-windows

│ └── README.md

├── 05_use_model

│ ├── generate_simple.py

│ ├── chat.py

│ ├── chat_multiturn.py

│ └── README.md

└── 03_optimized-LLM

│ ├── compare_inference.py

│ └── README.md

├── .github

├── ISSUE_TEMPLATE

│ ├── ask-a-question.md

│ └── bug-report.yaml

├── workflows

│ ├── check-spelling-errors.yml

│ ├── tests-linux.yml

│ ├── tests-macos.yml

│ ├── basic-tests-old-pytorch.yml

│ ├── basic-tests-pip.yml

│ ├── basic-test-nightly-pytorch.yml

│ ├── check-links.yml

│ ├── tests-windows.yml

│ └── code-linter.yml

└── scripts

│ ├── check_notebook_line_length.py

│ └── check_double_quotes.py

├── ch03

├── README.md

├── 01_main-chapter-code

│ └── README.md

└── 02_math500-verifier-scripts

│ ├── evaluate_math500.py

│ └── README.md

├── ch04

├── README.md

└── 02_math500-inference-scaling-scripts

│ ├── cot_prompting_math500.py

│ └── README.md

├── ch05

└── README.md

├── tests

├── test_appendix_f.py

├── test_math500_scripts.py

├── test_appendix_c.py

├── conftest.py

├── test_ch02.py

├── test_ch02_ex.py

├── test_ch04.py

├── test_ch05.py

├── test_qwen3_optimized.py

└── test_qwen3_batched_stop.py

├── reasoning_from_scratch

├── __init__.py

├── ch02_ex.py

├── appendix_f.py

├── utils.py

├── ch02.py

├── appendix_c.py

└── ch05.py

├── pyproject.toml

└── .gitignore

/chF/02_mmlu/requirements-extra.txt:

--------------------------------------------------------------------------------

1 | datasets >= 4.1.1

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | reasoning-from-scratch >= 0.1.2

2 | sympy>=1.14.0 # For verifier in ch03

3 | torch >= 2.7.1

4 | tokenizers >= 0.21.2

--------------------------------------------------------------------------------

/ch01/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 1: Understanding Reasoning Models

2 |

3 |

4 |

5 | ## Main chapter code

6 |

7 | There is no code in this chapter.

8 |

9 |

--------------------------------------------------------------------------------

/chG/01_main-chapter-code/public/logo_dark.webp:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rasbt/reasoning-from-scratch/HEAD/chG/01_main-chapter-code/public/logo_dark.webp

--------------------------------------------------------------------------------

/chG/01_main-chapter-code/public/logo_light.webp:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rasbt/reasoning-from-scratch/HEAD/chG/01_main-chapter-code/public/logo_light.webp

--------------------------------------------------------------------------------

/chG/README.md:

--------------------------------------------------------------------------------

1 | # Appendix G: Chat Interface

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code) contains the main chapter code and exercise solutions

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/chC/README.md:

--------------------------------------------------------------------------------

1 | # Appendix C: Qwen3 LLM Source Code

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code) contains the main chapter code and exercise solutions

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/ch02/01_main-chapter-code/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 2: Generating Text with a Pre-Trained LLM

2 |

3 |

4 |

5 | ## Main chapter code

6 |

7 | - [ch02_main.ipynb](ch02_main.ipynb): main chapter code

8 | - [ch02_exercise-solutions.ipynb](ch02_exercise-solutions.ipynb): exercise solutions

9 |

10 |

--------------------------------------------------------------------------------

/ch02/02_setup-tips/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 2: Generating Text with a Pre-Trained LLM

2 |

3 |

4 |

5 |

6 | ## Bonus material

7 |

8 | - [python-instructions.md](python-instructions.md): optional Python setup recommendations and instructions

9 | - [gpu-instructions.md](gpu-instructions.md): recommendations for cloud compute resources

10 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/ask-a-question.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Ask a Question

3 | about: Ask questions related to the book

4 | title: ''

5 | labels: [question]

6 | assignees: rasbt

7 |

8 | ---

9 |

10 | If you have a question that is not a bug, please consider asking it in this GitHub repository's [discussion forum](https://github.com/rasbt/reasoning-from-scratch/discussions).

11 |

--------------------------------------------------------------------------------

/ch03/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 3: Evaluating Reasoning Models

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code): main chapter code and exercise solutions

7 |

8 |

9 | ## Bonus material

10 |

11 | - [02_math500-verifier-scripts](02_math500-verifier-scripts): optional Python scripts to run the MATH-500 evaluation from the command line, including a batched version with higher throughput

12 |

13 |

--------------------------------------------------------------------------------

/ch02/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 2: Generating Text with a Pre-Trained LLM

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code): main chapter code and exercise solutions

7 |

8 |

9 | ## Bonus material

10 |

11 | - [02_setup-tips](02_setup-tips/): optional Python setup recommendations and cloud GPU recommendations

12 | - [03_optimized-LLM](03_optimized-LLM): info on how to use a GPU-optimized version of the LLM

13 |

14 |

--------------------------------------------------------------------------------

/ch04/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 4: Improving Reasoning with Inference-Time Scaling

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code): main chapter code and exercise solutions

7 |

8 |

9 | ## Bonus material

10 |

11 | - [02_math500-inference-scaling-scripts](02_math500-inference-scaling-scripts): optional Python scripts to apply the inference scaling techniques covered in this chapter (CoT prompting and self-consistency) to the MATH-500 evaluation from the previous chapter.

12 |

--------------------------------------------------------------------------------

/ch05/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 5: Inference-Time Scaling via Self-Refinement

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code): main chapter code and exercise solutions

7 |

8 |

9 | ## Bonus material

10 |

11 | - [02_math500-more-inference-scaling-scripts](02_math500-more-inference-scaling-scripts): optional Python scripts to apply the inference scaling techniques covered in this chapter (Best-of-N and self-refinement) to the MATH-500 evaluation from the previous chapter.

12 |

--------------------------------------------------------------------------------

/chF/README.md:

--------------------------------------------------------------------------------

1 | # Appendix F: Common Approaches to LLM Evaluation

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [01_main-chapter-code](01_main-chapter-code): the main chapter code and exercise solutions

7 |

8 |

9 |

10 | ## Bonus materials

11 |

12 | - [02_mmlu](02_mmlu): MMLU benchmark evaluation with all three different MMLU approaches

13 | - [03_leaderboards](03_leaderboards): Elo and Bradley-Terry implementations of leaderboard rankings

14 | - [04_llm-judge](04_llm-judge): LLM-as-a-judge approach, where a judge LLM evaluates a candidate LLM

15 |

--------------------------------------------------------------------------------

/tests/test_appendix_f.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | from reasoning_from_scratch.appendix_f import elo_ratings

6 | import math

7 |

8 |

9 | def test_elo_single_match():

10 | r = elo_ratings([("A", "B")], k_factor=32, initial_rating=1000)

11 | assert math.isclose(r["A"], 1016)

12 | assert math.isclose(r["B"], 984)

13 |

14 |

15 | def test_elo_total_points_constant():

16 | votes = [("A", "B"), ("B", "C"), ("A", "C")]

17 | r = elo_ratings(votes)

18 | assert math.isclose(sum(r.values()), 3000)

--------------------------------------------------------------------------------

/ch03/01_main-chapter-code/README.md:

--------------------------------------------------------------------------------

1 | # Chapter 3: Evaluating Reasoning Models

2 |

3 |

4 | ## Main chapter code

5 |

6 | - [ch03_main.ipynb](ch03_main.ipynb): main chapter code

7 | - [ch03_exercise-solutions.ipynb](ch03_exercise-solutions.ipynb): exercise solutions

8 |

9 |

10 |

11 | ## Bonus materials

12 |

13 | - [../02_math500-verifier-scripts/evaluate_math500.py](../02_math500-verifier-scripts/evaluate_math500.py): standalone script to evaluate models on the MATH-500 dataset

14 | - [../02_math500-verifier-scripts/evaluate_math500_batched.py](../02_math500-verifier-scripts/evaluate_math500_batched.py): same as above, but processes multiple examples in parallel during generation (for higher throughput)

15 |

16 | Both evaluation scripts import functionality from the [`reasoning_from_scratch`](../../reasoning_from_scratch) package to avoid code duplication. (See [chapter 2 setup instructions](../../ch02/02_setup-tips/python-instructions.md) for installation details.)

17 |

--------------------------------------------------------------------------------

/.github/workflows/check-spelling-errors.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Spell Check

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | pull_request:

11 | branches: [ main ]

12 |

13 | jobs:

14 | spellcheck:

15 | runs-on: ubuntu-latest

16 | env:

17 | SKIP_EXPENSIVE: "1"

18 | steps:

19 | - uses: actions/checkout@v4

20 | - name: Set up Python

21 | uses: actions/setup-python@v5

22 | with:

23 | python-version: "3.10"

24 | - name: Install codespell

25 | run: |

26 | curl -LsSf https://astral.sh/uv/install.sh | sh

27 | uv sync --dev --python=3.10

28 | uv add codespell

29 | - name: Run codespell

30 | run: |

31 | source .venv/bin/activate

32 | codespell -L "ocassion,occassion,ot,te,tje" **/*.{txt,md,py,ipynb}

33 |

--------------------------------------------------------------------------------

/reasoning_from_scratch/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | """

6 | Reasoning package used by the "Reasoning Models From Scratch" book.

7 |

8 | Copyright (c) 2025, Sebastian Raschka

9 |

10 | Licensed under the Apache License, Version 2.0 (the "License");

11 | you may not use this file except in compliance with the License.

12 | You may obtain a copy of the License at

13 |

14 | http://www.apache.org/licenses/LICENSE-2.0

15 |

16 | or in the top-level LICENSE file of this repository.

17 |

18 | Unless required by applicable law or agreed to in writing, software

19 | distributed under the License is distributed on an "AS IS" BASIS,

20 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

21 | See the License for the specific language governing permissions and

22 | limitations under the License.

23 | """

24 |

25 | __version__ = "0.1.12"

26 |

--------------------------------------------------------------------------------

/.github/workflows/tests-linux.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Code tests Linux

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | uv-tests:

32 | name: Code tests Linux

33 | runs-on: ubuntu-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 |

44 | - name: Install uv and dependencies

45 | run: |

46 | curl -LsSf https://astral.sh/uv/install.sh | sh

47 | uv sync --group dev

48 |

49 | - name: Run tests

50 | run: uv run pytest tests

--------------------------------------------------------------------------------

/.github/workflows/tests-macos.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Code tests macOS

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | uv-tests:

32 | name: Code tests macOS

33 | runs-on: macos-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 |

44 | - name: Install uv and dependencies

45 | run: |

46 | curl -LsSf https://astral.sh/uv/install.sh | sh

47 | uv sync --group dev

48 |

49 | - name: Run tests

50 | run: uv run pytest tests

51 |

--------------------------------------------------------------------------------

/tests/test_math500_scripts.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | import subprocess

6 | import sys

7 | from pathlib import Path

8 |

9 | import pytest

10 |

11 |

12 | SCRIPT_PATHS = [

13 | Path("ch03/02_math500-verifier-scripts/evaluate_math500_batched.py"),

14 | Path("ch03/02_math500-verifier-scripts/evaluate_math500.py"),

15 | Path("ch04/02_math500-inference-scaling-scripts/self_consistency_math500.py"),

16 | Path("ch04/02_math500-inference-scaling-scripts/cot_prompting_math500.py"),

17 | ]

18 |

19 |

20 | @pytest.mark.parametrize("script_path", SCRIPT_PATHS)

21 | def test_script_help_runs_without_import_errors(script_path):

22 |

23 | repo_root = Path(__file__).resolve().parent.parent

24 | full_path = repo_root / script_path

25 | assert full_path.exists(), f"Expected script at {full_path}"

26 |

27 | # Run scripts with --help to make sure they work

28 |

29 | result = subprocess.run(

30 | [sys.executable, str(full_path), "--help"],

31 | cwd=repo_root,

32 | capture_output=True,

33 | text=True,

34 | )

35 |

36 | assert result.returncode == 0, result.stderr

37 | assert "usage" in result.stdout.lower()

38 |

--------------------------------------------------------------------------------

/tests/test_appendix_c.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | import os

6 | from pathlib import Path

7 | import sys

8 | import torch

9 | import pytest

10 |

11 | from reasoning_from_scratch.ch02 import (

12 | generate_text_basic,

13 | generate_text_basic_cache,

14 | )

15 | # Local imports

16 | from test_qwen3 import test_model

17 | from conftest import import_definitions_from_notebook

18 |

19 |

20 | ROOT = Path(__file__).resolve().parents[1]

21 | sys.path.insert(0, str(ROOT))

22 |

23 |

24 | nb_path = ROOT / "chC" / "01_main-chapter-code" / "chC_main.ipynb"

25 | mod = import_definitions_from_notebook(nb_path, "chC_chC_main_defs")

26 | Qwen3Model = getattr(mod, "Qwen3Model")

27 |

28 | # Make CI more reproducible & robust

29 | os.environ["MKL_NUM_THREADS"] = "1"

30 | os.environ["OMP_NUM_THREADS"] = "1"

31 | torch.backends.mkldnn.enabled = False

32 | torch.set_num_threads(1)

33 | torch.use_deterministic_algorithms(True)

34 |

35 |

36 | @pytest.mark.parametrize("ModelClass", [Qwen3Model])

37 | @pytest.mark.parametrize("generate_fn", [generate_text_basic, generate_text_basic_cache])

38 | def test_model_here_too(ModelClass, qwen3_weights_path, generate_fn):

39 | test_model(ModelClass, qwen3_weights_path, generate_fn)

40 |

--------------------------------------------------------------------------------

/.github/workflows/basic-tests-old-pytorch.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Test Old PyTorch

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | uv-tests:

32 | name: Code tests

33 | runs-on: ubuntu-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python (uv)

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 |

44 | - name: Install uv and dependencies

45 | shell: bash

46 | run: |

47 | curl -LsSf https://astral.sh/uv/install.sh | sh

48 | uv sync --group dev

49 | uv add pytest-ruff torch==2.7.1

50 |

51 | - name: Test reasoning_from_scratch package

52 | shell: bash

53 | run: |

54 | uv run pytest tests

55 |

--------------------------------------------------------------------------------

/.github/workflows/basic-tests-pip.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Code Tests (Plain pip)

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | pip-tests:

32 | name: Pip Tests (Ubuntu Only)

33 | runs-on: ubuntu-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 | - name: Create Virtual Environment and Install Dependencies

44 | run: |

45 | python -m venv .venv

46 | source .venv/bin/activate

47 | pip install --upgrade pip

48 | pip install -r requirements.txt

49 | pip install .

50 | pip install pytest

51 | - name: Test Selected Python Scripts

52 | run: |

53 | source .venv/bin/activate

54 | pytest tests

55 |

--------------------------------------------------------------------------------

/.github/workflows/basic-test-nightly-pytorch.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Test Nightly PyTorch

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | uv-tests:

32 | name: Code tests

33 | runs-on: ubuntu-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python (uv)

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 |

44 | - name: Install uv and dependencies

45 | shell: bash

46 | run: |

47 | curl -LsSf https://astral.sh/uv/install.sh | sh

48 | uv sync --group dev

49 | uv pip install -U --pre torch --index-url https://download.pytorch.org/whl/nightly/cpu

50 |

51 | - name: Test reasoning_from_scratch package

52 | shell: bash

53 | run: |

54 | # uv run pytest tests

55 | # temporarily disabled

56 |

--------------------------------------------------------------------------------

/.github/workflows/check-links.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Check Hyperlinks

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | pull_request:

11 | branches: [ main ]

12 |

13 | jobs:

14 | test:

15 | runs-on: ubuntu-latest

16 | env:

17 | SKIP_EXPENSIVE: "1"

18 | steps:

19 | - uses: actions/checkout@v4

20 | - name: Set up Python

21 | uses: actions/setup-python@v5

22 | with:

23 | python-version: "3.10"

24 | - name: Install dependencies

25 | run: |

26 | curl -LsSf https://astral.sh/uv/install.sh | sh

27 | uv add pytest-check-links

28 | - name: Check links

29 | run: |

30 | source .venv/bin/activate

31 | pytest --check-links ./ \

32 | --check-links-ignore "https://platform.openai.com/*" \

33 | --check-links-ignore "https://openai.com/*" \

34 | --check-links-ignore "https://arena.lmsys.org" \

35 | --check-links-ignore "https://unsloth.ai/blog/gradient" \

36 | --check-links-ignore "https://www.reddit.com/r/*" \

37 | --check-links-ignore "https://code.visualstudio.com/*" \

38 | --check-links-ignore "https://arxiv.org/*" \

39 | --check-links-ignore "https://ai.stanford.edu/~amaas/data/sentiment/" \

40 | --check-links-ignore "https://x.com/*" \

41 | --check-links-ignore "https://scholar.google.com/*" \

42 | --check-links-ignore "https://en.wikipedia.org/*"

43 |

--------------------------------------------------------------------------------

/.github/workflows/tests-windows.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Code tests Windows

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 | workflow_dispatch:

25 |

26 | concurrency:

27 | group: ${{ github.workflow }}-${{ github.ref }}

28 | cancel-in-progress: true

29 |

30 | jobs:

31 | uv-tests:

32 | name: Code tests Windows

33 | runs-on: windows-latest

34 | env:

35 | SKIP_EXPENSIVE: "1"

36 | steps:

37 | - uses: actions/checkout@v4

38 |

39 | - name: Set up Python

40 | uses: actions/setup-python@v5

41 | with:

42 | python-version: "3.13"

43 |

44 | - name: Install uv and dependencies

45 | shell: bash

46 | run: |

47 | export PATH="$HOME/.local/bin:$PATH"

48 | curl -LsSf https://astral.sh/uv/install.sh | sh

49 | uv sync --group dev

50 |

51 | - name: Run tests

52 | shell: bash

53 | env:

54 | OMP_NUM_THREADS: "1"

55 | MKL_NUM_THREADS: "1"

56 | CUBLAS_WORKSPACE_CONFIG: ":16:8"

57 | run: |

58 | export PATH="$HOME/.local/bin:$PATH"

59 | uv run pytest tests

60 |

--------------------------------------------------------------------------------

/chG/01_main-chapter-code/README.md:

--------------------------------------------------------------------------------

1 | # Appendix G: Chat Interface

2 |

3 |

4 |

5 | This folder contains code for running a ChatGPT-like user interface to interact with the LLMs used and/or developed in this book, as shown below.

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 | To implement this user interface, we use the open-source [Chainlit Python package](https://github.com/Chainlit/chainlit).

14 |

15 |

16 | ## Step 1: Install dependencies

17 |

18 | First, we install the `chainlit` package and dependency:

19 |

20 | ```bash

21 | pip install chainlit

22 | ```

23 |

24 | Or, if you are using `uv`:

25 |

26 | ```bash

27 | uv add chainlit

28 | ```

29 |

30 |

31 |

32 |

33 |

34 | ## Step 2: Run `app` code

35 |

36 | This folder contains 2 files:

37 |

38 | 1. [`qwen3_chat_interface.py`](qwen3_chat_interface.py): This file loads and uses the Qwen3 0.6B model in thinking mode.

39 | 2. [`qwen3_chat_interface_multiturn.py`](qwen3_chat_interface_multiturn.py): The same as above, but configured to remember the message history.

40 |

41 | (Open and inspect these files to learn more.)

42 |

43 | Run one of the following commands from the terminal to start the UI server:

44 |

45 | ```bash

46 | chainlit run qwen3_chat_interface.py

47 | ```

48 |

49 | or, if you are using `uv`:

50 |

51 | ```bash

52 | uv run chainlit run qwen3_chat_interface.py

53 | ```

54 |

55 | Running one of the commands above should open a new browser tab where you can interact with the model. If the browser tab does not open automatically, inspect the terminal command and copy the local address into your browser address bar (usually, the address is `http://localhost:8000`).

--------------------------------------------------------------------------------

/chF/03_leaderboards/1_elo_leaderboard.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 | import json

5 | import argparse

6 |

7 |

8 | def elo_ratings(vote_pairs, k_factor=32, initial_rating=1000):

9 | ratings = {

10 | model: initial_rating

11 | for pair in vote_pairs

12 | for model in pair

13 | }

14 | for winner, loser in vote_pairs:

15 | expected = 1.0 / (

16 | 1.0 + 10 ** (

17 | (ratings[loser] - ratings[winner]) / 400.0

18 | )

19 | )

20 | ratings[winner] += k_factor * (1 - expected)

21 | ratings[loser] += k_factor * (0 - (1 - expected))

22 | return ratings

23 |

24 |

25 | def main():

26 | parser = argparse.ArgumentParser(

27 | description="Compute Elo leaderboard."

28 | )

29 | parser.add_argument("--path", type=str, help="Path to votes JSON")

30 | parser.add_argument("--k", type=int, default=32,

31 | help="Elo k-factor")

32 | parser.add_argument("--init", type=int, default=1000,

33 | help="Initial rating")

34 | args = parser.parse_args()

35 |

36 | with open(args.path, "r", encoding="utf-8") as f:

37 | votes = json.load(f)

38 |

39 | ratings = elo_ratings(votes, args.k, args.init)

40 | leaderboard = sorted(ratings.items(),

41 | key=lambda x: -x[1])

42 |

43 | print("\nLeaderboard (Elo) \n-----------------------")

44 | for i, (model, score) in enumerate(leaderboard, 1):

45 | print(f"{i:>2}. {model:<10} {score:7.1f}")

46 | print()

47 |

48 |

49 | if __name__ == "__main__":

50 | main()

51 |

--------------------------------------------------------------------------------

/chF/03_leaderboards/votes.json:

--------------------------------------------------------------------------------

1 | [

2 | ["GPT-5", "Claude-3"],

3 | ["GPT-5", "Llama-4"],

4 | ["Claude-3", "Llama-3"],

5 | ["Llama-4", "Llama-3"],

6 | ["Claude-3", "Llama-3"],

7 | ["GPT-5", "Llama-3"],

8 | ["Claude-3", "GPT-5"],

9 | ["Llama-4", "Claude-3"],

10 | ["Llama-3", "Llama-4"],

11 | ["GPT-5", "Claude-3"],

12 | ["Claude-3", "Llama-4"],

13 | ["GPT-5", "Llama-4"],

14 | ["Claude-3", "GPT-5"],

15 | ["Llama-4", "Claude-3"],

16 | ["GPT-5", "Llama-3"],

17 | ["Claude-3", "Llama-3"],

18 | ["Llama-3", "Claude-3"],

19 | ["GPT-5", "Llama-4"],

20 | ["Claude-3", "GPT-5"],

21 | ["Llama-4", "GPT-5"],

22 | ["Llama-3", "Claude-3"],

23 | ["GPT-5", "Claude-3"],

24 | ["Claude-3", "Llama-4"],

25 | ["Llama-4", "Llama-3"],

26 | ["GPT-5", "Llama-3"],

27 | ["Claude-3", "Llama-3"],

28 | ["GPT-5", "Claude-3"],

29 | ["Claude-3", "Llama-4"],

30 | ["GPT-5", "Claude-3"],

31 | ["Llama-4", "Llama-3"],

32 | ["Claude-3", "GPT-5"],

33 | ["GPT-5", "Llama-3"],

34 | ["Claude-3", "Llama-3"],

35 | ["Llama-3", "Llama-4"],

36 | ["GPT-5", "Claude-3"],

37 | ["Claude-3", "Llama-4"],

38 | ["GPT-5", "Llama-4"],

39 | ["Claude-3", "GPT-5"],

40 | ["Llama-4", "Claude-3"],

41 | ["GPT-5", "Llama-3"],

42 | ["Claude-3", "Llama-3"],

43 | ["Llama-3", "Claude-3"],

44 | ["GPT-5", "Llama-4"],

45 | ["Claude-3", "GPT-5"],

46 | ["Llama-4", "GPT-5"],

47 | ["Llama-3", "Claude-3"],

48 | ["GPT-5", "Claude-3"],

49 | ["Claude-3", "Llama-4"],

50 | ["Llama-4", "Llama-3"],

51 | ["GPT-5", "Llama-3"],

52 | ["Claude-3", "Llama-3"],

53 | ["GPT-5", "Claude-3"],

54 | ["Claude-3", "Llama-4"],

55 | ["GPT-5", "Claude-3"],

56 | ["Llama-4", "Llama-3"],

57 | ["Claude-3", "GPT-5"],

58 | ["GPT-5", "Llama-3"],

59 | ["Claude-3", "Llama-3"],

60 | ["Llama-3", "Llama-4"],

61 | ["Claude-3", "Llama-3"]

62 | ]

63 |

--------------------------------------------------------------------------------

/.github/workflows/code-linter.yml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | name: Code Style Checks

6 |

7 | on:

8 | push:

9 | branches: [ main ]

10 | paths:

11 | - '**/*.py'

12 | - '**/*.ipynb'

13 | - '**/*.yaml'

14 | - '**/*.yml'

15 | - '**/*.sh'

16 | pull_request:

17 | branches: [ main ]

18 | paths:

19 | - '**/*.py'

20 | - '**/*.ipynb'

21 | - '**/*.yaml'

22 | - '**/*.yml'

23 | - '**/*.sh'

24 |

25 | jobs:

26 | flake8:

27 | runs-on: ubuntu-latest

28 | env:

29 | SKIP_EXPENSIVE: "1"

30 | steps:

31 | - uses: actions/checkout@v4

32 | - name: Set up Python

33 | uses: actions/setup-python@v5

34 | with:

35 | python-version: "3.13"

36 | - name: Install ruff (a faster flake 8 equivalent)

37 | run: |

38 | curl -LsSf https://astral.sh/uv/install.sh | sh

39 | uv sync --dev --python=3.13

40 | uv add ruff

41 |

42 | - name: Run ruff with exceptions

43 | run: |

44 | source .venv/bin/activate

45 | ruff check .

46 |

47 | - name: Run quote style check

48 | run: |

49 | source .venv/bin/activate

50 | uv run .github/scripts/check_double_quotes.py

51 |

52 | - name: Run line length check on main chapter notebooks

53 | run: |

54 | source .venv/bin/activate

55 | uv run .github/scripts/check_notebook_line_length.py \

56 | ch02/01_main-chapter-code/ch02_main.ipynb \

57 | ch03/01_main-chapter-code/ch03_main.ipynb \

58 | chC/01_main-chapter-code/chC_main.ipynb \

59 | chF/01_main-chapter-code/chF_main.ipynb

--------------------------------------------------------------------------------

/reasoning_from_scratch/ch02_ex.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | from .qwen3 import KVCache

6 | import torch

7 |

8 |

9 | @torch.inference_mode()

10 | def generate_text_basic_stream(

11 | model,

12 | token_ids,

13 | max_new_tokens,

14 | eos_token_id=None

15 | ):

16 | # input_length = token_ids.shape[1]

17 | model.eval()

18 |

19 | for _ in range(max_new_tokens):

20 | out = model(token_ids)[:, -1]

21 | next_token = torch.argmax(out, dim=-1, keepdim=True)

22 |

23 | if (eos_token_id is not None

24 | and next_token.item() == eos_token_id):

25 | break

26 |

27 | yield next_token # New: Yield each token as it's generated

28 |

29 | token_ids = torch.cat([token_ids, next_token], dim=1)

30 | # return token_ids[:, input_length:]

31 |

32 |

33 | @torch.inference_mode()

34 | def generate_text_basic_stream_cache(

35 | model,

36 | token_ids,

37 | max_new_tokens,

38 | eos_token_id=None

39 | ):

40 | # input_length = token_ids.shape[1]

41 | model.eval()

42 | cache = KVCache(n_layers=model.cfg["n_layers"])

43 | model.reset_kv_cache()

44 |

45 | out = model(token_ids, cache=cache)[:, -1]

46 | for _ in range(max_new_tokens):

47 | next_token = torch.argmax(out, dim=-1, keepdim=True)

48 |

49 | if (eos_token_id is not None

50 | and next_token.item() == eos_token_id):

51 | break

52 |

53 | yield next_token # New: Yield each token as it's generated

54 | # token_ids = torch.cat([token_ids, next_token], dim=1)

55 | out = model(next_token, cache=cache)[:, -1]

56 |

57 | # return token_ids[:, input_length:]

58 |

--------------------------------------------------------------------------------

/reasoning_from_scratch/appendix_f.py:

--------------------------------------------------------------------------------

1 |

2 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

3 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

4 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

5 |

6 | from reasoning_from_scratch.ch02_ex import generate_text_basic_stream_cache

7 |

8 |

9 | def predict_choice(

10 | model, tokenizer, prompt_fmt, max_new_tokens=8

11 | ):

12 | pred = None

13 | for t in generate_text_basic_stream_cache(

14 | model=model,

15 | token_ids=prompt_fmt,

16 | max_new_tokens=max_new_tokens,

17 | eos_token_id=tokenizer.eos_token_id,

18 | ):

19 | answer = tokenizer.decode(t.squeeze(0).tolist())

20 | for letter in answer:

21 | letter = letter.upper()

22 | if letter in "ABCD":

23 | pred = letter

24 | break

25 | if pred: # stop as soon as a letter appears

26 | break

27 | return pred

28 |

29 |

30 | def elo_ratings(vote_pairs, k_factor=32, initial_rating=1000):

31 | # Initialize all models with the same base rating

32 | ratings = {

33 | model: initial_rating

34 | for pair in vote_pairs

35 | for model in pair

36 | }

37 |

38 | # Update ratings after each match

39 | for winner, loser in vote_pairs:

40 | rating_winner, rating_loser = ratings[winner], ratings[loser]

41 |

42 | # Expected score for the current winner given the ratings

43 | expected_winner = 1.0 / (

44 | 1.0 + 10 ** ((rating_loser - rating_winner) / 400.0)

45 | )

46 |

47 | # k_factor determines sensitivity of rating updates

48 | ratings[winner] = (

49 | rating_winner + k_factor * (1 - expected_winner)

50 | )

51 | ratings[loser] = (

52 | rating_loser + k_factor * (0 - (1 - expected_winner))

53 | )

54 |

55 | return ratings

56 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | [tool.setuptools.dynamic]

6 | version = { attr = "reasoning_from_scratch.__version__" }

7 |

8 | [build-system]

9 | requires = ["setuptools>=61.0", "wheel"]

10 | build-backend = "setuptools.build_meta"

11 |

12 | [project]

13 | name = "reasoning_from_scratch"

14 | dynamic = ["version"]

15 | description = "Reasoning Models From Scratch"

16 | readme = "README.md"

17 |

18 | authors = [

19 | { name = "Sebastian Raschka" }

20 | ]

21 |

22 | license = { file = "LICENSE" }

23 | requires-python = ">=3.10"

24 |

25 | dependencies = [

26 | "jupyterlab>=4.4.7",

27 | "torch>=2.7.1",

28 | "tokenizers>=0.21.2",

29 | "nbformat>=5.10.4",

30 | "sympy>=1.14.0", # For verifier in ch03

31 | "matplotlib>=3.10.7",

32 | ]

33 |

34 | classifiers = [

35 | "License :: OSI Approved :: Apache Software License",

36 | "Programming Language :: Python :: 3.10",

37 | "Programming Language :: Python :: 3.11",

38 | "Programming Language :: Python :: 3.12",

39 | "Programming Language :: Python :: 3.13",

40 | "Intended Audience :: Developers",

41 | "Operating System :: OS Independent"

42 | ]

43 |

44 | [dependency-groups]

45 | dev = [

46 | "pytest>=8.3.5",

47 | "ruff>=0.4.4",

48 | "pytest-ruff>=0.5",

49 | "transformers==4.53.0",

50 | "reasoning-from-scratch",

51 | "twine>=6.1.0",

52 | "build>=1.2.2.post1",

53 | ]

54 |

55 | extra = [

56 | "datasets>=4.1.1", # Appendix F, MMLU

57 | "chainlit>=2.8.3", # Appendix G, Chat UI

58 | ]

59 |

60 | [tool.setuptools.packages.find]

61 | where = ["."]

62 | include = ["reasoning_from_scratch"]

63 |

64 | [tool.uv.sources]

65 | reasoning = { workspace = true }

66 | reasoning-from-scratch = { workspace = true }

67 |

68 | [tool.uv.workspace]

69 | members = [

70 | ".",

71 | ]

72 |

--------------------------------------------------------------------------------

/chF/03_leaderboards/README.md:

--------------------------------------------------------------------------------

1 |

2 | # Leaderboard Rankings

3 |

4 | This bonus material implements two different ways to construct LM Arena (formerly Chatbot Arena) style leaderboards from pairwise comparisons.

5 |

6 | Both implementations take in a list of pairwise preferences (left: winner, right: loser) from a json file via the `--path` argument. Here's an excerpt of the provided [votes.json](votes.json) file:

7 |

8 | ```json

9 | [

10 | ["GPT-5", "Claude-3"],

11 | ["GPT-5", "Llama-4"],

12 | ["Claude-3", "Llama-3"],

13 | ["Llama-4", "Llama-3"],

14 | ...

15 | ]

16 | ```

17 |

18 |

19 |

20 |

21 |

22 | ---

23 |

24 | **Note**: If you are not a `uv` user, replace `uv run ...py` with `python ...py` in the examples below.

25 |

26 | ---

27 |

28 |

29 | ## Method 1: Elo ratings

30 |

31 | - Implements the popular Elo rating method (inspired by chess rankings) that was originally used by LM Arena

32 | - See the [main notebook](../01_main-chapter-code/chF_main.ipynb) for details

33 |

34 | ```bash

35 | ➜ 03_leaderboards git:(main) ✗ uv run 1_elo_leaderboard.py --path votes.json

36 |

37 | Leaderboard (Elo)

38 | -----------------------

39 | 1. GPT-5 1095.9

40 | 2. Claude-3 1058.7

41 | 3. Llama-4 958.2

42 | 4. Llama-3 887.2

43 | ```

44 |

45 |

46 |

47 |

48 |

49 |

50 |

51 | ## Method 2: Bradley-Terry model

52 |

53 | - Implements a [Bradley-Terry model](https://en.wikipedia.org/wiki/Bradley–Terry_model), similar to the new LM Arena leaderboard as described in the official paper ([Chatbot Arena: An Open Platform for Evaluating LLMs by Human Preference](https://arxiv.org/abs/2403.04132))

54 | - Like on the LM Arena leaderboard, the scores are re-scaled to be similar to the original Elo scores

55 | - The code here uses the Adam optimizer from PyTorch to fit the model (for better code familiarity and readability)

56 |

57 |

58 |

59 | ```bash

60 | ➜ 03_leaderboards git:(main) ✗ uv run 2_bradley_terry_leaderboard.py --path votes.json

61 |

62 | Leaderboard (Bradley-Terry)

63 | -----------------------------

64 | 1. GPT-5 1140.6

65 | 2. Claude-3 1058.7

66 | 3. Llama-4 950.3

67 | 4. Llama-3 850.4

68 | ```

69 |

70 |

--------------------------------------------------------------------------------

/chG/01_main-chapter-code/qwen3_chat_interface.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt).

2 | # Source for "Build a Large Language Model From Scratch"

3 | # - https://www.manning.com/books/build-a-large-language-model-from-scratch

4 | # Code: https://github.com/rasbt/LLMs-from-scratch

5 |

6 |

7 | import torch

8 | import chainlit

9 |

10 | from reasoning_from_scratch.ch02 import (

11 | get_device,

12 | )

13 | from reasoning_from_scratch.ch02_ex import generate_text_basic_stream_cache

14 | from reasoning_from_scratch.ch03 import load_model_and_tokenizer

15 |

16 |

17 | # ============================================================

18 | # EDIT ME: Simple configuration

19 | # ============================================================

20 | WHICH_MODEL = "reasoning" # "base" for base model

21 | MAX_NEW_TOKENS = 38912

22 | LOCAL_DIR = "qwen3"

23 | COMPILE = False

24 | # ============================================================

25 |

26 |

27 | DEVICE = get_device()

28 |

29 | MODEL, TOKENIZER = load_model_and_tokenizer(

30 | which_model=WHICH_MODEL,

31 | device=DEVICE,

32 | use_compile=COMPILE,

33 | local_dir=LOCAL_DIR

34 | )

35 |

36 |

37 | @chainlit.on_chat_start

38 | async def on_start():

39 | chainlit.user_session.set("history", [])

40 | chainlit.user_session.get("history").append(

41 | {"role": "system", "content": "You are a helpful assistant."}

42 | )

43 |

44 |

45 | @chainlit.on_message

46 | async def main(message: chainlit.Message):

47 | """

48 | The main Chainlit function.

49 | """

50 | # 1) Encode input

51 | input_ids = TOKENIZER.encode(message.content)

52 | input_ids_tensor = torch.tensor(input_ids, device=DEVICE).unsqueeze(0)

53 |

54 | # 2) Start an outgoing message we can stream into

55 | out_msg = chainlit.Message(content="")

56 | await out_msg.send()

57 |

58 | # 3) Stream generation

59 | for tok in generate_text_basic_stream_cache(

60 | model=MODEL,

61 | token_ids=input_ids_tensor,

62 | max_new_tokens=MAX_NEW_TOKENS,

63 | eos_token_id=TOKENIZER.eos_token_id

64 | ):

65 | token_id = tok.squeeze(0)

66 | piece = TOKENIZER.decode(token_id.tolist())

67 | await out_msg.stream_token(piece)

68 |

69 | # 4) Finalize the streamed message

70 | await out_msg.update()

71 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug-report.yaml:

--------------------------------------------------------------------------------

1 | name: Bug Report

2 | description: Report errors related to the book content or code

3 | title: "Description"

4 | labels: [bug]

5 | assignees: rasbt

6 | body:

7 | - type: markdown

8 | attributes:

9 | value: |

10 | Thank you for taking the time to report an issue. Please fill out the details below to help resolve it.

11 |

12 | - type: textarea

13 | id: bug_description

14 | attributes:

15 | label: Bug description

16 | description: A description of the issue.

17 | placeholder: |

18 | Please provide a description of what the bug or issue is.

19 | validations:

20 | required: true

21 |

22 | - type: dropdown

23 | id: operating_system

24 | attributes:

25 | label: What operating system are you using?

26 | description: If applicable, please select the operating system where you experienced this issue.

27 | options:

28 | - "Unknown"

29 | - "macOS"

30 | - "Linux"

31 | - "Windows"

32 | validations:

33 | required: False

34 |

35 | - type: dropdown

36 | id: compute_environment

37 | attributes:

38 | label: Where do you run your code?

39 | description: Please select the computing environment where you ran this code.

40 | options:

41 | - "Local (laptop, desktop)"

42 | - "Lightning AI Studio"

43 | - "Google Colab"

44 | - "Other cloud environment (AWS, Azure, GCP)"

45 | validations:

46 | required: False

47 |

48 | - type: textarea

49 | id: environment

50 | attributes:

51 | label: Environment

52 | description: |

53 | Please provide details about your Python environment via the environment by pasting the output of the following code:

54 | ```python

55 | import sys

56 | import torch

57 |

58 | print(f"Python version: {sys.version}")

59 | print(f"PyTorch version: {torch.__version__}")

60 | print(f"CUDA available: {torch.cuda.is_available()}")

61 | if torch.cuda.is_available():

62 | print(f"CUDA device: {torch.cuda.get_device_name(0)}")

63 | print(f"CUDA version: {torch.version.cuda}")

64 | print(f"Device count: {torch.cuda.device_count()}")

65 | ```

66 | value: |

67 | ```

68 |

69 |

70 |

71 | ```

72 | validations:

73 | required: false

74 |

--------------------------------------------------------------------------------

/reasoning_from_scratch/utils.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | from pathlib import Path

6 | import sys

7 | import requests

8 | from urllib.parse import urlparse

9 |

10 |

11 | def download_file(url, out_dir=".", backup_url=None):

12 | out_dir = Path(out_dir)

13 | out_dir.mkdir(parents=True, exist_ok=True)

14 | filename = Path(urlparse(url).path).name

15 | dest = out_dir / filename

16 |

17 | def try_download(u):

18 | try:

19 | with requests.get(u, stream=True, timeout=30) as r:

20 | r.raise_for_status()

21 | size_remote = int(r.headers.get("Content-Length", 0))

22 |

23 | # Skip download if already complete

24 | if dest.exists() and size_remote and dest.stat().st_size == size_remote:

25 | print(f"✓ {dest} already up-to-date")

26 | return True

27 |

28 | # Download in 1 MiB chunks with progress display

29 | block = 1024 * 1024

30 | downloaded = 0

31 | with open(dest, "wb") as f:

32 | for chunk in r.iter_content(chunk_size=block):

33 | if not chunk:

34 | continue

35 | f.write(chunk)

36 | downloaded += len(chunk)

37 | if size_remote:

38 | pct = downloaded * 100 // size_remote

39 | sys.stdout.write(

40 | f"\r{filename}: {pct:3d}% "

41 | f"({downloaded // (1024*1024)} MiB / "

42 | f"{size_remote // (1024*1024)} MiB)"

43 | )

44 | sys.stdout.flush()

45 | if size_remote:

46 | sys.stdout.write("\n")

47 | return True

48 | except requests.RequestException:

49 | return False

50 |

51 | # Try main URL first

52 | if try_download(url):

53 | return dest

54 |

55 | # Try backup URL if provided

56 | if backup_url:

57 | print(f"Primary URL ({url}) failed.\nTrying backup URL ({backup_url})...")

58 | if try_download(backup_url):

59 | return dest

60 |

61 | raise RuntimeError(f"Failed to download {filename} from both mirrors.")

62 |

--------------------------------------------------------------------------------

/chF/03_leaderboards/2_bradley_terry_leaderboard.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | import json

6 | import math

7 | import argparse

8 | import torch

9 | from reasoning_from_scratch.ch02 import get_device

10 |

11 |

12 | def bradley_terry_torch(vote_pairs, device):

13 |

14 | # Collect all unique model names

15 | models = sorted({m for winner, loser in vote_pairs for m in (winner, loser)})

16 | n = len(models)

17 | idx = {m: i for i, m in enumerate(models)}

18 |

19 | # Convert to index tensors

20 | winners = torch.tensor([idx[winner] for winner, _ in vote_pairs], dtype=torch.long)

21 | losers = torch.tensor([idx[loser] for _, loser in vote_pairs], dtype=torch.long)

22 |

23 | # Learnable parameters

24 | theta = torch.nn.Parameter(torch.zeros(n - 1, device=device))

25 | optimizer = torch.optim.Adam([theta], lr=0.01, weight_decay=1e-4)

26 |

27 | def scores():

28 | return torch.cat([theta, torch.zeros(1, device=device)])

29 |

30 | for epoch in range(500):

31 | s = scores()

32 | delta = s[winners] - s[losers] # score difference

33 | loss = -torch.nn.functional.logsigmoid(delta).mean() # negative log-likelihood

34 | optimizer.zero_grad(set_to_none=True)

35 | loss.backward()

36 | optimizer.step()

37 |

38 | # Convert latent scores to Elo-like scale

39 | with torch.no_grad():

40 | s = scores()

41 | scale = 400.0 / math.log(10.0)

42 | R = s * scale

43 | R -= R.mean()

44 | R += 1000.0 # center around 1000

45 |

46 | return {m: float(r) for m, r in zip(models, R.cpu().tolist())}

47 |

48 |

49 | def main():

50 | parser = argparse.ArgumentParser(description="Bradley-Terry leaderboard.")

51 | parser.add_argument("--path", type=str, help="Path to votes JSON")

52 | args = parser.parse_args()

53 |

54 | with open(args.path, "r", encoding="utf-8") as f:

55 | votes = json.load(f)

56 |

57 | device = get_device()

58 | ratings = bradley_terry_torch(votes, device)

59 |

60 | leaderboard = sorted(ratings.items(),

61 | key=lambda x: -x[1])

62 | print("\nLeaderboard (Bradley-Terry)")

63 | print("-----------------------------")

64 | for i, (model, score) in enumerate(leaderboard, 1):

65 | print(f"{i:>2}. {model:<10} {score:7.1f}")

66 | print()

67 |

68 |

69 | if __name__ == "__main__":

70 | main()

71 |

--------------------------------------------------------------------------------

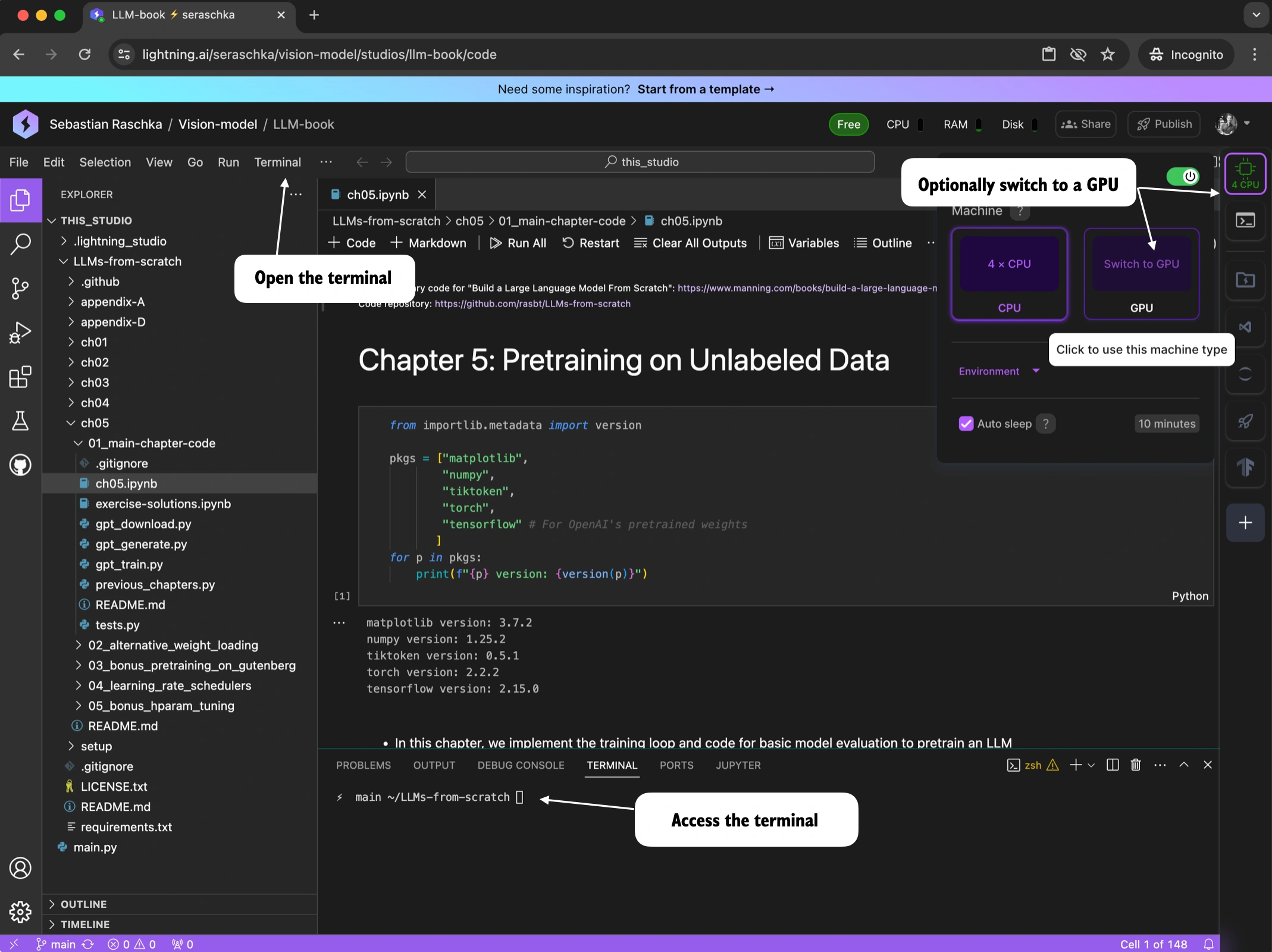

/ch02/02_setup-tips/gpu-instructions.md:

--------------------------------------------------------------------------------

1 |

2 | # GPU Cloud Resources

3 |

4 | This section describes cloud alternatives for running the code presented in this book.

5 |

6 | While the code can run on conventional laptops and desktop computers without a dedicated GPU, cloud platforms with NVIDIA GPUs can substantially improve the runtime of the code, especially in chapters 5 to 7.

7 |

8 |

9 |

10 | ## Using Lightning Studio

11 |

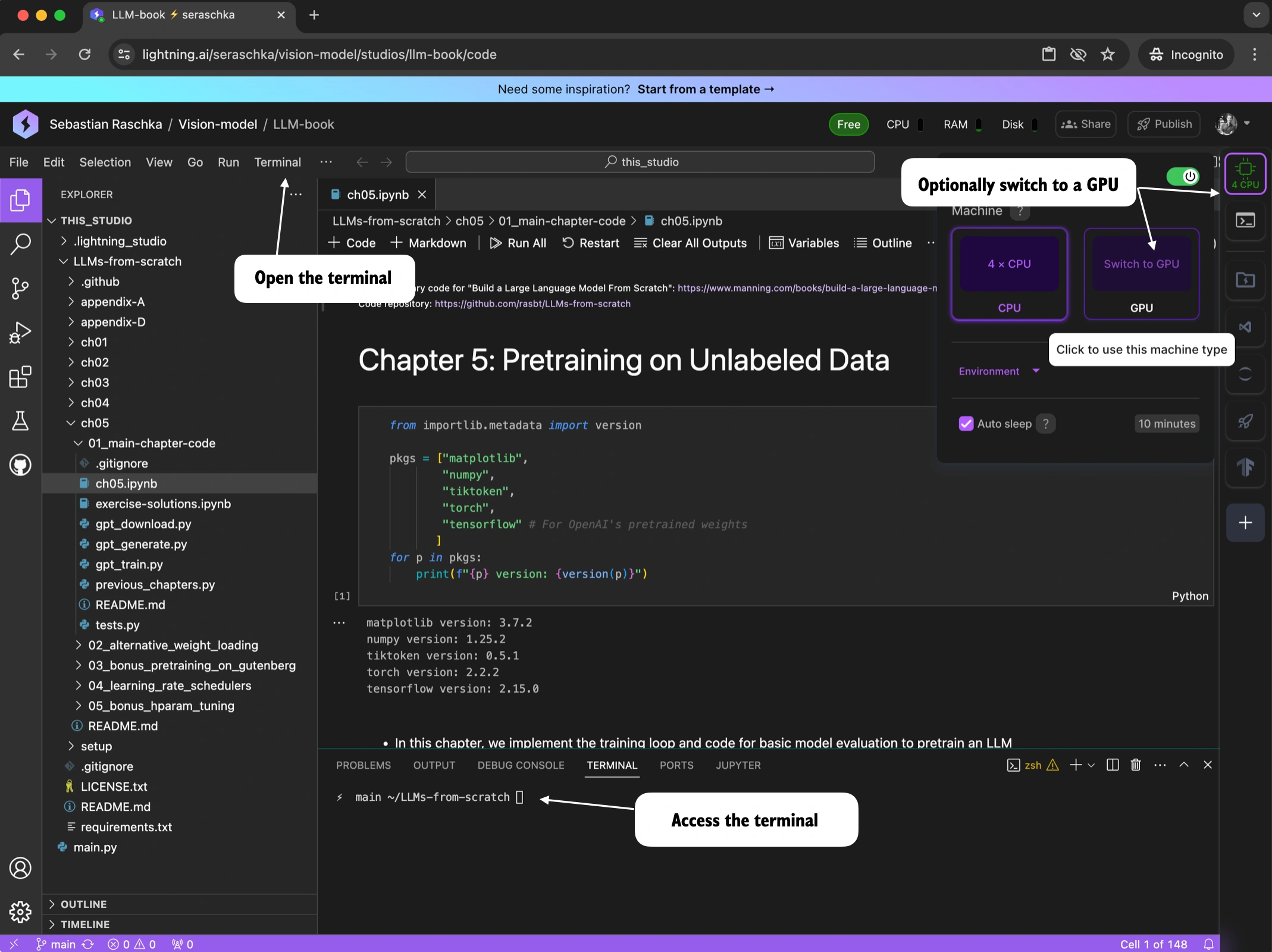

12 | For a smooth development experience in the cloud, I recommend the [Lightning AI Studio](https://lightning.ai/) platform, which allows users to set up a persistent environment and use both VSCode and Jupyter Lab on cloud CPUs and GPUs.

13 |

14 | Once you start a new Studio, you can open the terminal and execute the following setup steps to clone the repository and install the dependencies:

15 |

16 | ```bash

17 | git clone https://github.com/rasbt/reasoning-from-scratch.git

18 | cd reasoning-from-scratch

19 | pip install -r requirements.txt

20 | ```

21 |

22 | (In contrast to Google Colab, these only need to be executed once since the Lightning AI Studio environments are persistent, even if you switch between CPU and GPU machines.)

23 |

24 | Then, navigate to the Python script or Jupyter Notebook you want to run. Optionally, you can also easily connect a GPU to accelerate the code's runtime, for example, when you are pretraining the LLM in chapter 5 or finetuning it in chapters 6 and 7.

25 |

26 |  27 |

28 |

29 |

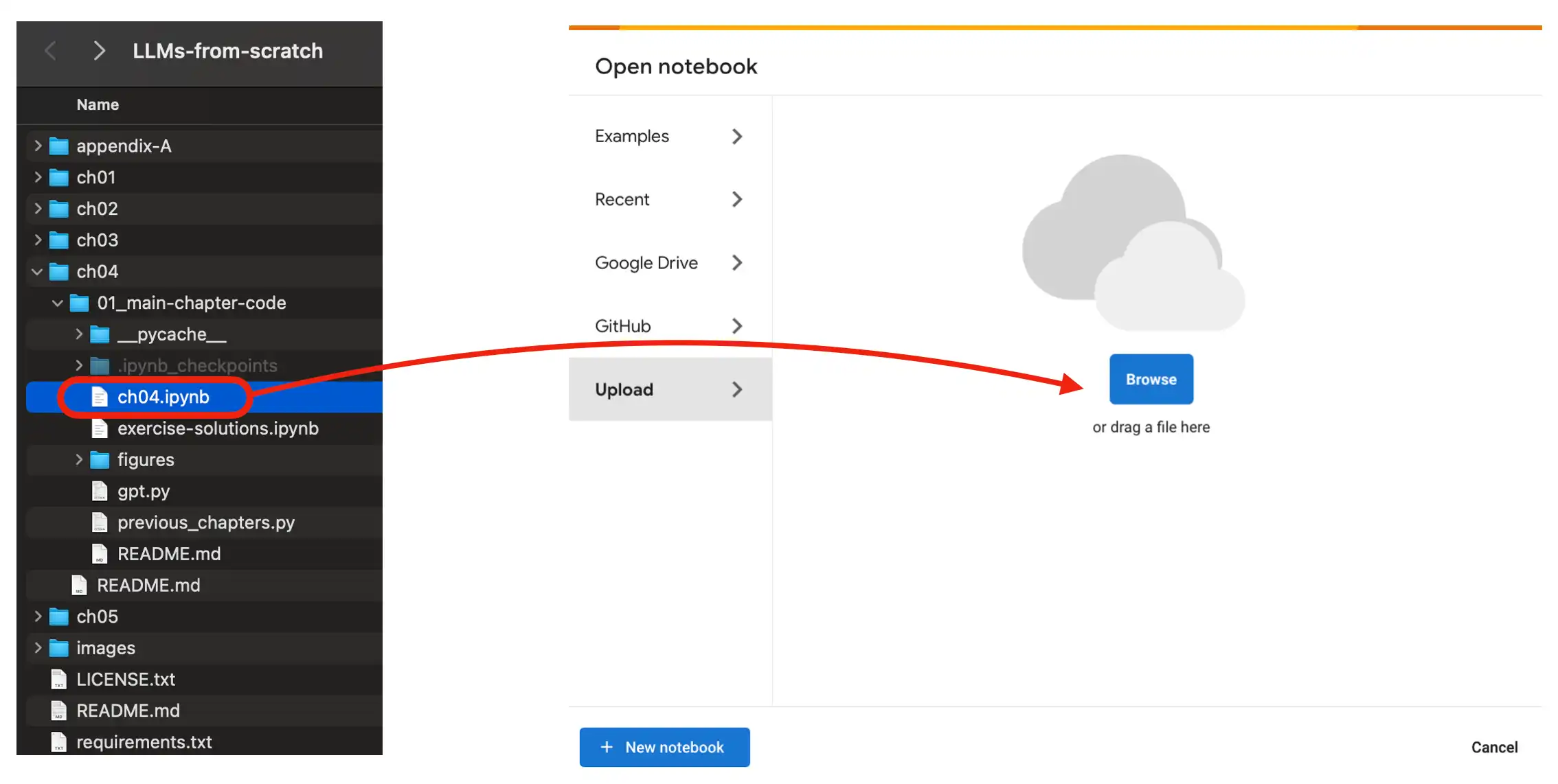

30 | ## Using Google Colab

31 |

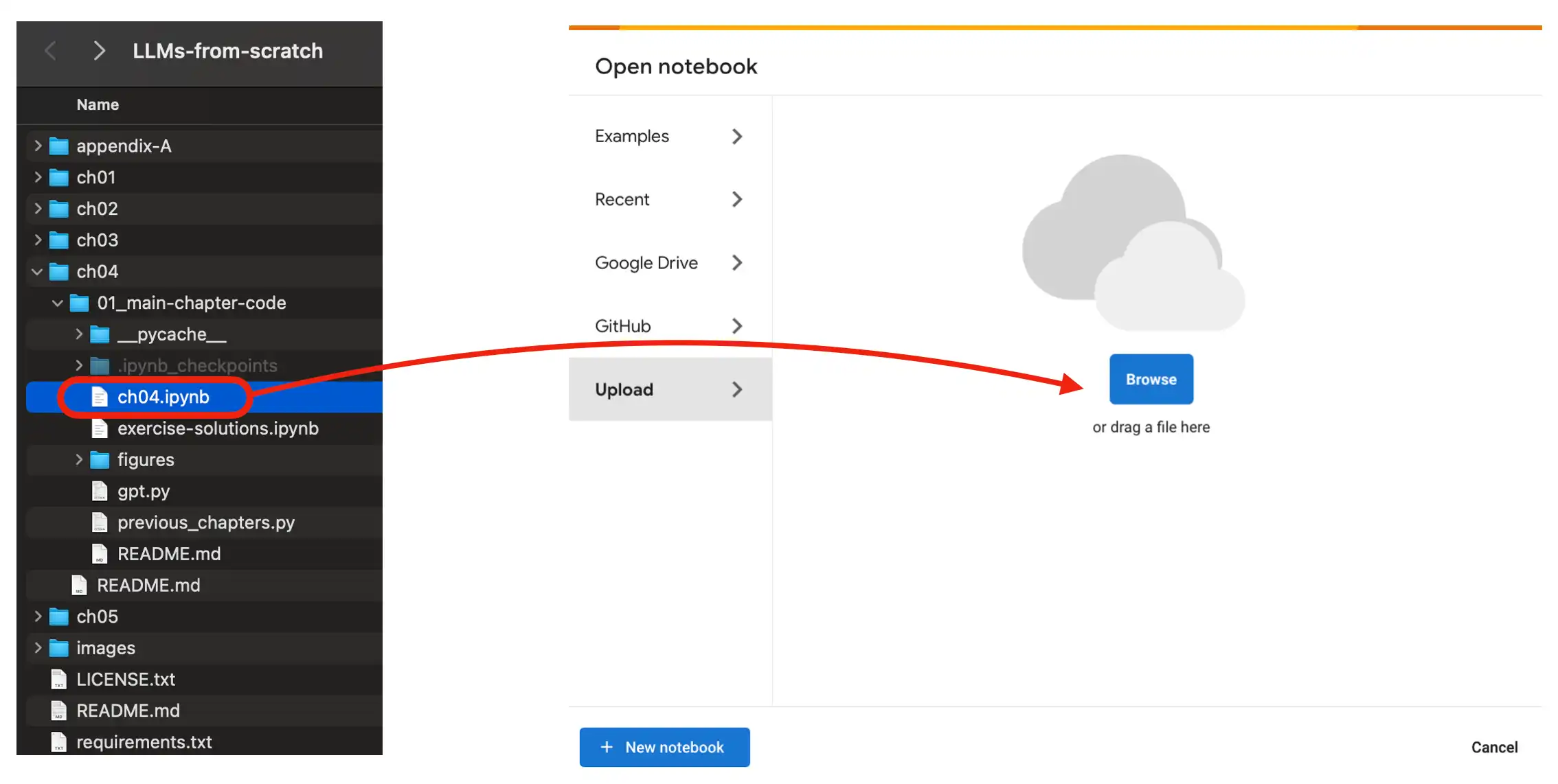

32 | To use a Google Colab environment in the cloud, head over to [https://colab.research.google.com/](https://colab.research.google.com/) and open the respective chapter notebook from the GitHub menu or by dragging the notebook into the *Upload* field as shown in the figure below.

33 |

34 |

27 |

28 |

29 |

30 | ## Using Google Colab

31 |

32 | To use a Google Colab environment in the cloud, head over to [https://colab.research.google.com/](https://colab.research.google.com/) and open the respective chapter notebook from the GitHub menu or by dragging the notebook into the *Upload* field as shown in the figure below.

33 |

34 |  35 |

36 |

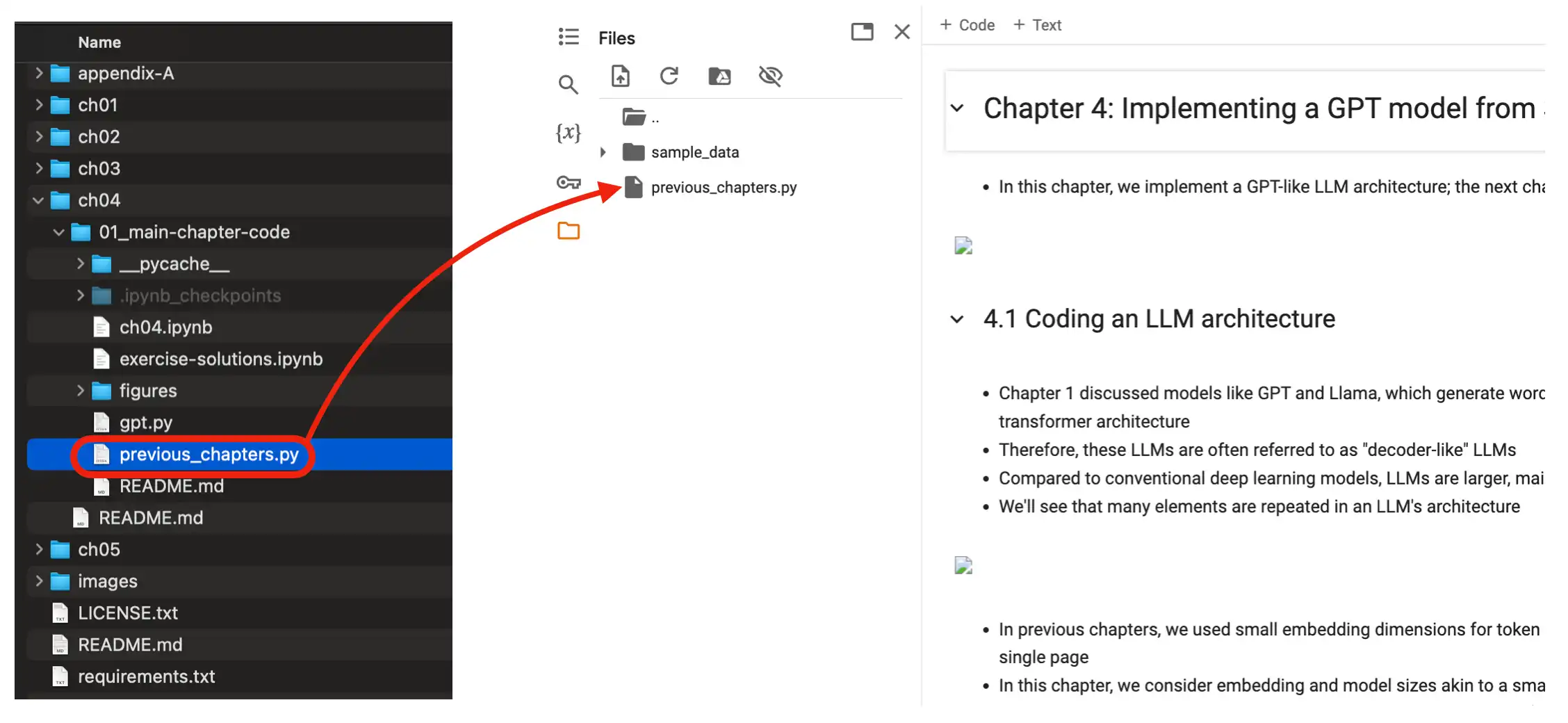

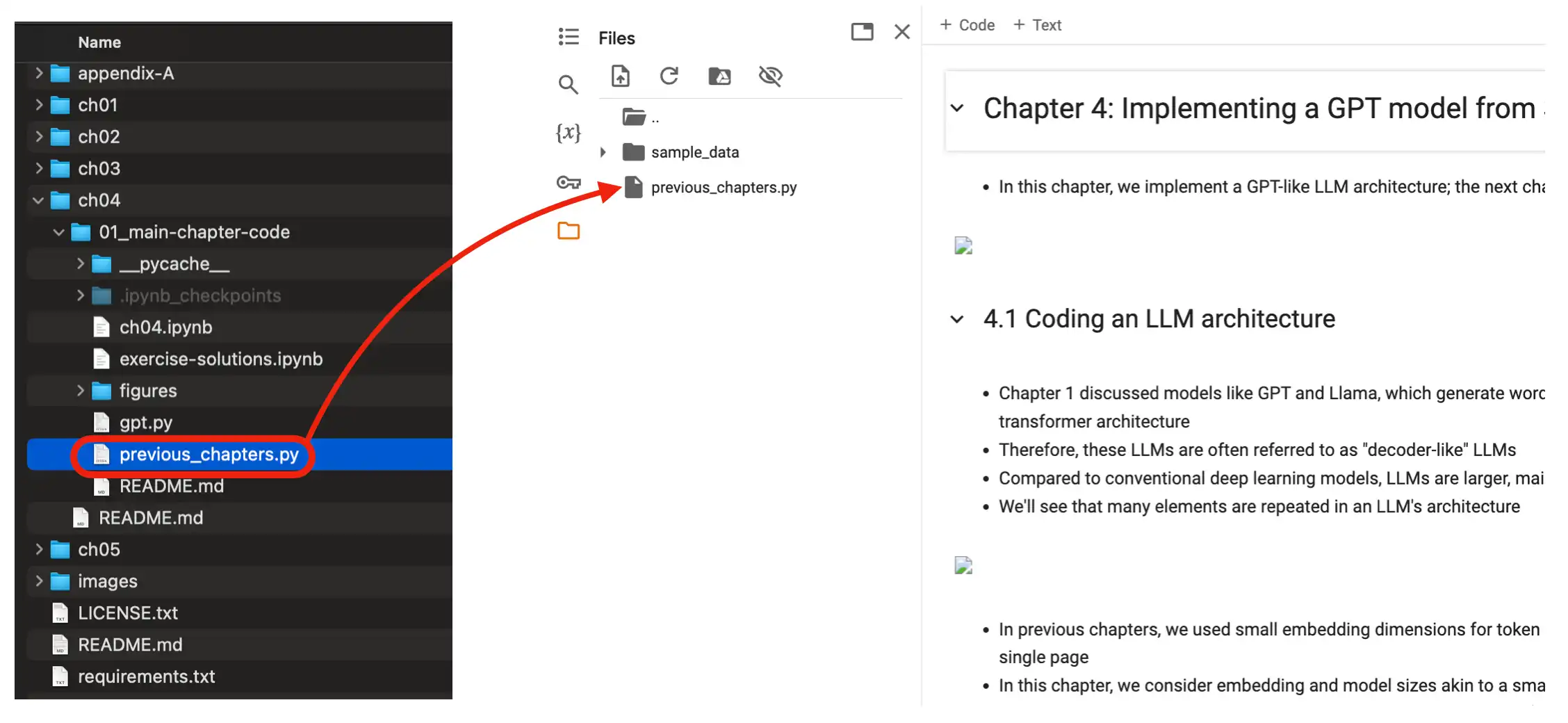

37 | Also make sure you upload the relevant files (dataset files and .py files the notebook is importing from) to the Colab environment as well, as shown below.

38 |

39 |

35 |

36 |

37 | Also make sure you upload the relevant files (dataset files and .py files the notebook is importing from) to the Colab environment as well, as shown below.

38 |

39 |  40 |

41 |

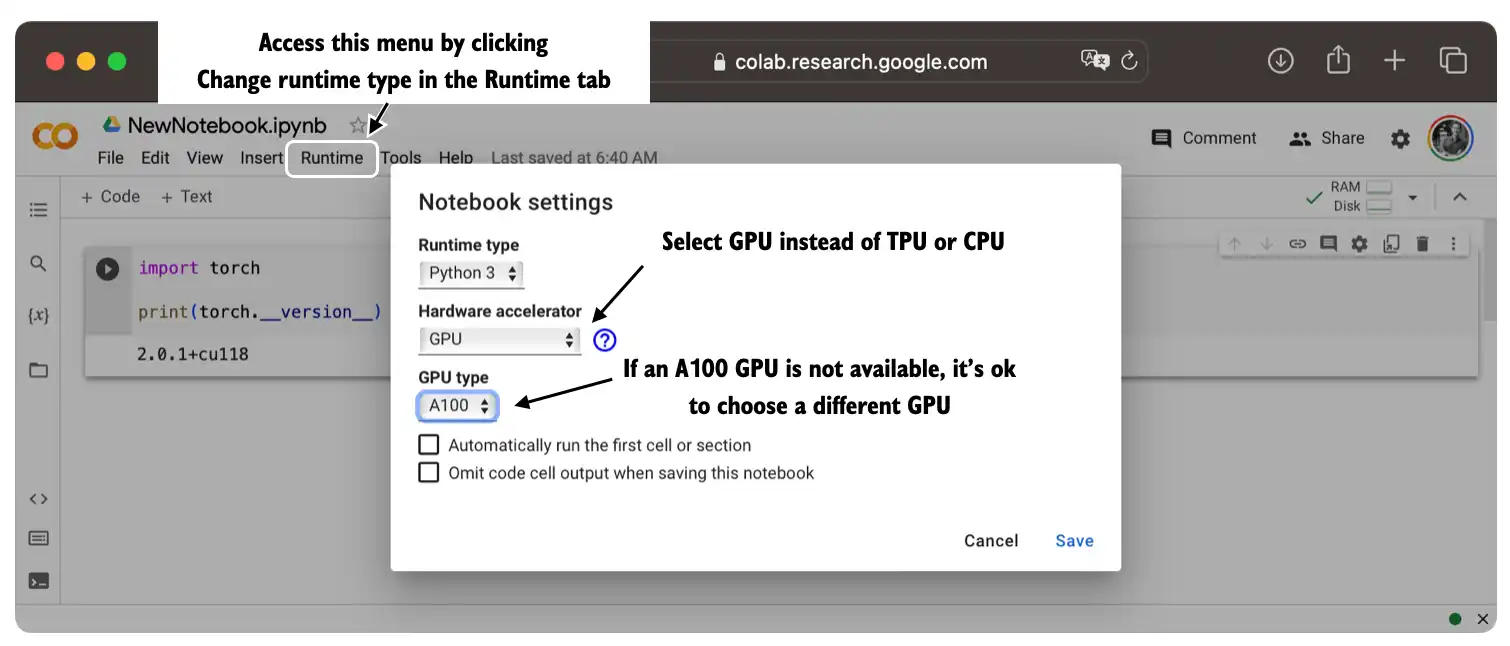

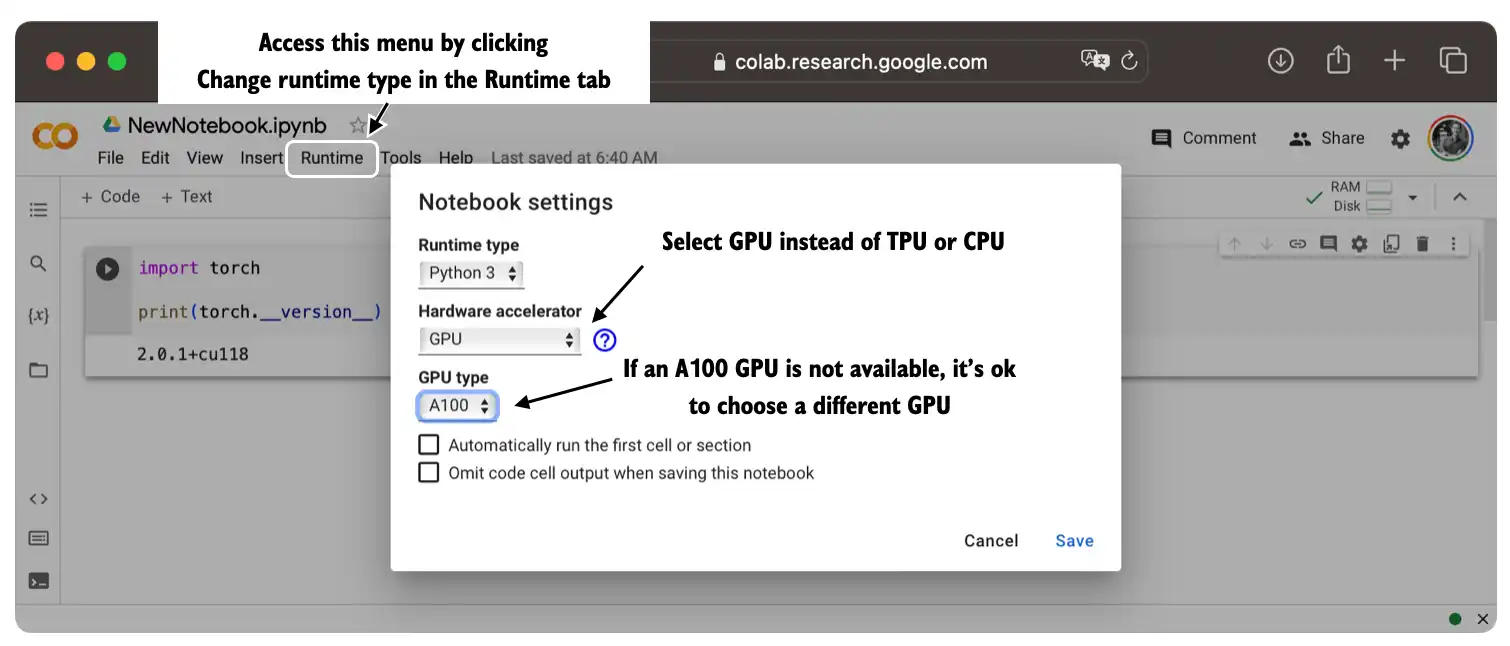

42 | You can optionally run the code on a GPU by changing the *Runtime* as illustrated in the figure below.

43 |

44 |

40 |

41 |

42 | You can optionally run the code on a GPU by changing the *Runtime* as illustrated in the figure below.

43 |

44 |  45 |

46 |

47 |

48 | ## Questions?

49 |

50 | If you have any questions, please don't hesitate to reach out via the [Discussions](https://github.com/rasbt/reasoning-from-scratch/discussions) forum in this GitHub repository.

--------------------------------------------------------------------------------

/tests/conftest.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | from reasoning_from_scratch.qwen3 import (

6 | download_qwen3_small,

7 | QWEN_CONFIG_06_B,

8 | Qwen3Model,

9 | )

10 | import sys

11 | import types

12 | import nbformat

13 | import pytest

14 | import torch

15 |

16 |

17 | @pytest.fixture(scope="session")

18 | def qwen3_weights_path(tmp_path_factory):

19 | """Creates and saves a deterministic model for testing."""

20 |

21 | base_path = tmp_path_factory.mktemp("models")

22 | model_path = base_path / "qwen3_test_weights.pt"

23 | download_qwen3_small(kind="base", tokenizer_only=True, out_dir=base_path)

24 |

25 | if not model_path.exists():

26 | torch.manual_seed(123)

27 | model = Qwen3Model(QWEN_CONFIG_06_B)

28 | torch.save(model.state_dict(), model_path)

29 |

30 | return base_path

31 |

32 |

33 | def import_definitions_from_notebook(nb_path, module_name):

34 | if not nb_path.exists():

35 | raise FileNotFoundError(f"Notebook file not found at: {nb_path}")

36 |

37 | nb = nbformat.read(str(nb_path), as_version=4)

38 |

39 | mod = types.ModuleType(module_name)

40 | sys.modules[module_name] = mod

41 |

42 | # Pass 1: execute only imports (handle multi-line)

43 | for cell in nb.cells:

44 | if cell.cell_type != "code":

45 | continue

46 | lines = cell.source.splitlines()

47 | collecting = False

48 | buf = []

49 | paren_balance = 0

50 | for line in lines:

51 | stripped = line.strip()

52 | if not collecting and (stripped.startswith("import ") or stripped.startswith("from ")):

53 | collecting = True

54 | buf = [line]

55 | paren_balance = line.count("(") - line.count(")")

56 | if paren_balance == 0:

57 | exec("\n".join(buf), mod.__dict__)

58 | collecting = False

59 | buf = []

60 | elif collecting:

61 | buf.append(line)

62 | paren_balance += line.count("(") - line.count(")")

63 | if paren_balance == 0:

64 | exec("\n".join(buf), mod.__dict__)

65 | collecting = False

66 | buf = []

67 |

68 | # Pass 2: execute only def/class definitions

69 | for cell in nb.cells:

70 | if cell.cell_type != "code":

71 | continue

72 | src = cell.source

73 | if "def " in src or "class " in src:

74 | exec(src, mod.__dict__)

75 |

76 | return mod

77 |

--------------------------------------------------------------------------------

/ch02/04_torch-compile-windows/README.md:

--------------------------------------------------------------------------------

1 | # Using `torch.compile()` on Windows

2 |

3 | `torch.compile()` relies on *TorchInductor*, which JIT-compiles kernels and requires a working C/C++ compiler toolchain.

4 |

5 | So, on Windows, the setup required to make `torch.compile` work can be a bit more involved than on Linux or macOS, which usually don't require any extra steps besides installing PyTorch.

6 |

7 | If you are a Windows user and using `torch.compile` sounds too tricky or complicated, don't worry, all code examples in this repository will work fine without compilation.

8 |

9 | Below are some tips that I compiled based on recommendations by [Daniel Kleine](https://github.com/d-kleine) and the following [PyTorch guide](https://docs.pytorch.org/tutorials/unstable/inductor_windows.html).

10 |

11 |

12 | ## 1 Basic Setup (CPU or CUDA)

13 |

14 |

15 | ### 1.1 Install Visual Studio 2022

16 |

17 | - Select the **“Desktop development with C++”** workload.

18 | - Make sure to include the **English language pack** (without it, you may run into UTF-8 encoding errors.)

19 |

20 |

21 | ### 1.2 Open the correct command prompt

22 |

23 |

24 | Launch Python from the

25 |

26 | **"x64 Native Tools Command Prompt for VS 2022"**

27 |

28 | or from the

29 |

30 | **"Visual Studio 2022 Developer Command Prompt"**.

31 |

32 | Alternatively, you can initialize the environment manually by running:

33 |

34 | ```bash

35 | "C:\Program Files\Microsoft Visual Studio\2022\Community\VC\Auxiliary\Build\vcvars64.bat"

36 | ```

37 |

38 |

39 | ### 1.3 Verify that the compiler works

40 |

41 | Run

42 |

43 | ```bash

44 | cl.exe

45 | ```

46 |

47 | If you see version information printed, the compiler is ready.

48 |

49 |

50 | ## 2 Troubleshooting Common Errors

51 |

52 |

53 | ### 2.1 Error: `cl not found`

54 |

55 | Install **Visual Studio Build Tools** with the "C++ build tools" workload and run Python from a developer command prompt. (See this Microsoft [guide](https://learn.microsoft.com/en-us/cpp/build/vscpp-step-0-installation?view=msvc-170) for details)

56 |

57 |

58 | ### 2.2 Error: `triton not found` (when using CUDA)

59 |

60 | Install the Windows build of Triton manually:

61 |

62 | ```bash

63 | pip install "triton-windows<3.4"

64 | ```

65 |

66 | or, if you are using `uv`:

67 |

68 | ```bash

69 | uv pip install "triton-windows<3.4"

70 | ```

71 |

72 | (As mentioned earlier, triton is required by TorchInductor for CUDA kernel compilation.)

73 |

74 |

75 |

76 |

77 | ## 3 Additional Notes

78 |

79 | On Windows, the `cl.exe` compiler is only accessible from within the Visual Studio Developer environment. This means that using `torch.compile()` in notebooks such as Jupyter may not work unless the notebook was launched from a Developer Command Prompt.

80 |

81 | As mentioned at the beginning of this article, there is also a [PyTorch guide](https://docs.pytorch.org/tutorials/unstable/inductor_windows.html) that some users found helpful when getting `torch.compile()` running on Windows CPU builds. However, note that it refers to PyTorch's unstable branch, so use it as a reference only.

82 |

83 | **If compilation continues to cause issues, please feel free to skip it. It's a nice bonus, but it's not important to follow the book.**

84 |

85 |

--------------------------------------------------------------------------------

/chF/02_mmlu/random_guessing_baseline.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | import argparse

6 | import random

7 | import statistics as stats

8 | from collections import Counter

9 |

10 | from datasets import load_dataset

11 |

12 |

13 | # Gold letter is MMLU jargon for correct answer letter

14 | def gold_letter(ans):

15 | if isinstance(ans, int):

16 | return "ABCD"[ans]

17 | s = str(ans).strip().upper()

18 | return s if s in {"A", "B", "C", "D"} else s[:1]

19 |

20 |

21 | def main():

22 | parser = argparse.ArgumentParser(

23 | description="Show gold answer distribution for an MMLU subset and a random-guess baseline."

24 | )

25 | parser.add_argument(

26 | "--subset",

27 | type=str,

28 | default="high_school_mathematics",

29 | help="MMLU subset name (default: 'high_school_mathematics').",

30 | )

31 | parser.add_argument(

32 | "--seed",

33 | type=int,

34 | default=42,

35 | help="Random seed for the random-guess baseline (default: 42).",

36 | )

37 | parser.add_argument(

38 | "--trials",

39 | type=int,

40 | default=10_000,

41 | help="Number of random-guess trials (default: 10,000).",

42 | )

43 | args = parser.parse_args()

44 |

45 | ds = load_dataset("cais/mmlu", args.subset, split="test")

46 |

47 | labels = [gold_letter(ex["answer"]) for ex in ds]

48 | n = len(labels)

49 | counts = Counter(labels)

50 |

51 | print(f"Subset: {args.subset} | split: test | n={n}")

52 | print("Gold distribution provided in the dataset:")

53 | for letter in "ABCD":

54 | c = counts.get(letter, 0)

55 | pct = (c / n) if n else 0.0

56 | print(f" {letter}: {c} ({pct:.2%})")

57 |

58 | if n == 0:

59 | print("\nNo items. Baseline undefined.")

60 | return

61 |

62 | # Repeat random guessing

63 | rng = random.Random(args.seed)

64 | accs = []

65 | for _ in range(args.trials):

66 | guesses = [rng.choice("ABCD") for _ in range(n)]

67 | correct = sum(1 for g, y in zip(guesses, labels) if g == y)

68 | accs.append(correct / n)

69 |

70 | mean_acc = stats.mean(accs)

71 | sd_acc = stats.stdev(accs) if len(accs) > 1 else 0.0

72 |

73 | print(f"\nRandom guessing over {args.trials:,} trials (uniform A/B/C/D, seed={args.seed}):")

74 | print(f" Mean accuracy: {mean_acc:.2%}")

75 | print(f" Std dev across trials: {sd_acc:.2%}")

76 |

77 | # Quantiles

78 | qs = [0.01, 0.05, 0.25, 0.5, 0.75, 0.95, 0.99]

79 | accs_sorted = sorted(accs)

80 | print("\nSelected quantiles of accuracy:")

81 | for q in qs:

82 | idx = int(q * len(accs_sorted))

83 | print(f" {q:.0%} quantile: {accs_sorted[idx]:.3%}")

84 |

85 | # Frequency table (rounded)

86 | acc_counts = Counter(round(a, 2) for a in accs)

87 | print("\nFull frequency table of accuracies (rounded):")

88 | for acc_val in sorted(acc_counts):

89 | freq = acc_counts[acc_val]

90 | pct = freq / args.trials

91 | print(f" {acc_val:.3f}: {freq} times ({pct:.2%})")

92 |

93 |

94 | if __name__ == "__main__":

95 | main()

96 |

--------------------------------------------------------------------------------

/reasoning_from_scratch/ch02.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) Sebastian Raschka under Apache License 2.0 (see LICENSE.txt)

2 | # Source for "Build a Reasoning Model (From Scratch)": https://mng.bz/lZ5B

3 | # Code repository: https://github.com/rasbt/reasoning-from-scratch

4 |

5 | from .qwen3 import KVCache

6 | import torch

7 |

8 |

9 | def get_device(enable_tensor_cores=True):

10 | if torch.cuda.is_available():

11 | device = torch.device("cuda")

12 | print("Using NVIDIA CUDA GPU")

13 |

14 | if enable_tensor_cores:

15 | major, minor = map(int, torch.__version__.split(".")[:2])

16 | if (major, minor) >= (2, 9):

17 | torch.backends.cuda.matmul.fp32_precision = "tf32"

18 | torch.backends.cudnn.conv.fp32_precision = "tf32"

19 | else:

20 | torch.backends.cuda.matmul.allow_tf32 = True

21 | torch.backends.cudnn.allow_tf32 = True

22 |

23 | elif torch.backends.mps.is_available():

24 | device = torch.device("mps")

25 | print("Using Apple Silicon GPU (MPS)")

26 |

27 | elif torch.xpu.is_available():

28 | device = torch.device("xpu")

29 | print("Using Intel GPU")

30 |

31 | else:

32 | device = torch.device("cpu")

33 | print("Using CPU")

34 |

35 | return device

36 |

37 |

38 | @torch.inference_mode()

39 | def generate_text_basic(model, token_ids, max_new_tokens, eos_token_id=None):

40 | input_length = token_ids.shape[1]

41 | model.eval()

42 |

43 | for _ in range(max_new_tokens):

44 | out = model(token_ids)[:, -1]

45 | next_token = torch.argmax(out, dim=-1, keepdim=True)

46 |

47 | # Stop if all sequences in the batch have generated EOS

48 | if (eos_token_id is not None

49 | and next_token.item() == eos_token_id):

50 | break

51 |

52 | token_ids = torch.cat([token_ids, next_token], dim=1)

53 | return token_ids[:, input_length:]

54 |

55 |

56 | @torch.inference_mode()

57 | def generate_text_basic_cache(

58 | model,

59 | token_ids,

60 | max_new_tokens,

61 | eos_token_id=None

62 | ):

63 |

64 | input_length = token_ids.shape[1]

65 | model.eval()

66 | cache = KVCache(n_layers=model.cfg["n_layers"])

67 | model.reset_kv_cache()

68 | out = model(token_ids, cache=cache)[:, -1]

69 |

70 | for _ in range(max_new_tokens):

71 | next_token = torch.argmax(out, dim=-1, keepdim=True)

72 |

73 | if (eos_token_id is not None

74 | and next_token.item() == eos_token_id):

75 | break

76 |

77 | token_ids = torch.cat([token_ids, next_token], dim=1)

78 | out = model(next_token, cache=cache)[:, -1]

79 |

80 | return token_ids[:, input_length:]

81 |

82 |

83 | def generate_stats(output_token_ids, tokenizer, start_time,