├── reports

└── images

│ ├── roc_all.png

│ ├── roc_curve.png

│ ├── varImprt.png

│ ├── roc_all_zoom.png

│ ├── roc_curve_zoom.png

│ ├── breastCancerWisconsinDataSet_MachineLearning_19_0.png

│ ├── breastCancerWisconsinDataSet_MachineLearning_22_1.png

│ ├── breastCancerWisconsinDataSet_MachineLearning_25_0.png

│ └── breastCancerWisconsinDataSet_MachineLearning_34_0.png

├── models

└── pickle_models

│ ├── model_nn.pkl

│ ├── model_rf.pkl

│ └── model_knn.pkl

├── src

├── r

│ ├── .Rprofile

│ ├── README.md

│ ├── r.Rproj

│ ├── packrat

│ │ ├── packrat.opts

│ │ ├── init.R

│ │ └── packrat.lock

│ ├── random_forest.R

│ ├── breastCancer.R

│ └── breast_cancer.Rmd

├── pyspark

│ ├── breast_cancer_neural_networks.scala

│ ├── breast_cancer_rdd.py

│ ├── breast_cancer_df.py

│ └── breast_cancer_zeppelin_notebook.json

└── python

│ ├── produce_model_metrics.py

│ ├── exploratory_analysis.py

│ ├── data_extraction.py

│ ├── neural_networks.py

│ ├── knn.py

│ ├── random_forest.py

│ ├── model_eval.py

│ └── helper_functions.py

├── notebooks

└── random_forest_files

│ ├── output_36_1.png

│ ├── output_50_0.png

│ ├── output_58_0.png

│ ├── output_65_0.png

│ └── output_67_0.png

├── dash_dashboard

├── dash_breast_cancer.css

├── README.md

├── global_vars.py

└── app.py

├── LICENSE.md

├── requirements.txt

├── .gitignore

└── README.md

/reports/images/roc_all.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/roc_all.png

--------------------------------------------------------------------------------

/reports/images/roc_curve.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/roc_curve.png

--------------------------------------------------------------------------------

/reports/images/varImprt.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/varImprt.png

--------------------------------------------------------------------------------

/models/pickle_models/model_nn.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/models/pickle_models/model_nn.pkl

--------------------------------------------------------------------------------

/models/pickle_models/model_rf.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/models/pickle_models/model_rf.pkl

--------------------------------------------------------------------------------

/reports/images/roc_all_zoom.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/roc_all_zoom.png

--------------------------------------------------------------------------------

/reports/images/roc_curve_zoom.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/roc_curve_zoom.png

--------------------------------------------------------------------------------

/src/r/.Rprofile:

--------------------------------------------------------------------------------

1 | #### -- Packrat Autoloader (version 0.4.8-1) -- ####

2 | source("packrat/init.R")

3 | #### -- End Packrat Autoloader -- ####

4 |

--------------------------------------------------------------------------------

/models/pickle_models/model_knn.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/models/pickle_models/model_knn.pkl

--------------------------------------------------------------------------------

/notebooks/random_forest_files/output_36_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/notebooks/random_forest_files/output_36_1.png

--------------------------------------------------------------------------------

/notebooks/random_forest_files/output_50_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/notebooks/random_forest_files/output_50_0.png

--------------------------------------------------------------------------------

/notebooks/random_forest_files/output_58_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/notebooks/random_forest_files/output_58_0.png

--------------------------------------------------------------------------------

/notebooks/random_forest_files/output_65_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/notebooks/random_forest_files/output_65_0.png

--------------------------------------------------------------------------------

/notebooks/random_forest_files/output_67_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/notebooks/random_forest_files/output_67_0.png

--------------------------------------------------------------------------------

/src/r/README.md:

--------------------------------------------------------------------------------

1 | # Machine Learning Techniques on Breast Cancer Wisconsin Data Set

2 |

3 | This serves as a sub-directory for the breast cancer project with all r related stuff. More info later

4 |

--------------------------------------------------------------------------------

/reports/images/breastCancerWisconsinDataSet_MachineLearning_19_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/breastCancerWisconsinDataSet_MachineLearning_19_0.png

--------------------------------------------------------------------------------

/reports/images/breastCancerWisconsinDataSet_MachineLearning_22_1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/breastCancerWisconsinDataSet_MachineLearning_22_1.png

--------------------------------------------------------------------------------

/reports/images/breastCancerWisconsinDataSet_MachineLearning_25_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/breastCancerWisconsinDataSet_MachineLearning_25_0.png

--------------------------------------------------------------------------------

/reports/images/breastCancerWisconsinDataSet_MachineLearning_34_0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/raviolli77/machineLearning_breastCancer_Python/HEAD/reports/images/breastCancerWisconsinDataSet_MachineLearning_34_0.png

--------------------------------------------------------------------------------

/src/r/r.Rproj:

--------------------------------------------------------------------------------

1 | Version: 1.0

2 |

3 | RestoreWorkspace: Default

4 | SaveWorkspace: Default

5 | AlwaysSaveHistory: Default

6 |

7 | EnableCodeIndexing: Yes

8 | UseSpacesForTab: Yes

9 | NumSpacesForTab: 2

10 | Encoding: UTF-8

11 |

12 | RnwWeave: Sweave

13 | LaTeX: pdfLaTeX

14 |

--------------------------------------------------------------------------------

/src/r/packrat/packrat.opts:

--------------------------------------------------------------------------------

1 | auto.snapshot: TRUE

2 | use.cache: FALSE

3 | print.banner.on.startup: auto

4 | vcs.ignore.lib: TRUE

5 | vcs.ignore.src: FALSE

6 | external.packages:

7 | local.repos:

8 | load.external.packages.on.startup: TRUE

9 | ignored.packages:

10 | quiet.package.installation: TRUE

11 | snapshot.recommended.packages: FALSE

12 | snapshot.fields:

13 | Imports

14 | Depends

15 | LinkingTo

16 |

--------------------------------------------------------------------------------

/dash_dashboard/dash_breast_cancer.css:

--------------------------------------------------------------------------------

1 | .banner {

2 | height: 75px;

3 | margin: 0px -10px 10px;

4 | background-color: #00878d;

5 | border-radius: 2px;

6 | }

7 |

8 | .banner h2{

9 | color: white;

10 | padding-top: 10px;

11 | margin-left: 2%;

12 | display: inline-block;

13 | }

14 |

15 | table, td, th {

16 | border: 1px solid #ddd;

17 | text-align: left;

18 | }

19 |

20 | table {

21 | border-collapse: collapse;

22 | width: 100%;

23 | }

24 |

25 | th, td {

26 | padding: 15px;

27 | }

28 |

29 |

30 | h1, h2, h3, h4, h5, h6 {

31 | color: #24515d;

32 | font-family: font-family: "Courier New", Courier;

33 | }

34 |

35 | p {

36 | font-family: font-family: "Courier New", Courier;

37 | }

--------------------------------------------------------------------------------

/LICENSE.md:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Inertia7

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

6 |

7 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

8 |

9 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

--------------------------------------------------------------------------------

/dash_dashboard/README.md:

--------------------------------------------------------------------------------

1 | # Dash Dashboard

2 |

3 | This document serves as a `readme` for the subdirectory containing the code for the interactive dashboard made available by [plotly](https://plot.ly/) called [Dash](https://plot.ly/products/dash/).

4 |

5 | To run the application run `app.py` and you're web browser will open the dashboard.

6 |

7 | ## Exploratory Analysis

8 |

9 | This section explores 3 variable interaction with a 3d scatter plot that showcases the relationship between the variables of your choice. Along with showcasing the distribution of the data using histograms seperating the diagnoses.

10 |

11 |  12 |

13 | ## Machine Learning

14 |

15 | This section showcases the machine learning section of the project.

16 | The metrics are as outlined:

17 |

18 | + ROC Curves

19 | + Interactive Confusion Matrix

20 | + Classification Report - outputs the `classification_report` function from `sklearn` in an html table.

21 |

22 |

12 |

13 | ## Machine Learning

14 |

15 | This section showcases the machine learning section of the project.

16 | The metrics are as outlined:

17 |

18 | + ROC Curves

19 | + Interactive Confusion Matrix

20 | + Classification Report - outputs the `classification_report` function from `sklearn` in an html table.

21 |

22 |  23 |

24 | Any questions or suggestions, please let me know!

25 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | appdirs==1.4.3

2 | appnope==0.1.0

3 | bleach==3.1.1

4 | certifi==2018.1.18

5 | chardet==3.0.4

6 | click==6.7

7 | cycler==0.10.0

8 | dash==0.19.0

9 | dash-core-components==0.16.0

10 | dash-html-components==0.8.0

11 | dash-renderer==0.11.1

12 | decorator==4.2.1

13 | entrypoints==0.2.2

14 | Flask==1.0

15 | Flask-Compress==1.4.0

16 | html5lib==0.999999999

17 | idna==2.6

18 | ipykernel==4.6.1

19 | ipython==6.0.0

20 | ipython-genutils==0.2.0

21 | ipywidgets==6.0.0

22 | itsdangerous==0.24

23 | jedi==0.10.2

24 | Jinja2==2.9.6

25 | jsonschema==2.6.0

26 | jupyter==1.0.0

27 | jupyter-client==5.2.3

28 | jupyter-console==5.2.0

29 | jupyter-core==4.4.0

30 | kiwisolver==1.0.1

31 | MarkupSafe==1.0

32 | matplotlib==2.2.2

33 | mistune==0.8.1

34 | nbconvert==5.1.1

35 | nbformat==4.4.0

36 | notebook==5.7.8

37 | numpy==1.14.5

38 | packaging==16.8

39 | pandas==0.23.3

40 | pandocfilters==1.4.1

41 | pexpect==4.2.1

42 | pickleshare==0.7.4

43 | plotly==3.1.0

44 | prometheus-client==0.3.0

45 | prompt-toolkit==1.0.14

46 | ptyprocess==0.5.1

47 | Pygments==2.2.0

48 | pyparsing==2.1.4

49 | python-dateutil==2.6.1

50 | pytz==2017.3

51 | pyzmq==17.1.0

52 | qtconsole==4.3.1

53 | requests==2.20.0

54 | retrying==1.3.3

55 | scikit-learn==0.19.2

56 | scipy==1.1.0

57 | seaborn==0.9.0

58 | Send2Trash==1.5.0

59 | simplegeneric==0.8.1

60 | six==1.11.0

61 | sklearn==0.0

62 | terminado==0.8.1

63 | terminaltables==3.1.0

64 | testpath==0.3

65 | tornado==4.5.1

66 | traitlets==4.3.2

67 | urllib3==1.25.3

68 | virtualenv==15.1.0

69 | wcwidth==0.1.7

70 | webencodings==0.5.1

71 | Werkzeug==0.15.3

72 | widgetsnbextension==2.0.0

73 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_store

2 |

3 | # Rstuff

4 | src/r/packrat/lib*/

5 | src/r/packrat/src/*

6 |

7 | rsconnect/

8 |

9 | # Byte-compiled / optimized / DLL files

10 | __pycache__/

11 | *.py[cod]

12 | *.pyc

13 | *$py.class

14 |

15 | # C extensions

16 | *.so

17 |

18 | # Icebox stuff

19 | ice_box/

20 |

21 | # Distribution / packaging

22 | .Python

23 | build/

24 | develop-eggs/

25 | dist/

26 | downloads/

27 | eggs/

28 | .eggs/

29 | lib/

30 | lib64/

31 | parts/

32 | sdist/

33 | var/

34 | wheels/

35 | *.egg-info/

36 | .installed.cfg

37 | *.egg

38 | MANIFEST

39 |

40 | # PyInstaller

41 | # Usually these files are written by a python script from a template

42 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

43 | *.manifest

44 | *.spec

45 |

46 | # Installer logs

47 | pip-log.txt

48 | pip-delete-this-directory.txt

49 |

50 | # Unit test / coverage reports

51 | htmlcov/

52 | .tox/

53 | .coverage

54 | .coverage.*

55 | .cache

56 | nosetests.xml

57 | coverage.xml

58 | *.cover

59 | .hypothesis/

60 |

61 | # Translations

62 | *.mo

63 | *.pot

64 |

65 | # Django stuff:

66 | *.log

67 | .static_storage/

68 | .media/

69 | local_settings.py

70 |

71 | # Flask stuff:

72 | instance/

73 | .webassets-cache

74 |

75 | # Scrapy stuff:

76 | .scrapy

77 |

78 | # Sphinx documentation

79 | docs/_build/

80 |

81 | # PyBuilder

82 | target/

83 |

84 | # Jupyter Notebook

85 | .ipynb_checkpoints

86 |

87 | # pyenv

88 | .python-version

89 |

90 | # celery beat schedule file

91 | celerybeat-schedule

92 |

93 | # SageMath parsed files

94 | *.sage.py

95 |

96 | # Environments

97 | .env

98 | .venv

99 | env/

100 | venv/

101 | ENV/

102 | env.bak/

103 | venv.bak/

104 |

105 | # Spyder project settings

106 | .spyderproject

107 | .spyproject

108 |

109 | # Rope project settings

110 | .ropeproject

111 |

112 | # mkdocs documentation

113 | /site

114 |

115 | # mypy

116 | .mypy_cache/

117 | # R Stuff

118 | .Rhistory

119 | .Rproj.user

120 |

--------------------------------------------------------------------------------

/src/pyspark/breast_cancer_neural_networks.scala:

--------------------------------------------------------------------------------

1 | // Load appropriate packages

2 | // Neural Networks

3 | // Compatible with Apache Zeppelin

4 | import org.apache.spark.ml.classification.MultilayerPerceptronClassifier

5 | import org.apache.spark.ml.evaluation.MulticlassClassificationEvaluator

6 | import org.apache.spark.ml.feature.MinMaxScaler

7 | import org.apache.spark.mllib.evaluation.BinaryClassificationMetrics

8 | import org.apache.spark.mllib.stat.{MultivariateStatisticalSummary, Statistics}

9 |

10 | // Read in file

11 | val data = spark.read.format("libsvm")

12 | .load("data/data.txt")

13 |

14 | data.collect()

15 |

16 | // Pre-processing

17 | val scaler = new MinMaxScaler()

18 | .setInputCol("features")

19 | .setOutputCol("scaledFeatures")

20 |

21 | val scalerModel = scaler.fit(data)

22 |

23 | val scaledData = scalerModel.transform(data)

24 | println(s"Features scaled to range: [${scaler.getMin}, ${scaler.getMax}]")

25 | scaledData.select("features", "scaledFeatures").show()

26 |

27 | // Changing RDD files variable names to get accurate predictions

28 | val newNames = Seq("label", "oldFeatures", "features")

29 | val data2 = scaledData.toDF(newNames: _*)

30 |

31 | val splits = data2.randomSplit(Array(0.7, 0.3), seed = 1234L)

32 | val trainingSet = splits(0)

33 | val testSet = splits(1)

34 |

35 | trainingSet.select("label", "features").show(25)

36 |

37 | // Neural Networks

38 | val layers = Array[Int](30, 5, 4, 2)

39 |

40 | // Train the Network

41 | val trainer = new MultilayerPerceptronClassifier()

42 | .setLayers(layers)

43 | .setBlockSize(128)

44 | .setSeed(1234L)

45 | .setMaxIter(100)

46 |

47 | val fitNN = trainer.fit(trainingSet)

48 |

49 | // Predict the Test set

50 | val results = fitNN.transform(testSet)

51 | val predictionAndLabelsNN = results.select("prediction", "label")

52 | val evaluator = new MulticlassClassificationEvaluator()

53 | .setMetricName("accuracy")

54 |

55 | println("Test error rate = " + (1 - evaluator.evaluate(predictionAndLabelsNN)))

56 |

57 | println("Test set accuracy = " + evaluator.evaluate(predictionAndLabelsNN))

--------------------------------------------------------------------------------

/src/python/produce_model_metrics.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from sklearn.metrics import roc_curve

3 | from sklearn.metrics import auc

4 |

5 | # Function for All Models to produce Metrics ---------------------

6 |

7 | def produce_model_metrics(fit, test_set, test_class_set, estimator):

8 | """

9 | Purpose

10 | ----------

11 | Function that will return predictions and probability metrics for said

12 | predictions.

13 |

14 | Parameters

15 | ----------

16 | * fit: Fitted model containing the attribute feature_importances_

17 | * test_set: dataframe/array containing the test set values

18 | * test_class_set: array containing the target values for the test set

19 | * estimator: String represenation of appropriate model, can only contain the

20 | following: ['knn', 'rf', 'nn']

21 |

22 | Returns

23 | ----------

24 | Box plot graph for all numeric data in data frame

25 | """

26 | my_estimators = {

27 | 'rf': 'estimators_',

28 | 'nn': 'out_activation_',

29 | 'knn': '_fit_method'

30 | }

31 | try:

32 | # Captures whether first parameter is a model

33 | if not hasattr(fit, 'fit'):

34 | return print("'{0}' is not an instantiated model from scikit-learn".format(fit))

35 |

36 | # Captures whether the model has been trained

37 | if not vars(fit)[my_estimators[estimator]]:

38 | return print("Model does not appear to be trained.")

39 |

40 | except KeyError as e:

41 | raise KeyError("'{0}' does not correspond with the appropriate key inside the estimators dictionary. \

42 | Please refer to function to check `my_estimators` dictionary.".format(estimator))

43 |

44 |

45 | # Outputting predictions and prediction probability

46 | # for test set

47 | predictions = fit.predict(test_set)

48 | accuracy = fit.score(test_set, test_class_set)

49 | # We grab the second array from the output which corresponds to

50 | # to the predicted probabilites of positive classes

51 | # Ordered wrt fit.classes_ in our case [0, 1] where 1 is our positive class

52 | predictions_prob = fit.predict_proba(test_set)[:, 1]

53 | # ROC Curve stuff

54 | fpr, tpr, _ = roc_curve(test_class_set,

55 | predictions_prob,

56 | pos_label = 1)

57 | auc_fit = auc(fpr, tpr)

58 | return {'predictions': predictions,

59 | 'accuracy': accuracy,

60 | 'fpr': fpr,

61 | 'tpr': tpr,

62 | 'auc': auc_fit}

63 |

--------------------------------------------------------------------------------

/src/pyspark/breast_cancer_rdd.py:

--------------------------------------------------------------------------------

1 | # LOAD APPROPRIATE PACKAGE

2 | import numpy as np

3 | from pyspark.context import SparkContext

4 | from pyspark.mllib.util import MLUtils

5 | from pyspark.mllib.tree import DecisionTree, DecisionTreeModel

6 | from pyspark.mllib.tree import RandomForest, RandomForestModel

7 | from pyspark.mllib.evaluation import BinaryClassificationMetrics

8 |

9 | sc = SparkContext.getOrCreate()

10 | data = MLUtils.loadLibSVMFile(sc, 'data/dataLibSVM.txt')

11 | print(data)

12 | # NEXT LET'S CREATE THE APPROPRIATE TRAINING AND TEST SETS

13 | # WE'LL BE SETTING THEM AS 70-30, ALONG WITH SETTING A

14 | # RANDOM SEED GENERATOR TO MAKE MY RESULTS REPRODUCIBLE

15 |

16 | (trainingSet, testSet) = data.randomSplit([0.7, 0.3], seed = 7)

17 |

18 | ##################

19 | # DECISION TREES #

20 | ##################

21 |

22 | fitDT = DecisionTree.trainClassifier(trainingSet,

23 | numClasses=2,

24 | categoricalFeaturesInfo={},

25 | impurity='gini',

26 | maxDepth=3,

27 | maxBins=32)

28 |

29 | print(fitDT.toDebugString())

30 |

31 | predictionsDT = fitDT.predict(testSet.map(lambda x: x.features))

32 |

33 | labelsAndPredictionsDT = testSet.map(lambda lp: lp.label).zip(predictionsDT)

34 |

35 | # Test Error Rate Evaluations

36 |

37 | testErrDT = labelsAndPredictionsDT.filter(lambda (v, p): v != p).count() / float(testSet.count())

38 |

39 | print('Test Error = {0}'.format(testErrDT))

40 |

41 | # Instantiate metrics object

42 | metricsDT = BinaryClassificationMetrics(labelsAndPredictionsDT)

43 |

44 | # Area under ROC curve

45 | print("Area under ROC = {0}".format(metricsDT.areaUnderROC))

46 |

47 | #################

48 | # RANDOM FOREST #

49 | #################

50 |

51 | fitRF = RandomForest.trainClassifier(trainingSet,

52 | numClasses = 2,

53 | categoricalFeaturesInfo = {},

54 | numTrees = 500,

55 | featureSubsetStrategy="auto",

56 | impurity = 'gini', # USING GINI INDEX FOR OUR RANDOM FOREST MODEL

57 | maxDepth = 4,

58 | maxBins = 100)

59 |

60 | predictionsRF = fitRF.predict(testSet.map(lambda x: x.features))

61 |

62 | labelsAndPredictions = testSet.map(lambda lp: lp.label).zip(predictionsRF)

63 |

64 |

65 | testErr = labelsAndPredictions.filter(lambda (v, p): v != p).count() / float(testSet.count())

66 |

67 | print('Test Error = {0}'.format(testErr))

68 | print('Learned classification forest model:')

69 | print(fitRF.toDebugString())

70 |

71 | # Instantiate metrics object

72 | metricsRF = BinaryClassificationMetrics(labelsAndPredictions)

73 |

74 | # Area under ROC curve

75 | print("Area under ROC = {0}".format(metricsRF.areaUnderROC))

76 |

77 | ###################

78 | # NEURAL NETWORKS #

79 | ###################

80 |

81 | # See Scala Script

--------------------------------------------------------------------------------

/src/python/exploratory_analysis.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | # NOTE: Better in jupyter notebook format

11 |

12 | """

13 | Exploratory Analysis

14 | """

15 | import helper_functions as hf

16 | from data_extraction import breast_cancer

17 | import matplotlib.pyplot as plt

18 | import seaborn as sns

19 |

20 | print('''

21 | ########################################

22 | ## DATA FRAME SHAPE AND DTYPES ##

23 | ########################################

24 | ''')

25 |

26 | print("Here's the dimensions of our data frame:\n",

27 | breast_cancer.shape)

28 |

29 | print("Here's the data types of our columns:\n",

30 | breast_cancer.dtypes)

31 |

32 | print("Some more statistics for our data frame: \n",

33 | breast_cancer.describe())

34 |

35 | print('''

36 | ##########################################

37 | ## STATISTICS RELATING TO DX ##

38 | ##########################################

39 | ''')

40 |

41 | # Next let's use the helper function to show distribution

42 | # of our data frame

43 | hf.print_target_perc(breast_cancer, 'diagnosis')

44 | import pdb

45 | pdb.set_trace()

46 | # Scatterplot Matrix

47 | # Variables chosen from Random Forest modeling.

48 |

49 | cols = ['concave_points_worst', 'concavity_mean',

50 | 'perimeter_worst', 'radius_worst',

51 | 'area_worst', 'diagnosis']

52 |

53 | sns.pairplot(breast_cancer,

54 | x_vars = cols,

55 | y_vars = cols,

56 | hue = 'diagnosis',

57 | palette = ('Red', '#875FDB'),

58 | markers=["o", "D"])

59 |

60 | plt.title('Scatterplot Matrix')

61 | plt.show()

62 | plt.close()

63 |

64 | # Pearson Correlation Matrix

65 | corr = breast_cancer.corr(method = 'pearson') # Correlation Matrix

66 | f, ax = plt.subplots(figsize=(11, 9))

67 |

68 | # Generate a custom diverging colormap

69 | cmap = sns.diverging_palette(10, 275, as_cmap=True)

70 |

71 | # Draw the heatmap with the mask and correct aspect ratio

72 | sns.heatmap(corr,

73 | cmap=cmap,

74 | square=True,

75 | xticklabels=True,

76 | yticklabels=True,

77 | linewidths=.5,

78 | cbar_kws={"shrink": .5},

79 | ax=ax)

80 |

81 | plt.title("Pearson Correlation Matrix")

82 | plt.yticks(rotation = 0)

83 | plt.xticks(rotation = 270)

84 | plt.show()

85 | plt.close()

86 |

87 | # BoxPlot

88 | hf.plot_box_plot(breast_cancer, 'Pre-Processed', (-.05, 50))

89 |

90 | # Normalizing data

91 | breast_cancer_norm = hf.normalize_data_frame(breast_cancer)

92 |

93 | # Visuals relating to normalized data to show significant difference

94 | print('''

95 | #################################

96 | ## Transformed Data Statistics ##

97 | #################################

98 | ''')

99 |

100 | print(breast_cancer_norm.describe())

101 |

102 | hf.plot_box_plot(breast_cancer_norm, 'Transformed', (-.05, 1.05))

103 |

--------------------------------------------------------------------------------

/src/python/data_extraction.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | # Import Packages -----------------------------------------------

13 | import numpy as np

14 | import pandas as pd

15 | from sklearn.preprocessing import MinMaxScaler

16 | from sklearn.model_selection import train_test_split

17 | from urllib.request import urlopen

18 |

19 | # Loading data ------------------------------

20 | UCI_data_URL = 'https://archive.ics.uci.edu/ml/machine-learning-databases\

21 | /breast-cancer-wisconsin/wdbc.data'

22 |

23 | names = ['id_number', 'diagnosis', 'radius_mean',

24 | 'texture_mean', 'perimeter_mean', 'area_mean',

25 | 'smoothness_mean', 'compactness_mean',

26 | 'concavity_mean','concave_points_mean',

27 | 'symmetry_mean', 'fractal_dimension_mean',

28 | 'radius_se', 'texture_se', 'perimeter_se',

29 | 'area_se', 'smoothness_se', 'compactness_se',

30 | 'concavity_se', 'concave_points_se',

31 | 'symmetry_se', 'fractal_dimension_se',

32 | 'radius_worst', 'texture_worst',

33 | 'perimeter_worst', 'area_worst',

34 | 'smoothness_worst', 'compactness_worst',

35 | 'concavity_worst', 'concave_points_worst',

36 | 'symmetry_worst', 'fractal_dimension_worst']

37 |

38 | dx = ['Malignant', 'Benign']

39 |

40 | breast_cancer = pd.read_csv(urlopen(UCI_data_URL), names=names)

41 |

42 | # Setting 'id_number' as our index

43 | breast_cancer.set_index(['id_number'], inplace = True)

44 |

45 | # Converted to binary to help later on with models and plots

46 | breast_cancer['diagnosis'] = breast_cancer['diagnosis'].map({'M':1, 'B':0})

47 |

48 | for col in breast_cancer:

49 | pd.to_numeric(col, errors='coerce')

50 |

51 | # For later use in CART models

52 | names_index = names[2:]

53 |

54 | # Create Training and Test Set ----------------------------------

55 | feature_space = breast_cancer.iloc[:,

56 | breast_cancer.columns != 'diagnosis']

57 | feature_class = breast_cancer.iloc[:,

58 | breast_cancer.columns == 'diagnosis']

59 |

60 |

61 | training_set, test_set, class_set, test_class_set = train_test_split(feature_space,

62 | feature_class,

63 | test_size = 0.20,

64 | random_state = 42)

65 |

66 | # Cleaning test sets to avoid future warning messages

67 | class_set = class_set.values.ravel()

68 | test_class_set = test_class_set.values.ravel()

69 |

70 | # Scaling dataframe

71 | scaler = MinMaxScaler()

72 |

73 | scaler.fit(training_set)

74 |

75 | training_set_scaled = scaler.fit_transform(training_set)

76 | test_set_scaled = scaler.transform(test_set)

77 |

--------------------------------------------------------------------------------

/src/python/neural_networks.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | """

13 | Neural Networks Classification

14 | """

15 | # Import Packages -----------------------------------------------

16 | import sys, os

17 | import pandas as pd

18 | import helper_functions as hf

19 | from data_extraction import training_set_scaled, class_set

20 | from data_extraction import test_set_scaled, test_class_set

21 | from sklearn.neural_network import MLPClassifier

22 | from produce_model_metrics import produce_model_metrics

23 |

24 | # Fitting Neural Network ----------------------------------------

25 | # Fit model

26 | fit_nn = MLPClassifier(solver='lbfgs',

27 | hidden_layer_sizes = (12, ),

28 | activation='tanh',

29 | learning_rate_init=0.05,

30 | random_state=42)

31 |

32 | # Train model on training set

33 | fit_nn.fit(training_set_scaled,

34 | class_set)

35 |

36 | if __name__ == '__main__':

37 | # Print model parameters ------------------------------------

38 | print(fit_nn, '\n')

39 |

40 | # Initialize function for metrics ---------------------------

41 | fit_dict_nn = produce_model_metrics(fit_nn, test_set_scaled,

42 | test_class_set, 'nn')

43 | # Extract each piece from dictionary

44 | predictions_nn = fit_dict_nn['predictions']

45 | accuracy_nn = fit_dict_nn['accuracy']

46 | auc_nn = fit_dict_nn['auc']

47 |

48 |

49 | print("Hyperparameter Optimization:")

50 | print("chosen parameters: \n \

51 | {'hidden_layer_sizes': 12, \n \

52 | 'activation': 'tanh', \n \

53 | 'learning_rate_init': 0.05}")

54 | print("Note: Remove commented code to see this section \n")

55 |

56 | # from sklearn.model_selection import GridSearchCV

57 | # import time

58 | # start = time.time()

59 | # gs = GridSearchCV(fit_nn, cv = 10,

60 | # param_grid={

61 | # 'learning_rate_init': [0.05, 0.01, 0.005, 0.001],

62 | # 'hidden_layer_sizes': [4, 8, 12],

63 | # 'activation': ["relu", "identity", "tanh", "logistic"]})

64 | # gs.fit(training_set_scaled, class_set)

65 | # print(gs.best_params_)

66 | # end = time.time()

67 | # print(end - start)

68 |

69 | # Test Set Calculations -------------------------------------

70 | # Test error rate

71 | test_error_rate_nn = 1 - accuracy_nn

72 |

73 | # Confusion Matrix

74 | test_crosstb = hf.create_conf_mat(test_class_set,

75 | predictions_nn)

76 |

77 | # Cross validation

78 | print("Cross Validation:")

79 |

80 | hf.cross_val_metrics(fit_nn,

81 | training_set_scaled,

82 | class_set,

83 | 'nn',

84 | print_results = True)

85 |

86 | print('Confusion Matrix:')

87 | print(test_crosstb, '\n')

88 |

89 | print("Here is our mean accuracy on the test set:\n {0: .3f}"\

90 | .format(accuracy_nn))

91 |

92 | print("The test error rate for our model is:\n {0: .3f}"\

93 | .format(test_error_rate_nn))

94 |

--------------------------------------------------------------------------------

/src/python/knn.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | """

13 | Kth Nearest Neighbor Classification

14 | """

15 | # Import Packages -----------------------------------------------

16 | import sys, os

17 | import pandas as pd

18 | import helper_functions as hf

19 | from data_extraction import training_set, class_set

20 | from data_extraction import test_set, test_class_set

21 | from sklearn.neighbors import KNeighborsClassifier

22 | from sklearn.model_selection import cross_val_score

23 | from produce_model_metrics import produce_model_metrics

24 |

25 | # Fitting model

26 | fit_knn = KNeighborsClassifier(n_neighbors=3)

27 |

28 | # Training model

29 | fit_knn.fit(training_set,

30 | class_set)

31 | # ---------------------------------------------------------------

32 | if __name__ == '__main__':

33 | # Print model parameters ------------------------------------

34 | print(fit_knn, '\n')

35 |

36 | # Optimal K -------------------------------------------------

37 | # Inspired by:

38 | # https://kevinzakka.github.io/2016/07/13/k-nearest-neighbor/

39 |

40 | myKs = []

41 | for i in range(0, 50):

42 | if (i % 2 != 0):

43 | myKs.append(i)

44 |

45 | cross_vals = []

46 | for k in myKs:

47 | knn = KNeighborsClassifier(n_neighbors=k)

48 | scores = cross_val_score(knn,

49 | training_set,

50 | class_set,

51 | cv = 10,

52 | scoring='accuracy')

53 | cross_vals.append(scores.mean())

54 |

55 | MSE = [1 - x for x in cross_vals]

56 | optimal_k = myKs[MSE.index(min(MSE))]

57 | print("Optimal K is {0}".format(optimal_k), '\n')

58 |

59 | # Initialize function for metrics ---------------------------

60 | fit_dict_knn = produce_model_metrics(fit_knn,

61 | test_set,

62 | test_class_set,

63 | 'knn')

64 | # Extract each piece from dictionary

65 | predictions_knn = fit_dict_knn['predictions']

66 | accuracy_knn = fit_dict_knn['accuracy']

67 | auc_knn = fit_dict_knn['auc']

68 |

69 | # Test Set Calculations -------------------------------------

70 | # Test error rate

71 | test_error_rate_knn = 1 - accuracy_knn

72 |

73 | # Confusion Matrix

74 | test_crosstb = hf.create_conf_mat(test_class_set,

75 | predictions_knn)

76 |

77 | print('Cross Validation:')

78 | hf.cross_val_metrics(fit_knn,

79 | training_set,

80 | class_set,

81 | 'knn',

82 | print_results = True)

83 |

84 | print('Confusion Matrix:')

85 | print(test_crosstb, '\n')

86 |

87 | print("Here is our accuracy for our test set:\n {0: .3f}"\

88 | .format(accuracy_knn))

89 |

90 | print("The test error rate for our model is:\n {0: .3f}"\

91 | .format(test_error_rate_knn))

92 |

--------------------------------------------------------------------------------

/dash_dashboard/global_vars.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | import sys

3 | import pandas as pd

4 | from sklearn.externals import joblib

5 | from urllib.request import urlopen

6 | from io import StringIO

7 |

8 | # Importing src python scripts ----------------------

9 | sys.path.insert(0, '../src/python/')

10 | from knn import fit_knn

11 | from random_forest import fit_rf

12 | from neural_networks import fit_nn

13 | from data_extraction import test_set_scaled

14 | from data_extraction import test_set, test_class_set

15 | from helper_functions import create_conf_mat

16 | from produce_model_metrics import produce_model_metrics

17 | sys.path.pop(0)

18 |

19 | # Calling up metrics from the model scripts

20 | # KNN -----------------------------------------------

21 | metrics_knn = produce_model_metrics(fit_knn, test_set,

22 | test_class_set, 'knn')

23 | # Call each value from dictionary

24 | predictions_knn = metrics_knn['predictions']

25 | accuracy_knn = metrics_knn['accuracy']

26 | fpr = metrics_knn['fpr']

27 | tpr = metrics_knn['tpr']

28 | auc_knn = metrics_knn['auc']

29 |

30 | test_error_rate_knn = 1 - accuracy_knn

31 |

32 | # Confusion Matrix

33 | cross_tab_knn = create_conf_mat(test_class_set,

34 | predictions_knn)

35 |

36 | # RF ------------------------------------------------

37 | metrics_rf = produce_model_metrics(fit_rf, test_set,

38 | test_class_set, 'rf')

39 | # Call each value from dictionary

40 | predictions_rf = metrics_rf['predictions']

41 | accuracy_rf = metrics_rf['accuracy']

42 | fpr2 = metrics_rf['fpr']

43 | tpr2 = metrics_rf['tpr']

44 | auc_rf = metrics_rf['auc']

45 |

46 | test_error_rate_rf = 1 - accuracy_rf

47 |

48 | cross_tab_rf = create_conf_mat(test_class_set,

49 | predictions_rf)

50 |

51 | # NN ----------------------------------------

52 | metrics_nn = produce_model_metrics(fit_nn, test_set_scaled,

53 | test_class_set, 'nn')

54 |

55 | # Call each value from dictionary

56 | predictions_nn = metrics_nn['predictions']

57 | accuracy_nn = metrics_nn['accuracy']

58 | fpr3 = metrics_nn['fpr']

59 | tpr3 = metrics_nn['tpr']

60 | auc_nn = metrics_nn['auc']

61 |

62 | test_error_rate_nn = 1 - accuracy_nn

63 |

64 | cross_tab_nn = create_conf_mat(test_class_set,

65 | predictions_nn)

66 |

67 | # Classification Report Stuff

68 | def create_class_report(class_report_string):

69 | class_report_mod = StringIO(class_report_string)

70 | class_report = pd.read_csv(class_report_mod, ',')

71 | return class_report

72 |

73 |

74 | class_rep_knn_str = """

75 | Class, Precision, Recall, F1-score, Support

76 | Benign, 0.96, 0.93, 0.94, 73

77 | Malignant, 0.88, 0.93, 0.90, 41

78 | Avg/Total, 0.93, 0.93, 0.93, 114

79 | """

80 |

81 | class_rep_knn = create_class_report(class_rep_knn_str)

82 |

83 | class_rep_rf_str = """

84 | Class, Precision, Recall, F1-score, Support

85 | Benign, 0.99, 0.96, 0.97, 73

86 | Malignant, 0.93, 0.98, 0.95, 41

87 | Avg/Total, 0.97, 0.96, 0.97, 114

88 | """

89 |

90 | class_rep_rf = create_class_report(class_rep_rf_str)

91 |

92 | class_rep_nn_str = """

93 | Class, Precision, Recall, F1-score, Support

94 | Benign , 0.99, 0.97, 0.98, 73

95 | Malignant, 0.95, 0.98, 0.96, 41

96 | Avg/Total, 0.97, 0.97, 0.97, 114

97 | """

98 |

99 | class_rep_nn = create_class_report(class_rep_nn_str)

100 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Machine Learning Techniques on Breast Cancer Wisconsin Data Set

2 |

3 | **Contributor**:

4 | + Raul Eulogio

5 |

6 | I created this repo as a way to get better acquainted with **Python** as a language and as a tool for data analysis. But it eventually became in exercise in utilizing various programming languages for machine learning applications.

7 |

8 | I employed four **Machine Learning** techniques:

9 | + **Kth Nearest Neighbor**

10 | + **Random Forest**

11 | + **Neural Networks**:

12 |

13 | If you would like to see a walk through of the analysis on [inertia7](https://www.inertia7.com/projects/3) includes running code as well as explanations for exploratory analysis. This [project](https://www.inertia7.com/projects/95) contains an overview of *random forest*, explanations for other algorithms in the works.

14 |

15 | The repository includes the *scripts* folder which contains scripts for these programming languages (in order of most detailed):

16 | + *Python*

17 | + *R*

18 | + *PySpark*

19 |

20 | This repo is primarily concerned with the *python* iteration.

21 |

22 | The multiple *python* script is broken into 5 sections (done by creating a script for each section) in the following order:

23 | + **Exploratory Analysis**

24 | + **Kth Nearest Neighbors**

25 | + **Random Forest**

26 | + **Neural Networks**

27 | + **Comparing Models**

28 |

29 | **NOTE**: The files `data_extraction.py`, `helper_functions.py`, and `produce_model_metrics.py` are used to abstract functions to make code easier to read. These files do a lot of the work so if you are interested in how the scripts work definitely check them out.

30 |

31 | ## Running .py Script

32 | A `virtualenv` is needed where you will download the necessary packages from the `requirements.txt` using:

33 |

34 | pip3 install -r requirements.txt

35 |

36 | Once this is done you can run the scripts using the usual terminal command:

37 |

38 | $ python3 exploratory_analysis.py

39 |

40 | **NOTE**: You can also run it by making script executable as such:

41 |

42 | $ chmod +x exploratory_analysis.py

43 |

44 |

45 | **Remember**: You must have a *shebang* for this to run i.e. this must be at the very beginning of your script:

46 |

47 | #!/usr/bin/env python3

48 |

49 | then you would simply just run it (I'll use **Random Forest** as an example)

50 |

51 | $ ./random_forest.py

52 |

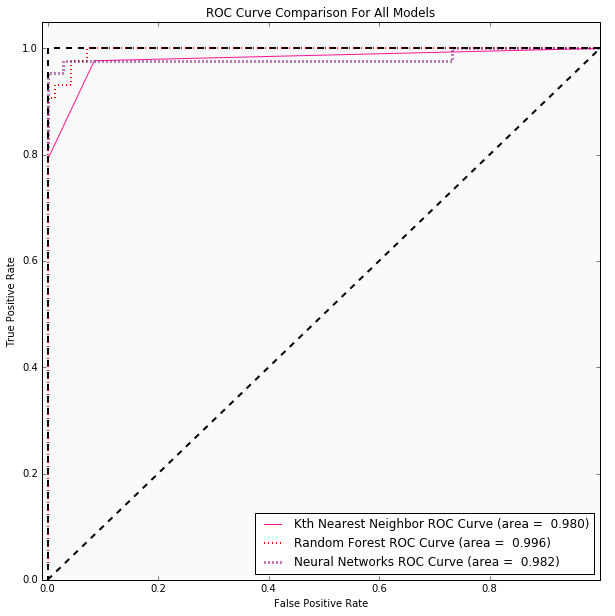

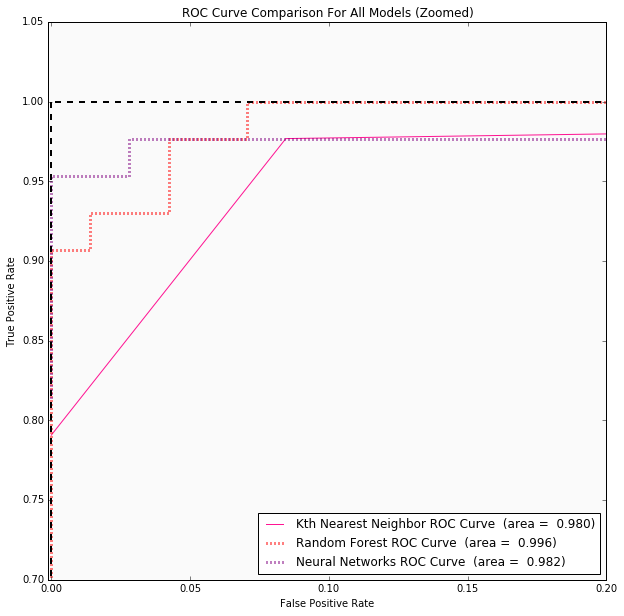

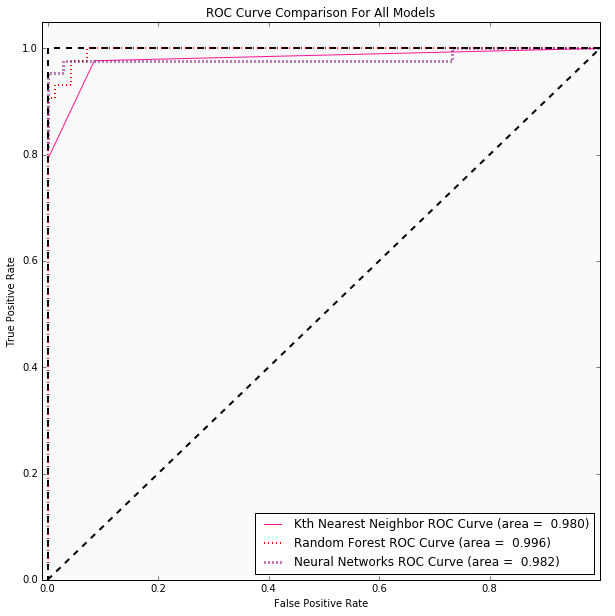

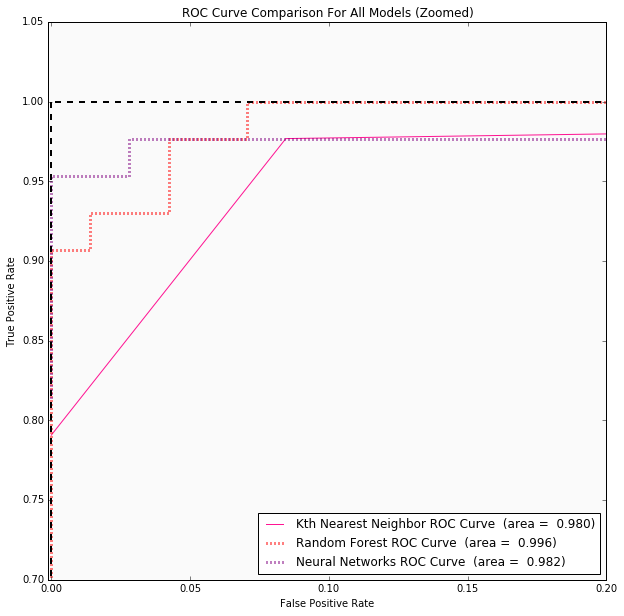

53 | ## Conclusions

54 | Once I employed all these methods, we were able to utilize various machine learning metrics. Each model provided valuable insight. *Kth Nearest Neighbor* helped create a baseline model to compare the more complex models. *Random forest* helps us see what variables were important in the bootstrapped decision trees. And *Neural Networks* provided minimal false negatives which results in false negatives. In this context it can mean death.

55 |

56 | ### Diagnostics for Data Set

57 |

58 |

59 | | Model/Algorithm | Test Error Rate | False Negative for Test Set | Area under the Curve for ROC | Cross Validation Score | Hyperparameter Optimization |

60 | |----------------------|-----------------|-----------------------------|------------------------------|-------------------------------|-----------------------|

61 | | Kth Nearest Neighbor | 0.07 | 5 | 0.980 | Accuracy: 0.925 (+/- 0.025) | Optimal *K* is 3 |

62 | | Random Forest | 0.035 | 3 | 0.996 | Accuracy: 0.963 (+/- 0.013) | {'max_features': 'log2', 'max_depth': 3, 'bootstrap': True, 'criterion': 'gini'} |

63 | | Neural Networks | 0.035 | 1 | 0.982 | Accuracy: 0.967 (+/- 0.011) | {'hidden_layer_sizes': 12, 'activation': 'tanh', 'learning_rate_init': 0.05} |

64 |

65 |

66 |

67 | #### ROC Curves for Data Set

68 |

23 |

24 | Any questions or suggestions, please let me know!

25 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | appdirs==1.4.3

2 | appnope==0.1.0

3 | bleach==3.1.1

4 | certifi==2018.1.18

5 | chardet==3.0.4

6 | click==6.7

7 | cycler==0.10.0

8 | dash==0.19.0

9 | dash-core-components==0.16.0

10 | dash-html-components==0.8.0

11 | dash-renderer==0.11.1

12 | decorator==4.2.1

13 | entrypoints==0.2.2

14 | Flask==1.0

15 | Flask-Compress==1.4.0

16 | html5lib==0.999999999

17 | idna==2.6

18 | ipykernel==4.6.1

19 | ipython==6.0.0

20 | ipython-genutils==0.2.0

21 | ipywidgets==6.0.0

22 | itsdangerous==0.24

23 | jedi==0.10.2

24 | Jinja2==2.9.6

25 | jsonschema==2.6.0

26 | jupyter==1.0.0

27 | jupyter-client==5.2.3

28 | jupyter-console==5.2.0

29 | jupyter-core==4.4.0

30 | kiwisolver==1.0.1

31 | MarkupSafe==1.0

32 | matplotlib==2.2.2

33 | mistune==0.8.1

34 | nbconvert==5.1.1

35 | nbformat==4.4.0

36 | notebook==5.7.8

37 | numpy==1.14.5

38 | packaging==16.8

39 | pandas==0.23.3

40 | pandocfilters==1.4.1

41 | pexpect==4.2.1

42 | pickleshare==0.7.4

43 | plotly==3.1.0

44 | prometheus-client==0.3.0

45 | prompt-toolkit==1.0.14

46 | ptyprocess==0.5.1

47 | Pygments==2.2.0

48 | pyparsing==2.1.4

49 | python-dateutil==2.6.1

50 | pytz==2017.3

51 | pyzmq==17.1.0

52 | qtconsole==4.3.1

53 | requests==2.20.0

54 | retrying==1.3.3

55 | scikit-learn==0.19.2

56 | scipy==1.1.0

57 | seaborn==0.9.0

58 | Send2Trash==1.5.0

59 | simplegeneric==0.8.1

60 | six==1.11.0

61 | sklearn==0.0

62 | terminado==0.8.1

63 | terminaltables==3.1.0

64 | testpath==0.3

65 | tornado==4.5.1

66 | traitlets==4.3.2

67 | urllib3==1.25.3

68 | virtualenv==15.1.0

69 | wcwidth==0.1.7

70 | webencodings==0.5.1

71 | Werkzeug==0.15.3

72 | widgetsnbextension==2.0.0

73 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_store

2 |

3 | # Rstuff

4 | src/r/packrat/lib*/

5 | src/r/packrat/src/*

6 |

7 | rsconnect/

8 |

9 | # Byte-compiled / optimized / DLL files

10 | __pycache__/

11 | *.py[cod]

12 | *.pyc

13 | *$py.class

14 |

15 | # C extensions

16 | *.so

17 |

18 | # Icebox stuff

19 | ice_box/

20 |

21 | # Distribution / packaging

22 | .Python

23 | build/

24 | develop-eggs/

25 | dist/

26 | downloads/

27 | eggs/

28 | .eggs/

29 | lib/

30 | lib64/

31 | parts/

32 | sdist/

33 | var/

34 | wheels/

35 | *.egg-info/

36 | .installed.cfg

37 | *.egg

38 | MANIFEST

39 |

40 | # PyInstaller

41 | # Usually these files are written by a python script from a template

42 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

43 | *.manifest

44 | *.spec

45 |

46 | # Installer logs

47 | pip-log.txt

48 | pip-delete-this-directory.txt

49 |

50 | # Unit test / coverage reports

51 | htmlcov/

52 | .tox/

53 | .coverage

54 | .coverage.*

55 | .cache

56 | nosetests.xml

57 | coverage.xml

58 | *.cover

59 | .hypothesis/

60 |

61 | # Translations

62 | *.mo

63 | *.pot

64 |

65 | # Django stuff:

66 | *.log

67 | .static_storage/

68 | .media/

69 | local_settings.py

70 |

71 | # Flask stuff:

72 | instance/

73 | .webassets-cache

74 |

75 | # Scrapy stuff:

76 | .scrapy

77 |

78 | # Sphinx documentation

79 | docs/_build/

80 |

81 | # PyBuilder

82 | target/

83 |

84 | # Jupyter Notebook

85 | .ipynb_checkpoints

86 |

87 | # pyenv

88 | .python-version

89 |

90 | # celery beat schedule file

91 | celerybeat-schedule

92 |

93 | # SageMath parsed files

94 | *.sage.py

95 |

96 | # Environments

97 | .env

98 | .venv

99 | env/

100 | venv/

101 | ENV/

102 | env.bak/

103 | venv.bak/

104 |

105 | # Spyder project settings

106 | .spyderproject

107 | .spyproject

108 |

109 | # Rope project settings

110 | .ropeproject

111 |

112 | # mkdocs documentation

113 | /site

114 |

115 | # mypy

116 | .mypy_cache/

117 | # R Stuff

118 | .Rhistory

119 | .Rproj.user

120 |

--------------------------------------------------------------------------------

/src/pyspark/breast_cancer_neural_networks.scala:

--------------------------------------------------------------------------------

1 | // Load appropriate packages

2 | // Neural Networks

3 | // Compatible with Apache Zeppelin

4 | import org.apache.spark.ml.classification.MultilayerPerceptronClassifier

5 | import org.apache.spark.ml.evaluation.MulticlassClassificationEvaluator

6 | import org.apache.spark.ml.feature.MinMaxScaler

7 | import org.apache.spark.mllib.evaluation.BinaryClassificationMetrics

8 | import org.apache.spark.mllib.stat.{MultivariateStatisticalSummary, Statistics}

9 |

10 | // Read in file

11 | val data = spark.read.format("libsvm")

12 | .load("data/data.txt")

13 |

14 | data.collect()

15 |

16 | // Pre-processing

17 | val scaler = new MinMaxScaler()

18 | .setInputCol("features")

19 | .setOutputCol("scaledFeatures")

20 |

21 | val scalerModel = scaler.fit(data)

22 |

23 | val scaledData = scalerModel.transform(data)

24 | println(s"Features scaled to range: [${scaler.getMin}, ${scaler.getMax}]")

25 | scaledData.select("features", "scaledFeatures").show()

26 |

27 | // Changing RDD files variable names to get accurate predictions

28 | val newNames = Seq("label", "oldFeatures", "features")

29 | val data2 = scaledData.toDF(newNames: _*)

30 |

31 | val splits = data2.randomSplit(Array(0.7, 0.3), seed = 1234L)

32 | val trainingSet = splits(0)

33 | val testSet = splits(1)

34 |

35 | trainingSet.select("label", "features").show(25)

36 |

37 | // Neural Networks

38 | val layers = Array[Int](30, 5, 4, 2)

39 |

40 | // Train the Network

41 | val trainer = new MultilayerPerceptronClassifier()

42 | .setLayers(layers)

43 | .setBlockSize(128)

44 | .setSeed(1234L)

45 | .setMaxIter(100)

46 |

47 | val fitNN = trainer.fit(trainingSet)

48 |

49 | // Predict the Test set

50 | val results = fitNN.transform(testSet)

51 | val predictionAndLabelsNN = results.select("prediction", "label")

52 | val evaluator = new MulticlassClassificationEvaluator()

53 | .setMetricName("accuracy")

54 |

55 | println("Test error rate = " + (1 - evaluator.evaluate(predictionAndLabelsNN)))

56 |

57 | println("Test set accuracy = " + evaluator.evaluate(predictionAndLabelsNN))

--------------------------------------------------------------------------------

/src/python/produce_model_metrics.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from sklearn.metrics import roc_curve

3 | from sklearn.metrics import auc

4 |

5 | # Function for All Models to produce Metrics ---------------------

6 |

7 | def produce_model_metrics(fit, test_set, test_class_set, estimator):

8 | """

9 | Purpose

10 | ----------

11 | Function that will return predictions and probability metrics for said

12 | predictions.

13 |

14 | Parameters

15 | ----------

16 | * fit: Fitted model containing the attribute feature_importances_

17 | * test_set: dataframe/array containing the test set values

18 | * test_class_set: array containing the target values for the test set

19 | * estimator: String represenation of appropriate model, can only contain the

20 | following: ['knn', 'rf', 'nn']

21 |

22 | Returns

23 | ----------

24 | Box plot graph for all numeric data in data frame

25 | """

26 | my_estimators = {

27 | 'rf': 'estimators_',

28 | 'nn': 'out_activation_',

29 | 'knn': '_fit_method'

30 | }

31 | try:

32 | # Captures whether first parameter is a model

33 | if not hasattr(fit, 'fit'):

34 | return print("'{0}' is not an instantiated model from scikit-learn".format(fit))

35 |

36 | # Captures whether the model has been trained

37 | if not vars(fit)[my_estimators[estimator]]:

38 | return print("Model does not appear to be trained.")

39 |

40 | except KeyError as e:

41 | raise KeyError("'{0}' does not correspond with the appropriate key inside the estimators dictionary. \

42 | Please refer to function to check `my_estimators` dictionary.".format(estimator))

43 |

44 |

45 | # Outputting predictions and prediction probability

46 | # for test set

47 | predictions = fit.predict(test_set)

48 | accuracy = fit.score(test_set, test_class_set)

49 | # We grab the second array from the output which corresponds to

50 | # to the predicted probabilites of positive classes

51 | # Ordered wrt fit.classes_ in our case [0, 1] where 1 is our positive class

52 | predictions_prob = fit.predict_proba(test_set)[:, 1]

53 | # ROC Curve stuff

54 | fpr, tpr, _ = roc_curve(test_class_set,

55 | predictions_prob,

56 | pos_label = 1)

57 | auc_fit = auc(fpr, tpr)

58 | return {'predictions': predictions,

59 | 'accuracy': accuracy,

60 | 'fpr': fpr,

61 | 'tpr': tpr,

62 | 'auc': auc_fit}

63 |

--------------------------------------------------------------------------------

/src/pyspark/breast_cancer_rdd.py:

--------------------------------------------------------------------------------

1 | # LOAD APPROPRIATE PACKAGE

2 | import numpy as np

3 | from pyspark.context import SparkContext

4 | from pyspark.mllib.util import MLUtils

5 | from pyspark.mllib.tree import DecisionTree, DecisionTreeModel

6 | from pyspark.mllib.tree import RandomForest, RandomForestModel

7 | from pyspark.mllib.evaluation import BinaryClassificationMetrics

8 |

9 | sc = SparkContext.getOrCreate()

10 | data = MLUtils.loadLibSVMFile(sc, 'data/dataLibSVM.txt')

11 | print(data)

12 | # NEXT LET'S CREATE THE APPROPRIATE TRAINING AND TEST SETS

13 | # WE'LL BE SETTING THEM AS 70-30, ALONG WITH SETTING A

14 | # RANDOM SEED GENERATOR TO MAKE MY RESULTS REPRODUCIBLE

15 |

16 | (trainingSet, testSet) = data.randomSplit([0.7, 0.3], seed = 7)

17 |

18 | ##################

19 | # DECISION TREES #

20 | ##################

21 |

22 | fitDT = DecisionTree.trainClassifier(trainingSet,

23 | numClasses=2,

24 | categoricalFeaturesInfo={},

25 | impurity='gini',

26 | maxDepth=3,

27 | maxBins=32)

28 |

29 | print(fitDT.toDebugString())

30 |

31 | predictionsDT = fitDT.predict(testSet.map(lambda x: x.features))

32 |

33 | labelsAndPredictionsDT = testSet.map(lambda lp: lp.label).zip(predictionsDT)

34 |

35 | # Test Error Rate Evaluations

36 |

37 | testErrDT = labelsAndPredictionsDT.filter(lambda (v, p): v != p).count() / float(testSet.count())

38 |

39 | print('Test Error = {0}'.format(testErrDT))

40 |

41 | # Instantiate metrics object

42 | metricsDT = BinaryClassificationMetrics(labelsAndPredictionsDT)

43 |

44 | # Area under ROC curve

45 | print("Area under ROC = {0}".format(metricsDT.areaUnderROC))

46 |

47 | #################

48 | # RANDOM FOREST #

49 | #################

50 |

51 | fitRF = RandomForest.trainClassifier(trainingSet,

52 | numClasses = 2,

53 | categoricalFeaturesInfo = {},

54 | numTrees = 500,

55 | featureSubsetStrategy="auto",

56 | impurity = 'gini', # USING GINI INDEX FOR OUR RANDOM FOREST MODEL

57 | maxDepth = 4,

58 | maxBins = 100)

59 |

60 | predictionsRF = fitRF.predict(testSet.map(lambda x: x.features))

61 |

62 | labelsAndPredictions = testSet.map(lambda lp: lp.label).zip(predictionsRF)

63 |

64 |

65 | testErr = labelsAndPredictions.filter(lambda (v, p): v != p).count() / float(testSet.count())

66 |

67 | print('Test Error = {0}'.format(testErr))

68 | print('Learned classification forest model:')

69 | print(fitRF.toDebugString())

70 |

71 | # Instantiate metrics object

72 | metricsRF = BinaryClassificationMetrics(labelsAndPredictions)

73 |

74 | # Area under ROC curve

75 | print("Area under ROC = {0}".format(metricsRF.areaUnderROC))

76 |

77 | ###################

78 | # NEURAL NETWORKS #

79 | ###################

80 |

81 | # See Scala Script

--------------------------------------------------------------------------------

/src/python/exploratory_analysis.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | # NOTE: Better in jupyter notebook format

11 |

12 | """

13 | Exploratory Analysis

14 | """

15 | import helper_functions as hf

16 | from data_extraction import breast_cancer

17 | import matplotlib.pyplot as plt

18 | import seaborn as sns

19 |

20 | print('''

21 | ########################################

22 | ## DATA FRAME SHAPE AND DTYPES ##

23 | ########################################

24 | ''')

25 |

26 | print("Here's the dimensions of our data frame:\n",

27 | breast_cancer.shape)

28 |

29 | print("Here's the data types of our columns:\n",

30 | breast_cancer.dtypes)

31 |

32 | print("Some more statistics for our data frame: \n",

33 | breast_cancer.describe())

34 |

35 | print('''

36 | ##########################################

37 | ## STATISTICS RELATING TO DX ##

38 | ##########################################

39 | ''')

40 |

41 | # Next let's use the helper function to show distribution

42 | # of our data frame

43 | hf.print_target_perc(breast_cancer, 'diagnosis')

44 | import pdb

45 | pdb.set_trace()

46 | # Scatterplot Matrix

47 | # Variables chosen from Random Forest modeling.

48 |

49 | cols = ['concave_points_worst', 'concavity_mean',

50 | 'perimeter_worst', 'radius_worst',

51 | 'area_worst', 'diagnosis']

52 |

53 | sns.pairplot(breast_cancer,

54 | x_vars = cols,

55 | y_vars = cols,

56 | hue = 'diagnosis',

57 | palette = ('Red', '#875FDB'),

58 | markers=["o", "D"])

59 |

60 | plt.title('Scatterplot Matrix')

61 | plt.show()

62 | plt.close()

63 |

64 | # Pearson Correlation Matrix

65 | corr = breast_cancer.corr(method = 'pearson') # Correlation Matrix

66 | f, ax = plt.subplots(figsize=(11, 9))

67 |

68 | # Generate a custom diverging colormap

69 | cmap = sns.diverging_palette(10, 275, as_cmap=True)

70 |

71 | # Draw the heatmap with the mask and correct aspect ratio

72 | sns.heatmap(corr,

73 | cmap=cmap,

74 | square=True,

75 | xticklabels=True,

76 | yticklabels=True,

77 | linewidths=.5,

78 | cbar_kws={"shrink": .5},

79 | ax=ax)

80 |

81 | plt.title("Pearson Correlation Matrix")

82 | plt.yticks(rotation = 0)

83 | plt.xticks(rotation = 270)

84 | plt.show()

85 | plt.close()

86 |

87 | # BoxPlot

88 | hf.plot_box_plot(breast_cancer, 'Pre-Processed', (-.05, 50))

89 |

90 | # Normalizing data

91 | breast_cancer_norm = hf.normalize_data_frame(breast_cancer)

92 |

93 | # Visuals relating to normalized data to show significant difference

94 | print('''

95 | #################################

96 | ## Transformed Data Statistics ##

97 | #################################

98 | ''')

99 |

100 | print(breast_cancer_norm.describe())

101 |

102 | hf.plot_box_plot(breast_cancer_norm, 'Transformed', (-.05, 1.05))

103 |

--------------------------------------------------------------------------------

/src/python/data_extraction.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | # Import Packages -----------------------------------------------

13 | import numpy as np

14 | import pandas as pd

15 | from sklearn.preprocessing import MinMaxScaler

16 | from sklearn.model_selection import train_test_split

17 | from urllib.request import urlopen

18 |

19 | # Loading data ------------------------------

20 | UCI_data_URL = 'https://archive.ics.uci.edu/ml/machine-learning-databases\

21 | /breast-cancer-wisconsin/wdbc.data'

22 |

23 | names = ['id_number', 'diagnosis', 'radius_mean',

24 | 'texture_mean', 'perimeter_mean', 'area_mean',

25 | 'smoothness_mean', 'compactness_mean',

26 | 'concavity_mean','concave_points_mean',

27 | 'symmetry_mean', 'fractal_dimension_mean',

28 | 'radius_se', 'texture_se', 'perimeter_se',

29 | 'area_se', 'smoothness_se', 'compactness_se',

30 | 'concavity_se', 'concave_points_se',

31 | 'symmetry_se', 'fractal_dimension_se',

32 | 'radius_worst', 'texture_worst',

33 | 'perimeter_worst', 'area_worst',

34 | 'smoothness_worst', 'compactness_worst',

35 | 'concavity_worst', 'concave_points_worst',

36 | 'symmetry_worst', 'fractal_dimension_worst']

37 |

38 | dx = ['Malignant', 'Benign']

39 |

40 | breast_cancer = pd.read_csv(urlopen(UCI_data_URL), names=names)

41 |

42 | # Setting 'id_number' as our index

43 | breast_cancer.set_index(['id_number'], inplace = True)

44 |

45 | # Converted to binary to help later on with models and plots

46 | breast_cancer['diagnosis'] = breast_cancer['diagnosis'].map({'M':1, 'B':0})

47 |

48 | for col in breast_cancer:

49 | pd.to_numeric(col, errors='coerce')

50 |

51 | # For later use in CART models

52 | names_index = names[2:]

53 |

54 | # Create Training and Test Set ----------------------------------

55 | feature_space = breast_cancer.iloc[:,

56 | breast_cancer.columns != 'diagnosis']

57 | feature_class = breast_cancer.iloc[:,

58 | breast_cancer.columns == 'diagnosis']

59 |

60 |

61 | training_set, test_set, class_set, test_class_set = train_test_split(feature_space,

62 | feature_class,

63 | test_size = 0.20,

64 | random_state = 42)

65 |

66 | # Cleaning test sets to avoid future warning messages

67 | class_set = class_set.values.ravel()

68 | test_class_set = test_class_set.values.ravel()

69 |

70 | # Scaling dataframe

71 | scaler = MinMaxScaler()

72 |

73 | scaler.fit(training_set)

74 |

75 | training_set_scaled = scaler.fit_transform(training_set)

76 | test_set_scaled = scaler.transform(test_set)

77 |

--------------------------------------------------------------------------------

/src/python/neural_networks.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | """

13 | Neural Networks Classification

14 | """

15 | # Import Packages -----------------------------------------------

16 | import sys, os

17 | import pandas as pd

18 | import helper_functions as hf

19 | from data_extraction import training_set_scaled, class_set

20 | from data_extraction import test_set_scaled, test_class_set

21 | from sklearn.neural_network import MLPClassifier

22 | from produce_model_metrics import produce_model_metrics

23 |

24 | # Fitting Neural Network ----------------------------------------

25 | # Fit model

26 | fit_nn = MLPClassifier(solver='lbfgs',

27 | hidden_layer_sizes = (12, ),

28 | activation='tanh',

29 | learning_rate_init=0.05,

30 | random_state=42)

31 |

32 | # Train model on training set

33 | fit_nn.fit(training_set_scaled,

34 | class_set)

35 |

36 | if __name__ == '__main__':

37 | # Print model parameters ------------------------------------

38 | print(fit_nn, '\n')

39 |

40 | # Initialize function for metrics ---------------------------

41 | fit_dict_nn = produce_model_metrics(fit_nn, test_set_scaled,

42 | test_class_set, 'nn')

43 | # Extract each piece from dictionary

44 | predictions_nn = fit_dict_nn['predictions']

45 | accuracy_nn = fit_dict_nn['accuracy']

46 | auc_nn = fit_dict_nn['auc']

47 |

48 |

49 | print("Hyperparameter Optimization:")

50 | print("chosen parameters: \n \

51 | {'hidden_layer_sizes': 12, \n \

52 | 'activation': 'tanh', \n \

53 | 'learning_rate_init': 0.05}")

54 | print("Note: Remove commented code to see this section \n")

55 |

56 | # from sklearn.model_selection import GridSearchCV

57 | # import time

58 | # start = time.time()

59 | # gs = GridSearchCV(fit_nn, cv = 10,

60 | # param_grid={

61 | # 'learning_rate_init': [0.05, 0.01, 0.005, 0.001],

62 | # 'hidden_layer_sizes': [4, 8, 12],

63 | # 'activation': ["relu", "identity", "tanh", "logistic"]})

64 | # gs.fit(training_set_scaled, class_set)

65 | # print(gs.best_params_)

66 | # end = time.time()

67 | # print(end - start)

68 |

69 | # Test Set Calculations -------------------------------------

70 | # Test error rate

71 | test_error_rate_nn = 1 - accuracy_nn

72 |

73 | # Confusion Matrix

74 | test_crosstb = hf.create_conf_mat(test_class_set,

75 | predictions_nn)

76 |

77 | # Cross validation

78 | print("Cross Validation:")

79 |

80 | hf.cross_val_metrics(fit_nn,

81 | training_set_scaled,

82 | class_set,

83 | 'nn',

84 | print_results = True)

85 |

86 | print('Confusion Matrix:')

87 | print(test_crosstb, '\n')

88 |

89 | print("Here is our mean accuracy on the test set:\n {0: .3f}"\

90 | .format(accuracy_nn))

91 |

92 | print("The test error rate for our model is:\n {0: .3f}"\

93 | .format(test_error_rate_nn))

94 |

--------------------------------------------------------------------------------

/src/python/knn.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | #####################################################

4 | ## WISCONSIN BREAST CANCER MACHINE LEARNING ##

5 | #####################################################

6 | #

7 | # Project by Raul Eulogio

8 | #

9 | # Project found at: https://www.inertia7.com/projects/3

10 | #

11 |

12 | """

13 | Kth Nearest Neighbor Classification

14 | """

15 | # Import Packages -----------------------------------------------

16 | import sys, os

17 | import pandas as pd

18 | import helper_functions as hf

19 | from data_extraction import training_set, class_set

20 | from data_extraction import test_set, test_class_set

21 | from sklearn.neighbors import KNeighborsClassifier

22 | from sklearn.model_selection import cross_val_score

23 | from produce_model_metrics import produce_model_metrics

24 |

25 | # Fitting model

26 | fit_knn = KNeighborsClassifier(n_neighbors=3)

27 |

28 | # Training model

29 | fit_knn.fit(training_set,

30 | class_set)

31 | # ---------------------------------------------------------------

32 | if __name__ == '__main__':

33 | # Print model parameters ------------------------------------

34 | print(fit_knn, '\n')

35 |

36 | # Optimal K -------------------------------------------------

37 | # Inspired by:

38 | # https://kevinzakka.github.io/2016/07/13/k-nearest-neighbor/

39 |

40 | myKs = []

41 | for i in range(0, 50):

42 | if (i % 2 != 0):

43 | myKs.append(i)

44 |

45 | cross_vals = []

46 | for k in myKs:

47 | knn = KNeighborsClassifier(n_neighbors=k)

48 | scores = cross_val_score(knn,

49 | training_set,

50 | class_set,

51 | cv = 10,

52 | scoring='accuracy')

53 | cross_vals.append(scores.mean())

54 |

55 | MSE = [1 - x for x in cross_vals]

56 | optimal_k = myKs[MSE.index(min(MSE))]

57 | print("Optimal K is {0}".format(optimal_k), '\n')

58 |

59 | # Initialize function for metrics ---------------------------

60 | fit_dict_knn = produce_model_metrics(fit_knn,

61 | test_set,

62 | test_class_set,

63 | 'knn')

64 | # Extract each piece from dictionary

65 | predictions_knn = fit_dict_knn['predictions']

66 | accuracy_knn = fit_dict_knn['accuracy']

67 | auc_knn = fit_dict_knn['auc']

68 |

69 | # Test Set Calculations -------------------------------------

70 | # Test error rate

71 | test_error_rate_knn = 1 - accuracy_knn

72 |

73 | # Confusion Matrix

74 | test_crosstb = hf.create_conf_mat(test_class_set,

75 | predictions_knn)

76 |

77 | print('Cross Validation:')

78 | hf.cross_val_metrics(fit_knn,

79 | training_set,

80 | class_set,

81 | 'knn',

82 | print_results = True)

83 |

84 | print('Confusion Matrix:')

85 | print(test_crosstb, '\n')

86 |

87 | print("Here is our accuracy for our test set:\n {0: .3f}"\

88 | .format(accuracy_knn))

89 |

90 | print("The test error rate for our model is:\n {0: .3f}"\

91 | .format(test_error_rate_knn))

92 |

--------------------------------------------------------------------------------

/dash_dashboard/global_vars.py: