├── .github

└── workflows

│ └── update_index.yaml

├── .gitignore

├── .pre-commit-config.yaml

├── LICENSE

├── Makefile

├── README.md

├── datasets

├── data-still-to-label.jsonl

├── embedding_qa.json

├── eval-dataset-v1.jsonl

├── eval-dataset-v2-alpha.jsonl

├── reranker-corrections.csv

├── routing-dataset-test.jsonl

└── routing-dataset-train.jsonl

├── deploy

├── jobs

│ └── update_index.yaml

└── services

│ └── service.yaml

├── experiments

├── evaluations

│ ├── bge-large-en_gpt-4.json

│ ├── chunk-size-100_gpt-4.json

│ ├── chunk-size-300_gpt-4.json

│ ├── chunk-size-500_gpt-4.json

│ ├── chunk-size-700_gpt-4.json

│ ├── chunk-size-900_gpt-4.json

│ ├── codellama-34b-instruct-hf_gpt-4.json

│ ├── cross-encoder-reranker_gpt-4.json

│ ├── falcon-180b_gpt-4.json

│ ├── gpt-3.5-turbo_gpt-4.json

│ ├── gpt-4-1106-preview_gpt-4.json

│ ├── gpt-4_gpt-4.json

│ ├── gte-base-fine-tuned-linear-adapter_gpt-4.json

│ ├── gte-base_gpt-4.json

│ ├── gte-large-fine-tuned-el_gpt-4.json

│ ├── gte-large-fine-tuned-fp_gpt-4.json

│ ├── gte-large-fine-tuned_gpt-4.json

│ ├── gte-large_gpt-4.json

│ ├── lexical-search-bm25-1_gpt-4.json

│ ├── lexical-search-bm25-3_gpt-4.json

│ ├── lexical-search-bm25-5_gpt-4.json

│ ├── llama-2-13b-chat-hf_gpt-4.json

│ ├── llama-2-70b-chat-hf_gpt-4.json

│ ├── llama-2-7b-chat-hf_gpt-4.json

│ ├── mistral-7b-instruct-v0.1_gpt-4.json

│ ├── mixtral-8x7b-instruct-v0.1_gpt-4.json

│ ├── num-chunks-11_gpt-4.json

│ ├── num-chunks-13_gpt-4.json

│ ├── num-chunks-15_gpt-4.json

│ ├── num-chunks-1_gpt-4.json

│ ├── num-chunks-3_gpt-4.json

│ ├── num-chunks-5_gpt-4.json

│ ├── num-chunks-7_gpt-4.json

│ ├── num-chunks-9_gpt-4.json

│ ├── prompt-ignore-contexts_gpt-4.json

│ ├── rerank-0.3_gpt-4.json

│ ├── rerank-0.5_gpt-4.json

│ ├── rerank-0.7_gpt-4.json

│ ├── rerank-0.9_gpt-4.json

│ ├── rerank-0_gpt-4.json

│ ├── text-embedding-ada-002_gpt-4.json

│ ├── with-context_gpt-4.json

│ ├── with-sections_gpt-4.json

│ ├── without-context-gpt-4-1106-preview_gpt-4.json

│ ├── without-context-gpt-4_gpt-4.json

│ ├── without-context-mixtral-8x7b-instruct-v0.1_gpt-4.json

│ ├── without-context_gpt-4.json

│ └── without-sections_gpt-4.json

├── references

│ ├── gpt-4-turbo.json

│ ├── gpt-4.json

│ ├── llama-2-70b.json

│ └── mixtral.json

└── responses

│ ├── bge-large-en.json

│ ├── chunk-size-100.json

│ ├── chunk-size-300.json

│ ├── chunk-size-500.json

│ ├── chunk-size-600.json

│ ├── chunk-size-700.json

│ ├── chunk-size-900.json

│ ├── codellama-34b-instruct-hf.json

│ ├── cross-encoder-reranker.json

│ ├── gpt-3.5-turbo-16k.json

│ ├── gpt-3.5-turbo.json

│ ├── gpt-4-1106-preview.json

│ ├── gpt-4.json

│ ├── gte-base-fine-tuned-linear-adapter.json

│ ├── gte-base.json

│ ├── gte-large-fine-tuned-el.json

│ ├── gte-large-fine-tuned-fp.json

│ ├── gte-large-fine-tuned.json

│ ├── gte-large.json

│ ├── lexical-search-bm25-1.json

│ ├── lexical-search-bm25-3.json

│ ├── lexical-search-bm25-5.json

│ ├── llama-2-13b-chat-hf.json

│ ├── llama-2-70b-chat-hf.json

│ ├── llama-2-7b-chat-hf.json

│ ├── mistral-7b-instruct-v0.1.json

│ ├── mixtral-8x7b-instruct-v0.1.json

│ ├── num-chunks-1.json

│ ├── num-chunks-10.json

│ ├── num-chunks-11.json

│ ├── num-chunks-13.json

│ ├── num-chunks-15.json

│ ├── num-chunks-20.json

│ ├── num-chunks-3.json

│ ├── num-chunks-5.json

│ ├── num-chunks-6.json

│ ├── num-chunks-7.json

│ ├── num-chunks-9.json

│ ├── prompt-ignore-contexts.json

│ ├── rerank-0.3.json

│ ├── rerank-0.5.json

│ ├── rerank-0.7.json

│ ├── rerank-0.9.json

│ ├── rerank-0.json

│ ├── text-embedding-ada-002.json

│ ├── with-context.json

│ ├── with-sections.json

│ ├── without-context-gpt-4-1106-preview.json

│ ├── without-context-gpt-4.json

│ ├── without-context-mixtral-8x7b-instruct-v0.1.json

│ ├── without-context-small.json

│ ├── without-context.json

│ └── without-sections.json

├── migrations

├── vector-1024.sql

├── vector-1536.sql

└── vector-768.sql

├── notebooks

├── clear_cell_nums.py

└── rag.ipynb

├── pyproject.toml

├── rag

├── __init__.py

├── config.py

├── data.py

├── embed.py

├── evaluate.py

├── generate.py

├── index.py

├── rerank.py

├── search.py

├── serve.py

└── utils.py

├── requirements.txt

├── setup-pgvector.sh

├── test.py

└── update-index.sh

/.github/workflows/update_index.yaml:

--------------------------------------------------------------------------------

1 | name: update-index

2 | on:

3 | workflow_dispatch: # manual trigger

4 | permissions: write-all

5 |

6 | jobs:

7 | workloads:

8 | runs-on: ubuntu-22.04

9 | steps:

10 |

11 | # Set up dependencies

12 | - uses: actions/checkout@v3

13 | - uses: actions/setup-python@v4

14 | with:

15 | python-version: '3.10.11'

16 | cache: 'pip'

17 | - run: python3 -m pip install anyscale

18 |

19 | # Run workloads

20 | - name: Workloads

21 | run: |

22 | export ANYSCALE_HOST=${{ secrets.ANYSCALE_HOST }}

23 | export ANYSCALE_CLI_TOKEN=${{ secrets.ANYSCALE_CLI_TOKEN }}

24 | anyscale job submit deploy/jobs/update_index.yaml --wait

25 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Data

2 | ray/

3 |

4 | # VSCode

5 | .vscode/

6 | .idea

7 |

8 | # Byte-compiled / optimized / DLL files

9 | __pycache__/

10 | *.py[cod]

11 | *$py.class

12 |

13 | # C extensions

14 | *.so

15 |

16 | # Distribution / packaging

17 | .Python

18 | build/

19 | develop-eggs/

20 | dist/

21 | downloads/

22 | eggs/

23 | .eggs/

24 | lib/

25 | lib64/

26 | parts/

27 | sdist/

28 | var/

29 | wheels/

30 | pip-wheel-metadata/

31 | share/python-wheels/

32 | *.egg-info/

33 | .installed.cfg

34 | *.egg

35 | MANIFEST

36 |

37 | # PyInstaller

38 | *.manifest

39 | *.spec

40 |

41 | # Installer logs

42 | pip-log.txt

43 | pip-delete-this-directory.txt

44 |

45 | # Unit test / coverage reports

46 | htmlcov/

47 | .tox/

48 | .nox/

49 | .coverage

50 | .coverage.*

51 | .cache

52 | nosetests.xml

53 | coverage.xml

54 | *.cover

55 | *.py,cover

56 | .hypothesis/

57 | .pytest_cache/

58 |

59 | # Flask:

60 | instance/

61 | .webassets-cache

62 |

63 | # Scrapy:

64 | .scrapy

65 |

66 | # Sphinx

67 | docs/_build/

68 |

69 | # PyBuilder

70 | target/

71 |

72 | # IPython

73 | .ipynb_checkpoints

74 | profile_default/

75 | ipython_config.py

76 |

77 | # pyenv

78 | .python-version

79 |

80 | # PEP 582

81 | __pypackages__/

82 |

83 | # Celery

84 | celerybeat-schedule

85 | celerybeat.pid

86 |

87 | # Environment

88 | .env

89 | .venv

90 | env/

91 | venv/

92 | ENV/

93 | env.bak/

94 | venv.bak/

95 |

96 | # mkdocs

97 | site/

98 |

99 | # Airflow

100 | airflow/airflow.db

101 |

102 | # MacOS

103 | .DS_Store

104 |

105 | # Clean up

106 | .trash/

107 |

108 | # scraped folders

109 | docs.ray.io/

110 |

111 | # book and other source folders

112 | data/

113 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # See https://pre-commit.com for more information

2 | # See https://pre-commit.com/hooks.html for more hooks

3 | repos:

4 | - repo: https://github.com/pre-commit/pre-commit-hooks

5 | rev: v4.5.0

6 | hooks:

7 | - id: trailing-whitespace

8 | - id: end-of-file-fixer

9 | - id: check-merge-conflict

10 | - id: check-yaml

11 | - id: check-added-large-files

12 | args: ['--maxkb=1000']

13 | exclude: "notebooks"

14 | - id: check-yaml

15 | exclude: "mkdocs.yml"

16 | - repo: https://github.com/Yelp/detect-secrets

17 | rev: v1.4.0

18 | hooks:

19 | - id: detect-secrets

20 | exclude: "notebooks|experiments|datasets"

21 | - repo: local

22 | hooks:

23 | - id: clean

24 | name: clean

25 | entry: make

26 | args: ["clean"]

27 | language: system

28 | pass_filenames: false

29 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (2023) Anyscale, Inc.

2 |

3 | Attribution 4.0 International

4 |

5 | =======================================================================

6 |

7 | Creative Commons Corporation ("Creative Commons") is not a law firm and

8 | does not provide legal services or legal advice. Distribution of

9 | Creative Commons public licenses does not create a lawyer-client or

10 | other relationship. Creative Commons makes its licenses and related

11 | information available on an "as-is" basis. Creative Commons gives no

12 | warranties regarding its licenses, any material licensed under their

13 | terms and conditions, or any related information. Creative Commons

14 | disclaims all liability for damages resulting from their use to the

15 | fullest extent possible.

16 |

17 | Using Creative Commons Public Licenses

18 |

19 | Creative Commons public licenses provide a standard set of terms and

20 | conditions that creators and other rights holders may use to share

21 | original works of authorship and other material subject to copyright

22 | and certain other rights specified in the public license below. The

23 | following considerations are for informational purposes only, are not

24 | exhaustive, and do not form part of our licenses.

25 |

26 | Considerations for licensors: Our public licenses are

27 | intended for use by those authorized to give the public

28 | permission to use material in ways otherwise restricted by

29 | copyright and certain other rights. Our licenses are

30 | irrevocable. Licensors should read and understand the terms

31 | and conditions of the license they choose before applying it.

32 | Licensors should also secure all rights necessary before

33 | applying our licenses so that the public can reuse the

34 | material as expected. Licensors should clearly mark any

35 | material not subject to the license. This includes other CC-

36 | licensed material, or material used under an exception or

37 | limitation to copyright. More considerations for licensors:

38 | wiki.creativecommons.org/Considerations_for_licensors

39 |

40 | Considerations for the public: By using one of our public

41 | licenses, a licensor grants the public permission to use the

42 | licensed material under specified terms and conditions. If

43 | the licensor's permission is not necessary for any reason--for

44 | example, because of any applicable exception or limitation to

45 | copyright--then that use is not regulated by the license. Our

46 | licenses grant only permissions under copyright and certain

47 | other rights that a licensor has authority to grant. Use of

48 | the licensed material may still be restricted for other

49 | reasons, including because others have copyright or other

50 | rights in the material. A licensor may make special requests,

51 | such as asking that all changes be marked or described.

52 | Although not required by our licenses, you are encouraged to

53 | respect those requests where reasonable. More_considerations

54 | for the public:

55 | wiki.creativecommons.org/Considerations_for_licensees

56 |

57 | =======================================================================

58 |

59 | Creative Commons Attribution 4.0 International Public License

60 |

61 | By exercising the Licensed Rights (defined below), You accept and agree

62 | to be bound by the terms and conditions of this Creative Commons

63 | Attribution 4.0 International Public License ("Public License"). To the

64 | extent this Public License may be interpreted as a contract, You are

65 | granted the Licensed Rights in consideration of Your acceptance of

66 | these terms and conditions, and the Licensor grants You such rights in

67 | consideration of benefits the Licensor receives from making the

68 | Licensed Material available under these terms and conditions.

69 |

70 |

71 | Section 1 -- Definitions.

72 |

73 | a. Adapted Material means material subject to Copyright and Similar

74 | Rights that is derived from or based upon the Licensed Material

75 | and in which the Licensed Material is translated, altered,

76 | arranged, transformed, or otherwise modified in a manner requiring

77 | permission under the Copyright and Similar Rights held by the

78 | Licensor. For purposes of this Public License, where the Licensed

79 | Material is a musical work, performance, or sound recording,

80 | Adapted Material is always produced where the Licensed Material is

81 | synched in timed relation with a moving image.

82 |

83 | b. Adapter's License means the license You apply to Your Copyright

84 | and Similar Rights in Your contributions to Adapted Material in

85 | accordance with the terms and conditions of this Public License.

86 |

87 | c. Copyright and Similar Rights means copyright and/or similar rights

88 | closely related to copyright including, without limitation,

89 | performance, broadcast, sound recording, and Sui Generis Database

90 | Rights, without regard to how the rights are labeled or

91 | categorized. For purposes of this Public License, the rights

92 | specified in Section 2(b)(1)-(2) are not Copyright and Similar

93 | Rights.

94 |

95 | d. Effective Technological Measures means those measures that, in the

96 | absence of proper authority, may not be circumvented under laws

97 | fulfilling obligations under Article 11 of the WIPO Copyright

98 | Treaty adopted on December 20, 1996, and/or similar international

99 | agreements.

100 |

101 | e. Exceptions and Limitations means fair use, fair dealing, and/or

102 | any other exception or limitation to Copyright and Similar Rights

103 | that applies to Your use of the Licensed Material.

104 |

105 | f. Licensed Material means the artistic or literary work, database,

106 | or other material to which the Licensor applied this Public

107 | License.

108 |

109 | g. Licensed Rights means the rights granted to You subject to the

110 | terms and conditions of this Public License, which are limited to

111 | all Copyright and Similar Rights that apply to Your use of the

112 | Licensed Material and that the Licensor has authority to license.

113 |

114 | h. Licensor means the individual(s) or entity(ies) granting rights

115 | under this Public License.

116 |

117 | i. Share means to provide material to the public by any means or

118 | process that requires permission under the Licensed Rights, such

119 | as reproduction, public display, public performance, distribution,

120 | dissemination, communication, or importation, and to make material

121 | available to the public including in ways that members of the

122 | public may access the material from a place and at a time

123 | individually chosen by them.

124 |

125 | j. Sui Generis Database Rights means rights other than copyright

126 | resulting from Directive 96/9/EC of the European Parliament and of

127 | the Council of 11 March 1996 on the legal protection of databases,

128 | as amended and/or succeeded, as well as other essentially

129 | equivalent rights anywhere in the world.

130 |

131 | k. You means the individual or entity exercising the Licensed Rights

132 | under this Public License. Your has a corresponding meaning.

133 |

134 |

135 | Section 2 -- Scope.

136 |

137 | a. License grant.

138 |

139 | 1. Subject to the terms and conditions of this Public License,

140 | the Licensor hereby grants You a worldwide, royalty-free,

141 | non-sublicensable, non-exclusive, irrevocable license to

142 | exercise the Licensed Rights in the Licensed Material to:

143 |

144 | a. reproduce and Share the Licensed Material, in whole or

145 | in part; and

146 |

147 | b. produce, reproduce, and Share Adapted Material.

148 |

149 | 2. Exceptions and Limitations. For the avoidance of doubt, where

150 | Exceptions and Limitations apply to Your use, this Public

151 | License does not apply, and You do not need to comply with

152 | its terms and conditions.

153 |

154 | 3. Term. The term of this Public License is specified in Section

155 | 6(a).

156 |

157 | 4. Media and formats; technical modifications allowed. The

158 | Licensor authorizes You to exercise the Licensed Rights in

159 | all media and formats whether now known or hereafter created,

160 | and to make technical modifications necessary to do so. The

161 | Licensor waives and/or agrees not to assert any right or

162 | authority to forbid You from making technical modifications

163 | necessary to exercise the Licensed Rights, including

164 | technical modifications necessary to circumvent Effective

165 | Technological Measures. For purposes of this Public License,

166 | simply making modifications authorized by this Section 2(a)

167 | (4) never produces Adapted Material.

168 |

169 | 5. Downstream recipients.

170 |

171 | a. Offer from the Licensor -- Licensed Material. Every

172 | recipient of the Licensed Material automatically

173 | receives an offer from the Licensor to exercise the

174 | Licensed Rights under the terms and conditions of this

175 | Public License.

176 |

177 | b. No downstream restrictions. You may not offer or impose

178 | any additional or different terms or conditions on, or

179 | apply any Effective Technological Measures to, the

180 | Licensed Material if doing so restricts exercise of the

181 | Licensed Rights by any recipient of the Licensed

182 | Material.

183 |

184 | 6. No endorsement. Nothing in this Public License constitutes or

185 | may be construed as permission to assert or imply that You

186 | are, or that Your use of the Licensed Material is, connected

187 | with, or sponsored, endorsed, or granted official status by,

188 | the Licensor or others designated to receive attribution as

189 | provided in Section 3(a)(1)(A)(i).

190 |

191 | b. Other rights.

192 |

193 | 1. Moral rights, such as the right of integrity, are not

194 | licensed under this Public License, nor are publicity,

195 | privacy, and/or other similar personality rights; however, to

196 | the extent possible, the Licensor waives and/or agrees not to

197 | assert any such rights held by the Licensor to the limited

198 | extent necessary to allow You to exercise the Licensed

199 | Rights, but not otherwise.

200 |

201 | 2. Patent and trademark rights are not licensed under this

202 | Public License.

203 |

204 | 3. To the extent possible, the Licensor waives any right to

205 | collect royalties from You for the exercise of the Licensed

206 | Rights, whether directly or through a collecting society

207 | under any voluntary or waivable statutory or compulsory

208 | licensing scheme. In all other cases the Licensor expressly

209 | reserves any right to collect such royalties.

210 |

211 |

212 | Section 3 -- License Conditions.

213 |

214 | Your exercise of the Licensed Rights is expressly made subject to the

215 | following conditions.

216 |

217 | a. Attribution.

218 |

219 | 1. If You Share the Licensed Material (including in modified

220 | form), You must:

221 |

222 | a. retain the following if it is supplied by the Licensor

223 | with the Licensed Material:

224 |

225 | i. identification of the creator(s) of the Licensed

226 | Material and any others designated to receive

227 | attribution, in any reasonable manner requested by

228 | the Licensor (including by pseudonym if

229 | designated);

230 |

231 | ii. a copyright notice;

232 |

233 | iii. a notice that refers to this Public License;

234 |

235 | iv. a notice that refers to the disclaimer of

236 | warranties;

237 |

238 | v. a URI or hyperlink to the Licensed Material to the

239 | extent reasonably practicable;

240 |

241 | b. indicate if You modified the Licensed Material and

242 | retain an indication of any previous modifications; and

243 |

244 | c. indicate the Licensed Material is licensed under this

245 | Public License, and include the text of, or the URI or

246 | hyperlink to, this Public License.

247 |

248 | 2. You may satisfy the conditions in Section 3(a)(1) in any

249 | reasonable manner based on the medium, means, and context in

250 | which You Share the Licensed Material. For example, it may be

251 | reasonable to satisfy the conditions by providing a URI or

252 | hyperlink to a resource that includes the required

253 | information.

254 |

255 | 3. If requested by the Licensor, You must remove any of the

256 | information required by Section 3(a)(1)(A) to the extent

257 | reasonably practicable.

258 |

259 | 4. If You Share Adapted Material You produce, the Adapter's

260 | License You apply must not prevent recipients of the Adapted

261 | Material from complying with this Public License.

262 |

263 |

264 | Section 4 -- Sui Generis Database Rights.

265 |

266 | Where the Licensed Rights include Sui Generis Database Rights that

267 | apply to Your use of the Licensed Material:

268 |

269 | a. for the avoidance of doubt, Section 2(a)(1) grants You the right

270 | to extract, reuse, reproduce, and Share all or a substantial

271 | portion of the contents of the database;

272 |

273 | b. if You include all or a substantial portion of the database

274 | contents in a database in which You have Sui Generis Database

275 | Rights, then the database in which You have Sui Generis Database

276 | Rights (but not its individual contents) is Adapted Material; and

277 |

278 | c. You must comply with the conditions in Section 3(a) if You Share

279 | all or a substantial portion of the contents of the database.

280 |

281 | For the avoidance of doubt, this Section 4 supplements and does not

282 | replace Your obligations under this Public License where the Licensed

283 | Rights include other Copyright and Similar Rights.

284 |

285 |

286 | Section 5 -- Disclaimer of Warranties and Limitation of Liability.

287 |

288 | a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

289 | EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

290 | AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

291 | ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

292 | IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

293 | WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

294 | PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

295 | ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

296 | KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

297 | ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

298 |

299 | b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

300 | TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

301 | NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

302 | INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

303 | COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

304 | USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

305 | ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

306 | DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

307 | IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

308 |

309 | c. The disclaimer of warranties and limitation of liability provided

310 | above shall be interpreted in a manner that, to the extent

311 | possible, most closely approximates an absolute disclaimer and

312 | waiver of all liability.

313 |

314 |

315 | Section 6 -- Term and Termination.

316 |

317 | a. This Public License applies for the term of the Copyright and

318 | Similar Rights licensed here. However, if You fail to comply with

319 | this Public License, then Your rights under this Public License

320 | terminate automatically.

321 |

322 | b. Where Your right to use the Licensed Material has terminated under

323 | Section 6(a), it reinstates:

324 |

325 | 1. automatically as of the date the violation is cured, provided

326 | it is cured within 30 days of Your discovery of the

327 | violation; or

328 |

329 | 2. upon express reinstatement by the Licensor.

330 |

331 | For the avoidance of doubt, this Section 6(b) does not affect any

332 | right the Licensor may have to seek remedies for Your violations

333 | of this Public License.

334 |

335 | c. For the avoidance of doubt, the Licensor may also offer the

336 | Licensed Material under separate terms or conditions or stop

337 | distributing the Licensed Material at any time; however, doing so

338 | will not terminate this Public License.

339 |

340 | d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

341 | License.

342 |

343 |

344 | Section 7 -- Other Terms and Conditions.

345 |

346 | a. The Licensor shall not be bound by any additional or different

347 | terms or conditions communicated by You unless expressly agreed.

348 |

349 | b. Any arrangements, understandings, or agreements regarding the

350 | Licensed Material not stated herein are separate from and

351 | independent of the terms and conditions of this Public License.

352 |

353 |

354 | Section 8 -- Interpretation.

355 |

356 | a. For the avoidance of doubt, this Public License does not, and

357 | shall not be interpreted to, reduce, limit, restrict, or impose

358 | conditions on any use of the Licensed Material that could lawfully

359 | be made without permission under this Public License.

360 |

361 | b. To the extent possible, if any provision of this Public License is

362 | deemed unenforceable, it shall be automatically reformed to the

363 | minimum extent necessary to make it enforceable. If the provision

364 | cannot be reformed, it shall be severed from this Public License

365 | without affecting the enforceability of the remaining terms and

366 | conditions.

367 |

368 | c. No term or condition of this Public License will be waived and no

369 | failure to comply consented to unless expressly agreed to by the

370 | Licensor.

371 |

372 | d. Nothing in this Public License constitutes or may be interpreted

373 | as a limitation upon, or waiver of, any privileges and immunities

374 | that apply to the Licensor or You, including from the legal

375 | processes of any jurisdiction or authority.

376 |

377 |

378 | =======================================================================

379 |

380 | Creative Commons is not a party to its public

381 | licenses. Notwithstanding, Creative Commons may elect to apply one of

382 | its public licenses to material it publishes and in those instances

383 | will be considered the “Licensor.” The text of the Creative Commons

384 | public licenses is dedicated to the public domain under the CC0 Public

385 | Domain Dedication. Except for the limited purpose of indicating that

386 | material is shared under a Creative Commons public license or as

387 | otherwise permitted by the Creative Commons policies published at

388 | creativecommons.org/policies, Creative Commons does not authorize the

389 | use of the trademark "Creative Commons" or any other trademark or logo

390 | of Creative Commons without its prior written consent including,

391 | without limitation, in connection with any unauthorized modifications

392 | to any of its public licenses or any other arrangements,

393 | understandings, or agreements concerning use of licensed material. For

394 | the avoidance of doubt, this paragraph does not form part of the

395 | public licenses.

396 |

397 | Creative Commons may be contacted at creativecommons.org.

398 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | # Makefile

2 | SHELL = /bin/bash

3 |

4 | # Styling

5 | .PHONY: style

6 | style:

7 | black .

8 | flake8

9 | python3 -m isort .

10 | pyupgrade

11 |

12 | # Cleaning

13 | .PHONY: clean

14 | clean: style

15 | python notebooks/clear_cell_nums.py

16 | find . -type f -name "*.DS_Store" -ls -delete

17 | find . | grep -E "(__pycache__|\.pyc|\.pyo)" | xargs rm -rf

18 | find . | grep -E ".pytest_cache" | xargs rm -rf

19 | find . | grep -E ".ipynb_checkpoints" | xargs rm -rf

20 | rm -rf .coverage*

21 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # LLM Applications

2 |

3 | A comprehensive guide to building RAG-based LLM applications for production.

4 |

5 | - **Blog post**: https://www.anyscale.com/blog/a-comprehensive-guide-for-building-rag-based-llm-applications-part-1

6 | - **GitHub repository**: https://github.com/ray-project/llm-applications

7 | - **Interactive notebook**: https://github.com/ray-project/llm-applications/blob/main/notebooks/rag.ipynb

8 | - **Anyscale Endpoints**: https://endpoints.anyscale.com/

9 | - **Ray documentation**: https://docs.ray.io/

10 |

11 | In this guide, we will learn how to:

12 |

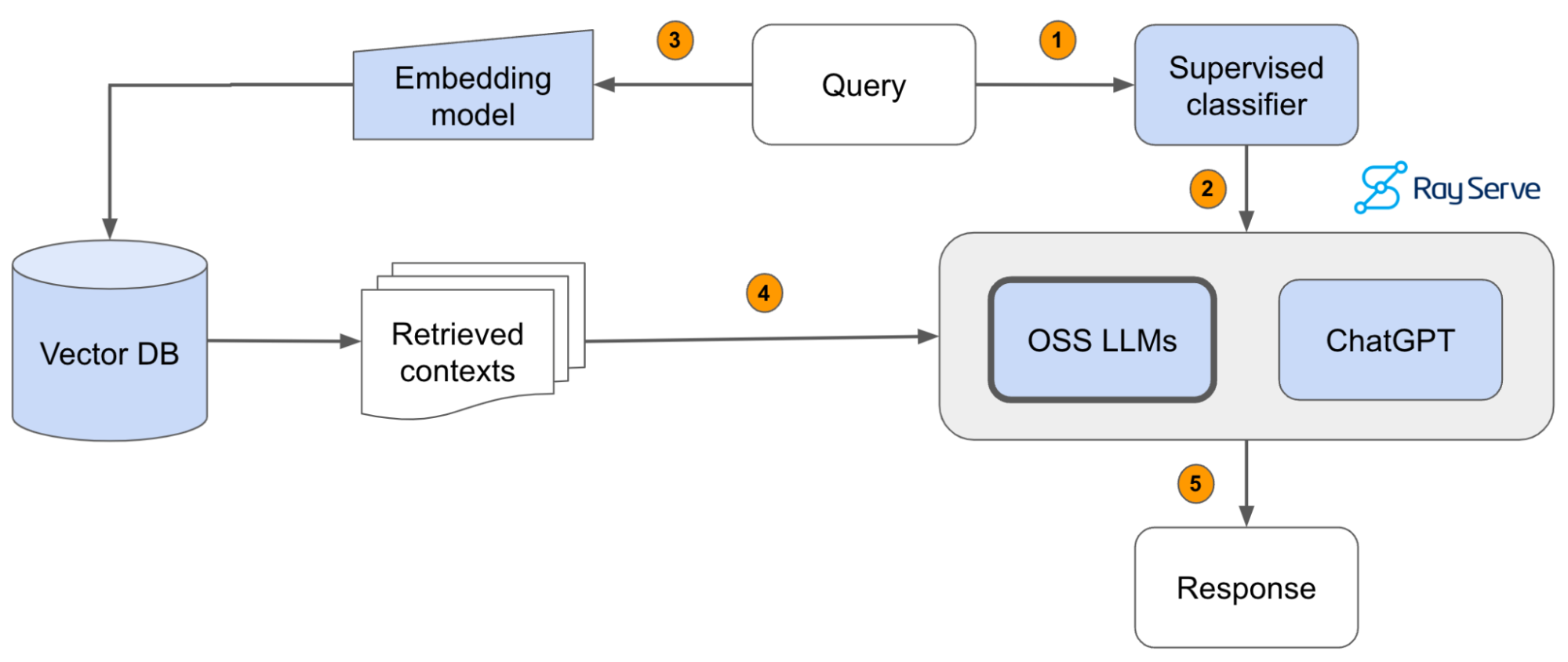

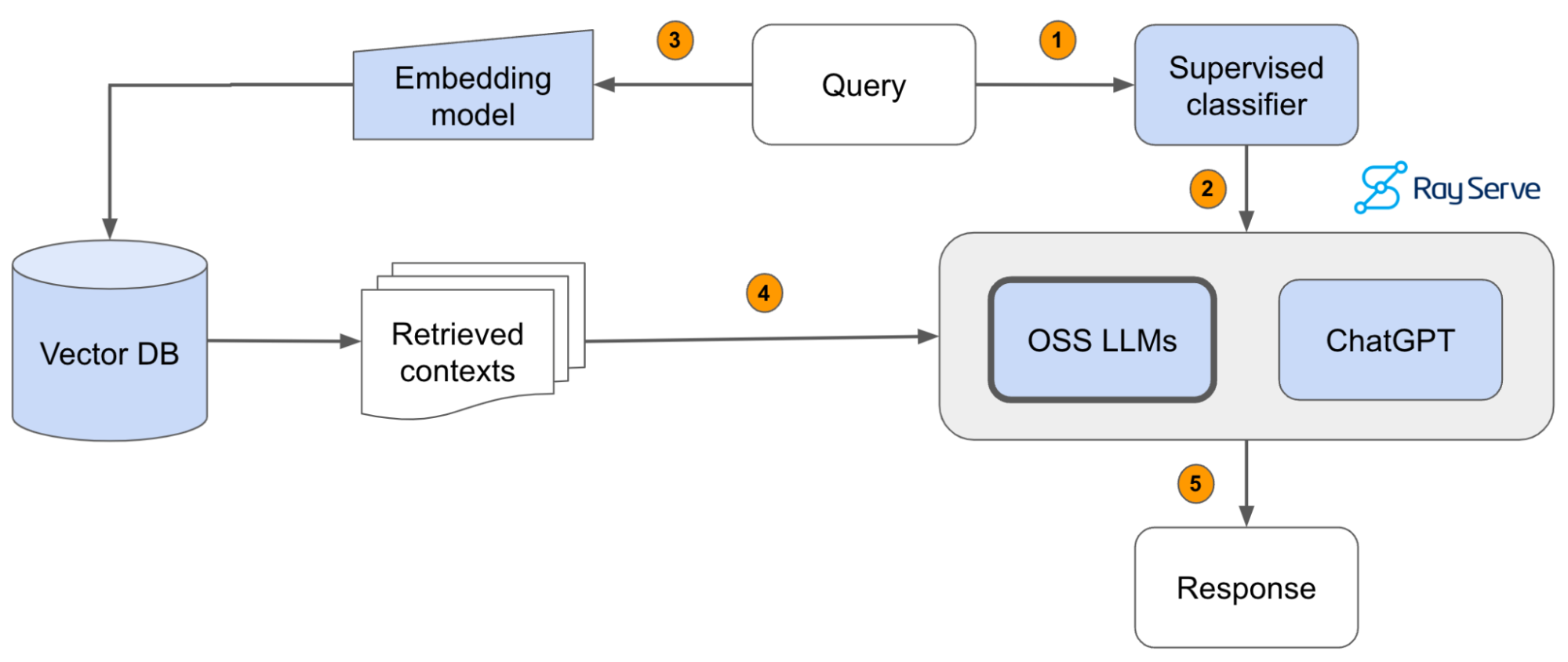

13 | - 💻 Develop a retrieval augmented generation (RAG) based LLM application from scratch.

14 | - 🚀 Scale the major components (load, chunk, embed, index, serve, etc.) in our application.

15 | - ✅ Evaluate different configurations of our application to optimize for both per-component (ex. retrieval_score) and overall performance (quality_score).

16 | - 🔀 Implement LLM hybrid routing approach to bridge the gap b/w OSS and closed LLMs.

17 | - 📦 Serve the application in a highly scalable and available manner.

18 | - 💥 Share the 1st order and 2nd order impacts LLM applications have had on our products.

19 |

20 |

21 |  22 |

23 | ## Setup

24 |

25 | ### API keys

26 | We'll be using [OpenAI](https://platform.openai.com/docs/models/) to access ChatGPT models like `gpt-3.5-turbo`, `gpt-4`, etc. and [Anyscale Endpoints](https://endpoints.anyscale.com/) to access OSS LLMs like `Llama-2-70b`. Be sure to create your accounts for both and have your credentials ready.

27 |

28 | ### Compute

29 |

22 |

23 | ## Setup

24 |

25 | ### API keys

26 | We'll be using [OpenAI](https://platform.openai.com/docs/models/) to access ChatGPT models like `gpt-3.5-turbo`, `gpt-4`, etc. and [Anyscale Endpoints](https://endpoints.anyscale.com/) to access OSS LLMs like `Llama-2-70b`. Be sure to create your accounts for both and have your credentials ready.

27 |

28 | ### Compute

29 |

30 | Local

31 | You could run this on your local laptop but a we highly recommend using a setup with access to GPUs. You can set this up on your own or on [Anyscale](http://anyscale.com/).

32 |

33 |

34 |

35 | Anyscale

36 |

37 | - Start a new Anyscale workspace on staging using an

g3.8xlarge head node, which has 2 GPUs and 32 CPUs. We can also add GPU worker nodes to run the workloads faster. If you're not on Anyscale, you can configure a similar instance on your cloud.

38 | - Use the

default_cluster_env_2.6.2_py39 cluster environment.

39 | - Use the

us-west-2 if you'd like to use the artifacts in our shared storage (source docs, vector DB dumps, etc.).

40 |

41 |

42 |

43 |

44 | ### Repository

45 | ```bash

46 | git clone https://github.com/ray-project/llm-applications.git .

47 | git config --global user.name

48 | git config --global user.email

49 | ```

50 |

51 | ### Data

52 | Our data is already ready at `/efs/shared_storage/goku/docs.ray.io/en/master/` (on Staging, `us-east-1`) but if you wanted to load it yourself, run this bash command (change `/desired/output/directory`, but make sure it's on the shared storage,

53 | so that it's accessible to the workers)

54 | ```bash

55 | git clone https://github.com/ray-project/llm-applications.git .

56 | ```

57 |

58 | ### Environment

59 |

60 | Then set up the environment correctly by specifying the values in your `.env` file,

61 | and installing the dependencies:

62 |

63 | ```bash

64 | pip install --user -r requirements.txt

65 | export PYTHONPATH=$PYTHONPATH:$PWD

66 | pre-commit install

67 | pre-commit autoupdate

68 | ```

69 |

70 | ### Credentials

71 | ```bash

72 | touch .env

73 | # Add environment variables to .env

74 | OPENAI_API_BASE="https://api.openai.com/v1"

75 | OPENAI_API_KEY="" # https://platform.openai.com/account/api-keys

76 | ANYSCALE_API_BASE="https://api.endpoints.anyscale.com/v1"

77 | ANYSCALE_API_KEY="" # https://app.endpoints.anyscale.com/credentials

78 | DB_CONNECTION_STRING="dbname=postgres user=postgres host=localhost password=postgres"

79 | source .env

80 | ```

81 |

82 | Now we're ready to go through the [rag.ipynb](notebooks/rag.ipynb) interactive notebook to develop and serve our LLM application!

83 |

84 | ### Learn more

85 | - If your team is investing heavily in developing LLM applications, [reach out](mailto:endpoints-help@anyscale.com) to us to learn more about how [Ray](https://github.com/ray-project/ray) and [Anyscale](http://anyscale.com/) can help you scale and productionize everything.

86 | - Start serving (+fine-tuning) OSS LLMs with [Anyscale Endpoints](https://endpoints.anyscale.com/) ($1/M tokens for `Llama-3-70b`) and private endpoints available upon request (1M free tokens trial).

87 | - Learn more about how companies like OpenAI, Netflix, Pinterest, Verizon, Instacart and others leverage Ray and Anyscale for their AI workloads at the [Ray Summit 2024](https://raysummit.anyscale.com/) this Sept 18-20 in San Francisco.

88 |

--------------------------------------------------------------------------------

/datasets/data-still-to-label.jsonl:

--------------------------------------------------------------------------------

1 | {'question': 'What is the rest api for getting the head node id?', 'source': 'https://docs.ray.io/en/latest/index.html'}

2 | {'question': 'how to rerun a canceled ray task', 'source': 'https://docs.ray.io/en/latest/ray-core/api/doc/ray.cancel.html#ray.cancel'}

3 | {'question': 'how to print ray version in notebook', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#runtime-environments-api-ref'}

4 | {'question': 'How do I set the max parallel concurrent scheduled tasks in map_batches?', 'source': 'https://docs.ray.io/en/latest/ray-core/examples/batch_prediction.html'}

5 | {'question': 'How do I get the number of cpus from ray cluster?', 'source': 'https://docs.ray.io/en/latest/ray-air/examples/huggingface_text_classification.html'}

6 | {'question': 'How to use the exclude option to the runtime_env', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#api-reference'}

7 | {'question': 'show a map batch example with batch_format', 'source': 'https://docs.ray.io/en/latest/data/transforming-data.html'}

8 | {'question': 'how to find local ray address', 'source': 'https://docs.ray.io/en/latest/ray-core/examples/gentle_walkthrough.html'}

9 | {'question': 'Why don’t I see any deprecation warnings from `warnings.warn` when running with Ray Tune?', 'source': 'https://docs.ray.io/en/latest/tune/tutorials/tune-output.html'}

10 | {'question': 'how can I set *num_heartbeats_timeout in `ray start --head`* command ?', 'source': 'https://docs.ray.io/en/latest/cluster/cli.html'}

11 | {'question': "ray crashing with AttributeError: module 'pydantic.fields' has no attribute 'ModelField", 'source': 'https://discuss.ray.io/'}

12 | {'question': 'How to start ray cluster on multiple node via CLI?', 'source': 'https://docs.ray.io/en/latest/cluster/vms/user-guides/launching-clusters/aws.html'}

13 | {'question': 'my ray tuner shows "running" but CPU usage is almost 0%. why ?', 'source': 'https://docs.ray.io/en/latest/tune/faq.html'}

14 | {'question': 'should the Ray head node and all workers have the same object store memory size allocated?', 'source': 'https://docs.ray.io/en/latest/ray-observability/user-guides/debug-apps/debug-memory.html'}

15 | {'question': 'I want to set up gcs health checks via REST API, what is the endpoint that I can hit to check health for gcs?', 'source': 'https://docs.ray.io'}

16 | {'question': 'In Ray Serve, how to specify whether to set up an httpproxy on each node, or just the head node?', 'source': 'https://docs.ray.io/en/latest/serve/architecture.html'}

17 | {'question': 'Want to embed Grafana into the Ray Dashboard, given that I am using KubeRay\n\nGiven the context that Prometheus and Grafana are not running on my Head node, and that I am using KubeRay, how should I be setting the following variables?\n• `RAY_GRAFANA_HOST`\n• `RAY_PROMETHEUS_HOST`\nAnd is there a way to set them more intelligently, given that head node IP is changing every time we reconfigure our cluster?', 'source': 'https://docs.ray.io/en/latest/cluster/configure-manage-dashboard.html'}

18 | {'question': 'How the GCS determines which Kubernetes pod to kill when using KubeRay autoscaling?', 'source': 'https://docs.ray.io/en/latest/cluster/kubernetes/user-guides/configuring-autoscaling.html'}

19 | {'question': 'How can I set the `request_timeout_s` in `http_options` section of a Ray Serve YAML config file?', 'source': 'https://docs.ray.io/en/latest/serve/index.html'}

20 | {'question': 'How do I make the GPU available on my M1 laptop to ray?', 'source': 'https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-MacOSX-arm64.sh'}

21 | {'question': 'How can I add a timeout for the Ray job?', 'source': 'https://docs.ray.io/en/latest/serve/performance.html'}

22 | {'question': 'how do I set custom /tmp directory for remote cluster?', 'source': 'https://discuss.ray.io/t/8862'}

23 | {'question': 'if I set --temp-dir to a different directory than /tmp, will ray object spill to the custom directory ?', 'source': 'https://docs.ray.io/en/latest/ray-core/objects/object-spilling.html'}

24 | {'question': 'can you give me an example for *`--runtime-env-json`*', 'source': 'https://docs.ray.io/en/latest/serve/dev-workflow.html'}

25 | {'question': 'What is a default value for memory for rayActorOptions?', 'source': 'https://docs.ray.io/en/latest/serve/api/doc/ray.serve.schema.RayActorOptionsSchema.html'}

26 | {'question': 'What should be the value of `maxConcurrentReplicas` if autoscaling configuration is specified?', 'source': 'https://docs.ray.io/en/latest/serve/api/doc/ray.serve.schema.DeploymentSchema.html#ray.serve.schema.DeploymentSchema.num_replicas_and_autoscaling_config_mutually_exclusive'}

27 | {'question': 'Yes what should be the value of `max_concurrent_queries` when `target_num_ongoing_requests_per_replica` is specified?', 'source': 'https://docs.ray.io/en/latest/serve/performance.html'}

28 | {'question': 'what is a `smoothing_factor`', 'source': 'https://docs.ray.io/en/latest/serve/scaling-and-resource-allocation.html'}

29 | {'question': 'Why do we need to configure ray serve application such that it can run on one node?', 'source': 'https://www.anyscale.com/blog/simplify-your-mlops-with-ray-and-ray-serve'}

30 | {'question': 'What is the reason actors change their state to unhealthy?', 'source': 'https://docs.ray.io/en/latest/ray-core/fault_tolerance/actors.html'}

31 | {'question': 'How can I add `max_restarts` to serve deployment?', 'source': 'https://docs.ray.io/en/latest/serve/index.html'}

32 | {'question': 'How do I access logs for a dead node?', 'source': 'https://docs.ray.io/en/latest/ray-observability/user-guides/cli-sdk.html'}

33 | {'question': 'What are the reasons for a node to change it’s status to dead?', 'source': 'https://docs.ray.io/en/latest/ray-core/fault_tolerance/nodes.html'}

34 | {'question': 'What are the reasons for spikes in node CPU utilization', 'source': 'https://www.anyscale.com/blog/autoscaling-clusters-with-ray'}

35 | {'question': 'What AWS machine type is recommended to deploy a RayService on EKS?', 'source': 'https://docs.ray.io/en/latest/'}

36 | {'question': 'Can you write a function that runs exactly once on each node of a ray cluster?', 'source': 'https://docs.ray.io/en/latest/ray-air/examples/gptj_deepspeed_fine_tuning.html'}

37 | {'question': 'can you drain a node for maintenance?', 'source': 'https://docs.ray.io/en/latest/cluster/cli.html'}

38 | {'question': 'what env variable should I set to disable the heartbeat message displayed every 5 sec? I would like to turn it to every 1 minute for instance.', 'source': 'https://docs.ray.io/en/latest/'}

39 | {'question': 'Is there a way to configure the session name generated by ray?', 'source': 'https://docs.ray.io/en/latest/ray-core/configure.html'}

40 | {'question': 'How can I choose which worker group to use when submitting a ray job?', 'source': 'https://discuss.ray.io/t/9824'}

41 | {'question': 'can I use the Python SDK to get a link to Ray dashboard for a given job?', 'source': 'https://docs.ray.io/en/latest/ray-observability/getting-started.html'}

42 | {'question': 'I’d like to use the Ray Jobs Python SDK to get a link to a specific Job view in the dashboard', 'source': 'https://docs.ray.io/en/latest/cluster/running-applications/job-submission/sdk.html'}

43 | {'question': 'I am building a product on top of ray and would like to use ray name & logo for it :slightly_smiling_face: where can I find ray name usage guidelines?', 'source': 'https://forms.gle/9TSdDYUgxYs8SA9e8'}

44 | {'question': 'What may possible cause the node where this task was running crashed unexpectedly. This can happen if: (1) the instance where the node was running failed, (2) raylet crashes unexpectedly (OOM, preempted node, etc).', 'source': 'https://www.anyscale.com/blog/automatic-and-optimistic-memory-scheduling-for-ml-workloads-in-ray'}

45 | {'question': 'Do you know how to resolve (gcs_server) : Health check failed for node? I observed that the node is still up and running.', 'source': 'https://docs.ray.io/en/latest/ray-observability/user-guides/cli-sdk.html'}

46 | {'question': 'How to extend the health check threshold?', 'source': 'https://docs.ray.io/en/latest/serve/api/doc/ray.serve.schema.DeploymentSchema.html'}

47 | {'question': 'How to extend the GCS health check threshold for for a Ray job use case?', 'source': 'https://docs.ray.io/en/latest/ray-core/fault_tolerance/gcs.html'}

48 | {'question': 'What is the working of `PowerOfTwoChoicesReplicaScheduler` ?', 'source': 'https://github.com/ray-project/ray/pull/36501'}

49 | {'question': 'Do you need the DAGDriver to deploy a serve application using RayServe?', 'source': 'https://docs.ray.io/en/latest/serve/key-concepts.html'}

50 | {'question': 'What’s the import path that I need to provide to a simple RayServe deployment?', 'source': 'https://maxpumperla.com/learning_ray'}

51 | {'question': 'what’s the latest version of ray', 'source': 'https://github.com/ray-project/ray/releases/tag/ray-1.11.0'}

52 | {'question': 'do you know ray have been updated to version 2.6?', 'source': 'https://github.com/ray-project/ray'}

53 | {'question': 'do you have any documents / examples showing the usage of RayJob in Kuberay?', 'source': 'https://ray-project.github.io/kuberay/guidance/rayjob/'}

54 | {'question': 'Do you have any document/guide which shows how to setup the local development environment for kuberay on a arm64 processor based machine?', 'source': 'https://docs.ray.io/en/latest/ray-contribute/development.html#building-ray'}

55 | {'question': 'How can I configure min and max worker number of nodes when I’m using Ray on Databricks?', 'source': 'https://docs.ray.io/en/latest/cluster/vms/references/ray-cluster-configuration.html'}

56 | {'question': 'Does Ray metrics have to be exported via an actor?', 'source': 'https://docs.ray.io/en/latest/ray-core/ray-metrics.html'}

57 | {'question': 'How is object store memory calculated?', 'source': 'https://docs.ray.io/en/latest/ray-core/scheduling/memory-management.html'}

58 | {'question': 'how can I avoid objects not getting spilled?', 'source': 'https://docs.ray.io/en/latest/data/data-internals.html'}

59 | {'question': 'what’s ray core', 'source': 'https://docs.ray.io/en/latest/ray-core/tasks.html#ray-remote-functions'}

60 | {'question': 'Does ray support cron job', 'source': 'https://pillow.readthedocs.io/en/stable/handbook/concepts.html#modes'}

61 | {'question': 'can you give me the dependencies list for api read_images?', 'source': 'https://pillow.readthedocs.io/en/stable/handbook/concepts.html#modes'}

62 | {'question': 'how do I kill a specific serve replica', 'source': 'https://docs.ray.io/en/latest/serve/production-guide/fault-tolerance.html'}

63 | {'question': 'What exactly is rayjob? How is it handled in kuberay? Can you give an example of what a Rayjob will look like?', 'source': 'https://ray-project.github.io/kuberay/guidance/rayjob/'}

64 | {'question': 'do you have access to the CRD yaml file of RayJob for KubeRay?', 'source': 'https://github.com/ray-project/kuberay'}

65 | {'question': 'how do I adjust the episodes per iteration in Ray Tune?', 'source': 'https://docs.ray.io/en/latest/tune/index.html'}

66 | {'question': 'in Ray Tune, can you explain what episodes are?', 'source': 'https://docs.ray.io/en/latest/ray-references/glossary.html'}

67 | {'question': 'how do I know how many agents a Tune episode is spanning?', 'source': 'https://docs.ray.io/en/latest/index.html'}

68 | {'question': 'how can I limit the number of jobs in the history stored in the ray GCS?', 'source': 'https://docs.ray.io/en/latest/index.html'}

69 | {'question': 'I have a large csv file on S3. How do I use Ray to create another csv file with one column removed?', 'source': 'https://docs.ray.io/en/master/data/api/doc/ray.data.read_csv.html#ray-data-read-csv'}

70 | {'question': 'How to discover what node was used to run a given task', 'source': 'https://docs.ray.io/en/latest/ray-core/ray-dashboard.html#ray-dashboard'}

71 | {'question': 'it is possible to discover what node was used to execute a given task using its return future, object reference ?', 'source': 'https://docs.ray.io/en/latest/ray-core/walkthrough.html#running-a-task'}

72 | {'question': 'how to efficiently broadcast a large nested dictionary from a single actor to thousands of tasks', 'source': 'https://discuss.ray.io/t/6521'}

73 | {'question': 'How to mock remote calls of an Actor for Testcases?', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#runtime-environments'}

74 | {'question': 'How to use pytest mock to create a Actor', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#runtime-environments'}

75 | {'question': 'Can I initiate an Actor directly without remote()', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#runtime-environments'}

76 | {'question': 'Is there a timeout or retry setting for long a worker will wait / retry to make an initial connection to the head node?', 'source': 'https://docs.ray.io/en/latest/ray-core/handling-dependencies.html#runtime-environments'}

77 | {'question': 'im getting this error of ValueError: The base resource usage of this topology ExecutionResources but my worker and head node are both GPU nodes...oh is it expecting 2 GPUs on a single worker node is that why?', 'source': 'https://docs.ray.io/en/latest/train/faq.html'}

78 | {'question': 'how can I move airflow variables in ray task ?', 'source': 'https://docs.ray.io/en/latest/ray-observability/monitoring-debugging/gotchas.html#environment-variables-are-not-passed-from-the-driver-to-workers'}

79 | {'question': 'How to recompile Ray docker image using Ubuntu 22.04LTS as the base docker image?', 'source': 'https://github.com/ray-project/ray.git'}

80 | {'question': 'I am using TuneSearchCV with an XGBoost regressor. To test it out, I have set the n_trials to 3 and left the n_jobs at its default of -1 to use all available processors. From what I have observed, only one trial runs per CPU since 3 trials only uses 3 CPUs which is pretty time consuming. Is there a way to run a single trial across multiple CPUs to speed things up?', 'source': 'https://docs.ray.io/en/latest/ray-core/actors/async_api.html'}

81 | {'question': 'how do I make rolling mean column in ray dataset?', 'source': 'https://docs.ray.io/en/latest/ray-core/actors/terminating-actors.html#manual-termination-within-the-actor'}

82 | {'question': "Where is the execution limit coming from? I'm not sure where I set it", 'source': 'https://docs.ray.io/en/latest/data/dataset-internals.html#configuring-resources-and-locality'}

83 | {'question': 'The ray cluster spins up the workers, but then immediately kills them when it starts to process the data - is this expected behavior? If not, what could the issue be?', 'source': 'https://docs.ray.io/en/latest/data/examples/nyc_taxi_basic_processing.html'}

84 | {'question': 'Does Ray support numpy 1.24.2?', 'source': 'https://docs.ray.io/en/latest/index.html'}

85 | {'question': 'Can I have a super class of Actor?', 'source': 'https://docs.ray.io/en/latest/cluster/running-applications/job-submission/ray-client.html#client-arguments'}

86 | {'question': 'can I specify working directory in ray.client(base_url).namespace(namespsce).connect()', 'source': 'https://docs.ray.io/en/latest/cluster/running-applications/job-submission/ray-client.html#client-arguments'}

87 | {'question': 'can I monkey patch a ray function?', 'source': 'https://docs.ray.io/en/latest/ray-observability/monitoring-debugging/gotchas.html#outdated-function-definitions'}

88 | {'question': 'I get the following error using Ray Tune with Ray version 2.4.0 after a successful training epoch: “TypeError: can’t convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.” According to the stack trace, the error seems to come from the __report_progress_ method. I’m using one GPU to train a pretrained ResNet18 model. Do you know what is causing this issue?', 'source': 'https://docs.ray.io/en/latest/index.html'}

89 | {'question': 'how to use ray.init to launch a multi-node cluster', 'source': 'https://docs.ray.io/en/latest/cluster/vms/references/ray-cluster-configuration.html'}

90 | {'question': 'why detauched Actor pointing to old working directory ?', 'source': 'https://docs.ray.io/en/latest/ray-core/actors/named-actors.html#actor-lifetimes'}

91 | {'question': 'If I spawn a process in a Ray Task, what happens to that process when the Ray Task completes?', 'source': 'https://docs.ray.io/en/latest/ray-core/tasks/using-ray-with-gpus.html'}

92 | {'question': 'how can I use torch.distributed.launch with Ray jobs?', 'source': 'https://www.anyscale.com/blog/large-scale-distributed-training-with-torchx-and-ray'}

93 | {'question': 'how to fix this issue: "WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly."', 'source': 'https://docs.ray.io/en/latest/tune/api/doc/ray.tune.sample_from.html'}

94 | {'question': 'How does one define the number of timesteps and episodes when training a PPO algorithm with Rllib?', 'source': 'https://docs.ray.io/en/latest/rllib/rllib-algorithms.html#part-2'}

95 | {'question': "my serve endpoint doesn't seem to run my code when deployed onto our remote cluster. Only the endpoints that are using DAGDrivers are running into issues", 'source': 'https://docs.ray.io/en/latest/serve/production-guide/deploy-vm.html#adding-a-runtime-environment'}

96 | {'question': 'How to specify different preprocessors for train and evaluation ray datasets?', 'source': 'https://docs.ray.io/en/latest/'}

97 | {'question': 'Can I set the ray.init() in the worker code for ray serve?', 'source': 'https://docs.ray.io/en/latest/serve/api/index.html'}

98 | {'question': 'Can I use a ubuntu 22.04 image to install Ray as a python package and use it for Kubernetes cluster?', 'source': 'https://docs.ray.io/en/latest/ray-overview/installation.html#installation'}

99 |

--------------------------------------------------------------------------------

/datasets/eval-dataset-v1.jsonl:

--------------------------------------------------------------------------------

1 | {"question": "I’m struggling a bit with Ray Data type conversions when I do map_batches. Any advice?", "source": "https://docs.ray.io/en/master/data/transforming-data.html#configuring-batch-format"}

2 | {"question": "How does autoscaling work in a Ray Serve application?", "source": "https://docs.ray.io/en/master/serve/scaling-and-resource-allocation.html#autoscaling"}

3 | {"question": "how do I get the address of a ray node", "source": "https://docs.ray.io/en/master/ray-core/miscellaneous.html#node-information"}

4 | {"question": "Does Ray support NCCL?", "source": "https://docs.ray.io/en/master/ray-more-libs/ray-collective.html"}

5 | {"question": "Is Ray integrated with DeepSpeed?", "source": "https://docs.ray.io/en/master/ray-air/examples/gptj_deepspeed_fine_tuning.html#fine-tuning-the-model-with-ray-air-a-name-train-a"}

6 | {"question": "what will happen if I use AsyncIO's await to wait for a Ray future like `await x.remote()`", "source": "https://docs.ray.io/en/master/ray-core/actors/async_api.html#objectrefs-as-asyncio-futures"}

7 | {"question": "How would you compare Spark, Ray, Dask?", "source": "https://docs.ray.io/en/master/data/overview.html#how-does-ray-data-compare-to-x-for-offline-inference"}

8 | {"question": "why would ray overload a node w/ more task that the resources allow ?", "source": "https://docs.ray.io/en/master/ray-core/scheduling/resources.html#physical-resources-and-logical-resources"}

9 | {"question": "when should I use Ray Client?", "source": "https://docs.ray.io/en/master/cluster/running-applications/job-submission/ray-client.html#when-to-use-ray-client"}

10 | {"question": "how to scatter actors across the cluster?", "source": "https://docs.ray.io/en/master/ray-core/scheduling/index.html#spread"}

11 | {"question": "On remote ray cluster, when I do `ray debug` I'm getting connection refused error. Why ?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/debug-apps/ray-debugging.html#running-on-a-cluster"}

12 | {"question": "How does Ray AIR set up the model to communicate gradient updates across machines?", "source": "https://docs.ray.io/en/master/train/train.html#intro-to-ray-train"}

13 | {"question": "Why would I use Ray Serve instead of Modal or Seldon? Why can't I just do it via containers?", "source": "https://docs.ray.io/en/master/serve/index.html"}

14 | {"question": "How do I deploy an LLM workload on top of Ray Serve?", "source": "https://docs.ray.io/en/master/ray-air/examples/gptj_serving.html"}

15 | {"question": "what size of memory should I need for this if I am setting set the `model_id` to “EleutherAI/gpt-j-6B”?", "source": "https://docs.ray.io/en/master/ray-air/examples/gptj_serving.html"}

16 | {"question": "How do I log the results from multiple distributed workers into a single tensorboard?", "source": "https://docs.ray.io/en/master/tune/tutorials/tune-output.html#how-to-log-your-tune-runs-to-tensorboard"}

17 | {"question": "how do you config SyncConfig for a Ray AIR job?", "source": "https://docs.ray.io/en/master/tune/tutorials/tune-storage.html#on-a-multi-node-cluster-deprecated"}

18 | {"question": "how can I quickly narrow down the root case of a failed ray job, assuming I have access to all the logs", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#log-files-in-logging-directory"}

19 | {"question": "How do I specify how many GPUs a serve deployment needs?", "source": "https://docs.ray.io/en/master/serve/scaling-and-resource-allocation.html#resource-management-cpus-gpus"}

20 | {"question": "One of my worker nodes keeps dying on using TensorflowTrainer with around 1500 workers, I observe SIGTERM has been received to the died node's raylet. How can I debug this?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#log-files-in-logging-directory"}

21 | {"question": "what are the possible reasons for nodes dying in a cluster?", "source": "https://docs.ray.io/en/master/ray-core/scheduling/ray-oom-prevention.html"}

22 | {"question": "how do I programatically get ray remote cluster to a target size immediately without scaling up through autoscaler ?", "source": "https://docs.ray.io/en/master/cluster/running-applications/autoscaling/reference.html#ray-autoscaler-sdk-request-resources"}

23 | {"question": "how do you disable async iter_batches with Ray Dataset?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.iter_batches.html#ray-data-dataset-iter-batches"}

24 | {"question": "what is the different between a batch and a block, for ray datasets?", "source": "https://docs.ray.io/en/master/data/data-internals.html#datasets-and-blocks"}

25 | {"question": "How to setup the development environments for ray project?", "source": "https://docs.ray.io/en/master/ray-contribute/development.html"}

26 | {"question": "how do I debug why ray rollout workers are deadlocking when using the sample API in `ray/rllib/evaluation/rollout_worker.py`", "source": "https://docs.ray.io/en/master/rllib/rllib-dev.html#troubleshooting"}

27 | {"question": "how do I join two ray datasets?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.zip.html"}

28 | {"question": "Is there a way to retrieve an object ref from its id?", "source": "https://docs.ray.io/en/master/ray-core/objects.html"}

29 | {"question": "how to create model Checkpoint from the model in memory?", "source": "https://docs.ray.io/en/master/train/api/doc/ray.train.torch.TorchCheckpoint.from_model.html#ray-train-torch-torchcheckpoint-from-model"}

30 | {"question": "what is Deployment in Ray Serve?", "source": "https://docs.ray.io/en/master/serve/key-concepts.html#deployment"}

31 | {"question": "What is user config in Ray Serve? how do I use it?", "source": "https://docs.ray.io/en/master/serve/configure-serve-deployment.html#configure-ray-serve-deployments"}

32 | {"question": "What is the difference between PACK and SPREAD strategy?", "source": "https://docs.ray.io/en/master/ray-core/scheduling/placement-group.html#placement-strategy"}

33 | {"question": "What’s the best way to run ray across multiple machines?", "source": "https://docs.ray.io/en/master/ray-core/cluster/index.html"}

34 | {"question": "how do I specify ScalingConfig for a Tuner run?", "source": "https://docs.ray.io/en/master/tune/api/doc/ray.tune.Tuner.html"}

35 | {"question": "how to utilize ‘zero-copy’ feature ray provide for numpy?", "source": "https://docs.ray.io/en/master/ray-core/objects/serialization.html#numpy-arrays"}

36 | {"question": "if there are O(millions) of keys that all have state, is it ok to spin up 1=1 actors? Or would it be advised to create ‘key pools’ where an actor can hold 1=many keys?", "source": "https://docs.ray.io/en/master/ray-core/patterns/too-fine-grained-tasks.html"}

37 | {"question": "How to find the best checkpoint from the trial directory?", "source": "https://docs.ray.io/en/master/tune/api/doc/ray.tune.ExperimentAnalysis.html"}

38 | {"question": "what are the advantage and disadvantage of using singleton Actor ?", "source": "https://docs.ray.io/en/master/ray-core/actors/named-actors.html"}

39 | {"question": "what are the advantages of using a named actor?", "source": "https://docs.ray.io/en/master/ray-core/actors/named-actors.html"}

40 | {"question": "How do I read a text file stored on S3 using Ray Data?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.read_text.html"}

41 | {"question": "how do I get the IP of the head node for my Ray cluster?", "source": "https://docs.ray.io/en/master/ray-core/miscellaneous.html#node-information"}

42 | {"question": "How to write a map function that returns a list of object for `map_batches`?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.map_batches.html#ray-data-dataset-map-batches"}

43 | {"question": "How do I set a maximum episode length when training with Rllib?", "source": "https://docs.ray.io/en/master/rllib/key-concepts.html"}

44 | {"question": "how do I make a Ray Tune trial retry on failures?", "source": "https://docs.ray.io/en/master/tune/tutorials/tune-fault-tolerance.html"}

45 | {"question": "For the supervised actor pattern, can we keep the Worker Actor up if the Supervisor passes a reference to the Actor to another Actor, to allow the worker actor to remain even on Supervisor / Driver failure?", "source": "https://docs.ray.io/en/master/ray-core/patterns/tree-of-actors.html"}

46 | {"question": "How do I read a large text file in S3 with Ray?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.read_text.html"}

47 | {"question": "how do I get a ray dataset from pandas", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.from_pandas.html"}

48 | {"question": "can you give me an example of using `ray.data.map` ?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.map.html"}

49 | {"question": "can you give me an example of using `ray.data.map` , with a callable class as input?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.map.html"}

50 | {"question": "How to set memory limit for each trial in Ray Tuner?", "source": "https://docs.ray.io/en/master/tune/tutorials/tune-resources.html"}

51 | {"question": "how do I get the actor id of an actor", "source": "https://docs.ray.io/en/master/ray-core/api/doc/ray.runtime_context.get_runtime_context.html"}

52 | {"question": "can ray.init() can check if ray is all-ready initiated ?", "source": "https://docs.ray.io/en/master/ray-core/api/doc/ray.init.html"}

53 | {"question": "What does the `compute=actor` argument do within `ray.data.map_batches` ?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.map_batches.html"}

54 | {"question": "how do I use wandb logger with accelerateTrainer?", "source": "https://docs.ray.io/en/master/tune/examples/tune-wandb.html"}

55 | {"question": "What will be implicitly put into object store?", "source": "https://docs.ray.io/en/master/ray-core/objects.html#objects"}

56 | {"question": "How do I kill or cancel a ray task that I already started?", "source": "https://docs.ray.io/en/master/ray-core/fault_tolerance/tasks.html#cancelling-misbehaving-tasks"}

57 | {"question": "how to send extra arguments in dataset.map_batches function?", "source": "https://docs.ray.io/en/master/data/api/doc/ray.data.Dataset.map_batches.html#ray-data-dataset-map-batches"}

58 | {"question": "where does ray GCS store the history of jobs run on a kuberay cluster? What type of database and format does it use for this?", "source": "https://docs.ray.io/en/master/cluster/kubernetes/user-guides/static-ray-cluster-without-kuberay.html#external-redis-integration-for-fault-tolerance"}

59 | {"question": "How to resolve ValueError: The actor ImplicitFunc is too large?", "source": "https://docs.ray.io/en/master/ray-core/patterns/closure-capture-large-objects.html"}

60 | {"question": "How do I use ray to distribute training for my custom neural net written using Keras in Databricks?", "source": "https://docs.ray.io/en/master/train/examples/tf/tensorflow_mnist_example.html"}

61 | {"question": "how to use ray.put and ray,get?", "source": "https://docs.ray.io/en/master/ray-core/objects.html#fetching-object-data"}

62 | {"question": "how do I use Ray Data to pre process many files?", "source": "https://docs.ray.io/en/master/data/transforming-data.html#transforming-batches-with-tasks"}

63 | {"question": "can’t pickle SSLContext objects", "source": "https://docs.ray.io/en/master/ray-core/objects/serialization.html#customized-serialization"}

64 | {"question": "How do I install CRDs in Kuberay?", "source": "https://docs.ray.io/en/master/cluster/kubernetes/getting-started.html#deploying-the-kuberay-operator"}

65 | {"question": "Why the function for Ray data batch inference has to be named as _`__call__()`_ ?", "source": "https://docs.ray.io/en/master/data/examples/nyc_taxi_basic_processing.html#parallel-batch-inference"}

66 | {"question": "How to disconnnect ray client?", "source": "https://docs.ray.io/en/master/cluster/running-applications/job-submission/ray-client.html#connect-to-multiple-ray-clusters-experimental"}

67 | {"question": "how to submit job with python with local files?", "source": "https://docs.ray.io/en/master/cluster/running-applications/job-submission/quickstart.html#submitting-a-job"}

68 | {"question": "How do I do inference from a model trained by Ray tune.fit()?", "source": "https://docs.ray.io/en/master/data/batch_inference.html#using-models-from-ray-train"}

69 | {"question": "is there a way to load and run inference without using pytorch or tensorflow directly?", "source": "https://docs.ray.io/en/master/serve/index.html"}

70 | {"question": "what does ray do", "source": "https://docs.ray.io/en/master/ray-overview/index.html#overview"}

71 | {"question": "If I specify a fractional GPU in the resource spec, what happens if I use more than that?", "source": "https://docs.ray.io/en/master/ray-core/tasks/using-ray-with-gpus.html#fractional-gpus"}

72 | {"question": "how to pickle a variable defined in actor’s init method", "source": "https://docs.ray.io/en/master/ray-core/objects/serialization.html#customized-serialization"}

73 | {"question": "how do I do an all_reduce operation among a list of actors", "source": "https://docs.ray.io/en/master/ray-core/examples/map_reduce.html#shuffling-and-reducing-data"}

74 | {"question": "What will happen if we specify a bundle with `{\"CPU\":0}` in the PlacementGroup?", "source": "https://docs.ray.io/en/master/ray-core/scheduling/placement-group.html#bundles"}

75 | {"question": "How to cancel job from UI?", "source": "https://docs.ray.io/en/master/cluster/running-applications/job-submission/cli.html#ray-job-stop"}

76 | {"question": "how do I get my project files on the cluster when using Ray Serve? My workflow is to call `serve deploy config.yaml --address `", "source": "https://docs.ray.io/en/master/serve/advanced-guides/dev-workflow.html#testing-on-a-remote-cluster"}

77 | {"question": "how do i install ray nightly wheel", "source": "https://docs.ray.io/en/master/ray-overview/installation.html#daily-releases-nightlies"}

78 | {"question": "how do i install the latest ray nightly wheel?", "source": "https://docs.ray.io/en/master/ray-overview/installation.html#daily-releases-nightlies"}

79 | {"question": "how can I write unit tests for Ray code?", "source": "https://docs.ray.io/en/master/ray-core/examples/testing-tips.html#tip-2-sharing-the-ray-cluster-across-tests-if-possible"}

80 | {"question": "How I stop Ray from spamming lots of Info updates on stdout?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#disable-logging-to-the-driver"}

81 | {"question": "how to deploy stable diffusion 2.1 with Ray Serve?", "source": "https://docs.ray.io/en/master/serve/tutorials/stable-diffusion.html#serving-a-stable-diffusion-model"}

82 | {"question": "what is actor_handle?", "source": "https://docs.ray.io/en/master/ray-core/actors.html#passing-around-actor-handles"}

83 | {"question": "how to kill a r detached actors?", "source": "https://docs.ray.io/en/master/ray-core/actors/named-actors.html#actor-lifetimes"}

84 | {"question": "How to force upgrade the pip package in the runtime environment if an old version exists?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#api-reference"}

85 | {"question": "How do I do global shuffle with Ray?", "source": "https://docs.ray.io/en/master/data/transforming-data.html#shuffling-rows"}

86 | {"question": "How to find namespace of an Actor?", "source": "https://docs.ray.io/en/master/ray-observability/reference/doc/ray.util.state.list_actors.html#ray-util-state-list-actors"}

87 | {"question": "How does Ray work with async.io ?", "source": "https://docs.ray.io/en/master/ray-core/actors/async_api.html#asyncio-for-actors"}

88 | {"question": "How do I debug a hanging `ray.get()` call? I have it reproduced locally.", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/debug-apps/debug-hangs.html"}

89 | {"question": "can you show me an example of ray.actor.exit_actor()", "source": "https://docs.ray.io/en/master/ray-core/actors/terminating-actors.html#manual-termination-within-the-actor"}

90 | {"question": "how to add log inside actor?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#customizing-worker-process-loggers"}

91 | {"question": "can you write a script to do batch inference with GPT-2 on text data from an S3 bucket?", "source": "https://docs.ray.io/en/master/data/working-with-text.html#performing-inference-on-text"}

92 | {"question": "How do I enable Ray debug logs?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#using-rays-logger"}

93 | {"question": "How do I list the current Ray actors from python?", "source": "https://docs.ray.io/en/master/ray-observability/reference/doc/ray.util.state.list_actors.html#ray-util-state-list-actors"}

94 | {"question": "I want to kill the replica actor from Python. how do I do it?", "source": "https://docs.ray.io/en/master/ray-core/actors/terminating-actors.html#manual-termination-via-an-actor-handle"}

95 | {"question": "how do I specify in my remote function declaration that I want the task to run on a V100 GPU type?", "source": "https://docs.ray.io/en/master/ray-core/tasks/using-ray-with-gpus.html#accelerator-types"}

96 | {"question": "How do I get started?", "source": "https://docs.ray.io/en/master/ray-overview/getting-started.html#getting-started"}

97 | {"question": "How to specify python version in runtime_env?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#api-reference"}

98 | {"question": "how to create a Actor in a namespace?", "source": "https://docs.ray.io/en/master/ray-core/namespaces.html#using-namespaces"}

99 | {"question": "Can I specify multiple working directories?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#using-local-files"}

100 | {"question": "what if I set num_cpus=0 for tasks", "source": "https://docs.ray.io/en/master/ray-core/scheduling/resources.html#fractional-resource-requirements"}

101 | {"question": "is it possible to have ray on k8s without using kuberay? especially with the case that autoscaler is enabled.", "source": "https://docs.ray.io/en/master/cluster/kubernetes/user-guides/static-ray-cluster-without-kuberay.html"}

102 | {"question": "how to manually configure and manage Ray cluster on Kubernetes", "source": "https://docs.ray.io/en/master/cluster/kubernetes/user-guides/static-ray-cluster-without-kuberay.html#deploying-a-static-ray-cluster"}

103 | {"question": "If I shutdown a raylet, will the tasks and workers on that node also get killed?", "source": "https://docs.ray.io/en/master/ray-core/fault_tolerance/nodes.html#node-fault-tolerance"}

104 | {"question": "If I’d like to debug out of memory, how do I Do that, and which documentation should I look?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/debug-apps/debug-memory.html#detecting-out-of-memory-errors"}

105 | {"question": "How to use callback in Trainer?", "source": "https://docs.ray.io/en/master/tune/api/doc/ray.tune.Callback.html#ray-tune-callback"}

106 | {"question": "How to provide current working directory to ray?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#remote-uris"}

107 | {"question": "how to create an actor instance with parameter?", "source": "https://docs.ray.io/en/master/ray-core/actors.html#actors"}

108 | {"question": "how to push a custom module to ray which is using by Actor ?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#library-development"}

109 | {"question": "how to print ray working directory?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#runtime-environments"}

110 | {"question": "why I can not see log.info in ray log?", "source": "https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#customizing-worker-process-loggers"}

111 | {"question": "when you use ray dataset to read a file, can you make sure the order of the data is preserved?", "source": "https://docs.ray.io/en/master/data/performance-tips.html#deterministic-execution"}

112 | {"question": "Can you explain what \"Ray will *not* retry tasks upon exceptions thrown by application code\" means ?", "source": "https://docs.ray.io/en/master/ray-core/fault_tolerance/tasks.html#retrying-failed-tasks"}

113 | {"question": "how do I specify the log directory when starting Ray?", "source": "https://docs.ray.io/en/master/ray-core/configure.html#logging-and-debugging"}

114 | {"question": "how to launch a ray cluster with 10 nodes, without setting the min worker as 10", "source": "https://docs.ray.io/en/master/cluster/vms/user-guides/launching-clusters/on-premises.html#start-worker-nodes"}

115 | {"question": "how to use ray api to scale up a cluster", "source": "https://docs.ray.io/en/master/cluster/running-applications/autoscaling/reference.html#ray-autoscaler-sdk-request-resources"}

116 | {"question": "we plan to use Ray cloud launcher to start a cluster in AWS. How can we specify a subnet in the deployment file?", "source": "https://docs.ray.io/en/master/cluster/vms/references/ray-cluster-configuration.html#full-configuration"}

117 | {"question": "where I can find HTTP server error code log for Ray serve", "source": "https://docs.ray.io/en/master/serve/monitoring.html#ray-logging"}

118 | {"question": "I am running ray cluster on amazon and I have troubles displaying the dashboard. When a I tunnel the dashboard port from the headnode to my machine, the dashboard opens, and then it disappears (internal refresh fails). Is it a known problem? What am I doing wrong?", "source": "https://docs.ray.io/en/master/cluster/configure-manage-dashboard.html#viewing-ray-dashboard-in-browsers"}

119 | {"question": "In the Ray cluster launcher YAML, does `max_workers` include the head node, or only worker nodes?", "source": "https://docs.ray.io/en/master/cluster/vms/references/ray-cluster-configuration.html"}

120 | {"question": "How to update files in working directory ?", "source": "https://docs.ray.io/en/master/ray-core/handling-dependencies.html#using-local-files"}