├── .classpath

├── .gitignore

├── LICENSE

├── NOTICE

├── README.md

├── img

├── IndexerV2Design.jpg

├── IndexerV2Design.pptx

├── Kafka_ES_Illustration.png

└── Kafka_ES_Illustration_New.png

├── pom.xml

├── run_indexer.sh

└── src

├── main

├── assemblies

│ └── plugin.xml

├── java

│ └── org

│ │ └── elasticsearch

│ │ └── kafka

│ │ └── indexer

│ │ ├── BasicIndexHandler.java

│ │ ├── ConsumerConfig.java

│ │ ├── FailedEventsLogger.java

│ │ ├── IndexHandler.java

│ │ ├── IndexerESException.java

│ │ ├── KafkaClient.java

│ │ ├── KafkaIndexerDriver.java

│ │ ├── MessageHandler.java

│ │ ├── jmx

│ │ ├── IndexerJobStatusMBean.java

│ │ ├── KafkaEsIndexerStatus.java

│ │ └── KafkaEsIndexerStatusMXBean.java

│ │ ├── jobs

│ │ ├── IndexerJob.java

│ │ ├── IndexerJobManager.java

│ │ ├── IndexerJobStatus.java

│ │ └── IndexerJobStatusEnum.java

│ │ ├── mappers

│ │ ├── AccessLogMapper.java

│ │ └── KafkaMetaDataMapper.java

│ │ └── messageHandlers

│ │ ├── AccessLogMessageHandler.java

│ │ └── RawMessageStringHandler.java

└── resources

│ ├── kafka-es-indexer.properties.template

│ └── logback.xml.template

└── test

└── org

└── elasticsearch

└── kafka

└── indexer

└── jmx

└── KafkaEsIndexerStatusTest.java

/.classpath:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

32 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | /bin/

2 | .project

3 | .settings/

4 | /logs/

5 | .idea

6 | .classpath

7 |

8 | *.iml

9 | kafkaESConsumerLocal.properties

10 | dependency-reduced-pom.xml

11 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

--------------------------------------------------------------------------------

/NOTICE:

--------------------------------------------------------------------------------

1 | kafka-elasticsearch-standalone-consumer

2 |

3 | Licensed under Apache License, Version 2.0

4 |

5 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

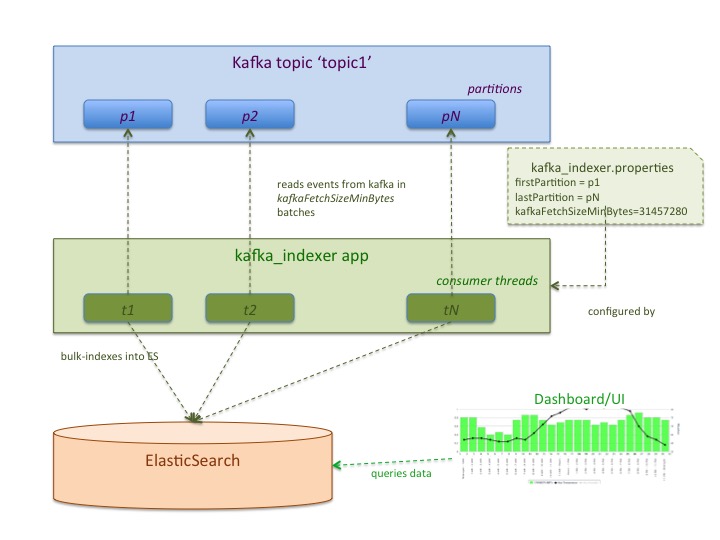

1 | # Welcome to the kafka-elasticsearch-standalone-consumer wiki!

2 |

3 | ## Architecture of the kafka-elasticsearch-standalone-consumer [indexer]

4 |

5 |

6 |

7 |

8 | # This project has moved to below repository

9 | ### Please see https://github.com/BigDataDevs/kafka-elasticsearch-consumer

10 |

11 | # Introduction

12 |

13 | ### **Kafka Standalone Consumer [Indexer] will read messages from Kafka, in batches, process and bulk-index them into ElasticSearch.**

14 |

15 | ### _As described in the illustration above, here is how the indexer works:_

16 |

17 | * Kafka has a topic named, say `Topic1`

18 |

19 | * Lets say, `Topic1` has 5 partitions.

20 |

21 | * In the configuration file, kafka-es-indexer.properties, set firstPartition=0 and lastPartition=4 properties

22 |

23 | * start the indexer application as described below

24 |

25 | * there will be 5 threads started, one for each consumer from each of the partitions

26 |

27 | * when a new partition is added to the kafka topic - configuration has to be updated and the indexer application has to be restarted

28 |

29 |

30 | # How to use ?

31 |

32 | ### Running as a standard Jar

33 |

34 | **1. Download the code into a `$INDEXER_HOME` dir.

35 |

36 | **2. cp `$INDEXER_HOME`/src/main/resources/kafka-es-indexer.properties.template /your/absolute/path/kafka-es-indexer.properties file - update all relevant properties as explained in the comments

37 |

38 | **3. cp `$INDEXER_HOME`/src/main/resources/logback.xml.template /your/absolute/path/logback.xml

39 |

40 | specify directory you want to store logs in:

41 |

42 |

43 | adjust values of max sizes and number of log files as needed

44 |

45 | **4. build/create the app jar (make sure you have MAven installed):

46 |

47 | cd $INDEXER_HOME

48 | mvn clean package

49 |

50 | The kafka-es-indexer-2.0.jar will be created in the $INDEXER_HOME/bin.

51 | All dependencies will be placed into $INDEXER_HOME/bin/lib.

52 | All JAR dependencies are linked via kafka-es-indexer-2.0.jar manifest.

53 |

54 | **5. edit your $INDEXER_HOME/run_indexer.sh script:

55 | -- make it executable if needed (chmod a+x $INDEXER_HOME/run_indexer.sh)

56 | -- update properties marked with "CHANGE FOR YOUR ENV" comments - according to your environment

57 |

58 | **6. run the app [use JDK1.8] :

59 |

60 | ./run_indexer.sh

61 |

62 | # Versions

63 |

64 | ### Kafka Version: 0.8.2.1

65 |

66 | ### ElasticSearch: > 1.5.1

67 |

68 | ### Scala Version for Kafka Build: 2.10.0

69 |

70 | # Configuration

71 |

72 | Indexer app configuration is specified in the kafka_es_indexer.properties file, which should be created from a provided template, kafka-es-indexer.properties.template. All properties are described in the template:

73 |

74 | [kafka-es-indexer.properties.template](https://github.com/ppine7/kafka-elasticsearch-standalone-consumer/blob/master/src/main/resources/kafka-es-indexer.properties.template)

75 |

76 | Logging properties are specified in the logback.xml file, which should be created from a provided template, logback.xml.template:

77 |

78 | [logback.xml.template](https://github.com/ppine7/kafka-elasticsearch-standalone-consumer/blob/master/src/main/resources/logback.xml.template)

79 |

80 |

81 | # Message Handler Class

82 |

83 | * `org.elasticsearch.kafka.consumer.MessageHandler` is an Abstract class that has most of the functionality of reading data from Kafka and batch-indexing into ElasticSearch already implemented. It has one abstract method, `transformMessage()`, that can be overwritten in the concrete sub-classes to customize message transformation before posting into ES

84 |

85 | * `org.elasticsearch.kafka.consumer.messageHandlers.RawMessageStringHandler` is a simple concrete sub-class of the MessageHAndler that sends messages into ES with no additional transformation, as is, in the 'UTF-8' format

86 |

87 | * Usually, its effective to Index the message in JSON format in ElasticSearch. This can be done using a Mapper Class and transforming the message from Kafka by overriding/implementing the `transformMessage()` method. An example can be found here: `org.elasticsearch.kafka.consumer.messageHandlers.AccessLogMessageHandler`

88 |

89 | * _**Do remember to set the newly created message handler class in the `messageHandlerClass` property in the kafka-es-indexer.properties file.**_

90 |

91 | # IndexHandler Interface and basic implementation

92 |

93 | * `org.elasticsearch.kafka.consumer.IndexHandler` is an interface that defines two methods: getIndexName(params) and getIndexType(params).

94 |

95 | * `org.elasticsearch.kafka.consumer.BasicIndexHandler` is a simple imlementation of this interface that returnes indexName and indexType values as configured in the kafkaESConsumer.properties file.

96 |

97 | * one might want to create a custom implementation of IndexHandler if, for example, index name and type are not static for all incoming messages but depend on the event data - for example customerId, orderId, etc. In that case, pass all info that is required to perform that custom index determination logic as a Map of parameters into the getIndexName(params) and getIndexType(params) methods (or pass NULL if no such data is required)

98 |

99 | * _**Do remember to set the index handler class in the `indexHandlerClass` property in the kafka-es-indexer.properties file. By default, BasicIndexHandler is used**_

100 |

101 | # License

102 |

103 | kafka-elasticsearch-standalone-consumer

104 |

105 | Licensed under the Apache License, Version 2.0 (the "License"); you may

106 | not use this file except in compliance with the License. You may obtain

107 | a copy of the License at

108 |

109 | http://www.apache.org/licenses/LICENSE-2.0

110 |

111 | Unless required by applicable law or agreed to in writing,

112 | software distributed under the License is distributed on an

113 | "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

114 | KIND, either express or implied. See the License for the

115 | specific language governing permissions and limitations

116 | under the License.

117 |

118 | # Contributors

119 | - [Krishna Raj](https://github.com/reachkrishnaraj)

120 | - [Marina Popova](https://github.com/ppine7)

121 | - [Dhyan ](https://github.com/dhyan-yottaa)

122 |

--------------------------------------------------------------------------------

/img/IndexerV2Design.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/reachkrishnaraj/kafka-elasticsearch-standalone-consumer/20c8b3ca71b74bb8c636a744b53948318d0e1da7/img/IndexerV2Design.jpg

--------------------------------------------------------------------------------

/img/IndexerV2Design.pptx:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/reachkrishnaraj/kafka-elasticsearch-standalone-consumer/20c8b3ca71b74bb8c636a744b53948318d0e1da7/img/IndexerV2Design.pptx

--------------------------------------------------------------------------------

/img/Kafka_ES_Illustration.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/reachkrishnaraj/kafka-elasticsearch-standalone-consumer/20c8b3ca71b74bb8c636a744b53948318d0e1da7/img/Kafka_ES_Illustration.png

--------------------------------------------------------------------------------

/img/Kafka_ES_Illustration_New.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/reachkrishnaraj/kafka-elasticsearch-standalone-consumer/20c8b3ca71b74bb8c636a744b53948318d0e1da7/img/Kafka_ES_Illustration_New.png

--------------------------------------------------------------------------------

/pom.xml:

--------------------------------------------------------------------------------

1 |

2 |

4 | kafka-es-indexer

5 | 4.0.0

6 | org.elasticsearch.kafka.consumer

7 | kafka-es-indexer

8 | ${buildId}.${buildNumber}

9 | jar

10 | Kafka standalone indexer for elasticsearch

11 | 2014

12 |

13 |

14 | The Apache Software License, Version 2.0

15 | http://www.apache.org/licenses/LICENSE-2.0.txt

16 | repo

17 |

18 |

19 |

20 | 2

21 | 0

22 | 1.5.1

23 | UTF-8

24 |

25 |

26 |

27 |

28 | org.elasticsearch

29 | elasticsearch

30 | ${elasticsearch.version}

31 | compile

32 |

33 |

34 | org.apache.kafka

35 | kafka_2.10

36 | 0.8.2.1

37 |

38 |

39 | com.netflix.curator

40 | curator-framework

41 | 1.0.1

42 |

43 |

44 | org.codehaus.jackson

45 | jackson-mapper-asl

46 | 1.9.3

47 |

48 |

49 | org.scala-lang

50 | scala-library

51 | 2.10.0

52 |

53 |

54 | log4j

55 | log4j

56 | 1.2.16

57 |

58 |

59 | javax.mail

60 | mail

61 |

62 |

63 | javax.jms

64 | jms

65 |

66 |

67 | com.sun.jdmk

68 | jmxtools

69 |

70 |

71 | com.sun.jmx

72 | jmxri

73 |

74 |

75 |

76 |

77 | org.xerial.snappy

78 | snappy-java

79 | 1.0.4.1

80 |

81 |

82 | org.apache.zookeeper

83 | zookeeper

84 | 3.3.4

85 |

86 |

87 | log4j

88 | log4j

89 |

90 |

91 | jline

92 | jline

93 |

94 |

95 |

96 |

97 | ch.qos.logback

98 | logback-classic

99 | 1.1.3

100 |

101 |

102 | ch.qos.logback

103 | logback-core

104 | 1.1.3

105 |

106 |

107 | org.codehaus.janino

108 | janino

109 | 2.7.8

110 |

111 |

112 | junit

113 | junit

114 | 4.12

115 |

116 |

117 | org.mockito

118 | mockito-all

119 | 1.10.19

120 |

121 |

122 |

123 | bin

124 | ${project.build.directory}/classes

125 |

126 |

127 | org.apache.maven.plugins

128 | maven-dependency-plugin

129 | 2.8

130 |

131 |

132 | copy-dependencies

133 | package

134 |

135 | copy-dependencies

136 |

137 |

138 | ${project.build.directory}/lib/

139 |

140 |

141 |

142 |

143 |

144 | org.apache.maven.plugins

145 | maven-compiler-plugin

146 | 2.3.2

147 |

148 | 1.8

149 | 1.8

150 |

151 |

152 |

153 | org.apache.maven.plugins

154 | maven-source-plugin

155 |

156 |

157 | attach-sources

158 |

159 | jar

160 |

161 |

162 |

163 |

164 |

165 | org.apache.maven.plugins

166 | maven-jar-plugin

167 | 2.6

168 |

169 | ${project.build.directory}/lib/

170 |

171 |

172 |

173 |

174 |

175 |

--------------------------------------------------------------------------------

/run_indexer.sh:

--------------------------------------------------------------------------------

1 | #!/bin/sh

2 |

3 | # Setup variables

4 | # GHANGE FOR YOUR ENV: absolute path of the indexer installation dir

5 | INDEXER_HOME=

6 |

7 | # GHANGE FOR YOUR ENV: JDK 8 installation dir - you can skip it if your JAVA_HOME env variable is set

8 | JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_25.jdk/Contents/Home

9 |

10 | # GHANGE FOR YOUR ENV: absolute path of the logback config file

11 | LOGBACK_CONFIG_FILE=

12 |

13 | # GHANGE FOR YOUR ENV: absolute path of the indexer properties file

14 | INDEXER_PROPERTIES_FILE=

15 |

16 | # DO NOT CHANGE ANYTHING BELOW THIS POINT (unless you know what you are doing :) )!

17 | echo "Starting Kafka ES Indexer app ..."

18 | echo "INDEXER_HOME=$INDEXER_HOME"

19 | echo "JAVA_HOME=$JAVA_HOME"

20 | echo "LOGBACK_CONFIG_FILE=$LOGBACK_CONFIG_FILE"

21 | echo "INDEXER_PROPERTIES_FILE=$INDEXER_PROPERTIES_FILE"

22 |

23 | # add all dependent jars to the classpath

24 | for file in $INDEXER_HOME/bin/lib/*.jar;

25 | do

26 | CLASS_PATH=$CLASS_PATH:$file

27 | done

28 | echo "CLASS_PATH=$CLASS_PATH"

29 |

30 | $JAVA_HOME/bin/java -Xmx1g -cp $CLASS_PATH -Dlogback.configurationFile=$LOGBACK_CONFIG_FILE org.elasticsearch.kafka.indexer.KafkaIndexerDriver $INDEXER_PROPERTIES_FILE

31 |

32 |

33 |

34 |

35 |

--------------------------------------------------------------------------------

/src/main/assemblies/plugin.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 | plugin

4 |

5 | zip

6 |

7 | false

8 |

9 |

10 | /

11 | true

12 | true

13 |

14 | org.elasticsearch:elasticsearch

15 |

16 |

17 |

18 | /

19 | true

20 | true

21 |

22 | org.apache.kafka:kafka

23 | com.netflix.curator:curator-framework

24 | org.scala-lang:scala-library

25 | org.apache.zookeeper:zookeeper

26 | net.sf.jopt-simple:jopt-simple

27 | com.github.sgroschupf:zkclient

28 | org.xerial.snappy:snappy-java

29 | org.codehaus.jackson:jackson-mapper-asl

30 | log4j:log4j

31 | com.timgroup:java-statsd-client

32 |

33 |

34 |

35 |

36 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/BasicIndexHandler.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import java.util.HashMap;

4 |

5 | /**

6 | * Basic Index handler that returns ElasticSearch index name defined

7 | * in the configuration file as is

8 | *

9 | * @author marinapopova

10 | *

11 | */

12 | public class BasicIndexHandler implements IndexHandler {

13 |

14 | private final ConsumerConfig config;

15 | private String indexName;

16 | private String indexType;

17 |

18 | public BasicIndexHandler(ConsumerConfig config) {

19 | this.config = config;

20 | indexName = config.esIndex;

21 | if (indexName == null || indexName.trim().length() < 1)

22 | indexName = DEFAULT_INDEX_NAME;

23 | indexType = config.esIndexType;

24 | if (indexType == null || indexType.trim().length() < 1)

25 | indexType = DEFAULT_INDEX_TYPE;

26 |

27 | }

28 |

29 | @Override

30 | public String getIndexName(HashMap indexLookupProperties) {

31 | return indexName;

32 | }

33 |

34 | @Override

35 | public String getIndexType(HashMap indexLookupProperties) {

36 | return indexType;

37 | }

38 |

39 | }

40 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/ConsumerConfig.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import java.io.FileInputStream;

4 | import java.util.Properties;

5 |

6 | import org.slf4j.Logger;

7 | import org.slf4j.LoggerFactory;

8 |

9 | public class ConsumerConfig {

10 |

11 | private static final Logger logger = LoggerFactory.getLogger(ConsumerConfig.class);

12 | private Properties prop = new Properties();

13 | private final int kafkaFetchSizeBytesDefault = 10 * 1024 * 1024;

14 |

15 | // Kafka ZooKeeper's IP Address/HostName : port list

16 | public final String kafkaZookeeperList;

17 | // Zookeeper session timeout in MS

18 | public final int zkSessionTimeoutMs;

19 | // Zookeeper connection timeout in MS

20 | public final int zkConnectionTimeoutMs;

21 | // Zookeeper number of retries when creating a curator client

22 | public final int zkCuratorRetryTimes;

23 | // Zookeeper: time in ms between re-tries when creating a Curator

24 | public final int zkCuratorRetryDelayMs;

25 | // Full class path and name for the concrete message handler class

26 | public final String messageHandlerClass;

27 | // Full class name of a custom IndexHandler implementation class

28 | public final String indexHandlerClass;

29 | // Kafka Broker's IP Address/HostName : port list

30 | public final String kafkaBrokersList;

31 | // Kafka Topic from which the message has to be processed

32 | public final String topic;

33 | // the below two parameters define the range of partitions to be processed by this app

34 | // first partition in the Kafka Topic from which the messages have to be processed

35 | public final short firstPartition;

36 | // last partition in the Kafka Topic from which the messages have to be processed

37 | public final short lastPartition;

38 | // Option from where the message fetching should happen in Kafka

39 | // Values can be: CUSTOM/EARLIEST/LATEST/RESTART.

40 | // If 'CUSTOM' is set, then 'startOffset' has to be set as an int value

41 | public String startOffsetFrom;

42 | // int value of the offset from where the message processing should happen

43 | public final int startOffset;

44 | // Name of the Kafka Consumer Group

45 | public final String consumerGroupName;

46 | // SimpleConsumer socket bufferSize

47 | public final int kafkaSimpleConsumerBufferSizeBytes;

48 | // SimpleConsumer socket timeout in MS

49 | public final int kafkaSimpleConsumerSocketTimeoutMs;

50 | // FetchRequest's minBytes value

51 | public final int kafkaFetchSizeMinBytes;

52 | // Preferred Message Encoding to process the message before posting it to ElasticSearch

53 | public final String messageEncoding;

54 | // Name of the ElasticSearch Cluster

55 | public final String esClusterName;

56 | // Name of the ElasticSearch Host Port List

57 | public final String esHostPortList;

58 | // IndexName in ElasticSearch to which the processed Message has to be posted

59 | public final String esIndex;

60 | // IndexType in ElasticSearch to which the processed Message has to be posted

61 | public final String esIndexType;

62 | // flag to enable/disable performance metrics reporting

63 | public boolean isPerfReportingEnabled;

64 | // number of times to try to re-init Kafka connections/consumer if read/write to Kafka fails

65 | public final int numberOfReinitAttempts;

66 | // sleep time in ms between Kafka re-init atempts

67 | public final int kafkaReinitSleepTimeMs;

68 | // sleep time in ms between attempts to index data into ES again

69 | public final int esIndexingRetrySleepTimeMs;

70 | // number of times to try to index data into ES if ES cluster is not reachable

71 | public final int numberOfEsIndexingRetryAttempts;

72 |

73 | // Log property file for the consumer instance

74 | public final String logPropertyFile;

75 |

76 | // determines whether the consumer will post to ElasticSearch or not:

77 | // If set to true, the consumer will read events from Kafka and transform them,

78 | // but will not post to ElasticSearch

79 | public final String isDryRun;

80 |

81 | // Wait time in seconds between consumer job rounds

82 | public final int consumerSleepBetweenFetchsMs;

83 |

84 | //wait time before force-stopping Consumer Job

85 | public final int timeLimitToStopConsumerJob = 10;

86 | //timeout in seconds before force-stopping Indexer app and all indexer jobs

87 | public final int appStopTimeoutSeconds;

88 |

89 | public String getStartOffsetFrom() {

90 | return startOffsetFrom;

91 | }

92 |

93 | public void setStartOffsetFrom(String startOffsetFrom) {

94 | this.startOffsetFrom = startOffsetFrom;

95 | }

96 |

97 | public ConsumerConfig(String configFile) throws Exception {

98 | try {

99 | logger.info("configFile : " + configFile);

100 | prop.load(new FileInputStream(configFile));

101 |

102 | logger.info("Properties : " + prop);

103 | } catch (Exception e) {

104 | logger.error("Error reading/loading configFile: " + e.getMessage(), e);

105 | throw e;

106 | }

107 |

108 | kafkaZookeeperList = (String) prop.getProperty("kafkaZookeeperList", "localhost:2181");

109 | zkSessionTimeoutMs = Integer.parseInt(prop.getProperty("zkSessionTimeoutMs", "10000"));

110 | zkConnectionTimeoutMs = Integer.parseInt(prop.getProperty("zkConnectionTimeoutMs", "15000"));

111 | zkCuratorRetryTimes = Integer.parseInt(prop.getProperty("zkCuratorRetryTimes", "3"));

112 | zkCuratorRetryDelayMs = Integer.parseInt(prop.getProperty("zkCuratorRetryDelayMs", "2000"));

113 |

114 | messageHandlerClass = prop.getProperty("messageHandlerClass",

115 | "org.elasticsearch.kafka.consumer.messageHandlers.RawMessageStringHandler");

116 | indexHandlerClass = prop.getProperty("indexHandlerClass",

117 | "org.elasticsearch.kafka.consumer.BasicIndexHandler");

118 | kafkaBrokersList = prop.getProperty("kafkaBrokersList", "localhost:9092");

119 | topic = prop.getProperty("topic", "");

120 | firstPartition = Short.parseShort(prop.getProperty("firstPartition", "0"));

121 | lastPartition = Short.parseShort(prop.getProperty("lastPartition", "3"));

122 | startOffsetFrom = prop.getProperty("startOffsetFrom", "0");

123 | startOffset = Integer.parseInt(prop.getProperty("startOffset", "LATEST"));

124 | consumerGroupName = prop.getProperty("consumerGroupName", "ESKafkaConsumerClient");

125 | kafkaFetchSizeMinBytes = Integer.parseInt(prop.getProperty(

126 | "kafkaFetchSizeMinBytes",

127 | String.valueOf(kafkaFetchSizeBytesDefault)));

128 | kafkaSimpleConsumerBufferSizeBytes = Integer.parseInt(prop.getProperty(

129 | "kafkaSimpleConsumerBufferSizeBytes",

130 | String.valueOf(kafkaFetchSizeBytesDefault)));

131 | kafkaSimpleConsumerSocketTimeoutMs = Integer.parseInt(prop.getProperty(

132 | "kafkaSimpleConsumerSocketTimeoutMs", "10000"));

133 | messageEncoding = prop.getProperty("messageEncoding", "UTF-8");

134 |

135 | esClusterName = prop.getProperty("esClusterName", "");

136 | esHostPortList = prop.getProperty("esHostPortList", "localhost:9300");

137 | esIndex = prop.getProperty("esIndex", "kafkaConsumerIndex");

138 | esIndexType = prop.getProperty("esIndexType", "kafka");

139 | isPerfReportingEnabled=Boolean.getBoolean(prop.getProperty(

140 | "isPerfReportingEnabled", "false"));

141 | logPropertyFile = prop.getProperty("logPropertyFile",

142 | "log4j.properties");

143 | isDryRun = prop.getProperty("isDryRun", "false");

144 | consumerSleepBetweenFetchsMs = Integer.parseInt(prop.getProperty(

145 | "consumerSleepBetweenFetchsMs", "25"));

146 | appStopTimeoutSeconds = Integer.parseInt(prop.getProperty(

147 | "appStopTimeoutSeconds", "10"));

148 | numberOfReinitAttempts = Integer.parseInt(prop.getProperty(

149 | "numberOfReinitAttempts", "2"));

150 | kafkaReinitSleepTimeMs = Integer.parseInt(prop.getProperty(

151 | "kafkaReinitSleepTimeMs", "1000"));

152 | esIndexingRetrySleepTimeMs = Integer.parseInt(prop.getProperty(

153 | "esIndexingRetrySleepTimeMs", "1000"));

154 | numberOfEsIndexingRetryAttempts = Integer.parseInt(prop.getProperty(

155 | "numberOfEsIndexingRetryAttempts", "2"));

156 | logger.info("Config reading complete !");

157 | }

158 |

159 | public boolean isPerfReportingEnabled() {

160 | return isPerfReportingEnabled;

161 | }

162 |

163 | public Properties getProperties() {

164 | return prop;

165 | }

166 |

167 | public int getNumberOfReinitAttempts() {

168 | return numberOfReinitAttempts;

169 | }

170 |

171 | public int getKafkaReinitSleepTimeMs() {

172 | return kafkaReinitSleepTimeMs;

173 | }

174 |

175 | public String getEsIndexType() {

176 | return esIndexType;

177 | }

178 |

179 | public int getEsIndexingRetrySleepTimeMs() {

180 | return esIndexingRetrySleepTimeMs;

181 | }

182 |

183 | public int getNumberOfEsIndexingRetryAttempts() {

184 | return numberOfEsIndexingRetryAttempts;

185 | }

186 |

187 | }

188 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/FailedEventsLogger.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import org.slf4j.Logger;

4 | import org.slf4j.LoggerFactory;

5 |

6 | public class FailedEventsLogger {

7 |

8 | private static final Logger logger = LoggerFactory.getLogger(FailedEventsLogger.class);

9 |

10 | public static void logFailedEvent(String errorMsg, String event){

11 | logger.error("General Error Processing Event: ERROR: {}, EVENT: {}", errorMsg, event);

12 | }

13 |

14 | public static void logFailedToPostToESEvent(String restResponse, String errorMsg){

15 | logger.error("Error posting event to ES: REST response: {}, ERROR: {}", restResponse, errorMsg);

16 | }

17 |

18 | public static void logFailedToTransformEvent(long offset, String errorMsg, String event){

19 | logger.error("Error transforming event: OFFSET: {}, ERROR: {}, EVENT: {}",

20 | offset, errorMsg, event);

21 | }

22 | public static void logFailedEvent(long startOffset,long endOffset, int partition ,String errorMsg, String event){

23 | logger.error("Error transforming event: OFFSET: {} --> {} PARTITION: {},EVENT: {},ERROR: {} ",

24 | startOffset,endOffset, partition,event,errorMsg);

25 | }

26 |

27 | }

28 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/IndexHandler.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import java.util.HashMap;

4 |

5 | /**

6 | * Basic interface for ElasticSearch Index name lookup

7 | *

8 | * getIndexName() and getIndexType() methods should be implemented as needed - to use custom logic

9 | * to determine which index to send the data to, based on business-specific

10 | * criteria

11 | *

12 | * @author marinapopova

13 | *

14 | */

15 | public interface IndexHandler {

16 |

17 | // default index name, if not specified/calculated otherwise

18 | public static final String DEFAULT_INDEX_NAME = "test_index";

19 | // default index type, if not specified/calculated otherwise

20 | public static final String DEFAULT_INDEX_TYPE = "test_index_type";

21 |

22 | public String getIndexName (HashMap indexLookupProperties);

23 | public String getIndexType (HashMap indexLookupProperties);

24 |

25 | }

26 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/IndexerESException.java:

--------------------------------------------------------------------------------

1 | /**

2 | * @author marinapopova

3 | * Sep 25, 2015

4 | */

5 | package org.elasticsearch.kafka.indexer;

6 |

7 | public class IndexerESException extends Exception {

8 |

9 | /**

10 | *

11 | */

12 | public IndexerESException() {

13 | // TODO Auto-generated constructor stub

14 | }

15 |

16 | /**

17 | * @param message

18 | */

19 | public IndexerESException(String message) {

20 | super(message);

21 | // TODO Auto-generated constructor stub

22 | }

23 |

24 | /**

25 | * @param cause

26 | */

27 | public IndexerESException(Throwable cause) {

28 | super(cause);

29 | // TODO Auto-generated constructor stub

30 | }

31 |

32 | /**

33 | * @param message

34 | * @param cause

35 | */

36 | public IndexerESException(String message, Throwable cause) {

37 | super(message, cause);

38 | // TODO Auto-generated constructor stub

39 | }

40 |

41 | /**

42 | * @param message

43 | * @param cause

44 | * @param enableSuppression

45 | * @param writableStackTrace

46 | */

47 | public IndexerESException(String message, Throwable cause,

48 | boolean enableSuppression, boolean writableStackTrace) {

49 | super(message, cause, enableSuppression, writableStackTrace);

50 | // TODO Auto-generated constructor stub

51 | }

52 |

53 | }

54 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/KafkaClient.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import com.netflix.curator.framework.CuratorFramework;

4 | import com.netflix.curator.framework.CuratorFrameworkFactory;

5 | import com.netflix.curator.retry.RetryNTimes;

6 | import kafka.api.FetchRequest;

7 | import kafka.api.FetchRequestBuilder;

8 | import kafka.api.OffsetRequest;

9 | import kafka.api.PartitionOffsetRequestInfo;

10 | import kafka.common.OffsetAndMetadata;

11 | import kafka.common.TopicAndPartition;

12 | import kafka.javaapi.*;

13 | import kafka.javaapi.consumer.SimpleConsumer;

14 | import kafka.javaapi.message.ByteBufferMessageSet;

15 | import org.slf4j.Logger;

16 | import org.slf4j.LoggerFactory;

17 |

18 | import java.util.ArrayList;

19 | import java.util.HashMap;

20 | import java.util.List;

21 | import java.util.Map;

22 |

23 | public class KafkaClient {

24 |

25 |

26 | private static final Logger logger = LoggerFactory.getLogger(KafkaClient.class);

27 | private CuratorFramework curator;

28 | private SimpleConsumer simpleConsumer;

29 | private String kafkaClientId;

30 | private String topic;

31 | private final int partition;

32 | private String leaderBrokerHost;

33 | private int leaderBrokerPort;

34 | private String leaderBrokerURL;

35 | private final ConsumerConfig consumerConfig;

36 | private String[] kafkaBrokersArray;

37 |

38 |

39 | public KafkaClient(final ConsumerConfig config, String kafkaClientId, int partition) throws Exception {

40 | logger.info("Instantiating KafkaClient");

41 | this.consumerConfig = config;

42 |

43 | this.topic = config.topic;

44 | this.kafkaClientId = kafkaClientId;

45 | this.partition = partition;

46 | kafkaBrokersArray = config.kafkaBrokersList.trim().split(",");

47 | logger.info("### KafkaClient Config: ###");

48 | logger.info("kafkaZookeeperList: {}", config.kafkaZookeeperList);

49 | logger.info("kafkaBrokersList: {}", config.kafkaBrokersList);

50 | logger.info("kafkaClientId: {}", kafkaClientId);

51 | logger.info("topic: {}", topic);

52 | logger.info("partition: {}", partition);

53 | connectToZooKeeper();

54 | findLeader();

55 | initConsumer();

56 |

57 | }

58 |

59 | public void connectToZooKeeper() throws Exception {

60 | try {

61 | curator = CuratorFrameworkFactory.newClient(consumerConfig.kafkaZookeeperList,

62 | consumerConfig.zkSessionTimeoutMs, consumerConfig.zkConnectionTimeoutMs,

63 | new RetryNTimes(consumerConfig.zkCuratorRetryTimes, consumerConfig.zkCuratorRetryDelayMs));

64 | curator.start();

65 | logger.info("Connected to Kafka Zookeeper successfully");

66 | } catch (Exception e) {

67 | logger.error("Failed to connect to Zookeer: " + e.getMessage());

68 | throw e;

69 | }

70 | }

71 |

72 | public void initConsumer() throws Exception{

73 | try{

74 | this.simpleConsumer = new SimpleConsumer(

75 | leaderBrokerHost, leaderBrokerPort,

76 | consumerConfig.kafkaSimpleConsumerSocketTimeoutMs,

77 | consumerConfig.kafkaSimpleConsumerBufferSizeBytes,

78 | kafkaClientId);

79 | logger.info("Initialized Kafka Consumer successfully for partition {}",partition);

80 | }

81 | catch(Exception e){

82 | logger.error("Failed to initialize Kafka Consumer: " + e.getMessage());

83 | throw e;

84 | }

85 | }

86 |

87 | public short saveOffsetInKafka(long offset, short errorCode) throws Exception{

88 | logger.debug("Starting to save the Offset value to Kafka: offset={}, errorCode={} for partition {}",

89 | offset, errorCode,partition);

90 | short versionID = 0;

91 | int correlationId = 0;

92 | try{

93 | TopicAndPartition tp = new TopicAndPartition(topic, partition);

94 | OffsetAndMetadata offsetMetaAndErr = new OffsetAndMetadata(

95 | offset, OffsetAndMetadata.NoMetadata(), errorCode);

96 | Map mapForCommitOffset = new HashMap<>();

97 | mapForCommitOffset.put(tp, offsetMetaAndErr);

98 | kafka.javaapi.OffsetCommitRequest offsetCommitReq = new kafka.javaapi.OffsetCommitRequest(

99 | kafkaClientId, mapForCommitOffset, correlationId, kafkaClientId, versionID);

100 | OffsetCommitResponse offsetCommitResp = simpleConsumer.commitOffsets(offsetCommitReq);

101 | logger.debug("Completed OffsetSet commit for partition {}. OffsetCommitResponse ErrorCode = {} Returning to caller ", partition,offsetCommitResp.errors().get(tp));

102 | return (Short) offsetCommitResp.errors().get(tp);

103 | }

104 | catch(Exception e){

105 | logger.error("Error when commiting Offset to Kafka: " + e.getMessage(), e);

106 | throw e;

107 | }

108 | }

109 |

110 | public PartitionMetadata findLeader() throws Exception {

111 | logger.info("Looking for Kafka leader broker for partition {}...", partition);

112 | PartitionMetadata leaderPartitionMetaData = null;

113 | // try to find leader META info, trying each broker until the leader is found -

114 | // in case some of the leaders are down

115 | for (int i=0; i topics = new ArrayList();

160 | topics.add(this.topic);

161 | TopicMetadataRequest req = new TopicMetadataRequest(topics);

162 | kafka.javaapi.TopicMetadataResponse resp = leadFindConsumer.send(req);

163 |

164 | List metaData = resp.topicsMetadata();

165 | for (TopicMetadata item : metaData) {

166 | for (PartitionMetadata part : item.partitionsMetadata()) {

167 | if (part.partitionId() == partition) {

168 | leaderPartitionMetadata = part;

169 | logger.info("Found leader for partition {} using Kafka Broker={}:{}, topic={}; leader broker URL: {}:{}",

170 | partition, kafkaBrokerHost, kafkaBrokerPortStr, topic,

171 | leaderPartitionMetadata.leader().host(), leaderPartitionMetadata.leader().port());

172 | break;

173 | }

174 | }

175 | // we found the leader - get out of this loop as well

176 | if (leaderPartitionMetadata != null)

177 | break;

178 | }

179 | } catch (Exception e) {

180 | logger.warn("Failed to find leader for partition {} using Kafka Broker={} , topic={}; Error: {}",

181 | partition, kafkaBrokerHost, topic, e.getMessage());

182 | } finally {

183 | if (leadFindConsumer != null){

184 | leadFindConsumer.close();

185 | logger.debug("Closed the leadFindConsumer connection");

186 | }

187 | }

188 | return leaderPartitionMetadata;

189 | }

190 |

191 | public long getLastestOffset() throws Exception {

192 | logger.debug("Getting LastestOffset for topic={}, partition={}, kafkaGroupId={}",

193 | topic, partition, kafkaClientId);

194 | long latestOffset = getOffset(topic, partition, OffsetRequest.LatestTime(), kafkaClientId);

195 | logger.debug("LatestOffset={} for partition {}", latestOffset ,partition);

196 | return latestOffset;

197 | }

198 |

199 | public long getEarliestOffset() throws Exception {

200 | logger.debug("Getting EarliestOffset for topic={}, partition={}, kafkaGroupId={}",

201 | topic, partition, kafkaClientId);

202 | long earliestOffset = this.getOffset(topic, partition, OffsetRequest.EarliestTime(), kafkaClientId);

203 | logger.debug("earliestOffset={} for partition {}", earliestOffset,partition);

204 | return earliestOffset;

205 | }

206 |

207 | private long getOffset(String topic, int partition, long whichTime, String clientName) throws Exception {

208 | try{

209 | TopicAndPartition topicAndPartition = new TopicAndPartition(topic,

210 | partition);

211 | Map requestInfo = new HashMap();

212 | requestInfo.put(topicAndPartition, new PartitionOffsetRequestInfo(

213 | whichTime, 1));

214 | kafka.javaapi.OffsetRequest request = new kafka.javaapi.OffsetRequest(

215 | requestInfo, kafka.api.OffsetRequest.CurrentVersion(),

216 | clientName);

217 | OffsetResponse response = simpleConsumer.getOffsetsBefore(request);

218 |

219 | if (response.hasError()) {

220 | logger.error("Error fetching offsets from Kafka. Reason: {} for partition {}" , response.errorCode(topic, partition),partition);

221 | throw new Exception("Error fetching offsets from Kafka. Reason: " + response.errorCode(topic, partition) +"for partition "+partition);

222 | }

223 | long[] offsets = response.offsets(topic, partition);

224 | return offsets[0];

225 | }

226 | catch(Exception e){

227 | logger.error("Exception when trying to get the Offset. Throwing the exception for partition {}" ,partition,e);

228 | throw e;

229 | }

230 | }

231 |

232 | public long fetchCurrentOffsetFromKafka() throws Exception{

233 | short versionID = 0;

234 | int correlationId = 0;

235 | try{

236 | List topicPartitionList = new ArrayList();

237 | TopicAndPartition myTopicAndPartition = new TopicAndPartition(topic, partition);

238 | topicPartitionList.add(myTopicAndPartition);

239 | OffsetFetchRequest offsetFetchReq = new OffsetFetchRequest(

240 | kafkaClientId, topicPartitionList, versionID, correlationId, kafkaClientId);

241 | OffsetFetchResponse offsetFetchResponse = simpleConsumer.fetchOffsets(offsetFetchReq);

242 | long currentOffset = offsetFetchResponse.offsets().get(myTopicAndPartition).offset();

243 | //logger.info("Fetched Kafka's currentOffset = " + currentOffset);

244 | return currentOffset;

245 | }

246 | catch(Exception e){

247 | logger.error("Error when fetching current offset from kafka: for partition {}" ,partition,e);

248 | throw e;

249 | }

250 | }

251 |

252 |

253 | public FetchResponse getMessagesFromKafka(long offset) throws Exception {

254 | logger.debug("Starting getMessagesFromKafka() ...");

255 | try{

256 | FetchRequest req = new FetchRequestBuilder()

257 | .clientId(kafkaClientId)

258 | .addFetch(topic, partition, offset, consumerConfig.kafkaFetchSizeMinBytes)

259 | .build();

260 | FetchResponse fetchResponse = simpleConsumer.fetch(req);

261 | return fetchResponse;

262 | }

263 | catch(Exception e){

264 | logger.error("Exception fetching messages from Kafka for partition {}" ,partition,e);

265 | throw e;

266 | }

267 | }

268 |

269 | public void close() {

270 | curator.close();

271 | logger.info("Curator/Zookeeper connection closed");

272 | }

273 |

274 | }

275 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/KafkaIndexerDriver.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import org.elasticsearch.kafka.indexer.jmx.KafkaEsIndexerStatus;

4 | import org.elasticsearch.kafka.indexer.jmx.KafkaEsIndexerStatusMXBean;

5 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobManager;

6 | import org.slf4j.Logger;

7 | import org.slf4j.LoggerFactory;

8 |

9 | import javax.management.MBeanServer;

10 | import javax.management.ObjectName;

11 | import java.lang.management.ManagementFactory;

12 |

13 | public class KafkaIndexerDriver {

14 |

15 | boolean stopped = false;

16 | public IndexerJobManager indexerJobManager = null;

17 | private boolean isConsumeJobInProgress = false;

18 | private ConsumerConfig kafkaConsumerConfig;

19 | private static final Logger logger = LoggerFactory.getLogger(KafkaIndexerDriver.class);

20 | private static final String KAFKA_CONSUMER_SHUTDOWN_THREAD = "kafka-indexer-shutdown-thread";

21 |

22 | public KafkaIndexerDriver(){

23 | }

24 |

25 | public void init(String[] args) throws Exception {

26 | logger.info("Initializing Kafka ES Indexer, arguments passed to the Driver: ");

27 | for(String arg : args){

28 | logger.info(arg);

29 | }

30 | kafkaConsumerConfig = new ConsumerConfig(args[0]);

31 | logger.info("Created kafka consumer config OK");

32 | indexerJobManager = new IndexerJobManager(kafkaConsumerConfig);

33 |

34 | logger.info("Registering KafkfaEsIndexerStatus MBean: ");

35 | MBeanServer mbs = ManagementFactory.getPlatformMBeanServer();

36 | ObjectName name = new ObjectName("org.elasticsearch.kafka.indexer:type=KafkfaEsIndexerStatus");

37 | KafkaEsIndexerStatusMXBean hc = new KafkaEsIndexerStatus(indexerJobManager);

38 | mbs.registerMBean(hc, name);

39 |

40 |

41 | }

42 |

43 |

44 | public void start() throws Exception {

45 | indexerJobManager.startAll();

46 | }

47 |

48 | public void stop() throws Exception {

49 | logger.info("Received the stop signal, trying to stop all indexer jobs...");

50 | stopped = true;

51 |

52 | indexerJobManager.stop();

53 | // TODO check if we still need the forced/timed-out shutdown

54 | /*

55 | LocalDateTime stopTime= LocalDateTime.now();

56 | while(isConsumeJobInProgress){

57 | logger.info(".... Waiting for inprogress Consumer Job to complete ...");

58 | Thread.sleep(1000);

59 | LocalDateTime dateTime2= LocalDateTime.now();

60 | if (java.time.Duration.between(stopTime, dateTime2).getSeconds() > kafkaConsumerConfig.timeLimitToStopConsumerJob){

61 | logger.info(".... Consumer Job not responding for " + kafkaConsumerConfig.timeLimitToStopConsumerJob +" seconds - stopping the job");

62 | break;

63 | }

64 |

65 | }

66 | logger.info("Completed waiting for inprogess Consumer Job to finish - stopping the job");

67 | try{

68 | kafkaConsumerJob.stopKafkaClient();

69 | }

70 | catch(Exception e){

71 | logger.error("********** Exception when trying to stop the Consumer Job: " +

72 | e.getMessage(), e);

73 | e.printStackTrace();

74 | }

75 | /* */

76 |

77 | logger.info("Stopped all indexer jobs OK");

78 | }

79 |

80 | public static void main(String[] args) {

81 | KafkaIndexerDriver driver = new KafkaIndexerDriver();

82 |

83 | Runtime.getRuntime().addShutdownHook(new Thread(KAFKA_CONSUMER_SHUTDOWN_THREAD) {

84 | public void run() {

85 | logger.info("Running Shutdown Hook .... ");

86 | try {

87 | driver.stop();

88 | } catch (Exception e) {

89 | logger.error("Error stopping the Consumer from the ShutdownHook: " + e.getMessage());

90 | }

91 | }

92 | });

93 |

94 | try {

95 | driver.init(args);

96 | driver.start();

97 | } catch (Exception e) {

98 | logger.error("Exception from main() - exiting: " + e.getMessage());

99 | }

100 |

101 |

102 | }

103 | }

104 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/MessageHandler.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer;

2 |

3 | import java.lang.reflect.InvocationTargetException;

4 | import java.nio.ByteBuffer;

5 | import java.util.Iterator;

6 |

7 | import kafka.message.Message;

8 | import kafka.message.MessageAndOffset;

9 |

10 | import org.elasticsearch.ElasticsearchException;

11 | import org.elasticsearch.action.bulk.BulkItemResponse;

12 | import org.elasticsearch.action.bulk.BulkRequestBuilder;

13 | import org.elasticsearch.action.bulk.BulkResponse;

14 | import org.elasticsearch.client.Client;

15 | import org.slf4j.Logger;

16 | import org.slf4j.LoggerFactory;

17 |

18 |

19 | public abstract class MessageHandler {

20 |

21 | private static final Logger logger = LoggerFactory.getLogger(MessageHandler.class);

22 | private Client esClient;

23 | private ConsumerConfig config;

24 | private BulkRequestBuilder bulkRequestBuilder;

25 | private IndexHandler indexHandler;

26 |

27 | public MessageHandler(Client client,ConsumerConfig config) throws Exception{

28 | this.esClient = client;

29 | this.config = config;

30 | this.bulkRequestBuilder = null;

31 | // instantiate specified in the config IndexHandler class

32 | try {

33 | indexHandler = (IndexHandler) Class

34 | .forName(config.indexHandlerClass)

35 | .getConstructor(ConsumerConfig.class)

36 | .newInstance(config);

37 | logger.info("Created IndexHandler: ", config.indexHandlerClass);

38 | } catch (InstantiationException | IllegalAccessException

39 | | IllegalArgumentException | InvocationTargetException

40 | | NoSuchMethodException | SecurityException

41 | | ClassNotFoundException e) {

42 | logger.error("Exception creating IndexHandler: " + e.getMessage(), e);

43 | throw e;

44 | }

45 | logger.info("Created Message Handler");

46 | }

47 |

48 | public Client getEsClient() {

49 | return esClient;

50 | }

51 |

52 | public ConsumerConfig getConfig() {

53 | return config;

54 | }

55 |

56 | public BulkRequestBuilder getBuildReqBuilder() {

57 | return bulkRequestBuilder;

58 | }

59 |

60 | public void setBuildReqBuilder(BulkRequestBuilder bulkReqBuilder) {

61 | this.bulkRequestBuilder = bulkReqBuilder;

62 | }

63 |

64 |

65 | public boolean postToElasticSearch() throws Exception {

66 | BulkResponse bulkResponse = null;

67 | BulkItemResponse bulkItemResp = null;

68 | //Nothing/NoMessages to post to ElasticSearch

69 | if(bulkRequestBuilder.numberOfActions() <= 0){

70 | logger.warn("No messages to post to ElasticSearch - returning");

71 | return true;

72 | }

73 | try{

74 | bulkResponse = bulkRequestBuilder.execute().actionGet();

75 | }

76 | catch(ElasticsearchException e){

77 | logger.error("Failed to post messages to ElasticSearch: " + e.getMessage(), e);

78 | throw e;

79 | }

80 | logger.debug("Time to post messages to ElasticSearch: {} ms", bulkResponse.getTookInMillis());

81 | if(bulkResponse.hasFailures()){

82 | logger.error("Bulk Message Post to ElasticSearch has errors: {}",

83 | bulkResponse.buildFailureMessage());

84 | int failedCount = 0;

85 | Iterator bulkRespItr = bulkResponse.iterator();

86 | //TODO research if there is a way to get all failed messages without iterating over

87 | // ALL messages in this bulk post request

88 | while (bulkRespItr.hasNext()){

89 | bulkItemResp = bulkRespItr.next();

90 | if (bulkItemResp.isFailed()) {

91 | failedCount++;

92 | String errorMessage = bulkItemResp.getFailure().getMessage();

93 | String restResponse = bulkItemResp.getFailure().getStatus().name();

94 | logger.error("Failed Message #{}, REST response:{}; errorMessage:{}",

95 | failedCount, restResponse, errorMessage);

96 | // TODO: there does not seem to be a way to get the actual failed event

97 | // until it is possible - do not log anything into the failed events log file

98 | //FailedEventsLogger.logFailedToPostToESEvent(restResponse, errorMessage);

99 | }

100 | }

101 | logger.info("# of failed to post messages to ElasticSearch: {} ", failedCount);

102 | return false;

103 | }

104 | logger.info("Bulk Post to ElasticSearch finished OK");

105 | bulkRequestBuilder = null;

106 | return true;

107 | }

108 |

109 | public abstract byte[] transformMessage(byte[] inputMessage, Long offset) throws Exception;

110 |

111 | public long prepareForPostToElasticSearch(Iterator messageAndOffsetIterator){

112 | bulkRequestBuilder = esClient.prepareBulk();

113 | int numProcessedMessages = 0;

114 | int numMessagesInBatch = 0;

115 | long offsetOfNextBatch = 0;

116 | while(messageAndOffsetIterator.hasNext()) {

117 | numMessagesInBatch++;

118 | MessageAndOffset messageAndOffset = messageAndOffsetIterator.next();

119 | offsetOfNextBatch = messageAndOffset.nextOffset();

120 | Message message = messageAndOffset.message();

121 | ByteBuffer payload = message.payload();

122 | byte[] bytesMessage = new byte[payload.limit()];

123 | payload.get(bytesMessage);

124 | byte[] transformedMessage;

125 | try {

126 | transformedMessage = this.transformMessage(bytesMessage, messageAndOffset.offset());

127 | } catch (Exception e) {

128 | String msgStr = new String(bytesMessage);

129 | logger.error("ERROR transforming message at offset={} - skipping it: {}",

130 | messageAndOffset.offset(), msgStr, e);

131 | FailedEventsLogger.logFailedToTransformEvent(

132 | messageAndOffset.offset(), e.getMessage(), msgStr);

133 | continue;

134 | }

135 | this.getBuildReqBuilder().add(

136 | esClient.prepareIndex(

137 | indexHandler.getIndexName(null), indexHandler.getIndexType(null))

138 | .setSource(transformedMessage)

139 | );

140 | numProcessedMessages++;

141 | }

142 | logger.info("Total # of messages in this batch: {}; " +

143 | "# of successfully transformed and added to Index messages: {}; offsetOfNextBatch: {}",

144 | numMessagesInBatch, numProcessedMessages, offsetOfNextBatch);

145 | return offsetOfNextBatch;

146 | }

147 |

148 | public IndexHandler getIndexHandler() {

149 | return indexHandler;

150 | }

151 |

152 |

153 | }

154 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/jmx/IndexerJobStatusMBean.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer.jmx;

2 |

3 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobStatusEnum;

4 |

5 | public interface IndexerJobStatusMBean {

6 | long getLastCommittedOffset();

7 | IndexerJobStatusEnum getJobStatus();

8 | int getPartition();

9 | }

10 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/jmx/KafkaEsIndexerStatus.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer.jmx;

2 |

3 | import java.util.List;

4 |

5 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobManager;

6 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobStatus;

7 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobStatusEnum;

8 |

9 | public class KafkaEsIndexerStatus implements KafkaEsIndexerStatusMXBean {

10 |

11 | protected IndexerJobManager indexerJobManager;

12 | private int failedJobs;

13 | private int cancelledJobs;

14 | private int stoppedJobs;

15 | private int hangingJobs;

16 |

17 | public KafkaEsIndexerStatus(IndexerJobManager indexerJobManager) {

18 | this.indexerJobManager = indexerJobManager;

19 | }

20 |

21 | public boolean isAlive() {

22 | return true;

23 | }

24 |

25 | public List getStatuses() {

26 | return indexerJobManager.getJobStatuses();

27 | }

28 |

29 | public int getCountOfFailedJobs() {

30 | failedJobs = 0;

31 | for (IndexerJobStatus jobStatus : indexerJobManager.getJobStatuses()) {

32 | if (jobStatus.getJobStatus().equals(IndexerJobStatusEnum.Failed)){

33 | failedJobs++;

34 | }

35 | }

36 | return failedJobs;

37 | }

38 |

39 | public int getCountOfStoppedJobs() {

40 | stoppedJobs = 0;

41 | for (IndexerJobStatus jobStatus : indexerJobManager.getJobStatuses()) {

42 | if (jobStatus.getJobStatus().equals(IndexerJobStatusEnum.Stopped)){

43 | stoppedJobs++;

44 | }

45 | }

46 | return stoppedJobs;

47 | }

48 |

49 | public int getCountOfHangingJobs() {

50 | hangingJobs = 0;

51 | for (IndexerJobStatus jobStatus : indexerJobManager.getJobStatuses()) {

52 | if (jobStatus.getJobStatus().equals(IndexerJobStatusEnum.Hanging)){

53 | hangingJobs++;

54 | }

55 | }

56 | return hangingJobs;

57 | }

58 |

59 | public int getCountOfCancelledJobs() {

60 | cancelledJobs = 0;

61 | for (IndexerJobStatus jobStatus : indexerJobManager.getJobStatuses()) {

62 | if (jobStatus.getJobStatus().equals(IndexerJobStatusEnum.Cancelled)){

63 | cancelledJobs++;

64 | }

65 | }

66 | return cancelledJobs;

67 | }

68 |

69 | }

70 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/jmx/KafkaEsIndexerStatusMXBean.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer.jmx;

2 |

3 | import java.util.List;

4 |

5 | import org.elasticsearch.kafka.indexer.jobs.IndexerJobStatus;

6 |

7 | public interface KafkaEsIndexerStatusMXBean {

8 | boolean isAlive();

9 | List getStatuses();

10 | int getCountOfFailedJobs();

11 | int getCountOfCancelledJobs();

12 | int getCountOfStoppedJobs();

13 | int getCountOfHangingJobs();

14 |

15 | }

16 |

--------------------------------------------------------------------------------

/src/main/java/org/elasticsearch/kafka/indexer/jobs/IndexerJob.java:

--------------------------------------------------------------------------------

1 | package org.elasticsearch.kafka.indexer.jobs;

2 |

3 | import java.util.concurrent.Callable;

4 |

5 | import org.elasticsearch.ElasticsearchException;

6 | import org.elasticsearch.client.transport.NoNodeAvailableException;

7 | import org.elasticsearch.client.transport.TransportClient;

8 | import org.elasticsearch.common.settings.ImmutableSettings;

9 | import org.elasticsearch.common.settings.Settings;

10 | import org.elasticsearch.common.transport.InetSocketTransportAddress;

11 | import org.elasticsearch.kafka.indexer.ConsumerConfig;

12 | import org.elasticsearch.kafka.indexer.FailedEventsLogger;

13 | import org.elasticsearch.kafka.indexer.IndexerESException;

14 | import org.elasticsearch.kafka.indexer.KafkaClient;

15 | import org.elasticsearch.kafka.indexer.MessageHandler;

16 | import org.slf4j.Logger;

17 | import org.slf4j.LoggerFactory;

18 |

19 | import kafka.common.ErrorMapping;

20 | import kafka.javaapi.FetchResponse;

21 | import kafka.javaapi.message.ByteBufferMessageSet;

22 |

23 | public class IndexerJob implements Callable {

24 |

25 | private static final Logger logger = LoggerFactory.getLogger(IndexerJob.class);

26 | private ConsumerConfig consumerConfig;

27 | private MessageHandler msgHandler;

28 | private TransportClient esClient;

29 | public KafkaClient kafkaConsumerClient;

30 | private long offsetForThisRound;

31 | private long nextOffsetToProcess;

32 | private boolean isStartingFirstTime;

33 | private ByteBufferMessageSet byteBufferMsgSet = null;

34 | private FetchResponse fetchResponse = null;

35 | private final String currentTopic;

36 | private final int currentPartition;

37 |

38 | private int kafkaReinitSleepTimeMs;

39 | private int numberOfReinitAttempts;

40 | private int esIndexingRetrySleepTimeMs;

41 | private int numberOfEsIndexingRetryAttempts;

42 | private IndexerJobStatus indexerJobStatus;

43 | private volatile boolean shutdownRequested = false;

44 | private boolean isDryRun = false;

45 |

46 | public IndexerJob(ConsumerConfig config, int partition) throws Exception {

47 | this.consumerConfig = config;

48 | this.currentPartition = partition;

49 | this.currentTopic = config.topic;

50 | indexerJobStatus = new IndexerJobStatus(-1L, IndexerJobStatusEnum.Created, partition);

51 | isStartingFirstTime = true;

52 | isDryRun = Boolean.parseBoolean(config.isDryRun);

53 | kafkaReinitSleepTimeMs = config.getKafkaReinitSleepTimeMs();

54 | numberOfReinitAttempts = config.getNumberOfReinitAttempts();

55 | esIndexingRetrySleepTimeMs = config.getEsIndexingRetrySleepTimeMs();

56 | numberOfEsIndexingRetryAttempts = config.getNumberOfEsIndexingRetryAttempts();

57 | initElasticSearch();

58 | initKafka();

59 | createMessageHandler();

60 | indexerJobStatus.setJobStatus(IndexerJobStatusEnum.Initialized);

61 | }

62 |

63 | void initKafka() throws Exception {

64 | logger.info("Initializing Kafka for partition {}...",currentPartition);

65 | String consumerGroupName = consumerConfig.consumerGroupName;

66 | if (consumerGroupName.isEmpty()) {

67 | consumerGroupName = "es_indexer_" + currentTopic + "_" + currentPartition;

68 | logger.info("ConsumerGroupName was empty, set it to {} for partition {}", consumerGroupName,currentPartition);

69 | }

70 | String kafkaClientId = consumerGroupName + "_" + currentPartition;

71 | logger.info("kafkaClientId={} for partition {}", kafkaClientId,currentPartition);

72 | kafkaConsumerClient = new KafkaClient(consumerConfig, kafkaClientId, currentPartition);

73 | logger.info("Kafka client created and intialized OK for partition {}",currentPartition);

74 | }

75 |

76 | private void initElasticSearch() throws Exception {

77 | String[] esHostPortList = consumerConfig.esHostPortList.trim().split(",");

78 | logger.info("Initializing ElasticSearch... hostPortList={}, esClusterName={} for partition {}",

79 | consumerConfig.esHostPortList, consumerConfig.esClusterName,currentPartition);

80 |

81 | // TODO add validation of host:port syntax - to avoid Runtime exceptions

82 | try {

83 | Settings settings = ImmutableSettings.settingsBuilder()

84 | .put("cluster.name", consumerConfig.esClusterName)

85 | .build();

86 | esClient = new TransportClient(settings);

87 | for (String eachHostPort : esHostPortList) {

88 | logger.info("adding [{}] to TransportClient for partition {}... ", eachHostPort,currentPartition);

89 | esClient.addTransportAddress(

90 | new InetSocketTransportAddress(

91 | eachHostPort.split(":")[0].trim(),

92 | Integer.parseInt(eachHostPort.split(":")[1].trim())

93 | )

94 | );

95 | }

96 | logger.info("ElasticSearch Client created and intialized OK for partition {}",currentPartition);

97 | } catch (Exception e) {

98 | logger.error("Exception when trying to connect and create ElasticSearch Client. Throwing the error. Error Message is::"

99 | + e.getMessage());

100 | throw e;

101 | }

102 | }

103 |

104 | void reInitKafka() throws Exception {

105 | for (int i = 0; i < numberOfReinitAttempts; i++) {

106 | try {

107 | logger.info("Re-initializing Kafka for partition {}, try # {}",

108 | currentPartition, i);

109 | kafkaConsumerClient.close();

110 | logger.info(

111 | "Kafka client closed for partition {}. Will sleep for {} ms to allow kafka to stabilize",

112 | currentPartition, kafkaReinitSleepTimeMs);

113 | Thread.sleep(kafkaReinitSleepTimeMs);

114 | logger.info("Connecting to zookeeper again for partition {}",

115 | currentPartition);

116 | kafkaConsumerClient.connectToZooKeeper();

117 | kafkaConsumerClient.findLeader();

118 | kafkaConsumerClient.initConsumer();

119 | logger.info(".. trying to get offsets info for partition {} ... ", currentPartition);

120 | this.checkKafkaOffsets();

121 | logger.info("Kafka Reintialization for partition {} finished OK",

122 | currentPartition);

123 | return;

124 | } catch (Exception e) {

125 | if (i < numberOfReinitAttempts) {

126 | logger.info("Re-initializing Kafka for partition {}, try # {} - still failing with Exception",

127 | currentPartition, i);

128 | } else {

129 | // if we got here - we failed to re-init Kafka after numberOfTries attempts - throw an exception out

130 | logger.info("Kafka Re-initialization failed for partition {} after {} attempts - throwing exception: "

131 | + e.getMessage(), currentPartition, numberOfReinitAttempts);

132 | throw e;

133 | }

134 | }

135 | }

136 | }

137 |

138 | private void createMessageHandler() throws Exception {

139 | try {

140 | logger.info("MessageHandler Class given in config is {} for partition {}", consumerConfig.messageHandlerClass,currentPartition);

141 | msgHandler = (MessageHandler) Class

142 | .forName(consumerConfig.messageHandlerClass)