├── .editorconfig

├── .flake8

├── .github

├── dependabot.yml

└── workflows

│ ├── automerge.yml

│ └── ci.yml

├── .gitignore

├── .pre-commit-config.yaml

├── LICENSE

├── Makefile

├── README.md

├── purger

├── __init__.py

└── main.py

├── pyproject.toml

├── setup.cfg

├── setup.py

└── tests

├── __init__.py

└── test_purger.py

/.editorconfig:

--------------------------------------------------------------------------------

1 | # https://editorconfig.org

2 |

3 | root = true

4 |

5 | [*]

6 | indent_style = space

7 | indent_size = 2

8 | trim_trailing_whitespace = true

9 | insert_final_newline = true

10 | charset = utf-8

11 | end_of_line = lf

12 |

13 | [*.{py,md}]

14 | indent_size = 4

15 |

16 | [Makefile]

17 | indent_size = 4

18 | indent_style = tab

19 |

--------------------------------------------------------------------------------

/.flake8:

--------------------------------------------------------------------------------

1 | [flake8]

2 | extend-exclude =

3 | .git,

4 | __pycache__,

5 | docs/source/conf.py,

6 | old,

7 | build,

8 | dist,

9 | .venv,

10 | venv

11 |

12 | extend-ignore = E203, E266, E501, W605

13 |

14 | # Black's default line length.

15 | max-line-length = 88

16 |

17 | max-complexity = 18

18 |

19 | # Specify the list of error codes you wish Flake8 to report.

20 | select = B,C,E,F,W,T4,B9

21 |

22 | # Parallelism

23 | jobs = 4

24 |

--------------------------------------------------------------------------------

/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 | updates:

3 | - package-ecosystem: "pip" # See documentation for possible values.

4 | directory: "/" # Location of package manifests.

5 | schedule:

6 | interval: "weekly"

7 |

8 | # Maintain dependencies for GitHub Actions.

9 | - package-ecosystem: "github-actions"

10 | directory: "/"

11 | schedule:

12 | interval: "weekly"

13 |

--------------------------------------------------------------------------------

/.github/workflows/automerge.yml:

--------------------------------------------------------------------------------

1 | # .github/workflows/automerge.yml

2 |

3 | name: Dependabot auto-merge

4 |

5 | on: pull_request

6 |

7 | permissions:

8 | contents: write

9 |

10 | jobs:

11 | dependabot:

12 | runs-on: ubuntu-latest

13 | if: ${{ github.actor == 'dependabot[bot]' }}

14 | steps:

15 | - name: Enable auto-merge for Dependabot PRs

16 | run: gh pr merge --auto --merge "$PR_URL"

17 | env:

18 | PR_URL: ${{github.event.pull_request.html_url}}

19 | # GitHub provides this variable in the CI env. You don't

20 | # need to add anything to the secrets vault.

21 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

22 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 | pull_request:

8 | branches:

9 | - main

10 |

11 | jobs:

12 | build:

13 | runs-on: ${{ matrix.os }}

14 | strategy:

15 | matrix:

16 | os: [ubuntu-latest, macos-latest]

17 | python-version: ["3.8", "3.9", "3.10", "3.11"]

18 |

19 | steps:

20 | - uses: actions/checkout@v3

21 |

22 | - name: Set up Python ${{ matrix.python-version }}

23 | uses: actions/setup-python@v4

24 | with:

25 | python-version: ${{ matrix.python-version }}

26 |

27 | - uses: actions/cache@v3

28 | with:

29 | path: ~/.cache/pip

30 | key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }} }}

31 | restore-keys: |

32 | ${{ runner.os }}-pip-

33 |

34 | - name: Install the dependencies

35 | run: |

36 | make install-deps

37 |

38 | - name: Check linter

39 | run: |

40 | make lint-check

41 |

42 | - name: Run the tests

43 | run: |

44 | make test

45 |

46 | pypi-upload:

47 | runs-on : ubuntu-latest

48 | needs: ["build"]

49 | if: github.event_name == 'release' && startsWith(github.ref, 'refs/tags')

50 | steps:

51 | - uses: actions/checkout@v3

52 |

53 | - name: Publish a Python distribution to PyPI

54 | uses: pypa/gh-action-pypi-publish@release/v1

55 | with:

56 | password: ${{ secrets.PYPI_API_TOKEN }}

57 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 |

29 | # PyInstaller

30 | # Usually these files are written by a python script from a template

31 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

32 | *.manifest

33 | *.spec

34 |

35 | # Installer logs

36 | pip-log.txt

37 | pip-delete-this-directory.txt

38 |

39 | # Unit test / coverage reports

40 | htmlcov/

41 | .tox/

42 | .nox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | *.py,cover

50 | .hypothesis/

51 | .pytest_cache/

52 | cover/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | .pybuilder/

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | # For a library or package, you might want to ignore these files since the code is

87 | # intended to run in multiple environments; otherwise, check them in:

88 | # .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

98 | __pypackages__/

99 |

100 | # Celery stuff

101 | celerybeat-schedule

102 | celerybeat.pid

103 |

104 | # SageMath parsed files

105 | *.sage.py

106 |

107 | # Environments

108 | .env

109 | .venv

110 | env/

111 | venv/

112 | ENV/

113 | env.bak/

114 | venv.bak/

115 |

116 | # Spyder project settings

117 | .spyderproject

118 | .spyproject

119 |

120 | # Rope project settings

121 | .ropeproject

122 |

123 | # mkdocs documentation

124 | /site

125 |

126 | # mypy

127 | .mypy_cache/

128 | .dmypy.json

129 | dmypy.json

130 |

131 | # Pyre type checker

132 | .pyre/

133 |

134 | # pytype static type analyzer

135 | .pytype/

136 |

137 | # Cython debug symbols

138 | cython_debug/

139 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # See https://pre-commit.com for more information

2 | # See https://pre-commit.com/hooks.html for more hooks

3 | repos:

4 | - repo: https://github.com/pre-commit/pre-commit-hooks

5 | rev: v4.0.1

6 | hooks:

7 | - id: trailing-whitespace

8 | - id: end-of-file-fixer

9 | - id: check-yaml

10 | - id: check-added-large-files

11 |

12 | - repo: local

13 | hooks:

14 | - id: lint

15 | name: Run Black Isort Mypy Flake8

16 | entry: make lint

17 | language: system

18 | types: [python]

19 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2021 Redowan Delowar

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | path := .

2 |

3 | define Comment

4 | - Run `make help` to see all the available options.

5 | - Run `make lint` to run the linter.

6 | - Run `make lint-check` to check linter conformity.

7 | - Run `dep-lock` to lock the deps in 'requirements.txt' and 'requirements-dev.txt'.

8 | - Run `dep-sync` to sync current environment up to date with the locked deps.

9 | endef

10 |

11 |

12 | .PHONY: lint

13 | lint: black isort flake mypy ## Apply all the linters.

14 |

15 |

16 | .PHONY: lint-check

17 | lint-check: ## Check whether the codebase satisfies the linter rules.

18 | @echo

19 | @echo "Checking linter rules..."

20 | @echo "========================"

21 | @echo

22 | @black --check $(path)

23 | @isort --check $(path)

24 | @flake8 $(path)

25 | @echo 'y' | mypy $(path) --install-types

26 |

27 |

28 | .PHONY: black

29 | black: ## Apply black.

30 | @echo

31 | @echo "Applying black..."

32 | @echo "================="

33 | @echo

34 | @black --fast $(path)

35 | @echo

36 |

37 |

38 | .PHONY: isort

39 | isort: ## Apply isort.

40 | @echo "Applying isort..."

41 | @echo "================="

42 | @echo

43 | @isort $(path)

44 |

45 |

46 | .PHONY: flake

47 | flake: ## Apply flake8.

48 | @echo

49 | @echo "Applying flake8..."

50 | @echo "================="

51 | @echo

52 | @flake8 $(path)

53 |

54 |

55 | .PHONY: mypy

56 | mypy: ## Apply mypy.

57 | @echo

58 | @echo "Applying mypy..."

59 | @echo "================="

60 | @echo

61 | @mypy $(path)

62 |

63 |

64 | .PHONY: help

65 | help: ## Show this help message.

66 | @grep -E '^[a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | sort | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[36m%-20s\033[0m %s\n", $$1, $$2}'

67 |

68 |

69 | .PHONY: dep-lock

70 | dep-lock: ## Freeze deps in 'requirements.txt' file.

71 | @pip-compile requirements.in -o requirements.txt --no-emit-options

72 | @pip-compile requirements-dev.in -o requirements-dev.txt --no-emit-options

73 |

74 |

75 | .PHONY: dep-sync

76 | dep-sync: ## Sync venv installation with 'requirements.txt' file.

77 | @pip-sync

78 |

79 |

80 | .PHONY: install-deps

81 | install-deps: ## Install all the dependencies.

82 | @pip install -e .[dev_deps]

83 |

84 |

85 | .PHONY: test

86 | test: ## Run the tests against the current version of Python.

87 | @pytest -s -v

88 |

89 |

90 | .PHONY: build

91 | build: ## Build the CLI.

92 | @rm -rf build/ dist/

93 | @python -m build

94 |

95 |

96 | .PHONY: upload

97 | upload: build ## Build and upload to PYPI.

98 | @twine upload dist/*

99 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | Fork Purger

2 |

3 |

4 | >> Delete all of your forked repositories on Github <<

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 | ## Installation

14 |

15 | * Install using pip:

16 |

17 | ```

18 | pip install fork-purger

19 | ```

20 |

21 | ## Exploration

22 |

23 | * Create and collect your Github [user access token](https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/creating-a-personal-access-token).

24 |

25 | * Inspect the `--help` menu. Run:

26 |

27 | ```

28 | fork-purger --help

29 | ```

30 |

31 | This will print the following:

32 |

33 | ```

34 | +-+-+-+-+ +-+-+-+-+-+-+

35 | |F|o|r|k| |P|u|r|g|e|r|

36 | +-+-+-+-+ +-+-+-+-+-+-+

37 |

38 | Usage: fork-purger [OPTIONS]

39 |

40 | Options:

41 | --username TEXT Your Github username. [required]

42 | --token TEXT Your Github access token with delete permission.

43 | [required]

44 | --debug / --no-debug See full traceback in case of HTTP error.

45 | --delete Delete the forked repos.

46 | --help Show this message and exit.

47 | ```

48 |

49 | * By default, `fork-purger` runs in dry mode and doesn't do anything other than just listing the repositories that are about to be deleted. Run:

50 |

51 | ```

52 | fork-purger --username --token

53 | ```

54 |

55 | You'll see the following output:

56 |

57 | ```

58 | +-+-+-+-+ +-+-+-+-+-+-+

59 | |F|o|r|k| |P|u|r|g|e|r|

60 | +-+-+-+-+ +-+-+-+-+-+-+

61 |

62 | These forks will be deleted:

63 | =============================

64 |

65 | https://api.github.com/repos//ddosify

66 | https://api.github.com/repos//delete-github-forks

67 | https://api.github.com/repos//dependabot-core

68 | https://api.github.com/repos//fork-purger

69 | ```

70 |

71 | * To delete the listed repositories, run the CLI with the `--delete` flag:

72 |

73 | ```

74 | fork-purger --username --token --delete

75 | ```

76 |

77 | The output should look similar to this:

78 | ```

79 | +-+-+-+-+ +-+-+-+-+-+-+

80 | |F|o|r|k| |P|u|r|g|e|r|

81 | +-+-+-+-+ +-+-+-+-+-+-+

82 |

83 | Deleting forked repos:

84 | =======================

85 |

86 | Deleting... https://api.github.com/repos//ddosify

87 | Deleting... https://api.github.com/repos//delete-github-forks

88 | Deleting... https://api.github.com/repos//dependabot-core

89 | Deleting... https://api.github.com/repos//fork-purger

90 | ```

91 |

92 | * In case of exceptions, if you need more information, you can run the CLI with the `--debug` flag. This will print out the Python stack trace on the stdout.

93 |

94 | ```

95 | fork-purger --username --token --delete --debug

96 | ```

97 |

98 | ## Architecture

99 |

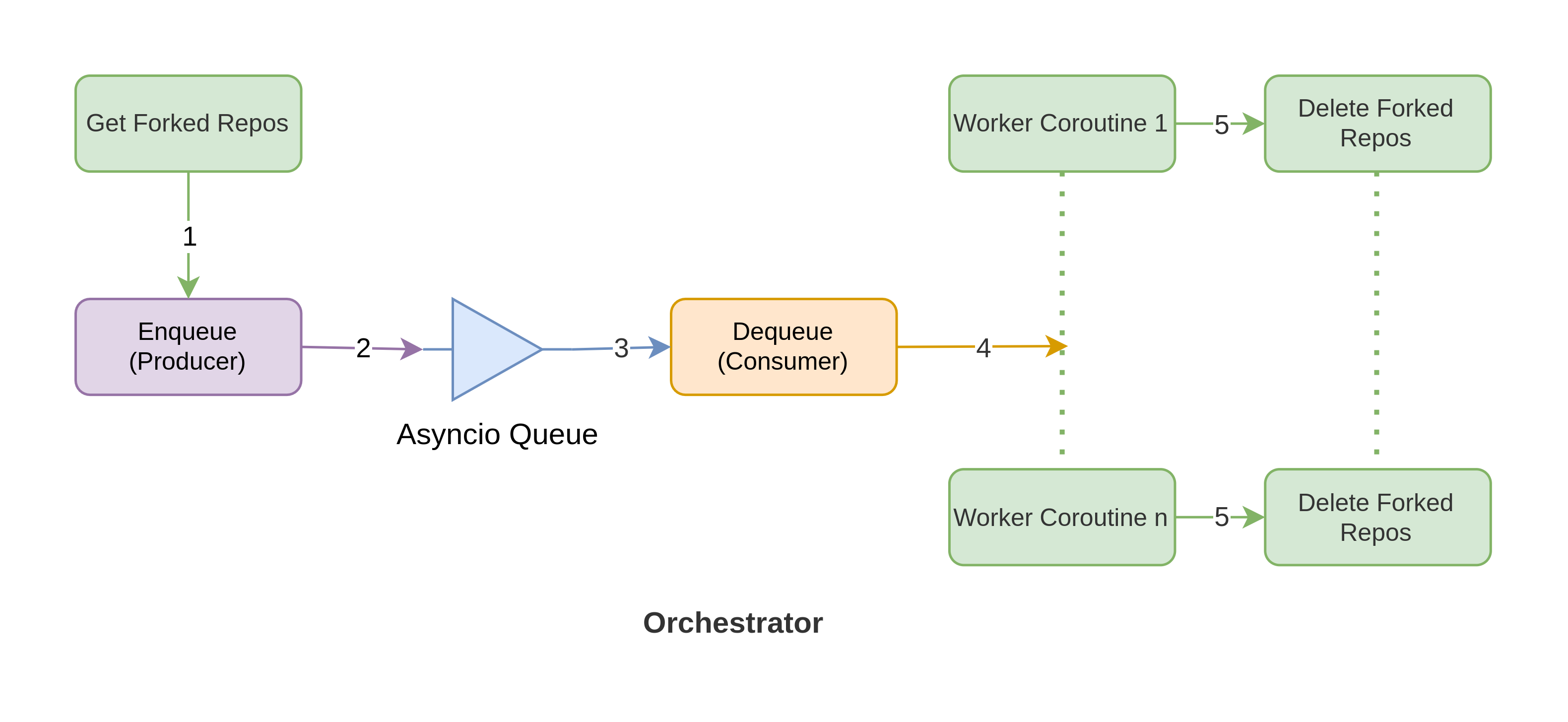

100 | Internally, `fork-purger` leverages Python's coroutine objects to collect the URLs of the forked repositories from GitHub and delete them asynchronously. Asyncio coordinates this workflow in a producer-consumer orientation which is choreographed in the `orchestrator` function. The following diagram can be helpful to understand how the entire workflow operates:

101 |

102 |

103 |

104 |

105 | Here, the square boxes are async functions and each one of them is dedicated to carrying out a single task.

106 |

107 | In the first step, an async function calls a GitHub GET API to collect the URLs of the forked repositories. The `enqueue` function then aggregates those URLs and puts them in a `queue`. The `dequeue` function pops the URLs from the `queue` and sends them to multiple worker functions to achieve concurrency. Finally, the worker functions leverage a DELETE API to purge the forked repositories.

108 |

109 |

110 | ✨ 🍰 ✨

111 |

112 |

--------------------------------------------------------------------------------

/purger/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rednafi/fork-purger/f90afdb1265bd7bff7a66684985f6a12c6b3e9c2/purger/__init__.py

--------------------------------------------------------------------------------

/purger/main.py:

--------------------------------------------------------------------------------

1 | from __future__ import annotations

2 |

3 | import asyncio

4 | import sys

5 | import textwrap

6 | from http import HTTPStatus

7 | from pprint import pformat

8 |

9 | import click

10 | import httpx

11 |

12 | MAX_CONCURRENCY = 5

13 |

14 |

15 | async def get_forked_repos(

16 | username: str,

17 | token: str,

18 | page: int = 1,

19 | per_page: int = 100,

20 | ) -> list[str]:

21 |

22 | """Get the URLs of forked repos in a page."""

23 |

24 | url = (

25 | f"https://api.github.com/users/{username}/repos"

26 | f"?page={page}&per_page={per_page}"

27 | )

28 |

29 | headers = {

30 | "Accept": "application/vnd.github.v3+json",

31 | "Authorization": f"Token {token}",

32 | }

33 |

34 | forked_urls = [] # type: list[str]

35 | async with httpx.AsyncClient(http2=True) as client:

36 | res = await client.get(url, headers=headers)

37 |

38 | if not res.status_code == HTTPStatus.OK:

39 | raise Exception(f"{pformat(res.json())}")

40 |

41 | results = res.json()

42 |

43 | if not results:

44 | return forked_urls

45 |

46 | for result in results:

47 | if result["fork"] is True:

48 | forked_urls.append(result["url"])

49 |

50 | return forked_urls

51 |

52 |

53 | async def delete_forked_repo(url: str, token: str, delete: bool = False) -> None:

54 | headers = {

55 | "Accept": "application/vnd.github.v3+json",

56 | "Authorization": f"Token {token}",

57 | }

58 | if not delete:

59 | print(url)

60 | return None

61 |

62 | client = httpx.AsyncClient(http2=True)

63 |

64 | async with client:

65 | print(f"Deleting... {url}")

66 |

67 | res = await client.delete(url, headers=headers)

68 |

69 | status_code = res.status_code

70 | if status_code not in (HTTPStatus.OK, HTTPStatus.NO_CONTENT):

71 | raise Exception(f"HTTP error: {res.status_code}.")

72 |

73 |

74 | async def enqueue(

75 | queue: asyncio.Queue[str],

76 | event: asyncio.Event,

77 | username: str,

78 | token: str,

79 | stop_after: int | None = None,

80 | ) -> None:

81 | """

82 | Collects the URLs of all the forked repos and inject them into an

83 | async queue.

84 |

85 | Parameters

86 | ----------

87 | queue : asyncio.Queue[str]

88 | Async queue where the forked repo URLs are injected.

89 | event : asyncio.Event

90 | Async event for coroutine synchronization.

91 | username : str

92 | Github username.

93 | token : str

94 | Github access token.

95 | stop_after : int | None

96 | Stop the while loop after `stop_after` iterations.

97 | """

98 |

99 | page = 1

100 | while True:

101 | forked_urls = await get_forked_repos(

102 | username=username,

103 | token=token,

104 | page=page,

105 | )

106 | if not forked_urls:

107 | break

108 |

109 | for forked_url in forked_urls:

110 | await queue.put(forked_url)

111 |

112 | if stop_after == page:

113 | break

114 |

115 | page += 1

116 | event.set()

117 | await asyncio.sleep(0.3)

118 |

119 |

120 | async def dequeue(

121 | queue: asyncio.Queue[str],

122 | event: asyncio.Event,

123 | token: str,

124 | delete: bool,

125 | stop_after: int | None = None,

126 | ) -> None:

127 | """

128 | Collects forked repo URLs from the async queue and deletes

129 | them concurrently.

130 |

131 | Parameters

132 | ----------

133 | queue : asyncio.Queue[str]

134 | Async queue where the forked repo URLs are popped off.

135 | event : asyncio.Event

136 | Async event for coroutine synchronization.

137 | token : str

138 | Github access token.

139 | delete : bool

140 | Whether to delete the forked repos or not.

141 | stop_after : asyncio.Event

142 | Stop the while loop after `stop_after` iterations.

143 | """

144 | cnt = 0

145 | while True:

146 | await event.wait()

147 |

148 | forked_url = await queue.get()

149 |

150 | await delete_forked_repo(forked_url, token, delete)

151 |

152 | # Yields control to the event loop.

153 | await asyncio.sleep(0)

154 |

155 | queue.task_done()

156 | cnt += 1

157 |

158 | if stop_after and stop_after == cnt:

159 | break

160 |

161 |

162 | async def orchestrator(username: str, token: str, delete: bool) -> None:

163 | """

164 | Coordinates the enqueue and dequeue functions in a

165 | producer-consumer setup.

166 | """

167 |

168 | queue = asyncio.Queue() # type: asyncio.Queue[str]

169 | event = asyncio.Event() # type: asyncio.Event

170 |

171 | enqueue_task = asyncio.create_task(enqueue(queue, event, username, token))

172 |

173 | dequeue_tasks = [

174 | asyncio.create_task(dequeue(queue, event, token, delete))

175 | for _ in range(MAX_CONCURRENCY)

176 | ]

177 |

178 | dequeue_tasks.append(enqueue_task)

179 |

180 | done, pending = await asyncio.wait(

181 | dequeue_tasks,

182 | return_when=asyncio.FIRST_COMPLETED,

183 | )

184 |

185 | for fut in done:

186 | try:

187 | exc = fut.exception()

188 | if exc:

189 | raise exc

190 | except asyncio.exceptions.InvalidStateError:

191 | pass

192 |

193 | for t in pending:

194 | t.cancel()

195 |

196 | # This runs the 'enqueue' function implicitly.

197 | await queue.join()

198 |

199 |

200 | @click.command()

201 | @click.option(

202 | "--username",

203 | help="Your Github username.",

204 | required=True,

205 | )

206 | @click.option(

207 | "--token",

208 | help="Your Github access token with delete permission.",

209 | required=True,

210 | )

211 | @click.option(

212 | "--debug/--no-debug",

213 | default=False,

214 | help="See full traceback in case of HTTP error.",

215 | )

216 | @click.option(

217 | "--delete",

218 | is_flag=True,

219 | default=False,

220 | help="Delete the forked repos.",

221 | )

222 | def _cli(username: str, token: str, debug: bool, delete: bool) -> None:

223 | if debug is False:

224 | sys.tracebacklimit = 0

225 |

226 | if not delete:

227 | click.echo("These forks will be deleted:")

228 | click.echo("=============================\n")

229 |

230 | elif delete:

231 | click.echo("Deleting forked repos:")

232 | click.echo("=======================\n")

233 |

234 | asyncio.run(orchestrator(username, token, delete))

235 |

236 |

237 | def cli() -> None:

238 | greet_text = textwrap.dedent(

239 | """

240 | +-+-+-+-+ +-+-+-+-+-+-+

241 | |F|o|r|k| |P|u|r|g|e|r|

242 | +-+-+-+-+ +-+-+-+-+-+-+

243 | """

244 | )

245 | click.echo(greet_text)

246 | _cli()

247 |

248 |

249 | if __name__ == "__main__":

250 | cli()

251 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | # Linter configuruation.

2 | [tool.isort]

3 | profile = "black"

4 | atomic = true

5 | extend_skip_glob = "migrations,scripts"

6 | line_length = 88

7 |

8 | [tool.black]

9 | extend-exclude = "migrations,scripts"

10 |

11 | [tool.mypy]

12 | follow_imports = "skip"

13 | ignore_missing_imports = true

14 | warn_no_return = false

15 | warn_unused_ignores = true

16 | allow_untyped_globals = true

17 | allow_redefinition = true

18 | pretty = true

19 |

20 | [[tool.mypy.overrides]]

21 | module = "tests.*"

22 | ignore_errors = true

23 |

24 | [tool.pytest.ini_options]

25 | asyncio_mode = "auto"

26 |

27 | [build-system]

28 | requires = [

29 | "setuptools >= 40.9.0",

30 | "wheel",

31 | ]

32 | build-backend = "setuptools.build_meta"

33 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | name = fork_purger

3 | version = 1.0.1

4 | description = Delete all of your forked repositories on Github.

5 | long_description = file: README.md

6 | long_description_content_type = text/markdown

7 | url = https://github.com/rednafi/fork-purger

8 | author = Redowan Delowar

9 | author_email = redowan.nafi@gmail.com

10 | license = MIT

11 | license_file = LICENSE

12 | classifiers =

13 | License :: OSI Approved :: MIT License

14 | Programming Language :: Python :: 3

15 | Programming Language :: Python :: 3 :: Only

16 | Programming Language :: Python :: 3.8

17 | Programming Language :: Python :: 3.9

18 | Programming Language :: Python :: 3.10

19 | Programming Language :: Python :: Implementation :: CPython

20 |

21 | [options]

22 | packages = find:

23 | install_requires =

24 | click>=7

25 | httpx[http2]>=0.16.0

26 | python_requires = >=3.8.0

27 |

28 | [options.packages.find]

29 | exclude =

30 | tests*

31 |

32 | [options.entry_points]

33 | console_scripts =

34 | fork-purger = purger.main:cli

35 |

36 | [options.extras_require]

37 | dev_deps =

38 | black

39 | flake8

40 | isort

41 | mypy

42 | pytest

43 | pytest-asyncio

44 | twine

45 | build

46 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from setuptools import setup

2 |

3 | setup()

4 |

--------------------------------------------------------------------------------

/tests/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rednafi/fork-purger/f90afdb1265bd7bff7a66684985f6a12c6b3e9c2/tests/__init__.py

--------------------------------------------------------------------------------

/tests/test_purger.py:

--------------------------------------------------------------------------------

1 | import asyncio

2 | import importlib

3 | import textwrap

4 | from http import HTTPStatus

5 | from unittest.mock import patch # Requires Python 3.8+.

6 |

7 | import pytest

8 | from click.testing import CliRunner

9 | from httpx import Response

10 |

11 | import purger.main as purger

12 |

13 |

14 | @patch.object(purger.httpx.AsyncClient, "get", autospec=True)

15 | async def test_get_forked_repos_ok(mock_async_get):

16 | mock_async_get.return_value = Response(

17 | status_code=HTTPStatus.OK,

18 | )

19 | mock_async_get.return_value.json = lambda: [

20 | {"fork": True, "url": "https://dummy_url.com"}

21 | ]

22 |

23 | result = await purger.get_forked_repos(

24 | username="dummy_username",

25 | token="dummy_token",

26 | )

27 | assert result == ["https://dummy_url.com"]

28 |

29 |

30 | @patch.object(purger.httpx.AsyncClient, "get", autospec=True)

31 | async def test_get_forked_repos_empty(mock_async_get):

32 | mock_async_get.return_value = Response(

33 | status_code=HTTPStatus.OK,

34 | )

35 | mock_async_get.return_value.json = lambda: []

36 |

37 | result = await purger.get_forked_repos(

38 | username="dummy_username",

39 | token="dummy_token",

40 | )

41 | assert result == []

42 |

43 |

44 | @patch.object(purger.httpx.AsyncClient, "get", autospec=True)

45 | async def test_get_forked_repos_error(mock_async_get):

46 | mock_async_get.return_value = Response(

47 | status_code=HTTPStatus.FORBIDDEN,

48 | )

49 | mock_async_get.return_value.json = lambda: []

50 |

51 | with pytest.raises(Exception):

52 | result = await purger.get_forked_repos(

53 | username="dummy_username",

54 | token="dummy_token",

55 | )

56 | assert result == []

57 |

58 |

59 | @patch.object(purger.httpx.AsyncClient, "delete", autospec=True)

60 | async def test_delete_forked_repo_ok(mock_async_delete, capsys):

61 | # Test dry run ok.

62 | mock_async_delete.return_value = Response(

63 | status_code=HTTPStatus.OK,

64 | )

65 | mock_async_delete.return_value.json = lambda: []

66 |

67 | result = await purger.delete_forked_repo(

68 | url="https://dummy_url.com",

69 | token="dummy_token",

70 | )

71 | out, err = capsys.readouterr()

72 |

73 | assert result is None

74 | assert err == ""

75 | assert "https://dummy_url.com" in out

76 |

77 | # Test delete ok.

78 | result = await purger.delete_forked_repo(

79 | url="https://dummy_url.com",

80 | token="dummy_token",

81 | delete=True,

82 | )

83 | out, err = capsys.readouterr()

84 |

85 | assert result is None

86 | assert err == ""

87 | assert "Deleting..." in out

88 |

89 |

90 | @patch.object(purger.httpx.AsyncClient, "delete", autospec=True)

91 | async def test_delete_forked_repo_error(mock_async_delete, capsys):

92 | mock_async_delete.return_value = Response(

93 | status_code=HTTPStatus.FORBIDDEN,

94 | )

95 | mock_async_delete.return_value.json = lambda: []

96 |

97 | # Test delete error.

98 | with pytest.raises(Exception):

99 | await purger.delete_forked_repo(

100 | url="https://dummy_url.com",

101 | token="dummy_token",

102 | delete=True,

103 | )

104 | out, err = capsys.readouterr()

105 |

106 | assert err == ""

107 | assert "Deleting..." in out

108 |

109 |

110 | @patch.object(purger.httpx.AsyncClient, "get", autospec=True)

111 | async def test_enqueue_ok(mock_async_get):

112 | mock_async_get.return_value = Response(

113 | status_code=HTTPStatus.OK,

114 | )

115 | mock_async_get.return_value.json = lambda: [

116 | {"fork": True, "url": "https://dummy_url_1.com"},

117 | {"fork": True, "url": "https://dummy_url_2.com"},

118 | ]

119 |

120 | queue = asyncio.Queue()

121 | event = asyncio.Event()

122 |

123 | result = await purger.enqueue(

124 | queue=queue,

125 | event=event,

126 | username="dummy_username",

127 | token="dummy_token",

128 | stop_after=1,

129 | )

130 | assert result is None

131 | assert queue.qsize() == 2

132 | assert event.is_set() is False

133 |

134 |

135 | @patch("purger.main.delete_forked_repo", autospec=True)

136 | async def test_dequeue_ok(mock_delete_forked_repo):

137 | mock_delete_forked_repo.return_value = None

138 |

139 | queue = asyncio.Queue()

140 | event = asyncio.Event()

141 | queue.put_nowait("https://dummy_url_1.com")

142 | queue.put_nowait("https://dummy_url_2.com")

143 |

144 | event.set()

145 |

146 | result = await purger.dequeue(

147 | queue=queue,

148 | event=event,

149 | token="dummy_token",

150 | delete=False,

151 | stop_after=2,

152 | )

153 |

154 | assert result is None

155 | assert queue.qsize() == 0

156 | assert event.is_set() is True

157 |

158 |

159 | @patch("purger.main.MAX_CONCURRENCY", default=1)

160 | @patch("purger.main.enqueue", autospec=True)

161 | @patch("purger.main.dequeue", autospec=True)

162 | @patch("purger.main.asyncio.Queue", autospec=True)

163 | @patch("purger.main.asyncio.Event", autospec=True)

164 | @patch("purger.main.asyncio.create_task", autospec=True)

165 | @patch("purger.main.asyncio.wait", autospec=True)

166 | async def test_orchestrator_ok(

167 | mock_wait,

168 | mock_create_task,

169 | mock_event,

170 | mock_queue,

171 | mock_dequeue,

172 | mock_enqueue,

173 | _,

174 | ):

175 |

176 | # Mocked futures.

177 | done_future = asyncio.Future()

178 | done_future.set_result(42)

179 | await done_future

180 |

181 | pending_future = asyncio.Future()

182 | done_futures, pending_futures = [done_future], [pending_future]

183 |

184 | mock_wait.return_value = (done_futures, pending_futures)

185 |

186 | # Called the mocked function.

187 | await purger.orchestrator(

188 | username="dummy_username",

189 | token="dummy_token",

190 | delete=False,

191 | )

192 |

193 | # Assert.

194 | mock_queue.assert_called_once()

195 | mock_queue().join.assert_called_once()

196 | mock_event.assert_called_once()

197 | mock_create_task.assert_called()

198 | mock_wait.assert_awaited_once()

199 | mock_enqueue.assert_called_once()

200 | mock_dequeue.assert_called_once()

201 |

202 |

203 | @patch("purger.main.orchestrator", autospec=True)

204 | @patch("purger.main.asyncio.run", autospec=True)

205 | def test__cli(mock_asyncio_run, mock_orchestrator):

206 | runner = CliRunner()

207 |

208 | # Test cli without any arguments.

209 | result = runner.invoke(purger._cli, [])

210 | assert result.exit_code != 0

211 |

212 | result = runner.invoke(

213 | purger._cli,

214 | ["--username=dummy_username", "--token=dummy_token"],

215 | )

216 | assert result.exit_code == 0

217 | mock_orchestrator.assert_called_once()

218 | mock_asyncio_run.assert_called_once()

219 |

220 | result = runner.invoke(

221 | purger._cli,

222 | ["--username=dummy_username", "--token=dummy_token", "--no-debug"],

223 | )

224 | assert result.exit_code == 0

225 | mock_orchestrator.assert_called()

226 | mock_asyncio_run.assert_called()

227 |

228 | result = runner.invoke(

229 | purger._cli,

230 | ["--username=dummy_username", "--token=dummy_token", "--delete"],

231 | )

232 | assert result.exit_code == 0

233 | mock_orchestrator.assert_called()

234 | mock_asyncio_run.assert_called()

235 |

236 |

237 | @patch("purger.main.click.command", autospec=True)

238 | @patch("purger.main.click.option", autospec=True)

239 | @patch("purger.main._cli", autospec=True)

240 | def test_dummy_cli(mock_cli, mock_click_option, mock_click_command, capsys):

241 |

242 | # Decorators are executed during import time. So for the 'patch' to work,

243 | # the module needs to be reloaded.

244 | importlib.reload(purger)

245 |

246 | # Since we're invoking the cli function without any parameter, it should

247 | # exit with an error code > 0.

248 | purger.cli()

249 | err, out = capsys.readouterr()

250 |

251 | # Test greeting message.

252 | greet_msg = textwrap.dedent(

253 | """

254 | +-+-+-+-+ +-+-+-+-+-+-+

255 | |F|o|r|k| |P|u|r|g|e|r|

256 | +-+-+-+-+ +-+-+-+-+-+-+

257 | """

258 | )

259 |

260 | assert greet_msg in err

261 | assert out == ""

262 |

--------------------------------------------------------------------------------