├── .gitignore

├── CryptoTracker2-0.alfredworkflow

├── LICENSE

├── README.md

├── screenshot_no_arg.png

├── screenshot_with_arg.png

└── src

├── 4FD48167-2143-421F-AAFE-395A6C9E511F.png

├── crypto-tracker.py

├── icon.png

├── icon

├── AlertStopIcon.icns

├── BookmarkIcon.icns

├── bch.png

├── btc.png

├── eth.png

└── ltc.png

├── info.plist

├── lib

├── bin

│ └── normalizer

├── certifi-2021.10.8.dist-info

│ ├── INSTALLER

│ ├── LICENSE

│ ├── METADATA

│ ├── RECORD

│ ├── WHEEL

│ └── top_level.txt

├── certifi

│ ├── __init__.py

│ ├── __main__.py

│ ├── cacert.pem

│ └── core.py

├── charset_normalizer-2.0.12.dist-info

│ ├── INSTALLER

│ ├── LICENSE

│ ├── METADATA

│ ├── RECORD

│ ├── WHEEL

│ ├── entry_points.txt

│ └── top_level.txt

├── charset_normalizer

│ ├── __init__.py

│ ├── api.py

│ ├── assets

│ │ └── __init__.py

│ ├── cd.py

│ ├── cli

│ │ ├── __init__.py

│ │ └── normalizer.py

│ ├── constant.py

│ ├── legacy.py

│ ├── md.py

│ ├── models.py

│ ├── py.typed

│ ├── utils.py

│ └── version.py

├── idna-3.3.dist-info

│ ├── INSTALLER

│ ├── LICENSE.md

│ ├── METADATA

│ ├── RECORD

│ ├── WHEEL

│ └── top_level.txt

├── idna

│ ├── __init__.py

│ ├── codec.py

│ ├── compat.py

│ ├── core.py

│ ├── idnadata.py

│ ├── intranges.py

│ ├── package_data.py

│ ├── py.typed

│ └── uts46data.py

├── requests-2.27.1.dist-info

│ ├── INSTALLER

│ ├── LICENSE

│ ├── METADATA

│ ├── RECORD

│ ├── REQUESTED

│ ├── WHEEL

│ └── top_level.txt

├── requests

│ ├── __init__.py

│ ├── __version__.py

│ ├── _internal_utils.py

│ ├── adapters.py

│ ├── api.py

│ ├── auth.py

│ ├── certs.py

│ ├── compat.py

│ ├── cookies.py

│ ├── exceptions.py

│ ├── help.py

│ ├── hooks.py

│ ├── models.py

│ ├── packages.py

│ ├── sessions.py

│ ├── status_codes.py

│ ├── structures.py

│ └── utils.py

├── urllib3-1.26.9.dist-info

│ ├── INSTALLER

│ ├── LICENSE.txt

│ ├── METADATA

│ ├── RECORD

│ ├── WHEEL

│ └── top_level.txt

└── urllib3

│ ├── __init__.py

│ ├── _collections.py

│ ├── _version.py

│ ├── connection.py

│ ├── connectionpool.py

│ ├── contrib

│ ├── __init__.py

│ ├── _appengine_environ.py

│ ├── _securetransport

│ │ ├── __init__.py

│ │ ├── bindings.py

│ │ └── low_level.py

│ ├── appengine.py

│ ├── ntlmpool.py

│ ├── pyopenssl.py

│ ├── securetransport.py

│ └── socks.py

│ ├── exceptions.py

│ ├── fields.py

│ ├── filepost.py

│ ├── packages

│ ├── __init__.py

│ ├── backports

│ │ ├── __init__.py

│ │ └── makefile.py

│ └── six.py

│ ├── poolmanager.py

│ ├── request.py

│ ├── response.py

│ └── util

│ ├── __init__.py

│ ├── connection.py

│ ├── proxy.py

│ ├── queue.py

│ ├── request.py

│ ├── response.py

│ ├── retry.py

│ ├── ssl_.py

│ ├── ssl_match_hostname.py

│ ├── ssltransport.py

│ ├── timeout.py

│ ├── url.py

│ └── wait.py

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | *.pyc

3 | __pycache__

4 |

--------------------------------------------------------------------------------

/CryptoTracker2-0.alfredworkflow:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/CryptoTracker2-0.alfredworkflow

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (c) 2014 Fabio Niephaus

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

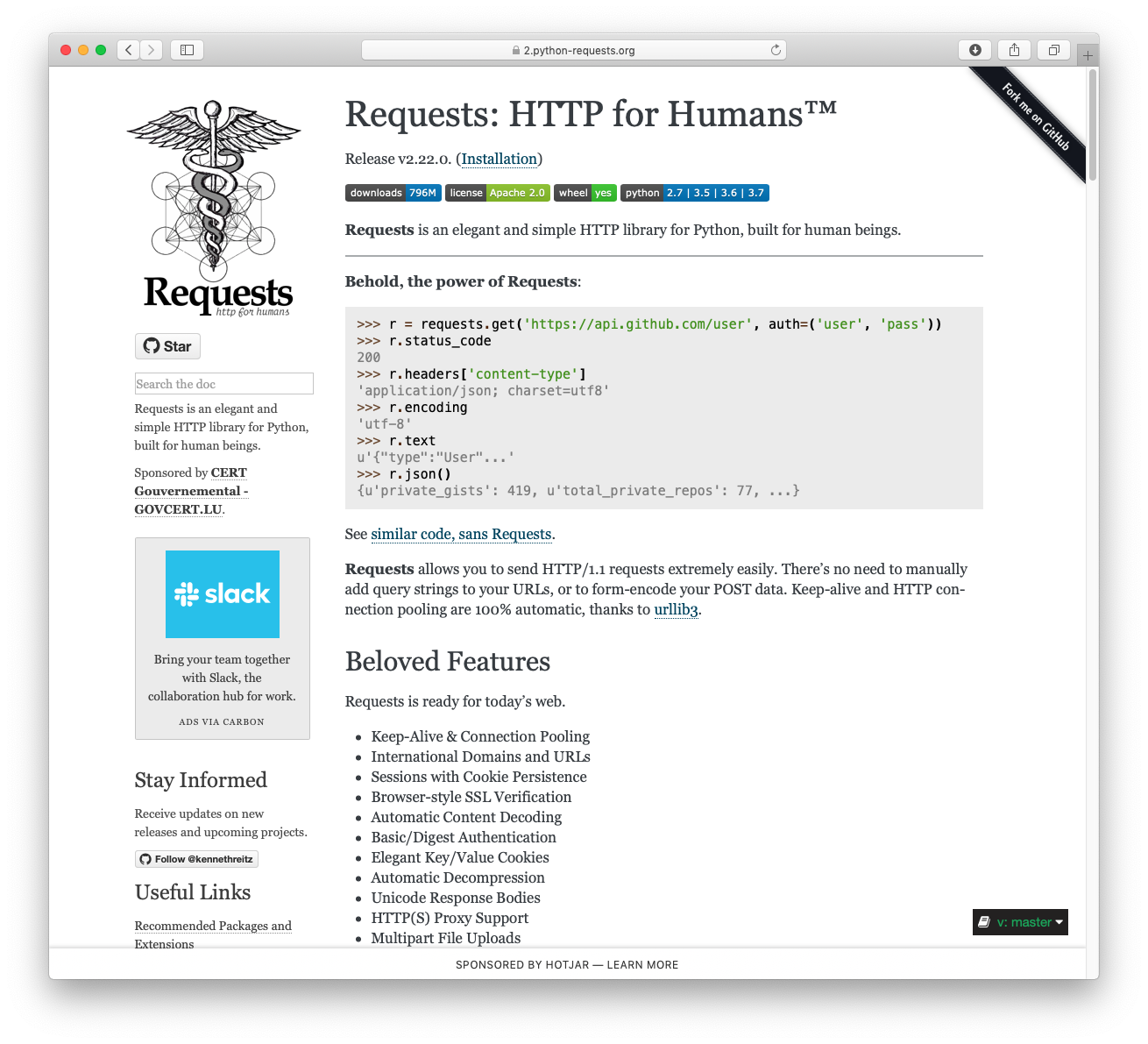

1 | # CryptoCurrency Tracker for Alfred

2 | Quickly check cryptocurrency prices with [Alfred](https://www.alfredapp.com/).

3 |

4 | ## March 2022

5 | Updated to python 3

6 |

7 |

8 |

9 |

11 |

12 |

13 |

14 | Use arguments to check many other cryptocurrency prices.

15 |

16 |

17 |

18 |

19 |

20 |

21 | # Features

22 | * Quickly check the main cryptocurrency ticker price using CryptoCompare (BTC, ETH, LTC, BCH).

23 | * Optionally provide an argument to get a quote for another currency symbol.

24 | * Quotes are all USD currency pair.

25 |

26 | # Usage

27 | ## Packal

28 | The package is hosted on [Packal](http://www.packal.org/workflow/cryptocurrency-price-tracker).

29 |

30 | ## Compiled Package

31 | The compiled package is committed to the repo. This file can be opened to import the workflow into Alfred.

32 |

33 | ## Build from Source

34 | Use makefile to build workflow from source. Workflow file can be imported into Alfred.

35 |

36 | # Contributing

37 | Pull requests welcome!

38 |

39 | # Credits

40 | This workflow uses [alfred-workflow](https://github.com/deanishe/alfred-workflow)

--------------------------------------------------------------------------------

/screenshot_no_arg.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/screenshot_no_arg.png

--------------------------------------------------------------------------------

/screenshot_with_arg.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/screenshot_with_arg.png

--------------------------------------------------------------------------------

/src/4FD48167-2143-421F-AAFE-395A6C9E511F.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/4FD48167-2143-421F-AAFE-395A6C9E511F.png

--------------------------------------------------------------------------------

/src/crypto-tracker.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 | # giovanni from Rahul Sethuram

4 | # Clear ☀️ 🌡️+32°F (feels +24°F, 37%) 🌬️↓12mph 🌑 Wed Mar 30 05:07:37 2022

5 |

6 |

7 | import sys

8 | import requests

9 | import json

10 |

11 |

12 | def log(s, *args):

13 | if args:

14 | s = s % args

15 | print(s, file=sys.stderr)

16 |

17 |

18 | def format_strings_from_quote(ticker, quote_data):

19 | data = quote_data['RAW'][ticker.upper()]['USD']

20 | price = '{:,.2f}'.format(data['PRICE'])

21 | high = '{:,.2f}'.format(data['HIGH24HOUR'])

22 | low = '{:,.2f}'.format(data['LOW24HOUR'])

23 | change = '{:,.2f}'.format(data['CHANGEPCT24HOUR'])

24 | formatted = {}

25 | formatted['title'] = '{}: ${} ({}%)'.format(ticker.upper(), price, change)

26 | formatted['subtitle'] = '24hr high: ${} | 24hr low: ${}'.format(high, low)

27 | return formatted

28 |

29 |

30 | def main():

31 |

32 | if len(sys.argv) > 1:

33 | query = sys.argv[1]

34 | else:

35 | query = None

36 |

37 | result = {"items": []}

38 |

39 |

40 | if query:

41 | url = 'https://min-api.cryptocompare.com/data/pricemultifull?fsyms=' + \

42 | query.upper() + '&tsyms=USD'

43 |

44 | resp = requests.get(url)

45 | myData = resp.json()

46 |

47 |

48 | try:

49 | formatted = format_strings_from_quote(query, myData)

50 |

51 | result["items"].append({

52 | "title": formatted['title'],

53 | 'subtitle': formatted['subtitle'],

54 | 'valid': True,

55 |

56 | "icon": {

57 | "path": 'icon/BookmarkIcon.icns'

58 | },

59 | 'arg': 'https://www.cryptocompare.com/coins/' + query + '/overview/USD'

60 | })

61 |

62 |

63 | except:

64 | result["items"].append({

65 | "title": 'Couldn\'t find a quote for that symbol 😞',

66 | 'subtitle': 'Please try again.',

67 |

68 |

69 | "icon": {

70 | "path": 'icon/AlertStopIcon.icns'

71 | },

72 | })

73 |

74 | else:

75 | url = 'https://min-api.cryptocompare.com/data/pricemultifull?fsyms=BTC,ETH,LTC,BCH&tsyms=USD'

76 | resp = requests.get(url)

77 | myData = resp.json()

78 |

79 |

80 | formatted = format_strings_from_quote('BTC', myData)

81 | result["items"].append({

82 | "title": formatted['title'],

83 | 'subtitle': formatted['subtitle'],

84 | 'valid': True,

85 |

86 | "icon": {

87 | "path": 'icon/btc.png'

88 | },

89 | 'arg': 'https://www.cryptocompare.com/'

90 | })

91 |

92 |

93 |

94 | formatted = format_strings_from_quote('ETH', myData)

95 |

96 | result["items"].append({

97 | "title": formatted['title'],

98 | 'subtitle': formatted['subtitle'],

99 | 'valid': True,

100 |

101 | "icon": {

102 | "path": 'icon/eth.png'

103 | },

104 | 'arg': 'https://www.cryptocompare.com/'

105 | })

106 |

107 |

108 | formatted = format_strings_from_quote('LTC', myData)

109 | result["items"].append({

110 | "title": formatted['title'],

111 | 'subtitle': formatted['subtitle'],

112 | 'valid': True,

113 |

114 | "icon": {

115 | "path": 'icon/ltc.png'

116 | },

117 | 'arg': 'https://www.cryptocompare.com/'

118 | })

119 |

120 | formatted = format_strings_from_quote('BCH', myData)

121 | result["items"].append({

122 | "title": formatted['title'],

123 | 'subtitle': formatted['subtitle'],

124 | 'valid': True,

125 |

126 | "icon": {

127 | "path": 'icon/bch.png'

128 | },

129 | 'arg': 'https://www.cryptocompare.com/'

130 | })

131 |

132 |

133 | print (json.dumps(result))

134 |

135 |

136 | if __name__ == u"__main__":

137 | main()

138 |

--------------------------------------------------------------------------------

/src/icon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon.png

--------------------------------------------------------------------------------

/src/icon/AlertStopIcon.icns:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/AlertStopIcon.icns

--------------------------------------------------------------------------------

/src/icon/BookmarkIcon.icns:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/BookmarkIcon.icns

--------------------------------------------------------------------------------

/src/icon/bch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/bch.png

--------------------------------------------------------------------------------

/src/icon/btc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/btc.png

--------------------------------------------------------------------------------

/src/icon/eth.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/eth.png

--------------------------------------------------------------------------------

/src/icon/ltc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/icon/ltc.png

--------------------------------------------------------------------------------

/src/info.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | bundleid

6 | com.cryprotracker

7 | connections

8 |

9 | CF35A24A-ED70-4124-9E31-D356993403FC

10 |

11 |

12 | destinationuid

13 | 203CE1F8-438D-407D-BB86-E46160867531

14 | modifiers

15 | 0

16 | modifiersubtext

17 |

18 | vitoclose

19 |

20 |

21 |

22 |

23 | createdby

24 | giovanni from Rahul Sethuram

25 | description

26 | Quickly get price information for cryptocurrencies.

27 | disabled

28 |

29 | name

30 | Crypto Tracker

31 | objects

32 |

33 |

34 | config

35 |

36 | browser

37 |

38 | spaces

39 |

40 | url

41 | {query}

42 | utf8

43 |

44 |

45 | type

46 | alfred.workflow.action.openurl

47 | uid

48 | 203CE1F8-438D-407D-BB86-E46160867531

49 | version

50 | 1

51 |

52 |

53 | config

54 |

55 | alfredfiltersresults

56 |

57 | alfredfiltersresultsmatchmode

58 | 0

59 | argumenttreatemptyqueryasnil

60 |

61 | argumenttrimmode

62 | 0

63 | argumenttype

64 | 1

65 | escaping

66 | 102

67 | keyword

68 | crypto

69 | queuedelaycustom

70 | 3

71 | queuedelayimmediatelyinitially

72 |

73 | queuedelaymode

74 | 1

75 | queuemode

76 | 1

77 | runningsubtext

78 | Retrieving quotes...

79 | script

80 | export PATH=/opt/homebrew/bin:/usr/local/bin:$PATH

81 | export PYTHONPATH="$PWD/lib"

82 | python3 crypto-tracker.py "$1"

83 | scriptargtype

84 | 1

85 | scriptfile

86 |

87 | subtext

88 | Enter a ticker or view main tickers

89 | title

90 | Track CryptoCurrency Prices

91 | type

92 | 0

93 | withspace

94 |

95 |

96 | type

97 | alfred.workflow.input.scriptfilter

98 | uid

99 | CF35A24A-ED70-4124-9E31-D356993403FC

100 | version

101 | 3

102 |

103 |

104 | readme

105 | Migrated to Python 3 from https://github.com/rhlsthrm/alfred-crypto-tracker

106 | uidata

107 |

108 | 203CE1F8-438D-407D-BB86-E46160867531

109 |

110 | xpos

111 | 400

112 | ypos

113 | 360

114 |

115 | CF35A24A-ED70-4124-9E31-D356993403FC

116 |

117 | xpos

118 | 165

119 | ypos

120 | 360

121 |

122 |

123 | variablesdontexport

124 |

125 | version

126 | 2.0

127 | webaddress

128 | github.com/rhlsthrm/alfred-crypto-tracker

129 |

130 |

131 |

--------------------------------------------------------------------------------

/src/lib/bin/normalizer:

--------------------------------------------------------------------------------

1 | #!/usr/local/opt/python@3.9/bin/python3.9

2 | # -*- coding: utf-8 -*-

3 | import re

4 | import sys

5 | from charset_normalizer.cli.normalizer import cli_detect

6 | if __name__ == '__main__':

7 | sys.argv[0] = re.sub(r'(-script\.pyw|\.exe)?$', '', sys.argv[0])

8 | sys.exit(cli_detect())

9 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/INSTALLER:

--------------------------------------------------------------------------------

1 | pip

2 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/LICENSE:

--------------------------------------------------------------------------------

1 | This package contains a modified version of ca-bundle.crt:

2 |

3 | ca-bundle.crt -- Bundle of CA Root Certificates

4 |

5 | Certificate data from Mozilla as of: Thu Nov 3 19:04:19 2011#

6 | This is a bundle of X.509 certificates of public Certificate Authorities

7 | (CA). These were automatically extracted from Mozilla's root certificates

8 | file (certdata.txt). This file can be found in the mozilla source tree:

9 | http://mxr.mozilla.org/mozilla/source/security/nss/lib/ckfw/builtins/certdata.txt?raw=1#

10 | It contains the certificates in PEM format and therefore

11 | can be directly used with curl / libcurl / php_curl, or with

12 | an Apache+mod_ssl webserver for SSL client authentication.

13 | Just configure this file as the SSLCACertificateFile.#

14 |

15 | ***** BEGIN LICENSE BLOCK *****

16 | This Source Code Form is subject to the terms of the Mozilla Public License,

17 | v. 2.0. If a copy of the MPL was not distributed with this file, You can obtain

18 | one at http://mozilla.org/MPL/2.0/.

19 |

20 | ***** END LICENSE BLOCK *****

21 | @(#) $RCSfile: certdata.txt,v $ $Revision: 1.80 $ $Date: 2011/11/03 15:11:58 $

22 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/METADATA:

--------------------------------------------------------------------------------

1 | Metadata-Version: 2.1

2 | Name: certifi

3 | Version: 2021.10.8

4 | Summary: Python package for providing Mozilla's CA Bundle.

5 | Home-page: https://certifiio.readthedocs.io/en/latest/

6 | Author: Kenneth Reitz

7 | Author-email: me@kennethreitz.com

8 | License: MPL-2.0

9 | Project-URL: Documentation, https://certifiio.readthedocs.io/en/latest/

10 | Project-URL: Source, https://github.com/certifi/python-certifi

11 | Platform: UNKNOWN

12 | Classifier: Development Status :: 5 - Production/Stable

13 | Classifier: Intended Audience :: Developers

14 | Classifier: License :: OSI Approved :: Mozilla Public License 2.0 (MPL 2.0)

15 | Classifier: Natural Language :: English

16 | Classifier: Programming Language :: Python

17 | Classifier: Programming Language :: Python :: 3

18 | Classifier: Programming Language :: Python :: 3.3

19 | Classifier: Programming Language :: Python :: 3.4

20 | Classifier: Programming Language :: Python :: 3.5

21 | Classifier: Programming Language :: Python :: 3.6

22 | Classifier: Programming Language :: Python :: 3.7

23 | Classifier: Programming Language :: Python :: 3.8

24 | Classifier: Programming Language :: Python :: 3.9

25 |

26 | Certifi: Python SSL Certificates

27 | ================================

28 |

29 | `Certifi`_ provides Mozilla's carefully curated collection of Root Certificates for

30 | validating the trustworthiness of SSL certificates while verifying the identity

31 | of TLS hosts. It has been extracted from the `Requests`_ project.

32 |

33 | Installation

34 | ------------

35 |

36 | ``certifi`` is available on PyPI. Simply install it with ``pip``::

37 |

38 | $ pip install certifi

39 |

40 | Usage

41 | -----

42 |

43 | To reference the installed certificate authority (CA) bundle, you can use the

44 | built-in function::

45 |

46 | >>> import certifi

47 |

48 | >>> certifi.where()

49 | '/usr/local/lib/python3.7/site-packages/certifi/cacert.pem'

50 |

51 | Or from the command line::

52 |

53 | $ python -m certifi

54 | /usr/local/lib/python3.7/site-packages/certifi/cacert.pem

55 |

56 | Enjoy!

57 |

58 | 1024-bit Root Certificates

59 | ~~~~~~~~~~~~~~~~~~~~~~~~~~

60 |

61 | Browsers and certificate authorities have concluded that 1024-bit keys are

62 | unacceptably weak for certificates, particularly root certificates. For this

63 | reason, Mozilla has removed any weak (i.e. 1024-bit key) certificate from its

64 | bundle, replacing it with an equivalent strong (i.e. 2048-bit or greater key)

65 | certificate from the same CA. Because Mozilla removed these certificates from

66 | its bundle, ``certifi`` removed them as well.

67 |

68 | In previous versions, ``certifi`` provided the ``certifi.old_where()`` function

69 | to intentionally re-add the 1024-bit roots back into your bundle. This was not

70 | recommended in production and therefore was removed at the end of 2018.

71 |

72 | .. _`Certifi`: https://certifiio.readthedocs.io/en/latest/

73 | .. _`Requests`: https://requests.readthedocs.io/en/master/

74 |

75 | Addition/Removal of Certificates

76 | --------------------------------

77 |

78 | Certifi does not support any addition/removal or other modification of the

79 | CA trust store content. This project is intended to provide a reliable and

80 | highly portable root of trust to python deployments. Look to upstream projects

81 | for methods to use alternate trust.

82 |

83 |

84 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/RECORD:

--------------------------------------------------------------------------------

1 | certifi-2021.10.8.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4

2 | certifi-2021.10.8.dist-info/LICENSE,sha256=vp2C82ES-Hp_HXTs1Ih-FGe7roh4qEAEoAEXseR1o-I,1049

3 | certifi-2021.10.8.dist-info/METADATA,sha256=iB_zbT1uX_8_NC7iGv0YEB-9b3idhQwHrFTSq8R1kD8,2994

4 | certifi-2021.10.8.dist-info/RECORD,,

5 | certifi-2021.10.8.dist-info/WHEEL,sha256=ADKeyaGyKF5DwBNE0sRE5pvW-bSkFMJfBuhzZ3rceP4,110

6 | certifi-2021.10.8.dist-info/top_level.txt,sha256=KMu4vUCfsjLrkPbSNdgdekS-pVJzBAJFO__nI8NF6-U,8

7 | certifi/__init__.py,sha256=xWdRgntT3j1V95zkRipGOg_A1UfEju2FcpujhysZLRI,62

8 | certifi/__main__.py,sha256=xBBoj905TUWBLRGANOcf7oi6e-3dMP4cEoG9OyMs11g,243

9 | certifi/__pycache__/__init__.cpython-39.pyc,,

10 | certifi/__pycache__/__main__.cpython-39.pyc,,

11 | certifi/__pycache__/core.cpython-39.pyc,,

12 | certifi/cacert.pem,sha256=-og4Keu4zSpgL5shwfhd4kz0eUnVILzrGCi0zRy2kGw,265969

13 | certifi/core.py,sha256=V0uyxKOYdz6ulDSusclrLmjbPgOXsD0BnEf0SQ7OnoE,2303

14 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/WHEEL:

--------------------------------------------------------------------------------

1 | Wheel-Version: 1.0

2 | Generator: bdist_wheel (0.35.1)

3 | Root-Is-Purelib: true

4 | Tag: py2-none-any

5 | Tag: py3-none-any

6 |

7 |

--------------------------------------------------------------------------------

/src/lib/certifi-2021.10.8.dist-info/top_level.txt:

--------------------------------------------------------------------------------

1 | certifi

2 |

--------------------------------------------------------------------------------

/src/lib/certifi/__init__.py:

--------------------------------------------------------------------------------

1 | from .core import contents, where

2 |

3 | __version__ = "2021.10.08"

4 |

--------------------------------------------------------------------------------

/src/lib/certifi/__main__.py:

--------------------------------------------------------------------------------

1 | import argparse

2 |

3 | from certifi import contents, where

4 |

5 | parser = argparse.ArgumentParser()

6 | parser.add_argument("-c", "--contents", action="store_true")

7 | args = parser.parse_args()

8 |

9 | if args.contents:

10 | print(contents())

11 | else:

12 | print(where())

13 |

--------------------------------------------------------------------------------

/src/lib/certifi/core.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | certifi.py

5 | ~~~~~~~~~~

6 |

7 | This module returns the installation location of cacert.pem or its contents.

8 | """

9 | import os

10 |

11 | try:

12 | from importlib.resources import path as get_path, read_text

13 |

14 | _CACERT_CTX = None

15 | _CACERT_PATH = None

16 |

17 | def where():

18 | # This is slightly terrible, but we want to delay extracting the file

19 | # in cases where we're inside of a zipimport situation until someone

20 | # actually calls where(), but we don't want to re-extract the file

21 | # on every call of where(), so we'll do it once then store it in a

22 | # global variable.

23 | global _CACERT_CTX

24 | global _CACERT_PATH

25 | if _CACERT_PATH is None:

26 | # This is slightly janky, the importlib.resources API wants you to

27 | # manage the cleanup of this file, so it doesn't actually return a

28 | # path, it returns a context manager that will give you the path

29 | # when you enter it and will do any cleanup when you leave it. In

30 | # the common case of not needing a temporary file, it will just

31 | # return the file system location and the __exit__() is a no-op.

32 | #

33 | # We also have to hold onto the actual context manager, because

34 | # it will do the cleanup whenever it gets garbage collected, so

35 | # we will also store that at the global level as well.

36 | _CACERT_CTX = get_path("certifi", "cacert.pem")

37 | _CACERT_PATH = str(_CACERT_CTX.__enter__())

38 |

39 | return _CACERT_PATH

40 |

41 |

42 | except ImportError:

43 | # This fallback will work for Python versions prior to 3.7 that lack the

44 | # importlib.resources module but relies on the existing `where` function

45 | # so won't address issues with environments like PyOxidizer that don't set

46 | # __file__ on modules.

47 | def read_text(_module, _path, encoding="ascii"):

48 | with open(where(), "r", encoding=encoding) as data:

49 | return data.read()

50 |

51 | # If we don't have importlib.resources, then we will just do the old logic

52 | # of assuming we're on the filesystem and munge the path directly.

53 | def where():

54 | f = os.path.dirname(__file__)

55 |

56 | return os.path.join(f, "cacert.pem")

57 |

58 |

59 | def contents():

60 | return read_text("certifi", "cacert.pem", encoding="ascii")

61 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/INSTALLER:

--------------------------------------------------------------------------------

1 | pip

2 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 TAHRI Ahmed R.

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/RECORD:

--------------------------------------------------------------------------------

1 | ../../bin/normalizer,sha256=qMFuG1HUuJwppVf1MG5al1cGmYpw5_PXeOBZyfp2rIA,267

2 | charset_normalizer-2.0.12.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4

3 | charset_normalizer-2.0.12.dist-info/LICENSE,sha256=6zGgxaT7Cbik4yBV0lweX5w1iidS_vPNcgIT0cz-4kE,1070

4 | charset_normalizer-2.0.12.dist-info/METADATA,sha256=eX-U3s7nb6wcvXZFyM1mdBf1yz4I0msVBgNvLEscAbo,11713

5 | charset_normalizer-2.0.12.dist-info/RECORD,,

6 | charset_normalizer-2.0.12.dist-info/WHEEL,sha256=G16H4A3IeoQmnOrYV4ueZGKSjhipXx8zc8nu9FGlvMA,92

7 | charset_normalizer-2.0.12.dist-info/entry_points.txt,sha256=5AJq_EPtGGUwJPgQLnBZfbVr-FYCIwT0xP7dIEZO3NI,77

8 | charset_normalizer-2.0.12.dist-info/top_level.txt,sha256=7ASyzePr8_xuZWJsnqJjIBtyV8vhEo0wBCv1MPRRi3Q,19

9 | charset_normalizer/__init__.py,sha256=x2A2OW29MBcqdxsvy6t1wzkUlH3ma0guxL6ZCfS8J94,1790

10 | charset_normalizer/__pycache__/__init__.cpython-39.pyc,,

11 | charset_normalizer/__pycache__/api.cpython-39.pyc,,

12 | charset_normalizer/__pycache__/cd.cpython-39.pyc,,

13 | charset_normalizer/__pycache__/constant.cpython-39.pyc,,

14 | charset_normalizer/__pycache__/legacy.cpython-39.pyc,,

15 | charset_normalizer/__pycache__/md.cpython-39.pyc,,

16 | charset_normalizer/__pycache__/models.cpython-39.pyc,,

17 | charset_normalizer/__pycache__/utils.cpython-39.pyc,,

18 | charset_normalizer/__pycache__/version.cpython-39.pyc,,

19 | charset_normalizer/api.py,sha256=r__Wz85F5pYOkRwEY5imXY_pCZ2Nil1DkdaAJY7T5o0,20303

20 | charset_normalizer/assets/__init__.py,sha256=FPnfk8limZRb8ZIUQcTvPEcbuM1eqOdWGw0vbWGycDs,25485

21 | charset_normalizer/assets/__pycache__/__init__.cpython-39.pyc,,

22 | charset_normalizer/cd.py,sha256=a9Kzzd9tHl_W08ExbCFMmRJqdo2k7EBQ8Z_3y9DmYsg,11076

23 | charset_normalizer/cli/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

24 | charset_normalizer/cli/__pycache__/__init__.cpython-39.pyc,,

25 | charset_normalizer/cli/__pycache__/normalizer.cpython-39.pyc,,

26 | charset_normalizer/cli/normalizer.py,sha256=LkeFIRc1l28rOgXpEby695x0bcKQv4D8z9FmA3Z2c3A,9364

27 | charset_normalizer/constant.py,sha256=51u_RS10I1vYVpBao__xHqf--HHNrR6me1A1se5r5Y0,19449

28 | charset_normalizer/legacy.py,sha256=XKeZOts_HdYQU_Jb3C9ZfOjY2CiUL132k9_nXer8gig,3384

29 | charset_normalizer/md.py,sha256=WEwnu2MyIiMeEaorRduqcTxGjIBclWIG3i-9_UL6LLs,18191

30 | charset_normalizer/models.py,sha256=XrGpVxfonhcilIWC1WeiP3-ZORGEe_RG3sgrfPLl9qM,13303

31 | charset_normalizer/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

32 | charset_normalizer/utils.py,sha256=AWSL0z1B42IwdLfjX4ZMASA9cTUsTp0PweCdW98SI-4,9308

33 | charset_normalizer/version.py,sha256=uxO2cT0YIavQv4dQlNGmHPIOOwOa-exspxXi3IR7dck,80

34 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/WHEEL:

--------------------------------------------------------------------------------

1 | Wheel-Version: 1.0

2 | Generator: bdist_wheel (0.37.1)

3 | Root-Is-Purelib: true

4 | Tag: py3-none-any

5 |

6 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/entry_points.txt:

--------------------------------------------------------------------------------

1 | [console_scripts]

2 | normalizer = charset_normalizer.cli.normalizer:cli_detect

3 |

4 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer-2.0.12.dist-info/top_level.txt:

--------------------------------------------------------------------------------

1 | charset_normalizer

2 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/__init__.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf_8 -*-

2 | """

3 | Charset-Normalizer

4 | ~~~~~~~~~~~~~~

5 | The Real First Universal Charset Detector.

6 | A library that helps you read text from an unknown charset encoding.

7 | Motivated by chardet, This package is trying to resolve the issue by taking a new approach.

8 | All IANA character set names for which the Python core library provides codecs are supported.

9 |

10 | Basic usage:

11 | >>> from charset_normalizer import from_bytes

12 | >>> results = from_bytes('Bсеки човек има право на образование. Oбразованието!'.encode('utf_8'))

13 | >>> best_guess = results.best()

14 | >>> str(best_guess)

15 | 'Bсеки човек има право на образование. Oбразованието!'

16 |

17 | Others methods and usages are available - see the full documentation

18 | at .

19 | :copyright: (c) 2021 by Ahmed TAHRI

20 | :license: MIT, see LICENSE for more details.

21 | """

22 | import logging

23 |

24 | from .api import from_bytes, from_fp, from_path, normalize

25 | from .legacy import (

26 | CharsetDetector,

27 | CharsetDoctor,

28 | CharsetNormalizerMatch,

29 | CharsetNormalizerMatches,

30 | detect,

31 | )

32 | from .models import CharsetMatch, CharsetMatches

33 | from .utils import set_logging_handler

34 | from .version import VERSION, __version__

35 |

36 | __all__ = (

37 | "from_fp",

38 | "from_path",

39 | "from_bytes",

40 | "normalize",

41 | "detect",

42 | "CharsetMatch",

43 | "CharsetMatches",

44 | "CharsetNormalizerMatch",

45 | "CharsetNormalizerMatches",

46 | "CharsetDetector",

47 | "CharsetDoctor",

48 | "__version__",

49 | "VERSION",

50 | "set_logging_handler",

51 | )

52 |

53 | # Attach a NullHandler to the top level logger by default

54 | # https://docs.python.org/3.3/howto/logging.html#configuring-logging-for-a-library

55 |

56 | logging.getLogger("charset_normalizer").addHandler(logging.NullHandler())

57 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/cli/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/lib/charset_normalizer/cli/__init__.py

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/cli/normalizer.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import sys

3 | from json import dumps

4 | from os.path import abspath

5 | from platform import python_version

6 | from typing import List

7 |

8 | from charset_normalizer import from_fp

9 | from charset_normalizer.models import CliDetectionResult

10 | from charset_normalizer.version import __version__

11 |

12 |

13 | def query_yes_no(question: str, default: str = "yes") -> bool:

14 | """Ask a yes/no question via input() and return their answer.

15 |

16 | "question" is a string that is presented to the user.

17 | "default" is the presumed answer if the user just hits .

18 | It must be "yes" (the default), "no" or None (meaning

19 | an answer is required of the user).

20 |

21 | The "answer" return value is True for "yes" or False for "no".

22 |

23 | Credit goes to (c) https://stackoverflow.com/questions/3041986/apt-command-line-interface-like-yes-no-input

24 | """

25 | valid = {"yes": True, "y": True, "ye": True, "no": False, "n": False}

26 | if default is None:

27 | prompt = " [y/n] "

28 | elif default == "yes":

29 | prompt = " [Y/n] "

30 | elif default == "no":

31 | prompt = " [y/N] "

32 | else:

33 | raise ValueError("invalid default answer: '%s'" % default)

34 |

35 | while True:

36 | sys.stdout.write(question + prompt)

37 | choice = input().lower()

38 | if default is not None and choice == "":

39 | return valid[default]

40 | elif choice in valid:

41 | return valid[choice]

42 | else:

43 | sys.stdout.write("Please respond with 'yes' or 'no' " "(or 'y' or 'n').\n")

44 |

45 |

46 | def cli_detect(argv: List[str] = None) -> int:

47 | """

48 | CLI assistant using ARGV and ArgumentParser

49 | :param argv:

50 | :return: 0 if everything is fine, anything else equal trouble

51 | """

52 | parser = argparse.ArgumentParser(

53 | description="The Real First Universal Charset Detector. "

54 | "Discover originating encoding used on text file. "

55 | "Normalize text to unicode."

56 | )

57 |

58 | parser.add_argument(

59 | "files", type=argparse.FileType("rb"), nargs="+", help="File(s) to be analysed"

60 | )

61 | parser.add_argument(

62 | "-v",

63 | "--verbose",

64 | action="store_true",

65 | default=False,

66 | dest="verbose",

67 | help="Display complementary information about file if any. "

68 | "Stdout will contain logs about the detection process.",

69 | )

70 | parser.add_argument(

71 | "-a",

72 | "--with-alternative",

73 | action="store_true",

74 | default=False,

75 | dest="alternatives",

76 | help="Output complementary possibilities if any. Top-level JSON WILL be a list.",

77 | )

78 | parser.add_argument(

79 | "-n",

80 | "--normalize",

81 | action="store_true",

82 | default=False,

83 | dest="normalize",

84 | help="Permit to normalize input file. If not set, program does not write anything.",

85 | )

86 | parser.add_argument(

87 | "-m",

88 | "--minimal",

89 | action="store_true",

90 | default=False,

91 | dest="minimal",

92 | help="Only output the charset detected to STDOUT. Disabling JSON output.",

93 | )

94 | parser.add_argument(

95 | "-r",

96 | "--replace",

97 | action="store_true",

98 | default=False,

99 | dest="replace",

100 | help="Replace file when trying to normalize it instead of creating a new one.",

101 | )

102 | parser.add_argument(

103 | "-f",

104 | "--force",

105 | action="store_true",

106 | default=False,

107 | dest="force",

108 | help="Replace file without asking if you are sure, use this flag with caution.",

109 | )

110 | parser.add_argument(

111 | "-t",

112 | "--threshold",

113 | action="store",

114 | default=0.1,

115 | type=float,

116 | dest="threshold",

117 | help="Define a custom maximum amount of chaos allowed in decoded content. 0. <= chaos <= 1.",

118 | )

119 | parser.add_argument(

120 | "--version",

121 | action="version",

122 | version="Charset-Normalizer {} - Python {}".format(

123 | __version__, python_version()

124 | ),

125 | help="Show version information and exit.",

126 | )

127 |

128 | args = parser.parse_args(argv)

129 |

130 | if args.replace is True and args.normalize is False:

131 | print("Use --replace in addition of --normalize only.", file=sys.stderr)

132 | return 1

133 |

134 | if args.force is True and args.replace is False:

135 | print("Use --force in addition of --replace only.", file=sys.stderr)

136 | return 1

137 |

138 | if args.threshold < 0.0 or args.threshold > 1.0:

139 | print("--threshold VALUE should be between 0. AND 1.", file=sys.stderr)

140 | return 1

141 |

142 | x_ = []

143 |

144 | for my_file in args.files:

145 |

146 | matches = from_fp(my_file, threshold=args.threshold, explain=args.verbose)

147 |

148 | best_guess = matches.best()

149 |

150 | if best_guess is None:

151 | print(

152 | 'Unable to identify originating encoding for "{}". {}'.format(

153 | my_file.name,

154 | "Maybe try increasing maximum amount of chaos."

155 | if args.threshold < 1.0

156 | else "",

157 | ),

158 | file=sys.stderr,

159 | )

160 | x_.append(

161 | CliDetectionResult(

162 | abspath(my_file.name),

163 | None,

164 | [],

165 | [],

166 | "Unknown",

167 | [],

168 | False,

169 | 1.0,

170 | 0.0,

171 | None,

172 | True,

173 | )

174 | )

175 | else:

176 | x_.append(

177 | CliDetectionResult(

178 | abspath(my_file.name),

179 | best_guess.encoding,

180 | best_guess.encoding_aliases,

181 | [

182 | cp

183 | for cp in best_guess.could_be_from_charset

184 | if cp != best_guess.encoding

185 | ],

186 | best_guess.language,

187 | best_guess.alphabets,

188 | best_guess.bom,

189 | best_guess.percent_chaos,

190 | best_guess.percent_coherence,

191 | None,

192 | True,

193 | )

194 | )

195 |

196 | if len(matches) > 1 and args.alternatives:

197 | for el in matches:

198 | if el != best_guess:

199 | x_.append(

200 | CliDetectionResult(

201 | abspath(my_file.name),

202 | el.encoding,

203 | el.encoding_aliases,

204 | [

205 | cp

206 | for cp in el.could_be_from_charset

207 | if cp != el.encoding

208 | ],

209 | el.language,

210 | el.alphabets,

211 | el.bom,

212 | el.percent_chaos,

213 | el.percent_coherence,

214 | None,

215 | False,

216 | )

217 | )

218 |

219 | if args.normalize is True:

220 |

221 | if best_guess.encoding.startswith("utf") is True:

222 | print(

223 | '"{}" file does not need to be normalized, as it already came from unicode.'.format(

224 | my_file.name

225 | ),

226 | file=sys.stderr,

227 | )

228 | if my_file.closed is False:

229 | my_file.close()

230 | continue

231 |

232 | o_ = my_file.name.split(".") # type: List[str]

233 |

234 | if args.replace is False:

235 | o_.insert(-1, best_guess.encoding)

236 | if my_file.closed is False:

237 | my_file.close()

238 | elif (

239 | args.force is False

240 | and query_yes_no(

241 | 'Are you sure to normalize "{}" by replacing it ?'.format(

242 | my_file.name

243 | ),

244 | "no",

245 | )

246 | is False

247 | ):

248 | if my_file.closed is False:

249 | my_file.close()

250 | continue

251 |

252 | try:

253 | x_[0].unicode_path = abspath("./{}".format(".".join(o_)))

254 |

255 | with open(x_[0].unicode_path, "w", encoding="utf-8") as fp:

256 | fp.write(str(best_guess))

257 | except IOError as e:

258 | print(str(e), file=sys.stderr)

259 | if my_file.closed is False:

260 | my_file.close()

261 | return 2

262 |

263 | if my_file.closed is False:

264 | my_file.close()

265 |

266 | if args.minimal is False:

267 | print(

268 | dumps(

269 | [el.__dict__ for el in x_] if len(x_) > 1 else x_[0].__dict__,

270 | ensure_ascii=True,

271 | indent=4,

272 | )

273 | )

274 | else:

275 | for my_file in args.files:

276 | print(

277 | ", ".join(

278 | [

279 | el.encoding or "undefined"

280 | for el in x_

281 | if el.path == abspath(my_file.name)

282 | ]

283 | )

284 | )

285 |

286 | return 0

287 |

288 |

289 | if __name__ == "__main__":

290 | cli_detect()

291 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/legacy.py:

--------------------------------------------------------------------------------

1 | import warnings

2 | from typing import Dict, Optional, Union

3 |

4 | from .api import from_bytes, from_fp, from_path, normalize

5 | from .constant import CHARDET_CORRESPONDENCE

6 | from .models import CharsetMatch, CharsetMatches

7 |

8 |

9 | def detect(byte_str: bytes) -> Dict[str, Optional[Union[str, float]]]:

10 | """

11 | chardet legacy method

12 | Detect the encoding of the given byte string. It should be mostly backward-compatible.

13 | Encoding name will match Chardet own writing whenever possible. (Not on encoding name unsupported by it)

14 | This function is deprecated and should be used to migrate your project easily, consult the documentation for

15 | further information. Not planned for removal.

16 |

17 | :param byte_str: The byte sequence to examine.

18 | """

19 | if not isinstance(byte_str, (bytearray, bytes)):

20 | raise TypeError( # pragma: nocover

21 | "Expected object of type bytes or bytearray, got: "

22 | "{0}".format(type(byte_str))

23 | )

24 |

25 | if isinstance(byte_str, bytearray):

26 | byte_str = bytes(byte_str)

27 |

28 | r = from_bytes(byte_str).best()

29 |

30 | encoding = r.encoding if r is not None else None

31 | language = r.language if r is not None and r.language != "Unknown" else ""

32 | confidence = 1.0 - r.chaos if r is not None else None

33 |

34 | # Note: CharsetNormalizer does not return 'UTF-8-SIG' as the sig get stripped in the detection/normalization process

35 | # but chardet does return 'utf-8-sig' and it is a valid codec name.

36 | if r is not None and encoding == "utf_8" and r.bom:

37 | encoding += "_sig"

38 |

39 | return {

40 | "encoding": encoding

41 | if encoding not in CHARDET_CORRESPONDENCE

42 | else CHARDET_CORRESPONDENCE[encoding],

43 | "language": language,

44 | "confidence": confidence,

45 | }

46 |

47 |

48 | class CharsetNormalizerMatch(CharsetMatch):

49 | pass

50 |

51 |

52 | class CharsetNormalizerMatches(CharsetMatches):

53 | @staticmethod

54 | def from_fp(*args, **kwargs): # type: ignore

55 | warnings.warn( # pragma: nocover

56 | "staticmethod from_fp, from_bytes, from_path and normalize are deprecated "

57 | "and scheduled to be removed in 3.0",

58 | DeprecationWarning,

59 | )

60 | return from_fp(*args, **kwargs) # pragma: nocover

61 |

62 | @staticmethod

63 | def from_bytes(*args, **kwargs): # type: ignore

64 | warnings.warn( # pragma: nocover

65 | "staticmethod from_fp, from_bytes, from_path and normalize are deprecated "

66 | "and scheduled to be removed in 3.0",

67 | DeprecationWarning,

68 | )

69 | return from_bytes(*args, **kwargs) # pragma: nocover

70 |

71 | @staticmethod

72 | def from_path(*args, **kwargs): # type: ignore

73 | warnings.warn( # pragma: nocover

74 | "staticmethod from_fp, from_bytes, from_path and normalize are deprecated "

75 | "and scheduled to be removed in 3.0",

76 | DeprecationWarning,

77 | )

78 | return from_path(*args, **kwargs) # pragma: nocover

79 |

80 | @staticmethod

81 | def normalize(*args, **kwargs): # type: ignore

82 | warnings.warn( # pragma: nocover

83 | "staticmethod from_fp, from_bytes, from_path and normalize are deprecated "

84 | "and scheduled to be removed in 3.0",

85 | DeprecationWarning,

86 | )

87 | return normalize(*args, **kwargs) # pragma: nocover

88 |

89 |

90 | class CharsetDetector(CharsetNormalizerMatches):

91 | pass

92 |

93 |

94 | class CharsetDoctor(CharsetNormalizerMatches):

95 | pass

96 |

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/py.typed:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/lib/charset_normalizer/py.typed

--------------------------------------------------------------------------------

/src/lib/charset_normalizer/version.py:

--------------------------------------------------------------------------------

1 | """

2 | Expose version

3 | """

4 |

5 | __version__ = "2.0.12"

6 | VERSION = __version__.split(".")

7 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/INSTALLER:

--------------------------------------------------------------------------------

1 | pip

2 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/LICENSE.md:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2013-2021, Kim Davies

4 | All rights reserved.

5 |

6 | Redistribution and use in source and binary forms, with or without

7 | modification, are permitted provided that the following conditions are met:

8 |

9 | 1. Redistributions of source code must retain the above copyright notice, this

10 | list of conditions and the following disclaimer.

11 |

12 | 2. Redistributions in binary form must reproduce the above copyright notice,

13 | this list of conditions and the following disclaimer in the documentation

14 | and/or other materials provided with the distribution.

15 |

16 | 3. Neither the name of the copyright holder nor the names of its

17 | contributors may be used to endorse or promote products derived from

18 | this software without specific prior written permission.

19 |

20 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

21 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

22 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

23 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

24 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

25 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

26 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

27 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

28 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

29 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

30 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/METADATA:

--------------------------------------------------------------------------------

1 | Metadata-Version: 2.1

2 | Name: idna

3 | Version: 3.3

4 | Summary: Internationalized Domain Names in Applications (IDNA)

5 | Home-page: https://github.com/kjd/idna

6 | Author: Kim Davies

7 | Author-email: kim@cynosure.com.au

8 | License: BSD-3-Clause

9 | Platform: UNKNOWN

10 | Classifier: Development Status :: 5 - Production/Stable

11 | Classifier: Intended Audience :: Developers

12 | Classifier: Intended Audience :: System Administrators

13 | Classifier: License :: OSI Approved :: BSD License

14 | Classifier: Operating System :: OS Independent

15 | Classifier: Programming Language :: Python

16 | Classifier: Programming Language :: Python :: 3

17 | Classifier: Programming Language :: Python :: 3 :: Only

18 | Classifier: Programming Language :: Python :: 3.5

19 | Classifier: Programming Language :: Python :: 3.6

20 | Classifier: Programming Language :: Python :: 3.7

21 | Classifier: Programming Language :: Python :: 3.8

22 | Classifier: Programming Language :: Python :: 3.9

23 | Classifier: Programming Language :: Python :: 3.10

24 | Classifier: Programming Language :: Python :: Implementation :: CPython

25 | Classifier: Programming Language :: Python :: Implementation :: PyPy

26 | Classifier: Topic :: Internet :: Name Service (DNS)

27 | Classifier: Topic :: Software Development :: Libraries :: Python Modules

28 | Classifier: Topic :: Utilities

29 | Requires-Python: >=3.5

30 | License-File: LICENSE.md

31 |

32 | Internationalized Domain Names in Applications (IDNA)

33 | =====================================================

34 |

35 | Support for the Internationalised Domain Names in Applications

36 | (IDNA) protocol as specified in `RFC 5891 `_.

37 | This is the latest version of the protocol and is sometimes referred to as

38 | “IDNA 2008”.

39 |

40 | This library also provides support for Unicode Technical Standard 46,

41 | `Unicode IDNA Compatibility Processing `_.

42 |

43 | This acts as a suitable replacement for the “encodings.idna” module that

44 | comes with the Python standard library, but which only supports the

45 | older superseded IDNA specification (`RFC 3490 `_).

46 |

47 | Basic functions are simply executed:

48 |

49 | .. code-block:: pycon

50 |

51 | >>> import idna

52 | >>> idna.encode('ドメイン.テスト')

53 | b'xn--eckwd4c7c.xn--zckzah'

54 | >>> print(idna.decode('xn--eckwd4c7c.xn--zckzah'))

55 | ドメイン.テスト

56 |

57 |

58 | Installation

59 | ------------

60 |

61 | To install this library, you can use pip:

62 |

63 | .. code-block:: bash

64 |

65 | $ pip install idna

66 |

67 | Alternatively, you can install the package using the bundled setup script:

68 |

69 | .. code-block:: bash

70 |

71 | $ python setup.py install

72 |

73 |

74 | Usage

75 | -----

76 |

77 | For typical usage, the ``encode`` and ``decode`` functions will take a domain

78 | name argument and perform a conversion to A-labels or U-labels respectively.

79 |

80 | .. code-block:: pycon

81 |

82 | >>> import idna

83 | >>> idna.encode('ドメイン.テスト')

84 | b'xn--eckwd4c7c.xn--zckzah'

85 | >>> print(idna.decode('xn--eckwd4c7c.xn--zckzah'))

86 | ドメイン.テスト

87 |

88 | You may use the codec encoding and decoding methods using the

89 | ``idna.codec`` module:

90 |

91 | .. code-block:: pycon

92 |

93 | >>> import idna.codec

94 | >>> print('домен.испытание'.encode('idna'))

95 | b'xn--d1acufc.xn--80akhbyknj4f'

96 | >>> print(b'xn--d1acufc.xn--80akhbyknj4f'.decode('idna'))

97 | домен.испытание

98 |

99 | Conversions can be applied at a per-label basis using the ``ulabel`` or ``alabel``

100 | functions if necessary:

101 |

102 | .. code-block:: pycon

103 |

104 | >>> idna.alabel('测试')

105 | b'xn--0zwm56d'

106 |

107 | Compatibility Mapping (UTS #46)

108 | +++++++++++++++++++++++++++++++

109 |

110 | As described in `RFC 5895 `_, the IDNA

111 | specification does not normalize input from different potential ways a user

112 | may input a domain name. This functionality, known as a “mapping”, is

113 | considered by the specification to be a local user-interface issue distinct

114 | from IDNA conversion functionality.

115 |

116 | This library provides one such mapping, that was developed by the Unicode

117 | Consortium. Known as `Unicode IDNA Compatibility Processing `_,

118 | it provides for both a regular mapping for typical applications, as well as

119 | a transitional mapping to help migrate from older IDNA 2003 applications.

120 |

121 | For example, “Königsgäßchen” is not a permissible label as *LATIN CAPITAL

122 | LETTER K* is not allowed (nor are capital letters in general). UTS 46 will

123 | convert this into lower case prior to applying the IDNA conversion.

124 |

125 | .. code-block:: pycon

126 |

127 | >>> import idna

128 | >>> idna.encode('Königsgäßchen')

129 | ...

130 | idna.core.InvalidCodepoint: Codepoint U+004B at position 1 of 'Königsgäßchen' not allowed

131 | >>> idna.encode('Königsgäßchen', uts46=True)

132 | b'xn--knigsgchen-b4a3dun'

133 | >>> print(idna.decode('xn--knigsgchen-b4a3dun'))

134 | königsgäßchen

135 |

136 | Transitional processing provides conversions to help transition from the older

137 | 2003 standard to the current standard. For example, in the original IDNA

138 | specification, the *LATIN SMALL LETTER SHARP S* (ß) was converted into two

139 | *LATIN SMALL LETTER S* (ss), whereas in the current IDNA specification this

140 | conversion is not performed.

141 |

142 | .. code-block:: pycon

143 |

144 | >>> idna.encode('Königsgäßchen', uts46=True, transitional=True)

145 | 'xn--knigsgsschen-lcb0w'

146 |

147 | Implementors should use transitional processing with caution, only in rare

148 | cases where conversion from legacy labels to current labels must be performed

149 | (i.e. IDNA implementations that pre-date 2008). For typical applications

150 | that just need to convert labels, transitional processing is unlikely to be

151 | beneficial and could produce unexpected incompatible results.

152 |

153 | ``encodings.idna`` Compatibility

154 | ++++++++++++++++++++++++++++++++

155 |

156 | Function calls from the Python built-in ``encodings.idna`` module are

157 | mapped to their IDNA 2008 equivalents using the ``idna.compat`` module.

158 | Simply substitute the ``import`` clause in your code to refer to the

159 | new module name.

160 |

161 | Exceptions

162 | ----------

163 |

164 | All errors raised during the conversion following the specification should

165 | raise an exception derived from the ``idna.IDNAError`` base class.

166 |

167 | More specific exceptions that may be generated as ``idna.IDNABidiError``

168 | when the error reflects an illegal combination of left-to-right and

169 | right-to-left characters in a label; ``idna.InvalidCodepoint`` when

170 | a specific codepoint is an illegal character in an IDN label (i.e.

171 | INVALID); and ``idna.InvalidCodepointContext`` when the codepoint is

172 | illegal based on its positional context (i.e. it is CONTEXTO or CONTEXTJ

173 | but the contextual requirements are not satisfied.)

174 |

175 | Building and Diagnostics

176 | ------------------------

177 |

178 | The IDNA and UTS 46 functionality relies upon pre-calculated lookup

179 | tables for performance. These tables are derived from computing against

180 | eligibility criteria in the respective standards. These tables are

181 | computed using the command-line script ``tools/idna-data``.

182 |

183 | This tool will fetch relevant codepoint data from the Unicode repository

184 | and perform the required calculations to identify eligibility. There are

185 | three main modes:

186 |

187 | * ``idna-data make-libdata``. Generates ``idnadata.py`` and ``uts46data.py``,

188 | the pre-calculated lookup tables using for IDNA and UTS 46 conversions. Implementors

189 | who wish to track this library against a different Unicode version may use this tool

190 | to manually generate a different version of the ``idnadata.py`` and ``uts46data.py``

191 | files.

192 |

193 | * ``idna-data make-table``. Generate a table of the IDNA disposition

194 | (e.g. PVALID, CONTEXTJ, CONTEXTO) in the format found in Appendix B.1 of RFC

195 | 5892 and the pre-computed tables published by `IANA `_.

196 |

197 | * ``idna-data U+0061``. Prints debugging output on the various properties

198 | associated with an individual Unicode codepoint (in this case, U+0061), that are

199 | used to assess the IDNA and UTS 46 status of a codepoint. This is helpful in debugging

200 | or analysis.

201 |

202 | The tool accepts a number of arguments, described using ``idna-data -h``. Most notably,

203 | the ``--version`` argument allows the specification of the version of Unicode to use

204 | in computing the table data. For example, ``idna-data --version 9.0.0 make-libdata``

205 | will generate library data against Unicode 9.0.0.

206 |

207 |

208 | Additional Notes

209 | ----------------

210 |

211 | * **Packages**. The latest tagged release version is published in the

212 | `Python Package Index `_.

213 |

214 | * **Version support**. This library supports Python 3.5 and higher. As this library

215 | serves as a low-level toolkit for a variety of applications, many of which strive

216 | for broad compatibility with older Python versions, there is no rush to remove

217 | older intepreter support. Removing support for older versions should be well

218 | justified in that the maintenance burden has become too high.

219 |

220 | * **Python 2**. Python 2 is supported by version 2.x of this library. While active

221 | development of the version 2.x series has ended, notable issues being corrected

222 | may be backported to 2.x. Use "idna<3" in your requirements file if you need this

223 | library for a Python 2 application.

224 |

225 | * **Testing**. The library has a test suite based on each rule of the IDNA specification, as

226 | well as tests that are provided as part of the Unicode Technical Standard 46,

227 | `Unicode IDNA Compatibility Processing `_.

228 |

229 | * **Emoji**. It is an occasional request to support emoji domains in this library. Encoding

230 | of symbols like emoji is expressly prohibited by the technical standard IDNA 2008 and

231 | emoji domains are broadly phased out across the domain industry due to associated security

232 | risks. For now, applications that wish need to support these non-compliant labels may

233 | wish to consider trying the encode/decode operation in this library first, and then falling

234 | back to using `encodings.idna`. See `the Github project `_

235 | for more discussion.

236 |

237 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/RECORD:

--------------------------------------------------------------------------------

1 | idna-3.3.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4

2 | idna-3.3.dist-info/LICENSE.md,sha256=otbk2UC9JNvnuWRc3hmpeSzFHbeuDVrNMBrIYMqj6DY,1523

3 | idna-3.3.dist-info/METADATA,sha256=BdqiAf8ou4x1nzIHp2_sDfXWjl7BrSUGpOeVzbYHQuQ,9765

4 | idna-3.3.dist-info/RECORD,,

5 | idna-3.3.dist-info/WHEEL,sha256=ewwEueio1C2XeHTvT17n8dZUJgOvyCWCt0WVNLClP9o,92

6 | idna-3.3.dist-info/top_level.txt,sha256=jSag9sEDqvSPftxOQy-ABfGV_RSy7oFh4zZJpODV8k0,5

7 | idna/__init__.py,sha256=KJQN1eQBr8iIK5SKrJ47lXvxG0BJ7Lm38W4zT0v_8lk,849

8 | idna/__pycache__/__init__.cpython-39.pyc,,

9 | idna/__pycache__/codec.cpython-39.pyc,,

10 | idna/__pycache__/compat.cpython-39.pyc,,

11 | idna/__pycache__/core.cpython-39.pyc,,

12 | idna/__pycache__/idnadata.cpython-39.pyc,,

13 | idna/__pycache__/intranges.cpython-39.pyc,,

14 | idna/__pycache__/package_data.cpython-39.pyc,,

15 | idna/__pycache__/uts46data.cpython-39.pyc,,

16 | idna/codec.py,sha256=6ly5odKfqrytKT9_7UrlGklHnf1DSK2r9C6cSM4sa28,3374

17 | idna/compat.py,sha256=0_sOEUMT4CVw9doD3vyRhX80X19PwqFoUBs7gWsFME4,321

18 | idna/core.py,sha256=RFIkY-HhFZaDoBEFjGwyGd_vWI04uOAQjnzueMWqwOU,12795

19 | idna/idnadata.py,sha256=fzMzkCea2xieVxcrjngJ-2pLsKQNejPCZFlBajIuQdw,44025

20 | idna/intranges.py,sha256=YBr4fRYuWH7kTKS2tXlFjM24ZF1Pdvcir-aywniInqg,1881

21 | idna/package_data.py,sha256=szxQhV0ZD0nKJ84Kuobw3l8q4_KeCyXjFRdpwIpKZmw,21

22 | idna/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

23 | idna/uts46data.py,sha256=o-D7V-a0fOLZNd7tvxof6MYfUd0TBZzE2bLR5XO67xU,204400

24 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/WHEEL:

--------------------------------------------------------------------------------

1 | Wheel-Version: 1.0

2 | Generator: bdist_wheel (0.37.0)

3 | Root-Is-Purelib: true

4 | Tag: py3-none-any

5 |

6 |

--------------------------------------------------------------------------------

/src/lib/idna-3.3.dist-info/top_level.txt:

--------------------------------------------------------------------------------

1 | idna

2 |

--------------------------------------------------------------------------------

/src/lib/idna/__init__.py:

--------------------------------------------------------------------------------

1 | from .package_data import __version__

2 | from .core import (

3 | IDNABidiError,

4 | IDNAError,

5 | InvalidCodepoint,

6 | InvalidCodepointContext,

7 | alabel,

8 | check_bidi,

9 | check_hyphen_ok,

10 | check_initial_combiner,

11 | check_label,

12 | check_nfc,

13 | decode,

14 | encode,

15 | ulabel,

16 | uts46_remap,

17 | valid_contextj,

18 | valid_contexto,

19 | valid_label_length,

20 | valid_string_length,

21 | )

22 | from .intranges import intranges_contain

23 |

24 | __all__ = [

25 | "IDNABidiError",

26 | "IDNAError",

27 | "InvalidCodepoint",

28 | "InvalidCodepointContext",

29 | "alabel",

30 | "check_bidi",

31 | "check_hyphen_ok",

32 | "check_initial_combiner",

33 | "check_label",

34 | "check_nfc",

35 | "decode",

36 | "encode",

37 | "intranges_contain",

38 | "ulabel",

39 | "uts46_remap",

40 | "valid_contextj",

41 | "valid_contexto",

42 | "valid_label_length",

43 | "valid_string_length",

44 | ]

45 |

--------------------------------------------------------------------------------

/src/lib/idna/codec.py:

--------------------------------------------------------------------------------

1 | from .core import encode, decode, alabel, ulabel, IDNAError

2 | import codecs

3 | import re

4 | from typing import Tuple, Optional

5 |

6 | _unicode_dots_re = re.compile('[\u002e\u3002\uff0e\uff61]')

7 |

8 | class Codec(codecs.Codec):

9 |

10 | def encode(self, data: str, errors: str = 'strict') -> Tuple[bytes, int]:

11 | if errors != 'strict':

12 | raise IDNAError('Unsupported error handling \"{}\"'.format(errors))

13 |

14 | if not data:

15 | return b"", 0

16 |

17 | return encode(data), len(data)

18 |

19 | def decode(self, data: bytes, errors: str = 'strict') -> Tuple[str, int]:

20 | if errors != 'strict':

21 | raise IDNAError('Unsupported error handling \"{}\"'.format(errors))

22 |

23 | if not data:

24 | return '', 0

25 |

26 | return decode(data), len(data)

27 |

28 | class IncrementalEncoder(codecs.BufferedIncrementalEncoder):

29 | def _buffer_encode(self, data: str, errors: str, final: bool) -> Tuple[str, int]: # type: ignore

30 | if errors != 'strict':

31 | raise IDNAError('Unsupported error handling \"{}\"'.format(errors))

32 |

33 | if not data:

34 | return "", 0

35 |

36 | labels = _unicode_dots_re.split(data)

37 | trailing_dot = ''

38 | if labels:

39 | if not labels[-1]:

40 | trailing_dot = '.'

41 | del labels[-1]

42 | elif not final:

43 | # Keep potentially unfinished label until the next call

44 | del labels[-1]

45 | if labels:

46 | trailing_dot = '.'

47 |

48 | result = []

49 | size = 0

50 | for label in labels:

51 | result.append(alabel(label))

52 | if size:

53 | size += 1

54 | size += len(label)

55 |

56 | # Join with U+002E

57 | result_str = '.'.join(result) + trailing_dot # type: ignore

58 | size += len(trailing_dot)

59 | return result_str, size

60 |

61 | class IncrementalDecoder(codecs.BufferedIncrementalDecoder):

62 | def _buffer_decode(self, data: str, errors: str, final: bool) -> Tuple[str, int]: # type: ignore

63 | if errors != 'strict':

64 | raise IDNAError('Unsupported error handling \"{}\"'.format(errors))

65 |

66 | if not data:

67 | return ('', 0)

68 |

69 | labels = _unicode_dots_re.split(data)

70 | trailing_dot = ''

71 | if labels:

72 | if not labels[-1]:

73 | trailing_dot = '.'

74 | del labels[-1]

75 | elif not final:

76 | # Keep potentially unfinished label until the next call

77 | del labels[-1]

78 | if labels:

79 | trailing_dot = '.'

80 |

81 | result = []

82 | size = 0

83 | for label in labels:

84 | result.append(ulabel(label))

85 | if size:

86 | size += 1

87 | size += len(label)

88 |

89 | result_str = '.'.join(result) + trailing_dot

90 | size += len(trailing_dot)

91 | return (result_str, size)

92 |

93 |

94 | class StreamWriter(Codec, codecs.StreamWriter):

95 | pass

96 |

97 |

98 | class StreamReader(Codec, codecs.StreamReader):

99 | pass

100 |

101 |

102 | def getregentry() -> codecs.CodecInfo:

103 | # Compatibility as a search_function for codecs.register()

104 | return codecs.CodecInfo(

105 | name='idna',

106 | encode=Codec().encode, # type: ignore

107 | decode=Codec().decode, # type: ignore

108 | incrementalencoder=IncrementalEncoder,

109 | incrementaldecoder=IncrementalDecoder,

110 | streamwriter=StreamWriter,

111 | streamreader=StreamReader,

112 | )

113 |

--------------------------------------------------------------------------------

/src/lib/idna/compat.py:

--------------------------------------------------------------------------------

1 | from .core import *

2 | from .codec import *

3 | from typing import Any, Union

4 |

5 | def ToASCII(label: str) -> bytes:

6 | return encode(label)

7 |

8 | def ToUnicode(label: Union[bytes, bytearray]) -> str:

9 | return decode(label)

10 |

11 | def nameprep(s: Any) -> None:

12 | raise NotImplementedError('IDNA 2008 does not utilise nameprep protocol')

13 |

14 |

--------------------------------------------------------------------------------

/src/lib/idna/intranges.py:

--------------------------------------------------------------------------------

1 | """

2 | Given a list of integers, made up of (hopefully) a small number of long runs

3 | of consecutive integers, compute a representation of the form

4 | ((start1, end1), (start2, end2) ...). Then answer the question "was x present

5 | in the original list?" in time O(log(# runs)).

6 | """

7 |

8 | import bisect

9 | from typing import List, Tuple

10 |

11 | def intranges_from_list(list_: List[int]) -> Tuple[int, ...]:

12 | """Represent a list of integers as a sequence of ranges:

13 | ((start_0, end_0), (start_1, end_1), ...), such that the original

14 | integers are exactly those x such that start_i <= x < end_i for some i.

15 |

16 | Ranges are encoded as single integers (start << 32 | end), not as tuples.

17 | """

18 |

19 | sorted_list = sorted(list_)

20 | ranges = []

21 | last_write = -1

22 | for i in range(len(sorted_list)):

23 | if i+1 < len(sorted_list):

24 | if sorted_list[i] == sorted_list[i+1]-1:

25 | continue

26 | current_range = sorted_list[last_write+1:i+1]

27 | ranges.append(_encode_range(current_range[0], current_range[-1] + 1))

28 | last_write = i

29 |

30 | return tuple(ranges)

31 |

32 | def _encode_range(start: int, end: int) -> int:

33 | return (start << 32) | end

34 |

35 | def _decode_range(r: int) -> Tuple[int, int]:

36 | return (r >> 32), (r & ((1 << 32) - 1))

37 |

38 |

39 | def intranges_contain(int_: int, ranges: Tuple[int, ...]) -> bool:

40 | """Determine if `int_` falls into one of the ranges in `ranges`."""

41 | tuple_ = _encode_range(int_, 0)

42 | pos = bisect.bisect_left(ranges, tuple_)

43 | # we could be immediately ahead of a tuple (start, end)

44 | # with start < int_ <= end

45 | if pos > 0:

46 | left, right = _decode_range(ranges[pos-1])

47 | if left <= int_ < right:

48 | return True

49 | # or we could be immediately behind a tuple (int_, end)

50 | if pos < len(ranges):

51 | left, _ = _decode_range(ranges[pos])

52 | if left == int_:

53 | return True

54 | return False

55 |

--------------------------------------------------------------------------------

/src/lib/idna/package_data.py:

--------------------------------------------------------------------------------

1 | __version__ = '3.3'

2 |

3 |

--------------------------------------------------------------------------------

/src/lib/idna/py.typed:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rhlsthrm/alfred-crypto-tracker/c73071bc70c9c206bcb532c873b0e3a395e0412b/src/lib/idna/py.typed