├── requirements_keras.txt

├── requirements_tensorflow.txt

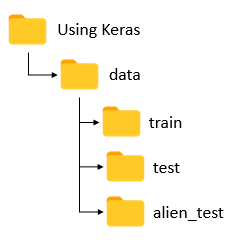

├── Using Keras

├── data

│ ├── alien_test

│ │ └── README.md

│ ├── train

│ │ └── README.md

│ └── test

│ │ └── README.md

├── Testing.py

└── Training.py

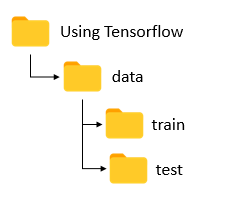

├── Using Tensorflow

├── data

│ ├── test

│ │ └── README.md

│ └── train

│ │ └── README.md

├── README.md

├── dataset.py

├── Prediction.py

└── Training.py

├── README.md

└── LICENSE

/requirements_keras.txt:

--------------------------------------------------------------------------------

1 | sys

2 | os

3 | time

4 | keras==2.2.0

5 | numpy==1.22.0

6 |

--------------------------------------------------------------------------------

/requirements_tensorflow.txt:

--------------------------------------------------------------------------------

1 | os

2 | time

3 | cv2

4 | glob

5 | dataset==1.1.0

6 | tensorflow==2.11.1

7 | numpy==1.22.0

8 | sklearn==0.19.1

9 |

--------------------------------------------------------------------------------

/Using Keras/data/alien_test/README.md:

--------------------------------------------------------------------------------

1 | Upload all the images to be tested here. Make sure they are not the images you have trained your system with.

2 |

--------------------------------------------------------------------------------

/Using Tensorflow/data/test/README.md:

--------------------------------------------------------------------------------

1 | Upload all the images to be tested here. Make sure they are not the images you have trained your system with.

2 |

--------------------------------------------------------------------------------

/Using Tensorflow/README.md:

--------------------------------------------------------------------------------

1 | While execution of Training.py, if there is an AssertionError, change the variable batch_size to a different value that is a factor of the total training images. To change the batch_size in Training.py, go to line number 14 and change the value.

--------------------------------------------------------------------------------

/Using Tensorflow/data/train/README.md:

--------------------------------------------------------------------------------

1 | In this data/train folder,

2 |

3 | Create sub-folders of all the classes of images. For example, if you want to train your system with 3 classes - Chair, Motorcycle and Soccer Ball, create the following sub-folders:

4 | ```

5 | data

6 | |

7 | |-train

8 | |

9 | |-Chair

10 | |

11 | |-ChairImag001.jpg

12 | |-ChairImg002.jpg

13 | |.....

14 | |-Motocrycle

15 | |

16 | |-Motorcycle001.jpg

17 | |-Motorcycle002.jpg

18 | |.....

19 | |-Soccer Ball

20 | |-Soccer_Ball-001.jpg

21 | |-Soccer_Ball-002.jpg

22 | ```

23 | If you have any more classes, create the sub-folders in the above similar fashion.

24 |

--------------------------------------------------------------------------------

/Using Keras/data/train/README.md:

--------------------------------------------------------------------------------

1 | In this data/train folder,

2 |

3 | Create sub-folders of all the classes of images. For example, if you want to train your system with 3 classes - Chair, Motorcycle and Soccer Ball, create the following sub-folders:

4 |

5 | ```

6 | data

7 | |

8 | |-train

9 | |

10 | |-Chair

11 | |

12 | |-ChairImag001.jpg

13 | |-ChairImg002.jpg

14 | |.....

15 | |-Motocrycle

16 | |

17 | |-Motorcycle001.jpg

18 | |-Motorcycle002.jpg

19 | |.....

20 | |-Soccer Ball

21 | |-Soccer_Ball-001.jpg

22 | |-Soccer_Ball-002.jpg

23 |

24 | ```

25 | If you have any more classes, create the sub-folders in the above similar fashion.

26 |

--------------------------------------------------------------------------------

/Using Keras/data/test/README.md:

--------------------------------------------------------------------------------

1 | NOTE: This folder is for validation purposes. The system checks its own training models by validating the model using these inputs.

2 |

3 | In this data/test folder,

4 |

5 | Create sub-folders of all the classes of images. For example, if you want to train your system with 3 classes - Chair, Motorcycle and Soccer Ball, create the following sub-folders:

6 |

7 | ```

8 | data

9 | |

10 | |-test

11 | |

12 | |-Chair

13 | |

14 | |-ChairImag001.jpg

15 | |-ChairImg002.jpg

16 | |.....

17 | |-Motocrycle

18 | |

19 | |-Motorcycle001.jpg

20 | |-Motorcycle002.jpg

21 | |.....

22 | |-Soccer Ball

23 | |-Soccer_Ball-001.jpg

24 | |-Soccer_Ball-002.jpg

25 | ```

26 |

27 | If you have any more classes, create the sub-folders in the above similar fashion.

28 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Image-Classification-by-Keras-and-Tensorflow

2 | Image Classification using Keras as well as Tensorflow. Image Classification is a Machine Learning module that trains itself from an existing dataset of multiclass images and develops a model for future prediction of similar images not encountered during training. Developed using Convolutional Neural Network (CNN).

3 |

4 | This repository contains implementation for multiclass image classification using Keras as well as Tensorflow. These two codes have no interdependecy on each other.

5 |

6 | ## Convolutional Neural Network (CNN)

7 | To be updated soon.

8 |

9 | ## Modules Required

10 | You can download the modules in the respective requirements.txt for each implementation.

11 |

12 | ### Implementation using Keras

13 | sys

14 | os

15 | time

16 | keras==2.2.0

17 | numpy==1.14.5

18 |

19 | ### Implementation using Tensorflow

20 | os

21 | time

22 | cv2

23 | glob

24 | dataset==1.1.0

25 | tensorflow==1.15.0

26 | numpy==1.14.5

27 | sklearn==0.19.1

28 |

29 | ## Steps

30 | 1. Install the modules required based on the type of implementation.

31 | 2. Download the dataset you want to train and predict your system with. For sample data, you can download the [101_ObjectCategories (131Mbytes)].

32 | 3. Construct the folder sub-structure required.

33 | ### Keras

34 |

35 | [](https://postimg.cc/image/i2de6jd2j/)

36 |

37 | ### Tensorflow

38 |

39 | [](https://postimg.cc/image/ame4kuryz/)

40 |

41 | [101_ObjectCategories (131Mbytes)]: http://www.vision.caltech.edu/Image_Datasets/Caltech101/

42 |

--------------------------------------------------------------------------------

/Using Keras/Testing.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | from keras.preprocessing.image import ImageDataGenerator, load_img, img_to_array

4 | from keras.models import Sequential, load_model

5 | import time

6 |

7 | start = time.time()

8 |

9 | #Define Path

10 | model_path = './models/model.h5'

11 | model_weights_path = './models/weights.h5'

12 | test_path = 'data/alien_test'

13 |

14 | #Load the pre-trained models

15 | model = load_model(model_path)

16 | model.load_weights(model_weights_path)

17 |

18 | #Define image parameters

19 | img_width, img_height = 150, 150

20 |

21 | #Prediction Function

22 | def predict(file):

23 | x = load_img(file, target_size=(img_width,img_height))

24 | x = img_to_array(x)

25 | x = np.expand_dims(x, axis=0)

26 | array = model.predict(x)

27 | result = array[0]

28 | #print(result)

29 | answer = np.argmax(result)

30 | if answer == 1:

31 | print("Predicted: chair")

32 | elif answer == 0:

33 | print("Predicted: Motorbikes")

34 | elif answer == 2:

35 | print("Predicted: soccer_ball")

36 |

37 | return answer

38 |

39 | #Walk the directory for every image

40 | for i, ret in enumerate(os.walk(test_path)):

41 | for i, filename in enumerate(ret[2]):

42 | if filename.startswith("."):

43 | continue

44 |

45 | print(ret[0] + '/' + filename)

46 | result = predict(ret[0] + '/' + filename)

47 | print(" ")

48 |

49 | #Calculate execution time

50 | end = time.time()

51 | dur = end-start

52 |

53 | if dur<60:

54 | print("Execution Time:",dur,"seconds")

55 | elif dur>60 and dur<3600:

56 | dur=dur/60

57 | print("Execution Time:",dur,"minutes")

58 | else:

59 | dur=dur/(60*60)

60 | print("Execution Time:",dur,"hours")

61 |

--------------------------------------------------------------------------------

/Using Keras/Training.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import os

3 | from keras.preprocessing.image import ImageDataGenerator

4 | from keras import optimizers

5 | from keras.models import Sequential

6 | from keras.layers import Dropout, Flatten, Dense, Activation

7 | from keras.layers.convolutional import Convolution2D, MaxPooling2D

8 | from keras import callbacks

9 | import time

10 |

11 | start = time.time()

12 |

13 | DEV = False

14 | argvs = sys.argv

15 | argc = len(argvs)

16 |

17 | if argc > 1 and (argvs[1] == "--development" or argvs[1] == "-d"):

18 | DEV = True

19 |

20 | if DEV:

21 | epochs = 2

22 | else:

23 | epochs = 20

24 |

25 | train_data_path = 'data/train'

26 | validation_data_path = 'data/test'

27 |

28 | """

29 | Parameters

30 | """

31 | img_width, img_height = 150, 150

32 | batch_size = 32

33 | samples_per_epoch = 1000

34 | validation_steps = 300

35 | nb_filters1 = 32

36 | nb_filters2 = 64

37 | conv1_size = 3

38 | conv2_size = 2

39 | pool_size = 2

40 | classes_num = 3

41 | lr = 0.0004

42 |

43 | model = Sequential()

44 | model.add(Convolution2D(nb_filters1, conv1_size, conv1_size, border_mode ="same", input_shape=(img_width, img_height, 3)))

45 | model.add(Activation("relu"))

46 | model.add(MaxPooling2D(pool_size=(pool_size, pool_size)))

47 |

48 | model.add(Convolution2D(nb_filters2, conv2_size, conv2_size, border_mode ="same"))

49 | model.add(Activation("relu"))

50 | model.add(MaxPooling2D(pool_size=(pool_size, pool_size), dim_ordering='th'))

51 |

52 | model.add(Flatten())

53 | model.add(Dense(256))

54 | model.add(Activation("relu"))

55 | model.add(Dropout(0.5))

56 | model.add(Dense(classes_num, activation='softmax'))

57 |

58 | model.compile(loss='categorical_crossentropy',

59 | optimizer=optimizers.RMSprop(lr=lr),

60 | metrics=['accuracy'])

61 |

62 | train_datagen = ImageDataGenerator(

63 | rescale=1. / 255,

64 | shear_range=0.2,

65 | zoom_range=0.2,

66 | horizontal_flip=True)

67 |

68 | test_datagen = ImageDataGenerator(rescale=1. / 255)

69 |

70 | train_generator = train_datagen.flow_from_directory(

71 | train_data_path,

72 | target_size=(img_height, img_width),

73 | batch_size=batch_size,

74 | class_mode='categorical')

75 |

76 | validation_generator = test_datagen.flow_from_directory(

77 | validation_data_path,

78 | target_size=(img_height, img_width),

79 | batch_size=batch_size,

80 | class_mode='categorical')

81 |

82 | """

83 | Tensorboard log

84 | """

85 | log_dir = './tf-log/'

86 | tb_cb = callbacks.TensorBoard(log_dir=log_dir, histogram_freq=0)

87 | cbks = [tb_cb]

88 |

89 | model.fit_generator(

90 | train_generator,

91 | samples_per_epoch=samples_per_epoch,

92 | epochs=epochs,

93 | validation_data=validation_generator,

94 | callbacks=cbks,

95 | validation_steps=validation_steps)

96 |

97 | target_dir = './models/'

98 | if not os.path.exists(target_dir):

99 | os.mkdir(target_dir)

100 | model.save('./models/model.h5')

101 | model.save_weights('./models/weights.h5')

102 |

103 | #Calculate execution time

104 | end = time.time()

105 | dur = end-start

106 |

107 | if dur<60:

108 | print("Execution Time:",dur,"seconds")

109 | elif dur>60 and dur<3600:

110 | dur=dur/60

111 | print("Execution Time:",dur,"minutes")

112 | else:

113 | dur=dur/(60*60)

114 | print("Execution Time:",dur,"hours")

115 |

--------------------------------------------------------------------------------

/Using Tensorflow/dataset.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import os

3 | import glob

4 | from sklearn.utils import shuffle

5 | import numpy as np

6 |

7 |

8 | def load_train(train_path, image_size, classes):

9 | images = []

10 | labels = []

11 | img_names = []

12 | cls = []

13 |

14 | print('Going to read training images')

15 | for fields in classes:

16 | index = classes.index(fields)

17 | print('Now going to read {} files (Index: {})'.format(fields, index))

18 | path = os.path.join(train_path, fields, '*g')

19 | files = glob.glob(path)

20 | for fl in files:

21 | image = cv2.imread(fl)

22 | image = cv2.resize(image, (image_size, image_size),0,0, cv2.INTER_LINEAR)

23 | image = image.astype(np.float32)

24 | image = np.multiply(image, 1.0 / 255.0)

25 | images.append(image)

26 | label = np.zeros(len(classes))

27 | label[index] = 1.0

28 | labels.append(label)

29 | flbase = os.path.basename(fl)

30 | img_names.append(flbase)

31 | cls.append(fields)

32 | images = np.array(images)

33 | labels = np.array(labels)

34 | img_names = np.array(img_names)

35 | cls = np.array(cls)

36 |

37 | return images, labels, img_names, cls

38 |

39 |

40 | class DataSet(object):

41 |

42 | def __init__(self, images, labels, img_names, cls):

43 | self._num_examples = images.shape[0]

44 |

45 | self._images = images

46 | self._labels = labels

47 | self._img_names = img_names

48 | self._cls = cls

49 | self._epochs_done = 0

50 | self._index_in_epoch = 0

51 |

52 | @property

53 | def images(self):

54 | return self._images

55 |

56 | @property

57 | def labels(self):

58 | return self._labels

59 |

60 | @property

61 | def img_names(self):

62 | return self._img_names

63 |

64 | @property

65 | def cls(self):

66 | return self._cls

67 |

68 | @property

69 | def num_examples(self):

70 | return self._num_examples

71 |

72 | @property

73 | def epochs_done(self):

74 | return self._epochs_done

75 |

76 | def next_batch(self, batch_size):

77 | """Return the next `batch_size` examples from this data set."""

78 | start = self._index_in_epoch

79 | self._index_in_epoch += batch_size

80 |

81 | if self._index_in_epoch > self._num_examples:

82 | # After each epoch we update this

83 | self._epochs_done += 1

84 | start = 0

85 | self._index_in_epoch = batch_size

86 | assert batch_size <= self._num_examples

87 | end = self._index_in_epoch

88 |

89 | return self._images[start:end], self._labels[start:end], self._img_names[start:end], self._cls[start:end]

90 |

91 |

92 | def read_train_sets(train_path, image_size, classes, validation_size):

93 | class DataSets(object):

94 | pass

95 | data_sets = DataSets()

96 |

97 | images, labels, img_names, cls = load_train(train_path, image_size, classes)

98 | images, labels, img_names, cls = shuffle(images, labels, img_names, cls)

99 |

100 | if isinstance(validation_size, float):

101 | validation_size = int(validation_size * images.shape[0])

102 |

103 | validation_images = images[:validation_size]

104 | validation_labels = labels[:validation_size]

105 | validation_img_names = img_names[:validation_size]

106 | validation_cls = cls[:validation_size]

107 |

108 | train_images = images[validation_size:]

109 | train_labels = labels[validation_size:]

110 | train_img_names = img_names[validation_size:]

111 | train_cls = cls[validation_size:]

112 |

113 | data_sets.train = DataSet(train_images, train_labels, train_img_names, train_cls)

114 | data_sets.valid = DataSet(validation_images, validation_labels, validation_img_names, validation_cls)

115 |

116 | return data_sets

117 |

--------------------------------------------------------------------------------

/Using Tensorflow/Prediction.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | import numpy as np

3 | import os

4 | import cv2

5 | import time

6 |

7 | start = time.time()

8 |

9 | try:

10 |

11 | # Path of training images

12 | train_path = r'C:\Users\T01144\Desktop\T01144\IDLE Scripts\Image Classification\Using Tensorflow\data\train'

13 | if not os.path.exists(train_path):

14 | print("No such directory")

15 | raise Exception

16 | # Path of testing images

17 | dir_path = r'C:\Users\T01144\Desktop\T01144\IDLE Scripts\Image Classification\Using Tensorflow\data\alien_test'

18 | if not os.path.exists(dir_path):

19 | print("No such directory")

20 | raise Exception

21 |

22 | # Walk though all testing images one by one

23 | for root, dirs, files in os.walk(dir_path):

24 | for name in files:

25 |

26 | print("")

27 | image_path = name

28 | filename = dir_path +'\\' +image_path

29 | print(filename)

30 | image_size=128

31 | num_channels=3

32 | images = []

33 |

34 | if os.path.exists(filename):

35 |

36 | # Reading the image using OpenCV

37 | image = cv2.imread(filename)

38 | # Resizing the image to our desired size and preprocessing will be done exactly as done during training

39 | image = cv2.resize(image, (image_size, image_size),0,0, cv2.INTER_LINEAR)

40 | images.append(image)

41 | images = np.array(images, dtype=np.uint8)

42 | images = images.astype('float32')

43 | images = np.multiply(images, 1.0/255.0)

44 |

45 | # The input to the network is of shape [None image_size image_size num_channels]. Hence we reshape.

46 | x_batch = images.reshape(1, image_size,image_size,num_channels)

47 |

48 | # Let us restore the saved model

49 | sess = tf.Session()

50 | # Step-1: Recreate the network graph. At this step only graph is created.

51 | saver = tf.train.import_meta_graph('models/trained_model.meta')

52 | # Step-2: Now let's load the weights saved using the restore method.

53 | saver.restore(sess, tf.train.latest_checkpoint('./models/'))

54 |

55 | # Accessing the default graph which we have restored

56 | graph = tf.get_default_graph()

57 |

58 | # Now, let's get hold of the op that we can be processed to get the output.

59 | # In the original network y_pred is the tensor that is the prediction of the network

60 | y_pred = graph.get_tensor_by_name("y_pred:0")

61 |

62 | ## Let's feed the images to the input placeholders

63 | x= graph.get_tensor_by_name("x:0")

64 | y_true = graph.get_tensor_by_name("y_true:0")

65 | y_test_images = np.zeros((1, len(os.listdir(train_path))))

66 |

67 |

68 | # Creating the feed_dict that is required to be fed to calculate y_pred

69 | feed_dict_testing = {x: x_batch, y_true: y_test_images}

70 | result=sess.run(y_pred, feed_dict=feed_dict_testing)

71 | # Result is of this format [[probabiliy_of_classA probability_of_classB ....]]

72 | print(result)

73 |

74 | # Convert np.array to list

75 | a = result[0].tolist()

76 | r=0

77 |

78 | # Finding the maximum of all outputs

79 | max1 = max(a)

80 | index1 = a.index(max1)

81 | predicted_class = None

82 |

83 | # Walk through directory to find the label of the predicted output

84 | count = 0

85 | for root, dirs, files in os.walk(train_path):

86 | for name in dirs:

87 | if count==index1:

88 | predicted_class = name

89 | count+=1

90 |

91 | # If the maximum confidence output is largest of all by a big margin then

92 | # print the class or else print a warning

93 | for i in a:

94 | if i!=max1:

95 | if max1-i60 and dur<3600:

117 | dur=dur/60

118 | print("Execution Time:",dur,"minutes")

119 | else:

120 | dur=dur/(60*60)

121 | print("Execution Time:",dur,"hours")

122 |

--------------------------------------------------------------------------------

/Using Tensorflow/Training.py:

--------------------------------------------------------------------------------

1 | import dataset

2 | import tensorflow as tf

3 | import time

4 | import numpy as np

5 | import os

6 |

7 | start = time.time()

8 | try:

9 |

10 | # Total iterations

11 | final_iter = 1000

12 |

13 | # Assign the batch value

14 | batch_size = 20

15 |

16 | #20% of the data will automatically be used for validation

17 | validation_size = 0.2

18 | img_size = 128

19 | num_channels = 3

20 | train_path=r'C:\Users\T01144\Desktop\T01144\IDLE Scripts\Image Classification\Using Tensorflow\data\train'

21 |

22 | #Prepare input data

23 | if not os.path.exists(train_path):

24 | print("No such directory")

25 | raise Exception

26 | classes = os.listdir(train_path)

27 | num_classes = len(classes)

28 |

29 | # We shall load all the training and validation images and labels into memory using openCV and use that during training

30 | data = dataset.read_train_sets(train_path, img_size, classes, validation_size=validation_size)

31 |

32 | # Display the stats

33 | print("Complete reading input data. Will Now print a snippet of it")

34 | print("Number of files in Training-set:\t\t{}".format(len(data.train.labels)))

35 | print("Number of files in Validation-set:\t{}".format(len(data.valid.labels)))

36 | session = tf.Session()

37 | x = tf.placeholder(tf.float32, shape=[None, img_size,img_size,num_channels], name='x')

38 |

39 | ## labels

40 | y_true = tf.placeholder(tf.float32, shape=[None, num_classes], name='y_true')

41 | y_true_cls = tf.argmax(y_true, dimension=1)

42 |

43 |

44 | ##Network graph params

45 | filter_size_conv1 = 3

46 | num_filters_conv1 = 32

47 |

48 | filter_size_conv2 = 3

49 | num_filters_conv2 = 32

50 |

51 | filter_size_conv3 = 3

52 | num_filters_conv3 = 64

53 |

54 | fc_layer_size = 128

55 |

56 | def create_weights(shape):

57 | return tf.Variable(tf.truncated_normal(shape, stddev=0.05))

58 |

59 | def create_biases(size):

60 | return tf.Variable(tf.constant(0.05, shape=[size]))

61 |

62 | # Function to create a convolutional layer

63 | def create_convolutional_layer(input,

64 | num_input_channels,

65 | conv_filter_size,

66 | num_filters):

67 |

68 | ## We shall define the weights that will be trained using create_weights function.

69 | weights = create_weights(shape=[conv_filter_size, conv_filter_size, num_input_channels, num_filters])

70 | ## We create biases using the create_biases function. These are also trained.

71 | biases = create_biases(num_filters)

72 |

73 | ## Creating the convolutional layer

74 | layer = tf.nn.conv2d(input=input,

75 | filter=weights,

76 | strides=[1, 1, 1, 1],

77 | padding='SAME')

78 |

79 | layer += biases

80 |

81 | ## We shall be using max-pooling.

82 | layer = tf.nn.max_pool(value=layer,

83 | ksize=[1, 2, 2, 1],

84 | strides=[1, 2, 2, 1],

85 | padding='SAME')

86 | ## Output of pooling is fed to Relu which is the activation function for us.

87 | layer = tf.nn.relu(layer)

88 |

89 | return layer

90 |

91 |

92 | # Function to create a Flatten Layer

93 | def create_flatten_layer(layer):

94 | #We know that the shape of the layer will be [batch_size img_size img_size num_channels]

95 | # But let's get it from the previous layer.

96 | layer_shape = layer.get_shape()

97 |

98 | ## Number of features will be img_height * img_width* num_channels. But we shall calculate it in place of hard-coding it.

99 | num_features = layer_shape[1:4].num_elements()

100 |

101 | ## Now, we Flatten the layer so we shall have to reshape to num_features

102 | layer = tf.reshape(layer, [-1, num_features])

103 |

104 | return layer

105 |

106 | # Function to create a Fully - Connected Layer

107 | def create_fc_layer(input,

108 | num_inputs,

109 | num_outputs,

110 | use_relu=True):

111 |

112 | #Let's define trainable weights and biases.

113 | weights = create_weights(shape=[num_inputs, num_outputs])

114 | biases = create_biases(num_outputs)

115 |

116 | # Fully connected layer takes input x and produces wx+b.Since, these are matrices, we use matmul function in Tensorflow

117 | layer = tf.matmul(input, weights) + biases

118 | if use_relu:

119 | layer = tf.nn.relu(layer)

120 |

121 | return layer

122 |

123 | # Create all the layers

124 | layer_conv1 = create_convolutional_layer(input=x,

125 | num_input_channels=num_channels,

126 | conv_filter_size=filter_size_conv1,

127 | num_filters=num_filters_conv1)

128 | layer_conv2 = create_convolutional_layer(input=layer_conv1,

129 | num_input_channels=num_filters_conv1,

130 | conv_filter_size=filter_size_conv2,

131 | num_filters=num_filters_conv2)

132 |

133 | layer_conv3= create_convolutional_layer(input=layer_conv2,

134 | num_input_channels=num_filters_conv2,

135 | conv_filter_size=filter_size_conv3,

136 | num_filters=num_filters_conv3)

137 |

138 | layer_flat = create_flatten_layer(layer_conv3)

139 |

140 | layer_fc1 = create_fc_layer(input=layer_flat,

141 | num_inputs=layer_flat.get_shape()[1:4].num_elements(),

142 | num_outputs=fc_layer_size,

143 | use_relu=True)

144 |

145 | layer_fc2 = create_fc_layer(input=layer_fc1,

146 | num_inputs=fc_layer_size,

147 | num_outputs=num_classes,

148 | use_relu=False)

149 |

150 | y_pred = tf.nn.softmax(layer_fc2,name='y_pred')

151 |

152 | y_pred_cls = tf.argmax(y_pred, dimension=1)

153 | session.run(tf.global_variables_initializer())

154 | cross_entropy = tf.nn.softmax_cross_entropy_with_logits_v2(logits=layer_fc2,

155 | labels=y_true)

156 | cost = tf.reduce_mean(cross_entropy)

157 | optimizer = tf.train.AdamOptimizer(learning_rate=1e-4).minimize(cost)

158 | correct_prediction = tf.equal(y_pred_cls, y_true_cls)

159 | accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

160 |

161 |

162 | session.run(tf.global_variables_initializer())

163 |

164 | # Display all stats for every epoch

165 | def show_progress(epoch, feed_dict_train, feed_dict_validate, val_loss,total_epochs):

166 | acc = session.run(accuracy, feed_dict=feed_dict_train)

167 | val_acc = session.run(accuracy, feed_dict=feed_dict_validate)

168 | msg = "Training Epoch {0}/{4} --- Training Accuracy: {1:>6.1%}, Validation Accuracy: {2:>6.1%}, Validation Loss: {3:.3f}"

169 | print(msg.format(epoch + 1, acc, val_acc, val_loss,total_epochs))

170 |

171 | total_iterations = 0

172 |

173 | saver = tf.train.Saver()

174 |

175 | print("")

176 |

177 | # Training Function

178 | def train(num_iteration):

179 | global total_iterations

180 |

181 | for i in range(total_iterations,

182 | total_iterations + num_iteration):

183 |

184 | x_batch, y_true_batch, _, cls_batch = data.train.next_batch(batch_size)

185 | x_valid_batch, y_valid_batch, _, valid_cls_batch = data.valid.next_batch(batch_size)

186 |

187 |

188 | feed_dict_tr = {x: x_batch,

189 | y_true: y_true_batch}

190 | feed_dict_val = {x: x_valid_batch,

191 | y_true: y_valid_batch}

192 |

193 | session.run(optimizer, feed_dict=feed_dict_tr)

194 |

195 | if i % int(data.train.num_examples/batch_size) == 0:

196 | val_loss = session.run(cost, feed_dict=feed_dict_val)

197 | epoch = int(i / int(data.train.num_examples/batch_size))

198 | #print(data.train.num_examples)

199 | #print(batch_size)

200 | #print(int(data.train.num_examples/batch_size))

201 | #print(i)

202 |

203 | total_epochs = int(num_iteration/int(data.train.num_examples/batch_size)) + 1

204 | show_progress(epoch, feed_dict_tr, feed_dict_val, val_loss,total_epochs)

205 | saver.save(session, 'C:\\Users\\T01144\\Desktop\\T01144\\IDLE Scripts\\Image Classification\\Using Tensorflow\\models\\trained_model')

206 |

207 | total_iterations += num_iteration

208 |

209 | train(num_iteration = final_iter)

210 |

211 | except Exception as e:

212 | print("Exception:",e)

213 |

214 | # Calculate execution time

215 | end = time.time()

216 | dur = end-start

217 | print("")

218 | if dur<60:

219 | print("Execution Time:",dur,"seconds")

220 | elif dur>60 and dur<3600:

221 | dur=dur/60

222 | print("Execution Time:",dur,"minutes")

223 | else:

224 | dur=dur/(60*60)

225 | print("Execution Time:",dur,"hours")

226 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------