├── .gitignore

├── README.md

├── dev

├── babynames

│ ├── babynames-dplyr.Rmd

│ ├── babynames-dplyr.nb.html

│ └── derby.log

├── cloudera

│ ├── bigvis_tile.R

│ ├── livy-architecture.png

│ ├── livy.Rmd

│ ├── livy.sh

│ ├── livy_connection.Rmd

│ ├── nyct2010r.csv

│ ├── spark_ml_classification_titanic.Rmd

│ ├── spark_plot_boxbin.R

│ ├── spark_plot_hist.R

│ ├── spark_plot_point.R

│ ├── spark_toolchain.Rmd

│ ├── sqlvis_histogram.R

│ ├── sqlvis_raster.R

│ ├── taxiDemoCloudera.Rmd

│ ├── taxiDemoCloudera.nb.html

│ ├── taxiDemoCloudera2.Rmd

│ ├── taxiDemoCloudera3.Rmd

│ ├── taxiDemoCloudera_backup.Rmd

│ └── testCloudera.R

├── flights-cdh

│ ├── flights_pred_2008.RData

│ ├── images

│ │ └── clusterDemo

│ │ │ ├── data-analysis-1.png

│ │ │ ├── flex-1.png

│ │ │ ├── forecast-1.png

│ │ │ ├── hue-metastore-1.png

│ │ │ ├── manager-landing-page.png

│ │ │ ├── performance-1.png

│ │ │ ├── sign-in-1.png

│ │ │ ├── spark-history-server-1.png

│ │ │ ├── spark-pane-1.png

│ │ │ ├── spark-rdd-1.png

│ │ │ └── tables-1.png

│ ├── nycflights_flexdashboard.Rmd

│ ├── sparkClusterDemo-source.R

│ ├── sparkClusterDemo.Rmd

│ └── sparkClusterDemo.html

├── flights

│ ├── flightsAnalysis.Rmd

│ ├── flightsAnalysis.nb.html

│ ├── flightsApp

│ │ └── app.R

│ ├── flightsApp2

│ │ ├── global.R

│ │ ├── server.R

│ │ └── ui.R

│ ├── flights_pred_2008.RData

│ ├── images

│ │ └── clusterDemo

│ │ │ ├── awsClusterConnect.png

│ │ │ ├── awsCreateCluster.png

│ │ │ ├── awsCreateCluster2.png

│ │ │ ├── awsNewSecurityGroup.png

│ │ │ ├── awsSecurityGroup.png

│ │ │ ├── awsSecurityGroup2.png

│ │ │ ├── emrArchitecture.png

│ │ │ ├── emrConfigStep1.png

│ │ │ ├── emrConfigStep2.png

│ │ │ ├── emrConfigStep3.png

│ │ │ ├── emrConfigStep4.png

│ │ │ ├── emrLogin.png

│ │ │ ├── flightsDashboard.png

│ │ │ ├── flightsDeciles.png

│ │ │ ├── flightsDecilesDesc.png

│ │ │ ├── flightsPredicted.png

│ │ │ ├── rstudio.png

│ │ │ ├── rstudioData.png

│ │ │ ├── rstudioLogin.png

│ │ │ ├── rstudioModel.png

│ │ │ ├── rstudioModelDetail.png

│ │ │ ├── rstudioSparkPane.png

│ │ │ ├── workflow.png

│ │ │ ├── workflowCommands.png

│ │ │ ├── workflowRSC.png

│ │ │ └── workflowShare.png

│ ├── nycflights_flexdashboard.Rmd

│ ├── nycflights_flexdashboard_spark.Rmd

│ ├── recode_for_prediction.R

│ ├── sparkClusterDemo.Rmd

│ └── sparkClusterDemo.html

├── h2o-demo

│ ├── emr_h2o_setup.sh

│ ├── h2oHadoop.Rmd

│ ├── h2oModels.Rmd

│ ├── h2oSetup.R

│ ├── h2oSetup.Rmd

│ ├── h2oSetup.nb.html

│ ├── h2oSetup_2_0_0.R

│ ├── iris.csv

│ ├── livy.R

│ ├── livy.Rmd

│ ├── nyct2010.csv

│ ├── sqlvis_histogram.R

│ ├── sqlvis_raster.R

│ ├── taxiDemoH2O.Rmd

│ └── taxiDemoH2O.nb.html

├── h2o

│ ├── 01_h2o_setup.R

│ ├── 02_h2o_rsparkling.Rmd

│ ├── 02_h2o_rsparkling.nb.html

│ ├── 03_h2o_ml.Rmd

│ ├── 03_h2o_ml.nb.html

│ └── 04_h2o_grid.R

├── helloworld

│ ├── derby.log

│ ├── helloWorld.Rmd

│ ├── helloWorld.html

│ └── helloWorld.nb.html

├── hive

│ ├── hiveJDBC.R

│ ├── hiveMetastore.R

│ ├── hiveMetastore.Rmd

│ └── hiveMetastore.nb.html

├── nyc-taxi-data

│ ├── .gitignore

│ ├── taxiAnalysis.R

│ ├── taxiApp.R

│ ├── taxiApp

│ │ └── app.R

│ ├── taxiDashboard.Rmd

│ ├── taxiDemo.Rmd

│ └── taxiDemo.nb.html

├── nycflights13

│ ├── .gitignore

│ ├── dplyr.Rmd

│ ├── dplyr.nb.html

│ ├── nycflights13_flexdashboard_rdata.Rmd

│ └── nycflights13_flexdashboard_sparkdata.Rmd

├── performance

│ ├── collect.Rmd

│ └── collect.html

└── titanic

│ ├── .gitignore

│ ├── notebook-classification-rdata.Rmd

│ ├── notebook-classification-rdata.nb.html

│ ├── notebook-classification.Rmd

│ ├── notebook-classification.html

│ ├── notebook-classification.nb.html

│ ├── rmarkdown-classification.Rmd

│ ├── rmarkdown-classification_files

│ └── figure-html

│ │ ├── auc-1.png

│ │ ├── importance-1.png

│ │ └── lift-1.png

│ └── titanic-parquet

│ ├── ._SUCCESS.crc

│ ├── .part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet.crc

│ ├── _SUCCESS

│ └── part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet

├── img

├── sparklyr-illustration.png

├── sparklyr-presentation-demos.001.jpeg

├── sparklyr-presentation-demos.002.jpeg

├── sparklyr-presentation-demos.003.jpeg

├── sparklyr-presentation-demos.004.jpeg

├── sparklyr-presentation-demos.005.jpeg

├── sparklyr-presentation-demos.006.jpeg

├── sparklyr-presentation-demos.007.jpeg

├── sparklyr-presentation-demos.008.jpeg

├── sparklyr-presentation-demos.009.jpeg

├── sparklyr-presentation-demos.010.jpeg

├── sparklyr-presentation-demos.011.jpeg

├── sparklyr-presentation-demos.012.jpeg

├── sparklyr-presentation-demos.013.jpeg

├── sparklyr-presentation-demos.014.jpeg

├── sparklyr-presentation-demos.015.jpeg

├── sparklyr-presentation-demos.016.jpeg

├── sparklyr-presentation-demos.017.jpeg

├── sparklyr-presentation-demos.018.jpeg

├── sparklyr-presentation-demos.019.jpeg

├── sparklyr-presentation-demos.020.jpeg

└── sparklyr-presentation-demos.021.jpeg

└── prod

├── apps

├── iris-k-means

│ ├── DESCRIPTION

│ ├── app.R

│ ├── config.yml

│ └── iris-parquet

│ │ ├── ._SUCCESS.crc

│ │ ├── ._common_metadata.crc

│ │ ├── ._metadata.crc

│ │ ├── .part-r-00000-30bd49c2-226d-41ad-8ec3-ccf9ddc0ccf5.gz.parquet.crc

│ │ ├── .part-r-00001-30bd49c2-226d-41ad-8ec3-ccf9ddc0ccf5.gz.parquet.crc

│ │ ├── _SUCCESS

│ │ ├── _common_metadata

│ │ ├── _metadata

│ │ ├── part-r-00000-30bd49c2-226d-41ad-8ec3-ccf9ddc0ccf5.gz.parquet

│ │ └── part-r-00001-30bd49c2-226d-41ad-8ec3-ccf9ddc0ccf5.gz.parquet

├── nycflights13-app-spark

│ ├── DESCRIPTION

│ ├── Readme.md

│ ├── app.R

│ └── config.yml

└── titanic-classification

│ ├── .gitignore

│ ├── DESCRIPTION

│ ├── app.R

│ ├── helpers.R

│ └── titanic-parquet

│ ├── ._SUCCESS.crc

│ ├── .part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet.crc

│ ├── _SUCCESS

│ └── part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet

├── conf

├── config.yml

└── shiny-server.conf

├── dashboards

├── diamonds-explorer

│ ├── config.yml

│ ├── diamonds-parquet

│ │ ├── ._SUCCESS.crc

│ │ ├── ._common_metadata.crc

│ │ ├── ._metadata.crc

│ │ ├── .part-r-00000-d6c7e259-62a6-415e-a145-f1715444705a.gz.parquet.crc

│ │ ├── .part-r-00001-d6c7e259-62a6-415e-a145-f1715444705a.gz.parquet.crc

│ │ ├── _SUCCESS

│ │ ├── _common_metadata

│ │ ├── _metadata

│ │ ├── part-r-00000-d6c7e259-62a6-415e-a145-f1715444705a.gz.parquet

│ │ └── part-r-00001-d6c7e259-62a6-415e-a145-f1715444705a.gz.parquet

│ └── flexdashboard-shiny-diamonds.Rmd

├── ggplot2-brushing

│ └── ggplot2Brushing.Rmd

├── nycflights13-dash-spark

│ ├── config.yml

│ └── nycflights13-dash-spark.Rmd

└── tor-project

│ ├── .gitignore

│ ├── metricsgraphicsTorProject.Rmd

│ └── metricsgraphicsTorProject.html

├── notebooks

├── babynames

│ ├── .gitignore

│ ├── babynames-dplyr.Rmd

│ └── babynames-dplyr.nb.html

├── end-to-end-flights

│ ├── end-to-end-flights-flexdashboard.Rmd

│ ├── end-to-end-flights-htmldoc.html

│ ├── end-to-end-flights.Rmd

│ └── flights_pred_2008.RData

├── ml_classification_titanic

│ ├── spark_ml_classification_titanic.Rmd

│ ├── spark_ml_classification_titanic.html

│ ├── spark_ml_classification_titanic.nb.html

│ └── titanic-parquet

│ │ ├── ._SUCCESS.crc

│ │ ├── .part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet.crc

│ │ ├── _SUCCESS

│ │ └── part-r-00000-69484ddd-e601-45e0-bea0-eb7b5b8b23eb.snappy.parquet

└── taxi_demo

│ ├── readme.md

│ ├── taxiDemo.Rmd

│ └── taxiDemo.nb.html

└── presentations

├── cazena

├── 01_taxiR.Rmd

├── 02_taxiDemo.Rmd

├── 03_taxiGadget.Rmd

├── README.md

├── emr_setup.sh

├── kerberos.R

├── nyct2010.csv

├── sqlvis_histogram.R

└── sqlvis_raster.R

├── cloudera

├── livy-architecture.png

├── livy.Rmd

├── readme.html

├── readme.md

├── sqlvis_histogram.R

├── sqlvis_raster.R

└── taxiDemoCloudera.Rmd

├── sparkSummitEast

├── README.md

├── img

│ ├── img.001.jpeg

│ ├── img.002.jpeg

│ ├── img.003.jpeg

│ ├── img.004.jpeg

│ ├── img.005.jpeg

│ ├── img.006.jpeg

│ ├── img.007.jpeg

│ ├── img.008.jpeg

│ ├── img.009.jpeg

│ ├── img.010.jpeg

│ ├── img.011.jpeg

│ ├── img.012.jpeg

│ ├── img.013.jpeg

│ ├── img.014.jpeg

│ ├── img.015.jpeg

│ ├── img.016.jpeg

│ └── img.017.jpeg

├── livy.Rmd

├── nyct2010.csv

├── sqlvis_histogram.R

├── sqlvis_raster.R

└── taxiDemoH2O.Rmd

└── tidyverse

├── 01_taxiR.Rmd

├── 02_taxiDemo.Rmd

├── 03_taxiGadget.Rmd

├── README.md

├── emr_setup.sh

├── img

├── tidyverse.001.jpeg

├── tidyverse.002.jpeg

├── tidyverse.003.jpeg

├── tidyverse.004.jpeg

├── tidyverse.005.jpeg

├── tidyverse.006.jpeg

├── tidyverse.007.jpeg

├── tidyverse.008.jpeg

├── tidyverse.009.jpeg

├── tidyverse.010.jpeg

├── tidyverse.011.jpeg

├── tidyverse.012.jpeg

├── tidyverse.013.jpeg

├── tidyverse.014.jpeg

├── tidyverse.015.jpeg

└── tidyverse.016.jpeg

├── nyct2010.csv

├── sqlvis_histogram.R

├── sqlvis_raster.R

└── tidyverseAndSpark.pdf

/.gitignore:

--------------------------------------------------------------------------------

1 | .Rproj.user

2 | .Rhistory

3 | .RData

4 | .Ruserdata

5 | sparkDemos.Rproj

6 | rsconnect

7 | derby.log

8 | *.nb.html

9 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Sparklyr Demos"

3 | output: html_document

4 | ---

5 |

6 |

7 |

8 | ***

9 |

10 |

11 |

12 | ***

13 |

14 |

15 |

16 | ***

17 |

18 |

19 |

20 | ***

21 |

22 |

23 |

24 | ***

25 |

26 |

27 |

28 | ***

29 |

30 |

31 |

32 | ***

33 |

34 |

35 |

36 | ***

37 |

38 |

39 |

40 | ***

41 |

42 |

43 |

44 | ***

45 |

46 |

47 |

48 | ***

49 |

50 |

51 |

52 | ***

53 |

54 |

55 |

56 | ***

57 |

58 |

59 |

60 | ***

61 |

62 |

63 |

64 | ***

65 |

66 |

67 |

68 | ***

69 |

70 |

71 |

72 | ***

73 |

74 |

75 |

76 | ***

77 |

78 |

79 |

80 | ***

81 |

82 |

83 |

84 | ***

85 |

86 |

87 |

--------------------------------------------------------------------------------

/dev/babynames/babynames-dplyr.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Analysis of babynames with dplyr"

3 | output: html_notebook

4 | ---

5 |

6 | Use dplyr syntax to write Apache Spark SQL queries. Use select, where, group by, joins, and window functions in Aparche Spark SQL.

7 |

8 | ## Setup

9 |

10 | ```{r setup}

11 | knitr::opts_chunk$set(warning = FALSE, message = FALSE)

12 | library(sparklyr)

13 | library(dplyr)

14 | library(babynames)

15 | library(ggplot2)

16 | library(dygraphs)

17 | library(rbokeh)

18 | ```

19 |

20 | ## Connect to Spark

21 |

22 | Install and connect to a local Spark instance. Copy data into Spark DataFrames.

23 |

24 | ```{r}

25 | #spark_install("2.0.0")

26 | sc <- spark_connect(master = "local", version = "2.0.0")

27 | babynames_tbl <- copy_to(sc, babynames, "babynames")

28 | applicants_tbl <- copy_to(sc, applicants, "applicants")

29 | ```

30 |

31 | ## Total US births

32 |

33 | Plot total US births recorded from the Social Security Administration.

34 |

35 | ```{r}

36 | birthsYearly <- applicants_tbl %>%

37 | mutate(male = ifelse(sex == "M", n_all, 0), female = ifelse(sex == "F", n_all, 0)) %>%

38 | group_by(year) %>%

39 | summarize(Male = sum(male) / 1000000, Female = sum(female) / 1000000) %>%

40 | arrange(year) %>%

41 | collect

42 |

43 | birthsYearly %>%

44 | dygraph(main = "Total US Births (SSN)", ylab = "Millions") %>%

45 | dySeries("Female") %>%

46 | dySeries("Male") %>%

47 | dyOptions(stackedGraph = TRUE) %>%

48 | dyRangeSelector(height = 20)

49 | ```

50 |

51 | ## Aggregate data by name

52 |

53 | Use Spark SQL to create a look up table. Register and cache the look up table in Spark for future queries.

54 |

55 | ```{r}

56 | topNames_tbl <- babynames_tbl %>%

57 | filter(year >= 1986) %>%

58 | group_by(name, sex) %>%

59 | summarize(count = as.numeric(sum(n))) %>%

60 | filter(count > 1000) %>%

61 | select(name, sex)

62 |

63 | filteredNames_tbl <- babynames_tbl %>%

64 | filter(year >= 1986) %>%

65 | inner_join(topNames_tbl)

66 |

67 | yearlyNames_tbl <- filteredNames_tbl %>%

68 | group_by(year, name, sex) %>%

69 | summarize(count = as.numeric(sum(n)))

70 |

71 | sdf_register(yearlyNames_tbl, "yearlyNames")

72 | tbl_cache(sc, "yearlyNames")

73 | ```

74 |

75 | ## Most popular names (1986)

76 |

77 | Identify the top 5 male and female names from 1986. Visualize the popularity trend over time.

78 |

79 | ```{r}

80 | topNames1986_tbl <- yearlyNames_tbl %>%

81 | filter(year == 1986) %>%

82 | group_by(name, sex) %>%

83 | summarize(count = sum(count)) %>%

84 | group_by(sex) %>%

85 | mutate(rank = min_rank(desc(count))) %>%

86 | filter(rank < 5) %>%

87 | arrange(sex, rank) %>%

88 | select(name, sex, rank) %>%

89 | sdf_register("topNames1986")

90 |

91 | tbl_cache(sc, "topNames1986")

92 |

93 | topNames1986Yearly <- yearlyNames_tbl %>%

94 | inner_join(topNames1986_tbl) %>%

95 | collect

96 |

97 | ggplot(topNames1986Yearly, aes(year, count, color=name)) +

98 | facet_grid(~sex) +

99 | geom_line() +

100 | ggtitle("Most Popular Names of 1986")

101 | ```

102 |

103 | ## Most popular names (2014)

104 |

105 | Identify the top 5 male and female names from 2014. Visualize the popularity trend over time.

106 |

107 | ```{r}

108 | topNames2014_tbl <- yearlyNames_tbl %>%

109 | filter(year == 2014) %>%

110 | group_by(name, sex) %>%

111 | summarize(count = sum(count)) %>%

112 | group_by(sex) %>%

113 | mutate(rank = min_rank(desc(count))) %>%

114 | filter(rank < 5) %>%

115 | arrange(sex, rank) %>%

116 | select(name, sex, rank) %>%

117 | sdf_register("topNames2014")

118 |

119 | tbl_cache(sc, "topNames2014")

120 |

121 | topNames2014Yearly <- yearlyNames_tbl %>%

122 | inner_join(topNames2014_tbl) %>%

123 | collect

124 |

125 | ggplot(topNames2014Yearly, aes(year, count, color=name)) +

126 | facet_grid(~sex) +

127 | geom_line() +

128 | ggtitle("Most Popular Names of 2014")

129 | ```

130 |

131 | ## Shared names

132 |

133 | Visualize the most popular names that are shared by both males and females.

134 |

135 | ```{r}

136 | sharedName <- babynames_tbl %>%

137 | mutate(male = ifelse(sex == "M", n, 0), female = ifelse(sex == "F", n, 0)) %>%

138 | group_by(name) %>%

139 | summarize(Male = as.numeric(sum(male)),

140 | Female = as.numeric(sum(female)),

141 | count = as.numeric(sum(n)),

142 | AvgYear = round(as.numeric(sum(year * n) / sum(n)),0)) %>%

143 | filter(Male > 30000 & Female > 30000) %>%

144 | collect

145 |

146 | figure(width = NULL, height = NULL,

147 | xlab = "Log10 Number of Males",

148 | ylab = "Log10 Number of Females",

149 | title = "Top shared names (1880 - 2014)") %>%

150 | ly_points(log10(Male), log10(Female), data = sharedName,

151 | color = AvgYear, size = scale(sqrt(count)),

152 | hover = list(name, Male, Female, AvgYear), legend = FALSE)

153 | ```

--------------------------------------------------------------------------------

/dev/babynames/derby.log:

--------------------------------------------------------------------------------

1 | ----------------------------------------------------------------

2 | Wed Feb 15 12:46:01 UTC 2017:

3 | Booting Derby version The Apache Software Foundation - Apache Derby - 10.11.1.1 - (1616546): instance a816c00e-015a-41ce-df39-000016b90d28

4 | on database directory memory:/home/nathan/projects/spark/sparkDemos/dev/babynames/databaseName=metastore_db with class loader org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1@53a3cfef

5 | Loaded from file:/home/nathan/.cache/spark/spark-2.0.0-bin-hadoop2.7/jars/derby-10.11.1.1.jar

6 | java.vendor=Oracle Corporation

7 | java.runtime.version=1.7.0_85-b01

8 | user.dir=/home/nathan/projects/spark/sparkDemos/dev/babynames

9 | os.name=Linux

10 | os.arch=amd64

11 | os.version=3.13.0-48-generic

12 | derby.system.home=null

13 | Database Class Loader started - derby.database.classpath=''

14 |

--------------------------------------------------------------------------------

/dev/cloudera/bigvis_tile.R:

--------------------------------------------------------------------------------

1 | ### Big data tile plot

2 |

3 | bigvis_compute_tiles <- function(data, x_field, y_field, resolution = 500){

4 |

5 | data_prep <- data %>%

6 | select_(x = x_field, y = y_field) %>%

7 | filter(!is.na(x), !is.na(y))

8 |

9 | s <- data_prep %>%

10 | summarise(max_x = max(x),

11 | max_y = max(y),

12 | min_x = min(x),

13 | min_y = min(y)) %>%

14 | mutate(rng_x = max_x - min_x,

15 | rng_y = max_y - min_y) %>%

16 | collect()

17 |

18 | image_frame_pre <- data_prep %>%

19 | mutate(res_x = round((x-s$min_x)/s$rng_x*resolution, 0),

20 | res_y = round((y-s$min_y)/s$rng_y*resolution, 0)) %>%

21 | count(res_x, res_y) %>%

22 | collect

23 |

24 | image_frame_pre %>%

25 | rename(freq = n) %>%

26 | mutate(alpha = round(freq / max(freq), 2)) %>%

27 | rename_(.dots=setNames(list("res_x", "res_y"), c(x_field, y_field)))

28 |

29 | }

30 |

31 | bigvis_ggplot_tiles <- function(data){

32 | data %>%

33 | select(x = 1, y = 2, Freq = 4) %>%

34 | ggplot(aes(x, y)) +

35 | geom_tile(aes(fill = Freq)) +

36 | xlab(colnames(data)[1]) +

37 | ylab(colnames(data)[2])

38 | }

39 |

--------------------------------------------------------------------------------

/dev/cloudera/livy-architecture.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/cloudera/livy-architecture.png

--------------------------------------------------------------------------------

/dev/cloudera/livy.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Connecting to Spark through Livy"

3 | output: html_notebook

4 | ---

5 |

6 | With Livy you can anaylze data in your spark cluster via R on your desktop.

7 |

8 | ## Livy

9 |

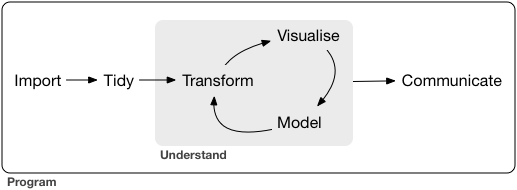

10 | Livy is a service that enables easy interaction with an Apache Spark cluster over a REST interface. It enables easy submission of Spark jobs or snippets of Spark code, synchronous or asynchronous result retrieval, as well as SparkContext management, all via a simple REST interface or a RPC client library. Livy also simplifies the interaction between Spark from application servers, thus enabling the use of Spark for interactive web/mobile applications.

11 |

12 |

13 |

14 |

15 |

16 | ## Start Livy

17 |

18 | Set home environment variables and start a Livy server to handle local requests.

19 |

20 | ```{bash}

21 | export JAVA_HOME=/usr/lib/jvm/java-7-oracle-cloudera

22 | export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark

23 | /home/ubuntu/livy/livy-server-0.2.0/bin/livy-server

24 | ```

25 |

26 | ## Connect to Spark

27 |

28 | Use `method = "livy"` to connect to the cluster.

29 |

30 | ```{r}

31 | library(sparklyr)

32 | library(dplyr)

33 | sc <- spark_connect(

34 | master = "http://ec2-***.us-west-2.compute.amazonaws.com:8998",

35 | method = "livy")

36 | ```

37 |

38 | ## Analyze

39 |

40 | Use R code on your workstation as you normally would. Your R commands will be sent to the cluster via Livy for processing. Collect your results back to the desktop for further processing in R.

41 |

42 | ```{r}

43 | library(ggplot2)

44 | trips_model_data_tbl <- tbl(sc, "trips_model_data")

45 | pickup_dropoff_tbl <- trips_model_data_tbl %>%

46 | filter(pickup_nta == "Turtle Bay-East Midtown" & dropoff_nta == "Airport") %>%

47 | mutate(pickup_hour = hour(pickup_datetime)) %>%

48 | mutate(trip_time = unix_timestamp(dropoff_datetime) - unix_timestamp(pickup_datetime)) %>%

49 | group_by(pickup_hour) %>%

50 | summarize(n = n(),

51 | trip_time_mean = mean(trip_time),

52 | trip_time_p10 = percentile(trip_time, 0.10),

53 | trip_time_p25 = percentile(trip_time, 0.25),

54 | trip_time_p50 = percentile(trip_time, 0.50),

55 | trip_time_p75 = percentile(trip_time, 0.75),

56 | trip_time_p90 = percentile(trip_time, 0.90))

57 |

58 | # Collect results

59 | pickup_dropoff <- collect(pickup_dropoff_tbl)

60 |

61 | # Plot

62 | ggplot(pickup_dropoff, aes(x = pickup_hour)) +

63 | geom_line(aes(y = trip_time_p50, alpha = "Median")) +

64 | geom_ribbon(aes(ymin = trip_time_p25, ymax = trip_time_p75,

65 | alpha = "25–75th percentile")) +

66 | geom_ribbon(aes(ymin = trip_time_p10, ymax = trip_time_p90,

67 | alpha = "10–90th percentile")) +

68 | scale_y_continuous("trip duration in minutes")

69 | ```

70 |

--------------------------------------------------------------------------------

/dev/cloudera/livy.sh:

--------------------------------------------------------------------------------

1 | export JAVA_HOME=/usr/lib/jvm/java-7-oracle-cloudera

2 | export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark

3 | /home/ubuntu/livy/livy-server-0.2.0/bin/livy-server

4 |

--------------------------------------------------------------------------------

/dev/cloudera/livy_connection.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Livy Connection"

3 | output: html_notebook

4 | ---

5 |

6 | ```{bash}

7 | export JAVA_HOME=/usr/lib/jvm/java-7-oracle-cloudera

8 | export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark

9 | /home/ubuntu/livy/livy-server-0.2.0/bin/livy-server

10 | ```

11 |

--------------------------------------------------------------------------------

/dev/cloudera/spark_plot_hist.R:

--------------------------------------------------------------------------------

1 | spark_plot_hist <- function(data,

2 | x_field,

3 | breaks=30)

4 | {

5 | #----- Pre calculating the max x brings down the time considerably

6 | max_x <- data %>%

7 | select_(x=x_field) %>%

8 | summarise(xmax = max(x)) %>%

9 | collect()

10 | max_x <- max_x$xmax[1]

11 |

12 | #----- The entire function is one long pipe

13 | data %>%

14 | select_(x=x_field) %>%

15 | filter(!is.na(x)) %>%

16 | mutate(bucket = round(x/(max_x/(breaks-1)),0)) %>%

17 | group_by(bucket) %>%

18 | summarise(top=max(x),

19 | bottom=min(x),

20 | count=n()) %>%

21 | arrange(bucket) %>%

22 | collect %>%

23 | ggplot() +

24 | geom_bar(aes(x=((top-bottom)/2)+bottom, y=count), color="black", stat = "identity") +

25 | labs(x=x_field) +

26 | theme_minimal() +

27 | theme(legend.position="none")}

--------------------------------------------------------------------------------

/dev/cloudera/spark_plot_point.R:

--------------------------------------------------------------------------------

1 | spark_plot_point<- function(data,

2 | x_field=NULL,

3 | y_field=NULL,

4 | color_field=NULL)

5 | {

6 |

7 | data %>%

8 | select_(x=x_field, y=y_field) %>%

9 | group_by(x,y) %>%

10 | tally() %>%

11 | collect() %>%

12 | ggplot() +

13 | geom_point(aes(x=x, y=y, color=n)) +

14 | labs(x=x_field, y=y_field)

15 |

16 | }

--------------------------------------------------------------------------------

/dev/cloudera/spark_toolchain.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Data Science Tool Chain with Spark"

3 | output: html_notebook

4 | ---

5 |

6 | ```{r}

7 | library(sparklyr)

8 | library(dplyr)

9 | library(ggplot2)

10 |

11 | Sys.setenv(JAVA_HOME="/usr/lib/jvm/java-7-oracle-cloudera/")

12 | Sys.setenv(SPARK_HOME = '/opt/cloudera/parcels/CDH/lib/spark')

13 |

14 | conf <- spark_config()

15 | conf$spark.executor.cores <- 16

16 | conf$spark.executor.memory <- "24G"

17 | conf$spark.yarn.am.cores <- 16

18 | conf$spark.yarn.am.memory <- "24G"

19 |

20 | sc <- spark_connect(master = "yarn-client", version="1.6.0", config = conf)

21 |

22 | nyct2010_tbl <- tbl(sc, "nyct2010")

23 | trips_par_tbl <- tbl(sc, "trips_par")

24 | trips_model_data_tbl <- tbl(sc, "trips_model_data")

25 | ```

26 |

27 | ### Histogram

28 |

29 | ```{r}

30 | source("bigvis_histogram.R")

31 |

32 | bigvis_compute_histogram(nyct2010_tbl, "ct2010") %>%

33 | bigvis_ggplot_histogram

34 |

35 | ```

36 |

37 | ### Tile plot

38 |

39 | ```{r}

40 | source("bigvis_tile.R")

41 |

42 | trips_model_data_tbl %>%

43 | bigvis_compute_tiles("pickup_longitude", "pickup_latitude", 500) %>%

44 | bigvis_ggplot_tiles

45 |

46 | ```

47 |

48 |

--------------------------------------------------------------------------------

/dev/cloudera/sqlvis_histogram.R:

--------------------------------------------------------------------------------

1 | ### Big data histogram

2 | sqlvis_compute_histogram <- function(data, x_name, bins = 30){

3 |

4 | data_prep <- data %>%

5 | select_(x_field = x_name) %>%

6 | filter(!is.na(x_field)) %>%

7 | mutate(x_field = as.double(x_field))

8 |

9 | s <- data_prep %>%

10 | summarise(max_x = max(x_field), min_x = min(x_field)) %>%

11 | mutate(bin_value = (max_x - min_x) / bins) %>%

12 | collect()

13 |

14 | new_bins <- as.numeric(c((0:(bins - 1) * s$bin_value) + s$min_x, s$max_x))

15 |

16 | plot_table <- data_prep %>%

17 | ft_bucketizer(input.col = "x_field", output.col = "key_bin", splits = new_bins) %>%

18 | group_by(key_bin) %>%

19 | tally() %>%

20 | collect()

21 |

22 | all_bins <- data.frame(

23 | key_bin = 0:(bins - 1),

24 | bin = 1:bins,

25 | bin_ceiling = head(new_bins, -1)

26 | )

27 |

28 | plot_table %>%

29 | full_join(all_bins, by="key_bin") %>%

30 | arrange(key_bin) %>%

31 | mutate(n = ifelse(!is.na(n), n, 0)) %>%

32 | select(bin = key_bin, count = n, bin_ceiling) %>%

33 | rename_(.dots = setNames(list("bin_ceiling"), x_name))

34 |

35 | }

36 |

37 | sqlvis_ggplot_histogram <- function(plot_table, ...){

38 | plot_table %>%

39 | select(x = 3, y = 2) %>%

40 | ggplot(aes(x, y)) +

41 | geom_bar(stat = "identity", fill = "cornflowerblue") +

42 | theme(legend.position = "none") +

43 | labs(x = colnames(plot_table)[3], y = colnames(plot_table)[2], ...)

44 | }

45 |

46 | sqlvis_ggvis_histogram <- function(plot_table, ...){

47 | plot_table %>%

48 | select(x = 3, y = 2) %>%

49 | ggvis(x = ~x, y = ~y) %>%

50 | layer_bars() %>%

51 | add_axis("x", title = colnames(plot_table)[3]) %>%

52 | add_axis("y", title = colnames(plot_table)[2])

53 | }

54 |

55 |

--------------------------------------------------------------------------------

/dev/cloudera/sqlvis_raster.R:

--------------------------------------------------------------------------------

1 | ### Big data tile plot

2 |

3 | # data <- tbl(sc, "trips_model_data")

4 | # x_field <- "pickup_longitude"

5 | # y_field <- "pickup_latitude"

6 | # resolution <- 50

7 |

8 | sqlvis_compute_raster <- function(data, x_field, y_field, resolution = 300){

9 |

10 | data_prep <- data %>%

11 | select_(x = x_field, y = y_field) %>%

12 | filter(!is.na(x), !is.na(y))

13 |

14 | s <- data_prep %>%

15 | summarise(max_x = max(x),

16 | max_y = max(y),

17 | min_x = min(x),

18 | min_y = min(y)) %>%

19 | mutate(rng_x = max_x - min_x,

20 | rng_y = max_y - min_y,

21 | resolution = resolution) %>%

22 | collect()

23 |

24 | counts <- data_prep %>%

25 | mutate(res_x = round((x - s$min_x) / s$rng_x * resolution, 0),

26 | res_y = round((y - s$min_y) / s$rng_y * resolution, 0)) %>%

27 | count(res_x, res_y) %>%

28 | collect

29 |

30 | list(counts = counts,

31 | limits = s,

32 | vnames = c(x_field, y_field)

33 | )

34 |

35 | }

36 |

37 | sqlvis_ggplot_raster <- function(data, ...) {

38 |

39 | d <- data$counts

40 | s <- data$limits

41 | v <- data$vnames

42 |

43 | xx <- setNames(seq(1, s$resolution, len = 6), round(seq(s$min_x, s$max_x, len = 6),2))

44 | yy <- setNames(seq(1, s$resolution, len = 6), round(seq(s$min_y, s$max_y, len = 6),2))

45 |

46 | ggplot(d, aes(res_x, res_y)) +

47 | geom_raster(aes(fill = n)) +

48 | coord_fixed() +

49 | scale_fill_distiller(palette = "Spectral", trans = "log", name = "Frequency") +

50 | scale_x_continuous(breaks = xx, labels = names(xx)) +

51 | scale_y_continuous(breaks = yy, labels = names(yy)) +

52 | labs(x = v[1], y = v[2], ...)

53 |

54 | }

55 |

56 | ### Facets

57 |

58 | sqlvis_compute_raster_g <- function(data, x_field, y_field, g_field, resolution = 300){

59 |

60 | data_prep <- data %>%

61 | mutate_(group = g_field) %>%

62 | select_(g = "group", x = x_field, y = y_field) %>%

63 | filter(!is.na(x), !is.na(y))

64 |

65 | s <- data_prep %>%

66 | summarise(max_x = max(x),

67 | max_y = max(y),

68 | min_x = min(x),

69 | min_y = min(y)) %>%

70 | mutate(rng_x = max_x - min_x,

71 | rng_y = max_y - min_y,

72 | resolution = resolution) %>%

73 | collect()

74 |

75 | counts <- data_prep %>%

76 | mutate(res_x = round((x-s$min_x)/s$rng_x*resolution, 0),

77 | res_y = round((y-s$min_y)/s$rng_y*resolution, 0)) %>%

78 | count(g, res_x, res_y) %>%

79 | collect

80 |

81 | list(counts = counts,

82 | limits = s,

83 | vnames = c(x_field, y_field)

84 | )

85 |

86 | }

87 |

88 | sqlvis_ggplot_raster_g <- function(data, ncol = 4, ...) {

89 |

90 | s <- data$limits

91 | d <- data$counts

92 | v <- data$vnames

93 |

94 | xx <- setNames(seq(1, s$resolution, len = 3), round(seq(s$min_x, s$max_x, len = 3), 1))

95 | yy <- setNames(seq(1, s$resolution, len = 3), round(seq(s$min_y, s$max_y, len = 3), 1))

96 |

97 | ggplot(d, aes(res_x, res_y)) +

98 | geom_raster(aes(fill = n)) +

99 | coord_fixed() +

100 | facet_wrap(~ g, ncol = ncol) +

101 | scale_fill_distiller(palette = "Spectral", trans = "log", name = "Frequency") +

102 | scale_x_continuous(breaks = xx, labels = names(xx)) +

103 | scale_y_continuous(breaks = yy, labels = names(yy)) +

104 | labs(x = v[1], y = v[2], ...)

105 |

106 | }

107 |

--------------------------------------------------------------------------------

/dev/flights-cdh/flights_pred_2008.RData:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/flights_pred_2008.RData

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/data-analysis-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/data-analysis-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/flex-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/flex-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/forecast-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/forecast-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/hue-metastore-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/hue-metastore-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/manager-landing-page.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/manager-landing-page.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/performance-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/performance-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/sign-in-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/sign-in-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/spark-history-server-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/spark-history-server-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/spark-pane-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/spark-pane-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/spark-rdd-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/spark-rdd-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/images/clusterDemo/tables-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights-cdh/images/clusterDemo/tables-1.png

--------------------------------------------------------------------------------

/dev/flights-cdh/nycflights_flexdashboard.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Time Gained in Flight"

3 | output:

4 | flexdashboard::flex_dashboard:

5 | orientation: rows

6 | social: menu

7 | source_code: embed

8 | runtime: shiny

9 | ---

10 |

11 | ```{r setup, include=F}

12 | # Attach packages

13 | library(dplyr)

14 | library(ggplot2)

15 | library(DT)

16 | library(leaflet)

17 | library(geosphere)

18 | load('flights_pred_2008.RData')

19 | airports <- mutate(airports, lat = as.numeric(lat), lon = as.numeric(lon))

20 | ```

21 |

22 |

23 | Summary

24 | ========================================================================

25 |

26 | Inputs {.sidebar}

27 | -----------------------------------------------------------------------

28 |

29 | ### Select Airports

30 |

31 | ```{r}

32 | # Shiny inputs for flight orgin and destination

33 | carrier_origin <- ungroup(pred_data) %>% distinct(origin) %>% .[['origin']]

34 | carrier_dest <- ungroup(pred_data) %>% distinct(dest) %>% .[['dest']]

35 | selectInput("origin", "Flight origin", carrier_origin, selected = "JFK")

36 | selectInput("dest", "Flight destination", carrier_dest, selected = "LAS")

37 | ```

38 |

39 | ### Background

40 |

41 | Given that your flight was delayed by 15 minutes or more, what is the likelihood

42 | your airline carrier will make up time in route? Some of the most signficant factors

43 | for making up time are flight distance and airline carrier. The data model behind

44 | this dashboard is based on flights from NYC airports in 2013.

45 |

46 |

47 | Row

48 | -----------------------------------------------------------------------

49 |

50 | ### Observed versus predicted time gain

51 |

52 | ```{r}

53 | # Aggregregate time gain by carrier and by route

54 | plot_data <- reactive({

55 | req(input$origin, input$dest)

56 | pred_data %>%

57 | filter(origin==input$origin & dest==input$dest) %>%

58 | ungroup() %>%

59 | select(airline, flights, distance, avg_dep_delay, avg_arr_delay, avg_gain, pred_gain)

60 | })

61 |

62 | # Plot observed versus predicted time gain for carriers and route

63 | renderPlot({

64 | ggplot(plot_data(), aes(factor(airline), pred_gain)) +

65 | geom_bar(stat = "identity", fill = '#2780E3') +

66 | geom_point(aes(factor(airline), avg_gain)) +

67 | coord_flip() +

68 | labs(x = "", y = "Time gained in flight (minutes)") +

69 | labs(title = "Observed gain (point) vs Predicted gain (bar)")

70 | })

71 | ```

72 |

73 | ### Route

74 |

75 | ```{r}

76 | # Identify origin lat and long

77 | origin <- reactive({

78 | req(input$origin)

79 | filter(airports, faa == input$origin)

80 | })

81 |

82 | # Identify destination lat and log

83 | dest <- reactive({

84 | req(input$dest)

85 | filter(airports, faa == input$dest)

86 | })

87 |

88 | # Plot route

89 | renderLeaflet({

90 | gcIntermediate(

91 | select(origin(), lon, lat),

92 | select(dest(), lon, lat),

93 | n=100, addStartEnd=TRUE, sp=TRUE

94 | ) %>%

95 | leaflet() %>%

96 | addProviderTiles("CartoDB.Positron") %>%

97 | addPolylines()

98 | })

99 | ```

100 |

101 | Row

102 | -----------------------------------------------------------------------

103 |

104 | ### Data details

105 |

106 | ```{r}

107 | # Print table of observed and predicted gains by airline

108 | renderDataTable(

109 | datatable(plot_data()) %>%

110 | formatRound(c("flights", "distance"), 0) %>%

111 | formatRound(c("avg_arr_delay", "avg_dep_delay", "avg_gain", "pred_gain"), 1)

112 | )

113 | ```

114 |

115 | Model Details

116 | ========================================================================

117 |

118 | ```{r}

119 | renderPrint(ml1_summary)

120 | ```

121 |

--------------------------------------------------------------------------------

/dev/flights-cdh/sparkClusterDemo-source.R:

--------------------------------------------------------------------------------

1 |

2 | library(sparklyr)

3 | library(dplyr)

4 | library(ggplot2)

5 |

6 | Sys.setenv(HADOOP_CONF_DIR='/etc/hadoop/conf.cloudera.hdfs')

7 | Sys.setenv(YARN_CONF_DIR='/etc/hadoop/conf.cloudera.yarn')

8 | #Sys.setenv(SPARK_HOME="/home/ubuntu/spark-1.6.0")

9 | #Sys.setenv(SPARK_HOME_VERSION="1.6.0")

10 |

11 | sc <- spark_connect(master = "yarn-client", version="1.6.0", spark_home = '/opt/cloudera/parcels/CDH/lib/spark/')

12 |

13 | #---------------------------------------------------------

14 |

15 | # Cache flights Hive table into Spark

16 | tbl_cache(sc, 'flights')

17 | flights_tbl <- tbl(sc, 'flights')

18 |

19 | # Cache airlines Hive table into Spark

20 | tbl_cache(sc, 'airlines')

21 | airlines_tbl <- tbl(sc, 'airlines')

22 |

23 | # Cache airports Hive table into Spark

24 | tbl_cache(sc, 'airports')

25 | airports_tbl <- tbl(sc, 'airports')

26 |

27 | #---------------------------------------------------------

28 |

29 | # Filter records and create target variable 'gain'

30 | model_data <- flights_tbl %>%

31 | filter(!is.na(arrdelay) & !is.na(depdelay) & !is.na(distance)) %>%

32 | filter(depdelay > 15 & depdelay < 240) %>%

33 | filter(arrdelay > -60 & arrdelay < 360) %>%

34 | filter(year >= 2003 & year <= 2007) %>%

35 | left_join(airlines_tbl, by = c("uniquecarrier" = "code")) %>%

36 | mutate(gain = depdelay - arrdelay) %>%

37 | select(year, month, arrdelay, depdelay, distance, uniquecarrier, description, gain)

38 |

39 | # Summarize data by carrier

40 | model_data %>%

41 | group_by(uniquecarrier) %>%

42 | summarize(description = min(description), gain=mean(gain),

43 | distance=mean(distance), depdelay=mean(depdelay)) %>%

44 | select(description, gain, distance, depdelay) %>%

45 | arrange(gain)

46 |

47 | #---------------------------------------------------------

48 |

49 | # Partition the data into training and validation sets

50 | model_partition <- model_data %>%

51 | sdf_partition(train = 0.8, valid = 0.2, seed = 5555)

52 |

53 | # Fit a linear model

54 | ml1 <- model_partition$train %>%

55 | ml_linear_regression(gain ~ distance + depdelay + uniquecarrier)

56 |

57 | # Summarize the linear model

58 | summary(ml1)

59 |

60 | #---------------------------------------------------------

61 |

62 | # Calculate average gains by predicted decile

63 | model_deciles <- lapply(model_partition, function(x) {

64 | sdf_predict(ml1, x) %>%

65 | mutate(decile = ntile(desc(prediction), 10)) %>%

66 | group_by(decile) %>%

67 | summarize(gain = mean(gain)) %>%

68 | select(decile, gain) %>%

69 | collect()

70 | })

71 |

72 | # Create a summary dataset for plotting

73 | deciles <- rbind(

74 | data.frame(data = 'train', model_deciles$train),

75 | data.frame(data = 'valid', model_deciles$valid),

76 | make.row.names = FALSE

77 | )

78 |

79 | # Plot average gains by predicted decile

80 | deciles %>%

81 | ggplot(aes(factor(decile), gain, fill = data)) +

82 | geom_bar(stat = 'identity', position = 'dodge') +

83 | labs(title = 'Average gain by predicted decile', x = 'Decile', y = 'Minutes')

84 |

85 | #---------------------------------------------------------

86 |

87 | # Select data from an out of time sample

88 | data_2008 <- flights_tbl %>%

89 | filter(!is.na(arrdelay) & !is.na(depdelay) & !is.na(distance)) %>%

90 | filter(depdelay > 15 & depdelay < 240) %>%

91 | filter(arrdelay > -60 & arrdelay < 360) %>%

92 | filter(year == 2008) %>%

93 | left_join(airlines_tbl, by = c("uniquecarrier" = "code")) %>%

94 | mutate(gain = depdelay - arrdelay) %>%

95 | select(year, month, arrdelay, depdelay, distance, uniquecarrier, description, gain, origin,dest)

96 |

97 | # Summarize data by carrier

98 | carrier <- sdf_predict(ml1, data_2008) %>%

99 | group_by(description) %>%

100 | summarize(gain = mean(gain), prediction = mean(prediction), freq = n()) %>%

101 | filter(freq > 10000) %>%

102 | collect

103 |

104 | # Plot actual gains and predicted gains by airline carrier

105 | ggplot(carrier, aes(gain, prediction)) +

106 | geom_point(alpha = 0.75, color = 'red', shape = 3) +

107 | geom_abline(intercept = 0, slope = 1, alpha = 0.15, color = 'blue') +

108 | geom_text(aes(label = substr(description, 1, 20)), size = 3, alpha = 0.75, vjust = -1) +

109 | labs(title='Average Gains Forecast', x = 'Actual', y = 'Predicted')

110 |

111 | #---------------------------------------------------------

112 |

113 | # Summarize by origin, destination, and carrier

114 | summary_2008 <- sdf_predict(ml1, data_2008) %>%

115 | rename(carrier = uniquecarrier, airline = description) %>%

116 | group_by(origin, dest, carrier, airline) %>%

117 | summarize(

118 | flights = n(),

119 | distance = mean(distance),

120 | avg_dep_delay = mean(depdelay),

121 | avg_arr_delay = mean(arrdelay),

122 | avg_gain = mean(gain),

123 | pred_gain = mean(prediction)

124 | )

125 |

126 | # Collect and save objects

127 | pred_data <- collect(summary_2008)

128 | airports <- collect(select(airports_tbl, name, faa, lat, lon))

129 | ml1_summary <- capture.output(summary(ml1))

130 | save(pred_data, airports, ml1_summary, file = 'flights_pred_2008.RData')

131 |

132 |

133 |

--------------------------------------------------------------------------------

/dev/flights/flightsApp/app.R:

--------------------------------------------------------------------------------

1 | library(shiny)

2 |

3 | ui <- fluidPage(

4 |

5 | # Application title

6 | titlePanel("Old Faithful Geyser Data"),

7 |

8 | # Sidebar with a slider input for number of bins

9 | sidebarLayout(

10 | sidebarPanel(

11 | sliderInput("bins",

12 | "Number of bins:",

13 | min = 1,

14 | max = 50,

15 | value = 30)

16 | ),

17 |

18 | # Show a plot of the generated distribution

19 | mainPanel(

20 | plotOutput("distPlot")

21 | )

22 | )

23 | )

24 |

25 | server <- function(input, output) {

26 |

27 | output$distPlot <- renderPlot({

28 | # generate bins based on input$bins from ui.R

29 | x <- faithful[, 2]

30 | bins <- seq(min(x), max(x), length.out = input$bins + 1)

31 |

32 | # draw the histogram with the specified number of bins

33 | hist(x, breaks = bins, col = 'darkgray', border = 'white')

34 | })

35 | }

36 |

37 | shinyApp(ui = ui, server = server)

38 |

39 |

--------------------------------------------------------------------------------

/dev/flights/flightsApp2/global.R:

--------------------------------------------------------------------------------

1 | library(nycflights13)

2 | library(tibble)

3 | library(ggplot2)

4 | library(dplyr)

5 | library(sparklyr)

6 | library(lubridate)

7 | library(MASS)

8 |

9 | Sys.setenv(SPARK_HOME="/usr/lib/spark")

10 | system.time(sc <- spark_connect(master = "yarn-client", version = '2.0.0'))

11 |

12 | # Cache airlines Hive table into Spark

13 | #system.time(tbl_cache(sc, 'airlines'))

14 |

15 | # We use a small subset of airlines in this application

16 | #system.time(airlines_tbl <- tbl(sc, 'airlines'))

17 | #system.time(airlines_tbl <- spark_read_csv(sc, "airlines", "hdfs:///airlines/airlines.csv", memory=TRUE))

18 | #airlines_r <- airlines_tbl %>% arrange(description) %>% collect

19 | airlines_r <- tibble::tibble(

20 | code = c("B6", "UA", "AA", "DL", "WN", "US"),

21 | description = c("JetBlue Airways","United Air Lines Inc.",

22 | "American Airlines Inc." , "Delta Air Lines Inc.",

23 | "Southwest Airlines Co.","US Airways Inc.")

24 | )

25 |

26 | # We use the airports from nycflights13 package in this application

27 | # airports_tbl <- copy_to(sc, nycflights13::airports, "airports", overwrite = TRUE)

28 | # airports <- airports_tbl %>% collect

29 | airports <- nycflights13::airports

30 |

31 | # Cache flights Hive table into Spark

32 | #system.time(tbl_cache(sc, 'flights'))

33 | #system.time(flights_tbl <- tbl(sc, 'flights'))

34 |

35 | #Instead of caching the flights data (which takes very long), we load the data in Parquet

36 | #format from HDFS. First the following 2 commented lines must be run to save the data.

37 | #system.time(flights_tbl <- tbl(sc, 'flights'))

38 | #system.time(spark_write_parquet(flights_tbl, "hdfs:///flights-parquet-all"))

39 | system.time(flights_tbl <- spark_read_parquet(sc, "flights_s", "hdfs:///flights-parquet-all", memory=FALSE))

40 |

41 | years <- tibble::tibble(year = c(1987:2008))

42 | years_sub <- tibble::tibble(year = c(1999:2008))

43 | dests <- c("LAX","ORD","ATL","HNL")

44 |

45 | delay <- flights_tbl %>%

46 | group_by(tailnum) %>%

47 | summarise(count = n(),

48 | dist = mean(distance),

49 | delay = mean(arrdelay),

50 | arrdelay_mean = mean(arrdelay),

51 | depdelay_mean = mean(depdelay)) %>%

52 | filter(count > 20,

53 | dist < 2000,

54 | !is.na(delay)) %>%

55 | collect

56 |

57 |

--------------------------------------------------------------------------------

/dev/flights/flightsApp2/server.R:

--------------------------------------------------------------------------------

1 | library(shinydashboard)

2 | library(dplyr)

3 | library(maps)

4 | library(geosphere)

5 | library(lubridate)

6 | library(MASS)

7 |

8 | source("global.R")

9 |

10 | function(input, output, session) {

11 |

12 | selected_carriers <- reactive(input$airline_selections)

13 | selected_density <- reactive(input$density_selection)

14 | selected_year <- reactive(input$years_selection)

15 | selected_airline <- reactive(filter(airlines_r, description==input$carrier_selection))

16 | selected_carrier <- reactive(selected_airline()$code)

17 | selected_dest_year <- reactive(input$years_dest_selection)

18 | selected_cancel_year <- reactive(input$years_cancel_selection)

19 | selected_day_year <- reactive(input$day_selection)

20 |

21 | output$yearsPlot <- renderPlot ({

22 | xlim <- c(-171.738281, -56.601563)

23 | ylim <- c(12.039321, 71.856229)

24 | pal <- colorRampPalette(c("#f2f2f2", "red"))

25 | colors <- pal(100)

26 | map("world", col="#f2f2f2", fill=TRUE, bg="black", lwd=0.05, xlim=xlim, ylim=ylim)

27 | #map("world", col="#191919", fill=TRUE, bg="#000000", lwd=0.05, xlim=xlim, ylim=ylim)

28 | year_selected = selected_year()

29 | flights_count <- flights_tbl %>% filter(year == year_selected) %>%

30 | group_by(uniquecarrier, origin, dest) %>%

31 | summarize( count = n()) %>%

32 | collect

33 | flights_count$count <- unlist(flights_count$count)

34 | fsub <- filter(flights_count, uniquecarrier == selected_carrier(), count > 200)

35 | fsub <- fsub[order(fsub$count),]

36 | maxcnt <- max(fsub$count)

37 | for (j in 1:length(fsub$uniquecarrier)) {

38 | air1 <- airports[airports$faa == fsub[j,]$origin,]

39 | air2 <- airports[airports$faa == fsub[j,]$dest,]

40 | if (dim(air1)[1] != 0 & dim(air2)[1] != 0) {

41 | inter <- gcIntermediate(c(air1[1,]$lon, air1[1,]$lat), c(air2[1,]$lon, air2[1,]$lat), n=100, addStartEnd=TRUE)

42 | colindex <- round( (fsub[j,]$count / maxcnt) * length(colors) )

43 |

44 | lines(inter, col=colors[colindex], lwd=0.8)

45 | lines(inter, col="black", lwd=0.8)

46 | }

47 | }

48 |

49 | })

50 |

51 | output$densityPlot <- renderPlot ({

52 | r <- ggplot(delay, aes_string("dist", selected_density())) +

53 | geom_point(aes(size = count), alpha = 1/2) +

54 | geom_smooth() +

55 | scale_size_area(max_size = 2)

56 | print(r)

57 | })

58 |

59 | output$destPlot <- renderPlot ({

60 | year_selected <- selected_dest_year()

61 | flights_by_dest <- flights_tbl %>% filter(year == year_selected) %>%

62 | filter(dest %in% dests) %>%

63 | group_by(dest, dayofweek, month, uniquecarrier) %>%

64 | select(dest, dayofweek, month, uniquecarrier) %>%

65 | collect

66 | d <- ggplot(data = flights_by_dest, aes(x = month, fill=dest)) + stat_density()

67 | r <- ggplot(data = flights_by_dest) +

68 | geom_bar(mapping = aes(x = month, fill = dest), position = "dodge")

69 | print(d)

70 | })

71 |

72 | output$cancelPlot <- renderPlot ({

73 | c_year_selected <- selected_cancel_year()

74 | flights_cancelled <- flights_tbl %>%

75 | filter(year == c_year_selected) %>%

76 | group_by(dest, month, cancelled) %>%

77 | summarise(

78 | count = n(),

79 | delay = mean(arrdelay, na.rm = TRUE),

80 | arrdelay_mean = mean(arrdelay, na.rm = TRUE),

81 | depdelay_mean = mean(depdelay, na.rm = TRUE)

82 | ) %>%

83 | filter(count > 20, dest != "HNL", cancelled == 1) %>%

84 | collect

85 |

86 | c <- ggplot(flights_cancelled, aes_string("month", "count")) +

87 | geom_point(alpha = 1/2, position = "jitter") +

88 | geom_smooth() +

89 | scale_size_area(max_size = 2)

90 | print(c)

91 | })

92 |

93 | output$dayPlot <- renderPlot ({

94 | year_day_selected <- selected_day_year()

95 | flights_by_year <- flights_tbl %>%

96 | filter(year== year_day_selected , Dest %in% dests) %>%

97 | group_by(year, month, dayofmonth, dest) %>%

98 | summarise(n = n()) %>%

99 | collect

100 |

101 | daily <- flights_by_year %>%

102 | mutate(date = make_datetime(year, month, dayofmonth)) %>%

103 | group_by(date)

104 |

105 | daily <- daily %>%

106 | mutate(wday = wday(date, label = TRUE))

107 |

108 | d <- ggplot(daily, aes(wday, n, color=dest)) +

109 | geom_boxplot()

110 | print(d)

111 | })

112 | }

--------------------------------------------------------------------------------

/dev/flights/flightsApp2/ui.R:

--------------------------------------------------------------------------------

1 | library(shinydashboard)

2 |

3 | header <- dashboardHeader(

4 | title = "Flights Data Analysis"

5 | )

6 | sidebar <- dashboardSidebar(

7 | sidebarMenu(

8 | menuItem("Flights by year and airline", tabName = "years"),

9 | menuItem("Delay Density", tabName = "delay_density"),

10 | menuItem("Cancelled flights", tabName = "cancelled"),

11 | menuItem("Flights by day of week", tabName = "dayofweek")

12 | )

13 | )

14 |

15 |

16 | body <- dashboardBody(

17 | tabItems(

18 | tabItem("years",

19 | fluidRow(

20 | column(width = 8,

21 | box(width = NULL, solidHeader = TRUE,

22 | plotOutput('yearsPlot')

23 | )

24 | ),

25 | column(width = 3,

26 | box(width = NULL, status = "warning",

27 | uiOutput("years_selection"),

28 | radioButtons("years_selection", label = h3("Select a year"),

29 | years_sub$year, selected = 2000)

30 | )

31 | ),

32 | column(width = 3,

33 | box(width = NULL, status = "warning",

34 | uiOutput("carrier_selection"),

35 | radioButtons("carrier_selection", label = h3("Select an airline"),

36 | airlines_r$description, selected = "American Airlines Inc.")

37 | )

38 | )

39 |

40 | )

41 | ),

42 | tabItem("delay_density",

43 | fluidRow(

44 | column(width = 9,

45 | box(width = NULL, solidHeader = TRUE,

46 | plotOutput('densityPlot')

47 | )

48 | ),

49 | column(width = 3,

50 | box(width = NULL, status = "warning",

51 | uiOutput("density_selection"),

52 | radioButtons("density_selection", label = h3("Select arrival or departure"),

53 | choices = c(

54 | Departure = "depdelay_mean",

55 | Arrival = "arrdelay_mean"

56 | ),

57 | selected = "arrdelay_mean")

58 | )

59 | )

60 |

61 | )

62 | ),

63 | tabItem("cancelled",

64 | fluidRow(

65 | column(width = 9,

66 | box(width = NULL, solidHeader = TRUE,

67 | plotOutput('cancelPlot')

68 | )

69 | ),

70 | column(width = 3,

71 | box(width = NULL, status = "warning",

72 | uiOutput("years_cancel_selection"),

73 | radioButtons("years_cancel_selection", label = h3("Select a year"),

74 | years_sub$year, selected = 2008)

75 | )

76 | )

77 | )

78 | ),

79 | tabItem("dayofweek",

80 | fluidRow(

81 | column(width = 9,

82 | box(width = NULL, solidHeader = TRUE,

83 | plotOutput('dayPlot')

84 | )

85 | ),

86 | column(width = 3,

87 | box(width = NULL, status = "warning",

88 | uiOutput("day_selection"),

89 | radioButtons("day_selection", label = h3("Select a year"),

90 | years_sub$year, selected = 2008)

91 | )

92 | )

93 | )

94 | )

95 |

96 | )

97 | )

98 |

99 | dashboardPage(

100 | header,

101 | sidebar,

102 | body

103 | )

--------------------------------------------------------------------------------

/dev/flights/flights_pred_2008.RData:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/flights_pred_2008.RData

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsClusterConnect.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsClusterConnect.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsCreateCluster.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsCreateCluster.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsCreateCluster2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsCreateCluster2.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsNewSecurityGroup.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsNewSecurityGroup.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsSecurityGroup.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsSecurityGroup.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/awsSecurityGroup2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/awsSecurityGroup2.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrArchitecture.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrArchitecture.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrConfigStep1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrConfigStep1.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrConfigStep2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrConfigStep2.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrConfigStep3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrConfigStep3.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrConfigStep4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrConfigStep4.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/emrLogin.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/emrLogin.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/flightsDashboard.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/flightsDashboard.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/flightsDeciles.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/flightsDeciles.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/flightsDecilesDesc.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/flightsDecilesDesc.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/flightsPredicted.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/flightsPredicted.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudio.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudio.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudioData.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudioData.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudioLogin.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudioLogin.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudioModel.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudioModel.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudioModelDetail.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudioModelDetail.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/rstudioSparkPane.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/rstudioSparkPane.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/workflow.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/workflow.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/workflowCommands.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/workflowCommands.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/workflowRSC.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/workflowRSC.png

--------------------------------------------------------------------------------

/dev/flights/images/clusterDemo/workflowShare.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/rstudio/sparkDemos/7b5c55154ef6513a49889c52c961359e8ab2cf49/dev/flights/images/clusterDemo/workflowShare.png

--------------------------------------------------------------------------------

/dev/flights/nycflights_flexdashboard.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Time Gained in Flight"

3 | output:

4 | flexdashboard::flex_dashboard:

5 | orientation: rows

6 | social: menu

7 | source_code: embed

8 | runtime: shiny

9 | ---

10 |

11 | ```{r setup, include=F}

12 | # Attach packages

13 | library(dplyr)

14 | library(ggplot2)

15 | library(DT)

16 | library(leaflet)

17 | library(geosphere)

18 | load('flights_pred_2008.RData')

19 | airports <- mutate(airports, lat = as.numeric(lat), lon = as.numeric(lon))

20 | ```

21 |

22 |

23 | Summary

24 | ========================================================================

25 |

26 | Inputs {.sidebar}

27 | -----------------------------------------------------------------------

28 |

29 | ### Select Airports

30 |

31 | ```{r}

32 | # Shiny inputs for flight orgin and destination

33 | carrier_origin <- ungroup(pred_data) %>% distinct(origin) %>% .[['origin']]

34 | carrier_dest <- ungroup(pred_data) %>% distinct(dest) %>% .[['dest']]

35 | selectInput("origin", "Flight origin", carrier_origin, selected = "JFK")

36 | selectInput("dest", "Flight destination", carrier_dest, selected = "LAS")

37 | ```

38 |

39 | ### Background

40 |

41 | Given that your flight was delayed by 15 minutes or more, what is the likelihood

42 | your airline carrier will make up time in route? Some of the most signficant factors

43 | for making up time are flight distance and airline carrier. The data model behind

44 | this dashboard is based on flights from NYC airports in 2013.

45 |

46 |

47 | Row

48 | -----------------------------------------------------------------------

49 |

50 | ### Observed versus predicted time gain

51 |

52 | ```{r}

53 | # Aggregregate time gain by carrier and by route

54 | plot_data <- reactive({

55 | req(input$origin, input$dest)

56 | pred_data %>%

57 | filter(origin==input$origin & dest==input$dest) %>%

58 | ungroup() %>%

59 | select(airline, flights, distance, avg_dep_delay, avg_arr_delay, avg_gain, pred_gain)

60 | })

61 |

62 | # Plot observed versus predicted time gain for carriers and route

63 | renderPlot({

64 | ggplot(plot_data(), aes(factor(airline), pred_gain)) +

65 | geom_bar(stat = "identity", fill = '#2780E3') +

66 | geom_point(aes(factor(airline), avg_gain)) +

67 | coord_flip() +

68 | labs(x = "", y = "Time gained in flight (minutes)") +

69 | labs(title = "Observed gain (point) vs Predicted gain (bar)")

70 | })

71 | ```

72 |

73 | ### Route

74 |

75 | ```{r}

76 | # Identify origin lat and long

77 | origin <- reactive({

78 | req(input$origin)

79 | filter(airports, faa == input$origin)

80 | })

81 |

82 | # Identify destination lat and log

83 | dest <- reactive({

84 | req(input$dest)

85 | filter(airports, faa == input$dest)

86 | })

87 |

88 | # Plot route

89 | renderLeaflet({

90 | gcIntermediate(

91 | select(origin(), lon, lat),

92 | select(dest(), lon, lat),

93 | n=100, addStartEnd=TRUE, sp=TRUE

94 | ) %>%

95 | leaflet() %>%

96 | addProviderTiles("CartoDB.Positron") %>%

97 | addPolylines()

98 | })

99 | ```

100 |

101 | Row

102 | -----------------------------------------------------------------------

103 |

104 | ### Data details

105 |

106 | ```{r}

107 | # Print table of observed and predicted gains by airline

108 | renderDataTable(

109 | datatable(plot_data()) %>%

110 | formatRound(c("flights", "distance"), 0) %>%

111 | formatRound(c("avg_arr_delay", "avg_dep_delay", "avg_gain", "pred_gain"), 1)

112 | )

113 | ```

114 |

115 | Model Details

116 | ========================================================================

117 |

118 | ```{r}

119 | renderPrint(ml1_summary)

120 | ```

121 |

--------------------------------------------------------------------------------

/dev/flights/nycflights_flexdashboard_spark.Rmd:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Time Gained in Flight"

3 | output:

4 | flexdashboard::flex_dashboard:

5 | orientation: rows

6 | social: menu

7 | source_code: embed

8 | runtime: shiny

9 | ---

10 |

11 | ```{r setup, include=F}

12 | # Attach packages

13 | library(dplyr)

14 | library(ggplot2)

15 | library(DT)

16 | library(leaflet)

17 | library(geosphere)

18 | library(sparklyr)

19 | library(dplyr)

20 |

21 | #Sys.setenv(SPARK_HOME = "/home/sean/.cache/spark/spark-1.6.2-bin-hadoop2.6")

22 | #sc <- spark_connect(master = "local", version = "1.6.2")

23 | #spark_read_csv(sc, "nyc_taxi_sample", path = "../../nathan/sol-eng-nyc-taxi-data/csv/trips/nyc_taxi_trips_2015-11.csv")

24 |

25 | # Connect to Spark

26 | Sys.setenv(SPARK_HOME="/usr/lib/spark")

27 | config <- spark_config()

28 | sc <- spark_connect(master = "yarn-client", config = config, version = '1.6.2')

29 | pred_data_tbl <- tbl(sc, 'summary_2008')

30 |

31 | #load('flights_pred_2008.RData')

32 | #airports <- mutate(airports, lat = as.numeric(lat), lon = as.numeric(lon))

33 |

34 | # Load summary data from flights forecast

35 | #pred_data_tbl <- tbl(sc, 'summary_2008')

36 | #pred_data <- collect(pred_data_tbl)

37 |

38 | # Load airports data

39 | #airports <- tbl(sc, 'airports') %>%

40 | # mutate(lat = as.numeric(lat), lon = as.numeric(lon)) %>%

41 | # collect

42 | ```

43 |

44 |

45 | Summary

46 | ========================================================================

47 |

48 | Inputs {.sidebar}