├── .ci.rosinstall

├── .github

└── workflows

│ └── industrial_ci.yml

├── .gitignore

├── CMakeLists.txt

├── LICENSE

├── README.en.md

├── README.md

├── config

├── joy_dualshock3.yml

├── joy_dualshock4.yml

└── joy_f710.yml

├── launch

├── direction_control.launch

├── line_follower.launch

├── mouse_with_lidar.launch

├── object_tracking.launch

├── slam_gmapping.launch

└── teleop.launch

├── package.xml

├── rviz

└── slam.rviz

└── scripts

├── camera.bash

├── direction_control.py

├── joystick_control.py

├── line_follower.py

└── object_tracking.py

/.ci.rosinstall:

--------------------------------------------------------------------------------

1 | - git:

2 | local-name: raspimouse_ros_2

3 | uri: https://github.com/ryuichiueda/raspimouse_ros_2

4 | version: master

5 | - git:

6 | local-name: rt_usb_9axisimu_driver

7 | uri: https://github.com/rt-net/rt_usb_9axisimu_driver

8 | version: master

9 |

--------------------------------------------------------------------------------

/.github/workflows/industrial_ci.yml:

--------------------------------------------------------------------------------

1 | name: industrial_ci

2 |

3 | on:

4 | push:

5 | paths-ignore:

6 | - '**.md'

7 | pull_request:

8 | paths-ignore:

9 | - '**.md'

10 | schedule:

11 | - cron: "0 2 * * 0" # Weekly on Sundays at 02:00

12 |

13 | jobs:

14 | industrial_ci:

15 | continue-on-error: ${{ matrix.experimental }}

16 | strategy:

17 | matrix:

18 | env:

19 | - { ROS_DISTRO: noetic, ROS_REPO: main }

20 | experimental: [false]

21 | include:

22 | - env: { ROS_DISTRO: noetic, ROS_REPO: testing }

23 | experimental: true

24 | env:

25 | UPSTREAM_WORKSPACE: .ci.rosinstall

26 | runs-on: ubuntu-latest

27 | steps:

28 | - uses: actions/checkout@v2

29 | - uses: "ros-industrial/industrial_ci@master"

30 | env: ${{ matrix.env }}

31 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | build/

2 | bin/

3 | lib/

4 | msg_gen/

5 | srv_gen/

6 | msg/*Action.msg

7 | msg/*ActionFeedback.msg

8 | msg/*ActionGoal.msg

9 | msg/*ActionResult.msg

10 | msg/*Feedback.msg

11 | msg/*Goal.msg

12 | msg/*Result.msg

13 | msg/_*.py

14 |

15 | # Generated by dynamic reconfigure

16 | *.cfgc

17 | /cfg/cpp/

18 | /cfg/*.py

19 |

20 | # Ignore generated docs

21 | *.dox

22 | *.wikidoc

23 |

24 | # eclipse stuff

25 | .project

26 | .cproject

27 |

28 | # qcreator stuff

29 | CMakeLists.txt.user

30 |

31 | srv/_*.py

32 | *.pcd

33 | *.pyc

34 | qtcreator-*

35 | *.user

36 |

37 | /planning/cfg

38 | /planning/docs

39 | /planning/src

40 |

41 | *~

42 |

43 | # Emacs

44 | .#*

45 |

46 | # Catkin custom files

47 | CATKIN_IGNORE

48 |

49 | # SketchUp backup files

50 | *~.skp

51 |

52 | # Swap files

53 | *.swp

54 |

55 | # MacOS metadata files

56 | .DS_store

57 |

--------------------------------------------------------------------------------

/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | cmake_minimum_required(VERSION 2.8.3)

2 | project(raspimouse_ros_examples)

3 |

4 | find_package(catkin REQUIRED COMPONENTS

5 | roslint

6 | )

7 |

8 | catkin_package()

9 |

10 | include_directories(

11 | ${catkin_INCLUDE_DIRS}

12 | )

13 |

14 | file(GLOB python_scripts scripts/*.py)

15 | catkin_install_python(

16 | PROGRAMS ${python_scripts}

17 | DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION}

18 | )

19 |

20 | roslint_python()

21 | roslint_add_test()

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

179 | APPENDIX: How to apply the Apache License to your work.

180 |

181 | To apply the Apache License to your work, attach the following

182 | boilerplate notice, with the fields enclosed by brackets "[]"

183 | replaced with your own identifying information. (Don't include

184 | the brackets!) The text should be enclosed in the appropriate

185 | comment syntax for the file format. We also recommend that a

186 | file or class name and description of purpose be included on the

187 | same "printed page" as the copyright notice for easier

188 | identification within third-party archives.

189 |

190 | Copyright [yyyy] [name of copyright owner]

191 |

192 | Licensed under the Apache License, Version 2.0 (the "License");

193 | you may not use this file except in compliance with the License.

194 | You may obtain a copy of the License at

195 |

196 | http://www.apache.org/licenses/LICENSE-2.0

197 |

198 | Unless required by applicable law or agreed to in writing, software

199 | distributed under the License is distributed on an "AS IS" BASIS,

200 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

201 | See the License for the specific language governing permissions and

202 | limitations under the License.

203 |

--------------------------------------------------------------------------------

/README.en.md:

--------------------------------------------------------------------------------

1 | [English](README.en.md) | [日本語](README.md)

2 |

3 | # raspimouse_ros_examples

4 |

5 | [](https://github.com/rt-net/raspimouse_ros_examples/actions?query=workflow%3Aindustrial_ci+branch%3Amaster)

6 |

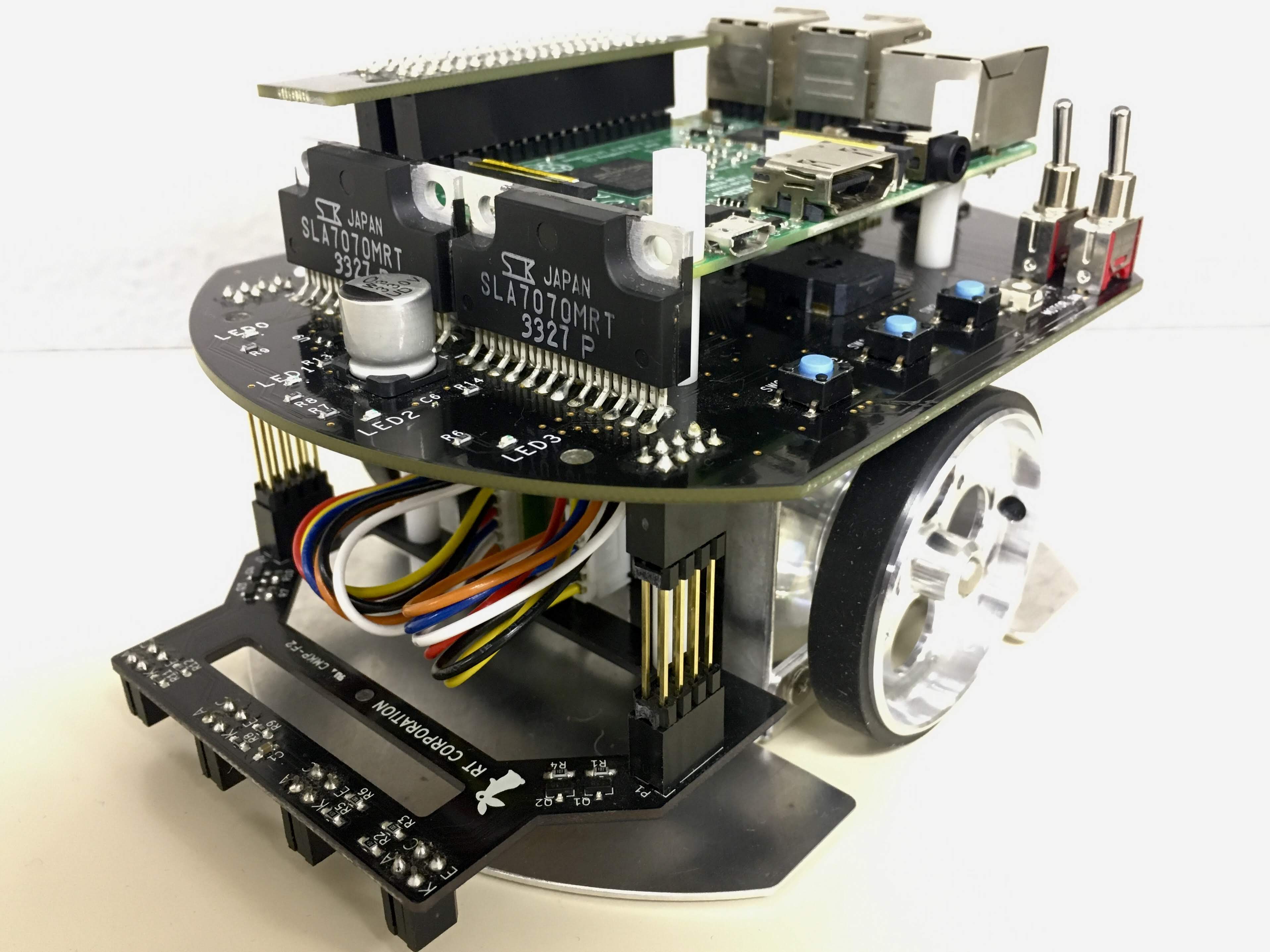

7 | ROS examples for Raspberry Pi Mouse.

8 |

9 | Samples for navigation is [here](https://github.com/rt-net/raspimouse_slam_navigation_ros).

10 | ROS 2 examples is [here](https://github.com/rt-net/raspimouse_ros2_examples).

11 |

12 |  13 |

14 | ## Requirements

15 |

16 | - Raspberry Pi Mouse

17 | - https://rt-net.jp/products/raspberrypimousev3/

18 | - Linux OS

19 | - Ubuntu server 16.04

20 | - Ubuntu server 18.04

21 | - Ubuntu server 20.04

22 | - https://ubuntu.com/download/raspberry-pi

23 | - Device Driver

24 | - [rt-net/RaspberryPiMouse](https://github.com/rt-net/RaspberryPiMouse)

25 | - ROS

26 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

27 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

28 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

29 | - Raspberry Pi Mouse ROS package

30 | - https://github.com/ryuichiueda/raspimouse_ros_2

31 | - Remote Computer (Optional)

32 | - ROS

33 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

34 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

35 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

36 | - Raspberry Pi Mouse ROS package

37 | - https://github.com/ryuichiueda/raspimouse_ros_2

38 |

39 | ## Installation

40 |

41 | ```sh

42 | cd ~/catkin_ws/src

43 | # Clone ROS packages

44 | git clone https://github.com/ryuichiueda/raspimouse_ros_2

45 | git clone -b $ROS_DISTRO-devel https://github.com/rt-net/raspimouse_ros_examples

46 | # For direction control example

47 | git clone https://github.com/rt-net/rt_usb_9axisimu_driver

48 |

49 | # Install dependencies

50 | rosdep install -r -y --from-paths . --ignore-src

51 |

52 | # make & install

53 | cd ~/catkin_ws && catkin_make

54 | source devel/setup.bash

55 | ```

56 |

57 | ## License

58 |

59 | This repository is licensed under the Apache 2.0, see [LICENSE](./LICENSE) for details.

60 |

61 | ## How To Use Examples

62 |

63 | - [keyboard_control](#keyboard_control)

64 | - [joystick_control](#joystick_control)

65 | - [object_tracking](#object_tracking)

66 | - [line_follower](#line_follower)

67 | - [SLAM](#SLAM)

68 | - [direction_control](#direction_control)

69 |

70 | ---

71 |

72 | ### keyboard_control

73 |

74 | This is an example to use [teleop_twist_keyboard](http://wiki.ros.org/teleop_twist_keyboard) package to send velocity command for Raspberry Pi Mouse.

75 |

76 | #### Requirements

77 |

78 | - Keyboard

79 |

80 | #### How to use

81 |

82 | Launch nodes with the following command:

83 |

84 | ```sh

85 | roslaunch raspimouse_ros_examples teleop.launch key:=true

86 |

87 | # Control from remote computer

88 | roslaunch raspimouse_ros_examples teleop.launch key:=true mouse:=false

89 | ```

90 |

91 | Then, call `/motor_on` service to enable motor control with the following command:

92 |

93 | ```sh

94 | rosservice call /motor_on

95 | ```

96 | [back to example list](#how-to-use-examples)

97 |

98 | ---

99 |

100 | ### joystick_control

101 |

102 | This is an example to use joystick controller to control a Raspberry Pi Mouse.

103 |

104 | #### Requirements

105 |

106 | - Joystick Controller

107 | - [Logicool Wireless Gamepad F710](https://gaming.logicool.co.jp/ja-jp/products/gamepads/f710-wireless-gamepad.html#940-0001440)

108 | - [SONY DUALSHOCK 3](https://www.jp.playstation.com/ps3/peripheral/cechzc2j.html)

109 |

110 | #### How to use

111 |

112 | Launch nodes with the following command:

113 |

114 | ```sh

115 | roslaunch raspimouse_ros_examples teleop.launch joy:=true

116 |

117 | # Use DUALSHOCK 3

118 | roslaunch raspimouse_ros_examples teleop.launch joy:=true joyconfig:="dualshock3"

119 |

120 | # Control from remote computer

121 | roslaunch raspimouse_ros_examples teleop.launch joy:=true mouse:=false

122 | ```

123 |

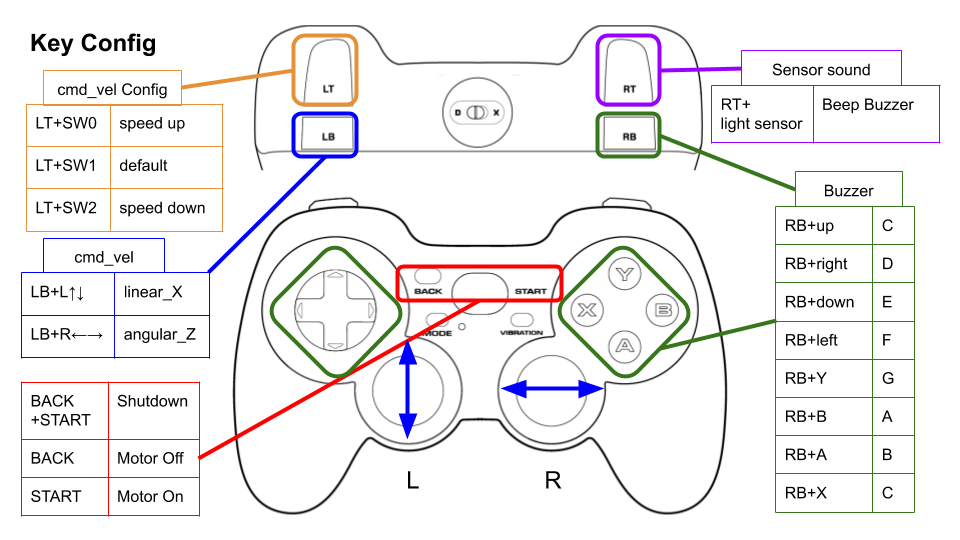

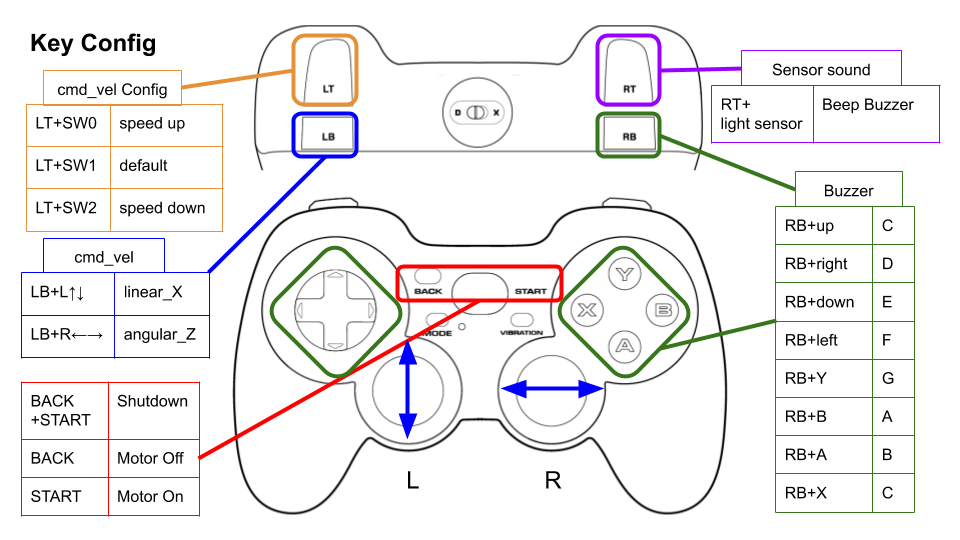

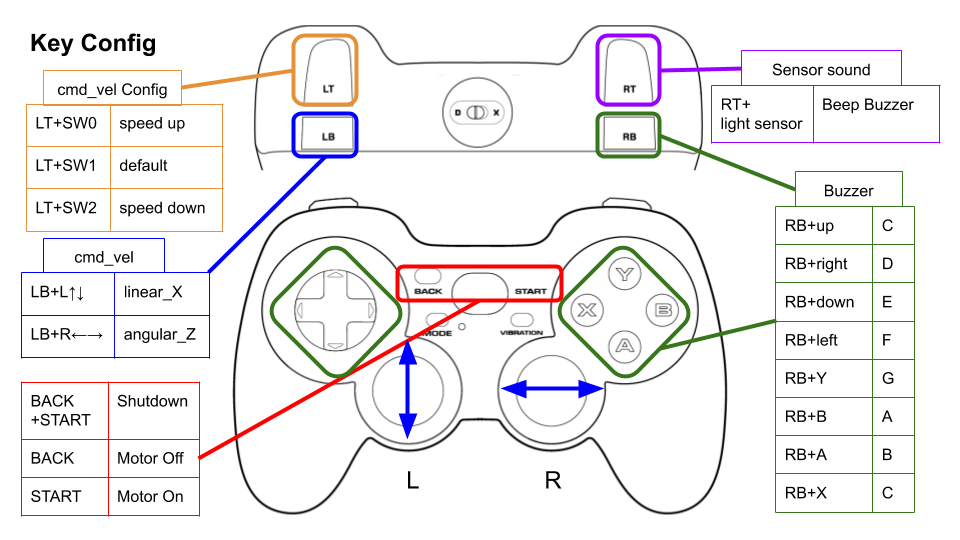

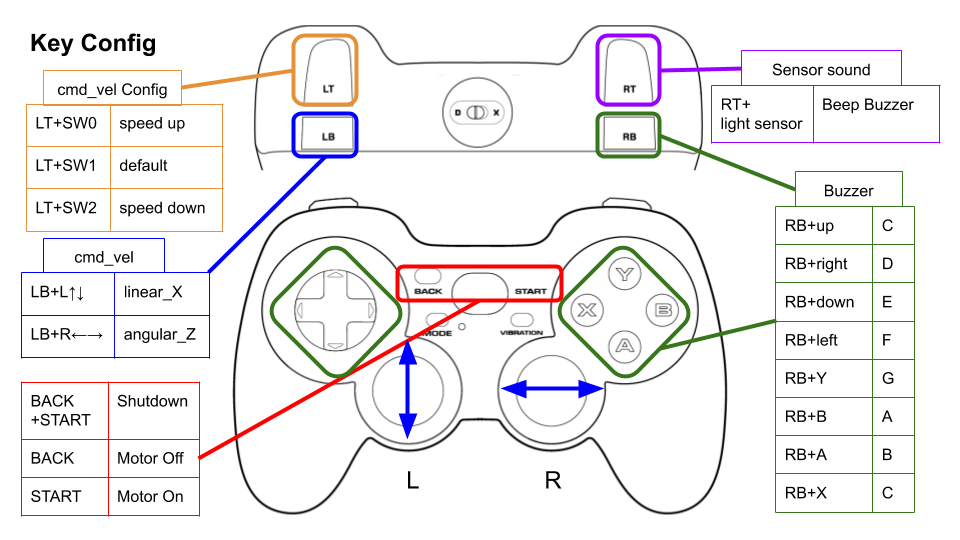

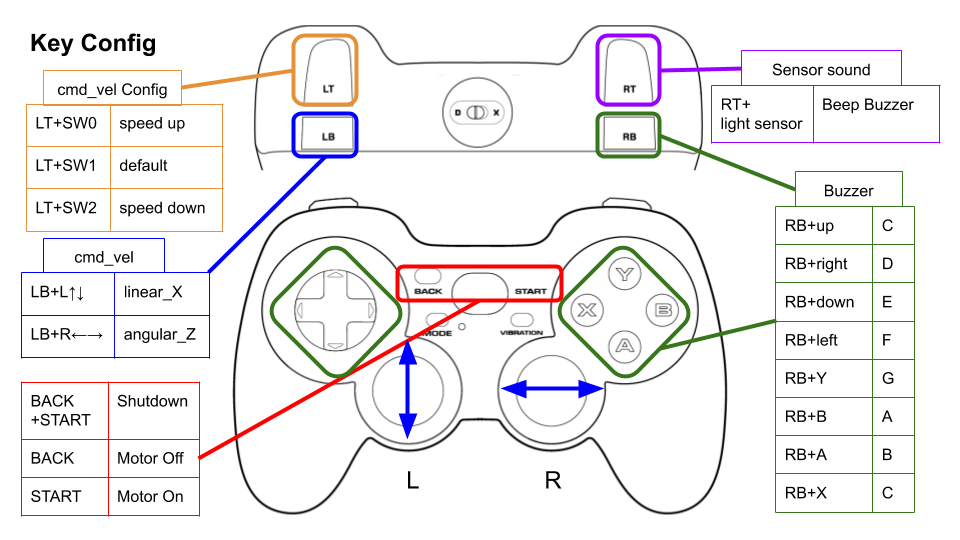

124 | This picture shows the default key configuration.

125 |

126 |

127 |

128 | #### Configure

129 |

130 | Key assignments can be edited with key numbers in [./config/joy_f710.yml](./config/joy_f710.yml) or [./config/joy_dualshock3.yml](./config/joy_dualshock3.yml).

131 |

132 | ```yaml

133 | button_shutdown_1 : 8

134 | button_shutdown_2 : 9

135 |

136 | button_motor_off : 8

137 | button_motor_on : 9

138 |

139 | button_cmd_enable : 4

140 | ```

141 |

142 | #### Videos

143 |

144 | [](https://youtu.be/GswxdB8Ia0Y)

145 |

146 | [back to example list](#how-to-use-examples)

147 |

148 | ---

149 |

150 | ### object_tracking

151 |

152 |

13 |

14 | ## Requirements

15 |

16 | - Raspberry Pi Mouse

17 | - https://rt-net.jp/products/raspberrypimousev3/

18 | - Linux OS

19 | - Ubuntu server 16.04

20 | - Ubuntu server 18.04

21 | - Ubuntu server 20.04

22 | - https://ubuntu.com/download/raspberry-pi

23 | - Device Driver

24 | - [rt-net/RaspberryPiMouse](https://github.com/rt-net/RaspberryPiMouse)

25 | - ROS

26 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

27 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

28 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

29 | - Raspberry Pi Mouse ROS package

30 | - https://github.com/ryuichiueda/raspimouse_ros_2

31 | - Remote Computer (Optional)

32 | - ROS

33 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

34 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

35 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

36 | - Raspberry Pi Mouse ROS package

37 | - https://github.com/ryuichiueda/raspimouse_ros_2

38 |

39 | ## Installation

40 |

41 | ```sh

42 | cd ~/catkin_ws/src

43 | # Clone ROS packages

44 | git clone https://github.com/ryuichiueda/raspimouse_ros_2

45 | git clone -b $ROS_DISTRO-devel https://github.com/rt-net/raspimouse_ros_examples

46 | # For direction control example

47 | git clone https://github.com/rt-net/rt_usb_9axisimu_driver

48 |

49 | # Install dependencies

50 | rosdep install -r -y --from-paths . --ignore-src

51 |

52 | # make & install

53 | cd ~/catkin_ws && catkin_make

54 | source devel/setup.bash

55 | ```

56 |

57 | ## License

58 |

59 | This repository is licensed under the Apache 2.0, see [LICENSE](./LICENSE) for details.

60 |

61 | ## How To Use Examples

62 |

63 | - [keyboard_control](#keyboard_control)

64 | - [joystick_control](#joystick_control)

65 | - [object_tracking](#object_tracking)

66 | - [line_follower](#line_follower)

67 | - [SLAM](#SLAM)

68 | - [direction_control](#direction_control)

69 |

70 | ---

71 |

72 | ### keyboard_control

73 |

74 | This is an example to use [teleop_twist_keyboard](http://wiki.ros.org/teleop_twist_keyboard) package to send velocity command for Raspberry Pi Mouse.

75 |

76 | #### Requirements

77 |

78 | - Keyboard

79 |

80 | #### How to use

81 |

82 | Launch nodes with the following command:

83 |

84 | ```sh

85 | roslaunch raspimouse_ros_examples teleop.launch key:=true

86 |

87 | # Control from remote computer

88 | roslaunch raspimouse_ros_examples teleop.launch key:=true mouse:=false

89 | ```

90 |

91 | Then, call `/motor_on` service to enable motor control with the following command:

92 |

93 | ```sh

94 | rosservice call /motor_on

95 | ```

96 | [back to example list](#how-to-use-examples)

97 |

98 | ---

99 |

100 | ### joystick_control

101 |

102 | This is an example to use joystick controller to control a Raspberry Pi Mouse.

103 |

104 | #### Requirements

105 |

106 | - Joystick Controller

107 | - [Logicool Wireless Gamepad F710](https://gaming.logicool.co.jp/ja-jp/products/gamepads/f710-wireless-gamepad.html#940-0001440)

108 | - [SONY DUALSHOCK 3](https://www.jp.playstation.com/ps3/peripheral/cechzc2j.html)

109 |

110 | #### How to use

111 |

112 | Launch nodes with the following command:

113 |

114 | ```sh

115 | roslaunch raspimouse_ros_examples teleop.launch joy:=true

116 |

117 | # Use DUALSHOCK 3

118 | roslaunch raspimouse_ros_examples teleop.launch joy:=true joyconfig:="dualshock3"

119 |

120 | # Control from remote computer

121 | roslaunch raspimouse_ros_examples teleop.launch joy:=true mouse:=false

122 | ```

123 |

124 | This picture shows the default key configuration.

125 |

126 |

127 |

128 | #### Configure

129 |

130 | Key assignments can be edited with key numbers in [./config/joy_f710.yml](./config/joy_f710.yml) or [./config/joy_dualshock3.yml](./config/joy_dualshock3.yml).

131 |

132 | ```yaml

133 | button_shutdown_1 : 8

134 | button_shutdown_2 : 9

135 |

136 | button_motor_off : 8

137 | button_motor_on : 9

138 |

139 | button_cmd_enable : 4

140 | ```

141 |

142 | #### Videos

143 |

144 | [](https://youtu.be/GswxdB8Ia0Y)

145 |

146 | [back to example list](#how-to-use-examples)

147 |

148 | ---

149 |

150 | ### object_tracking

151 |

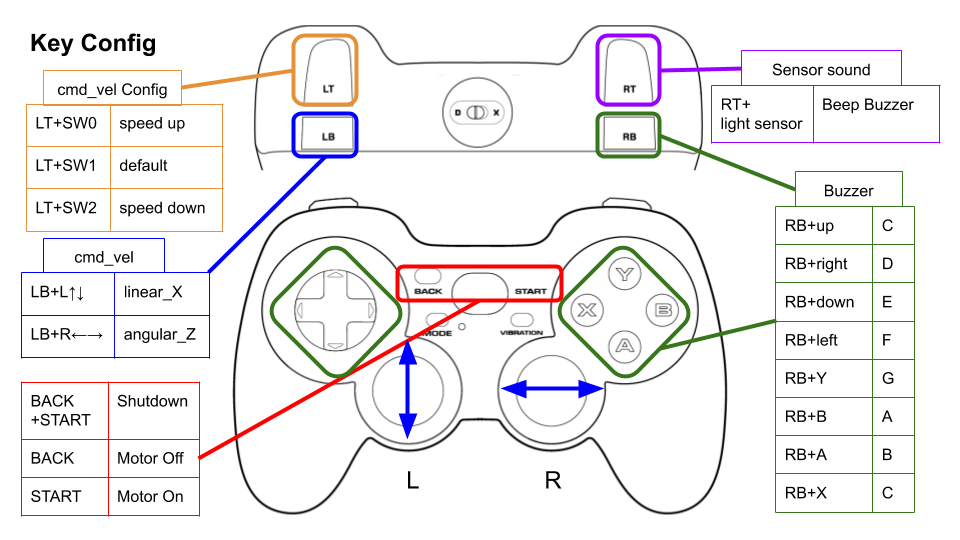

152 |  153 |

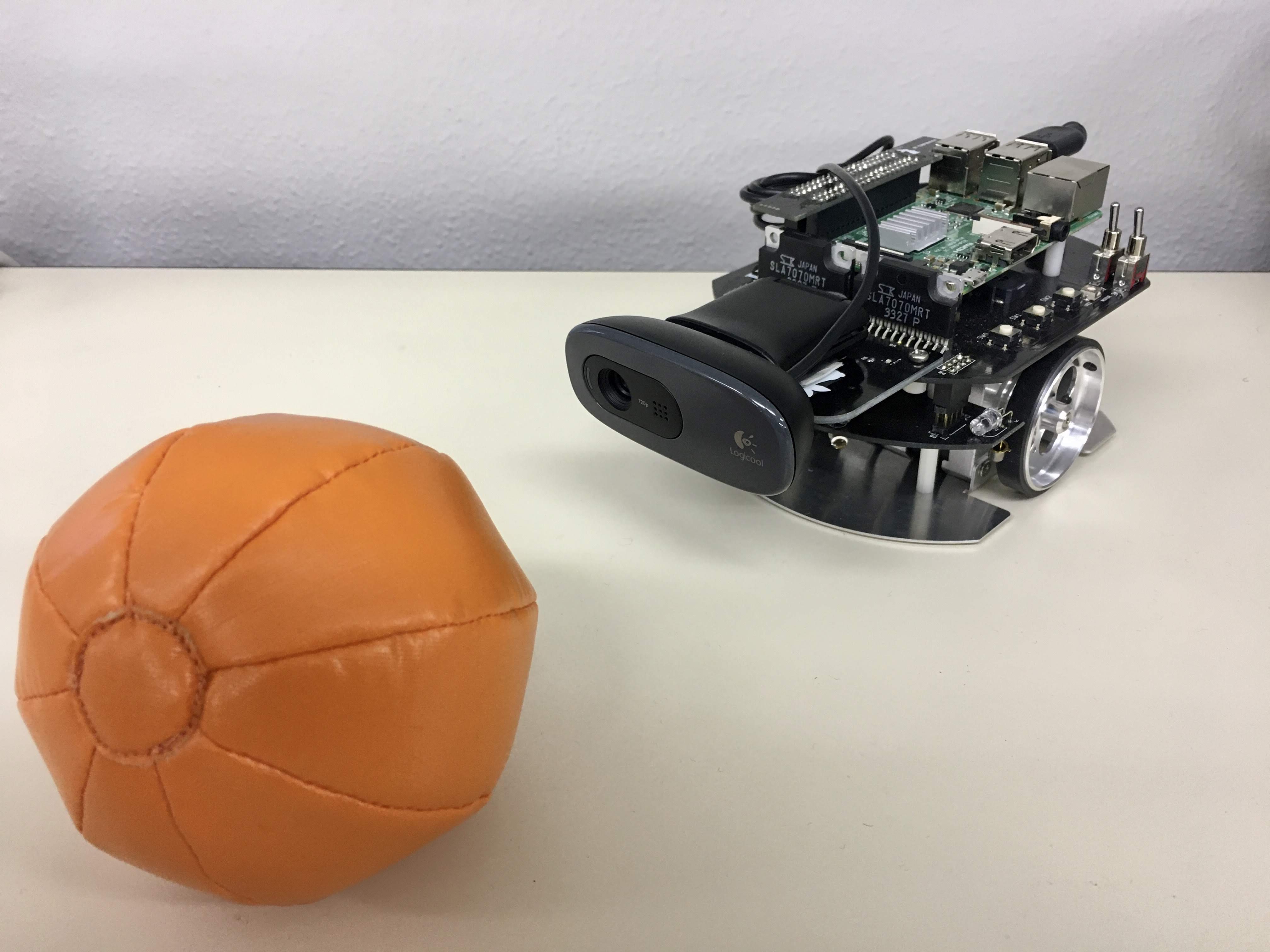

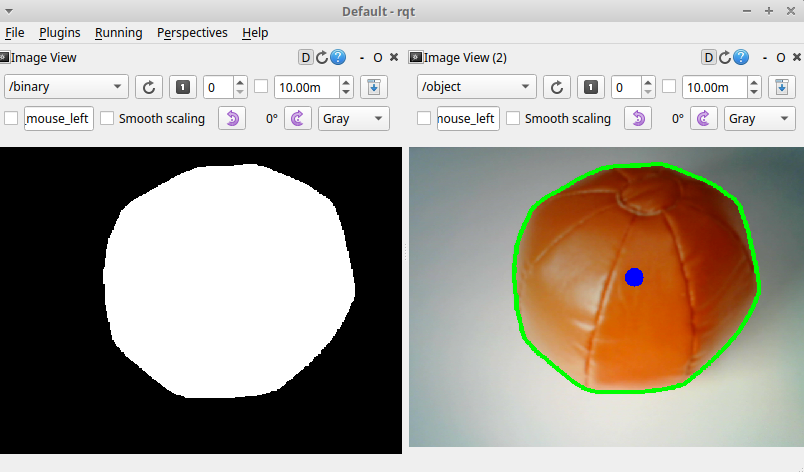

154 | This is an example to use RGB camera images and OpenCV library for object tracking.

155 |

156 | #### Requirements

157 |

158 | - Web camera

159 | - [Logicool HD WEBCAM C310N](https://www.logicool.co.jp/ja-jp/product/hd-webcam-c310n)

160 | - Camera mount

161 | - [Raspberry Pi Mouse Option kit No.4 \[Webcam mount\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3584&language=en)

162 | - Orange ball(Optional)

163 | - [Soft Ball (Orange)](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1307&products_id=3701&language=en)

164 | - Software

165 | - python

166 | - opencv

167 | - numpy

168 | - v4l-utils

169 |

170 | #### Installation

171 |

172 | Install a camera mount and a web camera to Raspberry Pi Mouse, then connect the camera to the Raspberry Pi.

173 |

174 | Next, install the v4l-utils package with the following command:

175 |

176 | ```sh

177 | sudo apt install v4l-utils

178 | ```

179 | #### How to use

180 |

181 | Turn off automatic adjustment parameters of a camera (auto focus, auto while balance, etc.) with the following command:

182 |

183 | ```sh

184 | rosrun raspimouse_ros_examples camera.bash

185 | ```

186 |

187 | Then, launch nodes with the following command:

188 |

189 | ```sh

190 | roslaunch raspimouse_ros_examples object_tracking.launch

191 | ```

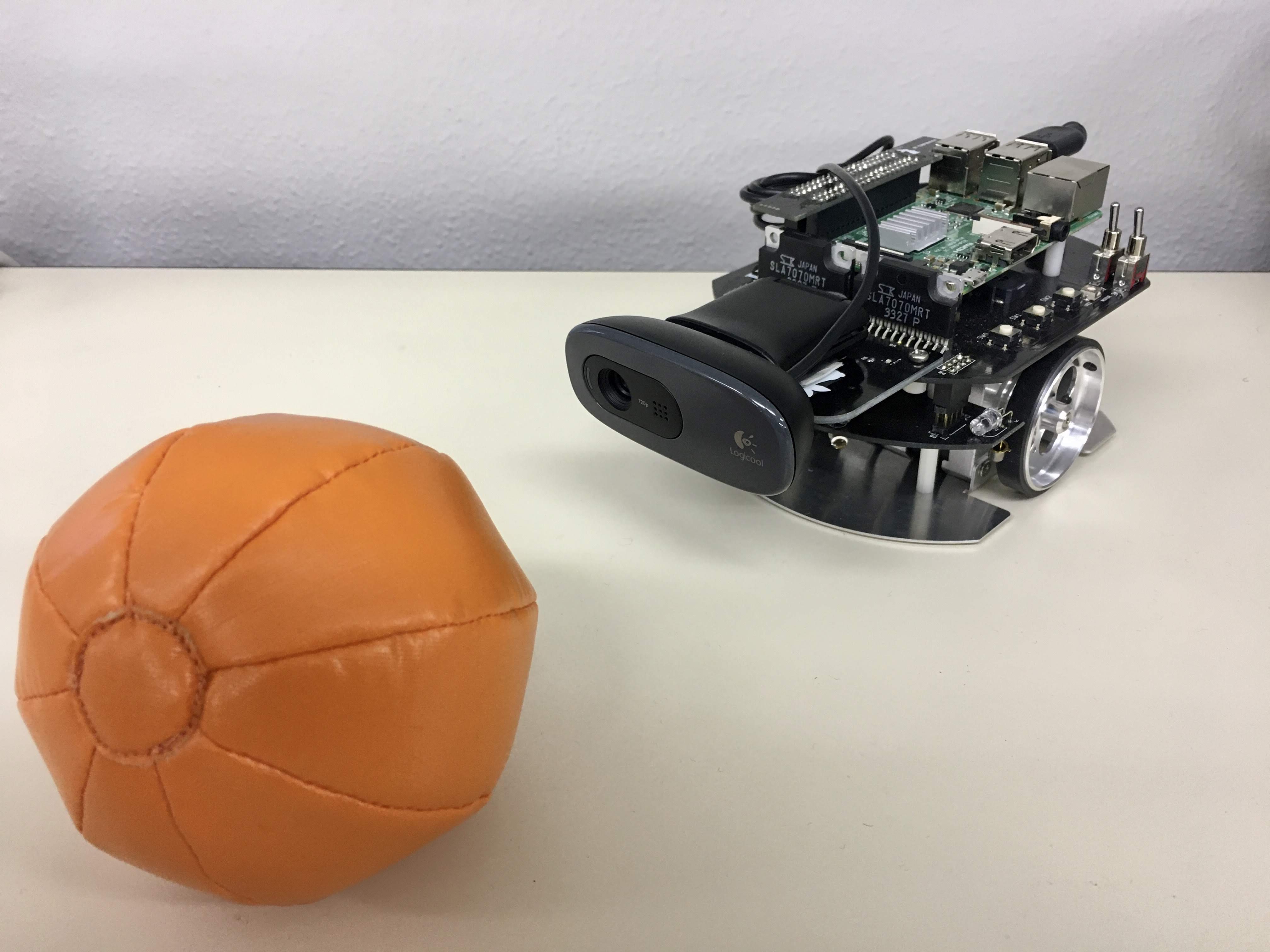

192 | This sample publishes `binary` and `object` topics for the object detection image.

193 | These images can be viewed with [RViz](http://wiki.ros.org/ja/rviz)

194 | or [rqt_image_view](http://wiki.ros.org/rqt_image_view).

195 |

196 |

153 |

154 | This is an example to use RGB camera images and OpenCV library for object tracking.

155 |

156 | #### Requirements

157 |

158 | - Web camera

159 | - [Logicool HD WEBCAM C310N](https://www.logicool.co.jp/ja-jp/product/hd-webcam-c310n)

160 | - Camera mount

161 | - [Raspberry Pi Mouse Option kit No.4 \[Webcam mount\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3584&language=en)

162 | - Orange ball(Optional)

163 | - [Soft Ball (Orange)](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1307&products_id=3701&language=en)

164 | - Software

165 | - python

166 | - opencv

167 | - numpy

168 | - v4l-utils

169 |

170 | #### Installation

171 |

172 | Install a camera mount and a web camera to Raspberry Pi Mouse, then connect the camera to the Raspberry Pi.

173 |

174 | Next, install the v4l-utils package with the following command:

175 |

176 | ```sh

177 | sudo apt install v4l-utils

178 | ```

179 | #### How to use

180 |

181 | Turn off automatic adjustment parameters of a camera (auto focus, auto while balance, etc.) with the following command:

182 |

183 | ```sh

184 | rosrun raspimouse_ros_examples camera.bash

185 | ```

186 |

187 | Then, launch nodes with the following command:

188 |

189 | ```sh

190 | roslaunch raspimouse_ros_examples object_tracking.launch

191 | ```

192 | This sample publishes `binary` and `object` topics for the object detection image.

193 | These images can be viewed with [RViz](http://wiki.ros.org/ja/rviz)

194 | or [rqt_image_view](http://wiki.ros.org/rqt_image_view).

195 |

196 |  197 |

198 | #### Configure

199 |

200 | Edit [`./scripts/object_tracking.py`](./scripts/object_tracking.py) to change a color of tracking target.

201 |

202 | ```python

203 | def detect_ball(self):

204 | # ~~~ 省略 ~~~

205 | min_hsv, max_hsv = self.set_color_orange()

206 | # min_hsv, max_hsv = self.set_color_green()

207 | # min_hsv, max_hsv = self.set_color_blue()

208 | ```

209 |

210 | If object tracking is unstable, please edit the following lines.

211 |

212 | ```python

213 | def set_color_orange(self):

214 | # [H(0~180), S(0~255), V(0~255)]

215 | # min_hsv_orange = np.array([15, 200, 80])

216 | min_hsv_orange = np.array([15, 150, 40])

217 | max_hsv_orange = np.array([20, 255, 255])

218 | return min_hsv_orange, max_hsv_orange

219 | ```

220 |

221 | #### Videos

222 |

223 | [](https://youtu.be/U6_BuvrjyFc)

224 |

225 | [back to example list](#how-to-use-examples)

226 |

227 | ---

228 |

229 | ### line_follower

230 |

231 |

197 |

198 | #### Configure

199 |

200 | Edit [`./scripts/object_tracking.py`](./scripts/object_tracking.py) to change a color of tracking target.

201 |

202 | ```python

203 | def detect_ball(self):

204 | # ~~~ 省略 ~~~

205 | min_hsv, max_hsv = self.set_color_orange()

206 | # min_hsv, max_hsv = self.set_color_green()

207 | # min_hsv, max_hsv = self.set_color_blue()

208 | ```

209 |

210 | If object tracking is unstable, please edit the following lines.

211 |

212 | ```python

213 | def set_color_orange(self):

214 | # [H(0~180), S(0~255), V(0~255)]

215 | # min_hsv_orange = np.array([15, 200, 80])

216 | min_hsv_orange = np.array([15, 150, 40])

217 | max_hsv_orange = np.array([20, 255, 255])

218 | return min_hsv_orange, max_hsv_orange

219 | ```

220 |

221 | #### Videos

222 |

223 | [](https://youtu.be/U6_BuvrjyFc)

224 |

225 | [back to example list](#how-to-use-examples)

226 |

227 | ---

228 |

229 | ### line_follower

230 |

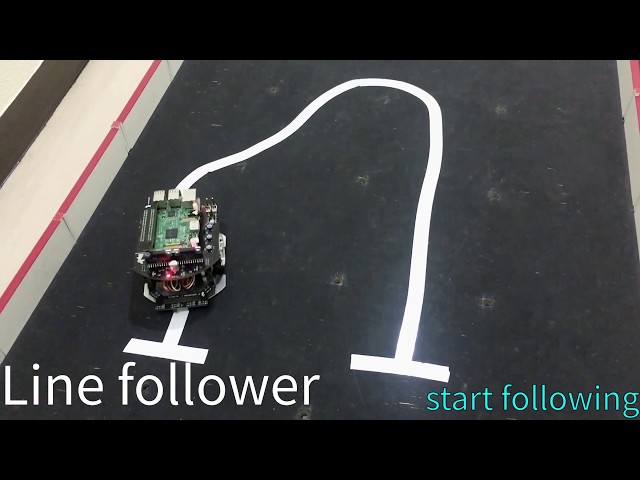

231 |  232 |

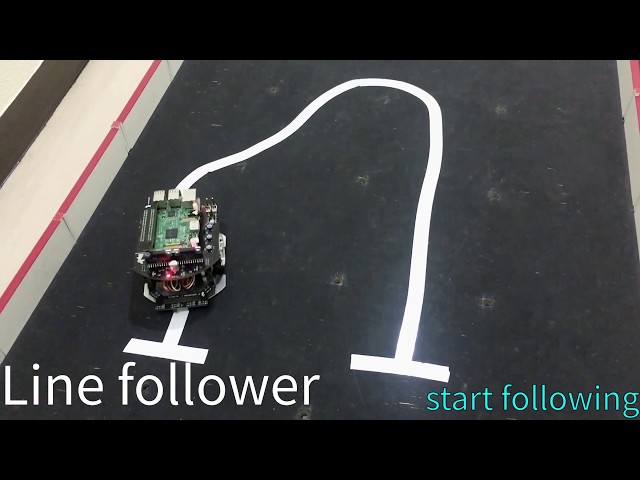

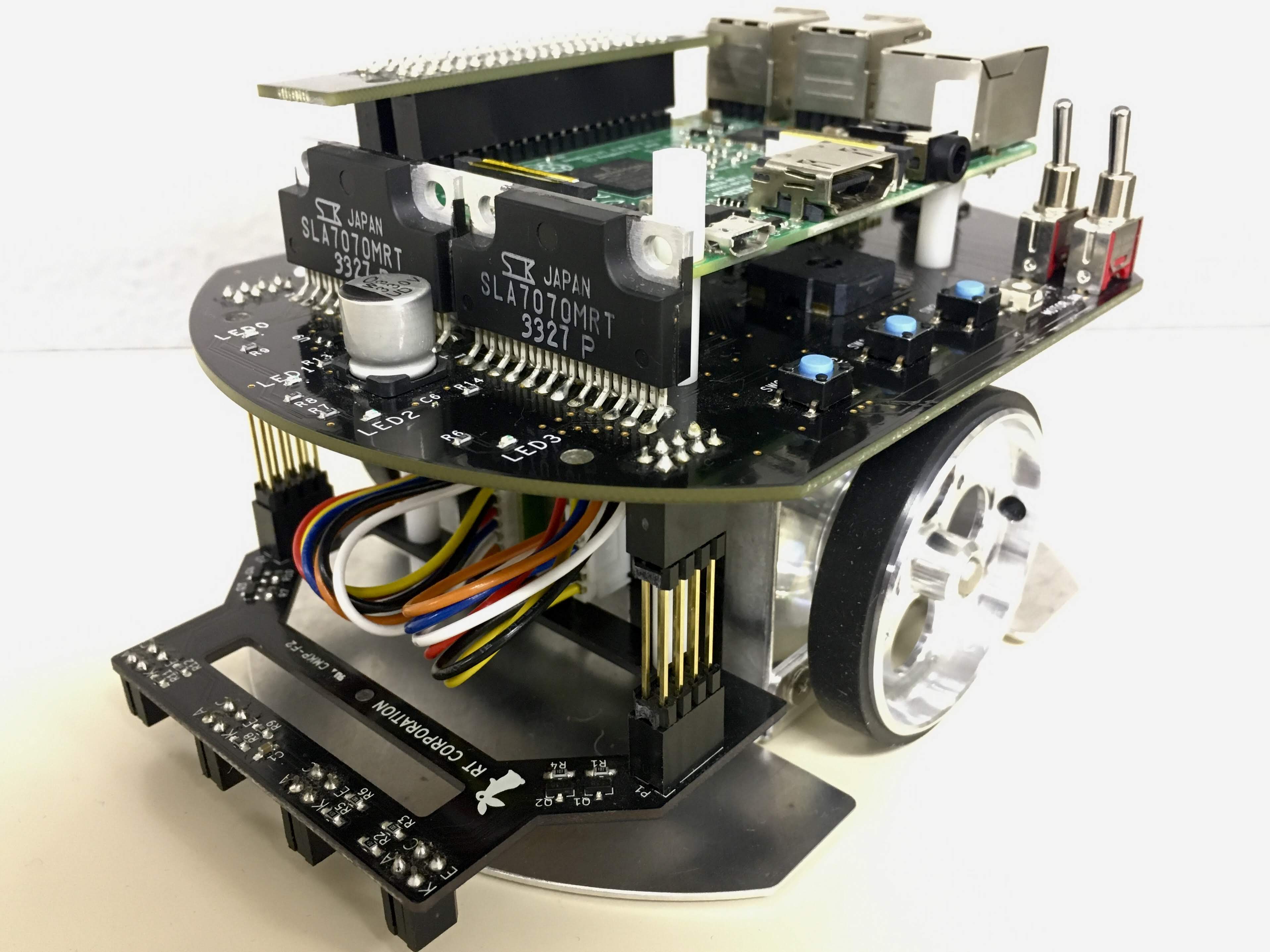

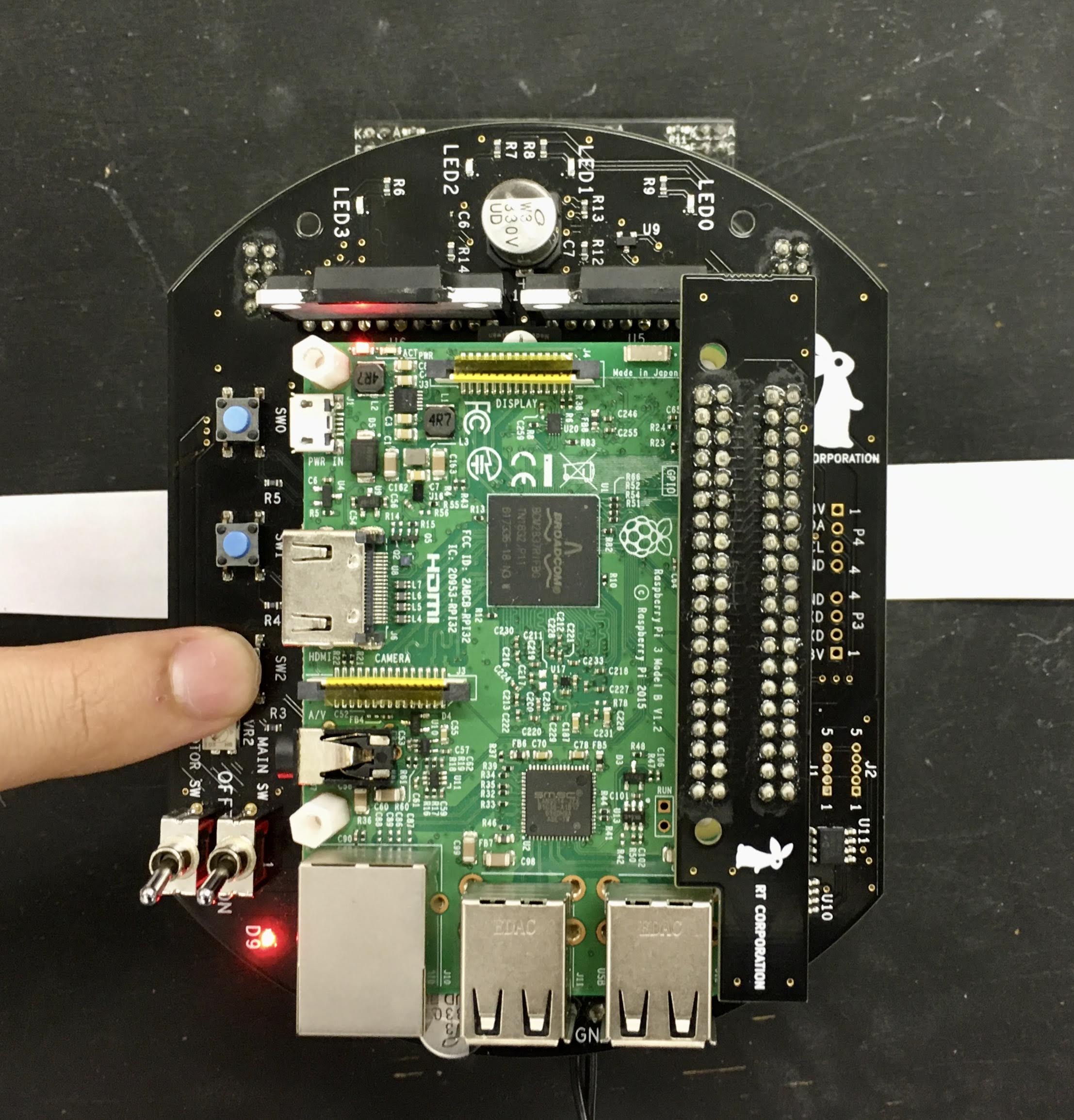

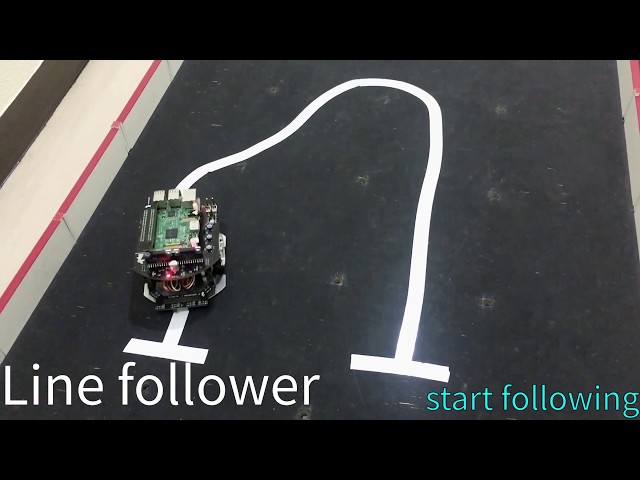

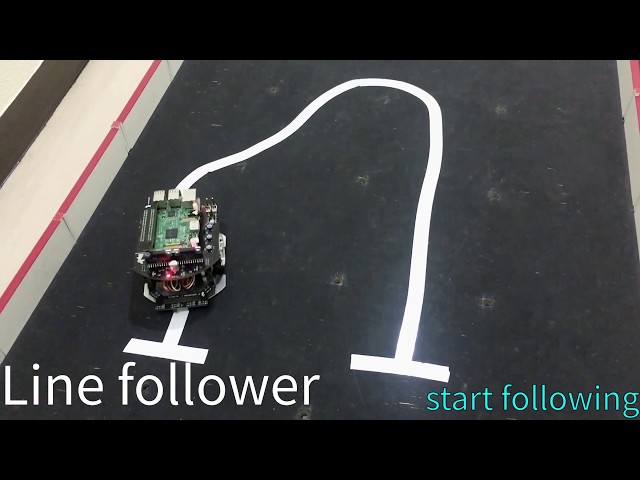

233 | This is an example for line following.

234 |

235 | #### Requirements

236 |

237 | - Line following sensor

238 | - [Raspberry Pi Mouse Option kit No.3 \[Line follower\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3591&language=en)

239 | - Field and lines for following (Optional)

240 |

241 | #### Installation

242 |

243 | Install a line following sensor unit to Raspberry Pi Mouse.

244 |

245 | #### How to use

246 |

247 | Launch nodes with the following command:

248 |

249 | ```sh

250 | roslaunch raspimouse_ros_examples line_follower.launch

251 |

252 | # Control from remote computer

253 | roslaunch raspimouse_ros_examples line_follower.launch mouse:=false

254 | ```

255 |

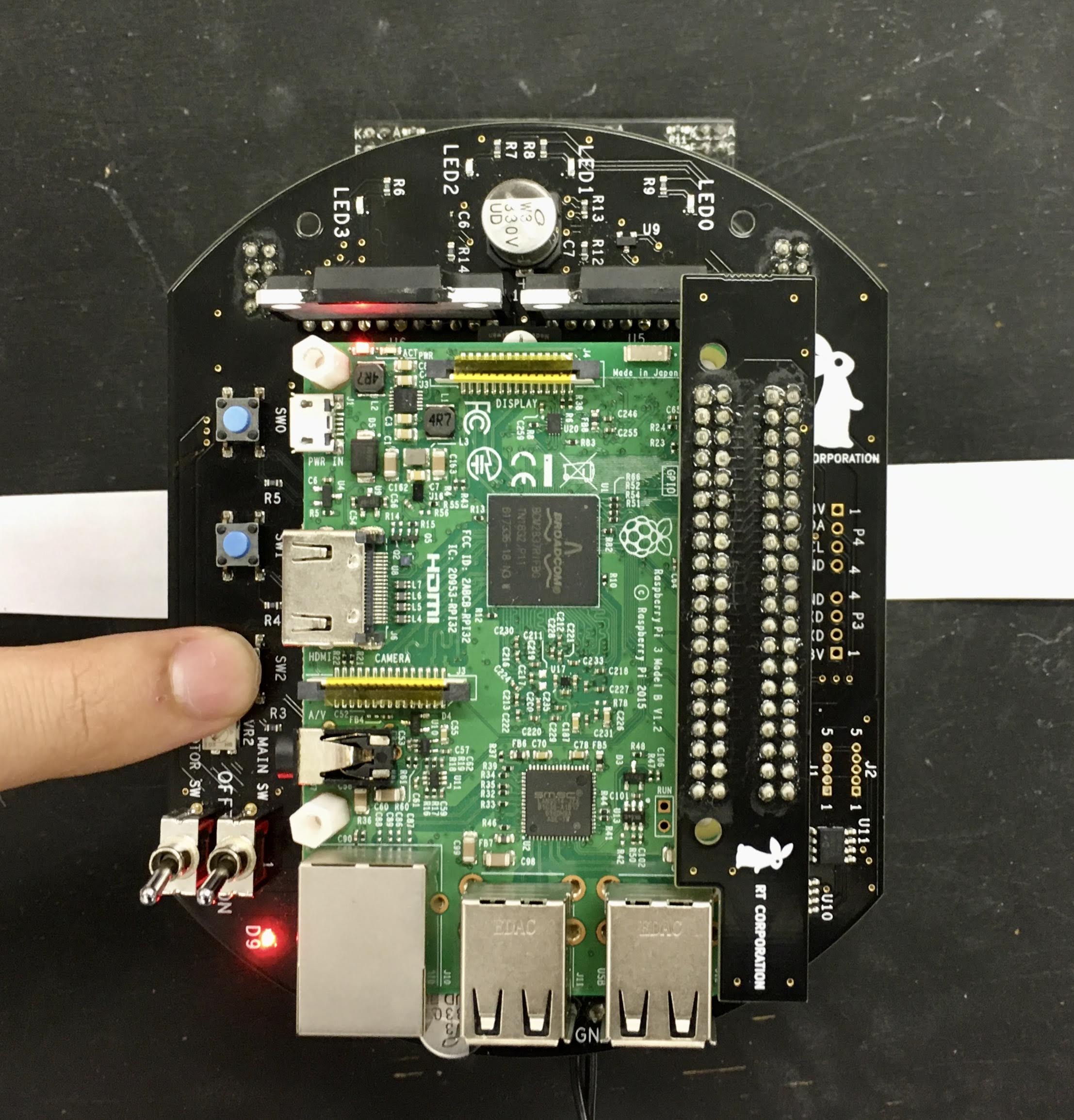

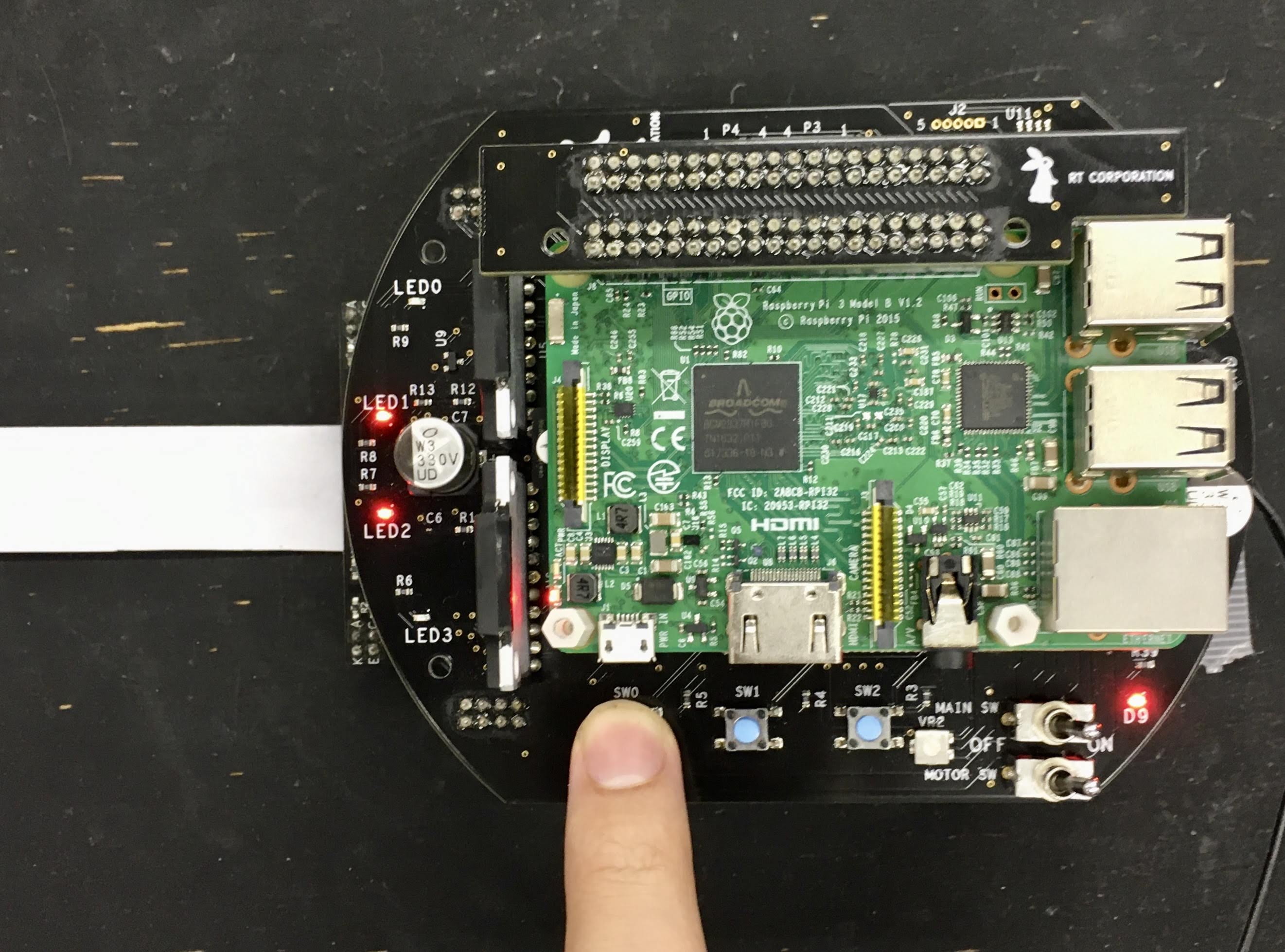

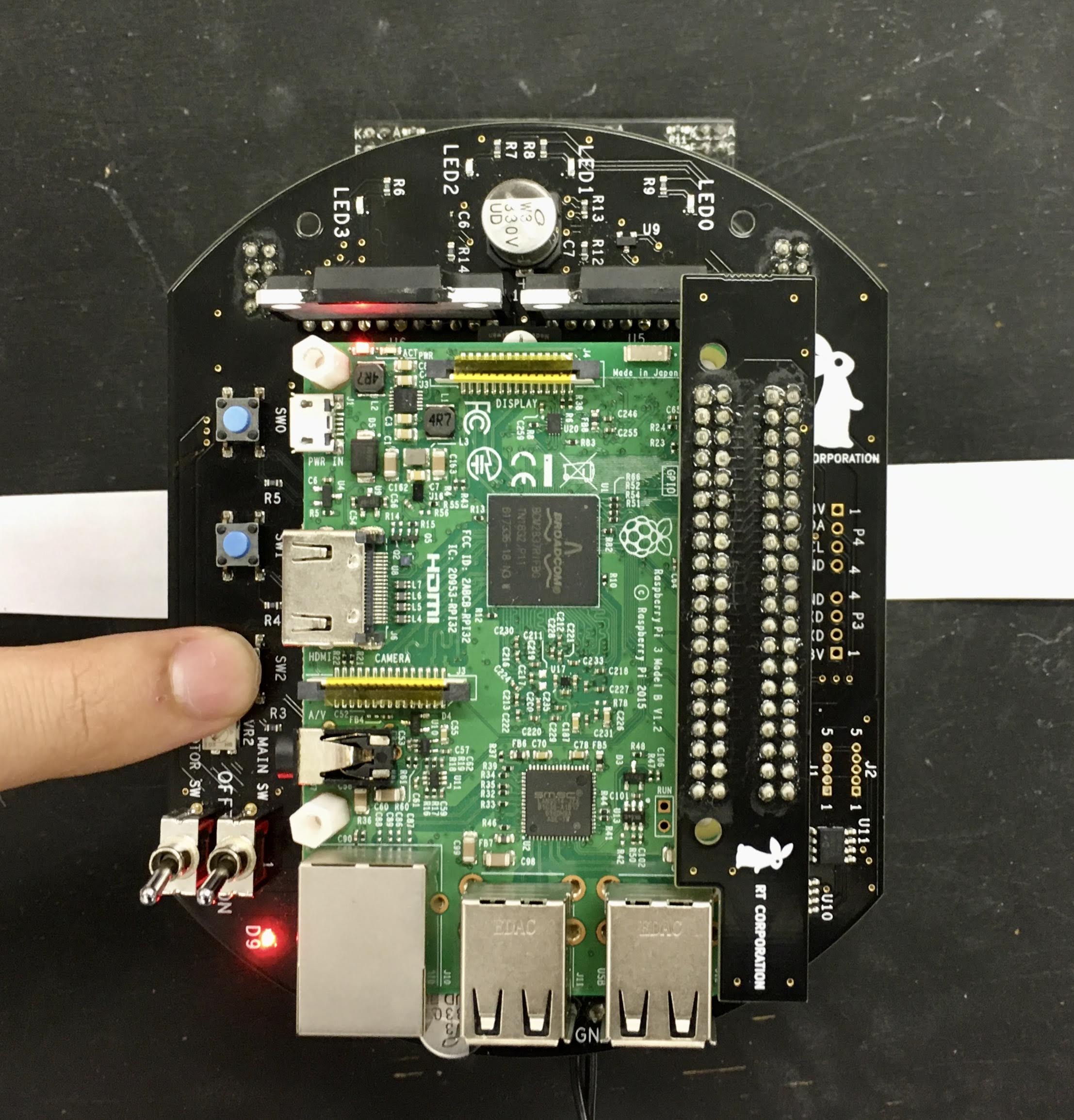

256 | Next, place Raspberry Pi Mouse on a field and press SW2 to sample sensor values on the field.

257 |

258 |

232 |

233 | This is an example for line following.

234 |

235 | #### Requirements

236 |

237 | - Line following sensor

238 | - [Raspberry Pi Mouse Option kit No.3 \[Line follower\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3591&language=en)

239 | - Field and lines for following (Optional)

240 |

241 | #### Installation

242 |

243 | Install a line following sensor unit to Raspberry Pi Mouse.

244 |

245 | #### How to use

246 |

247 | Launch nodes with the following command:

248 |

249 | ```sh

250 | roslaunch raspimouse_ros_examples line_follower.launch

251 |

252 | # Control from remote computer

253 | roslaunch raspimouse_ros_examples line_follower.launch mouse:=false

254 | ```

255 |

256 | Next, place Raspberry Pi Mouse on a field and press SW2 to sample sensor values on the field.

257 |

258 |  259 |

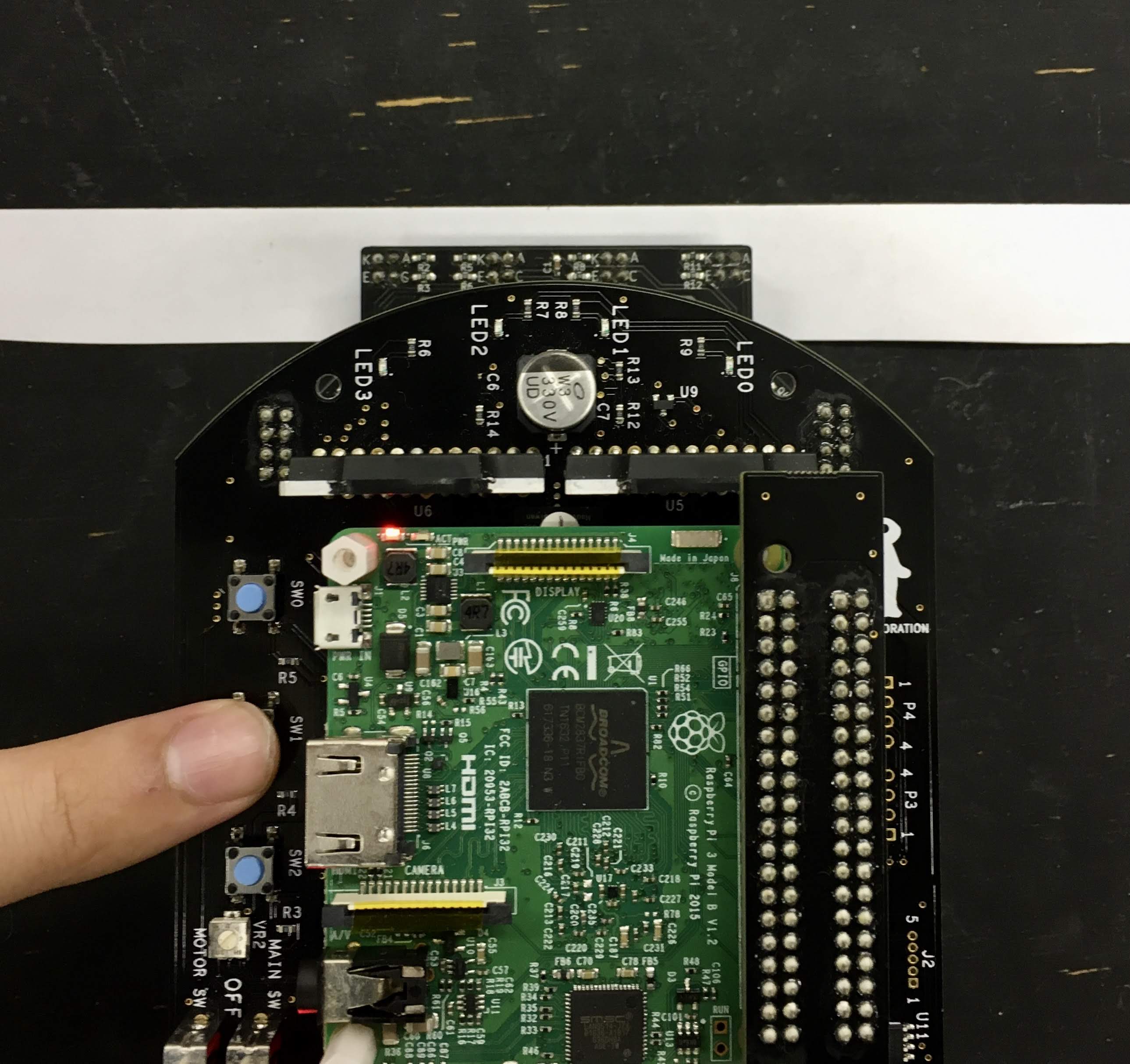

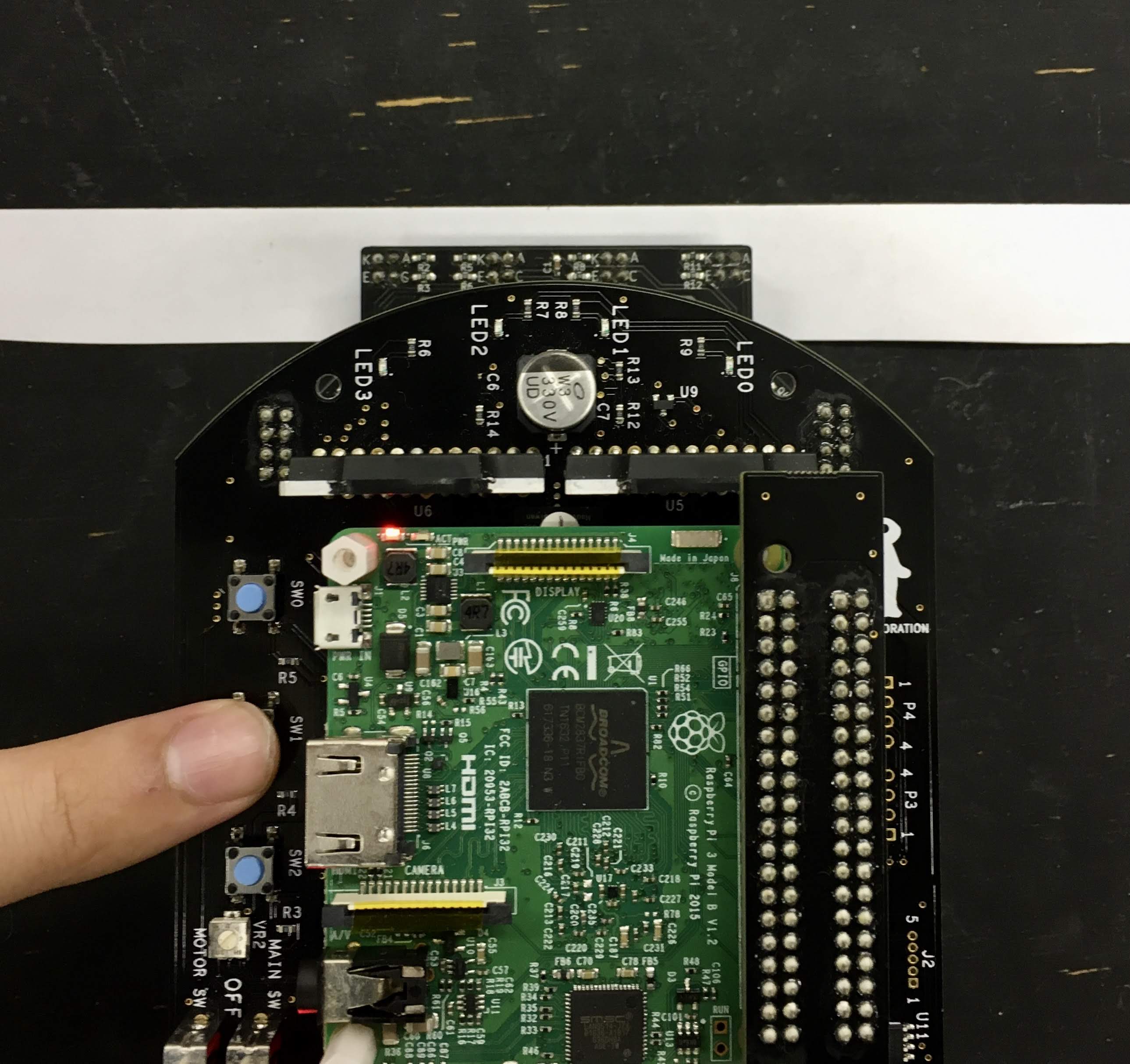

260 | Then, place Raspberry Pi Mouse to detect a line and press SW1 to sample sensor values on the line.

261 |

262 |

259 |

260 | Then, place Raspberry Pi Mouse to detect a line and press SW1 to sample sensor values on the line.

261 |

262 |  263 |

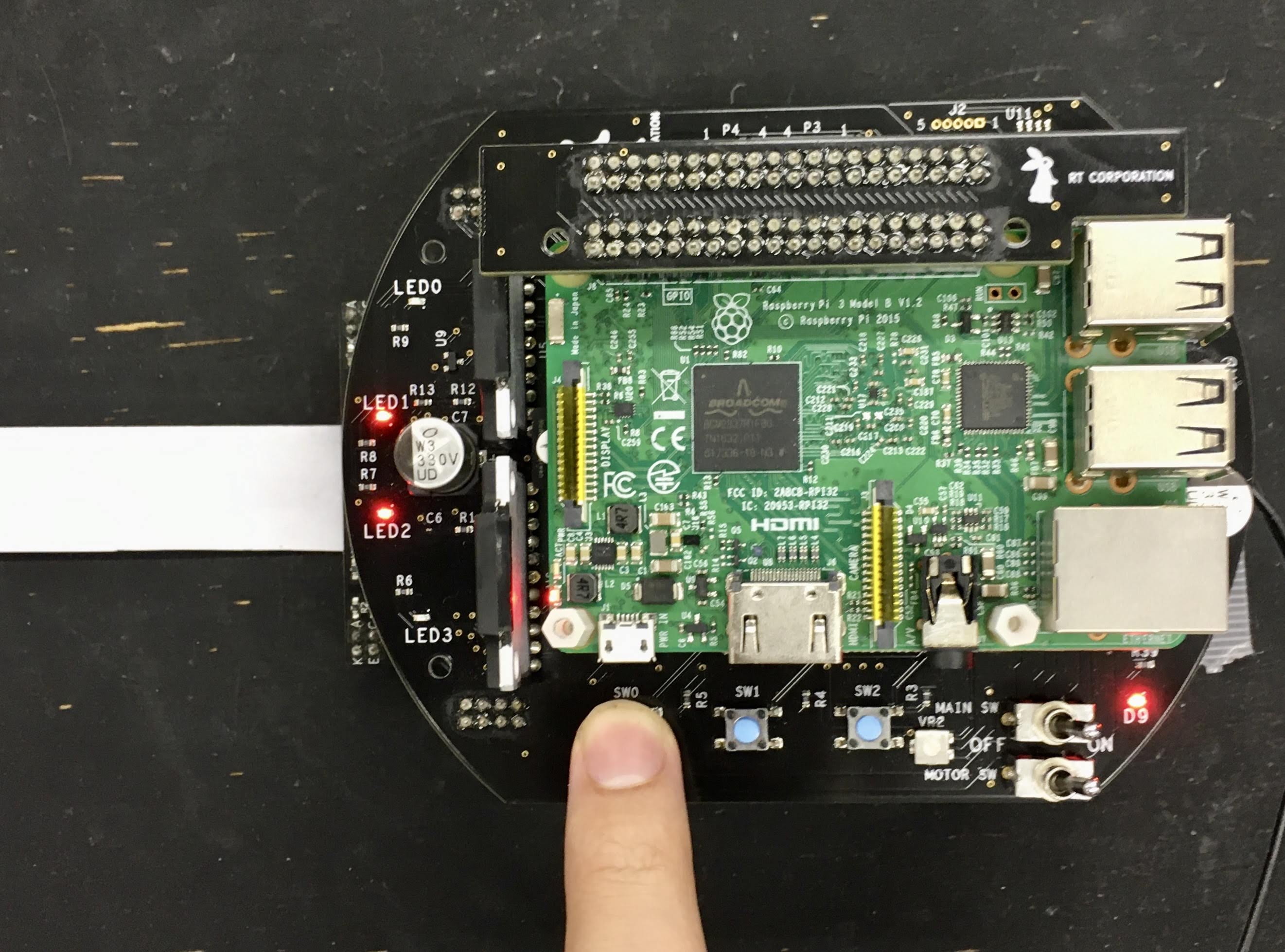

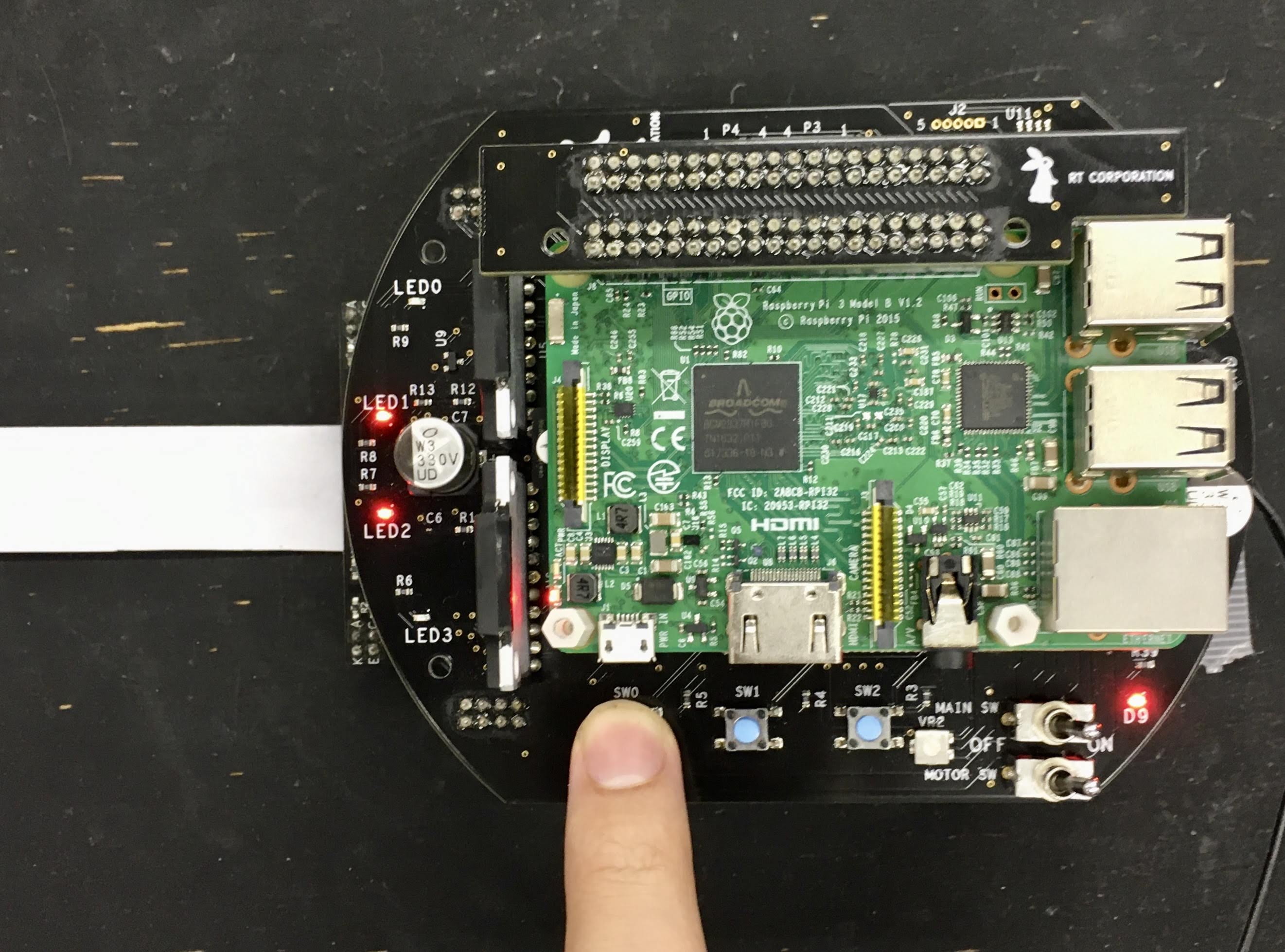

264 | Last, place Raspberry Pi Mouse on the line and press SW0 to start line following.

265 |

266 |

263 |

264 | Last, place Raspberry Pi Mouse on the line and press SW0 to start line following.

265 |

266 |  267 |

268 | Press SW0 again to stop the following.

269 |

270 | #### Configure

271 |

272 | Edit [`./scripts/line_follower.py`](./scripts/line_follower.py) to change a velocity command.

273 |

274 | ```python

275 | def _publish_cmdvel_for_line_following(self):

276 | VEL_LINER_X = 0.08 # m/s

277 | VEL_ANGULAR_Z = 0.8 # rad/s

278 | LOW_VEL_ANGULAR_Z = 0.5 # rad/s

279 |

280 | cmd_vel = Twist()

281 | ```

282 |

283 | #### Videos

284 |

285 | [](https://youtu.be/oPm0sW2V_tY)

286 |

287 | [back to example list](#how-to-use-examples)

288 |

289 | ---

290 |

291 | ### SLAM

292 |

293 |

267 |

268 | Press SW0 again to stop the following.

269 |

270 | #### Configure

271 |

272 | Edit [`./scripts/line_follower.py`](./scripts/line_follower.py) to change a velocity command.

273 |

274 | ```python

275 | def _publish_cmdvel_for_line_following(self):

276 | VEL_LINER_X = 0.08 # m/s

277 | VEL_ANGULAR_Z = 0.8 # rad/s

278 | LOW_VEL_ANGULAR_Z = 0.5 # rad/s

279 |

280 | cmd_vel = Twist()

281 | ```

282 |

283 | #### Videos

284 |

285 | [](https://youtu.be/oPm0sW2V_tY)

286 |

287 | [back to example list](#how-to-use-examples)

288 |

289 | ---

290 |

291 | ### SLAM

292 |

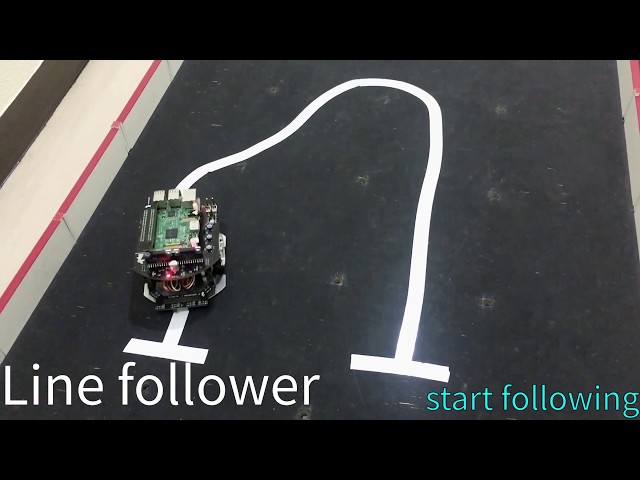

293 |  294 |

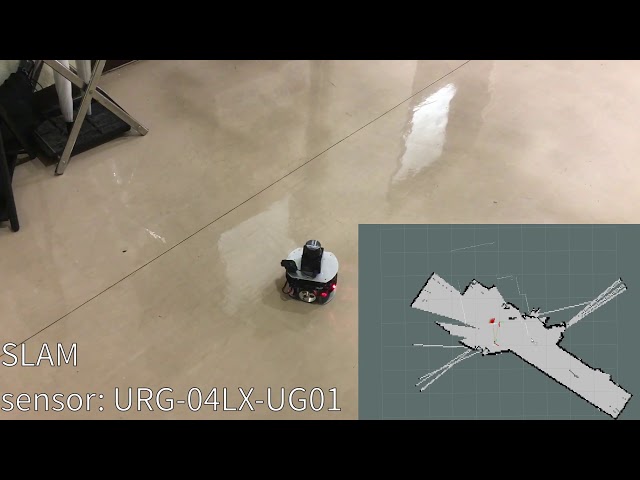

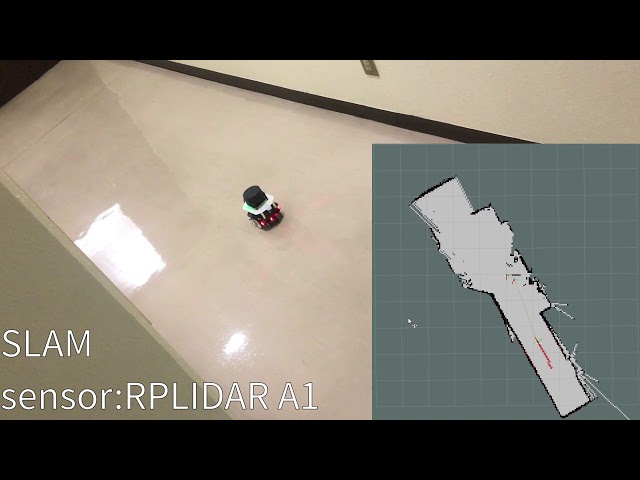

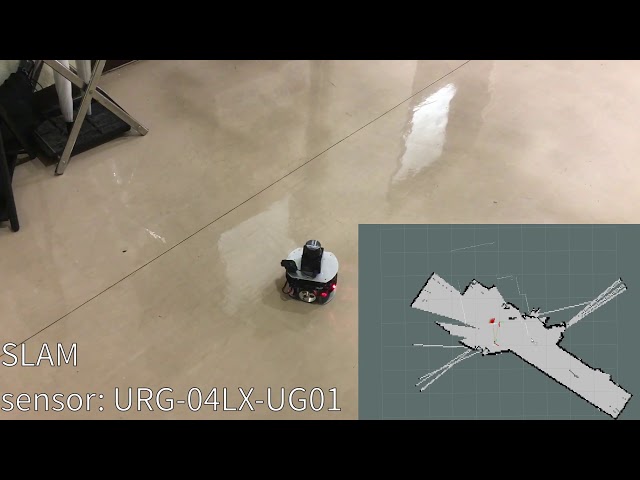

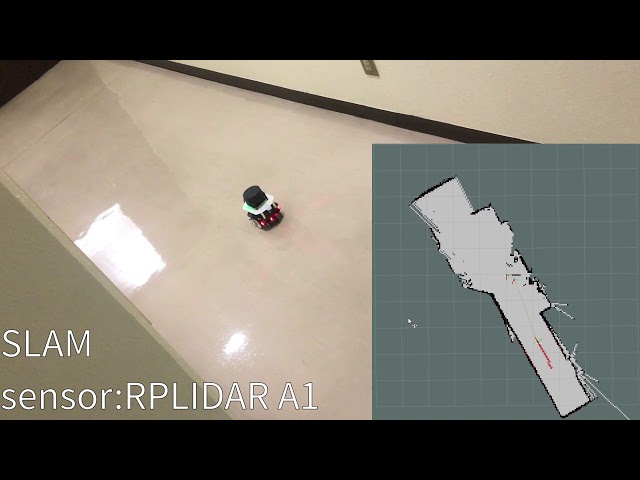

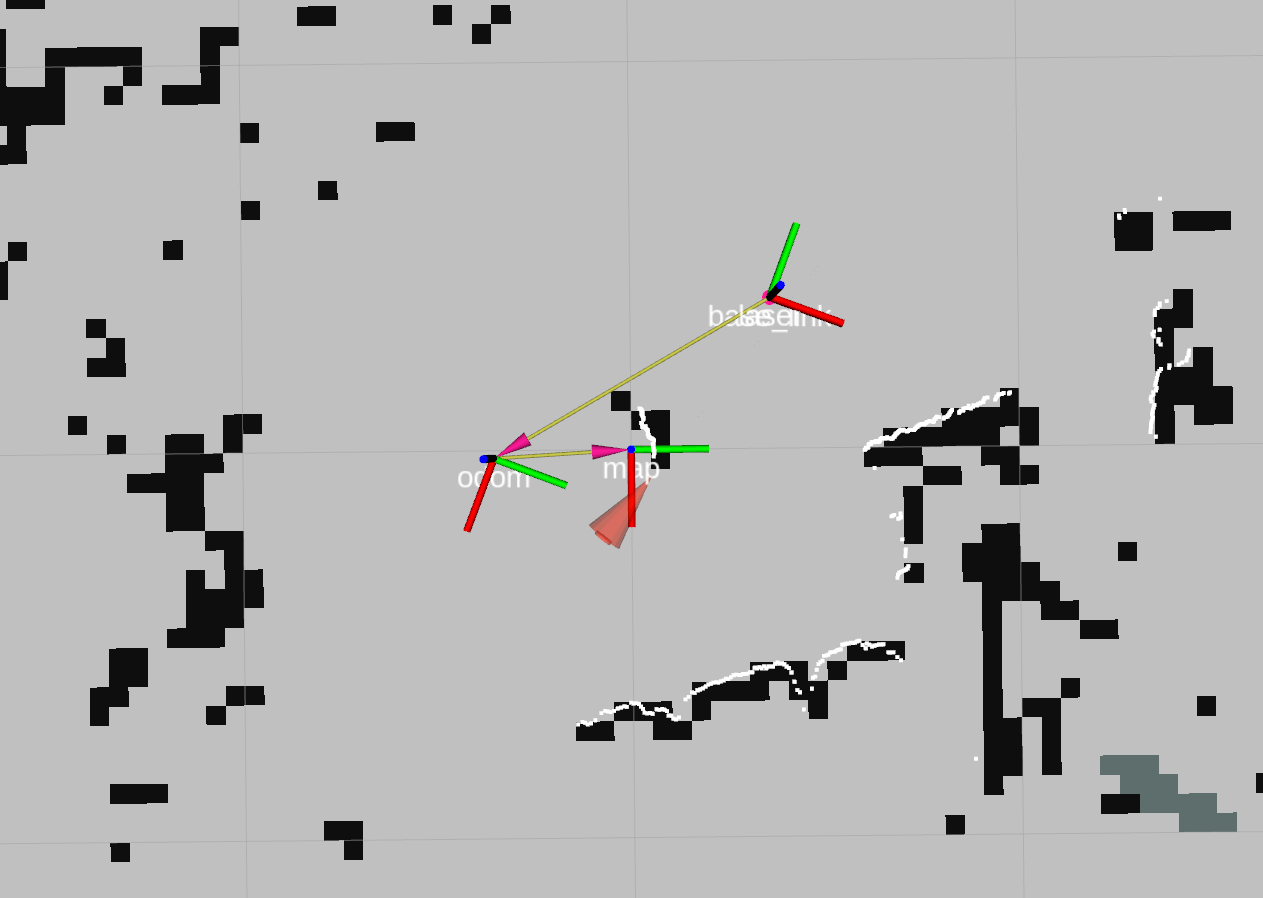

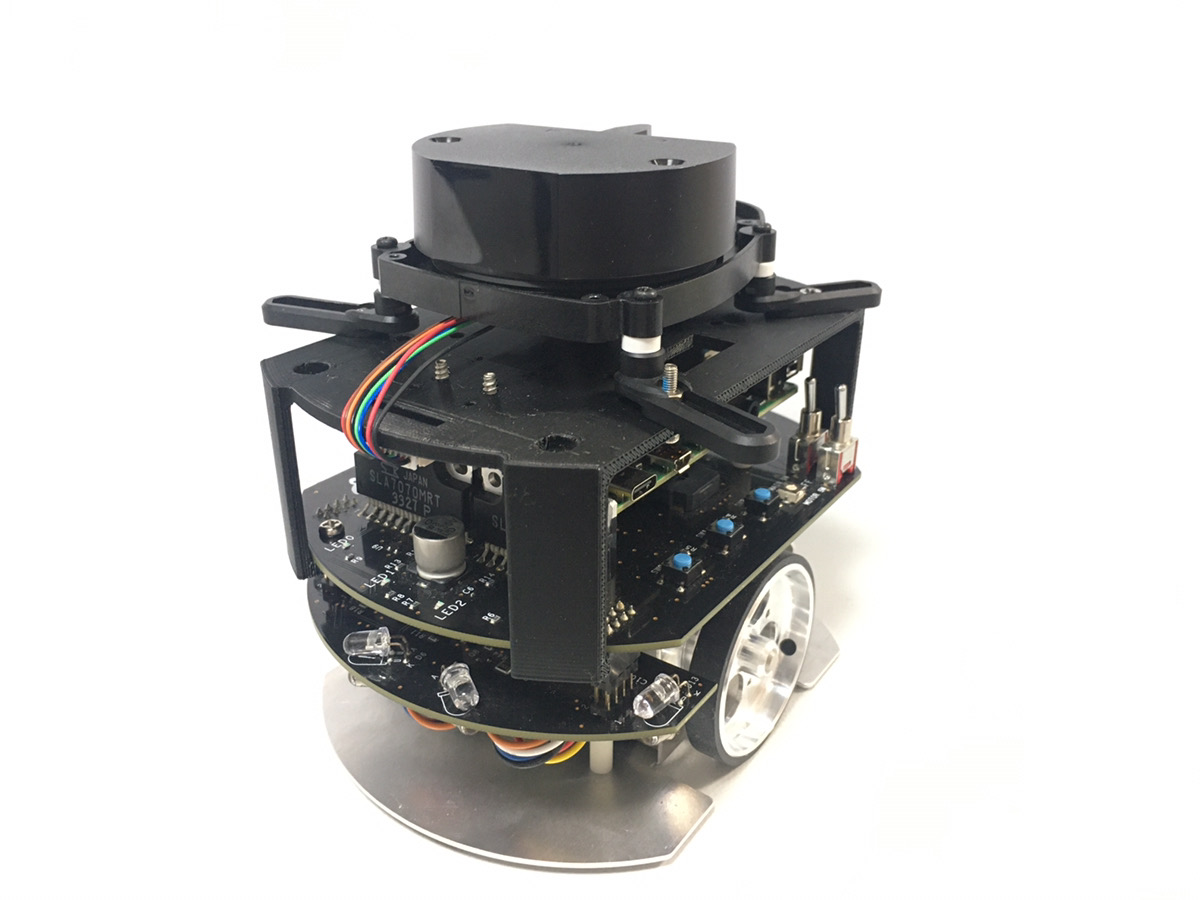

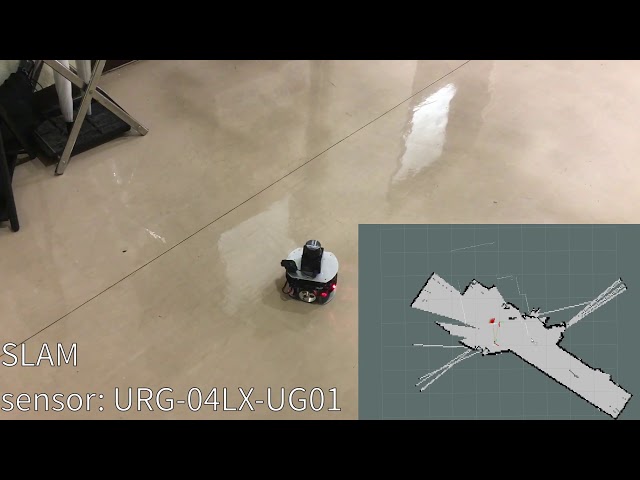

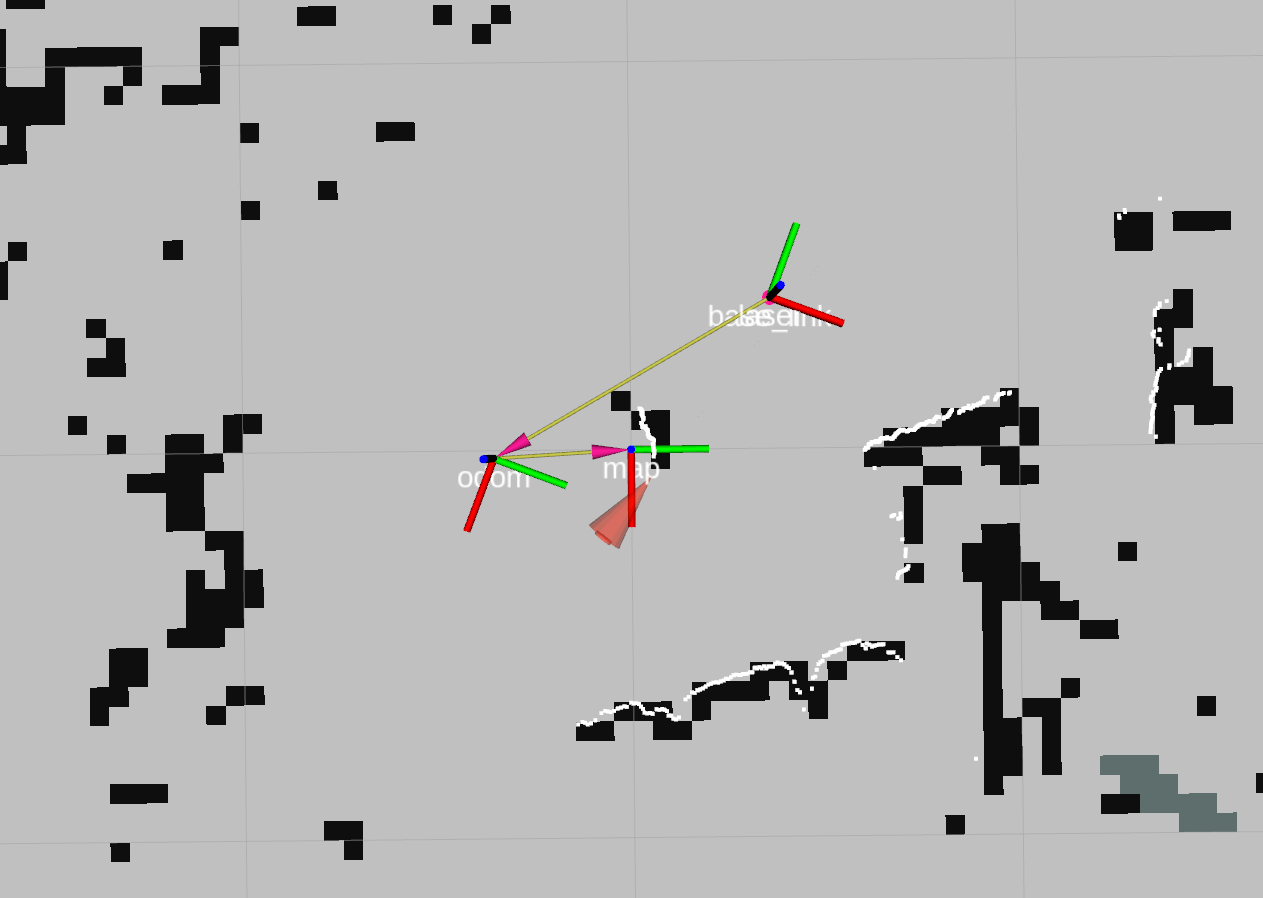

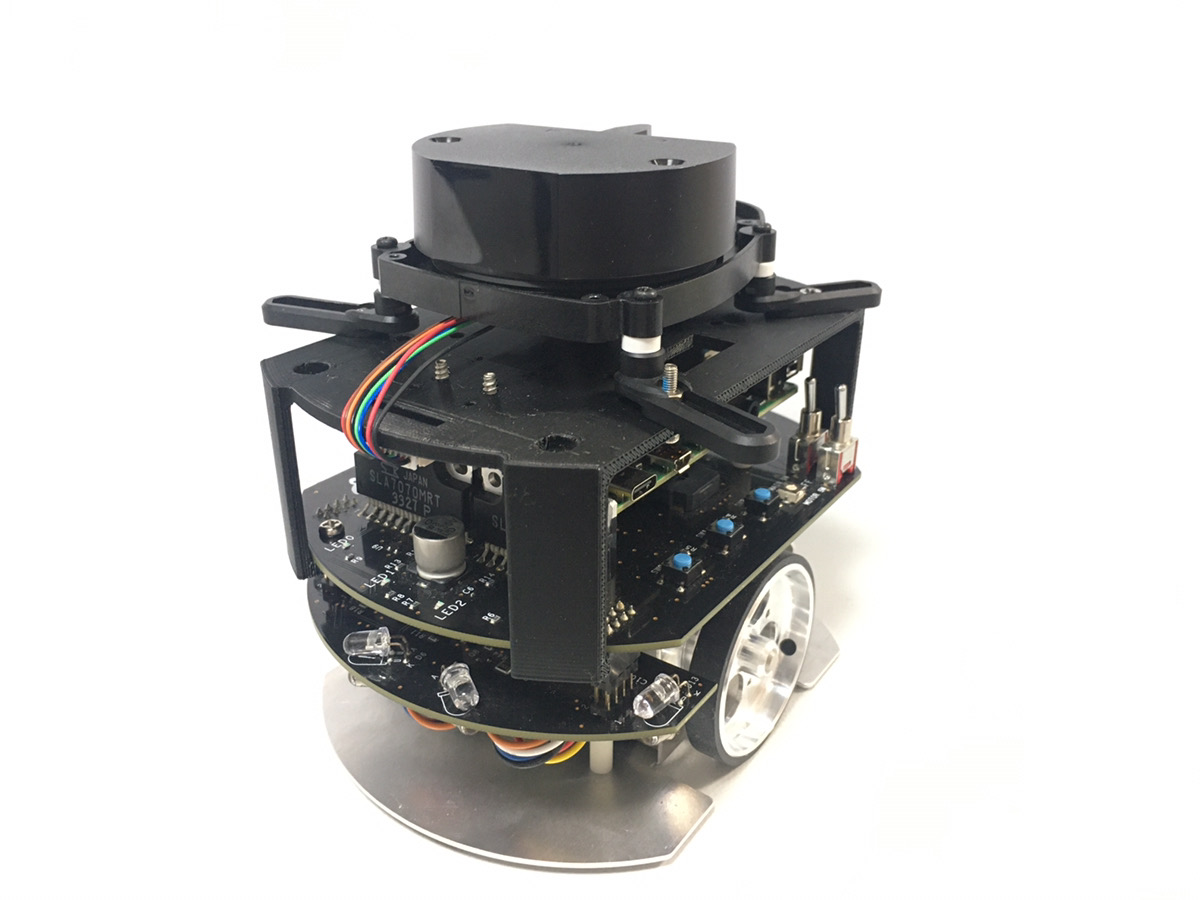

295 | This is an example to use LiDAR for SLAM (Simultaneous Localization And Mapping).

296 |

297 | #### Requirements

298 |

299 | - LiDAR

300 | - [URG-04LX-UG01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1296&products_id=2816&language=en)

301 |

302 | - [LDS-01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_5&products_id=3676&language=en)

303 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867&language=en)

304 | - Joystick Controller (Optional)

305 |

306 | This sample does not support RPLIDAR because its package [rplidar_ros](https://github.com/Slamtec/rplidar_ros) have not released a ROS Noetic version yet.

307 |

308 | #### Installation

309 |

310 | Install a LiDAR to the Raspberry Pi Mouse.

311 |

312 | - URG-04LX-UG01

313 | -

294 |

295 | This is an example to use LiDAR for SLAM (Simultaneous Localization And Mapping).

296 |

297 | #### Requirements

298 |

299 | - LiDAR

300 | - [URG-04LX-UG01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1296&products_id=2816&language=en)

301 |

302 | - [LDS-01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_5&products_id=3676&language=en)

303 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867&language=en)

304 | - Joystick Controller (Optional)

305 |

306 | This sample does not support RPLIDAR because its package [rplidar_ros](https://github.com/Slamtec/rplidar_ros) have not released a ROS Noetic version yet.

307 |

308 | #### Installation

309 |

310 | Install a LiDAR to the Raspberry Pi Mouse.

311 |

312 | - URG-04LX-UG01

313 | -  314 |

316 | - LDS-01

317 | -

314 |

316 | - LDS-01

317 | -  318 |

319 | #### How to use

320 |

321 | Launch nodes on Raspberry Pi Mouse with the following command:

322 |

323 | ```sh

324 | # URG

325 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch urg:=true port:=/dev/ttyACM0

326 |

327 | # LDS

328 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch lds:=true port:=/dev/ttyUSB0

329 | ```

330 |

331 | Next, launch `teleop.launch` to control Raspberry Pi Mouse with the following command:

332 |

333 | ```sh

334 | # joystick control

335 | roslaunch raspimouse_ros_examples teleop.launch mouse:=false joy:=true joyconfig:=dualshock3

336 | ```

337 |

338 | Then, launch SLAM packages (on a remote computer recommend) with the following command:

339 |

340 | ```sh

341 | # URG

342 | roslaunch raspimouse_ros_examples slam_gmapping.launch urg:=true

343 |

344 | # LDS

345 | roslaunch raspimouse_ros_examples slam_gmapping.launch lds:=true

346 | ```

347 |

348 | After moving Raspberry Pi Mouse and makeing a map, run a node to save the map with the following command:

349 |

350 | ```sh

351 | mkdir ~/maps

352 | rosrun map_server map_saver -f ~/maps/mymap

353 | ```

354 |

355 | #### Configure

356 |

357 | Edit [./launch/slam_gmapping.launch](./launch/slam_gmapping.launch) to configure parameters of [gmapping](http://wiki.ros.org/gmapping) package.

358 |

359 | ```xml

360 |

361 |

362 |

363 |

364 |

365 |

366 |

367 |

368 |

369 |

370 |

371 |

372 | ```

373 |

374 | #### Videos

375 |

376 | [](https://youtu.be/gWozU47UqVE)

377 |

378 | [](https://youtu.be/hV68UqAntfo)

379 |

380 | [back to example list](#how-to-use-examples)

381 |

382 | ---

383 |

384 | ### direction_control

385 |

386 |

318 |

319 | #### How to use

320 |

321 | Launch nodes on Raspberry Pi Mouse with the following command:

322 |

323 | ```sh

324 | # URG

325 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch urg:=true port:=/dev/ttyACM0

326 |

327 | # LDS

328 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch lds:=true port:=/dev/ttyUSB0

329 | ```

330 |

331 | Next, launch `teleop.launch` to control Raspberry Pi Mouse with the following command:

332 |

333 | ```sh

334 | # joystick control

335 | roslaunch raspimouse_ros_examples teleop.launch mouse:=false joy:=true joyconfig:=dualshock3

336 | ```

337 |

338 | Then, launch SLAM packages (on a remote computer recommend) with the following command:

339 |

340 | ```sh

341 | # URG

342 | roslaunch raspimouse_ros_examples slam_gmapping.launch urg:=true

343 |

344 | # LDS

345 | roslaunch raspimouse_ros_examples slam_gmapping.launch lds:=true

346 | ```

347 |

348 | After moving Raspberry Pi Mouse and makeing a map, run a node to save the map with the following command:

349 |

350 | ```sh

351 | mkdir ~/maps

352 | rosrun map_server map_saver -f ~/maps/mymap

353 | ```

354 |

355 | #### Configure

356 |

357 | Edit [./launch/slam_gmapping.launch](./launch/slam_gmapping.launch) to configure parameters of [gmapping](http://wiki.ros.org/gmapping) package.

358 |

359 | ```xml

360 |

361 |

362 |

363 |

364 |

365 |

366 |

367 |

368 |

369 |

370 |

371 |

372 | ```

373 |

374 | #### Videos

375 |

376 | [](https://youtu.be/gWozU47UqVE)

377 |

378 | [](https://youtu.be/hV68UqAntfo)

379 |

380 | [back to example list](#how-to-use-examples)

381 |

382 | ---

383 |

384 | ### direction_control

385 |

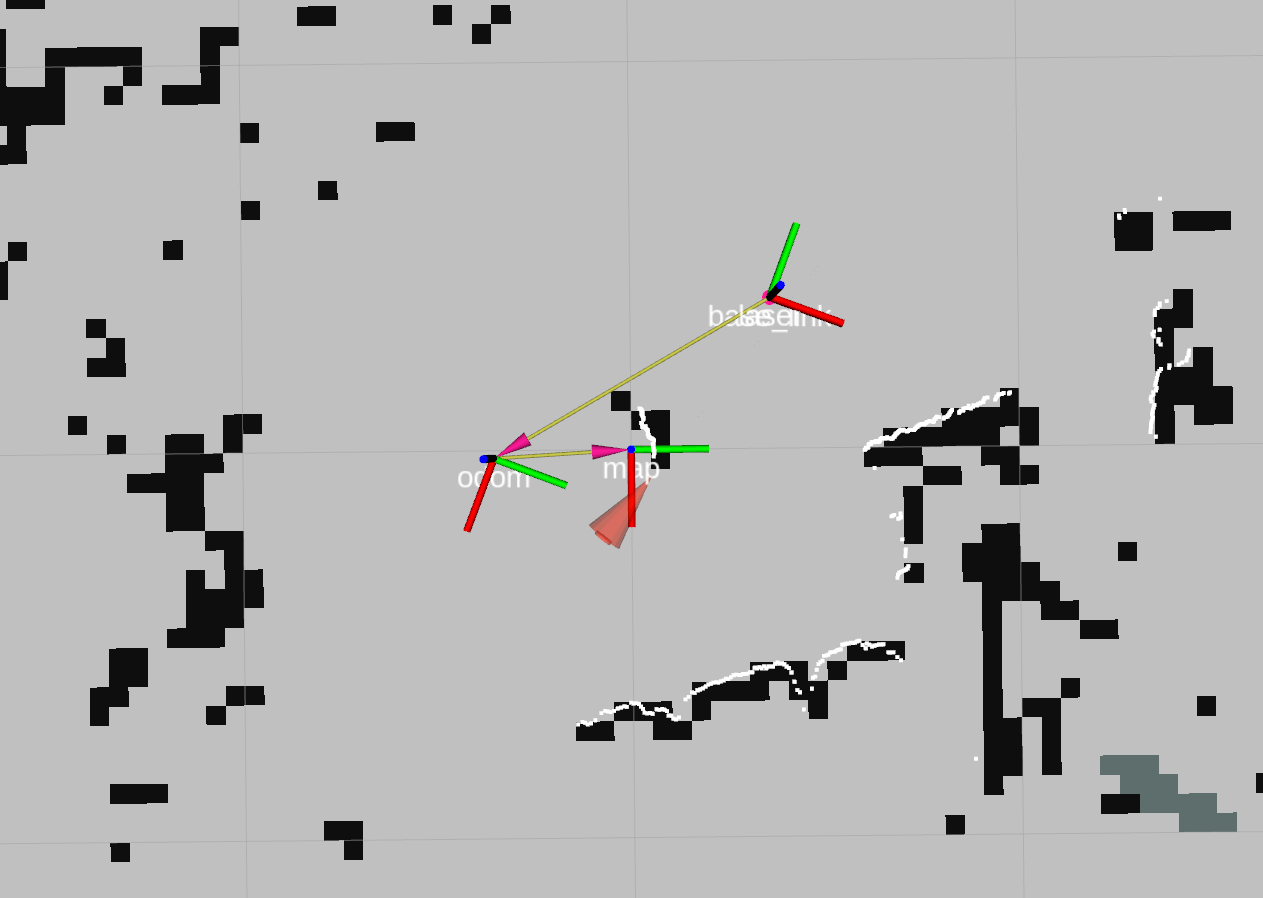

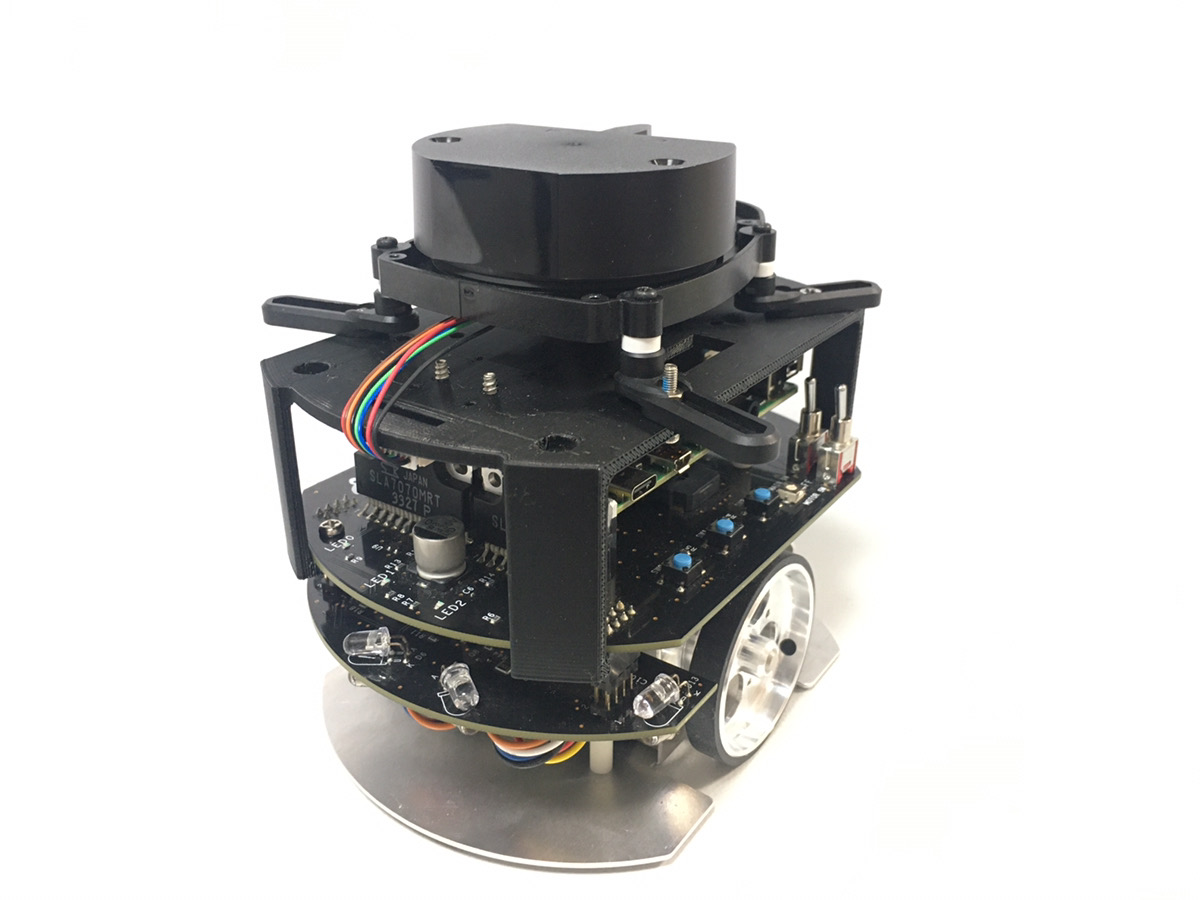

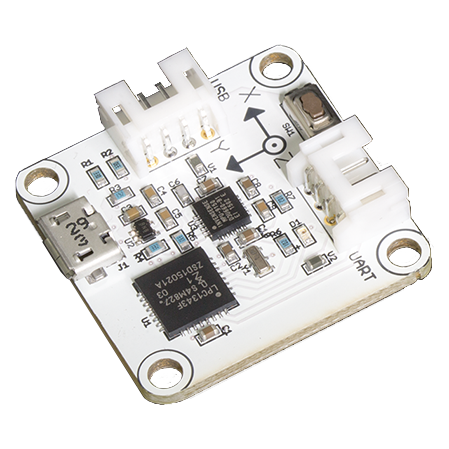

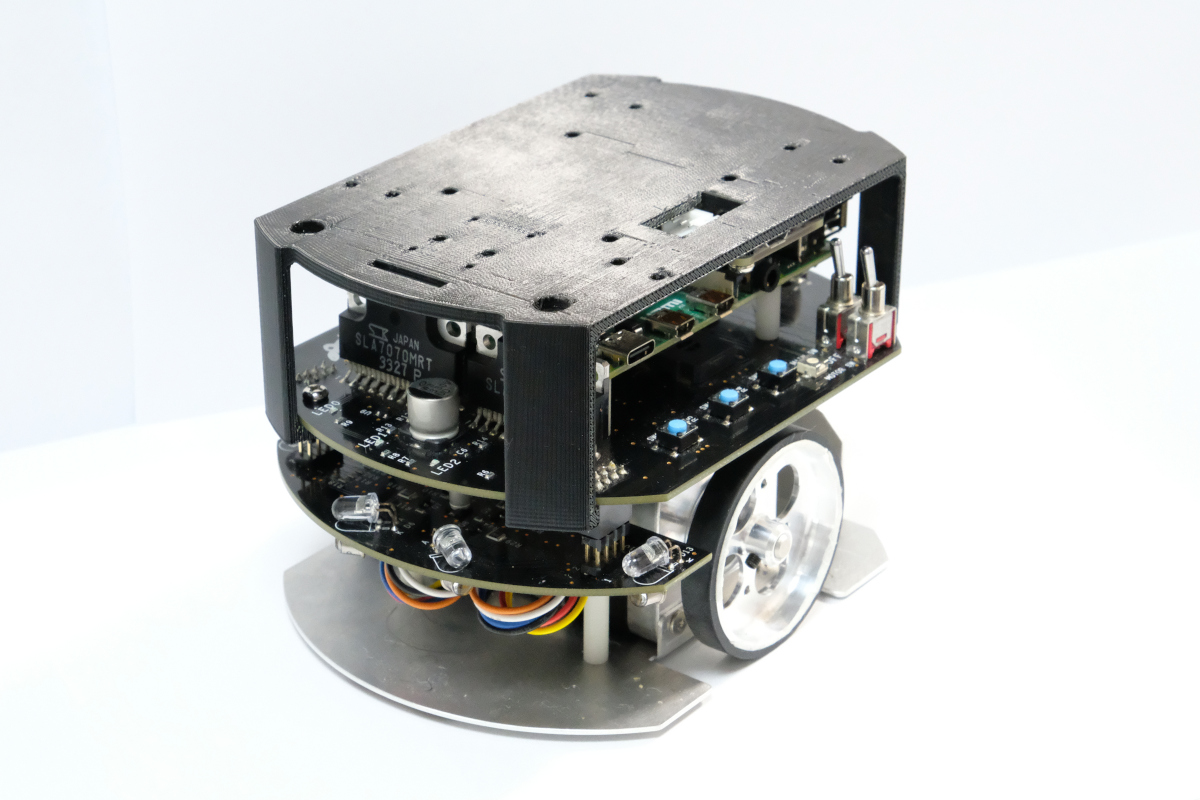

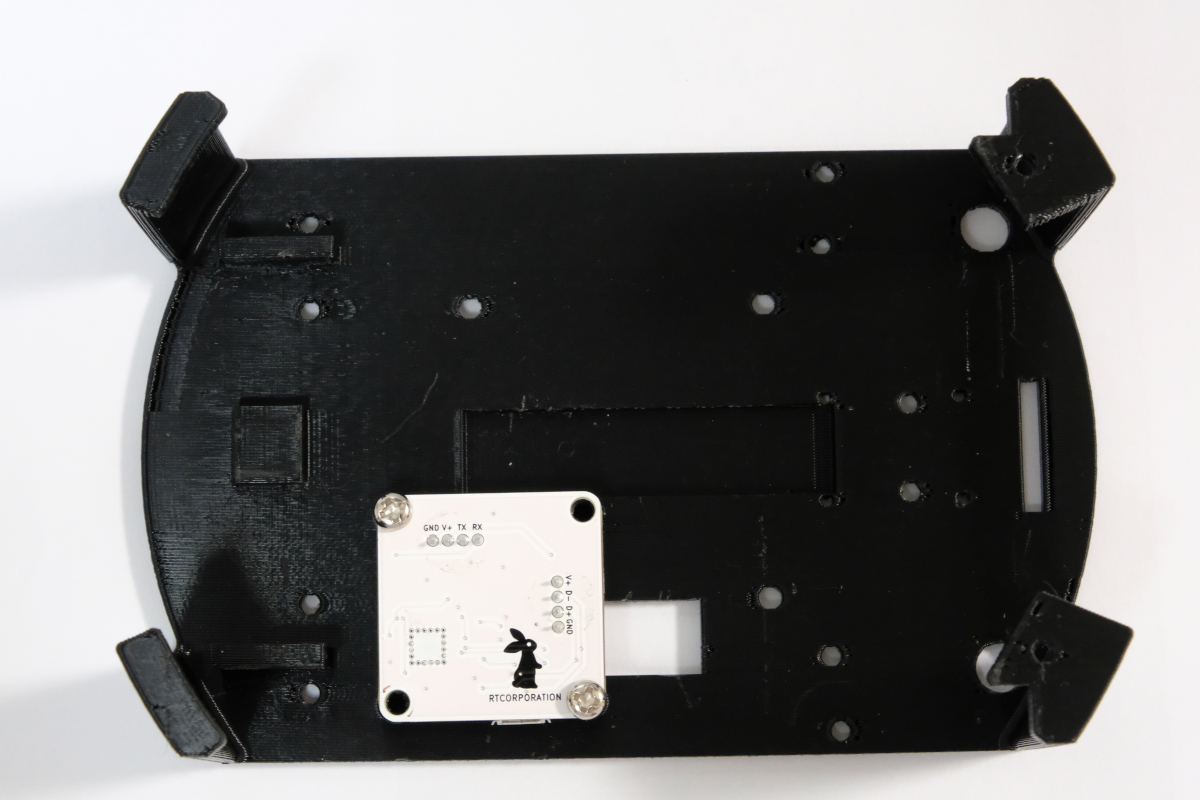

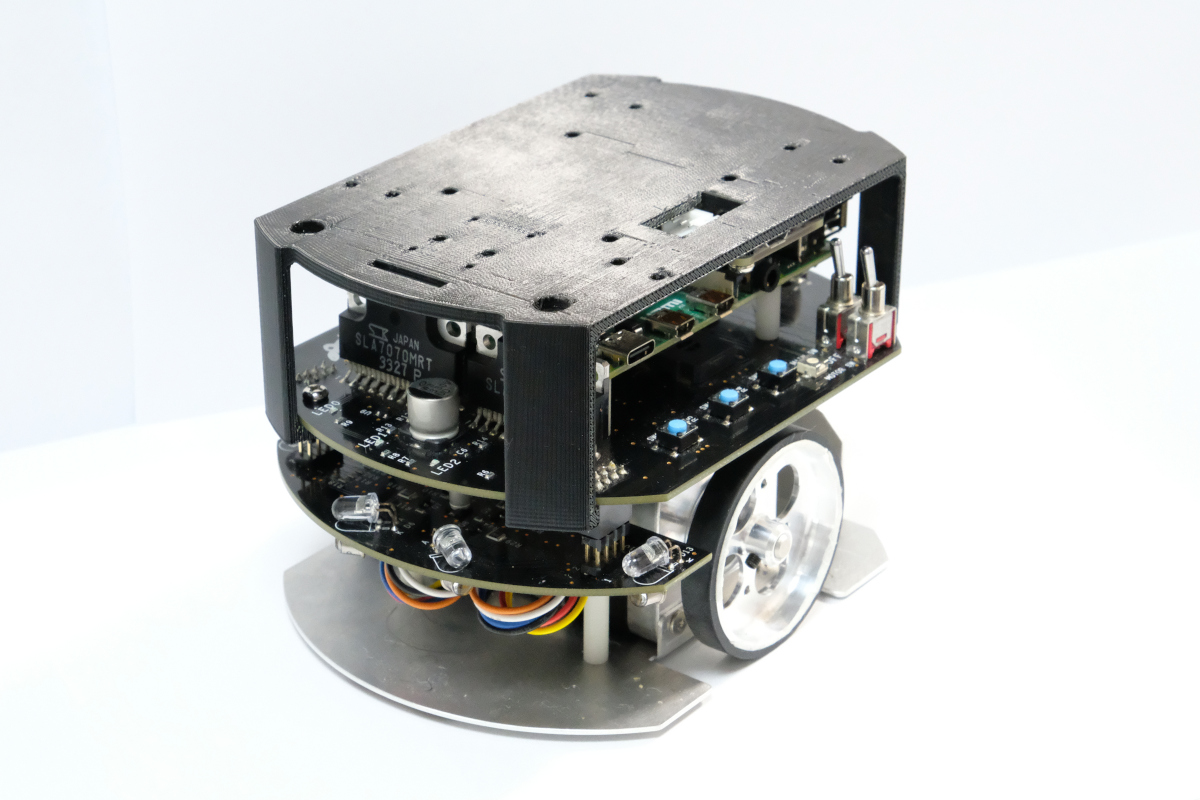

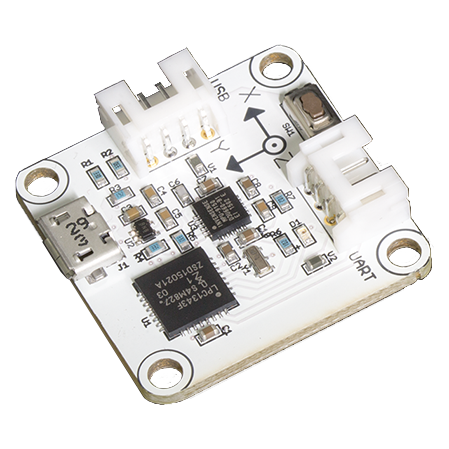

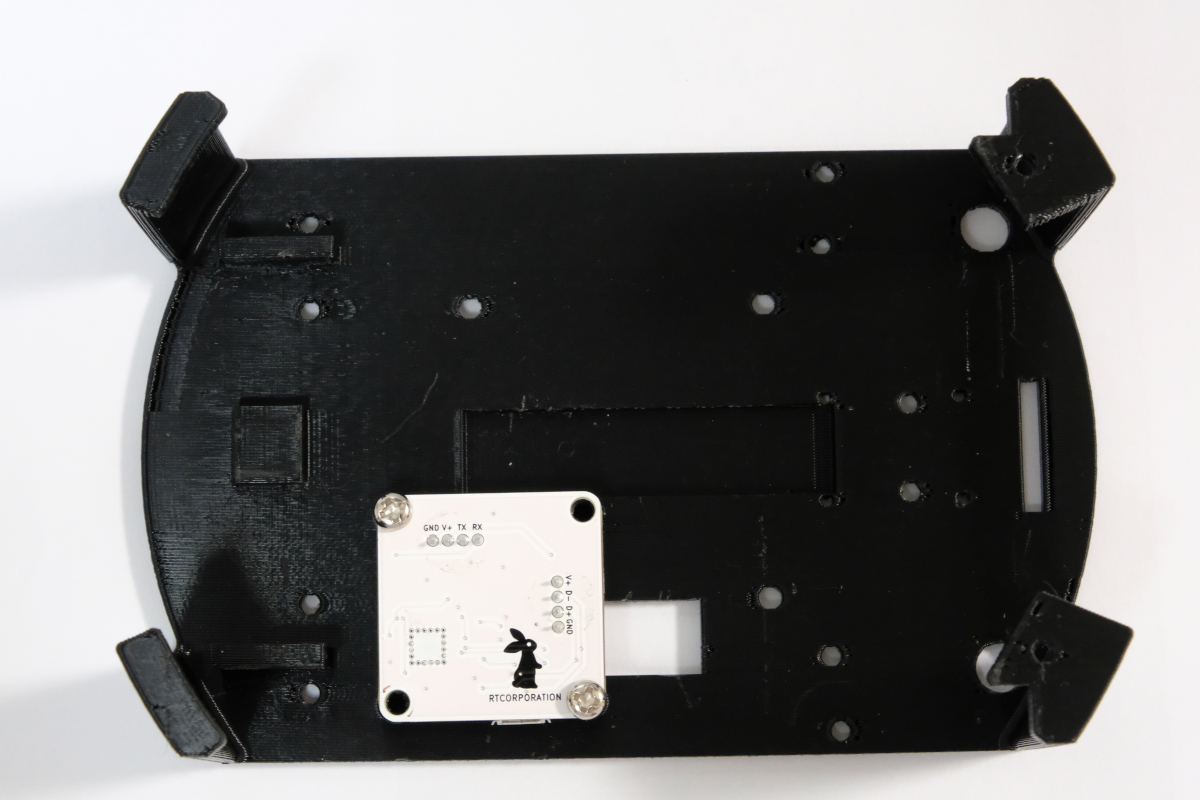

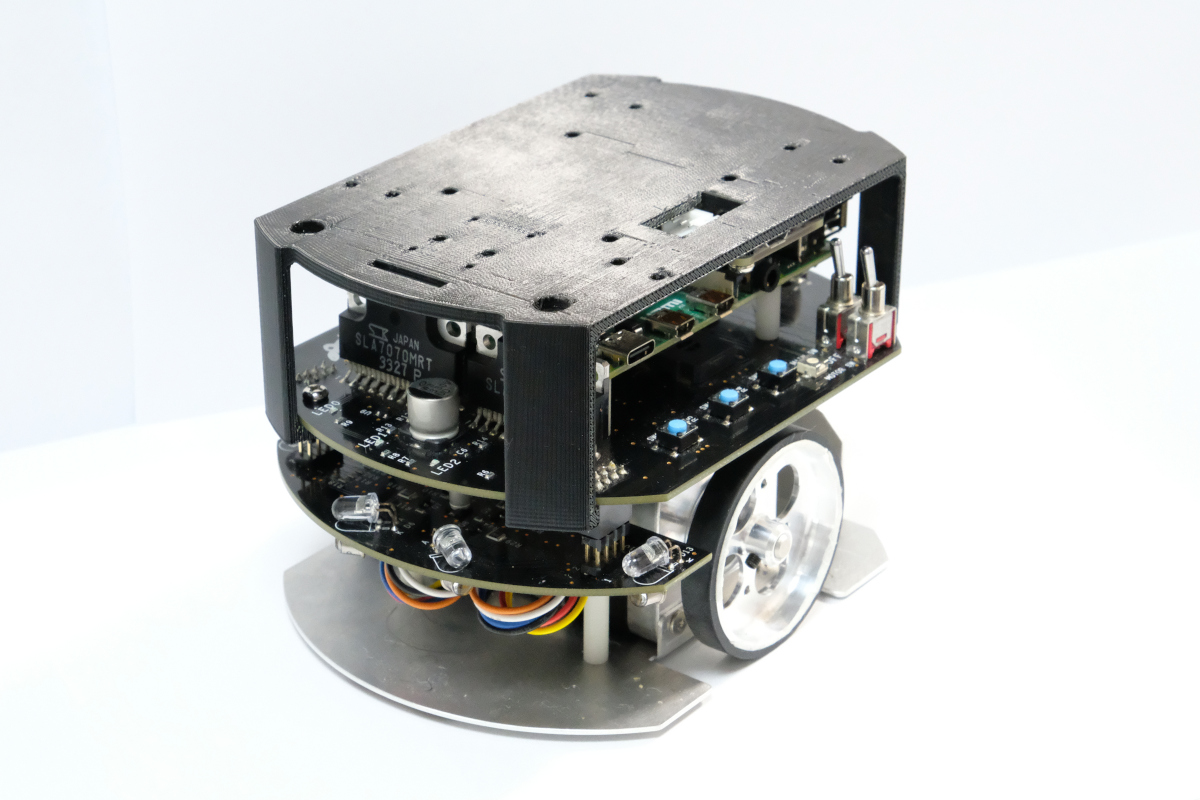

386 |  387 |

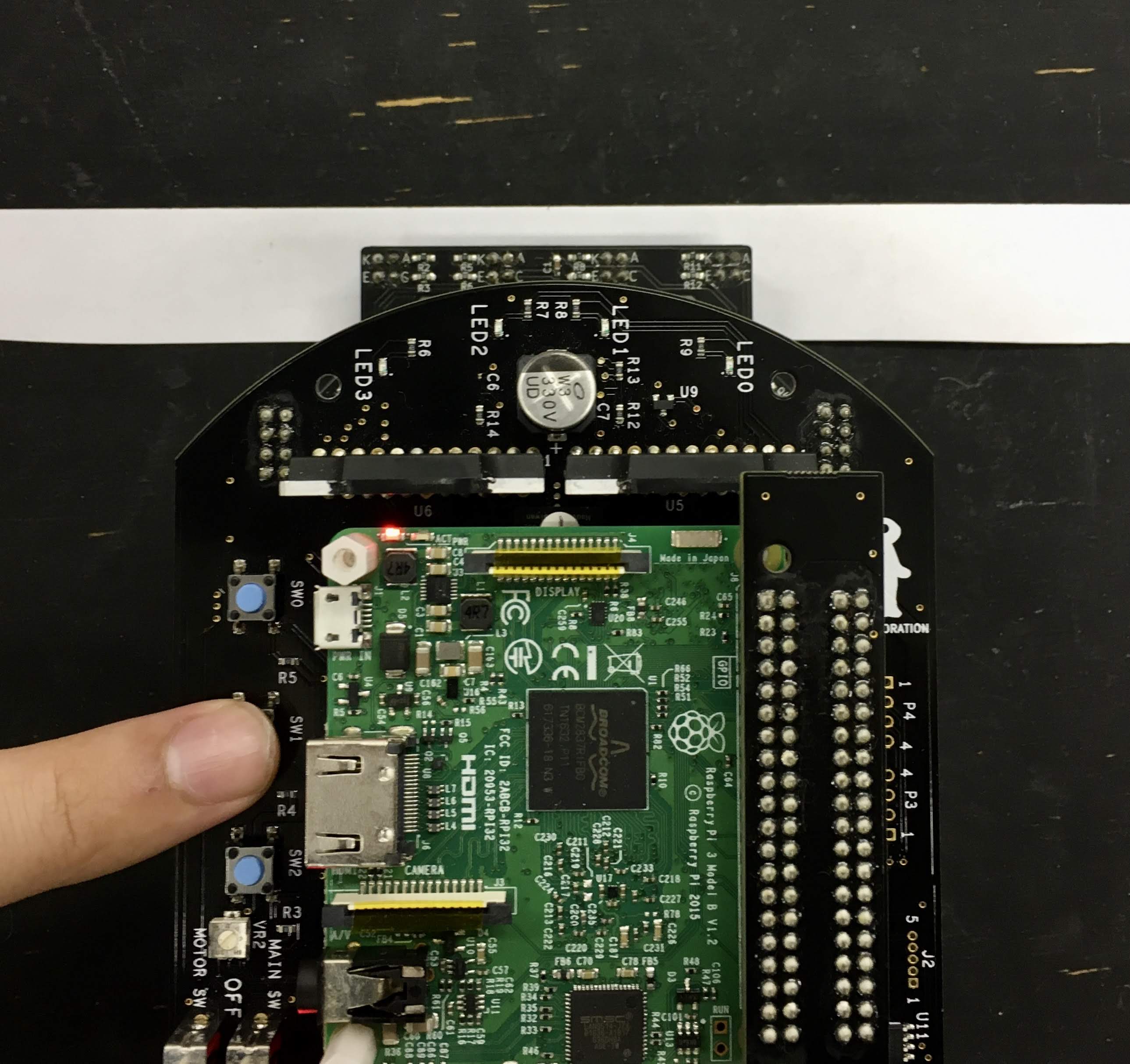

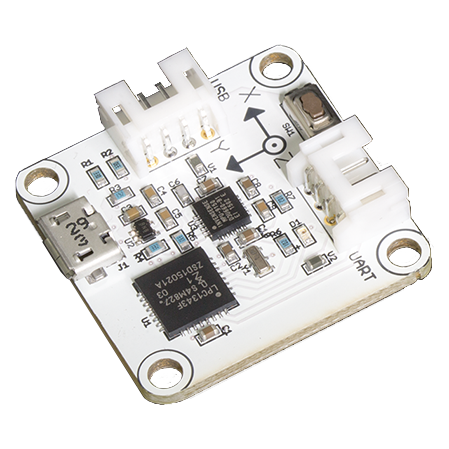

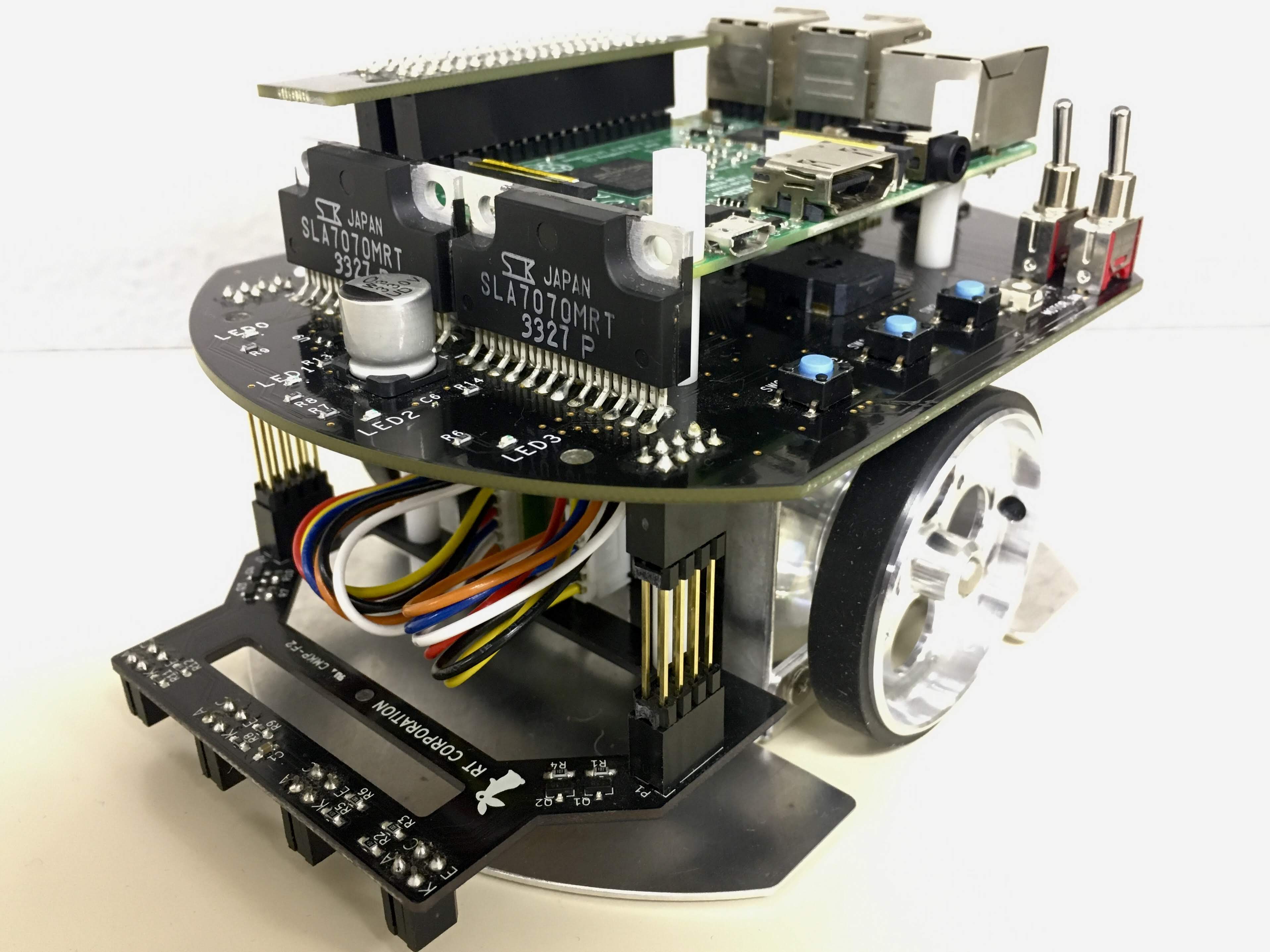

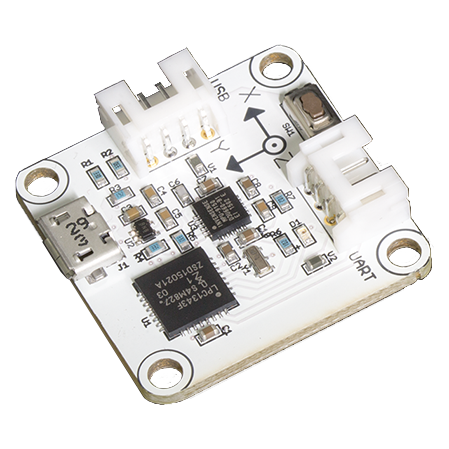

388 | This is an example to use an IMU sensor for direction control.

389 |

390 | #### Requirements

391 |

392 | - [USB output 9 degrees IMU sensor module](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1&products_id=3416&language=en)

393 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

394 | - RT-USB-9axisIMU ROS Package.

395 | - https://github.com/rt-net/rt_usb_9axisimu_driver

396 |

397 | #### Installation

398 |

399 | Install the IMU sensor module to the LiDAR mount.

400 |

401 |

387 |

388 | This is an example to use an IMU sensor for direction control.

389 |

390 | #### Requirements

391 |

392 | - [USB output 9 degrees IMU sensor module](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1&products_id=3416&language=en)

393 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

394 | - RT-USB-9axisIMU ROS Package.

395 | - https://github.com/rt-net/rt_usb_9axisimu_driver

396 |

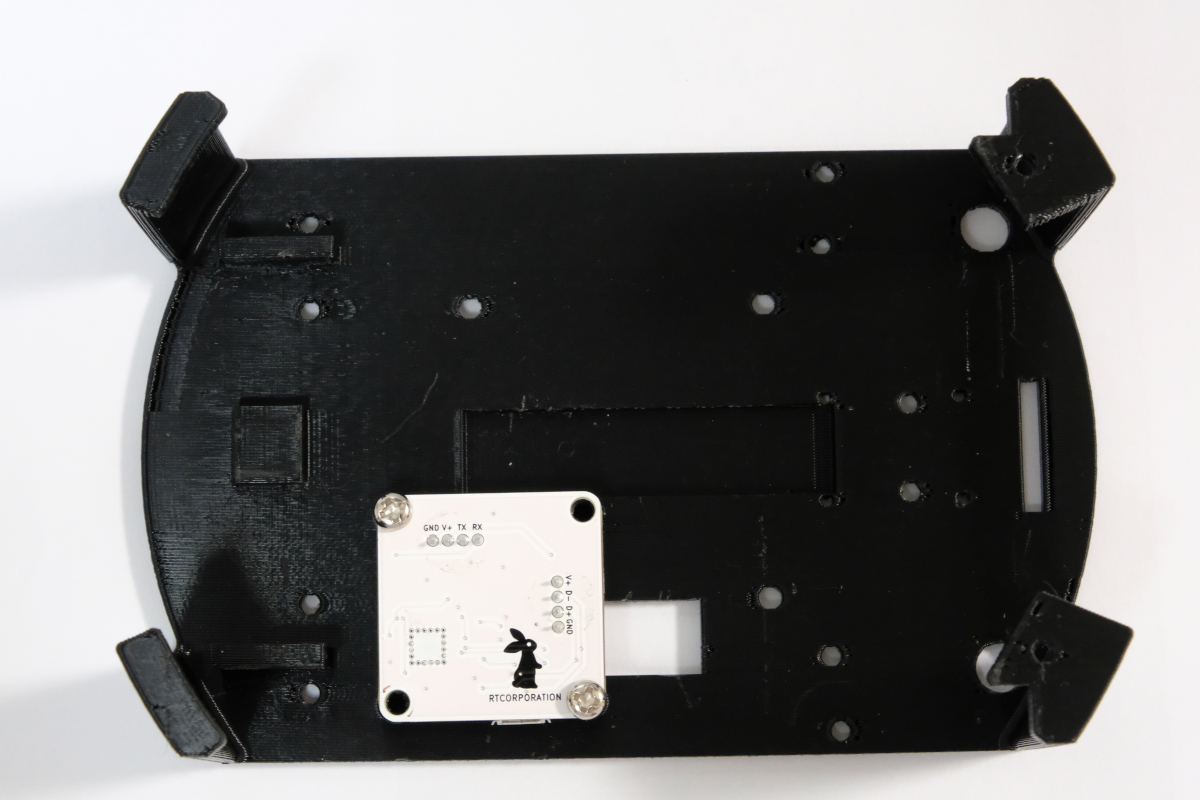

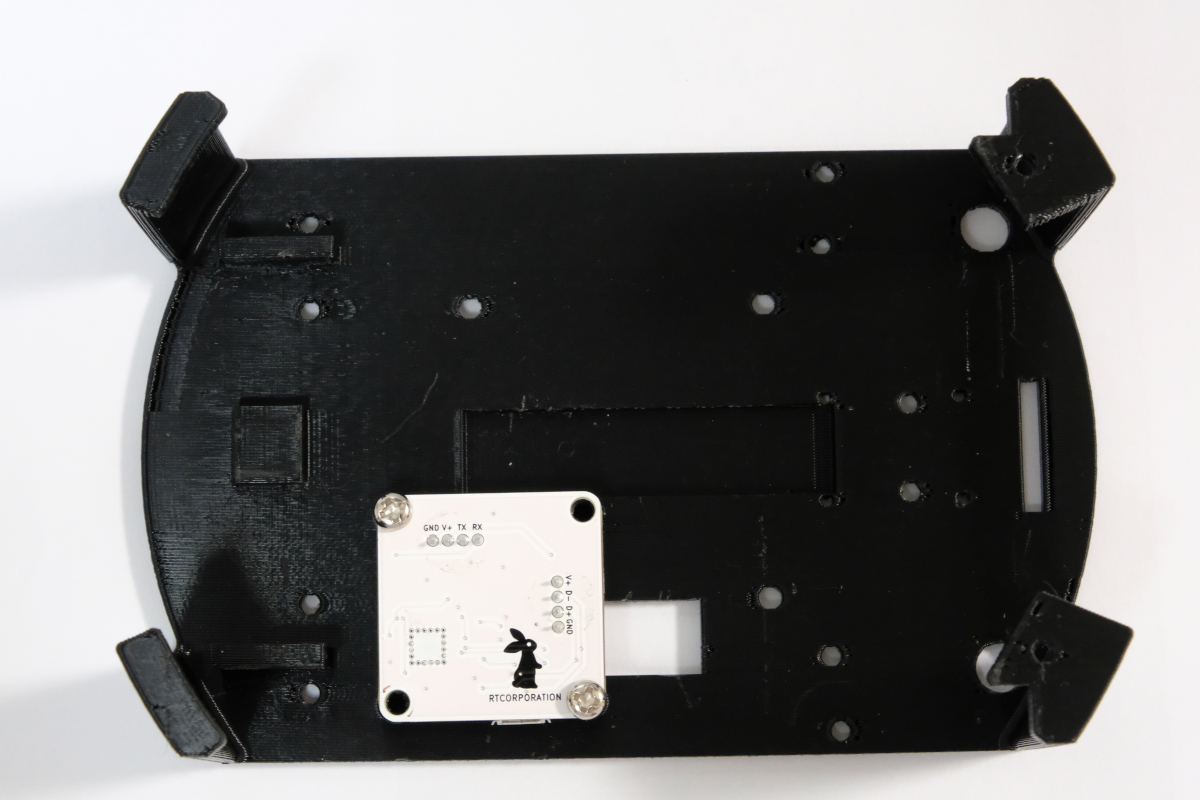

397 | #### Installation

398 |

399 | Install the IMU sensor module to the LiDAR mount.

400 |

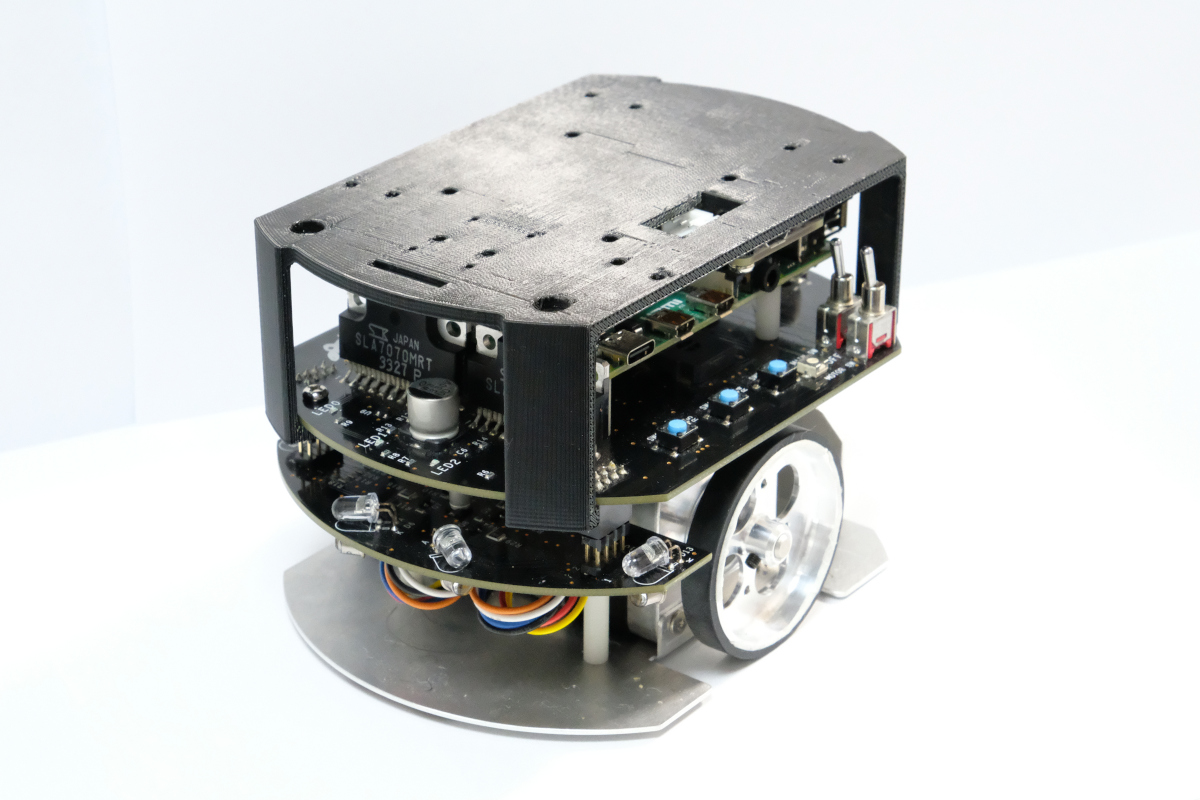

401 |  402 |

403 | Install the LiDAR mount to the Raspberry Pi Mouse.

404 |

405 |

402 |

403 | Install the LiDAR mount to the Raspberry Pi Mouse.

404 |

405 |  406 |

407 | #### How to use

408 |

409 | Launch nodes on Raspberry Pi Mouse with the following command:

410 |

411 | ```sh

412 | roslaunch raspimouse_ros_examples direction_control.launch

413 | ```

414 |

415 | Then, press SW0 ~ SW2 to change the control mode as following,

416 |

417 | - SW0: Calibrate the gyroscope bias and reset a heading angle of Raspberry Pi Mouse to 0 rad.

418 | - SW1: Start a direction control to keep the heading angle to 0 rad.

419 | - Press SW0 ~ SW2 or tilt the body to sideways to finish the control.

420 | - SW2: Start a direction control to change the heading angle to `-π ~ π rad`.

421 | - Press SW0 ~ SW2 or tilt the body to sideways to finish the control.

422 |

423 | #### Configure

424 |

425 | Edit [`./scripts/direction_control.py`](./scripts/direction_control.py)

426 | to configure gains of a PID controller for the direction control.

427 |

428 | ```python

429 | class DirectionController(object):

430 | # ---

431 | def __init__(self):

432 | # ---

433 | # for angle control

434 | self._omega_pid_controller = PIDController(10, 0, 20)

435 | ```

436 |

437 | #### Videos

438 |

439 | [](https://youtu.be/LDpC2wqIoU4)

440 |

441 | [back to example list](#how-to-use-examples)

442 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [English](README.en.md) | [日本語](README.md)

2 |

3 | # raspimouse_ros_examples

4 |

5 | [](https://github.com/rt-net/raspimouse_ros_examples/actions?query=workflow%3Aindustrial_ci+branch%3Amaster)

6 |

7 | Raspberry Pi MouseのROSサンプルコード集です。

8 |

9 | ナビゲーションのサンプルは[こちら](https://github.com/rt-net/raspimouse_slam_navigation_ros)。

10 | ROS 2のサンプルコード集は[こちら](https://github.com/rt-net/raspimouse_ros2_examples)。

11 |

12 |

406 |

407 | #### How to use

408 |

409 | Launch nodes on Raspberry Pi Mouse with the following command:

410 |

411 | ```sh

412 | roslaunch raspimouse_ros_examples direction_control.launch

413 | ```

414 |

415 | Then, press SW0 ~ SW2 to change the control mode as following,

416 |

417 | - SW0: Calibrate the gyroscope bias and reset a heading angle of Raspberry Pi Mouse to 0 rad.

418 | - SW1: Start a direction control to keep the heading angle to 0 rad.

419 | - Press SW0 ~ SW2 or tilt the body to sideways to finish the control.

420 | - SW2: Start a direction control to change the heading angle to `-π ~ π rad`.

421 | - Press SW0 ~ SW2 or tilt the body to sideways to finish the control.

422 |

423 | #### Configure

424 |

425 | Edit [`./scripts/direction_control.py`](./scripts/direction_control.py)

426 | to configure gains of a PID controller for the direction control.

427 |

428 | ```python

429 | class DirectionController(object):

430 | # ---

431 | def __init__(self):

432 | # ---

433 | # for angle control

434 | self._omega_pid_controller = PIDController(10, 0, 20)

435 | ```

436 |

437 | #### Videos

438 |

439 | [](https://youtu.be/LDpC2wqIoU4)

440 |

441 | [back to example list](#how-to-use-examples)

442 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [English](README.en.md) | [日本語](README.md)

2 |

3 | # raspimouse_ros_examples

4 |

5 | [](https://github.com/rt-net/raspimouse_ros_examples/actions?query=workflow%3Aindustrial_ci+branch%3Amaster)

6 |

7 | Raspberry Pi MouseのROSサンプルコード集です。

8 |

9 | ナビゲーションのサンプルは[こちら](https://github.com/rt-net/raspimouse_slam_navigation_ros)。

10 | ROS 2のサンプルコード集は[こちら](https://github.com/rt-net/raspimouse_ros2_examples)。

11 |

12 |  13 |

14 | ## Requirements

15 |

16 | - Raspberry Pi Mouse

17 | - https://rt-net.jp/products/raspberrypimousev3/

18 | - Linux OS

19 | - Ubuntu server 16.04

20 | - Ubuntu server 18.04

21 | - Ubuntu server 20.04

22 | - https://ubuntu.com/download/raspberry-pi

23 | - Device Driver

24 | - [rt-net/RaspberryPiMouse](https://github.com/rt-net/RaspberryPiMouse)

25 | - ROS

26 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

27 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

28 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

29 | - Raspberry Pi Mouse ROS package

30 | - https://github.com/ryuichiueda/raspimouse_ros_2

31 | - Remote Computer (Optional)

32 | - ROS

33 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

34 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

35 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

36 | - Raspberry Pi Mouse ROS package

37 | - https://github.com/ryuichiueda/raspimouse_ros_2

38 |

39 | ## Installation

40 |

41 | ```sh

42 | cd ~/catkin_ws/src

43 | # Clone ROS packages

44 | git clone https://github.com/ryuichiueda/raspimouse_ros_2

45 | git clone -b $ROS_DISTRO-devel https://github.com/rt-net/raspimouse_ros_examples

46 | # For direction control example

47 | git clone https://github.com/rt-net/rt_usb_9axisimu_driver

48 |

49 | # Install dependencies

50 | rosdep install -r -y --from-paths . --ignore-src

51 |

52 | # make & install

53 | cd ~/catkin_ws && catkin_make

54 | source devel/setup.bash

55 | ```

56 |

57 | ## License

58 |

59 | このリポジトリはApache 2.0ライセンスの元、公開されています。

60 | ライセンスについては[LICENSE](./LICENSE)を参照ください。

61 |

62 | ## How To Use Examples

63 |

64 | - [keyboard_control](#keyboard_control)

65 | - [joystick_control](#joystick_control)

66 | - [object_tracking](#object_tracking)

67 | - [line_follower](#line_follower)

68 | - [SLAM](#SLAM)

69 | - [direction_control](#direction_control)

70 |

71 | ---

72 |

73 | ### keyboard_control

74 |

75 | [teleop_twist_keyboard](http://wiki.ros.org/teleop_twist_keyboard)を使ってRaspberryPiMouseを動かします。

76 |

77 | #### Requirements

78 |

79 | - Keyboard

80 |

81 | #### How to use

82 |

83 | 次のコマンドでノードを起動します。

84 |

85 | ```sh

86 | roslaunch raspimouse_ros_examples teleop.launch key:=true

87 |

88 | # Control from remote computer

89 | roslaunch raspimouse_ros_examples teleop.launch key:=true mouse:=false

90 | ```

91 |

92 | ノードが起動したら`/motor_on`サービスをコールします。

93 |

94 | ```sh

95 | rosservice call /motor_on

96 | ```

97 | [back to example list](#how-to-use-examples)

98 |

99 | ---

100 |

101 | ### joystick_control

102 |

103 | ジョイスティックコントローラでRaspberryPiMouseを動かすコード例です。

104 |

105 | #### Requirements

106 |

107 | - Joystick Controller

108 | - [Logicool Wireless Gamepad F710](https://gaming.logicool.co.jp/ja-jp/products/gamepads/f710-wireless-gamepad.html#940-0001440)

109 | - [SONY DUALSHOCK 3](https://www.jp.playstation.com/ps3/peripheral/cechzc2j.html)

110 |

111 | #### How to use

112 |

113 | 次のコマンドでノードを起動します。

114 |

115 | ```sh

116 | roslaunch raspimouse_ros_examples teleop.launch joy:=true

117 |

118 | # Use DUALSHOCK 3

119 | roslaunch raspimouse_ros_examples teleop.launch joy:=true joyconfig:="dualshock3"

120 |

121 | # Control from remote computer

122 | roslaunch raspimouse_ros_examples teleop.launch joy:=true mouse:=false

123 | ```

124 |

125 | デフォルトのキー割り当てはこちらです。

126 |

127 |

128 |

129 | #### Configure

130 |

131 | [./config/joy_f710.yml](./config/joy_f710.yml)、[./config/joy_dualshock3.yml](./config/joy_dualshock3.yml)

132 | のキー番号を編集することで、キー割り当てを変更できます。

133 |

134 | ```yaml

135 | button_shutdown_1 : 8

136 | button_shutdown_2 : 9

137 |

138 | button_motor_off : 8

139 | button_motor_on : 9

140 |

141 | button_cmd_enable : 4

142 | ```

143 |

144 | #### Videos

145 |

146 | [](https://youtu.be/GswxdB8Ia0Y)

147 |

148 | [back to example list](#how-to-use-examples)

149 |

150 | ---

151 |

152 | ### object_tracking

153 |

154 |

13 |

14 | ## Requirements

15 |

16 | - Raspberry Pi Mouse

17 | - https://rt-net.jp/products/raspberrypimousev3/

18 | - Linux OS

19 | - Ubuntu server 16.04

20 | - Ubuntu server 18.04

21 | - Ubuntu server 20.04

22 | - https://ubuntu.com/download/raspberry-pi

23 | - Device Driver

24 | - [rt-net/RaspberryPiMouse](https://github.com/rt-net/RaspberryPiMouse)

25 | - ROS

26 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

27 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

28 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

29 | - Raspberry Pi Mouse ROS package

30 | - https://github.com/ryuichiueda/raspimouse_ros_2

31 | - Remote Computer (Optional)

32 | - ROS

33 | - [Kinetic Kame](http://wiki.ros.org/kinetic/Installation/Ubuntu)

34 | - [Melodic Morenia](http://wiki.ros.org/melodic/Installation/Ubuntu)

35 | - [Noetic Ninjemys](http://wiki.ros.org/noetic/Installation/Ubuntu)

36 | - Raspberry Pi Mouse ROS package

37 | - https://github.com/ryuichiueda/raspimouse_ros_2

38 |

39 | ## Installation

40 |

41 | ```sh

42 | cd ~/catkin_ws/src

43 | # Clone ROS packages

44 | git clone https://github.com/ryuichiueda/raspimouse_ros_2

45 | git clone -b $ROS_DISTRO-devel https://github.com/rt-net/raspimouse_ros_examples

46 | # For direction control example

47 | git clone https://github.com/rt-net/rt_usb_9axisimu_driver

48 |

49 | # Install dependencies

50 | rosdep install -r -y --from-paths . --ignore-src

51 |

52 | # make & install

53 | cd ~/catkin_ws && catkin_make

54 | source devel/setup.bash

55 | ```

56 |

57 | ## License

58 |

59 | このリポジトリはApache 2.0ライセンスの元、公開されています。

60 | ライセンスについては[LICENSE](./LICENSE)を参照ください。

61 |

62 | ## How To Use Examples

63 |

64 | - [keyboard_control](#keyboard_control)

65 | - [joystick_control](#joystick_control)

66 | - [object_tracking](#object_tracking)

67 | - [line_follower](#line_follower)

68 | - [SLAM](#SLAM)

69 | - [direction_control](#direction_control)

70 |

71 | ---

72 |

73 | ### keyboard_control

74 |

75 | [teleop_twist_keyboard](http://wiki.ros.org/teleop_twist_keyboard)を使ってRaspberryPiMouseを動かします。

76 |

77 | #### Requirements

78 |

79 | - Keyboard

80 |

81 | #### How to use

82 |

83 | 次のコマンドでノードを起動します。

84 |

85 | ```sh

86 | roslaunch raspimouse_ros_examples teleop.launch key:=true

87 |

88 | # Control from remote computer

89 | roslaunch raspimouse_ros_examples teleop.launch key:=true mouse:=false

90 | ```

91 |

92 | ノードが起動したら`/motor_on`サービスをコールします。

93 |

94 | ```sh

95 | rosservice call /motor_on

96 | ```

97 | [back to example list](#how-to-use-examples)

98 |

99 | ---

100 |

101 | ### joystick_control

102 |

103 | ジョイスティックコントローラでRaspberryPiMouseを動かすコード例です。

104 |

105 | #### Requirements

106 |

107 | - Joystick Controller

108 | - [Logicool Wireless Gamepad F710](https://gaming.logicool.co.jp/ja-jp/products/gamepads/f710-wireless-gamepad.html#940-0001440)

109 | - [SONY DUALSHOCK 3](https://www.jp.playstation.com/ps3/peripheral/cechzc2j.html)

110 |

111 | #### How to use

112 |

113 | 次のコマンドでノードを起動します。

114 |

115 | ```sh

116 | roslaunch raspimouse_ros_examples teleop.launch joy:=true

117 |

118 | # Use DUALSHOCK 3

119 | roslaunch raspimouse_ros_examples teleop.launch joy:=true joyconfig:="dualshock3"

120 |

121 | # Control from remote computer

122 | roslaunch raspimouse_ros_examples teleop.launch joy:=true mouse:=false

123 | ```

124 |

125 | デフォルトのキー割り当てはこちらです。

126 |

127 |

128 |

129 | #### Configure

130 |

131 | [./config/joy_f710.yml](./config/joy_f710.yml)、[./config/joy_dualshock3.yml](./config/joy_dualshock3.yml)

132 | のキー番号を編集することで、キー割り当てを変更できます。

133 |

134 | ```yaml

135 | button_shutdown_1 : 8

136 | button_shutdown_2 : 9

137 |

138 | button_motor_off : 8

139 | button_motor_on : 9

140 |

141 | button_cmd_enable : 4

142 | ```

143 |

144 | #### Videos

145 |

146 | [](https://youtu.be/GswxdB8Ia0Y)

147 |

148 | [back to example list](#how-to-use-examples)

149 |

150 | ---

151 |

152 | ### object_tracking

153 |

154 |  155 |

156 | 色情報をもとにオレンジ色のボールの追跡を行うコード例です。

157 | USB接続のWebカメラとOpenCVを使ってボール追跡をします。

158 |

159 | #### Requirements

160 |

161 | - Webカメラ

162 | - [Logicool HD WEBCAM C310N](https://www.logicool.co.jp/ja-jp/product/hd-webcam-c310n)

163 | - カメラマウント

164 | - [Raspberry Pi Mouse オプションキット No.4 \[Webカメラマウント\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3584)

165 | - ボール(Optional)

166 | - [ソフトボール(オレンジ)](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1307&products_id=3701)

167 | - Software

168 | - python

169 | - opencv

170 | - numpy

171 | - v4l-utils

172 |

173 | #### Installation

174 |

175 | Raspberry Pi Mouseにカメラマウントを取り付け,WebカメラをRaspberry Piに接続します.

176 |

177 | 次のコマンドで、カメラ制御用のパッケージ(v4l-utils)をインストールします。

178 |

179 | ```sh

180 | sudo apt install v4l-utils

181 | ```

182 | #### How to use

183 |

184 | 次のスクリプトを実行して、カメラの自動調節機能(自動露光,オートホワイトバランス等)を切ります。

185 |

186 | ```sh

187 | rosrun raspimouse_ros_examples camera.bash

188 | ```

189 |

190 | 次のコマンドでノードを起動します。

191 |

192 | ```sh

193 | roslaunch raspimouse_ros_examples object_tracking.launch

194 | ```

195 |

196 | 物体検出画像は`binary`と`object`というトピックとして発行されます。

197 | これらの画像は[RViz](http://wiki.ros.org/ja/rviz)

198 | や[rqt_image_view](http://wiki.ros.org/rqt_image_view)

199 | で表示できます。

200 |

201 |

155 |

156 | 色情報をもとにオレンジ色のボールの追跡を行うコード例です。

157 | USB接続のWebカメラとOpenCVを使ってボール追跡をします。

158 |

159 | #### Requirements

160 |

161 | - Webカメラ

162 | - [Logicool HD WEBCAM C310N](https://www.logicool.co.jp/ja-jp/product/hd-webcam-c310n)

163 | - カメラマウント

164 | - [Raspberry Pi Mouse オプションキット No.4 \[Webカメラマウント\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3584)

165 | - ボール(Optional)

166 | - [ソフトボール(オレンジ)](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1307&products_id=3701)

167 | - Software

168 | - python

169 | - opencv

170 | - numpy

171 | - v4l-utils

172 |

173 | #### Installation

174 |

175 | Raspberry Pi Mouseにカメラマウントを取り付け,WebカメラをRaspberry Piに接続します.

176 |

177 | 次のコマンドで、カメラ制御用のパッケージ(v4l-utils)をインストールします。

178 |

179 | ```sh

180 | sudo apt install v4l-utils

181 | ```

182 | #### How to use

183 |

184 | 次のスクリプトを実行して、カメラの自動調節機能(自動露光,オートホワイトバランス等)を切ります。

185 |

186 | ```sh

187 | rosrun raspimouse_ros_examples camera.bash

188 | ```

189 |

190 | 次のコマンドでノードを起動します。

191 |

192 | ```sh

193 | roslaunch raspimouse_ros_examples object_tracking.launch

194 | ```

195 |

196 | 物体検出画像は`binary`と`object`というトピックとして発行されます。

197 | これらの画像は[RViz](http://wiki.ros.org/ja/rviz)

198 | や[rqt_image_view](http://wiki.ros.org/rqt_image_view)

199 | で表示できます。

200 |

201 |  202 |

203 | #### Configure

204 |

205 | 追跡対象の色を変更するには

206 | [`./scripts/object_tracking.py`](./scripts/object_tracking.py)を編集します。

207 |

208 | ```python

209 | def detect_ball(self):

210 | # ~~~ 省略 ~~~

211 | min_hsv, max_hsv = self.set_color_orange()

212 | # min_hsv, max_hsv = self.set_color_green()

213 | # min_hsv, max_hsv = self.set_color_blue()

214 | ```

215 |

216 | 反応が悪い時にはカメラの露光や関数内のパラメータを調整して下さい.

217 |

218 | ```python

219 | def set_color_orange(self):

220 | # [H(0~180), S(0~255), V(0~255)]

221 | # min_hsv_orange = np.array([15, 200, 80])

222 | min_hsv_orange = np.array([15, 150, 40])

223 | max_hsv_orange = np.array([20, 255, 255])

224 | return min_hsv_orange, max_hsv_orange

225 | ```

226 |

227 | #### Videos

228 |

229 | [](https://youtu.be/U6_BuvrjyFc)

230 |

231 | [back to example list](#how-to-use-examples)

232 |

233 | ---

234 |

235 | ### line_follower

236 |

237 |

202 |

203 | #### Configure

204 |

205 | 追跡対象の色を変更するには

206 | [`./scripts/object_tracking.py`](./scripts/object_tracking.py)を編集します。

207 |

208 | ```python

209 | def detect_ball(self):

210 | # ~~~ 省略 ~~~

211 | min_hsv, max_hsv = self.set_color_orange()

212 | # min_hsv, max_hsv = self.set_color_green()

213 | # min_hsv, max_hsv = self.set_color_blue()

214 | ```

215 |

216 | 反応が悪い時にはカメラの露光や関数内のパラメータを調整して下さい.

217 |

218 | ```python

219 | def set_color_orange(self):

220 | # [H(0~180), S(0~255), V(0~255)]

221 | # min_hsv_orange = np.array([15, 200, 80])

222 | min_hsv_orange = np.array([15, 150, 40])

223 | max_hsv_orange = np.array([20, 255, 255])

224 | return min_hsv_orange, max_hsv_orange

225 | ```

226 |

227 | #### Videos

228 |

229 | [](https://youtu.be/U6_BuvrjyFc)

230 |

231 | [back to example list](#how-to-use-examples)

232 |

233 | ---

234 |

235 | ### line_follower

236 |

237 |  238 |

239 | ライントレースのコード例です。

240 |

241 | #### Requirements

242 |

243 | - ライントレースセンサ

244 | - [Raspberry Pi Mouse オプションキット No.3 \[ライントレース\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3591)

245 | - フィールドとライン (Optional)

246 |

247 | #### Installation

248 |

249 | Raspberry Pi Mouseにライントレースセンサを取り付けます。

250 |

251 |

252 | #### How to use

253 |

254 | 次のコマンドでノードを起動します。

255 |

256 | ```sh

257 | roslaunch raspimouse_ros_examples line_follower.launch

258 |

259 | # Control from remote computer

260 | roslaunch raspimouse_ros_examples line_follower.launch mouse:=false

261 | ```

262 |

263 | Raspberry Pi Mouseをフィールドに置き、SW2を押してフィールド上のセンサ値をサンプリングします。

264 |

265 |

238 |

239 | ライントレースのコード例です。

240 |

241 | #### Requirements

242 |

243 | - ライントレースセンサ

244 | - [Raspberry Pi Mouse オプションキット No.3 \[ライントレース\]](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3591)

245 | - フィールドとライン (Optional)

246 |

247 | #### Installation

248 |

249 | Raspberry Pi Mouseにライントレースセンサを取り付けます。

250 |

251 |

252 | #### How to use

253 |

254 | 次のコマンドでノードを起動します。

255 |

256 | ```sh

257 | roslaunch raspimouse_ros_examples line_follower.launch

258 |

259 | # Control from remote computer

260 | roslaunch raspimouse_ros_examples line_follower.launch mouse:=false

261 | ```

262 |

263 | Raspberry Pi Mouseをフィールドに置き、SW2を押してフィールド上のセンサ値をサンプリングします。

264 |

265 |  266 |

267 | 次に、センサとラインが重なるようにRaspberry Pi Mouseを置き、SW1を押してライン上のセンサ値をサンプリングします。

268 |

269 |

266 |

267 | 次に、センサとラインが重なるようにRaspberry Pi Mouseを置き、SW1を押してライン上のセンサ値をサンプリングします。

268 |

269 |  270 |

271 | 最後に、ライン上にRaspberry Pi Mouseを置き、SW0を押してライントレースを開始します。

272 |

273 |

270 |

271 | 最後に、ライン上にRaspberry Pi Mouseを置き、SW0を押してライントレースを開始します。

272 |

273 |  274 |

275 | もう一度SW0を押すとライントレースを停止します。

276 |

277 | #### Configure

278 |

279 | 走行速度を変更するには[`./scripts/line_follower.py`](./scripts/line_follower.py)を編集します。

280 |

281 | ```python

282 | def _publish_cmdvel_for_line_following(self):

283 | VEL_LINER_X = 0.08 # m/s

284 | VEL_ANGULAR_Z = 0.8 # rad/s

285 | LOW_VEL_ANGULAR_Z = 0.5 # rad/s

286 |

287 | cmd_vel = Twist()

288 | ```

289 |

290 | #### Videos

291 |

292 | [](https://youtu.be/oPm0sW2V_tY)

293 |

294 | [back to example list](#how-to-use-examples)

295 |

296 | ---

297 |

298 | ### SLAM

299 |

300 |

274 |

275 | もう一度SW0を押すとライントレースを停止します。

276 |

277 | #### Configure

278 |

279 | 走行速度を変更するには[`./scripts/line_follower.py`](./scripts/line_follower.py)を編集します。

280 |

281 | ```python

282 | def _publish_cmdvel_for_line_following(self):

283 | VEL_LINER_X = 0.08 # m/s

284 | VEL_ANGULAR_Z = 0.8 # rad/s

285 | LOW_VEL_ANGULAR_Z = 0.5 # rad/s

286 |

287 | cmd_vel = Twist()

288 | ```

289 |

290 | #### Videos

291 |

292 | [](https://youtu.be/oPm0sW2V_tY)

293 |

294 | [back to example list](#how-to-use-examples)

295 |

296 | ---

297 |

298 | ### SLAM

299 |

300 |  301 |

302 | LiDARを使ってSLAM(自己位置推定と地図作成)を行うサンプルです。

303 |

304 | #### Requirements

305 |

306 | - LiDAR

307 | - [URG-04LX-UG01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1296&products_id=2816)

308 |

309 | - [LDS-01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_5&products_id=3676)

310 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

311 | - Joystick Controller (Optional)

312 |

313 | RPLIDARについては、パッケージ[rplidar_ros](https://github.com/Slamtec/rplidar_ros)

314 | がROS Noetic向けにリリースされていないため動作確認していません。

315 |

316 | #### Installation

317 |

318 | Raspberry Pi MouseにLiDARを取り付けます。

319 |

320 | - URG-04LX-UG01

321 | -

301 |

302 | LiDARを使ってSLAM(自己位置推定と地図作成)を行うサンプルです。

303 |

304 | #### Requirements

305 |

306 | - LiDAR

307 | - [URG-04LX-UG01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1296&products_id=2816)

308 |

309 | - [LDS-01](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_5&products_id=3676)

310 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

311 | - Joystick Controller (Optional)

312 |

313 | RPLIDARについては、パッケージ[rplidar_ros](https://github.com/Slamtec/rplidar_ros)

314 | がROS Noetic向けにリリースされていないため動作確認していません。

315 |

316 | #### Installation

317 |

318 | Raspberry Pi MouseにLiDARを取り付けます。

319 |

320 | - URG-04LX-UG01

321 | -  322 |

324 | - LDS-01

325 | -

322 |

324 | - LDS-01

325 | -  326 |

327 | #### How to use

328 |

329 | Raspberry Pi Mouse上で次のコマンドでノードを起動します。

330 |

331 | ```sh

332 | # URG

333 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch urg:=true port:=/dev/ttyACM0

334 |

335 | # LDS

336 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch lds:=true port:=/dev/ttyUSB0

337 |

338 | ```

339 |

340 | Raspberry Pi Mouseを動かすため`teleop.launch`を起動します

341 |

342 | ```sh

343 | # joystick control

344 | roslaunch raspimouse_ros_examples teleop.launch mouse:=false joy:=true joyconfig:=dualshock3

345 | ```

346 |

347 | 次のコマンドでSLAMパッケージを起動します。(Remote computerでの実行推奨)

348 |

349 | ```sh

350 | # URG

351 | roslaunch raspimouse_ros_examples slam_gmapping.launch urg:=true

352 |

353 | # LDS

354 | roslaunch raspimouse_ros_examples slam_gmapping.launch lds:=true

355 | ```

356 |

357 | Raspberry Pi Mouseを動かして地図を作成します。

358 |

359 | 次のコマンドで作成した地図を保存します。

360 |

361 | ```sh

362 | mkdir ~/maps

363 | rosrun map_server map_saver -f ~/maps/mymap

364 | ```

365 |

366 | #### Configure

367 |

368 | [./launch/slam_gmapping.launch](./launch/slam_gmapping.launch)で[gmapping](http://wiki.ros.org/gmapping)パッケージのパラメータを調整します。

369 |

370 | ```xml

371 |

372 |

373 |

374 |

375 |

376 |

377 |

378 |

379 |

380 |

381 |

382 |

383 | ```

384 |

385 | #### Videos

386 |

387 | [](https://youtu.be/gWozU47UqVE)

388 |

389 |

390 |

391 | [back to example list](#how-to-use-examples)

392 |

393 | ---

394 |

395 | ### direction_control

396 |

397 |

326 |

327 | #### How to use

328 |

329 | Raspberry Pi Mouse上で次のコマンドでノードを起動します。

330 |

331 | ```sh

332 | # URG

333 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch urg:=true port:=/dev/ttyACM0

334 |

335 | # LDS

336 | roslaunch raspimouse_ros_examples mouse_with_lidar.launch lds:=true port:=/dev/ttyUSB0

337 |

338 | ```

339 |

340 | Raspberry Pi Mouseを動かすため`teleop.launch`を起動します

341 |

342 | ```sh

343 | # joystick control

344 | roslaunch raspimouse_ros_examples teleop.launch mouse:=false joy:=true joyconfig:=dualshock3

345 | ```

346 |

347 | 次のコマンドでSLAMパッケージを起動します。(Remote computerでの実行推奨)

348 |

349 | ```sh

350 | # URG

351 | roslaunch raspimouse_ros_examples slam_gmapping.launch urg:=true

352 |

353 | # LDS

354 | roslaunch raspimouse_ros_examples slam_gmapping.launch lds:=true

355 | ```

356 |

357 | Raspberry Pi Mouseを動かして地図を作成します。

358 |

359 | 次のコマンドで作成した地図を保存します。

360 |

361 | ```sh

362 | mkdir ~/maps

363 | rosrun map_server map_saver -f ~/maps/mymap

364 | ```

365 |

366 | #### Configure

367 |

368 | [./launch/slam_gmapping.launch](./launch/slam_gmapping.launch)で[gmapping](http://wiki.ros.org/gmapping)パッケージのパラメータを調整します。

369 |

370 | ```xml

371 |

372 |

373 |

374 |

375 |

376 |

377 |

378 |

379 |

380 |

381 |

382 |

383 | ```

384 |

385 | #### Videos

386 |

387 | [](https://youtu.be/gWozU47UqVE)

388 |

389 |

390 |

391 | [back to example list](#how-to-use-examples)

392 |

393 | ---

394 |

395 | ### direction_control

396 |

397 |  398 |

399 | IMUセンサを使用した角度制御のコード例です。

400 |

401 | #### Requirements

402 |

403 | - [USB出力9軸IMUセンサモジュール](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1&products_id=3416&language=ja)

404 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

405 | - RT-USB-9axisIMU ROS Package.

406 | - https://github.com/rt-net/rt_usb_9axisimu_driver

407 |

408 | #### Installation

409 |

410 | LiDAR MountにIMUセンサモジュールを取り付けます。

411 |

412 |

398 |

399 | IMUセンサを使用した角度制御のコード例です。

400 |

401 | #### Requirements

402 |

403 | - [USB出力9軸IMUセンサモジュール](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1348_1&products_id=3416&language=ja)

404 | - [LiDAR Mount](https://www.rt-shop.jp/index.php?main_page=product_info&cPath=1299_1395&products_id=3867)

405 | - RT-USB-9axisIMU ROS Package.

406 | - https://github.com/rt-net/rt_usb_9axisimu_driver

407 |

408 | #### Installation

409 |

410 | LiDAR MountにIMUセンサモジュールを取り付けます。

411 |

412 |  413 |

414 | Raspberry Pi Mouse にLiDAR Mountを取り付けます。

415 |

416 |

413 |

414 | Raspberry Pi Mouse にLiDAR Mountを取り付けます。

415 |

416 |  417 |

418 | #### How to use

419 |

420 | 次のコマンドでノードを起動します。

421 |

422 | ```sh

423 | roslaunch raspimouse_ros_examples direction_control.launch

424 | ```

425 |

426 | SW0 ~ SW2を押して動作モードを切り替えます。

427 |

428 | - SW0: ジャイロセンサのバイアスをキャリブレーションし、ラズパイマウスの方位角を`0 rad`にリセットします

429 | - SW1: 方位角を`0 rad`に維持する角度制御を開始します

430 | - SW0 ~ SW2を押すか、ラズパイマウス本体を横に傾けると終了します

431 | - SW2: 方位角を`-π ~ π rad`に変化させる角度制御を開始します

432 | - SW0 ~ SW2を押すか、ラズパイマウス本体を横に傾けると終了します

433 |

434 | #### Configure

435 |

436 | 角度制御に使うPID制御器のゲインを変更するには[`./scripts/direction_control.py`](./scripts/direction_control.py)を編集します。

437 |

438 | ```python

439 | class DirectionController(object):

440 | # ---

441 | def __init__(self):

442 | # ---

443 | # for angle control

444 | self._omega_pid_controller = PIDController(10, 0, 20)

445 | ```

446 |

447 | #### Videos

448 |

449 | [](https://youtu.be/LDpC2wqIoU4)

450 |

451 | [back to example list](#how-to-use-examples)

452 |

--------------------------------------------------------------------------------

/config/joy_dualshock3.yml:

--------------------------------------------------------------------------------

1 | button_shutdown_1 : 8

2 | button_shutdown_2 : 9

3 |

4 | button_motor_off : 8

5 | button_motor_on : 9

6 |

7 | button_cmd_enable : 4

8 | axis_cmd_linear_x : 1

9 | axis_cmd_angular_z : 3

10 |

11 | analog_d_pad : false

12 | d_pad_up : 13

13 | d_pad_down : 14

14 | d_pad_left : 15

15 | d_pad_right : 16

16 | # for analog_d_pad

17 | d_pad_up_is_positive : true

18 | d_pad_right_is_positive : false

19 |

20 | button_buzzer_enable : 5

21 | dpad_buzzer0 : "up"

22 | dpad_buzzer1 : "right"

23 | dpad_buzzer2 : "down"

24 | dpad_buzzer3 : "left"

25 | button_buzzer4 : 2

26 | button_buzzer5 : 1

27 | button_buzzer6 : 0

28 | button_buzzer7 : 3

29 |

30 | button_sensor_sound_en : 7

31 | button_config_enable : 6

32 |

--------------------------------------------------------------------------------

/config/joy_dualshock4.yml:

--------------------------------------------------------------------------------

1 | button_shutdown_1 : 8

2 | button_shutdown_2 : 9

3 |

4 | button_motor_off : 8

5 | button_motor_on : 9

6 |

7 | button_cmd_enable : 4

8 | axis_cmd_linear_x : 1

9 | axis_cmd_angular_z : 3

10 |

11 | analog_d_pad : true

12 | d_pad_up : 7

13 | d_pad_down : 7

14 | d_pad_left : 6

15 | d_pad_right : 6

16 | # for analog_d_pad

17 | d_pad_up_is_positive : true

18 | d_pad_right_is_positive : false

19 |

20 | button_buzzer_enable : 5

21 | dpad_buzzer0 : "up"

22 | dpad_buzzer1 : "right"

23 | dpad_buzzer2 : "down"

24 | dpad_buzzer3 : "left"

25 | button_buzzer4 : 2

26 | button_buzzer5 : 1

27 | button_buzzer6 : 0

28 | button_buzzer7 : 3

29 |

30 | button_sensor_sound_en : 7

31 | button_config_enable : 6

32 |

--------------------------------------------------------------------------------

/config/joy_f710.yml:

--------------------------------------------------------------------------------

1 | button_shutdown_1 : 8

2 | button_shutdown_2 : 9

3 |

4 | button_motor_off : 8

5 | button_motor_on : 9

6 |

7 | button_cmd_enable : 4

8 | axis_cmd_linear_x : 1

9 | axis_cmd_angular_z : 2

10 |

11 | analog_d_pad : true

12 | d_pad_up : 5

13 | d_pad_down : 5

14 | d_pad_left : 4

15 | d_pad_right : 4

16 | # for analog_d_pad

17 | d_pad_up_is_positive : true

18 | d_pad_right_is_positive : false

19 |

20 | button_buzzer_enable : 5