├── .gitignore

├── LICENSE

├── README.md

├── detection

├── Romain - vessel recognition.ipynb

├── attention_CAM.py

├── model_vessel_detection_classifier.py

└── superseded

│ ├── basic_image_label_generator.py

│ └── prepare_image_set_on_disk.py

├── learning_rate_utils.py

├── preprocessing.py

├── requirements.txt

├── scratch_pad.py

├── scratch_tensorflow.py

├── segmentation

└── segmentation_preprocessing.py

├── segmentation_model.py

└── visualisation.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | GNU GENERAL PUBLIC LICENSE

2 | Version 3, 29 June 2007

3 |

4 | Copyright (C) 2007 Free Software Foundation, Inc.

5 | Everyone is permitted to copy and distribute verbatim copies

6 | of this license document, but changing it is not allowed.

7 |

8 | Preamble

9 |

10 | The GNU General Public License is a free, copyleft license for

11 | software and other kinds of works.

12 |

13 | The licenses for most software and other practical works are designed

14 | to take away your freedom to share and change the works. By contrast,

15 | the GNU General Public License is intended to guarantee your freedom to

16 | share and change all versions of a program--to make sure it remains free

17 | software for all its users. We, the Free Software Foundation, use the

18 | GNU General Public License for most of our software; it applies also to

19 | any other work released this way by its authors. You can apply it to

20 | your programs, too.

21 |

22 | When we speak of free software, we are referring to freedom, not

23 | price. Our General Public Licenses are designed to make sure that you

24 | have the freedom to distribute copies of free software (and charge for

25 | them if you wish), that you receive source code or can get it if you

26 | want it, that you can change the software or use pieces of it in new

27 | free programs, and that you know you can do these things.

28 |

29 | To protect your rights, we need to prevent others from denying you

30 | these rights or asking you to surrender the rights. Therefore, you have

31 | certain responsibilities if you distribute copies of the software, or if

32 | you modify it: responsibilities to respect the freedom of others.

33 |

34 | For example, if you distribute copies of such a program, whether

35 | gratis or for a fee, you must pass on to the recipients the same

36 | freedoms that you received. You must make sure that they, too, receive

37 | or can get the source code. And you must show them these terms so they

38 | know their rights.

39 |

40 | Developers that use the GNU GPL protect your rights with two steps:

41 | (1) assert copyright on the software, and (2) offer you this License

42 | giving you legal permission to copy, distribute and/or modify it.

43 |

44 | For the developers' and authors' protection, the GPL clearly explains

45 | that there is no warranty for this free software. For both users' and

46 | authors' sake, the GPL requires that modified versions be marked as

47 | changed, so that their problems will not be attributed erroneously to

48 | authors of previous versions.

49 |

50 | Some devices are designed to deny users access to install or run

51 | modified versions of the software inside them, although the manufacturer

52 | can do so. This is fundamentally incompatible with the aim of

53 | protecting users' freedom to change the software. The systematic

54 | pattern of such abuse occurs in the area of products for individuals to

55 | use, which is precisely where it is most unacceptable. Therefore, we

56 | have designed this version of the GPL to prohibit the practice for those

57 | products. If such problems arise substantially in other domains, we

58 | stand ready to extend this provision to those domains in future versions

59 | of the GPL, as needed to protect the freedom of users.

60 |

61 | Finally, every program is threatened constantly by software patents.

62 | States should not allow patents to restrict development and use of

63 | software on general-purpose computers, but in those that do, we wish to

64 | avoid the special danger that patents applied to a free program could

65 | make it effectively proprietary. To prevent this, the GPL assures that

66 | patents cannot be used to render the program non-free.

67 |

68 | The precise terms and conditions for copying, distribution and

69 | modification follow.

70 |

71 | TERMS AND CONDITIONS

72 |

73 | 0. Definitions.

74 |

75 | "This License" refers to version 3 of the GNU General Public License.

76 |

77 | "Copyright" also means copyright-like laws that apply to other kinds of

78 | works, such as semiconductor masks.

79 |

80 | "The Program" refers to any copyrightable work licensed under this

81 | License. Each licensee is addressed as "you". "Licensees" and

82 | "recipients" may be individuals or organizations.

83 |

84 | To "modify" a work means to copy from or adapt all or part of the work

85 | in a fashion requiring copyright permission, other than the making of an

86 | exact copy. The resulting work is called a "modified version" of the

87 | earlier work or a work "based on" the earlier work.

88 |

89 | A "covered work" means either the unmodified Program or a work based

90 | on the Program.

91 |

92 | To "propagate" a work means to do anything with it that, without

93 | permission, would make you directly or secondarily liable for

94 | infringement under applicable copyright law, except executing it on a

95 | computer or modifying a private copy. Propagation includes copying,

96 | distribution (with or without modification), making available to the

97 | public, and in some countries other activities as well.

98 |

99 | To "convey" a work means any kind of propagation that enables other

100 | parties to make or receive copies. Mere interaction with a user through

101 | a computer network, with no transfer of a copy, is not conveying.

102 |

103 | An interactive user interface displays "Appropriate Legal Notices"

104 | to the extent that it includes a convenient and prominently visible

105 | feature that (1) displays an appropriate copyright notice, and (2)

106 | tells the user that there is no warranty for the work (except to the

107 | extent that warranties are provided), that licensees may convey the

108 | work under this License, and how to view a copy of this License. If

109 | the interface presents a list of user commands or options, such as a

110 | menu, a prominent item in the list meets this criterion.

111 |

112 | 1. Source Code.

113 |

114 | The "source code" for a work means the preferred form of the work

115 | for making modifications to it. "Object code" means any non-source

116 | form of a work.

117 |

118 | A "Standard Interface" means an interface that either is an official

119 | standard defined by a recognized standards body, or, in the case of

120 | interfaces specified for a particular programming language, one that

121 | is widely used among developers working in that language.

122 |

123 | The "System Libraries" of an executable work include anything, other

124 | than the work as a whole, that (a) is included in the normal form of

125 | packaging a Major Component, but which is not part of that Major

126 | Component, and (b) serves only to enable use of the work with that

127 | Major Component, or to implement a Standard Interface for which an

128 | implementation is available to the public in source code form. A

129 | "Major Component", in this context, means a major essential component

130 | (kernel, window system, and so on) of the specific operating system

131 | (if any) on which the executable work runs, or a compiler used to

132 | produce the work, or an object code interpreter used to run it.

133 |

134 | The "Corresponding Source" for a work in object code form means all

135 | the source code needed to generate, install, and (for an executable

136 | work) run the object code and to modify the work, including scripts to

137 | control those activities. However, it does not include the work's

138 | System Libraries, or general-purpose tools or generally available free

139 | programs which are used unmodified in performing those activities but

140 | which are not part of the work. For example, Corresponding Source

141 | includes interface definition files associated with source files for

142 | the work, and the source code for shared libraries and dynamically

143 | linked subprograms that the work is specifically designed to require,

144 | such as by intimate data communication or control flow between those

145 | subprograms and other parts of the work.

146 |

147 | The Corresponding Source need not include anything that users

148 | can regenerate automatically from other parts of the Corresponding

149 | Source.

150 |

151 | The Corresponding Source for a work in source code form is that

152 | same work.

153 |

154 | 2. Basic Permissions.

155 |

156 | All rights granted under this License are granted for the term of

157 | copyright on the Program, and are irrevocable provided the stated

158 | conditions are met. This License explicitly affirms your unlimited

159 | permission to run the unmodified Program. The output from running a

160 | covered work is covered by this License only if the output, given its

161 | content, constitutes a covered work. This License acknowledges your

162 | rights of fair use or other equivalent, as provided by copyright law.

163 |

164 | You may make, run and propagate covered works that you do not

165 | convey, without conditions so long as your license otherwise remains

166 | in force. You may convey covered works to others for the sole purpose

167 | of having them make modifications exclusively for you, or provide you

168 | with facilities for running those works, provided that you comply with

169 | the terms of this License in conveying all material for which you do

170 | not control copyright. Those thus making or running the covered works

171 | for you must do so exclusively on your behalf, under your direction

172 | and control, on terms that prohibit them from making any copies of

173 | your copyrighted material outside their relationship with you.

174 |

175 | Conveying under any other circumstances is permitted solely under

176 | the conditions stated below. Sublicensing is not allowed; section 10

177 | makes it unnecessary.

178 |

179 | 3. Protecting Users' Legal Rights From Anti-Circumvention Law.

180 |

181 | No covered work shall be deemed part of an effective technological

182 | measure under any applicable law fulfilling obligations under article

183 | 11 of the WIPO copyright treaty adopted on 20 December 1996, or

184 | similar laws prohibiting or restricting circumvention of such

185 | measures.

186 |

187 | When you convey a covered work, you waive any legal power to forbid

188 | circumvention of technological measures to the extent such circumvention

189 | is effected by exercising rights under this License with respect to

190 | the covered work, and you disclaim any intention to limit operation or

191 | modification of the work as a means of enforcing, against the work's

192 | users, your or third parties' legal rights to forbid circumvention of

193 | technological measures.

194 |

195 | 4. Conveying Verbatim Copies.

196 |

197 | You may convey verbatim copies of the Program's source code as you

198 | receive it, in any medium, provided that you conspicuously and

199 | appropriately publish on each copy an appropriate copyright notice;

200 | keep intact all notices stating that this License and any

201 | non-permissive terms added in accord with section 7 apply to the code;

202 | keep intact all notices of the absence of any warranty; and give all

203 | recipients a copy of this License along with the Program.

204 |

205 | You may charge any price or no price for each copy that you convey,

206 | and you may offer support or warranty protection for a fee.

207 |

208 | 5. Conveying Modified Source Versions.

209 |

210 | You may convey a work based on the Program, or the modifications to

211 | produce it from the Program, in the form of source code under the

212 | terms of section 4, provided that you also meet all of these conditions:

213 |

214 | a) The work must carry prominent notices stating that you modified

215 | it, and giving a relevant date.

216 |

217 | b) The work must carry prominent notices stating that it is

218 | released under this License and any conditions added under section

219 | 7. This requirement modifies the requirement in section 4 to

220 | "keep intact all notices".

221 |

222 | c) You must license the entire work, as a whole, under this

223 | License to anyone who comes into possession of a copy. This

224 | License will therefore apply, along with any applicable section 7

225 | additional terms, to the whole of the work, and all its parts,

226 | regardless of how they are packaged. This License gives no

227 | permission to license the work in any other way, but it does not

228 | invalidate such permission if you have separately received it.

229 |

230 | d) If the work has interactive user interfaces, each must display

231 | Appropriate Legal Notices; however, if the Program has interactive

232 | interfaces that do not display Appropriate Legal Notices, your

233 | work need not make them do so.

234 |

235 | A compilation of a covered work with other separate and independent

236 | works, which are not by their nature extensions of the covered work,

237 | and which are not combined with it such as to form a larger program,

238 | in or on a volume of a storage or distribution medium, is called an

239 | "aggregate" if the compilation and its resulting copyright are not

240 | used to limit the access or legal rights of the compilation's users

241 | beyond what the individual works permit. Inclusion of a covered work

242 | in an aggregate does not cause this License to apply to the other

243 | parts of the aggregate.

244 |

245 | 6. Conveying Non-Source Forms.

246 |

247 | You may convey a covered work in object code form under the terms

248 | of sections 4 and 5, provided that you also convey the

249 | machine-readable Corresponding Source under the terms of this License,

250 | in one of these ways:

251 |

252 | a) Convey the object code in, or embodied in, a physical product

253 | (including a physical distribution medium), accompanied by the

254 | Corresponding Source fixed on a durable physical medium

255 | customarily used for software interchange.

256 |

257 | b) Convey the object code in, or embodied in, a physical product

258 | (including a physical distribution medium), accompanied by a

259 | written offer, valid for at least three years and valid for as

260 | long as you offer spare parts or customer support for that product

261 | model, to give anyone who possesses the object code either (1) a

262 | copy of the Corresponding Source for all the software in the

263 | product that is covered by this License, on a durable physical

264 | medium customarily used for software interchange, for a price no

265 | more than your reasonable cost of physically performing this

266 | conveying of source, or (2) access to copy the

267 | Corresponding Source from a network server at no charge.

268 |

269 | c) Convey individual copies of the object code with a copy of the

270 | written offer to provide the Corresponding Source. This

271 | alternative is allowed only occasionally and noncommercially, and

272 | only if you received the object code with such an offer, in accord

273 | with subsection 6b.

274 |

275 | d) Convey the object code by offering access from a designated

276 | place (gratis or for a charge), and offer equivalent access to the

277 | Corresponding Source in the same way through the same place at no

278 | further charge. You need not require recipients to copy the

279 | Corresponding Source along with the object code. If the place to

280 | copy the object code is a network server, the Corresponding Source

281 | may be on a different server (operated by you or a third party)

282 | that supports equivalent copying facilities, provided you maintain

283 | clear directions next to the object code saying where to find the

284 | Corresponding Source. Regardless of what server hosts the

285 | Corresponding Source, you remain obligated to ensure that it is

286 | available for as long as needed to satisfy these requirements.

287 |

288 | e) Convey the object code using peer-to-peer transmission, provided

289 | you inform other peers where the object code and Corresponding

290 | Source of the work are being offered to the general public at no

291 | charge under subsection 6d.

292 |

293 | A separable portion of the object code, whose source code is excluded

294 | from the Corresponding Source as a System Library, need not be

295 | included in conveying the object code work.

296 |

297 | A "User Product" is either (1) a "consumer product", which means any

298 | tangible personal property which is normally used for personal, family,

299 | or household purposes, or (2) anything designed or sold for incorporation

300 | into a dwelling. In determining whether a product is a consumer product,

301 | doubtful cases shall be resolved in favor of coverage. For a particular

302 | product received by a particular user, "normally used" refers to a

303 | typical or common use of that class of product, regardless of the status

304 | of the particular user or of the way in which the particular user

305 | actually uses, or expects or is expected to use, the product. A product

306 | is a consumer product regardless of whether the product has substantial

307 | commercial, industrial or non-consumer uses, unless such uses represent

308 | the only significant mode of use of the product.

309 |

310 | "Installation Information" for a User Product means any methods,

311 | procedures, authorization keys, or other information required to install

312 | and execute modified versions of a covered work in that User Product from

313 | a modified version of its Corresponding Source. The information must

314 | suffice to ensure that the continued functioning of the modified object

315 | code is in no case prevented or interfered with solely because

316 | modification has been made.

317 |

318 | If you convey an object code work under this section in, or with, or

319 | specifically for use in, a User Product, and the conveying occurs as

320 | part of a transaction in which the right of possession and use of the

321 | User Product is transferred to the recipient in perpetuity or for a

322 | fixed term (regardless of how the transaction is characterized), the

323 | Corresponding Source conveyed under this section must be accompanied

324 | by the Installation Information. But this requirement does not apply

325 | if neither you nor any third party retains the ability to install

326 | modified object code on the User Product (for example, the work has

327 | been installed in ROM).

328 |

329 | The requirement to provide Installation Information does not include a

330 | requirement to continue to provide support service, warranty, or updates

331 | for a work that has been modified or installed by the recipient, or for

332 | the User Product in which it has been modified or installed. Access to a

333 | network may be denied when the modification itself materially and

334 | adversely affects the operation of the network or violates the rules and

335 | protocols for communication across the network.

336 |

337 | Corresponding Source conveyed, and Installation Information provided,

338 | in accord with this section must be in a format that is publicly

339 | documented (and with an implementation available to the public in

340 | source code form), and must require no special password or key for

341 | unpacking, reading or copying.

342 |

343 | 7. Additional Terms.

344 |

345 | "Additional permissions" are terms that supplement the terms of this

346 | License by making exceptions from one or more of its conditions.

347 | Additional permissions that are applicable to the entire Program shall

348 | be treated as though they were included in this License, to the extent

349 | that they are valid under applicable law. If additional permissions

350 | apply only to part of the Program, that part may be used separately

351 | under those permissions, but the entire Program remains governed by

352 | this License without regard to the additional permissions.

353 |

354 | When you convey a copy of a covered work, you may at your option

355 | remove any additional permissions from that copy, or from any part of

356 | it. (Additional permissions may be written to require their own

357 | removal in certain cases when you modify the work.) You may place

358 | additional permissions on material, added by you to a covered work,

359 | for which you have or can give appropriate copyright permission.

360 |

361 | Notwithstanding any other provision of this License, for material you

362 | add to a covered work, you may (if authorized by the copyright holders of

363 | that material) supplement the terms of this License with terms:

364 |

365 | a) Disclaiming warranty or limiting liability differently from the

366 | terms of sections 15 and 16 of this License; or

367 |

368 | b) Requiring preservation of specified reasonable legal notices or

369 | author attributions in that material or in the Appropriate Legal

370 | Notices displayed by works containing it; or

371 |

372 | c) Prohibiting misrepresentation of the origin of that material, or

373 | requiring that modified versions of such material be marked in

374 | reasonable ways as different from the original version; or

375 |

376 | d) Limiting the use for publicity purposes of names of licensors or

377 | authors of the material; or

378 |

379 | e) Declining to grant rights under trademark law for use of some

380 | trade names, trademarks, or service marks; or

381 |

382 | f) Requiring indemnification of licensors and authors of that

383 | material by anyone who conveys the material (or modified versions of

384 | it) with contractual assumptions of liability to the recipient, for

385 | any liability that these contractual assumptions directly impose on

386 | those licensors and authors.

387 |

388 | All other non-permissive additional terms are considered "further

389 | restrictions" within the meaning of section 10. If the Program as you

390 | received it, or any part of it, contains a notice stating that it is

391 | governed by this License along with a term that is a further

392 | restriction, you may remove that term. If a license document contains

393 | a further restriction but permits relicensing or conveying under this

394 | License, you may add to a covered work material governed by the terms

395 | of that license document, provided that the further restriction does

396 | not survive such relicensing or conveying.

397 |

398 | If you add terms to a covered work in accord with this section, you

399 | must place, in the relevant source files, a statement of the

400 | additional terms that apply to those files, or a notice indicating

401 | where to find the applicable terms.

402 |

403 | Additional terms, permissive or non-permissive, may be stated in the

404 | form of a separately written license, or stated as exceptions;

405 | the above requirements apply either way.

406 |

407 | 8. Termination.

408 |

409 | You may not propagate or modify a covered work except as expressly

410 | provided under this License. Any attempt otherwise to propagate or

411 | modify it is void, and will automatically terminate your rights under

412 | this License (including any patent licenses granted under the third

413 | paragraph of section 11).

414 |

415 | However, if you cease all violation of this License, then your

416 | license from a particular copyright holder is reinstated (a)

417 | provisionally, unless and until the copyright holder explicitly and

418 | finally terminates your license, and (b) permanently, if the copyright

419 | holder fails to notify you of the violation by some reasonable means

420 | prior to 60 days after the cessation.

421 |

422 | Moreover, your license from a particular copyright holder is

423 | reinstated permanently if the copyright holder notifies you of the

424 | violation by some reasonable means, this is the first time you have

425 | received notice of violation of this License (for any work) from that

426 | copyright holder, and you cure the violation prior to 30 days after

427 | your receipt of the notice.

428 |

429 | Termination of your rights under this section does not terminate the

430 | licenses of parties who have received copies or rights from you under

431 | this License. If your rights have been terminated and not permanently

432 | reinstated, you do not qualify to receive new licenses for the same

433 | material under section 10.

434 |

435 | 9. Acceptance Not Required for Having Copies.

436 |

437 | You are not required to accept this License in order to receive or

438 | run a copy of the Program. Ancillary propagation of a covered work

439 | occurring solely as a consequence of using peer-to-peer transmission

440 | to receive a copy likewise does not require acceptance. However,

441 | nothing other than this License grants you permission to propagate or

442 | modify any covered work. These actions infringe copyright if you do

443 | not accept this License. Therefore, by modifying or propagating a

444 | covered work, you indicate your acceptance of this License to do so.

445 |

446 | 10. Automatic Licensing of Downstream Recipients.

447 |

448 | Each time you convey a covered work, the recipient automatically

449 | receives a license from the original licensors, to run, modify and

450 | propagate that work, subject to this License. You are not responsible

451 | for enforcing compliance by third parties with this License.

452 |

453 | An "entity transaction" is a transaction transferring control of an

454 | organization, or substantially all assets of one, or subdividing an

455 | organization, or merging organizations. If propagation of a covered

456 | work results from an entity transaction, each party to that

457 | transaction who receives a copy of the work also receives whatever

458 | licenses to the work the party's predecessor in interest had or could

459 | give under the previous paragraph, plus a right to possession of the

460 | Corresponding Source of the work from the predecessor in interest, if

461 | the predecessor has it or can get it with reasonable efforts.

462 |

463 | You may not impose any further restrictions on the exercise of the

464 | rights granted or affirmed under this License. For example, you may

465 | not impose a license fee, royalty, or other charge for exercise of

466 | rights granted under this License, and you may not initiate litigation

467 | (including a cross-claim or counterclaim in a lawsuit) alleging that

468 | any patent claim is infringed by making, using, selling, offering for

469 | sale, or importing the Program or any portion of it.

470 |

471 | 11. Patents.

472 |

473 | A "contributor" is a copyright holder who authorizes use under this

474 | License of the Program or a work on which the Program is based. The

475 | work thus licensed is called the contributor's "contributor version".

476 |

477 | A contributor's "essential patent claims" are all patent claims

478 | owned or controlled by the contributor, whether already acquired or

479 | hereafter acquired, that would be infringed by some manner, permitted

480 | by this License, of making, using, or selling its contributor version,

481 | but do not include claims that would be infringed only as a

482 | consequence of further modification of the contributor version. For

483 | purposes of this definition, "control" includes the right to grant

484 | patent sublicenses in a manner consistent with the requirements of

485 | this License.

486 |

487 | Each contributor grants you a non-exclusive, worldwide, royalty-free

488 | patent license under the contributor's essential patent claims, to

489 | make, use, sell, offer for sale, import and otherwise run, modify and

490 | propagate the contents of its contributor version.

491 |

492 | In the following three paragraphs, a "patent license" is any express

493 | agreement or commitment, however denominated, not to enforce a patent

494 | (such as an express permission to practice a patent or covenant not to

495 | sue for patent infringement). To "grant" such a patent license to a

496 | party means to make such an agreement or commitment not to enforce a

497 | patent against the party.

498 |

499 | If you convey a covered work, knowingly relying on a patent license,

500 | and the Corresponding Source of the work is not available for anyone

501 | to copy, free of charge and under the terms of this License, through a

502 | publicly available network server or other readily accessible means,

503 | then you must either (1) cause the Corresponding Source to be so

504 | available, or (2) arrange to deprive yourself of the benefit of the

505 | patent license for this particular work, or (3) arrange, in a manner

506 | consistent with the requirements of this License, to extend the patent

507 | license to downstream recipients. "Knowingly relying" means you have

508 | actual knowledge that, but for the patent license, your conveying the

509 | covered work in a country, or your recipient's use of the covered work

510 | in a country, would infringe one or more identifiable patents in that

511 | country that you have reason to believe are valid.

512 |

513 | If, pursuant to or in connection with a single transaction or

514 | arrangement, you convey, or propagate by procuring conveyance of, a

515 | covered work, and grant a patent license to some of the parties

516 | receiving the covered work authorizing them to use, propagate, modify

517 | or convey a specific copy of the covered work, then the patent license

518 | you grant is automatically extended to all recipients of the covered

519 | work and works based on it.

520 |

521 | A patent license is "discriminatory" if it does not include within

522 | the scope of its coverage, prohibits the exercise of, or is

523 | conditioned on the non-exercise of one or more of the rights that are

524 | specifically granted under this License. You may not convey a covered

525 | work if you are a party to an arrangement with a third party that is

526 | in the business of distributing software, under which you make payment

527 | to the third party based on the extent of your activity of conveying

528 | the work, and under which the third party grants, to any of the

529 | parties who would receive the covered work from you, a discriminatory

530 | patent license (a) in connection with copies of the covered work

531 | conveyed by you (or copies made from those copies), or (b) primarily

532 | for and in connection with specific products or compilations that

533 | contain the covered work, unless you entered into that arrangement,

534 | or that patent license was granted, prior to 28 March 2007.

535 |

536 | Nothing in this License shall be construed as excluding or limiting

537 | any implied license or other defenses to infringement that may

538 | otherwise be available to you under applicable patent law.

539 |

540 | 12. No Surrender of Others' Freedom.

541 |

542 | If conditions are imposed on you (whether by court order, agreement or

543 | otherwise) that contradict the conditions of this License, they do not

544 | excuse you from the conditions of this License. If you cannot convey a

545 | covered work so as to satisfy simultaneously your obligations under this

546 | License and any other pertinent obligations, then as a consequence you may

547 | not convey it at all. For example, if you agree to terms that obligate you

548 | to collect a royalty for further conveying from those to whom you convey

549 | the Program, the only way you could satisfy both those terms and this

550 | License would be to refrain entirely from conveying the Program.

551 |

552 | 13. Use with the GNU Affero General Public License.

553 |

554 | Notwithstanding any other provision of this License, you have

555 | permission to link or combine any covered work with a work licensed

556 | under version 3 of the GNU Affero General Public License into a single

557 | combined work, and to convey the resulting work. The terms of this

558 | License will continue to apply to the part which is the covered work,

559 | but the special requirements of the GNU Affero General Public License,

560 | section 13, concerning interaction through a network will apply to the

561 | combination as such.

562 |

563 | 14. Revised Versions of this License.

564 |

565 | The Free Software Foundation may publish revised and/or new versions of

566 | the GNU General Public License from time to time. Such new versions will

567 | be similar in spirit to the present version, but may differ in detail to

568 | address new problems or concerns.

569 |

570 | Each version is given a distinguishing version number. If the

571 | Program specifies that a certain numbered version of the GNU General

572 | Public License "or any later version" applies to it, you have the

573 | option of following the terms and conditions either of that numbered

574 | version or of any later version published by the Free Software

575 | Foundation. If the Program does not specify a version number of the

576 | GNU General Public License, you may choose any version ever published

577 | by the Free Software Foundation.

578 |

579 | If the Program specifies that a proxy can decide which future

580 | versions of the GNU General Public License can be used, that proxy's

581 | public statement of acceptance of a version permanently authorizes you

582 | to choose that version for the Program.

583 |

584 | Later license versions may give you additional or different

585 | permissions. However, no additional obligations are imposed on any

586 | author or copyright holder as a result of your choosing to follow a

587 | later version.

588 |

589 | 15. Disclaimer of Warranty.

590 |

591 | THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

592 | APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

593 | HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

594 | OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

595 | THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

596 | PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

597 | IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

598 | ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

599 |

600 | 16. Limitation of Liability.

601 |

602 | IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

603 | WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

604 | THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

605 | GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

606 | USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

607 | DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

608 | PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

609 | EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

610 | SUCH DAMAGES.

611 |

612 | 17. Interpretation of Sections 15 and 16.

613 |

614 | If the disclaimer of warranty and limitation of liability provided

615 | above cannot be given local legal effect according to their terms,

616 | reviewing courts shall apply local law that most closely approximates

617 | an absolute waiver of all civil liability in connection with the

618 | Program, unless a warranty or assumption of liability accompanies a

619 | copy of the Program in return for a fee.

620 |

621 | END OF TERMS AND CONDITIONS

622 |

623 | How to Apply These Terms to Your New Programs

624 |

625 | If you develop a new program, and you want it to be of the greatest

626 | possible use to the public, the best way to achieve this is to make it

627 | free software which everyone can redistribute and change under these terms.

628 |

629 | To do so, attach the following notices to the program. It is safest

630 | to attach them to the start of each source file to most effectively

631 | state the exclusion of warranty; and each file should have at least

632 | the "copyright" line and a pointer to where the full notice is found.

633 |

634 |

635 | Copyright (C)

636 |

637 | This program is free software: you can redistribute it and/or modify

638 | it under the terms of the GNU General Public License as published by

639 | the Free Software Foundation, either version 3 of the License, or

640 | (at your option) any later version.

641 |

642 | This program is distributed in the hope that it will be useful,

643 | but WITHOUT ANY WARRANTY; without even the implied warranty of

644 | MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

645 | GNU General Public License for more details.

646 |

647 | You should have received a copy of the GNU General Public License

648 | along with this program. If not, see .

649 |

650 | Also add information on how to contact you by electronic and paper mail.

651 |

652 | If the program does terminal interaction, make it output a short

653 | notice like this when it starts in an interactive mode:

654 |

655 | Copyright (C)

656 | This program comes with ABSOLUTELY NO WARRANTY; for details type `show w'.

657 | This is free software, and you are welcome to redistribute it

658 | under certain conditions; type `show c' for details.

659 |

660 | The hypothetical commands `show w' and `show c' should show the appropriate

661 | parts of the General Public License. Of course, your program's commands

662 | might be different; for a GUI interface, you would use an "about box".

663 |

664 | You should also get your employer (if you work as a programmer) or school,

665 | if any, to sign a "copyright disclaimer" for the program, if necessary.

666 | For more information on this, and how to apply and follow the GNU GPL, see

667 | .

668 |

669 | The GNU General Public License does not permit incorporating your program

670 | into proprietary programs. If your program is a subroutine library, you

671 | may consider it more useful to permit linking proprietary applications with

672 | the library. If this is what you want to do, use the GNU Lesser General

673 | Public License instead of this License. But first, please read

674 | .

675 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # ship detection & localisation

2 | Ship detection and localisation from satellite images.

3 |

4 | **Blog posts:**

5 | - Ship detection - Part 1: Ship detection, i.e. binary prediction of whether there is at least 1 ship, or not. Part 1 is a simple solution showing great results in a few lines of code

6 | - Ship detection - Part 2: ship detection with transfer learning and decision interpretability through GAP/GMP's implicit localisation properties

7 | - Ship localisation / image segmentation - Part 3: identify where ship are within the image, and highlight pixel by pixel

8 |

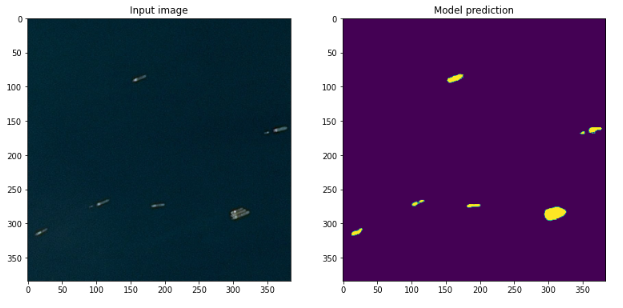

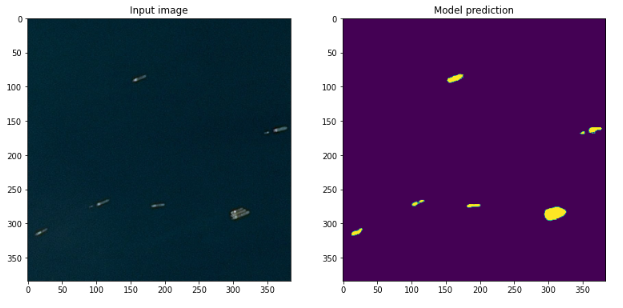

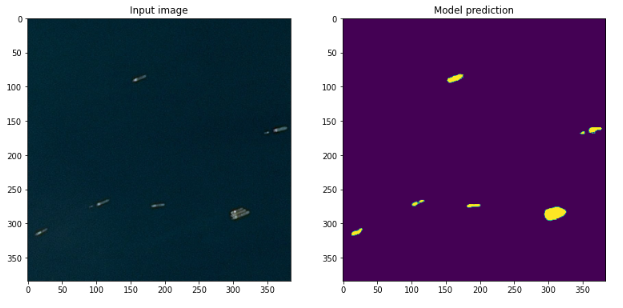

9 | Part 3 highlight: Image segmentation with a U-Net

10 |

11 |  12 |

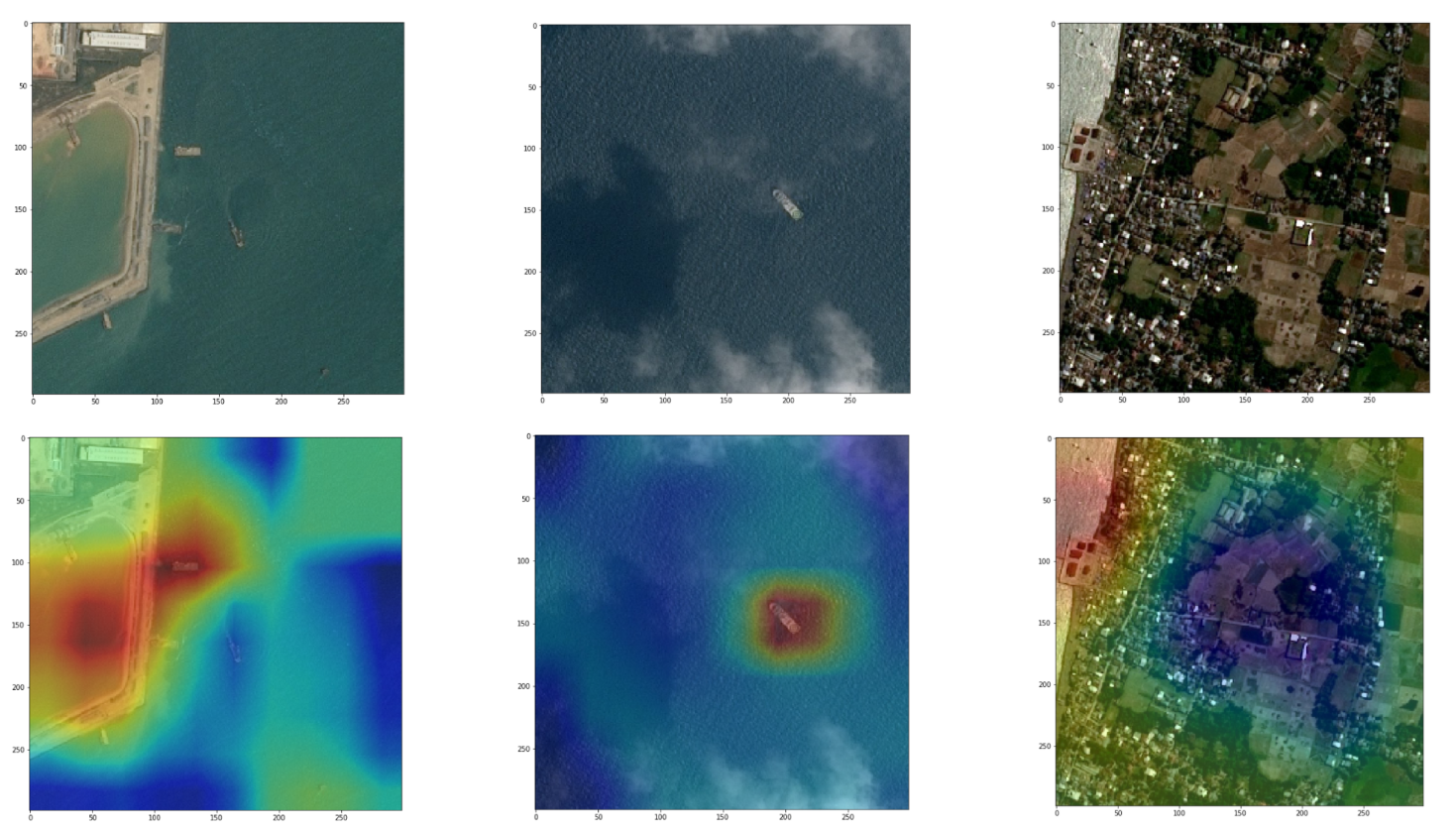

13 | Part 2 highlight: Class activation mapping on vessel detection classifier ConvNet - convnet learned where ships are without supervision!

14 |

12 |

13 | Part 2 highlight: Class activation mapping on vessel detection classifier ConvNet - convnet learned where ships are without supervision!

14 |  15 |

16 | Steps taken:

17 | - **find data sets:**

18 | - planet API:

19 | - needs subscription, but there is a free trial

20 | - Example usage here: https://medium.com/dataseries/satellite-imagery-analysis-with-python-a06eea5465ea

21 | - airbus kaggle set (selected for first iteration)

22 | - https://www.kaggle.com/c/airbus-ship-detection/data

23 | - to download locally, make sure your connection is stable (29 GB)

24 | - get API key through your kaggle profile (free), and either save file or enter name and key as environmental variable

25 | - nohup kaggle competitions download -c airbus-ship-detection & disown %1

26 | - other data providers: Airbus, Digital Globe

27 | - free sources: includes EOS's Sentinel 1 (SAR - active/radar) and 2 (optical) with coverage period ranging of 2-7 days

28 | https://eos.com/blog/7-top-free-satellite-imagery-sources-in-2019/

29 | - **simple EDA on data used in this repo and blog post:**

30 | - 200k+ images of size 768 x 768 x 3

31 | - 78% of images have no vessel

32 | - some images have up to 15 vessels

33 | - Ships within and across images differ in size, and are located in open sea, at docks, marinas, etc.

34 | - **modelling broken down into two steps**, with subfolders in the repo

35 | - ship detection: binary prediction of whether there is at least 1 ship, or not

36 | - ship localisation / image segmentation: identify where ship are within the image, and classify each pixel as having a ship or no ship (alternative could be to have a bounding box, with a different kind of model)

37 | - **other articles on the topic:**

38 | - https://www.kaggle.com/iafoss/unet34-dice-0-87/data

39 | - https://www.kaggle.com/uysimty/ship-detection-using-keras-u-net

40 | - https://medium.com/dataseries/detecting-ships-in-satellite-imagery-7f0ca04e7964

41 | - https://github.com/davidtvs/kaggle-airbus-ship-detection

42 | - https://towardsdatascience.com/deep-learning-for-ship-detection-and-segmentation-71d223aca649

43 | - https://towardsdatascience.com/u-net-b229b32b4a71

44 | - https://www.tensorflow.org/tutorials/images/segmentation

45 | - https://lmb.informatik.uni-freiburg.de/people/ronneber/u-net/

46 | - more classic ship detection algorithms in skimage.segmentation: https://developers.planet.com/tutorials/detect-ships-in-planet-data/

47 |

--------------------------------------------------------------------------------

/detection/Romain - vessel recognition.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {

7 | "_cell_guid": "b1076dfc-b9ad-4769-8c92-a6c4dae69d19",

8 | "_uuid": "8f2839f25d086af736a60e9eeb907d3b93b6e0e5"

9 | },

10 | "outputs": [],

11 | "source": [

12 | "# This Python 3 environment comes with many helpful analytics libraries installed\n",

13 | "# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python\n",

14 | "# For example, here's several helpful packages to load in \n",

15 | "\n",

16 | "import numpy as np # linear algebra\n",

17 | "import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)\n",

18 | "\n",

19 | "# Input data files are available in the \"../input/\" directory.\n",

20 | "# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory\n",

21 | "\n",

22 | "import os\n",

23 | "\n",

24 | "# for dirname, _, filenames in os.walk('/kaggle/input'):\n",

25 | "# for filename in filenames:\n",

26 | "# print(os.path.join(dirname, filename))\n",

27 | "\n",

28 | "# Any results you write to the current directory are saved as output.\n",

29 | "\n",

30 | "# additional imports \n",

31 | "import matplotlib.pyplot as plt"

32 | ]

33 | },

34 | {

35 | "cell_type": "markdown",

36 | "metadata": {},

37 | "source": [

38 | "# Image metadata"

39 | ]

40 | },

41 | {

42 | "cell_type": "code",

43 | "execution_count": null,

44 | "metadata": {},

45 | "outputs": [],

46 | "source": [

47 | "df_csv = pd.read_csv('../input/airbus-ship-detection/train_ship_segmentations_v2.csv')\n",

48 | "df_csv.head()"

49 | ]

50 | },

51 | {

52 | "cell_type": "code",

53 | "execution_count": null,

54 | "metadata": {},

55 | "outputs": [],

56 | "source": [

57 | "df_csv['has_vessel'] = df_csv['EncodedPixels'].notnull()\n",

58 | "df_csv['has_vessel'].head()"

59 | ]

60 | },

61 | {

62 | "cell_type": "code",

63 | "execution_count": null,

64 | "metadata": {},

65 | "outputs": [],

66 | "source": [

67 | "def rle_to_pixels(rle_code):\n",

68 | " '''\n",

69 | " Transforms a RLE code string into a list of pixels of a (768, 768) canvas\n",

70 | " \n",

71 | " Source: https://www.kaggle.com/julian3833/2-understanding-and-plotting-rle-bounding-boxes\n",

72 | " '''\n",

73 | " rle_code = [int(i) for i in rle_code.split()]\n",

74 | " pixels = [(pixel_position % 768, pixel_position // 768) \n",

75 | " for start, length in list(zip(rle_code[0:-1:2], rle_code[1::2])) \n",

76 | " for pixel_position in range(start, start + length)]\n",

77 | " return pixels"

78 | ]

79 | },

80 | {

81 | "cell_type": "markdown",

82 | "metadata": {},

83 | "source": [

84 | "# small training set"

85 | ]

86 | },

87 | {

88 | "cell_type": "code",

89 | "execution_count": 1,

90 | "metadata": {

91 | "scrolled": false

92 | },

93 | "outputs": [

94 | {

95 | "ename": "NameError",

96 | "evalue": "name 'df_csv' is not defined",

97 | "output_type": "error",

98 | "traceback": [

99 | "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

100 | "\u001b[0;31mNameError\u001b[0m Traceback (most recent call last)",

101 | "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m\u001b[0m\n\u001b[0;32m----> 1\u001b[0;31m \u001b[0mmask_has_vessel\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mdf_csv\u001b[0m\u001b[0;34m[\u001b[0m\u001b[0;34m'has_vessel'\u001b[0m\u001b[0;34m]\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m",

102 | "\u001b[0;31mNameError\u001b[0m: name 'df_csv' is not defined"

103 | ]

104 | }

105 | ],

106 | "source": [

107 | "mask_has_vessel = df_csv['has_vessel']"

108 | ]

109 | },

110 | {

111 | "cell_type": "code",

112 | "execution_count": null,

113 | "metadata": {},

114 | "outputs": [],

115 | "source": [

116 | "from __future__ import absolute_import, division, print_function, unicode_literals\n",

117 | "\n",

118 | "import tensorflow as tf\n",

119 | "\n",

120 | "AUTOTUNE = tf.data.experimental.AUTOTUNE\n",

121 | "\n",

122 | "import IPython.display as display\n",

123 | "from PIL import Image\n",

124 | "import numpy as np\n",

125 | "import matplotlib.pyplot as plt"

126 | ]

127 | },

128 | {

129 | "cell_type": "code",

130 | "execution_count": null,

131 | "metadata": {},

132 | "outputs": [],

133 | "source": [

134 | "df_labels = df_csv.groupby('ImageId')['has_vessel'].max()\n",

135 | "df_labels = df_labels.astype(str)\n",

136 | "df_labels.head(3)"

137 | ]

138 | },

139 | {

140 | "cell_type": "code",

141 | "execution_count": null,

142 | "metadata": {},

143 | "outputs": [],

144 | "source": [

145 | "import pathlib\n",

146 | "# data_dir = pathlib.Path('../input/airbus-ship-detection/train_v2\n",

147 | "data_dir_raw = pathlib.Path('../input/airbus-ship-detection/train_v2')\n",

148 | "data_dir_raw"

149 | ]

150 | },

151 | {

152 | "cell_type": "code",

153 | "execution_count": null,

154 | "metadata": {},

155 | "outputs": [],

156 | "source": [

157 | "mkdir training_small"

158 | ]

159 | },

160 | {

161 | "cell_type": "code",

162 | "execution_count": null,

163 | "metadata": {},

164 | "outputs": [],

165 | "source": [

166 | "mkdir training_small/ship/"

167 | ]

168 | },

169 | {

170 | "cell_type": "code",

171 | "execution_count": null,

172 | "metadata": {},

173 | "outputs": [],

174 | "source": [

175 | "mkdir training_small/no_ship/"

176 | ]

177 | },

178 | {

179 | "cell_type": "code",

180 | "execution_count": null,

181 | "metadata": {},

182 | "outputs": [],

183 | "source": [

184 | "mkdir test_small"

185 | ]

186 | },

187 | {

188 | "cell_type": "code",

189 | "execution_count": null,

190 | "metadata": {},

191 | "outputs": [],

192 | "source": [

193 | "mkdir test_small/ship/"

194 | ]

195 | },

196 | {

197 | "cell_type": "code",

198 | "execution_count": null,

199 | "metadata": {},

200 | "outputs": [],

201 | "source": [

202 | "mkdir test_small/no_ship/"

203 | ]

204 | },

205 | {

206 | "cell_type": "code",

207 | "execution_count": null,

208 | "metadata": {},

209 | "outputs": [],

210 | "source": [

211 | "import shutil\n",

212 | "\n",

213 | "training_size = 50\n",

214 | "test_size = 50\n",

215 | "\n",

216 | "counter_ship = 0\n",

217 | "counter_no_ship = 0\n",

218 | "\n",

219 | "\n",

220 | "for item in data_dir_raw.glob('*.jpg'):\n",

221 | " item_str = str(item)\n",

222 | " if df_labels.loc[item.name] == 'True':\n",

223 | " if counter_ship < training_size:\n",

224 | " shutil.copy(item_str, 'training_small/ship/')\n",

225 | " counter_ship += 1\n",

226 | " elif counter_ship < training_size + test_size:\n",

227 | " shutil.copy(item_str, 'test_small/ship/')\n",

228 | " counter_ship += 1\n",

229 | " else:\n",

230 | " if counter_no_ship < training_size:\n",

231 | " shutil.copy(item_str, 'training_small/no_ship/') \n",

232 | " counter_no_ship += 1\n",

233 | " elif counter_no_ship < training_size + test_size:\n",

234 | " shutil.copy(item_str, 'test_small/no_ship/')\n",

235 | " counter_no_ship += 1\n",

236 | " "

237 | ]

238 | },

239 | {

240 | "cell_type": "code",

241 | "execution_count": null,

242 | "metadata": {},

243 | "outputs": [],

244 | "source": [

245 | "ls training_small/no_ship/ -1 | wc -l"

246 | ]

247 | },

248 | {

249 | "cell_type": "code",

250 | "execution_count": null,

251 | "metadata": {},

252 | "outputs": [],

253 | "source": [

254 | "ls test_small/ship/ -1 | wc -l"

255 | ]

256 | },

257 | {

258 | "cell_type": "code",

259 | "execution_count": null,

260 | "metadata": {},

261 | "outputs": [],

262 | "source": [

263 | "ls training_small/ship"

264 | ]

265 | },

266 | {

267 | "cell_type": "code",

268 | "execution_count": null,

269 | "metadata": {},

270 | "outputs": [],

271 | "source": [

272 | "import pathlib\n",

273 | "# data_dir = pathlib.Path('../input/airbus-ship-detection/train_v2\n",

274 | "data_dir = pathlib.Path('training_small/')\n",

275 | "data_dir"

276 | ]

277 | },

278 | {

279 | "cell_type": "code",

280 | "execution_count": null,

281 | "metadata": {},

282 | "outputs": [],

283 | "source": [

284 | "image_count = len(list(data_dir.glob('*/*.jpg')))\n",

285 | "image_count\n",

286 | "# image_count = df_csv_small_training.shape[0]"

287 | ]

288 | },

289 | {

290 | "cell_type": "code",

291 | "execution_count": null,

292 | "metadata": {},

293 | "outputs": [],

294 | "source": [

295 | "BATCH_SIZE = 10\n",

296 | "IMG_HEIGHT = 768\n",

297 | "IMG_WIDTH = 768\n",

298 | "# STEPS_PER_EPOCH = np.ceil(image_count/BATCH_SIZE)\n",

299 | "epochs = 10"

300 | ]

301 | },

302 | {

303 | "cell_type": "code",

304 | "execution_count": null,

305 | "metadata": {},

306 | "outputs": [],

307 | "source": [

308 | "# CLASS_NAMES = np.array([df_labels.loc[item.name] for item in data_dir.glob('*.jpg')])\n",

309 | "# CLASS_NAMES\n",

310 | "\n",

311 | "CLASS_NAMES = np.array([item.name for item in data_dir.glob('*')])\n",

312 | "CLASS_NAMES"

313 | ]

314 | },

315 | {

316 | "cell_type": "code",

317 | "execution_count": null,

318 | "metadata": {},

319 | "outputs": [],

320 | "source": [

321 | "ls training_small/"

322 | ]

323 | },

324 | {

325 | "cell_type": "code",

326 | "execution_count": null,

327 | "metadata": {},

328 | "outputs": [],

329 | "source": []

330 | },

331 | {

332 | "cell_type": "code",

333 | "execution_count": null,

334 | "metadata": {},

335 | "outputs": [],

336 | "source": [

337 | "train = os.listdir('training_small/no_ship')\n",

338 | "print(len(train))"

339 | ]

340 | },

341 | {

342 | "cell_type": "code",

343 | "execution_count": null,

344 | "metadata": {},

345 | "outputs": [],

346 | "source": [

347 | "# ls training_small/no_ship"

348 | ]

349 | },

350 | {

351 | "cell_type": "code",

352 | "execution_count": null,

353 | "metadata": {},

354 | "outputs": [],

355 | "source": [

356 | "# The 1./255 is to convert from uint8 to float32 in range [0,1].\n",

357 | "image_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)\n",

358 | "validation_image_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255) # Generator for our validation data"

359 | ]

360 | },

361 | {

362 | "cell_type": "code",

363 | "execution_count": null,

364 | "metadata": {},

365 | "outputs": [],

366 | "source": [

367 | "str(data_dir)"

368 | ]

369 | },

370 | {

371 | "cell_type": "code",

372 | "execution_count": null,

373 | "metadata": {},

374 | "outputs": [],

375 | "source": [

376 | "train_data_gen = image_generator.flow_from_directory(directory=str(data_dir), #training_dir\n",

377 | " batch_size=BATCH_SIZE,\n",

378 | " shuffle=True,\n",

379 | " target_size=(IMG_HEIGHT, IMG_WIDTH),\n",

380 | " class_mode = 'sparse'\n",

381 | "# classes = list(CLASS_NAMES),\n",

382 | "# color_mode='grayscale',\n",

383 | "# data_format='channels_last'\n",

384 | " )\n",

385 | "\n",

386 | "val_data_gen = validation_image_generator.flow_from_directory(directory='test_small',\n",

387 | " batch_size=BATCH_SIZE,\n",

388 | " shuffle=True,\n",

389 | " target_size=(IMG_HEIGHT, IMG_WIDTH),\n",

390 | " class_mode = 'sparse')\n"

391 | ]

392 | },

393 | {

394 | "cell_type": "code",

395 | "execution_count": null,

396 | "metadata": {},

397 | "outputs": [],

398 | "source": [

399 | "from __future__ import absolute_import, division, print_function, unicode_literals\n",

400 | "\n",

401 | "# TensorFlow and tf.keras\n",

402 | "import tensorflow as tf\n",

403 | "from tensorflow import keras\n",

404 | "\n",

405 | "# Helper libraries\n",

406 | "import numpy as np\n",

407 | "import matplotlib.pyplot as plt\n",

408 | "\n",

409 | "print(tf.__version__)"

410 | ]

411 | },

412 | {

413 | "cell_type": "code",

414 | "execution_count": null,

415 | "metadata": {},

416 | "outputs": [],

417 | "source": [

418 | "model = keras.Sequential([\n",

419 | " keras.layers.Conv2D(16, 3, padding='same', activation='relu', input_shape=(IMG_HEIGHT, IMG_WIDTH ,3)),\n",

420 | " keras.layers.MaxPooling2D(),\n",

421 | " keras.layers.Conv2D(32, 3, padding='same', activation='relu'),\n",

422 | " keras.layers.MaxPooling2D(),\n",

423 | " keras.layers.Conv2D(64, 3, padding='same', activation='relu'),\n",

424 | " keras.layers.MaxPooling2D(),\n",

425 | " keras.layers.Dropout(0.2),\n",

426 | " keras.layers.Flatten(),\n",

427 | " keras.layers.Dense(128, activation='relu'),\n",

428 | " keras.layers.Dense(2, activation='softmax')\n",

429 | "# keras.layers.Dense(1, activation = 'sigmoid')\n",

430 | "])"

431 | ]

432 | },

433 | {

434 | "cell_type": "code",

435 | "execution_count": null,

436 | "metadata": {},

437 | "outputs": [],

438 | "source": [

439 | "model.compile(optimizer='adam',\n",

440 | " loss='sparse_categorical_crossentropy',\n",

441 | " metrics=['accuracy'])"

442 | ]

443 | },

444 | {

445 | "cell_type": "code",

446 | "execution_count": null,

447 | "metadata": {},

448 | "outputs": [],

449 | "source": [

450 | "model.summary()"

451 | ]

452 | },

453 | {

454 | "cell_type": "code",

455 | "execution_count": null,

456 | "metadata": {},

457 | "outputs": [],

458 | "source": [

459 | "# model.fit(next(train_data_gen)[0], next(train_data_gen)[1], epochs=20)"

460 | ]

461 | },

462 | {

463 | "cell_type": "code",

464 | "execution_count": null,

465 | "metadata": {},

466 | "outputs": [],

467 | "source": [

468 | "history = model.fit_generator(\n",

469 | " train_data_gen,\n",

470 | "# steps_per_epoch=total_train // batch_size,\n",

471 | " epochs=epochs,\n",

472 | " validation_data=val_data_gen,\n",

473 | "# validation_steps=total_val // batch_size\n",

474 | ")"

475 | ]

476 | },

477 | {

478 | "cell_type": "code",

479 | "execution_count": null,

480 | "metadata": {},

481 | "outputs": [],

482 | "source": [

483 | "acc = history.history['accuracy']\n",

484 | "val_acc = history.history['val_accuracy']\n",

485 | "\n",

486 | "loss = history.history['loss']\n",

487 | "val_loss = history.history['val_loss']\n",

488 | "\n",

489 | "epochs_range = range(epochs)\n",

490 | "\n",

491 | "plt.figure(figsize=(8, 8))\n",

492 | "plt.subplot(1, 2, 1)\n",

493 | "plt.plot(epochs_range, acc, label='Training Accuracy')\n",

494 | "plt.plot(epochs_range, val_acc, label='Validation Accuracy')\n",

495 | "plt.legend(loc='lower right')\n",

496 | "plt.title('Training and Validation Accuracy')\n",

497 | "\n",

498 | "plt.subplot(1, 2, 2)\n",

499 | "plt.plot(epochs_range, loss, label='Training Loss')\n",

500 | "plt.plot(epochs_range, val_loss, label='Validation Loss')\n",

501 | "plt.legend(loc='upper right')\n",

502 | "plt.title('Training and Validation Loss')\n",

503 | "plt.show()"

504 | ]

505 | },

506 | {

507 | "cell_type": "code",

508 | "execution_count": null,

509 | "metadata": {},

510 | "outputs": [],

511 | "source": [

512 | "model.predict(val_data_gen)"

513 | ]

514 | },

515 | {

516 | "cell_type": "code",

517 | "execution_count": null,

518 | "metadata": {},

519 | "outputs": [],

520 | "source": []

521 | }

522 | ],

523 | "metadata": {

524 | "kernelspec": {

525 | "display_name": "Python 3",

526 | "language": "python",

527 | "name": "python3"

528 | },

529 | "language_info": {

530 | "codemirror_mode": {

531 | "name": "ipython",

532 | "version": 3

533 | },

534 | "file_extension": ".py",

535 | "mimetype": "text/x-python",

536 | "name": "python",

537 | "nbconvert_exporter": "python",

538 | "pygments_lexer": "ipython3",

539 | "version": "3.7.5"

540 | }

541 | },

542 | "nbformat": 4,

543 | "nbformat_minor": 1

544 | }

545 |

--------------------------------------------------------------------------------

/detection/attention_CAM.py:

--------------------------------------------------------------------------------

1 | # Network attention: Class Activation Mapping (CAM)

2 | # inspired from paper https://arxiv.org/pdf/1512.04150.pdf

3 |

4 | import tensorflow as tf

5 | import matplotlib.pyplot as plt

6 |

7 |

8 | def extract_relevant_layers(model, image_batch, global_pooling_layer_nbr=1):

9 | # define a new variable for the layer just before the Global Pooling layer (GAP/GMP)

10 | # in our example that's the input to layer 1, or the output of layer 0

11 | model_pre_gp_layer = tf.keras.models.Model(

12 | inputs=model.input, outputs=model.layers[global_pooling_layer_nbr].input

13 | )

14 |

15 | # get an example data, and make a prediction on it

16 | pre_gp_activation_example = model_pre_gp_layer.predict(image_batch[0])

17 |

18 | # classification weights, last layer (here we are working on binary classification - slight variation for multiclass)

19 | classification_weights = model.layers[-1].weights[0].numpy()

20 |

21 | return pre_gp_activation_example, classification_weights

22 |

23 |

24 | def class_activation_mapping(pre_gp_activation_oneimage, classification_weights):

25 | """

26 | Calculate weighted sum showing class activation mapping

27 |

28 | Input

29 | - pre_gmp_activation_oneimage: pre global pooling activations, shaped (height, width, channels)

30 | - classification_weights: weights for each channel, shaped (channels)

31 | """

32 |

33 | # this dot product relies on broadcasting on the spatial dimensions

34 | dot_prod = pre_gp_activation_oneimage.dot(classification_weights)

35 | return dot_prod

36 |

37 |

38 | # ------------------------- EXAMPLE USAGE -------------------------

39 | """

40 | Simplified architecture in the example:

41 | - Image inputs: (batch size=40, height=299, width=299, channels=3)

42 | - Xception output: (batch size=40, height at this layer=10, width at this layer=10, channels at this layer=2048)

43 | - Global Max Pooling output: (batch size=40, 1, 1, channels at this layer=2048)

44 | - Classifier (dense) output: (batch size=40, classification dim=1)

45 |

46 | The quantity we show on the heatmap is

47 | the Xception output (pre_gmp_activation)

48 | summed over the channel dimension

49 | with the Classifier's weights for each channel (classification_weights).

50 | """

51 |

52 | # --------- INPUT DATA ---------

53 | # get model (here we had pretrained it)

54 | model_loaded = tf.keras.models.load_model(

55 | "../input/vessel-detection-transferlearning-xception/model_xception_gmp_cycling_20200112_7_40.h5"

56 | )

57 |

58 | # get a batch of pictures

59 | # here assuming the validation_generator is running, or through another mean

60 | batch_test = next(validation_generator)

61 |

62 | # --------- PROCESS ---------

63 | # prediction on relevant layers for that batch

64 | pre_gp_activation_batch, classification_weights = extract_relevant_layers(

65 | model=model_loaded, image_batch=batch_test

66 | )

67 |

68 | # select an image and work with this

69 | selected_image_index = 5

70 |

71 | dot_prod_oneimage = class_activation_mapping(

72 | pre_gp_activation_oneimage=pre_gp_activation_batch[selected_image_index],

73 | classification_weights=classification_weights,

74 | )

75 |

76 | # --------- VISUALISATION ---------

77 | # can plot what we have at this stage

78 | plt.imshow(dot_prod_oneimage.reshape(10, 10))

79 |

80 | # for better results, better to upsample

81 | resized_dot_prod = tf.image.resize(

82 | dot_prod_oneimage, (299, 299), antialias=True

83 | ).numpy()

84 |

85 | plt.figure(figsize=(10, 10))

86 | plt.imshow(batch_test[0][selected_image_index])

87 | plt.imshow(

88 | resized_dot_prod.reshape(299, 299), cmap="jet", alpha=0.3, interpolation="nearest"

89 | )

90 | plt.show()

91 |

92 |

--------------------------------------------------------------------------------

/detection/model_vessel_detection_classifier.py:

--------------------------------------------------------------------------------

1 | from __future__ import absolute_import, division, print_function, unicode_literals

2 |

3 | import tensorflow as tf

4 |

5 | # from tensorflow import keras

6 |

7 | import numpy as np

8 | import matplotlib.pyplot as plt

9 |

10 | print(tf.__version__)

11 |

12 |

13 | def define_model_supersimple_convnet(IMG_HEIGHT=256, IMG_WIDTH=256):

14 | model = tf.keras.Sequential(

15 | [

16 | tf.keras.layers.Conv2D(

17 | 16,

18 | 3,

19 | padding="same",

20 | activation="relu",

21 | input_shape=(IMG_HEIGHT, IMG_WIDTH, 3),

22 | ),

23 | tf.keras.layers.MaxPooling2D(),

24 | tf.keras.layers.Conv2D(32, 3, padding="same", activation="relu"),

25 | tf.keras.layers.MaxPooling2D(),

26 | tf.keras.layers.Conv2D(64, 3, padding="same", activation="relu"),

27 | tf.keras.layers.MaxPooling2D(),

28 | tf.keras.layers.Dropout(0.2),

29 | tf.keras.layers.Flatten(),

30 | tf.keras.layers.Dense(128, activation="relu"),

31 | tf.keras.layers.Dense(2, activation="softmax")

32 | # tf.keras.layers.Dense(1, activation = 'sigmoid')

33 | ]

34 | )

35 |

36 | model.compile(

37 | optimizer=tf.keras.optimizers.Adam(

38 | learning_rate=3e-4

39 | ), # this LR is overriden by base cycle LR if CyclicLR callback used

40 | loss="sparse_categorical_crossentropy",

41 | # loss='binary blabla

42 | metrics=["accuracy"],

43 | )

44 |

45 | print(model.summary())

46 |

47 | return model

48 |

49 |

50 | # >>>>>>

51 | # ------------------ notes for model training ------------------

52 | # >>>>>>

53 | def elements_for_model_training(model, train_generator, validation_generator):

54 | """

55 | Note to the reader:

56 | In practice this step often quite a bit of babysitting, so I tend to run these elements in a notebook

57 |

58 | I have included some simple code snipets here for completeness only,

59 | but this is by no means exhaustive or representative of the actual training process often

60 | - training is compatible with production code if the model is serialised as a one-off

61 | - if the model had to be trained in production, I'd recommend documenting the process

62 | """

63 |

64 | # ------- checkpoint callback -------

65 | checkpoint_path = "training_1/cp.ckpt"

66 | checkpoint_dir = os.path.dirname(checkpoint_path)

67 |

68 | cp_callback = tf.keras.callbacks.ModelCheckpoint(

69 | filepath=checkpoint_path,

70 | # save_weights_only=True,

71 | save_best_only=True,

72 | verbose=1,

73 | )

74 |

75 | # ------- tensorboard callback -------

76 | import datetime

77 | import os

78 |

79 | log_dir = "logs/fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

80 | tensorboard_callback = tf.keras.callbacks.TensorBoard(

81 | log_dir=log_dir, histogram_freq=1

82 | )

83 |

84 | # ------- learning rate finder -------

85 | from learning_rate_utils import LRFinder

86 |

87 | lr_finder = LRFinder(start_lr=1e-7, end_lr=1, max_steps=1000)

88 |

89 | # ------- cycling learning rate -------

90 | from learning_rate_utils import CyclicLR

91 |

92 | # step_size is the number of iteration per half cycle

93 | # authors suggest setting step_size to 2-8x the number of training iterations per epoch

94 | cyclic_learning_rate = CyclicLR(

95 | base_lr=5e-5, max_lr=1e-2, step_size=5000, mode="triangular2"

96 | )

97 |

98 | # ------- actual_training -------

99 | # model.fit(next(train_data_gen)[0], next(train_data_gen)[1], epochs=20)

100 |

101 | # fit_generator will be deprecated: use fit instead -> works faster with Tensorflow 2.0

102 | history = model.fit_generator(

103 | train_generator,

104 | # train_example_gen,

105 | # 40 img/batch * 1000 steps per epoch * 20 epochs = 800k = 200k*4 --> see all data points + their 3 flipped versions once on average

106 | steps_per_epoch=1000,

107 | epochs=35,

108 | validation_data=validation_generator,

109 | validation_steps=100,

110 | # initial_epoch=25,

111 | callbacks=[

112 | cp_callback,

113 | # lr_finder,

114 | # cyclic_learning_rate,

115 | # tensorboard_callback

116 | ],

117 | )

118 |

119 | # ------- save -------

120 | # TODO: save history variable: often pretty useful retrospectively

121 |

122 | # TODO: save model when results meet expectations

123 |

124 | # ------- plot learning curves -------

125 | acc = history.history["accuracy"]

126 | val_acc = history.history["val_accuracy"]

127 |

128 | loss = history.history["loss"]

129 | val_loss = history.history["val_loss"]

130 |

131 | epochs_range = range(epochs)

132 |

133 | plt.figure(figsize=(8, 8))

134 | plt.subplot(1, 2, 1)

135 | plt.plot(epochs_range, acc, label="Training Accuracy")

136 | plt.plot(epochs_range, val_acc, label="Validation Accuracy")

137 | plt.legend(loc="lower right")

138 | plt.title("Training and Validation Accuracy")

139 |

140 | plt.subplot(1, 2, 2)

141 | plt.plot(epochs_range, loss, label="Training Loss")

142 | plt.plot(epochs_range, val_loss, label="Validation Loss")

143 | plt.legend(loc="upper right")

144 | plt.title("Training and Validation Loss")

145 | plt.show()

146 |

--------------------------------------------------------------------------------

/detection/superseded/basic_image_label_generator.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import tensorflow as tf

3 | import matplotlib.pyplot as plt

4 |

5 | # magic numbers

6 | input_dir = "../../../datasets/satellite_ships"

7 | IMG_WIDTH = 768

8 | IMG_HEIGHT = 768

9 | IMG_CHANNELS = 3

10 | TARGET_WIDTH = 256

11 | TARGET_HEIGHT = 256

12 |

13 |

14 | def get_image(image_name):