├── tool

├── __init__.py

├── __pycache__

│ ├── utils.cpython-37.pyc

│ ├── __init__.cpython-37.pyc

│ ├── torch_utils.cpython-37.pyc

│ └── yolo_layer.cpython-37.pyc

├── torch_utils.py

├── utils_iou.py

├── utils.py

├── region_loss.py

├── config.py

└── yolo_layer.py

├── train.sh

├── requirements.txt

├── cfg.py

├── DATA_analysis.md

├── demo.py

├── README.md

├── models.py

├── cfg

└── yolov4.cfg

├── dataset.py

└── train.py

/tool/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/train.sh:

--------------------------------------------------------------------------------

1 | python train.py -g gpu_id -classes number of classes -dir 'data_dir' -pretrained 'pretrained_model.pth

2 |

--------------------------------------------------------------------------------

/tool/__pycache__/utils.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ry-eon/Bubble-Detector-YOLOv4/HEAD/tool/__pycache__/utils.cpython-37.pyc

--------------------------------------------------------------------------------

/tool/__pycache__/__init__.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ry-eon/Bubble-Detector-YOLOv4/HEAD/tool/__pycache__/__init__.cpython-37.pyc

--------------------------------------------------------------------------------

/tool/__pycache__/torch_utils.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ry-eon/Bubble-Detector-YOLOv4/HEAD/tool/__pycache__/torch_utils.cpython-37.pyc

--------------------------------------------------------------------------------

/tool/__pycache__/yolo_layer.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ry-eon/Bubble-Detector-YOLOv4/HEAD/tool/__pycache__/yolo_layer.cpython-37.pyc

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy==1.18.2

2 | torch==1.4.0

3 | tensorboardX==2.0

4 | scikit_image==0.16.2

5 | matplotlib==2.2.3

6 | tqdm==4.43.0

7 | easydict==1.9

8 | Pillow==7.1.2

9 | skimage

10 | opencv_python

11 | pycocotools

--------------------------------------------------------------------------------

/cfg.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | '''

3 | @Time : 2020/05/06 21:05

4 | @Author : Tianxiaomo

5 | @File : Cfg.py

6 | @Noice :

7 | @Modificattion :

8 | @Author :

9 | @Time :

10 | @Detail :

11 |

12 | '''

13 | import os

14 | from easydict import EasyDict

15 |

16 |

17 | _BASE_DIR = os.path.dirname(os.path.abspath(__file__))

18 |

19 | Cfg = EasyDict()

20 |

21 | Cfg.use_darknet_cfg = False

22 | Cfg.cfgfile = os.path.join(_BASE_DIR, 'cfg', 'yolov4.cfg')

23 | Cfg.train_dir ='/home/ic-ai2/ry/datasets/bubble/'

24 |

25 | Cfg.batch = 16

26 | Cfg.subdivisions = 8

27 | Cfg.width = 608

28 | Cfg.height = 608

29 | Cfg.channels = 3

30 | Cfg.momentum = 0.949

31 | Cfg.decay = 0.0005

32 | Cfg.angle = 0

33 | Cfg.saturation = 1.5

34 | Cfg.exposure = 1.5

35 | Cfg.hue = .1

36 |

37 | Cfg.learning_rate = 0.00261

38 | Cfg.burn_in = 1000

39 | Cfg.max_batches = 4000

40 | Cfg.steps = [3200, 3600]

41 | Cfg.policy = Cfg.steps

42 | Cfg.scales = .1, .1

43 |

44 | Cfg.cutmix = 0

45 | Cfg.mosaic = 1

46 |

47 | Cfg.letter_box = 0

48 | Cfg.jitter = 0.2

49 | Cfg.classes = 1

50 | Cfg.track = 0

51 | Cfg.w = Cfg.width

52 | Cfg.h = Cfg.height

53 | Cfg.flip = 1

54 | Cfg.blur = 0

55 | Cfg.gaussian = 0

56 | Cfg.boxes = 60 # box num

57 | Cfg.TRAIN_EPOCHS = 300

58 | Cfg.train_label = os.path.join(_BASE_DIR, 'data', 'train.txt')

59 | Cfg.val_label = os.path.join(_BASE_DIR, 'data' ,'val.txt')

60 | Cfg.TRAIN_OPTIMIZER = 'adam'

61 | '''

62 | image_path1 x1,y1,x2,y2,id x1,y1,x2,y2,id x1,y1,x2,y2,id ...

63 | image_path2 x1,y1,x2,y2,id x1,y1,x2,y2,id x1,y1,x2,y2,id ...

64 | ...

65 | '''

66 |

67 | if Cfg.mosaic and Cfg.cutmix:

68 | Cfg.mixup = 4

69 | elif Cfg.cutmix:

70 | Cfg.mixup = 2

71 | elif Cfg.mosaic:

72 | Cfg.mixup = 3

73 |

74 | Cfg.checkpoints = os.path.join(_BASE_DIR, 'checkpoints')

75 | Cfg.TRAIN_TENSORBOARD_DIR = os.path.join(_BASE_DIR, 'log')

76 |

77 | Cfg.iou_type = 'iou' # 'giou', 'diou', 'ciou'

78 |

79 | Cfg.keep_checkpoint_max = 10

80 |

--------------------------------------------------------------------------------

/DATA_analysis.md:

--------------------------------------------------------------------------------

1 | # Data Distribution Update

2 |

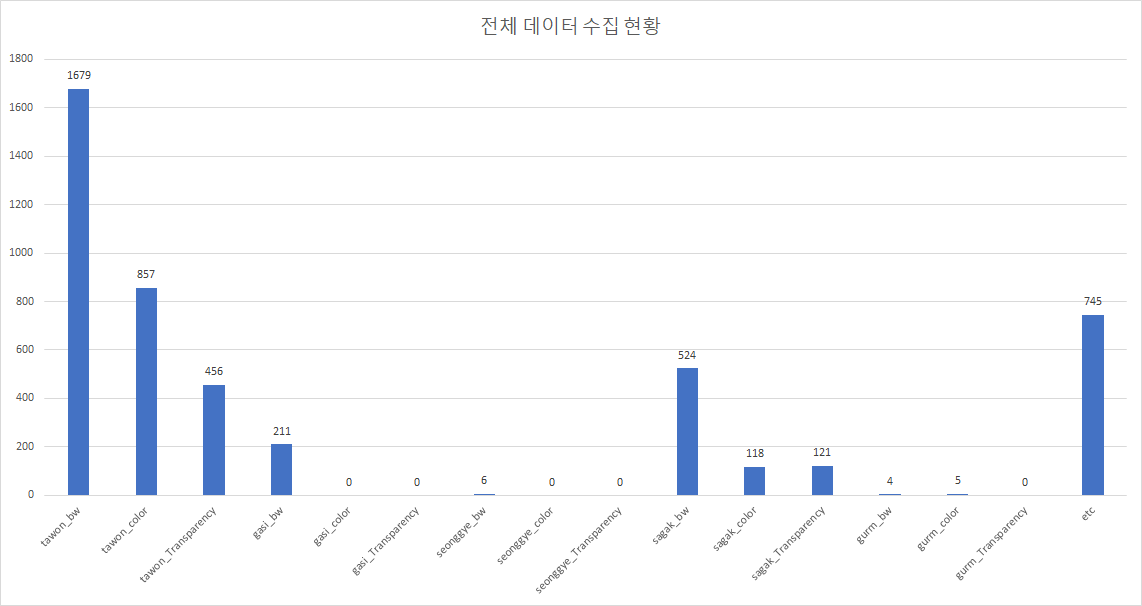

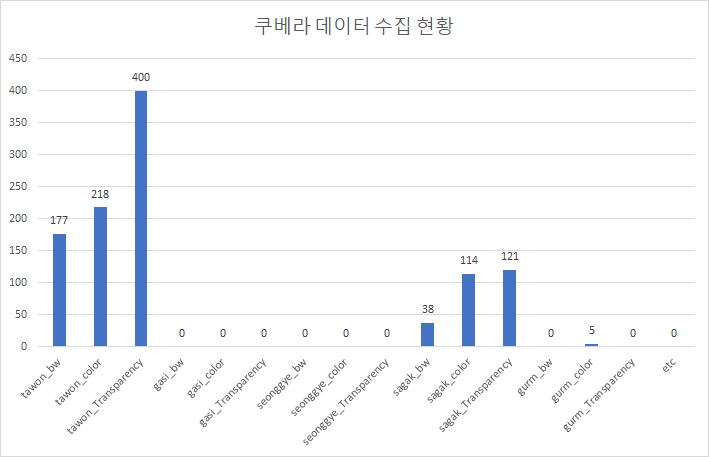

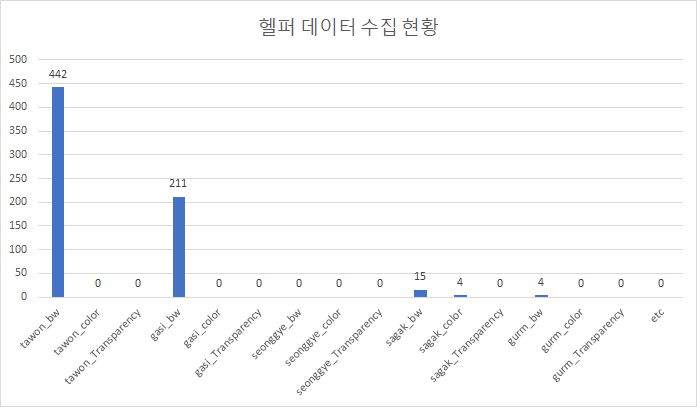

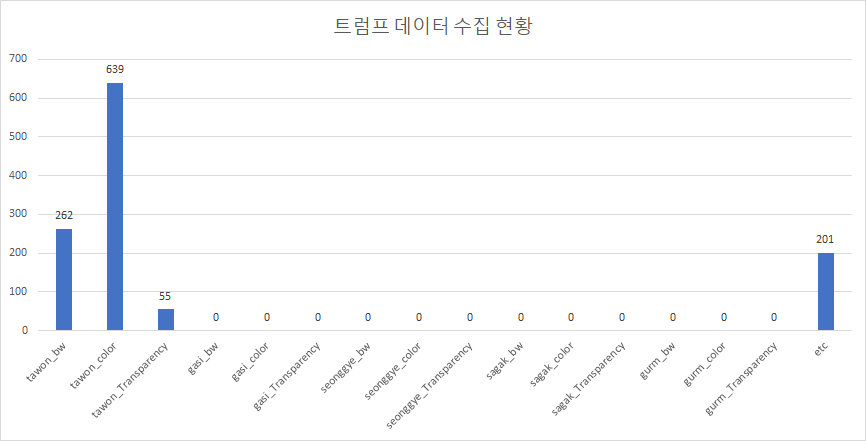

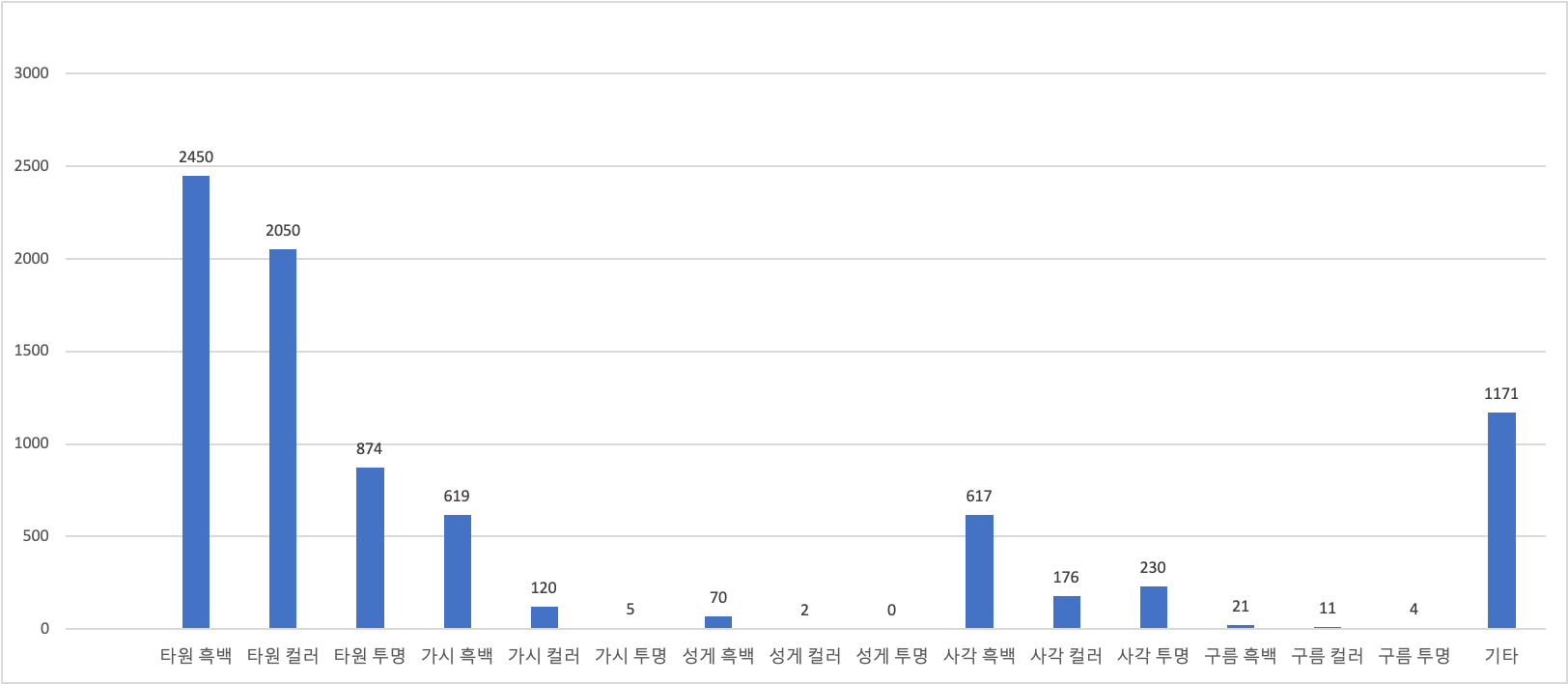

3 | ### Data Distribution(2020.07.30)-4726

4 |

5 |

6 | Detail

7 | |Webtoon|Distribution|Explain|

8 | |------------------|------------|-------|

9 | |쿠베라(kubera)-1073||Kubera adds color to speech balloons to reveal the characteristics of the characters.|

10 | |헬퍼(Helper)-676||Although there is a slight frequency of use of gasi speech bubbles, most of them are black and white due to the nature of the helper.|

11 | |트럼프(Trump)-1157||Trump adds color to speech balloons to reveal the characteristics of the characters.|

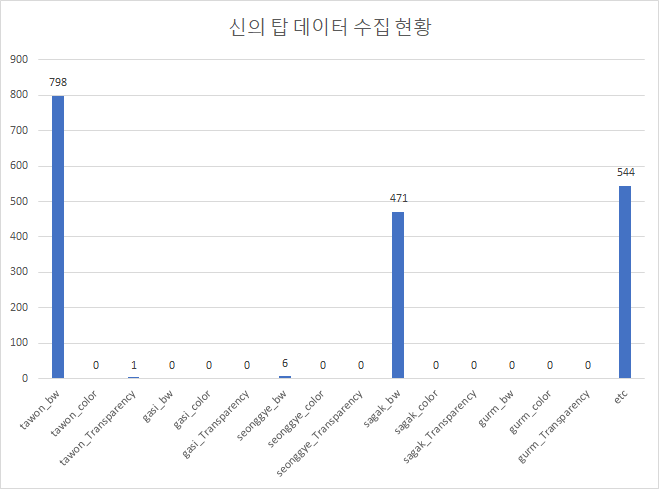

12 | |신의탑(tower of god)-1820||The Tower of God has many action scenes, so it uses a lot of dynamic speech bubbles. Therefore, there are many types of speech bubbles that are difficult to classify.|

13 |

14 |

15 |

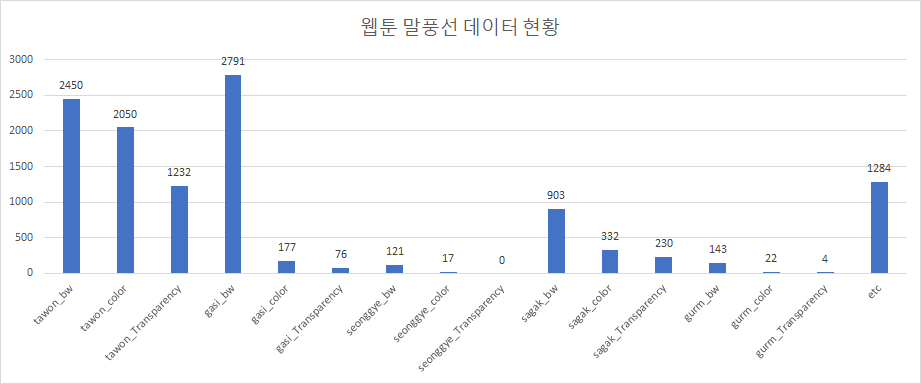

16 | ### Data Distribution(2020.08.07)-8420

17 |

18 |

19 | **Webtoons used are Kubera, Trump, God of High School, The magic scroll merchant Gio, Golden Change, Underprin, Level up hard warrior, Empress remarried, and Wind breaker.**

20 |

21 | |Webtoon|Explain|

22 | |-------|-------|

23 | |God of High School|Many tawon-shaped transparency speech bubbles exist.|

24 | |The magic scroll merchant Gio|Among black and white, Many tawon-shaped transparency speech bubbles exist.|

25 | |Golden Change|Some tawon-shaped color speech bubbles exist.|

26 | |Underprin|Among the tawon-shaped speech bubbles, there is a speech bubble with white letters on a black background.|

27 | |Level up hard warrior| There are speech bubbles with patterns on the outer line.|

28 | |Empress remarried|There are speech bubbles with patterns on the outer line.|

29 | |Wind Breaker|Some tawon-shaped transparency speech bubbles exist.|

30 |

31 |

32 |

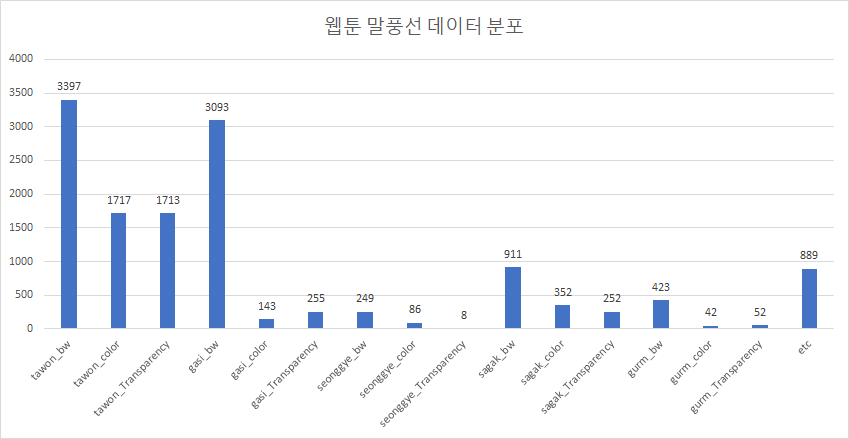

33 | ### Data Distribution(2020.08.11)-11832

34 |

35 |

36 |

37 | ### Data Distribution(2020.08.19)-13582

38 |

39 |

40 |

--------------------------------------------------------------------------------

/tool/torch_utils.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import os

3 | import time

4 | import math

5 | import torch

6 | import numpy as np

7 | from torch.autograd import Variable

8 |

9 | import itertools

10 | import struct # get_image_size

11 | import imghdr # get_image_size

12 |

13 | from tool import utils

14 |

15 |

16 | def bbox_ious(boxes1, boxes2, x1y1x2y2=True):

17 | if x1y1x2y2:

18 | mx = torch.min(boxes1[0], boxes2[0])

19 | Mx = torch.max(boxes1[2], boxes2[2])

20 | my = torch.min(boxes1[1], boxes2[1])

21 | My = torch.max(boxes1[3], boxes2[3])

22 | w1 = boxes1[2] - boxes1[0]

23 | h1 = boxes1[3] - boxes1[1]

24 | w2 = boxes2[2] - boxes2[0]

25 | h2 = boxes2[3] - boxes2[1]

26 | else:

27 | mx = torch.min(boxes1[0] - boxes1[2] / 2.0, boxes2[0] - boxes2[2] / 2.0)

28 | Mx = torch.max(boxes1[0] + boxes1[2] / 2.0, boxes2[0] + boxes2[2] / 2.0)

29 | my = torch.min(boxes1[1] - boxes1[3] / 2.0, boxes2[1] - boxes2[3] / 2.0)

30 | My = torch.max(boxes1[1] + boxes1[3] / 2.0, boxes2[1] + boxes2[3] / 2.0)

31 | w1 = boxes1[2]

32 | h1 = boxes1[3]

33 | w2 = boxes2[2]

34 | h2 = boxes2[3]

35 | uw = Mx - mx

36 | uh = My - my

37 | cw = w1 + w2 - uw

38 | ch = h1 + h2 - uh

39 | mask = ((cw <= 0) + (ch <= 0) > 0)

40 | area1 = w1 * h1

41 | area2 = w2 * h2

42 | carea = cw * ch

43 | carea[mask] = 0

44 | uarea = area1 + area2 - carea

45 | return carea / uarea

46 |

47 |

48 | def get_region_boxes(boxes_and_confs):

49 |

50 | # print('Getting boxes from boxes and confs ...')

51 |

52 | boxes_list = []

53 | confs_list = []

54 |

55 | for item in boxes_and_confs:

56 | boxes_list.append(item[0])

57 | confs_list.append(item[1])

58 |

59 | # boxes: [batch, num1 + num2 + num3, 1, 4]

60 | # confs: [batch, num1 + num2 + num3, num_classes]

61 | boxes = torch.cat(boxes_list, dim=1)

62 | confs = torch.cat(confs_list, dim=1)

63 |

64 | return [boxes, confs]

65 |

66 |

67 | def convert2cpu(gpu_matrix):

68 | return torch.FloatTensor(gpu_matrix.size()).copy_(gpu_matrix)

69 |

70 |

71 | def convert2cpu_long(gpu_matrix):

72 | return torch.LongTensor(gpu_matrix.size()).copy_(gpu_matrix)

73 |

74 |

75 |

76 | def do_detect(model, img, conf_thresh, nms_thresh, use_cuda=1):

77 | model.eval()

78 | t0 = time.time()

79 |

80 | if type(img) == np.ndarray and len(img.shape) == 3: # cv2 image

81 | img = torch.from_numpy(img.transpose(2, 0, 1)).float().div(255.0).unsqueeze(0)

82 | elif type(img) == np.ndarray and len(img.shape) == 4:

83 | img = torch.from_numpy(img.transpose(0, 3, 1, 2)).float().div(255.0)

84 | else:

85 | print("unknow image type")

86 | exit(-1)

87 |

88 | if use_cuda:

89 | img = img.cuda()

90 | img = torch.autograd.Variable(img)

91 |

92 | t1 = time.time()

93 |

94 | with torch.no_grad():

95 | output =model (img)

96 | t2 = time.time()

97 |

98 | # print('-----------------------------------')

99 | # print(' Preprocess : %f' % (t1 - t0))

100 | # print(' Model Inference : %f' % (t2 - t1))

101 | # print('-----------------------------------')

102 |

103 | return utils.post_processing(img, conf_thresh, nms_thresh, output)

104 |

105 |

--------------------------------------------------------------------------------

/demo.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | '''

3 | @Time : 20/04/25 15:49

4 | @Author : huguanghao

5 | @File : demo.py

6 | @Noice :

7 | @Modificattion :

8 | @Author :

9 | @Time :

10 | @Detail :

11 | '''

12 |

13 | # import sys

14 | # import time

15 | # from PIL import Image, ImageDraw

16 | # from models.tiny_yolo import TinyYoloNet

17 | from tool.utils import *

18 | from tool.torch_utils import *

19 | from tool.darknet2pytorch import Darknet

20 | import argparse

21 |

22 | """hyper parameters"""

23 | use_cuda = True

24 |

25 | def detect_cv2(cfgfile, weightfile, imgfile):

26 | import cv2

27 | m = Darknet(cfgfile)

28 |

29 | m.print_network()

30 | m.load_weights(weightfile)

31 | print('Loading weights from %s... Done!' % (weightfile))

32 |

33 | if use_cuda:

34 | m.cuda()

35 |

36 | num_classes = m.num_classes

37 | if num_classes == 20:

38 | namesfile = 'data/voc.names'

39 | elif num_classes == 80:

40 | namesfile = 'data/coco.names'

41 | else:

42 | namesfile = 'data/x.names'

43 | class_names = load_class_names(namesfile)

44 |

45 | img = cv2.imread(imgfile)

46 | sized = cv2.resize(img, (m.width, m.height))

47 | sized = cv2.cvtColor(sized, cv2.COLOR_BGR2RGB)

48 |

49 | for i in range(2):

50 | start = time.time()

51 | boxes = do_detect(m, sized, 0.4, 0.6, use_cuda)

52 | finish = time.time()

53 | if i == 1:

54 | print('%s: Predicted in %f seconds.' % (imgfile, (finish - start)))

55 |

56 | plot_boxes_cv2(img, boxes[0], savename='predictions.jpg', class_names=class_names)

57 |

58 |

59 | def detect_cv2_camera(cfgfile, weightfile):

60 | import cv2

61 | m = Darknet(cfgfile)

62 |

63 | m.print_network()

64 | m.load_weights(weightfile)

65 | print('Loading weights from %s... Done!' % (weightfile))

66 |

67 | if use_cuda:

68 | m.cuda()

69 |

70 | cap = cv2.VideoCapture(0)

71 | # cap = cv2.VideoCapture("./test.mp4")

72 | cap.set(3, 1280)

73 | cap.set(4, 720)

74 | print("Starting the YOLO loop...")

75 |

76 | num_classes = m.num_classes

77 | if num_classes == 20:

78 | namesfile = 'data/voc.names'

79 | elif num_classes == 80:

80 | namesfile = 'data/coco.names'

81 | else:

82 | namesfile = 'data/x.names'

83 | class_names = load_class_names(namesfile)

84 |

85 | while True:

86 | ret, img = cap.read()

87 | sized = cv2.resize(img, (m.width, m.height))

88 | sized = cv2.cvtColor(sized, cv2.COLOR_BGR2RGB)

89 |

90 | start = time.time()

91 | boxes = do_detect(m, sized, 0.4, 0.6, use_cuda)

92 | finish = time.time()

93 | print('Predicted in %f seconds.' % (finish - start))

94 |

95 | result_img = plot_boxes_cv2(img, boxes[0], savename=None, class_names=class_names)

96 |

97 | cv2.imshow('Yolo demo', result_img)

98 | cv2.waitKey(1)

99 |

100 | cap.release()

101 |

102 |

103 | def detect_skimage(cfgfile, weightfile, imgfile):

104 | from skimage import io

105 | from skimage.transform import resize

106 | m = Darknet(cfgfile)

107 |

108 | m.print_network()

109 | m.load_weights(weightfile)

110 | print('Loading weights from %s... Done!' % (weightfile))

111 |

112 | if use_cuda:

113 | m.cuda()

114 |

115 | num_classes = m.num_classes

116 | if num_classes == 20:

117 | namesfile = 'data/voc.names'

118 | elif num_classes == 80:

119 | namesfile = 'data/coco.names'

120 | else:

121 | namesfile = 'data/x.names'

122 | class_names = load_class_names(namesfile)

123 |

124 | img = io.imread(imgfile)

125 | sized = resize(img, (m.width, m.height)) * 255

126 |

127 | for i in range(2):

128 | start = time.time()

129 | boxes = do_detect(m, sized, 0.4, 0.4, use_cuda)

130 | finish = time.time()

131 | if i == 1:

132 | print('%s: Predicted in %f seconds.' % (imgfile, (finish - start)))

133 |

134 | plot_boxes_cv2(img, boxes, savename='predictions.jpg', class_names=class_names)

135 |

136 |

137 | def get_args():

138 | parser = argparse.ArgumentParser('Test your image or video by trained model.')

139 | parser.add_argument('-cfgfile', type=str, default='./cfg/yolov4.cfg',

140 | help='path of cfg file', dest='cfgfile')

141 | parser.add_argument('-weightfile', type=str,

142 | default='./checkpoints/Yolov4_epoch1.pth',

143 | help='path of trained model.', dest='weightfile')

144 | parser.add_argument('-imgfile', type=str,

145 | default='./data/mscoco2017/train2017/190109_180343_00154162.jpg',

146 | help='path of your image file.', dest='imgfile')

147 | args = parser.parse_args()

148 |

149 | return args

150 |

151 |

152 | if __name__ == '__main__':

153 | args = get_args()

154 | if args.imgfile:

155 | detect_cv2(args.cfgfile, args.weightfile, args.imgfile)

156 | # detect_imges(args.cfgfile, args.weightfile)

157 | # detect_cv2(args.cfgfile, args.weightfile, args.imgfile)

158 | # detect_skimage(args.cfgfile, args.weightfile, args.imgfile)

159 | else:

160 | detect_cv2_camera(args.cfgfile, args.weightfile)

161 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # bubble detector using YOLOv4

2 | ~~~

3 | Note : It's not the final version code. I will the refine and update the code.

4 | ~~~

5 |

6 | ## Overview

7 | Models detection speech bubble in webtoons or cartoons. I have referenced and implemented [pytorch-YOLOv4](https://github.com/Tianxiaomo/pytorch-YOLOv4) to detect speech bubble. The key point for improving performance is data analysis. In the case of speech bubbles, there are various forms. Therefore, I define the form of speech bubbles and present the results of training by considering the distribution of data.

8 |

9 |

10 |

11 | ### Definition of Speech Bubble

12 |

13 |

29 |

30 | #### Various speech bubble forms of real webtoons

31 |

32 |

33 | + **In fact, there are various colors and various shapes of speech bubbles in webtoons.**

34 |

35 |

36 |

37 | ### New Definition

38 | **Key standard for Data Definition: Shape, Color, Form**

39 |

40 | `standard`

41 | + shape : Ellipse(tawon), Thorn(gasi), Sea_urchin(seonggye), Rectangle(sagak), Cloud(gurm)

42 | + Color : Black/white(bw), Colorful(color), Transparency(tran), Gradation

43 | + Form : Basic, Double Speech bubble, Multi-External, Scatter-type

44 | + example image

45 |

46 | + **In this project, two categories are applied, shape and color, and form and Gradation are classified as ect.**

47 |

48 |

49 |

50 | ### classes

51 | **This class is not about detection, but about speech bubble data distribution.**

52 |

53 |

54 |

55 |

56 |

61 |

62 |

63 | ### Install dependencies

64 |

65 | + **Pytorch Version**

66 | + Pytorch 1.4.0 for TensorRT 7.0 and higher

67 | + Pytorch 1.5.0 and 1.6.0 for TensorRT 7.1.2 and higher

68 |

69 | + **Install Dependencies Code**

70 | ~~~

71 | pip install onnxruntime numpy torch tensorboardX scikit_image tqdm easydict Pillow skimage opencv_python pycocotools

72 | ~~~

73 | or

74 | ~~~

75 | pip install -r requirements.txt

76 | ~~~

77 |

78 |

79 | ### Pretrained model

80 |

81 | |**Model**|**Link**|

82 | |---------|--------|

83 | |YOLOv4|[Link](https://drive.google.com/open?id=1fcbR0bWzYfIEdLJPzOsn4R5mlvR6IQyA)|

84 | |YOLOv4-bubble|[Link](https://drive.google.com/drive/u/2/folders/1hYGU8hPY1VH8P0DkKDnAfV4AOtRjKYhC)|

85 |

86 |

87 | ### Train

88 |

89 | + **1. Download weight**

90 |

91 | + **2. Train**

92 | ~~~

93 | python train.py -g gpu_id -classes number of classes -dir 'data_dir' -pretrained 'pretrained_model.pth'

94 | ~~~

95 | or

96 | ~~~

97 | Train.sh

98 | ~~~

99 |

100 | + **3. Config setting**

101 | + cfg.py

102 | + class = 1

103 | + learning_rate = 0.001

104 | + max_batches = 2000 (class * 2000)

105 | + steps = [1600, 1800], (max_batches * 0.8 , max_batches * 0.9)

106 | + train_dir = your dataset root

107 | + root tree

The image folder contains .jpg or .png image files. The XML folder contains .XML files(label).

108 |

109 | + cfg/yolov4.cfg

110 | + class 1

111 | + filter 18 (4 + 1 + class) * 3 (line: 961, 1049, 1137)

112 |

113 | **If you want to train custom dataset, use the information above.**

114 |

115 |

116 |

117 | ### Demo

118 |

119 | + **1. Download weight**

120 | + **2. Demo**

121 | ~~~

122 | python demp.py -cfgfile cfgfile -weightfile pretrained_model.pth -imgfile image_dir

123 | ~~~

124 | + defualt cfgfile is `./cfg/yolov4.cfg`

125 |

126 |

127 |

128 | ### Metric

129 |

130 | + **1. validation dataset**

131 |

132 |

133 | |tawon_bw|tawon_color|tawon_Transparency|gasi_bw|gasi_color|gasi_Transparency|seonggye_bw|seonggye_color|seonggye_Transparency|sagak_bw|sagak_color|sagak_Transparency|gurm_bw|gurm_color|gurm_Transparency|total|

134 | |----|----|-----|-----|-----|-----|-----|-----|-----|------|-----|-----|-----|-----|------|----|

135 | |116|70|68|65|29|59|51|43|44|42|33|69|47|2|12|750|

136 |

137 |

138 | + The above distribution is based on speech bubbles, not cuts.

139 | + The distribution is not constant because there are a number of speech bubbles inside a single cut. In addition, for some classes, examples are difficult to find, resulting in an unbalanced distribution as shown above.

140 |

--------------------------------------------------------------------------------

/tool/utils_iou.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | '''

3 |

4 | '''

5 | import torch

6 | import os, sys

7 | from torch.nn import functional as F

8 |

9 | import numpy as np

10 | from packaging import version

11 |

12 |

13 | __all__ = [

14 | "bboxes_iou",

15 | "bboxes_giou",

16 | "bboxes_diou",

17 | "bboxes_ciou",

18 | ]

19 |

20 |

21 | if version.parse(torch.__version__) >= version.parse('1.5.0'):

22 | def _true_divide(dividend, divisor):

23 | return torch.true_divide(dividend, divisor)

24 | else:

25 | def _true_divide(dividend, divisor):

26 | return dividend / divisor

27 |

28 | def bboxes_iou(bboxes_a, bboxes_b, fmt='voc', iou_type='iou'):

29 | """Calculate the Intersection of Unions (IoUs) between bounding boxes.

30 | IoU is calculated as a ratio of area of the intersection

31 | and area of the union.

32 |

33 | Args:

34 | bbox_a (array): An array whose shape is :math:`(N, 4)`.

35 | :math:`N` is the number of bounding boxes.

36 | The dtype should be :obj:`numpy.float32`.

37 | bbox_b (array): An array similar to :obj:`bbox_a`,

38 | whose shape is :math:`(K, 4)`.

39 | The dtype should be :obj:`numpy.float32`.

40 | Returns:

41 | array:

42 | An array whose shape is :math:`(N, K)`. \

43 | An element at index :math:`(n, k)` contains IoUs between \

44 | :math:`n` th bounding box in :obj:`bbox_a` and :math:`k` th bounding \

45 | box in :obj:`bbox_b`.

46 |

47 | from: https://github.com/chainer/chainercv

48 | """

49 | if bboxes_a.shape[1] != 4 or bboxes_b.shape[1] != 4:

50 | raise IndexError

51 |

52 | N, K = bboxes_a.shape[0], bboxes_b.shape[0]

53 |

54 | if fmt.lower() == 'voc': # xmin, ymin, xmax, ymax

55 | # top left

56 | tl_intersect = torch.max(

57 | bboxes_a[:, np.newaxis, :2],

58 | bboxes_b[:, :2]

59 | ) # of shape `(N,K,2)`

60 | # bottom right

61 | br_intersect = torch.min(

62 | bboxes_a[:, np.newaxis, 2:],

63 | bboxes_b[:, 2:]

64 | )

65 | bb_a = bboxes_a[:, 2:] - bboxes_a[:, :2]

66 | bb_b = bboxes_b[:, 2:] - bboxes_b[:, :2]

67 | # bb_* can also be seen vectors representing box_width, box_height

68 | elif fmt.lower() == 'yolo': # xcen, ycen, w, h

69 | # top left

70 | tl_intersect = torch.max(

71 | bboxes_a[:, np.newaxis, :2] - bboxes_a[:, np.newaxis, 2:] / 2,

72 | bboxes_b[:, :2] - bboxes_b[:, 2:] / 2

73 | )

74 | # bottom right

75 | br_intersect = torch.min(

76 | bboxes_a[:, np.newaxis, :2] + bboxes_a[:, np.newaxis, 2:] / 2,

77 | bboxes_b[:, :2] + bboxes_b[:, 2:] / 2

78 | )

79 | bb_a = bboxes_a[:, 2:]

80 | bb_b = bboxes_b[:, 2:]

81 | elif fmt.lower() == 'coco': # xmin, ymin, w, h

82 | # top left

83 | tl_intersect = torch.max(

84 | bboxes_a[:, np.newaxis, :2],

85 | bboxes_b[:, :2]

86 | )

87 | # bottom right

88 | br_intersect = torch.min(

89 | bboxes_a[:, np.newaxis, :2] + bboxes_a[:, np.newaxis, 2:],

90 | bboxes_b[:, :2] + bboxes_b[:, 2:]

91 | )

92 | bb_a = bboxes_a[:, 2:]

93 | bb_b = bboxes_b[:, 2:]

94 |

95 | area_a = torch.prod(bb_a, 1)

96 | area_b = torch.prod(bb_b, 1)

97 |

98 | # torch.prod(input, dim, keepdim=False, dtype=None) → Tensor

99 | # Returns the product of each row of the input tensor in the given dimension dim

100 | # if tl, br does not form a nondegenerate squre, then the corr. element in the `prod` would be 0

101 | en = (tl_intersect < br_intersect).type(tl_intersect.type()).prod(dim=2) # shape `(N,K,2)` ---> shape `(N,K)`

102 |

103 | area_intersect = torch.prod(br_intersect - tl_intersect, 2) * en # * ((tl < br).all())

104 | area_union = (area_a[:, np.newaxis] + area_b - area_intersect)

105 |

106 | iou = _true_divide(area_intersect, area_union)

107 |

108 | if iou_type.lower() == 'iou':

109 | return iou

110 |

111 | if fmt.lower() == 'voc': # xmin, ymin, xmax, ymax

112 | # top left

113 | tl_union = torch.min(

114 | bboxes_a[:, np.newaxis, :2],

115 | bboxes_b[:, :2]

116 | ) # of shape `(N,K,2)`

117 | # bottom right

118 | br_union = torch.max(

119 | bboxes_a[:, np.newaxis, 2:],

120 | bboxes_b[:, 2:]

121 | )

122 | elif fmt.lower() == 'yolo': # xcen, ycen, w, h

123 | # top left

124 | tl_union = torch.min(

125 | bboxes_a[:, np.newaxis, :2] - bboxes_a[:, np.newaxis, 2:] / 2,

126 | bboxes_b[:, :2] - bboxes_b[:, 2:] / 2

127 | )

128 | # bottom right

129 | br_union = torch.max(

130 | bboxes_a[:, np.newaxis, :2] + bboxes_a[:, np.newaxis, 2:] / 2,

131 | bboxes_b[:, :2] + bboxes_b[:, 2:] / 2

132 | )

133 | elif fmt.lower() == 'coco': # xmin, ymin, w, h

134 | # top left

135 | tl_union = torch.min(

136 | bboxes_a[:, np.newaxis, :2],

137 | bboxes_b[:, :2]

138 | )

139 | # bottom right

140 | br_union = torch.max(

141 | bboxes_a[:, np.newaxis, :2] + bboxes_a[:, np.newaxis, 2:],

142 | bboxes_b[:, :2] + bboxes_b[:, 2:]

143 | )

144 |

145 | # c for covering, of shape `(N,K,2)`

146 | # the last dim is box width, box hight

147 | bboxes_c = br_union - tl_union

148 |

149 | area_covering = torch.prod(bboxes_c, 2) # shape `(N,K)`

150 |

151 | giou = iou - _true_divide(area_covering - area_union, area_covering)

152 |

153 | if iou_type.lower() == 'giou':

154 | return giou

155 |

156 | if fmt.lower() == 'voc': # xmin, ymin, xmax, ymax

157 | centre_a = (bboxes_a[..., 2 :] + bboxes_a[..., : 2]) / 2

158 | centre_b = (bboxes_b[..., 2 :] + bboxes_b[..., : 2]) / 2

159 | elif fmt.lower() == 'yolo': # xcen, ycen, w, h

160 | centre_a = bboxes_a[..., : 2]

161 | centre_b = bboxes_b[..., : 2]

162 | elif fmt.lower() == 'coco': # xmin, ymin, w, h

163 | centre_a = bboxes_a[..., 2 :] + bboxes_a[..., : 2]/2

164 | centre_b = bboxes_b[..., 2 :] + bboxes_b[..., : 2]/2

165 |

166 | centre_dist = torch.norm(centre_a[:, np.newaxis] - centre_b, p='fro', dim=2)

167 | diag_len = torch.norm(bboxes_c, p='fro', dim=2)

168 |

169 | diou = iou - _true_divide(centre_dist.pow(2), diag_len.pow(2))

170 |

171 | if iou_type.lower() == 'diou':

172 | return diou

173 |

174 | """ the legacy custom cosine similarity:

175 |

176 | # bb_a of shape `(N,2)`, bb_b of shape `(K,2)`

177 | v = torch.einsum('nm,km->nk', bb_a, bb_b)

178 | v = _true_divide(v, (torch.norm(bb_a, p='fro', dim=1)[:,np.newaxis] * torch.norm(bb_b, p='fro', dim=1)))

179 | # avoid nan for torch.acos near \pm 1

180 | # https://github.com/pytorch/pytorch/issues/8069

181 | eps = 1e-7

182 | v = torch.clamp(v, -1+eps, 1-eps)

183 | """

184 | v = F.cosine_similarity(bb_a[:,np.newaxis,:], bb_b, dim=-1)

185 | v = (_true_divide(2*torch.acos(v), np.pi)).pow(2)

186 | with torch.no_grad():

187 | alpha = (_true_divide(v, 1-iou+v)) * ((iou>=0.5).type(iou.type()))

188 |

189 | ciou = diou - alpha * v

190 |

191 | if iou_type.lower() == 'ciou':

192 | return ciou

193 |

194 |

195 | def bboxes_giou(bboxes_a, bboxes_b, fmt='voc'):

196 | return bboxes_iou(bboxes_a, bboxes_b, fmt, 'giou')

197 |

198 |

199 | def bboxes_diou(bboxes_a, bboxes_b, fmt='voc'):

200 | return bboxes_iou(bboxes_a, bboxes_b, fmt, 'diou')

201 |

202 |

203 | def bboxes_ciou(bboxes_a, bboxes_b, fmt='voc'):

204 | return bboxes_iou(bboxes_a, bboxes_b, fmt, 'ciou')

205 |

--------------------------------------------------------------------------------

/tool/utils.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import os

3 | import time

4 | import math

5 | import numpy as np

6 |

7 | import itertools

8 | import struct # get_image_size

9 | import imghdr # get_image_size

10 |

11 |

12 | def sigmoid(x):

13 | return 1.0 / (np.exp(-x) + 1.)

14 |

15 |

16 | def softmax(x):

17 | x = np.exp(x - np.expand_dims(np.max(x, axis=1), axis=1))

18 | x = x / np.expand_dims(x.sum(axis=1), axis=1)

19 | return x

20 |

21 |

22 | def bbox_iou(box1, box2, x1y1x2y2=True):

23 |

24 | # print('iou box1:', box1)

25 | # print('iou box2:', box2)

26 |

27 | if x1y1x2y2:

28 | mx = min(box1[0], box2[0])

29 | Mx = max(box1[2], box2[2])

30 | my = min(box1[1], box2[1])

31 | My = max(box1[3], box2[3])

32 | w1 = box1[2] - box1[0]

33 | h1 = box1[3] - box1[1]

34 | w2 = box2[2] - box2[0]

35 | h2 = box2[3] - box2[1]

36 | else:

37 | w1 = box1[2]

38 | h1 = box1[3]

39 | w2 = box2[2]

40 | h2 = box2[3]

41 |

42 | mx = min(box1[0], box2[0])

43 | Mx = max(box1[0] + w1, box2[0] + w2)

44 | my = min(box1[1], box2[1])

45 | My = max(box1[1] + h1, box2[1] + h2)

46 | uw = Mx - mx

47 | uh = My - my

48 | cw = w1 + w2 - uw

49 | ch = h1 + h2 - uh

50 | carea = 0

51 | if cw <= 0 or ch <= 0:

52 | return 0.0

53 |

54 | area1 = w1 * h1

55 | area2 = w2 * h2

56 | carea = cw * ch

57 | uarea = area1 + area2 - carea

58 | return carea / uarea

59 |

60 |

61 | def nms_cpu(boxes, confs, nms_thresh=0.5, min_mode=False):

62 | # print(boxes.shape)

63 | x1 = boxes[:, 0]

64 | y1 = boxes[:, 1]

65 | x2 = boxes[:, 2]

66 | y2 = boxes[:, 3]

67 |

68 | areas = (x2 - x1) * (y2 - y1)

69 | order = confs.argsort()[::-1]

70 |

71 | keep = []

72 | while order.size > 0:

73 | idx_self = order[0]

74 | idx_other = order[1:]

75 |

76 | keep.append(idx_self)

77 |

78 | xx1 = np.maximum(x1[idx_self], x1[idx_other])

79 | yy1 = np.maximum(y1[idx_self], y1[idx_other])

80 | xx2 = np.minimum(x2[idx_self], x2[idx_other])

81 | yy2 = np.minimum(y2[idx_self], y2[idx_other])

82 |

83 | w = np.maximum(0.0, xx2 - xx1)

84 | h = np.maximum(0.0, yy2 - yy1)

85 | inter = w * h

86 |

87 | if min_mode:

88 | over = inter / np.minimum(areas[order[0]], areas[order[1:]])

89 | else:

90 | over = inter / (areas[order[0]] + areas[order[1:]] - inter)

91 |

92 | inds = np.where(over <= nms_thresh)[0]

93 | order = order[inds + 1]

94 |

95 | return np.array(keep)

96 |

97 |

98 |

99 | def plot_boxes_cv2(img, boxes, savename=None, class_names=None, color=None):

100 | import cv2

101 | img = np.copy(img)

102 | colors = np.array([[1, 0, 1], [0, 0, 1], [0, 1, 1], [0, 1, 0], [1, 1, 0], [1, 0, 0]], dtype=np.float32)

103 | imgs_cropped =[]

104 | bboxes_pts= []

105 |

106 | def get_color(c, x, max_val):

107 | ratio = float(x) / max_val * 5

108 | i = int(math.floor(ratio))

109 | j = int(math.ceil(ratio))

110 | ratio = ratio - i

111 | r = (1 - ratio) * colors[i][c] + ratio * colors[j][c]

112 | return int(r * 255)

113 |

114 | width = img.shape[1]

115 | height = img.shape[0]

116 | for i in range(len(boxes)):

117 | box = boxes[i]

118 | x1 = int(box[0] * width)

119 | y1 = int(box[1] * height)

120 | x2 = int(box[2] * width)

121 | y2 = int(box[3] * height)

122 |

123 | if color:

124 | rgb = color

125 | else:

126 | rgb = (255, 0, 0)

127 | if len(box) >= 7 and class_names:

128 | cls_conf = box[5]

129 | cls_id = box[6]

130 | print('%s: %f' % (class_names[cls_id], cls_conf))

131 | classes = len(class_names)

132 | offset = cls_id * 123457 % classes

133 | red = get_color(2, offset, classes)

134 | green = get_color(1, offset, classes)

135 | blue = get_color(0, offset, classes)

136 | if color is None:

137 | rgb = (red, green, blue)

138 | img = cv2.putText(img, class_names[cls_id], (x1, y1), cv2.FONT_HERSHEY_SIMPLEX, 1.2, rgb, 1)

139 |

140 | extend_w = int((x2 - x1) * 0.1)

141 | extend_h = int((y2 - y1) * 0.1)

142 | x1 = max(x1-extend_w , 0 )

143 | x2 = min(x2+extend_w, width-1)

144 | y1 = max(y1-extend_h , 0 )

145 | y2 = min(y2+extend_h, height-1)

146 | bbox_pts = []

147 | bbox_pts.append(x1)

148 | bbox_pts.append(y1)

149 | bbox_pts.append(x2)

150 | bbox_pts.append(y2)

151 | #print("x1 {} y1 {} x2 {} y2 {} ".format(x1,y1,x2,y2 ) )

152 | img_cropped = img[y1:y2, x1:x2]

153 | imgs_cropped.append(img_cropped)

154 | bboxes_pts.append(bbox_pts )

155 |

156 |

157 | img = cv2.rectangle(img, (x1, y1), (x2, y2), rgb, 1)

158 |

159 |

160 |

161 |

162 | '''

163 | if savename:

164 | print("save plot results to %s" % savename)

165 | cv2.imwrite(savename, img)

166 | '''

167 | return imgs_cropped, bboxes_pts , img

168 |

169 |

170 | def read_truths(lab_path):

171 | if not os.path.exists(lab_path):

172 | return np.array([])

173 | if os.path.getsize(lab_path):

174 | truths = np.loadtxt(lab_path)

175 | truths = truths.reshape(truths.size / 5, 5) # to avoid single truth problem

176 | return truths

177 | else:

178 | return np.array([])

179 |

180 |

181 | def load_class_names(namesfile):

182 | class_names = []

183 | with open(namesfile, 'r') as fp:

184 | lines = fp.readlines()

185 | for line in lines:

186 | line = line.rstrip()

187 | class_names.append(line)

188 | return class_names

189 |

190 |

191 |

192 | def post_processing(img, conf_thresh, nms_thresh, output):

193 |

194 | # anchors = [12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401]

195 | # num_anchors = 9

196 | # anchor_masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

197 | # strides = [8, 16, 32]

198 | # anchor_step = len(anchors) // num_anchors

199 |

200 | # [batch, num, 1, 4]

201 | box_array = output[0]

202 | # [batch, num, num_classes]

203 | confs = output[1]

204 |

205 | t1 = time.time()

206 |

207 | if type(box_array).__name__ != 'ndarray':

208 | box_array = box_array.cpu().detach().numpy()

209 | confs = confs.cpu().detach().numpy()

210 |

211 | num_classes = confs.shape[2]

212 |

213 | # [batch, num, 4]

214 | box_array = box_array[:, :, 0]

215 |

216 | # [batch, num, num_classes] --> [batch, num]

217 | max_conf = np.max(confs, axis=2)

218 | max_id = np.argmax(confs, axis=2)

219 |

220 | t2 = time.time()

221 |

222 | bboxes_batch = []

223 | for i in range(box_array.shape[0]):

224 |

225 | argwhere = max_conf[i] > conf_thresh

226 | l_box_array = box_array[i, argwhere, :]

227 | l_max_conf = max_conf[i, argwhere]

228 | l_max_id = max_id[i, argwhere]

229 |

230 | bboxes = []

231 | # nms for each class

232 | for j in range(num_classes):

233 |

234 | cls_argwhere = l_max_id == j

235 | ll_box_array = l_box_array[cls_argwhere, :]

236 | ll_max_conf = l_max_conf[cls_argwhere]

237 | ll_max_id = l_max_id[cls_argwhere]

238 |

239 | keep = nms_cpu(ll_box_array, ll_max_conf, nms_thresh)

240 |

241 | if (keep.size > 0):

242 | ll_box_array = ll_box_array[keep, :]

243 | ll_max_conf = ll_max_conf[keep]

244 | ll_max_id = ll_max_id[keep]

245 |

246 | for k in range(ll_box_array.shape[0]):

247 | bboxes.append([ll_box_array[k, 0], ll_box_array[k, 1], ll_box_array[k, 2], ll_box_array[k, 3], ll_max_conf[k], ll_max_conf[k], ll_max_id[k]])

248 |

249 | bboxes_batch.append(bboxes)

250 |

251 | t3 = time.time()

252 |

253 | #print('-----------------------------------')

254 | #print(' max and argmax : %f' % (t2 - t1))

255 | #print(' nms : %f' % (t3 - t2))

256 | #print('Post processing total : %f' % (t3 - t1))

257 | #print('-----------------------------------')

258 |

259 | return bboxes_batch

260 |

--------------------------------------------------------------------------------

/tool/region_loss.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.nn.functional as F

3 | from tool.torch_utils import *

4 |

5 |

6 | def build_targets(pred_boxes, target, anchors, num_anchors, num_classes, nH, nW, noobject_scale, object_scale,

7 | sil_thresh, seen):

8 | nB = target.size(0)

9 | nA = num_anchors

10 | nC = num_classes

11 | anchor_step = len(anchors) / num_anchors

12 | conf_mask = torch.ones(nB, nA, nH, nW) * noobject_scale

13 | coord_mask = torch.zeros(nB, nA, nH, nW)

14 | cls_mask = torch.zeros(nB, nA, nH, nW)

15 | tx = torch.zeros(nB, nA, nH, nW)

16 | ty = torch.zeros(nB, nA, nH, nW)

17 | tw = torch.zeros(nB, nA, nH, nW)

18 | th = torch.zeros(nB, nA, nH, nW)

19 | tconf = torch.zeros(nB, nA, nH, nW)

20 | tcls = torch.zeros(nB, nA, nH, nW)

21 |

22 | nAnchors = nA * nH * nW

23 | nPixels = nH * nW

24 | for b in range(nB):

25 | cur_pred_boxes = pred_boxes[b * nAnchors:(b + 1) * nAnchors].t()

26 | cur_ious = torch.zeros(nAnchors)

27 | for t in range(50):

28 | if target[b][t * 5 + 1] == 0:

29 | break

30 | gx = target[b][t * 5 + 1] * nW

31 | gy = target[b][t * 5 + 2] * nH

32 | gw = target[b][t * 5 + 3] * nW

33 | gh = target[b][t * 5 + 4] * nH

34 | cur_gt_boxes = torch.FloatTensor([gx, gy, gw, gh]).repeat(nAnchors, 1).t()

35 | cur_ious = torch.max(cur_ious, bbox_ious(cur_pred_boxes, cur_gt_boxes, x1y1x2y2=False))

36 | conf_mask[b][cur_ious > sil_thresh] = 0

37 | if seen < 12800:

38 | if anchor_step == 4:

39 | tx = torch.FloatTensor(anchors).view(nA, anchor_step).index_select(1, torch.LongTensor([2])).view(1, nA, 1,

40 | 1).repeat(

41 | nB, 1, nH, nW)

42 | ty = torch.FloatTensor(anchors).view(num_anchors, anchor_step).index_select(1, torch.LongTensor([2])).view(

43 | 1, nA, 1, 1).repeat(nB, 1, nH, nW)

44 | else:

45 | tx.fill_(0.5)

46 | ty.fill_(0.5)

47 | tw.zero_()

48 | th.zero_()

49 | coord_mask.fill_(1)

50 |

51 | nGT = 0

52 | nCorrect = 0

53 | for b in range(nB):

54 | for t in range(50):

55 | if target[b][t * 5 + 1] == 0:

56 | break

57 | nGT = nGT + 1

58 | best_iou = 0.0

59 | best_n = -1

60 | min_dist = 10000

61 | gx = target[b][t * 5 + 1] * nW

62 | gy = target[b][t * 5 + 2] * nH

63 | gi = int(gx)

64 | gj = int(gy)

65 | gw = target[b][t * 5 + 3] * nW

66 | gh = target[b][t * 5 + 4] * nH

67 | gt_box = [0, 0, gw, gh]

68 | for n in range(nA):

69 | aw = anchors[anchor_step * n]

70 | ah = anchors[anchor_step * n + 1]

71 | anchor_box = [0, 0, aw, ah]

72 | iou = bbox_iou(anchor_box, gt_box, x1y1x2y2=False)

73 | if anchor_step == 4:

74 | ax = anchors[anchor_step * n + 2]

75 | ay = anchors[anchor_step * n + 3]

76 | dist = pow(((gi + ax) - gx), 2) + pow(((gj + ay) - gy), 2)

77 | if iou > best_iou:

78 | best_iou = iou

79 | best_n = n

80 | elif anchor_step == 4 and iou == best_iou and dist < min_dist:

81 | best_iou = iou

82 | best_n = n

83 | min_dist = dist

84 |

85 | gt_box = [gx, gy, gw, gh]

86 | pred_box = pred_boxes[b * nAnchors + best_n * nPixels + gj * nW + gi]

87 |

88 | coord_mask[b][best_n][gj][gi] = 1

89 | cls_mask[b][best_n][gj][gi] = 1

90 | conf_mask[b][best_n][gj][gi] = object_scale

91 | tx[b][best_n][gj][gi] = target[b][t * 5 + 1] * nW - gi

92 | ty[b][best_n][gj][gi] = target[b][t * 5 + 2] * nH - gj

93 | tw[b][best_n][gj][gi] = math.log(gw / anchors[anchor_step * best_n])

94 | th[b][best_n][gj][gi] = math.log(gh / anchors[anchor_step * best_n + 1])

95 | iou = bbox_iou(gt_box, pred_box, x1y1x2y2=False) # best_iou

96 | tconf[b][best_n][gj][gi] = iou

97 | tcls[b][best_n][gj][gi] = target[b][t * 5]

98 | if iou > 0.5:

99 | nCorrect = nCorrect + 1

100 |

101 | return nGT, nCorrect, coord_mask, conf_mask, cls_mask, tx, ty, tw, th, tconf, tcls

102 |

103 |

104 | class RegionLoss(nn.Module):

105 | def __init__(self, num_classes=0, anchors=[], num_anchors=1):

106 | super(RegionLoss, self).__init__()

107 | self.num_classes = num_classes

108 | self.anchors = anchors

109 | self.num_anchors = num_anchors

110 | self.anchor_step = len(anchors) / num_anchors

111 | self.coord_scale = 1

112 | self.noobject_scale = 1

113 | self.object_scale = 5

114 | self.class_scale = 1

115 | self.thresh = 0.6

116 | self.seen = 0

117 |

118 | def forward(self, output, target):

119 | # output : BxAs*(4+1+num_classes)*H*W

120 | t0 = time.time()

121 | nB = output.data.size(0)

122 | nA = self.num_anchors

123 | nC = self.num_classes

124 | nH = output.data.size(2)

125 | nW = output.data.size(3)

126 |

127 | output = output.view(nB, nA, (5 + nC), nH, nW)

128 | x = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([0]))).view(nB, nA, nH, nW))

129 | y = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([1]))).view(nB, nA, nH, nW))

130 | w = output.index_select(2, Variable(torch.cuda.LongTensor([2]))).view(nB, nA, nH, nW)

131 | h = output.index_select(2, Variable(torch.cuda.LongTensor([3]))).view(nB, nA, nH, nW)

132 | conf = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([4]))).view(nB, nA, nH, nW))

133 | cls = output.index_select(2, Variable(torch.linspace(5, 5 + nC - 1, nC).long().cuda()))

134 | cls = cls.view(nB * nA, nC, nH * nW).transpose(1, 2).contiguous().view(nB * nA * nH * nW, nC)

135 | t1 = time.time()

136 |

137 | pred_boxes = torch.cuda.FloatTensor(4, nB * nA * nH * nW)

138 | grid_x = torch.linspace(0, nW - 1, nW).repeat(nH, 1).repeat(nB * nA, 1, 1).view(nB * nA * nH * nW).cuda()

139 | grid_y = torch.linspace(0, nH - 1, nH).repeat(nW, 1).t().repeat(nB * nA, 1, 1).view(nB * nA * nH * nW).cuda()

140 | anchor_w = torch.Tensor(self.anchors).view(nA, self.anchor_step).index_select(1, torch.LongTensor([0])).cuda()

141 | anchor_h = torch.Tensor(self.anchors).view(nA, self.anchor_step).index_select(1, torch.LongTensor([1])).cuda()

142 | anchor_w = anchor_w.repeat(nB, 1).repeat(1, 1, nH * nW).view(nB * nA * nH * nW)

143 | anchor_h = anchor_h.repeat(nB, 1).repeat(1, 1, nH * nW).view(nB * nA * nH * nW)

144 | pred_boxes[0] = x.data + grid_x

145 | pred_boxes[1] = y.data + grid_y

146 | pred_boxes[2] = torch.exp(w.data) * anchor_w

147 | pred_boxes[3] = torch.exp(h.data) * anchor_h

148 | pred_boxes = convert2cpu(pred_boxes.transpose(0, 1).contiguous().view(-1, 4))

149 | t2 = time.time()

150 |

151 | nGT, nCorrect, coord_mask, conf_mask, cls_mask, tx, ty, tw, th, tconf, tcls = build_targets(pred_boxes,

152 | target.data,

153 | self.anchors, nA,

154 | nC, \

155 | nH, nW,

156 | self.noobject_scale,

157 | self.object_scale,

158 | self.thresh,

159 | self.seen)

160 | cls_mask = (cls_mask == 1)

161 | nProposals = int((conf > 0.25).sum().data[0])

162 |

163 | tx = Variable(tx.cuda())

164 | ty = Variable(ty.cuda())

165 | tw = Variable(tw.cuda())

166 | th = Variable(th.cuda())

167 | tconf = Variable(tconf.cuda())

168 | tcls = Variable(tcls.view(-1)[cls_mask].long().cuda())

169 |

170 | coord_mask = Variable(coord_mask.cuda())

171 | conf_mask = Variable(conf_mask.cuda().sqrt())

172 | cls_mask = Variable(cls_mask.view(-1, 1).repeat(1, nC).cuda())

173 | cls = cls[cls_mask].view(-1, nC)

174 |

175 | t3 = time.time()

176 |

177 | loss_x = self.coord_scale * nn.MSELoss(reduction='sum')(x * coord_mask, tx * coord_mask) / 2.0

178 | loss_y = self.coord_scale * nn.MSELoss(reduction='sum')(y * coord_mask, ty * coord_mask) / 2.0

179 | loss_w = self.coord_scale * nn.MSELoss(reduction='sum')(w * coord_mask, tw * coord_mask) / 2.0

180 | loss_h = self.coord_scale * nn.MSELoss(reduction='sum')(h * coord_mask, th * coord_mask) / 2.0

181 | loss_conf = nn.MSELoss(reduction='sum')(conf * conf_mask, tconf * conf_mask) / 2.0

182 | loss_cls = self.class_scale * nn.CrossEntropyLoss(reduction='sum')(cls, tcls)

183 | loss = loss_x + loss_y + loss_w + loss_h + loss_conf + loss_cls

184 | t4 = time.time()

185 | if False:

186 | print('-----------------------------------')

187 | print(' activation : %f' % (t1 - t0))

188 | print(' create pred_boxes : %f' % (t2 - t1))

189 | print(' build targets : %f' % (t3 - t2))

190 | print(' create loss : %f' % (t4 - t3))

191 | print(' total : %f' % (t4 - t0))

192 | print('%d: nGT %d, recall %d, proposals %d, loss: x %f, y %f, w %f, h %f, conf %f, cls %f, total %f' % (

193 | self.seen, nGT, nCorrect, nProposals, loss_x.data[0], loss_y.data[0], loss_w.data[0], loss_h.data[0],

194 | loss_conf.data[0], loss_cls.data[0], loss.data[0]))

195 | return loss

196 |

--------------------------------------------------------------------------------

/tool/config.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from tool.torch_utils import convert2cpu

3 |

4 |

5 | def parse_cfg(cfgfile):

6 | blocks = []

7 | fp = open(cfgfile, 'r')

8 | block = None

9 | line = fp.readline()

10 | while line != '':

11 | line = line.rstrip()

12 | if line == '' or line[0] == '#':

13 | line = fp.readline()

14 | continue

15 | elif line[0] == '[':

16 | if block:

17 | blocks.append(block)

18 | block = dict()

19 | block['type'] = line.lstrip('[').rstrip(']')

20 | # set default value

21 | if block['type'] == 'convolutional':

22 | block['batch_normalize'] = 0

23 | else:

24 | key, value = line.split('=')

25 | key = key.strip()

26 | if key == 'type':

27 | key = '_type'

28 | value = value.strip()

29 | block[key] = value

30 | line = fp.readline()

31 |

32 | if block:

33 | blocks.append(block)

34 | fp.close()

35 | return blocks

36 |

37 |

38 | def print_cfg(blocks):

39 | print('layer filters size input output');

40 | prev_width = 416

41 | prev_height = 416

42 | prev_filters = 3

43 | out_filters = []

44 | out_widths = []

45 | out_heights = []

46 | ind = -2

47 | for block in blocks:

48 | ind = ind + 1

49 | if block['type'] == 'net':

50 | prev_width = int(block['width'])

51 | prev_height = int(block['height'])

52 | continue

53 | elif block['type'] == 'convolutional':

54 | filters = int(block['filters'])

55 | kernel_size = int(block['size'])

56 | stride = int(block['stride'])

57 | is_pad = int(block['pad'])

58 | pad = (kernel_size - 1) // 2 if is_pad else 0

59 | width = (prev_width + 2 * pad - kernel_size) // stride + 1

60 | height = (prev_height + 2 * pad - kernel_size) // stride + 1

61 | print('%5d %-6s %4d %d x %d / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (

62 | ind, 'conv', filters, kernel_size, kernel_size, stride, prev_width, prev_height, prev_filters, width,

63 | height, filters))

64 | prev_width = width

65 | prev_height = height

66 | prev_filters = filters

67 | out_widths.append(prev_width)

68 | out_heights.append(prev_height)

69 | out_filters.append(prev_filters)

70 | elif block['type'] == 'maxpool':

71 | pool_size = int(block['size'])

72 | stride = int(block['stride'])

73 | width = prev_width // stride

74 | height = prev_height // stride

75 | print('%5d %-6s %d x %d / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (

76 | ind, 'max', pool_size, pool_size, stride, prev_width, prev_height, prev_filters, width, height,

77 | filters))

78 | prev_width = width

79 | prev_height = height

80 | prev_filters = filters

81 | out_widths.append(prev_width)

82 | out_heights.append(prev_height)

83 | out_filters.append(prev_filters)

84 | elif block['type'] == 'avgpool':

85 | width = 1

86 | height = 1

87 | print('%5d %-6s %3d x %3d x%4d -> %3d' % (

88 | ind, 'avg', prev_width, prev_height, prev_filters, prev_filters))

89 | prev_width = width

90 | prev_height = height

91 | prev_filters = filters

92 | out_widths.append(prev_width)

93 | out_heights.append(prev_height)

94 | out_filters.append(prev_filters)

95 | elif block['type'] == 'softmax':

96 | print('%5d %-6s -> %3d' % (ind, 'softmax', prev_filters))

97 | out_widths.append(prev_width)

98 | out_heights.append(prev_height)

99 | out_filters.append(prev_filters)

100 | elif block['type'] == 'cost':

101 | print('%5d %-6s -> %3d' % (ind, 'cost', prev_filters))

102 | out_widths.append(prev_width)

103 | out_heights.append(prev_height)

104 | out_filters.append(prev_filters)

105 | elif block['type'] == 'reorg':

106 | stride = int(block['stride'])

107 | filters = stride * stride * prev_filters

108 | width = prev_width // stride

109 | height = prev_height // stride

110 | print('%5d %-6s / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (

111 | ind, 'reorg', stride, prev_width, prev_height, prev_filters, width, height, filters))

112 | prev_width = width

113 | prev_height = height

114 | prev_filters = filters

115 | out_widths.append(prev_width)

116 | out_heights.append(prev_height)

117 | out_filters.append(prev_filters)

118 | elif block['type'] == 'upsample':

119 | stride = int(block['stride'])

120 | filters = prev_filters

121 | width = prev_width * stride

122 | height = prev_height * stride

123 | print('%5d %-6s * %d %3d x %3d x%4d -> %3d x %3d x%4d' % (

124 | ind, 'upsample', stride, prev_width, prev_height, prev_filters, width, height, filters))

125 | prev_width = width

126 | prev_height = height

127 | prev_filters = filters

128 | out_widths.append(prev_width)

129 | out_heights.append(prev_height)

130 | out_filters.append(prev_filters)

131 | elif block['type'] == 'route':

132 | layers = block['layers'].split(',')

133 | layers = [int(i) if int(i) > 0 else int(i) + ind for i in layers]

134 | if len(layers) == 1:

135 | print('%5d %-6s %d' % (ind, 'route', layers[0]))

136 | prev_width = out_widths[layers[0]]

137 | prev_height = out_heights[layers[0]]

138 | prev_filters = out_filters[layers[0]]

139 | elif len(layers) == 2:

140 | print('%5d %-6s %d %d' % (ind, 'route', layers[0], layers[1]))

141 | prev_width = out_widths[layers[0]]

142 | prev_height = out_heights[layers[0]]

143 | assert (prev_width == out_widths[layers[1]])

144 | assert (prev_height == out_heights[layers[1]])

145 | prev_filters = out_filters[layers[0]] + out_filters[layers[1]]

146 | elif len(layers) == 4:

147 | print('%5d %-6s %d %d %d %d' % (ind, 'route', layers[0], layers[1], layers[2], layers[3]))

148 | prev_width = out_widths[layers[0]]

149 | prev_height = out_heights[layers[0]]

150 | assert (prev_width == out_widths[layers[1]] == out_widths[layers[2]] == out_widths[layers[3]])

151 | assert (prev_height == out_heights[layers[1]] == out_heights[layers[2]] == out_heights[layers[3]])

152 | prev_filters = out_filters[layers[0]] + out_filters[layers[1]] + out_filters[layers[2]] + out_filters[

153 | layers[3]]

154 | else:

155 | print("route error !!! {} {} {}".format(sys._getframe().f_code.co_filename,

156 | sys._getframe().f_code.co_name, sys._getframe().f_lineno))

157 |

158 | out_widths.append(prev_width)

159 | out_heights.append(prev_height)

160 | out_filters.append(prev_filters)

161 | elif block['type'] in ['region', 'yolo']:

162 | print('%5d %-6s' % (ind, 'detection'))

163 | out_widths.append(prev_width)

164 | out_heights.append(prev_height)

165 | out_filters.append(prev_filters)

166 | elif block['type'] == 'shortcut':

167 | from_id = int(block['from'])

168 | from_id = from_id if from_id > 0 else from_id + ind

169 | print('%5d %-6s %d' % (ind, 'shortcut', from_id))

170 | prev_width = out_widths[from_id]

171 | prev_height = out_heights[from_id]

172 | prev_filters = out_filters[from_id]

173 | out_widths.append(prev_width)

174 | out_heights.append(prev_height)

175 | out_filters.append(prev_filters)

176 | elif block['type'] == 'connected':

177 | filters = int(block['output'])

178 | print('%5d %-6s %d -> %3d' % (ind, 'connected', prev_filters, filters))

179 | prev_filters = filters

180 | out_widths.append(1)

181 | out_heights.append(1)

182 | out_filters.append(prev_filters)

183 | else:

184 | print('unknown type %s' % (block['type']))

185 |

186 |

187 | def load_conv(buf, start, conv_model):

188 | num_w = conv_model.weight.numel()

189 | num_b = conv_model.bias.numel()

190 | conv_model.bias.data.copy_(torch.from_numpy(buf[start:start + num_b]));

191 | start = start + num_b

192 | conv_model.weight.data.copy_(torch.from_numpy(buf[start:start + num_w]).reshape(conv_model.weight.data.shape));

193 | start = start + num_w

194 | return start

195 |

196 |

197 | def save_conv(fp, conv_model):

198 | if conv_model.bias.is_cuda:

199 | convert2cpu(conv_model.bias.data).numpy().tofile(fp)

200 | convert2cpu(conv_model.weight.data).numpy().tofile(fp)

201 | else:

202 | conv_model.bias.data.numpy().tofile(fp)

203 | conv_model.weight.data.numpy().tofile(fp)

204 |

205 |

206 | def load_conv_bn(buf, start, conv_model, bn_model):

207 | num_w = conv_model.weight.numel()

208 | num_b = bn_model.bias.numel()

209 | bn_model.bias.data.copy_(torch.from_numpy(buf[start:start + num_b]));

210 | start = start + num_b

211 | bn_model.weight.data.copy_(torch.from_numpy(buf[start:start + num_b]));

212 | start = start + num_b

213 | bn_model.running_mean.copy_(torch.from_numpy(buf[start:start + num_b]));

214 | start = start + num_b

215 | bn_model.running_var.copy_(torch.from_numpy(buf[start:start + num_b]));

216 | start = start + num_b

217 | conv_model.weight.data.copy_(torch.from_numpy(buf[start:start + num_w]).reshape(conv_model.weight.data.shape));

218 | start = start + num_w

219 | return start

220 |

221 |

222 | def save_conv_bn(fp, conv_model, bn_model):

223 | if bn_model.bias.is_cuda:

224 | convert2cpu(bn_model.bias.data).numpy().tofile(fp)

225 | convert2cpu(bn_model.weight.data).numpy().tofile(fp)

226 | convert2cpu(bn_model.running_mean).numpy().tofile(fp)

227 | convert2cpu(bn_model.running_var).numpy().tofile(fp)

228 | convert2cpu(conv_model.weight.data).numpy().tofile(fp)

229 | else:

230 | bn_model.bias.data.numpy().tofile(fp)

231 | bn_model.weight.data.numpy().tofile(fp)

232 | bn_model.running_mean.numpy().tofile(fp)

233 | bn_model.running_var.numpy().tofile(fp)

234 | conv_model.weight.data.numpy().tofile(fp)

235 |

236 |

237 | def load_fc(buf, start, fc_model):

238 | num_w = fc_model.weight.numel()

239 | num_b = fc_model.bias.numel()

240 | fc_model.bias.data.copy_(torch.from_numpy(buf[start:start + num_b]));

241 | start = start + num_b

242 | fc_model.weight.data.copy_(torch.from_numpy(buf[start:start + num_w]));

243 | start = start + num_w

244 | return start

245 |

246 |

247 | def save_fc(fp, fc_model):

248 | fc_model.bias.data.numpy().tofile(fp)

249 | fc_model.weight.data.numpy().tofile(fp)

250 |

251 |

252 | if __name__ == '__main__':

253 | import sys

254 |

255 | blocks = parse_cfg('cfg/yolo.cfg')

256 | if len(sys.argv) == 2:

257 | blocks = parse_cfg(sys.argv[1])

258 | print_cfg(blocks)

259 |

--------------------------------------------------------------------------------

/tool/yolo_layer.py:

--------------------------------------------------------------------------------

1 | import torch.nn as nn

2 | import torch.nn.functional as F

3 | from tool.torch_utils import *

4 |

5 | def yolo_forward(output, conf_thresh, num_classes, anchors, num_anchors, scale_x_y, only_objectness=1,

6 | validation=False):

7 | # Output would be invalid if it does not satisfy this assert

8 | # assert (output.size(1) == (5 + num_classes) * num_anchors)

9 |

10 | # print(output.size())

11 |

12 | # Slice the second dimension (channel) of output into:

13 | # [ 2, 2, 1, num_classes, 2, 2, 1, num_classes, 2, 2, 1, num_classes ]

14 | # And then into

15 | # bxy = [ 6 ] bwh = [ 6 ] det_conf = [ 3 ] cls_conf = [ num_classes * 3 ]

16 | batch = output.size(0)

17 | H = output.size(2)

18 | W = output.size(3)

19 |

20 | bxy_list = []

21 | bwh_list = []

22 | det_confs_list = []

23 | cls_confs_list = []

24 |

25 | for i in range(num_anchors):

26 | begin = i * (5 + num_classes)

27 | end = (i + 1) * (5 + num_classes)

28 |

29 | bxy_list.append(output[:, begin : begin + 2])

30 | bwh_list.append(output[:, begin + 2 : begin + 4])

31 | det_confs_list.append(output[:, begin + 4 : begin + 5])

32 | cls_confs_list.append(output[:, begin + 5 : end])

33 |

34 | # Shape: [batch, num_anchors * 2, H, W]

35 | bxy = torch.cat(bxy_list, dim=1)

36 | # Shape: [batch, num_anchors * 2, H, W]

37 | bwh = torch.cat(bwh_list, dim=1)

38 |

39 | # Shape: [batch, num_anchors, H, W]

40 | det_confs = torch.cat(det_confs_list, dim=1)

41 | # Shape: [batch, num_anchors * H * W]

42 | det_confs = det_confs.view(batch, num_anchors * H * W)

43 |

44 | # Shape: [batch, num_anchors * num_classes, H, W]

45 | cls_confs = torch.cat(cls_confs_list, dim=1)

46 | # Shape: [batch, num_anchors, num_classes, H * W]

47 | cls_confs = cls_confs.view(batch, num_anchors, num_classes, H * W)

48 | # Shape: [batch, num_anchors, num_classes, H * W] --> [batch, num_anchors * H * W, num_classes]

49 | cls_confs = cls_confs.permute(0, 1, 3, 2).reshape(batch, num_anchors * H * W, num_classes)

50 |

51 | # Apply sigmoid(), exp() and softmax() to slices

52 | #

53 | bxy = torch.sigmoid(bxy) * scale_x_y - 0.5 * (scale_x_y - 1)

54 | bwh = torch.exp(bwh)

55 | det_confs = torch.sigmoid(det_confs)

56 | cls_confs = torch.sigmoid(cls_confs)

57 |

58 | # Prepare C-x, C-y, P-w, P-h (None of them are torch related)

59 | grid_x = np.expand_dims(np.expand_dims(np.expand_dims(np.linspace(0, W - 1, W), axis=0).repeat(H, 0), axis=0), axis=0)

60 | grid_y = np.expand_dims(np.expand_dims(np.expand_dims(np.linspace(0, H - 1, H), axis=1).repeat(W, 1), axis=0), axis=0)

61 | # grid_x = torch.linspace(0, W - 1, W).reshape(1, 1, 1, W).repeat(1, 1, H, 1)

62 | # grid_y = torch.linspace(0, H - 1, H).reshape(1, 1, H, 1).repeat(1, 1, 1, W)

63 |

64 | anchor_w = []

65 | anchor_h = []

66 | for i in range(num_anchors):

67 | anchor_w.append(anchors[i * 2])

68 | anchor_h.append(anchors[i * 2 + 1])

69 |

70 | device = None

71 | cuda_check = output.is_cuda

72 | if cuda_check:

73 | device = output.get_device()

74 |

75 | bx_list = []

76 | by_list = []

77 | bw_list = []

78 | bh_list = []

79 |

80 | # Apply C-x, C-y, P-w, P-h

81 | for i in range(num_anchors):

82 | ii = i * 2

83 | # Shape: [batch, 1, H, W]

84 | bx = bxy[:, ii : ii + 1] + torch.tensor(grid_x, device=device, dtype=torch.float32) # grid_x.to(device=device, dtype=torch.float32)

85 | # Shape: [batch, 1, H, W]

86 | by = bxy[:, ii + 1 : ii + 2] + torch.tensor(grid_y, device=device, dtype=torch.float32) # grid_y.to(device=device, dtype=torch.float32)

87 | # Shape: [batch, 1, H, W]

88 | bw = bwh[:, ii : ii + 1] * anchor_w[i]

89 | # Shape: [batch, 1, H, W]

90 | bh = bwh[:, ii + 1 : ii + 2] * anchor_h[i]

91 |

92 | bx_list.append(bx)

93 | by_list.append(by)

94 | bw_list.append(bw)

95 | bh_list.append(bh)

96 |

97 |

98 | ########################################

99 | # Figure out bboxes from slices #

100 | ########################################

101 |

102 | # Shape: [batch, num_anchors, H, W]

103 | bx = torch.cat(bx_list, dim=1)

104 | # Shape: [batch, num_anchors, H, W]

105 | by = torch.cat(by_list, dim=1)

106 | # Shape: [batch, num_anchors, H, W]

107 | bw = torch.cat(bw_list, dim=1)

108 | # Shape: [batch, num_anchors, H, W]

109 | bh = torch.cat(bh_list, dim=1)

110 |

111 | # Shape: [batch, 2 * num_anchors, H, W]

112 | bx_bw = torch.cat((bx, bw), dim=1)

113 | # Shape: [batch, 2 * num_anchors, H, W]

114 | by_bh = torch.cat((by, bh), dim=1)

115 |

116 | # normalize coordinates to [0, 1]

117 | bx_bw /= W

118 | by_bh /= H

119 |

120 | # Shape: [batch, num_anchors * H * W, 1]

121 | bx = bx_bw[:, :num_anchors].view(batch, num_anchors * H * W, 1)

122 | by = by_bh[:, :num_anchors].view(batch, num_anchors * H * W, 1)

123 | bw = bx_bw[:, num_anchors:].view(batch, num_anchors * H * W, 1)

124 | bh = by_bh[:, num_anchors:].view(batch, num_anchors * H * W, 1)

125 |

126 | bx1 = bx - bw * 0.5

127 | by1 = by - bh * 0.5

128 | bx2 = bx1 + bw

129 | by2 = by1 + bh

130 |

131 | # Shape: [batch, num_anchors * h * w, 4] -> [batch, num_anchors * h * w, 1, 4]

132 | boxes = torch.cat((bx1, by1, bx2, by2), dim=2).view(batch, num_anchors * H * W, 1, 4)

133 | # boxes = boxes.repeat(1, 1, num_classes, 1)

134 |

135 | # boxes: [batch, num_anchors * H * W, 1, 4]

136 | # cls_confs: [batch, num_anchors * H * W, num_classes]

137 | # det_confs: [batch, num_anchors * H * W]

138 |

139 | det_confs = det_confs.view(batch, num_anchors * H * W, 1)

140 | confs = cls_confs * det_confs

141 |

142 | # boxes: [batch, num_anchors * H * W, 1, 4]

143 | # confs: [batch, num_anchors * H * W, num_classes]

144 |

145 | return boxes, confs

146 |

147 |

148 | def yolo_forward_dynamic(output, conf_thresh, num_classes, anchors, num_anchors, scale_x_y, only_objectness=1,

149 | validation=False):

150 | # Output would be invalid if it does not satisfy this assert

151 | # assert (output.size(1) == (5 + num_classes) * num_anchors)

152 |

153 | # print(output.size())

154 |

155 | # Slice the second dimension (channel) of output into:

156 | # [ 2, 2, 1, num_classes, 2, 2, 1, num_classes, 2, 2, 1, num_classes ]

157 | # And then into

158 | # bxy = [ 6 ] bwh = [ 6 ] det_conf = [ 3 ] cls_conf = [ num_classes * 3 ]

159 | # batch = output.size(0)

160 | # H = output.size(2)

161 | # W = output.size(3)

162 |

163 | bxy_list = []

164 | bwh_list = []

165 | det_confs_list = []

166 | cls_confs_list = []

167 |

168 | for i in range(num_anchors):

169 | begin = i * (5 + num_classes)

170 | end = (i + 1) * (5 + num_classes)

171 |

172 | bxy_list.append(output[:, begin : begin + 2])

173 | bwh_list.append(output[:, begin + 2 : begin + 4])

174 | det_confs_list.append(output[:, begin + 4 : begin + 5])

175 | cls_confs_list.append(output[:, begin + 5 : end])

176 |

177 | # Shape: [batch, num_anchors * 2, H, W]

178 | bxy = torch.cat(bxy_list, dim=1)

179 | # Shape: [batch, num_anchors * 2, H, W]

180 | bwh = torch.cat(bwh_list, dim=1)

181 |

182 | # Shape: [batch, num_anchors, H, W]

183 | det_confs = torch.cat(det_confs_list, dim=1)

184 | # Shape: [batch, num_anchors * H * W]

185 | det_confs = det_confs.view(output.size(0), num_anchors * output.size(2) * output.size(3))

186 |

187 | # Shape: [batch, num_anchors * num_classes, H, W]

188 | cls_confs = torch.cat(cls_confs_list, dim=1)

189 | # Shape: [batch, num_anchors, num_classes, H * W]

190 | cls_confs = cls_confs.view(output.size(0), num_anchors, num_classes, output.size(2) * output.size(3))

191 | # Shape: [batch, num_anchors, num_classes, H * W] --> [batch, num_anchors * H * W, num_classes]

192 | cls_confs = cls_confs.permute(0, 1, 3, 2).reshape(output.size(0), num_anchors * output.size(2) * output.size(3), num_classes)

193 |

194 | # Apply sigmoid(), exp() and softmax() to slices

195 | #

196 | bxy = torch.sigmoid(bxy) * scale_x_y - 0.5 * (scale_x_y - 1)

197 | bwh = torch.exp(bwh)

198 | det_confs = torch.sigmoid(det_confs)

199 | cls_confs = torch.sigmoid(cls_confs)

200 |

201 | # Prepare C-x, C-y, P-w, P-h (None of them are torch related)

202 | grid_x = np.expand_dims(np.expand_dims(np.expand_dims(np.linspace(0, output.size(3) - 1, output.size(3)), axis=0).repeat(output.size(2), 0), axis=0), axis=0)

203 | grid_y = np.expand_dims(np.expand_dims(np.expand_dims(np.linspace(0, output.size(2) - 1, output.size(2)), axis=1).repeat(output.size(3), 1), axis=0), axis=0)

204 | # grid_x = torch.linspace(0, W - 1, W).reshape(1, 1, 1, W).repeat(1, 1, H, 1)

205 | # grid_y = torch.linspace(0, H - 1, H).reshape(1, 1, H, 1).repeat(1, 1, 1, W)

206 |

207 | anchor_w = []

208 | anchor_h = []

209 | for i in range(num_anchors):

210 | anchor_w.append(anchors[i * 2])

211 | anchor_h.append(anchors[i * 2 + 1])

212 |

213 | device = None

214 | cuda_check = output.is_cuda

215 | if cuda_check:

216 | device = output.get_device()

217 |

218 | bx_list = []

219 | by_list = []

220 | bw_list = []

221 | bh_list = []

222 |

223 | # Apply C-x, C-y, P-w, P-h

224 | for i in range(num_anchors):

225 | ii = i * 2

226 | # Shape: [batch, 1, H, W]

227 | bx = bxy[:, ii : ii + 1] + torch.tensor(grid_x, device=device, dtype=torch.float32) # grid_x.to(device=device, dtype=torch.float32)

228 | # Shape: [batch, 1, H, W]

229 | by = bxy[:, ii + 1 : ii + 2] + torch.tensor(grid_y, device=device, dtype=torch.float32) # grid_y.to(device=device, dtype=torch.float32)

230 | # Shape: [batch, 1, H, W]

231 | bw = bwh[:, ii : ii + 1] * anchor_w[i]

232 | # Shape: [batch, 1, H, W]

233 | bh = bwh[:, ii + 1 : ii + 2] * anchor_h[i]

234 |

235 | bx_list.append(bx)

236 | by_list.append(by)

237 | bw_list.append(bw)

238 | bh_list.append(bh)

239 |

240 |

241 | ########################################

242 | # Figure out bboxes from slices #

243 | ########################################

244 |

245 | # Shape: [batch, num_anchors, H, W]

246 | bx = torch.cat(bx_list, dim=1)

247 | # Shape: [batch, num_anchors, H, W]

248 | by = torch.cat(by_list, dim=1)

249 | # Shape: [batch, num_anchors, H, W]

250 | bw = torch.cat(bw_list, dim=1)

251 | # Shape: [batch, num_anchors, H, W]

252 | bh = torch.cat(bh_list, dim=1)

253 |

254 | # Shape: [batch, 2 * num_anchors, H, W]

255 | bx_bw = torch.cat((bx, bw), dim=1)

256 | # Shape: [batch, 2 * num_anchors, H, W]

257 | by_bh = torch.cat((by, bh), dim=1)

258 |

259 | # normalize coordinates to [0, 1]

260 | bx_bw /= output.size(3)

261 | by_bh /= output.size(2)

262 |

263 | # Shape: [batch, num_anchors * H * W, 1]

264 | bx = bx_bw[:, :num_anchors].view(output.size(0), num_anchors * output.size(2) * output.size(3), 1)

265 | by = by_bh[:, :num_anchors].view(output.size(0), num_anchors * output.size(2) * output.size(3), 1)

266 | bw = bx_bw[:, num_anchors:].view(output.size(0), num_anchors * output.size(2) * output.size(3), 1)

267 | bh = by_bh[:, num_anchors:].view(output.size(0), num_anchors * output.size(2) * output.size(3), 1)

268 |

269 | bx1 = bx - bw * 0.5

270 | by1 = by - bh * 0.5

271 | bx2 = bx1 + bw

272 | by2 = by1 + bh

273 |

274 | # Shape: [batch, num_anchors * h * w, 4] -> [batch, num_anchors * h * w, 1, 4]

275 | boxes = torch.cat((bx1, by1, bx2, by2), dim=2).view(output.size(0), num_anchors * output.size(2) * output.size(3), 1, 4)

276 | # boxes = boxes.repeat(1, 1, num_classes, 1)

277 |

278 | # boxes: [batch, num_anchors * H * W, 1, 4]

279 | # cls_confs: [batch, num_anchors * H * W, num_classes]

280 | # det_confs: [batch, num_anchors * H * W]

281 |

282 | det_confs = det_confs.view(output.size(0), num_anchors * output.size(2) * output.size(3), 1)

283 | confs = cls_confs * det_confs

284 |

285 | # boxes: [batch, num_anchors * H * W, 1, 4]

286 | # confs: [batch, num_anchors * H * W, num_classes]

287 |

288 | return boxes, confs

289 |

290 | class YoloLayer(nn.Module):

291 | ''' Yolo layer

292 | model_out: while inference,is post-processing inside or outside the model

293 | true:outside

294 | '''

295 | def __init__(self, anchor_mask=[], num_classes=0, anchors=[], num_anchors=1, stride=32, model_out=False):

296 | super(YoloLayer, self).__init__()

297 | self.anchor_mask = anchor_mask

298 | self.num_classes = num_classes

299 | self.anchors = anchors

300 | self.num_anchors = num_anchors

301 | self.anchor_step = len(anchors) // num_anchors

302 | self.coord_scale = 1

303 | self.noobject_scale = 1

304 | self.object_scale = 5

305 | self.class_scale = 1

306 | self.thresh = 0.6

307 | self.stride = stride

308 | self.seen = 0

309 | self.scale_x_y = 1

310 |

311 | self.model_out = model_out

312 |

313 | def forward(self, output, target=None):

314 | if self.training:

315 | return output

316 | masked_anchors = []

317 | for m in self.anchor_mask:

318 | masked_anchors += self.anchors[m * self.anchor_step:(m + 1) * self.anchor_step]

319 | masked_anchors = [anchor / self.stride for anchor in masked_anchors]

320 |

321 | return yolo_forward_dynamic(output, self.thresh, self.num_classes, masked_anchors, len(self.anchor_mask),scale_x_y=self.scale_x_y)

322 |

323 |

--------------------------------------------------------------------------------

/models.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch import nn

3 | import torch.nn.functional as F

4 | from tool.torch_utils import *

5 | from tool.yolo_layer import YoloLayer

6 |

7 |

8 | class Mish(torch.nn.Module):

9 | def __init__(self):

10 | super().__init__()

11 |

12 | def forward(self, x):

13 | x = x * (torch.tanh(torch.nn.functional.softplus(x)))

14 | return x

15 |

16 |

17 | class Upsample(nn.Module):

18 | def __init__(self):

19 | super(Upsample, self).__init__()

20 |

21 | def forward(self, x, target_size, inference=False):

22 | assert (x.data.dim() == 4)

23 | # _, _, tH, tW = target_size

24 |

25 | if inference:

26 |

27 | #B = x.data.size(0)

28 | #C = x.data.size(1)

29 | #H = x.data.size(2)

30 | #W = x.data.size(3)

31 |

32 | return x.view(x.size(0), x.size(1), x.size(2), 1, x.size(3), 1).\

33 | expand(x.size(0), x.size(1), x.size(2), target_size[2] // x.size(2), x.size(3), target_size[3] // x.size(3)).\

34 | contiguous().view(x.size(0), x.size(1), target_size[2], target_size[3])

35 | else:

36 | return F.interpolate(x, size=(target_size[2], target_size[3]), mode='nearest')

37 |

38 |

39 | class Conv_Bn_Activation(nn.Module):

40 | def __init__(self, in_channels, out_channels, kernel_size, stride, activation, bn=True, bias=False):

41 | super().__init__()

42 | pad = (kernel_size - 1) // 2

43 |

44 | self.conv = nn.ModuleList()

45 | if bias:

46 | self.conv.append(nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad))

47 | else:

48 | self.conv.append(nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad, bias=False))

49 | if bn:

50 | self.conv.append(nn.BatchNorm2d(out_channels))

51 | if activation == "mish":

52 | self.conv.append(Mish())

53 | elif activation == "relu":

54 | self.conv.append(nn.ReLU(inplace=True))

55 | elif activation == "leaky":

56 | self.conv.append(nn.LeakyReLU(0.1, inplace=True))

57 | elif activation == "linear":

58 | pass

59 | else:

60 | print("activate error !!! {} {} {}".format(sys._getframe().f_code.co_filename,

61 | sys._getframe().f_code.co_name, sys._getframe().f_lineno))

62 |

63 | def forward(self, x):

64 | for l in self.conv:

65 | x = l(x)

66 | return x

67 |

68 |

69 | class ResBlock(nn.Module):

70 | """

71 | Sequential residual blocks each of which consists of \

72 | two convolution layers.

73 | Args:

74 | ch (int): number of input and output channels.

75 | nblocks (int): number of residual blocks.

76 | shortcut (bool): if True, residual tensor addition is enabled.

77 | """

78 |

79 | def __init__(self, ch, nblocks=1, shortcut=True):

80 | super().__init__()

81 | self.shortcut = shortcut

82 | self.module_list = nn.ModuleList()

83 | for i in range(nblocks):

84 | resblock_one = nn.ModuleList()

85 | resblock_one.append(Conv_Bn_Activation(ch, ch, 1, 1, 'mish'))

86 | resblock_one.append(Conv_Bn_Activation(ch, ch, 3, 1, 'mish'))

87 | self.module_list.append(resblock_one)

88 |

89 | def forward(self, x):

90 | for module in self.module_list:

91 | h = x

92 | for res in module:

93 | h = res(h)

94 | x = x + h if self.shortcut else h

95 | return x

96 |

97 |

98 | class DownSample1(nn.Module):

99 | def __init__(self):