├── .env.example

├── .gitignore

├── .project-root

├── LICENSE

├── README.md

├── assets

└── GotenNet_framework.png

├── gotennet

├── __init__.py

├── configs

│ ├── __init__.py

│ ├── callbacks

│ │ ├── default.yaml

│ │ └── none.yaml

│ ├── datamodule

│ │ └── qm9.yaml

│ ├── experiment

│ │ ├── qm9.yaml

│ │ └── qm9_u0.yaml

│ ├── hydra

│ │ ├── default.yaml

│ │ └── job_logging

│ │ │ └── logger.yaml

│ ├── local

│ │ └── .gitkeep

│ ├── logger

│ │ ├── comet.yaml

│ │ ├── csv.yaml

│ │ ├── default.yaml

│ │ ├── many_loggers.yaml

│ │ ├── mlflow.yaml

│ │ ├── neptune.yaml

│ │ ├── tensorboard.yaml

│ │ └── wandb.yaml

│ ├── model

│ │ └── gotennet.yaml

│ ├── paths

│ │ └── default.yaml

│ ├── test.yaml

│ ├── train.yaml

│ └── trainer

│ │ └── default.yaml

├── datamodules

│ ├── __init__.py

│ ├── components

│ │ ├── __init__.py

│ │ ├── qm9.py

│ │ └── utils.py

│ └── datamodule.py

├── models

│ ├── __init__.py

│ ├── components

│ │ ├── __init__.py

│ │ ├── layers.py

│ │ └── outputs.py

│ ├── goten_model.py

│ ├── representation

│ │ └── gotennet.py

│ └── tasks

│ │ ├── QM9Task.py

│ │ ├── Task.py

│ │ └── __init__.py

├── scripts

│ ├── __init__.py

│ ├── test.py

│ └── train.py

├── testing_pipeline.py

├── training_pipeline.py

├── utils

│ ├── __init__.py

│ ├── file.py

│ ├── logging_utils.py

│ └── utils.py

└── vendor

│ └── __init__.py

├── pyproject.toml

└── requirements.txt

/.env.example:

--------------------------------------------------------------------------------

1 | # example of file for storing private and user specific environment variables, like keys or system paths

2 | # rename it to ".env" (excluded from version control by default)

3 | # .env is loaded by train.py automatically

4 | # hydra allows you to reference variables in .yaml configs with special syntax: ${oc.env:MY_VAR}

5 |

6 | MY_VAR="/home/user/my/system/path"

7 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # OS

7 | .DS_Store

8 | .DS_Store?

9 |

10 | # C extensions

11 | *.so

12 |

13 | # Distribution / packaging

14 | .Python

15 | build/

16 | develop-eggs/

17 | dist/

18 | downloads/

19 | eggs/

20 | .eggs/

21 | lib/

22 | lib64/

23 | parts/

24 | sdist/

25 | var/

26 | wheels/

27 | pip-wheel-metadata/

28 | share/python-wheels/

29 | *.egg-info/

30 | .installed.cfg

31 | *.egg

32 | MANIFEST

33 |

34 | # PyInstaller

35 | # Usually these files are written by a python script from a template

36 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

37 | *.manifest

38 | *.spec

39 |

40 | # Installer logs

41 | pip-log.txt

42 | pip-delete-this-directory.txt

43 |

44 | # Unit test / coverage reports

45 | htmlcov/

46 | .tox/

47 | .nox/

48 | .coverage

49 | .coverage.*

50 | .cache

51 | nosetests.xml

52 | coverage.xml

53 | *.cover

54 | *.py,cover

55 | .hypothesis/

56 | .pytest_cache/

57 |

58 | # Translations

59 | *.mo

60 | *.pot

61 |

62 | # Django stuff:

63 | *.log

64 | local_settings.py

65 | db.sqlite3

66 | db.sqlite3-journal

67 |

68 | # Flask stuff:

69 | instance/

70 | .webassets-cache

71 |

72 | # Scrapy stuff:

73 | .scrapy

74 |

75 | # Sphinx documentation

76 | docs/_build/

77 |

78 | # PyBuilder

79 | target/

80 |

81 | # Jupyter Notebook

82 | .ipynb_checkpoints

83 |

84 | # IPython

85 | profile_default/

86 | ipython_config.py

87 |

88 | # pyenv

89 | .python-version

90 |

91 | # pipenv

92 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

93 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

94 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

95 | # install all needed dependencies.

96 | #Pipfile.lock

97 |

98 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

99 | __pypackages__/

100 |

101 | # Celery stuff

102 | celerybeat-schedule

103 | celerybeat.pid

104 |

105 | # SageMath parsed files

106 | *.sage.py

107 |

108 | # Environments

109 | .venv

110 | env/

111 | venv/

112 | ENV/

113 | env.bak/

114 | venv.bak/

115 |

116 | # Spyder project settings

117 | .spyderproject

118 | .spyproject

119 |

120 | # Rope project settings

121 | .ropeproject

122 |

123 | # mkdocs documentation

124 | /site

125 |

126 | # mypy

127 | .mypy_cache/

128 | .dmypy.json

129 | dmypy.json

130 |

131 | # Pyre type checker

132 | .pyre/

133 |

134 | ### VisualStudioCode

135 | .vscode/*

136 | !.vscode/settings.json

137 | !.vscode/tasks.json

138 | !.vscode/launch.json

139 | !.vscode/extensions.json

140 | *.code-workspace

141 | **/.vscode

142 |

143 | # JetBrains

144 | .idea/

145 |

146 | # Data & Models

147 | *.h5

148 | *.tar

149 | *.tar.gz

150 |

151 | # Lightning-Hydra-Template

152 | configs/local/default.yaml

153 | /data/

154 | /logs/

155 | .env

156 |

157 | # Aim logging

158 | .aim

159 |

--------------------------------------------------------------------------------

/.project-root:

--------------------------------------------------------------------------------

1 | # this file is required for inferring the project root directory

2 | # do not delete

3 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2025 Sarp Aykent

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # GotenNet: Rethinking Efficient 3D Equivariant Graph Neural Networks

2 |

3 |

4 |

5 | [](https://openreview.net/pdf?id=5wxCQDtbMo)

6 | [](https://www.sarpaykent.com/publications/gotennet/)

7 | [](LICENSE)

8 | [](https://pypi.org/project/gotennet/)

9 | [](https://pytorch.org/)

10 |

11 |

12 |

13 |

14 |  15 |

15 |

16 |

17 | ## Overview

18 |

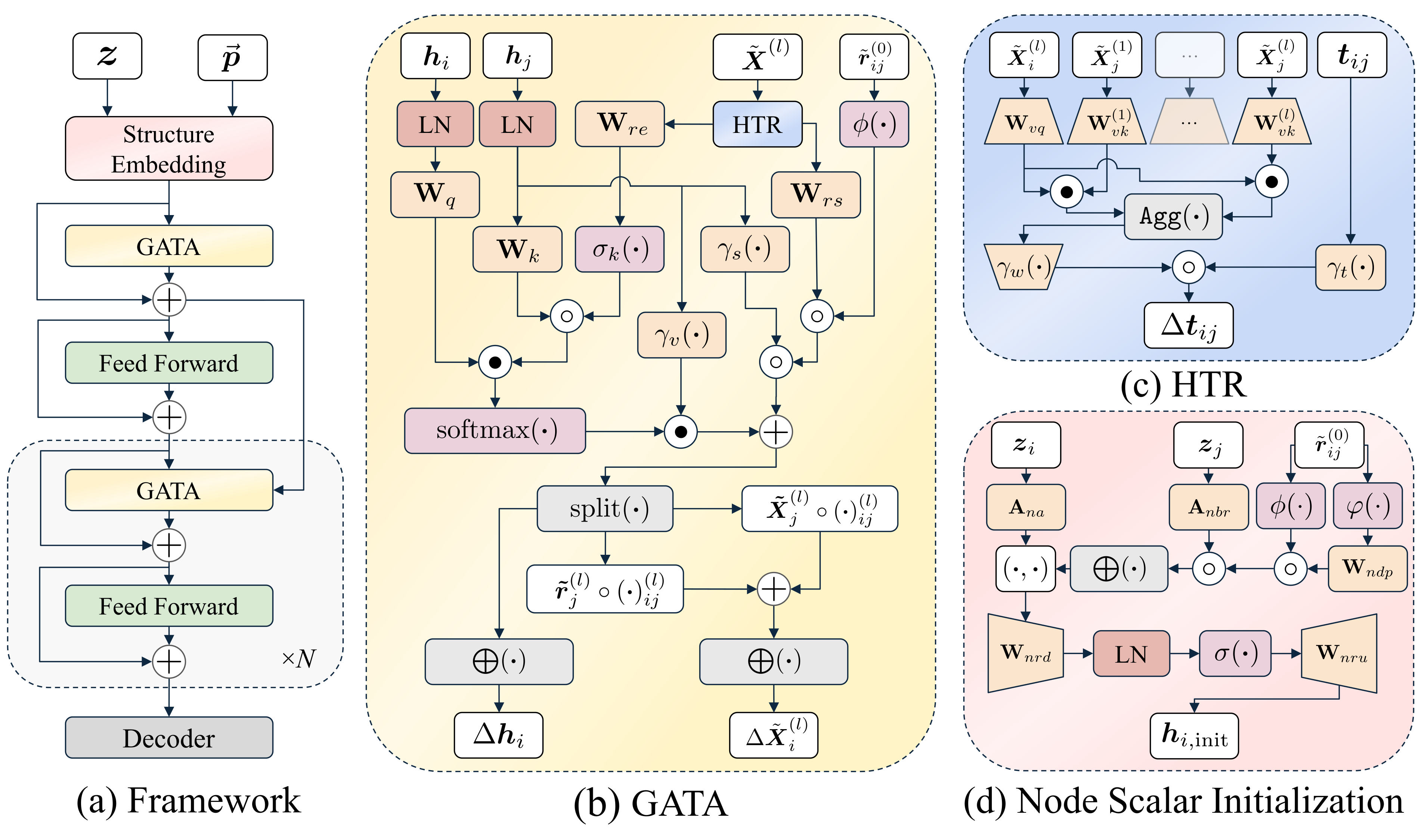

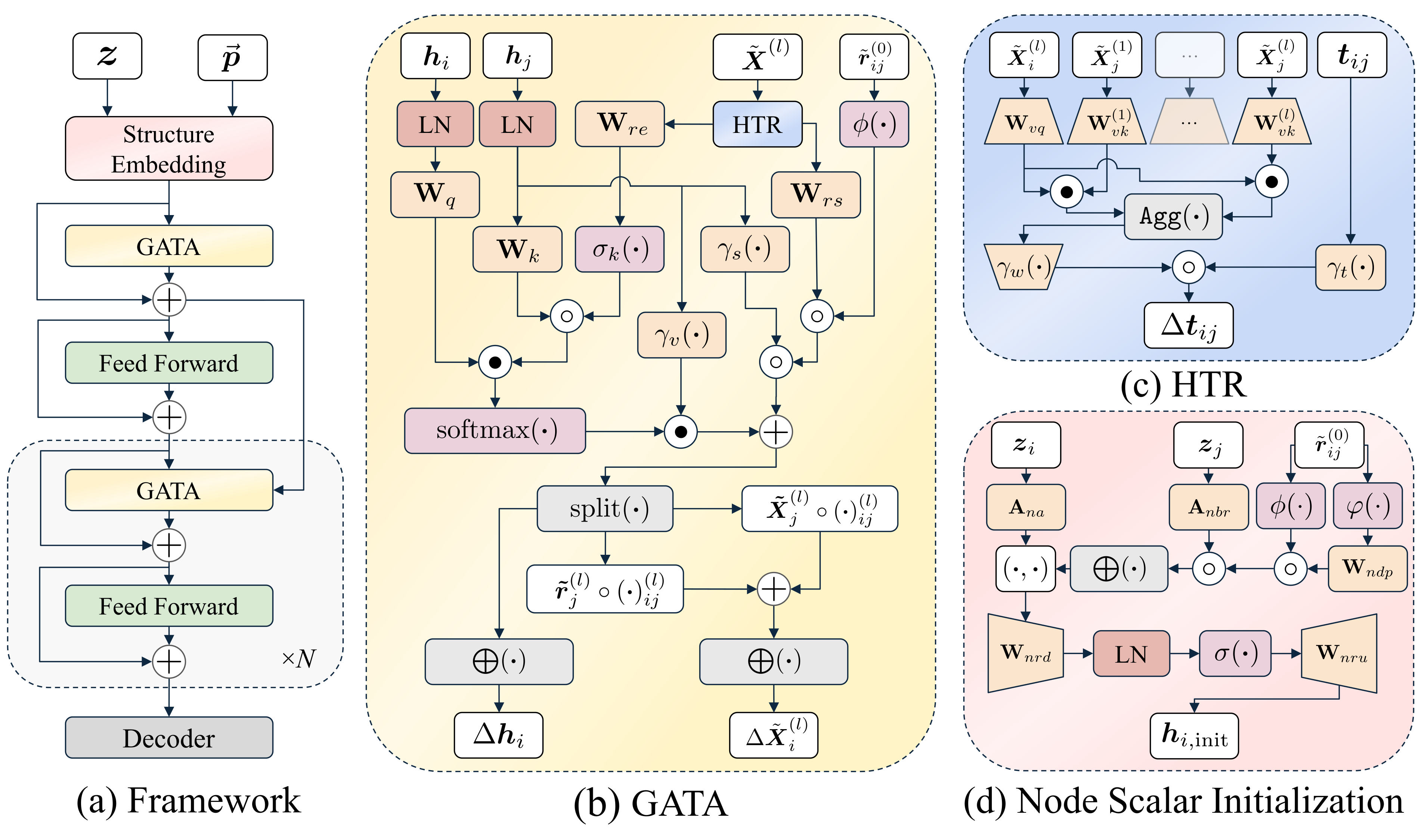

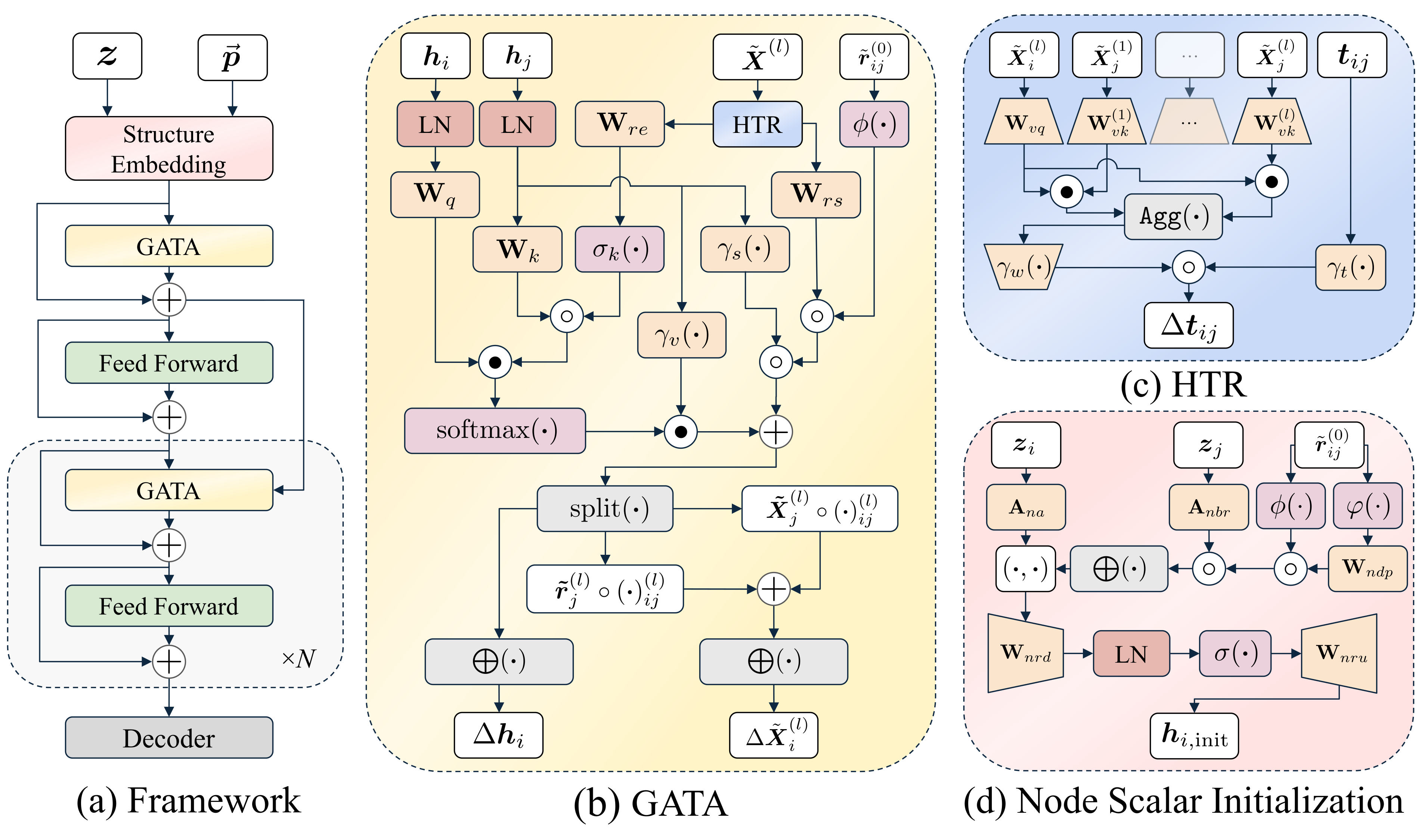

19 | This is the official implementation of **"GotenNet: Rethinking Efficient 3D Equivariant Graph Neural Networks"** published at ICLR 2025.

20 |

21 | GotenNet introduces a novel framework for modeling 3D molecular structures that achieves state-of-the-art performance while maintaining computational efficiency. Our approach balances expressiveness and efficiency through innovative tensor-based representations and attention mechanisms.

22 |

23 | ## Table of Contents

24 | - [✨ Key Features](#-key-features)

25 | - [🚀 Installation](#-installation)

26 | - [📦 From PyPI (Recommended)](#-from-pypi-recommended)

27 | - [🔧 From Source](#🔧-from-source)

28 | - [🔬 Usage](#🔬-usage)

29 | - [Using the Model](#using-the-model)

30 | - [Loading Pre-trained Models Programmatically](#loading-pre-trained-models-programmatically)

31 | - [Training a Model](#training-a-model)

32 | - [Testing a Model](#testing-a-model)

33 | - [Configuration](#configuration)

34 | - [🤝 Contributing](#-contributing)

35 | - [📚 Citation](#-citation)

36 | - [📄 License](#-license)

37 | - [Acknowledgements](#acknowledgements)

38 |

39 | ## ✨ Key Features

40 |

41 | - 🔄 **Effective Geometric Tensor Representations**: Leverages geometric tensors without relying on irreducible representations or Clebsch-Gordan transforms

42 | - 🧩 **Unified Structural Embedding**: Introduces geometry-aware tensor attention for improved molecular representation

43 | - 📊 **Hierarchical Tensor Refinement**: Implements a flexible and efficient representation scheme

44 | - 🏆 **State-of-the-Art Performance**: Achieves superior results on QM9, rMD17, MD22, and Molecule3D datasets

45 | - 📈 **Load Pre-trained Models**: Easily load and use pre-trained model checkpoints by name, URL, or local path, with automatic download capabilities.

46 |

47 | ## 🚀 Installation

48 |

49 | ### 📦 From PyPI (Recommended)

50 |

51 | You can install it using pip:

52 |

53 | * **Core Model Only:** Installs only the essential dependencies required to use the `GotenNet` model.

54 | ```bash

55 | pip install gotennet

56 | ```

57 |

58 | * **Full Installation (Core + Training/Utilities):** Installs core dependencies plus libraries needed for training, data handling, logging, etc.

59 | ```bash

60 | pip install gotennet[full]

61 | ```

62 |

63 | ### 🔧 From Source

64 |

65 | 1. **Clone the repository:**

66 | ```bash

67 | git clone https://github.com/sarpaykent/gotennet.git

68 | cd gotennet

69 | ```

70 |

71 | 2. **Create and activate a virtual environment** (using conda or venv/uv):

72 | ```bash

73 | # Using conda

74 | conda create -n gotennet python=3.10

75 | conda activate gotennet

76 |

77 | # Or using venv/uv

78 | uv venv --python 3.10

79 | source .venv/bin/activate

80 | ```

81 |

82 | 3. **Install the package:**

83 | Choose the installation type based on your needs:

84 |

85 | * **Core Model Only:** Installs only the essential dependencies required to use the `GotenNet` model.

86 | ```bash

87 | pip install .

88 | ```

89 |

90 | * **Full Installation (Core + Training/Utilities):** Installs core dependencies plus libraries needed for training, data handling, logging, etc.

91 | ```bash

92 | pip install .[full]

93 | # Or for editable install:

94 | # pip install -e .[full]

95 | ```

96 | *(Note: `uv` can be used as a faster alternative to `pip` for installation, e.g., `uv pip install .[full]`)*

97 |

98 | ## 🔬 Usage

99 |

100 | ### Using the Model

101 |

102 | Once installed, you can import and use the `GotenNet` model directly in your Python code:

103 |

104 | ```python

105 | from gotennet import GotenNet

106 |

107 | # --- Using the base GotenNet model ---

108 | # Requires manual calculation of edge_index, edge_diff, edge_vec

109 |

110 | # Example instantiation

111 | model = GotenNet(

112 | n_atom_basis=256,

113 | n_interactions=4,

114 | # resf of the parameters

115 | )

116 |

117 | # Encoded representations can be computed with

118 | h, X = model(atomic_numbers, edge_index, edge_diff, edge_vec)

119 |

120 | # --- Using GotenNetWrapper (handles distance calculation) ---

121 | # Expects a PyTorch Geometric Data object or similar dict

122 | # with keys like 'z' (atomic_numbers), 'pos' (positions), 'batch'

123 |

124 | # Example instantiation

125 | from gotennet import GotenNetWrapper

126 | wrapped_model = GotenNetWrapper(

127 | n_atom_basis=256,

128 | n_interactions=4,

129 | # rest of the parameters

130 | )

131 |

132 | # Encoded representations can be computed with

133 | h, X = wrapped_model(data)

134 |

135 | ```

136 |

137 | ### Loading Pre-trained Models Programmatically

138 |

139 | You can easily load pre-trained `GotenModel` instances programmatically using the `from_pretrained` class method. This method can accept a model alias (which will be resolved to a download URL), a direct HTTPS URL to a checkpoint file, or a local file path. It handles automatic downloading and caching of checkpoints. Pre-trained model weights and aliases are hosted on the [GotenNet Hugging Face Model Hub](https://huggingface.co/sarpaykent/GotenNet).

140 |

141 | ```python

142 | from gotennet.models import GotenModel

143 |

144 | # Example 1: Load by model alias

145 | # This will automatically download from a known location if not found locally.

146 | # The format is {dataset}_{size}_{target}

147 | model_by_alias = GotenModel.from_pretrained("QM9_small_homo")

148 |

149 | # Example 2: Load from a direct URL

150 | model_url = "https://huggingface.co/sarpaykent/GotenNet/resolve/main/pretrained/qm9/small/gotennet_homo.ckpt" # Replace with an actual URL

151 | model_by_url = GotenModel.from_pretrained(model_url)

152 |

153 | # Example 3: Load from a local file path

154 | local_model_path = "/path/to/your/local_model.ckpt"

155 | model_by_path = GotenModel.from_pretrained(local_model_path)

156 |

157 | # After loading, the model is ready for inference:

158 | predictions = model_by_alias(data_input)

159 | ```

160 |

161 | For more advanced scenarios, if you only need to load the base `GotenNet` representation module from a local checkpoint (e.g., a checkpoint that only contains representation weights), you can use:

162 |

163 | ```python

164 | from gotennet.models.representation import GotenNet, GotenNetWrapper

165 |

166 | # Example: Load a GotenNet representation from a local file

167 | representation_checkpoint_path = "/path/to/your/local_model.ckpt"

168 | gotennet_model = GotenNet.load_from_checkpoint(representation_checkpoint_path)

169 | # or

170 | gotennet_wrapped = GotenNetWrapper.load_from_checkpoint(representation_checkpoint_path)

171 | ```

172 |

173 | ### Training a Model

174 |

175 | After installation, you can use the `train_gotennet` command:

176 |

177 | ```bash

178 | train_gotennet

179 | ```

180 |

181 | Or you can run the training script directly:

182 |

183 | ```bash

184 | python gotennet/scripts/train.py

185 | ```

186 |

187 | Both methods use Hydra for configuration. You can reproduce U0 target prediction on the QM9 dataset with the following command:

188 |

189 | ```bash

190 | train_gotennet experiment=qm9_u0.yaml

191 | ```

192 |

193 | ### Testing a Model

194 |

195 | To evaluate a trained model, you can use the `test_gotennet` script. When you provide a checkpoint, the script can infer necessary configurations (like dataset and task details) directly from the checkpoint file. This script leverages the `GotenModel.from_pretrained` capabilities, allowing you to specify the model to test by its alias, a direct URL, or a local file path, handling automatic downloads.

196 |

197 | Here's how you can use it:

198 |

199 | ```bash

200 | # Option 1: Test by model alias (e.g., QM9_small_homo)

201 | # The script will automatically download the checkpoint and infer configurations.

202 | test_gotennet checkpoint=QM9_small_homo

203 |

204 | # Option 2: Test with a direct checkpoint URL

205 | # The script will automatically download the checkpoint and infer configurations.

206 | test_gotennet checkpoint=https://huggingface.co/sarpaykent/GotenNet/resolve/main/pretrained/qm9/small/gotennet_homo.ckpt

207 |

208 | # Option 3: Test with a local checkpoint file path

209 | test_gotennet checkpoint=/path/to/your/local_model.ckpt

210 | ```

211 |

212 | The script uses [Hydra](https://hydra.cc/) for any additional or overriding configurations if needed, but for straightforward evaluation of a checkpoint, only the `checkpoint` argument is typically required.

213 |

214 | ### Configuration

215 |

216 | The project uses [Hydra](https://hydra.cc/) for configuration management. Configuration files are located in the `configs/` directory.

217 |

218 | Main configuration categories:

219 | - `datamodule`: Dataset configurations (md17, qm9, etc.)

220 | - `model`: Model configurations

221 | - `trainer`: Training parameters

222 | - `callbacks`: Callback configurations

223 | - `logger`: Logging configurations

224 |

225 | ## 🤝 Contributing

226 |

227 | We welcome contributions to GotenNet! Please feel free to submit a Pull Request.

228 |

229 |

230 | ## 📚 Citation

231 |

232 | Please consider citing our work below if this project is helpful:

233 |

234 |

235 | ```bibtex

236 | @inproceedings{aykent2025gotennet,

237 | author = {Aykent, Sarp and Xia, Tian},

238 | booktitle = {The Thirteenth International Conference on LearningRepresentations},

239 | year = {2025},

240 | title = {{GotenNet: Rethinking Efficient 3D Equivariant Graph Neural Networks}},

241 | url = {https://openreview.net/forum?id=5wxCQDtbMo},

242 | howpublished = {https://openreview.net/forum?id=5wxCQDtbMo},

243 | }

244 | ```

245 |

246 | ## 📄 License

247 |

248 | This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

249 |

250 | ## Acknowledgements

251 |

252 | GotenNet is proudly built on the innovative foundations provided by the projects below.

253 | - [e3nn](https://github.com/e3nn/e3nn)

254 | - [PyG](https://github.com/pyg-team/pytorch_geometric)

255 | - [PyTorch Lightning](https://github.com/Lightning-AI/pytorch-lightning)

256 |

--------------------------------------------------------------------------------

/assets/GotenNet_framework.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/assets/GotenNet_framework.png

--------------------------------------------------------------------------------

/gotennet/__init__.py:

--------------------------------------------------------------------------------

1 | """GotenNet: A machine learning model for molecular property prediction."""

2 |

3 | __version__ = "1.1.2"

4 |

5 | from gotennet.models.representation.gotennet import (

6 | EQFF, # noqa: F401

7 | GATA, # noqa: F401

8 | GotenNet, # noqa: F401

9 | GotenNetWrapper, # noqa: F401

10 | )

11 |

12 |

13 |

--------------------------------------------------------------------------------

/gotennet/configs/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/configs/__init__.py

--------------------------------------------------------------------------------

/gotennet/configs/callbacks/default.yaml:

--------------------------------------------------------------------------------

1 | model_checkpoint:

2 | _target_: pytorch_lightning.callbacks.ModelCheckpoint

3 | monitor: "validation/val_loss" # name of the logged metric which determines when model is improving

4 | mode: "min" # "max" means higher metric value is better, can be also "min"

5 | save_top_k: 1 # save k best models (determined by above metric)

6 | save_last: True # additionaly always save model from last epoch

7 | verbose: False

8 | dirpath: ${paths.output_dir}/checkpoints

9 | filename: "epoch_{epoch:03d}"

10 | auto_insert_metric_name: False

11 |

12 | early_stopping:

13 | _target_: pytorch_lightning.callbacks.EarlyStopping

14 | monitor: "validation/ema_loss" # name of the logged metric which determines when model is improving

15 | mode: "min" # "max" means higher metric value is better, can be also "min"

16 | patience: 25 # how many validation epochs of not improving until training stops

17 | min_delta: 1e-6 # minimum change in the monitored metric needed to qualify as an improvement

18 |

19 | model_summary:

20 | _target_: pytorch_lightning.callbacks.RichModelSummary

21 | max_depth: 5

22 |

23 | rich_progress_bar:

24 | _target_: pytorch_lightning.callbacks.RichProgressBar

25 | refresh_rate: 1

26 |

27 | learning_rate_monitor:

28 | _target_: pytorch_lightning.callbacks.LearningRateMonitor

29 |

--------------------------------------------------------------------------------

/gotennet/configs/callbacks/none.yaml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/gotennet/configs/datamodule/qm9.yaml:

--------------------------------------------------------------------------------

1 | _target_: gotennet.datamodules.datamodule.DataModule

2 |

3 | hparams:

4 | dataset: QM9

5 | dataset_arg:

6 | dataset_root: ${paths.data_dir} # data_path is specified in config.yaml

7 | derivative: false

8 | split_mode: null

9 | reload: 0

10 | batch_size: 32

11 | inference_batch_size: 128

12 | standardize: false

13 | splits: null

14 | train_size: 110000

15 | val_size: 10000

16 | test_size: null

17 | num_workers: 12

18 | seed: 1

19 | output_dir: ${paths.output_dir}

20 | ngpus: 1

21 | num_nodes: 1

22 | precision: 32

23 | task: train

24 | distributed_backend: ddp

25 | redirect: false

26 | accelerator: gpu

27 | test_interval: 10

28 | save_interval: 1

29 | prior_model: Atomref

30 | normalize_positions: false

31 |

--------------------------------------------------------------------------------

/gotennet/configs/experiment/qm9.yaml:

--------------------------------------------------------------------------------

1 | # @package _global_

2 |

3 | defaults:

4 | - override /datamodule: qm9.yaml

5 | - override /model: gotennet.yaml

6 | - override /callbacks: default.yaml

7 | - override /logger: wandb.yaml # set logger here or use command line (e.g. `python train.py logger=tensorboard`)

8 | - override /trainer: default.yaml

9 |

10 | datamodule:

11 | hparams:

12 | batch_size: 32

13 | seed: 1

14 | standardize: false

15 |

16 | model:

17 | lr: 0.0001

18 | lr_warmup_steps: 10000

19 | lr_monitor: "validation/val_loss"

20 | lr_minlr: 1.e-07

21 | lr_patience: 15

22 | weight_decay: 0.0

23 | task_config:

24 | task_loss: "MSELoss"

25 | representation:

26 | n_interactions: 4

27 | n_atom_basis: 256

28 | radial_basis: "expnorm"

29 | n_rbf: 64

30 | output:

31 | n_hidden: 256

32 |

33 | callbacks:

34 | early_stopping:

35 | monitor: "validation/val_loss" # name of the logged metric which determines when model is improving

36 | patience: 150 # how many validation epochs of not improving until training stops

37 | model_checkpoint:

38 | _target_: pytorch_lightning.callbacks.ModelCheckpoint

39 | monitor: "validation/MeanAbsoluteError_${label}" # name of the logged metric which determines when model is improving

40 |

--------------------------------------------------------------------------------

/gotennet/configs/experiment/qm9_u0.yaml:

--------------------------------------------------------------------------------

1 | # @package _global_

2 |

3 | defaults:

4 | - override /datamodule: qm9.yaml

5 | - override /model: gotennet.yaml

6 | - override /callbacks: default.yaml

7 | - override /logger: wandb.yaml # set logger here or use command line (e.g. `python train.py logger=tensorboard`)

8 | - override /trainer: default.yaml

9 |

10 | datamodule:

11 | hparams:

12 | batch_size: 32

13 | seed: 1

14 | standardize: false

15 |

16 | model:

17 | lr: 0.0001

18 | lr_warmup_steps: 10000

19 | lr_monitor: "validation/val_loss"

20 | lr_minlr: 1.e-07

21 | lr_patience: 15

22 | weight_decay: 0.0

23 | task_config:

24 | task_loss: "MSELoss"

25 | representation:

26 | n_interactions: 4

27 | n_atom_basis: 256

28 | radial_basis: "expnorm"

29 | n_rbf: 64

30 | output:

31 | n_hidden: 256

32 |

33 | callbacks:

34 | early_stopping:

35 | monitor: "validation/val_loss" # name of the logged metric which determines when model is improving

36 | patience: 150 # how many validation epochs of not improving until training stops

37 | model_checkpoint:

38 | _target_: pytorch_lightning.callbacks.ModelCheckpoint

39 | monitor: "validation/MeanAbsoluteError_${label}" # name of the logged metric which determines when model is improving

40 |

41 | label: "U0"

42 |

--------------------------------------------------------------------------------

/gotennet/configs/hydra/default.yaml:

--------------------------------------------------------------------------------

1 | # https://hydra.cc/docs/configure_hydra/intro/

2 |

3 | # enable color logging

4 | defaults:

5 | - override hydra_logging: colorlog

6 | - override job_logging: colorlog

7 | - override sweeper: optuna

8 | - override sweeper/sampler: grid

9 | # - override launcher: joblib

10 |

11 | # output directory, generated dynamically on each run

12 | run:

13 | dir: ${paths.log_dir}/${label}_${name}/runs/${now:%Y-%m-%d}_${now:%H-%M-%S}

14 | sweep:

15 | dir: ${paths.log_dir}/${name}/multiruns/${now:%Y-%m-%d}_${now:%H-%M-%S}

16 | subdir: 0

17 |

--------------------------------------------------------------------------------

/gotennet/configs/hydra/job_logging/logger.yaml:

--------------------------------------------------------------------------------

1 | # @package hydra.job_logging

2 | # python logging configuration for tasks

3 | version: 1

4 | formatters:

5 | simple:

6 | format: "[%(asctime)s][%(name)s][%(levelname)s] - %(message)s"

7 | colorlog:

8 | "()": "colorlog.ColoredFormatter"

9 | format: "[%(cyan)s%(asctime)s%(reset)s][%(blue)s%(name)s%(reset)s][%(log_color)s%(levelname)s%(reset)s] - %(message)s"

10 | log_colors:

11 | DEBUG: purple

12 | INFO: green

13 | WARNING: yellow

14 | ERROR: red

15 | CRITICAL: red

16 | handlers:

17 | console:

18 | class: rich.logging.RichHandler

19 | formatter: colorlog

20 | file:

21 | class: logging.FileHandler

22 | formatter: simple

23 | # relative to the job log directory

24 | filename: ${hydra.job.name}.log

25 | root:

26 | level: INFO

27 | handlers: [console, file]

28 |

29 | disable_existing_loggers: false

30 |

--------------------------------------------------------------------------------

/gotennet/configs/local/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/configs/local/.gitkeep

--------------------------------------------------------------------------------

/gotennet/configs/logger/comet.yaml:

--------------------------------------------------------------------------------

1 | # https://www.comet.ml

2 |

3 | comet:

4 | _target_: pytorch_lightning.loggers.comet.CometLogger

5 | api_key: ${oc.env:COMET_API_TOKEN} # api key is loaded from environment variable

6 | project_name: "template-tests"

7 | experiment_name: ${name}

8 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/csv.yaml:

--------------------------------------------------------------------------------

1 | # csv logger built in lightning

2 |

3 | csv:

4 | _target_: pytorch_lightning.loggers.csv_logs.CSVLogger

5 | save_dir: "."

6 | name: "csv/"

7 | prefix: ""

8 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/default.yaml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/many_loggers.yaml:

--------------------------------------------------------------------------------

1 | # train with many loggers at once

2 |

3 | defaults:

4 | # - comet.yaml

5 | - csv.yaml

6 | # - mlflow.yaml

7 | # - neptune.yaml

8 | - tensorboard.yaml

9 | - wandb.yaml

10 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/mlflow.yaml:

--------------------------------------------------------------------------------

1 | # https://mlflow.org

2 |

3 | mlflow:

4 | _target_: pytorch_lightning.loggers.mlflow.MLFlowLogger

5 | experiment_name: ${name}

6 | tracking_uri: ${original_work_dir}/logs/mlflow/mlruns # run `mlflow ui` command inside the `logs/mlflow/` dir to open the UI

7 | tags: null

8 | prefix: ""

9 | artifact_location: null

10 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/neptune.yaml:

--------------------------------------------------------------------------------

1 | # https://neptune.ai

2 |

3 | neptune:

4 | _target_: pytorch_lightning.loggers.neptune.NeptuneLogger

5 | api_key: ${oc.env:NEPTUNE_API_TOKEN} # api key is loaded from environment variable

6 | project_name: your_name/template-tests

7 | close_after_fit: True

8 | offline_mode: False

9 | experiment_name: ${name}

10 | experiment_id: null

11 | prefix: ""

12 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/tensorboard.yaml:

--------------------------------------------------------------------------------

1 | # https://www.tensorflow.org/tensorboard/

2 |

3 | tensorboard:

4 | _target_: pytorch_lightning.loggers.tensorboard.TensorBoardLogger

5 | save_dir: "tensorboard/"

6 | name: null

7 | version: ${name}

8 | log_graph: False

9 | default_hp_metric: True

10 | prefix: ""

11 |

--------------------------------------------------------------------------------

/gotennet/configs/logger/wandb.yaml:

--------------------------------------------------------------------------------

1 | # https://wandb.ai

2 |

3 | wandb:

4 | _target_: pytorch_lightning.loggers.wandb.WandbLogger

5 | project: ${project}

6 | name: ${name}

7 | save_dir: "."

8 | offline: False # set True to store all logs only locally

9 | id: null # pass correct id to resume experiment!

10 | entity: "" # set to name of your wandb team

11 | log_model: False

12 | prefix: ""

13 | job_type: "train"

14 | group: ""

15 | tags: []

16 |

--------------------------------------------------------------------------------

/gotennet/configs/model/gotennet.yaml:

--------------------------------------------------------------------------------

1 | _target_: gotennet.models.goten_model.GotenModel

2 | label: ${label}

3 | task: ${task}

4 |

5 | cutoff: 5.0

6 | lr: 0.0001

7 | lr_decay: 0.8

8 | lr_patience: 5

9 | lr_monitor: "validation/ema_loss"

10 | ema_decay: 0.9

11 | weight_decay: 0.01

12 |

13 | output:

14 | n_hidden: 256

15 |

16 | representation:

17 | __target__: gotennet.models.representation.gotennet.GotenNetWrapper

18 | n_atom_basis: 256

19 | n_interactions: 4

20 | n_rbf: 32

21 | cutoff_fn:

22 | __target__: gotennet.models.components.layers.CosineCutoff

23 | cutoff: 5.0

24 | radial_basis: "expnorm"

25 | activation: "swish"

26 | max_z: 100

27 | weight_init: "xavier_uniform"

28 | bias_init: "zeros"

29 | num_heads: 8

30 | attn_dropout: 0.1

31 | edge_updates: True

32 | lmax: 2

33 | aggr: "add"

34 | scale_edge: False

35 | evec_dim:

36 | emlp_dim:

37 | sep_htr: True

38 | sep_dir: True

39 | sep_tensor: True

40 | edge_ln: ""

41 |

42 | #task_config:

43 | # name: "Test"

44 |

--------------------------------------------------------------------------------

/gotennet/configs/paths/default.yaml:

--------------------------------------------------------------------------------

1 | # path to root directory

2 | # this requires PROJECT_ROOT environment variable to exist

3 | # PROJECT_ROOT is inferred and set by pyrootutils package in `train.py` and `eval.py`

4 | root_dir: ${oc.env:PROJECT_ROOT}

5 |

6 | # path to data directory

7 | data_dir: ${paths.root_dir}/data/

8 |

9 | # path to logging directory

10 | log_dir: ${paths.root_dir}/logs/

11 |

12 | # path to output directory, created dynamically by hydra

13 | # path generation pattern is specified in `configs/hydra/default.yaml`

14 | # use it to store all files generated during the run, like ckpts and metrics

15 | output_dir: ${hydra:runtime.output_dir}

16 |

--------------------------------------------------------------------------------

/gotennet/configs/test.yaml:

--------------------------------------------------------------------------------

1 | # @package _global_

2 |

3 | # specify here default evaluation configuration

4 | defaults:

5 | - _self_

6 | - datamodule: qm9.yaml

7 | - model: gotennet.yaml

8 | - callbacks: default.yaml

9 | - logger: default.yaml

10 | - trainer: default.yaml

11 |

12 | # experiment configs allow for version control of specific configurations

13 | # e.g. best hyperparameters for each combination of model and datamodule

14 | - experiment: null

15 |

16 | # config for hyperparameter optimization

17 | - hparams_search: null

18 |

19 | # optional local config for machine/user specific settings

20 | # it's optional since it doesn't need to exist and is excluded from version control

21 | - optional local: default.yaml

22 |

23 | # enable color logging

24 | - paths: default.yaml

25 | - hydra: default.yaml

26 | # - override /hydra/launcher: joblib

27 |

28 | original_work_dir: ${hydra:runtime.cwd}

29 |

30 | data_dir: ${original_work_dir}/data/

31 |

32 | print_config: True

33 |

34 | ignore_warnings: True

35 |

36 | seed: 42

37 | # default name for the experiment, determines logging folder path

38 | # (you can overwrite this name in experiment configs)

39 | name: "default"

40 | task: "QM9"

41 | exp: False

42 | project: "gotennet"

43 | label: -1

44 | label_str: -1

45 | # passing checkpoint path is necessary

46 | ckpt_path: null

47 | checkpoint: null

48 |

--------------------------------------------------------------------------------

/gotennet/configs/train.yaml:

--------------------------------------------------------------------------------

1 | # @package _global_

2 |

3 | # specify here default training configuration

4 | defaults:

5 | - _self_

6 | - datamodule: qm9.yaml

7 | - model: gotennet.yaml

8 | - callbacks: default.yaml

9 | - logger: default.yaml

10 | - trainer: default.yaml

11 |

12 | # experiment configs allow for version control of specific configurations

13 | # e.g. best hyperparameters for each combination of model and datamodule

14 | - experiment: null

15 |

16 | # config for hyperparameter optimization

17 | - hparams_search: null

18 |

19 | # optional local config for machine/user specific settings

20 | # it's optional since it doesn't need to exist and is excluded from version control

21 | - optional local: default.yaml

22 |

23 | # enable color logging

24 | - paths: default.yaml

25 | - hydra: default.yaml

26 |

27 | # pretty print config at the start of the run using Rich library

28 | print_config: True

29 |

30 | # disable python warnings if they annoy you

31 | ignore_warnings: True

32 |

33 | # set False to skip model training

34 | train: True

35 |

36 | # evaluate on test set, using best model weights achieved during training

37 | # lightning chooses best weights based on the metric specified in checkpoint callback

38 | test: True

39 |

40 | # seed for random number generators in pytorch, numpy and python.random

41 | seed: 42

42 |

43 | # default name for the experiment, determines logging folder path

44 | # (you can overwrite this name in experiment configs)

45 | name: "default"

46 | task: "QM9"

47 | exp: False

48 | project: "gotennet"

49 | label: -1

50 | label_str: -1

51 | ckpt_path: null

52 |

--------------------------------------------------------------------------------

/gotennet/configs/trainer/default.yaml:

--------------------------------------------------------------------------------

1 | _target_: pytorch_lightning.Trainer

2 |

3 | devices: 1

4 |

5 | min_epochs: 1

6 | max_epochs: 1000

7 | strategy: ddp_find_unused_parameters_false

8 | # number of validation steps to execute at the beginning of the training

9 | num_sanity_val_steps: 0

10 | gradient_clip_val: 5.0

11 |

--------------------------------------------------------------------------------

/gotennet/datamodules/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/datamodules/__init__.py

--------------------------------------------------------------------------------

/gotennet/datamodules/components/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/datamodules/components/__init__.py

--------------------------------------------------------------------------------

/gotennet/datamodules/components/qm9.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch_geometric.datasets import QM9 as QM9_geometric

3 | from torch_geometric.transforms import Compose

4 |

5 | qm9_target_dict = {

6 | 0: "mu",

7 | 1: "alpha",

8 | 2: "homo",

9 | 3: "lumo",

10 | 4: "gap",

11 | 5: "r2",

12 | 6: "zpve",

13 | 7: "U0",

14 | 8: "U",

15 | 9: "H",

16 | 10: "G",

17 | 11: "Cv",

18 | }

19 |

20 |

21 | class QM9(QM9_geometric):

22 | """

23 | QM9 dataset wrapper for PyTorch Geometric QM9 dataset.

24 |

25 | This class extends the PyTorch Geometric QM9 dataset to provide additional

26 | functionality for working with specific molecular properties.

27 | """

28 |

29 | mu = "mu"

30 | alpha = "alpha"

31 | homo = "homo"

32 | lumo = "lumo"

33 | gap = "gap"

34 | r2 = "r2"

35 | zpve = "zpve"

36 | U0 = "U0"

37 | U = "U"

38 | H = "H"

39 | G = "G"

40 | Cv = "Cv"

41 |

42 | available_properties = [

43 | mu,

44 | alpha,

45 | homo,

46 | lumo,

47 | gap,

48 | r2,

49 | zpve,

50 | U0,

51 | U,

52 | H,

53 | G,

54 | Cv,

55 | ]

56 |

57 | def __init__(

58 | self,

59 | root: str,

60 | transform=None,

61 | pre_transform=None,

62 | pre_filter=None,

63 | dataset_arg=None,

64 | ):

65 | """

66 | Initialize the QM9 dataset.

67 |

68 | Args:

69 | root (str): Root directory where the dataset should be saved.

70 | transform: Transform to be applied to each data object. If None,

71 | defaults to _filter_label.

72 | pre_transform: Transform to be applied to each data object before saving.

73 | pre_filter: Function that takes in a data object and returns a boolean,

74 | indicating whether the item should be included.

75 | dataset_arg (str): The property to train on. Must be one of the available

76 | properties defined in qm9_target_dict.

77 |

78 | Raises:

79 | AssertionError: If dataset_arg is None.

80 | """

81 | assert dataset_arg is not None, (

82 | "Please pass the desired property to "

83 | 'train on via "dataset_arg". Available '

84 | f'properties are {", ".join(qm9_target_dict.values())}.'

85 | )

86 |

87 | self.label = dataset_arg

88 | label2idx = dict(zip(qm9_target_dict.values(), qm9_target_dict.keys(), strict=False))

89 | self.label_idx = label2idx[self.label]

90 |

91 | if transform is None:

92 | transform = self._filter_label

93 | else:

94 | transform = Compose([transform, self._filter_label])

95 |

96 | super(QM9, self).__init__(

97 | root,

98 | transform=transform,

99 | pre_transform=pre_transform,

100 | pre_filter=pre_filter,

101 | )

102 |

103 |

104 | @staticmethod

105 | def label_to_idx(label: str) -> int:

106 | """

107 | Convert a property label to its corresponding index.

108 |

109 | Args:

110 | label (str): The property label to convert.

111 |

112 | Returns:

113 | int: The index corresponding to the property label.

114 | """

115 | label2idx = dict(zip(qm9_target_dict.values(), qm9_target_dict.keys(), strict=False))

116 | return label2idx[label]

117 |

118 | def mean(self, divide_by_atoms: bool = True) -> float:

119 | """

120 | Calculate the mean of the target property across the dataset.

121 |

122 | Args:

123 | divide_by_atoms (bool): Whether to normalize the property by the number

124 | of atoms in each molecule.

125 |

126 | Returns:

127 | float: The mean value of the target property.

128 | """

129 | if not divide_by_atoms:

130 | get_labels = lambda i: self.get(i).y

131 | else:

132 | get_labels = lambda i: self.get(i).y/self.get(i).pos.shape[0]

133 |

134 | y = torch.cat([get_labels(i) for i in range(len(self))], dim=0)

135 | assert len(y.shape) == 2

136 | if y.shape[1] != 1:

137 | y = y[:, self.label_idx]

138 | else:

139 | y = y[:, 0]

140 | return y.mean(axis=0)

141 | def min(self, divide_by_atoms: bool = True) -> float:

142 | """

143 | Calculate the minimum of the target property across the dataset.

144 |

145 | Args:

146 | divide_by_atoms (bool): Whether to normalize the property by the number

147 | of atoms in each molecule.

148 |

149 | Returns:

150 | float: The minimum value of the target property.

151 | """

152 | if not divide_by_atoms:

153 | get_labels = lambda i: self.get(i).y

154 | else:

155 | get_labels = lambda i: self.get(i).y/self.get(i).pos.shape[0]

156 |

157 | y = torch.cat([get_labels(i) for i in range(len(self))], dim=0)

158 | assert len(y.shape) == 2

159 | if y.shape[1] != 1:

160 | y = y[:, self.label_idx]

161 | else:

162 | y = y[:, 0]

163 | return y.min(axis=0)

164 |

165 | def std(self, divide_by_atoms: bool = True) -> float:

166 | """

167 | Calculate the standard deviation of the target property across the dataset.

168 |

169 | Args:

170 | divide_by_atoms (bool): Whether to normalize the property by the number

171 | of atoms in each molecule.

172 |

173 | Returns:

174 | float: The standard deviation of the target property.

175 | """

176 | if not divide_by_atoms:

177 | get_labels = lambda i: self.get(i).y

178 | else:

179 | get_labels = lambda i: self.get(i).y/self.get(i).pos.shape[0]

180 |

181 | y = torch.cat([get_labels(i) for i in range(len(self))], dim=0)

182 | assert len(y.shape) == 2

183 | if y.shape[1] != 1:

184 | y = y[:, self.label_idx]

185 | else:

186 | y = y[:, 0]

187 | return y.std(axis=0)

188 |

189 | def get_atomref(self, max_z: int = 100) -> torch.Tensor:

190 | """

191 | Get atomic reference values for the target property.

192 |

193 | Args:

194 | max_z (int): Maximum atomic number to consider.

195 |

196 | Returns:

197 | torch.Tensor: Tensor of atomic reference values, or None if not available.

198 | """

199 | atomref = self.atomref(self.label_idx)

200 | if atomref is None:

201 | return None

202 | if atomref.size(0) != max_z:

203 | tmp = torch.zeros(max_z).unsqueeze(1)

204 | idx = min(max_z, atomref.size(0))

205 | tmp[:idx] = atomref[:idx]

206 | return tmp

207 | return atomref

208 |

209 | def _filter_label(self, batch) -> torch.Tensor:

210 | """

211 | Filter the batch to only include the target property.

212 |

213 | Args:

214 | batch: A batch of data from the dataset.

215 |

216 | Returns:

217 | torch.Tensor: The filtered batch with only the target property.

218 | """

219 | batch.y = batch.y[:, self.label_idx].unsqueeze(1)

220 | return batch

221 |

--------------------------------------------------------------------------------

/gotennet/datamodules/components/utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | from pytorch_lightning.utilities import rank_zero_warn

4 |

5 |

6 | def train_val_test_split(

7 | dset_len: int,

8 | train_size: float or int or None,

9 | val_size: float or int or None,

10 | test_size: float or int or None,

11 | seed: int,

12 | ) -> tuple:

13 | """

14 | Split dataset indices into training, validation, and test sets.

15 |

16 | This function splits a dataset of length dset_len into training, validation,

17 | and test sets according to the specified sizes. The sizes can be specified as

18 | fractions of the dataset (float) or as absolute counts (int).

19 |

20 | Args:

21 | dset_len (int): Total length of the dataset.

22 | train_size (float or int or None): Size of the training set. If float, interpreted

23 | as a fraction of the dataset. If int, interpreted as an absolute count.

24 | If None, calculated as the remainder after val_size and test_size.

25 | val_size (float or int or None): Size of the validation set. If float, interpreted

26 | as a fraction of the dataset. If int, interpreted as an absolute count.

27 | If None, calculated as the remainder after train_size and test_size.

28 | test_size (float or int or None): Size of the test set. If float, interpreted

29 | as a fraction of the dataset. If int, interpreted as an absolute count.

30 | If None, calculated as the remainder after train_size and val_size.

31 | seed (int): Random seed for reproducibility.

32 |

33 | Returns:

34 | tuple: A tuple containing three numpy arrays (idx_train, idx_val, idx_test)

35 | with the indices for each split.

36 |

37 | Raises:

38 | AssertionError: If more than one of train_size, val_size, test_size is None,

39 | or if any split size is negative, or if the total split size exceeds

40 | the dataset length.

41 | """

42 | assert (train_size is None) + (val_size is None) + (test_size is None) <= 1, "Only one of train_size, val_size, test_size is allowed to be None."

43 |

44 | is_float = (isinstance(train_size, float), isinstance(val_size, float), isinstance(test_size, float))

45 |

46 | train_size = round(dset_len * train_size) if is_float[0] else train_size

47 | val_size = round(dset_len * val_size) if is_float[1] else val_size

48 | test_size = round(dset_len * test_size) if is_float[2] else test_size

49 |

50 | if train_size is None:

51 | train_size = dset_len - val_size - test_size

52 | elif val_size is None:

53 | val_size = dset_len - train_size - test_size

54 | elif test_size is None:

55 | test_size = dset_len - train_size - val_size

56 |

57 | # Adjust split sizes if they exceed the dataset length

58 | if train_size + val_size + test_size > dset_len:

59 | if is_float[2]:

60 | test_size -= 1

61 | elif is_float[1]:

62 | val_size -= 1

63 | elif is_float[0]:

64 | train_size -= 1

65 |

66 | assert train_size >= 0 and val_size >= 0 and test_size >= 0, (

67 | f"One of training ({train_size}), validation ({val_size}) or "

68 | f"testing ({test_size}) splits ended up with a negative size."

69 | )

70 |

71 | total = train_size + val_size + test_size

72 | assert dset_len >= total, f"The dataset ({dset_len}) is smaller than the combined split sizes ({total})."

73 |

74 | if total < dset_len:

75 | rank_zero_warn(f"{dset_len - total} samples were excluded from the dataset")

76 |

77 | # Generate random indices

78 | idxs = np.arange(dset_len, dtype=np.int64)

79 | idxs = np.random.default_rng(seed).permutation(idxs)

80 |

81 | # Split indices into train, validation, and test sets

82 | idx_train = idxs[:train_size]

83 | idx_val = idxs[train_size: train_size + val_size]

84 | idx_test = idxs[train_size + val_size: total]

85 |

86 | return np.array(idx_train), np.array(idx_val), np.array(idx_test)

87 |

88 |

89 | def make_splits(

90 | dataset_len: int,

91 | train_size: float or int or None,

92 | val_size: float or int or None,

93 | test_size: float or int or None,

94 | seed: int,

95 | filename: str = None,

96 | splits: str = None,

97 | ) -> tuple:

98 | """

99 | Create or load dataset splits and optionally save them to a file.

100 |

101 | This function either loads existing splits from a file or creates new splits

102 | using train_val_test_split. The resulting splits can be saved to a file.

103 |

104 | Args:

105 | dataset_len (int): Total length of the dataset.

106 | train_size (float or int or None): Size of the training set. See train_val_test_split.

107 | val_size (float or int or None): Size of the validation set. See train_val_test_split.

108 | test_size (float or int or None): Size of the test set. See train_val_test_split.

109 | seed (int): Random seed for reproducibility.

110 | filename (str, optional): If provided, the splits will be saved to this file.

111 | splits (str, optional): If provided, splits will be loaded from this file

112 | instead of being generated.

113 |

114 | Returns:

115 | tuple: A tuple containing three torch tensors (idx_train, idx_val, idx_test)

116 | with the indices for each split.

117 | """

118 | if splits is not None:

119 | splits = np.load(splits)

120 | idx_train = splits["idx_train"]

121 | idx_val = splits["idx_val"]

122 | idx_test = splits["idx_test"]

123 | else:

124 | idx_train, idx_val, idx_test = train_val_test_split(

125 | dataset_len,

126 | train_size,

127 | val_size,

128 | test_size,

129 | seed,

130 | )

131 |

132 | if filename is not None:

133 | np.savez(filename, idx_train=idx_train, idx_val=idx_val, idx_test=idx_test)

134 |

135 | return torch.from_numpy(idx_train), torch.from_numpy(idx_val), torch.from_numpy(idx_test)

136 |

137 |

138 | class MissingLabelException(Exception):

139 | """

140 | Exception raised when a required label is missing from the dataset.

141 |

142 | This exception is used to indicate that a required label or property

143 | is not available in the dataset being processed.

144 | """

145 | pass

146 |

--------------------------------------------------------------------------------

/gotennet/datamodules/datamodule.py:

--------------------------------------------------------------------------------

1 | from os.path import join

2 | from typing import Any, Dict, Optional, Union

3 |

4 | import torch

5 | from pytorch_lightning import LightningDataModule

6 | from pytorch_lightning.utilities import rank_zero_only, rank_zero_warn

7 | from torch_geometric.loader import DataLoader

8 | from torch_scatter import scatter

9 | from tqdm import tqdm

10 |

11 | from gotennet import utils

12 |

13 | from .components.qm9 import QM9

14 | from .components.utils import MissingLabelException, make_splits

15 |

16 | log = utils.get_logger(__name__)

17 |

18 |

19 | def normalize_positions(batch):

20 | """

21 | Normalize positions by subtracting center of mass.

22 |

23 | Args:

24 | batch: Data batch with position information.

25 |

26 | Returns:

27 | batch: Batch with normalized positions.

28 | """

29 | center = batch.center_of_mass

30 | batch.pos = batch.pos - center

31 | return batch

32 |

33 |

34 | class DataModule(LightningDataModule):

35 | """

36 | DataModule for handling various molecular datasets.

37 |

38 | This class provides a unified interface for loading, splitting, and

39 | standardizing different types of molecular datasets.

40 | """

41 |

42 | def __init__(self, hparams: Union[Dict, Any]):

43 | """

44 | Initialize the DataModule with configuration parameters.

45 |

46 | Args:

47 | hparams: Hyperparameters for the datamodule.

48 | """

49 | # Check if hparams is omegaconf.dictconfig.DictConfig

50 | if type(hparams) == "omegaconf.dictconfig.DictConfig":

51 | hparams = dict(hparams)

52 | super(DataModule, self).__init__()

53 | hparams = dict(hparams)

54 |

55 | # Update hyperparameters

56 | if hasattr(hparams, "__dict__"):

57 | self.hparams.update(hparams.__dict__)

58 | else:

59 | self.hparams.update(hparams)

60 |

61 | # Initialize attributes

62 | self._mean, self._std = None, None

63 | self._saved_dataloaders = dict()

64 | self.dataset = None

65 | self.loaded = False

66 |

67 | def get_metadata(self, label: Optional[str] = None) -> Dict:

68 | """

69 | Get metadata about the dataset.

70 |

71 | Args:

72 | label: Optional label to set as dataset_arg.

73 |

74 | Returns:

75 | Dict containing dataset metadata.

76 | """

77 | if label is not None:

78 | self.hparams["dataset_arg"] = label

79 |

80 | if self.loaded == False:

81 | self.prepare_dataset()

82 | self.loaded = True

83 |

84 | return {

85 | 'atomref': self.atomref,

86 | 'dataset': self.dataset,

87 | 'mean': self.mean,

88 | 'std': self.std

89 | }

90 |

91 | def prepare_dataset(self):

92 | """

93 | Prepare the dataset for training, validation, and testing.

94 |

95 | Loads the appropriate dataset based on the configuration and

96 | creates the train/val/test splits.

97 |

98 | Raises:

99 | AssertionError: If the specified dataset type is not supported.

100 | """

101 | dataset_type = self.hparams['dataset']

102 |

103 | # Validate dataset type is supported

104 | assert hasattr(self, f"_prepare_{dataset_type}"), \

105 | f"Dataset {dataset_type} not defined"

106 |

107 | # Call the appropriate dataset preparation method

108 | dataset_preparer = lambda t: getattr(self, f"_prepare_{t}")()

109 | self.idx_train, self.idx_val, self.idx_test = dataset_preparer(dataset_type)

110 |

111 | log.info(f"train {len(self.idx_train)}, val {len(self.idx_val)}, test {len(self.idx_test)}")

112 |

113 | # Set up dataset subsets

114 | self.train_dataset = self.dataset[self.idx_train]

115 | self.val_dataset = self.dataset[self.idx_val]

116 | self.test_dataset = self.dataset[self.idx_test]

117 |

118 | # Standardize if requested

119 | if self.hparams["standardize"]:

120 | self._standardize()

121 |

122 | def train_dataloader(self):

123 | """

124 | Get the training dataloader.

125 |

126 | Returns:

127 | DataLoader for training data.

128 | """

129 | return self._get_dataloader(self.train_dataset, "train")

130 |

131 | def val_dataloader(self):

132 | """

133 | Get the validation dataloader.

134 |

135 | Returns:

136 | DataLoader for validation data.

137 | """

138 | return self._get_dataloader(self.val_dataset, "val")

139 |

140 | def test_dataloader(self):

141 | """

142 | Get the test dataloader.

143 |

144 | Returns:

145 | DataLoader for test data.

146 | """

147 | return self._get_dataloader(self.test_dataset, "test")

148 |

149 | @property

150 | def atomref(self):

151 | """

152 | Get atom reference values if available.

153 |

154 | Returns:

155 | Atom reference values or None.

156 | """

157 | if hasattr(self.dataset, "get_atomref"):

158 | return self.dataset.get_atomref()

159 | return None

160 |

161 | @property

162 | def mean(self):

163 | """

164 | Get mean value for standardization.

165 |

166 | Returns:

167 | Mean value.

168 | """

169 | return self._mean

170 |

171 | @property

172 | def std(self):

173 | """

174 | Get standard deviation value for standardization.

175 |

176 | Returns:

177 | Standard deviation value.

178 | """

179 | return self._std

180 |

181 | def _get_dataloader(

182 | self,

183 | dataset,

184 | stage: str,

185 | store_dataloader: bool = True

186 | ):

187 | """

188 | Create a dataloader for the given dataset and stage.

189 |

190 | Args:

191 | dataset: The dataset to create a dataloader for.

192 | stage: The stage ('train', 'val', or 'test').

193 | store_dataloader: Whether to store the dataloader for reuse.

194 |

195 | Returns:

196 | DataLoader for the dataset.

197 | """

198 | store_dataloader = (store_dataloader and not self.hparams["reload"])

199 | if stage in self._saved_dataloaders and store_dataloader:

200 | return self._saved_dataloaders[stage]

201 |

202 | if stage == "train":

203 | batch_size = self.hparams["batch_size"]

204 | shuffle = True

205 | elif stage in ["val", "test"]:

206 | batch_size = self.hparams["inference_batch_size"]

207 | shuffle = False

208 |

209 | dl = DataLoader(

210 | dataset=dataset,

211 | batch_size=batch_size,

212 | shuffle=shuffle,

213 | num_workers=self.hparams["num_workers"],

214 | pin_memory=True,

215 | )

216 |

217 | if store_dataloader:

218 | self._saved_dataloaders[stage] = dl

219 | return dl

220 |

221 | @rank_zero_only

222 | def _standardize(self):

223 | """

224 | Standardize the dataset by computing mean and standard deviation.

225 |

226 | This method computes the mean and standard deviation of the dataset

227 | for standardization purposes. It handles different standardization

228 | approaches based on the configuration.

229 | """

230 | def get_label(batch, atomref):

231 | """

232 | Extract label from batch, accounting for atom references if provided.

233 | """

234 | if batch.y is None:

235 | raise MissingLabelException()

236 |

237 | dy = None

238 | if 'dy' in batch:

239 | dy = batch.dy.squeeze().clone()

240 |

241 | if atomref is None:

242 | return batch.y.clone(), dy

243 |

244 | atomref_energy = scatter(atomref[batch.z], batch.batch, dim=0)

245 | return (batch.y.squeeze() - atomref_energy.squeeze()).clone(), dy

246 |

247 | # Standard approach: compute mean and std from data

248 | data = tqdm(

249 | self._get_dataloader(self.train_dataset, "val", store_dataloader=False),

250 | desc="computing mean and std",

251 | )

252 | try:

253 | atomref = self.atomref if self.hparams.get("prior_model") == "Atomref" else None

254 | ys = [get_label(batch, atomref) for batch in data]

255 | # Convert array with n elements and each element contains 2 values

256 | # to array of two elements with n values

257 | ys, dys = zip(*ys, strict=False)

258 | ys = torch.cat(ys)

259 | except MissingLabelException:

260 | rank_zero_warn(

261 | "Standardize is true but failed to compute dataset mean and "

262 | "standard deviation. Maybe the dataset only contains forces."

263 | )

264 | return None

265 |

266 | self._mean = ys.mean(dim=0)[0].item()

267 | self._std = ys.std(dim=0)[0].item()

268 | log.info(f"mean: {self._mean}, std: {self._std}")

269 |

270 | def _prepare_QM9(self):

271 | """

272 | Load and prepare the QM9 dataset with appropriate splits.

273 |

274 | Returns:

275 | Tuple[torch.Tensor, torch.Tensor, torch.Tensor]:

276 | Indices for train, validation, and test splits.

277 | """

278 | # Apply position normalization if requested

279 | transform = normalize_positions if self.hparams["normalize_positions"] else None

280 | if transform:

281 | log.warning("Normalizing positions.")

282 |

283 | self.dataset = QM9(

284 | root=self.hparams["dataset_root"],

285 | dataset_arg=self.hparams["dataset_arg"],

286 | transform=transform

287 | )

288 |

289 | train_size = self.hparams["train_size"]

290 | val_size = self.hparams["val_size"]

291 |

292 | idx_train, idx_val, idx_test = make_splits(

293 | len(self.dataset),

294 | train_size,

295 | val_size,

296 | None,

297 | self.hparams["seed"],

298 | join(self.hparams["output_dir"], "splits.npz"),

299 | self.hparams["splits"],

300 | )

301 |

302 | return idx_train, idx_val, idx_test

303 |

--------------------------------------------------------------------------------

/gotennet/models/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/models/__init__.py

--------------------------------------------------------------------------------

/gotennet/models/components/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/sarpaykent/GotenNet/c561c05a1120118004912b248c944f74022b30cc/gotennet/models/components/__init__.py

--------------------------------------------------------------------------------

/gotennet/models/components/outputs.py:

--------------------------------------------------------------------------------

1 | from typing import Optional, Union

2 |

3 | import ase

4 | import torch

5 | import torch.nn.functional as F

6 | import torch_scatter

7 | from torch import nn

8 | from torch.autograd import grad

9 | from torch_geometric.utils import scatter

10 |

11 | from gotennet.models.components.layers import (

12 | Dense,

13 | GetItem,

14 | ScaleShift,

15 | SchnetMLP,

16 | shifted_softplus,

17 | str2act,

18 | )

19 | from gotennet.utils import get_logger

20 |

21 | log = get_logger(__name__)

22 |

23 |

24 | class GatedEquivariantBlock(nn.Module):

25 | """

26 | The gated equivariant block is used to obtain rotationally invariant and equivariant features to be used

27 | for tensorial prop.

28 | """

29 |

30 | def __init__(

31 | self,

32 | n_sin: int,

33 | n_vin: int,

34 | n_sout: int,

35 | n_vout: int,

36 | n_hidden: int,

37 | activation=F.silu,

38 | sactivation=None,

39 | ):

40 | """

41 | Initialize the GatedEquivariantBlock.

42 |

43 | Args:

44 | n_sin (int): Input dimension of scalar features.

45 | n_vin (int): Input dimension of vectorial features.

46 | n_sout (int): Output dimension of scalar features.

47 | n_vout (int): Output dimension of vectorial features.

48 | n_hidden (int): Size of hidden layers.

49 | activation: Activation of hidden layers.

50 | sactivation: Final activation to scalar features.

51 | """

52 | super().__init__()

53 | self.n_sin = n_sin

54 | self.n_vin = n_vin

55 | self.n_sout = n_sout

56 | self.n_vout = n_vout

57 | self.n_hidden = n_hidden

58 | self.mix_vectors = Dense(n_vin, 2 * n_vout, activation=None, bias=False)

59 | self.scalar_net = nn.Sequential(

60 | Dense(

61 | n_sin + n_vout, n_hidden, activation=activation

62 | ),

63 | Dense(n_hidden, n_sout + n_vout, activation=None),

64 | )

65 | self.sactivation = sactivation

66 |

67 | def forward(self, scalars: torch.Tensor, vectors: torch.Tensor):

68 | """

69 | Forward pass of the GatedEquivariantBlock.

70 |

71 | Args:

72 | scalars (torch.Tensor): Scalar input features.

73 | vectors (torch.Tensor): Vector input features.

74 |

75 | Returns:

76 | tuple: Tuple containing:

77 | - torch.Tensor: Output scalar features.

78 | - torch.Tensor: Output vector features.

79 | """

80 | vmix = self.mix_vectors(vectors)

81 | vectors_V, vectors_W = torch.split(vmix, self.n_vout, dim=-1)

82 | vectors_Vn = torch.norm(vectors_V, dim=-2)

83 |

84 | ctx = torch.cat([scalars, vectors_Vn], dim=-1)

85 | x = self.scalar_net(ctx)

86 | s_out, x = torch.split(x, [self.n_sout, self.n_vout], dim=-1)

87 | v_out = x.unsqueeze(-2) * vectors_W

88 |

89 | if self.sactivation:

90 | s_out = self.sactivation(s_out)

91 |

92 | return s_out, v_out

93 |

94 |

95 |

96 | class AtomwiseV3(nn.Module):

97 | """

98 | Atomwise prediction module V3 for predicting atomic properties.

99 | """

100 |

101 | def __init__(

102 | self,

103 | n_in: int,

104 | n_out: int = 1,

105 | aggregation_mode: Optional[str] = "sum",

106 | n_layers: int = 2,

107 | n_hidden: Optional[int] = None,

108 | activation = shifted_softplus,

109 | property: str = "y",

110 | contributions: Optional[str] = None,

111 | derivative: Optional[str] = None,

112 | negative_dr: bool = True,

113 | create_graph: bool = True,

114 | mean: Optional[Union[float, torch.Tensor]] = None,

115 | stddev: Optional[Union[float, torch.Tensor]] = None,

116 | atomref: Optional[torch.Tensor] = None,

117 | outnet: Optional[nn.Module] = None,

118 | return_vector: Optional[str] = None,

119 | standardize: bool = True,

120 | ):

121 | """

122 | Initialize the AtomwiseV3 module.

123 |

124 | Args:

125 | n_in (int): Input dimension of atomwise features.

126 | n_out (int): Output dimension of target property.

127 | aggregation_mode (Optional[str]): Aggregation method for atomic contributions.

128 | n_layers (int): Number of layers in the output network.

129 | n_hidden (Optional[int]): Size of hidden layers.

130 | activation: Activation function.

131 | property (str): Name of the target property.

132 | contributions (Optional[str]): Name of the atomic contributions.

133 | derivative (Optional[str]): Name of the property derivative.

134 | negative_dr (bool): If True, negative derivative of the energy.

135 | create_graph (bool): If True, create computational graph for derivatives.

136 | mean (Optional[Union[float, torch.Tensor]]): Mean of the property for standardization.

137 | stddev (Optional[Union[float, torch.Tensor]]): Standard deviation for standardization.

138 | atomref (Optional[torch.Tensor]): Reference single-atom properties.

139 | outnet (Optional[nn.Module]): Network for property prediction.

140 | return_vector (Optional[str]): Name of the vector property to return.

141 | standardize (bool): If True, standardize the output property.

142 | """

143 | super(AtomwiseV3, self).__init__()

144 |

145 | self.return_vector = return_vector

146 | self.n_layers = n_layers

147 | self.create_graph = create_graph

148 | self.property = property

149 | self.contributions = contributions

150 | self.derivative = derivative

151 | self.negative_dr = negative_dr

152 | self.standardize = standardize

153 |

154 |

155 | mean = 0.0 if mean is None else mean

156 | stddev = 1.0 if stddev is None else stddev

157 | self.mean = mean

158 | self.stddev = stddev

159 |

160 | if type(activation) is str:

161 | activation = str2act(activation)

162 |

163 | if atomref is not None:

164 | self.atomref = nn.Embedding.from_pretrained(

165 | atomref.type(torch.float32)

166 | )

167 | else:

168 | self.atomref = None

169 |

170 | if outnet is None:

171 | self.out_net = nn.Sequential(

172 | GetItem("representation"),

173 | SchnetMLP(n_in, n_out, n_hidden, n_layers, activation),

174 | )

175 | else:

176 | self.out_net = outnet

177 |

178 | # build standardization layer

179 | if self.standardize and (mean is not None and stddev is not None):

180 | self.standardize = ScaleShift(mean, stddev)

181 | else:

182 | self.standardize = nn.Identity()

183 |

184 | self.aggregation_mode = aggregation_mode

185 |

186 | def forward(self, inputs):

187 | """

188 | Predicts atomwise property.

189 |

190 | Args:

191 | inputs: Input data containing atomic representations.

192 |

193 | Returns:

194 | dict: Dictionary with predicted properties.

195 | """

196 | atomic_numbers = inputs.z

197 | result = {}

198 | yi = self.out_net(inputs)

199 | yi = yi * self.stddev

200 |

201 | if self.atomref is not None:

202 | y0 = self.atomref(atomic_numbers)

203 | yi = yi + y0

204 |

205 | if self.aggregation_mode is not None:

206 | y = torch_scatter.scatter(yi, inputs.batch, dim=0, reduce=self.aggregation_mode)

207 | else:

208 | y = yi

209 |

210 | y = y + self.mean

211 |

212 | # collect results

213 | result[self.property] = y

214 |

215 | if self.contributions:

216 | result[self.contributions] = yi

217 | if self.derivative:

218 | sign = -1.0 if self.negative_dr else 1.0

219 | dy = grad(

220 | outputs=result[self.property],

221 | inputs=[inputs.pos],

222 | grad_outputs=torch.ones_like(result[self.property]),

223 | create_graph=self.create_graph,

224 | retain_graph=True

225 | )[0]

226 |

227 | dy = sign * dy

228 | result[self.derivative] = dy

229 | return result

230 |

231 |

232 | class Atomwise(nn.Module):

233 | """

234 | Atomwise prediction module for predicting atomic properties.

235 | """

236 |

237 | def __init__(

238 | self,

239 | n_in: int,

240 | n_out: int = 1,

241 | aggregation_mode: Optional[str] = "sum",

242 | n_layers: int = 2,

243 | n_hidden: Optional[int] = None,

244 | activation = shifted_softplus,

245 | property: str = "y",

246 | contributions: Optional[str] = None,

247 | derivative: Optional[str] = None,

248 | negative_dr: bool = True,

249 | create_graph: bool = True,

250 | mean: Optional[torch.Tensor] = None,

251 | stddev: Optional[torch.Tensor] = None,

252 | atomref: Optional[torch.Tensor] = None,

253 | outnet: Optional[nn.Module] = None,

254 | return_vector: Optional[str] = None,

255 | standardize: bool = True,

256 | ):

257 | """

258 | Initialize the Atomwise module.

259 |

260 | Args:

261 | n_in (int): Input dimension of atomwise features.

262 | n_out (int): Output dimension of target property.

263 | aggregation_mode (Optional[str]): Aggregation method for atomic contributions.

264 | n_layers (int): Number of layers in the output network.

265 | n_hidden (Optional[int]): Size of hidden layers.

266 | activation: Activation function.

267 | property (str): Name of the target property.

268 | contributions (Optional[str]): Name of the atomic contributions.

269 | derivative (Optional[str]): Name of the property derivative.

270 | negative_dr (bool): If True, negative derivative of the energy.

271 | create_graph (bool): If True, create computational graph for derivatives.

272 | mean (Optional[torch.Tensor]): Mean of the property for standardization.

273 | stddev (Optional[torch.Tensor]): Standard deviation for standardization.

274 | atomref (Optional[torch.Tensor]): Reference single-atom properties.

275 | outnet (Optional[nn.Module]): Network for property prediction.

276 | return_vector (Optional[str]): Name of the vector property to return.

277 | standardize (bool): If True, standardize the output property.

278 | """

279 | super(Atomwise, self).__init__()

280 |

281 | self.return_vector = return_vector

282 | self.n_layers = n_layers