├── .actor

├── Dockerfile

├── README.md

├── actor.json

├── actor.sh

├── dataset_schema.json

└── input_schema.json

├── .dockerignore

├── .editorconfig

├── .github

├── CODEOWNERS

├── FUNDING.yml

├── ISSUE_TEMPLATE

│ ├── bug-report.yml

│ ├── config.yml

│ ├── false-negative.yml

│ ├── false-positive.yml

│ ├── feature-request.yml

│ └── site-request.yml

├── SECURITY.md

└── workflows

│ ├── regression.yml

│ └── update-site-list.yml

├── .gitignore

├── Dockerfile

├── LICENSE

├── devel

└── site-list.py

├── docs

├── CODE_OF_CONDUCT.md

├── README.md

├── images

│ ├── demo.png

│ └── sherlock-logo.png

├── pyproject

│ └── README.md

└── removed-sites.md

├── pyproject.toml

├── pytest.ini

├── sherlock_project

├── __init__.py

├── __main__.py

├── notify.py

├── py.typed

├── resources

│ ├── data.json

│ └── data.schema.json

├── result.py

├── sherlock.py

└── sites.py

├── tests

├── conftest.py

├── few_test_basic.py

├── sherlock_interactives.py

├── test_manifest.py

├── test_probes.py

├── test_ux.py

└── test_version.py

└── tox.ini

/.actor/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM sherlock/sherlock as sherlock

2 |

3 | # Install Node.js

4 | RUN apt-get update; apt-get install curl gpg -y

5 | RUN mkdir -p /etc/apt/keyrings

6 | RUN curl -fsSL https://deb.nodesource.com/gpgkey/nodesource-repo.gpg.key | gpg --dearmor -o /etc/apt/keyrings/nodesource.gpg

7 | RUN echo "deb [signed-by=/etc/apt/keyrings/nodesource.gpg] https://deb.nodesource.com/node_20.x nodistro main" | tee /etc/apt/sources.list.d/nodesource.list

8 | RUN apt-get update && apt-get install -y curl bash git jq jo xz-utils nodejs

9 |

10 | # Install Apify CLI (node.js) for the Actor Runtime

11 | RUN npm -g install apify-cli

12 |

13 | # Install Dependencies for the Actor Shell Script

14 | RUN apt-get update && apt-get install -y bash jq jo xz-utils nodejs

15 |

16 | # Copy Actor dir with the actorization shell script

17 | COPY .actor/ .actor

18 |

19 | ENTRYPOINT [".actor/actor.sh"]

20 |

--------------------------------------------------------------------------------

/.actor/README.md:

--------------------------------------------------------------------------------

1 | # Sherlock Actor on Apify

2 |

3 | [](https://apify.com/netmilk/sherlock?fpr=sherlock)

4 |

5 | This Actor wraps the [Sherlock Project](https://sherlockproject.xyz/) to provide serverless username reconnaissance across social networks in the cloud. It helps you find usernames across multiple social media platforms without installing and running the tool locally.

6 |

7 | ## What are Actors?

8 | [Actors](https://docs.apify.com/platform/actors?fpr=sherlock) are serverless microservices running on the [Apify Platform](https://apify.com/?fpr=sherlock). They are based on the [Actor SDK](https://docs.apify.com/sdk/js?fpr=sherlock) and can be found in the [Apify Store](https://apify.com/store?fpr=sherlock). Learn more about Actors in the [Apify Whitepaper](https://whitepaper.actor?fpr=sherlock).

9 |

10 | ## Usage

11 |

12 | ### Apify Console

13 |

14 | 1. Go to the Apify Actor page

15 | 2. Click "Run"

16 | 3. In the input form, fill in **Username(s)** to search for

17 | 4. The Actor will run and produce its outputs in the default datastore

18 |

19 |

20 | ### Apify CLI

21 |

22 | ```bash

23 | apify call YOUR_USERNAME/sherlock --input='{

24 | "usernames": ["johndoe", "janedoe"]

25 | }'

26 | ```

27 |

28 | ### Using Apify API

29 |

30 | ```bash

31 | curl --request POST \

32 | --url "https://api.apify.com/v2/acts/YOUR_USERNAME~sherlock/run" \

33 | --header 'Content-Type: application/json' \

34 | --header 'Authorization: Bearer YOUR_API_TOKEN' \

35 | --data '{

36 | "usernames": ["johndoe", "janedoe"],

37 | }

38 | }'

39 | ```

40 |

41 | ## Input Parameters

42 |

43 | The Actor accepts a JSON schema with the following structure:

44 |

45 | | Field | Type | Required | Default | Description |

46 | |-------|------|----------|---------|-------------|

47 | | `usernames` | array | Yes | - | List of usernames to search for |

48 | | `usernames[]` | string | Yes | "json" | Username to search for |

49 |

50 |

51 | ### Example Input

52 |

53 | ```json

54 | {

55 | "usernames": ["techuser", "designuser"],

56 | }

57 | ```

58 |

59 | ## Output

60 |

61 | The Actor provides three types of outputs:

62 |

63 | ### Dataset Record*

64 |

65 | | Field | Type | Required | Description |

66 | |-------|------|----------|-------------|

67 | | `username` | string | Yes | Username the search was conducted for |

68 | | `links` | arrray | Yes | Array with found links to the social media |

69 | | `links[]`| string | No | URL to the account

70 |

71 | ### Example Dataset Item (JSON)

72 |

73 | ```json

74 | {

75 | "username": "johndoe",

76 | "links": [

77 | "https://github.com/johndoe"

78 | ]

79 | }

80 | ```

81 |

82 | ## Performance & Resources

83 |

84 | - **Memory Requirements**:

85 | - Minimum: 512 MB RAM

86 | - Recommended: 1 GB RAM for multiple usernames

87 | - **Processing Time**:

88 | - Single username: ~1-2 minutes

89 | - Multiple usernames: 2-5 minutes

90 | - Varies based on number of sites checked and response times

91 |

92 |

93 | For more help, check the [Sherlock Project documentation](https://github.com/sherlock-project/sherlock) or raise an issue in the Actor's repository.

94 |

--------------------------------------------------------------------------------

/.actor/actor.json:

--------------------------------------------------------------------------------

1 | {

2 | "actorSpecification": 1,

3 | "name": "sherlock",

4 | "version": "0.0",

5 | "buildTag": "latest",

6 | "environmentVariables": {},

7 | "dockerFile": "./Dockerfile",

8 | "dockerContext": "../",

9 | "input": "./input_schema.json",

10 | "storages": {

11 | "dataset": "./dataset_schema.json"

12 | }

13 | }

14 |

--------------------------------------------------------------------------------

/.actor/actor.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | INPUT=`apify actor:get-input | jq -r .usernames[] | xargs echo`

3 | echo "INPUT: $INPUT"

4 |

5 | sherlock $INPUT

6 |

7 | for username in $INPUT; do

8 | # escape the special meaning leading characters

9 | # https://github.com/jpmens/jo/blob/master/jo.md#description

10 | safe_username=$(echo $username | sed 's/^@/\\@/' | sed 's/^:/\\:/' | sed 's/%/\\%/')

11 | echo "pushing results for username: $username, content:"

12 | cat $username.txt

13 | sed '$d' $username.txt | jo -a | jo username=$safe_username links:=- | apify actor:push-data

14 | done

15 |

--------------------------------------------------------------------------------

/.actor/dataset_schema.json:

--------------------------------------------------------------------------------

1 | {

2 | "actorSpecification": 1,

3 | "fields":{

4 | "title": "Sherlock actor input",

5 | "description": "This is actor input schema",

6 | "type": "object",

7 | "schemaVersion": 1,

8 | "properties": {

9 | "links": {

10 | "title": "Links to accounts",

11 | "type": "array",

12 | "description": "A list of social media accounts found for the uername"

13 | },

14 | "username": {

15 | "title": "Lookup username",

16 | "type": "string",

17 | "description": "Username the lookup was performed for"

18 | }

19 | },

20 | "required": [

21 | "username",

22 | "links"

23 | ]

24 | },

25 | "views": {

26 | "overview": {

27 | "title": "Overview",

28 | "transformation": {

29 | "fields": [

30 | "username",

31 | "links"

32 | ],

33 | },

34 | "display": {

35 | "component": "table",

36 | "links": {

37 | "label": "Links"

38 | },

39 | "username":{

40 | "label": "Username"

41 | }

42 | }

43 | }

44 | }

45 | }

46 |

--------------------------------------------------------------------------------

/.actor/input_schema.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Sherlock actor input",

3 | "description": "This is actor input schema",

4 | "type": "object",

5 | "schemaVersion": 1,

6 | "properties": {

7 | "usernames": {

8 | "title": "Usernames to hunt down",

9 | "type": "array",

10 | "description": "A list of usernames to be checked for existence across social media",

11 | "editor": "stringList",

12 | "prefill": ["johndoe"]

13 | }

14 | },

15 | "required": [

16 | "usernames"

17 | ]

18 | }

19 |

--------------------------------------------------------------------------------

/.dockerignore:

--------------------------------------------------------------------------------

1 | .git/

2 | .vscode/

3 | screenshot/

4 | tests/

5 | *.txt

6 | !/requirements.txt

7 | venv/

8 | devel/

--------------------------------------------------------------------------------

/.editorconfig:

--------------------------------------------------------------------------------

1 | root = true

2 |

3 | [*]

4 | indent_style = space

5 | indent_size = 2

6 | end_of_line = lf

7 | charset = utf-8

8 | trim_trailing_whitespace = true

9 | insert_final_newline = true

10 | curly_bracket_next_line = false

11 | spaces_around_operators = true

12 |

13 | [*.{markdown,md}]

14 | trim_trailing_whitespace = false

15 |

16 | [*.py]

17 | indent_size = 4

18 | quote_type = double

19 |

--------------------------------------------------------------------------------

/.github/CODEOWNERS:

--------------------------------------------------------------------------------

1 | ### REPOSITORY

2 | /.github/CODEOWNERS @sdushantha

3 | /.github/FUNDING.yml @sdushantha

4 | /LICENSE @sdushantha

5 |

6 | ### PACKAGING

7 | # Changes made to these items without code owner approval may negatively

8 | # impact packaging pipelines.

9 | /pyproject.toml @ppfeister @sdushantha

10 |

11 | ### REGRESSION

12 | /.github/workflows/regression.yml @ppfeister

13 | /tox.ini @ppfeister

14 | /pytest.ini @ppfeister

15 | /tests/ @ppfeister

16 |

--------------------------------------------------------------------------------

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | github: [ sdushantha, ppfeister, matheusfelipeog ]

2 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug-report.yml:

--------------------------------------------------------------------------------

1 | name: Bug report

2 | description: File a bug report

3 | labels: ["bug"]

4 | body:

5 | - type: dropdown

6 | id: package

7 | attributes:

8 | label: Installation method

9 | description: |

10 | Some packages are maintained by the community, rather than by the Sherlock Project.

11 | Knowing which packages are affected helps us diagnose package-specific bugs.

12 | options:

13 | - Select one

14 | - PyPI (via pip)

15 | - Homebrew

16 | - Docker

17 | - Kali repository (via apt)

18 | - Built from source

19 | - Other (indicate below)

20 | validations:

21 | required: true

22 | - type: input

23 | id: package-version

24 | attributes:

25 | label: Package version

26 | description: |

27 | Knowing the version of the package you are using can help us diagnose your issue more quickly.

28 | You can find the version by running `sherlock --version`.

29 | validations:

30 | required: true

31 | - type: textarea

32 | id: description

33 | attributes:

34 | label: Description

35 | description: |

36 | Detailed descriptions that help contributors understand and reproduce your bug are much more likely to lead to a fix.

37 | Please include the following information:

38 | - What you were trying to do

39 | - What you expected to happen

40 | - What actually happened

41 | placeholder: |

42 | When doing {action}, the expected result should be {expected result}.

43 | When doing {action}, however, the actual result was {actual result}.

44 | This is undesirable because {reason}.

45 | validations:

46 | required: true

47 | - type: textarea

48 | id: steps-to-reproduce

49 | attributes:

50 | label: Steps to reproduce

51 | description: Write a step by step list that will allow us to reproduce this bug.

52 | placeholder: |

53 | 1. Do something

54 | 2. Then do something else

55 | validations:

56 | required: true

57 | - type: textarea

58 | id: additional-info

59 | attributes:

60 | label: Additional information

61 | description: If you have some additional information, please write it here.

62 | validations:

63 | required: false

64 | - type: checkboxes

65 | id: terms

66 | attributes:

67 | label: Code of Conduct

68 | description: By submitting this issue, you agree to follow our [Code of Conduct](https://github.com/sherlock-project/sherlock/blob/master/docs/CODE_OF_CONDUCT.md).

69 | options:

70 | - label: I agree to follow this project's Code of Conduct

71 | required: true

72 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/config.yml:

--------------------------------------------------------------------------------

1 | blank_issues_enabled: false

2 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/false-negative.yml:

--------------------------------------------------------------------------------

1 | name: False negative

2 | description: Report a site that is returning false negative results

3 | title: "False negative for: "

4 | labels: ["false negative"]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Please include the site name in the title of your issue.

10 | Submit **one site per report** for faster resolution. If you have multiple sites in the same report, it often takes longer to fix.

11 | - type: textarea

12 | id: additional-info

13 | attributes:

14 | label: Additional info

15 | description: If you know why the site is returning false negatives, or noticed any patterns, please explain.

16 | placeholder: |

17 | Reddit is returning false negatives because...

18 | validations:

19 | required: false

20 | - type: checkboxes

21 | id: terms

22 | attributes:

23 | label: Code of Conduct

24 | description: By submitting this issue, you agree to follow our [Code of Conduct](https://github.com/sherlock-project/sherlock/blob/master/docs/CODE_OF_CONDUCT.md).

25 | options:

26 | - label: I agree to follow this project's Code of Conduct

27 | required: true

28 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/false-positive.yml:

--------------------------------------------------------------------------------

1 | name: False positive

2 | description: Report a site that is returning false positive results

3 | title: "False positive for: "

4 | labels: ["false positive"]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Please include the site name in the title of your issue.

10 | Submit **one site per report** for faster resolution. If you have multiple sites in the same report, it often takes longer to fix.

11 | - type: textarea

12 | id: additional-info

13 | attributes:

14 | label: Additional info

15 | description: If you know why the site is returning false positives, or noticed any patterns, please explain.

16 | placeholder: |

17 | Reddit is returning false positives because...

18 | False positives only occur after x searches...

19 | validations:

20 | required: false

21 | - type: checkboxes

22 | id: terms

23 | attributes:

24 | label: Code of Conduct

25 | description: By submitting this issue, you agree to follow our [Code of Conduct](https://github.com/sherlock-project/sherlock/blob/master/docs/CODE_OF_CONDUCT.md).

26 | options:

27 | - label: I agree to follow this project's Code of Conduct

28 | required: true

29 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature-request.yml:

--------------------------------------------------------------------------------

1 | name: Feature request

2 | description: Request a feature or enhancement

3 | labels: ["enhancement"]

4 | body:

5 | - type: markdown

6 | attributes:

7 | value: |

8 | Concise and thoughtful titles help other contributors find and add your requested feature.

9 | - type: textarea

10 | id: description

11 | attributes:

12 | label: Description

13 | description: Describe the feature you are requesting

14 | placeholder: I'd like Sherlock to be able to do xyz

15 | validations:

16 | required: true

17 | - type: checkboxes

18 | id: terms

19 | attributes:

20 | label: Code of Conduct

21 | description: By submitting this issue, you agree to follow our [Code of Conduct](https://github.com/sherlock-project/sherlock/blob/master/docs/CODE_OF_CONDUCT.md).

22 | options:

23 | - label: I agree to follow this project's Code of Conduct

24 | required: true

25 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/site-request.yml:

--------------------------------------------------------------------------------

1 | name: Reuest a new website

2 | description: Request that Sherlock add support for a new website

3 | title: "Requesting support for: "

4 | labels: ["site support request"]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Ensure that the site name is in the title of your request. Requests without this information will be **closed**.

10 | - type: input

11 | id: site-url

12 | attributes:

13 | label: Site URL

14 | description: |

15 | What is the URL of the website indicated in your title?

16 | Websites sometimes have similar names. This helps constributors find the correct site.

17 | placeholder: https://reddit.com

18 | validations:

19 | required: true

20 | - type: textarea

21 | id: additional-info

22 | attributes:

23 | label: Additional info

24 | description: If you have suggestions on how Sherlock should detect for usernames, please explain below

25 | placeholder: Sherlock can detect if a username exists on Reddit by checking for...

26 | validations:

27 | required: false

28 | - type: checkboxes

29 | id: terms

30 | attributes:

31 | label: Code of Conduct

32 | description: By submitting this issue, you agree to follow our [Code of Conduct](https://github.com/sherlock-project/sherlock/blob/master/docs/CODE_OF_CONDUCT.md).

33 | options:

34 | - label: I agree to follow this project's Code of Conduct

35 | required: true

36 |

--------------------------------------------------------------------------------

/.github/SECURITY.md:

--------------------------------------------------------------------------------

1 | ## Security Policy

2 |

3 | ### Supported Versions

4 |

5 | Sherlock is a forward looking project. Only the latest and most current version is supported.

6 |

7 | ### Reporting a Vulnerability

8 |

9 | Security concerns can be submitted [__here__][report-url] without risk of exposing sensitive information. For issues that are low severity or unlikely to see exploitation, public issues are often acceptable.

10 |

11 | [report-url]: https://github.com/sherlock-project/sherlock/security/advisories/new

12 |

--------------------------------------------------------------------------------

/.github/workflows/regression.yml:

--------------------------------------------------------------------------------

1 | name: Regression Testing

2 |

3 | on:

4 | pull_request:

5 | branches:

6 | - master

7 | - release/**

8 | paths:

9 | - '.github/workflows/regression.yml'

10 | - '**/*.json'

11 | - '**/*.py'

12 | - '**/*.ini'

13 | - '**/*.toml'

14 | push:

15 | branches:

16 | - master

17 | - release/**

18 | paths:

19 | - '.github/workflows/regression.yml'

20 | - '**/*.json'

21 | - '**/*.py'

22 | - '**/*.ini'

23 | - '**/*.toml'

24 |

25 | jobs:

26 | tox-lint:

27 | # Linting is ran through tox to ensure that the same linter is used by local runners

28 | runs-on: ubuntu-latest

29 | steps:

30 | - uses: actions/checkout@v4

31 | - name: Set up linting environment

32 | uses: actions/setup-python@v5

33 | with:

34 | python-version: '3.x'

35 | - name: Install tox and related dependencies

36 | run: |

37 | python -m pip install --upgrade pip

38 | pip install tox

39 | - name: Run tox linting environment

40 | run: tox -e lint

41 | tox-matrix:

42 | runs-on: ${{ matrix.os }}

43 | strategy:

44 | fail-fast: false # We want to know what specicic versions it fails on

45 | matrix:

46 | os: [

47 | ubuntu-latest,

48 | windows-latest,

49 | macos-latest,

50 | ]

51 | python-version: [

52 | '3.9',

53 | '3.10',

54 | '3.11',

55 | '3.12',

56 | ]

57 | steps:

58 | - uses: actions/checkout@v4

59 | - name: Set up environment ${{ matrix.python-version }}

60 | uses: actions/setup-python@v5

61 | with:

62 | python-version: ${{ matrix.python-version }}

63 | - name: Install tox and related dependencies

64 | run: |

65 | python -m pip install --upgrade pip

66 | pip install tox

67 | pip install tox-gh-actions

68 | - name: Run tox

69 | run: tox

70 |

--------------------------------------------------------------------------------

/.github/workflows/update-site-list.yml:

--------------------------------------------------------------------------------

1 | name: Update Site List

2 |

3 | # Trigger the workflow when changes are pushed to the main branch

4 | # and the changes include the sherlock_project/resources/data.json file

5 | on:

6 | push:

7 | branches:

8 | - master

9 | paths:

10 | - sherlock_project/resources/data.json

11 |

12 | jobs:

13 | sync-json-data:

14 | # Use the latest version of Ubuntu as the runner environment

15 | runs-on: ubuntu-latest

16 |

17 | steps:

18 | # Check out the code at the specified pull request head commit

19 | - name: Checkout code

20 | uses: actions/checkout@v4

21 | with:

22 | ref: ${{ github.event.pull_request.head.sha }}

23 | fetch-depth: 0

24 |

25 | # Install Python 3

26 | - name: Install Python

27 | uses: actions/setup-python@v5

28 | with:

29 | python-version: '3.x'

30 |

31 | # Execute the site_list.py Python script

32 | - name: Execute site-list.py

33 | run: python devel/site-list.py

34 |

35 | - name: Pushes to another repository

36 | uses: sdushantha/github-action-push-to-another-repository@main

37 | env:

38 | SSH_DEPLOY_KEY: ${{ secrets.SSH_DEPLOY_KEY }}

39 | API_TOKEN_GITHUB: ${{ secrets.API_TOKEN_GITHUB }}

40 | with:

41 | source-directory: 'output'

42 | destination-github-username: 'sherlock-project'

43 | commit-message: 'Updated site list'

44 | destination-repository-name: 'sherlockproject.xyz'

45 | user-email: siddharth.dushantha@gmail.com

46 | target-branch: master

47 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Virtual Environments

2 | venv/

3 | bin/

4 | lib/

5 | pyvenv.cfg

6 | poetry.lock

7 |

8 | # Regression Testing

9 | .coverage

10 | .tox/

11 |

12 | # Editor Configurations

13 | .vscode/

14 | .idea/

15 |

16 | # Python

17 | __pycache__/

18 |

19 | # Pip

20 | src/

21 |

22 | # Devel, Build, and Installation

23 | *.egg-info/

24 | dist/**

25 |

26 | # Jupyter Notebook

27 | .ipynb_checkpoints

28 | *.ipynb

29 |

30 | # Output files, except requirements.txt

31 | *.txt

32 | !requirements.txt

33 |

34 | # Comma-Separated Values (CSV) Reports

35 | *.csv

36 |

37 | #XLSX Reports

38 | *.xlsx

39 |

40 | # Excluded sites list

41 | tests/.excluded_sites

42 |

43 | # MacOS Folder Metadata File

44 | .DS_Store

45 |

46 | # Vim swap files

47 | *.swp

48 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | # Release instructions:

2 | # 1. Update the version tag in the Dockerfile to match the version in sherlock/__init__.py

3 | # 2. Update the VCS_REF tag to match the tagged version's FULL commit hash

4 | # 3. Build image with BOTH latest and version tags

5 | # i.e. `docker build -t sherlock/sherlock:0.15.0 -t sherlock/sherlock:latest .`

6 |

7 | FROM python:3.12-slim-bullseye as build

8 | WORKDIR /sherlock

9 |

10 | RUN pip3 install --no-cache-dir --upgrade pip

11 |

12 | FROM python:3.12-slim-bullseye

13 | WORKDIR /sherlock

14 |

15 | ARG VCS_REF= # CHANGE ME ON UPDATE

16 | ARG VCS_URL="https://github.com/sherlock-project/sherlock"

17 | ARG VERSION_TAG= # CHANGE ME ON UPDATE

18 |

19 | ENV SHERLOCK_ENV=docker

20 |

21 | LABEL org.label-schema.vcs-ref=$VCS_REF \

22 | org.label-schema.vcs-url=$VCS_URL \

23 | org.label-schema.name="Sherlock" \

24 | org.label-schema.version=$VERSION_TAG \

25 | website="https://sherlockproject.xyz"

26 |

27 | RUN pip3 install --no-cache-dir sherlock-project==$VERSION_TAG

28 |

29 | WORKDIR /sherlock

30 |

31 | ENTRYPOINT ["sherlock"]

32 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Sherlock Project

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/devel/site-list.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # This module generates the listing of supported sites which can be found in

3 | # sites.md. It also organizes all the sites in alphanumeric order

4 | import json

5 | import os

6 |

7 |

8 | DATA_REL_URI: str = "sherlock_project/resources/data.json"

9 |

10 | # Read the data.json file

11 | with open(DATA_REL_URI, "r", encoding="utf-8") as data_file:

12 | data: dict = json.load(data_file)

13 |

14 | # Removes schema-specific keywords for proper processing

15 | social_networks: dict = dict(data)

16 | social_networks.pop('$schema', None)

17 |

18 | # Sort the social networks in alphanumeric order

19 | social_networks: list = sorted(social_networks.items())

20 |

21 | # Make output dir where the site list will be written

22 | os.mkdir("output")

23 |

24 | # Write the list of supported sites to sites.md

25 | with open("output/sites.mdx", "w") as site_file:

26 | site_file.write("---\ntitle: 'List of supported sites'\nsidebarTitle: 'Supported sites'\nicon: 'globe'\ndescription: 'Sherlock currently supports **400+** sites'\n---\n\n")

27 | for social_network, info in social_networks:

28 | url_main = info["urlMain"]

29 | is_nsfw = "**(NSFW)**" if info.get("isNSFW") else ""

30 | site_file.write(f"1. [{social_network}]({url_main}) {is_nsfw}\n")

31 |

32 | # Overwrite the data.json file with sorted data

33 | with open(DATA_REL_URI, "w") as data_file:

34 | sorted_data = json.dumps(data, indent=2, sort_keys=True)

35 | data_file.write(sorted_data)

36 | data_file.write("\n")

37 |

38 | print("Finished updating supported site listing!")

39 |

40 |

--------------------------------------------------------------------------------

/docs/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Contributor Covenant Code of Conduct

2 |

3 | ## Our Pledge

4 |

5 | We as members, contributors, and leaders pledge to make participation in our

6 | community a harassment-free experience for everyone, regardless of age, body

7 | size, visible or invisible disability, ethnicity, sex characteristics, gender

8 | identity and expression, level of experience, education, socio-economic status,

9 | nationality, personal appearance, race, caste, color, religion, or sexual

10 | identity and orientation.

11 |

12 | We pledge to act and interact in ways that contribute to an open, welcoming,

13 | diverse, inclusive, and healthy community.

14 | ## Our Standards

15 |

16 | Examples of behavior that contributes to a positive environment for our

17 | community include:

18 |

19 | * Demonstrating empathy and kindness toward other people

20 | * Being respectful of differing opinions, viewpoints, and experiences

21 | * Giving and gracefully accepting constructive feedback

22 | * Accepting responsibility and apologizing to those affected by our mistakes,

23 | and learning from the experience

24 | * Focusing on what is best not just for us as individuals, but for the overall

25 | community

26 |

27 | Examples of unacceptable behavior include:

28 |

29 | * The use of sexualized language or imagery, and sexual attention or advances of

30 | any kind

31 | * Trolling, insulting or derogatory comments, and personal or political attacks

32 | * Public or private harassment

33 | * Publishing others' private information, such as a physical or email address,

34 | without their explicit permission

35 | * Other conduct which could reasonably be considered inappropriate in a

36 | professional setting

37 |

38 | ## Enforcement Responsibilities

39 |

40 | Community leaders are responsible for clarifying and enforcing our standards of

41 | acceptable behavior and will take appropriate and fair corrective action in

42 | response to any behavior that they deem inappropriate, threatening, offensive,

43 | or harmful.

44 |

45 | Community leaders have the right and responsibility to remove, edit, or reject

46 | comments, commits, code, wiki edits, issues, and other contributions that are

47 | not aligned to this Code of Conduct, and will communicate reasons for moderation

48 | decisions when appropriate.

49 |

50 | ## Scope

51 |

52 | This Code of Conduct applies within all community spaces, and also applies when

53 | an individual is officially representing the community in public spaces.

54 | Examples of representing our community include using an official e-mail address,

55 | posting via an official social media account, or acting as an appointed

56 | representative at an online or offline event.

57 |

58 | ## Enforcement

59 |

60 | Instances of abusive, harassing, or otherwise unacceptable behavior may be

61 | reported to the community leaders responsible for enforcement at yahya.arbabi@gmail.com.

62 | All complaints will be reviewed and investigated promptly and fairly.

63 |

64 | All community leaders are obligated to respect the privacy and security of the

65 | reporter of any incident.

66 |

67 | ## Enforcement Guidelines

68 |

69 | Community leaders will follow these Community Impact Guidelines in determining

70 | the consequences for any action they deem in violation of this Code of Conduct:

71 |

72 | ### 1. Correction

73 |

74 | **Community Impact**: Use of inappropriate language or other behavior deemed

75 | unprofessional or unwelcome in the community.

76 |

77 | **Consequence**: A private, written warning from community leaders, providing

78 | clarity around the nature of the violation and an explanation of why the

79 | behavior was inappropriate. A public apology may be requested.

80 |

81 | ### 2. Warning

82 |

83 | **Community Impact**: A violation through a single incident or series of

84 | actions.

85 |

86 | **Consequence**: A warning with consequences for continued behavior. No

87 | interaction with the people involved, including unsolicited interaction with

88 | those enforcing the Code of Conduct, for a specified period of time. This

89 | includes avoiding interactions in community spaces as well as external channels

90 | like social media. Violating these terms may lead to a temporary or permanent

91 | ban.

92 |

93 | ### 3. Temporary Ban

94 |

95 | **Community Impact**: A serious violation of community standards, including

96 | sustained inappropriate behavior.

97 |

98 | **Consequence**: A temporary ban from any sort of interaction or public

99 | communication with the community for a specified period of time. No public or

100 | private interaction with the people involved, including unsolicited interaction

101 | with those enforcing the Code of Conduct, is allowed during this period.

102 | Violating these terms may lead to a permanent ban.

103 |

104 | ### 4. Permanent Ban

105 |

106 | **Community Impact**: Demonstrating a pattern of violation of community

107 | standards, including sustained inappropriate behavior, harassment of an

108 | individual, or aggression toward or disparagement of classes of individuals.

109 |

110 | **Consequence**: A permanent ban from any sort of public interaction within the

111 | community.

112 |

113 | ## Attribution

114 |

115 | This Code of Conduct is adapted from the [Contributor Covenant][homepage],

116 | version 2.1, available at

117 | [https://www.contributor-covenant.org/version/2/1/code_of_conduct.html][v2.1].

118 |

119 | Community Impact Guidelines were inspired by

120 | [Mozilla's code of conduct enforcement ladder][Mozilla CoC].

121 |

122 | For answers to common questions about this code of conduct, see the FAQ at

123 | [https://www.contributor-covenant.org/faq][FAQ]. Translations are available at

124 | [https://www.contributor-covenant.org/translations][translations].

125 |

126 | [homepage]: https://www.contributor-covenant.org

127 | [v2.1]: https://www.contributor-covenant.org/version/2/1/code_of_conduct.html

128 | [Mozilla CoC]: https://github.com/mozilla/diversity

129 | [FAQ]: https://www.contributor-covenant.org/faq

130 | [translations]: https://www.contributor-covenant.org/translations

--------------------------------------------------------------------------------

/docs/README.md:

--------------------------------------------------------------------------------

1 |

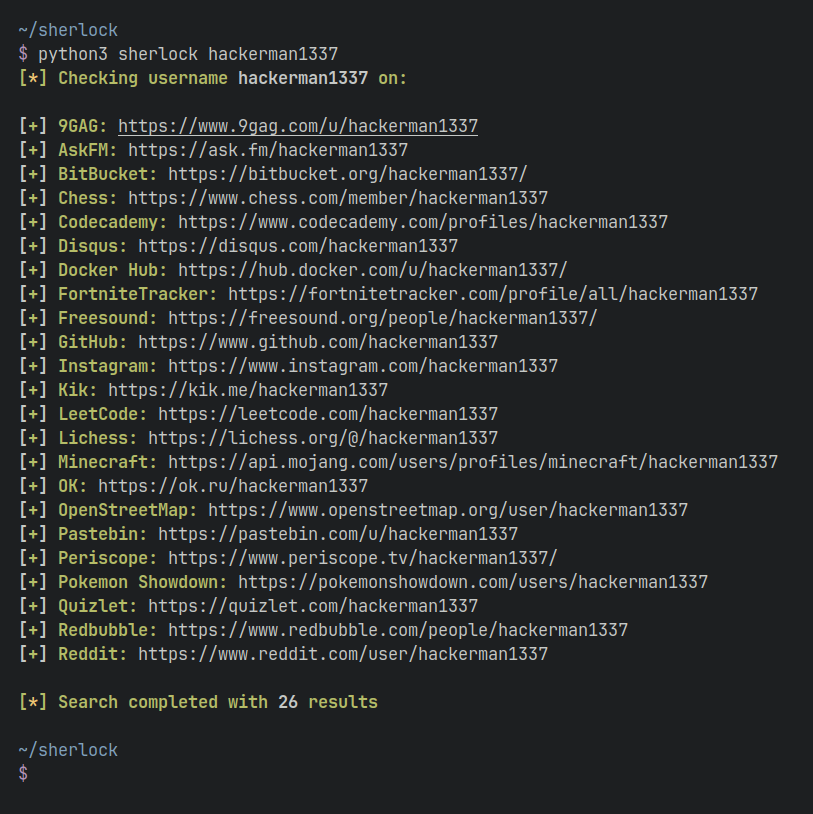

2 | Hunt down social media accounts by username across 400+ social networks

6 |

8 |

9 |

10 | Installation

11 | •

12 | Usage

13 | •

14 | Contributing

15 |

16 |

17 |

18 |

21 |

22 |

23 | ## Installation

24 |

25 | > [!WARNING]

26 | > Packages for ParrotOS and Ubuntu 24.04, maintained by a third party, appear to be __broken__.

27 | > Users of these systems should defer to pipx/pip or Docker.

28 |

29 | | Method | Notes |

30 | | - | - |

31 | | `pipx install sherlock-project` | `pip` may be used in place of `pipx` |

32 | | `docker run -it --rm sherlock/sherlock` |

33 | | `dnf install sherlock-project` | |

34 |

35 | Community-maintained packages are available for Debian (>= 13), Ubuntu (>= 22.10), Homebrew, Kali, and BlackArch. These packages are not directly supported or maintained by the Sherlock Project.

36 |

37 | See all alternative installation methods [here](https://sherlockproject.xyz/installation)

38 |

39 | ## General usage

40 |

41 | To search for only one user:

42 | ```bash

43 | sherlock user123

44 | ```

45 |

46 | To search for more than one user:

47 | ```bash

48 | sherlock user1 user2 user3

49 | ```

50 |

51 | Accounts found will be stored in an individual text file with the corresponding username (e.g ```user123.txt```).

52 |

53 | ```console

54 | $ sherlock --help

55 | usage: sherlock [-h] [--version] [--verbose] [--folderoutput FOLDEROUTPUT]

56 | [--output OUTPUT] [--tor] [--unique-tor] [--csv] [--xlsx]

57 | [--site SITE_NAME] [--proxy PROXY_URL] [--json JSON_FILE]

58 | [--timeout TIMEOUT] [--print-all] [--print-found] [--no-color]

59 | [--browse] [--local] [--nsfw]

60 | USERNAMES [USERNAMES ...]

61 |

62 | Sherlock: Find Usernames Across Social Networks (Version 0.14.3)

63 |

64 | positional arguments:

65 | USERNAMES One or more usernames to check with social networks.

66 | Check similar usernames using {?} (replace to '_', '-', '.').

67 |

68 | optional arguments:

69 | -h, --help show this help message and exit

70 | --version Display version information and dependencies.

71 | --verbose, -v, -d, --debug

72 | Display extra debugging information and metrics.

73 | --folderoutput FOLDEROUTPUT, -fo FOLDEROUTPUT

74 | If using multiple usernames, the output of the results will be

75 | saved to this folder.

76 | --output OUTPUT, -o OUTPUT

77 | If using single username, the output of the result will be saved

78 | to this file.

79 | --tor, -t Make requests over Tor; increases runtime; requires Tor to be

80 | installed and in system path.

81 | --unique-tor, -u Make requests over Tor with new Tor circuit after each request;

82 | increases runtime; requires Tor to be installed and in system

83 | path.

84 | --csv Create Comma-Separated Values (CSV) File.

85 | --xlsx Create the standard file for the modern Microsoft Excel

86 | spreadsheet (xlsx).

87 | --site SITE_NAME Limit analysis to just the listed sites. Add multiple options to

88 | specify more than one site.

89 | --proxy PROXY_URL, -p PROXY_URL

90 | Make requests over a proxy. e.g. socks5://127.0.0.1:1080

91 | --json JSON_FILE, -j JSON_FILE

92 | Load data from a JSON file or an online, valid, JSON file.

93 | --timeout TIMEOUT Time (in seconds) to wait for response to requests (Default: 60)

94 | --print-all Output sites where the username was not found.

95 | --print-found Output sites where the username was found.

96 | --no-color Don't color terminal output

97 | --browse, -b Browse to all results on default browser.

98 | --local, -l Force the use of the local data.json file.

99 | --nsfw Include checking of NSFW sites from default list.

100 | ```

101 | ## Apify Actor Usage [](https://apify.com/netmilk/sherlock?fpr=sherlock)

102 |

103 |

125 |

127 |

128 | ## Star history

129 |

130 |

131 |

135 |

136 | ## License

137 |

138 | MIT © Sherlock Project

4 | Hunt down social media accounts by username across 400+ social networks Additional documentation can be found at our GitHub repository

10 |

12 |

13 | ## Usage

14 |

15 | ```console

16 | $ sherlock --help

17 | usage: sherlock [-h] [--version] [--verbose] [--folderoutput FOLDEROUTPUT]

18 | [--output OUTPUT] [--tor] [--unique-tor] [--csv] [--xlsx]

19 | [--site SITE_NAME] [--proxy PROXY_URL] [--json JSON_FILE]

20 | [--timeout TIMEOUT] [--print-all] [--print-found] [--no-color]

21 | [--browse] [--local] [--nsfw]

22 | USERNAMES [USERNAMES ...]

23 | ```

24 |

25 | To search for only one user:

26 | ```bash

27 | $ sherlock user123

28 | ```

29 |

30 | To search for more than one user:

31 | ```bash

32 | $ sherlock user1 user2 user3

33 | ```

34 |

40 |

43 |

--------------------------------------------------------------------------------

/docs/removed-sites.md:

--------------------------------------------------------------------------------

1 | # List Of Sites Removed From Sherlock

2 |

3 | This is a list of sites implemented in such a way that the current design of

4 | Sherlock is not capable of determining if a given username exists or not.

5 | They are listed here in the hope that things may change in the future

6 | so they may be re-included.

7 |

8 |

9 | ## gpodder.net

10 |

11 | As of 2020-05-25, all usernames are reported as available.

12 |

13 | The server is returning a HTTP Status 500 (Internal server error)

14 | for all queries.

15 |

16 | ```json

17 | "gpodder.net": {

18 | "errorType": "status_code",

19 | "rank": 2013984,

20 | "url": "https://gpodder.net/user/{}",

21 | "urlMain": "https://gpodder.net/",

22 | "username_claimed": "blue",

23 | "username_unclaimed": "noonewouldeverusethis7"

24 | },

25 | ```

26 |

27 |

28 | ## Investing.com

29 |

30 | As of 2020-05-25, all usernames are reported as claimed.

31 |

32 | Any query against a user seems to be redirecting to a general

33 | information page at https://www.investing.com/brokers/. Probably

34 | required login before access.

35 |

36 | ```json

37 | "Investing.com": {

38 | "errorType": "status_code",

39 | "rank": 196,

40 | "url": "https://www.investing.com/traders/{}",

41 | "urlMain": "https://www.investing.com/",

42 | "username_claimed": "jenny",

43 | "username_unclaimed": "noonewouldeverusethis7"

44 | },

45 | ```

46 |

47 | ## AdobeForums

48 |

49 | As of 2020-04-12, all usernames are reported as available.

50 |

51 | When I went to the site to see what was going on, usernames that I know

52 | existed were redirecting to the main page.

53 |

54 | I was able to see user profiles without logging in, but the URL was not

55 | related to their user name. For example, user "tomke" went to

56 | https://community.adobe.com/t5/user/viewprofilepage/user-id/10882613.

57 | This can be detected, but it requires a different detection method.

58 |

59 | ```json

60 | "AdobeForums": {

61 | "errorType": "status_code",

62 | "rank": 59,

63 | "url": "https://forums.adobe.com/people/{}",

64 | "urlMain": "https://forums.adobe.com/",

65 | "username_claimed": "jack",

66 | "username_unclaimed": "noonewouldeverusethis77777"

67 | },

68 | ```

69 |

70 | ## Basecamp

71 |

72 | As of 2020-02-23, all usernames are reported as not existing.

73 |

74 |

75 | ```json

76 | "Basecamp": {

77 | "errorMsg": "The account you were looking for doesn't exist",

78 | "errorType": "message",

79 | "rank": 4914,

80 | "url": "https://{}.basecamphq.com",

81 | "urlMain": "https://basecamp.com/",

82 | "username_claimed": "blue",

83 | "username_unclaimed": "noonewouldeverusethis7"

84 | },

85 | ```

86 |

87 | ## Canva

88 |

89 | As of 2020-02-23, all usernames are reported as not existing.

90 |

91 | ```json

92 | "Canva": {

93 | "errorType": "response_url",

94 | "errorUrl": "https://www.canva.com/{}",

95 | "rank": 128,

96 | "url": "https://www.canva.com/{}",

97 | "urlMain": "https://www.canva.com/",

98 | "username_claimed": "jenny",

99 | "username_unclaimed": "xgtrq"

100 | },

101 | ```

102 |

103 | ## Pixabay

104 |

105 | As of 2020-01-21, all usernames are reported as not existing.

106 |

107 | ```json

108 | "Pixabay": {

109 | "errorType": "status_code",

110 | "rank": 378,

111 | "url": "https://pixabay.com/en/users/{}",

112 | "urlMain": "https://pixabay.com/",

113 | "username_claimed": "blue",

114 | "username_unclaimed": "noonewouldeverusethis7"

115 | },

116 | ```

117 |

118 | ## NPM-Packages

119 |

120 | NPM-Packages are not users.

121 |

122 | ```json

123 | "NPM-Package": {

124 | "errorType": "status_code",

125 | "url": "https://www.npmjs.com/package/{}",

126 | "urlMain": "https://www.npmjs.com/",

127 | "username_claimed": "blue",

128 | "username_unclaimed": "noonewouldeverusethis7"

129 | },

130 | ```

131 |

132 | ## Pexels

133 |

134 | As of 2020-01-21, all usernames are reported as not existing.

135 |

136 | ```json

137 | "Pexels": {

138 | "errorType": "status_code",

139 | "rank": 745,

140 | "url": "https://www.pexels.com/@{}",

141 | "urlMain": "https://www.pexels.com/",

142 | "username_claimed": "bruno",

143 | "username_unclaimed": "noonewouldeverusethis7"

144 | },

145 | ```

146 |

147 | ## RamblerDating

148 |

149 | As of 2019-12-31, site always times out.

150 |

151 | ```json

152 | "RamblerDating": {

153 | "errorType": "response_url",

154 | "errorUrl": "https://dating.rambler.ru/page/{}",

155 | "rank": 322,

156 | "url": "https://dating.rambler.ru/page/{}",

157 | "urlMain": "https://dating.rambler.ru/",

158 | "username_claimed": "blue",

159 | "username_unclaimed": "noonewouldeverusethis7"

160 | },

161 | ```

162 |

163 | ## YandexMarket

164 |

165 | As of 2019-12-31, all usernames are reported as existing.

166 |

167 | ```json

168 | "YandexMarket": {

169 | "errorMsg": "\u0422\u0443\u0442 \u043d\u0438\u0447\u0435\u0433\u043e \u043d\u0435\u0442",

170 | "errorType": "message",

171 | "rank": 47,

172 | "url": "https://market.yandex.ru/user/{}/achievements",

173 | "urlMain": "https://market.yandex.ru/",

174 | "username_claimed": "blue",

175 | "username_unclaimed": "noonewouldeverusethis7"

176 | },

177 | ```

178 |

179 | ## Codementor

180 |

181 | As of 2019-12-31, usernames that exist are not detected.

182 |

183 | ```json

184 | "Codementor": {

185 | "errorType": "status_code",

186 | "rank": 10252,

187 | "url": "https://www.codementor.io/@{}",

188 | "urlMain": "https://www.codementor.io/",

189 | "username_claimed": "blue",

190 | "username_unclaimed": "noonewouldeverusethis7"

191 | },

192 | ```

193 |

194 | ## KiwiFarms

195 |

196 | As of 2019-12-31, the site gives a 403 for all usernames. You have to

197 | be logged into see a profile.

198 |

199 | ```json

200 | "KiwiFarms": {

201 | "errorMsg": "The specified member cannot be found",

202 | "errorType": "message",

203 | "rank": 38737,

204 | "url": "https://kiwifarms.net/members/?username={}",

205 | "urlMain": "https://kiwifarms.net/",

206 | "username_claimed": "blue",

207 | "username_unclaimed": "noonewouldeverusethis"

208 | },

209 | ```

210 |

211 | ## Teknik

212 |

213 | As of 2019-11-30, the site causes Sherlock to just hang.

214 |

215 | ```json

216 | "Teknik": {

217 | "errorMsg": "The user does not exist",

218 | "errorType": "message",

219 | "rank": 357163,

220 | "url": "https://user.teknik.io/{}",

221 | "urlMain": "https://teknik.io/",

222 | "username_claimed": "red",

223 | "username_unclaimed": "noonewouldeverusethis7"

224 | }

225 | ```

226 |

227 | ## Shockwave

228 |

229 | As of 2019-11-28, usernames that exist give a 503 "Service Unavailable"

230 | HTTP Status.

231 |

232 | ```json

233 | "Shockwave": {

234 | "errorMsg": "Oh no! You just finished all of the games on the internet!",

235 | "errorType": "message",

236 | "rank": 35916,

237 | "url": "http://www.shockwave.com/member/profiles/{}.jsp",

238 | "urlMain": "http://www.shockwave.com/",

239 | "username_claimed": "blue",

240 | "username_unclaimed": "noonewouldeverusethis"

241 | },

242 | ```

243 |

244 | ## Foursquare

245 |

246 | When usage of automated tool is detected. Whole IP is banned from future requests.

247 | There is an error message:

248 |

249 | > Please verify you are a human

250 | > Access to this page has been denied because we believe you are using automation tools to browse the website.

251 |

252 | ```json

253 | "Foursquare": {

254 | "errorType": "status_code",

255 | "rank": 1843,

256 | "url": "https://foursquare.com/{}",

257 | "urlMain": "https://foursquare.com/",

258 | "username_claimed": "dens",

259 | "username_unclaimed": "noonewouldeverusethis7"

260 | },

261 | ```

262 |

263 | ## Khan Academy

264 |

265 | Usernames that don't exist are detected. First noticed 2019-10-25.

266 |

267 | ```json

268 | "Khan Academy": {

269 | "errorType": "status_code",

270 | "rank": 377,

271 | "url": "https://www.khanacademy.org/profile/{}",

272 | "urlMain": "https://www.khanacademy.org/",

273 | "username_claimed": "blue",

274 | "username_unclaimed": "noonewouldeverusethis7"

275 | },

276 | ```

277 |

278 |

279 | ## EVE Online

280 |

281 | Usernames that exist are not detected.

282 |

283 | ```json

284 | "EVE Online": {

285 | "errorType": "response_url",

286 | "errorUrl": "https://eveonline.com",

287 | "rank": 15347,

288 | "url": "https://evewho.com/pilot/{}/",

289 | "urlMain": "https://eveonline.com",

290 | "username_claimed": "blue",

291 | "username_unclaimed": "noonewouldeverusethis7"

292 | },

293 | ```

294 |

295 | ## AngelList

296 |

297 | Usernames that exist are not detected. Forbidden Request 403 Error.

298 |

299 | ```json

300 | "AngelList": {

301 | "errorType": "status_code",

302 | "rank": 5767,

303 | "url": "https://angel.co/u/{}",

304 | "urlMain": "https://angel.co/",

305 | "username_claimed": "blue",

306 | "username_unclaimed": "noonewouldeverusethis7"

307 | },

308 | ```

309 |

310 | ## PowerShell Gallery

311 |

312 | Accidentally merged even though the original pull request showed that all

313 | user names were available.

314 |

315 | ```json

316 | "PowerShell Gallery": {

317 | "errorType": "status_code",

318 | "rank": 163562,

319 | "url": "https://www.powershellgallery.com/profiles/{}",

320 | "urlMain": "https://www.powershellgallery.com",

321 | "username_claimed": "powershellteam",

322 | "username_unclaimed": "noonewouldeverusethis7"

323 | },

324 | ```

325 |

326 | ## StreamMe

327 |

328 | On 2019-04-07, I get a Timed Out message from the website. It has not

329 | been working earlier either (for some weeks). It takes about 21s before

330 | the site finally times out, so it really makes getting the results from

331 | Sherlock a pain.

332 |

333 | If the site becomes available in the future, we can put it back in.

334 |

335 | ```json

336 | "StreamMe": {

337 | "errorType": "status_code",

338 | "rank": 31702,

339 | "url": "https://www.stream.me/{}",

340 | "urlMain": "https://www.stream.me/",

341 | "username_claimed": "blue",

342 | "username_unclaimed": "noonewouldeverusethis7"

343 | },

344 | ```

345 |

346 | ## BlackPlanet

347 |

348 | This site has always returned a false positive. The site returns the exact

349 | same text for a claimed or an unclaimed username. The site must be rendering

350 | all of the different content using Javascript in the browser. So, there is

351 | no way distinguish between the results with the current design of Sherlock.

352 |

353 | ```json

354 | "BlackPlanet": {

355 | "errorMsg": "My Hits",

356 | "errorType": "message",

357 | "rank": 110021,

358 | "url": "http://blackplanet.com/{}",

359 | "urlMain": "http://blackplanet.com/"

360 | },

361 | ```

362 |

363 | ## Fotolog

364 |

365 | Around 2019-02-09, I get a 502 HTTP error (bad gateway) for any access. On

366 | 2019-03-10, the site is up, but it is in maintenance mode.

367 |

368 | It does not seem to be working, so there is no sense in including it in

369 | Sherlock.

370 |

371 | ```json

372 | "Fotolog": {

373 | "errorType": "status_code",

374 | "rank": 47777,

375 | "url": "https://fotolog.com/{}",

376 | "urlMain": "https://fotolog.com/"

377 | },

378 | ```

379 |

380 | ## Google Plus

381 |

382 | On 2019-04-02, Google shutdown Google Plus. While the content for some

383 | users is available after that point, it is going away. And, no one will

384 | be able to create a new account. So, there is no value is keeping it in

385 | Sherlock.

386 |

387 | Good-bye [Google Plus](https://en.wikipedia.org/wiki/Google%2B)...

388 |

389 | ```json

390 | "Google Plus": {

391 | "errorType": "status_code",

392 | "rank": 1,

393 | "url": "https://plus.google.com/+{}",

394 | "urlMain": "https://plus.google.com/",

395 | "username_claimed": "davidbrin1",

396 | "username_unclaimed": "noonewouldeverusethis7"

397 | },

398 | ```

399 |

400 |

401 | ## InsaneJournal

402 |

403 | As of 2020-02-23, InsaneJournal returns false positive, when providing a username which contains a period.

404 | Since we were not able to find the criteria for a valid username, the best thing to do now is to remove it.

405 |

406 | ```json

407 | "InsaneJournal": {

408 | "errorMsg": "Unknown user",

409 | "errorType": "message",

410 | "rank": 29728,

411 | "url": "http://{}.insanejournal.com/profile",

412 | "urlMain": "insanejournal.com",

413 | "username_claimed": "blue",

414 | "username_unclaimed": "dlyr6cd"

415 | },

416 | ```

417 |

418 | ## Sports Tracker

419 |

420 | As of 2020-04-02, Sports Tracker returns false positives. Checking with `errorMsg` and `response_url`

421 | did not seem to work.

422 |

423 | ```

424 | "SportsTracker": {

425 | "errorUrl": "https://www.sports-tracker.com/page-not-found",

426 | "errorType": "response_url",

427 | "rank": 93950,

428 | "url": "https://www.sports-tracker.com/view_profile/{}",

429 | "urlMain": "https://www.sports-tracker.com/",

430 | "username_claimed": "blue",

431 | "username_unclaimed": "noonewouldeveruse"

432 | },

433 | ```

434 |

435 | ## Trip

436 |

437 | As of 2020-04-02, Trip by Skyscanner seems to not work beceause it keeps on

438 | redirecting to skyscanner.com whether the username exists or not.

439 |

440 | ```json

441 | "Trip": {

442 | "errorType": "status_code",

443 | "rank": 2847,

444 | "url": "https://www.trip.skyscanner.com/user/{}",

445 | "urlMain": "https://www.trip.skyscanner.com/",

446 | "username_claimed": "blue",

447 | "username_unclaimed": "noonewouldeverusethis7"

448 | },

449 |

450 | ```

451 |

452 | ## boingboing.net

453 |

454 | As of 2020-04-02, boingboing.net requires a login to check if a user exits or not.

455 |

456 | ```

457 | "boingboing.net": {

458 | "errorType": "status_code",

459 | "rank": 5821,

460 | "url": "https://bbs.boingboing.net/u/{}",

461 | "urlMain": "https://boingboing.net/",

462 | "username_claimed": "admin",

463 | "username_unclaimed": "noonewouldeverusethis7"

464 | },

465 | ```

466 |

467 | ## elwoRU

468 | As of 2020-04-04, elwoRu does not exist anymore. I confirmed using

469 | downforeveryoneorjustme.com that the website is down.

470 |

471 | ```json

472 | "elwoRU": {

473 | "errorMsg": "\u041f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u043d\u0435 \u043d\u0430\u0439\u0434\u0435\u043d",

474 | "errorType": "message",

475 | "rank": 254810,

476 | "url": "https://elwo.ru/index/8-0-{}",

477 | "urlMain": "https://elwo.ru/",

478 | "username_claimed": "red",

479 | "username_unclaimed": "noonewouldeverusethis7"

480 | },

481 | ```

482 |

483 | ## ingvarr.net.ru

484 |

485 | As of 2020-04-04, ingvarr.net.ru does not exist anymore. I confirmed using

486 | downforeveryoneorjustme.com that the website is down.

487 |

488 | ```json

489 | "ingvarr.net.ru": {

490 | "errorMsg": "\u041f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u043d\u0435 \u043d\u0430\u0439\u0434\u0435\u043d",

491 | "errorType": "message",

492 | "rank": 107721,

493 | "url": "http://ingvarr.net.ru/index/8-0-{}",

494 | "urlMain": "http://ingvarr.net.ru/",

495 | "username_claimed": "red",

496 | "username_unclaimed": "noonewouldeverusethis7"

497 | },

498 | ```

499 |

500 | ## Redsun.tf

501 |

502 | As of 2020-06-20, Redsun.tf seems to be adding random digits to the end of the usernames which makes it pretty much impossible

503 | for Sherlock to check for usernames on this particular website.

504 |

505 | ```json

506 | "Redsun.tf": {

507 | "errorMsg": "The specified member cannot be found",

508 | "errorType": "message",

509 | "rank": 3796657,

510 | "url": "https://forum.redsun.tf/members/?username={}",

511 | "urlMain": "https://redsun.tf/",

512 | "username_claimed": "dan",

513 | "username_unclaimed": "noonewouldeverusethis"

514 | },

515 | ```

516 |

517 | ## Creative Market

518 |

519 | As of 2020-06-20, Creative Market has a captcha to prove that you are a human, and because of this

520 | Sherlock is unable to check for username on this site because we will always get a page which asks

521 | us to prove that we are not a robot.

522 |

523 | ```json

524 | "CreativeMarket": {

525 | "errorType": "status_code",

526 | "rank": 1896,

527 | "url": "https://creativemarket.com/users/{}",

528 | "urlMain": "https://creativemarket.com/",

529 | "username_claimed": "blue",

530 | "username_unclaimed": "noonewouldeverusethis7"

531 | },

532 | ```

533 |

534 | ## pvpru

535 |

536 | As of 2020-06-20, pvpru uses CloudFlair, and because of this we get a "Access denied" error whenever

537 | we try to check for a username.

538 |

539 | ```json

540 | "pvpru": {

541 | "errorType": "status_code",

542 | "rank": 405547,

543 | "url": "https://pvpru.com/board/member.php?username={}&tab=aboutme#aboutme",

544 | "urlMain": "https://pvpru.com/",

545 | "username_claimed": "blue",

546 | "username_unclaimed": "noonewouldeverusethis7"

547 | },

548 | ```

549 |

550 | ## easyen

551 | As of 2020-06-21, easyen returns false positives when using a username which contains

552 | a period. Since we could not find the criteria for the usernames for this site, it will be

553 | removed

554 |

555 | ```json

556 | "easyen": {

557 | "errorMsg": "\u041f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u043d\u0435 \u043d\u0430\u0439\u0434\u0435\u043d",

558 | "errorType": "message",

559 | "rank": 11564,

560 | "url": "https://easyen.ru/index/8-0-{}",

561 | "urlMain": "https://easyen.ru/",

562 | "username_claimed": "wd",

563 | "username_unclaimed": "noonewouldeverusethis7"

564 | },

565 | ```

566 |

567 | ## pedsovet

568 | As of 2020-06-21, pedsovet returns false positives when using a username which contains

569 | a period. Since we could not find the criteria for the usernames for this site, it will be

570 | removed

571 |

572 | ```json

573 | "pedsovet": {

574 | "errorMsg": "\u041f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u043d\u0435 \u043d\u0430\u0439\u0434\u0435\u043d",

575 | "errorType": "message",

576 | "rank": 6776,

577 | "url": "http://pedsovet.su/index/8-0-{}",

578 | "urlMain": "http://pedsovet.su/",

579 | "username_claimed": "blue",

580 | "username_unclaimed": "noonewouldeverusethis7"

581 | },

582 | ```

583 |

584 |

585 | ## radioskot

586 | As of 2020-06-21, radioskot returns false positives when using a username which contains

587 | a period. Since we could not find the criteria for the usernames for this site, it will be

588 | removed

589 | ```json

590 | "radioskot": {

591 | "errorMsg": "\u041f\u043e\u043b\u044c\u0437\u043e\u0432\u0430\u0442\u0435\u043b\u044c \u043d\u0435 \u043d\u0430\u0439\u0434\u0435\u043d",

592 | "errorType": "message",

593 | "rank": 105878,

594 | "url": "https://radioskot.ru/index/8-0-{}",

595 | "urlMain": "https://radioskot.ru/",

596 | "username_claimed": "red",

597 | "username_unclaimed": "noonewouldeverusethis7"

598 | },

599 | ```

600 |

601 |

602 |

603 | ## Coderwall

604 | As of 2020-07-06, Coderwall returns false positives when checking for an username which contains a period.

605 | I have tried to find out what Coderwall's criteria is for a valid username, but unfortunately I have not been able to

606 | find it and because of this, the best thing we can do now is to remove it.

607 | ```json

608 | "Coderwall": {

609 | "errorMsg": "404! Our feels when that url is used",

610 | "errorType": "message",

611 | "rank": 11256,

612 | "url": "https://coderwall.com/{}",

613 | "urlMain": "https://coderwall.com/",

614 | "username_claimed": "jenny",

615 | "username_unclaimed": "noonewouldeverusethis7"

616 | }

617 | ```

618 |

619 |

620 | ## TamTam

621 | As of 2020-07-06, TamTam returns false positives when given a username which contains a period

622 | ```json

623 | "TamTam": {

624 | "errorType": "response_url",

625 | "errorUrl": "https://tamtam.chat/",

626 | "rank": 87903,

627 | "url": "https://tamtam.chat/{}",

628 | "urlMain": "https://tamtam.chat/",

629 | "username_claimed": "blue",

630 | "username_unclaimed": "noonewouldeverusethis7"

631 | },

632 | ```

633 |

634 | ## Zomato

635 | As of 2020-07-24, Zomato seems to be unstable. Majority of the time, Zomato takes a very long time to respond.

636 | ```json

637 | "Zomato": {

638 | "errorType": "status_code",

639 | "headers": {

640 | "Accept-Language": "en-US,en;q=0.9"

641 | },

642 | "rank": 1920,

643 | "url": "https://www.zomato.com/pl/{}/foodjourney",

644 | "urlMain": "https://www.zomato.com/",

645 | "username_claimed": "deepigoyal",

646 | "username_unclaimed": "noonewouldeverusethis7"

647 | },

648 | ```

649 |

650 | ## Mixer

651 | As of 2020-07-22, the Mixer service has closed down.

652 | ```json

653 | "mixer.com": {

654 | "errorType": "status_code",

655 | "rank": 1544,

656 | "url": "https://mixer.com/{}",

657 | "urlMain": "https://mixer.com/",

658 | "urlProbe": "https://mixer.com/api/v1/channels/{}",

659 | "username_claimed": "blue",

660 | "username_unclaimed": "noonewouldeverusethis7"

661 | },

662 | ```

663 |

664 |

665 | ## KanoWorld

666 | As of 2020-07-22, KanoWorld's api.kano.me subdomain no longer exists which makes it not possible for us check for usernames.

667 | If an alternative way to check for usernames is found then it will added.

668 | ```json

669 | "KanoWorld": {

670 | "errorType": "status_code",

671 | "rank": 181933,

672 | "url": "https://api.kano.me/progress/user/{}",

673 | "urlMain": "https://world.kano.me/",

674 | "username_claimed": "blue",

675 | "username_unclaimed": "noonewouldeverusethis7"

676 | },

677 | ```

678 |

679 | ## YandexCollection

680 | As of 2020-08-11, YandexCollection presents us with a recaptcha which prevents us from checking for usernames

681 | ```json

682 | "YandexCollection": {

683 | "errorType": "status_code",

684 | "url": "https://yandex.ru/collections/user/{}/",

685 | "urlMain": "https://yandex.ru/collections/",

686 | "username_claimed": "blue",

687 | "username_unclaimed": "noonewouldeverusethis7"

688 | },

689 | ```

690 |

691 | ## PayPal

692 |

693 | As of 2020-08-24, PayPal now returns false positives, which was found when running the tests, but will most likley be added again in the near

694 | future once we find a better error detecting method.

695 | ```json

696 | "PayPal": {

697 | "errorMsg": "Cent ",

1023 | "errorType": "message",

1024 | "url": "https://beta.cent.co/@{}",

1025 | "urlMain": "https://cent.co/",

1026 | "username_claimed": "blue",

1027 | "username_unclaimed": "noonewouldeverusethis7"

1028 | },

1029 | ```

1030 |

1031 | ## Anobii

1032 |

1033 | As of 2021-06-27, Anobii returns false positives and there is no stable way of checking usernames.

1034 | ```

1035 |

1036 | "Anobii": {

1037 | "errorType": "response_url",

1038 | "url": "https://www.anobii.com/{}/profile",

1039 | "urlMain": "https://www.anobii.com/",

1040 | "username_claimed": "blue",

1041 | "username_unclaimed": "noonewouldeverusethis7"

1042 | }

1043 | ```

1044 |

1045 | ## Kali Community

1046 |

1047 | As of 2021-06-27, Kali Community requires us to be logged in order to check if a user exists on their forum.

1048 |

1049 | ```json

1050 | "Kali community": {

1051 | "errorMsg": "This user has not registered and therefore does not have a profile to view.",

1052 | "errorType": "message",

1053 | "url": "https://forums.kali.org/member.php?username={}",

1054 | "urlMain": "https://forums.kali.org/",

1055 | "username_claimed": "blue",

1056 | "username_unclaimed": "noonewouldeverusethis7"

1057 | }

1058 | ```

1059 |

1060 | ## NameMC

1061 |

1062 | As of 2021-06-27, NameMC uses captcha through CloudFlare which prevents us from checking if usernames exists on the site.

1063 |

1064 | ```json

1065 | "NameMC (Minecraft.net skins)": {

1066 | "errorMsg": "Profiles: 0 results",

1067 | "errorType": "message",

1068 | "url": "https://namemc.com/profile/{}",

1069 | "urlMain": "https://namemc.com/",

1070 | "username_claimed": "blue",

1071 | "username_unclaimed": "noonewouldeverusethis7"

1072 | },

1073 | ```

1074 |

1075 | ## SteamID

1076 |

1077 | As of 2021-06-27, Steam uses captcha through CloudFlare which prevents us from checking if usernames exists on the site.

1078 | ```json

1079 | "Steamid": {

1080 | "errorMsg": "No results found

",

1222 | "errorType": "message",

1223 | "regexCheck": "^[a-zA-z][a-zA-Z0-9_]{2,79}$",

1224 | "url": "https://www.capfriendly.com/users/{}",

1225 | "urlMain": "https://www.capfriendly.com/",

1226 | "username_claimed": "thisactuallyexists",

1227 | "username_unclaimed": "noonewouldeverusethis7"

1228 | },

1229 | ```

1230 |

1231 | ## Gab

1232 |

1233 | As of 2022-05-01, Gab returns false positives because they now use CloudFlare

1234 | ```json

1235 | "Gab": {

1236 | "errorMsg": "The page you are looking for isn't here.",

1237 | "errorType": "message",

1238 | "url": "https://gab.com/{}",

1239 | "urlMain": "https://gab.com",

1240 | "username_claimed": "a",

1241 | "username_unclaimed": "noonewouldeverusethis"

1242 | },

1243 | ```

1244 |

1245 | ## FanCentro

1246 |

1247 | As of 2022-05-1, FanCentro returns false positives. Will later in new version of Sherlock.

1248 |

1249 | ```json

1250 | "FanCentro": {

1251 | "errorMsg": "var environment",

1252 | "errorType": "message",

1253 | "url": "https://fancentro.com/{}",

1254 | "urlMain": "https://fancentro.com/",

1255 | "username_claimed": "nielsrosanna",

1256 | "username_unclaimed": "noonewouldeverusethis7"

1257 | },

1258 | ```

1259 |

1260 | ## Smashcast

1261 | As og 2022-05-01, Smashcast is down

1262 | ```json

1263 | "Smashcast": {

1264 | "errorType": "status_code",

1265 | "url": "https://www.smashcast.tv/api/media/live/{}",

1266 | "urlMain": "https://www.smashcast.tv/",

1267 | "username_claimed": "hello",

1268 | "username_unclaimed": "noonewouldeverusethis7"

1269 | },

1270 | ```

1271 |

1272 | ## Countable

1273 |

1274 | As og 2022-05-01, Countable returns false positives

1275 | ```json

1276 | "Countable": {

1277 | "errorType": "status_code",

1278 | "url": "https://www.countable.us/{}",

1279 | "urlMain": "https://www.countable.us/",

1280 | "username_claimed": "blue",

1281 | "username_unclaimed": "noonewouldeverusethis7"

1282 | },

1283 | ```

1284 |

1285 | ## Raidforums

1286 |

1287 | Raidforums is [now run by the FBI](https://twitter.com/janomine/status/1499453777648234501?s=21)

1288 | ```json

1289 | "Raidforums": {

1290 | "errorType": "status_code",

1291 | "url": "https://raidforums.com/User-{}",

1292 | "urlMain": "https://raidforums.com/",

1293 | "username_claimed": "red",

1294 | "username_unclaimed": "noonewouldeverusethis7"

1295 | },

1296 | ```

1297 |

1298 | ## Pinterest

1299 | Removed due to false positive

1300 |

1301 | ```json

1302 | "Pinterest": {

1303 | "errorType": "status_code",

1304 | "url": "https://www.pinterest.com/{}/",

1305 | "urlMain": "https://www.pinterest.com/",

1306 | "username_claimed": "blue",

1307 | "username_unclaimed": "noonewouldeverusethis76543"

1308 | }

1309 | ```

1310 |

1311 | ## PCPartPicker

1312 | As of 17-07-2022, PCPartPicker requires us to login in order to check if a user exits

1313 |

1314 | ```json

1315 | "PCPartPicker": {

1316 | "errorType": "status_code",

1317 | "url": "https://pcpartpicker.com/user/{}",

1318 | "urlMain": "https://pcpartpicker.com",

1319 | "username_claimed": "blue",

1320 | "username_unclaimed": "noonewouldeverusethis7"

1321 | },

1322 | ```

1323 |

1324 | ## Ebay

1325 | As of 17-07-2022, Ebay is very slow to respond. It was also reported that it returned false positives. So this is something that has been investigated further later.

1326 |

1327 | ```json

1328 | "eBay.com": {

1329 | "errorMsg": "The User ID you entered was not found. Please check the User ID and try again.",

1330 | "errorType": "message",

1331 | "url": "https://www.ebay.com/usr/{}",

1332 | "urlMain": "https://www.ebay.com/",

1333 | "username_claimed": "blue",

1334 | "username_unclaimed": "noonewouldeverusethis7"

1335 | },

1336 | "eBay.de": {

1337 | "errorMsg": "Der eingegebene Nutzername wurde nicht gefunden. Bitte pr\u00fcfen Sie den Nutzernamen und versuchen Sie es erneut.",

1338 | "errorType": "message",

1339 | "url": "https://www.ebay.de/usr/{}",

1340 | "urlMain": "https://www.ebay.de/",

1341 | "username_claimed": "blue",

1342 | "username_unclaimed": "noonewouldeverusethis7"

1343 | },

1344 | ```

1345 |

1346 | ## Ghost

1347 | As of 17-07-2022, Ghost returns false positives

1348 |

1349 | ```json

1350 | "Ghost": {

1351 | "errorMsg": "Domain Error",

1352 | "errorType": "message",

1353 | "url": "https://{}.ghost.io/",

1354 | "urlMain": "https://ghost.org/",

1355 | "username_claimed": "troyhunt",

1356 | "username_unclaimed": "noonewouldeverusethis7"

1357 | }

1358 | ```

1359 |

1360 | ## Atom Discussions

1361 | As of 25-07-2022, Atom Discussions seems to not work beceause it keeps on

1362 | redirecting to github discussion tab which does not exist and is not specific to a username

1363 |

1364 | ```json

1365 | "Atom Discussions": {

1366 | "errorMsg": "Oops! That page doesn\u2019t exist or is private.",