├── .gitattributes

├── .gitignore

├── Pipfile

├── Pipfile.lock

├── README.md

├── arcgis_integration

├── Script.hlk

├── __pycache__

│ ├── class_measure.cpython-36.pyc

│ └── class_measure.cpython-37.pyc

├── class_measure.py

├── command.py

└── exe_measure.py

├── camera

├── data_capture_no_gui.py

└── qt gui

│ ├── cmd_class.py

│ ├── img

│ ├── 1.jpg

│ ├── intel.ico

│ └── intel.png

│ ├── qtcam.exe

│ ├── qtcam.py

│ └── temp.py

├── examples

├── bag

│ ├── 0917_006.bag

│ ├── 0917_007.bag

│ ├── 0917_008.bag

│ ├── 0917_009.bag

│ └── 0917_010.bag

├── foto_log

│ ├── 0917_006.txt

│ ├── 0917_007.txt

│ ├── 0917_008.txt

│ ├── 0917_009.txt

│ └── 0917_010.txt

└── google earth.PNG

├── processor

├── data_process_tkinter.py

├── gui2.py

└── proccessor.py

├── requirements.txt

└── separate functions

├── 1.PNG

├── background_remove.py

├── check_bag_valid.py

├── cvcanvas.py

├── data_process0812.py

├── datacapture_mp_kml.py

├── geotag.py

├── jpgcreator.py

├── live_gps.py

├── measure_new.py

├── opencv_find_key.py

├── phase 1

├── Hello World.py

├── Ruler.py

├── color_to_png.py

├── depth to png.py

├── depth_to_png.py

├── manual_photo.py

├── matchframelist.py

├── measure.py

├── measure_loop.py

├── measure_loop_temp.py

├── measure_loop_temp_0405.py

├── measure_read_per_frame.py

├── measure_search.py

├── measure_stream.py

├── measure_synced.py

├── measure_with_mpl.py

├── read_bag.py

├── record_loop.py

├── save-translate.py

├── to_frame_script.py

└── widgets2.py

├── photo_capture.py

├── single_frameset.py

└── widgets2.py

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.bag filter=lfs diff=lfs merge=lfs -text

2 | .idea

3 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | location.csv

3 | foto_location.csv

4 | .idea

--------------------------------------------------------------------------------

/Pipfile:

--------------------------------------------------------------------------------

1 | [[source]]

2 | name = "pypi"

3 | url = "https://pypi.org/simple"

4 | verify_ssl = true

5 |

6 | [dev-packages]

7 |

8 | [packages]

9 | pyrealsense2 = "*"

10 | pyserial = "*"

11 | pyqt5 = "*"

12 | numpy = "*"

13 | opencv-python = "*"

14 | piexif = "*"

15 | fraction = "*"

16 |

17 | [requires]

18 | python_version = "3.7"

19 |

--------------------------------------------------------------------------------

/Pipfile.lock:

--------------------------------------------------------------------------------

1 | {

2 | "_meta": {

3 | "hash": {

4 | "sha256": "bd01a48eee3da1bb553ff512153f4918d0be814b99c439c84d737273ebe1703b"

5 | },

6 | "pipfile-spec": 6,

7 | "requires": {

8 | "python_version": "3.7"

9 | },

10 | "sources": [

11 | {

12 | "name": "pypi",

13 | "url": "https://pypi.org/simple",

14 | "verify_ssl": true

15 | }

16 | ]

17 | },

18 | "default": {

19 | "fraction": {

20 | "hashes": [

21 | "sha256:242dcff2c097e45467533d985125ac6ec2b1590972a22592449fbab8fae82188"

22 | ],

23 | "index": "pypi",

24 | "version": "==1.1.0"

25 | },

26 | "numpy": {

27 | "hashes": [

28 | "sha256:0a7a1dd123aecc9f0076934288ceed7fd9a81ba3919f11a855a7887cbe82a02f",

29 | "sha256:0c0763787133dfeec19904c22c7e358b231c87ba3206b211652f8cbe1241deb6",

30 | "sha256:3d52298d0be333583739f1aec9026f3b09fdfe3ddf7c7028cb16d9d2af1cca7e",

31 | "sha256:43bb4b70585f1c2d153e45323a886839f98af8bfa810f7014b20be714c37c447",

32 | "sha256:475963c5b9e116c38ad7347e154e5651d05a2286d86455671f5b1eebba5feb76",

33 | "sha256:64874913367f18eb3013b16123c9fed113962e75d809fca5b78ebfbb73ed93ba",

34 | "sha256:683828e50c339fc9e68720396f2de14253992c495fdddef77a1e17de55f1decc",

35 | "sha256:6ca4000c4a6f95a78c33c7dadbb9495c10880be9c89316aa536eac359ab820ae",

36 | "sha256:75fd817b7061f6378e4659dd792c84c0b60533e867f83e0d1e52d5d8e53df88c",

37 | "sha256:7d81d784bdbed30137aca242ab307f3e65c8d93f4c7b7d8f322110b2e90177f9",

38 | "sha256:8d0af8d3664f142414fd5b15cabfd3b6cc3ef242a3c7a7493257025be5a6955f",

39 | "sha256:9679831005fb16c6df3dd35d17aa31dc0d4d7573d84f0b44cc481490a65c7725",

40 | "sha256:a8f67ebfae9f575d85fa859b54d3bdecaeece74e3274b0b5c5f804d7ca789fe1",

41 | "sha256:acbf5c52db4adb366c064d0b7c7899e3e778d89db585feadd23b06b587d64761",

42 | "sha256:ada4805ed51f5bcaa3a06d3dd94939351869c095e30a2b54264f5a5004b52170",

43 | "sha256:c7354e8f0eca5c110b7e978034cd86ed98a7a5ffcf69ca97535445a595e07b8e",

44 | "sha256:e2e9d8c87120ba2c591f60e32736b82b67f72c37ba88a4c23c81b5b8fa49c018",

45 | "sha256:e467c57121fe1b78a8f68dd9255fbb3bb3f4f7547c6b9e109f31d14569f490c3",

46 | "sha256:ede47b98de79565fcd7f2decb475e2dcc85ee4097743e551fe26cfc7eb3ff143",

47 | "sha256:f58913e9227400f1395c7b800503ebfdb0772f1c33ff8cb4d6451c06cabdf316",

48 | "sha256:fe39f5fd4103ec4ca3cb8600b19216cd1ff316b4990f4c0b6057ad982c0a34d5"

49 | ],

50 | "index": "pypi",

51 | "version": "==1.17.4"

52 | },

53 | "opencv-python": {

54 | "hashes": [

55 | "sha256:01505b131dc35f60e99a5da98b77156e37f872ae0ff5596e5e68d526bb572d3c",

56 | "sha256:0478a1037505ddde312806c960a5e8958d2cf7a2885e8f2f5dde74c4028e0b04",

57 | "sha256:17810b89f9ef8e8537e75332acf533e619e26ccadbf1b73f24bf338f2d327ddd",

58 | "sha256:19ad2ea9fb32946761b47b9d6eed51876a8329da127f27788263fecd66651ba0",

59 | "sha256:1a250edb739baf3e7c25d99a2ee252aac4f59a97e0bee39237eaa490fd0281d3",

60 | "sha256:3505468970448f66cd776cb9e179570c87988f94b5cf9bcbc4c2d88bd88bbdf1",

61 | "sha256:4e04a91da157885359f487534433340b2d709927559c80acf62c28167e59be02",

62 | "sha256:5a49cffcdec5e37217672579c3343565926d999642844efa9c6a031ed5f32318",

63 | "sha256:604b2ce3d4a86480ced0813da7fba269b4605ad9fea26cd2144d8077928d4b49",

64 | "sha256:61cbb8fa9565a0480c46028599431ad8f19181a7fac8070a700515fd54cd7377",

65 | "sha256:62d7c6e511c9454f099616315c695d02a584048e1affe034b39160db7a2ae34d",

66 | "sha256:6555272dd9efd412d17cdc1a4f4c2da5753c099d95d9ff01aca54bb9782fb5cf",

67 | "sha256:67d994c6b2b14cb9239e85dc7dfa6c08ef7cf6eb4def80c0af6141dfacc8cbb9",

68 | "sha256:68c9cbe538666c4667523821cc56caee49389bea06bae4c0fc2cd68bd264226a",

69 | "sha256:822ad8f628a9498f569c57d30865f5ef9ee17824cee0a1d456211f742028c135",

70 | "sha256:82d972429eb4fee22c1dc4204af2a2e981f010e5e4f66daea2a6c68381b79184",

71 | "sha256:9128924f5b58269ee221b8cf2d736f31bd3bb0391b92ee8504caadd68c8176a2",

72 | "sha256:9172cf8270572c494d8b2ae12ef87c0f6eed9d132927e614099f76843b0c91d7",

73 | "sha256:952bce4d30a8287b17721ddaad7f115dab268efee8576249ddfede80ec2ce404",

74 | "sha256:a8147718e70b1f170a3d26518e992160137365a4db0ed82a9efd3040f9f660d4",

75 | "sha256:bfdb636a3796ff223460ea0fcfda906b3b54f4bef22ae433a5b67e66fab00b25",

76 | "sha256:c9c3f27867153634e1083390920067008ebaaab78aeb09c4e0274e69746cb2c8",

77 | "sha256:d69be21973d450a4662ae6bd1b3df6b1af030e448d7276380b0d1adf7c8c2ae6",

78 | "sha256:db1479636812a6579a3753b72a6fefaa73190f32bf7b19e483f8bc750cebe1a5",

79 | "sha256:db8313d755962a7dd61e5c22a651e0743208adfdb255c6ec8904ce9cb02940c6",

80 | "sha256:e4625a6b032e7797958aeb630d6e3e91e3896d285020aae612e6d7b342d6dfea",

81 | "sha256:e8397a26966a1290836a52c34b362aabc65a422b9ffabcbbdec1862f023ccab8"

82 | ],

83 | "index": "pypi",

84 | "version": "==4.1.1.26"

85 | },

86 | "piexif": {

87 | "hashes": [

88 | "sha256:3bc435d171720150b81b15d27e05e54b8abbde7b4242cddd81ef160d283108b6",

89 | "sha256:83cb35c606bf3a1ea1a8f0a25cb42cf17e24353fd82e87ae3884e74a302a5f1b"

90 | ],

91 | "index": "pypi",

92 | "version": "==1.1.3"

93 | },

94 | "pyqt5": {

95 | "hashes": [

96 | "sha256:14737bb4673868d15fa91dad79fe293d7a93d76c56d01b3757b350b8dcb32b2d",

97 | "sha256:1936c321301f678d4e6703d52860e1955e5c4964e6fd00a1f86725ce5c29083c",

98 | "sha256:3f79de6e9f29e858516cc36ffc2b992e262af841f3799246aec282b76a3eccdf",

99 | "sha256:509daab1c5aca22e3cf9508128abf38e6e5ae311d7426b21f4189ffd66b196e9"

100 | ],

101 | "index": "pypi",

102 | "version": "==5.13.2"

103 | },

104 | "pyqt5-sip": {

105 | "hashes": [

106 | "sha256:02d94786bada670ab17a2b62ce95b3cf8e3b40c99d36007593a6334d551840bb",

107 | "sha256:06bc66b50556fb949f14875a4c224423dbf03f972497ccb883fb19b7b7c3b346",

108 | "sha256:091fbbe10a7aebadc0e8897a9449cda08d3c3f663460d812eca3001ca1ed3526",

109 | "sha256:0a067ade558befe4d46335b13d8b602b5044363bfd601419b556d4ec659bca18",

110 | "sha256:1910c1cb5a388d4e59ebb2895d7015f360f3f6eeb1700e7e33e866c53137eb9e",

111 | "sha256:1c7ad791ec86247f35243bbbdd29cd59989afbe0ab678e0a41211f4407f21dd8",

112 | "sha256:3c330ff1f70b3eaa6f63dce9274df996dffea82ad9726aa8e3d6cbe38e986b2f",

113 | "sha256:482a910fa73ee0e36c258d7646ef38f8061774bbc1765a7da68c65056b573341",

114 | "sha256:7695dfafb4f5549ce1290ae643d6508dfc2646a9003c989218be3ce42a1aa422",

115 | "sha256:8274ed50f4ffbe91d0f4cc5454394631edfecd75dc327aa01be8bc5818a57e88",

116 | "sha256:9047d887d97663790d811ac4e0d2e895f1bf2ecac4041691487de40c30239480",

117 | "sha256:9f6ab1417ecfa6c1ce6ce941e0cebc03e3ec9cd9925058043229a5f003ae5e40",

118 | "sha256:b43ba2f18999d41c3df72f590348152e14cd4f6dcea2058c734d688dfb1ec61f",

119 | "sha256:c3ab9ea1bc3f4ce8c57ebc66fb25cd044ef92ed1ca2afa3729854ecc59658905",

120 | "sha256:da69ba17f6ece9a85617743cb19de689f2d63025bf8001e2facee2ec9bcff18f",

121 | "sha256:ef3c7a0bf78674b0dda86ff5809d8495019903a096c128e1f160984b37848f73",

122 | "sha256:fabff832046643cdb93920ddaa8f77344df90768930fbe6bb33d211c4dcd0b5e"

123 | ],

124 | "version": "==12.7.0"

125 | },

126 | "pyrealsense2": {

127 | "hashes": [

128 | "sha256:0417e2cda00a29cc95e4da0ee2b42cafcc06f61b5ce12563fb0a533e522c1cd9",

129 | "sha256:08fd9806ff48bf923c05c4a9014652f27c3db318477334ce115340a511c5d212",

130 | "sha256:135e7df4bf22421e90566288fb5bfa52a160a680c24dabdc0f7f51bbbfbd7f42",

131 | "sha256:4c8a975c711f423750ab8cf30cecba9df17757616118e2c8b7423426f03f2903",

132 | "sha256:5799894abad6210541435d186001c6ca2690cf3fe0e5c1f9803079b81eca3264",

133 | "sha256:65fd76d42190c4820e18933503745a7b843852e096d4b1fc3f557e7e01549fa6",

134 | "sha256:7109b53c247b392c4870e6545b07fc5a629839883da9536a56fbe401c3563cd6",

135 | "sha256:712cdbb951e1b3c45a99502ecd0bbc7f979af55f39c93d0d514deb6f7d06f479",

136 | "sha256:7bfa5c212d28607da0a03d3a71ec492795105da942184ee6b4d865c6f09e1de2",

137 | "sha256:8323bcb2338f62824f78f710f7a810ec315bc8f4343c39f9a94e46f4d0ef4b07"

138 | ],

139 | "index": "pypi",

140 | "version": "==2.30.0.1184"

141 | },

142 | "pyserial": {

143 | "hashes": [

144 | "sha256:6e2d401fdee0eab996cf734e67773a0143b932772ca8b42451440cfed942c627",

145 | "sha256:e0770fadba80c31013896c7e6ef703f72e7834965954a78e71a3049488d4d7d8"

146 | ],

147 | "index": "pypi",

148 | "version": "==3.4"

149 | }

150 | },

151 | "develop": {}

152 | }

153 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # PyRealsense for d435

2 |

3 | ready-to-use exe version can be seen at https://github.com/soarwing52/Realsense_exe

4 |

5 | in this project will have record bag and measure picture from bag with the RealsenseD435 camera. The scripts are separated into two parts, collector and processor.

6 |

7 | the collector is to let the driver collect data effortlessly, with one remote controller.

8 |

9 | the processor is creating data from the bag files and the txt recorded, match frames and location, using arcpy API to create shapefile for users.

10 |

11 | The further usage is using matplotlib within ArcGIS 10, let users read ROSbag files with hyperlink script function

12 |

13 | ## Getting Started

14 |

15 | the script is mainly based on Realsense, using ROSbag and txt files to record gps location

16 |

17 | *the save_single_frame function can't get depth data, show always 0,0,0, therefore I can only use rs.recorder

18 |

19 |

20 | ### Prerequisites

21 |

22 | Hardware: GPS reciever (BU353S4 in my case)

23 |

24 |

25 | RS camera

26 |

27 |

28 |

29 |

30 | ### Data Preparation

31 |

32 | data collector: will generate folders: bag, log, and csvs :live location. foto_location

33 |

34 | data processor: from the collected bag and log file, create jpg and matched.txt

35 |

36 |

37 | ### Installing

38 |

39 | A step by step series of examples that tell you how to get a development env running

40 |

41 | will generate requirment.txt in the future

42 | Python packages

43 | ```

44 | pip install --upgrade pip

45 | pip install --user pyrealsense2

46 | pip install --user opencv-python (especially in user, else it will overwrite arcgis python package)

47 | argparse, py-getch, pyserial, fractions,

48 | ```

49 |

50 | ### Data Capture

51 |

52 | the data capture script will automatically generate three folders: bag, foto_log

53 |

54 | it will put the recorded bag in bag format,

55 |

56 | information of index,color frame number, depth frame number, longtitude, lattitude, day, month, year, timewill be saved in txt format in foto_log

57 |

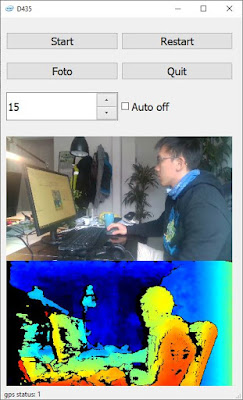

58 | QtCam:

59 |

60 |

61 |

62 |

63 | The new version:

64 | now it will write two csv files, and is used to open in QGIS, set open layer with csv, and the rendering frequency 1-5 seconds

65 | depends on the performance of your hardware.

66 | The reason is QGIS can render the huge amount of point data better than Google Earth

67 |

68 | in QGIS:

69 | Add Delimited Text Layer and set render frequency to 1 second for live.csv, foto_location.csv

70 |

71 | ------------------------------------------------------------------------------------------------------------------

72 | kml will generate live location as live, and the location with recorded places in Foto.kml

73 | use Google Earth: Temporary Places/Add/Networklink link to folder/kml/ live for location Foto.kml for foto point

74 |

75 |

76 |

77 | ### Data process

78 |

79 | to prepare the data, use the data_prepare.py

80 |

81 | click the three buttons in the order left to right

82 |

83 | 1.generate jpg

84 | using openCV to generate JPEG files, one sharpening filter is added.

85 | ```

86 | kernel = np.array([[-1, -1, -1],

87 | [-1, 9, -1],

88 | [-1, -1, -1]])

89 | ```

90 | *due to disk writing speed, sometimes the realsense api will miss some frames, this script loops through the bag files for two times. can be run multiple times if needed

91 |

92 | *this script will skip existed files

93 |

94 | 2.generate shp

95 | because of latency, the frames recorded and the frame number in foto_log can jump in a range around +-2, for example: in log is frame no.100 but it could happen that the recorded is 99-102, so one extra step to match the real frame number to the log file is needed.

96 |

97 | after the matcher.txt is generated, this is the complete list of points taken, use MakeXYEventLayer, and FeatureClassToShapefile_conversion will save a shapefile.

98 |

99 | *the earlier version has match the frame in a 25ms timestamp for Color and Depth to make sure the frames are matching, the current version removed it, since the latency is acceptable

100 |

101 | 3.geotag

102 |

103 | according to the matcher.txt, photos EXIF will be written, the Lontitude and Latitude

104 |

105 | ### in ArcGIS

106 |

107 | in Arcgis use hyperlink and show script, select python

108 | put in

109 |

110 | ```

111 | import subprocess

112 | def OpenLink ( [jpg_path] ):

113 | bag = [jpg_path]

114 | comnd = 'measure.exe -p {}'.format(bag)

115 | subprocess.call(comnd)

116 | return

117 | ```

118 |

119 |

120 | The method I suggest here is to use the hlk file, by open hlk from the arcgis integration folder, the working directory will be automatically changed to this folder.

121 | Then the video, photo function can be open automatically.

122 |

123 | End with an example of getting some data out of the system or using it for a little demo

124 |

125 | in here we use subprocess because the current realsense library will freeze after set_real_time(False)

126 | and ArcMap don't support multithread nor multicore

127 | therefore we can't use the simple import but have to call out command line and then run python

128 |

129 | The running image will be like this:

130 |

131 | use spacebar to switch between modes, video mode can browse through the frames faster

132 | left click and hold to measure, right click to erase all

133 |

134 |

135 |

136 | ## Authors

137 |

138 | * **Che-Yi Hung** - *Initial work* - [soarwing52](https://github.com/soarwing52)

139 |

140 |

--------------------------------------------------------------------------------

/arcgis_integration/Script.hlk:

--------------------------------------------------------------------------------

1 | i�m�p�o�r�t� �s�u�b�p�r�o�c�e�s�s�

�

2 | �d�e�f� �O�p�e�n�L�i�n�k� �(� �[�P�a�t�h�]� � �)�:�

�

3 | � � �b�a�g� �=� �[�P�a�t�h�]� �

�

4 | � � �c�o�m�n�d� �=� �r�'�Z�:�\�V�o�r�l�a�g�e�n�\�R�e�a�l� �S�e�n�s�e�\�A�r�c�G�I�S�\�m�e�a�s�u�r�e�.�e�x�e� �-�p� �{�}�'�.�f�o�r�m�a�t�(�b�a�g�)�

�

5 | � � �s�u�b�p�r�o�c�e�s�s�.�c�a�l�l�(�c�o�m�n�d�)�

�

6 | � � �r�e�t�u�r�n�

--------------------------------------------------------------------------------

/arcgis_integration/__pycache__/class_measure.cpython-36.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/arcgis_integration/__pycache__/class_measure.cpython-36.pyc

--------------------------------------------------------------------------------

/arcgis_integration/__pycache__/class_measure.cpython-37.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/arcgis_integration/__pycache__/class_measure.cpython-37.pyc

--------------------------------------------------------------------------------

/arcgis_integration/class_measure.py:

--------------------------------------------------------------------------------

1 | import pyrealsense2 as rs

2 | import numpy as np

3 | import cv2

4 | import os

5 | import copy, math

6 | import Tkinter

7 |

8 |

9 | class Arc_Real:

10 | def __init__(self, jpg_path):

11 | # Basic setting

12 | self.width, self.height = self.screen_size()

13 | self.x_ratio, self.y_ratio = self.screen_ratio()

14 |

15 | # Data from txt log file

16 | BagFilePath = os.path.abspath(jpg_path)

17 | file_dir = os.path.dirname(BagFilePath)

18 | Pro_Dir = os.path.dirname(file_dir)

19 | self.weg_id, target_color = os.path.splitext(os.path.basename(BagFilePath))[0].split('-')

20 |

21 | with open('{}/shp/matcher.txt'.format(Pro_Dir), 'r') as txtfile:

22 | self.title = [elt.strip() for elt in txtfile.readline().split(',')]

23 | self.frame_list = [[elt.strip() for elt in line.split(',')] for line in txtfile if line.split(',')[0] == self.weg_id]

24 |

25 | self.frame_dict, self.i = self.get_attribute(color=target_color, weg_id=self.weg_id)

26 | file_name = '{}\\bag\\{}.bag'.format(Pro_Dir, self.weg_id)

27 | # Start Pipeline

28 | self.pipeline = rs.pipeline()

29 | config = rs.config()

30 | config.enable_device_from_file(file_name)

31 | config.enable_all_streams()

32 | profile = self.pipeline.start(config)

33 | device = profile.get_device()

34 | playback = device.as_playback()

35 | playback.set_real_time(False)

36 |

37 | self.frame_getter('Color') # Get Color Frame with the matching frame number from self.frame_dict

38 | mode = 'Video Mode'

39 | direction = 1

40 | while True:

41 | img = self.frame_to_image()

42 | self.img_work(mode=mode, img=img)

43 | cv2.namedWindow("Color Stream", cv2.WINDOW_FULLSCREEN)

44 | cv2.imshow("Color Stream", img)

45 |

46 | if mode == 'Measure Mode':

47 | self.img_origin = self.img_work(mode='Measure Mode', img=img)

48 | self.img_copy = copy.copy(self.img_origin)

49 | cv2.setMouseCallback("Color Stream", self.draw)

50 | cv2.imshow("Color Stream", self.img_copy)

51 |

52 | key = cv2.waitKeyEx(0)

53 |

54 | # if pressed escape exit program

55 | if key == 27 or key == 113 or cv2.getWindowProperty('Color Stream', cv2.WND_PROP_VISIBLE) < 1:

56 | break

57 |

58 | elif key == 32: # press space

59 | if mode == 'Measure Mode':

60 | mode = 'Video Mode'

61 | else:

62 | self.frame_dict, self.i = self.get_attribute(color=self.color_frame_num, weg_id=self.weg_id)

63 | self.img_work(mode='Searching', img=img)

64 | cv2.imshow("Color Stream", img)

65 | cv2.waitKey(1)

66 | item = self.get_attribute(color=self.color_frame_num, weg_id=self.weg_id)

67 | if item is not None:

68 | print item

69 | mode = 'Measure Mode'

70 |

71 | elif key == 2555904: # press right

72 | self.i += 1

73 | direction = 1

74 | elif key == 2424832: # press left

75 | self.i -= 1

76 | direction = -1

77 |

78 | if mode == 'Measure Mode':

79 | self.img_work(mode='Searching', img=img)

80 | cv2.imshow("Color Stream", img)

81 | cv2.waitKey(1)

82 | while True:

83 | Color, Depth = self.frame_getter('Color'), self.frame_getter('Depth')

84 | if Color and Depth:

85 | break

86 | else:

87 | self.i += direction

88 |

89 | if mode != 'Measure Mode':

90 | frames = self.pipeline.wait_for_frames()

91 | self.color_frame = frames.get_color_frame()

92 | self.color_frame_num = self.color_frame.get_frame_number()

93 |

94 | print('finish')

95 | cv2.destroyAllWindows()

96 | os._exit(0)

97 | self.pipeline.stop()

98 |

99 | def screen_size(self):

100 | root = Tkinter.Tk()

101 | width = root.winfo_screenwidth()

102 | height = root.winfo_screenheight()

103 | return int(width * 0.8), int(height * 0.8)

104 |

105 | def screen_ratio(self):

106 | img_size = (1920.0, 1080.0)

107 | screen = self.screen_size()

108 | width_ratio, height_ratio = screen[0]/img_size[0],screen[1]/img_size[1]

109 | return width_ratio, height_ratio

110 |

111 | def get_attribute(self, color, weg_id):

112 | for obj in self.frame_list:

113 | if obj[0] == weg_id and abs(int(obj[2]) - int(color)) < 5:

114 | i = self.frame_list.index(obj)

115 | obj = dict(zip(self.title, obj))

116 | return obj, i

117 |

118 | def index_to_obj(self):

119 | if self.i >= len(self.frame_list):

120 | self.i = self.i % len(self.frame_list) +1

121 | content = self.frame_list[self.i]

122 | self.frame_dict = dict(zip(self.title, content))

123 |

124 | def draw(self, event, x, y, flags, params):

125 | img = copy.copy(self.img_copy)

126 | if event == 1:

127 | self.ix = x

128 | self.iy = y

129 | cv2.imshow("Color Stream", self.img_copy)

130 | elif event == 4:

131 | img = self.img_copy

132 | self.img_work(img=img, mode='calc', x=x, y=y)

133 | cv2.imshow("Color Stream", img)

134 | elif event == 2:

135 | self.img_copy = copy.copy(self.img_origin)

136 | cv2.imshow("Color Stream", self.img_copy)

137 | elif flags == 1:

138 | self.img_work(img=img, mode='calc', x=x, y=y)

139 | cv2.imshow("Color Stream", img)

140 |

141 | def img_work(self, mode, img, x=0, y=0):

142 | if 'Measure' in mode: # show black at NAN

143 | depth_image = np.asanyarray(self.depth_frame.get_data())

144 | grey_color = 0

145 | depth_image_3d = np.dstack(

146 | (depth_image, depth_image, depth_image)) # depth image is 1 channel, color is 3 channels

147 | depth_image_3d= cv2.resize(depth_image_3d, self.screen_size())

148 | img = np.where((depth_image_3d <= 0), grey_color, img)

149 |

150 | font = cv2.FONT_ITALIC

151 | fontScale = 1

152 | fontColor = (0, 0, 0)

153 | lineType = 2

154 |

155 | # Add frame number

156 | mid_line_frame_num = int(self.width/4)

157 | rec1, rec2 = (mid_line_frame_num - 50, 20), (mid_line_frame_num + 50, 60)

158 | text = str(self.color_frame_num)

159 | bottomLeftCornerOfText = (mid_line_frame_num - 45, 50)

160 | cv2.rectangle(img, rec1, rec2, (255, 255, 255), -1)

161 | cv2.putText(img, text, bottomLeftCornerOfText, font, fontScale, fontColor, lineType)

162 |

163 | if mode == 'calc':

164 | pt1, pt2 = (self.ix, self.iy), (x, y)

165 | ans = self.calculate_distance(x, y)

166 | text = '{0:.3}'.format(ans)

167 | bottomLeftCornerOfText = (self.ix + 10, (self.iy - 10))

168 | rec1, rec2 = (self.ix + 10, (self.iy - 5)), (self.ix + 80, self.iy - 35)

169 |

170 | cv2.line(img, pt1=pt1, pt2=pt2, color=(0, 0, 230), thickness=3)

171 |

172 | else:

173 | mid_line_screen = int(self.width/2)

174 | rec1, rec2 = (mid_line_screen-100, 20), (mid_line_screen + 130, 60)

175 | text = mode

176 | bottomLeftCornerOfText = (mid_line_screen - 95, 50)

177 |

178 | cv2.rectangle(img, rec1, rec2, (255, 255, 255), -1)

179 | cv2.putText(img, text, bottomLeftCornerOfText, font, fontScale, fontColor, lineType)

180 | return img

181 |

182 | def calculate_distance(self, x, y):

183 | color_intrin = self.color_intrin

184 | x_ratio, y_ratio = self.x_ratio, self.y_ratio

185 | ix, iy = int(self.ix/x_ratio), int(self.iy/y_ratio)

186 | x, y = int(x/x_ratio), int(y/y_ratio)

187 | udist = self.depth_frame.get_distance(ix, iy)

188 | vdist = self.depth_frame.get_distance(x, y)

189 | if udist ==0.00 or vdist ==0.00:

190 | dist = 'NaN'

191 | else:

192 | point1 = rs.rs2_deproject_pixel_to_point(color_intrin, [ix, iy], udist)

193 | point2 = rs.rs2_deproject_pixel_to_point(color_intrin, [x, y], vdist)

194 | dist = math.sqrt(

195 | math.pow(point1[0] - point2[0], 2) + math.pow(point1[1] - point2[1], 2) + math.pow(

196 | point1[2] - point2[2], 2))

197 | return dist

198 |

199 | def frame_getter(self, mode):

200 | align_to = rs.stream.color

201 | align = rs.align(align_to)

202 | count = 0

203 | while True:

204 | self.index_to_obj()

205 | num = self.frame_dict[mode]

206 | frames = self.pipeline.wait_for_frames()

207 | aligned_frames = align.process(frames)

208 |

209 | if mode == 'Color':

210 | frame = aligned_frames.get_color_frame()

211 | self.color_frame_num = frame.get_frame_number()

212 | self.color_intrin = frame.profile.as_video_stream_profile().intrinsics

213 | self.color_frame = frame

214 | else:

215 | frame = aligned_frames.get_depth_frame()

216 | self.depth_frame_num = frame.get_frame_number()

217 | self.depth_frame = frame

218 |

219 | frame_num = frame.get_frame_number()

220 |

221 | count = self.count_search(count, frame_num, int(num))

222 | print 'Suchen {}: {}, Jetzt: {}'.format(mode, num, frame_num)

223 |

224 | if abs(int(frame_num) - int(num)) < 5:

225 | print('match {} {}'.format(mode, frame_num))

226 | return frame

227 | elif count > 10:

228 | print(num + ' nicht gefunden, suchen naechste frame')

229 | return None

230 |

231 |

232 | def frame_to_image(self):

233 | color_image = np.asanyarray(self.color_frame.get_data())

234 | color_cvt = cv2.cvtColor(color_image, cv2.COLOR_BGR2RGB)

235 | color_final = cv2.resize(color_cvt, (self.width, self.height))

236 | return color_final

237 |

238 | def count_search(self, count, now, target):

239 | if abs(now - target) < 100:

240 | count += 1

241 | print 'search count:{}'.format(count)

242 | return count

243 |

244 |

245 | def main():

246 | Arc_Real(r'X:/WER_Werl/Realsense/jpg/0917_012-1916.jpg')

247 |

248 | if __name__ == '__main__':

249 | main()

250 |

--------------------------------------------------------------------------------

/arcgis_integration/command.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import class_measure

3 | import os

4 |

5 | ap = argparse.ArgumentParser()

6 | ap.add_argument("-p","--path", required=False)

7 |

8 | args = vars(ap.parse_args())

9 |

10 | jpg_path = args['path']

11 |

12 | if 'Kamera' in jpg_path:

13 | os.startfile(jpg_path)

14 | else:

15 | class_measure.Arc_Real(jpg_path)

16 |

17 |

--------------------------------------------------------------------------------

/arcgis_integration/exe_measure.py:

--------------------------------------------------------------------------------

1 | import pyrealsense2 as rs

2 | import numpy as np

3 | import cv2

4 | import os

5 | import copy, math

6 | import tkinter as Tkinter

7 | import argparse

8 |

9 |

10 | class Arc_Real:

11 | def __init__(self, jpg_path):

12 | # Basic setting

13 | self.width, self.height = self.screen_size()

14 | self.x_ratio, self.y_ratio = self.screen_ratio()

15 |

16 | # Data from txt log file

17 | BagFilePath = os.path.abspath(jpg_path)

18 | file_dir = os.path.dirname(BagFilePath)

19 | Pro_Dir = os.path.dirname(file_dir)

20 | self.weg_id, target_color = os.path.splitext(os.path.basename(BagFilePath))[0].split('-')

21 |

22 | with open('{}/shp/matcher.txt'.format(Pro_Dir), 'r') as txtfile:

23 | self.title = [elt.strip() for elt in txtfile.readline().split(',')]

24 | self.frame_list = [[elt.strip() for elt in line.split(',')] for line in txtfile if line.split(',')[0] == self.weg_id]

25 |

26 | self.frame_dict, self.i = self.get_attribute(color=target_color, weg_id=self.weg_id)

27 | file_name = '{}\\bag\\{}.bag'.format(Pro_Dir, self.weg_id)

28 | # Start Pipeline

29 | self.pipeline = rs.pipeline()

30 | config = rs.config()

31 | config.enable_device_from_file(file_name)

32 | config.enable_all_streams()

33 | profile = self.pipeline.start(config)

34 | device = profile.get_device()

35 | playback = device.as_playback()

36 | playback.set_real_time(False)

37 |

38 | self.frame_getter('Color') # Get Color Frame with the matching frame number from self.frame_dict

39 | mode = 'Video Mode'

40 | direction = 1

41 | while True:

42 | img = self.frame_to_image()

43 | self.img_work(mode=mode, img=img)

44 | cv2.namedWindow("Color Stream", cv2.WINDOW_FULLSCREEN)

45 | cv2.imshow("Color Stream", img)

46 |

47 | if mode == 'Measure Mode':

48 | self.img_origin = self.img_work(mode='Measure Mode', img=img)

49 | self.img_copy = copy.copy(self.img_origin)

50 | cv2.setMouseCallback("Color Stream", self.draw)

51 | cv2.imshow("Color Stream", self.img_copy)

52 |

53 | key = cv2.waitKeyEx(0)

54 |

55 | # if pressed escape exit program

56 | if key == 27 or key == 113 or cv2.getWindowProperty('Color Stream', cv2.WND_PROP_VISIBLE) < 1:

57 | break

58 |

59 | elif key == 32: # press space

60 | if mode == 'Measure Mode':

61 | mode = 'Video Mode'

62 | else:

63 | self.frame_dict, self.i = self.get_attribute(color=self.color_frame_num, weg_id=self.weg_id)

64 | self.img_work(mode='Searching', img=img)

65 | cv2.imshow("Color Stream", img)

66 | cv2.waitKey(1)

67 | item = self.get_attribute(color=self.color_frame_num, weg_id=self.weg_id)

68 | if item is not None:

69 | print(item)

70 | mode = 'Measure Mode'

71 |

72 | elif key == 2555904: # press right

73 | self.i += 1

74 | direction = 1

75 | elif key == 2424832: # press left

76 | self.i -= 1

77 | direction = -1

78 |

79 | if mode == 'Measure Mode':

80 | self.img_work(mode='Searching', img=img)

81 | cv2.imshow("Color Stream", img)

82 | cv2.waitKey(1)

83 | while True:

84 | Color, Depth = self.frame_getter('Color'), self.frame_getter('Depth')

85 | if Color and Depth:

86 | break

87 | else:

88 | self.i += direction

89 |

90 | if mode != 'Measure Mode':

91 | frames = self.pipeline.wait_for_frames()

92 | self.color_frame = frames.get_color_frame()

93 | self.color_frame_num = self.color_frame.get_frame_number()

94 |

95 | print('finish')

96 | cv2.destroyAllWindows()

97 | os._exit(0)

98 | self.pipeline.stop()

99 |

100 | def screen_size(self):

101 | root = Tkinter.Tk()

102 | width = root.winfo_screenwidth()

103 | height = root.winfo_screenheight()

104 | return int(width * 0.8), int(height * 0.8)

105 |

106 | def screen_ratio(self):

107 | img_size = (1920.0, 1080.0)

108 | screen = self.screen_size()

109 | width_ratio, height_ratio = screen[0]/img_size[0],screen[1]/img_size[1]

110 | return width_ratio, height_ratio

111 |

112 | def get_attribute(self, color, weg_id):

113 | for obj in self.frame_list:

114 | if obj[0] == weg_id and abs(int(obj[2]) - int(color)) < 5:

115 | i = self.frame_list.index(obj)

116 | obj = dict(zip(self.title, obj))

117 | return obj, i

118 |

119 | def index_to_obj(self):

120 | if self.i >= len(self.frame_list):

121 | self.i = self.i % len(self.frame_list) +1

122 | content = self.frame_list[self.i]

123 | self.frame_dict = dict(zip(self.title, content))

124 |

125 | def draw(self, event, x, y, flags, params):

126 | img = copy.copy(self.img_copy)

127 | if event == 1:

128 | self.ix = x

129 | self.iy = y

130 | cv2.imshow("Color Stream", self.img_copy)

131 | elif event == 4:

132 | img = self.img_copy

133 | self.img_work(img=img, mode='calc', x=x, y=y)

134 | cv2.imshow("Color Stream", img)

135 | elif event == 2:

136 | self.img_copy = copy.copy(self.img_origin)

137 | cv2.imshow("Color Stream", self.img_copy)

138 | elif flags == 1:

139 | self.img_work(img=img, mode='calc', x=x, y=y)

140 | cv2.imshow("Color Stream", img)

141 |

142 | def img_work(self, mode, img, x=0, y=0):

143 | if 'Measure' in mode: # show black at NAN

144 | depth_image = np.asanyarray(self.depth_frame.get_data())

145 | grey_color = 0

146 | depth_image_3d = np.dstack(

147 | (depth_image, depth_image, depth_image)) # depth image is 1 channel, color is 3 channels

148 | depth_image_3d= cv2.resize(depth_image_3d, self.screen_size())

149 | img = np.where((depth_image_3d <= 0), grey_color, img)

150 |

151 | font = cv2.FONT_ITALIC

152 | fontScale = 1

153 | fontColor = (0, 0, 0)

154 | lineType = 2

155 |

156 | # Add frame number

157 | mid_line_frame_num = int(self.width/4)

158 | rec1, rec2 = (mid_line_frame_num - 50, 20), (mid_line_frame_num + 50, 60)

159 | text = str(self.color_frame_num)

160 | bottomLeftCornerOfText = (mid_line_frame_num - 45, 50)

161 | cv2.rectangle(img, rec1, rec2, (255, 255, 255), -1)

162 | cv2.putText(img, text, bottomLeftCornerOfText, font, fontScale, fontColor, lineType)

163 |

164 | if mode == 'calc':

165 | pt1, pt2 = (self.ix, self.iy), (x, y)

166 | ans = self.calculate_distance(x, y)

167 | text = '{0:.3}'.format(ans)

168 | bottomLeftCornerOfText = (self.ix + 10, (self.iy - 10))

169 | rec1, rec2 = (self.ix + 10, (self.iy - 5)), (self.ix + 80, self.iy - 35)

170 |

171 | cv2.line(img, pt1=pt1, pt2=pt2, color=(0, 0, 230), thickness=3)

172 |

173 | else:

174 | mid_line_screen = int(self.width/2)

175 | rec1, rec2 = (mid_line_screen-100, 20), (mid_line_screen + 130, 60)

176 | text = mode

177 | bottomLeftCornerOfText = (mid_line_screen - 95, 50)

178 |

179 | cv2.rectangle(img, rec1, rec2, (255, 255, 255), -1)

180 | cv2.putText(img, text, bottomLeftCornerOfText, font, fontScale, fontColor, lineType)

181 | return img

182 |

183 | def calculate_distance(self, x, y):

184 | color_intrin = self.color_intrin

185 | x_ratio, y_ratio = self.x_ratio, self.y_ratio

186 | ix, iy = int(self.ix/x_ratio), int(self.iy/y_ratio)

187 | x, y = int(x/x_ratio), int(y/y_ratio)

188 | udist = self.depth_frame.get_distance(ix, iy)

189 | vdist = self.depth_frame.get_distance(x, y)

190 | if udist ==0.00 or vdist ==0.00:

191 | dist = 'NaN'

192 | else:

193 | point1 = rs.rs2_deproject_pixel_to_point(color_intrin, [ix, iy], udist)

194 | point2 = rs.rs2_deproject_pixel_to_point(color_intrin, [x, y], vdist)

195 | dist = math.sqrt(

196 | math.pow(point1[0] - point2[0], 2) + math.pow(point1[1] - point2[1], 2) + math.pow(

197 | point1[2] - point2[2], 2))

198 | return dist

199 |

200 | def frame_getter(self, mode):

201 | align_to = rs.stream.color

202 | align = rs.align(align_to)

203 | count = 0

204 | while True:

205 | self.index_to_obj()

206 | num = self.frame_dict[mode]

207 | frames = self.pipeline.wait_for_frames()

208 | aligned_frames = align.process(frames)

209 |

210 | if mode == 'Color':

211 | frame = aligned_frames.get_color_frame()

212 | self.color_frame_num = frame.get_frame_number()

213 | self.color_intrin = frame.profile.as_video_stream_profile().intrinsics

214 | self.color_frame = frame

215 | else:

216 | frame = aligned_frames.get_depth_frame()

217 | self.depth_frame_num = frame.get_frame_number()

218 | self.depth_frame = frame

219 |

220 | frame_num = frame.get_frame_number()

221 |

222 | count = self.count_search(count, frame_num, int(num))

223 | print('Suchen {}: {}, Jetzt: {}'.format(mode, num, frame_num))

224 |

225 | if abs(int(frame_num) - int(num)) < 5:

226 | print('match {} {}'.format(mode, frame_num))

227 | return frame

228 | elif count > 10:

229 | print(num + ' nicht gefunden, suchen naechste frame')

230 | return None

231 |

232 |

233 | def frame_to_image(self):

234 | color_image = np.asanyarray(self.color_frame.get_data())

235 | color_cvt = cv2.cvtColor(color_image, cv2.COLOR_BGR2RGB)

236 | color_final = cv2.resize(color_cvt, (self.width, self.height))

237 | return color_final

238 |

239 | def count_search(self, count, now, target):

240 | if abs(now - target) < 100:

241 | count += 1

242 | print('search count:{}'.format(count))

243 | return count

244 |

245 |

246 | def main():

247 | ap = argparse.ArgumentParser()

248 | ap.add_argument("-p", "--path", required=False)

249 |

250 | args = vars(ap.parse_args())

251 |

252 | jpg_path = args['path']

253 |

254 | if 'Kamera' in jpg_path:

255 | os.startfile(jpg_path)

256 | else:

257 | Arc_Real(jpg_path)

258 |

259 | if __name__ == '__main__':

260 | main()

261 |

--------------------------------------------------------------------------------

/camera/data_capture_no_gui.py:

--------------------------------------------------------------------------------

1 | import pyrealsense2 as rs

2 | import numpy as np

3 | import cv2

4 | import serial

5 | import datetime

6 | import time

7 | import os

8 | from math import sin, cos, sqrt, atan2, radians

9 | from getch import pause_exit

10 | from multiprocessing import Process,Value, Array, Pipe

11 |

12 |

13 | def dir_generate(dir_name):

14 | """

15 | :param dir_name: input complete path of the desired directory

16 | :return: None

17 | """

18 | dir_name = str(dir_name)

19 | if not os.path.exists(dir_name):

20 | try:

21 | os.mkdir(dir_name)

22 | finally:

23 | pass

24 |

25 |

26 | def port_check(gps_on):

27 | """

28 | :param gps_on: when started it is False

29 | :return: when gps started correctly, return True, if error return 3, which will shut down the program

30 | """

31 | serialPort = serial.Serial()

32 | serialPort.baudrate = 4800

33 | serialPort.bytesize = serial.EIGHTBITS

34 | serialPort.parity = serial.PARITY_NONE

35 | serialPort.timeout = 2

36 | exist_port = None

37 | for x in range(1, 10):

38 | portnum = 'COM{}'.format(x)

39 | serialPort.port = 'COM{}'.format(x)

40 | try:

41 | serialPort.open()

42 | serialPort.close()

43 | exist_port = portnum

44 | except serial.SerialException:

45 | pass

46 | finally:

47 | pass

48 | if exist_port:

49 | return exist_port

50 | else:

51 | print ('close other programs using gps or check if the gps is correctly connected')

52 | gps_on.value = 3

53 | os._exit(0)

54 |

55 |

56 |

57 | def gps_dis(location_1,location_2):

58 | """

59 | this is the calculation of the distance between two long/lat locations

60 | input tuple/list

61 | :param location_1: [Lon, Lat]

62 | :param location_2: [Lon, Lat]

63 | :return: distance in meter

64 | """

65 | R = 6373.0

66 |

67 | lat1 = radians(location_1[1])

68 | lon1 = radians(location_1[0])

69 | lat2 = radians(location_2[1])

70 | lon2 = radians(location_2[0])

71 |

72 | dlon = lon2 - lon1

73 | dlat = lat2 - lat1

74 |

75 | a = sin(dlat / 2)**2 + cos(lat1) * cos(lat2) * sin(dlon / 2)**2

76 | c = 2 * atan2(sqrt(a), sqrt(1 - a))

77 |

78 | distance = R * c

79 | distance = distance*1000

80 | #print("Result:", distance)

81 | return distance

82 |

83 |

84 | def min2decimal(in_data):

85 | """

86 | transform lon,lat from 00'00" to decimal

87 | :param in_data: lon / lat

88 | :return: in decimal poiints

89 | """

90 | latgps = float(in_data)

91 | latdeg = int(latgps / 100)

92 | latmin = latgps - latdeg * 100

93 | lat = latdeg + (latmin / 60)

94 | return lat

95 |

96 |

97 | def GPS(Location,gps_on):

98 | """

99 | the main function of starting the GPS

100 | :param Location: mp.Array

101 | :param gps_on: mp.Value

102 | :return:

103 | """

104 |

105 | print('GPS thread start')

106 |

107 | # Set port

108 | serialPort = serial.Serial()

109 | serialPort.port = port_check(gps_on) # Check the available ports, return the valid one

110 | serialPort.baudrate = 4800

111 | serialPort.bytesize = serial.EIGHTBITS

112 | serialPort.parity = serial.PARITY_NONE

113 | serialPort.timeout = 2

114 | serialPort.open()

115 | print ('GPS opened successfully')

116 | lon, lat = 0, 0

117 | try:

118 | while True:

119 | line = serialPort.readline()

120 | data = line.split(b',')

121 | data = [x.decode("UTF-8") for x in data]

122 | if data[0] == '$GPRMC':

123 | if data[2] == "A":

124 | lat = min2decimal(data[3])

125 | lon = min2decimal(data[5])

126 | elif data[0] == '$GPGGA':

127 | if data[6] == '1':

128 | lon = min2decimal(data[4])

129 | lat = min2decimal(data[2])

130 |

131 |

132 | if lon ==0 or lat == 0:

133 | time.sleep(1)

134 |

135 | else:

136 | #print ('gps ready, current location:{},{}'.format(lon,lat))

137 | gps_on.value = True

138 | Location[:] = [lon,lat]

139 | with open('location.csv', 'w') as gps:

140 | gps.write('Lat,Lon\n')

141 | gps.write('{},{}'.format(lat,lon))

142 |

143 |

144 | except serial.SerialException:

145 | print ('Error opening GPS')

146 | gps_on.value = 3

147 | finally:

148 | serialPort.close()

149 | print('GPS finish')

150 |

151 |

152 | def Camera(child_conn, take_pic, Frame_num, camera_on, bag):

153 | """

154 | Main camera running

155 | :param child_conn: source of image, sending to openCV

156 | :param take_pic: mp.Value, receive True will take one picture, and send back False when done

157 | :param Frame_num: mp.Array, frame number of the picture taken

158 | :param camera_on: mp.Value, the status of camera

159 | :param bag: the number of the current recorded file

160 | :return:

161 | """

162 | print('camera start')

163 | bag_name = './bag/{}.bag'.format(bag)

164 | camera_on.value = True

165 | pipeline = rs.pipeline()

166 | config = rs.config()

167 | config.enable_stream(rs.stream.depth, 1280, 720, rs.format.z16, 15)

168 | config.enable_stream(rs.stream.color, 1920, 1080, rs.format.rgb8, 15)

169 | config.enable_record_to_file(bag_name)

170 | profile = pipeline.start(config)

171 |

172 | device = profile.get_device() # get record device

173 | recorder = device.as_recorder()

174 | recorder.pause() # and pause it

175 |

176 | # get sensor and set to high density

177 | depth_sensor = device.first_depth_sensor()

178 | #depth_sensor.set_option(rs.option.visual_preset, 4)

179 | # dev_range = depth_sensor.get_option_range(rs.option.visual_preset)

180 | #preset_name = depth_sensor.get_option_value_description(rs.option.visual_preset, 4)

181 | #print (preset_name)

182 | # set frame queue size to max

183 | sensor = profile.get_device().query_sensors()

184 | for x in sensor:

185 | x.set_option(rs.option.frames_queue_size, 32)

186 | # set auto exposure but process data first

187 | color_sensor = profile.get_device().query_sensors()[1]

188 | color_sensor.set_option(rs.option.auto_exposure_priority, True)

189 |

190 | try:

191 | while True:

192 | frames = pipeline.wait_for_frames()

193 | depth_frame = frames.get_depth_frame()

194 | color_frame = frames.get_color_frame()

195 | depth_color_frame = rs.colorizer().colorize(depth_frame)

196 | depth_image = np.asanyarray(depth_color_frame.get_data())

197 | color_image = np.asanyarray(color_frame.get_data())

198 | child_conn.send((color_image, depth_image))

199 |

200 | if take_pic.value == 1:

201 | recorder.resume()

202 | frames = pipeline.wait_for_frames()

203 | depth_frame = frames.get_depth_frame()

204 | color_frame = frames.get_color_frame()

205 | var = rs.frame.get_frame_number(color_frame)

206 | vard = rs.frame.get_frame_number(depth_frame)

207 | Frame_num[:] = [var,vard]

208 | time.sleep(0.05)

209 | recorder.pause()

210 | take_pic.value = False

211 |

212 | elif camera_on.value == 0:

213 | child_conn.close()

214 | break

215 |

216 | except RuntimeError:

217 | print ('run')

218 |

219 | finally:

220 | print('pipeline closed')

221 | pipeline.stop()

222 |

223 |

224 | def Show_Image(bag, parent_conn, take_pic, Frame_num, camera_on, camera_repeat, gps_on, Location):

225 | """

226 | The GUI for the program, using openCV to send and receive instructions

227 | :param bag: number of the recorded file

228 | :param parent_conn: receiver side of image, to show on openCV, the recorded bag will still in Camera

229 | :param take_pic: send True to let Camera take picture

230 | :param Frame_num: receive frame number

231 | :param camera_on: receive camera situation

232 | :param camera_repeat: mp.Value, determine if automatic start the next record

233 | :param gps_on: receive the status of gps

234 | :param Location: the current Lon, Lat

235 | :return:

236 | """

237 | Pause = False

238 | i = 1

239 | foto_location = (0, 0)

240 | foto_frame = Frame_num[0]

241 |

242 | try:

243 | while True:

244 | (lon, lat) = Location[:]

245 | current_location = (lon, lat)

246 | present = datetime.datetime.now()

247 | date = '{},{},{},{}'.format(present.day, present.month, present.year, present.time())

248 | local_take_pic = False

249 |

250 | color_image, depth_image = parent_conn.recv()

251 | depth_colormap_resize = cv2.resize(depth_image, (424, 240))

252 | color_cvt = cv2.cvtColor(color_image, cv2.COLOR_RGB2BGR)

253 | color_cvt_2 = cv2.resize(color_cvt, (424, 318))

254 | images = np.vstack((color_cvt_2, depth_colormap_resize))

255 | cv2.namedWindow('Color', cv2.WINDOW_AUTOSIZE)

256 | cv2.setWindowProperty('Color', cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

257 | cv2.setWindowProperty('Color', cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_NORMAL)

258 |

259 | if Pause is True:

260 | cv2.rectangle(images, (111,219), (339,311), (0, 0, 255), -1)

261 | font = cv2.FONT_HERSHEY_SIMPLEX

262 | bottomLeftCornerOfText = (125,290)

263 | fontScale = 2

264 | fontColor = (0, 0, 0)

265 | lineType = 4

266 | cv2.putText(images, 'Pause', bottomLeftCornerOfText, font, fontScale, fontColor, lineType)

267 |

268 | cv2.imshow('Color', images)

269 | key = cv2.waitKeyEx(1)

270 | if take_pic.value == 1 or current_location == foto_location:

271 | continue

272 |

273 | if Pause is False:

274 | if gps_dis(current_location, foto_location) > 15:

275 | local_take_pic = True

276 |

277 | if key == 98 or key == 32:

278 | local_take_pic = True

279 |

280 | if local_take_pic == True:

281 | take_pic.value = True

282 | time.sleep(0.1)

283 | (color_frame_num, depth_frame_num) = Frame_num[:]

284 | logmsg = '{},{},{},{},{},{}\n'.format(i, color_frame_num, depth_frame_num, lon, lat,date)

285 | print('Foto {} gemacht um {:.03},{:.04}'.format(i,lon,lat))

286 | with open('./foto_log/{}.txt'.format(bag), 'a') as logfile:

287 | logfile.write(logmsg)

288 | with open('foto_location.csv', 'a') as record:

289 | record.write(logmsg)

290 | foto_location = (lon, lat)

291 | i += 1

292 | if key & 0xFF == ord('q') or key == 27:

293 | camera_on.value = False

294 | gps_on.value = False

295 | camera_repeat.value = False

296 | print('Camera finish\n')

297 | elif key == 114 or key == 2228224:

298 | camera_on.value = False

299 | camera_repeat.value = True

300 | print ('Camera restart')

301 | elif gps_on is False:

302 | camera_repeat.value = False

303 | elif cv2.waitKey(1) & 0xFF == ord('p') or key == 2162688:

304 | if Pause is False:

305 | print ('pause pressed')

306 | Pause = True

307 | elif Pause is True:

308 | print ('restart')

309 | Pause = False

310 | except EOFError:

311 | pass

312 | finally:

313 | print ('Image thread ended')

314 |

315 |

316 | def bag_num():

317 | """

318 | Generate the number of record file MMDD001

319 | :return:

320 | """

321 | num = 1

322 | now = datetime.datetime.now()

323 | time.sleep(1)

324 |

325 | try:

326 | while True:

327 | file_name = '{:02d}{:02d}_{:03d}'.format(now.month, now.day, num)

328 | bag_name = './bag/{}.bag'.format(file_name)

329 | exist = os.path.isfile(bag_name)

330 | if exist:

331 | num+=1

332 | else:

333 | print ('current filename:{}'.format(file_name))

334 | break

335 | return file_name

336 | finally:

337 | pass

338 |

339 |

340 | def main():

341 | # Create Folders for Data

342 | folder_list = ('bag','foto_log')

343 | for folder in folder_list:

344 | dir_generate(folder)

345 | # Create Variables between Processes

346 | Location = Array('d',[0,0])

347 | Frame_num = Array('i',[0,0])

348 |

349 | take_pic = Value('i',False)

350 | camera_on = Value('i',True)

351 | camera_repeat = Value('i',True)

352 | gps_on = Value('i',False)

353 |

354 | # Start GPS process

355 | gps_process = Process(target=GPS, args=(Location,gps_on,))

356 | gps_process.start()

357 |

358 | print('Program Start')

359 | while camera_repeat.value:

360 | if gps_on.value == 0:

361 | time.sleep(1)

362 | continue

363 | elif gps_on.value == 3:

364 | pause_exit()

365 | break

366 |

367 | else:

368 | parent_conn, child_conn = Pipe()

369 | bag = bag_num()

370 | img_process = Process(target=Show_Image,

371 | args=(bag,parent_conn, take_pic, Frame_num, camera_on, camera_repeat, gps_on, Location,))

372 | img_process.start()

373 | Camera(child_conn,take_pic,Frame_num,camera_on,bag)

374 |

375 | gps_process.terminate()

376 |

377 | if __name__ == '__main__':

378 | main()

--------------------------------------------------------------------------------

/camera/qt gui/cmd_class.py:

--------------------------------------------------------------------------------

1 | import multiprocessing as mp

2 | import pyrealsense2 as rs

3 | import numpy as np

4 | import cv2

5 | import serial

6 | import datetime

7 | import time

8 | import os, sys

9 | from math import sin, cos, sqrt, atan2, radians

10 | import threading

11 |

12 |

13 | def dir_generate(dir_name):

14 | """

15 | :param dir_name: input complete path of the desired directory

16 | :return: None

17 | """

18 | dir_name = str(dir_name)

19 | if not os.path.exists(dir_name):

20 | try:

21 | os.mkdir(dir_name)

22 | finally:

23 | pass

24 |

25 |

26 | def port_check(gps_on):

27 | """

28 | :param gps_on: when started it is False

29 | :return: when gps started correctly, return True, if error return 3, which will shut down the program

30 | """

31 | serialPort = serial.Serial()

32 | serialPort.baudrate = 4800

33 | serialPort.bytesize = serial.EIGHTBITS

34 | serialPort.parity = serial.PARITY_NONE

35 | serialPort.timeout = 2

36 | exist_port = None

37 | win = ['COM{}'.format(x) for x in range(10)]

38 | linux = ['/dev/ttyUSB{}'.format(x) for x in range(5)]

39 | for x in (win + linux):

40 | serialPort.port = x

41 | try:

42 | serialPort.open()

43 | serialPort.close()

44 | exist_port = x

45 | except serial.SerialException:

46 | pass

47 | finally:

48 | pass

49 | if exist_port:

50 | return exist_port

51 | else:

52 | print('close other programs using gps or check if the gps is correctly connected')

53 | gps_on.value = 3

54 |

55 |

56 | def gps_dis(location_1, location_2):

57 | """

58 | this is the calculation of the distance between two long/lat locations

59 | input tuple/list

60 | :param location_1: [Lon, Lat]

61 | :param location_2: [Lon, Lat]

62 | :return: distance in meter

63 | """

64 | R = 6373.0

65 |

66 | lat1 = radians(location_1[1])

67 | lon1 = radians(location_1[0])

68 | lat2 = radians(location_2[1])

69 | lon2 = radians(location_2[0])

70 |

71 | dlon = lon2 - lon1

72 | dlat = lat2 - lat1

73 |

74 | a = sin(dlat / 2) ** 2 + cos(lat1) * cos(lat2) * sin(dlon / 2) ** 2

75 | c = 2 * atan2(sqrt(a), sqrt(1 - a))

76 |

77 | distance = R * c

78 | distance = distance * 1000

79 | # print("Result:", distance)

80 | return distance

81 |

82 |

83 | def min2decimal(in_data):

84 | """

85 | transform lon,lat from 00'00" to decimal

86 | :param in_data: lon / lat

87 | :return: in decimal poiints

88 | """

89 | latgps = float(in_data)

90 | latdeg = int(latgps / 100)

91 | latmin = latgps - latdeg * 100

92 | lat = latdeg + (latmin / 60)

93 | return lat

94 |

95 |

96 | def gps_information(port):

97 | lon, lat = 0, 0

98 | try:

99 | while lon == 0 or lat == 0:

100 | line = port.readline()

101 | data = line.split(b',')

102 | data = [x.decode("UTF-8") for x in data]

103 | if data[0] == '$GPRMC':

104 | if data[2] == "A":

105 | lat = min2decimal(data[3])

106 | lon = min2decimal(data[5])

107 | elif data[0] == '$GPGGA':

108 | if data[6] == '1':

109 | lon = min2decimal(data[4])

110 | lat = min2decimal(data[2])

111 | time.sleep(1)

112 | #import random

113 | #if lon == 0 or lat == 0:

114 | # lon, lat = random.random(), random.random()

115 | # print("return", lon, lat)

116 | except:

117 | print('decode error')

118 |

119 | return lon, lat

120 |

121 |

122 | def GPS(Location, gps_on, root):

123 | """

124 |

125 | :param Location: mp.Array fot longitude, latitude

126 | :param gps_on: gps status 0 for resting, 1 for looking for signal, 2 for signal got, 3 for error

127 | :param root: root dir for linux at /home/pi

128 | :return:

129 | """

130 | print('GPS thread start')

131 | # Set port

132 | serialPort = serial.Serial()

133 | serialPort.port = port_check(gps_on) # Check the available ports, return the valid one

134 | serialPort.baudrate = 4800

135 | serialPort.bytesize = serial.EIGHTBITS

136 | serialPort.parity = serial.PARITY_NONE

137 | serialPort.timeout = 2

138 | serialPort.open()

139 | print('GPS opened successfully')

140 | gps_on.value = 2

141 | lon, lat = gps_information(serialPort)

142 | gps_on.value = 1

143 | try:

144 | while gps_on.value != 99:

145 | lon, lat = gps_information(serialPort)

146 | Location[:] = [lon, lat]

147 | with open('{}location.csv'.format(root), 'w') as gps:

148 | gps.write('Lat,Lon\n')

149 | gps.write('{},{}'.format(lat, lon))

150 | # print(lon, lat)

151 |

152 | except serial.SerialException:

153 | print('Error opening GPS')

154 | gps_on.value = 3

155 | finally:

156 | serialPort.close()

157 | print('GPS finish')

158 | gps_on.value = 0

159 |

160 |

161 | def Camera(child_conn, take_pic, frame_num, camera_status, bag):

162 | """

163 |

164 | :param child_conn: mp.Pipe for image

165 | :param take_pic: take pic command, 0 for rest, 1 for take one pic, after taken is 2, log file will turn back to 0

166 | :param frame_num: mp.Array for frame number

167 | :param camera_status: 0 for rest, 1 for running, 99 for end

168 | :param bag: bag path /home/pi/bag

169 | :return:

170 | """

171 | print('camera start')

172 | try:

173 | pipeline = rs.pipeline()

174 | config = rs.config()

175 | config.enable_stream(rs.stream.depth, 1280, 720, rs.format.z16, 15)

176 | config.enable_stream(rs.stream.color, 1920, 1080, rs.format.rgb8, 15)

177 | config.enable_record_to_file(bag)

178 | profile = pipeline.start(config)

179 |

180 | device = profile.get_device() # get record device

181 | recorder = device.as_recorder()

182 | recorder.pause() # and pause it

183 |

184 | # set frame queue size to max

185 | sensor = profile.get_device().query_sensors()

186 | for x in sensor:

187 | x.set_option(rs.option.frames_queue_size, 32)

188 | # set auto exposure but process data first

189 | color_sensor = profile.get_device().query_sensors()[1]

190 | color_sensor.set_option(rs.option.auto_exposure_priority, True)

191 | camera_status.value = 1

192 | while camera_status.value != 99:

193 | frames = pipeline.wait_for_frames()

194 | depth_frame = frames.get_depth_frame()

195 | color_frame = frames.get_color_frame()

196 | depth_color_frame = rs.colorizer().colorize(depth_frame)

197 | depth_image = np.asanyarray(depth_color_frame.get_data())

198 | color_image = np.asanyarray(color_frame.get_data())

199 | depth_colormap_resize = cv2.resize(depth_image, (400, 250))

200 | color_cvt = cv2.cvtColor(color_image, cv2.COLOR_RGB2BGR)

201 | color_cvt_2 = cv2.resize(color_cvt, (400, 250))

202 | img = np.vstack((color_cvt_2, depth_colormap_resize))

203 | child_conn.send(img)

204 |

205 | if take_pic.value == 1:

206 | recorder.resume()

207 | frames = pipeline.wait_for_frames()

208 | depth_frame = frames.get_depth_frame()

209 | color_frame = frames.get_color_frame()

210 | var = rs.frame.get_frame_number(color_frame)

211 | vard = rs.frame.get_frame_number(depth_frame)

212 | frame_num[:] = [var, vard]

213 | time.sleep(0.05)

214 | recorder.pause()

215 | print('taken', frame_num[:])

216 | take_pic.value = 2

217 |

218 | #child_conn.close()

219 | pipeline.stop()

220 |

221 | except RuntimeError:

222 | print('run')

223 |

224 | finally:

225 | print('pipeline closed')

226 | camera_status.value = 98

227 | print('camera value', camera_status.value)

228 |

229 |

230 | def bag_num():

231 | """

232 | Generate the number of record file MMDD001

233 | :return:

234 | """

235 | num = 1

236 | now = datetime.datetime.now()

237 | time.sleep(1)

238 |

239 | try:

240 | while True:

241 | file_name = '{:02d}{:02d}_{:03d}'.format(now.month, now.day, num)

242 | bag_name = 'bag/{}.bag'.format(file_name)

243 | if sys.platform == 'linux':

244 | bag_name = "/home/pi/RR/" + bag_name

245 | exist = os.path.isfile(bag_name)

246 | if exist:

247 | num += 1

248 | else:

249 | print('current filename:{}'.format(file_name))

250 | break

251 | return file_name

252 | finally:

253 | pass

254 |

255 |

256 | class RScam:

257 | def __init__(self):

258 | # Create Folders for Data

259 | if sys.platform == "linux":

260 | self.root_dir = '/home/pi/RR/'

261 | else:

262 | self.root_dir = ''

263 |

264 | folder_list = ('bag', 'foto_log')

265 |

266 | for folder in folder_list:

267 | dir_generate(self.root_dir + folder)

268 | # Create Variables between Processes

269 | self.Location = mp.Array('d', [0, 0])

270 | self.Frame_num = mp.Array('i', [0, 0])

271 |

272 | self.take_pic = mp.Value('i', 0)

273 | self.camera_command = mp.Value('i', 0)

274 | self.gps_status = mp.Value('i', 0)

275 | jpg_path = "/home/pi/RR/jpg.jpeg"

276 | if os.path.isfile(jpg_path):

277 | self.img = cv2.imread(jpg_path)

278 | else:

279 | self.img = cv2.imread('img/1.jpg')

280 |

281 | self.auto = False

282 | self.restart = True

283 | self.command = None

284 | self.distance = 15

285 | self.msg = 'waiting'

286 |

287 | def start_gps(self):

288 | # Start GPS process

289 | gps_process = mp.Process(target=GPS, args=(self.Location, self.gps_status, self.root_dir,))

290 | gps_process.start()

291 |

292 | def main_loop(self):

293 | parent_conn, child_conn = mp.Pipe()

294 | self.img_thread_status = True

295 | image_thread = threading.Thread(target=self.image_receiver, args=(parent_conn,))

296 | image_thread.start()

297 | while self.restart:

298 | self.msg = 'gps status: {}'.format(self.gps_status.value)

299 | if self.gps_status.value == 3:

300 | self.msg = 'error with gps'

301 | break

302 | elif self.gps_status.value == 2:

303 | time.sleep(1)

304 | elif self.gps_status.value == 1 and self.camera_command.value == 0:

305 | bag = bag_num()

306 | bag_name = "{}bag/{}.bag".format(self.root_dir, bag)

307 | cam_process = mp.Process(target=Camera, args=(child_conn, self.take_pic,

308 | self.Frame_num, self.camera_command, bag_name))

309 | cam_process.start()

310 | self.command_receiver(bag)

311 | self.msg = 'end one round'

312 | #print('end one round')

313 | self.camera_command.value = 0

314 | self.gps_status.value = 99

315 | self.img_thread_status = False

316 | self.msg = "waiting"

317 |

318 | def image_receiver(self, parent_conn):

319 | while self.img_thread_status:

320 | try:

321 | self.img = parent_conn.recv()

322 | except EOFError:

323 | print('EOF')

324 |

325 | self.img = cv2.imread("img/1.jpg")

326 | print("img thread closed")

327 |

328 | def command_receiver(self, bag):

329 | i = 1

330 | foto_location = (0, 0)

331 | while self.camera_command.value != 98:

332 | #print('msg', self.msg)

333 | (lon, lat) = self.Location[:]

334 | current_location = (lon, lat)

335 | present = datetime.datetime.now()

336 | date = '{},{},{},{}'.format(present.day, present.month, present.year, present.time())

337 | local_take_pic = False

338 |

339 | if self.take_pic.value == 2:

340 | color_frame_num, depth_frame_num = self.Frame_num[:]

341 | print(color_frame_num, depth_frame_num)

342 | logmsg = '{},{},{},{},{},{}\n'.format(i, color_frame_num, depth_frame_num, lon, lat, date)

343 | #print('Foto {} gemacht um {:.03},{:.04}'.format(i, lon, lat))

344 | self.msg = 'Foto {} gemacht um {:.03},{:.04}'.format(i, lon, lat)

345 | with open('{}foto_log/{}.txt'.format(self.root_dir, bag), 'a') as logfile:

346 | logfile.write(logmsg)

347 | with open('{}foto_location.csv'.format(self.root_dir), 'a') as record:

348 | record.write(logmsg)

349 | foto_location = (lon, lat)

350 | i += 1

351 | self.take_pic.value = 0

352 |

353 | if self.take_pic.value in (1, 2) or current_location == foto_location:

354 | continue

355 |

356 | cmd = self.command

357 |

358 | if cmd == 'auto':

359 | self.auto = True

360 | elif cmd == "pause":

361 | self.auto = False

362 | elif cmd == "shot":

363 | print('take manual')

364 | local_take_pic = True

365 | elif cmd == "restart" or cmd == "quit":

366 | self.camera_command.value = 99

367 | self.msg = cmd

368 | print("close main", self.msg)

369 |

370 | self.command = None

371 |

372 | if self.auto and gps_dis(current_location, foto_location) > self.distance:

373 | local_take_pic = True

374 |

375 | if local_take_pic:

376 | self.take_pic.value = 1

377 |

378 | self.msg = 'main closed'

379 | print("main closed")

380 | self.camera_command.value = 0

381 |

382 |

383 | if __name__ == '__main__':

384 | pass

--------------------------------------------------------------------------------

/camera/qt gui/img/1.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/camera/qt gui/img/1.jpg

--------------------------------------------------------------------------------

/camera/qt gui/img/intel.ico:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/camera/qt gui/img/intel.ico

--------------------------------------------------------------------------------

/camera/qt gui/img/intel.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/camera/qt gui/img/intel.png

--------------------------------------------------------------------------------

/camera/qt gui/qtcam.exe:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/soarwing52/RealsensePython/d4f5cf5c0be9ec7d84e9d759886144c8127d445d/camera/qt gui/qtcam.exe

--------------------------------------------------------------------------------

/camera/qt gui/qtcam.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | import sys

3 | import multiprocessing as mp

4 | from PyQt5 import QtWidgets, QtCore, QtGui

5 | from PyQt5.QtCore import QThread, pyqtSignal

6 | from PyQt5.QtGui import QImage, QPixmap

7 | from temp import Ui_MainWindow

8 | from cmd_class import RScam

9 | import cv2

10 | import threading

11 |

12 | a = RScam()

13 |

14 |

15 | class MainWindow(QtWidgets.QMainWindow, Ui_MainWindow):

16 | def __init__(self, parent=None):

17 | super(MainWindow, self).__init__(parent=parent)

18 | self.setupUi(self)

19 | self.setWindowIcon(QtGui.QIcon('img/intel.png'))

20 |

21 | self.timer_camera = QtCore.QTimer()

22 | self.timer_camera.timeout.connect(self.show_image)

23 | self.timer_camera.start(int(1000 / 15))

24 |

25 | self.timer_msg = QtCore.QTimer()

26 | self.timer_msg.timeout.connect(self.get_msg)

27 | self.timer_msg.start(1000)

28 |

29 | self.btn_start.clicked.connect(lambda : self.start())

30 | self.btn_restart.clicked.connect(lambda: self.restart())

31 | self.btn_photo.clicked.connect(lambda: self.foto())

32 | self.btn_quit.clicked.connect(lambda: self.quit_btn())

33 |

34 | self.spinBox.setValue(a.distance)

35 | self.checkBox.stateChanged.connect(lambda : self.auto_change())

36 | self.spinBox.valueChanged.connect(lambda : self.dis_change())

37 |

38 |

39 | def keyPressEvent(self, event):

40 | key = event.key()

41 | if key == 16777238:

42 | if a.auto is True:

43 | self.checkBox.setChecked(False)

44 | else:

45 | self.checkBox.setChecked(True)

46 | print(a.auto)

47 | elif key == 16777239:

48 | self.restart()

49 | elif key == 66:

50 | self.foto()

51 |

52 | def start(self):

53 | if a.gps_status.value == 0:

54 | a.start_gps()

55 | a.restart = True

56 | t = threading.Thread(target=a.main_loop)

57 | t.start()

58 | print("start loop")

59 | return "started camera"

60 | elif a.gps_status.value == 2:

61 | print("wait for signal")

62 | return "waiting for signal"

63 | else:

64 | print(a.gps_status.value, a.camera_command.value)

65 | return "start but waiting GPS"

66 |

67 | def show_image(self):

68 | try:

69 | self.img = a.img

70 | self.img = cv2.resize(self.img, (400, 500))

71 | height, width, channel = self.img.shape

72 | except:

73 | print('no image, get default')

74 | self.img = cv2.imread('1.jpg')

75 | self.img = cv2.resize(self.img, (400, 500))

76 | height, width, channel = self.img.shape

77 |

78 | bytesPerline = 3 * width

79 | self.qImg = QImage(self.img.data, width, height, bytesPerline, QImage.Format_RGB888).rgbSwapped()

80 | # 將QImage顯示出來

81 | self.label.setPixmap(QPixmap.fromImage(self.qImg))

82 |

83 | def restart(self):

84 | print("restart")

85 | a.command = "restart"

86 |

87 | def foto(self):

88 | print("foto")

89 | a.command = 'shot'

90 |

91 | def quit_btn(self):

92 | print("quit")

93 | a.restart = False

94 | a.command = 'quit'

95 |

96 | def auto_change(self):

97 | if self.checkBox.isChecked() is False:

98 | print('no')

99 | self.checkBox.setText('Auto off')

100 | self.checkBox.setChecked(False)

101 | a.auto = False

102 | else:

103 | print('checked')

104 | self.checkBox.setText('Auto on')

105 | self.checkBox.setChecked(True)

106 | a.auto = True

107 |

108 | def dis_change(self):

109 | a.distance = self.spinBox.value()

110 | print(a.distance)

111 |

112 | def get_msg(self):

113 | self.statusbar.showMessage(a.msg)

114 |

115 |

116 |

117 | class msgThread(QThread):

118 | msg = pyqtSignal(str)

119 |

120 | def __int__(self, parent=None):

121 | super(msgThread, self).__init__(parent)

122 |

123 | def run(self):

124 | while True:

125 | #print('ruuning')

126 | self.msg.emit(a.msg)

127 |

128 | if __name__ == "__main__":

129 | mp.freeze_support()

130 | app = QtWidgets.QApplication(sys.argv)

131 | w = MainWindow()

132 | w.show()

133 | sys.exit(app.exec_())

--------------------------------------------------------------------------------

/camera/qt gui/temp.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | # Form implementation generated from reading ui file 'cam.ui'

4 | #

5 | # Created by: PyQt5 UI code generator 5.13.1

6 | #

7 | # WARNING! All changes made in this file will be lost!

8 |

9 |

10 | from PyQt5 import QtCore, QtGui, QtWidgets

11 |

12 |

13 | class Ui_MainWindow(object):

14 | def setupUi(self, MainWindow):

15 | MainWindow.setObjectName("MainWindow")

16 | MainWindow.resize(428, 675)

17 | font = QtGui.QFont()

18 | font.setPointSize(14)

19 | MainWindow.setFont(font)

20 | self.centralwidget = QtWidgets.QWidget(MainWindow)

21 | self.centralwidget.setObjectName("centralwidget")

22 | self.gridLayoutWidget = QtWidgets.QWidget(self.centralwidget)

23 | self.gridLayoutWidget.setGeometry(QtCore.QRect(10, 10, 401, 191))

24 | self.gridLayoutWidget.setObjectName("gridLayoutWidget")

25 | self.gridLayout = QtWidgets.QGridLayout(self.gridLayoutWidget)

26 | self.gridLayout.setContentsMargins(0, 0, 0, 0)

27 | self.gridLayout.setObjectName("gridLayout")

28 | self.btn_start = QtWidgets.QPushButton(self.gridLayoutWidget)

29 | self.btn_start.setObjectName("btn_start")

30 | self.gridLayout.addWidget(self.btn_start, 0, 0, 1, 1)

31 | self.btn_photo = QtWidgets.QPushButton(self.gridLayoutWidget)

32 | self.btn_photo.setObjectName("btn_photo")

33 | self.gridLayout.addWidget(self.btn_photo, 1, 0, 1, 1)

34 | self.btn_restart = QtWidgets.QPushButton(self.gridLayoutWidget)

35 | self.btn_restart.setObjectName("btn_restart")

36 | self.gridLayout.addWidget(self.btn_restart, 0, 1, 1, 1)

37 | self.btn_quit = QtWidgets.QPushButton(self.gridLayoutWidget)

38 | self.btn_quit.setObjectName("btn_quit")

39 | self.gridLayout.addWidget(self.btn_quit, 1, 1, 1, 1)

40 | self.spinBox = QtWidgets.QSpinBox(self.gridLayoutWidget)

41 | sizePolicy = QtWidgets.QSizePolicy(QtWidgets.QSizePolicy.Minimum, QtWidgets.QSizePolicy.Fixed)

42 | sizePolicy.setHorizontalStretch(0)