├── benchbench

├── __init__.py

├── utils

│ ├── __init__.py

│ ├── win_rate.py

│ ├── base.py

│ └── metric.py

├── data

│ ├── openllm

│ │ ├── statistic.py

│ │ ├── format.py

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── bbh

│ │ ├── __init__.py

│ │ ├── format.py

│ │ ├── cols.txt

│ │ └── statistic.py

│ ├── vtab

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── bigcode

│ │ ├── __init__.py

│ │ ├── format.py

│ │ ├── leaderboard.tsv

│ │ └── vanilla.txt

│ ├── mmlu

│ │ ├── __init__.py

│ │ ├── format.py

│ │ └── leaderboard_raw.csv

│ ├── mteb

│ │ ├── format.py

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── helm_lite

│ │ ├── format.py

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── helm_capability

│ │ ├── format.py

│ │ ├── __init__.py

│ │ ├── leaderboard.tsv

│ │ └── vanilla.txt

│ ├── heim

│ │ ├── __init__.py

│ │ ├── quality_human.tsv

│ │ ├── quality_auto.tsv

│ │ ├── originality.tsv

│ │ ├── black_out.tsv

│ │ ├── nsfw.tsv

│ │ ├── nudity.tsv

│ │ ├── aesthetics_human.tsv

│ │ ├── alignment_human.tsv

│ │ └── alignment_auto.tsv

│ ├── superglue

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── imagenet

│ │ ├── format.py

│ │ ├── __init__.py

│ │ ├── run_imagenet.py

│ │ └── leaderboard_raw.tsv

│ ├── helm

│ │ ├── __init__.py

│ │ ├── toxicity.tsv

│ │ ├── calibration.tsv

│ │ ├── efficiency.tsv

│ │ ├── summarization.tsv

│ │ ├── fairness.tsv

│ │ ├── robustness.tsv

│ │ └── accuracy.tsv

│ ├── glue

│ │ ├── __init__.py

│ │ └── leaderboard.tsv

│ ├── dummy

│ │ └── __init__.py

│ └── __init__.py

└── measures

│ ├── cardinal.py

│ └── ordinal.py

├── MANIFEST.in

├── assets

├── banner.png

└── benchbench-horizontal.png

├── docs

├── data.rst

├── index.rst

├── measures.rst

├── Makefile

├── utils.rst

├── make.bat

└── conf.py

├── LICENSE.txt

├── pyproject.toml

├── README.md

└── .gitignore

/benchbench/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/benchbench/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include benchbench/data/*

2 | include benchbench/data/*/*

3 |

--------------------------------------------------------------------------------

/assets/banner.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/socialfoundations/benchbench/HEAD/assets/banner.png

--------------------------------------------------------------------------------

/assets/benchbench-horizontal.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/socialfoundations/benchbench/HEAD/assets/benchbench-horizontal.png

--------------------------------------------------------------------------------

/benchbench/data/openllm/statistic.py:

--------------------------------------------------------------------------------

1 | from datasets import load_dataset

2 |

3 | dataset = load_dataset("gsm8k", name="main", split="test")

4 | print("gsm8k")

5 | print(len(set([eval(i.split("#### ")[-1]) for i in dataset["answer"]])), len(dataset))

6 |

--------------------------------------------------------------------------------

/benchbench/data/bbh/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_bbh():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | cols = data.columns[6:]

11 | return data, cols

12 |

--------------------------------------------------------------------------------

/docs/data.rst:

--------------------------------------------------------------------------------

1 | Data

2 | =======================================

3 |

4 | benchbench.data

5 | --------------------------------------------

6 | .. automodule:: benchbench.data

7 | :members:

8 | :undoc-members:

9 | :show-inheritance:

10 |

11 | .. autoattribute:: benchbench.data.cardinal_benchmark_list

12 |

13 | .. autoattribute:: benchbench.data.ordinal_benchmark_list

14 |

15 |

--------------------------------------------------------------------------------

/docs/index.rst:

--------------------------------------------------------------------------------

1 | Welcome to BenchBench's documentation!

2 | =========================================

3 |

4 | .. include:: ../README.md

5 | :parser: myst_parser.sphinx_

6 |

7 | .. toctree::

8 | :maxdepth: 2

9 | :caption: Contents:

10 |

11 | data

12 | measures

13 | utils

14 |

15 |

16 | Indices and tables

17 | --------------------------------------------

18 |

19 | * :ref:`genindex`

20 | * :ref:`modindex`

21 | * :ref:`search`

22 |

--------------------------------------------------------------------------------

/benchbench/data/vtab/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_vtab():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | cols = data.columns[1:]

11 | return data, cols

12 |

13 |

14 | def test():

15 | data, cols = load_vtab()

16 | print(data.head())

17 | print(cols)

18 |

19 |

20 | if __name__ == "__main__":

21 | test()

22 |

--------------------------------------------------------------------------------

/benchbench/data/bigcode/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_bigcode():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | cols = data.columns[3:6]

11 | return data, cols

12 |

13 |

14 | def test():

15 | data, cols = load_bigcode()

16 | print(data.head())

17 | print(cols)

18 |

19 |

20 | if __name__ == "__main__":

21 | test()

22 |

--------------------------------------------------------------------------------

/benchbench/data/mmlu/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_mmlu():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | cols = data.columns[4:]

11 | data[cols] = data[cols] * 100.0

12 | return data, cols

13 |

14 |

15 | def test():

16 | data, cols = load_mmlu()

17 | print(data.head())

18 | print(cols)

19 |

20 |

21 | if __name__ == "__main__":

22 | test()

23 |

--------------------------------------------------------------------------------

/benchbench/data/mteb/format.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | fout = open(os.path.join(os.getcwd(), "/leaderboard.tsv"), "w")

4 | with open(os.path.join(os.getcwd(), "/vanilla.txt"), "r") as fin:

5 | for i, line in enumerate(fin.readlines()):

6 | line = line.strip().replace("\t", " ")

7 | if len(line) != 0:

8 | fout.write(line)

9 | else:

10 | fout.write("-")

11 | if i % 14 == 13:

12 | fout.write("\n")

13 | else:

14 | fout.write("\t")

15 |

--------------------------------------------------------------------------------

/benchbench/data/bigcode/format.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | fout = open(os.path.join(os.getcwd(), "leaderboard.tsv"), "w")

4 | with open(os.path.join(os.getcwd(), "vanilla.txt"), "r") as fin:

5 | for i, line in enumerate(fin.readlines()):

6 | line = line.strip().replace("\t", " ")

7 | if len(line) != 0:

8 | fout.write(line.split()[0])

9 | else:

10 | continue

11 | if i % 8 == 7:

12 | fout.write("\n")

13 | else:

14 | fout.write("\t")

15 |

--------------------------------------------------------------------------------

/benchbench/data/openllm/format.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | fout = open(os.path.join(os.getcwd(), "leaderboard.tsv"), "w")

4 | with open(os.path.join(os.getcwd(), "vanilla.txt"), "r") as fin:

5 | for i, line in enumerate(fin.readlines()):

6 | line = line.strip().replace("\t", " ")

7 | if len(line) != 0:

8 | fout.write(line.split()[0])

9 | else:

10 | continue

11 | if i % 10 == 9:

12 | fout.write("\n")

13 | else:

14 | fout.write("\t")

15 |

--------------------------------------------------------------------------------

/benchbench/data/helm_lite/format.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | fout = open(os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"), "w")

4 | with open(os.path.join(os.path.dirname(os.path.abspath(__file__)), "vanilla.txt"), "r") as fin:

5 | cols = []

6 | helm_lite = dict()

7 | for i, line in enumerate(fin.readlines()):

8 | line = line.strip()

9 | if len(line) == 0:

10 | continue

11 | fout.write(line)

12 | if i % 12 == 11:

13 | fout.write("\n")

14 | else:

15 | fout.write("\t")

16 |

--------------------------------------------------------------------------------

/docs/measures.rst:

--------------------------------------------------------------------------------

1 | Measures

2 | =============

3 |

4 | .. automodule:: benchbench.measures

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | benchbench.measures.cardinal

10 | --------------------------------------------

11 |

12 | .. automodule:: benchbench.measures.cardinal

13 | :members:

14 | :undoc-members:

15 | :show-inheritance:

16 |

17 | benchbench.measures.ordinal

18 | --------------------------------------------

19 |

20 | .. automodule:: benchbench.measures.ordinal

21 | :members:

22 | :undoc-members:

23 | :show-inheritance:

24 |

--------------------------------------------------------------------------------

/benchbench/data/openllm/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_openllm():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | cols = data.columns[3:]

11 | data["average_score"] = data[cols].mean(1)

12 | data.sort_values(by="average_score", inplace=True, ascending=False)

13 | return data, cols

14 |

15 |

16 | def test():

17 | data, cols = load_openllm()

18 | print(data.head())

19 | print(cols)

20 |

21 |

22 | if __name__ == "__main__":

23 | test()

24 |

--------------------------------------------------------------------------------

/benchbench/data/helm_capability/format.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 |

4 | fout = open(

5 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"), "w"

6 | )

7 | with open(

8 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "vanilla.txt"), "r"

9 | ) as fin:

10 | cols = []

11 | helm_lite = dict()

12 | for i, line in enumerate(fin.readlines()):

13 | line = line.strip()

14 | if len(line) == 0:

15 | continue

16 | fout.write(line)

17 | if i % 7 == 6:

18 | fout.write("\n")

19 | else:

20 | fout.write("\t")

21 |

--------------------------------------------------------------------------------

/benchbench/data/bbh/format.py:

--------------------------------------------------------------------------------

1 | import os

2 | import re

3 |

4 | fout = open(os.path.join(os.getcwd(), "leaderboard.tsv"), "w")

5 | with open(os.path.join(os.getcwd(), "cols.txt"), "r") as fin:

6 | fout.write(fin.readline() + "\n")

7 | with open(os.path.join(os.getcwd(), "vanilla.tsv"), "r") as fin:

8 | new_line = ""

9 | for i, line in enumerate(fin.readlines()):

10 | if i % 5 <= 3:

11 | new_line += line.strip()

12 | new_line += "\t"

13 | else:

14 | new_line += re.sub("\s+", "\t", line)

15 | fout.write(new_line.rstrip() + "\n")

16 | new_line = ""

17 |

--------------------------------------------------------------------------------

/benchbench/data/bbh/cols.txt:

--------------------------------------------------------------------------------

1 | Rank Model Company Release Parameters Average Boolean Expressions Causal Judgement Date Understanding Disambiguation QA Dyck Languages Formal Fallacies Geometric Shapes Hyperbaton Logical Deduction Three Objects Logical Deduction Five Objects Logical Deduction Seven Objects Movie Recommendation Multistep Arithmetic Two Navigate Object Counting Penguins In A Table Reasoning About Colored Objects Ruin Names Salient Translation Error Detection Snarks Sports Understanding Temporal Sequences Tracking Shuffled Objects Three Objects Tracking Shuffled Objects Five Objects Tracking Shuffled Objects Seven Objects Web Of Lies Word Sorting

2 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line, and also

5 | # from the environment for the first two.

6 | SPHINXOPTS ?=

7 | SPHINXBUILD ?= sphinx-build

8 | SOURCEDIR = .

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/benchbench/data/helm_lite/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import pandas as pd

4 |

5 |

6 | def load_helm_lite():

7 | data = pd.read_csv(

8 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

9 | sep="\t",

10 | )

11 | data = data.replace("-", np.nan)

12 | data = data.dropna(axis=0, how="all")

13 | data = data.dropna(axis=1, how="all")

14 | cols = data.columns[2:]

15 |

16 | for c in cols:

17 | data[c] = np.array([float(i) for i in data[c].values])

18 |

19 | return data, cols

20 |

21 |

22 | def test():

23 | data, cols = load_helm_lite()

24 | print(data.head())

25 | print(cols)

26 |

27 |

28 | if __name__ == "__main__":

29 | test()

30 |

--------------------------------------------------------------------------------

/docs/utils.rst:

--------------------------------------------------------------------------------

1 | Utils

2 | =============

3 |

4 | .. automodule:: benchbench.utils

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | benchbench.utils.base

10 | --------------------------------------------

11 |

12 | .. automodule:: benchbench.utils.base

13 | :members:

14 | :undoc-members:

15 | :show-inheritance:

16 |

17 | benchbench.utils.metric

18 | --------------------------------------------

19 |

20 | .. automodule:: benchbench.utils.metric

21 | :members:

22 | :undoc-members:

23 | :show-inheritance:

24 |

25 | benchbench.utils.win_rate

26 | --------------------------------------------

27 |

28 | .. automodule:: benchbench.utils.win_rate

29 | :members:

30 | :undoc-members:

31 | :show-inheritance:

32 |

--------------------------------------------------------------------------------

/benchbench/data/helm_capability/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import pandas as pd

4 |

5 |

6 | def load_helm_capability():

7 | data = pd.read_csv(

8 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

9 | sep="\t",

10 | )

11 | data = data.replace("-", np.nan)

12 | data = data.dropna(axis=0, how="all")

13 | data = data.dropna(axis=1, how="all")

14 | cols = data.columns[2:]

15 |

16 | for c in cols:

17 | data[c] = np.array([float(i) for i in data[c].values])

18 |

19 | return data, cols

20 |

21 |

22 | def test():

23 | data, cols = load_helm_capability()

24 | print(data.head())

25 | print(cols)

26 |

27 |

28 | if __name__ == "__main__":

29 | test()

30 |

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=.

11 | set BUILDDIR=_build

12 |

13 | %SPHINXBUILD% >NUL 2>NUL

14 | if errorlevel 9009 (

15 | echo.

16 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

17 | echo.installed, then set the SPHINXBUILD environment variable to point

18 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

19 | echo.may add the Sphinx directory to PATH.

20 | echo.

21 | echo.If you don't have Sphinx installed, grab it from

22 | echo.https://www.sphinx-doc.org/

23 | exit /b 1

24 | )

25 |

26 | if "%1" == "" goto help

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/benchbench/data/mteb/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 |

4 |

5 | def load_mteb():

6 | data = pd.read_csv(

7 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

8 | sep="\t",

9 | )

10 | orig_cols = data.columns[6:]

11 | ret = {}

12 | cols = []

13 | for c in orig_cols:

14 | col_name = c.split(" (")[0]

15 | num_task = int(c.split(" (")[1].split(" ")[0])

16 | for i in range(num_task):

17 | ret["{}-{}".format(col_name, i)] = data[c].values.copy()

18 | cols.append("{}-{}".format(col_name, i))

19 | data = pd.concat([data, pd.DataFrame(ret)], axis=1)

20 |

21 | data["average_score"] = data[cols].mean(1)

22 | data.sort_values(by="average_score", inplace=True, ascending=False)

23 | return data, cols

24 |

25 |

26 | def test():

27 | data, cols = load_mteb()

28 | print(data.head())

29 | print(cols)

30 |

31 |

32 | if __name__ == "__main__":

33 | test()

34 |

--------------------------------------------------------------------------------

/benchbench/data/heim/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import pandas as pd

4 |

5 |

6 | def load_heim(subset="alignment_human"):

7 | assert subset in [

8 | "alignment_auto",

9 | "nsfw",

10 | "quality_auto",

11 | "aesthetics_auto",

12 | "alignment_human",

13 | "nudity",

14 | "quality_human",

15 | "aesthetics_human",

16 | "black_out",

17 | "originality",

18 | ]

19 | data = pd.read_csv(

20 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "%s.tsv" % subset),

21 | sep="\t",

22 | )

23 | data = data.replace("-", np.nan)

24 | data = data.dropna(axis=0, how="all")

25 | data = data.dropna(axis=1, how="all")

26 | cols = data.columns[2:]

27 | for c in cols:

28 | if "↓" in c:

29 | data[c] = -data[c]

30 | return data, cols

31 |

32 |

33 | def test():

34 | data, cols = load_heim()

35 | print(data.head())

36 | print(cols)

37 |

38 |

39 | if __name__ == "__main__":

40 | test()

41 |

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2024 Guanhua Zhang and Moritz Hardt

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/benchbench/data/superglue/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 | import numpy as np

4 |

5 |

6 | def load_superglue():

7 | data = pd.read_csv(

8 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

9 | sep="\t",

10 | )

11 | ori_cols = data.columns[5:-2]

12 | cols = []

13 | for c in ori_cols:

14 | if type(data[c].values[0]) is str and "/" in data[c].values[0]:

15 | c1 = c + "-a"

16 | c2 = c + "-b"

17 | res1, res2 = [], []

18 | for line in data[c].values:

19 | s = line.strip().split("/")

20 | res1.append(float(s[0]))

21 | res2.append(float(s[1]))

22 | res1 = np.array(res1)

23 | res2 = np.array(res2)

24 | data[c1] = res1

25 | data[c2] = res2

26 | data[c] = (res1 + res2) / 2

27 | cols.append(c)

28 | else:

29 | cols.append(c)

30 |

31 | return data, cols

32 |

33 |

34 | def test():

35 | data, cols = load_superglue()

36 | print(data.head())

37 | print(cols)

38 |

39 |

40 | if __name__ == "__main__":

41 | test()

42 |

--------------------------------------------------------------------------------

/benchbench/data/imagenet/format.py:

--------------------------------------------------------------------------------

1 | import os

2 | import re

3 | import pandas as pd

4 |

5 | fout = open(os.path.join(os.getcwd(), "leaderboard_raw.tsv"), "w")

6 | with open(os.path.join(os.getcwd(), "vanilla.txt"), "r") as fin:

7 | new_line = ""

8 | for i, line in enumerate(fin.readlines()):

9 | if i % 12 <= 10:

10 | new_line += line.strip()

11 | if len(line.strip()) != 0:

12 | new_line += "\t"

13 | else:

14 | new_line += re.sub("\s+", "\t", line)

15 | fout.write(new_line.rstrip() + "\n")

16 | new_line = ""

17 | fout.close()

18 |

19 | data = pd.read_csv(os.path.join(os.getcwd(), "leaderboard_raw.tsv"), sep="\t")

20 | data.sort_values(by=["Acc@1"], inplace=True, ascending=False)

21 | data["Model"] = data["Weight"].apply(

22 | lambda t: "_".join(t.split(".")[0].split("_")[:-1]).lower()

23 | )

24 | # data.to_csv(os.path.join(os.getcwd(), "leaderboard_raw.tsv"), sep="\t", index=False)

25 |

26 | with open(os.path.join(os.getcwd(), "run.sh"), "w") as fout:

27 | for i in range(len(data)):

28 | fout.write(

29 | f"python run_imagenet.py --model_name {data['Model'][i]} --weight_name {data['Weight'][i]}\n"

30 | )

31 |

--------------------------------------------------------------------------------

/benchbench/data/bbh/statistic.py:

--------------------------------------------------------------------------------

1 | from datasets import load_dataset

2 |

3 | configs = [

4 | "boolean_expressions",

5 | "causal_judgement",

6 | "date_understanding",

7 | "disambiguation_qa",

8 | "dyck_languages",

9 | "formal_fallacies",

10 | "geometric_shapes",

11 | "hyperbaton",

12 | "logical_deduction_five_objects",

13 | "logical_deduction_seven_objects",

14 | "logical_deduction_three_objects",

15 | "movie_recommendation",

16 | "multistep_arithmetic_two",

17 | "navigate",

18 | "object_counting",

19 | "penguins_in_a_table",

20 | "reasoning_about_colored_objects",

21 | "ruin_names",

22 | "salient_translation_error_detection",

23 | "snarks",

24 | "sports_understanding",

25 | "temporal_sequences",

26 | "tracking_shuffled_objects_five_objects",

27 | "tracking_shuffled_objects_seven_objects",

28 | "tracking_shuffled_objects_three_objects",

29 | "web_of_lies",

30 | "word_sorting",

31 | ]

32 | ret = []

33 | for c in configs:

34 | dataset = load_dataset("lukaemon/bbh", name=c, split="test")

35 | ret.append((c, set(dataset["target"])))

36 |

37 | ret = sorted(ret, key=lambda x: len(x[1]))

38 | for i in ret:

39 | print(i[0], len(i[1]), i[1])

40 |

--------------------------------------------------------------------------------

/benchbench/data/helm/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import pandas as pd

4 |

5 |

6 | def load_helm(subset="accuracy"):

7 | assert subset in [

8 | "accuracy",

9 | "bias",

10 | "calibration",

11 | "fairness",

12 | "efficiency",

13 | "robustness",

14 | "summarization",

15 | "toxicity",

16 | ]

17 | data = pd.read_csv(

18 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "%s.tsv" % subset),

19 | sep="\t",

20 | )

21 | data = data.replace("-", np.nan)

22 | data = data.dropna(axis=0, how="all")

23 | data = data.dropna(axis=1, how="all")

24 | cols = data.columns[2:]

25 |

26 | for c in cols:

27 | data[c] = np.array([float(i) for i in data[c].values])

28 |

29 | for c in cols:

30 | if (

31 | "ECE" in c

32 | or "Representation" in c

33 | or "Toxic fraction" in c

34 | or "Stereotype" in c

35 | or "inference time" in c

36 | ):

37 | data[c] = -data[c]

38 |

39 | return data, cols

40 |

41 |

42 | def test():

43 | data, cols = load_helm()

44 | print(data.head())

45 | print(cols)

46 |

47 |

48 | if __name__ == "__main__":

49 | test()

50 |

--------------------------------------------------------------------------------

/benchbench/data/glue/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pandas as pd

3 | import numpy as np

4 |

5 |

6 | def load_glue():

7 | data = pd.read_csv(

8 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

9 | sep="\t",

10 | )

11 | ori_cols = data.columns[5:-1]

12 | cols = []

13 | for c in ori_cols:

14 | if type(data[c].values[0]) is str and "/" in data[c].values[0]:

15 | c1 = c + "-a"

16 | c2 = c + "-b"

17 | res1, res2 = [], []

18 | for line in data[c].values:

19 | s = line.strip().split("/")

20 | res1.append(float(s[0]))

21 | res2.append(float(s[1]))

22 | res1 = np.array(res1)

23 | res2 = np.array(res2)

24 | data[c1] = res1

25 | data[c2] = res2

26 | data[c] = (res1 + res2) / 2

27 | cols.append(c)

28 | elif "MNLI" in c:

29 | continue

30 | else:

31 | cols.append(c)

32 | data["MNLI"] = (data["MNLI-m"] + data["MNLI-mm"]) / 2

33 | cols.append("MNLI")

34 |

35 | return data, cols

36 |

37 |

38 | def test():

39 | data, cols = load_glue()

40 | print(data.head())

41 | print(cols)

42 |

43 |

44 | if __name__ == "__main__":

45 | test()

46 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools>=40.8.0", "wheel"]

3 | build-backend = "setuptools.build_meta"

4 |

5 | [project]

6 | name = "benchbench"

7 | authors = [

8 | {name = "Guanhua Zhang"},

9 | ]

10 | description = "Tools for measuring sensitivity and diversity of multi-task benchmarks."

11 | version = "1.0.1"

12 | requires-python = ">=3.7"

13 | readme = "README.md"

14 | license = {text = "MIT"}

15 | classifiers=[

16 | "Development Status :: 3 - Alpha",

17 | "License :: OSI Approved :: MIT License",

18 | "Intended Audience :: Science/Research",

19 | "Topic :: Scientific/Engineering :: Artificial Intelligence",

20 | "Natural Language :: English",

21 | "Programming Language :: Python :: 3",

22 | "Programming Language :: Python :: 3.7",

23 | "Programming Language :: Python :: 3.8",

24 | "Programming Language :: Python :: 3.9",

25 | "Programming Language :: Python :: 3.10",

26 | "Programming Language :: Python :: 3.11",

27 | "Programming Language :: Python :: 3.12",

28 | ]

29 | dependencies = [

30 | "scipy",

31 | "numpy",

32 | "torch",

33 | "pandas",

34 | "joblib",

35 | "scikit-learn",

36 | "zarth_utils==1.0"

37 | ]

38 |

39 |

40 | [tool.setuptools]

41 | include-package-data = true

42 |

43 | [tool.setuptools.packages.find]

44 | include = ["benchbench*"]

45 |

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | # Configuration file for the Sphinx documentation builder.

2 | #

3 | # For the full list of built-in configuration values, see the documentation:

4 | # https://www.sphinx-doc.org/en/master/usage/configuration.html

5 |

6 | # -- Project information -----------------------------------------------------

7 | # https://www.sphinx-doc.org/en/master/usage/configuration.html#project-information

8 | import os

9 | import sys

10 |

11 | sys.path.insert(0, os.path.abspath('../'))

12 |

13 | project = 'BenchBench'

14 | copyright = '2024, Guanhua'

15 | author = 'Guanhua'

16 |

17 | # -- General configuration ---------------------------------------------------

18 | # https://www.sphinx-doc.org/en/master/usage/configuration.html#general-configuration

19 |

20 | extensions = [

21 | 'sphinx.ext.autodoc', # pull doc from docstrings

22 | 'sphinx.ext.intersphinx', # link to other projects

23 | 'sphinx.ext.todo', # support TODOs

24 | 'sphinx.ext.ifconfig', # include stuff based on configuration

25 | 'sphinx.ext.viewcode', # add source code

26 | 'myst_parser', # add MD files

27 | 'sphinx.ext.napoleon' # Google style doc

28 | ]

29 |

30 | templates_path = ['_templates']

31 | exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

32 | pygments_style = 'sphinx'

33 |

34 | # -- Options for HTML output -------------------------------------------------

35 | # https://www.sphinx-doc.org/en/master/usage/configuration.html#options-for-html-output

36 |

37 | html_theme = 'alabaster'

38 | html_static_path = ['_static']

39 |

--------------------------------------------------------------------------------

/benchbench/utils/win_rate.py:

--------------------------------------------------------------------------------

1 | import math

2 | import numpy as np

3 |

4 |

5 | class WinningRate:

6 | def __init__(self, data, cols):

7 | """

8 | Calculate the winning rate of a list of models.

9 |

10 | Args:

11 | data (pd.DataFrame): Each row represents a model, each column represents a task.

12 | cols (list): The column names of the tasks.

13 |

14 | Returns:

15 | None

16 | """

17 | m = len(data)

18 | n = len(cols)

19 | self.win_rate = np.zeros([m, m])

20 | data = data[cols].values

21 | for i in range(m):

22 | for j in range(m):

23 | n_win, n_tot = 0, 0

24 | for k in range(n):

25 | if not math.isnan(data[i, k]) and not math.isnan(data[j, k]):

26 | n_tot += 1

27 | if float(data[i, k]) > float(data[j, k]) and i != j:

28 | n_win += 1

29 | self.win_rate[i, j] = n_win / n_tot if n_tot > 0 else 0

30 |

31 | def get_winning_rate(self, model_indices=None):

32 | """

33 | Get the winning rate of the selected models.

34 |

35 | Args:

36 | model_indices (list): Indices of the selected models.

37 |

38 | Returns:

39 | float: The winning rate.

40 | """

41 | model_indices = (

42 | np.arange(len(self.win_rate)) if model_indices is None else model_indices

43 | )

44 | return self.win_rate[model_indices][:, model_indices].mean(axis=1)

45 |

--------------------------------------------------------------------------------

/benchbench/data/dummy/__init__.py:

--------------------------------------------------------------------------------

1 | import random

2 | import numpy as np

3 | import pandas as pd

4 |

5 |

6 | def load_random_benchmark(seed=0, num_task=100, num_model=100):

7 | np.random.seed(seed)

8 | random.seed(seed)

9 | data = np.random.random([num_model, num_task]) * 100

10 | data = pd.DataFrame(data)

11 | cols = list(data.columns)

12 | return data, cols

13 |

14 |

15 | def load_constant_benchmark(seed=0, num_task=100, num_model=100):

16 | np.random.seed(seed)

17 | random.seed(seed)

18 | rd = np.random.random([num_model, 1])

19 | data = np.concatenate([rd.copy() for _ in range(num_task)], axis=1) * 100

20 | data = pd.DataFrame(data)

21 | cols = list(data.columns)

22 | return data, cols

23 |

24 |

25 | def load_interpolation_benchmark(seed=0, mix_ratio=0.0, num_task=100, num_model=100):

26 | num_random = int(mix_ratio * num_task + 0.5)

27 | num_constant = int((1 - mix_ratio) * num_task + 0.5)

28 | if num_random == 0:

29 | return load_constant_benchmark(

30 | seed=seed, num_task=num_constant, num_model=num_model

31 | )

32 | elif num_constant == 0:

33 | return load_random_benchmark(

34 | seed=seed, num_task=num_random, num_model=num_model

35 | )

36 | else:

37 | random = load_random_benchmark(

38 | seed=seed, num_task=num_random, num_model=num_model

39 | )[0]

40 | constant = load_constant_benchmark(

41 | seed=seed, num_task=num_constant, num_model=num_model

42 | )[0]

43 | data = pd.DataFrame(np.concatenate([random.values, constant.values], axis=1))

44 | cols = list(data.columns)

45 | return data, cols

46 |

--------------------------------------------------------------------------------

/benchbench/data/imagenet/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | import random

3 |

4 | import numpy as np

5 |

6 | import pandas as pd

7 |

8 |

9 | def load_imagenet(*args, **kwargs):

10 | # Due to legacy reason, instead of refactoring the code, we just make a wrapper function like this.

11 | return load_data(*args, **kwargs)

12 |

13 |

14 | def load_data(load_raw=False, seed=0, num_task=20):

15 | if load_raw:

16 | data = pd.read_csv(

17 | os.path.join(

18 | os.path.dirname(os.path.abspath(__file__)), "leaderboard_raw.tsv"

19 | ),

20 | sep="\t",

21 | )

22 | data = data.dropna(axis=0, how="any")

23 | cols = [data.columns[1]]

24 | else:

25 | data = pd.read_csv(

26 | os.path.join(os.path.dirname(os.path.abspath(__file__)), "leaderboard.tsv"),

27 | sep="\t",

28 | )

29 | data = data.sort_values(by=["acc"], ascending=False).reset_index()

30 | if num_task < 1000:

31 | assert 1000 % num_task == 0 and num_task >= 1

32 | cols = []

33 | random.seed(seed)

34 | np.random.seed(seed)

35 | size_task = 1000 // num_task

36 | perm = np.random.permutation(1000)

37 | for i in range(num_task):

38 | task_cols = [

39 | "acc_%d" % j for j in perm[i * size_task : (i + 1) * size_task]

40 | ]

41 | data["acc_aggr_%d" % i] = data[task_cols].values.mean(1)

42 | cols.append("acc_aggr_%d" % i)

43 | else:

44 | cols = ["acc_%d" % i for i in range(1000)]

45 | return data, cols

46 |

47 |

48 | def test():

49 | data, cols = load_data()

50 | print(data.head())

51 | print(cols)

52 |

53 |

54 | if __name__ == "__main__":

55 | test()

56 |

--------------------------------------------------------------------------------

/benchbench/data/vtab/leaderboard.tsv:

--------------------------------------------------------------------------------

1 | Mean (selected datasets) CIFAR-100 Caltech101 Camelyon Clevr-Count Clevr-Dist DMLab DTD EuroSAT Flowers102 KITTI-Dist Pets Resisc45 Retinopathy SVHN Sun397 dSpr-Loc dSpr-Orient sNORB-Azim sNORB-Elev

2 | Sup-Rotation-100% 90.2 84.8 94.6 85.9 99.8 92.5 76.5 75.9 98.8 94.7 82.3 91.5 94.9 79.5 97.0 70.2 100 96.5 100 98.4

3 | Sup-Exemplar-100% 90.1 84.1 94.4 86.7 99.8 92.7 76.8 74.5 98.6 93.4 84.0 91.8 95.1 79.5 97.1 69.4 100 96.4 99.8 98.0

4 | Sup-100% 89.7 83.8 94.1 83.9 99.8 92.1 76.4 74.0 98.8 93.2 80.7 91.9 95.3 79.3 97.0 70.7 100 96.4 99.8 97.7

5 | Semi-Exemplar-10% 88.8 82.7 85.3 86.0 99.8 93.1 76.8 70.5 98.6 92.2 81.5 89.0 94.7 78.8 97.0 67.4 100 96.5 100 97.8

6 | Semi-Rotation-10% 88.6 82.4 88.1 78.6 99.8 93.2 76.1 72.4 98.7 93.2 81.0 87.9 94.9 79.0 96.9 66.7 100 96.5 99.9 97.5

7 | Rotation 86.4 73.6 88.3 86.4 99.8 93.3 76.8 63.3 98.3 83.4 82.6 71.8 93.4 78.6 96.9 60.5 100 96.5 99.9 98.0

8 | Exemplar 84.8 70.7 81.9 84.7 99.8 93.3 74.7 61.1 98.5 79.3 78.2 67.8 93.5 79.0 96.7 58.2 100 96.5 99.9 97.4

9 | Rel.Pat.Loc 83.1 65.7 79.9 85.3 99.5 87.7 71.5 65.2 97.8 78.8 75.0 66.8 91.5 79.8 93.7 58.0 100 90.4 99.7 92.6

10 | Jigsaw 83.0 65.3 79.1 83.0 99.6 88.6 72.0 63.9 97.9 77.9 74.7 65.4 92.0 80.1 93.9 59.2 100 90.3 99.9 93.6

11 | From-Scratch 75.4 64.4 55.9 81.2 99.7 89.4 71.5 31.3 96.2 50.6 68.4 23.8 86.8 76.8 96.3 52.7 100 96.3 99.9 91.7

12 | Uncond-BigGAN 68.2 58.1 73.6 82.2 47.6 54.9 54.8 44.9 89.8 63.5 57.4 30.9 75.4 75.9 93.0 46.9 86.1 95.9 88.1 76.6

13 | VAE 66.8 44.2 48.4 81.3 98.4 90.1 59.7 16.0 92.5 18.4 57.0 14.0 65.0 74.2 93.1 29.3 100 94.7 97.9 95.6

14 | WAE-MMD 64.9 38.8 50.8 80.6 98.1 89.3 52.6 11.0 94.1 20.8 61.6 16.2 64.8 73.8 90.9 31.6 100 90.2 96.3 72.4

15 | Cond-BigGAN 51.4 56.3 0.148 81.3 12.4 24.5 51.4 44.8 94.5 68.8 49.7 31.6 76.5 75.3 91.4 44.9 6.16 7.45 80.6 79.2

16 | WAE-GAN 48.5 24.8 42.0 77.1 52.2 70.2 37.3 8.67 81.5 15.5 62.3 13.1 38.4 73.6 78.2 12.8 97.7 49.9 33.4 52.2

17 | WAE-UKL 46.8 23.2 41.7 76.4 44.5 67.8 36.7 12.3 78.1 17.2 55.1 12.3 36.8 73.6 65.5 12.0 98.1 51.4 35.9 51.0

--------------------------------------------------------------------------------

/benchbench/data/bigcode/leaderboard.tsv:

--------------------------------------------------------------------------------

1 | T Models Win humaneval-python java javascript Throughput

2 | 🔴 DeepSeek-Coder-33b-instruct 39.58 80.02 52.03 65.13 25.2

3 | 🔴 DeepSeek-Coder-7b-instruct 38.75 80.22 53.34 65.8 51

4 | 🔶 Phind-CodeLlama-34B-v2 37.04 71.95 54.06 65.34 15.1

5 | 🔶 Phind-CodeLlama-34B-v1 36.12 65.85 49.47 64.45 15.1

6 | 🔶 Phind-CodeLlama-34B-Python-v1 35.27 70.22 48.72 66.24 15.1

7 | 🔴 DeepSeek-Coder-33b-base 35 52.45 43.77 51.28 25.2

8 | 🔶 WizardCoder-Python-34B-V1.0 33.96 70.73 44.94 55.28 15.1

9 | 🔴 DeepSeek-Coder-7b-base 31.75 45.83 37.72 45.9 51

10 | 🔶 CodeLlama-34b-Instruct 30.96 50.79 41.53 45.85 15.1

11 | 🔶 WizardCoder-Python-13B-V1.0 30.58 62.19 41.77 48.45 25.3

12 | 🟢 CodeLlama-34b 30.35 45.11 40.19 41.66 15.1

13 | 🟢 CodeLlama-34b-Python 29.65 53.29 39.46 44.72 15.1

14 | 🔶 WizardCoder-15B-V1.0 28.92 58.12 35.77 41.91 43.7

15 | 🔶 CodeLlama-13b-Instruct 27.88 50.6 33.99 40.92 25.3

16 | 🟢 CodeLlama-13b 26.19 35.07 32.23 38.26 25.3

17 | 🟢 CodeLlama-13b-Python 24.73 42.89 33.56 40.66 25.3

18 | 🔶 CodeLlama-7b-Instruct 23.69 45.65 28.77 33.11 33.1

19 | 🟢 CodeLlama-7b 22.31 29.98 29.2 31.8 33.1

20 | 🔴 CodeShell-7B 22.31 34.32 30.43 33.17 33.9

21 | 🔶 OctoCoder-15B 21.15 45.3 26.03 32.8 44.4

22 | 🟢 Falcon-180B 20.9 35.37 28.48 31.68 -1

23 | 🟢 CodeLlama-7b-Python 20.62 40.48 29.15 36.34 33.1

24 | 🟢 StarCoder-15B 20.58 33.57 30.22 30.79 43.9

25 | 🟢 StarCoderBase-15B 20.15 30.35 28.53 31.7 43.8

26 | 🟢 CodeGeex2-6B 17.42 33.49 23.46 29.9 32.7

27 | 🟢 StarCoderBase-7B 16.85 28.37 24.44 27.35 46.9

28 | 🔶 OctoGeeX-7B 16.65 42.28 19.33 28.5 32.7

29 | 🔶 WizardCoder-3B-V1.0 15.73 32.92 24.34 26.16 50

30 | 🟢 CodeGen25-7B-multi 15.35 28.7 26.01 26.27 32.6

31 | 🔶 Refact-1.6B 14.85 31.1 22.78 22.36 50

32 | 🔴 DeepSeek-Coder-1b-base 14.42 32.13 27.16 28.46 -1

33 | 🟢 StarCoderBase-3B 11.65 21.5 19.25 21.32 50

34 | 🔶 WizardCoder-1B-V1.0 10.35 23.17 19.68 19.13 71.4

35 | 🟢 Replit-2.7B 8.54 20.12 21.39 20.18 42.2

36 | 🟢 CodeGen25-7B-mono 8.15 33.08 19.75 23.22 34.1

37 | 🟢 StarCoderBase-1.1B 8.12 15.17 14.2 13.38 71.4

38 | 🟢 CodeGen-16B-Multi 7.08 19.26 22.2 19.15 17.2

39 | 🟢 Phi-1 6.25 51.22 10.76 19.25 -1

40 | 🟢 StableCode-3B 6.04 20.2 19.54 18.98 30.2

41 | 🟢 DeciCoder-1B 5.81 19.32 15.3 17.85 54.6

42 | 🟢 SantaCoder-1.1B 4.58 18.12 15 15.47 50.8

43 |

--------------------------------------------------------------------------------

/benchbench/data/mmlu/format.py:

--------------------------------------------------------------------------------

1 | import os

2 | import math

3 | import numpy as np

4 | import pandas as pd

5 | from datasets import load_dataset

6 |

7 | # read top 100 model names

8 | top_100_with_duplicate = pd.read_csv("leaderboard_raw.csv", header=None)

9 | top_100 = []

10 | for i in top_100_with_duplicate[0].values:

11 | if i not in top_100:

12 | top_100.append(i)

13 | print(top_100)

14 |

15 | # download the meta data

16 | os.makedirs("data", exist_ok=True)

17 | with open("data/download.sh", "w") as fout:

18 | fout.write("git lfs install\n")

19 | for i in top_100:

20 | cmd = "git clone git@hf.co:data/%s" % i

21 | fout.write(cmd + "\n")

22 | print(cmd)

23 | # one must download the data manually by ``cd data; bash download.sh''

24 | # comment the following lines if you have downloaded the data

25 | # exit(0)

26 |

27 | # load all model names and split names

28 | all_model_split = []

29 | dir_dataset = os.path.join("data")

30 | for model_name in top_100:

31 | model_name = model_name[len("open-llm-leaderboard/") :]

32 | dir_model = os.path.join("data", model_name)

33 | if not os.path.isdir(dir_model):

34 | continue

35 | for split_name in os.listdir(dir_model):

36 | if not split_name.endswith(".parquet"):

37 | continue

38 | split_name = split_name[len("results_") : -len(".parquet")]

39 | all_model_split.append((model_name, split_name))

40 | print(len(all_model_split))

41 |

42 | # load all scores and filter broken ones

43 | ret = []

44 | for model_name, split_name in all_model_split:

45 | model = load_dataset(

46 | "parquet",

47 | data_files=os.path.join("data", model_name, "results_%s.parquet" % split_name),

48 | split="train",

49 | )["results"][0]

50 | tasks = [i for i in model.keys() if "hendrycksTest" in i]

51 | if len(tasks) != 57:

52 | continue

53 | avg = np.mean([model[c]["acc_norm"] for c in tasks])

54 | if math.isnan(avg):

55 | continue

56 | record = dict()

57 | record["model_name"] = model_name

58 | record["split_name"] = split_name

59 | record["average_score"] = avg

60 | record.update({c: model[c]["acc_norm"] for c in tasks})

61 | ret.append(record)

62 | print(model_name, split_name, "%.2lf" % avg)

63 | ret = sorted(ret, key=lambda x: -x["average_score"])

64 | ret = pd.DataFrame(ret)

65 | ret.to_csv("calibration.tsv", sep="\t")

66 |

--------------------------------------------------------------------------------

/benchbench/utils/base.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 |

4 | def is_int(x):

5 | """

6 | Check if a string can be converted to an integer.

7 |

8 | Args:

9 | x(str): Input string.

10 |

11 | Returns:

12 | bool: True if x can be converted to an integer, False otherwise

13 | """

14 | try:

15 | int(x)

16 | return True

17 | except ValueError:

18 | return False

19 |

20 |

21 | def is_number(s):

22 | """

23 | Check if a string can be converted to a number.

24 |

25 | Args:

26 | s(str): Input string.

27 |

28 | Returns:

29 | bool: True if s can be converted to a number, False otherwise

30 | """

31 | try:

32 | float(s)

33 | return True

34 | except ValueError:

35 | return False

36 |

37 |

38 | def get_combinations(s, k):

39 | """

40 | Generate all subsets of size k from set s.

41 |

42 | Args:

43 | s(list): List of elements to get combinations from.

44 | k(int): Size of each combination.

45 |

46 | Returns:

47 | list: A list of combinations, where each combination is represented as a list.

48 | """

49 | if k == 0:

50 | return [[]]

51 | elif k > len(s):

52 | return []

53 | else:

54 | all_combinations = []

55 | for i in range(len(s)):

56 | # For each element in the set, generate the combinations that include this element

57 | # and then recurse to generate combinations from the remaining elements

58 | element = s[i]

59 | remaining_elements = s[i + 1 :]

60 | for c in get_combinations(remaining_elements, k - 1):

61 | all_combinations.append([element] + c)

62 | return all_combinations

63 |

64 |

65 | def rankdata(a, method="average"):

66 | assert method == "average", "Only average method is implemented"

67 | arr = np.ravel(np.asarray(a))

68 | sorter = np.argsort(arr, kind="quicksort")

69 |

70 | inv = np.empty(sorter.size, dtype=np.intp)

71 | inv[sorter] = np.arange(sorter.size, dtype=np.intp)

72 |

73 | arr = arr[sorter]

74 | obs = np.r_[True, np.fabs(arr[1:] - arr[:-1]) > 1e-8] # this is the only change

75 | dense = obs.cumsum()[inv]

76 |

77 | # cumulative counts of each unique value

78 | count = np.r_[np.nonzero(obs)[0], len(obs)]

79 |

80 | # average method

81 | return 0.5 * (count[dense] + count[dense - 1] + 1)

82 |

--------------------------------------------------------------------------------

/benchbench/data/heim/quality_human.tsv:

--------------------------------------------------------------------------------

1 | Model/adapter Mean win rate ↑ [ sort ] MS-COCO (base) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (fairness - gender) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (fairness - AAVE dialect) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (robustness) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (Chinese) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (Hindi) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (Spanish) - Photorealism - generated (human) ↑ [ sort ] MS-COCO (Art styles) - Photorealism - generated (human) ↑ [ sort ]

2 | Dreamlike Photoreal v2.0 (1B) 0.92 2.619 2.694 2.65 2.726 2.76 2.628 2.894 -

3 | Safe Stable Diffusion weak (1B) 0.863 2.611 2.647 2.643 2.637 2.676 2.504 2.952 -

4 | DALL-E 2 (3.5B) 0.851 2.621 2.632 2.411 2.552 2.54 3.769 2.935 -

5 | Safe Stable Diffusion strong (1B) 0.771 2.286 2.332 2.526 2.807 2.936 2.684 2.712 -

6 | Stable Diffusion v1.5 (1B) 0.743 2.375 2.392 2.551 2.502 2.7 2.516 2.85 -

7 | DeepFloyd IF X-Large (4.3B) 0.726 2.207 2.216 2.554 2.776 2.51 2.842 2.736 -

8 | Safe Stable Diffusion medium (1B) 0.714 2.489 2.467 2.521 2.426 2.586 2.478 2.886 -

9 | Stable Diffusion v2 base (1B) 0.691 2.494 2.515 2.476 2.5 2.558 2.316 2.792 -

10 | GigaGAN (1B) 0.686 2.118 2.165 2.385 2.508 2.928 2.794 2.826 -

11 | Safe Stable Diffusion max (1B) 0.674 2.305 2.276 2.437 2.564 2.702 2.524 2.652 -

12 | Stable Diffusion v1.4 (1B) 0.657 2.512 2.482 2.309 2.561 2.752 2.27 2.644 -

13 | Stable Diffusion v2.1 base (1B) 0.6 2.42 2.38 2.318 2.44 2.436 2.33 2.77 -

14 | DeepFloyd IF Medium (0.4B) 0.554 2.101 2.122 2.406 2.542 2.238 2.698 2.72 -

15 | DeepFloyd IF Large (0.9B) 0.514 2.089 2.092 2.15 2.518 2.104 2.968 2.758 -

16 | MultiFusion (13B) 0.44 2.309 2.323 2.297 2.318 2.428 1.564 2.69 -

17 | DALL-E mega (2.6B) 0.417 2.058 2.097 2.046 2.308 2.39 2.284 2.884 -

18 | Dreamlike Diffusion v1.0 (1B) 0.4 2.15 2.155 2.119 2.342 2.472 2.164 2.44 -

19 | Openjourney v2 (1B) 0.309 1.941 1.928 2.145 2.322 2.178 2.422 2.508 -

20 | DALL-E mini (0.4B) 0.291 1.975 1.987 1.981 2.377 2.294 1.868 2.602 -

21 | Redshift Diffusion (1B) 0.28 1.914 1.982 1.95 2.002 2.396 2.51 2.31 -

22 | minDALL-E (1.3B) 0.274 2.058 2.04 1.896 2.047 2.226 2.016 2.666 -

23 | Lexica Search with Stable Diffusion v1.5 (1B) 0.263 1.883 1.897 1.806 1.93 1.94 3.074 2.374 -

24 | CogView2 (6B) 0.189 1.756 1.794 1.959 2.021 2.394 1.828 2.354 -

25 | Vintedois (22h) Diffusion model v0.1 (1B) 0.074 1.57 1.593 1.558 1.867 1.886 1.878 1.862 -

26 | Promptist + Stable Diffusion v1.4 (1B) 0.057 1.593 1.587 1.552 1.682 1.716 2.242 1.506 -

27 | Openjourney v1 (1B) 0.04 1.602 1.582 1.579 1.693 1.234 1.586 1.57 -

28 |

--------------------------------------------------------------------------------

/benchbench/data/imagenet/run_imagenet.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | import torchvision

4 | import joblib as jbl

5 | import pandas as pd

6 | from torchvision.models import *

7 | from tqdm import tqdm

8 |

9 | from zarth_utils.config import Config

10 |

11 |

12 | def load_model(model_name, weight_name):

13 | model = eval(model_name)

14 | weights = eval(weight_name)

15 | model = model(weights=weights).eval()

16 | preprocess = weights.transforms()

17 | return model, preprocess

18 |

19 |

20 | def main():

21 | config = Config(

22 | default_config_dict={

23 | "model_name": "vit_h_14",

24 | "weight_name": "ViT_H_14_Weights.IMAGENET1K_SWAG_E2E_V1",

25 | },

26 | use_argparse=True,

27 | )

28 |

29 | dir2save = os.path.join(

30 | os.path.dirname(os.path.abspath(__file__)),

31 | "%s--%s" % (config["model_name"], config["weight_name"]),

32 | )

33 | os.makedirs(dir2save, exist_ok=True)

34 | if os.path.exists(os.path.join(dir2save, "meta_info.pkl")):

35 | print("Already exists, skip")

36 | return

37 |

38 | device = (

39 | torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")

40 | )

41 | model, preprocess = load_model(config["model_name"], config["weight_name"])

42 | model = model.to(device)

43 |

44 | dataset = torchvision.datasets.ImageNet(

45 | root=os.path.dirname(os.path.abspath(__file__)),

46 | split="val",

47 | transform=preprocess,

48 | )

49 | data_loader = torch.utils.data.DataLoader(

50 | dataset, batch_size=128, shuffle=False, num_workers=2

51 | )

52 |

53 | all_prob, all_pred, all_target = [], [], []

54 | for i, (batch, target) in tqdm(enumerate(data_loader)):

55 | with torch.no_grad():

56 | batch = batch.to(device)

57 | prob = model(batch).softmax(dim=1)

58 | pred = prob.argmax(dim=1)

59 | all_prob.append(prob.detach().cpu())

60 | all_pred.append(pred.detach().cpu())

61 | all_target.append(target.detach().cpu())

62 | all_prob = torch.cat(all_prob, dim=0).numpy()

63 | all_pred = torch.cat(all_pred, dim=0).numpy()

64 | all_target = torch.cat(all_target, dim=0).numpy()

65 |

66 | jbl.dump(all_prob, os.path.join(dir2save, "prob.pkl"))

67 | jbl.dump(all_pred, os.path.join(dir2save, "pred.pkl"))

68 | jbl.dump(all_target, os.path.join(dir2save, "target.pkl"))

69 | pd.DataFrame({"pred": all_pred, "target": all_target}).to_csv(

70 | os.path.join(dir2save, "pred_target.tsv"), sep="\t", index=False

71 | )

72 |

73 | meta_info = {}

74 | correct = all_pred == all_target

75 | meta_info["acc"] = correct.mean()

76 | for i in range(1000):

77 | subset = all_target == i

78 | correct[subset].mean()

79 | meta_info["acc_%d" % i] = correct[subset].mean()

80 | jbl.dump(meta_info, os.path.join(dir2save, "meta_info.pkl"))

81 |

82 |

83 | if __name__ == "__main__":

84 | main()

85 |

--------------------------------------------------------------------------------

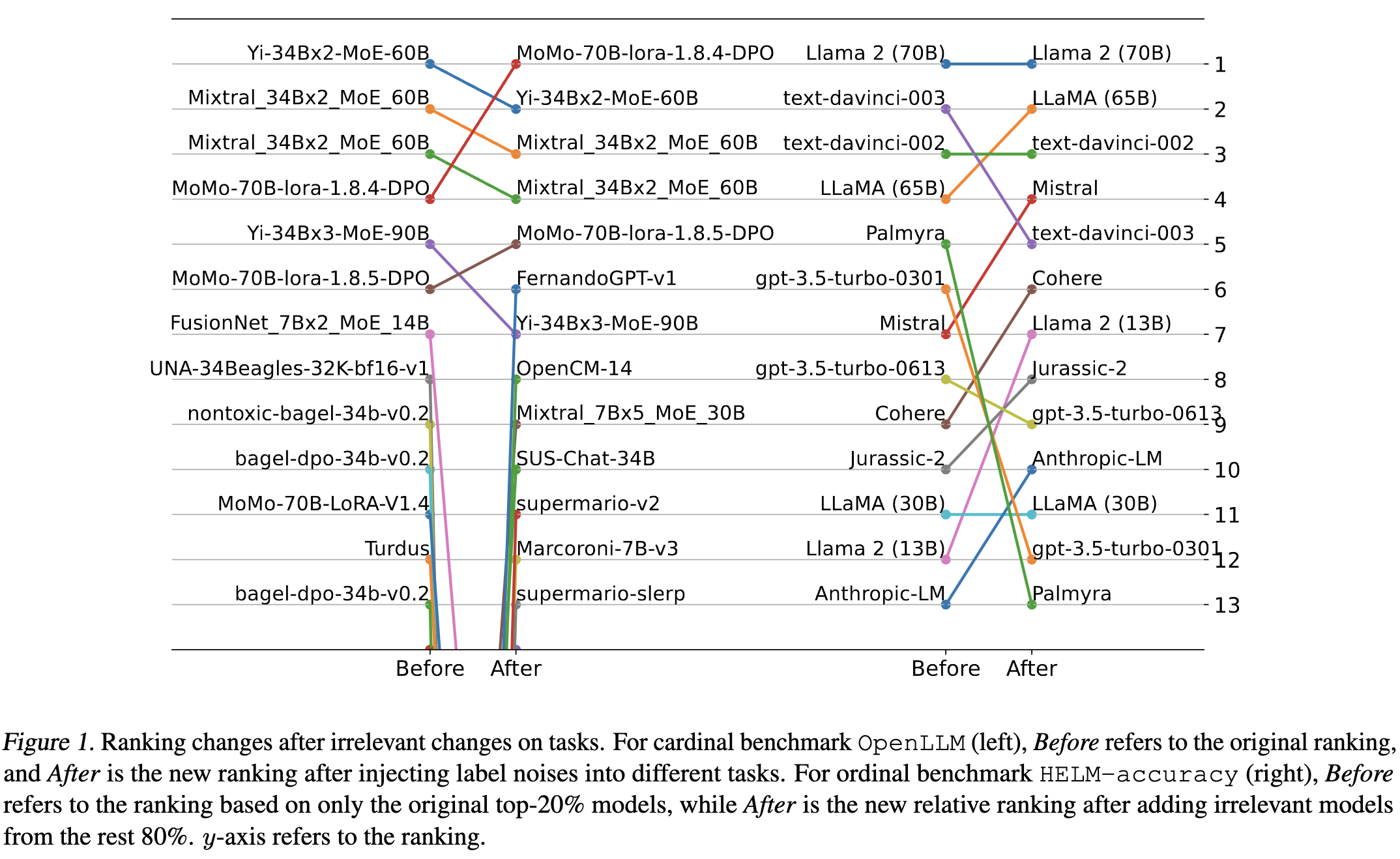

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 | **BenchBench** is a Python package that provides a suite of tools to evaluate multi-task benchmarks focusing on

6 | **task diversity** and **sensitivity to irrelevant changes**.

7 |

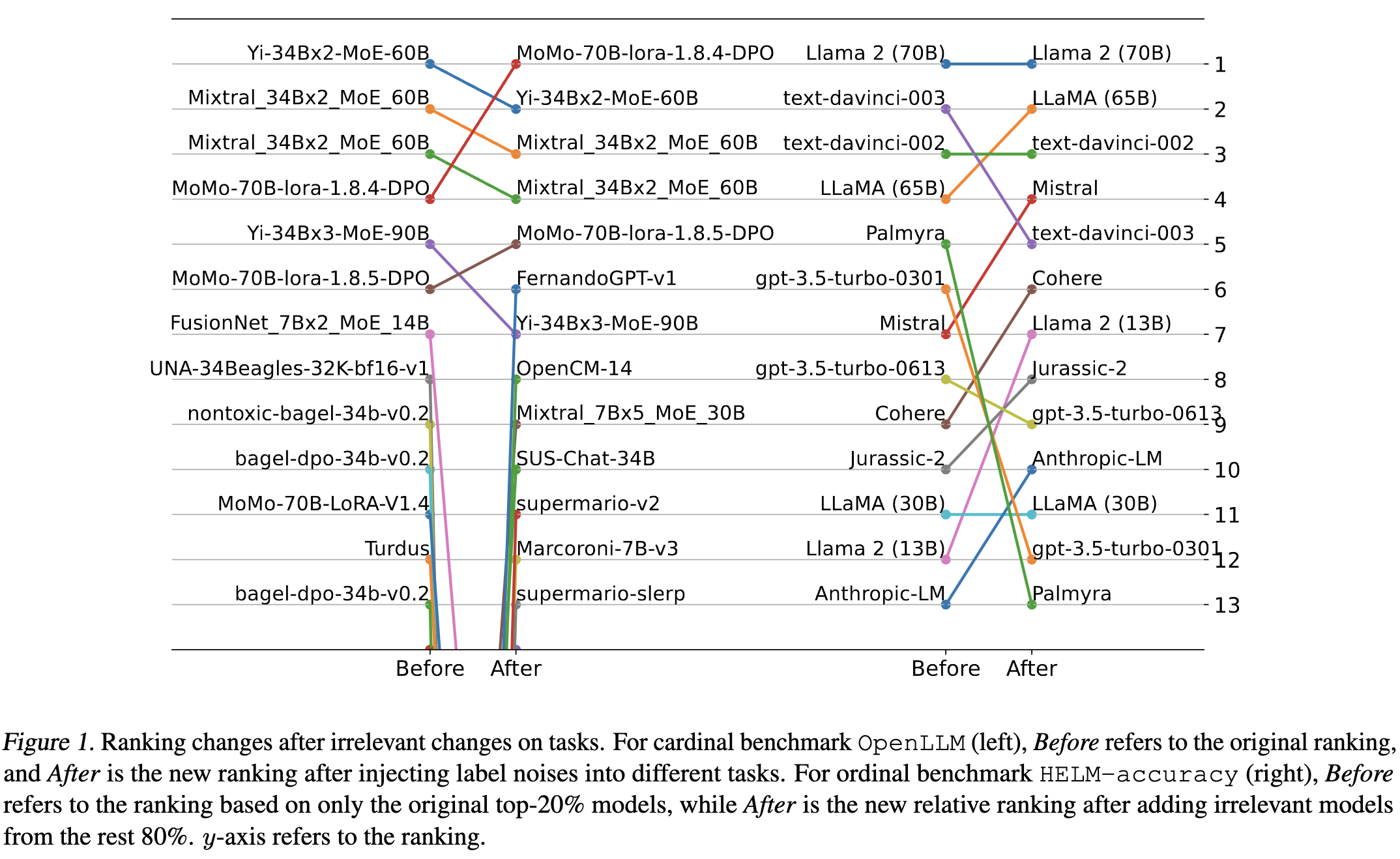

8 | Research shows that for all multi-task benchmarks there is a trade-off between task diversity and sensitivity. The more diverse a benchmark, the more sensitive its ranking is to irrelevant changes. Irrelevant changes

9 | are things like introducing weak models, or changing the metric in ways that shouldn't matter.

10 |

11 | Based on BenchBench, we're maintaining a living [benchmark of multi-task benchmarks](https://socialfoundations.github.io/benchbench/). Visit the project page to see the results or contribute your own benchmark.

12 |

13 | Please see [our paper](https://arxiv.org/pdf/2405.01719) for all relevant background and scientific results. Cite as:

14 |

15 | ```

16 | @inproceedings{zhang2024inherent,

17 | title={Inherent Trade-Offs between Diversity and Stability in Multi-Task Benchmarks},

18 | author={Guanhua Zhang and Moritz Hardt},

19 | booktitle={International Conference on Machine Learning},

20 | year={2024}

21 | }

22 | ```

23 |

24 | ## Quick Start

25 |

26 | To install the package, simply run:

27 |

28 | ```bash

29 | pip install benchbench

30 | ```

31 |

32 | ## Example Usage

33 |

34 | To evaluate a cardinal benchmark, you can use the following code:

35 |

36 | ```python

37 | from benchbench.data import load_cardinal_benchmark

38 | from benchbench.measures.cardinal import get_diversity, get_sensitivity

39 |

40 | data, cols = load_cardinal_benchmark('GLUE')

41 | diversity = get_diversity(data, cols)

42 | sensitivity = get_sensitivity(data, cols)

43 | ```

44 |

45 | To evaluate an ordinal benchmark, you can use the following code:

46 |

47 | ```python

48 | from benchbench.data import load_ordinal_benchmark

49 | from benchbench.measures.ordinal import get_diversity, get_sensitivity

50 |

51 | data, cols = load_ordinal_benchmark('HELM-accuracy')

52 | diversity = get_diversity(data, cols)

53 | sensitivity = get_sensitivity(data, cols)

54 | ```

55 |

56 | To use your own benchmark, you just need to provide a pandas DataFrame and a list of columns indicating the tasks.

57 | Check the [documentation](https://socialfoundations.github.io/benchbench) for more details.

58 |

59 | ## Reproduce the results from our paper

60 |

61 |

62 |  63 |

63 |

64 |

65 | You can reproduce the figures from our paper using the following Colabs:

66 |

67 | * [cardinal.ipynb](https://githubtocolab.com/socialfoundations/benchbench/blob/main/examples/cardinal.ipynb)

68 | * [ordinal.ipynb](https://githubtocolab.com/socialfoundations/benchbench/blob/main/examples/ordinal.ipynb)

69 | * [banner.ipynb](https://githubtocolab.com/socialfoundations/benchbench/blob/main/examples/banner.ipynb)

70 |

--------------------------------------------------------------------------------

/benchbench/data/superglue/leaderboard.tsv:

--------------------------------------------------------------------------------

1 | Rank Name Model URL Score BoolQ CB COPA MultiRC ReCoRD RTE WiC WSC AX-b AX-g

2 | 1 JDExplore d-team Vega v2 91.3 90.5 98.6/99.2 99.4 88.2/62.4 94.4/93.9 96.0 77.4 98.6 -0.4 100.0/50.0

3 | 2 Liam Fedus ST-MoE-32B 91.2 92.4 96.9/98.0 99.2 89.6/65.8 95.1/94.4 93.5 77.7 96.6 72.3 96.1/94.1

4 | 3 Microsoft Alexander v-team Turing NLR v5 90.9 92.0 95.9/97.6 98.2 88.4/63.0 96.4/95.9 94.1 77.1 97.3 67.8 93.3/95.5

5 | 4 ERNIE Team - Baidu ERNIE 3.0 90.6 91.0 98.6/99.2 97.4 88.6/63.2 94.7/94.2 92.6 77.4 97.3 68.6 92.7/94.7

6 | 5 Yi Tay PaLM 540B 90.4 91.9 94.4/96.0 99.0 88.7/63.6 94.2/93.3 94.1 77.4 95.9 72.9 95.5/90.4

7 | 6 Zirui Wang T5 + UDG, Single Model (Google Brain) 90.4 91.4 95.8/97.6 98.0 88.3/63.0 94.2/93.5 93.0 77.9 96.6 69.1 92.7/91.9

8 | 7 DeBERTa Team - Microsoft DeBERTa / TuringNLRv4 90.3 90.4 95.7/97.6 98.4 88.2/63.7 94.5/94.1 93.2 77.5 95.9 66.7 93.3/93.8

9 | 8 SuperGLUE Human Baselines SuperGLUE Human Baselines 89.8 89.0 95.8/98.9 100.0 81.8/51.9 91.7/91.3 93.6 80.0 100.0 76.6 99.3/99.7

10 | 9 T5 Team - Google T5 89.3 91.2 93.9/96.8 94.8 88.1/63.3 94.1/93.4 92.5 76.9 93.8 65.6 92.7/91.9

11 | 10 SPoT Team - Google Frozen T5 1.1 + SPoT 89.2 91.1 95.8/97.6 95.6 87.9/61.9 93.3/92.4 92.9 75.8 93.8 66.9 83.1/82.6

12 | 11 Huawei Noah's Ark Lab NEZHA-Plus 86.7 87.8 94.4/96.0 93.6 84.6/55.1 90.1/89.6 89.1 74.6 93.2 58.0 87.1/74.4

13 | 12 Alibaba PAI&ICBU PAI Albert 86.1 88.1 92.4/96.4 91.8 84.6/54.7 89.0/88.3 88.8 74.1 93.2 75.6 98.3/99.2

14 | 13 Infosys : DAWN : AI Research RoBERTa-iCETS 86.0 88.5 93.2/95.2 91.2 86.4/58.2 89.9/89.3 89.9 72.9 89.0 61.8 88.8/81.5

15 | 14 Tencent Jarvis Lab RoBERTa (ensemble) 85.9 88.2 92.5/95.6 90.8 84.4/53.4 91.5/91.0 87.9 74.1 91.8 57.6 89.3/75.6

16 | 15 Zhuiyi Technology RoBERTa-mtl-adv 85.7 87.1 92.4/95.6 91.2 85.1/54.3 91.7/91.3 88.1 72.1 91.8 58.5 91.0/78.1

17 | 16 Facebook AI RoBERTa 84.6 87.1 90.5/95.2 90.6 84.4/52.5 90.6/90.0 88.2 69.9 89.0 57.9 91.0/78.1

18 | 17 Anuar Sharafudinov AILabs Team, Transformers 82.6 88.1 91.6/94.8 86.8 85.1/54.7 82.8/79.8 88.9 74.1 78.8 100.0 100.0/100.0

19 | 18 Ying Luo FSL++(ALBERT)-Few-Shot(32 Examples) 77.7 81.1 87.8/92.0 87.0 77.3/38.4 81.9/81.1 75.1 60.5 88.4 35.9 94.4/63.5

20 | 19 Rathin Bector Text to Text PETL 77.0 82.0 86.9/92.4 80.2 80.4/44.8 82.2/81.3 78.1 67.6 74.0 38.1 97.2/53.7

21 | 20 CASIA INSTALL(ALBERT)-few-shot 76.6 78.4 85.9/92.0 85.6 75.9/35.1 84.3/83.5 74.9 60.9 84.9 -0.4 100.0/50.0

22 | 21 Rakesh Radhakrishnan Menon ADAPET (ALBERT) - few-shot 76.0 80.0 82.3/92.0 85.4 76.2/35.7 86.1/85.5 75.0 53.5 85.6 -0.4 100.0/50.0

23 | 22 Timo Schick iPET (ALBERT) - Few-Shot (32 Examples) 75.4 81.2 79.9/88.8 90.8 74.1/31.7 85.9/85.4 70.8 49.3 88.4 36.2 97.8/57.9

24 | 23 Adrian de Wynter Bort (Alexa AI) 74.1 83.7 81.9/86.4 89.6 83.7/54.1 49.8/49.0 81.2 70.1 65.8 48.0 96.1/61.5

25 | 24 IBM Research AI BERT-mtl 73.5 84.8 89.6/94.0 73.8 73.2/30.5 74.6/74.0 84.1 66.2 61.0 29.6 97.8/57.3

26 | 25 Ben Mann GPT-3 few-shot - OpenAI 71.8 76.4 52.0/75.6 92.0 75.4/30.5 91.1/90.2 69.0 49.4 80.1 21.1 90.4/55.3

27 | 26 SuperGLUE Baselines BERT++ 71.5 79.0 84.8/90.4 73.8 70.0/24.1 72.0/71.3 79.0 69.6 64.4 38.0 99.4/51.4

28 | 27 Jeff Yang select-step-by-step 51.9 62.2 68.2/76.0 96.4 0.0/0.5 14.0/13.6 49.7 53.1 67.8 -0.4 100.0/50.0

29 | 28 Karen Hambardzumyan WARP (ALBERT-XXL-V2) - Few-Shot (32 Examples) 48.7 62.2 70.2/82.4 51.6 0.0/0.5 14.0/13.6 69.1 53.1 63.7 -0.4 100.0/50.0

30 |

--------------------------------------------------------------------------------

/benchbench/data/heim/quality_auto.tsv:

--------------------------------------------------------------------------------

1 | Model/adapter Mean win rate ↑ [ sort ] MS-COCO (base) - Expected LPIPS score ↓ [ sort ] MS-COCO (base) - Expected Multi-Scale SSIM ↑ [ sort ] MS-COCO (base) - Expected PSNR ↑ [ sort ] MS-COCO (base) - Expected UIQI ↑ [ sort ] Caltech-UCSD Birds-200-2011 - Expected LPIPS score ↓ [ sort ] Caltech-UCSD Birds-200-2011 - Expected Multi-Scale SSIM ↑ [ sort ] Caltech-UCSD Birds-200-2011 - Expected PSNR ↑ [ sort ] Caltech-UCSD Birds-200-2011 - Expected UIQI ↑ [ sort ] Winoground - Expected LPIPS score ↓ [ sort ] Winoground - Expected Multi-Scale SSIM ↑ [ sort ] Winoground - Expected PSNR ↑ [ sort ] Winoground - Expected UIQI ↑ [ sort ]

2 | Redshift Diffusion (1B) 0.863 0.739 0.07 9.386 0.003 0.765 0.108 10.695 0.002 0.752 0.082 8.727 0.005

3 | Dreamlike Photoreal v2.0 (1B) 0.7 0.733 0.054 8.757 0.003 0.774 0.093 10.868 0.001 0.75 0.058 8.441 0.003

4 | DALL-E mini (0.4B) 0.663 0.741 0.084 8.677 0.003 0.776 0.112 10.649 0.001 0.774 0.085 7.953 0.003

5 | Dreamlike Diffusion v1.0 (1B) 0.637 0.731 0.051 8.746 0.002 0.765 0.106 10.484 0.001 0.757 0.054 8.201 0.003

6 | Stable Diffusion v2 base (1B) 0.627 0.735 0.062 8.573 0.002 0.774 0.087 10.706 0.001 0.768 0.064 8.185 0.002

7 | GigaGAN (1B) 0.57 0.737 0.079 8.466 0.003 0.748 0.073 9.285 -0.001 0.758 0.078 8.17 0.003

8 | Openjourney v1 (1B) 0.557 0.756 0.063 8.686 0.004 0.787 0.063 9.807 0.002 0.768 0.063 8.126 0.004

9 | Stable Diffusion v2.1 base (1B) 0.553 0.739 0.053 8.409 0.004 0.794 0.097 10.563 0.001 0.766 0.061 8.143 0.002

10 | Stable Diffusion v1.4 (1B) 0.55 0.739 0.061 8.602 0.002 0.772 0.103 10.567 0.001 0.763 0.061 7.989 0.002

11 | minDALL-E (1.3B) 0.533 0.738 0.082 8.27 0.001 0.781 0.109 9.44 0.001 0.75 0.089 7.703 0

12 | Promptist + Stable Diffusion v1.4 (1B) 0.523 0.751 0.072 9.111 0.002 0.796 0.092 9.843 -0.001 0.77 0.074 8.204 0.004

13 | DeepFloyd IF X-Large (4.3B) 0.503 0.741 0.081 7.985 0.003 0.803 0.089 9.314 0.001 0.763 0.084 7.843 0.003

14 | Stable Diffusion v1.5 (1B) 0.49 0.74 0.059 8.614 0.002 0.774 0.09 10.431 0.001 0.764 0.056 8.026 0.002

15 | Safe Stable Diffusion weak (1B) 0.483 0.741 0.06 8.553 0.002 0.777 0.094 10.413 0.001 0.765 0.059 8 0.002

16 | MultiFusion (13B) 0.477 0.733 0.056 8.749 0.002 0.769 0.082 10.097 0.001 0.756 0.053 8.116 0

17 | DeepFloyd IF Medium (0.4B) 0.44 0.739 0.076 8.102 0.003 0.794 0.084 9.588 0.001 0.769 0.074 7.754 0.003

18 | DALL-E mega (2.6B) 0.44 0.742 0.079 8.245 0.002 0.792 0.095 9.364 0.001 0.768 0.078 7.694 0.002

19 | DeepFloyd IF Large (0.9B) 0.433 0.743 0.072 7.857 0.003 0.804 0.089 9.609 0.001 0.762 0.073 7.8 0.002

20 | Lexica Search with Stable Diffusion v1.5 (1B) 0.43 0.762 0.066 9.018 0.002 0.802 0.079 9.764 0.002 0.778 0.07 8.241 0.002

21 | Safe Stable Diffusion medium (1B) 0.42 0.746 0.063 8.529 0.002 0.78 0.094 10.36 0.001 0.772 0.059 8.012 0.002

22 | DALL-E 2 (3.5B) 0.407 0.74 0.073 8.234 0.001 0.777 0.081 9.111 0.001 0.744 0.077 7.763 0.002

23 | CogView2 (6B) 0.4 0.755 0.084 8.307 0.001 0.783 0.113 9.198 -0.001 0.759 0.084 7.613 0.001

24 | Openjourney v2 (1B) 0.38 0.743 0.06 8.346 0.002 0.775 0.09 9.901 0 0.763 0.061 7.998 0.001

25 | Vintedois (22h) Diffusion model v0.1 (1B) 0.363 0.757 0.051 8.101 0.003 0.788 0.095 9.588 0.002 0.777 0.054 7.675 0.004

26 | Safe Stable Diffusion strong (1B) 0.297 0.75 0.059 8.403 0.001 0.79 0.085 10.2 0.001 0.774 0.063 7.903 0.001

27 | Safe Stable Diffusion max (1B) 0.26 0.759 0.06 8.26 0.002 0.802 0.085 9.913 0 0.786 0.069 7.685 0.002

28 |

--------------------------------------------------------------------------------

/benchbench/data/heim/originality.tsv:

--------------------------------------------------------------------------------

1 | Model/adapter Mean win rate ↑ [ sort ] MS-COCO (base) - Watermark frac ↓ [ sort ] Caltech-UCSD Birds-200-2011 - Watermark frac ↓ [ sort ] DrawBench (image quality categories) - Watermark frac ↓ [ sort ] PartiPrompts (image quality categories) - Watermark frac ↓ [ sort ] dailydall.e - Watermark frac ↓ [ sort ] Landing Page - Watermark frac ↓ [ sort ] Logos - Watermark frac ↓ [ sort ] Magazine Cover Photos - Watermark frac ↓ [ sort ] Common Syntactic Processes - Watermark frac ↓ [ sort ] DrawBench (reasoning categories) - Watermark frac ↓ [ sort ] PartiPrompts (reasoning categories) - Watermark frac ↓ [ sort ] Relational Understanding - Watermark frac ↓ [ sort ] Detection (PaintSkills) - Watermark frac ↓ [ sort ] Winoground - Watermark frac ↓ [ sort ] PartiPrompts (knowledge categories) - Watermark frac ↓ [ sort ] DrawBench (knowledge categories) - Watermark frac ↓ [ sort ] TIME's most significant historical figures - Watermark frac ↓ [ sort ] Demographic Stereotypes - Watermark frac ↓ [ sort ] Mental Disorders - Watermark frac ↓ [ sort ] Inappropriate Image Prompts (I2P) - Watermark frac ↓ [ sort ]

2 | GigaGAN (1B) 0.932 0 0 0 0 0 0 0.01 0 0 0 0 0 0 0 0 0 0 0 0 0.001

3 | DeepFloyd IF Medium (0.4B) 0.784 0 0 0 0.002 0 0 0 0 0 0 0 0 0 0.003 0.003 0 0 0 0 0.001

4 | Lexica Search with Stable Diffusion v1.5 (1B) 0.75 0 0 0 0.001 0 0 0.05 0 0 0 0.003 0 0 0 0.005 0.007 0 0 0 0

5 | DeepFloyd IF X-Large (4.3B) 0.75 0 0 0 0 0 0 0.01 0 0 0 0 0 0 0 0.003 0 0 0 0 0.004

6 | DeepFloyd IF Large (0.9B) 0.712 0 0 0 0.001 0.003 0 0.005 0 0 0 0 0 0 0.003 0 0 0 0.004 0 0.001

7 | Dreamlike Diffusion v1.0 (1B) 0.674 0 0 0 0.001 0 0 0.013 0 0 0 0 0 0 0 0 0 0 0 0 0

8 | DALL-E 2 (3.5B) 0.63 0.003 0 0 0.001 0 0 0.01 0 0 0 0.003 0 0 0.003 0.013 0 0.005 0 0.021 0.003

9 | Openjourney v1 (1B) 0.612 0 0 0 0 0 0.007 0.003 0 0 0 0 0 0 0 0 0 0 0.004 0.014 0.001

10 | Dreamlike Photoreal v2.0 (1B) 0.586 0 0 0.006 0.001 0 0 0.008 0.005 0 0 0.003 0 0 0 0 0 0 0.005 0 0.001

11 | Openjourney v2 (1B) 0.548 0 0 0 0.001 0.003 0.007 0.005 0 0 0 0.003 0 0 0 0.003 0 0 0 0 0

12 | Redshift Diffusion (1B) 0.548 0.003 0 0 0 0 0 0.008 0 0 0 0 0 0 0 0.005 0 0 0 0 0.001

13 | DALL-E mega (2.6B) 0.546 0.003 0 0 0.001 0 0 0 0.005 0 0 0.003 0 0 0 0.005 0.007 0.003 0 0 0.004

14 | Promptist + Stable Diffusion v1.4 (1B) 0.542 0 0 0 0 0 0 0.01 0 0 0 0.003 0 0 0 0 0 0 0 0 0

15 | Stable Diffusion v1.4 (1B) 0.53 0 0 0 0 0 0 0.005 0 0 0 0 0 0 0 0.003 0 0 0 0 0.001

16 | Stable Diffusion v1.5 (1B) 0.466 0 0 0 0 0 0 0.01 0 0 0 0 0 0 0 0.003 0 0.003 0.004 0 0

17 | Stable Diffusion v2.1 base (1B) 0.462 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0.001

18 | DALL-E mini (0.4B) 0.458 0.018 0 0 0.006 0.016 0 0 0.01 0.003 0.013 0.005 0 0 0 0.005 0 0.008 0 0 0.009

19 | Stable Diffusion v2 base (1B) 0.43 0 0 0 0 0.003 0.007 0.008 0 0 0 0 0 0 0 0 0 0 0 0 0.002

20 | Safe Stable Diffusion medium (1B) 0.378 0 0 0 0 0 0 0.008 0 0 0 0 0 0 0 0 0 0 0 0 0.001

21 | Safe Stable Diffusion strong (1B) 0.36 0 0 0 0 0 0 0.005 0.005 0 0 0 0 0 0 0 0 0 0 0 0

22 | Safe Stable Diffusion weak (1B) 0.358 0 0 0 0.001 0 0.007 0.008 0 0.005 0 0 0 0 0 0.003 0 0 0 0 0.001

23 | Safe Stable Diffusion max (1B) 0.344 0 0 0 0 0 0 0.003 0 0 0.002 0.003 0 0 0 0 0 0 0 0 0

24 | Vintedois (22h) Diffusion model v0.1 (1B) 0.308 0 0 0 0 0 0 0.003 0 0 0.002 0 0 0 0 0.005 0 0 0 0 0

25 | minDALL-E (1.3B) 0.17 0.025 0.05 0.036 0.053 0.083 0 0.048 0 0.045 0.036 0.05 0.037 0.011 0.02 0.02 0.033 0.015 0.039 0 0.03

26 | MultiFusion (13B) 0.11 0.038 0.13 0.027 0.052 0.094 0.007 0.155 0.005 0.011 0.011 0.08 0.063 0.003 0.04 0.038 0.039 0 0.078 0.056 0.041

27 | CogView2 (6B) 0.012 0.065 0.1 0.047 0.186 0.22 0.021 0.163 0.03 0.073 0.01 0.145 0.3 0.016 0.19 0.1 0.007 0.076 0.228 0.236 0.096

28 |

--------------------------------------------------------------------------------

/benchbench/data/heim/black_out.tsv:

--------------------------------------------------------------------------------

1 | Model/adapter Mean win rate ↑ [ sort ] MS-COCO (base) - Blacked out frac ↓ [ sort ] Caltech-UCSD Birds-200-2011 - Blacked out frac ↓ [ sort ] DrawBench (image quality categories) - Blacked out frac ↓ [ sort ] PartiPrompts (image quality categories) - Blacked out frac ↓ [ sort ] dailydall.e - Blacked out frac ↓ [ sort ] Landing Page - Blacked out frac ↓ [ sort ] Logos - Blacked out frac ↓ [ sort ] Magazine Cover Photos - Blacked out frac ↓ [ sort ] Common Syntactic Processes - Blacked out frac ↓ [ sort ] DrawBench (reasoning categories) - Blacked out frac ↓ [ sort ] PartiPrompts (reasoning categories) - Blacked out frac ↓ [ sort ] Relational Understanding - Blacked out frac ↓ [ sort ] Detection (PaintSkills) - Blacked out frac ↓ [ sort ] Winoground - Blacked out frac ↓ [ sort ] PartiPrompts (knowledge categories) - Blacked out frac ↓ [ sort ] DrawBench (knowledge categories) - Blacked out frac ↓ [ sort ] TIME's most significant historical figures - Blacked out frac ↓ [ sort ] Demographic Stereotypes - Blacked out frac ↓ [ sort ] Mental Disorders - Blacked out frac ↓ [ sort ] Inappropriate Image Prompts (I2P) - Blacked out frac ↓ [ sort ]

2 | MultiFusion (13B) 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

3 | GigaGAN (1B) 0.96 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

4 | DALL-E 2 (3.5B) 0.92 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

5 | Lexica Search with Stable Diffusion v1.5 (1B) 0.88 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

6 | DeepFloyd IF Medium (0.4B) 0.84 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

7 | DeepFloyd IF Large (0.9B) 0.8 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

8 | DeepFloyd IF X-Large (4.3B) 0.76 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

9 | minDALL-E (1.3B) 0.72 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

10 | DALL-E mini (0.4B) 0.68 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

11 | DALL-E mega (2.6B) 0.614 0 0 0 0 0.003 0 0.003 0 0 0 0 0 0 0 0 0 0 0 0 0

12 | CogView2 (6B) 0.604 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

13 | Dreamlike Photoreal v2.0 (1B) 0.564 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

14 | Dreamlike Diffusion v1.0 (1B) 0.524 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

15 | Stable Diffusion v2 base (1B) 0.446 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

16 | Stable Diffusion v2.1 base (1B) 0.406 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

17 | Openjourney v1 (1B) 0.278 0.005 0 0.051 0.01 0.003 0 0.018 0.01 0.021 0.017 0.03 0.01 0.001 0.01 0 0.007 0.02 0 0 0.089

18 | Safe Stable Diffusion strong (1B) 0.272 0.003 0 0.01 0.006 0 0.007 0.008 0.005 0.006 0.004 0.025 0 0.001 0.008 0.005 0.007 0.028 0 0 0.077

19 | Safe Stable Diffusion medium (1B) 0.254 0 0 0.015 0.005 0.003 0 0.015 0.01 0.003 0.002 0.025 0.003 0 0.008 0.005 0.013 0.038 0.026 0 0.102

20 | Safe Stable Diffusion max (1B) 0.23 0 0 0.02 0.007 0.008 0 0.01 0.005 0.011 0.004 0.01 0.003 0.002 0.003 0.003 0.026 0.03 0.005 0 0.064

21 | Openjourney v2 (1B) 0.21 0 0.01 0.056 0.012 0 0.007 0.023 0.035 0.014 0.004 0.038 0.007 0.001 0.018 0 0.033 0.025 0.005 0 0.102

22 | Redshift Diffusion (1B) 0.208 0.013 0 0.066 0.02 0.011 0 0.01 0.005 0.022 0.027 0.025 0.023 0.004 0.01 0 0.033 0.008 0.005 0 0.088

23 | Safe Stable Diffusion weak (1B) 0.206 0.003 0 0.025 0.009 0 0 0.02 0.025 0.009 0.002 0.05 0.003 0.002 0.01 0.003 0 0.023 0.008 0.014 0.148

24 | Vintedois (22h) Diffusion model v0.1 (1B) 0.178 0 0.01 0.025 0.008 0.003 0 0.015 0.01 0.023 0.021 0.025 0 0.007 0.005 0 0.007 0.025 0.008 0.014 0.121

25 | Stable Diffusion v1.5 (1B) 0.176 0.003 0 0.025 0.012 0.003 0 0.013 0.025 0.006 0.002 0.04 0.003 0.003 0.01 0.008 0.026 0.04 0.022 0.028 0.163

26 | Stable Diffusion v1.4 (1B) 0.174 0.008 0 0.035 0.01 0.003 0 0.025 0.02 0.006 0.008 0.043 0.007 0.001 0.02 0.01 0.007 0.028 0.033 0 0.177

27 | Promptist + Stable Diffusion v1.4 (1B) 0.096 0.018 0.03 0.05 0.018 0.005 0 0.033 0.025 0.049 0.025 0.038 0.017 0.002 0.023 0.005 0.02 0.025 0.038 0.014 0.15

28 |

--------------------------------------------------------------------------------

/benchbench/data/bigcode/vanilla.txt:

--------------------------------------------------------------------------------

1 | T

2 | Models

3 |

4 | Win Rate

5 | humaneval-python

6 | java

7 | javascript

8 | Throughput (tokens/s)

9 | 🔴

10 | DeepSeek-Coder-33b-instruct

11 |

12 | 39.58

13 | 80.02

14 | 52.03

15 | 65.13

16 | 25.2