├── .git-blame-ignore-revs

├── .github

├── release-drafter.yml

└── workflows

│ ├── ci.yml

│ └── scala-steward.yml

├── .gitignore

├── .mergify.yml

├── .scala-steward.conf

├── .scalafmt.conf

├── LICENSE

├── README.md

├── build.sbt

├── core

└── src

│ ├── main

│ ├── java

│ │ └── com

│ │ │ └── softwaremill

│ │ │ └── kmq

│ │ │ ├── EndMarker.java

│ │ │ ├── KafkaClients.java

│ │ │ ├── KmqClient.java

│ │ │ ├── KmqConfig.java

│ │ │ ├── MarkerKey.java

│ │ │ ├── MarkerValue.java

│ │ │ ├── PartitionFromMarkerKey.java

│ │ │ ├── RedeliveryTracker.java

│ │ │ └── StartMarker.java

│ ├── resources

│ │ └── reference.conf

│ └── scala

│ │ └── com.softwaremill.kmq

│ │ └── redelivery

│ │ ├── CommitMarkerOffsetsActor.scala

│ │ ├── ConsumeMarkersActor.scala

│ │ ├── KafkaClientsResourceHelpers.scala

│ │ ├── MarkersQueue.scala

│ │ ├── RedeliverActor.scala

│ │ ├── Redeliverer.scala

│ │ ├── RedeliveryActors.scala

│ │ └── package.scala

│ └── test

│ ├── resources

│ └── logback-test.xml

│ └── scala

│ └── com

│ └── softwaremill

│ └── kmq

│ └── redelivery

│ ├── IntegrationTest.scala

│ ├── MarkersQueueTest.scala

│ └── infrastructure

│ └── KafkaSpec.scala

├── example-java

└── src

│ └── main

│ ├── java

│ └── com

│ │ └── softwaremill

│ │ └── kmq

│ │ └── example

│ │ ├── UncaughtExceptionHandling.java

│ │ ├── embedded

│ │ └── EmbeddedExample.java

│ │ └── standalone

│ │ ├── StandaloneConfig.java

│ │ ├── StandaloneProcessor.java

│ │ ├── StandaloneRedeliveryTracker.java

│ │ └── StandaloneSender.java

│ └── resources

│ └── log4j.xml

├── example-scala

└── src

│ └── main

│ ├── resources

│ └── logback.xml

│ └── scala

│ └── com

│ └── softwaremill

│ └── kmq

│ └── example

│ └── Standalone.scala

├── kmq.png

└── project

├── build.properties

└── plugins.sbt

/.git-blame-ignore-revs:

--------------------------------------------------------------------------------

1 | # Scala Steward: Reformat with scalafmt 3.5.9

2 | c744d4468606060325ca3aae78c748610b6006bb

3 |

4 | # Scala Steward: Reformat with scalafmt 3.7.3

5 | d799494c991e2f1aa272f1564555c02983e7cd17

6 |

--------------------------------------------------------------------------------

/.github/release-drafter.yml:

--------------------------------------------------------------------------------

1 | template: |

2 | ## What’s Changed

3 |

4 | $CHANGES

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: CI

2 | on:

3 | pull_request:

4 | branches: ['**']

5 | push:

6 | branches: ['**']

7 | tags: [v*]

8 | jobs:

9 | build:

10 | uses: softwaremill/github-actions-workflows/.github/workflows/build-scala.yml@main

11 | # Run on external PRs, but not on internal PRs since those will be run by push to branch

12 | if: github.event_name == 'push' || github.event.pull_request.head.repo.full_name != github.repository

13 | with:

14 | java-opts: '-Xmx4G'

15 |

16 | mima:

17 | uses: softwaremill/github-actions-workflows/.github/workflows/mima.yml@main

18 | # run on external PRs, but not on internal PRs since those will be run by push to branch

19 | if: github.event_name == 'push' || github.event.pull_request.head.repo.full_name != github.repository

20 | with:

21 | java-opts: '-Xmx4G'

22 |

23 | publish:

24 | uses: softwaremill/github-actions-workflows/.github/workflows/publish-release.yml@main

25 | needs: [build]

26 | if: github.event_name != 'pull_request' && (startsWith(github.ref, 'refs/tags/v'))

27 | secrets: inherit

28 | with:

29 | java-opts: '-Xmx4G'

--------------------------------------------------------------------------------

/.github/workflows/scala-steward.yml:

--------------------------------------------------------------------------------

1 | name: Scala Steward

2 |

3 | # This workflow will launch at 00:00 every day

4 | on:

5 | schedule:

6 | - cron: '0 0 * * *'

7 | workflow_dispatch:

8 |

9 | jobs:

10 | scala-steward:

11 | uses: softwaremill/github-actions-workflows/.github/workflows/scala-steward.yml@main

12 | secrets:

13 | repo-github-token: ${{secrets.REPO_GITHUB_TOKEN}}

14 | with:

15 | java-opts: '-Xmx3500M'

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | target

2 | .idea

3 | .bsp

4 | *.iml

5 | # virtual machine crash logs, see http://www.java.com/en/download/help/error_hotspot.xml

6 | hs_err_pid*

--------------------------------------------------------------------------------

/.mergify.yml:

--------------------------------------------------------------------------------

1 | pull_request_rules:

2 | - name: delete head branch after merge

3 | conditions: []

4 | actions:

5 | delete_head_branch: {}

6 | - name: automatic merge for softwaremill-ci pull requests affecting build.sbt

7 | conditions:

8 | - author=softwaremill-ci

9 | - check-success=ci

10 | - check-success=mima

11 | - "#files=1"

12 | - files=build.sbt

13 | actions:

14 | merge:

15 | method: merge

16 | - name: automatic merge for softwaremill-ci pull requests affecting project plugins.sbt

17 | conditions:

18 | - author=softwaremill-ci

19 | - check-success=ci

20 | - check-success=mima

21 | - "#files=1"

22 | - files=project/plugins.sbt

23 | actions:

24 | merge:

25 | method: merge

26 | - name: semi-automatic merge for softwaremill-ci pull requests

27 | conditions:

28 | - author=softwaremill-ci

29 | - check-success=ci

30 | - check-success=mima

31 | - "#approved-reviews-by>=1"

32 | actions:

33 | merge:

34 | method: merge

35 | - name: automatic merge for softwaremill-ci pull requests affecting project build.properties

36 | conditions:

37 | - author=softwaremill-ci

38 | - check-success=ci

39 | - check-success=mima

40 | - "#files=1"

41 | - files=project/build.properties

42 | actions:

43 | merge:

44 | method: merge

45 | - name: automatic merge for softwaremill-ci pull requests affecting .scalafmt.conf

46 | conditions:

47 | - author=softwaremill-ci

48 | - check-success=ci

49 | - check-success=mima

50 | - "#files=1"

51 | - files=.scalafmt.conf

52 | actions:

53 | merge:

54 | method: merge

55 | - name: add label to scala steward PRs

56 | conditions:

57 | - author=softwaremill-ci

58 | actions:

59 | label:

60 | add:

61 | - dependency

--------------------------------------------------------------------------------

/.scala-steward.conf:

--------------------------------------------------------------------------------

1 | updates.ignore = [

2 | {groupId = "org.scala-lang", artifactId = "scala-compiler", version = "2.12."},

3 | {groupId = "org.scala-lang", artifactId = "scala-compiler", version = "2.13."},

4 | {groupId = "org.scala-lang", artifactId = "scala-compiler", version = "3."}

5 | ]

6 |

--------------------------------------------------------------------------------

/.scalafmt.conf:

--------------------------------------------------------------------------------

1 | runner.dialect = scala3

2 | version = 3.7.3

3 | maxColumn = 120

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "{}"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright 2017 SoftwareMill

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

203 |

--------------------------------------------------------------------------------

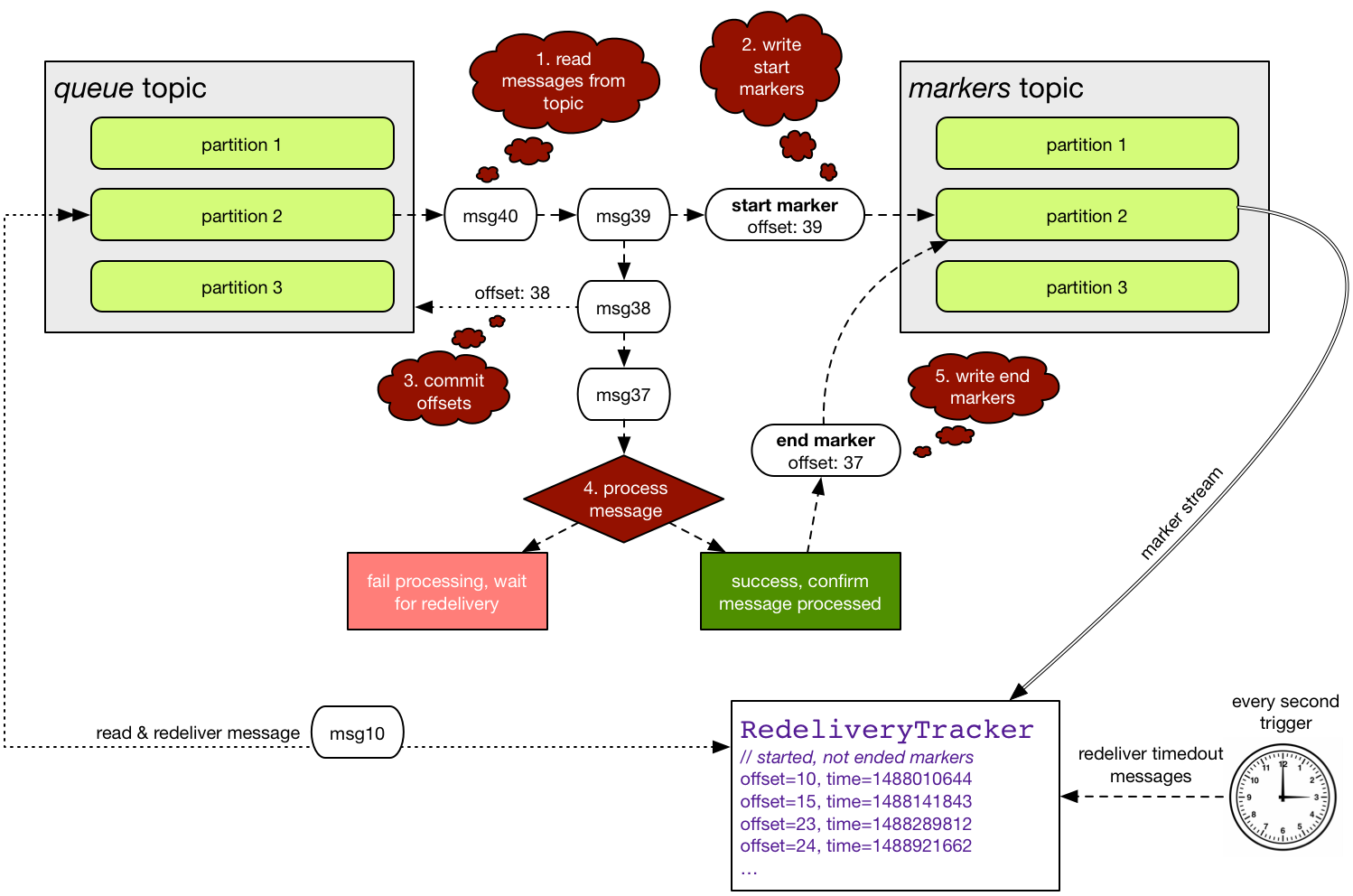

/README.md:

--------------------------------------------------------------------------------

1 | # Kafka Message Queue

2 |

3 | [](https://gitter.im/softwaremill/kmq?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge)

4 | [](https://maven-badges.herokuapp.com/maven-central/com.softwaremill.kmq/core_2.12)

5 |

6 | Using `kmq` you can acknowledge processing of individual messages in Kafka, and have unacknowledged messages

7 | re-delivered after a timeout.

8 |

9 | This is in contrast to the usual Kafka offset-committing mechanism, using which you can acknowledge all messages

10 | up to a given offset only.

11 |

12 | If you are familiar with [Amazon SQS](https://aws.amazon.com/sqs/), `kmq` implements a similar message processing

13 | model.

14 |

15 | # How does this work?

16 |

17 | For a more in-depth overview see the blog: [Using Kafka as a message queue](https://softwaremill.com/using-kafka-as-a-message-queue/),

18 | and for performance benchmarks: [Kafka with selective acknowledgments (kmq) performance & latency benchmark](https://softwaremill.com/kafka-with-selective-acknowledgments-performance/)

19 |

20 | The acknowledgment mechanism uses a `marker` topic, which should have the same number of partitions as the "main"

21 | data topic (called the `queue` topic). The marker topic is used to track which messages have been processed, by

22 | writing start/end markers for every message.

23 |

24 |

25 |

26 | # Using kmq

27 |

28 | An application using `kmq` should consist of the following components:

29 |

30 | * a number of `RedeliveryTracker`s. This components consumes the `marker` topic and redelivers messages if appropriate.

31 | Multiple copies should be started in a cluster for fail-over. Uses automatic partition assignment.

32 | * components which send data to the `queue` topic to be processed

33 | * queue clients, either custom or using the `KmqClient`

34 |

35 | # Maven/SBT dependency

36 |

37 | SBT:

38 |

39 | "com.softwaremill.kmq" %% "core" % "0.3.1"

40 |

41 | Maven:

42 |

43 |

44 | com.softwaremill.kmq

45 | core_2.13

46 | 0.3.1

47 |

48 |

49 | Note: The supported Scala versions are: 2.12, 2.13.

50 |

51 | # Client flow

52 |

53 | The flow of processing a message is as follows:

54 |

55 | 1. read messages from the `queue` topic, in batches

56 | 2. write a `start` marker to the `markers` topic for each message, wait until the markers are written

57 | 3. commit the biggest message offset to the `queue` topic

58 | 4. process messages

59 | 5. for each message, write an `end` marker. No need to wait until the markers are written.

60 |

61 | This ensures at-least-once processing of each message. Note that the acknowledgment of each message (writing the

62 | `end` marker) can be done for each message separately, out-of-order, from a different thread, server or application.

63 |

64 | # Example code

65 |

66 | There are three example applications:

67 |

68 | * `example-java/embedded`: a single java application that starts all three components (sender, client, redelivery tracker)

69 | * `example-java/standalone`: three separate runnable classes to start the different components

70 | * `example-scala`: an implementation of the client using [reactive-kafka](https://github.com/akka/reactive-kafka)

71 |

72 | # Time & timestamps

73 |

74 | How time is handled is crucial for message redelivery, as messages are redelivered after a given amount of time passes

75 | since the `start` marker was sent.

76 |

77 | To track what was sent when, `kmq` uses Kafka's message timestamp. By default, this is messages create time

78 | (`message.timestamp.type=CreateTime`), but for the `markers` topic, it is advisable to switch this to `LogAppendTime`.

79 | That way, the timestamps more closely reflect when the markers are really written to the log, and are guaranteed to be

80 | monotonic in each partition (which is important for redelivery - see below).

81 |

82 | To calculate which messages should be redelivered, we need to know the value of "now", to check which `start` markers

83 | have been sent later than the configured timeout. When a marker has been received from a partition recently, the

84 | maximum such timestamp is used as the value of "now" - as it indicates exactly how far we are in processing the

85 | partition. What "recently" means depends on the `useNowForRedeliverDespiteNoMarkerSeenForMs` config setting. Otherwise,

86 | the current system time is used, as we assume that all markers from the partition have been processed.

87 |

88 | # Dead letter queue (DMQ)

89 | The redelivery of the message is attempted only a configured number of times. By default, it's 3. You can change that number by setting `maxRedeliveryCount` value in `KmqConfig`.

90 | After that number is exceeded messages will be forwarded to a topic working as a *dead letter queue*. By default, the name of that topic is name of the message topic concatenated with the suffix `__undelivered`. You can configure the name by setting `deadLetterTopic` in `KmqConfig`.

91 | The number of redeliveries is tracked by `kmq` with a special header. The default the name of that header is `kmq-redelivery-count`. You can change it by setting `redeliveryCountHeader` in `KmqConfig`.

--------------------------------------------------------------------------------

/build.sbt:

--------------------------------------------------------------------------------

1 | import com.softwaremill.Publish.ossPublishSettings

2 | import com.softwaremill.SbtSoftwareMillCommon.commonSmlBuildSettings

3 | import sbt.Keys._

4 | import sbt._

5 |

6 | val scala2_13 = "2.13.10"

7 |

8 | val kafkaVersion = "3.4.0"

9 | val logbackVersion = "1.4.7"

10 | val akkaVersion = "2.6.19"

11 | val akkaStreamKafkaVersion = "2.1.1"

12 | val scalaLoggingVersion = "3.9.5"

13 | val scalaTestVersion = "3.2.15"

14 | val catsEffectVersion = "3.4.9"

15 | val fs2Version = "3.6.1"

16 | val logs4CatsVersion = "2.6.0"

17 | val fs2KafkaVersion = "2.6.0"

18 |

19 | // slow down Tests for CI

20 | parallelExecution in Global := false

21 | concurrentRestrictions in Global += Tags.limit(Tags.Test, 1)

22 | // disable mima checks globally

23 | mimaPreviousArtifacts in Global := Set.empty

24 |

25 | lazy val commonSettings = commonSmlBuildSettings ++ ossPublishSettings ++ Seq(

26 | organization := "com.softwaremill.kmq",

27 | mimaPreviousArtifacts := Set.empty,

28 | versionScheme := Some("semver-spec"),

29 | scalacOptions ++= Seq("-unchecked", "-deprecation"),

30 | evictionErrorLevel := Level.Info,

31 | addCompilerPlugin("com.olegpy" %% "better-monadic-for" % "0.3.1"),

32 | ideSkipProject := (scalaVersion.value != scala2_13),

33 | mimaPreviousArtifacts := previousStableVersion.value.map(organization.value %% moduleName.value % _).toSet,

34 | mimaReportBinaryIssues := {

35 | if ((publish / skip).value) {} else mimaReportBinaryIssues.value

36 | }

37 | )

38 |

39 | lazy val kmq = (project in file("."))

40 | .settings(commonSettings)

41 | .settings(

42 | publishArtifact := false,

43 | name := "kmq",

44 | scalaVersion := scala2_13

45 | )

46 | .aggregate((core.projectRefs ++ exampleJava.projectRefs ++ exampleScala.projectRefs): _*)

47 |

48 | lazy val core = (projectMatrix in file("core"))

49 | .settings(commonSettings)

50 | .settings(

51 | libraryDependencies ++= List(

52 | "org.apache.kafka" % "kafka-clients" % kafkaVersion exclude ("org.scala-lang.modules", "scala-java8-compat"),

53 | "com.typesafe.scala-logging" %% "scala-logging" % scalaLoggingVersion,

54 | "org.typelevel" %% "cats-effect" % catsEffectVersion,

55 | "co.fs2" %% "fs2-core" % fs2Version,

56 | "org.typelevel" %% "log4cats-core" % logs4CatsVersion,

57 | "org.typelevel" %% "log4cats-slf4j" % logs4CatsVersion,

58 | "org.scalatest" %% "scalatest" % scalaTestVersion % Test,

59 | "org.scalatest" %% "scalatest-flatspec" % scalaTestVersion % Test,

60 | "io.github.embeddedkafka" %% "embedded-kafka" % kafkaVersion % Test exclude ("javax.jms", "jms"),

61 | "ch.qos.logback" % "logback-classic" % logbackVersion % Test,

62 | "com.github.fd4s" %% "fs2-kafka" % fs2KafkaVersion % Test

63 | )

64 | )

65 | .jvmPlatform(scalaVersions = Seq(scala2_13))

66 |

67 | lazy val exampleJava = (projectMatrix in file("example-java"))

68 | .settings(commonSettings)

69 | .settings(

70 | publishArtifact := false,

71 | libraryDependencies ++= List(

72 | "org.apache.kafka" %% "kafka" % kafkaVersion,

73 | "io.github.embeddedkafka" %% "embedded-kafka" % kafkaVersion,

74 | "ch.qos.logback" % "logback-classic" % logbackVersion % Runtime

75 | )

76 | )

77 | .jvmPlatform(scalaVersions = Seq(scala2_13))

78 | .dependsOn(core)

79 |

80 | lazy val exampleScala = (projectMatrix in file("example-scala"))

81 | .settings(commonSettings)

82 | .settings(

83 | publishArtifact := false,

84 | libraryDependencies ++= List(

85 | "com.typesafe.akka" %% "akka-stream-kafka" % akkaStreamKafkaVersion,

86 | "ch.qos.logback" % "logback-classic" % logbackVersion % Runtime

87 | )

88 | )

89 | .jvmPlatform(scalaVersions = Seq(scala2_13))

90 | .dependsOn(core)

91 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/EndMarker.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import java.nio.ByteBuffer;

4 |

5 | public class EndMarker implements MarkerValue {

6 | private EndMarker() {}

7 |

8 | public byte[] serialize() {

9 | return ByteBuffer.allocate(1)

10 | .put((byte) 0)

11 | .array();

12 | }

13 |

14 | @Override

15 | public String toString() {

16 | return "EndMarker{}";

17 | }

18 |

19 | @Override

20 | public boolean equals(Object o) {

21 | if (this == o) return true;

22 | if (o == null || getClass() != o.getClass()) return false;

23 | return true;

24 | }

25 |

26 | @Override

27 | public int hashCode() {

28 | return 0;

29 | }

30 |

31 | public static final EndMarker INSTANCE = new EndMarker();

32 | }

33 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/KafkaClients.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import org.apache.kafka.clients.consumer.ConsumerConfig;

4 | import org.apache.kafka.clients.consumer.KafkaConsumer;

5 | import org.apache.kafka.clients.producer.KafkaProducer;

6 | import org.apache.kafka.common.serialization.Deserializer;

7 | import org.apache.kafka.common.serialization.Serializer;

8 |

9 | import java.util.Collections;

10 | import java.util.Map;

11 | import java.util.Properties;

12 |

13 | public class KafkaClients {

14 | private final String bootstrapServers;

15 | private final Map extraGlobalConfig;

16 |

17 | public KafkaClients(String bootstrapServers) {

18 | this(bootstrapServers, Collections.emptyMap());

19 | }

20 |

21 | /**

22 | * @param extraGlobalConfig Extra Kafka parameter configuration, e.g. SSL

23 | */

24 | public KafkaClients(String bootstrapServers, Map extraGlobalConfig) {

25 | this.bootstrapServers = bootstrapServers;

26 | this.extraGlobalConfig = extraGlobalConfig;

27 | }

28 |

29 | public KafkaProducer createProducer(Class> keySerializer,

30 | Class> valueSerializer) {

31 | return createProducer(keySerializer, valueSerializer, Collections.emptyMap());

32 | }

33 |

34 | public KafkaProducer createProducer(Class> keySerializer,

35 | Class> valueSerializer,

36 | Map extraConfig) {

37 | Properties props = new Properties();

38 | props.put("bootstrap.servers", bootstrapServers);

39 | props.put("acks", "all");

40 | props.put("retries", 0);

41 | props.put("batch.size", 16384);

42 | props.put("linger.ms", 1);

43 | props.put("buffer.memory", 33554432);

44 | props.put("key.serializer", keySerializer.getName());

45 | props.put("value.serializer", valueSerializer.getName());

46 | for (Map.Entry extraCfgEntry : extraConfig.entrySet()) {

47 | props.put(extraCfgEntry.getKey(), extraCfgEntry.getValue());

48 | }

49 | for (Map.Entry extraCfgEntry : extraGlobalConfig.entrySet()) {

50 | props.put(extraCfgEntry.getKey(), extraCfgEntry.getValue());

51 | }

52 |

53 | return new KafkaProducer<>(props);

54 | }

55 |

56 | public KafkaConsumer createConsumer(String groupId,

57 | Class> keyDeserializer,

58 | Class> valueDeserializer) {

59 | return createConsumer(groupId, keyDeserializer, valueDeserializer, Collections.emptyMap());

60 | }

61 |

62 | public KafkaConsumer createConsumer(String groupId,

63 | Class> keyDeserializer,

64 | Class> valueDeserializer,

65 | Map extraConfig) {

66 | Properties props = new Properties();

67 | props.put("bootstrap.servers", bootstrapServers);

68 | props.put("enable.auto.commit", "false");

69 | props.put("key.deserializer", keyDeserializer.getName());

70 | props.put("value.deserializer", valueDeserializer.getName());

71 | props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

72 | if (groupId != null) {

73 | props.put(ConsumerConfig.GROUP_ID_CONFIG, groupId);

74 | }

75 | for (Map.Entry extraCfgEntry : extraConfig.entrySet()) {

76 | props.put(extraCfgEntry.getKey(), extraCfgEntry.getValue());

77 | }

78 | for (Map.Entry extraCfgEntry : extraGlobalConfig.entrySet()) {

79 | props.put(extraCfgEntry.getKey(), extraCfgEntry.getValue());

80 | }

81 |

82 | return new KafkaConsumer<>(props);

83 | }

84 | }

85 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/KmqClient.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import org.apache.kafka.clients.consumer.ConsumerRecord;

4 | import org.apache.kafka.clients.consumer.ConsumerRecords;

5 | import org.apache.kafka.clients.consumer.KafkaConsumer;

6 | import org.apache.kafka.clients.producer.KafkaProducer;

7 | import org.apache.kafka.clients.producer.ProducerConfig;

8 | import org.apache.kafka.clients.producer.ProducerRecord;

9 | import org.apache.kafka.clients.producer.RecordMetadata;

10 | import org.apache.kafka.common.serialization.Deserializer;

11 | import org.slf4j.Logger;

12 | import org.slf4j.LoggerFactory;

13 |

14 | import java.io.Closeable;

15 | import java.io.IOException;

16 | import java.time.Duration;

17 | import java.util.*;

18 | import java.util.concurrent.Future;

19 |

20 | /**

21 | * Kafka-based MQ client. A call to `nextBatch()` will:

22 | * 1. poll the `msgTopic` for at most `msgPollTimeout`

23 | * 2. send the start markers to the `markerTopic`, wait until they are written

24 | * 3. commit read offsets of the messages from `msgTopic`

25 | *

26 | * The next step of the message flow - 4. processing the messages - should be done by the client.

27 | *

28 | * After each message is processed, the `processed()` method should be called, which will:

29 | * 5. send an end marker to the `markerTopic`

30 | *

31 | * Note that `processed()` can be called at any time for any message and out-of-order. If processing fails, it shuoldn't

32 | * be called at all.

33 | */

34 | public class KmqClient implements Closeable {

35 | private final static Logger LOG = LoggerFactory.getLogger(KmqClient.class);

36 |

37 | private final KmqConfig config;

38 | private final Duration msgPollTimeout;

39 |

40 | private final KafkaConsumer msgConsumer;

41 | private final KafkaProducer markerProducer;

42 |

43 | public KmqClient(KmqConfig config, KafkaClients clients,

44 | Class> keyDeserializer,

45 | Class> valueDeserializer,

46 | Duration msgPollTimeout) {

47 |

48 | this.config = config;

49 | this.msgPollTimeout = msgPollTimeout;

50 |

51 | this.msgConsumer = clients.createConsumer(config.getMsgConsumerGroupId(), keyDeserializer, valueDeserializer);

52 | // Using the custom partitioner, each offset-partition will contain markers only from a single queue-partition.

53 | this.markerProducer = clients.createProducer(

54 | MarkerKey.MarkerKeySerializer.class, MarkerValue.MarkerValueSerializer.class,

55 | Collections.singletonMap(ProducerConfig.PARTITIONER_CLASS_CONFIG, PartitionFromMarkerKey.class));

56 |

57 | LOG.info(String.format("Subscribing to topic: %s, using group id: %s", config.getMsgTopic(), config.getMsgConsumerGroupId()));

58 | msgConsumer.subscribe(Collections.singletonList(config.getMsgTopic()));

59 | }

60 |

61 | public ConsumerRecords nextBatch() {

62 | List> markerSends = new ArrayList<>();

63 |

64 | // 1. Get messages from topic, in batches

65 | ConsumerRecords records = msgConsumer.poll(msgPollTimeout);

66 | for (ConsumerRecord record : records) {

67 | // 2. Write a "start" marker. Collecting the future responses.

68 | markerSends.add(markerProducer.send(

69 | new ProducerRecord<>(config.getMarkerTopic(),

70 | MarkerKey.fromRecord(record),

71 | new StartMarker(config.getMsgTimeoutMs()))));

72 | }

73 |

74 | // Waiting for a confirmation that each start marker has been sent

75 | markerSends.forEach(f -> {

76 | try { f.get(); } catch (Exception e) { throw new RuntimeException(e); }

77 | });

78 |

79 | // 3. after all start markers are sent, commit offsets. This needs to be done as close to writing the

80 | // start marker as possible, to minimize the number of double re-processed messages in case of failure.

81 | msgConsumer.commitSync();

82 |

83 | return records;

84 | }

85 |

86 | // client-side: 4. process the messages

87 |

88 | /**

89 | * @param record The message for which should be acknowledged as processed; an end marker will be send to the

90 | * markers topic.

91 | * @return Result of the marker send. Usually can be ignored, we don't need a guarantee the marker has been sent,

92 | * worst case the message will be reprocessed.

93 | */

94 | public Future processed(ConsumerRecord record) {

95 | // 5. writing an "end" marker. No need to wait for confirmation that it has been sent. It would be

96 | // nice, though, not to ignore that output completely.

97 | return markerProducer.send(new ProducerRecord<>(config.getMarkerTopic(),

98 | MarkerKey.fromRecord(record),

99 | EndMarker.INSTANCE));

100 | }

101 |

102 | @Override

103 | public void close() throws IOException {

104 | msgConsumer.close();

105 | markerProducer.close();

106 | }

107 | }

108 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/KmqConfig.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | /**

4 | * Configuration for the Kafka-based MQ.

5 | */

6 | public class KmqConfig {

7 | private static final int DEFAULT_MAX_REDELIVERY_COUNT = 3;

8 | private static final String DEFAULT_REDELIVERY_COUNT_HEADER = "kmq-redelivery-count";

9 | private static final String DEFAULT_DEAD_LETTER_TOPIC_SUFFIX = "__undelivered";

10 |

11 | private final String msgTopic;

12 | private final String markerTopic;

13 | private final String msgConsumerGroupId;

14 | private final String markerConsumerGroupId;

15 | private final String markerConsumerOffsetGroupId;

16 | private final long msgTimeoutMs;

17 | private final long useNowForRedeliverDespiteNoMarkerSeenForMs;

18 | private final String deadLetterTopic;

19 | private final String redeliveryCountHeader;

20 | private final int maxRedeliveryCount;

21 |

22 | /**

23 | * @param msgTopic Name of the Kafka topic containing the messages.

24 | * @param markerTopic Name of the Kafka topic containing the markers.

25 | * @param msgConsumerGroupId Consumer group id for reading messages from `msgTopic`.

26 | * @param markerConsumerGroupId Consumer group id for reading messages from `markerTopic`.

27 | * @param markerConsumerOffsetGroupId Consumer group id for direct API to commit offsets to `markerTopic`

28 | * @param msgTimeoutMs Timeout, after which messages, if not processed, are redelivered.

29 | * @param useNowForRedeliverDespiteNoMarkerSeenForMs After what time "now" should be used to calculate redelivery

30 | * instead of maximum marker timestamp seen in a partition

31 | * @param deadLetterTopic Name of the Kafka topic containing all undelivered messages.

32 | * @param redeliveryCountHeader Name of the redelivery count header.

33 | * @param maxRedeliveryCount Max number of message redeliveries.

34 | */

35 | public KmqConfig(

36 | String msgTopic, String markerTopic, String msgConsumerGroupId, String markerConsumerGroupId,

37 | String markerConsumerOffsetGroupId, long msgTimeoutMs, long useNowForRedeliverDespiteNoMarkerSeenForMs,

38 | String deadLetterTopic, String redeliveryCountHeader, int maxRedeliveryCount) {

39 |

40 | this.msgTopic = msgTopic;

41 | this.markerTopic = markerTopic;

42 | this.msgConsumerGroupId = msgConsumerGroupId;

43 | this.markerConsumerGroupId = markerConsumerGroupId;

44 | this.markerConsumerOffsetGroupId = markerConsumerOffsetGroupId;

45 | this.msgTimeoutMs = msgTimeoutMs;

46 | this.useNowForRedeliverDespiteNoMarkerSeenForMs = useNowForRedeliverDespiteNoMarkerSeenForMs;

47 | this.deadLetterTopic = deadLetterTopic;

48 | this.redeliveryCountHeader = redeliveryCountHeader;

49 | this.maxRedeliveryCount = maxRedeliveryCount;

50 | }

51 |

52 | public KmqConfig(

53 | String msgTopic, String markerTopic, String msgConsumerGroupId, String markerConsumerGroupId,

54 | String markerConsumerOffsetGroupId,

55 | long msgTimeoutMs, long useNowForRedeliverDespiteNoMarkerSeenForMs) {

56 |

57 | this(msgTopic, markerTopic, msgConsumerGroupId, markerConsumerGroupId,

58 | markerConsumerOffsetGroupId, msgTimeoutMs, useNowForRedeliverDespiteNoMarkerSeenForMs,

59 | msgTopic + DEFAULT_DEAD_LETTER_TOPIC_SUFFIX, DEFAULT_REDELIVERY_COUNT_HEADER, DEFAULT_MAX_REDELIVERY_COUNT);

60 | }

61 |

62 | public String getMsgTopic() {

63 | return msgTopic;

64 | }

65 |

66 | public String getMarkerTopic() {

67 | return markerTopic;

68 | }

69 |

70 | public String getMsgConsumerGroupId() {

71 | return msgConsumerGroupId;

72 | }

73 |

74 | public String getMarkerConsumerGroupId() {

75 | return markerConsumerGroupId;

76 | }

77 |

78 | public String getMarkerConsumerOffsetGroupId() {

79 | return markerConsumerOffsetGroupId;

80 | }

81 |

82 | public long getMsgTimeoutMs() {

83 | return msgTimeoutMs;

84 | }

85 |

86 | public long getUseNowForRedeliverDespiteNoMarkerSeenForMs() {

87 | return useNowForRedeliverDespiteNoMarkerSeenForMs;

88 | }

89 |

90 | public String getDeadLetterTopic() {

91 | return deadLetterTopic;

92 | }

93 |

94 | public String getRedeliveryCountHeader() {

95 | return redeliveryCountHeader;

96 | }

97 |

98 | public int getMaxRedeliveryCount() {

99 | return maxRedeliveryCount;

100 | }

101 | }

102 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/MarkerKey.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import org.apache.kafka.clients.consumer.ConsumerRecord;

4 | import org.apache.kafka.common.serialization.Deserializer;

5 | import org.apache.kafka.common.serialization.Serializer;

6 |

7 | import java.nio.ByteBuffer;

8 | import java.util.Map;

9 | import java.util.Objects;

10 |

11 | public class MarkerKey {

12 | private final int partition;

13 | private final long messageOffset;

14 |

15 | public MarkerKey(int partition, long messageOffset) {

16 | this.partition = partition;

17 | this.messageOffset = messageOffset;

18 | }

19 |

20 | public int getPartition() {

21 | return partition;

22 | }

23 |

24 | public long getMessageOffset() {

25 | return messageOffset;

26 | }

27 |

28 | public byte[] serialize() {

29 | return ByteBuffer.allocate(4+8)

30 | .putInt(partition)

31 | .putLong(messageOffset)

32 | .array();

33 | }

34 |

35 | @Override

36 | public String toString() {

37 | return "MarkerKey{" +

38 | "partition=" + partition +

39 | ", messageOffset=" + messageOffset +

40 | '}';

41 | }

42 |

43 | @Override

44 | public boolean equals(Object o) {

45 | if (this == o) return true;

46 | if (o == null || getClass() != o.getClass()) return false;

47 | MarkerKey markerKey = (MarkerKey) o;

48 | return partition == markerKey.partition &&

49 | messageOffset == markerKey.messageOffset;

50 | }

51 |

52 | @Override

53 | public int hashCode() {

54 | return Objects.hash(partition, messageOffset);

55 | }

56 |

57 | public static class MarkerKeySerializer implements Serializer {

58 | @Override

59 | public void configure(Map configs, boolean isKey) {}

60 |

61 | @Override

62 | public byte[] serialize(String topic, MarkerKey data) {

63 | return data.serialize();

64 | }

65 |

66 | @Override

67 | public void close() {}

68 | }

69 |

70 | public static class MarkerKeyDeserializer implements Deserializer {

71 | @Override

72 | public void configure(Map configs, boolean isKey) {}

73 |

74 | @Override

75 | public MarkerKey deserialize(String topic, byte[] data) {

76 | ByteBuffer bb = ByteBuffer.wrap(data);

77 | return new MarkerKey(bb.getInt(), bb.getLong());

78 | }

79 |

80 | @Override

81 | public void close() {}

82 | }

83 |

84 | public static MarkerKey fromRecord(ConsumerRecord r) {

85 | return new MarkerKey(r.partition(), r.offset());

86 | }

87 | }

88 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/MarkerValue.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import org.apache.kafka.common.serialization.Deserializer;

4 | import org.apache.kafka.common.serialization.Serializer;

5 |

6 | import java.nio.ByteBuffer;

7 | import java.util.Map;

8 |

9 | public interface MarkerValue {

10 | byte[] serialize();

11 |

12 | class MarkerValueSerializer implements Serializer {

13 | @Override

14 | public void configure(Map configs, boolean isKey) {}

15 |

16 | @Override

17 | public byte[] serialize(String topic, MarkerValue data) {

18 | if (data == null) {

19 | return new byte[0];

20 | } else {

21 | return data.serialize();

22 | }

23 | }

24 |

25 | @Override

26 | public void close() {}

27 | }

28 |

29 | class MarkerValueDeserializer implements Deserializer {

30 | @Override

31 | public void configure(Map configs, boolean isKey) {}

32 |

33 | @Override

34 | public MarkerValue deserialize(String topic, byte[] data) {

35 | if (data.length == 0) {

36 | return null;

37 | } else {

38 | ByteBuffer bb = ByteBuffer.wrap(data);

39 | if (bb.get() == 1) {

40 | return new StartMarker(bb.getLong());

41 | } else {

42 | return EndMarker.INSTANCE;

43 | }

44 | }

45 | }

46 |

47 | @Override

48 | public void close() {}

49 | }

50 | }

51 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/PartitionFromMarkerKey.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import org.apache.kafka.clients.producer.Partitioner;

4 | import org.apache.kafka.common.Cluster;

5 |

6 | import java.util.Map;

7 |

8 | /**

9 | * Assigns partitions basing on the partition contained in the key, which must be a `MarkerKey`.

10 | */

11 | public class PartitionFromMarkerKey implements Partitioner {

12 | @Override

13 | public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

14 | return ((MarkerKey) key).getPartition();

15 | }

16 |

17 | @Override

18 | public void close() {}

19 |

20 | @Override

21 | public void configure(Map configs) {}

22 | }

23 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/RedeliveryTracker.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import com.softwaremill.kmq.redelivery.RedeliveryActors;

4 |

5 | import scala.Option;

6 |

7 | import java.io.Closeable;

8 | import java.util.Collections;

9 | import java.util.Map;

10 |

11 | /**

12 | * Tracks which messages has been processed, and redelivers the ones which are not processed until their redelivery

13 | * time.

14 | */

15 | public class RedeliveryTracker {

16 | public static Closeable start(KafkaClients clients, KmqConfig config) {

17 | return RedeliveryActors.start(clients, config);

18 | }

19 | }

20 |

--------------------------------------------------------------------------------

/core/src/main/java/com/softwaremill/kmq/StartMarker.java:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq;

2 |

3 | import java.nio.ByteBuffer;

4 | import java.util.Objects;

5 |

6 | public class StartMarker implements MarkerValue {

7 | private final long redeliverAfter;

8 |

9 | public StartMarker(long redeliverAfter) {

10 | this.redeliverAfter = redeliverAfter;

11 | }

12 |

13 | public long getRedeliverAfter() {

14 | return redeliverAfter;

15 | }

16 |

17 | public byte[] serialize() {

18 | return ByteBuffer.allocate(1 + 8)

19 | .put((byte) 1)

20 | .putLong(redeliverAfter)

21 | .array();

22 | }

23 |

24 | @Override

25 | public String toString() {

26 | return "StartMarker{" +

27 | "redeliverAfter=" + redeliverAfter +

28 | '}';

29 | }

30 |

31 | @Override

32 | public boolean equals(Object o) {

33 | if (this == o) return true;

34 | if (o == null || getClass() != o.getClass()) return false;

35 | StartMarker that = (StartMarker) o;

36 | return redeliverAfter == that.redeliverAfter;

37 | }

38 |

39 | @Override

40 | public int hashCode() {

41 | return Objects.hash(redeliverAfter);

42 | }

43 | }

44 |

--------------------------------------------------------------------------------

/core/src/main/resources/reference.conf:

--------------------------------------------------------------------------------

1 | kmq.redeliver-dispatcher {

2 | executor = "thread-pool-executor"

3 | }

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/CommitMarkerOffsetsActor.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import cats.effect.{IO, Resource}

4 | import org.apache.kafka.clients.consumer.{KafkaConsumer, OffsetAndMetadata}

5 | import org.apache.kafka.common.TopicPartition

6 | import org.apache.kafka.common.serialization.ByteArrayDeserializer

7 |

8 | import scala.jdk.CollectionConverters._

9 | import scala.concurrent.duration._

10 | import fs2.Stream

11 | import cats.effect.std.Queue

12 |

13 | import org.typelevel.log4cats.slf4j.Slf4jLogger

14 |

15 | class CommitMarkerOffsetsActor private (

16 | mailbox: Queue[IO, CommitMarkerOffsetsActorMessage]

17 | ) {

18 | def tell(commitMessage: CommitMarkerOffsetsActorMessage): IO[Unit] = mailbox.offer(commitMessage)

19 | }

20 |

21 | object CommitMarkerOffsetsActor {

22 |

23 | private val logger = Slf4jLogger.getLogger[IO]

24 | private def stream(

25 | consumer: KafkaConsumer[Array[Byte], Array[Byte]],

26 | markerTopic: String,

27 | mailbox: Queue[IO, CommitMarkerOffsetsActorMessage]

28 | ) = {

29 |

30 | def commitOffsets(toCommit: Map[Partition, Offset]): IO[Map[Partition, Offset]] = for {

31 | _ <-

32 | if (toCommit.nonEmpty) {

33 | IO.blocking {

34 | consumer.commitSync(

35 | toCommit.map { case (partition, offset) =>

36 | (new TopicPartition(markerTopic, partition), new OffsetAndMetadata(offset))

37 | }.asJava

38 | )

39 | } >> logger.info(s"Committed marker offsets: $toCommit")

40 | } else IO.unit

41 | } yield Map.empty

42 |

43 | val receive: (Map[Partition, Offset], CommitMarkerOffsetsActorMessage) => IO[(Map[Partition, Offset], Unit)] = {

44 | case (toCommit, CommitOffset(p, o)) =>

45 | // only updating if the current offset is smaller

46 | if (toCommit.get(p).fold(true)(_ < o))

47 | IO.pure((toCommit.updated(p, o), ()))

48 | else

49 | IO.pure((toCommit, ()))

50 | case (state, DoCommit) => commitOffsets(state).map((_, ()))

51 | }

52 |

53 | Stream

54 | .awakeEvery[IO](1.second)

55 | .as(DoCommit)

56 | .merge(Stream.fromQueueUnterminated(mailbox))

57 | .evalMapAccumulate(Map.empty[Partition, Offset])(receive)

58 | }

59 |

60 | def create(

61 | markerTopic: String,

62 | markerOffsetGroupId: String,

63 | clients: KafkaClientsResourceHelpers

64 | ): Resource[IO, CommitMarkerOffsetsActor] = for {

65 | consumer <- clients.createConsumer(

66 | markerOffsetGroupId,

67 | classOf[ByteArrayDeserializer],

68 | classOf[ByteArrayDeserializer]

69 | )

70 | mailbox <- Resource.eval(Queue.unbounded[IO, CommitMarkerOffsetsActorMessage])

71 | _ <- stream(consumer, markerTopic, mailbox).compile.drain.background

72 | } yield new CommitMarkerOffsetsActor(mailbox)

73 |

74 | }

75 |

76 | sealed trait CommitMarkerOffsetsActorMessage

77 | case class CommitOffset(p: Partition, o: Offset) extends CommitMarkerOffsetsActorMessage

78 | case object DoCommit extends CommitMarkerOffsetsActorMessage

79 |

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/ConsumeMarkersActor.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import java.time.Duration

4 | import java.util.Collections

5 | import cats.effect.IO

6 | import cats.effect.kernel.Resource

7 | import cats.effect.std.{Dispatcher, Queue}

8 | import com.softwaremill.kmq.{KmqConfig, MarkerKey, MarkerValue}

9 | import org.apache.kafka.clients.consumer.{ConsumerRebalanceListener, ConsumerRecord, KafkaConsumer}

10 | import org.apache.kafka.clients.producer.KafkaProducer

11 | import org.apache.kafka.common.TopicPartition

12 | import org.apache.kafka.common.serialization.ByteArraySerializer

13 | import cats.syntax.all._

14 | import org.typelevel.log4cats.slf4j.Slf4jLogger

15 | import fs2.Stream

16 |

17 | import scala.jdk.CollectionConverters._

18 | object ConsumeMarkersActor {

19 |

20 | private val logger = Slf4jLogger.getLogger[IO]

21 |

22 | private val OneSecond = Duration.ofSeconds(1)

23 |

24 | def create(config: KmqConfig, helpers: KafkaClientsResourceHelpers): Resource[IO, ConsumeMarkersActor] = {

25 |

26 | def setupMarkerConsumer(

27 | markerConsumer: KafkaConsumer[MarkerKey, MarkerValue],

28 | mailbox: Queue[IO, ConsumeMarkersActorMessage],

29 | dispatcher: Dispatcher[IO]

30 | ): Resource[IO, Unit] = Resource.eval(

31 | IO(

32 | markerConsumer.subscribe(

33 | Collections.singleton(config.getMarkerTopic),

34 | new ConsumerRebalanceListener() {

35 | def onPartitionsRevoked(partitions: java.util.Collection[TopicPartition]): Unit =

36 | dispatcher.unsafeRunSync(mailbox.offer(PartitionRevoked(partitions.asScala.toList)))

37 |

38 | def onPartitionsAssigned(partitions: java.util.Collection[TopicPartition]): Unit = ()

39 | }

40 | )

41 | )

42 | )

43 |

44 | def setupOffsetCommitting = for {

45 | commitMarkerOffsetsActor <- CommitMarkerOffsetsActor

46 | .create(

47 | config.getMarkerTopic,

48 | config.getMarkerConsumerOffsetGroupId,

49 | helpers

50 | )

51 | _ <- Resource.eval(commitMarkerOffsetsActor.tell(DoCommit))

52 | } yield commitMarkerOffsetsActor

53 |

54 | for {

55 | producer <- helpers.createProducer(classOf[ByteArraySerializer], classOf[ByteArraySerializer])

56 | dispatcher <- Dispatcher.sequential[IO]

57 | mailbox <- Resource.eval(Queue.unbounded[IO, ConsumeMarkersActorMessage])

58 | markerConsumer <- helpers.createConsumer(

59 | config.getMarkerConsumerGroupId,

60 | classOf[MarkerKey.MarkerKeyDeserializer],

61 | classOf[MarkerValue.MarkerValueDeserializer]

62 | )

63 | _ <- setupMarkerConsumer(markerConsumer, mailbox, dispatcher)

64 | commitMarkerOffsetsActor <- setupOffsetCommitting

65 | _ <- stream(

66 | mailbox,

67 | commitMarkerOffsetsActor,

68 | markerConsumer,

69 | dispatcher,

70 | producer,

71 | config,

72 | helpers

73 | ).compile.drain.background

74 | _ <- Resource.eval(logger.info("Consume markers actor setup complete"))

75 | } yield new ConsumeMarkersActor(mailbox)

76 | }

77 |

78 | private def stream(

79 | mailbox: Queue[IO, ConsumeMarkersActorMessage],

80 | commitMarkerOffsetsActor: CommitMarkerOffsetsActor,

81 | markerConsumer: KafkaConsumer[MarkerKey, MarkerValue],

82 | dispatcher: Dispatcher[IO],

83 | producer: KafkaProducer[Array[Byte], Array[Byte]],

84 | config: KmqConfig,

85 | helpers: KafkaClientsResourceHelpers

86 | ) = {

87 |

88 | def partitionAssigned(

89 | producer: KafkaProducer[Array[Byte], Array[Byte]],

90 | p: Partition,

91 | endOffset: Offset,

92 | dispatcher: Dispatcher[IO]

93 | ) = for {

94 | (redeliverActor, shutdown) <- RedeliverActor.create(p, producer, config, helpers.clients).allocated

95 | _ <- redeliverActor.tell(DoRedeliver)

96 | } yield AssignedPartition(new MarkersQueue(endOffset - 1), redeliverActor, shutdown, config, None, None, dispatcher)

97 |

98 | def handleRecords(

99 | assignedPartition: AssignedPartition,

100 | now: Long,

101 | partition: Partition,

102 | records: Iterable[ConsumerRecord[MarkerKey, MarkerValue]]

103 | ) =

104 | IO(assignedPartition.handleRecords(records, now)) >>

105 | assignedPartition.markersQueue

106 | .smallestMarkerOffset()

107 | .traverse { offset =>

108 | commitMarkerOffsetsActor.tell(CommitOffset(partition, offset))

109 | }

110 | .void

111 |

112 | val receive: (Map[Partition, AssignedPartition], ConsumeMarkersActorMessage) => IO[

113 | (Map[Partition, AssignedPartition], Unit)

114 | ] = {

115 | case (assignedPartitions, PartitionRevoked(partitions)) =>

116 | val revokedPartitions = partitions.map(_.partition())

117 |

118 | logger.info(s"Revoked marker partitions: $revokedPartitions") >>

119 | revokedPartitions

120 | .flatMap(assignedPartitions.get)

121 | .traverseTap(_.shutdown)

122 | .as((assignedPartitions.removedAll(revokedPartitions), ()))

123 | case (assignedPartitions, DoConsume) =>

124 | val process = for {

125 | markers <- IO.blocking(markerConsumer.poll(OneSecond).asScala)

126 | now <- IO.realTime.map(_.toMillis)

127 | newlyAssignedPartitions <- markers.groupBy(_.partition()).toList.flatTraverse { case (partition, records) =>

128 | assignedPartitions.get(partition) match {

129 | case None =>

130 | for {

131 | endOffsets <- IO(

132 | markerConsumer

133 | .endOffsets(Collections.singleton(new TopicPartition(config.getMarkerTopic, partition)))

134 | )

135 | _ <- logger.info(s"Assigned marker partition: $partition")

136 | ap <- partitionAssigned(

137 | producer,

138 | partition,

139 | endOffsets.get(partition) - 1,

140 | dispatcher

141 | )

142 | _ <- handleRecords(ap, now, partition, records)

143 | } yield List((partition, ap))

144 | case Some(ap) => handleRecords(ap, now, partition, records).as(Nil)

145 | }

146 | }

147 | updatedAssignedPartitions = assignedPartitions ++ newlyAssignedPartitions.toMap

148 | _ <- updatedAssignedPartitions.values.toList.traverse(_.sendRedeliverMarkers(now))

149 | } yield updatedAssignedPartitions

150 |

151 | process.guarantee(mailbox.offer(DoConsume)).map((_, ()))

152 | }

153 |

154 | Stream

155 | .fromQueueUnterminated(mailbox)

156 | .evalMapAccumulate(Map.empty[Partition, AssignedPartition])(receive)

157 | .onFinalize(logger.info("Stopped consume markers actor"))

158 | }

159 |

160 | }

161 | class ConsumeMarkersActor private (

162 | mailbox: Queue[IO, ConsumeMarkersActorMessage]

163 | ) {

164 | def tell(message: ConsumeMarkersActorMessage): IO[Unit] = mailbox.offer(message)

165 |

166 | }

167 |

168 | private case class AssignedPartition(

169 | markersQueue: MarkersQueue,

170 | redeliverActor: RedeliverActor,

171 | shutdown: IO[Unit],

172 | config: KmqConfig,

173 | var latestSeenMarkerTimestamp: Option[Timestamp],

174 | var latestMarkerSeenAt: Option[Timestamp],

175 | dispatcher: Dispatcher[IO]

176 | ) {

177 |

178 | private def updateLatestSeenMarkerTimestamp(markerTimestamp: Timestamp, now: Timestamp): Unit = {

179 | latestSeenMarkerTimestamp = Some(markerTimestamp)

180 | latestMarkerSeenAt = Some(now)

181 | }

182 |

183 | def handleRecords(records: Iterable[ConsumerRecord[MarkerKey, MarkerValue]], now: Timestamp): Unit = {

184 | records.toVector.foreach { record =>

185 | markersQueue.handleMarker(record.offset(), record.key(), record.value(), record.timestamp())

186 | }

187 |

188 | updateLatestSeenMarkerTimestamp(records.maxBy(_.timestamp()).timestamp(), now)

189 | }

190 |

191 | def sendRedeliverMarkers(now: Timestamp): IO[Unit] =

192 | redeliverTimestamp(now).traverse { rt =>

193 | for {

194 | toRedeliver <- IO(markersQueue.markersToRedeliver(rt))

195 | _ <- redeliverActor.tell(RedeliverMarkers(toRedeliver)).whenA(toRedeliver.nonEmpty)

196 | } yield ()

197 | }.void

198 |

199 | private def redeliverTimestamp(now: Timestamp): Option[Timestamp] = {

200 | // No markers seen at all -> no sense to check for redelivery

201 | latestMarkerSeenAt.flatMap { lm =>

202 | if (now - lm < config.getUseNowForRedeliverDespiteNoMarkerSeenForMs) {

203 | /* If we've seen a marker recently, then using the latest seen marker (which is the maximum marker offset seen

204 | at all) for computing redelivery. This guarantees that we won't redeliver a message for which an end marker

205 | was sent, but is waiting in the topic for being observed, even though comparing the wall clock time and start

206 | marker timestamp exceeds the message timeout. */

207 | latestSeenMarkerTimestamp

208 | } else {

209 | /* If we haven't seen a marker recently, assuming that it's because all available have been observed. Hence

210 | there are no delays in processing of the markers, so we can use the current time for computing which messages

211 | should be redelivered. */

212 | Some(now)

213 | }

214 | }

215 | }

216 | }

217 |

218 | sealed trait ConsumeMarkersActorMessage

219 | case object DoConsume extends ConsumeMarkersActorMessage

220 |

221 | case class PartitionRevoked(partitions: List[TopicPartition]) extends ConsumeMarkersActorMessage

222 |

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/KafkaClientsResourceHelpers.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import cats.effect.{IO, Resource}

4 | import com.softwaremill.kmq.KafkaClients

5 | import org.apache.kafka.clients.consumer.KafkaConsumer

6 | import org.apache.kafka.clients.producer.KafkaProducer

7 | import org.apache.kafka.common.serialization.{Deserializer, Serializer}

8 |

9 | import scala.jdk.CollectionConverters._

10 |

11 | private[redelivery] final class KafkaClientsResourceHelpers(val clients: KafkaClients) {

12 |

13 | def createProducer[K, V](

14 | keySerializer: Class[_ <: Serializer[K]],

15 | valueSerializer: Class[_ <: Serializer[V]],

16 | extraConfig: Map[String, AnyRef] = Map.empty

17 | ): Resource[IO, KafkaProducer[K, V]] =

18 | Resource.make(

19 | IO(clients.createProducer(keySerializer, valueSerializer, extraConfig.asJava))

20 | )(p => IO(p.close()))

21 |

22 | def createConsumer[K, V](

23 | groupId: String,

24 | keyDeserializer: Class[_ <: Deserializer[K]],

25 | valueDeserializer: Class[_ <: Deserializer[V]],

26 | extraConfig: Map[String, AnyRef] = Map.empty

27 | ): Resource[IO, KafkaConsumer[K, V]] = Resource.make(

28 | IO(clients.createConsumer(groupId, keyDeserializer, valueDeserializer, extraConfig.asJava))

29 | )(p => IO(p.close()))

30 |

31 | }

32 |

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/MarkersQueue.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import com.softwaremill.kmq.{EndMarker, MarkerKey, MarkerValue, StartMarker}

4 |

5 | import scala.collection.mutable

6 |

7 | class MarkersQueue(disableRedeliveryBefore: Offset) {

8 | private val markersInProgress = mutable.Set[MarkerKey]()

9 | private val markersByTimestamp =

10 | new mutable.PriorityQueue[AttributedMarkerKey[Timestamp]]()(bySmallestAttributeOrdering)

11 | private val markersByOffset = new mutable.PriorityQueue[AttributedMarkerKey[Offset]]()(bySmallestAttributeOrdering)

12 | private var redeliveryEnabled = false

13 |

14 | def handleMarker(markerOffset: Offset, k: MarkerKey, v: MarkerValue, t: Timestamp): Unit = {

15 | if (markerOffset >= disableRedeliveryBefore) {

16 | redeliveryEnabled = true

17 | }

18 |

19 | v match {

20 | case s: StartMarker =>

21 | markersByOffset.enqueue(AttributedMarkerKey(k, markerOffset))

22 | markersByTimestamp.enqueue(AttributedMarkerKey(k, t + s.getRedeliverAfter))

23 | markersInProgress += k

24 |

25 | case _: EndMarker =>

26 | markersInProgress -= k

27 |

28 | case x => throw new IllegalArgumentException(s"Unknown marker type: ${x.getClass}")

29 | }

30 | }

31 |

32 | def markersToRedeliver(now: Timestamp): List[MarkerKey] = {

33 | removeEndedMarkers(markersByTimestamp)

34 |

35 | var toRedeliver = List.empty[MarkerKey]

36 |

37 | if (redeliveryEnabled) {

38 | while (shouldRedeliverMarkersQueueHead(now)) {

39 | val queueHead = markersByTimestamp.dequeue()

40 | // the first marker, if any, is not ended for sure (b/c of the cleanup that's done at the beginning),

41 | // but subsequent markers don't have to be.

42 | if (markersInProgress.contains(queueHead.key)) {

43 | toRedeliver ::= queueHead.key

44 | }

45 |

46 | // not removing from markersInProgress - until we are sure the message is redelivered (the redeliverer

47 | // sends an end marker when this is done) - the marker needs to stay for minimum-offset calculations to be

48 | // correct

49 | }

50 | }

51 |

52 | toRedeliver

53 | }

54 |

55 | def smallestMarkerOffset(): Option[Offset] = {

56 | removeEndedMarkers(markersByOffset)

57 | markersByOffset.headOption.map(_.attr)

58 | }

59 |

60 | private def removeEndedMarkers[T](queue: mutable.PriorityQueue[AttributedMarkerKey[T]]): Unit = {

61 | while (isHeadEnded(queue)) {

62 | queue.dequeue()

63 | }

64 | }

65 |

66 | private def isHeadEnded[T](queue: mutable.PriorityQueue[AttributedMarkerKey[T]]): Boolean = {

67 | queue.headOption.exists(e => !markersInProgress.contains(e.key))

68 | }

69 |

70 | private def shouldRedeliverMarkersQueueHead(now: Timestamp): Boolean = {

71 | markersByTimestamp.headOption match {

72 | case None => false

73 | case Some(m) => now >= m.attr

74 | }

75 | }

76 |

77 | private case class AttributedMarkerKey[T](key: MarkerKey, attr: T)

78 |

79 | private def bySmallestAttributeOrdering[T](implicit O: Ordering[T]): Ordering[AttributedMarkerKey[T]] =

80 | (x, y) => -O.compare(x.attr, y.attr)

81 | }

82 |

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/RedeliverActor.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import cats.effect.std.Queue

4 | import cats.effect.{IO, Resource}

5 | import com.softwaremill.kmq.{KafkaClients, KmqConfig, MarkerKey}

6 |

7 | import scala.concurrent.duration._

8 | import fs2.Stream

9 | import org.apache.kafka.clients.producer.KafkaProducer

10 | import org.typelevel.log4cats.slf4j.Slf4jLogger

11 |

12 | object RedeliverActor {

13 |

14 | private val logger = Slf4jLogger.getLogger[IO]

15 | def create(

16 | p: Partition,

17 | producer: KafkaProducer[Array[Byte], Array[Byte]],

18 | config: KmqConfig,

19 | clients: KafkaClients

20 | ): Resource[IO, RedeliverActor] = {

21 | val resource = for {

22 | redeliverer <- Resource.make(

23 | IO(new RetryingRedeliverer(new DefaultRedeliverer(p, producer, config, clients)))

24 | )(r => IO(r.close()))

25 | mailbox <- Resource.eval(Queue.unbounded[IO, RedeliverActorMessage])

26 | _ <- Resource.eval(logger.info(s"Started redeliver actor for partition $p"))

27 | _ <- stream(redeliverer, mailbox).compile.drain.background

28 | } yield new RedeliverActor(mailbox)

29 |

30 | resource.onFinalize(

31 | logger.info(s"Stopped redeliver actor for partition $p")

32 | )

33 | }

34 |

35 | private def stream(redeliverer: Redeliverer, mailbox: Queue[IO, RedeliverActorMessage]) = {

36 |

37 | val receive: (List[MarkerKey], RedeliverActorMessage) => IO[(List[MarkerKey], Unit)] = {

38 | case (toRedeliver, RedeliverMarkers(m)) =>

39 | IO.pure((toRedeliver ++ m, ()))

40 | case (toRedeliver, DoRedeliver) =>

41 | val hadRedeliveries = toRedeliver.nonEmpty

42 | IO(redeliverer.redeliver(toRedeliver))

43 | .as((Nil, ()))

44 | .guarantee {

45 | if (hadRedeliveries)

46 | mailbox.offer(DoRedeliver)

47 | else

48 | mailbox.offer(DoRedeliver).delayBy(1.second).start.void

49 | }

50 | }

51 |

52 | Stream

53 | .fromQueueUnterminated(mailbox)

54 | .evalMapAccumulate(List.empty[MarkerKey])(receive)

55 | }

56 |

57 | }

58 | final class RedeliverActor private (mailbox: Queue[IO, RedeliverActorMessage]) {

59 | def tell(message: RedeliverActorMessage): IO[Unit] = mailbox.offer(message)

60 |

61 | }

62 |

63 | sealed trait RedeliverActorMessage

64 |

65 | case class RedeliverMarkers(markers: List[MarkerKey]) extends RedeliverActorMessage

66 |

67 | case object DoRedeliver extends RedeliverActorMessage

68 |

--------------------------------------------------------------------------------

/core/src/main/scala/com.softwaremill.kmq/redelivery/Redeliverer.scala:

--------------------------------------------------------------------------------

1 | package com.softwaremill.kmq.redelivery

2 |

3 | import com.softwaremill.kmq.{EndMarker, KafkaClients, KmqConfig, MarkerKey}

4 | import com.typesafe.scalalogging.StrictLogging

5 | import org.apache.kafka.clients.consumer.{ConsumerRecord, ConsumerRecords, KafkaConsumer}

6 | import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord, RecordMetadata}

7 | import org.apache.kafka.common.TopicPartition

8 | import org.apache.kafka.common.header.Header

9 | import org.apache.kafka.common.header.internals.RecordHeader

10 | import org.apache.kafka.common.serialization.ByteArrayDeserializer

11 |

12 | import java.nio.ByteBuffer

13 | import java.time.Duration

14 | import java.util.Collections

15 | import java.util.concurrent.{Future, TimeUnit}

16 | import scala.annotation.tailrec

17 | import scala.jdk.CollectionConverters._

18 |

19 | trait Redeliverer {

20 | def redeliver(toRedeliver: List[MarkerKey]): Unit

21 |

22 | def close(): Unit

23 | }

24 |

25 | class DefaultRedeliverer(

26 | partition: Partition,

27 | producer: KafkaProducer[Array[Byte], Array[Byte]],

28 | config: KmqConfig,

29 | clients: KafkaClients

30 | ) extends Redeliverer

31 | with StrictLogging {

32 |

33 | private val SendTimeoutSeconds = 60L

34 |