├── .gitignore

├── README.md

├── experiments

├── __init__.py

├── classification_ensembles.py

├── classification_imagenet.py

├── classification_ue.py

├── classification_ue_ood.py

├── configs.py

├── data

│ ├── .gitignore

│ ├── al

│ │ └── .gitkeep

│ ├── detector

│ │ └── .gitkeep

│ ├── models

│ │ └── .gitkeep

│ ├── ood

│ │ └── .gitkeep

│ ├── regression

│ │ └── .gitkeep

│ └── xor

│ │ └── .gitkeep

├── deprecated

│ ├── __init__.py

│ ├── active_learning_debug.py

│ ├── active_learning_mnist.py

│ ├── active_learning_svhn.py

│ ├── al_rosenbrock_experiment.py

│ ├── classification_active_learning.py

│ ├── classification_active_learning_fasetai.py

│ ├── classification_bench_masks.ipynb

│ ├── classification_error_detection.py

│ ├── classification_error_detection_fastai.py

│ ├── classification_image.py

│ ├── classification_ood_detection.py

│ ├── classification_visual.ipynb

│ ├── classification_xor.ipynb

│ ├── dpp.ipynb

│ ├── ensemble-datasets.ipynb

│ ├── ensemble-masks.ipynb

│ ├── ensemble_debug.py

│ ├── ensembles.ipynb

│ ├── ensembles_2.ipynb

│ ├── ensembles_3.ipynb

│ ├── ensembles_4.ipynb

│ ├── ensembles_5.ipynb

│ ├── move.py

│ ├── plot_df.py

│ ├── plot_df_al.py

│ ├── print_confidence_accuracy_multi_ack.py

│ ├── print_histogram.py

│ ├── print_it.py

│ ├── print_ll.py

│ ├── regression_2_prfm2.ipynb

│ ├── regression_3_dolan-more.ipynb

│ ├── regression_active_learning.ipynb

│ ├── regression_bench_ue.ipynb

│ ├── regression_dm_big_exper_train.ipynb

│ ├── regression_dm_dolan-more.ipynb

│ ├── regression_dm_produce_results_from_models.ipynb

│ ├── regression_masks.ipynb

│ ├── regression_visual-circles.ipynb

│ ├── regression_visual.ipynb

│ ├── small_eigen_debug.ipynb

│ ├── uq_proc (2).ipynb

│ └── utils

│ │ ├── __init__.py

│ │ ├── data.py

│ │ └── fastai.py

├── logs

│ └── .gitignore

├── models.py

├── move_chest.py

├── print_confidence_accuracy.py

├── print_ood.py

├── regression_1_big_exper_train-clean.ipynb

├── regression_2_ll_on_trained_models.ipynb

├── regression_3_ood_w_training.ipynb

└── visual_datasets.py

├── figures

├── 2d_toy.png

├── active_learning_mnist.png

├── benchmark.png

├── convergence.png

├── dolan_acc_ens.png

├── dolan_acc_single.png

├── dpp_ring_contour.png

├── error_detector_cifar.png

├── error_detector_mnist.png

├── error_detector_svhn.png

├── ood_mnist.png

└── ring_results.png

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | .idea

2 | .ipynb_checkpoints

3 | *.pyc

4 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Uncertainty estimation via decorrelation and DPP

2 | Code for paper "Dropout Strikes Back: Improved Uncertainty

3 | Estimation via Diversity Sampling" by Kirill Fedyanin, Evgenii Tsymbalov, Maxim Panov published in [Recent Trends in Analysis of Images, Social Networks and Texts](https://link.springer.com/chapter/10.1007/978-3-031-15168-2_11) by Springer. You can cite this paper by bibtex provided below.

4 |

5 | Main code with implemented methods (DPP, k-DPP, leverages masks for dropout) are in our [alpaca library](https://github.com/stat-ml/alpaca)

6 |

7 |

8 | ### Motivation

9 |

10 |

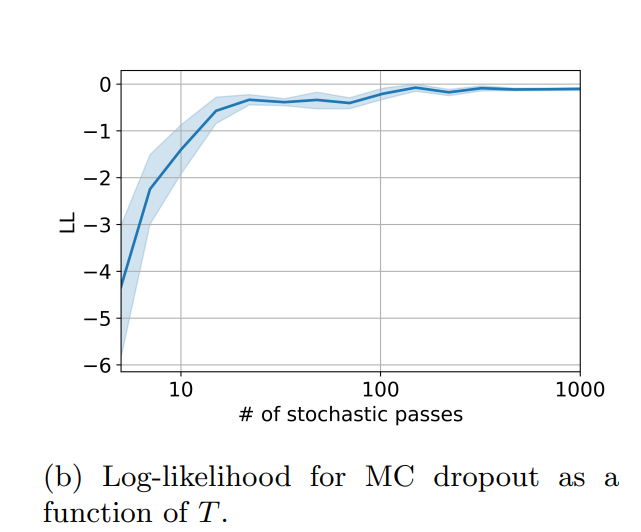

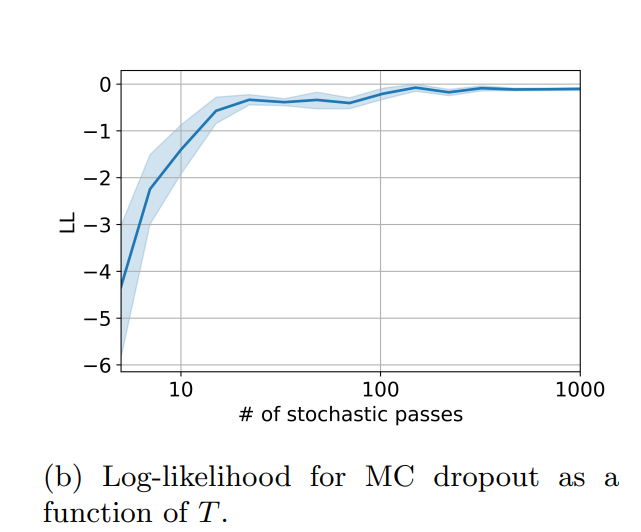

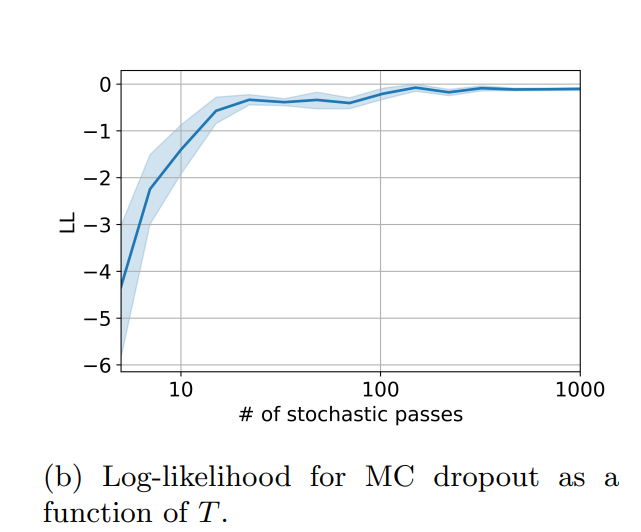

11 | For regression tasks, it could be useful to know not just a prediction but also a confidence interval. It's hard to do it in the close form for deep learning, but to estimate, you can you so-called [ensembles](https://arxiv.org/abs/1612.01474) of several models. To avoid training and keeping several models, you could use [monte-carlo dropout](https://arxiv.org/abs/1506.02142) on inference.

12 |

13 | To use MC dropout, you need multiple forward passes; converging requires tens or even hundreds of forward passes.

14 |

15 |

16 |  17 |

17 |

18 |

19 | We propose to force the diversity of forward passes by hiring determinantal point processes. See how it improves the log-likelihood metric across various UCI datasets for the different numbers of stochastic passes T = 10, 30, 100.

20 |

21 |

22 |

23 |

24 | ### Paper

25 |

26 | You can read full paper here [https://link.springer.com/chapter/10.1007/978-3-031-15168-2_11](https://link.springer.com/chapter/10.1007/978-3-031-15168-2_11)

27 |

28 | For the citation, please use

29 |

30 | ```bibtex

31 | @InProceedings{Fedyanin2021DropoutSB,

32 | author="Fedyanin, Kirill

33 | and Tsymbalov, Evgenii

34 | and Panov, Maxim",

35 | title="Dropout Strikes Back: Improved Uncertainty Estimation via Diversity Sampling",

36 | booktitle="Recent Trends in Analysis of Images, Social Networks and Texts",

37 | year="2022",

38 | publisher="Springer International Publishing",

39 | pages="125--137",

40 | isbn="978-3-031-15168-2"

41 | }

42 | ```

43 |

44 |

45 | ## Install dependency

46 | ```

47 | pip install -r requirements.txt

48 | ```

49 |

50 | ## Regression

51 | To get the experiment results from the paper, run the following notebooks

52 | - `experiments/regression_1_big_exper_train-clean.ipynb` to train the models

53 | - `experiments/regression_2_ll_on_trained_models.ipynb` to get the ll values for different datasets

54 | - `experiments/regression_3_ood_w_training.ipynb` for the OOD experiments

55 |

56 | ## Classification

57 |

58 | From the experiment folder run the following scripts. They goes in pairs, first script trains models and estimate the uncertainty, second just print the results.

59 |

60 | #### Accuracy experiment on MNIST

61 | ```bash

62 | python classification_ue.py mnist

63 | python print_confidence_accuracy.py mnist

64 | ```

65 | #### Accuracy experiment on CIFAR

66 | ```bash

67 | python classification_ue.py cifar

68 | python print_confidence_accuracy.py cifar

69 | ```

70 | #### Accuracy experiment on ImageNet

71 | For the imagenet you need to manually download validation dataset (version ILSVRC2012) and put images to the `experiments/data/imagenet/valid` folder

72 | ```bash

73 | python classification_imagenet.py

74 | python print_confidence_accuracy.py imagenet

75 | ```

76 | #### OOD experiment on MNIST

77 | ```bash

78 | python classification_ue_ood.py mnist

79 | python print_ood.py mnist

80 | ```

81 | #### OOD experiment on CIFAR

82 | ```bash

83 | python classification_ue_ood.py cifar

84 | python print_ood.py cifar

85 | ```

86 | #### OOD experiment on ImageNet

87 | ```bash

88 | python classification_imagenet.py --ood

89 | python print_ood.py imagenet

90 | ```

91 |

92 | You can change the uncertainty estimation function for mnist/cifar by adding `-a=var_ratio` or `-a=max_prob` keys to the scripts.

93 |

--------------------------------------------------------------------------------

/experiments/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/__init__.py

--------------------------------------------------------------------------------

/experiments/classification_ensembles.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pickle

3 | from argparse import ArgumentParser

4 | from collections import OrderedDict

5 | from copy import deepcopy

6 | from pathlib import Path

7 |

8 | import numpy as np

9 | import torch

10 | from sklearn.model_selection import train_test_split

11 | from catalyst.dl import SupervisedRunner

12 | from catalyst.dl.callbacks import AccuracyCallback, EarlyStoppingCallback

13 | from catalyst.utils import set_global_seed

14 |

15 | from alpaca.uncertainty_estimator.bald import bald as bald_score

16 |

17 | from configs import base_config, experiment_config

18 | from deprecated.classification_active_learning import loader

19 | from classification_ue_ood import get_data as get_data_ood

20 | from classification_ue import get_data, train

21 |

22 |

23 | def parse_arguments():

24 | parser = ArgumentParser()

25 | parser.add_argument('name')

26 | parser.add_argument('--acquisition', '-a', type=str, default='bald')

27 | parser.add_argument('--ood', dest='ood', action='store_true')

28 | args = parser.parse_args()

29 |

30 | config = deepcopy(base_config)

31 | config.update(experiment_config[args.name])

32 | config['name'] = args.name

33 | config['acquisition'] = args.acquisition

34 | config['ood'] = args.ood

35 |

36 | return config

37 |

38 |

39 | def bench_uncertainty(ensemble, x_val_tensor, y_val, config):

40 | probabilities, max_prob, bald, var_ratio_ue = ensemble.estimate(x_val_tensor)

41 | uncertainties = {

42 | 'ensemble_max_prob': np.array(max_prob),

43 | 'ensemble_bald': np.array(bald),

44 | 'ensemble_var_ratio': np.array(var_ratio_ue)

45 | }

46 | record = {

47 | 'y_val': y_val,

48 | 'uncertainties': uncertainties,

49 | 'probabilities': probabilities,

50 | 'estimators': list(uncertainties.keys()),

51 | }

52 |

53 | ood_str = '_ood' if config['ood'] else ''

54 | with open(ensemble.logdir / f"ue_ensemble{ood_str}.pickle", 'wb') as f:

55 | pickle.dump(record, f)

56 |

57 |

58 | class Ensemble:

59 | def __init__(self, logbase, n_models, config, loaders, start_i=0):

60 | self.models = []

61 | self.n_models = n_models

62 | self.logdir = Path(f"{logbase}/{config['name']}_{start_i}")

63 |

64 | for i in range(start_i, start_i + n_models):

65 | set_global_seed(i + 42)

66 | logdir = Path(f"{logbase}/{config['name']}_{i}")

67 | print(logdir)

68 |

69 | possible_checkpoint = logdir / 'checkpoints' / 'best.pth'

70 | if os.path.exists(possible_checkpoint):

71 | checkpoint = possible_checkpoint

72 | else:

73 | checkpoint = None

74 |

75 | self.models.append(train(config, loaders, logdir, checkpoint))

76 |

77 | def estimate(self, X_pool):

78 | mcd_realizations = torch.zeros((len(X_pool), self.n_models, 10))

79 |

80 | with torch.no_grad():

81 | for i, model in enumerate(self.models):

82 | model.cuda()

83 | prediction = model(X_pool.cuda())

84 | prediction = prediction.to('cpu')

85 | # mcd_realizations.append(prediction)

86 | mcd_realizations[:, i, :] = torch.softmax(prediction, dim=-1)

87 |

88 | # mcd_realizations = torch.cat(mcd_realizations, dim=0)

89 | probabilities = mcd_realizations.mean(dim=1)

90 | max_class = torch.argmax(probabilities, dim=-1)

91 | max_prob_ens = mcd_realizations[np.arange(len(X_pool)), :, max_class]

92 | max_prob_ue = -max_prob_ens.mean(dim=-1) + 1

93 | bald = bald_score(mcd_realizations.numpy())

94 | var_ratio_ue = var_ratio_score(mcd_realizations, max_class, self.n_models)

95 | return probabilities, max_prob_ue, bald, var_ratio_ue

96 |

97 |

98 | def var_ratio_score(mcd_realizations, max_class, n_models):

99 | reps = max_class.unsqueeze(dim=-1).repeat(1, n_models)

100 | tops = torch.argmax(mcd_realizations, axis=-1)

101 | incorrects = (reps != tops).float()

102 | ue = torch.sum(incorrects, dim=-1) / n_models

103 | return ue

104 |

105 |

106 | if __name__ == '__main__':

107 | config = parse_arguments()

108 | set_global_seed(42)

109 | logbase = 'logs/classification_ensembles'

110 | n_models = config['n_models']

111 |

112 | if config['ood']:

113 | loaders, ood_loader, x_train, y_train, x_val, y_val, x_ood, y_ood = get_data_ood(config)

114 | x_ood_tensor = torch.cat([batch[0] for batch in ood_loader])

115 | for j in range(config['repeats']):

116 | ensemble = Ensemble(logbase, n_models, config, loaders, j * n_models)

117 | bench_uncertainty(ensemble, x_ood_tensor, y_val, config)

118 | else:

119 | loaders, x_train, y_train, x_val, y_val = get_data(config)

120 | x_val_tensor = torch.cat([batch[0] for batch in loaders['valid']])

121 |

122 | for j in range(config['repeats']):

123 | ensemble = Ensemble(logbase, n_models, config, loaders, j * n_models)

124 | bench_uncertainty(ensemble, x_val_tensor, y_val, config)

125 |

126 |

--------------------------------------------------------------------------------

/experiments/classification_imagenet.py:

--------------------------------------------------------------------------------

1 | from time import time

2 | from collections import defaultdict

3 | import os

4 | import pickle

5 | from argparse import ArgumentParser

6 | from copy import deepcopy

7 | from pathlib import Path

8 |

9 | from torch.utils.data import Dataset, DataLoader

10 | from torchvision import transforms

11 | from PIL import Image

12 |

13 | import numpy as np

14 | import torch

15 | from catalyst.utils import set_global_seed

16 |

17 | from alpaca.active_learning.simple_update import entropy

18 | from alpaca.uncertainty_estimator import build_estimator

19 |

20 | from configs import base_config, experiment_config

21 | from models import resnet_dropout

22 |

23 |

24 | def parse_arguments():

25 | parser = ArgumentParser()

26 | parser.add_argument('--acquisition', '-a', type=str, default='bald')

27 | parser.add_argument('--dataset-folder', type=str, default='data/imagenet')

28 | parser.add_argument('--bs', type=int, default=250)

29 | parser.add_argument('--ood', dest='ood', action='store_true')

30 | args = parser.parse_args()

31 | args.name ='imagenet'

32 |

33 | config = deepcopy(base_config)

34 | config.update(experiment_config[args.name])

35 |

36 |

37 | if args.ood:

38 | args.dataset_folder = 'data/chest'

39 |

40 | for param in ['name', 'acquisition', 'bs', 'dataset_folder', 'ood']:

41 | config[param] = getattr(args, param)

42 |

43 |

44 | return config

45 |

46 |

47 | def bench_uncertainty(model, val_loader, y_val, acquisition, config, logdir):

48 | ood_str = '_ood' if config['ood'] else ''

49 | logfile = logdir / f"ue{ood_str}_{config['acquisition']}.pickle"

50 | print(logfile)

51 |

52 | probabilities = get_probabilities(model, val_loader).astype(np.single)

53 |

54 | estimators = ['max_prob', 'mc_dropout', 'ht_dpp', 'cov_k_dpp']

55 | # estimators = ['mc_dropout']

56 |

57 | print(estimators)

58 |

59 | uncertainties = {}

60 | times = defaultdict(list)

61 | for estimator_name in estimators:

62 | print(estimator_name)

63 | t0 = time()

64 | ue = calc_ue(model, val_loader, probabilities, config['dropout_rate'], estimator_name, nn_runs=config['nn_runs'], acquisition=acquisition)

65 | times[estimator_name].append(time() - t0)

66 | print('time', time() - t0)

67 | uncertainties[estimator_name] = ue

68 |

69 | record = {

70 | 'y_val': y_val,

71 | 'uncertainties': uncertainties,

72 | 'probabilities': probabilities,

73 | 'estimators': estimators,

74 | 'times': times

75 | }

76 | with open(logfile, 'wb') as f:

77 | pickle.dump(record, f)

78 |

79 | return probabilities, uncertainties, estimators

80 |

81 |

82 | def calc_ue(model, val_loader, probabilities, dropout_rate, estimator_type='max_prob', nn_runs=150, acquisition='bald'):

83 | if estimator_type == 'max_prob':

84 | ue = 1 - np.max(probabilities, axis=-1)

85 | elif estimator_type == 'max_entropy':

86 | ue = entropy(probabilities)

87 | else:

88 | acquisition_param = 'var_ratio' if acquisition == 'max_prob' else acquisition

89 |

90 | estimator = build_estimator(

91 | 'bald_masked', model, dropout_mask=estimator_type, num_classes=1000,

92 | nn_runs=nn_runs, keep_runs=False, acquisition=acquisition_param,

93 | dropout_rate=dropout_rate

94 | )

95 | ue = estimator.estimate(val_loader)

96 |

97 | return ue

98 |

99 |

100 | def get_probabilities(model, loader):

101 | model.eval().cuda()

102 |

103 | results = []

104 |

105 | with torch.no_grad():

106 | for i, batch in enumerate(loader):

107 | print(i)

108 | probabilities = torch.softmax(model(batch.cuda()), dim=-1)

109 | results.extend(list(probabilities.cpu().numpy()))

110 | return np.array(results)

111 |

112 |

113 |

114 | image_transforms = transforms.Compose([

115 | transforms.Resize(256),

116 | transforms.CenterCrop(224),

117 | transforms.ToTensor(),

118 | transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

119 | ])

120 |

121 |

122 | class ImageDataset(Dataset):

123 | def __init__(self, folder):

124 | self.folder = Path(folder)

125 | files = sorted(os.listdir(folder))

126 | self.files = files[:downsample]

127 |

128 | def __len__(self):

129 | return len(self.files)

130 |

131 | def __getitem__(self, idx):

132 | img_path = self.folder / self.files[idx]

133 | image = Image.open(img_path).convert('RGB')

134 |

135 | image = image_transforms(image).double()

136 |

137 | return image

138 |

139 |

140 | def get_data(config):

141 | label_file = Path(config['dataset_folder'])/'val.txt'

142 |

143 | with open(label_file, 'r') as f:

144 | y_val = np.array([int(line.split()[1]) for line in f.readlines()])[:downsample]

145 |

146 | valid_folder = Path(config['dataset_folder']) / 'valid'

147 |

148 | dataset = ImageDataset(valid_folder)

149 | val_loader = DataLoader(dataset, batch_size=config['bs'])

150 | val_loader.shape = (downsample, 3, 224, 224)

151 |

152 | return val_loader, y_val

153 |

154 |

155 | if __name__ == '__main__':

156 | downsample = 50_000

157 |

158 | config = parse_arguments()

159 | print(config)

160 | set_global_seed(42)

161 | val_loader, y_val = get_data(config)

162 |

163 | for i in range(config['repeats']):

164 | set_global_seed(i + 42)

165 | logdir = Path(f"logs/classification/{config['name']}_{i}")

166 |

167 | model = resnet_dropout(dropout_rate=config['dropout_rate']).double()

168 |

169 | bench_uncertainty(model, val_loader, y_val, config['acquisition'], config, logdir)

170 |

--------------------------------------------------------------------------------

/experiments/classification_ue.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pickle

3 | from argparse import ArgumentParser

4 | from collections import OrderedDict

5 | from copy import deepcopy

6 | from pathlib import Path

7 |

8 | import numpy as np

9 | import torch

10 | from sklearn.model_selection import train_test_split

11 | from sklearn.metrics import roc_curve, roc_auc_score

12 | from scipy.special import softmax

13 | import matplotlib.pyplot as plt

14 | import seaborn as sns

15 | import pandas as pd

16 | from catalyst.dl import SupervisedRunner

17 | from catalyst.dl.callbacks import AccuracyCallback, EarlyStoppingCallback

18 | from catalyst.utils import set_global_seed

19 |

20 | from alpaca.active_learning.simple_update import entropy

21 | from alpaca.uncertainty_estimator import build_estimator

22 | from alpaca.uncertainty_estimator.masks import DEFAULT_MASKS

23 |

24 | from configs import base_config, experiment_config

25 | from visual_datasets import loader

26 |

27 |

28 | def parse_arguments():

29 | parser = ArgumentParser()

30 | parser.add_argument('name')

31 | parser.add_argument('--acquisition', '-a', type=str, default='bald')

32 | args = parser.parse_args()

33 |

34 | config = deepcopy(base_config)

35 | config.update(experiment_config[args.name])

36 | config['name'] = args.name

37 | config['acquisition'] = args.acquisition

38 |

39 | return config

40 |

41 |

42 | def train(config, loaders, logdir, checkpoint=None):

43 | model = config['model_class'](dropout_rate=config['dropout_rate']).double()

44 |

45 | if checkpoint is not None:

46 | model.load_state_dict(torch.load(checkpoint)['model_state_dict'])

47 | model.eval()

48 | else:

49 | criterion = torch.nn.CrossEntropyLoss()

50 | optimizer = torch.optim.Adam(model.parameters())

51 | callbacks = [AccuracyCallback(num_classes=10), EarlyStoppingCallback(config['patience'])]

52 |

53 | runner = SupervisedRunner()

54 | runner.train(

55 | model, criterion, optimizer, loaders,

56 | logdir=logdir, num_epochs=config['epochs'], verbose=True,

57 | callbacks=callbacks

58 | )

59 |

60 | return model

61 |

62 |

63 | def bench_uncertainty(model, model_checkpoint, loaders, x_val, y_val, acquisition, config):

64 | runner = SupervisedRunner()

65 | logits = runner.predict_loader(model, loaders['valid'])

66 | probabilities = softmax(logits, axis=-1)

67 |

68 | if config['acquisition'] in ['bald', 'var_ratio']:

69 | estimators = ['mc_dropout', 'ht_dpp', 'ht_k_dpp', 'cov_dpp', 'cov_k_dpp']

70 | elif config['acquisition'] == 'max_prob':

71 | estimators = ['max_prob', 'mc_dropout', 'ht_dpp', 'ht_k_dpp', 'cov_dpp', 'cov_k_dpp']

72 | else:

73 | raise ValueError

74 |

75 | print(estimators)

76 |

77 | uncertainties = {}

78 | lls = {}

79 | for estimator_name in estimators:

80 | # try:

81 | print(estimator_name)

82 | ue, ll = calc_ue(model, x_val, y_val, probabilities, estimator_name, nn_runs=config['nn_runs'], acquisition=acquisition)

83 | uncertainties[estimator_name] = ue

84 | lls[estimator_name] = ll

85 | # except Exception as e:

86 | # print(e)

87 |

88 | record = {

89 | 'checkpoint': model_checkpoint,

90 | 'y_val': y_val,

91 | 'uncertainties': uncertainties,

92 | 'probabilities': probabilities,

93 | 'logits': logits,

94 | 'estimators': estimators,

95 | 'lls': lls

96 | }

97 | with open(logdir / f"ue_{config['acquisition']}.pickle", 'wb') as f:

98 | pickle.dump(record, f)

99 |

100 | return probabilities, uncertainties, estimators

101 |

102 |

103 | def log_likelihood(probabilities, y):

104 | try:

105 | ll = np.mean(np.log(probabilities[np.arange(len(probabilities)), y]))

106 | except FloatingPointError:

107 | import ipdb; ipdb.set_trace()

108 | return ll

109 |

110 |

111 | def calc_ue(model, datapoints, y_val, probabilities, estimator_type='max_prob', nn_runs=100, acquisition='bald'):

112 | if estimator_type == 'max_prob':

113 | ue = 1 - np.max(probabilities, axis=-1)

114 | ll = log_likelihood(probabilities, y_val)

115 | elif estimator_type == 'max_entropy':

116 | ue = entropy(probabilities)

117 | ll = log_likelihood(probabilities, y_val)

118 | else:

119 | acquisition_param = 'var_ratio' if acquisition == 'max_prob' else acquisition

120 |

121 | estimator = build_estimator(

122 | 'bald_masked', model, dropout_mask=estimator_type, num_classes=10,

123 | nn_runs=nn_runs, keep_runs=True, acquisition=acquisition_param)

124 | ue = estimator.estimate(torch.DoubleTensor(datapoints).cuda())

125 | probs = softmax(estimator.last_mcd_runs(), axis=-1)

126 | probs = np.mean(probs, axis=-2)

127 |

128 | ll = log_likelihood(probs, y_val)

129 |

130 | if acquisition == 'max_prob':

131 | ue = 1 - np.max(probs, axis=-1)

132 |

133 | return ue, ll

134 |

135 |

136 | def misclassfication_detection(y_val, probabilities, uncertainties, estimators):

137 | results = []

138 | predictions = np.argmax(probabilities, axis=-1)

139 | errors = (predictions != y_val)

140 | for estimator_name in estimators:

141 | fpr, tpr, _ = roc_curve(errors, uncertainties[estimator_name])

142 | roc_auc = roc_auc_score(errors, uncertainties[estimator_name])

143 | results.append((estimator_name, roc_auc))

144 |

145 | return results

146 |

147 |

148 | def get_data(config):

149 | x_train, y_train, x_val, y_val, train_tfms = config['prepare_dataset'](config)

150 |

151 | if len(x_train) > config['train_size']:

152 | x_train, _, y_train, _ = train_test_split(

153 | x_train, y_train, train_size=config['train_size'], stratify=y_train

154 | )

155 |

156 | loaders = OrderedDict({

157 | 'train': loader(x_train, y_train, config['batch_size'], tfms=train_tfms, train=True),

158 | 'valid': loader(x_val, y_val, config['batch_size'])

159 | })

160 | return loaders, x_train, y_train, x_val, y_val

161 |

162 |

163 | if __name__ == '__main__':

164 | config = parse_arguments()

165 | set_global_seed(42)

166 | loaders, x_train, y_train, x_val, y_val = get_data(config)

167 | print(y_train[:5])

168 |

169 | rocaucs = []

170 | for i in range(config['repeats']):

171 | set_global_seed(i + 42)

172 | logdir = Path(f"logs/classification/{config['name']}_{i}")

173 | print(logdir)

174 |

175 | possible_checkpoint = logdir / 'checkpoints' / 'best.pth'

176 | if os.path.exists(possible_checkpoint):

177 | checkpoint = possible_checkpoint

178 | else:

179 | checkpoint = None

180 |

181 | model = train(config, loaders, logdir, checkpoint)

182 | x_val_tensor = torch.cat([batch[0] for batch in loaders['valid']])

183 |

184 | bench_uncertainty(

185 | model, checkpoint, loaders, x_val_tensor, y_val, config['acquisition'], config)

186 |

187 |

188 |

--------------------------------------------------------------------------------

/experiments/classification_ue_ood.py:

--------------------------------------------------------------------------------

1 | import os

2 | import pickle

3 | from argparse import ArgumentParser

4 | from collections import OrderedDict

5 | from copy import deepcopy

6 | from pathlib import Path

7 |

8 | import numpy as np

9 | import torch

10 | from sklearn.model_selection import train_test_split

11 | from scipy.special import softmax

12 | from catalyst.dl import SupervisedRunner

13 | from catalyst.utils import set_global_seed

14 |

15 | from alpaca.active_learning.simple_update import entropy

16 | from alpaca.uncertainty_estimator import build_estimator

17 | from alpaca.uncertainty_estimator.masks import DEFAULT_MASKS

18 |

19 | from configs import base_config, experiment_ood_config

20 | from visual_datasets import loader

21 |

22 | from classification_ue import train

23 |

24 |

25 | def parse_arguments():

26 | parser = ArgumentParser()

27 | parser.add_argument('name')

28 | parser.add_argument('--acquisition', '-a', type=str, default='bald')

29 | args = parser.parse_args()

30 |

31 | config = deepcopy(base_config)

32 | config.update(experiment_ood_config[args.name])

33 | config['name'] = args.name

34 | config['acquisition'] = args.acquisition

35 |

36 | return config

37 |

38 |

39 | def bench_uncertainty(model, model_checkpoint, ood_loader, x_ood, acquisition, nn_runs):

40 | runner = SupervisedRunner()

41 | logits = runner.predict_loader(model, ood_loader)

42 | probabilities = softmax(logits, axis=-1)

43 |

44 | if config['acquisition'] in ['bald', 'var_ratio']:

45 | estimators = ['mc_dropout', 'ht_dpp', 'ht_k_dpp', 'cov_dpp', 'cov_k_dpp']

46 | elif config['acquisition'] == 'max_prob':

47 | estimators = ['max_prob', 'mc_dropout', 'ht_dpp', 'ht_k_dpp', 'cov_dpp', 'cov_k_dpp']

48 | else:

49 | raise ValueError

50 |

51 |

52 | uncertainties = {}

53 | for estimator_name in estimators:

54 | print(estimator_name)

55 | ue = calc_ue(model, x_ood, probabilities, estimator_name, nn_runs=nn_runs, acquisition=acquisition)

56 | uncertainties[estimator_name] = ue

57 | print(ue)

58 |

59 | record = {

60 | 'checkpoint': model_checkpoint,

61 | 'uncertainties': uncertainties,

62 | 'probabilities': probabilities,

63 | 'logits': logits,

64 | 'estimators': estimators

65 | }

66 |

67 | file_name = logdir / f"ue_ood_{config['acquisition']}.pickle"

68 | with open(file_name, 'wb') as f:

69 | pickle.dump(record, f)

70 |

71 | return probabilities, uncertainties, estimators

72 |

73 |

74 | def calc_ue(model, datapoints, probabilities, estimator_type='max_prob', nn_runs=100, acquisition='bald'):

75 | if estimator_type == 'max_prob':

76 | ue = 1 - np.max(probabilities, axis=-1)

77 | elif estimator_type == 'max_entropy':

78 | ue = entropy(probabilities)

79 | else:

80 | acquisition_param = 'var_ratio' if acquisition == 'max_prob' else acquisition

81 |

82 | estimator = build_estimator(

83 | 'bald_masked', model, dropout_mask=estimator_type, num_classes=10,

84 | nn_runs=nn_runs, keep_runs=True, acquisition=acquisition_param)

85 | ue = estimator.estimate(torch.DoubleTensor(datapoints).cuda())

86 |

87 | if acquisition == 'max_prob':

88 | probs = softmax(estimator.last_mcd_runs(), axis=-1)

89 | probs = np.mean(probs, axis=-2)

90 | ue = 1 - np.max(probs, axis=-1)

91 |

92 | return ue

93 |

94 |

95 | def get_data(config):

96 | x_train, y_train, x_val, y_val, train_tfms = config['prepare_dataset'](config)

97 |

98 | if len(x_train) > config['train_size']:

99 | x_train, _, y_train, _ = train_test_split(

100 | x_train, y_train, train_size=config['train_size'], stratify=y_train

101 | )

102 |

103 | _, _, x_ood, y_ood, _ = config['prepare_dataset'](config)

104 | ood_loader = loader(x_ood, y_ood, config['batch_size'])

105 |

106 | loaders = OrderedDict({

107 | 'train': loader(x_train, y_train, config['batch_size'], tfms=train_tfms, train=True),

108 | 'valid': loader(x_val, y_val, config['batch_size']),

109 | })

110 | return loaders, ood_loader, x_train, y_train, x_val, y_val, x_ood, y_ood

111 |

112 |

113 | if __name__ == '__main__':

114 | config = parse_arguments()

115 | print(config)

116 | set_global_seed(42)

117 | loaders, ood_loader, x_train, y_train, x_val, y_val, x_ood, y_ood = get_data(config)

118 | print(y_train[:5])

119 |

120 | for i in range(config['repeats']):

121 | set_global_seed(i + 42)

122 | logdir = Path(f"logs/classification/{config['name']}_{i}")

123 | print(logdir)

124 |

125 | possible_checkpoint = logdir / 'checkpoints' / 'best.pth'

126 | if os.path.exists(possible_checkpoint):

127 | checkpoint = possible_checkpoint

128 | else:

129 | checkpoint = None

130 |

131 | model = train(config, loaders, logdir, checkpoint)

132 | print(model)

133 | x_ood_tensor = torch.cat([batch[0] for batch in ood_loader])

134 |

135 | probabilities, uncertainties, estimators = bench_uncertainty(

136 | model, checkpoint, ood_loader, x_ood_tensor, config['acquisition'], config['nn_runs'])

137 |

138 |

--------------------------------------------------------------------------------

/experiments/configs.py:

--------------------------------------------------------------------------------

1 | from copy import deepcopy

2 |

3 | from visual_datasets import prepare_cifar, prepare_mnist, prepare_svhn

4 | from models import SimpleConv, StrongConv

5 |

6 | base_config = {

7 | 'train_size': 50_000,

8 | 'val_size': 10_000,

9 | 'prepare_dataset': prepare_mnist,

10 | 'model_class': SimpleConv,

11 | 'epochs': 50,

12 | 'patience': 3,

13 | 'batch_size': 128,

14 | 'repeats': 3,

15 | 'dropout_rate': 0.5,

16 | 'nn_runs': 100

17 | }

18 |

19 |

20 | experiment_config = {

21 | 'mnist': {

22 | 'train_size': 500,

23 | 'dropout_rate': 0.5,

24 | 'n_models': 20

25 | },

26 | 'cifar': {

27 | 'prepare_dataset': prepare_cifar,

28 | 'model_class': StrongConv,

29 | 'n_models': 20,

30 | },

31 | 'imagenet': {

32 | 'dropout_rate': 0.5,

33 | 'repeats': 1,

34 | }

35 | }

36 |

37 | experiment_ood_config = {

38 | 'mnist': {

39 | 'train_size': 500,

40 | 'ood_dataset': prepare_mnist

41 | },

42 | 'svhn': {

43 | 'prepare_dataset': prepare_svhn,

44 | 'model_class': StrongConv,

45 | 'repeats': 3,

46 | },

47 | 'cifar': {

48 | 'prepare_dataset': prepare_cifar,

49 | 'model_class': StrongConv,

50 | 'repeats': 3

51 | }

52 | }

53 |

54 |

55 | al_config = deepcopy(base_config)

56 | al_config.update({

57 | 'pool_size': 10_000,

58 | 'val_size': 10_000,

59 | 'repeats': 5,

60 | 'methods': ['random', 'mc_dropout', 'decorrelating_sc', 'dpp', 'ht_dpp', 'k_dpp', 'ht_k_dpp'],

61 | 'steps': 50

62 | })

63 |

64 | al_experiments = {

65 | 'mnist': {

66 | 'start_size': 200,

67 | 'step_size': 10,

68 | },

69 | 'cifar': {

70 | 'start_size': 2000,

71 | 'step_size': 30,

72 | 'prepare_dataset': prepare_cifar,

73 | 'model_class': StrongConv,

74 | 'repeats': 3

75 | },

76 | 'svhn': {

77 | 'start_size': 2000,

78 | 'step_size': 30,

79 | 'prepare_dataset': prepare_svhn,

80 | 'model_class': StrongConv,

81 | 'repeats': 3

82 | }

83 | }

84 |

--------------------------------------------------------------------------------

/experiments/data/.gitignore:

--------------------------------------------------------------------------------

1 | *.csv

2 | *.png

3 | *.pkl

4 |

--------------------------------------------------------------------------------

/experiments/data/al/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/al/.gitkeep

--------------------------------------------------------------------------------

/experiments/data/detector/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/detector/.gitkeep

--------------------------------------------------------------------------------

/experiments/data/models/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/models/.gitkeep

--------------------------------------------------------------------------------

/experiments/data/ood/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/ood/.gitkeep

--------------------------------------------------------------------------------

/experiments/data/regression/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/regression/.gitkeep

--------------------------------------------------------------------------------

/experiments/data/xor/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/data/xor/.gitkeep

--------------------------------------------------------------------------------

/experiments/deprecated/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/stat-ml/dpp-dropout-uncertainty/3e0ac89c6717be0ce1dc322ccd3cfb1f06e0e952/experiments/deprecated/__init__.py

--------------------------------------------------------------------------------

/experiments/deprecated/active_learning_debug.py:

--------------------------------------------------------------------------------

1 | import sys

2 | sys.path.append('..')

3 |

4 | import torch

5 | import numpy as np

6 | import matplotlib.pyplot as plt

7 |

8 | from fastai.vision import rand_pad, flip_lr, ImageDataBunch

9 |

10 | from model.cnn import AnotherConv

11 | from dataloader.builder import build_dataset

12 | from deprecated.utils import ImageArrayDS

13 |

14 | # == Place to develop, experiment and debug new methods of active learning == #

15 |

16 | # torch.cuda.set_device(1)

17 |

18 |

19 | total_size = 60_000

20 | val_size = 10_000

21 | start_size = 1_000

22 | step_size = 10

23 | steps = 8

24 | reload = True

25 | nn_runs = 100

26 |

27 | pool_size = 200

28 |

29 |

30 | # Load data

31 | dataset = build_dataset('cifar_10', val_size=val_size)

32 | x_set, y_set = dataset.dataset('train')

33 | x_val, y_val = dataset.dataset('val')

34 |

35 | shape = (-1, 3, 32, 32)

36 | x_set = ((x_set - 128)/128).reshape(shape)

37 | x_val = ((x_val - 128)/128).reshape(shape)

38 |

39 | # x_pool, x_train, y_pool, y_train = train_test_split(x_set, y_set, test_size=start_size, stratify=y_set)

40 | x_train, y_train = x_set, y_set

41 |

42 | train_tfms = [*rand_pad(4, 32), flip_lr(p=0.5)]

43 | train_ds = ImageArrayDS(x_train, y_train, train_tfms)

44 | val_ds = ImageArrayDS(x_val, y_val)

45 | data = ImageDataBunch.create(train_ds, val_ds, bs=256)

46 |

47 |

48 | loss_func = torch.nn.CrossEntropyLoss()

49 |

50 | np.set_printoptions(threshold=sys.maxsize, suppress=True)

51 |

52 | model = AnotherConv()

53 | # model = resnet_masked(pretrained=True)

54 | # model = resnet_linear(pretrained=True, dropout_rate=0.5, freeze=False)

55 |

56 | # learner = Learner(data, model, metrics=accuracy, loss_func=loss_func)

57 | #

58 | # model_path = "experiments/data/model.pt"

59 | # if reload and os.path.exists(model_path):

60 | # model.load_state_dict(torch.load(model_path))

61 | # else:

62 | # learner.fit(10, 1e-3, wd=0.02)

63 | # torch.save(model.state_dict(), model_path)

64 | #

65 | # images = torch.FloatTensor(x_val)# .to('cuda')

66 | #

67 | # inferencer = Inferencer(model)

68 | # predictions = F.softmax(inferencer(images), dim=1).detach().cpu().numpy()[:10]

69 |

70 |

71 | repeats = 3

72 | methods = ['goy', 'mus', 'cosher']

73 | from random import random

74 |

75 | results = []

76 | for _ in range(repeats):

77 | start = 0.1 * random()

78 | for method in methods:

79 | accuracies = [start]

80 | current = start

81 | for i in range(10):

82 | current += 0.1*random()

83 | accuracies.append(current)

84 | records = list(zip(accuracies, range(len(accuracies)), [method] * len(accuracies)))

85 | results.extend(records)

86 |

87 | import pandas as pd

88 | import seaborn as sns

89 | df = pd.DataFrame(results, columns=['accuracy', 'step', 'method'])

90 | sns.lineplot('step', 'accuracy', hue='method', data=df)

91 | plt.show()

92 |

93 |

94 | # idxs = np.argsort(entropies)[::-1][:10]

95 | # print(idxs)

96 |

97 | # mask = build_mask('k_dpp')

98 | # estimator = BaldMasked(inferencer, dropout_mask=mask, num_classes=10, nn_runs=nn_runs)

99 | # estimations = estimator.estimate(images)

100 | # print(estimations)

101 |

102 |

103 |

104 |

105 |

106 |

107 |

108 |

109 |

110 |

--------------------------------------------------------------------------------

/experiments/deprecated/active_learning_mnist.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import torch

3 |

4 | sys.path.append('..')

5 | from dataloader.builder import build_dataset

6 | from uncertainty_estimator.masks import DEFAULT_MASKS

7 | from active_learning import main

8 |

9 | torch.cuda.set_device(1)

10 | torch.backends.cudnn.benchmark = True

11 |

12 |

13 | def prepare_mnist(config):

14 | dataset = build_dataset('mnist', val_size=config['val_size'])

15 | x_set, y_set = dataset.dataset('train')

16 | x_val, y_val = dataset.dataset('val')

17 |

18 | shape = (-1, 1, 28, 28)

19 | x_set = ((x_set - 128) / 128).reshape(shape)

20 | x_val = ((x_val - 128) / 128).reshape(shape)

21 |

22 | train_tfms = []

23 |

24 | return x_set, y_set, x_val, y_val, train_tfms

25 |

26 |

27 | mnist_config = {

28 | 'repeats': 5,

29 | 'start_size': 100,

30 | 'step_size': 20,

31 | 'val_size': 10_000,

32 | 'pool_size': 10_000,

33 | 'steps': 30,

34 | 'methods': ['random', 'error_oracle', 'max_entropy', *DEFAULT_MASKS],

35 | 'epochs': 30,

36 | 'patience': 2,

37 | 'model_type': 'simple_conv',

38 | 'nn_runs': 100,

39 | 'batch_size': 32,

40 | 'start_lr': 5e-4,

41 | 'weight_decay': 0.2,

42 | 'prepare_dataset': prepare_mnist,

43 | 'name': 'mnist_beauty_2'

44 | }

45 |

46 |

47 | if __name__ == '__main__':

48 | main(mnist_config)

49 |

--------------------------------------------------------------------------------

/experiments/deprecated/active_learning_svhn.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import torch

3 | import numpy as np

4 | from fastai.vision import (rand_pad, flip_lr)

5 |

6 | sys.path.append('..')

7 | from dataloader.builder import build_dataset

8 | from uncertainty_estimator.masks import DEFAULT_MASKS

9 | from active_learning import main

10 |

11 | torch.cuda.set_device(1)

12 | torch.backends.cudnn.benchmark = True

13 |

14 |

15 | def prepare_svhn(config):

16 | dataset = build_dataset('svhn', val_size=config['val_size'])

17 | x_set, y_set = dataset.dataset('train')

18 | x_val, y_val = dataset.dataset('val')

19 | y_set[y_set == 10] = 0

20 | y_val[y_val == 10] = 0

21 |

22 | shape = (-1, 32, 32, 3)

23 | x_set = ((x_set - 128) / 128).reshape(shape)

24 | x_val = ((x_val - 128) / 128).reshape(shape)

25 | x_set = np.rollaxis(x_set, 3, 1)

26 | x_val = np.rollaxis(x_val, 3, 1)

27 |

28 | train_tfms = [*rand_pad(4, 32), flip_lr(p=0.5)] # Transformation to augment images

29 |

30 | return x_set, y_set, x_val, y_val, train_tfms

31 |

32 |

33 | svhn_config = {

34 | 'repeats': 3,

35 | 'start_size': 5_000,

36 | 'step_size': 50,

37 | 'val_size': 10_000,

38 | 'pool_size': 12_000,

39 | 'steps': 30,

40 | 'methods': ['random', 'error_oracle', 'max_entropy', *DEFAULT_MASKS],

41 | 'epochs': 30,

42 | 'patience': 2,

43 | 'model_type': 'resnet',

44 | 'nn_runs': 100,

45 | 'batch_size': 256,

46 | 'start_lr': 5e-4,

47 | 'weight_decay': 0.2,

48 | 'prepare_dataset': prepare_svhn,

49 | 'name': 'svhn'

50 | }

51 |

52 | experiment = 4

53 |

54 | if experiment == 2:

55 | svhn_config['pool_size'] = 5000

56 | svhn_config['start_size'] = 2000

57 | svhn_config['step_size'] = 20

58 | svhn_config['model'] = 'conv'

59 | elif experiment == 3:

60 | svhn_config['pool_size'] = 20_000

61 | svhn_config['start_size'] = 10_000

62 | svhn_config['step_size'] = 100

63 | svhn_config['model'] = 'resent'

64 | elif experiment == 4:

65 | svhn_config['pool_size'] = 5_000

66 | svhn_config['start_size'] = 1_000

67 | svhn_config['step_size'] = 30

68 | svhn_config['model'] = 'resent'

69 | svhn_config['repeats'] = 10

70 |

71 |

72 | if __name__ == '__main__':

73 | main(svhn_config)

74 |

--------------------------------------------------------------------------------

/experiments/deprecated/al_rosenbrock_experiment.py:

--------------------------------------------------------------------------------

1 | import random

2 | import argparse

3 |

4 | import numpy as np

5 | import matplotlib.pyplot as plt

6 | import torch

7 |

8 | from model.mlp import MLP

9 | from dataloader.rosen import RosenData

10 | from uncertainty_estimator.nngp import NNGPRegression

11 | from uncertainty_estimator.mcdue import MCDUE

12 | from uncertainty_estimator.random_estimator import RandomEstimator

13 | from sample_selector.eager import EagerSampleSelector

14 | from oracle.identity import IdentityOracle

15 | from active_learning.al_trainer import ALTrainer

16 |

17 |

18 | def run_experiment(config):

19 | """

20 | Run active learning for the 10D rosenbrock function data

21 | It starts from small train dataset and then extends it with points from pool

22 |

23 | We compare three sampling methods:

24 | - Random datapoints

25 | - Points with highest uncertainty by MCDUE

26 | - Points with highest uncertainty by NNGP (proposed method)

27 | """

28 | rmses = {}

29 |

30 | for estimator_name in config['estimators']:

31 | print("\nEstimator:", estimator_name)

32 |

33 | # load data

34 |

35 | rosen = RosenData(

36 | config['n_dim'], config['data_size'], config['data_split'],

37 | use_cache=config['use_cache'])

38 | x_train, y_train = rosen.dataset('train')

39 | x_val, y_val = rosen.dataset('train')

40 | x_pool, y_pool = rosen.dataset('pool')

41 |

42 | # Build neural net and set random seed

43 | set_random(config['random_seed'])

44 | model = MLP(config['layers'])

45 |

46 | estimator = build_estimator(estimator_name, model) # to estimate uncertainties

47 | oracle = IdentityOracle(y_pool) # generate y for X from pool

48 | sampler = EagerSampleSelector() # sample X and y from pool by uncertainty estimations

49 |

50 | # Active learning training

51 | trainer = ALTrainer(

52 | model, estimator, sampler, oracle, config['al_iterations'],

53 | config['update_size'], verbose=config['verbose'])

54 | rmses[estimator_name] = trainer.train(x_train, y_train, x_val, y_val, x_pool)

55 |

56 | visualize(rmses)

57 |

58 |

59 | def set_random(random_seed):

60 | # Setting seeds for reproducibility

61 | if random_seed is not None:

62 | torch.manual_seed(random_seed)

63 | np.random.seed(random_seed)

64 | random.seed(random_seed)

65 |

66 |

67 | def build_estimator(name, model):

68 | if name == 'nngp':

69 | estimator = NNGPRegression(model)

70 | elif name == 'random':

71 | estimator = RandomEstimator()

72 | elif name == 'mcdue':

73 | estimator = MCDUE(model)

74 | else:

75 | raise ValueError("Wrong estimator name")

76 | return estimator

77 |

78 |

79 | def visualize(rmses):

80 | print(rmses)

81 | plt.figure(figsize=(12, 9))

82 | plt.xlabel('Active learning iteration')

83 | plt.ylabel('Validation RMSE')

84 | for estimator_name, rmse in rmses.items():

85 | plt.plot(rmse, label=estimator_name, marker='.')

86 |

87 | plt.title('RMS Error by active learning iterations')

88 | plt.legend()

89 |

90 | plt.show()

91 |

92 |

93 | def parse_arguments():

94 | parser = argparse.ArgumentParser(description='Change experiment parameters')

95 | parser.add_argument(

96 | '--estimators', choices=['nngp', 'mcdue', 'random'], default=['nngp', 'mcdue', 'random'],

97 | nargs='+', help='Estimator types for the experiment')

98 | parser.add_argument(

99 | '--random-seed', type=int, default=None,

100 | help='Set the seed to make result reproducible')

101 | parser.add_argument(

102 | '--n-dim', type=int, default=10, help='Rosenbrock function dimentions')

103 | parser.add_argument(

104 | '--data-size', type=int, default=2000, help='Size of dataset')

105 | parser.add_argument(

106 | '--data-split', type=int, default=[0.1, 0.1, 0.1, 0.7], help='Size of dataset')

107 | parser.add_argument(

108 | '--update-size', type=int, default=100,

109 | help='Amount of samples to take from pool per iteration')

110 | parser.add_argument(

111 | '--al-iterations', '-i', type=int, default=10, help='Number of learning iterations')

112 | parser.add_argument('--verbose', action='store_true')

113 | parser.add_argument(

114 | '--no-use-cache', dest='use_cache', action='store_false',

115 | help='To generate new sample points for rosenbrock function')

116 | parser.add_argument(

117 | '--layers', type=int, nargs='+', default=[10, 128, 64, 32, 1],

118 | help='Size of the layers in neural net')

119 |

120 | return vars(parser.parse_args())

121 |

122 |

123 | if __name__ == '__main__':

124 | config = parse_arguments()

125 | run_experiment(config)

126 |

127 |

128 | config = {

129 | 'estimators': ['nngp', 'mcdue', 'random'],

130 | 'random_seed': None,

131 | 'n_dim': 9,

132 | 'data_size': 2400,

133 | 'data_split': [0.2, 0.1, 0.1, 0.6],

134 | 'update_size': 99,

135 | 'al_iterations': 9,

136 | 'verbose': False,

137 | 'use_cache': True,

138 | 'layers': [9, 128, 64, 32, 1]

139 | }

140 |

--------------------------------------------------------------------------------

/experiments/deprecated/classification_active_learning.py:

--------------------------------------------------------------------------------

1 | from argparse import ArgumentParser

2 | from collections import OrderedDict

3 | from copy import deepcopy

4 | from pathlib import Path

5 |

6 | import numpy as np

7 | import torch

8 | from torch.utils.data import DataLoader

9 | from sklearn.model_selection import train_test_split

10 | import matplotlib.pyplot as plt

11 | import seaborn as sns

12 | import pandas as pd

13 | from catalyst.dl import SupervisedRunner

14 | from catalyst.dl.callbacks import AccuracyCallback, EarlyStoppingCallback

15 | from catalyst.utils import set_global_seed

16 |

17 | from alpaca.active_learning.simple_update import update_set

18 |

19 | from configs import al_config, al_experiments

20 | from visual_datasets import ImageDataset

21 |

22 |

23 | def parse_arguments():

24 | parser = ArgumentParser()

25 | parser.add_argument('name')

26 | name = parser.parse_args().name

27 | config = deepcopy(al_config)

28 | config.update(al_experiments[name])

29 | config['name'] = name

30 |

31 | return config

32 |

33 |

34 | def active_train(config, i):

35 | set_global_seed(i + 42)

36 | # Load data

37 | x_set, y_set, x_val, y_val, train_tfms = config['prepare_dataset'](config)

38 | print(x_set.shape)

39 |

40 | # Initial data split

41 | x_set, x_train_init, y_set, y_train_init = train_test_split(x_set, y_set, test_size=config['start_size'], stratify=y_set)

42 | _, x_pool_init, _, y_pool_init = train_test_split(x_set, y_set, test_size=config['pool_size'], stratify=y_set)

43 |

44 | model_init = config['model_class']().double()

45 | val_accuracy = []

46 | # Active learning

47 | for method in config['methods']:

48 | model = deepcopy(model_init)

49 | print(f"== {method} ==")

50 | logdir = f"logs/al/{config['name']}_{method}_{i}"

51 | x_pool, y_pool = np.copy(x_pool_init), np.copy(y_pool_init)

52 | x_train, y_train = np.copy(x_train_init), np.copy(y_train_init)

53 |

54 | criterion = torch.nn.CrossEntropyLoss()

55 | optimizer = torch.optim.Adam(model.parameters())

56 |

57 | accuracies = []

58 |

59 | for j in range(config['steps']):

60 | print(f"Step {j+1}, train size: {len(x_train)}")

61 |

62 | loaders = get_loaders(x_train, y_train, x_val, y_val, config['batch_size'], train_tfms)

63 |

64 | runner = SupervisedRunner()

65 | callbacks = [AccuracyCallback(num_classes=10), EarlyStoppingCallback(config['patience'])]

66 | runner.train(

67 | model, criterion, optimizer, loaders,

68 | logdir=logdir, num_epochs=config['epochs'], verbose=False,

69 | callbacks=callbacks

70 | )

71 |

72 | accuracies.append(runner.state.best_valid_metrics['accuracy01'])

73 |

74 | if i != config['steps'] - 1:

75 | samples = next(iter(loader(x_pool, y_pool, batch_size=len(x_pool))))[0].cuda()

76 | print('Samples!', samples.shape)

77 | x_pool, x_train, y_pool, y_train = update_set(

78 | x_pool, x_train, y_pool, y_train, config['step_size'],

79 | method=method, model=model, samples=samples)

80 | print('Metric', accuracies)

81 |

82 | records = list(zip(accuracies, range(len(accuracies)), [method] * len(accuracies)))

83 | val_accuracy.extend(records)

84 |

85 | return val_accuracy

86 |

87 |

88 | def get_loaders(x_train, y_train, x_val, y_val, batch_size, tfms):

89 | loaders = OrderedDict({

90 | 'train': loader(x_train, y_train, batch_size, tfms=tfms, train=True),

91 | 'valid': loader(x_val, y_val, batch_size)

92 | })

93 | return loaders

94 |

95 |

96 | # Set initial datas

97 | def loader(x, y, batch_size=128, tfms=None, train=False):

98 | # ds = TensorDataset(torch.DoubleTensor(x), torch.LongTensor(y))

99 | ds = ImageDataset(x, y, train=train, tfms=tfms)

100 | _loader = DataLoader(ds, batch_size=batch_size, num_workers=4, shuffle=train)

101 | return _loader

102 |

103 |

104 | def plot_metric(metrics, config, title=None):

105 | df = pd.DataFrame(metrics, columns=['Accuracy', 'Step', 'Method'])

106 |

107 | filename = f"ht_{config['name']}_{config['start_size']}_{config['step_size']}"

108 | dir = Path(__file__).parent.absolute() / 'data' / 'al'

109 | df.to_csv(dir / (filename + '.csv'))

110 |

111 | try:

112 | sns.lineplot('Step', 'Accuracy', hue='Method', data=df)

113 | plt.legend(loc='upper left')

114 | plt.figure(figsize=(8, 6))

115 | default_title = f"Validation accuracy, start size {config['start_size']}, "

116 | default_title += f"step size {config['step_size']}"

117 | title = title or default_title

118 | plt.title(title)

119 | file = dir / filename

120 | plt.savefig(file)

121 | except Exception as e:

122 | print(e)

123 |

124 |

125 | if __name__ == '__main__':

126 | config = parse_arguments()

127 | results = []

128 | for i in range(config['repeats']):

129 | print(f"======{i}======")

130 | accuracies = active_train(config, i)

131 | results.extend(accuracies)

132 |

133 | print(results)

134 |

135 | plot_metric(results, config)

136 |

137 |

--------------------------------------------------------------------------------

/experiments/deprecated/classification_active_learning_fasetai.py:

--------------------------------------------------------------------------------

1 | import random

2 |

3 | import numpy as np

4 | import matplotlib.pyplot as plt

5 | from sklearn.model_selection import train_test_split

6 | import seaborn as sns

7 | import pandas as pd

8 |

9 | import torch

10 | from torch import nn

11 |

12 | from fastai.vision import (ImageDataBunch, Learner, accuracy)

13 | from fastai.callbacks import EarlyStoppingCallback

14 |

15 | from alpaca.model.cnn import AnotherConv, SimpleConv

16 | from alpaca.model.resnet import resnet_masked

17 | from alpaca.active_learning.simple_update import update_set

18 | from deprecated.utils import ImageArrayDS

19 | from visual_datasets import prepare_mnist, prepare_cifar, prepare_svhn

20 |

21 |

22 | """

23 | Active learning experiment for computer vision tasks (MNIST, CIFAR, SVHN)

24 | """

25 |

26 |

27 | SEED = 43

28 | torch.manual_seed(SEED)

29 | np.random.seed(SEED)

30 | random.seed(SEED)

31 |

32 | if torch.cuda.is_available():

33 | torch.cuda.set_device(0)

34 | torch.backends.cudnn.deterministic = True

35 | torch.backends.cudnn.benchmark = False

36 | device = 'cuda'

37 | else:

38 | device = 'cpu'

39 |

40 | experiment_label = 'ht'

41 |

42 |

43 | def main(config):

44 | # Load data

45 | x_set, y_set, x_val, y_val, train_tfms = config['prepare_dataset'](config)

46 |

47 | val_accuracy = []

48 | for _ in range(config['repeats']): # more repЕсли кнопочный, то есть вот прямо бабушкофон. Ещё его можно забрать из пассажа на фрунзе.eats for robust results

49 | # Initial data split

50 | x_set, x_train_init, y_set, y_train_init = train_test_split(x_set, y_set, test_size=config['start_size'], stratify=y_set)

51 | _, x_pool_init, _, y_pool_init = train_test_split(x_set, y_set, test_size=config['pool_size'], stratify=y_set)

52 |

53 | # Active learning

54 | for method in config['methods']:

55 | print(f"== {method} ==")

56 | x_pool, y_pool = np.copy(x_pool_init), np.copy(y_pool_init)

57 | x_train, y_train = np.copy(x_train_init), np.copy(y_train_init)

58 |

59 | model = build_model(config['model_type'])

60 | accuracies = []

61 |

62 | for i in range(config['steps']):

63 | print(f"Step {i+1}, train size: {len(x_train)}")

64 |

65 | learner = train_classifier(model, config, x_train, y_train, x_val, y_val, train_tfms)

66 | accuracies.append(learner.recorder.metrics[-1][0].item())

67 |

68 | if i != config['steps'] - 1:

69 | x_pool, x_train, y_pool, y_train = update_set(

70 | x_pool, x_train, y_pool, y_train, config['step_size'], method=method, model=model)

71 |

72 | records = list(zip(accuracies, range(len(accuracies)), [method] * len(accuracies)))

73 | val_accuracy.extend(records)

74 |

75 | # Display results

76 | try:

77 | plot_metric(val_accuracy, config)

78 | except:

79 | import ipdb; ipdb.set_trace()

80 |

81 |

82 | def train_classifier(model, config, x_train, y_train, x_val, y_val, train_tfms=None):

83 | loss_func = torch.nn.CrossEntropyLoss()

84 |

85 | if train_tfms is None:

86 | train_tfms = []

87 | train_ds = ImageArrayDS(x_train, y_train, train_tfms)

88 | val_ds = ImageArrayDS(x_val, y_val)

89 | data = ImageDataBunch.create(train_ds, val_ds, bs=config['batch_size'])

90 |

91 | callbacks = [partial(EarlyStoppingCallback, min_delta=1e-3, patience=config['patience'])]

92 | learner = Learner(data, model, metrics=accuracy, loss_func=loss_func, callback_fns=callbacks)

93 | learner.fit(config['epochs'], config['start_lr'], wd=config['weight_decay'])

94 |

95 | return learner

96 |

97 |

98 | def plot_metric(metrics, config, title=None):

99 | plt.figure(figsize=(8, 6))

100 | default_title = f"Validation accuracy, start size {config['start_size']}, "

101 | default_title += f"step size {config['step_size']}, model {config['model_type']}"

102 | title = title or default_title

103 | plt.title(title)

104 |

105 | df = pd.DataFrame(metrics, columns=['Accuracy', 'Step', 'Method'])

106 | sns.lineplot('Step', 'Accuracy', hue='Method', data=df)

107 | # plt.legend(loc='upper left')

108 |

109 | filename = f"{experiment_label}_{config['name']}_{config['model_type']}_{config['start_size']}_{config['step_size']}"

110 | dir = Path(__file__).parent.absolute() / 'data' / 'al'

111 | file = dir / filename

112 | plt.savefig(file)

113 | df.to_csv(dir / (filename + '.csv'))

114 | # plt.show()

115 |

116 |

117 | class Model21(nn.Module):

118 | def __init__(self):

119 | super().__init__()

120 | base = 16

121 | self.conv = nn.Sequential(

122 | nn.Conv2d(3, base, 3, padding=1, bias=False),

123 | nn.BatchNorm2d(base),

124 | nn.CELU(),

125 | nn.Conv2d(base, base, 3, padding=1, bias=False),

126 | nn.CELU(),

127 | nn.MaxPool2d(2, 2),

128 | nn.Dropout2d(0.2),

129 | nn.Conv2d(base, 2*base, 3, padding=1, bias=False),

130 | nn.BatchNorm2d(2*base),

131 | nn.CELU(),

132 | nn.Conv2d(2 * base, 2 * base, 3, padding=1, bias=False),

133 | nn.CELU(),

134 | nn.Dropout2d(0.3),

135 | nn.MaxPool2d(2, 2),

136 | nn.Conv2d(2*base, 4*base, 3, padding=1, bias=False),

137 | nn.BatchNorm2d(4*base),

138 | nn.CELU(),

139 | nn.Conv2d(4*base, 4*base, 3, padding=1, bias=False),

140 | nn.CELU(),

141 | nn.Dropout2d(0.4),

142 | nn.MaxPool2d(2, 2),

143 | )

144 | self.linear_size = 8 * 8 * base

145 | self.linear = nn.Sequential(

146 | nn.Linear(self.linear_size, 8*base),

147 | nn.CELU(),

148 | )

149 | self.dropout = nn.Dropout(0.3)

150 | self.fc = nn.Linear(8*base, 10)

151 |

152 | def forward(self, x, dropout_rate=0.5, dropout_mask=None):

153 | x = self.conv(x)

154 | x = x.reshape(-1, self.linear_size)

155 | x = self.linear(x)

156 | if dropout_mask is None:

157 | x = self.dropout(x)

158 | else:

159 | x = x * dropout_mask(x, 0.3, 0)

160 | return self.fc(x)

161 |

162 |

163 | def build_model(model_type):

164 | if model_type == 'conv':

165 | model = AnotherConv()

166 | elif model_type == 'resnet':

167 | model = resnet_masked(pretrained=True)

168 | elif model_type == 'simple_conv':

169 | model = SimpleConv()

170 | elif model_type == 'strong_conv':

171 | print('piu!')

172 | model = Model21()

173 | return model

174 |

175 |

176 | config_cifar = {

177 | 'val_size': 10_000,

178 | 'pool_size': 15_000,

179 | 'start_size': 7_000,

180 | 'step_size': 50,

181 | 'steps': 50,

182 | # 'methods': ['random', 'error_oracle', 'max_entropy', *DEFAULT_MASKS, 'ht_dpp'],

183 | 'methods': ['random', 'error_oracle', 'max_entropy', 'mc_dropout', 'ht_dpp'],

184 | 'epochs': 30,

185 | 'patience': 2,

186 | 'model_type': 'strong_conv',

187 | 'repeats': 3,

188 | 'nn_runs': 100,

189 | 'batch_size': 128,

190 | 'start_lr': 5e-4,

191 | 'weight_decay': 0.0002,

192 | 'prepare_dataset': prepare_cifar,

193 | 'name': 'cifar'

194 | }

195 |

196 | config_svhn = deepcopy(config_cifar)

197 | config_svhn.update({

198 | 'prepare_dataset': prepare_svhn,

199 | 'name': 'svhn',

200 | 'model_type': 'strong_conv',

201 | 'repeats': 3,

202 | 'epochs': 30,

203 | 'methods': ['random', 'error_oracle', 'max_entropy', 'mc_dropout', 'ht_dpp']

204 | })

205 |

206 | config_mnist = deepcopy(config_cifar)

207 | config_mnist.update({

208 | 'start_size': 100,

209 | 'step_size': 20,

210 | 'model_type': 'simple_conv',

211 | 'methods': ['ht_dpp'],

212 | 'prepare_dataset': prepare_mnist,

213 | 'batch_size': 32,

214 | 'name': 'mnist',

215 | 'steps': 50

216 | })

217 |

218 | # configs = [config_mnist, config_cifar, config_svhn]

219 |

220 | configs = [config_mnist]

221 |

222 | if __name__ == '__main__':

223 | for config in configs:

224 | print(config)

225 | main(config)

--------------------------------------------------------------------------------

/experiments/deprecated/classification_error_detection.py:

--------------------------------------------------------------------------------

1 | from argparse import ArgumentParser

2 | from collections import OrderedDict, defaultdict

3 | from copy import deepcopy

4 | from pathlib import Path

5 |

6 | import numpy as np

7 | import torch

8 | from torch.utils.data import TensorDataset, DataLoader

9 | from sklearn.model_selection import train_test_split

10 | from sklearn.preprocessing import StandardScaler

11 | from sklearn.metrics import roc_curve, roc_auc_score

12 | from scipy.special import softmax

13 | import matplotlib.pyplot as plt

14 | import seaborn as sns

15 | import pandas as pd

16 | from catalyst.dl import SupervisedRunner

17 | from catalyst.dl.callbacks import AccuracyCallback, EarlyStoppingCallback

18 | from catalyst.utils import set_global_seed

19 |

20 | from alpaca.active_learning.simple_update import entropy

21 | from alpaca.uncertainty_estimator import build_estimator

22 |

23 | from configs import base_config, experiment_config

24 |

25 |

26 | def parse_arguments():

27 | parser = ArgumentParser()

28 | parser.add_argument('name')

29 | name = parser.parse_args().name

30 |

31 | config = deepcopy(base_config)

32 | config.update(experiment_config[name])

33 | config['name'] = name

34 |

35 | return config

36 |

37 |

38 | def train(config, loaders, logdir, checkpoint=None):

39 | model = config['model_class']().double()

40 |

41 | if checkpoint is not None:

42 | model.load_state_dict(torch.load(checkpoint)['model_state_dict'])

43 | model.eval()

44 | else:

45 | criterion = torch.nn.CrossEntropyLoss()

46 | optimizer = torch.optim.Adam(model.parameters())

47 | callbacks = [AccuracyCallback(num_classes=10), EarlyStoppingCallback(config['patience'])]

48 |

49 | runner = SupervisedRunner()

50 | runner.train(

51 | model, criterion, optimizer, loaders,

52 | logdir=logdir, num_epochs=config['epochs'], verbose=False,

53 | callbacks=callbacks

54 | )

55 |

56 | return model

57 |

58 |

59 | def bench_error_detection(model, estimators, loaders, x_val, y_val):

60 | runner = SupervisedRunner()

61 | logits = runner.predict_loader(model, loaders['valid'])

62 | probabilities = softmax(logits, axis=-1)

63 |

64 | estimators = [

65 | # 'max_entropy', 'max_prob',

66 | 'mc_dropout', 'decorrelating_sc',

67 | 'dpp', 'ht_dpp', 'k_dpp', 'ht_k_dpp']

68 |

69 | uncertainties = {}

70 | for estimator_name in estimators:

71 | print(estimator_name)

72 | ue = calc_ue(model, x_val, probabilities, estimator_name, nn_runs=150)

73 | uncertainties[estimator_name] = ue

74 |

75 | predictions = np.argmax(probabilities, axis=-1)

76 | errors = (predictions != y_val).astype(np.int)

77 |

78 | results = []

79 | for estimator_name in estimators:

80 | fpr, tpr, _ = roc_curve(errors, uncertainties[estimator_name])

81 | roc_auc = roc_auc_score(errors, uncertainties[estimator_name])

82 | results.append((estimator_name, roc_auc))

83 |

84 | return results

85 |

86 |

87 | def get_data(config):

88 | x_train, y_train, x_val, y_val, train_tfms = config['prepare_dataset'](config)

89 |

90 | if len(x_train) > config['train_size']:

91 | x_train, _, y_train, _ = train_test_split(

92 | x_train, y_train, train_size=config['train_size'], stratify=y_train

93 | )

94 |

95 | loaders = OrderedDict({

96 | 'train': loader(x_train, y_train, config['batch_size'], shuffle=True),

97 | 'valid': loader(x_val, y_val, config['batch_size'])

98 | })

99 | return loaders, x_train, y_train, x_val, y_val

100 |

101 |

102 | def calc_ue(model, datapoints, probabilities, estimator_type='max_prob', nn_runs=150):

103 | if estimator_type == 'max_prob':

104 | ue = 1 - probabilities[np.arange(len(probabilities)), np.argmax(probabilities, axis=-1)]

105 | elif estimator_type == 'max_entropy':

106 | ue = entropy(probabilities)

107 | else:

108 | estimator = build_estimator(

109 | 'bald_masked', model, dropout_mask=estimator_type, num_classes=10,

110 | nn_runs=nn_runs, keep_runs=True, acquisition='var_ratio')

111 | ue = estimator.estimate(torch.DoubleTensor(datapoints).cuda())

112 | return ue

113 |

114 |

115 | # Set initial datas

116 | def loader(x, y, batch_size=128, shuffle=False):

117 | ds = TensorDataset(torch.DoubleTensor(x), torch.LongTensor(y))

118 | _loader = DataLoader(ds, batch_size=batch_size, num_workers=4, shuffle=shuffle)

119 | return _loader

120 |

121 |

122 | if __name__ == '__main__':

123 | config = parse_arguments()

124 | print(config)

125 | loaders, x_train, y_train, x_val, y_val = get_data(config)

126 |

127 | rocaucs = []

128 | for i in range(config['repeats']):

129 | set_global_seed(i + 42)

130 | logdir = f"logs/ht/{config['name']}_{i}"

131 | model = train(config, loaders, logdir)

132 | rocaucs.extend(bench_error_detection(model, config, loaders, x_val, y_val))

133 |

134 | df = pd.DataFrame(rocaucs, columns=['Estimator', 'ROC-AUCs'])

135 | plt.figure(figsize=(9, 6))

136 | plt.title(f"Error detection for {config['name']}")

137 | sns.boxplot('Estimator', 'ROC-AUCs', data=df)

138 | plt.savefig(f"data/ed/{config['name']}.png", dpi=150)

139 | plt.show()

140 |

141 | df.to_csv(f"logs/{config['name']}_ed.csv")

142 |

--------------------------------------------------------------------------------

/experiments/deprecated/classification_error_detection_fastai.py:

--------------------------------------------------------------------------------

1 | from copy import deepcopy

2 | import sys

3 | from functools import partial

4 | import random

5 |

6 | import numpy as np

7 | import matplotlib.pyplot as plt

8 | from sklearn.model_selection import train_test_split

9 |

10 | import torch

11 | import torch.nn.functional as F

12 | from fastai.vision import (ImageDataBunch, Learner, accuracy)

13 | from fastai.callbacks import EarlyStoppingCallback

14 |

15 | from alpaca.uncertainty_estimator import build_estimator

16 | from alpaca.active_learning.simple_update import entropy

17 |

18 | from deprecated.utils import ImageArrayDS

19 | from deprecated.classification_active_learning import build_model

20 | from visual_datasets import prepare_cifar, prepare_mnist, prepare_svhn

21 |

22 |

23 | """

24 | Experiment to detect errors by uncertainty estimation quantification

25 | It provided on MNIST, CIFAR and SVHN datasets (see config below)

26 | We report results as a boxplot ROC-AUC figure on multiple runs

27 | """

28 |

29 | label = 'ue_accuracy'

30 |

31 | SEED = 42

32 | torch.manual_seed(SEED)

33 | np.random.seed(SEED)

34 | random.seed(SEED)

35 |

36 | if torch.cuda.is_available():

37 | torch.cuda.set_device(1)

38 | torch.backends.cudnn.deterministic = True

39 | torch.backends.cudnn.benchmark = False

40 | device = 'cuda'

41 | else:

42 | device = 'cpu'

43 |

44 |

45 | def accuracy_curve(mistake, ue):

46 | accuracy_ = lambda x: 1 - sum(x) / len(x)

47 | thresholds = np.arange(0.1, 1.1, 0.1)

48 | accuracy_by_ue = [accuracy_(mistake[ue < t]) for t in thresholds]

49 | return thresholds, accuracy_by_ue

50 |

51 |

52 | def benchmark_uncertainty(config):

53 | results = []

54 | plt.figure(figsize=(10, 8))

55 | for i in range(config['repeats']):

56 | # Load data

57 | x_set, y_set, x_val, y_val, train_tfms = config['prepare_dataset'](config)

58 |

59 | if len(x_set) > config['train_size']:

60 | _, x_train, _, y_train = train_test_split(

61 | x_set, y_set, test_size=config['train_size'], stratify=y_set)

62 | else:

63 | x_train, y_train = x_set, y_set

64 |

65 | train_ds = ImageArrayDS(x_train, y_train, train_tfms)

66 | val_ds = ImageArrayDS(x_val, y_val)

67 | data = ImageDataBunch.create(train_ds, val_ds, bs=config['batch_size'])

68 |

69 | # Train model

70 | loss_func = torch.nn.CrossEntropyLoss()

71 | np.set_printoptions(threshold=sys.maxsize, suppress=True)

72 |

73 | model = build_model(config['model_type'])

74 | callbacks = [partial(EarlyStoppingCallback, min_delta=1e-3, patience=config['patience'])]

75 | learner = Learner(data, model, metrics=accuracy, loss_func=loss_func, callback_fns=callbacks)

76 | learner.fit(config['epochs'], config['start_lr'], wd=config['weight_decay'])

77 |

78 | # Evaluate different estimators

79 | images = torch.FloatTensor(x_val).to(device)

80 | logits = model(images)

81 | probabilities = F.softmax(logits, dim=1).detach().cpu().numpy()

82 | predictions = np.argmax(probabilities, axis=-1)

83 | print('Logits average', np.average(logits))

84 |

85 | for estimator_name in config['estimators']:

86 | print(estimator_name)

87 | ue = calc_ue(model, images, probabilities, estimator_name, config['nn_runs'])

88 | mistake = 1 - (predictions == y_val).astype(np.int)

89 |

90 | # roc_auc = roc_auc_score(mistake, ue)

91 | # print(estimator_name, roc_auc)

92 | # results.append((estimator_name, roc_auc))

93 |

94 | # ue_thresholds, ue_accuracy = accuracy_curve(mistake, ue)

95 | # results.extend([(t, accu, estimator_name) for t, accu in zip(ue_thresholds, ue_accuracy)])

96 | # plt.plot(ue_thresholds, ue_accuracy, label=estimator_name, alpha=0.8)

97 |

98 | # if i == config['repeats'] - 1:

99 | # fpr, tpr, thresholds = roc_curve(mistake, ue, pos_label=1)

100 | # plt.plot(fpr, tpr, label=name, alpha=0.8)

101 | # plt.xlabel('FPR')

102 | # plt.ylabel('TPR')

103 |

104 | # if i == config['repeats'] - 1:

105 | # ue_thresholds, ue_accuracy = accuracy_curve(mistake, ue)

106 | # plt.plot(ue_thresholds, ue_accuracy, label=name, alpha=0.8)

107 | # Plot the results and generate figures

108 | # plt.figure(dpi=150)