├── logs

└── encode.log

├── .gitignore

├── _config.yml

├── kill.sh

├── logo

├── streamline_dark_mode.png

└── streamline_light_mode.png

├── caddyFile

├── LICENSE

├── launch.sh

└── README.md

/logs/encode.log:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | logs/

2 |

--------------------------------------------------------------------------------

/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-hacker

--------------------------------------------------------------------------------

/kill.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Run a command to kill the encoder

4 |

5 | sudo pkill ffmpeg

6 |

--------------------------------------------------------------------------------

/logo/streamline_dark_mode.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/streamlinevideo/streamline/HEAD/logo/streamline_dark_mode.png

--------------------------------------------------------------------------------

/logo/streamline_light_mode.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/streamlinevideo/streamline/HEAD/logo/streamline_light_mode.png

--------------------------------------------------------------------------------

/caddyFile:

--------------------------------------------------------------------------------

1 | :80

2 |

3 | root /home/ubuntu/streamline/www

4 |

5 | webdav {

6 | scope /home/ubuntu/streamline/www

7 | modify true

8 | allow /home/ubuntu/streamline/www

9 | }

10 |

11 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Colleen K. Henry, Et al.

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/launch.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Create a video ID from the time thes scipt is executed

4 |

5 | vid=$(date '+%M%S')

6 |

7 | # Print help if requested

8 |

9 | if [ "$1" == "-h" ]; then

10 | echo "Usage: `basename $0` ./launch.sh originserverurl urltocdn"

11 | exit 0

12 | fi

13 |

14 | command -v ffmpeg >/dev/null 2>&1 || { echo "ffmpeg is required for launching the encoder. Aborting." >&2; exit 1; }

15 |

16 | # Get the name of the capture card

17 |

18 | ffmpeg -hide_banner -f decklink -list_devices 1 \

19 | -i dummy &> .tmp.txt

20 | sed -i '1d' .tmp.txt

21 | output=$(<.tmp.txt)

22 |

23 | IFS="'" read _ device _ <<< "$output"

24 |

25 | # Get the input format from the capture card

26 |

27 | echo -ne '\n' | StatusMonitor > .format.txt

28 | output=$(<.format.txt)

29 | while read -r line

30 | do

31 | IFS=':' read -r -a array <<< "$line"

32 | key=$(echo ${array[0]} | tr -d ' ')

33 | value=$(echo ${array[1]} | tr -d ' ')

34 |

35 | if [ "$key" == "DetectedVideoInputMode" ]

36 | then

37 | video_format=$value

38 | #echo $video_format

39 | break

40 | fi

41 | done <<< "$output"

42 |

43 | IFS='p' read -r -a array <<< "$video_format"

44 | res=${array[0]}

45 | fps=${array[1]}

46 |

47 | # Get video format list from the input card

48 |

49 | ffmpeg -f decklink -list_formats 1 -i "$device" &> .tmp.txt

50 |

51 | output=$(<.tmp.txt)

52 | min_fps=1000

53 | while read -r line

54 | do

55 | IFS=' ' read -ra array <<< "$line"

56 | identify=$(echo ${array[3]} | tr -d ' ')

57 | if [ "$identify" != "fps" ]

58 | then

59 | continue

60 | fi

61 |

62 | IFS='x' read -r -a array2 <<< "${array[0]}"

63 | if [ "${array2[1]}" != "$res" ]

64 | then

65 | continue

66 | fi

67 |

68 | # Calculate fps

69 |

70 | IFS='/' read -r -a array3 <<< "${array[2]}"

71 | DIV=$(echo "scale=2; ${array3[0]}/${array3[1]}" | bc)

72 |

73 | rownum=$(echo $DIV-$fps | bc)

74 | less_than=$(echo $rownum'<'0 | bc)

75 | if (( $less_than > 0 ))

76 | then

77 | #echo "hello"

78 | rownum=$(echo $rownum*-1 | bc)

79 |

80 | fi

81 | less_than=$(echo $rownum'<'$min_fps | bc)

82 |

83 | if (( $less_than > 0 ))

84 | then

85 | format_code=${array[0]}

86 | min_fps=$rownum

87 | fi

88 |

89 | done <<< "$output"

90 |

91 | IFS='\t' read -r -a array4 <<< "$format_code"

92 |

93 | f_code=$(echo ${array4[0]}| cut -d' ' -f 1)

94 |

95 | # Round off drop frame inputs to whole numbers

96 |

97 | roundedfps=$(echo ${fps} | awk '{printf("%d\n",$1 + 0.99)}')

98 |

99 | # HLS parameters that create 2 second segments, delete old segment, transmit over a persistent connetion using HTTP PUT, re-send a variant playlist every 15 seconds.

100 |

101 | hlsargs="-f hls -hls_time 2 -hls_flags delete_segments -method PUT -http_persistent 1 -master_pl_publish_rate 15 -master_pl_name ${vid}.m3u8"

102 |

103 | # Encoding settings for x264 (CPU based encoder)

104 |

105 | x264enc='libx264 -profile:v high -bf 4 -refs 3 -sc_threshold 0'

106 |

107 | # Encoding settings for nvenc (GPU based encoder)

108 |

109 | nvenc='h264_nvenc -profile:v high -bf 4 -refs 3 -rc vbr_hq'

110 |

111 | # If the input is 4k then encode with 4k encoding settings

112 |

113 | if [ "${res}" == "2160" ] || [ "${roundedfps}" == "30" ]

114 | then

115 | ffmpeg \

116 | -hide_banner \

117 | -queue_size 4294967296 \

118 | -f decklink \

119 | -i "$device" \

120 | -filter_complex \

121 | "[0:v]format=yuv420p,fps=30,split=8[1][2][3][4][5][6][7][8]; \

122 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

123 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

124 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

125 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

126 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

127 | [6]hwupload_cuda,scale_npp=-1:1080:interp_algo=super[6out]; \

128 | [7]hwupload_cuda,scale_npp=-1:1440:interp_algo=super[7out]; \

129 | [8]null[8out]" \

130 | -map '[1out]' -c:v:0 ${x264enc} -g 60 -b:v:0 400k \

131 | -map '[2out]' -c:v:1 ${x264enc} -g 60 -b:v:1 800k \

132 | -map '[3out]' -c:v:2 ${x264enc} -g 60 -b:v:2 1100k \

133 | -map '[4out]' -c:v:3 ${nvenc} -g 60 -b:v:3 2200k \

134 | -map '[5out]' -c:v:4 ${nvenc} -g 60 -b:v:4 3300k \

135 | -map '[6out]' -c:v:5 ${nvenc} -g 60 -b:v:5 6000k \

136 | -map '[7out]' -c:v:6 ${nvenc} -g 60 -b:v:6 12000k \

137 | -map '[8out]' -c:v:7 ${nvenc} -g 60 -b:v:7 20000k \

138 | -c:a:0 aac -b:a 128k -map 0:a \

139 | ${hlsargs} \

140 | -var_stream_map "a:0,agroup:teh_audio \

141 | v:0,agroup:teh_audio \

142 | v:1,agroup:teh_audio \

143 | v:2,agroup:teh_audio \

144 | v:3,agroup:teh_audio \

145 | v:4,agroup:teh_audio \

146 | v:5,agroup:teh_audio \

147 | v:6,agroup:teh_audio \

148 | v:7,agroup:teh_audio" \

149 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

150 |

151 | # If the input is 4k then encode with 4k encoding settings

152 |

153 | elif [ "${res}" == "2160" ] || [ "${roundedfps}" == "25" ]

154 | then

155 | ffmpeg \

156 | -hide_banner \

157 | -queue_size 4294967296 \

158 | -f decklink \

159 | -i "$device" \

160 | -filter_complex \

161 | "[0:v]format=yuv420p,split=8[1][2][3][4][5][6][7][8]; \

162 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

163 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

164 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

165 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

166 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

167 | [6]hwupload_cuda,scale_npp=-1:1080:interp_algo=super[6out]; \

168 | [7]hwupload_cuda,scale_npp=-1:1440:interp_algo=super[7out]; \

169 | [8]null[8out]" \

170 | -map '[1out]' -c:v:0 ${x264enc} -g 50 -b:v:0 400k \

171 | -map '[2out]' -c:v:1 ${x264enc} -g 50 -b:v:1 800k \

172 | -map '[3out]' -c:v:2 ${x264enc} -g 50 -b:v:2 1100k \

173 | -map '[4out]' -c:v:3 ${x264enc} -g 50 -b:v:3 2200k \

174 | -map '[5out]' -c:v:4 ${nvenc} -g 50 -b:v:4 3300k \

175 | -map '[6out]' -c:v:5 ${nvenc} -g 50 -b:v:5 6000k \

176 | -map '[7out]' -c:v:6 ${nvenc} -g 50 -b:v:6 12000k \

177 | -map '[8out]' -c:v:7 ${nvenc} -g 50 -b:v:7 20000k \

178 | -c:a:0 aac -b:a 128k -map 0:a \

179 | ${hlsargs} \

180 | -var_stream_map "a:0,agroup:teh_audio \

181 | v:0,agroup:teh_audio \

182 | v:1,agroup:teh_audio \

183 | v:2,agroup:teh_audio \

184 | v:3,agroup:teh_audio \

185 | v:4,agroup:teh_audio \

186 | v:5,agroup:teh_audio \

187 | v:6,agroup:teh_audio \

188 | v:7,agroup:teh_audio" \

189 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

190 |

191 | # If the input is 1080p59.94 or 1080p60, then encode with 1080p60 ABR encoding settings.

192 |

193 | elif [ "${res}" == "1080" ] || [ "${roundedfps}" == "60" ]

194 | then

195 | ffmpeg \

196 | -hide_banner \

197 | -f decklink \

198 | -i "$device" \

199 | -filter_complex \

200 | "[0:v]format=yuv420p,fps=60,split=6[1][2][3][4][5][6]; \

201 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

202 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

203 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

204 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

205 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

206 | [6]null[6out]" \

207 | -map '[1out]' -c:v:0 ${x264enc} -g 120 -b:v:0 800k \

208 | -map '[2out]' -c:v:1 ${x264enc} -g 120 -b:v:1 1600k \

209 | -map '[3out]' -c:v:2 ${x264enc} -g 120 -b:v:2 2200k \

210 | -map '[4out]' -c:v:3 ${x264enc} -g 120 -b:v:3 4400k \

211 | -map '[5out]' -c:v:4 ${nvenc} -g 120 -b:v:4 6600k \

212 | -map '[6out]' -c:v:5 ${nvenc} -g 120 -b:v:5 12000k \

213 | -c:a:0 aac -b:a 128k -map 0:a \

214 | ${hlsargs} \

215 | -var_stream_map "a:0,agroup:teh_audio \

216 | v:0,agroup:teh_audio \

217 | v:1,agroup:teh_audio \

218 | v:2,agroup:teh_audio \

219 | v:3,agroup:teh_audio \

220 | v:4,agroup:teh_audio \

221 | v:5,agroup:teh_audio" \

222 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

223 |

224 | # If the input is 1080p50, then encode with 1080p50 ABR encoding settings.

225 |

226 | elif [ "${res}" == "1080" ] || [ "${fps}" == "50" ]

227 | then

228 | ffmpeg \

229 | -hide_banner \

230 | -f decklink \

231 | -i "$device" \

232 | -filter_complex \

233 | "[0:v]format=yuv420p,split=6[1][2][3][4][5][6]; \

234 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

235 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

236 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

237 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

238 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

239 | [6]null[6out]" \

240 | -map '[1out]' -c:v:0 ${x264enc} -g 100 -b:v:0 800k \

241 | -map '[2out]' -c:v:1 ${x264enc} -g 100 -b:v:1 1600k \

242 | -map '[3out]' -c:v:2 ${x264enc} -g 100 -b:v:2 2200k \

243 | -map '[4out]' -c:v:3 ${x264enc} -g 100 -b:v:3 4400k \

244 | -map '[5out]' -c:v:4 ${nvenc} -g 100 -b:v:4 6600k \

245 | -map '[6out]' -c:v:5 ${nvenc} -g 100 -b:v:5 12000k \

246 | -c:a:0 aac -b:a 128k -map 0:a \

247 | ${hlsargs} \

248 | -var_stream_map "a:0,agroup:teh_audio \

249 | v:0,agroup:teh_audio \

250 | v:1,agroup:teh_audio \

251 | v:2,agroup:teh_audio \

252 | v:3,agroup:teh_audio \

253 | v:4,agroup:teh_audio \

254 | v:5,agroup:teh_audio" \

255 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

256 |

257 | # If the input is 1080i59.94, deinterlace it, then encode with 1080p30 ABR encoding settings.

258 |

259 | elif [[ "${res}" == "1080i59.94" ]]

260 | then

261 | ffmpeg \

262 | -hide_banner \

263 | -f decklink \

264 | -i "$device" \

265 | -filter_complex \

266 | "[0:v]yadif=0:-1:1,fps=30,format=yuv420p,split=6[1][2][3][4][5][6]; \

267 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

268 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

269 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

270 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

271 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

272 | [6]null[6out]" \

273 | -map '[1out]' -c:v:0 ${x264enc} -g 60 -b:v:0 800k \

274 | -map '[2out]' -c:v:1 ${x264enc} -g 60 -b:v:1 1600k \

275 | -map '[3out]' -c:v:2 ${x264enc} -g 60 -b:v:2 2200k \

276 | -map '[4out]' -c:v:3 ${x264enc} -g 60 -b:v:3 4400k \

277 | -map '[5out]' -c:v:4 ${nvenc} -g 60 -b:v:4 6600k \

278 | -map '[6out]' -c:v:5 ${nvenc} -g 60 -b:v:5 12000k \

279 | -c:a:0 aac -b:a 128k -map a:0 \

280 | ${hlsargs} \

281 | -var_stream_map "a:0,agroup:teh_audio \

282 | v:0,agroup:teh_audio \

283 | v:1,agroup:teh_audio \

284 | v:2,agroup:teh_audio \

285 | v:3,agroup:teh_audio \

286 | v:4,agroup:teh_audio \

287 | v:5,agroup:teh_audio" \

288 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

289 |

290 | # If the input is 1080i50, then deinterlace and encode with 1080p25 ABR encoding settings.

291 |

292 | elif [[ "${res}" == "1080i50" ]]

293 | then

294 | ffmpeg \

295 | -hide_banner \

296 | -f decklink \

297 | -i "$device" \

298 | -filter_complex \

299 | "[0:v]yadif=0:-1:1,format=yuv420p,split=6[1][2][3][4][5][6]; \

300 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

301 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

302 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

303 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

304 | [5]hwupload_cuda,scale_npp=-1:720:interp_algo=super[5out]; \

305 | [6]null[6out]" \

306 | -map '[1out]' -c:v:0 ${x264enc} -g 50 -b:v:0 800k \

307 | -map '[2out]' -c:v:1 ${x264enc} -g 50 -b:v:1 1600k \

308 | -map '[3out]' -c:v:2 ${x264enc} -g 50 -b:v:2 2200k \

309 | -map '[4out]' -c:v:3 ${x264enc} -g 50 -b:v:3 4400k \

310 | -map '[5out]' -c:v:4 ${nvenc} -g 50 -b:v:4 6600k \

311 | -map '[6out]' -c:v:5 ${nvenc} -g 50 -b:v:5 12000k \

312 | -c:a:0 aac -b:a 128k -map 0:a \

313 | ${hlsargs} \

314 | -var_stream_map "a:0,agroup:teh_audio \

315 | v:0,agroup:teh_audio \

316 | v:1,agroup:teh_audio \

317 | v:2,agroup:teh_audio \

318 | v:3,agroup:teh_audio \

319 | v:4,agroup:teh_audio \

320 | v:5,agroup:teh_audio" \

321 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

322 |

323 | # If the input is 720p60, then encode with 720p60 ABR encoding settings.

324 |

325 | elif [[ "${res}" == "720p" ]] || [ "${roundedfps}" == "60" ]

326 | then

327 | ffmpeg \

328 | -hide_banner \

329 | -f decklink \

330 | -i "$device" \

331 | -filter_complex \

332 | "[0:v]format=yuv420p,fps=60,split=5[1][2][3][4][5]; \

333 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

334 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

335 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

336 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

337 | [5]null[5out]" \

338 | -map '[1out]' -c:v:0 ${x264enc} -g 120 -b:v:0 800k \

339 | -map '[2out]' -c:v:1 ${x264enc} -g 120 -b:v:1 1600k \

340 | -map '[3out]' -c:v:2 ${x264enc} -g 120 -b:v:2 2200k \

341 | -map '[4out]' -c:v:3 ${x264enc} -g 120 -b:v:3 4400k \

342 | -map '[5out]' -c:v:4 ${nvenc} -g 120 -b:v:4 6600k \

343 | -c:a:0 aac -b:a 128k -map 0:a \

344 | ${hlsargs} \

345 | -var_stream_map "a:0,agroup:teh_audio \

346 | v:0,agroup:teh_audio \

347 | v:1,agroup:teh_audio \

348 | v:2,agroup:teh_audio \

349 | v:3,agroup:teh_audio \

350 | v:4,agroup:teh_audio" \

351 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

352 |

353 | # If the input is 720p50, then encode with 720p50 ABR encoding settings.

354 |

355 | elif [[ "${res}" == "720p" ]] || [ "${roundedfps}" == "50" ]

356 | then

357 | ffmpeg \

358 | -hide_banner \

359 | -f decklink \

360 | -i "$device" \

361 | -filter_complex \

362 | "[0:v]format=yuv420p,split=5[1][2][3][4][5]; \

363 | [1]hwupload_cuda,scale_npp=-1:288:interp_algo=super,hwdownload[1out]; \

364 | [2]hwupload_cuda,scale_npp=-1:360:interp_algo=super,hwdownload[2out]; \

365 | [3]hwupload_cuda,scale_npp=-1:432:interp_algo=super,hwdownload[3out]; \

366 | [4]hwupload_cuda,scale_npp=-1:540:interp_algo=super,hwdownload[4out]; \

367 | [5]null[5out]" \

368 | -map '[1out]' -c:v:0 ${x264enc} -g 100 -b:v:0 800k \

369 | -map '[2out]' -c:v:1 ${x264enc} -g 100 -b:v:1 1600k \

370 | -map '[3out]' -c:v:2 ${x264enc} -g 100 -b:v:2 2200k \

371 | -map '[4out]' -c:v:3 ${x264enc} -g 100 -b:v:3 4400k \

372 | -map '[5out]' -c:v:4 ${nvenc} -g 100 -b:v:4 6600k \

373 | -c:a:0 aac -b:a 128k -map 0:a \

374 | ${hlsargs} \

375 | -var_stream_map "a:0,agroup:teh_audio \

376 | v:0,agroup:teh_audio \

377 | v:1,agroup:teh_audio \

378 | v:2,agroup:teh_audio \

379 | v:3,agroup:teh_audio \

380 | v:4,agroup:teh_audio" \

381 | http://${1}/${vid}_%v.m3u8 >/dev/null 2>~/streamline/logs/encode.log &

382 |

383 | fi

384 |

385 | # Create a web page with embedded hls.js player.

386 |

387 | cat > /tmp/${vid}.html <<_PAGE_

388 |

389 |

390 |

391 |

392 |

406 |

407 |

408 |

423 |

424 |

425 | _PAGE_

426 |

427 | # Upload the player over HTTP PUT to the origin server

428 |

429 | curl -X PUT --upload-file /tmp/${vid}.html http://${1}/${vid}.html -H "Content-Type: text/html; charset=utf-8"

430 |

431 | echo ...and awaaaaayyyyy we go! 🚀🚀🚀🚀

432 |

433 | echo Input detected on ${device} as ${res} ${fps}

434 |

435 | echo Currently streaming to: https://${2}/${vid}.html

436 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | ## Streamline

5 |

6 | Streamline is a reference system design for a premium quality, white label, end to end live streaming system from HDMI / HD-SDI capture all the way to a player on a CDN that works on web, iOS, and Android devices.

7 |

8 | Using commodity computer hardware, free software, and AWS, it’s an affordable way to learn how to build a very high quality live streaming system.

9 |

10 | This project is primarily designed as a learning tool for people to learn how live video works end to end. It is meant to be as simple to understand as possible while still providing great visual quality and streaming performance. It’s not meant for production use as it hasn’t been heavily tested over long periods of time and there are many hard coded parameters. It also may just simply stop working at any time since I’m using the master branch of FFmpeg. Maybe in the next version iteration I’ll freeze to that, which will help, but that’s not out yet.

11 |

12 | Processing on this system is a hybrid of GPU and CPU.

13 |

14 | ## What is this not?

15 |

16 | Streamline is not meant for mobile streaming, it’s meant for streaming from a more professional production with dedicated cameras, microphones, and video switching equipment. Think more “Apple Product Launch” live streaming, not Periscope or Twitch. In fact it’s not designed currently to feed services like YouTube Live, Facebook live, etc, it is meant to be its own end to end platform. The video will work on web browsers on a computer, iOS, or Android.

17 |

18 | It's also not a production ready tool. I wouldn't like, use this in production. It might explode. I make no promises that it wont explode on you. Go check out AWS Elemental, Momento, Wowza, Bitmovin, Haivision, etc if you want production ready stuff with support that is available.

19 |

20 | ## What is included?

21 |

22 | - Hardware and OS specs for an encoder.

23 | - A script to build the software on the encoder.

24 | - Scripts that use FFmpeg to capture, process, and encode the video, and then create a web page and a player that can allow you to watch this live video.

25 | - An Amazon Web Services configuration directions for an origin web server and a CDN configuration.

26 |

27 | ## Projects leveraged

28 |

29 | - [HLS](https://developer.apple.com/streaming/)

30 | - [Caddy server](https://caddyserver.com/)

31 | - [FFmpeg](https://ffmpeg.org/ffmpeg-all.html)

32 | - [Black Magic DeckLink cards and drivers](https://www.blackmagicdesign.com/products/decklink)

33 | - [HLS.js](https://github.com/video-dev/hls.js)

34 |

35 | ## Services leveraged

36 |

37 | - [cdnjs](https://cdnjs.com/)

38 | - [AWS EC2](https://aws.amazon.com/ec2/)

39 | - [AWS CloudFront](https://aws.amazon.com/cloudfront/)

40 |

41 | ## Project approach

42 |

43 | Since this is meant primarily as a teaching tool, we're going to be "showing our work" a lot, and, favoring simple to understand implementations over absolute ease of use through automation.

44 |

45 | There are many architectures that can be used to build an end to end live streaming system. We have chosen one that provides better quality, is easy to understand, and has low server side costs. The tradeoff here is that it does require a faster connection from the encoder to the internet and requires more powerful hardware on site.

46 |

47 | Additionally, we are not a production tool like OBS or Wirecast, meaning no video switching or compositing is provided. This is designed for higher end productions where you would use dedicated hardware for that purpose, like an ATEM switcher from Blackmagic.

48 |

49 | We have not tried to address all possible hardware or trying to co-exist with a multi function system. We are targeting specific hardware with a dedicated software stack, giving us the most control for reliability as the project matures.

50 |

51 | ## Parts of the system

52 |

53 |

54 |

55 | **Source -** Camera with HDMI or HD-SDI output. (it could be a video switcher or a matrix router in a larger production.) We will be taking in raw video from a camera or some video production system. It will be a raw, uncompressed, full quality video source. HDMI and HD-SDI are two different standards for how you can connect raw video between devices. Our goal here is to take this source and compress it and deliver it across the internet, yet, try to make it look as close as possible to this original “golden master” source.

56 |

57 | **Encoder -** A desktop PC with an NVIDIA Quadro GPU and Blackmagic Capture Card. The encoder is running FFMPEG which will take the raw video and audio from the capture card, and compress it. It will then multiplex it into mpeg-ts, and send it via HTTP PUT to a web server, as well as send up a manifest. It will continue to send new manifests as they are needed, and it will send HTTP DELETE requests on the old segments. I’ll provide a parts list for this, but, you can vary the hardware to some extend, such as building it as a rack mount server.

58 |

59 | **Origin server -** This will be the web server that will host the segments of video that come in, so that clients can pull them now. We will be using the software “Caddy Server” for this use case. It will receive HTTP PUTs of segments from the encoder.

60 |

61 | **CDN -** Between the clients and the origin server, will be a network of caching servers all over the world, called edge servers, to scale out how many people can watch at once and improve their viewing experience. We will use Amazon’s CloudFront CDN for this.

62 |

63 | **Web Page -** The web page that people hit which contains the video

64 |

65 | **Player -** This is the software running on the web page which pulls down the manifest repeatedly, decides which bitrates to play, then requests the segments and shoves them into the media source API of the web page for decoding and display. We will be using HLS.js for this.

66 |

67 | ## How does streaming work?

68 |

69 | Lets start off by talking about how live streaming works. As a disclaimer, I’m going to be explaining HLS. HLS is a very common live streaming protocol, which allows for high quality and adaptive bitrate, at scale, on publicly available content delivery networks. Is HLS the best protocol? No, it has issues like very high latency, often around 15 seconds, and that it is controlled by Apple. However, it is common, simple to understand, there are many tools for it, and with the right approach can work pretty much everywhere. There are many ways to implement HLS, and I’m going to describe a very basic one. I can’t cover every rule of HLS here, so if you want to know more check out the HLS spec on Apple’s developer website. I’ll cover things like live and ABR, but I will skip things like DVR, or going live to VOD, etc. If want to know more about the lower level details of how video works, check out [this link](https://developer.apple.com/streaming/).

70 |

71 | While we would imagine a more complex production typically, the simplest source to imagine is a camera with HDMI or SDI output. You would plug this camera into your “encoder” which will take the raw uncompressed video and audio, compress each source, package it into segments, and send it to a server hosted in AWS EC2. Each segment is typically one second to ten seconds long, depending on your goals and use cases. Contained within each segment is a stream of H.264 compressed video data, and AAC compressed audio data. These two streams are multiplexed together with a “container” which in this use case is mpeg-ts (transport stream).

72 |

73 | When a segment is created, it is added to a manifest. A manifest in HLS is a .m3u8 file. Its a text file which contains the URLs for all of the segments. It would look something like “playlist.m3u8” and if you were to open it with a text editor, it’s fully human readable.

74 |

75 | If you have only one segment (because you just started) your manifest would look like...

76 |

77 | #EXTM3U

78 | #EXT-X-VERSION:3

79 | #EXT-X-TARGETDURATION:2

80 | #EXT-X-MEDIA-SEQUENCE:0

81 | #EXTINF:2.000000,

82 | 1435_10.ts

83 |

84 | If you have three segments it would look like...

85 |

86 | #EXTM3U

87 | #EXT-X-VERSION:3

88 | #EXT-X-TARGETDURATION:2

89 | #EXT-X-MEDIA-SEQUENCE:0

90 | #EXTINF:2.000000,

91 | 1435_10.ts

92 | #EXTINF:2.000000,

93 | 1435_11.ts

94 | #EXTINF:2.000000,

95 | 1435_12.ts

96 |

97 | Eventually you will reach the max number of segments in your manifest (which is configurable). At that point you would start removing old segments while you add new ones. Thus, you have a rolling window. It’s like a caterpillar track, as you lay down new segments you pick up old ones by deleting them. Note that segment 0 is now gone.

98 |

99 | #EXTM3U

100 | #EXT-X-VERSION:3

101 | #EXT-X-TARGETDURATION:2

102 | #EXT-X-MEDIA-SEQUENCE:1

103 | #EXTINF:2.000000,

104 | 1435_11.ts

105 | #EXTINF:2.000000,

106 | 1435_12.ts

107 | #EXTINF:2.000000,

108 | 1435_13.ts

109 | #EXTINF:2.000000,

110 | 1435_14.ts

111 | #EXTINF:2.000000,

112 | 1435_15.ts

113 |

114 | On the client, there will be what's called a "player." This is software that reads the manifest, and will keep pulling it down regularly looking for new segments. As it sees new segments, it will pull them down too and feed them into the player one after another, like a playlist of songs. The player will seamlessly go from one to the next as they come down. It will also make decisions such as what bitrate to select so that it does not rebuffer.

115 |

116 | One important part of streaming, is bitrate adaptation. Some people will be on a cell phone, some will be on a fast wifi connection at home on a laptop. It’s important to provide the best quality experience given the variations in the speeds of different connections. To that end, we create multiple streams at different bitrates simultaneously, and a “variant playlist” which is a manifest that lists the location of the manifests for each bitrate stream. If you provide this variant playlist to a player, it can then decide which is the maximum quality for it’s connection in real time and adapt to that.

117 |

118 | If you were to pull down a variant playlist like...

119 |

120 | curl http://ec2-54-183-60-162.us-west-1.compute.amazonaws.com/5906.m3u8

121 |

122 | You would get...

123 |

124 | #EXTM3U

125 | #EXT-X-VERSION:3

126 | #EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="group_teh_audio",NAME="audio_0",DEFAULT=YES,URI="5906_0.m3u8"

127 | #EXT-X-STREAM-INF:BANDWIDTH=140800,CODECS="mp4a.40.2",AUDIO="group_teh_audio"

128 | 5906_0.m3u8

129 |

130 | #EXT-X-STREAM-INF:BANDWIDTH=1020800,RESOLUTION=512x288,CODECS="avc1.640015,mp4a.40.2",AUDIO="group_teh_audio"

131 | 5906_1.m3u8

132 |

133 | #EXT-X-STREAM-INF:BANDWIDTH=1900800,RESOLUTION=640x360,CODECS="avc1.64001e,mp4a.40.2",AUDIO="group_teh_audio"

134 | 5906_2.m3u8

135 |

136 | #EXT-X-STREAM-INF:BANDWIDTH=2560800,RESOLUTION=768x432,CODECS="avc1.64001e,mp4a.40.2",AUDIO="group_teh_audio"

137 | 5906_3.m3u8

138 |

139 | #EXT-X-STREAM-INF:BANDWIDTH=4980800,RESOLUTION=960x540,CODECS="avc1.64001f,mp4a.40.2",AUDIO="group_teh_audio"

140 | 5906_4.m3u8

141 |

142 | #EXT-X-STREAM-INF:BANDWIDTH=7400800,RESOLUTION=1280x720,CODECS="avc1.64001f,mp4a.40.2",AUDIO="group_teh_audio"

143 | 5906_5.m3u8

144 |

145 | #EXT-X-STREAM-INF:BANDWIDTH=13340800,RESOLUTION=1920x1080,CODECS="avc1.640028,mp4a.40.2",AUDIO="group_teh_audio"

146 | 5906_6.m3u8

147 |

148 | Notice how this calls out each resolution, bitrate, and codecs for each stream level. This allows us to share one audio stream between all of the video streams without having to copy it into every single video. It also allows the player to know whats available for it to adapt up and down to depending on the speed of the client internet connection.

149 |

150 | Cool, we got that? Great. Let’s actually build this thing now.

151 |

152 | ## Getting the hardware

153 |

154 | [Here is a parts list on Amazon of what I used to build my encoder.](http://a.co/68LVXQe) It’s a bit overkill, but it leaves room to grow.

155 |

156 | With the current setup, an NVIDIA GPU is required. I used a quadro which is not artificially limited by NVIDIA to two encodes at at time. I’m thinking of trying to get away with only two encodes so you can use a geforce, but I haven’t tested that yet. I am however doing all the scaling on the GPU, freeing up more cycles for the CPU, but I don’t know if they limit scaling or not on a geforce. In the future I might get a slower GPU and get a threadripper CPU to do more encoding on the CPU for better quality. There are tons of configurations you can do, this is just a reference of something that worked and has room to grow. You can put it in smaller form factors, use an intel chip, whatever if you want.

157 |

158 | ## Building the encoder

159 |

160 | After ordering your parts, put it together like a normal DIY PC.

161 |

162 | Does the PC have a WiFi module in it? Rip it out! If it’s there, someone might be stupid enough to try using WiFi for high bitrate live video streaming. Obviously, this would not be a good idea. ;) Ethernet cables are your friend.

163 |

164 | Next, install [Ubuntu Desktop 18.04 LTS 64 bit.](http://releases.ubuntu.com/18.04/) To do this you will create a bootable USB stick to install the OS on your newly assembled encoder. You can follow the directions [here.](https://tutorials.ubuntu.com/tutorial/tutorial-create-a-usb-stick-on-ubuntu) Feel free to skip the boxes that are asking if you want to install drivers or download updates.

165 |

166 | Note that the screen shots in this readme are from 16.04 but everything should be roughly the same and 18.04 has been tested. I'll update the screenshots in the future.

167 |

168 | Once you have installed and rebooted, log in, then open up the program “terminal.” Now run the command...

169 |

170 | sudo apt-get -y update && sudo apt-get -y upgrade && sudo apt-get -y install git

171 |

172 | Now you can clone the repository to get the scripts for this project.

173 |

174 | cd ~/ && git clone https://github.com/streamlinevideo/streamline.git

175 |

176 | Now lets execute the build script. This script will download all of the drivers you need for the NVIDIA GPU and Black Magic DeckLink capture card, as well as correctly compile FFmpeg for you with support for all the codecs and hardware that we need.

177 |

178 | cd ~/streamline && ./buildEncoder.sh

179 |

180 | Let it sit and finish everything until it tells you to reboot, then do so. Simply type...

181 |

182 | reboot

183 |

184 | Once you’re rebooted and back at the desktop, open up the BlackMagic Desktop Video software, which was installed by the build script. If this is your first time using a brand new Black Magic capture card, you may need to update it's firmware. This can be done with the application "Blackmagic Firmware Updater." Do that before proceeding.

185 |

186 |

187 |

188 | Select HDMI or HD-SDI, whichever you will be using to ingest your raw video. Now plug in your video source. This is the HDMI or HD-SDI from your camera. The default is HD-SDI. You will need to select the video input manually if you want to use HDMI.

189 |

190 |

191 |

192 | You should see that it detected the resolution and frame rate of your incoming video if you have the right input selected and everything is working correctly.

193 |

194 |

195 |

196 | Next go ahead and open Media Express (also installed with the Black Magic software). Click on "Log and Capture", and see if you see your video. Close this window. You must close this if you intend to stream, you cannot have two pieces of software using the capture card at the same time.

197 |

198 |

199 |

200 | ## Building the origin server

201 |

202 | To proceed you will need an Amazon Web Services account.

203 |

204 | Once you’ve made one, go to the EC2 console. Click on the region on the top right that is nearest to you.

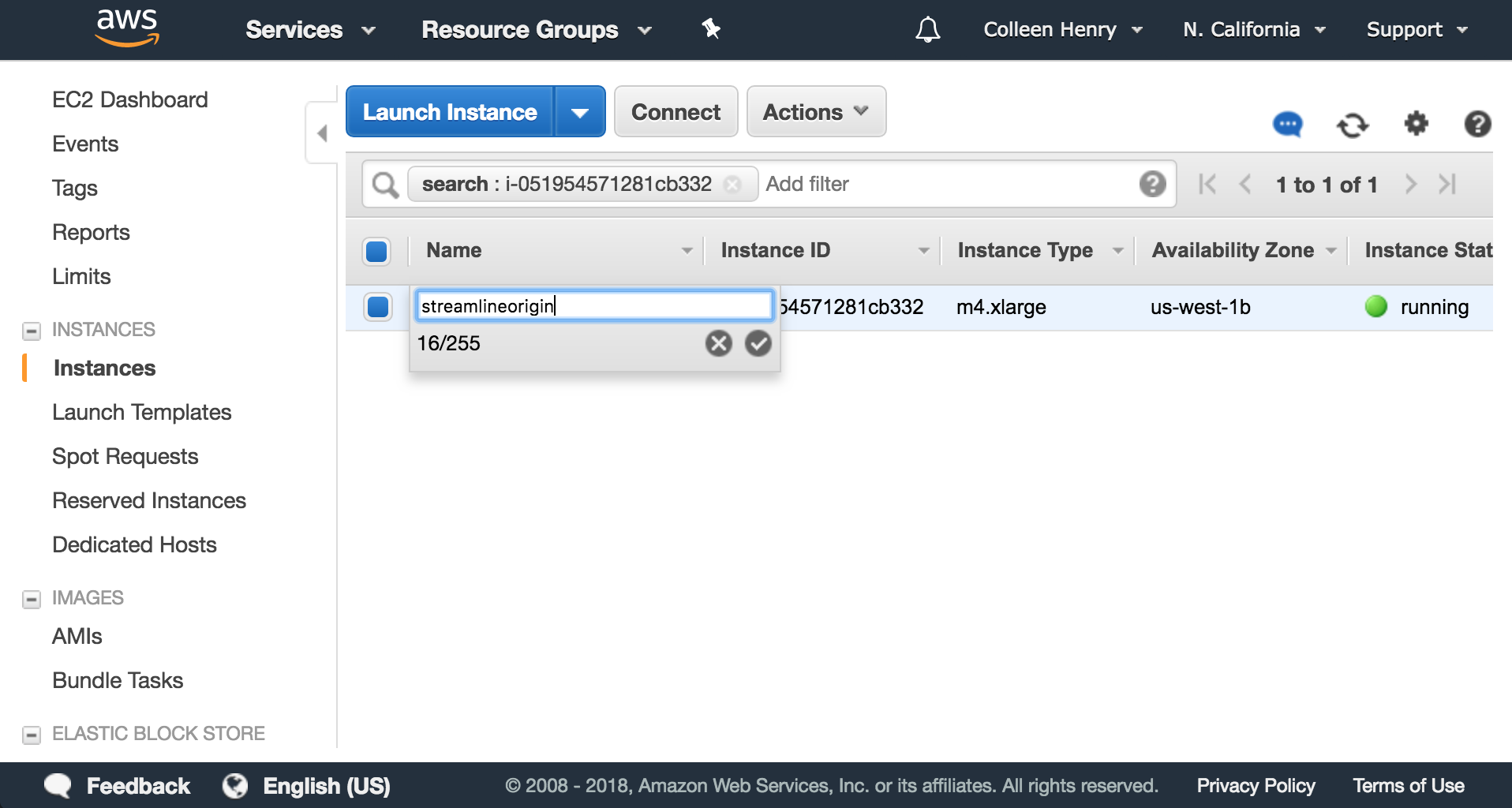

205 |

206 |

207 |

208 | Now hit the blue "Launch Instance" button.

209 |

210 |

211 |

212 | On the "Choose AMI" screen, please select Ubuntu 16.04 by hitting the blue "Select" button next to it.

213 |

214 |

215 |

216 | Now select your instance type. This is how fast your instance is and its resources. I’m going to choose a m4.xlarge for the sake of my personal testing. It’s a quad core instance with pretty good network throughput. In a Northern California data center, at the time of writing this, it’s $0.2 per Hour. That’s about 5 bucks a day. You can find AWS instance pricing [here](https://aws.amazon.com/ec2/pricing/on-demand). The larger your origin server, the more edge servers can pull from your origin at the same time before overwhelming it. Small streams can use small origins. Large streams will need bigger ones. It's actually somewhat difficult to predict the capacity you will need, so I'd recommend only using larger instance types for the duration of large events. Don't forget to watch your AWS bill, this can add up fast and sometimes can be wrong! Keep a close eye on how much you're getting charged. After you've clicked on m4.2xlarge (or whatever you choose) click "Next: Configure Instance Details"

217 |

218 |

219 |

220 | Now hit on the upper right "6. Configure Security Group" From here you can enable port 80 to the world, this is the port from which our CDN will be pulling our HTTP streams. Hit “Add Rule” then make the type "HTTP" to port open on 80 from the source of “Anywhere.” Then hit "Review and Launch" on the lower right hand corner.

221 |

222 |

223 |

224 | On the next screen where it says "Step 7: Review Instance Launch" go ahead and hit the blue "Launch" button on the lower right.

225 |

226 |

227 |

228 | Next a dialogue will ask you to “Select an existing key pair or create a new key pair.” You will want to “Create a new key pair” and name it. I’ll call it “myawesomekey.” Hit "Download Key Pair" to download the key.

229 |

230 |

231 |

232 | Now hit the blue "Launch Instances" button. Now hit “view instances”.

233 |

234 | You will notice the name of your instance is blank on this screen. Hover over the blank area and click on the edit pencil and give your instance a name. I’ll call it “streamlineorigin.”

235 |

236 |

237 |

238 | Now we will want to click on “Connect” up near the launch instance button. This will show you how to SSH (remotely connect to the command line shell of the remote server.) This step is different based on your personal operating system. There is a link to directions on this screen for how to connect via Windows if you hit “connect using PuTTY”.

239 |

240 |

241 |

242 | On Linux or MacOS, simply find where you downloaded your key, and move it to your ~/.ssh directory, and then change it’s permissions to be correct. That would be in your terminal (asuming you're in the folder it was downloaded to)...

243 |

244 | cp myawesomekey.pem ~/.ssh/myawesomekey.pem && sudo chmod 400 ~/.ssh/myawesomekey.pem

245 |

246 | Cool, now you can SSH into your server. Again follow the PuTTY directions if you’re on Windows. For MacOS and Linux, go ahead copy the example command line from this window.

247 |

248 | An example looks like

249 |

250 | ssh -i ~/.ssh/myawesomekey.pem ubuntu@ec2-54-183-60-162.us-west-1.compute.amazonaws.com

251 |

252 | Cool, now you are SSHed into your origin server.

253 |

254 | Now go ahead and clone this repository to get the build ...

255 |

256 | git clone https://github.com/streamlinevideo/streamline.git && cd ~/streamline && ./buildServer.sh

257 |

258 | At some point this will pop up. Just hit enter.

259 |

260 |

261 |

262 | After the script is done, you will want to go ahead and download your caddy server. Go to caddyserver.com/download and choose the “Platform” to be “Linux 64-bit” and add the plugins http.cors and http.webdav. I’m going to assume that you are using this all for personal use, so please go ahead and select the personal license. If you are using this for Commercial stuff (please go ahead and use the commercial license workflow.) If you want to use server that is free for commercial use, but slightly harder to configure, but has no cost, please check out NGINX or Apache. You will need to Google how to allow HTTP PUT uploads. Caddy is nice because it’s so simple, and, if you need, it does HTTPS configuration for you super quickly.

263 |

264 | After you’ve selected your options, copy the “One-step installer script (bash):” command line into your terminal where you are SSHed into your server. This will look like.

265 |

266 | curl https://getcaddy.com | bash -s personal hook.service,http.cors,http.webdav

267 |

268 | Paste this command into your terminal, and hit enter. Note that this is a personal license. Make sure this fits your use case before using it.

269 |

270 | sudo caddy -service install -conf /home/ubuntu/streamline/caddyFile && sudo caddy -service start

271 |

272 | Your origin server is now running.

273 |

274 | ## Building the CDN

275 |

276 | Go to https://console.aws.amazon.com/cloudfront/home

277 |

278 |

279 |

280 | Click “Create Distribution”

281 |

282 | Then click under Web “Get Started”

283 |

284 |

285 |

286 | Under origin domain name, put the URL of your origin server. Earlier my example was “ec2-54-183-60-162.us-west-1.compute.amazonaws.com” You will also want to enable CORS to work on any domain (or your specific one), if you want to embed this on a third party website. To do that add a header under "Header Name" called "Access-Control-Allow-Origin" and give it a value of "*".

287 |

288 |

289 |

290 | Then scroll all the way down...

291 |

292 |

293 |

294 | ...and click the blue “Create Distribution.” button.

295 |

296 | Now click on your distribution and click “Distribution Settings”

297 |

298 |

299 |

300 | Now click on “behaviors” then click the blue "Create behavior" button.

301 |

302 |

303 |

304 | Make the “Push Pattern” *.m3u8. Hit “Customize” next to “Object Caching.” Then, change the "Maximum TTL" and "Default TTL" to 1. This means that the manifest won’t be cached in the system longer than 1 second. If we don’t do this, we will get stale manifests. That would work for on demand video, but, not for live HLS where we have to keep pulling down the manifest down. Therefore, we don't want this to be cached for too long. Scroll to the bottom and hit the blue “Create” button.

305 |

306 | If you go back to your distributions you can see the status of your distribution, and you can see the domain name. Please copy that domain name.

307 |

308 | You will have to wait for the Status of the distribution to go from “In Progress” to “ready” before you can use it. When it’s “ready” you are about ready to stream!

309 |

310 | Now you can take that URL and go back to your encoder. You can SSH into it if you like and control it remotely. An example I had was d1043ohasfxsrx.cloudfront.net. Yours will look something similar.

311 |

312 | Now go back to the terminal of your encoder. You can also feel free to SSH into the encoder from the computer you are controlling AWS from to make copying and pasting easier, an open-ssh server is now running. Once you are there go into the streamline directory...

313 |

314 | cd ~/streamline

315 |

316 | You are now all ready to launch your stream! Run the launch.sh script and put URL of your origin server as the first argument, and the url to your cloudfront distribution as the second argument. Make sure not to add http:// or https:// to the hostnames.

317 |

318 | Run...

319 |

320 | ./launch.sh ec2-54-183-60-162.us-west-1.compute.amazonaws.com d1043ohasfxsrx.cloudfront.net

321 |

322 |

323 | The command line will return something like

324 |

325 | ...and awaaaaayyyyy we go! 🚀🚀🚀🚀

326 | Input detected on DeckLink Mini Recorder 4K as 1080 59.94

327 | Currently streaming to: https://d1043ohasfxsrx.cloudfront.net/1014.html

328 |

329 | If you go to this URL in your web browser you can now see your live video. 🔥🔥🔥 The encode script also creates an HTML page with an embedded hls.js player and uploads it along with the video stream. The hls.js player will pull down all of the segments and put them into the media source apis in the client's web browser in real time as they are added to the manifest. For iOS, we will use the