├── .gitignore

├── README.md

├── magic.head

├── pandoc.css

├── types_1.md

├── intro.md

├── intro.html

└── types_1.html

/.gitignore:

--------------------------------------------------------------------------------

1 | .ninja_*

2 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Writing a Concatenative Programming Language

2 |

3 | This is a source code of the [WCPL](https://suhr.github.io/wcpl/intro.html) post series.

4 |

--------------------------------------------------------------------------------

/magic.head:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/pandoc.css:

--------------------------------------------------------------------------------

1 | /*

2 | * I add this to html files generated with pandoc.

3 | */

4 |

5 | html {

6 | font-size: 100%;

7 | overflow-y: scroll;

8 | -webkit-text-size-adjust: 100%;

9 | -ms-text-size-adjust: 100%;

10 | text-rendering: optimizeLegibility;

11 | }

12 |

13 | body {

14 | color: #080808;

15 | font-family: 'PT Serif', Georgia, Palatino, 'Palatino Linotype', Times, 'Times New Roman', serif;

16 | font-size: 14px;

17 | line-height: 1.7;

18 | padding: 1em;

19 | margin: auto;

20 | max-width: 48em;

21 | background: #fefefe;

22 | }

23 |

24 | .abstract {

25 | font-size: 0.9em;

26 | text-align: center;

27 | width: inherit;

28 | padding-left: 5.7em;

29 | padding-right: 5.8em;

30 | margin-bottom: 2em;

31 | }

32 |

33 | .proposition {

34 | margin-top: 1em;

35 | margin-bottom: 1em;

36 | }

37 |

38 | a {

39 | color: #0645ad;

40 | text-decoration: none;

41 | }

42 |

43 | a:visited {

44 | color: #0b0080;

45 | }

46 |

47 | a:hover {

48 | color: #06e;

49 | }

50 |

51 | a:active {

52 | color: #faa700;

53 | }

54 |

55 | a:focus {

56 | outline: thin dotted;

57 | }

58 |

59 | *::-moz-selection {

60 | background: rgba(255, 255, 0, 0.3);

61 | color: #000;

62 | }

63 |

64 | *::selection {

65 | background: rgba(255, 255, 0, 0.3);

66 | color: #000;

67 | }

68 |

69 | a::-moz-selection {

70 | background: rgba(255, 255, 0, 0.3);

71 | color: #0645ad;

72 | }

73 |

74 | a::selection {

75 | background: rgba(255, 255, 0, 0.3);

76 | color: #0645ad;

77 | }

78 |

79 | p {

80 | margin: 0em 0;

81 | }

82 |

83 | p {

84 | margin: 0.7em 0;

85 | hyphens: auto;

86 | }

87 |

88 | img {

89 | max-width: 100%;

90 | margin: 1em;

91 | }

92 |

93 | h1, h2, h3, h4, h5, h6 {

94 | color: #111;

95 | line-height: 125%;

96 | margin-top: 1.7em;

97 | font-weight: normal;

98 | }

99 |

100 | h4, h5, h6 {

101 | font-weight: bold;

102 | }

103 |

104 | h1 {

105 | font-size: 2.5em;

106 | }

107 |

108 | h2 {

109 | font-size: 2em;

110 | }

111 |

112 | h3 {

113 | font-size: 1.5em;

114 | }

115 |

116 | h4 {

117 | font-size: 1.2em;

118 | }

119 |

120 | h5 {

121 | font-size: 1em;

122 | }

123 |

124 | h6 {

125 | font-size: 0.9em;

126 | }

127 |

128 | blockquote {

129 | color: #666666;

130 | margin: 0;

131 | padding-left: 3em;

132 | border-left: 0.5em #EEE solid;

133 | }

134 |

135 | hr {

136 | display: block;

137 | height: 2px;

138 | border: 0;

139 | border-top: 1px solid #aaa;

140 | border-bottom: 1px solid #eee;

141 | margin: 1em 0;

142 | padding: 0;

143 | }

144 |

145 | pre, code, kbd, samp {

146 | color: #000;

147 | font-family: 'Consolas', monospace;

148 | _font-family: 'courier new', monospace;

149 | font-size: 0.98em;

150 | background: #ededed;

151 | }

152 |

153 | pre {

154 | white-space: pre;

155 | white-space: pre-wrap;

156 | word-wrap: break-word;

157 | }

158 |

159 | b, strong {

160 | font-weight: bold;

161 | }

162 |

163 | dfn {

164 | font-style: italic;

165 | }

166 |

167 | ins {

168 | background: #ff9;

169 | color: #000;

170 | text-decoration: none;

171 | }

172 |

173 | mark {

174 | background: #ff0;

175 | color: #000;

176 | font-style: italic;

177 | font-weight: bold;

178 | }

179 |

180 | sub, sup {

181 | font-size: 75%;

182 | line-height: 0;

183 | position: relative;

184 | vertical-align: baseline;

185 | }

186 |

187 | sup {

188 | top: -0.5em;

189 | }

190 |

191 | sub {

192 | bottom: -0.25em;

193 | }

194 |

195 | ul, ol {

196 | margin: 1em 0;

197 | padding: 0 0 0 2em;

198 | }

199 |

200 | li p:last-child {

201 | margin-bottom: 0;

202 | }

203 |

204 | ul ul, ol ol {

205 | margin: .3em 0;

206 | }

207 |

208 | dl {

209 | margin-bottom: 1em;

210 | }

211 |

212 | dt {

213 | font-weight: bold;

214 | margin-bottom: .8em;

215 | }

216 |

217 | dd {

218 | margin: 0 0 .8em 2em;

219 | }

220 |

221 | dd:last-child {

222 | margin-bottom: 0;

223 | }

224 |

225 | img {

226 | border: 0;

227 | -ms-interpolation-mode: bicubic;

228 | vertical-align: middle;

229 | }

230 |

231 | figure {

232 | display: block;

233 | text-align: center;

234 | margin: 1em 0;

235 | }

236 |

237 | figure img {

238 | border: none;

239 | margin: 0 auto;

240 | }

241 |

242 | figcaption {

243 | font-size: 0.8em;

244 | font-style: italic;

245 | margin: 0 0 .8em;

246 | }

247 |

248 | table {

249 | margin-top: 1em;

250 | margin-bottom: 2em;

251 | border-bottom: 1px solid #ddd;

252 | border-right: 1px solid #ddd;

253 | border-spacing: 0;

254 | border-collapse: collapse;

255 | }

256 |

257 | table th {

258 | padding: .2em 1em;

259 | background-color: #eee;

260 | border-top: 1px solid #ddd;

261 | border-left: 1px solid #ddd;

262 | }

263 |

264 | table td {

265 | padding: .2em 1em;

266 | border-top: 1px solid #ddd;

267 | border-left: 1px solid #ddd;

268 | vertical-align: top;

269 | }

270 |

271 | table.flow {

272 | border: none;

273 | }

274 |

275 | table.flow td {

276 | border: 1px solid #ddd;

277 | padding: 0.5em 1.5em;

278 | margin: 0;

279 | text-align: center;

280 | }

281 |

282 | table.flow td.open {

283 | border: none;

284 | border-right: none;

285 | }

286 |

287 | .author {

288 | font-size: 1.2em;

289 | text-align: center;

290 | }

291 |

292 | @media only screen and (min-width: 480px) {

293 | body {

294 | font-size: 14px;

295 | }

296 | }

297 |

298 | @media only screen and (min-width: 768px) {

299 | body {

300 | font-size: 16px;

301 | }

302 | }

303 |

304 | @media only screen and (min-width: 960px) {

305 | body {

306 | font-size: 18px;

307 | }

308 | }

309 |

310 | @media print {

311 | * {

312 | background: transparent !important;

313 | color: black !important;

314 | filter: none !important;

315 | -ms-filter: none !important;

316 | }

317 |

318 | body {

319 | font-size: 12pt;

320 | max-width: 100%;

321 | }

322 |

323 | a, a:visited {

324 | text-decoration: underline;

325 | }

326 |

327 | hr {

328 | height: 1px;

329 | border: 0;

330 | border-bottom: 1px solid black;

331 | }

332 |

333 | a[href]:after {

334 | content: " (" attr(href) ")";

335 | }

336 |

337 | abbr[title]:after {

338 | content: " (" attr(title) ")";

339 | }

340 |

341 | .ir a:after, a[href^="javascript:"]:after, a[href^="#"]:after {

342 | content: "";

343 | }

344 |

345 | pre, blockquote {

346 | border: 1px solid #999;

347 | padding-right: 1em;

348 | page-break-inside: avoid;

349 | }

350 |

351 | tr, img {

352 | page-break-inside: avoid;

353 | }

354 |

355 | img {

356 | max-width: 100% !important;

357 | }

358 |

359 | @page :left {

360 | margin: 15mm 20mm 15mm 10mm;

361 | }

362 |

363 | @page :right {

364 | margin: 15mm 10mm 15mm 20mm;

365 | }

366 |

367 | p, h2, h3 {

368 | orphans: 3;

369 | widows: 3;

370 | }

371 |

372 | h2, h3 {

373 | page-break-after: avoid;

374 | }

375 | }

376 |

--------------------------------------------------------------------------------

/types_1.md:

--------------------------------------------------------------------------------

1 | title: Writing a Concatenative Programming Language: Type System, Part 1

2 |

3 | This post should have been *after* the post about parsing. But there's no post about parsing yet. You may wonder, why.

4 |

5 | Well, the reason is simple. I already wrote the parser and started to implement the analysis. But quite immediately I got stuck: I don't quite know to do things I want to have in my language, and also nobody did something like this before. And though a lot of things can be thrown away and then implemented later, some things you can't just omit. The type system is such a thing.

6 |

7 | This post is my attempt to solve this problem. I figure out what the type system should look like, and try to *explain* it. And explaining it helps me to *understand* it. And once I understand it well, I will be able to actually implement it.

8 |

9 | ## Logic, formal and computational

10 |

11 | Type systems are defined by a set of inference rules. An inference rule is a rule that takes *premises* and returns a conclusion. An example of such rule:

12 |

13 | $$\frac{A, A → B}{B}$$

14 |

15 | This is *modus pones,* the equvalent of function application in logic. This rule derives “streets are wet” from “it's raining” and “If it's raining, then streets are wet”.

16 |

17 | Inference rules have one significant drawback: they are not directly computable. This is fine when everything is already set in stone, but if things are fluid, you really want being able to run code to experiment.

18 |

19 | So instead of inference rules we will use Prolog.

20 |

21 | Prolog is a solver of clauses like $u ← p \land q \land … \land t$, which are FOL analog of inference rules. When an inference rule looks like this:

22 |

23 | $$\frac{A}{B}$$

24 |

25 | We will write something like this:

26 |

27 | ```

28 | B :- A

29 | ```

30 |

31 | Of course, it's not that simple. Prolog is more a programming language than theorem solver, so the code inevitably contain workarounds.

32 |

33 | ## Tier 0: typed concatenative algebra

34 |

35 | We start with typing the concatenative algebra, described in [[calg](https://suhr.github.io/papers/calg.html)].

36 |

37 | The proper composition is straightforward:

38 |

39 | $$\frac{Γ \vdash e_1 : α^{*} → β^{*}\quad Γ \vdash e_2 : β^{*} → γ^{*} }{Γ \vdash (e_1 ∘ e_2) : α^{*} → γ^{*}}( \mathtt{Comp} )$$

40 |

41 | The concatenation is simple as well:

42 |

43 | $$\frac{Γ \vdash e_1 : α^{*} → β^{*}\quad Γ \vdash e_2 : ξ^{*} → η^{*} }{Γ \vdash (e_1 \mathbin , e_2) : α^{*} \mathbin , ξ^{*} → β^{*} \mathbin , η^{*}}( \mathtt{Conc} )$$

44 |

45 | Note that $α^{ * }$, $β^{ * }$, etc are *type lists*, not individual types.

46 |

47 | Primitive rewiring functions have these types:

48 |

49 | $$

50 | \begin{align}

51 | \mathtt{id} &: α → α \\

52 | \mathtt{dup} &: α → α\mathbin{,}α \\

53 | \mathtt{drop} &: α → \\

54 | \mathtt{swap} &: α\mathbin{,}β → β\mathbin{,}α

55 | \end{align}

56 | $$

57 |

58 | Overall, the Prolog code for typed concatenative algebra looks like this:

59 |

60 | ```prolog

61 | :- use_module(library(lists)).

62 |

63 | % Some notes:

64 | % - `comp` is composition, lists are concatenation

65 | % - We do not use any context, so `ty_chk` takes none

66 |

67 | % Rule for composition

68 | ty_chk( ty(comp(E1, E2), fn(A, C)) ) :-

69 | ty_chk( ty(E1, fn(A, B)) ), ty_chk( ty(E2, fn(B, C)) ).

70 |

71 | % Rule for concatenation

72 | ty_chk( ty([ ], fn([ ], [ ])) ).

73 | ty_chk( ty([E1 | E2], fn(AX, BY)) ) :-

74 | ty_chk( ty(E1, fn(A, B)) ), ty_chk( ty(E2, fn(X, Y)) ),

75 | append(A, X, AX), append(B, Y, BY).

76 |

77 | % Primitive functions

78 | ty_chk( ty(id, fn(X, X)) ).

79 | ty_chk( ty(dup, fn([X], [X, X])) ).

80 | ty_chk( ty(drop, fn([_], [ ])) ).

81 | ty_chk( ty(swap, fn([X, Y], [Y, X])) ).

82 | ```

83 |

84 | Quite straightforward! Note that this code can both check and infer the type of an expression.

85 |

86 | ## Tier ½: specifying the typechecking

87 |

88 | Instead of relying on Prolog unification, let's detail the process of type checking and type inference.

89 |

90 | We need a way to specify type variables. To do so, we'll use indexes. So $\mathtt{swap} : α\mathbin{,}β → β\mathbin{,}α$ becomes $\mathtt{swap} : τ_0\mathbin{,}τ_1 → τ_1\mathbin{,}τ_0$. In Prolog, we write $τ_n$ as `tau(N)`.

91 |

92 | When we concatenate types, we need to increment the indices:

93 |

94 | $$

95 | \frac{

96 | \mathtt{drop} : τ_0 →\ \quad \mathtt{id} : τ_0 → τ_0

97 | } {

98 | (\mathtt{drop} \mathbin{,} \mathtt{id}) : τ_0\mathbin{;}τ_1 → τ_1

99 | }

100 | $$

101 |

102 | This is type concatenation with reindexing:

103 |

104 | ```prolog

105 | % Type concatenation with reindexing

106 | alpha_conc(fn(AX, BY), fn(A, B), fn(X, Y)) :-

107 | append(A, B, AB), upper_bound(N, AB),

108 | inc_vars(Xi, X, N), inc_vars(Yi, Y, N),

109 | append(A, Xi, AX), append(B, Yi, BY).

110 |

111 | % Upper bound: N < P for all tau(N)

112 | upper_bound(0, [ ]).

113 | upper_bound(P, [V|X]) :-

114 | upper_bound(Q, X),

115 | (

116 | V = tau(N) ->

117 | P is max(Q, N + 1)

118 | ;

119 | P = Q

120 | ).

121 |

122 | % Increment all tau by N

123 | inc_vars([ ], [ ], _).

124 | inc_vars([Vi|Xi], [V|X], N) :-

125 | inc_vars(Xi, X, N),

126 | (

127 | V = tau(P) ->

128 | Vi = tau(Q), Q is P + N

129 | ;

130 | Vi = V

131 | ).

132 | ```

133 |

134 | `X -> Y ; Z` is the Prolog version of “If X, then Y, otherwise Z”.

135 |

136 | Function composition requires more sophisticated transformation:

137 |

138 | $$

139 | \frac{

140 | 42 : \mathrm{Nat} \quad

141 | \mathtt{id} : τ_0 → τ_0 \quad

142 | \mathtt{swap} : τ_0\mathbin{,}τ_1 → τ_1\mathbin{,}τ_0

143 | } {

144 | (42 \mathbin{,} \mathtt{id}) ∘ \mathtt{swap} : τ_0 → τ_0\mathbin{,} \mathrm{Nat}

145 | }

146 | $$

147 |

148 | In `f g`, first we unify the output type of `f` with the input type of `g`. Then replace type variables of `g` with corresponding type variables of `f`. Also, we need to replace some type variables with concrete types.

149 |

150 | This way:

151 |

152 | ```prolog

153 | % Function composition with unification

154 | unify_comp(fn(Au, Cu), fn(A, B1), fn(B2, C)) :-

155 | unify(S, B1, B2), backward_s(Sb, S),

156 | subst(Cu, S, C), subst(Au, Sb, A).

157 |

158 | % Correspond variables to values

159 | unify([], [], []).

160 | unify(S, [X|Xs], [Y|Ys]) :-

161 | X = Y ->

162 | unify(S, Xs, Ys);

163 | S = [u(X, Y)|Sx], X = tau(_) ->

164 | unify(Sx, Xs, Ys);

165 | S = [u(X, Y)|Sx], Y = tau(_) ->

166 | unify(Sx, Xs, Ys).

167 |

168 | backward_s([], []).

169 | backward_s(Sb, [u(X, Y)|Sx]) :-

170 | X = tau(_), Y \= tau(_) ->

171 | Sb = [u(X, Y)|Sbx],

172 | backward_s(Sbx, Sx)

173 | ;

174 | backward_s(Sb, Sx).

175 |

176 | subst([], _, []).

177 | subst([Y|Ys], S, [X|Xs]) :-

178 | replace(Y, S, X), subst(Ys, S, Xs).

179 |

180 | replace(X, [], X).

181 | replace(Y, [E|S], X) :-

182 | X = tau(_), E = u(Y, X), Y \= tau(_) ->

183 | true;

184 | E = u(X, Y) ->

185 | true

186 | ;

187 | replace(Y, S, X).

188 | ```

189 |

190 | Now we can write the typechecking rules:

191 |

192 | ```prolog

193 | ty_chk(ty(E, T)) :- primal_ty(E, T).

194 |

195 | % Rule for composition

196 | ty_chk( ty(comp(E1, E2), F) ) :-

197 | ty_chk( ty(E1, F1) ),

198 | ty_chk( ty(E2, F2) ),

199 | unify_comp(F, F1, F2).

200 |

201 | % Rule for concatenation

202 | ty_chk( ty([ ], fn([ ], [ ])) ).

203 | ty_chk( ty([E1 | E2], F) ) :-

204 | ty_chk( ty(E1, fn(A, B)) ),

205 | ty_chk( ty(E2, fn(X, Y)) ),

206 | alpha_conc(F, fn(A, B), fn(X, Y)).

207 | ```

208 |

209 | # Tier 1: infering the unknown

210 |

211 | Sometimes you cannot to infer the type of an expression immediately. For example, consider this code:

212 |

213 | ```

214 | fact (n ~ fact) =

215 | if dup <= 1:

216 | drop 1

217 | else:

218 | dup id * (id - 1 fact)

219 | ```

220 |

221 | Here, function `fact` refers to itself, so inferring its type recursively will never terminate.

222 |

223 | Instead of trying to infer the type, let's assume that

224 |

225 | $$\mathtt{fact} : α → β$$

226 |

227 | From `id - 1`, we can know, that $α = \mathrm{Integer}$. From `drop 1`, we know that $β = \mathrm{Integer}$. Thus, we know that

228 |

229 | $$\mathtt{fact} : \mathrm{Integer} → \mathrm{Integer}$$

230 |

231 | Formally, this is the algorithm:

232 |

233 | 1. Assume that $\mathtt{f} : χ_1 → χ_2$, there $χ_n$ is a *hole*. In Prolog, it is written as `hole(N)`

234 | 2. The typechecker returns not only types but also an unification list

235 | 3. Propagate holes:

236 | - When unifying $χ_n$ and $χ_m$, add $χ_n = χ_m$ to the unification list

237 | - When unifying a hole with a type variable, replace the type variable with the hole

238 | - When unifying $χ_n$ with a type $η$, add $χ_n = η$ to the unification list, replace the $χ_n$ with $η$

239 |

240 | The implementation of this algorithm is straightforward.

241 |

242 | ## Tier 2: typed generalized composition

243 |

244 | TODO

245 |

246 | ## Tier 3: lol, generics

247 |

248 | TODO

249 |

--------------------------------------------------------------------------------

/intro.md:

--------------------------------------------------------------------------------

1 | # Writing a Concatenative Programming Language: Introduction

2 |

3 | Yes, this is a yet another “write you a language”. But this one is a little bit different. First, the language I'm going to implement is rather different from languages you used to see in such tutorials. Second, I have little experience in creating programming languages, so a lot of things will be new to me as well.

4 |

5 | ## Philosophy

6 |

7 | What's the point in doing the thing everybody else is doing? What's the point in solving a problem someone else already have solved? Guides show you how to make a yet another Haskell or Python, but if you like these languages so much why don't you just use existing implementations?

8 |

9 | I do not like Python. And I always seeked for something that could make programming less annoying. The language I'd like to use doesn't exist, and probably will never exist. But a more pleasant language, in fact, could.

10 |

11 | But the language will not write itself. Also, nobody will write such a language: most people prefer to do things everybody else does, and anyway nobody cares about things I care about.

12 |

13 | So I build a toy language and hope it will grow into something more useful.

14 |

15 | ## Freedom from variables

16 |

17 | There's more than one way to do it. The should be a way to do it without using any variables.

18 |

19 | Most programming languages are extremely bad at programming without variables. For example, in C it is usually just impossible. In Haskell you have to use kludges like `(.).(.)` and end with unreadable code. In APL and J you end with character soup.

20 |

21 | But in some languages, programming without variable can result into concise and clean code. Such languages are known as [concatenative](https://en.wikipedia.org/wiki/Concatenative_programming_language).

22 |

23 | For example, in [Factor](http://factorcode.org/) you can write something like this:

24 |

25 | ```factor

26 | "http://factorcode.org" http-get nip string>xml

27 | "a" deep-tags-named

28 | [ "href" attr ] map

29 | [ print ] each

30 | ```

31 |

32 | Clean [pipeline-like](http://concatenative.org/wiki/view/Pipeline%20style) style.

33 |

34 | But concatenative are not perfect either. As shown in [Why Concatenative Programming Matters](https://evincarofautumn.blogspot.com/2012/02/why-concatenative-programming-matters.html), a simple expression `f(x, y, z) = x^2 + y^2 - abs(y)` becomes

35 |

36 | `drop dup dup × swap abs rot3 dup × swap − +`

37 |

38 | or

39 |

40 | `drop [square] [abs] bi − [square] dip +`

41 |

42 | But why can't you just write `drop dup (^2) + (^2) - abs` instead?

43 |

44 | In Conc, the language I'm going to implement, you can do that. Also, in Conc you can do things you can't do in other languages, like pattern matching without variables. This is achieved with a very simple extention of the concatenative model.

45 |

46 | ## Motivation

47 |

48 | Now you probably have a one big question.

49 |

50 | {title="Why"}

51 |

52 | A good question indeed. Well, reasons are many. I'll show some though.

53 |

54 | Suppose, you have some kind of programming notebook, like Jupyter Notebook. In there, you write expressions in a frame to get results below.

55 |

56 | So you can type

57 |

58 | ```

59 | 1200.0 * (3.0/2.0 log2)

60 | ```

61 |

62 | and get

63 |

64 | ```

65 | 701.9550008653874

66 | ```

67 |

68 | (a pure perfect fifth in [cents]( https://en.wikipedia.org/wiki/Cent_(music) )). Suppose, you want see a chain of pure fifths. Then you write

69 |

70 | ```

71 | 1200.0 * (3.0/2.0 log2) -> fifth

72 |

73 | 1..inf {* fifth} map

74 | ```

75 |

76 | ```

77 | [701.9550008653874, 1403.9100017307749, ...]

78 | ```

79 |

80 | How many pure fifths you need to approximate an octave (using octave equivalence)? It isn't hard to find out:

81 |

82 | ```

83 | 1200.0 * (3.0/2.0 log2) -> fifth

84 |

85 | 1..inf {* fifth} map

86 | {(round `mod` 1200) < 10} take_while

87 | ```

88 |

89 | (there should be a chain of 53 fifths)

90 |

91 | You may consider a tuning made from this chain

92 |

93 | ```

94 | 1200.0 * (3.0/2.0 log2) -> fifth

95 |

96 | 1..inf {* fifth} map

97 | {(round `mod` 1200) < 10} take_while

98 | sort

99 | ```

100 |

101 | Maybe it has a great approximation of a pure major third as well?

102 |

103 | ```

104 | 1200.0 * (3.0/2.0 log2) -> fifth

105 |

106 | 1..inf {* fifth} map

107 | {(round `mod` 1200) < 10} take_while

108 | sort

109 | enumerate {second (id - 386 abs) < 5} find

110 | ```

111 |

112 | Now you can see a very nice feature of concatenative languages: they are good for interactive coding. There's no need to make unnecessary variables. There's no need to put everthing in dozens of parentheses or write code backwards. You just add more code to get new results.

113 |

114 | Everything is an expression. Every expression is a function. The code is concise yet readable. There's no special syntax and the basic syntax is very simple and consistent.

115 |

116 | Also, Conc functions are pure. The programming notebook could execute the code every time you move to a new line. It could memoize results to avoid expensive computations. It could use hot swapping technique, like [in Elm](http://elm-lang.org/blog/interactive-programming), allowing you to modify your program while it's running.

117 |

118 | The second reason to create a new language is because the concatenative notation makes some things cleaner. Consider the famous Functor and Monad rules:

119 |

120 | ```

121 | fmap id = id

122 | fmap (p . q) = (fmap p) . (fmap q)

123 |

124 | return a >>= k = k a

125 | m >>= return = m

126 | m >>= (\x -> k x >>= h) = (m >>= k) >>= h

127 | ```

128 |

129 | In Conc they become:

130 |

131 | ```

132 | {id} map ≡ id

133 | {f g} map ≡ {f} map {g} map

134 |

135 | return >>= {k} ≡ k

136 | >>= {return} ≡ id

137 | >>= {k >>= {h}} ≡ (>>= {k}) >>= {h}

138 | ```

139 |

140 | Not only Conc version is shorter, it also allows you to *see* why the third Monad rule is called “associativity”.

141 |

142 | By the way, the concatenative style plays nicely with an effect system:

143 |

144 | ```

145 | name_do : -> +IO

146 | name_do =

147 | "What is your first name? " print

148 | get_line -> first

149 | "And your last name? " print

150 | get_line -> last

151 |

152 | first ++ " " ++ last

153 | "Pleased to meet you, " ++ id ++ "!" print_ln

154 | ```

155 |

156 | And affine types:

157 |

158 | ```

159 | Array.new

160 | 1 push

161 | 2 push

162 | 3 push

163 | id !! 1

164 | ⇒ Array 2

165 | ```

166 |

167 | A yet another reason to make a new language is to avoid this:

168 |

169 |

170 |

171 | It is much easier if you have `,` and functions that can return multiple values.

172 |

173 | A yet another reason is the associativity of concatenation. While [arrows](https://www.haskell.org/arrows/syntax.html) in Haskell look nice with *two* outputs, I wonder how they play with *three* outputs.

174 |

175 | Consider a simple example:

176 |

177 | ```

178 | f : a -> b c

179 | g : a -> b

180 | p : a b -> c

181 | q : a -> b

182 | ```

183 |

184 | You can write:

185 |

186 | ```

187 | f,g

188 | p,q

189 | ```

190 |

191 | But you also can write:

192 |

193 | ```

194 | f,g

195 | q,p

196 | ```

197 |

198 | You can't reproduce this using only pairs, because

199 |

200 | ```

201 | T[T[a, b], c] ≠ T[a, T[b, c]]

202 | ```

203 |

204 | But in Conc `((a, b), c) ≡ (a, (b, c)) ≡ a, b, c`.

205 |

206 | ## The language

207 |

208 | ```

209 | data a Maybe = a Some | None

210 |

211 | map : a Some (a -> b) -> b Some

212 | map (mb f -- mb) = case 2id:

213 | Some,id -> apply Some

214 | None,_ -> None

215 |

216 | fizzbuzz (n -- str) =

217 | -> n

218 | case (n fizz),(n buzz):

219 | True, False -> "Fizz"

220 | False, True -> "Buzz"

221 | True, True -> "FizzBuzz"

222 | _, _ -> n to_string

223 | where

224 | fizz = (3 mod) == 0

225 | buzz = (5 mod) == 0

226 |

227 | main : -> +IO

228 | main = 1 101 range {fizzbuzz println} each

229 | ```

230 |

231 | Ok, I have to explain some things. The first thing you have to know, Conc is an algebra of two operations on functions. The first operation is *generalized composition*. It works like ordinary composition in concatenative languages:

232 |

233 | ```

234 | add : Int Int -> Int

235 | mul : Int Int -> Int

236 | add mul : Int Int Int -> Int

237 |

238 | 2 3 5 add mul ⇒ 2 (3 5 add) mul ⇒ 2 8 mul ⇒ 16

239 | ```

240 |

241 | The second operation is *parallel concatenation*:

242 |

243 | ```

244 | add,mul : Int Int Int Int -> Int Int

245 |

246 | 2 2 3 3 add,mul ⇒ (2 2 add),(3 3 mul) ⇒ 4 9

247 | ```

248 |

249 | Both operations are monoids:

250 |

251 | ```

252 | ( ) f ≡ f ( ) ≡ f

253 | ( ),f ≡ f,( ) ≡ f

254 |

255 | (f g) h ≡ f (g h)

256 | (f,g),h ≡ f,(g,h)

257 | ```

258 |

259 | Now notice that if `c` is a constant (a function that doesn't take any arguments) than composition is equvalent to concatenation:

260 |

261 | ```

262 | f c ≡ f,c

263 | ```

264 |

265 | Also, if output arity of `f` is lesser than input arity of `g`,

266 |

267 | ```

268 | f g ≡ idN,f g

269 | ```

270 |

271 | where N is the difference between arities.

272 |

273 | This implies that the generalized composition is probably too general. This leads us to the concept of the *canonical concatenative form*.

274 |

275 | Expression `e1 e2` is in CCF if both `e1` and `e2` are in CCF and the output arity or `e1` equals the input arity of `e2`.

276 |

277 | This is our old examples in CCF:

278 |

279 | ```

280 | 2,3,5 id,add mul

281 | 2,2,3,3 add,mul

282 | ```

283 |

284 | Now let's define an infix notation:

285 |

286 | ```

287 | f `h` g ⇒ f,g h

288 | ```

289 |

290 | This allows you to write expressions in a usual way:

291 |

292 | ```

293 | 2*2 + 3*3 ⇒ (2,2 (*)),(3,3 (*)) (+) ⇒ 4,9 + ⇒ 13

294 | ```

295 |

296 | But it also allows you to write expression in an unusual way:

297 |

298 | ```

299 | 2 2 3 3 (*) + (*) ⇒ 2,2,3,3 (*),(*) (+) ⇒ ... ⇒ 13

300 | ```

301 |

302 | It is also nice to have some sugar:

303 |

304 | ```

305 | (`h` g) ⇒ idN,g h

306 | (f `h`) ⇒ f,idM h

307 | ```

308 |

309 | Where `N = max(arity_in(h) - arity_out(g), 0)`.

310 |

311 | Now the meaning of `drop dup (^2) + (^2) - abs` should be crystal clear to you:

312 |

313 | ```

314 | x y z drop dup (^2) + (^2) - abs ⇒

315 | x,y,y (^2) + (^2) - abs ⇒

316 | ((x,2 (^)),(y,2 (^)) (+)),(y abs) (-) ⇒ ...

317 | ```

318 |

319 | Visually it is more like

320 |

321 | ```

322 | x,y,y (^2) + (^2) - abs ⇒ (x^2) + (y^2) - (y abs)

323 | ```

324 |

325 | Simple and intuitive.

326 |

327 | ### Concatenative pattern matching

328 |

329 | Operation `,` is not only about writing nice expressions. It also allows to introduce a concatenative version of pattern matching without variables.

330 |

331 | Consider an expression:

332 |

333 | ```

334 | case foo of

335 | Some a -> ...

336 | None -> ...

337 | ```

338 |

339 | What does `Some a ->` mean? Well, assuming that `foo = Some bar`, it defines `a = bar` and evaluates expression after `->`. `Some` is a function that constructs a value, and pattern matching *inverts* this function.

340 |

341 | In concatenative world, `Some bar` becomes `bar Some` (postfix notation!). If there'd be `unSome: a Maybe -> a`, you could write

342 |

343 | ```

344 | bar Some unSome ⇒ bar

345 | ```

346 |

347 | But `unSome` doesn't exist. But what if we reproduce `unSome` behaviour in the place where it makes sense?

348 |

349 | ```

350 | case (bar Some):

351 | Some -> ⇒ bar

352 | None -> ...

353 | ```

354 |

355 | That's basically the essence of concatenative pattern matching. Together with `id`, type constructors and composition form a [right-cancellative monoid](https://en.wikipedia.org/wiki/Cancellation_property).

356 |

357 | What does it have to do with `,`? Well, consider an another example:

358 |

359 | ```

360 | case foo of

361 | Cons(Some(x), xs) -> ...

362 | ```

363 |

364 | How to express this in the concatenative pattern matching? Let's rewrite `Cons(Some(x), xs)` in concatenative:

365 |

366 | ```

367 | (x Some),xs Cons ⇒ x,xs Some,id Cons

368 | ```

369 |

370 | The `case` statement becomes:

371 |

372 | ```

373 | case foo:

374 | Some,id Cons -> ⇒ x,xs

375 | ```

376 |

377 | So `,` allow us to do advanced pattern matching without any variables.

378 |

379 | ### Miscellaneous

380 |

381 | #### Cross-cutting statements

382 | Consider a branching statement:

383 |

384 | ```

385 | if foo:

386 | bar

387 | else:

388 | baz

389 | ```

390 |

391 | If `foo` is a constant, the meaning of this statement is clear. But that if `foo` is a function?

392 |

393 | In Conc, statement are cross-cutting. That means that the function inside uses values outside:

394 |

395 | ```

396 | a b if (==):

397 | ...

398 | ⇒

399 | if a == b:

400 | ...

401 | ```

402 |

403 | #### Stack effect comments

404 |

405 | Concatenative notation has many advantages. But it also have some drawbacks.

406 |

407 | ```

408 | magic = abra cadabra

409 | ```

410 |

411 | What does this function do?

412 |

413 | Raw types are not helpful either:

414 |

415 | ```

416 | mysterious: Int Int -> Int

417 | ```

418 |

419 | Clearly, we need something else.

420 |

421 | In Forth, people use *stack effect comments* to show what a word does:

422 |

423 | ```

424 | : SQUARED ( n -- nsquared ) DUP * ;

425 | ```

426 |

427 | Conc has a similar thing too:

428 |

429 | ```

430 | map (mb f -- mb) = ...

431 | ```

432 |

433 | But unlike Forth, `(mb f -- mb)` is more than just a comment. The compiler can use it to check the arity, and error if the arity of the stack effect doesn't match the arity of the function.

434 |

435 | ## A puzzle

436 |

437 | Enough about Conc, let's talk about something more abstract. Specifically: concatenative combinators.

438 |

439 | [The Theory of Concatenative Combinators](http://tunes.org/~iepos/joy.html) gives a good introduction into the subject. My set of combinators is a little bit different though:

440 |

441 | ```

442 | A dup ⇒ A A

443 | A B swap ⇒ B A

444 | A B drop ⇒ A

445 |

446 | {f} {g} comp ⇒ {f g}

447 | … {f} apply ⇒ … f

448 | ```

449 |

450 | This surely isn't a minimal set. But it is a very convenient one. Each combinator correspondes to some fundamental concept.

451 |

452 | `dup`, `drop` and `swap` correspond to structural rules. Indeed:

453 |

454 | * Contraction rule allows to use a variable twice. And `dup` allows to use a value twice.

455 | * Weaking rule allows to not use a variable. And `drop` allows to not use a value.

456 | * Exchange rule allows to use variables in a different order. And `swap` allows to use values in a different order.

457 |

458 | `comp` and `apply` are, of course, a composition and an application.

459 |

460 | What can you do with this set of combinators? Well, a lot of things. Let's build the famous fixed-point combinator `y`.

461 |

462 | By definition:

463 |

464 | ```

465 | {f} y ⇒ {f} y f ⇒ {f} y f f ⇒ ...

466 | ```

467 |

468 | Let's try `rec = dup apply`:

469 |

470 | ```

471 | {f} rec ⇒ {f} {f} apply ⇒ {f} f

472 | ```

473 |

474 | Not very interesting. But:

475 |

476 | ```

477 | {rec} rec ⇒ {rec} rec ⇒ ...

478 | ```

479 |

480 | It recurses. And if we add some `f`:

481 |

482 | ```

483 | {rec f} rec ⇒ {rec f} rec f ⇒ {rec f} rec f f ⇒ ...

484 | ```

485 |

486 | Here we go. Now we only have to construct `{rec f}` from `{f}`:

487 |

488 | ```

489 | {f} {rec} swap comp ⇒ {rec f}

490 | ```

491 |

492 | So:

493 |

494 | ```

495 | y = {dup apply} swap comp dup apply

496 | ```

497 |

498 | Nice.

499 |

500 | But remember that Conc is an algebra of *two* operations. This suggests an additional combinator:

501 |

502 | ```

503 | {f} {g} conc ⇒ {f,g} conc

504 | ```

505 |

506 | But this immediately leads to a question...

507 |

508 | **Problem #1:** How do you define `,` in an untyped context?

509 |

510 | Suppose you defined it somehow. Now let `f: A B → C` be a function that takes two arguments and return only one. You can nest it:

511 |

512 | ```

513 | f,f f : A B C D → E

514 | f,f,f,f f,f f : A … H → K

515 | ```

516 |

517 | By analogy, let `g: A → B C`:

518 |

519 | ```

520 | g g,g : A → B C D E

521 | g g,g g,g,g,g → ...

522 | ```

523 |

524 | **Problem #2**: define `2y` and `y2` such as

525 |

526 | `{f} 2y ⇒ {f,f} 2y f ⇒ {f,f,f,f} 2y f,f f ⇒ ...`

527 |

528 | and

529 |

530 | `{g} y2 ⇒ g {g,g} y2 ⇒ g g,g {g,g,g,g} y2 ⇒ ...`

531 |

532 | ## Follow up

533 |

534 | In the next post I'll describe and implement the Conc parser.

535 |

--------------------------------------------------------------------------------

/intro.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

13 |

22 |

23 |

24 |

Writing a Concatenative Programming Language: Introduction

25 |

Yes, this is a yet another “write you a language”. But this one is a little bit different. First, the language I'm going to implement is rather different from languages you used to see in such tutorials. Second, I have little experience in creating programming languages, so a lot of things will be new to me as well.

26 |

Philosophy

27 |

What's the point in doing the thing everybody else is doing? What's the point in solving a problem someone else already have solved? Guides show you how to make a yet another Haskell or Python, but if you like these languages so much why don't you just use existing implementations?

28 |

I do not like Python. And I always seeked for something that could make programming less annoying. The language I'd like to use doesn't exist, and probably will never exist. But a more pleasant language, in fact, could.

29 |

But the language will not write itself. Also, nobody will write such a language: most people prefer to do things everybody else does, and anyway nobody cares about things I care about.

30 |

So I build a toy language and hope it will grow into something more useful.

31 |

Freedom from variables

32 |

There's more than one way to do it. The should be a way to do it without using any variables.

33 |

Most programming languages are extremely bad at programming without variables. For example, in C it is usually just impossible. In Haskell you have to use kludges like (.).(.) and end with unreadable code. In APL and J you end with character soup.

34 |

But in some languages, programming without variable can result into concise and clean code. Such languages are known as concatenative.

35 |

For example, in Factor you can write something like this:

But concatenative are not perfect either. As shown in Why Concatenative Programming Matters, a simple expression f(x, y, z) = x^2 + y^2 - abs(y) becomes

42 |

drop dup dup × swap abs rot3 dup × swap − +

43 |

or

44 |

drop [square] [abs] bi − [square] dip +

45 |

But why can't you just write drop dup (^2) + (^2) - abs instead?

46 |

In Conc, the language I'm going to implement, you can do that. Also, in Conc you can do things you can't do in other languages, like pattern matching without variables. This is achieved with a very simple extention of the concatenative model.

47 |

Motivation

48 |

Now you probably have a one big question.

49 |

50 |

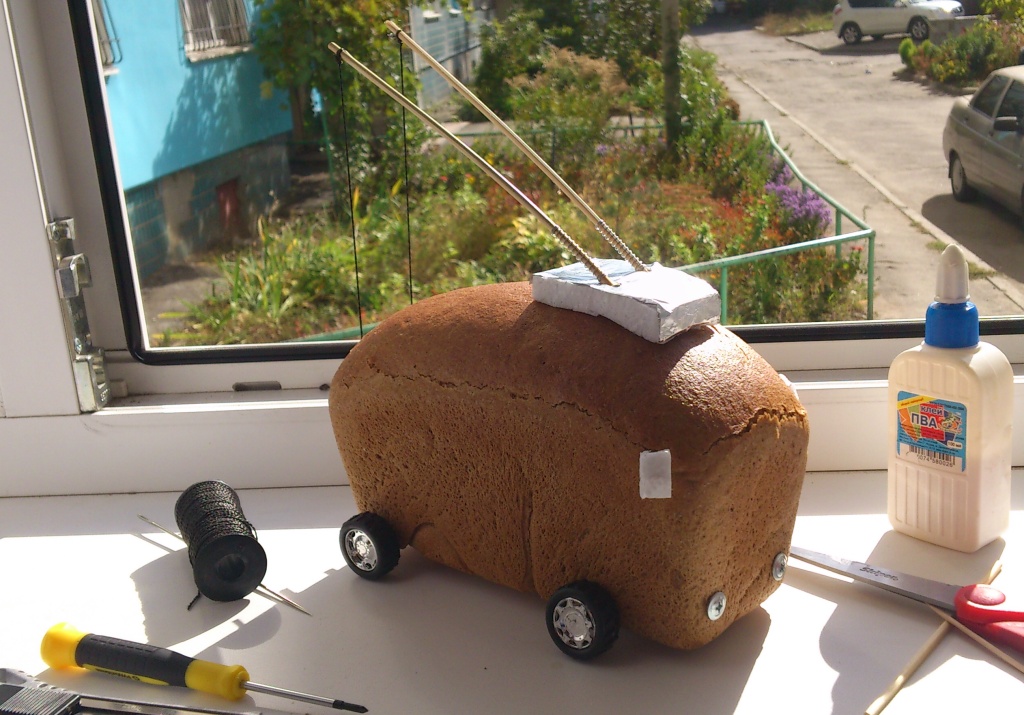

A good question indeed. Well, reasons are many. I'll show some though.

51 |

Suppose, you have some kind of programming notebook, like Jupyter Notebook. In there, you write expressions in a frame to get results below.

52 |

So you can type

53 |

1200.0 * (3.0/2.0 log2)

54 |

and get

55 |

701.9550008653874

56 |

(a pure perfect fifth in cents). Suppose, you want see a chain of pure fifths. Then you write

Now you can see a very nice feature of concatenative languages: they are good for interactive coding. There's no need to make unnecessary variables. There's no need to put everthing in dozens of parentheses or write code backwards. You just add more code to get new results.

81 |

Everything is an expression. Every expression is a function. The code is concise yet readable. There's no special syntax and the basic syntax is very simple and consistent.

82 |

Also, Conc functions are pure. The programming notebook could execute the code every time you move to a new line. It could memoize results to avoid expensive computations. It could use hot swapping technique, like in Elm, allowing you to modify your program while it's running.

83 |

The second reason to create a new language is because the concatenative notation makes some things cleaner. Consider the famous Functor and Monad rules:

84 |

fmap id = id

85 | fmap (p . q) = (fmap p) . (fmap q)

86 |

87 | return a >>= k = k a

88 | m >>= return = m

89 | m >>= (\x -> k x >>= h) = (m >>= k) >>= h

A yet another reason to make a new language is to avoid this:

116 |

117 |

It is much easier if you have , and functions that can return multiple values.

118 |

A yet another reason is the associativity of concatenation. While arrows in Haskell look nice with two outputs, I wonder how they play with three outputs.

119 |

Consider a simple example:

120 |

f : a -> b c

121 | g : a -> b

122 | p : a b -> c

123 | q : a -> b

124 |

You can write:

125 |

f,g

126 | p,q

127 |

But you also can write:

128 |

f,g

129 | q,p

130 |

You can't reproduce this using only pairs, because

131 |

T[T[a, b], c] ≠ T[a, T[b, c]]

132 |

But in Conc ((a, b), c) ≡ (a, (b, c)) ≡ a, b, c.

133 |

The language

134 |

data a Maybe = a Some | None

135 |

136 | map : a Some (a -> b) -> b Some

137 | map (mb f -- mb) = case 2id:

138 | Some,id -> apply Some

139 | None,_ -> None

140 |

141 | fizzbuzz (n -- str) =

142 | -> n

143 | case (n fizz),(n buzz):

144 | True, False -> "Fizz"

145 | False, True -> "Buzz"

146 | True, True -> "FizzBuzz"

147 | _, _ -> n to_string

148 | where

149 | fizz = (3 mod) == 0

150 | buzz = (5 mod) == 0

151 |

152 | main : -> +IO

153 | main = 1 101 range {fizzbuzz println} each

154 |

Ok, I have to explain some things. The first thing you have to know, Conc is an algebra of two operations on functions. The first operation is generalized composition. It works like ordinary composition in concatenative languages:

155 |

add : Int Int -> Int

156 | mul : Int Int -> Int

157 | add mul : Int Int Int -> Int

158 |

159 | 2 3 5 add mul ⇒ 2 (3 5 add) mul ⇒ 2 8 mul ⇒ 16

160 |

The second operation is parallel concatenation:

161 |

add,mul : Int Int Int Int -> Int Int

162 |

163 | 2 2 3 3 add,mul ⇒ (2 2 add),(3 3 mul) ⇒ 4 9

164 |

Both operations are monoids:

165 |

( ) f ≡ f ( ) ≡ f

166 | ( ),f ≡ f,( ) ≡ f

167 |

168 | (f g) h ≡ f (g h)

169 | (f,g),h ≡ f,(g,h)

170 |

Now notice that if c is a constant (a function that doesn't take any arguments) than composition is equvalent to concatenation:

171 |

f c ≡ f,c

172 |

Also, if output arity of f is lesser than input arity of g,

173 |

f g ≡ idN,f g

174 |

where N is the difference between arities.

175 |

This implies that the generalized composition is probably too general. This leads us to the concept of the canonical concatenative form.

176 |

Expression e1 e2 is in CCF if both e1 and e2 are in CCF and the output arity or e1 equals the input arity of e2.

177 |

This is our old examples in CCF:

178 |

2,3,5 id,add mul

179 | 2,2,3,3 add,mul

180 |

Now let's define an infix notation:

181 |

f `h` g ⇒ f,g h

182 |

This allows you to write expressions in a usual way:

183 |

2*2 + 3*3 ⇒ (2,2 (*)),(3,3 (*)) (+) ⇒ 4,9 + ⇒ 13

184 |

But it also allows you to write expression in an unusual way:

Now the meaning of drop dup (^2) + (^2) - abs should be crystal clear to you:

191 |

x y z drop dup (^2) + (^2) - abs ⇒

192 | x,y,y (^2) + (^2) - abs ⇒

193 | ((x,2 (^)),(y,2 (^)) (+)),(y abs) (-) ⇒ ...

194 |

Visually it is more like

195 |

x,y,y (^2) + (^2) - abs ⇒ (x^2) + (y^2) - (y abs)

196 |

Simple and intuitive.

197 |

Concatenative pattern matching

198 |

Operation , is not only about writing nice expressions. It also allows to introduce a concatenative version of pattern matching without variables.

199 |

Consider an expression:

200 |

case foo of

201 | Some a -> ...

202 | None -> ...

203 |

What does Some a -> mean? Well, assuming that foo = Some bar, it defines a = bar and evaluates expression after ->. Some is a function that constructs a value, and pattern matching inverts this function.

204 |

In concatenative world, Some bar becomes bar Some (postfix notation!). If there'd be unSome: a Maybe -> a, you could write

205 |

bar Some unSome ⇒ bar

206 |

But unSome doesn't exist. But what if we reproduce unSome behaviour in the place where it makes sense?

207 |

case (bar Some):

208 | Some -> ⇒ bar

209 | None -> ...

210 |

That's basically the essence of concatenative pattern matching. Together with id, type constructors and composition form a right-cancellative monoid.

211 |

What does it have to do with ,? Well, consider an another example:

212 |

case foo of

213 | Cons(Some(x), xs) -> ...

214 |

How to express this in the concatenative pattern matching? Let's rewrite Cons(Some(x), xs) in concatenative:

215 |

(x Some),xs Cons ⇒ x,xs Some,id Cons

216 |

The case statement becomes:

217 |

case foo:

218 | Some,id Cons -> ⇒ x,xs

219 |

So , allow us to do advanced pattern matching without any variables.

220 |

Miscellaneous

221 |

Cross-cutting statements

222 |

Consider a branching statement:

223 |

if foo:

224 | bar

225 | else:

226 | baz

227 |

If foo is a constant, the meaning of this statement is clear. But that if foo is a function?

228 |

In Conc, statement are cross-cutting. That means that the function inside uses values outside:

229 |

a b if (==):

230 | ...

231 | ⇒

232 | if a == b:

233 | ...

234 |

Stack effect comments

235 |

Concatenative notation has many advantages. But it also have some drawbacks.

236 |

magic = abra cadabra

237 |

What does this function do?

238 |

Raw types are not helpful either:

239 |

mysterious: Int Int -> Int

240 |

Clearly, we need something else.

241 |

In Forth, people use stack effect comments to show what a word does:

242 |

: SQUARED ( n -- nsquared ) DUP * ;

243 |

Conc has a similar thing too:

244 |

map (mb f -- mb) = ...

245 |

But unlike Forth, (mb f -- mb) is more than just a comment. The compiler can use it to check the arity, and error if the arity of the stack effect doesn't match the arity of the function.

246 |

A puzzle

247 |

Enough about Conc, let's talk about something more abstract. Specifically: concatenative combinators.

Writing a Concatenative Programming Language: Type System, Part 1

97 |

98 |

This post should have been after the post about parsing. But there's no post about parsing yet. You may wonder, why.

99 |

Well, the reason is simple. I already wrote the parser and started to implement the analysis. But quite immediately I got stuck: I don't quite know to do things I want to have in my language, and also nobody did something like this before. And though a lot of things can be thrown away and then implemented later, some things you can't just omit. The type system is such a thing.

100 |

This post is my attempt to solve this problem. I figure out what the type system should look like, and try to explain it. And explaining it helps me to understand it. And once I understand it well, I will be able to actually implement it.

101 |

Logic, formal and computational

102 |

Type systems are defined by a set of inference rules. An inference rule is a rule that takes premises and returns a conclusion. An example of such rule:

103 |

\[\frac{A, A → B}{B}\]

104 |

This is modus pones, the equvalent of function application in logic. This rule derives “streets are wet” from “it's raining” and “If it's raining, then streets are wet”.

105 |

Inference rules have one significant drawback: they are not directly computable. This is fine when everything is already set in stone, but if things are fluid, you really want being able to run code to experiment.

106 |

So instead of inference rules we will use Prolog.

107 |

Prolog is a solver of clauses like \(u ← p \land q \land … \land t\), which are FOL analog of inference rules. When an inference rule looks like this:

108 |

\[\frac{A}{B}\]

109 |

We will write something like this:

110 |

B :- A

111 |

Of course, it's not that simple. Prolog is more a programming language than theorem solver, so the code inevitably contain workarounds.

112 |

Tier 0: typed concatenative algebra

113 |

We start with typing the concatenative algebra, described in [calg].

Quite straightforward! Note that this code can both check and infer the type of an expression.

151 |

Tier ½: specifying the typechecking

152 |

Instead of relying on Prolog unification, let's detail the process of type checking and type inference.

153 |

We need a way to specify type variables. To do so, we'll use indexes. So \(\mathtt{swap} : α\mathbin{,}β → β\mathbin{,}α\) becomes \(\mathtt{swap} : τ_0\mathbin{,}τ_1 → τ_1\mathbin{,}τ_0\). In Prolog, we write \(τ_n\) as tau(N).

154 |

When we concatenate types, we need to increment the indices:

In f g, first we unify the output type of f with the input type of g. Then replace type variables of g with corresponding type variables of f. Also, we need to replace some type variables with concrete types.