├── FKD_train.py

├── README.md

├── extensions

├── __init__.py

├── data_parallel.py

├── kd_loss.py

└── teacher_wrapper.py

├── hubconf.py

├── imagenet.py

├── images

├── MEAL-V2_more_tricks_top1.png

├── MEAL-V2_more_tricks_top5.png

└── comparison.png

├── inference.py

├── loss.py

├── models

├── __init__.py

├── blocks.py

├── discriminator.py

└── model_factory.py

├── opts.py

├── script

├── resume_train.sh

└── train.sh

├── test.py

├── train.py

├── utils.py

└── utils_FKD.py

/FKD_train.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | """Script to train a model through soft labels on ImageNet's train set."""

3 |

4 | import argparse

5 | import logging

6 | import pprint

7 | import os

8 | import sys

9 | import time

10 | import math

11 | import numpy as np

12 |

13 | import torch

14 | from torch import nn

15 |

16 | from loss import discriminatorLoss

17 |

18 | import imagenet

19 | from models import model_factory

20 | from models import discriminator

21 | import opts

22 | import test

23 | import utils

24 | from utils_FKD import Recover_soft_label

25 |

26 | def parse_args(argv):

27 | """Parse arguments @argv and return the flags needed for training."""

28 | parser = argparse.ArgumentParser(description=__doc__, allow_abbrev=False)

29 |

30 | group = parser.add_argument_group('General Options')

31 | opts.add_general_flags(group)

32 |

33 | group = parser.add_argument_group('Dataset Options')

34 | opts.add_dataset_flags(group)

35 |

36 | group = parser.add_argument_group('Model Options')

37 | opts.add_model_flags(group)

38 |

39 | group = parser.add_argument_group('Soft Label Options')

40 | opts.add_teacher_flags(group)

41 |

42 | group = parser.add_argument_group('Training Options')

43 | opts.add_training_flags(group)

44 |

45 | group = parser.add_argument_group('CutMix Training Options')

46 | opts.add_cutmix_training_flags(group)

47 |

48 | args = parser.parse_args(argv)

49 |

50 | return args

51 |

52 |

53 | class LearningRateRegime:

54 | """Encapsulates the learning rate regime for training a model.

55 |

56 | Args:

57 | @intervals (list): A list of triples (start, end, lr). The intervals

58 | are inclusive (for start <= epoch <= end, lr will be used). The

59 | start of each interval must be right after the end of its previous

60 | interval.

61 | """

62 |

63 | def __init__(self, regime):

64 | if len(regime) % 3 != 0:

65 | raise ValueError("Regime length should be devisible by 3.")

66 | intervals = list(zip(regime[0::3], regime[1::3], regime[2::3]))

67 | self._validate_intervals(intervals)

68 | self.intervals = intervals

69 | self.num_epochs = intervals[-1][1]

70 |

71 | @classmethod

72 | def _validate_intervals(cls, intervals):

73 | if type(intervals) is not list:

74 | raise TypeError("Intervals must be a list of triples.")

75 | elif len(intervals) == 0:

76 | raise ValueError("Intervals must be a non empty list.")

77 | elif intervals[0][0] != 1:

78 | raise ValueError("Intervals must start from 1: {}".format(intervals))

79 | elif any(end < start for (start, end, lr) in intervals):

80 | raise ValueError("End of intervals must be greater or equal than their"

81 | " start: {}".format(intervals))

82 | elif any(intervals[i][1] + 1 != intervals[i + 1][0]

83 | for i in range(len(intervals) - 1)):

84 | raise ValueError("Start of each each interval must be the end of its "

85 | "previous interval plus one: {}".format(intervals))

86 |

87 | def get_lr(self, epoch):

88 | for (start, end, lr) in self.intervals:

89 | if start <= epoch <= end:

90 | return lr

91 | raise ValueError("Invalid epoch {} for regime {!r}".format(

92 | epoch, self.intervals))

93 |

94 |

95 | def _set_learning_rate(optimizer, lr):

96 | for param_group in optimizer.param_groups:

97 | param_group['lr'] = lr

98 |

99 | def adjust_learning_rate(optimizer, epoch, args):

100 | """Decay the learning rate based on schedule"""

101 | lr = args.lr

102 | if args.cos: # cosine lr schedule

103 | lr *= 0.5 * (1. + math.cos(math.pi * epoch / args.epochs))

104 | else: # stepwise lr schedule

105 | for milestone in args.schedule:

106 | lr *= 0.1 if epoch >= milestone else 1.

107 | for param_group in optimizer.param_groups:

108 | param_group['lr'] = lr

109 |

110 | def _get_learning_rate(optimizer):

111 | return max(param_group['lr'] for param_group in optimizer.param_groups)

112 |

113 |

114 | def train_for_one_epoch(model, g_loss, discriminator_loss, train_loader, optimizer, epoch_number, args):

115 | model.train()

116 | g_loss.train()

117 |

118 | data_time_meter = utils.AverageMeter()

119 | batch_time_meter = utils.AverageMeter()

120 | g_loss_meter = utils.AverageMeter(recent=100)

121 | d_loss_meter = utils.AverageMeter(recent=100)

122 | top1_meter = utils.AverageMeter(recent=100)

123 | top5_meter = utils.AverageMeter(recent=100)

124 |

125 | timestamp = time.time()

126 | for i, (images, labels, soft_labels) in enumerate(train_loader):

127 | batch_size = args.batch_size

128 |

129 | # Record data time

130 | data_time_meter.update(time.time() - timestamp)

131 |

132 | images = torch.cat(images, dim=0)

133 | soft_labels = torch.cat(soft_labels, dim=0)

134 | labels = torch.cat(labels, dim=0)

135 |

136 | if args.soft_label_type == 'ori':

137 | soft_labels = soft_labels.cuda()

138 | else:

139 | soft_labels = Recover_soft_label(soft_labels, args.soft_label_type, args.num_classes)

140 | soft_labels = soft_labels.cuda()

141 |

142 | if utils.is_model_cuda(model):

143 | images = images.cuda()

144 | labels = labels.cuda()

145 |

146 | if args.w_cutmix == True:

147 | r = np.random.rand(1)

148 | if args.beta > 0 and r < args.cutmix_prob:

149 | # generate mixed sample

150 | lam = np.random.beta(args.beta, args.beta)

151 | rand_index = torch.randperm(images.size()[0]).cuda()

152 | target_a = soft_labels

153 | target_b = soft_labels[rand_index]

154 | bbx1, bby1, bbx2, bby2 = utils.rand_bbox(images.size(), lam)

155 | images[:, :, bbx1:bbx2, bby1:bby2] = images[rand_index, :, bbx1:bbx2, bby1:bby2]

156 | lam = 1 - ((bbx2 - bbx1) * (bby2 - bby1) / (images.size()[-1] * images.size()[-2]))

157 |

158 | # Forward pass, backward pass, and update parameters.

159 | output = model(images)

160 | # output, soft_label, soft_no_softmax = outputs

161 | if args.w_cutmix == True:

162 | g_loss_output1 = g_loss((output, target_a), labels)

163 | g_loss_output2 = g_loss((output, target_b), labels)

164 | else:

165 | g_loss_output = g_loss((output, soft_labels), labels)

166 | if args.use_discriminator_loss:

167 | # Our stored label is "after softmax", this is slightly different from original MEAL V2

168 | # that used probibilaties "before softmax" for the discriminator.

169 | output_softmax = nn.functional.softmax(output)

170 | if args.w_cutmix == True:

171 | d_loss_value = discriminator_loss([output_softmax], [target_a]) * lam + discriminator_loss([output_softmax], [target_b]) * (1 - lam)

172 | else:

173 | d_loss_value = discriminator_loss([output_softmax], [soft_labels])

174 |

175 | # Sometimes loss function returns a modified version of the output,

176 | # which must be used to compute the model accuracy.

177 | if args.w_cutmix == True:

178 | if isinstance(g_loss_output1, tuple):

179 | g_loss_value1, output1 = g_loss_output1

180 | g_loss_value2, output2 = g_loss_output2

181 | g_loss_value = g_loss_value1 * lam + g_loss_value2 * (1 - lam)

182 | else:

183 | g_loss_value = g_loss_output1 * lam + g_loss_output2 * (1 - lam)

184 | else:

185 | if isinstance(g_loss_output, tuple):

186 | g_loss_value, output = g_loss_output

187 | else:

188 | g_loss_value = g_loss_output

189 |

190 | if args.use_discriminator_loss:

191 | loss_value = g_loss_value + d_loss_value

192 | else:

193 | loss_value = g_loss_value

194 |

195 | loss_value.backward()

196 |

197 | # Update parameters and reset gradients.

198 | optimizer.step()

199 | optimizer.zero_grad()

200 |

201 | # Record loss and model accuracy.

202 | g_loss_meter.update(g_loss_value.item(), batch_size)

203 | d_loss_meter.update(d_loss_value.item(), batch_size)

204 |

205 | top1, top5 = utils.topk_accuracy(output, labels, recalls=(1, 5))

206 | top1_meter.update(top1, batch_size)

207 | top5_meter.update(top5, batch_size)

208 |

209 | # Record batch time

210 | batch_time_meter.update(time.time() - timestamp)

211 | timestamp = time.time()

212 |

213 | if i%20 == 0:

214 | logging.info(

215 | 'Epoch: [{epoch}][{batch}/{epoch_size}]\t'

216 | 'Time {batch_time.value:.2f} ({batch_time.average:.2f}) '

217 | 'Data {data_time.value:.2f} ({data_time.average:.2f}) '

218 | 'G_Loss {g_loss.value:.3f} {{{g_loss.average:.3f}, {g_loss.average_recent:.3f}}} '

219 | 'D_Loss {d_loss.value:.3f} {{{d_loss.average:.3f}, {d_loss.average_recent:.3f}}} '

220 | 'Top-1 {top1.value:.2f} {{{top1.average:.2f}, {top1.average_recent:.2f}}} '

221 | 'Top-5 {top5.value:.2f} {{{top5.average:.2f}, {top5.average_recent:.2f}}} '

222 | 'LR {lr:.5f}'.format(

223 | epoch=epoch_number, batch=i + 1, epoch_size=len(train_loader),

224 | batch_time=batch_time_meter, data_time=data_time_meter,

225 | g_loss=g_loss_meter, d_loss=d_loss_meter, top1=top1_meter, top5=top5_meter,

226 | lr=_get_learning_rate(optimizer)))

227 | # Log the overall train stats

228 | logging.info(

229 | 'Epoch: [{epoch}] -- TRAINING SUMMARY\t'

230 | 'Time {batch_time.sum:.2f} '

231 | 'Data {data_time.sum:.2f} '

232 | 'G_Loss {g_loss.average:.3f} '

233 | 'D_Loss {d_loss.average:.3f} '

234 | 'Top-1 {top1.average:.2f} '

235 | 'Top-5 {top5.average:.2f} '.format(

236 | epoch=epoch_number, batch_time=batch_time_meter, data_time=data_time_meter,

237 | g_loss=g_loss_meter, d_loss=d_loss_meter, top1=top1_meter, top5=top5_meter))

238 |

239 |

240 | def save_checkpoint(checkpoints_dir, model, optimizer, epoch):

241 | model_state_file = os.path.join(checkpoints_dir, 'model_state_{:02}.pytar'.format(epoch))

242 | optim_state_file = os.path.join(checkpoints_dir, 'optim_state_{:02}.pytar'.format(epoch))

243 | torch.save(model.state_dict(), model_state_file)

244 | torch.save(optimizer.state_dict(), optim_state_file)

245 |

246 |

247 | def create_optimizer(model, discriminator_parameters, momentum=0.9, weight_decay=0):

248 | # Get model parameters that require a gradient.

249 | parameters = [{'params': model.parameters()}, discriminator_parameters]

250 | optimizer = torch.optim.SGD(parameters, lr=0,

251 | momentum=momentum, weight_decay=weight_decay)

252 | return optimizer

253 |

254 | def create_discriminator_criterion(args):

255 | d = discriminator.Discriminator(outputs_size=1000, K=8).cuda()

256 | d = torch.nn.DataParallel(d)

257 | update_parameters = {'params': d.parameters(), "lr": args.d_lr}

258 | discriminators_criterion = discriminatorLoss(d).cuda()

259 | if len(args.gpus) > 1:

260 | discriminators_criterion = torch.nn.DataParallel(discriminators_criterion, device_ids=args.gpus)

261 | return discriminators_criterion, update_parameters

262 |

263 | def main(argv):

264 | """Run the training script with command line arguments @argv."""

265 | args = parse_args(argv)

266 | utils.general_setup(args.save, args.gpus)

267 |

268 | logging.info("Arguments parsed.\n{}".format(pprint.pformat(vars(args))))

269 |

270 | # convert to TRUE number of loading-images since we use multiple crops from the same image within a minbatch

271 | args.batch_size = math.ceil(args.batch_size / args.num_crops)

272 |

273 | # Create the train and the validation data loaders.

274 | train_loader = imagenet.get_train_loader_FKD(args.imagenet, args.batch_size,

275 | args.num_workers, args.image_size, args.num_crops, args.softlabel_path)

276 | val_loader = imagenet.get_val_loader(args.imagenet, args.batch_size,

277 | args.num_workers, args.image_size)

278 | # Create model with optional teachers.

279 | model, loss = model_factory.create_model(

280 | args.model, args.student_state_file, args.gpus, args.teacher_model,

281 | args.teacher_state_file, True)

282 | logging.info("Model:\n{}".format(model))

283 |

284 | discriminator_loss, update_parameters = create_discriminator_criterion(args)

285 |

286 | optimizer = create_optimizer(model, update_parameters, args.momentum, args.weight_decay)

287 |

288 | for epoch in range(args.start_epoch, args.epochs, args.num_crops):

289 | adjust_learning_rate(optimizer, epoch, args)

290 | train_for_one_epoch(model, loss, discriminator_loss, train_loader, optimizer, epoch, args)

291 | test.test_for_one_epoch(model, loss, val_loader, epoch)

292 | save_checkpoint(args.save, model, optimizer, epoch)

293 |

294 |

295 | if __name__ == '__main__':

296 | main(sys.argv[1:])

297 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # MEAL-V2

2 |

3 | This is the official pytorch implementation of our paper:

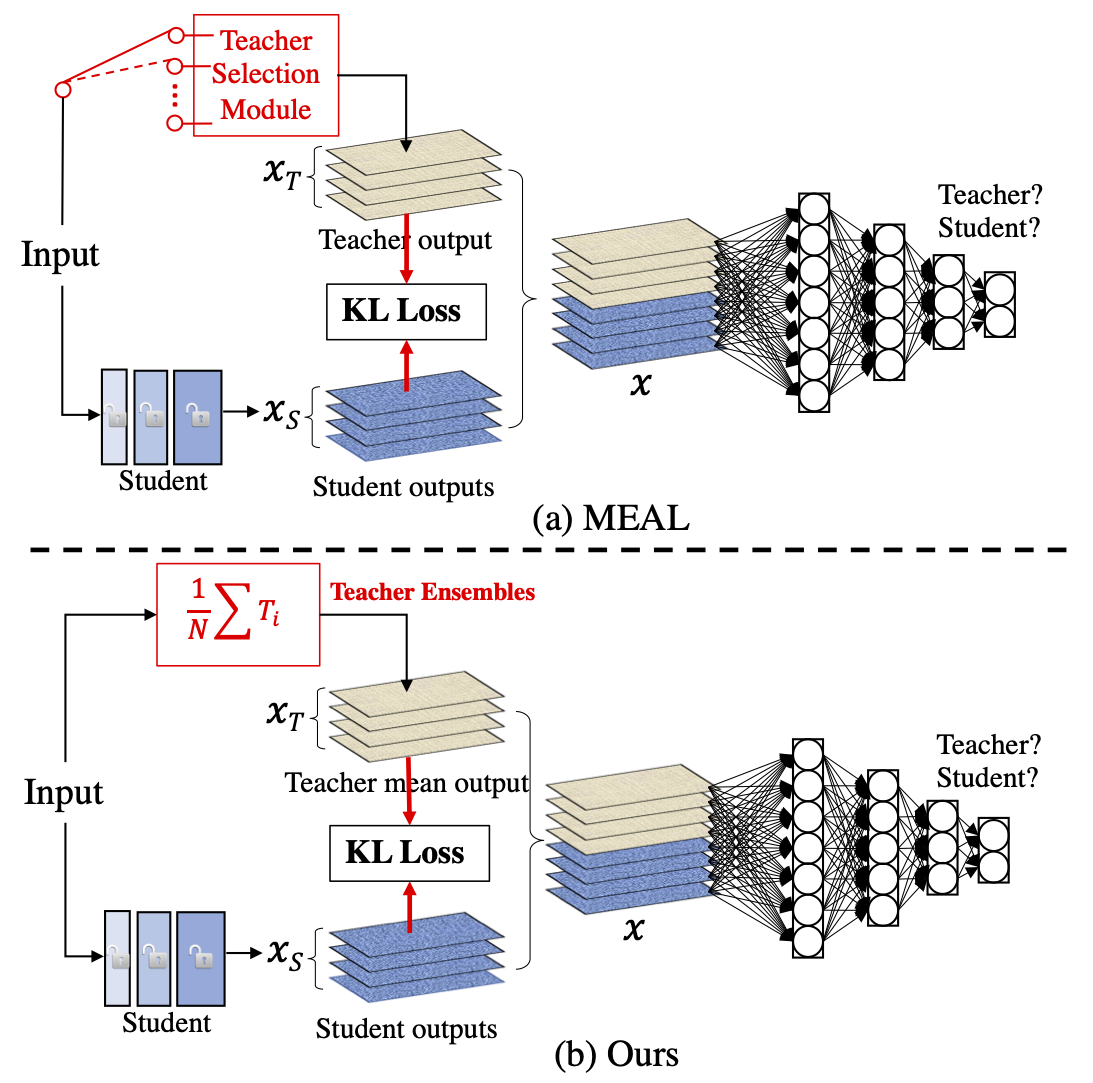

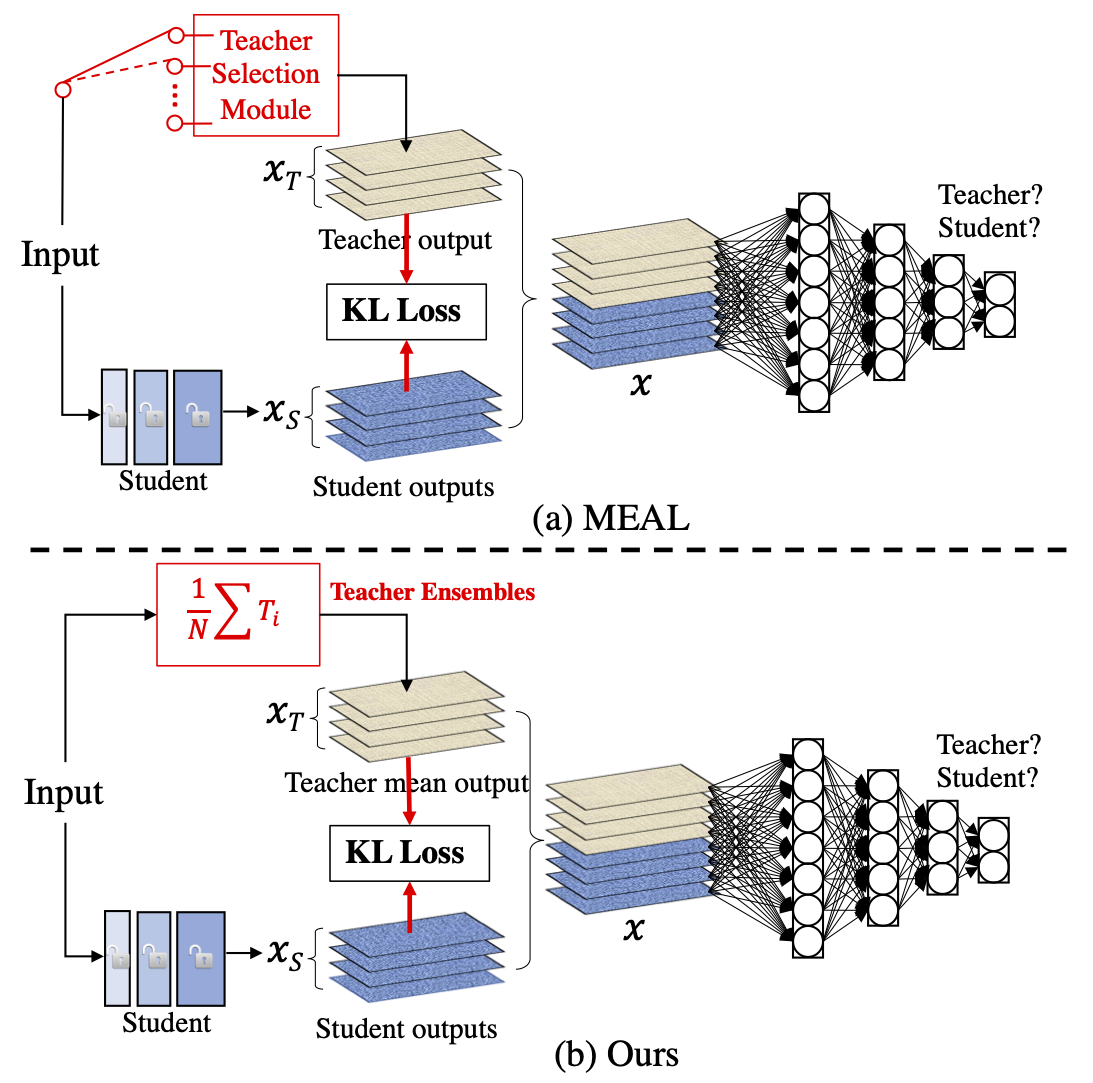

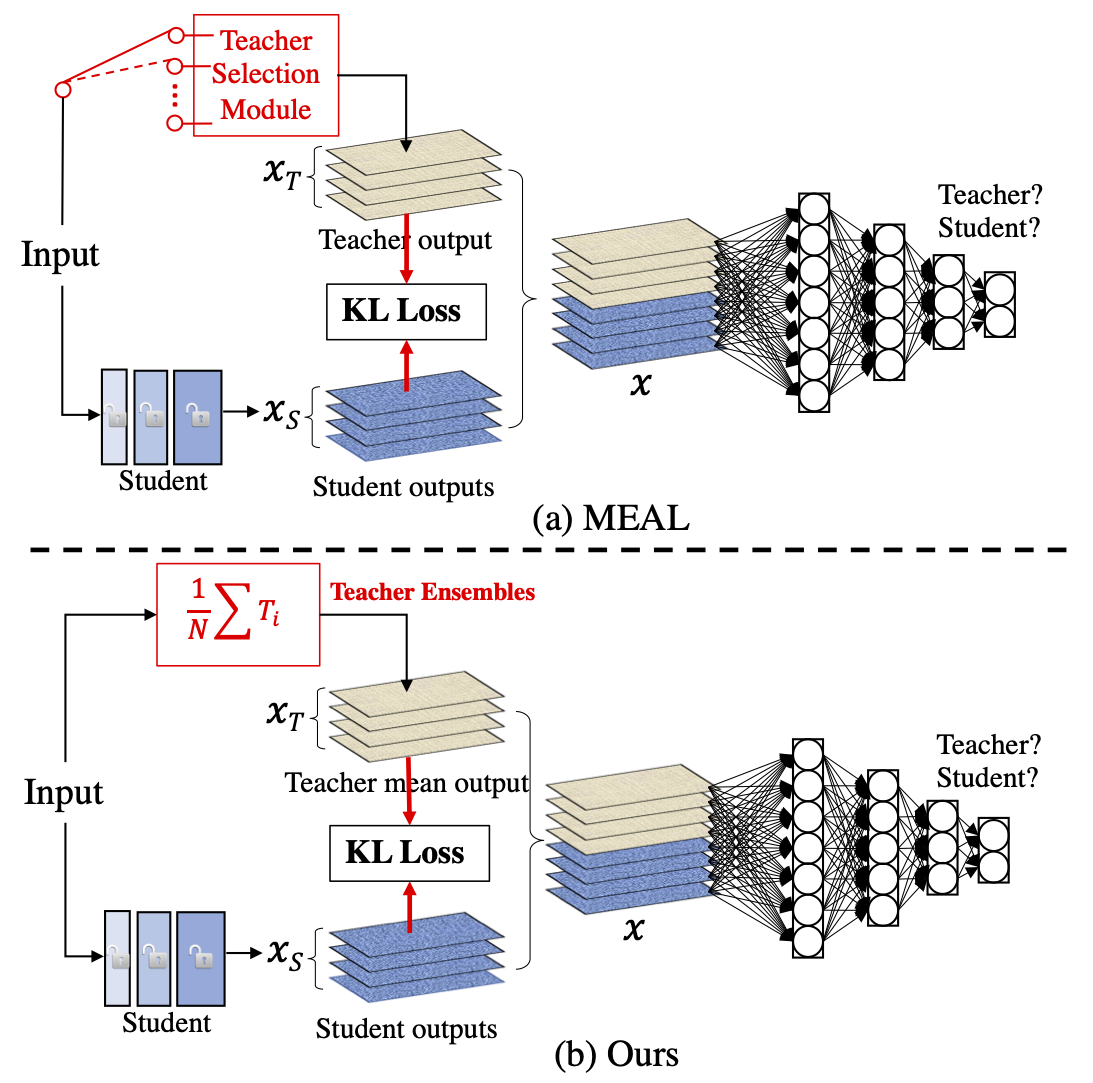

4 | ["MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks"](https://arxiv.org/abs/2009.08453) by

5 | [Zhiqiang Shen](http://zhiqiangshen.com/) and [Marios Savvides](https://www.ece.cmu.edu/directory/bios/savvides-marios.html) from Carnegie Mellon University.

6 |

7 |

8 |

9 |

47 |

48 |

49 |

9 |

9 |  9 |

9 |  48 |

48 |  49 |

49 |