├── Classification_3DSAMPLE.ipynb

├── README.md

├── config.py

├── figure

├── 3D_SAMPLE

│ └── cell32

│ │ ├── Normalized_confusion_matrix.svg

│ │ ├── acc_1.svg

│ │ ├── model.png

│ │ ├── model.svg

│ │ ├── model3.png

│ │ ├── test_asp.jpg

│ │ └── train_asp.jpg

├── ModelNet10

│ └── cell32

│ │ ├── Normalized_confusion_matrix.svg

│ │ ├── acc.svg

│ │ ├── acc_1.svg

│ │ ├── loss.svg

│ │ ├── model.png

│ │ ├── model.svg

│ │ ├── model2.png

│ │ ├── model3.png

│ │ ├── test_asp.jpg

│ │ ├── test_asp.svg

│ │ ├── train_asp.jpg

│ │ └── train_asp.svg

├── ModelNet40

│ └── cell32

│ │ ├── Normalized_confusion_matrix.svg

│ │ ├── acc.svg

│ │ ├── acc_1.svg

│ │ ├── loss.svg

│ │ ├── model.png

│ │ ├── model2.png

│ │ ├── model3.png

│ │ ├── test_asp.jpg

│ │ └── train_asp.jpg

├── fig1.png

├── fig2.png

├── fig3.png

└── fig4.png

├── get_modelnet.sh

├── make_npy_file.ipynb

├── poster_takitani2.pdf

├── sample.txt

└── viz.ipynb

/README.md:

--------------------------------------------------------------------------------

1 | # Classification3dmodel

2 |

3 |  4 |

5 |

6 | This is a Jupyter notebook for classifying 3DCAD models using CNN.

7 | I am using Keras as a framework.

8 |

9 | # Requirement

10 | ```

11 | Keras 2.2.4

12 | tensorboard 1.9.0

13 | tensorflow 1.9.0

14 | tensorflow-gpu 1.10.0

15 | numpy 1.14.5

16 | matplotlib 3.0.0

17 | scikit-learn 0.20.0

18 | tqdm 4.26.0

19 | ```

20 |

21 | # Corresponding file format

22 | - OFF

23 | - STL

24 |

25 | # How to use

26 | - 3DCAD models data download

27 | ModelNet10/40 downlod

28 | ```

29 | $ git clone https://github.com/tacky0612/classification3dmodel.git

30 | $ cd classification3dmodel

31 | $ bash get_modelnet.sh

32 | ```

33 |

34 | - Convert 3DCAD model to voxels

35 | Please run "make_npy_file.ipynb" with Jupyter notebook.

36 | It converts the 3D data to voxels.

37 | Then, t is saved as numpy

38 |

39 | - Voxel visualization

40 | Please run "vis.ipynb" with Jupyter notebook.

41 | It makes visualization of 3D model.

42 | ↓ Like this ↓

43 |

4 |

5 |

6 | This is a Jupyter notebook for classifying 3DCAD models using CNN.

7 | I am using Keras as a framework.

8 |

9 | # Requirement

10 | ```

11 | Keras 2.2.4

12 | tensorboard 1.9.0

13 | tensorflow 1.9.0

14 | tensorflow-gpu 1.10.0

15 | numpy 1.14.5

16 | matplotlib 3.0.0

17 | scikit-learn 0.20.0

18 | tqdm 4.26.0

19 | ```

20 |

21 | # Corresponding file format

22 | - OFF

23 | - STL

24 |

25 | # How to use

26 | - 3DCAD models data download

27 | ModelNet10/40 downlod

28 | ```

29 | $ git clone https://github.com/tacky0612/classification3dmodel.git

30 | $ cd classification3dmodel

31 | $ bash get_modelnet.sh

32 | ```

33 |

34 | - Convert 3DCAD model to voxels

35 | Please run "make_npy_file.ipynb" with Jupyter notebook.

36 | It converts the 3D data to voxels.

37 | Then, t is saved as numpy

38 |

39 | - Voxel visualization

40 | Please run "vis.ipynb" with Jupyter notebook.

41 | It makes visualization of 3D model.

42 | ↓ Like this ↓

43 |  44 |

44 |  45 |

46 | - Classification 3Dmodels

47 | Please run "Classification_3DSAMPLE.ipynb" with Jupyter notebook.

48 | It classifies 3D models.

49 |

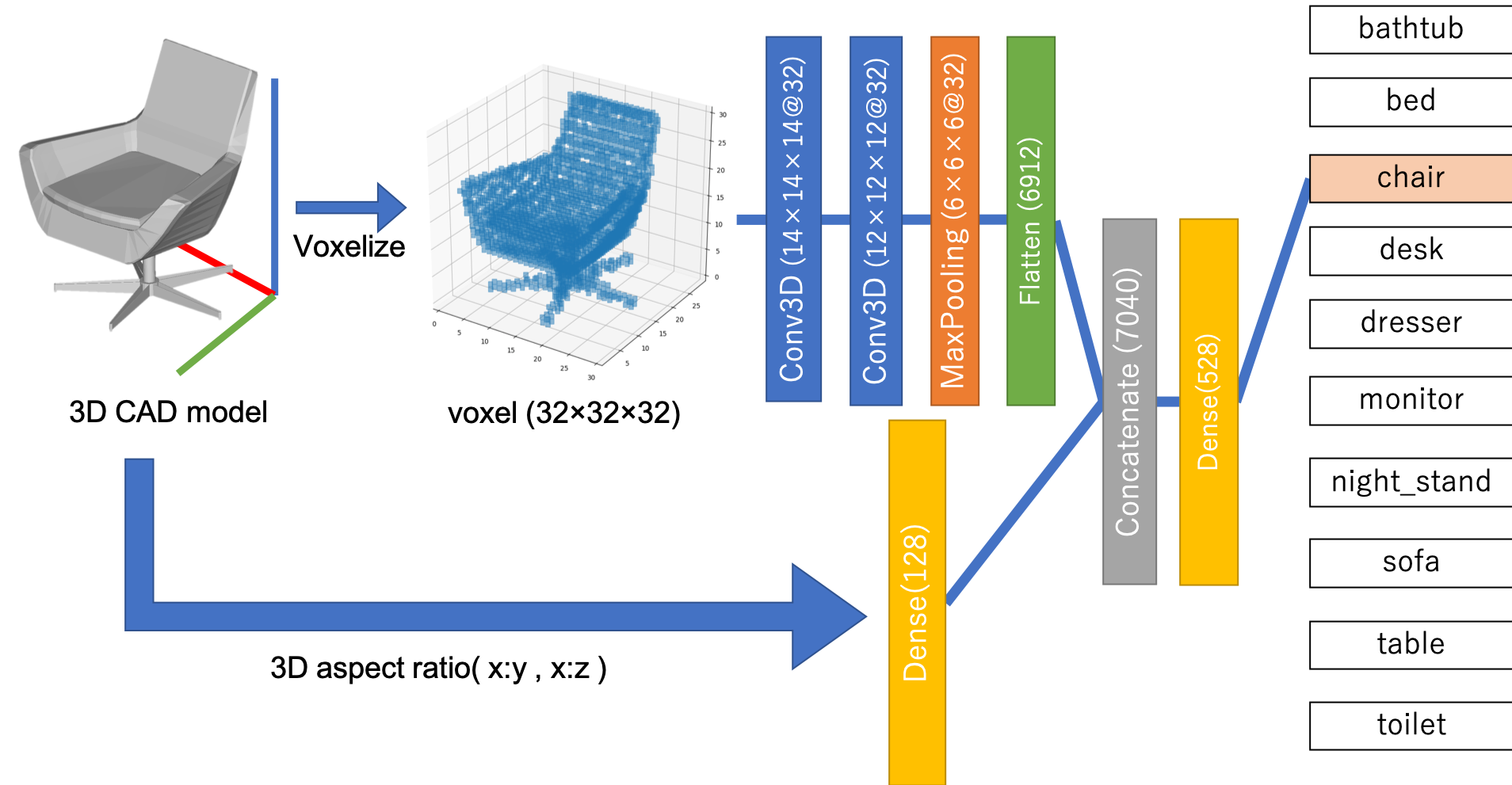

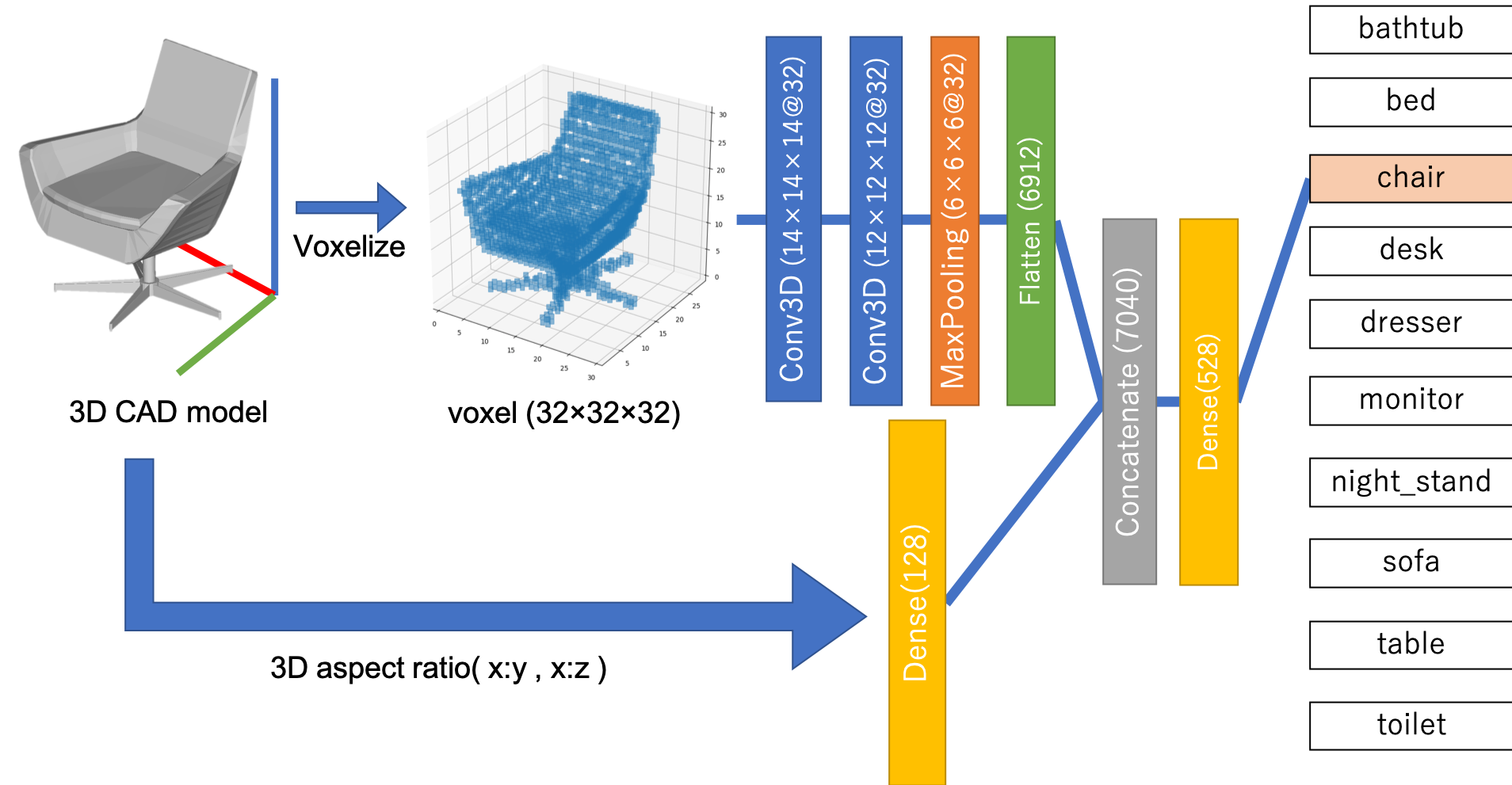

50 | Using this architecture.

51 |

45 |

46 | - Classification 3Dmodels

47 | Please run "Classification_3DSAMPLE.ipynb" with Jupyter notebook.

48 | It classifies 3D models.

49 |

50 | Using this architecture.

51 |  52 |

53 | ↓ Accuracy ↓

54 |

55 | | ModelNet10 | ModelNet40 | My dataset(3D_SAMPLE) |

56 | | :---: | :---: | :---: |

57 | | 90.1% | 86.8% | 97.6% |

58 |

59 |

60 | # When this does not work

61 | Please check ./config.py

62 | 'DATASET = Modelnet10 or Modelnet40'

63 | Remove the comment out of this.

64 | 3D_SAMPLE is our own dataset. I can not publish it now.

65 |

66 | # P.S.

67 | Forgive me for my poor English... XD

68 |

69 | [My twitter](https://twitter.com/tacky0612) ← Please contact me if you have any troubles.

70 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 | import os

3 | import keras

4 | from keras.optimizers import Adam

5 | from keras.callbacks import LearningRateScheduler, ModelCheckpoint , TensorBoard

6 |

7 | # BASE_DIR = os.path.dirname(os.path.abspath(__file__))

8 |

9 | class Config():

10 |

11 | def make_tensorboard(set_dir_name='TensorBoard'):

12 | log_dir = set_dir_name

13 | if os.path.exists(log_dir) == False:

14 | os.makedirs(log_dir)

15 | tensorboard = TensorBoard(log_dir=log_dir, write_graph=True, )

16 | return tensorboard

17 |

18 | # DATASET = "ModelNet10"

19 | DATASET = "ModelNet40"

20 | # DATASET = "3D_SAMPLE"

21 |

22 |

23 | N_POINTS = 10000

24 | CELL = 32

25 | BATCH_SIZE = 128

26 | EPOCHS = 40

27 | LEARNIG_RATE = 0.001

28 |

29 |

30 | OPTIMIZER = Adam(lr=LEARNIG_RATE)

31 |

32 |

33 | if DATASET == "ModelNet10":

34 | DATA_DIR = "ModelNet10/"

35 | NUM_CLASSES = 10

36 | EXTENSION = ".off"

37 | CLASS_NAME = [

38 | 'bathtub',

39 | 'chair',

40 | 'dresser',

41 | 'night_stand',

42 | 'table',

43 | 'bed',

44 | 'desk',

45 | 'monitor',

46 | 'sofa',

47 | 'toilet',

48 | ]

49 |

50 | if DATASET == "ModelNet40":

51 | DATA_DIR = "ModelNet40/"

52 | NUM_CLASSES = 40

53 | EXTENSION = ".off"

54 | CLASS_NAME = [

55 | 'airplane',

56 | 'bookshelf',

57 | 'chair',

58 | 'desk',

59 | 'glass_box',

60 | 'laptop',

61 | 'person',

62 | 'range_hood',

63 | 'stool',

64 | 'tv_stand',

65 | 'bathtub',

66 | 'bottle',

67 | 'cone',

68 | 'door',

69 | 'guitar',

70 | 'mantel',

71 | 'piano',

72 | 'sink',

73 | 'table',

74 | 'vase',

75 | 'bed',

76 | 'bowl',

77 | 'cup',

78 | 'dresser',

79 | 'keyboard',

80 | 'monitor',

81 | 'plant',

82 | 'sofa',

83 | 'tent',

84 | 'wardrobe',

85 | 'bench',

86 | 'car',

87 | 'curtain',

88 | 'flower_pot',

89 | 'lamp',

90 | 'night_stand',

91 | 'radio',

92 | 'stairs',

93 | 'toilet',

94 | 'xbox',

95 | ]

96 |

97 | if DATASET == "3D_SAMPLE":

98 | DATA_DIR = "3D_SAMPLE/"

99 | NUM_CLASSES = 34

100 | EXTENSION = ".stl"

101 | CLASS_NAME = [

102 | 'auto_valve1',

103 | 'auto_valve2',

104 | 'auto_valve3',

105 | 'bearing',

106 | 'bevel_gear',

107 | 'block',

108 | 'bracket1',

109 | 'bracket2',

110 | 'bushing',

111 | 'cylinder',

112 | 'etc',

113 | 'frame',

114 | 'gear',

115 | 'handle_valve1',

116 | 'handle_valve2',

117 | 'handle_valve3',

118 | 'hinge1',

119 | 'hinge2',

120 | 'nut',

121 | 'pipe',

122 | 'plate',

123 | 'pully1',

124 | 'pully2',

125 | 'robot1',

126 | 'robot2',

127 | 'robot3',

128 | 'robot4',

129 | 'rod',

130 | 'shaft1',

131 | 'shaft2',

132 | 'spring',

133 | 'trigeminal_valve',

134 | 'valve_connector',

135 | 'washer'

136 | ]

137 |

138 |

139 | VOX_DIR = "np_vox/" + DATASET + "/cell" + str(CELL) + "/"

140 | FIG_DIR = "figure/"+ DATASET + "/cell" + str(CELL) + "/"

141 | WEIGHTS_DIR = "weights/" + DATASET + "/cell" + str(CELL) + "/"

142 | MODEL_DIR = "model/"+ DATASET + "/cell" + str(CELL) + "/"

143 | DIST_DIR = "dist/"+ DATASET + "/cell" + str(CELL) + "/"

144 |

145 | # early_stopping

146 | early_stopping = keras.callbacks.EarlyStopping(monitor='val_loss', patience=0, verbose=0, mode='auto')

147 |

148 | #save best model

149 | checkpoint = ModelCheckpoint(filepath = os.path.join(WEIGHTS_DIR, "model-{epoch:02d}.h5"), save_best_only=True)

150 |

151 | # TensorBoard

152 | CALLBACKS = [make_tensorboard(),checkpoint]

153 |

154 |

155 |

156 |

157 | config = Config()

158 |

159 |

160 |

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/model.png

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

279 |

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/model3.png

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/acc.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1166 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/loss.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1047 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

279 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model2.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model3.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/loss.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1106 |

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model2.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model3.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/fig1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig1.png

--------------------------------------------------------------------------------

/figure/fig2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig2.png

--------------------------------------------------------------------------------

/figure/fig3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig3.png

--------------------------------------------------------------------------------

/figure/fig4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig4.png

--------------------------------------------------------------------------------

/get_modelnet.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 |

4 | # download zip files

5 | wget http://3dvision.princeton.edu/projects/2014/3DShapeNets/ModelNet10.zip

6 | wget http://modelnet.cs.princeton.edu/ModelNet40.zip

7 |

8 | # extract zip files

9 | unzip ModelNet10.zip

10 | unzip ModelNet40.zip

11 |

--------------------------------------------------------------------------------

/make_npy_file.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Head"

8 | ]

9 | },

10 | {

11 | "cell_type": "markdown",

12 | "metadata": {},

13 | "source": [

14 | "## imports"

15 | ]

16 | },

17 | {

18 | "cell_type": "code",

19 | "execution_count": 1,

20 | "metadata": {},

21 | "outputs": [],

22 | "source": [

23 | "# -*- coding: utf-8 -*-"

24 | ]

25 | },

26 | {

27 | "cell_type": "code",

28 | "execution_count": 2,

29 | "metadata": {},

30 | "outputs": [],

31 | "source": [

32 | "\n",

33 | "import os\n",

34 | "import sys\n",

35 | "import numpy as np\n",

36 | "import glob\n",

37 | "import math\n",

38 | "import random\n",

39 | "import shutil\n",

40 | "import keras\n",

41 | "\n",

42 | "from config import config"

43 | ]

44 | },

45 | {

46 | "cell_type": "markdown",

47 | "metadata": {},

48 | "source": [

49 | "## Tuning"

50 | ]

51 | },

52 | {

53 | "cell_type": "code",

54 | "execution_count": 3,

55 | "metadata": {},

56 | "outputs": [],

57 | "source": [

58 | "#Tuning\n",

59 | "dataset = config.DATASET\n",

60 | "n_points = config.N_POINTS\n",

61 | "cell = config.CELL\n",

62 | "num_classes = config.NUM_CLASSES\n",

63 | "extension = config.EXTENSION\n",

64 | "class_name = config.CLASS_NAME"

65 | ]

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": 4,

70 | "metadata": {},

71 | "outputs": [],

72 | "source": [

73 | "train_or_test = [\"train\",\"test\"]"

74 | ]

75 | },

76 | {

77 | "cell_type": "code",

78 | "execution_count": 5,

79 | "metadata": {},

80 | "outputs": [],

81 | "source": [

82 | "#読み込み,書き込み用ディレクトリ\n",

83 | "data_dir = config.DATA_DIR\n",

84 | "vox_dir = config.VOX_DIR\n",

85 | "fig_dir = config.FIG_DIR\n",

86 | "dist_dir = config.DIST_DIR"

87 | ]

88 | },

89 | {

90 | "cell_type": "code",

91 | "execution_count": 6,

92 | "metadata": {},

93 | "outputs": [],

94 | "source": [

95 | "dir_list = [data_dir , vox_dir , fig_dir , dist_dir]\n",

96 | "for directory in dir_list:\n",

97 | " if os.path.exists(directory) == False:\n",

98 | " os.makedirs(directory)"

99 | ]

100 | },

101 | {

102 | "cell_type": "code",

103 | "execution_count": 7,

104 | "metadata": {},

105 | "outputs": [],

106 | "source": [

107 | "# TrainとTestのデータdirectory作成\n",

108 | "for t in train_or_test:\n",

109 | " for cl in class_name:\n",

110 | " if os.path.exists(data_dir + cl + \"/\" + t + \"/\") == False:\n",

111 | " os.makedirs(data_dir + cl + \"/\" + t + \"/\")"

112 | ]

113 | },

114 | {

115 | "cell_type": "markdown",

116 | "metadata": {

117 | "heading_collapsed": true

118 | },

119 | "source": [

120 | "# Function"

121 | ]

122 | },

123 | {

124 | "cell_type": "code",

125 | "execution_count": 8,

126 | "metadata": {

127 | "hidden": true

128 | },

129 | "outputs": [],

130 | "source": [

131 | "def load_off(filename):\n",

132 | " # read OFF file\n",

133 | " with open(filename,\"r\") as handle:\n",

134 | " off = handle.read().rstrip().split(\"\\n\")\n",

135 | " \n",

136 | " #OFFファイルが不正かどうか判定\n",

137 | " if off[0] != \"OFF\":\n",

138 | "# print(\"{} is broken!!\".format(filename))\n",

139 | " params = list(off[0].split(\" \"))\n",

140 | " n_vertices = int(params[0].strip(\"OFF\"))\n",

141 | " n_faces = int(params[1])\n",

142 | " off.insert(0, \"OFF\")\n",

143 | " \n",

144 | " else:\n",

145 | " #get params and faces\n",

146 | " params = list(map(int, off[1].split(\" \")))\n",

147 | " n_vertices = params[0]\n",

148 | " n_faces = params[1]\n",

149 | "\n",

150 | " # read Vertex coordinates\n",

151 | " vertices = []\n",

152 | " for n in range(n_vertices):\n",

153 | " coords = list(map(float, off[2+n].split()))\n",

154 | " vertices.append(coords)\n",

155 | "\n",

156 | " # read information of faces\n",

157 | " faces = []\n",

158 | " for n in range(n_faces):\n",

159 | " connects = list(map(int, off[2 + n_vertices + n].split(\" \")[1:4]))\n",

160 | " faces.append(connects)\n",

161 | "\n",

162 | " return vertices, faces"

163 | ]

164 | },

165 | {

166 | "cell_type": "code",

167 | "execution_count": 9,

168 | "metadata": {

169 | "hidden": true

170 | },

171 | "outputs": [],

172 | "source": [

173 | "def load_stl(filename):\n",

174 | " # read STL file\n",

175 | " with open(filename,\"r\") as handle:\n",

176 | " stl = handle.read().rstrip().split(\"\\n\")\n",

177 | " \n",

178 | " #get vertice\n",

179 | " vertice = []\n",

180 | " for i in range(len(stl)):\n",

181 | " pool = stl[i].split()\n",

182 | " if pool[0] == \"vertex\":\n",

183 | " vertex = list(map(float, pool[1:]))\n",

184 | " vertice.append(vertex)\n",

185 | " \n",

186 | " seen = []\n",

187 | " unique_vertice = [x for x in vertice if x not in seen and not seen.append(x)]\n",

188 | " \n",

189 | " #get faces\n",

190 | " faces = []\n",

191 | " for n in range(len(stl)):\n",

192 | " if stl[n].split() == ['outer', 'loop']:\n",

193 | " indexes = []\n",

194 | " for i in range(3):\n",

195 | " index = get_index_2d_list(unique_vertice, list(map(float,stl[n+i+1].split()[1:])))\n",

196 | " indexes.append(index)\n",

197 | " faces.append(indexes)\n",

198 | "\n",

199 | " return unique_vertice, faces"

200 | ]

201 | },

202 | {

203 | "cell_type": "code",

204 | "execution_count": 10,

205 | "metadata": {

206 | "hidden": true

207 | },

208 | "outputs": [],

209 | "source": [

210 | "def generate_points(points, face_ids, num_generated_point=1000):\n",

211 | " \n",

212 | " points = np.array(points)\n",

213 | " face_ids = np.array(face_ids)\n",

214 | " \n",

215 | " # compute area\n",

216 | " vec1 = points[face_ids[:,0]] - points[face_ids[:,1]]\n",

217 | " vec2 = points[face_ids[:,1]] - points[face_ids[:,2]]\n",

218 | " cross_product = np.cross(vec1, vec2)\n",

219 | " area = np.sqrt(np.power(cross_product, 2).sum(axis=1))\n",

220 | "\n",

221 | " # cumsum area\n",

222 | " cum_area = np.cumsum(area)\n",

223 | "\n",

224 | " # generate random \n",

225 | " random = np.random.rand(num_generated_point) * cum_area[-1]\n",

226 | "\n",

227 | " # convert random to index\n",

228 | " #random_idx = [bisect.bisect_left(cum_area, x) for x in random]\n",

229 | " random_idx = np.random.choice(np.arange(len(face_ids)), size=num_generated_point, p=area/cum_area[-1])\n",

230 | "\n",

231 | " #import pdb; pdb.set_trace()\n",

232 | "\n",

233 | " # generate points\n",

234 | " r1 = np.tile(np.random.rand(num_generated_point), (3, 1)).T\n",

235 | " r2 = np.tile(np.random.rand(num_generated_point), (3, 1)).T\n",

236 | "\n",

237 | " generated_points = (1 - np.sqrt(r1))*points[face_ids[random_idx, 0]] + np.sqrt(r1)*(1-r2)*points[face_ids[random_idx, 1]] + np.sqrt(r1)*r2*points[face_ids[random_idx, 2]]\n",

238 | "\n",

239 | " return generated_points, random_idx"

240 | ]

241 | },

242 | {

243 | "cell_type": "code",

244 | "execution_count": 11,

245 | "metadata": {

246 | "hidden": true

247 | },

248 | "outputs": [],

249 | "source": [

250 | "def voxilize(np_pc,cell):\n",

251 | "# ボクセル化した配列を返す\n",

252 | " max_dist = 0.0\n",

253 | " for it in range(0,3):\n",

254 | " # 最大値と最小値の距離を求める\n",

255 | " min_ = np.amin(np_pc[:,it])\n",

256 | " max_ = np.amax(np_pc[:,it])\n",

257 | " dist = max_-min_\n",

258 | "\n",

259 | " #xyzで一番並行距離が大きいのを求める\n",

260 | " if dist > max_dist:\n",

261 | " max_dist = dist\n",

262 | " \n",

263 | " for it in range(0,3):\n",

264 | "\n",

265 | " # 最大値と最小値の距離を求める\n",

266 | " min_ = np.amin(np_pc[:,it])\n",

267 | " max_ = np.amax(np_pc[:,it])\n",

268 | " dist = max_-min_\n",

269 | " \n",

270 | " #中心座標を 0,0,0にセットする(原点が中心にくるようにする)\n",

271 | " np_pc[:,it] = np_pc[:,it] - dist/2 - min_\n",

272 | "\n",

273 | " #covered cell\n",

274 | " cls = cell - 3\n",

275 | "\n",

276 | " #ボクセル一個当たりのサイズを求める\n",

277 | " vox_sz = max_dist/(cls-1)\n",

278 | "\n",

279 | " #上で算出した値で各点を割る。これで各点は(-14, 14)の範囲の値になる\n",

280 | " np_pc[:,it] = np_pc[:,it]/vox_sz\n",

281 | "\n",

282 | " #各点が全て正の整数になるよう移動。これで各点は[0, 30]になる(多分)\n",

283 | " np_pc[:,it] = np_pc[:,it] + (cls-1)/2\n",

284 | "\n",

285 | "\n",

286 | " #整数にする\n",

287 | " np_pc = np.rint(np_pc).astype(np.uint32)\n",

288 | "\n",

289 | "\n",

290 | " #30*30*30の配列を作り,点が存在する場合は1、存在しない場合は0を入力する。\n",

291 | " vox = np.zeros([cell-2,cell-2,cell-2])\n",

292 | "\n",

293 | " # (pc_x, pc_y, pc_z)にnp_pcの座標を代入する\n",

294 | " for (pc_x, pc_y, pc_z) in np_pc:\n",

295 | "\n",

296 | "# # 点が存在しても20%の確率で0とし、データにノイズを加え、汎用性を上げている\n",

297 | "# # ここ,ノイジーなデータの場合80よりも小さい数字にしたほうがいいかもね?\n",

298 | "# if random.randint(0,100) < 80:\n",

299 | " vox[pc_x, pc_y, pc_z] = 1\n",

300 | "\n",

301 | " np_vox = np.zeros([1,cell,cell,cell,1])\n",

302 | " np_vox[0, 1:-1, 1:-1, 1:-1,0] = vox\n",

303 | "\n",

304 | " return np_vox"

305 | ]

306 | },

307 | {

308 | "cell_type": "code",

309 | "execution_count": 12,

310 | "metadata": {

311 | "hidden": true

312 | },

313 | "outputs": [],

314 | "source": [

315 | "def voxel_scatter(np_vox):\n",

316 | "# キレイに整形するやつ\n",

317 | " #空の配列を作る\n",

318 | " vox_scat = np.zeros([0,3], dtype= np.uint32)\n",

319 | "\n",

320 | " #32回\n",

321 | " for x in range(0,np_vox.shape[1]):\n",

322 | " #32回\n",

323 | " for y in range(0,np_vox.shape[2]):\n",

324 | " #32回\n",

325 | " for z in range(0,np_vox.shape[3]):\n",

326 | " #(x,y,z)に1が入っていればその座標を返す\n",

327 | " if np_vox[0,x,y,z,0] == 1.0:\n",

328 | " arr_tmp = np.zeros([1,3],dtype=np.uint32)\n",

329 | " arr_tmp[0,:] = (x,y,z)\n",

330 | " vox_scat = np.concatenate((vox_scat,arr_tmp))\n",

331 | " return vox_scat"

332 | ]

333 | },

334 | {

335 | "cell_type": "code",

336 | "execution_count": 13,

337 | "metadata": {

338 | "hidden": true

339 | },

340 | "outputs": [],

341 | "source": [

342 | "def load_vox_stl(filename, n_points = n_points):\n",

343 | " #shape(N,1,32,32,32)の配列を返す\n",

344 | " vertices, faces = load_stl(filename)\n",

345 | " points,faces = generate_points(vertices, faces, n_points)\n",

346 | " # ValueError: sequence too large; cannot be greater than 32の回避策\n",

347 | " # list 2 numpy.ndarray\n",

348 | " pc = np.empty((len(points), len(points[0])))\n",

349 | " pc[:] = points\n",

350 | " vox = voxilize(pc,cell)\n",

351 | "\n",

352 | " return vox"

353 | ]

354 | },

355 | {

356 | "cell_type": "code",

357 | "execution_count": 14,

358 | "metadata": {

359 | "hidden": true

360 | },

361 | "outputs": [],

362 | "source": [

363 | "def load_vox_off(filename, n_points = n_points):\n",

364 | " #shape(N,1,32,32,32)の配列を返す\n",

365 | " vertices, faces = load_off(filename)\n",

366 | " points,faces = generate_points(vertices, faces, n_points)\n",

367 | " # ValueError: sequence too large; cannot be greater than 32の回避策\n",

368 | " # list 2 numpy.ndarray\n",

369 | " pc = np.empty((len(points), len(points[0])))\n",

370 | " pc[:] = points\n",

371 | " vox = voxilize(pc,cell)\n",

372 | "\n",

373 | " return vox"

374 | ]

375 | },

376 | {

377 | "cell_type": "code",

378 | "execution_count": 15,

379 | "metadata": {

380 | "hidden": true

381 | },

382 | "outputs": [],

383 | "source": [

384 | "# XYZ 長さの取得\n",

385 | "def calc_dist_off(filename):\n",

386 | " vertices, faces = load_off(filename)\n",

387 | " point = np.array(vertices)\n",

388 | " dist = np.zeros(3)\n",

389 | " for i in range(0,3):\n",

390 | " min_ = np.amin(point[:,i])\n",

391 | " max_ = np.amax(point[:,i])\n",

392 | " dist_ = max_ - min_\n",

393 | " if dist_ < 0.001:\n",

394 | " dist_ = 0.001\n",

395 | " dist[i] = dist_\n",

396 | " return dist"

397 | ]

398 | },

399 | {

400 | "cell_type": "code",

401 | "execution_count": 16,

402 | "metadata": {},

403 | "outputs": [],

404 | "source": [

405 | "# XYZ 長さの取得\n",

406 | "def calc_dist_stl(filename):\n",

407 | " vertices, faces = load_stl(filename)\n",

408 | " point = np.array(vertices)\n",

409 | " dist = np.zeros(3)\n",

410 | " for i in range(0,3):\n",

411 | " min_ = np.amin(point[:,i])\n",

412 | " max_ = np.amax(point[:,i])\n",

413 | " dist_ = max_ - min_\n",

414 | " if dist_ < 0.001:\n",

415 | " dist_ = 0.001\n",

416 | " dist[i] = dist_\n",

417 | " return dist"

418 | ]

419 | },

420 | {

421 | "cell_type": "markdown",

422 | "metadata": {},

423 | "source": [

424 | "# ディレクトリ操作"

425 | ]

426 | },

427 | {

428 | "cell_type": "code",

429 | "execution_count": null,

430 | "metadata": {},

431 | "outputs": [],

432 | "source": [

433 | "\n",

434 | " "

435 | ]

436 | },

437 | {

438 | "cell_type": "code",

439 | "execution_count": 17,

440 | "metadata": {

441 | "scrolled": true

442 | },

443 | "outputs": [],

444 | "source": [

445 | "\n",

446 | "if dataset == \"3D_SAMPLE\":\n",

447 | " # train_or_testの分ける\n",

448 | " for cl in class_name:\n",

449 | " namelist = glob.glob(data_dir+ cl +\"/*\")\n",

450 | " namelist.remove(data_dir+ cl + \"/train\")\n",

451 | " namelist.remove(data_dir+ cl + \"/test\")\n",

452 | " for i in range(len(namelist)):\n",

453 | " if i%5 == 0 :\n",

454 | " shutil.move(namelist[i], data_dir + cl + \"/test/.\")\n",

455 | " # print(namelist[i])\n",

456 | " else:\n",

457 | " shutil.move(namelist[i], data_dir + cl + \"/train/.\")\n",

458 | " # print(namelist[i])\n",

459 | "# nameの取得\n",

460 | "namelist_all = []\n",

461 | "\n",

462 | "for cl in class_name:\n",

463 | " \n",

464 | " for t in train_or_test:\n",

465 | " i = 0\n",

466 | " #ファイル名を取得\n",

467 | " namelist_class = glob.glob(data_dir+ cl +\"/\" + t + \"/*\")\n",

468 | " namelist_class.sort()\n",

469 | " namelist_all.append(namelist_class)\n",

470 | "\n"

471 | ]

472 | },

473 | {

474 | "cell_type": "markdown",

475 | "metadata": {},

476 | "source": [

477 | "# ボクセルのnumpy保存"

478 | ]

479 | },

480 | {

481 | "cell_type": "code",

482 | "execution_count": 23,

483 | "metadata": {},

484 | "outputs": [

485 | {

486 | "name": "stdout",

487 | "output_type": "stream",

488 | "text": [

489 | "airplane\n",

490 | "bookshelf\n",

491 | "chair\n",

492 | "desk\n",

493 | "glass_box\n",

494 | "laptop\n",

495 | "person\n",

496 | "range_hood\n",

497 | "stool\n",

498 | "tv_stand\n",

499 | "bathtub\n",

500 | "bottle\n",

501 | "cone\n",

502 | "door\n",

503 | "guitar\n",

504 | "mantel\n",

505 | "piano\n",

506 | "sink\n",

507 | "table\n",

508 | "vase\n",

509 | "bed\n",

510 | "bowl\n",

511 | "cup\n",

512 | "dresser\n",

513 | "keyboard\n",

514 | "monitor\n",

515 | "plant\n",

516 | "sofa\n",

517 | "tent\n",

518 | "wardrobe\n",

519 | "bench\n",

520 | "car\n",

521 | "curtain\n",

522 | "flower_pot\n",

523 | "lamp\n",

524 | "night_stand\n",

525 | "radio\n",

526 | "stairs\n",

527 | "toilet\n",

528 | "xbox\n",

529 | "airplane\n",

530 | "bookshelf\n",

531 | "chair\n",

532 | "desk\n",

533 | "glass_box\n",

534 | "laptop\n",

535 | "person\n",

536 | "range_hood\n",

537 | "stool\n",

538 | "tv_stand\n",

539 | "bathtub\n",

540 | "bottle\n",

541 | "cone\n",

542 | "door\n",

543 | "guitar\n",

544 | "mantel\n",

545 | "piano\n",

546 | "sink\n",

547 | "table\n",

548 | "vase\n",

549 | "bed\n",

550 | "bowl\n",

551 | "cup\n",

552 | "dresser\n",

553 | "keyboard\n",

554 | "monitor\n",

555 | "plant\n",

556 | "sofa\n",

557 | "tent\n",

558 | "wardrobe\n",

559 | "bench\n",

560 | "car\n",

561 | "curtain\n",

562 | "flower_pot\n",

563 | "lamp\n",

564 | "night_stand\n",

565 | "radio\n",

566 | "stairs\n",

567 | "toilet\n",

568 | "xbox\n"

569 | ]

570 | }

571 | ],

572 | "source": [

573 | "off_voxilize = False\n",

574 | "\n",

575 | "for t in train_or_test:\n",

576 | " for cl in class_name:\n",

577 | " print(cl)\n",

578 | " \n",

579 | " #すでにボクセル化が済んていればスキップ\n",

580 | " if os.path.exists(vox_dir + \"x_train.npy\") == True:\n",

581 | "# print(\"{} is exist.\".format(vox_dir + cl + t + \".npy\"))\n",

582 | " off_voxilize = True\n",

583 | " continue\n",

584 | " \n",

585 | " #すでに存在してるnpyファイルはスキップ\n",

586 | " if os.path.exists(vox_dir + cl + t + \".npy\") == True:\n",

587 | " print(\"{} is exist.\".format(data_dir + cl + t + \".npy\"))\n",

588 | " continue\n",

589 | " \n",

590 | " data_3d = glob.glob(data_dir+ cl +\"/\" + t + \"/*\")\n",

591 | " data_3d.sort()\n",

592 | " flag0 = True\n",

593 | " \n",

594 | " for d in data_3d:\n",

595 | " if flag0 :\n",

596 | " #stl,off判定\n",

597 | " if extension == \".off\":\n",

598 | " vox_data = load_vox_off(d)\n",

599 | " elif extension == \".stl\":\n",

600 | " vox_data = load_vox_stl(d)\n",

601 | " flag0 = False\n",

602 | " continue\n",

603 | " if extension == \".off\":\n",

604 | " vox_data_ = load_vox_off(d)\n",

605 | " elif extension == \".stl\":\n",

606 | " vox_data_ = load_vox_stl(d) \n",

607 | " \n",

608 | " vox_data = np.append(vox_data, vox_data_, axis=0)\n",

609 | " np.save(vox_dir + cl + t + \".npy\", vox_data)\n",

610 | "#クラス毎,TestTrain毎ボクセル化されたnpzが生成される(N,32,32,32,1)"

611 | ]

612 | },

613 | {

614 | "cell_type": "code",

615 | "execution_count": 19,

616 | "metadata": {},

617 | "outputs": [

618 | {

619 | "data": {

620 | "text/plain": [

621 | "'.off'"

622 | ]

623 | },

624 | "execution_count": 19,

625 | "metadata": {},

626 | "output_type": "execute_result"

627 | }

628 | ],

629 | "source": [

630 | "extension"

631 | ]

632 | },

633 | {

634 | "cell_type": "code",

635 | "execution_count": 22,

636 | "metadata": {},

637 | "outputs": [

638 | {

639 | "data": {

640 | "text/plain": [

641 | "[]"

642 | ]

643 | },

644 | "execution_count": 22,

645 | "metadata": {},

646 | "output_type": "execute_result"

647 | }

648 | ],

649 | "source": [

650 | "data_3d"

651 | ]

652 | },

653 | {

654 | "cell_type": "code",

655 | "execution_count": null,

656 | "metadata": {},

657 | "outputs": [],

658 | "source": []

659 | },

660 | {

661 | "cell_type": "code",

662 | "execution_count": 37,

663 | "metadata": {},

664 | "outputs": [

665 | {

666 | "ename": "FileNotFoundError",

667 | "evalue": "[Errno 2] No such file or directory: 'np_vox/ModelNet40/cell32/airplanetrain.npy'",

668 | "output_type": "error",

669 | "traceback": [

670 | "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

671 | "\u001b[0;31mFileNotFoundError\u001b[0m Traceback (most recent call last)",

672 | "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m\u001b[0m\n\u001b[1;32m 8\u001b[0m \u001b[0;32mfor\u001b[0m \u001b[0mcl\u001b[0m \u001b[0;32min\u001b[0m \u001b[0mclass_name\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 9\u001b[0m \u001b[0;32mif\u001b[0m \u001b[0mswich_npy\u001b[0m \u001b[0;34m==\u001b[0m \u001b[0;32mTrue\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m---> 10\u001b[0;31m \u001b[0mnpy\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mnp\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mload\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mvox_dir\u001b[0m \u001b[0;34m+\u001b[0m \u001b[0mcl\u001b[0m \u001b[0;34m+\u001b[0m \u001b[0mt\u001b[0m \u001b[0;34m+\u001b[0m \u001b[0;34m\".npy\"\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 11\u001b[0m \u001b[0mnum_of_data\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mappend\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mnpy\u001b[0m\u001b[0;34m.\u001b[0m\u001b[0mshape\u001b[0m\u001b[0;34m[\u001b[0m\u001b[0;36m0\u001b[0m\u001b[0;34m]\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 12\u001b[0m \u001b[0mswich_npy\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0;32mFalse\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

673 | "\u001b[0;32m~/anaconda3/lib/python3.6/site-packages/numpy/lib/npyio.py\u001b[0m in \u001b[0;36mload\u001b[0;34m(file, mmap_mode, allow_pickle, fix_imports, encoding)\u001b[0m\n\u001b[1;32m 370\u001b[0m \u001b[0mown_fid\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0;32mFalse\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 371\u001b[0m \u001b[0;32mif\u001b[0m \u001b[0misinstance\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mfile\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0mbasestring\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0;32m--> 372\u001b[0;31m \u001b[0mfid\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0mopen\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mfile\u001b[0m\u001b[0;34m,\u001b[0m \u001b[0;34m\"rb\"\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 373\u001b[0m \u001b[0mown_fid\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0;32mTrue\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 374\u001b[0m \u001b[0;32melif\u001b[0m \u001b[0mis_pathlib_path\u001b[0m\u001b[0;34m(\u001b[0m\u001b[0mfile\u001b[0m\u001b[0;34m)\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

674 | "\u001b[0;31mFileNotFoundError\u001b[0m: [Errno 2] No such file or directory: 'np_vox/ModelNet40/cell32/airplanetrain.npy'"

675 | ]

676 | }

677 | ],

678 | "source": [

679 | "\n",

680 | "#ココらへん可読性わるすぎ\n",

681 | "if not off_voxilize:\n",

682 | " # データ整形\n",

683 | " for t in train_or_test:\n",

684 | " swich_npy = True \n",

685 | " num_of_data = []#init\n",

686 | " for cl in class_name:\n",

687 | " if swich_npy == True:\n",

688 | " npy = np.load(vox_dir + cl + t + \".npy\")\n",

689 | " num_of_data.append(npy.shape[0])\n",

690 | " swich_npy = False\n",

691 | " else:\n",

692 | " npy_ = np.load(vox_dir + cl + t + \".npy\")\n",

693 | " npy = np.append(npy,npy_,axis=0)\n",

694 | " num_of_data.append(npy_.shape[0])\n",

695 | " if t == \"train\":\n",

696 | " x_train = npy\n",

697 | " y_train = num_of_data\n",

698 | " if t == \"test\":\n",

699 | " x_test = npy\n",

700 | " y_test = num_of_data\n",

701 | " \n",

702 | " \n",

703 | " # ラベルの生成\n",

704 | " for t in train_or_test:\n",

705 | " if t == \"train\":\n",

706 | " for i in range(num_classes):\n",

707 | " if i == 0:\n",

708 | " label = np.full(y_train[i] , i ) \n",

709 | " else:\n",

710 | " label_ = np.full(y_train[i] , i ) \n",

711 | " label = np.append(label, label_, axis=0)\n",

712 | " y_train = label\n",

713 | "\n",

714 | " if t == \"test\":\n",

715 | " for i in range(num_classes):\n",

716 | " if i == 0:\n",

717 | " label = np.full(y_test[i] , i ) \n",

718 | " else:\n",

719 | " label_ = np.full(y_test[i] , i ) \n",

720 | " label = np.append(label, label_, axis=0)\n",

721 | " y_test = label\n",

722 | "\n",

723 | "\n",

724 | "# convert class vectors to binary class matrices\n",

725 | " y_train = keras.utils.to_categorical(y_train, num_classes)\n",

726 | " y_test = keras.utils.to_categorical(y_test, num_classes)\n",

727 | " \n",

728 | " # 保存\n",

729 | " npy = [\"x_train.npy\" , \"x_test.npy\" , \"y_train.npy\" , \"y_test.npy\"]\n",

730 | " data = [x_train , x_test , y_train , y_test]\n",

731 | " for i in range(len(npy)):\n",

732 | " np.save(vox_dir + npy[i],data[i])\n",

733 | " \n",

734 | " # 不要ファイルの除去\n",

735 | " for i in os.listdir(vox_dir):\n",

736 | " if not i in npy:\n",

737 | " os.remove(vox_dir + i)"

738 | ]

739 | },

740 | {

741 | "cell_type": "markdown",

742 | "metadata": {},

743 | "source": [

744 | "# 距離の計算"

745 | ]

746 | },

747 | {

748 | "cell_type": "code",

749 | "execution_count": null,

750 | "metadata": {},

751 | "outputs": [],

752 | "source": [

753 | "dist_calcrated = False\n",

754 | "\n",

755 | "for t in train_or_test:\n",

756 | " for cl in class_name:\n",

757 | " print(cl)\n",

758 | " \n",

759 | " #すでに距離の計算が済んていればスキップ\n",

760 | " if os.path.exists(dist_dir + \"x_train.npy\") == True:\n",

761 | "# print(\"{} is exist.\".format(vox_dir + cl + t + \".npy\"))\n",

762 | " dist_calcrated = True\n",

763 | " continue\n",

764 | " \n",

765 | " #すでに存在してるnpyファイルはスキップ\n",

766 | " if os.path.exists(dist_dir + cl + t + \".npy\") == True:\n",

767 | "# print(\"{} is exist.\".format(data_dir + cl + t + \".npy\"))\n",

768 | " continue\n",

769 | " \n",

770 | " data_3d = glob.glob(data_dir+ cl +\"/\" + t + \"/*\")\n",

771 | " data_3d.sort()\n",

772 | " flag0 = True\n",

773 | " for d in data_3d:\n",

774 | " if flag0:\n",

775 | " if extension == \".off\":\n",

776 | " dist = calc_dist_off(d)\n",

777 | " elif extension == \".stl\":\n",

778 | " dist = calc_dist_stl(d)\n",

779 | " dist = [dist]\n",

780 | " flag0 = False\n",

781 | " continue\n",

782 | " if extension == \".off\":\n",

783 | " dist_ = calc_dist_off(d)\n",

784 | " elif extension == \".stl\":\n",

785 | " dist_ = calc_dist_stl(d)\n",

786 | " \n",

787 | " dist = np.append(dist, [dist_], axis=0)\n",

788 | "# print(dist.shape)\n",

789 | " np.save(dist_dir + cl + t + \".npy\", dist)"

790 | ]

791 | },

792 | {

793 | "cell_type": "code",

794 | "execution_count": 19,

795 | "metadata": {},

796 | "outputs": [

797 | {

798 | "ename": "NameError",

799 | "evalue": "name 'dist_calcrated' is not defined",

800 | "output_type": "error",

801 | "traceback": [

802 | "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

803 | "\u001b[0;31mNameError\u001b[0m Traceback (most recent call last)",

804 | "\u001b[0;32m\u001b[0m in \u001b[0;36m\u001b[0;34m\u001b[0m\n\u001b[0;32m----> 1\u001b[0;31m \u001b[0;32mif\u001b[0m \u001b[0;32mnot\u001b[0m \u001b[0mdist_calcrated\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[0m\u001b[1;32m 2\u001b[0m \u001b[0;31m# データ整形\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 3\u001b[0m \u001b[0;32mfor\u001b[0m \u001b[0mt\u001b[0m \u001b[0;32min\u001b[0m \u001b[0mtrain_or_test\u001b[0m\u001b[0;34m:\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 4\u001b[0m \u001b[0mswich_npy\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0;32mTrue\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n\u001b[1;32m 5\u001b[0m \u001b[0mnum_of_data\u001b[0m \u001b[0;34m=\u001b[0m \u001b[0;34m[\u001b[0m\u001b[0;34m]\u001b[0m\u001b[0;31m#init\u001b[0m\u001b[0;34m\u001b[0m\u001b[0;34m\u001b[0m\u001b[0m\n",

805 | "\u001b[0;31mNameError\u001b[0m: name 'dist_calcrated' is not defined"

806 | ]

807 | }

808 | ],

809 | "source": [

810 | "if not dist_calcrated:\n",

811 | " # データ整形\n",

812 | " for t in train_or_test:\n",

813 | " swich_npy = True \n",

814 | " num_of_data = []#init\n",

815 | " for cl in class_name:\n",

816 | " if swich_npy == True:\n",

817 | " npy = np.load(dist_dir + cl + t + \".npy\")\n",

818 | " num_of_data.append(npy.shape[0])\n",

819 | " swich_npy = False\n",

820 | " else:\n",

821 | " npy_ = np.load(dist_dir + cl + t + \".npy\")\n",

822 | " npy = np.append(npy,npy_,axis=0)\n",

823 | " num_of_data.append(npy_.shape[0])\n",

824 | " if t == \"train\":\n",

825 | " x_train = npy\n",

826 | " y_train = num_of_data\n",

827 | " if t == \"test\":\n",

828 | " x_test = npy\n",

829 | " y_test = num_of_data\n",

830 | " \n",

831 | " \n",

832 | " # ラベルの生成\n",

833 | " for t in train_or_test:\n",

834 | " if t == \"train\":\n",

835 | " for i in range(num_classes):\n",

836 | " if i == 0:\n",

837 | " label = np.full(y_train[i] , i ) \n",

838 | " else:\n",

839 | " label_ = np.full(y_train[i] , i ) \n",

840 | " label = np.append(label, label_, axis=0)\n",

841 | " y_train = label\n",

842 | "\n",

843 | " if t == \"test\":\n",

844 | " for i in range(num_classes):\n",

845 | " if i == 0:\n",

846 | " label = np.full(y_test[i] , i ) \n",

847 | " else:\n",

848 | " label_ = np.full(y_test[i] , i ) \n",

849 | " label = np.append(label, label_, axis=0)\n",

850 | " y_test = label\n",

851 | "\n",

852 | "\n",

853 | "# convert class vectors to binary class matrices\n",

854 | " y_train = keras.utils.to_categorical(y_train, num_classes)\n",

855 | " y_test = keras.utils.to_categorical(y_test, num_classes)\n",

856 | " \n",

857 | " # 保存\n",

858 | " npy = [\"x_train.npy\" , \"x_test.npy\" , \"y_train.npy\" , \"y_test.npy\"]\n",

859 | " data = [x_train , x_test , y_train , y_test]\n",

860 | " for i in range(len(npy)):\n",

861 | " np.save(dist_dir + npy[i],data[i])\n",

862 | " \n",

863 | " # 不要ファイルの除去\n",

864 | "# for i in os.listdir(dist_dir):\n",

865 | "# if not i in npy:\n",

866 | "# os.remove(dist_dir + i)"

867 | ]

868 | },

869 | {

870 | "cell_type": "code",

871 | "execution_count": null,

872 | "metadata": {},

873 | "outputs": [],

874 | "source": []

875 | },

876 | {

877 | "cell_type": "code",

878 | "execution_count": null,

879 | "metadata": {},

880 | "outputs": [],

881 | "source": []

882 | },

883 | {

884 | "cell_type": "code",

885 | "execution_count": null,

886 | "metadata": {},

887 | "outputs": [],

888 | "source": []

889 | },

890 | {

891 | "cell_type": "code",

892 | "execution_count": null,

893 | "metadata": {},

894 | "outputs": [],

895 | "source": []

896 | },

897 | {

898 | "cell_type": "code",

899 | "execution_count": null,

900 | "metadata": {},

901 | "outputs": [],

902 | "source": []

903 | },

904 | {

905 | "cell_type": "code",

906 | "execution_count": null,

907 | "metadata": {},

908 | "outputs": [],

909 | "source": []

910 | },

911 | {

912 | "cell_type": "code",

913 | "execution_count": null,

914 | "metadata": {},

915 | "outputs": [],

916 | "source": []

917 | },

918 | {

919 | "cell_type": "code",

920 | "execution_count": null,

921 | "metadata": {},

922 | "outputs": [],

923 | "source": []

924 | },

925 | {

926 | "cell_type": "code",

927 | "execution_count": null,

928 | "metadata": {},

929 | "outputs": [],

930 | "source": []

931 | },

932 | {

933 | "cell_type": "code",

934 | "execution_count": null,

935 | "metadata": {},

936 | "outputs": [],

937 | "source": []

938 | },

939 | {

940 | "cell_type": "code",

941 | "execution_count": null,

942 | "metadata": {},

943 | "outputs": [],

944 | "source": []

945 | },

946 | {

947 | "cell_type": "code",

948 | "execution_count": null,

949 | "metadata": {},

950 | "outputs": [],

951 | "source": []

952 | },

953 | {

954 | "cell_type": "code",

955 | "execution_count": null,

956 | "metadata": {},

957 | "outputs": [],

958 | "source": []

959 | }

960 | ],

961 | "metadata": {

962 | "kernelspec": {

963 | "display_name": "Python 3",

964 | "language": "python",

965 | "name": "python3"

966 | },

967 | "language_info": {

968 | "codemirror_mode": {

969 | "name": "ipython",

970 | "version": 3

971 | },

972 | "file_extension": ".py",

973 | "mimetype": "text/x-python",

974 | "name": "python",

975 | "nbconvert_exporter": "python",

976 | "pygments_lexer": "ipython3",

977 | "version": "3.6.8"

978 | },

979 | "toc": {

980 | "base_numbering": 1,

981 | "nav_menu": {},

982 | "number_sections": true,

983 | "sideBar": true,

984 | "skip_h1_title": false,

985 | "title_cell": "Table of Contents",

986 | "title_sidebar": "Contents",

987 | "toc_cell": false,

988 | "toc_position": {},

989 | "toc_section_display": true,

990 | "toc_window_display": false

991 | }

992 | },

993 | "nbformat": 4,

994 | "nbformat_minor": 2

995 | }

996 |

--------------------------------------------------------------------------------

/poster_takitani2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/poster_takitani2.pdf

--------------------------------------------------------------------------------

/sample.txt:

--------------------------------------------------------------------------------

1 | hoge.

2 |

--------------------------------------------------------------------------------

52 |

53 | ↓ Accuracy ↓

54 |

55 | | ModelNet10 | ModelNet40 | My dataset(3D_SAMPLE) |

56 | | :---: | :---: | :---: |

57 | | 90.1% | 86.8% | 97.6% |

58 |

59 |

60 | # When this does not work

61 | Please check ./config.py

62 | 'DATASET = Modelnet10 or Modelnet40'

63 | Remove the comment out of this.

64 | 3D_SAMPLE is our own dataset. I can not publish it now.

65 |

66 | # P.S.

67 | Forgive me for my poor English... XD

68 |

69 | [My twitter](https://twitter.com/tacky0612) ← Please contact me if you have any troubles.

70 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 | import os

3 | import keras

4 | from keras.optimizers import Adam

5 | from keras.callbacks import LearningRateScheduler, ModelCheckpoint , TensorBoard

6 |

7 | # BASE_DIR = os.path.dirname(os.path.abspath(__file__))

8 |

9 | class Config():

10 |

11 | def make_tensorboard(set_dir_name='TensorBoard'):

12 | log_dir = set_dir_name

13 | if os.path.exists(log_dir) == False:

14 | os.makedirs(log_dir)

15 | tensorboard = TensorBoard(log_dir=log_dir, write_graph=True, )

16 | return tensorboard

17 |

18 | # DATASET = "ModelNet10"

19 | DATASET = "ModelNet40"

20 | # DATASET = "3D_SAMPLE"

21 |

22 |

23 | N_POINTS = 10000

24 | CELL = 32

25 | BATCH_SIZE = 128

26 | EPOCHS = 40

27 | LEARNIG_RATE = 0.001

28 |

29 |

30 | OPTIMIZER = Adam(lr=LEARNIG_RATE)

31 |

32 |

33 | if DATASET == "ModelNet10":

34 | DATA_DIR = "ModelNet10/"

35 | NUM_CLASSES = 10

36 | EXTENSION = ".off"

37 | CLASS_NAME = [

38 | 'bathtub',

39 | 'chair',

40 | 'dresser',

41 | 'night_stand',

42 | 'table',

43 | 'bed',

44 | 'desk',

45 | 'monitor',

46 | 'sofa',

47 | 'toilet',

48 | ]

49 |

50 | if DATASET == "ModelNet40":

51 | DATA_DIR = "ModelNet40/"

52 | NUM_CLASSES = 40

53 | EXTENSION = ".off"

54 | CLASS_NAME = [

55 | 'airplane',

56 | 'bookshelf',

57 | 'chair',

58 | 'desk',

59 | 'glass_box',

60 | 'laptop',

61 | 'person',

62 | 'range_hood',

63 | 'stool',

64 | 'tv_stand',

65 | 'bathtub',

66 | 'bottle',

67 | 'cone',

68 | 'door',

69 | 'guitar',

70 | 'mantel',

71 | 'piano',

72 | 'sink',

73 | 'table',

74 | 'vase',

75 | 'bed',

76 | 'bowl',

77 | 'cup',

78 | 'dresser',

79 | 'keyboard',

80 | 'monitor',

81 | 'plant',

82 | 'sofa',

83 | 'tent',

84 | 'wardrobe',

85 | 'bench',

86 | 'car',

87 | 'curtain',

88 | 'flower_pot',

89 | 'lamp',

90 | 'night_stand',

91 | 'radio',

92 | 'stairs',

93 | 'toilet',

94 | 'xbox',

95 | ]

96 |

97 | if DATASET == "3D_SAMPLE":

98 | DATA_DIR = "3D_SAMPLE/"

99 | NUM_CLASSES = 34

100 | EXTENSION = ".stl"

101 | CLASS_NAME = [

102 | 'auto_valve1',

103 | 'auto_valve2',

104 | 'auto_valve3',

105 | 'bearing',

106 | 'bevel_gear',

107 | 'block',

108 | 'bracket1',

109 | 'bracket2',

110 | 'bushing',

111 | 'cylinder',

112 | 'etc',

113 | 'frame',

114 | 'gear',

115 | 'handle_valve1',

116 | 'handle_valve2',

117 | 'handle_valve3',

118 | 'hinge1',

119 | 'hinge2',

120 | 'nut',

121 | 'pipe',

122 | 'plate',

123 | 'pully1',

124 | 'pully2',

125 | 'robot1',

126 | 'robot2',

127 | 'robot3',

128 | 'robot4',

129 | 'rod',

130 | 'shaft1',

131 | 'shaft2',

132 | 'spring',

133 | 'trigeminal_valve',

134 | 'valve_connector',

135 | 'washer'

136 | ]

137 |

138 |

139 | VOX_DIR = "np_vox/" + DATASET + "/cell" + str(CELL) + "/"

140 | FIG_DIR = "figure/"+ DATASET + "/cell" + str(CELL) + "/"

141 | WEIGHTS_DIR = "weights/" + DATASET + "/cell" + str(CELL) + "/"

142 | MODEL_DIR = "model/"+ DATASET + "/cell" + str(CELL) + "/"

143 | DIST_DIR = "dist/"+ DATASET + "/cell" + str(CELL) + "/"

144 |

145 | # early_stopping

146 | early_stopping = keras.callbacks.EarlyStopping(monitor='val_loss', patience=0, verbose=0, mode='auto')

147 |

148 | #save best model

149 | checkpoint = ModelCheckpoint(filepath = os.path.join(WEIGHTS_DIR, "model-{epoch:02d}.h5"), save_best_only=True)

150 |

151 | # TensorBoard

152 | CALLBACKS = [make_tensorboard(),checkpoint]

153 |

154 |

155 |

156 |

157 | config = Config()

158 |

159 |

160 |

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/model.png

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

279 |

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/model3.png

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/3D_SAMPLE/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/3D_SAMPLE/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/acc.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1166 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/loss.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1047 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

279 |

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model2.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/model3.png

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet10/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet10/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/loss.svg:

--------------------------------------------------------------------------------

1 |

2 |

4 |

5 |

1106 |

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model2.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/model3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/model3.png

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/test_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/test_asp.jpg

--------------------------------------------------------------------------------

/figure/ModelNet40/cell32/train_asp.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/ModelNet40/cell32/train_asp.jpg

--------------------------------------------------------------------------------

/figure/fig1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig1.png

--------------------------------------------------------------------------------

/figure/fig2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig2.png

--------------------------------------------------------------------------------

/figure/fig3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig3.png

--------------------------------------------------------------------------------

/figure/fig4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tacky0612/classification3dmodel/4e4e4c37d305703a4afdd445a24ac11a81dd9f6c/figure/fig4.png

--------------------------------------------------------------------------------

/get_modelnet.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 |

4 | # download zip files

5 | wget http://3dvision.princeton.edu/projects/2014/3DShapeNets/ModelNet10.zip

6 | wget http://modelnet.cs.princeton.edu/ModelNet40.zip

7 |

8 | # extract zip files

9 | unzip ModelNet10.zip

10 | unzip ModelNet40.zip

11 |

--------------------------------------------------------------------------------

/make_npy_file.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Head"

8 | ]

9 | },

10 | {

11 | "cell_type": "markdown",

12 | "metadata": {},

13 | "source": [

14 | "## imports"

15 | ]

16 | },

17 | {

18 | "cell_type": "code",

19 | "execution_count": 1,

20 | "metadata": {},

21 | "outputs": [],

22 | "source": [

23 | "# -*- coding: utf-8 -*-"

24 | ]

25 | },

26 | {

27 | "cell_type": "code",

28 | "execution_count": 2,

29 | "metadata": {},

30 | "outputs": [],

31 | "source": [

32 | "\n",

33 | "import os\n",

34 | "import sys\n",

35 | "import numpy as np\n",

36 | "import glob\n",

37 | "import math\n",

38 | "import random\n",

39 | "import shutil\n",

40 | "import keras\n",

41 | "\n",

42 | "from config import config"

43 | ]

44 | },

45 | {

46 | "cell_type": "markdown",

47 | "metadata": {},

48 | "source": [

49 | "## Tuning"

50 | ]

51 | },

52 | {

53 | "cell_type": "code",

54 | "execution_count": 3,

55 | "metadata": {},

56 | "outputs": [],

57 | "source": [

58 | "#Tuning\n",

59 | "dataset = config.DATASET\n",

60 | "n_points = config.N_POINTS\n",

61 | "cell = config.CELL\n",

62 | "num_classes = config.NUM_CLASSES\n",

63 | "extension = config.EXTENSION\n",

64 | "class_name = config.CLASS_NAME"

65 | ]

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": 4,

70 | "metadata": {},

71 | "outputs": [],

72 | "source": [

73 | "train_or_test = [\"train\",\"test\"]"

74 | ]

75 | },

76 | {

77 | "cell_type": "code",

78 | "execution_count": 5,

79 | "metadata": {},

80 | "outputs": [],

81 | "source": [

82 | "#読み込み,書き込み用ディレクトリ\n",

83 | "data_dir = config.DATA_DIR\n",

84 | "vox_dir = config.VOX_DIR\n",

85 | "fig_dir = config.FIG_DIR\n",

86 | "dist_dir = config.DIST_DIR"

87 | ]

88 | },

89 | {

90 | "cell_type": "code",

91 | "execution_count": 6,

92 | "metadata": {},

93 | "outputs": [],

94 | "source": [

95 | "dir_list = [data_dir , vox_dir , fig_dir , dist_dir]\n",

96 | "for directory in dir_list:\n",

97 | " if os.path.exists(directory) == False:\n",

98 | " os.makedirs(directory)"

99 | ]

100 | },

101 | {

102 | "cell_type": "code",

103 | "execution_count": 7,

104 | "metadata": {},

105 | "outputs": [],

106 | "source": [

107 | "# TrainとTestのデータdirectory作成\n",

108 | "for t in train_or_test:\n",

109 | " for cl in class_name:\n",

110 | " if os.path.exists(data_dir + cl + \"/\" + t + \"/\") == False:\n",

111 | " os.makedirs(data_dir + cl + \"/\" + t + \"/\")"

112 | ]

113 | },

114 | {

115 | "cell_type": "markdown",

116 | "metadata": {

117 | "heading_collapsed": true

118 | },

119 | "source": [

120 | "# Function"

121 | ]

122 | },

123 | {

124 | "cell_type": "code",

125 | "execution_count": 8,

126 | "metadata": {

127 | "hidden": true

128 | },

129 | "outputs": [],

130 | "source": [

131 | "def load_off(filename):\n",

132 | " # read OFF file\n",

133 | " with open(filename,\"r\") as handle:\n",

134 | " off = handle.read().rstrip().split(\"\\n\")\n",

135 | " \n",

136 | " #OFFファイルが不正かどうか判定\n",

137 | " if off[0] != \"OFF\":\n",

138 | "# print(\"{} is broken!!\".format(filename))\n",

139 | " params = list(off[0].split(\" \"))\n",

140 | " n_vertices = int(params[0].strip(\"OFF\"))\n",

141 | " n_faces = int(params[1])\n",

142 | " off.insert(0, \"OFF\")\n",

143 | " \n",

144 | " else:\n",

145 | " #get params and faces\n",

146 | " params = list(map(int, off[1].split(\" \")))\n",

147 | " n_vertices = params[0]\n",

148 | " n_faces = params[1]\n",

149 | "\n",

150 | " # read Vertex coordinates\n",

151 | " vertices = []\n",

152 | " for n in range(n_vertices):\n",

153 | " coords = list(map(float, off[2+n].split()))\n",

154 | " vertices.append(coords)\n",

155 | "\n",

156 | " # read information of faces\n",

157 | " faces = []\n",

158 | " for n in range(n_faces):\n",

159 | " connects = list(map(int, off[2 + n_vertices + n].split(\" \")[1:4]))\n",

160 | " faces.append(connects)\n",

161 | "\n",

162 | " return vertices, faces"

163 | ]

164 | },

165 | {

166 | "cell_type": "code",

167 | "execution_count": 9,

168 | "metadata": {

169 | "hidden": true

170 | },

171 | "outputs": [],

172 | "source": [

173 | "def load_stl(filename):\n",

174 | " # read STL file\n",

175 | " with open(filename,\"r\") as handle:\n",

176 | " stl = handle.read().rstrip().split(\"\\n\")\n",

177 | " \n",

178 | " #get vertice\n",

179 | " vertice = []\n",

180 | " for i in range(len(stl)):\n",

181 | " pool = stl[i].split()\n",

182 | " if pool[0] == \"vertex\":\n",

183 | " vertex = list(map(float, pool[1:]))\n",

184 | " vertice.append(vertex)\n",

185 | " \n",

186 | " seen = []\n",

187 | " unique_vertice = [x for x in vertice if x not in seen and not seen.append(x)]\n",

188 | " \n",

189 | " #get faces\n",

190 | " faces = []\n",

191 | " for n in range(len(stl)):\n",

192 | " if stl[n].split() == ['outer', 'loop']:\n",

193 | " indexes = []\n",

194 | " for i in range(3):\n",

195 | " index = get_index_2d_list(unique_vertice, list(map(float,stl[n+i+1].split()[1:])))\n",

196 | " indexes.append(index)\n",

197 | " faces.append(indexes)\n",

198 | "\n",

199 | " return unique_vertice, faces"

200 | ]

201 | },

202 | {

203 | "cell_type": "code",

204 | "execution_count": 10,

205 | "metadata": {

206 | "hidden": true

207 | },

208 | "outputs": [],

209 | "source": [

210 | "def generate_points(points, face_ids, num_generated_point=1000):\n",

211 | " \n",

212 | " points = np.array(points)\n",

213 | " face_ids = np.array(face_ids)\n",

214 | " \n",

215 | " # compute area\n",

216 | " vec1 = points[face_ids[:,0]] - points[face_ids[:,1]]\n",

217 | " vec2 = points[face_ids[:,1]] - points[face_ids[:,2]]\n",

218 | " cross_product = np.cross(vec1, vec2)\n",

219 | " area = np.sqrt(np.power(cross_product, 2).sum(axis=1))\n",

220 | "\n",

221 | " # cumsum area\n",

222 | " cum_area = np.cumsum(area)\n",

223 | "\n",

224 | " # generate random \n",

225 | " random = np.random.rand(num_generated_point) * cum_area[-1]\n",

226 | "\n",

227 | " # convert random to index\n",

228 | " #random_idx = [bisect.bisect_left(cum_area, x) for x in random]\n",

229 | " random_idx = np.random.choice(np.arange(len(face_ids)), size=num_generated_point, p=area/cum_area[-1])\n",

230 | "\n",

231 | " #import pdb; pdb.set_trace()\n",

232 | "\n",

233 | " # generate points\n",

234 | " r1 = np.tile(np.random.rand(num_generated_point), (3, 1)).T\n",

235 | " r2 = np.tile(np.random.rand(num_generated_point), (3, 1)).T\n",

236 | "\n",

237 | " generated_points = (1 - np.sqrt(r1))*points[face_ids[random_idx, 0]] + np.sqrt(r1)*(1-r2)*points[face_ids[random_idx, 1]] + np.sqrt(r1)*r2*points[face_ids[random_idx, 2]]\n",

238 | "\n",

239 | " return generated_points, random_idx"

240 | ]

241 | },

242 | {

243 | "cell_type": "code",

244 | "execution_count": 11,

245 | "metadata": {

246 | "hidden": true

247 | },

248 | "outputs": [],

249 | "source": [

250 | "def voxilize(np_pc,cell):\n",

251 | "# ボクセル化した配列を返す\n",

252 | " max_dist = 0.0\n",

253 | " for it in range(0,3):\n",

254 | " # 最大値と最小値の距離を求める\n",

255 | " min_ = np.amin(np_pc[:,it])\n",

256 | " max_ = np.amax(np_pc[:,it])\n",

257 | " dist = max_-min_\n",

258 | "\n",

259 | " #xyzで一番並行距離が大きいのを求める\n",

260 | " if dist > max_dist:\n",

261 | " max_dist = dist\n",

262 | " \n",

263 | " for it in range(0,3):\n",

264 | "\n",

265 | " # 最大値と最小値の距離を求める\n",

266 | " min_ = np.amin(np_pc[:,it])\n",

267 | " max_ = np.amax(np_pc[:,it])\n",

268 | " dist = max_-min_\n",

269 | " \n",

270 | " #中心座標を 0,0,0にセットする(原点が中心にくるようにする)\n",

271 | " np_pc[:,it] = np_pc[:,it] - dist/2 - min_\n",

272 | "\n",

273 | " #covered cell\n",

274 | " cls = cell - 3\n",

275 | "\n",

276 | " #ボクセル一個当たりのサイズを求める\n",

277 | " vox_sz = max_dist/(cls-1)\n",

278 | "\n",

279 | " #上で算出した値で各点を割る。これで各点は(-14, 14)の範囲の値になる\n",

280 | " np_pc[:,it] = np_pc[:,it]/vox_sz\n",

281 | "\n",

282 | " #各点が全て正の整数になるよう移動。これで各点は[0, 30]になる(多分)\n",

283 | " np_pc[:,it] = np_pc[:,it] + (cls-1)/2\n",

284 | "\n",

285 | "\n",

286 | " #整数にする\n",

287 | " np_pc = np.rint(np_pc).astype(np.uint32)\n",

288 | "\n",

289 | "\n",

290 | " #30*30*30の配列を作り,点が存在する場合は1、存在しない場合は0を入力する。\n",

291 | " vox = np.zeros([cell-2,cell-2,cell-2])\n",

292 | "\n",

293 | " # (pc_x, pc_y, pc_z)にnp_pcの座標を代入する\n",

294 | " for (pc_x, pc_y, pc_z) in np_pc:\n",

295 | "\n",

296 | "# # 点が存在しても20%の確率で0とし、データにノイズを加え、汎用性を上げている\n",

297 | "# # ここ,ノイジーなデータの場合80よりも小さい数字にしたほうがいいかもね?\n",

298 | "# if random.randint(0,100) < 80:\n",

299 | " vox[pc_x, pc_y, pc_z] = 1\n",

300 | "\n",

301 | " np_vox = np.zeros([1,cell,cell,cell,1])\n",

302 | " np_vox[0, 1:-1, 1:-1, 1:-1,0] = vox\n",

303 | "\n",

304 | " return np_vox"

305 | ]

306 | },

307 | {

308 | "cell_type": "code",

309 | "execution_count": 12,

310 | "metadata": {

311 | "hidden": true

312 | },

313 | "outputs": [],

314 | "source": [

315 | "def voxel_scatter(np_vox):\n",

316 | "# キレイに整形するやつ\n",

317 | " #空の配列を作る\n",

318 | " vox_scat = np.zeros([0,3], dtype= np.uint32)\n",

319 | "\n",

320 | " #32回\n",

321 | " for x in range(0,np_vox.shape[1]):\n",

322 | " #32回\n",

323 | " for y in range(0,np_vox.shape[2]):\n",

324 | " #32回\n",